Realizing method and system of vision inertial mileometer

An implementation method and odometer technology, applied in the field of computer vision, which can solve the problems of high power consumption, poor real-time performance, and difficulty in processing power.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

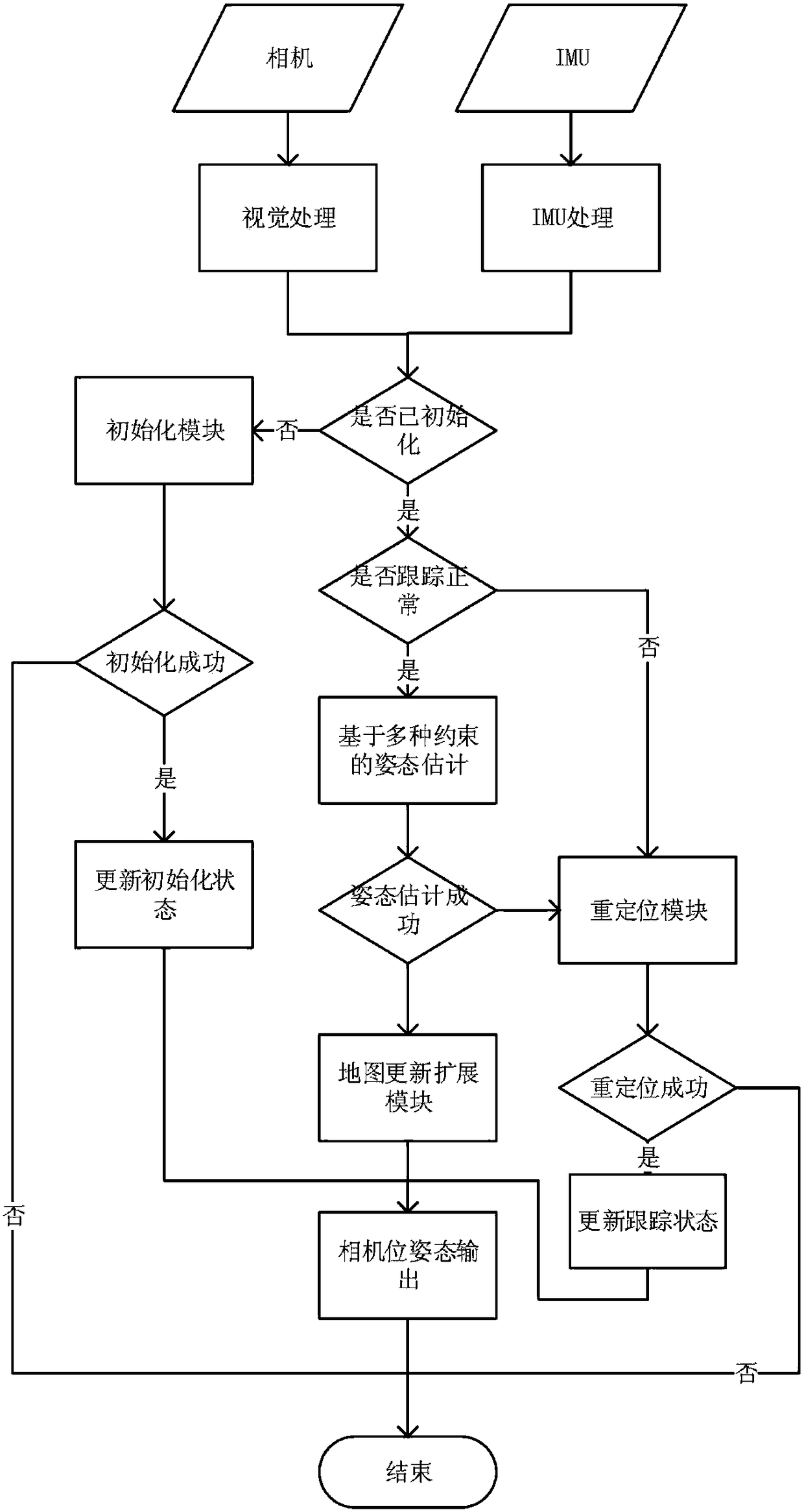

[0068] Such as figure 1 As shown, the implementation method of the visual inertial odometer in the embodiment of the present invention includes the following steps:

[0069] In the first step (S1), the device collects image data in real time through the camera; the angular velocity and acceleration data of the device are collected in real time through an inertial measurement unit (IMU); the IMU includes a gyroscope and an accelerometer, and the angular velocity and acceleration data collected by the IMU are The data can also be referred to as the acquisition data of the IMU for short;

[0070] In the second step (S2), the scene initialization module establishes the initial space of the visual-inertial odometry system based on the image data collected in real time by the camera, the angular velocity data of the device collected by the IMU using the gyroscope, and the acceleration data collected by the accelerometer. 3D map;

[0071] Preferably, the second step (S2) may includ...

Embodiment 2

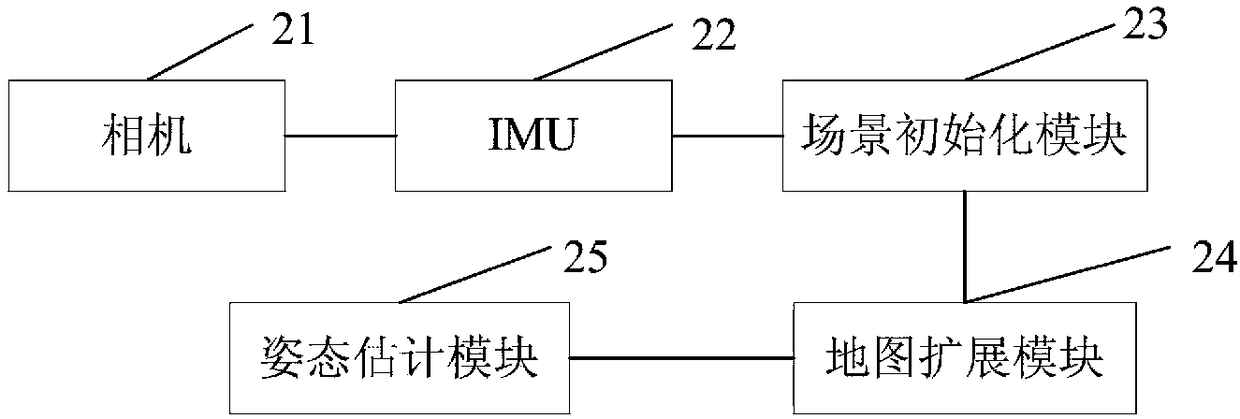

[0088] Such as figure 2 As shown, the embodiment of the present invention also provides a visual-inertial odometry system, including a camera 21, an IMU22, a scene initialization module 23, a map extension module 24 and a pose estimation module 25, wherein:

[0089] Camera 21, for collecting image data in real time;

[0090] IMU22, used to collect angular velocity and acceleration data of the device, the IMU includes a gyroscope and an accelerometer;

[0091] The scene initialization module 23 is used for the establishment of the initial space three-dimensional map of the visual-inertial odometry system;

[0092] The map extension module 24 is used to update the spatial three-dimensional map set up by the scene initialization module 23 in real time;

[0093] The attitude estimation module 25 uses the spatial constraint relationship between the current image feature point and the three-dimensional map point maintained by the map extension module, the feature matching constrain...

Embodiment 3

[0110] The implementation method of the visual inertial odometer provided by the embodiment of the present invention uses a smart phone to perform real-time positioning and tracking of the mobile phone in an unknown indoor environment as an example to specifically illustrate the technical solution of the present invention:

[0111] 301. Use a smart phone equipped with a camera, a gyroscope, and an accelerometer. The smart phone is also integrated with the visual-inertial odometer system provided by the embodiment of the present invention. In the embodiment of the present invention, the smart phone can be regarded as Equipment; the visual-inertial odometer system can obtain the real-time two-dimensional image input of the camera and the input of the IMU in real time; the camera collects images at a fixed frame rate, such as 30 Hz, and the image size collected by the camera can be set according to the actual mobile phone computing power, such as 720p ; The frequency of the gyrosc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com