A video emotion recognition method integrating facial expression recognition and voice emotion recognition

A facial expression recognition, speech emotion recognition technology, applied in speech analysis, character and pattern recognition, acquisition/recognition of facial features, etc. There are few facial expression frames, ignoring the internal connection between facial features and voice features, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

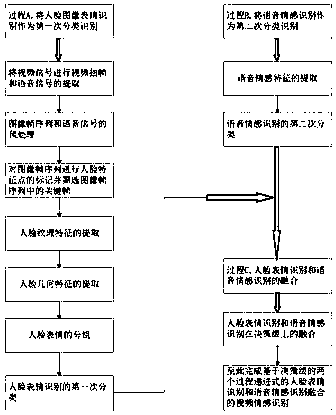

[0125] The video emotion recognition method of the fusion of facial expression recognition and voice emotion recognition in this embodiment is a two-process progressive audio-visual emotion recognition method based on decision-making level, and the specific steps are as follows:

[0126] Process A. Face image expression recognition as the first classification recognition:

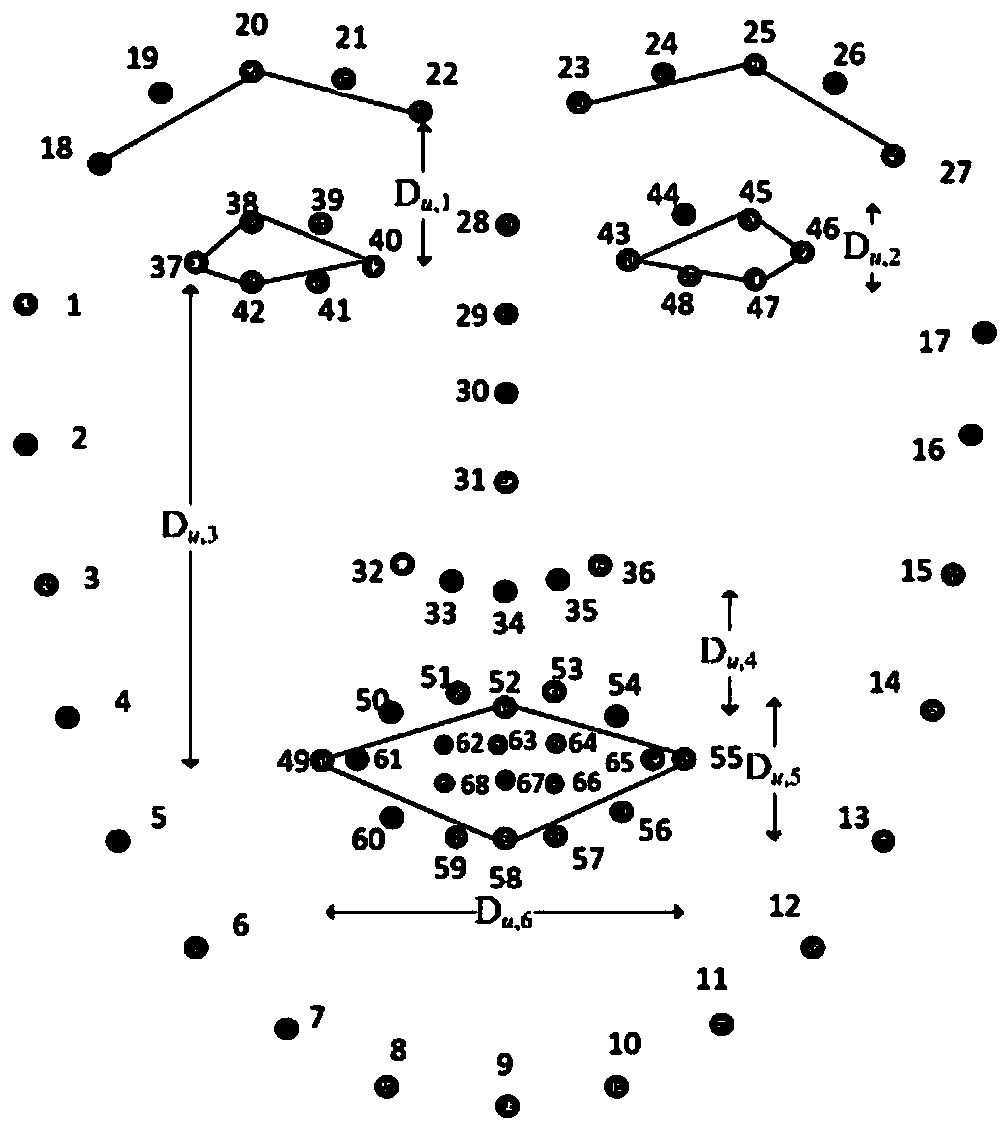

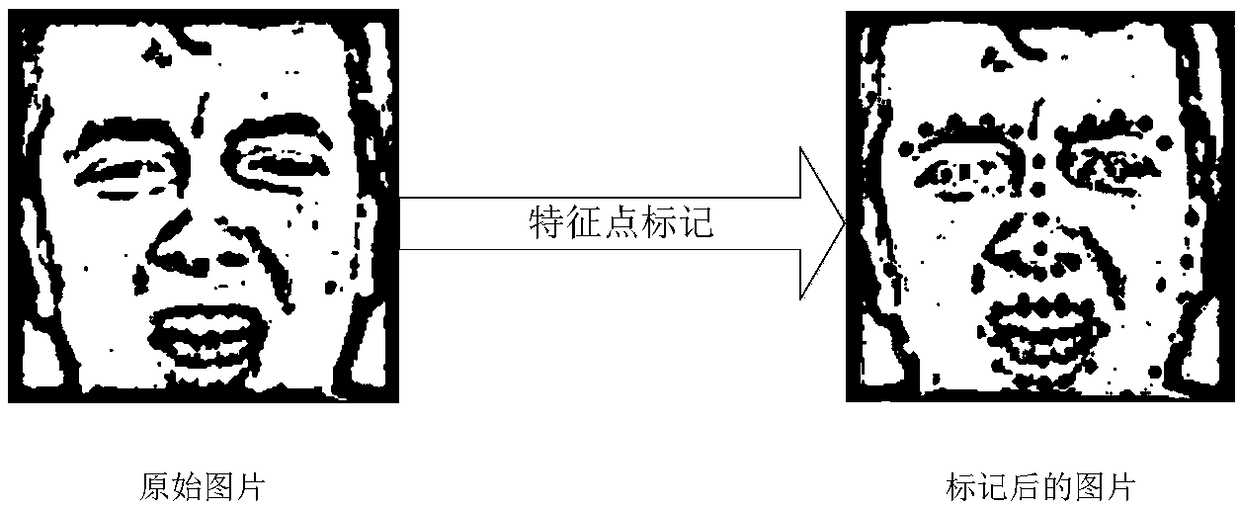

[0127] This process A includes the extraction of facial expression features, the grouping of facial expressions and the first classification of facial expression recognition, and the steps are as follows:

[0128] In the first step, the video signal is subjected to video frame extraction and voice signal extraction:

[0129] Decompose the video in the database into a sequence of image frames, and use the open source FormatFactory software to extract video frames, extract the voice signal in the video and save it in MP3 format;

[0130] The second step is the preprocessing of image frame sequence and speech...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com