A method for removing station captions and subtitles in an image based on a deep neural network

A deep neural network and image-in-image technology, applied in the field of removing logos and subtitles in images based on deep neural networks, can solve problems such as limitations of application scenarios, poor ability of complex scenes, and large amount of calculations to achieve visual effects The effect of realism, strong fitting ability, and good restoration results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

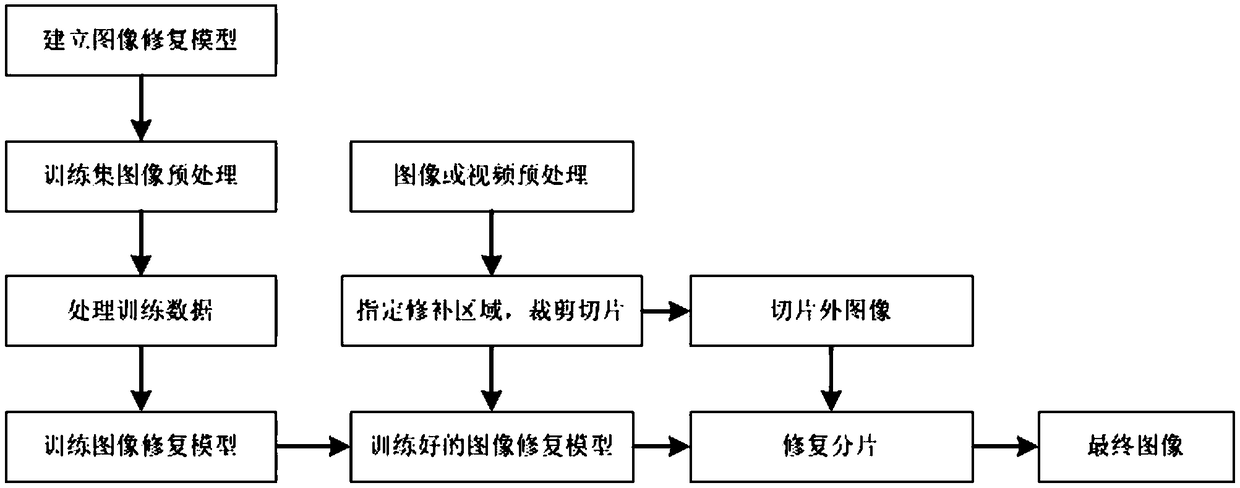

[0050] Such as figure 1 As shown, this embodiment provides a method for removing the logo and subtitles in an image based on a deep neural network, including the following steps:

[0051] S1. Establish an image repair model: the image repair model is composed of a "U-net" network and a GAN, and the "U-net" network is used as a Generator of the GAN;

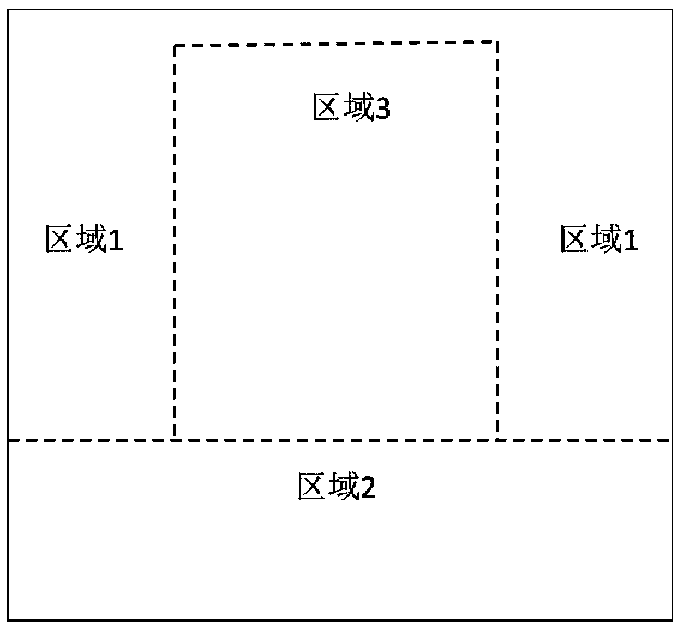

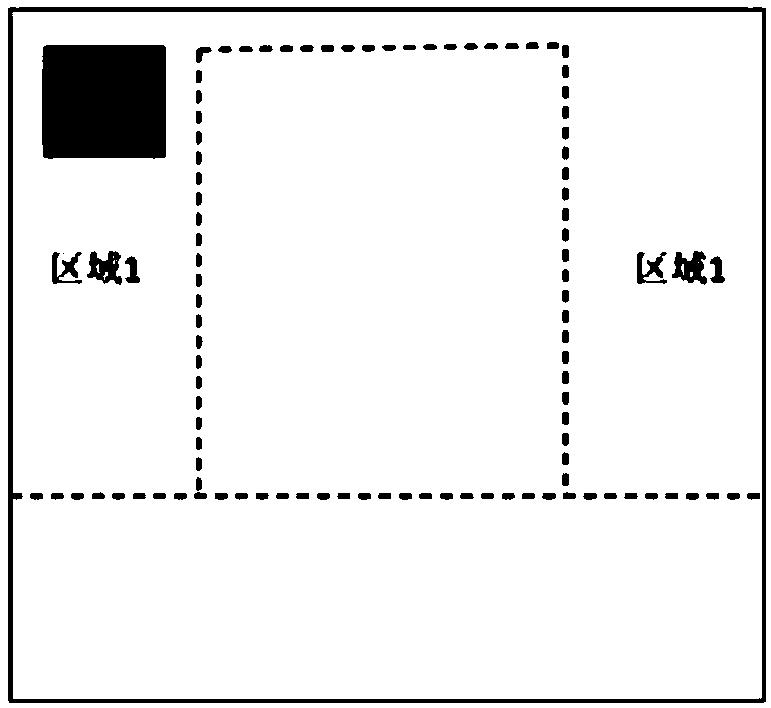

[0052] S2. Image preprocessing of the training set: crop or scale the images in the training set to a limited size to obtain the training image. In this embodiment, the length and width of the training image are limited to 512*512mm. According to the area where the station logo and subtitles are usually located, the The training images are logically partitioned as figure 2 Area 1, area 2 and area 3 are shown, wherein area 1 is the area where the station logo is located under normal conditions, area 2 is the area where subtitles are located under normal conditions, and corresponding Mask1 and Mask2 are generated in area 1 and are...

Embodiment 2

[0071] This embodiment is further optimized on the basis of the embodiment, specifically:

[0072] The class "U-net" network in S4 is composed of a convolutional layer and a deconvolution layer, and the processing flow of the class "U-net" network to the training image P1 and the training image P2 includes a downsampling process and an upsampling process, so In the down sampling process, the feature size is reduced by a convolution kernel with a step size of 2, and in the up-sampling process, the feature size is enlarged by a convolution kernel with a step size of 1 / 2; the class "U-net" network performs training image P1 When calculating with the training image P2, there is a ReLU activation function after each convolution and deconvolution operation.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com