Real-time monocular video depth estimation method

A technology of depth estimation and video, which is applied in computing, computer components, image analysis, etc., can solve the problems that limit the practicality of depth estimation, and achieve the effect of promoting practicality, good time consistency, and fast operation speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

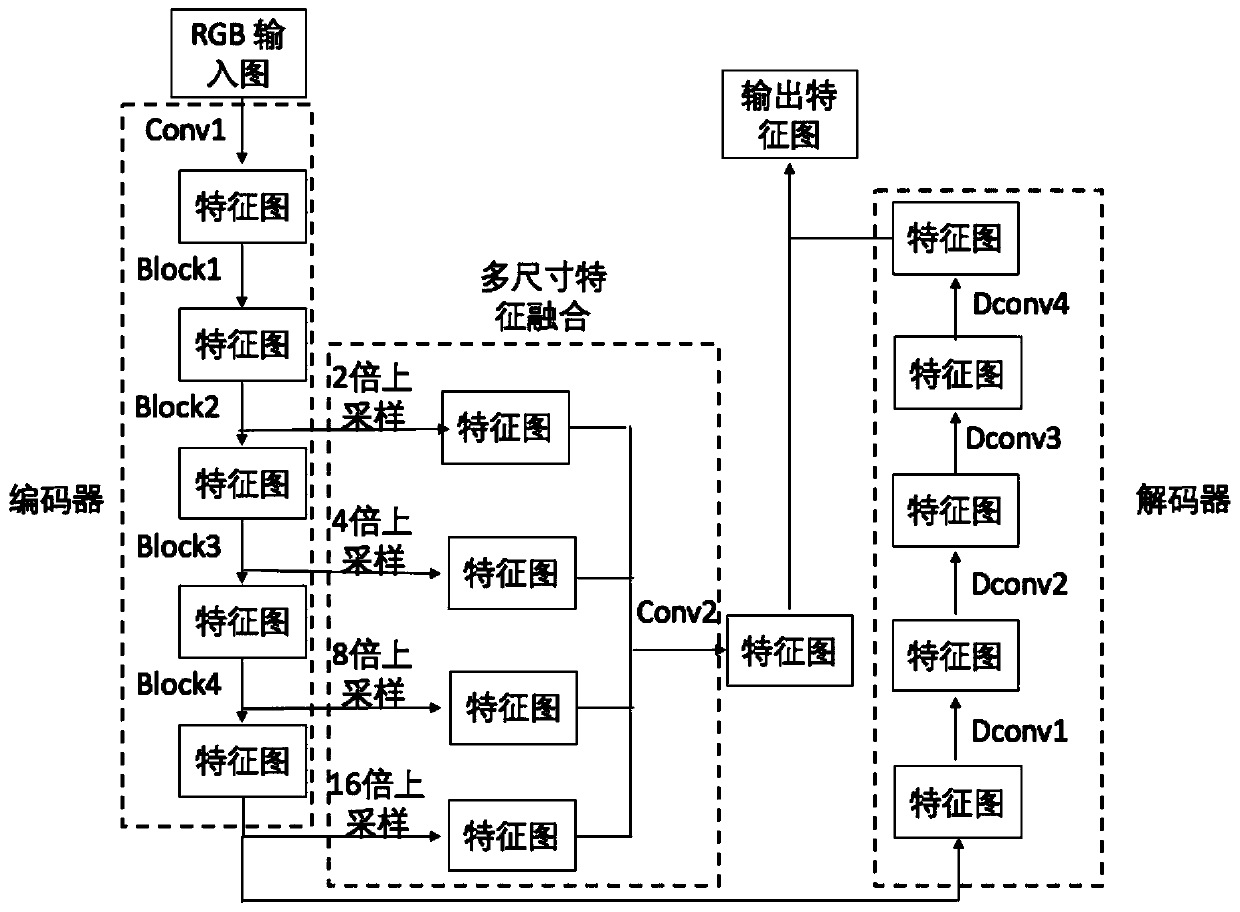

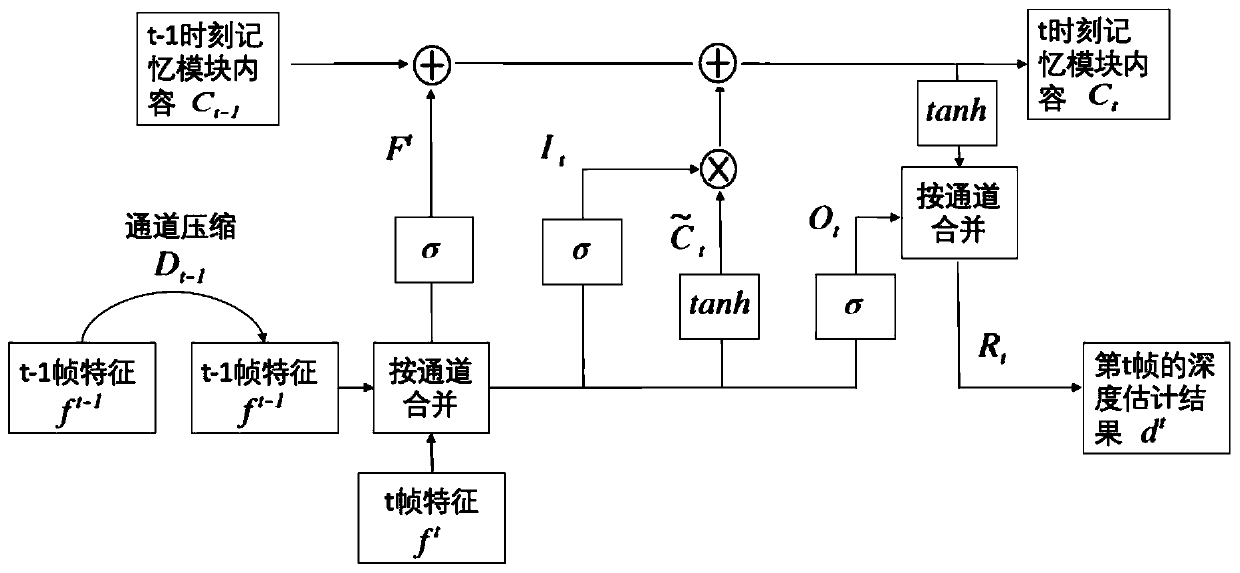

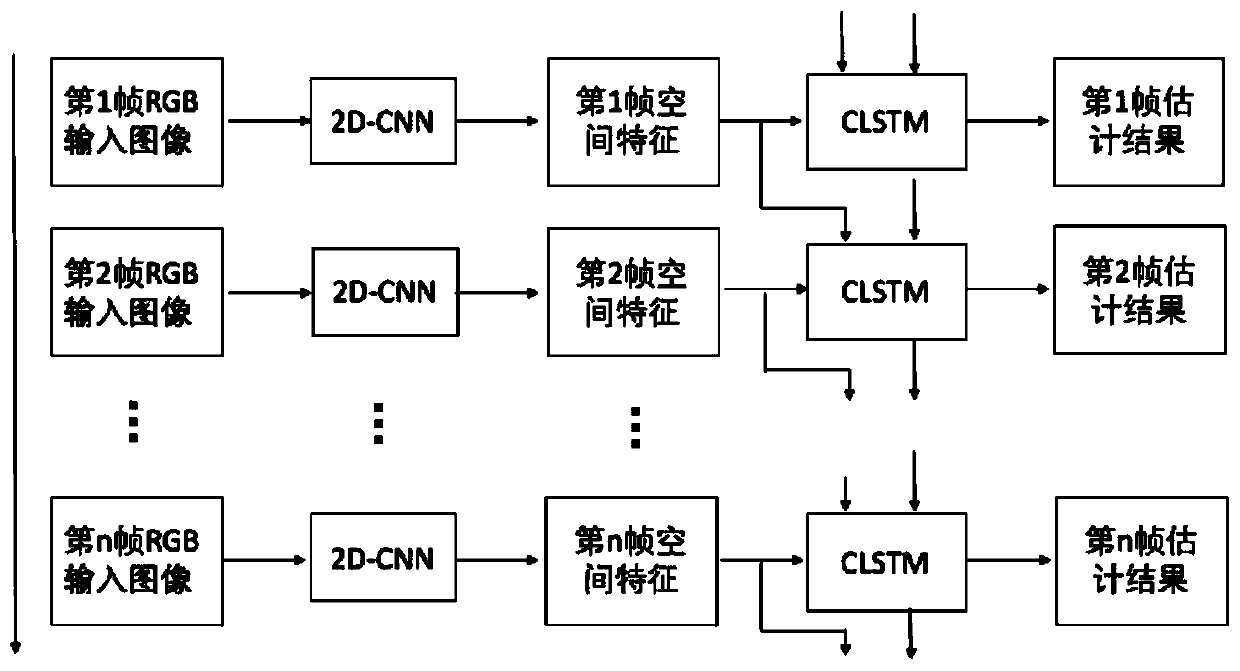

[0042] The technical solution of the present invention is to combine two-dimensional convolutional neural network (2D-CNN) and convolutional long short-term memory network (convolutional long short-termmemory, CLSTM), construct a pair of spatial and temporal information that can simultaneously use A model for real-time deep depth estimation from monocular video data. At the same time, a generative adversarial network (GAN) is used to constrain the estimated results so that they meet time consistency.

[0043] The concrete measures of this technical scheme are as follows:

[0044] Step 1: Data preprocessing. Data preprocessing includes RGB video normalization, depth map normalization and sample extraction.

[0045] Step 2: Divide the training set and validation set. A small number of samples are extracted as a validation set, and all remaining samples are used...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com