A multimodal perception and analysis system of patient behavior based on deep learning

An analysis system and deep learning technology, applied in the field of patient behavior multimodal perception and analysis system based on deep learning, can solve the problems of unsolvable gradient problem, difficult to express timing clues, difficult to express texture information, etc., to achieve accurate perception Sexual needs, improve the efficiency and level of diagnosis and treatment, and accurately assess the effect of patient behavior

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

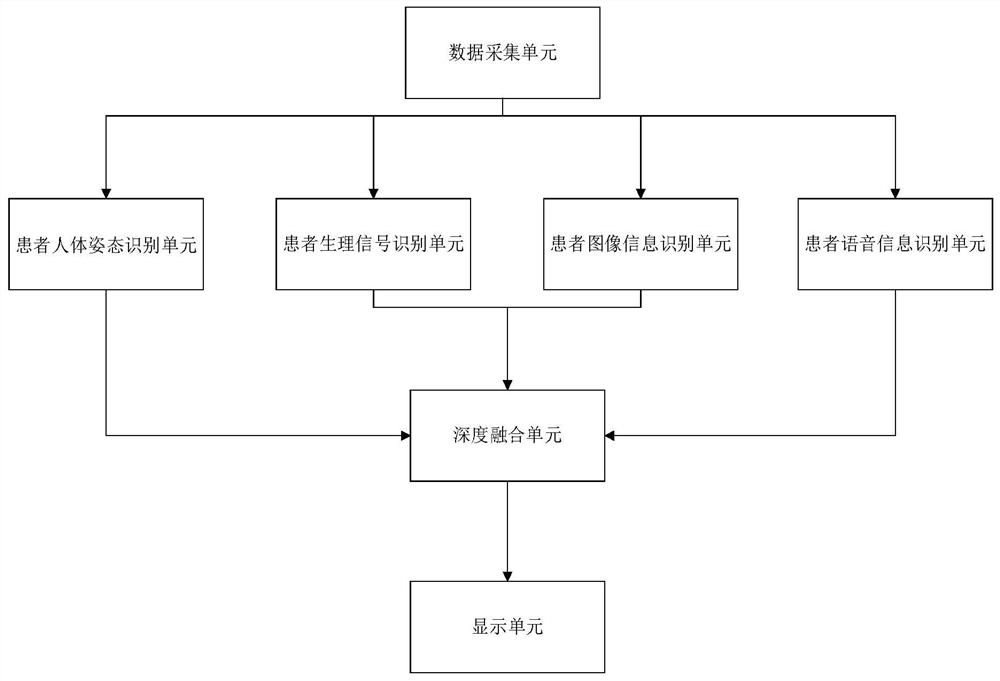

[0043] like figure 1 As shown, this embodiment provides a deep learning-based multimodal perception and analysis system for patient behavior, including a data acquisition unit, a patient body posture recognition unit, a patient physiological signal recognition unit, a patient image information recognition unit, and a patient voice information recognition unit. Unit, deep fusion unit and display module, the data acquisition unit is used to acquire multimodal patient data, and the data acquisition unit is respectively connected to the patient body posture recognition unit, patient physiological signal recognition unit, patient image information recognition unit and patient voice information recognition unit; The deep fusion unit is respectively connected to the patient body posture recognition unit, the patient physiological signal recognition unit, the patient image information recognition unit, the patient voice information recognition unit and the display module.

[0044] The...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com