Radar-assisted camera calibration method based on deep learning

A camera calibration and deep learning technology, applied in neural learning methods, computer parts, image data processing, etc. The problem is to avoid mathematical models and complicated calculations, reduce the amount of parameters and calculations, and avoid the problem of gradient explosion.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

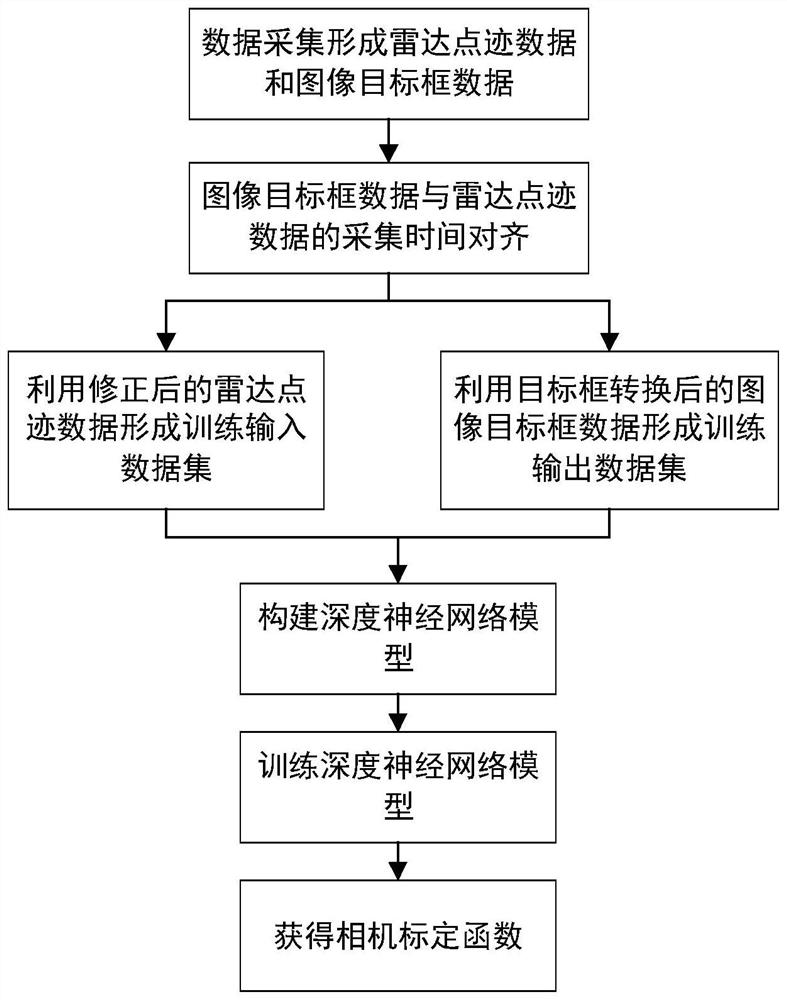

Method used

Image

Examples

Embodiment 1

[0035] With the rapid development of new technologies such as automatic driving and unmanned monitoring, due to the inevitable limitations of a single sensor itself, the industrial field often adopts multi-sensor fusion solutions. Cameras Cameras and radars are currently the main ranging sensor components and are widely used in multi-sensor data fusion. Since the camera and radar are installed in different locations, their coordinate systems have spatial differences, so the camera and radar need to be calibrated in space.

[0036] The traditional camera calibration method requires the cooperation of specific auxiliary calibration boards and calibration equipment to obtain the internal and external parameters of the camera model by establishing the correspondence between points with known coordinates on the calibration object and their image target points, such as Zhang Zhengyou calibration method, Pseudo-inverse method, least squares fitting method, etc. These traditional came...

Embodiment 2

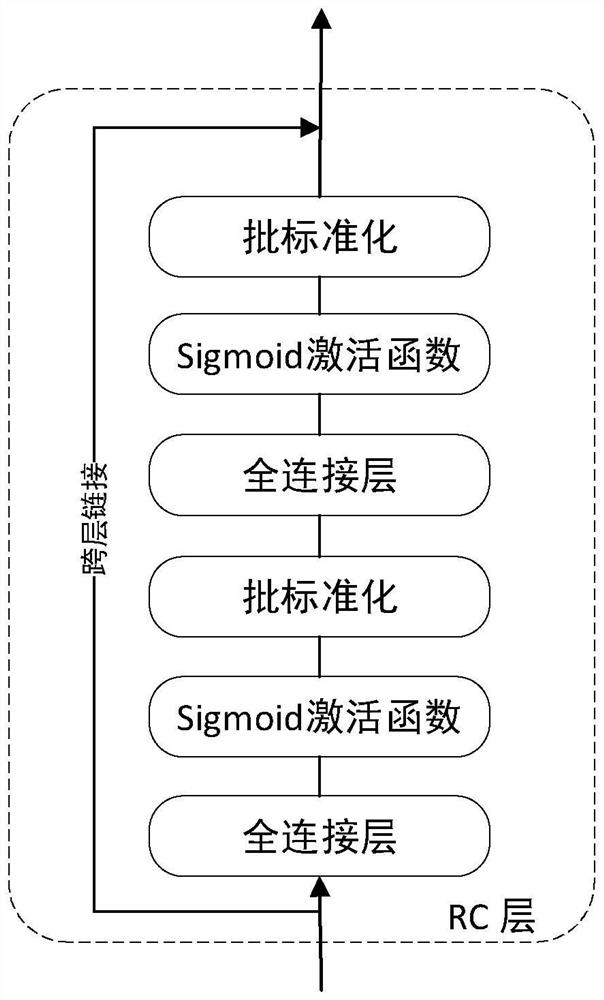

[0051] The radar-assisted camera calibration method based on deep learning is the same as in embodiment 1, and the deep neural network model is constructed in step 5, including the following steps:

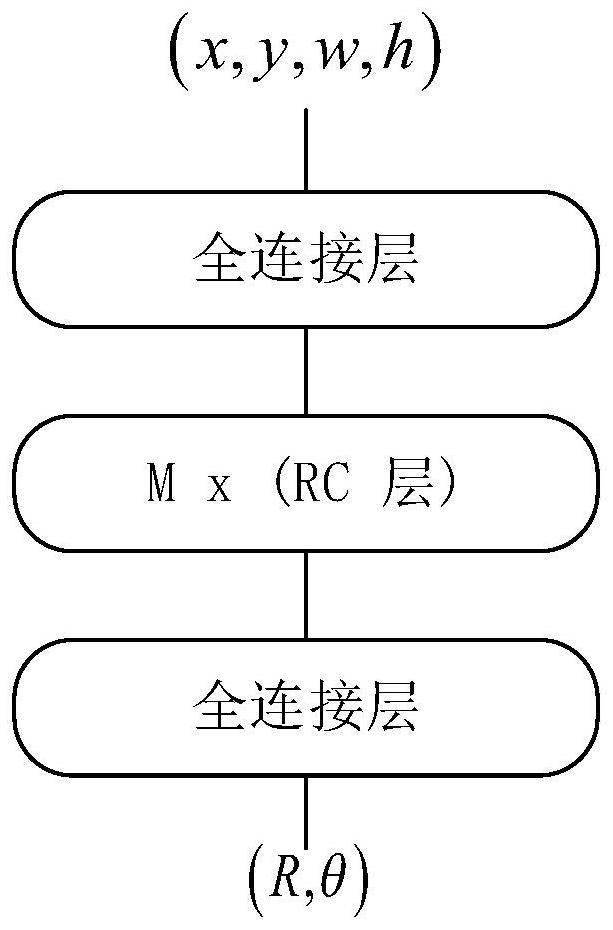

[0052] 5.1: Construct the overall framework of the deep neural network model: the input of the deep neural network model is the training input data set constructed by radar traces Input the input data set into a fully connected layer with an input of 2 and an output of p to obtain a p-dimensional vector, and then pass this p-dimensional vector through K consecutive RC layers, and then input the output of the last RC layer into In a fully connected layer with an input of p and an output of 4, the input dimension of the RC layer is p≥2, the number of RC layers is K≥1, and p and K are integers, which are changed according to the amount of input data.

[0053]5.2: Construct the RC layer in the deep neural network model: the input of the RC layer in the deep neural network model is a ...

Embodiment 3

[0056] The radar-assisted camera calibration method based on deep learning is the same as embodiment 1-2, and the training deep neural network model described in step 6 includes the following steps:

[0057] 6.1: Forward propagation of the deep neural network model: the training input data set constructed by the radar trace As the input of the deep neural network model, the output of the deep neural network model is obtained through the neural network described in step 5 in The horizontal axis coordinates of the bottom center of the image target box output by the neural network model, The vertical axis coordinates of the bottom center of the image target frame output by the neural network model, is the height of the image target box output by the neural network model, Image target box width for neural network model output.

[0058] 6.2: Construct a point-to-frame IOA loss function: In the deep neural network model, construct a point-to-frame IOA loss function IOA, an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com