Run length coding accelerator and method for sparse CNN neural network model

A neural network model and run-length coding technology, which is applied in the field of digital signal processing and hardware accelerated neural network algorithm, can solve the problem of large memory energy consumption, and achieve the effects of saving power consumption and computing power, high energy efficiency, and reducing data scale

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The present invention will be described in detail below in conjunction with the accompanying drawings.

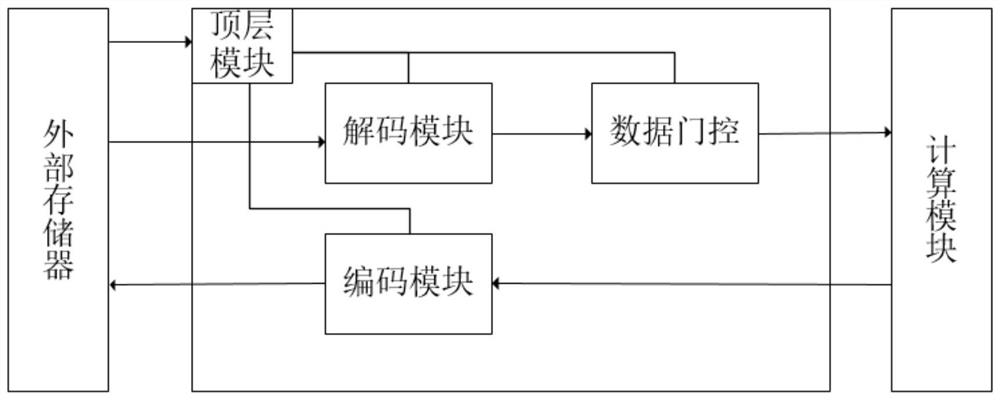

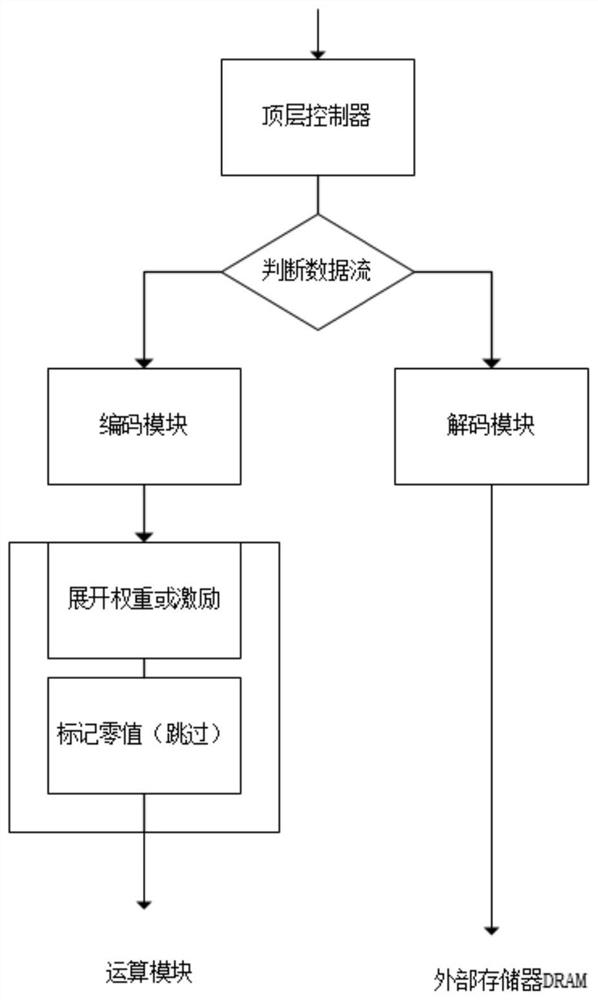

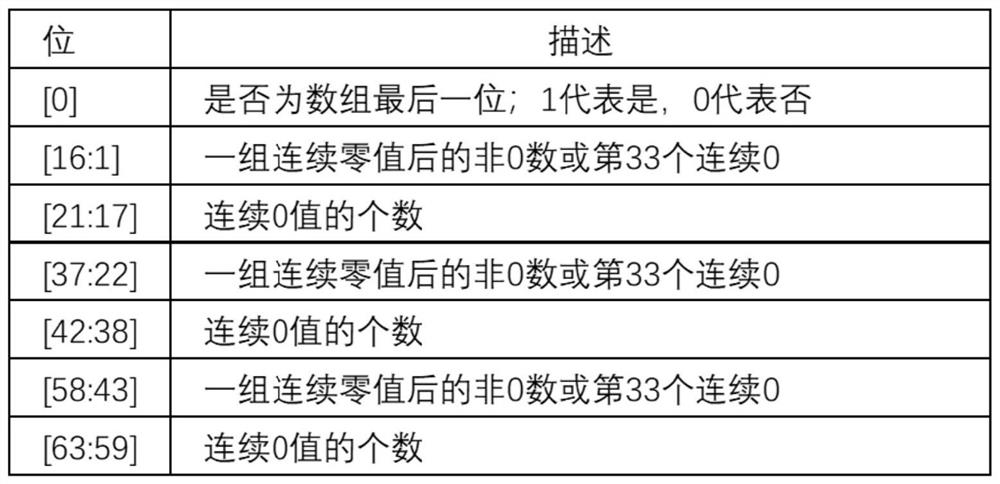

[0031] Such as figure 1 Shown is a structural block diagram of a run-length coding accelerator for a sparse CNN neural network model. The accelerator includes: a top-level controller for identifying input and output data types and performing group processing, and passing inputs such as weight data and excitation data to the run-length Encoding module; run-length encoding module, used to compress the result data output by computing module, and transmit the calculated result after compression encoding to external memory (DRAM); run-length decoding module, used to decompress the data read from external memory, And transmit the data to the data gating; the data gating is used to identify the zero value in the decoded input excitation data and weight data, record the position of the zero value in the data expansion into the vector array, and skip the multiplication and ad...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com