Method for carrying out blind source separation on convolutionary aliasing voice signals

A blind source separation and speech signal technology, applied in speech analysis, speech recognition, instruments, etc., can solve problems such as BSS uncertainty

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

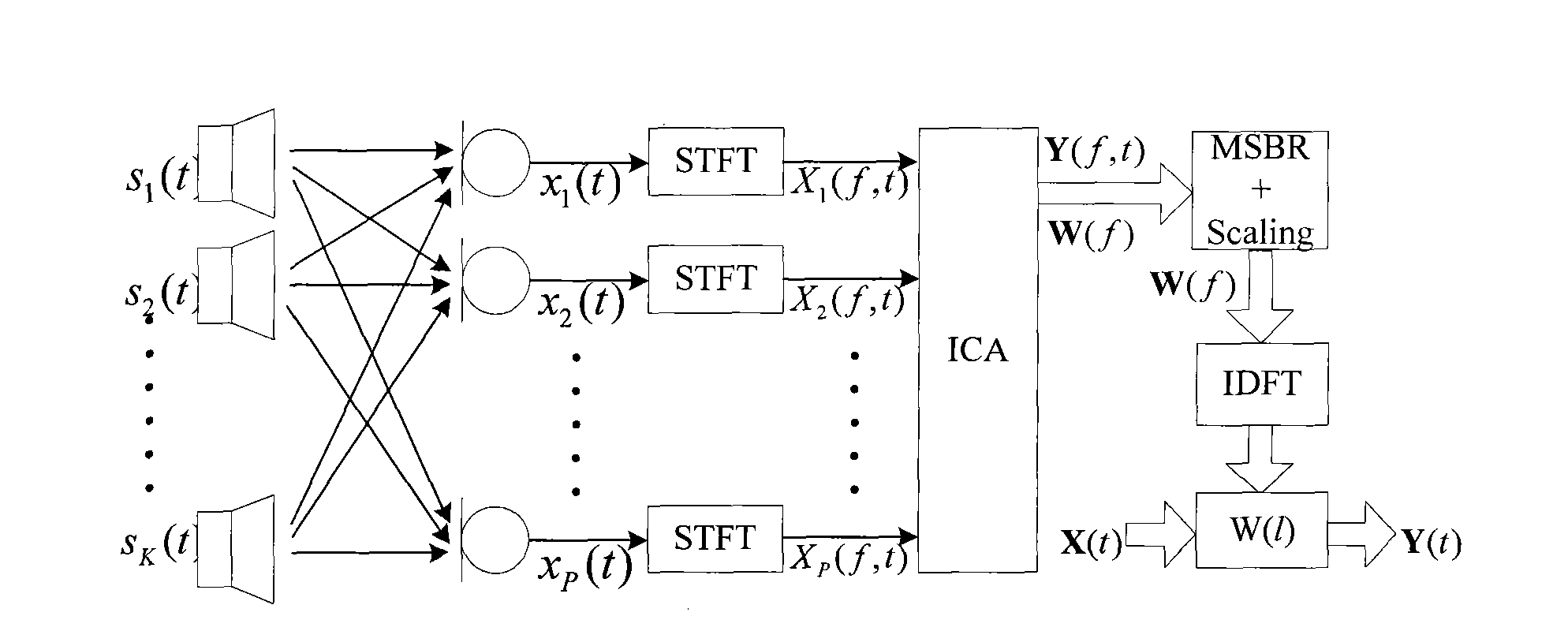

[0083] The present invention is to the system block diagram of convolution aliasing speech signal BSS as figure 1 As shown, K sound sources are detected by P sensors after convolution and mixing. The basic process of the BSS algorithm is as follows: first transform to the frequency domain by STFT, and then separate by ICA. Rearrange the ICA separation data with the MSBR algorithm to solve the order uncertainty, then adjust the amplitude, and then transform the separation matrix W(f) in the frequency domain to the time domain through IDFT to obtain the separation matrix W(t) in the time domain, and finally use W(t) convolves the sensor signal to obtain an estimate of the original signal.

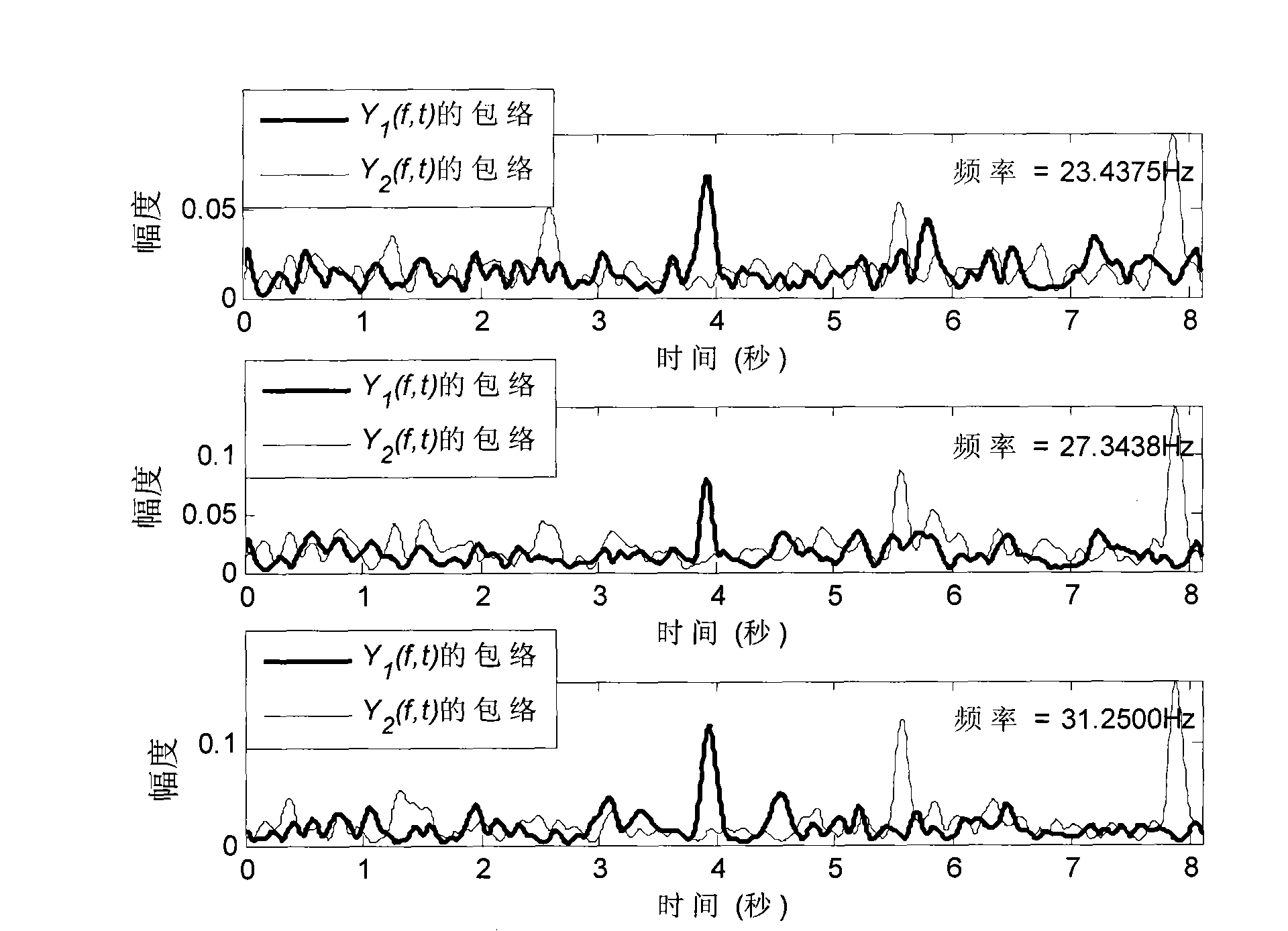

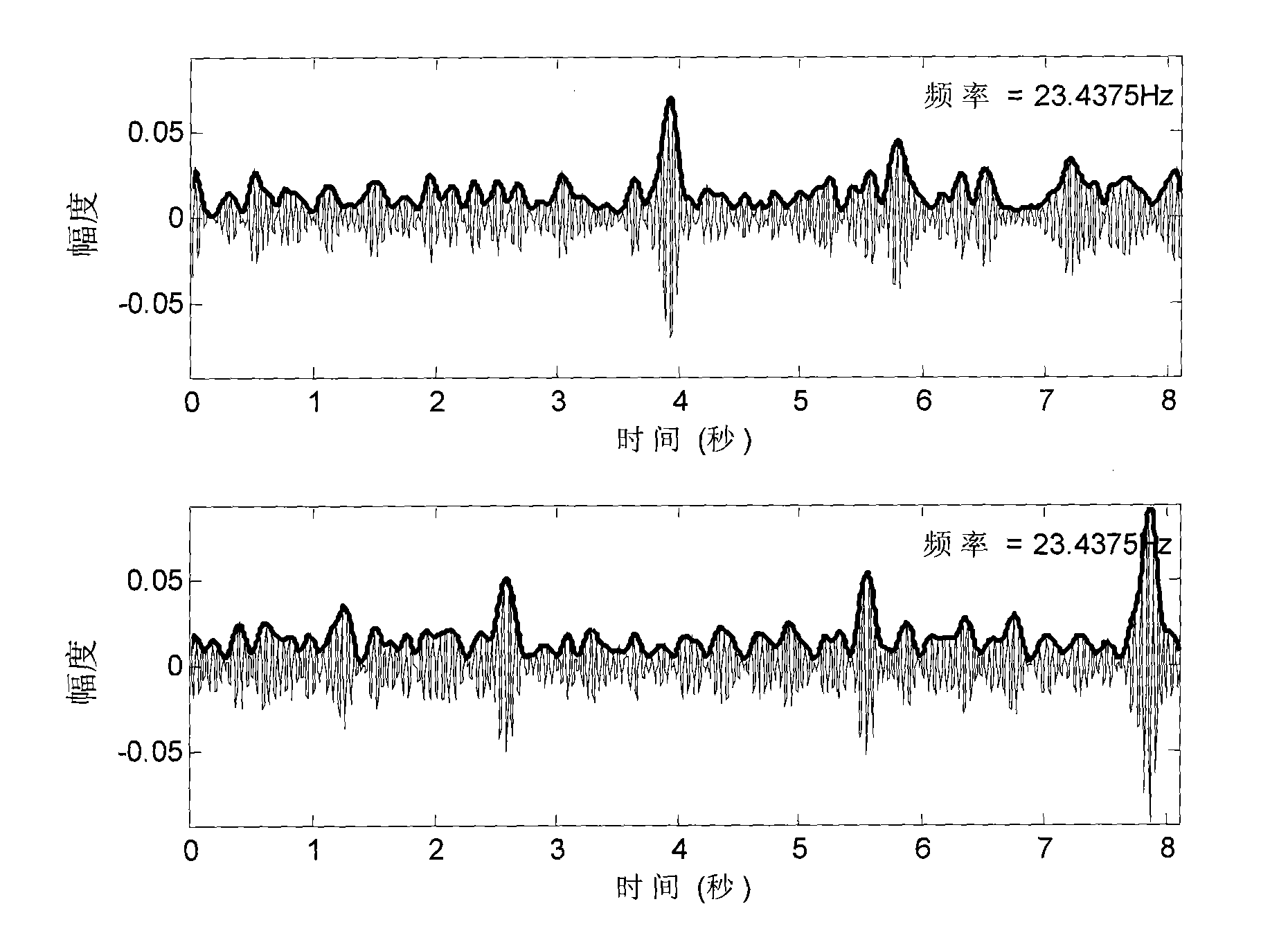

[0084] The simulation experiment verifies the performance of the ICA algorithm of the method of the present invention, the impulse response of the global filter and the effect of speech recovery through the following aspects. Among them, the hybrid filter has 300 tap coefficients (such as ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com