Image Feature Representation and Human Movement Tracking Method Based on the Second Generation Striplet Transform

A strip wave transform, human motion technology, applied in image analysis, image data processing, instruments, etc., can solve the ambiguity of motion tracking and recovery, cannot effectively represent the direction of geometric texture, closed boundary gray discontinuity blur, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

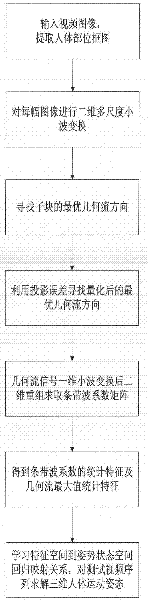

[0049] The present invention is an image feature representation and human body motion tracking method based on the second-generation striplet transform, referring to figure 1 , the specific implementation process of the present invention is as follows:

[0050] (1) Input the training and test video image sets to be processed and convert them into continuous single sequence images. According to the image content, judge the main human target that needs to be recognized, extract the rectangular frame containing the human body, and uniformly convert the size of each image to approximate The initial image of 64 × 192 pixels in proportion to human motion is used as a training sample for subsequent processing. Because the present invention does not need to cut out the background of the video image, it saves computing resources and time complexity.

[0051] (2) Perform two-dimensional discrete orthogonal wavelet transform on each training sample image, and the number of layers of wav...

Embodiment 2

[0076] Based on the second-generation strip wave transform image feature representation and human body motion tracking method with embodiment 1, wherein in the step (3), the Lagrangian function to obtain the optimal value λ is a penalty scaling factor, and the value 2 / 35, T is 20 for the quantization threshold, R g is the number of bits required to process the optimal geometric flow parameter d through entropy coding, obtained by calculation, R b is the size of the number of bits required for quantization encoding {Q(t)} determined by calculation.

[0077] In addition, the learning regression method adopted in step (8) is realized by a double Gaussian process. Similarly, feature extraction with clear edges, which is relatively consistent with Embodiment 1, can be obtained, especially reflecting the texture information in a motion state.

Embodiment 3

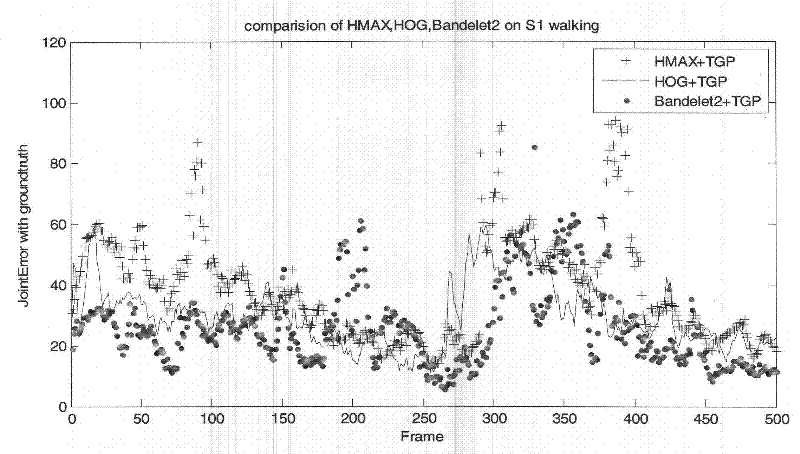

[0079] Based on the second-generation strip wave transform image feature representation and human body motion tracking method are the same as those in Embodiment 1-2, the present invention is verified by means of simulation.

[0080] (1) Experimental condition setting

[0081]In the present invention, the classification category of moving images is taken as "walking", and verifications are carried out on different subcategories of recognized moving video sequence databases. Matlab environment is used for simulation programming.

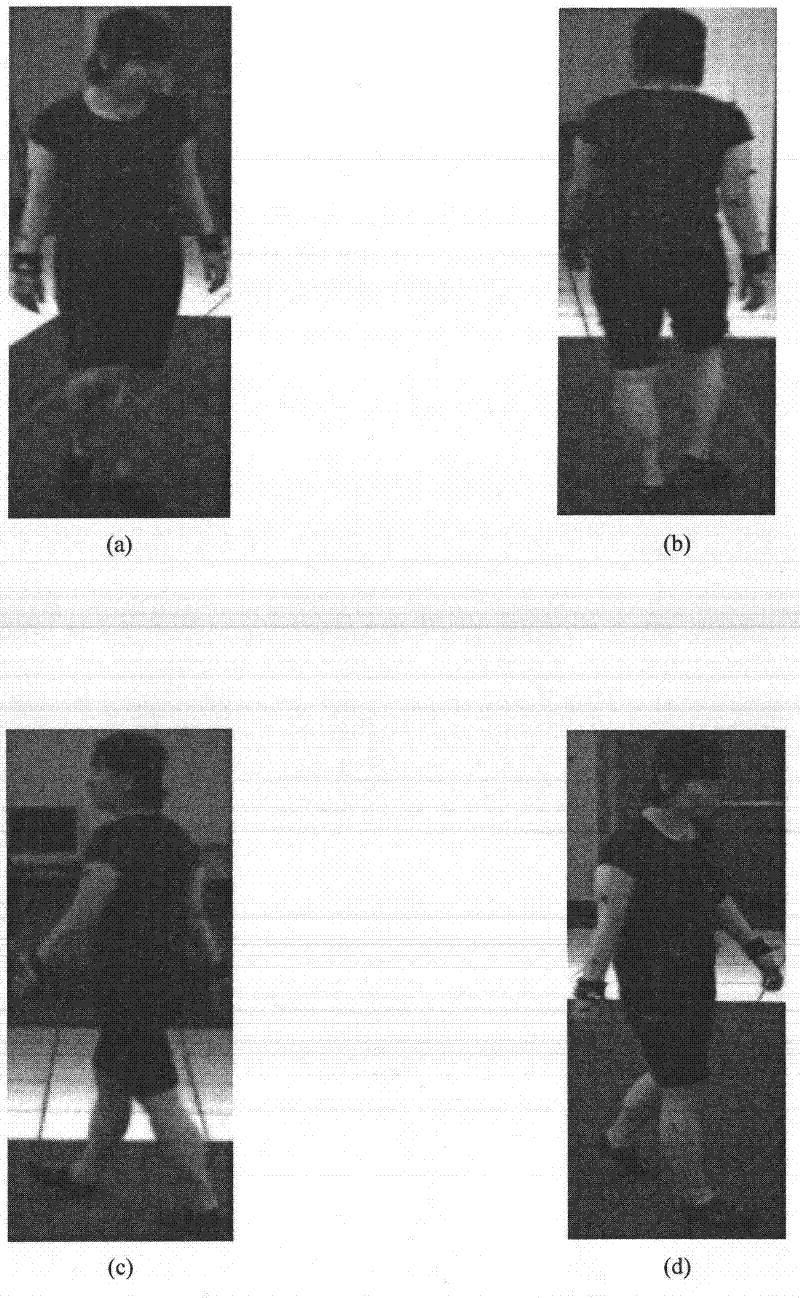

[0082] like figure 2 As shown in (a)-2(d), the "walking" video sequence image is a female character walking in a circular gait on the red carpet parallel to the direction of the camera's viewing angle. The original image size is 640×480, after step 1 processing The size of each human body image is 64×192, and it includes frame image segments facing the camera and facing away from the camera. in figure 2 a is the first screenshot of the sequence,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com