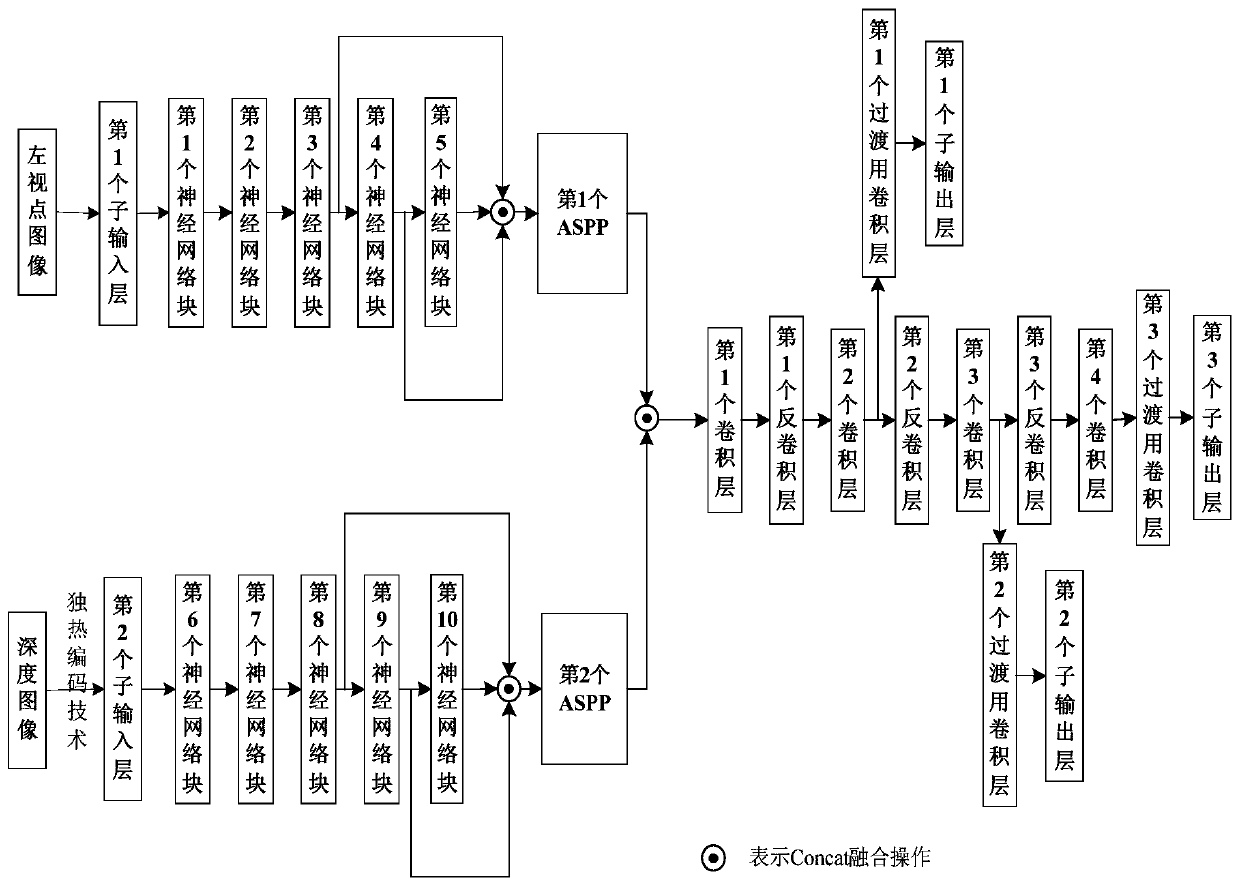

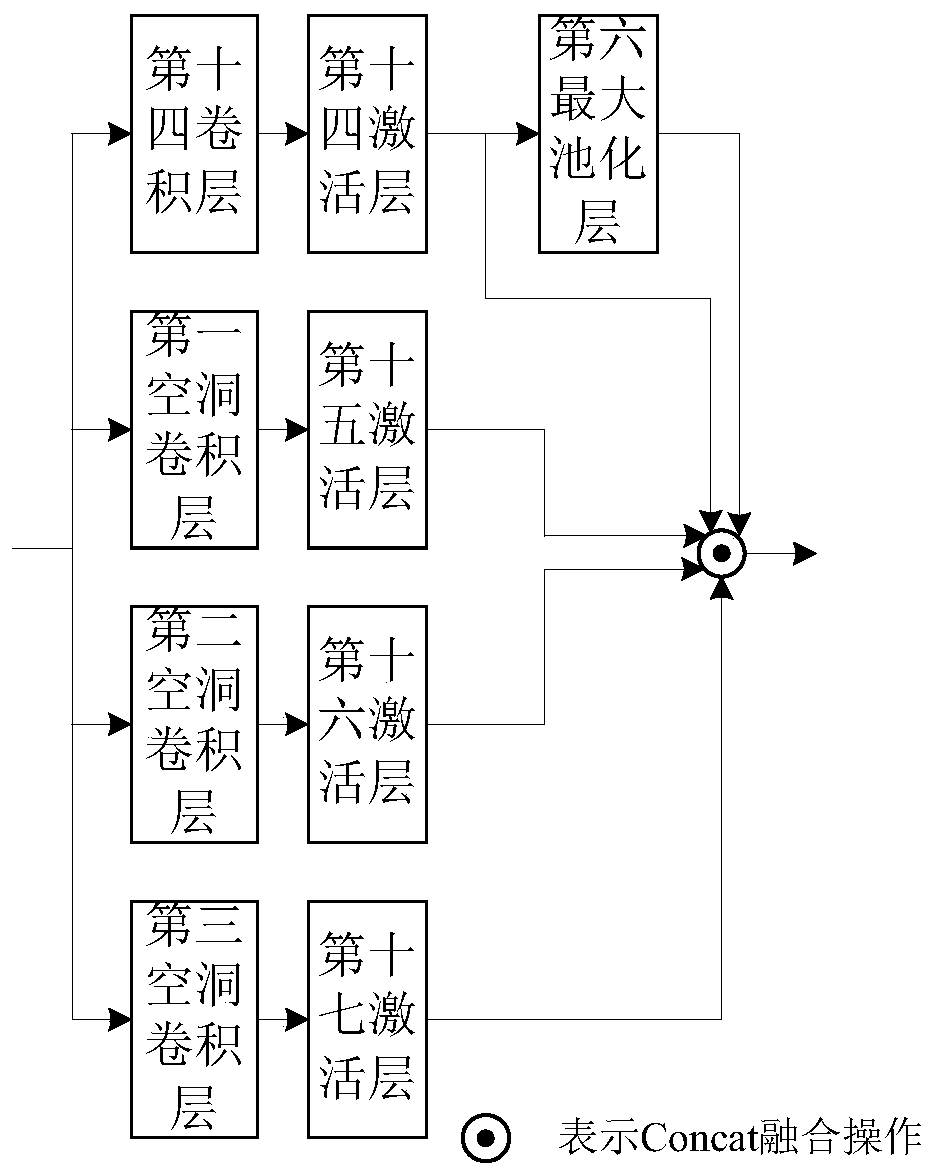

Multi-modal fusion saliency detection method based on spatial pyramid pool

A technology of spatial pyramids and detection methods, applied in neural learning methods, character and pattern recognition, biological neural network models, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

[0041] A kind of multimodal fusion saliency detection method based on spatial pyramid pool proposed by the present invention, it includes two processes of training phase and testing phase;

[0042] The specific steps of the described training phase process are:

[0043] Step 1_1: Select the left viewpoint image, depth image and real human eye gaze image of M original stereoscopic images, and form a training set, and use the left viewpoint image, depth image and real human gaze of the i-th original stereoscopic image in the training set The corresponding eye-gaze pattern is denoted as {D i (x,y)} and {Y i (x, y)}; then use the existing one-hot encoding technique (HHA) to process the depth image of each original stereo image in the training set to have the same R channel component, G channel component and B channel component; wherein, M is ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com