CNN acceleration calculation method and system based on low-precision floating-point number data representation form

A technology of expression and calculation method, applied in the field of deep convolutional neural network quantization, which can solve problems such as low acceleration performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

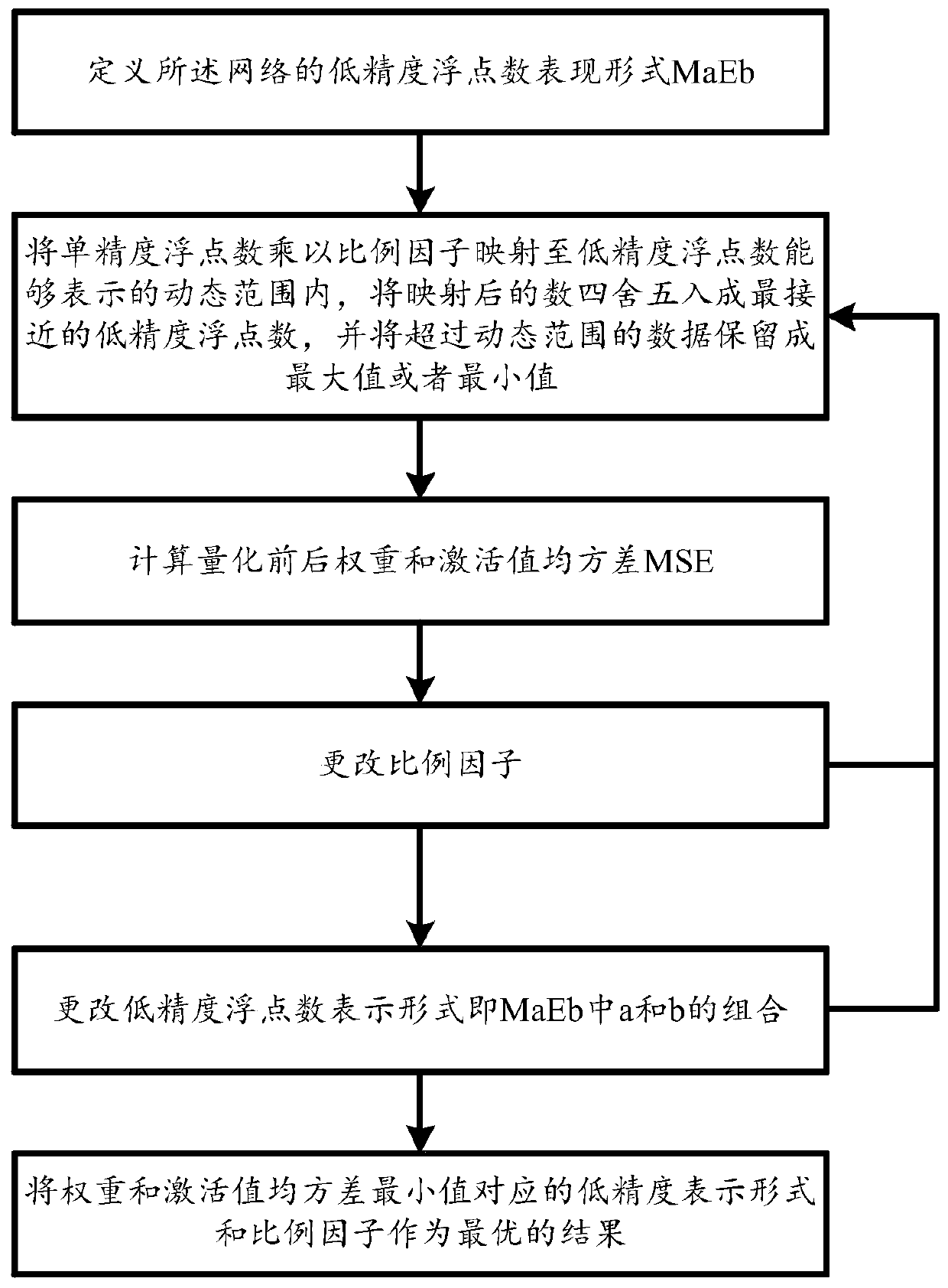

[0059] This embodiment provides a CNN acceleration calculation method and system based on low-precision floating-point data representation, using the low-precision floating-point representation MaEb, without retraining, to ensure the accuracy of the quantized convolutional neural network rate, and perform low-precision floating-point multiplication through MaEb floating-point numbers, and realize N through DSP m A MaEb floating-point multiplier to improve the acceleration performance of custom circuits or non-custom circuits, as follows:

[0060] A CNN acceleration calculation method based on low-precision floating-point number data representation, including the following steps:

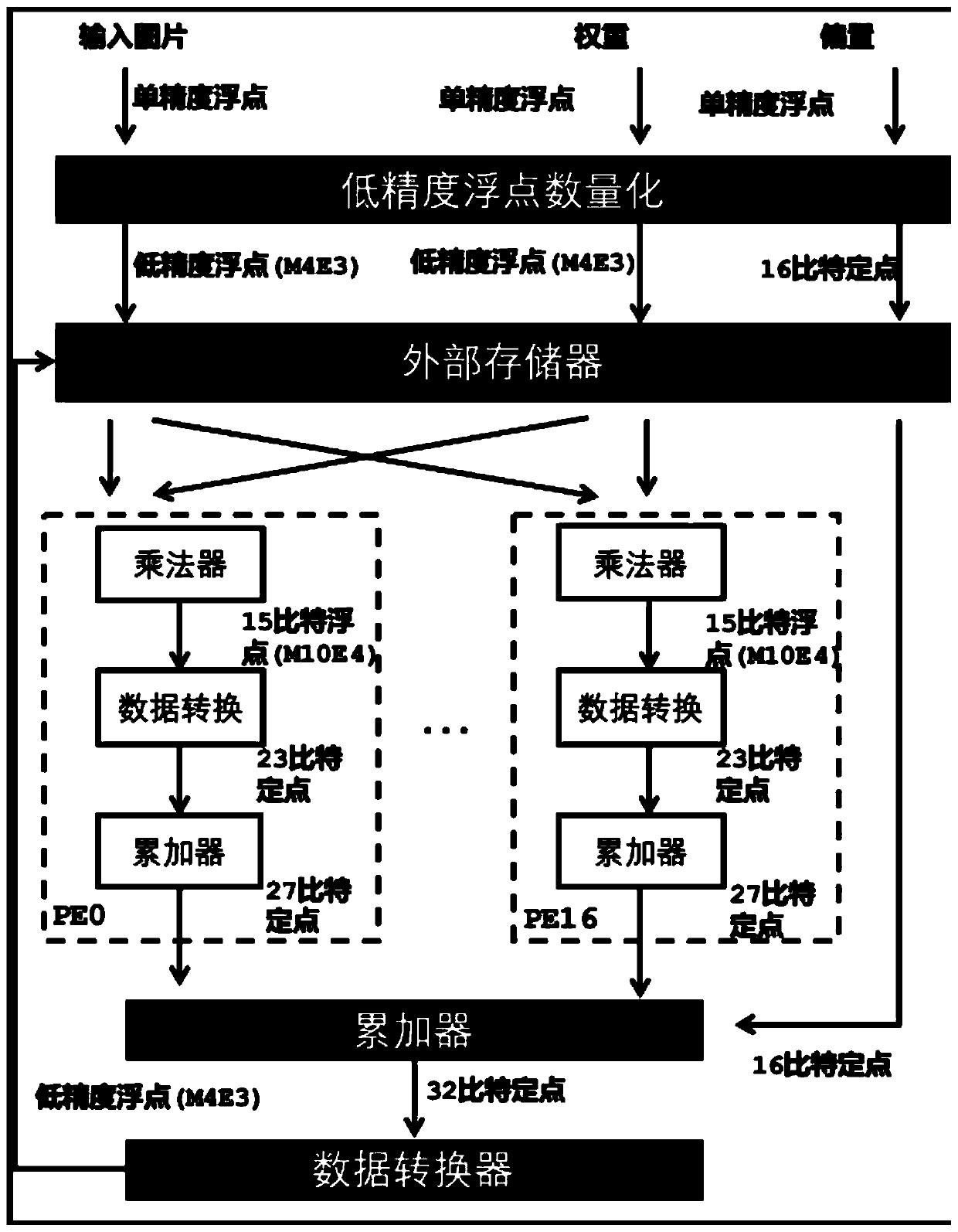

[0061] The central control module generates a control signal to arbitrate the floating-point function module and the storage system;

[0062] The floating-point function module receives the input activation value and weight from the storage system according to the control signal, and distributes the...

Embodiment 2

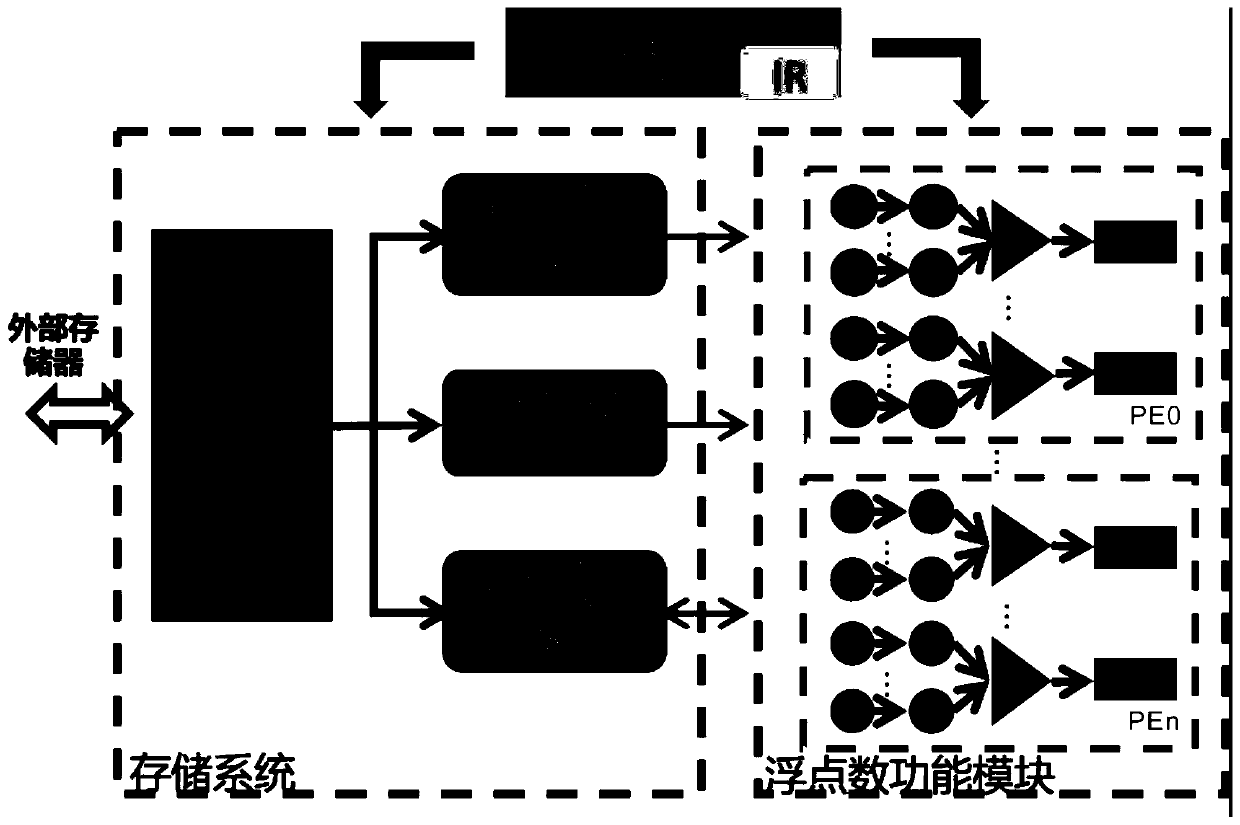

[0117] Based on Embodiment 1, a system includes customized circuits or non-customized circuits, the customized circuits include ASIC or SOC, and the non-customized circuits include FPGAs, such as figure 1 As shown, the customized circuit or non-customized circuit includes a floating-point function module, which is used to receive input activation values and weights from the storage system according to control signals, and distribute input activation values and weights to different processing units PE Parallel computing is quantized as the convolution of MaEb floating-point numbers through the representation of low-precision floating-point numbers, where 0<a+b≤31, a and b are both positive integers;

[0118] Storage system for caching input feature maps, weights and output feature maps;

[0119] The central control module is used for arbitrating the floating-point function module and the storage system after decoding the instruction into a control signal;

[0120] The floa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com