Quantitative calculation method and system for convolutional neural network

A convolutional neural network and calculation method technology, applied in the field of neural network algorithm hardware implementation, can solve problems such as low accuracy, large array power consumption, and insufficient computing power, so as to improve speed, reduce calculation power consumption, and increase The effect of throughput

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

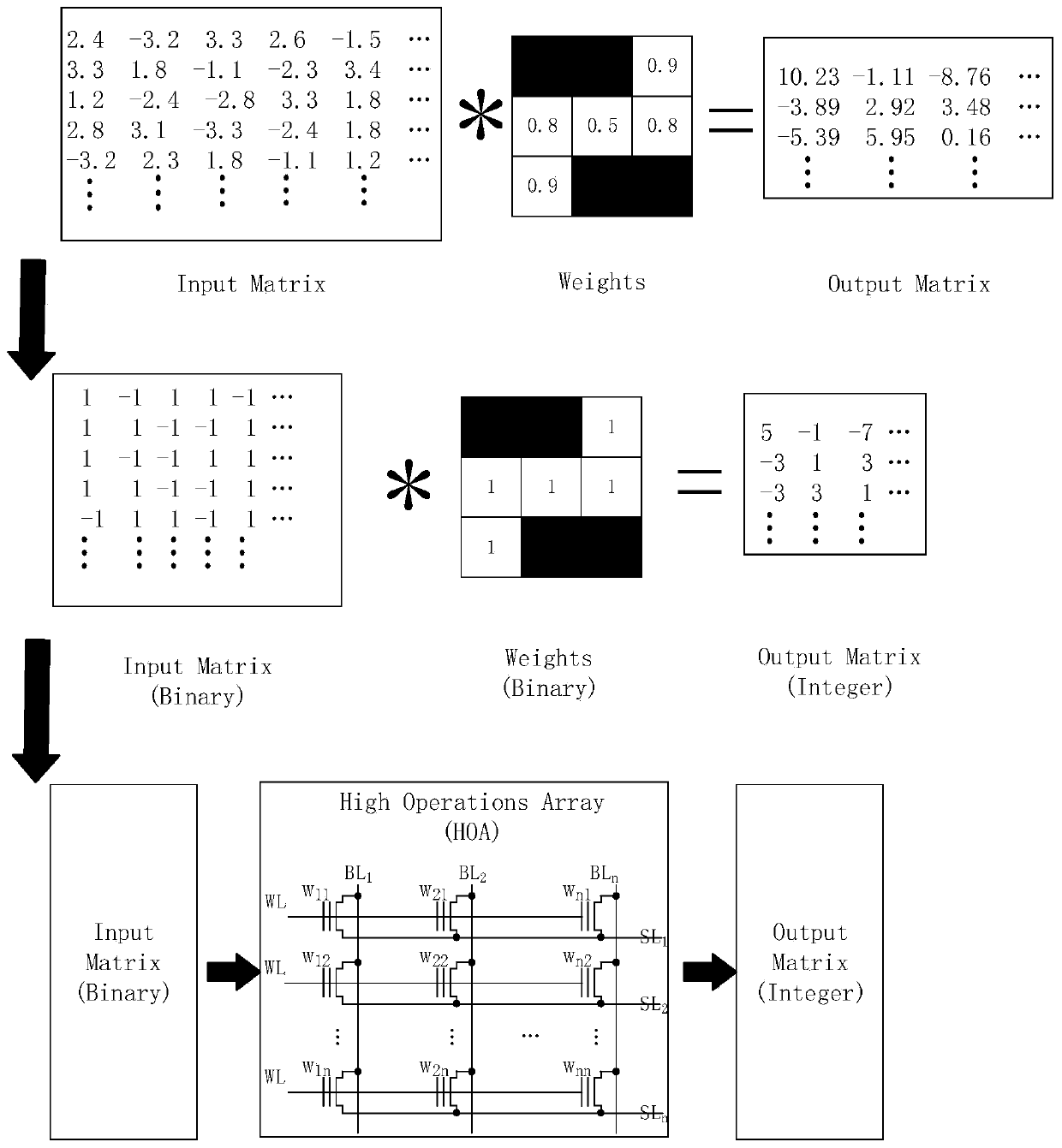

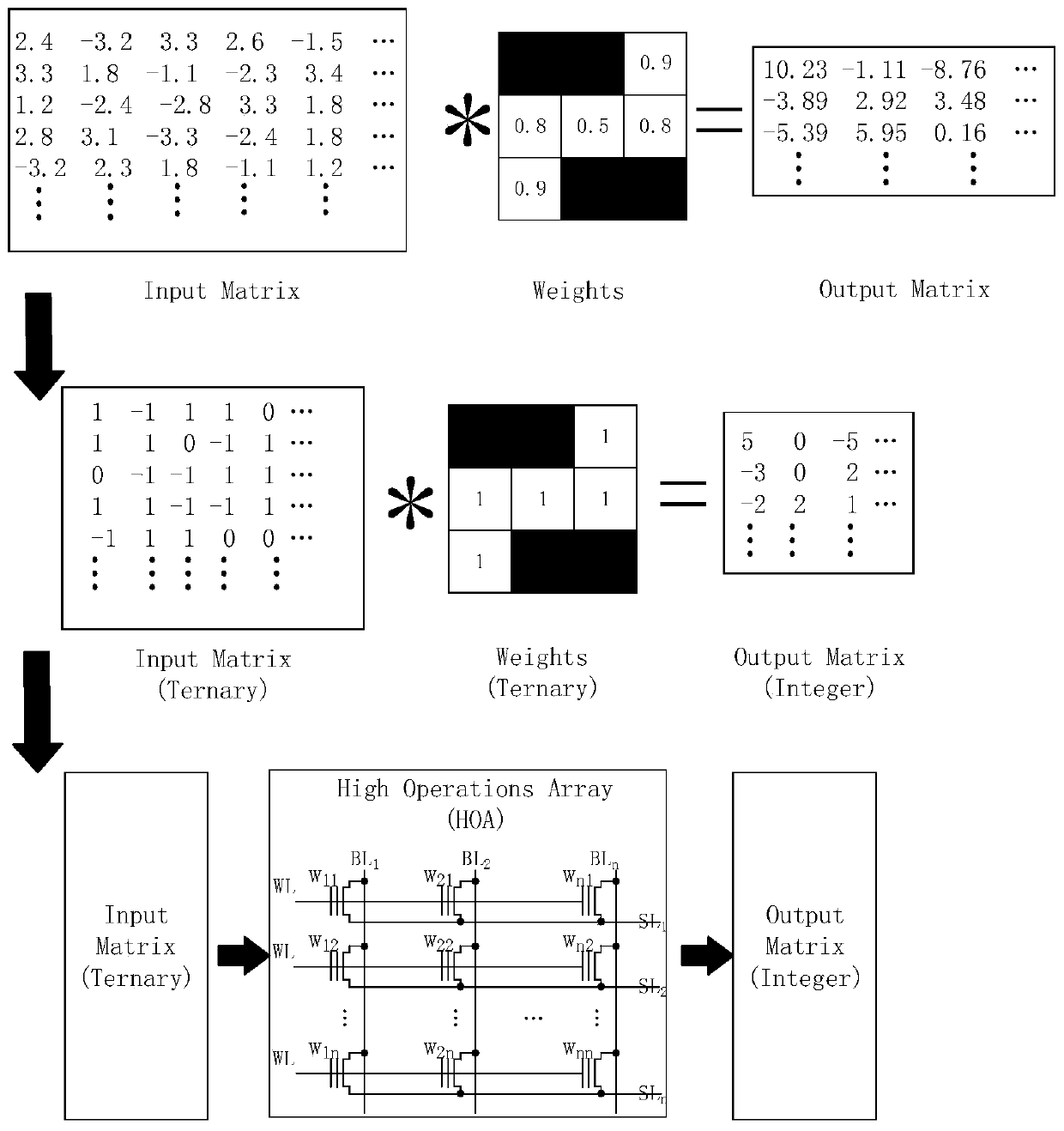

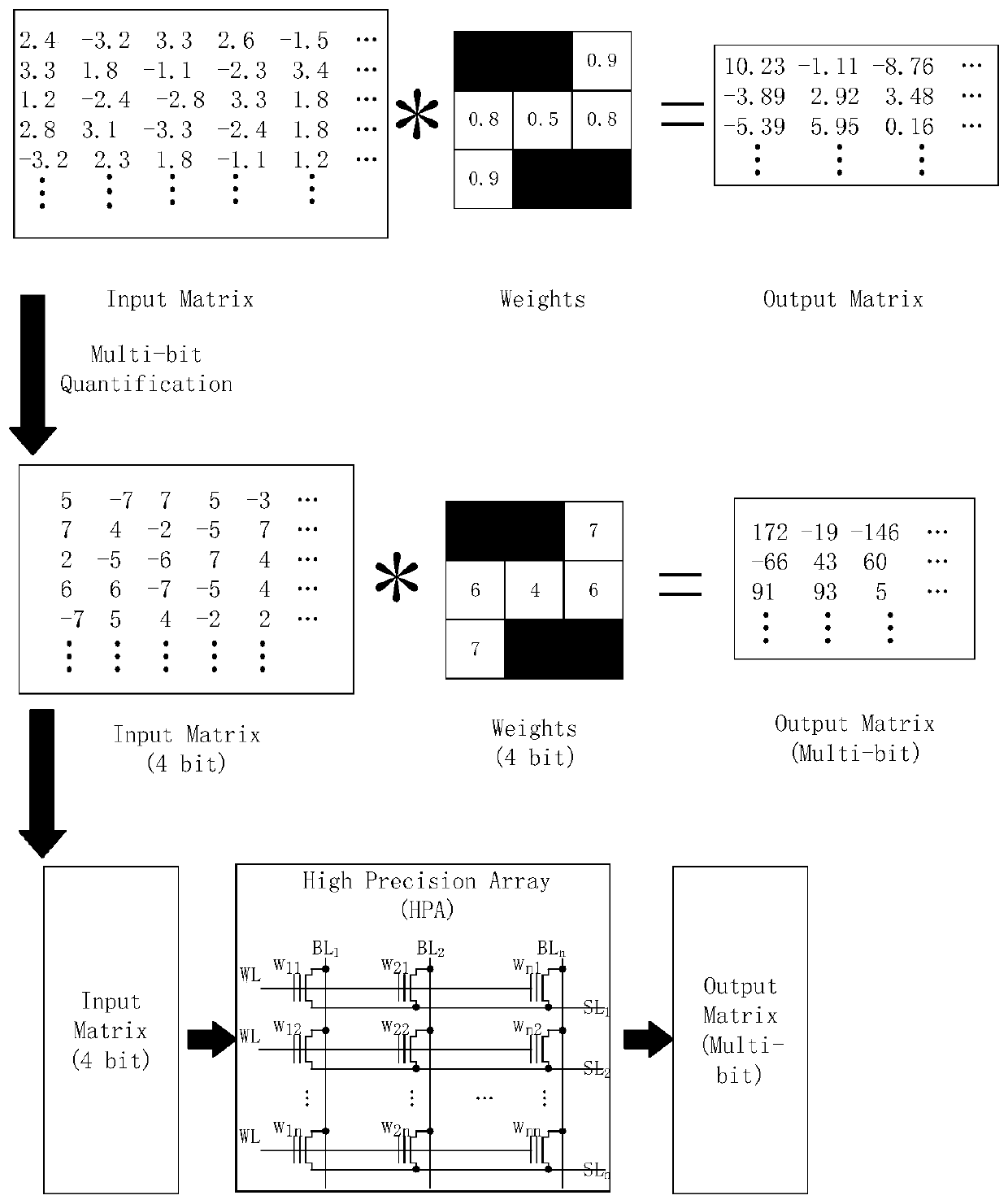

Method used

Image

Examples

Embodiment 1

[0085] Such as Figure 7 , this embodiment takes the AlexNet network as an example to further illustrate the convolutional neural network quantization calculation method of the present invention:

[0086] The AlexNet network structure has a total of 8 layers, the first 5 layers are convolutional layers, and the last three layers are fully connected layers; the convolutional neural network quantization calculation method accelerates the network is divided into three steps:

[0087] The first step is to perform high-precision quantization on the first layer of convolution (Conv1), the second layer of convolution (conv2), the penultimate fully connected layer (Fc7), and the last layer of fully connected layer (Fc8), and the third Convolution layer (Conv3), convolution layer 4 (Conv4), convolution layer 5 (Conv5), layer 6 full connection (Fc6) for binarization, whether the accuracy rate after the software simulation runs meets the requirements, For example, whether the accuracy o...

Embodiment 2

[0094] Such as Figure 8The present embodiment takes the LeNet network as an example, and further explains the quantitative calculation method of the convolutional neural network of the present invention: the LeNet network is simple and has only 7 layers, including a convolutional layer (Conv), a pooling layer (pool) and a fully connected layer (Fc ), the convolutional neural network quantitative calculation method to accelerate the network includes three steps:

[0095] The first step is to divide the layers with a large amount of calculation (convolutional layer, fully connected layer) into binary quantization and high-precision quantization. In general, the first layer of convolution (Conv1) and the last layer of full connection The connection layer (Fc2) is quantized with high precision, and the second layer of convolution (Conv2) and the first layer of fully connected layer (Fc1) are binarized and quantized. After the software simulation runs, whether the accuracy rate me...

Embodiment 3

[0102] Such as Figure 9 , this embodiment takes the DeepID1 network as an example to further illustrate the quantitative calculation method of the convolutional neural network of the present invention:

[0103] The DeepID1 neural network model used to extract facial features in the face recognition algorithm is mainly composed of a convolutional layer (Conv), a pooling layer (pool) and a fully connected layer (Fc). The convolutional neural network quantization calculation method is accelerated. The network consists of three steps:

[0104] The first step is to divide the layers with a large amount of calculation (convolutional layer, fully connected layer) into binary quantization and high-precision quantization. In general, the first layer of convolution (Conv1) and the last layer of full connection The connection layer (Fc) is quantized with high precision, and the second layer of convolution (Conv2), the third layer of convolution (Conv3), and the fourth layer of convolut...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com