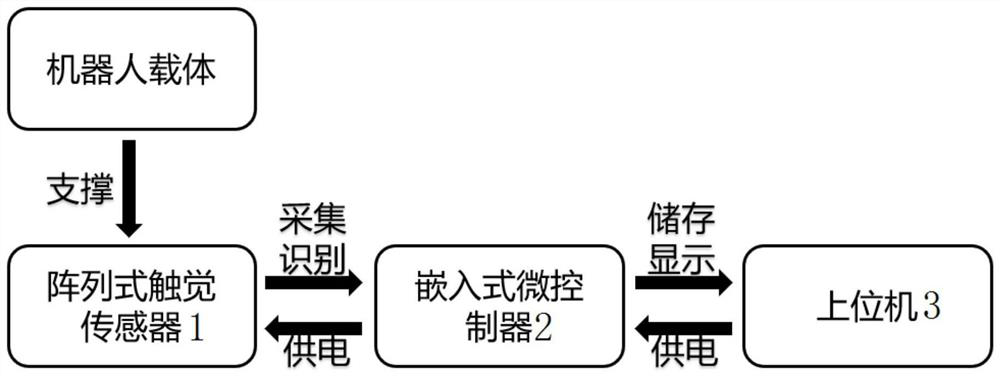

A robot tactile action recognition system and recognition method

A technology of motion recognition and robotics, which is applied in manipulators, program-controlled manipulators, manufacturing tools, etc., can solve the problems of less information, more information, and occupying computer resources, etc., to achieve improved accuracy, strong robustness, and fault tolerance Good results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

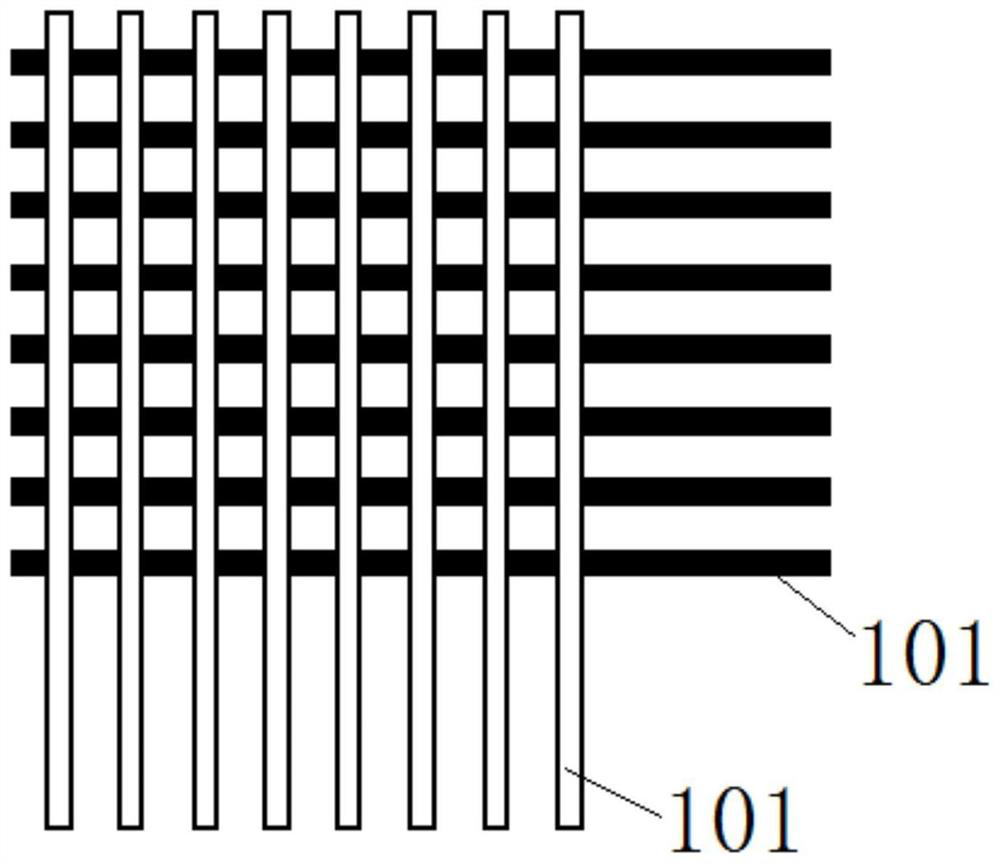

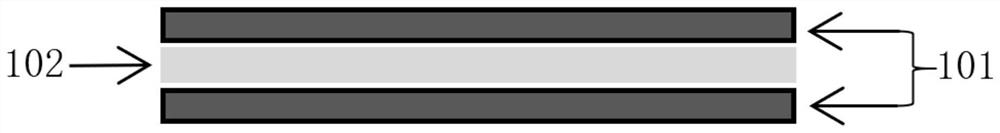

Embodiment 1

[0066] In this embodiment, in (1) of the first step, the carrier is the forearm of the robot, m=32, n=32, x=100, M=25, R=12, N=40 (20 times for the right hand, 20 times for the left hand ), P=12000, u=32. The 12 kinds of characteristic actions are pulled, pinched, pushed, grasped, grasped, poked, pulled, hit, stroked, scratched, patted, and slid. The embedded microcontroller 2 is connected with the host computer 3 through a USB data line.

[0067] In (2) of the first step, the format type of the file in any program readable format of i_j is i_j.txt, i_j.xls or i_j.xlsx.

[0068] In the first step (3), the deep learning framework adopts the pytorch deep learning framework. a=80, the highest accuracy rate of the verification set is 94.57%. The save format of the convolutional neural network model is .tflite.

[0069] Depend on Figure 4 It can be seen that the convolutional neural network architecture consists of seven convolutional layers and a pooling layer, c represents t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com