Kinect-based body shape adaptive three-dimensional virtual human body model building method and animation system

A human body model and three-dimensional virtual technology, applied in animation production, image data processing, instruments, etc., can solve the problems of low animation reuse rate, complicated use and operation, and difficulty in getting started, so as to enhance the sense of reality and fun, and make production easy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

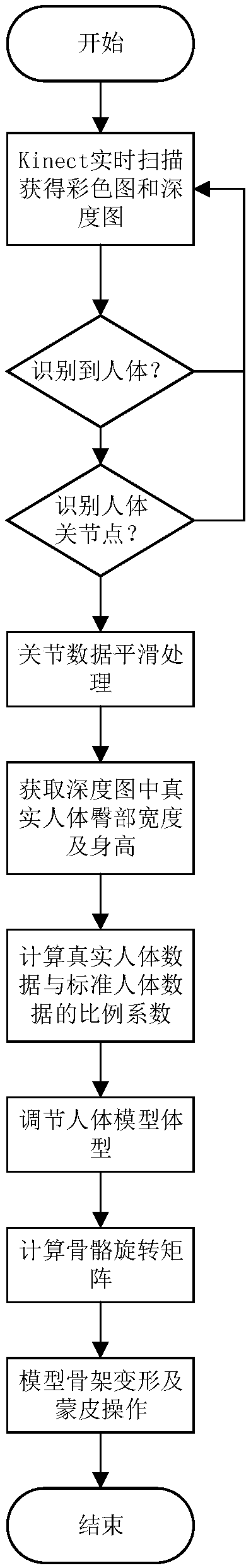

[0070] see figure 1 , a Kinect-based adaptive three-dimensional virtual human body model construction method, comprising the following steps:

[0071] S1, Kinect real-time scanning to obtain the color image and depth image of the human body;

[0072] S2. Using the acquired color image and depth image to identify the human body;

[0073] When identifying the human body, if the human body is not recognized, the color image and the depth image are acquired again, and if the human body is recognized, step S3 is performed;

[0074] S3. Obtain human body joint point data in the identified human body; when identifying human body joint points, if all relevant nodes are not obtained, then re-acquire the color image and depth image, and if all relevant nodes of the human body are obtained, then perform step S4;

[0075] S4. Smoothing the human body joint point data;

[0076] S5. Acquiring human hip width data and human body height data;

[0077] S6. Calculating the ratio coefficient...

Embodiment 2

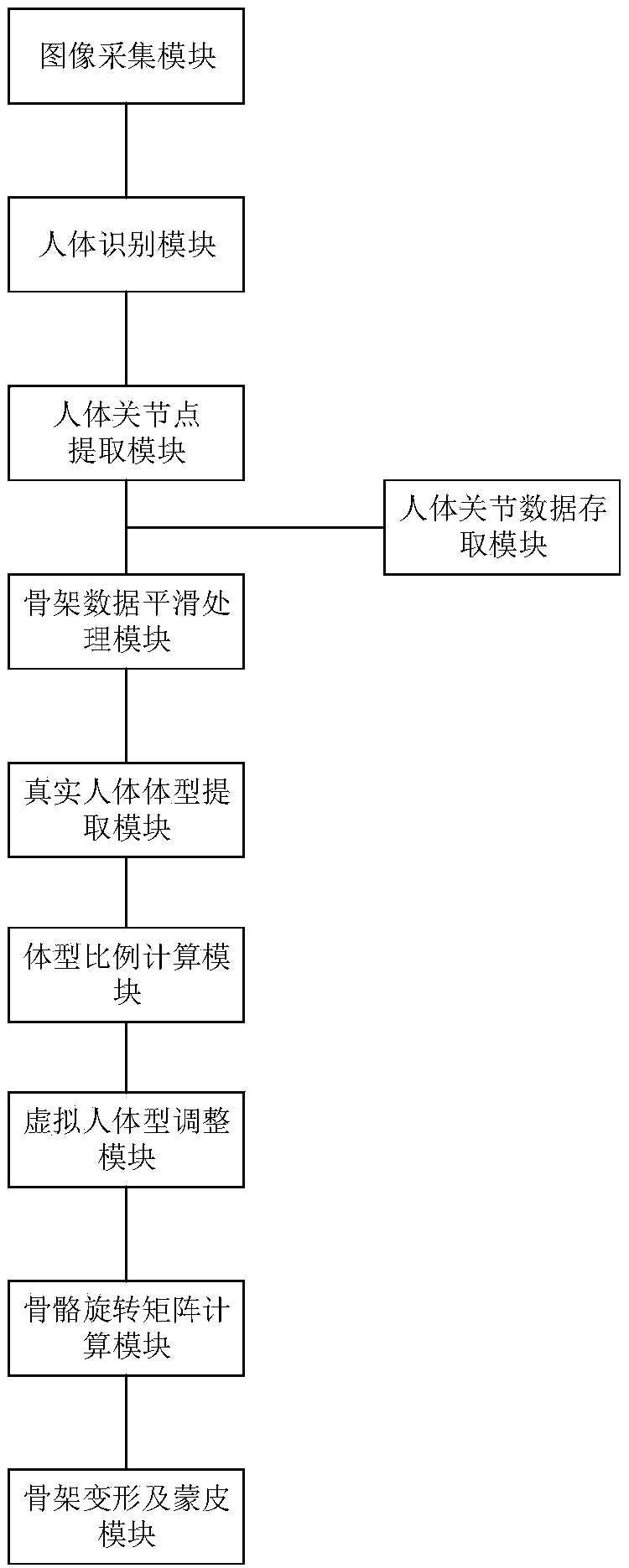

[0095] see figure 2 , a Kinect-based adaptive three-dimensional virtual human body animation system, said system comprising:

[0096] Image acquisition module, for utilizing Kinect to obtain color image and depth image;

[0097] A human body recognition module, configured to identify a human body using the acquired color image and depth image;

[0098] Human body joint point extraction module, used for human body joint point data in the identified human body;

[0099] Skeleton data smoothing processing module, used for smoothing human body joint point data;

[0100] The real human body shape extraction module is used to extract the human body hip width data and human body height data in the depth image;

[0101] Body proportion calculation module, used to calculate the proportion coefficient of real human body shape data and standard human body shape data;

[0102] The virtual human body shape adjustment module is used to adjust the fat and thin coefficient and the height...

Embodiment 3

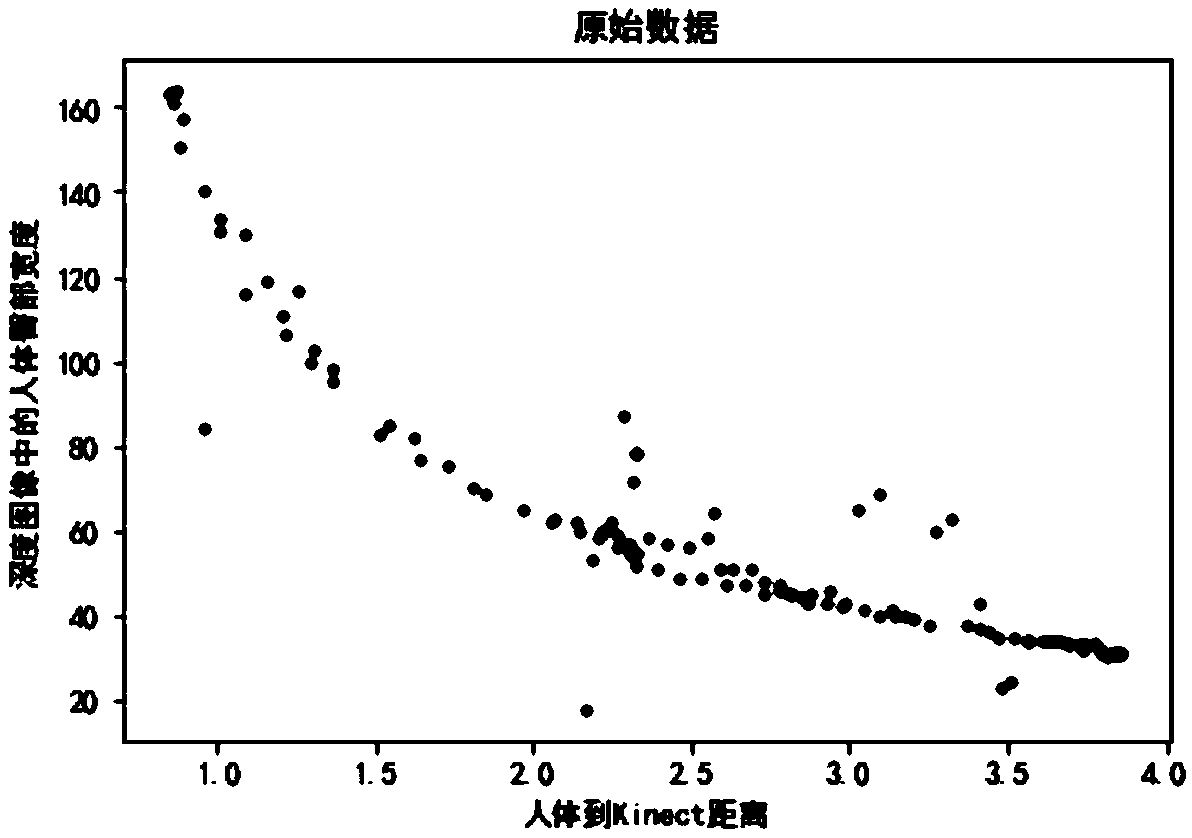

[0119] see Figure 3.1-3.3 , standard human hip width data fitting, the steps are:

[0120] a) Select the standard human body;

[0121] b) Acquisition of the hip width data in the depth image at different distances from the standard human body to Kinect, such as Figure 3.1 ;

[0122] c) Invert the distance data from the human body to Kinect, such as Figure 3.2 ;

[0123] d) By least square curve fitting, the relationship between the distance after inversion and the hip width data in the depth image is obtained, such as Figure 3.3 .

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com