Mobile robot positioning method based on RGB-D camera and IMU information fusion

A mobile robot and positioning method technology, which is applied in the directions of instruments, surveying and navigation, measuring devices, etc., can solve the problems of divergent pose, positioning accuracy only at the decimeter level, and large volume, and achieves good dynamic characteristics and real-time performance. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

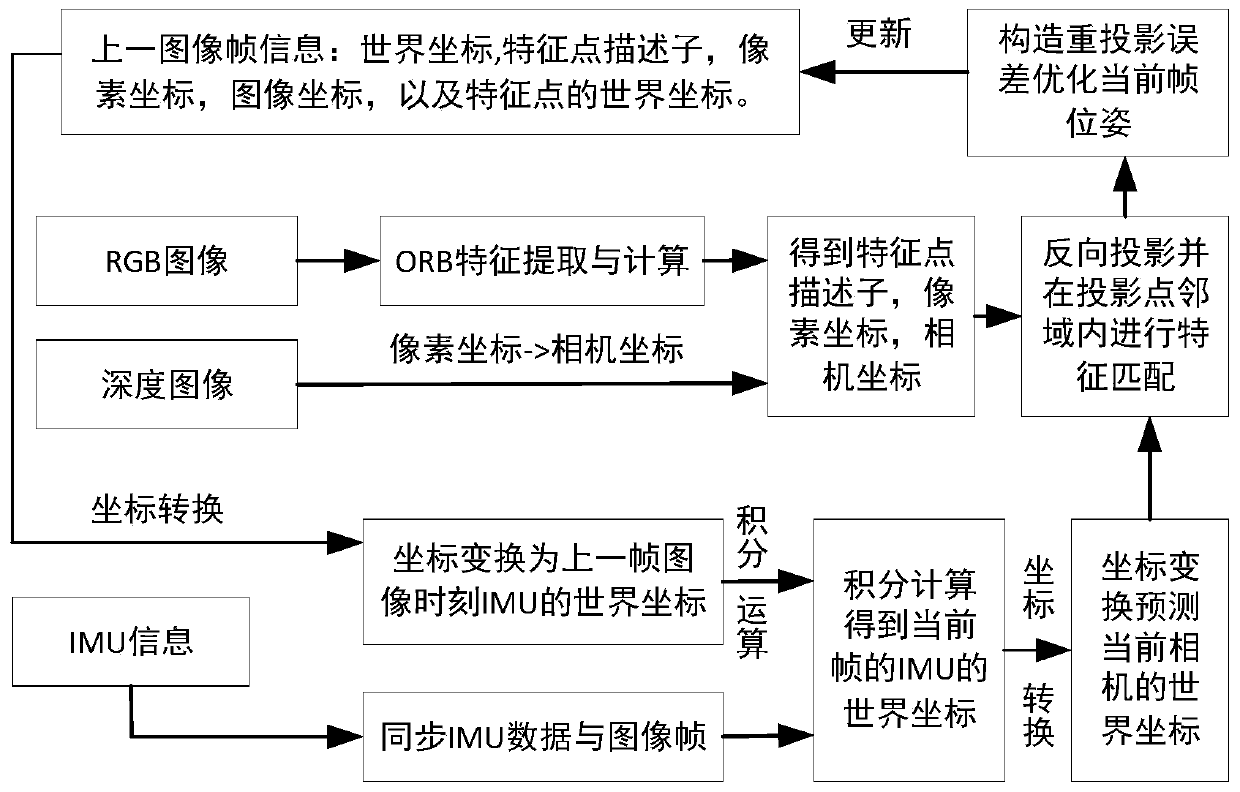

[0048] Such as figure 1 As shown, the present embodiment is based on the method for positioning a mobile robot fused with an RGB-D camera and an IMU, and the specific real-time steps are as follows:

[0049] Step (1): Establish a pinhole camera model.

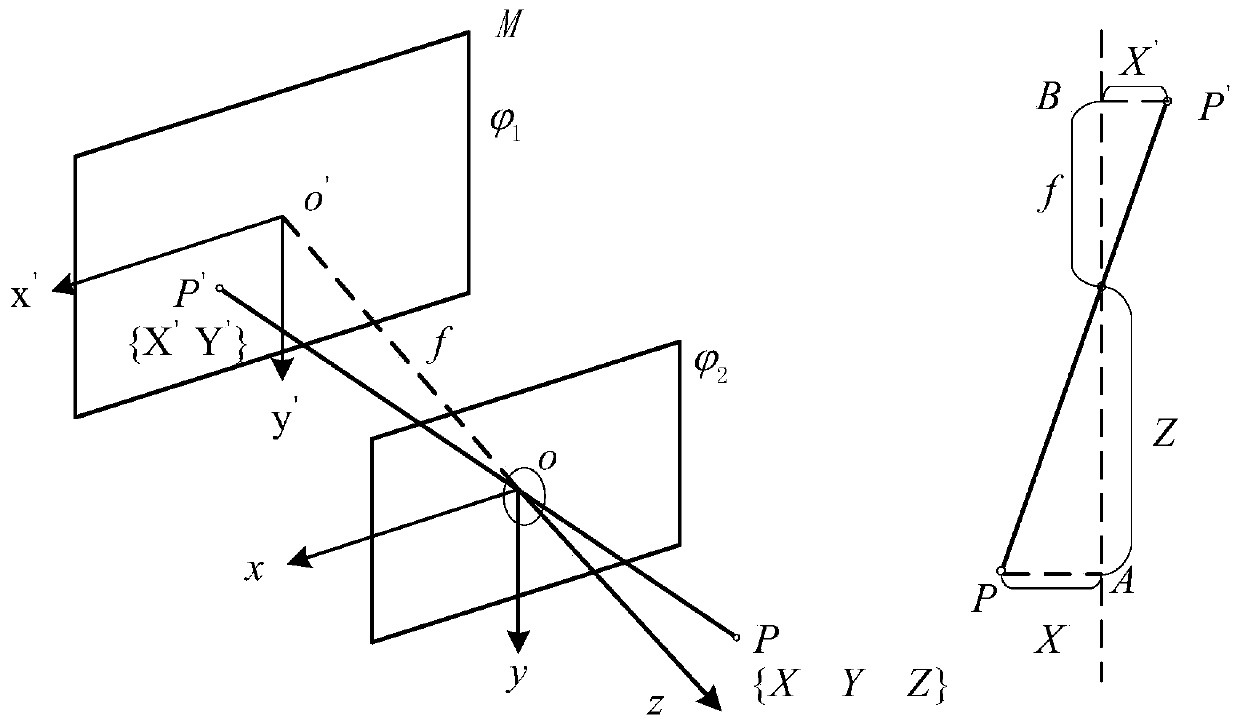

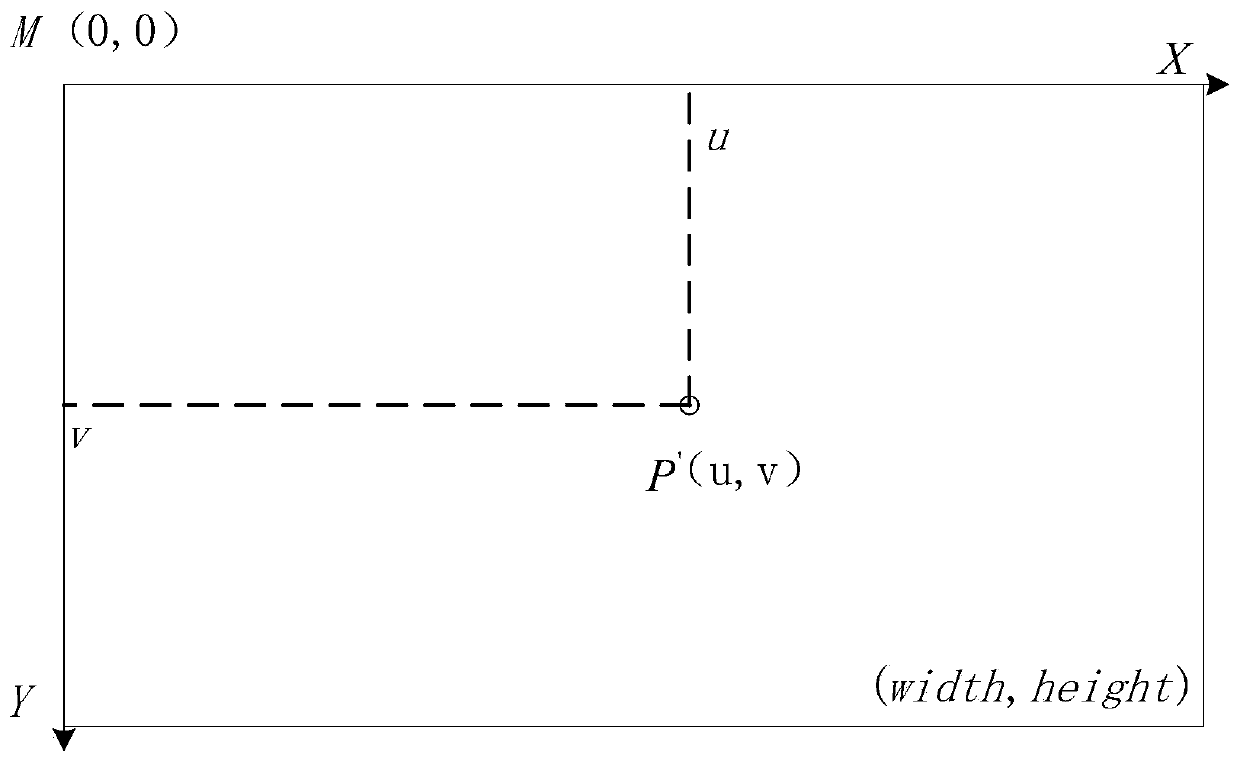

[0050] consider as figure 2 The pinhole camera model shown, where The plane is the imaging plane, The plane is the camera plane. Among them, xyz is the camera coordinate, the z axis in the camera coordinate is perpendicular to the camera plane, and the xy axis is parallel to the two borders of the camera and forms a right-handed coordinate system with the z axis. The plane coordinate system x'y' is parallel to the xy axis respectively. o is the optical center of the camera, o' is the intersection point of the straight line passing through the optical center parallel to the z-axis and the imaging plane α, oo' is the focal length, and its size is f. Assuming that the coordinates of a point P in the three-dimensional spac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com