High-precision farmland vegetation information extraction method

An information extraction and high-precision technology, applied in the field of agricultural remote sensing, can solve the problems of unfavorable target feature extraction and monitoring, low precision, uneven intensity, etc., achieve accurate crop information extraction results, improve accuracy, and reduce unevenness.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

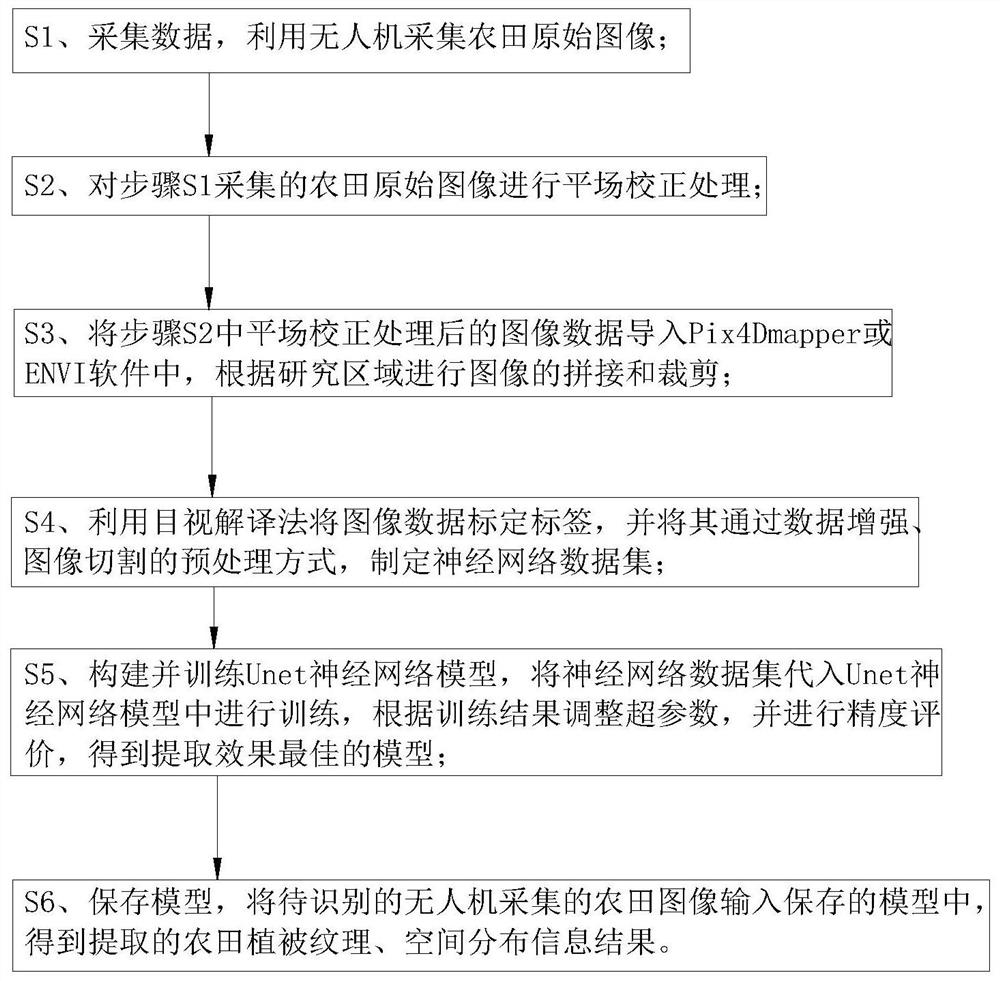

[0036] Embodiment: a kind of high-precision farmland vegetation information extraction method, such as figure 1 As shown, it specifically includes the following steps:

[0037] S1. Collect data, use drones to collect original images of farmland.

[0038] S2. Perform flat-field correction processing on the original farmland image collected in step S1.

[0039] S3. Import the image data processed by the flat-field correction in step S2 into Pix4Dmapper or ENVI software, and perform image splicing and cropping according to the research area.

[0040] S4. Use the visual interpretation method to calibrate the label of the image data, and preprocess it through data enhancement and image cutting to formulate a neural network data set.

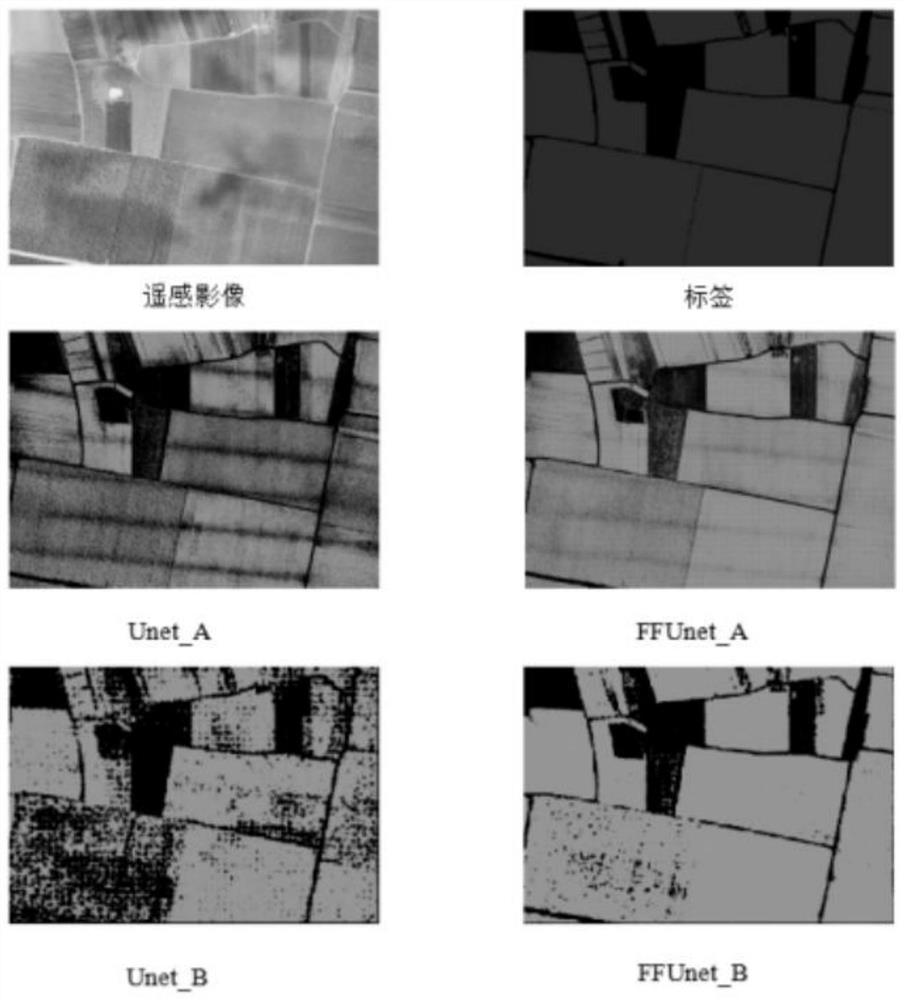

[0041] S5. Construct and train the Unet neural network model, substitute the neural network data set into the Unet neural network model for training, adjust the hyperparameters according to the training results, and perform accuracy evaluation to ob...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com