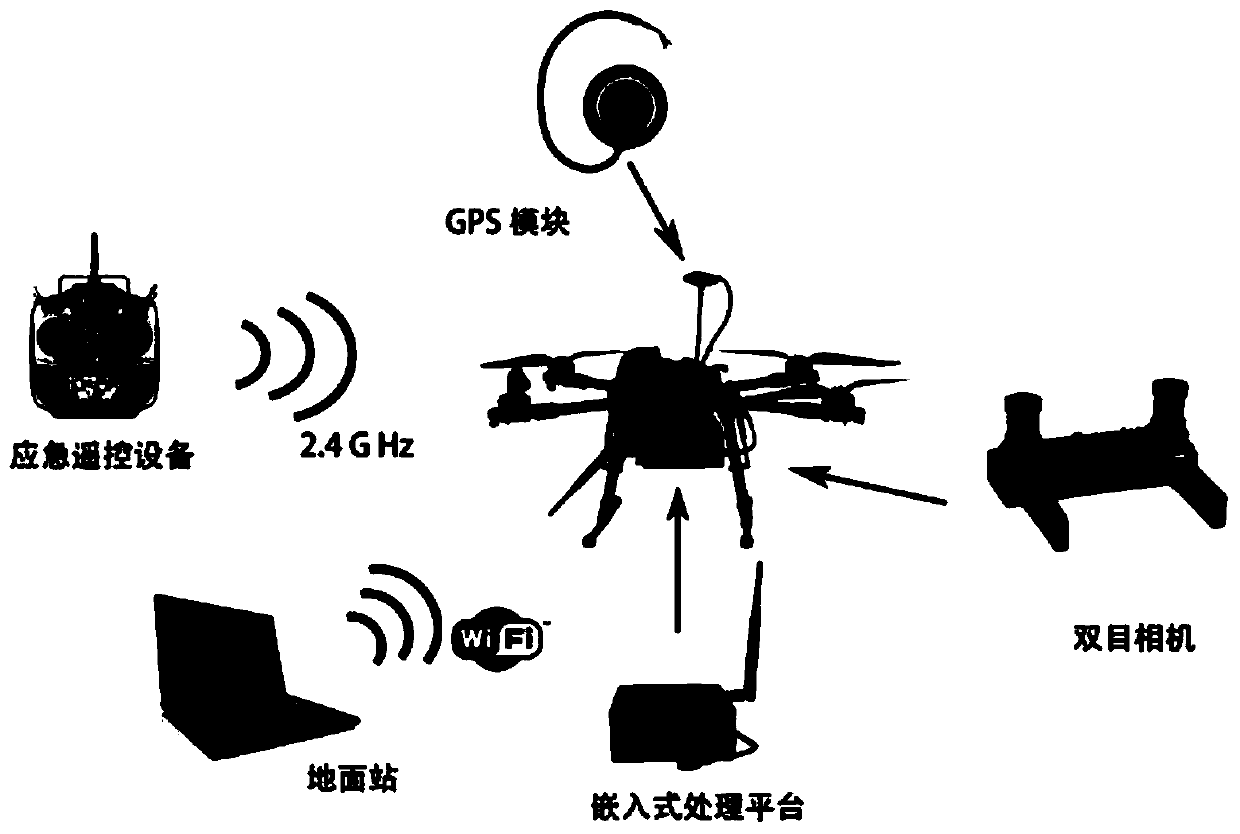

A UAV human-computer interaction method based on binocular vision and deep learning

A deep learning and binocular vision technology, applied in neural learning methods, user/computer interaction input/output, mechanical mode conversion, etc., can solve the problems of high computational complexity, lack of acceleration algorithms, slow recognition speed, etc. Wide working range, solving camera drift and complex background interference, high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be further described in detail below in conjunction with the accompanying drawings and through specific embodiments. The following embodiments are only descriptive, not restrictive, and cannot limit the protection scope of the present invention.

[0033] A human-computer interaction method for drones based on binocular vision and deep learning, the specific steps are as follows:

[0034] 1) When the system is started, according to the content displayed by the camera, the navigator is framed from a single point of view displayed on the ground station, and the navigator is tracked by using the fast tracking algorithm. Low-resolution video sequence in the center.

[0035] 2) Using the stereo matching algorithm based on block matching to perform stereo matching on the low-resolution part, this part of the stereo matching is accelerated by the graphics processor. At the same time, the parameters of this step provide the maximum and minimum paralla...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com