Vision-laser radar fusion method and system based on depth canonical correlation analysis

A typical correlation analysis and lidar technology, applied in the field of vision-lidar fusion, can solve problems such as short boards, detection system failures, negatives, etc., and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

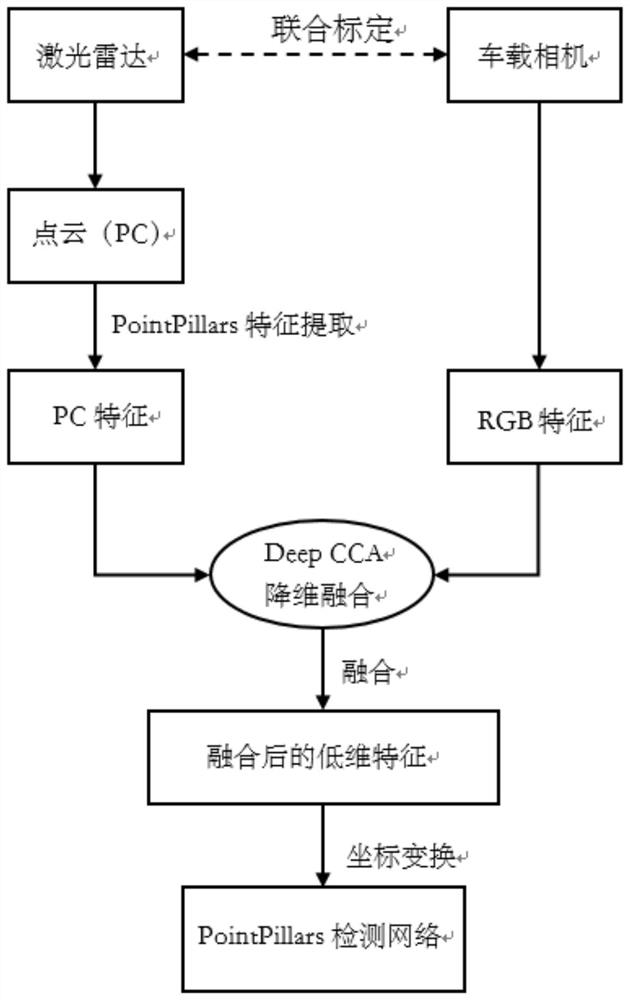

[0059] Such as figure 1 As shown, Embodiment 1 of the present invention provides a vision-lidar fusion method based on deep canonical correlation analysis, including the following steps:

[0060] Step 1. Collect the lidar point cloud and camera images in the autonomous driving scene. By default, the calibration and alignment have been completed. Since the verification of the algorithm must ensure that it is open and effective, relevant experiments are carried out in the public data set KITTI.

[0061] Step 2. Fusion of lidar point cloud data and RGB data.

[0062] 1) The original lidar data is a point cloud. The point cloud in KITTI can be expressed as a matrix of [N, 4], where N is the number of point clouds in a frame scene, and the four-dimensional features of each point [x, y, z, i], respectively, the space x, y, z coordinates and the laser reflection intensity i;

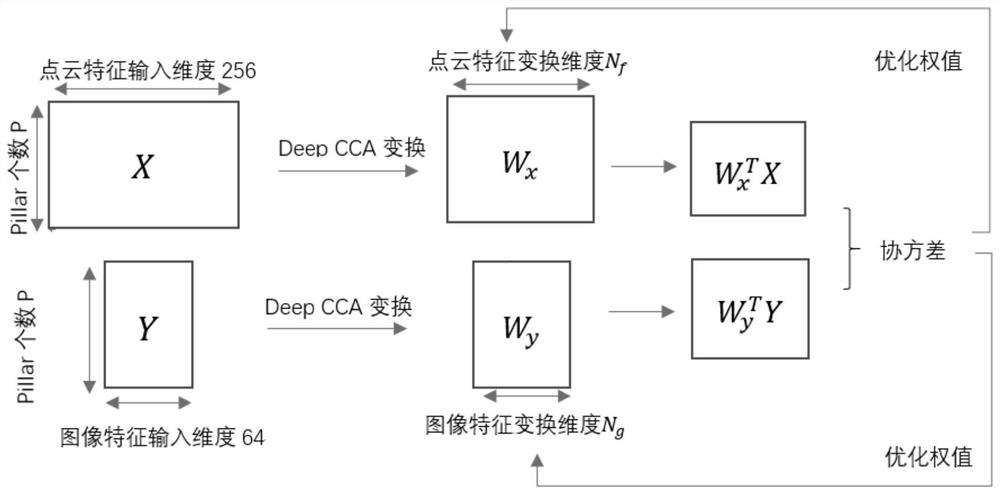

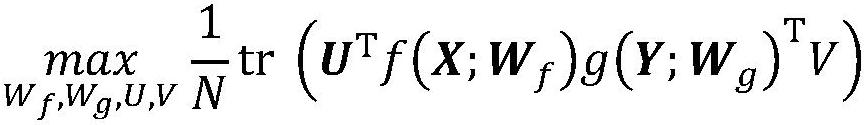

[0063] 2) Given 2 sets of N vectors: x represents image features, and y represents point cloud features. ...

Embodiment 2

[0079] Embodiment 2 of the present invention provides a vision-lidar fusion system based on deep canonical correlation analysis, the system includes: a pre-established and trained fusion model, an acquisition module, an RGB feature extraction module, and a point cloud feature extraction module , fusion output module and target detection module; where,

[0080] The collection module is used to synchronously collect RGB images and point cloud data of the road surface;

[0081]The RGB feature extraction module is used to perform feature extraction on RGB images to obtain RGB features;

[0082] The point cloud feature extraction module is used to sequentially perform coordinate system conversion and rasterization processing on the point cloud data, and then perform feature extraction to obtain point cloud features;

[0083] The fusion output module is used to input point cloud features and RGB features into a pre-established and trained fusion model at the same time, and output f...

Embodiment 3

[0086] A computer device includes a memory, a processor, and a computer program stored on the memory and operable on the processor, and the method of Embodiment 1 is implemented when the processor executes the computer program.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com