Data annotation method and device and data annotation model training method and device

A technology for labeling models and training methods, applied in the field of data processing, can solve problems such as low labeling efficiency, manual labeling cannot achieve refined labeling, etc., to improve the degree of proximity, improve labeling accuracy, and speed, purpose and advantages are concise and easy to understand Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

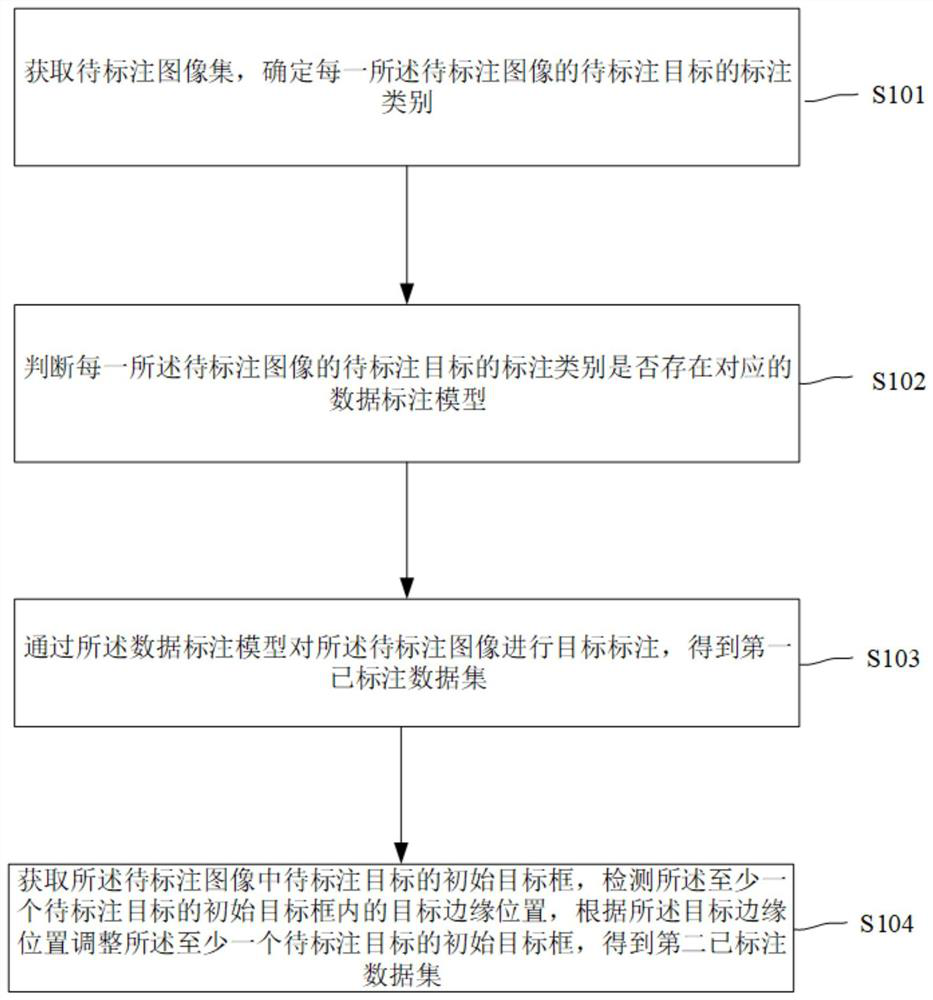

[0037] The embodiment of this application provides a data labeling method, refer to figure 1 , the method includes the following steps S101 to S104:

[0038] Step S101 , acquiring a set of images to be labeled, and determining the labeling category of the target to be labeled for each of the images to be labeled.

[0039] Step S102 , judging whether there is a corresponding data labeling model for the labeling category of the target to be labeled in each image to be labelled.

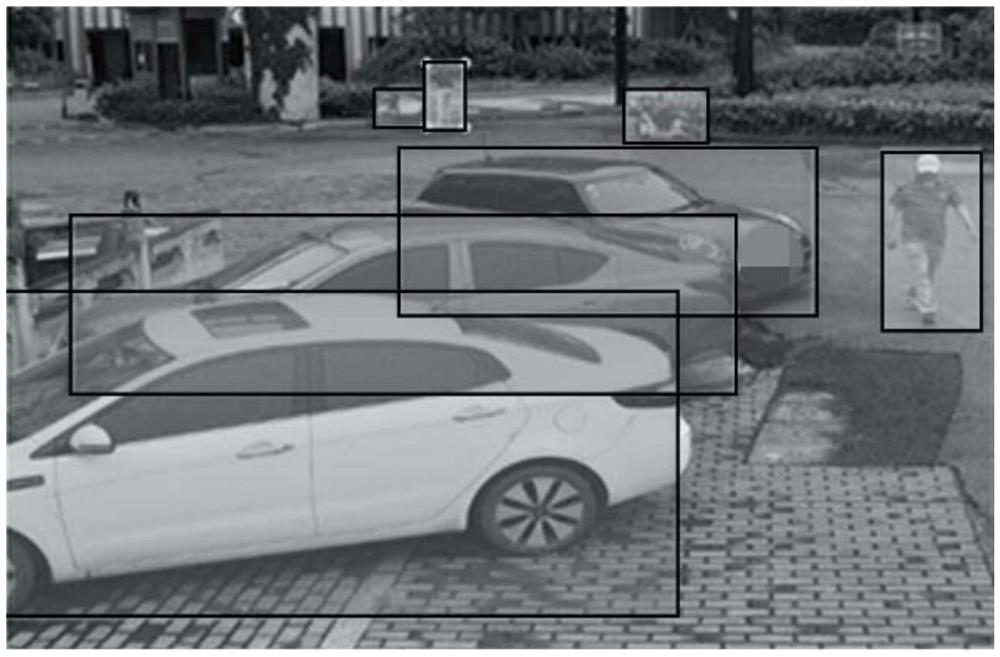

[0040] Step S103, if yes, perform object labeling on the image to be labeled by using the data labeling model to obtain a first labeled data set.

[0041] Step S104, if not, obtain the initial target frame of the target to be marked in the image to be marked, detect the target edge position in the initial target frame of the at least one target to be marked, and adjust the at least one object according to the target edge position The initial target frame of the target to be labeled to obtain the secon...

Embodiment 2

[0074] Based on the same idea, refer to Figure 9 , the application also proposes a data labeling device, including:

[0075] An acquisition module 901, configured to acquire a set of images to be labeled, and determine the labeling category of the target to be labeled for each of the images to be labeled;

[0076] A judging module 902, configured to judge whether there is a corresponding data tagging model for the tagging category of the target to be tagged for each image to be tagged;

[0077] The first tagging module 903 is configured to perform target tagging on the image to be tagged through the data tagging model to obtain a first tagged data set;

[0078] The second labeling module 904 is configured to obtain the initial target frame of the target to be marked in the image to be marked, detect the target edge position in the initial target frame of the at least one target to be marked, and adjust the target edge position according to the target edge position. At least...

Embodiment 3

[0080] This embodiment also provides an electronic device, refer to Figure 10 , including a memory 1004 and a processor 1002, the memory 1004 stores a computer program, and the processor 1002 is configured to run the computer program to perform the steps in any one of the above method embodiments.

[0081] Specifically, the above-mentioned processor 1002 may include a central processing unit (CPU), or an application specific integrated circuit (Application Specific Integrated Circuit, ASIC for short), or may be configured to implement one or more integrated circuits in the embodiments of the present application.

[0082] Wherein, the storage 1004 may include a mass storage 1004 for data or instructions. For example without limitation, the memory 1004 may include a hard disk drive (HardDiskDrive, referred to as HDD), a floppy disk drive, a solid state drive (SolidStateDrive, referred to as SSD), flash memory, optical disk, magneto-optical disk, magnetic tape or Universal Seria...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com