Moreover, the increased functionality and robustness of today's systems and applications, and continued demand for additional features and functionality, as well as the lack of uniform standards adopted and implemented by the divergent devices, applications, systems, and components communicating in operation of such systems and applications have led to significant deterioration in these critical performance factors—i.e., speed / end-to-end response times, reliability, and security.

Such solutions, however, have inherent limitations in the performance increases possible.

Most notably, the typical “bottlenecks” leading to limitations in

data transport and processing speeds in computer networks and communication systems are not the hardware being utilized, but the

software and, more particularly, the

software architecture driving the transport and processing of data from end point to end point.

Traditional transport

software implementations suffer from design flaws, lack of

standardization and compatibility across platforms, networks, and systems, as well as the utilization and transport of unnecessary overhead, such as

control data and communication protocol

layers.

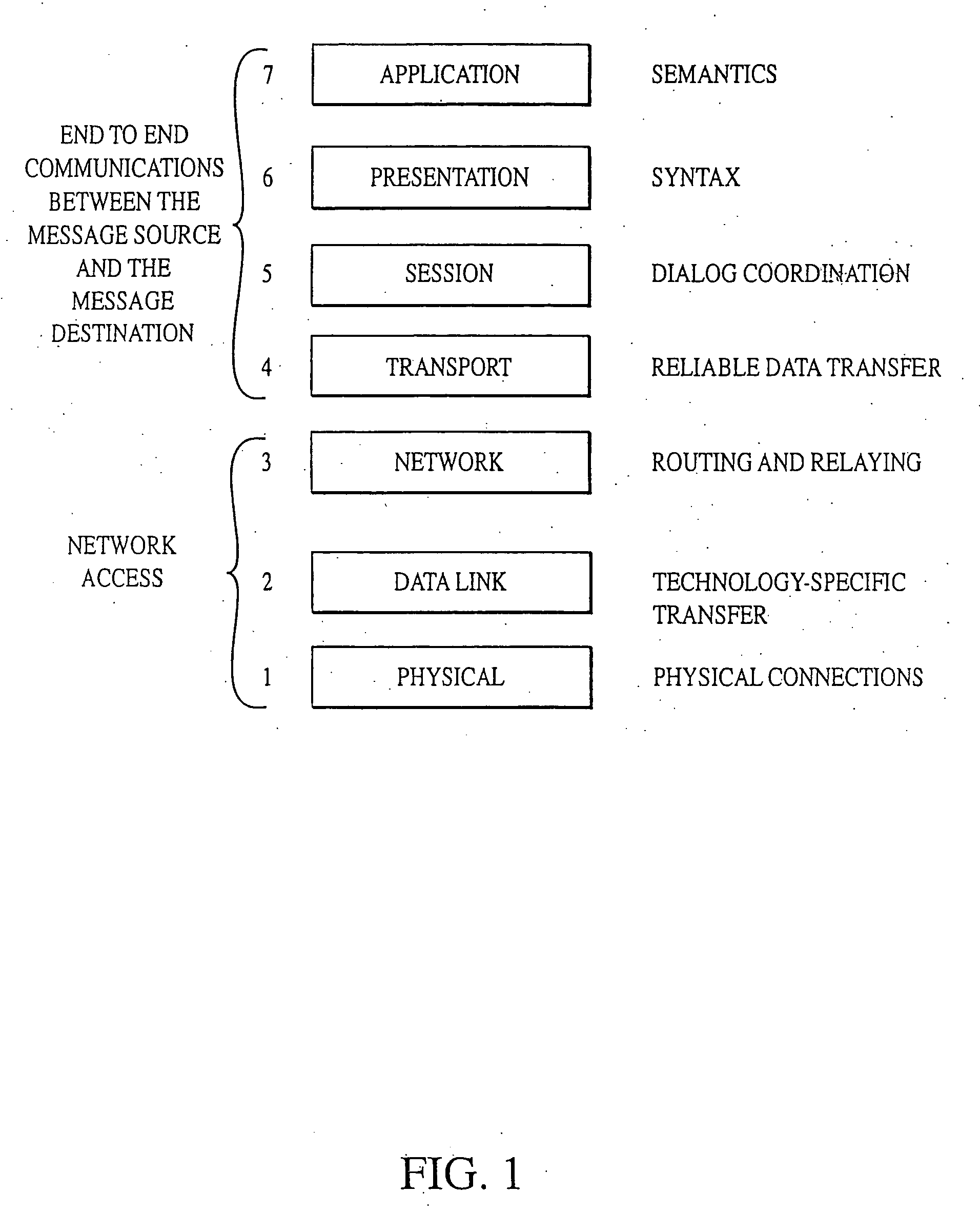

These drawbacks are due, in large part, to a lack of industry agreement on a universal protocol or language to be used in the overall process of transporting data between a message source and a message destination.

As a consequence of this lack of a universal protocol or language, numerous and varying protocols and languages have been, and continue to be, adopted and used resulting in significant additional overhead, complexity, and a lack of

standardization and compatibility across platforms, networks, and systems.

Moreover, this diversity in protocols and languages, and lack of a universal language beyond the

transport layer, forces the actual data being transported to be saddled with significant additional data to allow for translation as transmission of the data occurs through these various

layers in the communication stack.

The use of these numerous and varying protocols and languages such as, for example, HTTP, WAP / WTP / WSP,

XML, WML,

HTML / SMTP / POP, COM, ADO, HL7, EDI,

SOAP,

JAVA, JDBC, ODBC, OLE / DB, create and, indeed, require additional

layers and additional data for translation and control, adding additional overhead on top of the actual data being transported and complicating system design, deployment, operation, maintenance, and modification.

These deficiencies in such traditional implementations lead to the inefficient utilization of available bandwidth and available processing capacity, and result in unsatisfactory response times. Even a significant

upgrade in hardware—e.g., processor power and speed, or transport media and associated hardware—will provide little, if any, increase in system performance from the standpoint of transport speed and processing of data, end-to-end

response time, system reliability and security.

With the explosion in the use of web-based protocols, yet another major deficiency has emerged in current implementations as a result of the combination of both transport / communication state processing and application / presentation state processing.

This merging has the effect of increasing transport and application complexity in both the amount of

handshaking and the amount of additional protocol data that is required.

As computer networks and communication systems continue to grow, with the addition of more devices, applications, interfaces, components, and systems, the transport and application complexities caused by merging transport / communication state processing and application / presentation state processing will grow to the point that all network and system resources will be exhausted.

Another challenge for the current

momentum of the industry is adopting functionality to the emerging

wireless communications industry.

The

wireless devices used for this industry are small, with limited CPU capacity and limited onboard resources.

The

wireless bandwidth currently available to these devices is also very limited and can be of an unstable variety in which the

signal is fluctuating.

The industry's future expansion cannot rely on software technologies that exhibit major inefficiency in either processing or bandwidth.

An example of this is in the wireless industry's unsuccessful adoption of web-based technologies.

Early software projects in the wireless industry are producing unacceptable results and a very low level of customer satisfaction.

This is due to the fact that these technologies are currently having functional performance problems because of their higher bandwidth and substantially higher CPU requirements.

The use of these wireless solutions for internal business functions has been limited due, in large part, to an absence of cost effective, real time wireless applications that function with 100% security and reliability.

The

momentum of the wireless industry is failing to penetrate most of these markets.

Another challenge for the current

momentum of the industry is adopting functionality to legacy or mainframe systems.

Many of the current development efforts in applying these inefficient technologies, such as web-based, into technologies that require high efficiency are producing systems that do not provide adequate reliability or security for performing business critical functions.

These systems are not fast enough to perform functions in real time as they add additional layers of processing that complicate and slow down the business functions.

Therefore, organizations are reluctant to apply these technologies to their

mission critical internal business functions.

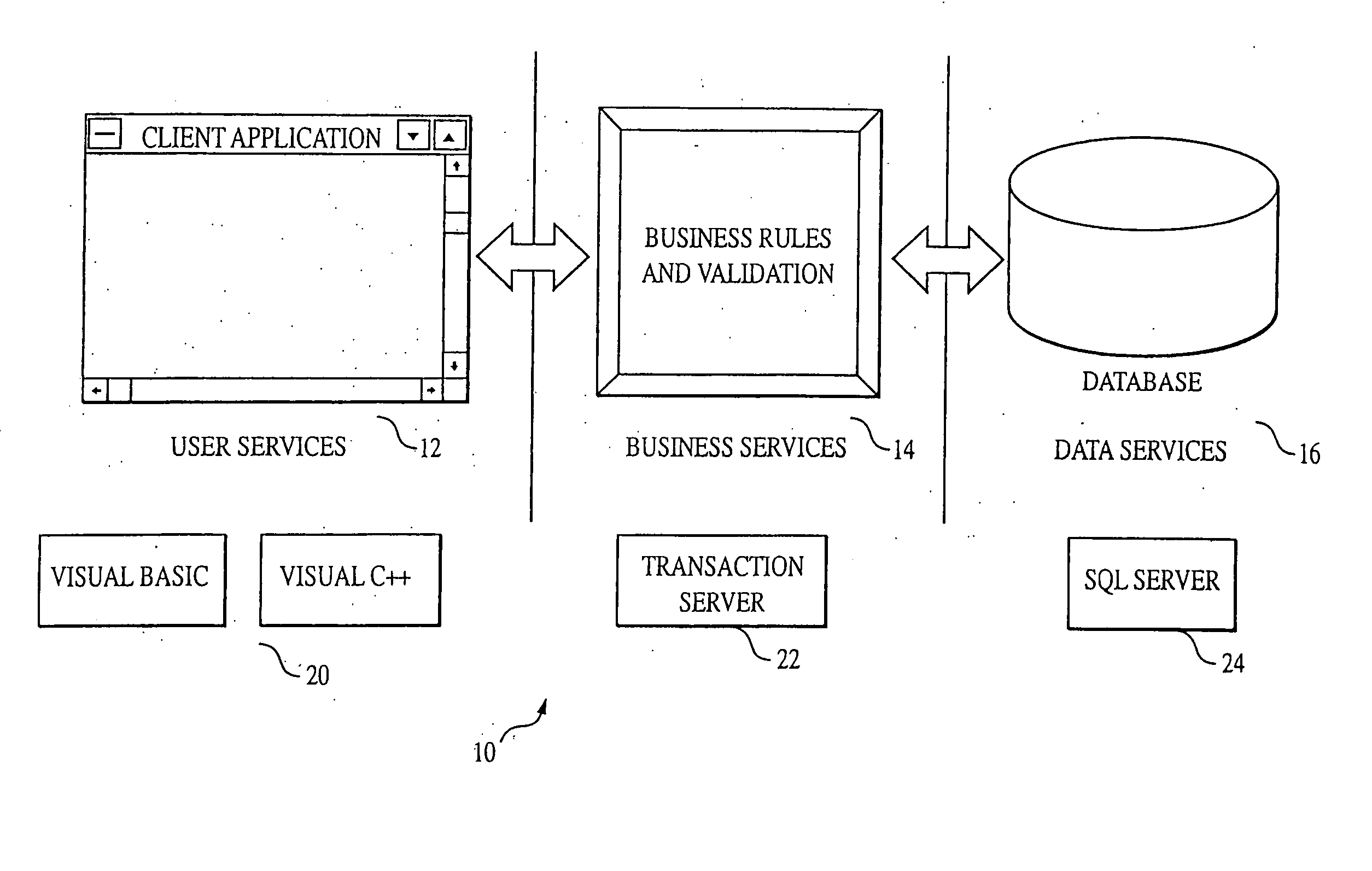

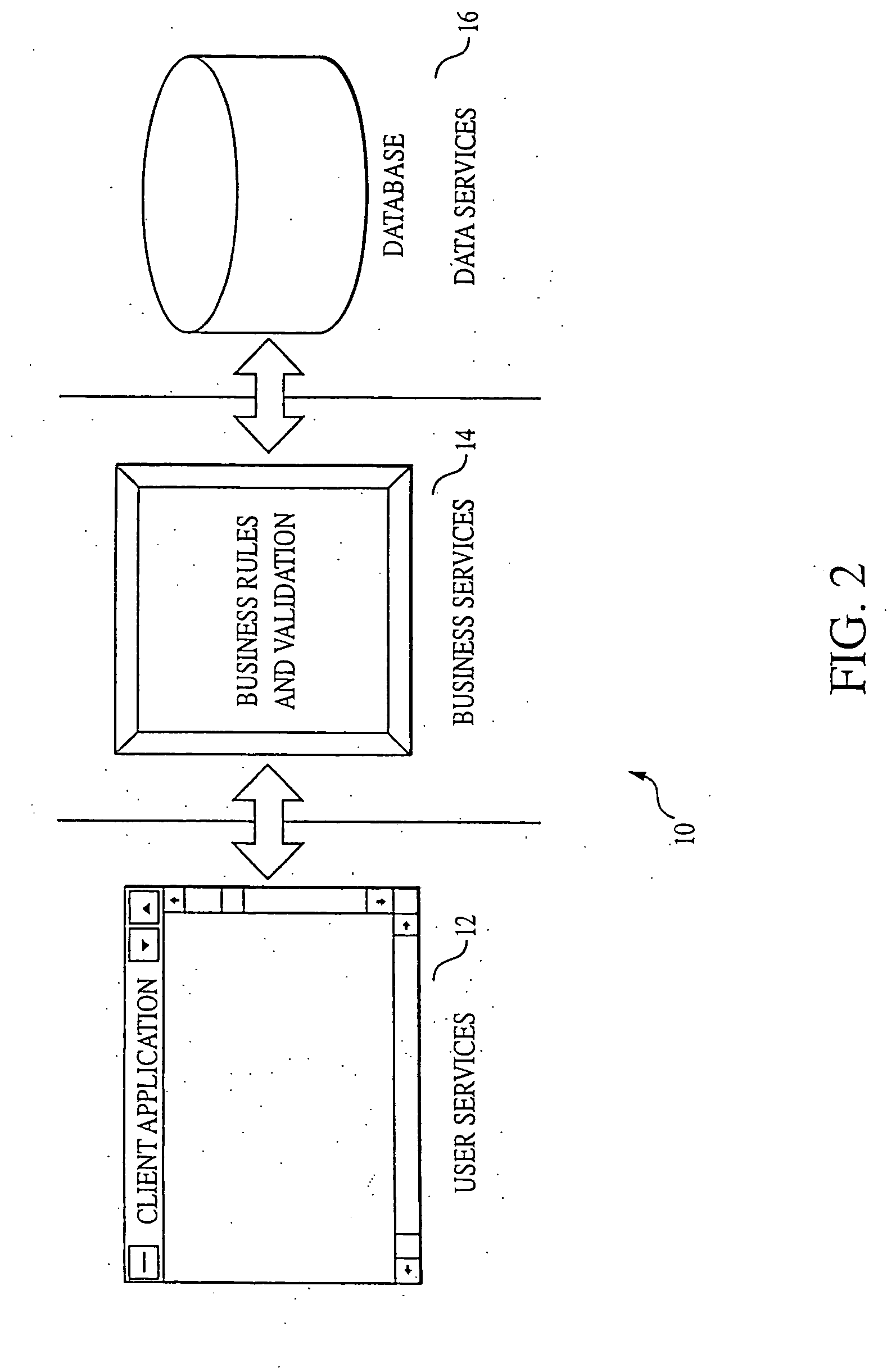

Three-tier client / server applications are rapidly displacing traditional two-tier applications, especially in large-scale systems involving complex distributed transactions.

The

primary problem with the two-tier configuration is that the modules of the system that represent the

business logic by applying, for example, business rules,

data validation, and other business

semantics to the data (i.e., business services) must be implemented on either the client or the server.

When the server implements these modules that represent the

business logic (i.e., business services, such as business rules, by using stored procedures), it can become overloaded by having to process both

database requests and, for example, the business rules.

This approach prevents the user from modifying the data beyond the constraints of the business, tightening the integrity of the system.

Such systems, designed using a three-tiered architecture and implemented using

middleware such as MTS, still suffer from the limitations and drawbacks associated with the software driving the transport and processing of data from end point to end point—i.e., design flaws, increased complexity, lack of

standardization and compatibility across platforms, networks, and systems, as well as the utilization and transport of unnecessary overhead, such as

control data and communication protocol layers, as discussed above.

Login to View More

Login to View More  Login to View More

Login to View More