Video human action reorganization method based on sparse subspace clustering

A technology of recognition method and clustering method, which is applied in the field of computer vision pattern recognition and video image processing, can solve problems such as high cost, complex algorithm, and unsatisfactory effects in human behavior recognition, so as to improve performance, improve accuracy, and alleviate Effects of Overfitting and Gradient Diffusion Problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

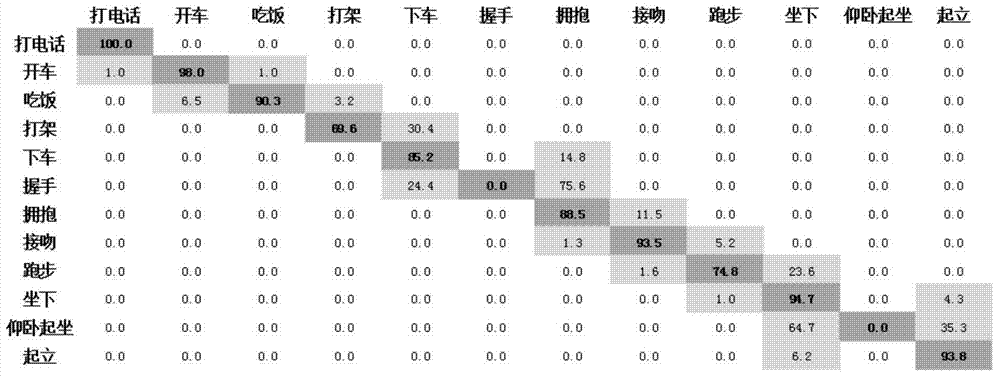

Image

Examples

Embodiment Construction

[0032] The hardware configuration that the present invention adopts is: Dell server, 8 nuclear 2.60Ghz CPU, 128Gb memory; Software configuration is: Windows Server 2003 operating system, OpenCV open source computer vision library, Microsoft Visual Studio 2010 development environment, Matlab simulation environment etc.

[0033] The concrete implementation stage of the present invention comprises training stage and identification stage, and its concrete implementation steps are as follows:

[0034] A. Establish a model for video human behavior recognition:

[0035] A1: Establish a three-dimensional spatio-temporal subframe cube: Divide each frame on the human behavior video of the same category in the human behavior database Hollywood2 used for learning into subframes of the same size (16×16 pixels), and then form the corresponding human behavior video The time series length of some consecutive frames (10 frames) is used as its thickness to establish a three-dimensional space-ti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com