Hardware accelerator applied to binarized convolutional neural network and data processing method thereof

A technology of convolutional neural network and hardware accelerator, which is applied in neural learning methods, electrical digital data processing, biological neural network models, etc., and can solve problems such as increased computation, high resource consumption, and increased computing cycles

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

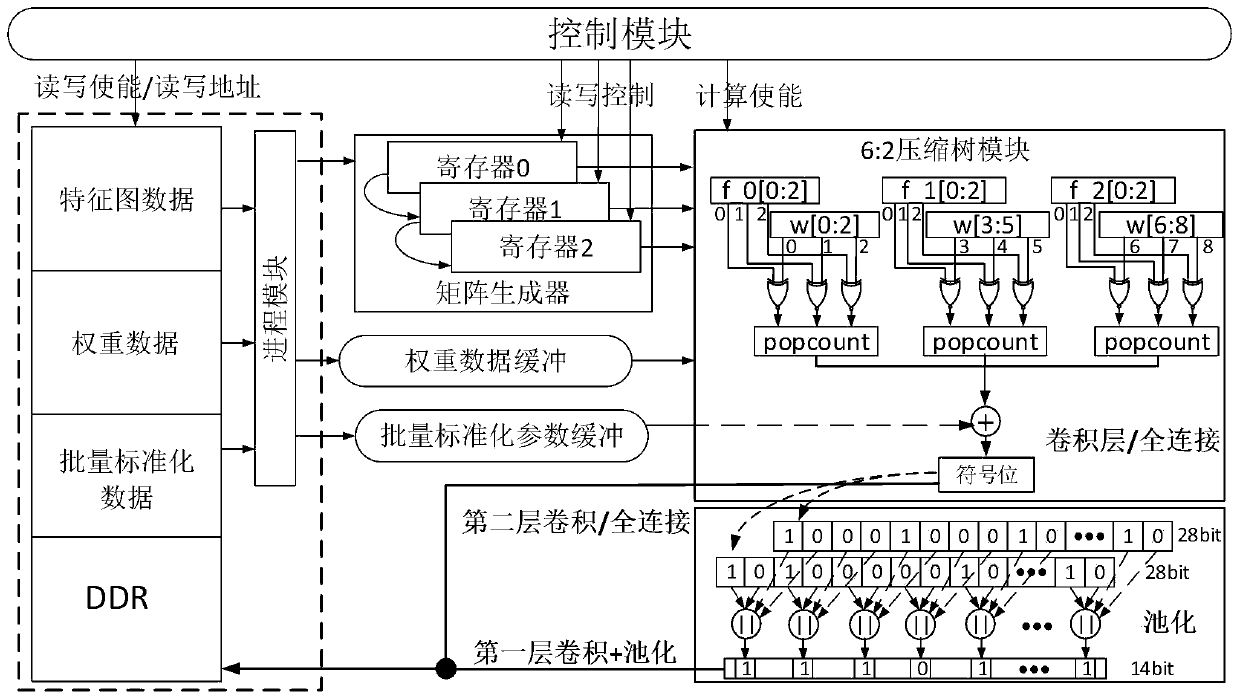

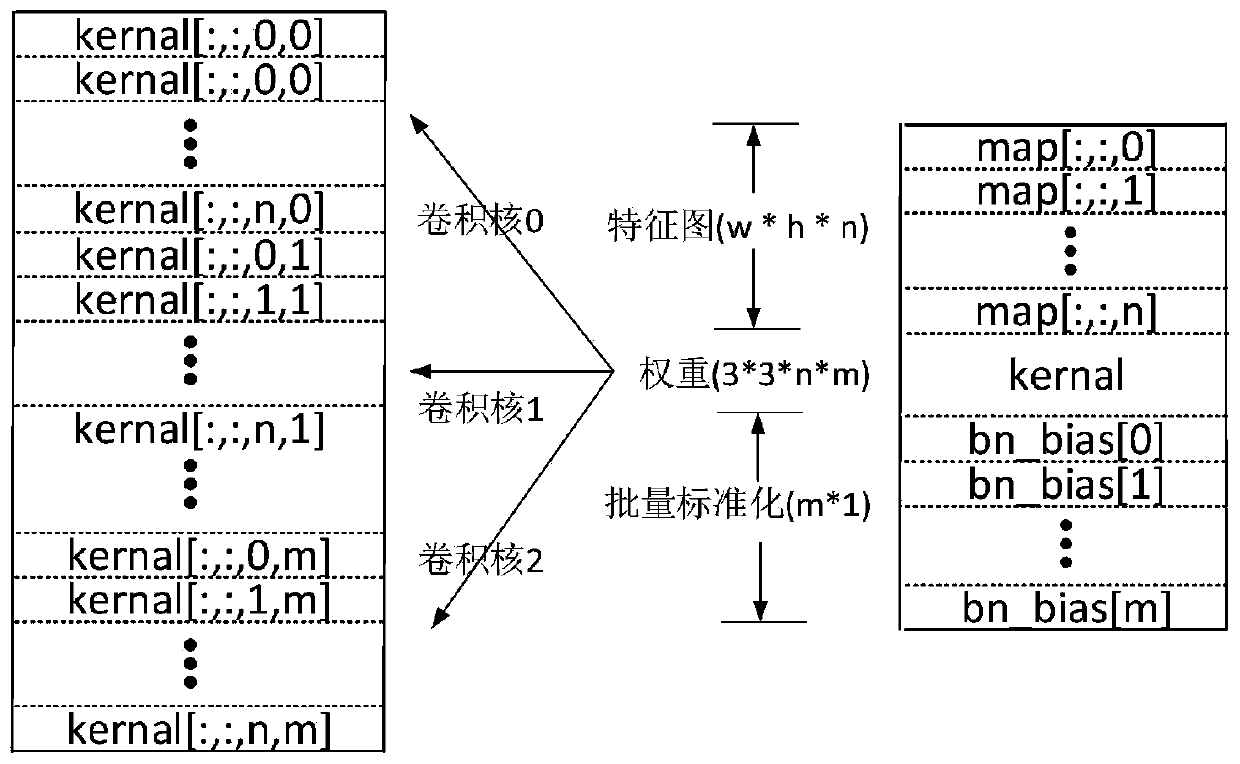

[0081] In this embodiment, the binary convolutional neural network includes: a K-layer binary convolution layer, a K-layer activation function layer, a K-batch normalization layer, a K-layer pooling layer, and a fully connected classification output layer; and The number of training parameters in the batch normalization layer is combined into one;

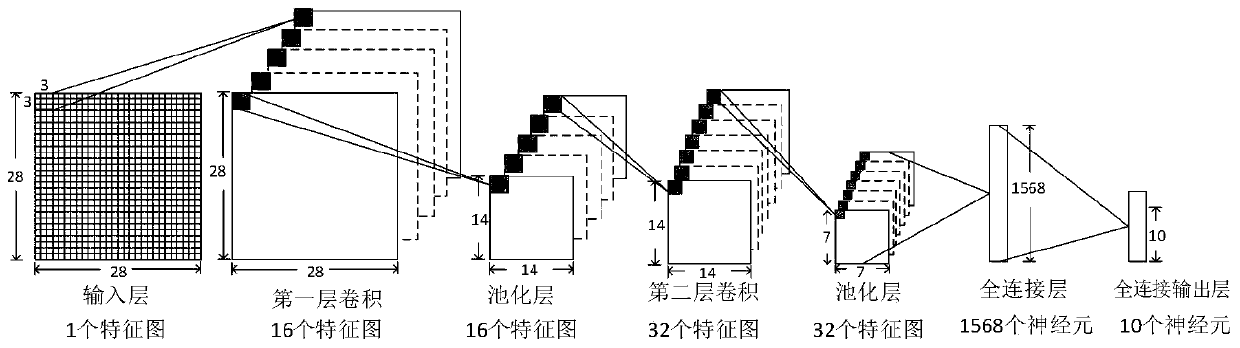

[0082] The convolutional neural network adopted in this embodiment is a handwritten digit recognition network, and the structure diagram is as follows figure 1 As shown, its structure includes an input layer, two convolutional layers, two pooling layers, and a fully connected layer. The first layer calculation is the convolutional layer calculation, the input layer is 784 neural nodes, the convolution kernel size is 3×3, the convolution step is 1, and 16 feature maps of 28×28 are output, with a total of 12544 neural nodes; The second layer is the calculation of the pooling layer. The input layer is 16 28×28 feature maps output by...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com