Real-time attitude estimation motion analysis method and system, computer equipment and storage medium

A technology of motion analysis and attitude estimation, applied in the field of computer vision, can solve problems such as joint connections that cannot be distinguished from people, less human body posture estimation, and inability to analyze multiple people at the same time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

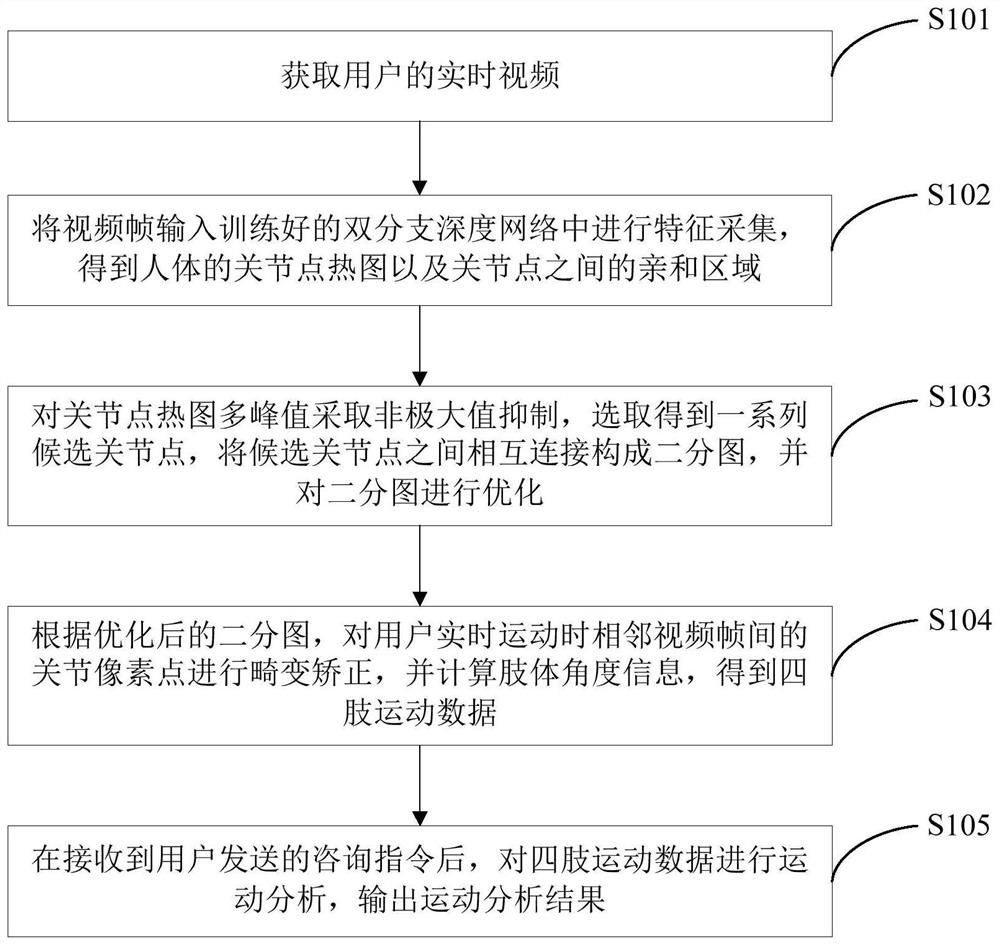

[0078] Such as figure 1 As shown, the present embodiment provides a real-time pose estimation motion analysis method, the method includes the following steps:

[0079] S101. Acquire a real-time video of a user.

[0080] In this embodiment, the real-time video of the user is acquired through a monocular camera.

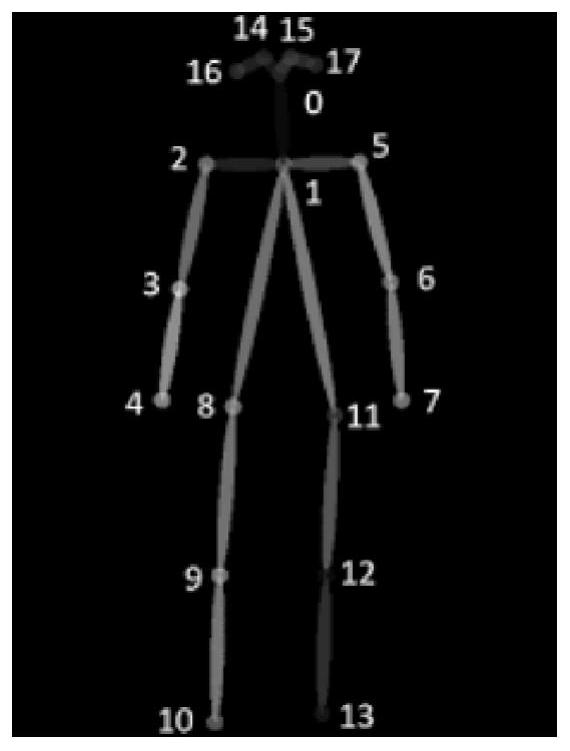

[0081] S102. Input the video frame into the trained dual-branch deep network for feature collection, and obtain the heat map of the joint points of the human body and the affinity regions between the joint points.

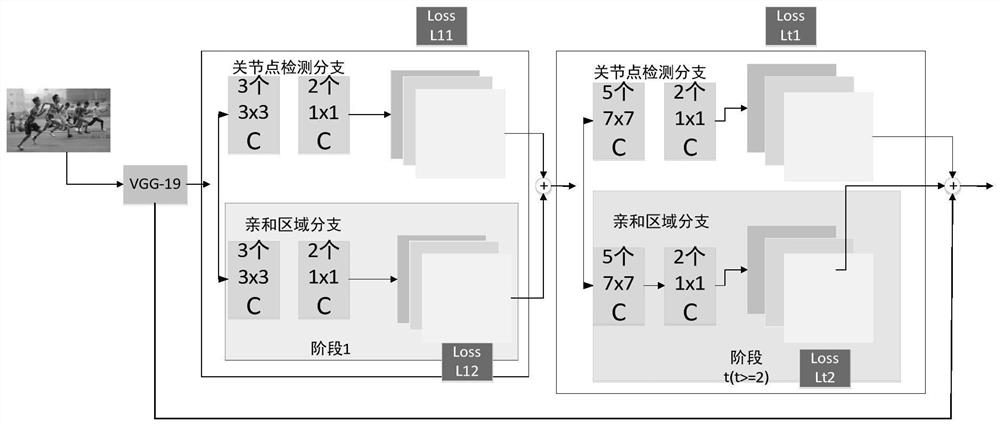

[0082] The dual-branch deep network of this embodiment adopts the VGG network, specifically the VGG-19 network, and its structure is as follows figure 2 As shown, the upper branch of the VGG-19 network is used to collect the position of the joint points of the human body, the lower branch of the VGG-19 network is used to collect the affinity area between the joint points, and the prediction results of the previous stage are used for feature fusion of vid...

Embodiment 2

[0133] Such as Figure 6 As shown, the present embodiment provides a real-time posture estimation motion analysis system, the system includes a video acquisition module 601, a feature acquisition module 602, a limb connection module 603, a posture correction module 604 and a motion analysis module 605, the specific functions of each module as follows:

[0134] Video acquisition module 601, used to acquire the real-time video of the user;

[0135] The feature collection module 602 is used to input the video frame into the trained double-branch deep network for feature collection, and obtain the joint point heat map of the human body and the affinity area between the joint points.

[0136] The limb connection module 603 is used to suppress the multi-peak points of the joint point heat map by non-maximum value, select a series of candidate joint points, connect the candidate joint points to form a bipartite graph, and optimize the bipartite graph.

[0137] The posture correctio...

Embodiment 3

[0141] This embodiment provides a computer device, which can be a computer, such as Figure 7As shown, a processor 702, a memory, an input device 703, a display 704 and a network interface 705 are connected through a system bus 701, the processor is used to provide computing and control capabilities, and the memory includes a non-volatile storage medium 706 and an internal memory 707, the non-volatile storage medium 706 stores an operating system, a computer program, and a database, the internal memory 707 provides an environment for the operation of the operating system and the computer program in the non-volatile storage medium, and the processor 702 executes the During computer program, realize the real-time pose estimation motion analysis method of above-mentioned embodiment 1, as follows:

[0142] Obtain the user's real-time video;

[0143] Input the video frame into the trained dual-branch deep network for feature collection, and obtain the joint point heat map of the h...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com