A Neural Network-Based Rotation Difference Correction Method for Multimodal Remote Sensing Images

A neural network and remote sensing image technology, applied in the field of remote sensing image processing, can solve the problems of inaccurate geographic information, unusable, lost algorithms, etc., and achieve the effect of simplifying network structure, enhancing universality, and eliminating correction errors.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

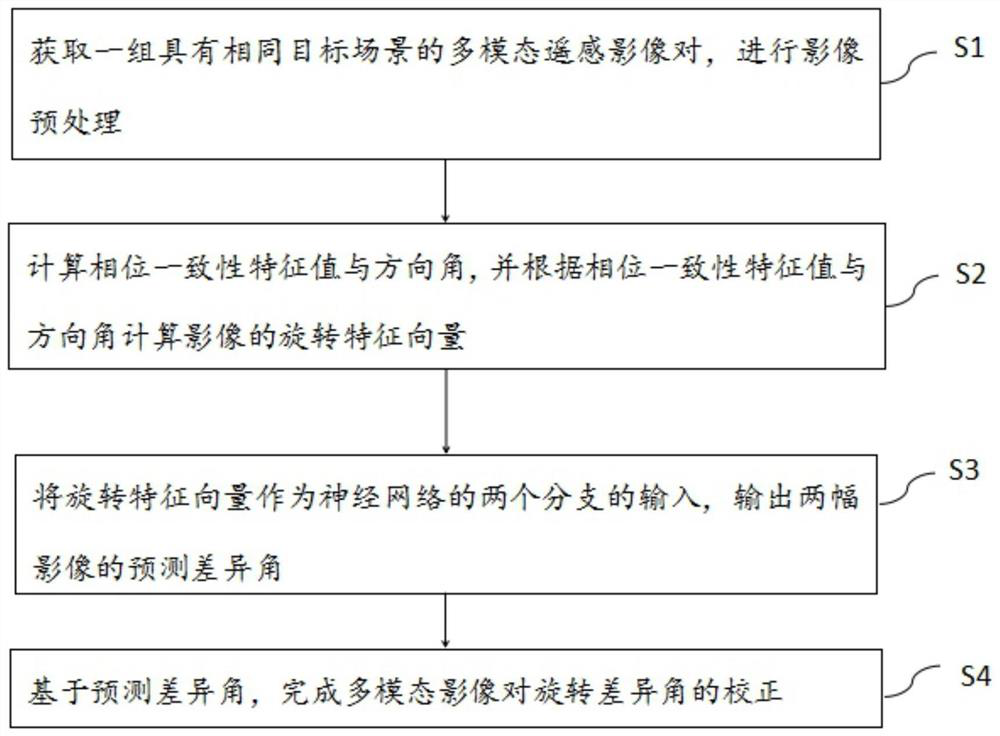

[0046] S4: Based on the predicted difference angle, complete the correction of the multimodal remote sensing image rotation difference angle.

[0049]

[0052]

[0054]

[0055]

[0057]

[0058]

[0059]

[0061] [E

[0062]=[I(x, y)*L

[0064]

[0068] b=∑

[0070]

[0074]

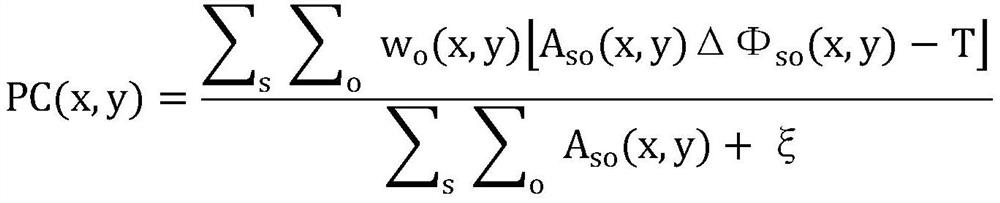

[0075] traverse all the pixel points of the image in turn, according to the size of the direction angle of its phase consistency, take the feature value as the weight,

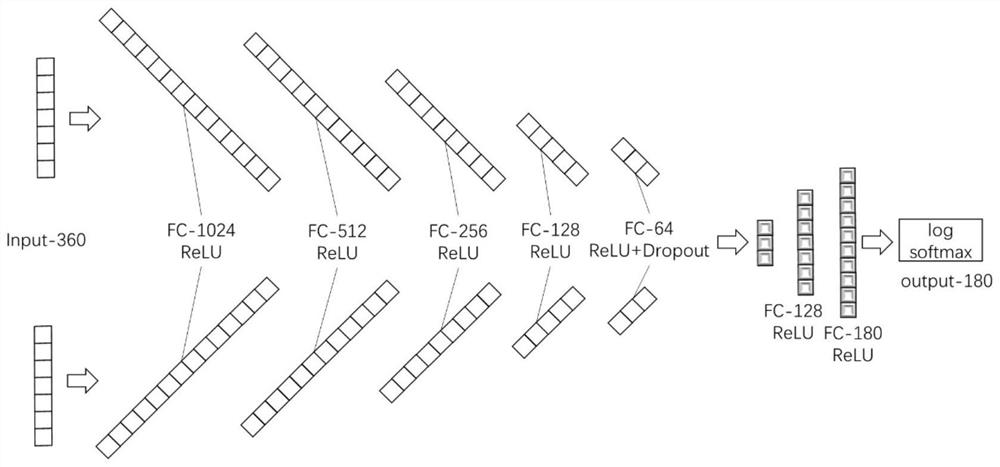

[0077] n

[0078] Finally, a rotation feature vector Rot with a size of 1 × 360 is obtained

[0082] The various embodiments in this specification are described in a progressive manner, and what each embodiment focuses on is that it is related to other

[0083] The foregoing description of the disclosed embodiments enables any person skilled in the art to make or use the present invention.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com