Capsule endoscopy image multi-focus detection method

A technology of capsule endoscopy and detection methods, applied in image enhancement, image analysis, image data processing, etc., can solve problems such as difficulty in feature extraction, low precision of multi-lesions, limited number of training set samples, etc., to reduce the burden of diagnosis, The effect of high detection accuracy and reduced calculation load

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

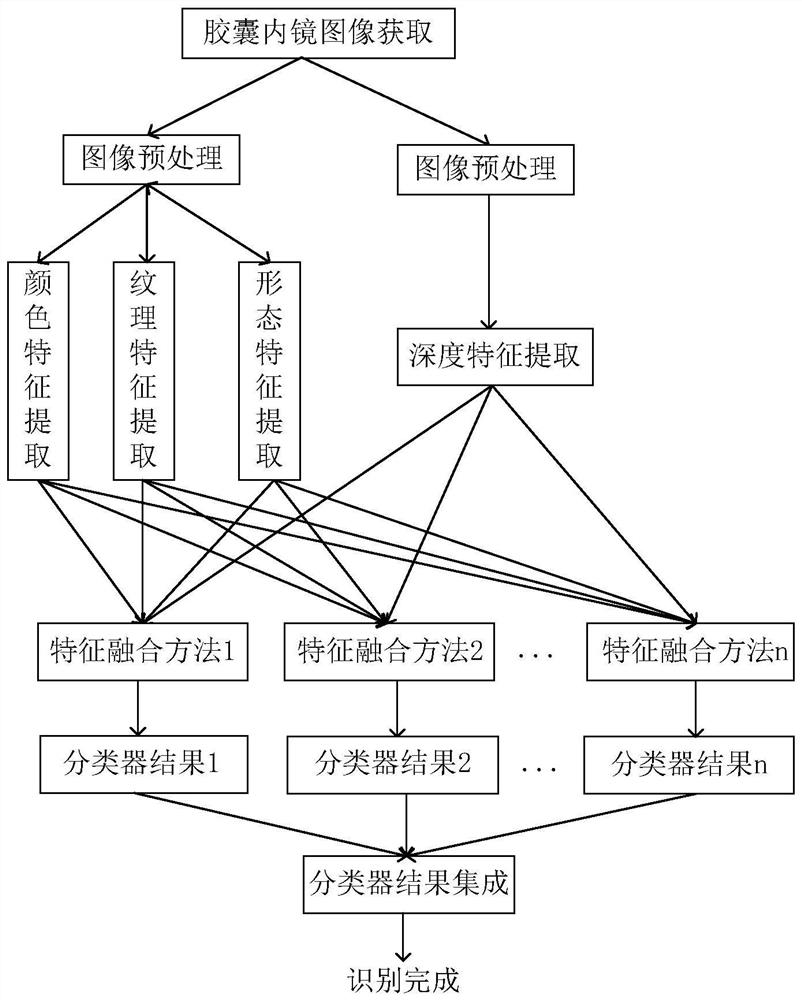

[0038] Such as figure 1 As shown, a multi-focal detection method for capsule endoscopic images based on visual feature fusion includes the following complete steps:

[0039] Capsule endoscopy image data collection: Capsule endoscopy images include normal images, bleeding images, ulcer images, polyp images, erosion images, vein exposure images, and edema images. Store these images in separate labeled folders.

[0040] Image preprocessing: Preprocessing the acquired images, removing image borders, deleting useless image information, and randomly shuffling the data set before feature extraction.

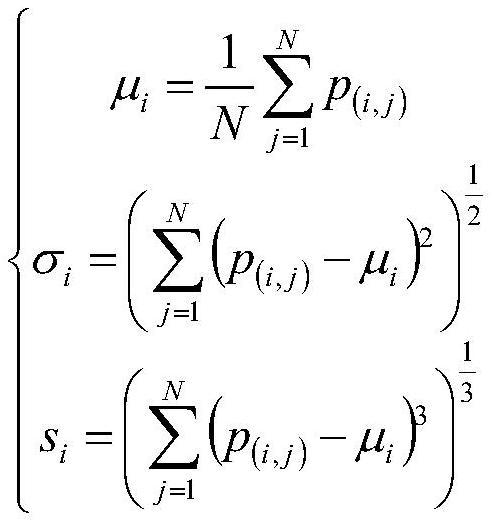

[0041] Color feature extraction: extract the third-order color moment color feature of the image in the HSV color space, and perform HSV color space conversion on the image; then divide the entire picture evenly into 25 non-overlapping sub-blocks:

[0042] Then the third-order color moment on each sub-block area includes: first-order moment (mean value), second-order moment (variance)...

Embodiment 2

[0049] Texture feature extraction:

[0050] Such as figure 1 The LBP texture histogram texture feature of the image extracted in the RGB color space is shown, and the LBP calculation formula is:

[0051]

[0052]

[0053] g in the formulac Represents the gray value of the center point pixel; g n Indicates the gray value of the pixels within the neighborhood radius, and R is the neighborhood radius. The image texture feature extraction is to extract the uniform local binary texture histogram of the image as the texture feature in the RGB color space. First, the image is divided into 100 complementary and overlapping sub-block images, and then the texture histogram of each sub-block is extracted separately. Finally, according to Sequentially concatenate the features of each region to form the texture features of the entire complete image, with a total of 5900 (59×100) dimensions.

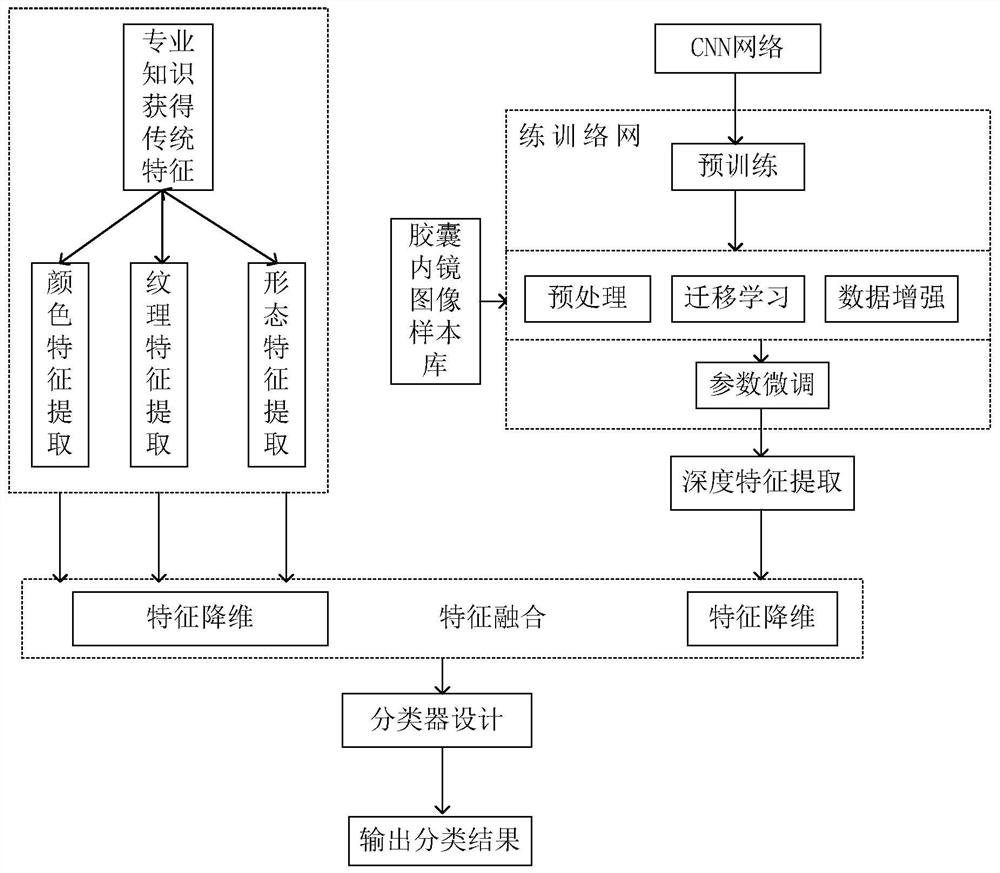

[0054] Extract image convolutional neural network features: There are two methods for ext...

Embodiment 3

[0061] CNN feature and traditional feature fusion:

[0062] Such as figure 1 As shown, the present invention adopts four kinds of feature fusion methods, which are series fusion, series fusion and PCA dimensionality reduction, canonical correlation analysis fusion, and multi-layer perceptron fusion. Concatenation fusion refers to concatenating four features together. Concatenation and PCA dimensionality reduction fusion refers to concatenation of four features and then PCA dimensionality reduction. Canonical correlation analysis fusion means that two of the four features are fused into one feature through canonical correlation analysis, and then this feature is further fused with one of the remaining features until the final fusion feature is formed. Multilayer perceptron fusion refers to the establishment of a three-layer multilayer perceptron network. The fully connected layer with 512 neurons in the fully connected layer before the softmax layer is used as the feature ext...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com