Content-based information retrieval architecture

a content-based information and architecture technology, applied in the field of architectures for fast information retrieval, can solve problems such as complicated reconstruction of tables, and achieve the effects of convenient manufacture, convenient hardware implementation, and convenient construction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

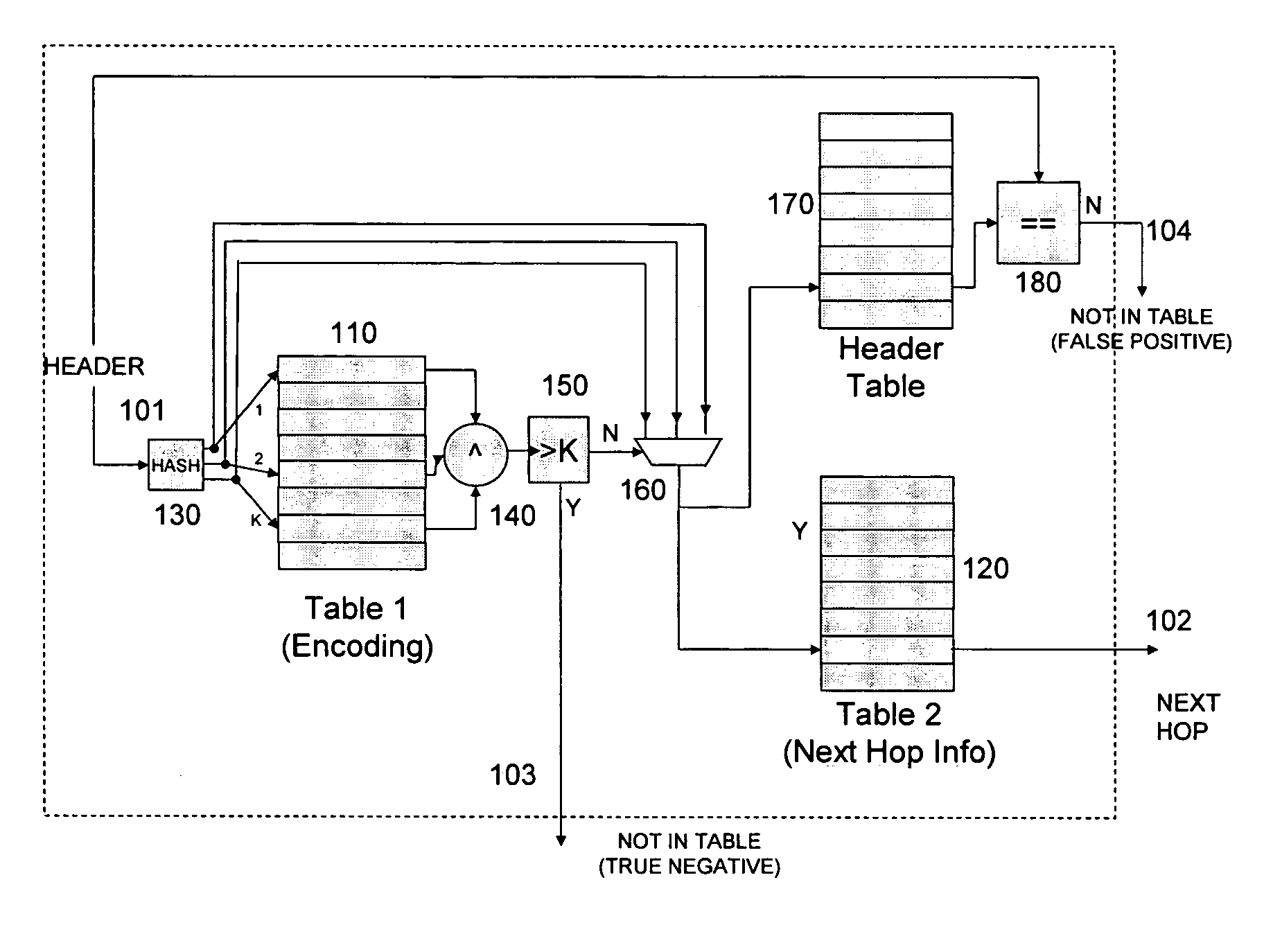

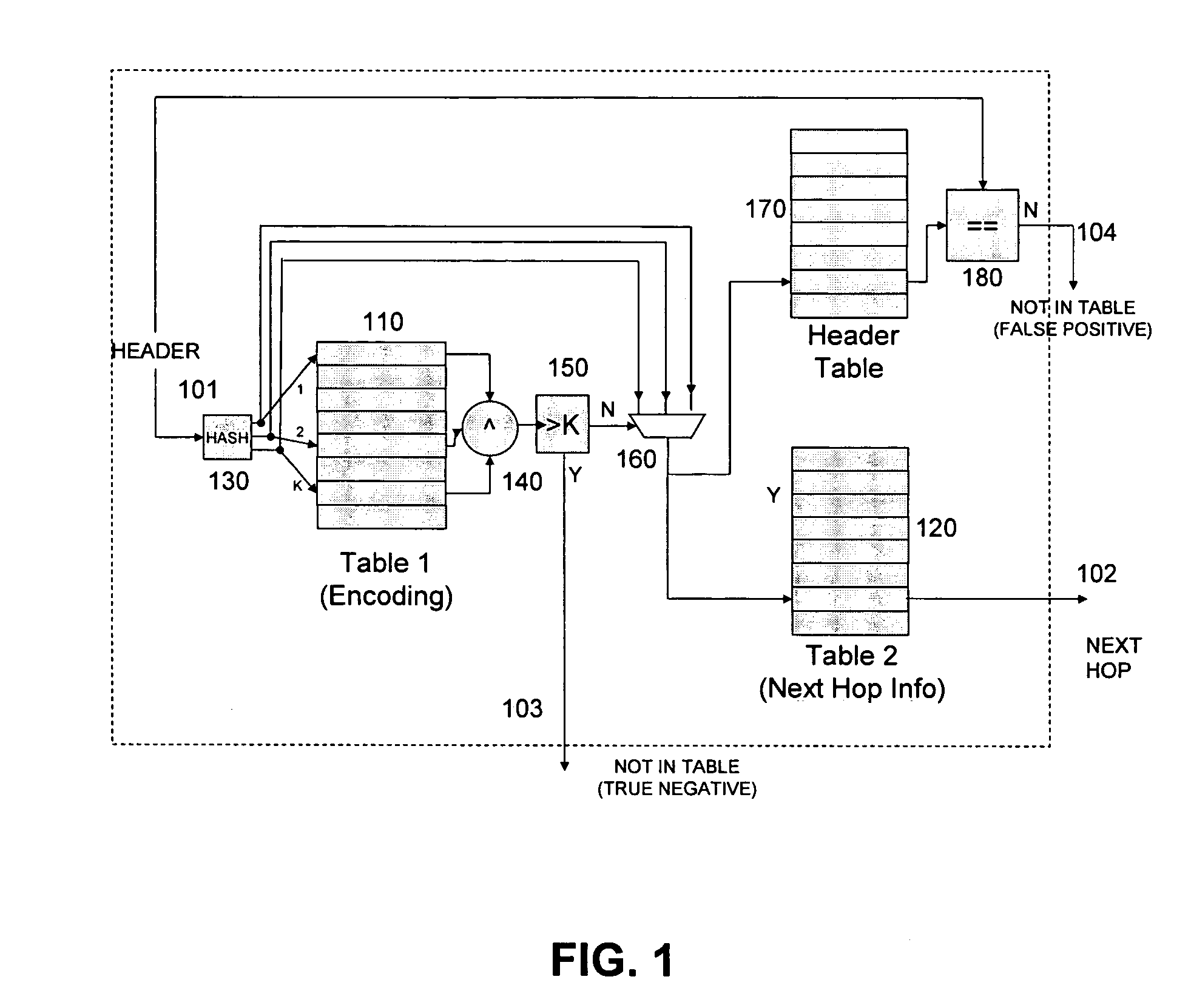

[0025]FIG. 1 is a diagram of a content-based information retrieval architecture, in accordance with an embodiment of the invention. As depicted in FIG. 1, an input value 101 is received and processed by the architecture. Based on the processing of the input value 101, what is output is either a lookup value 102 retrieved from a table 120 or a signal 103, 104 that the input value 101 is not a member of the lookup set.

[0026] In FIG. 1, there are three tables 110, 120, 170 the construction and operation of which are described in further detail herein. Each table in FIG. 1 has the same number of entries, although, as further described herein and in accordance with another embodiment of the invention, the tables can have different numbers of entries. Table 120 is referred to herein as a lookup table and comprises a plurality of entries, each storing a lookup value. For example, in the case of a router application, the lookup values can be next hop information associated with different d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com