Patents

Literature

49 results about "Finite state transducer" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

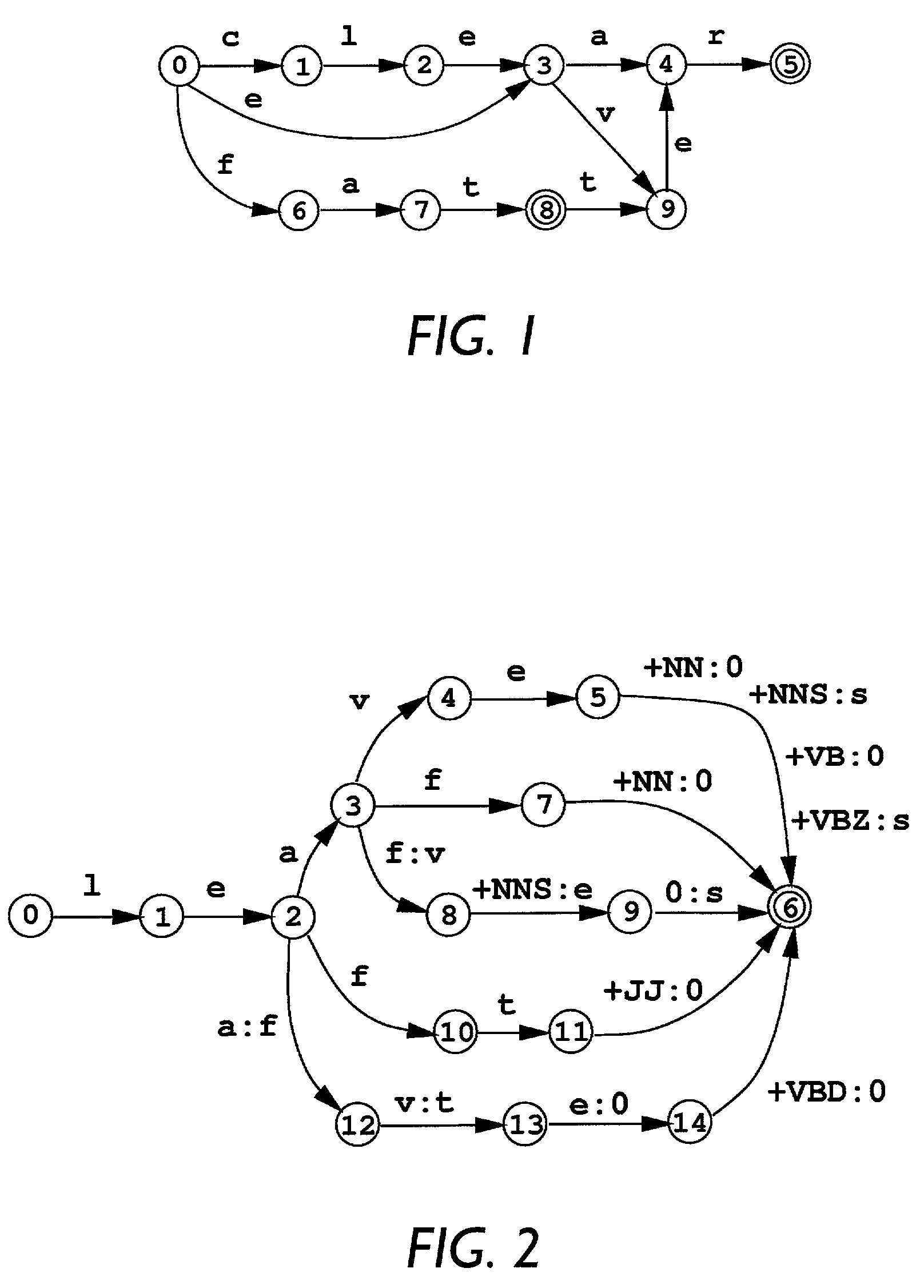

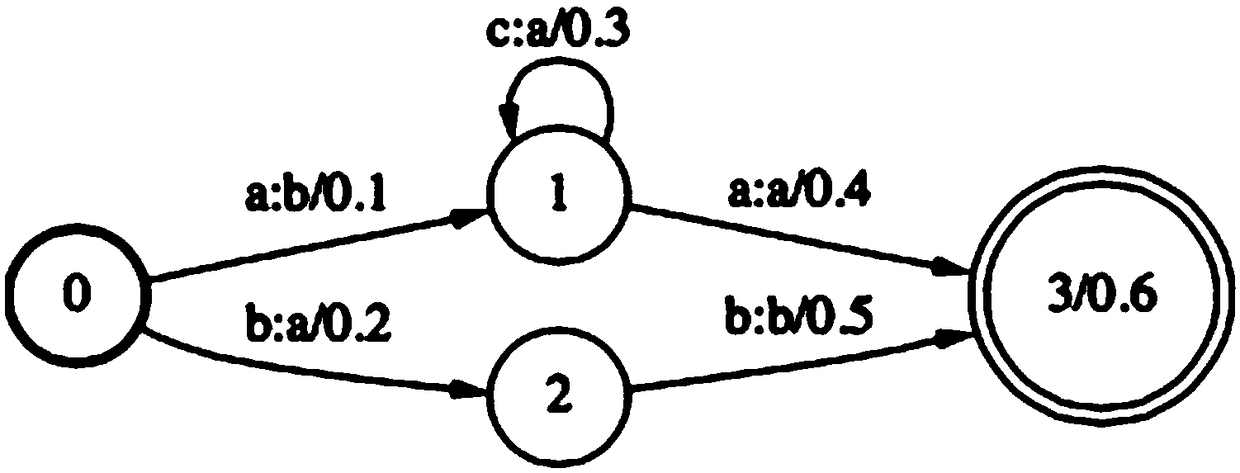

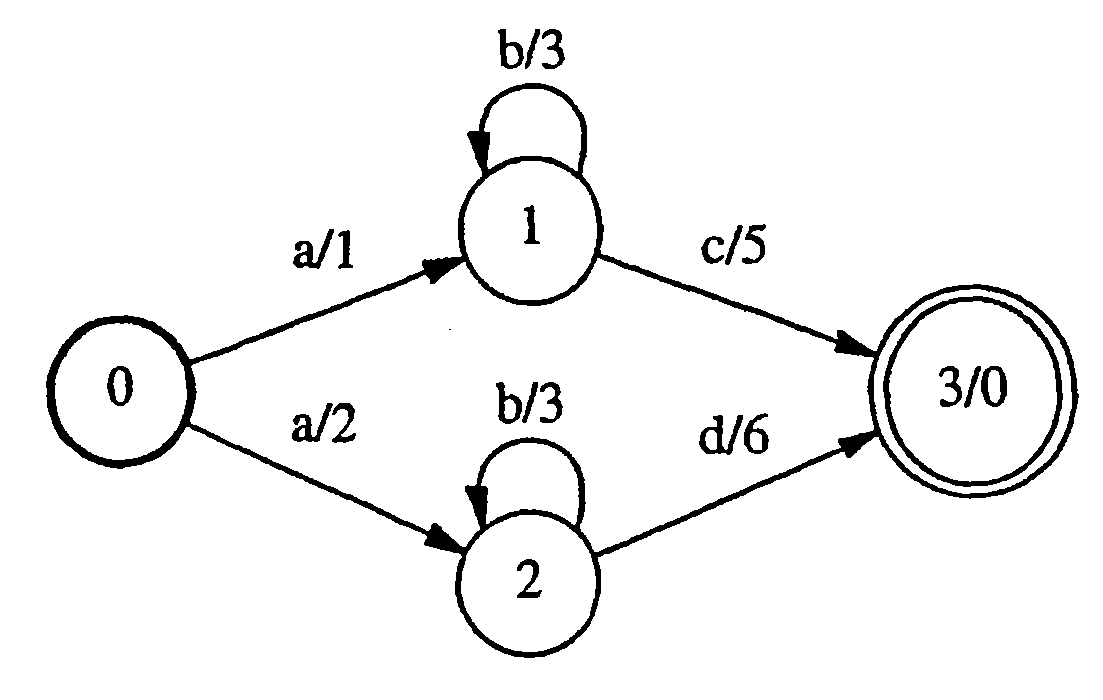

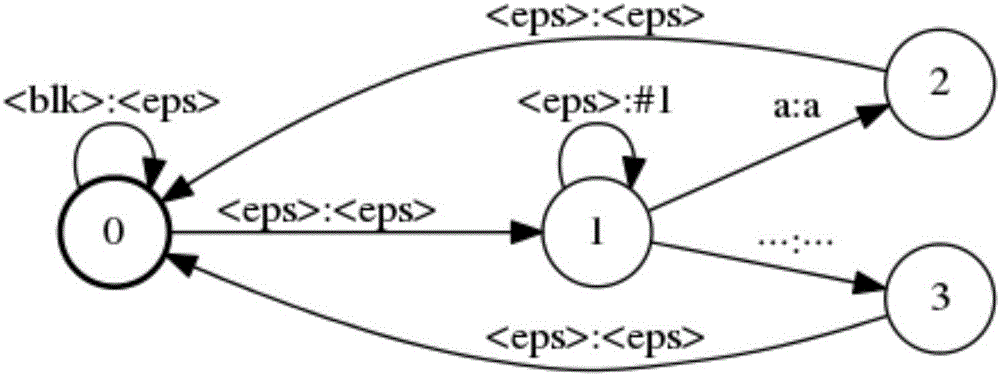

A finite-state transducer (FST) is a finite-state machine with two memory tapes, following the terminology for Turing machines: an input tape and an output tape. This contrasts with an ordinary finite-state automaton, which has a single tape. An FST is a type of finite-state automaton that maps between two sets of symbols. An FST is more general than a finite-state automaton (FSA). An FSA defines a formal language by defining a set of accepted strings, while an FST defines relations between sets of strings.

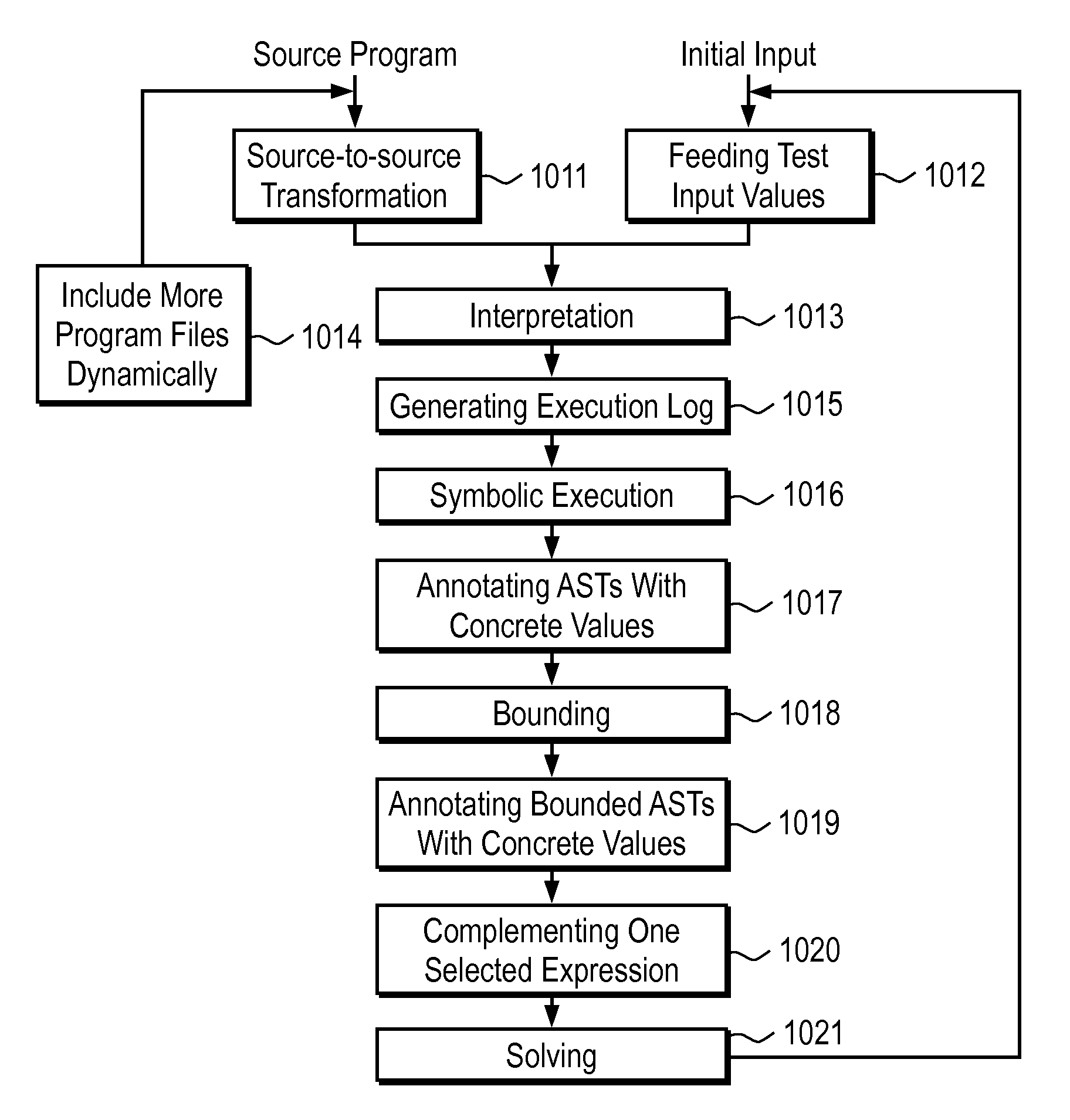

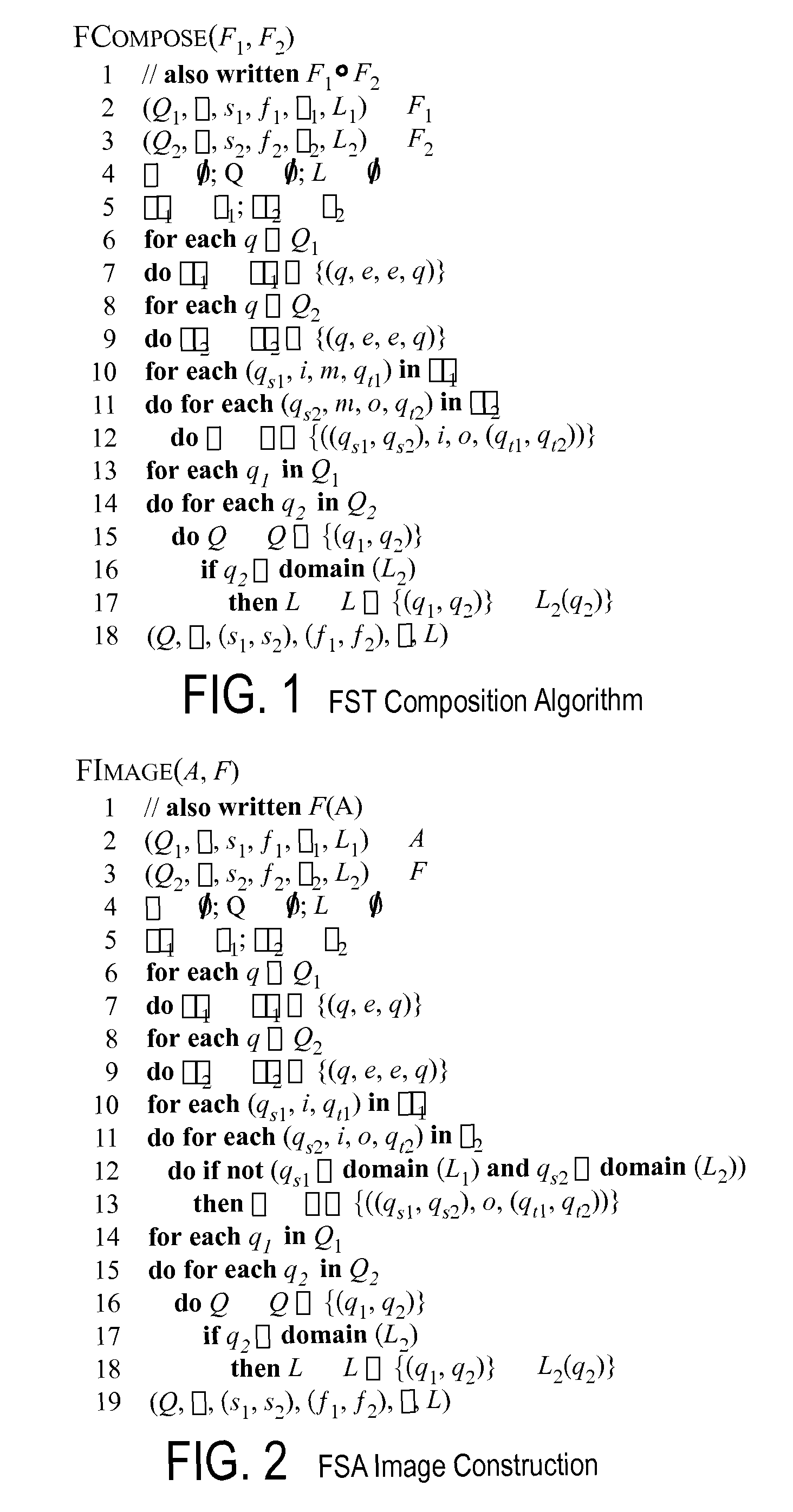

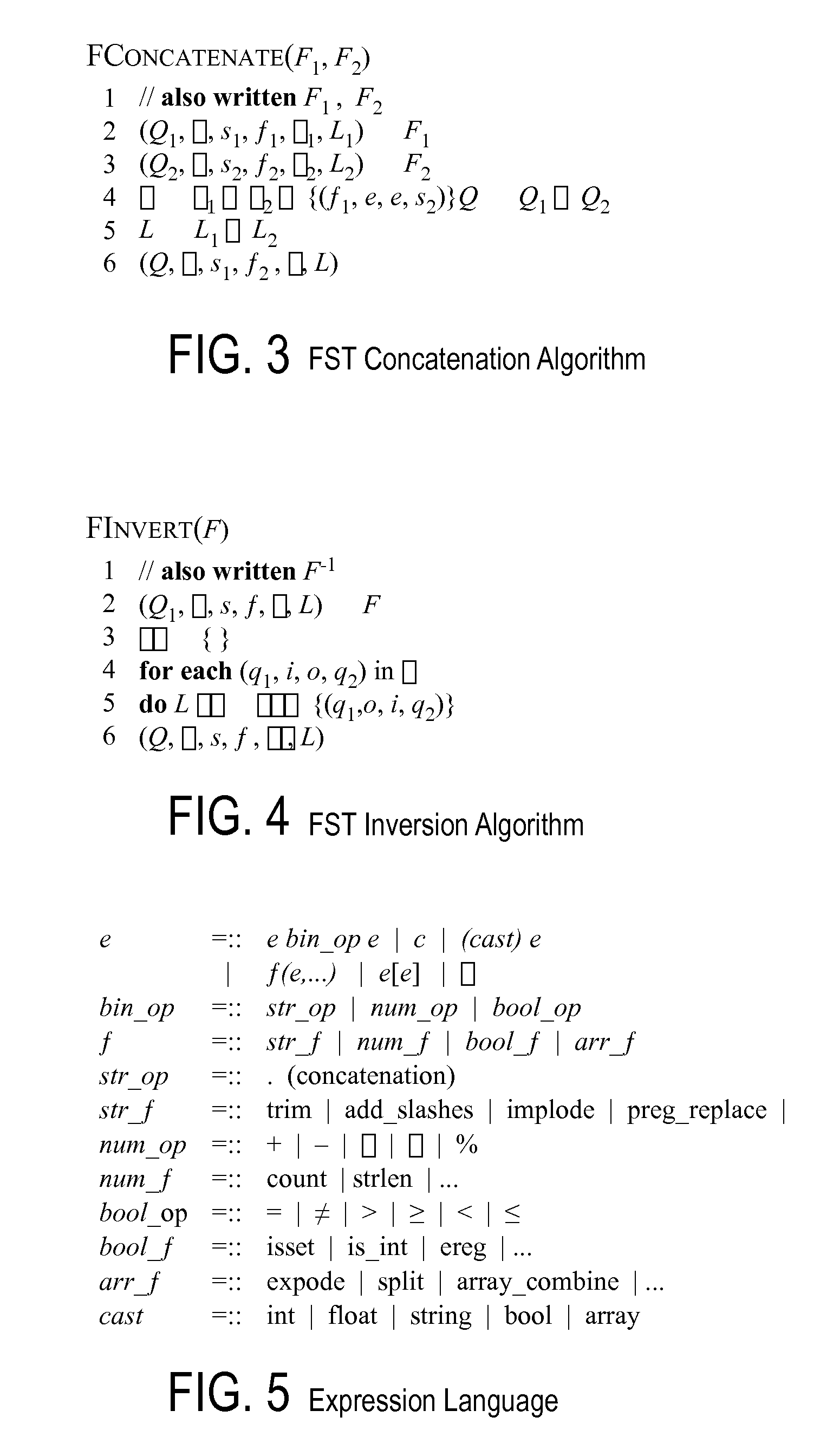

Automated test input generation for web applications

ActiveUS20090125976A1Error detection/correctionSoftware engineeringSource transformationString operations

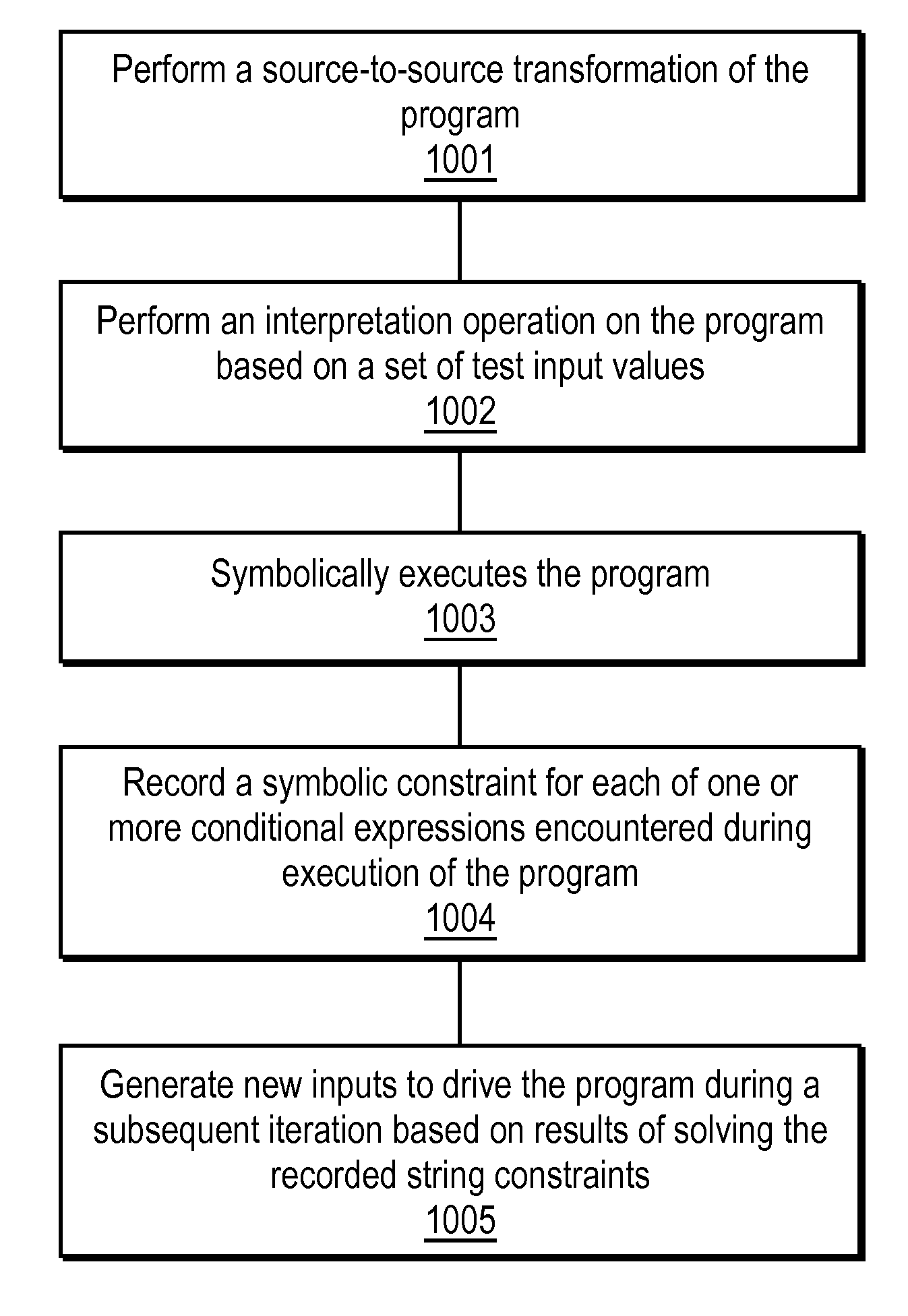

A method and apparatus is disclosed herein for automated test input generation for web applications. In one embodiment, the method comprises performing a source-to-source transformation of the program; performing interpretation on the program based on a set of test input values; symbolically executing the program; recording a symbolic constraint for each of one or more conditional expressions encountered during execution of the program, including analyzing a string operation in the program to identify one or more possible execution paths, and generating symbolic inputs representing values of variables in each of the conditional expressions as a numeric expression and a string constraint including generating constraints on string values by modeling string operations using finite state transducers (FSTs) and supplying values from the program's execution in place of intractable sub-expressions; and generating new inputs to drive the program during a subsequent iteration based on results of solving the recorded string constraints.

Owner:NTT DOCOMO INC

Systems and methods for generating weighted finite-state automata representing grammars

InactiveUS7181386B2Natural language analysisDigital data processing detailsFinite state transducerAutomaton

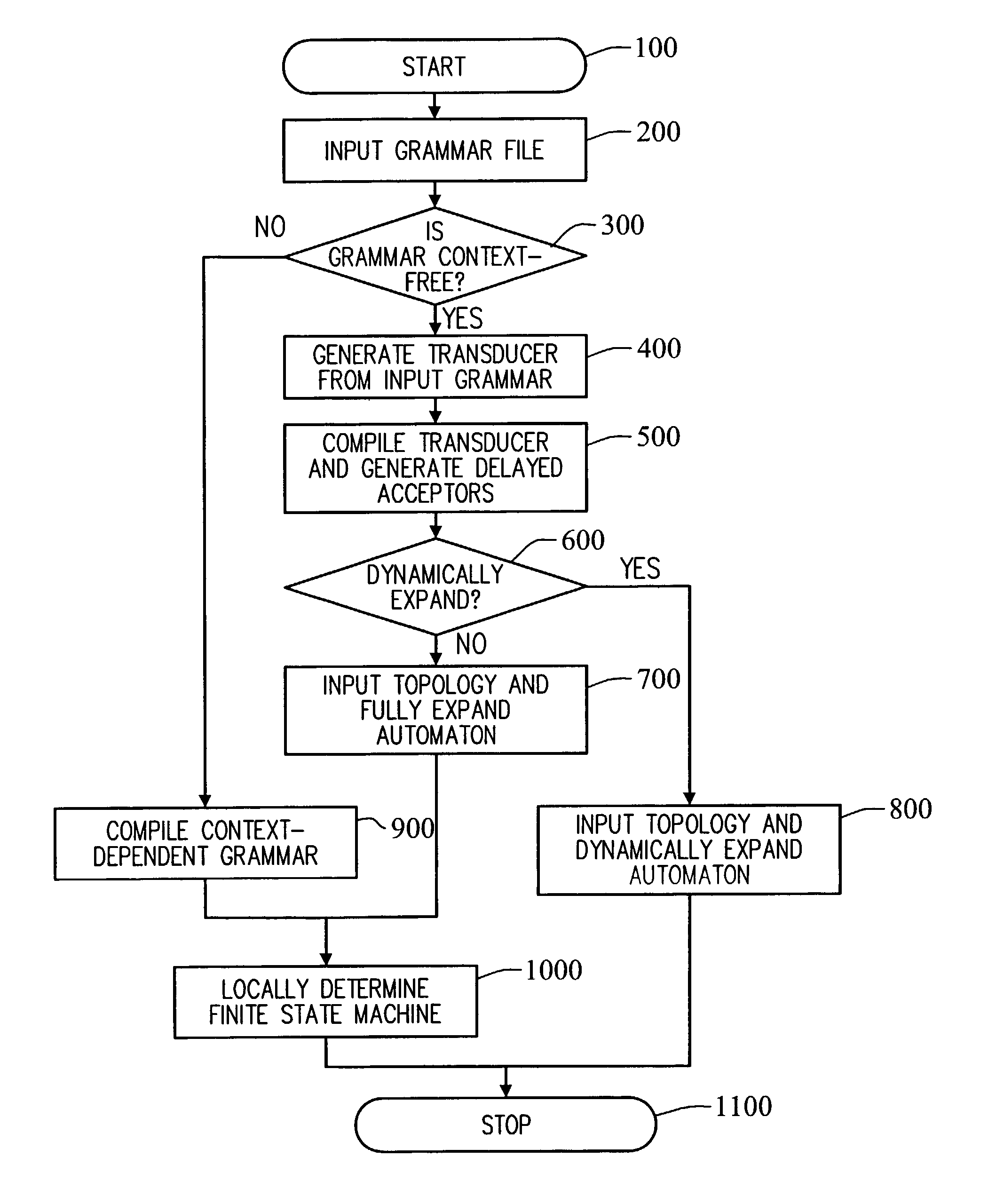

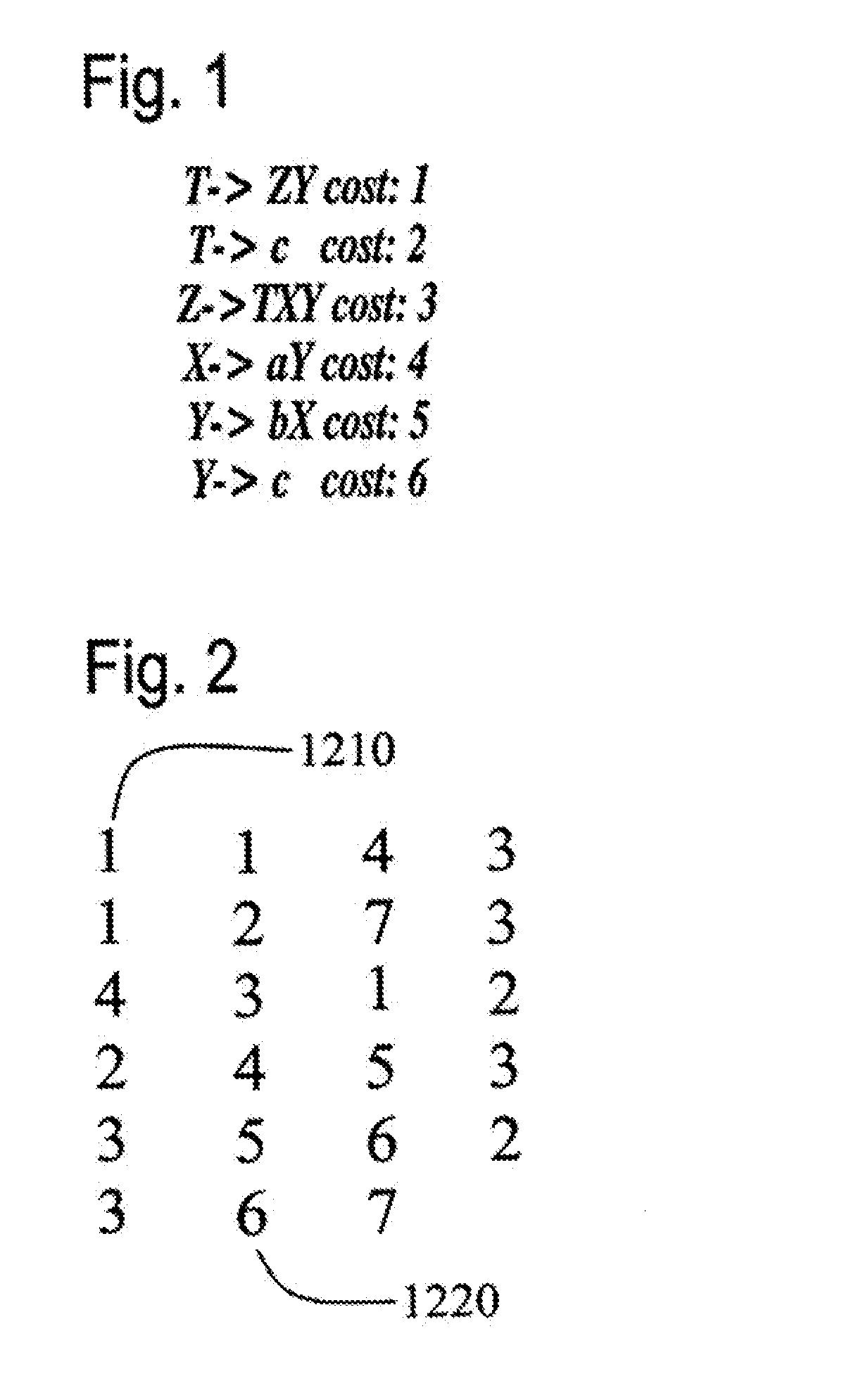

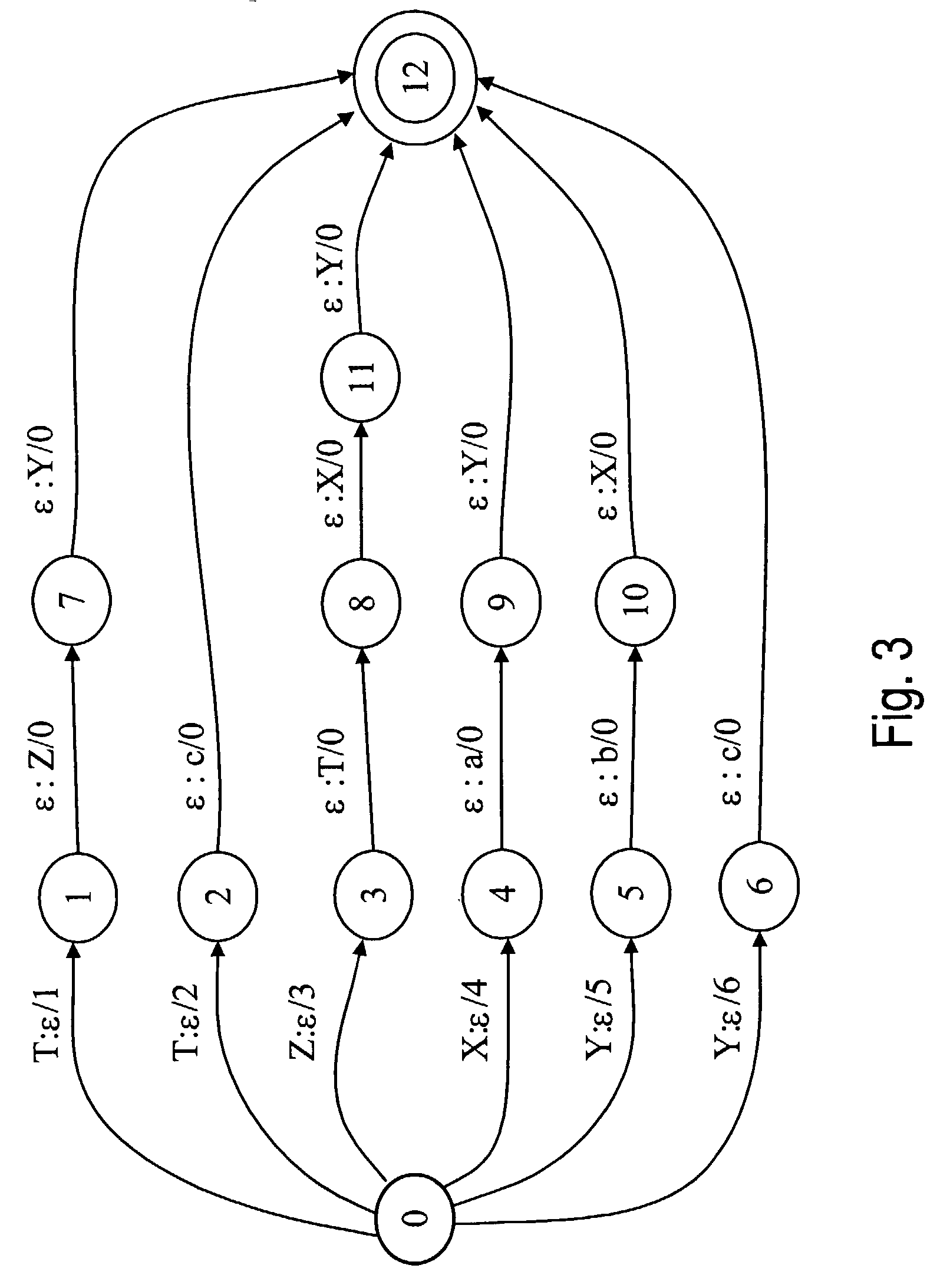

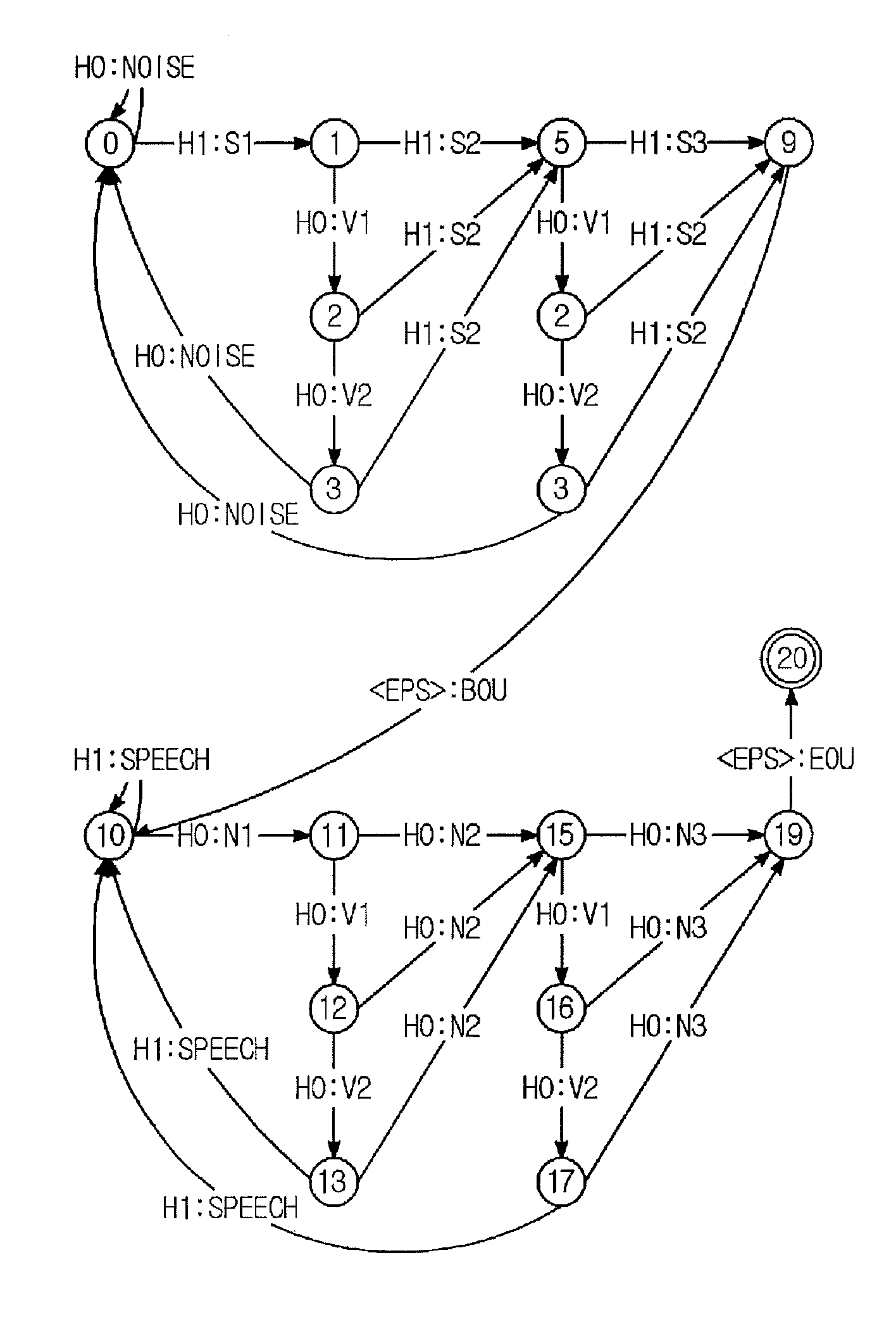

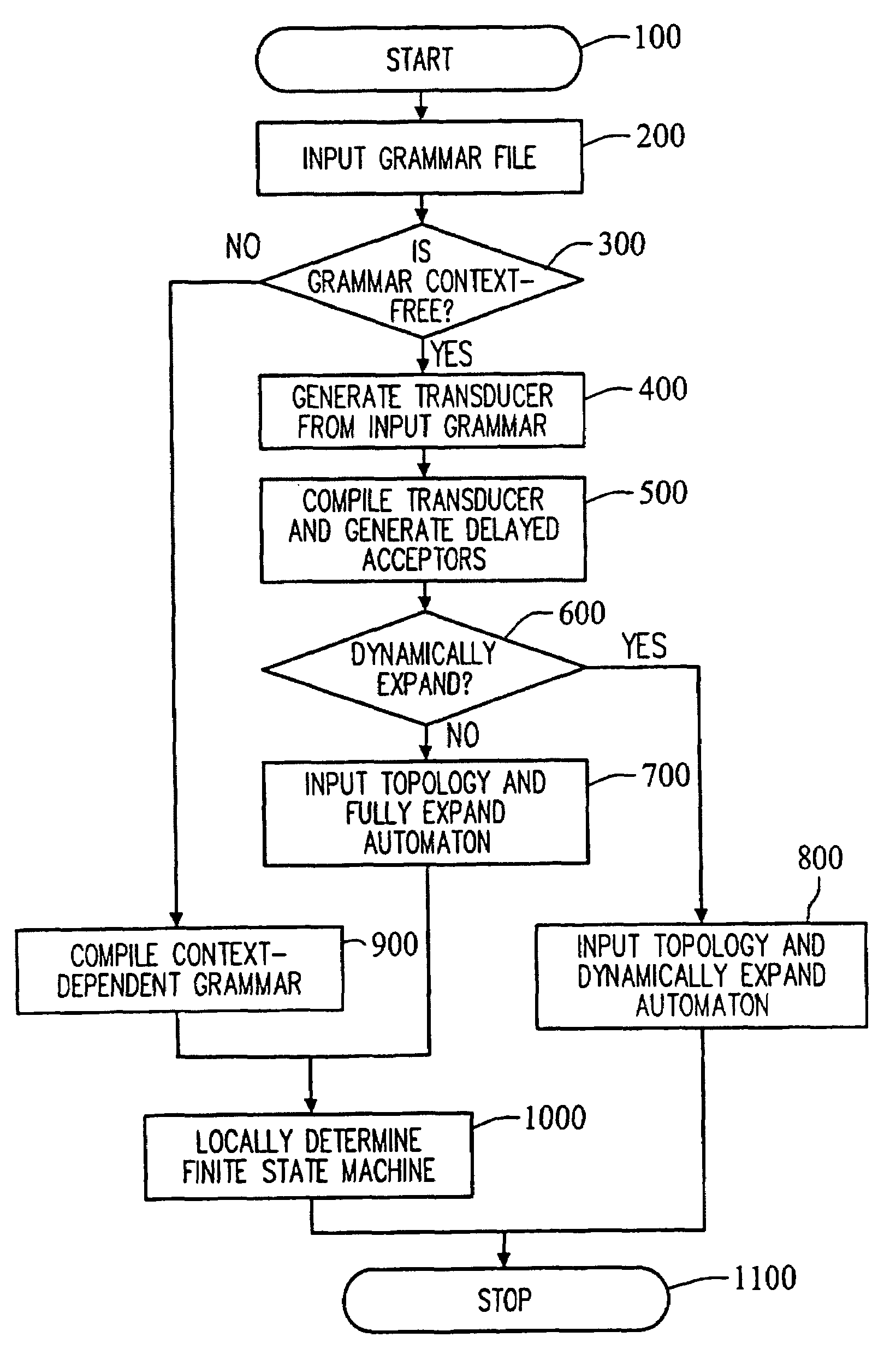

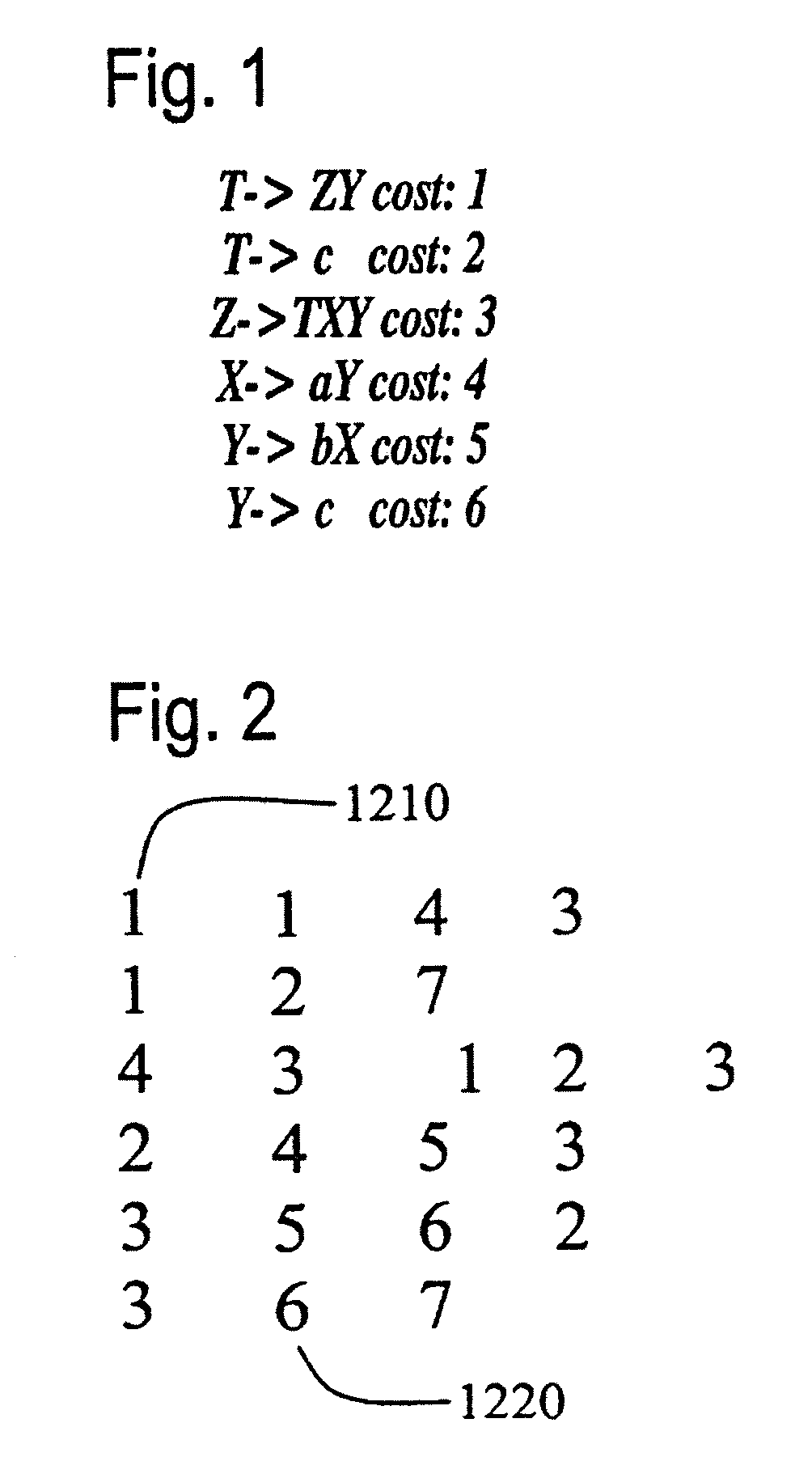

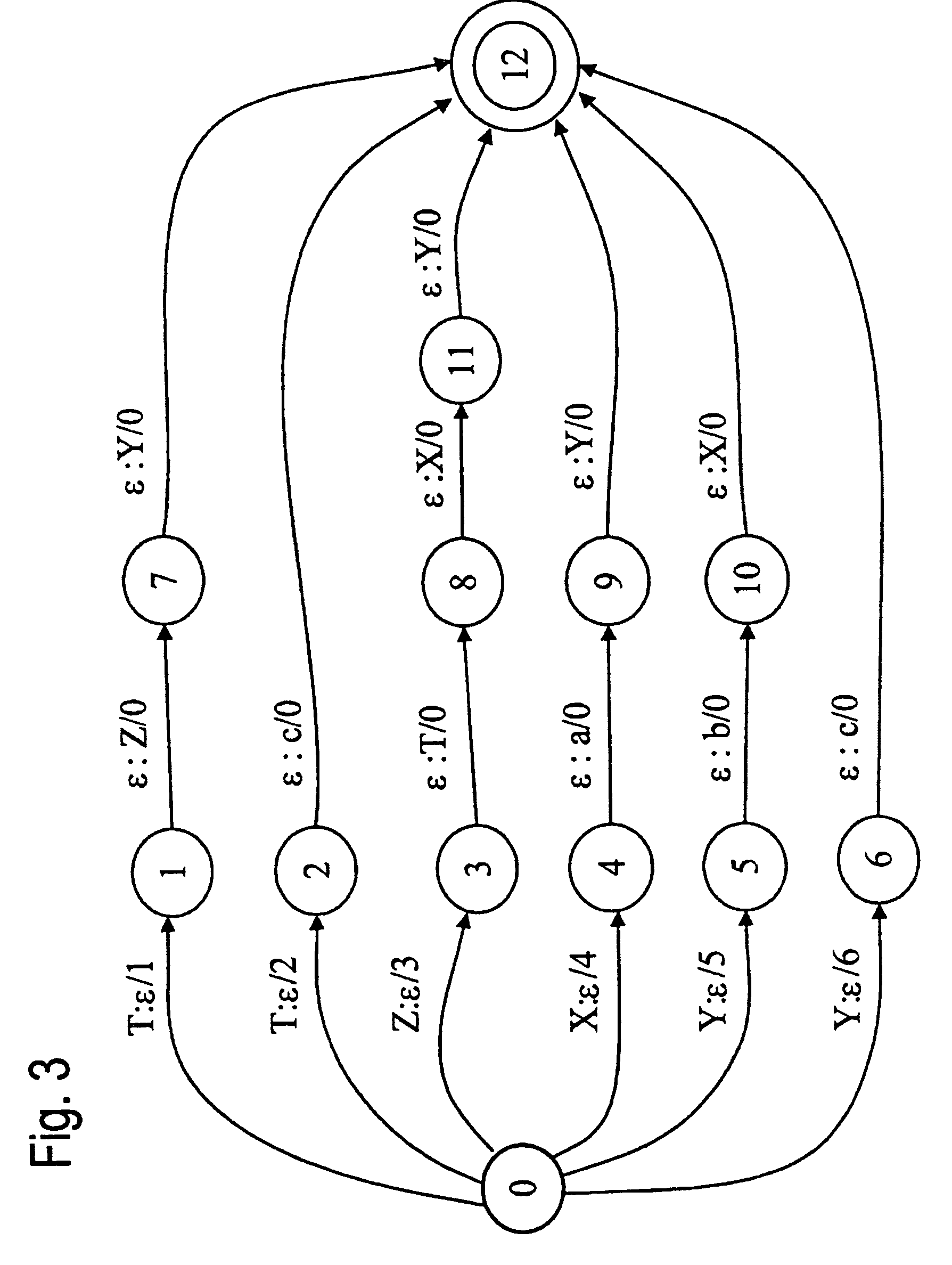

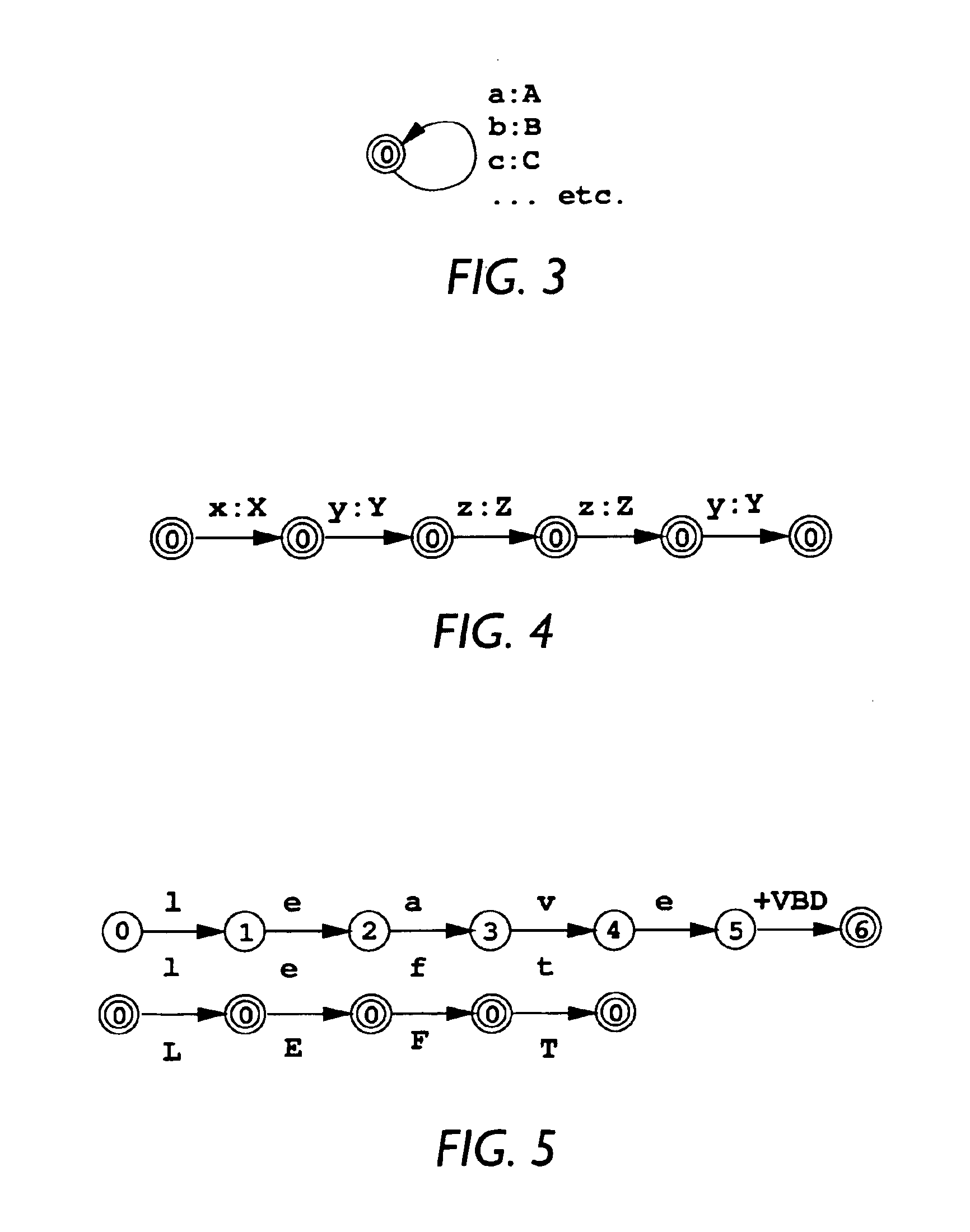

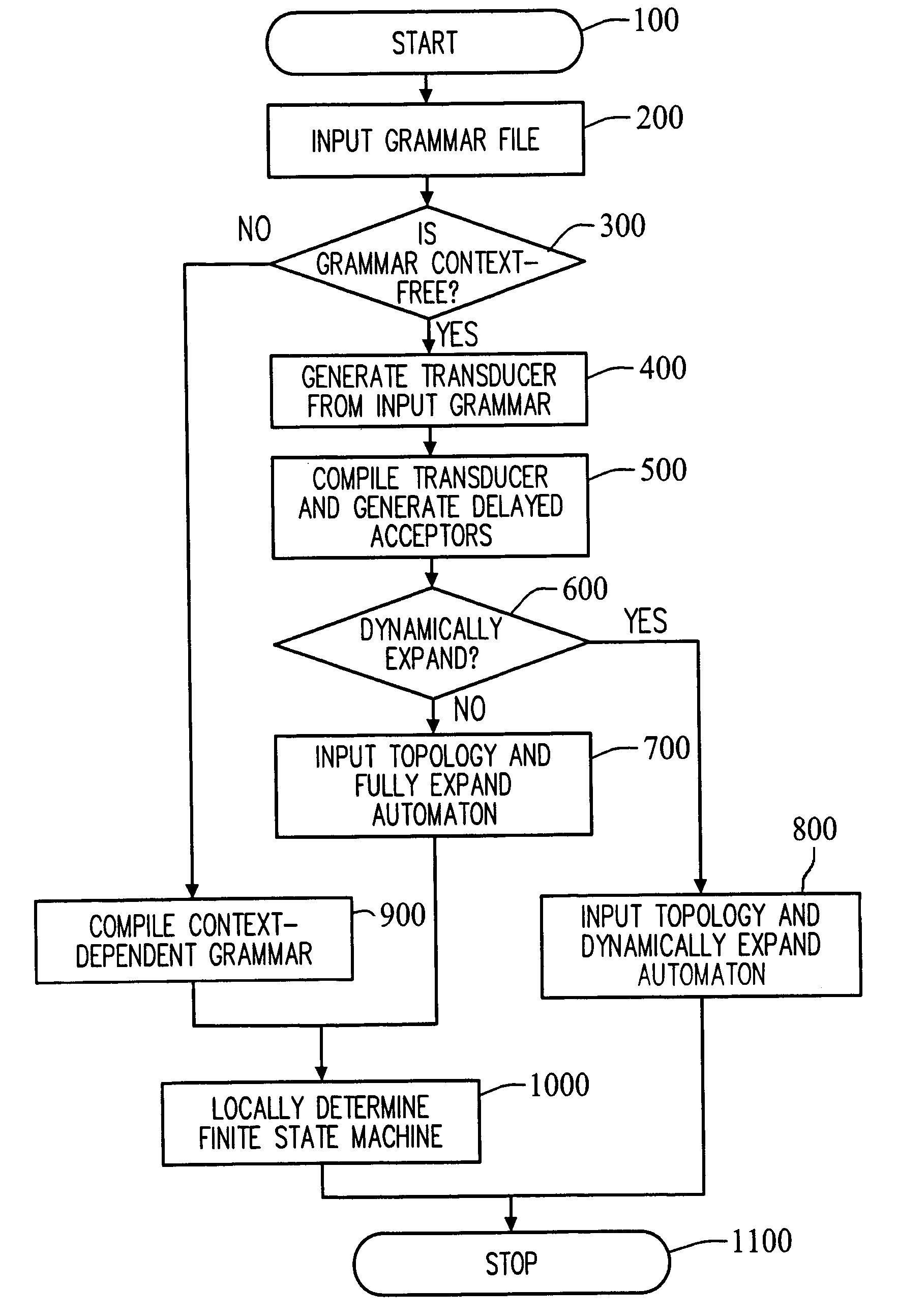

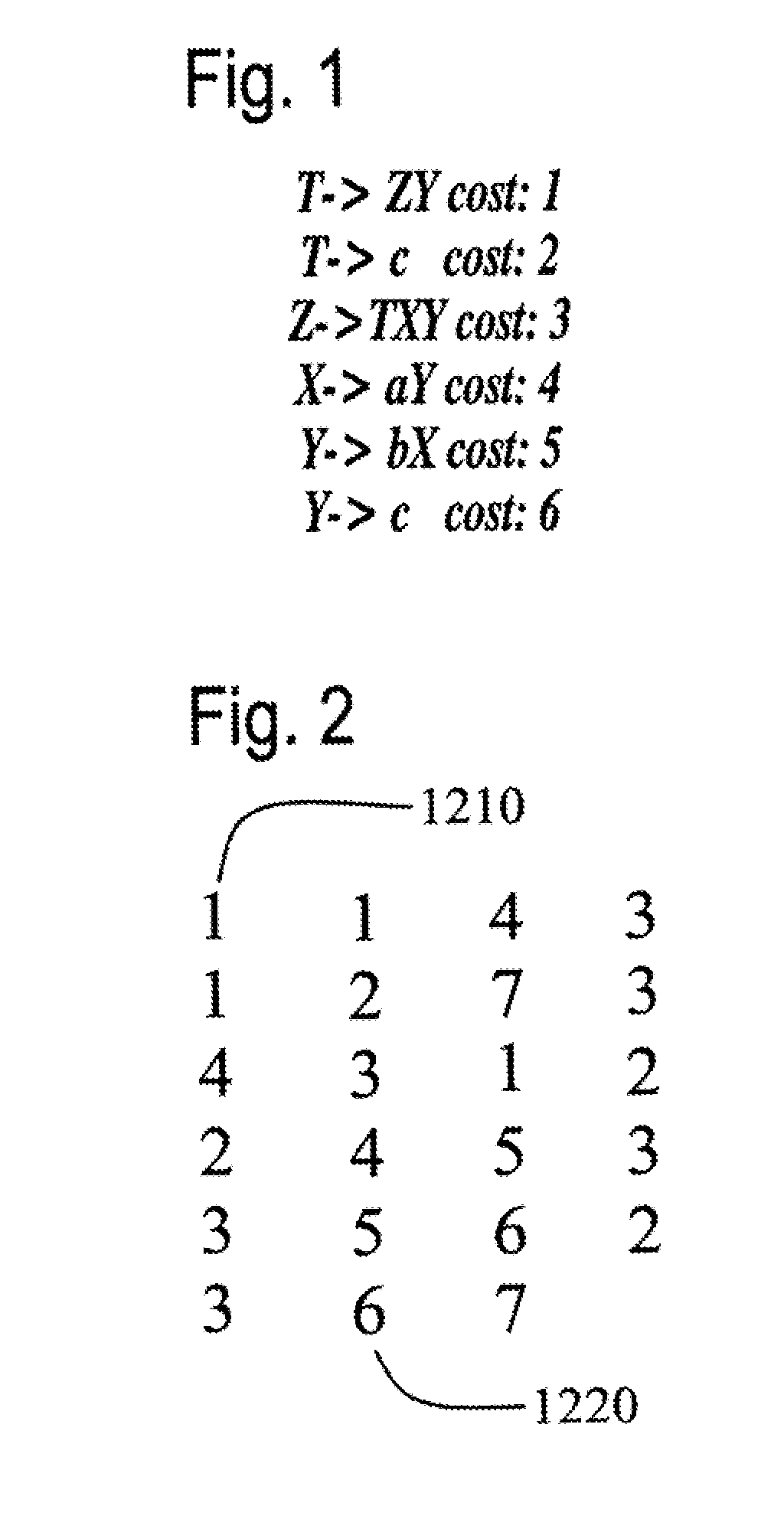

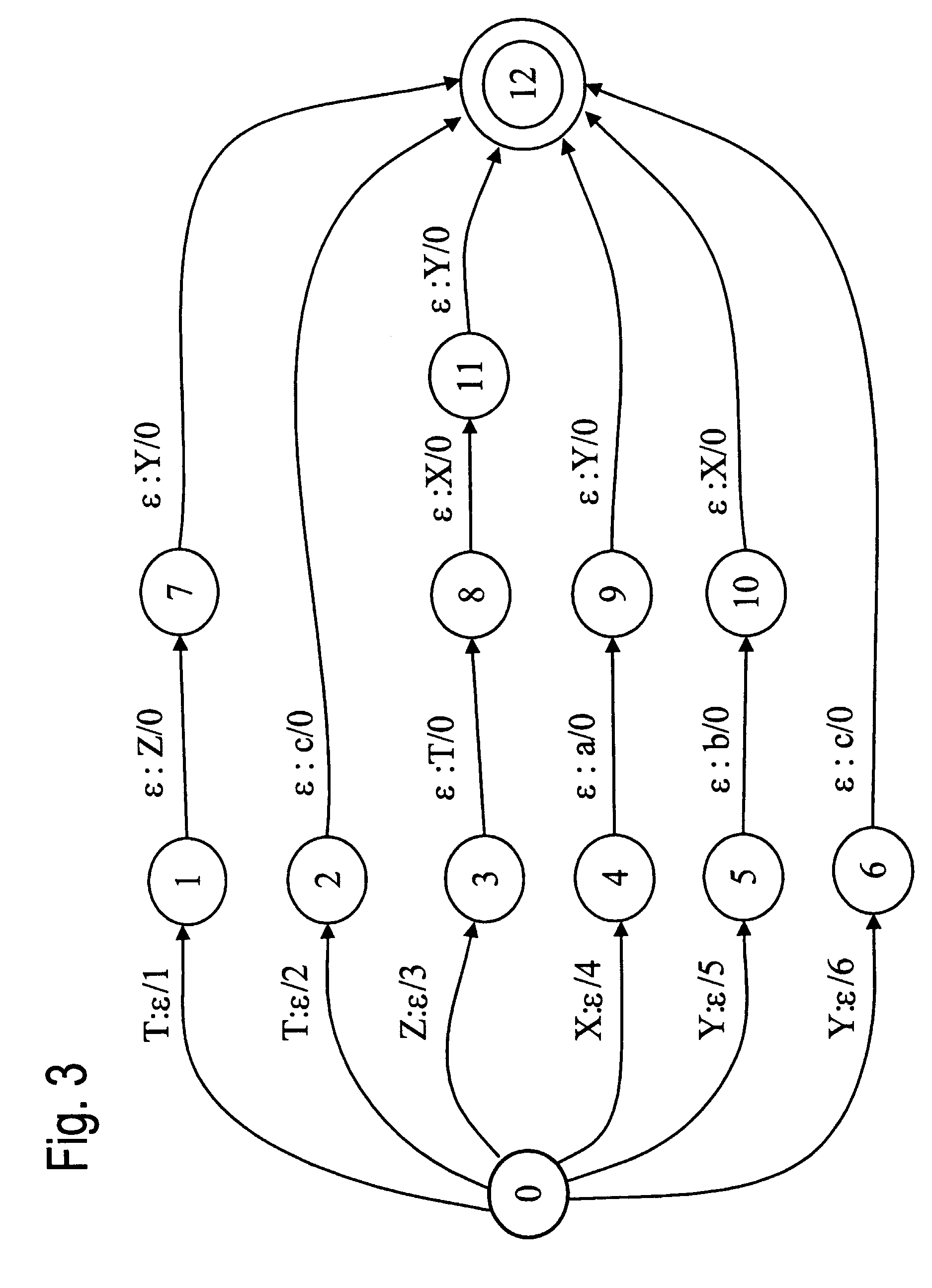

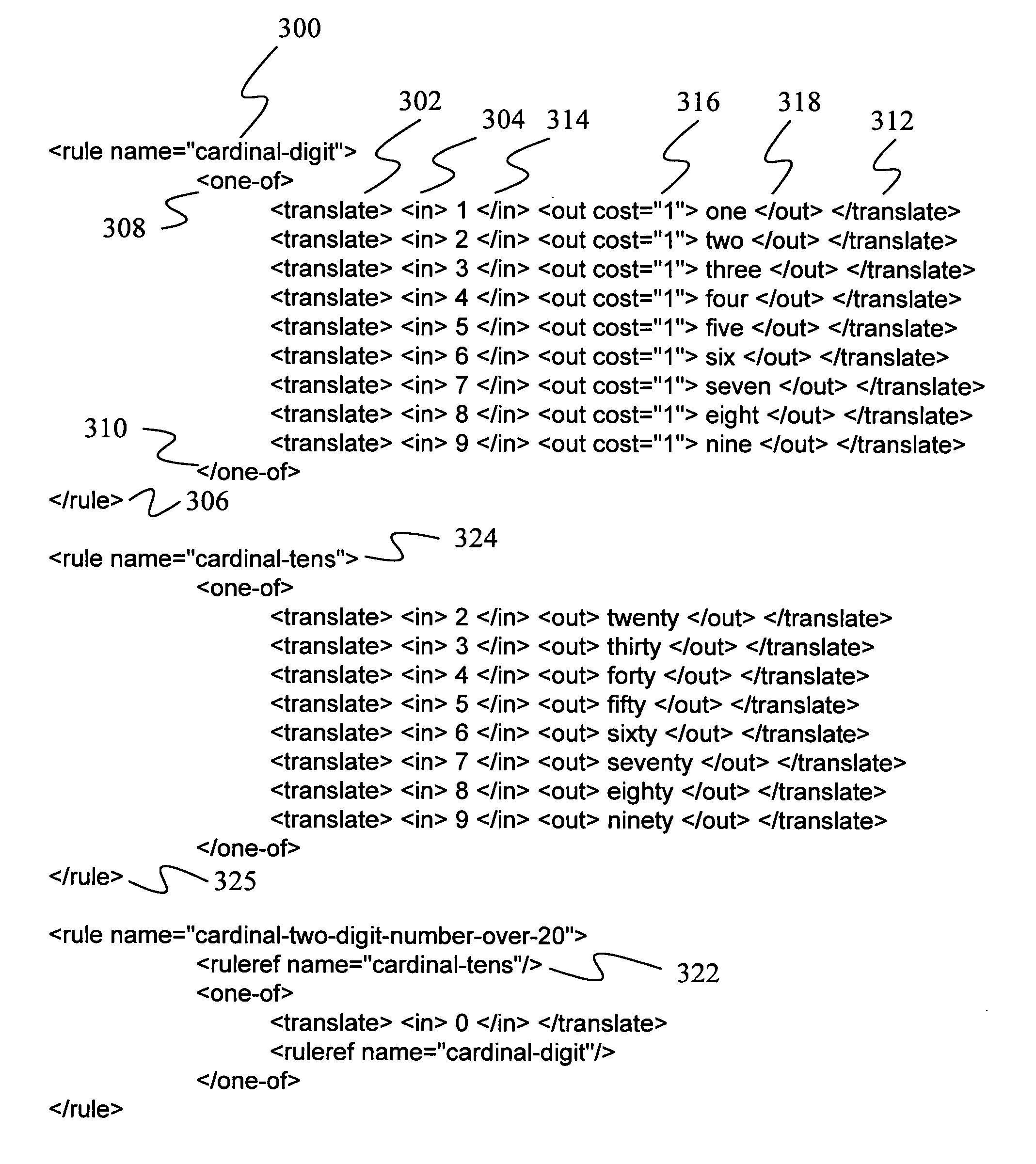

A context-free grammar can be represented by a weighted finite-state transducer. This representation can be used to efficiently compile that grammar into a weighted finite-state automaton that accepts the strings allowed by the grammar with the corresponding weights. The rules of a context-free grammar are input. A finite-state automaton is generated from the input rules. Strongly connected components of the finite-state automaton are identified. An automaton is generated for each strongly connected component. A topology that defines a number of states, and that uses active ones of the non-terminal symbols of the context-free grammar as the labels between those states, is defined. The topology is expanded by replacing a transition, and its beginning and end states, with the automaton that includes, as a state, the symbol used as the label on that transition. The topology can be fully expanded or dynamically expanded as required to recognize a particular input string.

Owner:NUANCE COMM INC

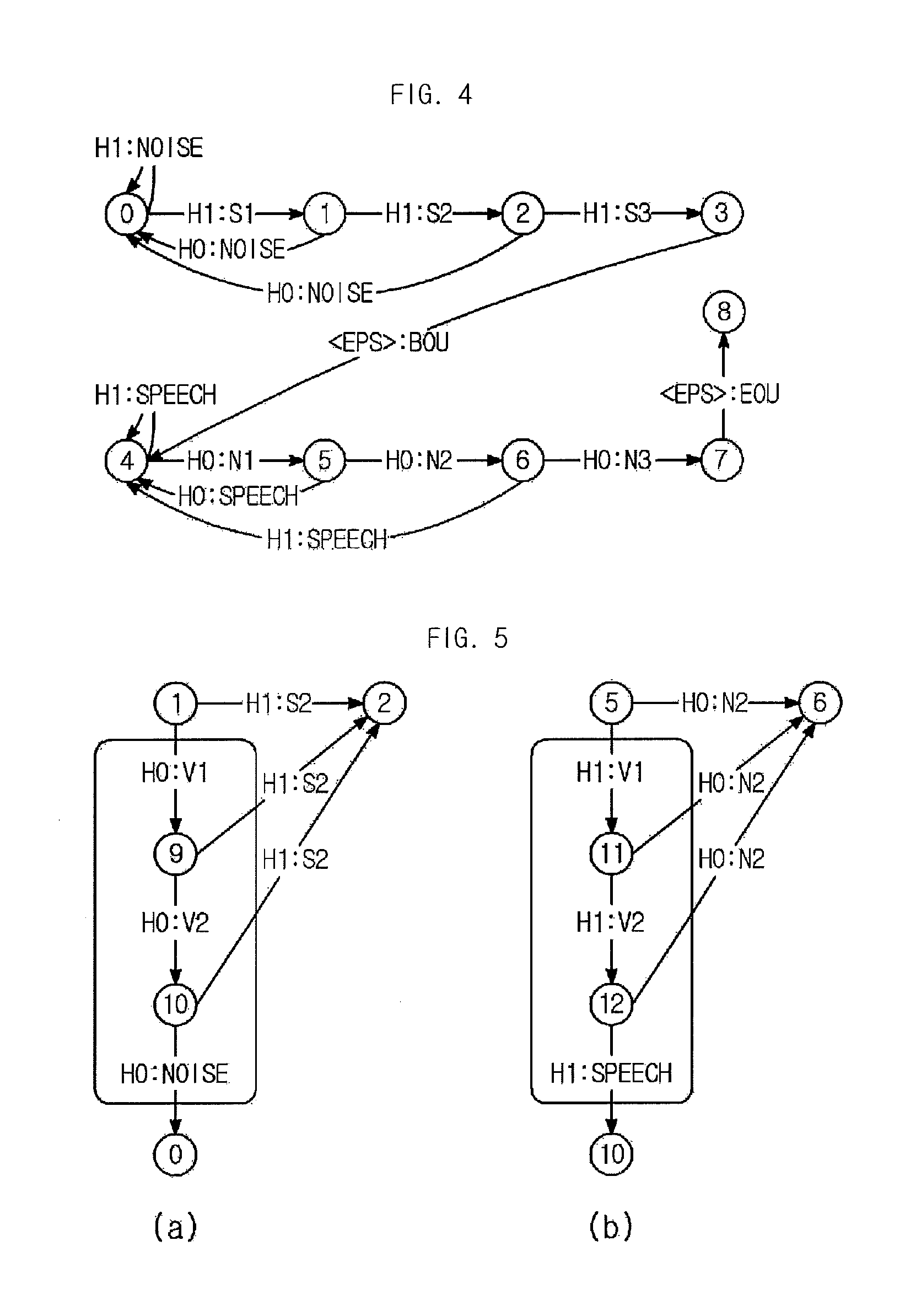

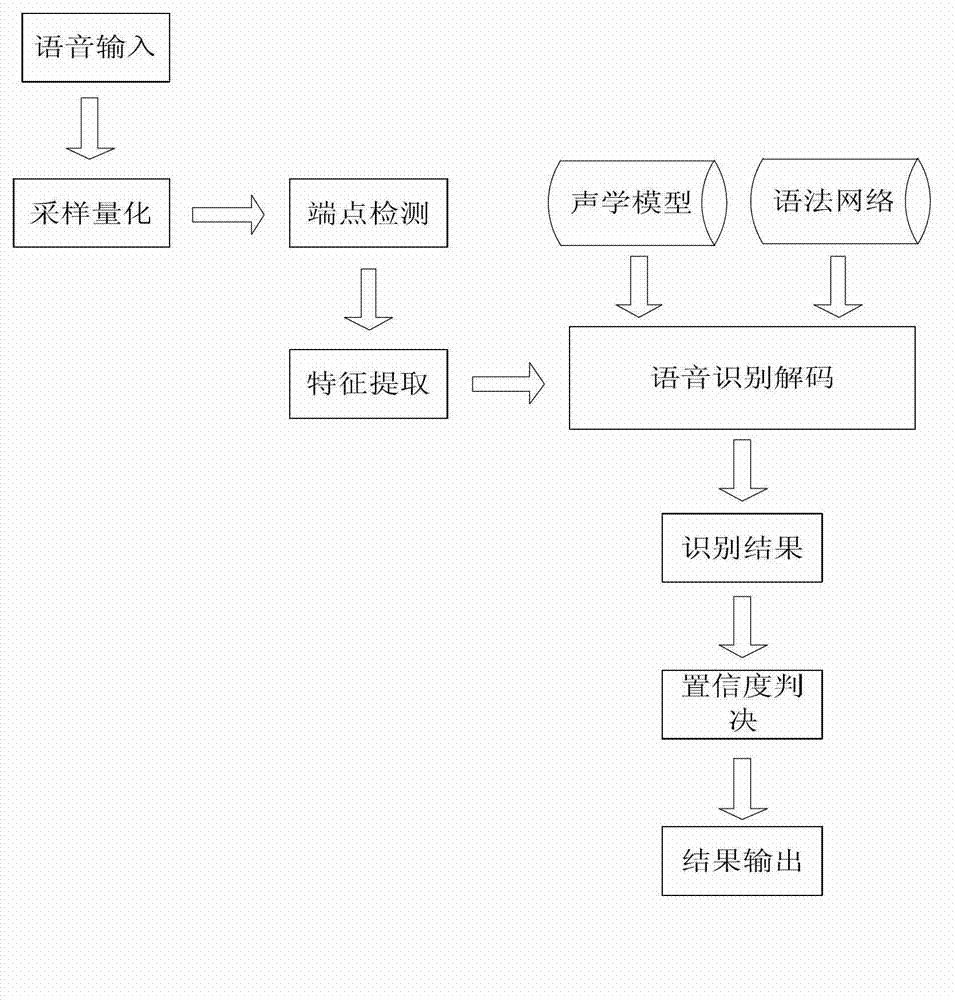

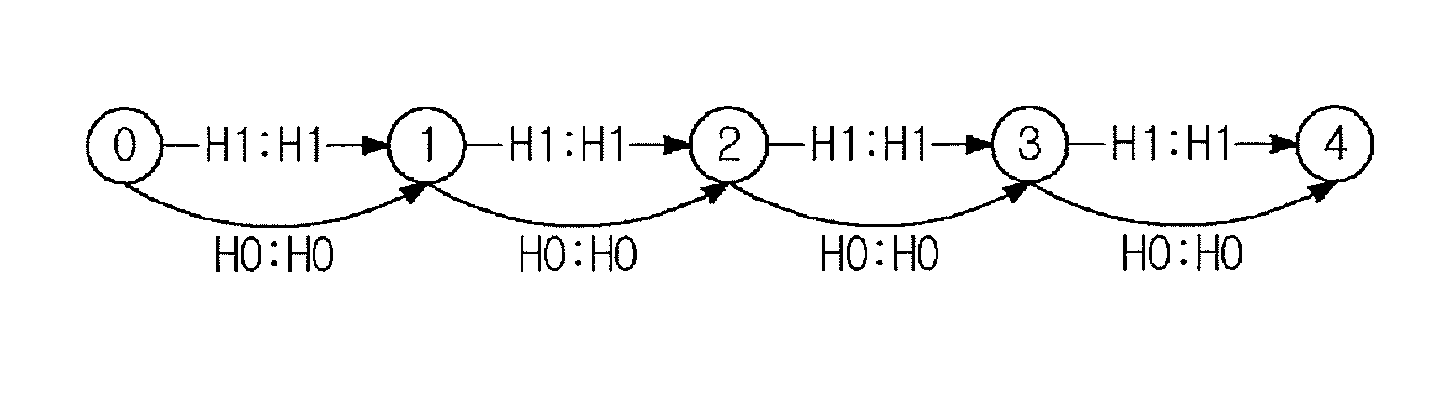

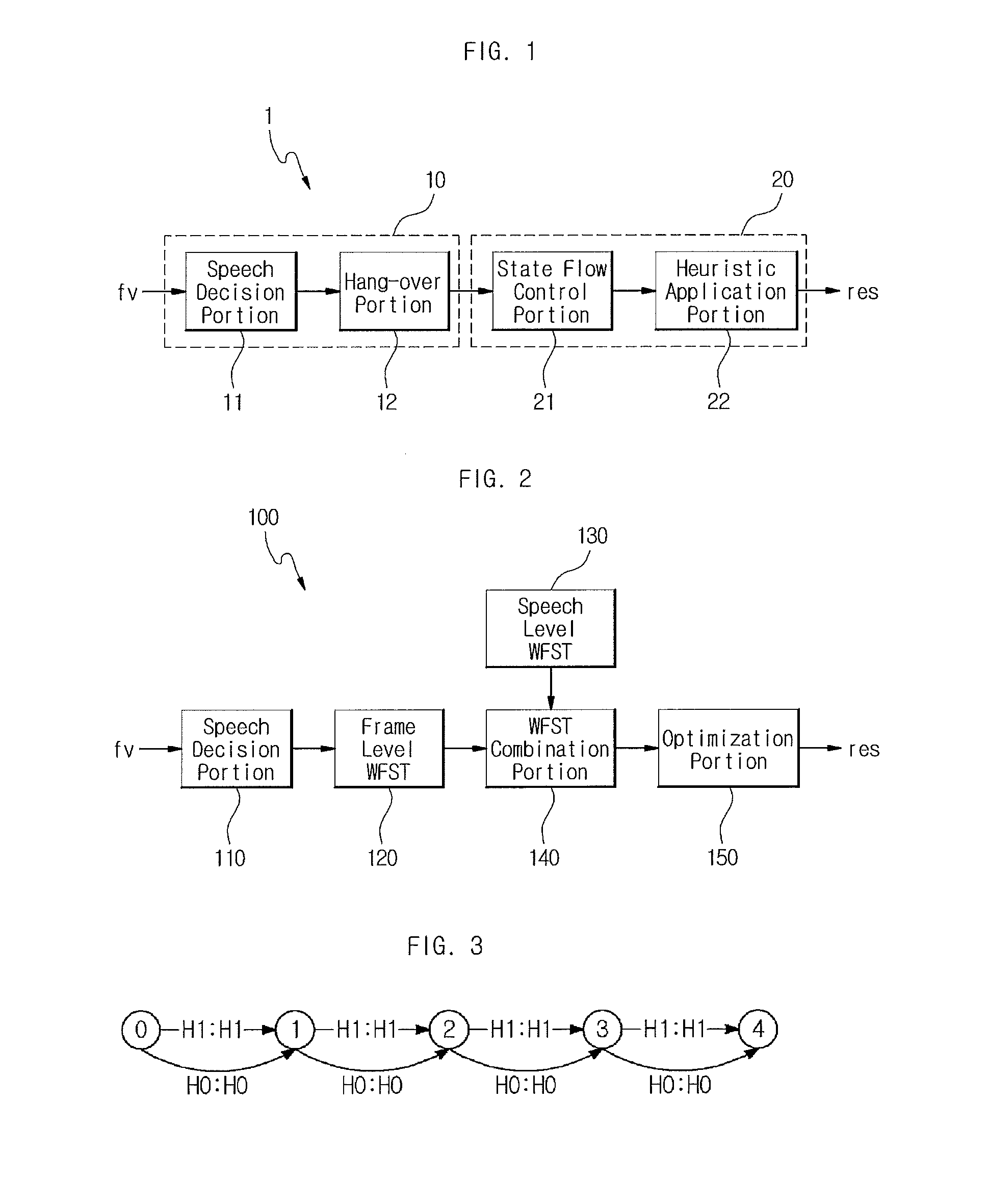

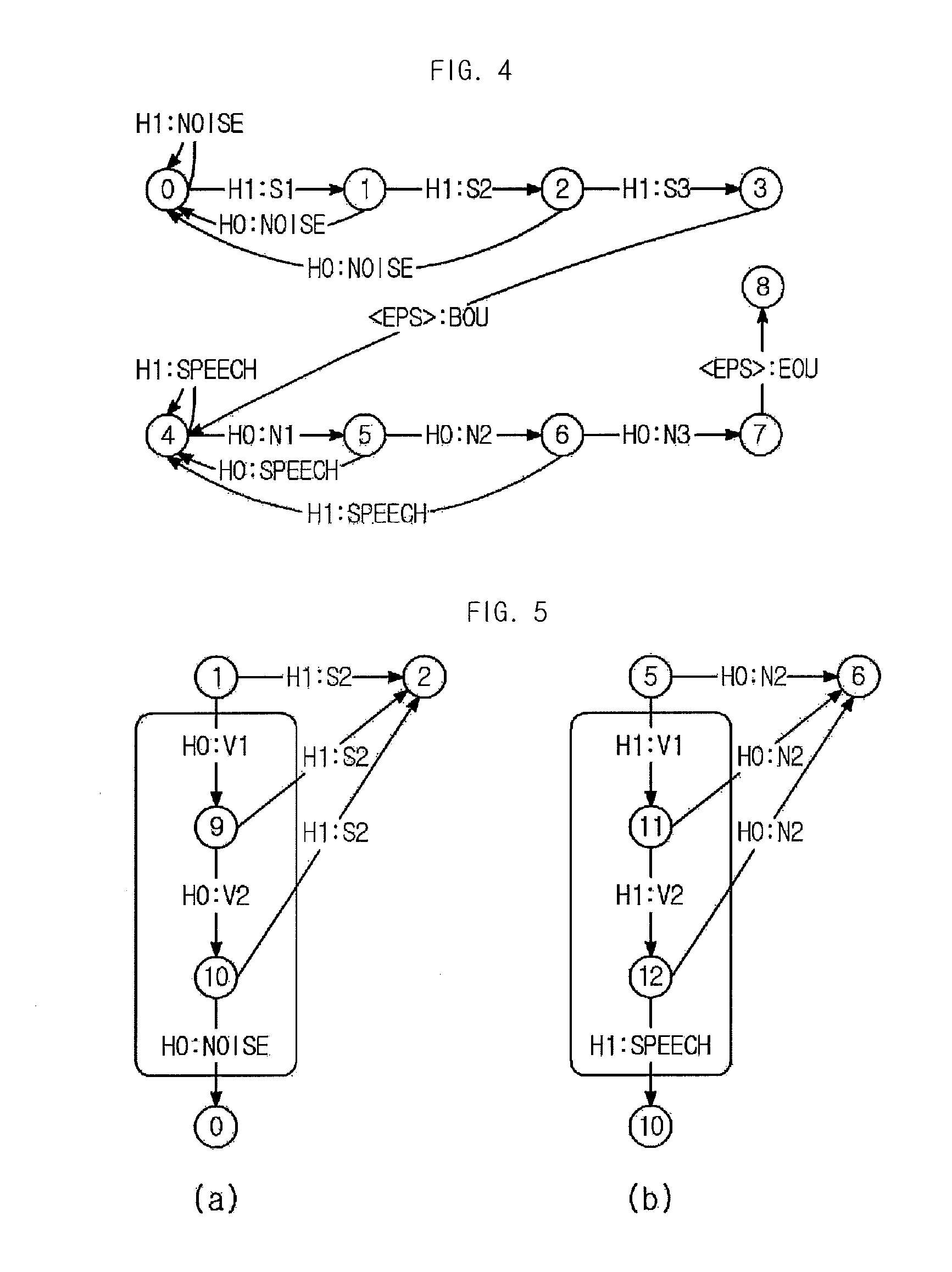

Method and apparatus for detecting speech endpoint using weighted finite state transducer

InactiveUS9396722B2Easy to add and deleteAvoid mistakesSpeech recognitionFeature vectorFinite state transducer

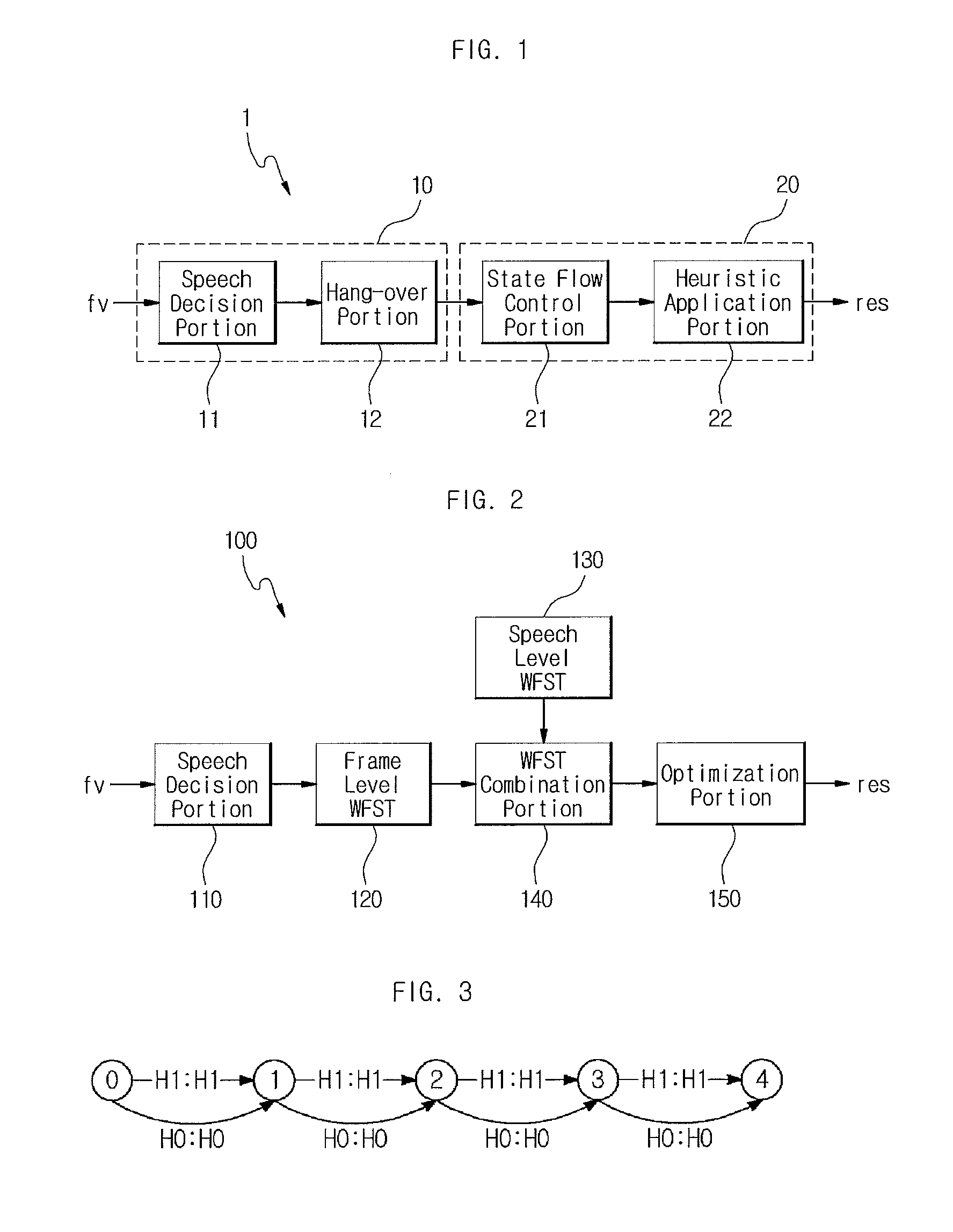

Disclosed are an apparatus and a method for detecting a speech endpoint using a WFST. The apparatus in accordance with an embodiment of the present invention includes: a speech decision portion configured to receive frame units of feature vector converted from a speech signal and to analyze and classify the received feature vector into a speech class or a noise class; a frame level WFST configured to receive the speech class and the noise class and to convert the speech class and the noise class to a WFST format; a speech level WFST configured to detect a speech endpoint by analyzing a relationship between the speech class and noise class and a preset state; a WFST combination portion configured to combine the frame level WFST with the speech level WFST; and an optimization portion configured to optimize the combined WFST having the frame level WFST and the speech level WFST combined therein to have a minimum route.

Owner:ELECTRONICS & TELECOMM RES INST

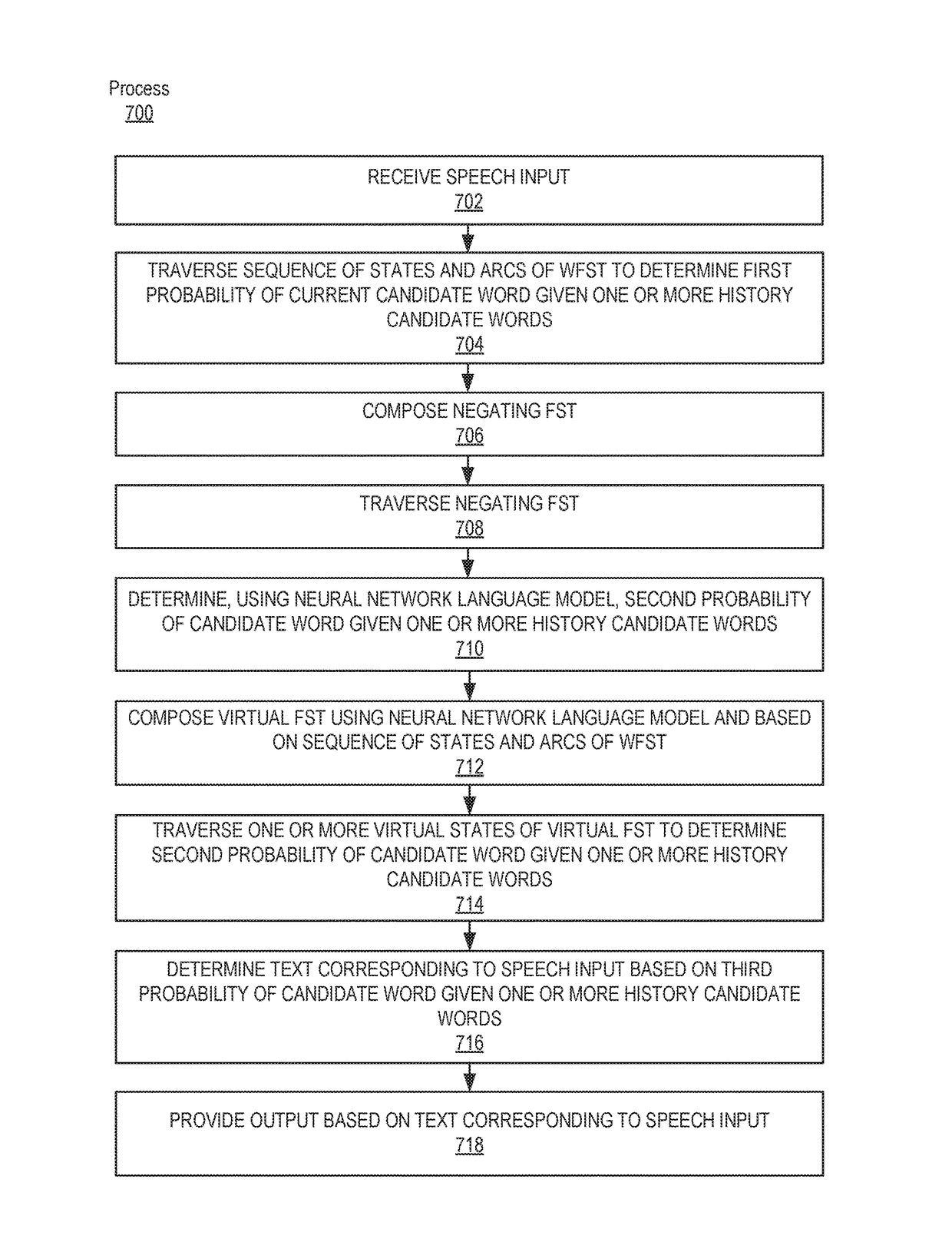

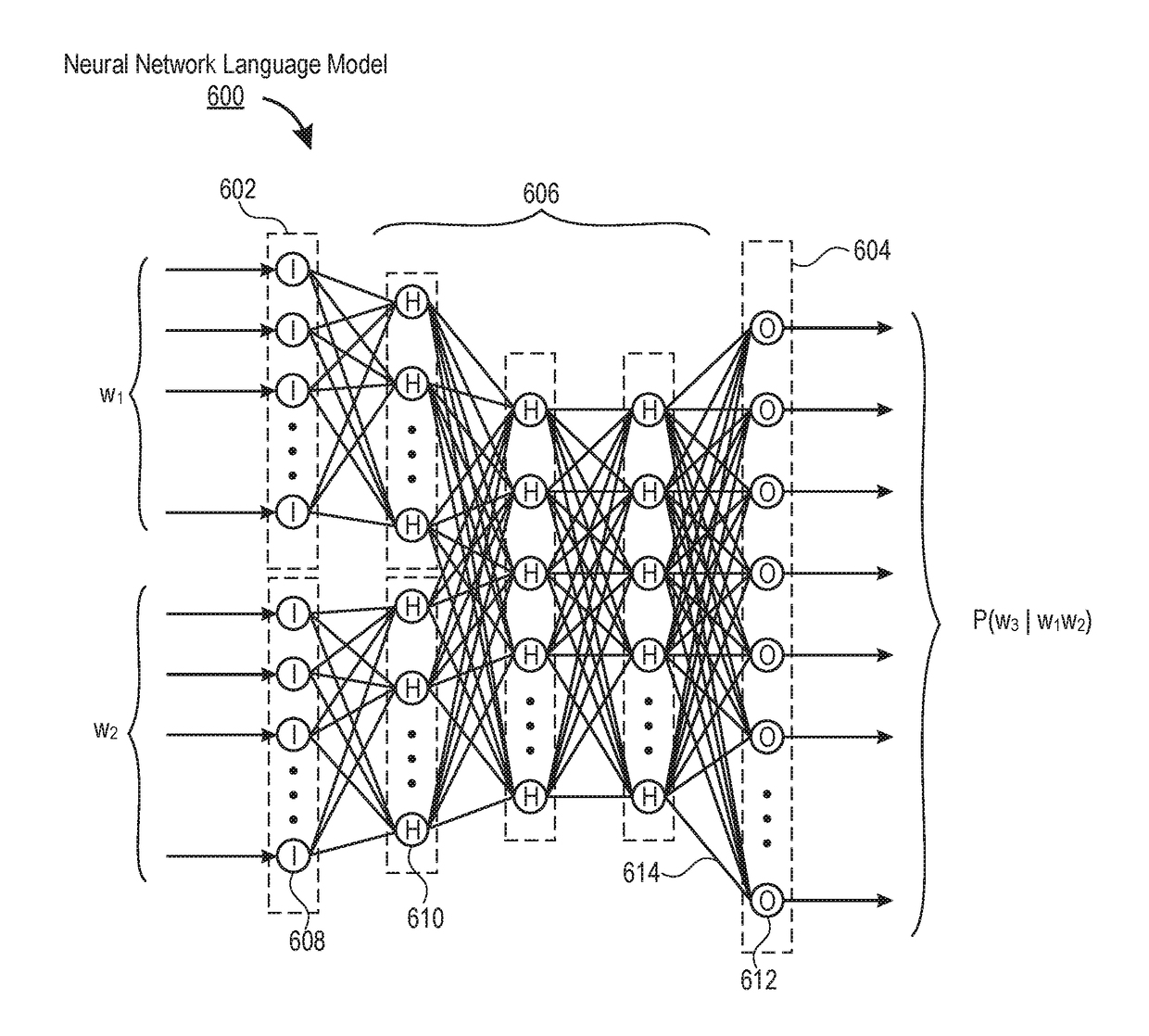

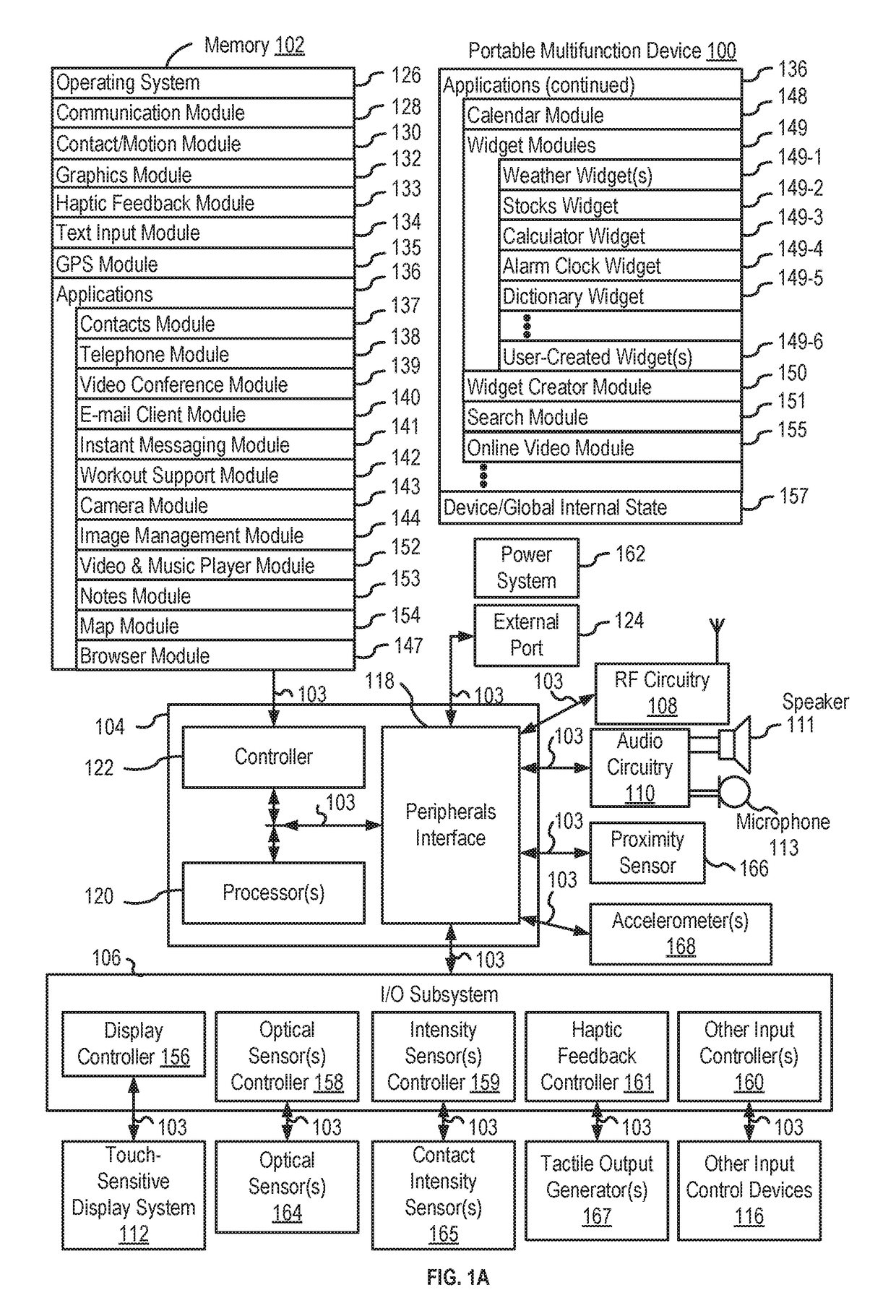

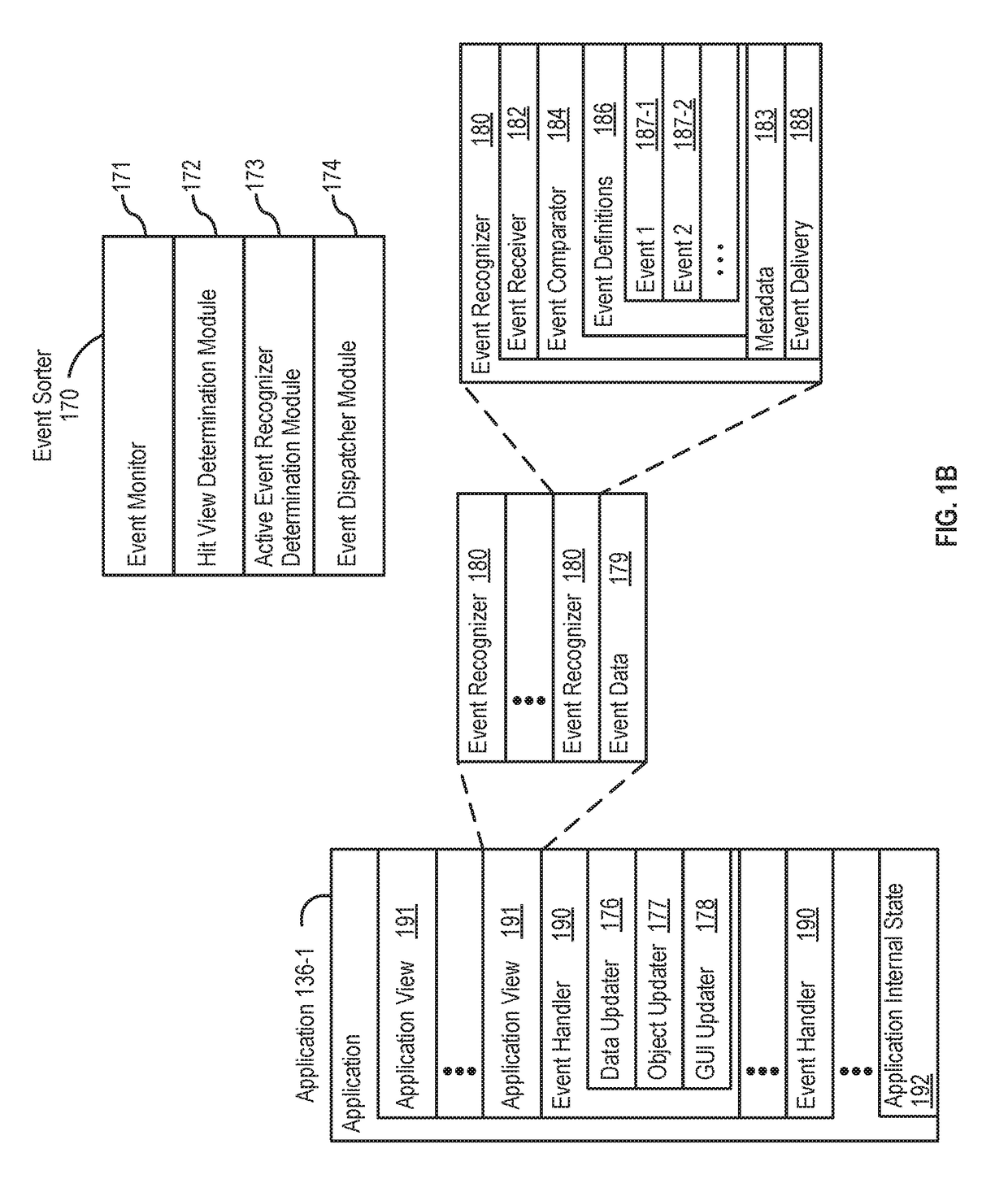

Applying neural network language models to weighted finite state transducers for automatic speech recognition

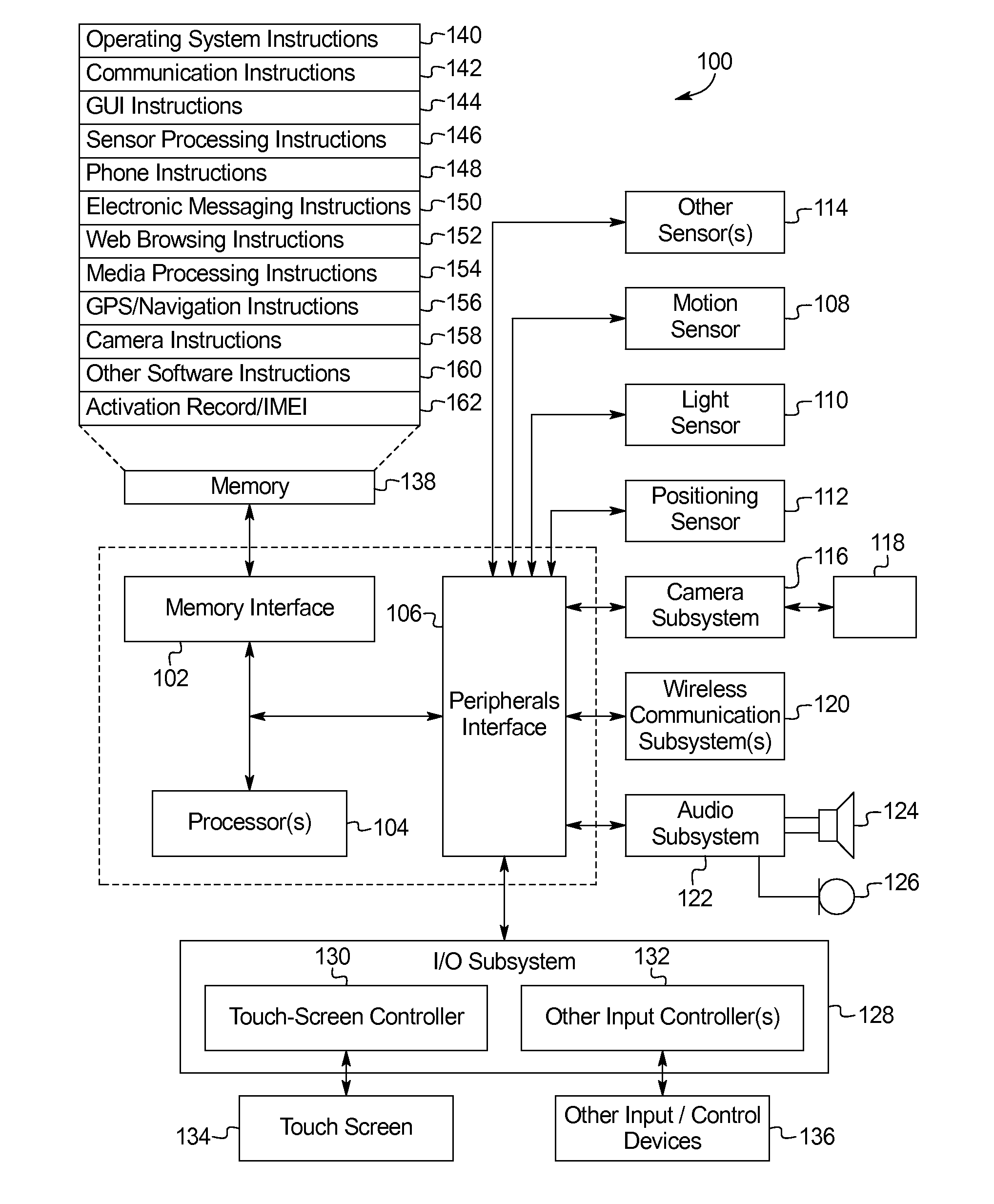

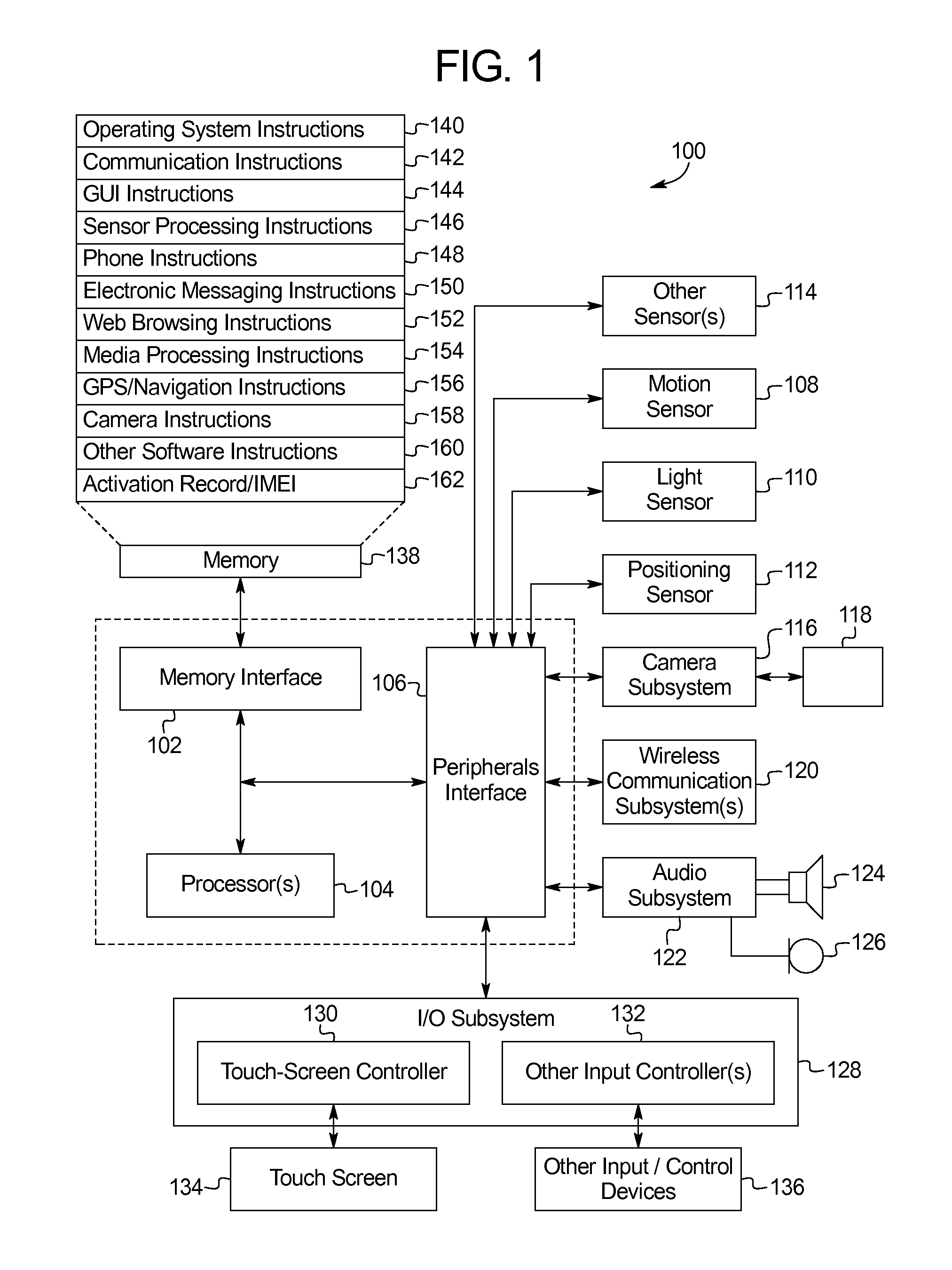

Systems and processes for converting speech-to-text are provided. In one example process, speech input can be received. A sequence of states and arcs of a weighted finite state transducer (WFST) can be traversed. A negating finite state transducer (FST) can be traversed. A virtual FST can be composed using a neural network language model and based on the sequence of states and arcs of the WFST. The one or more virtual states of the virtual FST can be traversed to determine a probability of a candidate word given one or more history candidate words. Text corresponding to the speech input can be determined based on the probability of the candidate word given the one or more history candidate words. An output can be provided based on the text corresponding to the speech input.

Owner:APPLE INC

Applying neural network language models to weighted finite state transducers for automatic speech recognition

Systems and processes for converting speech-to-text are provided. In one example process, speech input can be received. A sequence of states and arcs of a weighted finite state transducer (WFST) can be traversed. A negating finite state transducer (FST) can be traversed. A virtual FST can be composed using a neural network language model and based on the sequence of states and arcs of the WFST. The one or more virtual states of the virtual FST can be traversed to determine a probability of a candidate word given one or more history candidate words. Text corresponding to the speech input can be determined based on the probability of the candidate word given the one or more history candidate words. An output can be provided based on the text corresponding to the speech input.

Owner:APPLE INC

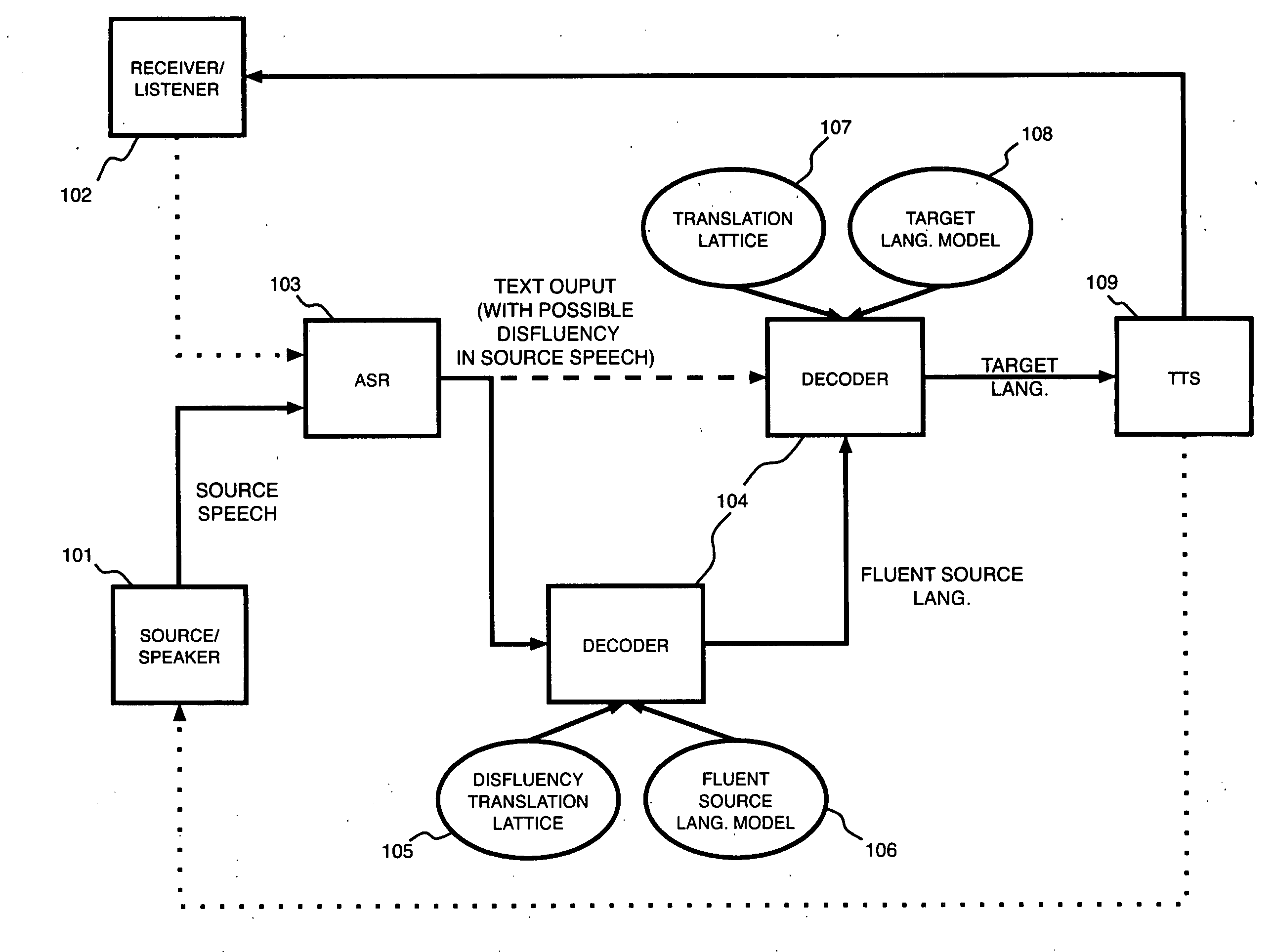

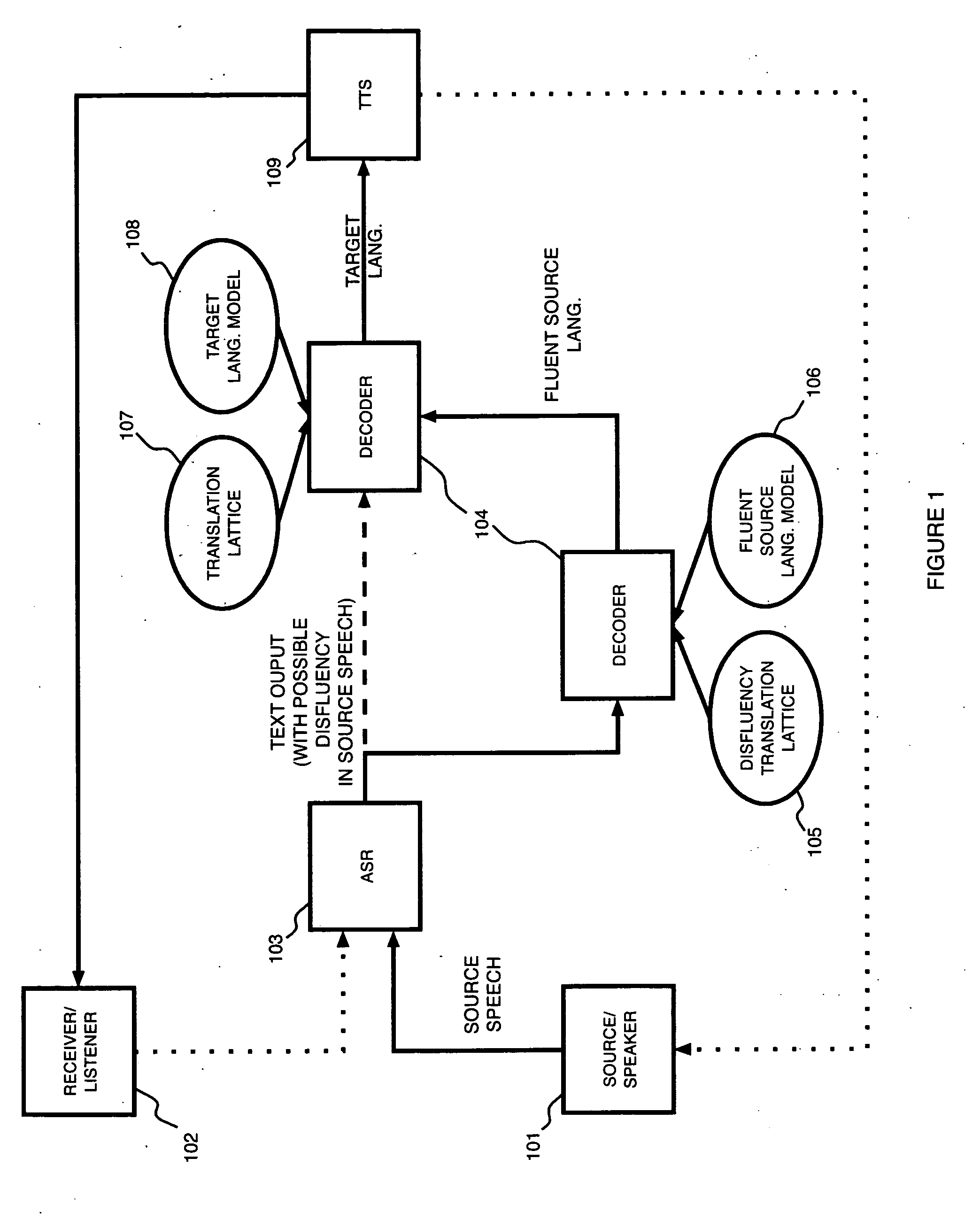

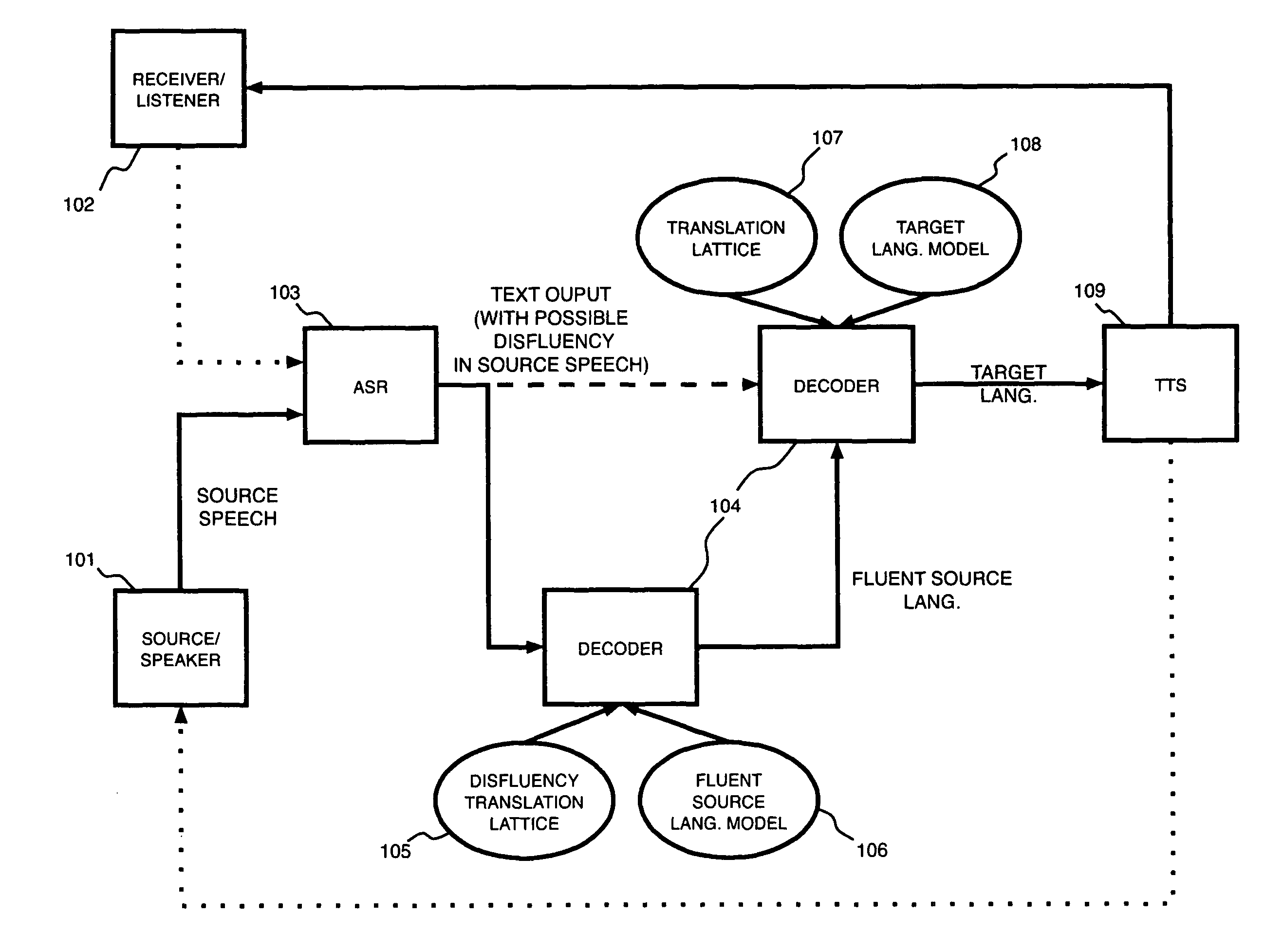

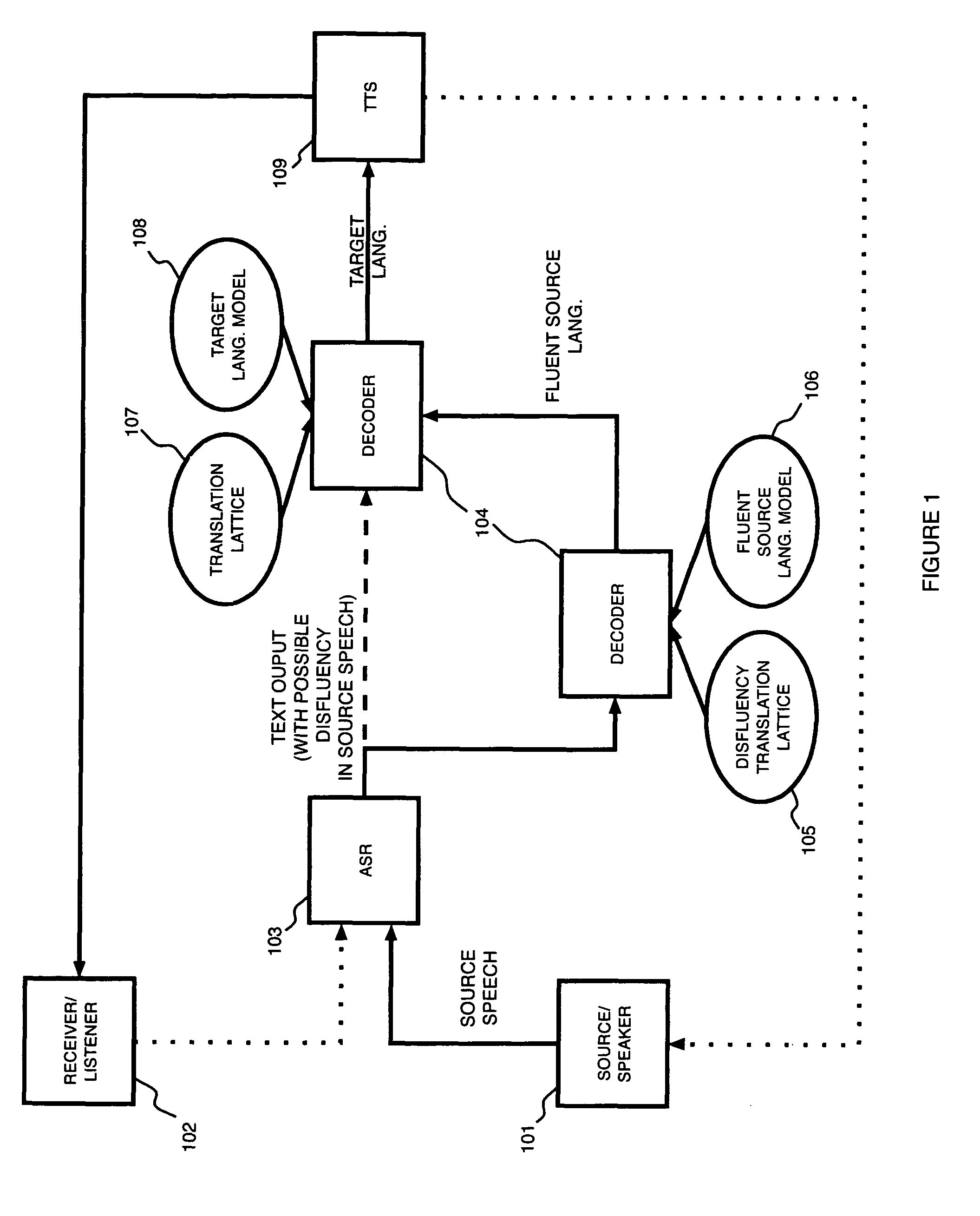

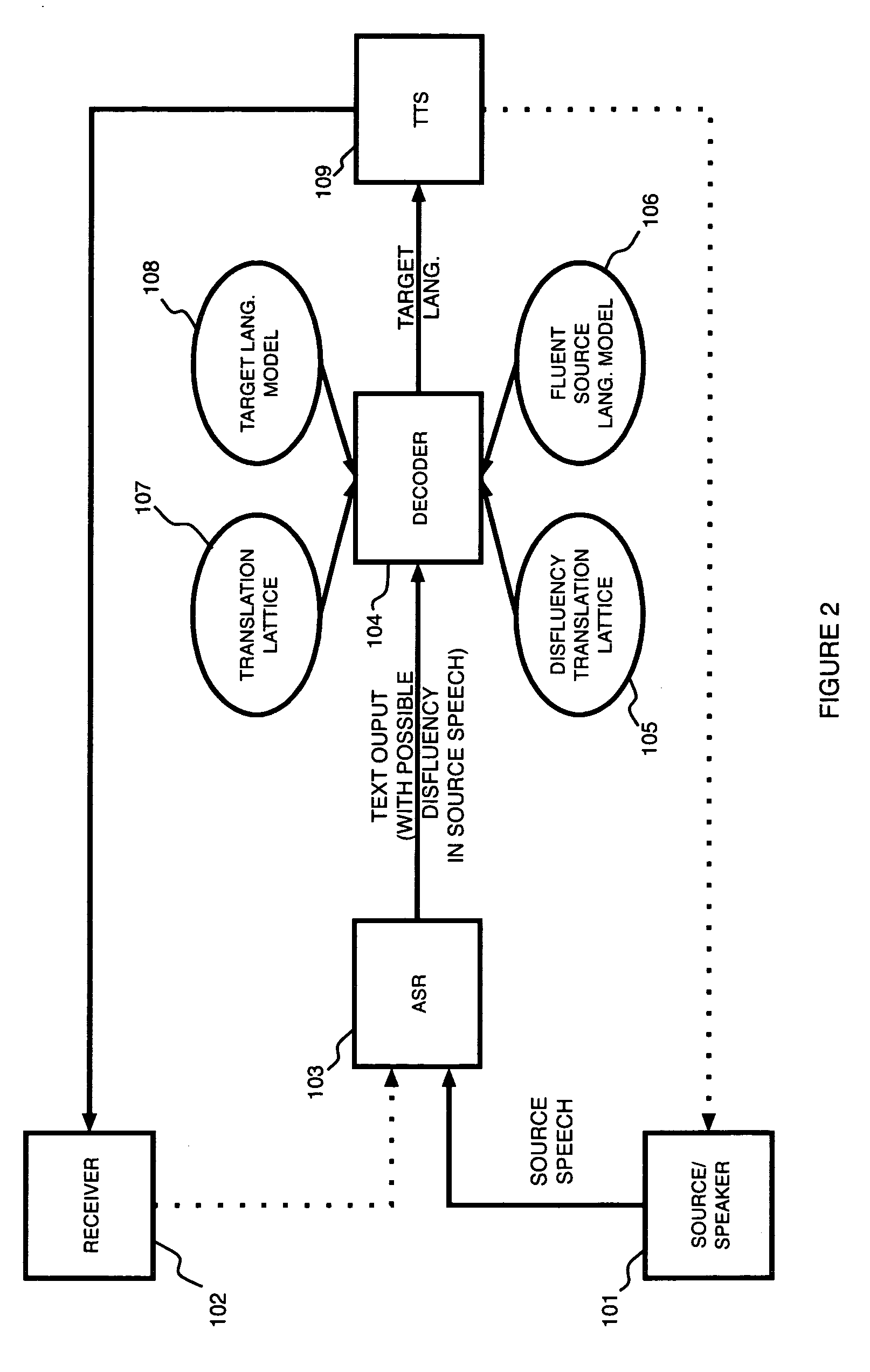

Disfluency detection for a speech-to-speech translation system using phrase-level machine translation with weighted finite state transducers

InactiveUS20080046229A1Natural language data processingSpeech recognitionSpeech to speech translationFinite state transducer

A computer-implemented method for creating a disfluency translation lattice includes providing a plurality of weighted finite state transducers including a translation model, a language model, and a phrase segmentation model as input, performing a cascaded composition of the weighted finite state transducers to create a disfluency translation lattice, and storing the disfluency translation lattice to a computer-readable media.

Owner:IBM CORP

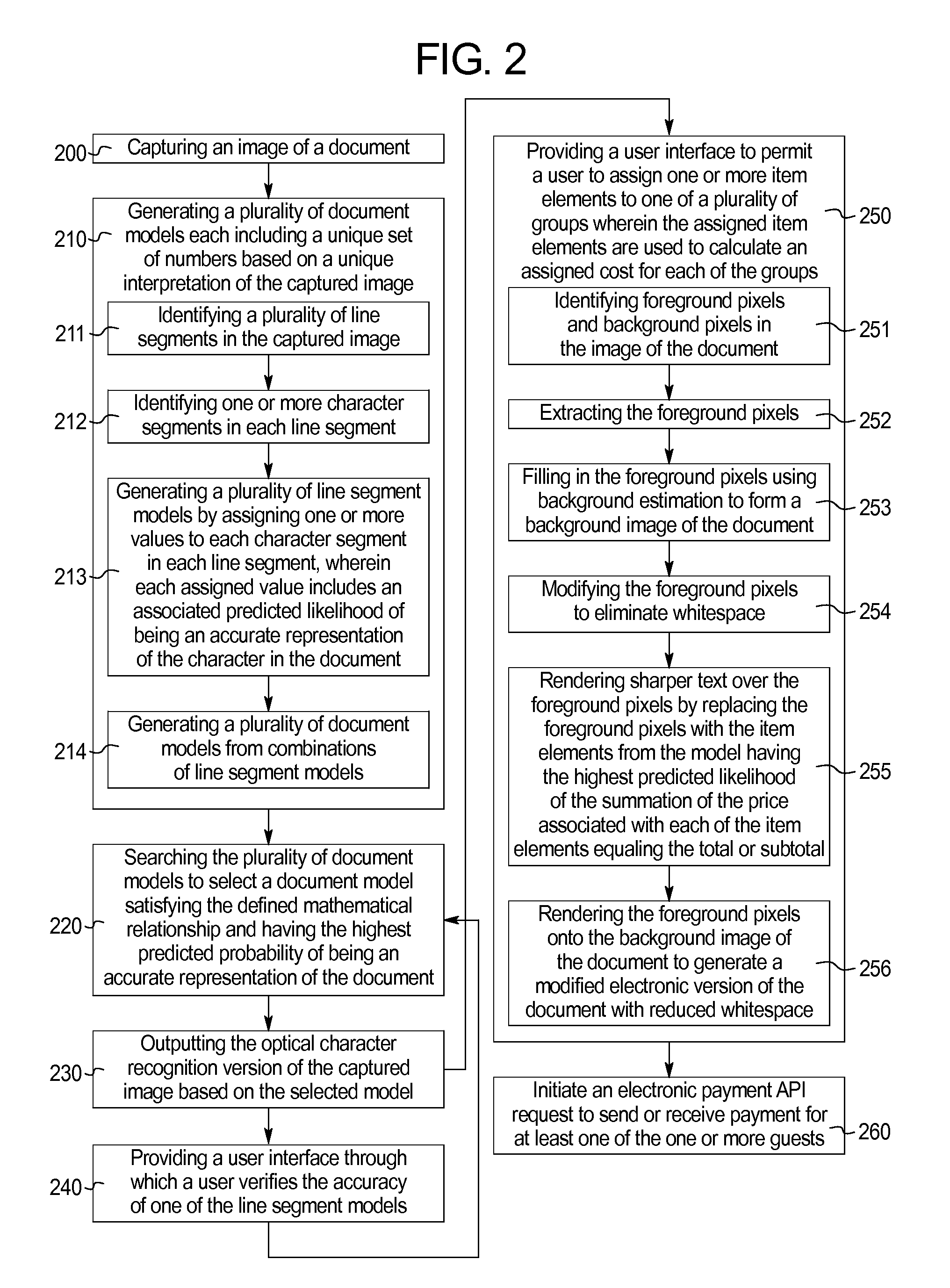

Systems and Methods for Processing Structured Data from a Document Image

InactiveUS20140067631A1Improving optical character recognitionQuickly errorComplete banking machinesFinanceFinite state transducerDocument model

Optical character recognition systems and methods including the steps of: capturing an image of a document including a set of numbers having a defined mathematical relationship; analyzing the image to determine line segments; analyzing each line segment to determine one or more character segments; analyzing each character segment to determine possible interpretations, each interpretation having an associated predicted probability of being accurate; forming a weighted finite state transducer for each interpretation, wherein the weights are based on the predicted probabilities; combining the weighted finite state transducer for each interpretation into a document model weighted finite state transducer that encodes the defined mathematical relationship; searching the document model weighted finite state transducer for the lowest weight path, which is an interpretation of the document that is most likely to accurately represent the document; and outputting an optical character recognition version of the captured image.

Owner:HELIX SYST

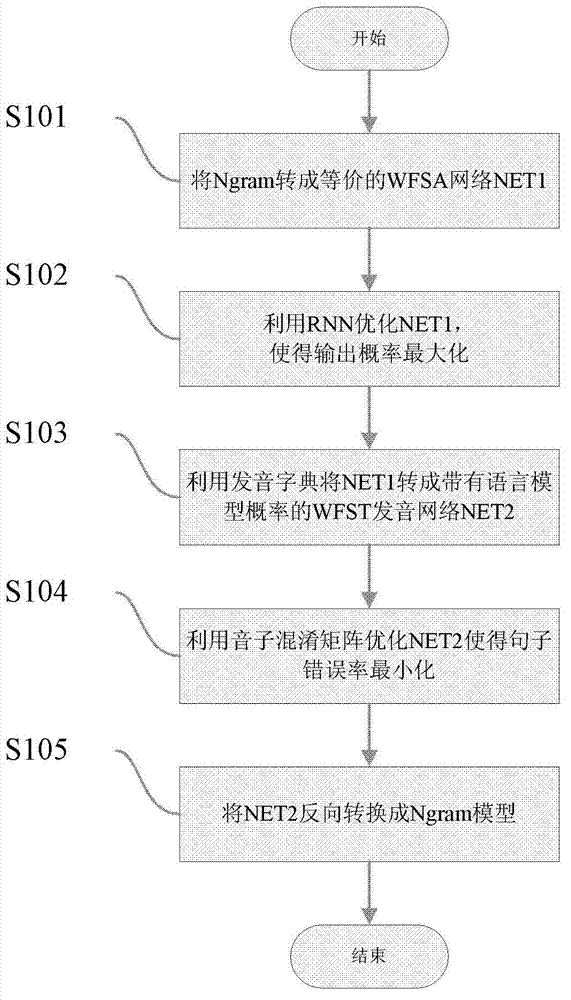

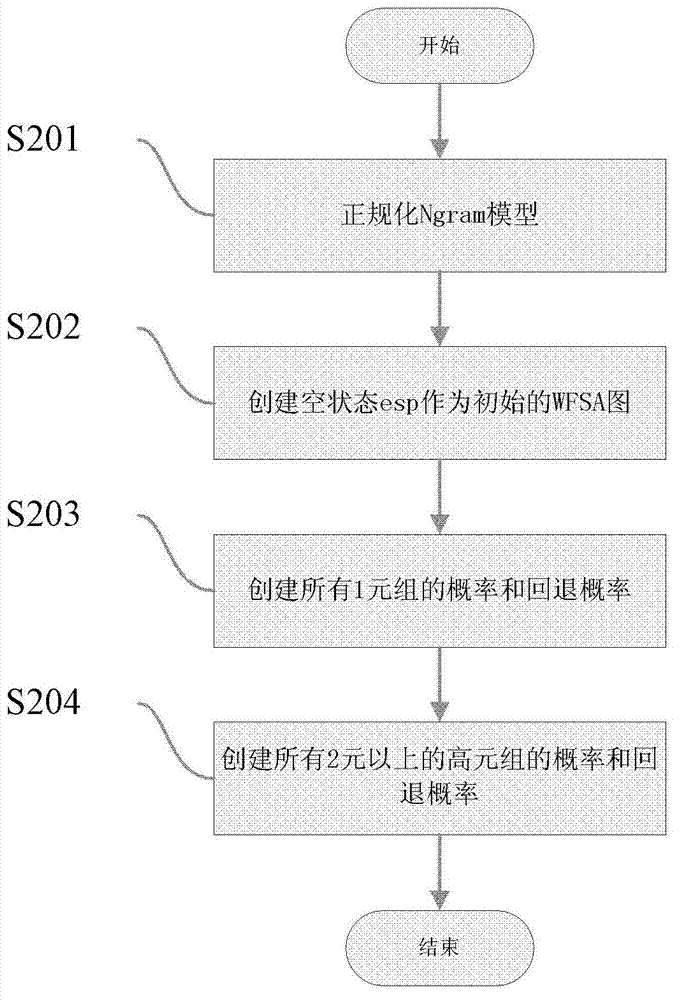

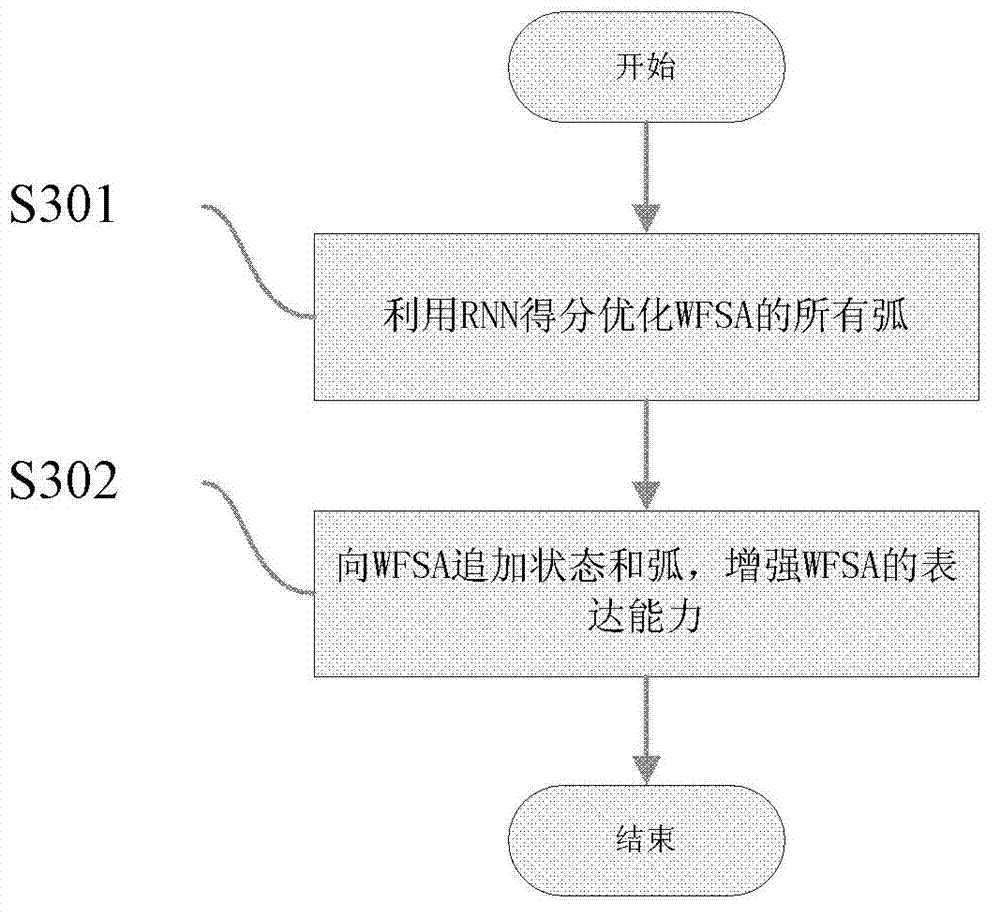

Improvement method of Ngram model for voice recognition

ActiveCN102968989AExcellent PPL performanceImprove recognition rateSpeech recognitionFinite state transducerSpeech identification

The invention discloses an improvement method of a Ngram model for voice recognition, comprising the following steps of: converting an original Ngram model for voice recognition into an equivalent WFSA (Weighted Finite-State Automaton) network NET1; optimizing the NET1 by using an RNN (Recurrent Neural Network) to ensure that the output possibility for each sentence in a training text is maximized when the training text is marked by using the NET1; converting the NET1 into a WFST (Weighted Finite State Transducer) pronunciation network NET2 with voice model possibility by utilizing a pronunciation dictionary; optimizing the pronunciation network NET2 by utilizing a phoneme confusion matrix to ensure that the error rate of sentences is minimized; and reversely converting the pronunciation network NET2 into an improved Ngram model, and carrying out voice recognition by using the improved Ngram model.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

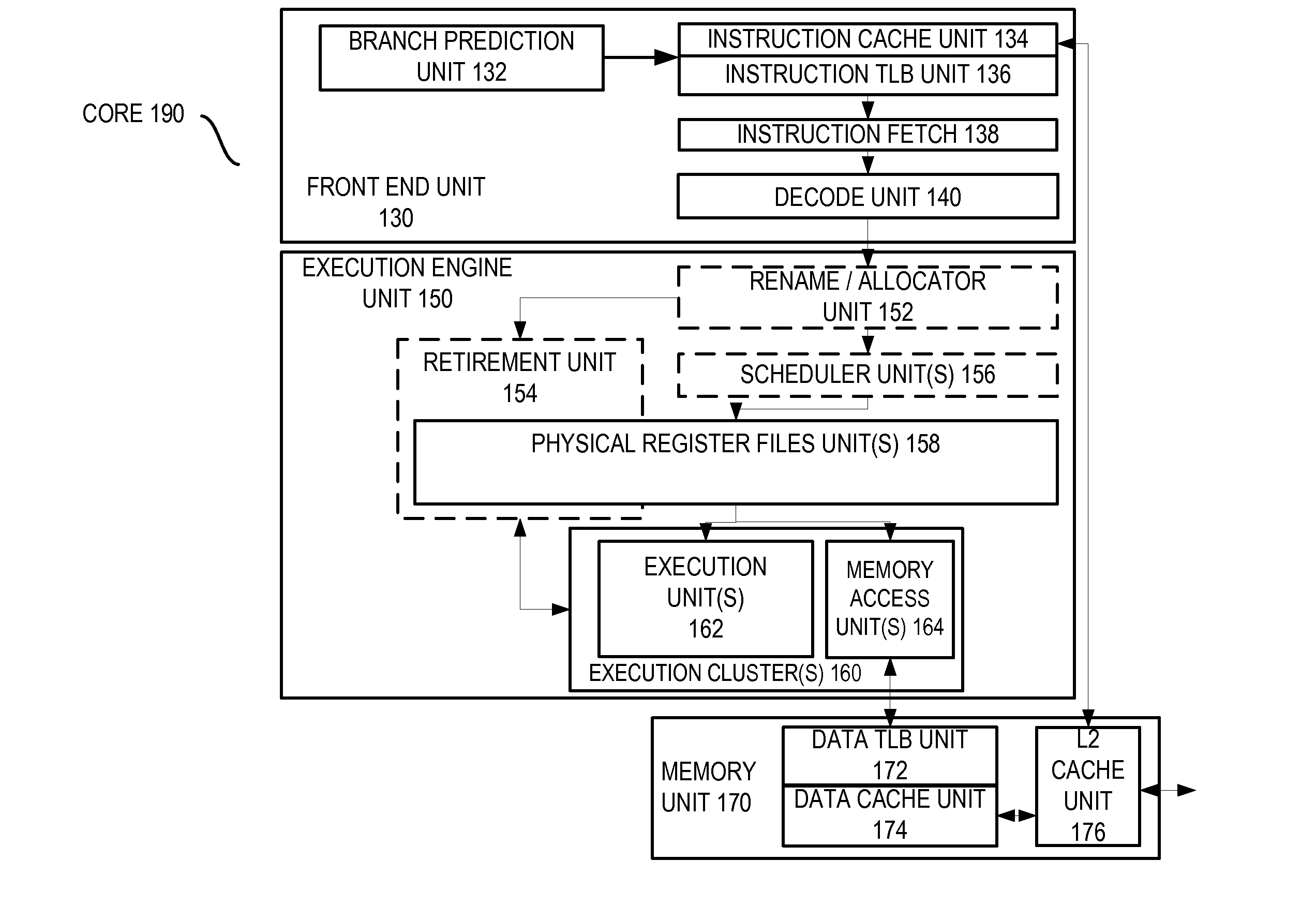

Method and apparatus for efficient, low power finite state transducer decoding

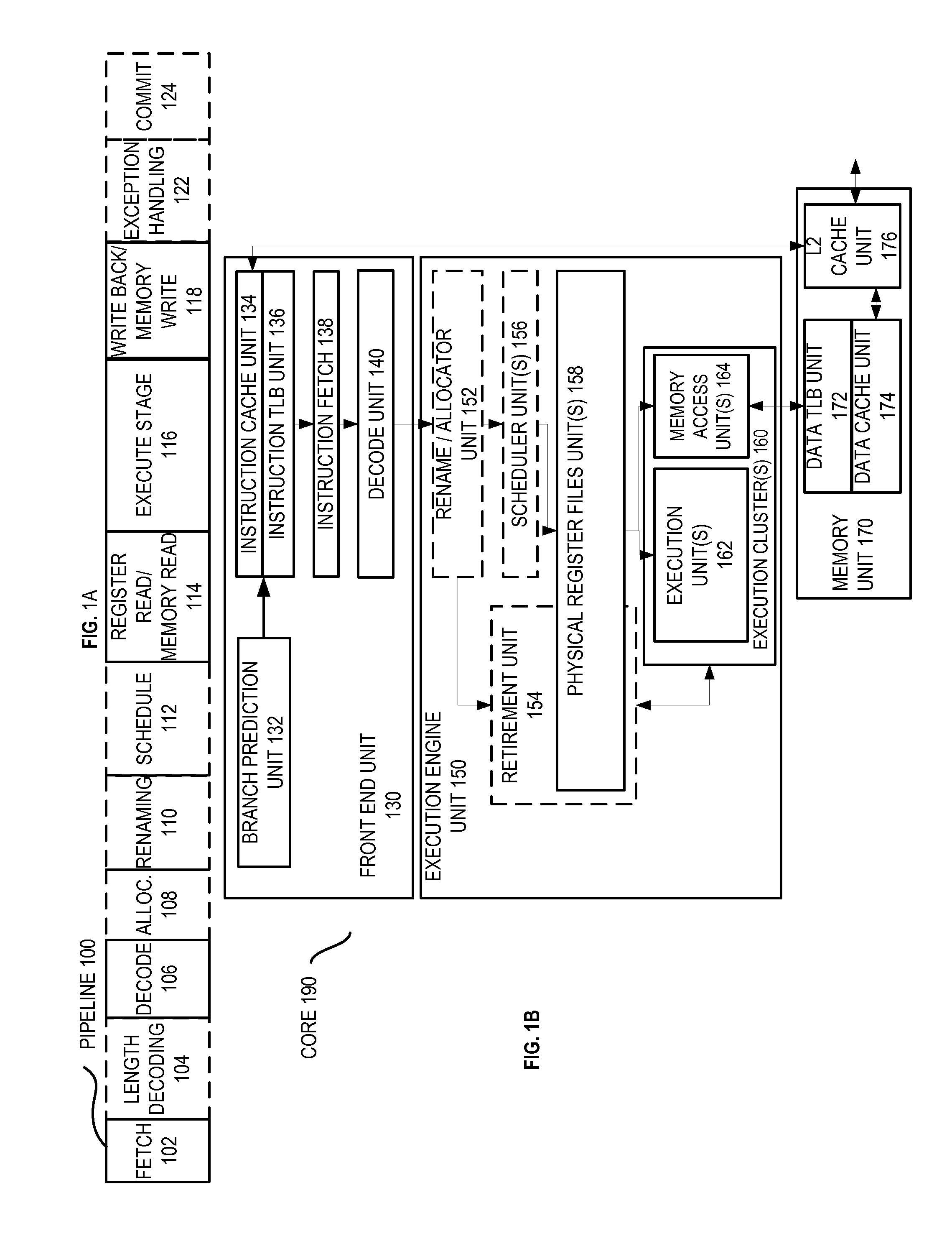

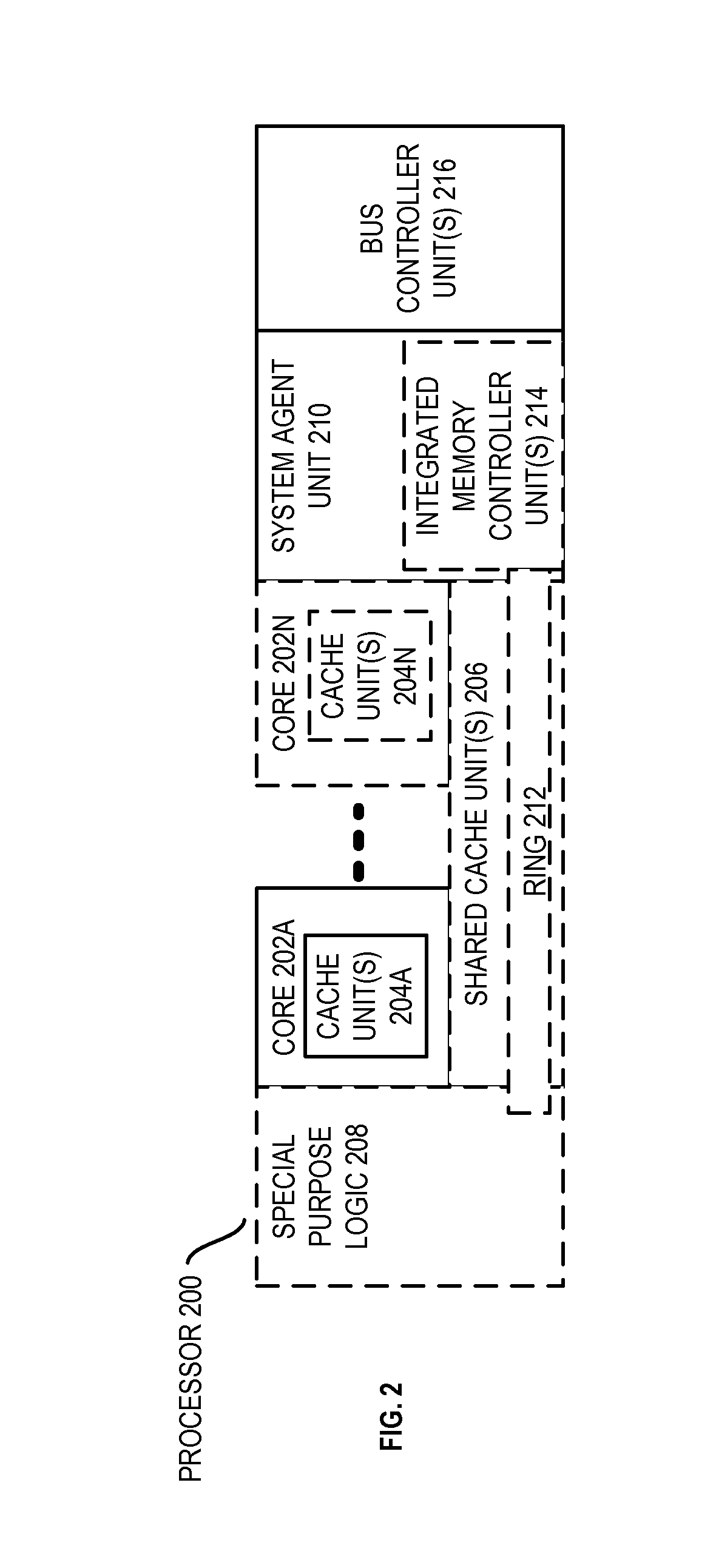

A system, apparatus and method for efficient, low power, finite state transducer decoding. For example, one embodiment of a system for performing speech recognition comprises: a processor to perform feature extraction on a plurality of digitally sampled speech frames and to responsively generate a feature vector; an acoustic model likelihood scoring unit communicatively coupled to the processor over a communication interconnect to compare the feature vector against a library of models of various known speech sounds and responsively generate a plurality of scores representing similarities between the feature vector and the models; and a weighted finite state transducer (WFST) decoder communicatively coupled to the processor and the acoustic model likelihood scoring unit over the communication interconnect to perform speech decoding by traversing a WFST graph using the plurality of scores provided by the acoustic model likelihood scoring unit.

Owner:INTEL CORP

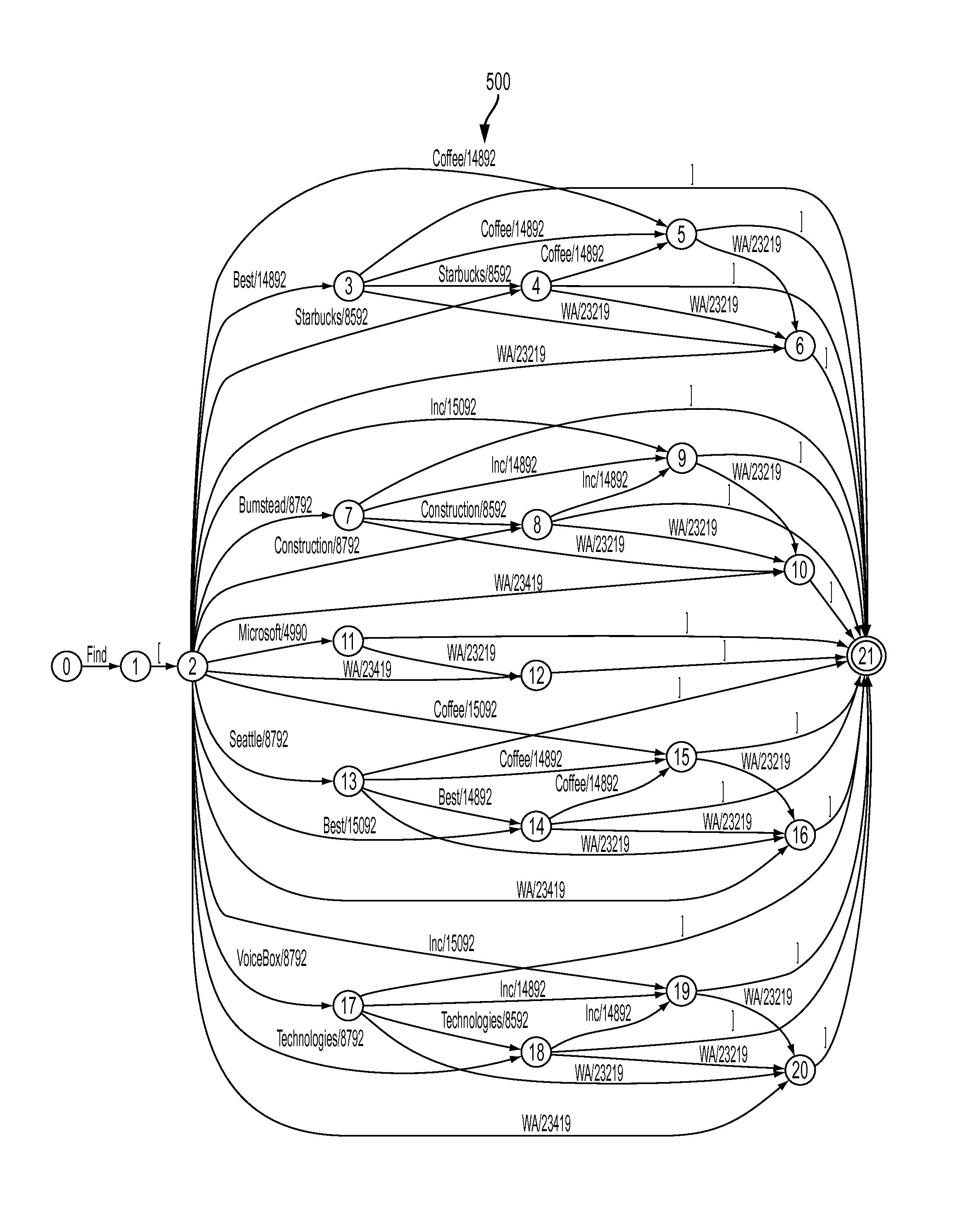

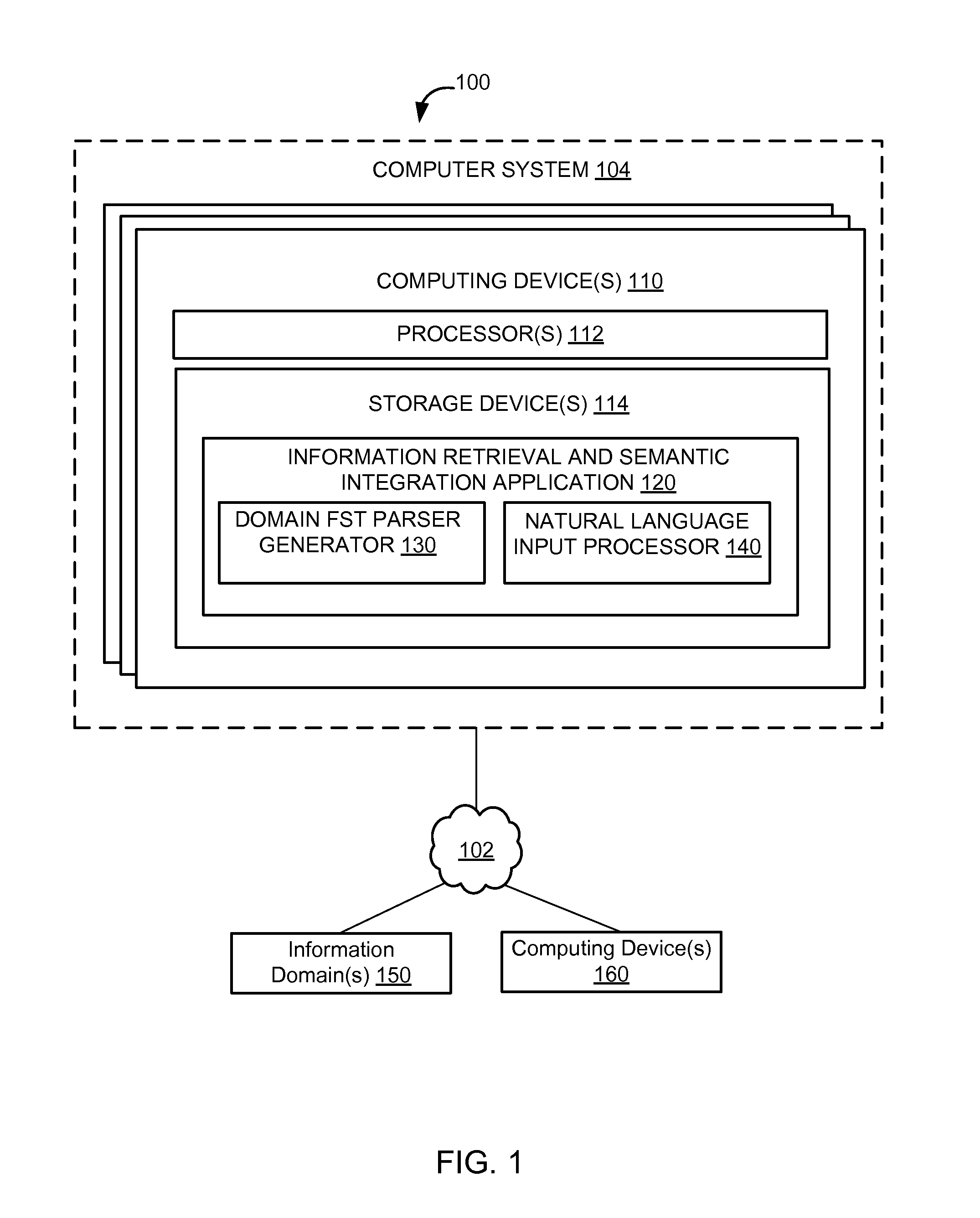

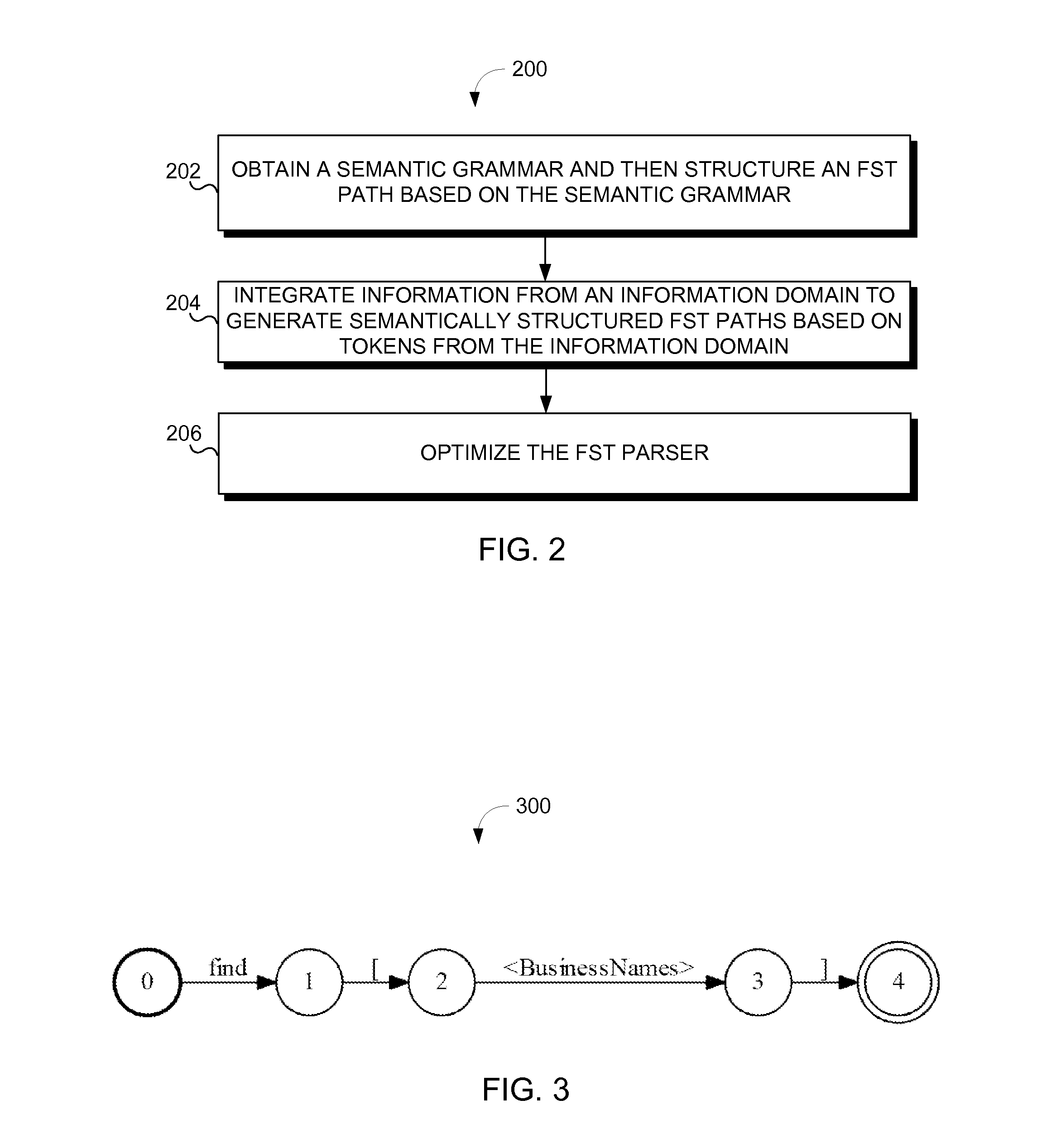

Integration of domain information into state transitions of a finite state transducer for natural language processing

ActiveUS20160188573A1Precise alignmentEfficiently generate robust meaning representationNatural language translationSemantic analysisFinite state transducerApplication software

The invention relates to a system and method for integrating domain information into state transitions of a Finite State Transducer (“FST”) for natural language processing. A system may integrate semantic parsing and information retrieval from an information domain to generate an FST parser that represents the information domain. The FST parser may include a plurality of FST paths, at least one of which may be used to generate a meaning representation from a natural language input. As such, the system may perform domain-based semantic parsing of a natural language input, generating more robust meaning representations using domain information. The system may be applied to a wide range of natural language applications that use natural language input from a user such as, for example, natural language interfaces to computing systems, communication with robots in natural language, personalized digital assistants, question-answer query systems, and / or other natural language processing applications.

Owner:VOICEBOX TECH INC

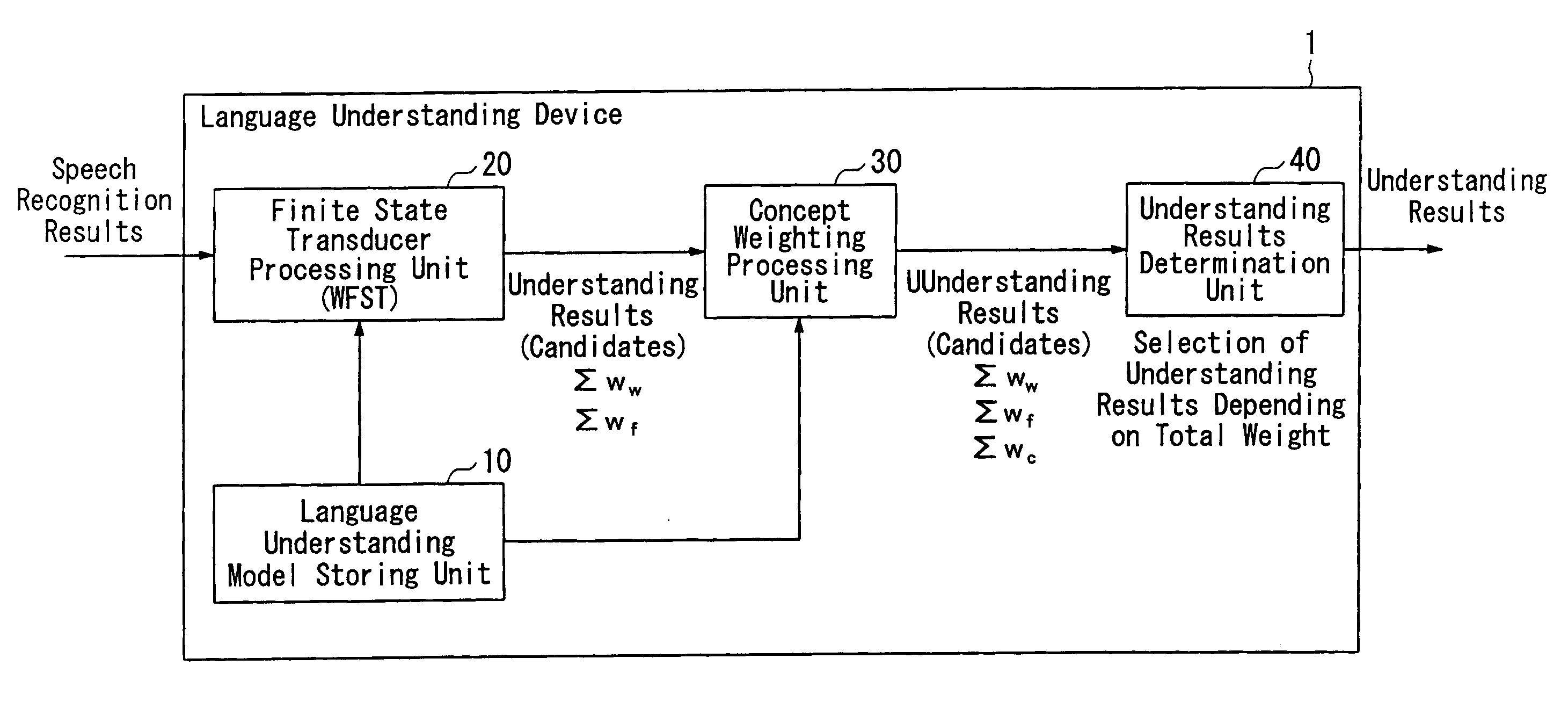

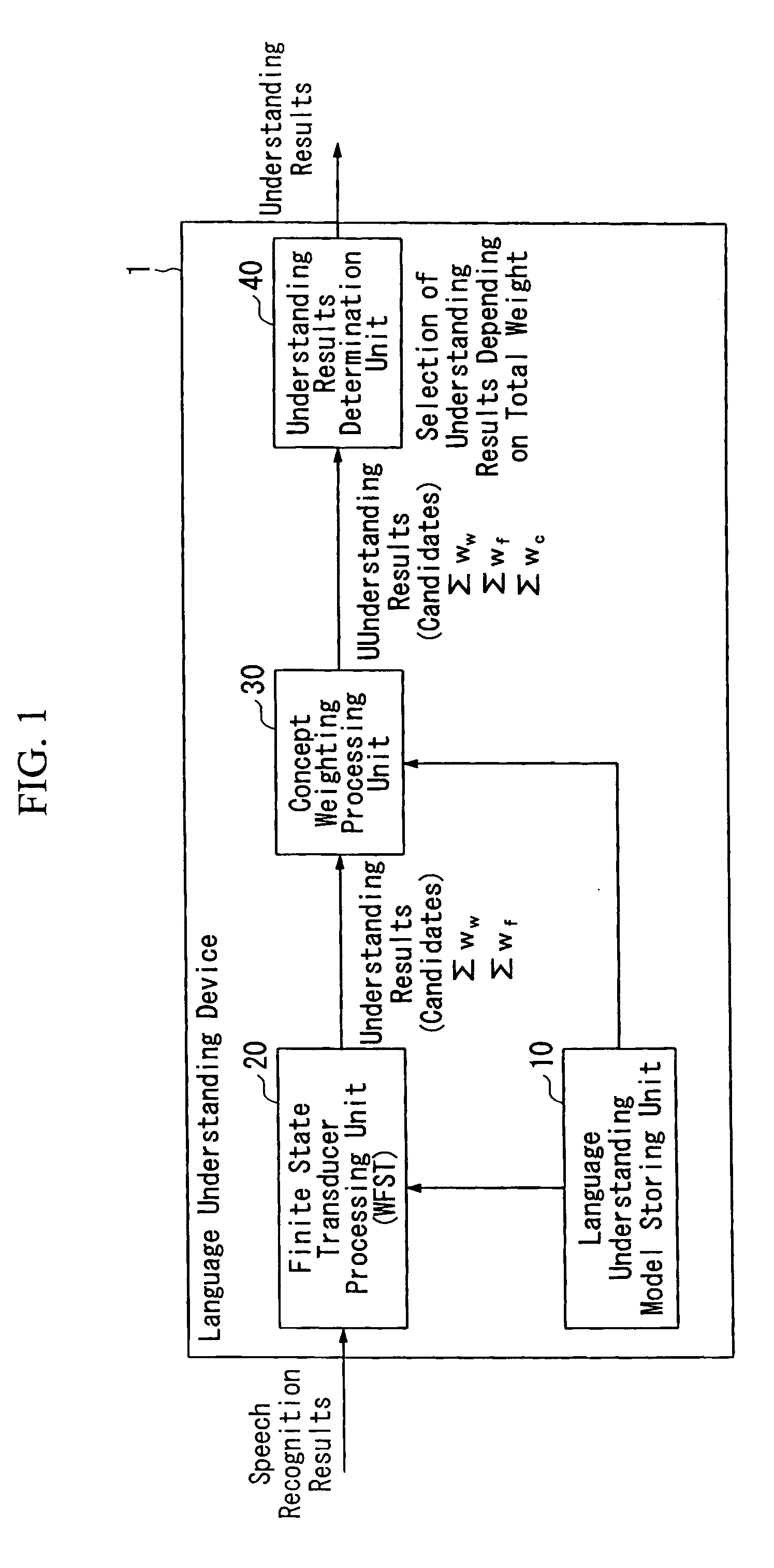

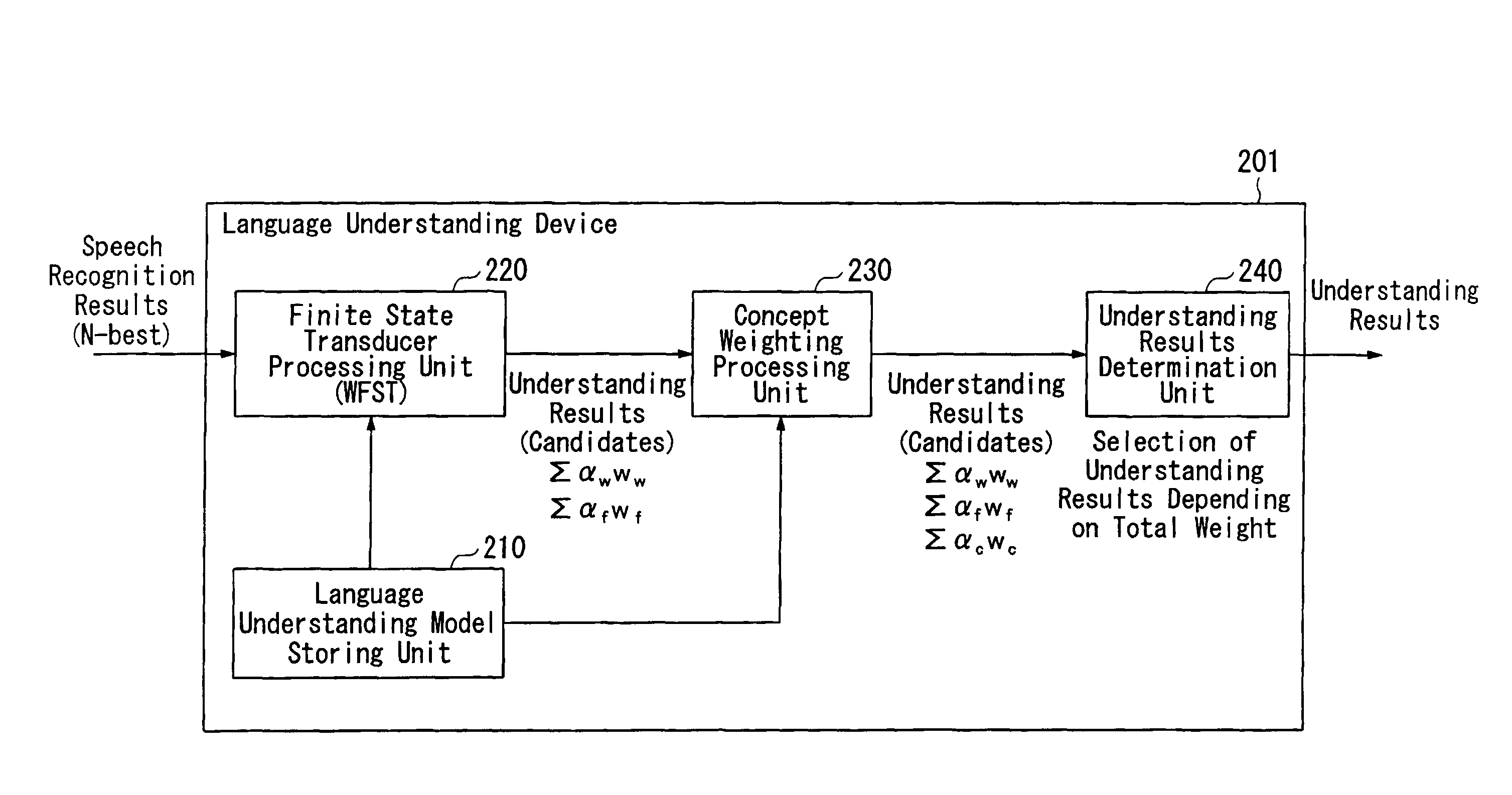

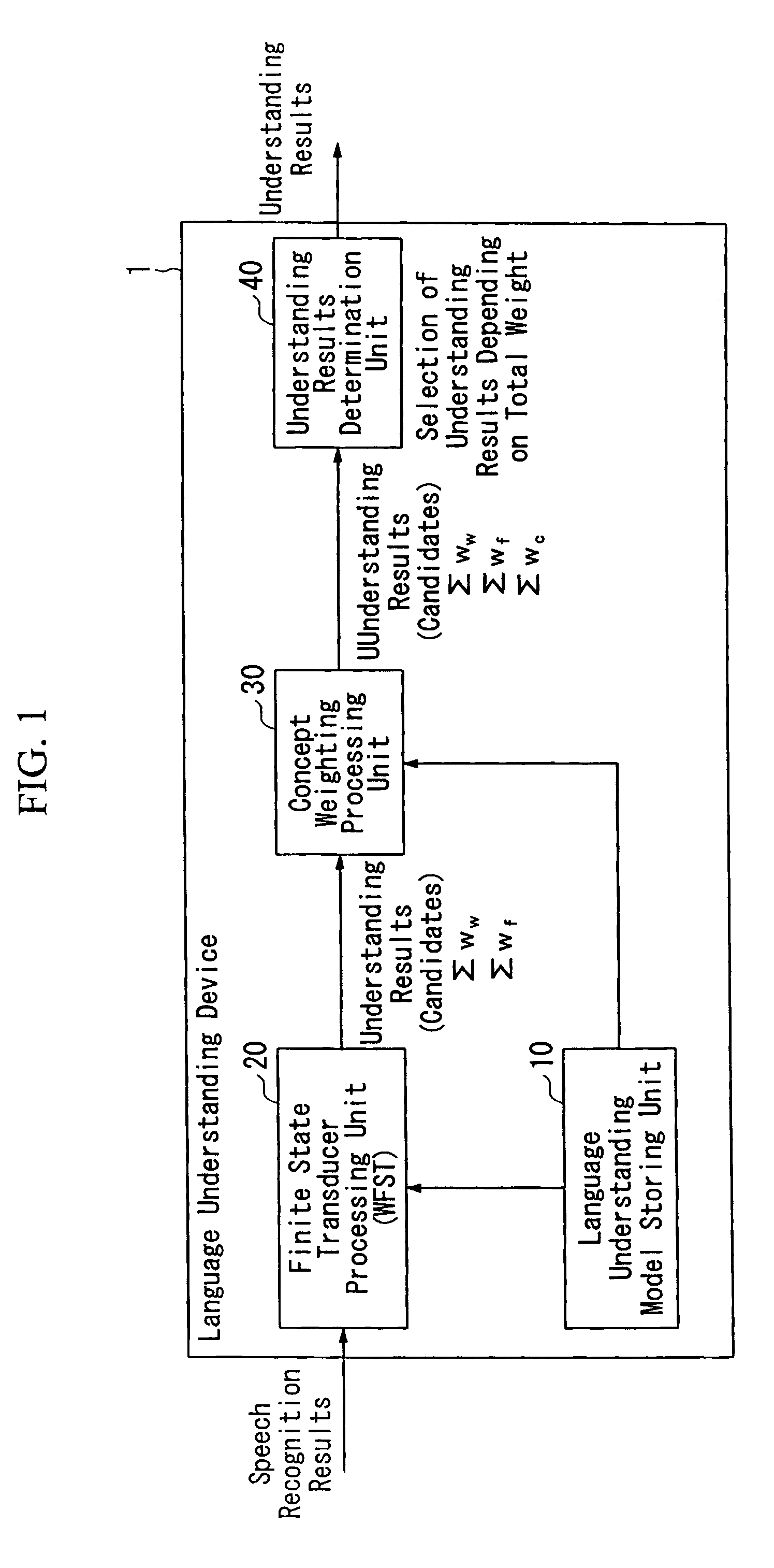

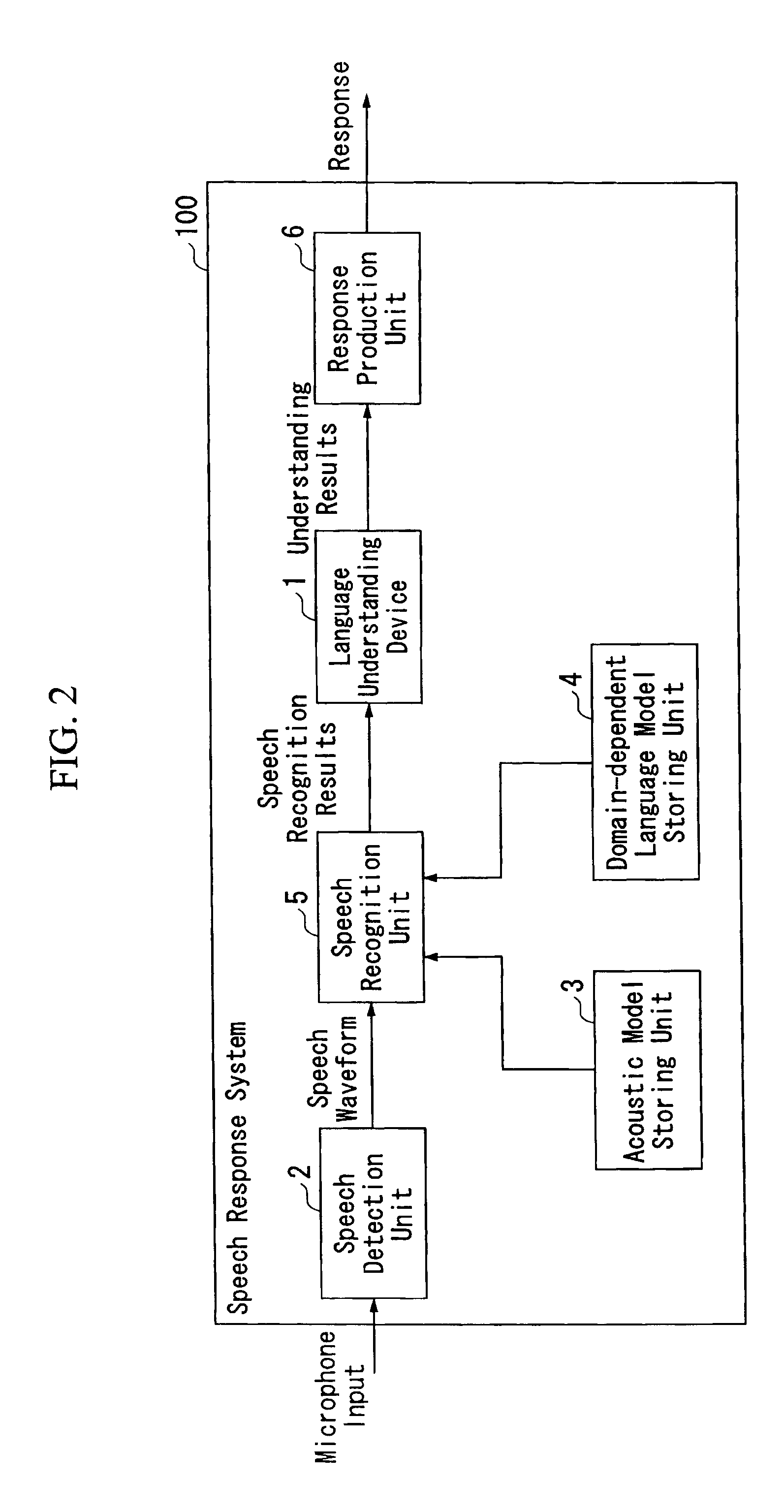

Language understanding device

ActiveUS20080294437A1Easy constructionImprove understanding accuracyNatural language data processingSpeech recognitionLanguage understandingFinite state transducer

A language understanding device includes: a language understanding model storing unit configured to store word transition data including pre-transition states, input words, predefined outputs corresponding to the input words, word weight information, and post-transition states, and concept weighting data including concepts obtained from language understanding results for at least one word, and concept weight information corresponding to the concepts; a finite state transducer processing unit configured to output understanding result candidates including the predefined outputs, to accumulate word weights so as to obtain a cumulative word weight, and to sequentially perform state transition operations; a concept weighting processing unit configured to accumulate concept weights so as to obtain a cumulative concept weight; and an understanding result determination unit configured to determine an understanding result from the understanding result candidates by referring to the cumulative word weight and the cumulative concept weight.

Owner:HONDA MOTOR CO LTD

Automated test input generation for web applications

ActiveUS8302080B2Software engineeringError detection/correctionSource transformationFinite state transducer

A method and apparatus is disclosed herein for automated test input generation for web applications. In one embodiment, the method comprises performing a source-to-source transformation of the program; performing interpretation on the program based on a set of test input values; symbolically executing the program; recording a symbolic constraint for each of one or more conditional expressions encountered during execution of the program, including analyzing a string operation in the program to identify one or more possible execution paths, and generating symbolic inputs representing values of variables in each of the conditional expressions as a numeric expression and a string constraint including generating constraints on string values by modeling string operations using finite state transducers (FSTs) and supplying values from the program's execution in place of intractable sub-expressions; and generating new inputs to drive the program during a subsequent iteration based on results of solving the recorded string constraints.

Owner:NTT DOCOMO INC

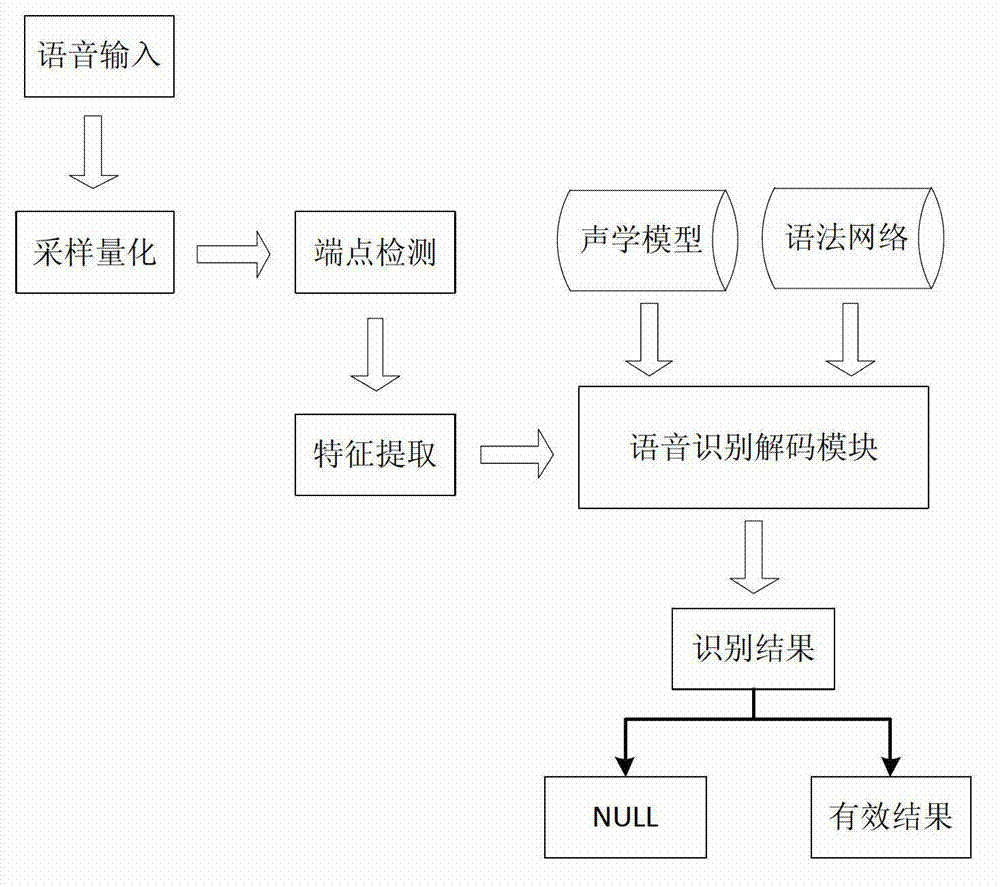

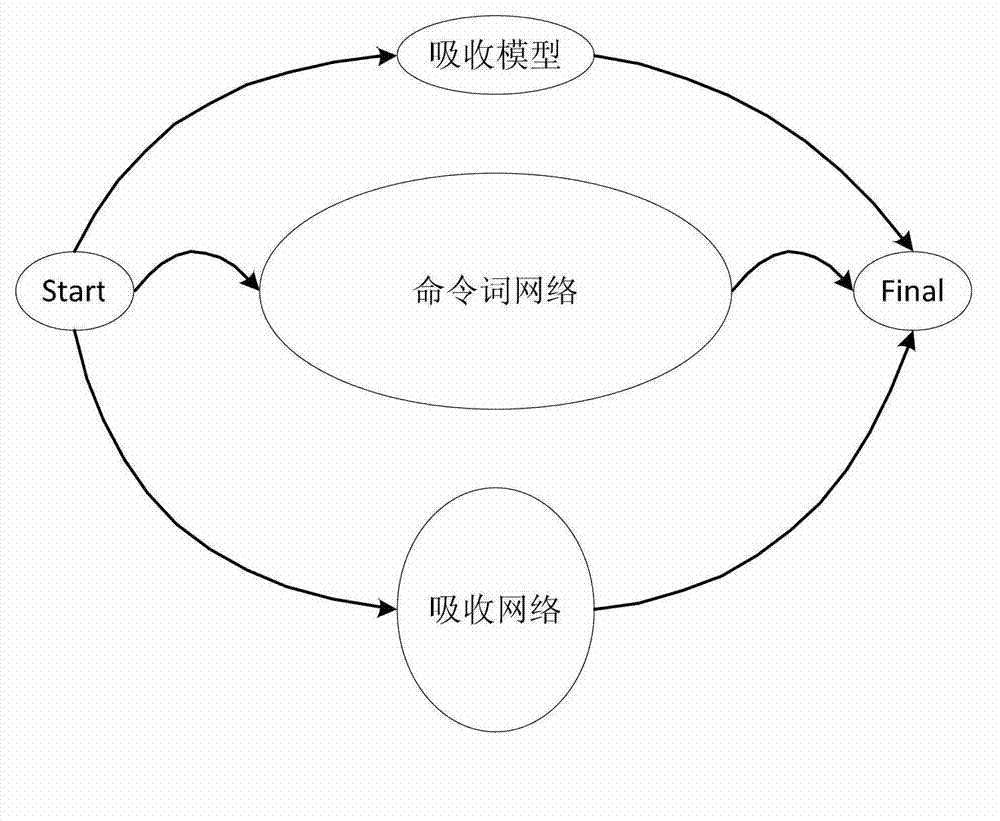

Method for improving rejection capability of speech recognition system

ActiveCN103077708AEffective denialImprove the effect of rejectionSpeech recognitionFeature vectorFeature extraction

The invention relates to a method for improving rejection capability of a speech recognition system. The method comprises the following steps of collecting various types of noise data; classifying according to the noise types; for different types of noise, respectively training GMMs (Gauss mixed model); assembling various types of GMMs into an integral absorption model; training a statistic language model by various types of relatively random texts, and then establishing a recognition network by WFST (weighted finite state transducer) technique, which is called as an absorption network; connecting the absorption network, the absorption model and an original decoding network in parallel to form a new decoding network; enabling the input original audio frequency to pass endpoint detection and a feature extraction module, so as to generate feature vectors; and competing the feature vectors in the three parts of the decoding network according to an Viterbi algorithm, so as to generate a final recognition result, and effectively reject the noise and an out-of-vocabulary condition. The method has the advantage that on the premise of balancing the recognition efficiency, the effect of rejecting the out-of-vocabulary condition and the invalid input is well realized.

Owner:讯飞医疗科技股份有限公司

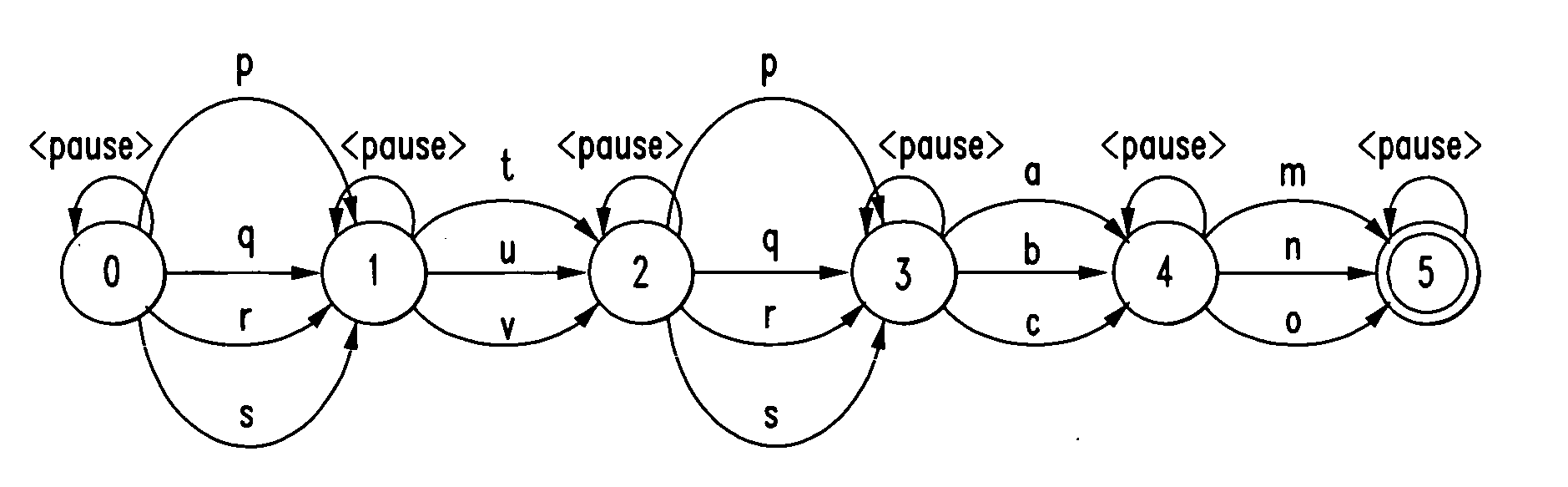

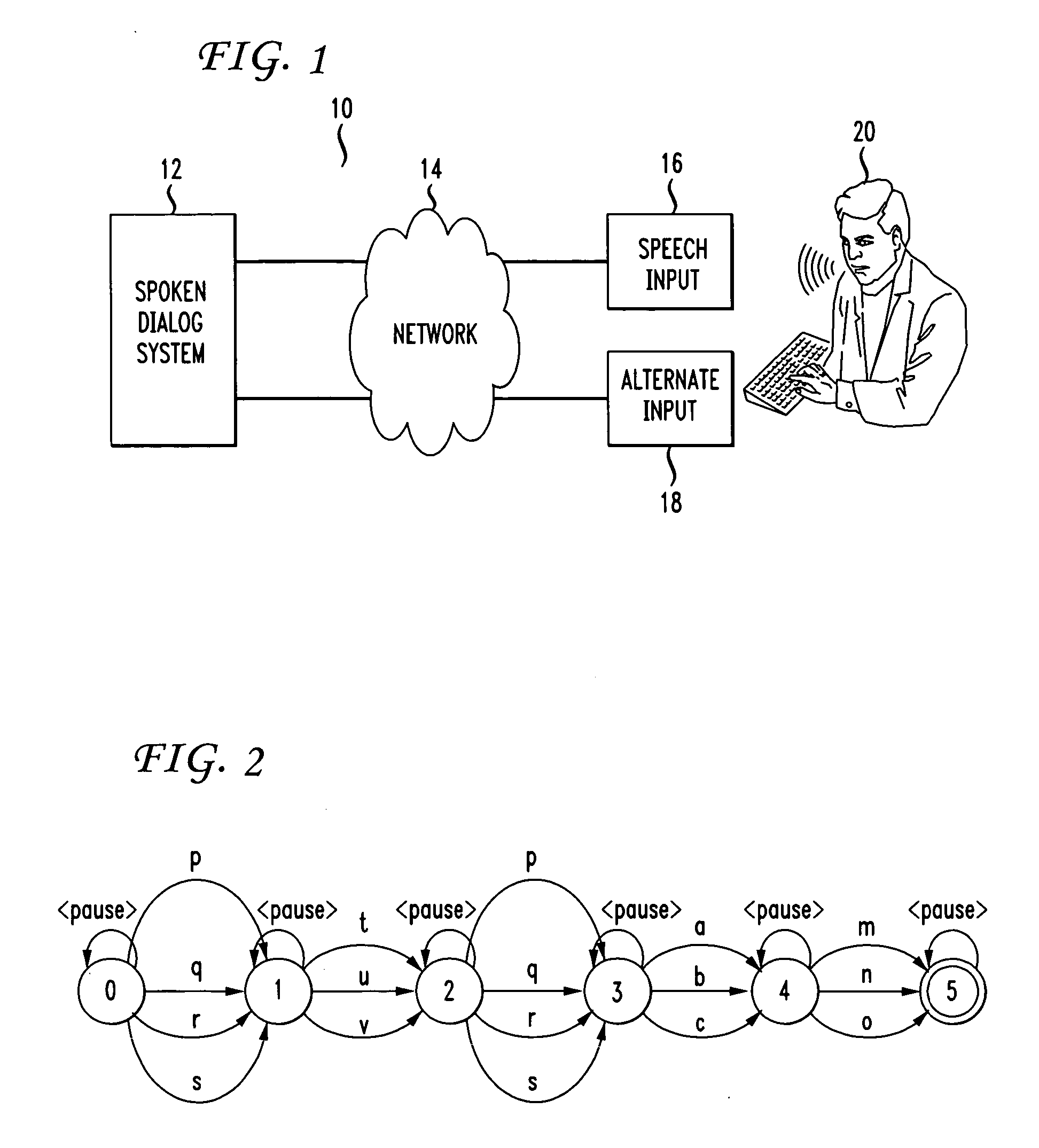

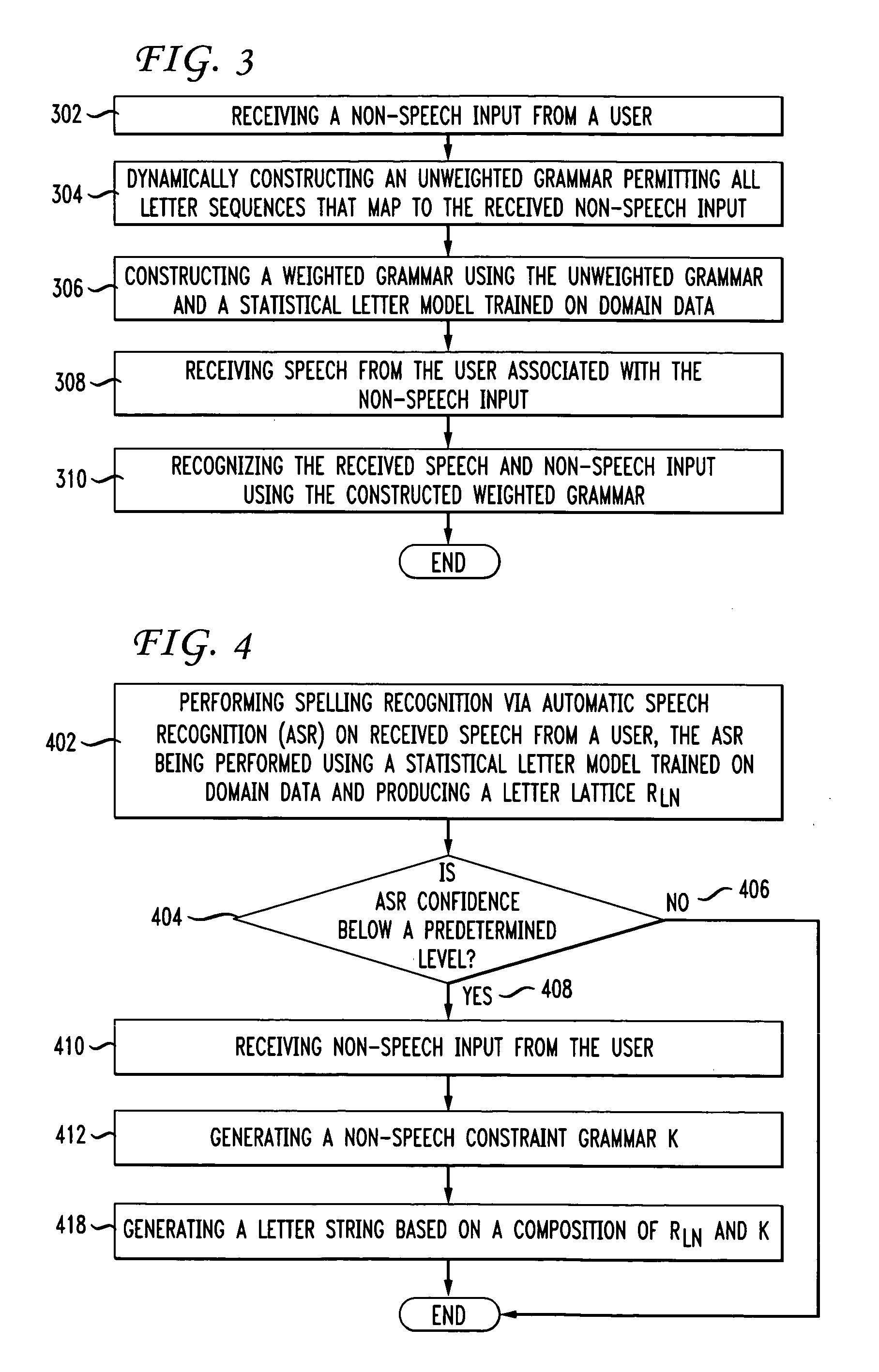

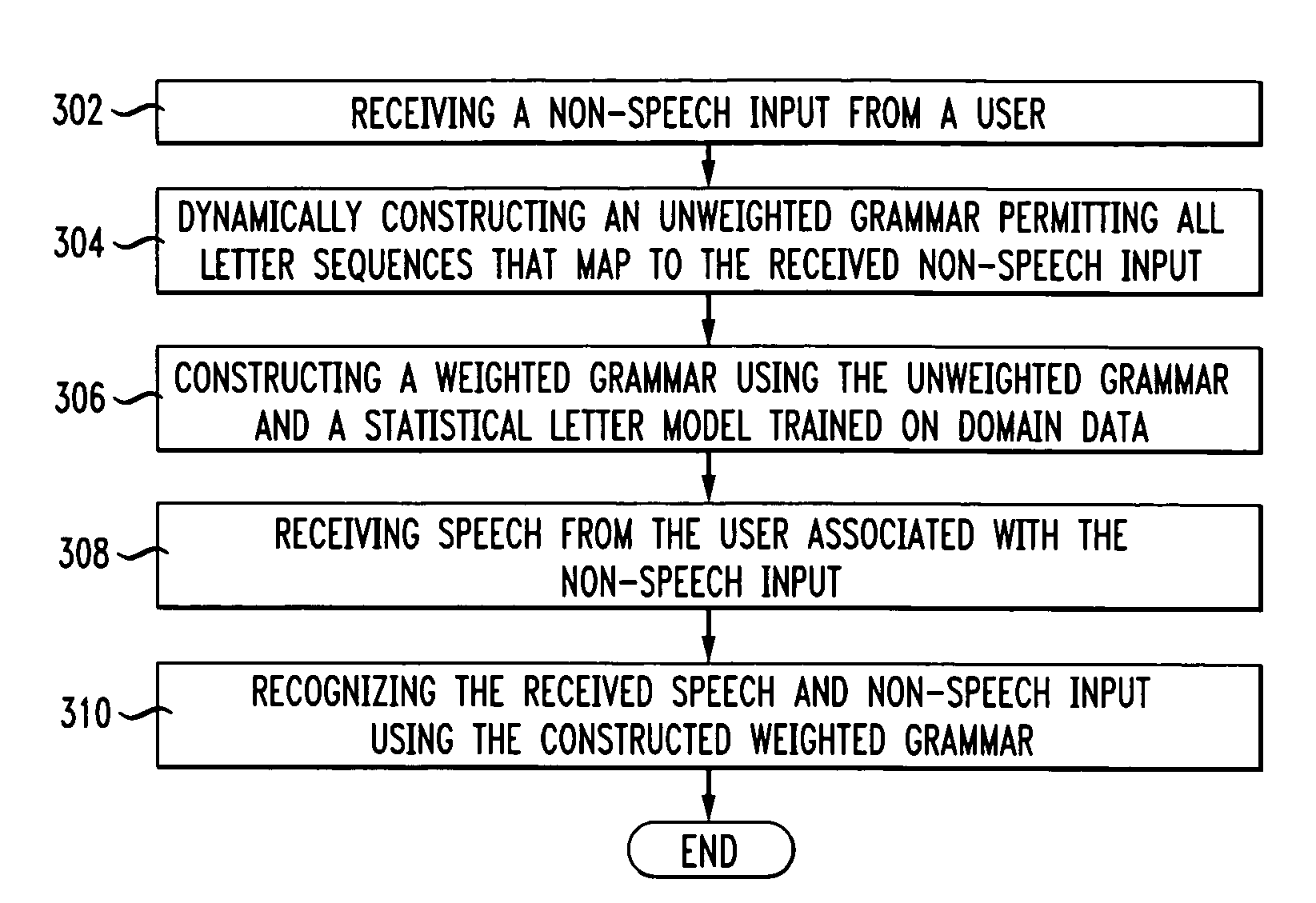

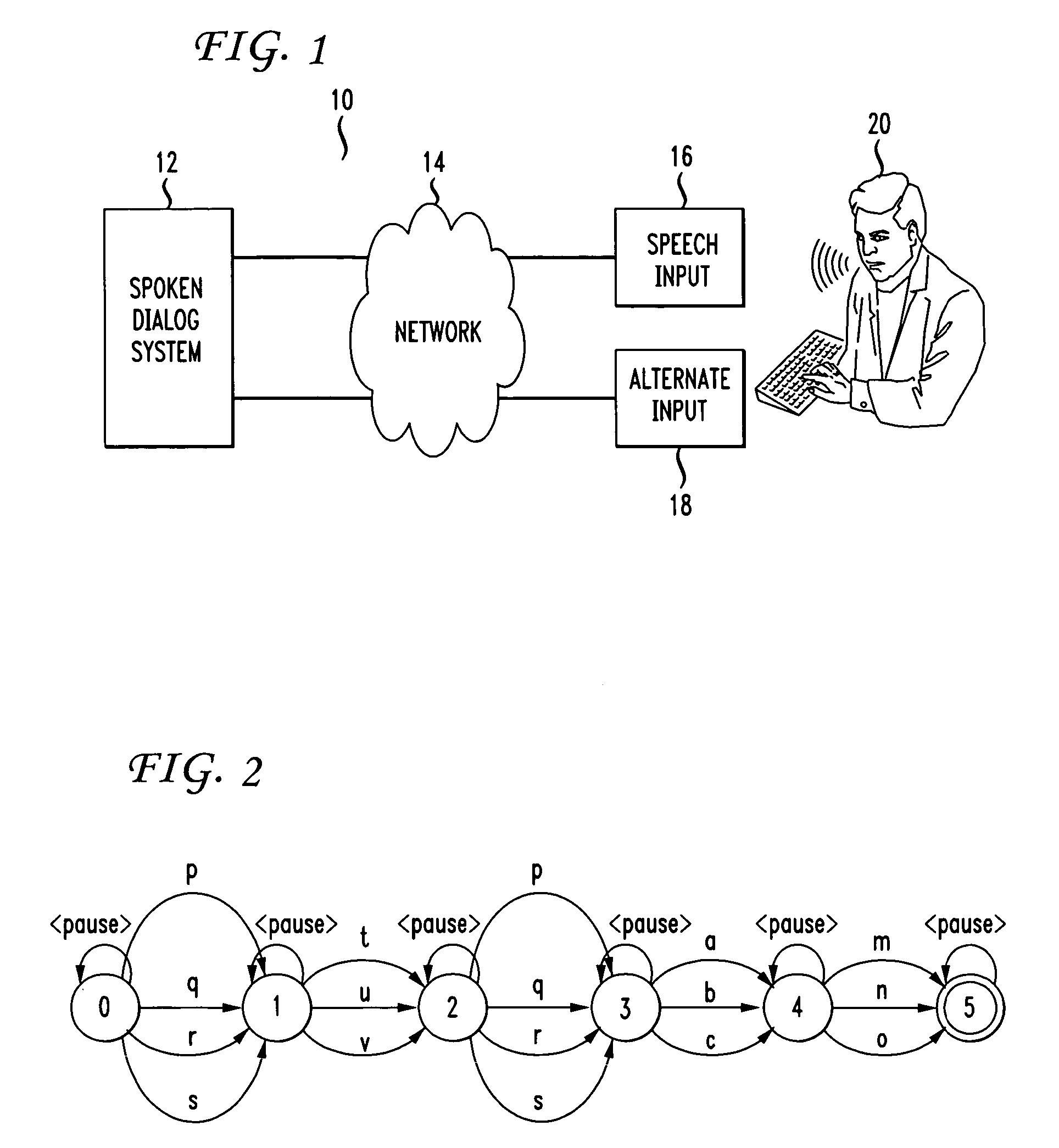

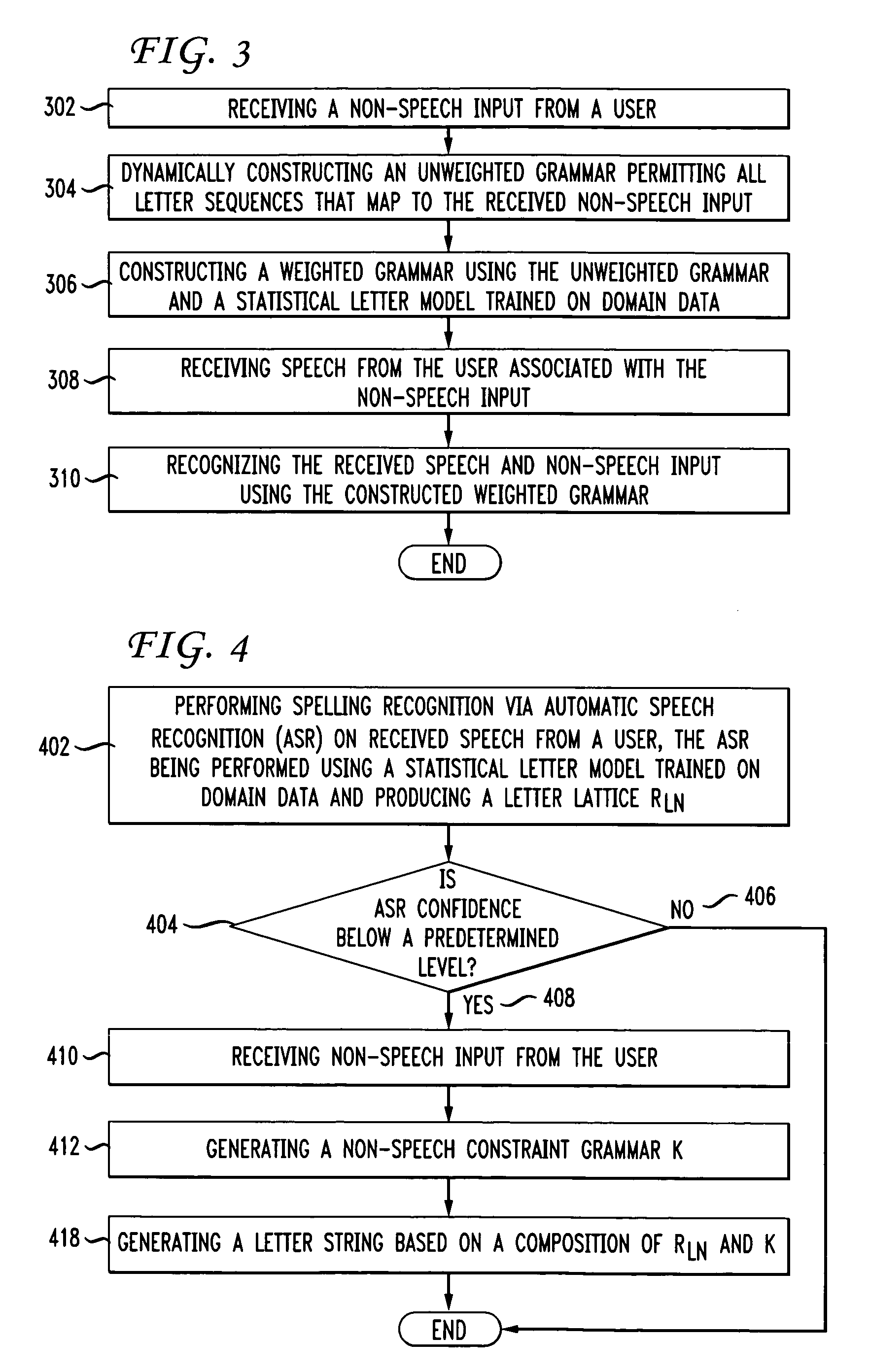

System and method for spelling recognition using speech and non-speech input

ActiveUS20060015336A1Reduce error rateAccurate identificationAutomatic call-answering/message-recording/conversation-recordingSpeech recognitionFinite state transducerConstraint Grammar

A system and method for non-speech input or keypad-aided word and spelling recognition is disclosed. The method comprises performing spelling recognition via automatic speech recognition (ASR) on received speech from a user, the ASR being performed using a statistical letter model trained on domain data and producing a letter lattice RLN. If an ASR confidence is below a predetermined level, then the method comprises receiving non-speech input from the user, generating a keypad constraint grammar K and generating a letter string based on a composition of finite state transducers RLN and K. Other variations of the invention include recognizing input by first receiving non-speech input, dynamically generating an unweighted grammar, generating a weighted grammar using domain data, and then performing speech, and thus spelling, recognition on input speech using the weighted grammar.

Owner:NUANCE COMM INC

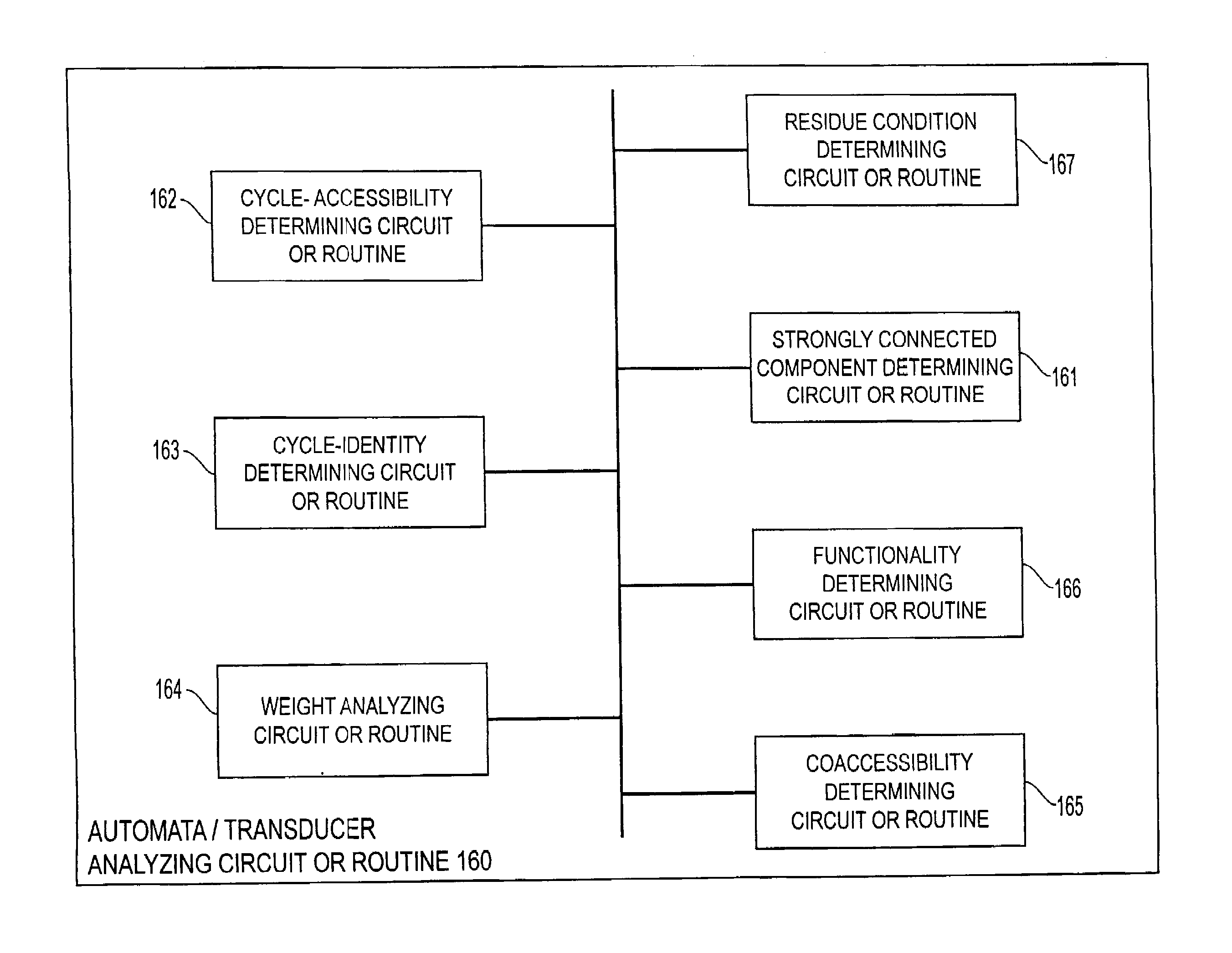

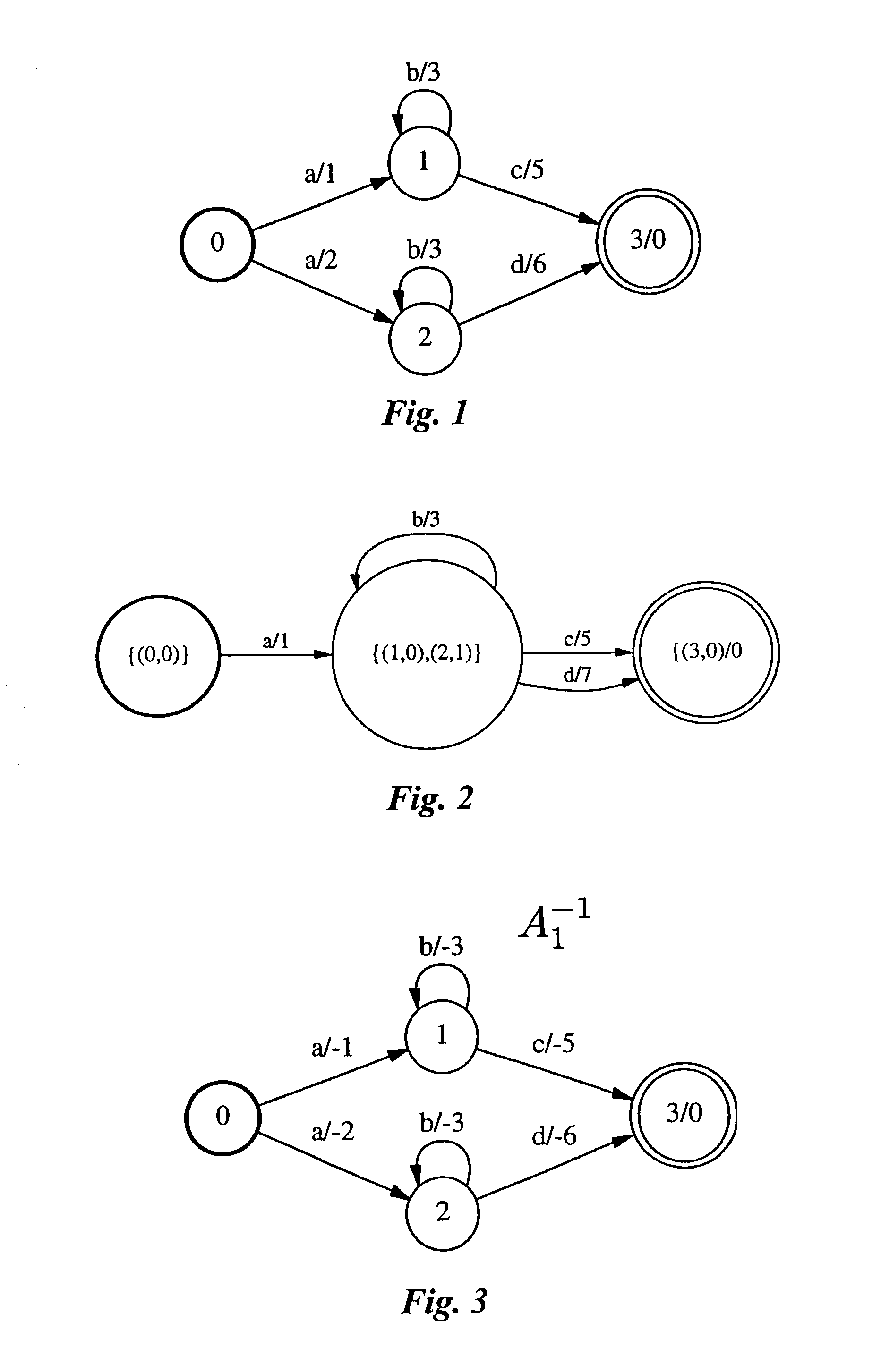

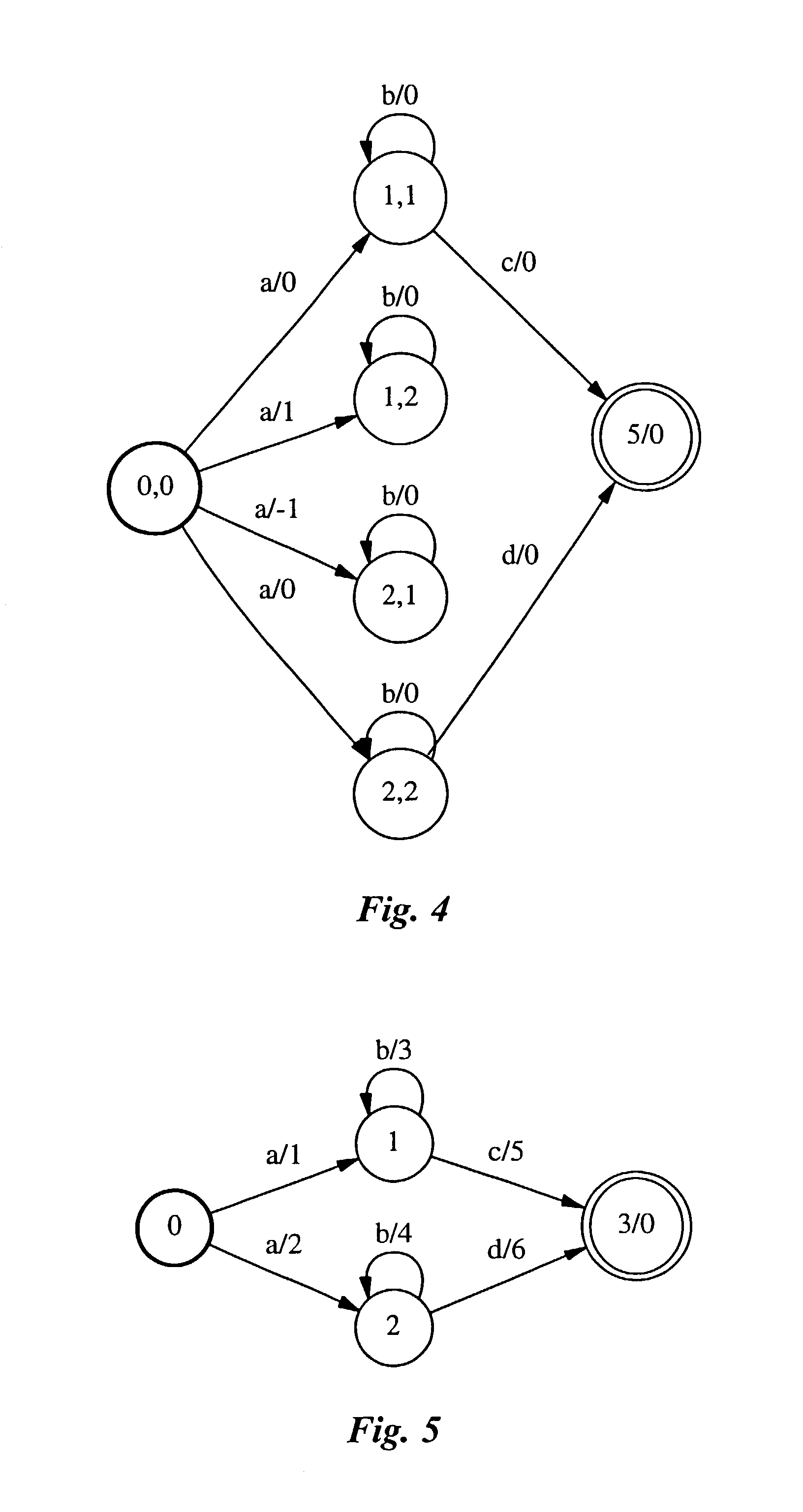

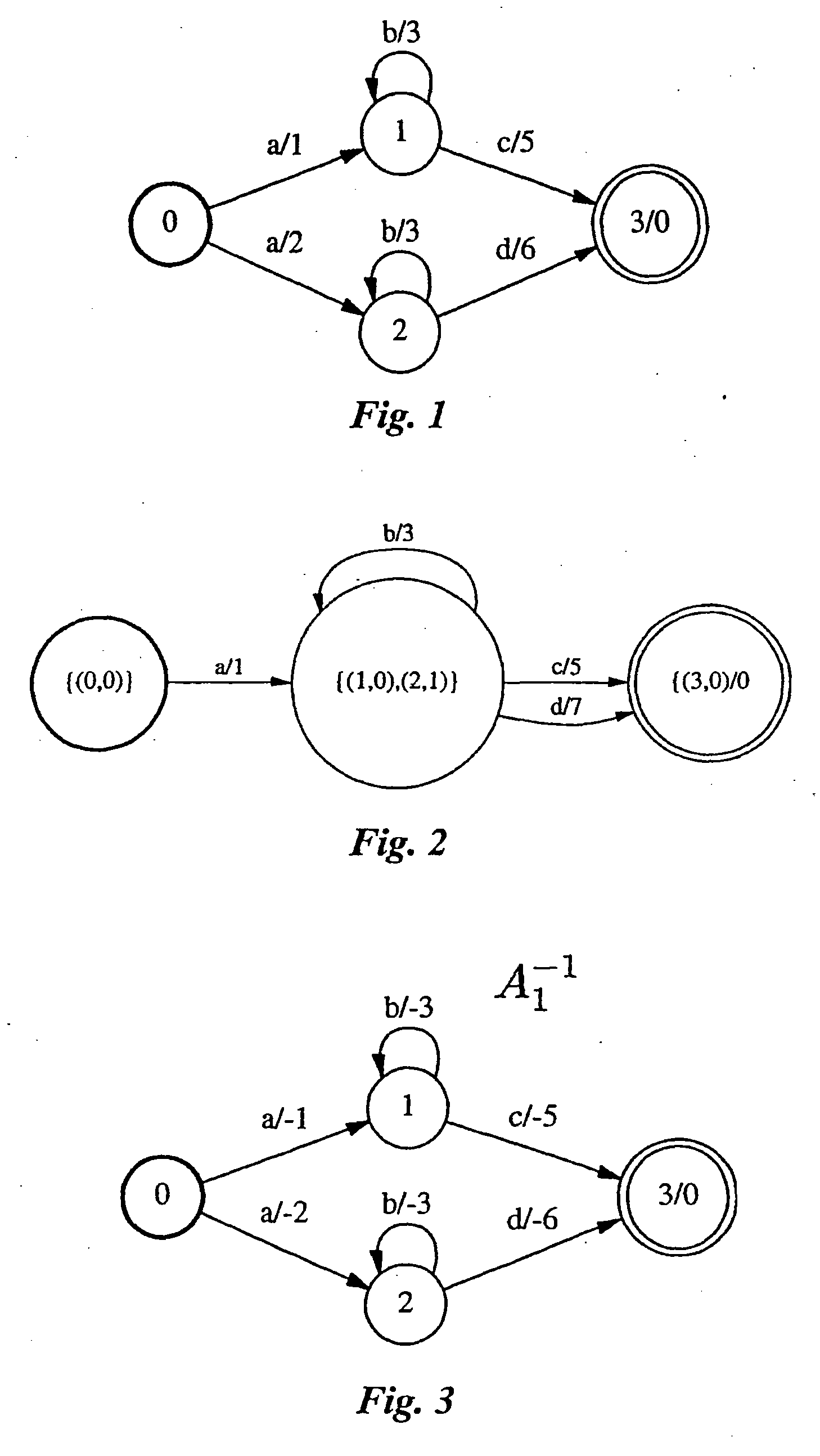

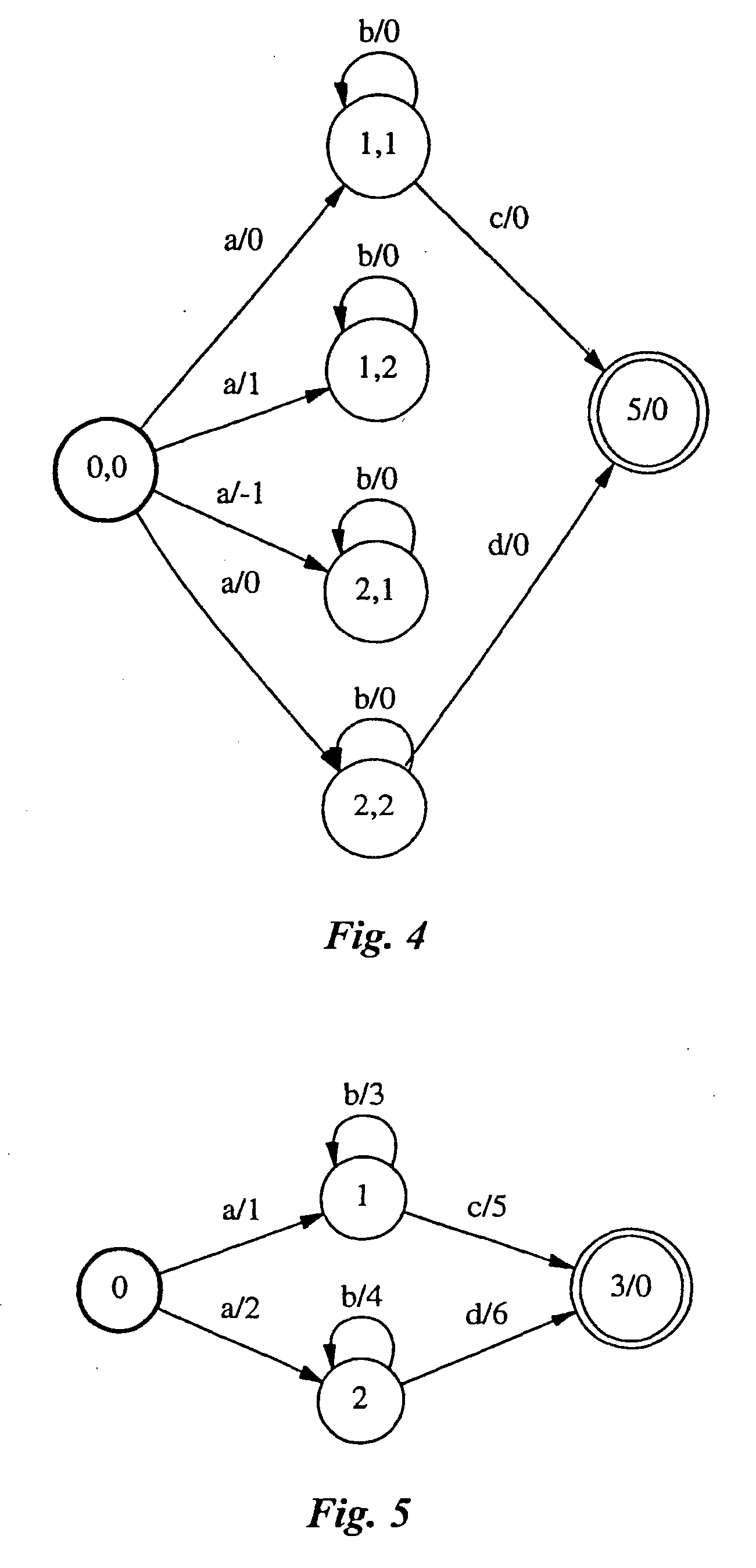

Systems and methods for determining the determinizability of finite-state automata and transducers

InactiveUS7240004B1High complexityNatural language data processingSpeech recognitionFinite state transducerDeterministic finite automaton

Finite-state transducers and weighted finite-state automata may not be determinizable. The twins property can be used to characterize the determinizability of such devices. For a weighted finite-state automaton or transducer, that weighted finite-state automaton or transducer and its inverse are intersected or composed, respectively. The resulting device is checked to determine if it has the cycle-identity property. If not, the original weighted finite-state automaton or transducer is not determinizable. For a weighted or unweighted finite-state transducer, that device is checked to determine if it is functional. If not, that device is not determinizable. That device is then composed with its inverse. The composed device is checked to determine if every edge in the composed device having a cycle-accessible end state meets at least one of a number of conditions. If so, the original device has the twins property. If the original device has the twins property, then it is determinizable.

Owner:AMERICAN TELEPHONE & TELEGRAPH CO

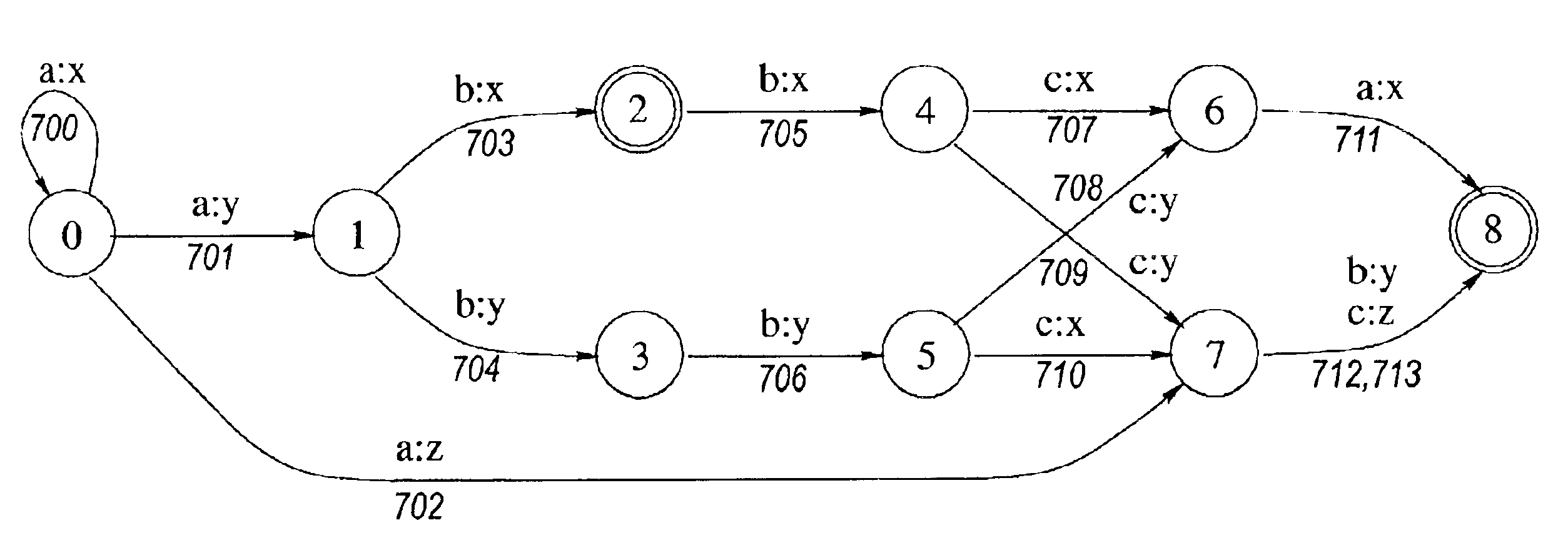

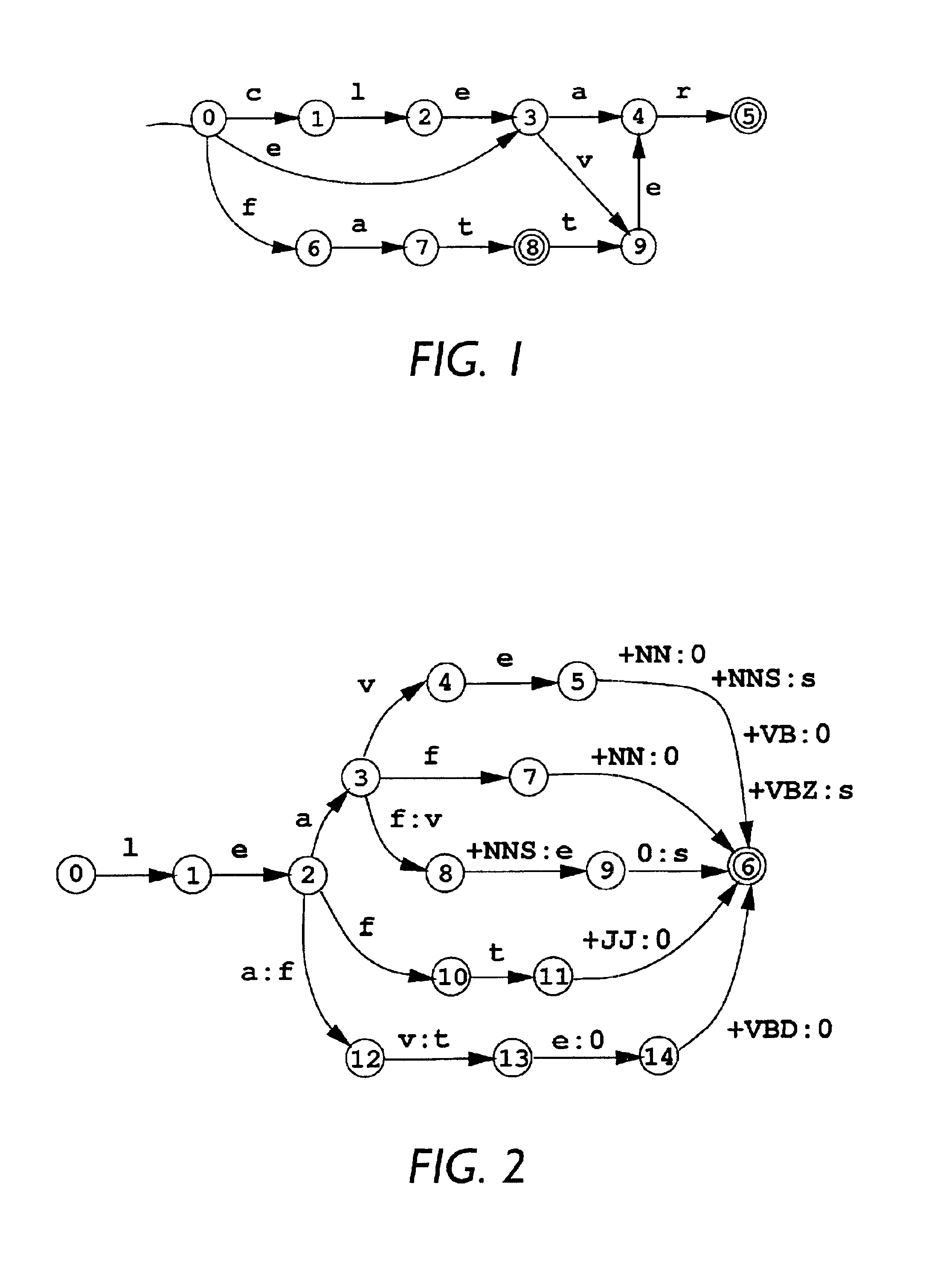

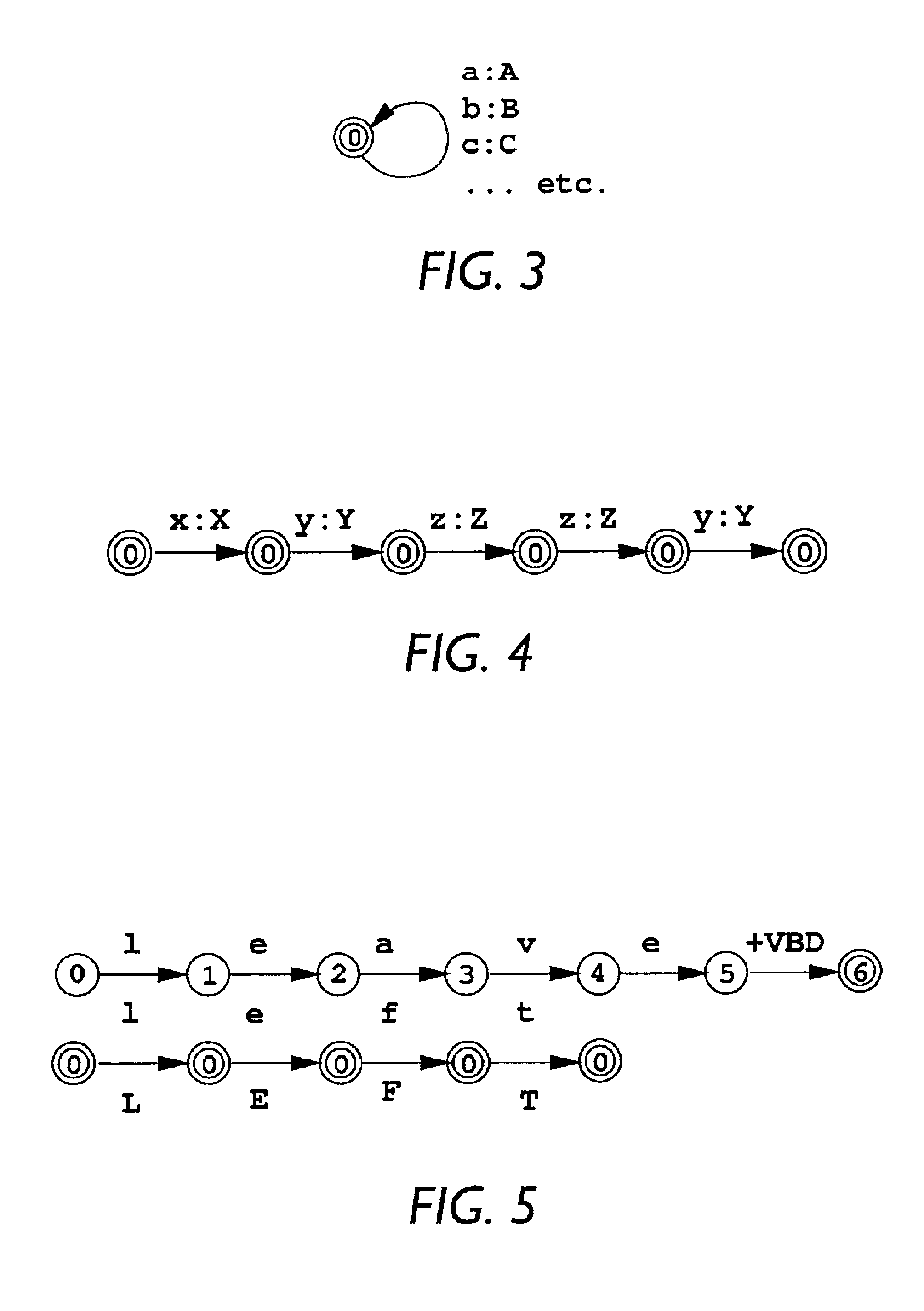

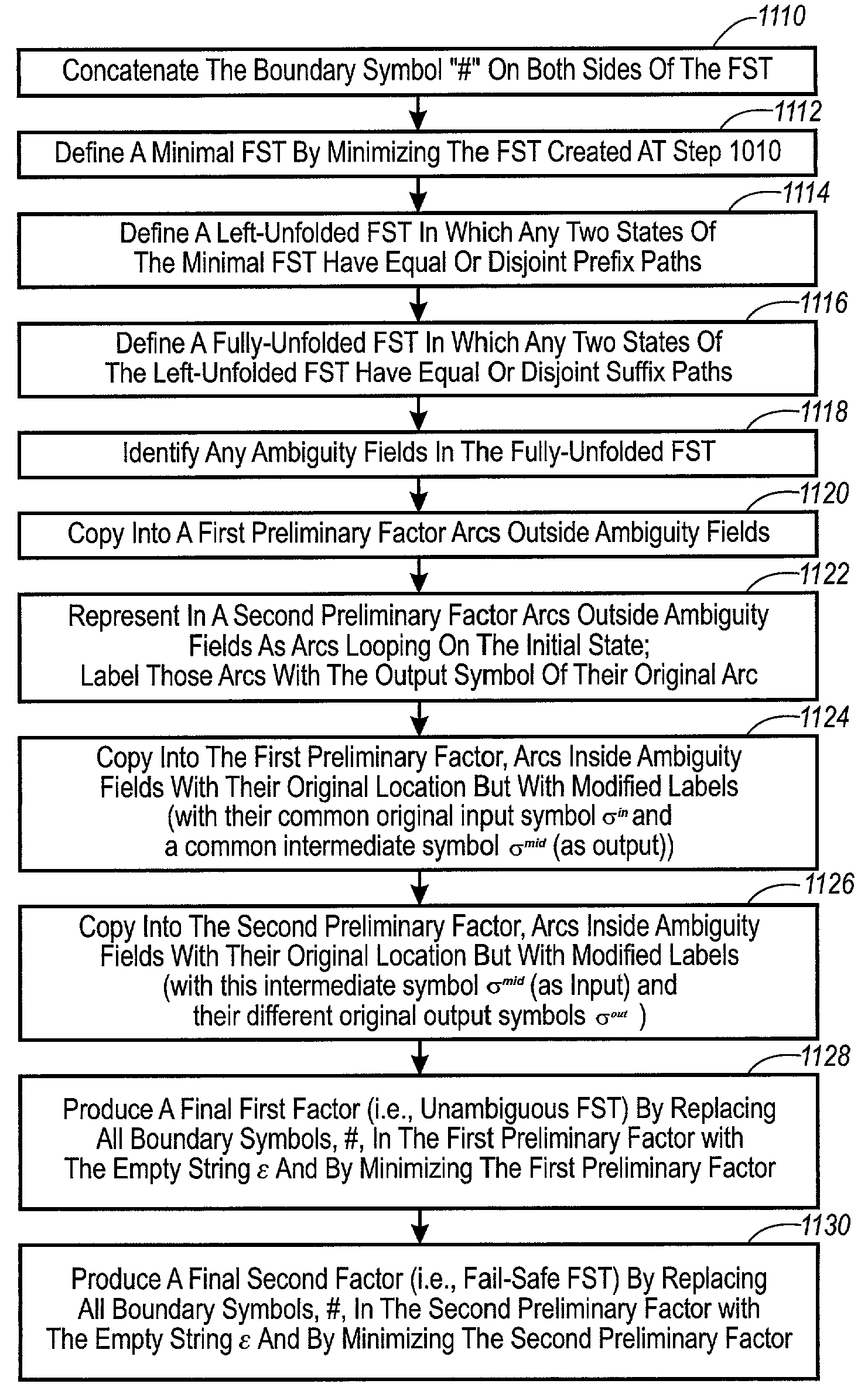

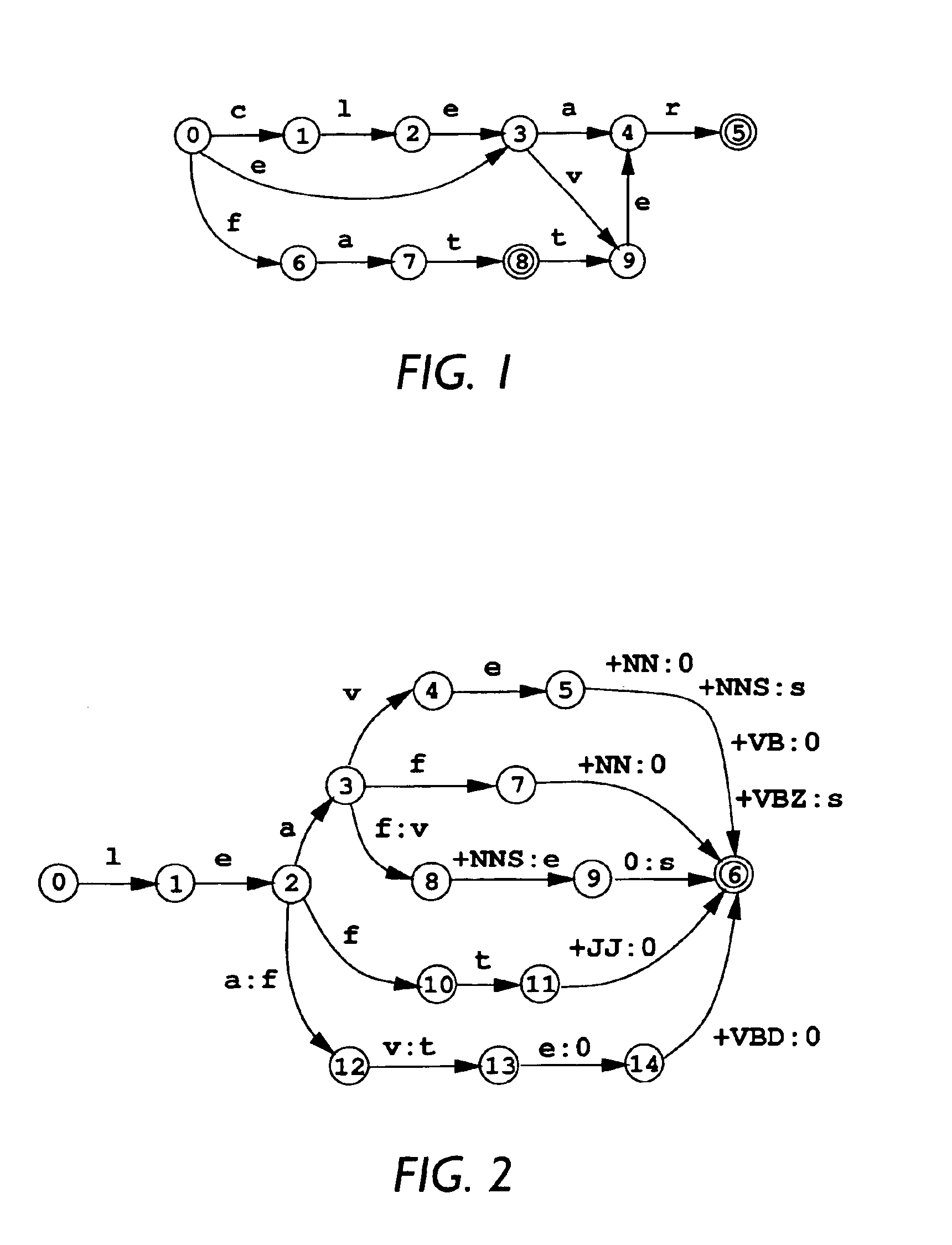

Method and apparatus for factoring unambiguous finite state transducers

InactiveUS6944588B2Reduce the number of linesSemantic analysisSpeech recognitionFinite state transducerAlgorithm

A method factors a functional (i.e., ambiguous) finite state transducer (FST) into a bimachine with a reduced intermediate alphabet. Initially, the method determines an emission matrix corresponding to a factorization of the functional FST. Subsequently, the emission matrix is split into a plurality of emission sub-matrices equal in number to the number of input symbols to reduce the intermediate alphabet. Equal rows of each emission sub-matrix are assigned an identical index value in its corresponding factorization matrix before creating the bimachine.

Owner:XEROX CORP

Method and apparatus for aligning ambiguity in finite state transducers

InactiveUS7107205B2Natural language analysisDigital computer detailsFinite state transducerTheoretical computer science

A method prepares a functional finite-state transducer (FST) with an epsilon or empty string on the input side for factorization into a bimachine. The method creates a left-deterministic input finite-state automation (FSA) by extracting and left-determinizing the input side of the functional FST. Subsequently, the corresponding sub-paths in the FST are identified for each arc in the left-deterministic FST and aligned.

Owner:XEROX CORP

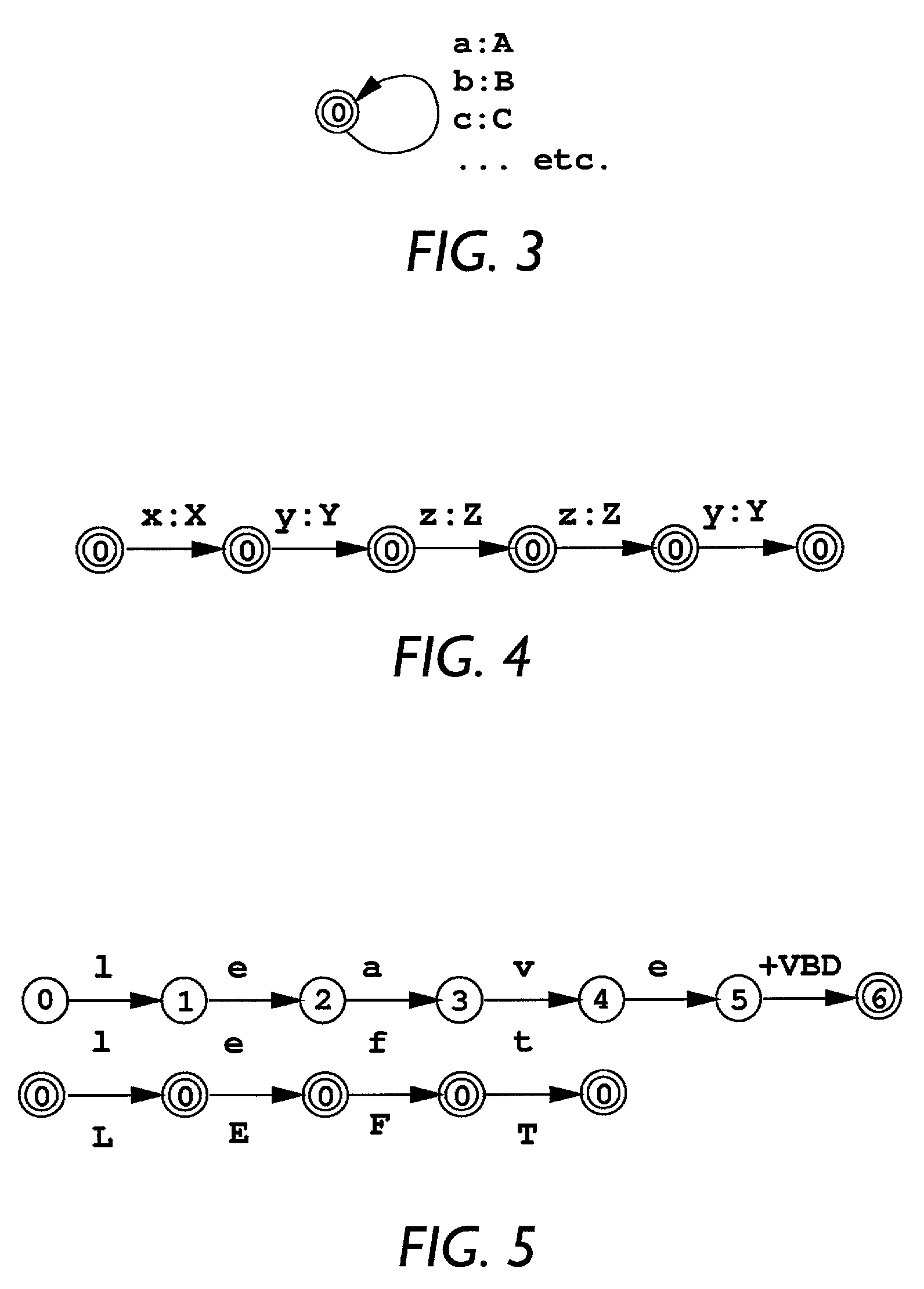

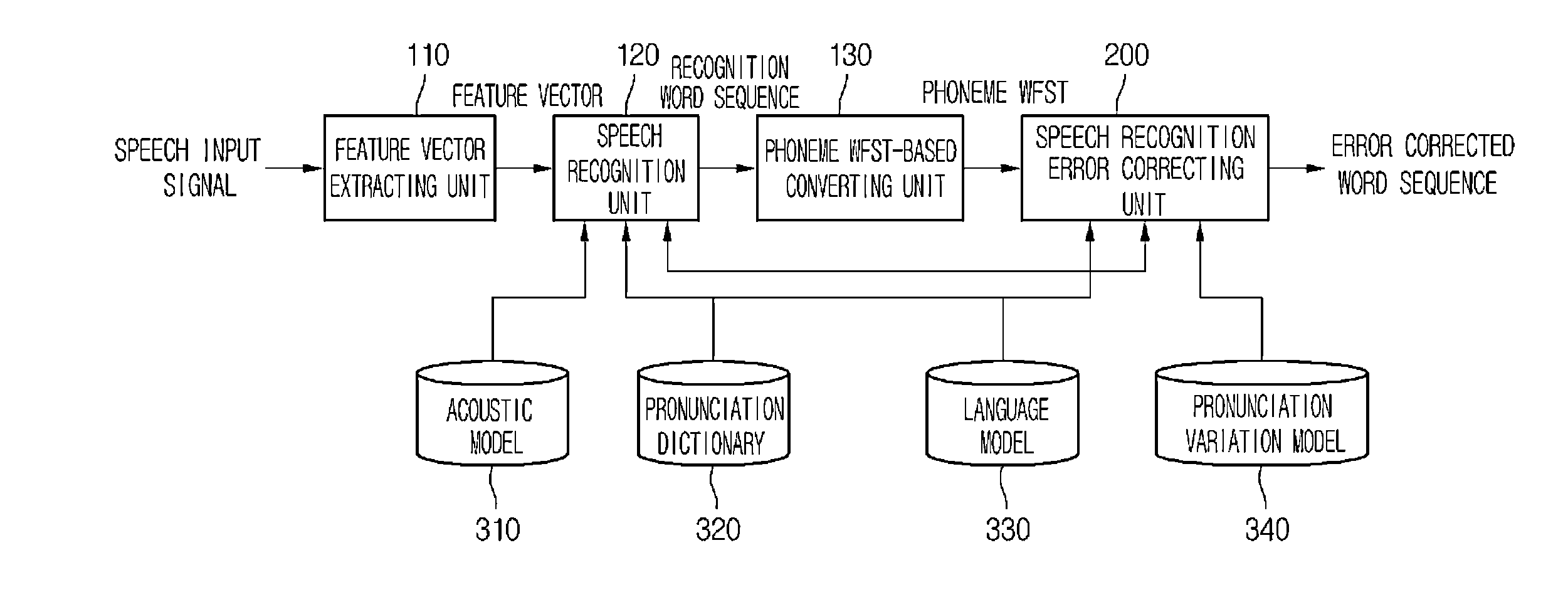

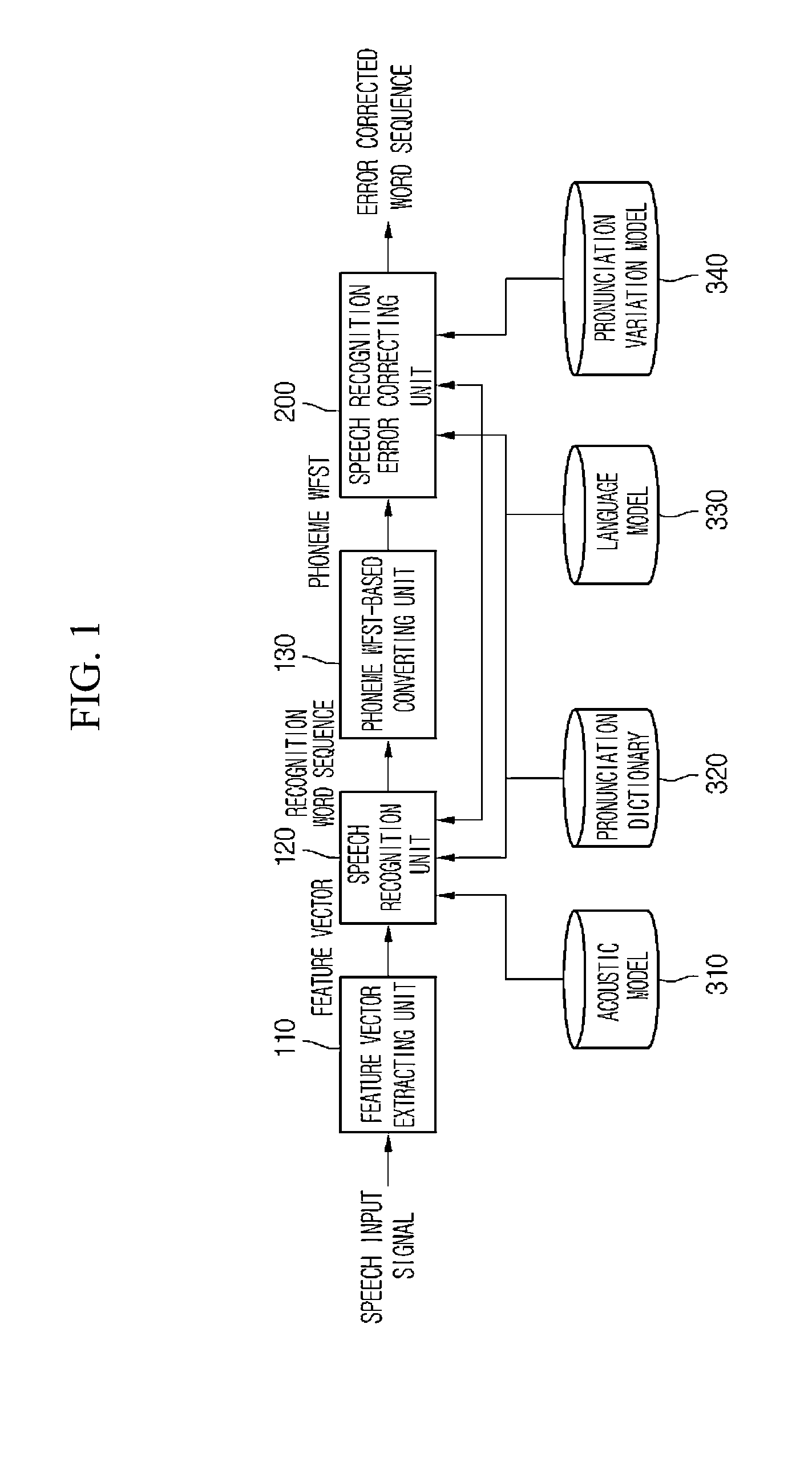

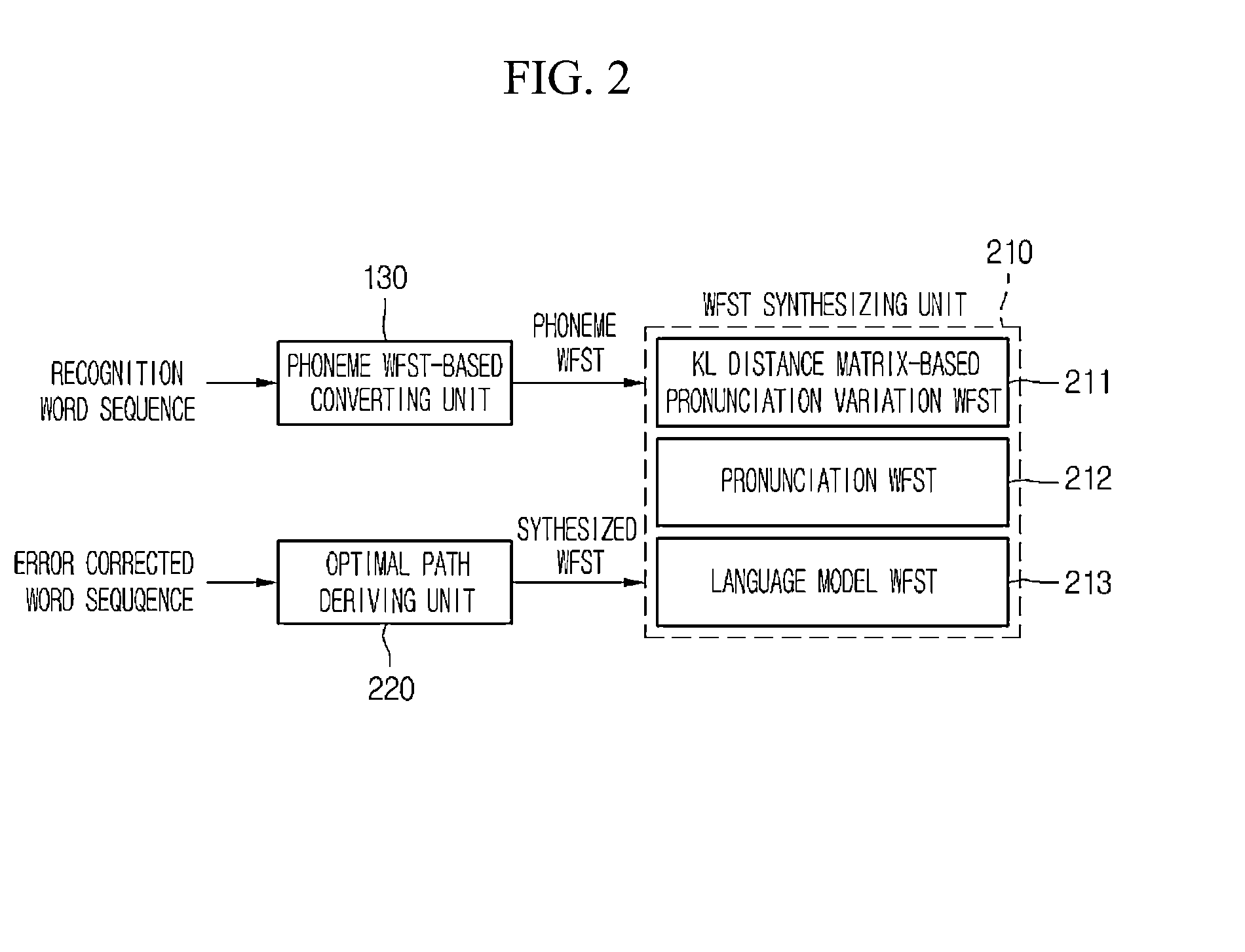

Apparatus for correcting error in speech recognition

An apparatus for correcting errors in speech recognition is provided. The apparatus includes a feature vector extracting unit extracting feature vectors from a received speech. A speech recognizing unit recognizes the received speech as a word sequence on the basis of the extracted feature vectors. A phoneme weighted finite state transducer (WFST)-based converting unit converts the recognized word sequence recognized by the speech recognizing unit into a phoneme WFST. A speech recognition error correcting unit corrects errors in the converted phoneme WFST. The speech recognition error correcting unit includes a WFST synthesizing unit modeling a phoneme WFST transferred from the phoneme WFST-based converting unit as pronunciation variation on the basis of a Kullback-Leibler (KL) distance matrix.

Owner:GWANGJU INST OF SCI & TECH

System and method for spelling recognition using speech and non-speech input

ActiveUS7574356B2Automatic call-answering/message-recording/conversation-recordingSpeech recognitionFinite state transducerAutomatic speech

Owner:MICROSOFT TECH LICENSING LLC

Systems and methods for generating weighted finite-state automata representing grammars

ActiveUS20080243484A1Natural language data processingSpeech recognitionFinite state transducerSymbol of a differential operator

A context-free grammar can be represented by a weighted finite-state transducer. This representation can be used to efficiently compile that grammar into a weighted finite-state automaton that accepts the strings allowed by the grammar with the corresponding weights. The rules of a context-free grammar are input. A finite-state automaton is generated from the input rules. Strongly connected components of the finite-state automaton are identified. An automaton is generated for each strongly connected component. A topology that defines a number of states, and that uses active ones of the non-terminal symbols of the context-free grammar as the labels between those states, is defined. The topology is expanded by replacing a transition, and its beginning and end states, with the automaton that includes, as a state, the symbol used as the label on that transition. The topology can be fully expanded or dynamically expanded as required to recognize a particular input string.

Owner:MICROSOFT TECH LICENSING LLC

Disfluency detection for a speech-to-speech translation system using phrase-level machine translation with weighted finite state transducers

InactiveUS7860719B2Natural language data processingSpeech recognitionSpeech to speech translationFinite state transducer

A computer-implemented method for creating a disfluency translation lattice includes providing a plurality of weighted finite state transducers including a translation model, a language model, and a phrase segmentation model as input, performing a cascaded composition of the weighted finite state transducers to create a disfluency translation lattice, and storing the disfluency translation lattice to a computer-readable media.

Owner:IBM CORP

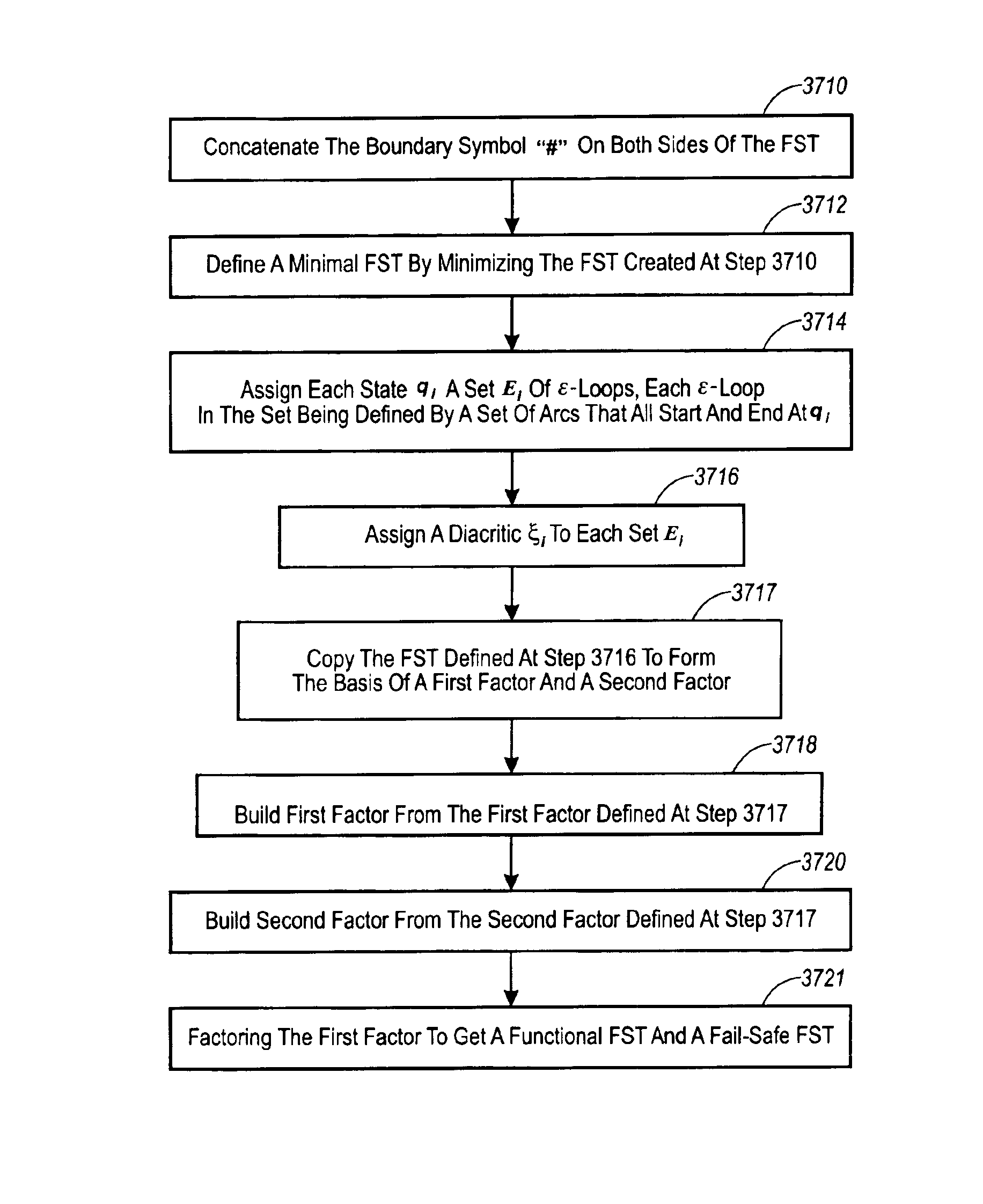

Method and apparatus for extracting infinite ambiguity when factoring finite state transducers

InactiveUS6952667B2Speech recognitionSpecial data processing applicationsFinite state transducerAlgorithm

A method extracts all infinite ambiguity from an input finite-state transducer (FST). The input FST is factorized into a first factor and a second factor such that the first factor is finitely ambiguous, and the second factor retains all infinite ambiguity of the original FST. The first factor is defined so that it replaces every loop where the input symbol of every arc is an ε (i.e., epsilon, empty string) by a single arc with ε on the input side and a diacritic on the output side. The second factor is defined so that it maps every diacritic to one or more ε-loops.

Owner:XEROX CORP

Systems and methods for generating weighted finite-state automata representing grammars

InactiveUS7398197B1Digital data processing detailsNatural language data processingFinite state transducerAutomaton

A context-free grammar can be represented by a weighted finite-state transducer. This representation can be used to efficiently compile that grammar into a weighted finite-state automaton that accepts the strings allowed by the grammar with the corresponding weights. The rules of a context-free grammar are input. A finite-state automaton is generated from the input rules. Strongly connected components of the finite-state automaton are identified. An automaton is generated for each strongly connected component. A topology that defines a number of states, and that uses active ones of the non-terminal symbols of the context-free grammar as the labels between those states, is defined. The topology is expanded by replacing a transition, and its beginning and end states, with the automaton that includes, as a state, the symbol used as the label on that transition. The topology can be fully expanded or dynamically expanded as required to recognize a particular input string.

Owner:NUANCE COMM INC

Method and apparatus for transducer-based text normalization and inverse text normalization

InactiveUS7630892B2Natural language data processingSpeech recognitionFinite state transducerStandardization

A method and apparatus are provided that perform text normalization and inverse text normalization using a single grammar. During text normalization, a finite state transducer identifies a second string of symbols from a first string of symbols it receives. During inverse text normalization, the context free transducer identifies the first string of symbols after receiving the second string of symbols.

Owner:MICROSOFT TECH LICENSING LLC

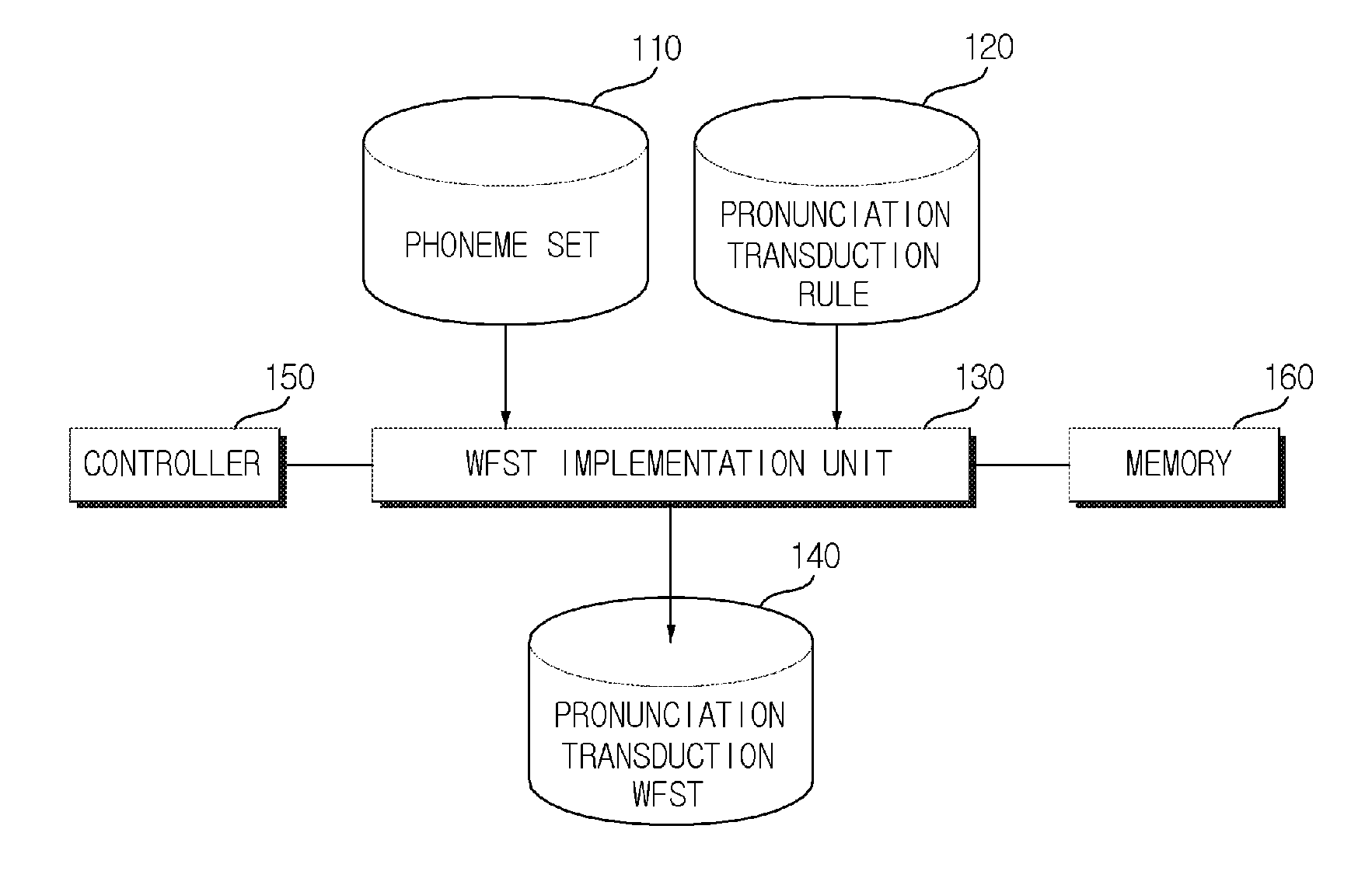

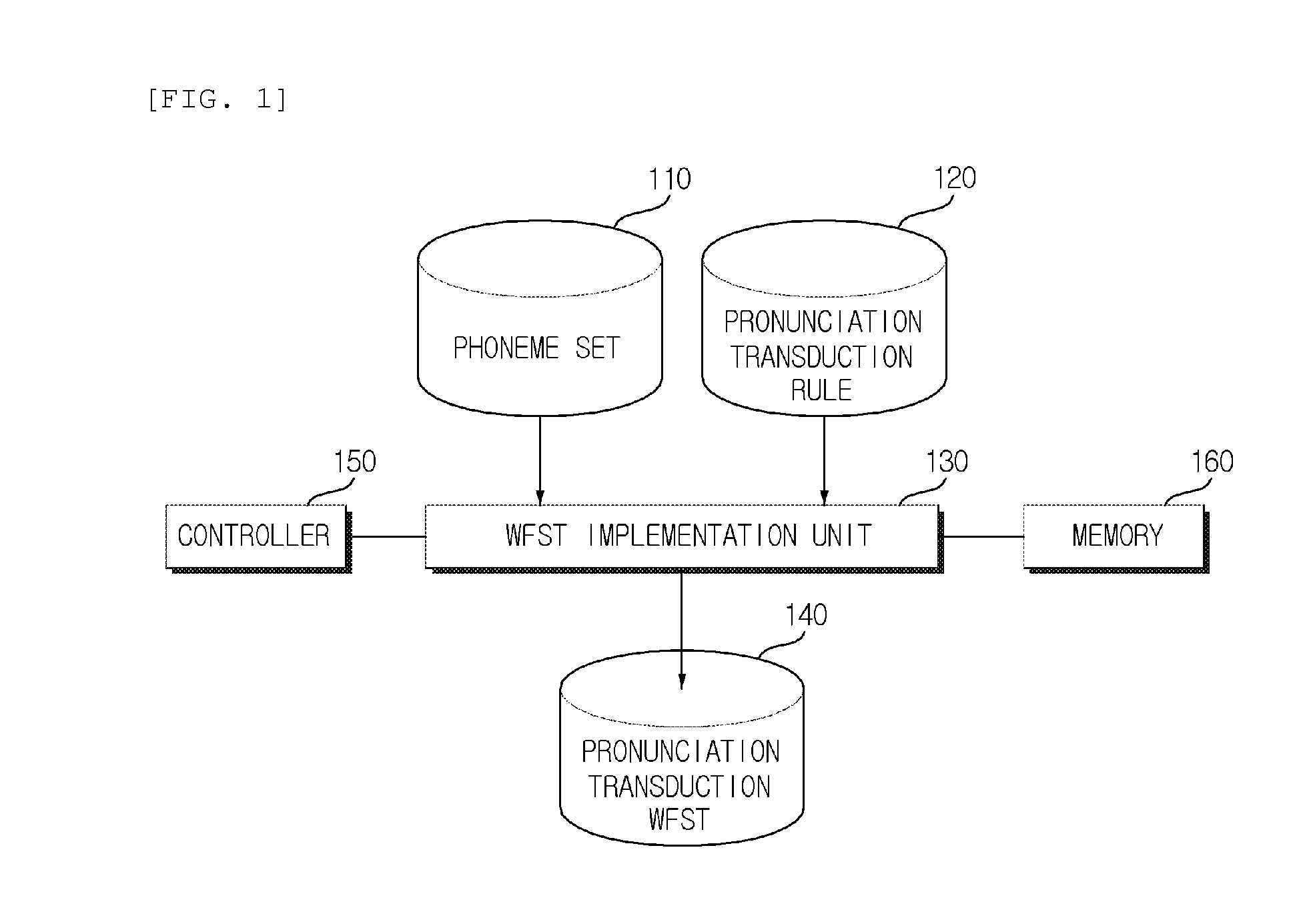

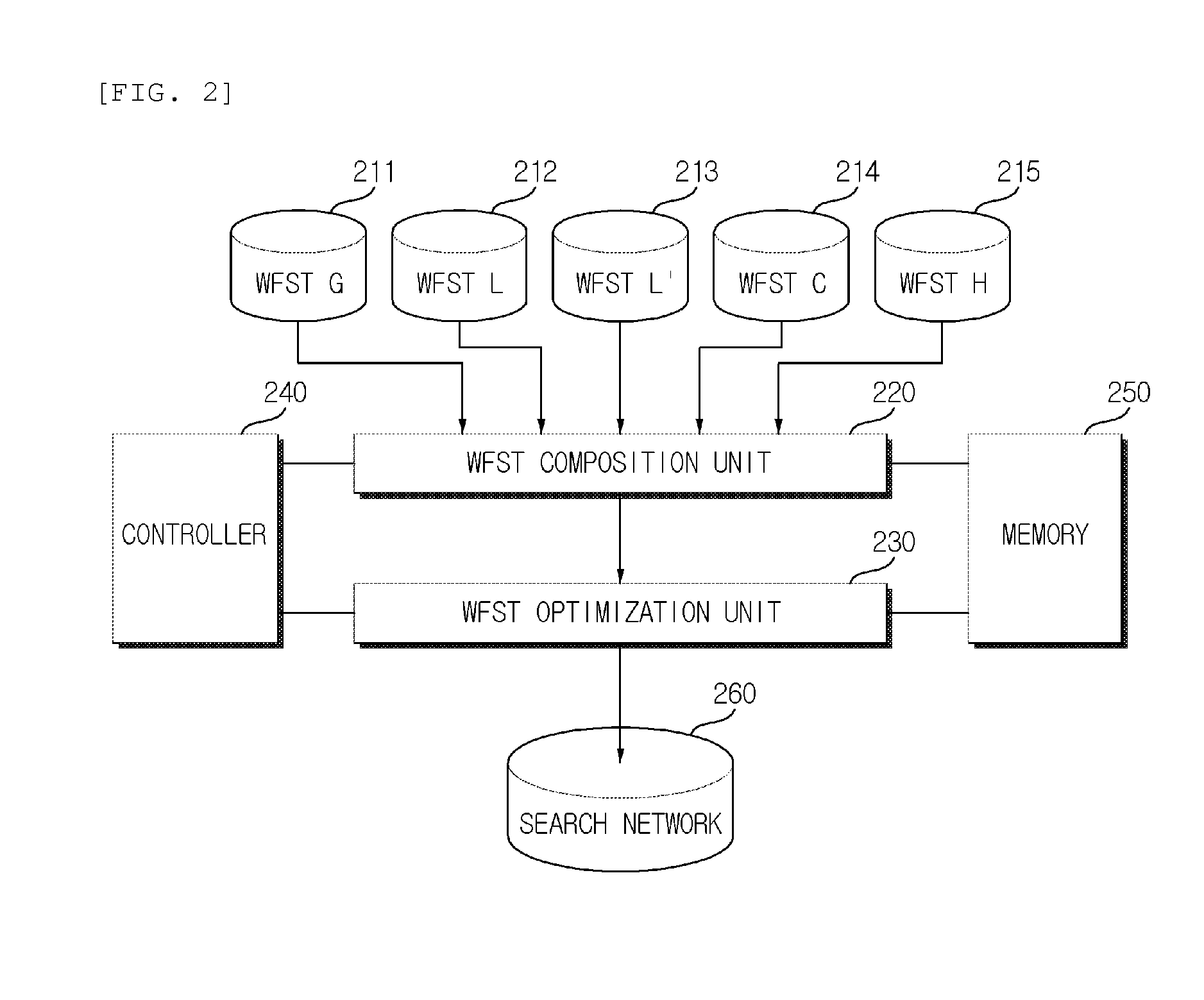

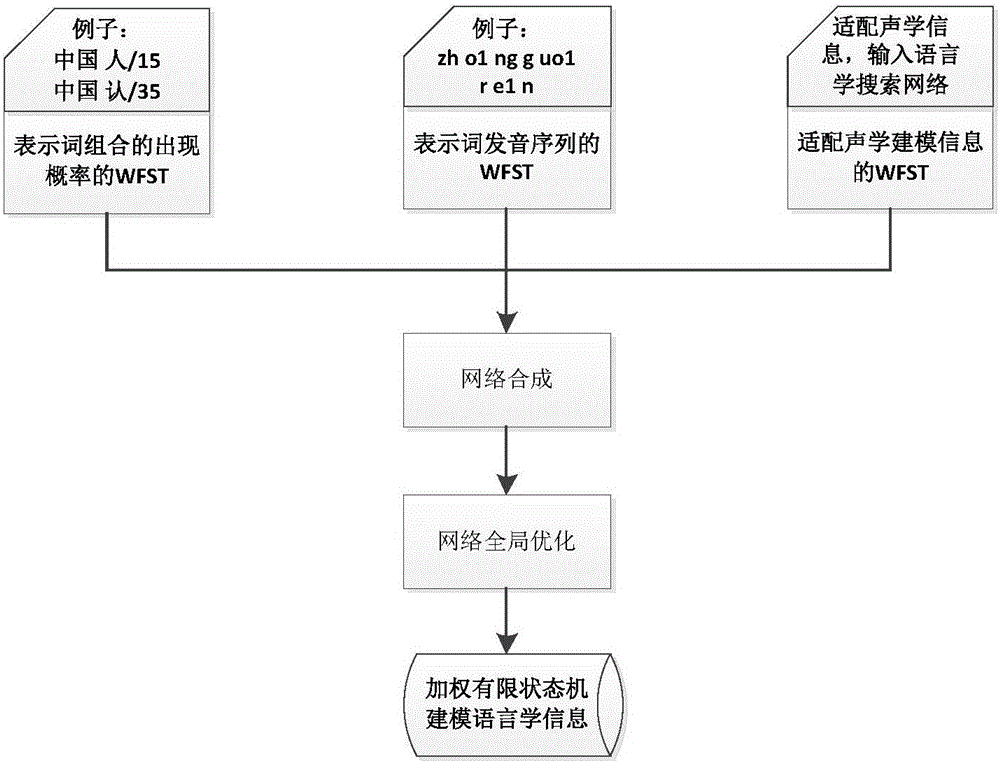

Method and system for generating search network for voice recognition

InactiveUS20130138441A1Improve accuracyIncrease reflectionSpeech recognitionFinite state transducerSpeech sound

Disclosed is a method of generating a search network for voice recognition, the method including: generating a pronunciation transduction weighted finite state transducer by implementing a pronunciation transduction rule representing a phenomenon of pronunciation transduction between recognition units as a weighted finite state transducer; and composing the pronunciation transduction weighted finite state transducer and one or more weighted finite state transducers.

Owner:ELECTRONICS & TELECOMM RES INST

Method and apparatus for detecting speech endpoint using weighted finite state transducer

InactiveUS20140379345A1Easy to addEasy to deleteSpeech recognitionFeature vectorFinite state transducer

Disclosed are an apparatus and a method for detecting a speech endpoint using a WFST. The apparatus in accordance with an embodiment of the present invention includes: a speech decision portion configured to receive frame units of feature vector converted from a speech signal and to analyze and classify the received feature vector into a speech class or a noise class; a frame level WFST configured to receive the speech class and the noise class and to convert the speech class and the noise class to a WFST format; a speech level WFST configured to detect a speech endpoint by analyzing a relationship between the speech class and noise class and a preset state; a WFST combination portion configured to combine the frame level WFST with the speech level WFST; and an optimization portion configured to optimize the combined WFST having the frame level WFST and the speech level WFST combined therein to have a minimum route.

Owner:ELECTRONICS & TELECOMM RES INST

Language understanding device

ActiveUS8244522B2Easy constructionImprove understanding accuracyNatural language data processingSpeech recognitionLanguage understandingFinite state transducer

A language understanding device includes: a language understanding model storing unit configured to store word transition data including pre-transition states, input words, predefined outputs corresponding to the input words, word weight information, and post-transition states, and concept weighting data including concepts obtained from language understanding results for at least one word, and concept weight information corresponding to the concepts; a finite state transducer processing unit configured to output understanding result candidates including the predefined outputs, to accumulate word weights so as to obtain a cumulative word weight, and to sequentially perform state transition operations; a concept weighting processing unit configured to accumulate concept weights so as to obtain a cumulative concept weight; and an understanding result determination unit configured to determine an understanding result from the understanding result candidates by referring to the cumulative word weight and the cumulative concept weight.

Owner:HONDA MOTOR CO LTD

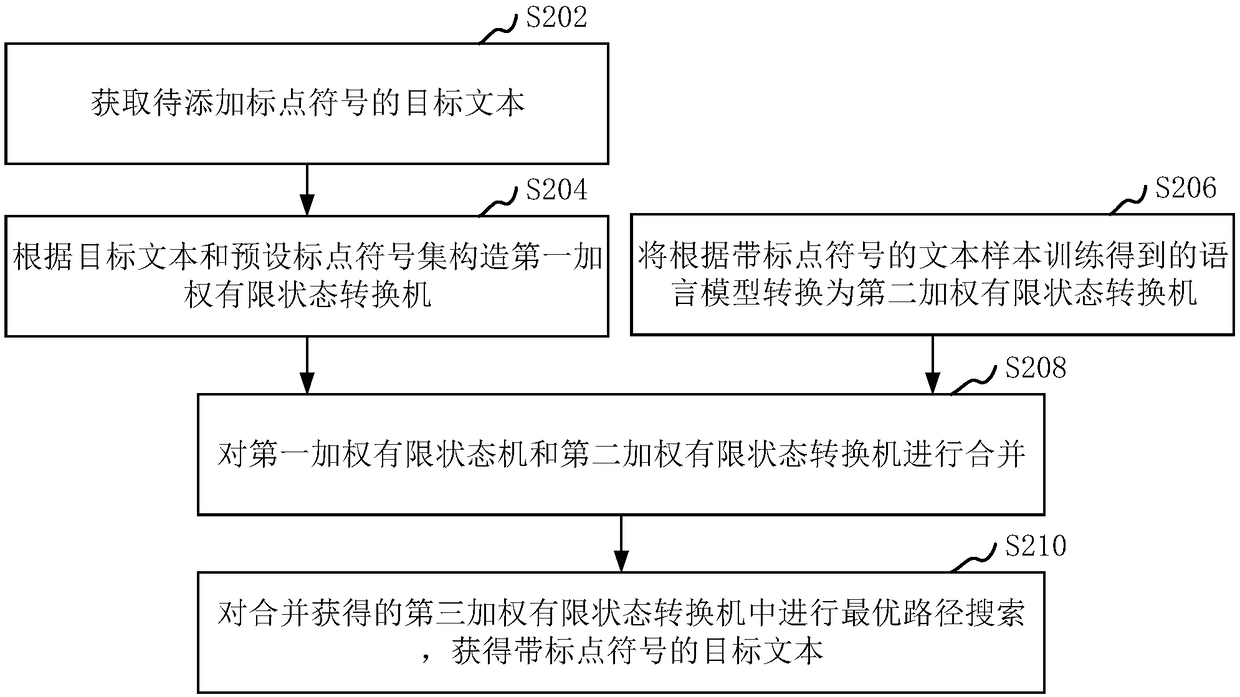

Punctuation adding method and device, computer device, and storage medium

ActiveCN108597517AImprove accuracyNatural language data processingSpeech recognitionFinite state transducerTarget text

The application relates to a punctuation adding method and device, a computer device, and a storage medium. The method comprises the following steps: obtaining a to-be-punctuated target text; constructing a first weighted finite state transducer on the basis of the target text and a preset punctuation set; converting a language model obtained through training on the basis of a punctuated text sample into a second weighted finite state transducer; combining the first weighted finite state transducer and the second weighted finite state transducer; and searching for an optical path in a third weighted finite state transducer obtained through combination and obtaining the target text with punctuation marks. The method can improve the accuracy of punctuation marks correspondingly added to thetarget text.

Owner:VOICEAI TECH CO LTD

Systems and methods for determining the determinizability of finite-state automata and transducers

InactiveUS20070299668A1Natural language data processingSpeech recognitionFinite state transducerDeterministic finite automaton

Finite-state transducers and weighted finite-state automata may not be determinizable. The twins property can be used to characterize the determinizability of such devices. For a weighted finite-state automaton or transducer, that weighted finite-state automaton or transducer and its inverse are intersected or composed, respectively. The resulting device is checked to determine if it has the cycle-identity property. If not, the original weighted finite-state automaton or transducer is not determinizable. For a weighted or unweighted finite-state transducer, that device is checked to determine if it is functional. If not, that device is not determinizable. That device is then composed with its inverse. The composed device is checked to determine if every edge in the composed device having a cycle-accessible end state meets at least one of a number of conditions. If so, the original device has the twins property. If the original device has the twins property, then it is determinizable.

Owner:AT&T INTPROP II L P

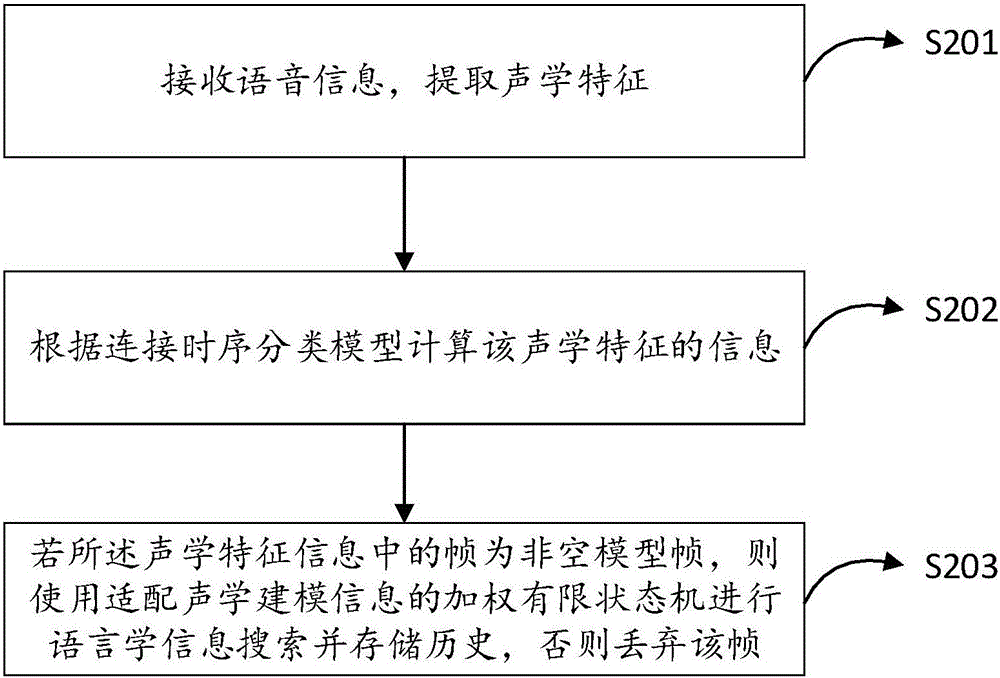

Speech recognition decoding method and speech recognition decoding device

InactiveCN105895081AReduce the amount of calculationReduce the number of timesSpeech recognitionFinite state transducerModel representation

The invention discloses a speech recognition decoding method and a speech recognition decoding device, which belong to the field of speech processing. The method comprises steps: speech information is received, and acoustic features are extracted; information of the acoustic features is calculated according to a continuous time sequence classification model; if a frame in the acoustic feature information is a non null model frame, a weighted finite state transducer adaptive to acoustic modeling information is used for linguistic information search and historical data are stored, or otherwise, the frame is discarded. Through building the continuous time sequence classification model, the acoustic modeling is more accurate; through using the weighted finite state transducer, model representation is more efficient, and nearly 50% of computation and memory resource consumption is reduced; and by using a phoneme synchronization method in the case of decoding, the calculation quantity and the times for model search are effectively reduced.

Owner:AISPEECH CO LTD +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com