But lithographic errors have set limits on the complexity of circuitry that can be fabricated in one piece without fatal flaws.

Portable computers using single-chip processors can be built on single circuit boards today, but because lithographic errors limit the size and complexity of today's chips, each system still requires many separate chips.

Using separate chips also creates off-chip data flow bottlenecks because the chips are connected on a macroscopic rather than a

microscopic scale, which severely limits the number of interconnections.

Furthermore, even single board systems use separate devices external to that board for system input and output, further increasing system size and

power consumption.

Chip size limits, however, prevent the amount of on-chip memory from exceeding a tiny fraction of the memory used in a whole system.

While such

shared memory parallel systems do remove the von Neumann uniprocessor

bottleneck, the funneling of memory access from all the processors through a single

data path rapidly reduces the effectiveness of adding more processors.

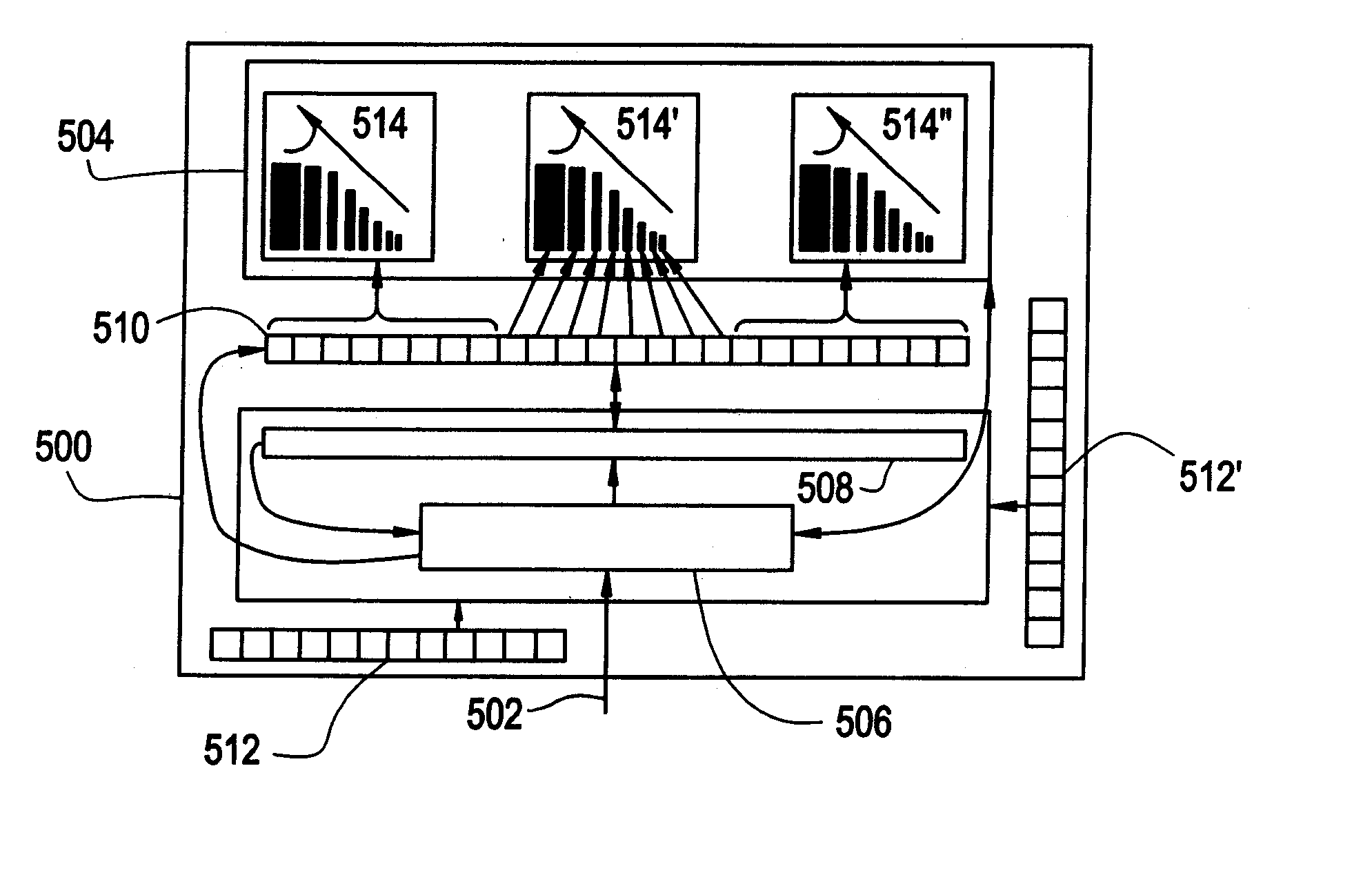

This requires complex macroscopic (and hence off-chip-bottleneck-limited) connections between the processors and external chips and devices, which rapidly increases the cost and complexity of the system as the number of processors is increased.

Having each processor set connected to an external I / O device also necessitates having a multitude of connections between the

processor array and the external devices, thus greatly increasing the overall size, cost and complexity of the system.

The chip-size limit, however, forces a severe trade-off between number and size of processors in such architectures; the cm−1 chip used 1-bit processors instead of the 8-bit to 32-bit processors in common use at that time.

But even for

massively parallel tasks, trading one 32-bit processor per chip for 32 one-bit processors per chip does not produce any performance gains except for those tasks where only a few bits at a time can be processed by a given processor.

Furthermore, these non-standard processors do not run standard

software, requiring everything from operating systems to compilers to utilities to be re-written, greatly increasing the expense of

programming such systems.

The sizes of these arrays are also limited by lithographic errors, so systems based on such arrays are subjected to the off-chip data flow bottleneck.

Since the output elements cannot add or subtract or edit-and-pass-on a

data stream, such display elements can do no data decompression or other

processing, so the output array requires a single uncompressed

data stream, creating a band-width bottleneck as array size increases.

This necessity for perfection creates low yields and high costs for such displays.

But increased use of such links and increases in their range and data transfer rates are all increasing their demands for bandwidth.

Some electromagnetic frequency ranges are already crowded, making this transmission bottleneck increasingly a

limiting factor.

Processors, however, have large numbers of circuits with unique functions (often referred to in the art as

random logic circuits), and a spare circuit capable of replacing one kind of defective circuit cannot usually replace a different kind, making these general spare-circuit schemes impractical for processors.

Of these replication schemes, circuit duplication schemes, as exemplified by U.S. Pat. Nos. 4,798,976 and 5,111,060, use the least resources for redundancy, but provide the least protection against defects because two defective copies of a given circuit (or a defect in their joint output line) still creates an uncorrectable defect.

This, however, leads to a dilemma: When the voting is done on the output of large blocks of circuitry, there is a significant chance that two out of the three copies will have defects, but when the voting is done on the output of small blocks of circuitry, many voting circuits are needed, increasing the likelihood of errors in the voting circuits themselves) Ways to

handle having two defective circuits out of three (which happens more frequently than the 2 defects out of 2 problem that the duplication schemes face) are also known.

Not only is a large N an inefficient use of space, but it increases the complexity of the voting circuits themselves, and therefore the likelihood of failures in them.

While this scheme can be applied to integrated circuits (although it predates them considerably), it requires four times as many gates, each with twice as many inputs, as equivalent non-redundant logic, increasing the circuit area and power requirements too much to be practical.

All these N-fold redundancy schemes also suffer from problems where if the replicates are physically far apart, gathering the signals requires extra wiring, creating propagation delays, while if the replicates are close together, a single large lithographic error can annihilate the replicates en masse, thus creating an unrecoverable fault.

The resulting one-dimensional chains, however, lack the direct

addressability needed for fast memory arrays, the positional regularity of array cells needed for I / O arrays, and the two-dimensional or higher—neighbor-to-neighbor communication needed to efficiently

handle most

parallel processing tasks.

This limits the usefulness of these arrangements low or medium performance

memory systems and to tasks dominated by one-dimensional or lower

connectivity, such as sorting data.

Addressing cells through a global

bus has significant drawbacks; it does not allow parallel access of multiple cells, and comparing the

cell's address with an address on the

bus introduces a

delay in accessing the

cell.

Furthermore, with large numbers of cells it is an inefficient user of power; in order for N cells to determine whether they are being addressed, each must check a minimum of log 2(N) address bits (in binary systems), so an address

signal requires enough power to drive N*log 2(N) inputs.

This is a high price in a system where all intercell signals are global.

Several considerations, however, diminish its applicability to large high-performance array at all but the lowest defect densities.

Thus while large cells create high defect rates, small cells sizes create significant delays in the propagation of signals across the array.

As

cell size decreases, yields grow rapidly, but the propagation delays grow, too.

But row-addressing signals propagated across the array would pass sequentially through up to 30 gates, creating far too long a

delay for high-performance

memory systems.

The cell bypassing scheme does support two-dimensional neighbor-to-neighbor

connectivity, and could support a column-oriented

bus for each column, but it cannot support a corresponding row-oriented bus without the 2-gate-per-

cell delay.

This multi-cell shift also prevents this scheme from being useful in arrays where physical position of array cells is important, such as direct input or output cell arrays.

Login to View More

Login to View More