Patents

Literature

44 results about "Weighted fair queueing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

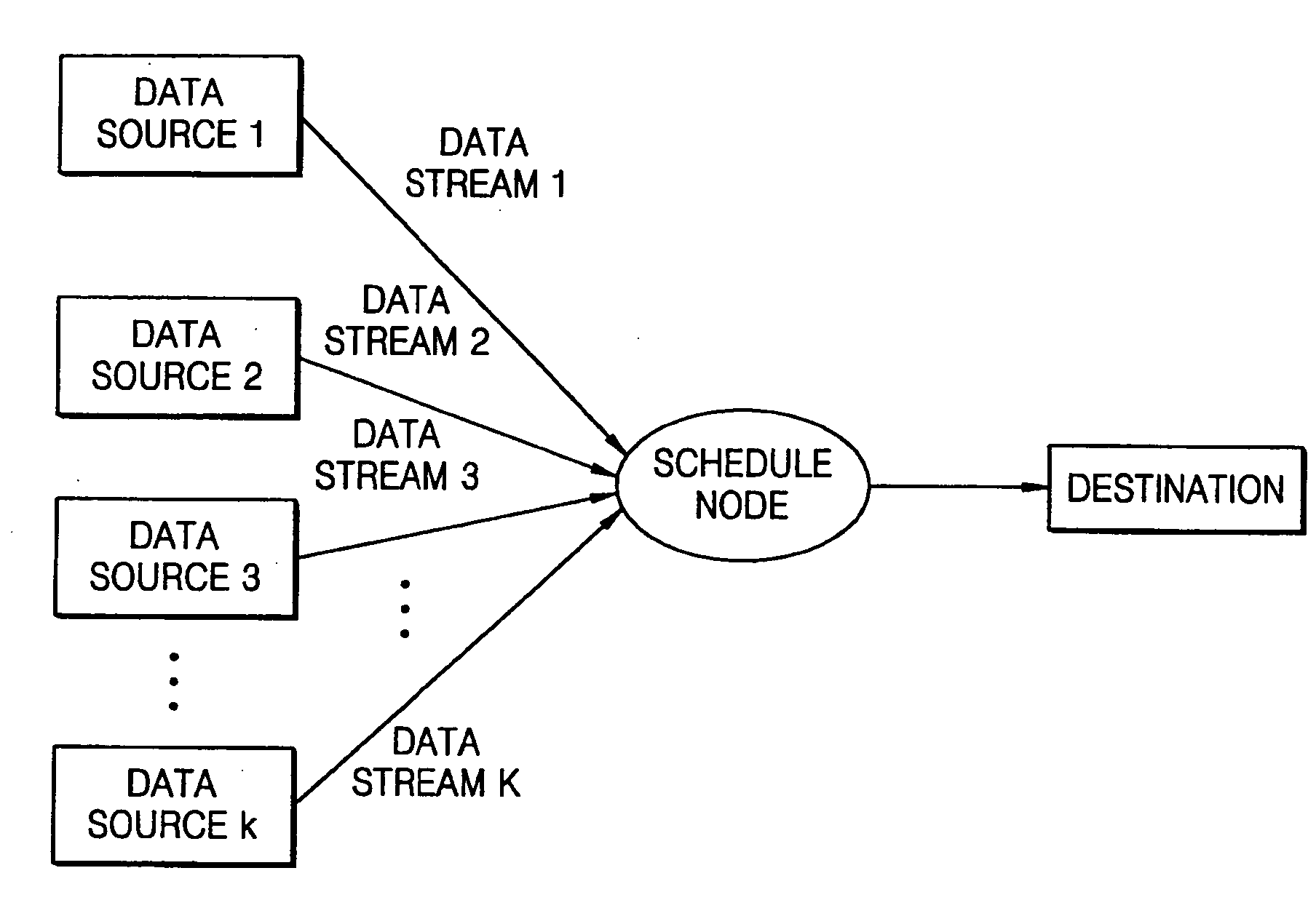

Weighted fair queueing (WFQ) is a network scheduler scheduling algorithm. WFQ is both a packet-based implementation of the generalized processor sharing (GPS) policy, and a natural extension of fair queuing (FQ). Whereas FQ shares the link's capacity in equal subparts, WFQ allows schedulers to specify, for each flow, which fraction of the capacity will be given.

Method for providing integrated packet services over a shared-media network

InactiveUS6563829B1Good serviceError preventionTransmission systemsQos quality of serviceExchange network

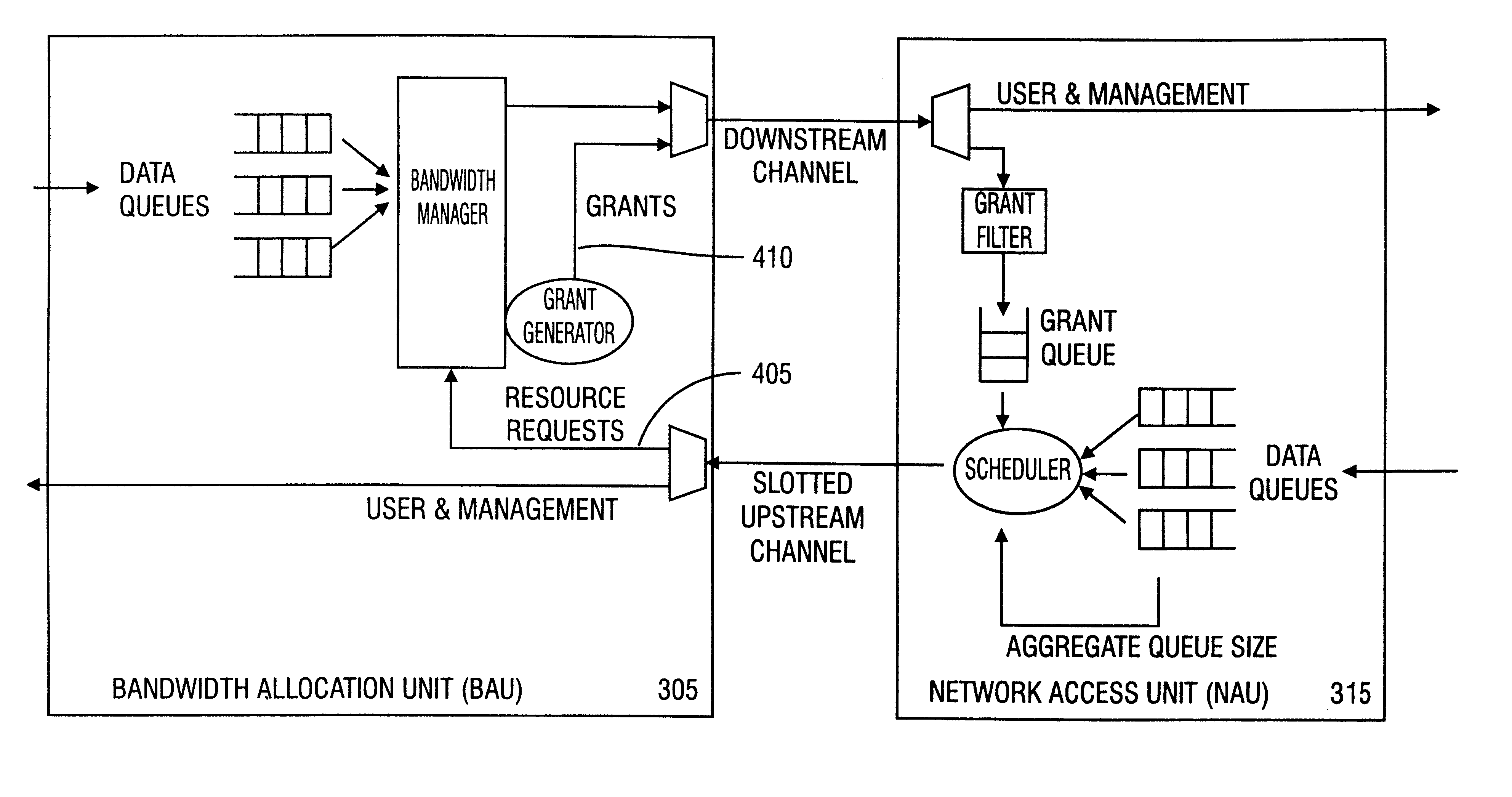

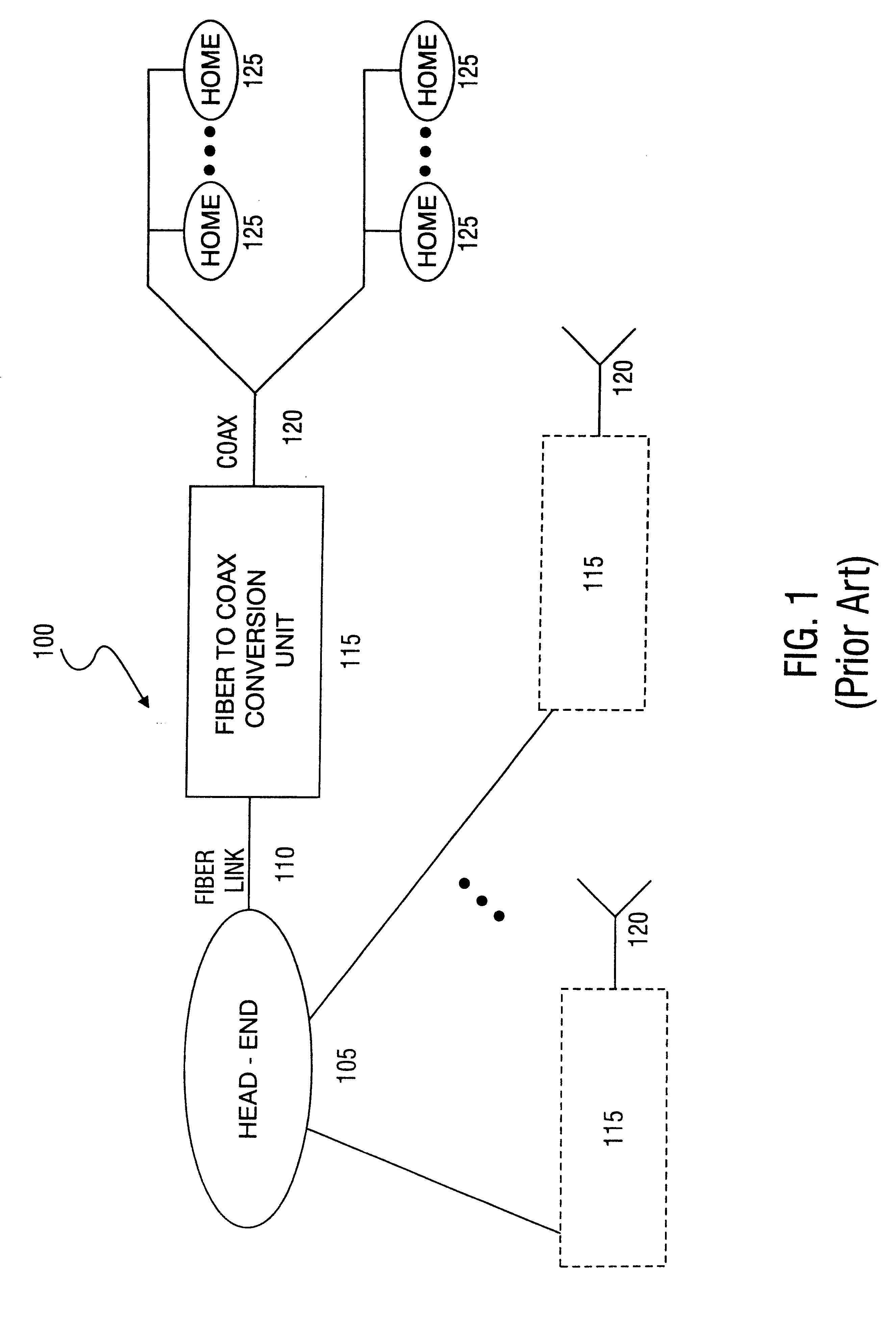

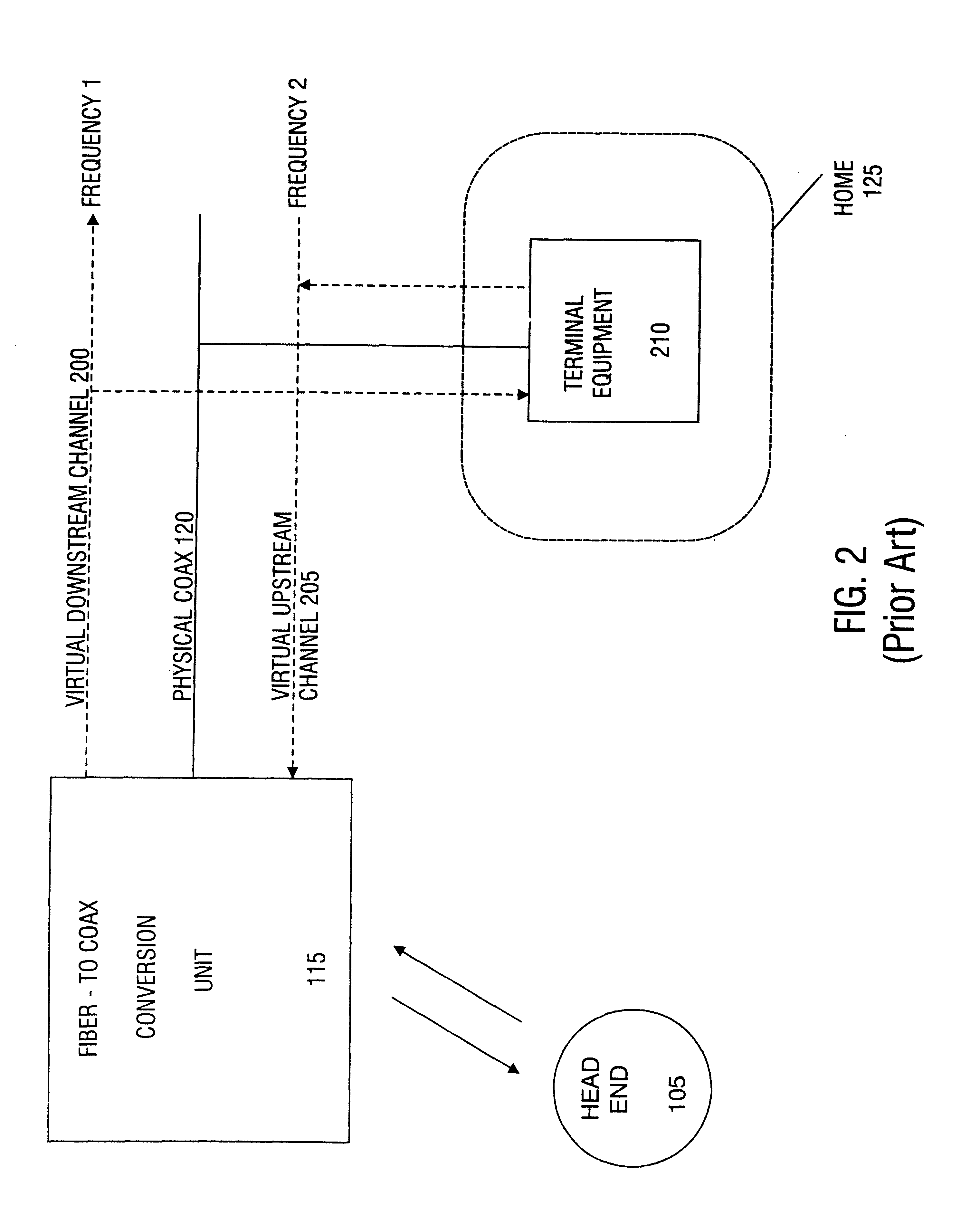

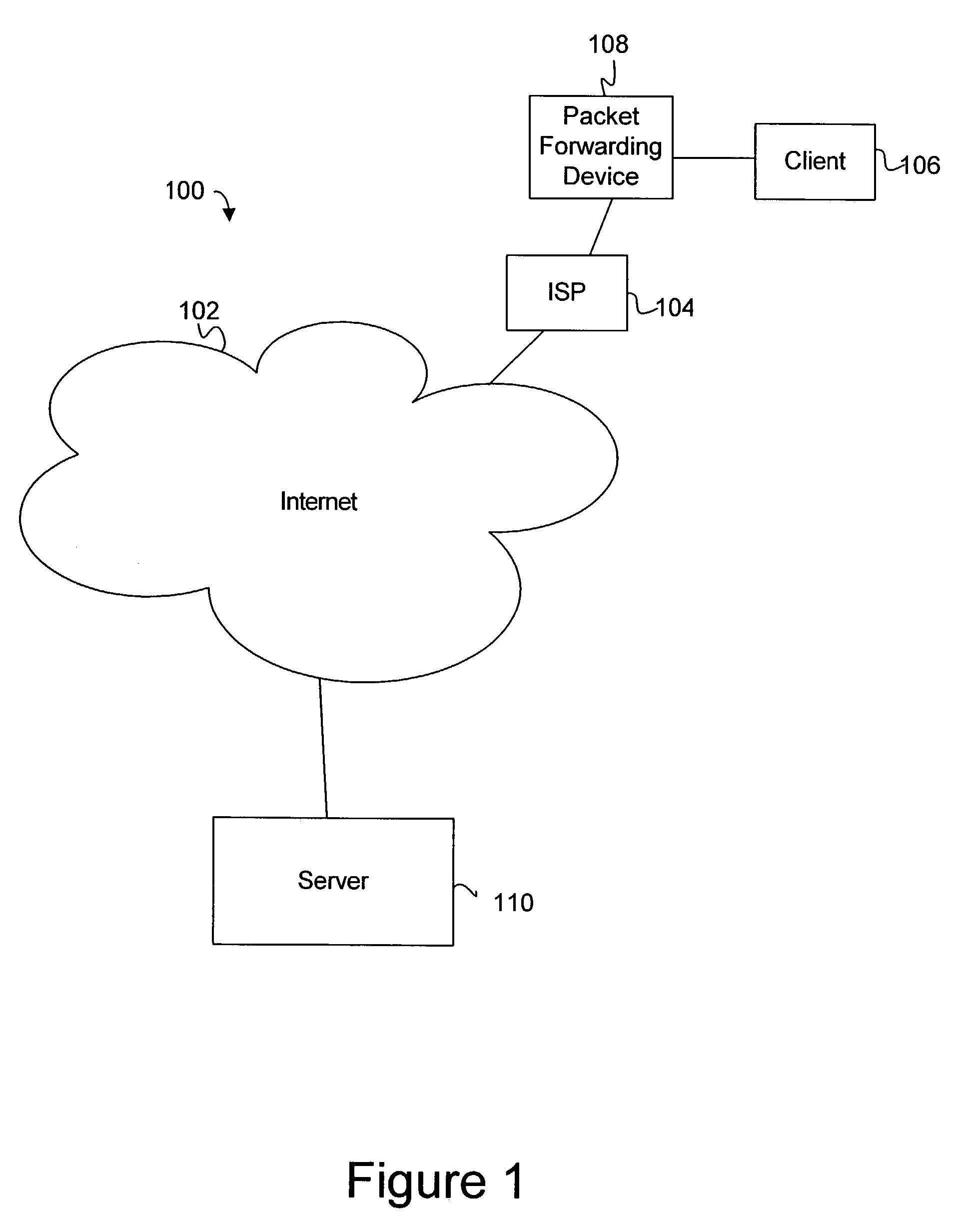

A method in accordance with the invention allocates bandwidth, fairly and dynamically, in a shared-media packet switched network to accommodate both elastic and inelastic applications. The method, executed by or in a head-end controller, allocates bandwidth transmission slots, converting requests for bandwidth into virtual scheduling times for granting access to the shared media. The method can use a weighted fair queuing algorithm or a virtual clock algorithm to generate a sequence of upstream slot / transmission assignment grants. The method supports multiple quality of service (QoS) classes via mechanisms which give highest priority to the service class with the most stringent QoS requirements.

Owner:AMERICAN CAPITAL FINANCIAL SERVICES

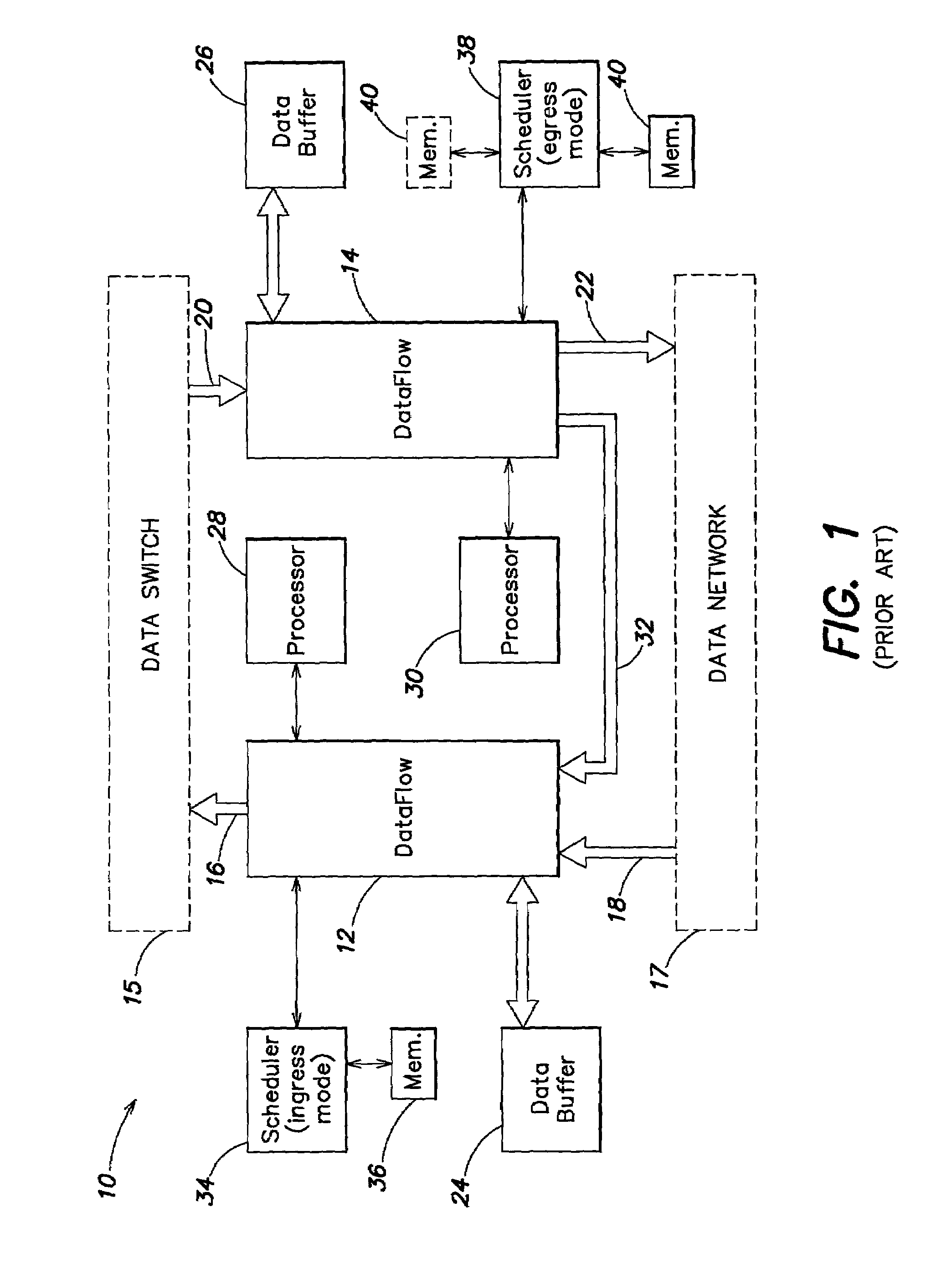

Method and system for network processor scheduling based on service levels

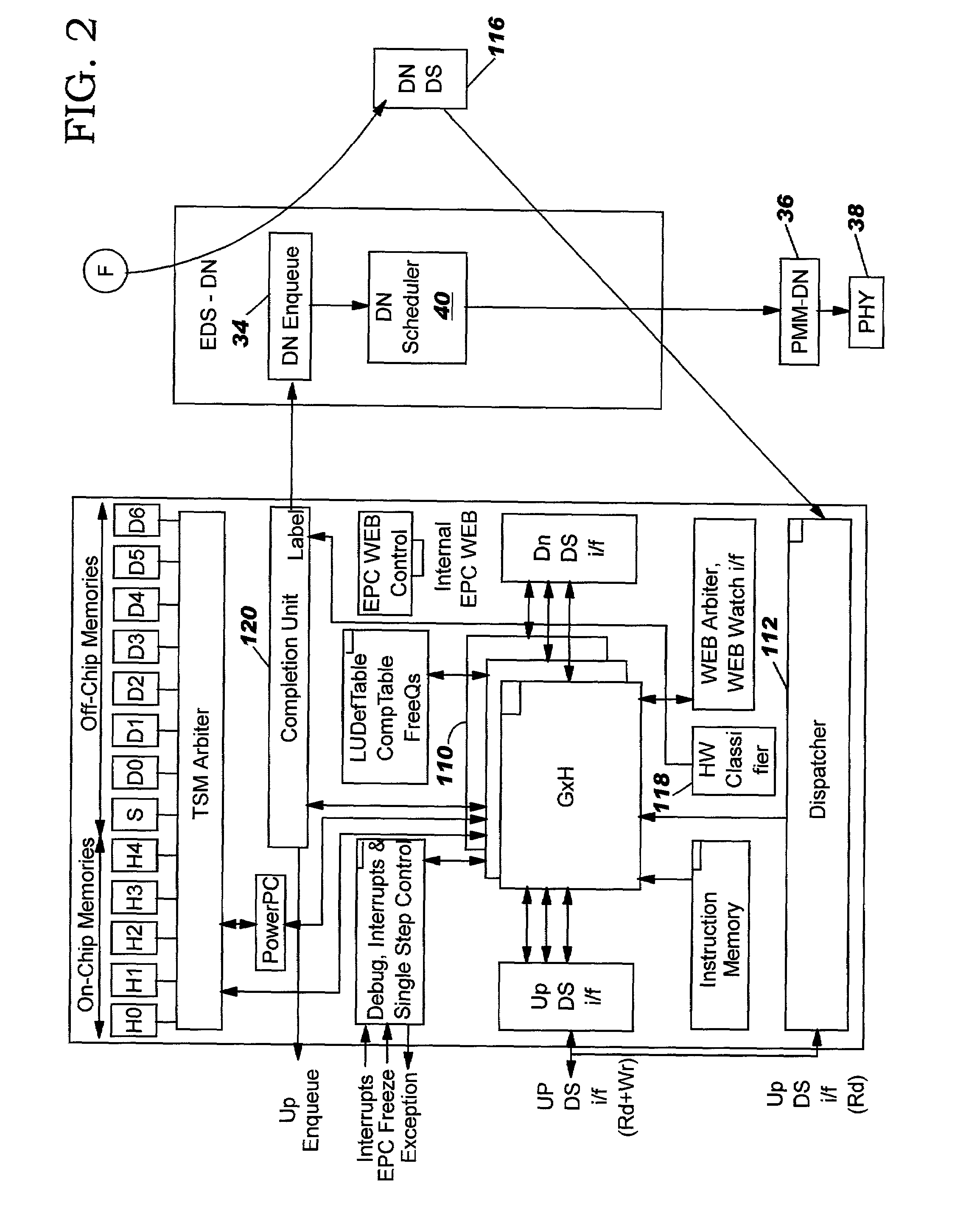

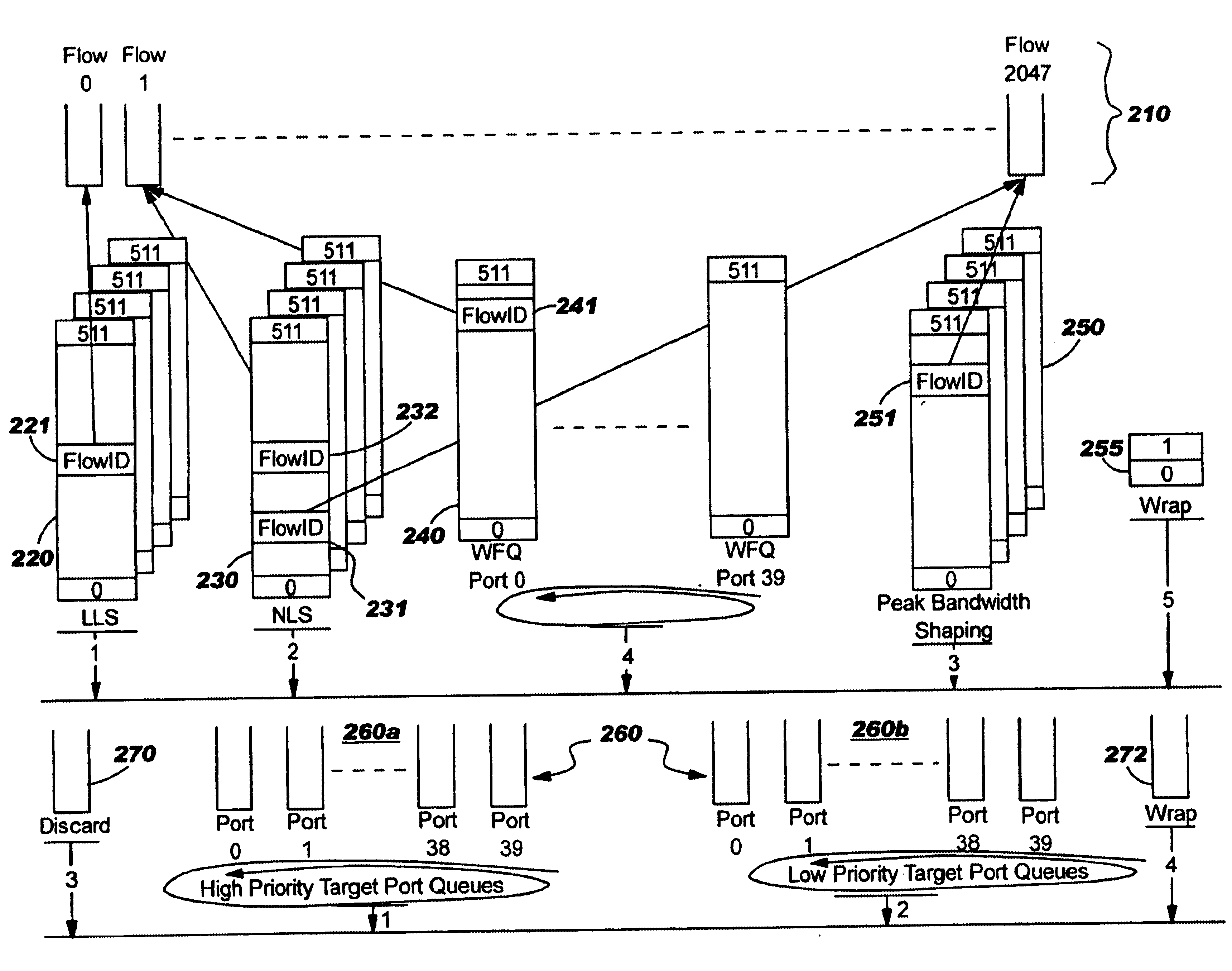

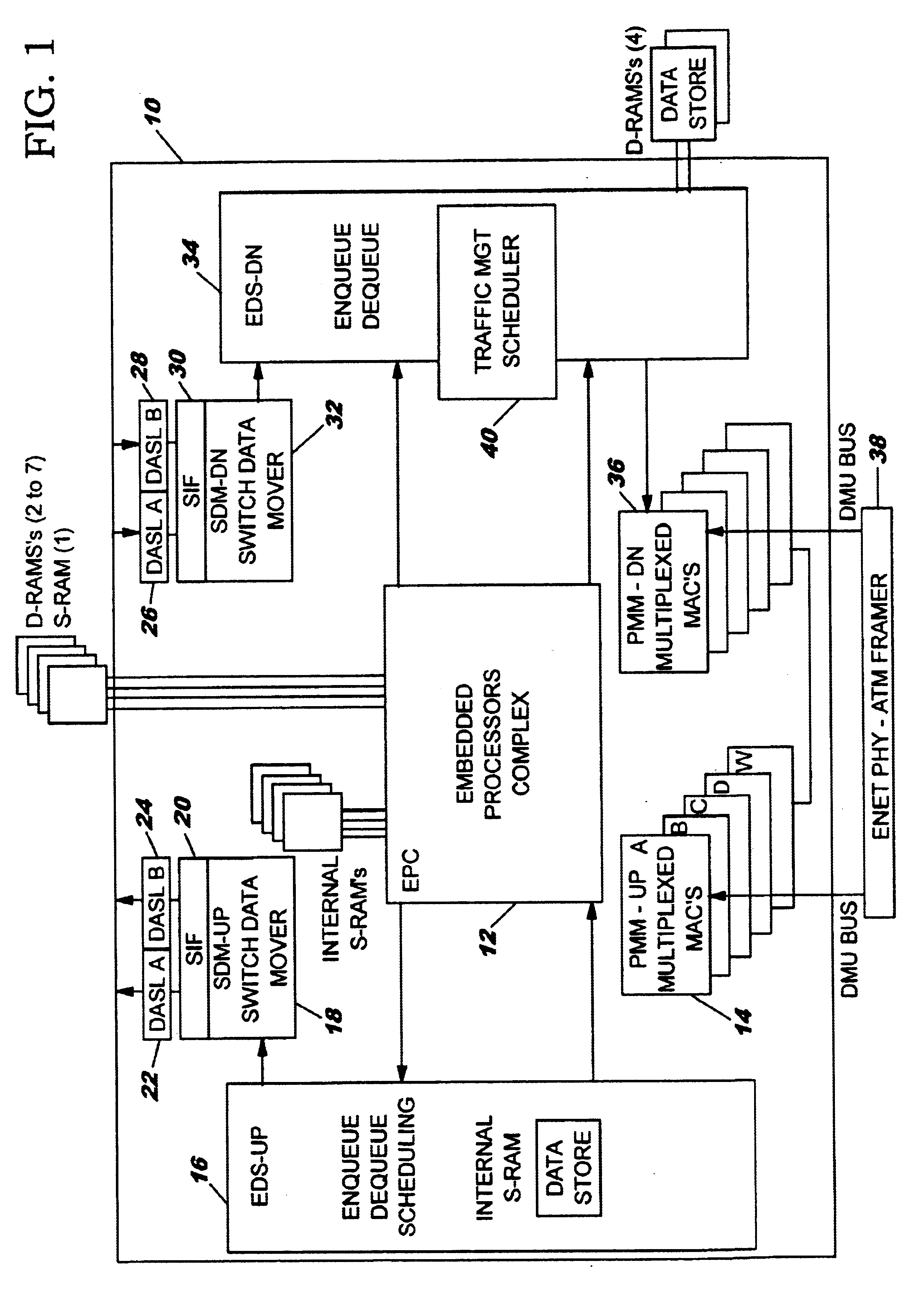

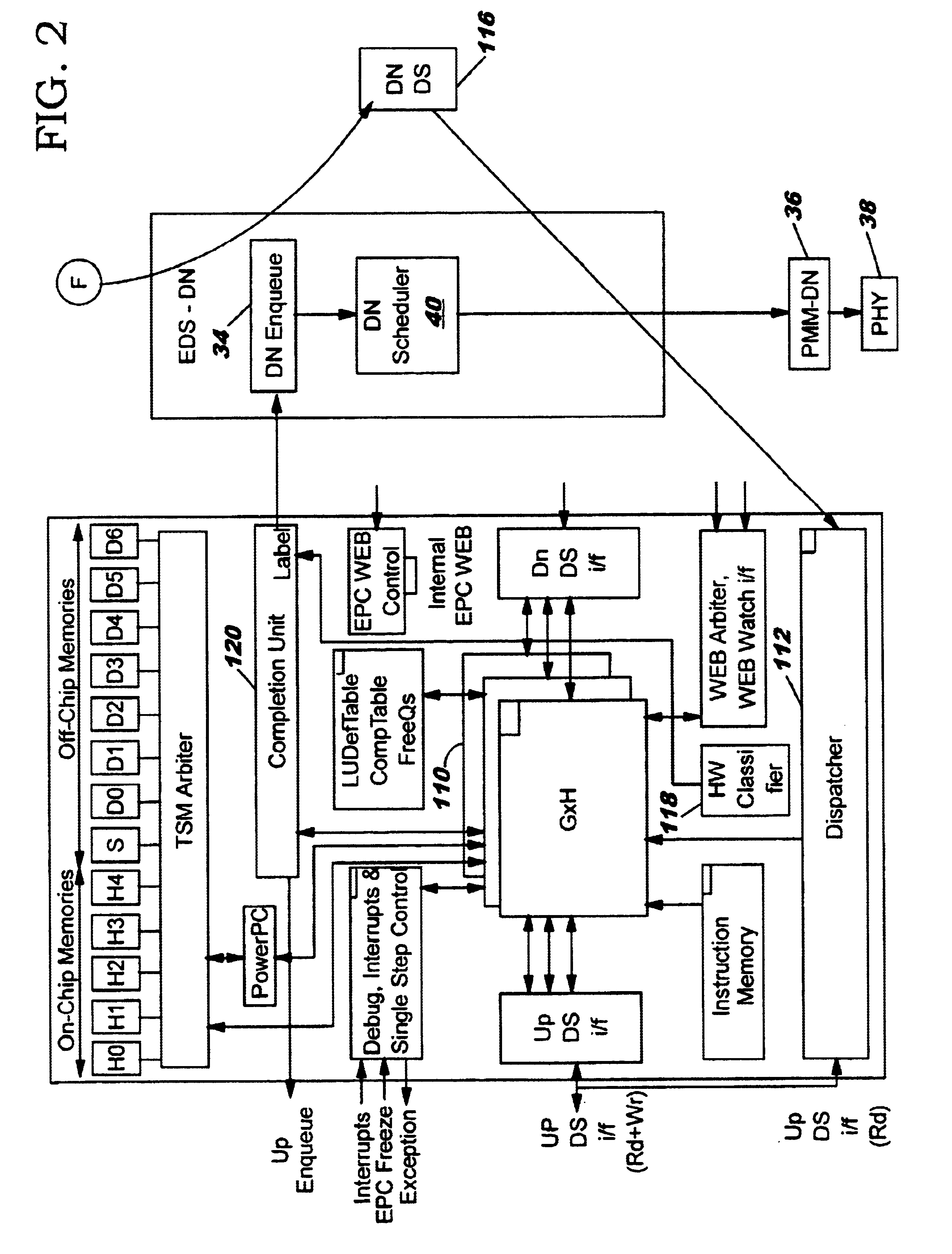

A system and method of moving information units from an output flow control toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to service based on a weighted fair queue where position in the queue is adjusted after each service based on a weight factor and the length of frame, a process which provides a method for and system of interaction between different calendar types is used to provide minimum bandwidth, best effort bandwidth, weighted fair queuing service, best effort peak bandwidth, and maximum burst size specifications. The present invention permits different combinations of service that can be used to create different QoS specifications. The "base" services which are offered to a customer in the example described in this patent application are minimum bandwidth, best effort, peak and maximum burst size (or MBS), which may be combined as desired. For example, a user could specify minimum bandwidth plus best effort additional bandwidth and the system would provide this capability by putting the flow queue in both the NLS and WFQ calendar. The system includes tests when a flow queue is in multiple calendars to determine when it must come out.

Owner:IBM CORP

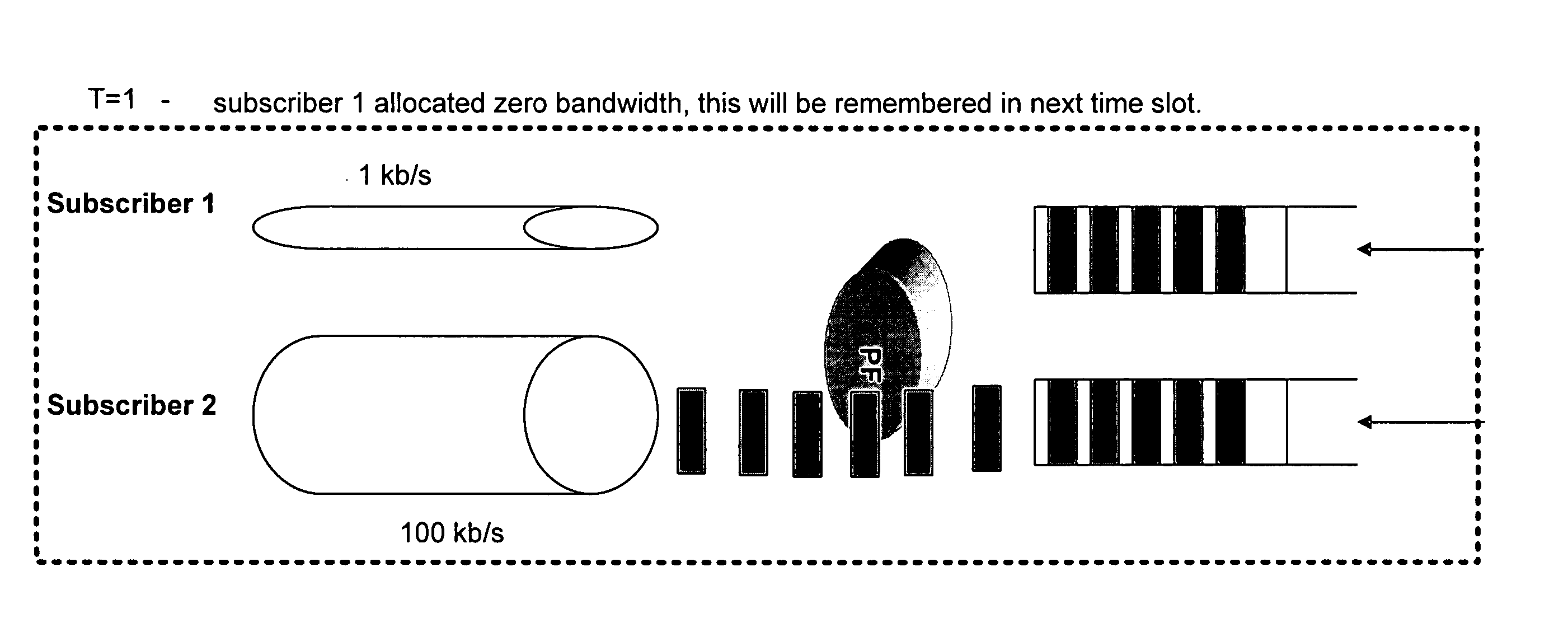

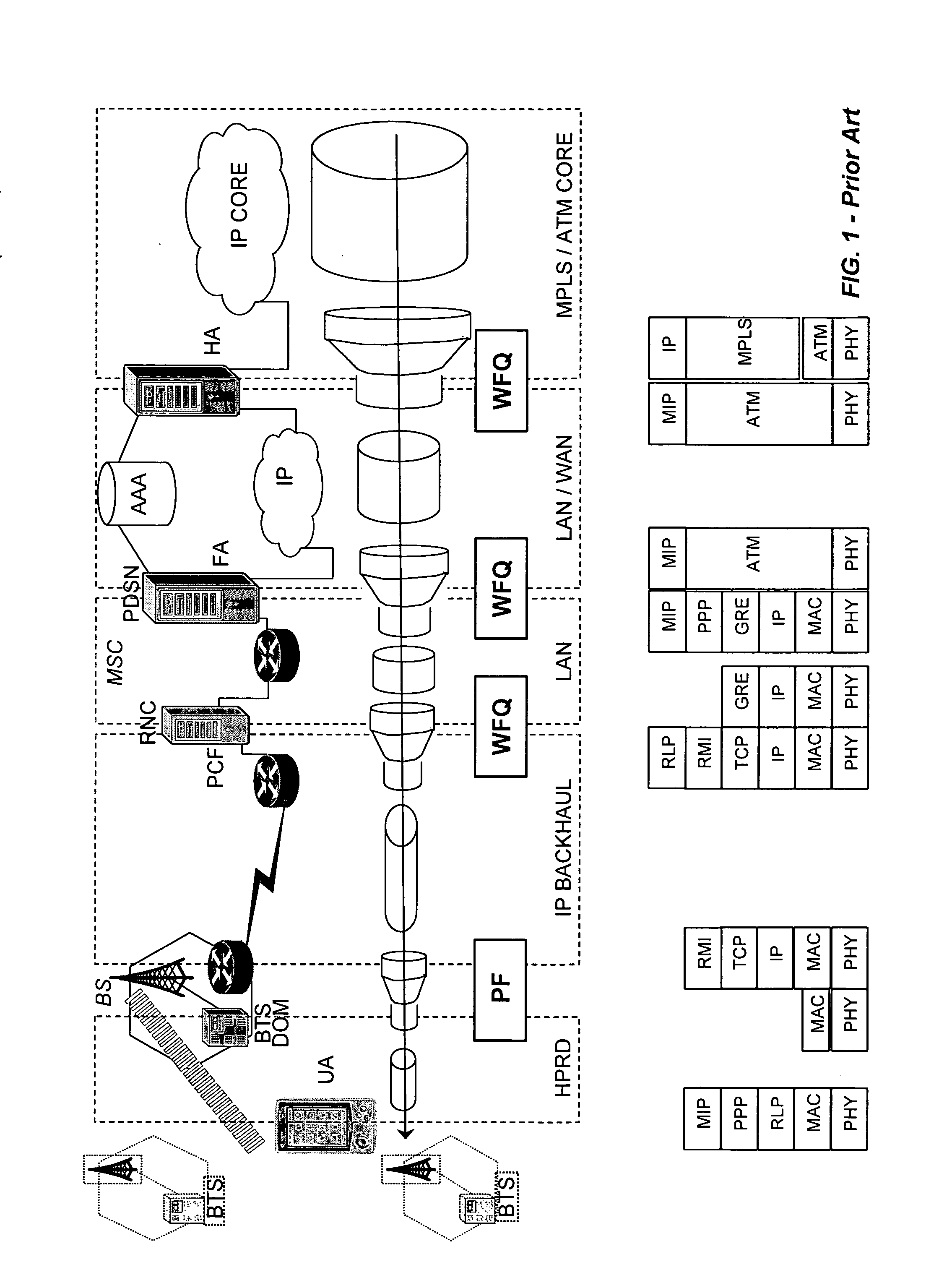

Integrated packet latency aware QoS scheduling using proportional fairness and weighted fair queuing for wireless integrated multimedia packet services

ActiveUS20070041364A1Network traffic/resource managementIn VoIP networksPacket communicationPacket scheduling

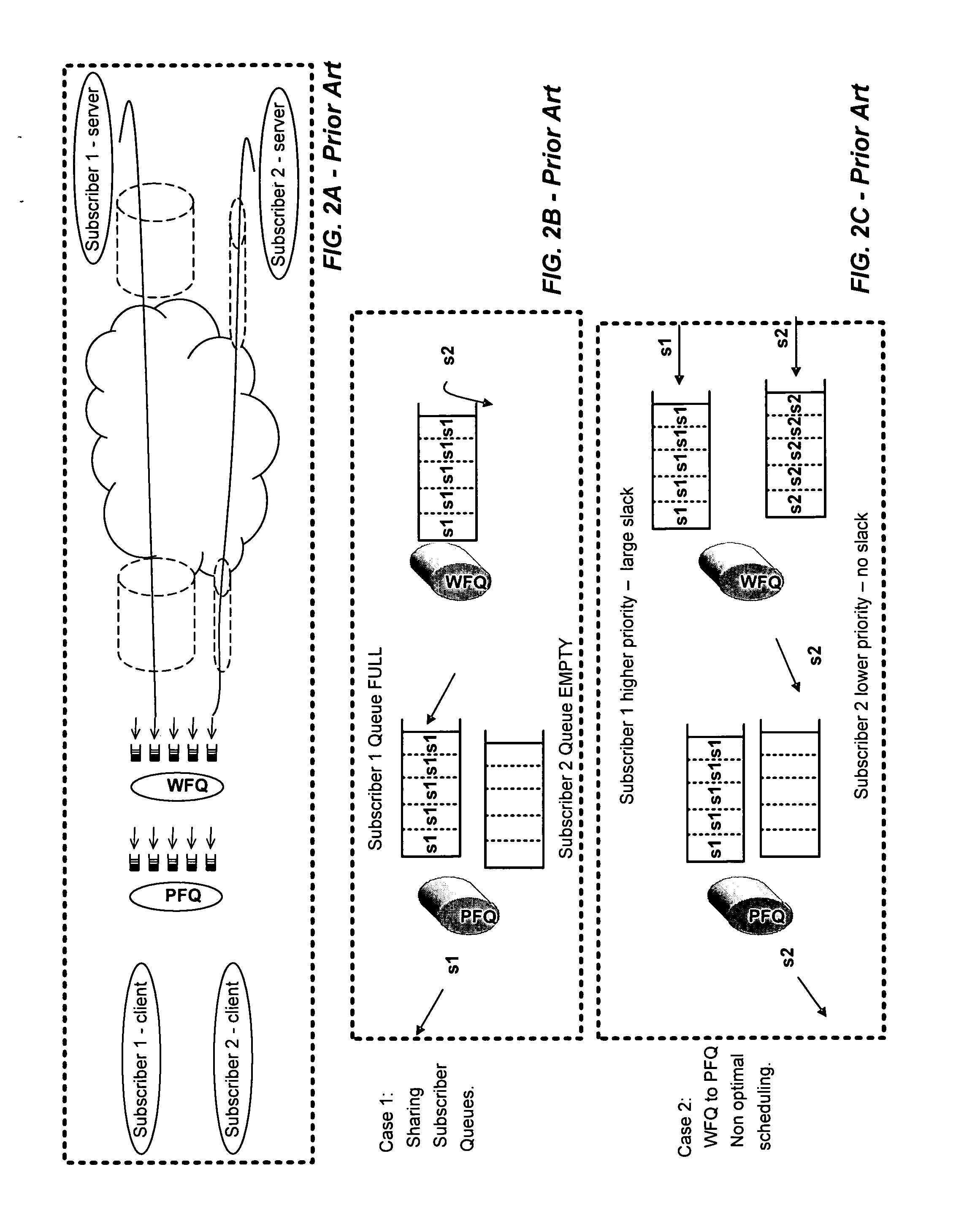

Packet communication networks for transmission to wireless subscriber devices utilize both wireline and wireless packet routing components. The routing elements of these two different types often implement different packet scheduling algorithms, typically a form of Weighted Fair Queuing (WFQ) in the wireline portion of the network and Proportional Fairness (PF) queuing in the wireless domain. To improve resource allocation and thus end to end quality of service for time sensitive communications, such as integrated multimedia services, the present disclosure suggests adding the notion of slack time into either one or both of the packet scheduling algorithms. By modifying one or more of these algorithms, e.g. to reorder or shuffle packets based on slack times, global optimal resource allocations are possible, at least in certain cases.

Owner:CELLCO PARTNERSHIP INC

Weighted fair queuing-based methods and apparatus for protecting against overload conditions on nodes of a distributed network

InactiveUS7342929B2Data switching by path configurationSecuring communicationComputer networkNetwork on

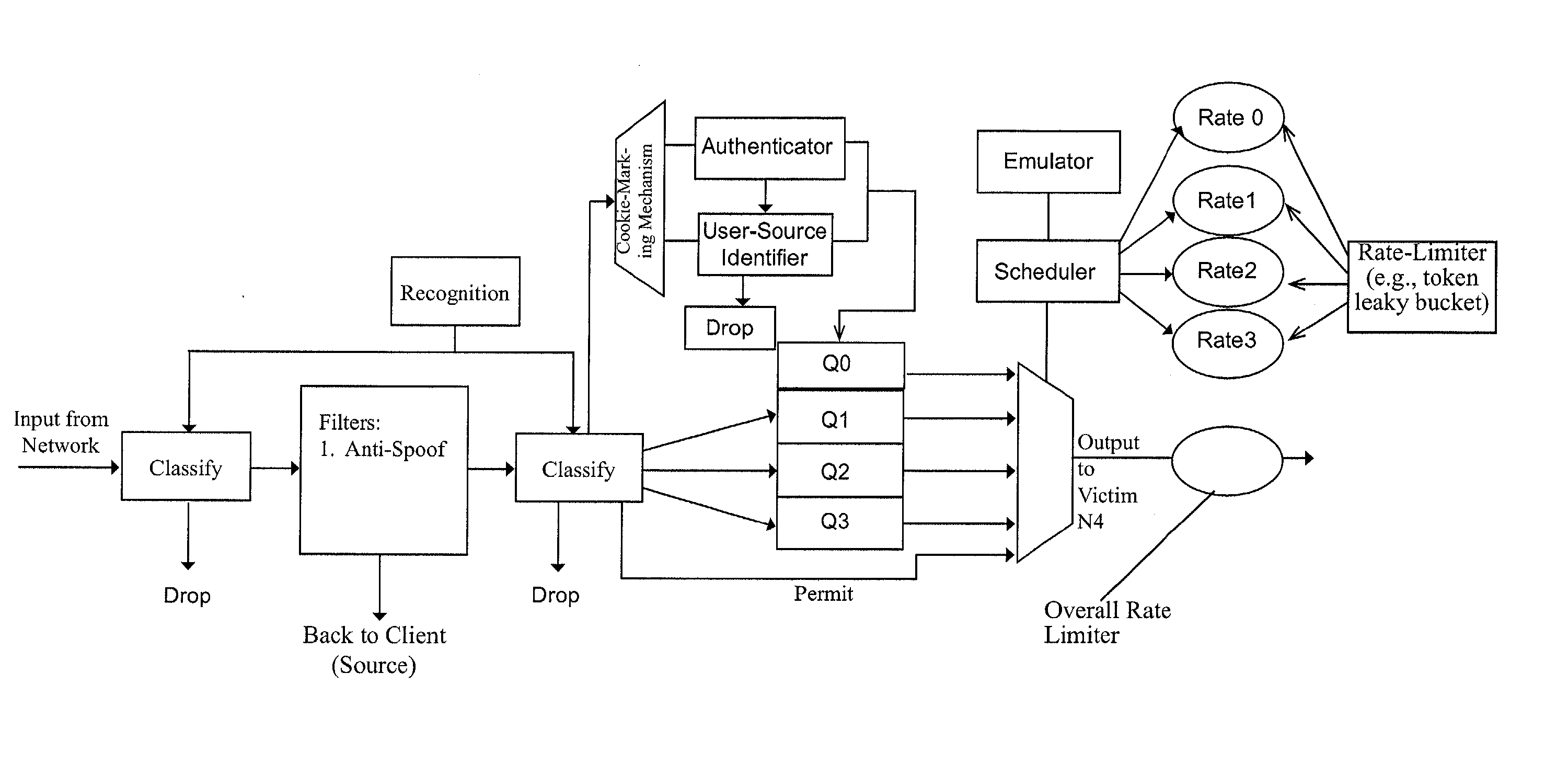

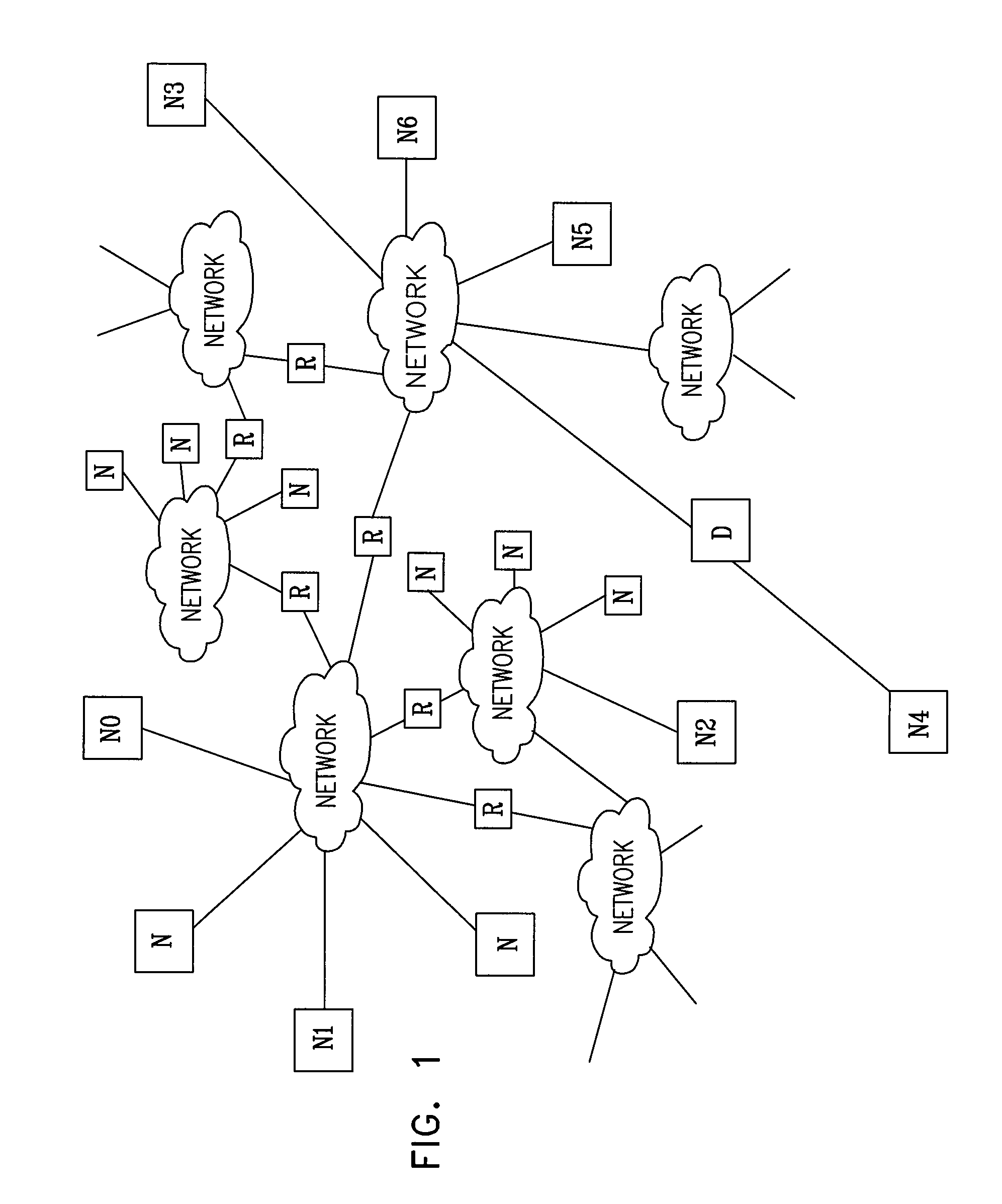

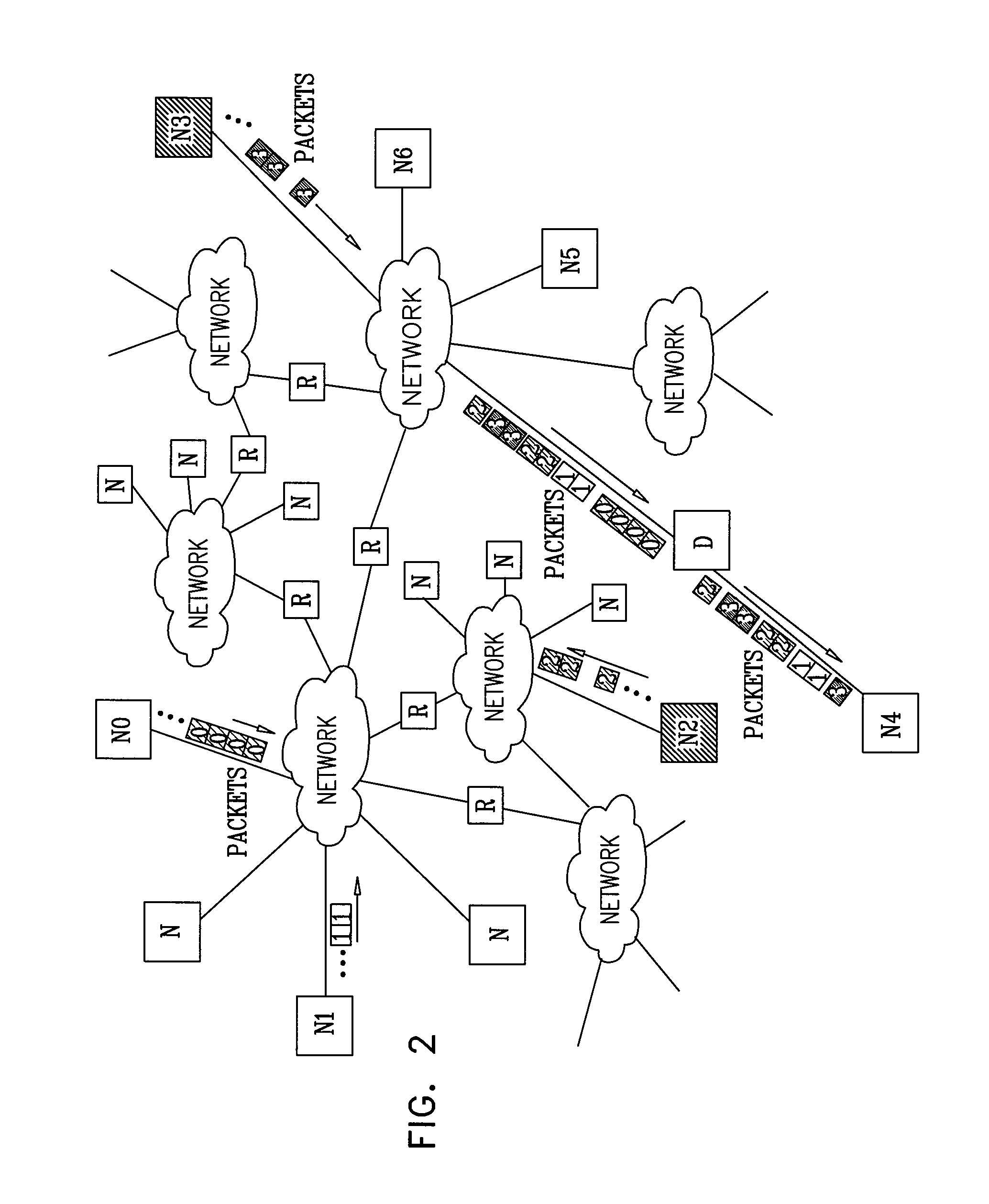

An improved network device that controls throughput of packets received thereby, e.g., to downstream devices or to downstream logic contained within the same network device. The network device comprises a scheduler that schedules one or more packets of a selected class for throughput as a function of a weight of that class and weights of one or more other classes. The weight of at least the selected class is dynamic and is a function of a history of volume of packets received by the network device in the selected class. An apparatus for protecting against overload conditions on a network, e.g., of the type caused by DDoS attacks, has a scheduler and a token bucket mechanism, e.g., as described above. Such apparatus can also include a plurality of queues into which packets of the respective classes are placed on receipt by the apparatus. Those packets are dequeued by the scheduler, e.g., in the manner described above, for transmittal to downstream devices (e.g., potential victim nodes) on the network.

Owner:CISCO TECH INC

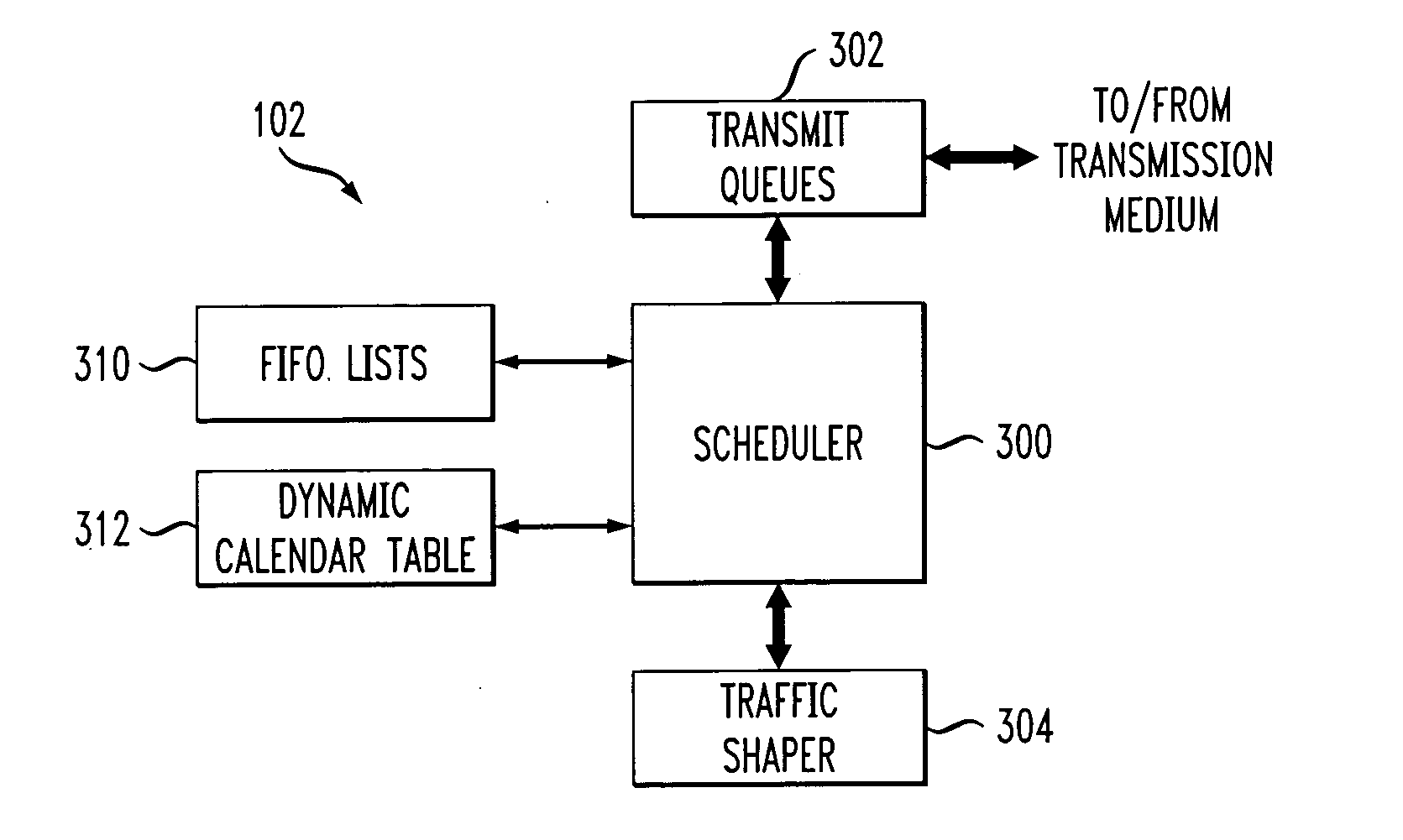

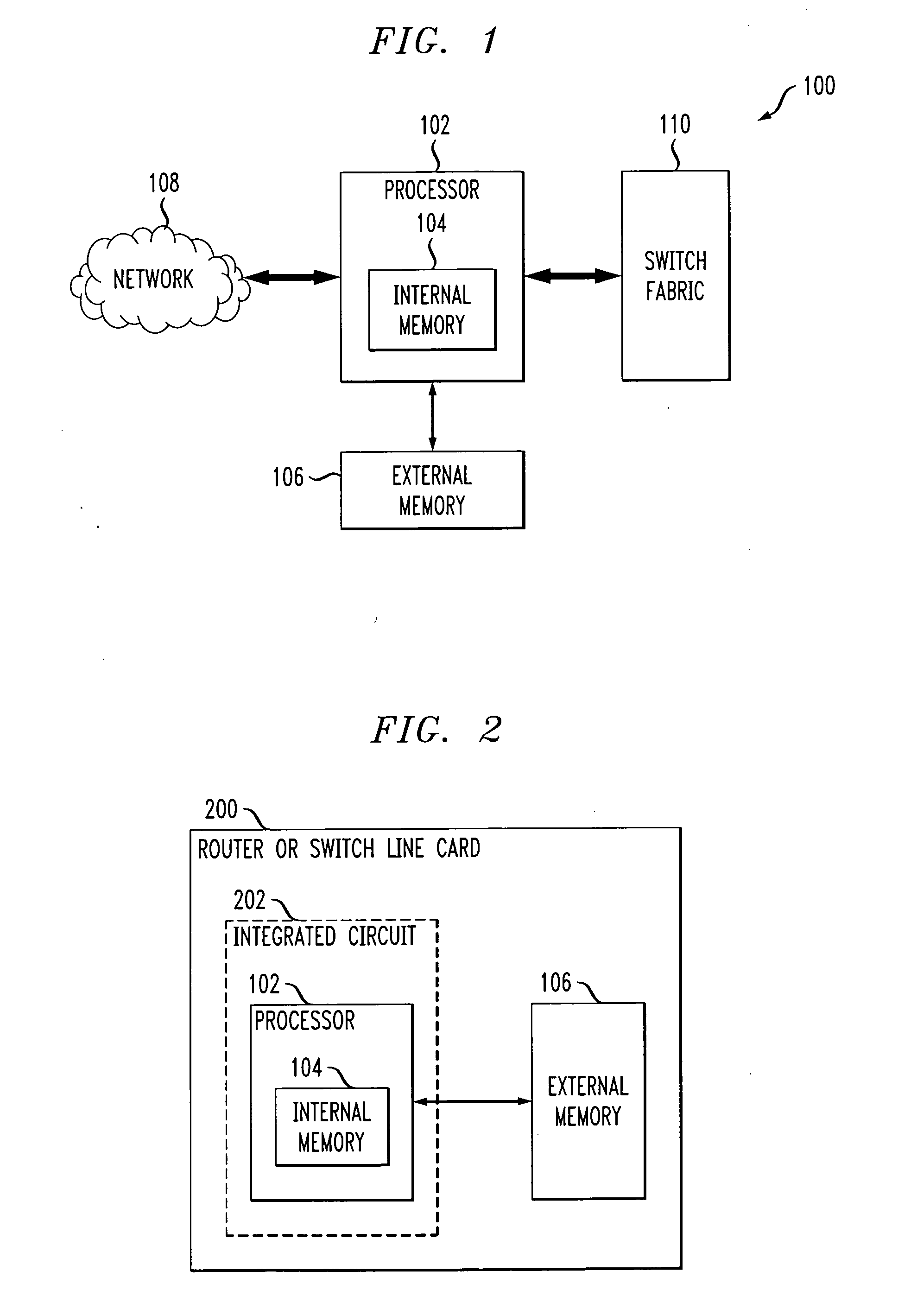

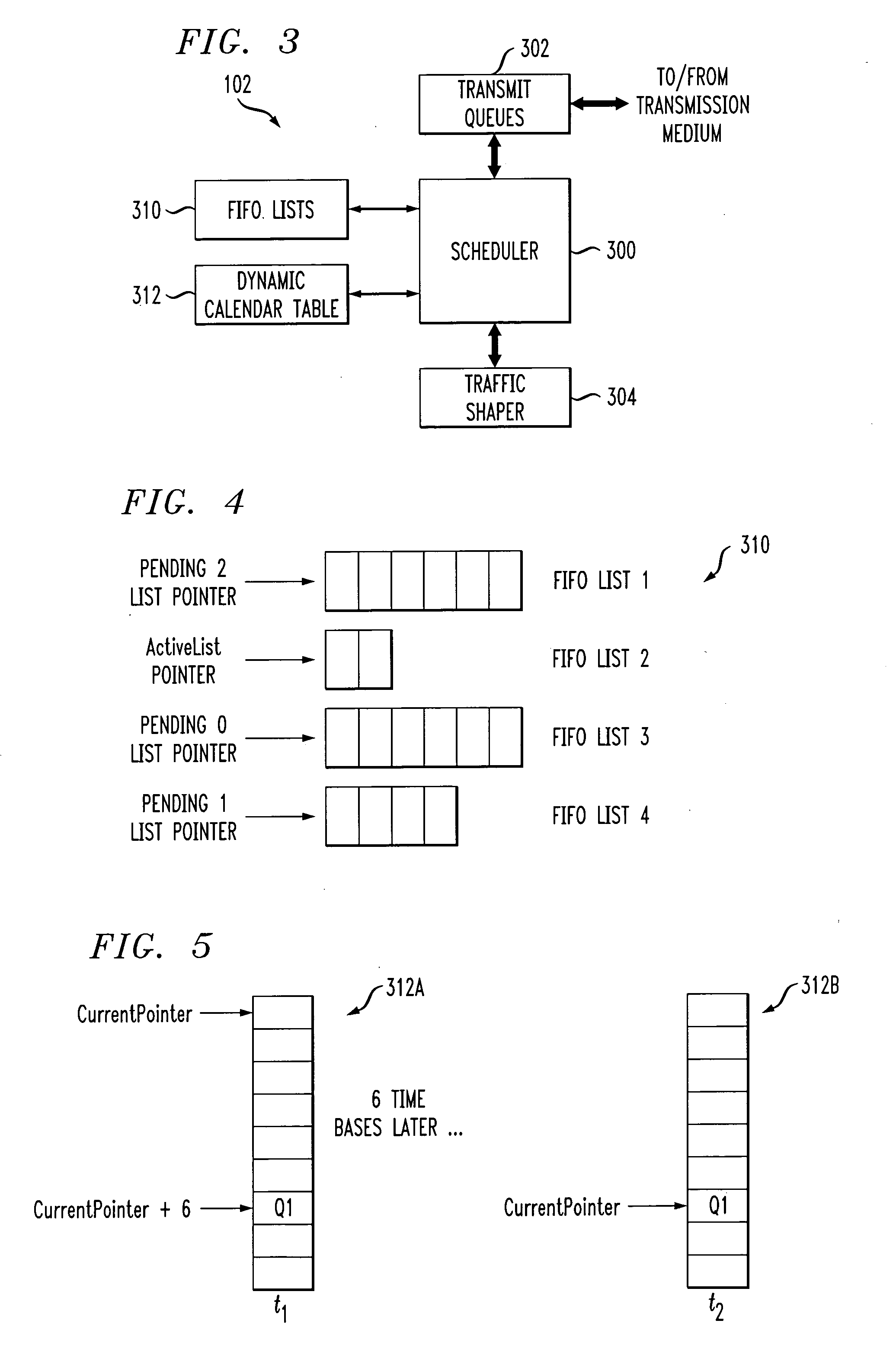

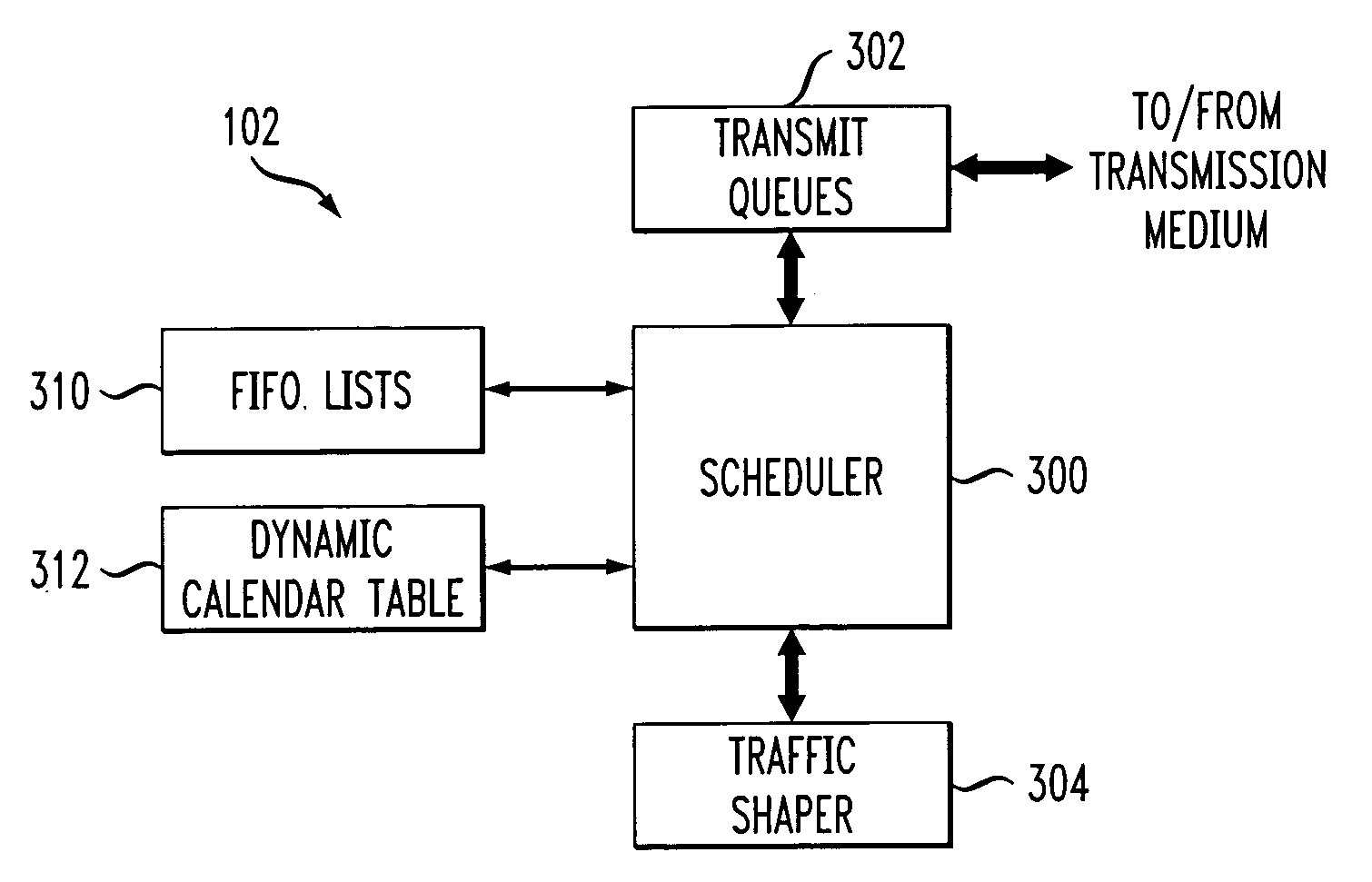

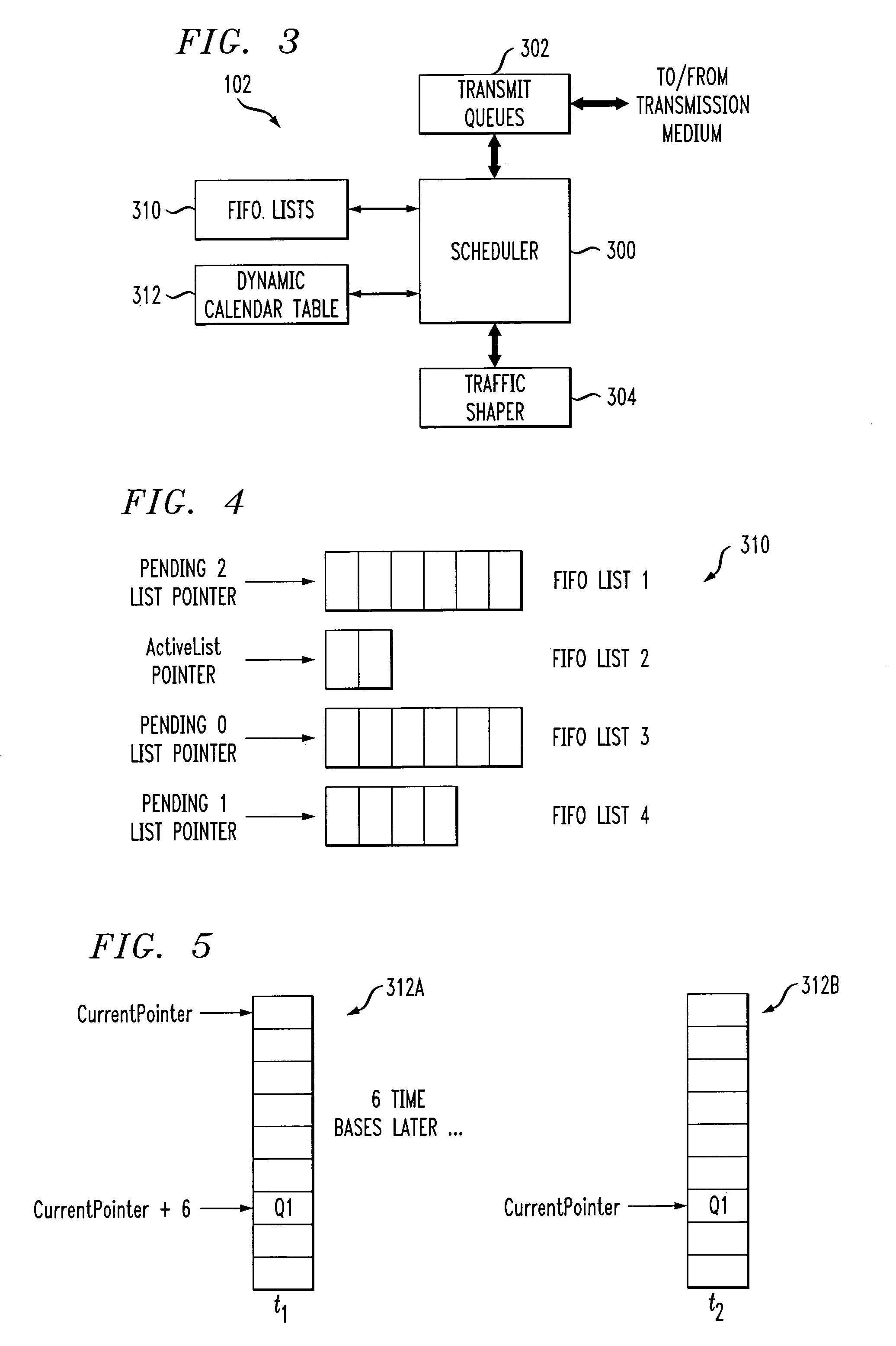

Processor with scheduler architecture supporting multiple distinct scheduling algorithms

InactiveUS20050111461A1Sufficient flexibilityApplication of processData switching by path configurationRadio transmissionFair queuingWeighted fair queueing

A processor includes a scheduler operative to schedule data blocks for transmission from a plurality of queues or other transmission elements, utilizing at least a first table and a second table. The first table may comprise at least first and second first-in first-out (FIFO) lists of entries corresponding to transmission elements for which data blocks are to be scheduled in accordance with a first scheduling algorithm, such as a weighted fair queuing scheduling algorithm. The scheduler maintains a first table pointer identifying at least one of the first and second lists of the first table as having priority over the other of the first and second lists of the first table. The second table includes a plurality of entries corresponding to transmission elements for which data blocks are to be scheduled in accordance with a second scheduling algorithm, such as a constant bit rate or variable bit rate scheduling algorithm. Association of a given one of the transmission elements with a particular one of the second table entries establishes a scheduling rate for that transmission element. The scheduler maintains a second table pointer identifying a current one of the second table entries that is eligible for transmission.

Owner:INTEL CORP

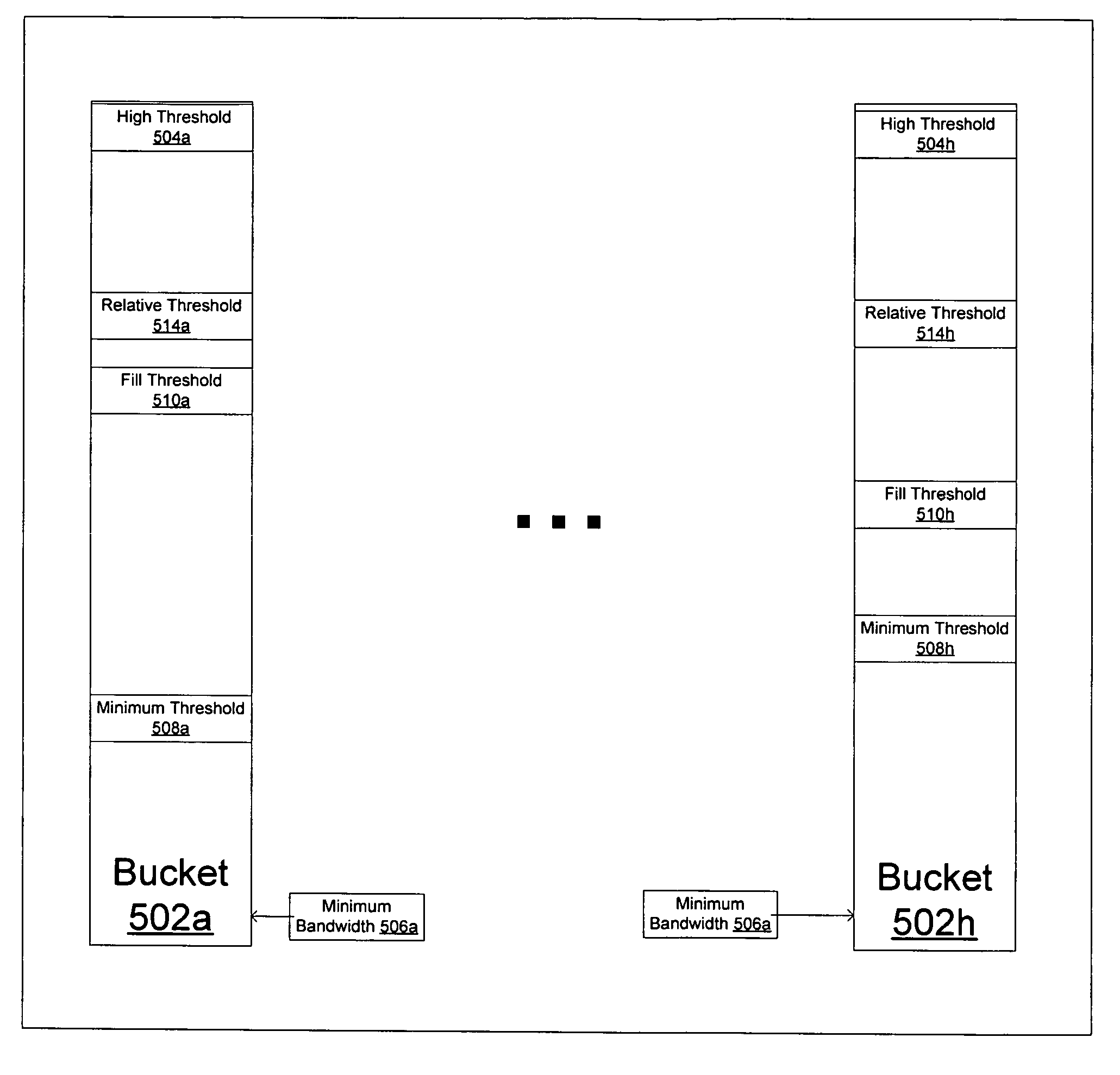

Weighted-fair-queuing relative bandwidth sharing

A network device for scheduling packets in a plurality of queues. The network device includes a plurality of configurable mechanisms, each of which is configured to process information in one of a plurality of queues based on a predefined bandwidth. A scheduler services an associated one of the plurality of queues based on the predefined bandwidth. The network device also includes means for tracking whether or not the plurality of queues has exceeded a predefined threshold. If the plurality of queues has exceeded the predefined threshold, a new bandwidth allocation is calculated for each of the plurality of queues. The new bandwidth allocation replaces the predefined bandwidth and is proportional to the predefined bandwidth for each of the plurality of queues.

Owner:AVAGO TECH INT SALES PTE LTD

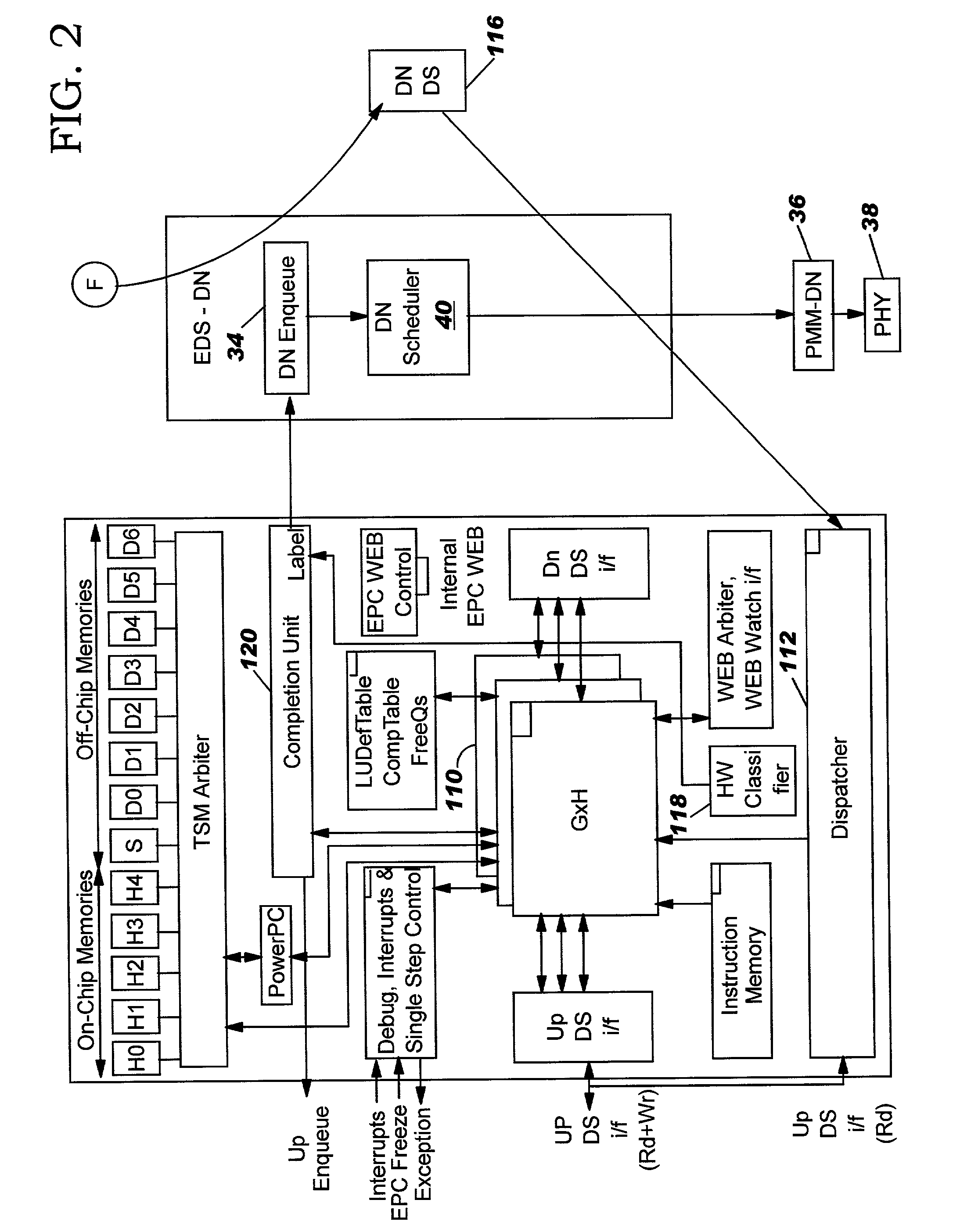

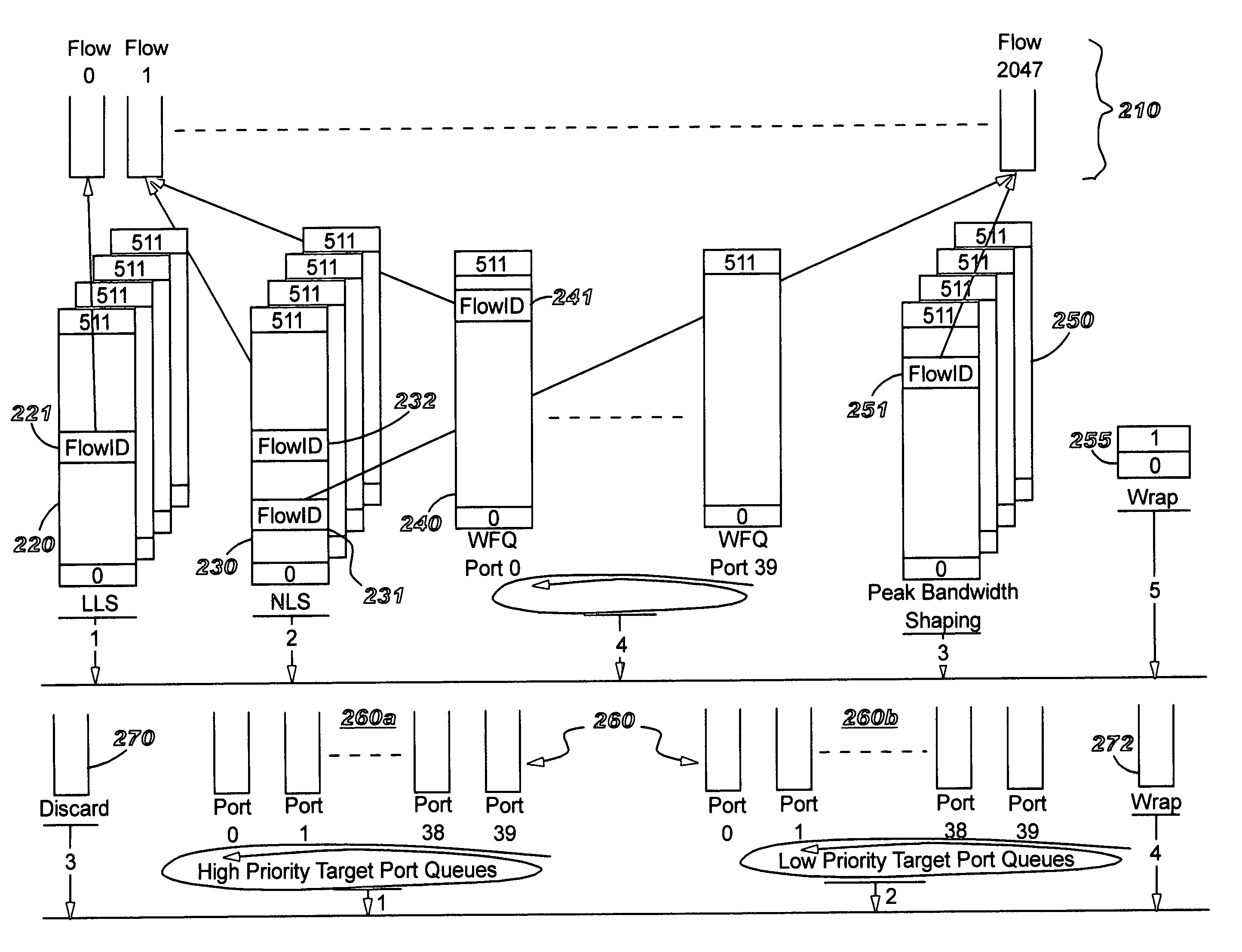

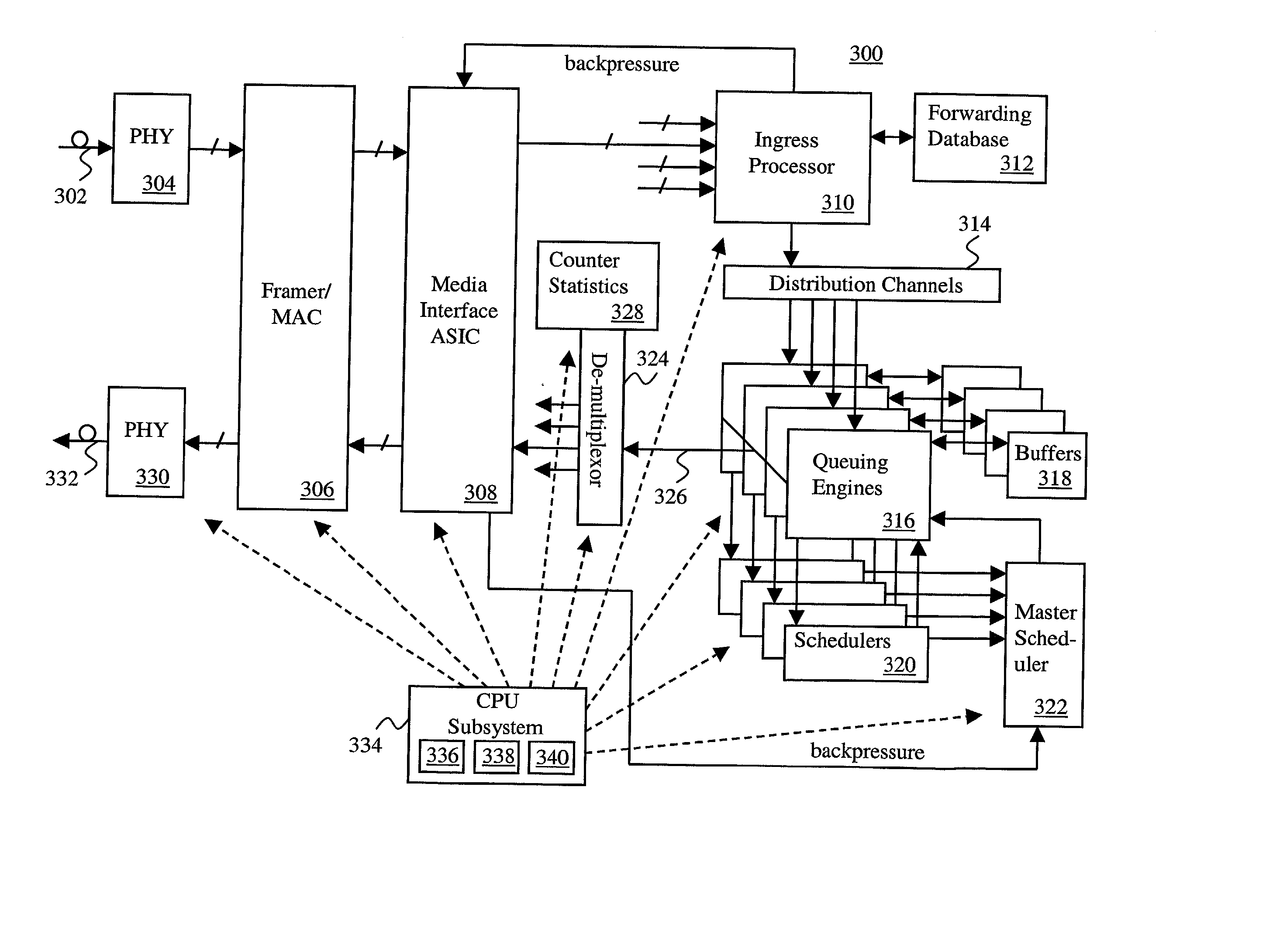

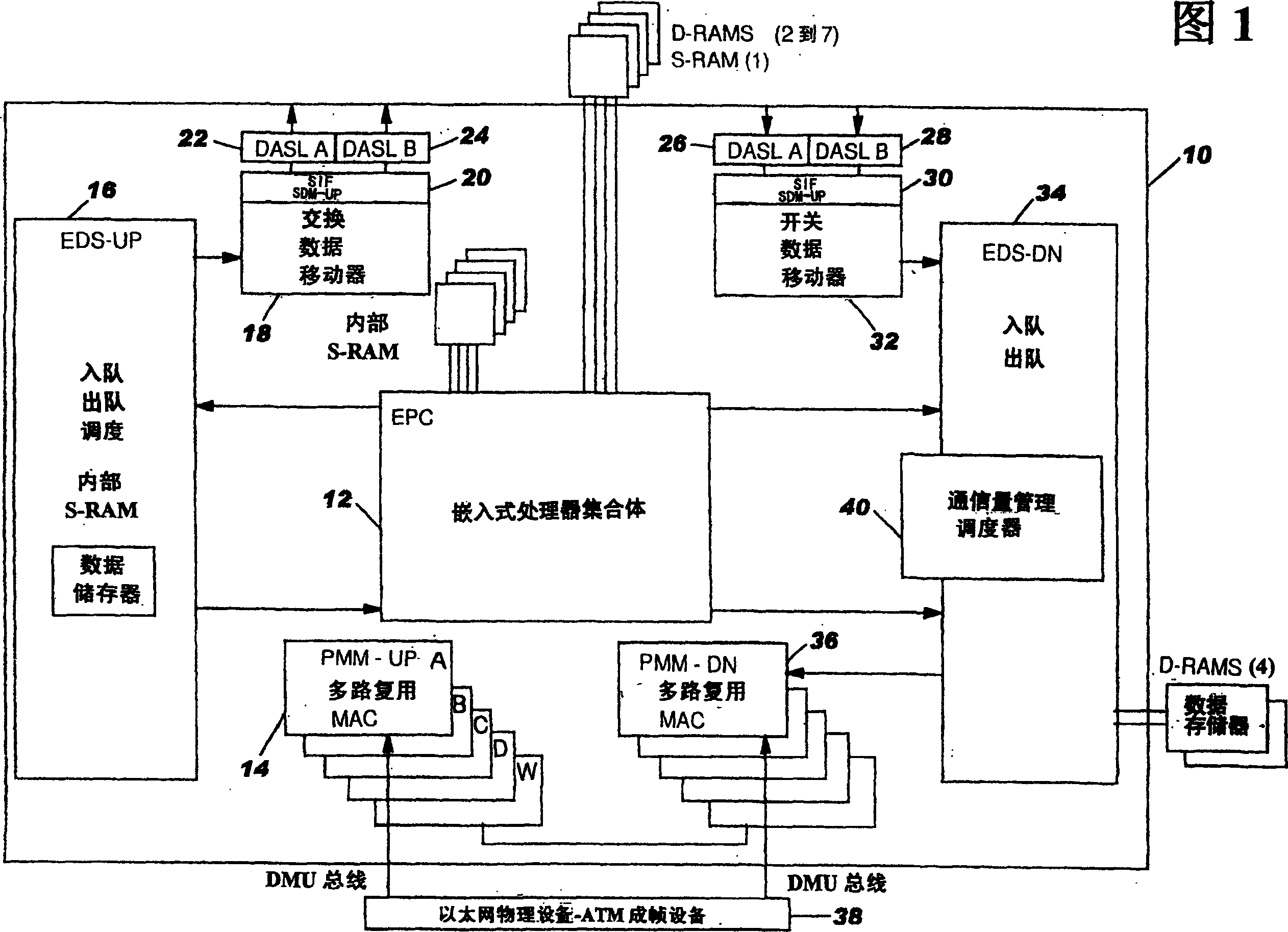

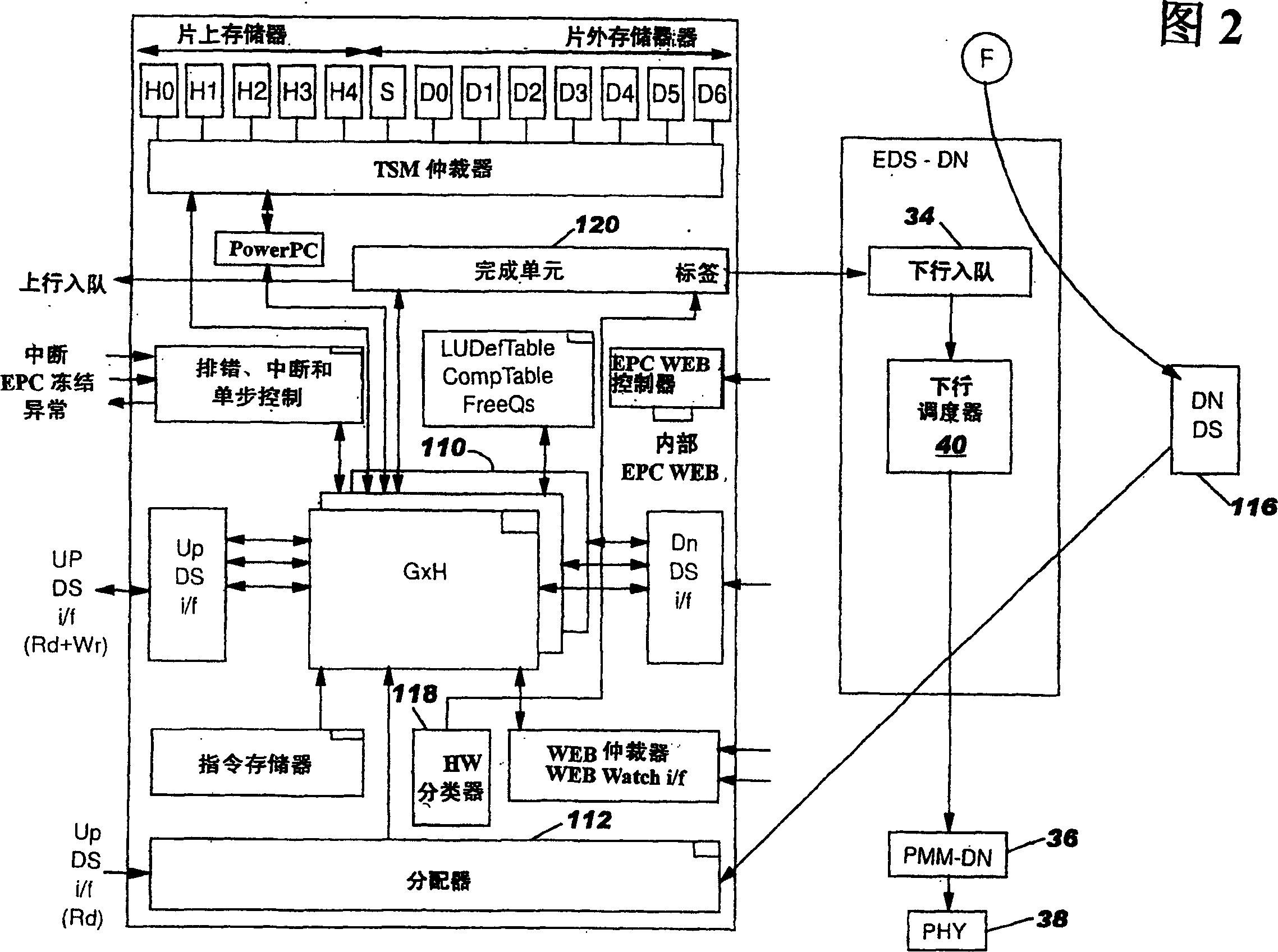

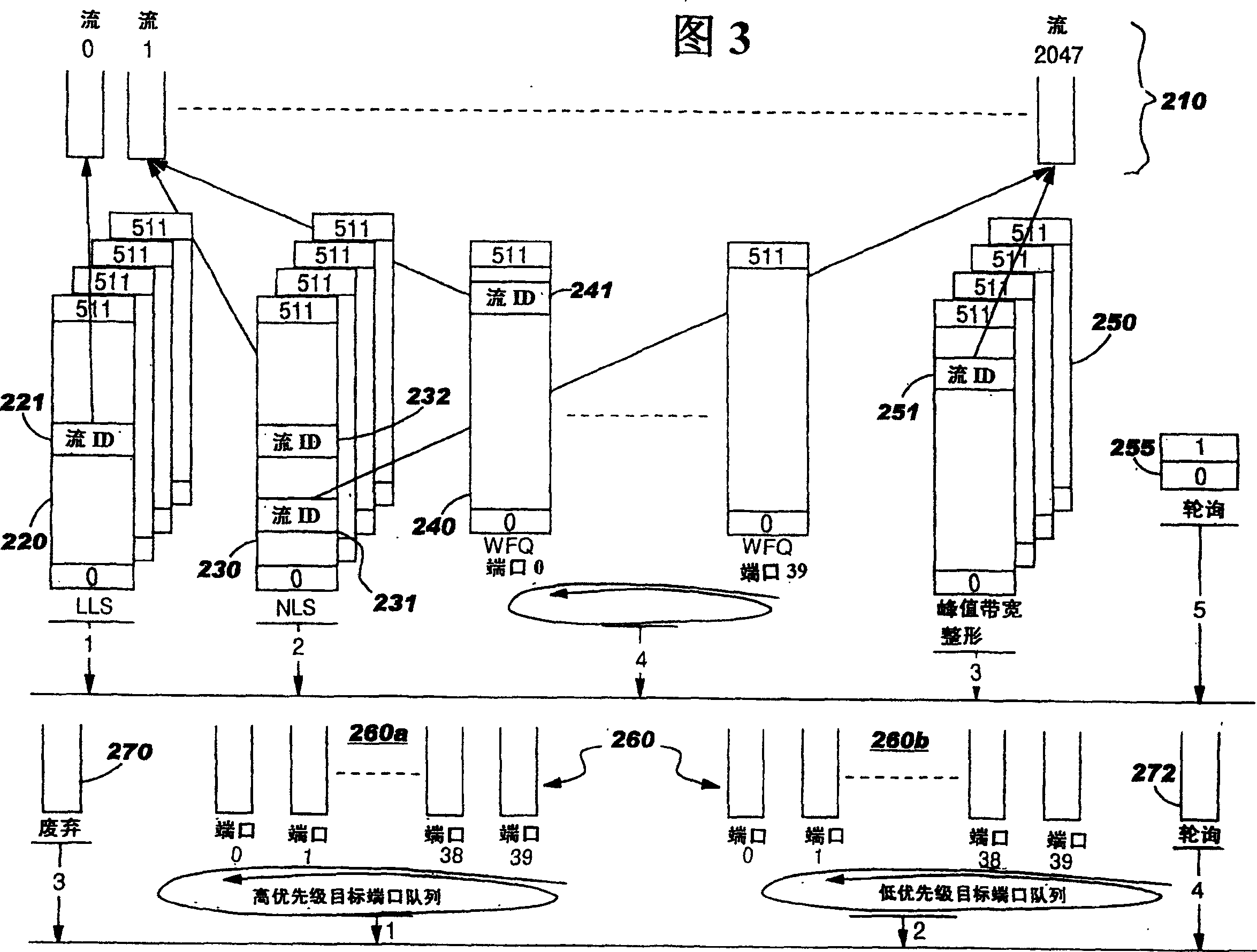

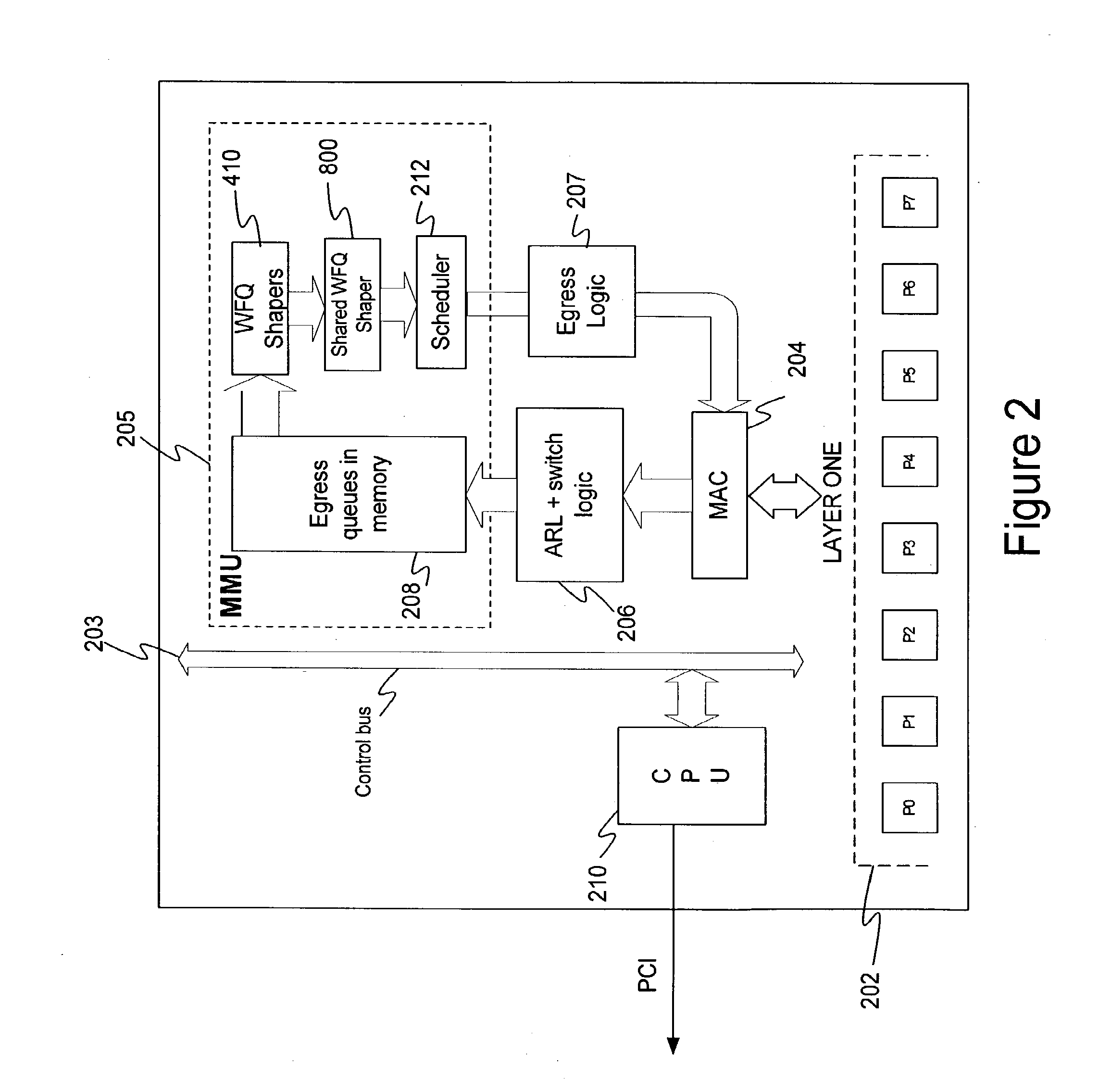

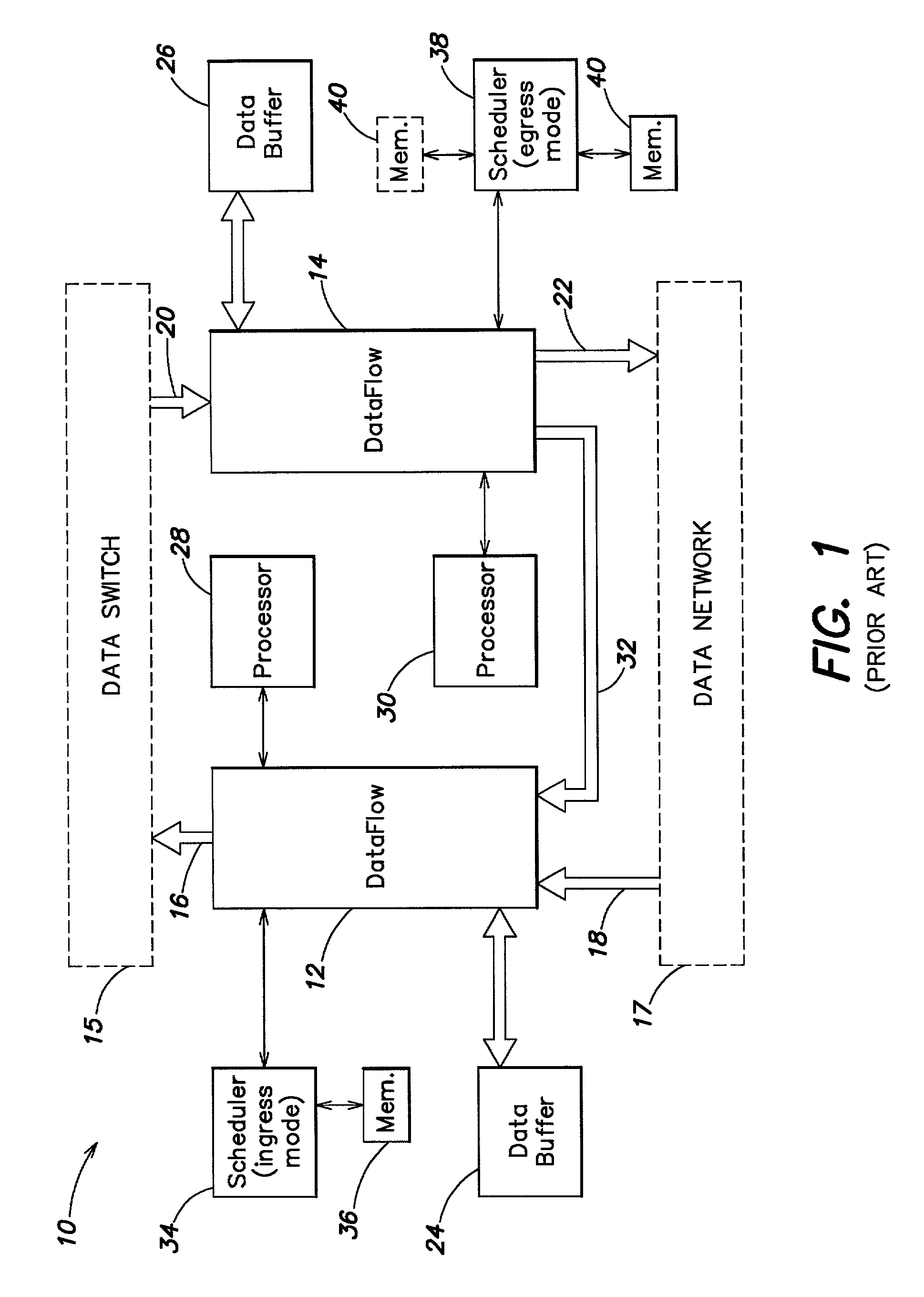

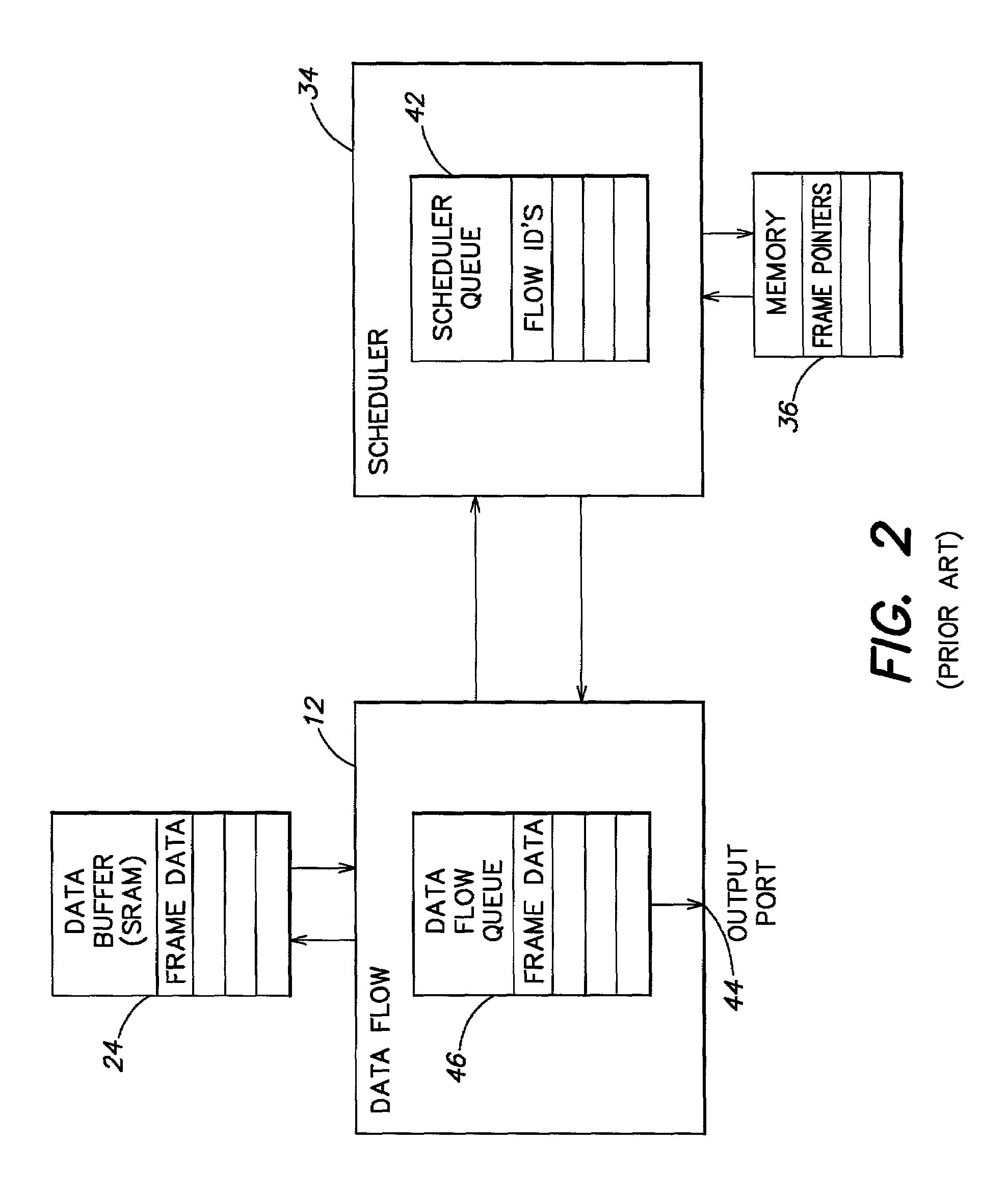

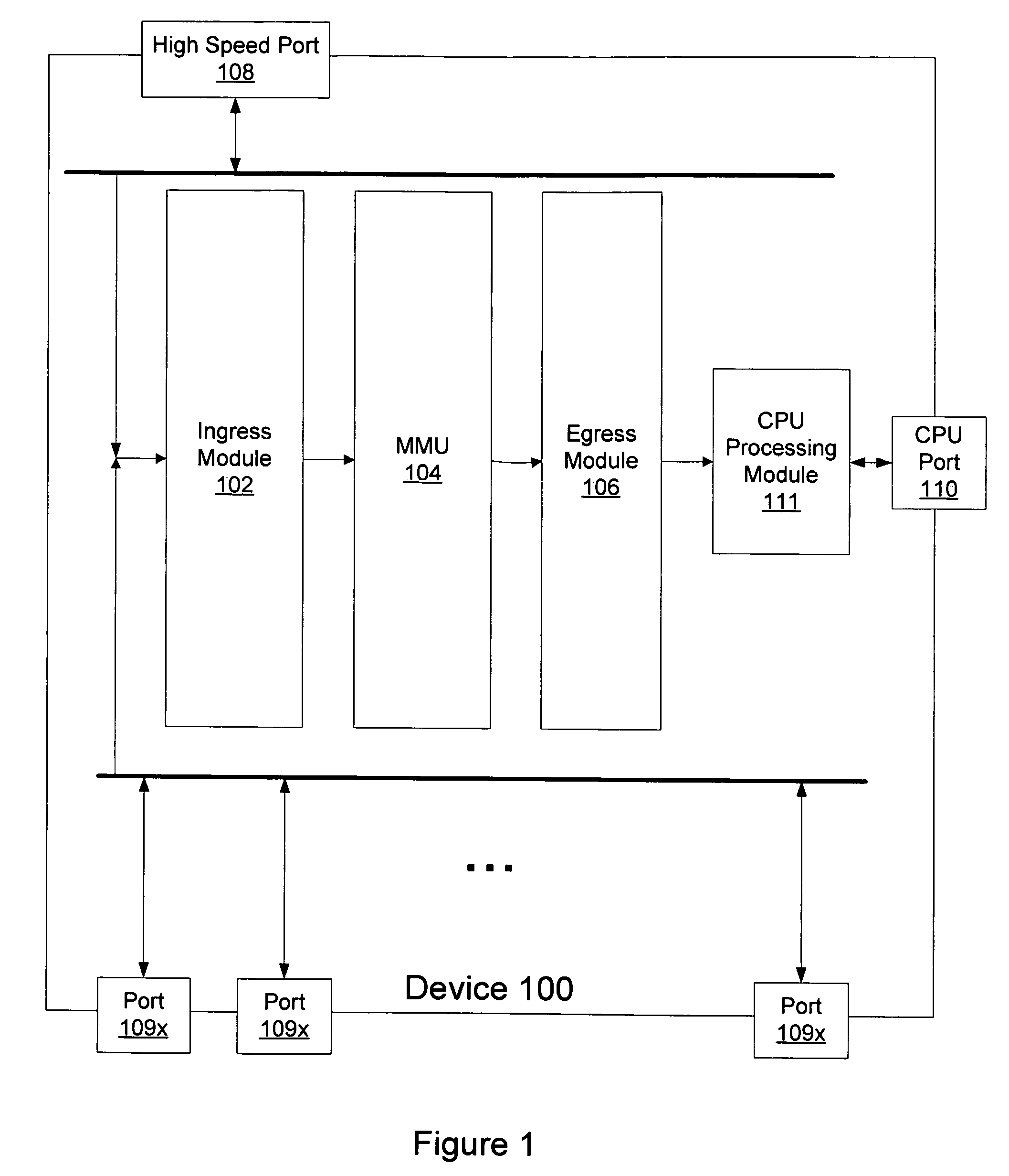

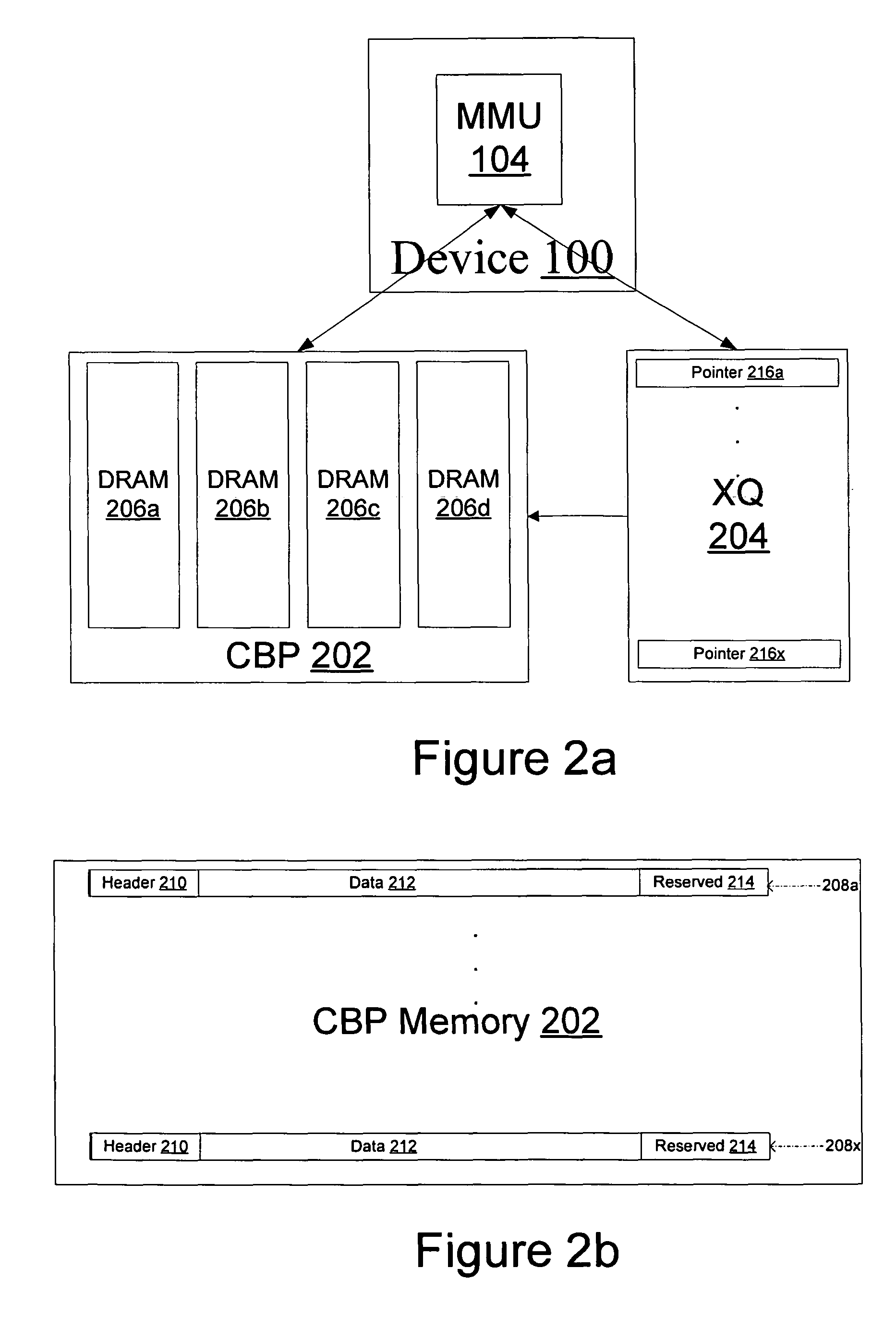

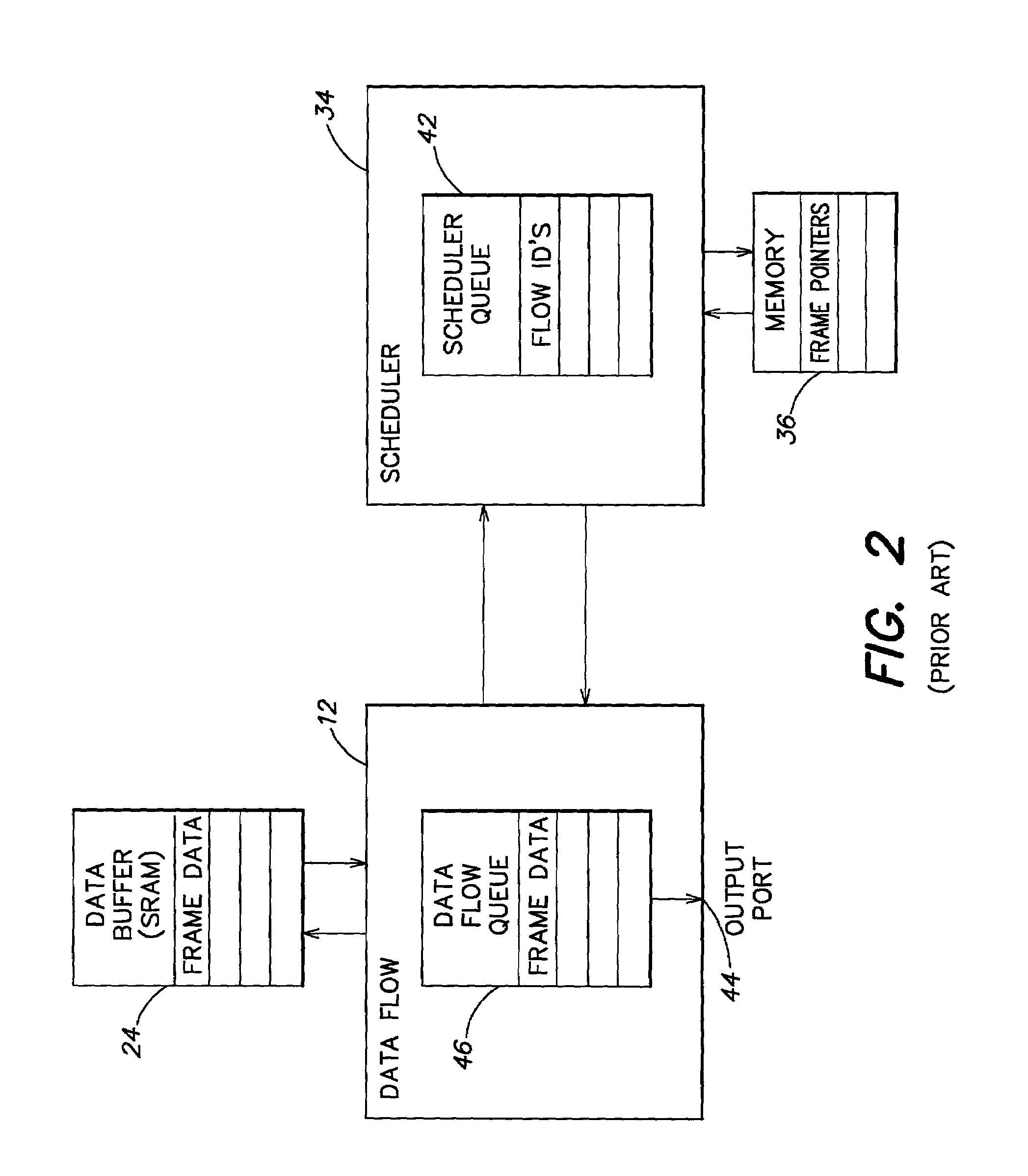

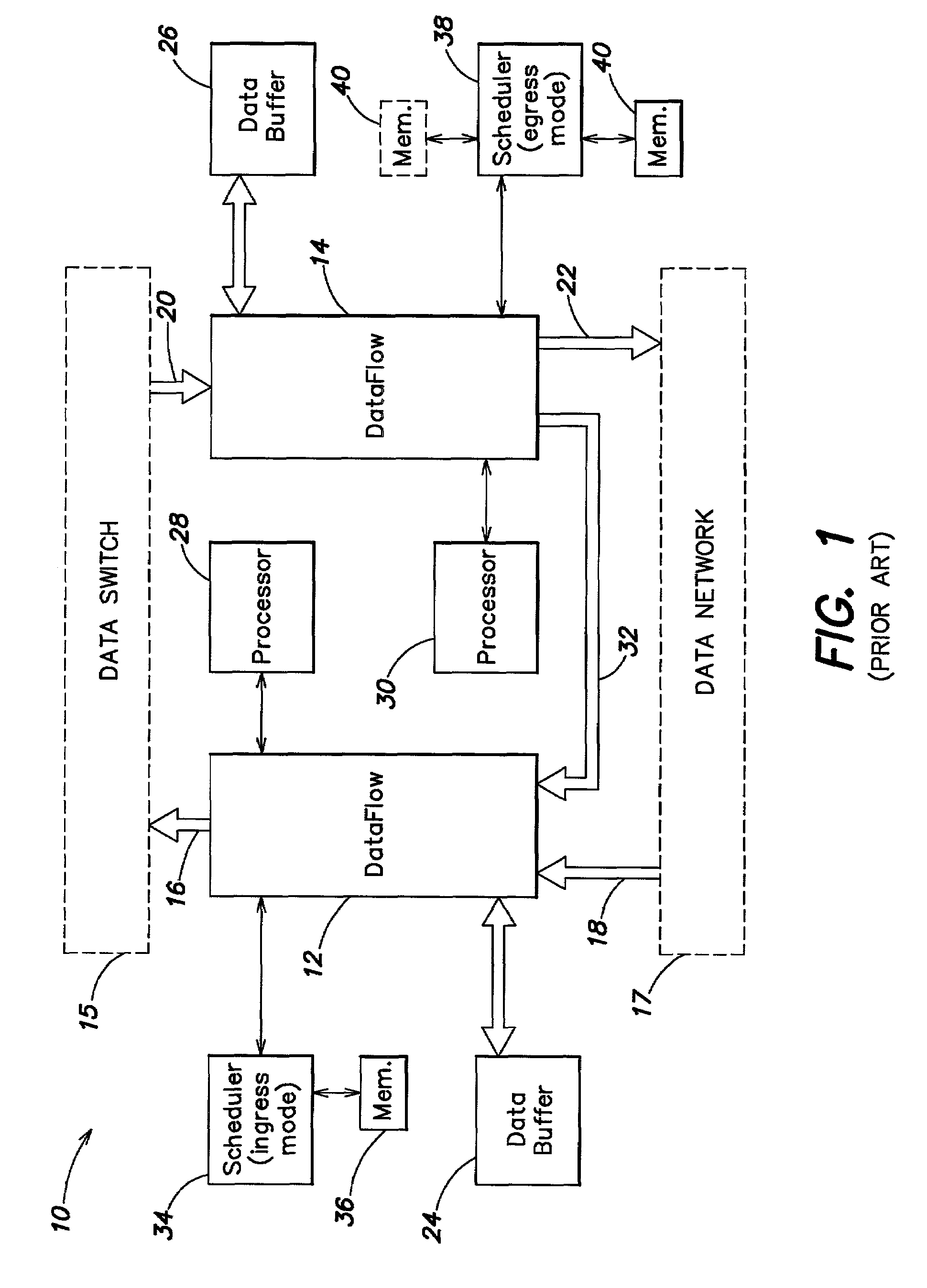

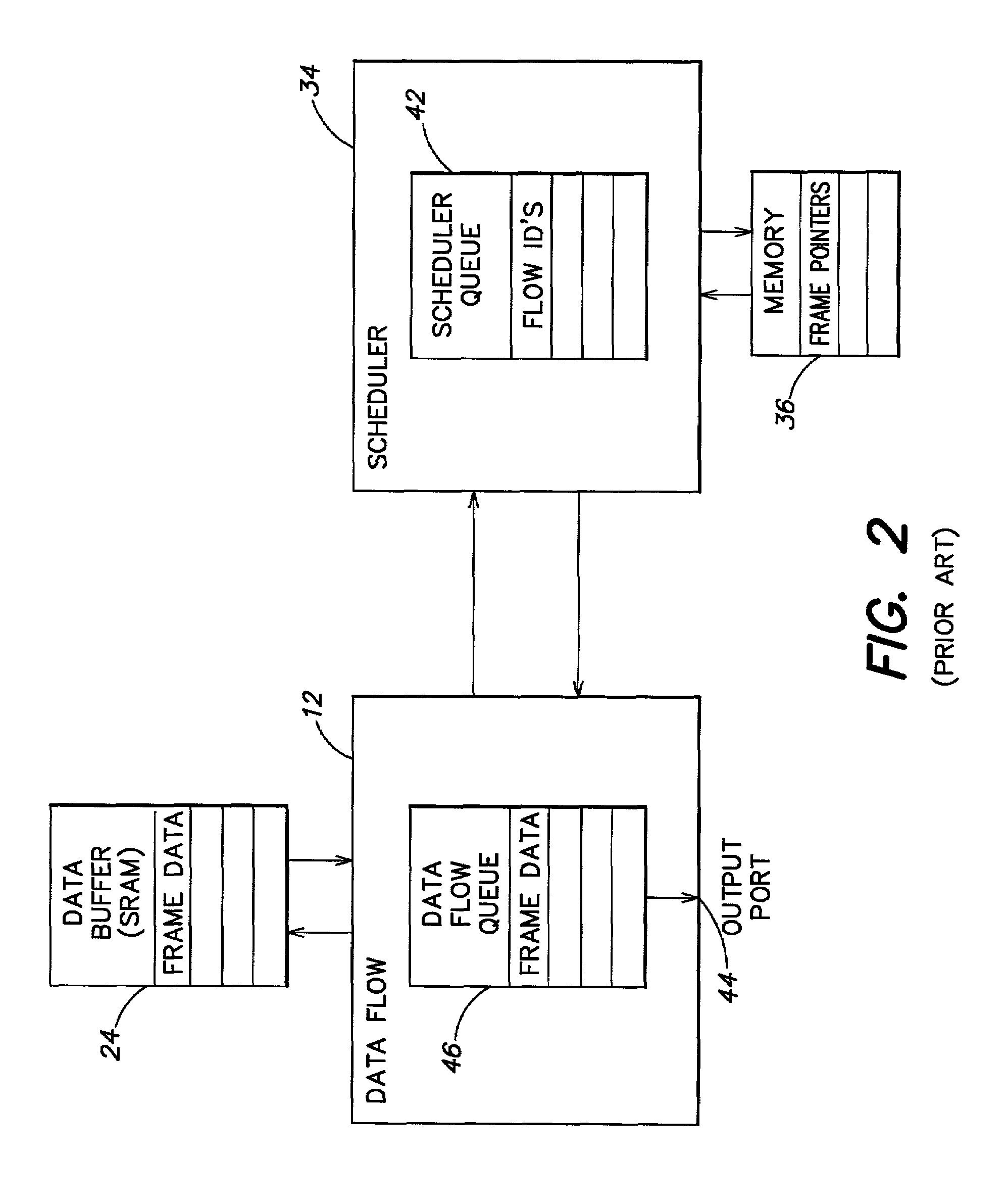

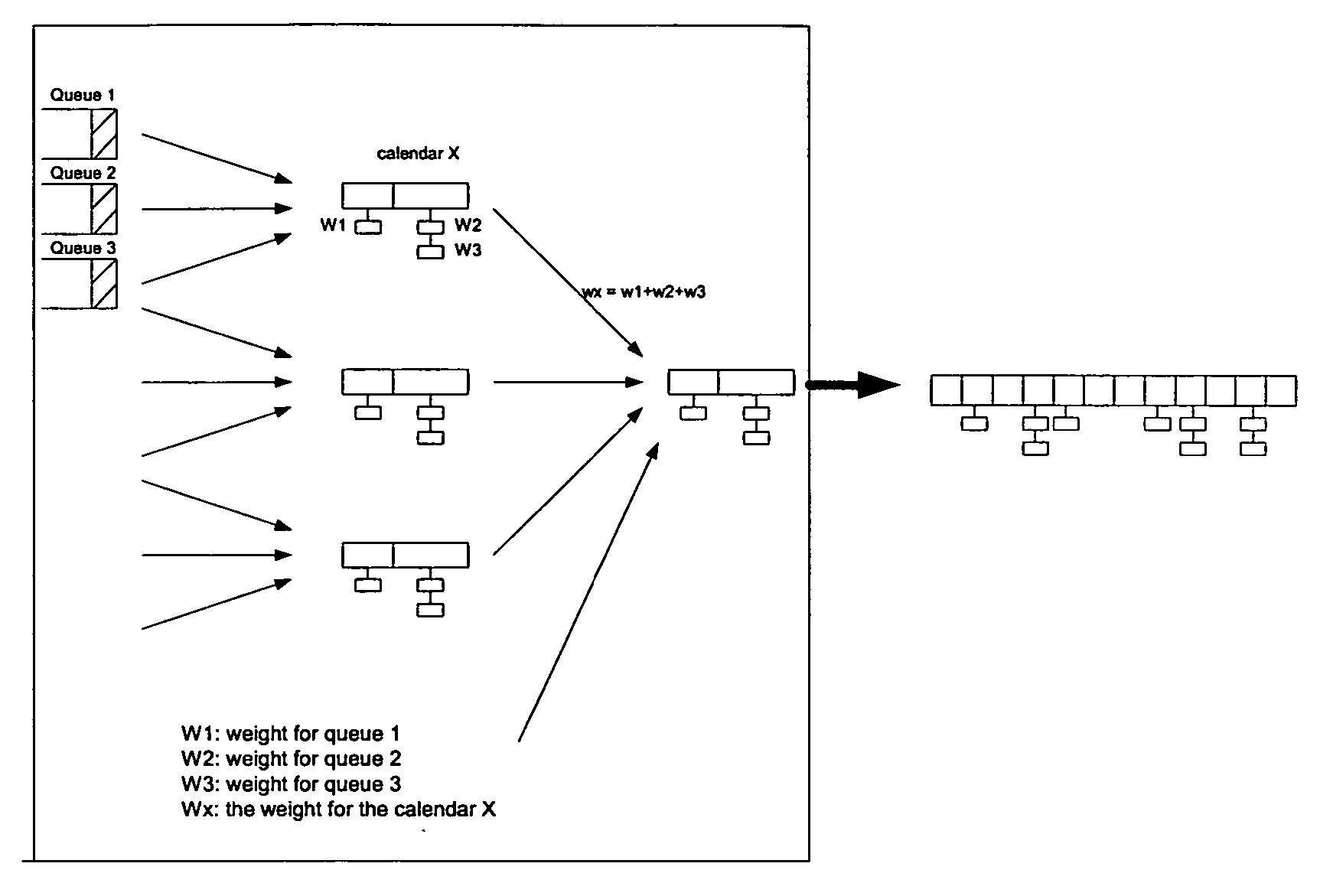

Method and system for network processor scheduling outputs using queueing

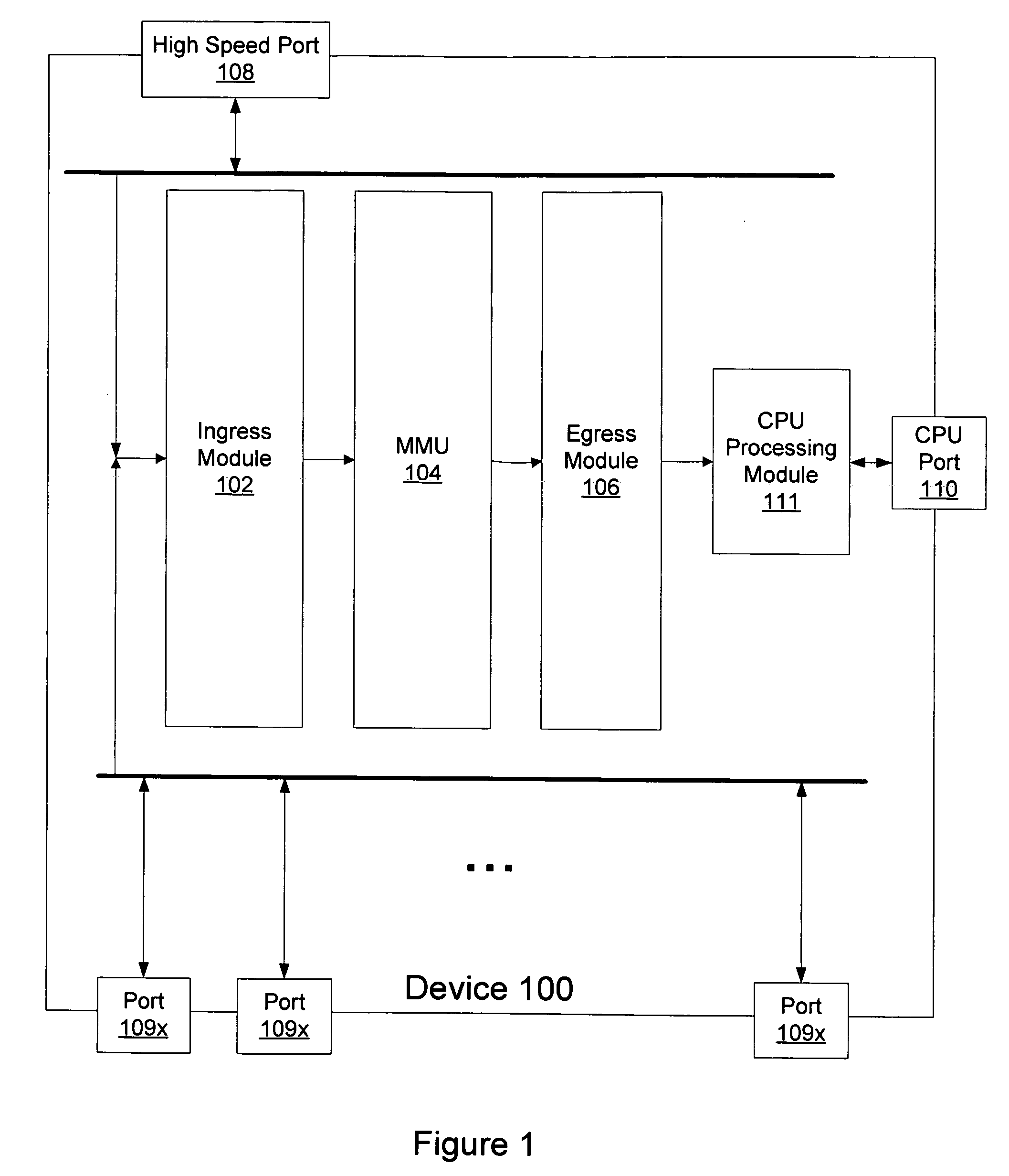

InactiveUS6952424B1Level of serviceGuaranteed bandwidthData switching by path configurationStore-and-forward switching systemsNetwork processing unitTime segment

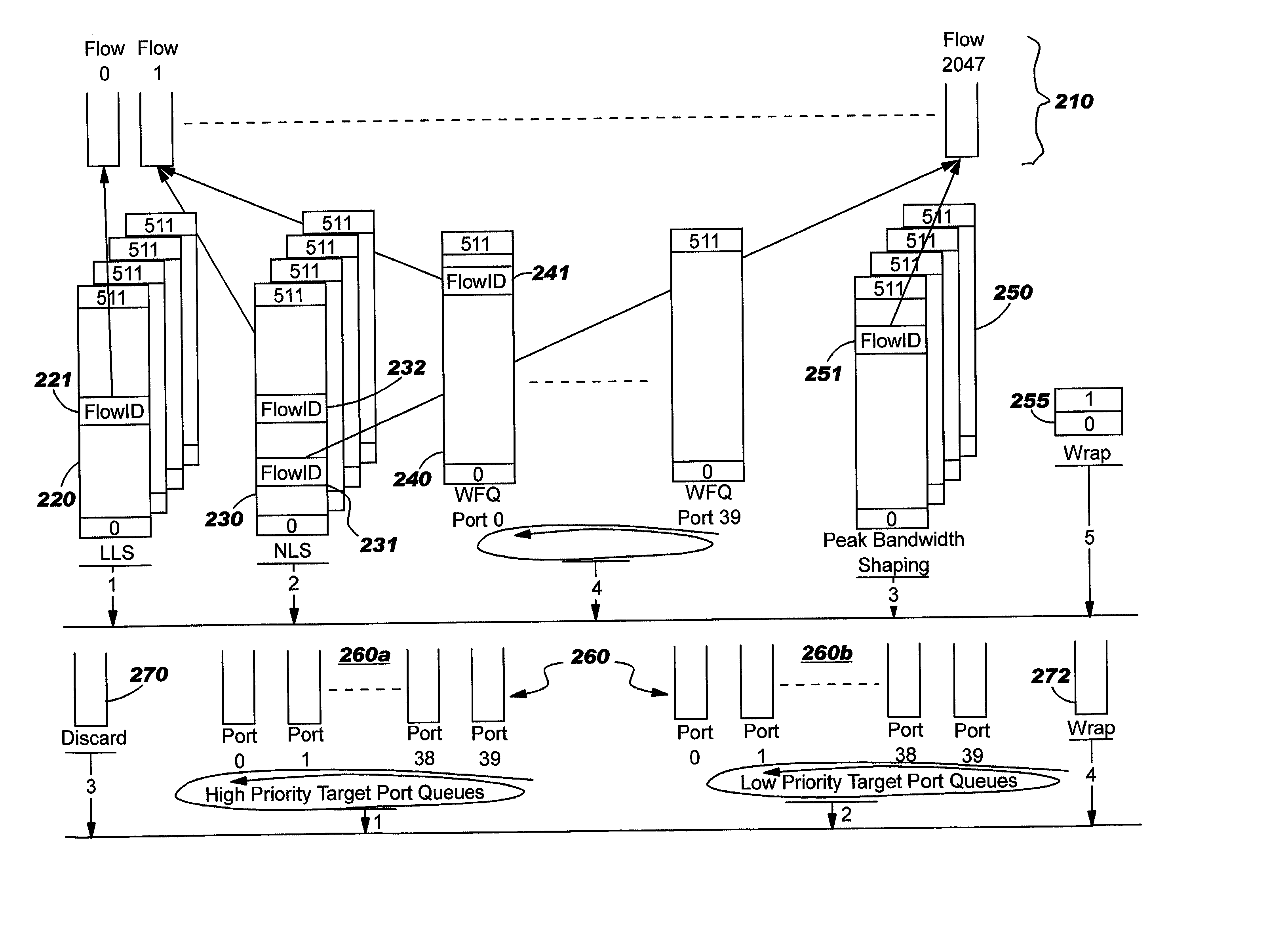

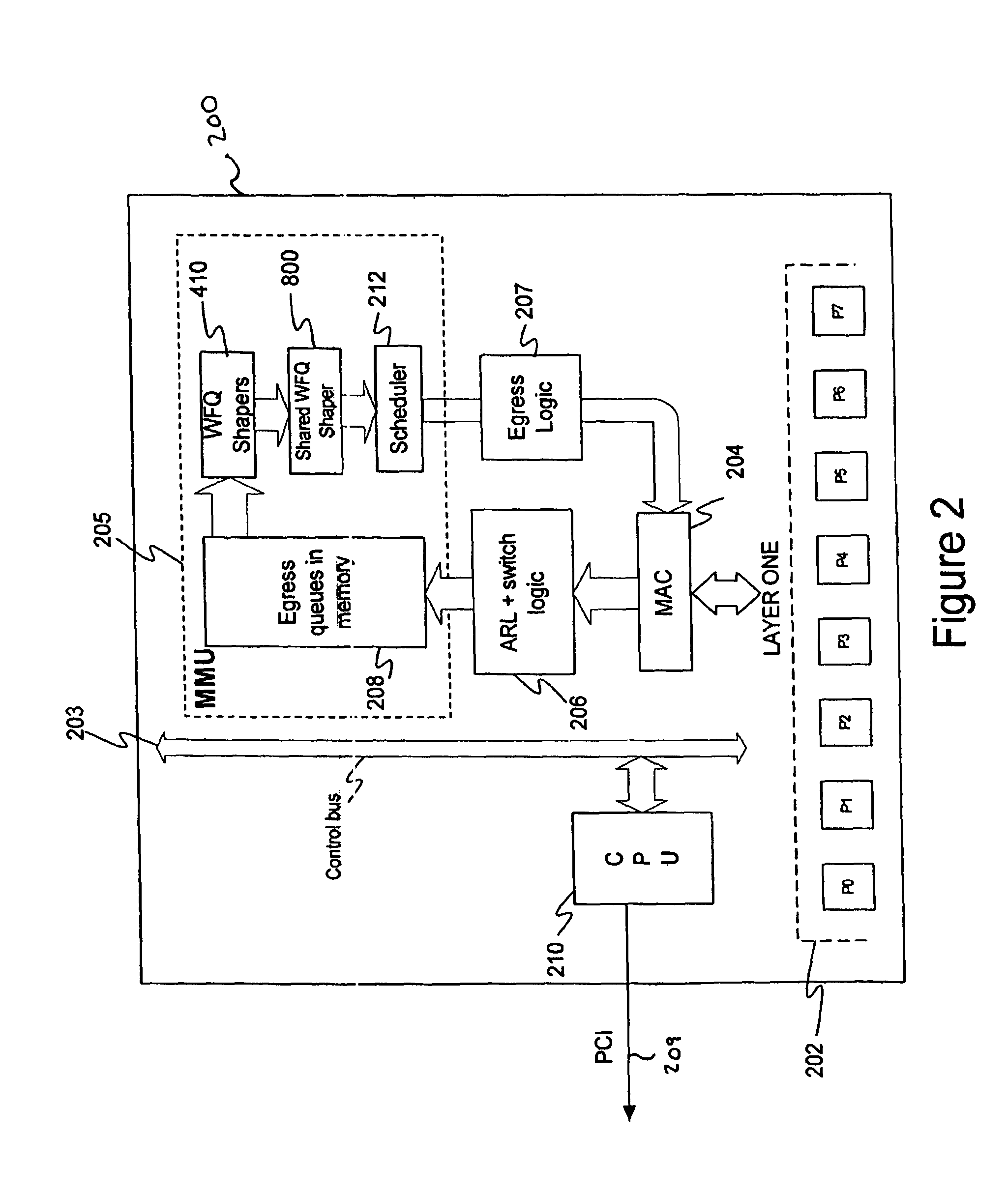

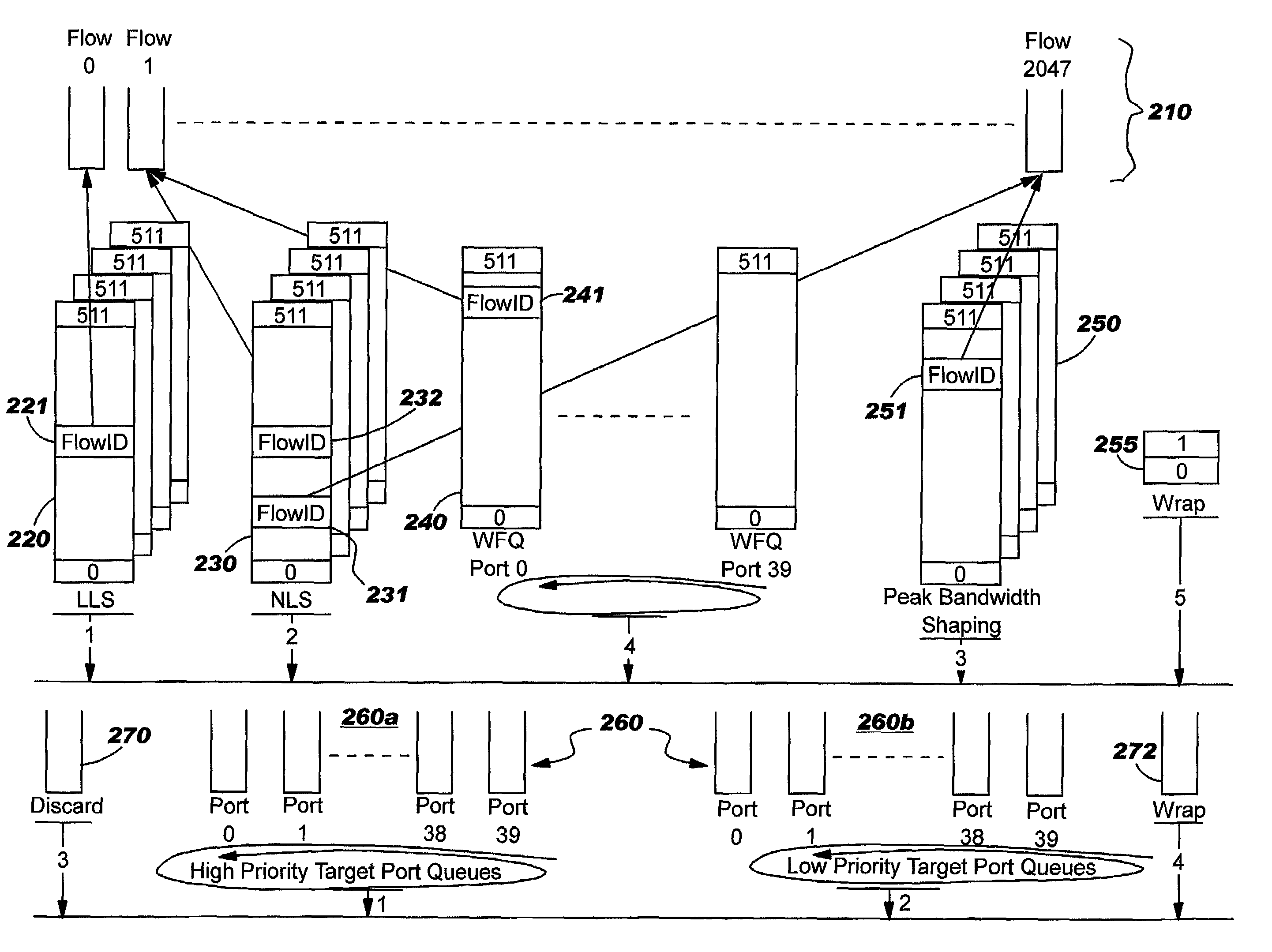

A system and method of moving information units from a network processor toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to stored priorities associated with the various sources of the information units. The priorities in the preferred embodiment include a low latency service, a minimum bandwidth, a weighted fair queueing and a system for preventing a user from continuing to exceed his service levels over an extended period. The present invention includes a weighted fair queueing system where the position of the next service in a best efforts system for using bandwidth which is not used by committed bandwidth is determined based on the length of the frame and the weight of the particular flow. A “back pressure” system keeps a flow from being selected if its output cannot accept an additional frame because the current level of that port queue exceeds a threshold.

Owner:IBM CORP

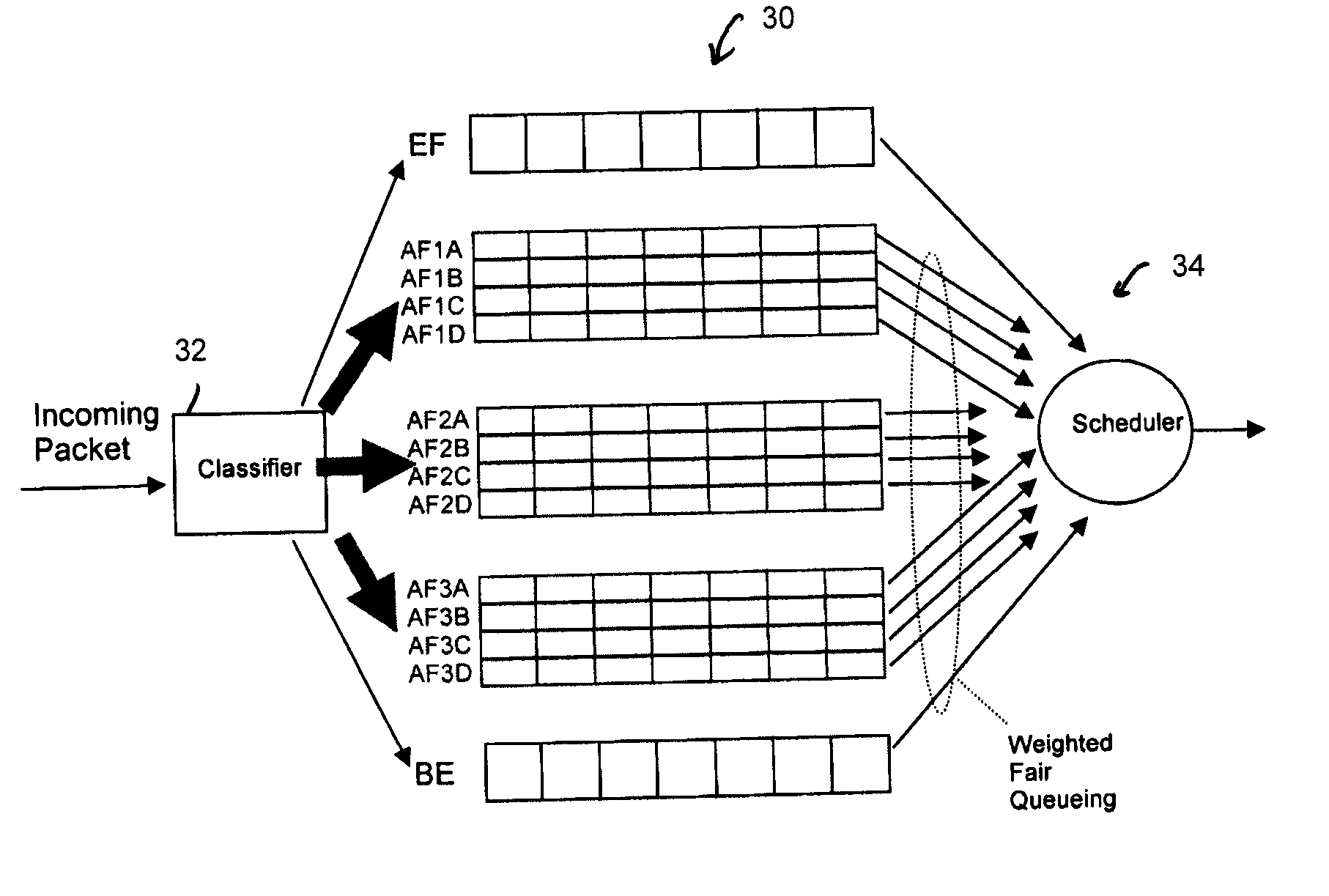

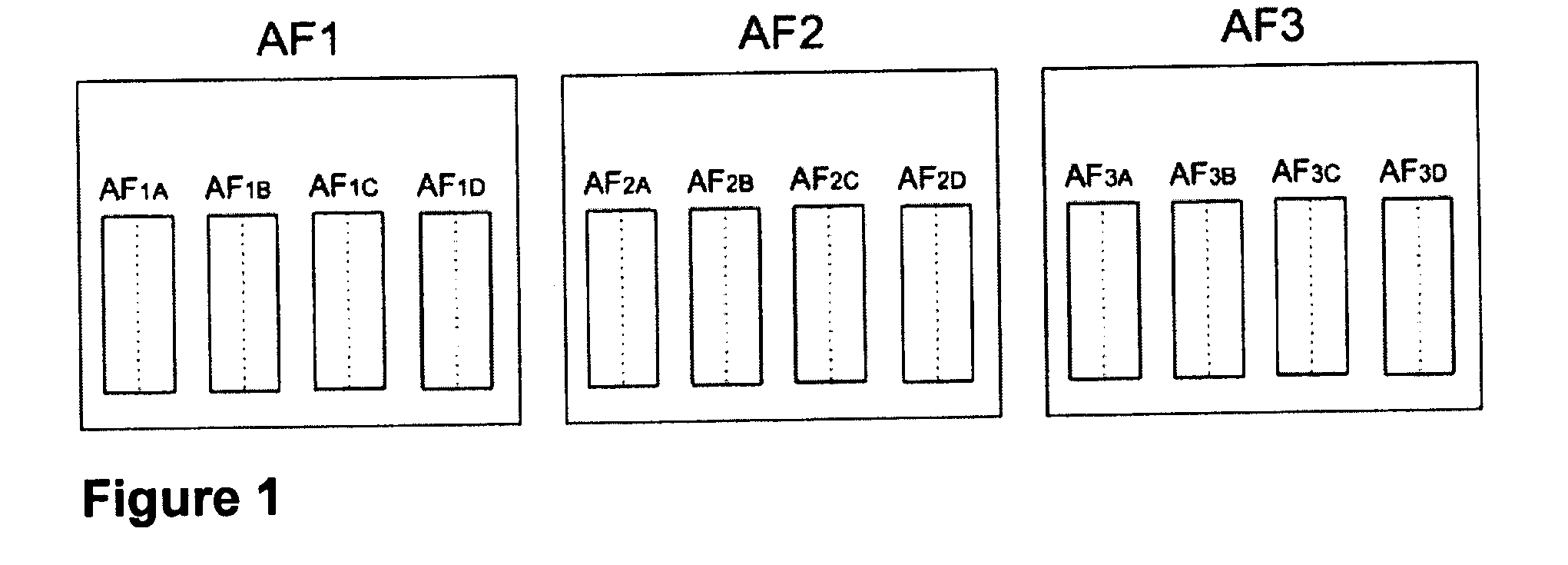

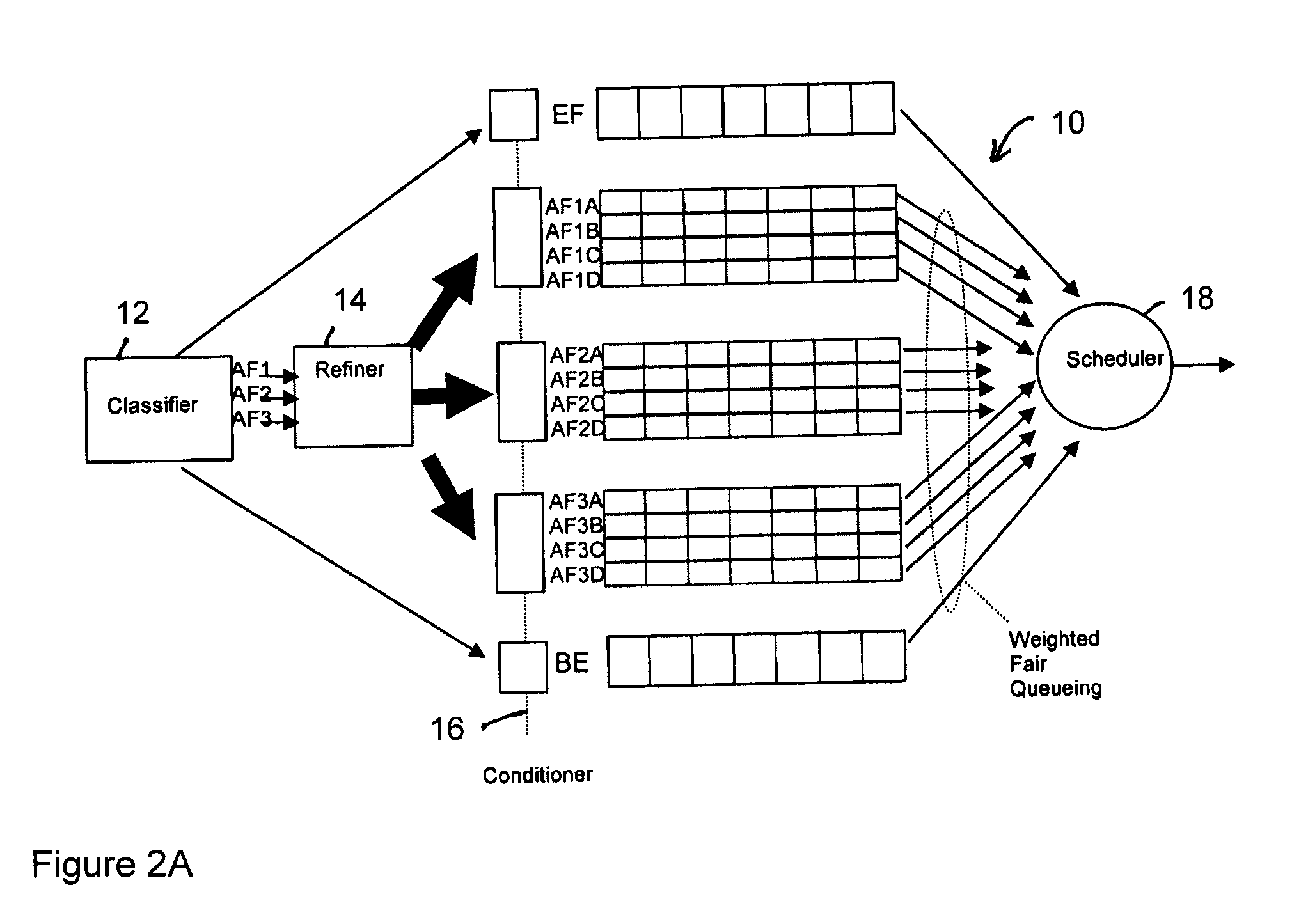

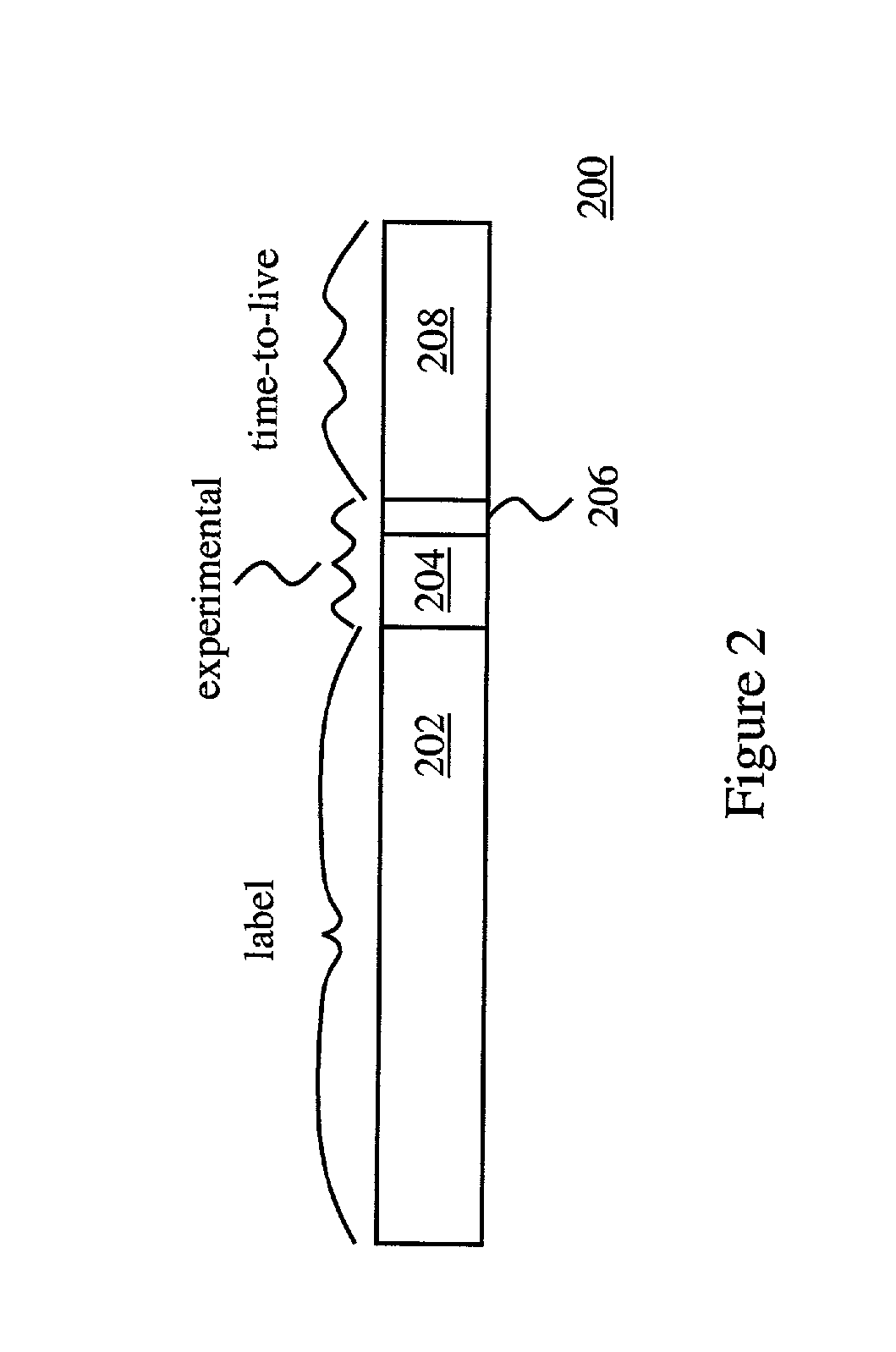

Refined Assured Forwarding Framework for Differentiated Services Architecture

InactiveUS20080080382A1Error preventionFrequency-division multiplex detailsDifferentiated servicesTraffic capacity

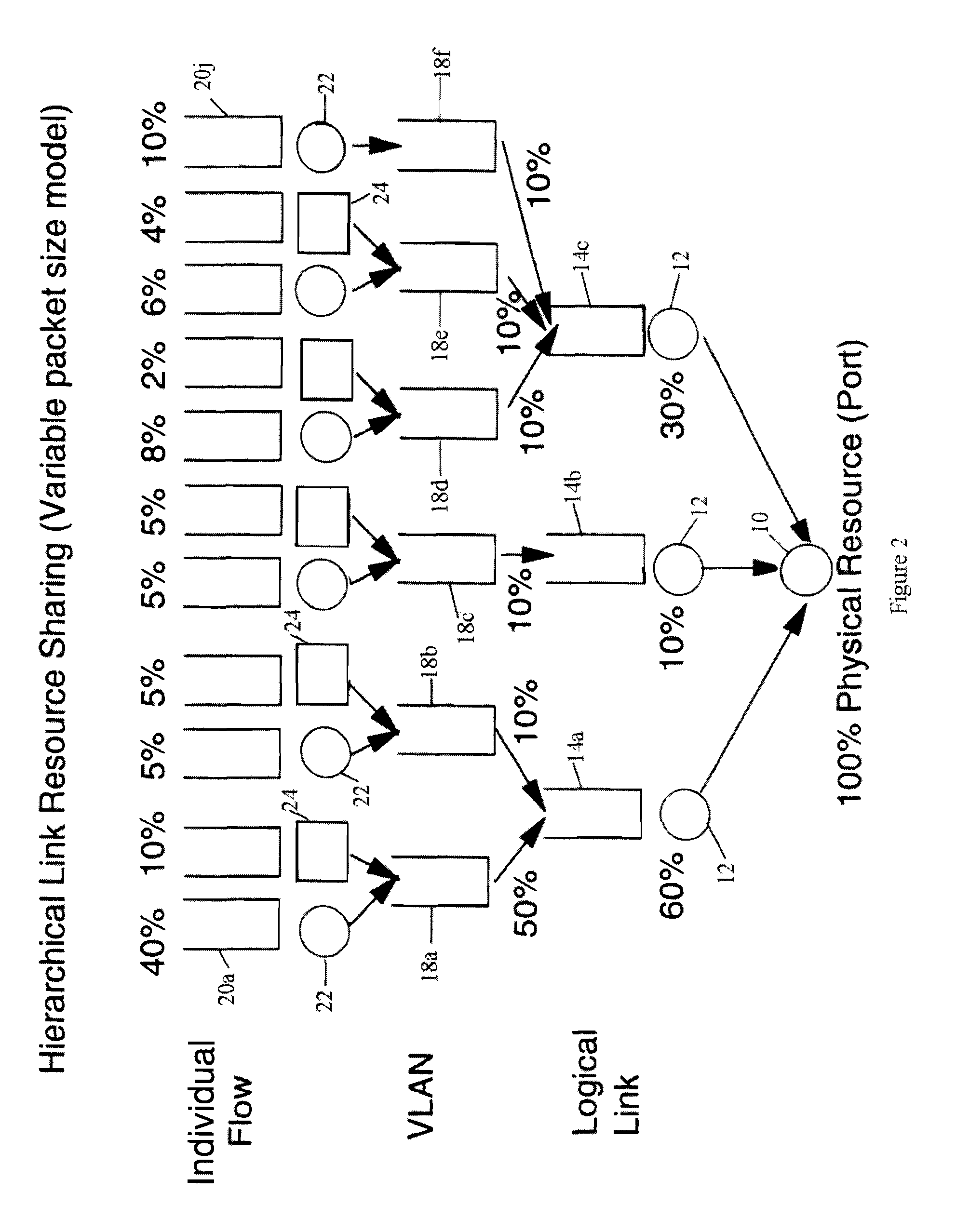

The DiffServ architecture is an increasingly preferred approach for providing varying levels of Quality of Service in an IP network. This discovery presents a new framework for improving the performance of the DiffServ architecture where heterogeneous traffic flows share the same aggregate class. The new framework requires minimal modification to existing DiffServ routers by adding a second layer of classification of flows based on their average packet sizes and using Weighted Fair Queueing for flow scheduling. The efficiency of the new framework is demonstrated by simulation results for delay, packet delivery, throughput, and packet loss, under different traffic scenarios.

Owner:THE BOARD OF RGT UNIV OF OKLAHOMA

Packet transmission scheduling in a data communication network

InactiveUS20020126690A1Easy to handleRadio transmissionNetworks interconnectionHeap (data structure)Fair queuing

The present invention is directed toward methods and apparatus for packet transmission scheduling in a data communication network. In one aspect, received data packets are assigned to an appropriate one of a plurality of scheduling heap data structures. Each scheduling heap data structure is percolated to identify a most eligible data packet in each heap data structure. A highest-priority one of the most-eligible data packets is identifying by prioritizing among the most-eligible data packets. This is useful because the scheduling tasks may be distributed among the hierarchy of schedulers to efficiently handle data packet scheduling. Another aspect provides a technique for combining priority schemes, such as strict priority and weighted fair queuing. This is useful because packets may have equal priorities or no priorities, such as in the case of certain legacy equipment.

Owner:MAPLE OPTICAL SYST

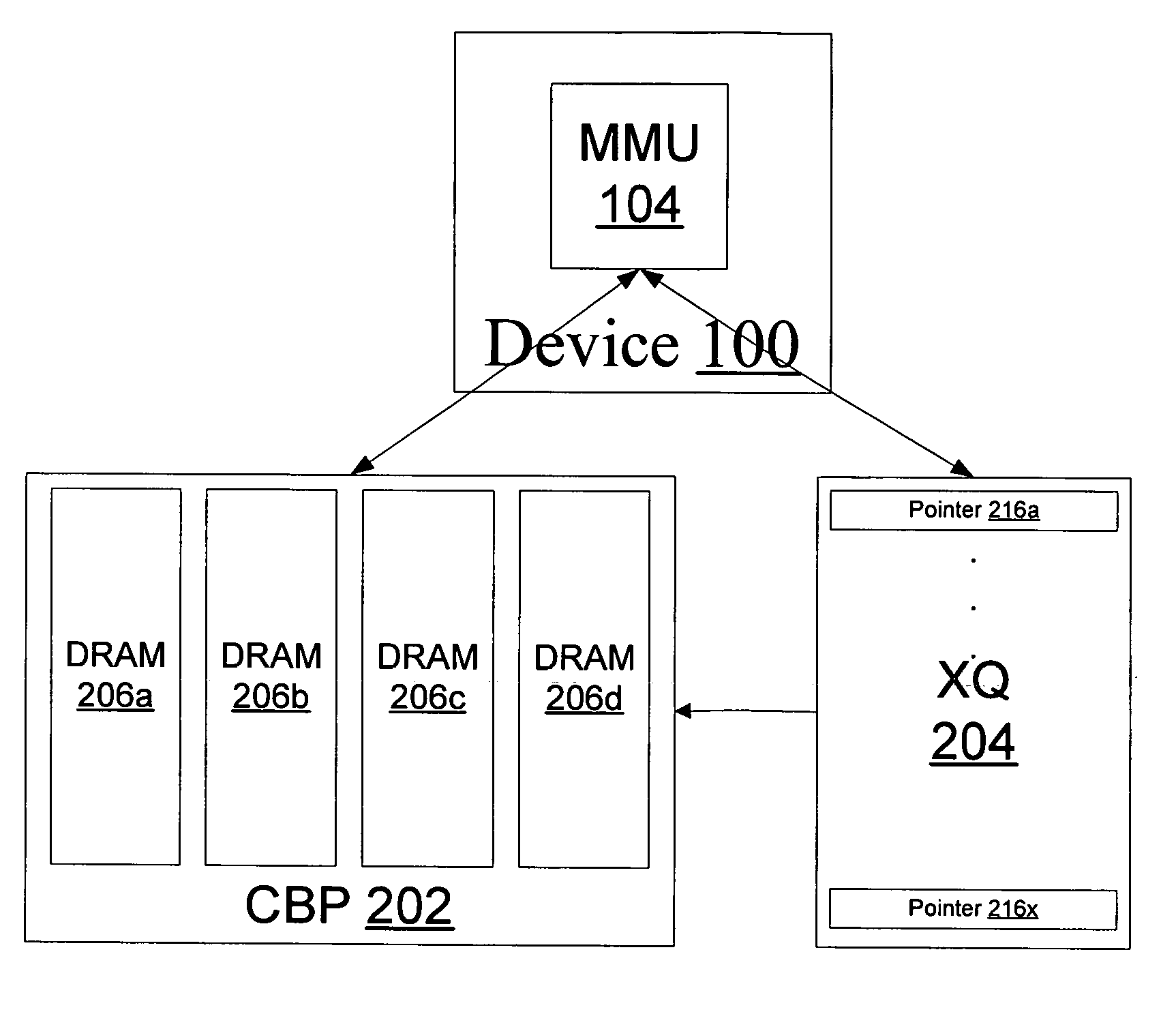

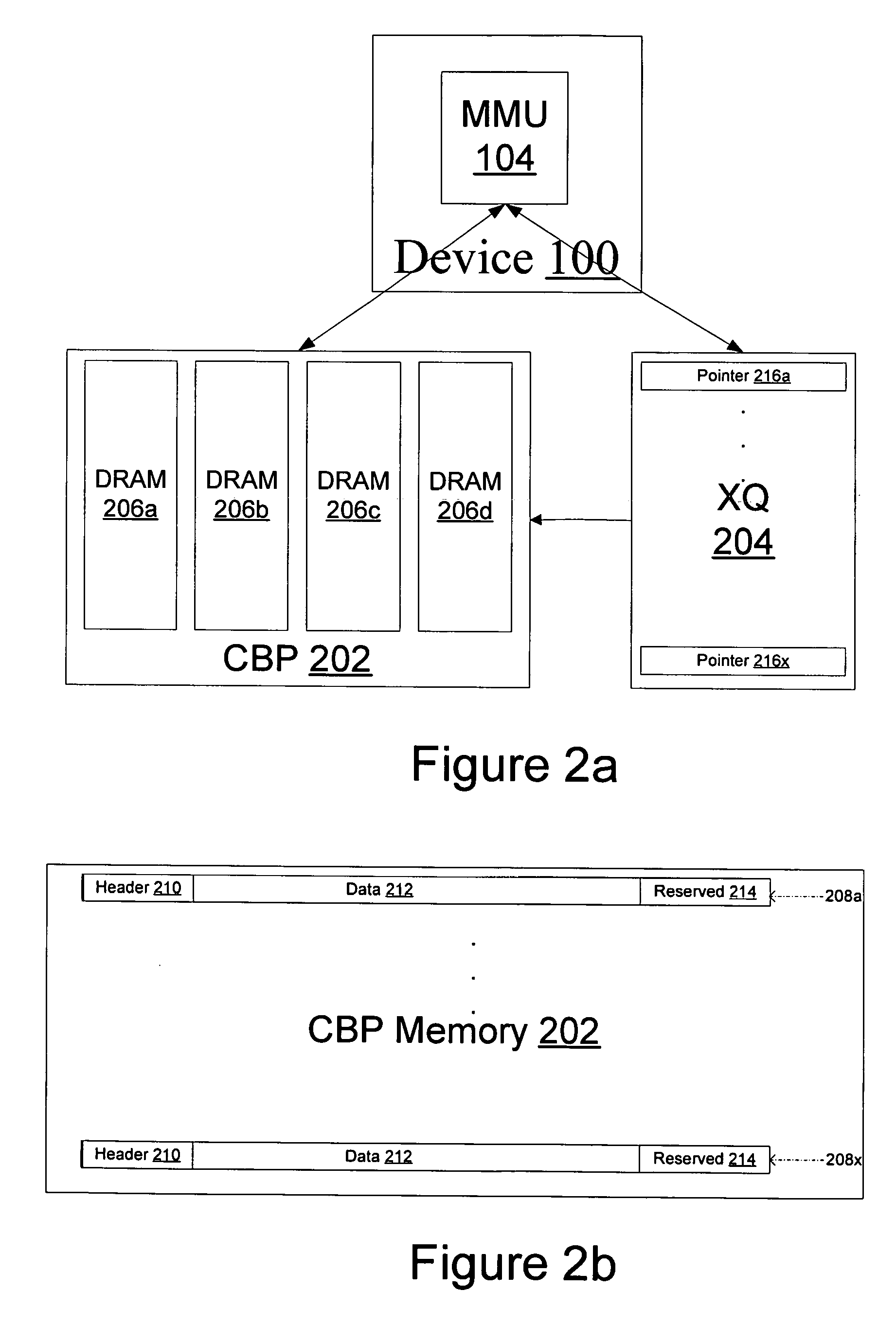

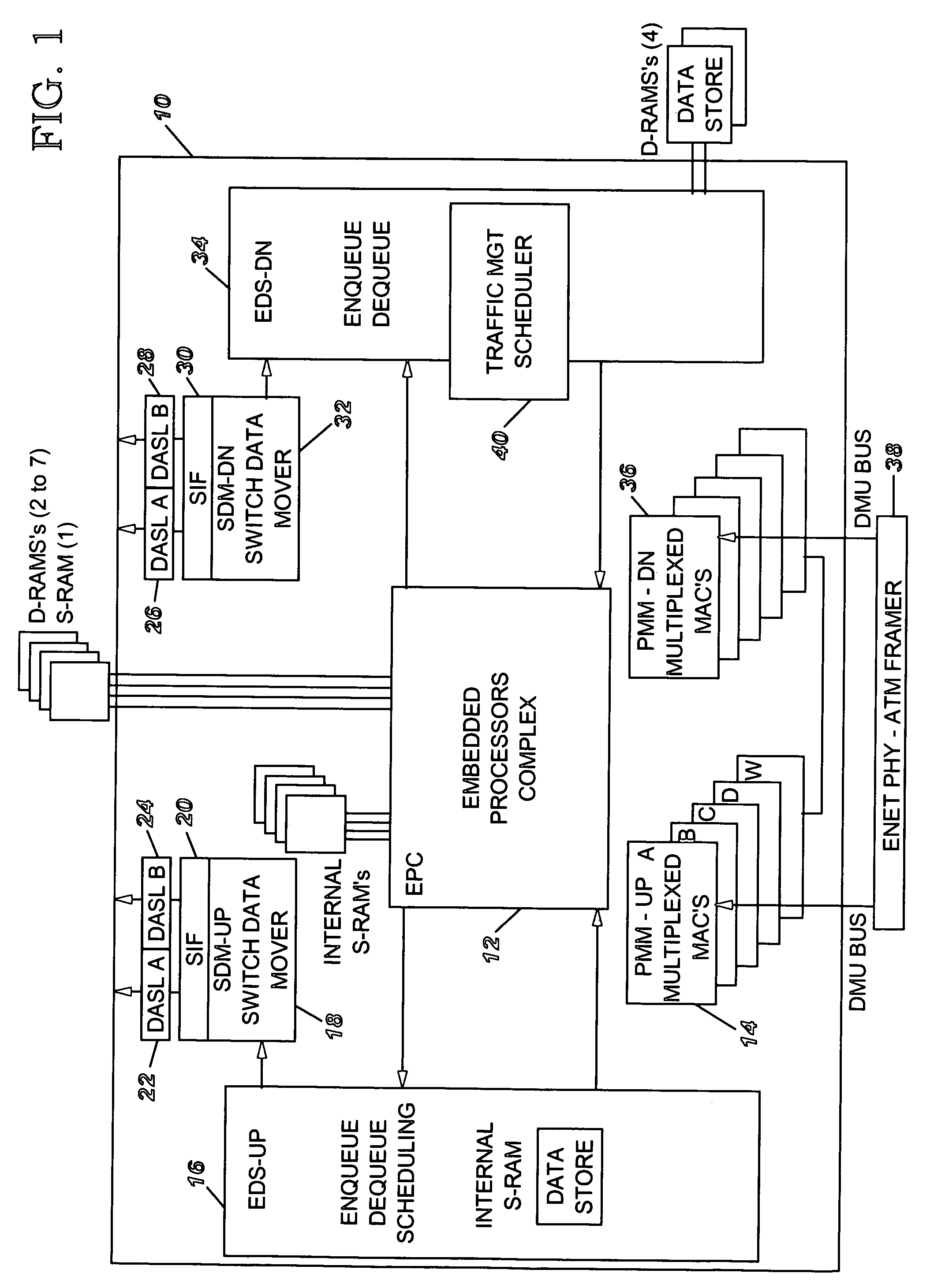

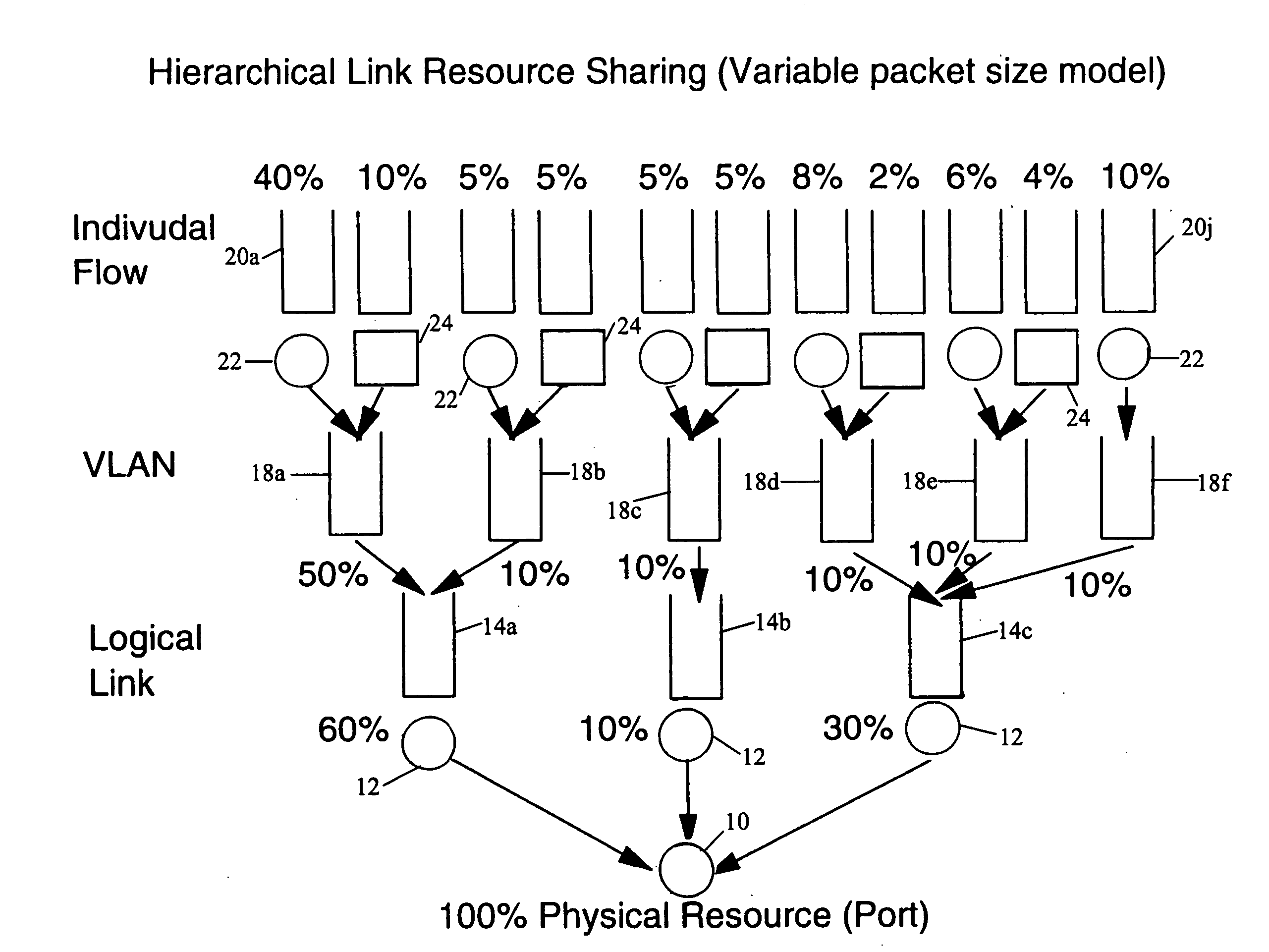

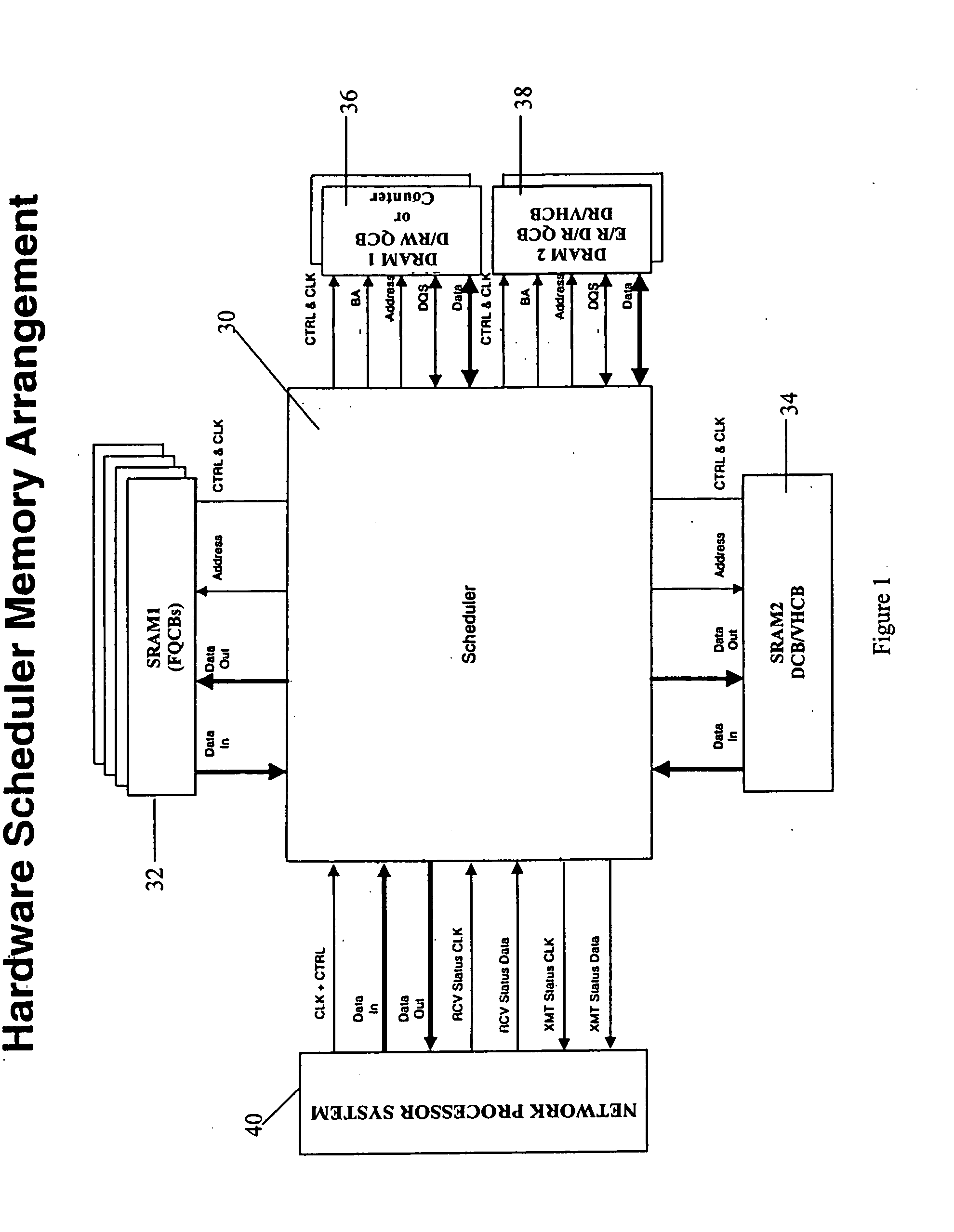

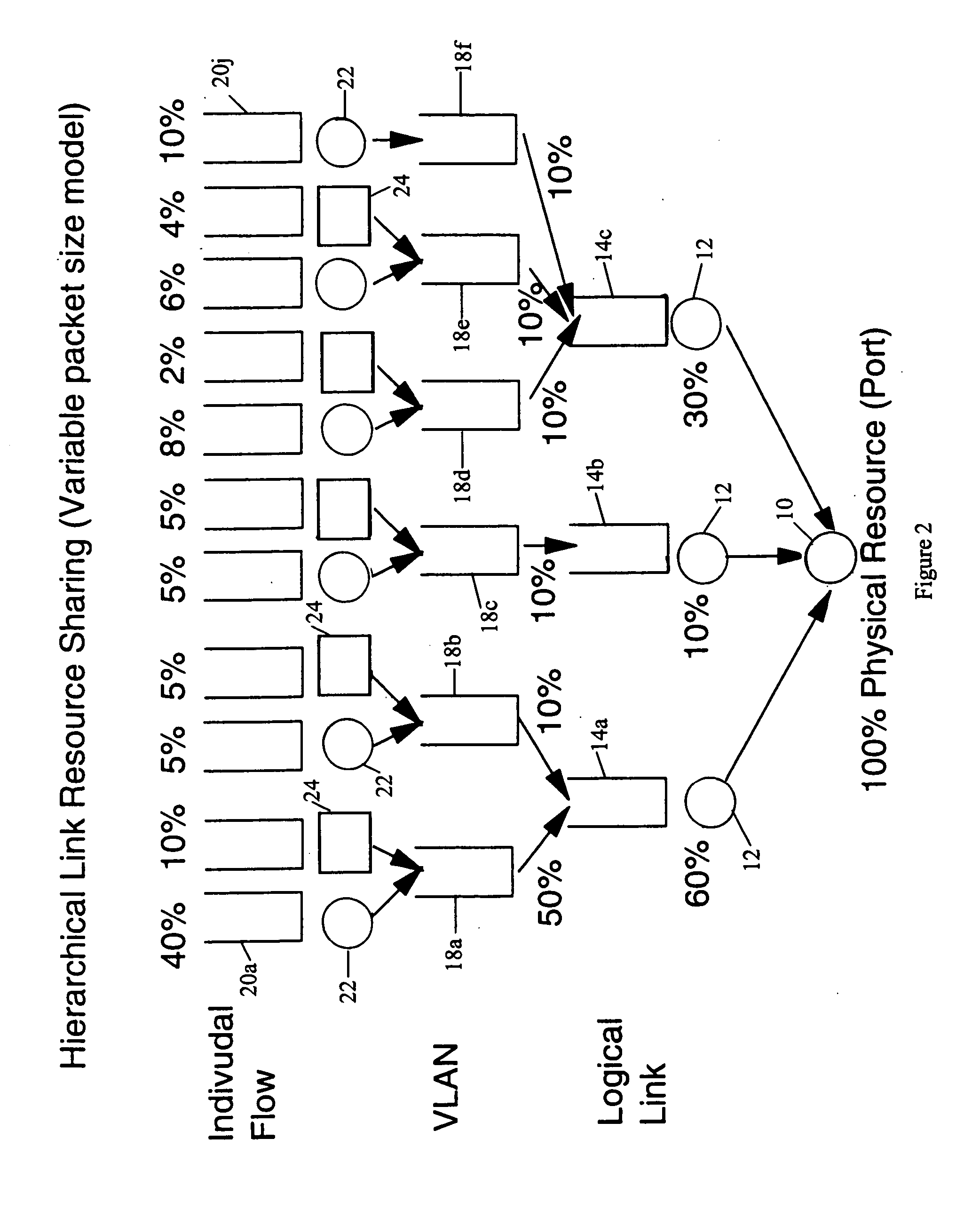

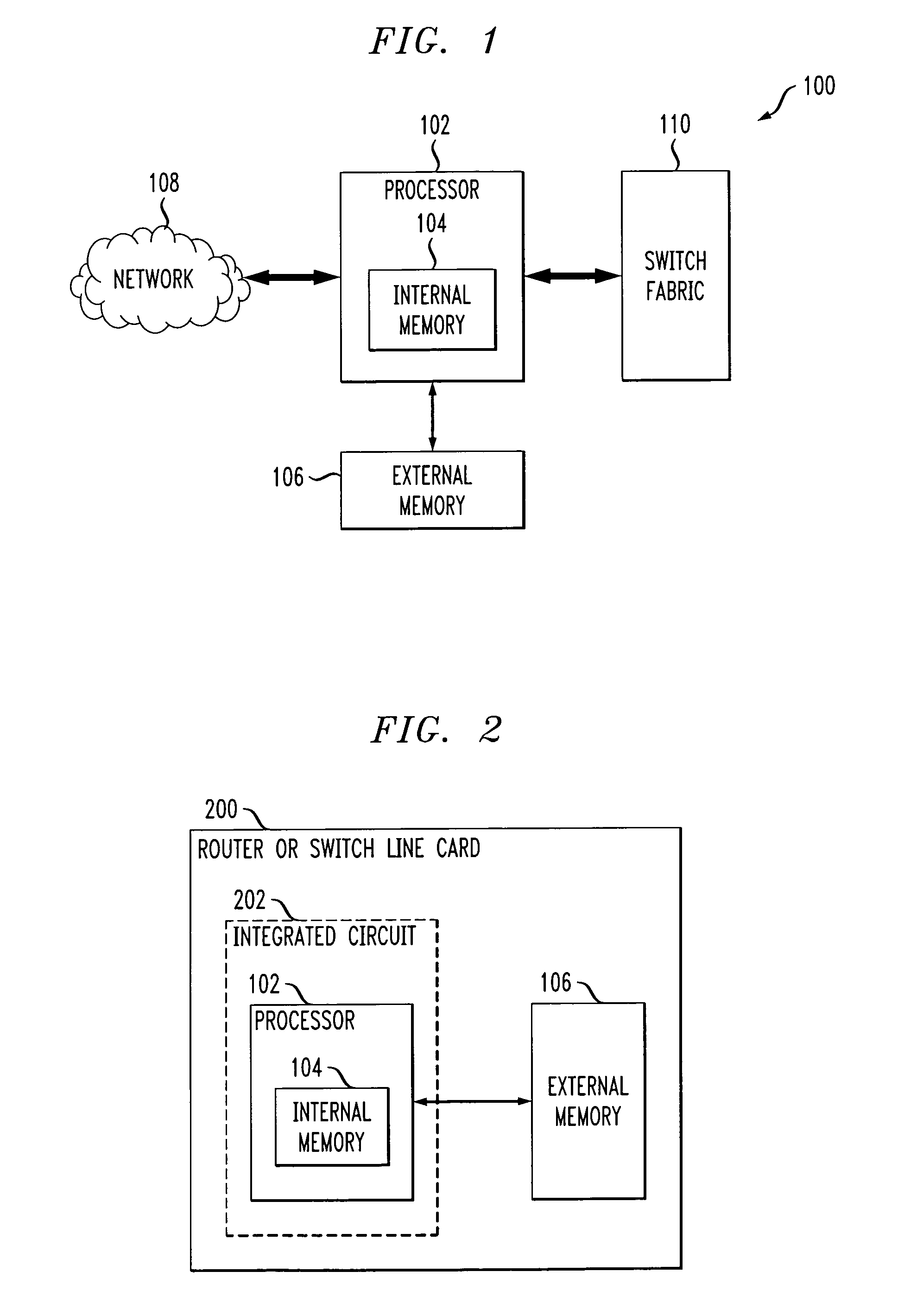

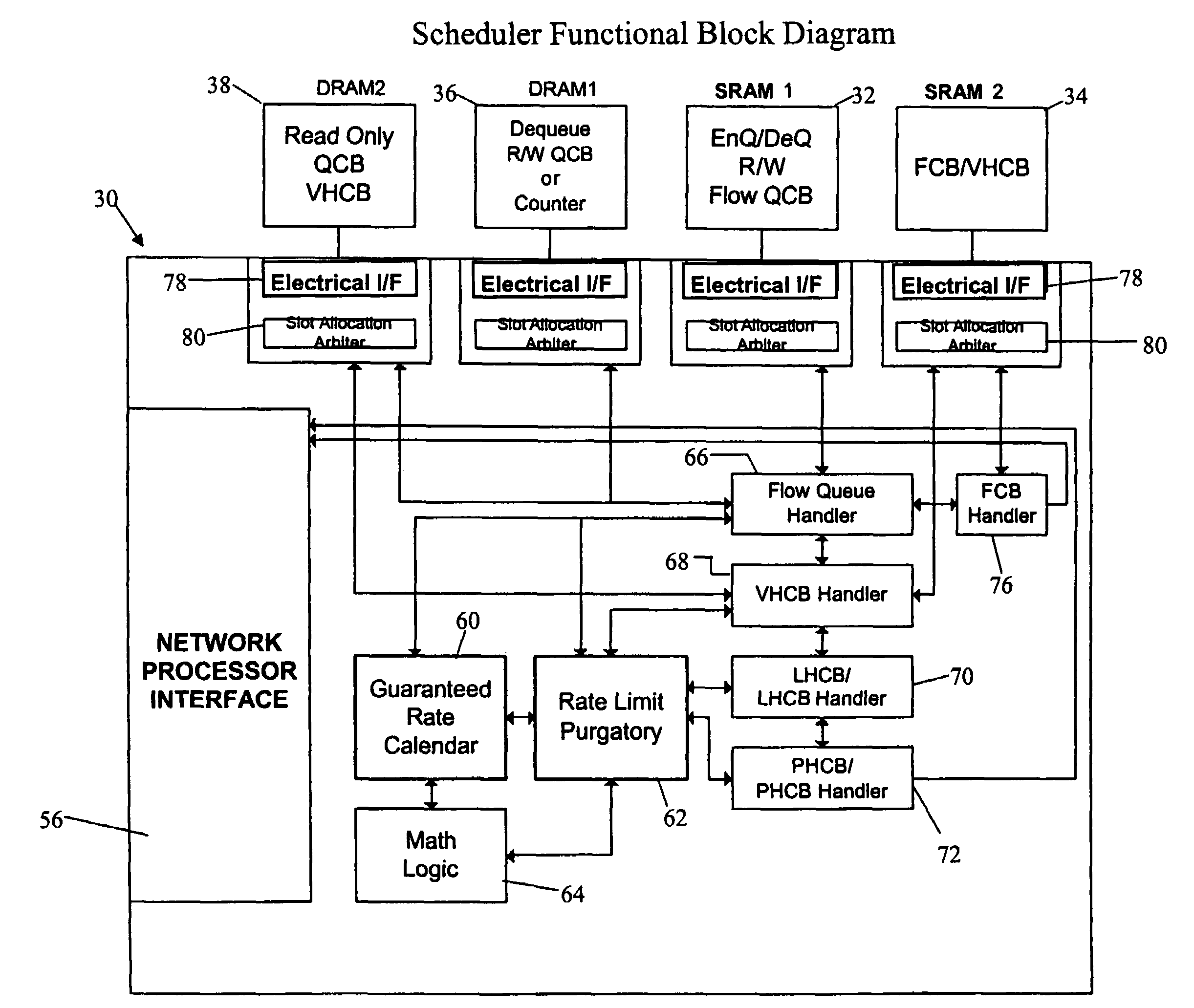

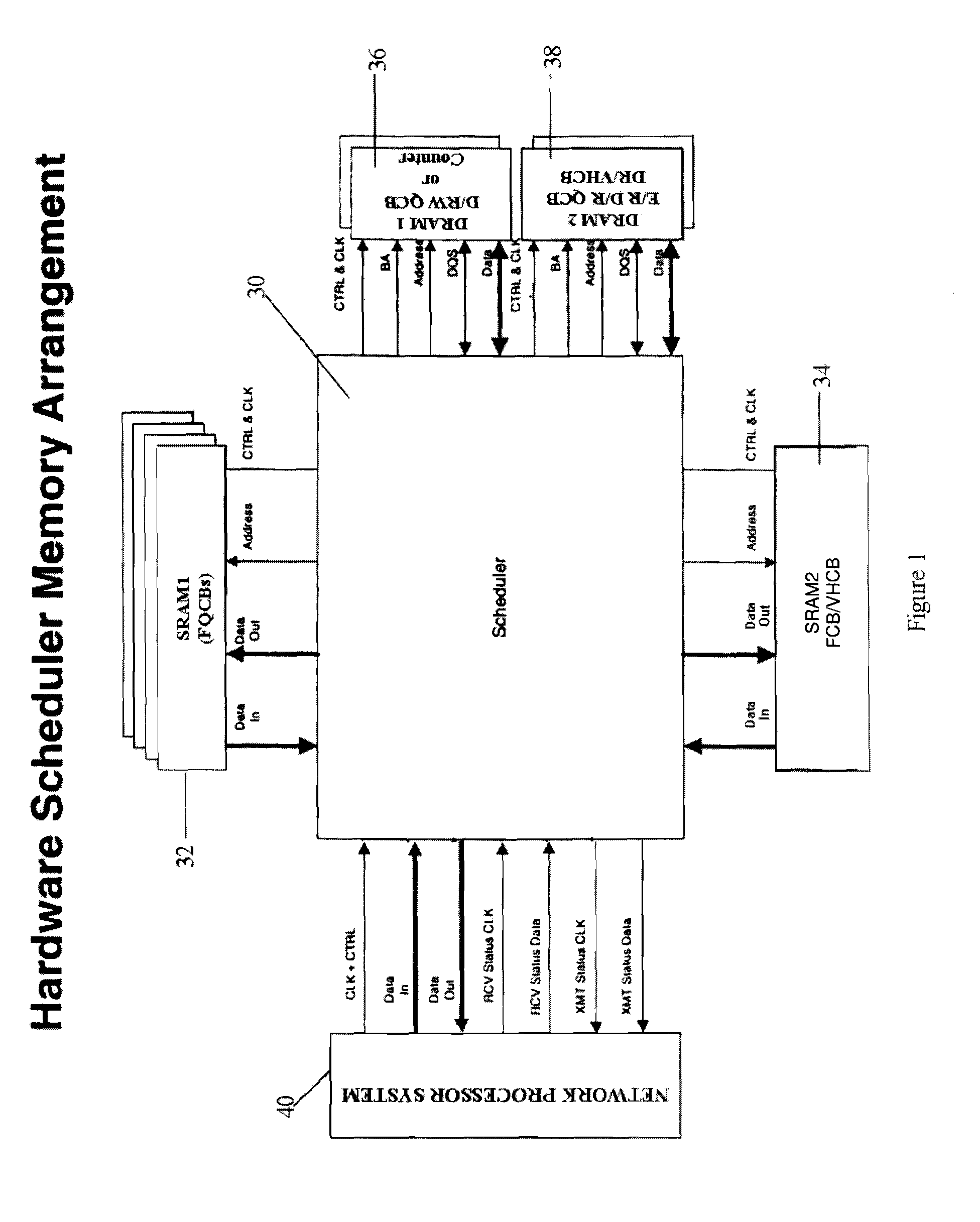

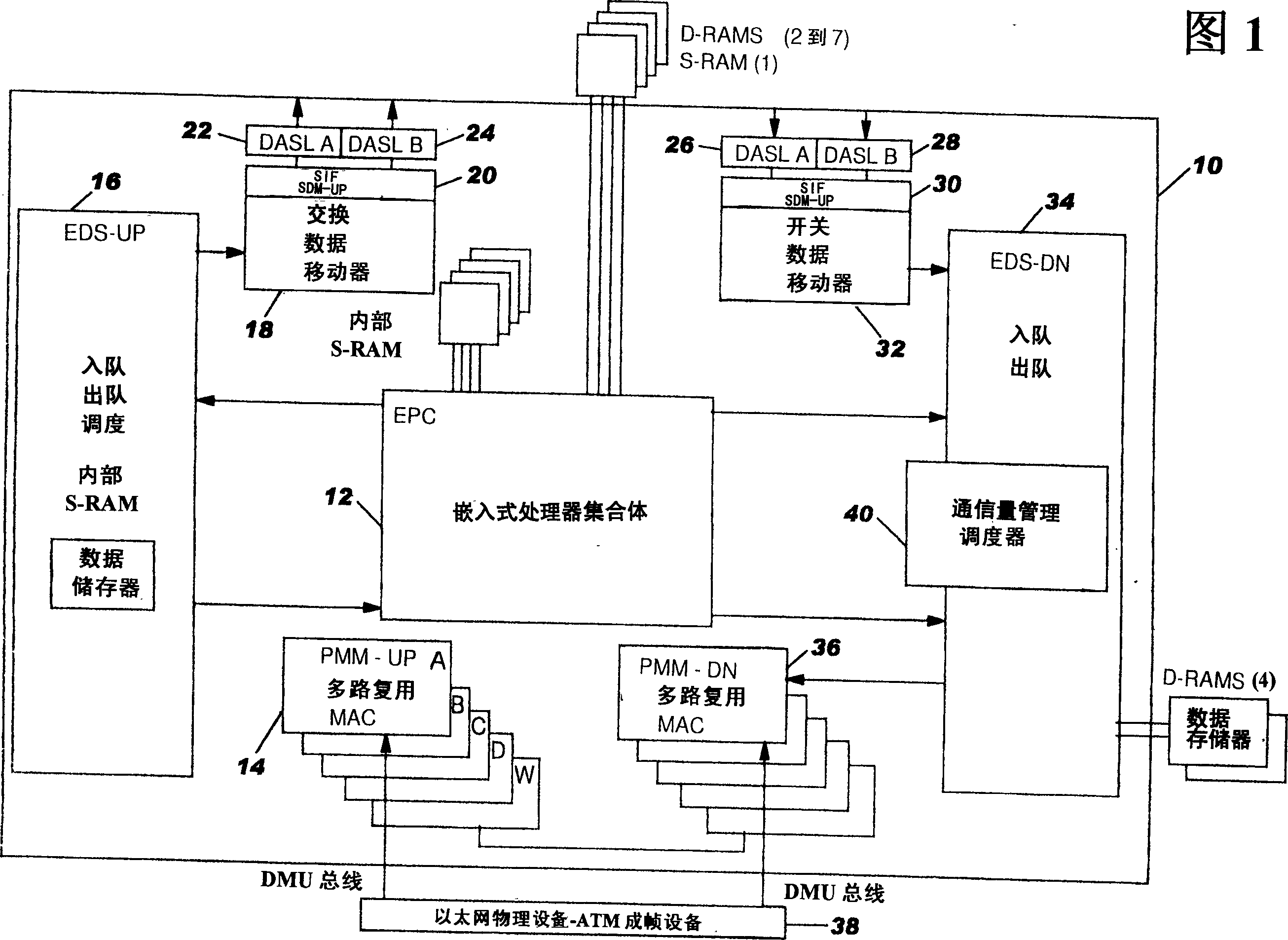

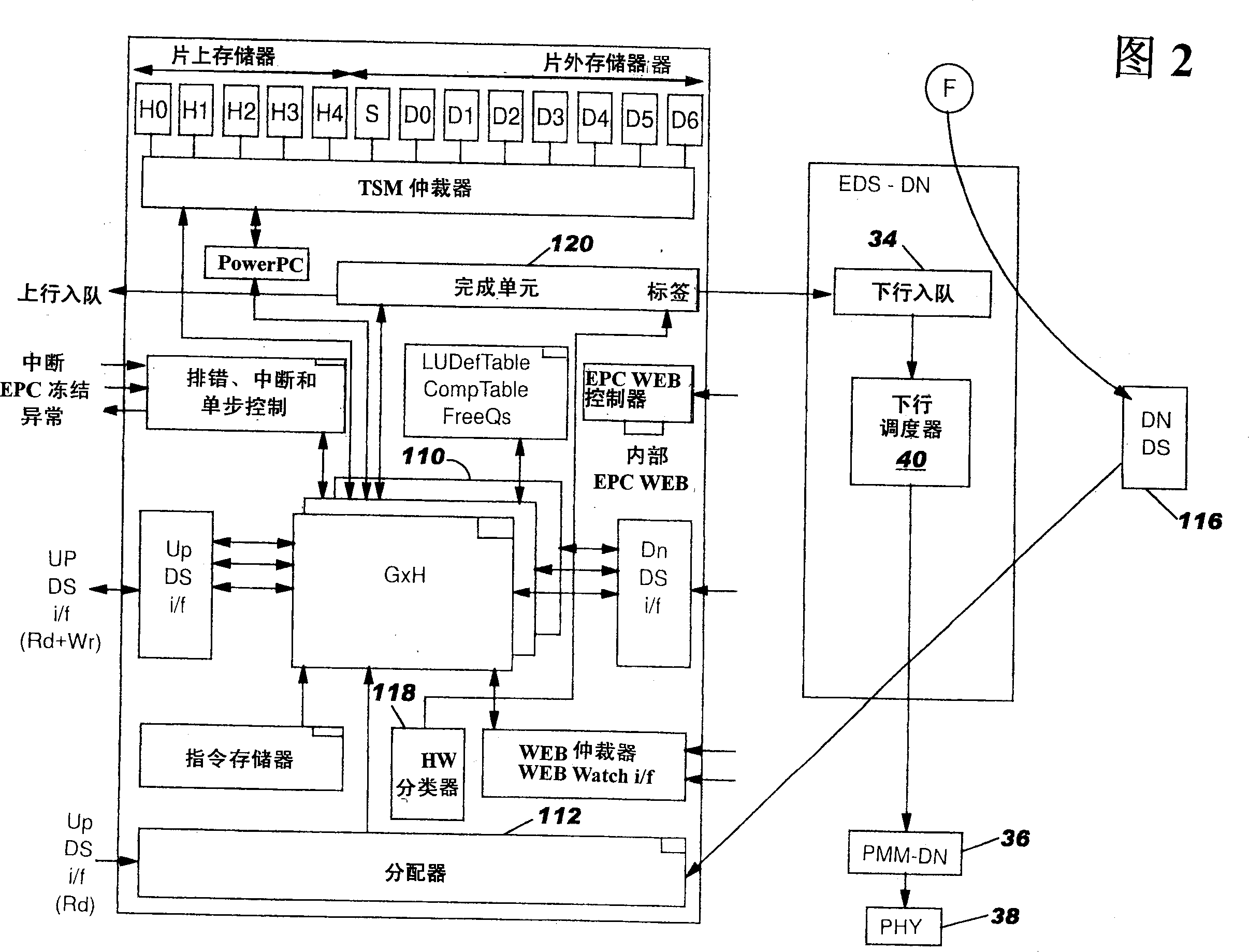

Structure and method for scheduler pipeline design for hierarchical link sharing

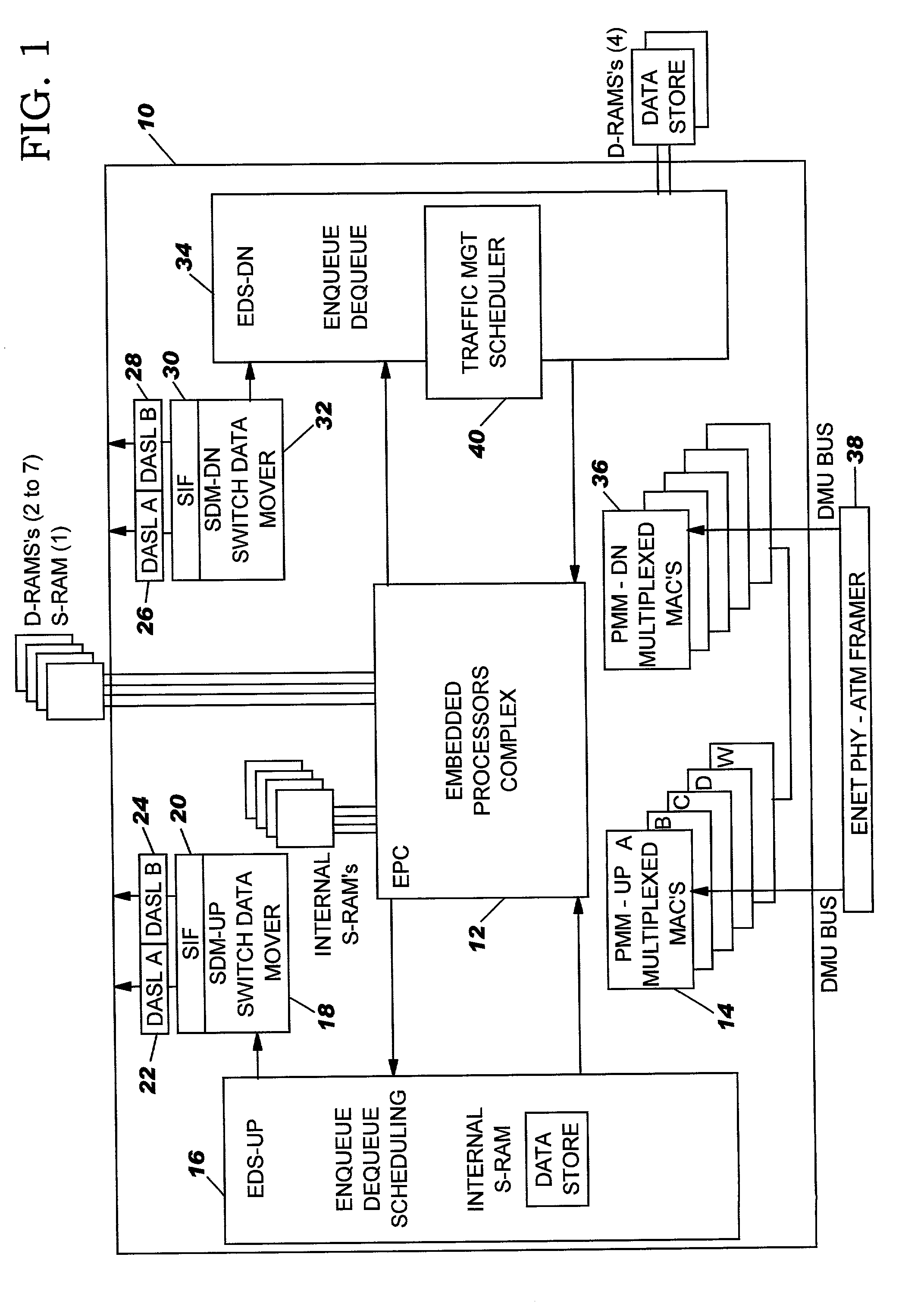

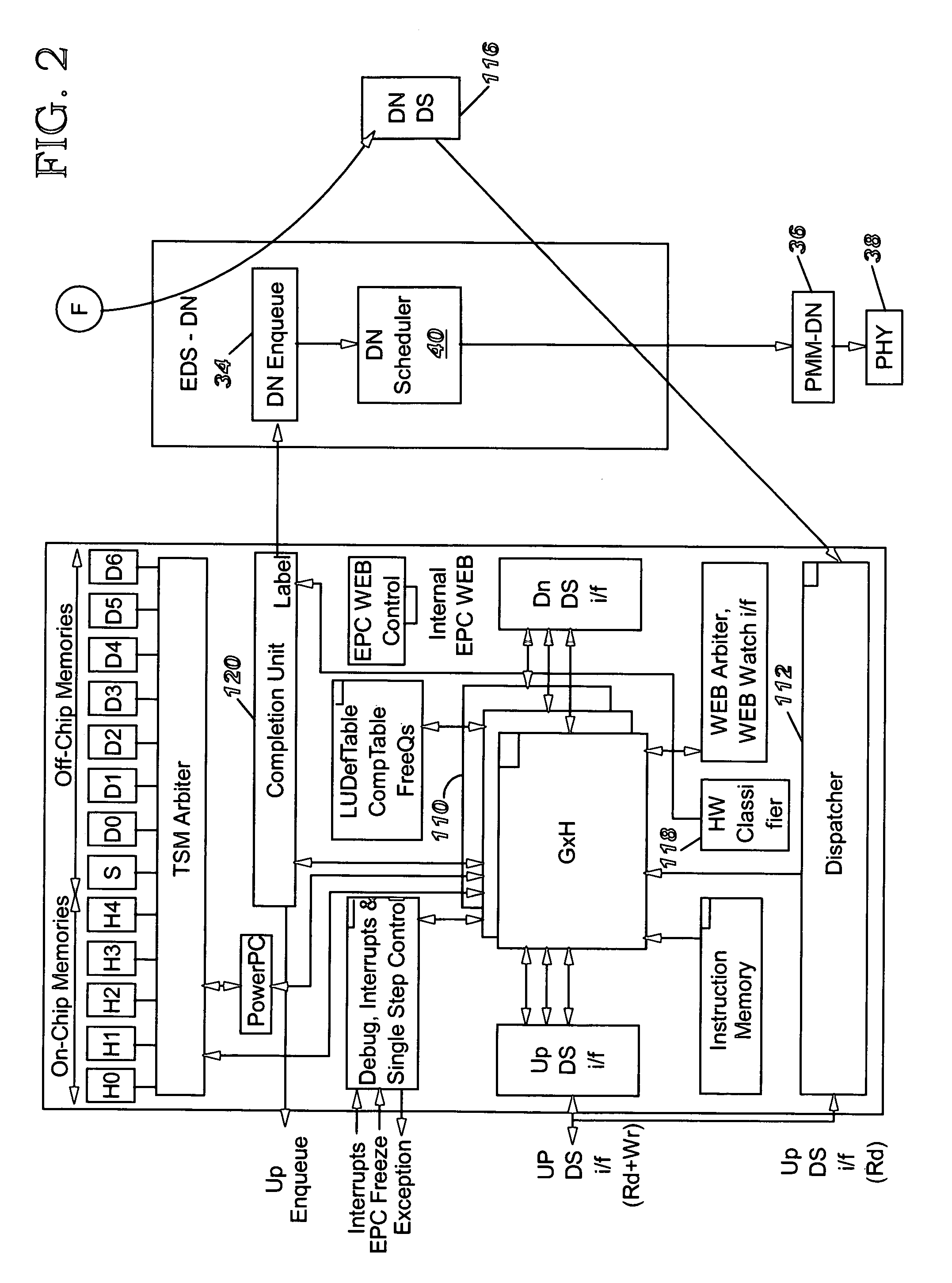

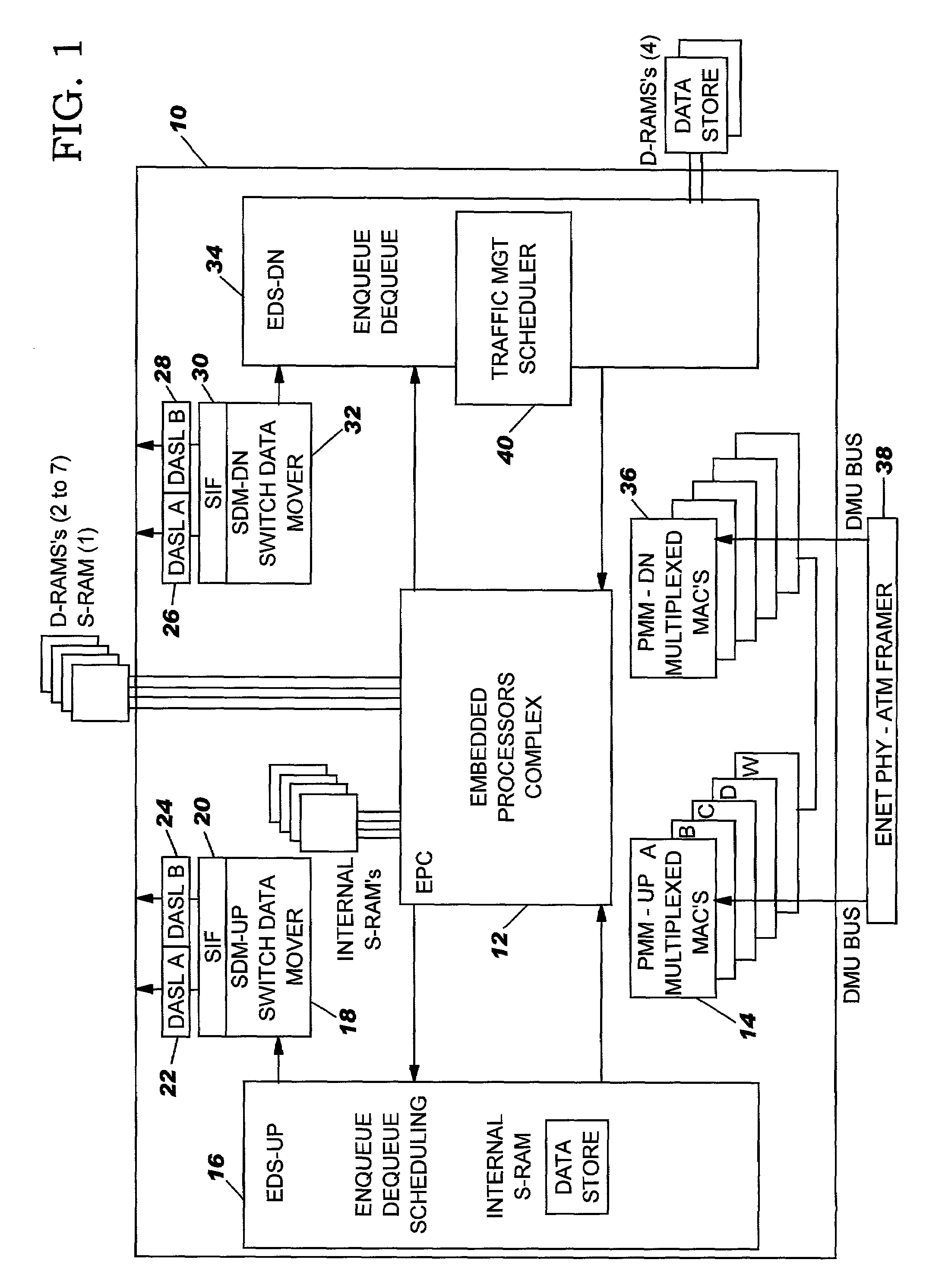

InactiveUS20050177644A1Reduce in quantityMultiple digital computer combinationsData switching networksTraffic capacityExternal storage

A pipeline configuration is described for use in network traffic management for the hardware scheduling of events arranged in a hierarchical linkage. The configuration reduces costs by minimizing the use of external SRAM memory devices. This results in some external memory devices being shared by different types of control blocks, such as flow queue control blocks, frame control blocks and hierarchy control blocks. Both SRAM and DRAM memory devices are used, depending on the content of the control block (Read-Modify-Write or ‘read’ only) at enqueue and dequeue, or Read-Modify-Write solely at dequeue. The scheduler utilizes time-based calendars and weighted fair queueing calendars in the egress calendar design. Control blocks that are accessed infrequently are stored in DRAM memory while those accessed frequently are stored in SRAM.

Owner:IBM CORP

Method and system for network processor scheduling outputs using disconnect/reconnect flow queues

InactiveCN1642143AEfficient use ofFair useStore-and-forward switching systemsTime-division multiplexing selectionHigh bandwidthNetwork processing unit

A system and method of moving information units from a network processor toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to stored priorities associated with the various sources of the information units. The priorities in the preferred embodiment include a low latency service, a minimum bandwidth, a weighted fair queueing and a system for preventing a user from continuing to exceed his service levels over an extended period. The present invention includes a plurality of calendars with different service rates to allow a user to select the service rate which he desires. If a customer has chosen a high bandwidth for service, the customer will be included in a calendar which is serviced more often than if the customer has chosen a lower bandwidth.

Owner:INT BUSINESS MASCH CORP

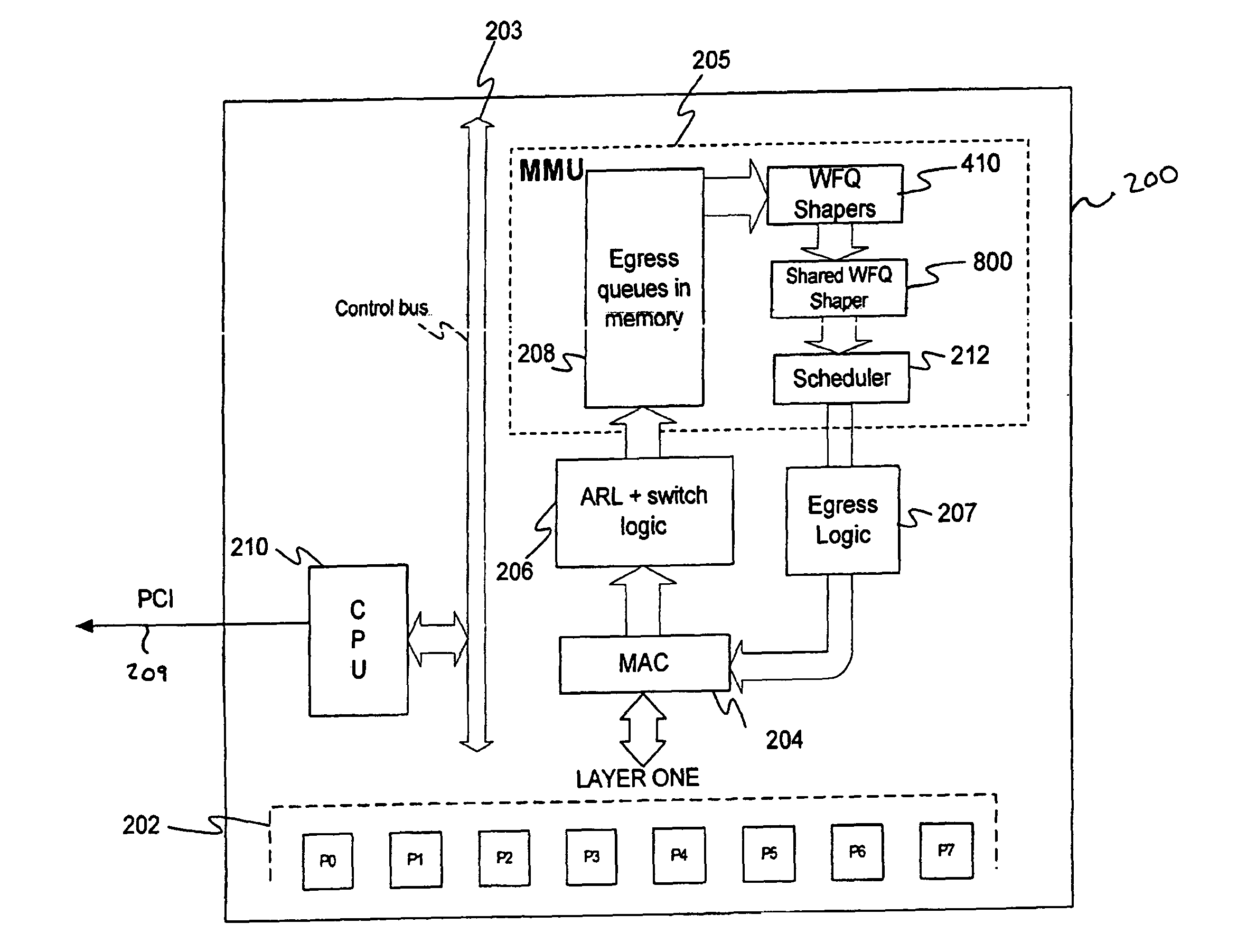

Shared weighted fair queuing (WFQ) shaper

InactiveUS20100302942A1Error preventionFrequency-division multiplex detailsFair queuingWeighted fair queueing

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

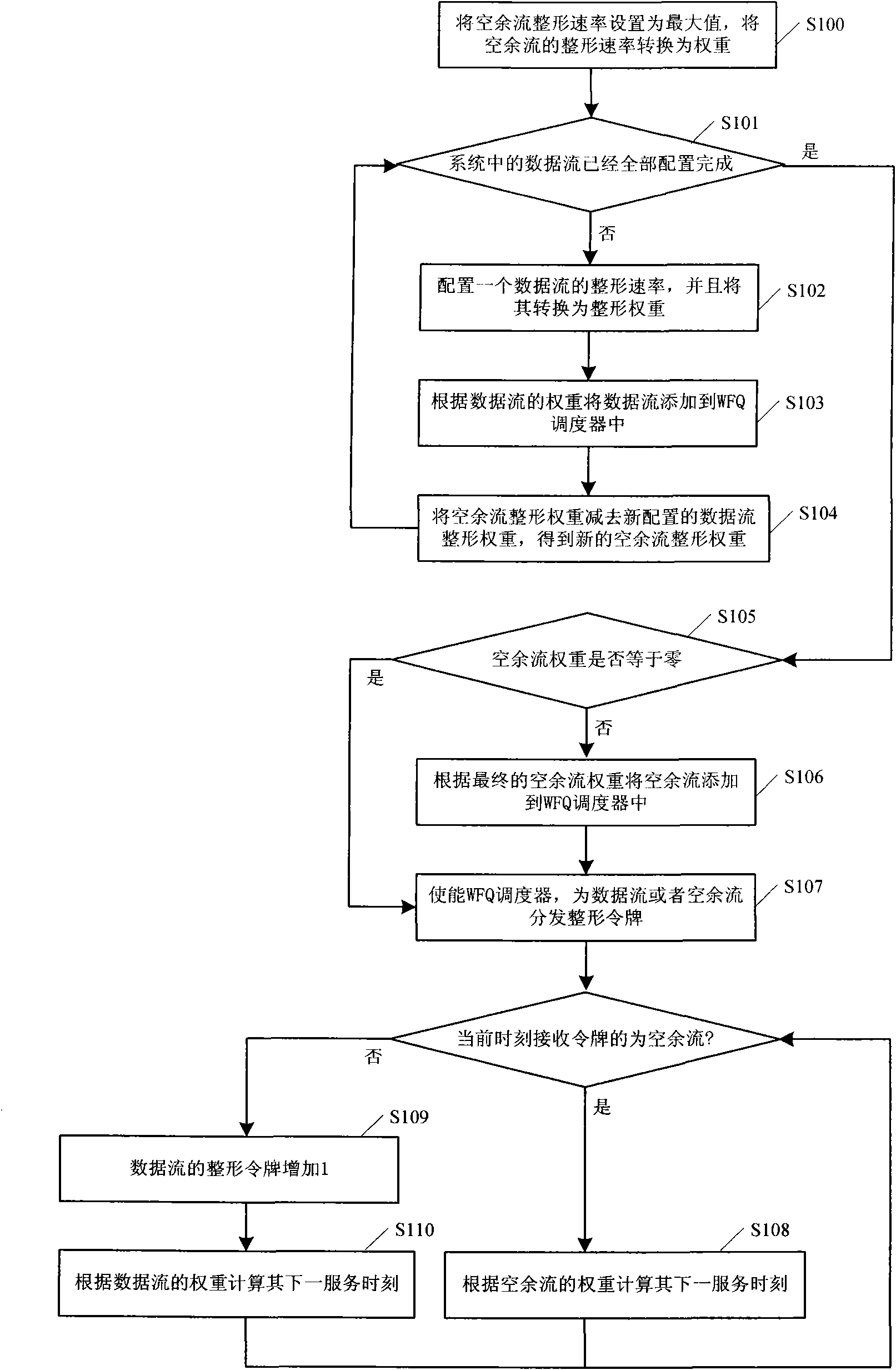

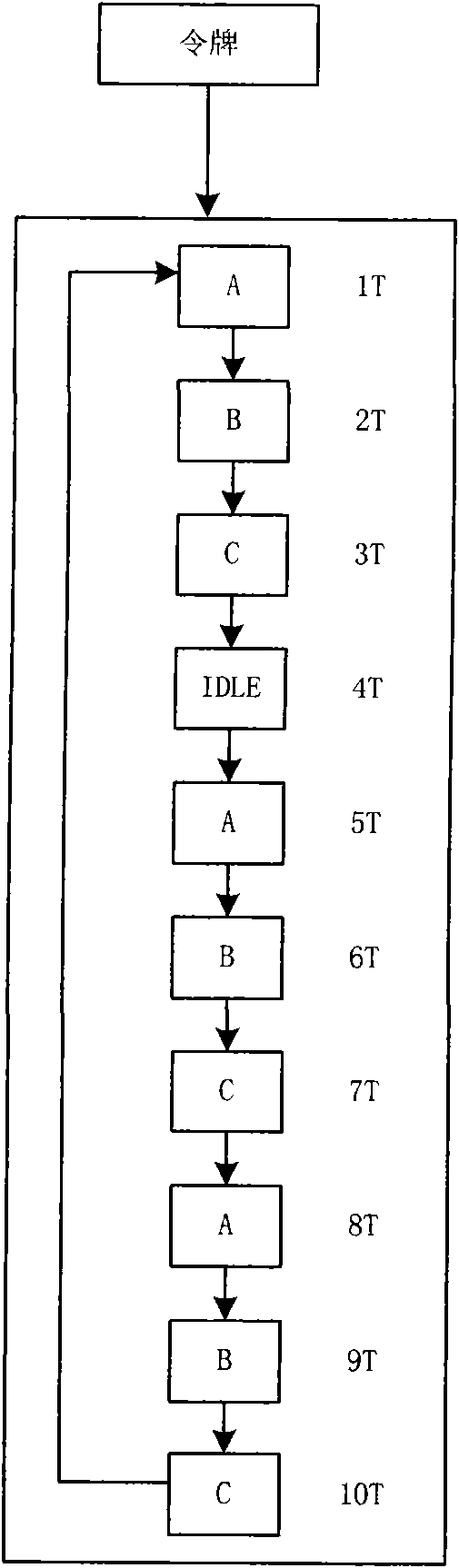

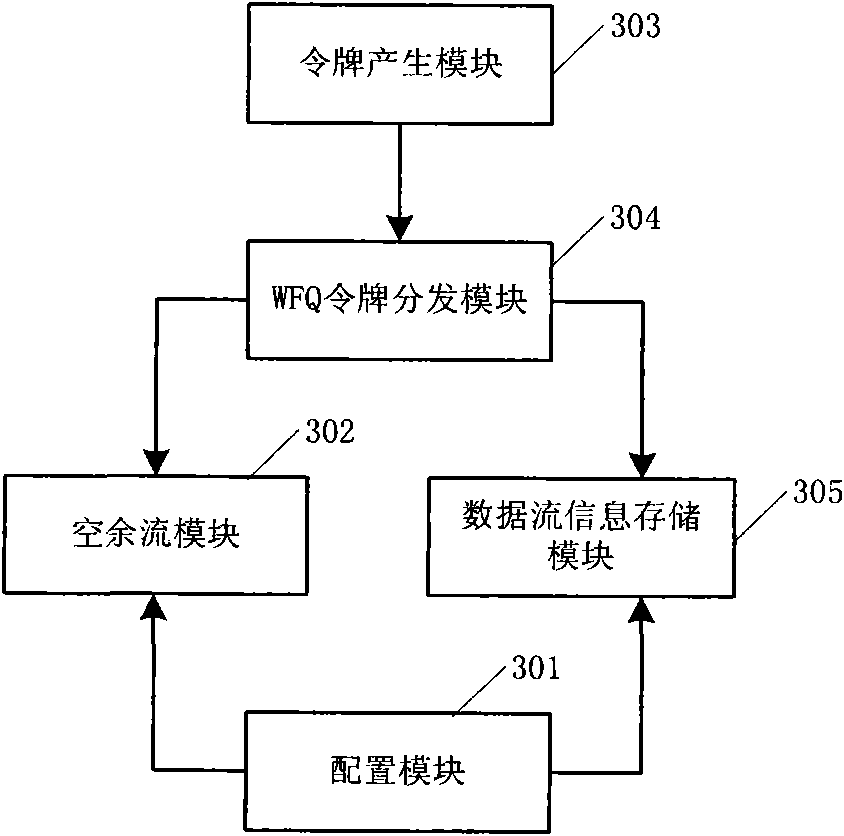

Method, device and system for realizing addition of traffic shaping token

The invention discloses a method for realizing addition of a traffic shaping token, which comprises the following steps: A. allocating a shaping rate to each data stream, converting the shaping rate to a shaping weight, and adding each data stream to a weighted fair queuing (WFQ) token distribution module according to the shaping weight of each data stream; and at the same time of performing step A, calculating the latest idle stream weight; B, according to the idle stream weight, adding the idle stream to the WFQ token distribution module; and C, adding a token to each data stream or idle stream by the WFQ token distribution module according to set intervals. The invention also discloses a corresponding device and a corresponding system. The method, the device and the system add tokens to each data stream and idle stream in a mode of allocating corresponding shaping rates and shaping weights to different data streams so as to realize the shaping of various data streams and achieve good effect.

Owner:ZTE CORP

Shared weighted fair queuing (WFQ) shaper

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Method and system for network processor scheduling based on service levels

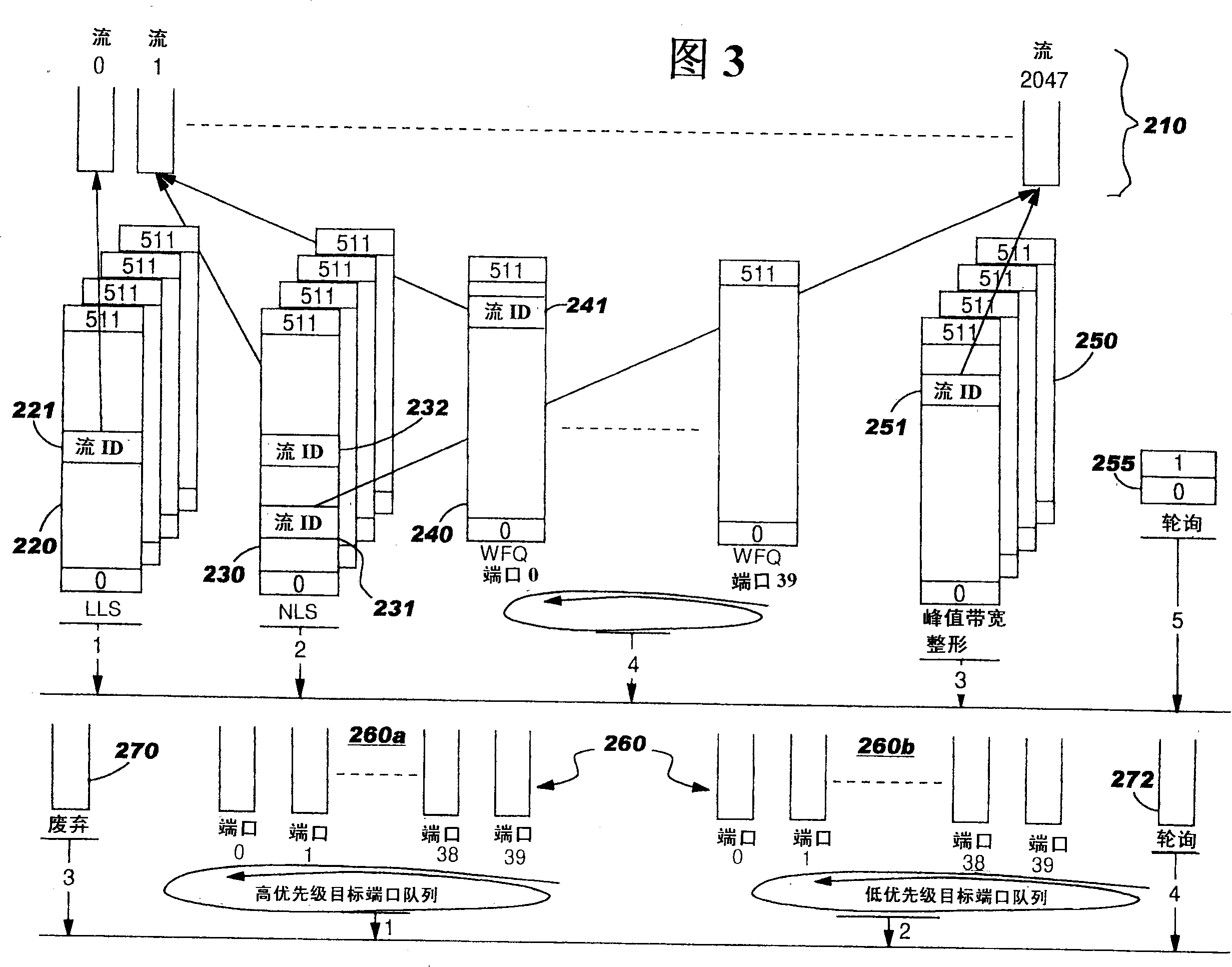

InactiveUS7123622B2Easy to useMinimal overheadError preventionTransmission systemsMaximum burst sizeComing out

A system and method of moving information units from an output flow control toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to service based on a weighted fair queue where position in the queue is adjusted after each service based on a weight factor and the length of frame, a process which provides a method for and system of interaction between different calendar types is used to provide minimum bandwidth, best effort bandwidth, weighted fair queuing service, best effort peak bandwidth, and maximum burst size specifications. The present invention permits different combinations of service that can be used to create different QoS specifications. The “base” services which are offered to a customer in the example described in this patent application are minimum bandwidth, best effort, peak and maximum burst size (or MBS), which may be combined as desired. For example, a user could specify minimum bandwidth plus best effort additional bandwidth and the system would provide this capability by putting the flow queue in both the NLS and WFQ calendar. The system includes tests when a flow queue is in multiple calendars to determine when it must come out.

Owner:IBM CORP

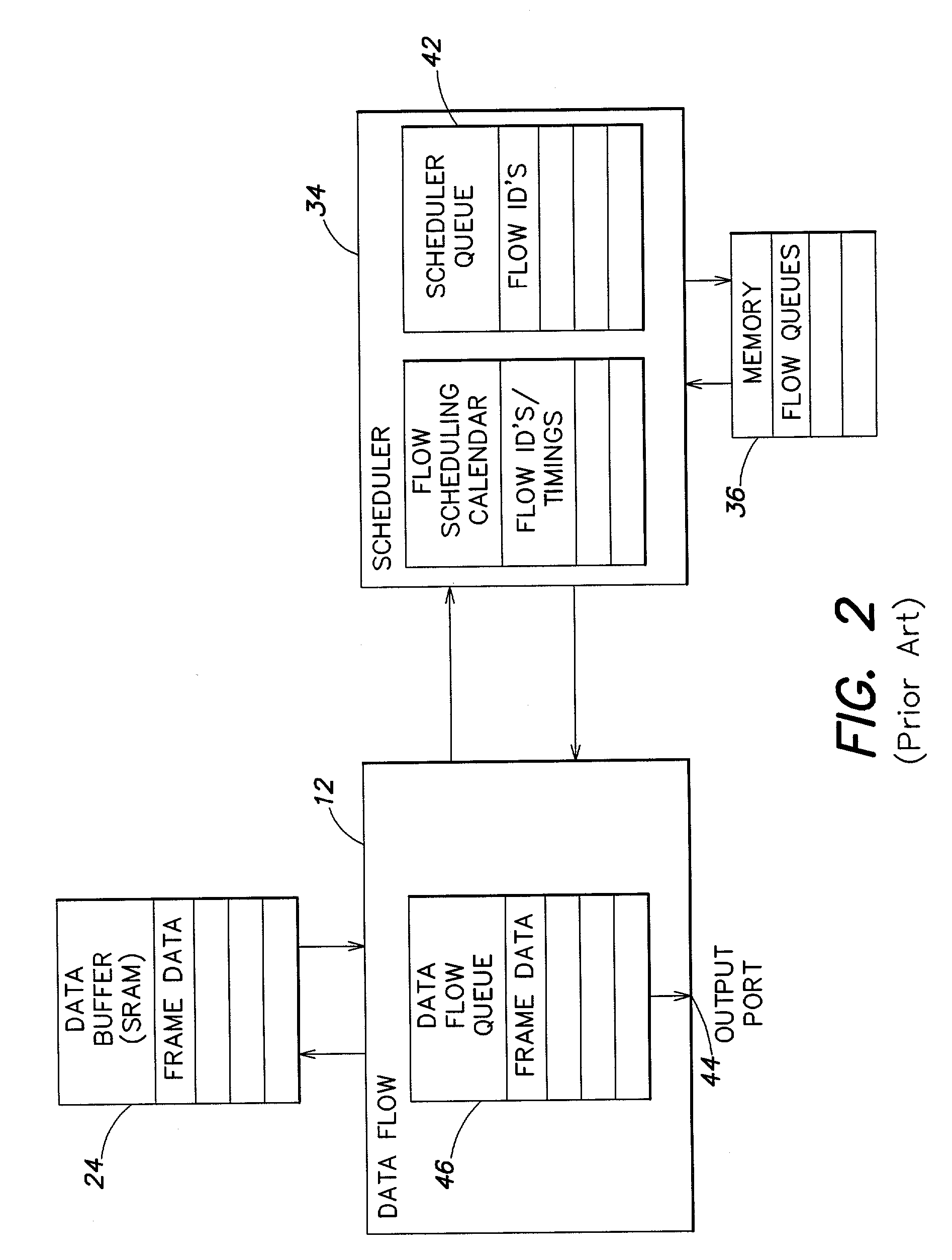

Method and system for network processor scheduling outputs based on multiple calendars

InactiveUS6862292B1Level of serviceEasy to useError preventionTransmission systemsHigh bandwidthNetwork processing unit

A system and method of moving information units from a network processor toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to stored priorities associated with the various sources of the information units. The priorities in the preferred embodiment include a low latency service, a minimum bandwidth, a weighted fair queueing and a system for preventing a user from continuing to exceed his service levels over an extended period. The present invention includes a plurality of calendars with different service rates to allow a user to select the service rate which he desires. If a customer has chosen a high bandwidth for service, the customer will be included in a calendar which is serviced more often than if the customer has chosen a lower bandwidth.

Owner:IBM CORP

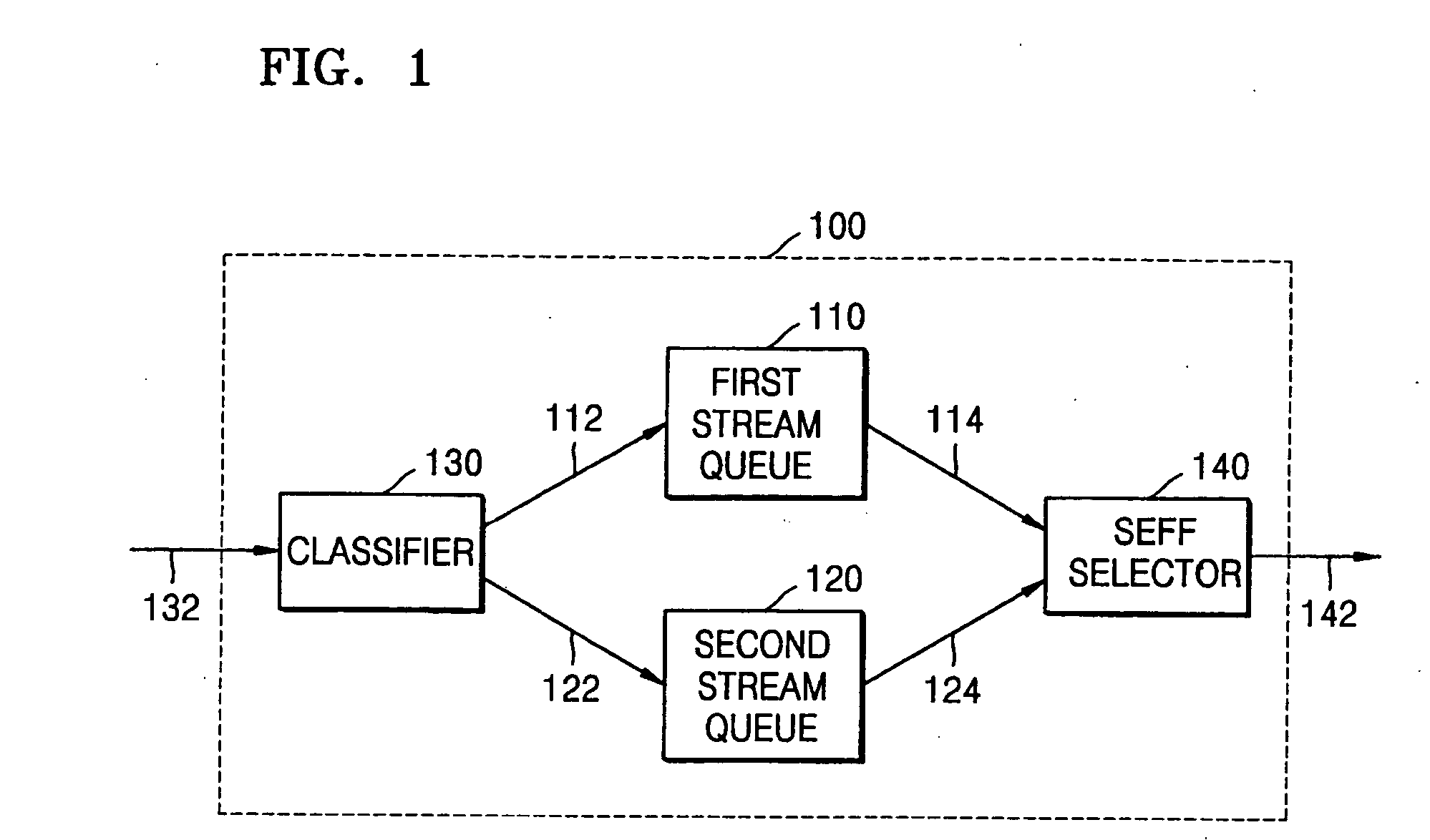

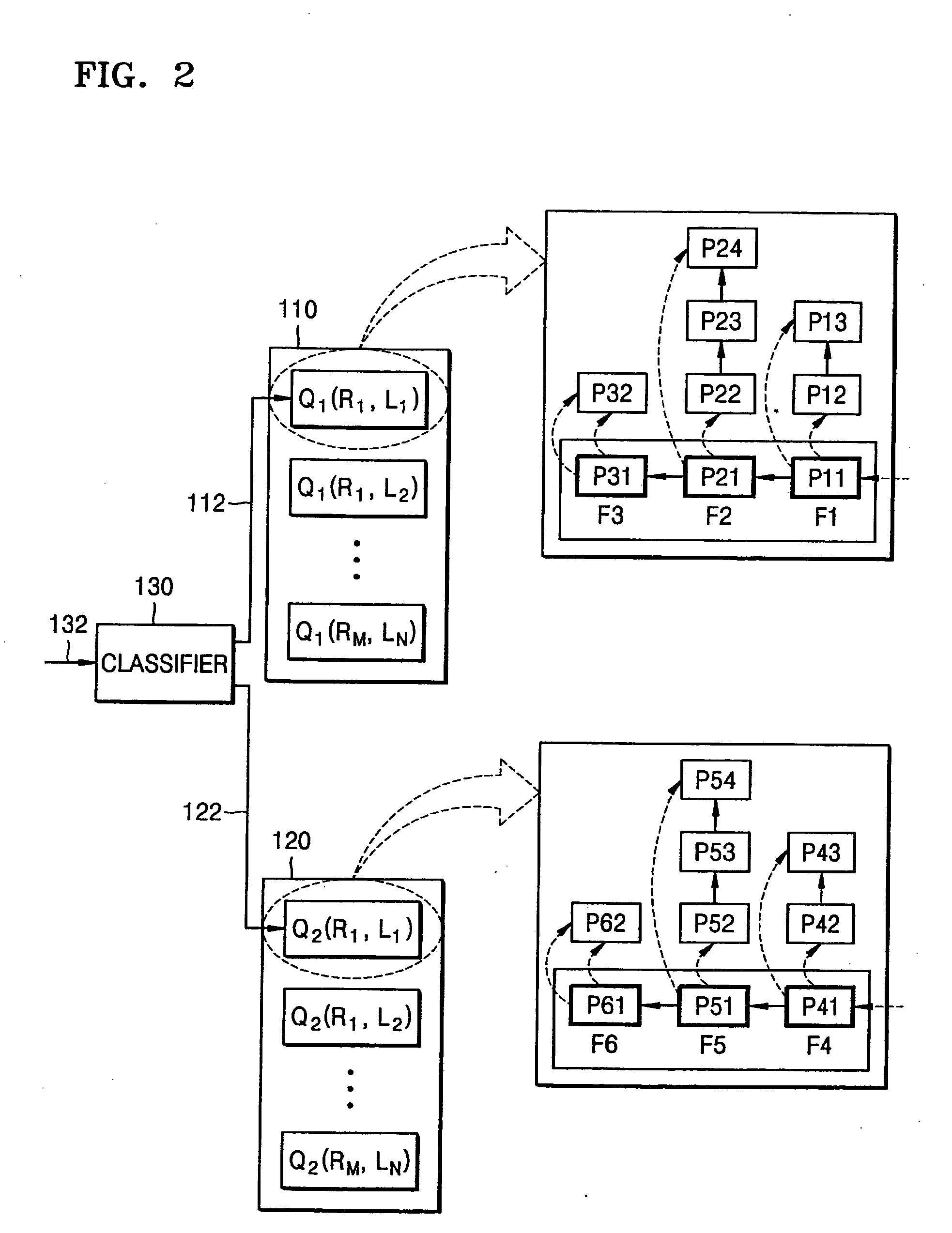

Packet scheduling method and apparatus

InactiveUS20050147103A1The process is simple and effectiveGuaranteed performanceData switching by path configurationData ratePacket scheduling

A packet scheduling method and apparatus including (a) classifying a stream that enters into a scheduler according to a data rate and / or length of a packet; (b) if the packet of the classified stream is a first packet, storing the packet in a first stream queue, and if the packet of the classified stream is a subsequent packet, storing the packet in a second stream queue; (c) counting a virtual start service time of the packet stored in the first stream queue according to a weighted fairness queuing method; and (d) counting a virtual start service time of the packet stored in the second stream queue as a virtual start service time of the previous packet.

Owner:SAMSUNG ELECTRONICS CO LTD +1

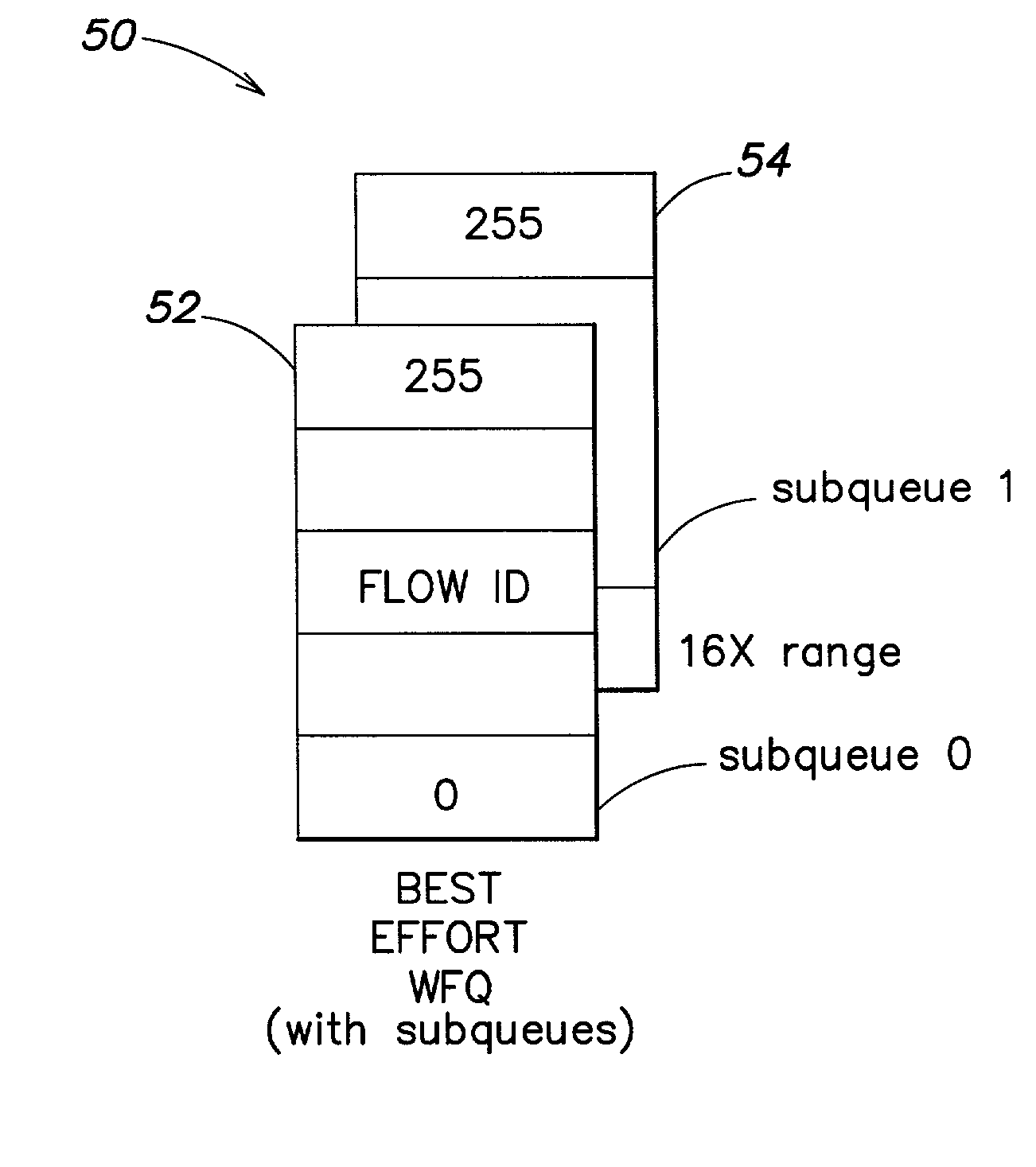

Weighted fair queue having extended effective range

InactiveUS7187684B2High resolutionIncrease rangeError preventionFrequency-division multiplex detailsExtended coverageImage resolution

A scheduler for a network processor includes a scheduling queue in which weighted fair queuing is applied to define a sequence in which flows are to be serviced. The scheduling queue includes at least a first subqueue and a second subqueue. The first subqueue has a first range and a first resolution, and the second subqueue has an extended range that is greater than the first range and a lower resolution that is less than the first resolution. Flows that are to be enqueued within the range of highest precision to the current pointer of the scheduling queue are attached to the first subqueue. Flows that are to be enqueued outside the range of highest precision from the current pointer of the scheduling queue are attached to the second subqueue. Numerous other aspects are provided.

Owner:IBM CORP

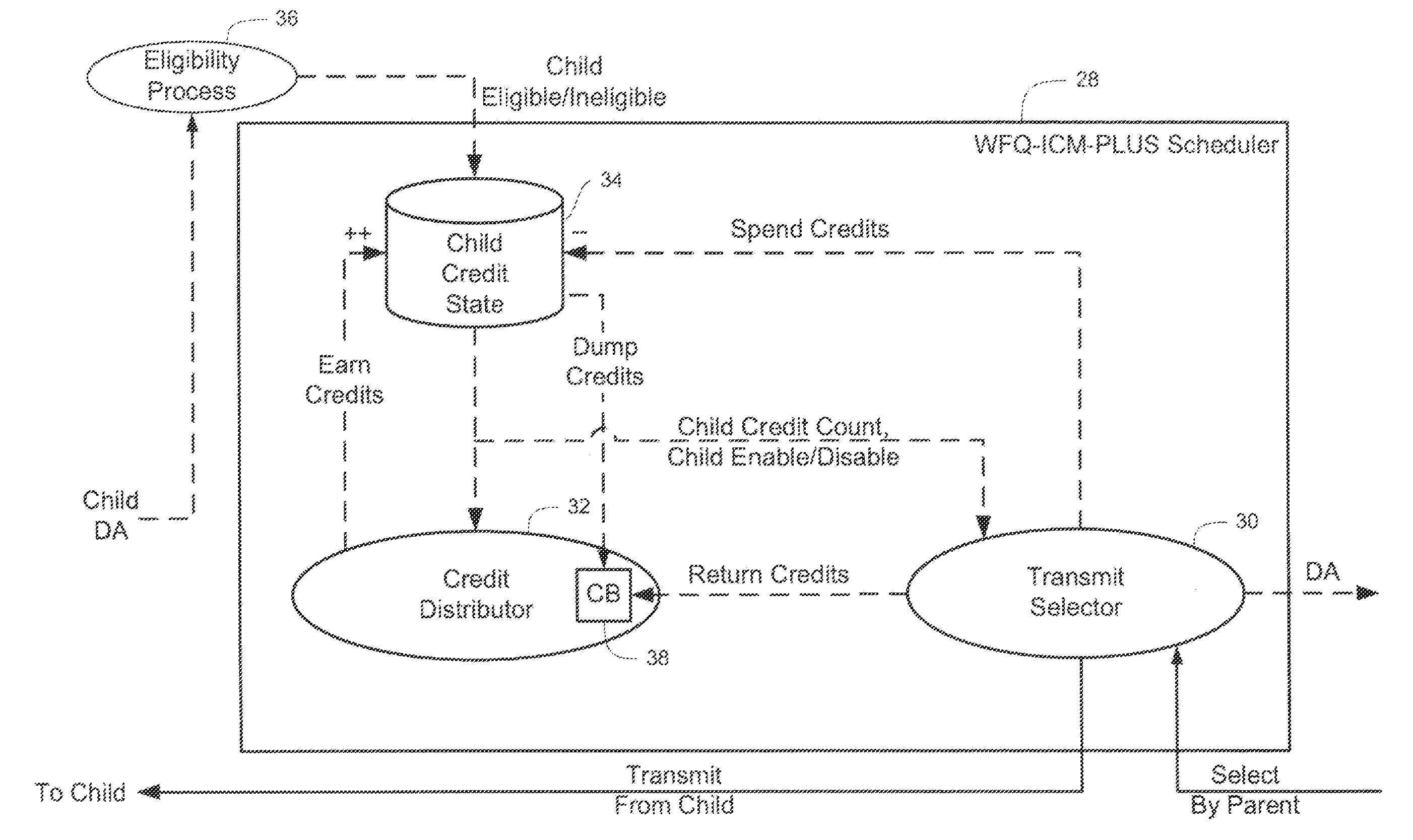

Method and system for weighted fair queuing

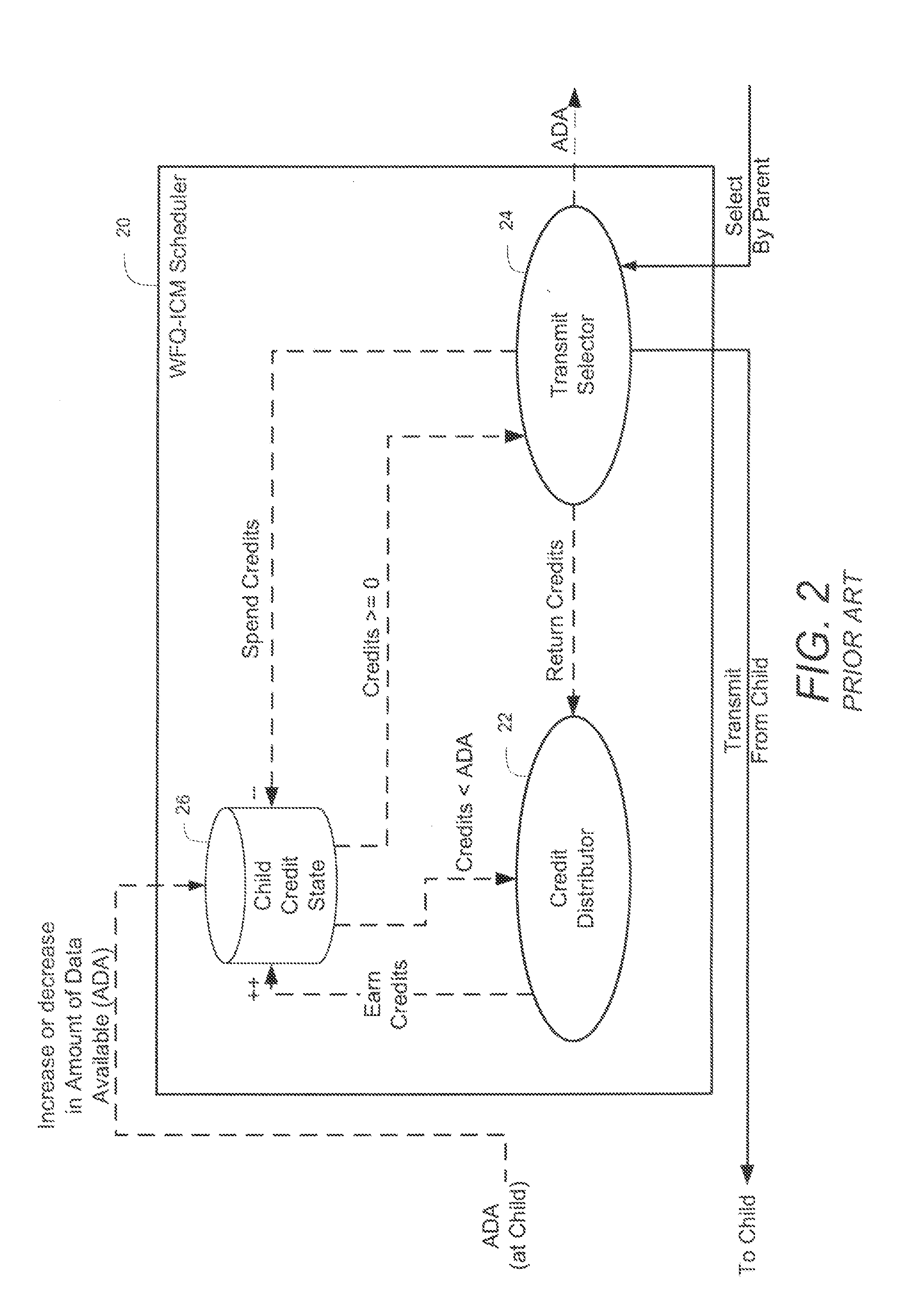

A system for scheduling data for transmission in a communication network includes a credit distributor and a transmit selector. The communication network includes a plurality of children. The transmit selector is communicatively coupled to the credit distributor. The credit distributor operates to grant credits to at least one of eligible children and children having a negative credit count. Each credit is redeemable for data transmission. The credit distributor further operates to affect fairness between children with ratios of granted credits, maintain a credit balance representing a total amount of undistributed credits available, and deduct the granted credits from the credit balance. The transmit selector operates to select at least one eligible and enabled child for dequeuing, bias selection of the eligible and enabled child to an eligible and enabled child with positive credits, and add credits to the credit balance corresponding to an amount of data selected for dequeuing.

Owner:RPX CLEARINGHOUSE

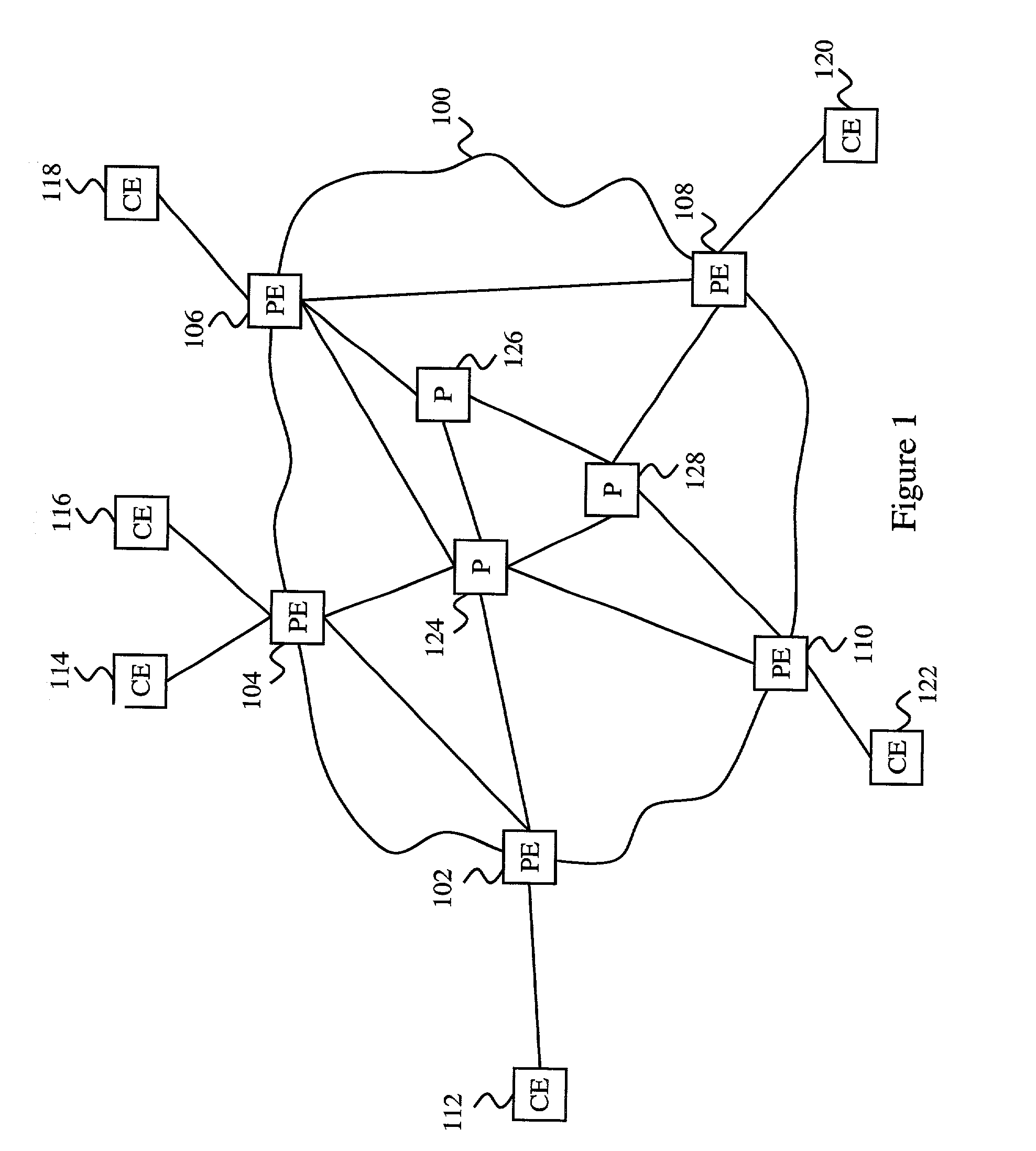

Queueing technique for multiple sources and multiple priorities

ActiveUS20060083226A1Multiplex system selection arrangementsError preventionData packClass of service

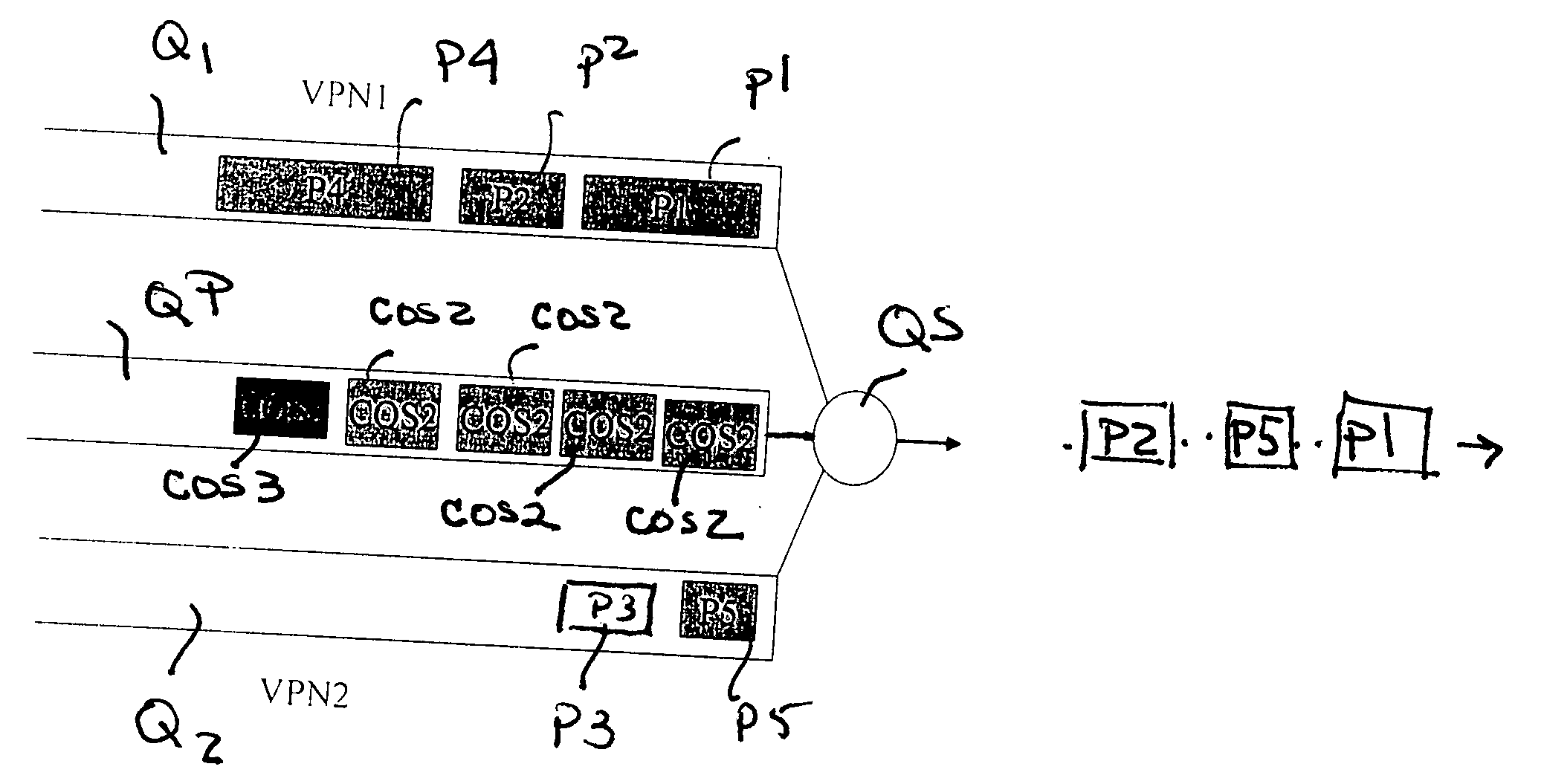

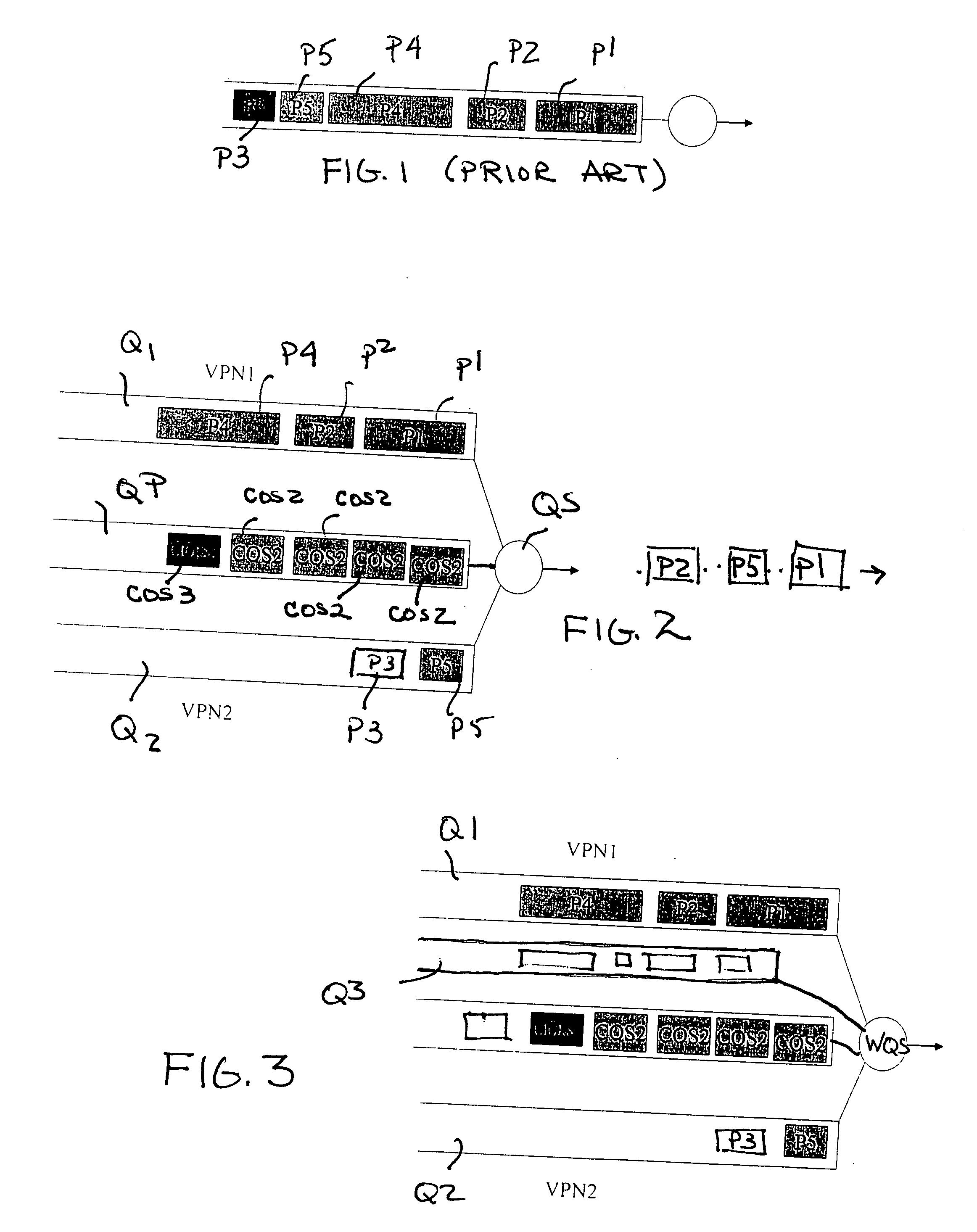

A method of dealing equitably with traffic from multiple VPNs feeding into a single router utilizes the weighted fair queueing (WFQ) technique of the prior art in combination with a “service level” queue comprising a set of tokens defining the class of service (COS) with the next packet to be transmitted and a queue selector that functions to query each queue in a round robin fashion. The service level queue ensures that the higher priority traffic will be transmitted first, while the queue selector ensures that each VPN will receive similar service. The queue selector may be “weighted” in that if a particular VPN generates substantially more traffic than the other VPNS, that VPN will be selected more than once during a round robin cycle to transmit a packet (“weighted round robin” WFQ).

Owner:AMERICAN TELEPHONE & TELEGRAPH CO

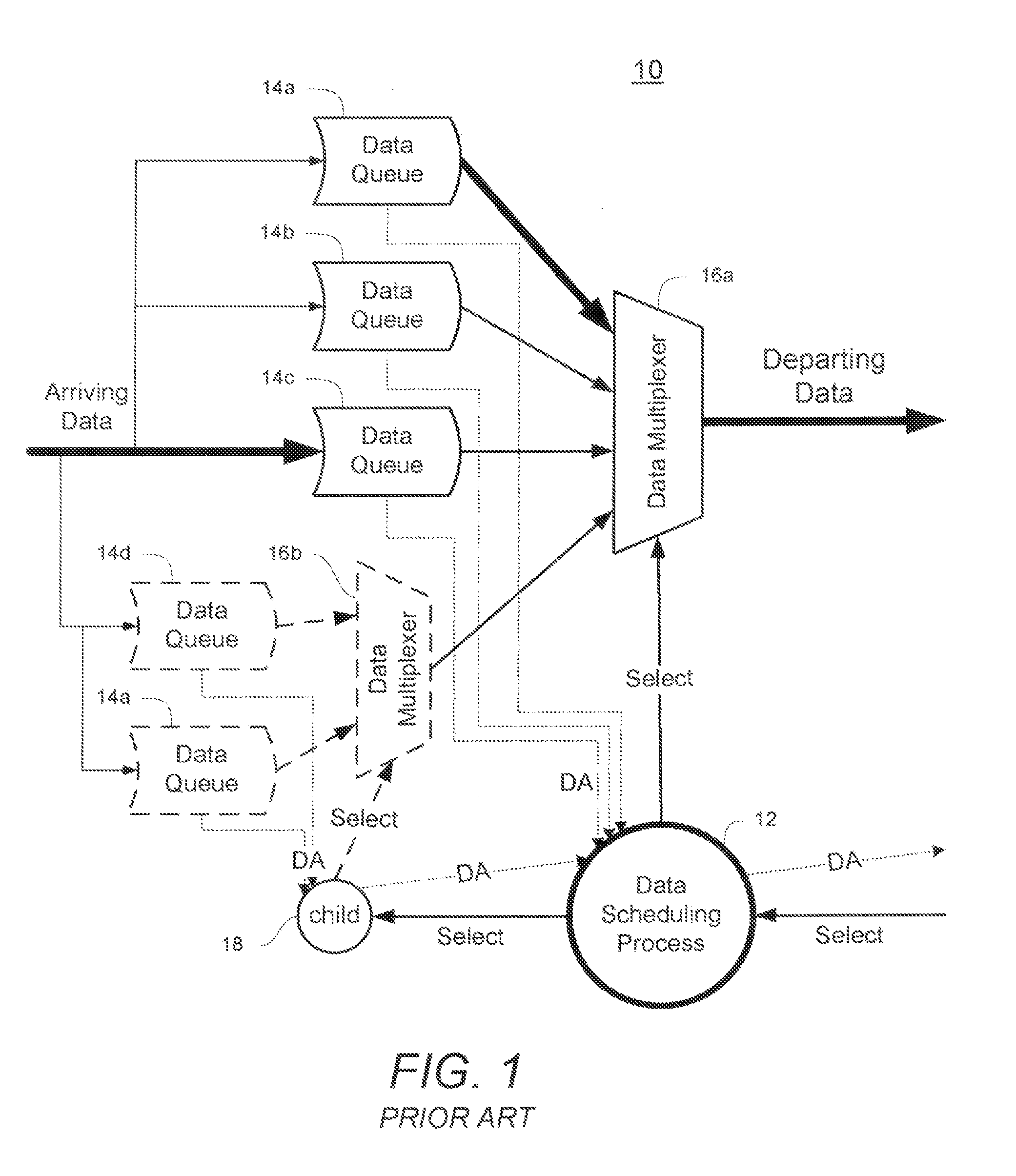

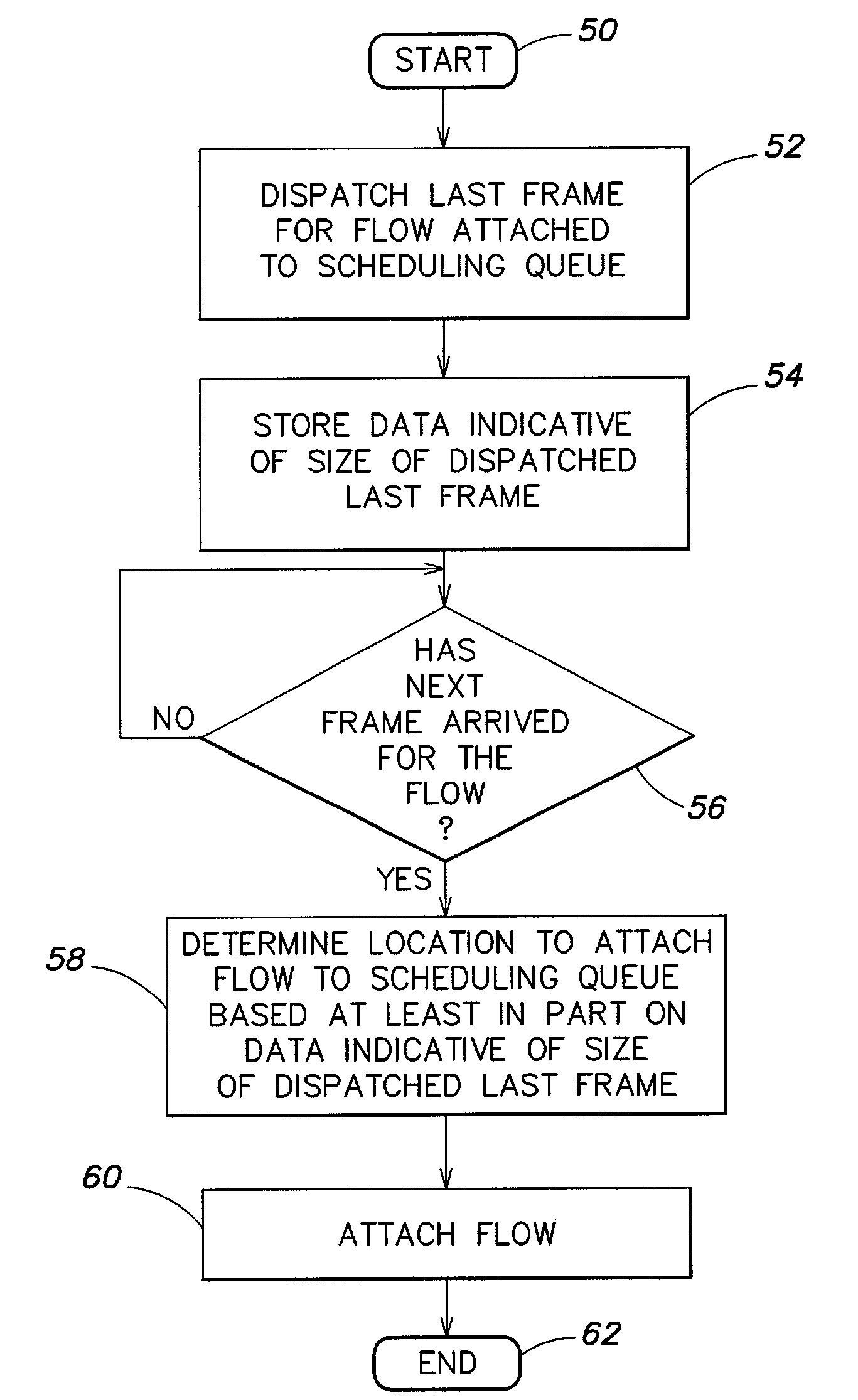

Method and apparatus for improving the fairness of new attaches to a weighted fair queue in a quality of service (QoS) scheduler

In a first aspect, a network processor includes a scheduler in which a scheduling queue is maintained. A last frame is dispatched from a flow queue maintained in the network processor, thereby emptying the flow queue. Data indicative of the size of the dispatched last frame is stored in association with the scheduler. A new frame corresponding to the emptied flow queue is received, and the flow corresponding to the emptied flow queue is attached to the scheduling queue. The flow is attached to the scheduling queue at a distance D from a current pointer for the scheduling queue. The distance D is determined based at least in part on the stored data indicative of the size of the dispatched last frame.

Owner:IBM CORP

Processor with scheduler architecture supporting multiple distinct scheduling algorithms

InactiveUS7477636B2Sufficient flexibilityApplication of processData switching by path configurationRadio transmissionFair queuingWeighted fair queueing

Owner:INTEL CORP

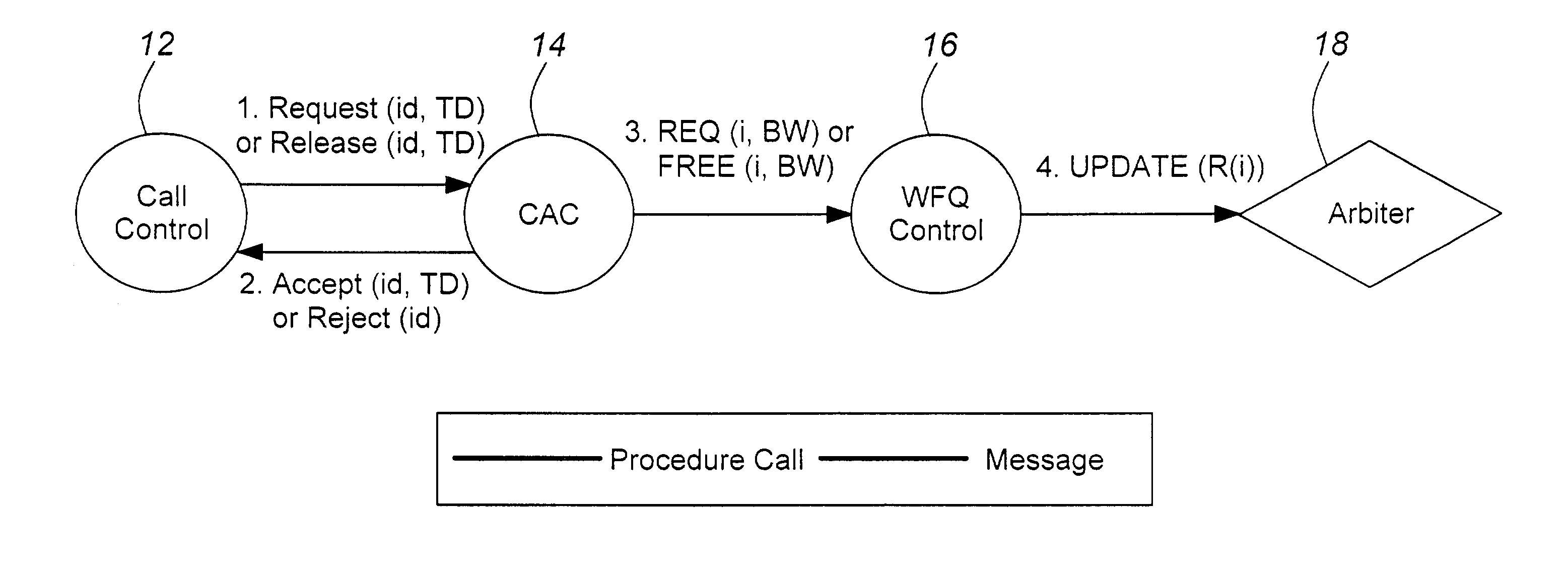

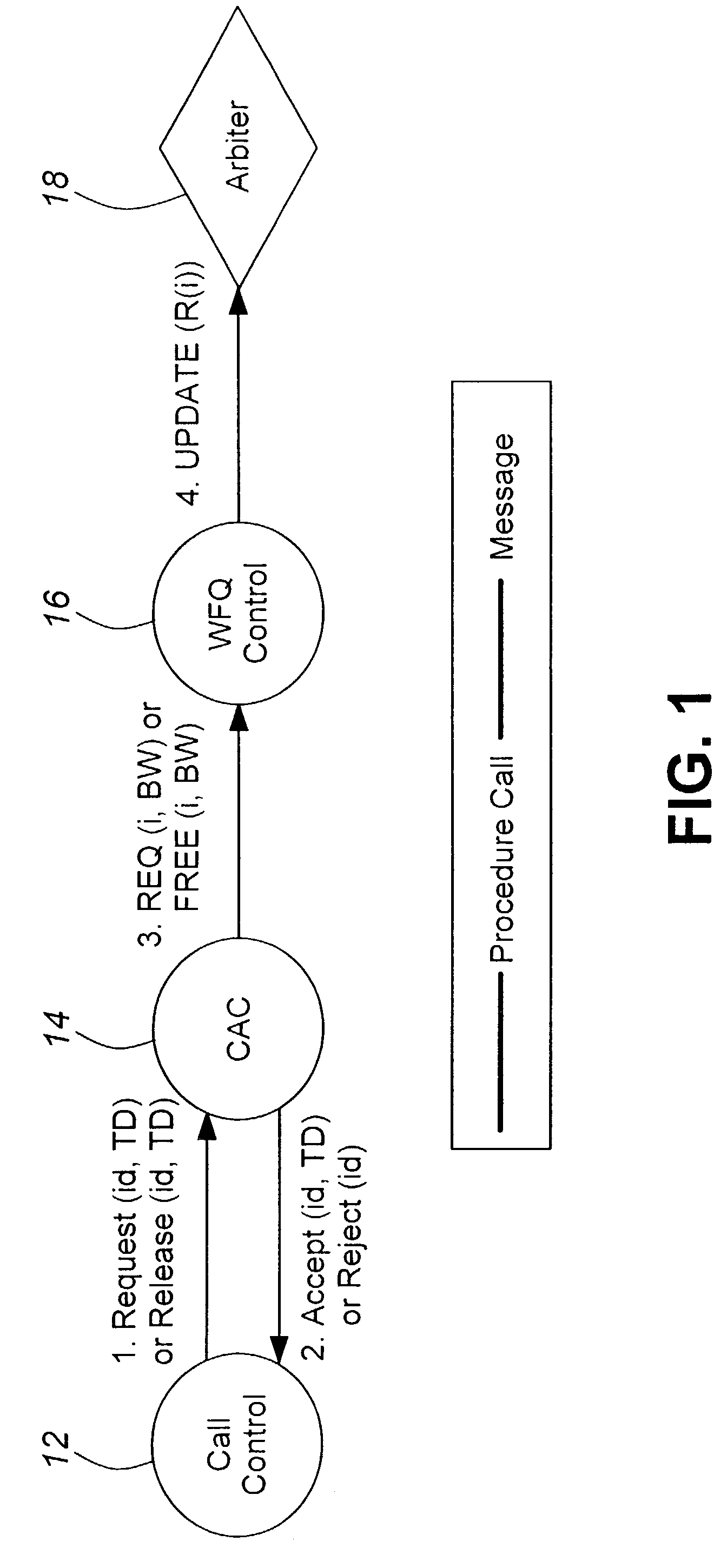

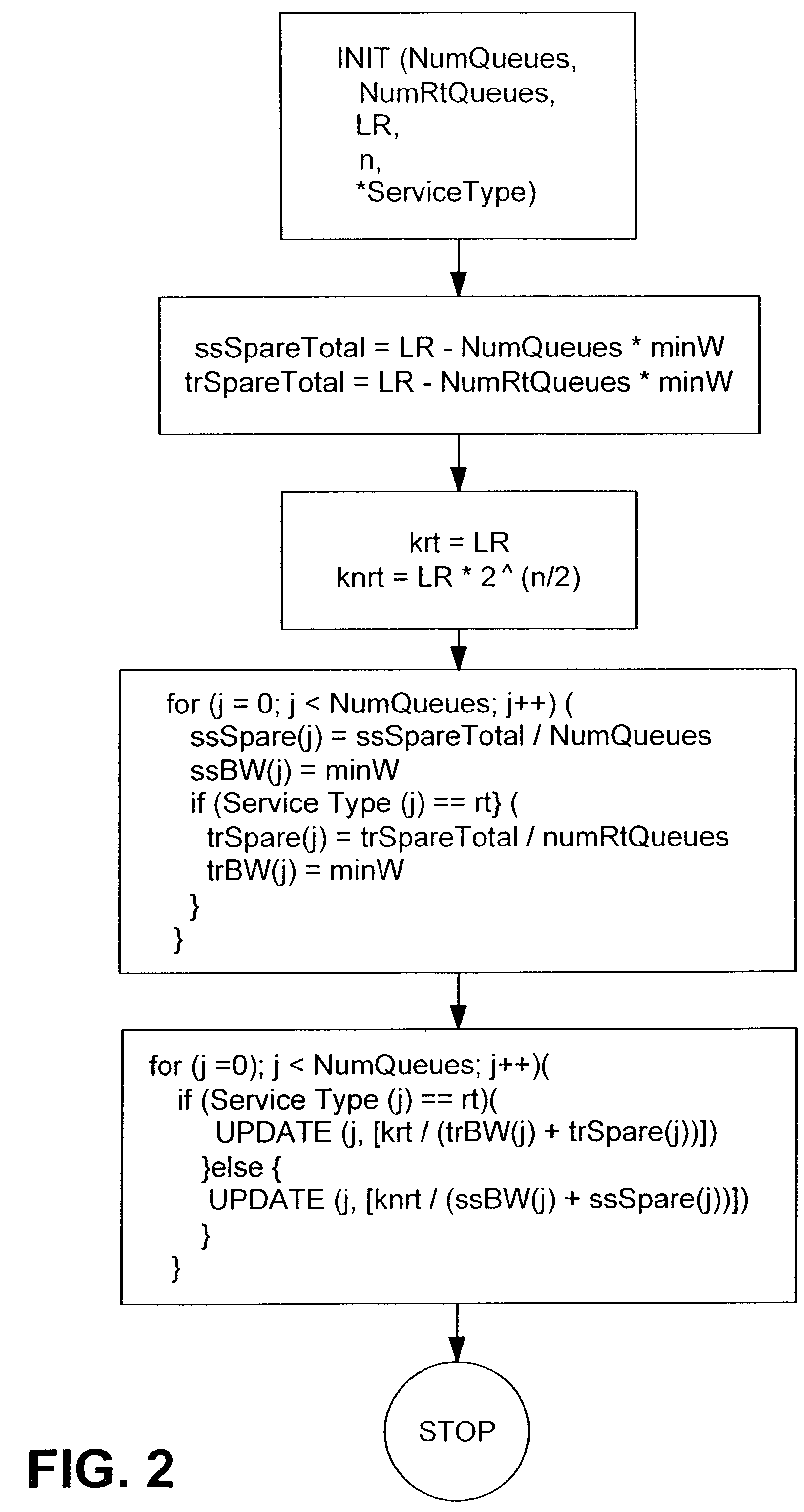

Adaptive service weight assignments for ATM scheduling

InactiveUS6993040B2Data switching by path configurationStore-and-forward switching systemsAdaptive servicesFair queuing

A system and method for adaptively assigning queue service weights to an ATM traffic management controller in order to reduce service weight update calculations. The implementation of a fair weighted queuing scheme on a network is divided into two functions: queue arbitration; and queue weight configuration. Queue arbitration is performed via a virtual time-stamped fair queuing algorithm. The queue weight configuration is performed via a reconfigurable weighted fair queuing controller wherein bandwidth requirements are calculated in response to connection setup and release values.

Owner:ALCATEL CANADA

Weighted-fair-queuing relative bandwidth sharing

ActiveUS7948896B2Error preventionFrequency-division multiplex detailsFair queuingWeighted fair queueing

Owner:AVAGO TECH INT SALES PTE LTD

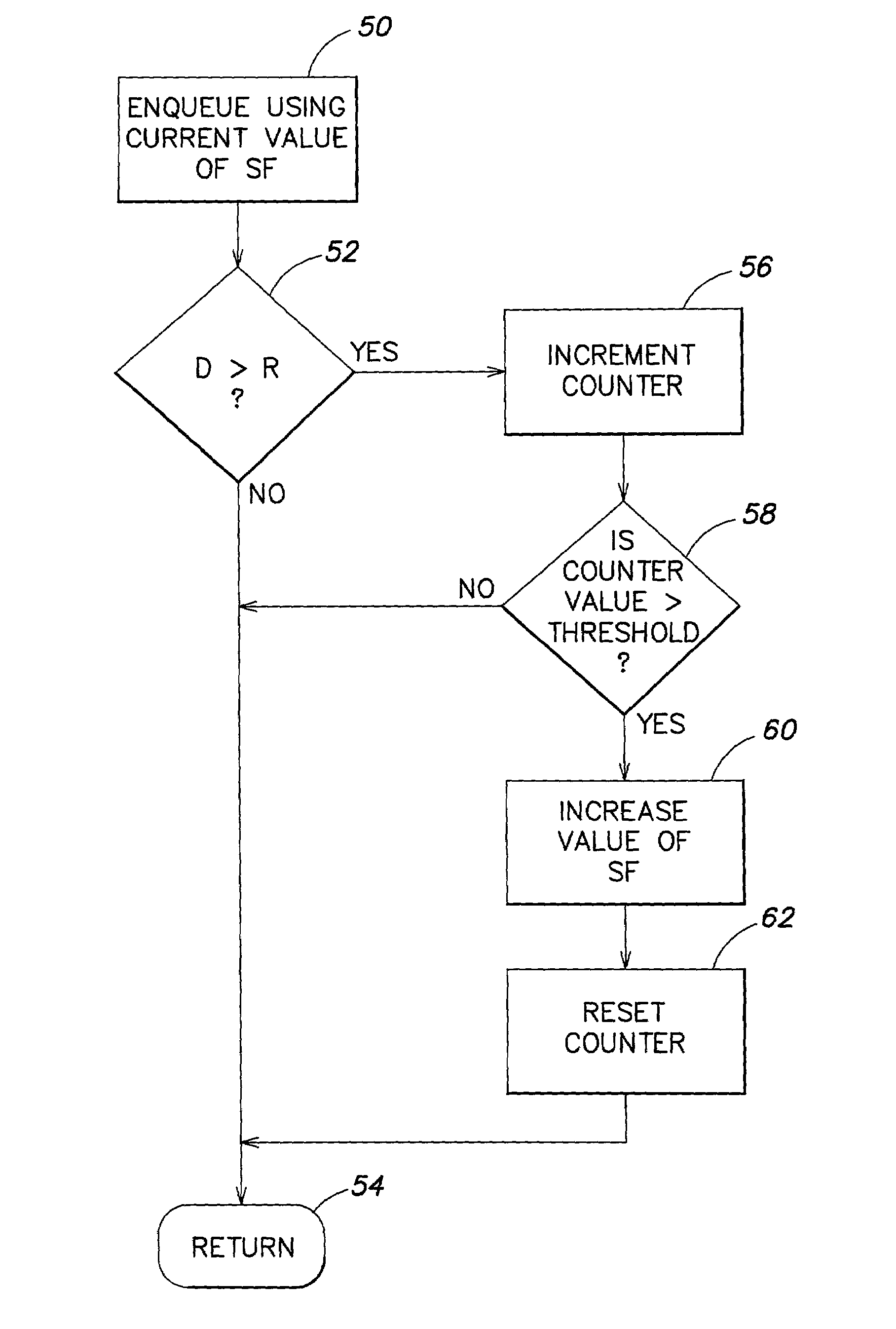

Weighted fair queue having adjustable scaling factor

InactiveUS7280474B2Error preventionFrequency-division multiplex detailsFair queuingNetwork processor

A scheduler for a network processor includes a scheduling queue in which weighted fair queuing is applied. The scheduling queue has a range R. Flows are attached to the scheduling queue at a distance D from a current pointer for the scheduling queue. The distance D is calculated for each flow according to the formula D=((WF×FS) / SF), where WF is a weighting factor applicable to a respective flow; FS is a frame size attributable to the respective flow; and SF is a scaling factor. The scaling factor SF is adjusted depending on a comparison of the distance D to the range R.

Owner:GOOGLE LLC

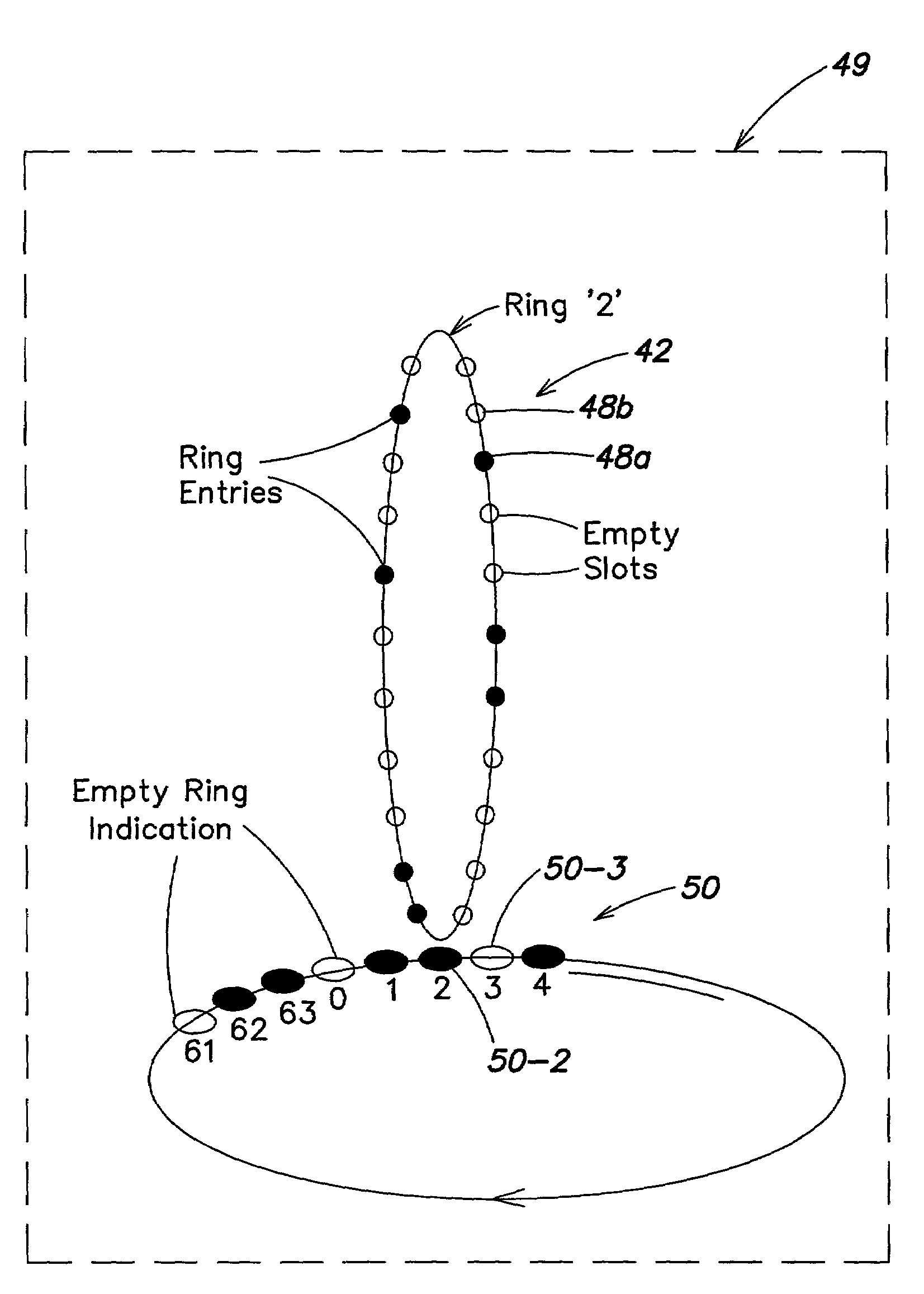

Empty indicators for weighted fair queues

ActiveUS7310345B2Reduce spendingError preventionFrequency-division multiplex detailsNetwork processorWeighted fair queueing

A scheduler for a network processor includes one or more scheduling queues. Each scheduling queue defines a respective sequence in which flows are to be serviced. A respective empty indicator is associated with each scheduling queue to indicate whether the respective scheduling queue is empty. By referring to the empty indicators, it is possible to avoid wasting operating cycles of the scheduler on searching scheduling queues that are empty.

Owner:GOOGLE LLC

Structure for scheduler pipeline design for hierarchical link sharing

InactiveUS7457241B2Reduce in quantityError preventionTransmission systemsTraffic capacityExternal storage

A pipeline configuration is described for use in network traffic management for the hardware scheduling of events arranged in a hierarchical linkage. The configuration reduces costs by minimizing the use of external SRAM memory devices. This results in some external memory devices being shared by different types of control blocks, such as flow queue control blocks, frame control blocks and hierarchy control blocks. Both SRAM and DRAM memory devices are used, depending on the content of the control block (Read-Modify-Write or ‘read’ only) at enqueue and dequeue, or Read-Modify-Write solely at dequeue. The scheduler utilizes time-based calendars and weighted fair queueing calendars in the egress calendar design. Control blocks that are accessed infrequently are stored in DRAM memory while those accessed frequently are stored in SRAM.

Owner:IBM CORP

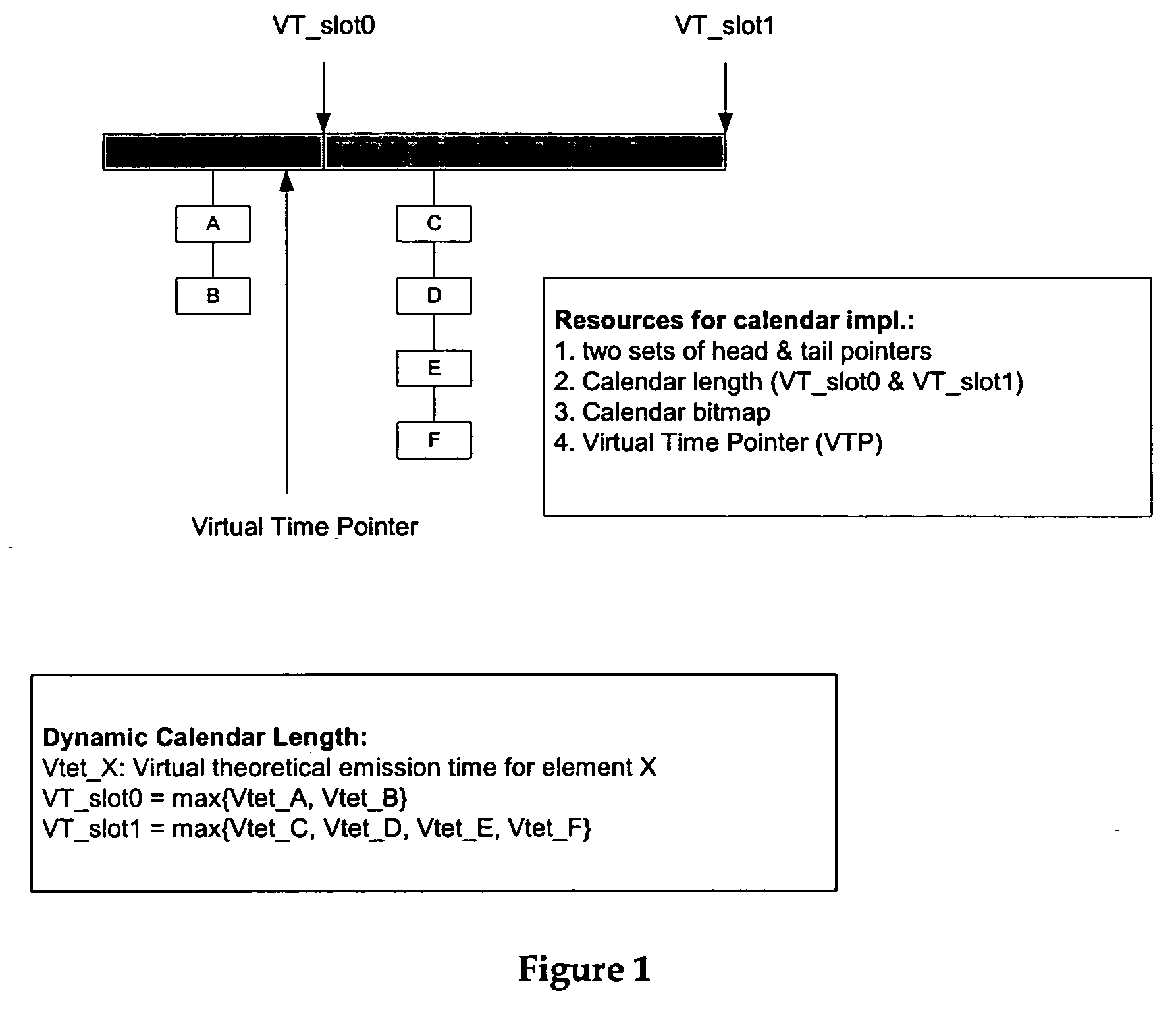

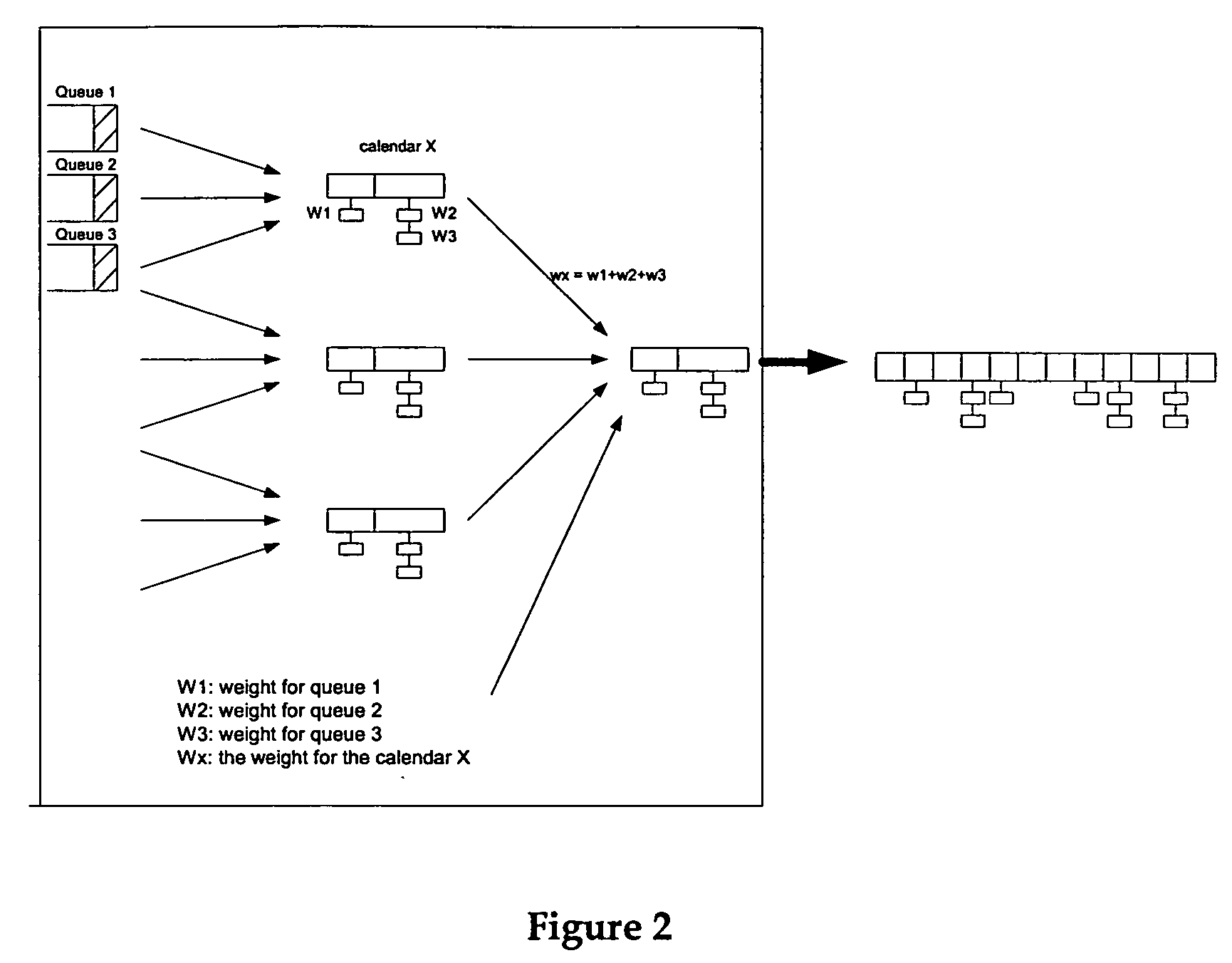

Two-slot dynamic length WFQ calendar

A system and method of scheduling and servicing events in a communications network are described. To provide improved efficiency while maintaining fairness to all traffic a two slot dynamic length Weighted Fair Queuing (WFQ) calendar is implemented. The two slot calendar can transformed to provide fine granularity utilizing a hierarchical WFQ scheme.

Owner:ALCATEL LUCENT SAS

Method and system for scheduling information using disconnection/reconnection of network server

InactiveCN1419767AService will not affectEfficient use ofStore-and-forward switching systemsTime-division multiplexing selectionHigh bandwidthNetwork processing unit

A system and method of moving information units from a network processor toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to stored priorities associated with the various sources of the information units. The priorities in the preferred embodiment include a low latency service, a minimum bandwidth, a weighted fair queueing and a system for preventing a user from continuing to exceed his service levels over an extended period. The present invention includes a plurality of calendars with different service rates to allow a user to select the service rate which he desires. If a customer has chosen a high bandwidth for service, the customer will be included in a calendar which is serviced more often than if the customer has chosen a lower bandwidth.

Owner:IBM CORP

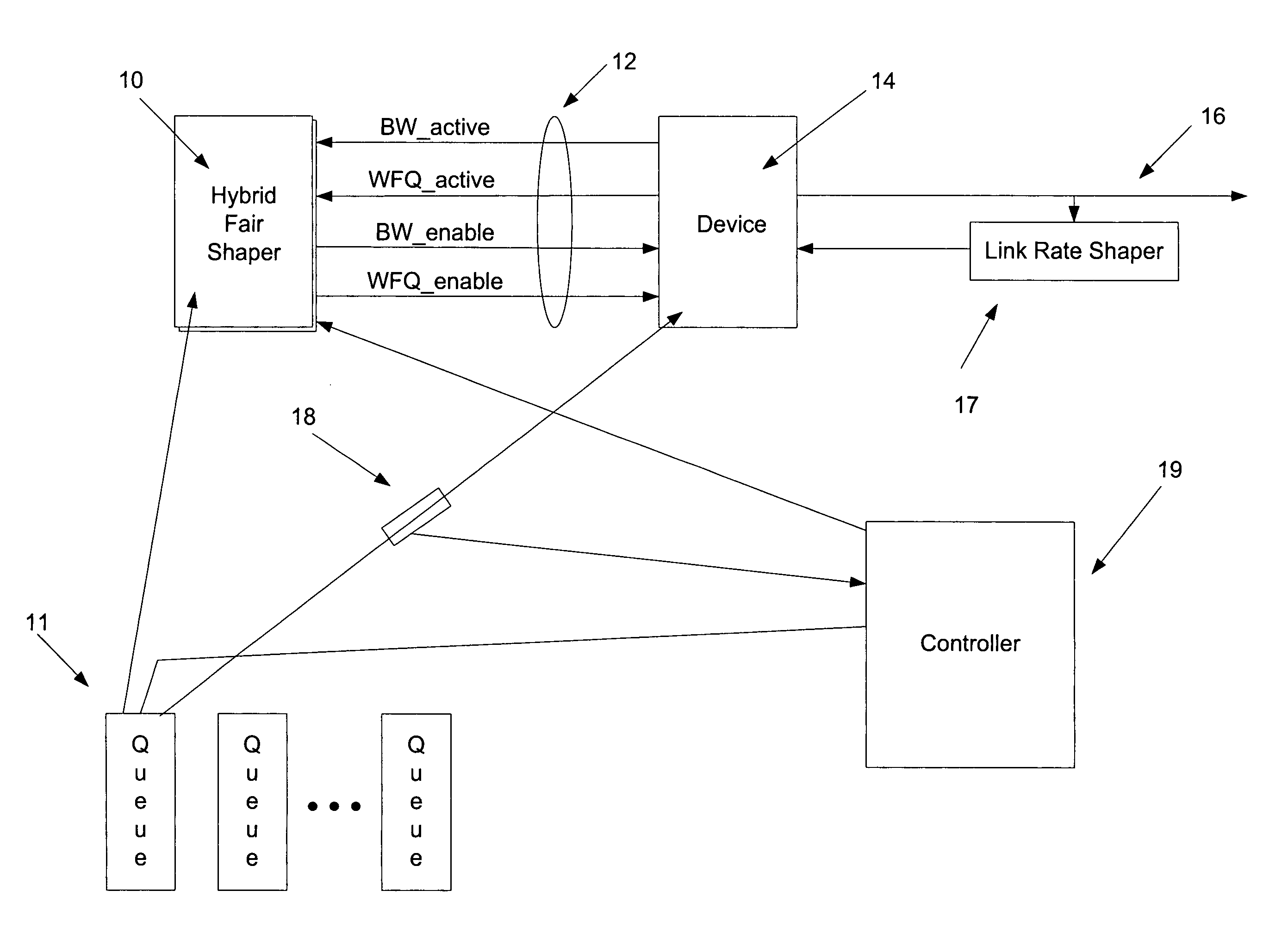

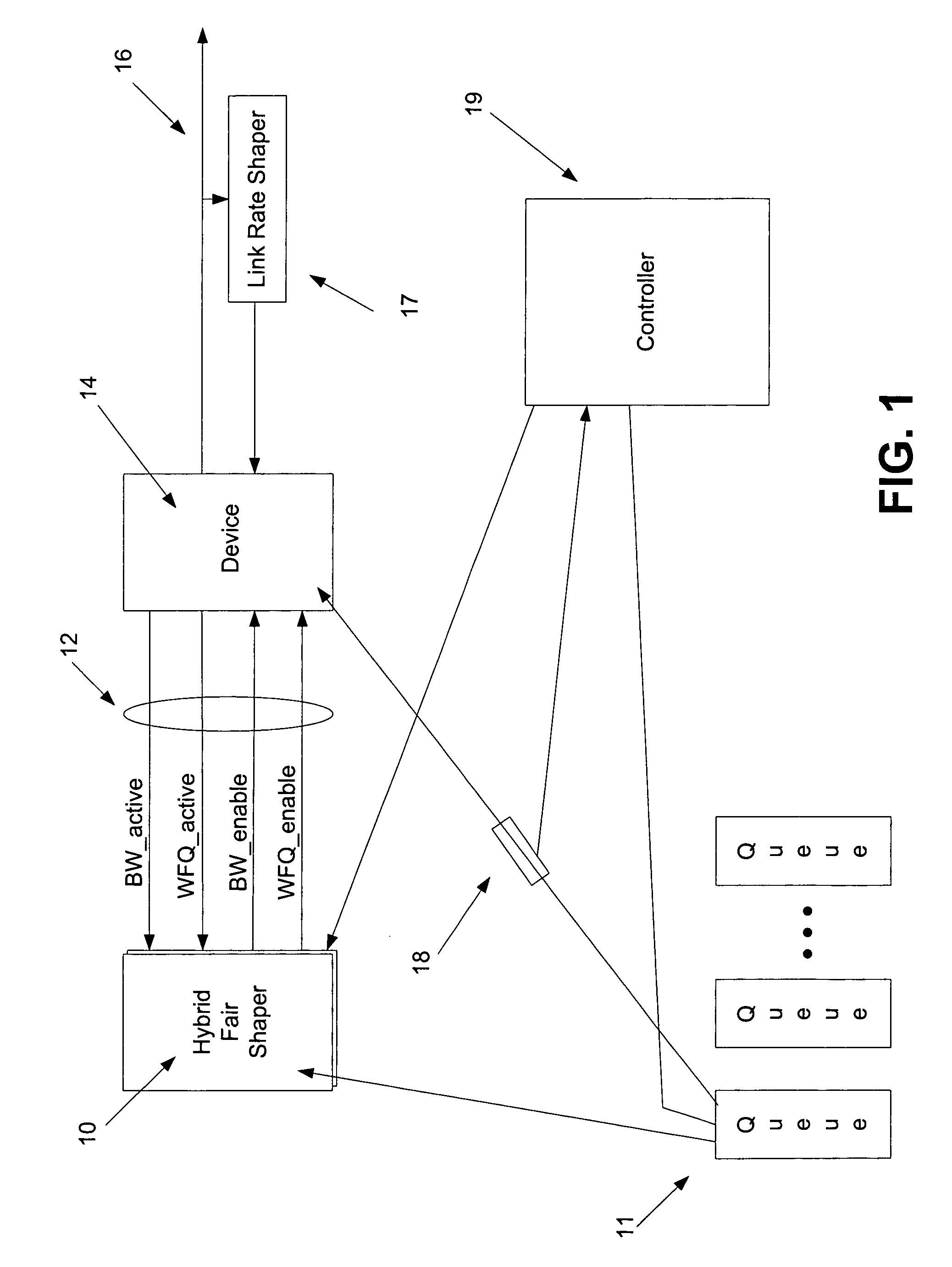

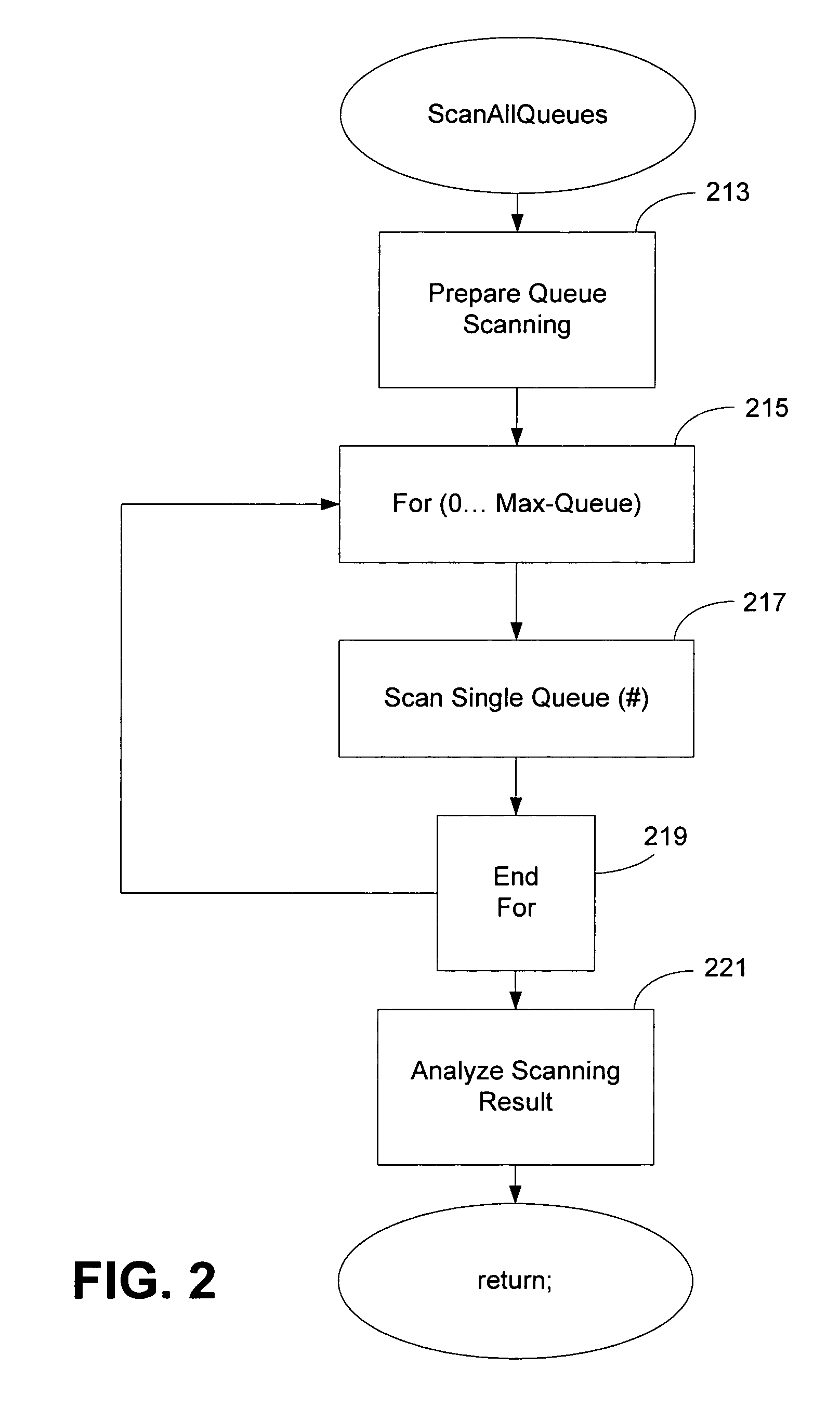

Method and apparatus for scheduling data on a medium

A bandwidth guaranteeing, BW, process transmits data from a first group of queues to a medium. When none of the queues of the first group wishes to transmit data via the bandwidth guaranteeing process, a weighted fair queuing process is provided for dividing any excess bandwidth between a second group of queues. A mathematically simple WFQ process is described which may be interrupted by the BW process and resumed with the same order etc. between the queues.

Owner:MICROSEMI STORAGE SOLUTIONS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com