Patents

Literature

101 results about "Touch user interface" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A touch user interface (TUI) is a computer-pointing technology based upon the sense of touch (haptics). Whereas a graphical user interface (GUI) relies upon the sense of sight, a TUI enables not only the sense of touch to innervate and activate computer-based functions, it also allows the user, particularly those with visual impairments, an added level of interaction based upon tactile or Braille input.

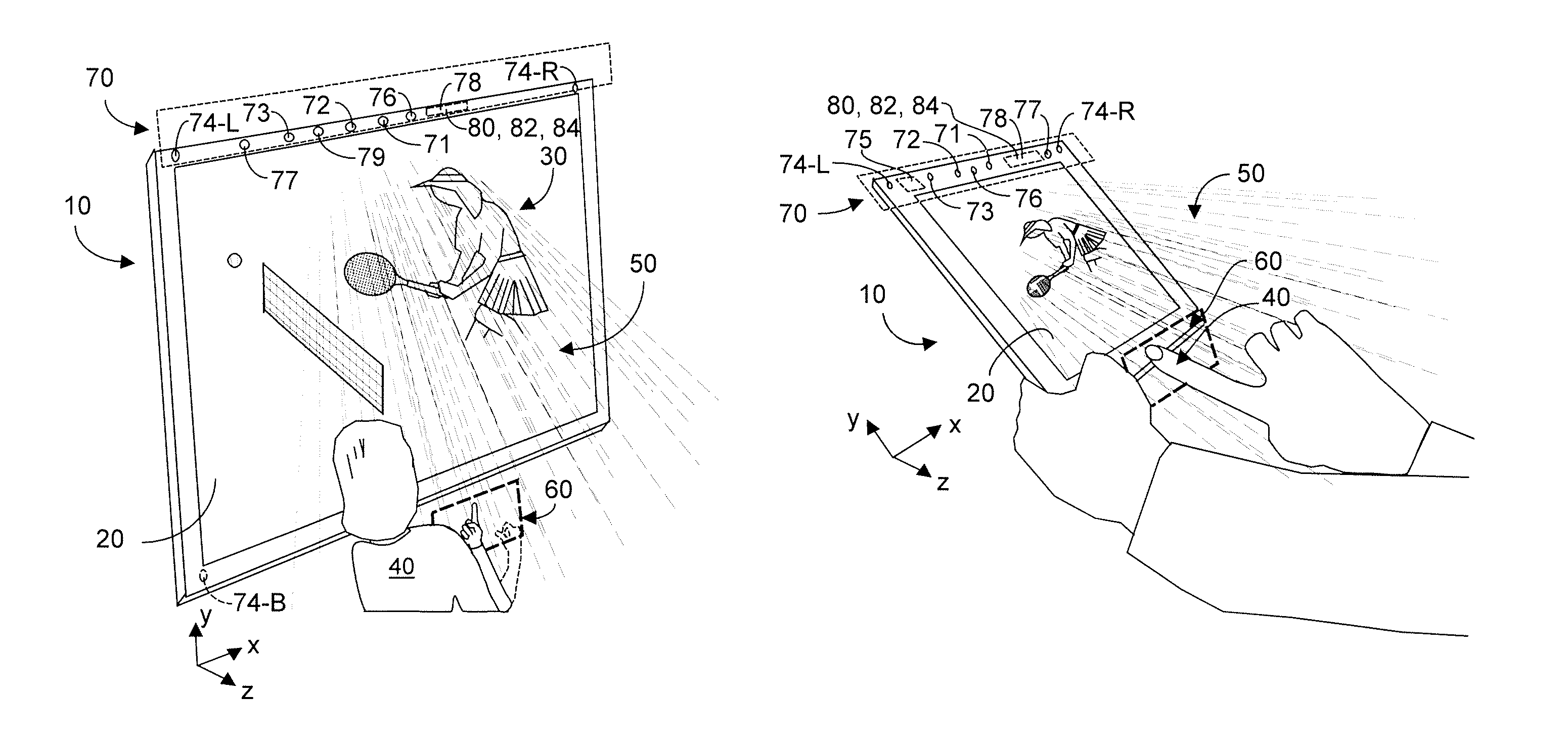

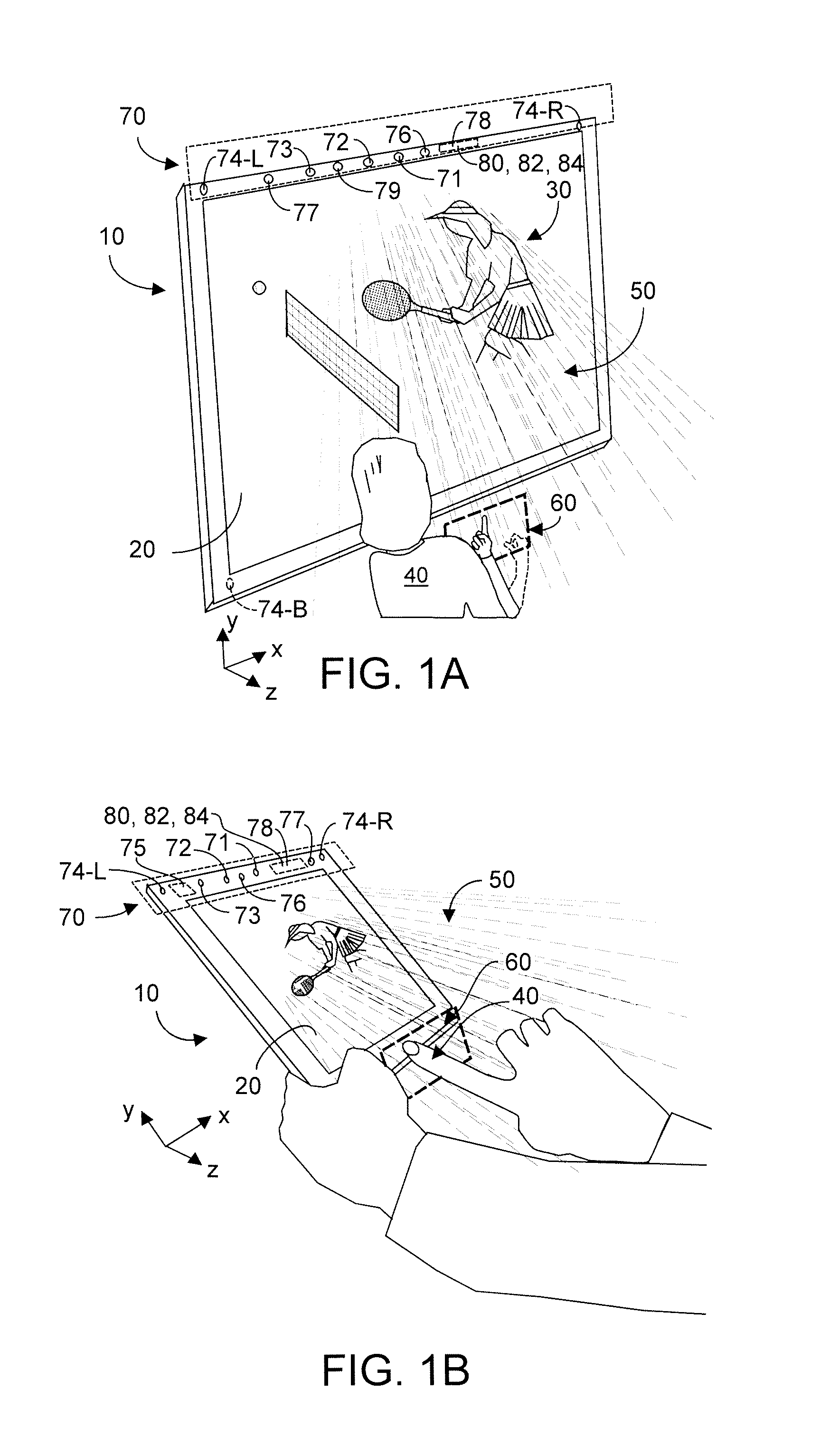

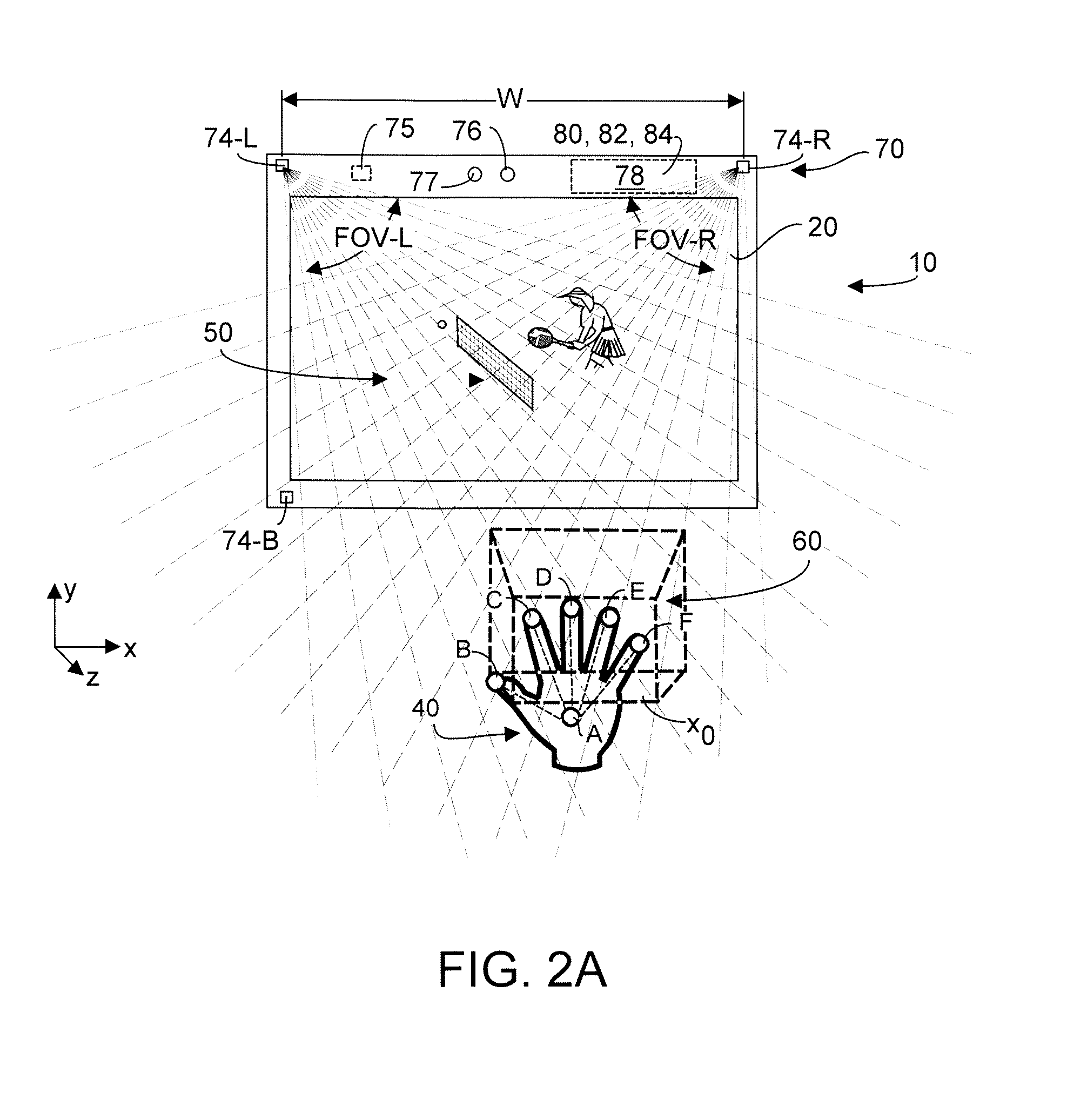

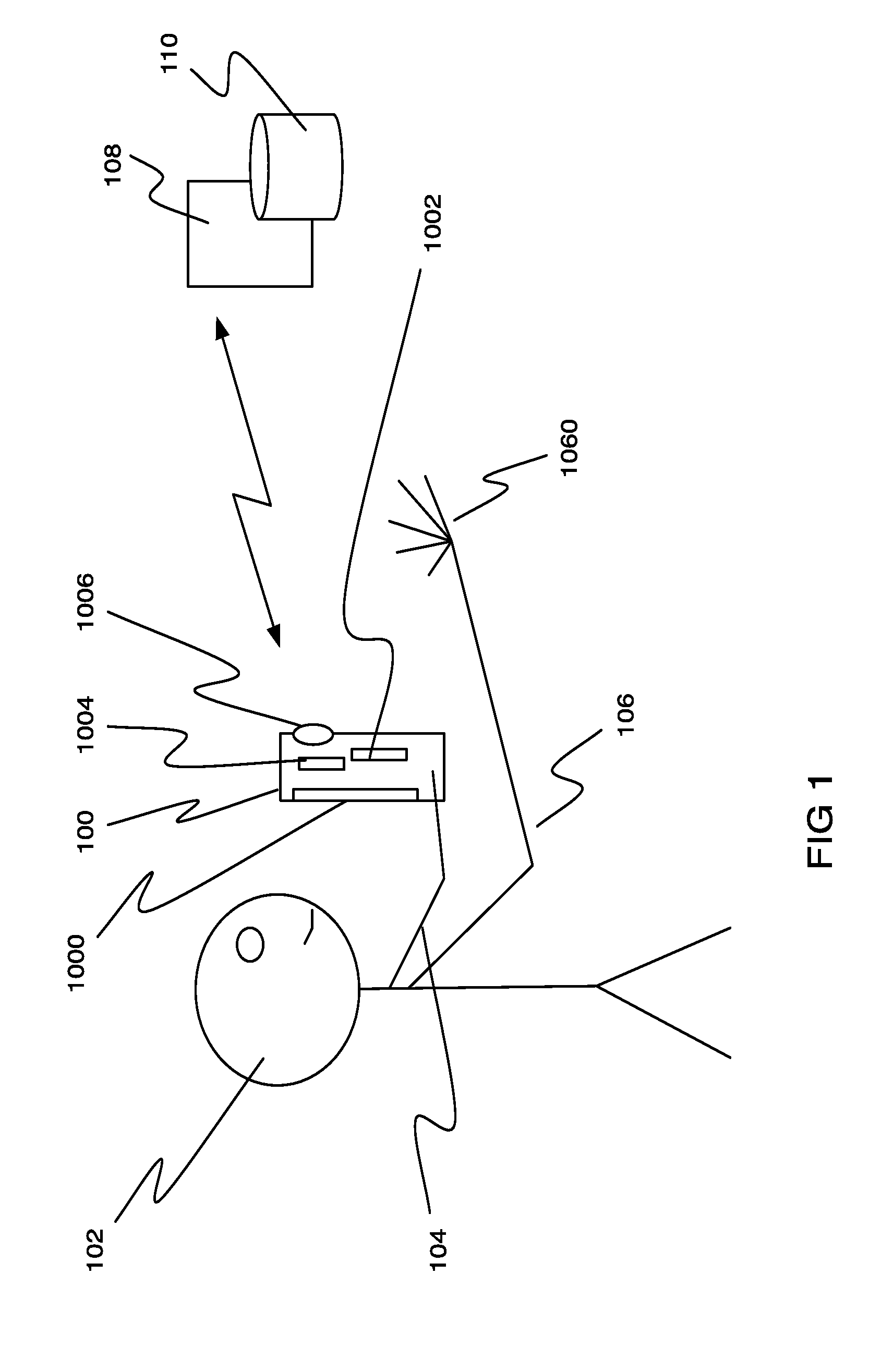

Method and system enabling natural user interface gestures with an electronic system

ActiveUS8854433B1Speed up the processReduce power consumptionInput/output for user-computer interactionClosed circuit television systemsElectronic systemsHand movements

An electronic device coupleable to a display screen includes a camera system that acquires optical data of a user comfortably gesturing in a user-customizable interaction zone having a z0 plane, while controlling operation of the device. Subtle gestures include hand movements commenced in a dynamically resizable and relocatable interaction zone. Preferably (x,y,z) locations in the interaction zone are mapped to two-dimensional display screen locations. Detected user hand movements can signal the device that an interaction is occurring in gesture mode. Device response includes presenting GUI on the display screen, creating user feedback including haptic feedback. User three-dimensional interaction can manipulate displayed virtual objects, including releasing such objects. User hand gesture trajectory clues enable the device to anticipate probable user intent and to appropriately update display screen renderings.

Owner:KAYA DYNAMICS LLC

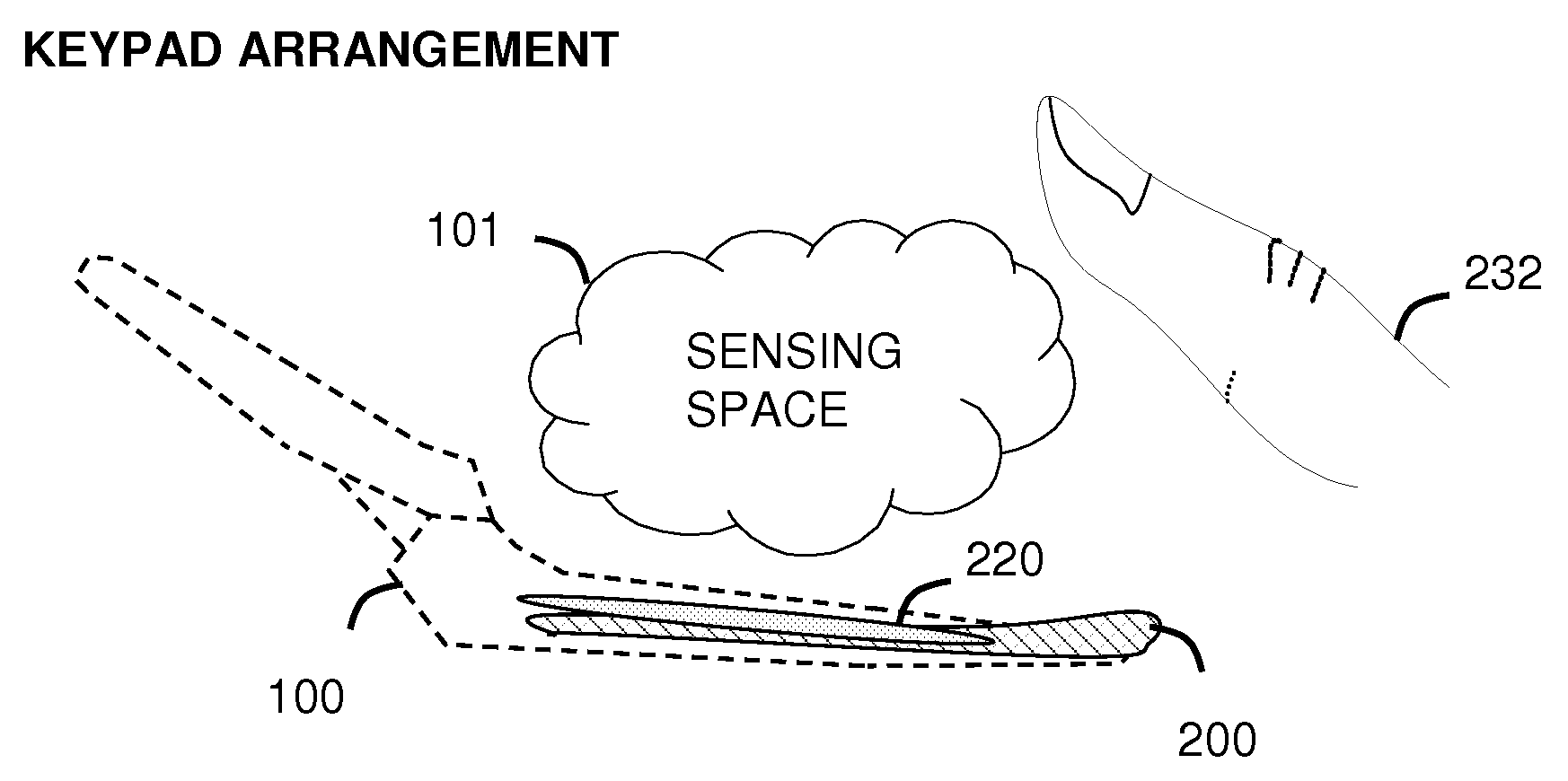

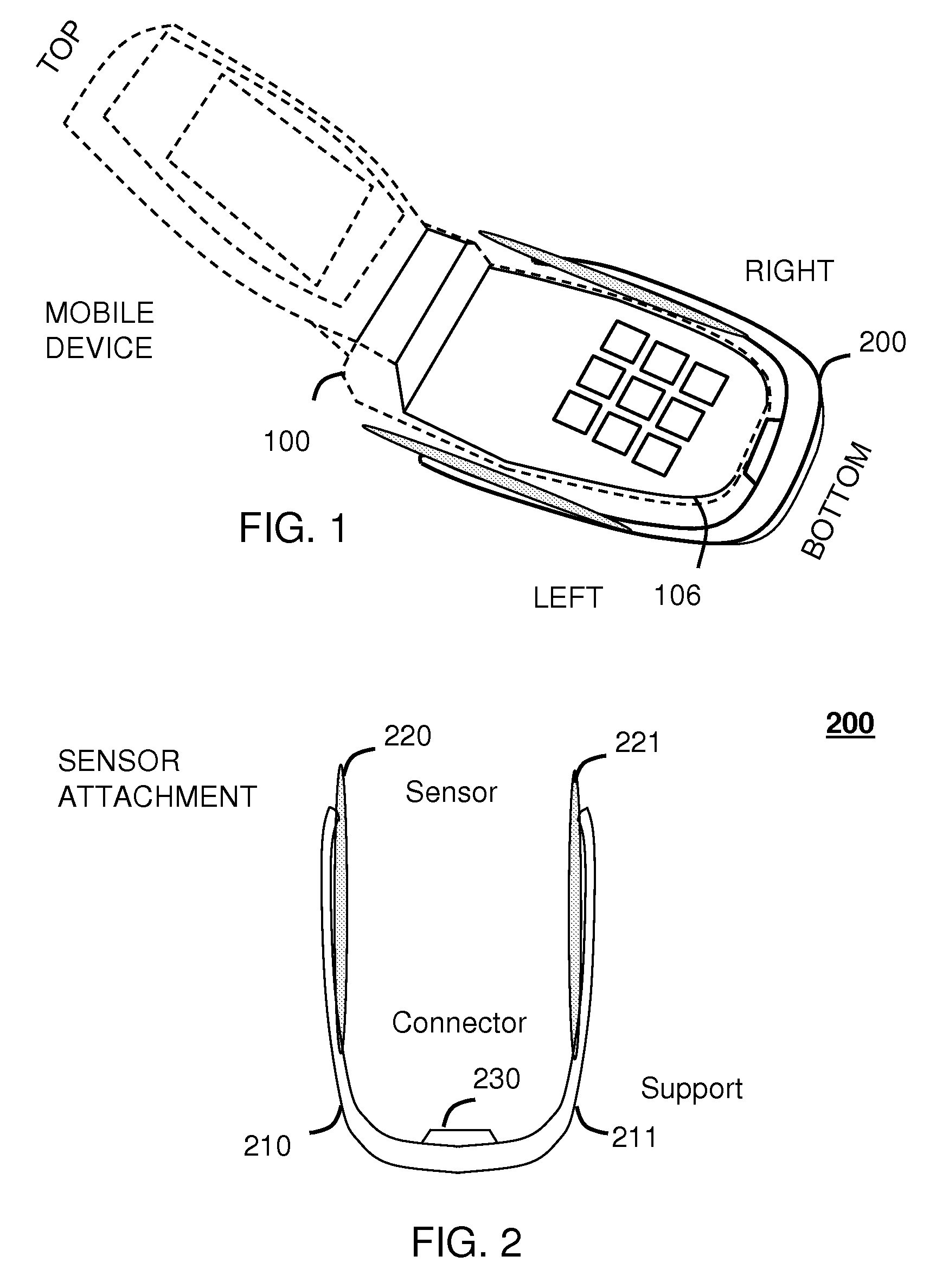

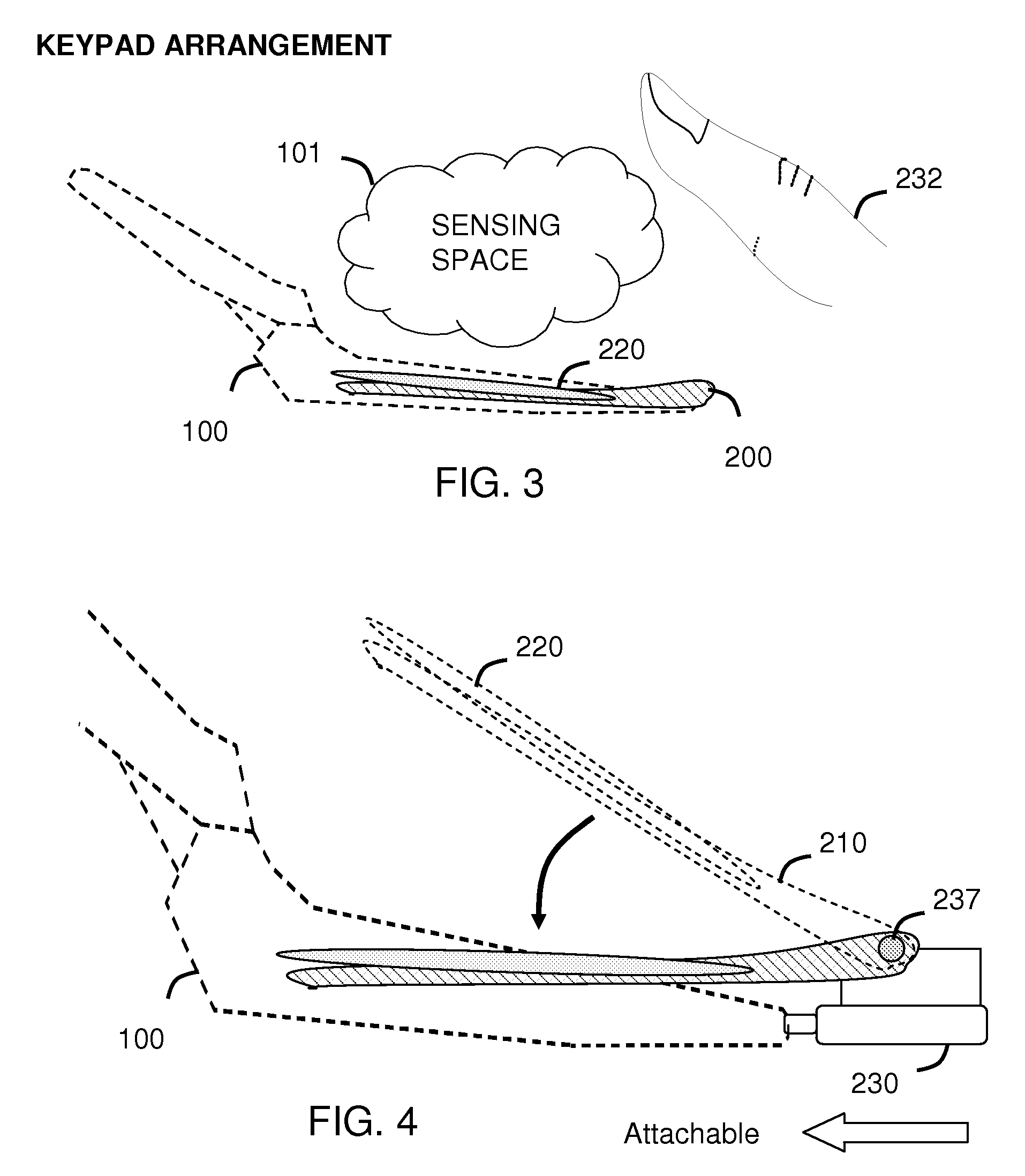

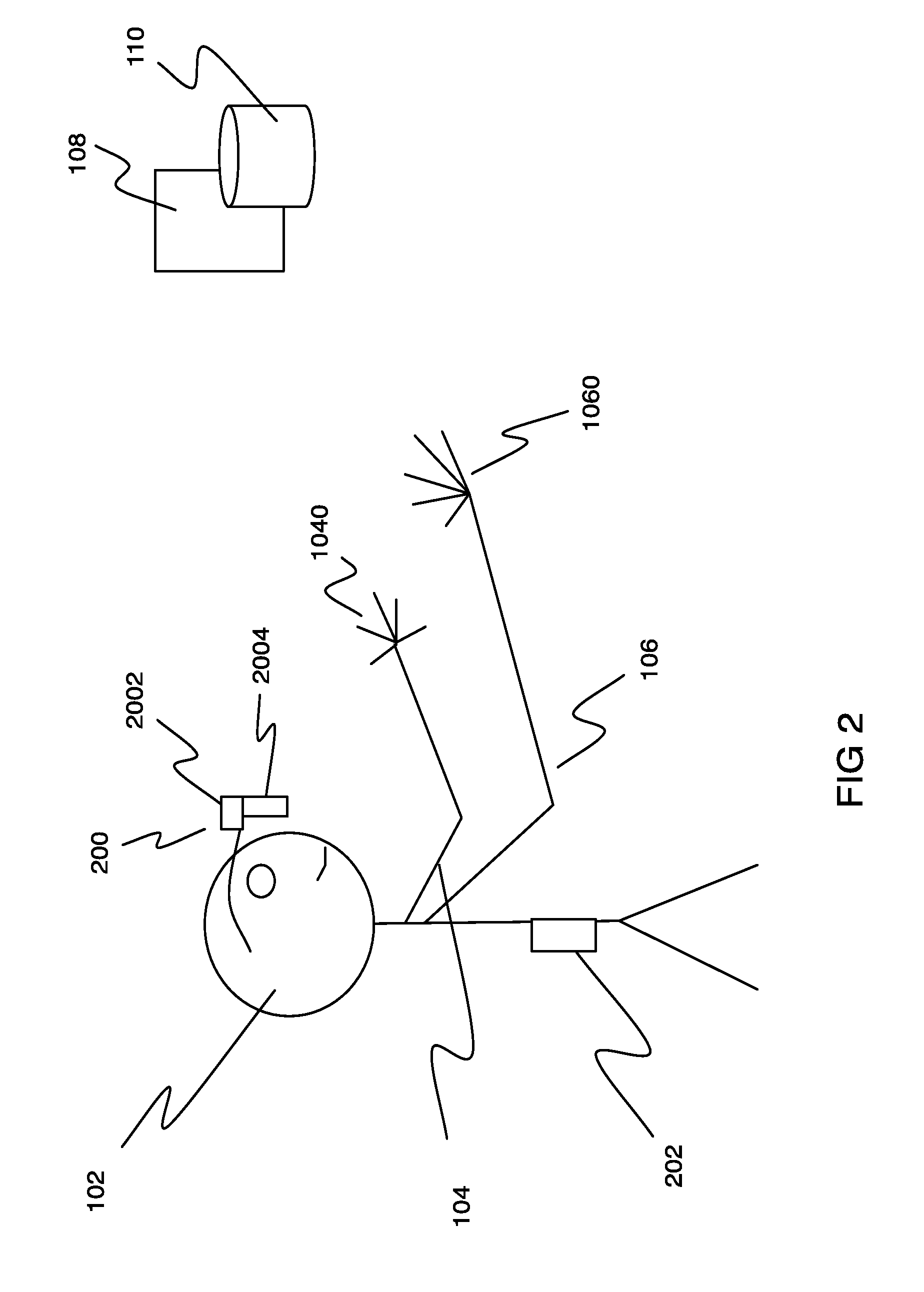

Touchless User Interface for a Mobile Device

InactiveUS20080100572A1Digital data processing detailsCathode-ray tube indicatorsDisplay deviceHuman–computer interaction

A sensory device (200) for providing a touchless user interface to a mobile device (100) is provided. The sensory device can include at least one appendage (210) having at least one sensor (220) that senses a finger (232) within a touchless sensing space (101), and a connector (230) for communicating sensory signals received by the at least one sensor to the mobile device. The sensory device can attach external to the mobile device. A controller (240) can trace a movement of the finger, recognize a pattern from the movement, and send the pattern to the mobile device. The controller can recognize finger gestures and send control commands associated with the finger gestures to the mobile device. A user can perform touchless acquire and select actions on or above a display or removable face plate to interact with the mobile device.

Owner:NAVISENSE

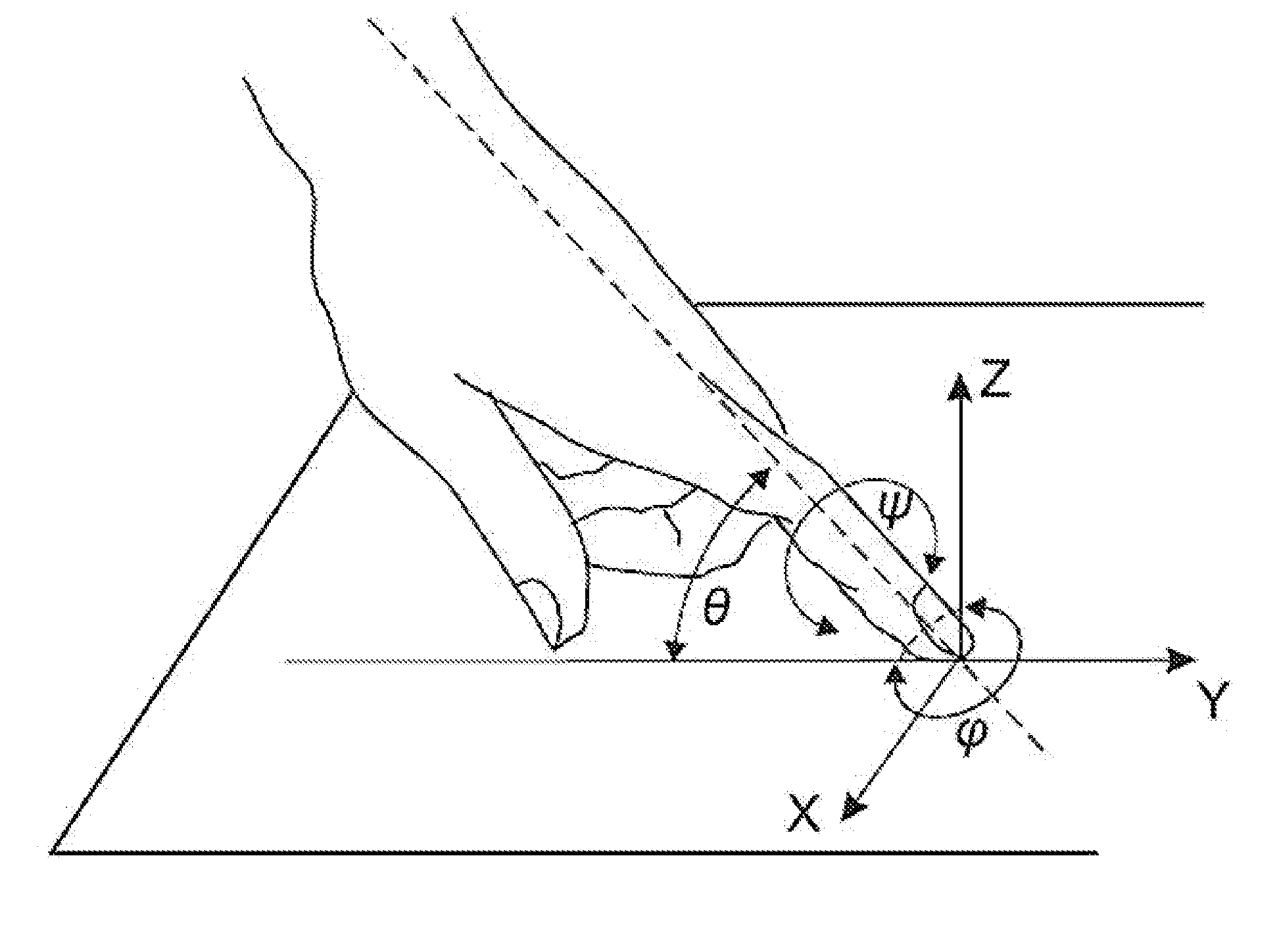

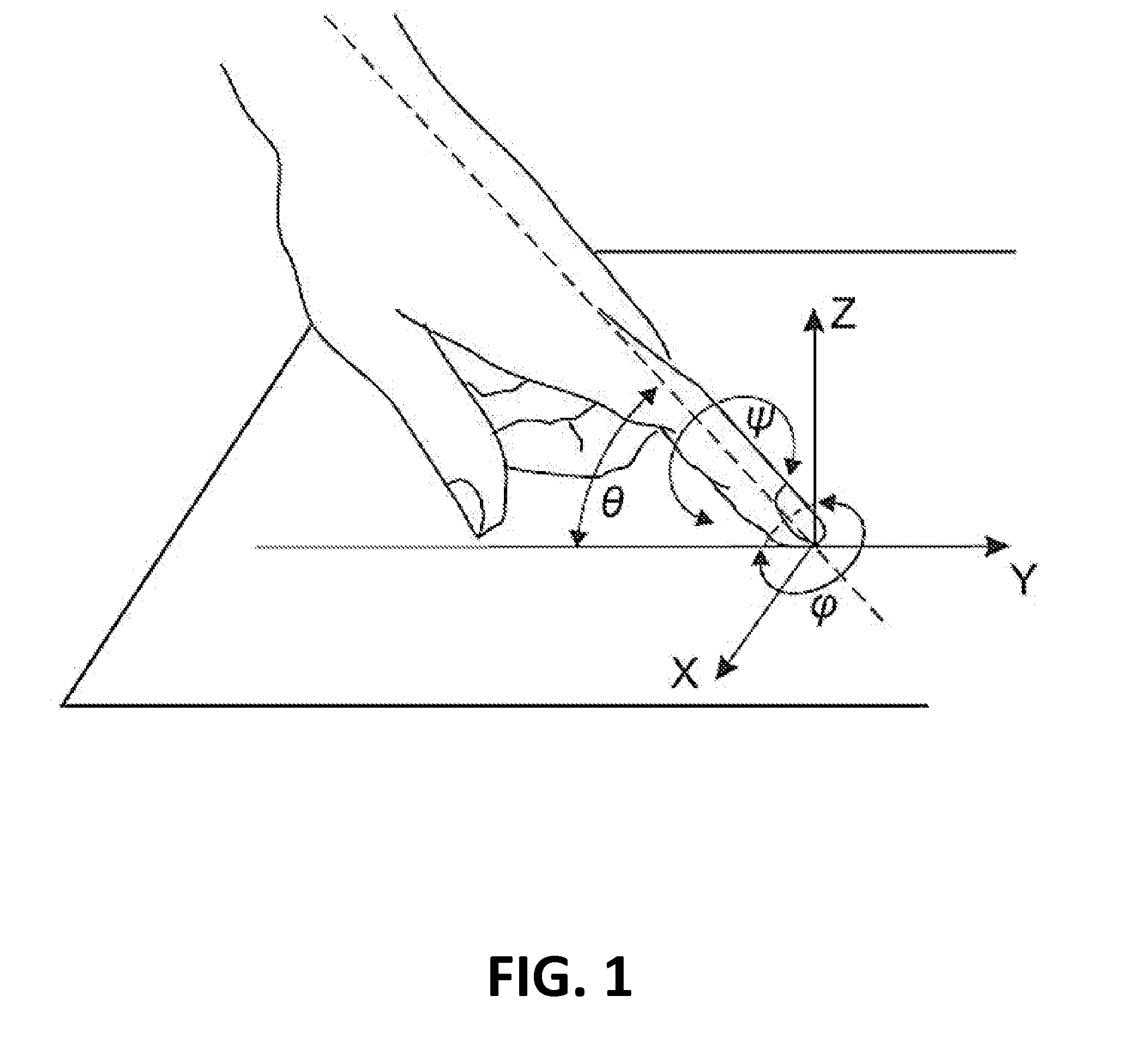

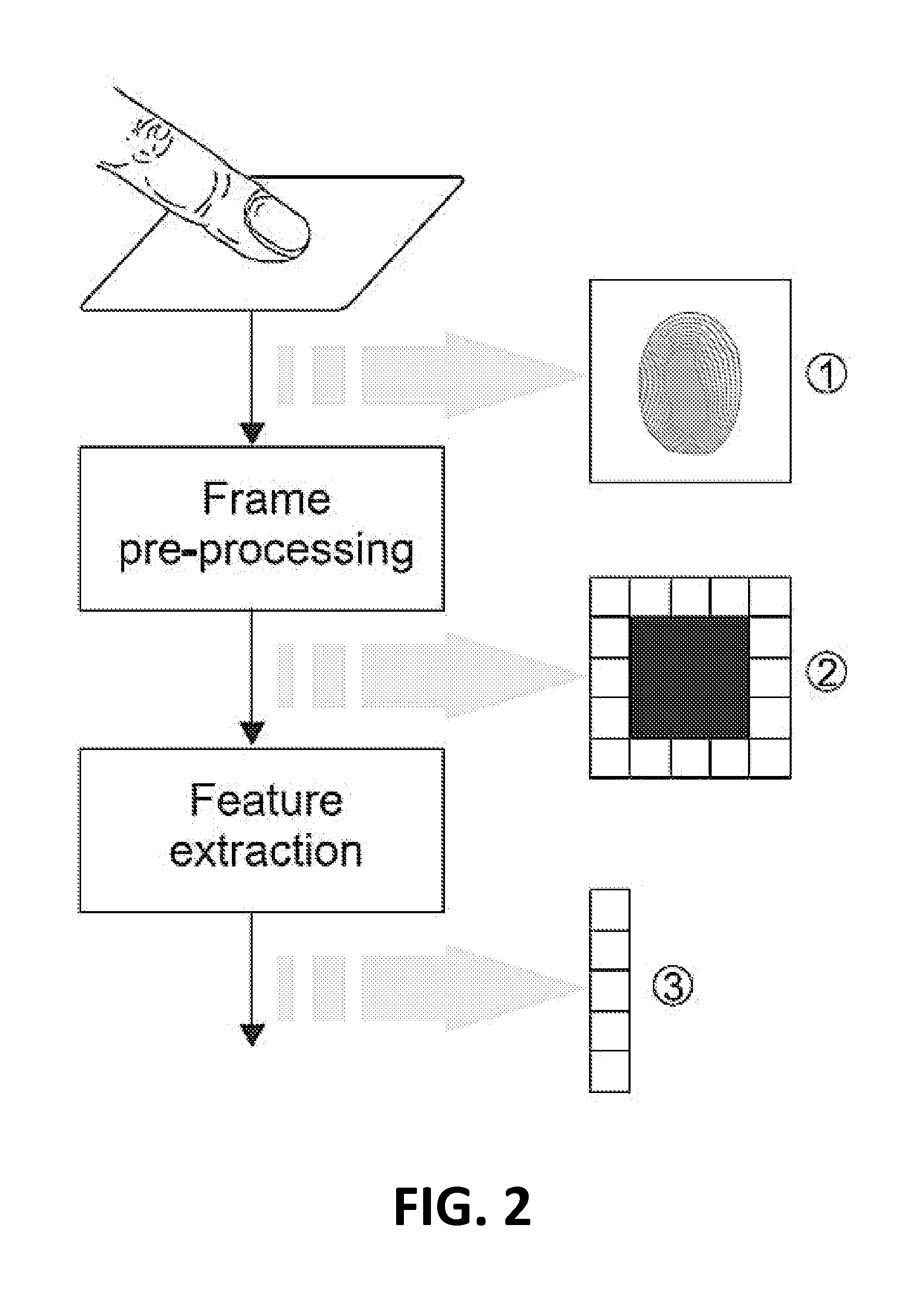

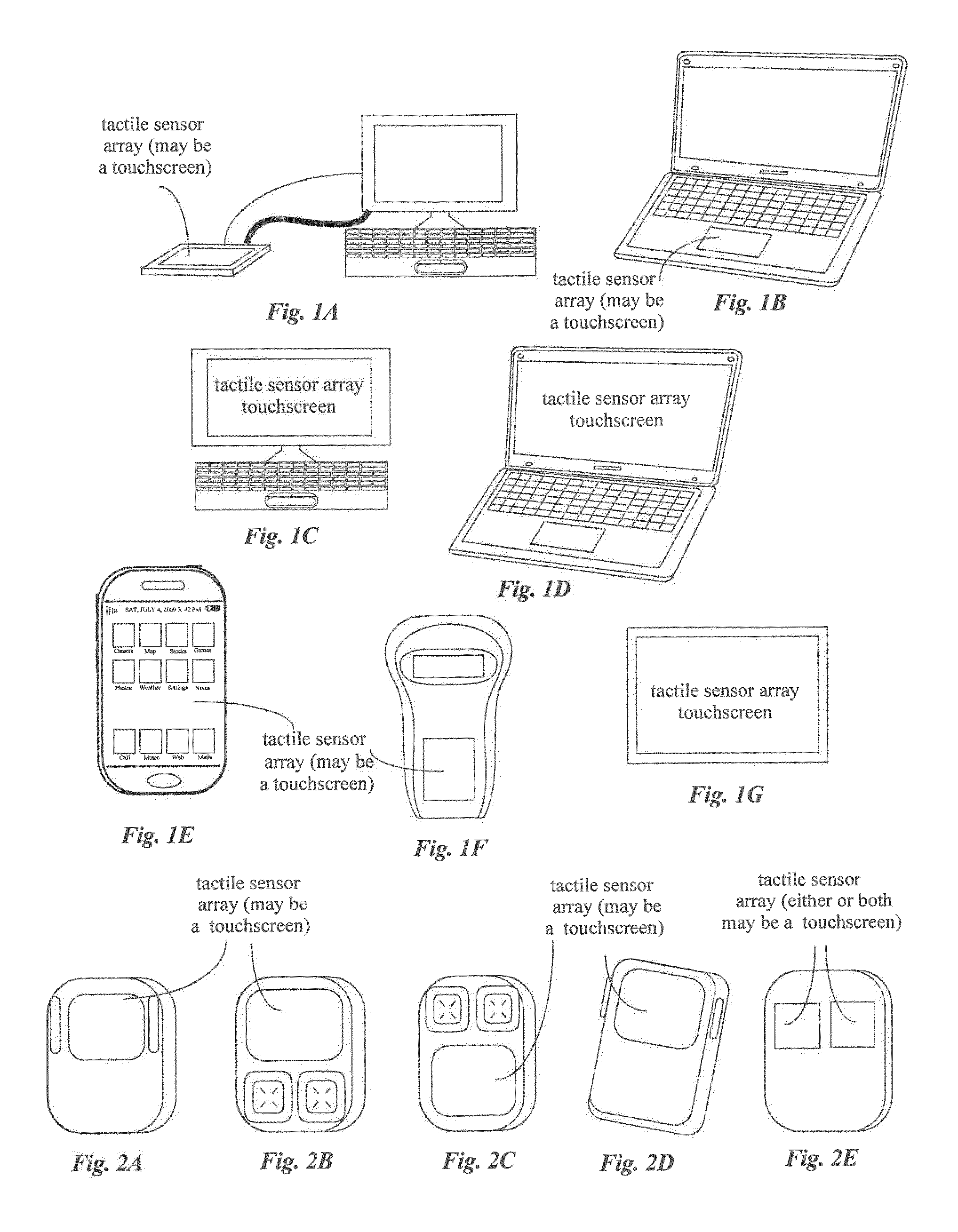

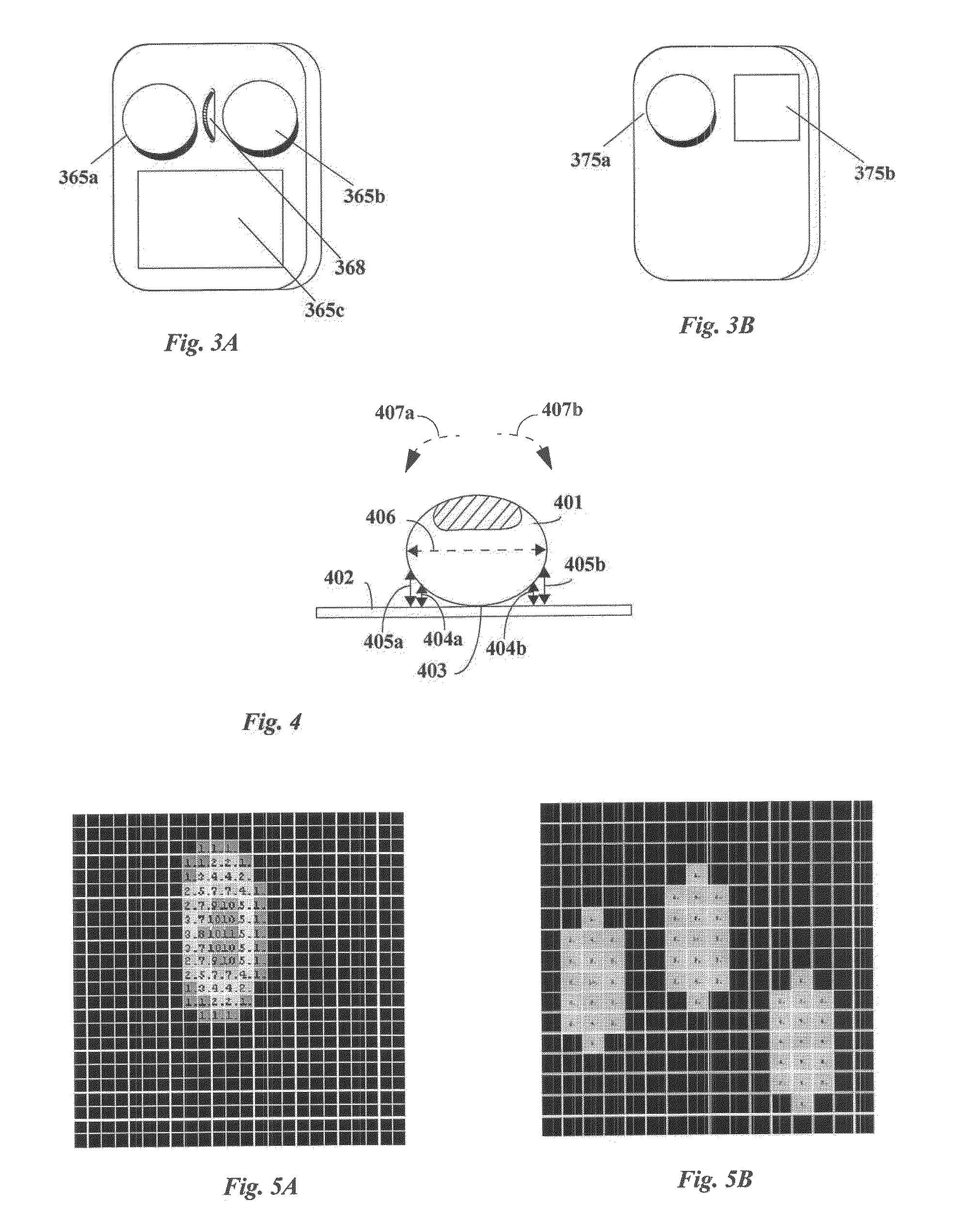

3D finger posture detection and gesture recognition on touch surfaces

InactiveUS20130009896A1Easily and inexpensively allowsInput/output processes for data processingFeature vectorSensor array

The invention provides 3D touch gesture recognition on touch surfaces incorporating finger posture detection and includes a touch user interface device in communication with a processing device. The interface device includes a sensor array for sensing spatial information of one or more regions of contact and provides finger contact information in the form of a stream of frame data. A frame is read from the sensor array, subjected to thresholding, normalization, and feature extraction operations to produce a features vector. A multi-dimensional gesture space is constructed having desired set of features, each represented by a space dimension. A gesture trajectory is a sequence of transitions between pre-calculated clusters, and when a specific gesture trajectory is detected, a control signal is generated.

Owner:NRI R&D PATENT LICENSING LLC

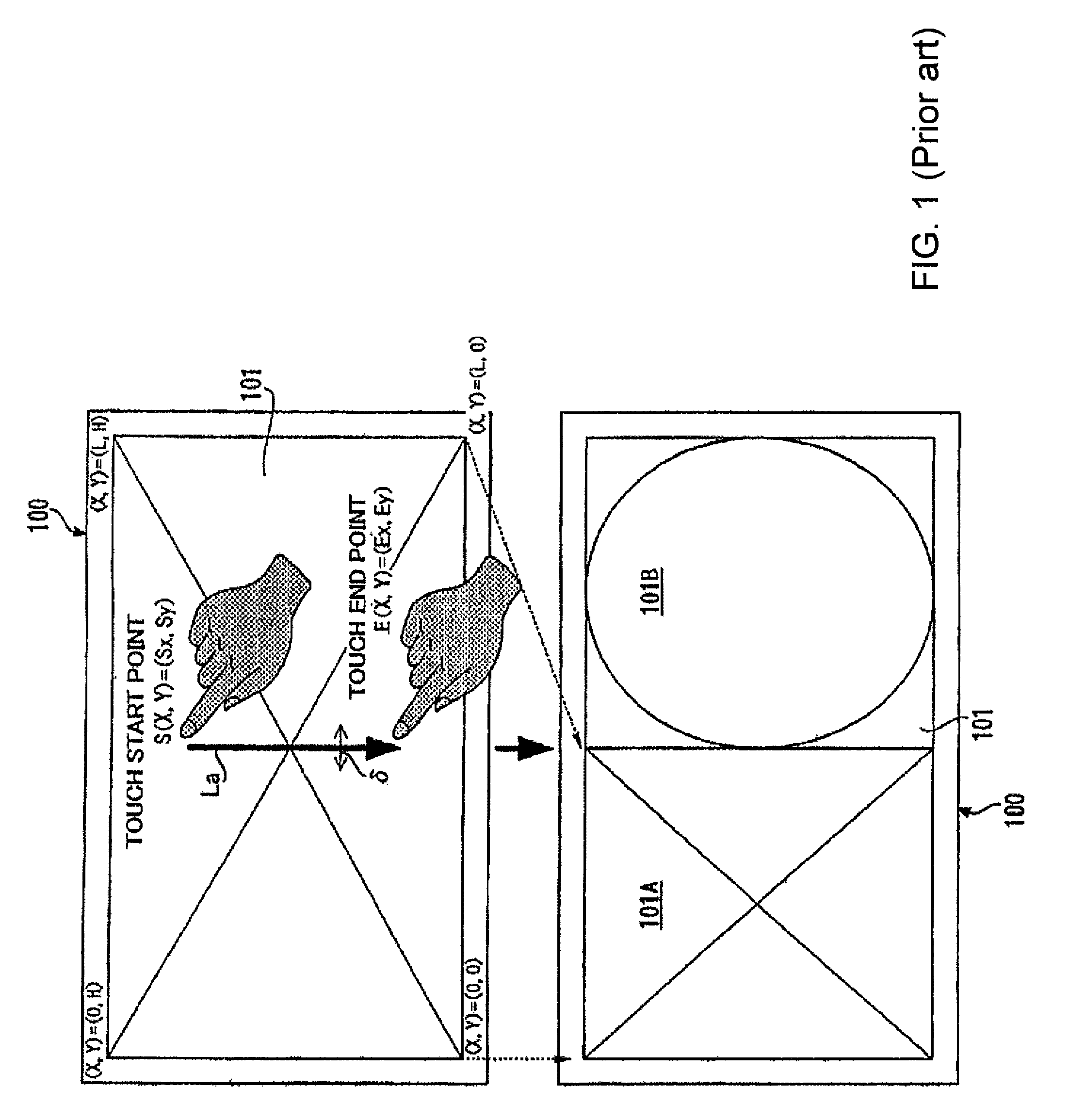

Relative Touch User Interface Enhancements

ActiveUS20120242581A1Minimize and eliminateStrong correspondenceInput/output for user-computer interactionCathode-ray tube indicatorsVertical planeTouchpad

Some embodiments provide a meta touch interface (MTI) with multiple position indicators with each position indicator operating as a separate pointing tool that can be activated (i) using taps on a touchpad or other touch sensitive surface or (ii) by pressing certain keyboard keys. The MT pointer allows for adjacent UI elements to be selected without having to reposition the MT pointer for each selection or activation. Some embodiments provide a multi-device UI that comprises at least two UIs, wherein the first UI is presented on an essentially horizontal plane that is aligned with operational focus and the second UI that is presented on an essentially vertical plane that is aligned with visual focus of the user. Some embodiments provide a precision pointer that includes an adjustable magnified region to better present underlying on-screen content, thereby allowing the user to more precisely position the pointer.

Owner:INTELLITACT

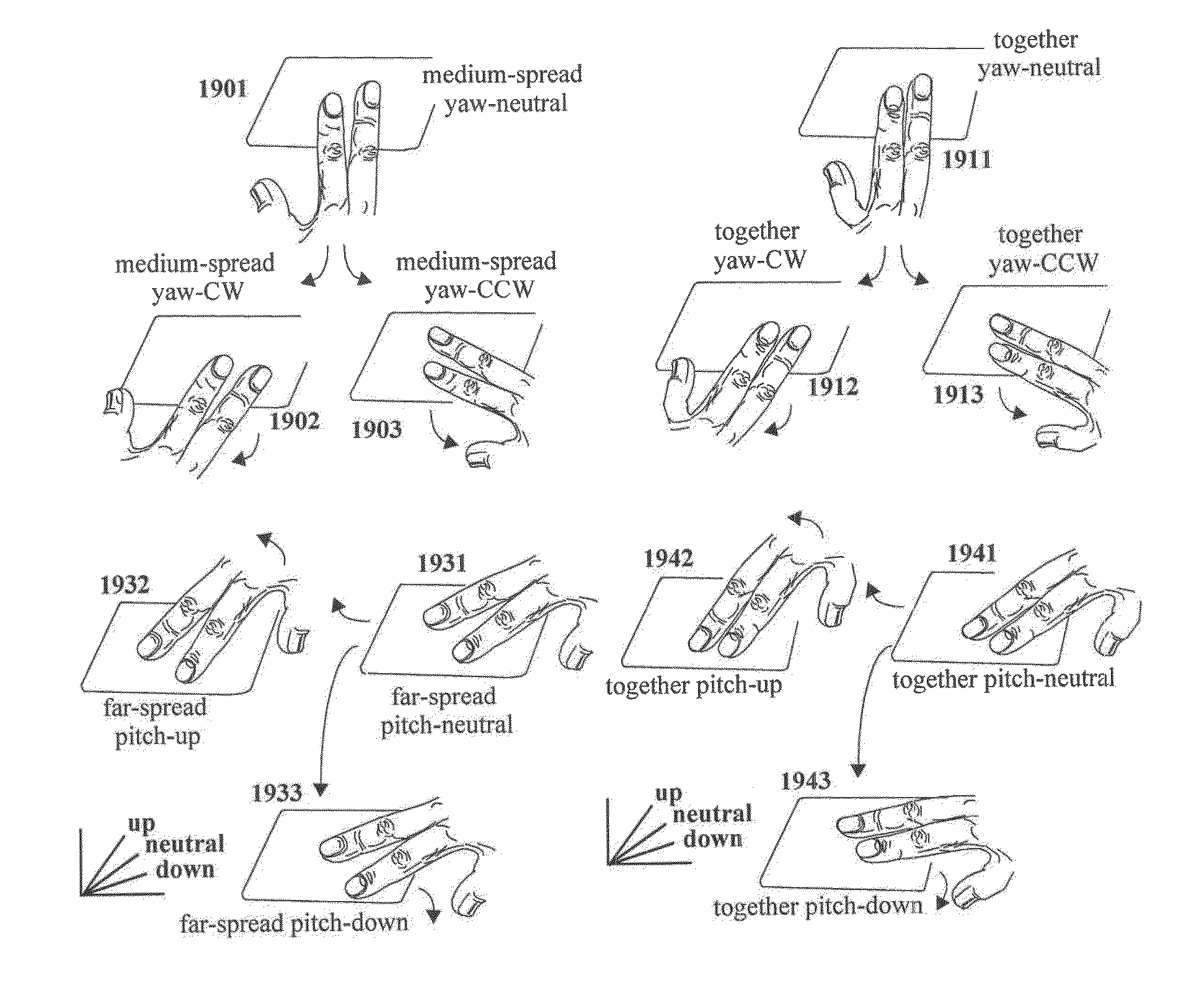

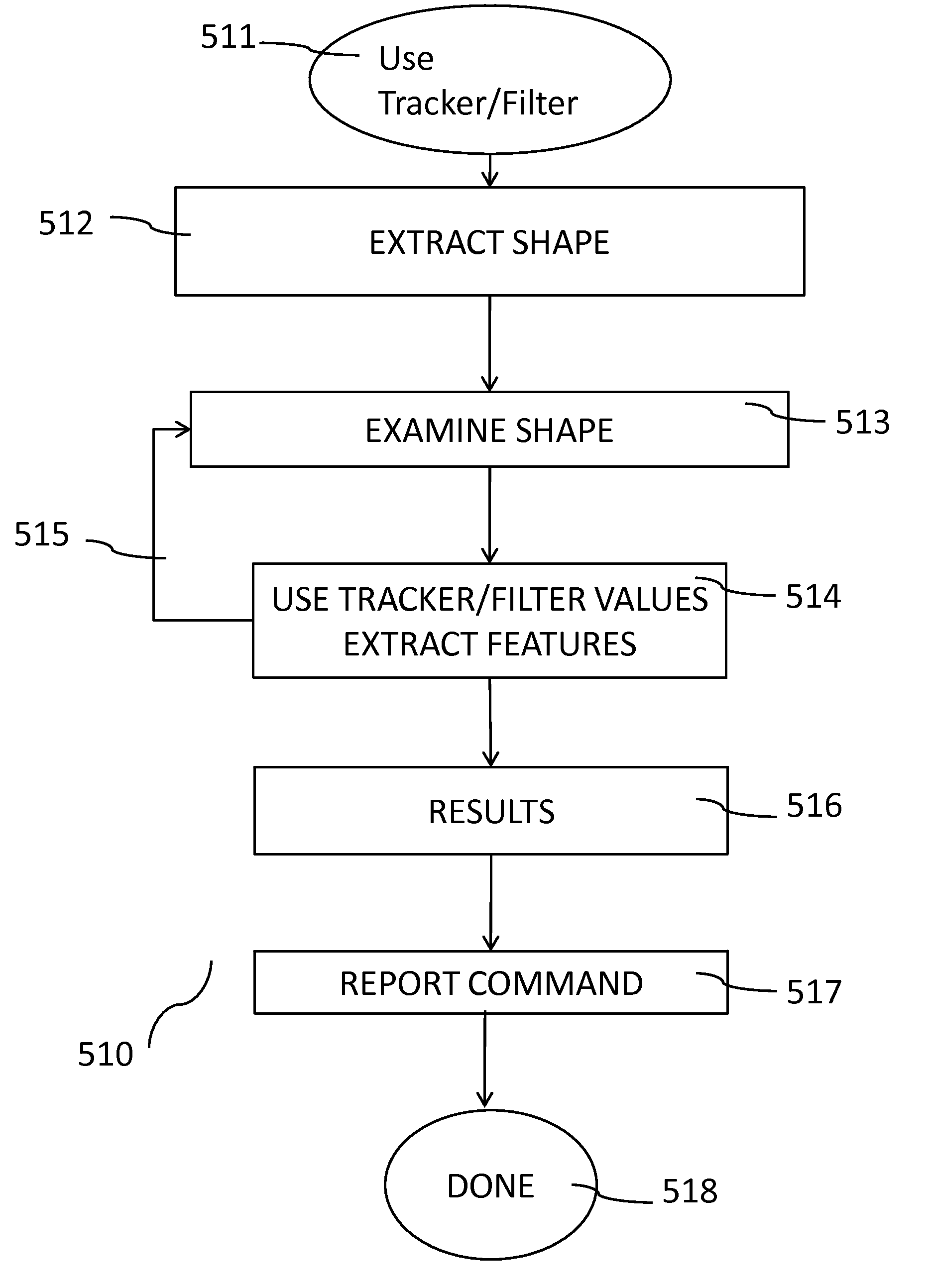

Gesteme (gesture primitive) recognition for advanced touch user interfaces

ActiveUS20130141375A1Input/output processes for data processingHigh dimensionalHuman–computer interaction

This invention relates to signal space architectures for generalized gesture capture. Embodiments of the invention includes a gesture-primitive approach involving families of “gesteme” from which gestures can be constructed, recognized, and modulated via prosody operations. Gestemes can be associated with signals in a signal space. Prosody operations can include temporal execution modulation, shape modulation, and modulations of other aspects of gestures and gestemes. The approaches can be used for advanced touch user interfaces such as High-Dimensional Touch Pad (HDTP) in touchpad and touchscreen forms, video camera hand-gesture user interfaces, eye-tracking user interfaces, etc.

Owner:NRI R&D PATENT LICENSING LLC

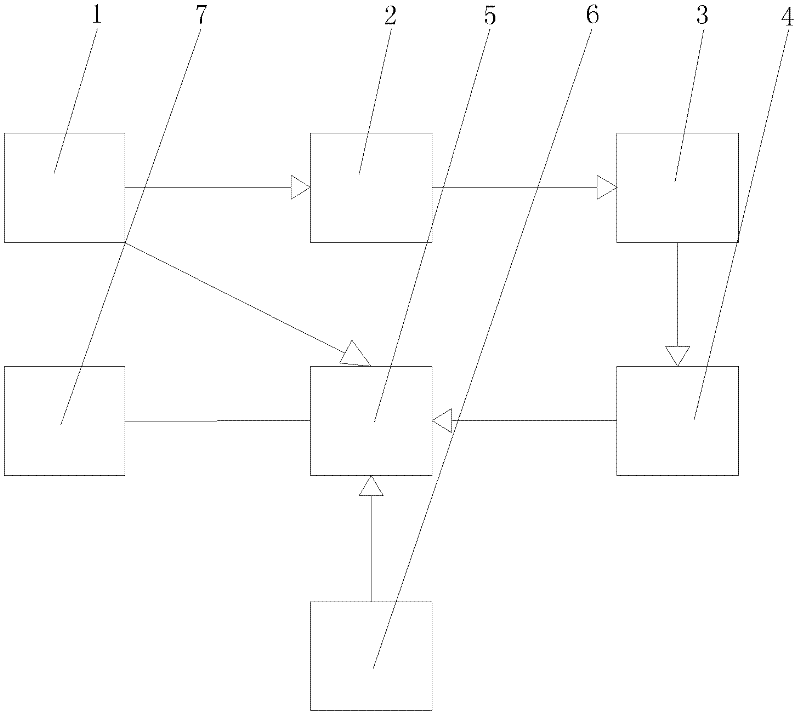

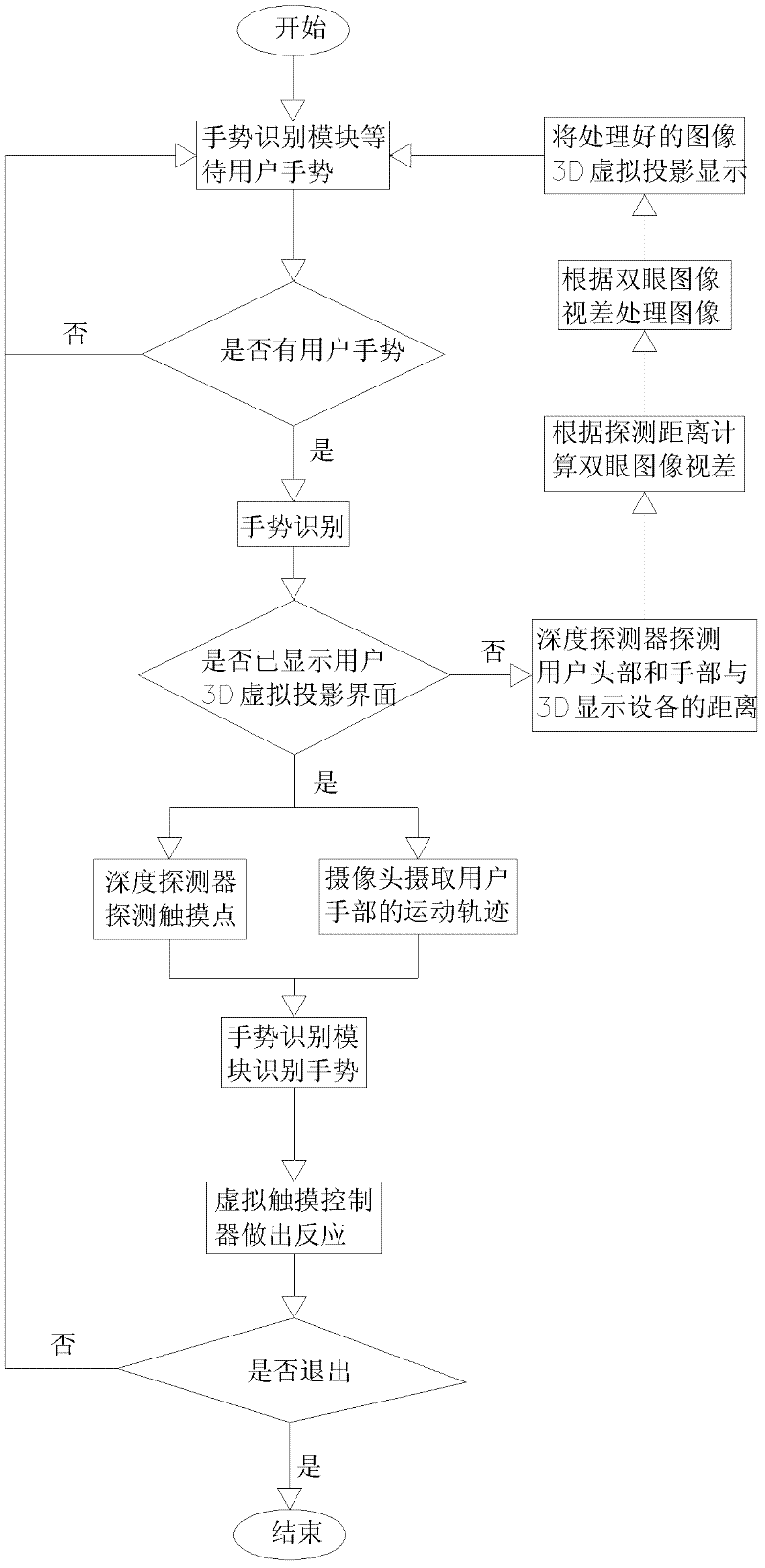

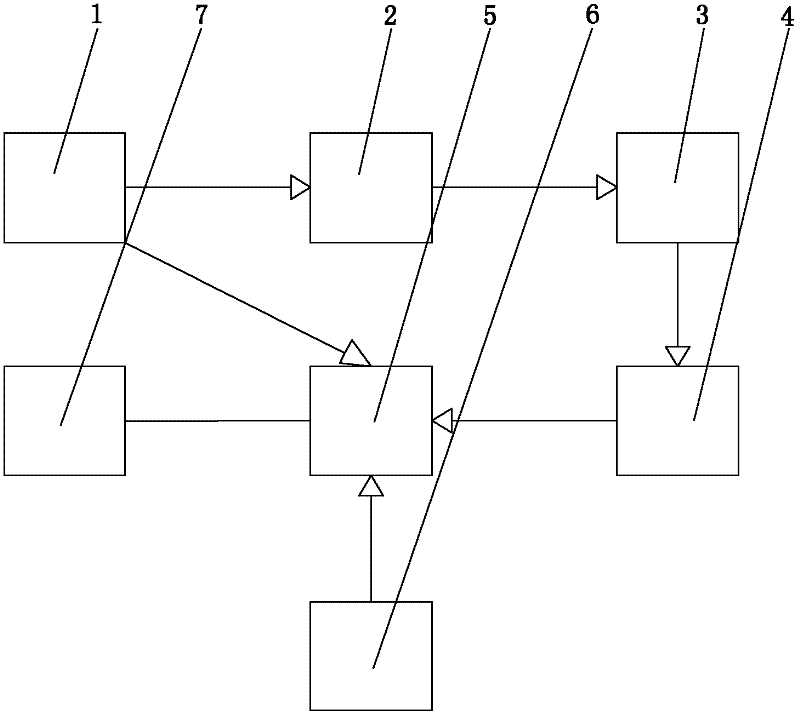

Three-dimensional (3D) virtual projection and virtual touch user interface and achieving method

ActiveCN102508546AConvenience and new interactive experienceInput/output for user-computer interactionGraph readingParallaxImaging processing

The invention discloses a three-dimensional (3D) virtual projection and virtual touch user interface and an achieving method. The three dimensional virtual projection and virtual touch user interface comprises a depth detecting device, an eyes image optical parallax calculation module, an eyes image processing module, a 3D display device, a gesture identification module, a camera and a virtual touch controller. The depth detecting device detects to obtain distance information between the head and the hands of a user and the 3D display device, the eyes image optical parallax calculation module calculates eyes image optical parallax according to the distance information, the eyes image processing module processes an image according to information of the eyes image optical parallax and sends processed image to the three dimensional display device to be virtually projected in a scope of arm length of the user, the gesture identification module identifies user finger moving tracks through the depth detecting device and the camera, the virtual touch controller conducts corresponding reactions according to gestures and the moving tracks of the user. The 3D virtual projection and virtual touch user interface not only achieves the 3D virtual projection and virtual touch user interface with feedbacks, but also brings the user interactive experience which is convenient to use and novel.

Owner:TPV DISPLAY TECH (XIAMEN) CO LTD

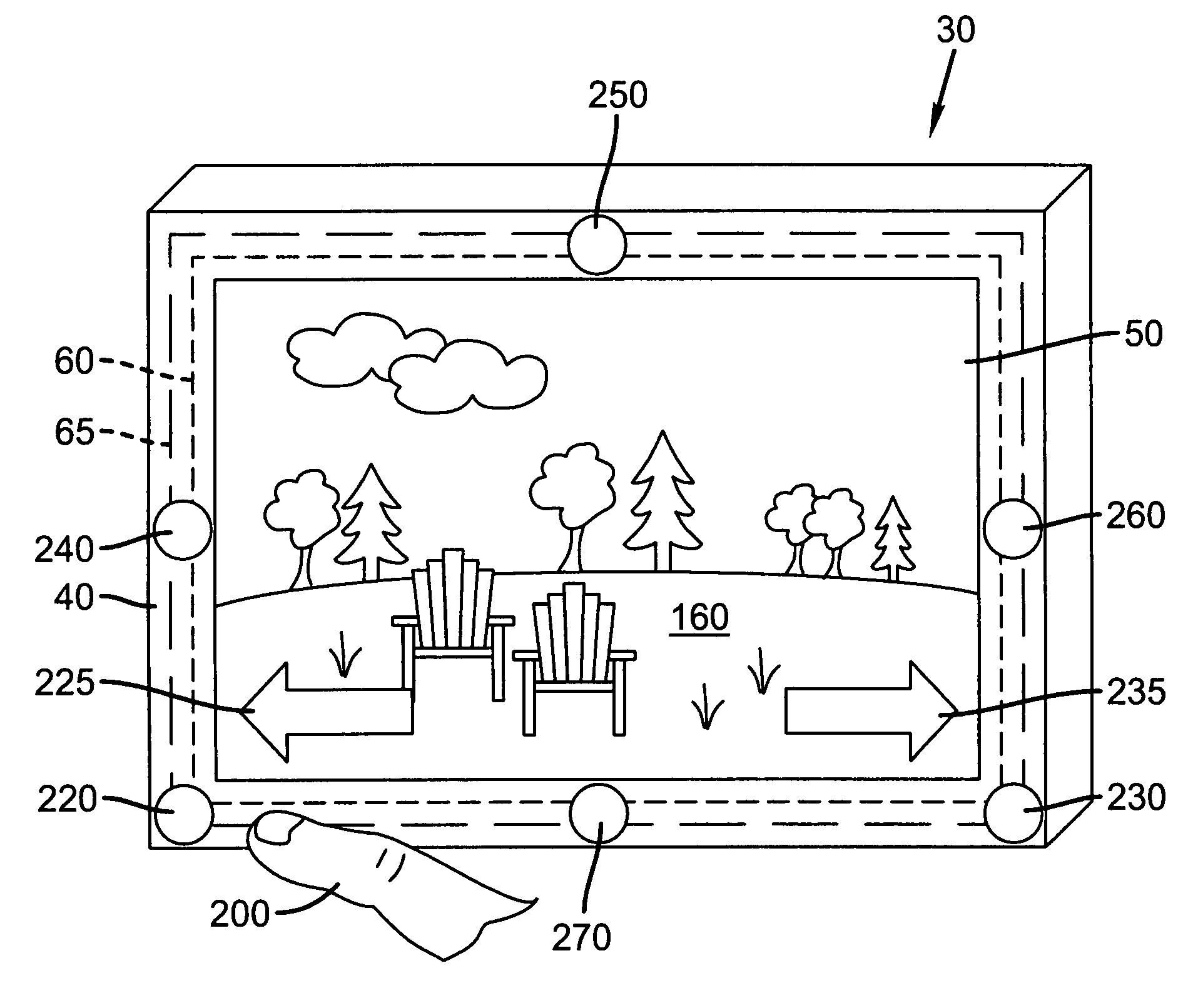

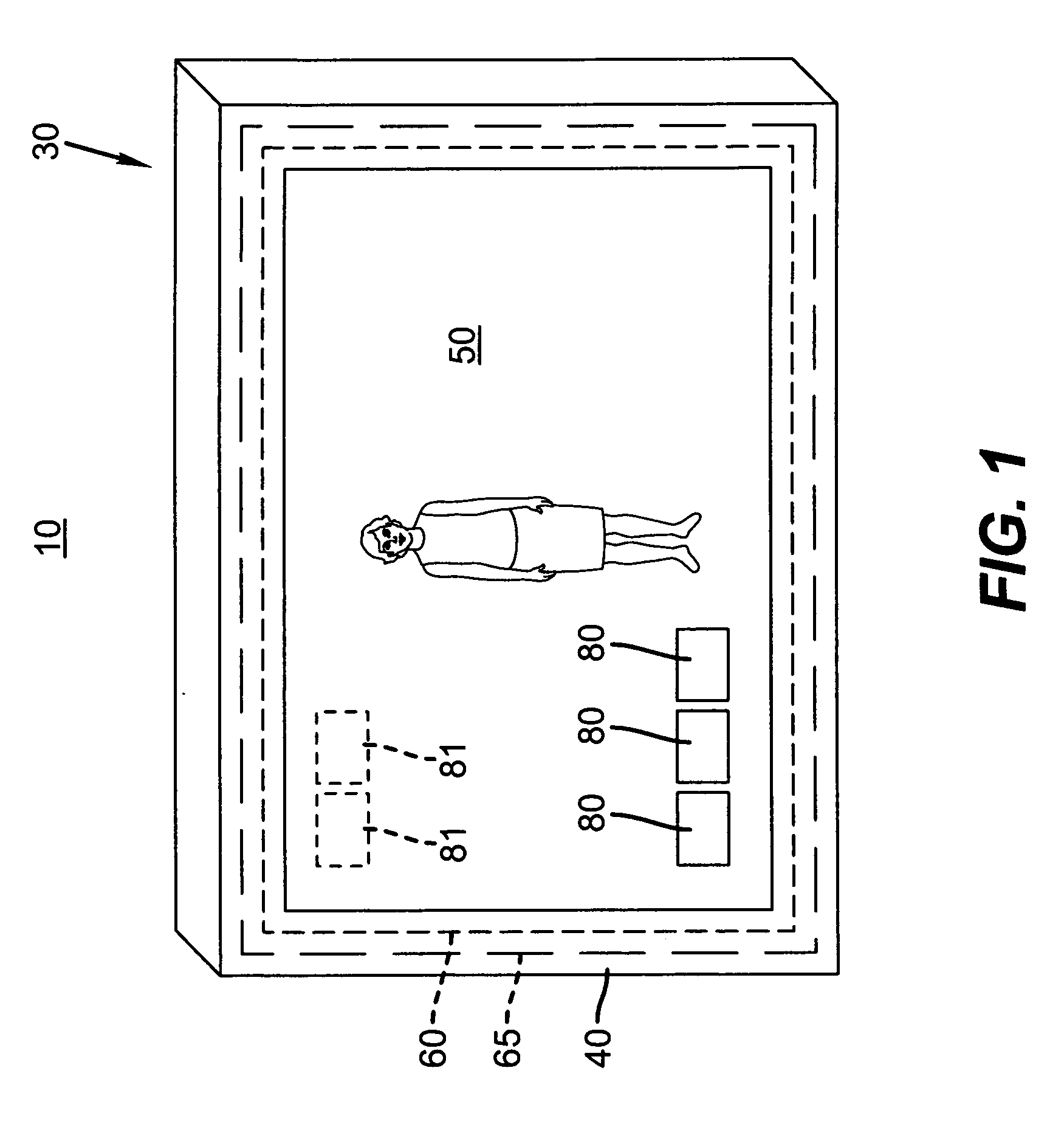

Digital picture frame having near-touch and true-touch

InactiveUS20100245263A1Simplify user experienceIntuitive experienceInput/output for user-computer interactionCathode-ray tube indicatorsDigital picturesTouch user interface

A digital picture frame includes a near-touch user interface component that senses when an object is within a predetermined spatial region of the digital picture frame; a true-touch user interface component that senses physical contact with the digital picture frame; and a processor that receives input signals from the near-touch user interface component and the true-touch user interface component and executes device controls based on inputs from both user interface components.

Owner:EASTMAN KODAK CO

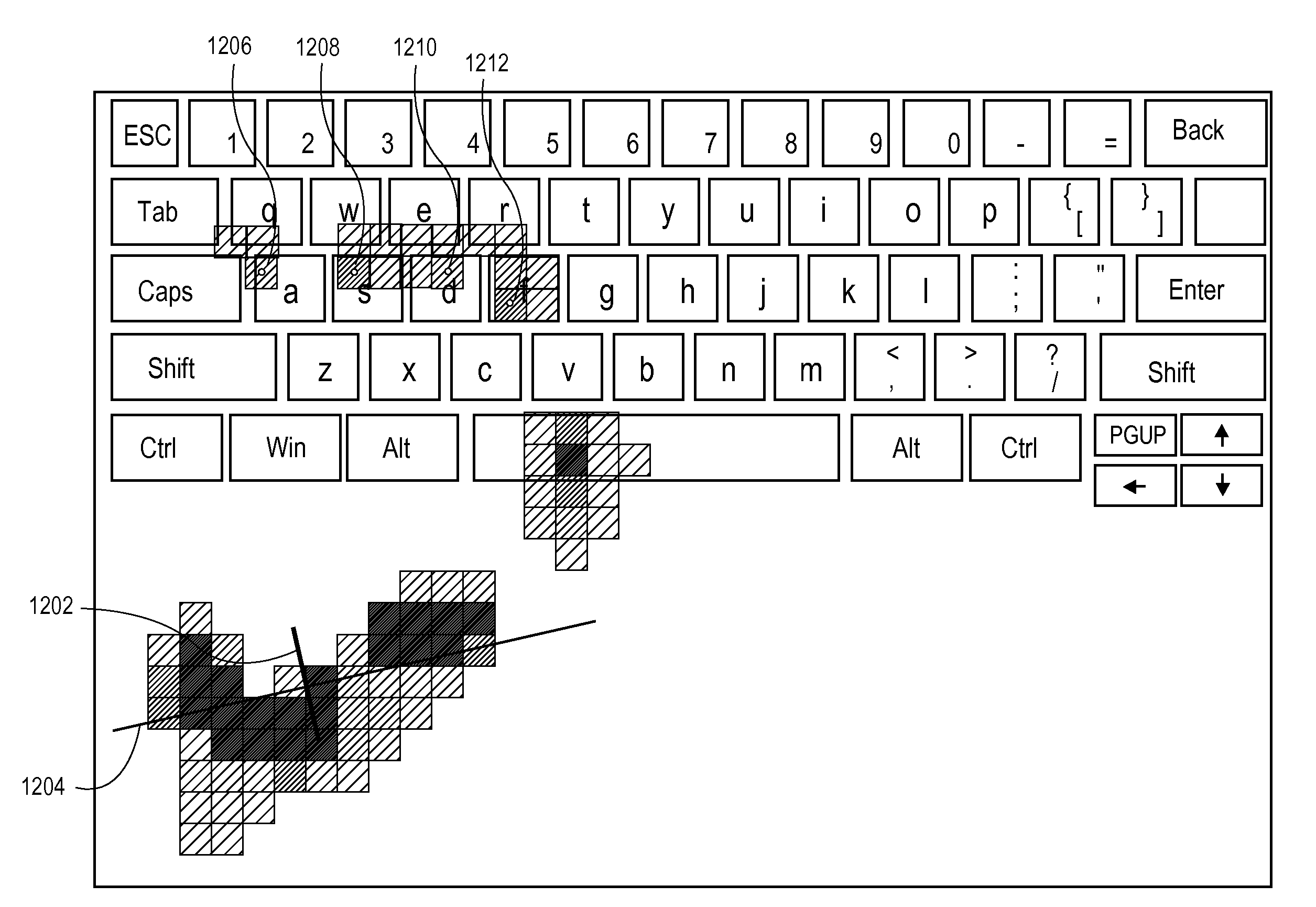

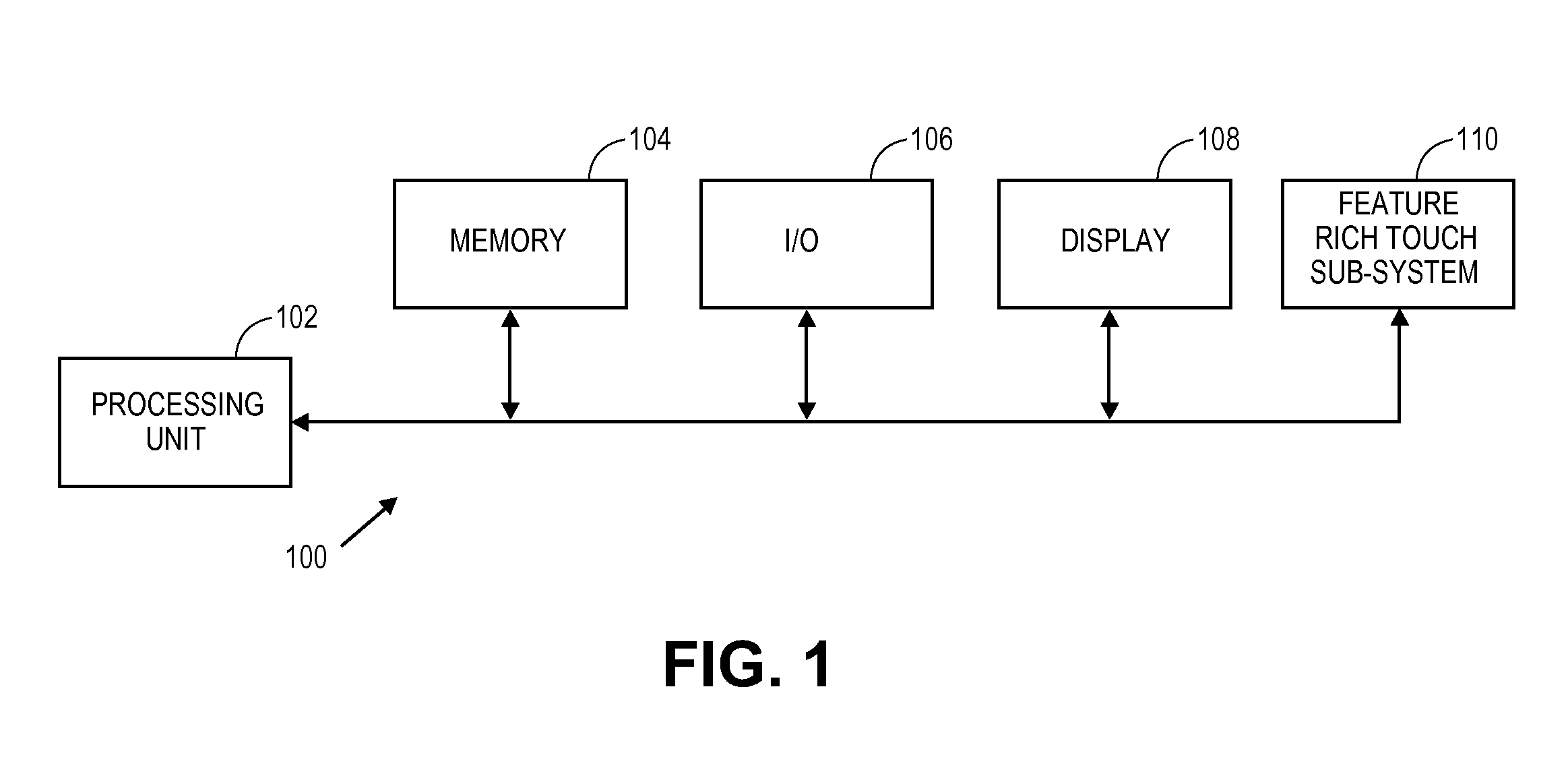

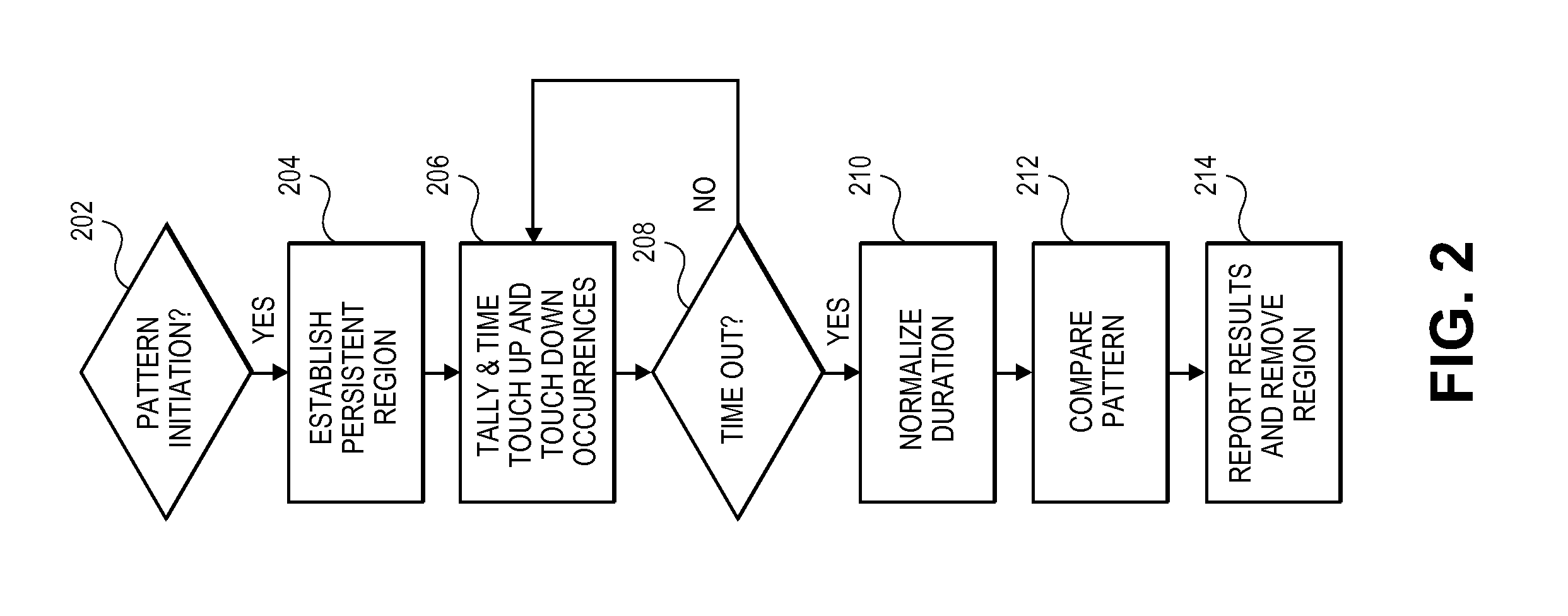

Multi-feature interactive touch user interface

InactiveUS20110148770A1Input/output processes for data processingUser inputHuman–computer interaction

Briefly, a feature rich touch subsystem is disclosed. The feature rich touch subsystem includes one or more novel user input capabilities that enhance the user experience.

Owner:INTEL CORP

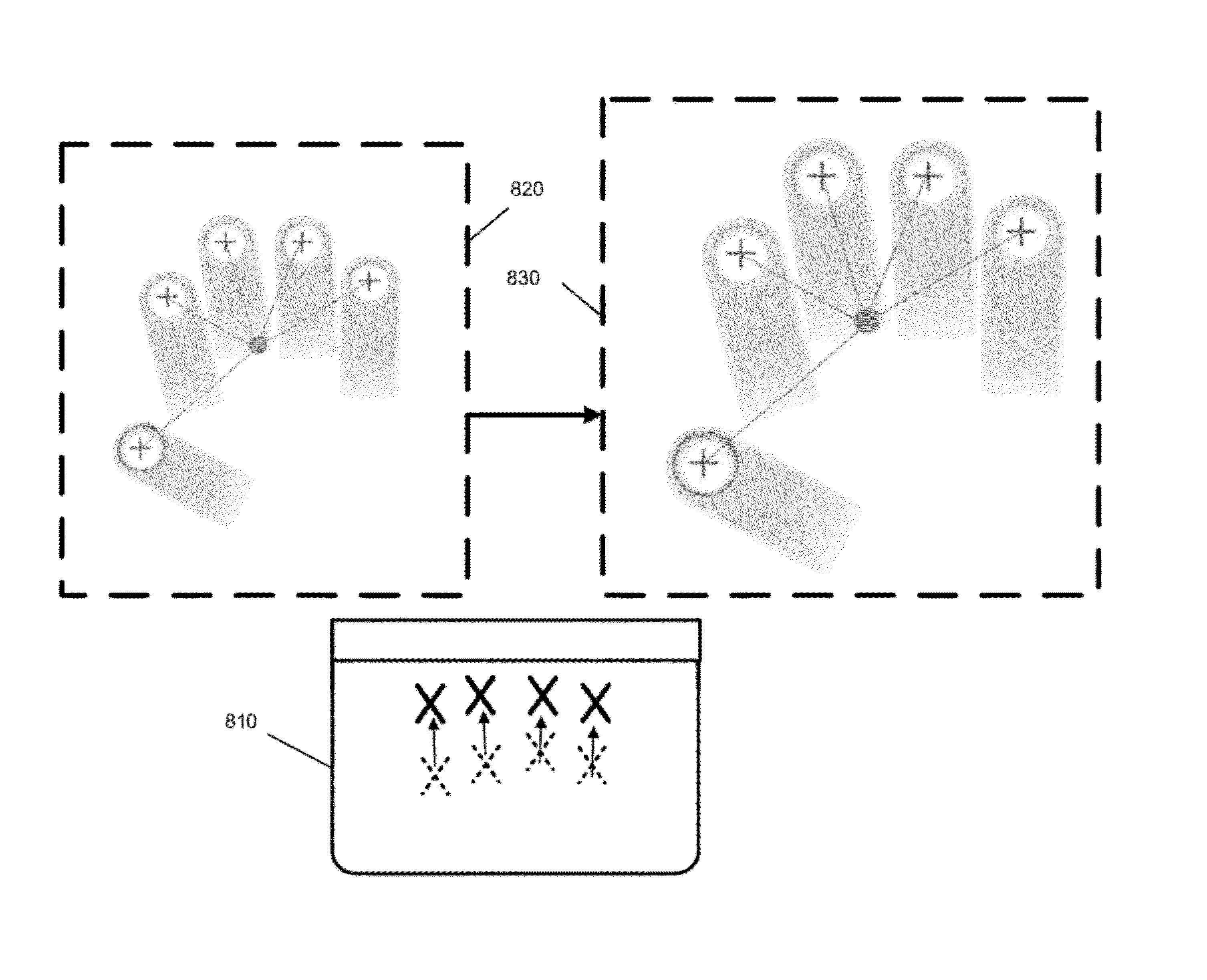

Gesture based user interface

ActiveUS20150009124A1Input/output for user-computer interactionCharacter and pattern recognitionUser inputHuman–computer interaction

A system and method for recognition of hand gesture in computing devices. The system recognizes a hand of a user by identifying a predefined first gesture and further collects visual information related to the hand identified on the basis of the first predefined gesture. The visual information is used to extract a second gesture (and all other gestures after the second) from the video / image captured by the camera and finally interpreting the second gesture as a user input to the computing device. The system enables gesture recognition in various light conditions and can be operated by various user hands including the ones wearing gloves.

Owner:AUGUMENTA

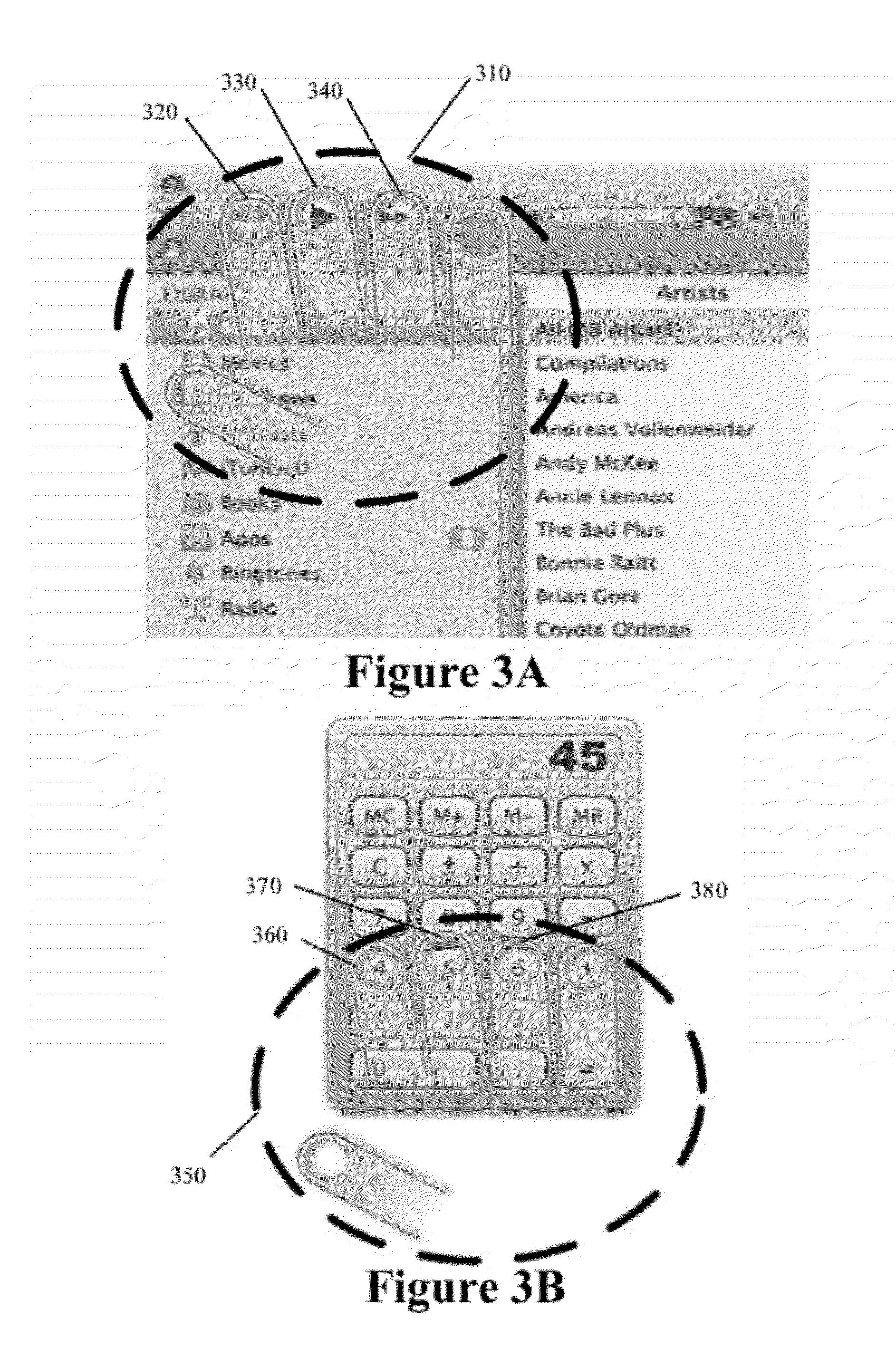

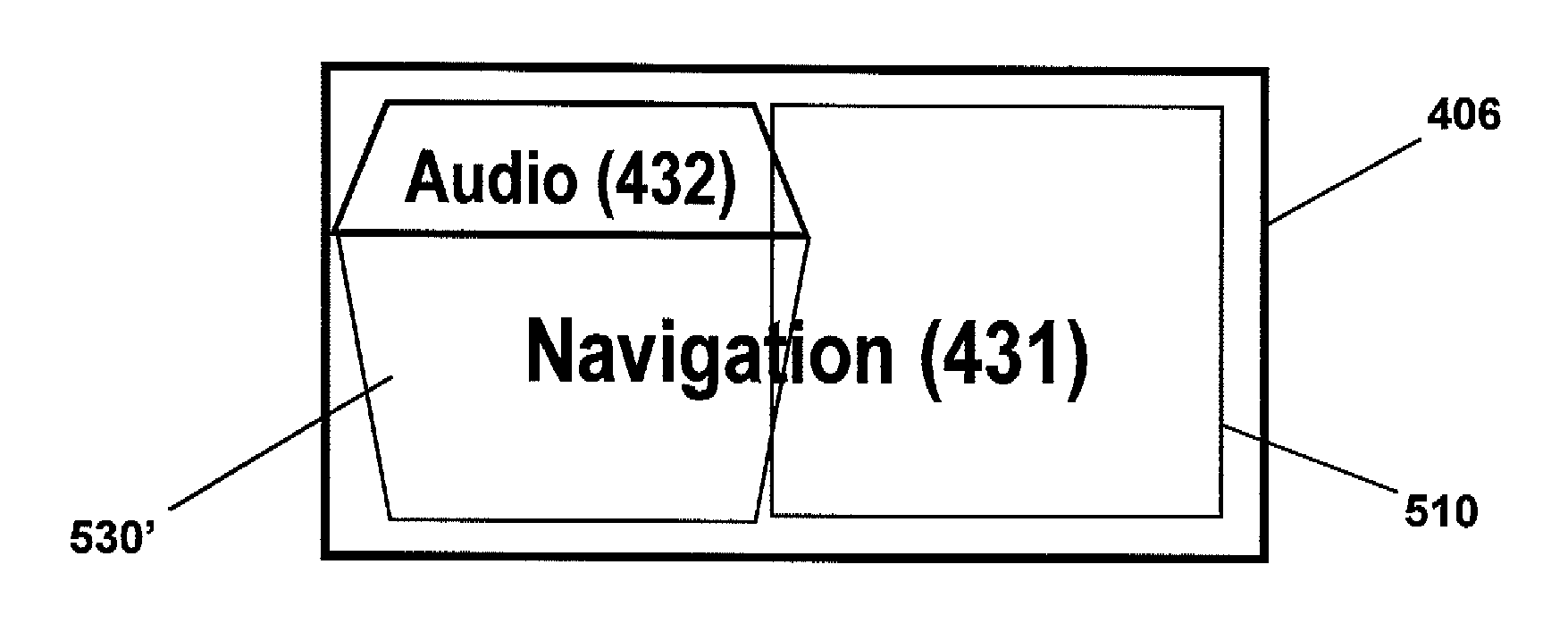

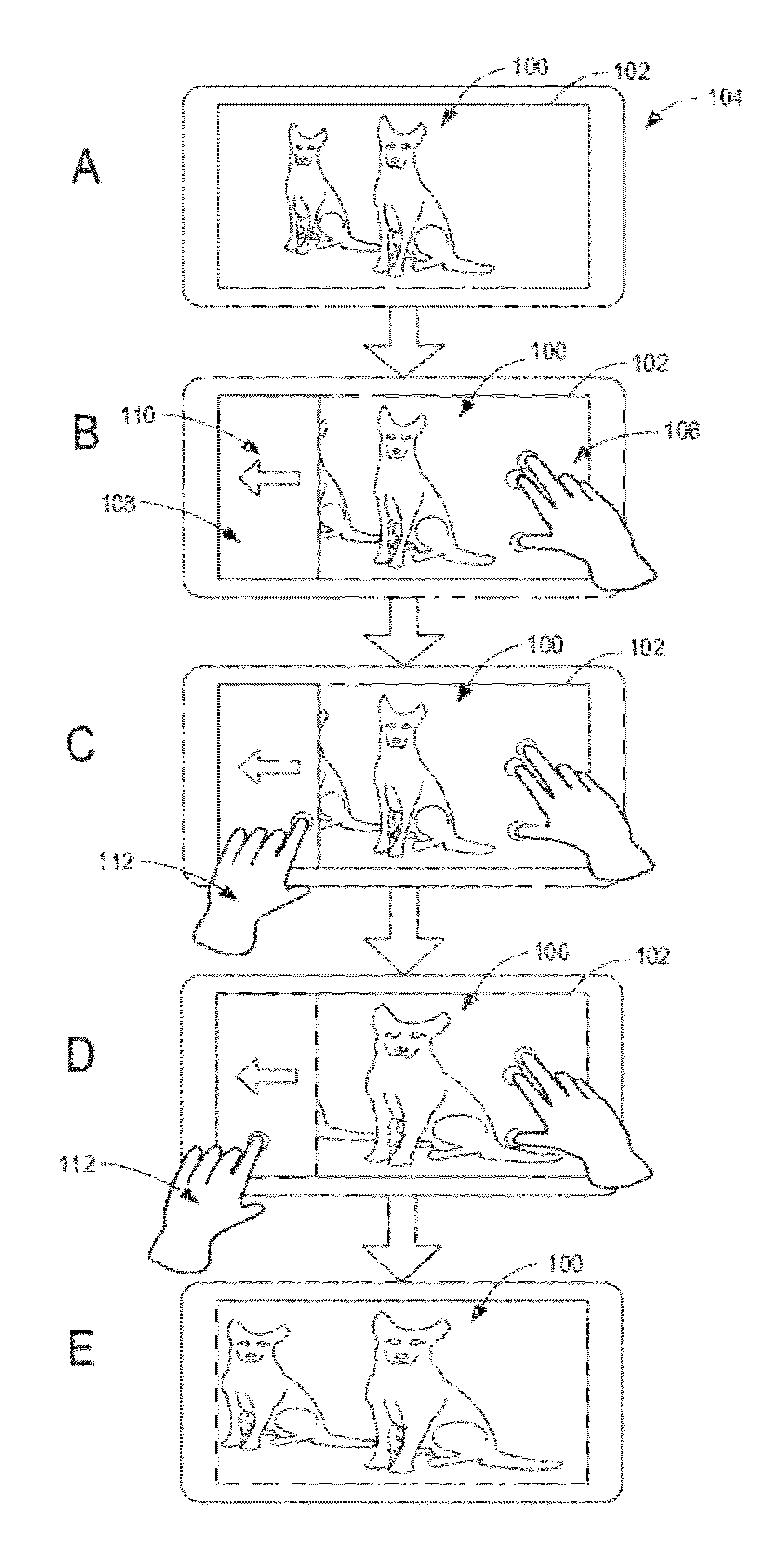

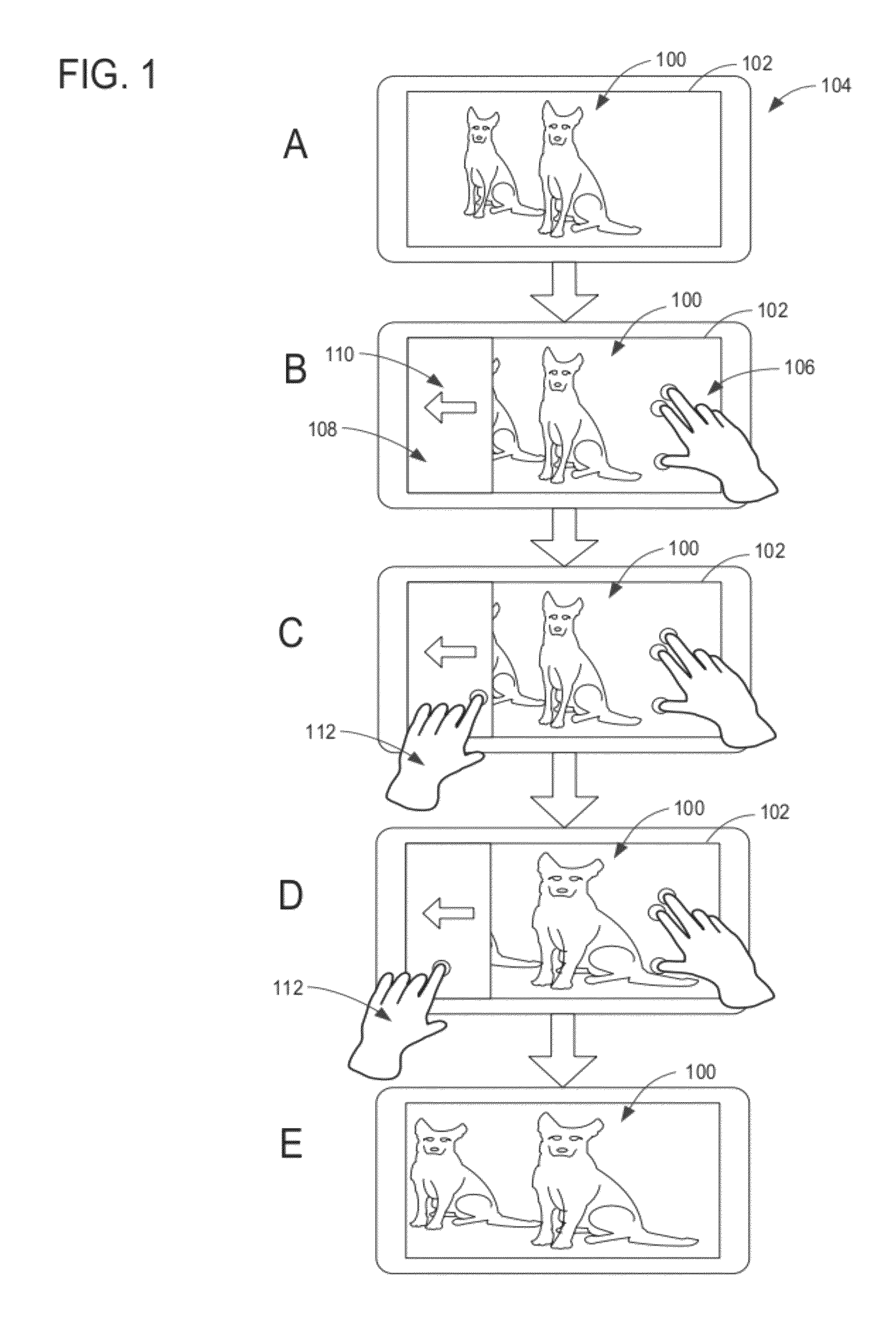

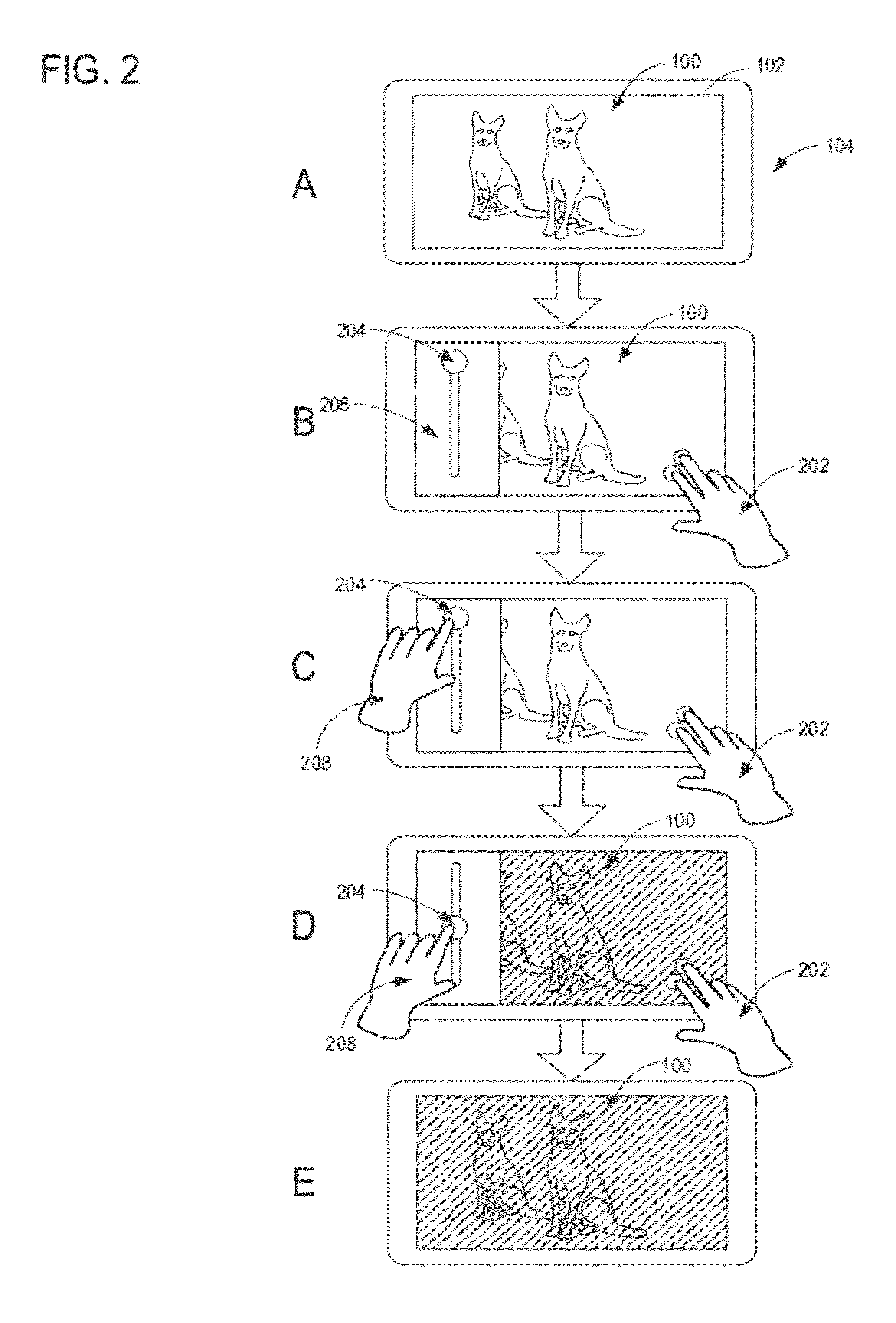

Method and apparatus for controlling and displaying contents in a user interface

ActiveUS8677284B2Easy to operateSimple finger gesturesInput/output processes for data processingOperabilityApplication software

A user interface with multiple applications which can be operated concurrently and / or independently by using simple finger gestures allows a user to intuitively operate and control digital information, functions, applications, etc. to improve operability. The user is able to use finger gesture to navigate the user interface via a virtual spindle metaphor to select different applications. The user can also split the user interface to at least two display segments which may contain different applications. Moreover, each display segment can be moved to a desirable location on the user interface to provide a seamless operation environment.

Owner:ALPINE ELECTRONICS INC

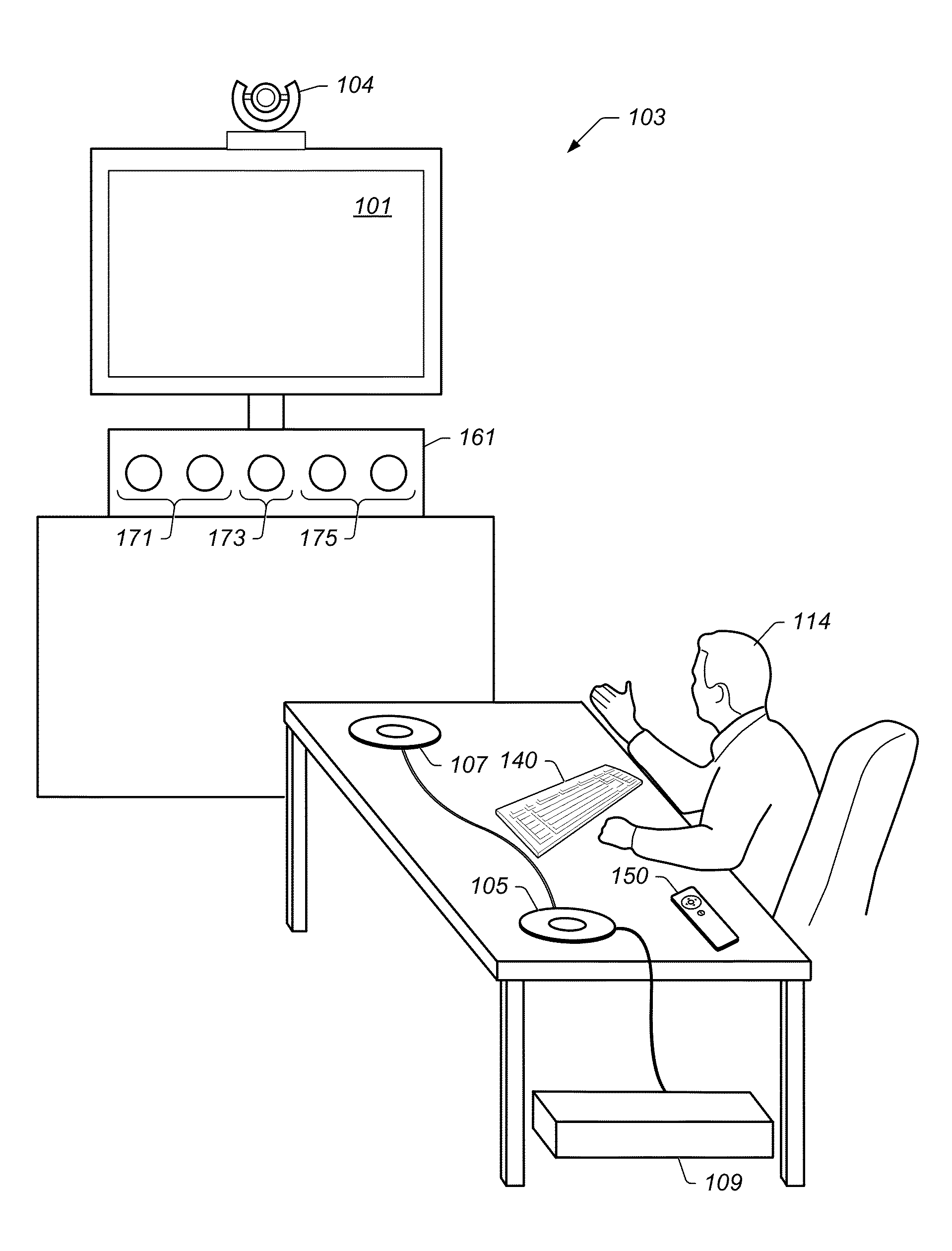

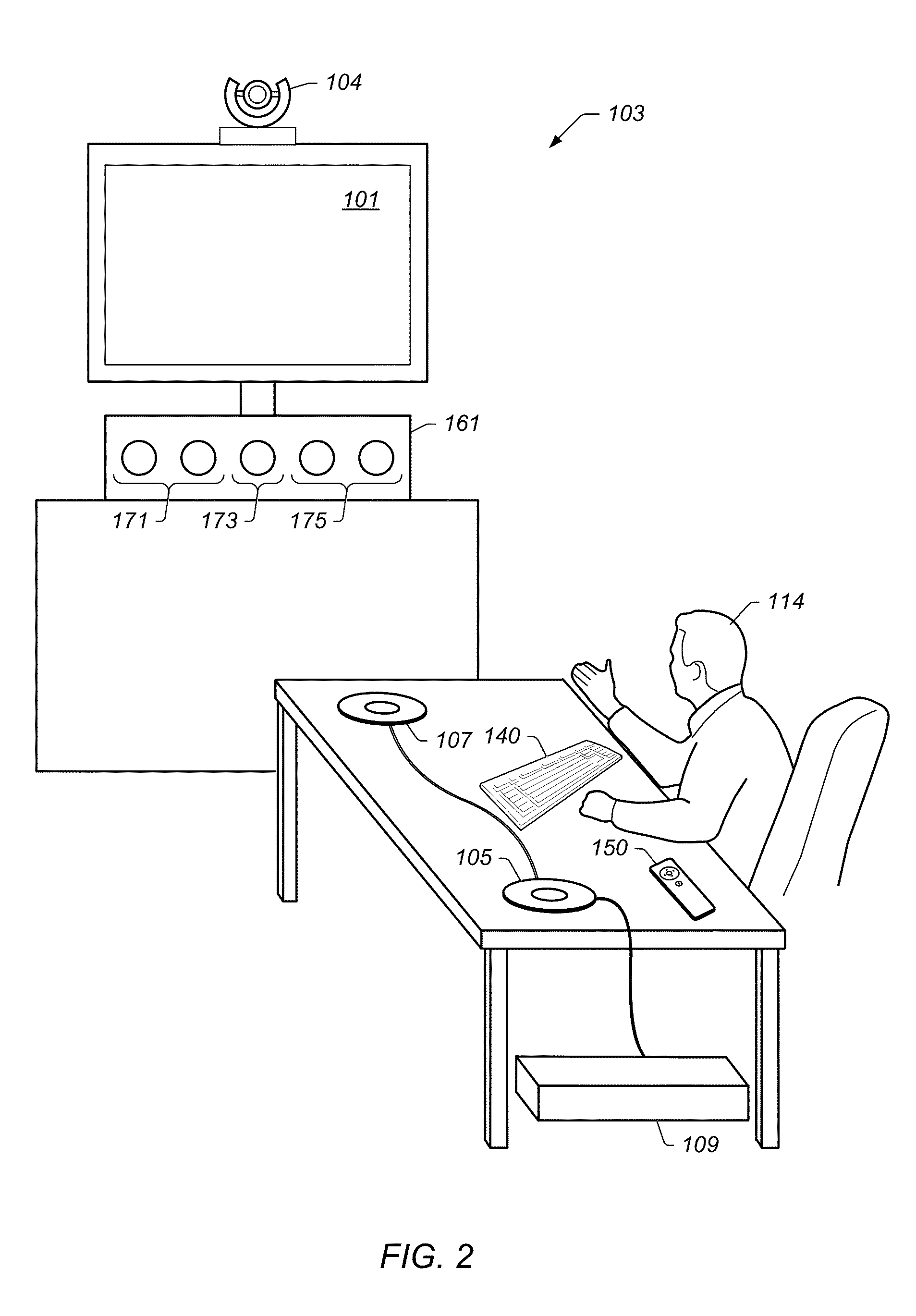

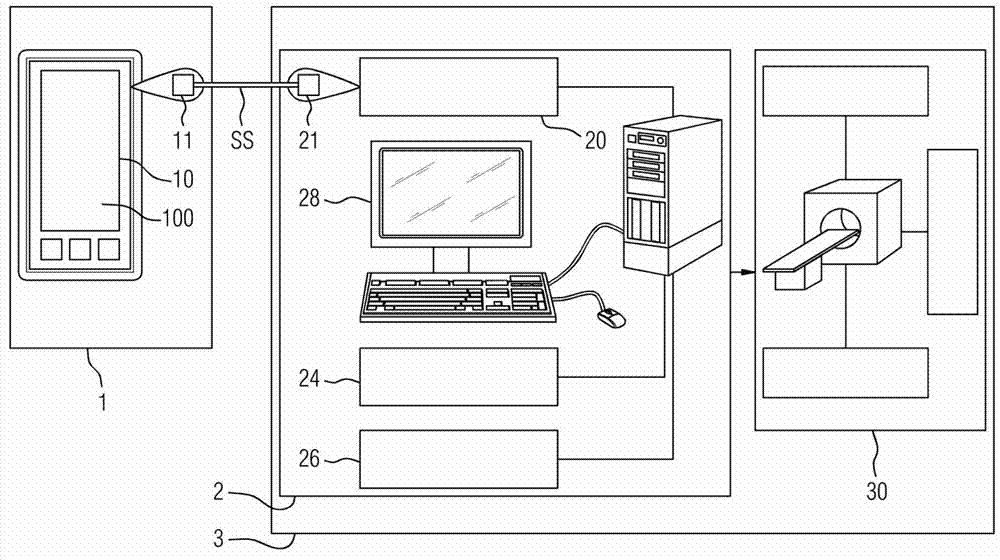

Videoconferencing System with Context Sensitive Wake Features

ActiveUS20130278710A1Quickly and intuitively interactTelevision conference systemsAutomatic exchangesRemote controlDisplay device

System and method involving user interfaces and remote control devices. These user interfaces may be particularly useful for providing an intuitive and user friendly interaction between a user and a device or application using a display, e.g., at a “10 foot” interaction level. The user interfaces may be specifically designed for interaction using a simple remote control device having a limited number of inputs. For example, the simple remote control may include directional inputs (e.g., up, down, left, right), a confirmation input (e.g., ok), and possibly a mute input. The user interface may be customized based on current user activity or other contexts (e.g., based on current or previous states), the user logging in (e.g., using a communication device), etc. Additionally, the user interface may allow the user to adjust cameras whose video are not currently displayed, rejoin previously left videoconferences, and / or any of a variety of desirable actions.

Owner:LIFESIZE INC

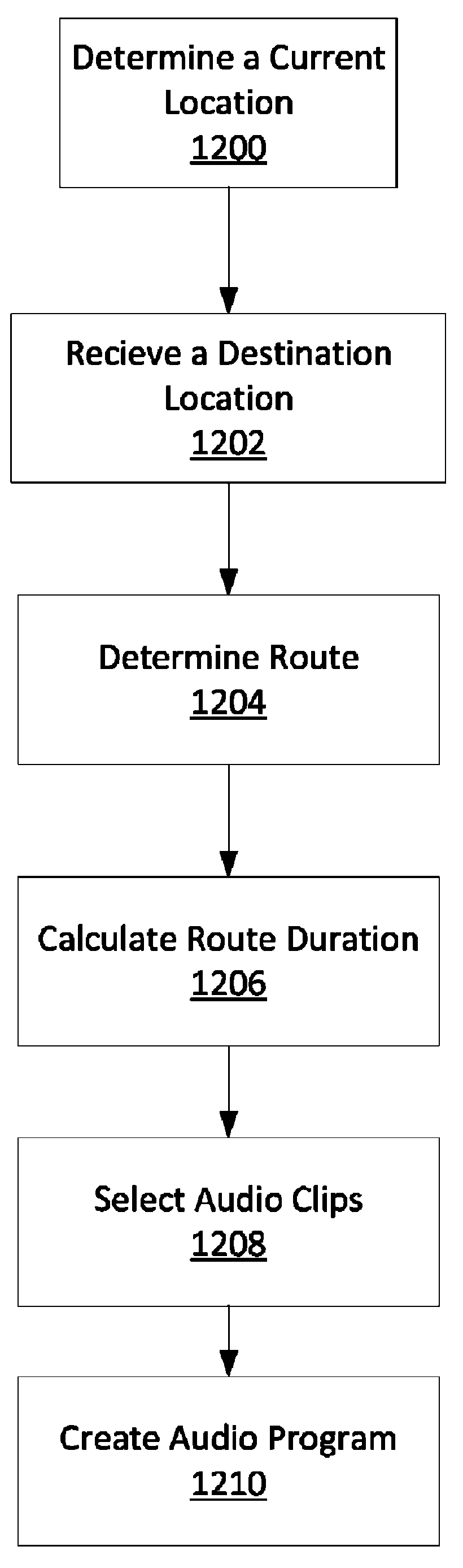

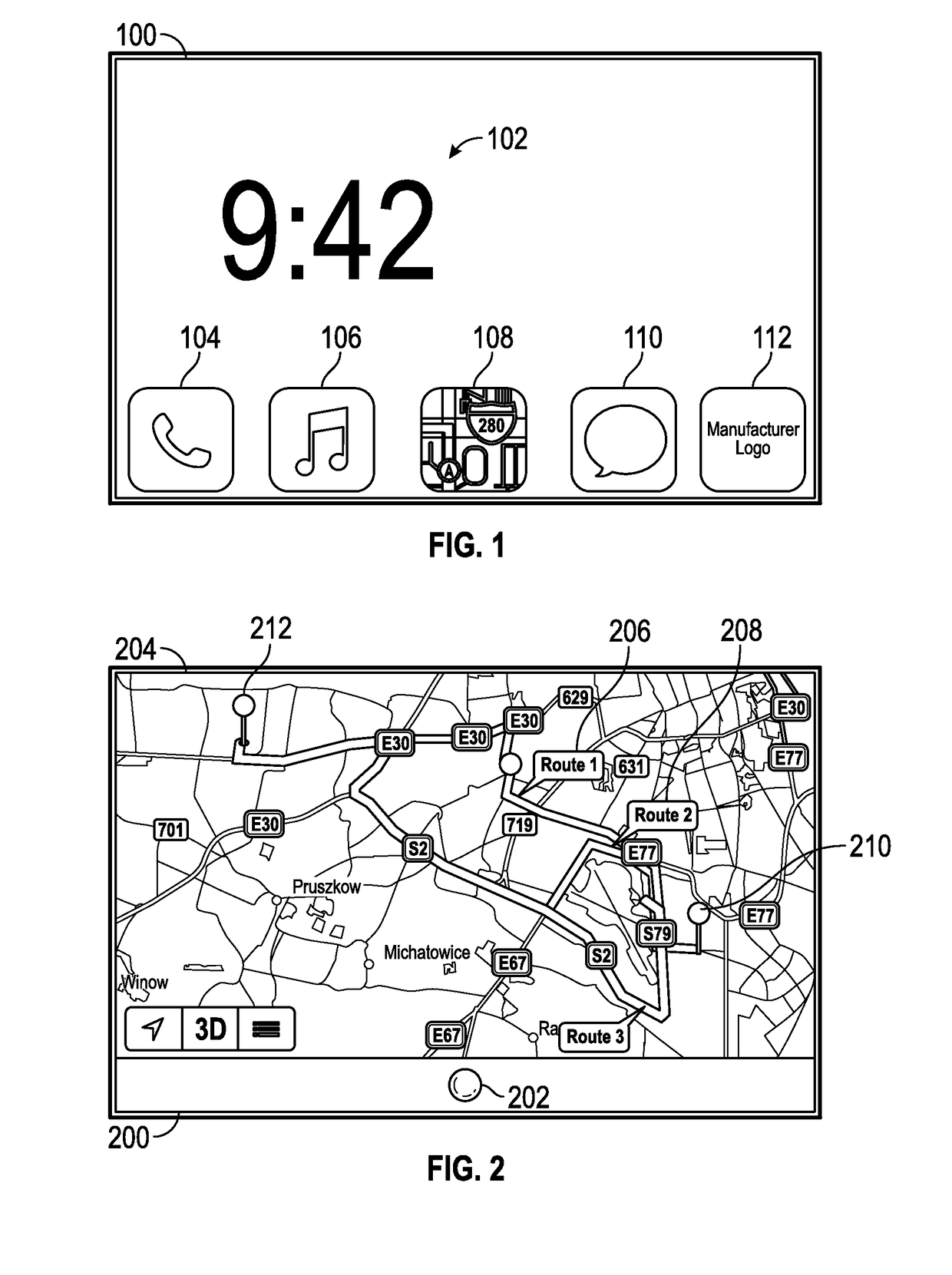

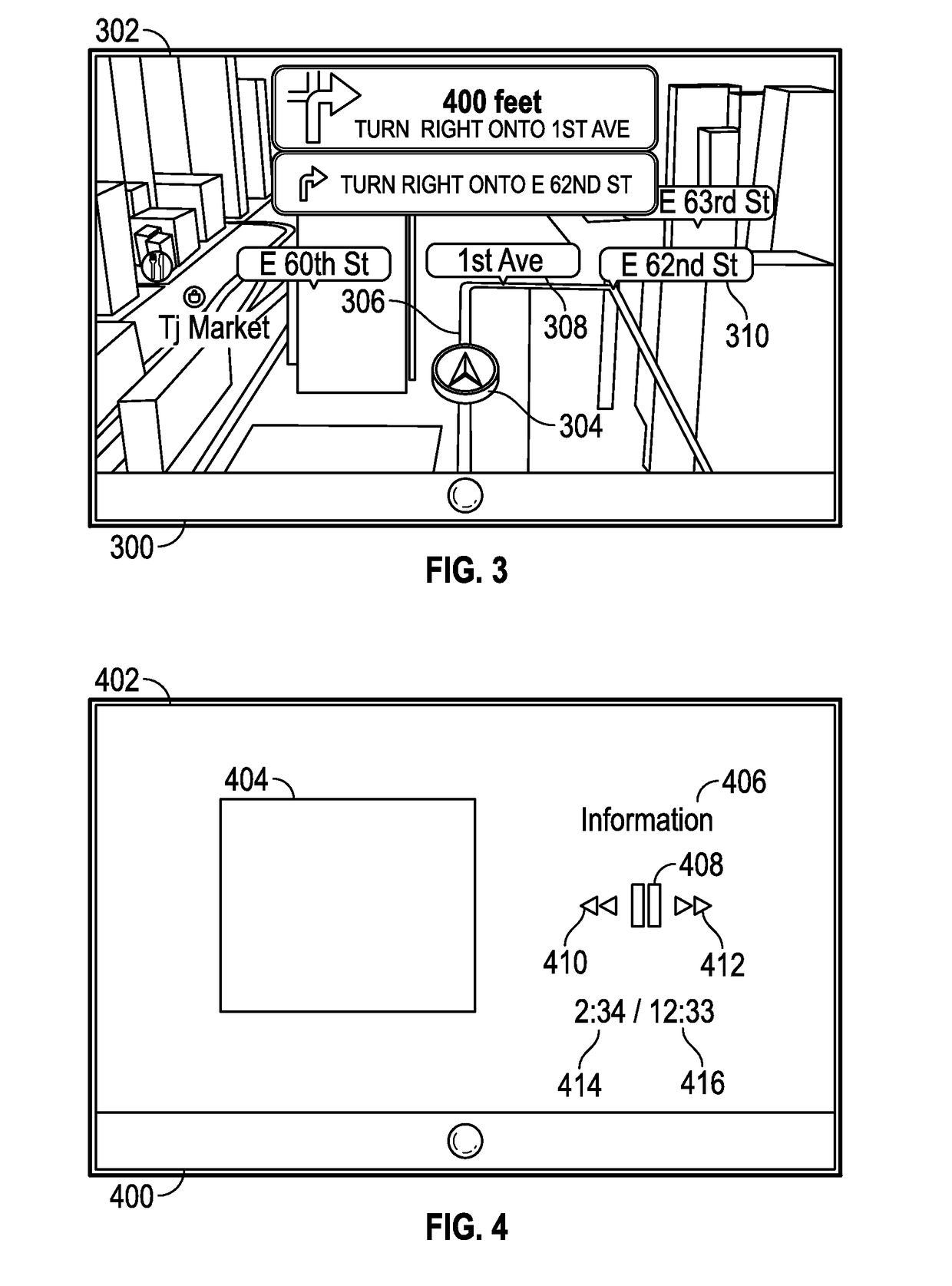

Voice and touch user interface

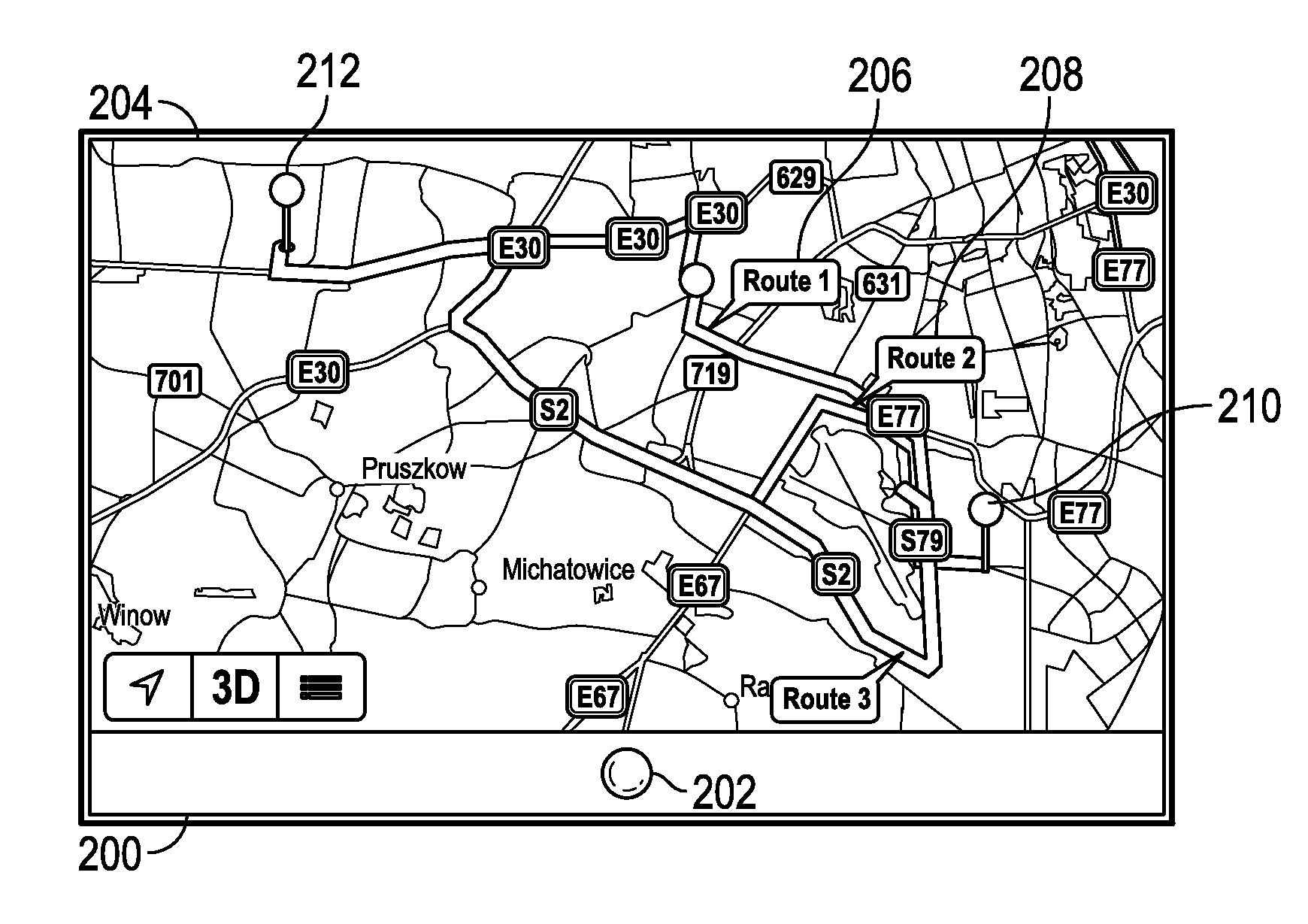

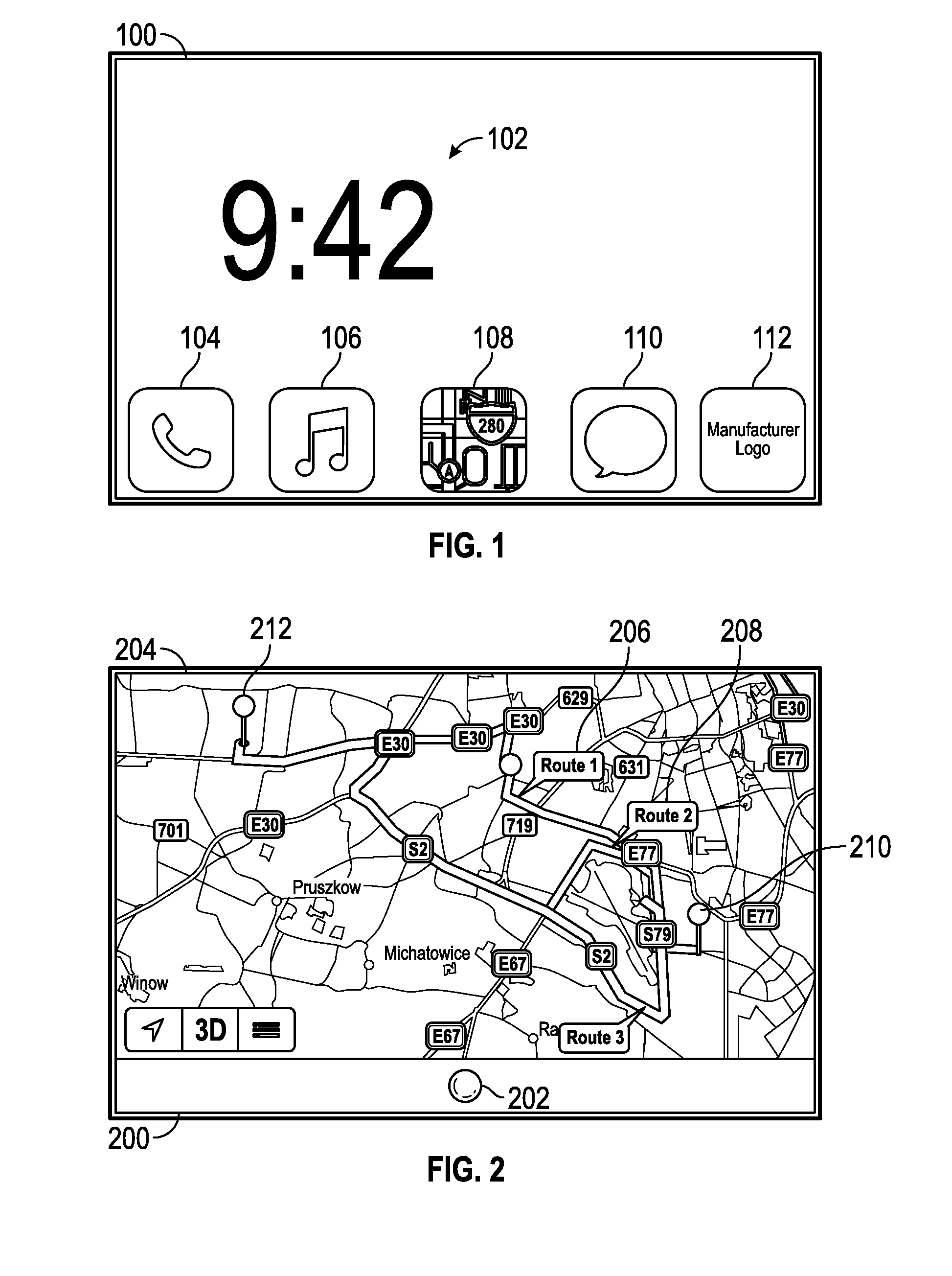

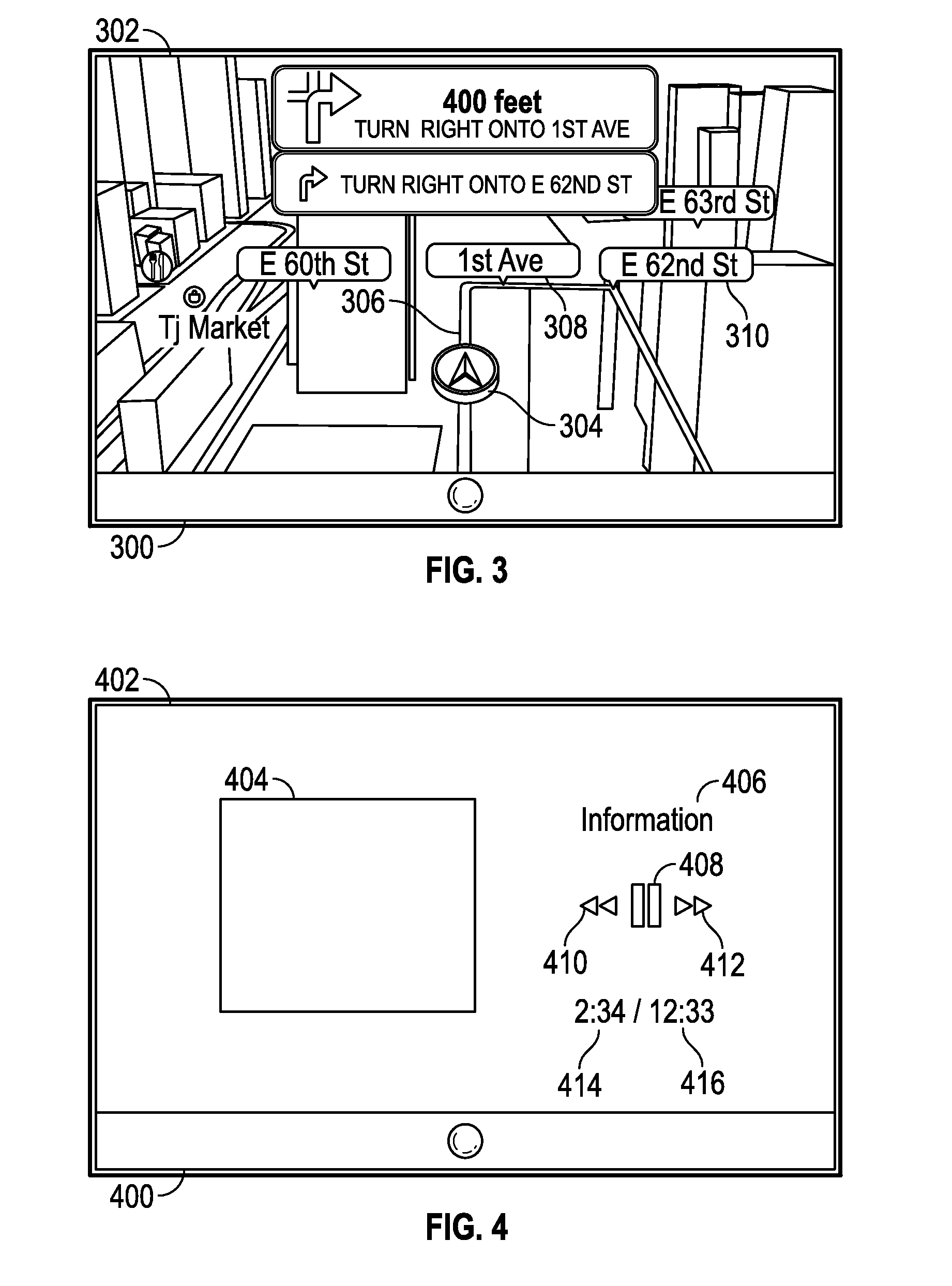

ActiveUS20140278072A1Safe driving experienceMinimizing user interactionInstruments for road network navigationDashboardNatural user interface

Owner:APPLE INC

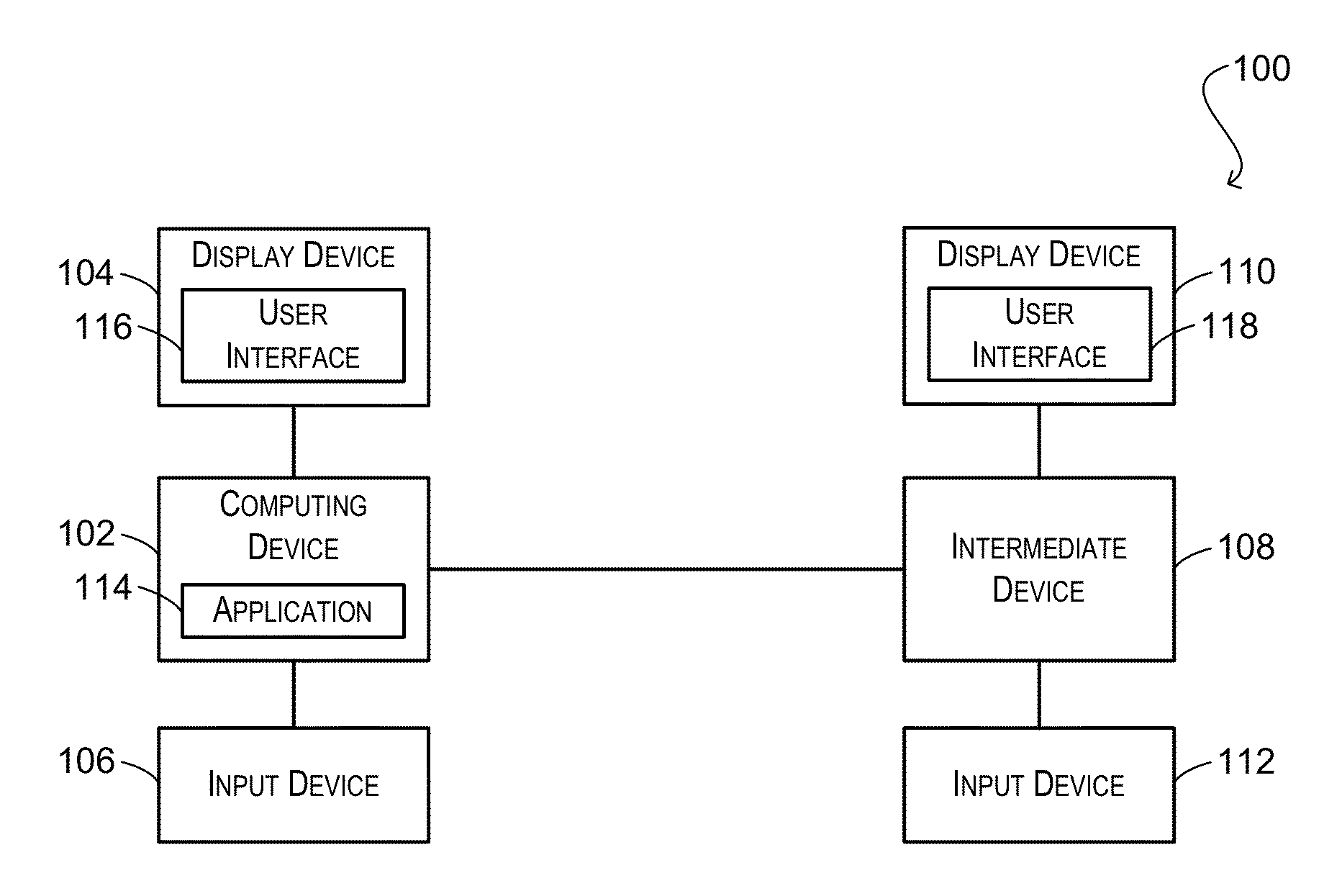

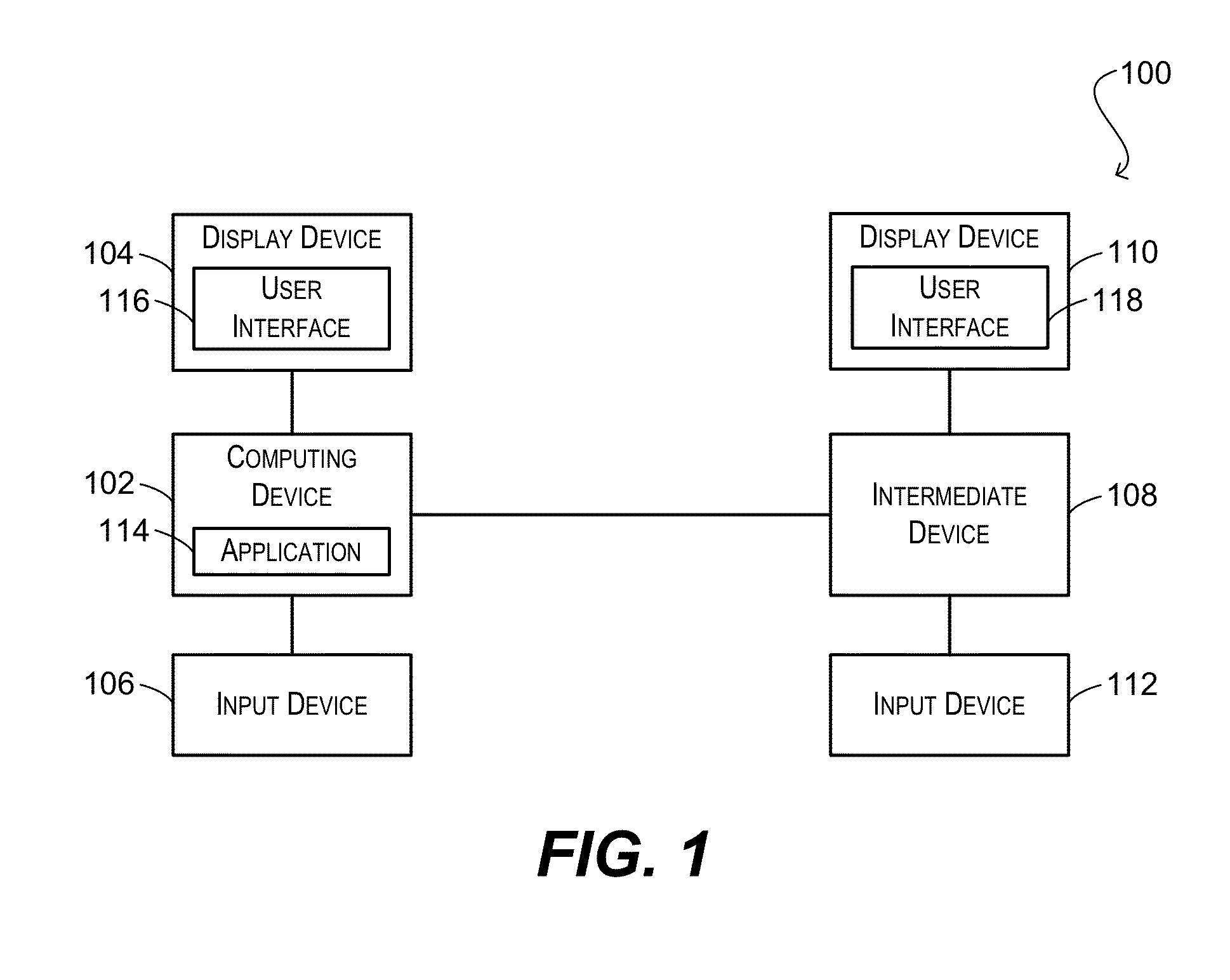

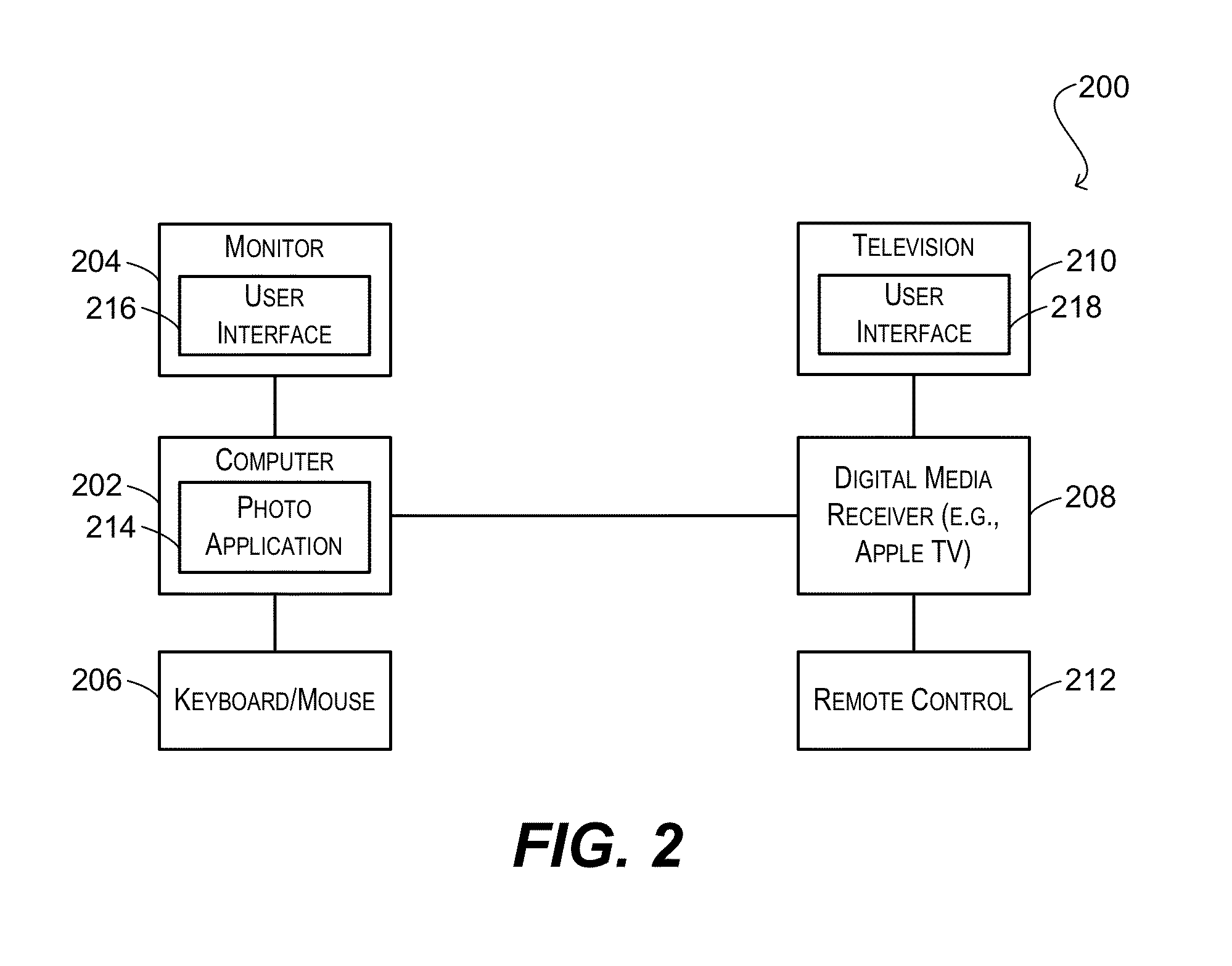

Application interaction via multiple user interfaces

InactiveUS20130132848A1Optimize layoutComplex layoutInput/output for user-computer interactionProgram controlUser inputDisplay device

Techniques for concurrently presenting multiple, distinct user interfaces for a single software application on multiple display devices. Each of the user interfaces can be interactive, such that user input received with respect to any of the user interfaces (presented on any of the display devices) can change the state of the application and / or modify data associated with the application. Further, this state or data change can be reflected in all (or a subset) of the user interfaces.

Owner:APPLE INC

Device controller with connectable touch user interface

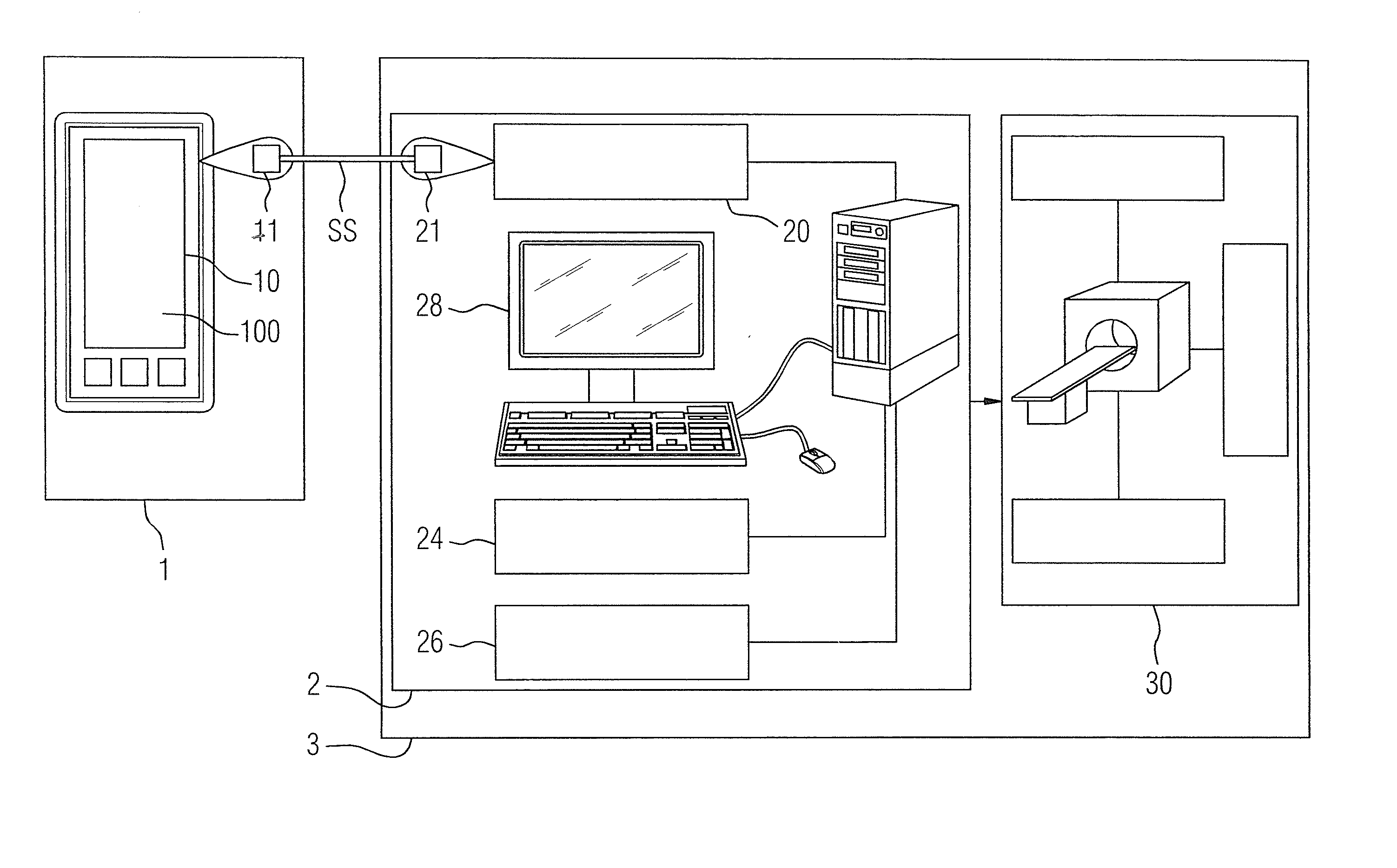

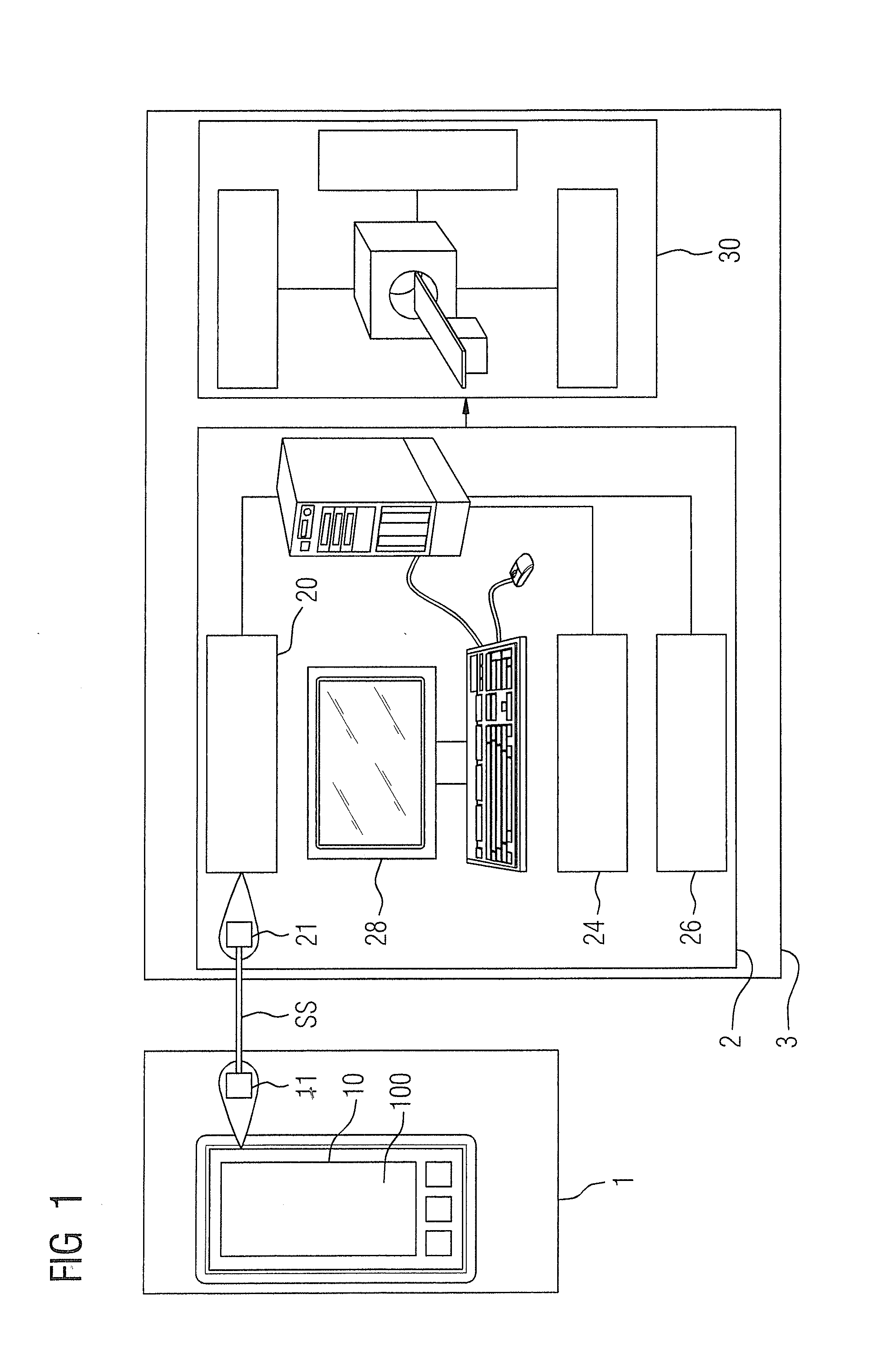

InactiveUS20130088452A1Easy to adaptAvoid impermissibleLocal control/monitoringSurgical systems user interfaceControl dataComputer module

In an electronic, context-sensitive controller system for a medical technology device, at least one external input and output device, with a touchscreen user interface, is provided with an adapter module. The medical technology device is operated and / or controlled via a computer-assisted application in order to exchange data with a control module. An interface between the adapter module and the control module is designed to exchange control data for control of the medical technology device via the touchscreen user interface of the external input and output device.

Owner:SIEMENS AG

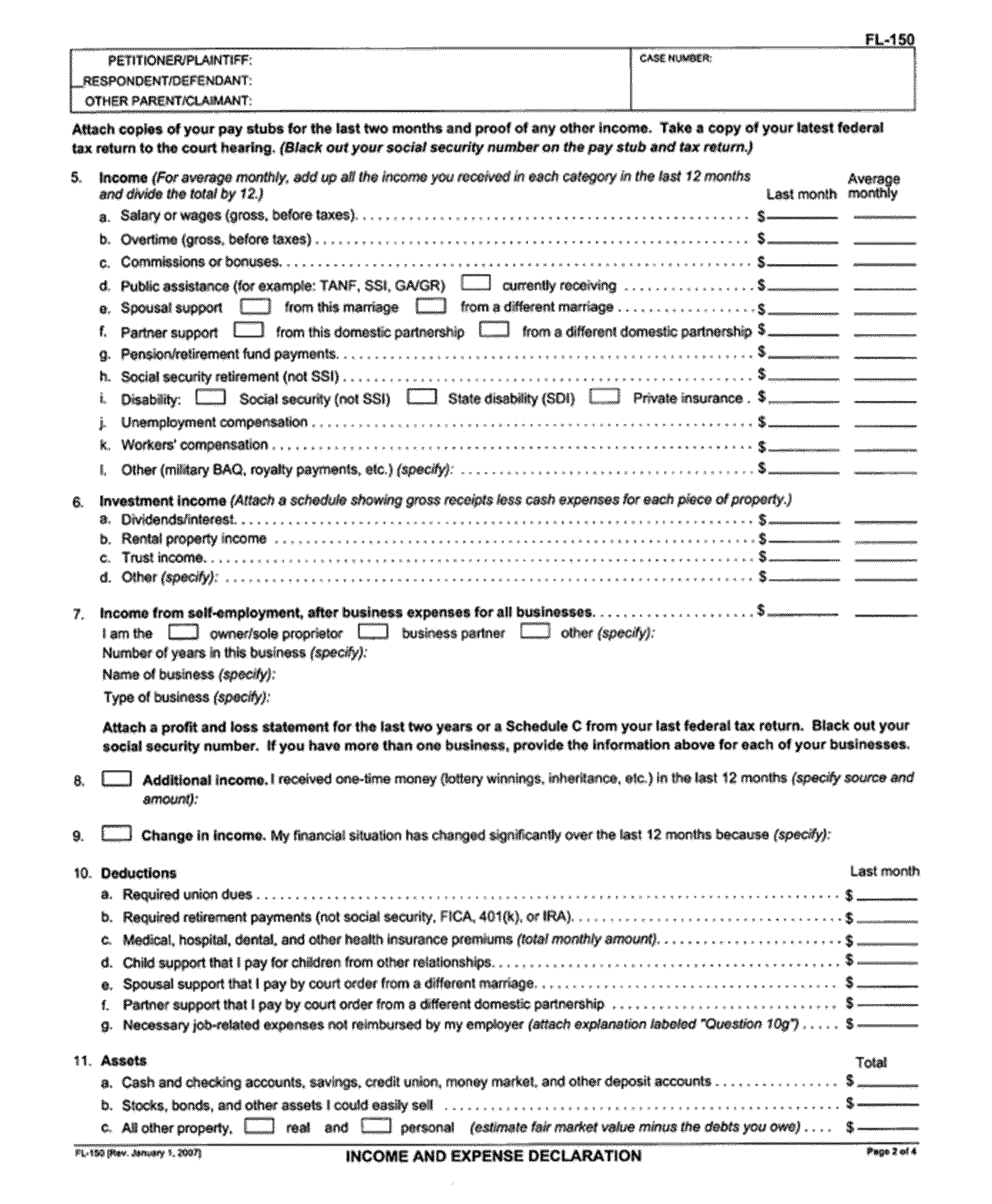

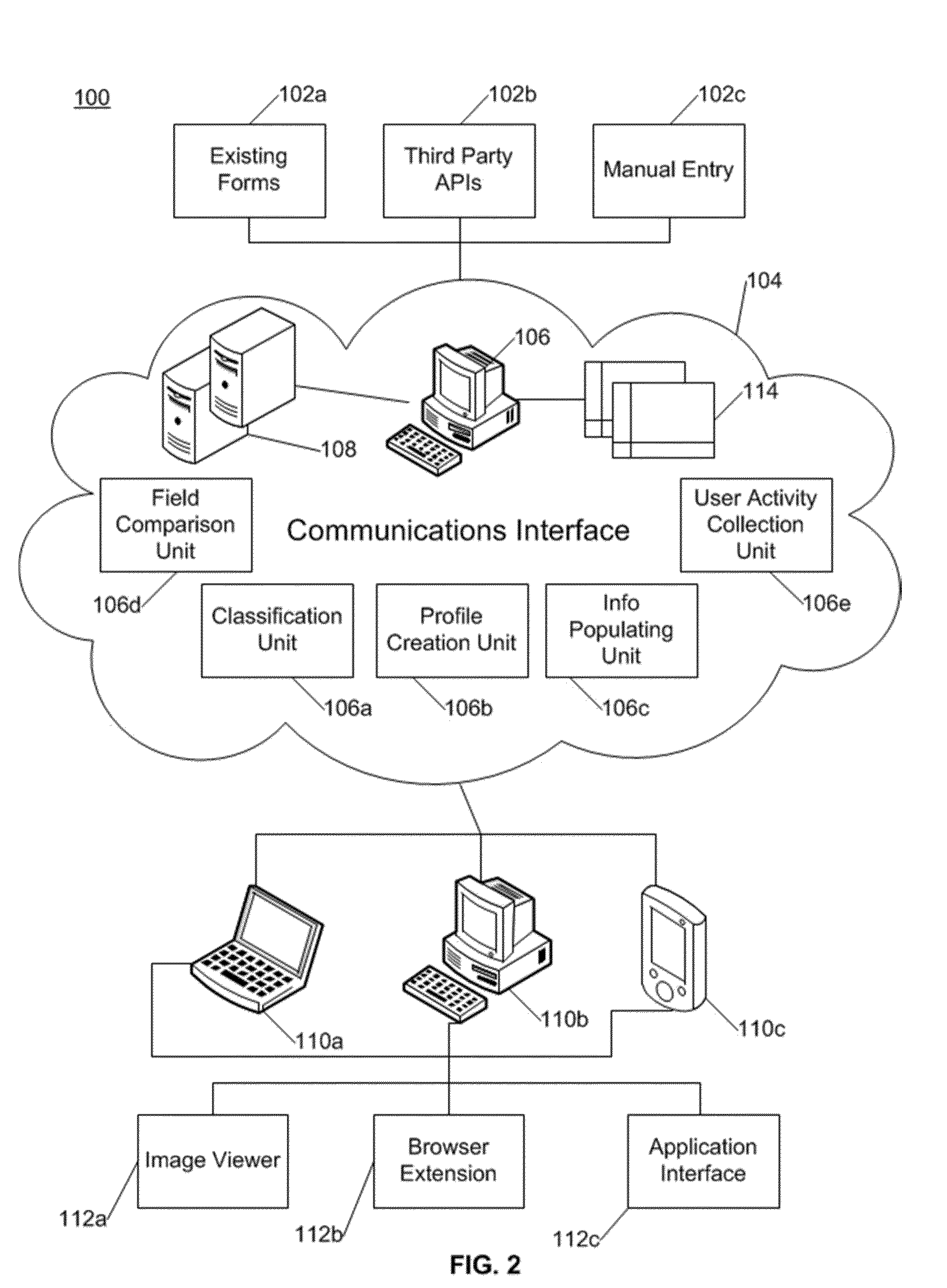

Human interactions for populating user information on electronic forms

ActiveUS20140123057A1Easy to completeMarket predictionsRelational databasesHuman interactionElectronic form

Systems and methods are provided for populating user information onto an electronic form using human interactions via touch, voice, gestures or an input device. The electronic form is selected by the user for completion using a user profile of stored data. When a form field requires a manual input—such as a form field with multiple potential values—a user is prompted to complete the field using one or more of the human interactions to allow the user to easily complete the field. These human interactions may include touching the form field with a finger on a touchscreen user interface, speaking the form field name, gesturing or selecting via the input device to generate a window of different potential values, and then touching, speaking, gesturing of selecting via the input device the value that the user prefers.

Owner:FHOOSH

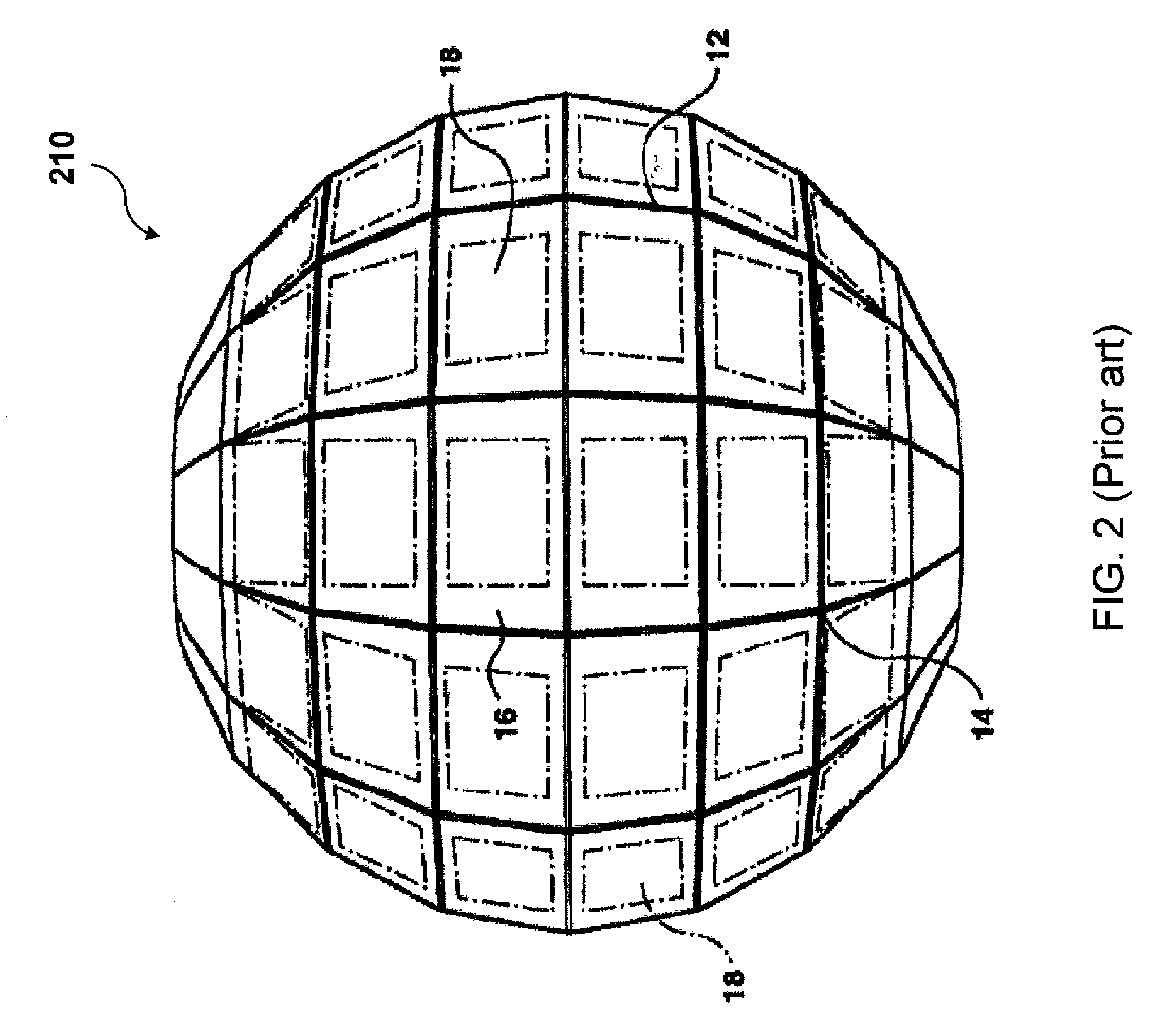

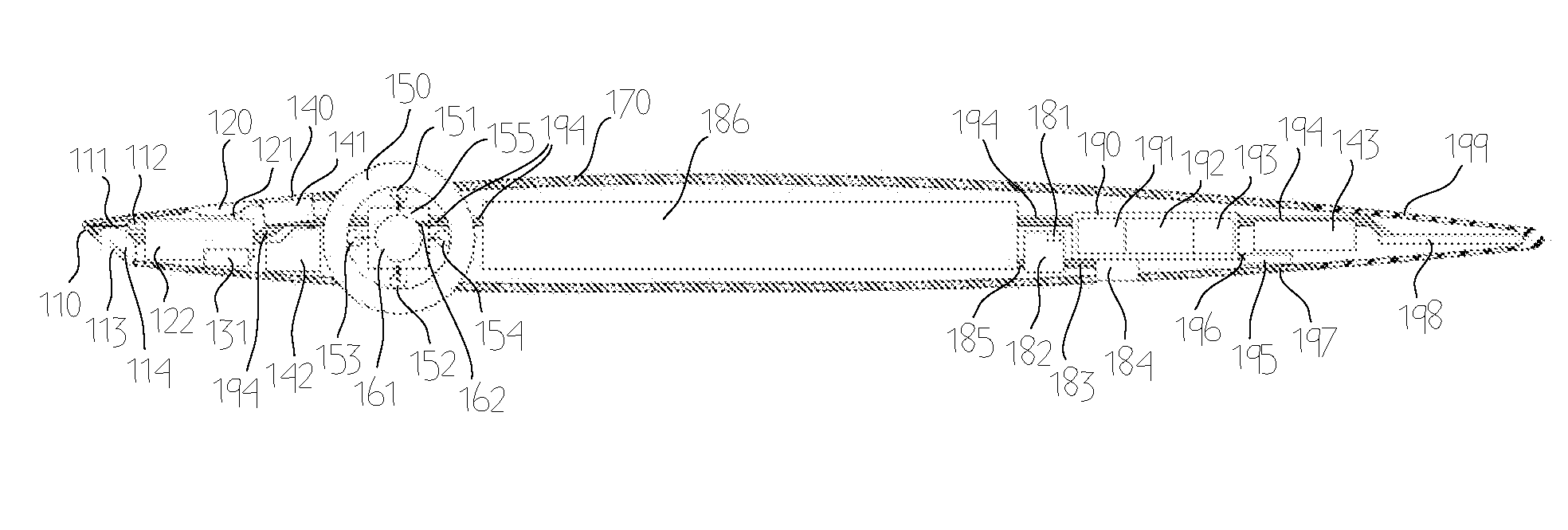

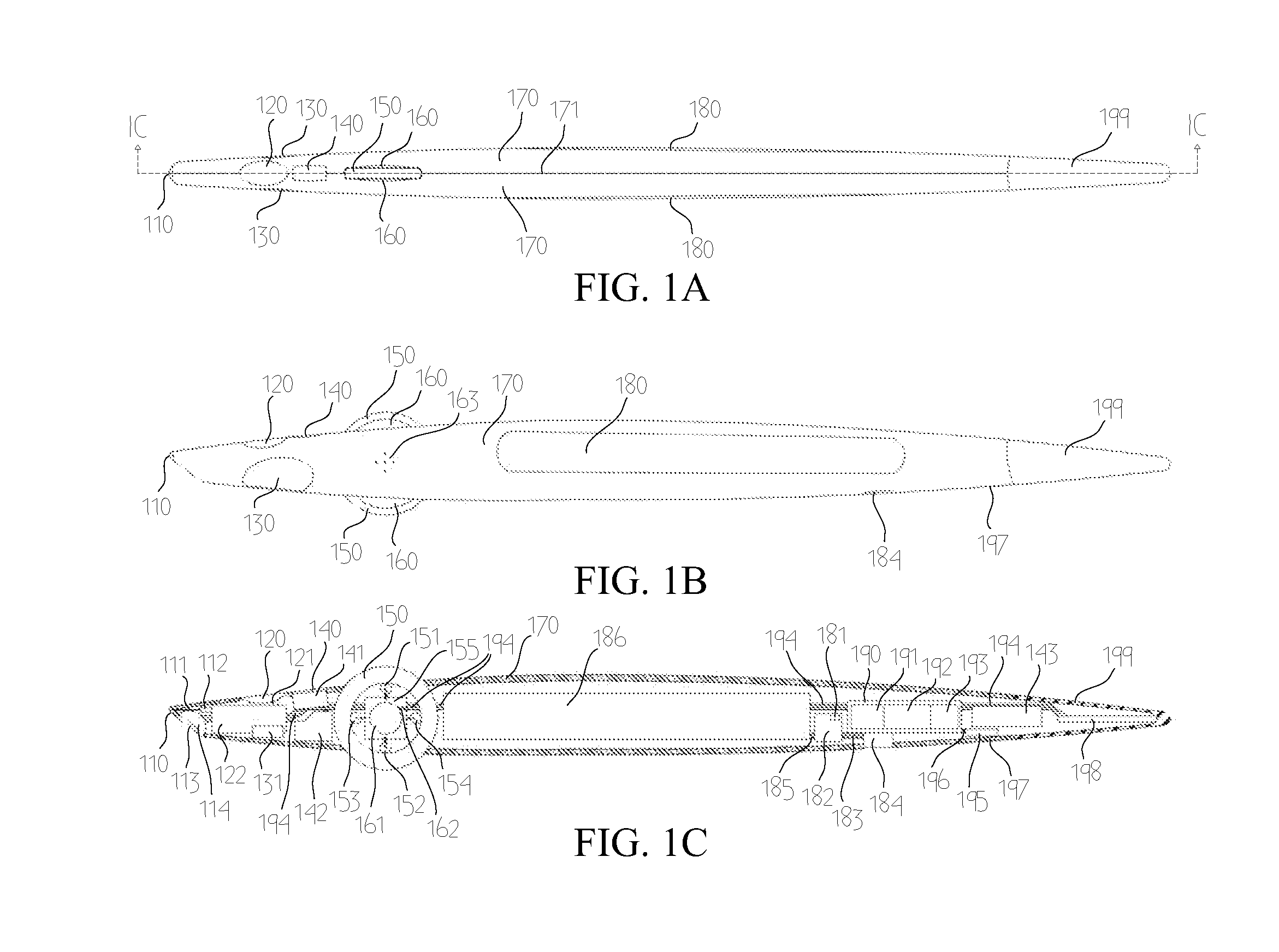

Device and user interface for visualizing, navigating, and manipulating hierarchically structured information on host electronic devices

ActiveUS20150205367A1Input/output for user-computer interactionCathode-ray tube indicatorsWireless transmissionTwo-form

A defined class of input devices is disclosed, consisting of a graspable body in two forms. Both forms include a scroll wheel-style mechanism that functions as a button from multiple directions, both include an apparatus providing six degrees of gestural freedom in three dimensions, and both provide for the wireless transmission capacity of these inputs, user data, audio, and other communications for the purpose of carrying credentials among various host electronic devices, and for manipulating and navigating an associated user interface for hierarchy visualization of structured information to be displayed on those host devices.

Owner:BANDT HORN BENJAMIN D

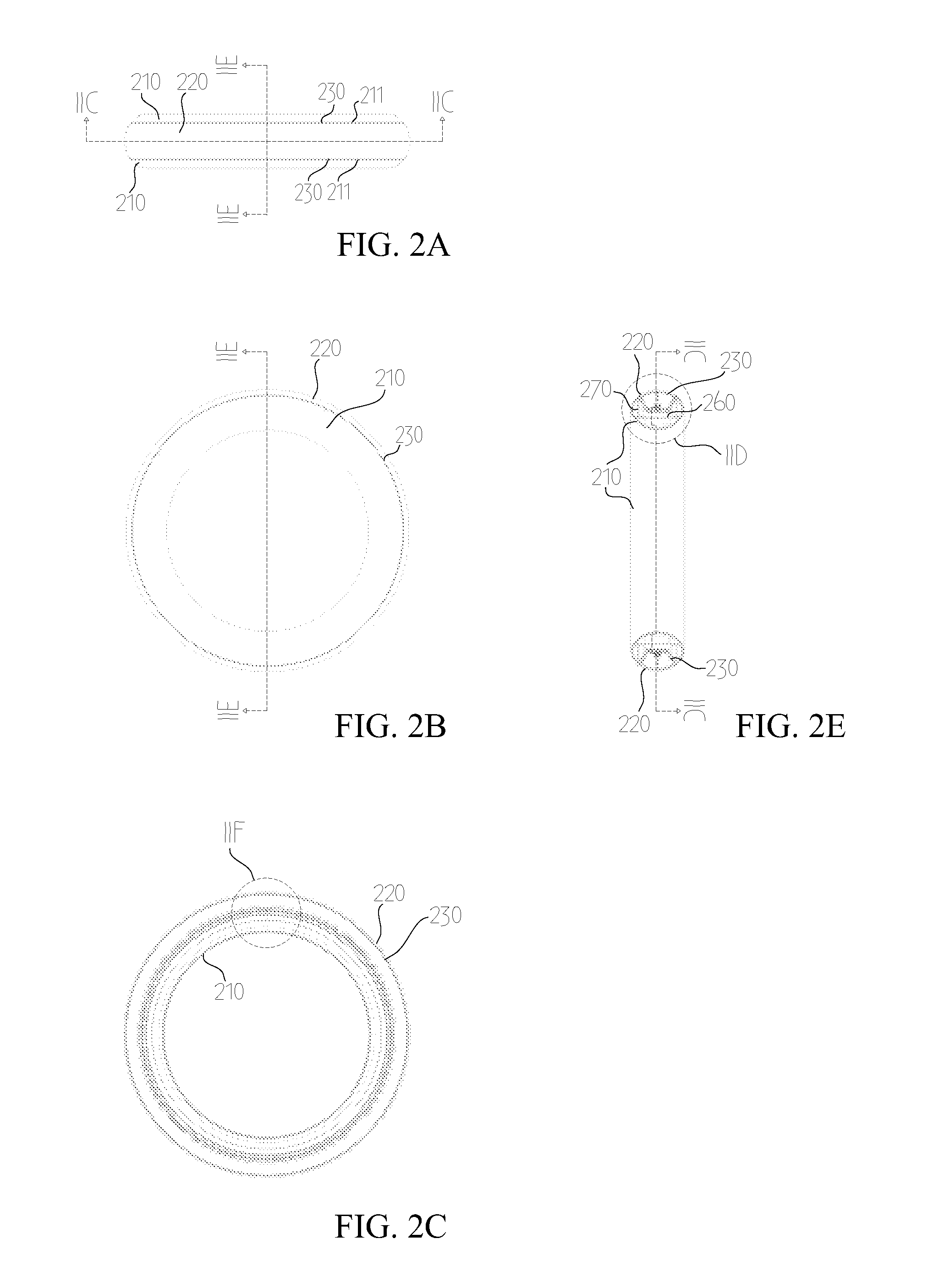

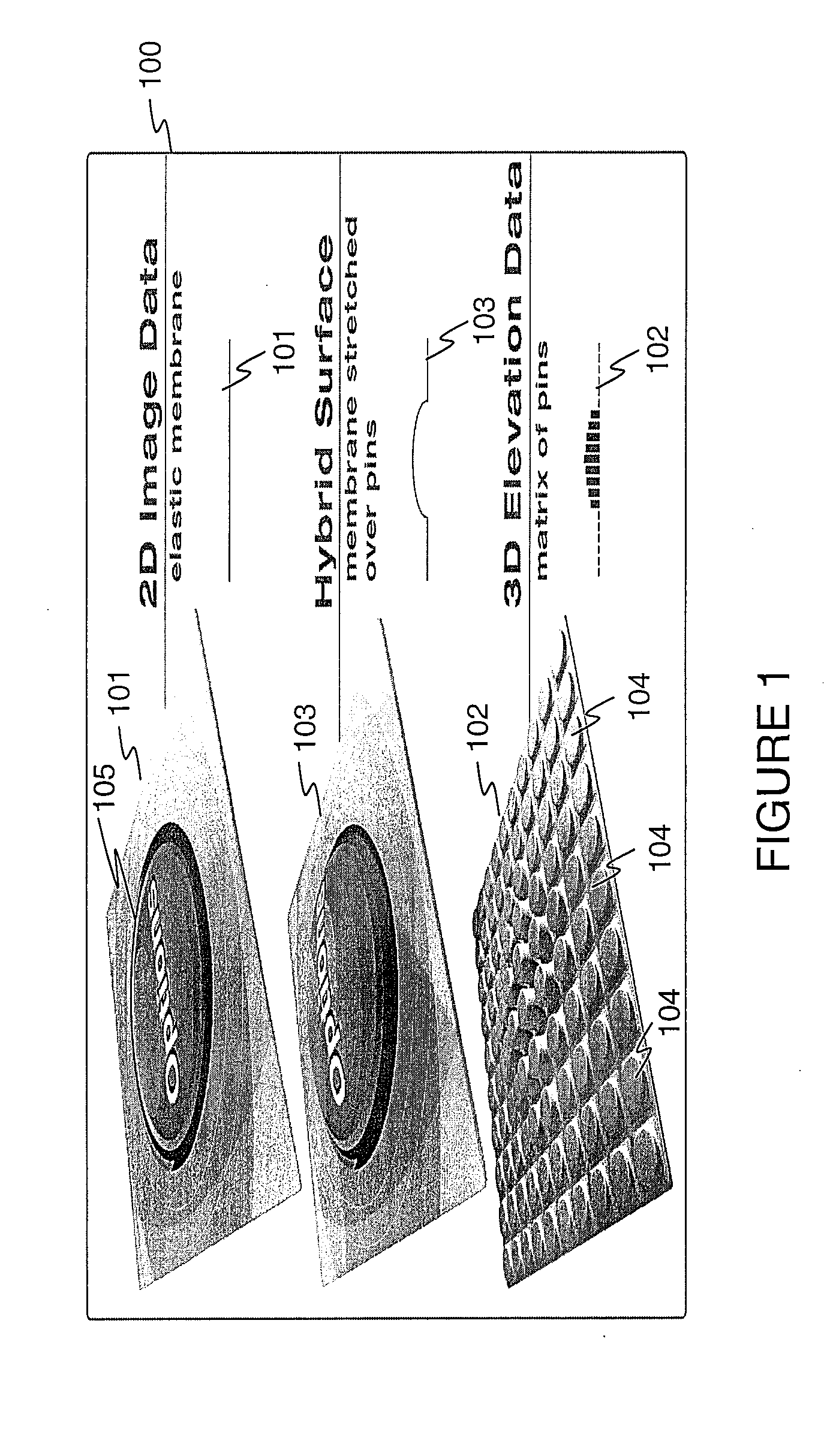

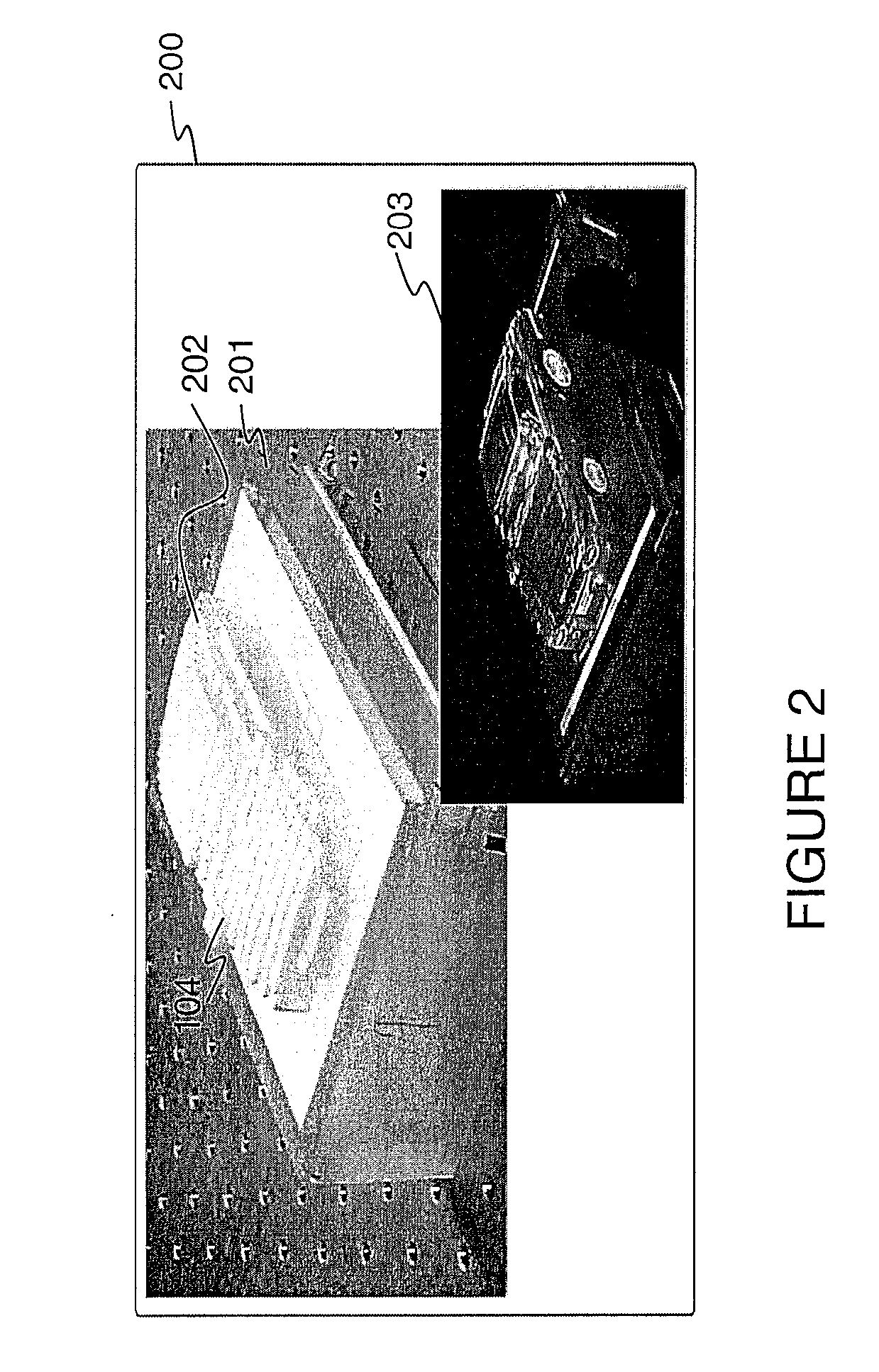

Low relief tactile interface with visual overlay

InactiveUS20080204420A1Steroscopic systemsInput/output processes for data processingGraphical user interfaceHuman–computer interaction

Described is a method and a system for providing user interaction with various devices that incorporates adaptable visual and haptic stimuli. Both the visual and tactile elements of this user interface are aligned with each other and are animated in such a way as to convey more information to the user of such a system than is possible with traditional user interfaces. An implementation of the inventive user interface device includes a flexible and / or stretchable two-dimensional (2D) display membrane covering a set of moving parts, forming a hybrid two-dimensional (2D) and three-dimensional (3D) user interface. The flexible display membrane provides detailed imagery, while the moving parts provide a low relief tactile information to the user. Both the detailed imagery and the low relief tactile information are coordinated together as to the timing, to enable a coordinated user interface experience for the user. Optionally, various sound affects may also be provided, in a time-synchronized manner with respect to the imagery and the tactile information.

Owner:FUJIFILM BUSINESS INNOVATION CORP

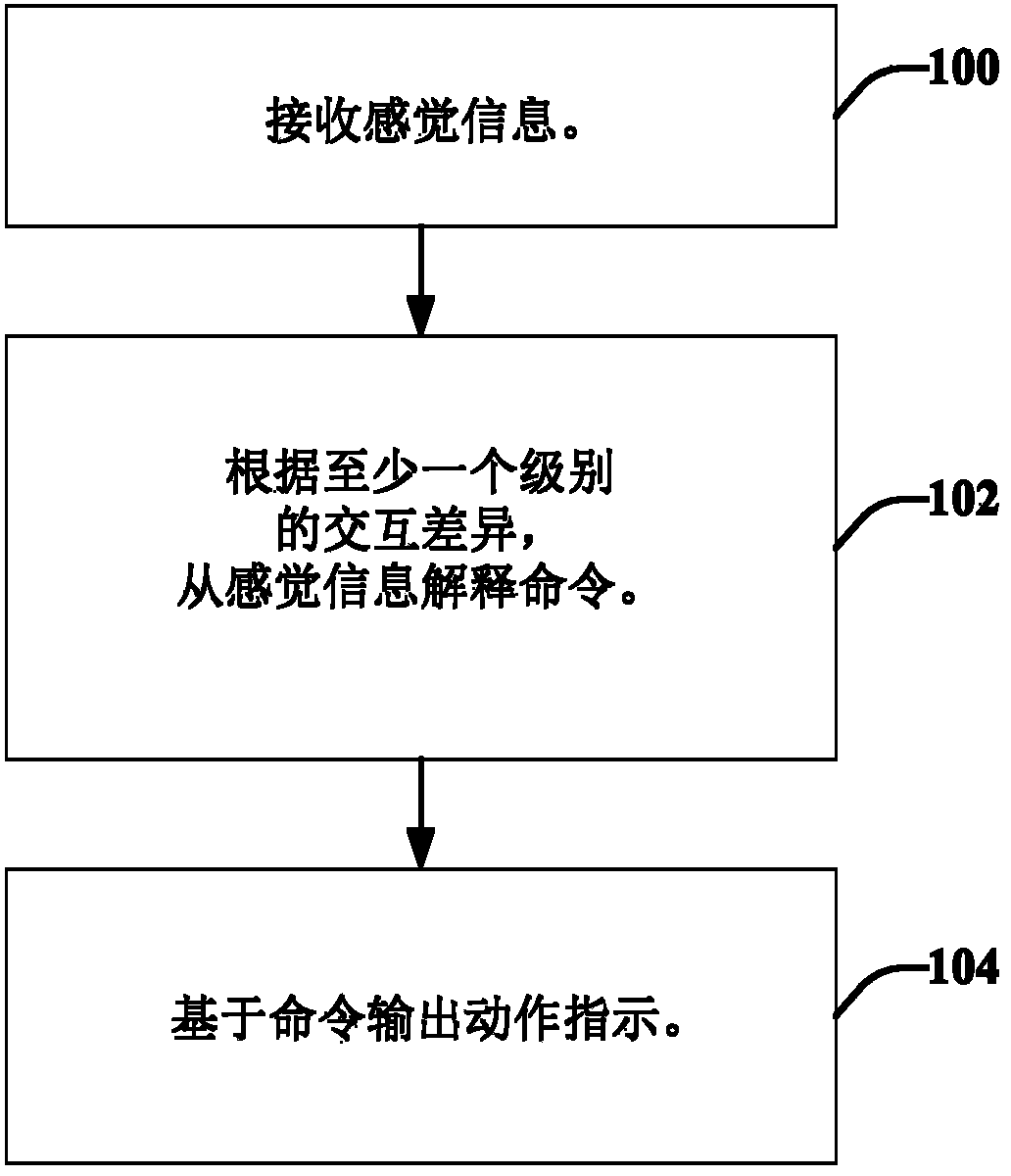

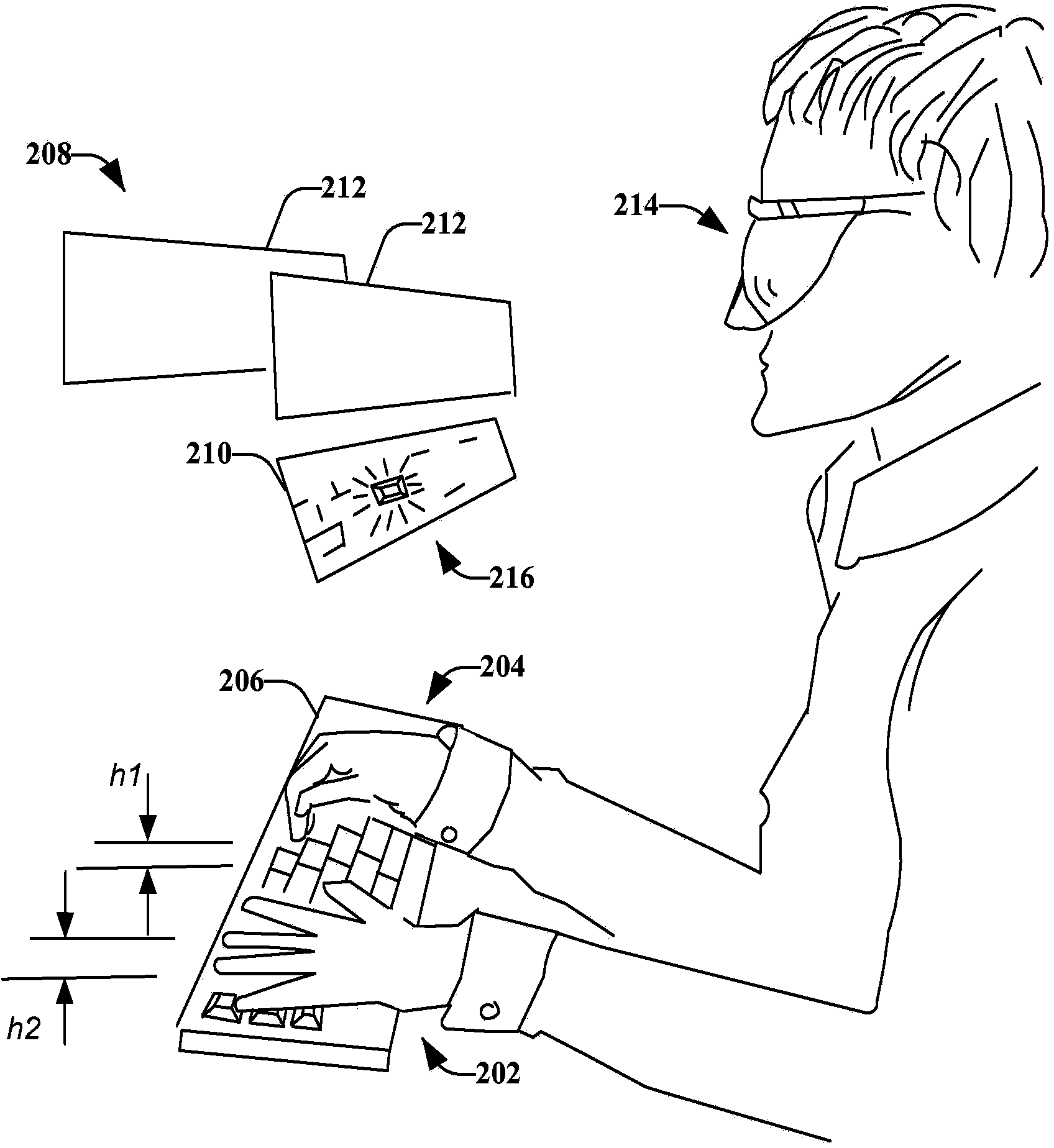

Gesture based user interface for augmented reality

Technologies are generally described for systems and methods effective to provide a gesture keyboard that can be utilized with a virtual display. In an example, the method includes receiving sensory information associated with an object in proximity to, or in contact with, an input device including receiving at least one level of interaction differentiation detected from at least three levels of interaction differentiation, interpreting a command from the sensory information as a function of the at least one level of interaction differentiation, and outputting an action indication based on the command.

Owner:EMPIRE TECH DEV LLC

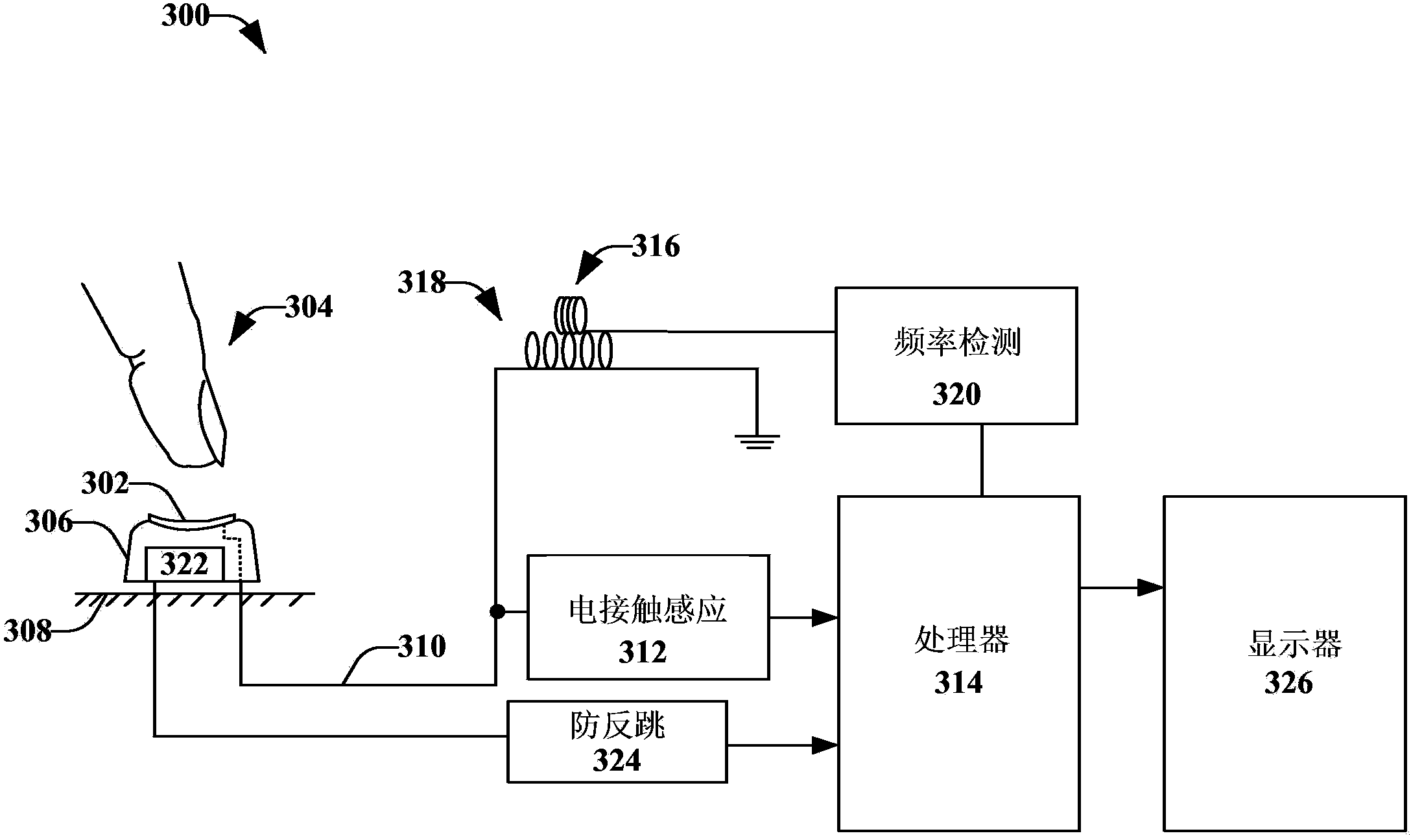

Touch sensitive user interface

ActiveCN103294260AInput/output for user-computer interactionElectromagnetic wave reradiationMulti touch interfaceUser input

A system and a method are disclosed for providing a touch interface for electronic devices. The touch interface can be any surface. As one example, a table top can be used as a touch sensitive interface. In one embodiment, the system determines a touch region of the surface, and correlates that touch region to a display of an electronic device for which input is provided. The system may have a 3D camera that identifies the relative position of a user's hands to the touch region to allow for user input. Note that the user's hands do not occlude the display. The system may render a representation of the user's hand on the display in order for the user to interact with elements on the display screen.

Owner:MICROSOFT TECH LICENSING LLC

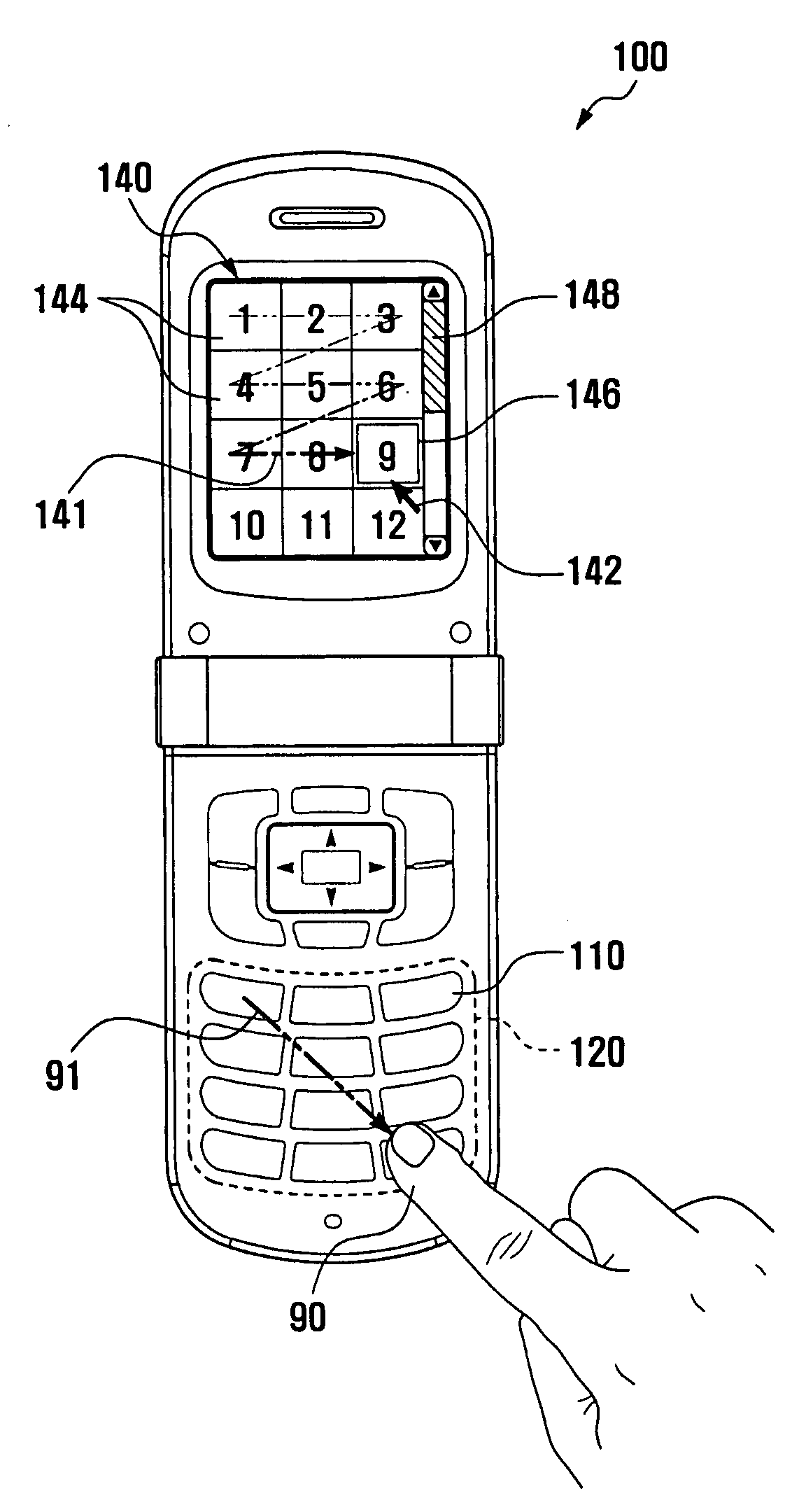

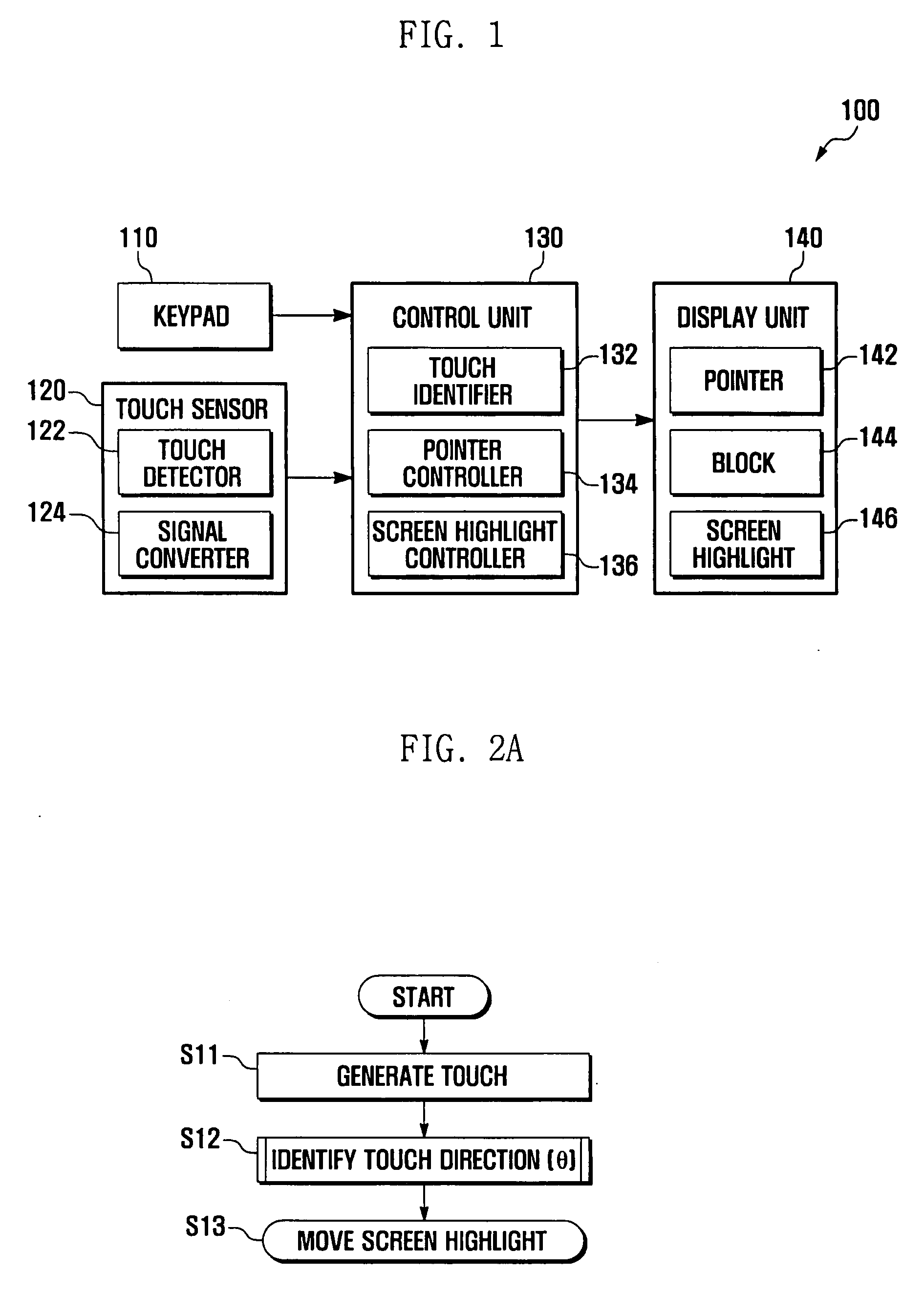

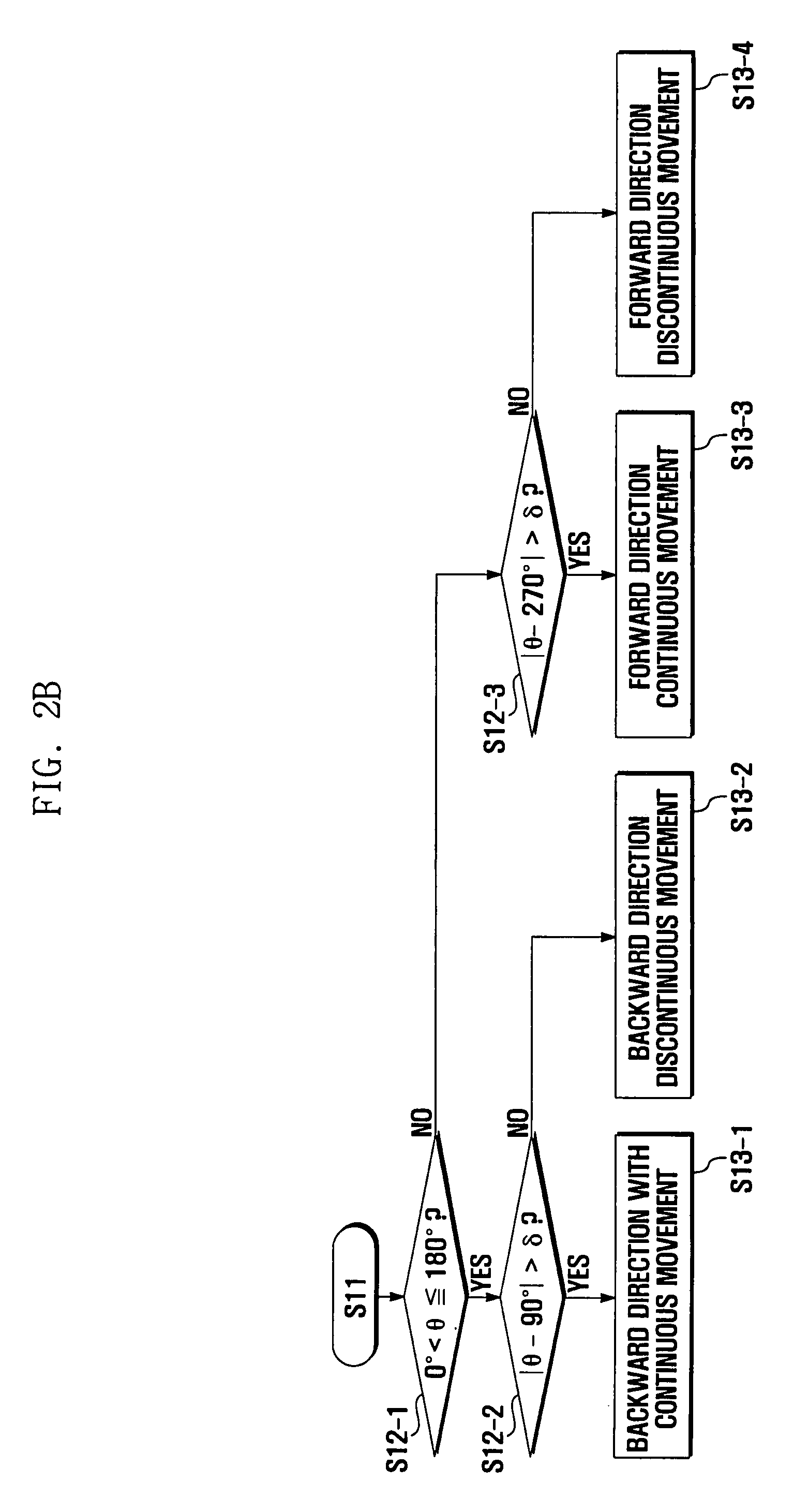

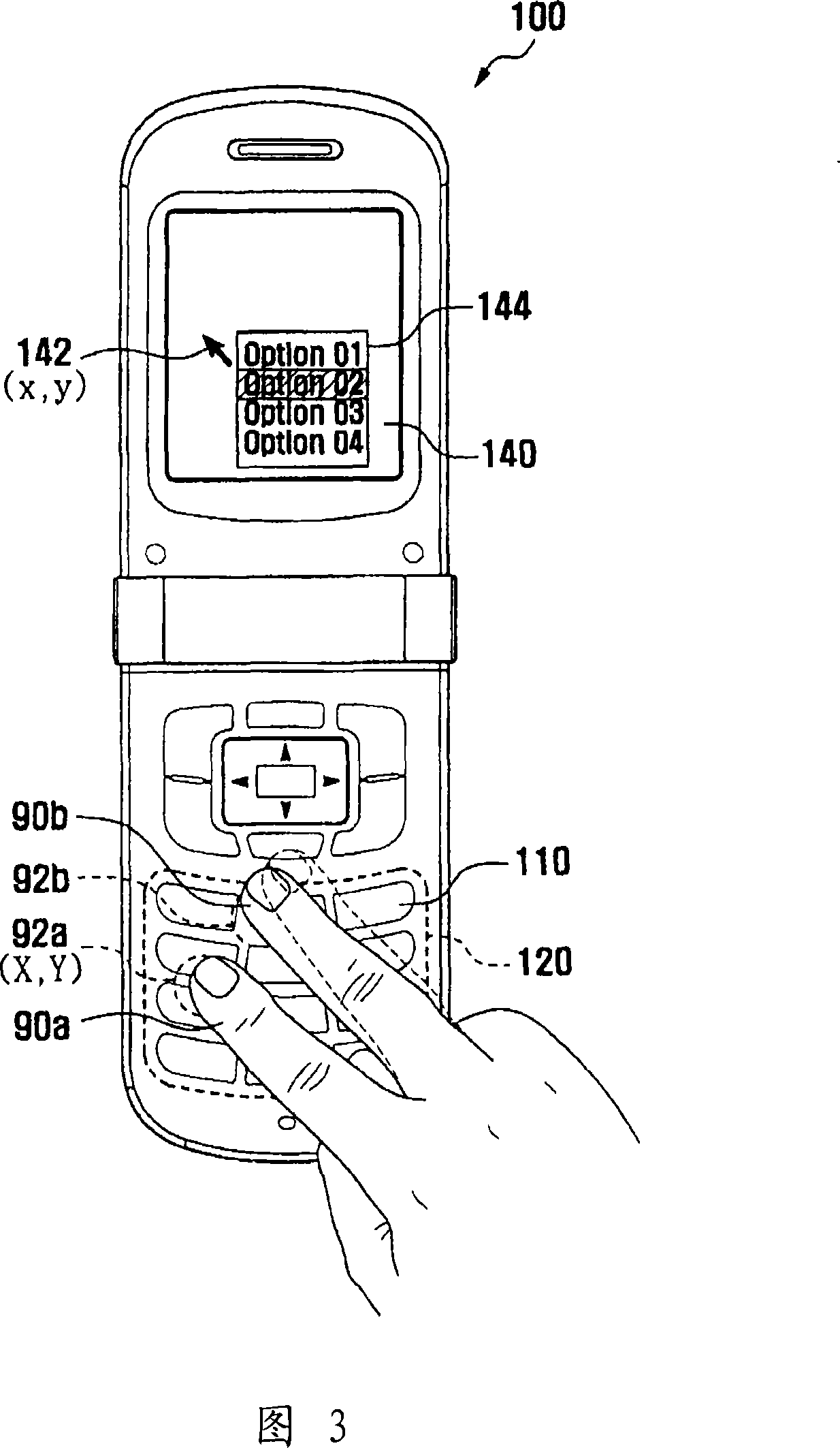

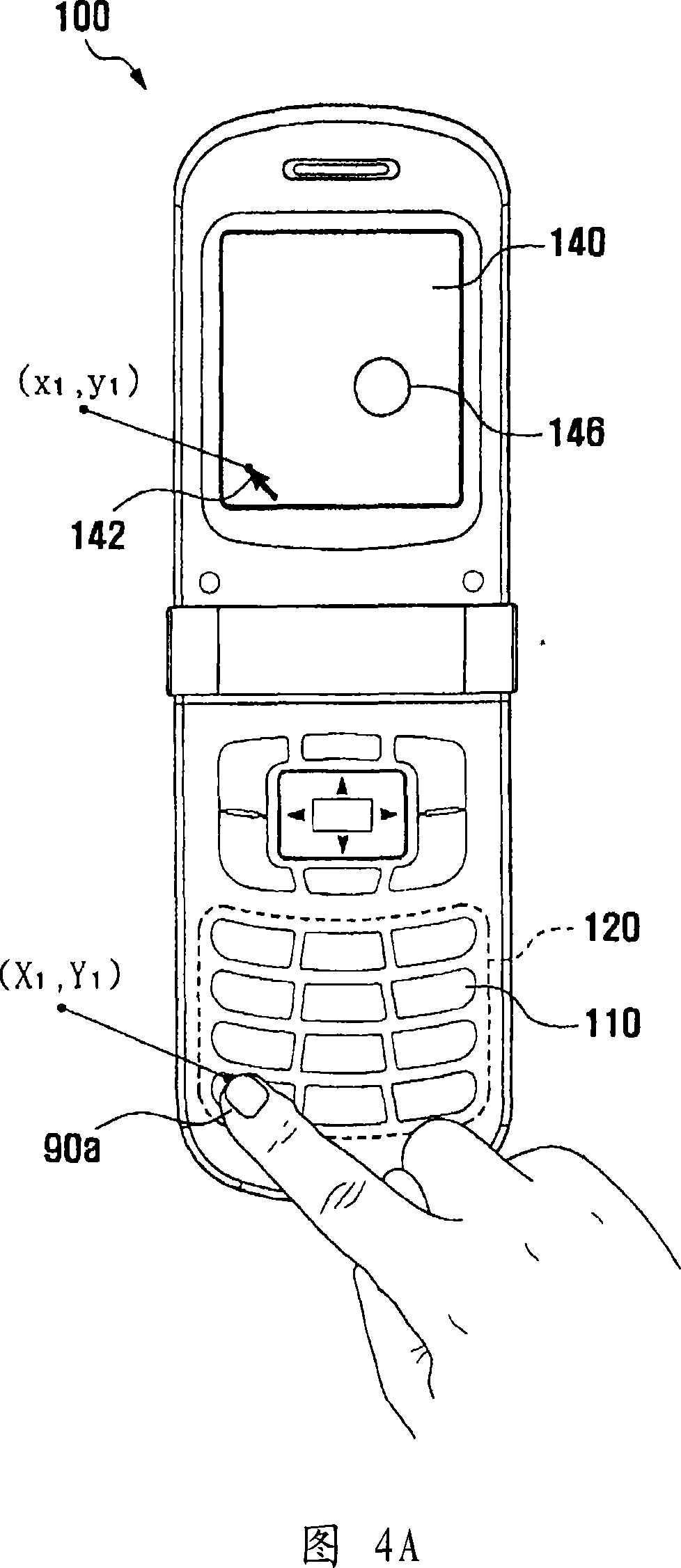

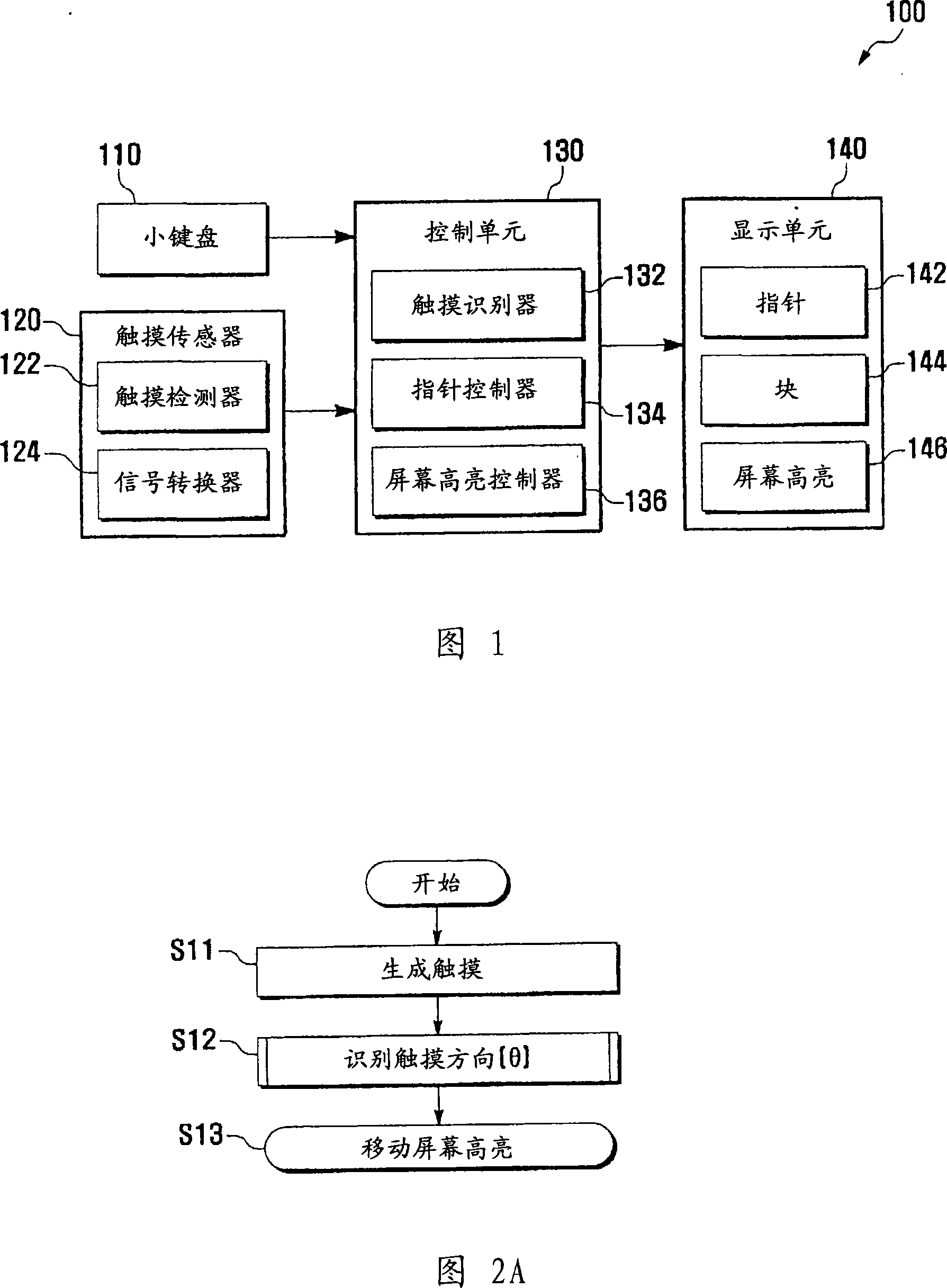

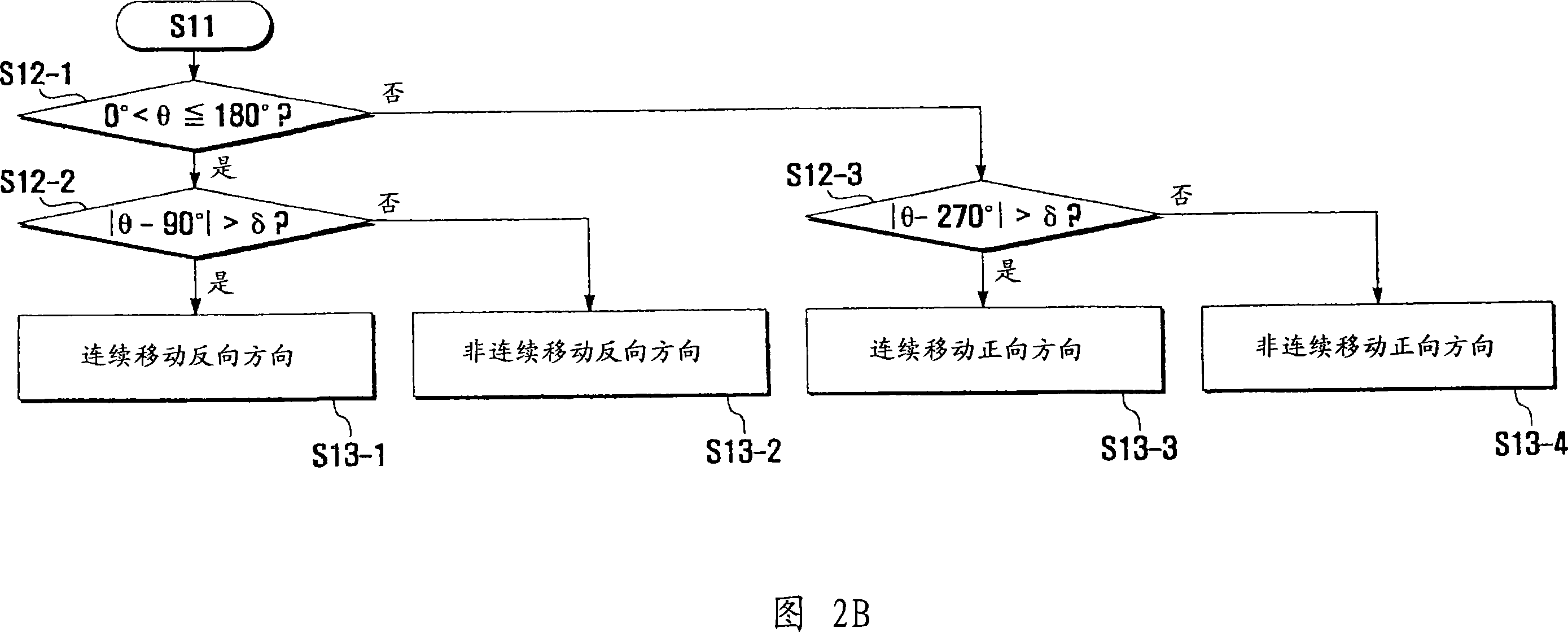

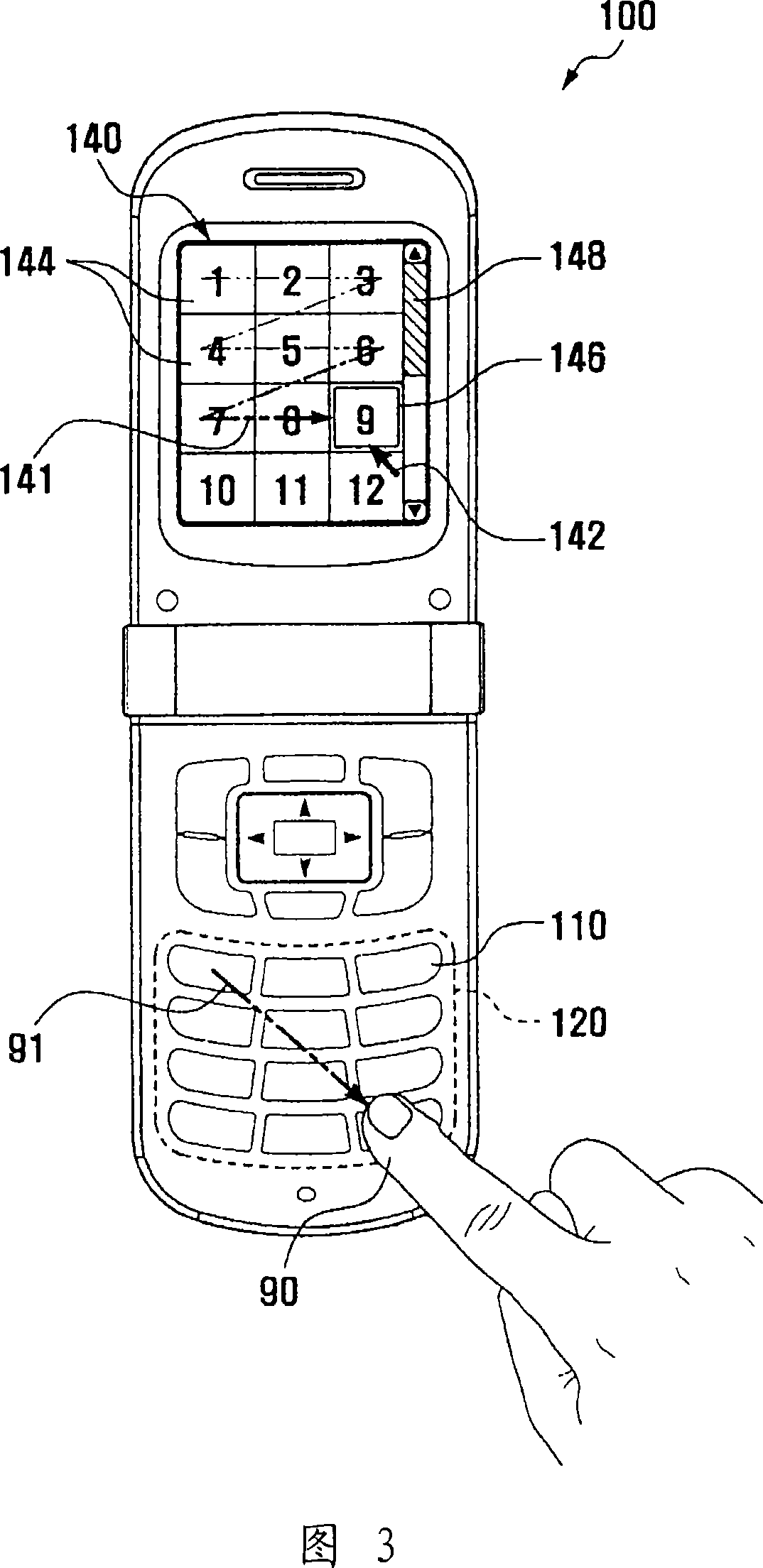

Keypad touch user interface method and a mobile terminal using the same

InactiveUS20070298849A1Improve convenienceImproved user accessibilityInput/output for user-computer interactionCathode-ray tube indicatorsHuman–computer interactionTouch user interface

A user interface method and a mobile terminal is disclosed. If a finger touches and moves in a specific direction on a keypad having a touch sensor, a touch is detected by the touch sensor and a type of touch direction is identified by a control unit according to the angle of a touch direction. A screen of a display unit is partitioned into a plurality of blocks, and a screen highlight is located at a specific block. The control unit for moving the screen highlight on the display unit according to the type of the touch direction. A path of the screen highlight is set in one of a forward direction with continuous movement, a forward direction with discontinuous movement, a backward direction with continuous movement, and a backward direction with discontinuous movement. A user interface according to the present invention can include a pointer in the display unit, the pointer being controlled by linking a pointer position with a touch position.

Owner:SAMSUNG ELECTRONICS CO LTD

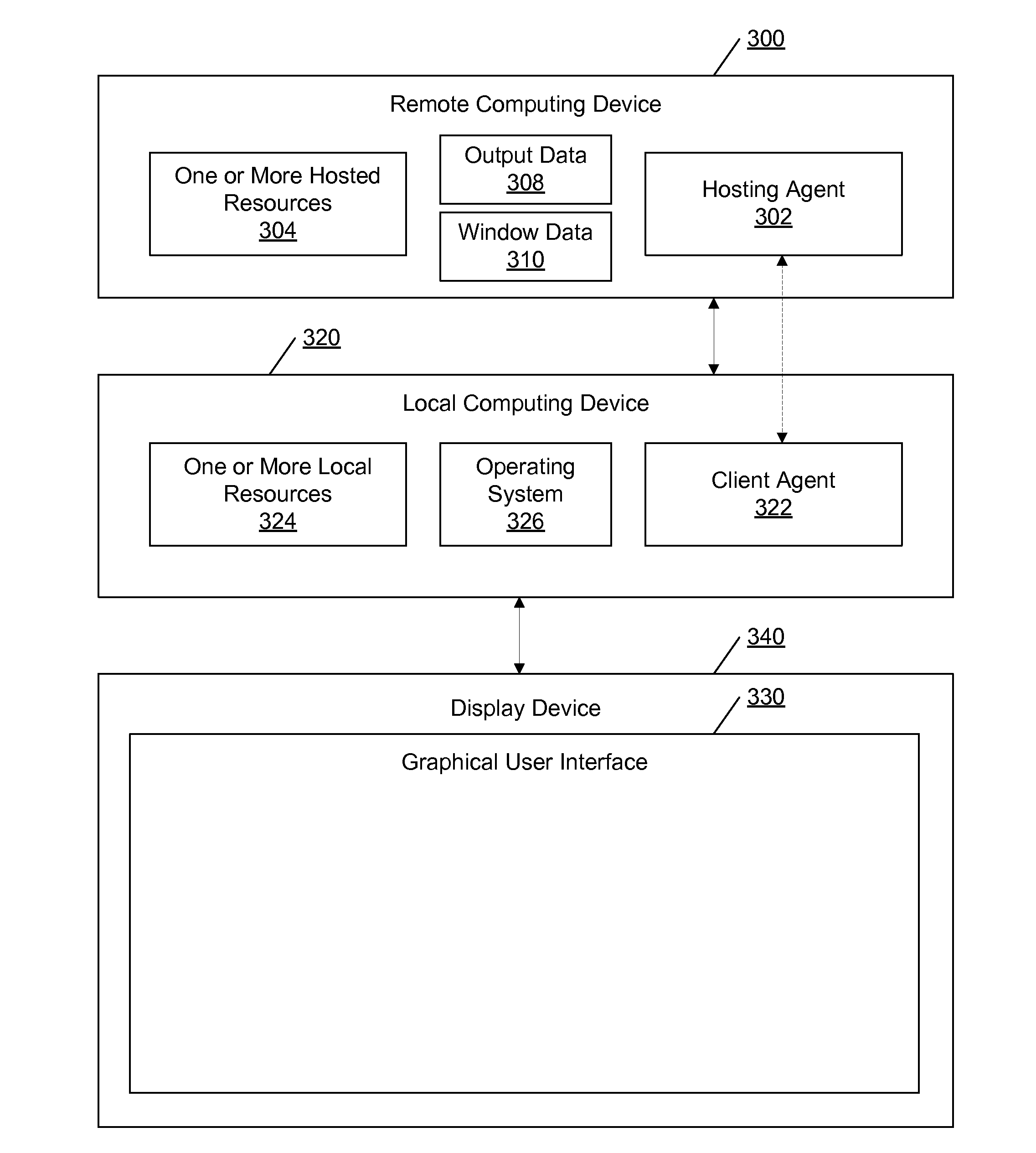

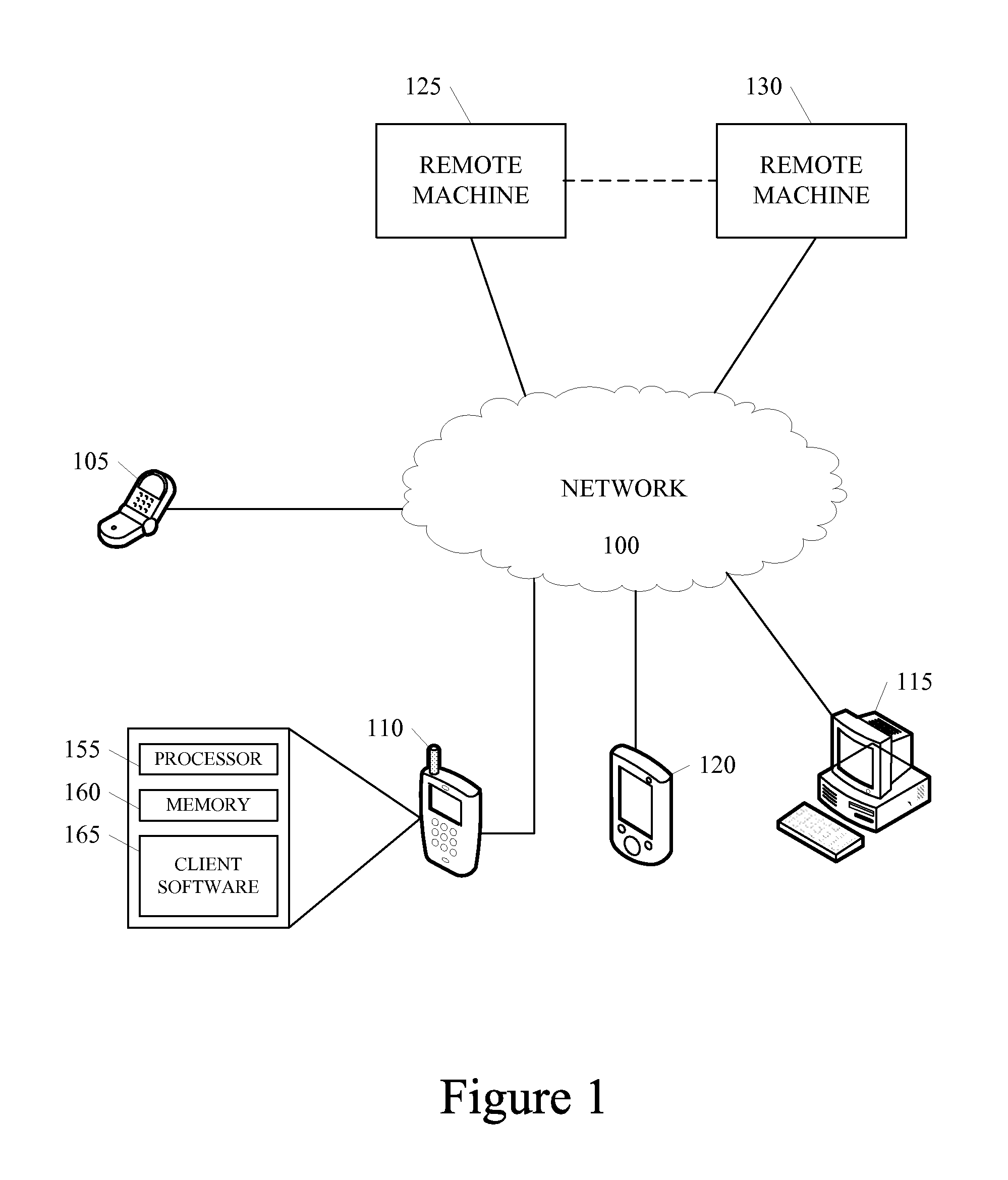

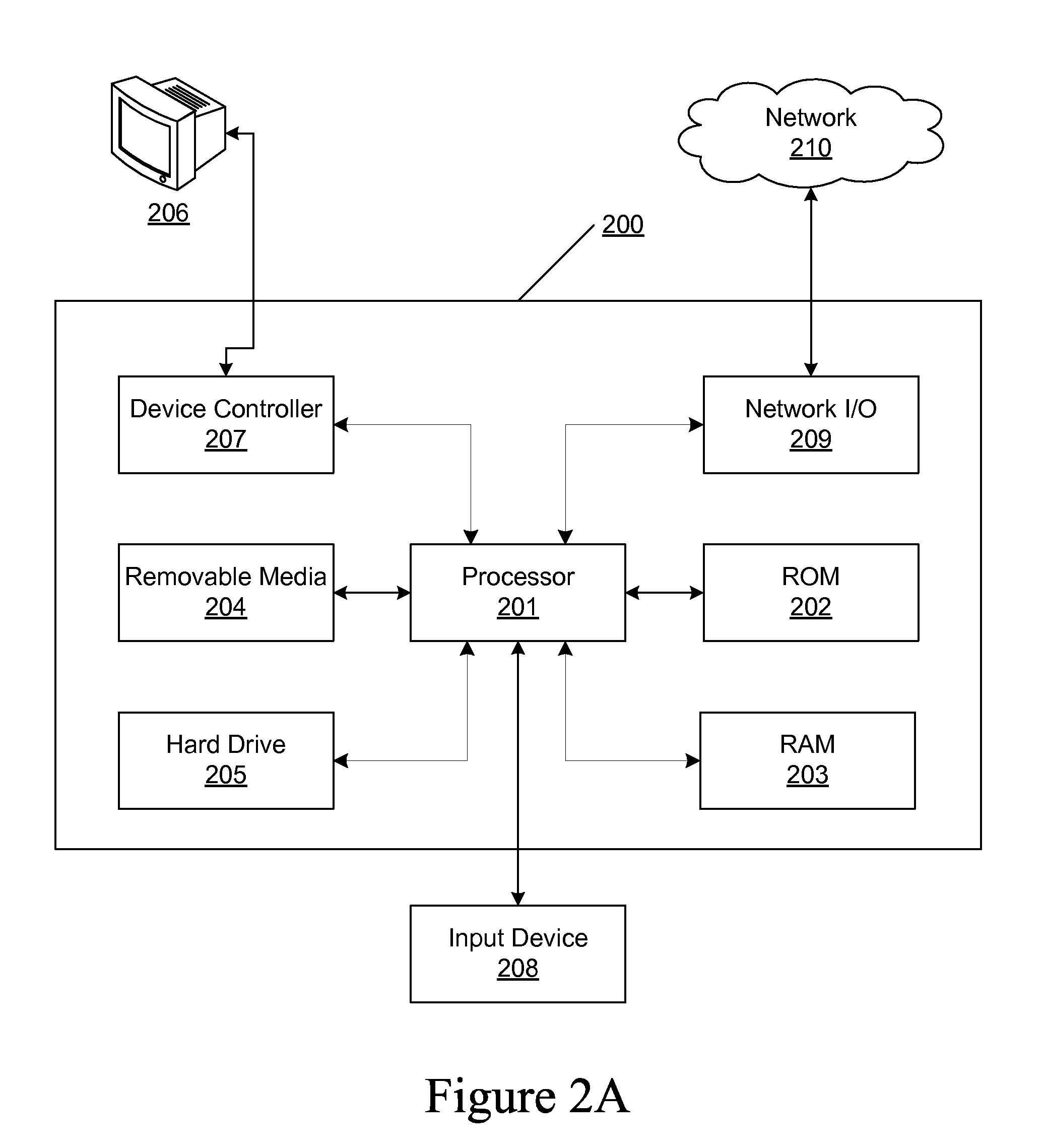

Integrating Native User Interface Components on a Mobile Device

ActiveUS20130139103A1Easy to completeExecution for user interfacesSpecial data processing applicationsRemote desktopApplication software

By enabling mobile devices, such as smart phones and tablets, to leverage native user interface components, the methods and systems described herein provide users a more seamless experience, wherein the user can potentially be oblivious to the fact that the application is not executing locally on the mobile device. In some embodiments, a user interface is provided which the user uses to trigger the display of a native user interface component. In some embodiments, the systems and methods described herein auto-adjust the pan and zoom settings on the mobile device to ensure that remote windows are presented in a manner that makes it easier for the user to interact with the device. The systems and methods described herein permit the user to switch to the new window in focus or a visual cue may be displayed to indicate that a window has been created somewhere on the remote desktop.

Owner:CITRIX SYST INC

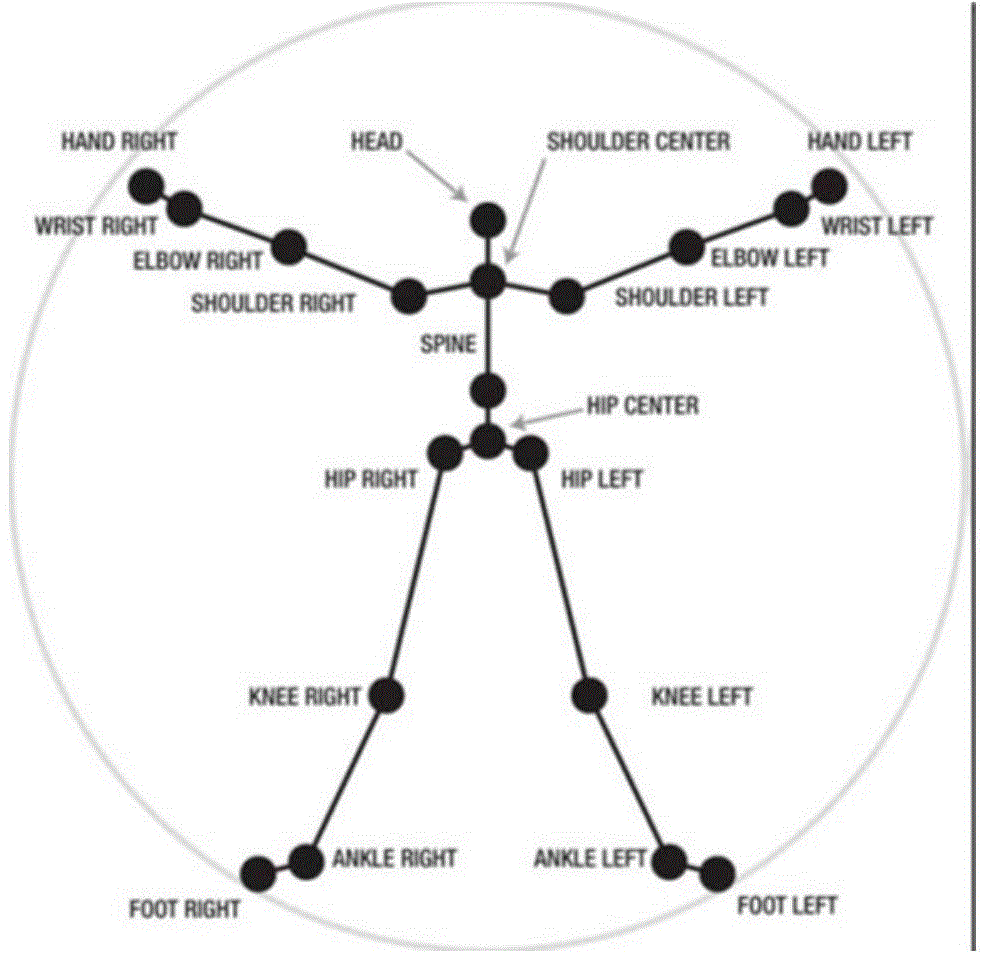

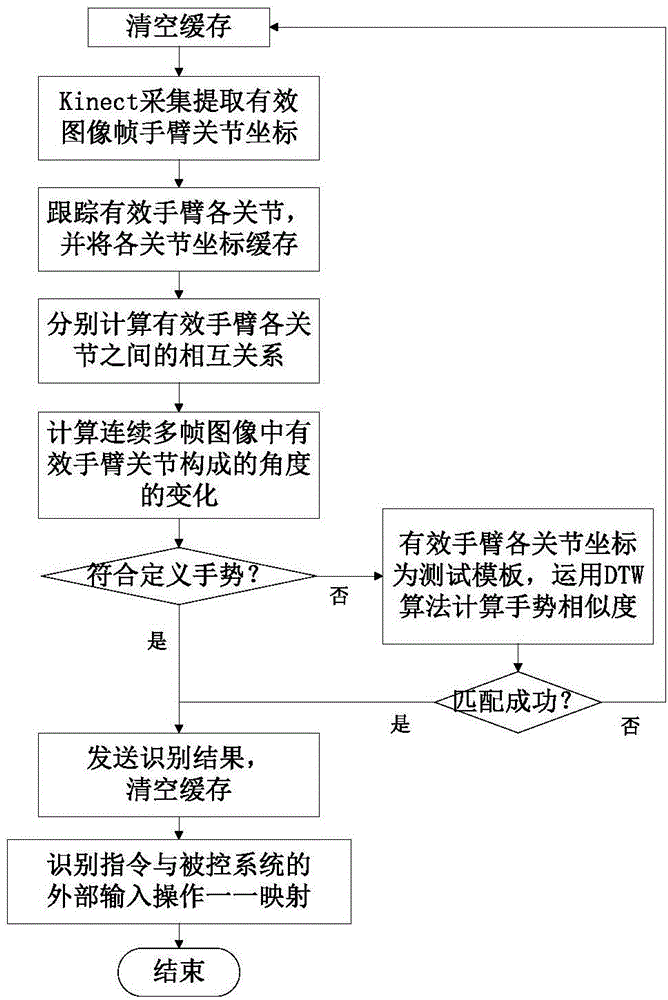

Method for controlling user interfaces through non-contact gestures

ActiveCN104808788AImprove experienceIntuitive and natural interactionInput/output for user-computer interactionCharacter and pattern recognitionTouchscreenTouch user interface

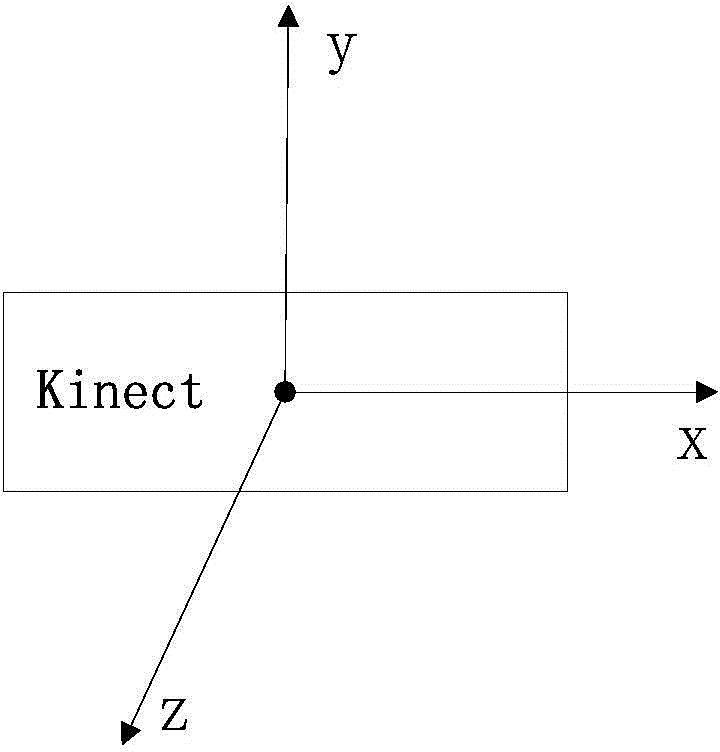

The invention relates to a method for controlling a computer, a touch screen, a projector and other user interfaces through Kinect non-contact gestures. The method comprises the main steps of acquiring actions of double arms of an operator and using a gesture recognition algorithm to recognize gestures, utilizing recognized gestures to achieve interaction between the operator and the computer, the touch screen, projector and other user interfaces. The method relates to six gestures including single click, right-key single click, double clicks, forward rolling, back rolling, zooming in, zooming out and dragging and basically covers the demands for interaction between the operator and the computer, the touch screen, projector and other user interfaces. The method is a novel recognition method, gesture recognition accuracy can be improved, and recognition rapidity can be improved. By the combination of the six gestures, man-machine interaction can be convenient and flexible.

Owner:BEIJING UNIV OF TECH

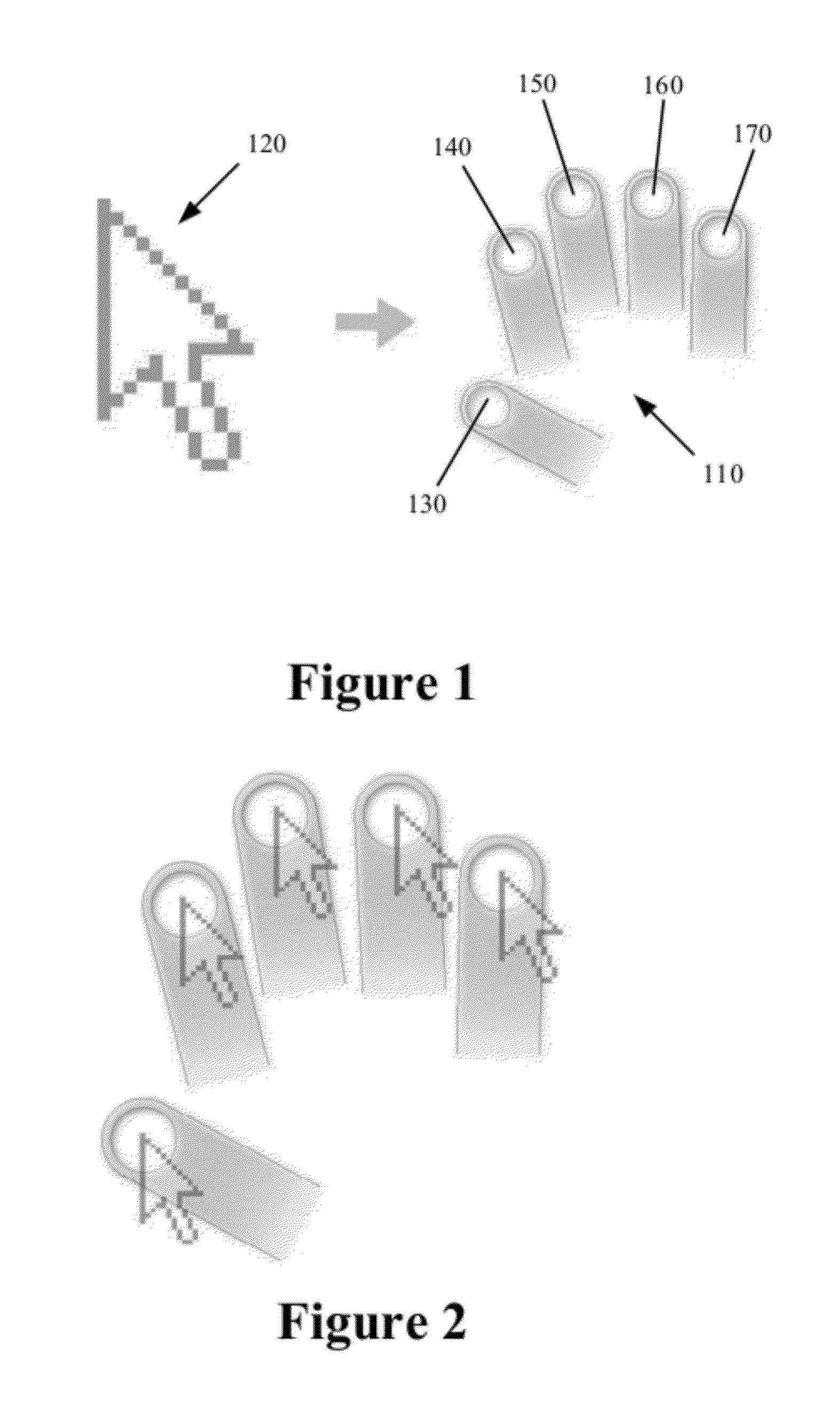

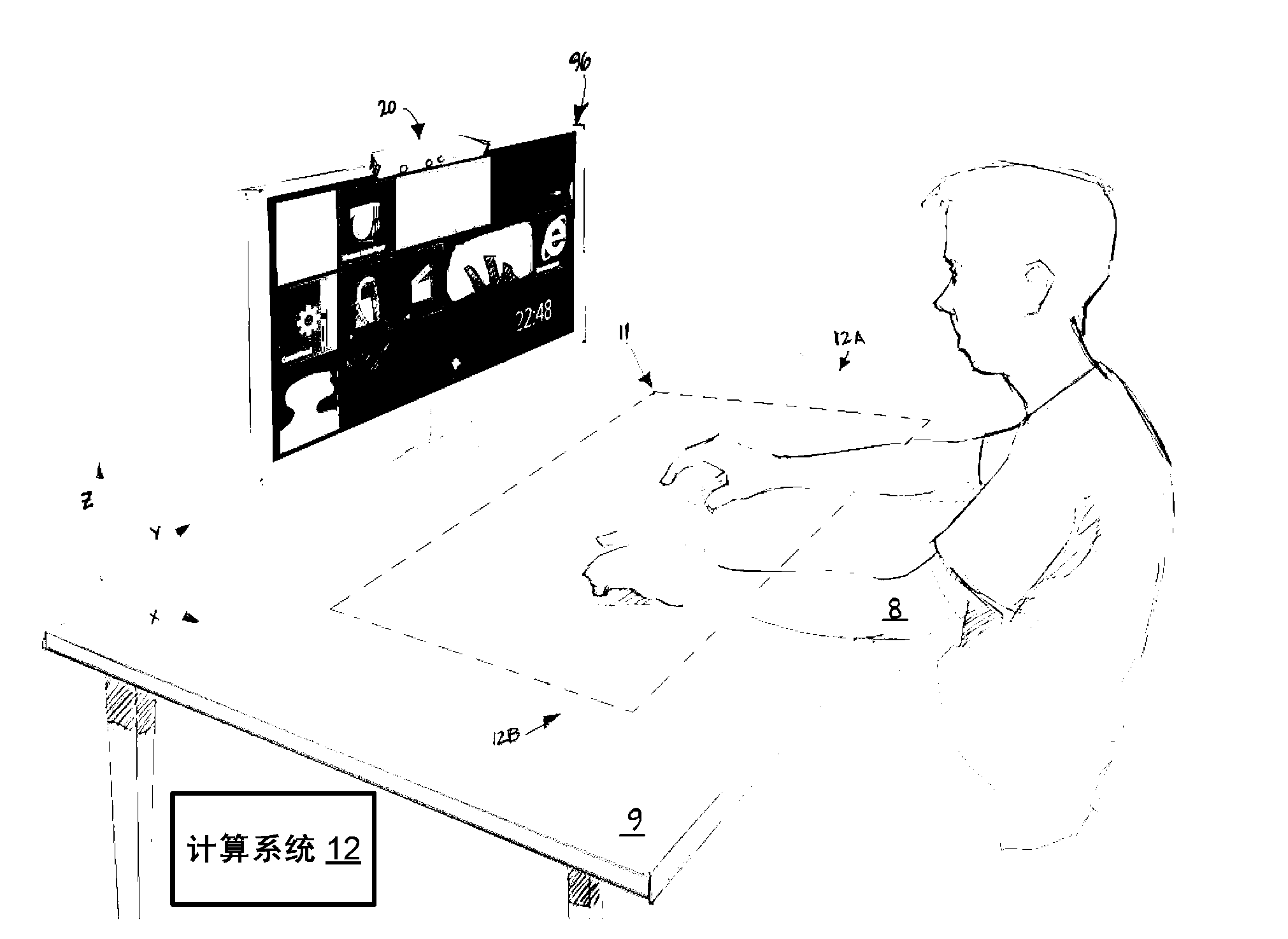

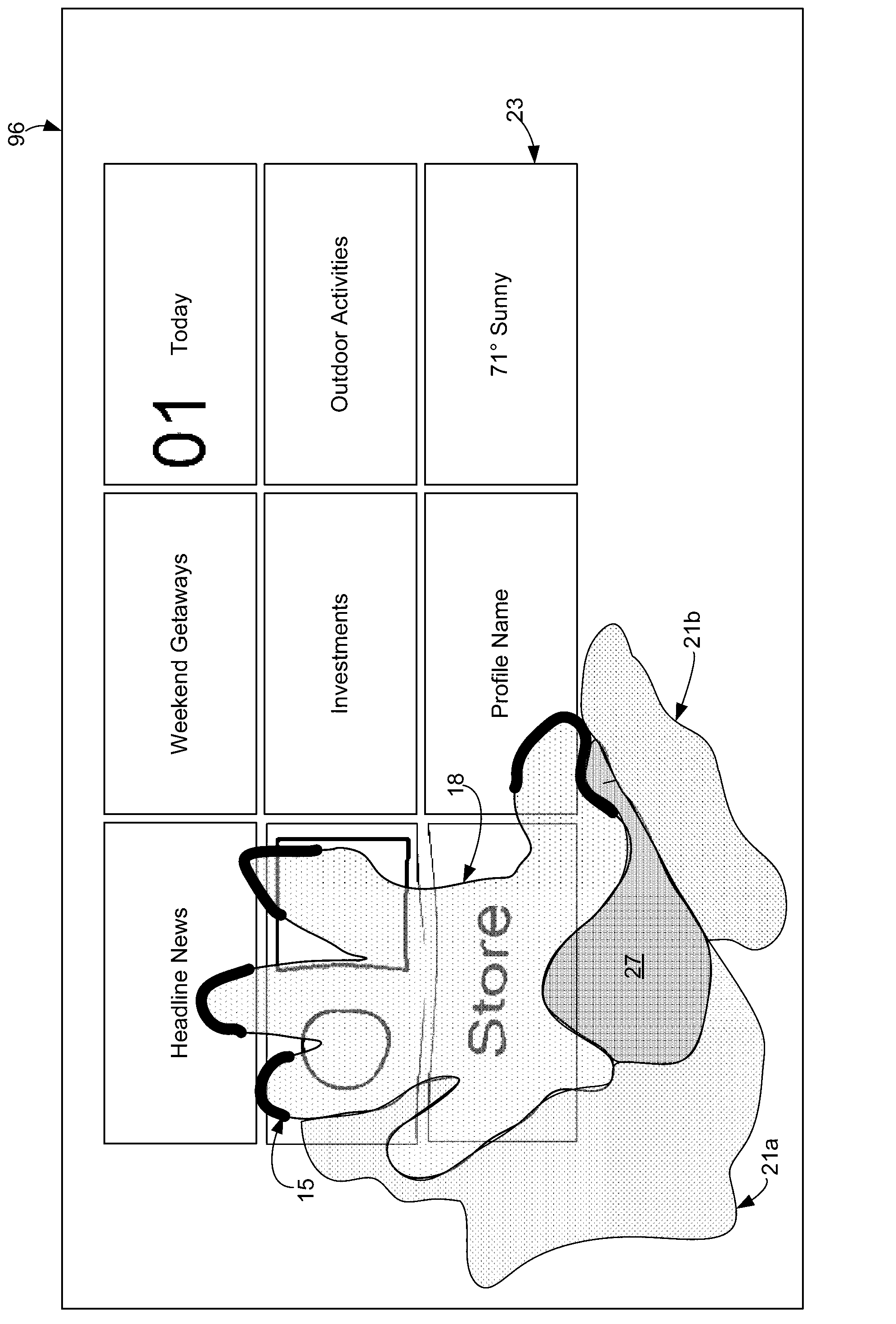

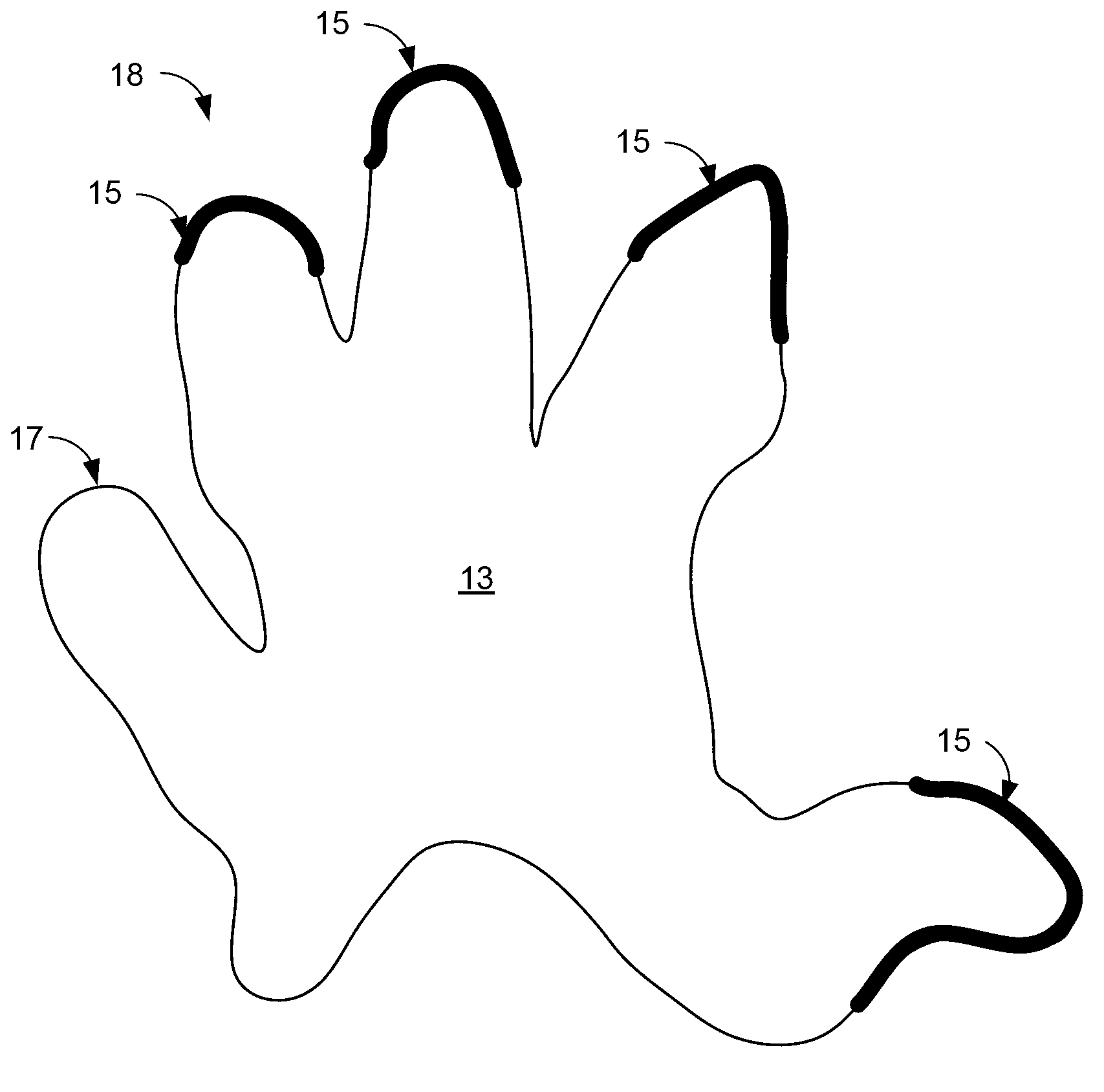

Displaying GUI elements on natural user interfaces

ActiveUS8261212B2Well formedInput/output processes for data processingNatural user interfaceHuman–computer interaction

A computing system for displaying a GUI element on a natural user interface is described herein. The computing system includes a display configured to display a natural user interface of a program executed on the computing system, and a gesture sensor configured to detect a gesture input directed at the natural user interface by a user. The computing system also includes a processor configured to execute a gesture-recognizing module for recognizing a registration phase, an operation phase, and a termination phase of the gesture input, and a gesture assist module configured to first display a GUI element overlaid upon the natural user interface in response to recognition of the registration phase. The GUI element includes a visual or audio operation cue to prompt the user to carry out the operation phase of the gesture input, and a selector manipulatable by the user via the operation phase of the gesture.

Owner:MICROSOFT TECH LICENSING LLC

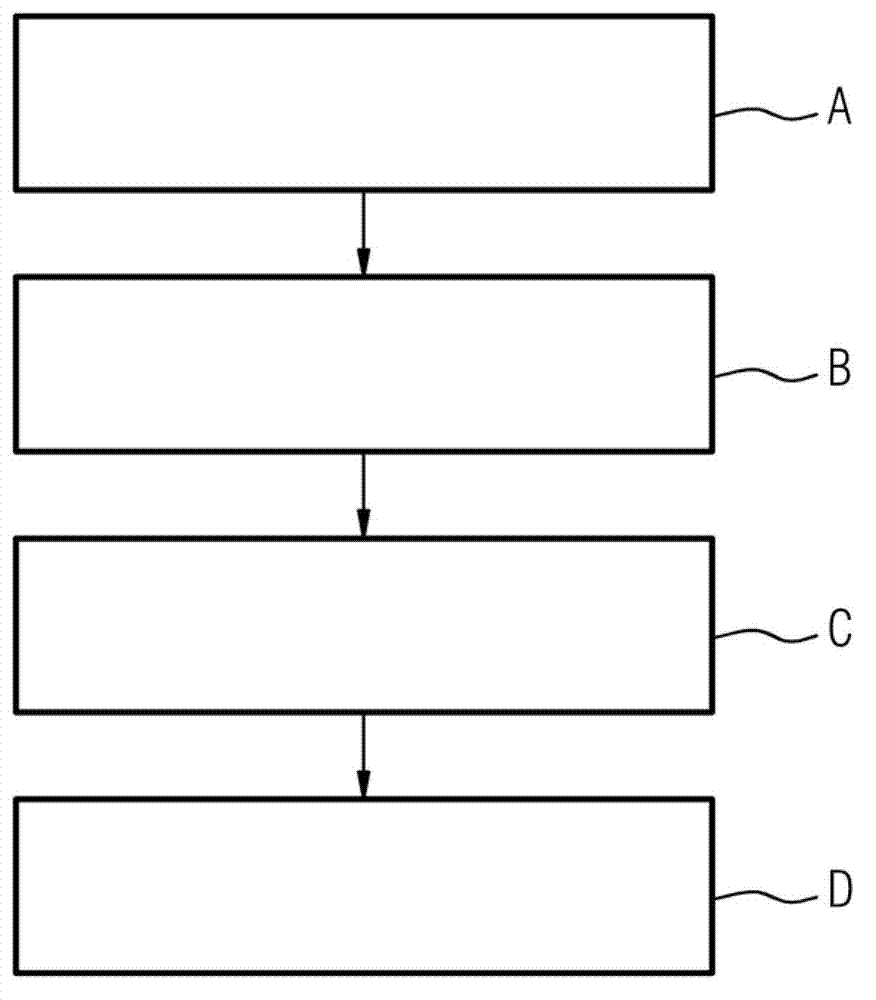

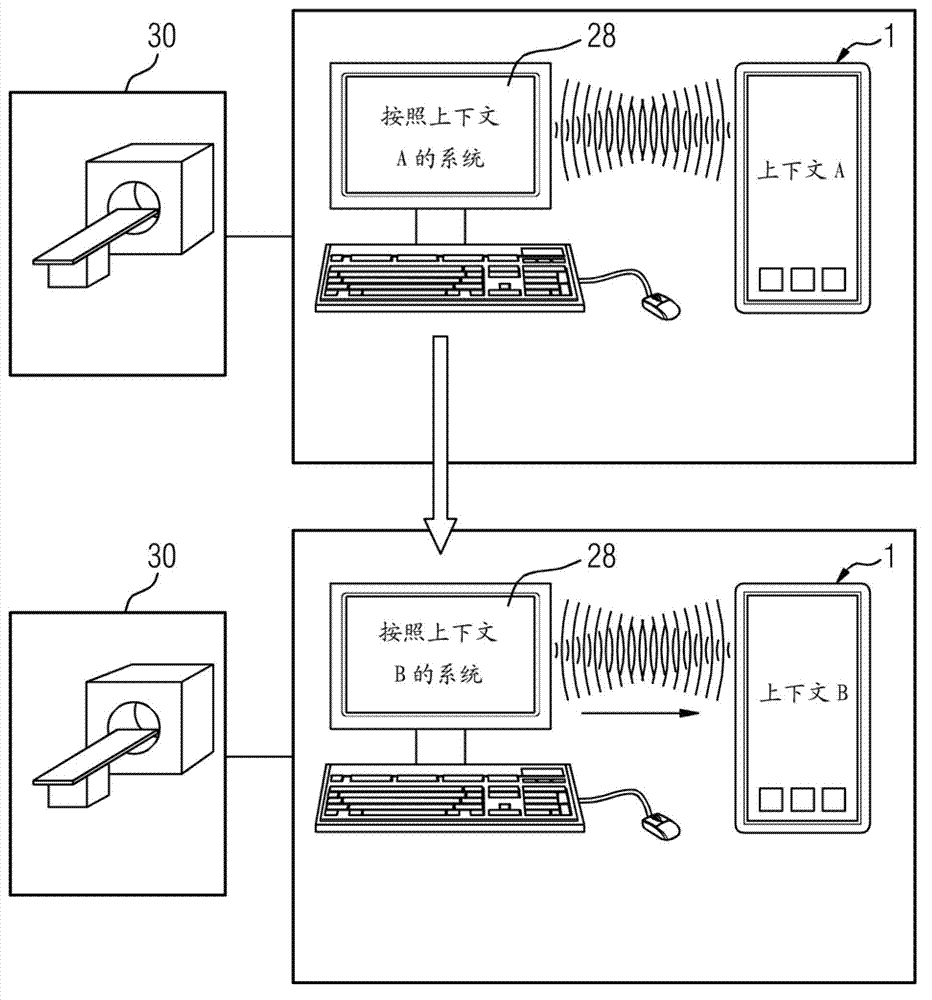

Device controller with connectable touch user interface

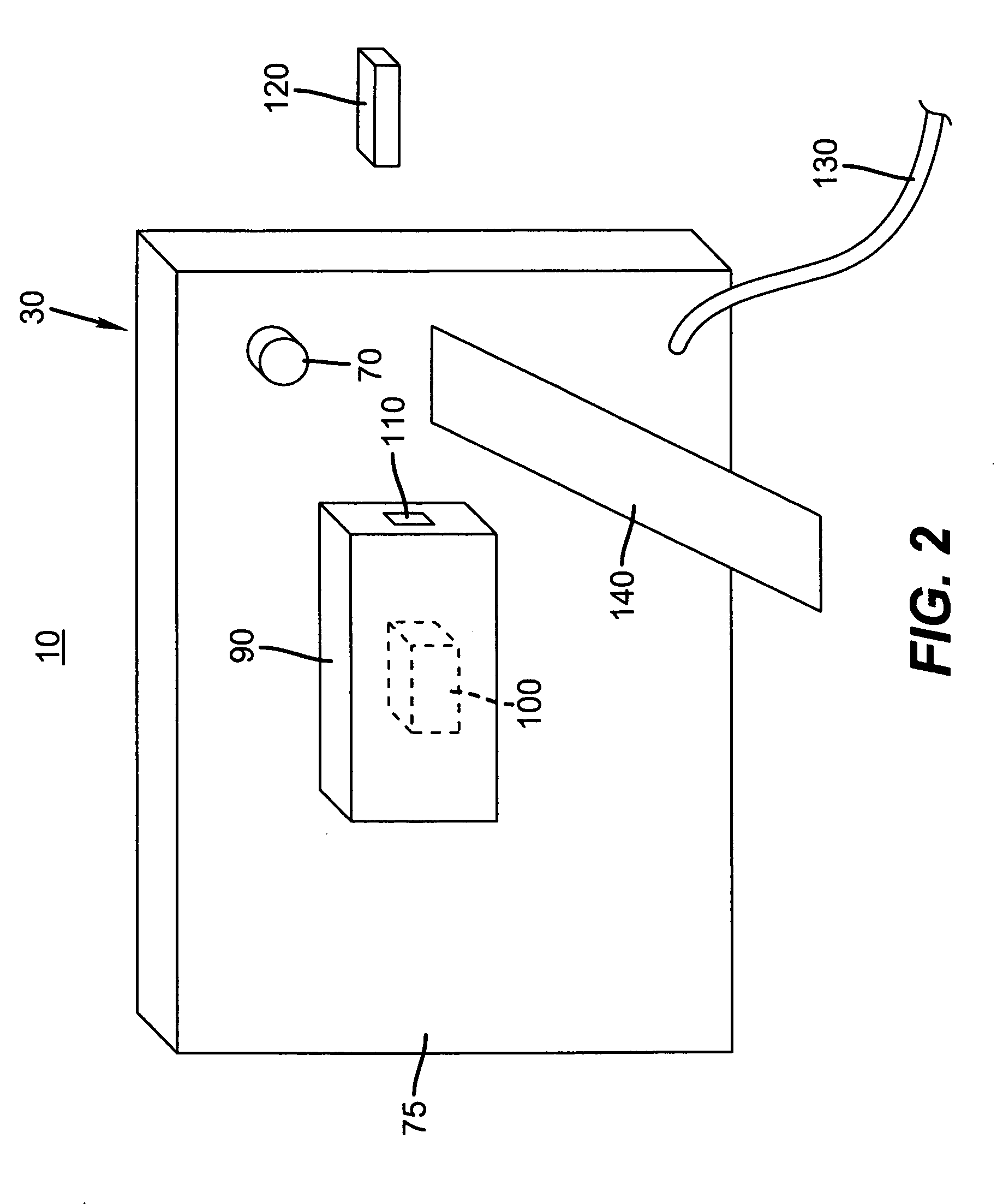

The invention provides a device controller with a connectable touch user interface, and relates to a context-sensitive control system, a method and a computer program product for controlling a medical technology device (30) which is preferably an imaging device. The imaging device is operated through an application (20). An external touch screen (100) is accessed into a medical technology device system (3) and is applied through a touch screen control. The touch screen (100) of a mobile wireless device (1) is expanded by means of an adapter module, and the application (20) is expanded as a control module (21). The control is context sensitive and can automatically identify context.

Owner:SIEMENS HEALTHCARE GMBH

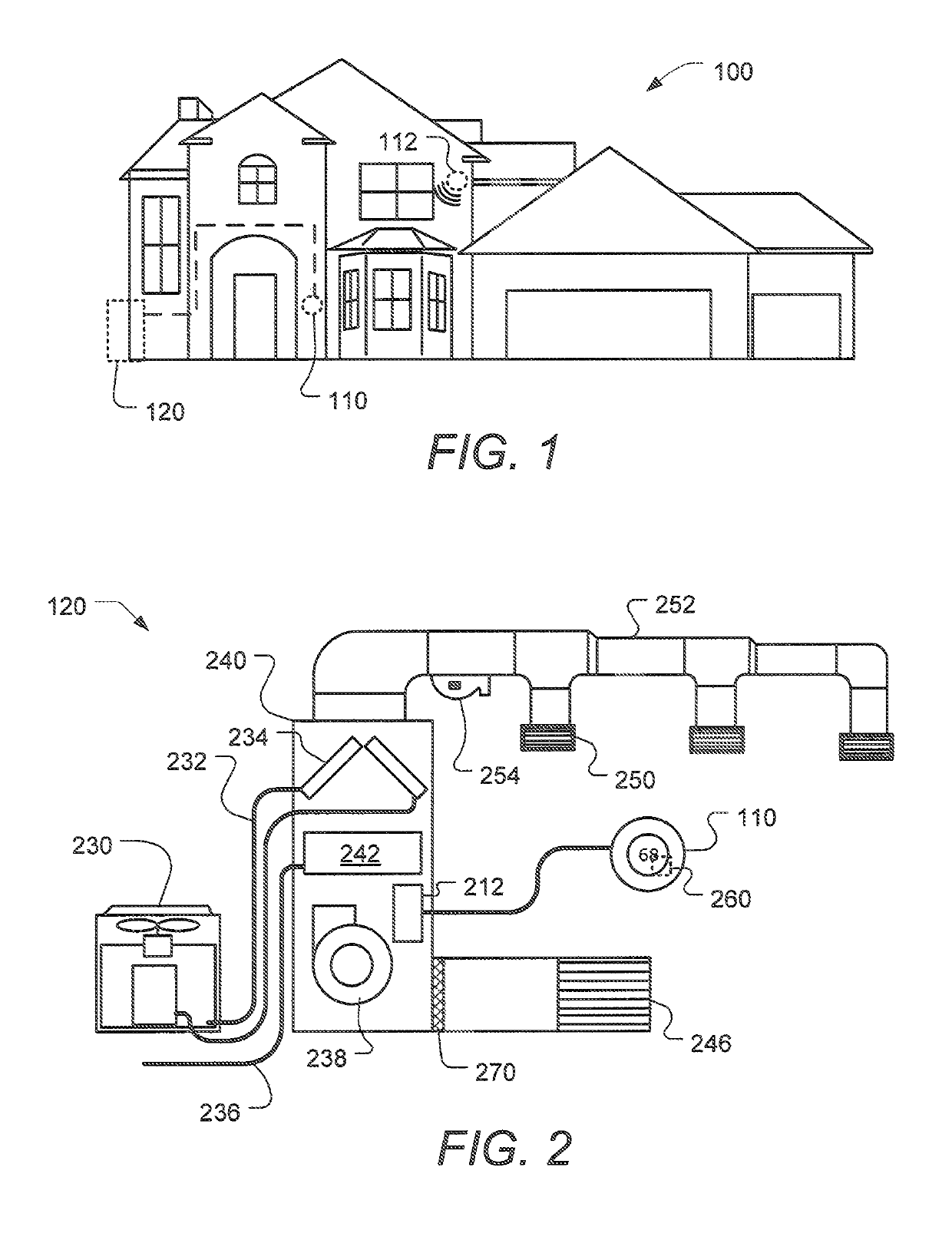

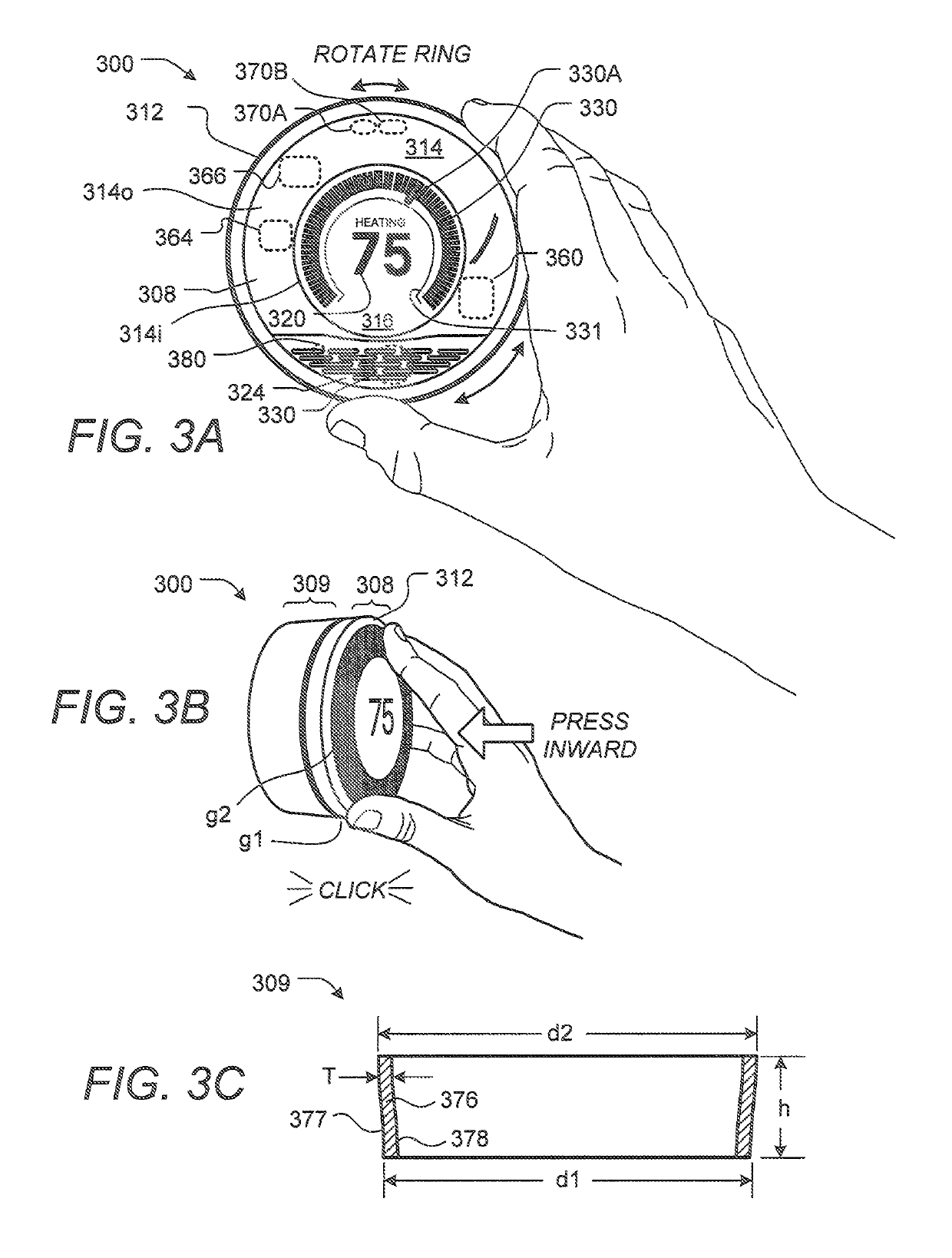

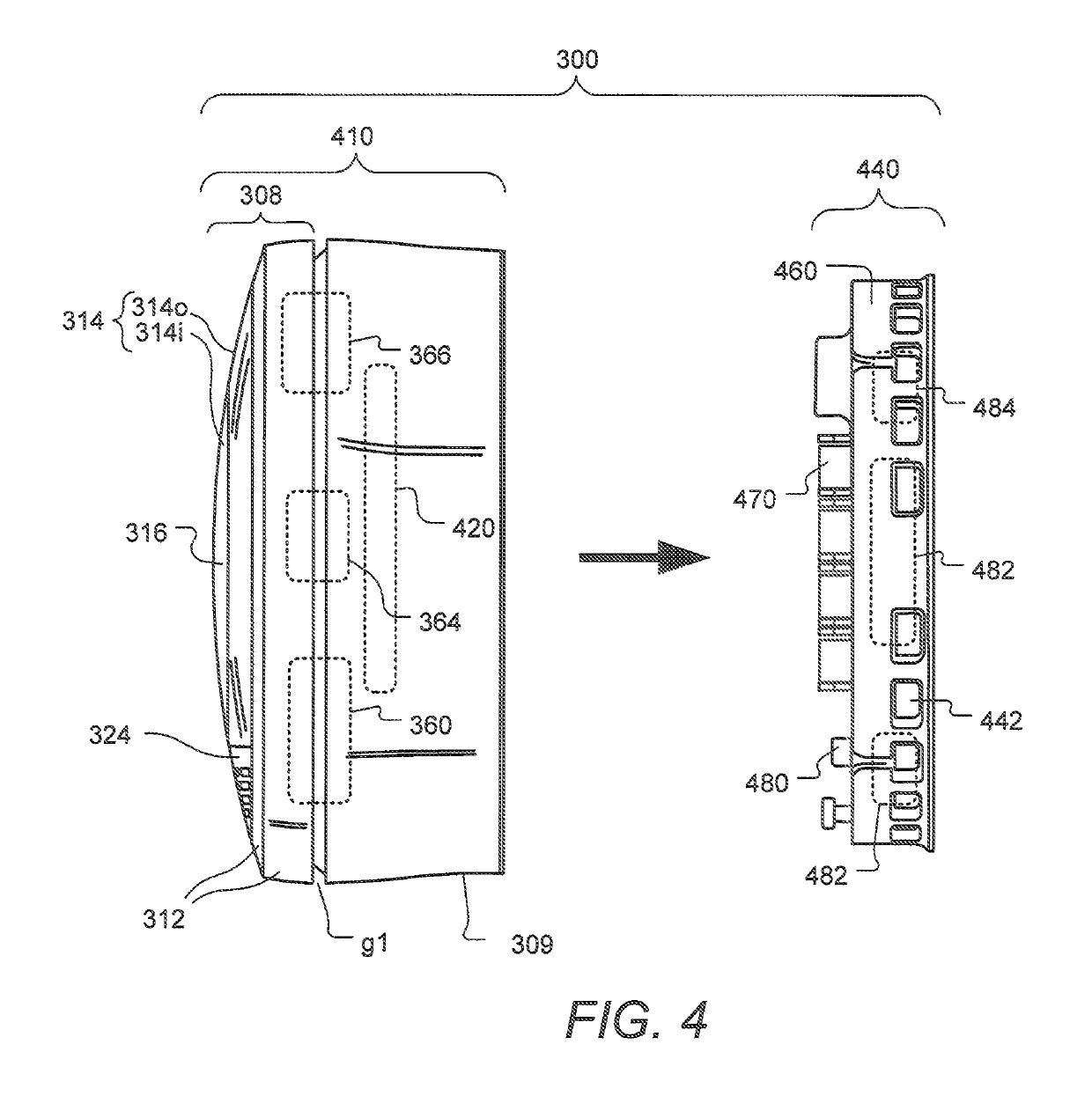

Thermostat graphical user interface

ActiveUS10241527B2Easy to understandImprove understandingMechanical apparatusSpace heating and ventilation safety systemsGraphicsGraphical user interface

A thermostat for controlling an HVAC system is described, the thermostat having a user interface that is visually pleasing, approachable, and easy to use while also providing intuitive navigation within a menuing system. In a first mode of operation, an electronic display of the thermostat displays a population of tick marks arranged in an arcuate arrangement including a plurality of background tick marks, a setpoint tick mark representing a setpoint temperature, and an ambient temperature tick mark representing an ambient temperature, the setpoint temperature being dynamically changeable according to a tracked rotational input motion of a ring-shaped user interface component of the thermostat. In a second mode, the a plurality of user-selectable menu options is displayed in an arcuate arrangement along a menu option range area, and respective ones of the user-selectable menu options are selectively highlighted according to the tracked rotational input motion of the ring-shaped user interface component.

Owner:GOOGLE LLC

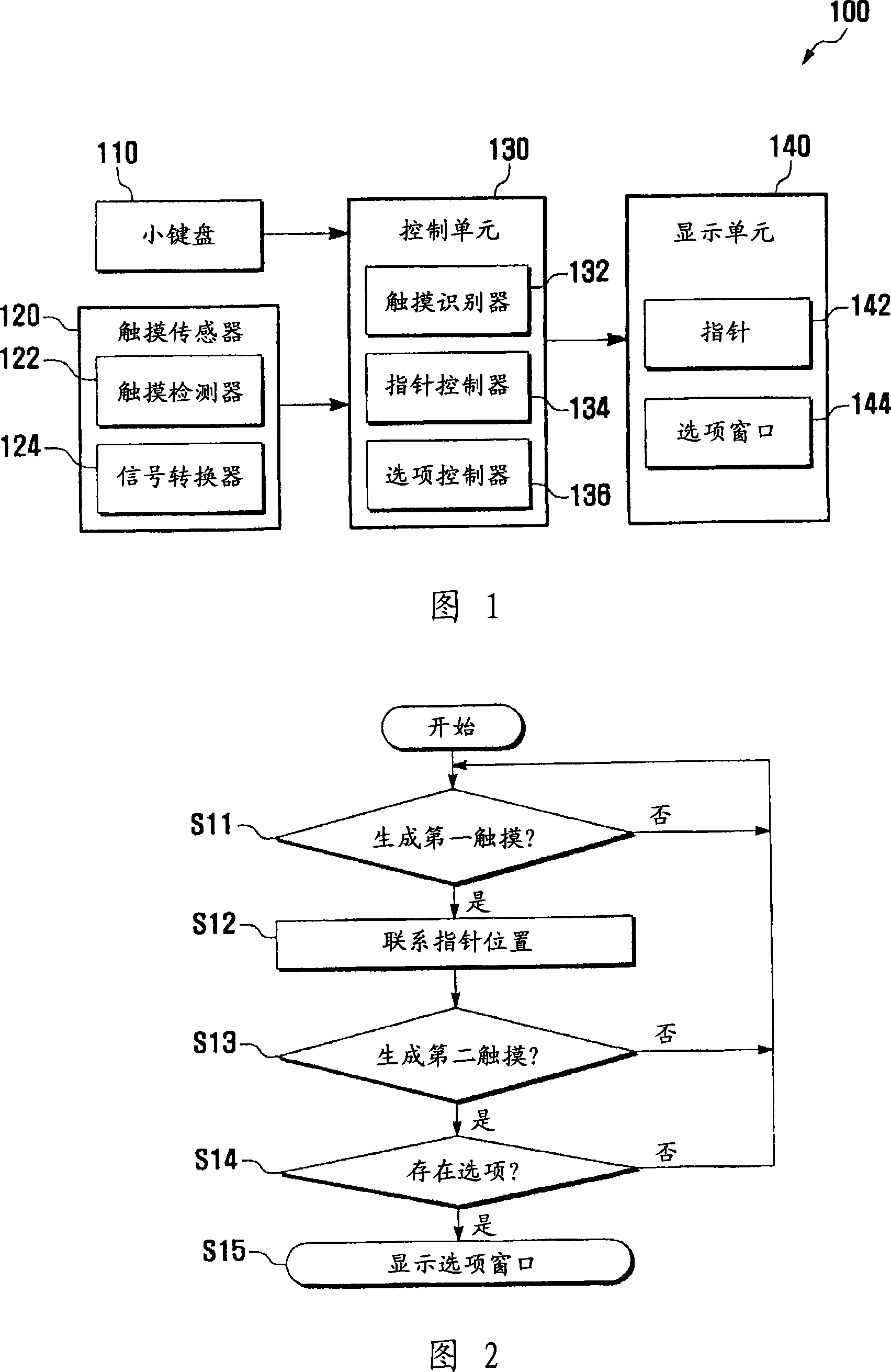

Keypad touch user interface method and mobile terminal using the same

InactiveCN101098533AImprove usabilityImprove convenienceDetails for portable computersRadio/inductive link selection arrangementsComputer terminalHuman–computer interaction

A keypad touch user interface method and a mobile terminal using the same are disclosed. If a touch is generated by a finger operation on a keypad installed with a touch sensor, the touch sensor detects the touch and a control unit identifies the type of the touch. According to the type of the touch, the control unit controls the display of a pointer on a display unit or displays an option window on the display unit. According to the result of identifying the type of the touch, if a new touch is generated by a finger in the state that another finger is touching a position on the keypad, the option window is displayed as with a right-click function of a mouse in a personal computing environment.

Owner:SAMSUNG ELECTRONICS CO LTD

Voice and touch user interface

ActiveUS9719797B2Minimizing user interactionSafe driving experienceInstruments for road network navigationNavigation by speed/acceleration measurementsDashboardNatural user interface

Owner:APPLE INC

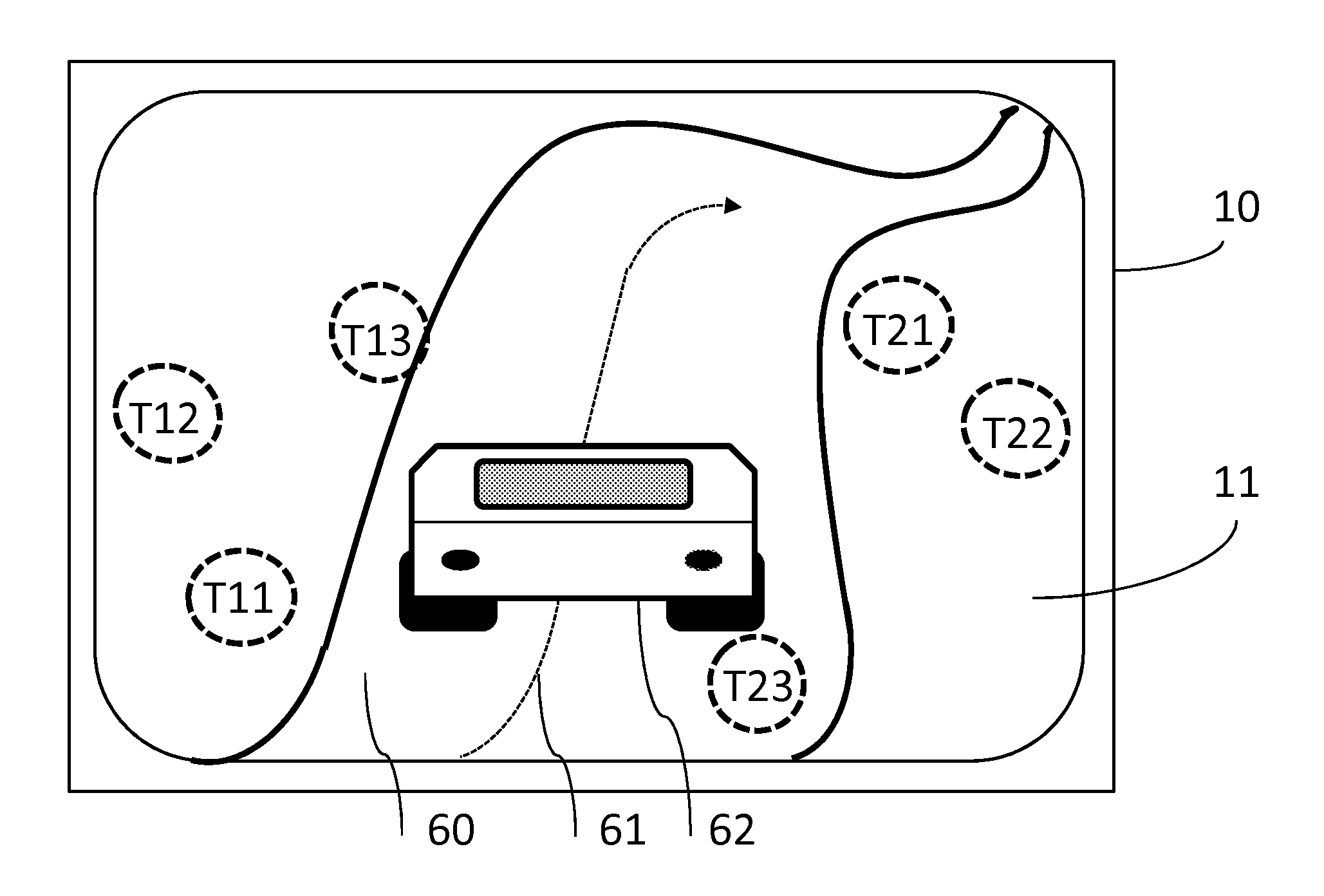

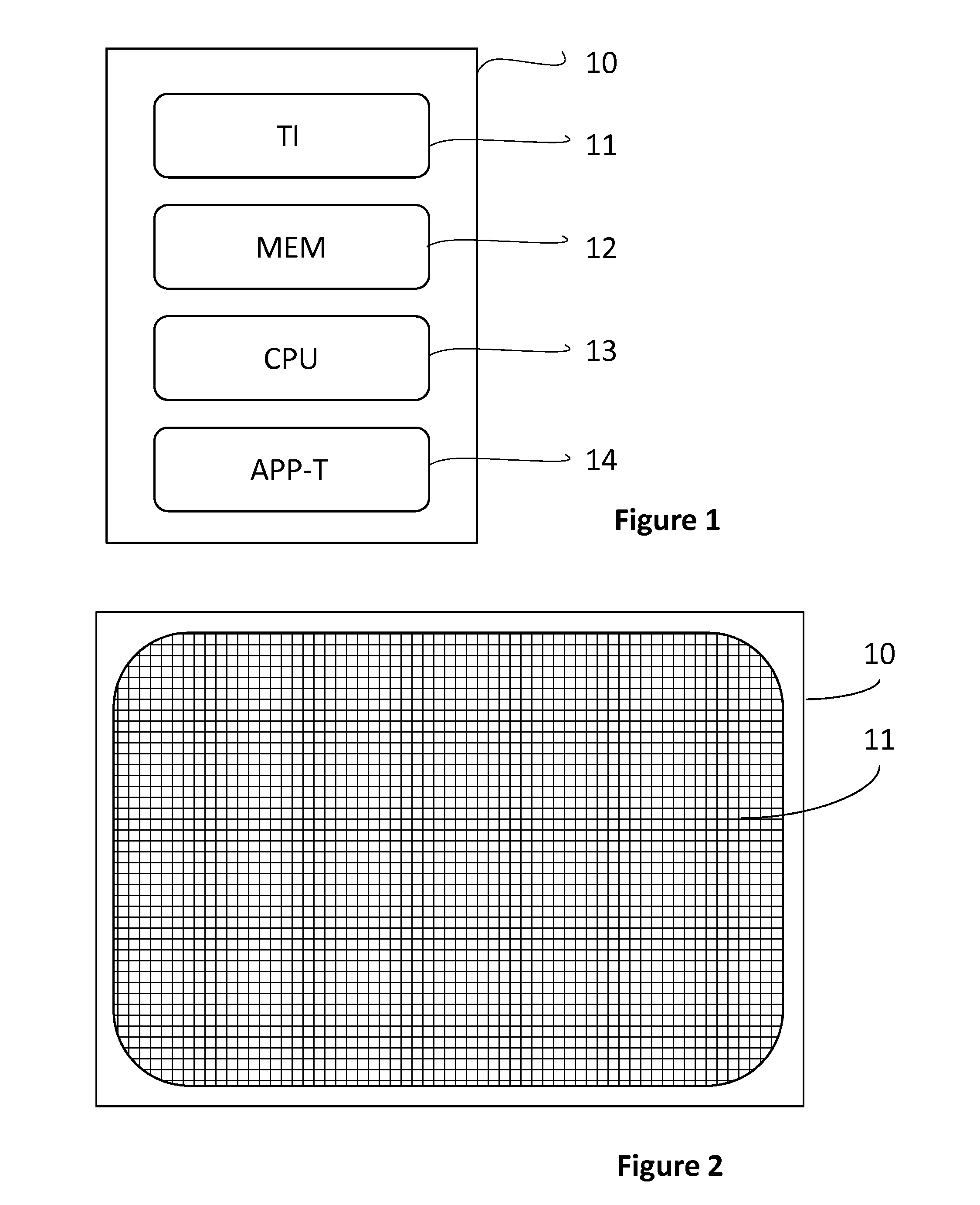

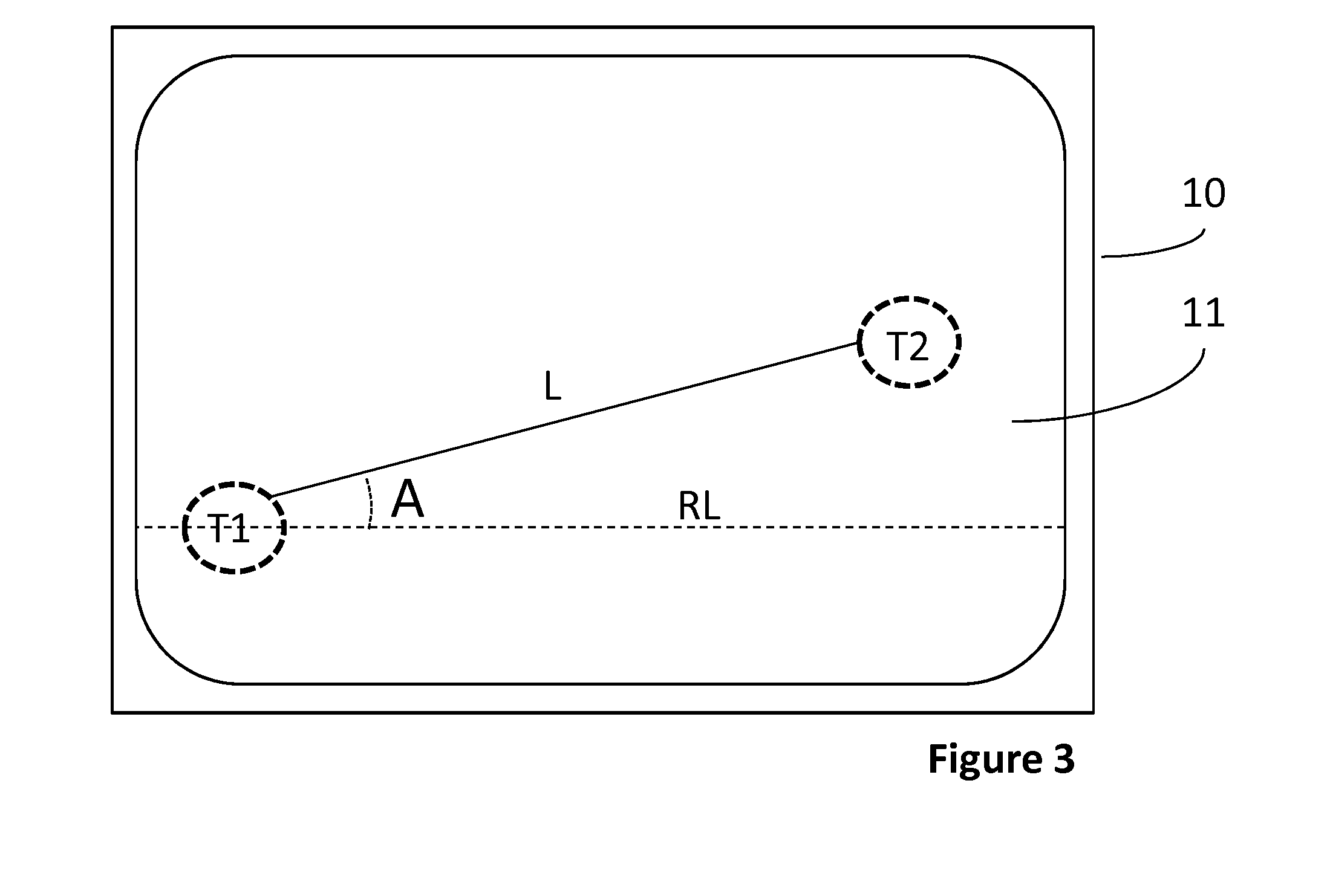

Advanced touch user interface

InactiveUS20160117075A1More freedom to design layoutInput/output processes for data processingVirtual spaceControl signal

The present invention is based on a touch based control of a user terminal. The touch based control may comprise a touch screen, a touch pad or other touch user interface enabling “multitouch”, where a touch sensing surface is able to recognize presence of two or more touch points. Two detected touch points on the sensing surface define end points of a line segment. Length of the line segment is determined providing basis for a first control signal and angle of the line segment compared to a reference line is determined providing basis for a second control signal. These control signals are used to control a moving object in a virtual space.

Owner:ROVIO ENTERTAINMENT

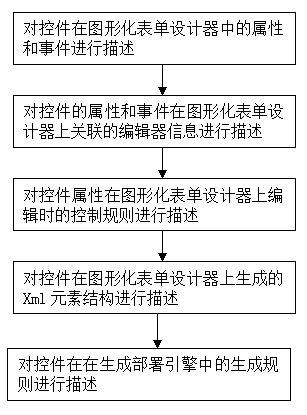

Interface UI (user interface) control configuration method based on description

InactiveCN104267962AExpandable and flexibleMeet flexible configuration needsSpecific program execution arrangementsSoftware engineeringControl table

The invention discloses an interface UI (user interface) control configuration method based on description. The method includes the steps: step one, describing attributes and events of controls in a graphical form designer; step two, describing editor information associated with the attributes and the events of the controls in the graphical form designer; step three, describing control rules of the attributes of the controls during editing on the graphical form designer; step four, describing Xm1 element structures generated on the graphical form designer by the controls; step five, describing generative rules of the controls in generation of deployment engines. The interface UI control configuration method based on description has the advantages that flexibility in extension of the attributes of the UI controls can be realized, the method is supportive to combinational configuration of controls different in edition and form type, and requirements on flexible configuration in development of different types of forms can be met.

Owner:INSPUR COMMON SOFTWARE

A keypad touch user interface method and a mobile terminal using the same

InactiveCN101098532AImprove usabilityImprove convenienceDetails for portable computersRadio/inductive link selection arrangementsComputer terminalHuman–computer interaction

A user interface method and a mobile terminal is disclosed. If a finger touches and moves in a specific direction on a keypad having a touch sensor, a touch is detected by the touch sensor and a type of touch direction is identified by a control unit according to the angle of a touch direction. A screen of a display unit is partitioned into a plurality of blocks, and a screen highlight is located at a specific block. The control unit for moving the screen highlight on the display unit according to the type of the touch direction. A path of the screen highlight is set in one of a forward direction with continuous movement, a forward direction with discontinuous movement, a backward direction with continuous movement, and a backward direction with discontinuous movement. A user interface according to the present invention can include a pointer in the display unit, the pointer being controlled by linking a pointer position with a touch position.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com