Patents

Literature

30 results about "Auditory event" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Auditory events describe the subjective perception, when listening to a certain sound situation. This term was introduced by Jens Blauert (Ruhr-University Bochum) in 1966, in order to distinguish clearly between the physical sound field and the auditory perception of the sound.

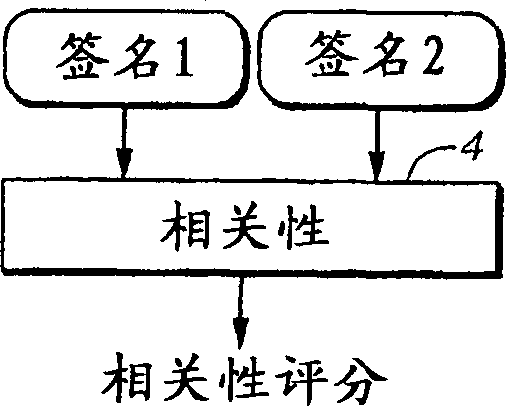

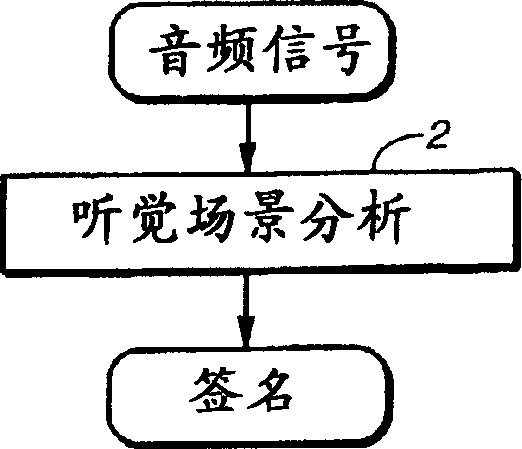

Comparing audio using characterizations based on auditory events

InactiveUS7283954B2Simple and computationally efficientReduce and eliminate effectTelevision system detailsSpeech analysisTime shiftingA domain

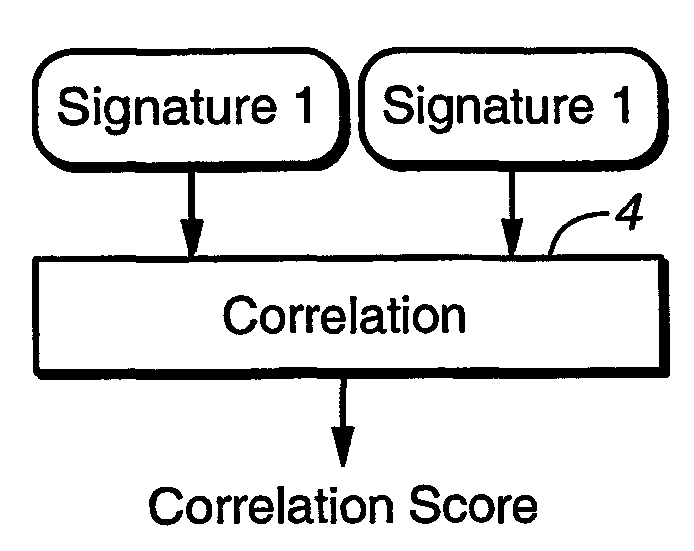

A method for determining if one audio signal is derived from another audio signal or if two audio signals are derived from the same audio signal compares reduced-information characterizations of said audio signals, wherein said characterizations are based on auditory scene analysis. The comparison removes from the characterisations or minimizes in the characterisations the effect of temporal shift or delay on the audio signals (5-1), calculates a measure of similarity (5-2), and compares the measure of similarity against a threshold. In one alternative, the effect of temporal shift or delay is removed or minimized by cross-correlating the two characterizations. In another alternative, the effect of temporal shift or delay is removed or minimized by transforming the characterizations into a domain that is independent of temporal delay effects, such as the frequency domain. In both cases, a measure of similarity is calculated by calculating a coefficient of correlation.

Owner:DOLBY LAB LICENSING CORP

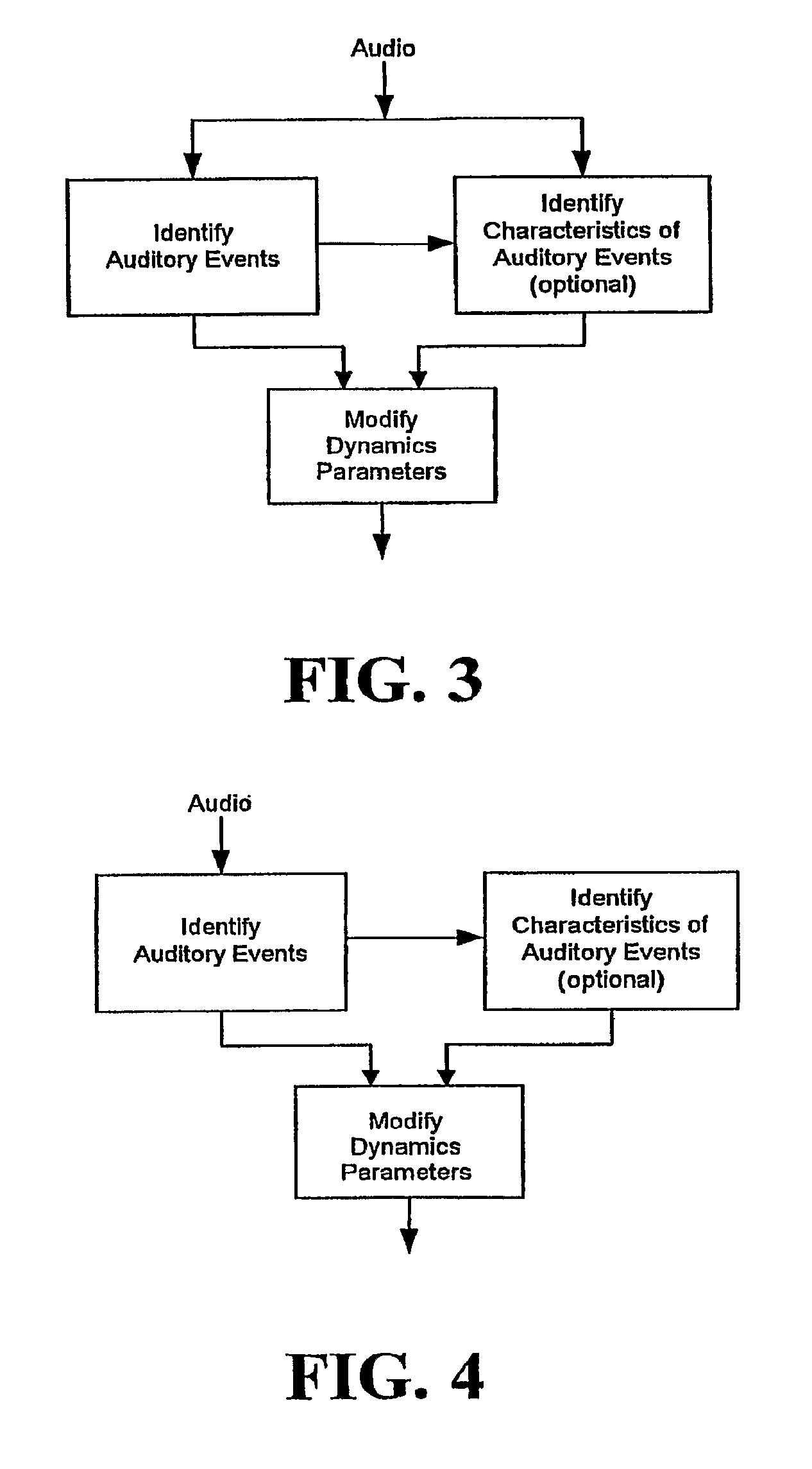

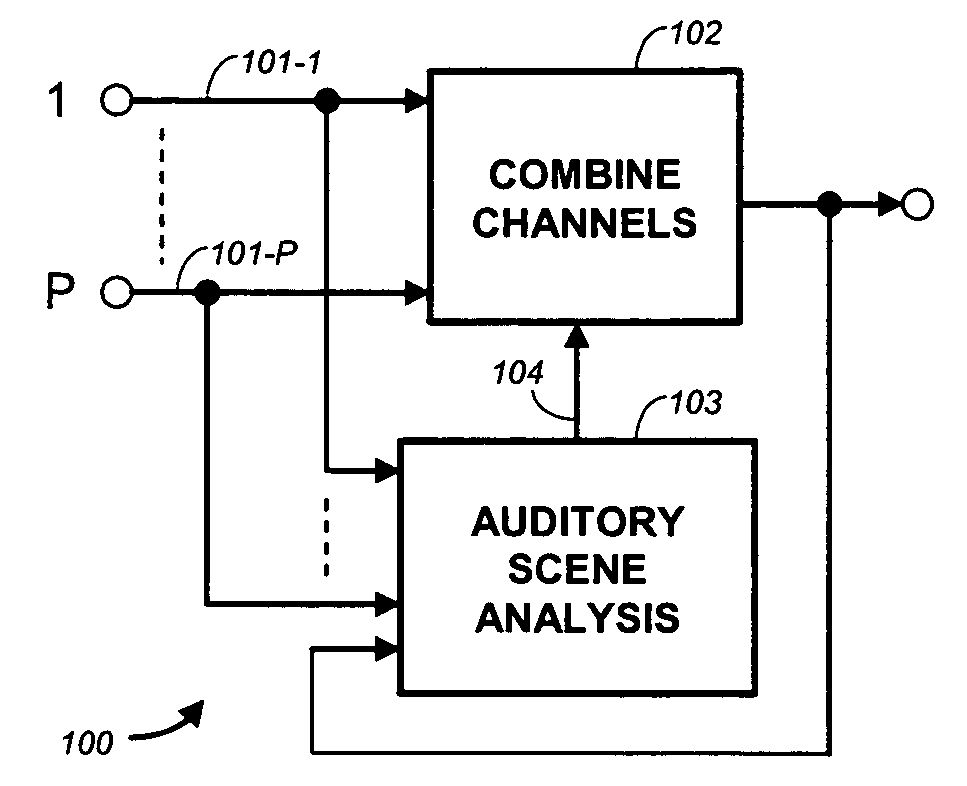

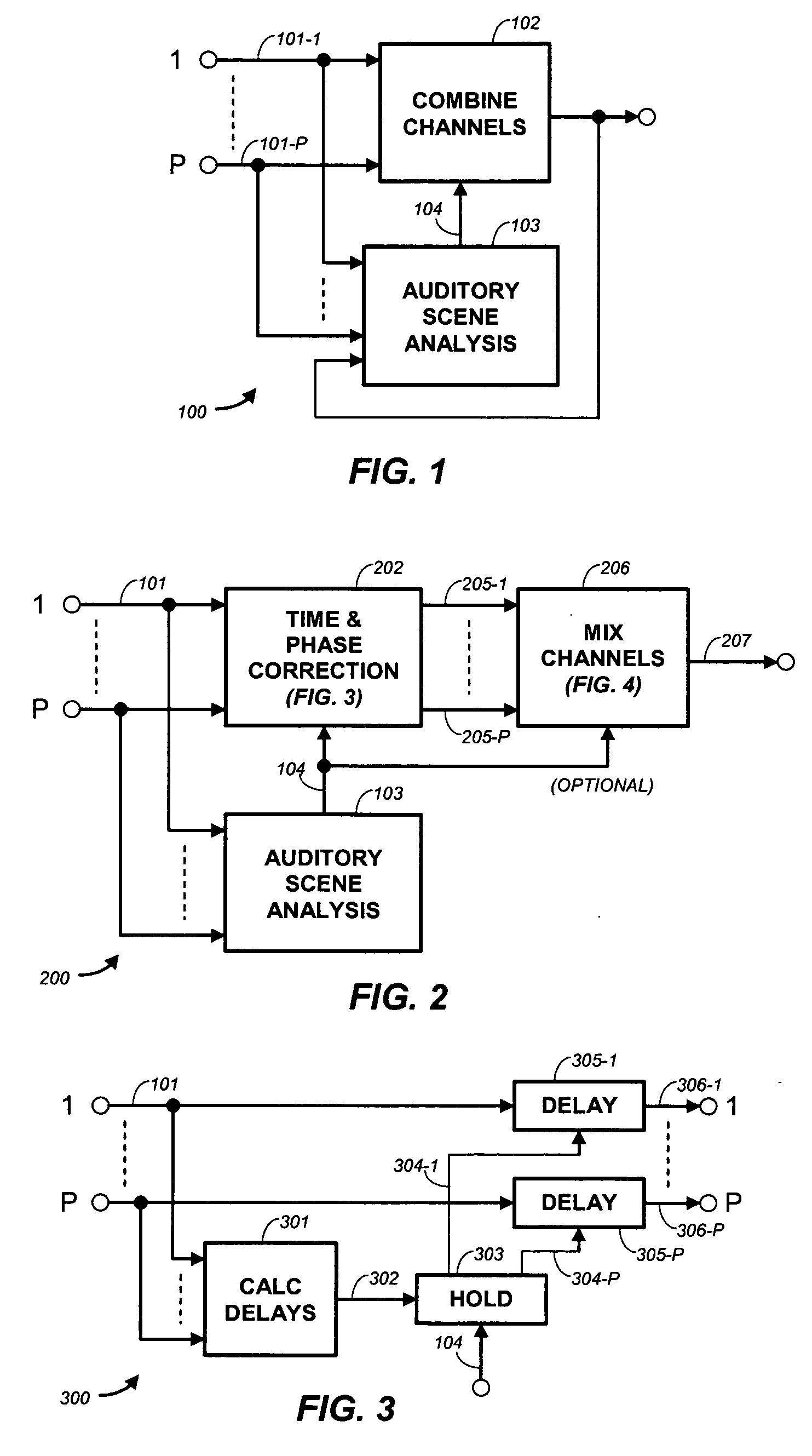

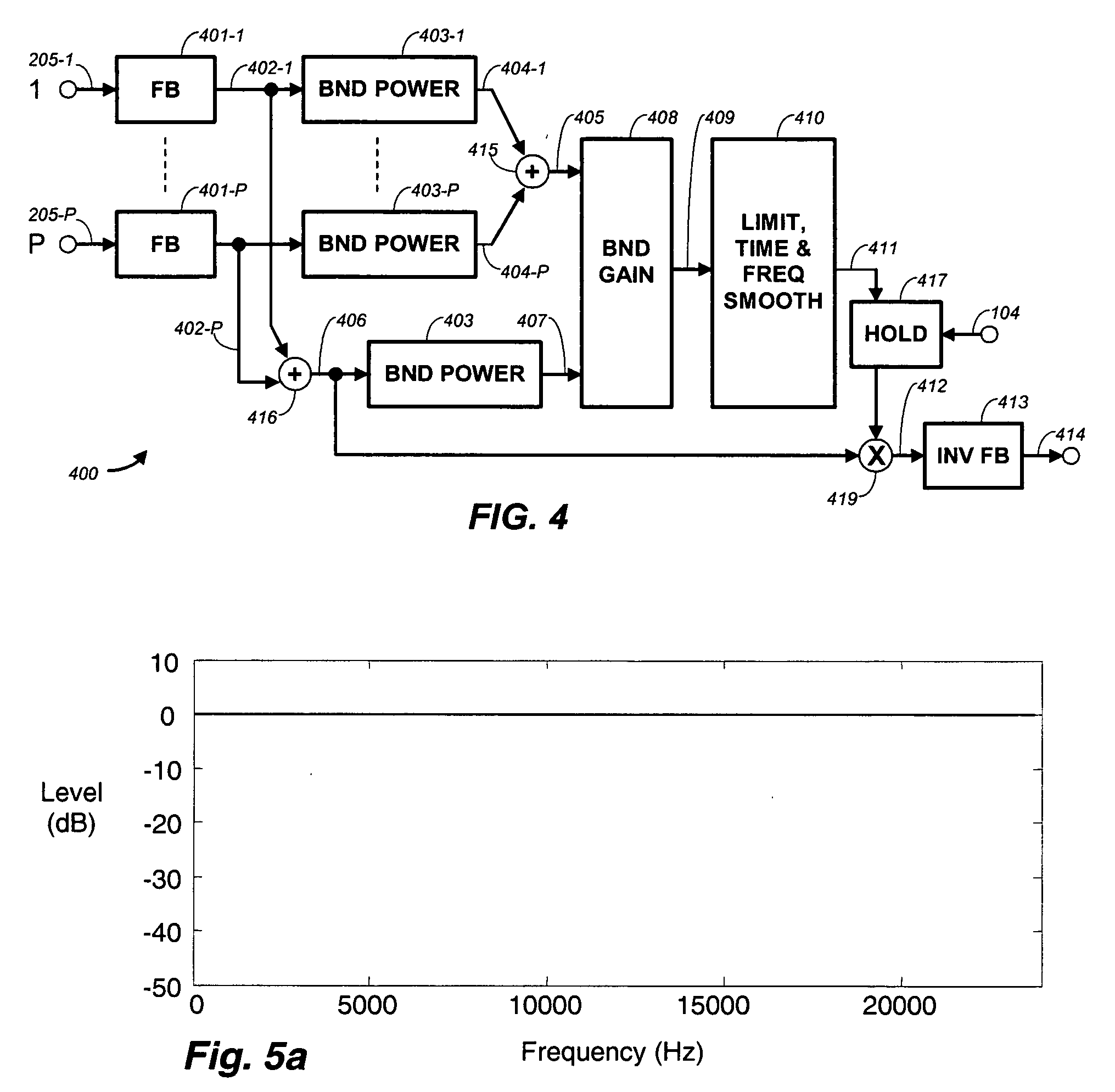

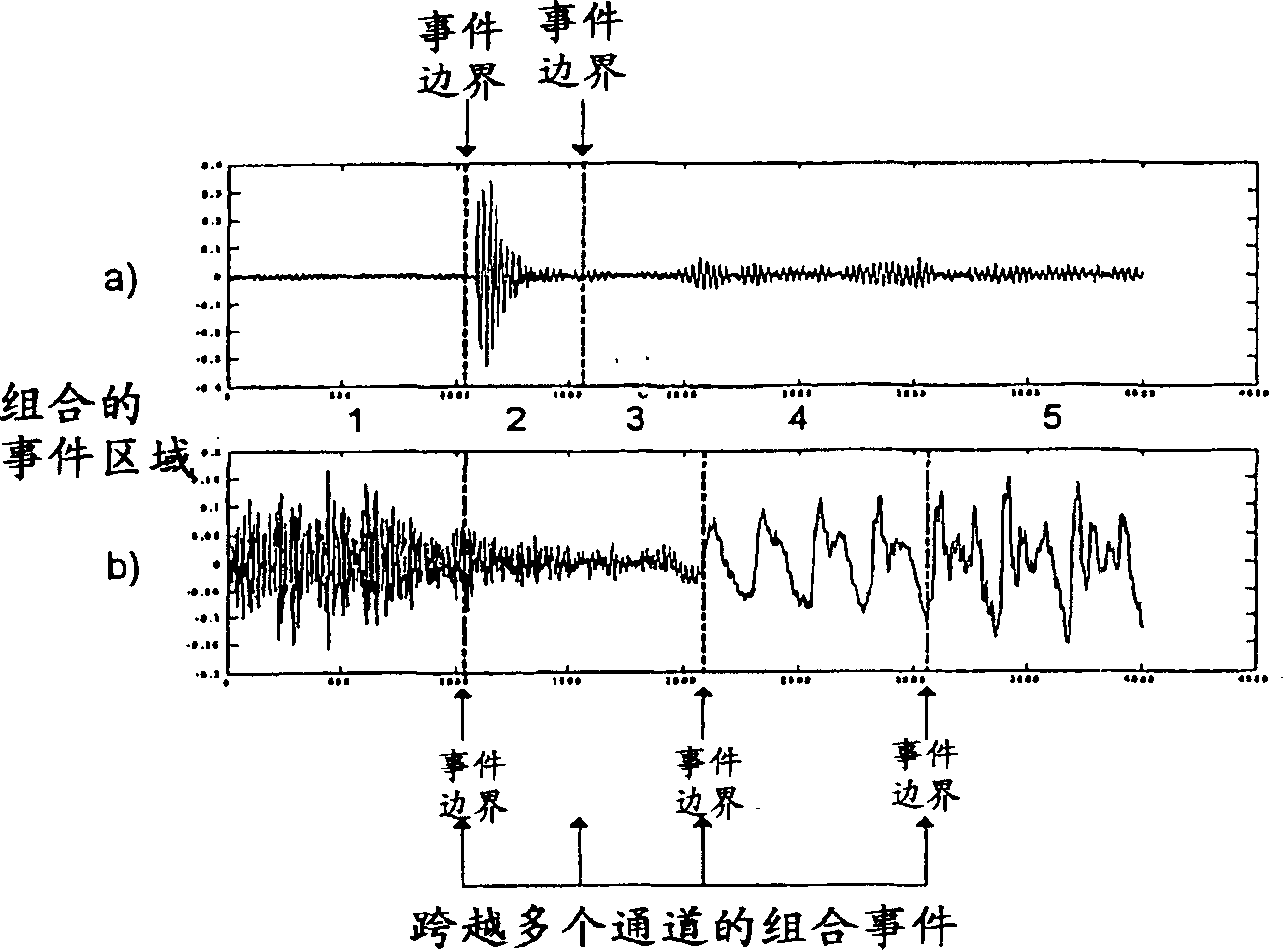

Method for combining audio signals using auditory scene analysis

ActiveUS7508947B2Improve sound qualityTransducer acoustic reaction preventionTransmissionComputer hardwareVocal tract

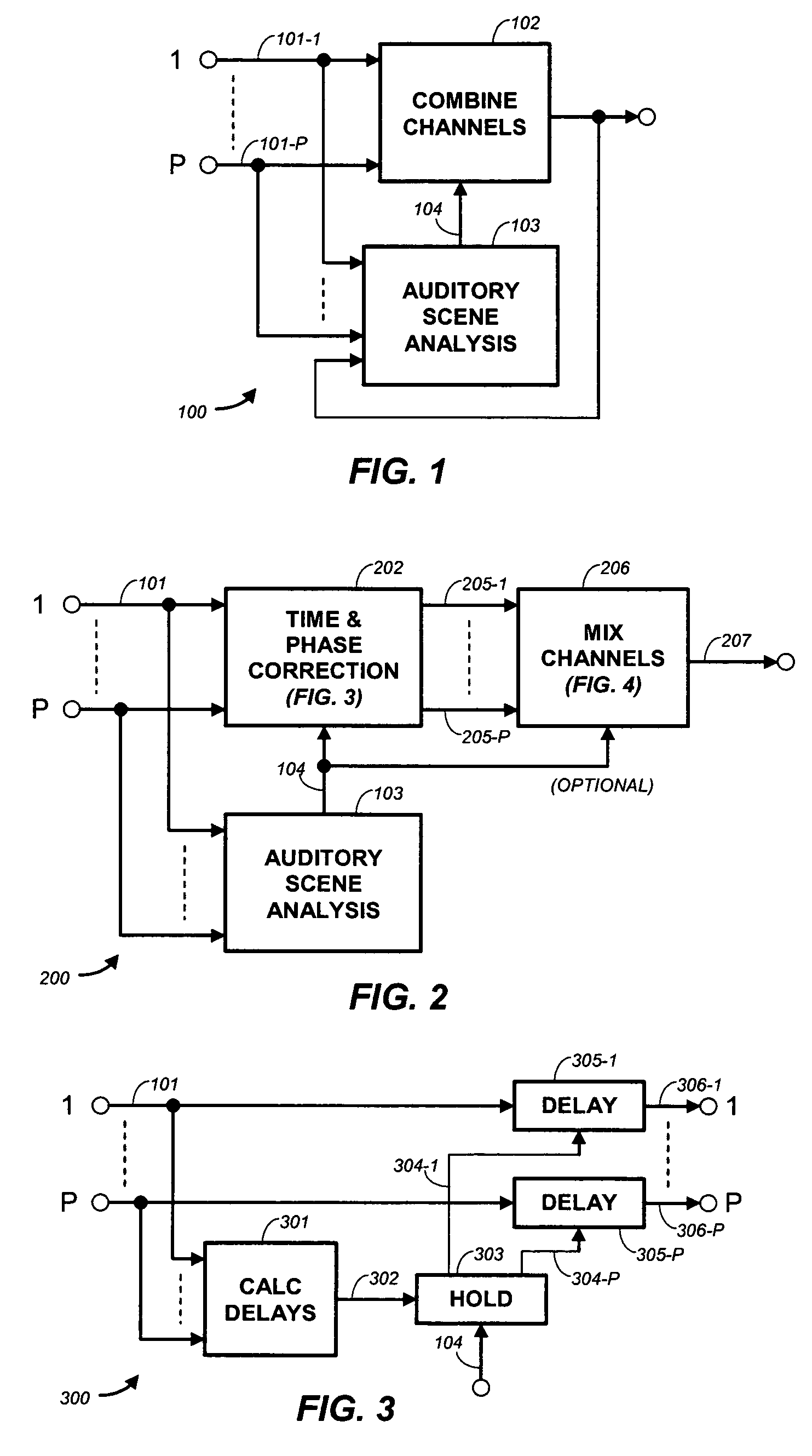

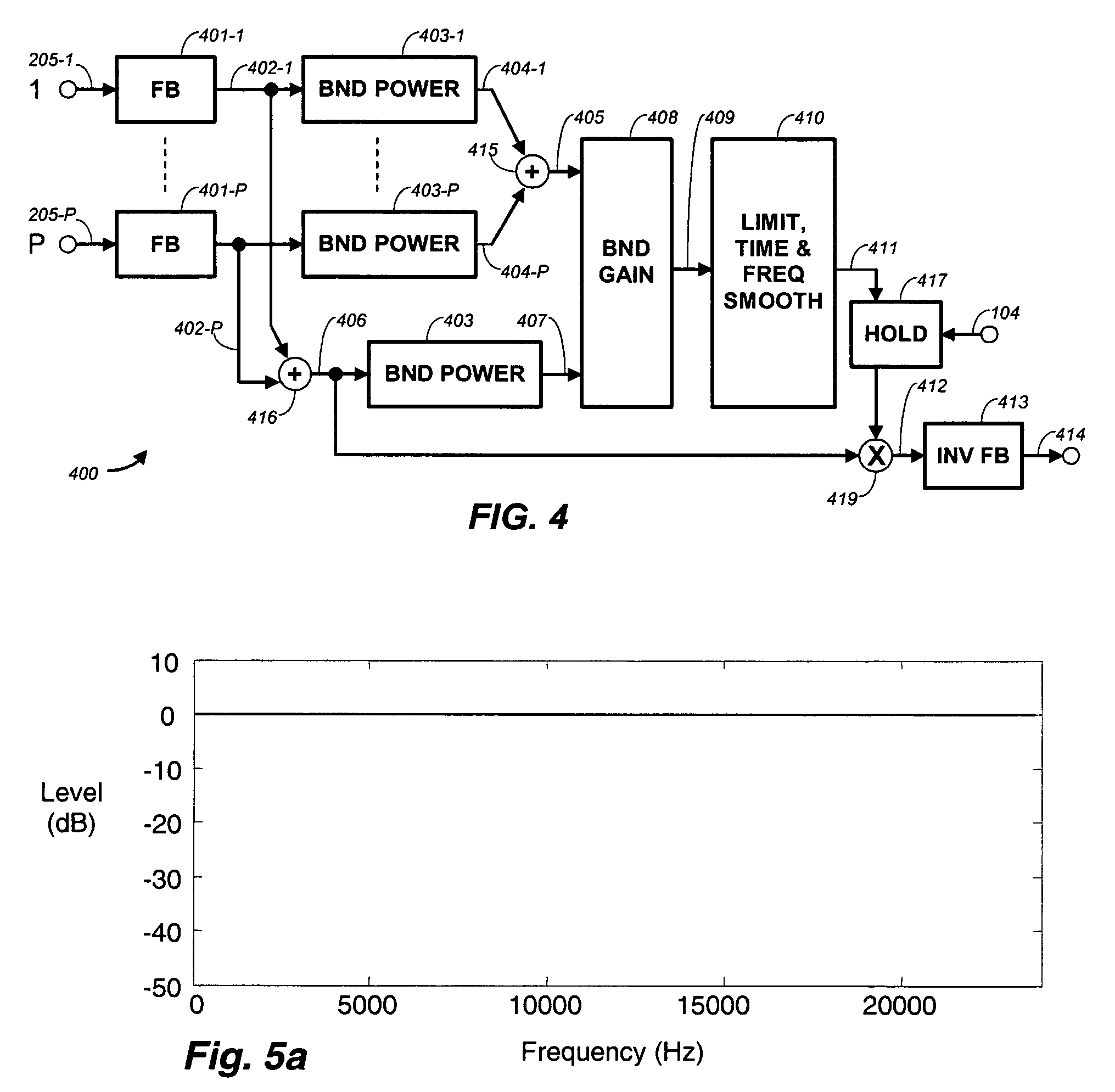

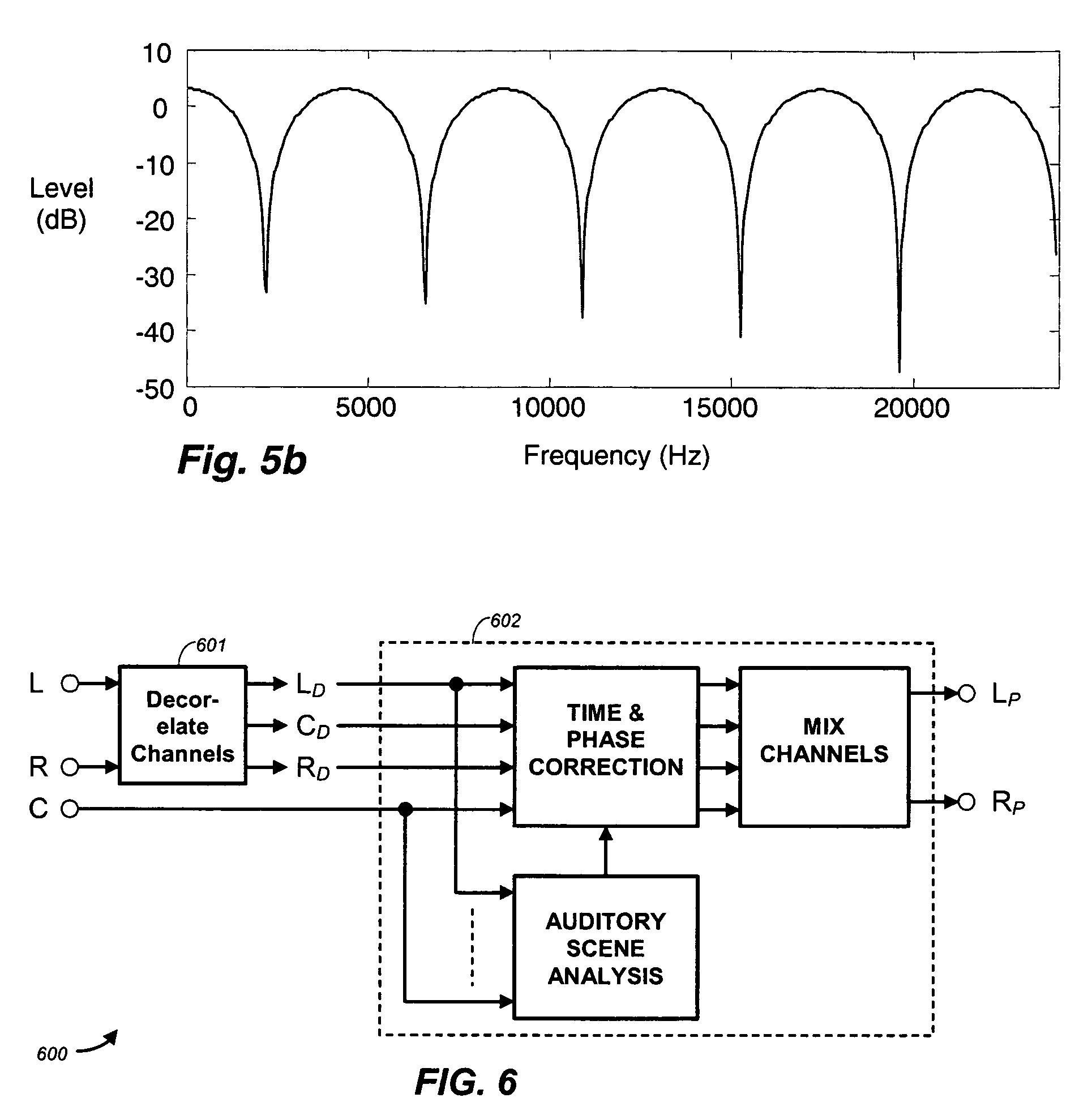

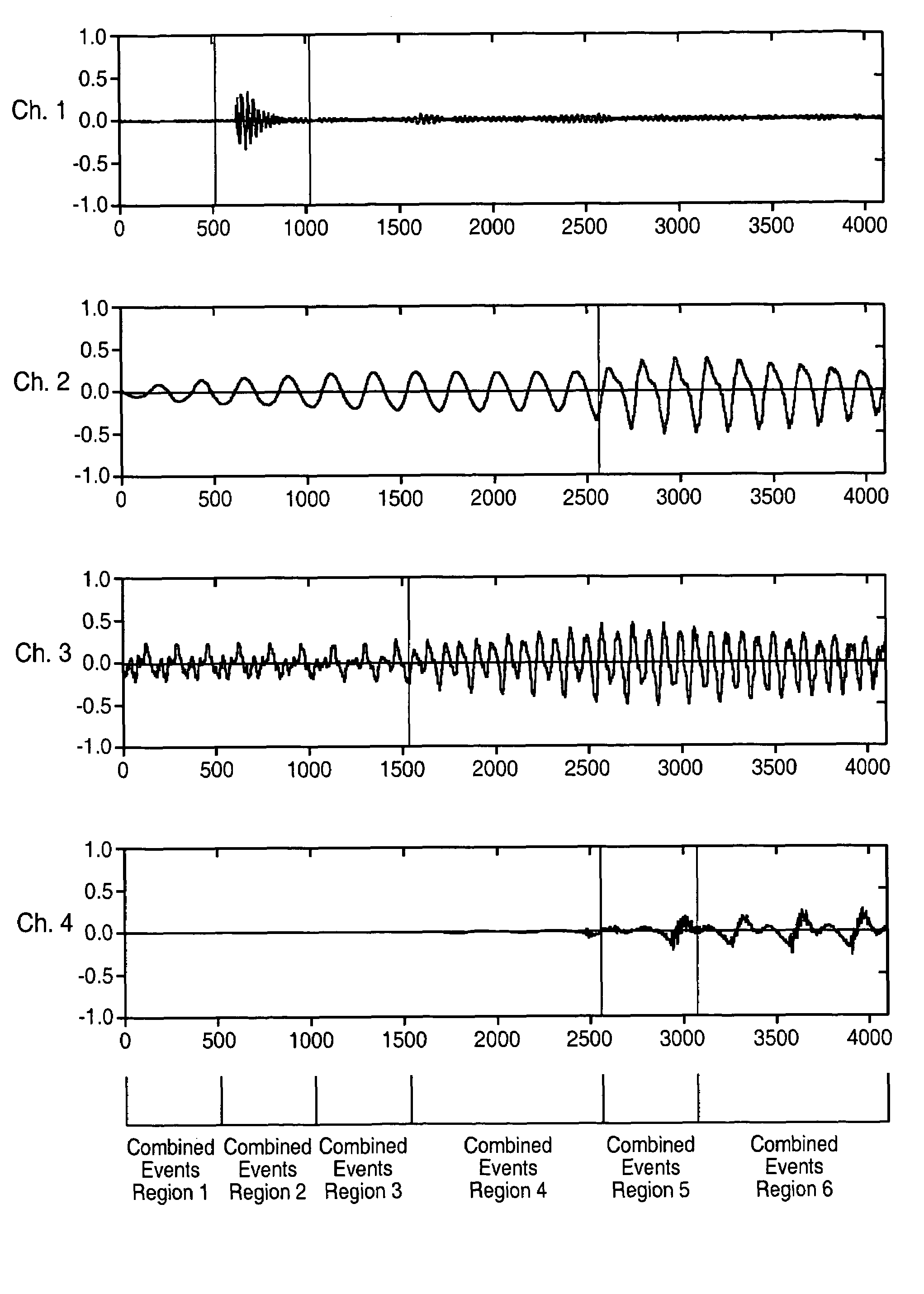

A process for combining audio channels combines the audio channels to produce a combined audio channel and dynamically applies one or more of time, phase, and amplitude or power adjustments to the channels, to the combined channel, or to both the channels and the combined channel. One or more of the adjustments are controlled at least in part by a measure of auditory events in one or more of the channels and / or the combined channel. Applications include the presentation of multichannel audio in cinemas and vehicles. Not only methods, but also corresponding computer program implementations and apparatus implementations are included.

Owner:DOLBY LAB LICENSING CORP

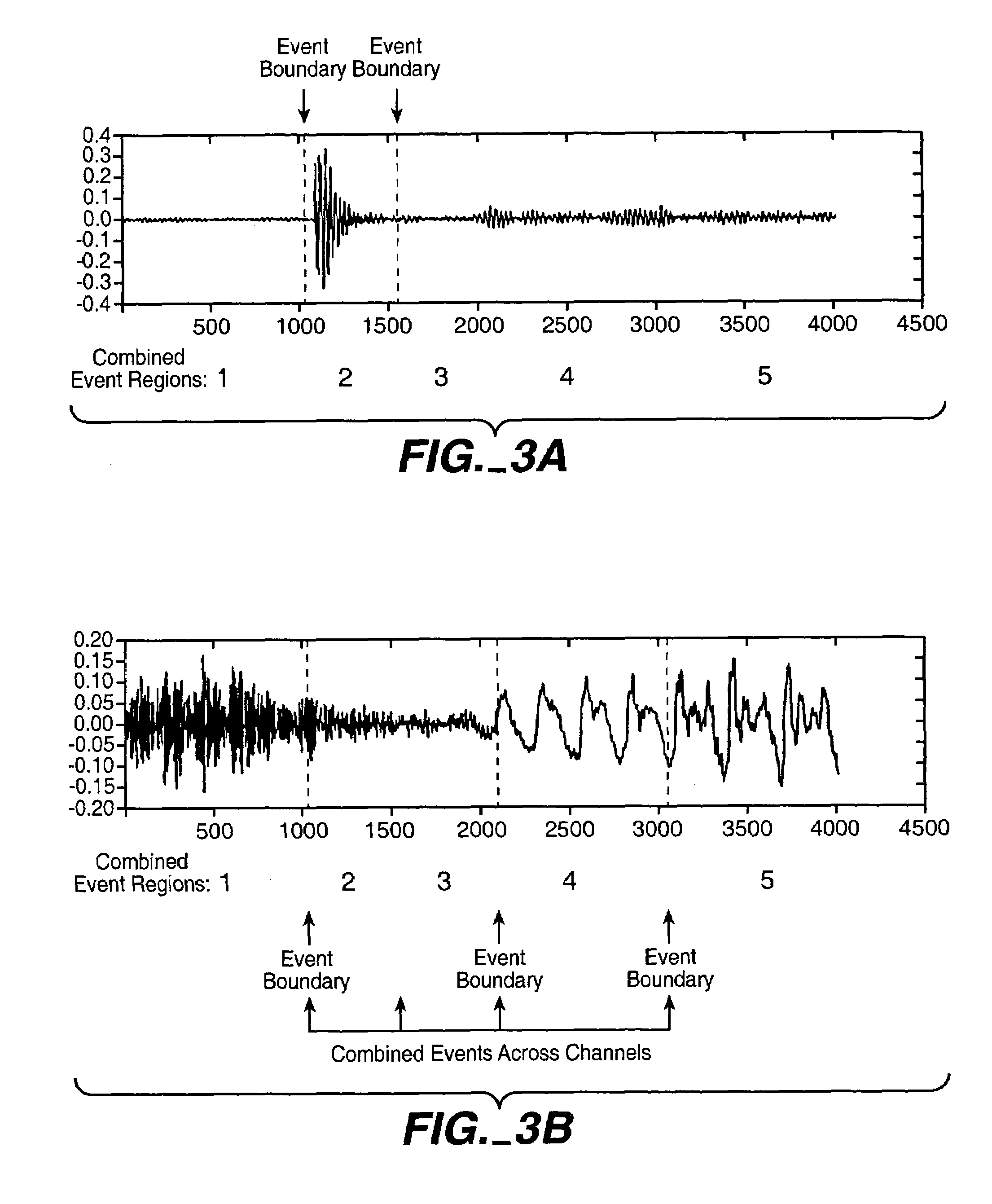

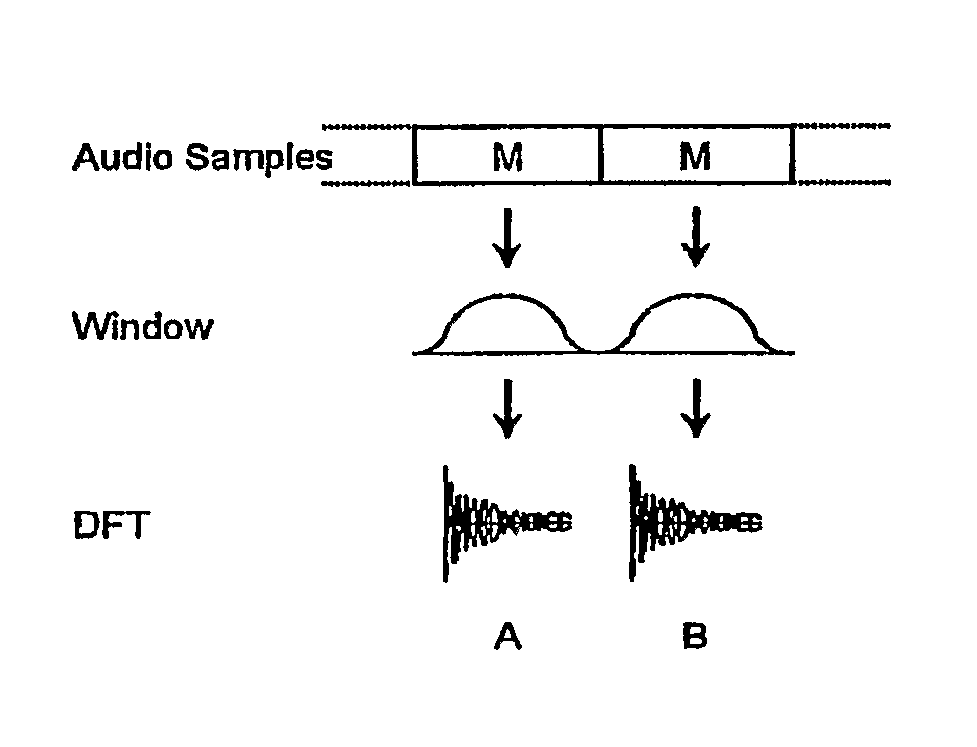

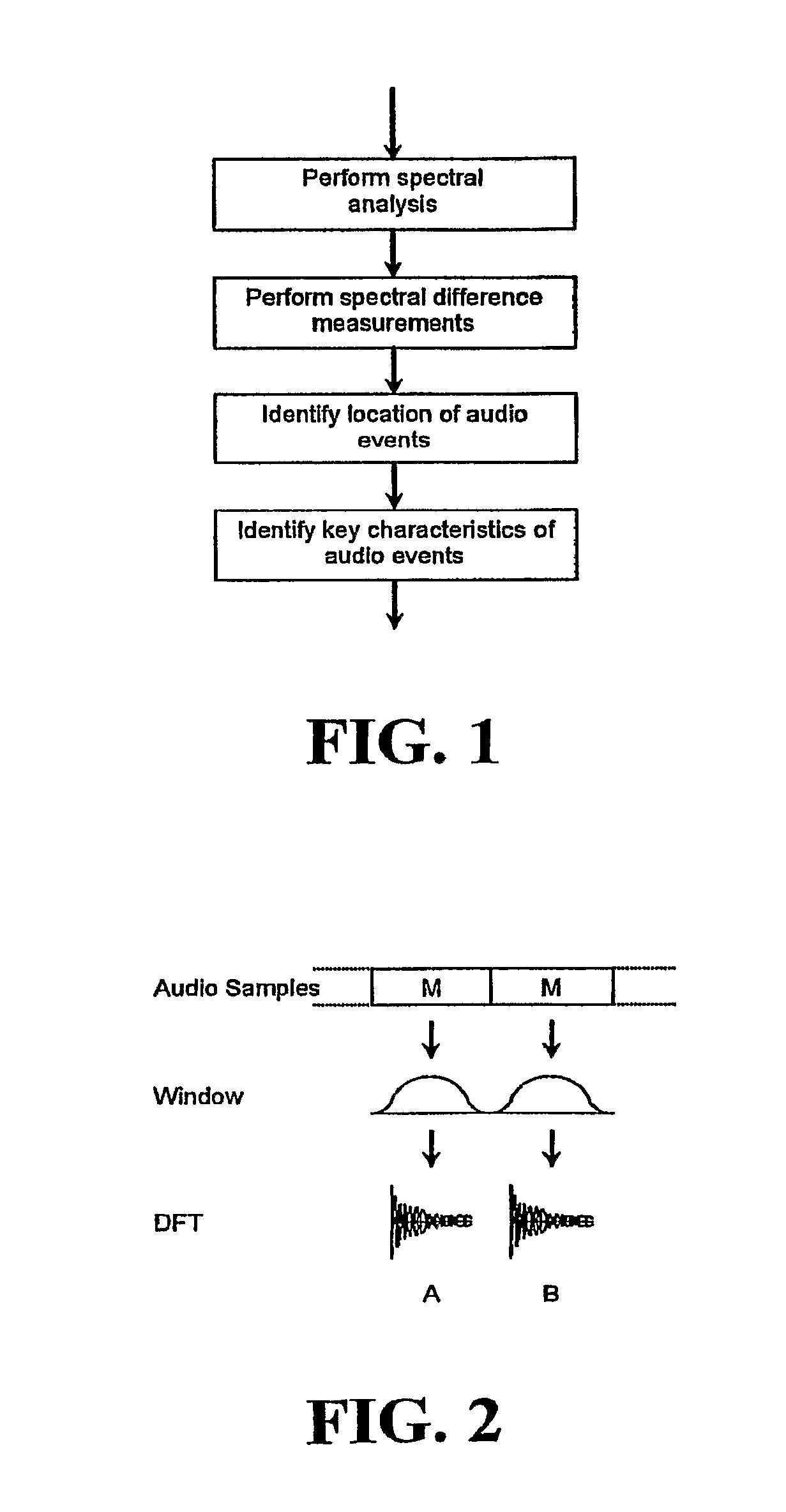

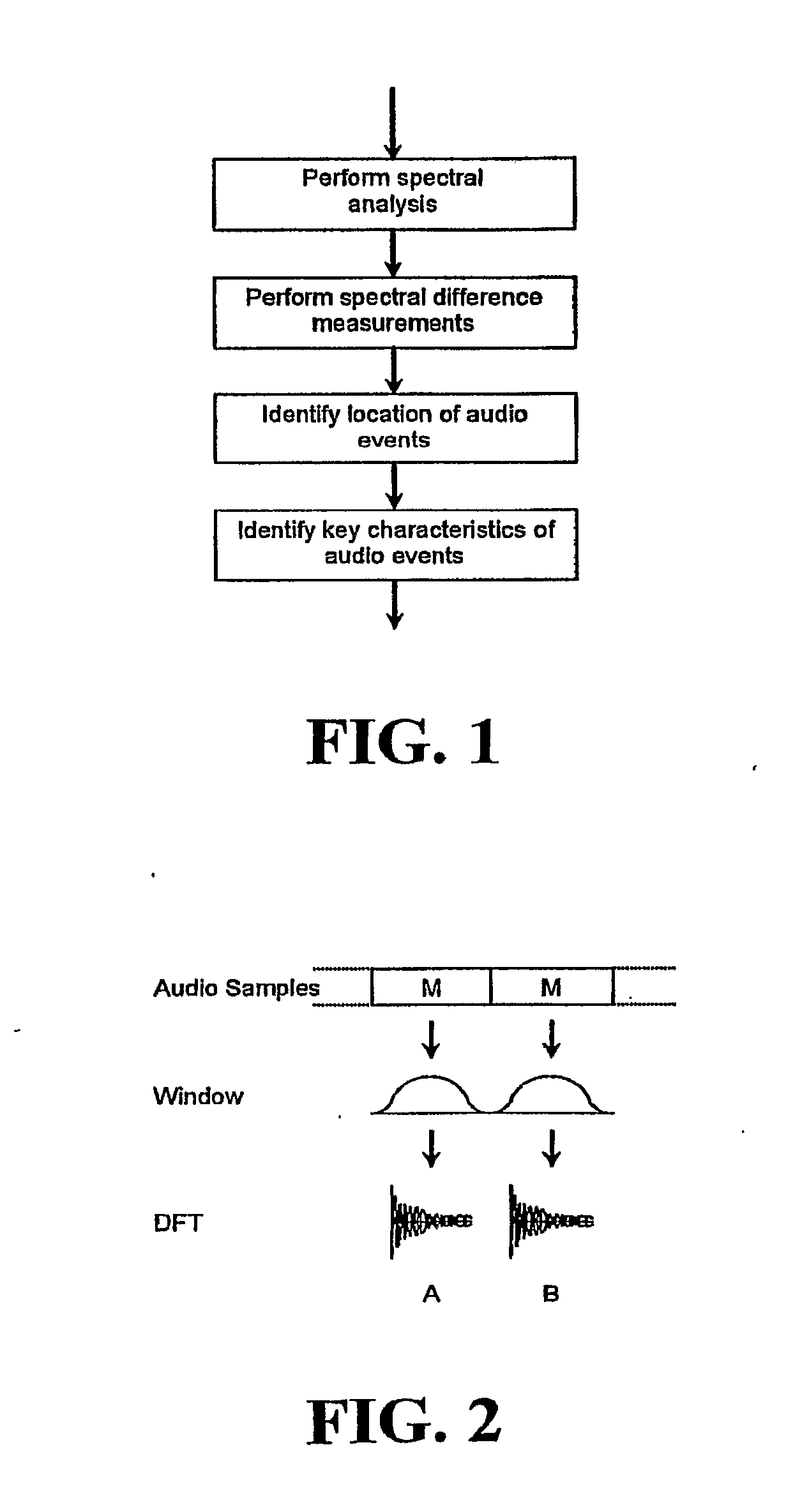

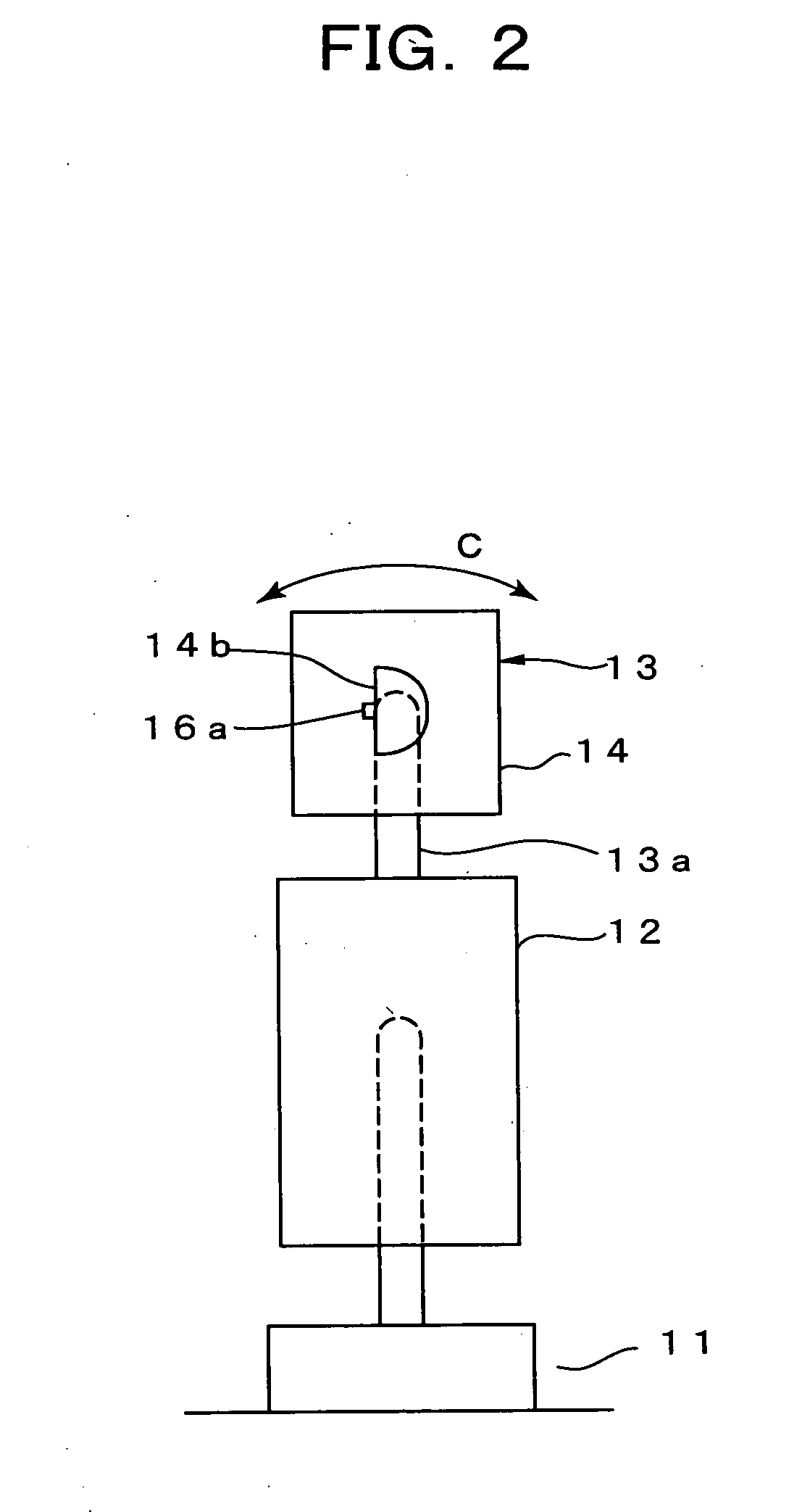

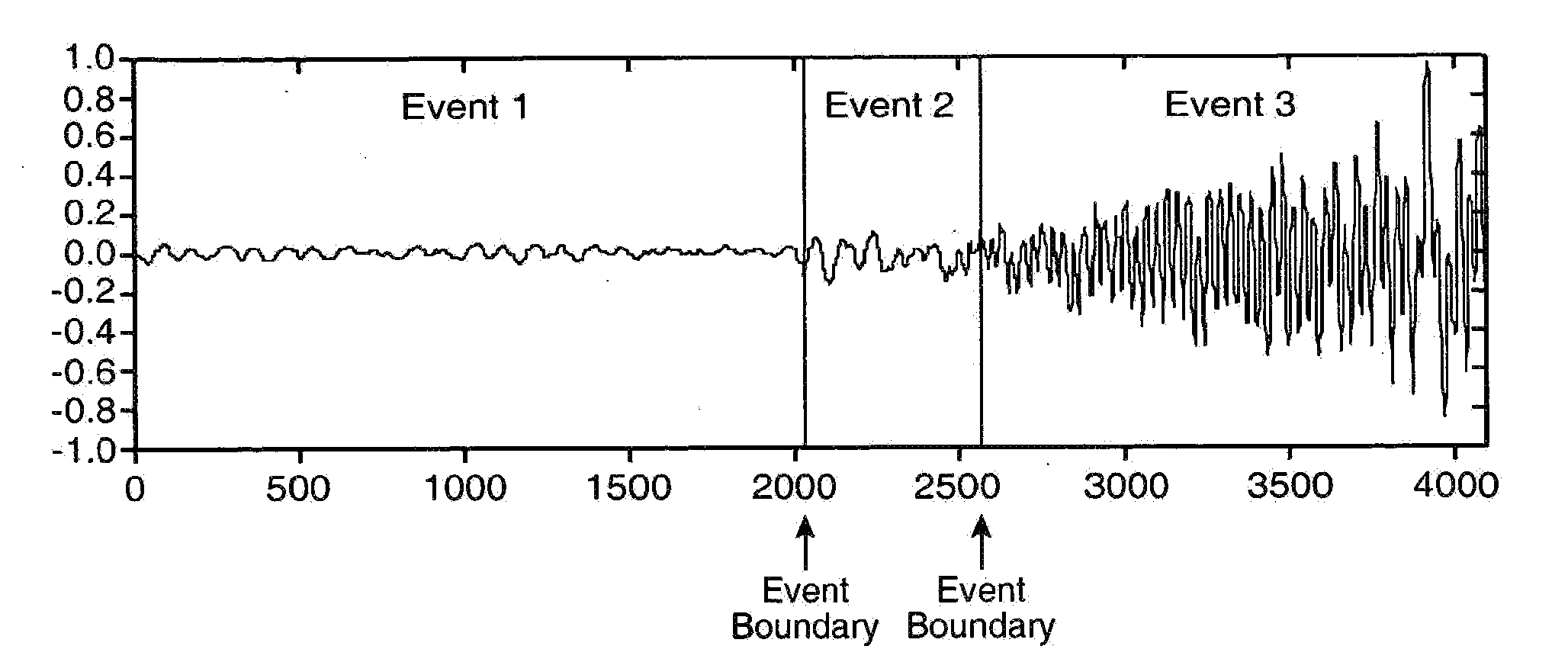

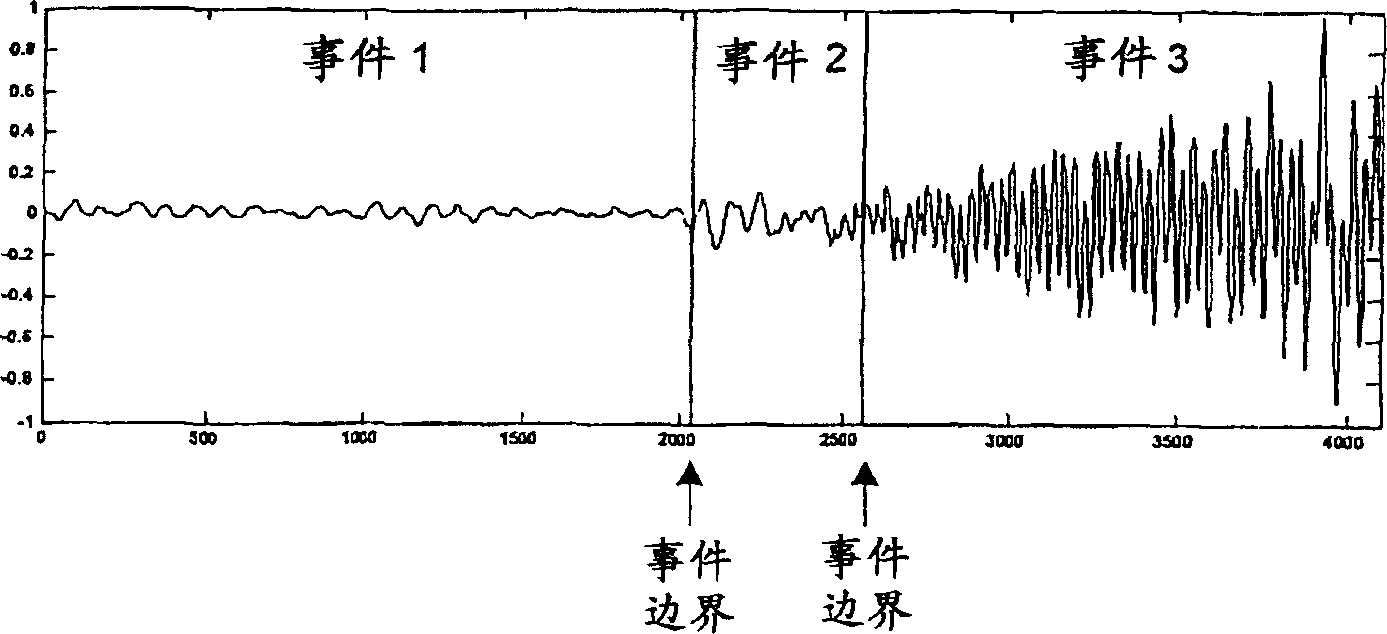

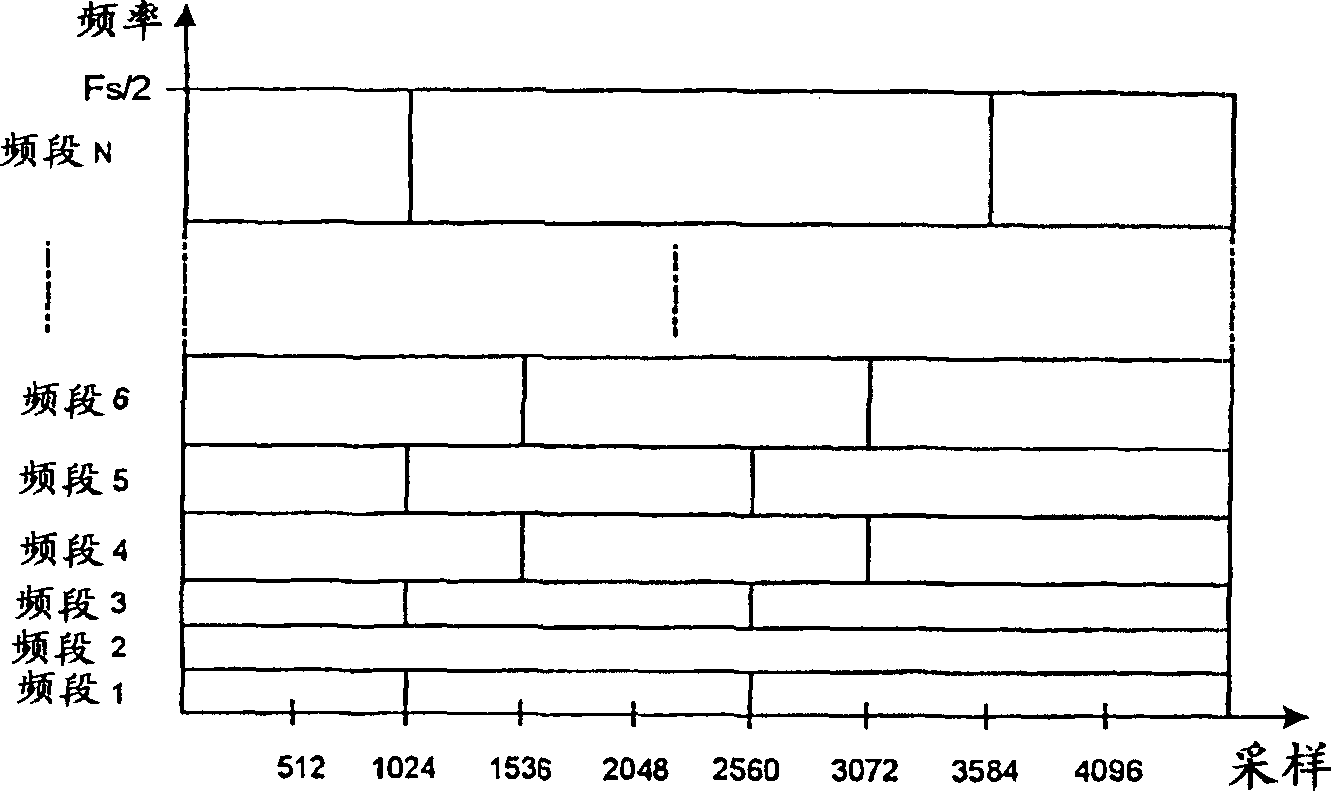

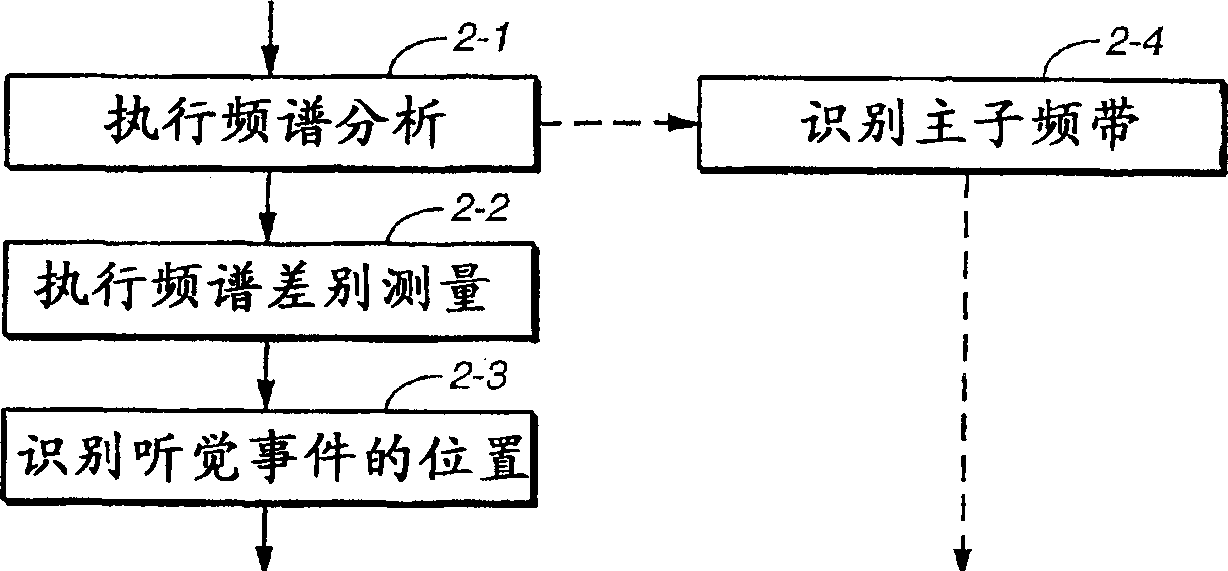

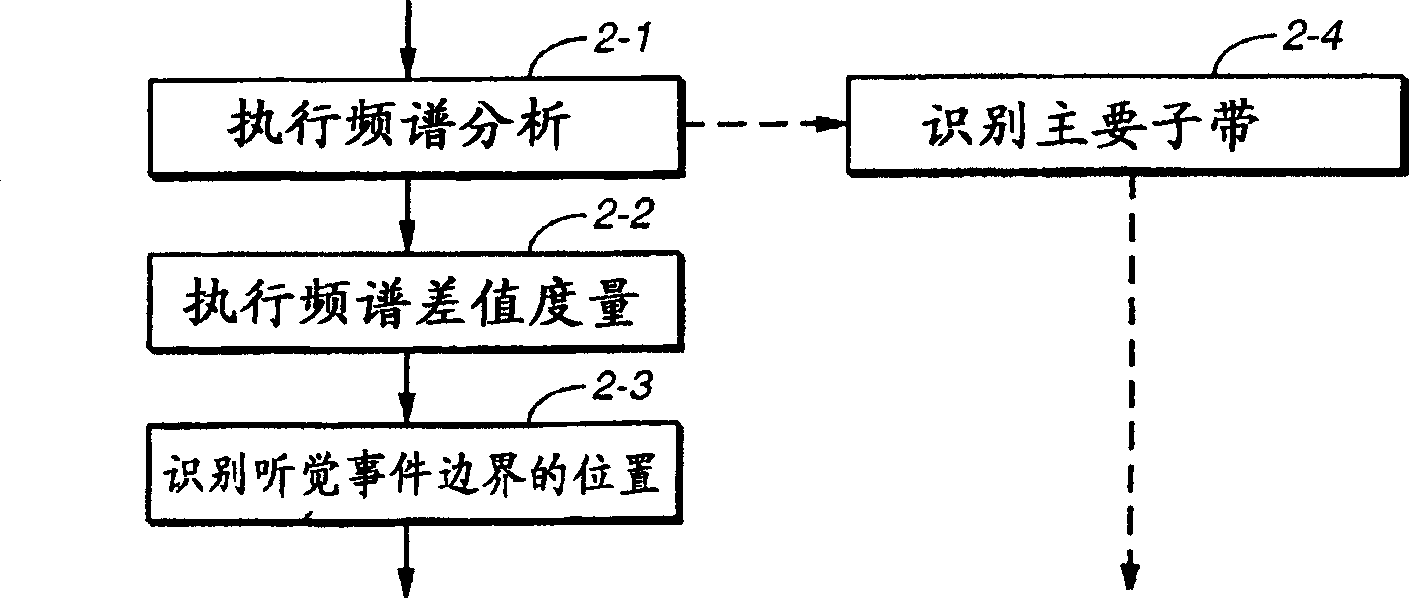

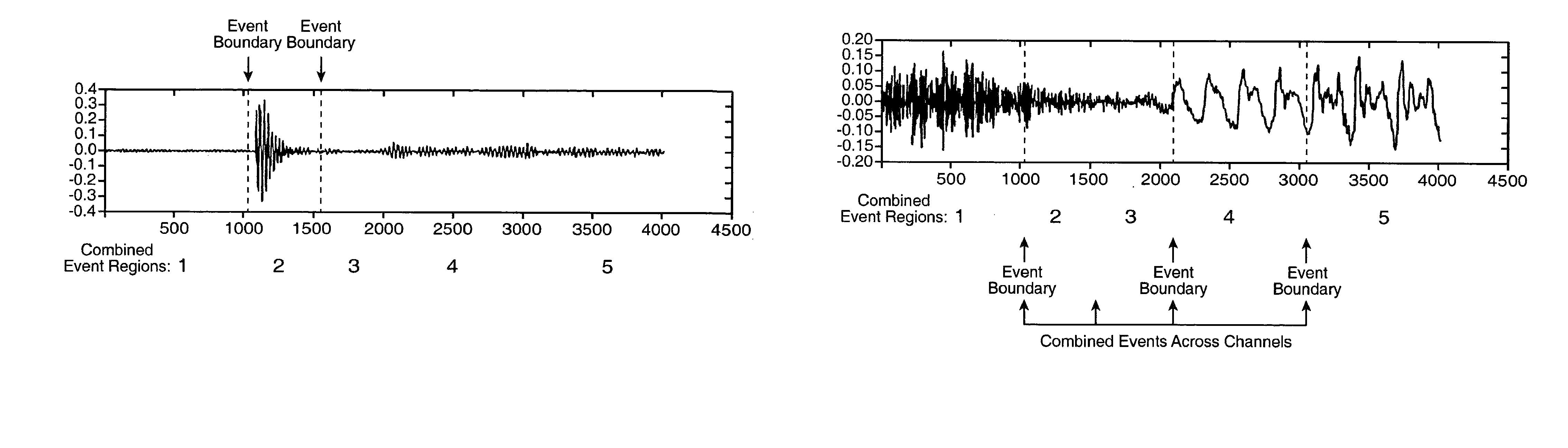

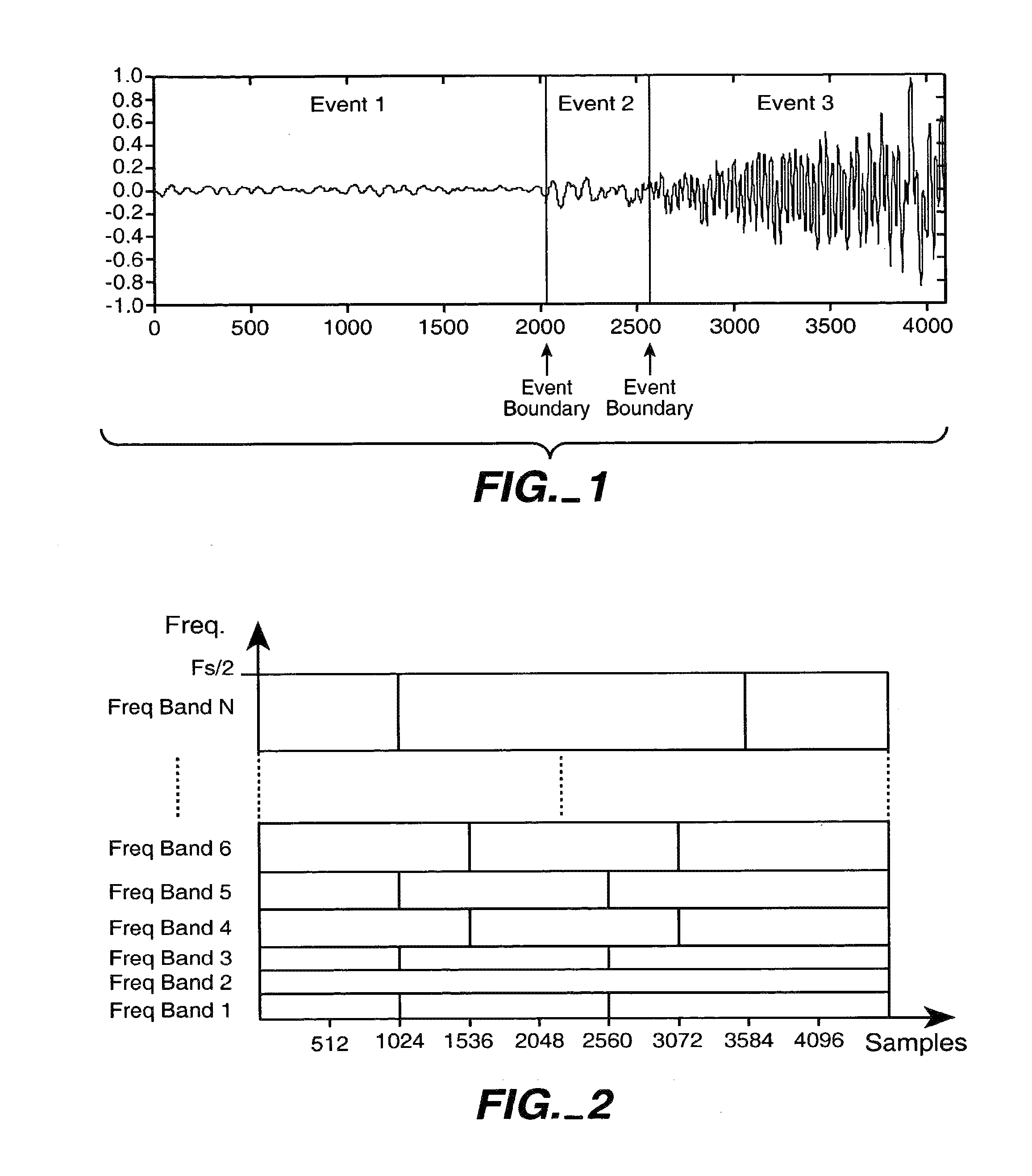

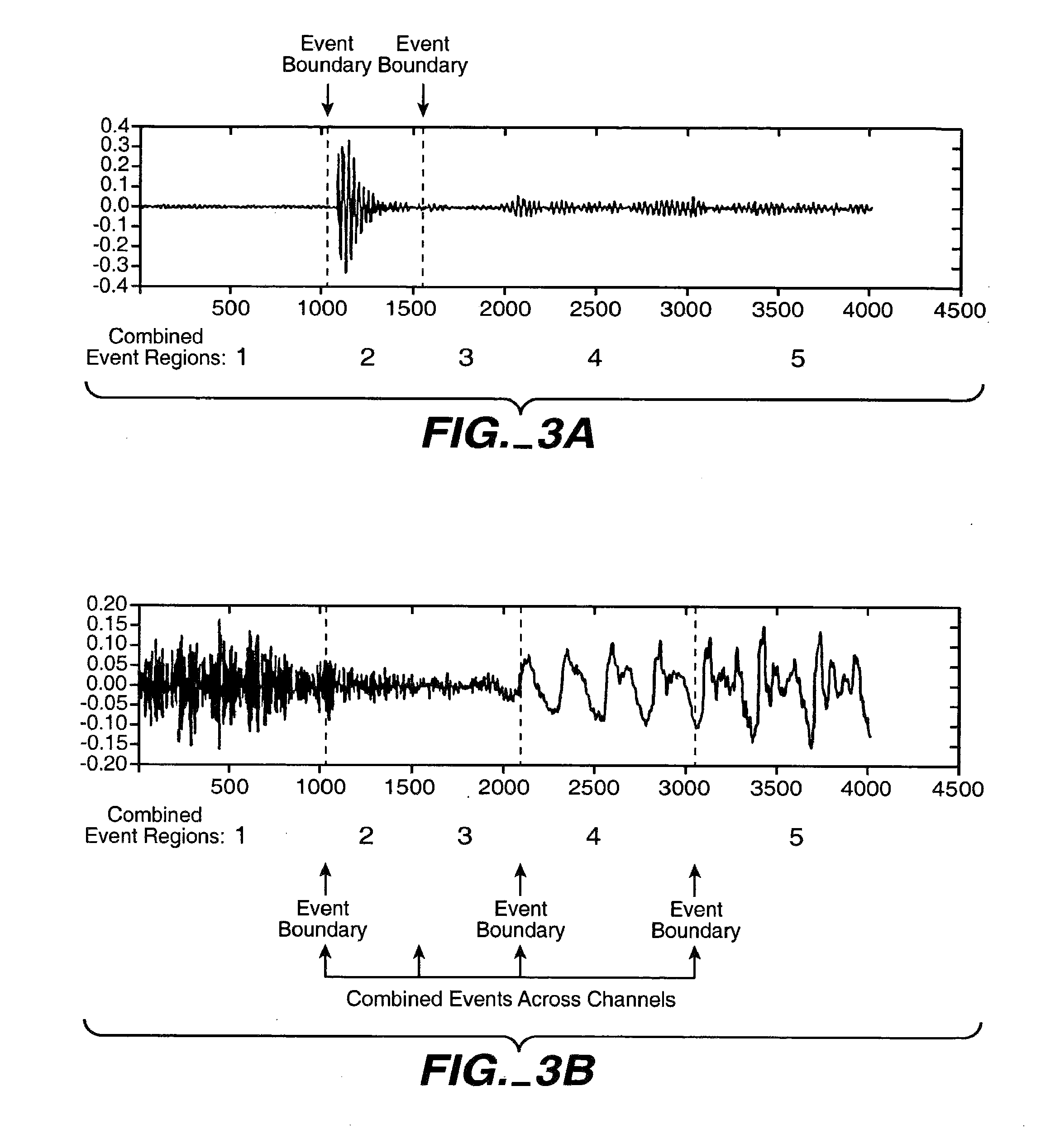

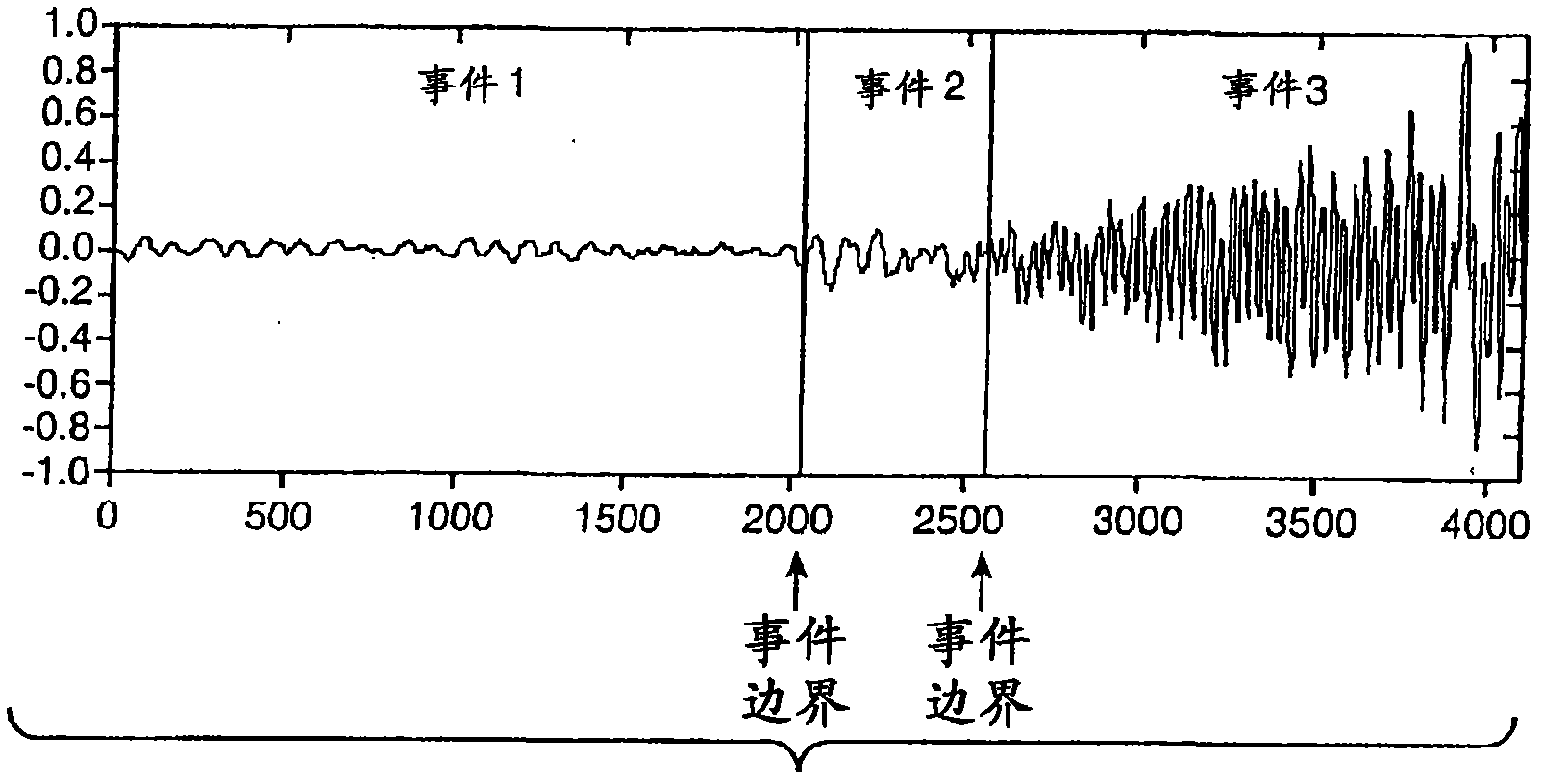

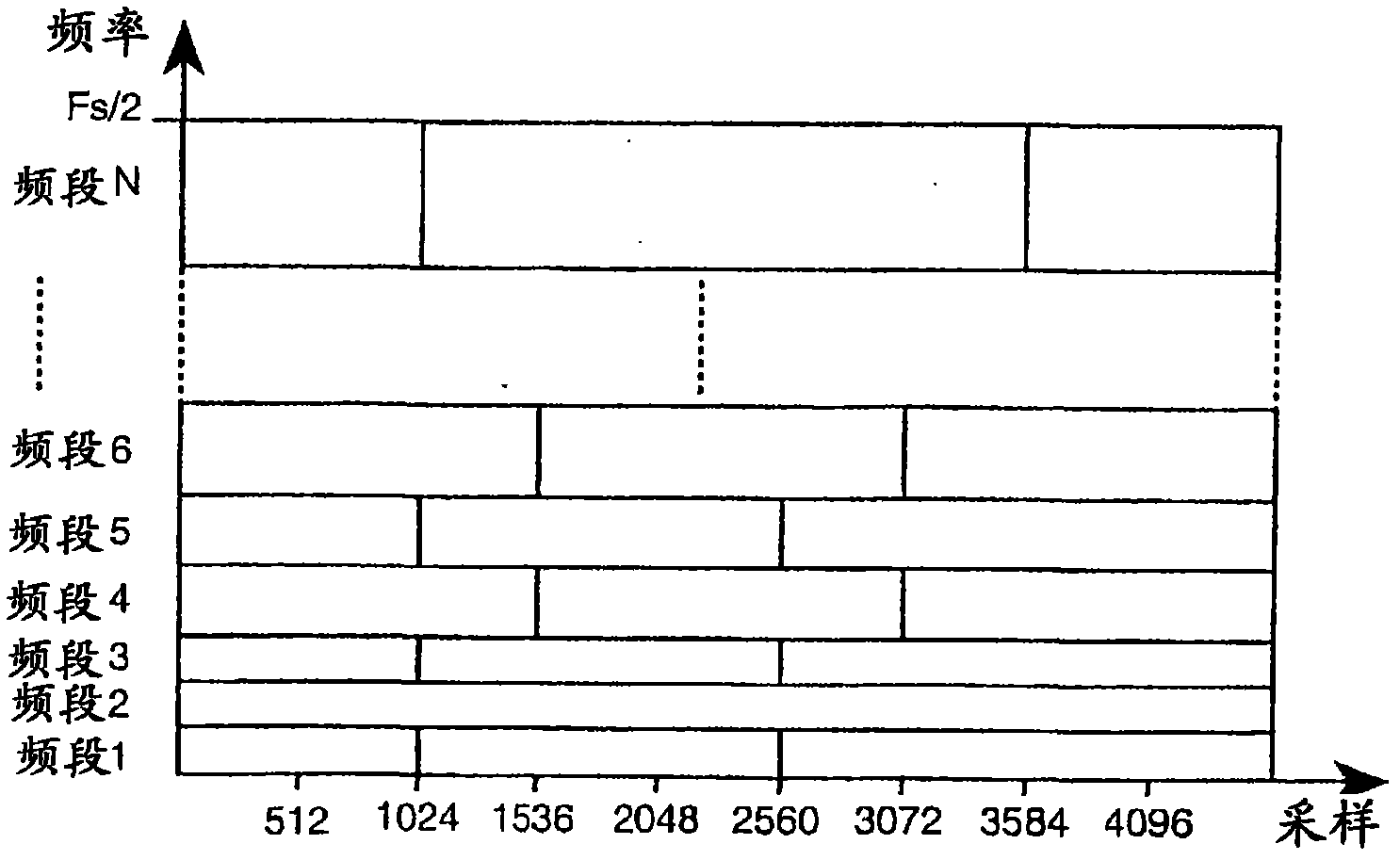

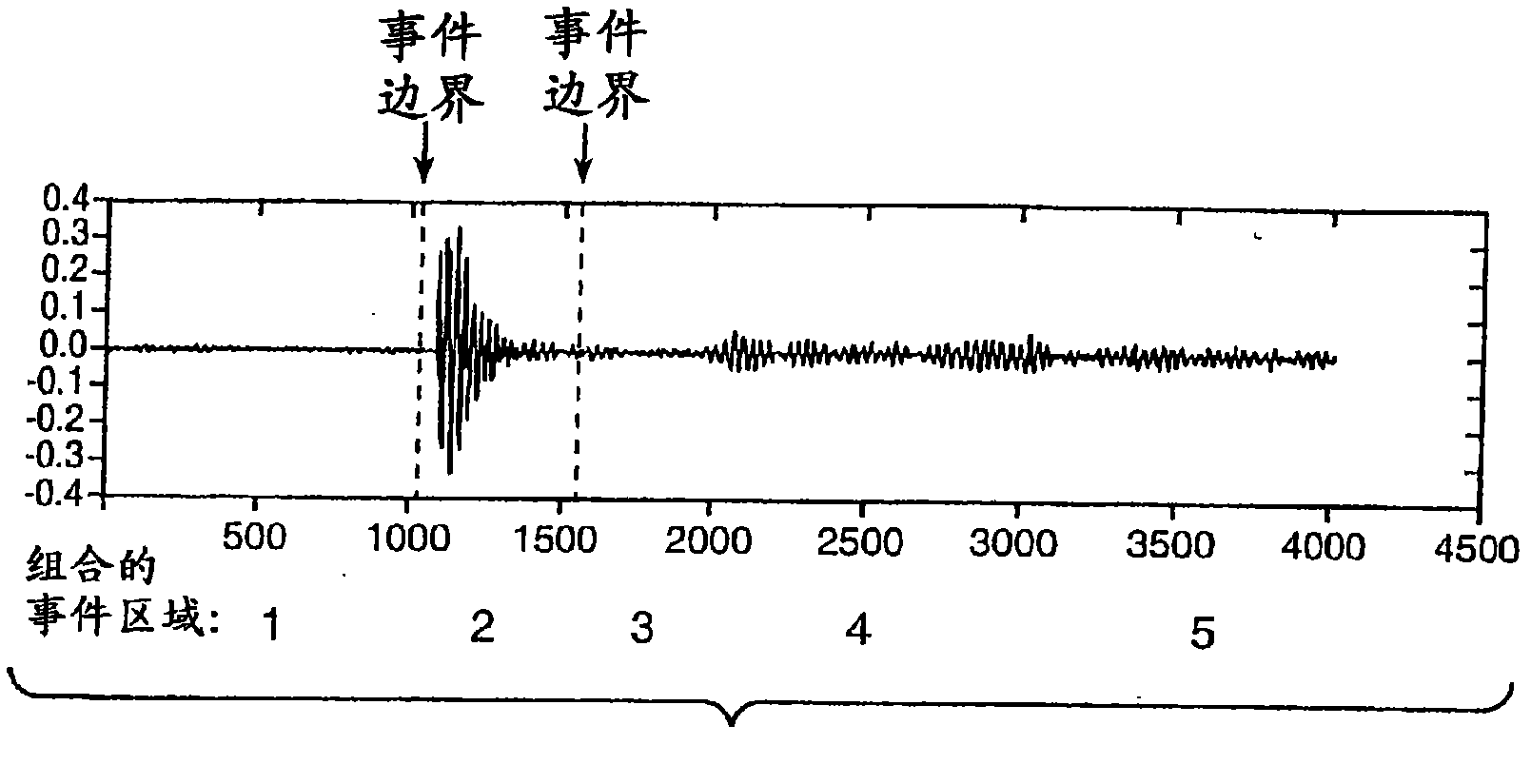

Segmenting audio signals into auditory events

InactiveUS7711123B2Valuable informationGreat weightTelevision system detailsColor television detailsFrequency spectrumInformation representation

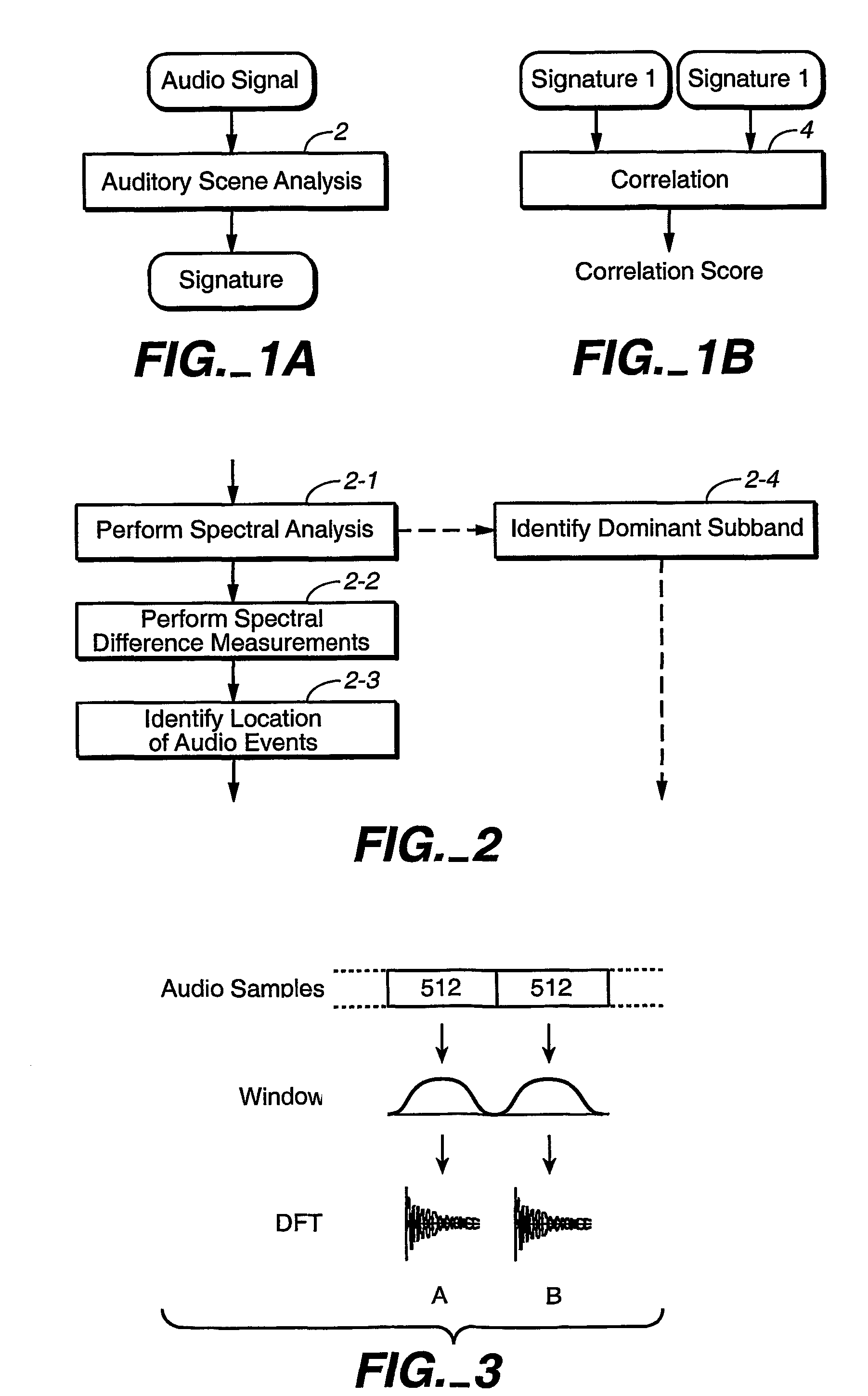

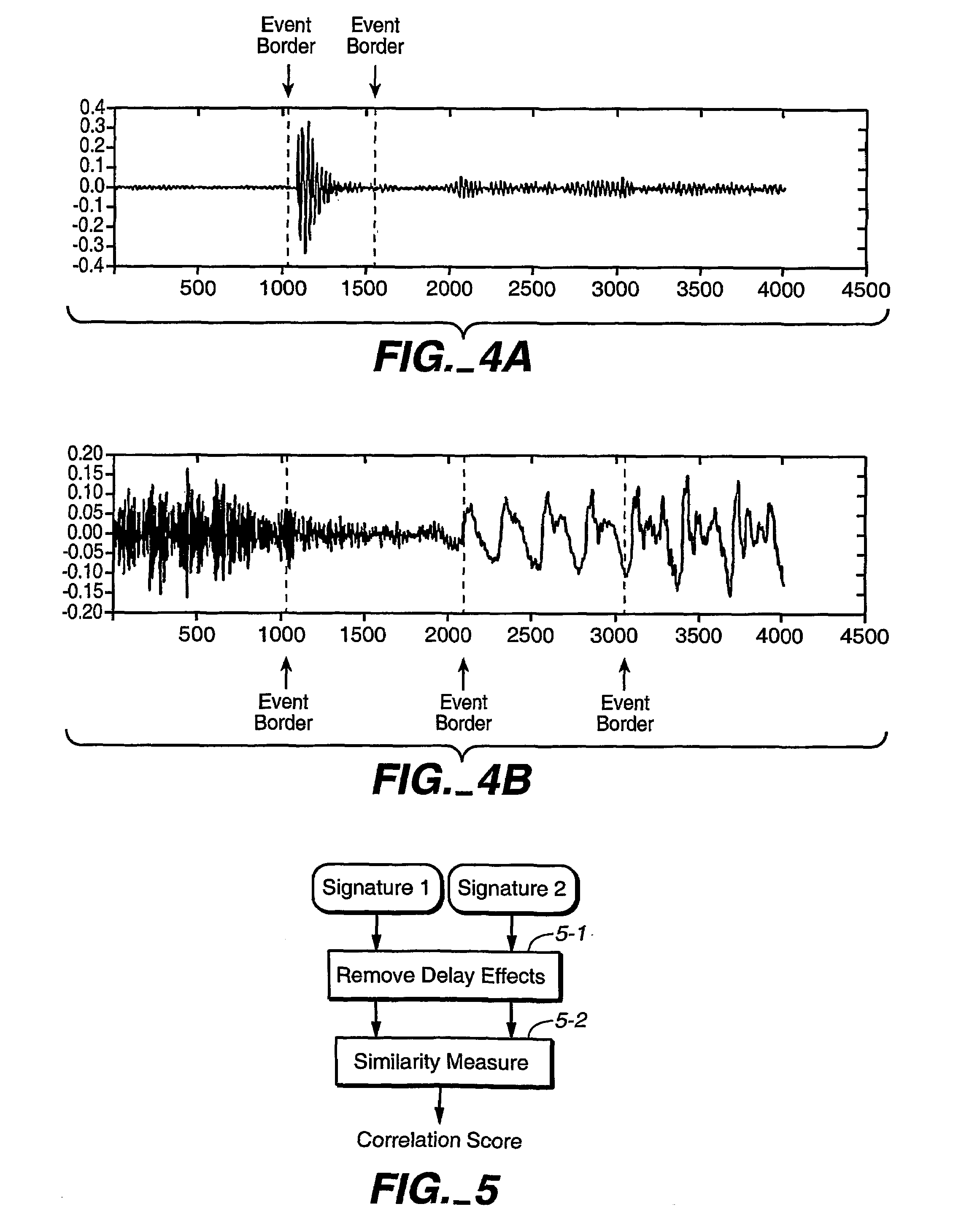

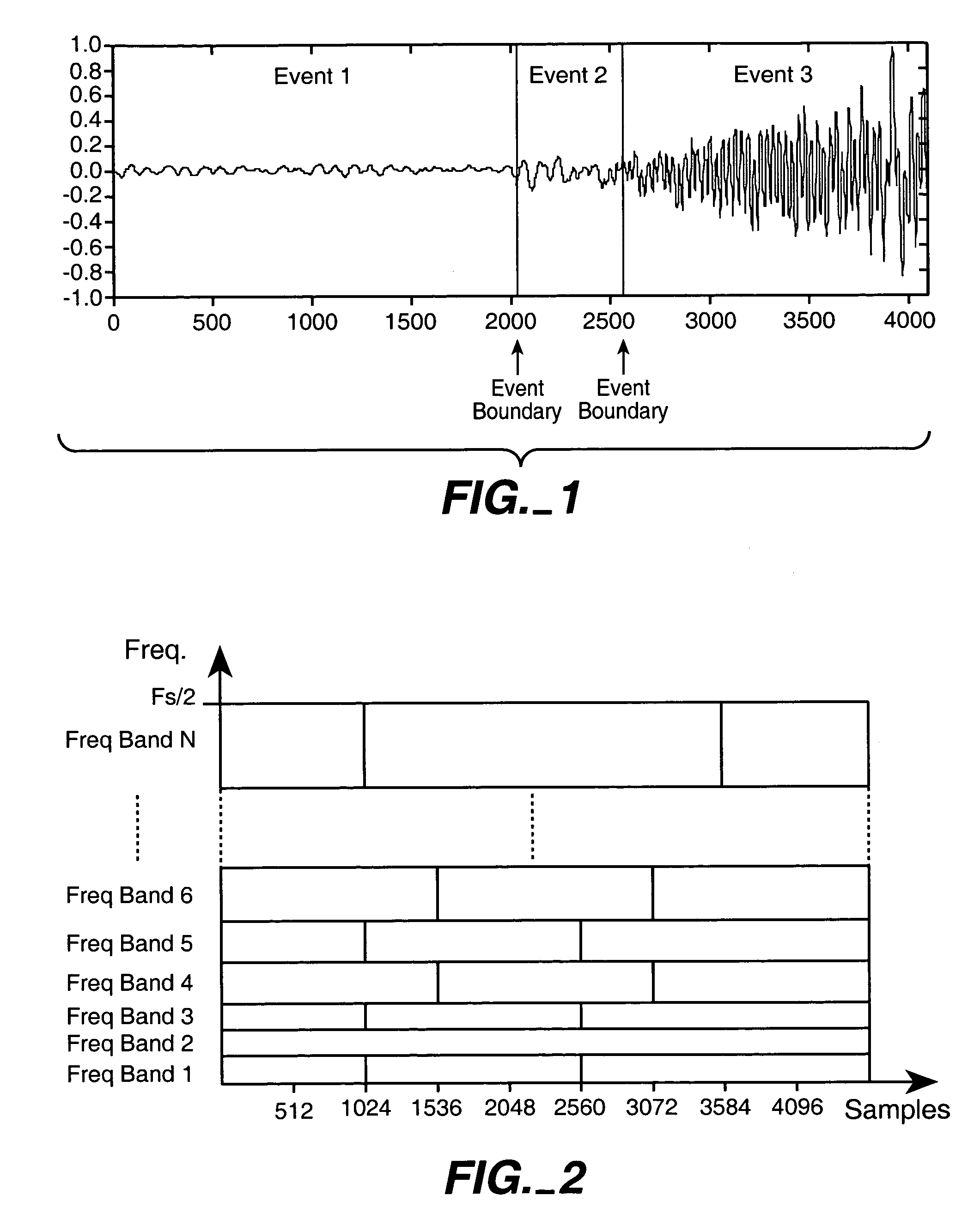

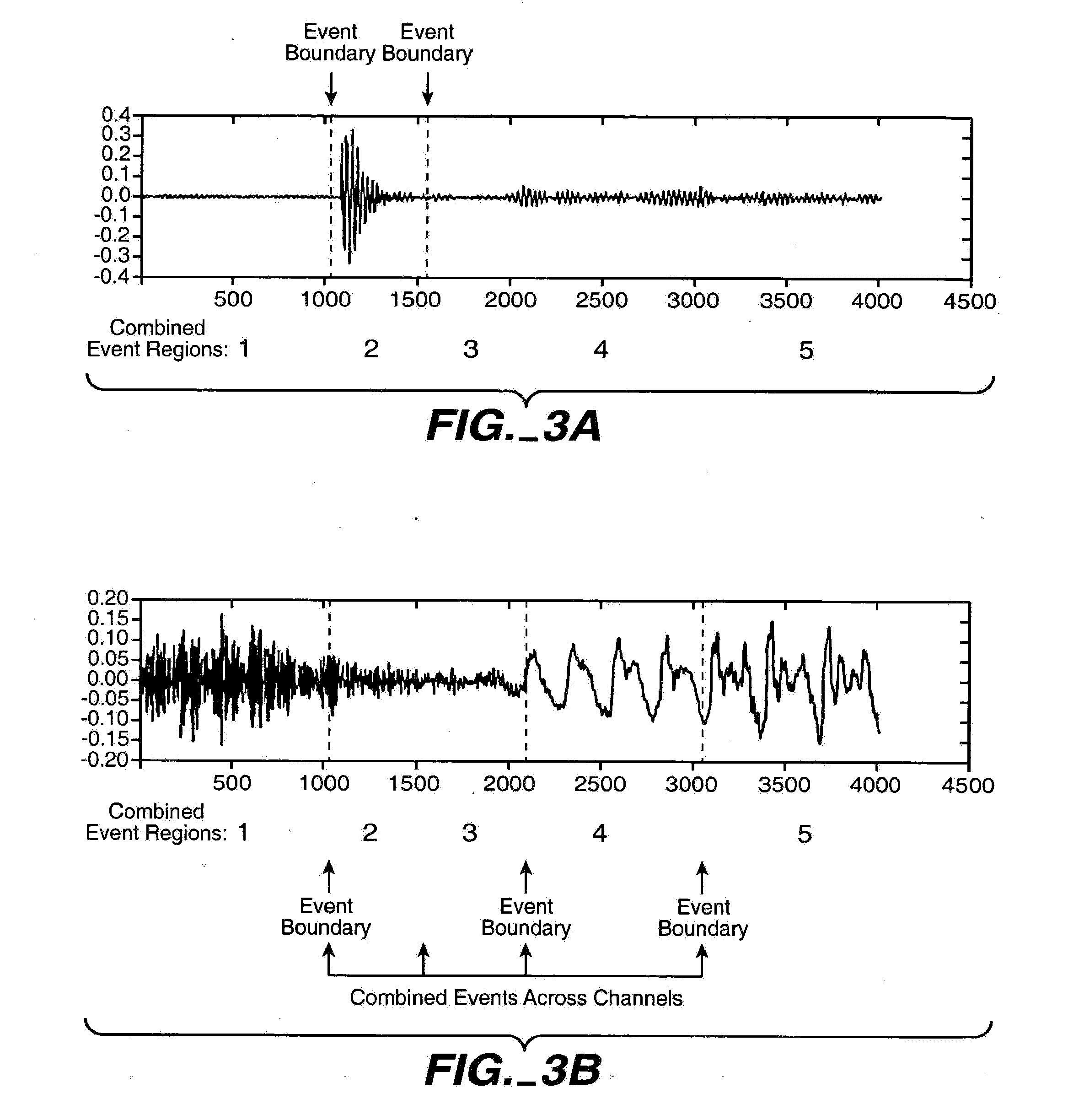

In one aspect, the invention divides an audio signal into auditory events, each of which tends to be perceived as separate and distinct, by calculating the spectral content of successive time blocks of the audio signal (5-1), calculating the difference in spectral content between successive time blocks of the audio signal (5-2), and identifying an auditory event boundary as the boundary between successive time blocks when the difference in the spectral content between such successive time blocks exceeds a threshold (5-3). In another aspect, the invention generates a reduced-information representation of an audio signal by dividing an audio signal into auditory events, each of which tends to be perceived as separate and distinct, and formatting and storing information relating to the auditory events (5-4). Optionally, the invention may also assign a characteristic to one or more of the auditory events (5-5).

Owner:DOLBY LAB LICENSING CORP

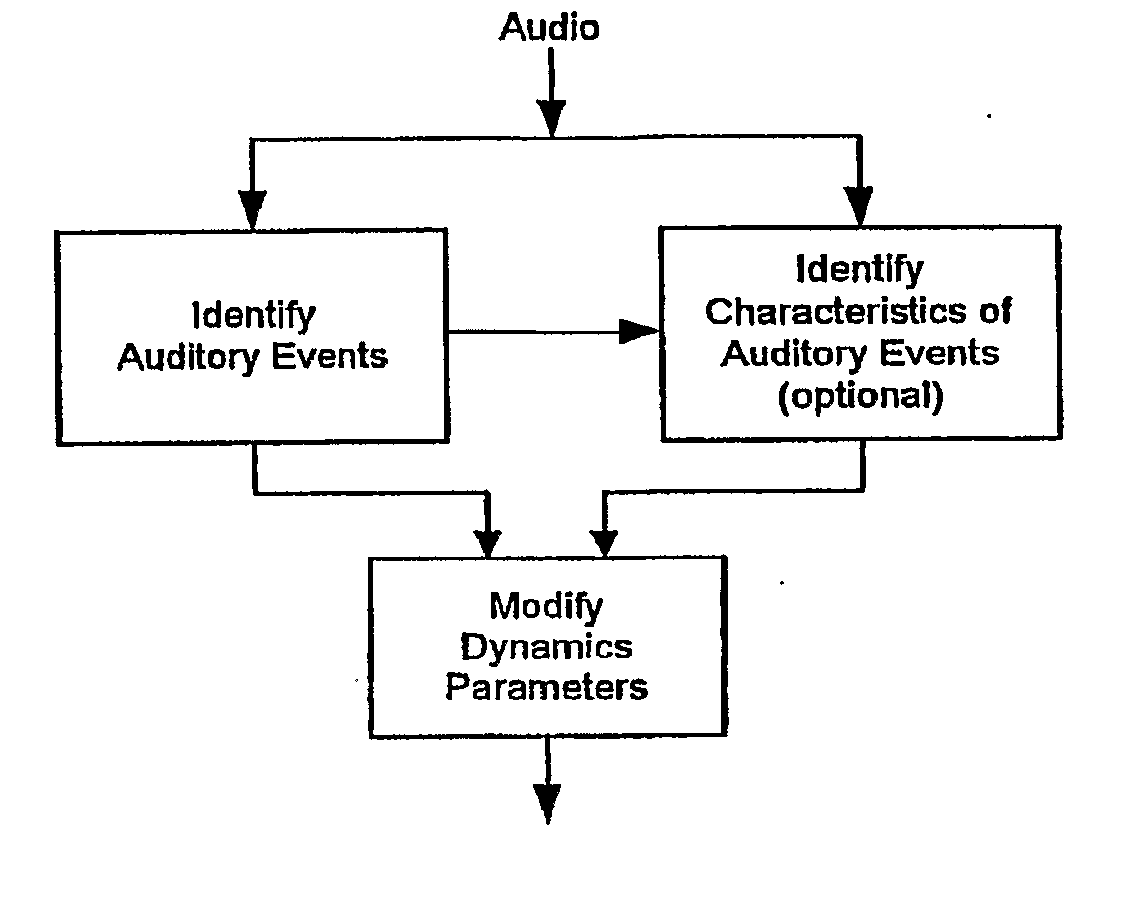

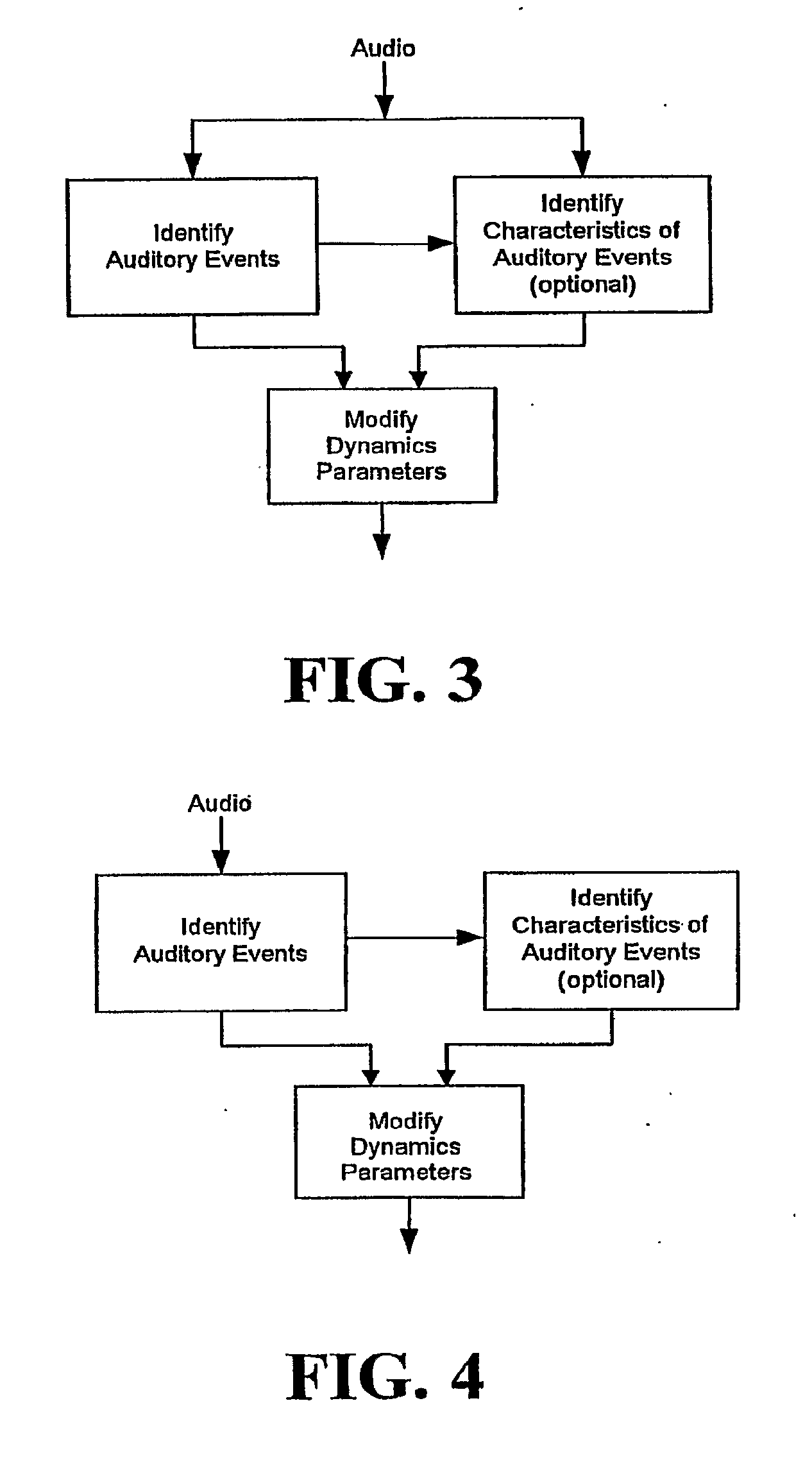

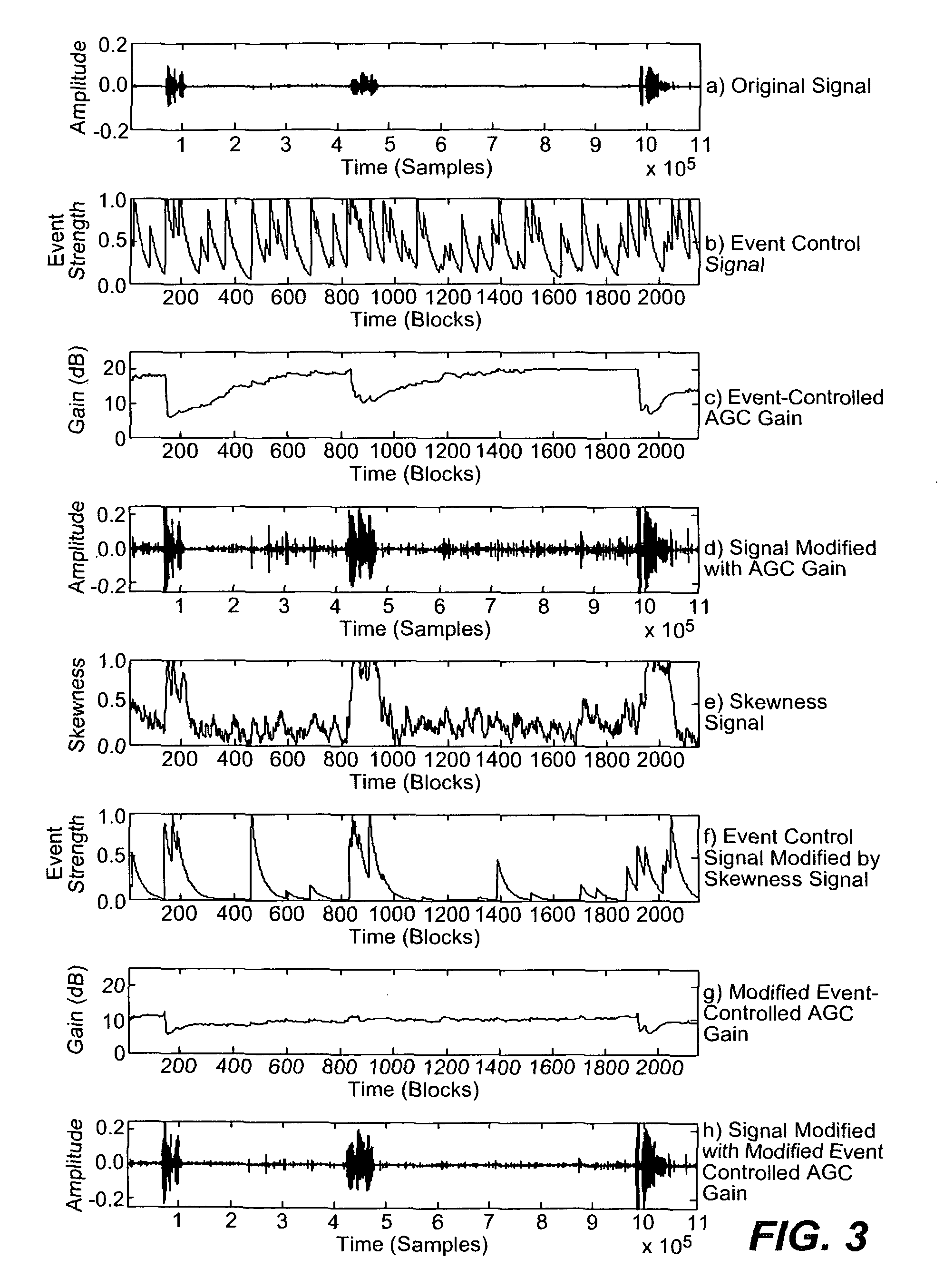

Audio gain control using specific-loudness-based auditory event detection

ActiveUS8144881B2Change damageSlow down the rate of change of gainSignal processingGain controlLoudnessComputer science

Owner:DOLBY LAB LICENSING CORP

Method for combining audio signals using auditory scene analysis

ActiveUS20060029239A1Improve sound qualityTransducer acoustic reaction preventionTransmissionComputer hardwareVocal tract

A process for combining audio channels combines the audio channels to produce a combined audio channel and dynamically applies one or more of time, phase, and amplitude or power adjustments to the channels, to the combined channel, or to both the channels and the combined channel. One or more of the adjustments are controlled at least in part by a measure of auditory events in one or more of the channels and / or the combined channel. Applications include the presentation of multichannel audio in cinemas and vehicles. Not only methods, but also corresponding computer program implementations and apparatus implementations are included.

Owner:DOLBY LAB LICENSING CORP

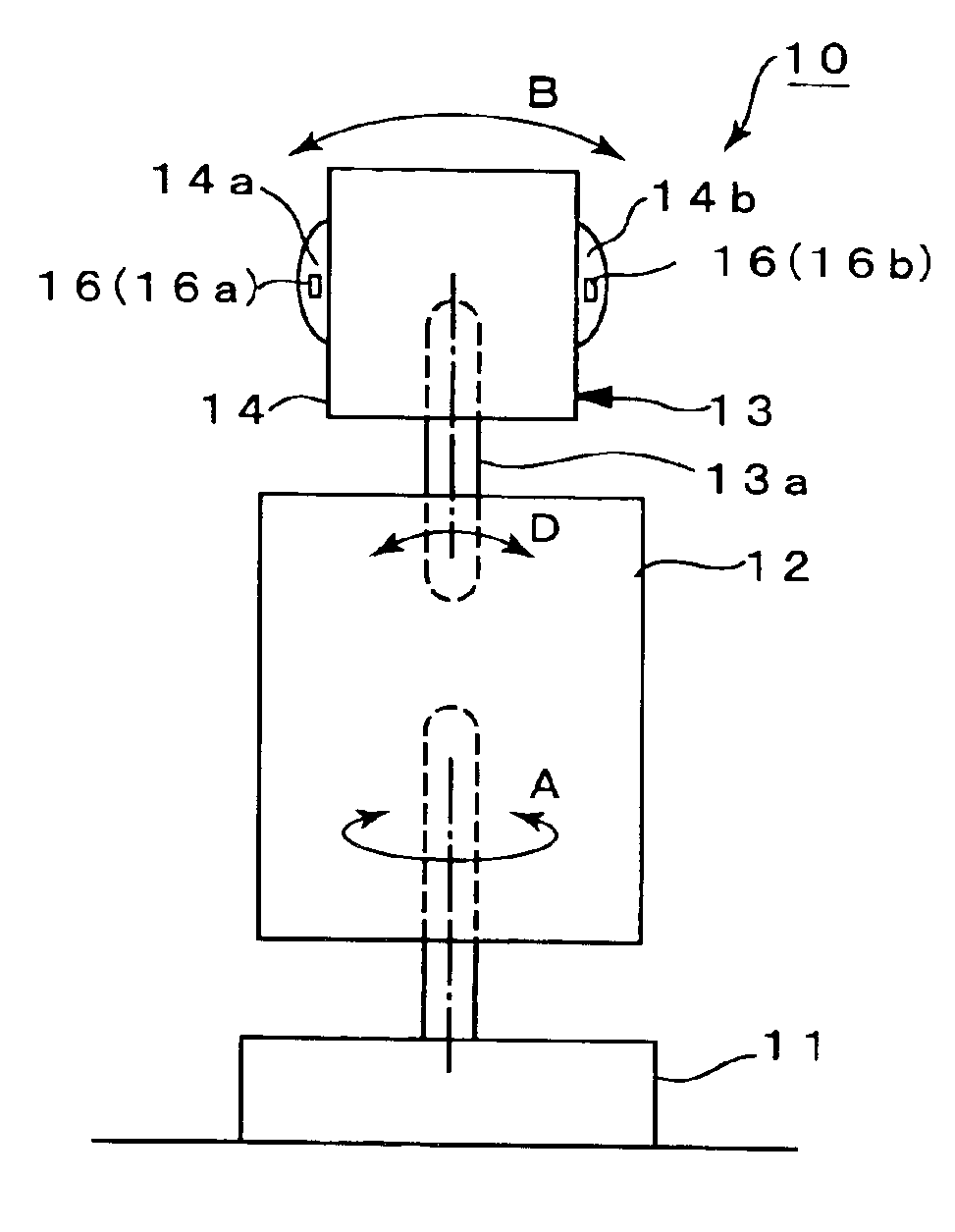

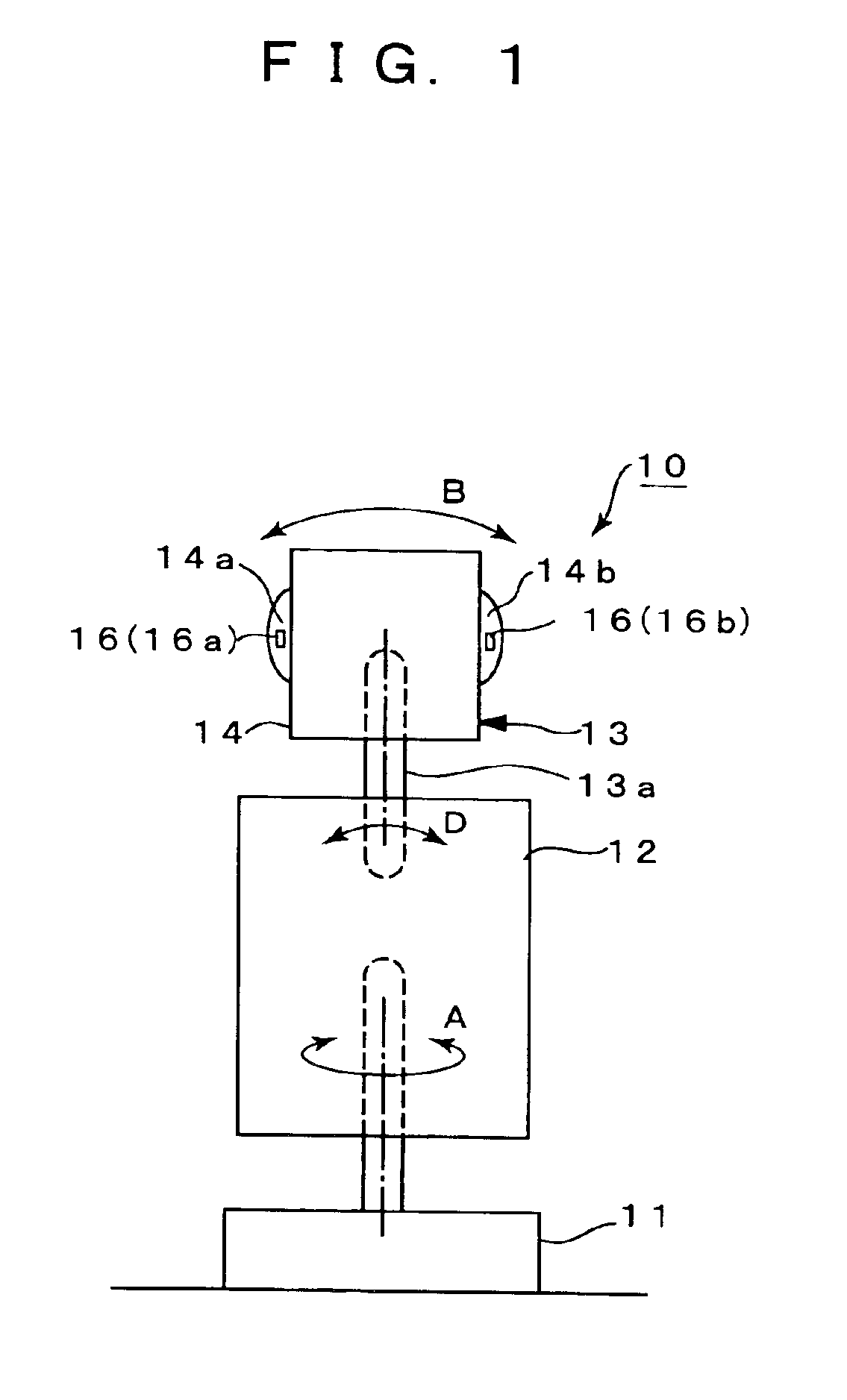

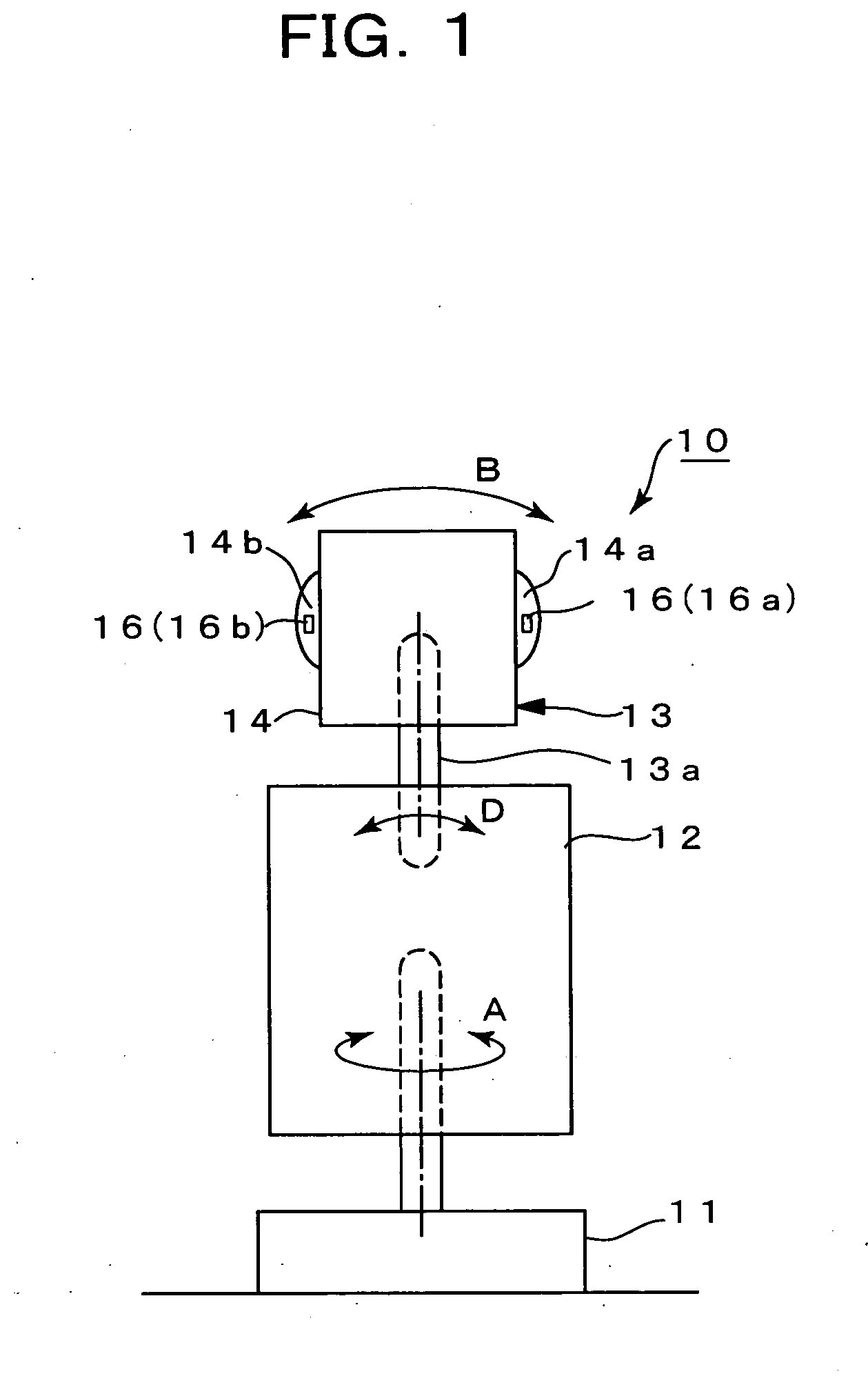

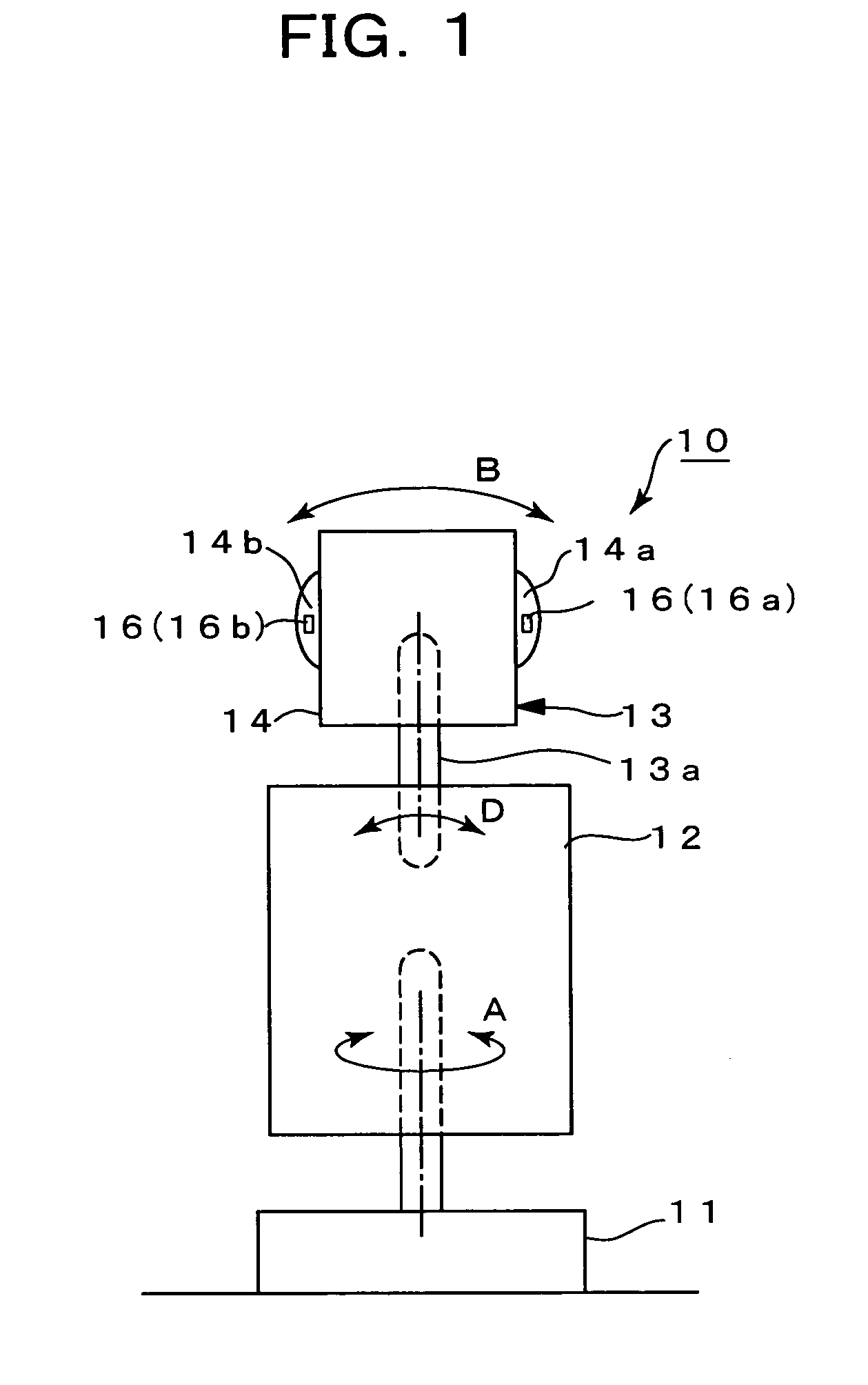

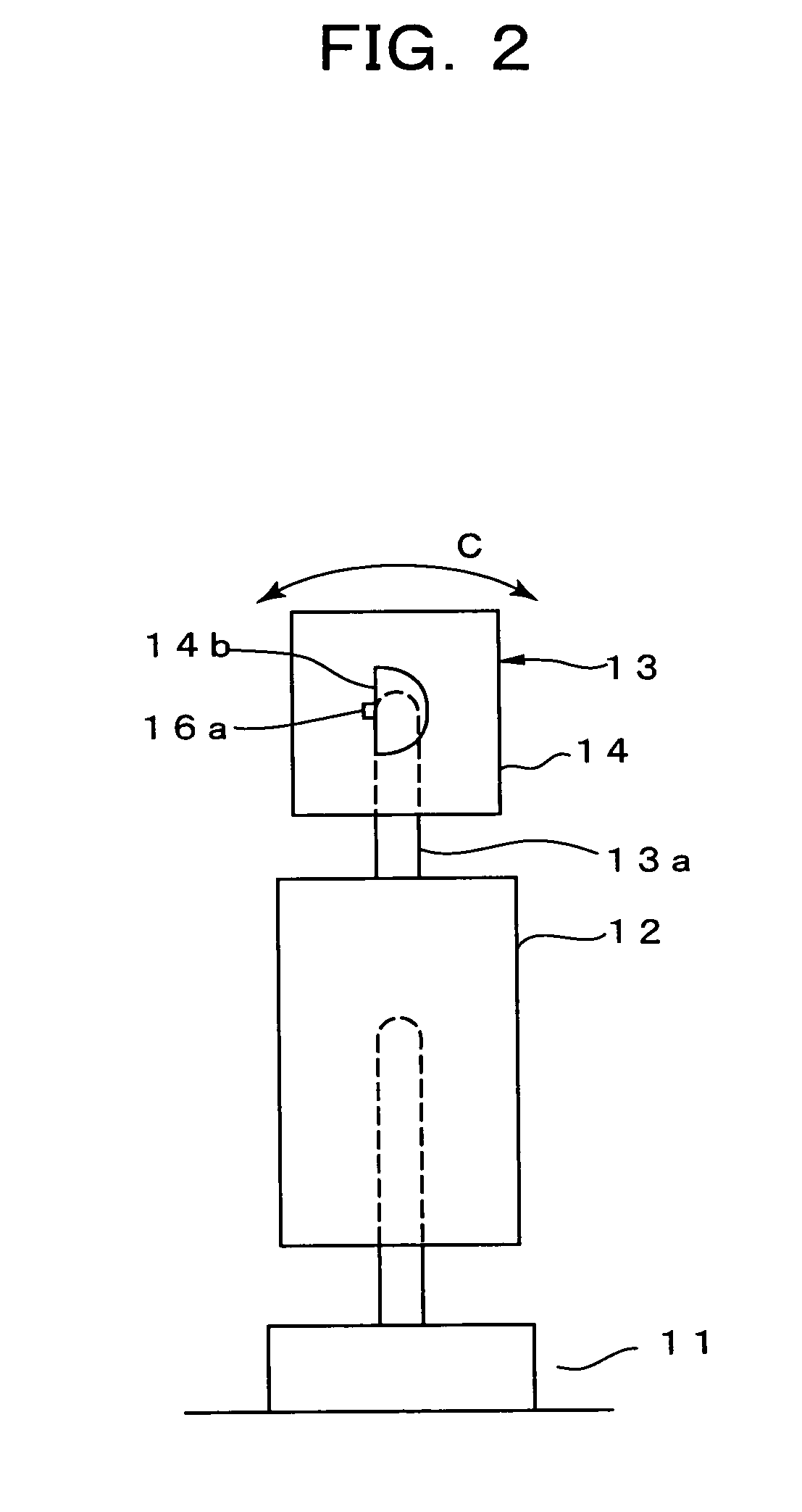

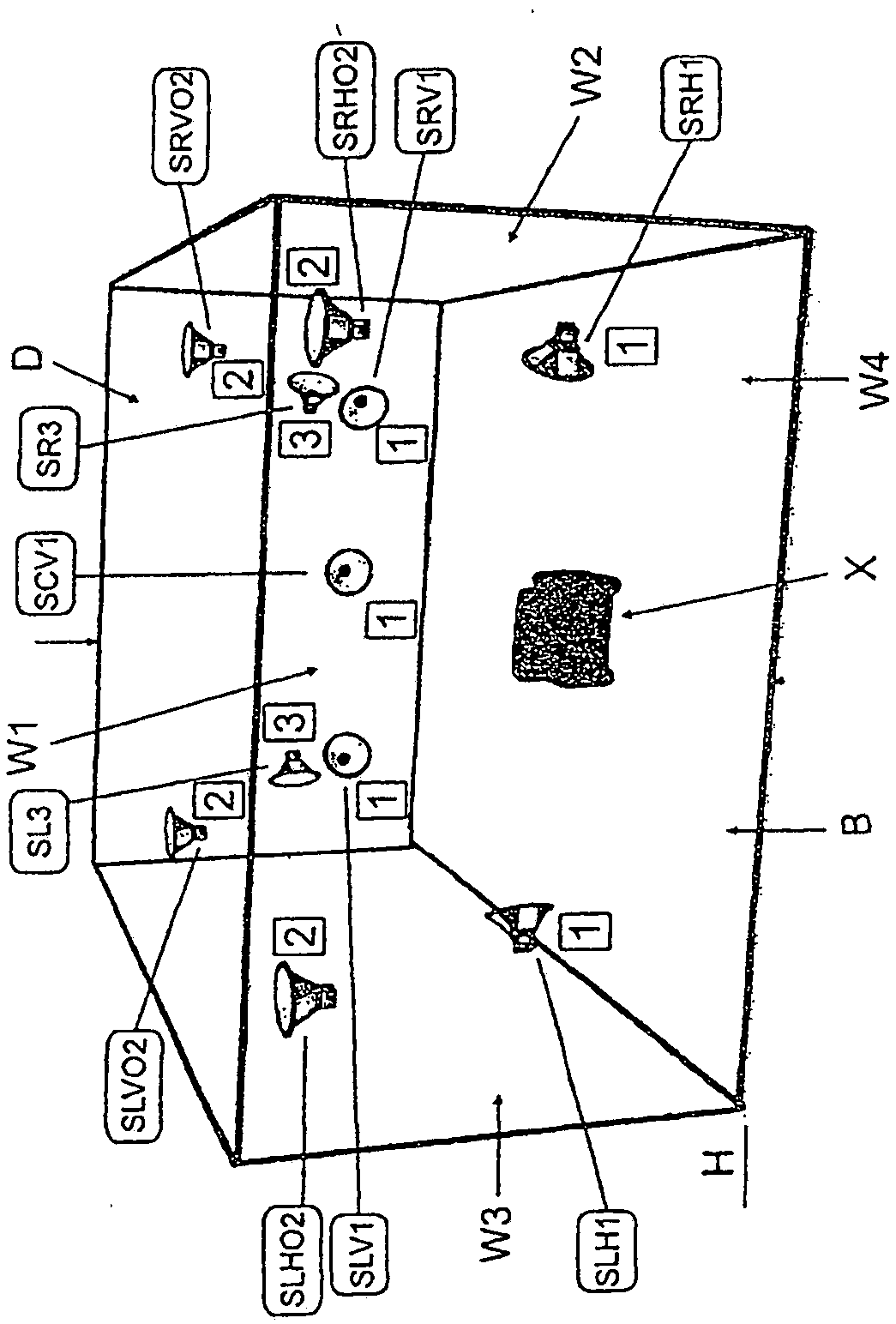

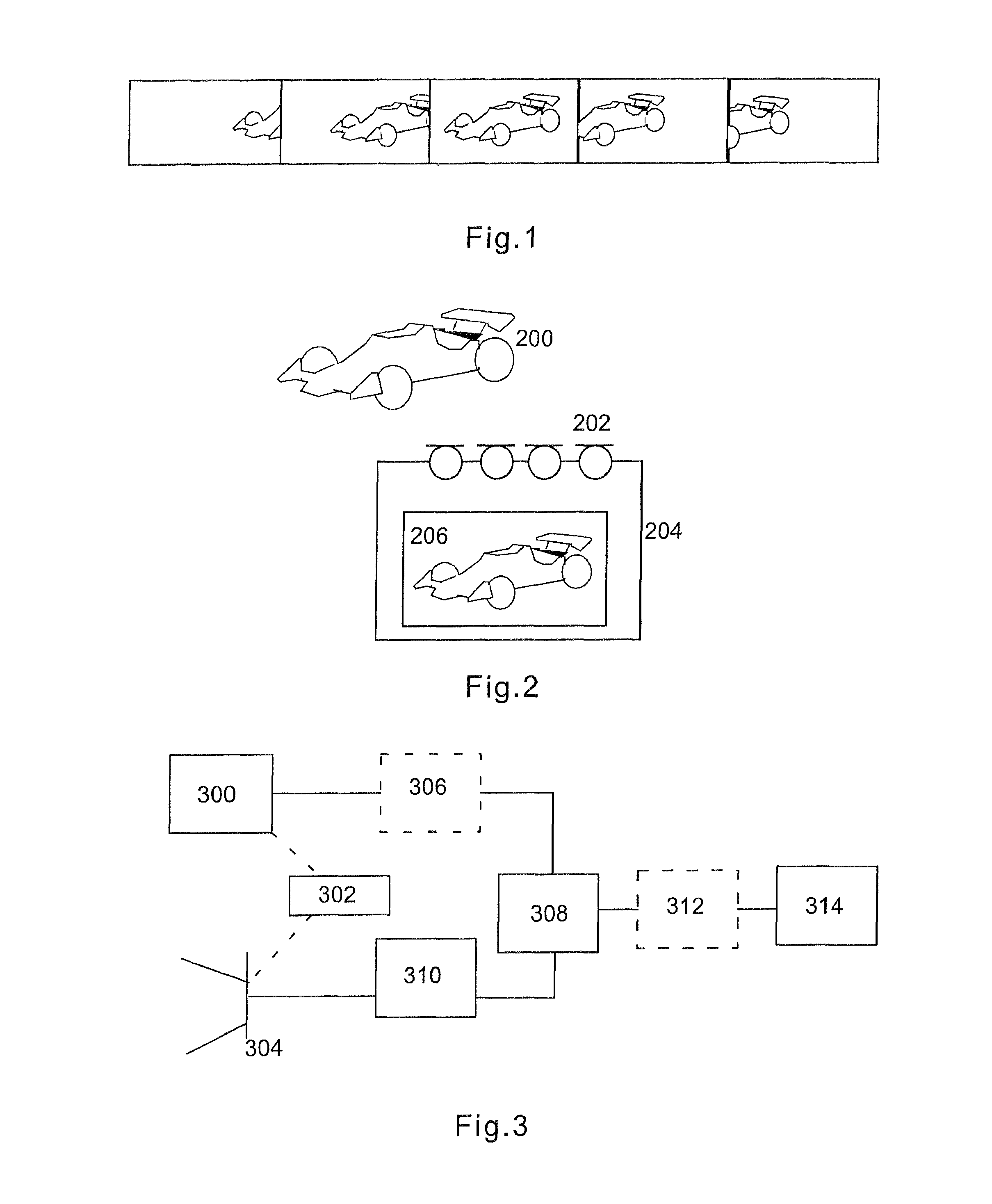

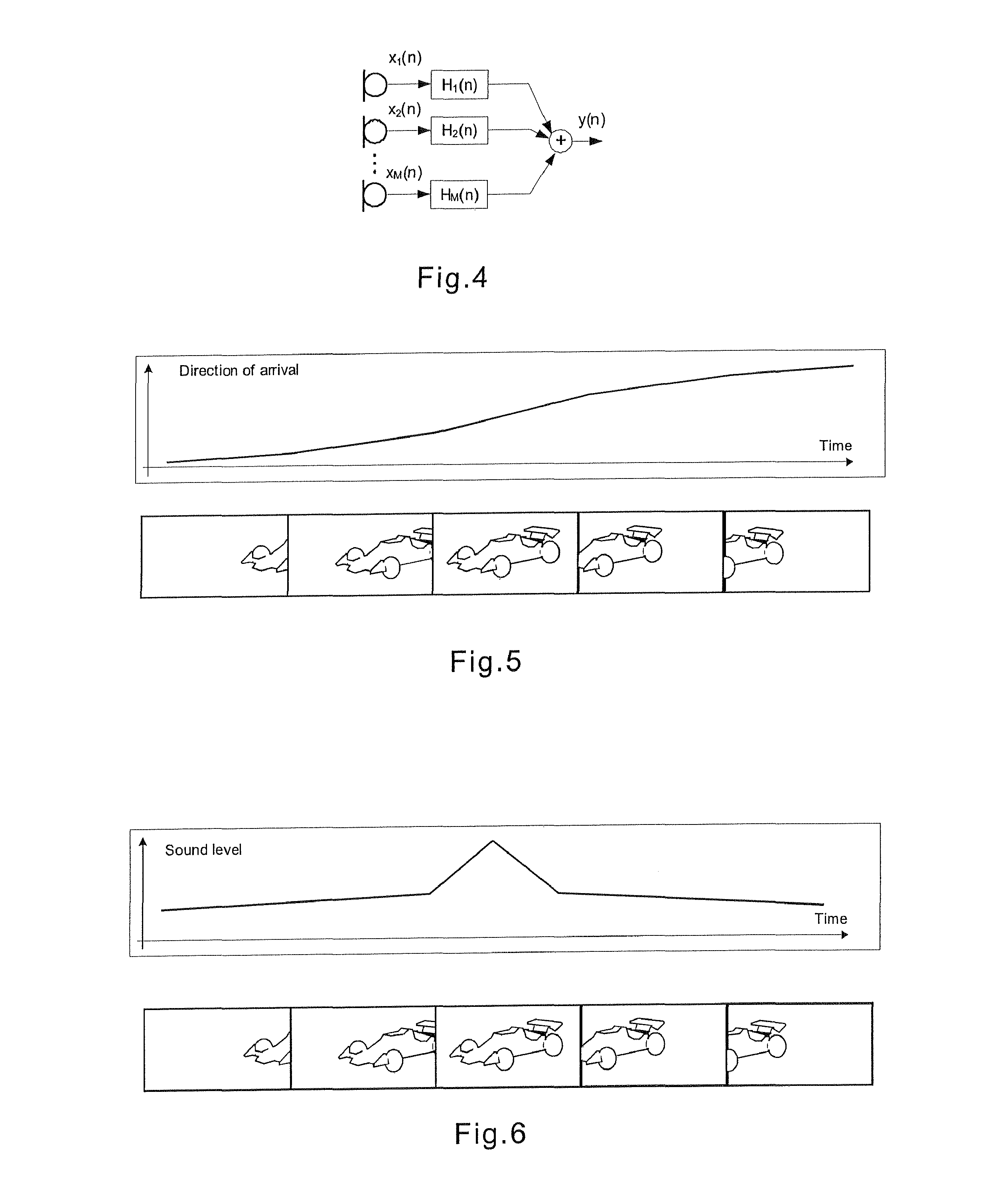

Robot audiovisual system

A robot visuoauditory system that makes it possible to process data in real time to track vision and audition for an object, that can integrate visual and auditory information on an object to permit the object to be kept tracked without fail and that makes it possible to process the information in real time to keep tracking the object both visually and auditorily and visualize the real-time processing is disclosed. In the system, the audition module (20) in response to sound signals from microphones extracts pitches therefrom, separate their sound sources from each other and locate sound sources such as to identify a sound source as at least one speaker, thereby extracting an auditory event (28) for each object speaker. The vision module (30) on the basis of an image taken by a camera identifies by face, and locate, each such speaker, thereby extracting a visual event (39) therefor. The motor control module (40) for turning the robot horizontally. extracts a motor event (49) from a rotary position of the motor. The association module (60) for controlling these modules forms from the auditory, visual and motor control events an auditory stream (65) and a visual stream (66) and then associates these streams with each other to form an association stream (67). The attention control module (6) effects attention control designed to make a plan of the course in which to control the drive motor, e.g., upon locating the sound source for the auditory event and locating the face for the visual event, thereby determining the direction in which each speaker lies. The system also includes a display (27, 37, 48, 68) for displaying at least a portion of auditory, visual and motor information. The attention control module (64) servo-controls the robot on the basis of the association stream or streams.

Owner:JAPAN SCI & TECH CORP

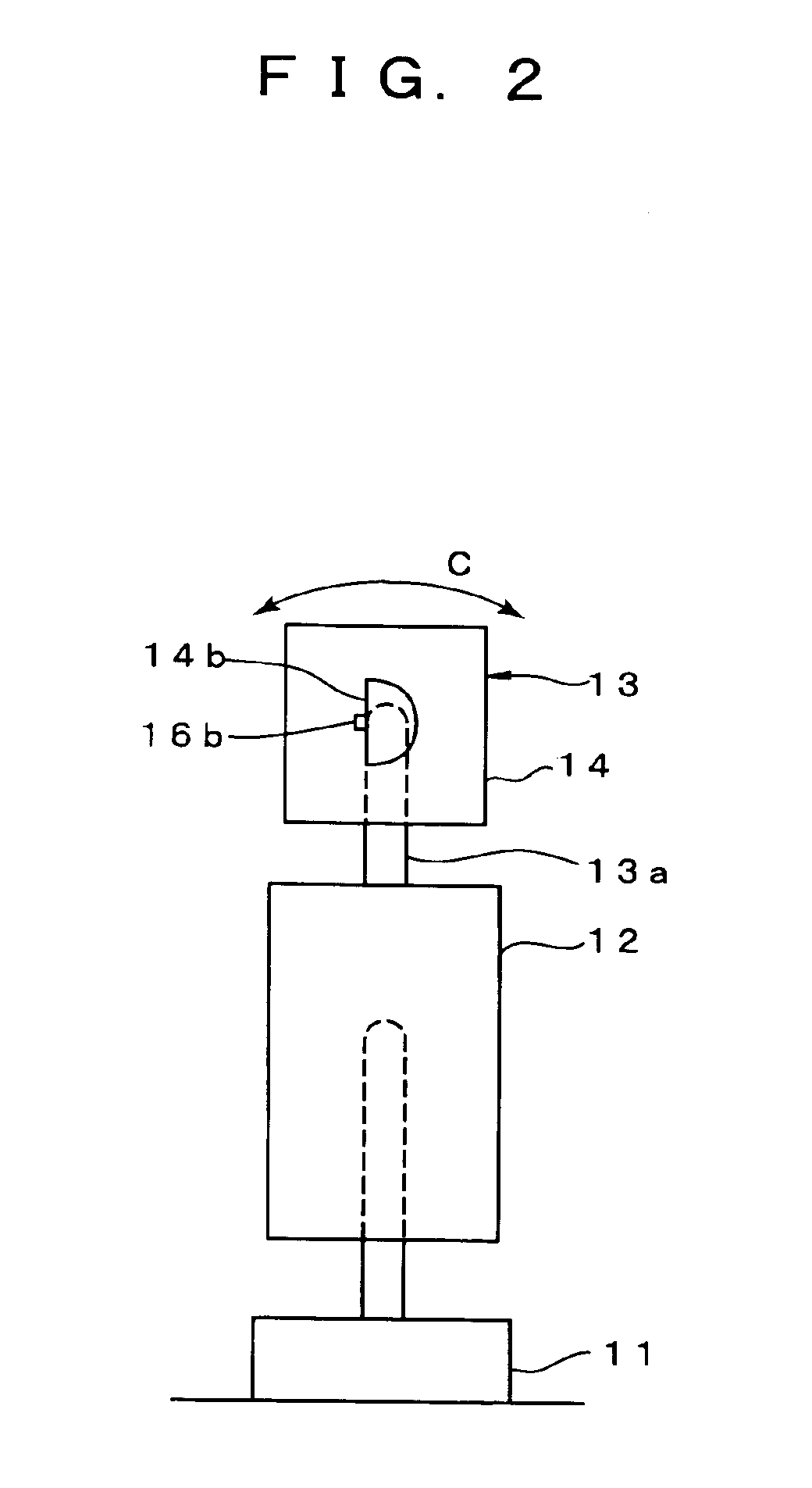

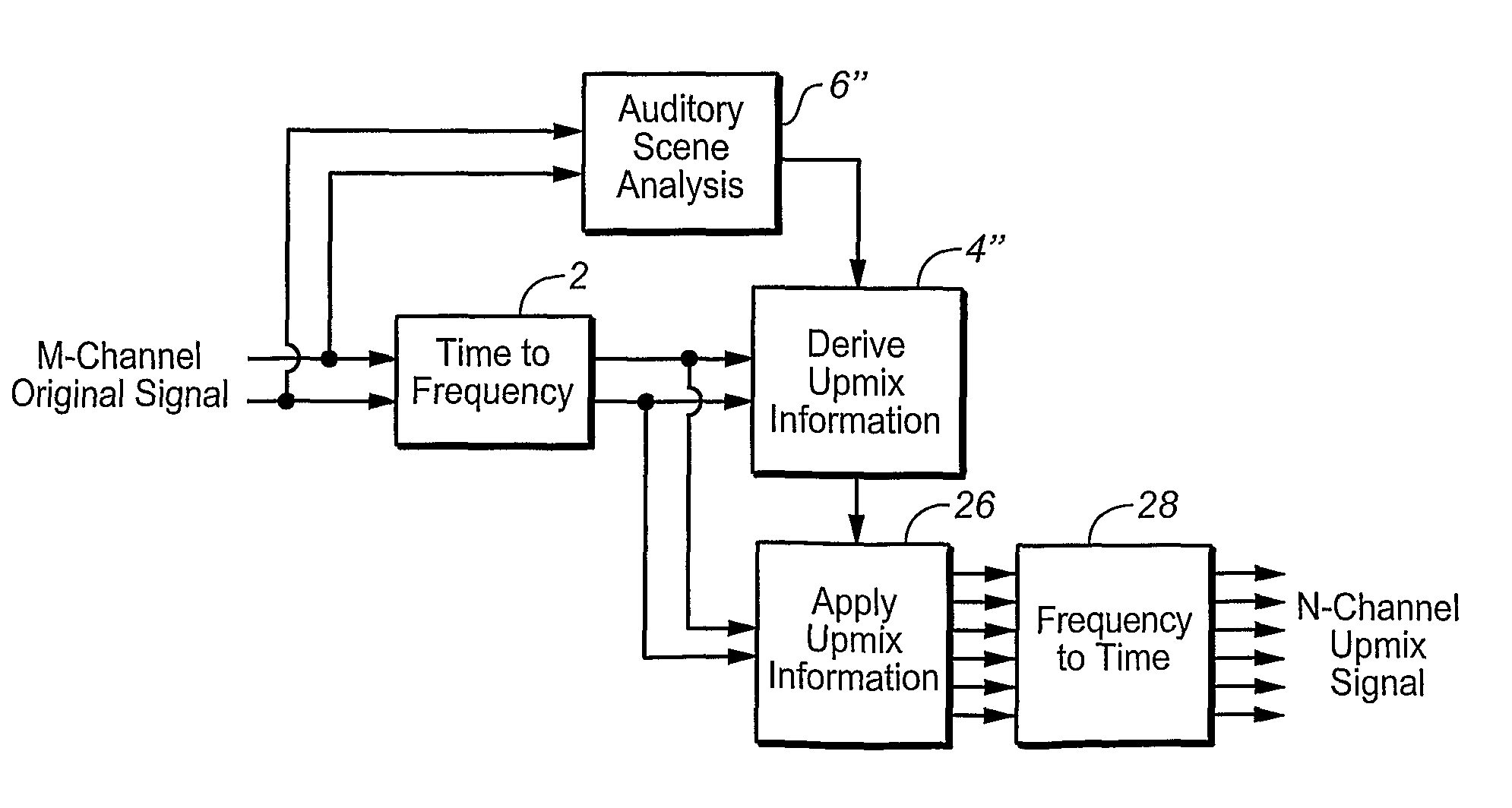

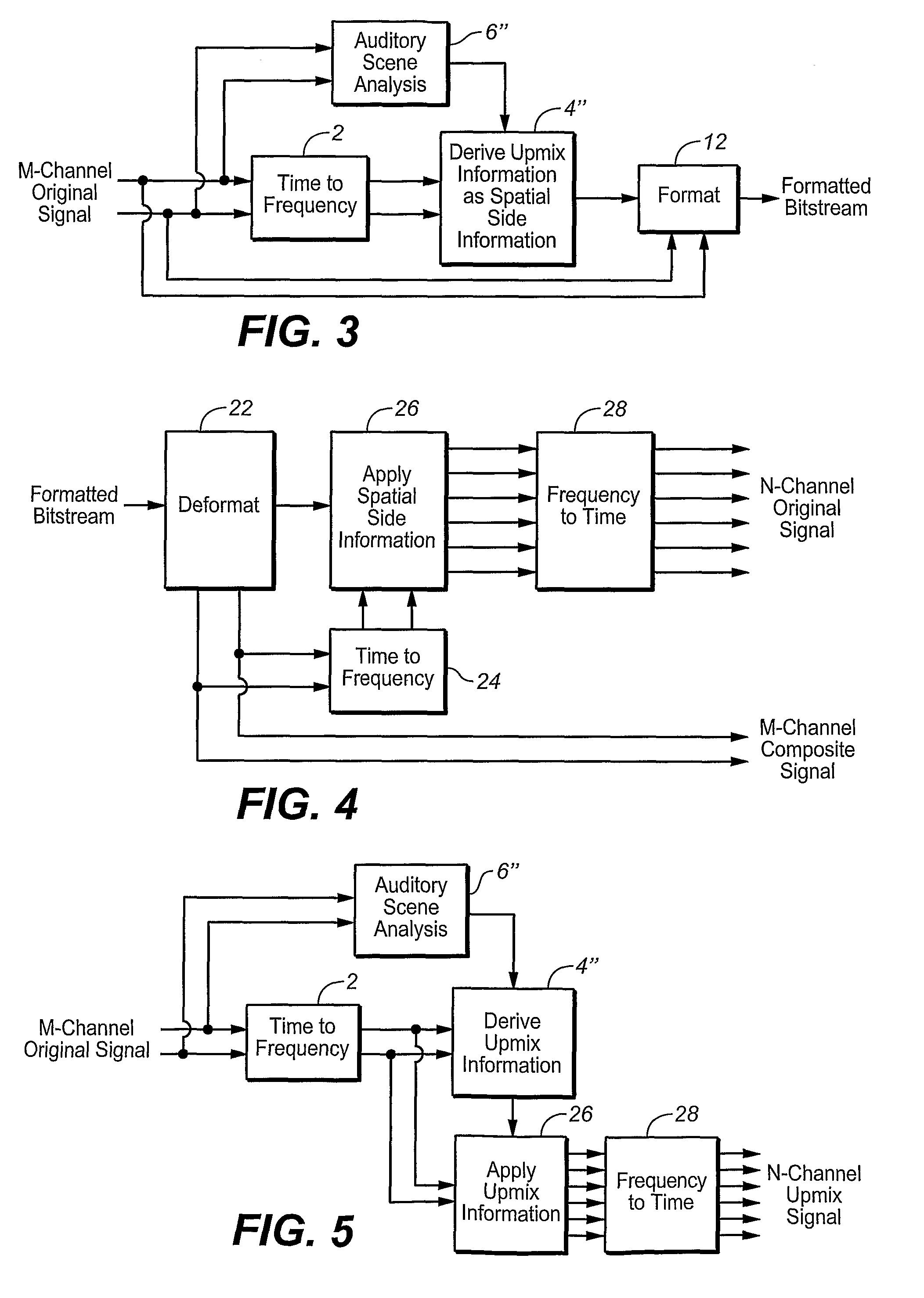

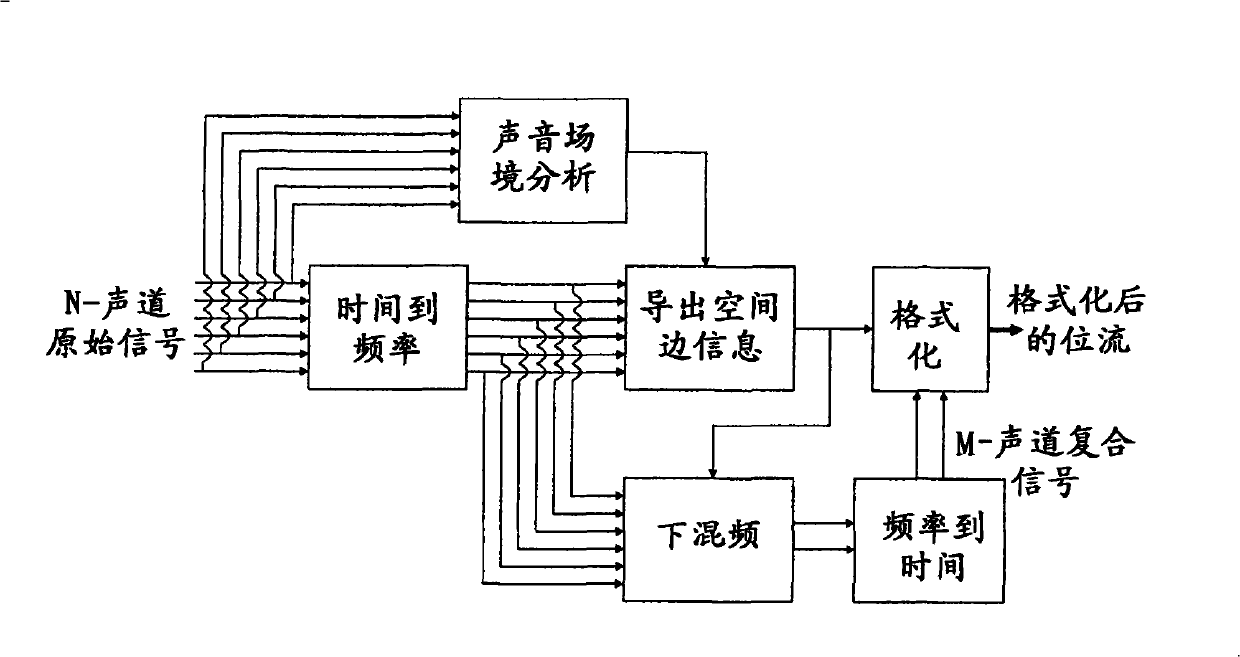

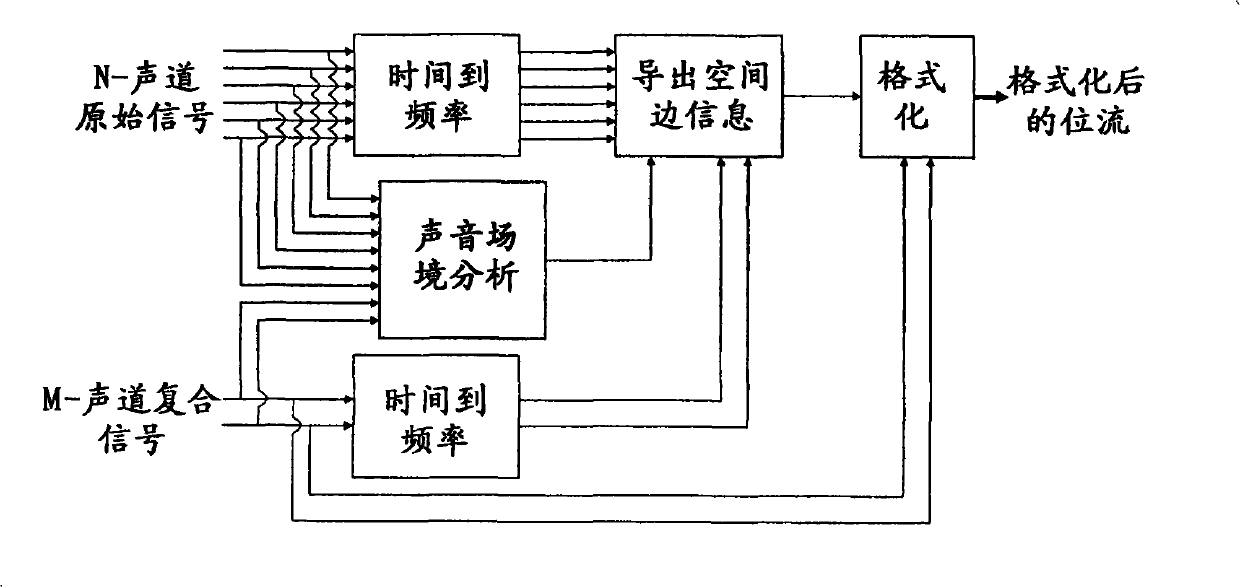

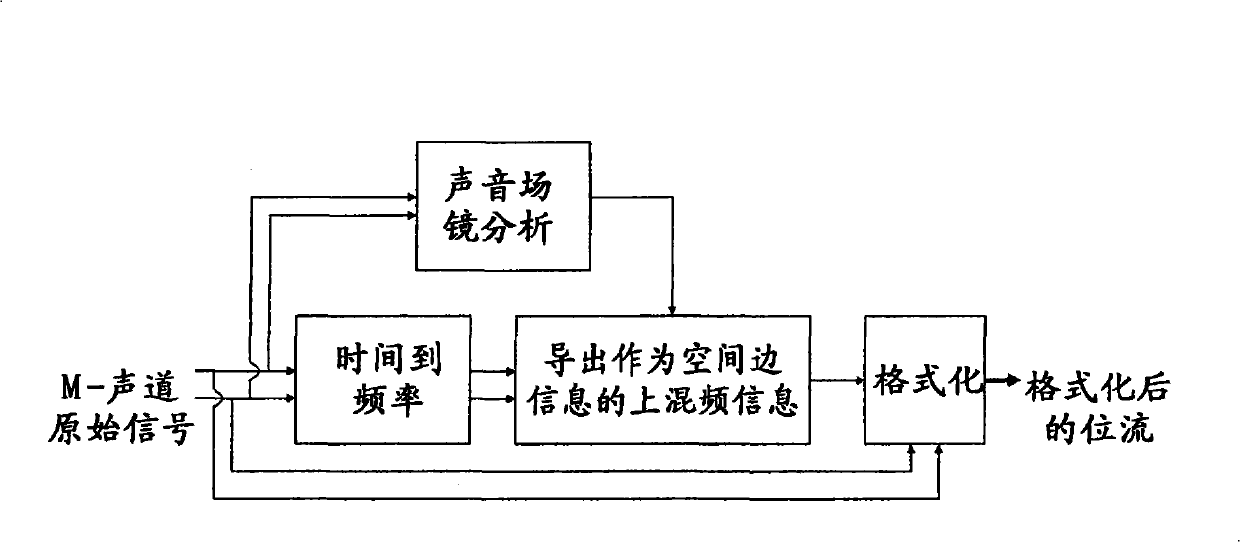

Controlling Spatial Audio Coding Parameters as a Function of Auditory Events

An audio encoder or encoding method receives a plurality of input channels and generates one or more audio output channels and one or more parameters describing desired spatial relationships among a plurality of audio channels that may be derived from the one or more audio output channels, by detecting changes in signal characteristics with respect to lime in one or more of the plurality of audio input channels, identifying as auditory event boundaries changes in signal characteristics with respect to lime in the one or more of the plurality of audio input channels, an audio segment between consecutive boundaries constituting an auditory event in the channel or channels, and generating all or some of the one or more parameters al least partly in response to auditory events and / or the degree of change in signal characteristics associated with the auditory event boundaries. An auditory-event-responsive audio upmixer or upmixing method is also disclosed.

Owner:DOLBY LAB LICENSING CORP

Audio Gain Control Using Specific-Loudness-Based Auditory Event Detection

ActiveUS20090220109A1Reduction of audible artifactChange damageSignal processingGain controlLoudnessComputer science

In one disclosed aspect, dynamic gain modifications are applied to an audio signal at least partly in response to auditory events and / or the degree of change in signal characteristics associated with said auditory event boundaries. In another aspect, an audio signal is divided into auditory events by comparing the difference in specific loudness between successive time blocks of the audio signal.

Owner:DOLBY LAB LICENSING CORP

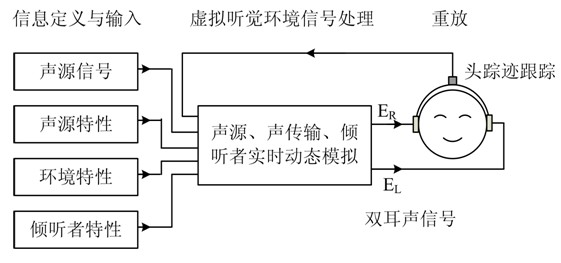

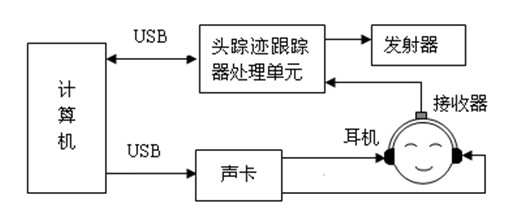

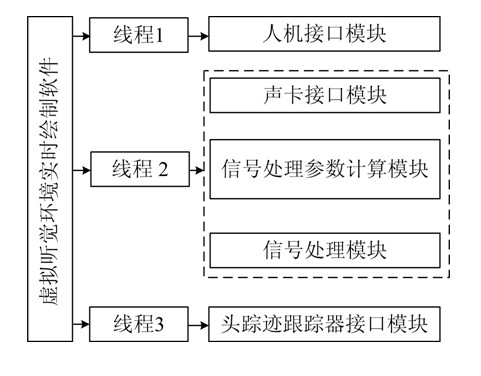

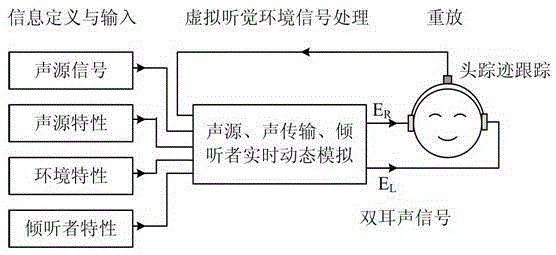

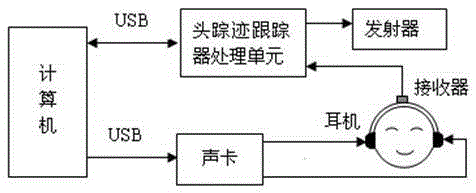

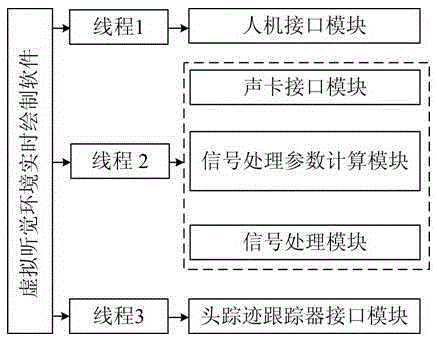

Real-time rendering method for virtual auditory environment

ActiveCN102572676AImplement dynamic processingReduce computationStereophonic systemsSound sourcesHeadphones

The invention discloses a real-time rendering method for a virtual auditory environment. According to the method, the initial information of the virtual auditory environment can be set, and a head trace tracker is used for detecting the dynamic spatial positions of six degrees of freedom of motion of the head of a listener in real time, and dynamically simulating sound sources, sound transmission, environment reflection, the radiation and binaural sound signal conversion of a receiver, and the like in real time according to the data. In the simulation of the binaural sound signal conversion, a method for realizing joint processing on a plurality of virtual sound sources in different directions and at difference distances by utilizing a shared filter is adopted, so that signal processing efficiency is improved. A binaural sound signal is subjected to earphone-ear canal transmission characteristic balancing, and then is fed to an earphone for replay, so that a realistic spatial auditory event or perception can be generated.

Owner:SOUTH CHINA UNIV OF TECH

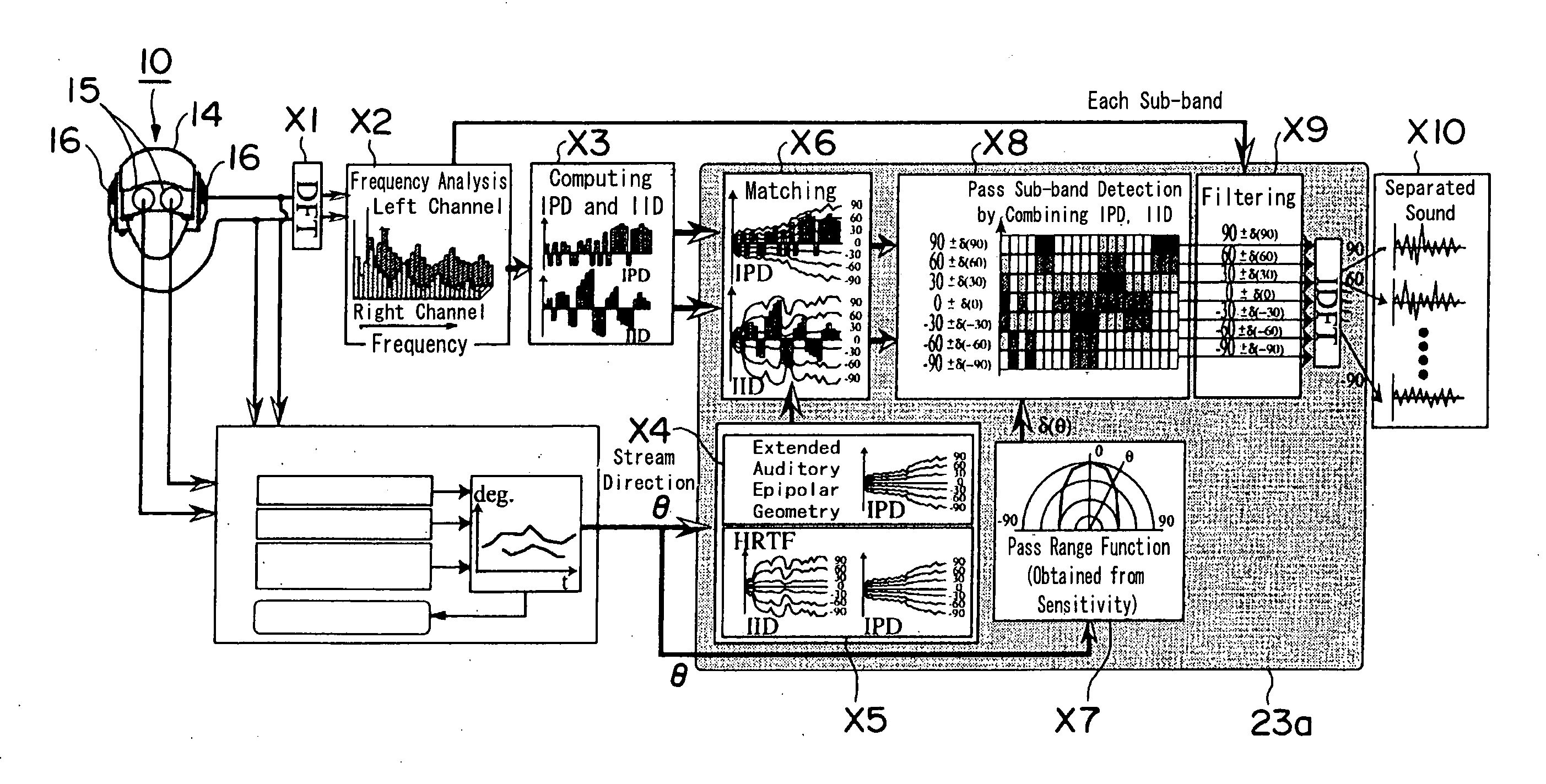

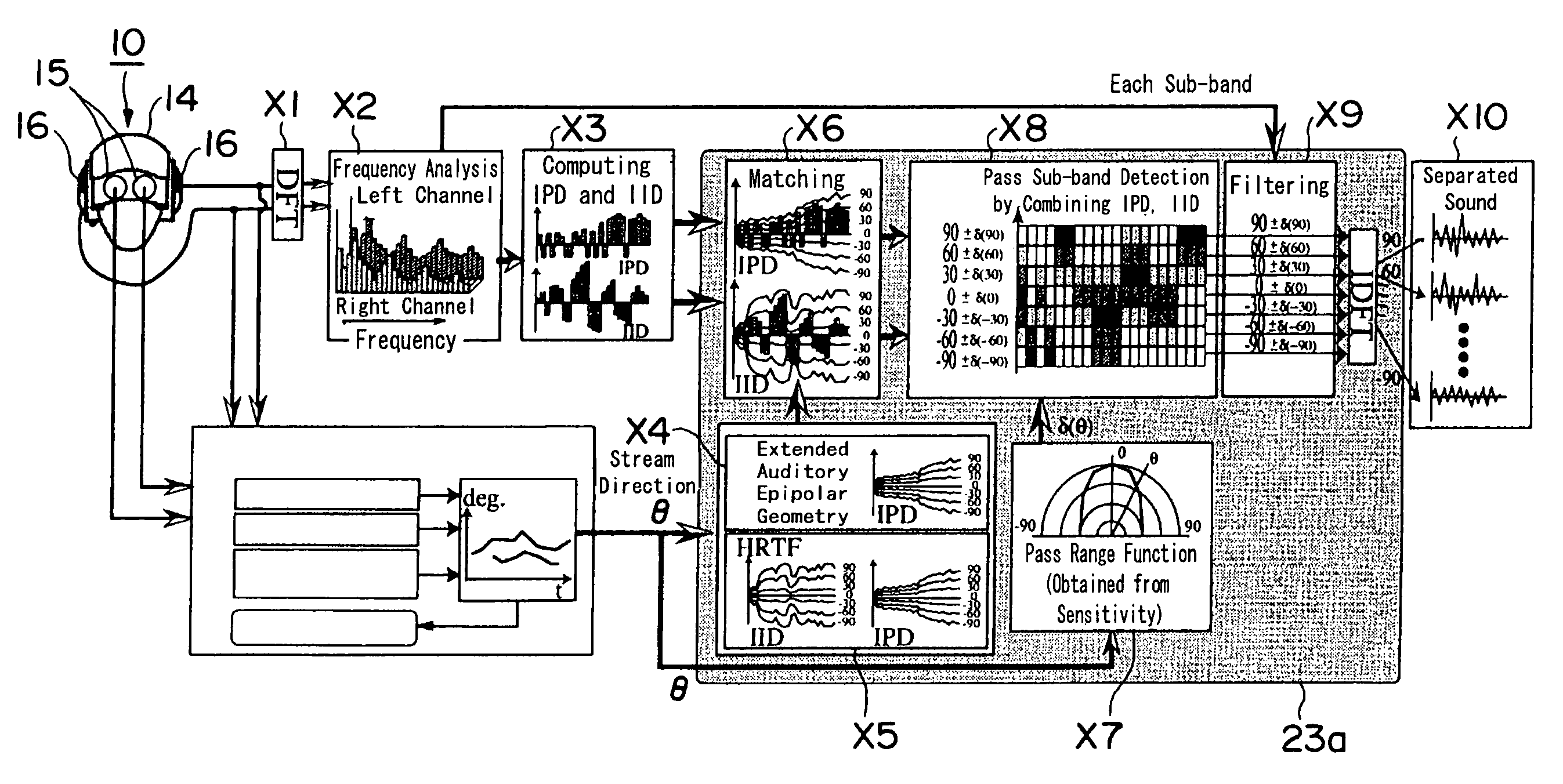

Robotics visual and auditory system

InactiveUS20060241808A1The effect is accurateImprove robustnessProgramme-controlled manipulatorComputer controlSound source separationPhase difference

Robotics visual and auditory system is provided which is made capable of accurately conducting the sound source localization of a target by associating a visual and an auditory information with respect to a target. It is provided with an audition module (20), a face module (30), a stereo module (37), a motor control module (40), an association module (50) for generating streams by associating events from said each module (20, 30, 37, and 40), and an attention control module (57) for conducting attention control based on the streams generated by the association module (50), and said association module (50) generates an auditory stream (55) and a visual stream (56) from a auditory event (28) from the auditory module (20), a face event (39) from the face module (30), a stereo event (39a) from the stereo module (37), and a motor event (48) from the motor control module (40), and an association stream (57) which associates said streams, as well as said audition module (20) collects sub-bands having the interaural phase difference (IPD) or the interaural intensity difference (IID) within the preset range by an active direction pass filter (23a) having a pass range which, according to auditory characteristics, becomes minimum in the frontal direction, and larger as the angle becomes wider to the left and right, based on an accurate sound source directional information from the association module (50), and conducts sound source separation by restructuring the wave shape of the sound source.

Owner:HONDA MOTOR CO LTD

Segmenting audio signals into auditory events

InactiveUS20100185439A1Increased complexityIncrease in sizeTelevision system detailsColor television detailsFrequency spectrumInformation representation

In one aspect, the invention divides an audio signal into auditory events, each of which tends to be perceived as separate and distinct, by calculating the spectral content of successive time blocks of the audio signal, calculating the difference in spectral content between successive time blocks of the audio signal, and identifying an auditory event boundary as the boundary between successive time blocks when the difference in the spectral content between such successive time blocks exceeds a threshold. In another aspect, the invention generates a reduced-information representation of an audio signal by dividing an audio signal into auditory events, each of which tends to be perceived as separate and distinct, and formatting and storing information relating to the auditory events. Optionally, the invention may also assign a characteristic to one or more of the auditory events. Auditory events may be determined according to the first aspect of the invention or by another method.

Owner:DOLBY LAB LICENSING CORP

Controlling spatial audio coding parameters as a function of auditory events

An audio encoder or encoding method receives a plurality of input channels and generates one or more audio output channels and one or more parameters describing desired spatial relationships among a plurality of audio channels that may be derived from the one or more audio output channels, by detecting changes in signal characteristics with respect to time in one or more of the plurality of audio input channels, identifying as auditory event boundaries changes in signal characteristics with respect to time in the one or more of the plurality of audio input channels, an audio segment between consecutive boundaries constituting an auditory event in the channel or channels, and generating all or some of the one or more parameters at least partly in response to auditory events and / or the degree of change in signal characteristics associated with the auditory event boundaries. An auditory-event-responsive audio upmixer or upmixing method is also disclosed.

Owner:DOLBY LAB LICENSING CORP

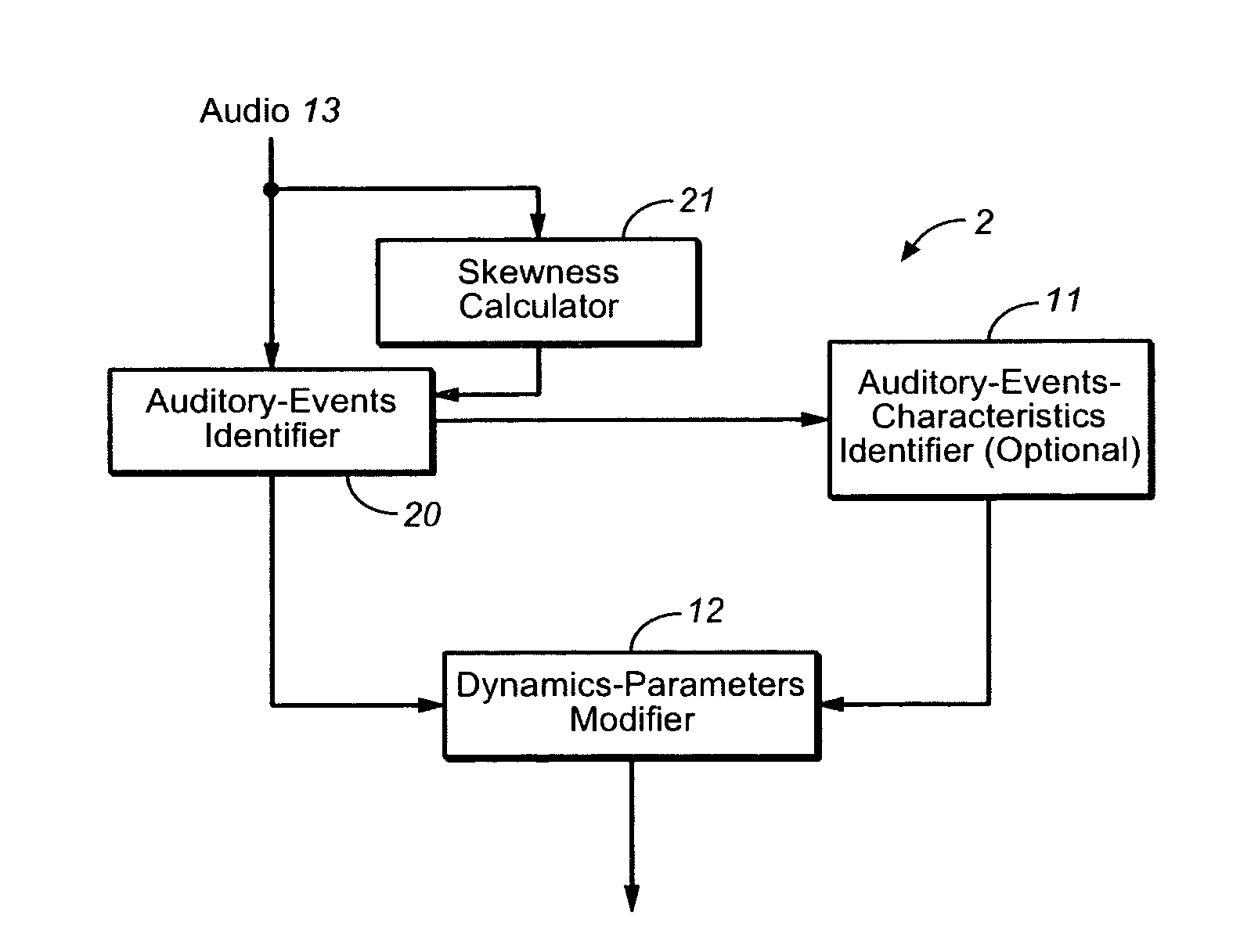

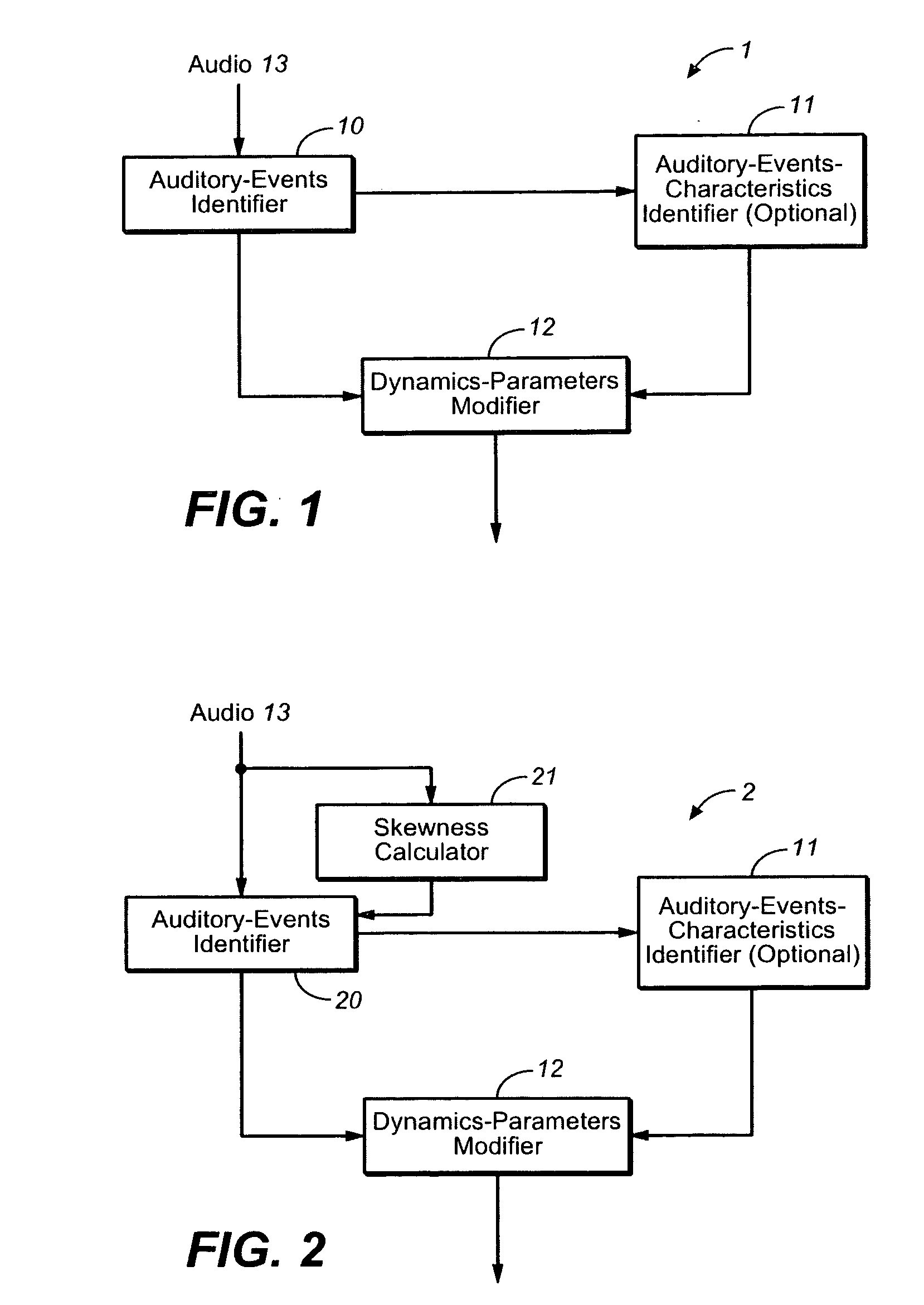

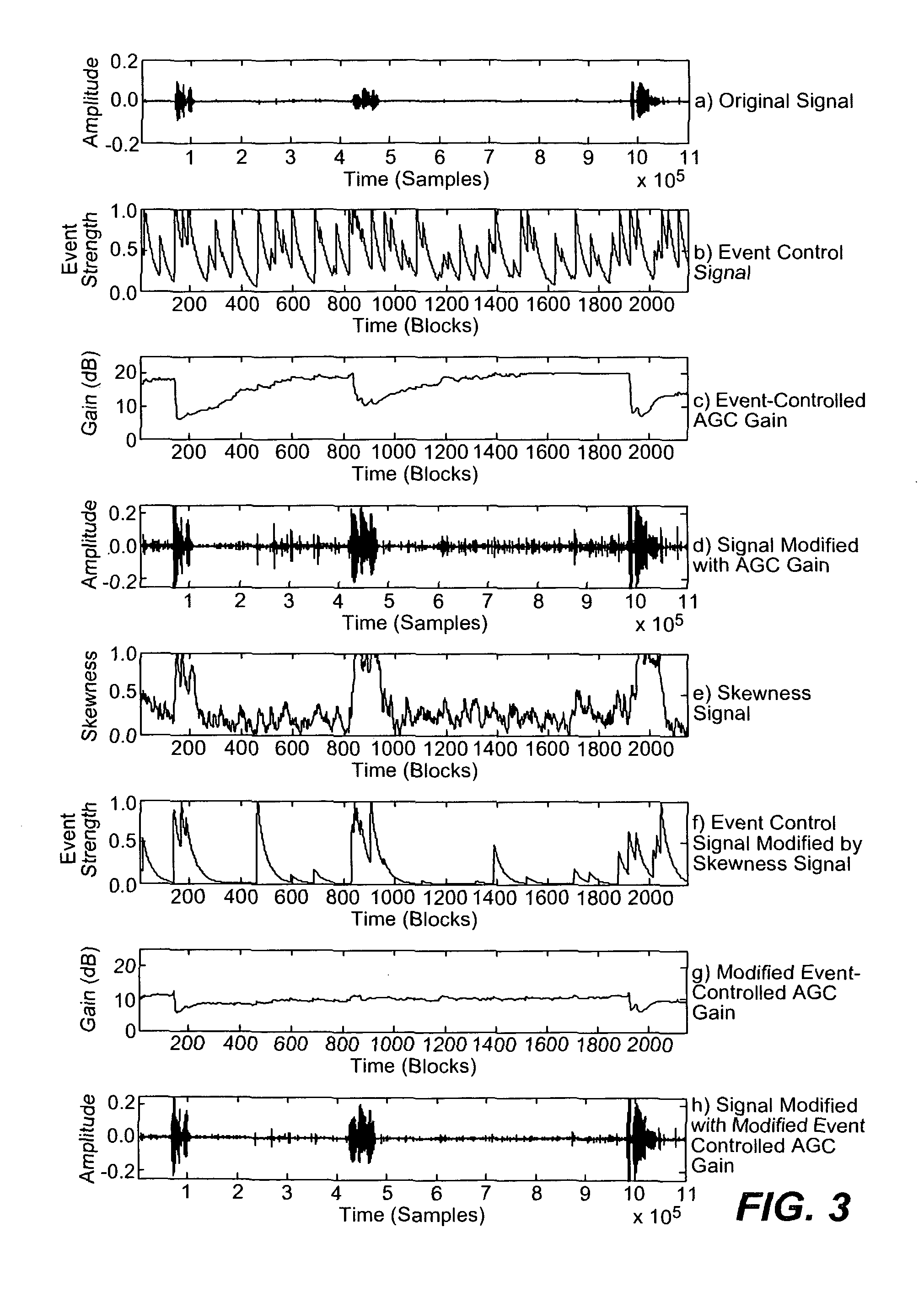

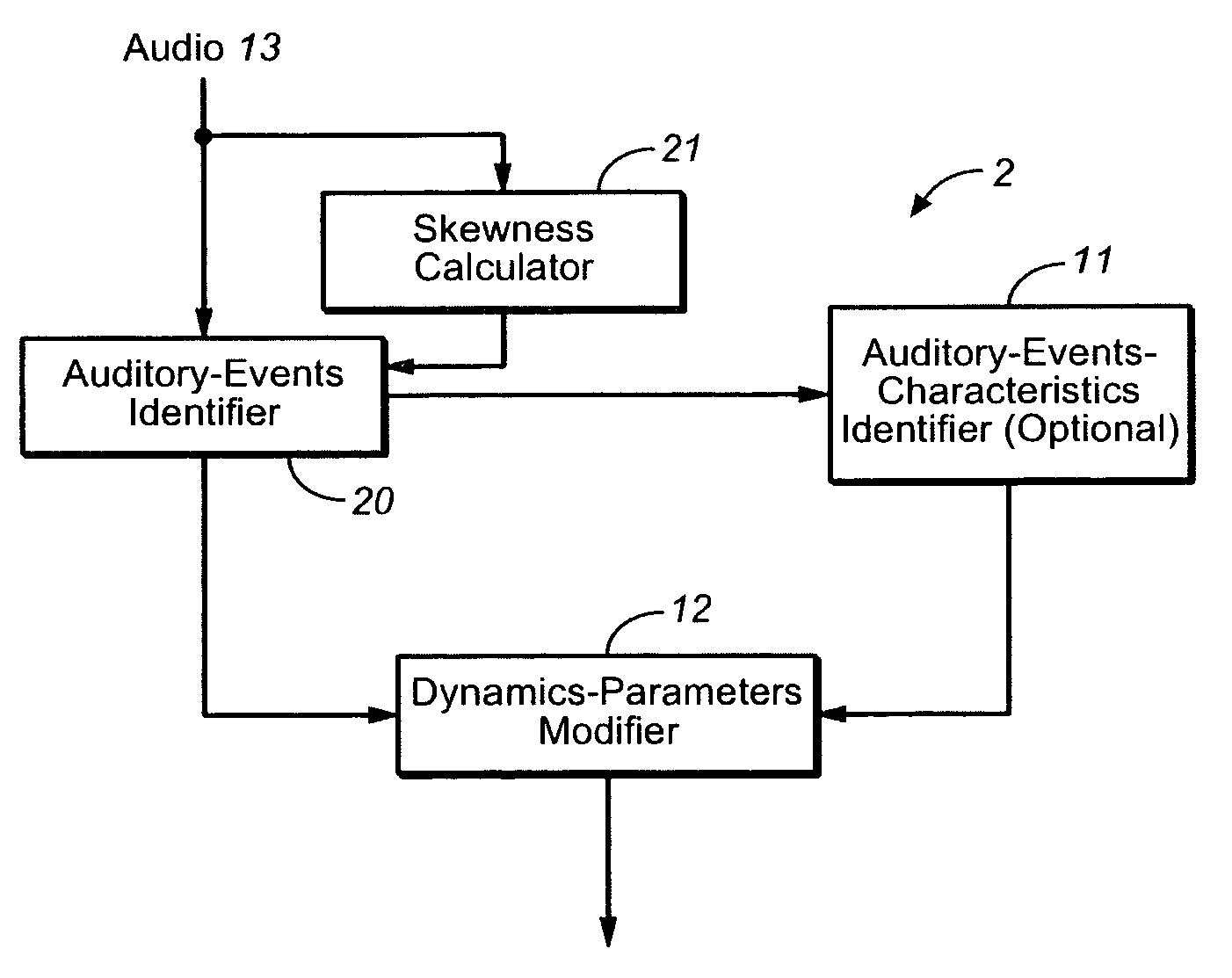

Audio Processing Using Auditory Scene Analysis and Spectral Skewness

ActiveUS20100198378A1Reduce swellingControlling loudness of the auditory eventsSpeech analysisDigital/coded signal combination controlFrequency spectrumLoudness

A method for controlling the loudness of auditory events in an audio signal. In an embodiment, the method includes weighting the auditory events (an auditory event having a spectrum and a loudness), using skewness in the spectra and controlling loudness of the auditory events, using the weights. Various embodiments of the invention are as follows: The weighting being proportionate to the measure of skewness in the spectra; the measure of skewness is a measure of smoothed skewness; the weighting is insensitive to amplitude of the audio signal; the weighting is insensitive to power; the weighting is insensitive to loudness; and any relationship between signal measure and absolute reproduction level is not known at the time of weighting; the weighting includes weighting auditory-event-boundary importance, using skewness in the spectra.

Owner:DOLBY LAB LICENSING CORP

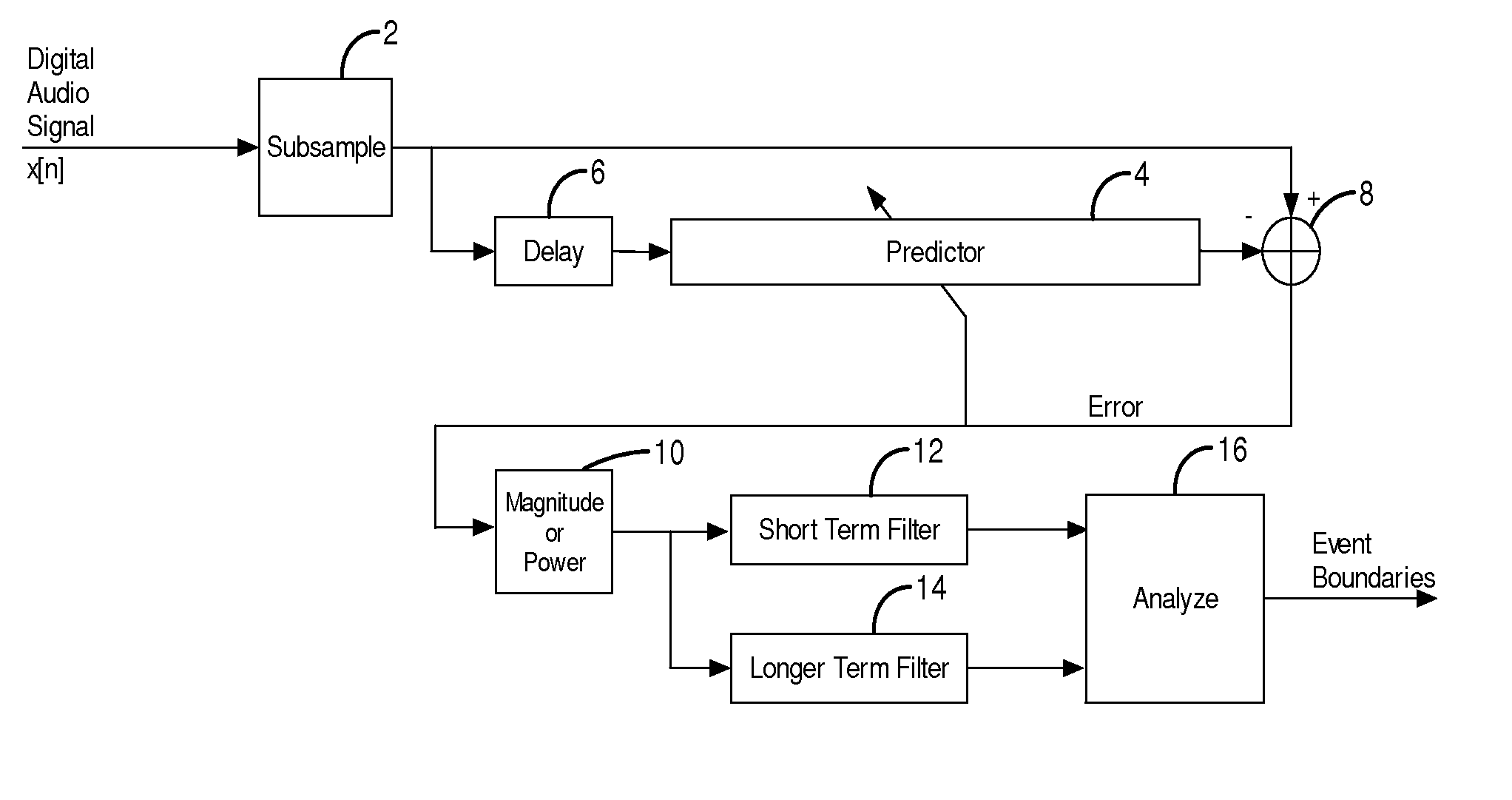

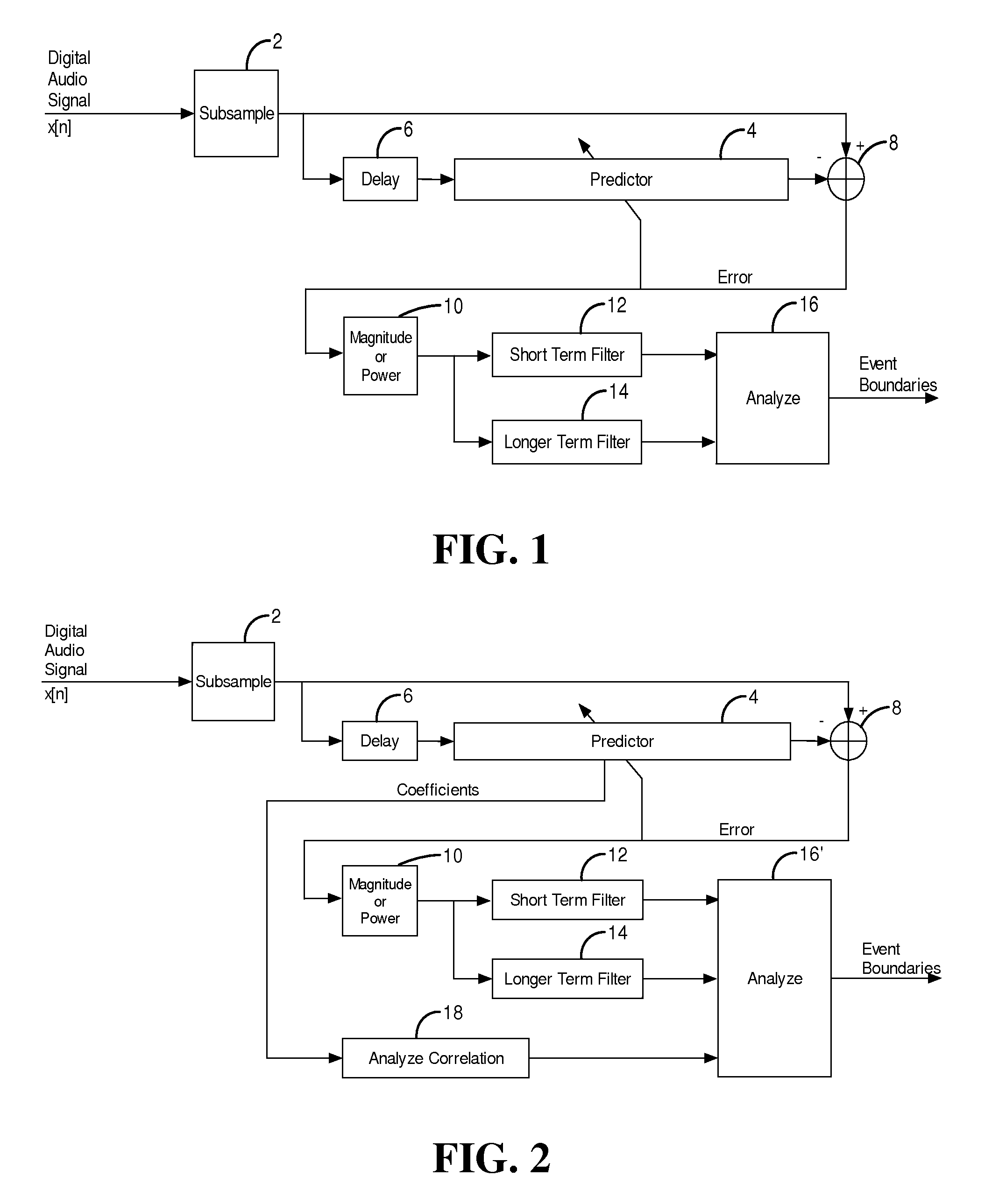

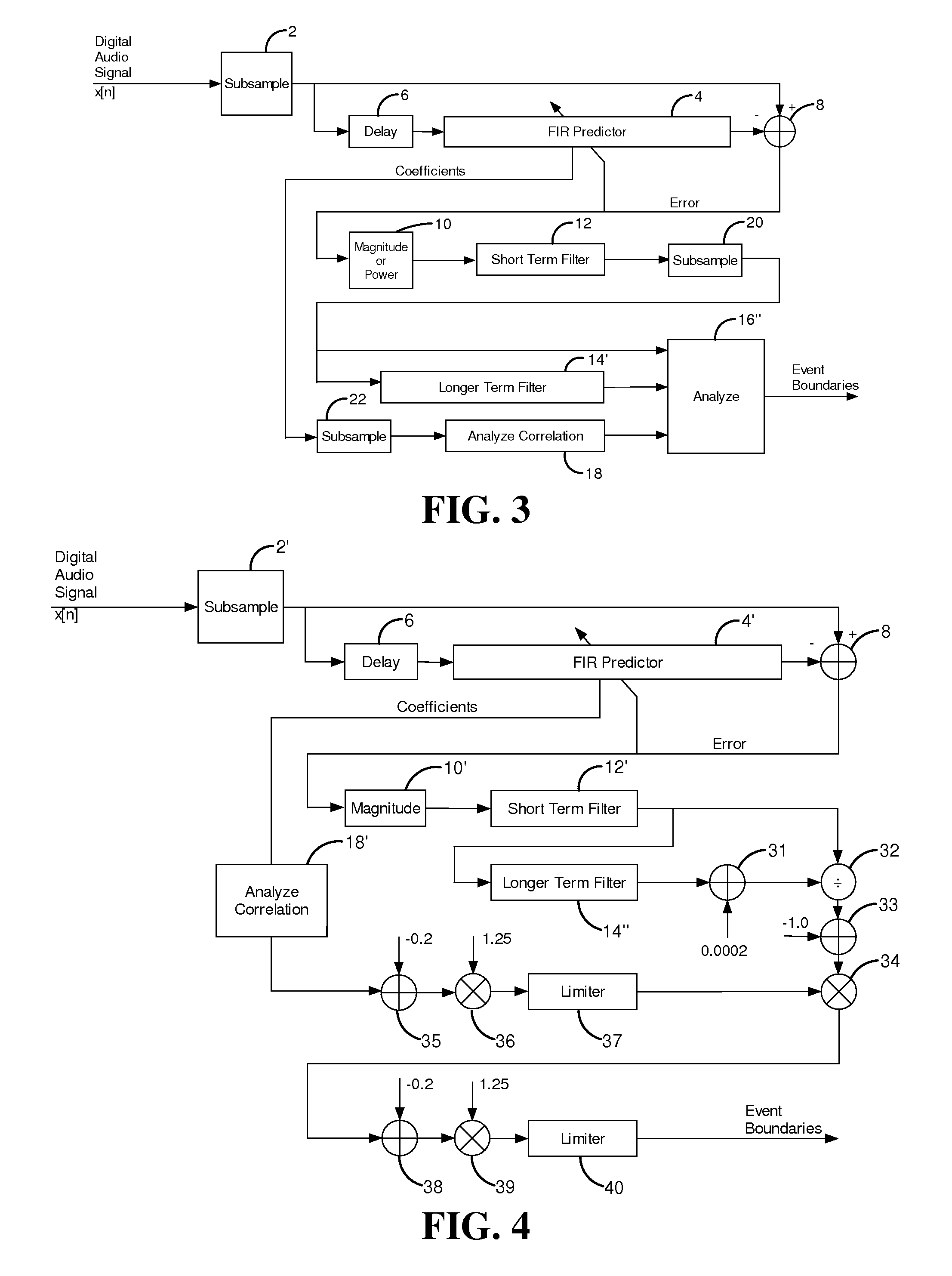

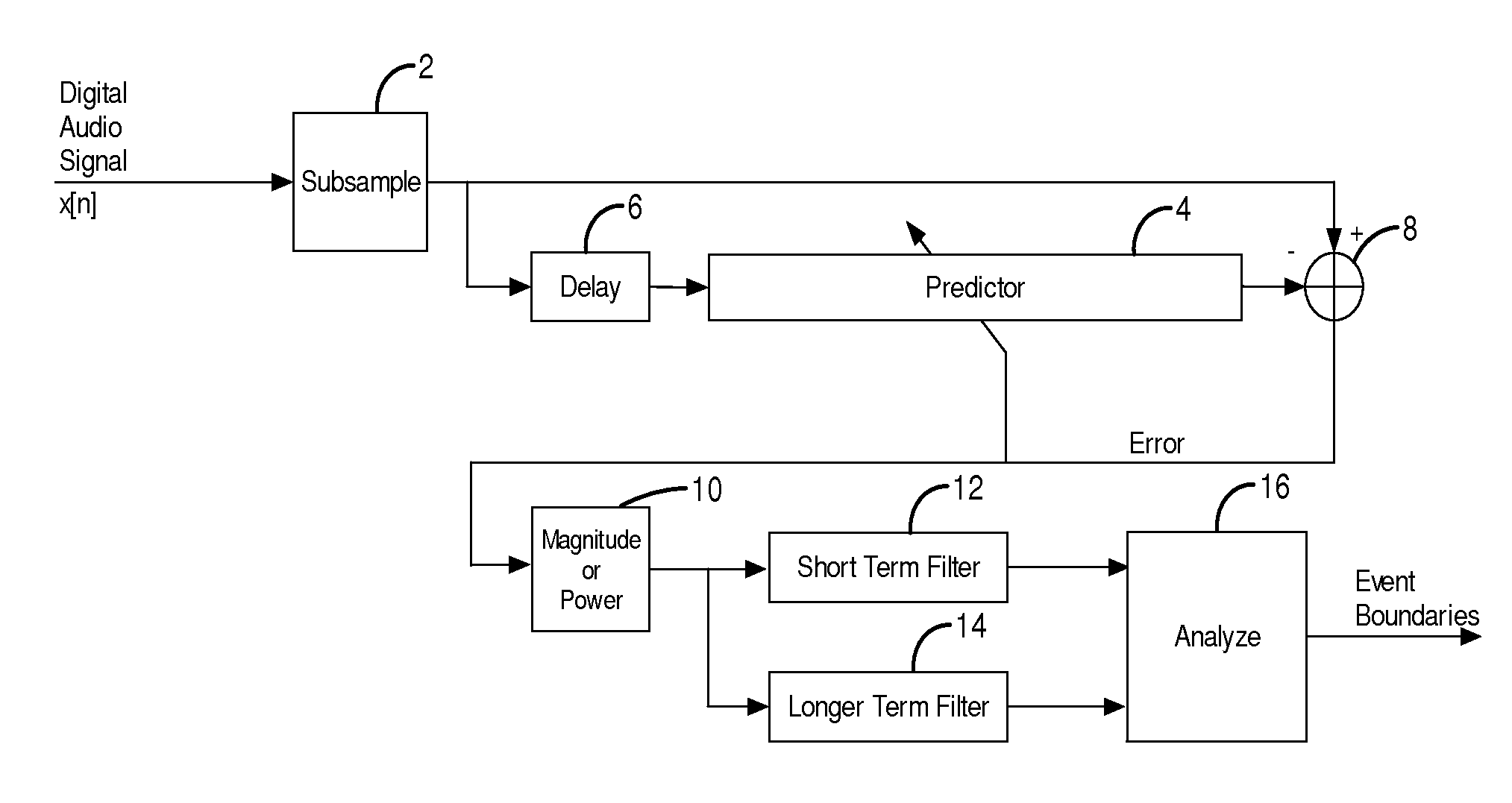

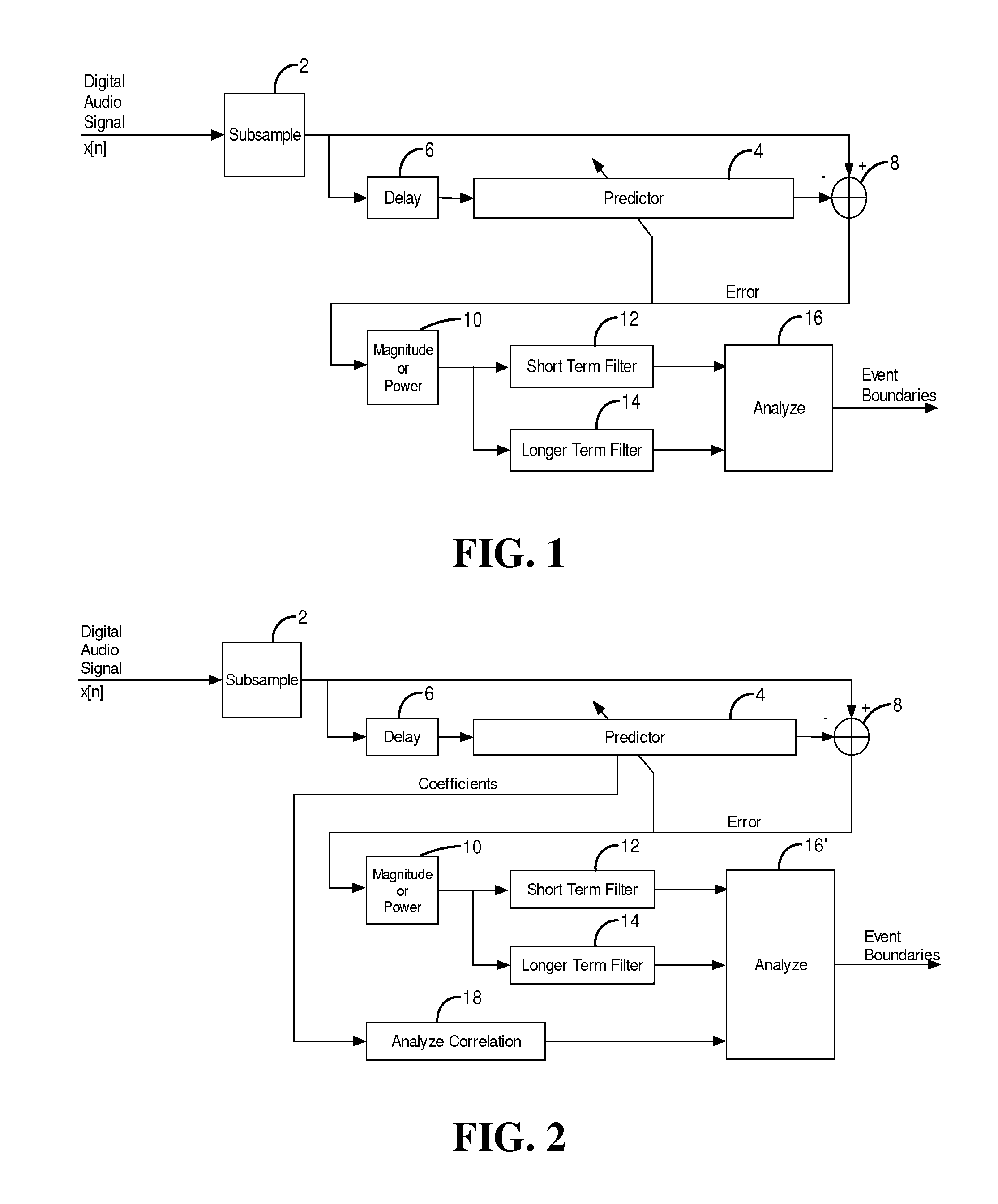

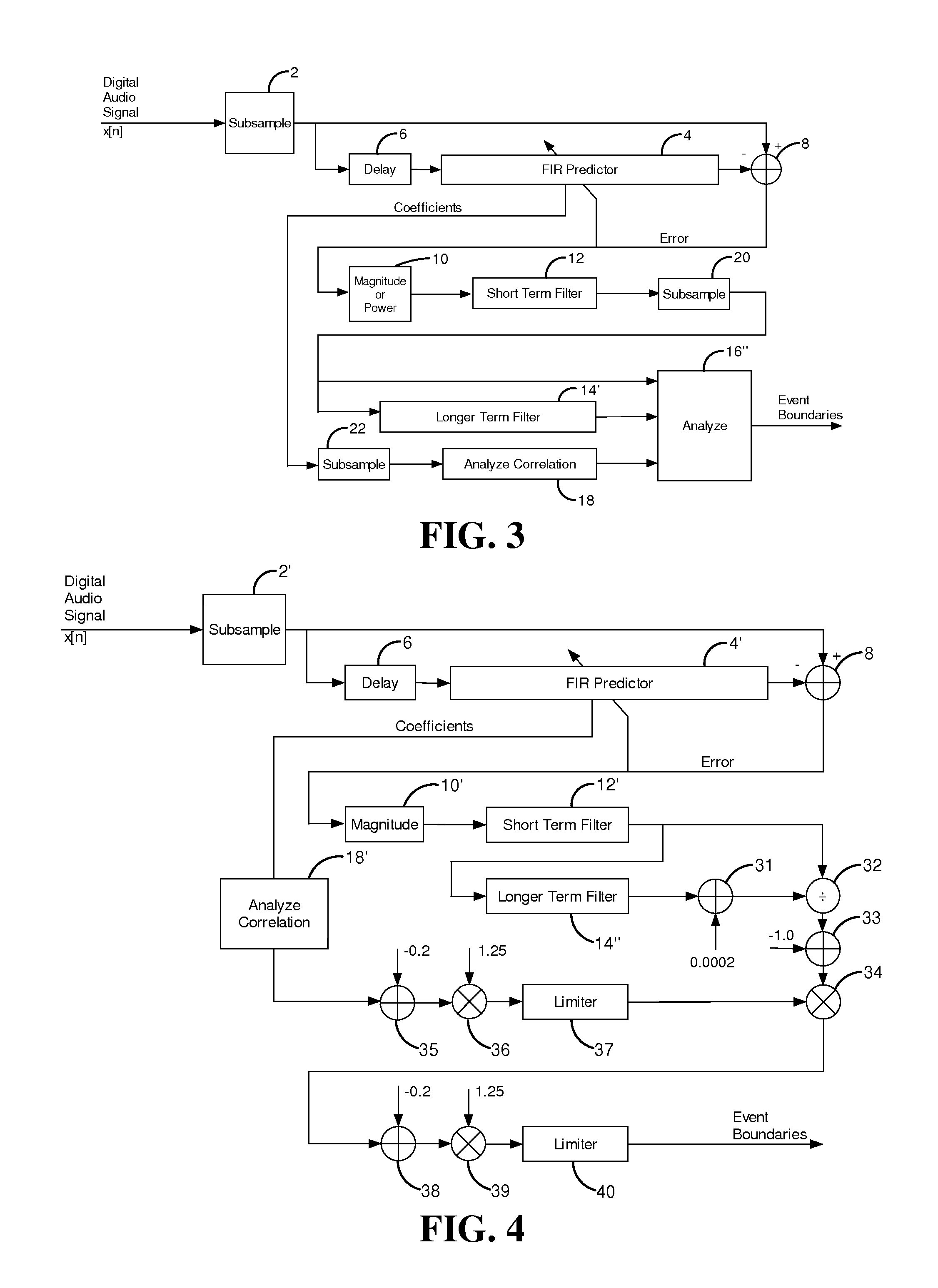

Low Complexity Auditory Event Boundary Detection

ActiveUS20120046772A1Reduce effective bandwidthUseful spectral selectivitySpeech analysisSpecial data processing applicationsFrequency spectrumBoundary detection

An auditory event boundary detector employs down-sampling of the input digital audio signal without an anti-aliasing filter, resulting in a narrower bandwidth intermediate signal with aliasing. Spectral changes of that intermediate signal, indicating event boundaries, may be detected using an adaptive filter to track a linear predictive model of the samples of the intermediate signal. Changes in the magnitude or power of the filter error correspond to changes in the spectrum of the input audio signal. The adaptive filter converges at a rate consistent with the duration of auditory events, so filter error magnitude or power changes indicate event boundaries. The detector is much less complex than methods employing time-to-frequency transforms for the full bandwidth of the audio signal.

Owner:DOLBY LAB LICENSING CORP

Robotics visual and auditory system

InactiveUS7526361B2The effect is accurateImprove robustnessProgramme-controlled manipulatorComputer controlSound source separationPhase difference

Robotics visual and auditory system is provided which is made capable of accurately conducting the sound source localization of a target by associating a visual and an auditory information with respect to a target. It is provided with an audition module (20), a face module (30), a stereo module (37), a motor control module (40), an association module (50) for generating streams by associating events from said each module (20, 30, 37, and 40), and an attention control module (57) for conducting attention control based on the streams generated by the association module (50), and said association module (50) generates an auditory stream (55) and a visual stream (56) from a auditory event (28) from the auditory module (20), a face event (39) from the face module (30), a stereo event (39a) from the stereo module (37), and a motor event (48) from the motor control module (40), and an association stream (57) which associates said streams, as well as said audition module (20) collects sub-bands having the interaural phase difference (IPD) or the interaural intensity difference (IID) within the preset range by an active direction pass filter (23a) having a pass range which, according to auditory characteristics, becomes minimum in the frontal direction, and larger as the angle becomes wider to the left and right, based on an accurate sound source directional information from the association module (50), and conducts sound source separation by restructuring the wave shape of the sound source.

Owner:HONDA MOTOR CO LTD

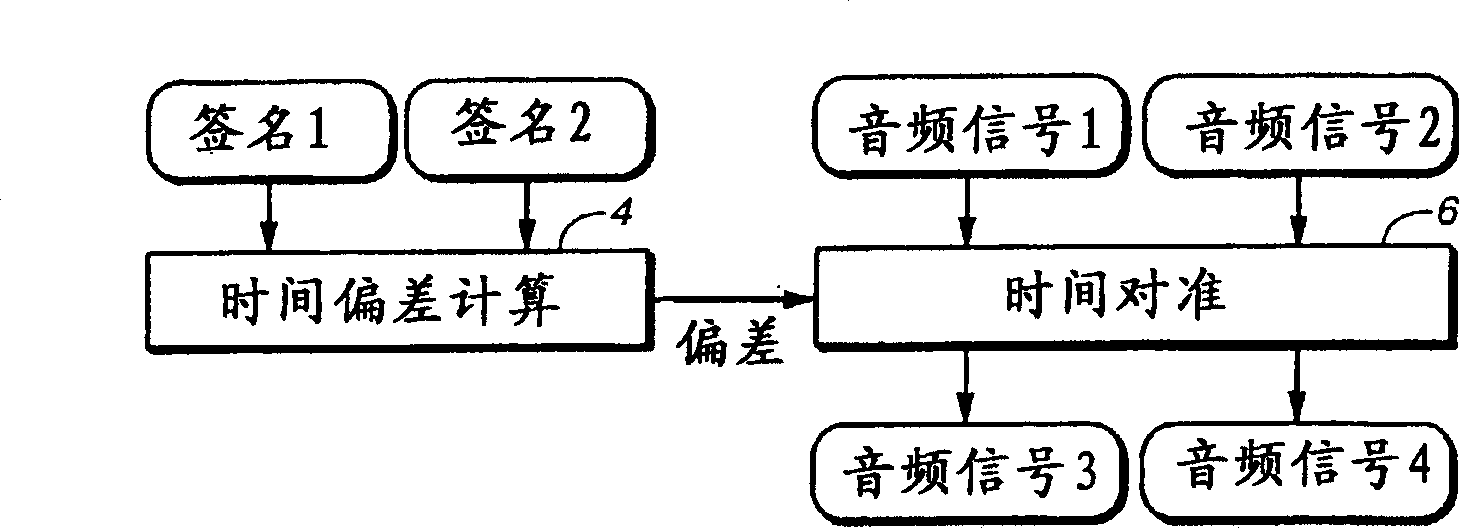

Segmenting audio signals into auditory events

Described is a method for time aligning an audio signal and another signal, comprising deriving a reduced-information characterization of the audio signal and embedding said characterization in the other signal when the audio signal and other signal are substantially in synchronism, wherein said characterization is based on auditory scene analysis; recovering the embedded characterization of said audio signal from said other signal and deriving a reduced-information characterization of said audio signal from said audio signal in the same way the embedded characterization of the audio signal was derived based on auditory scene analysis, after said audio signal and said other signal have been subjected to differential time offsets; calculating the time offset of one characterization with respect to the other characterization, and modifying the temporal relationship of the audio signal with respect to the other signal in response to said time offset such that the audio signal and other signal are in synchronism or nearly in synchronism with each other.

Owner:DOLBY LAB LICENSING CORP

Audio-Controlled Image Capturing

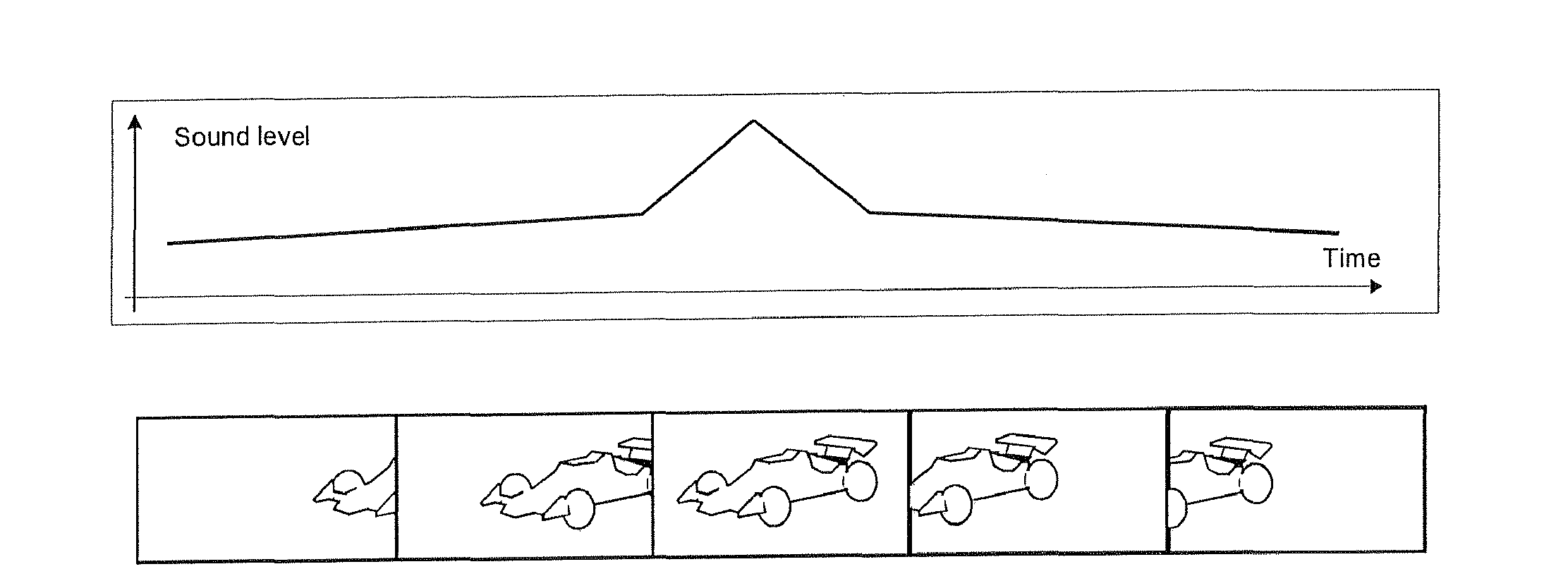

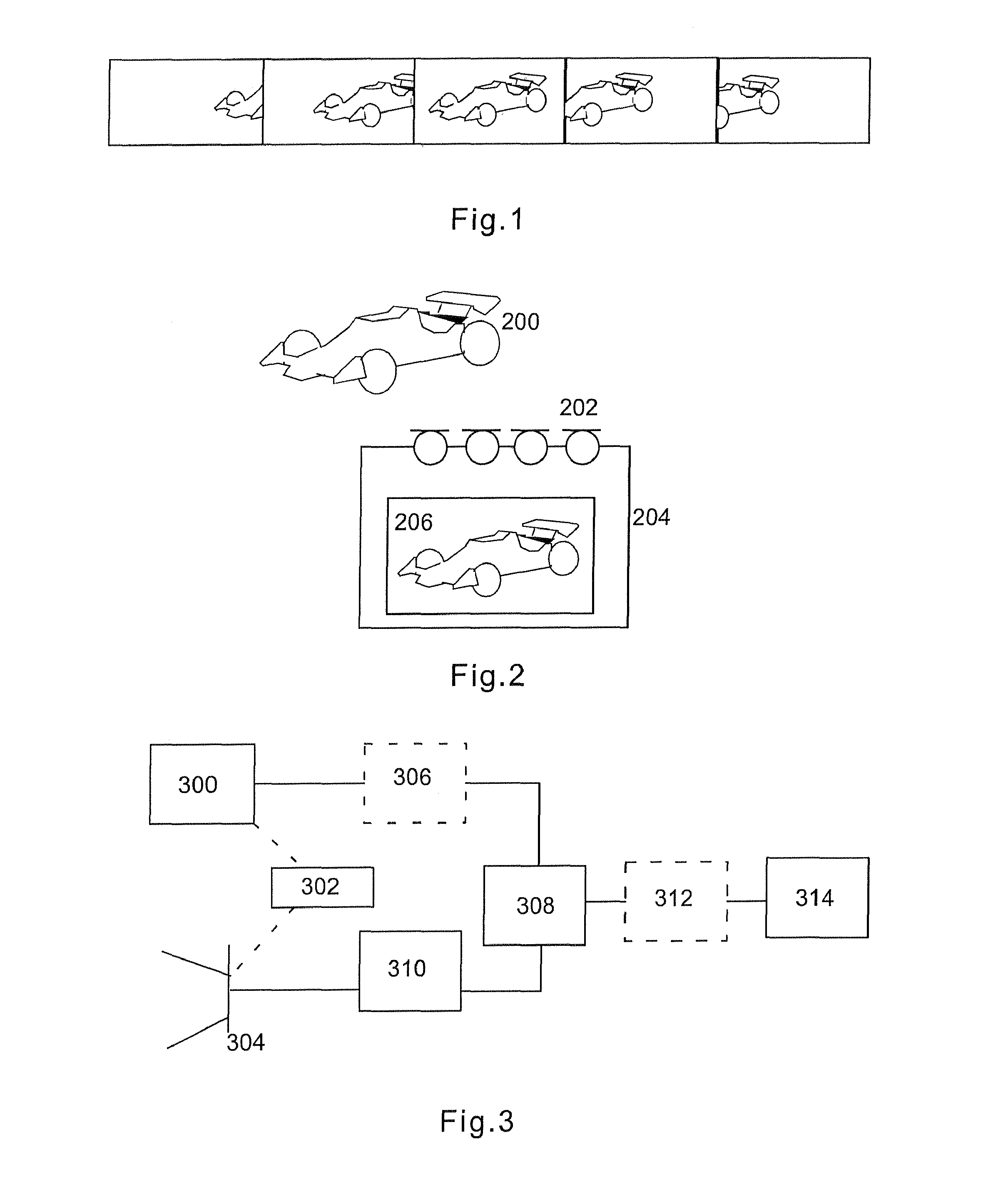

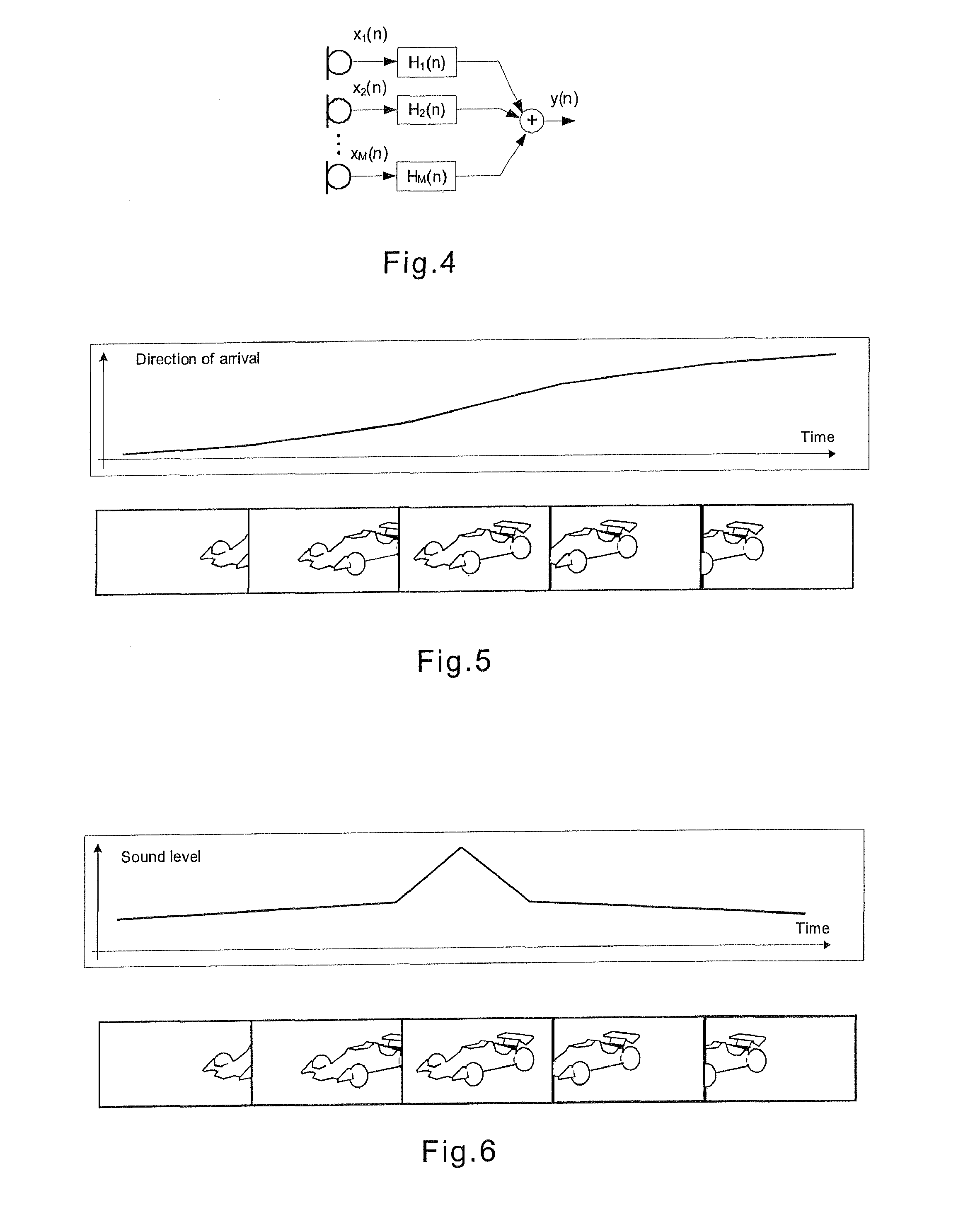

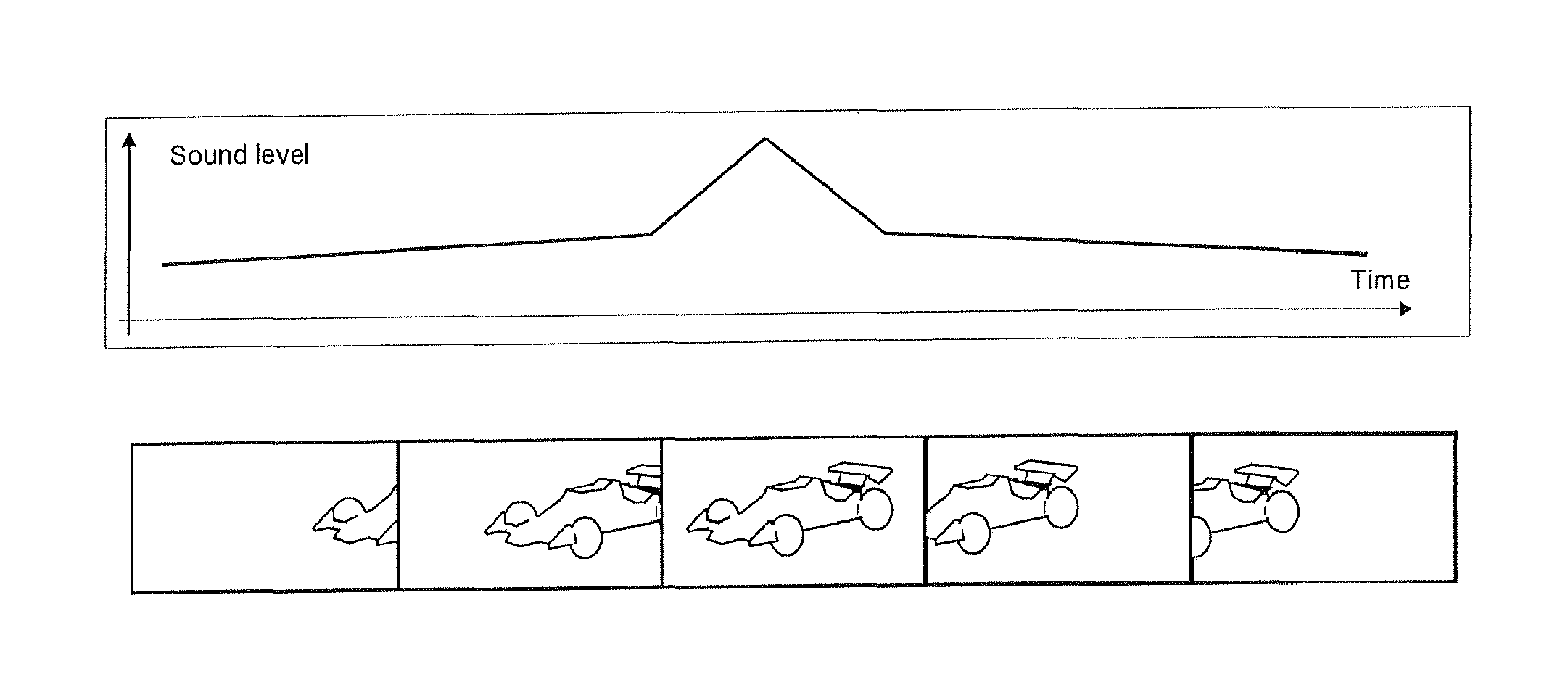

ActiveUS20120098983A1Quality improvementEnhance the imageTelevision system detailsDirection finders using ultrasonic/sonic/infrasonic wavesVIT signalsAudio signal

A method comprising: receiving a plurality of images corresponding to a time period covering the intended moment for releasing the shutter; receiving an audio signal associated with the plurality of images using audio capturing means; analyzing the received audio signal in order to determine an auditory event associated with a desired output image; and selecting at least one of the plurality of images on the basis of the analysis of the received audio signal for further processing in order to obtain the desired output image.

Owner:NOKIA TECHNOLOGLES OY

Comparing audio using characterizations based on auditory events

InactiveCN1620684AComputationally efficientTelevision system detailsCode conversionAudio frequencyAudio signal

In one aspect, the invention divides an audio signal into auditory events, each of which tends to be perceived as separate and distinct, by calculating the spectral content of successive time blocks of the audio signal, calculating the difference in spectral content between successive time blocks of the audio signal, and identifying an auditory event boundary as the boundary between successive time blocks when the difference in the spectral content between such successive time blocks exceeds a threshold. In another aspect, the invention generates a reduced-information representation of an audio signal by dividing an audio signal into auditory events, each of which tends to be perceived as separate and distinct, and formatting and storing information relating to the auditory events. Optionally, the invention may also assign a characteristic to one or more of the auditory events. Auditory events may be determined according to the first aspect of the invention or by another method.

Owner:DOLBY LAB LICENSING CORP

Method for comparing audio signal by characterisation based on auditory events

InactiveCN1511311ATime alignment changeEfficient identificationTelevision system detailsCode conversionInformation representationAudio signal flow

In one aspect, the invention divides an audio signal into auditory events, each of which tends to be perceived as separate and distinct, by calculating the spectral content of successive time blocks of the audio signal, calculating the difference in spectral content between successive time blocks of the audio signal, and identifying an auditory event boundary as the boundary between successive time blocks when the difference in the spectral content between such successive time blocks exceeds a threshold. In another aspect, the invention generates a reduced-information representation of an audio signal by dividing an audio signal into auditory events, each of which tends to be perceived as separate and distinct, and formatting and storing information relating to the auditory events. Optionally, the invention may also assign a characteristic to one or more of the auditory events. Auditory events may be determined according to the first aspect of the invention or by another method.

Owner:DOLBY LAB LICENSING CORP

Audio processing using auditory scene analysis and spectral skewness

ActiveUS8396574B2Reduce swellingControlling loudness of the auditory eventsSpeech analysisDigital/coded signal combination controlFrequency spectrumLoudness

A method for controlling the loudness of auditory events in an audio signal. In an embodiment, the method includes weighting the auditory events (an auditory event having a spectrum and a loudness), using skewness in the spectra and controlling loudness of the auditory events, using the weights. Various embodiments of the invention are as follows: The weighting being proportionate to the measure of skewness in the spectra; the measure of skewness is a measure of smoothed skewness; the weighting is insensitive to amplitude of the audio signal; the weighting is insensitive to power; the weighting is insensitive to loudness; and any relationship between signal measure and absolute reproduction level is not known at the time of weighting; the weighting includes weighting auditory-event-boundary importance, using skewness in the spectra.

Owner:DOLBY LAB LICENSING CORP

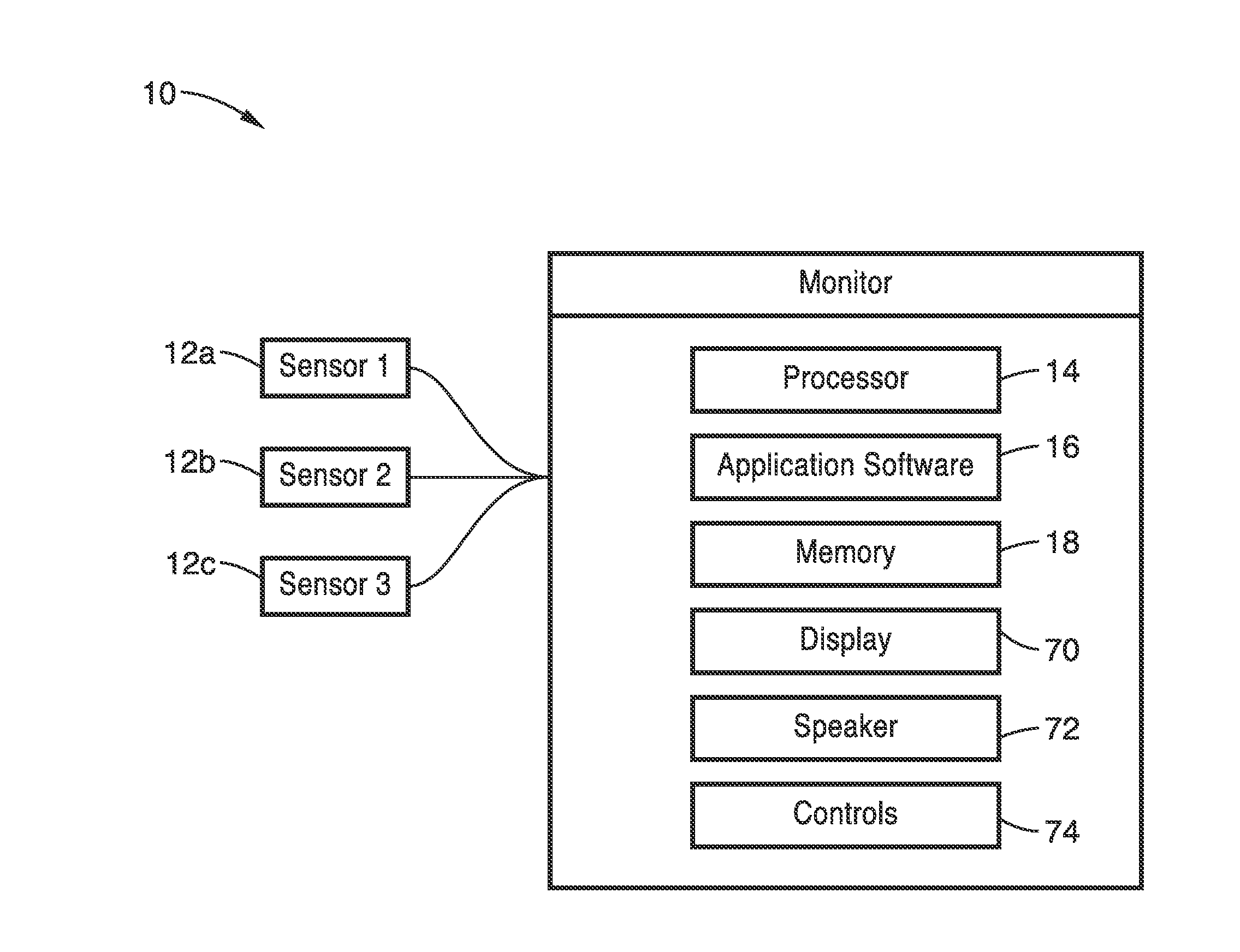

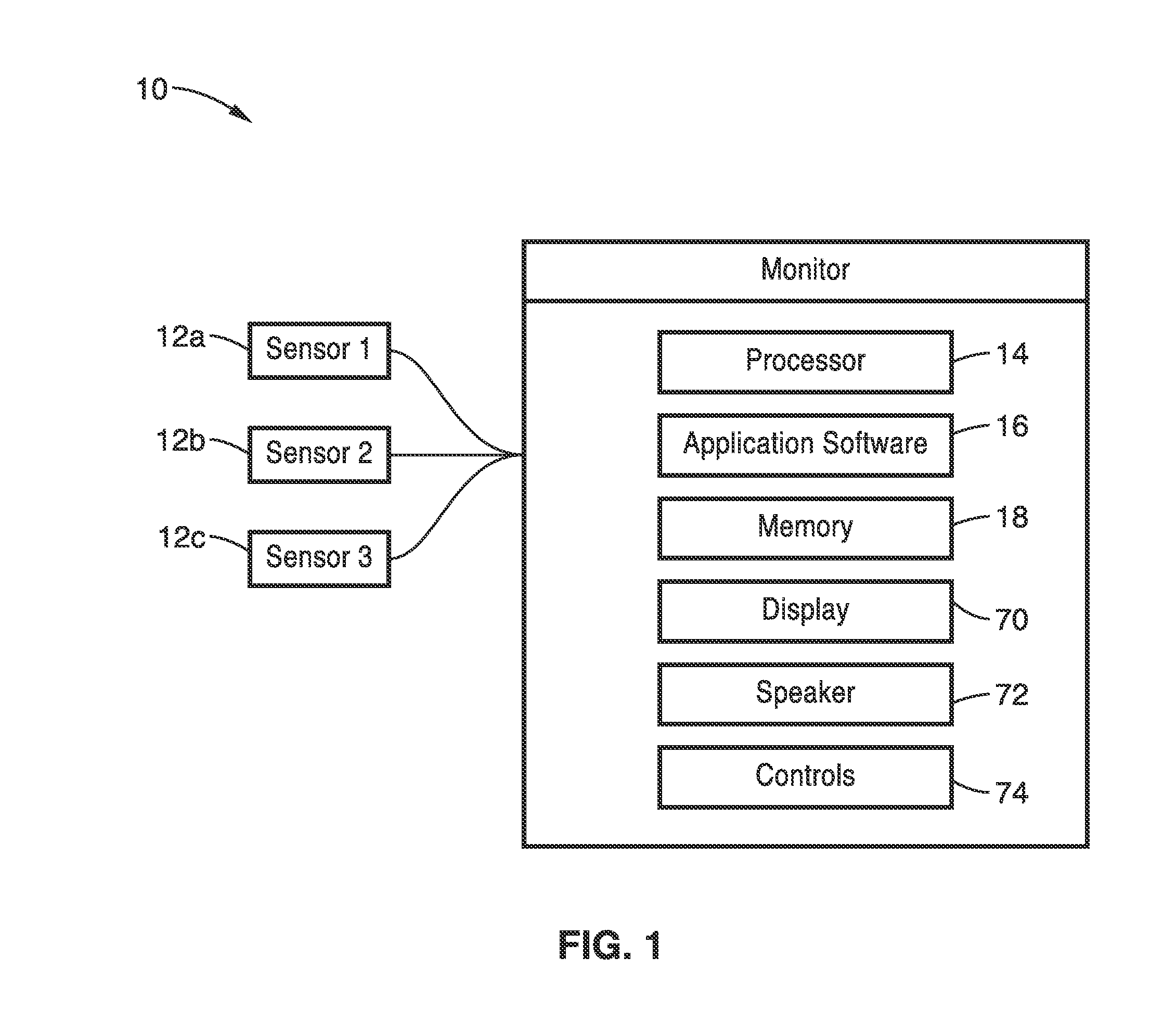

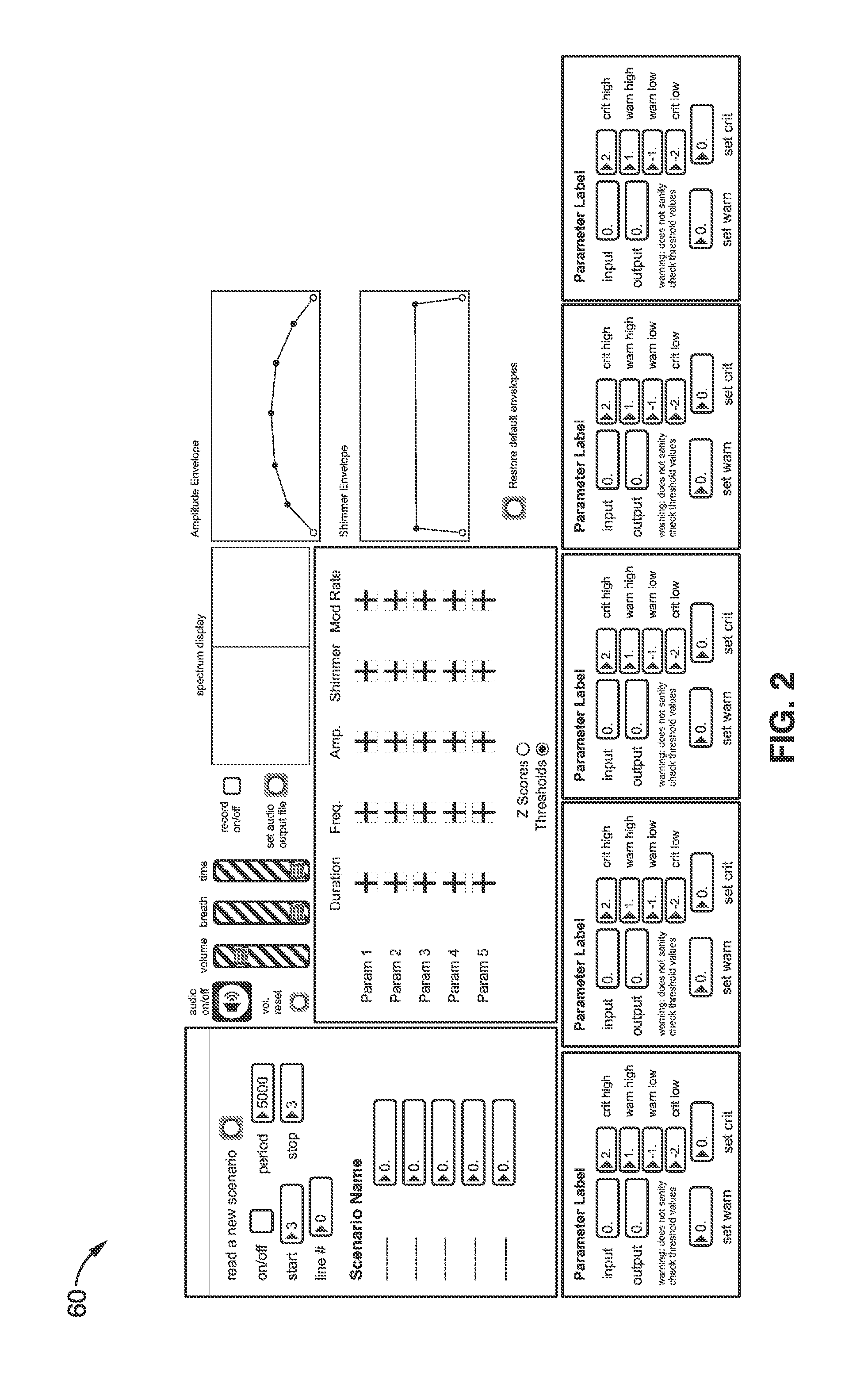

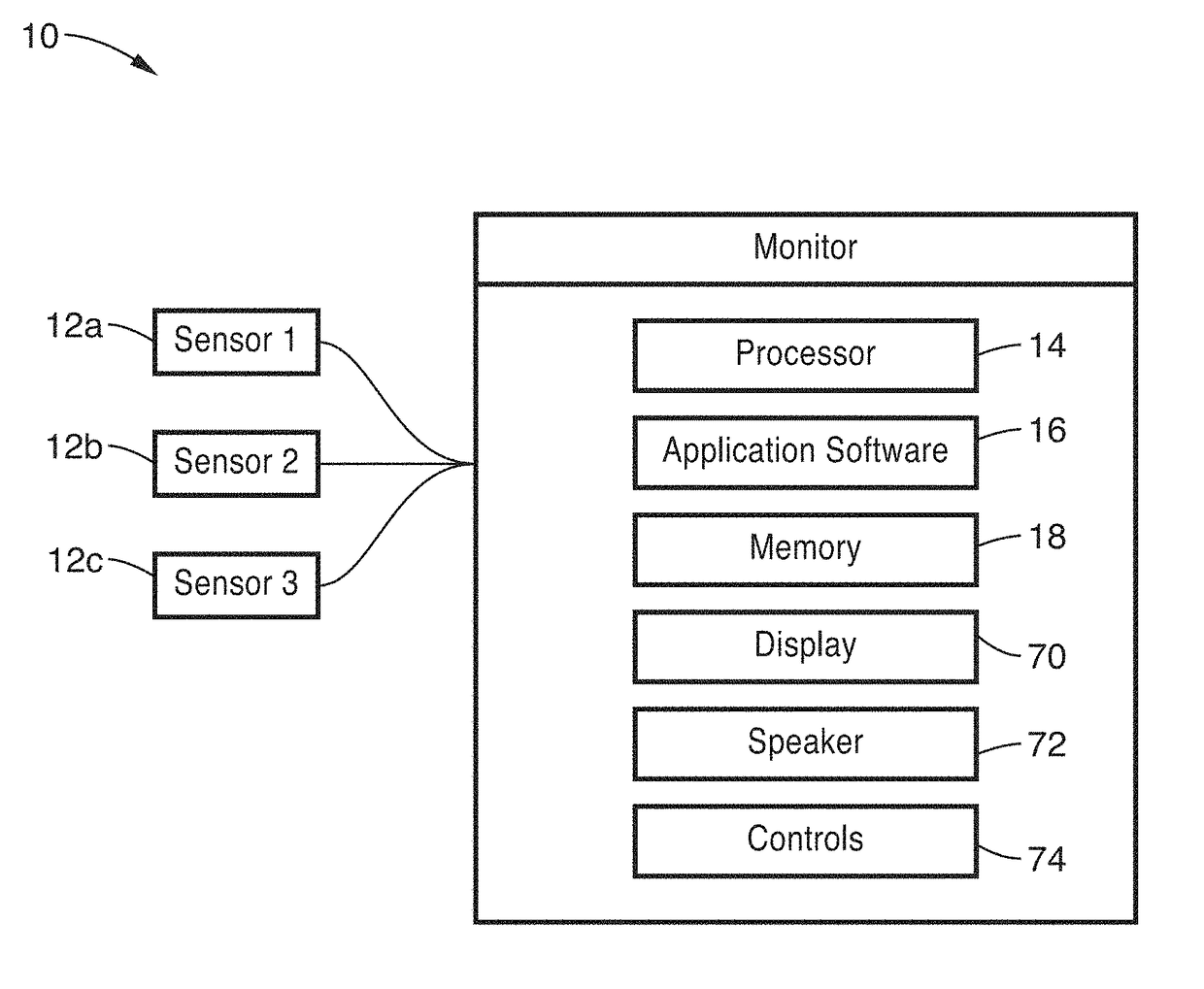

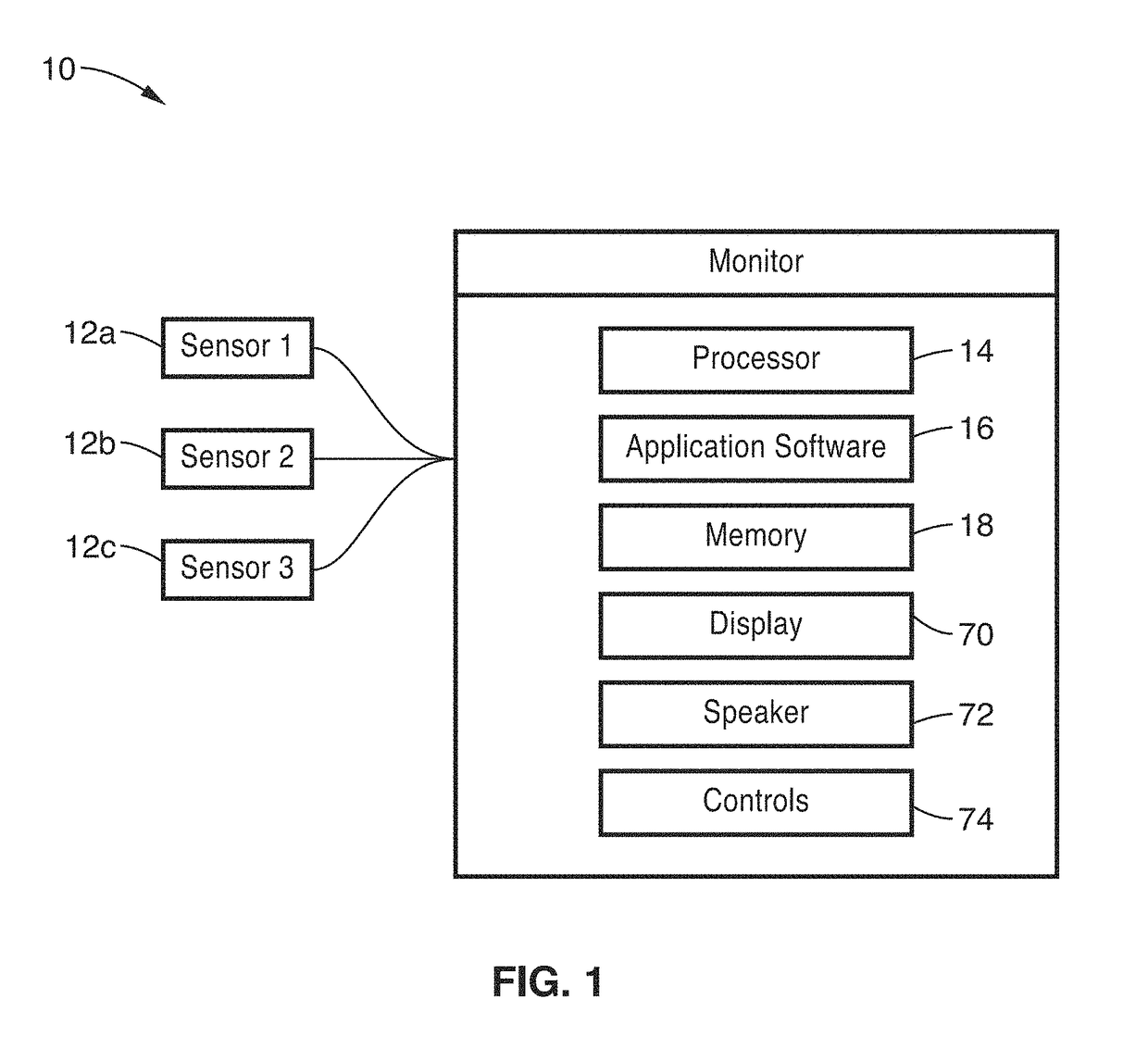

Sonification systems and methods for auditory display of physiological parameters

ActiveUS20150133749A1Easy to monitorLimited utilitySensorsMeasuring/recording heart/pulse rateSonificationMedicine

Systems and methods for generating a sonification output for presenting information about physiological parameters such as oxygen saturation and heart rate parameters in discrete auditory events. In a preferred embodiment, each event comprises two sounds. The first is a reference sound that indicates the desired target state for the two parameters and the second indicates the actual state. Heart rate is preferably represented by amplitude modulation. Oxygen saturation is preferably represented by a timbral manipulation.

Owner:NEW TECH SOUNDINGS +2

Segmenting audio signals into auditory events

InactiveUS8488800B2Simple and computationally efficientIncrease in sizeTelevision system detailsColor television detailsFrequency spectrumInformation representation

In one aspect, the invention divides an audio signal into auditory events, each of which tends to be perceived as separate and distinct, by calculating the spectral content of successive time blocks of the audio signal, calculating the difference in spectral content between successive time blocks of the audio signal, and identifying an auditory event boundary as the boundary between successive time blocks when the difference in the spectral content between such successive time blocks exceeds a threshold. In another aspect, the invention generates a reduced-information representation of an audio signal by dividing an audio signal into auditory events, each of which tends to be perceived as separate and distinct, and formatting and storing information relating to the auditory events. Optionally, the invention may also assign a characteristic to one or more of the auditory events. Auditory events may be determined according to the first aspect of the invention or by another method.

Owner:DOLBY LAB LICENSING CORP

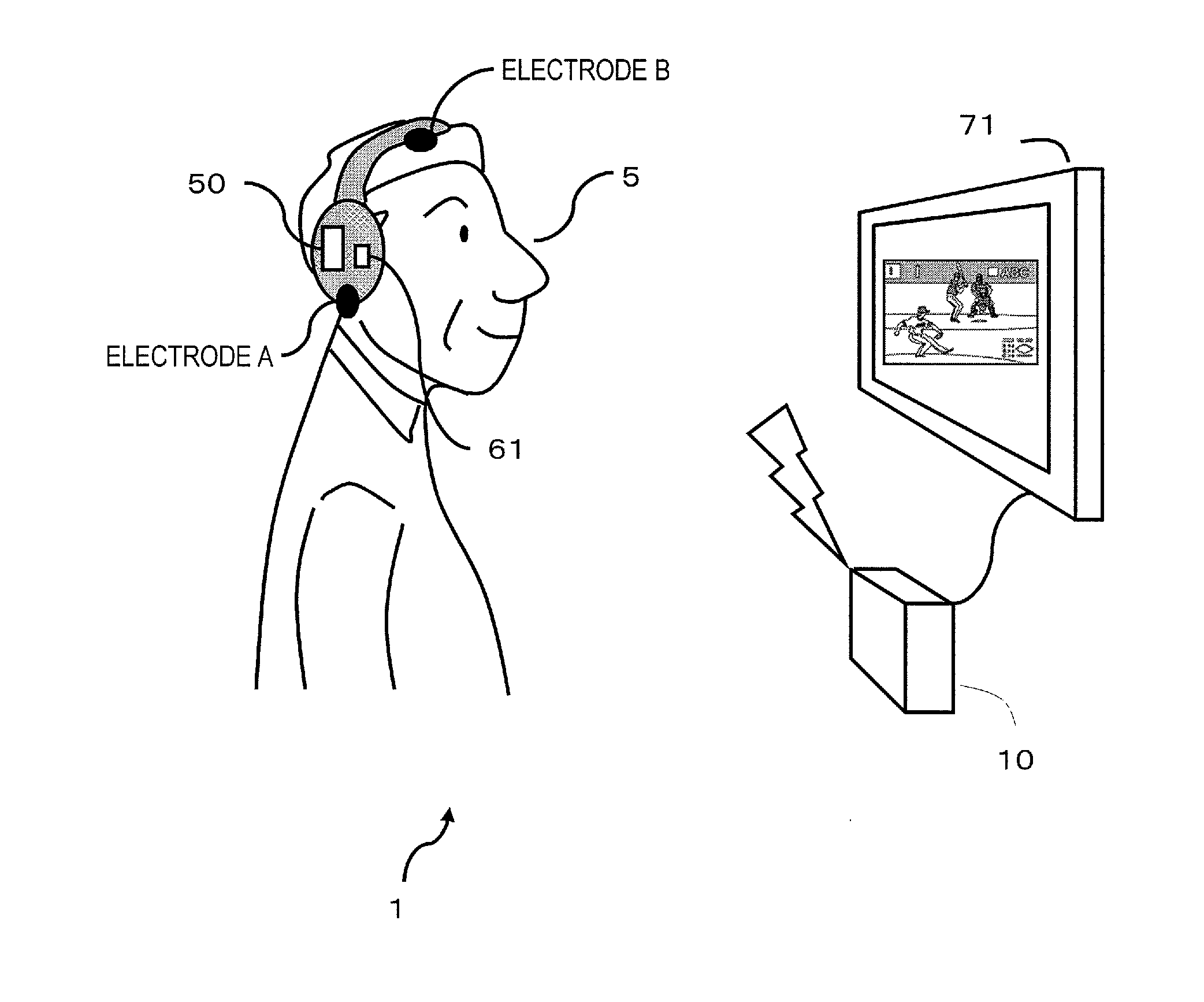

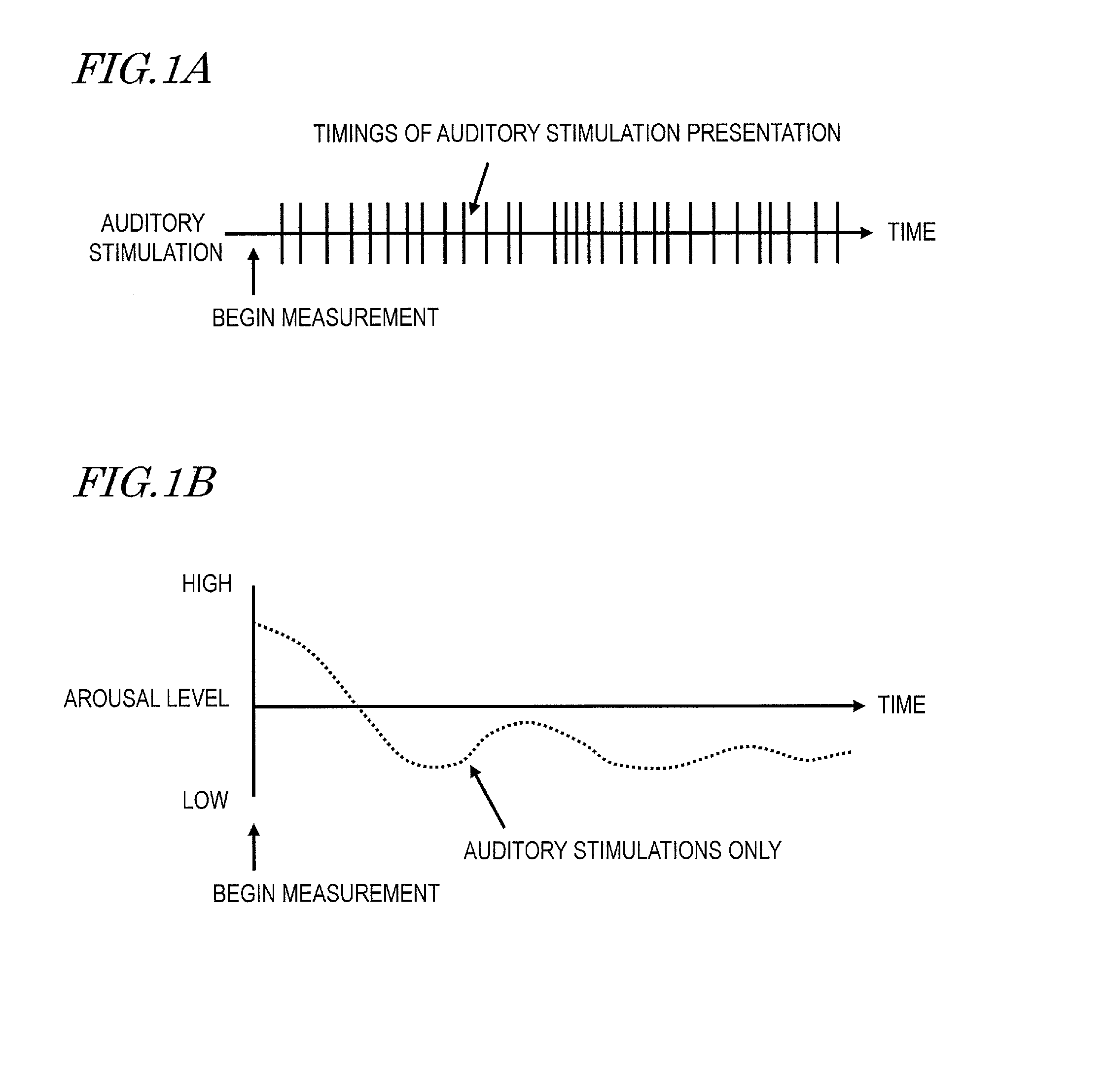

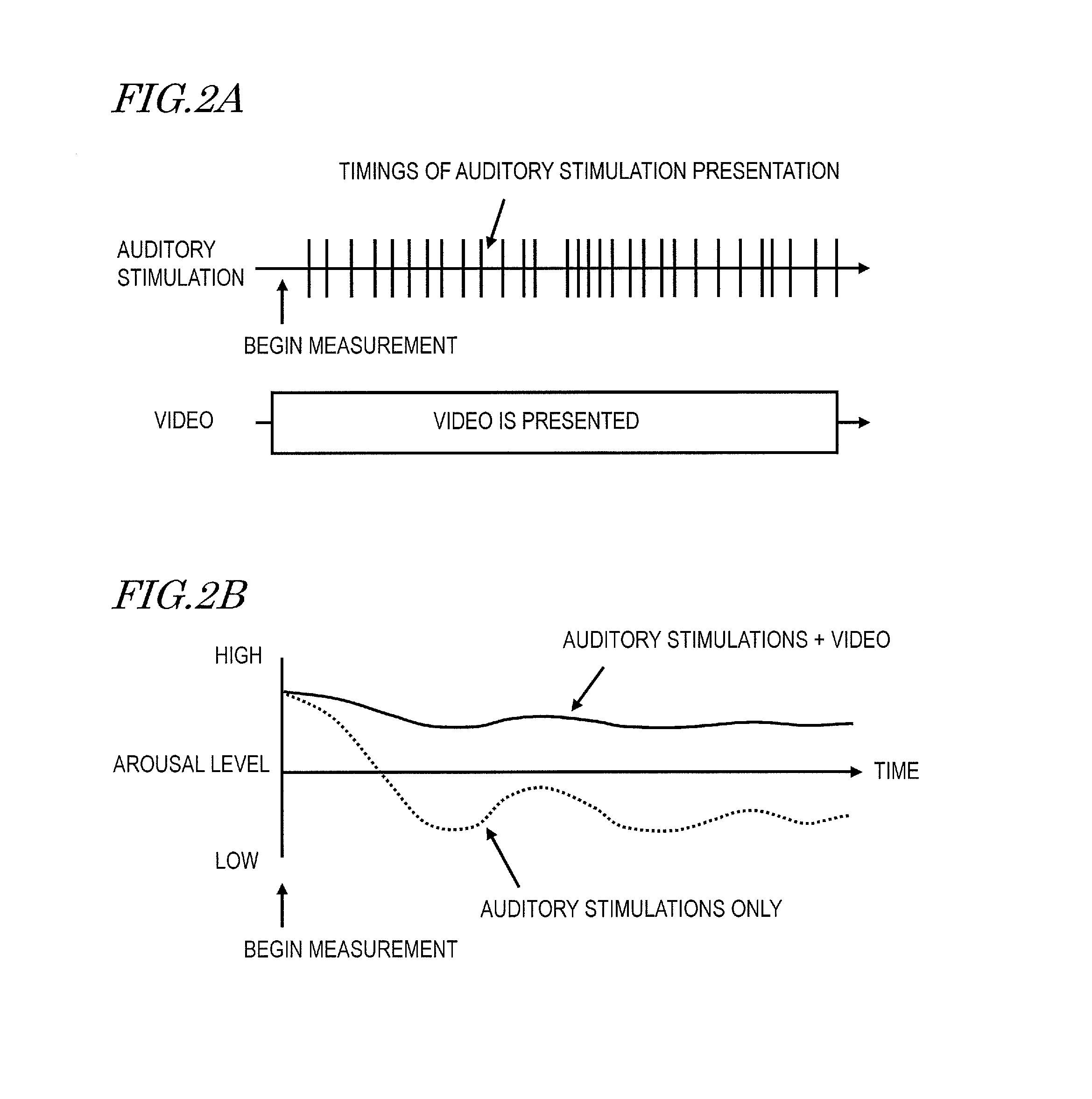

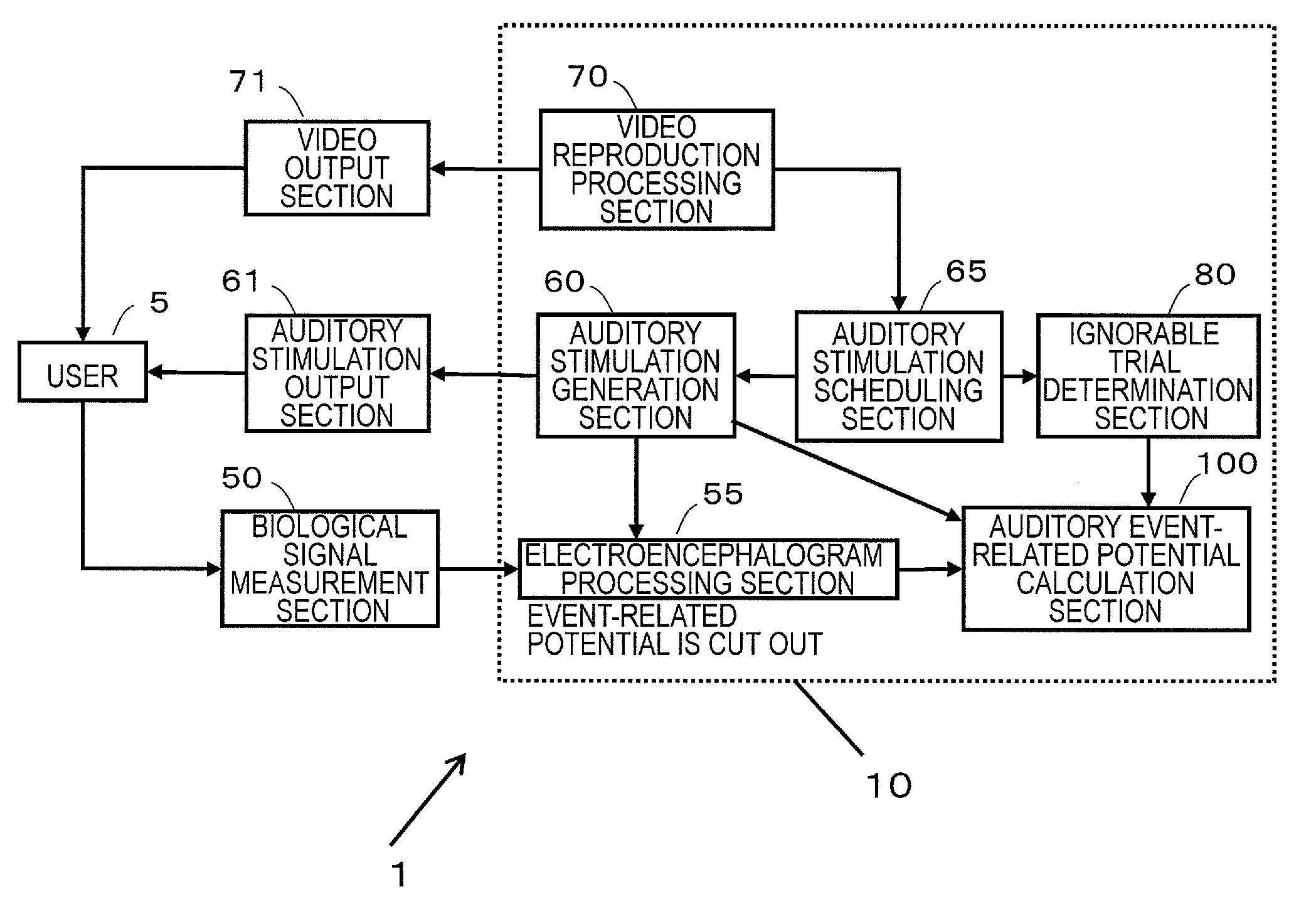

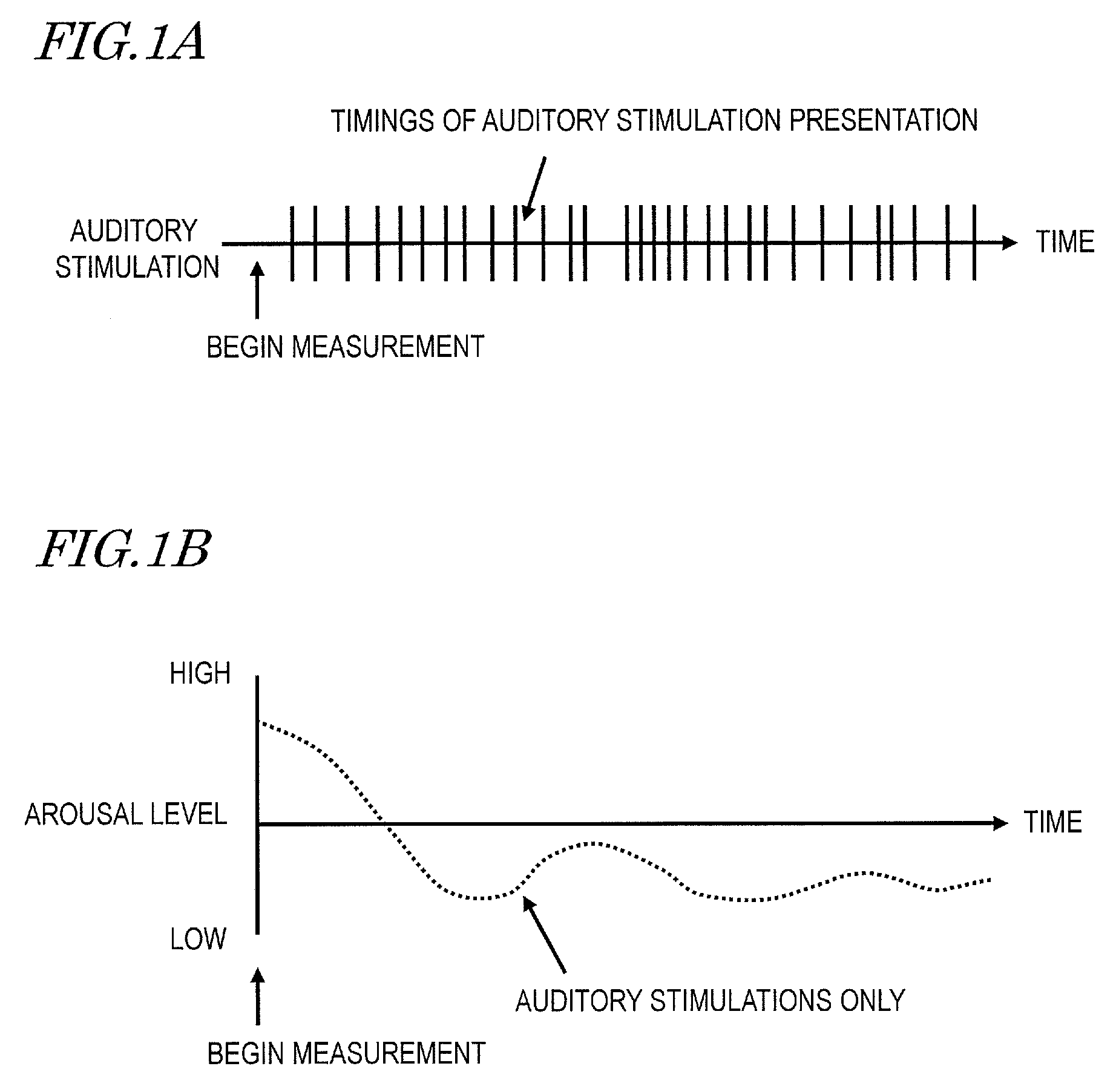

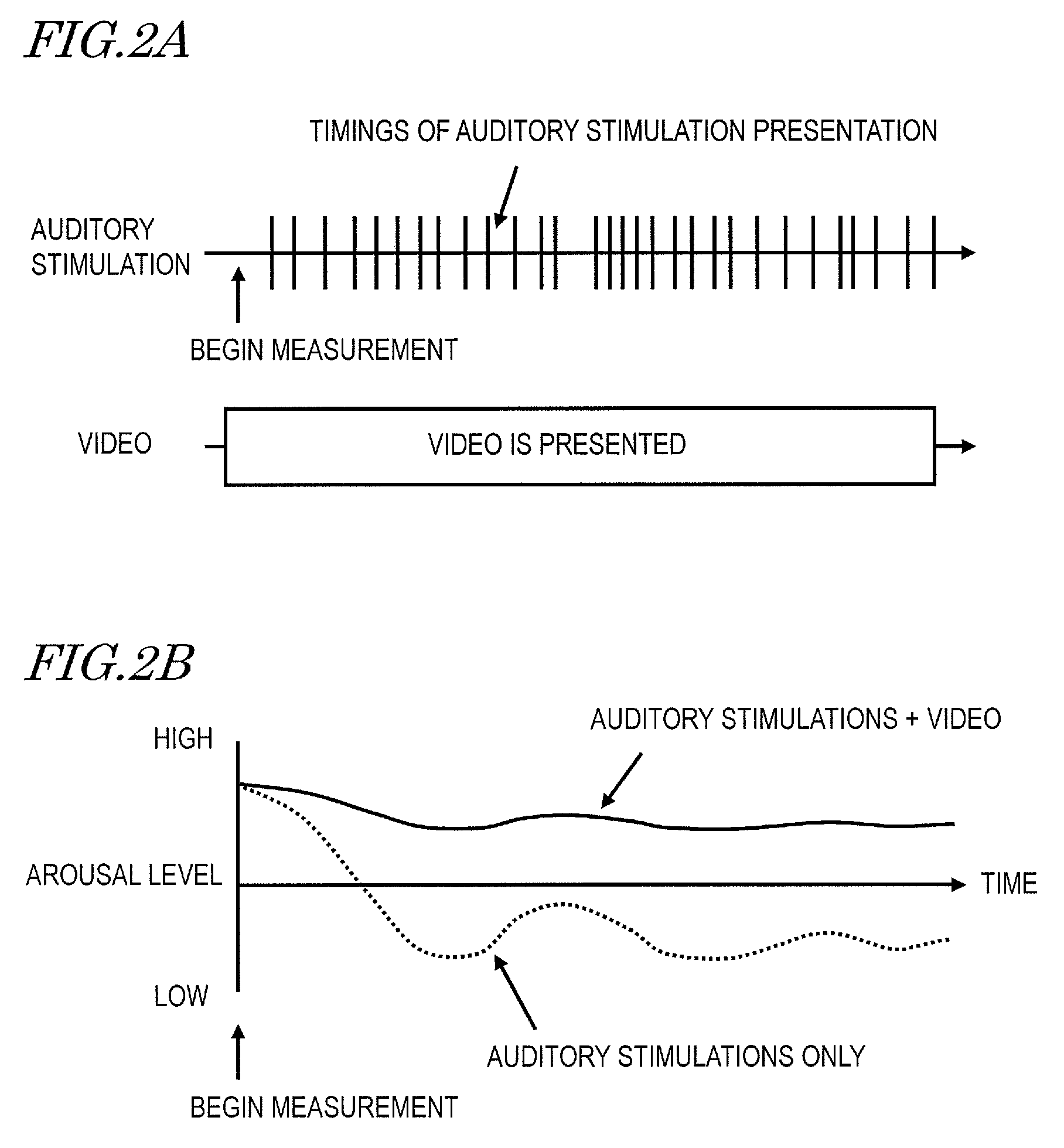

Auditory event-related potential measurement system, auditory event-related potential measurement method, and computer program thereof

ActiveUS20130296732A1Quicker electroencephalogram measurementAccurate assessmentElectroencephalographySensorsTime rangePotential measurement

An auditory event-related potential measurement system includes: a video output section configured to present a video to a user; a measurement section configured to measure a user's electroencephalogram signal; a scheduling section configured to schedule a timing of presenting an auditory stimulation so that the auditory stimulation is presented during a period in which the video is being presented to the user; an auditory stimulation output section configured to present the auditory stimulation to the user at the scheduled timing; and a processing section configured to acquire, from the electroencephalogram signal, an event-related potential in a first time range as reckoned from a point in time at which the auditory stimulation is presented. When an amount of video luminance change exceeds a threshold value, the auditory stimulation is not presented during a second time range as reckoned from a point in time at which the threshold value is exceeded.

Owner:PANASONIC INTELLECTUAL PROPERTY MANAGEMENT CO LTD

Method for sound reproduction in reflection environments, in particular in listening rooms

The invention relates to a method in which, primarily in listening rooms, instead of the spatial sound of the respective environment, the spatial sound of a third room can be made substantially enveloping in the perception of the listener. The third room spatial acoustics perceived instead of the listening room spatial acoustics act in the same way in terms of hearing physiology as the spatial acoustics of natural rooms, namely enveloping in terms of the spatial sound (in distinction to direct sound). This type of spatial enveloping effect can also be incorporated emotionally by the listener into the auditory event, and has no sweet spot problem (there is a large preferred listening area instead of a preferred listening spot) and lets deep frequencies take effect in a way through which subwoofer speakers can generally be omitted.

Owner:SAALAKUSTIK DE GMBH

Auditory event-related potential measurement system, auditory event-related potential measurement method, and computer program thereof

ActiveUS9241652B2Suppress fluctuationsImprove accuracyElectroencephalographyAudiometeringPotential measurementTime range

An auditory event-related potential measurement system includes: a video output section configured to present a video to a user; a measurement section configured to measure a user's electroencephalogram signal; a scheduling section configured to schedule a timing of presenting an auditory stimulation so that the auditory stimulation is presented during a period in which the video is being presented to the user; an auditory stimulation output section configured to present the auditory stimulation to the user at the scheduled timing; and a processing section configured to acquire, from the electroencephalogram signal, an event-related potential in a first time range as reckoned from a point in time at which the auditory stimulation is presented. When an amount of video luminance change exceeds a threshold value, the auditory stimulation is not presented during a second time range as reckoned from a point in time at which the threshold value is exceeded.

Owner:PANASONIC INTELLECTUAL PROPERTY MANAGEMENT CO LTD

Audio-controlled image capturing

ActiveUS9007477B2Quality improvementEnhance the imageTelevision system detailsProjectorsComputer scienceVIT signals

A method comprising: receiving a plurality of images corresponding to a time period covering the intended moment for releasing the shutter; receiving an audio signal associated with the plurality of images using audio capturing means; analyzing the received audio signal in order to determine an auditory event associated with a desired output image; and selecting at least one of the plurality of images on the basis of the analysis of the received audio signal for further processing in order to obtain the desired output image.

Owner:NOKIA TECH OY

Low complexity auditory event boundary detection

ActiveUS8938313B2Less complexReduce memory requirementsSpeech analysisDigital computer detailsFrequency spectrumBoundary detection

An auditory event boundary detector employs down-sampling of the input digital audio signal without an anti-aliasing filter, resulting in a narrower bandwidth intermediate signal with aliasing. Spectral changes of that intermediate signal, indicating event boundaries, may be detected using an adaptive filter to track a linear predictive model of the samples of the intermediate signal. Changes in the magnitude or power of the filter error correspond to changes in the spectrum of the input audio signal. The adaptive filter converges at a rate consistent with the duration of auditory events, so filter error magnitude or power changes indicate event boundaries. The detector is much less complex than methods employing time-to-frequency transforms for the full bandwidth of the audio signal.

Owner:DOLBY LAB LICENSING CORP

Sonification systems and methods for auditory display of physiological parameters

ActiveUS9918679B2Easy to monitorLimited utilitySensorsMeasuring/recording heart/pulse rateSonificationAuditory display

Systems and methods for generating a sonification output for presenting information about physiological parameters such as oxygen saturation and heart rate parameters in discrete auditory events. In a preferred embodiment, each event comprises two sounds. The first is a reference sound that indicates the desired target state for the two parameters and the second indicates the actual state. Heart rate is preferably represented by amplitude modulation. Oxygen saturation is preferably represented by a timbral manipulation.

Owner:NEW TECH SOUNDINGS +2

A real-time rendering method for virtual auditory environment

ActiveCN102572676BImplement dynamic processingReduce computationStereophonic systemsSound sourcesHeadphones

The invention discloses a real-time rendering method for a virtual auditory environment. According to the method, the initial information of the virtual auditory environment can be set, and a head trace tracker is used for detecting the dynamic spatial positions of six degrees of freedom of motion of the head of a listener in real time, and dynamically simulating sound sources, sound transmission, environment reflection, the radiation and binaural sound signal conversion of a receiver, and the like in real time according to the data. In the simulation of the binaural sound signal conversion, a method for realizing joint processing on a plurality of virtual sound sources in different directions and at difference distances by utilizing a shared filter is adopted, so that signal processing efficiency is improved. A binaural sound signal is subjected to earphone-ear canal transmission characteristic balancing, and then is fed to an earphone for replay, so that a realistic spatial auditory event or perception can be generated.

Owner:SOUTH CHINA UNIV OF TECH

Segmenting audio signals into auditory events

In one aspect, the invention divides an audio signal into auditory events, each of which tends to be perceived as separate and distinct, by calculating the spectral content of successive time blocks of the audio signal (5-1), calculating the difference in spectral content between successive time blocks of the audio signal (5-2), and identifying an auditory event boundary as the boundary between successive time blocks when the difference in the spectral content between such successive time blocks exceeds a threshold (5-3). In another aspect, the invention generates a reduced-information representation of an audio signal by dividing an audio signal into auditory events, each of which tends to be perceived as separate and distinct, and formatting and storing information relating to the auditory events (5-4). Optionally, the invention may also assign a characteristic to one or more of the auditory events (5-5).

Owner:DOLBY LAB LICENSING CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com