Patents

Literature

31 results about "Text independent" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

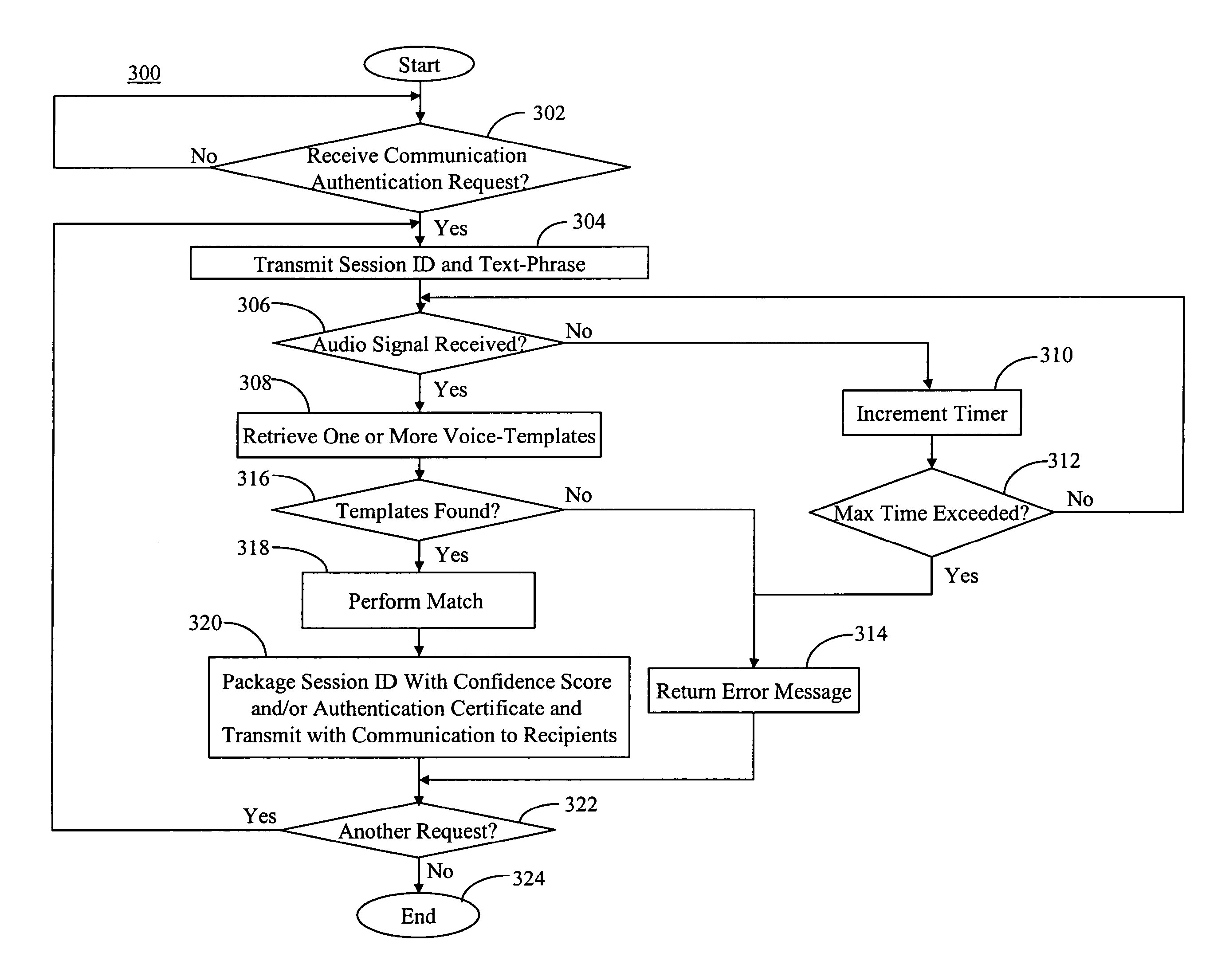

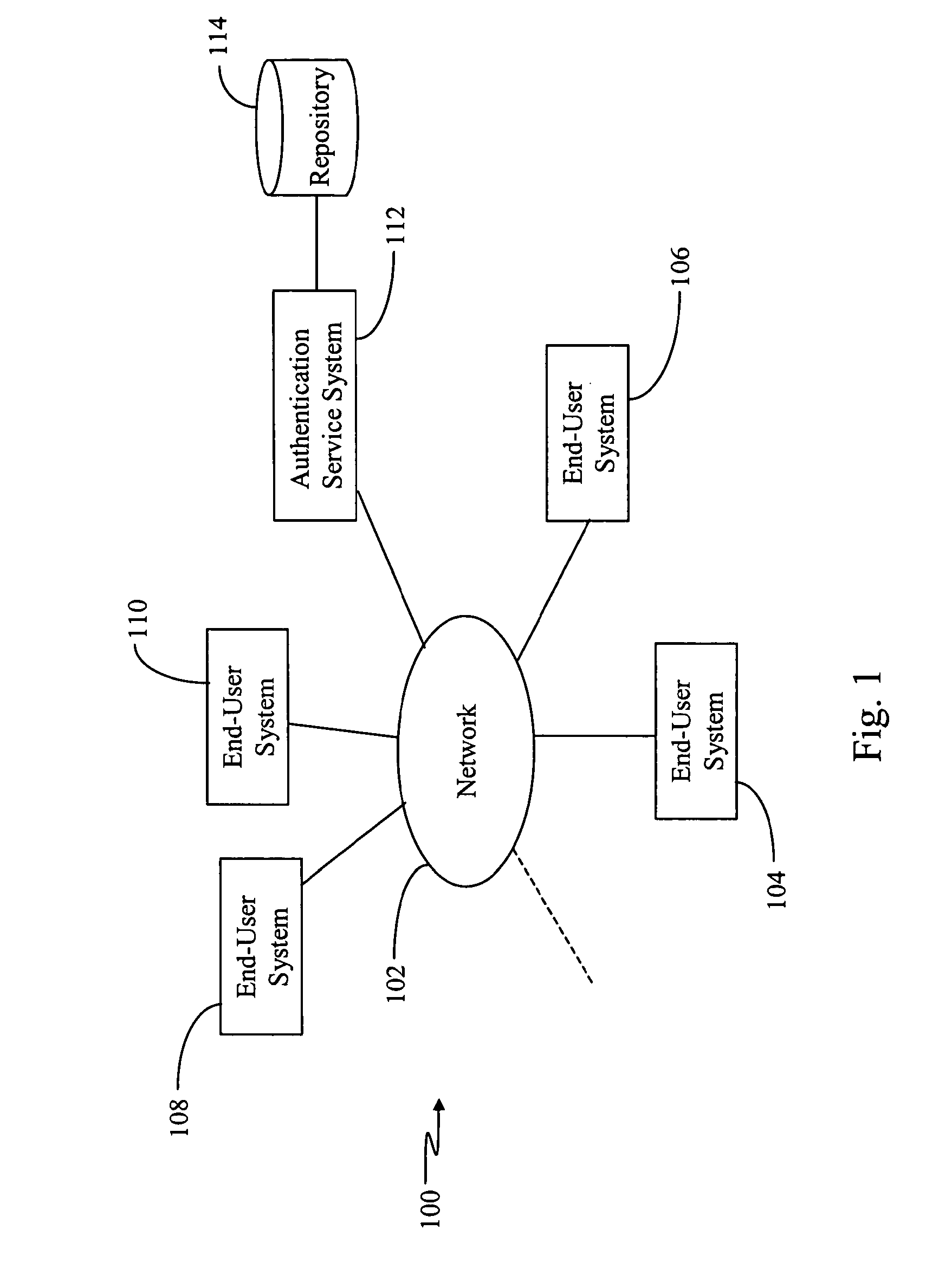

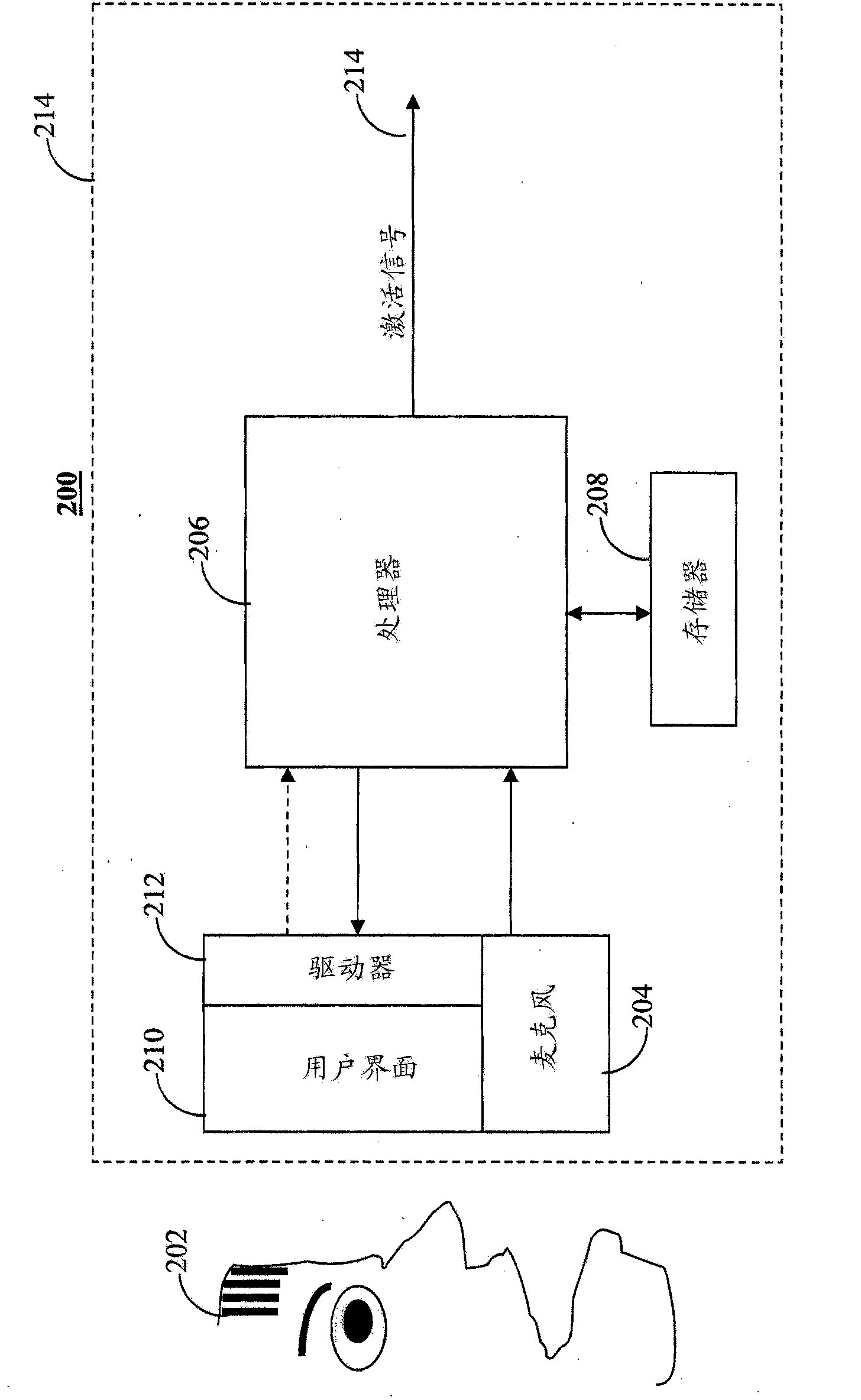

Digital Signatures for Communications Using Text-Independent Speaker Verification

ActiveUS20120296649A1Improve speech recognition performanceSpeech recognitionTransmissionText independentDigital signature

A speaker-verification digital signature system is disclosed that provides greater confidence in communications having digital signatures because a signing party may be prompted to speak a text-phrase that may be different for each digital signature, thus making it difficult for anyone other than the legitimate signing party to provide a valid signature.

Owner:NUANCE COMM INC

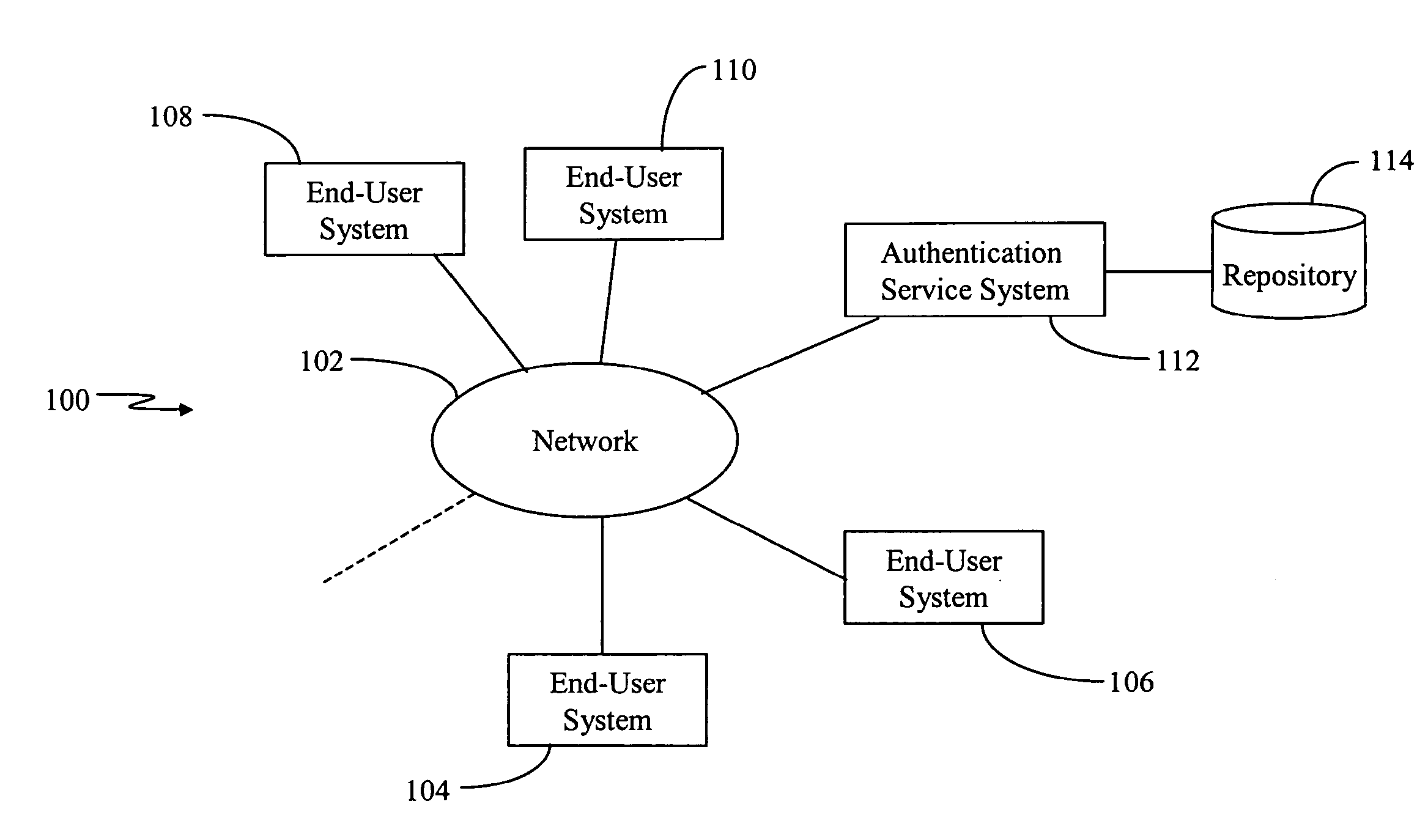

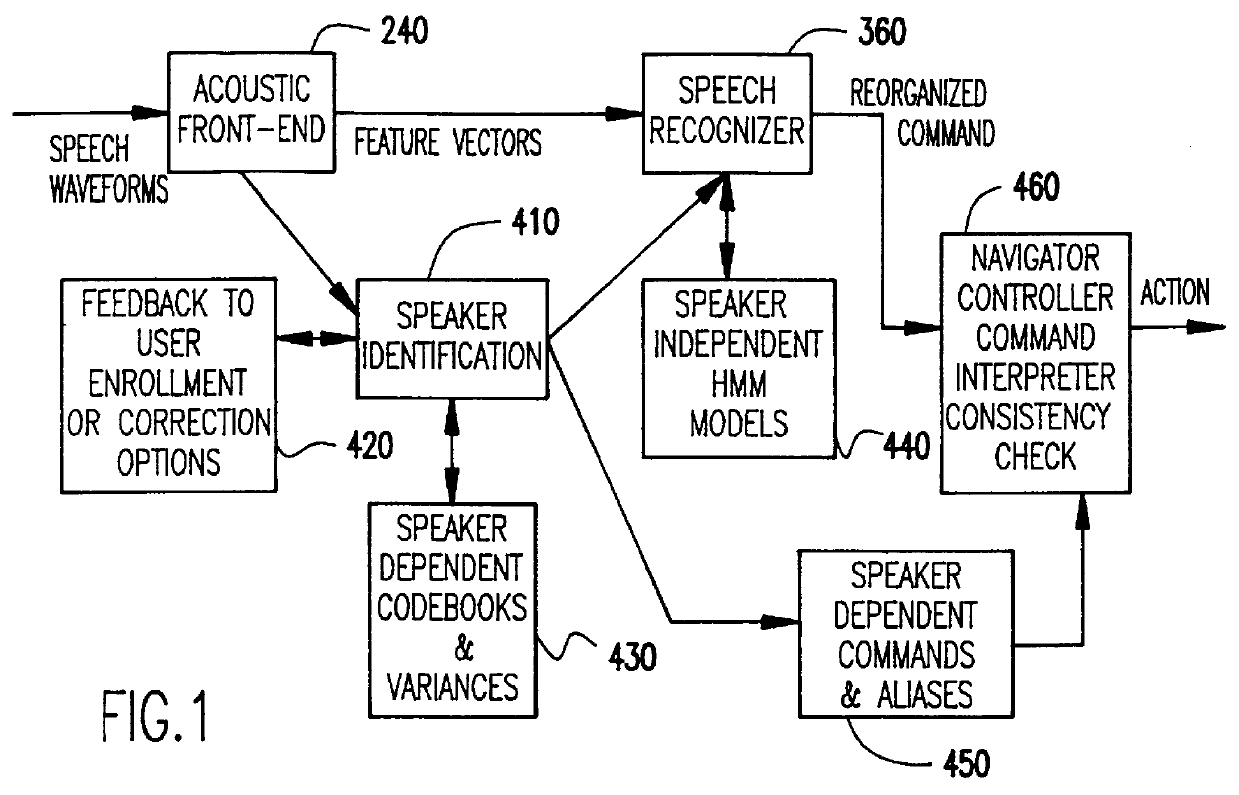

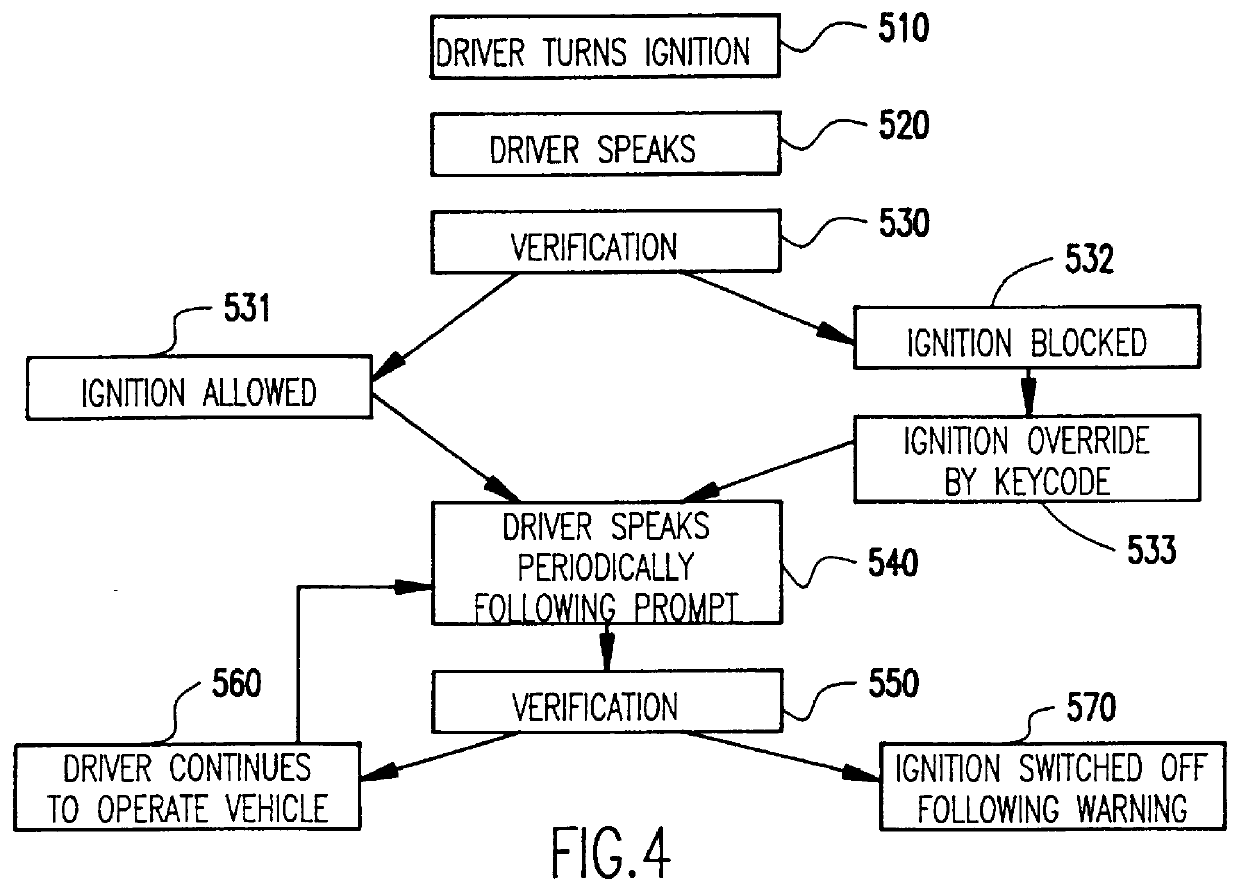

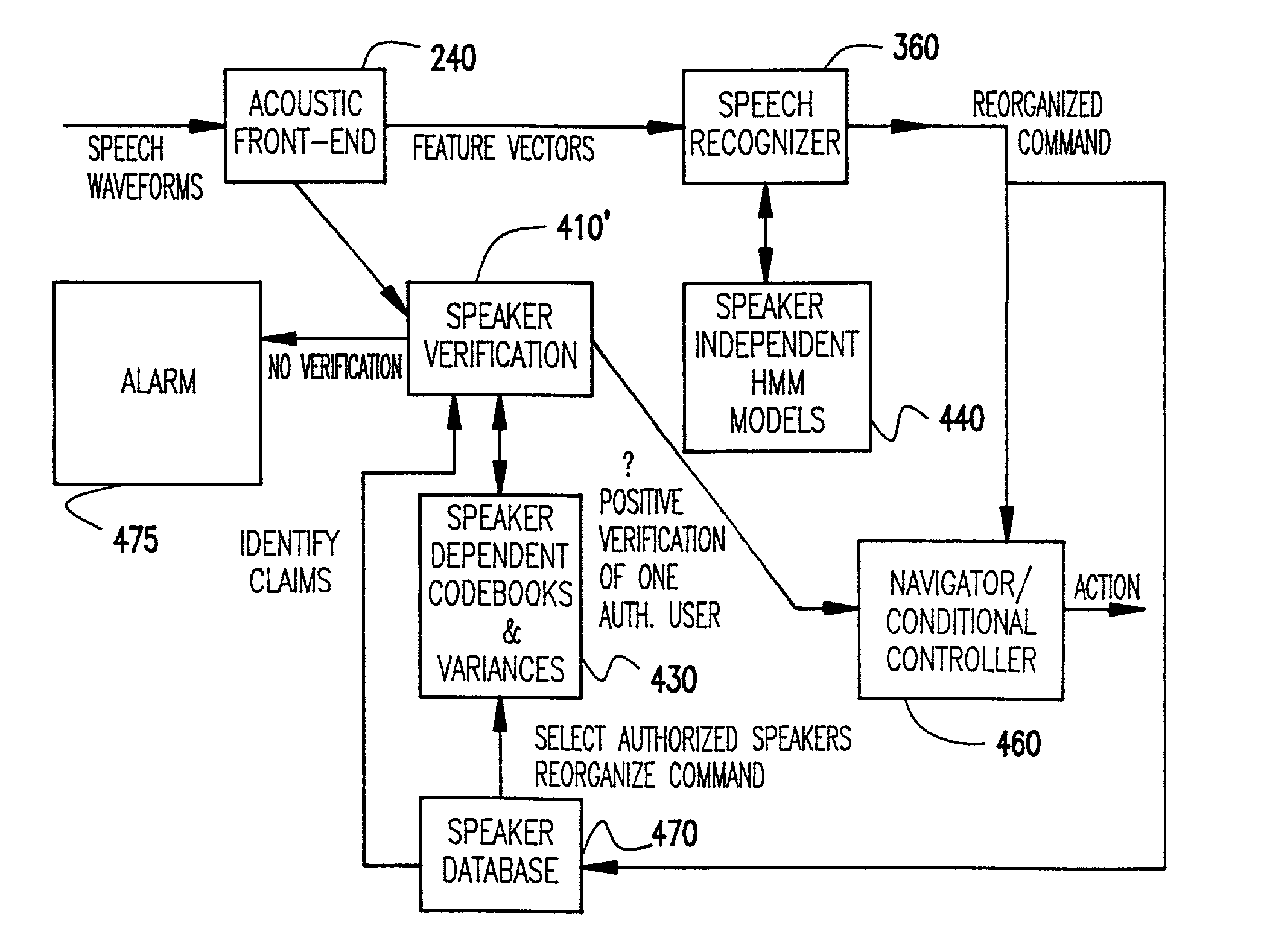

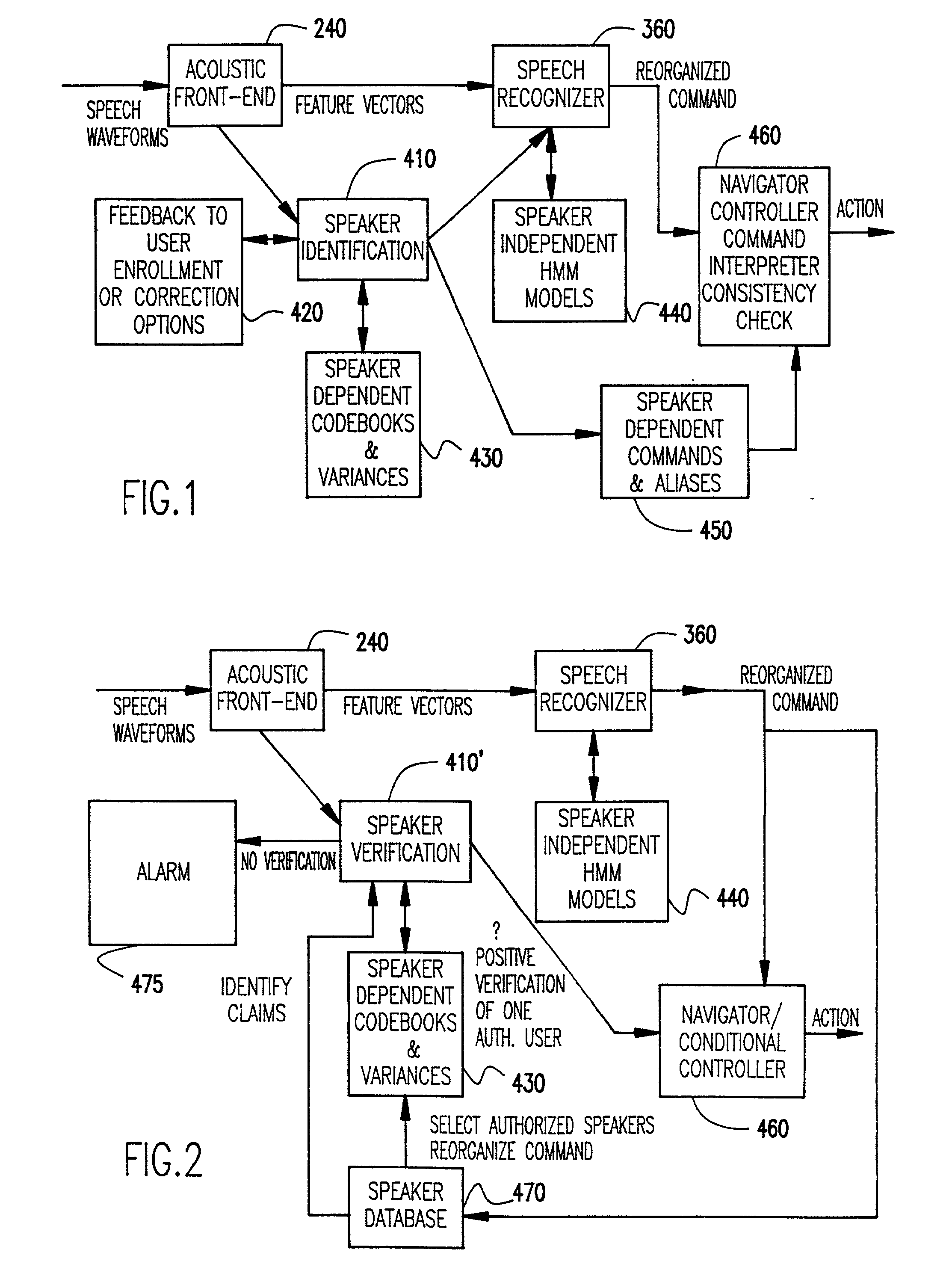

Text independent speaker recognition for transparent command ambiguity resolution and continuous access control

Feature vectors representing each of a plurality of overlapping frames of an arbitrary, text independent speech signal are computed and compared to vector parameters and variances stored as codewords in one or more codebooks corresponding to each of one or more enrolled users to provide speaker dependent information for speech recognition and / or ambiguity resolution. Other information such as aliases and preferences of each enrolled user may also be enrolled and stored, for example, in a database. Correspondence of the feature vectors may be ranked by closeness of correspondence to a codeword entry and the number of frames corresponding to each codebook are accumulated or counted to identify a potential enrolled speaker. The differences between the parameters of the feature vectors and codewords in the codebooks can be used to identify a new speaker and an enrollment procedure can be initiated. Continuous authorization and access control can be carried out based on any utterance either by verification of the authorization of a speaker of a recognized command or comparison with authorized commands for the recognized speaker. Text independence also permits coherence checks to be carried out for commands to validate the recognition process.

Owner:NUANCE COMM INC

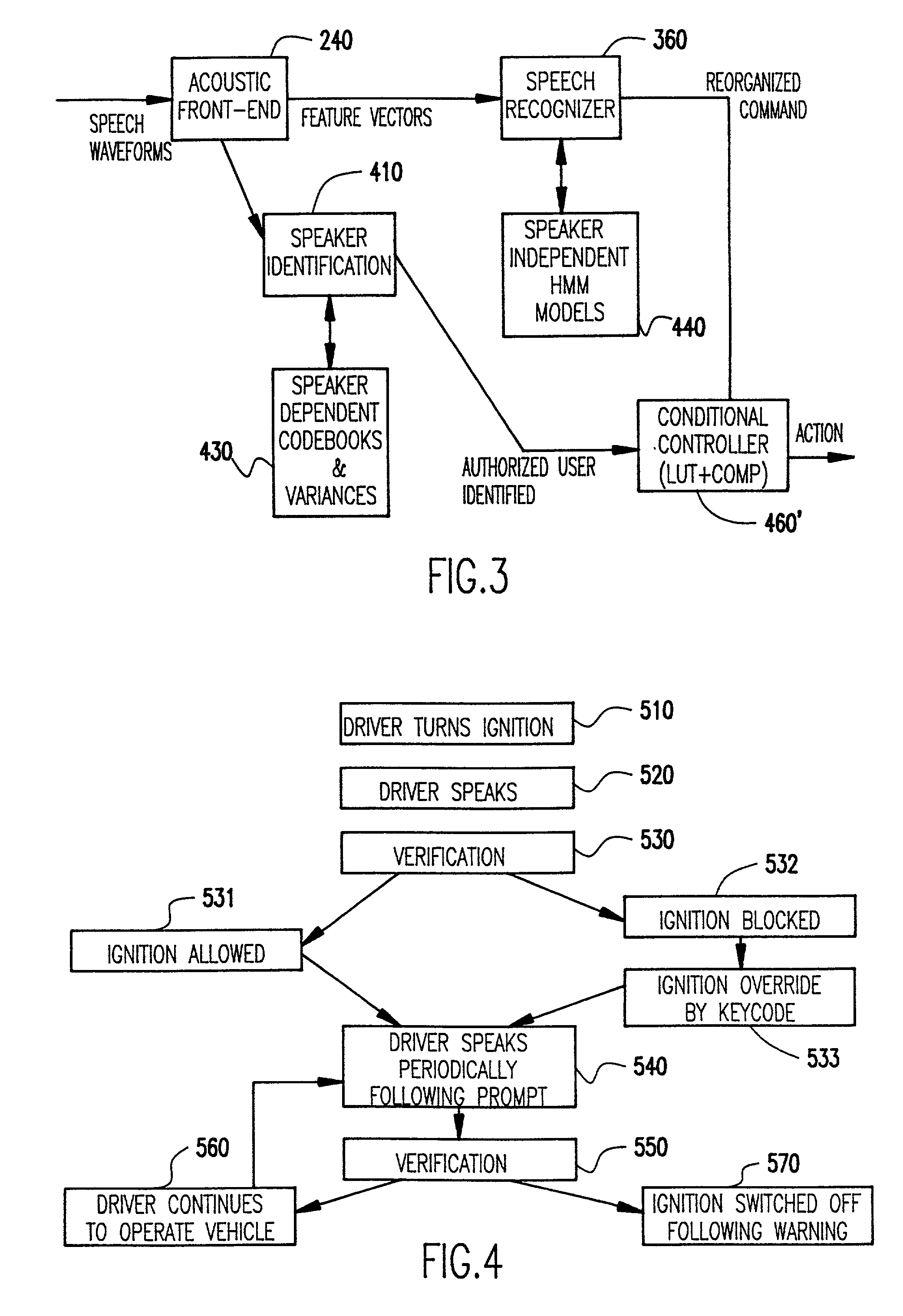

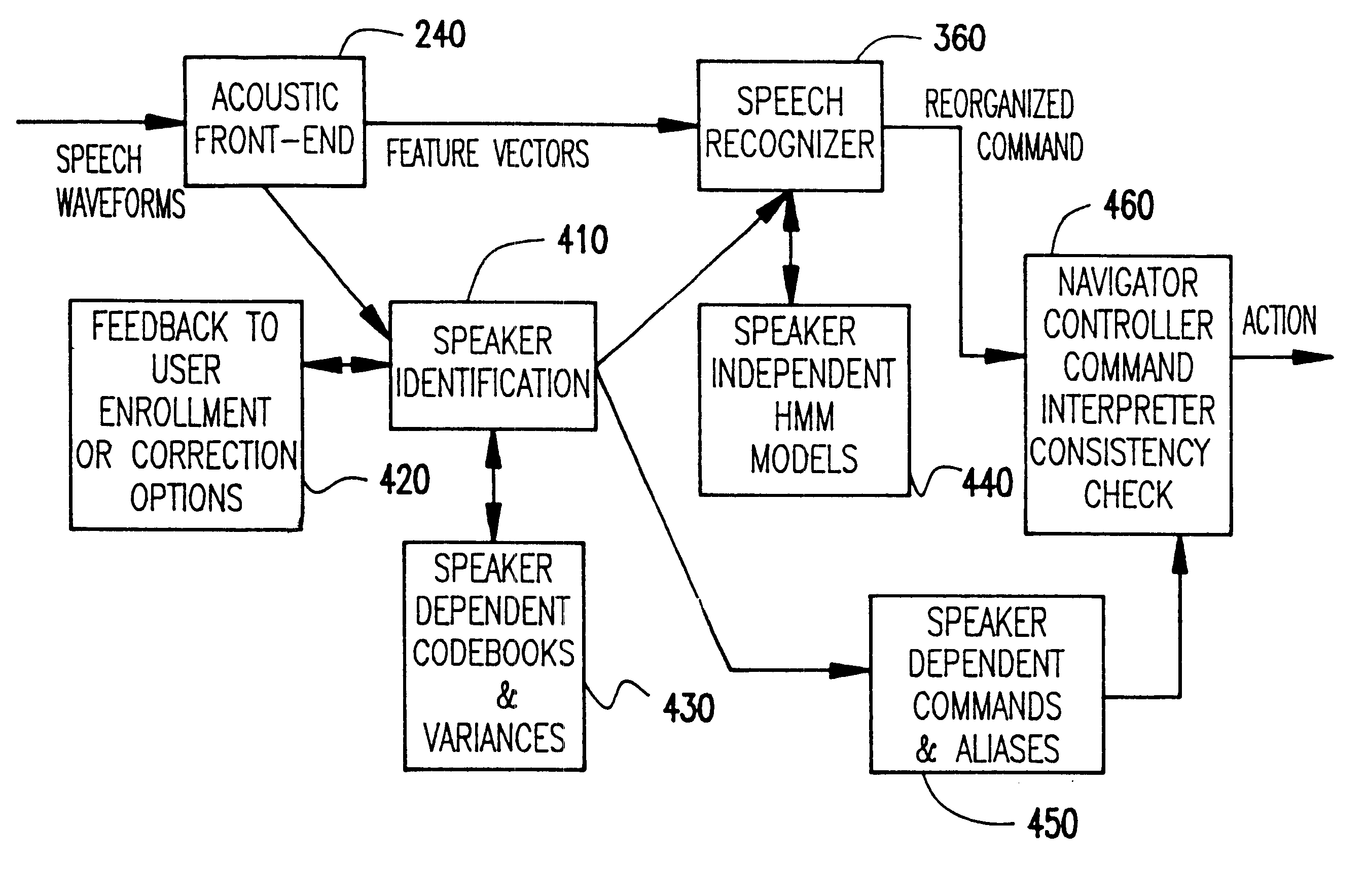

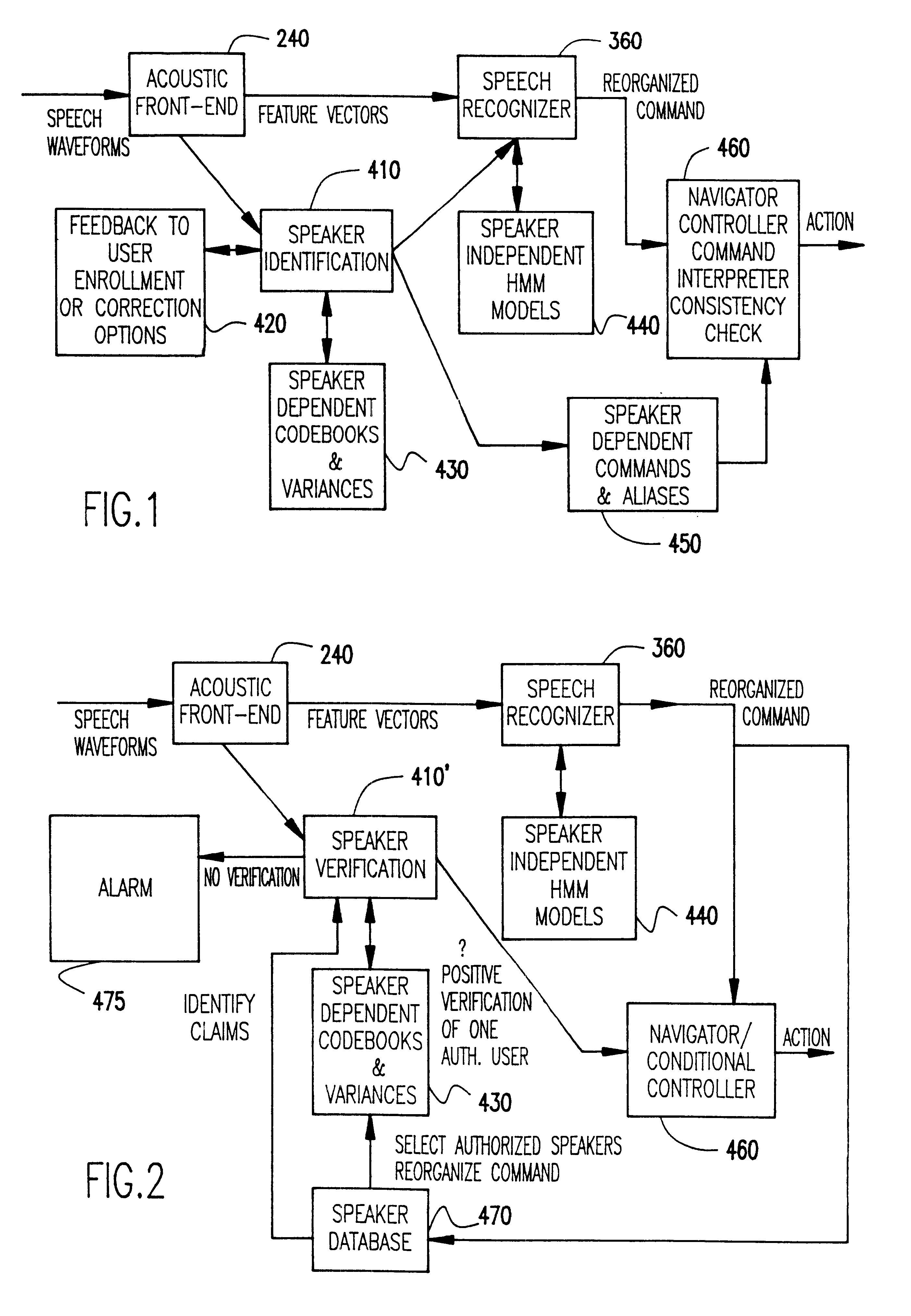

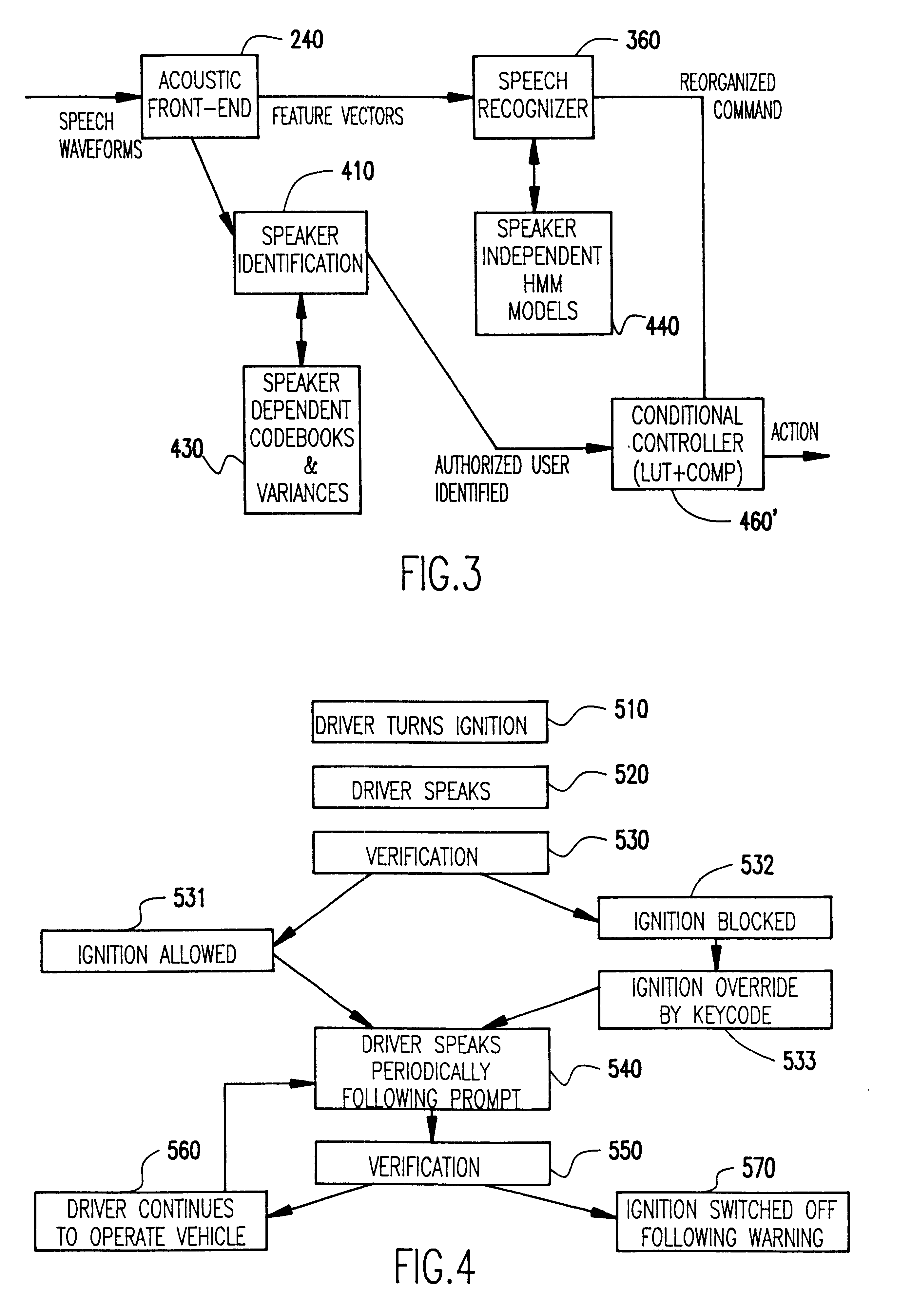

Text independent speaker recognition for transparent command ambiguity resolution and continuous access control

InactiveUS20020002465A1Increases ambiguityEasy accessSpeech recognitionFeature vectorText independent

Feature vectors representing each of a plurality of overlapping frames of an arbitrary, text independent speech signal are computed and compared to vector parameters and variances stored as codewords in one or more codebooks corresponding to each of one or more enrolled users to provide speaker dependent information for speech recognition and / or ambiguity resolution. Other information such as aliases and preferences of each enrolled user may also be enrolled and stored, for example, in a database. Correspondence of the feature vectors may be ranked by closeness of correspondence to a codeword entry and the number of frames corresponding to each codebook are accumulated or counted to identify a potential enrolled speaker. The differences between the parameters of the feature vectors and codewords in the codebooks can be used to identify a new speaker and an enrollment procedure can be initiated. Continuous authorization and access control can be carried out based on any utterance either by verification of the authorization of a speaker of a recognized command or comparison with authorized commands for the recognized speaker. Text independence also permits coherence checks to be carried out for commands to validate the recognition process.

Owner:NUANCE COMM INC

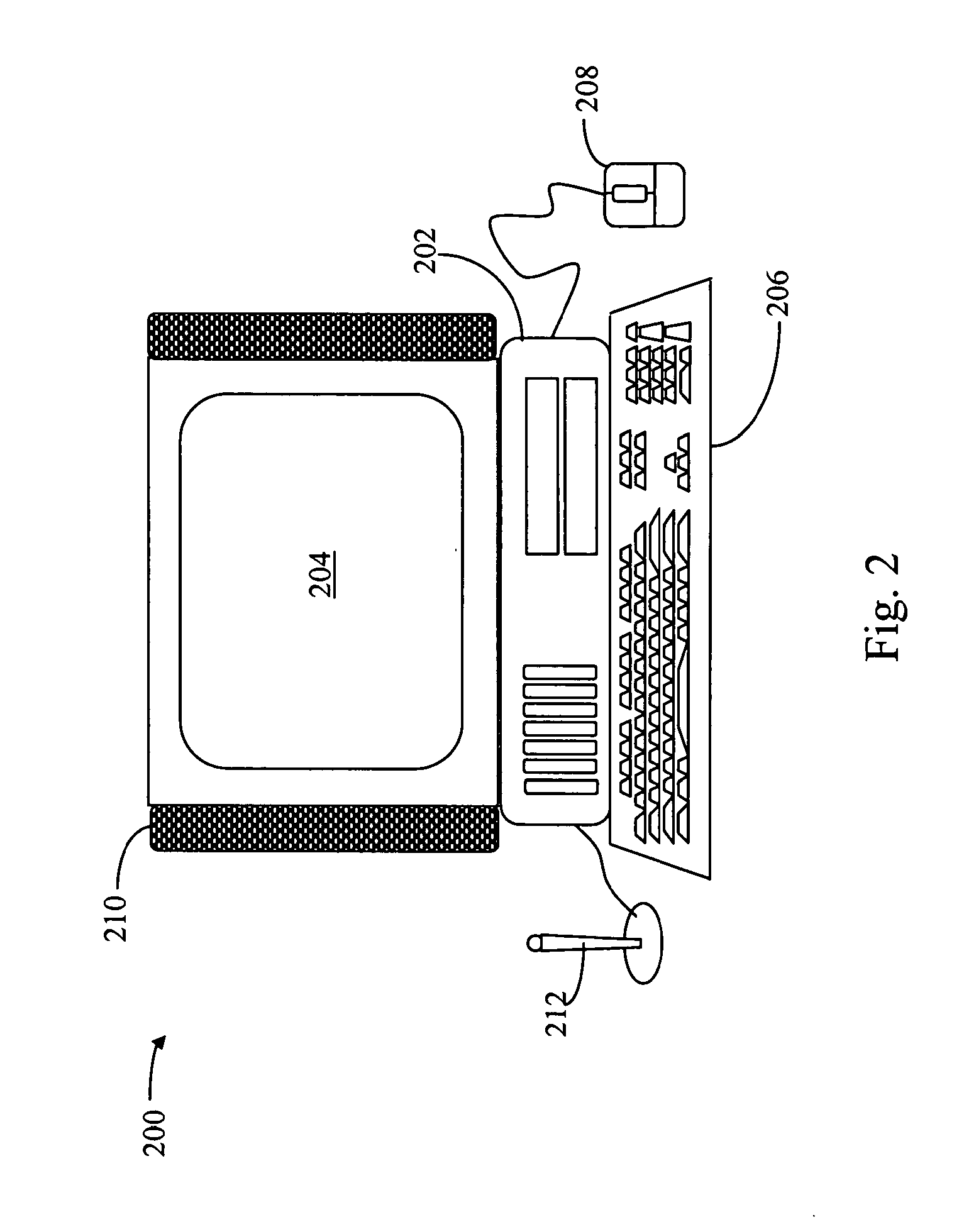

Text independent speaker recognition with simultaneous speech recognition for transparent command ambiguity resolution and continuous access control

Feature vectors representing each of a plurality of overlapping frames of an arbitrary, text independent speech signal are computed and compared to vector parameters and variances stored as codewords in one or more codebooks corresponding to each of one or more enrolled users to provide speaker dependent information for speech recognition and / or ambiguity resolution. Other information such as aliases and preferences of each enrolled user may also be enrolled and stored, for example, in a database. Correspondence of the feature vectors may be ranked by closeness of correspondence to a codeword entry and the number of frames corresponding to each codebook are accumulated or counted to identify a potential enrolled speaker. The differences between the parameters of the feature vectors and codewords in the codebooks can be used to identify a new speaker and an enrollment procedure can be initiated. Continuous authorization and access control can be carried out based on any utterance either by verification of the authorization of a speaker of a recognized command or comparison with authorized commands for the recognized speaker. Text independence also permits coherence checks to be carried out for commands to validate the recognition process.

Owner:NUANCE COMM INC

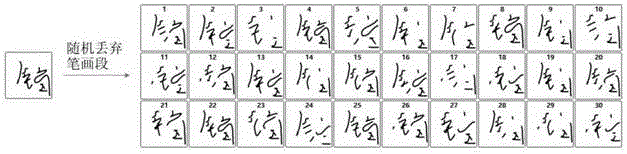

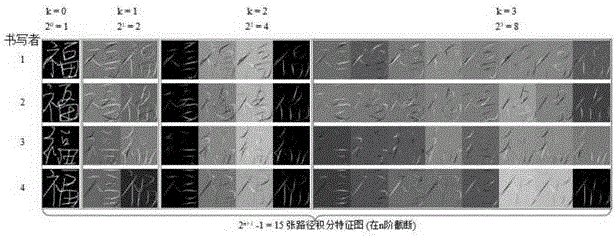

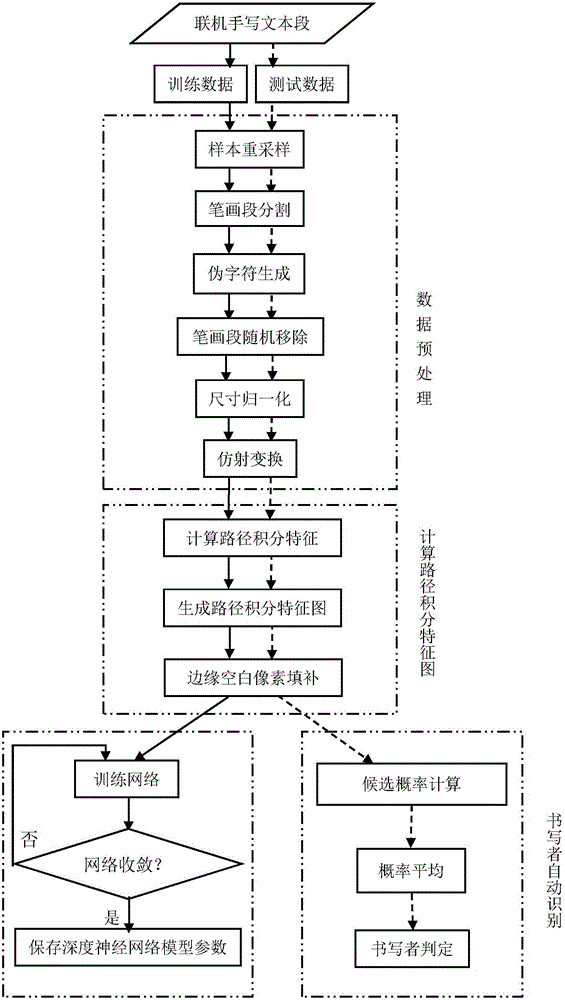

Text-independent end-to-end handwriting recognition method based on deep learning

ActiveCN105893968AImprove generalization abilityPrevent overfittingCharacter and pattern recognitionNeural learning methodsPattern recognitionHandwriting

The invention provides a text-independent end-to-end handwriting recognition method based on deep learning, comprising the following steps: A, preprocessing an online handwritten text to generate a pseudo character sample; B, calculating the path integral feature image of the pseudo character sample; C, training a deep neural network model of a sample of a known writer; and D, using the deep neural network model in step C to automatically recognize a sample of an unknown writer. Through the method, online text lines can be processed automatically, there is no need to extract character features manually, and text-independent online writer recognition is realized efficiently.

Owner:CHONGQING AOXIONG INFORMATION TECH

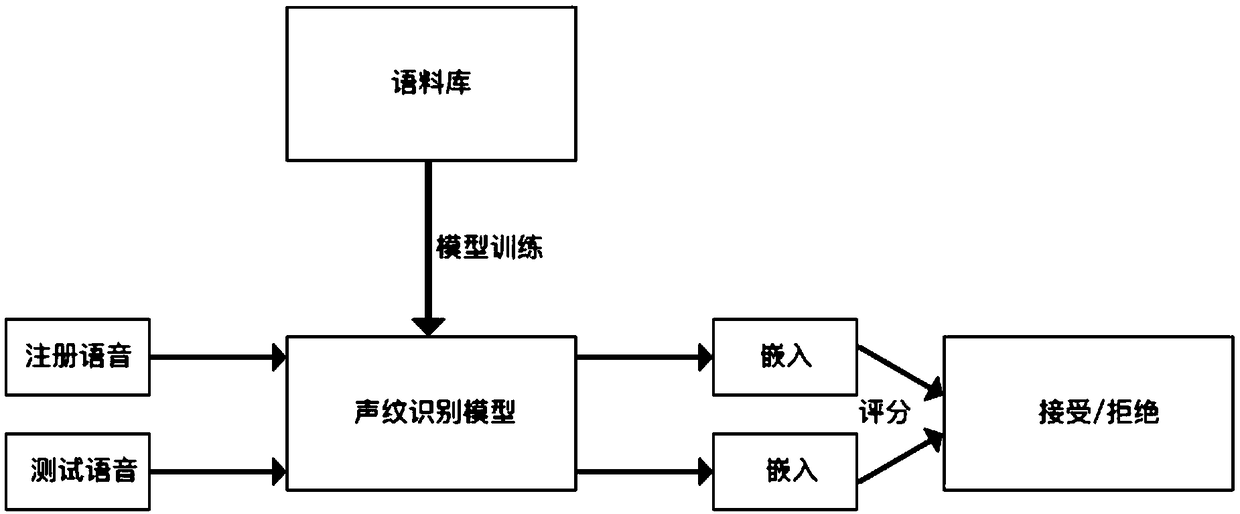

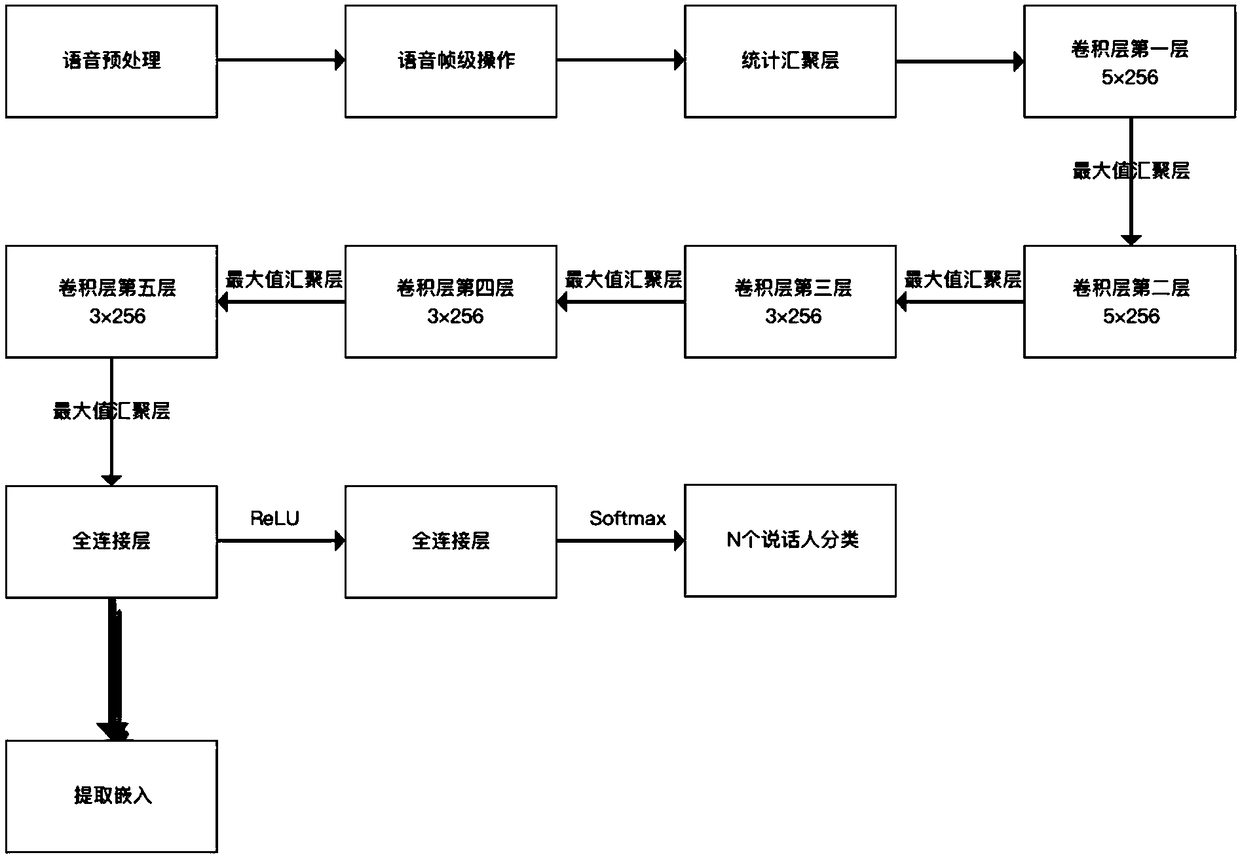

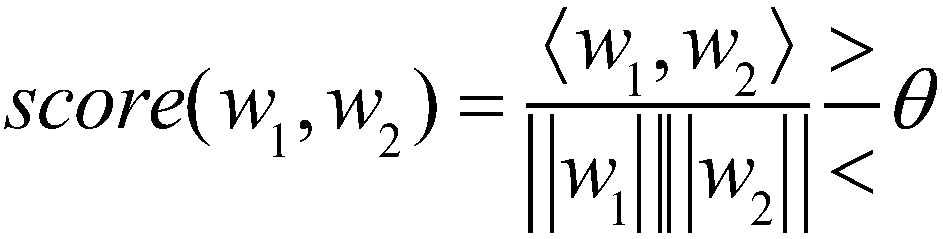

Text-independent voiceprint recognition method

InactiveCN108648759AFew parametersImprove robustnessSpeech analysisText independentFeature extraction

The invention discloses a text-independent voiceprint recognition method. The text-independent voiceprint recognition method comprises three stages of voiceprint recognition model training, extractionembedding and decision-making scoring. The model training stage comprises the steps of (1) voice signal preprocessing; (2) voice frame-level operation; (3) statistical convergence layer summary frame-level outputting; (4) one-dimension convolution operation; and (5) full joint layer output speaker sorting. After model training is completed, extraction embedding is conducted before nonlinearization of a first layer of full joint layers. Finally, decision-making scoring is conducted through the cosine distance to decide acceptance or refusal. A neural network embedding technology and a convolutional neural network are combined, one-dimension convolution and a maximum value convergence layer are used for dimensionality reduction, the number of convolution layers is increased, then deep-layerfeature extraction is conducted, and thus the property of a model is improved; and the cosine distance is used as the scoring standard, and the process is quicker and simpler.

Owner:SOUTH CHINA UNIV OF TECH

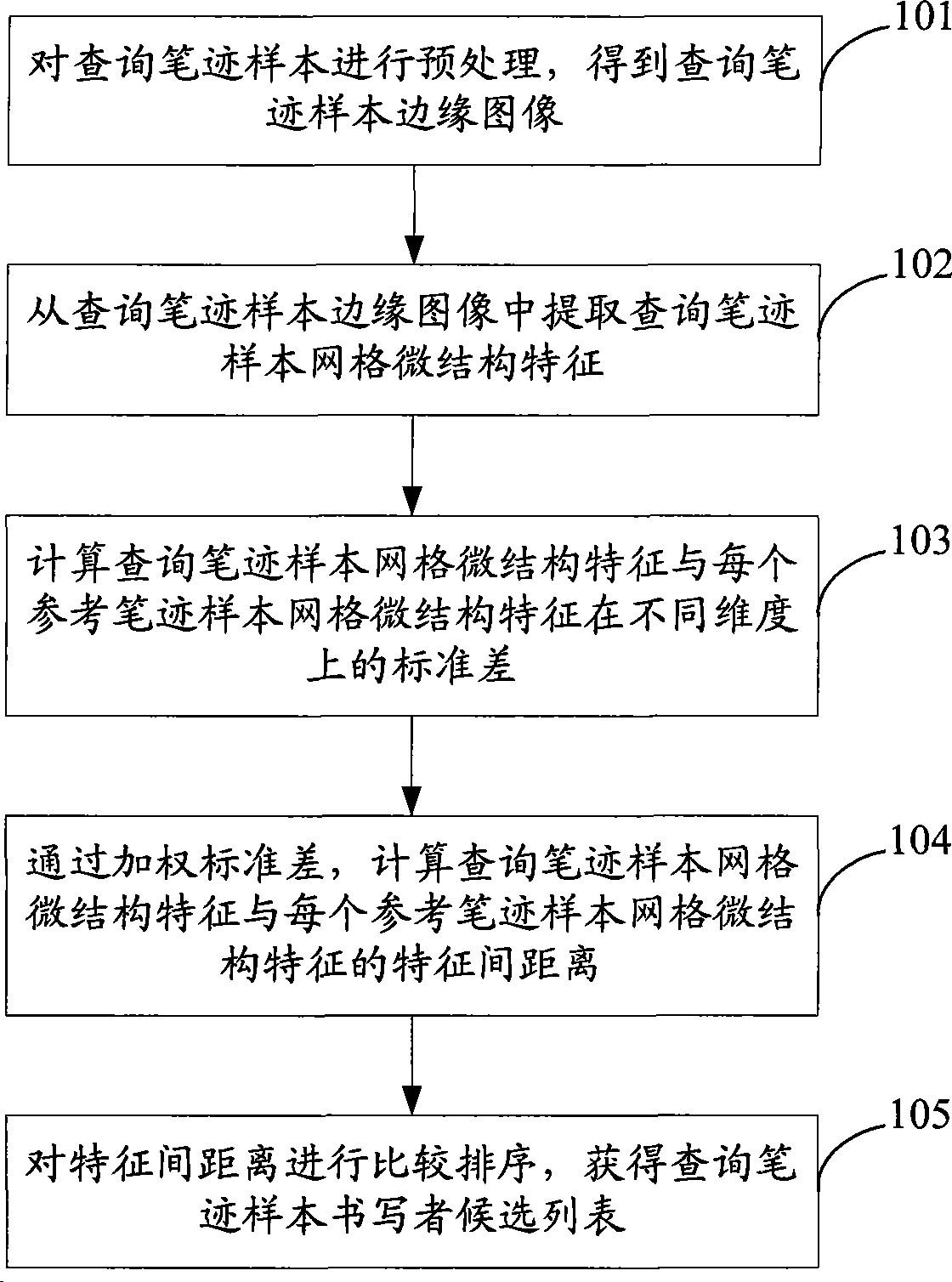

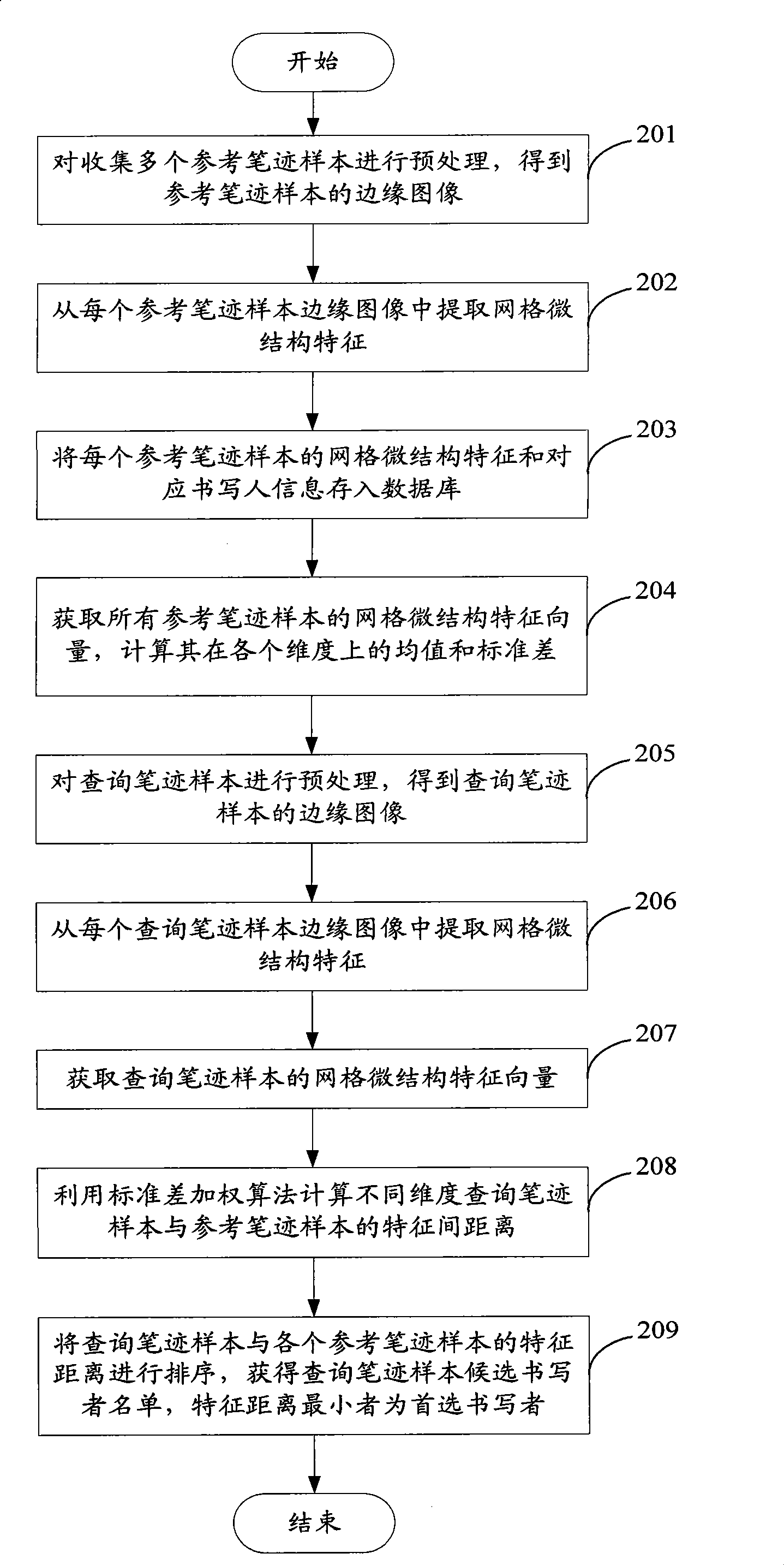

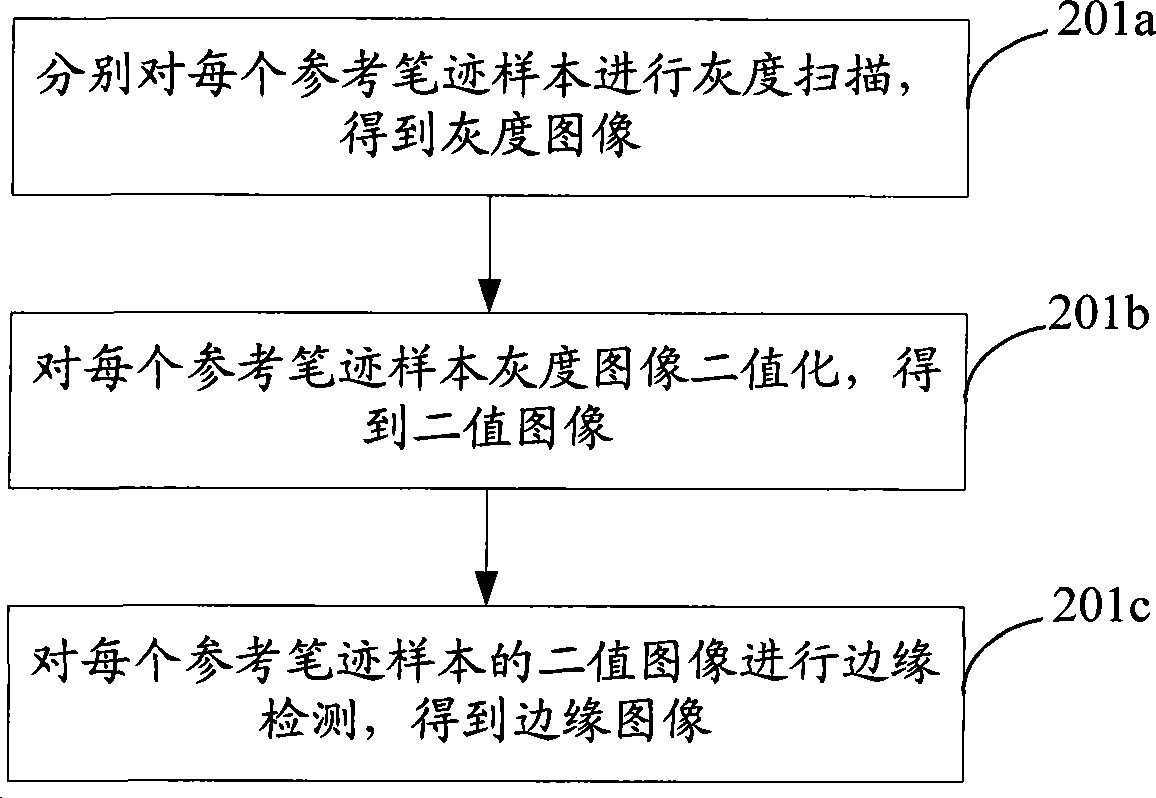

Text identification method and device irrelevant to handwriting

ActiveCN101452532AImprove accuracyEasy to identifyCharacter and pattern recognitionHandwritingFeature extraction

The invention discloses a method and a device for identifying text-independent writing, and belongs to the field of computer vision. The method comprises: preprocessing a query wring sample to obtain edge images of the query writing sample; extracting network microstructure characteristics of the query writing sample from the edge images of the query writing sample; calculating a standard difference of the network microstructure characteristics of the query writing sample and the network microstructure characteristics of each reference writing sample; calculating characteristic intervals of the network microstructure characteristics of the query writing sample and the network microstructure characteristics of each reference writing sample through weighing the standard difference; and comparing and ordering the characteristic intervals to obtain a writer candidate list of the query writing sample. The device comprises a preprocessing module, a characteristic extracting module, a weight calculating module, an interval calculating module and a comparing module. The method obtains a writing candidate through comparing intervals of the network microstructure characteristics, and improves accuracy and identifying property of writing identification.

Owner:TSINGHUA UNIV

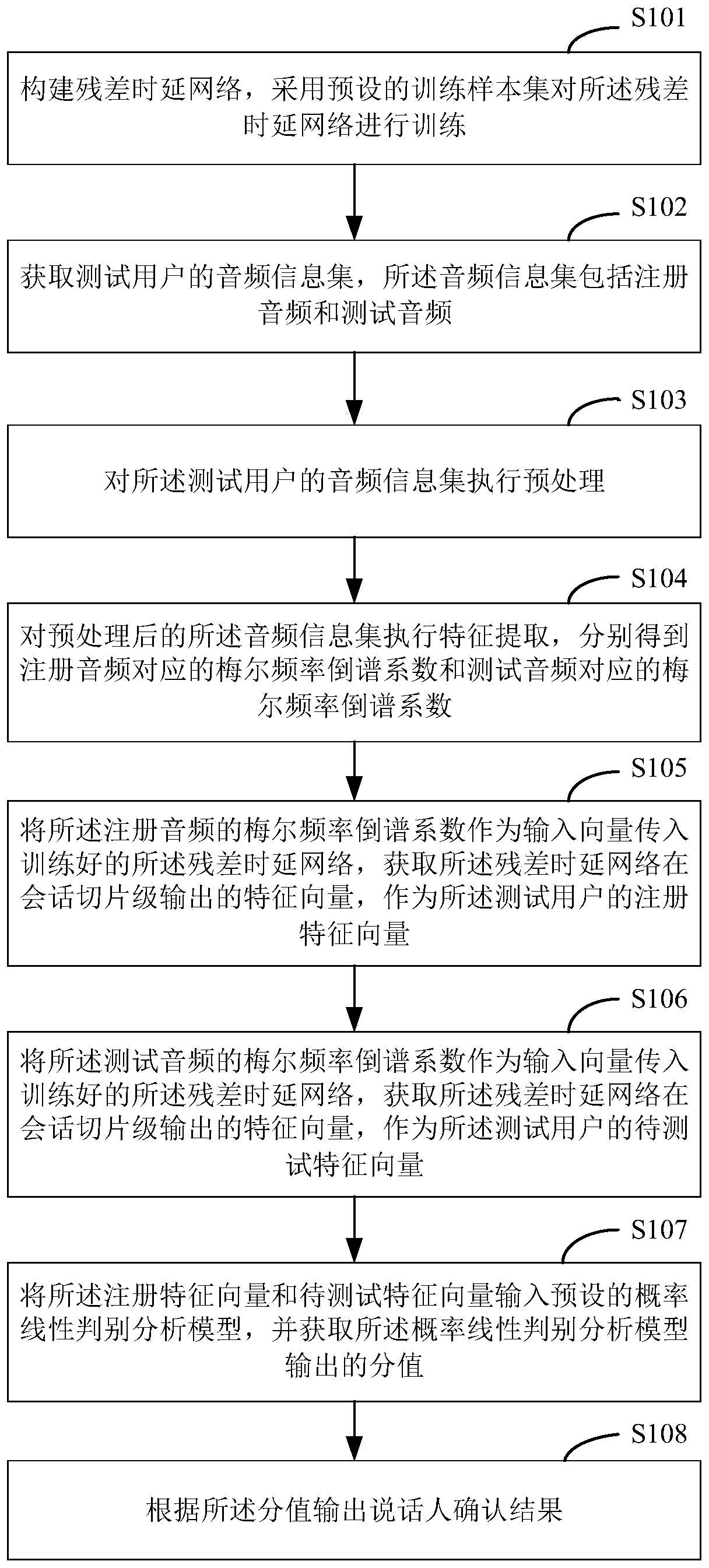

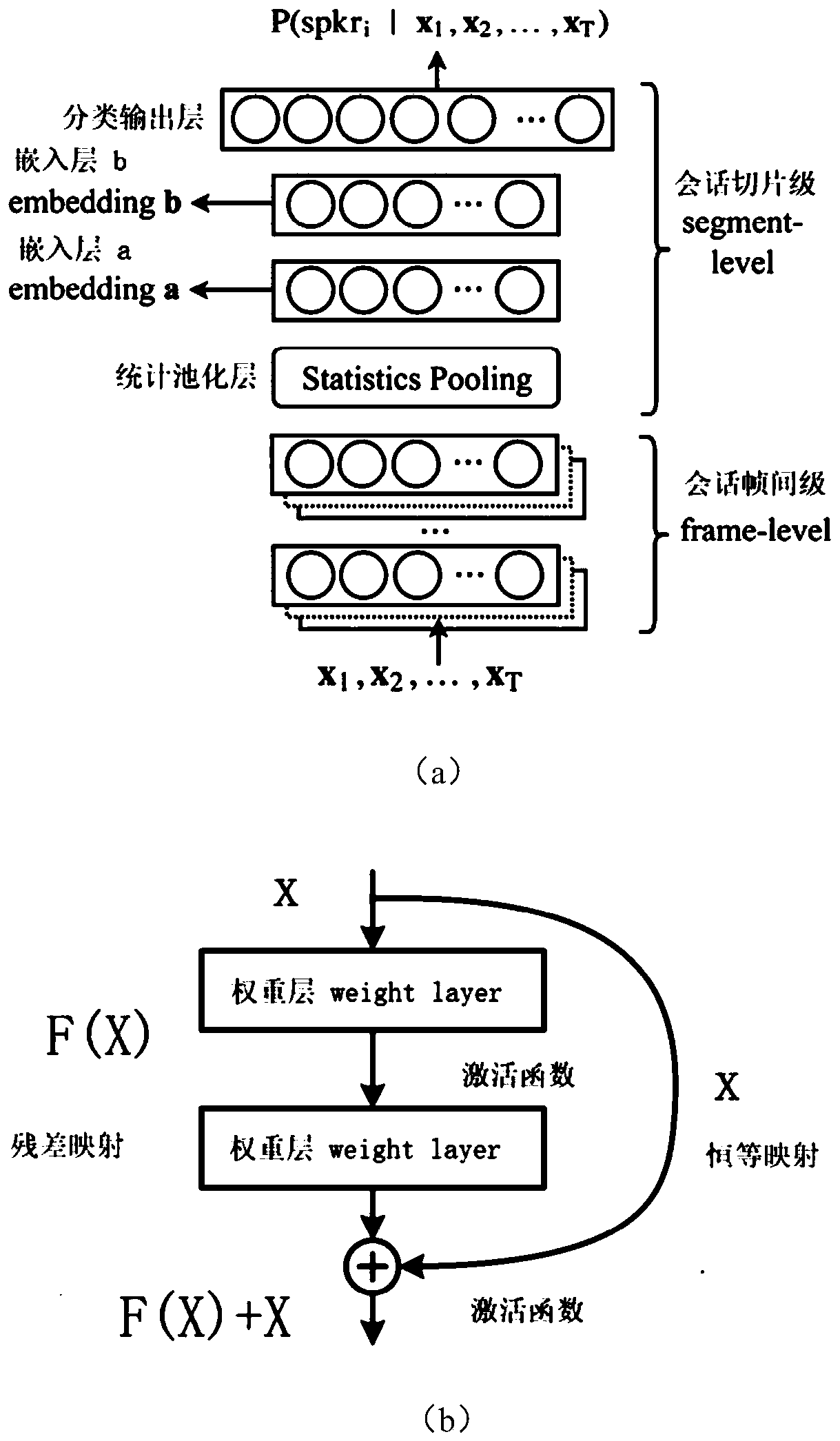

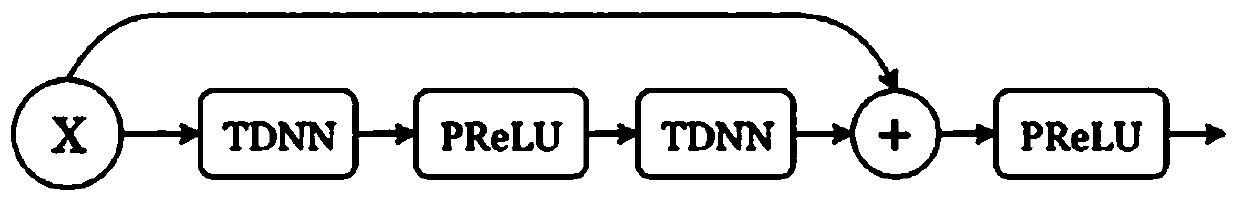

Speaker confirmation method and device based on residual time delay network, equipment and medium

The invention discloses a speaker confirmation method and a speaker confirmation device based on a residual time delay network, equipment and a medium. The method comprises the steps of constructing the residual time delay network, and carrying out the training of the residual time delay network; acquiring a registered audio and a test audio of a test user; preprocessing the registered audio and the test audio, and then performing feature extraction to obtain the Mel-frequency cepstrum coefficients of the registered audio and the test audio respectively; transmitting the Mel-frequency cepstrumcoefficient of the registered audio / test audio to the trained residual time delay network, and obtaining a feature vector output by the residual time delay network at a session slice level as a registered feature vector / to-be-tested feature vector; and inputting the registration feature vector and the to-be-tested feature vector into a probability linear discriminant analysis model, and outputting a speaker confirmation result according to a score output by the model. The problem that an existing text-independent speaker confirmation method is poor in accuracy in the aspect of short audios issolved.

Owner:PING AN TECH (SHENZHEN) CO LTD

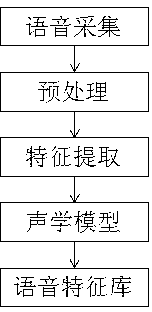

Method for recognizing text-independent voice prints

The invention relates to a method for recognizing text-independent voice prints and belongs to the field of voice signal processing. The method comprises the following steps: firstly, creating all acoustic models aiming at a user set: collecting voice signals of speakers in users by using external voice frequency collecting equipment, creating acoustic models for the speakers, and storing the acoustic models in a phonetic feature library; and matching and recognizing the models for existing users to be recognized: collecting voice signals of existing speakers by using the external voice frequency collecting equipment, creating acoustic models for the existing speakers, matching the acoustic models of the existing speakers with the all acoustic models in the phonetic feature library, and returning the most closely matched model numbers obtained by the calculation, thus identifying the users to be recognized. The method of the invention is high in the reorganization rate and exact in the result.

Owner:镇江佳得信息技术有限公司

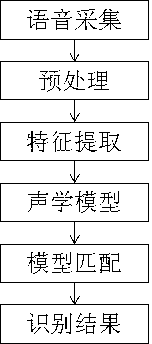

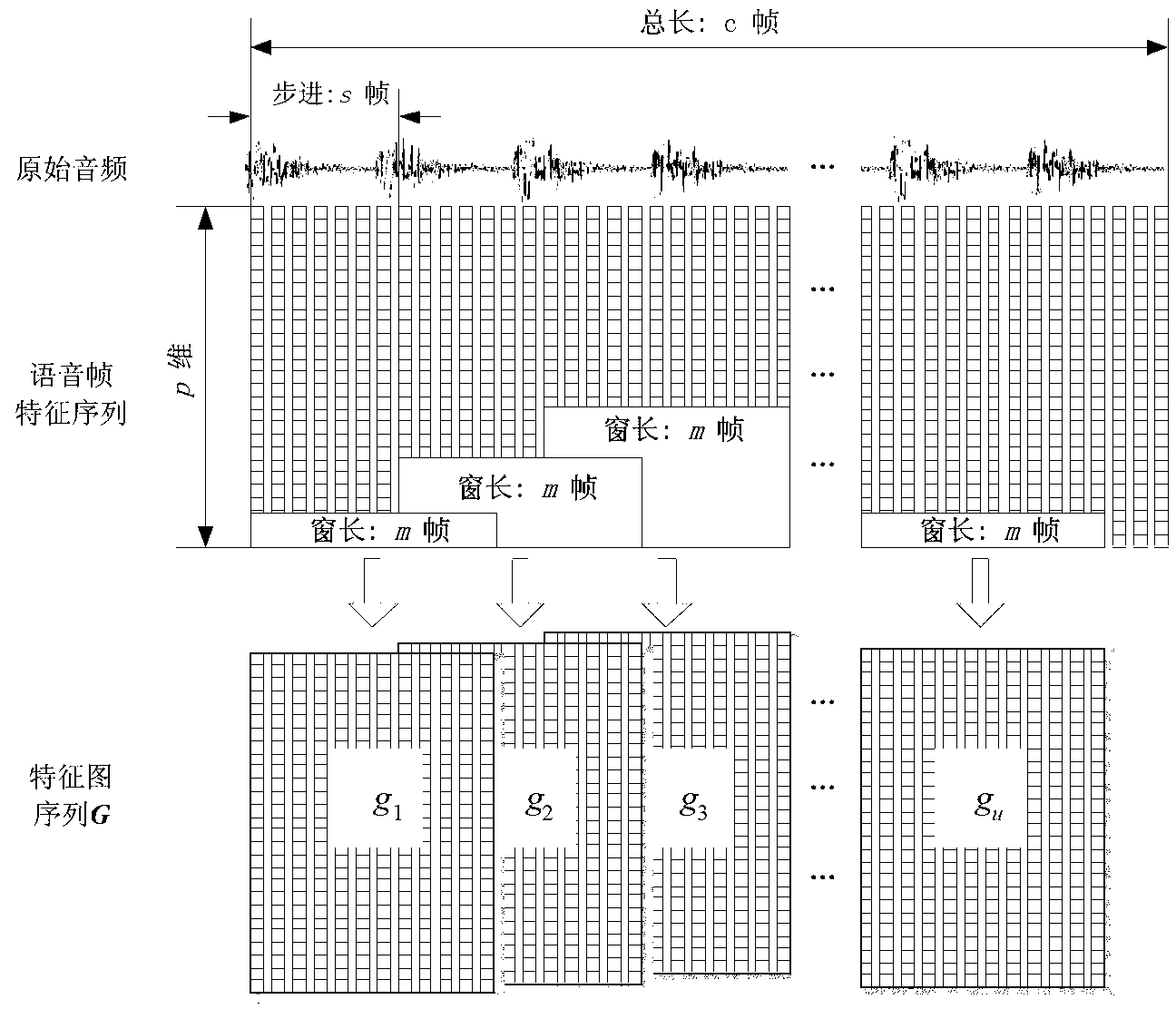

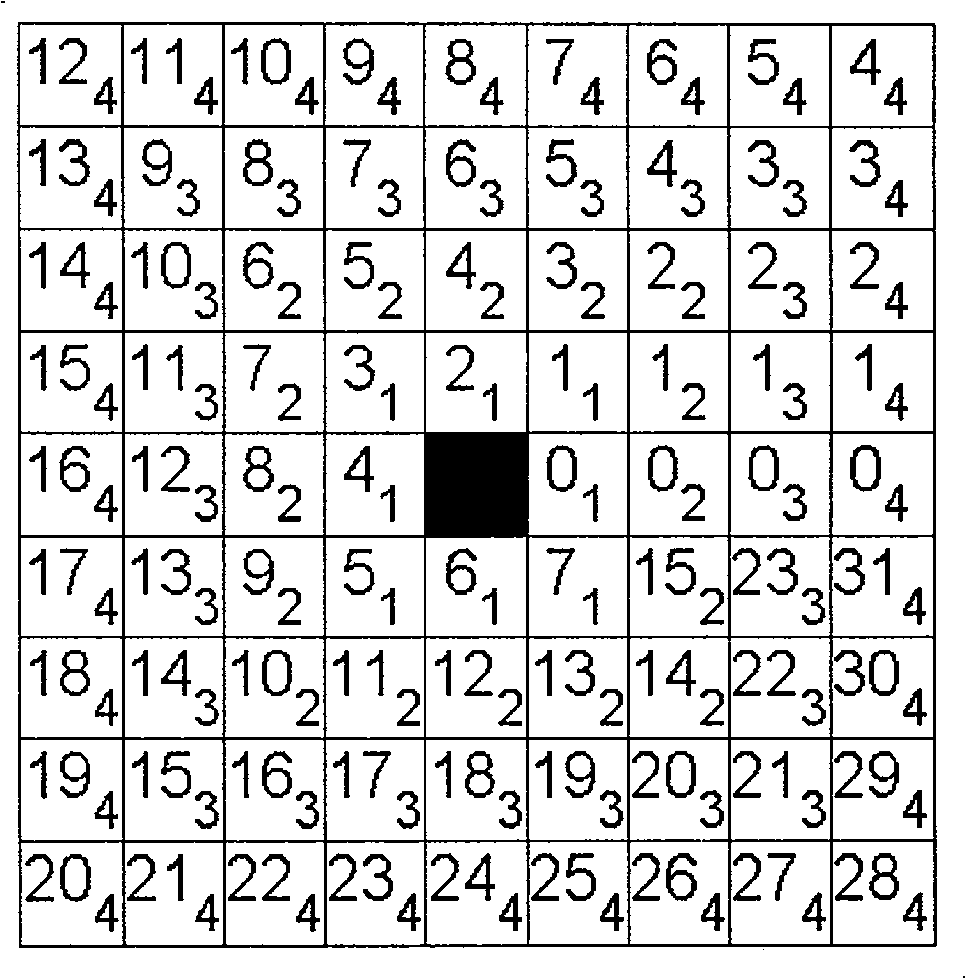

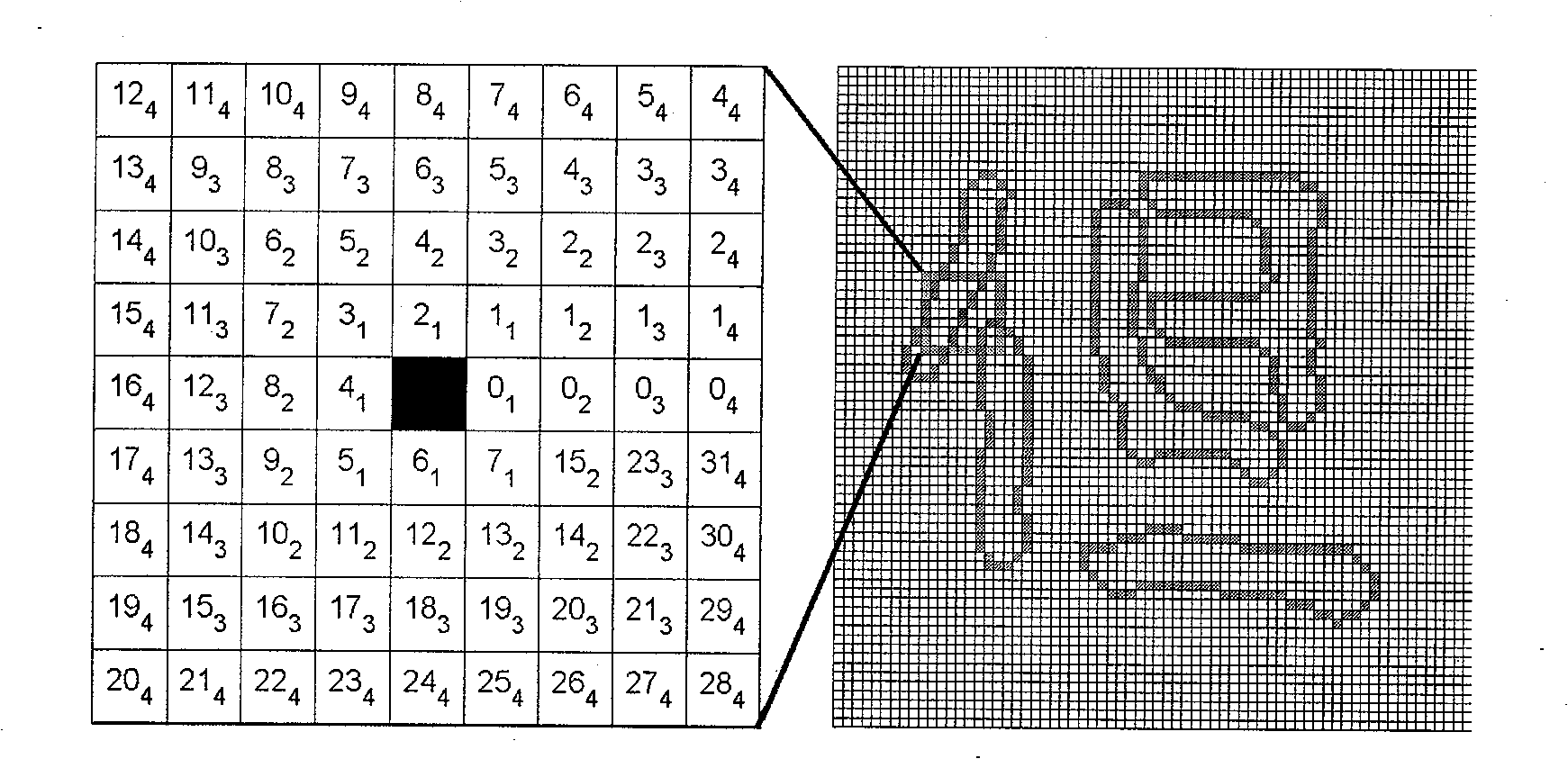

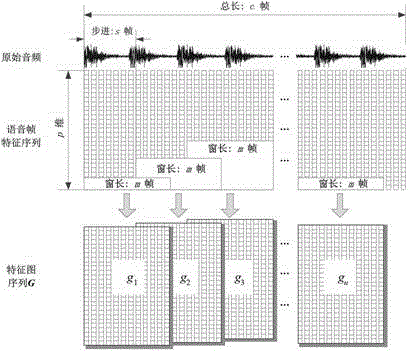

Large-scaled speaker identification method

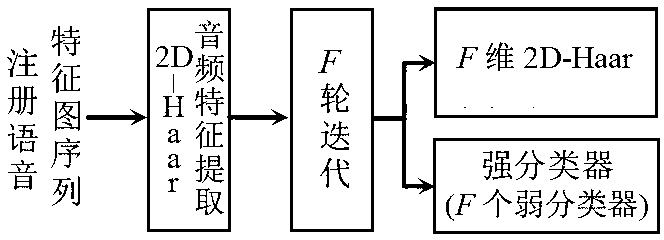

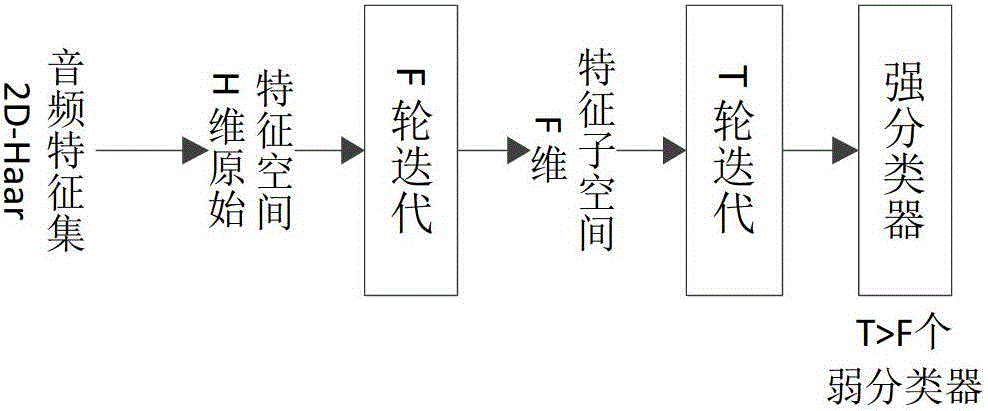

InactiveCN103258536AHuge feature spaceLighten the computational burdenSpeech analysisText independentAdaBoost

The invention relates to a text-independent speaker identification method, wherein the text-independent speaker identification method is based on 2D-Haar voice frequency characteristics and suitable for large-scaled speakers. The invention provides conception and a calculation method of the 2D-Haar voice frequency characteristics, and foundational voice frequency characteristics are used to form a voice frequency characteristic graph at first; then the voice frequency characteristic graph is used to extract 2D-Haar voice frequency characteristics; then an AdaBoost.MH algorithm is used to accomplish screening of the 2D-Haar voice frequency characteristics and training of a speaker classifier; finally the trained speaker classifier is used to achieve the identification of speakers. Compared with the prior art, the large-scaled speaker identification method can effectively restrain decay of identification accuracy rate in a large-scale speaker identification situation, and has high identification accuracy rate and identification speed. The text-independent speaker identification method is not only applied to a desktop computer, but also applied to mobile calculation platforms like a cell phone, a tablet and the like.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Digital signatures for communications using text-independent speaker verification

ActiveUS8751233B2Improve speech recognition performanceFinanceDigital data processing detailsText independentDigital signature

A speaker-verification digital signature system is disclosed that provides greater confidence in communications having digital signatures because a signing party may be prompted to speak a text-phrase that may be different for each digital signature, thus making it difficult for anyone other than the legitimate signing party to provide a valid signature.

Owner:MICROSOFT TECH LICENSING LLC

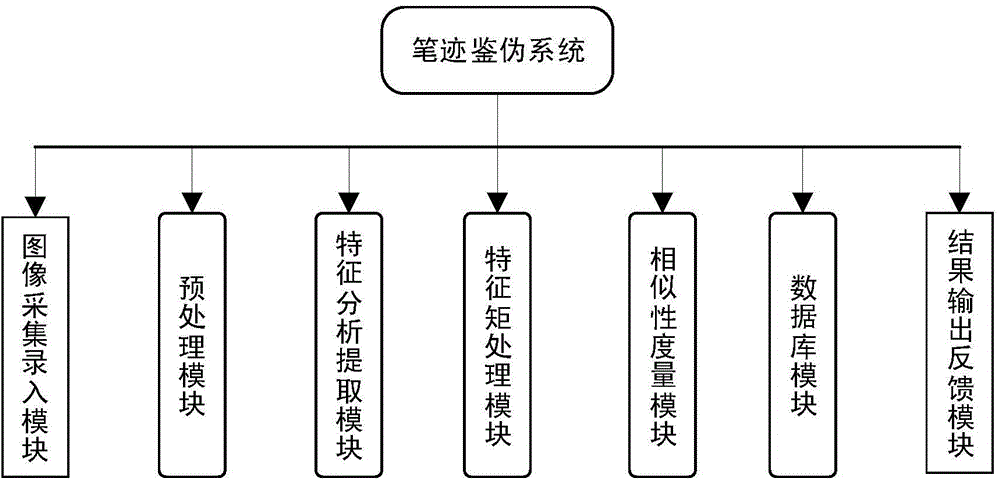

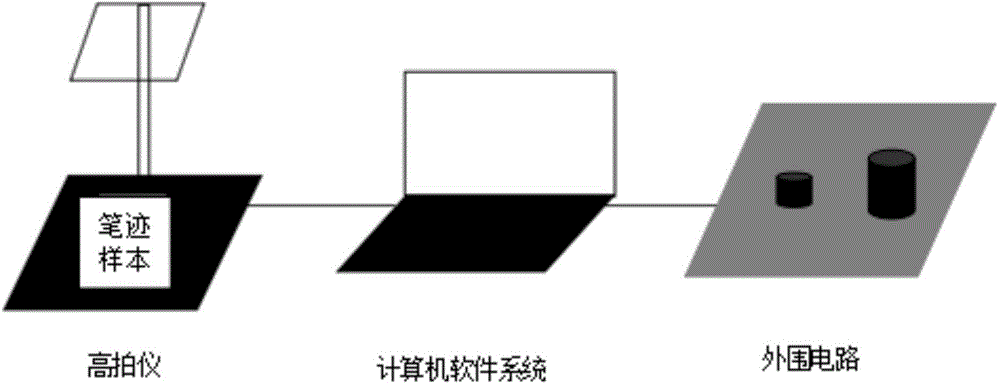

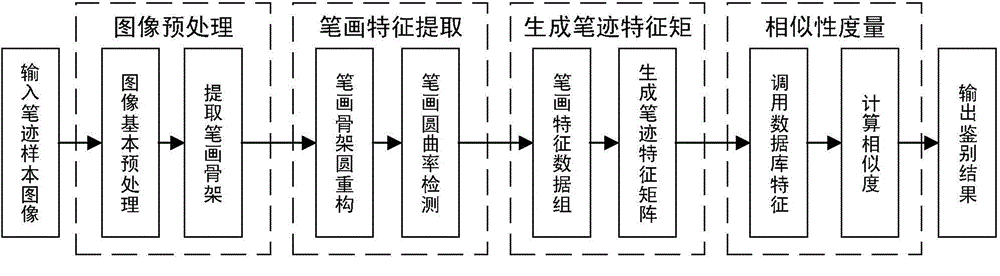

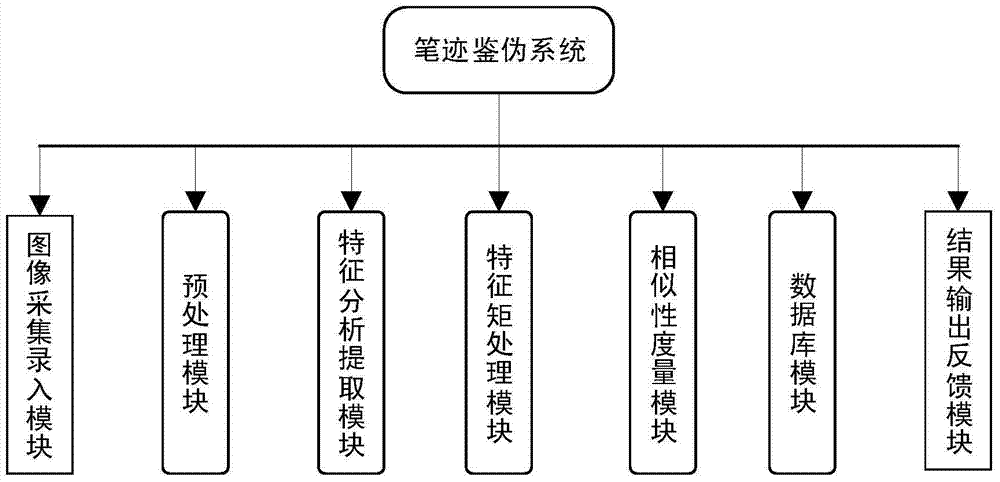

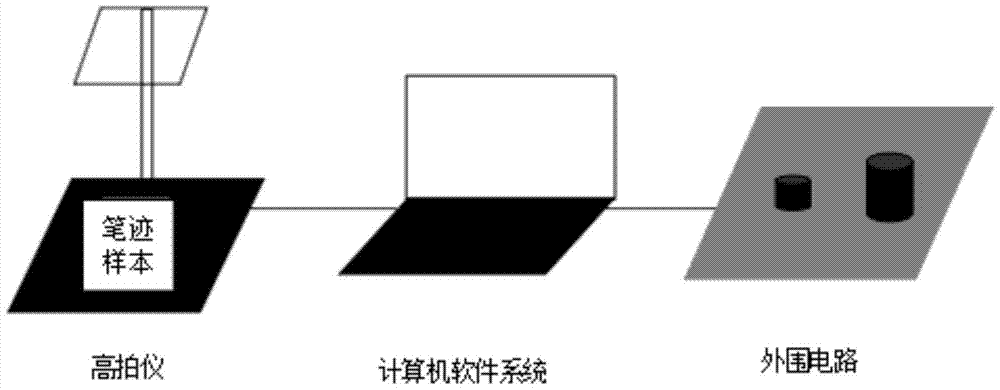

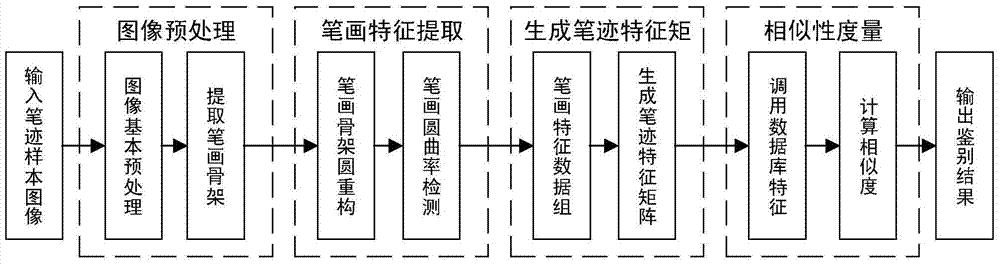

Handwriting authentication system based on stroke curvature detection

ActiveCN104809451AEasy to operateImprove real-time performanceCharacter recognitionHandwritingText independent

The invention discloses a handwriting authentication system based on stroke curvature detection. The handwriting authentication system comprises a handwriting sample database module, an image acquisition recording module, a preprocessing module, a characteristic analysis extraction module, a characteristic matrix processing module, a similarity measurement module and a result output feedback module. In a handwriting authentication process, the preprocessing module preprocesses an image acquired by the image acquisition module in real time, the characteristic analysis extraction module extracts characteristic values of strokes in a horizontal direction, a vertical direction, a left-falling direction and a right-falling direction, the characteristic matrix processing module processes the characteristic values of the strokes and generates a handwriting characteristic matrix, the similarity measurement module measures the similarity of the handwriting characteristic matrix needing to be authenticated and the handwriting characteristic matrix in a database by using a vector angle similarity measurement method, and the result output feedback module outputs a corresponding result. According to the handwriting authentication system, text-independent handwriting authentication can be quickly carried out, and an authentication result is stable and objective.

Owner:HOHAI UNIV CHANGZHOU

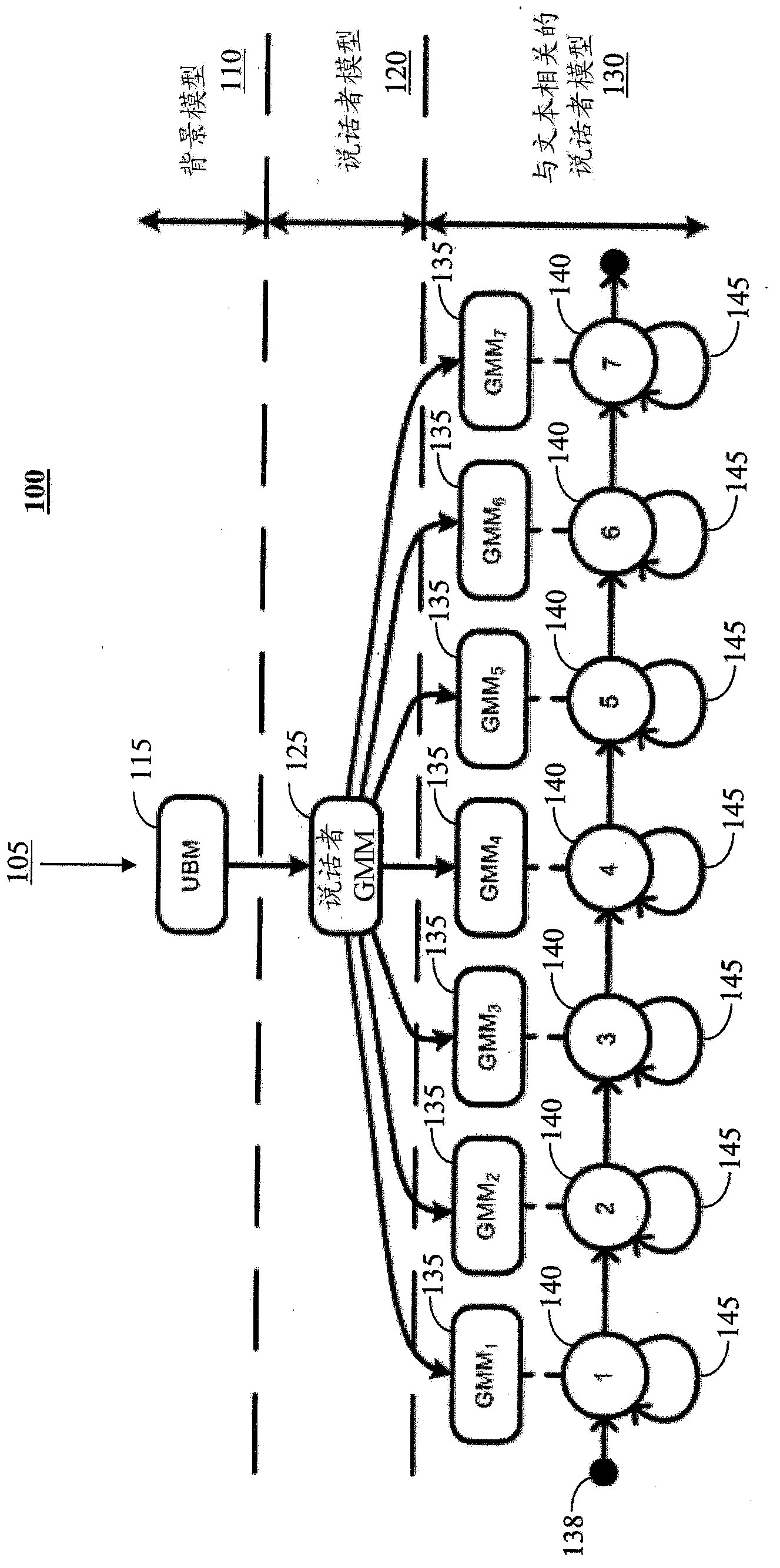

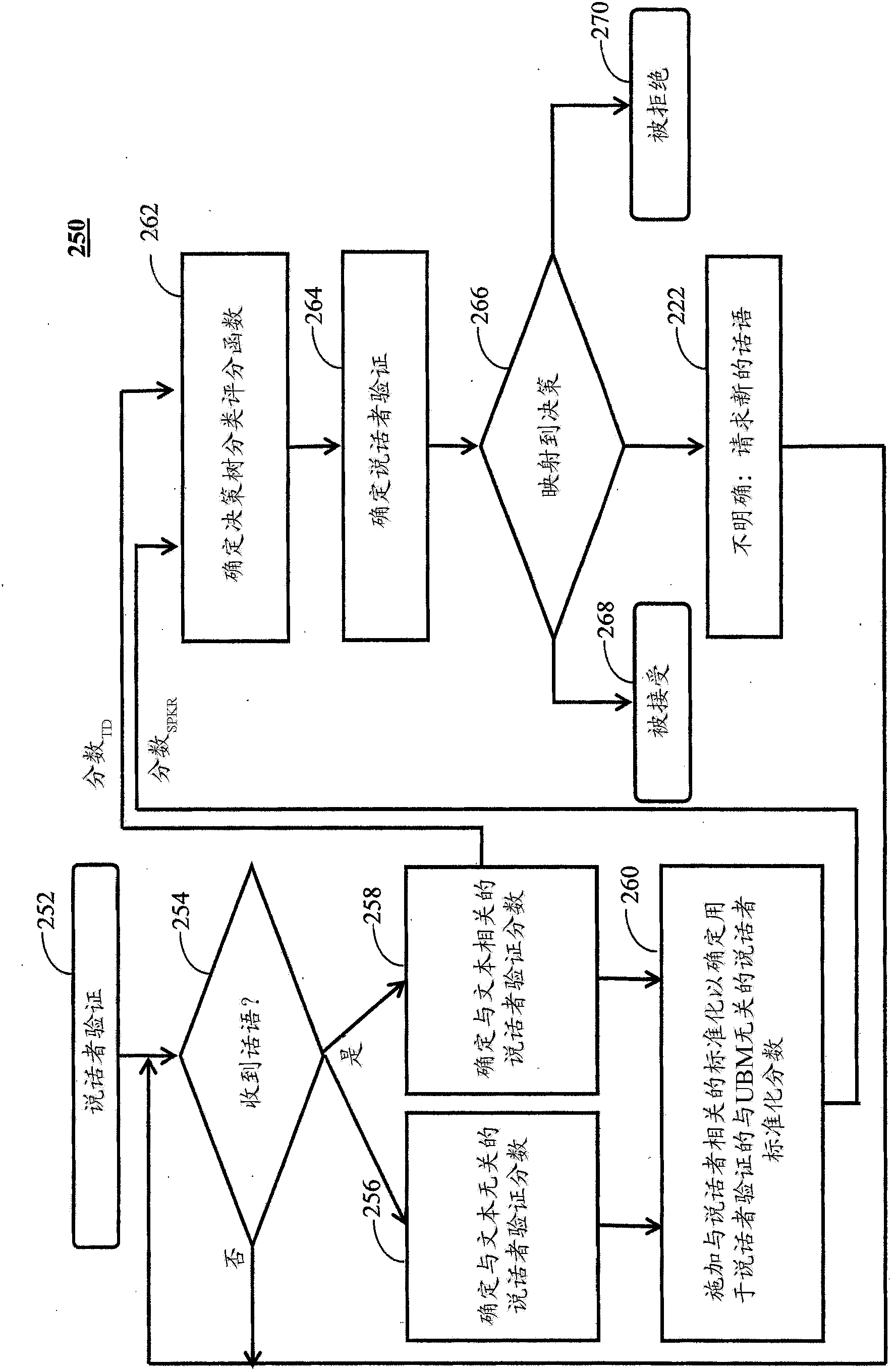

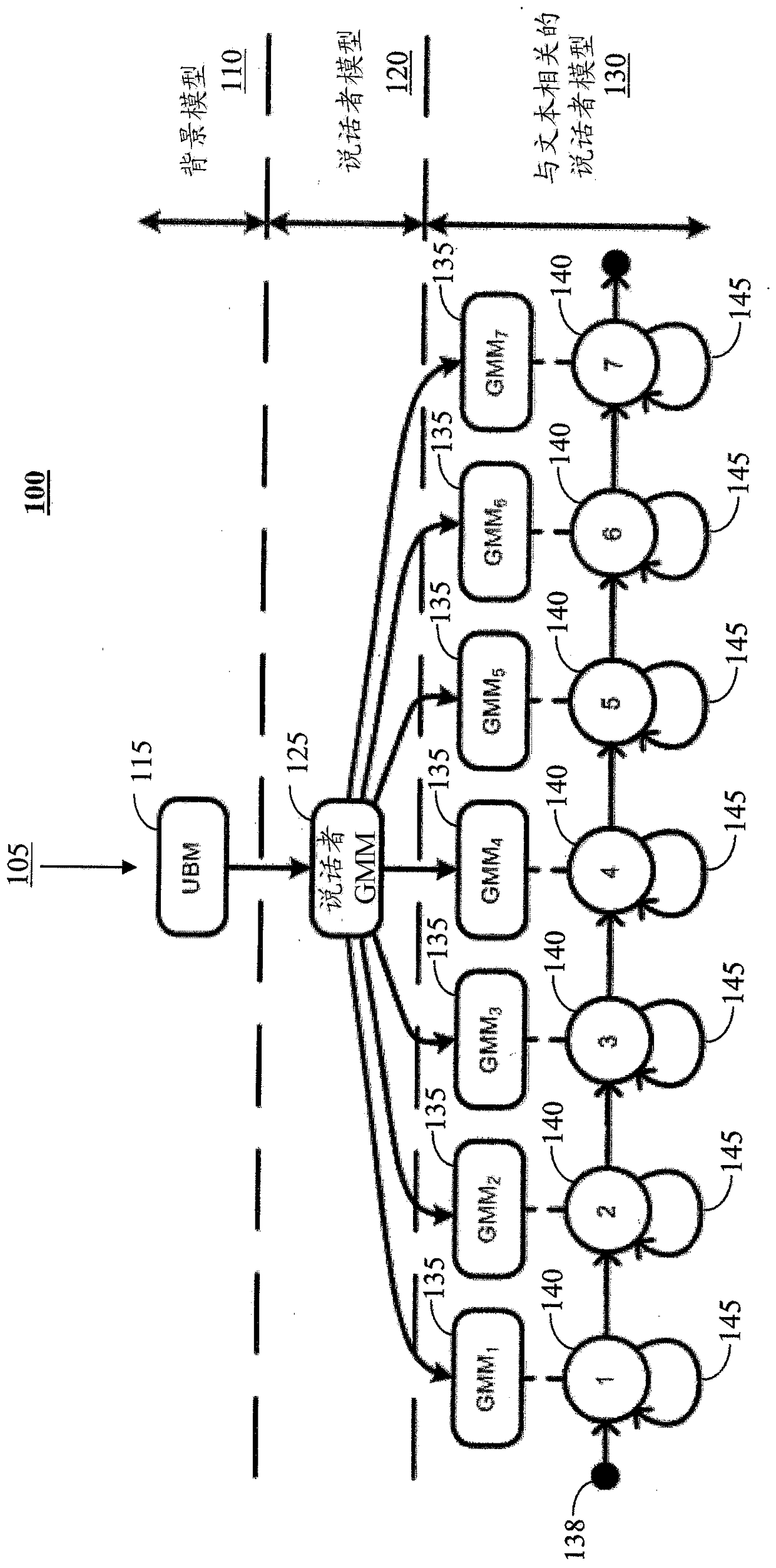

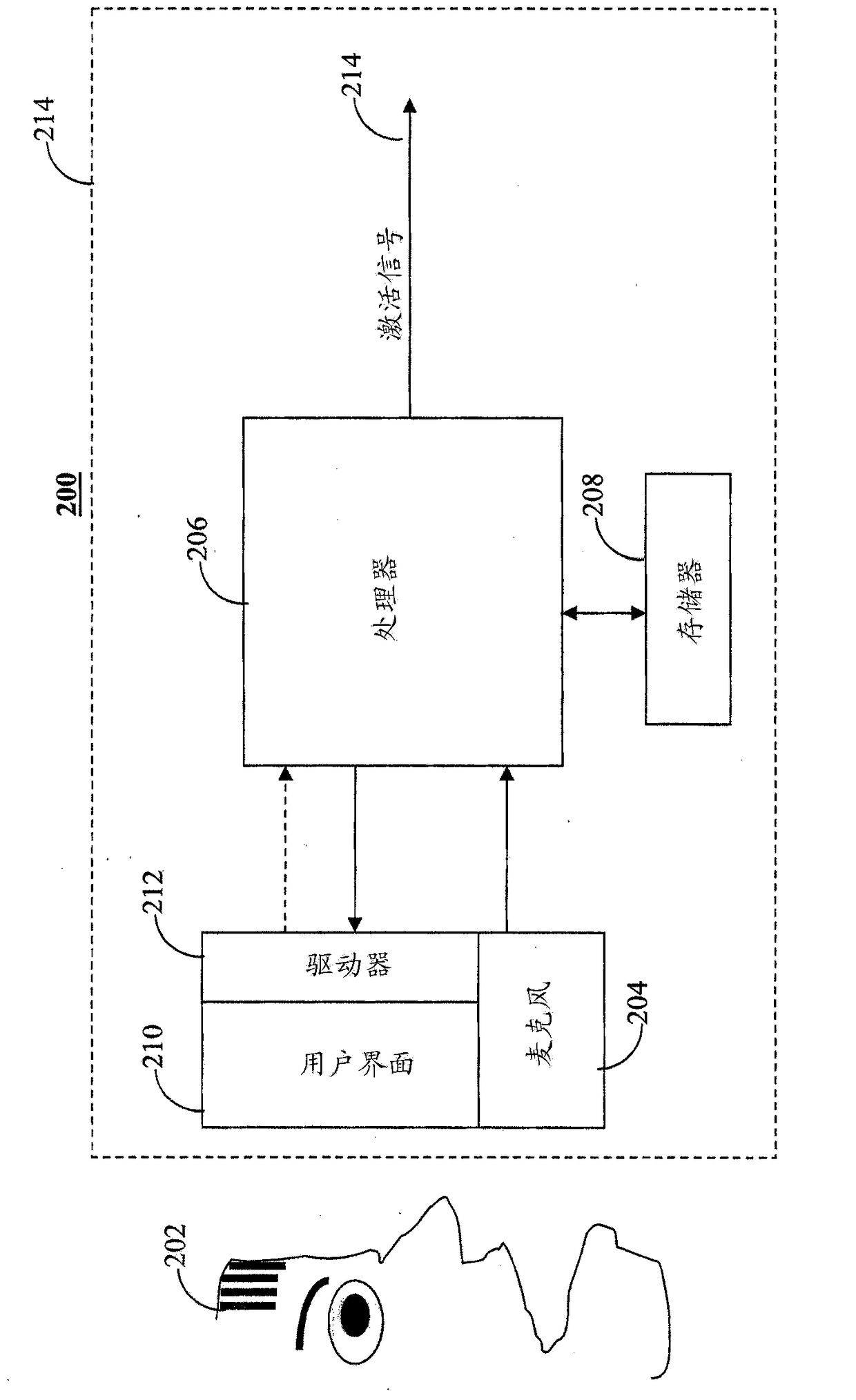

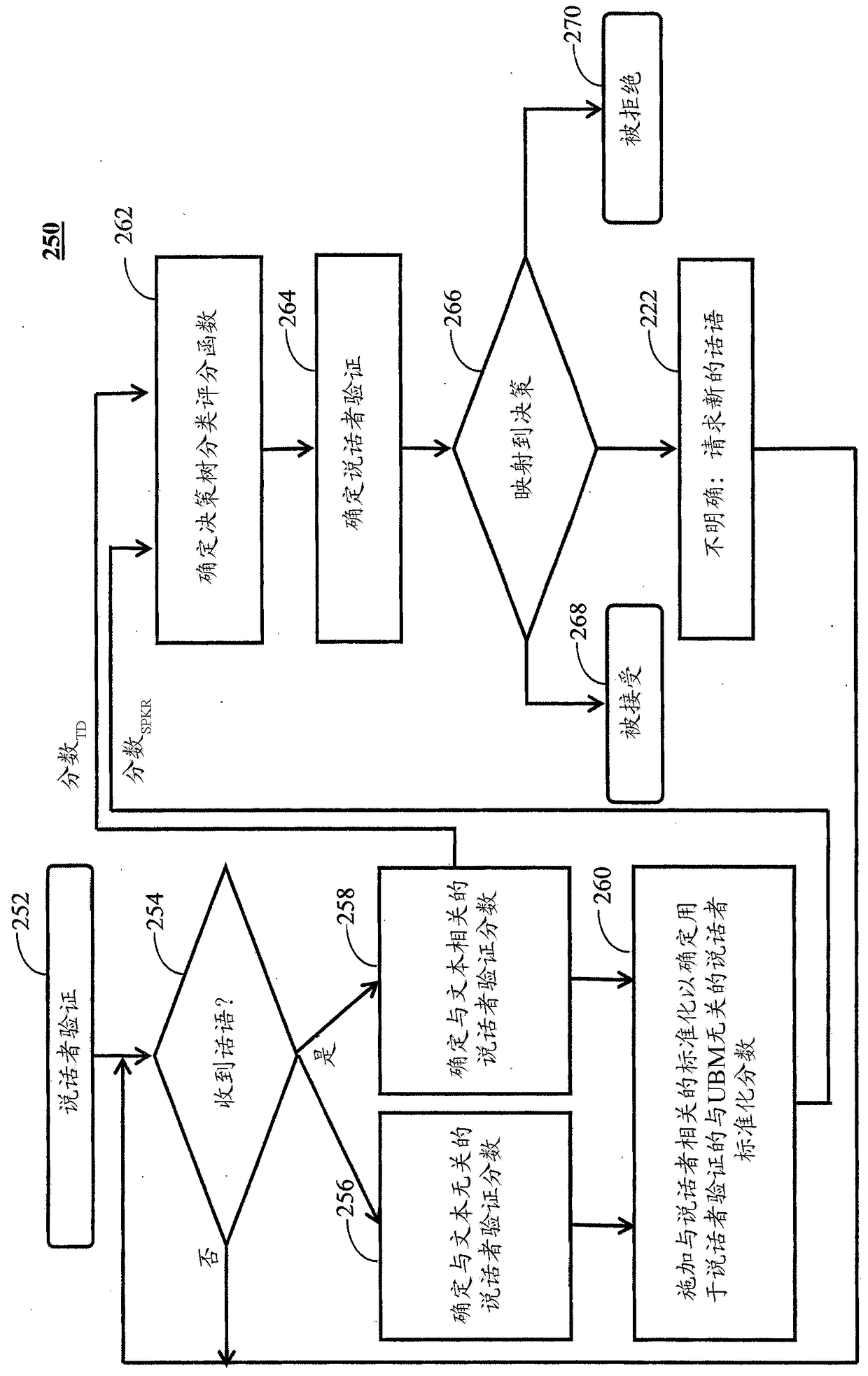

Method and system for dual scoring for text-dependent speaker verification

The invention provides a method and system for dual scoring for text-dependent speaker verification. Embodiments of systems and methods for speaker verification are provided. In various embodiments, a method includes receiving an utterance from a speaker and determining a text-independent speaker verification score and a text-dependent speaker verification score in response to the utterance. Various embodiments include a system for speaker verification, the system comprising an audio receiving device for receiving an utterance from a speaker and converting the utterance to an utterance signal, and a processor coupled to the audio receiving device for determining speaker verification in response to the utterance signal, wherein the processor determines speaker verification in response to a UBM-independent speaker-normalized score.

Owner:AGENCY FOR SCI TECH & RES

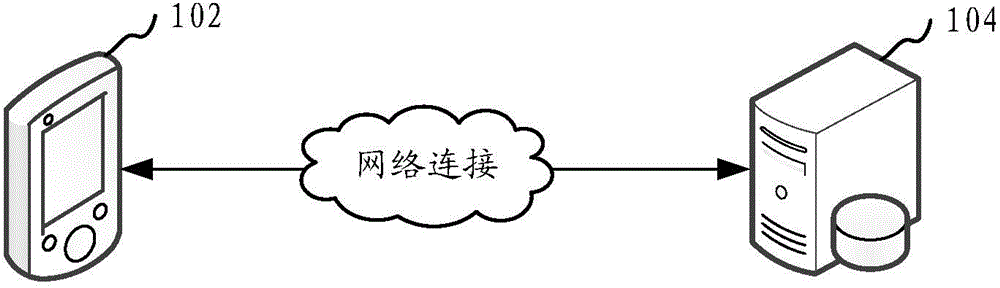

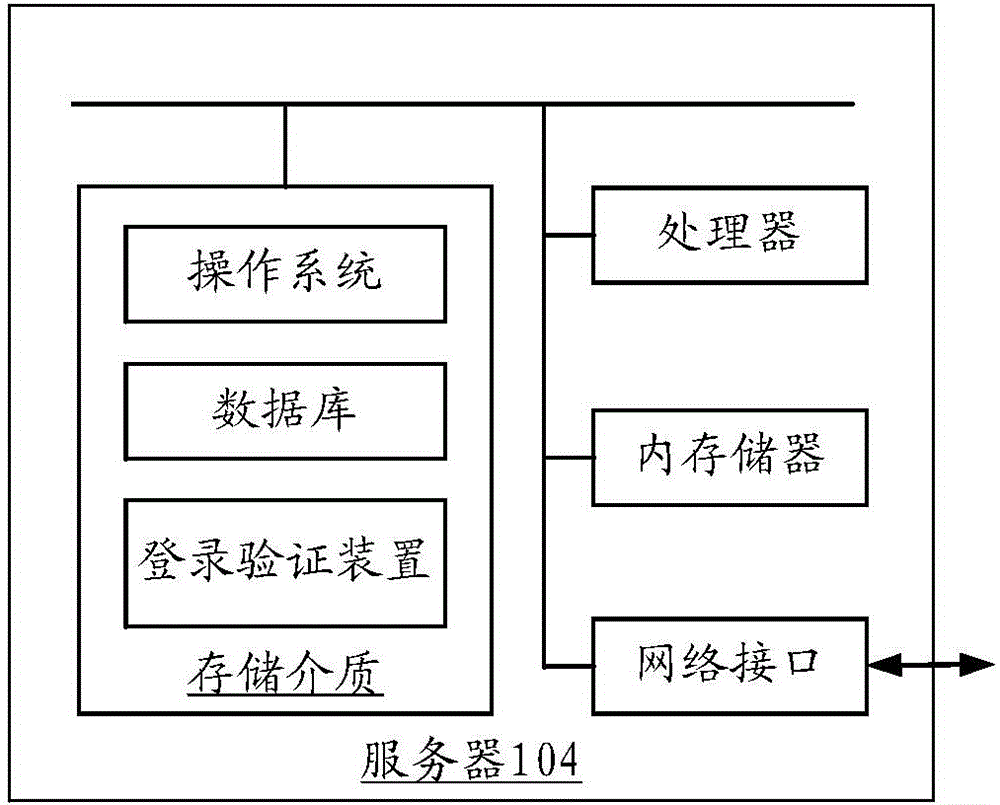

Login verification method and device, and login method and device

PendingCN106302339APrevent impersonationImprove securitySpeech recognitionSecuring communicationText independentValidation methods

The invention relates to a login verification method and device. The method comprises the following steps: receiving a login request sent by a terminal, wherein the login request contains a user identifier; generating a brand new verification text according to the login request, and returning the brand new verification text to the terminal; receiving to-be-verified voice corresponding to the user identifier and uploaded by the terminal; employing a text-independent voice recognition algorithm to carry out voice verification on the to-be-verified voice; converting the to-be-verified voice into a text after the voice verification is passed; and comparing whether the text converted by the to-be-verified voice is consistent with the verification text, allowing login if the to-be-verified voice is consistent with the verification text, and otherwise, refusing the login. The invention further provides a login method and device. According to the login verification method and device provided by the invention, the personation based on the situation that the voice of users is recorded by phishing sites is well avoided, and thus the login security is effectively improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

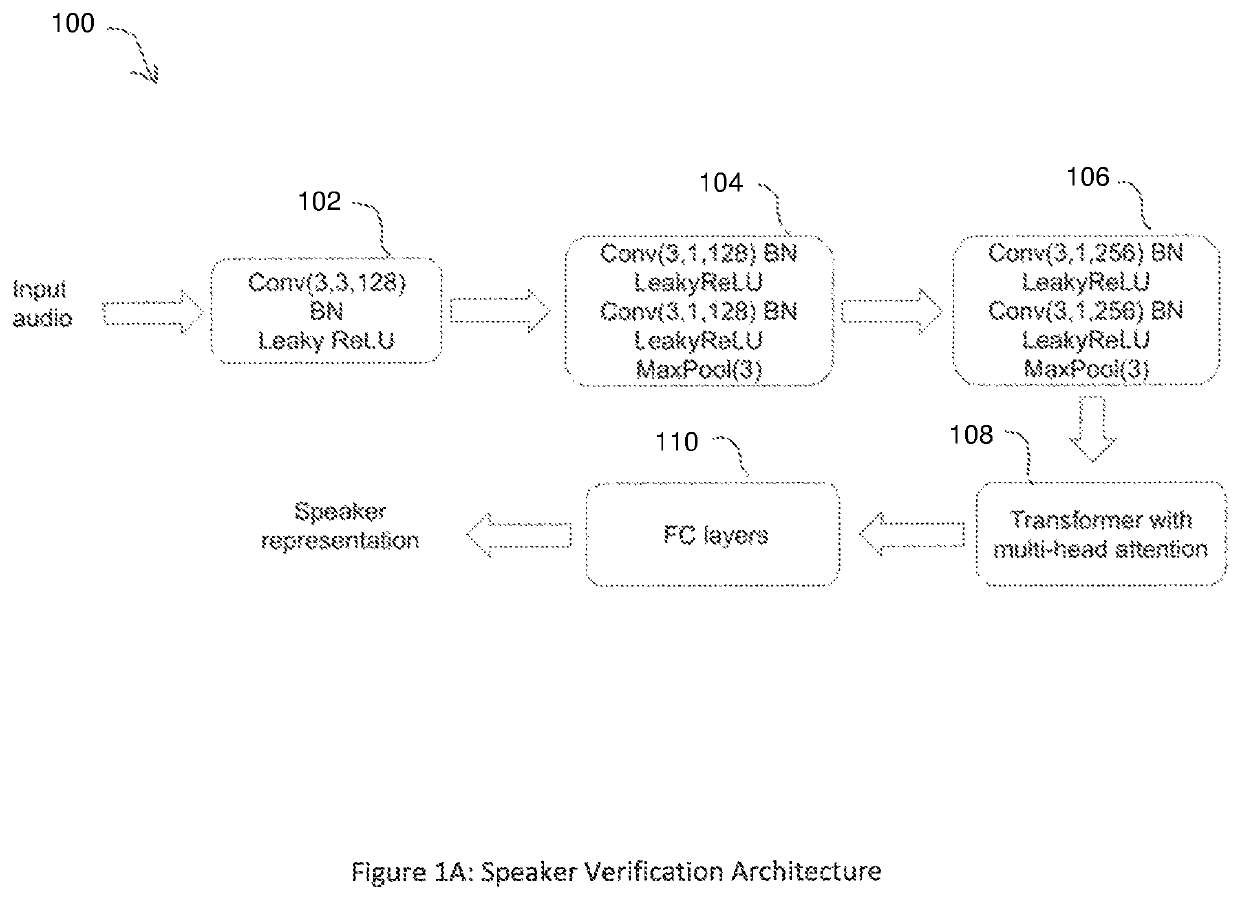

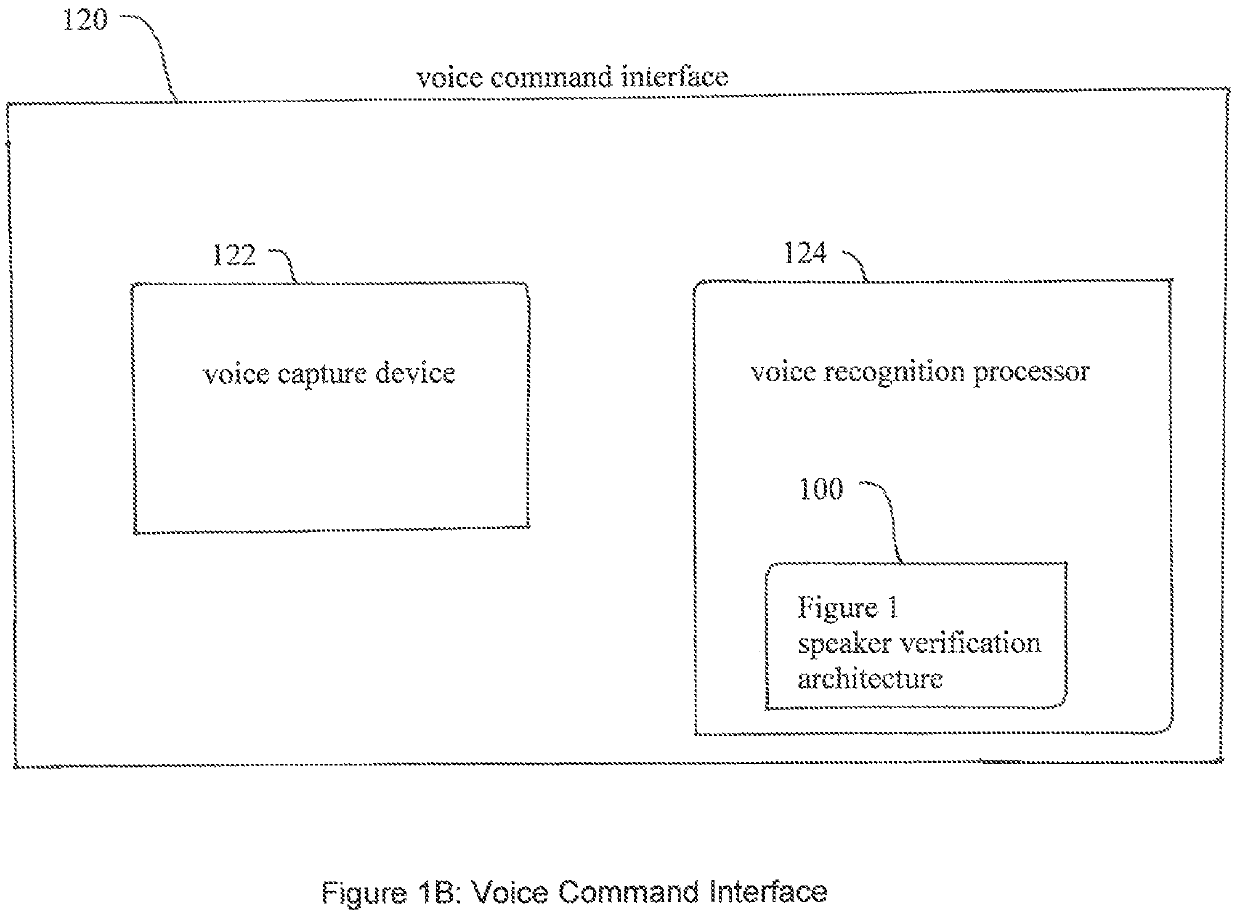

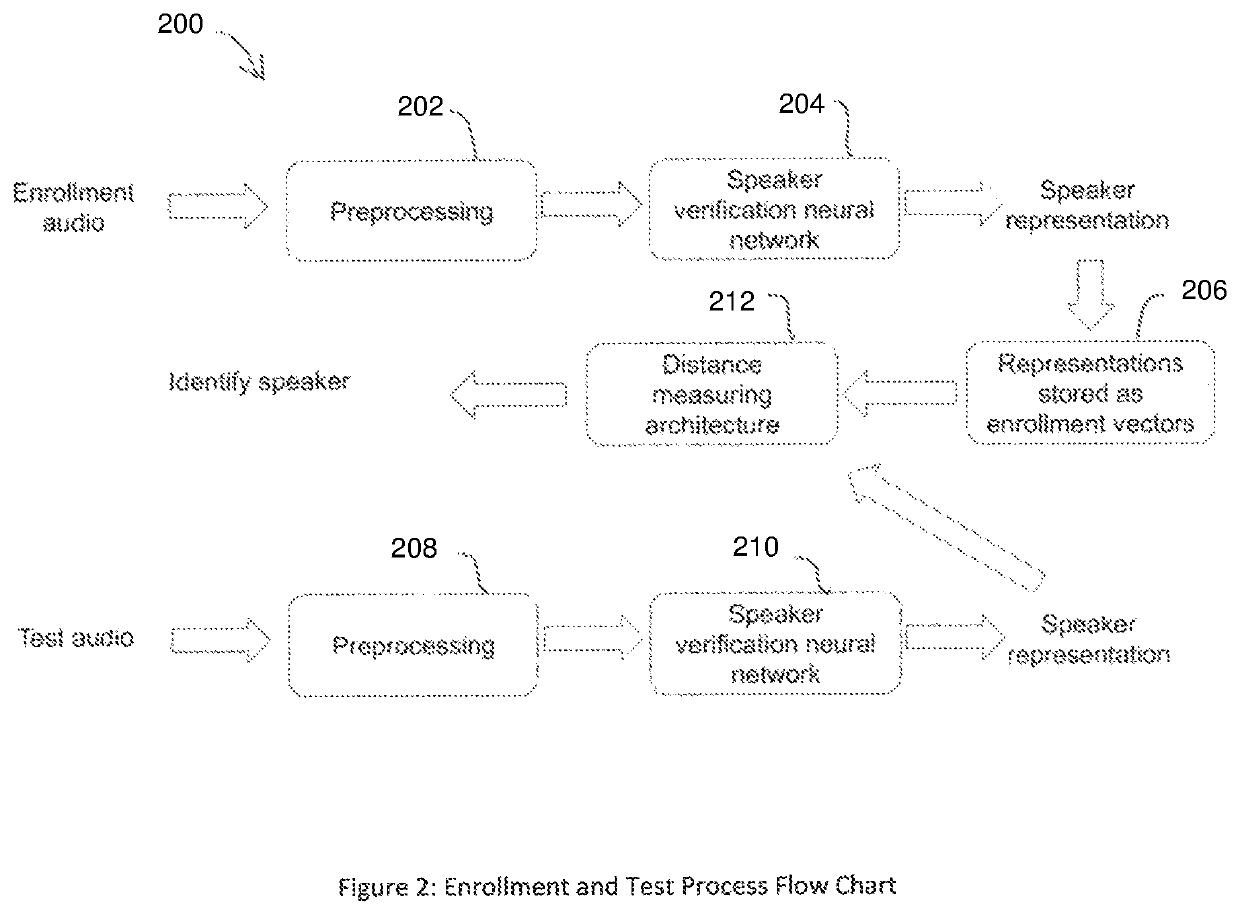

Text independent speaker-verification on a media operating system using deep learning on raw waveforms

An artificial neural network architecture is provided for processing raw audio waveforms to create speaker representations that are used for text-independent speaker verification and recognition. The artificial neural network architecture includes a strided convolution layer, first and second sequentially connected residual blocks, a transformer layer, and a final fully connected (FC) layer. The strided convolution layer is configured to receive raw audio waveforms from a speaker. The first and the second residual blocks both include multiple convolutional and max pooling layers. The transformer layer is configured to aggregate frame level embeddings to an utterance level embedding. The output of the FC layer creates a speaker representation for the speaker whose raw audio waveforms were inputted into the strided convolution layer.

Owner:ALPHONSO

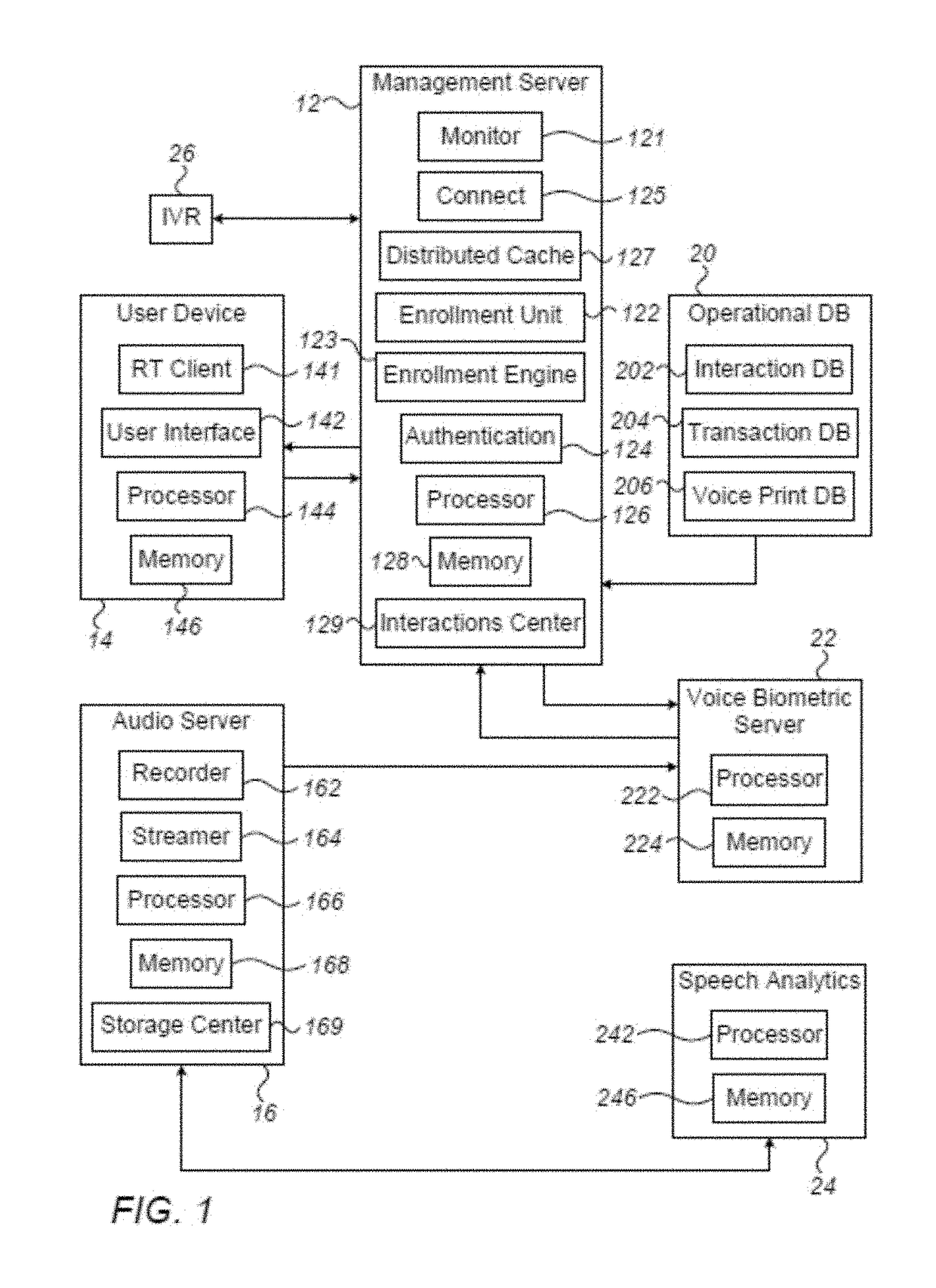

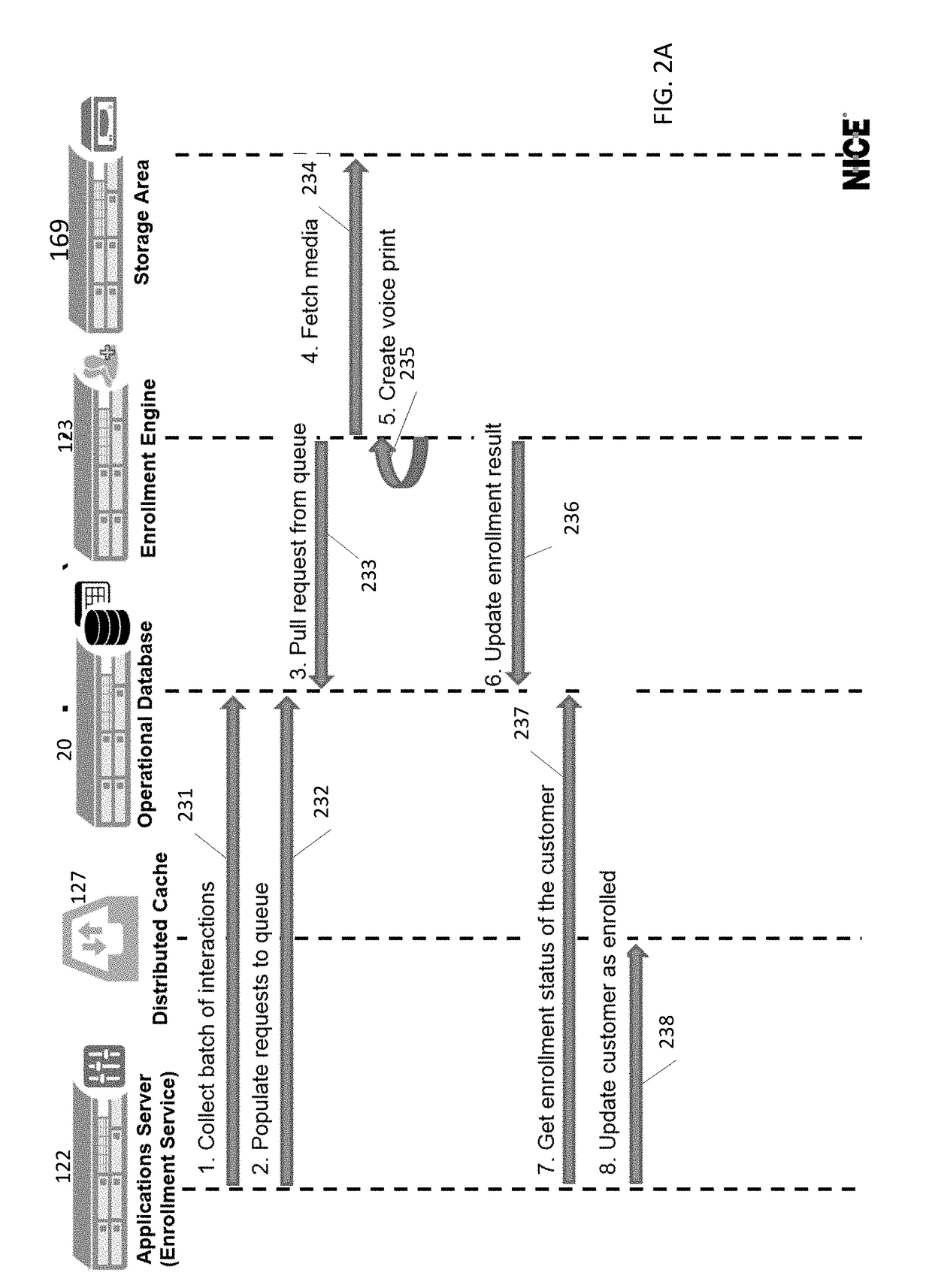

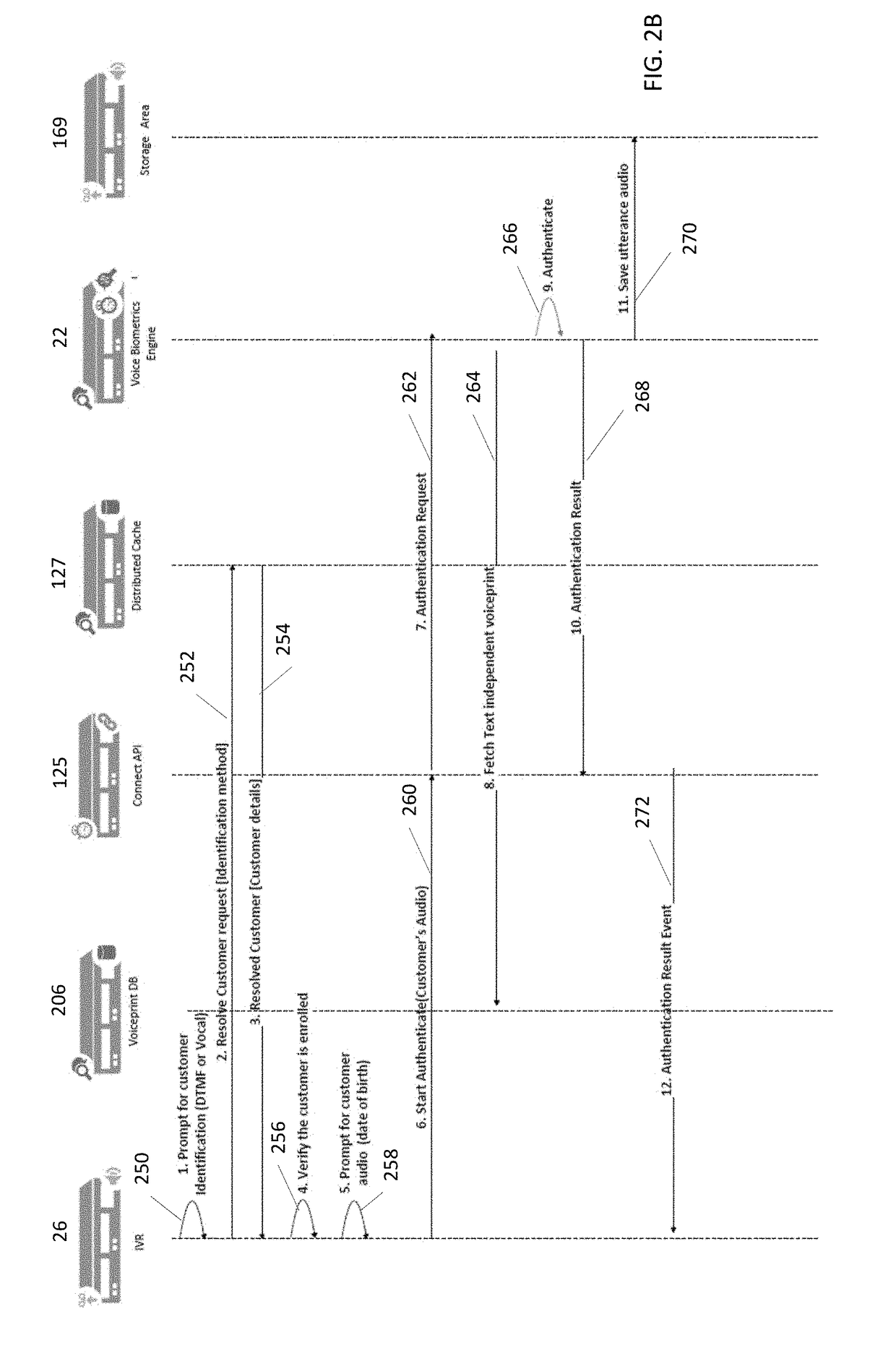

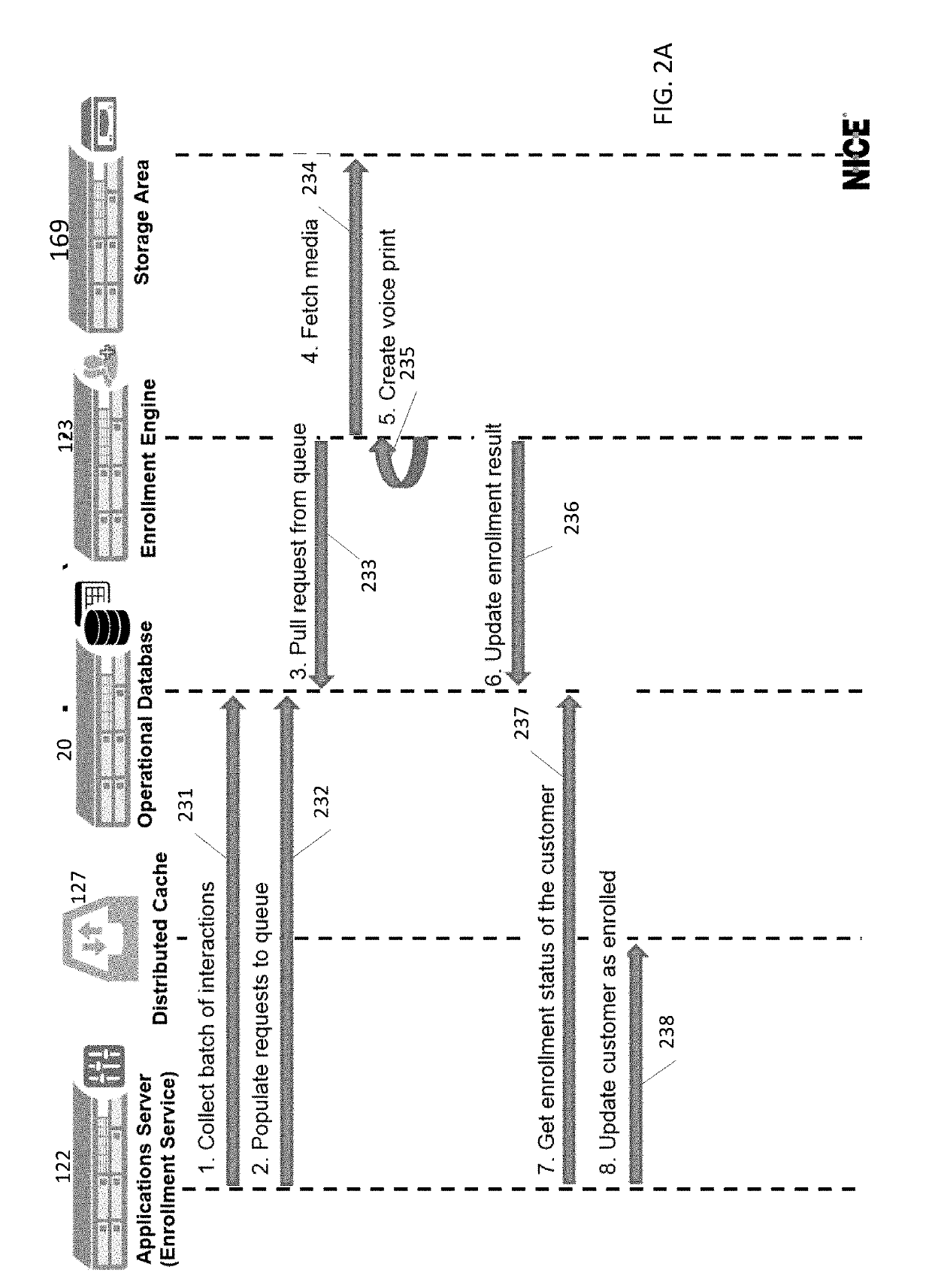

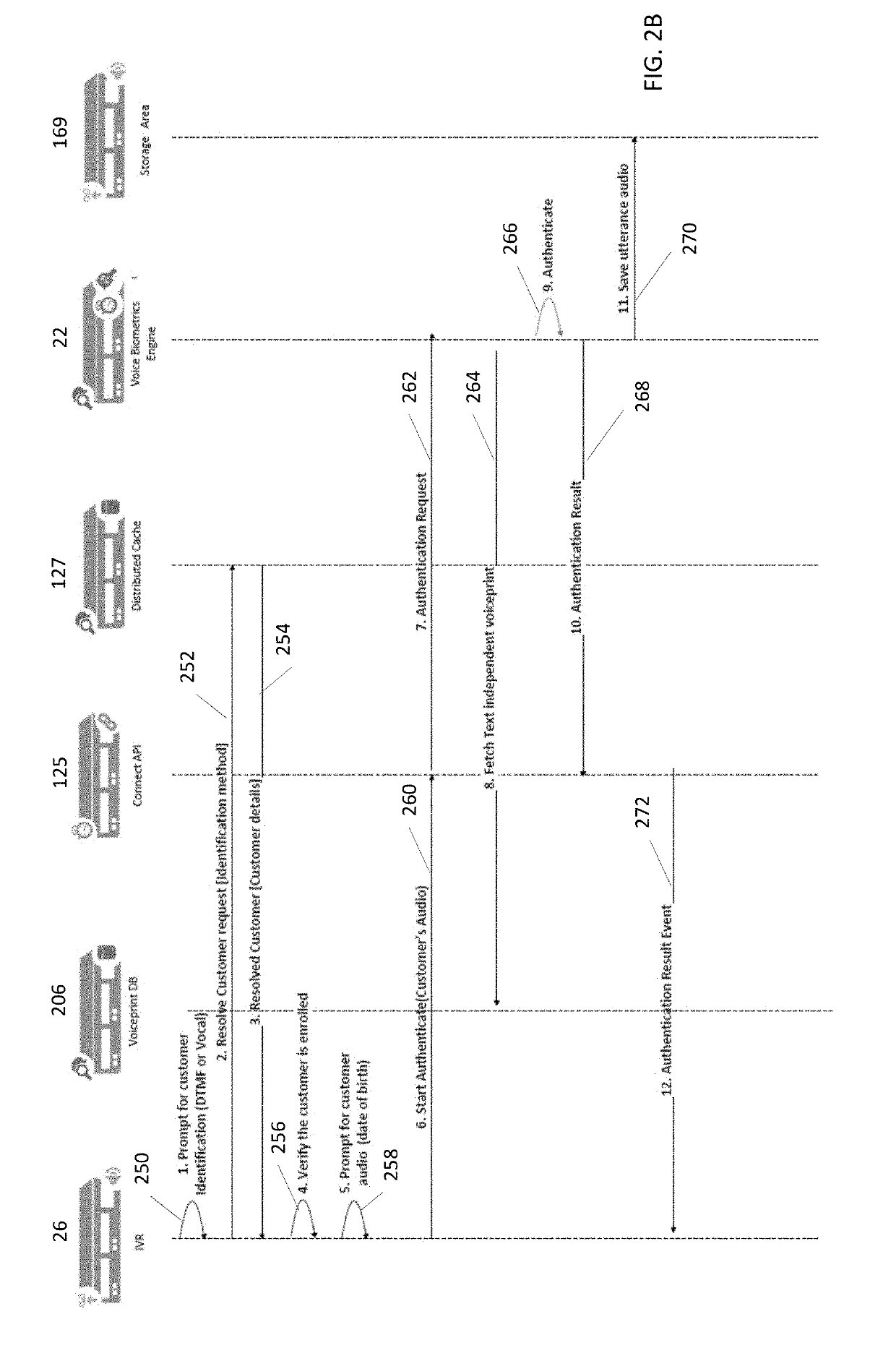

Seamless text-dependent enrollment

Methods and systems for transforming a text-independent enrolment of a customer in a self-service system into a text-dependent enrolment are provided. A request for authentication of a customer that is enrolled in the self-service system with a text-independent voice print is received. A request is transmitted to the customer to repeat a passphrase and the customer's response is received as an audio stream of the passphrase. The customer is authenticated by comparing the audio stream of the passphrase against the text-independent voice print and if the customer is authenticated then a text-dependent voice print is created based on the passphrase, otherwise discard the audio stream of the passphrase.

Owner:NICE LTD

Seamless text-dependent enrollment

Methods and systems for transforming a text-independent enrolment of a customer in a self-service system into a text-dependent enrolment are provided. A request for authentication of a customer that is enrolled in the self-service system with a text-independent voice print is received. A request is transmitted to the customer to repeat a passphrase and the customer's response is received as an audio stream of the passphrase. The customer is authenticated by comparing the audio stream of the passphrase against the text-independent voice print and if the customer is authenticated then a text-dependent voice print is created based on the passphrase, otherwise discard the audio stream of the passphrase.

Owner:NICE LTD

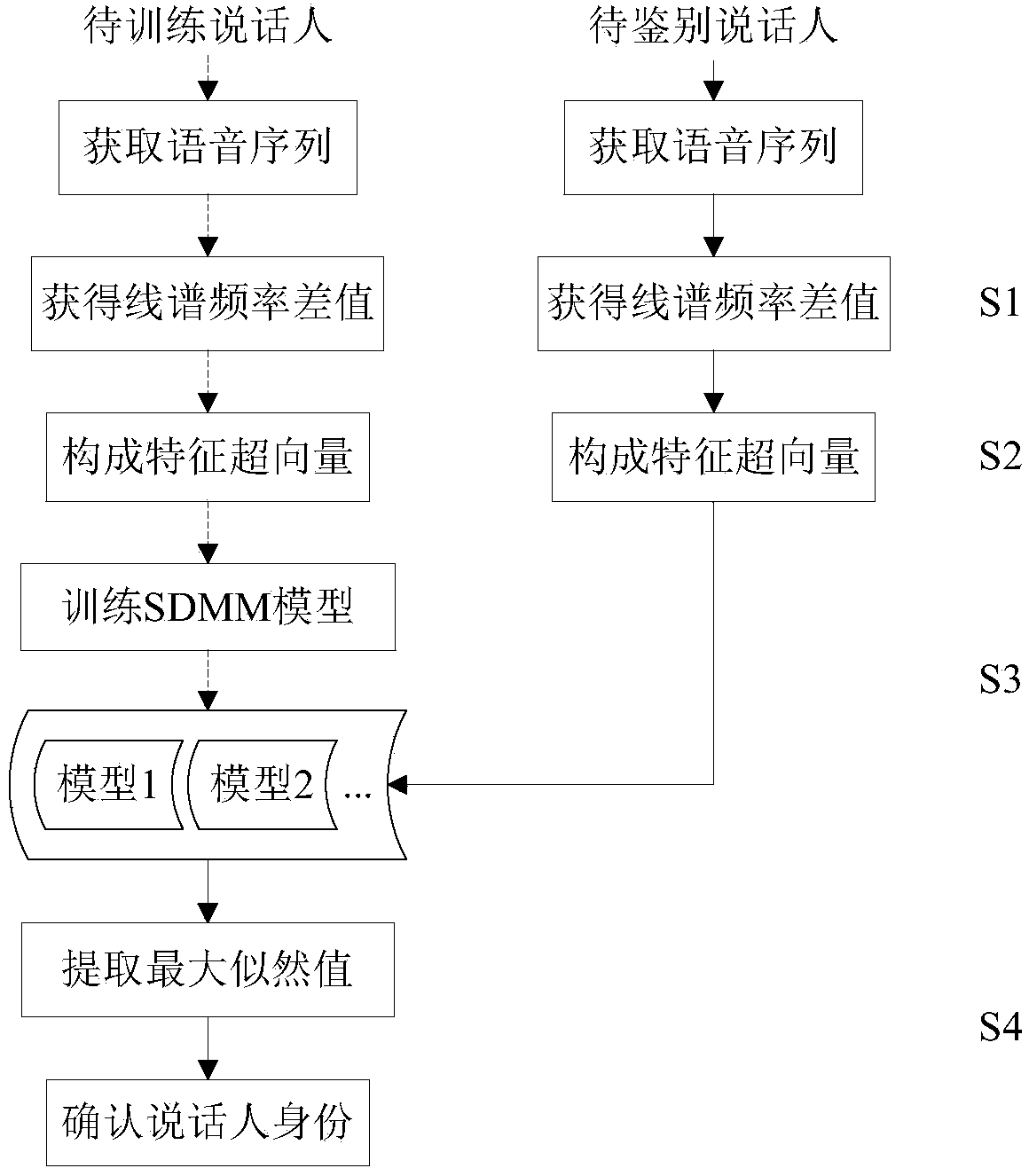

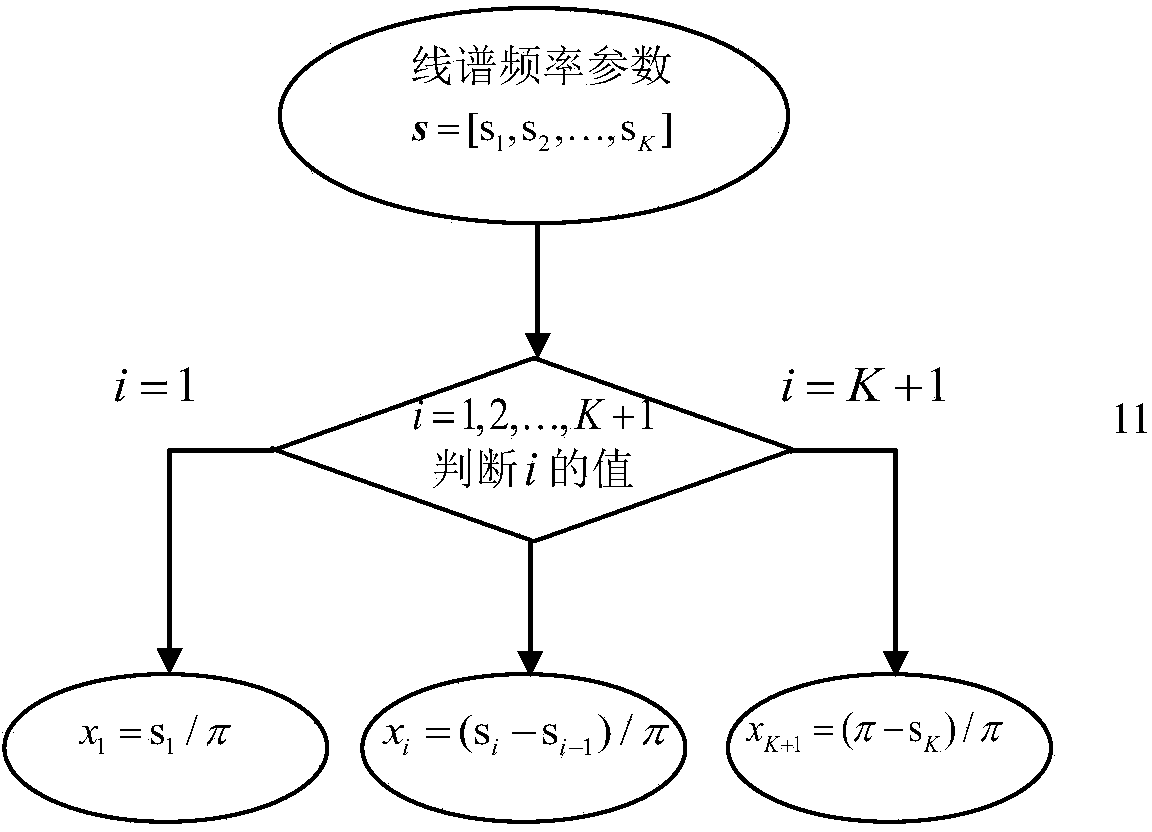

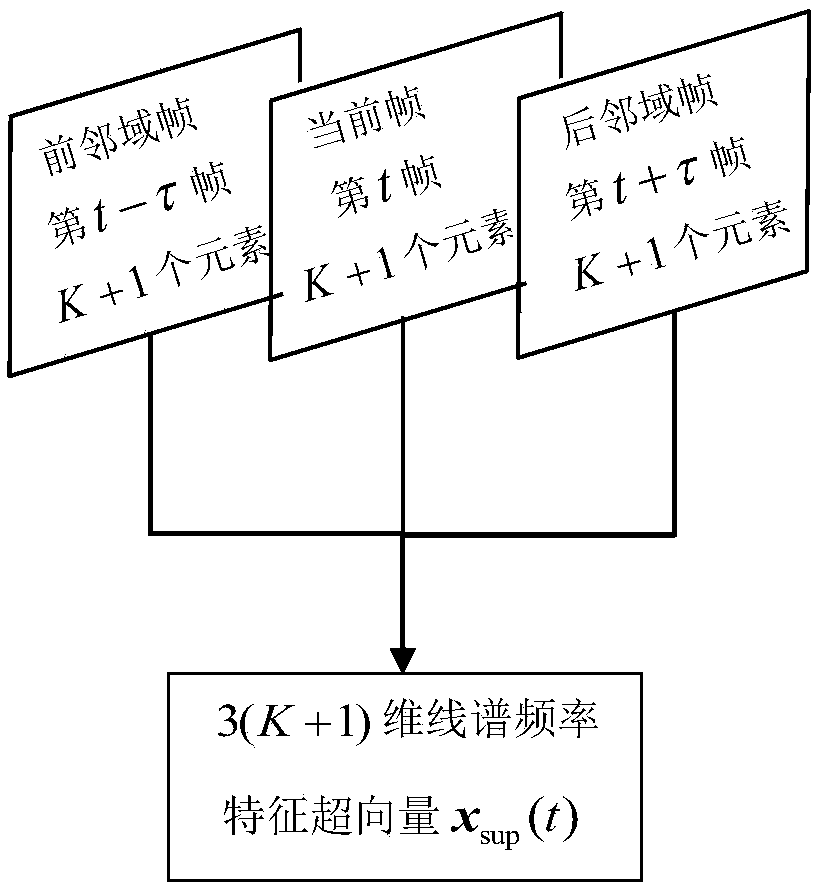

Text-independent speaker identifying device based on line spectrum frequency difference value

The embodiment of the invention discloses a text-independent speaker identifying device based on a line spectrum frequency difference value. A method comprises the following steps: the feature extraction step, wherein a line spectrum frequency parameter is converted into the line spectrum frequency parameter difference value through linear conversion, a generation line spectrum frequency characteristic supervector is formed by combining a current frame, a front adjacent frame and a rear adjacent frame; the model training step, wherein distribution of the characteristic supervector is simulated by utilizing a super Dirichlet mixed model, and a parameter in the model is solved; the identifying step, wherein regarding a voice sequence of an identified person, characteristics are abstracted according the step one, then the model obtained in the step two is input, a likelihood value of each probabilistic model is calculated, the largest likelihood value is obtained, and a number of the speaker is confirmed. By means of the method, the text-independent speaker identification rate can be improved, and high practical value is achieved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

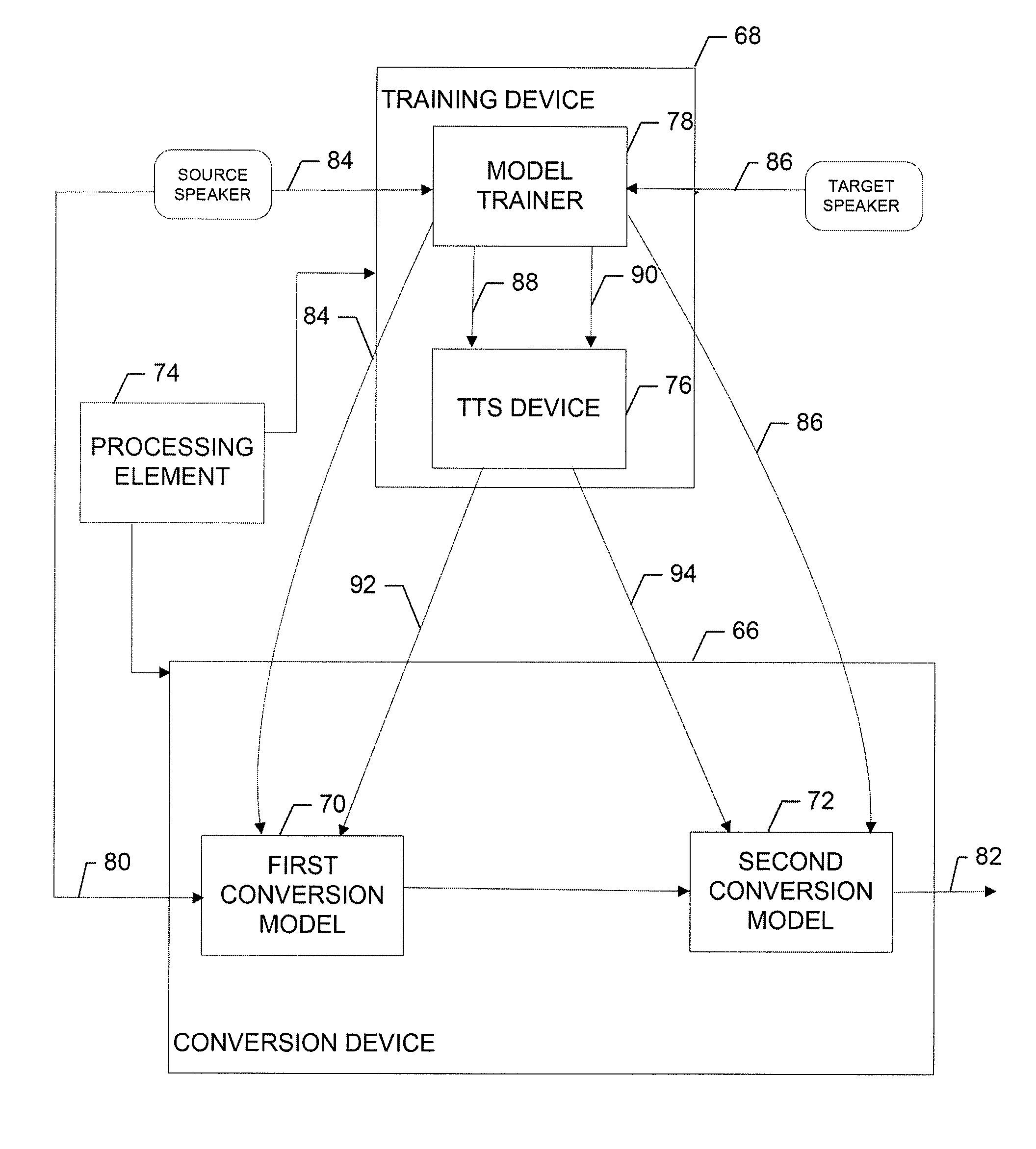

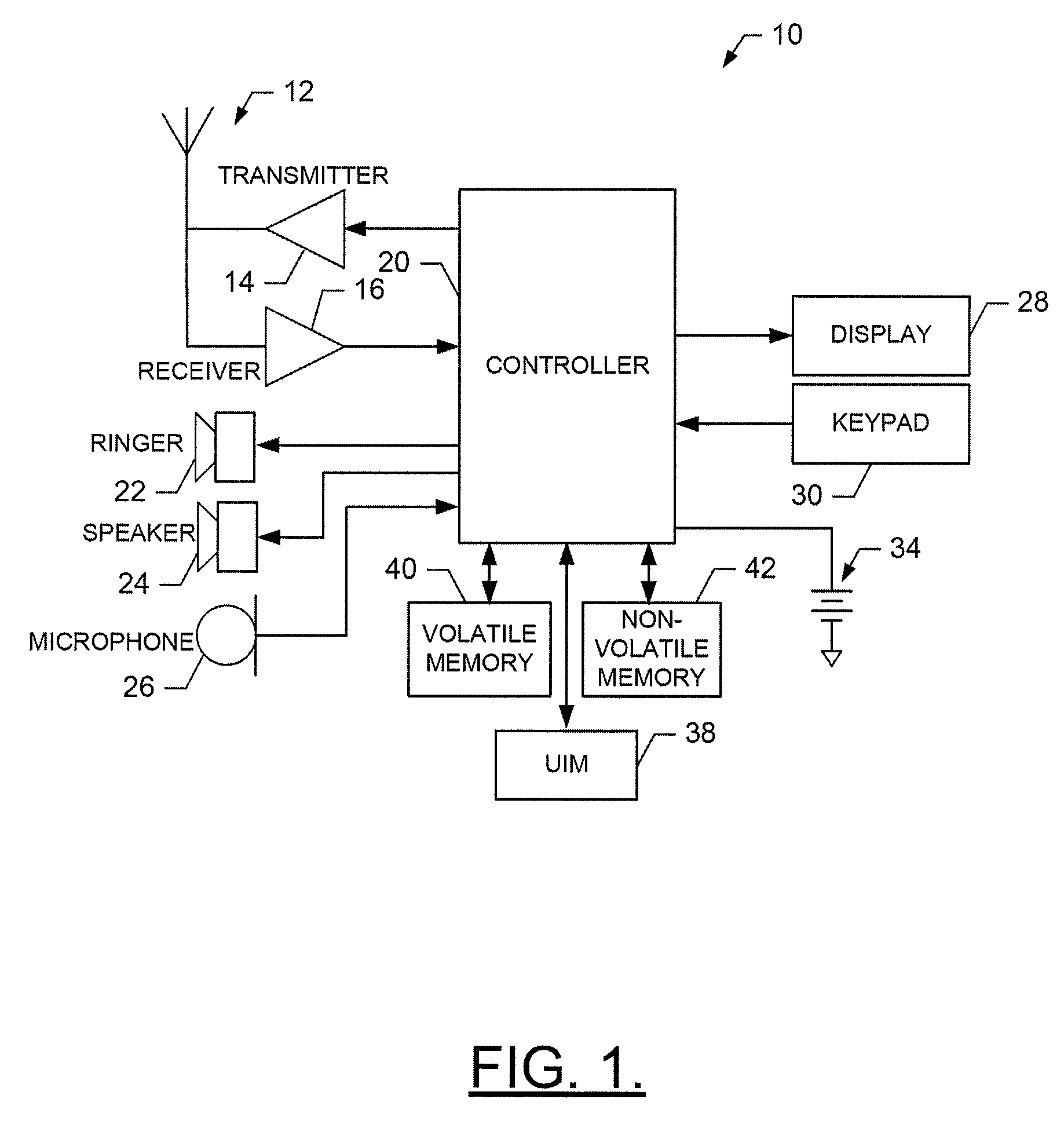

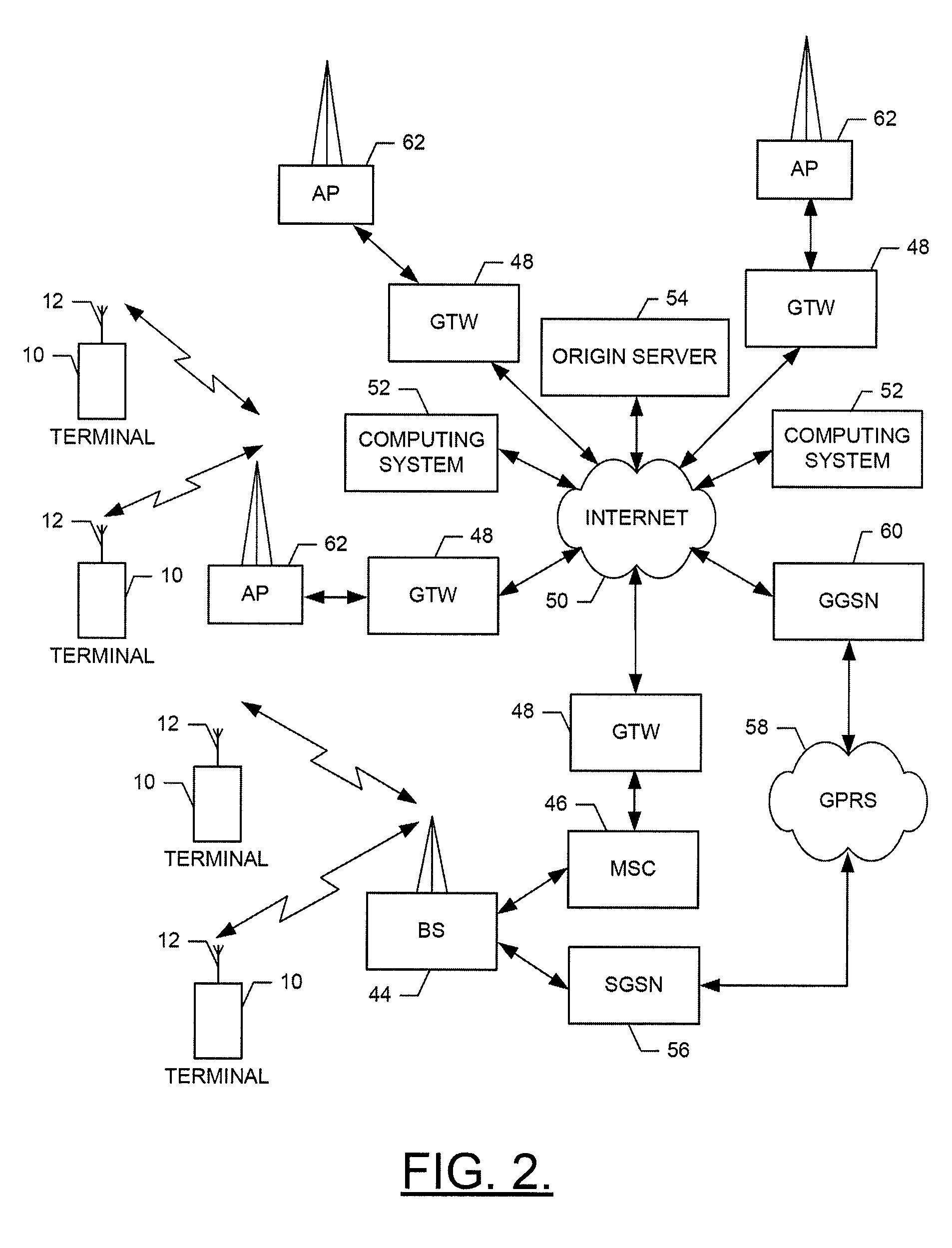

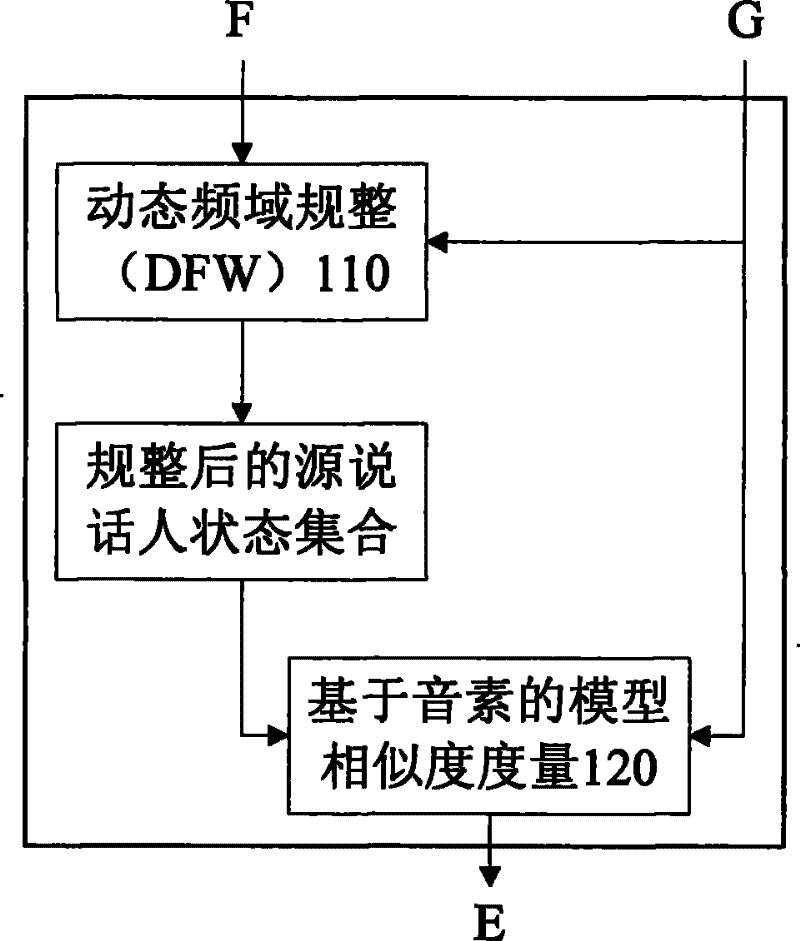

Method, apparatus and computer program product for providing text independent voice conversion

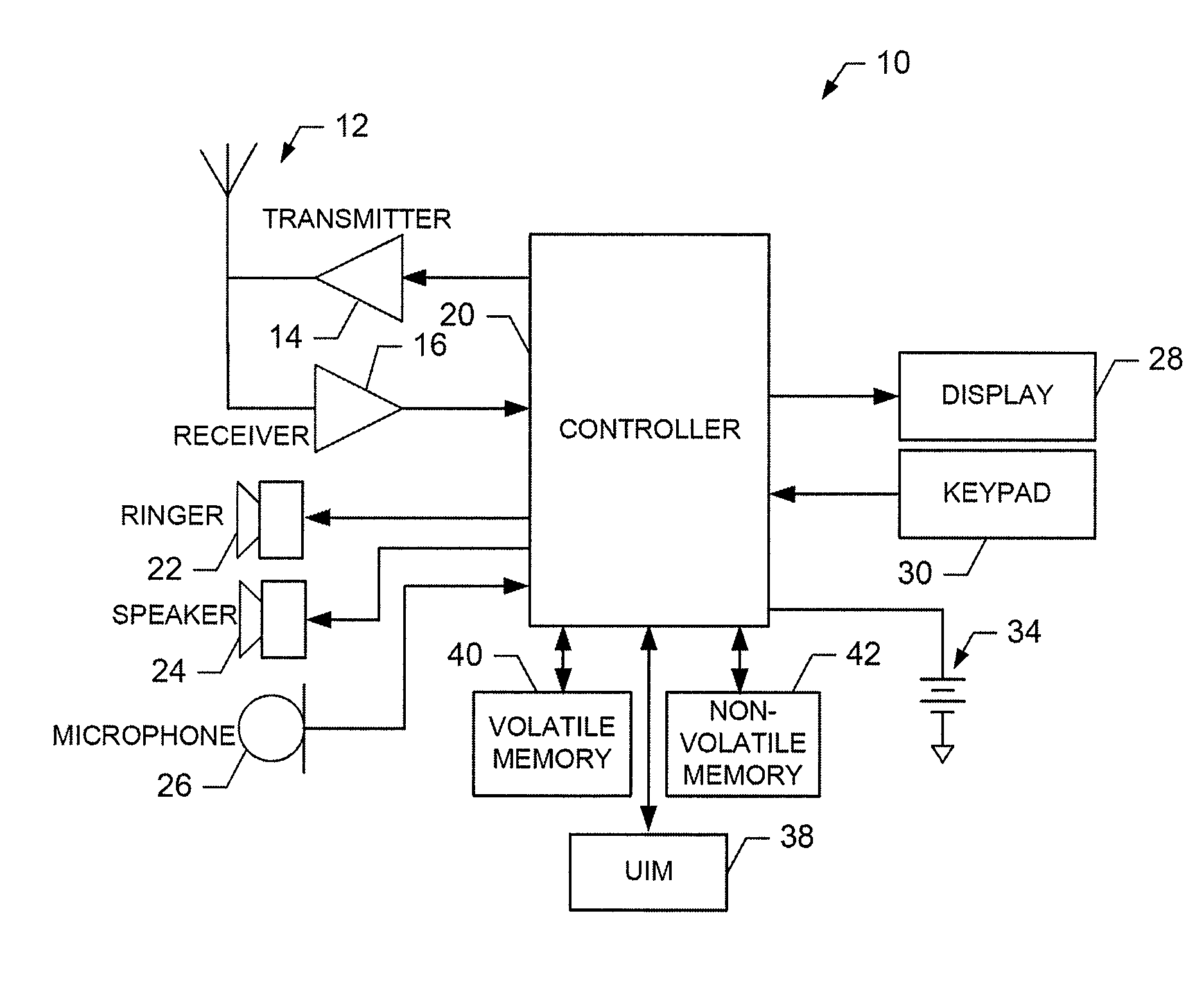

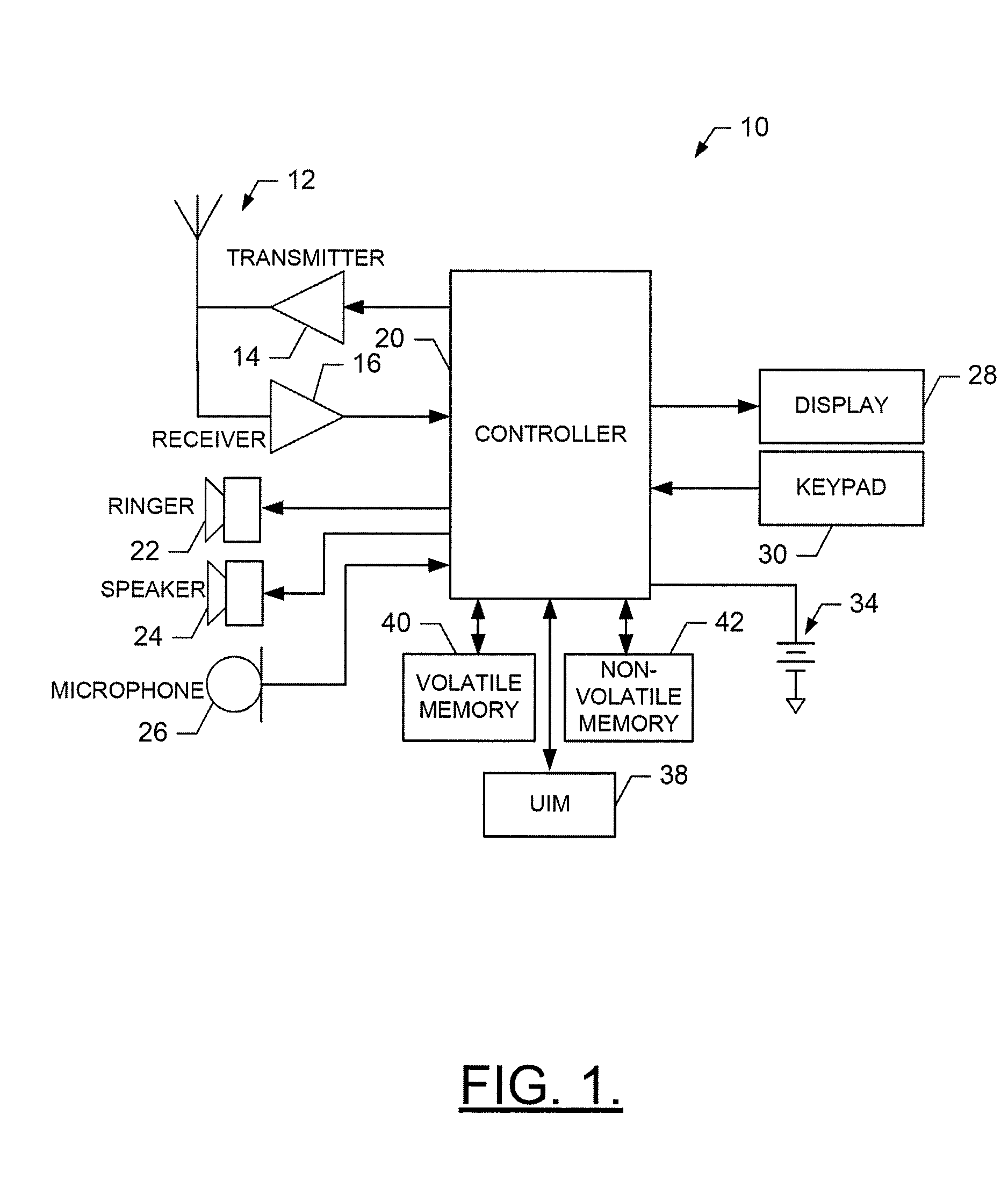

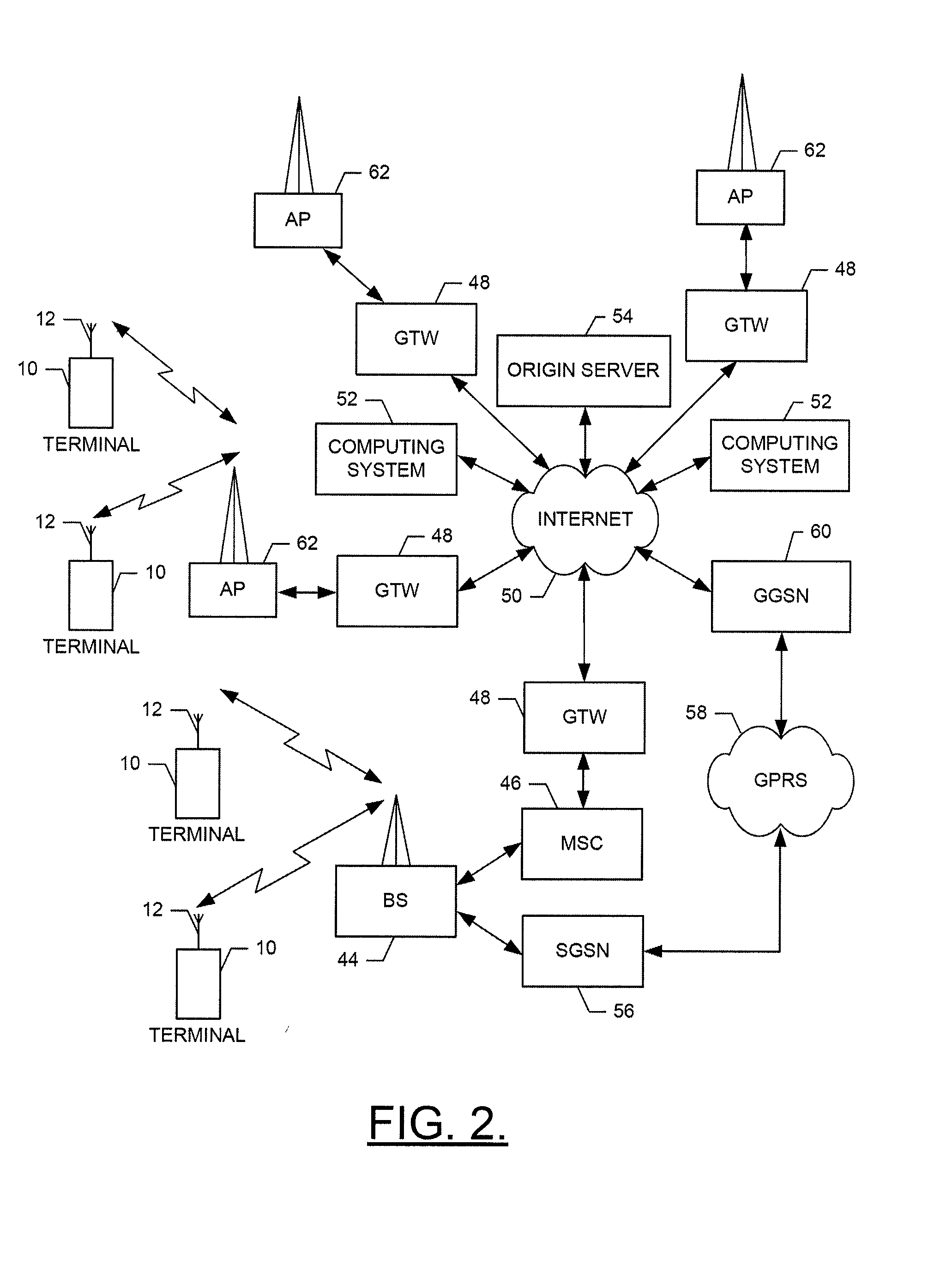

InactiveUS8751239B2Quality improvementImprove usabilitySpeech recognitionSpeech synthesisText independentSpeech input

An apparatus for providing text independent voice conversion may include a first voice conversion model and a second voice conversion model. The first voice conversion model may be trained with respect to conversion of training source speech to synthetic speech corresponding to the training source speech. The second voice conversion model may be trained with respect to conversion to training target speech from synthetic speech corresponding to the training target speech. An output of the first voice conversion model may be communicated to the second voice conversion model to process source speech input into the first voice conversion model into target speech corresponding to the source speech as the output of the second voice conversion model.

Owner:CORE WIRELESS LICENSING R L

Method, apparatus and computer program product for providing text independent voice conversion

InactiveUS20140249815A1Quality improvementImprove usabilitySpeech recognitionText independentSpeech input

An apparatus for providing text independent voice conversion may include a first voice conversion model and a second voice conversion model. The first voice conversion model may be trained with respect to conversion of training source speech to synthetic speech corresponding to the training source speech. The second voice conversion model may be trained with respect to conversion to training target speech from synthetic speech corresponding to the training target speech. An output of the first voice conversion model may be communicated to the second voice conversion model to process source speech input into the first voice conversion model into target speech corresponding to the source speech as the output of the second voice conversion model.

Owner:CORE WIRELESS LICENSING R L

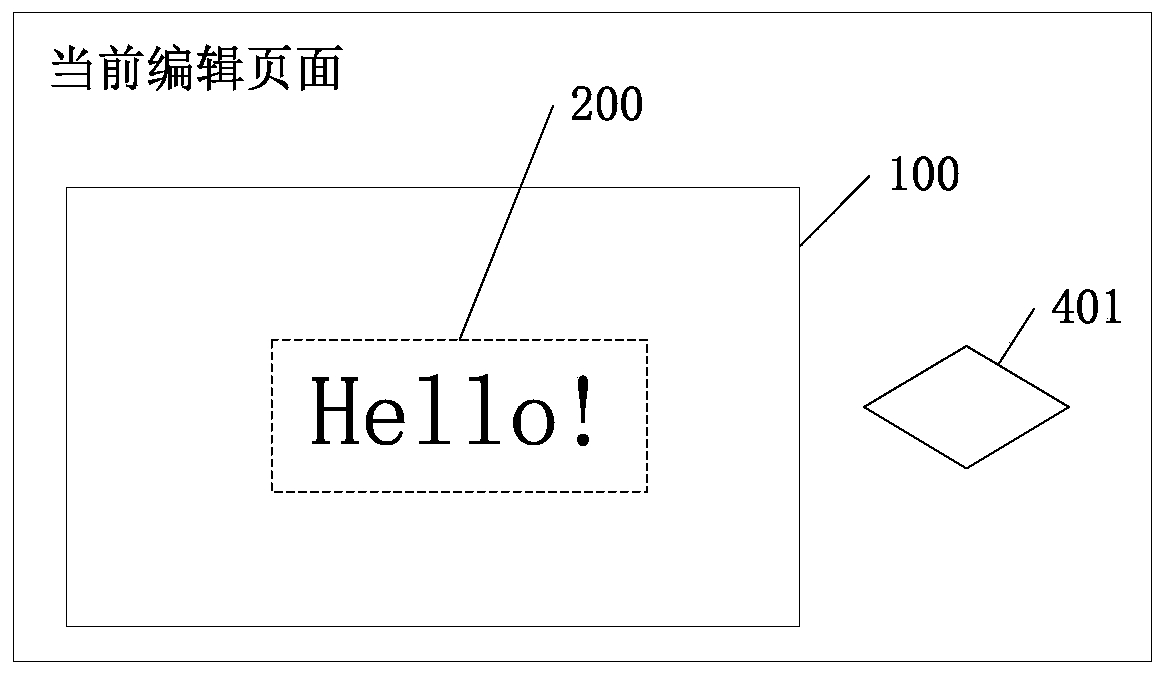

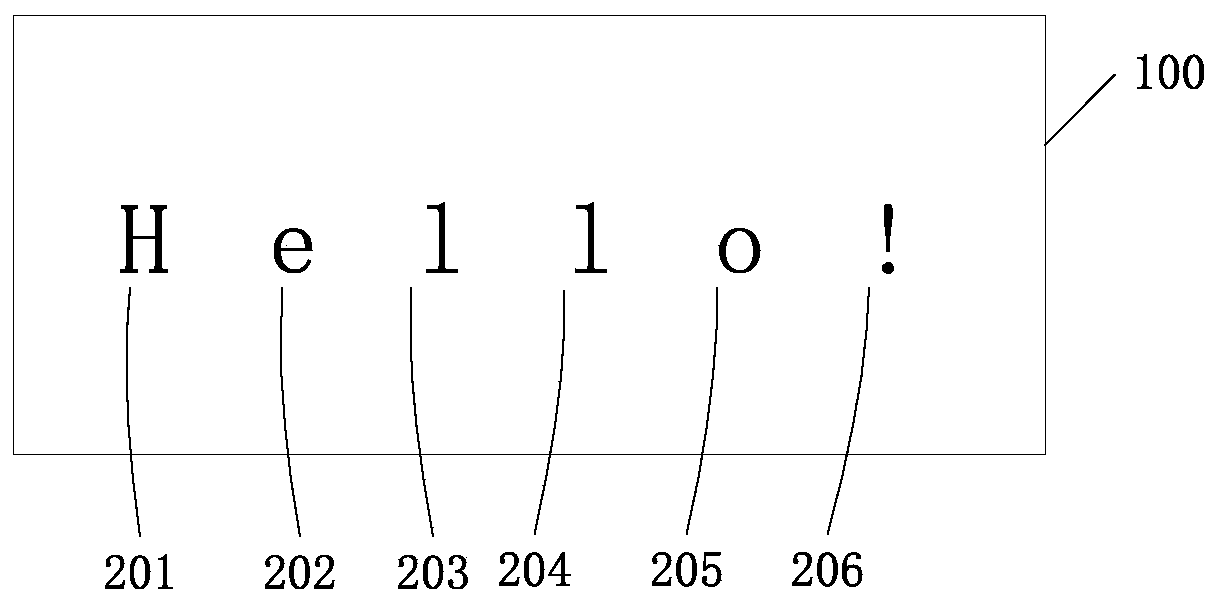

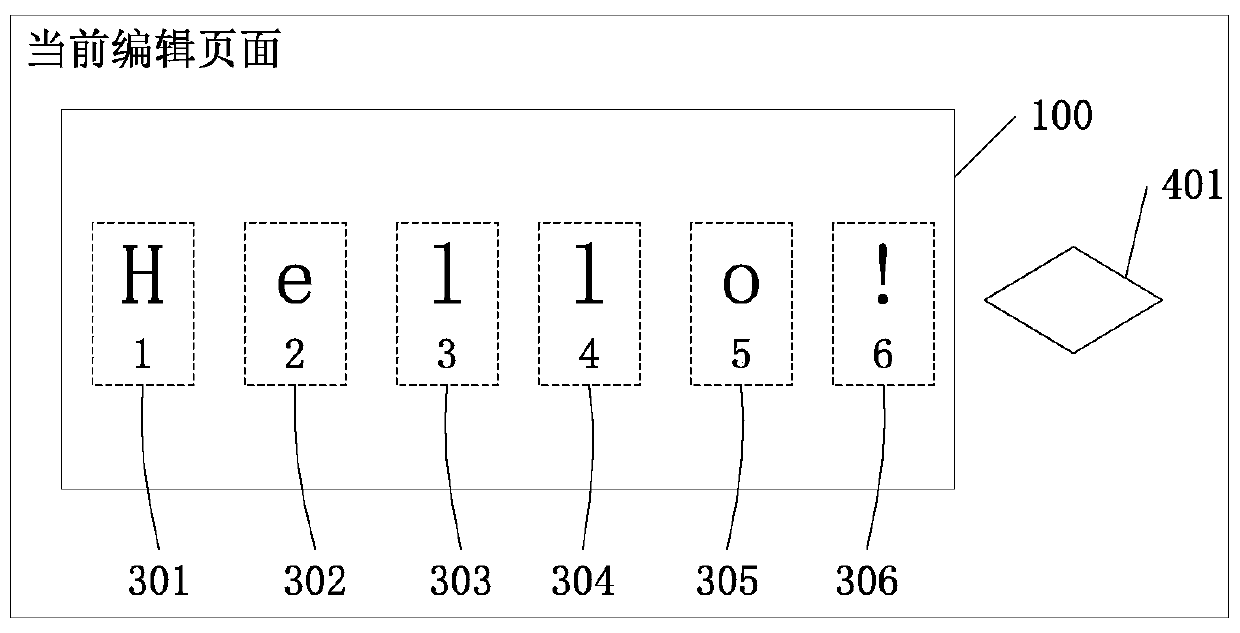

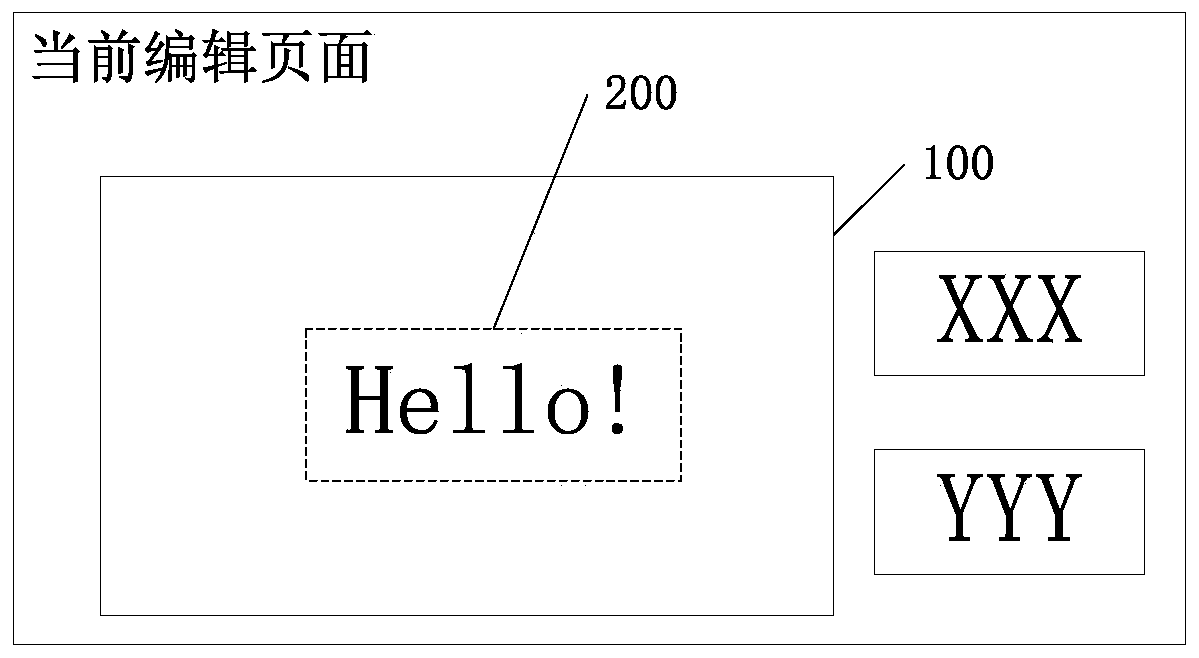

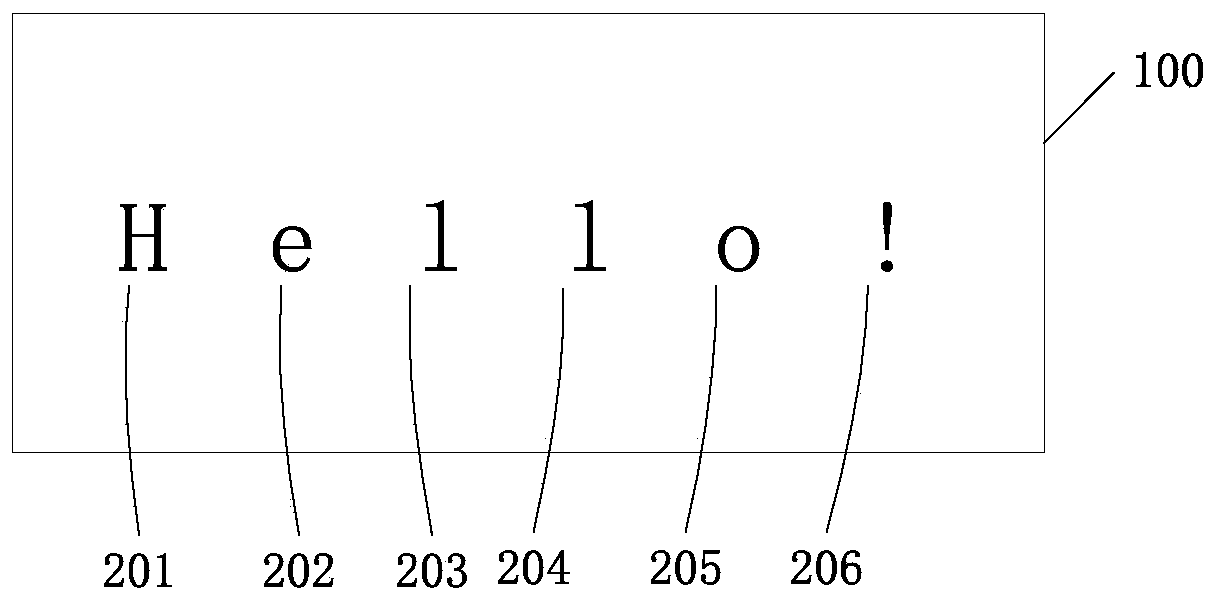

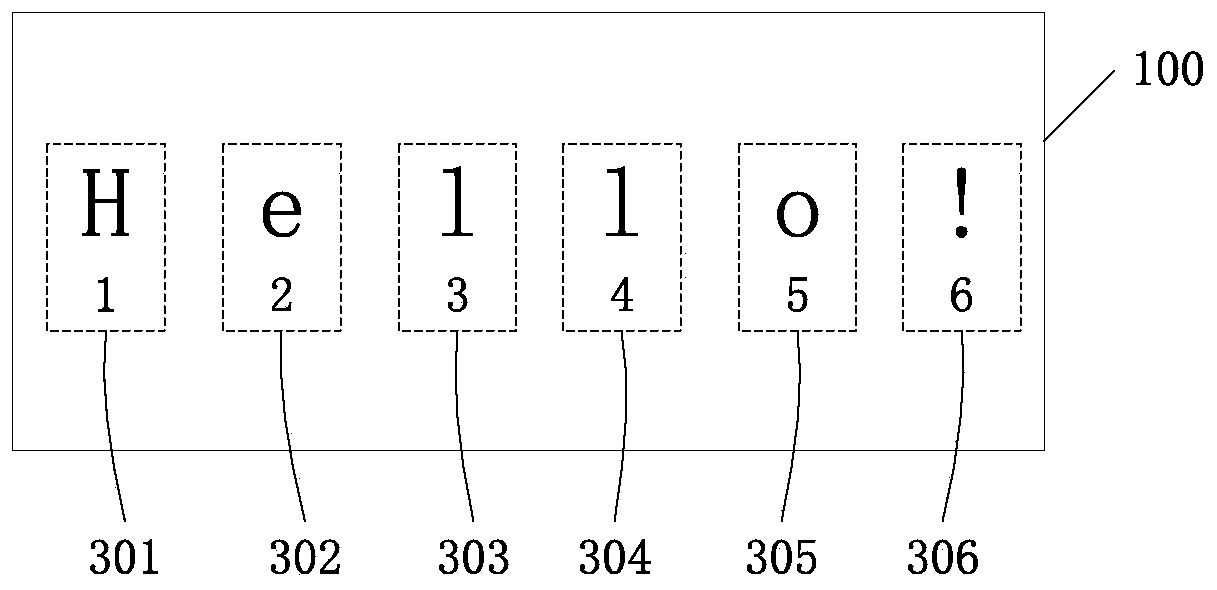

Method for extracting edited data based on online editing process of teaching courseware

The invention discloses a method for extracting edited data based on an online editing process of teaching courseware. The method comprises the following steps that S1, enabling a user to input new text contents or delete the current text contents on a current editing page according to needs, add new pictures or deletes the current pictures, and / or add new audios or delete the current audios; S2,enabling the user to preprocess the current text on the current editing page according to needs to obtain text independent elements; S3, monitoring whether the user triggers a text style modificationinstruction, an animation modification instruction and / or an audio modification instruction; S4, sorting all editing events of the current editing page according to the playing sequence number to obtain an editing event list of the current editing page; and S5, converting the attribute information of all the text style modification instructions, the attribute information of all the animation modification instructions and the attribute information of all the audio modification instructions into edit data in a general format, and transmitting the edit data to a background server for storage.

Owner:苏州云学时代信息技术有限公司

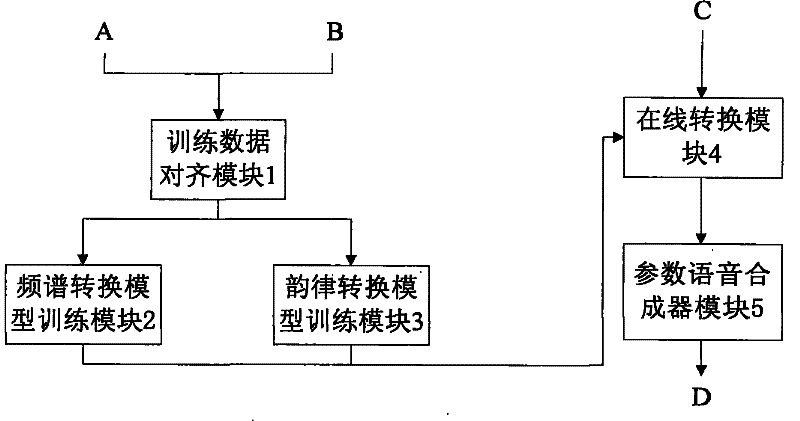

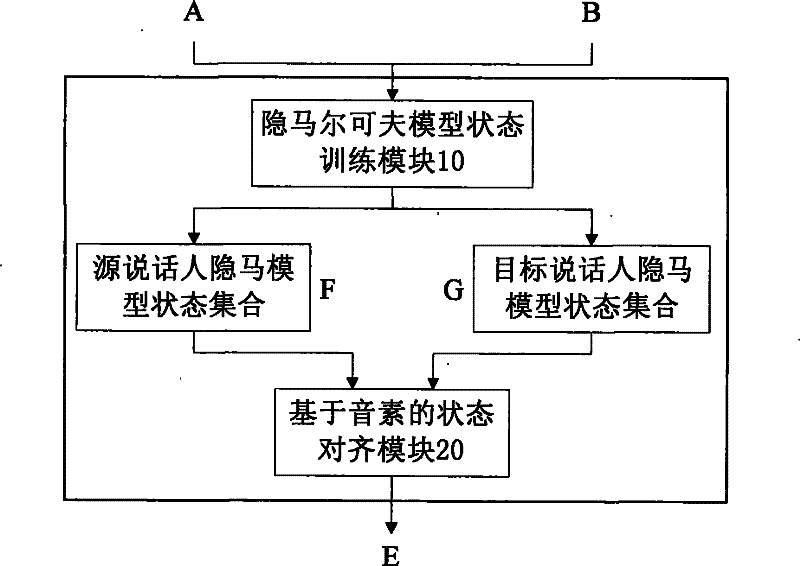

Text-independent Speech Conversion System Based on Hidden Markov Model State Mapping

ActiveCN101751922BImprove accuracyRich conversion resultsSpeech recognitionSpeech synthesisText independentFrequency spectrum

The invention discloses a text-independent speech conversion system based on HMM model state mapping, which is composed of a data alignment module, a spectrum conversion model generation module, a rhythm conversion model generation module, an online conversion module and a parameter voice synthesizer; wherein, the data alignment module receives the voice parameters of the source and target speakers, and aligns to the input data according to phoneme information to generate state-aligned data pairs; the spectrum conversion model generation module receives the aligned data pairs and establishes a voice spectrum parameter conversion module based on source and target speakers according to the data; the rhythm conversion model generation module receives the aligned data pairs and establishes a voice rhythm parameter conversion module based on source and target speakers according to the data; the online conversion module obtains the converted voice spectrum parameter and rhythm parameter according to the conversion modules generated by the spectrum conversion model generation module and the rhythm conversion model generation module, and voice data of the source speaker for conversion; the parameter voice synthesizer module receives the converted spectrum information and rhythm information from the online conversion module and outputs the converted voice result.

Owner:北京中科欧科科技有限公司

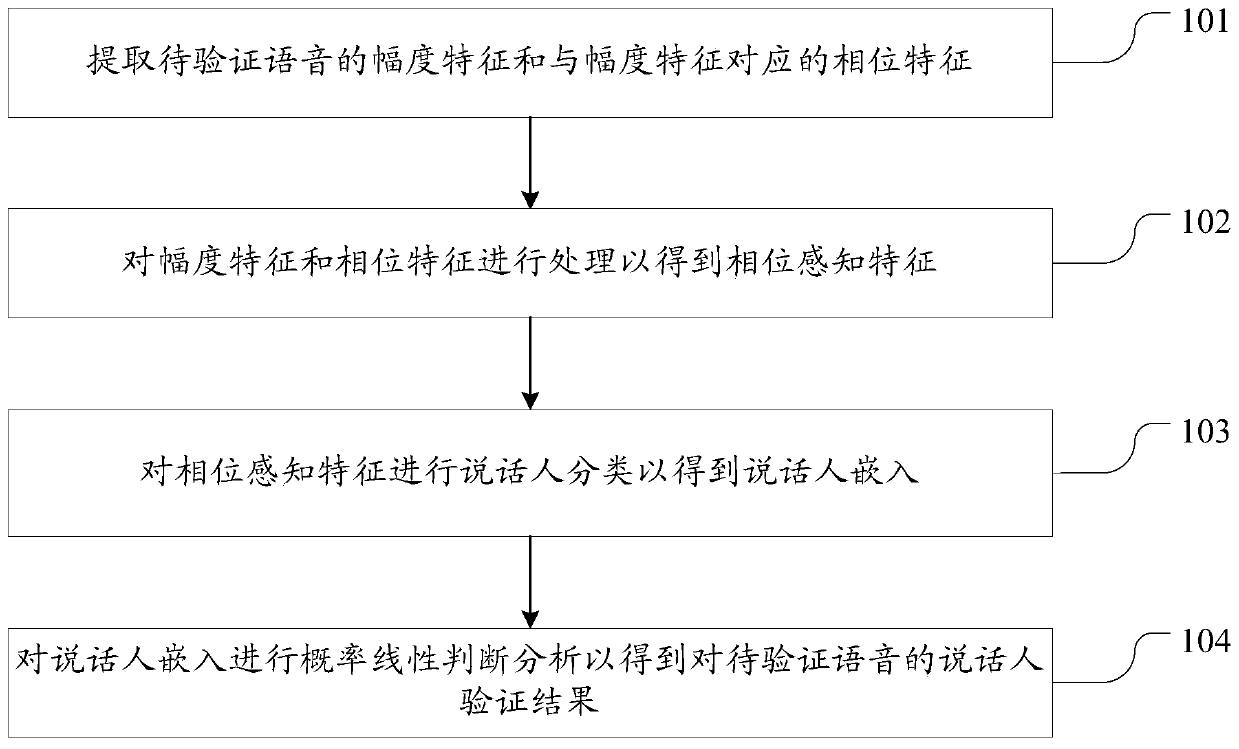

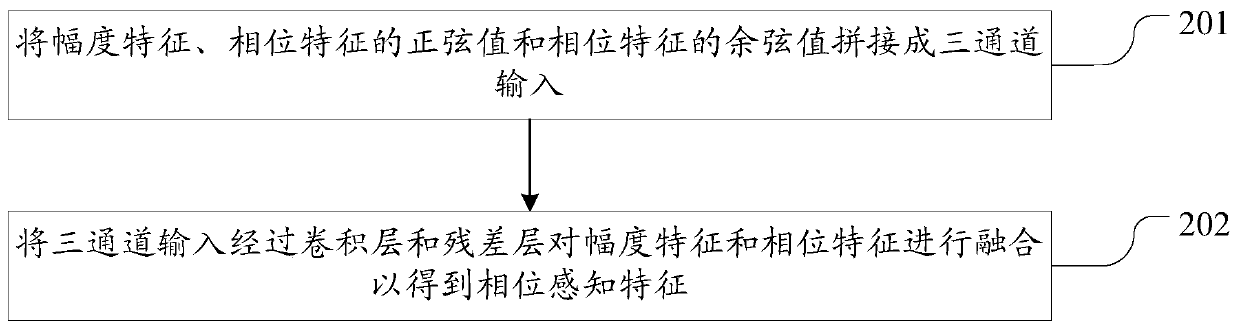

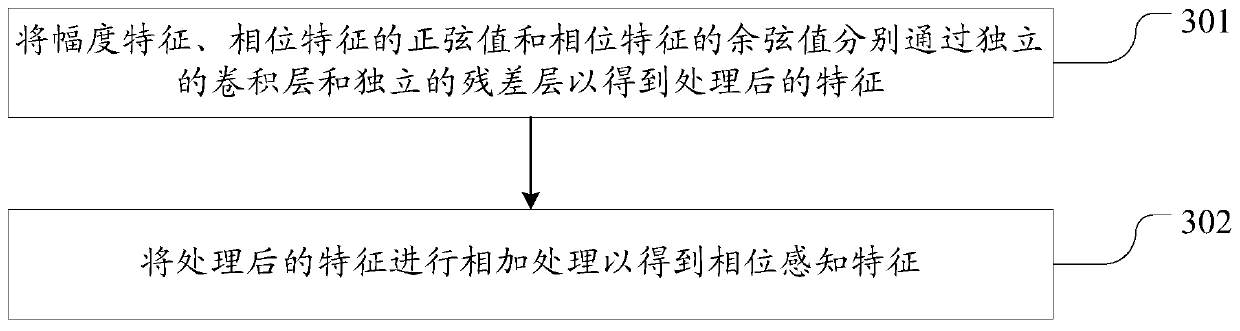

Text-independent speaker verification method and device

The invention discloses a text-independent speaker verification method and a text-independent speaker verification device. The method comprises the steps of extracting the amplitude features of to-be-verified voice and phase features corresponding to the amplitude features; processing the amplitude characteristics and the phase characteristics to obtain phase perception characteristics; speaker classification is carried out on the phase perception features to obtain speaker embedding; and performing probability linear judgment analysis on the speaker embedding to obtain a speaker verificationresult of the to-be-verified voice. According to the scheme provided by the invention, the amplitude features and the phase features are combined in deep speaker embedding learning, so that the noiserobustness of the speaker verification system can be improved. Furthermore, the scheme of the invention not only provides a new scheme for a speaker verification system with robust noise, but also shows various possibilities of improving performance by using phase characteristics.

Owner:AISPEECH CO LTD

Dual scoring method and system for text-dependent speaker verification

Embodiments of systems and methods for speaker verification are provided. In various embodiments, a method includes receiving an utterance from a speaker and determining a text-independent speaker verification score and a text-dependent speaker verification score in response to the utterance. Various embodiments include a system for speaker verification, the system comprising an audio receiving device for receiving an utterance from a speaker and converting the utterance to an utterance signal, and a processor coupled to the audio receiving device for determining speaker verification in response to the utterance signal, wherein the processor determines speaker verification in response to a UBM-independent speaker-normalized score.

Owner:AGENCY FOR SCI TECH & RES

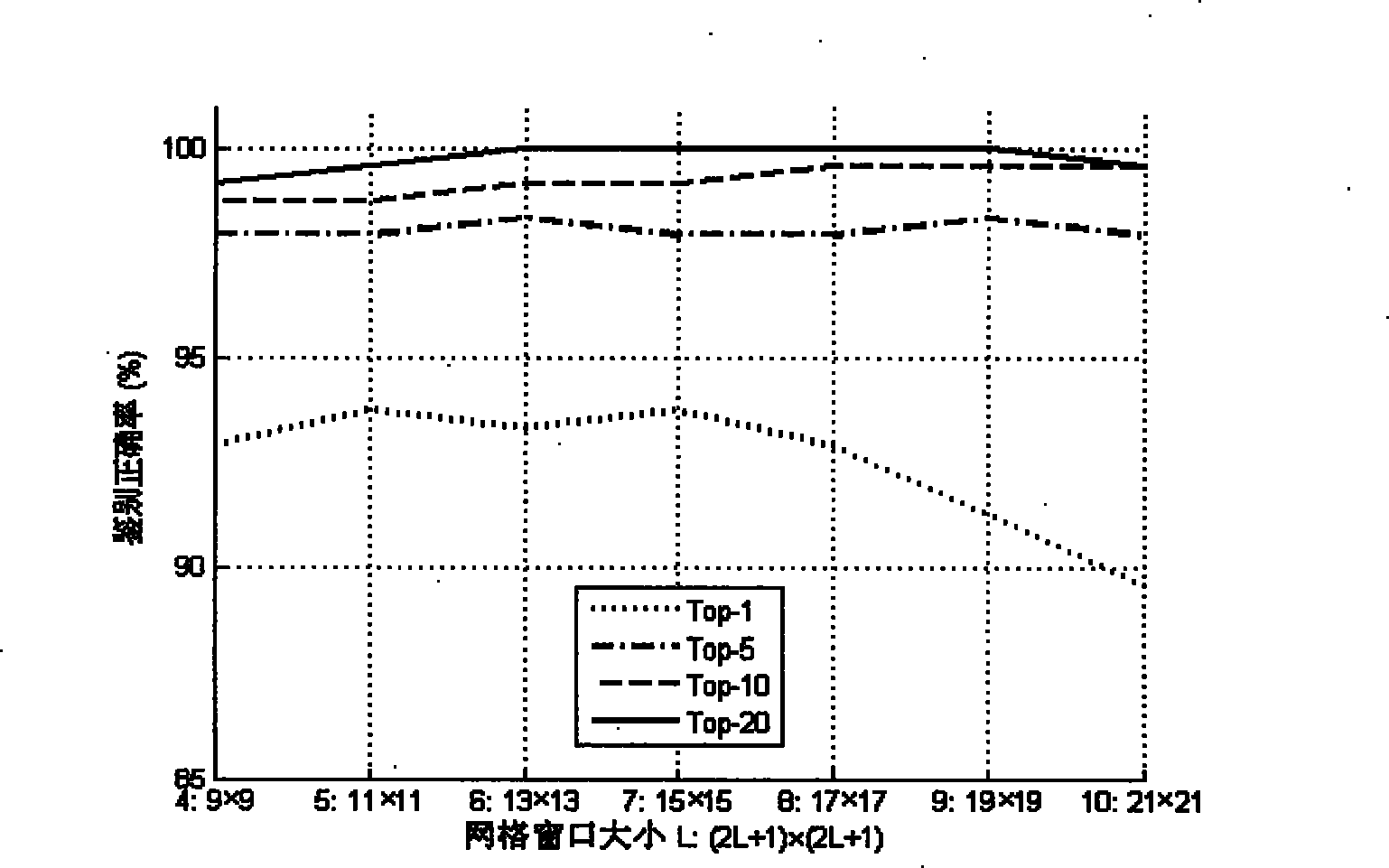

Text identification method and device irrelevant to handwriting

ActiveCN101452532BImprove accuracyEasy to identifyCharacter and pattern recognitionHandwritingText independent

The invention discloses a method and a device for identifying text-independent writing, and belongs to the field of computer vision. The method comprises: preprocessing a query wring sample to obtain edge images of the query writing sample; extracting network microstructure characteristics of the query writing sample from the edge images of the query writing sample; calculating a standard difference of the network microstructure characteristics of the query writing sample and the network microstructure characteristics of each reference writing sample; calculating characteristic intervals of the network microstructure characteristics of the query writing sample and the network microstructure characteristics of each reference writing sample through weighing the standard difference; and comparing and ordering the characteristic intervals to obtain a writer candidate list of the query writing sample. The device comprises a preprocessing module, a characteristic extracting module, a weightcalculating module, an interval calculating module and a comparing module. The method obtains a writing candidate through comparing intervals of the network microstructure characteristics, and improves accuracy and identifying property of writing identification.

Owner:TSINGHUA UNIV

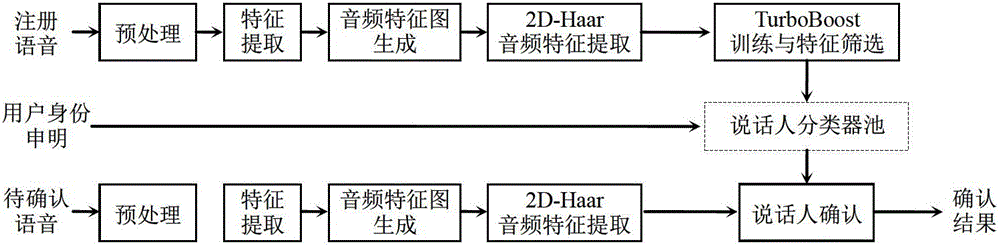

A High Accuracy Speaker Confirmation Method

InactiveCN103198833BHuge feature spaceLighten the computational burdenSpeech analysisSorting algorithmText independent

The invention relates to a text-independent method of confirming a speaker, and discloses a method of confirming the speaker combining a Turbo-Boost sorting algorithm with 2D-Haar audio features. The method comprises firstly using basic voice frequency characteristics to form an audio feature pattern, then utilizing the audio feature pattern to extract the 2D-Haar audio features, using the turbo-Boost algorithm to respectively achieve the screening of the 2D-Haar audio features and the training of a speaker classifier through two rounds of iterative operation, and finally using the trained speaker classifier to achieve the confirming of the speaker. Compared with the prior art, the high-precision method of confirming the speaker can obtain higher accuracy under identical operation consumption, and is especially suitable for a speaker confirming occasion the strict requirements on operation speed and speaker confirming accuracy, such as a telephone automatic answering system, a computer identity authentication system, a high-secret access control system and the like.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Method for extracting animation editing data based on online editing process of teaching courseware

The invention discloses a method for extracting animation editing data based on an online editing process of teaching courseware. The method comprises the following steps that S1, a user inputs new text content or deletes the current text content in any text editing area on a current editing page according to needs; S2, the user preprocesses the current text in the current text editing area on thecurrent editing page according to needs to obtain text independent elements; S3, whether a user triggers an animation change instruction for the text independent elements or the text independent element combination or not is monitored; S4, all animation editing events of the current text editing area are sorted according to the playing sequence number to obtain an animation editing event list ofthe current text editing area on the current editing page; and S5, the attribute information of the animation change instruction mapped to all the text independent elements or the combination of the text independent elements is converted into animation edition data in a general format, and the animation edition data is transmitted to a background server for storage through a network protocol.

Owner:苏州云学时代信息技术有限公司

A Handwriting Authentication System Based on Stroke Curvature Detection

ActiveCN104809451BEasy to operateImprove real-time performanceCharacter recognitionHandwritingText independent

The invention discloses a handwriting identification system based on stroke curvature detection, which includes a handwriting sample database module, an image acquisition and entry module, a preprocessing module, a feature analysis and extraction module, a feature moment processing module, a similarity measurement module, and a result output feedback module . The handwriting discriminating process is that the preprocessing module preprocesses the image collected in real time by the image acquisition module, the feature analysis and extraction module extracts the eigenvalues of strokes in the four directions of horizontal, vertical, left and right, and the feature moment processing module performs stroke eigenvalue processing and The handwriting characteristic moment is generated, and the similarity measurement module adopts the method of vector angle similarity measurement to measure the similarity between the handwriting characteristic moment to be authenticated and the handwriting characteristic moment in the database, and the result output feedback module outputs the corresponding result. The handwriting authenticating system of the present invention can rapidly perform text-independent handwriting authenticating, and the authenticating result is stable and objective.

Owner:HOHAI UNIV CHANGZHOU

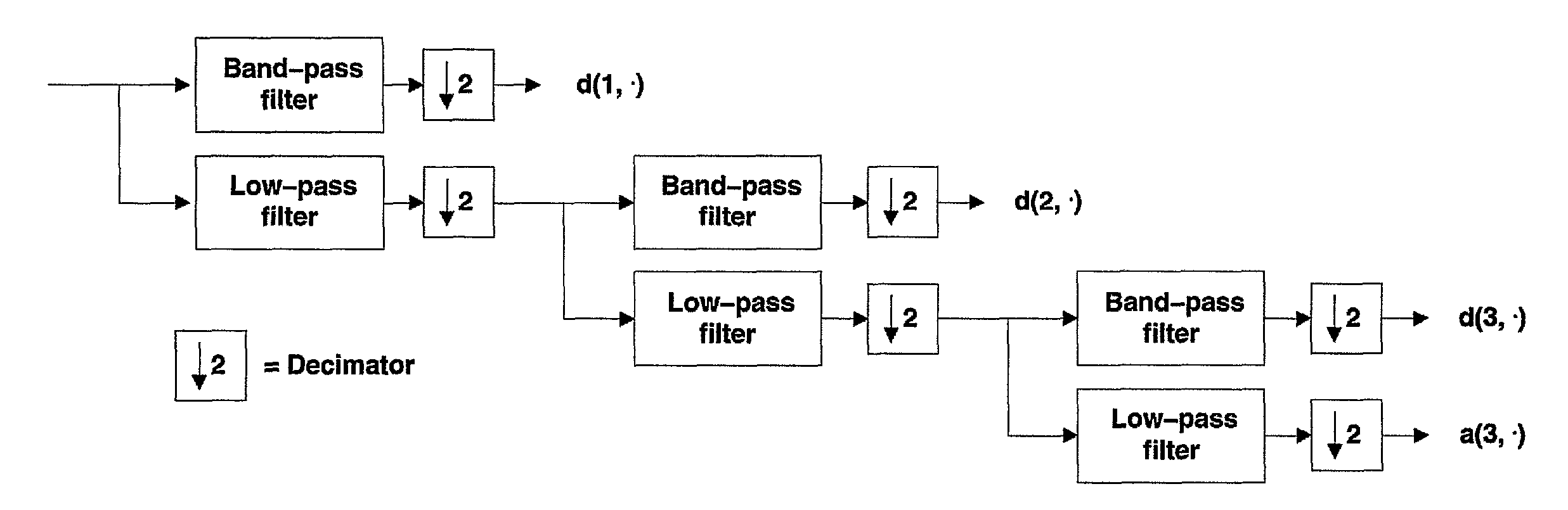

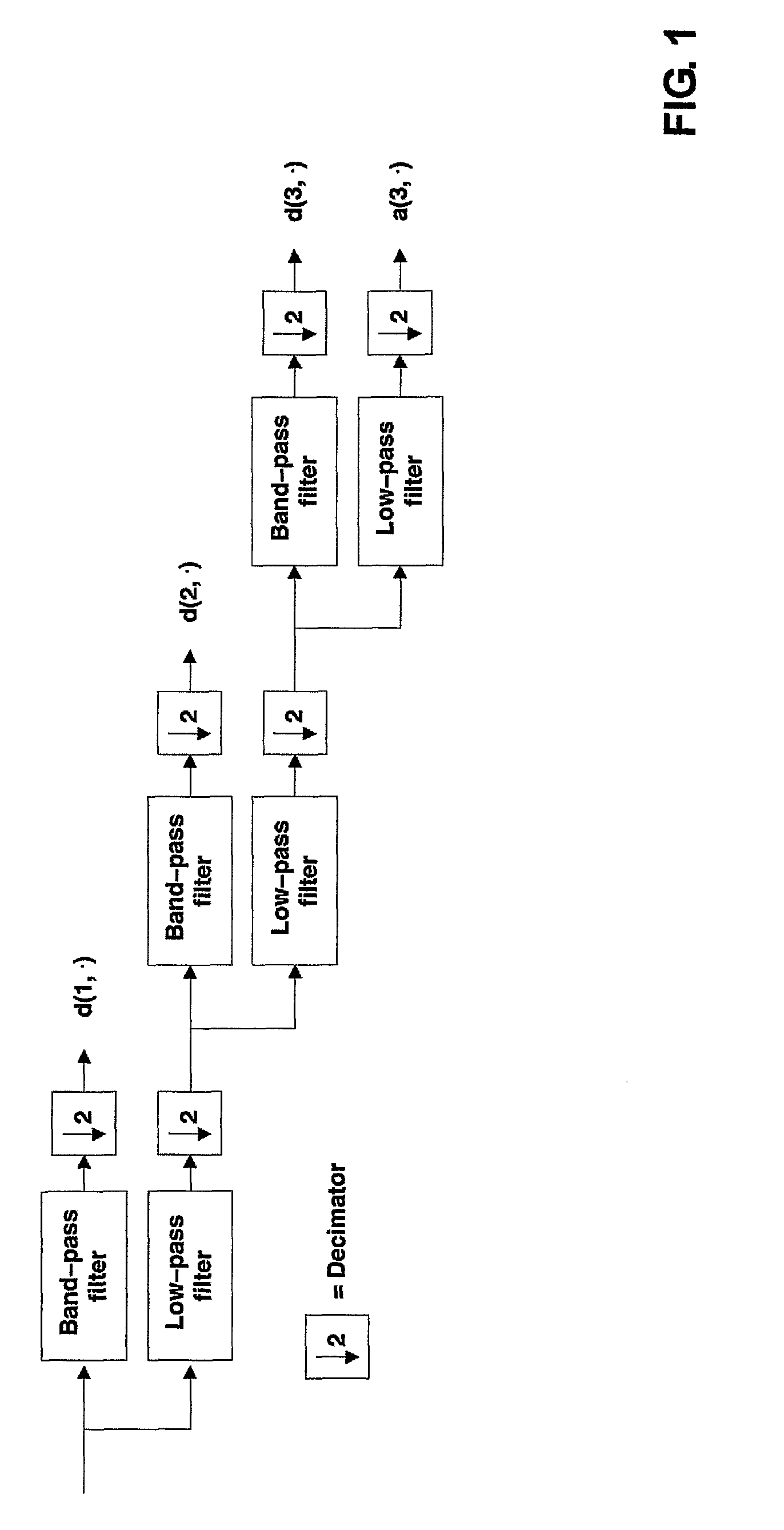

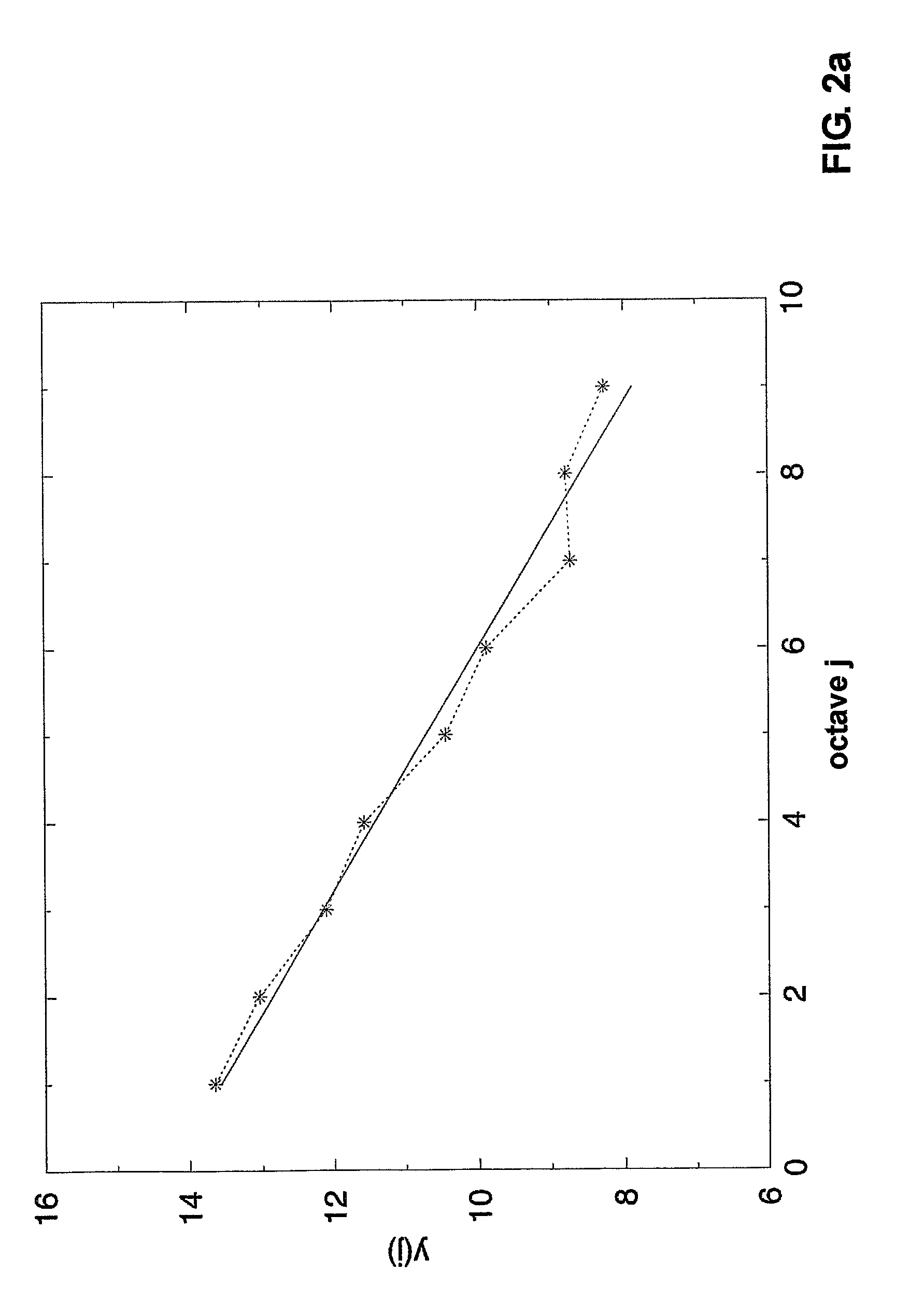

Method for automatic speaker recognition with hurst parameter based features and method for speaker classification based on fractional brownian motion classifiers

InactiveUS7904295B2Improve accuracyReduce the amount of calculationSpeech recognitionFractional Brownian motionText independent

It is proposed a text-independent automatic speaker recognition (ASkR) system which employs a new speech feature and a new classifier. The statistical feature pH is a vector of Hurst parameters obtained by applying a wavelet-based multi-dimensional estimator (M dim wavelets) to the windowed short-time segments of speech. The proposed classifier for the speaker identification and verification tasks is based on the multi-dimensional fBm (fractional Brownian motion) model, denoted by M dim fBm. For a given sequence of input speech features, the speaker model is obtained from the sequence of vectors of H parameters, means and variances of these features.

Owner:COELHO ROSANGELO FERNANDES

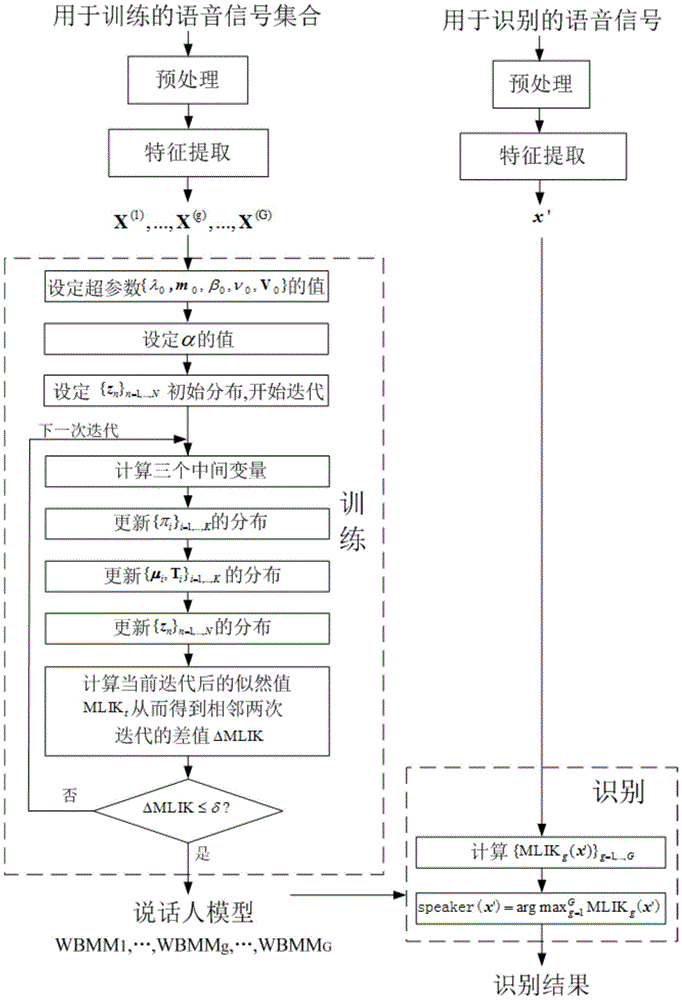

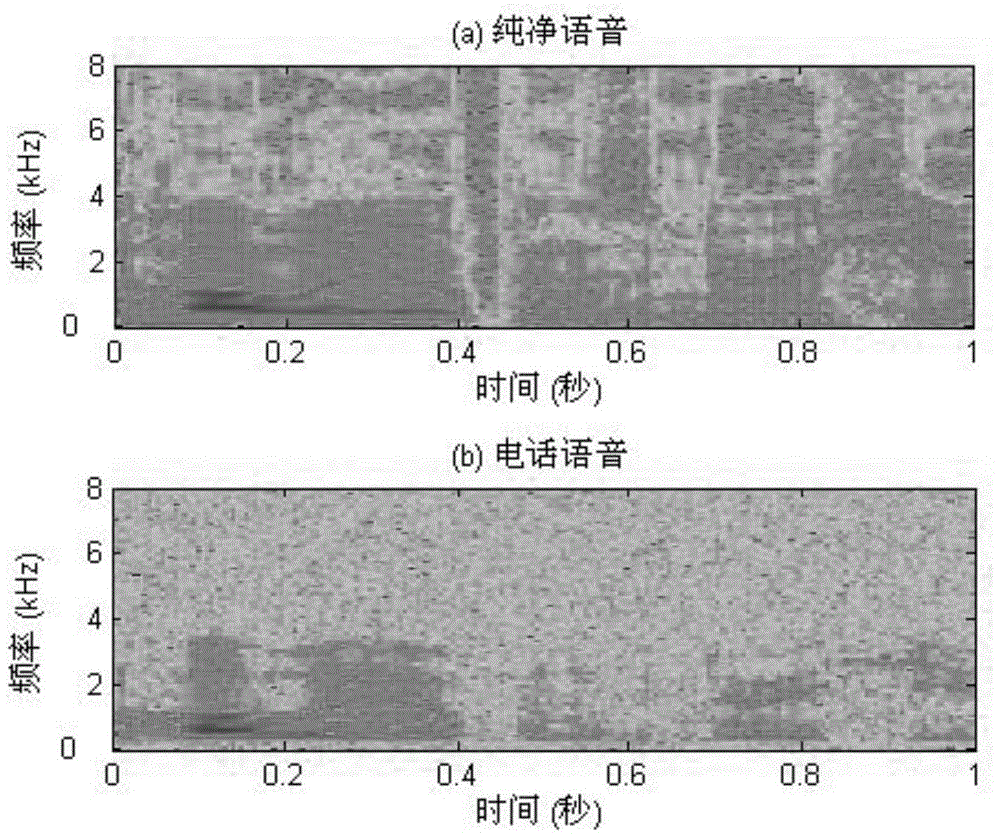

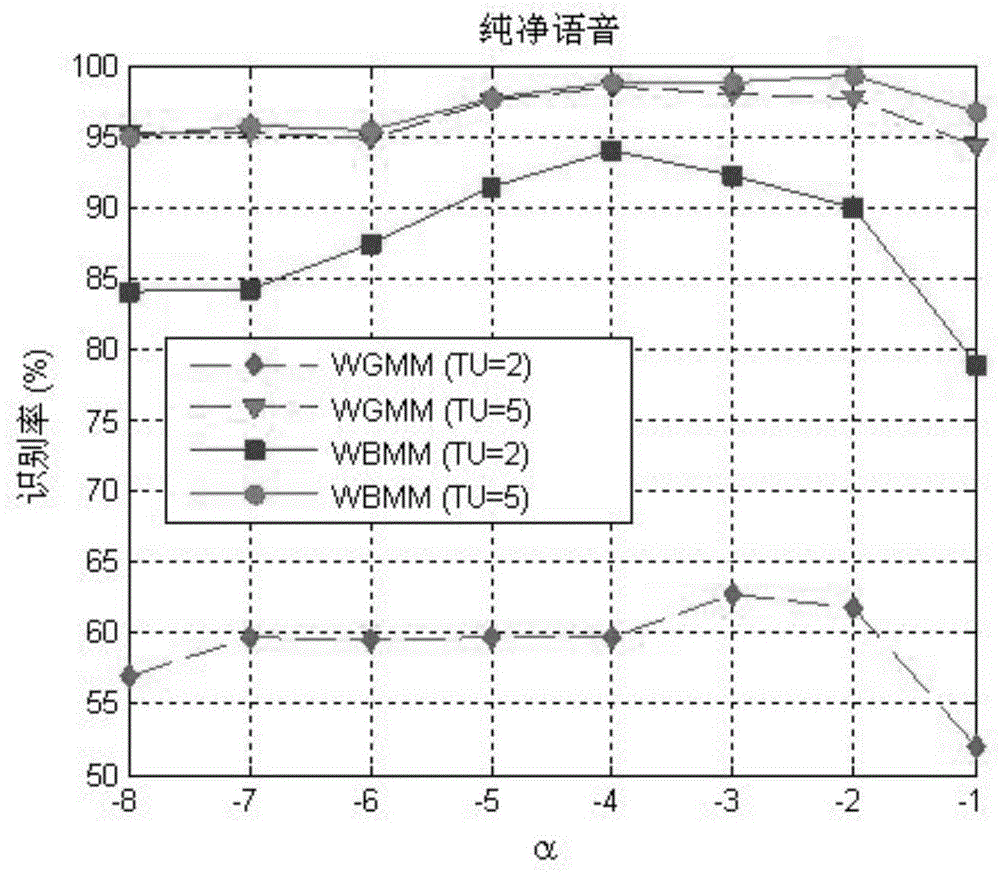

Text-Independent Speaker Recognition Method Based on Weighted Bayesian Mixture Model

InactiveCN104183239BIncrease flexibilityEasy and flexible controlSpeech analysisText independentFeature extraction

Owner:INFORMATION & COMM BRANCH OF STATE GRID JIANGSU ELECTRIC POWER

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com