Patents

Literature

42 results about "Demand paging" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

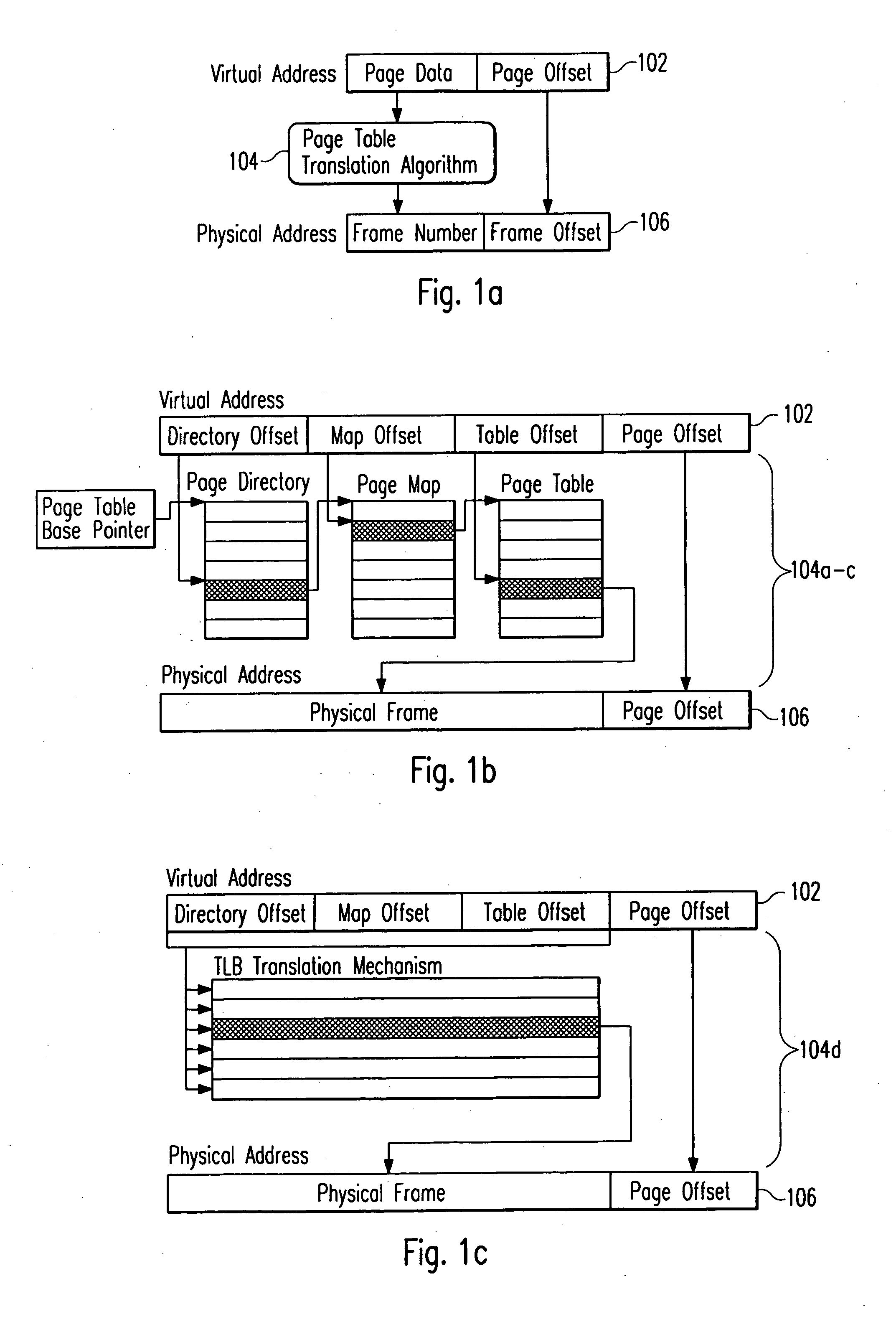

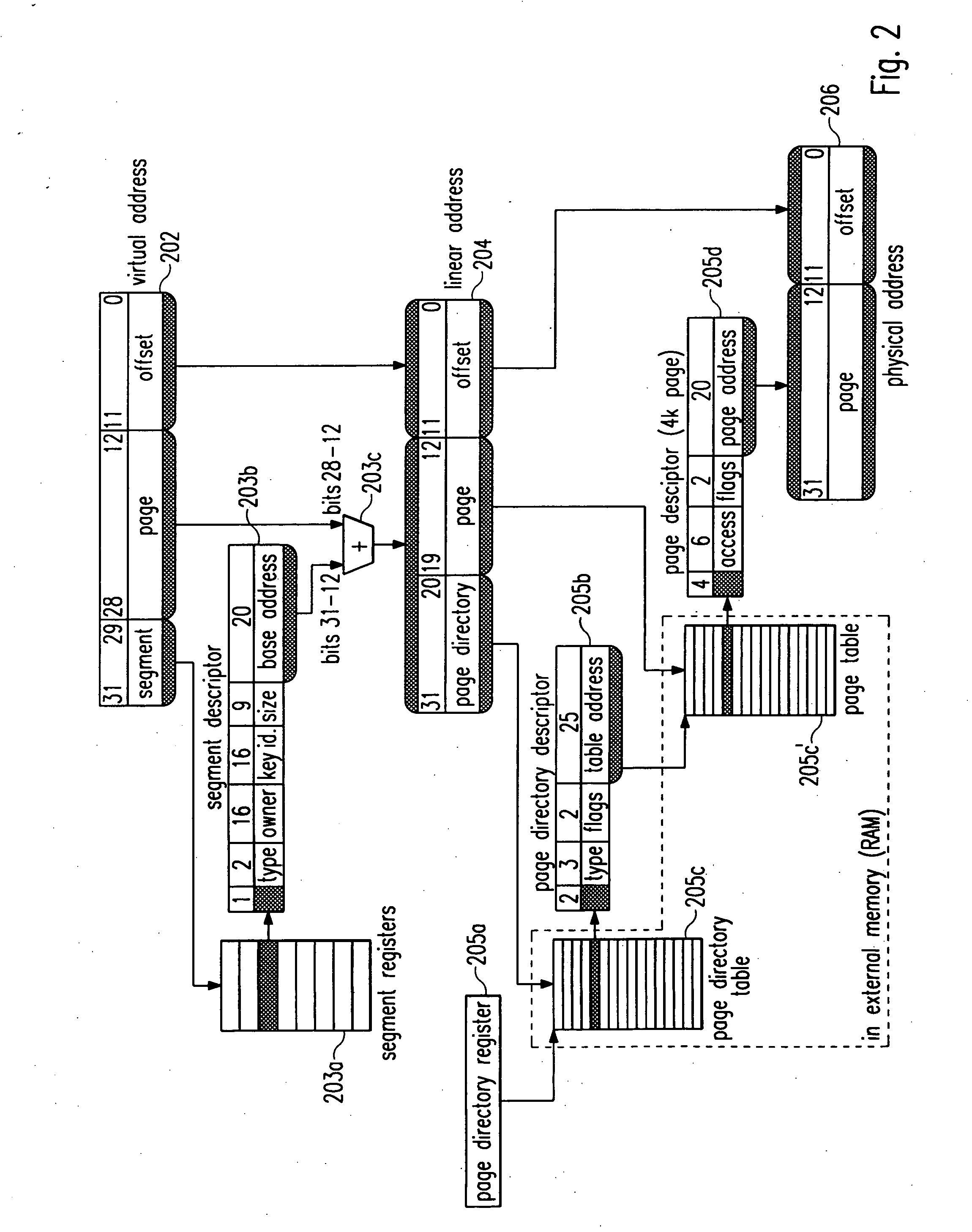

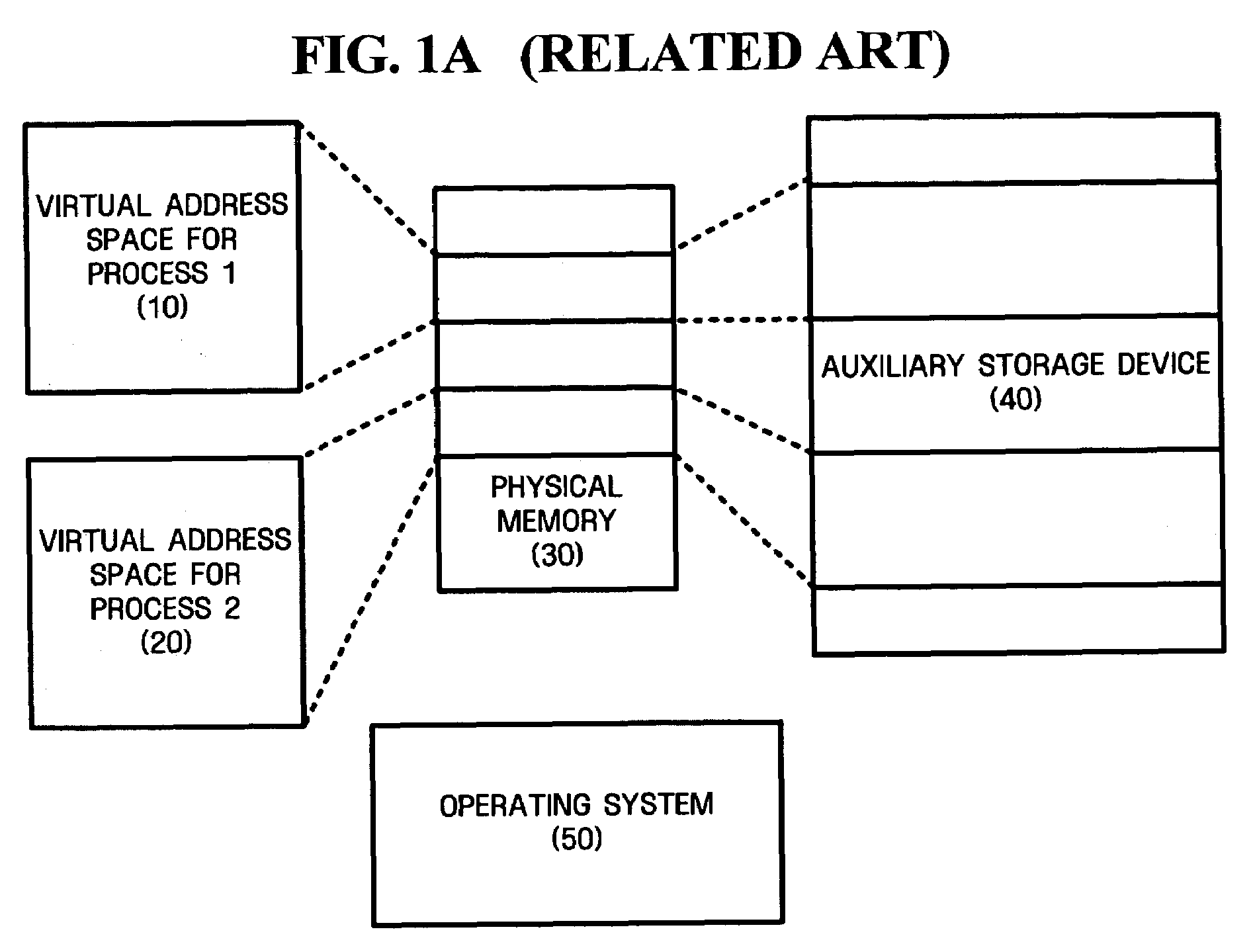

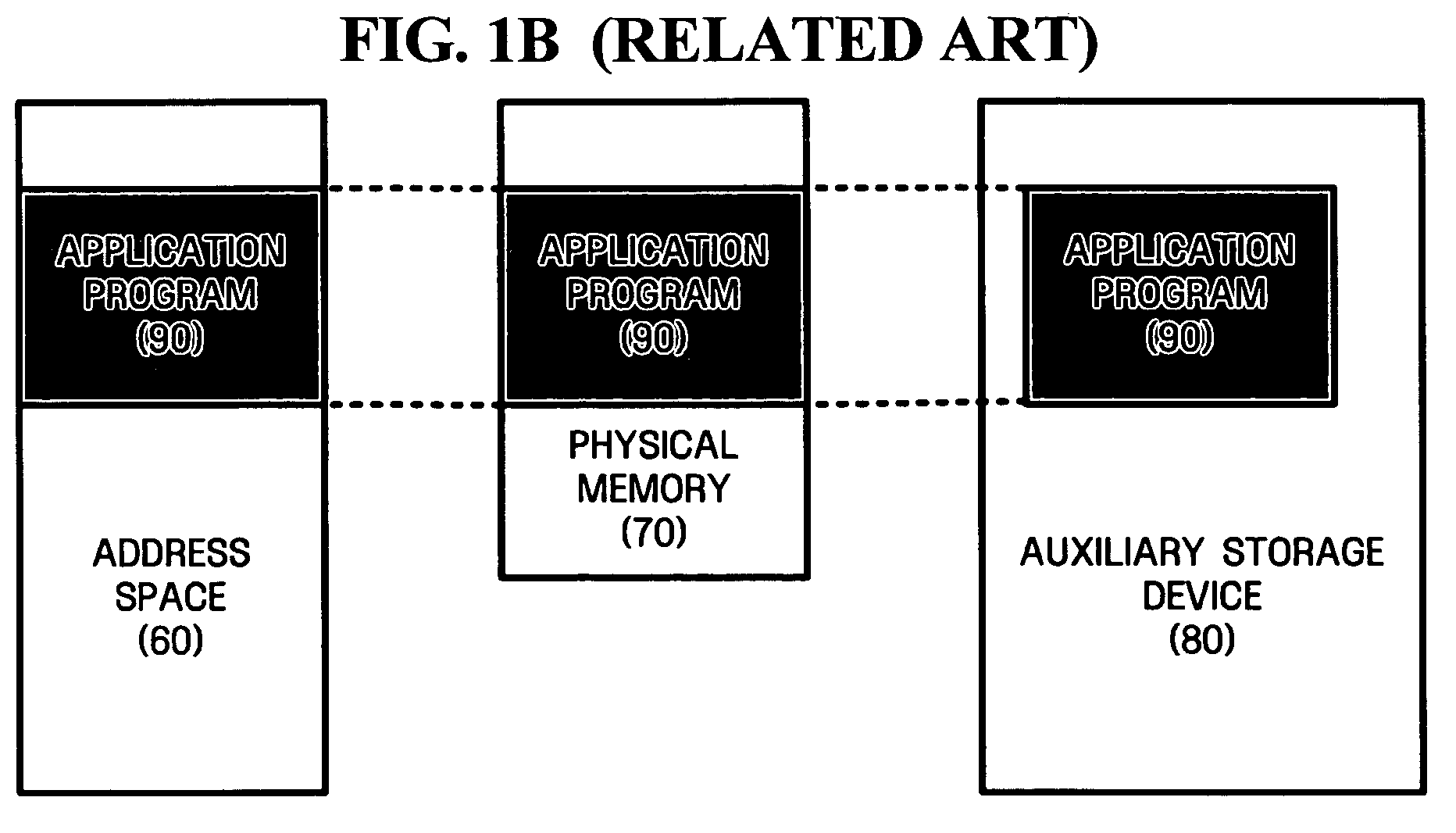

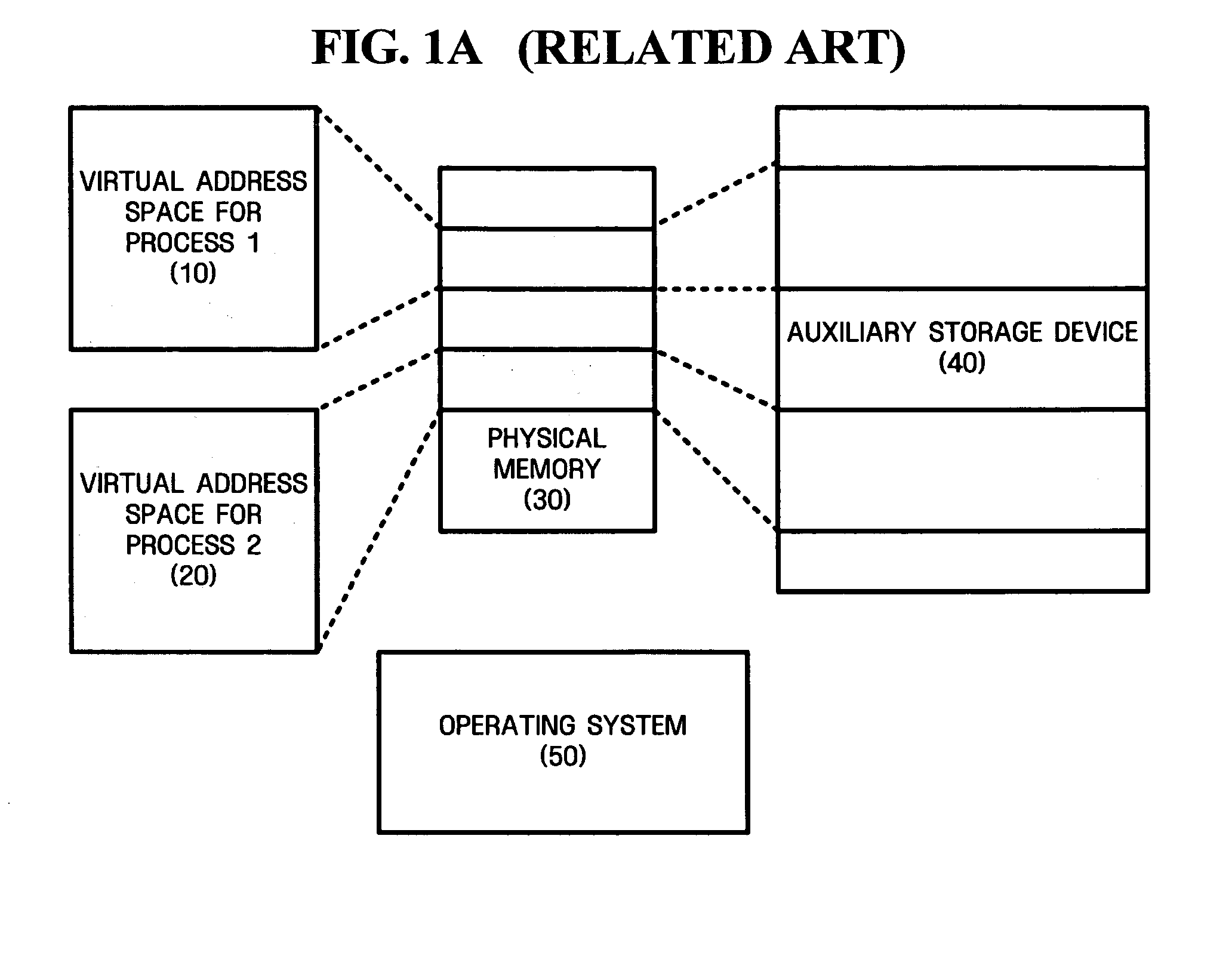

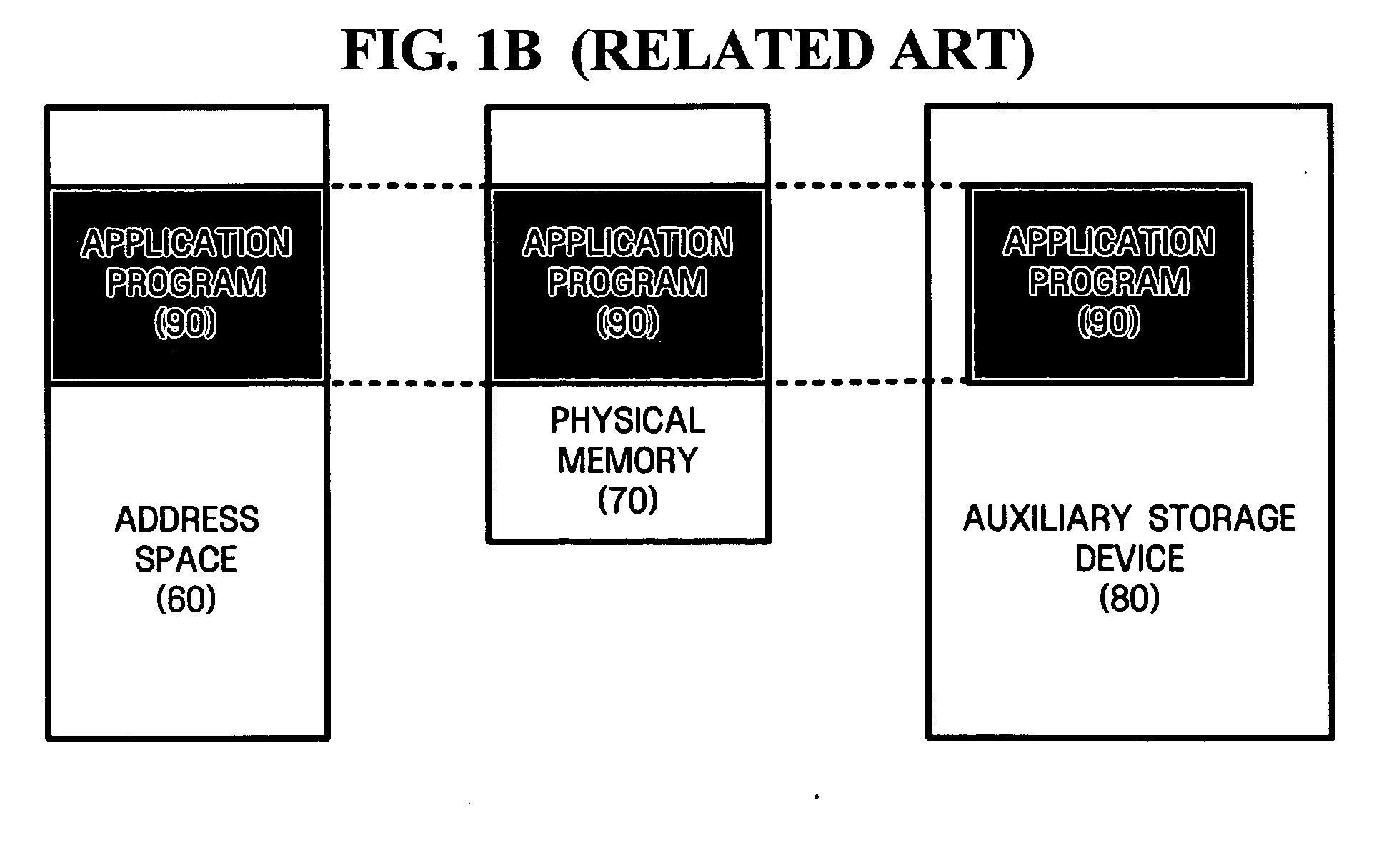

In computer operating systems, demand paging (as opposed to anticipatory paging) is a method of virtual memory management. In a system that uses demand paging, the operating system copies a disk page into physical memory only if an attempt is made to access it and that page is not already in memory (i.e., if a page fault occurs). It follows that a process begins execution with none of its pages in physical memory, and many page faults will occur until most of a process's working set of pages are located in physical memory. This is an example of a lazy loading technique.

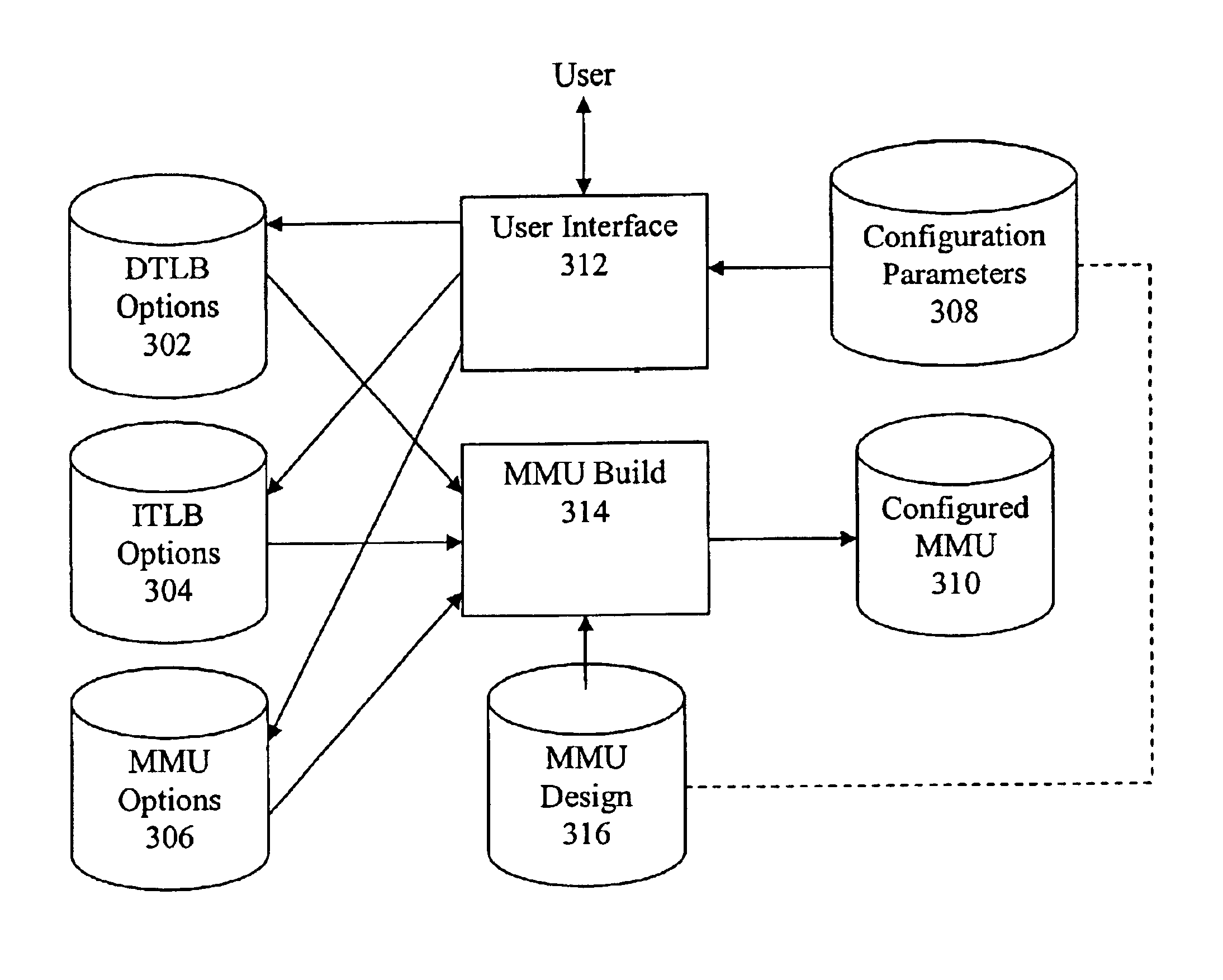

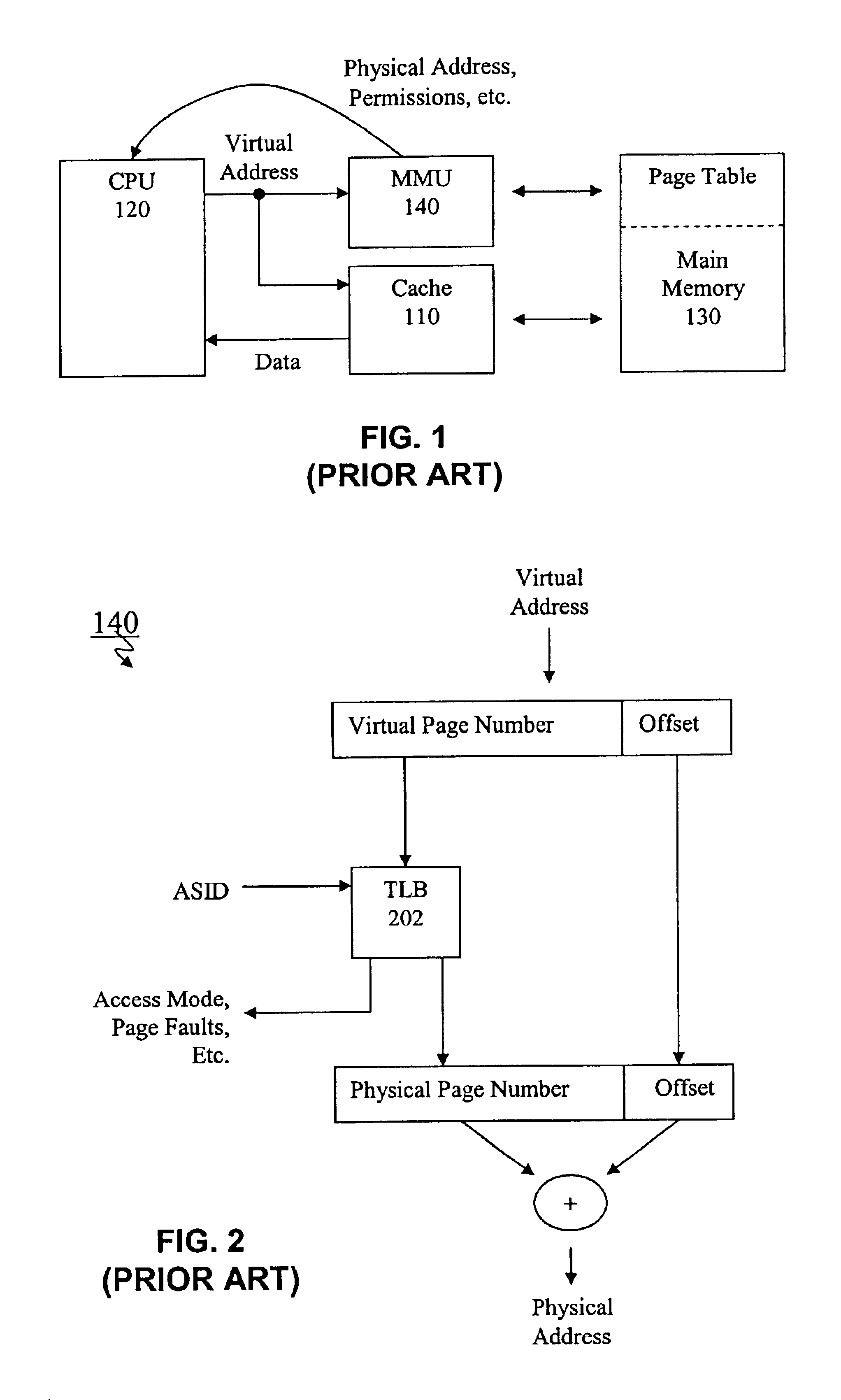

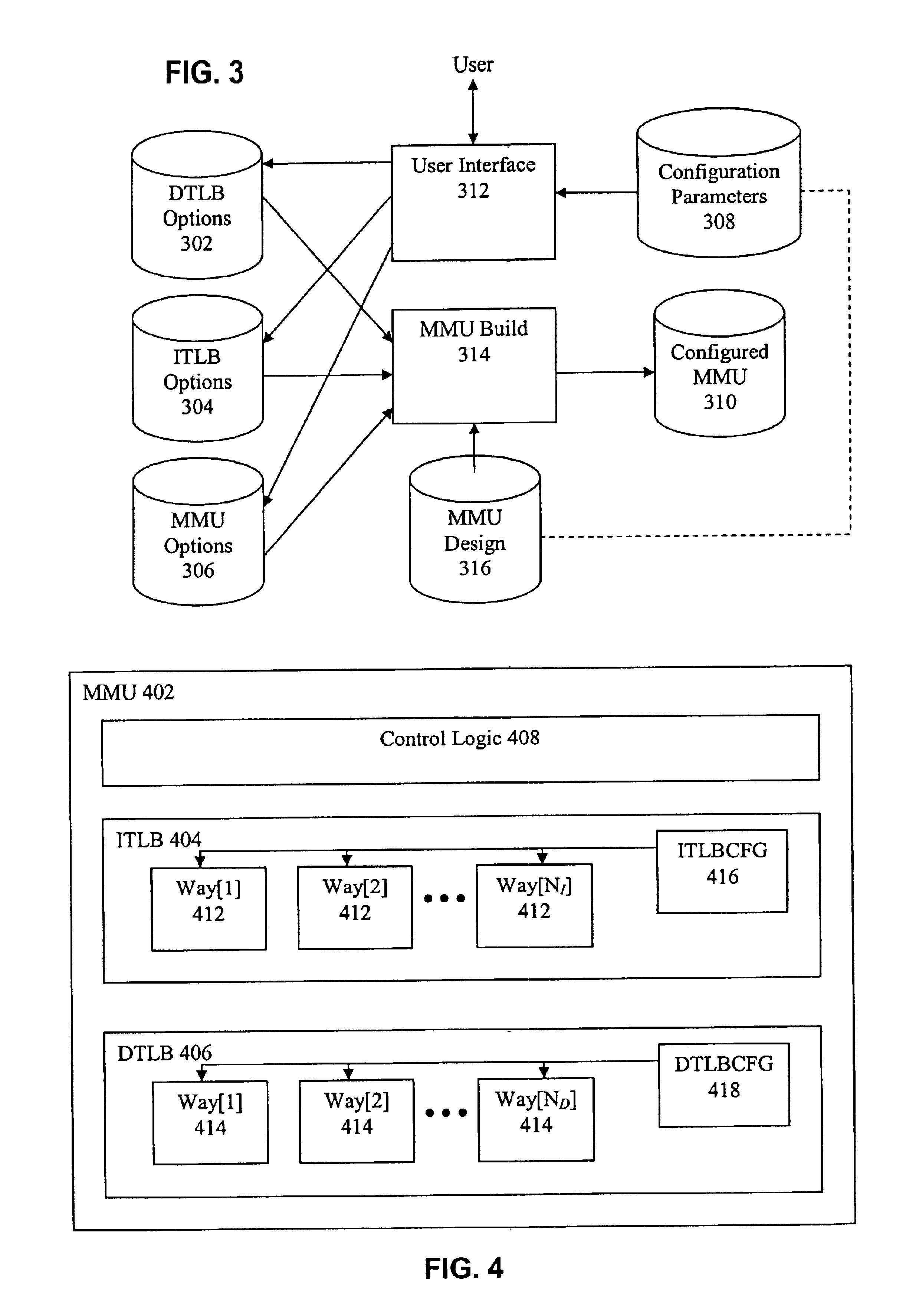

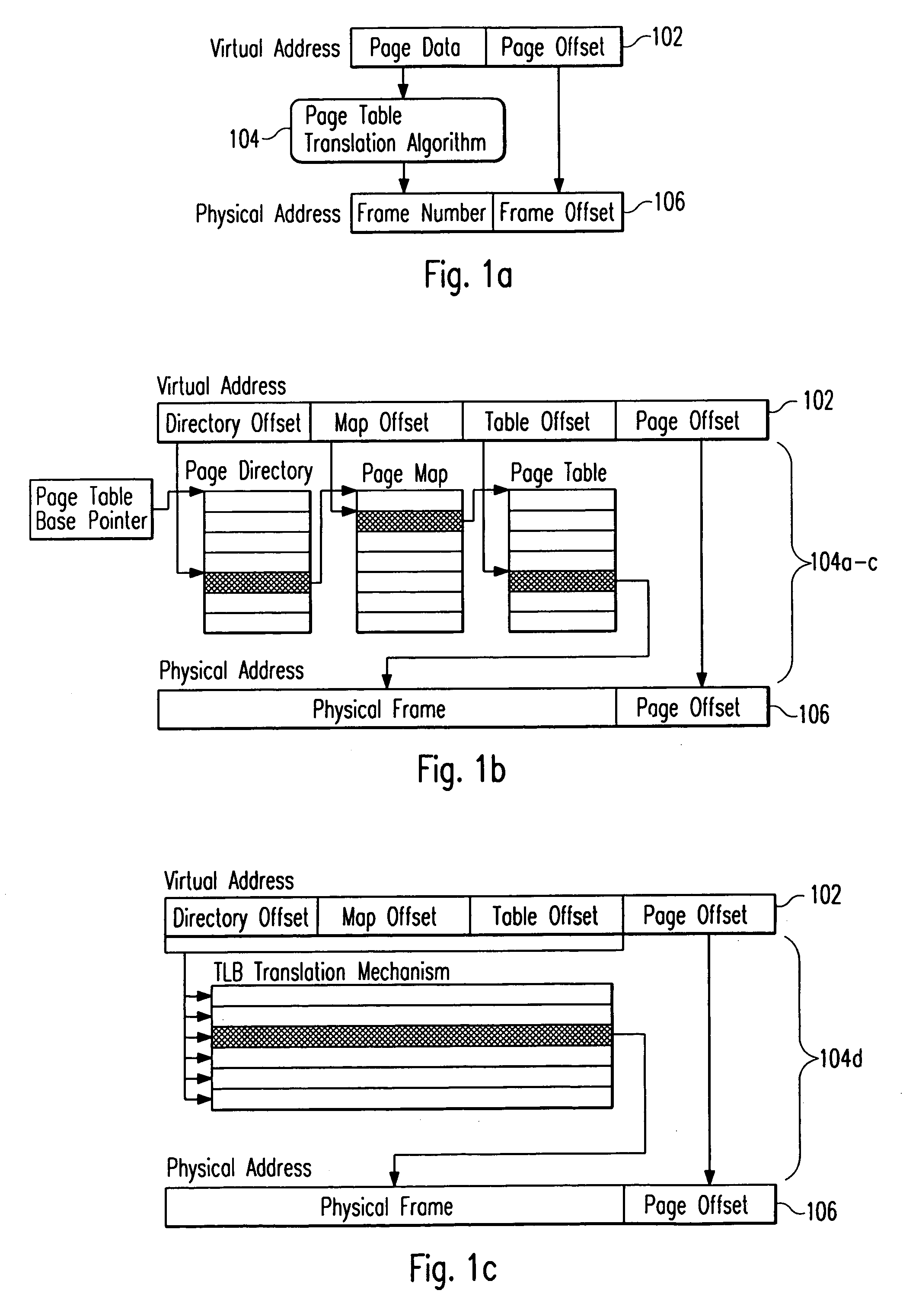

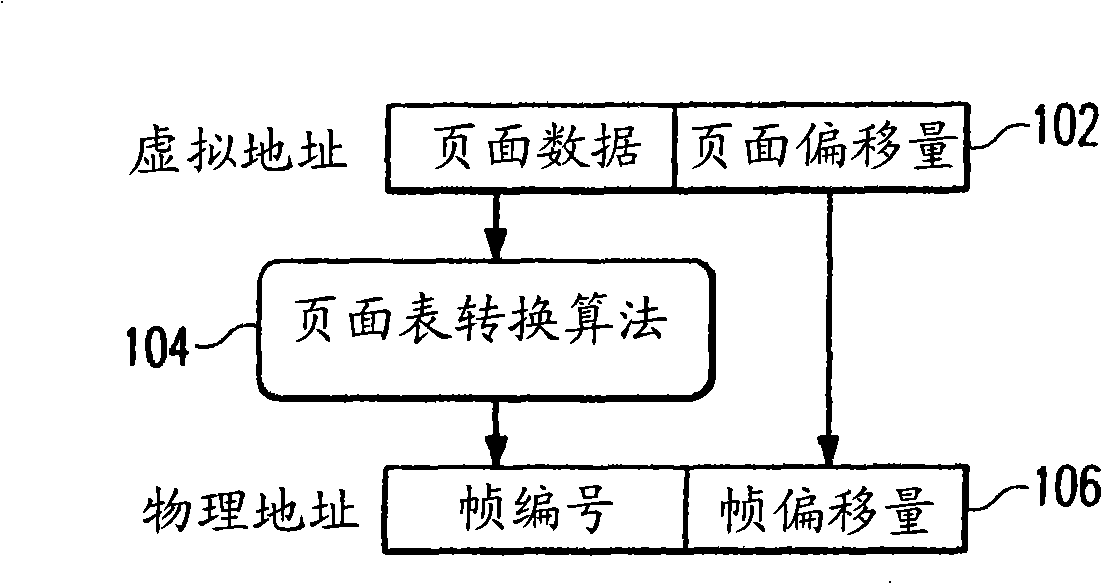

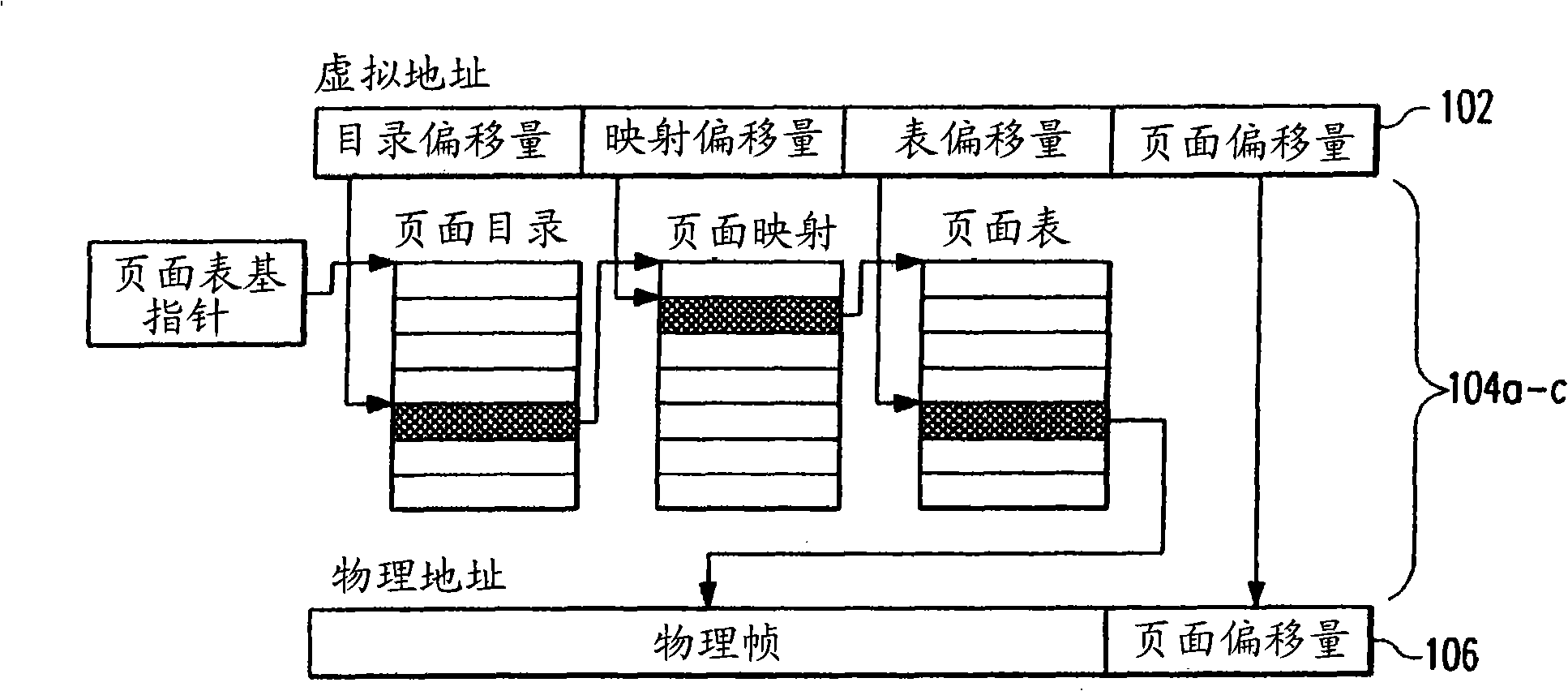

Configurable memory management unit

InactiveUS6854046B1Memory systemsMicro-instruction address formationSystems designApplication software

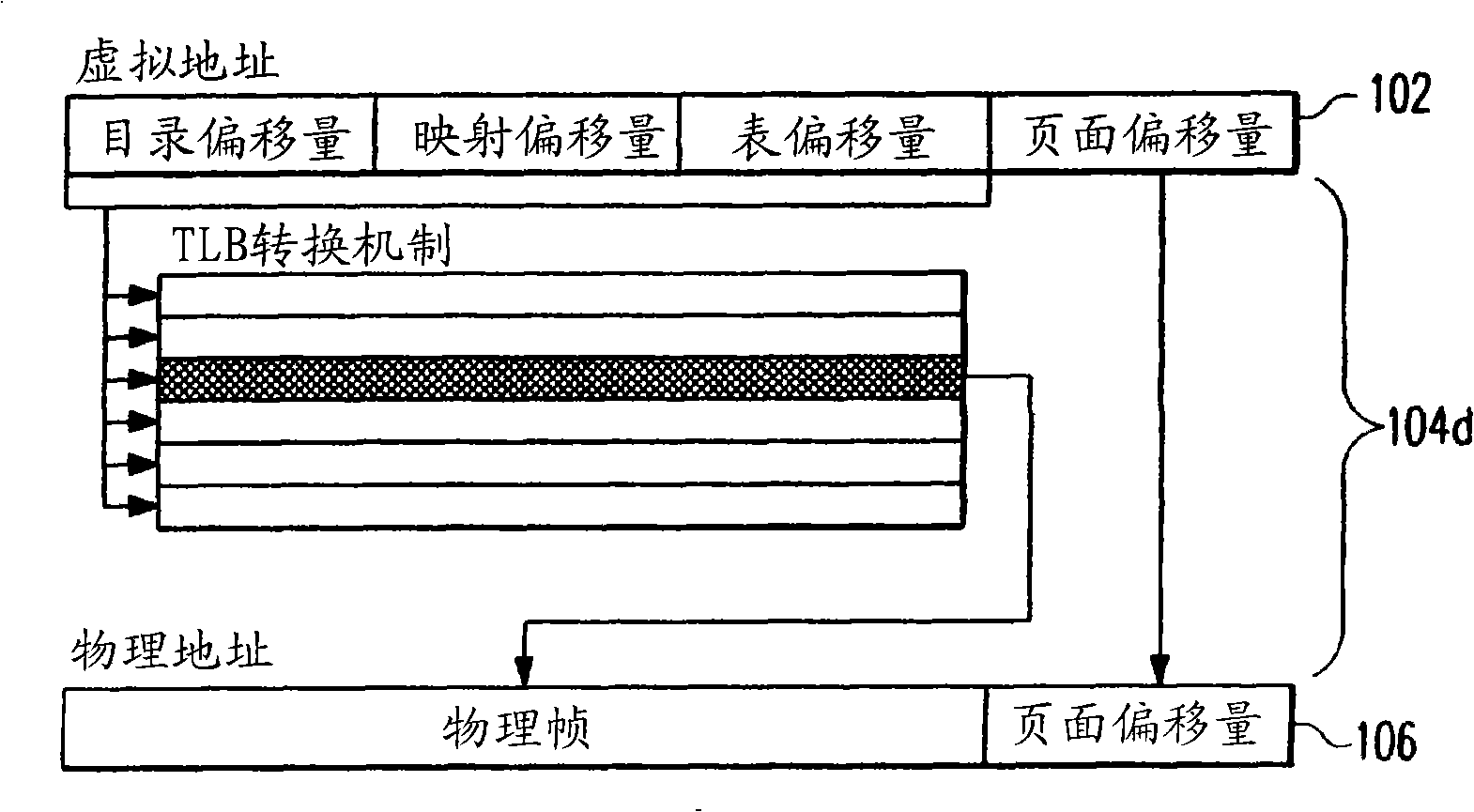

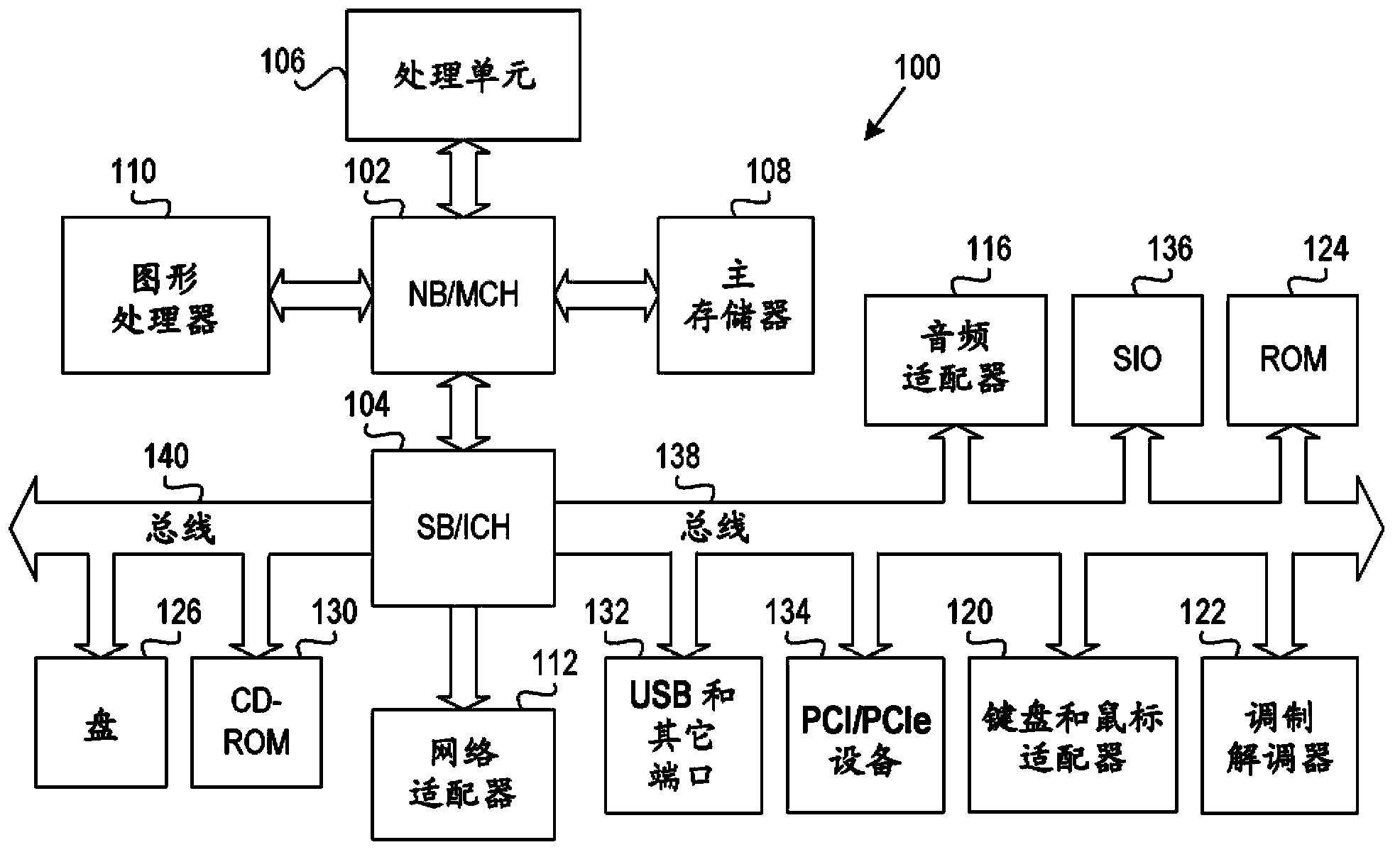

An MMU provides services at a cost more directly proportional to the needs of the system. According to one aspect, the MMU provides both address translation and sophisticated protection capabilities. Translation and protection are desirable when applications running on the processor are not completely debugged or trustable, for example. According to another aspect, a system for configuring the MMU design according to user specifications and system needs is provided. The MMU configurability aspects enable the system designer to configure MMUs having run-time programmability features that span the range from completely static to completely dynamic. In addition, the MMU can be configured to support variable page sizes, multiple protection and sharing rings, demand paging, and hardware TLB refill, for example.

Owner:TENSILICA

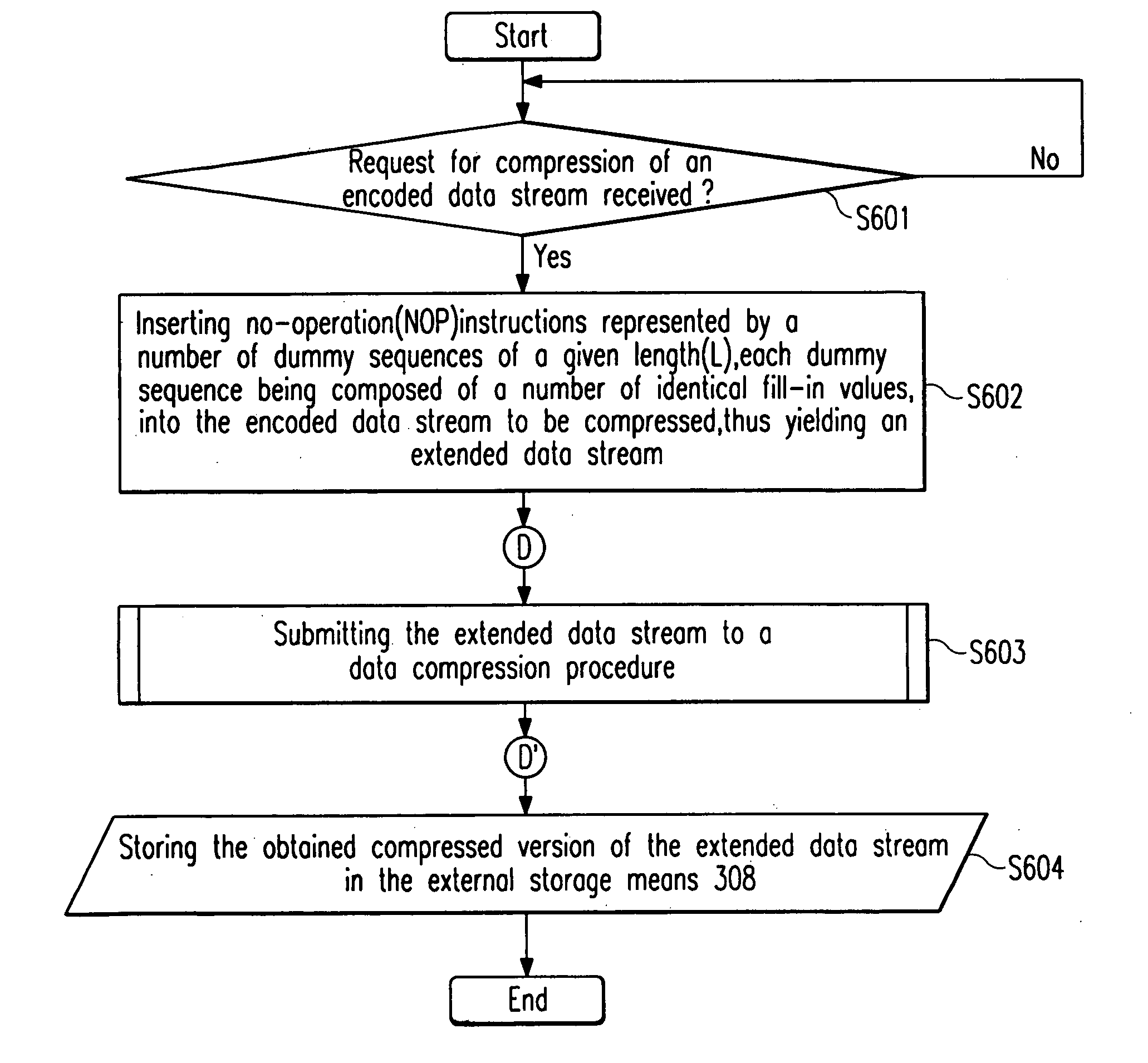

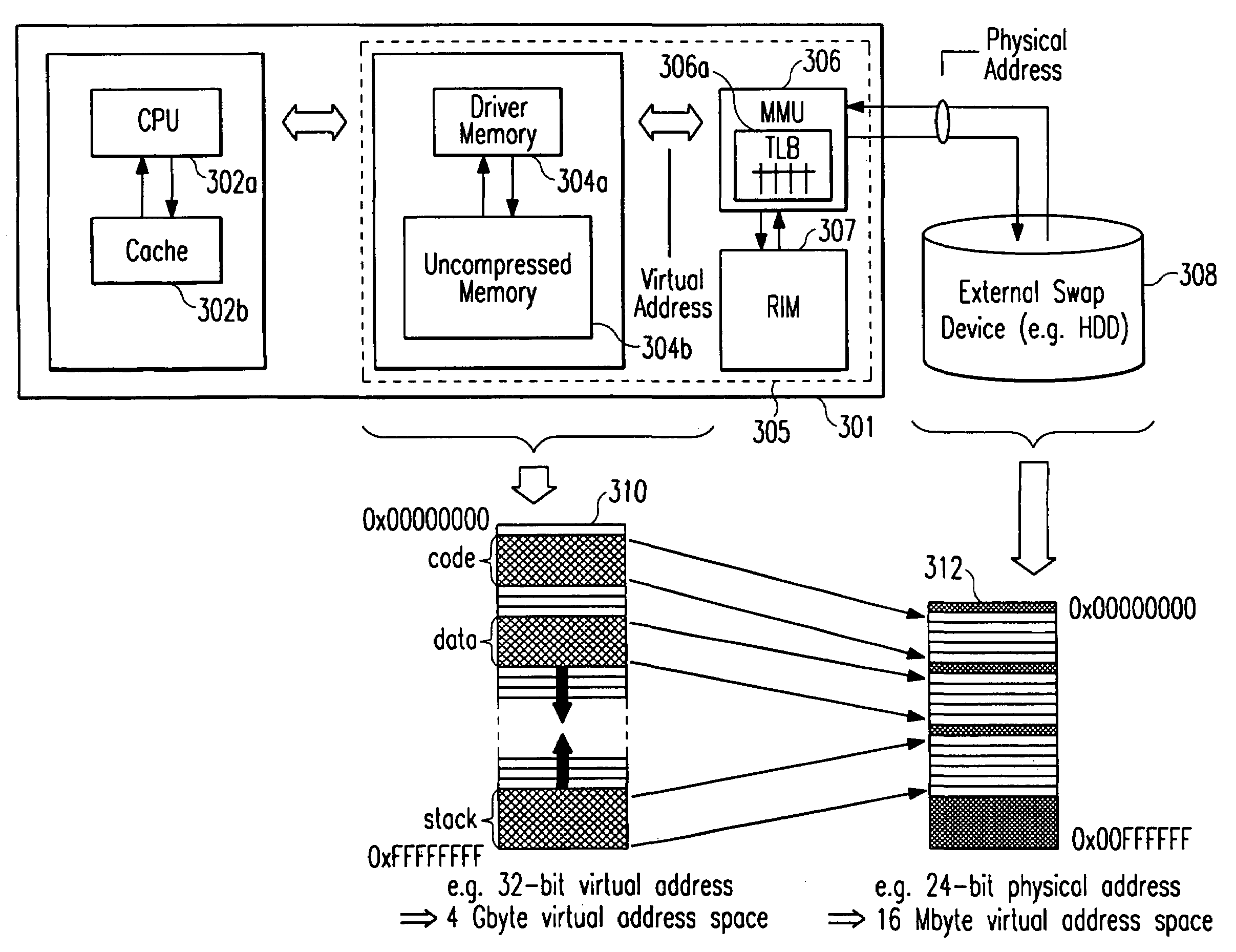

Data compression method for supporting virtual memory management in a demand paging system

InactiveUS20070157001A1Avoid time-consuming index searchAvoid indexMemory architecture accessing/allocationMemory adressing/allocation/relocationData compressionData stream

A virtual memory management unit (306) includes a redundancy insertion module (307) which is used for inserting redundancy into an encoded data stream to be compressed, such that after being compressed each logical data block fits into a different one from a set of equal-sized physical data blocks of a given size. For example, said redundancy may be given by no-operation (NOP) instructions represented by a number of dummy sequences of a given length (L) into an encoded data stream to be compressed, each dummy sequence being composed of a number of identical binary or hexadecimal fill-in values.

Owner:SONY ERICSSON MOBILE COMM AB

System and method for implementing a demand paging jitter buffer algorithm

InactiveUS20060095612A1Reduce disadvantagesReduce problemsMemory systemsInput/output processes for data processingData segmentWater level

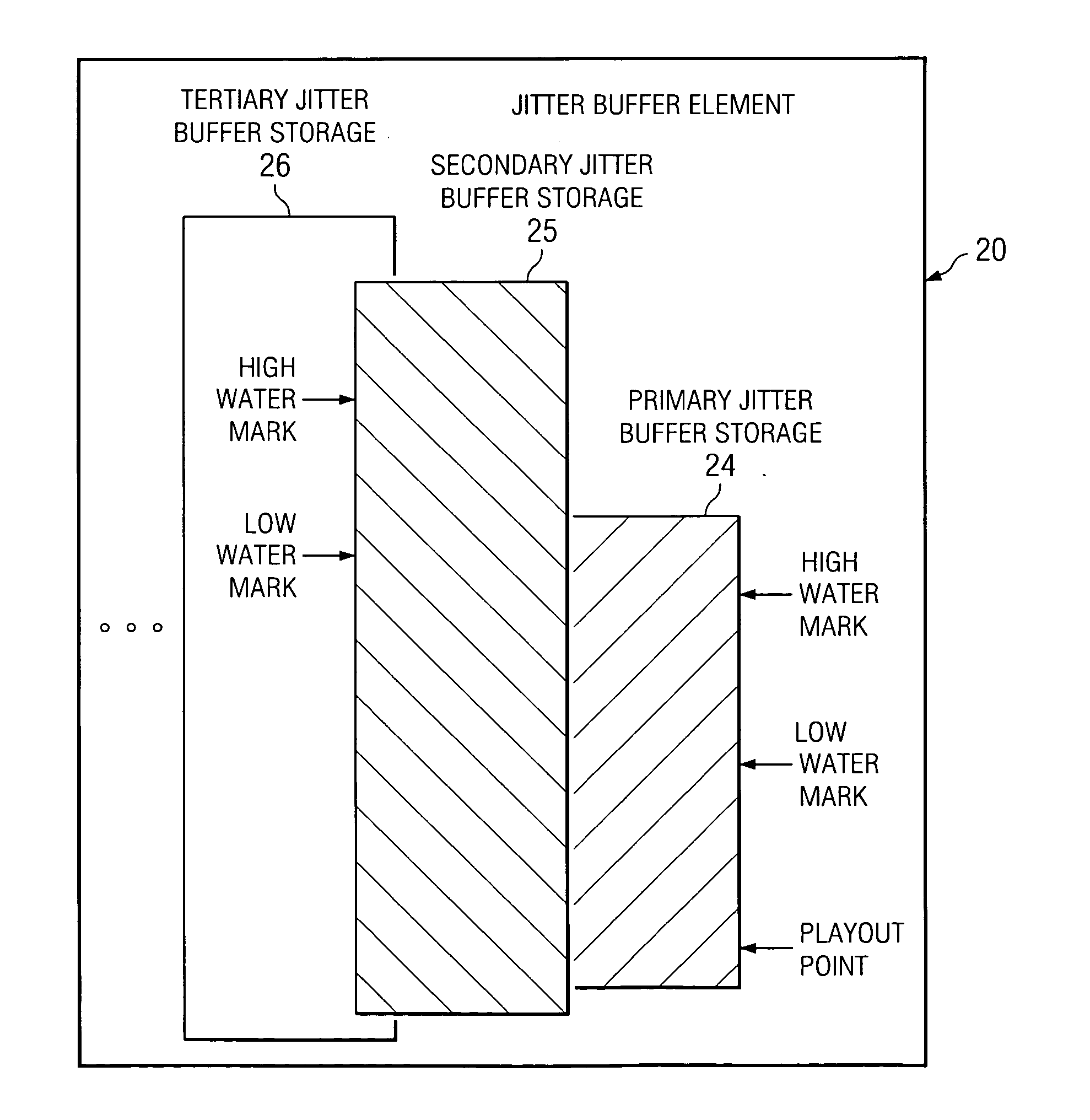

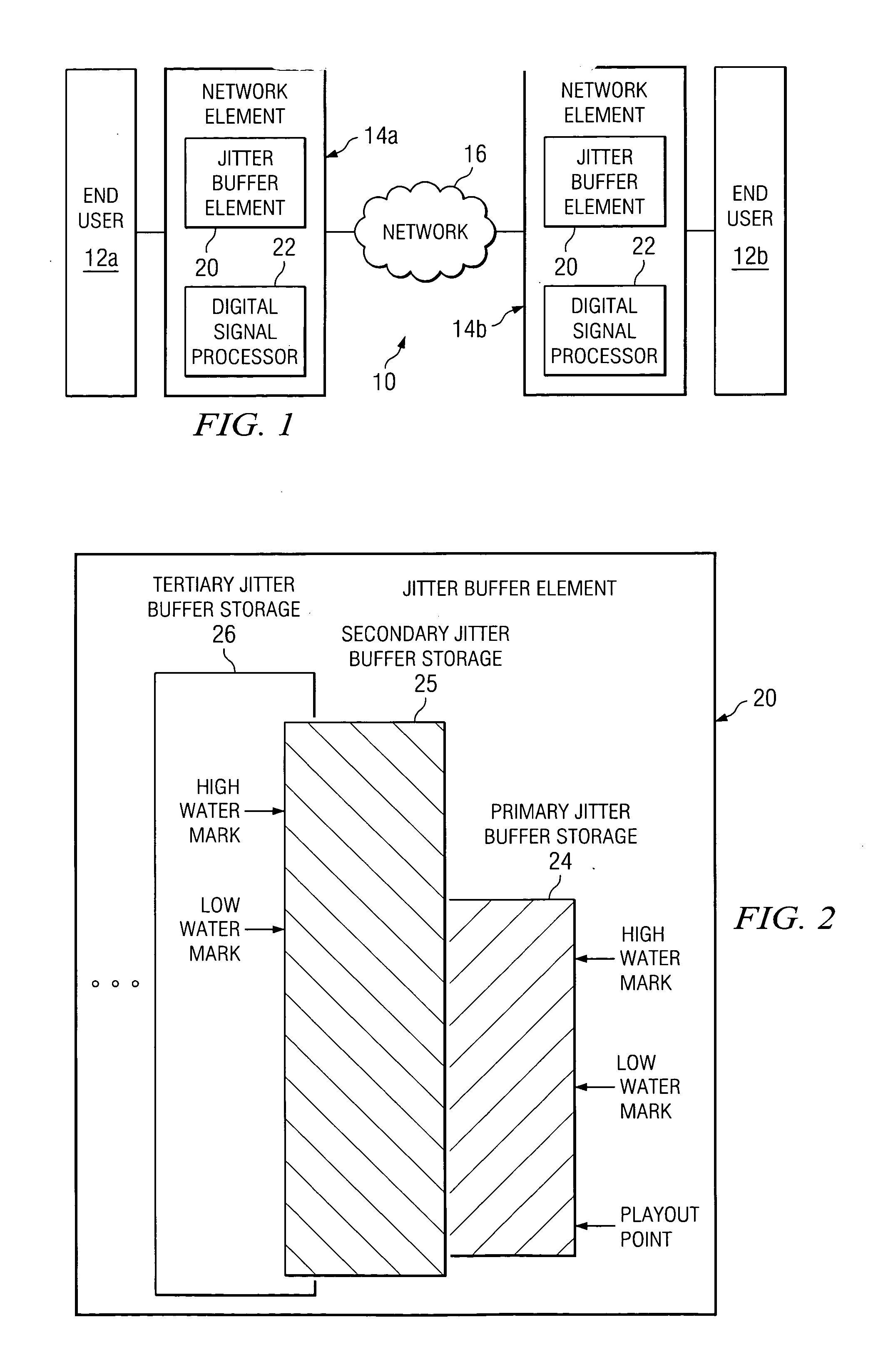

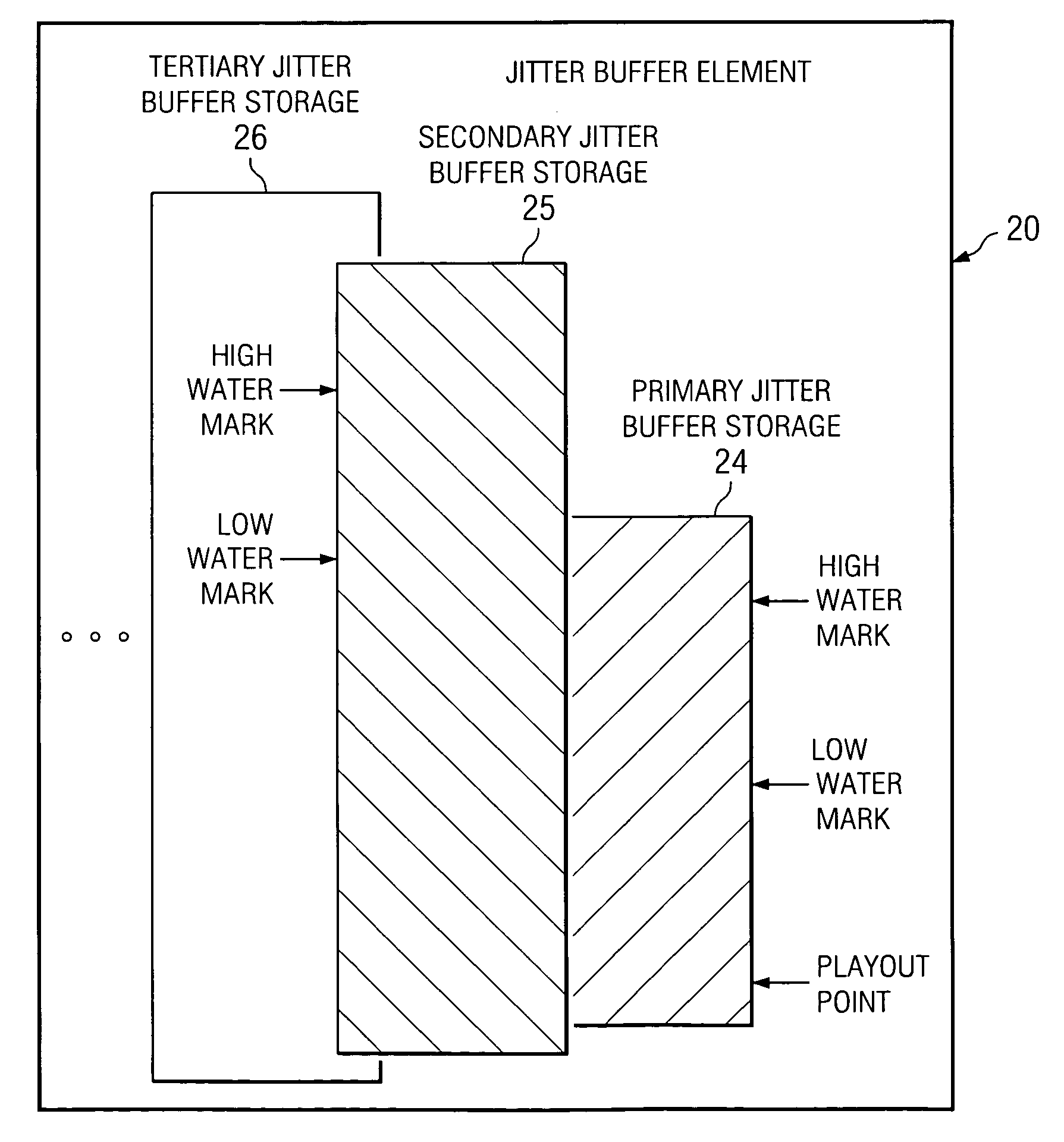

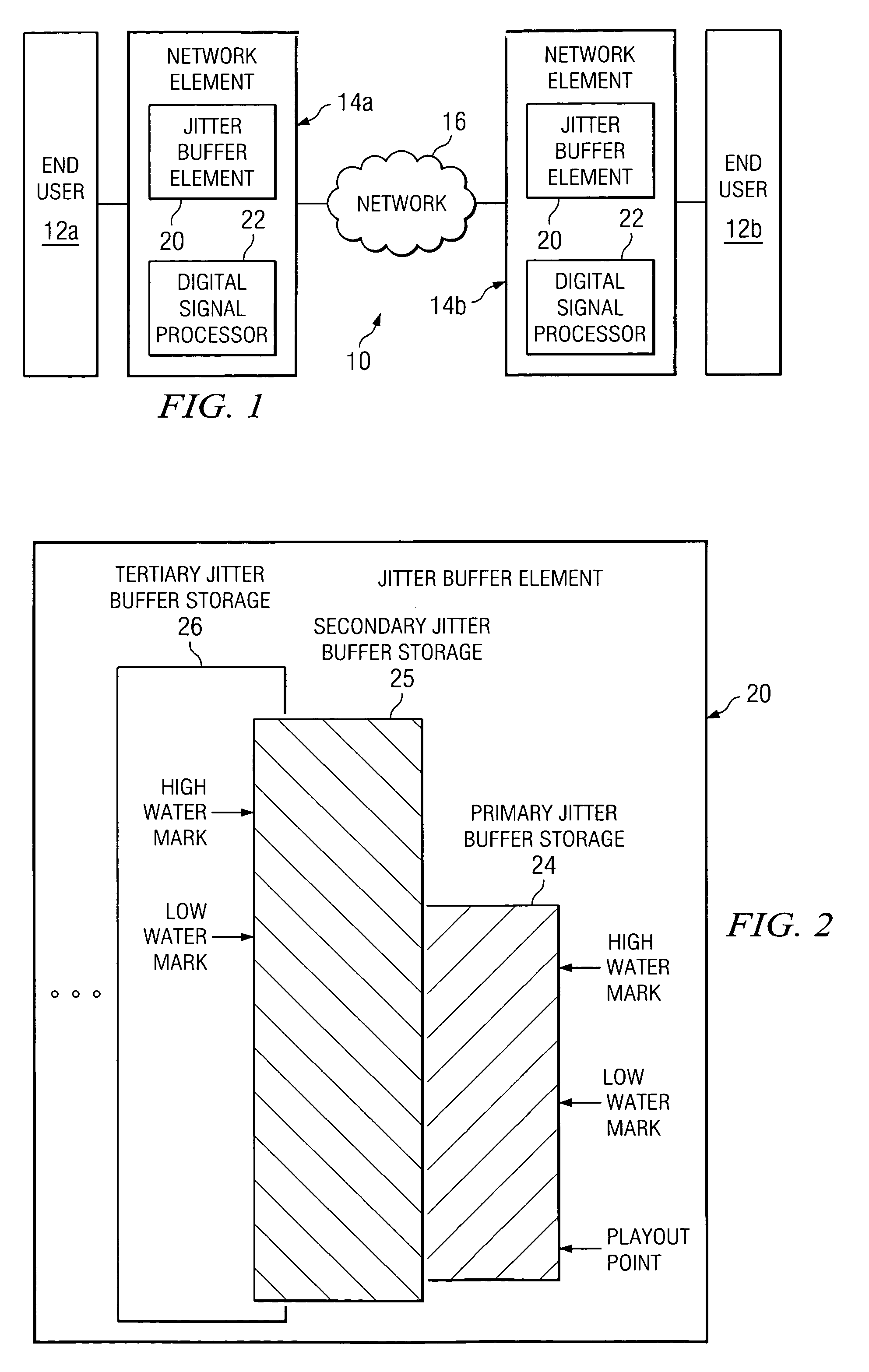

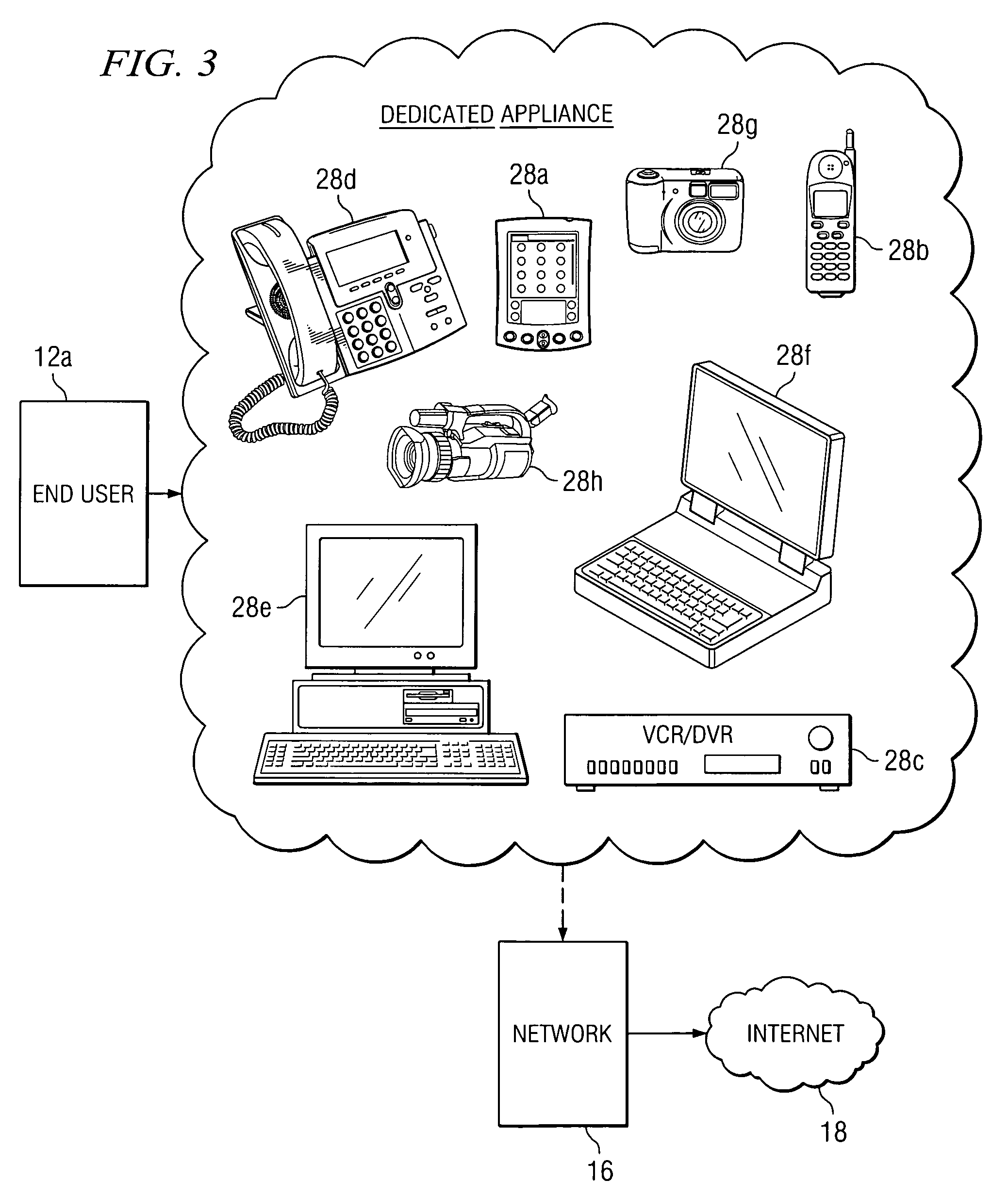

An apparatus for providing storage is provided that includes a jitter buffer element. The jitter buffer element includes a primary jitter buffer storage that includes a primary low water mark and a primary high water mark. The jitter buffer element also includes a secondary jitter buffer storage that includes a secondary low water mark and a secondary high water mark. A first data segment within the primary jitter buffer storage is held for a processor. A playout point may advance from a bottom of the primary jitter buffer storage to the primary low water mark. When the playout point reaches the primary low water mark, the processor communicates a message for the secondary jitter buffer storage to request a second data segment up to the secondary high water mark associated with the secondary jitter buffer storage.

Owner:CISCO TECH INC

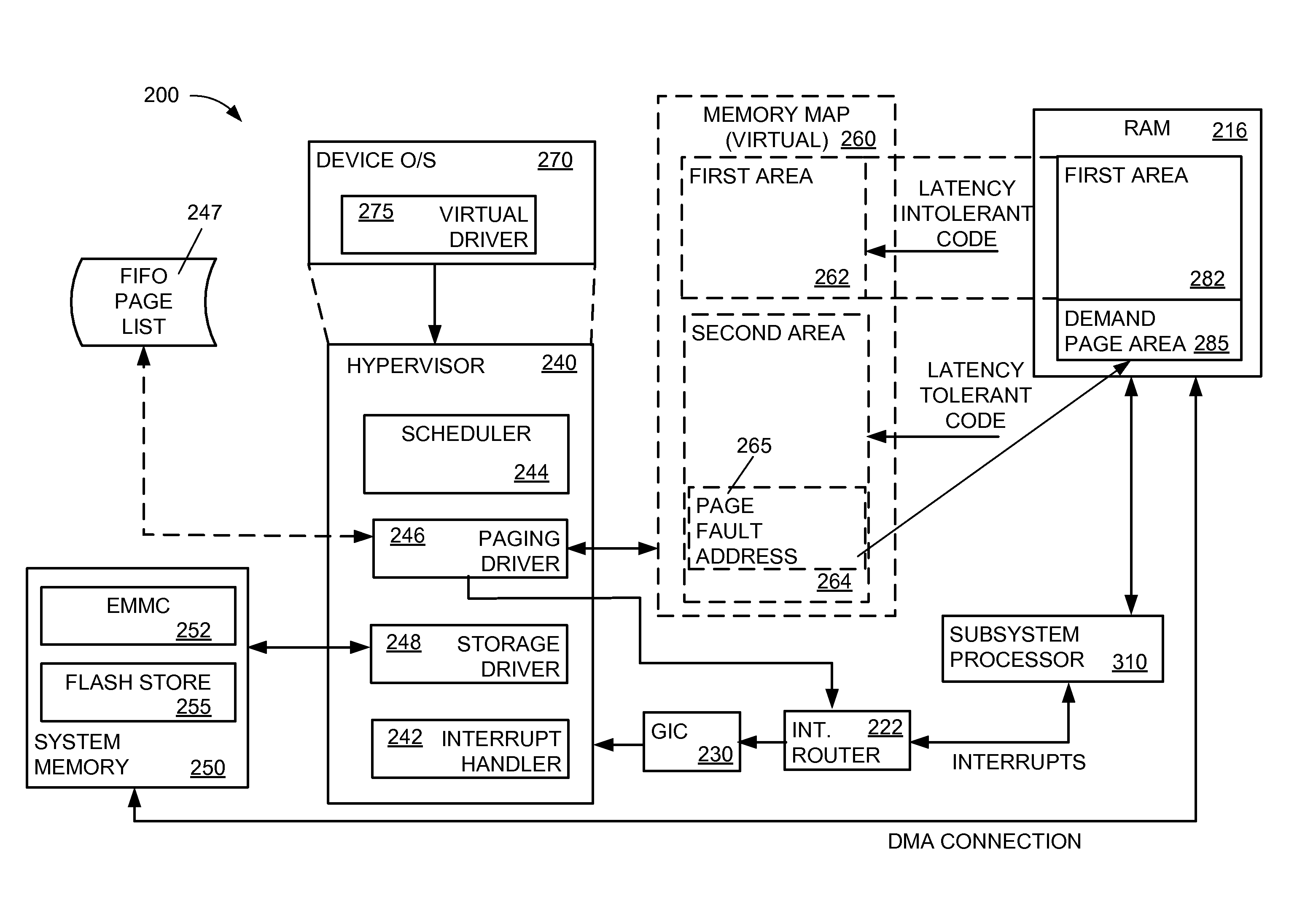

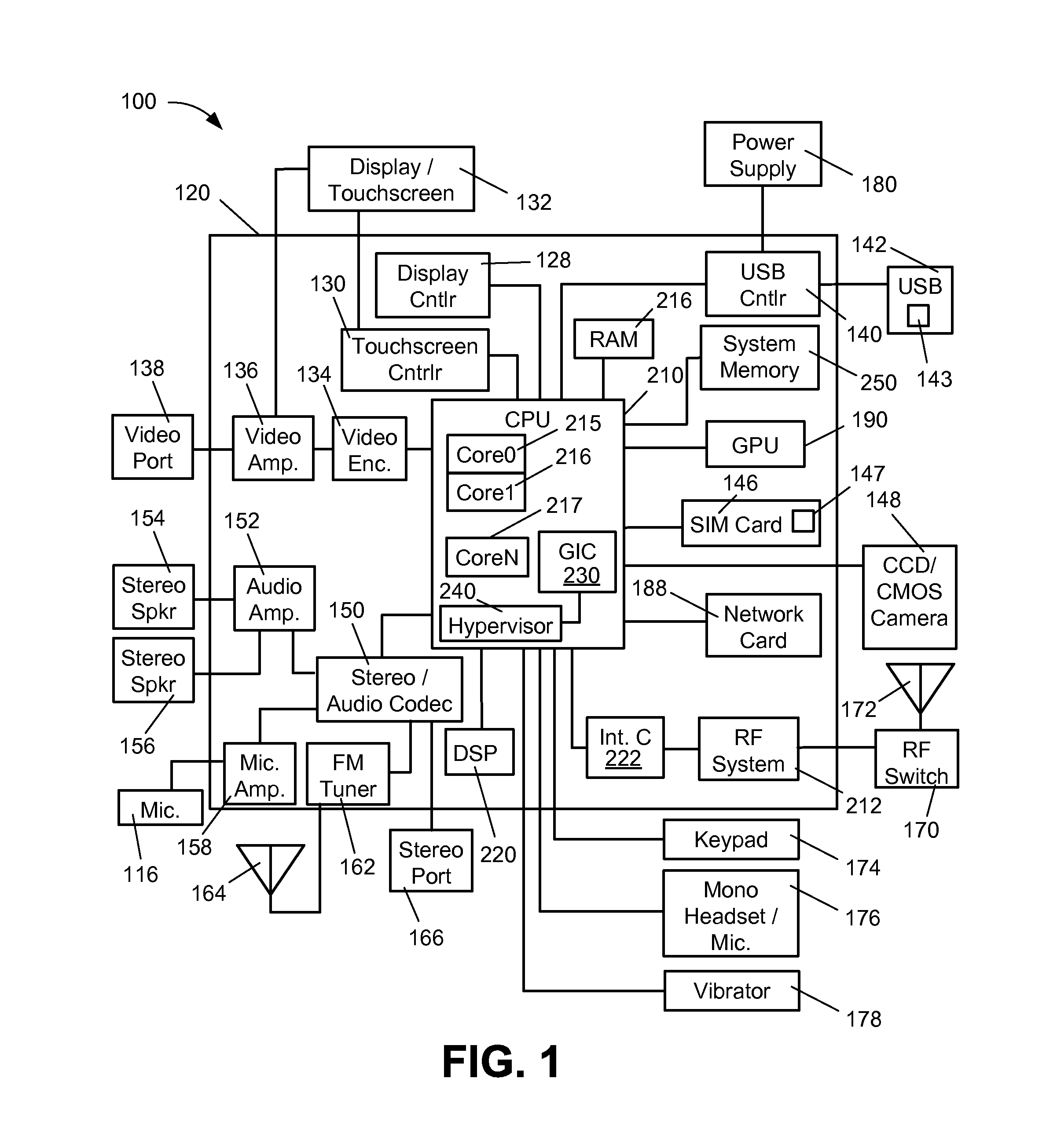

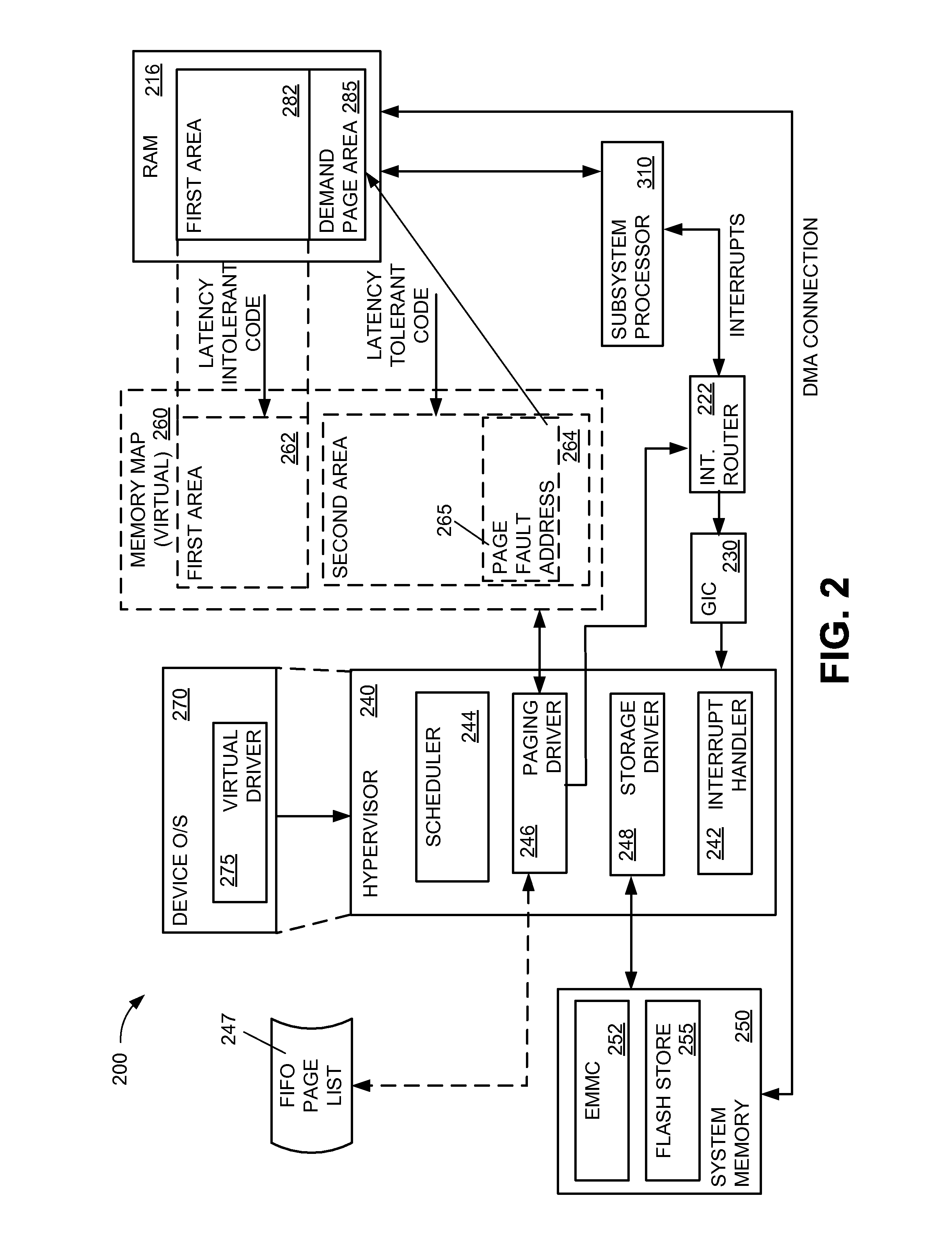

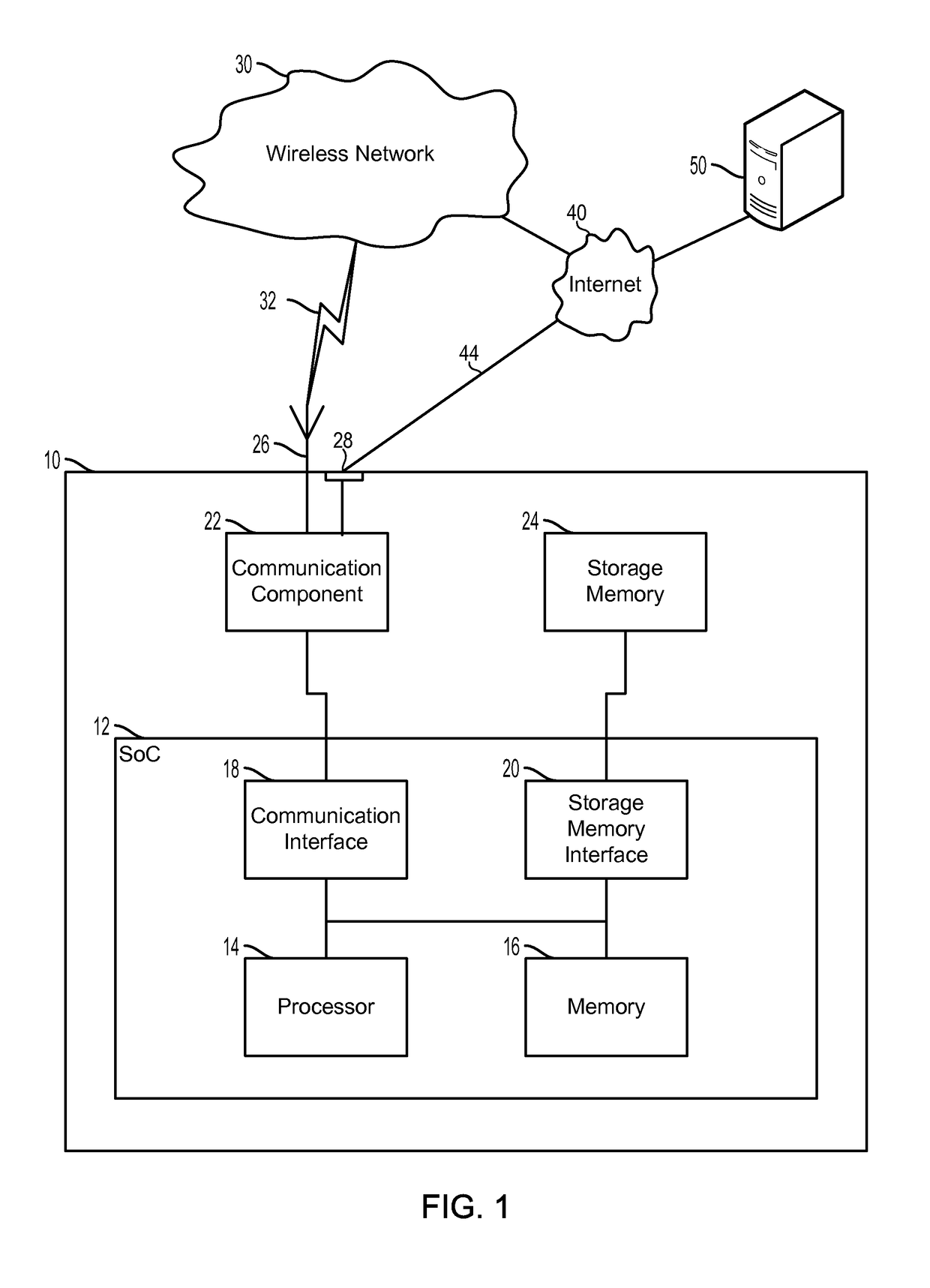

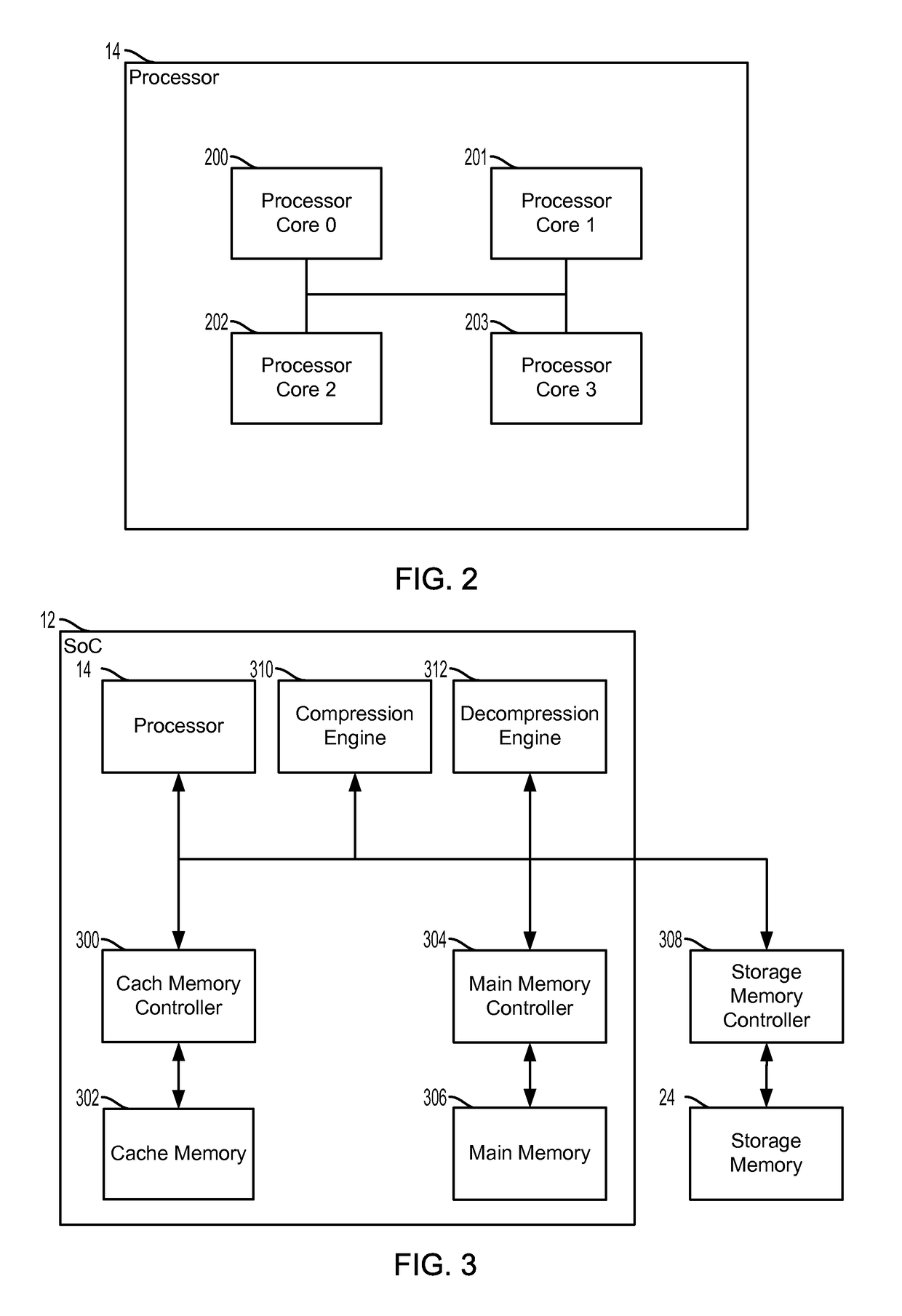

Systems and methods for supporting demand paging for subsystems in a portable computing environment with restricted memory resources

InactiveUS20150261686A1Reduce paging overheadReduce overheadMemory architecture accessing/allocationMemory adressing/allocation/relocationTime criticalAuxiliary memory

A portable computing device is arranged with one or more subsystems that include a processor and a memory management unit arranged to execute threads under a subsystem level operating system. The processor is in communication with a primary memory. A first area of the primary memory is used for storing time critical code and data. A second area is available for demand pages required by a thread executing in the processor. A secondary memory is accessible to a hypervisor. The processor generates an interrupt when a page fault is detected. The hypervisor, in response to the interrupt, initiates a direct memory transfer of information in the secondary memory to the second area available for demand pages in the primary memory. Upon completion of the transfer, the hypervisor communicates a task complete acknowledgement to the processor.

Owner:QUALCOMM INC

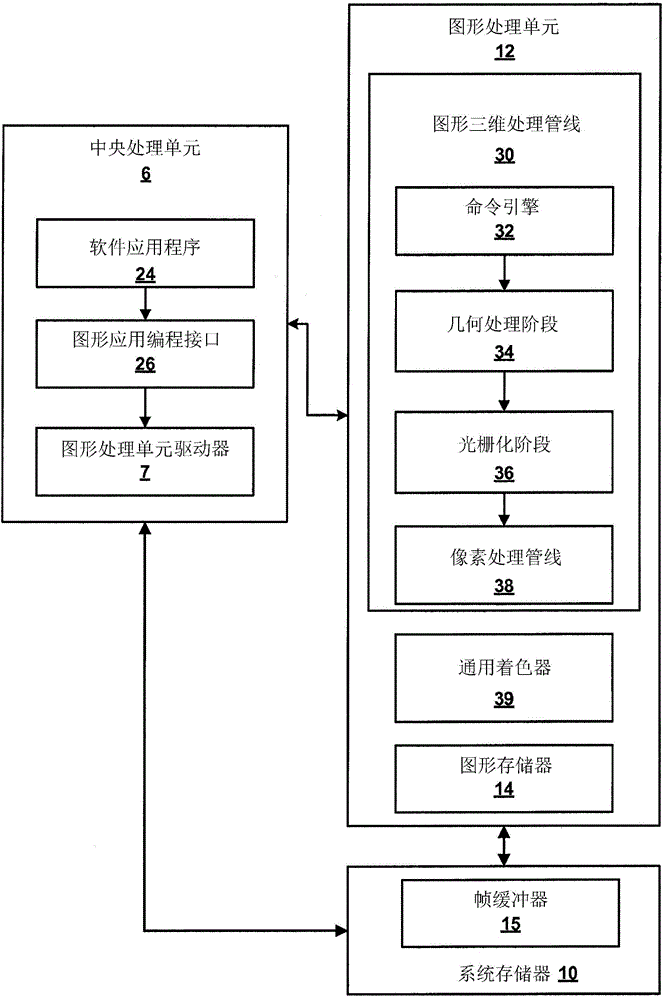

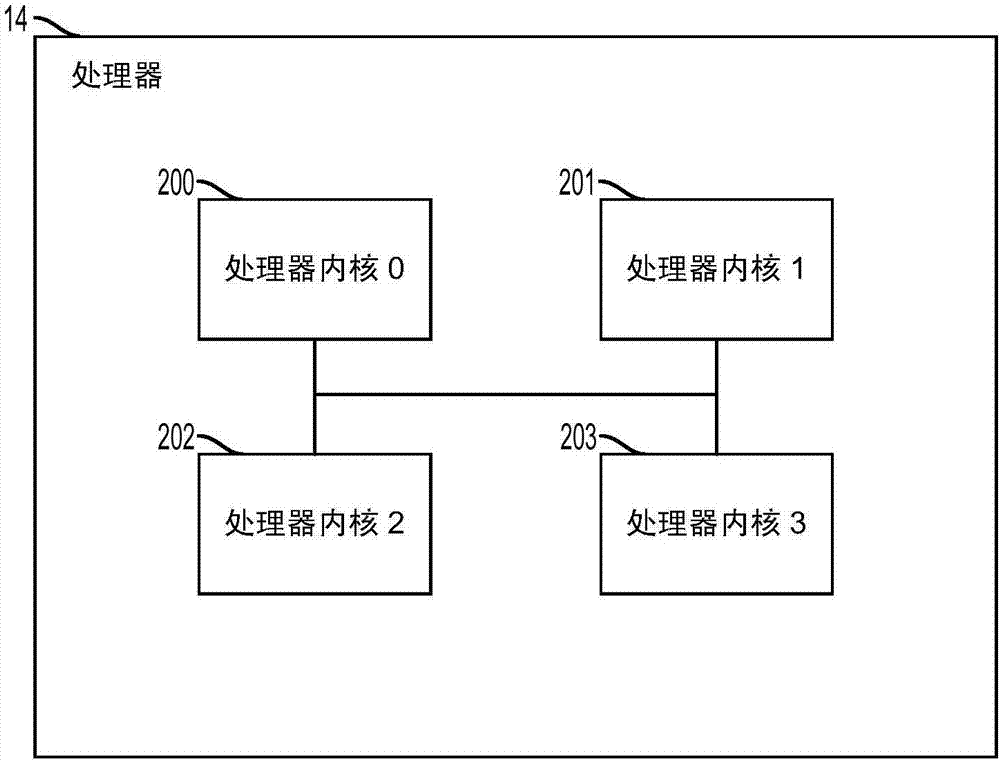

Techniques for supporting for demand paging

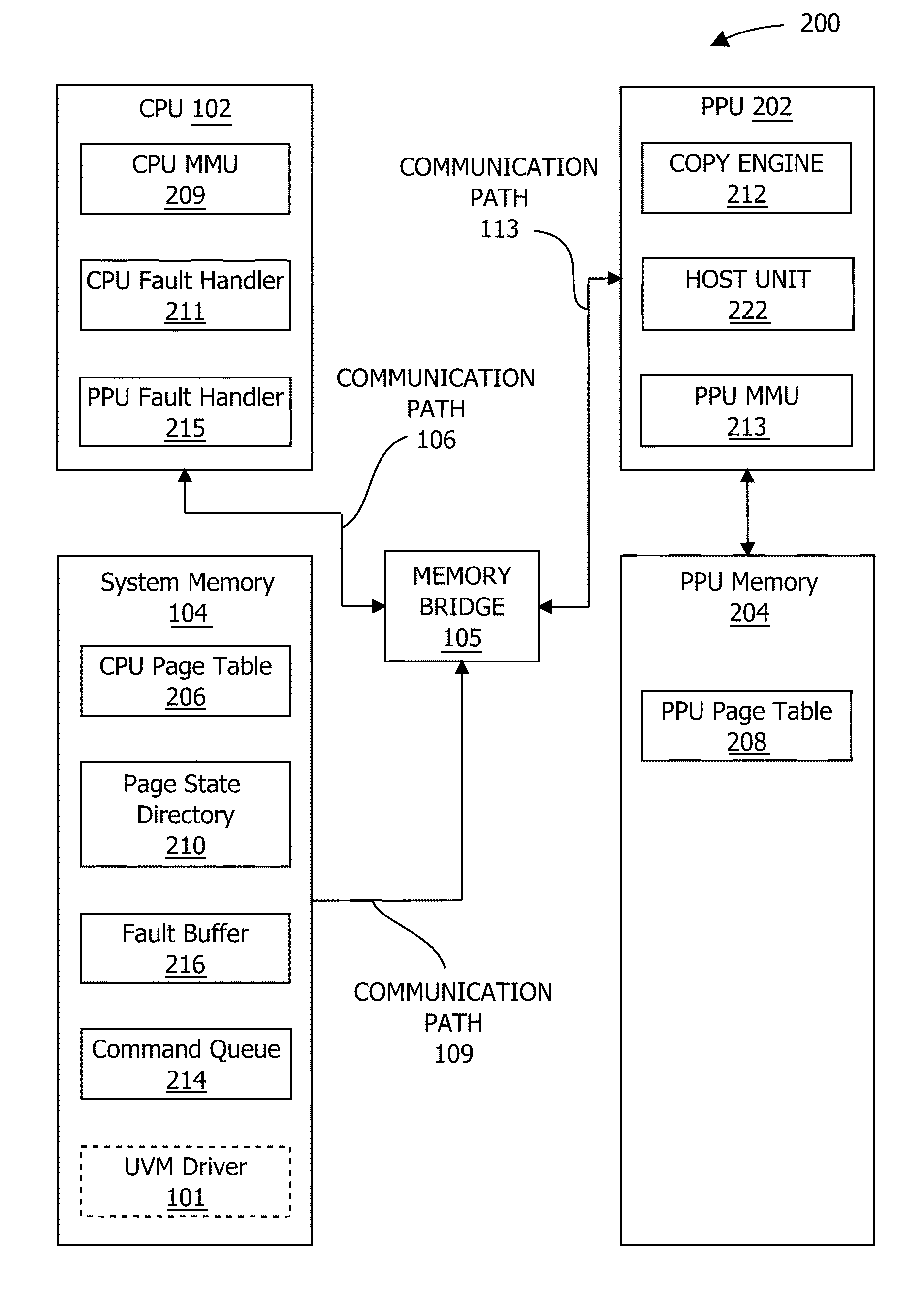

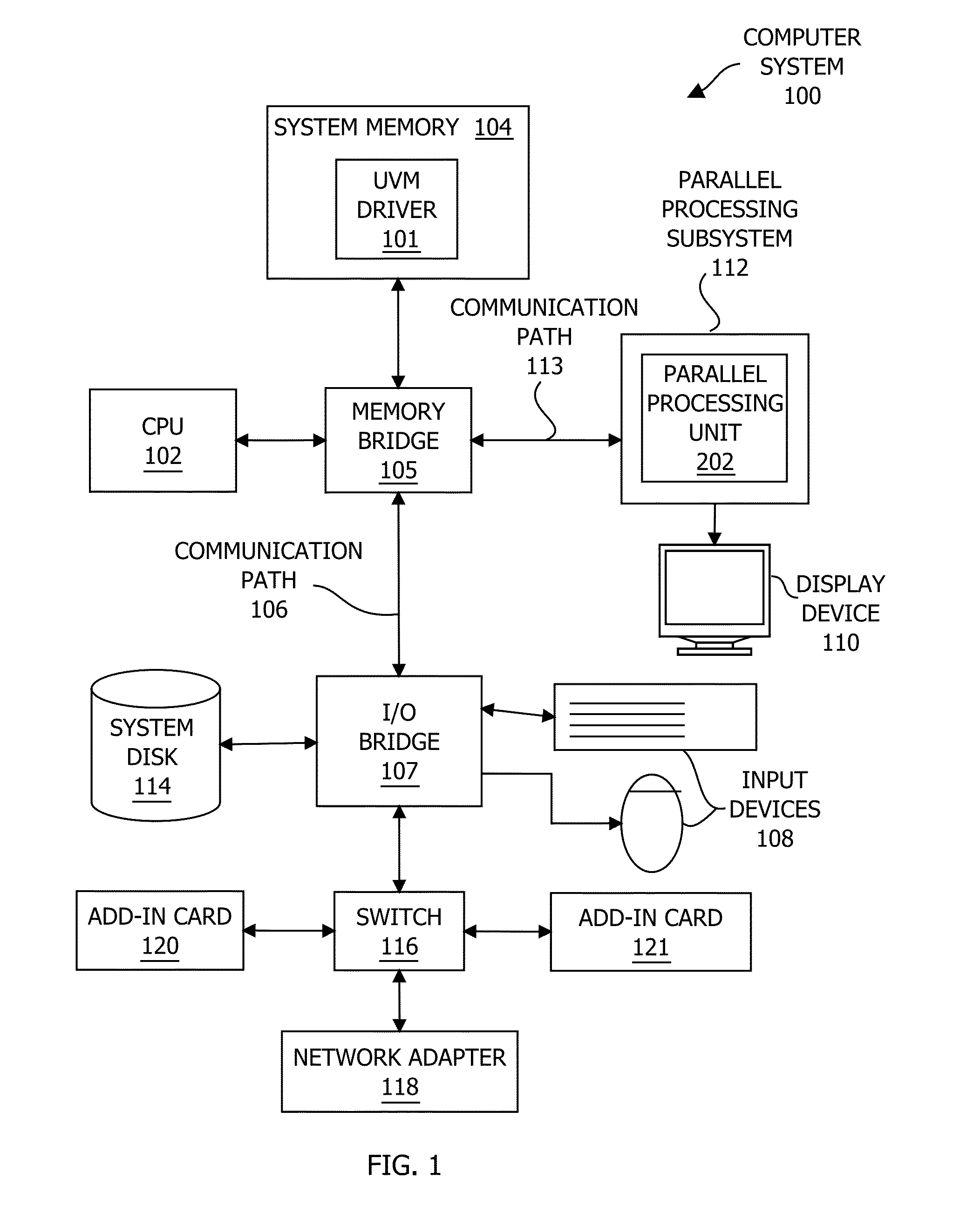

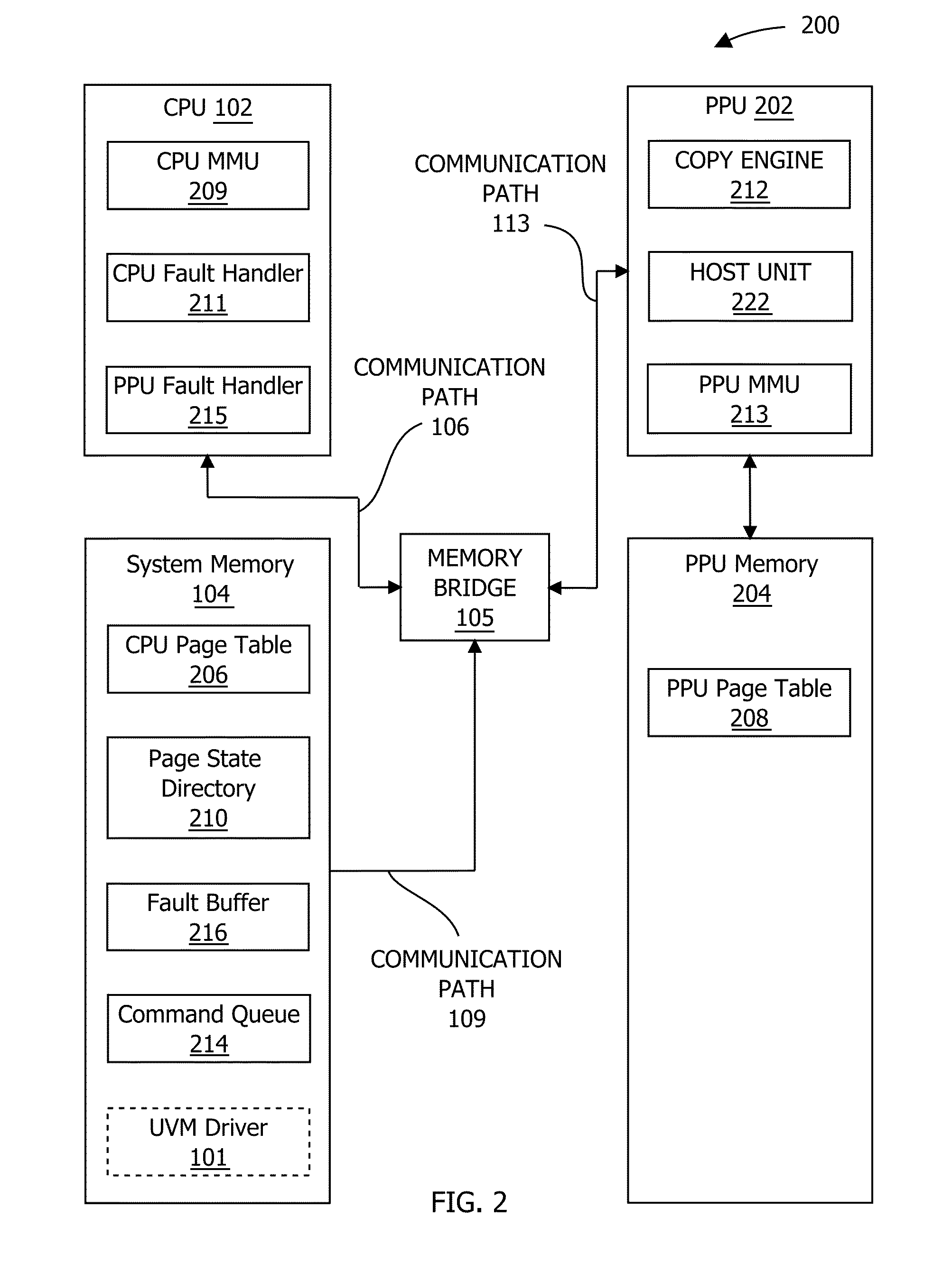

ActiveUS20150082001A1Effective supportMemory adressing/allocation/relocationMicro-instruction address formationMemory addressDemand paging

One embodiment of the present invention includes techniques to support demand paging across a processing unit. Before a host unit transmits a command to an engine that does not tolerate page faults, the host unit ensures that the virtual memory addresses associated with the command are appropriately mapped to physical memory addresses. In particular, if the virtual memory addresses are not appropriately mapped, then the processing unit performs actions to map the virtual memory address to appropriate locations in physical memory. Further, the processing unit ensures that the access permissions required for successful execution of the command are established. Because the virtual memory address mappings associated with the command are valid when the engine receives the command, the engine does not encounter page faults upon executing the command. Consequently, in contrast to prior-art techniques, the engine supports demand paging regardless of whether the engine is involved in remedying page faults.

Owner:NVIDIA CORP

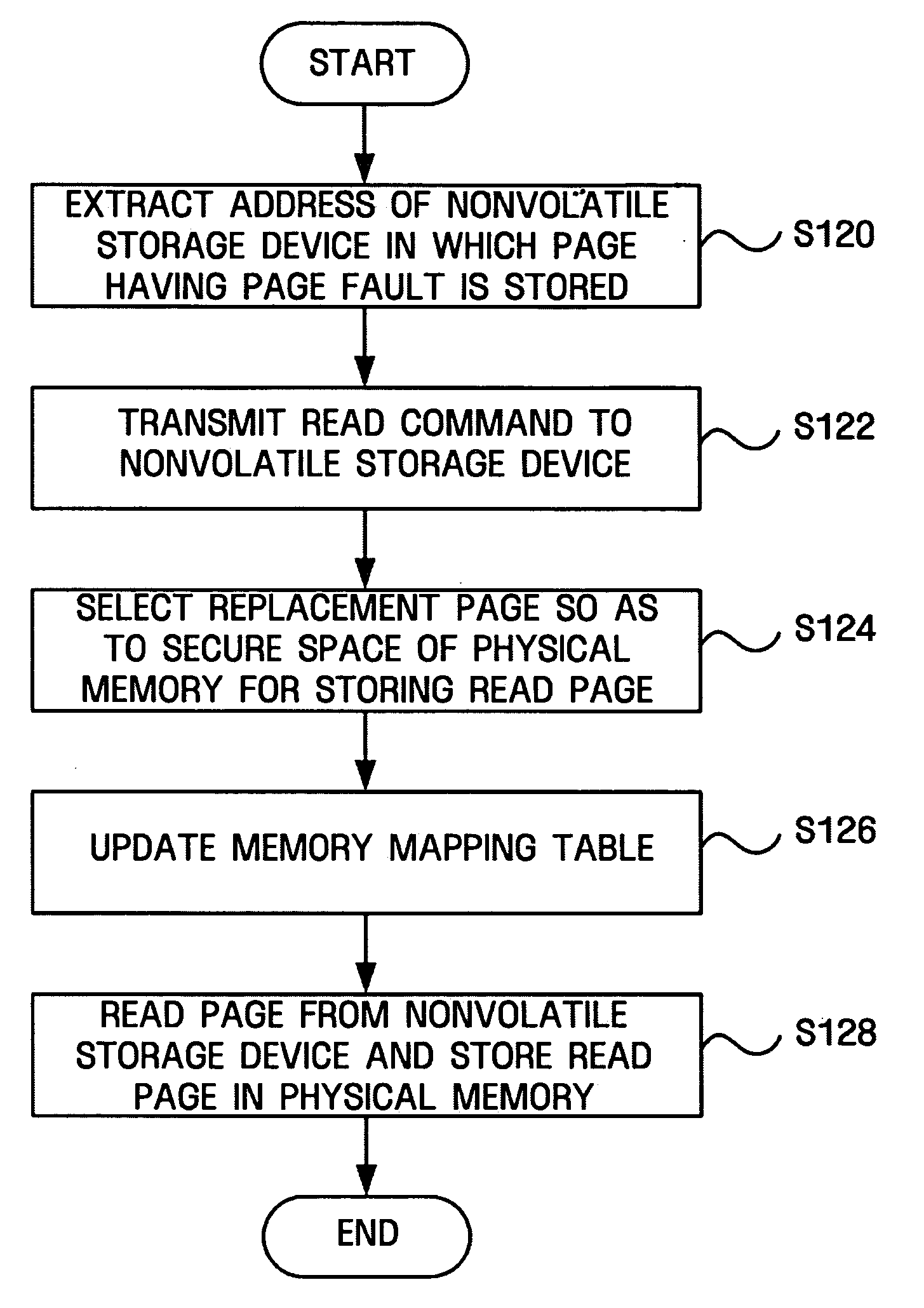

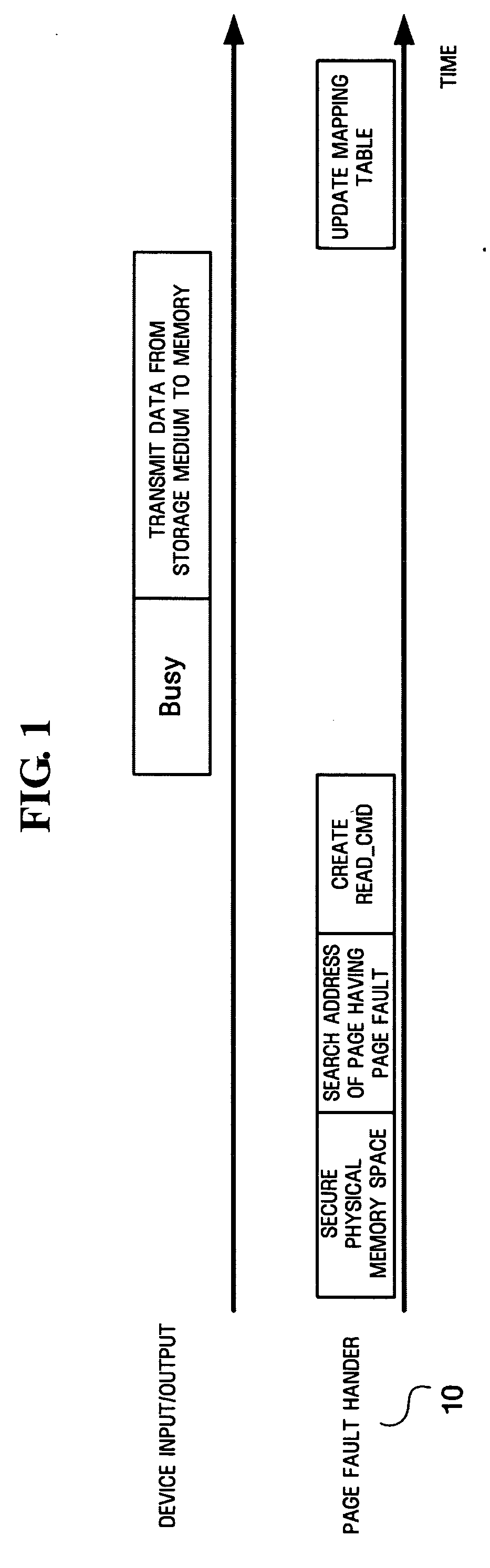

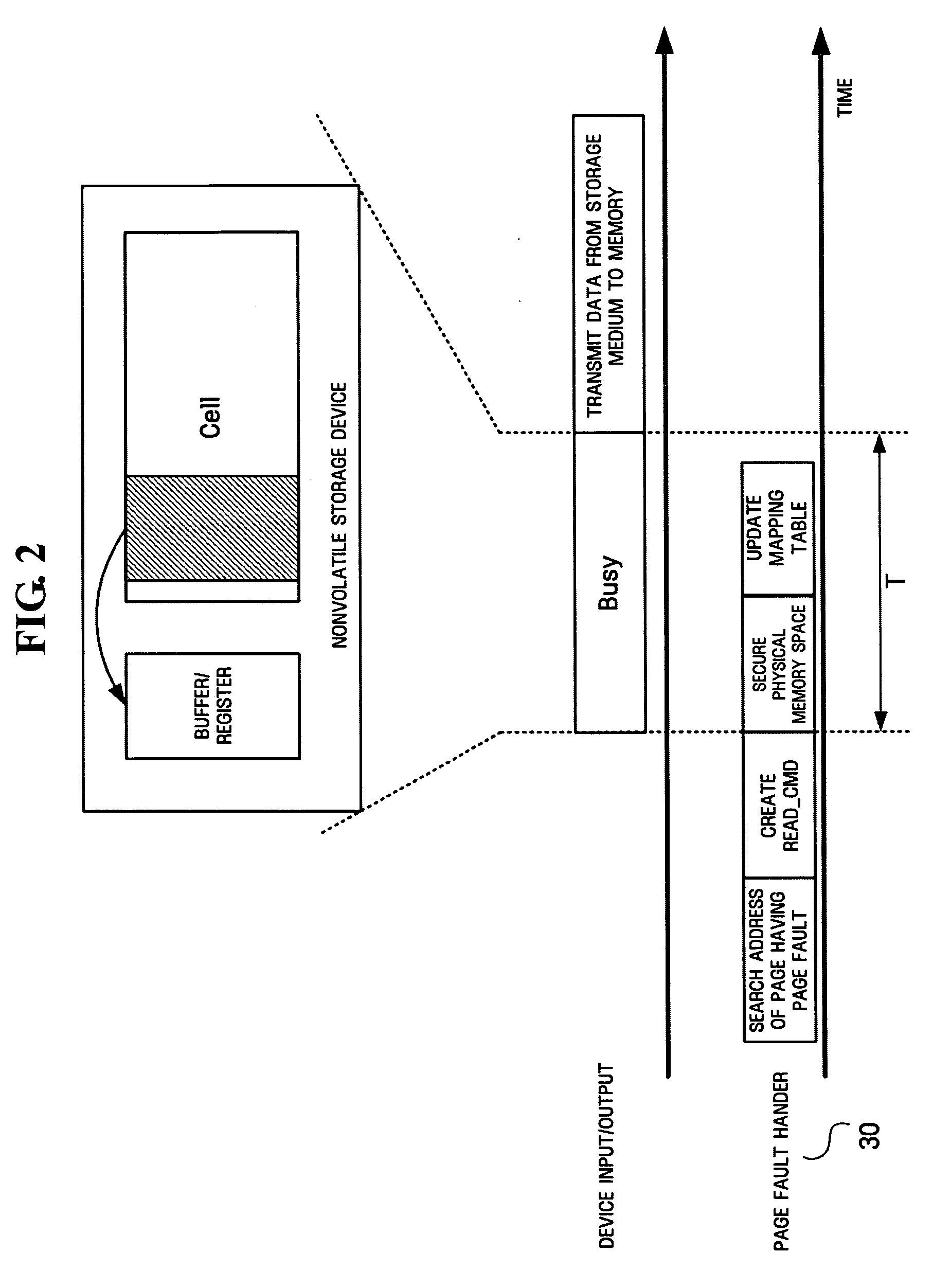

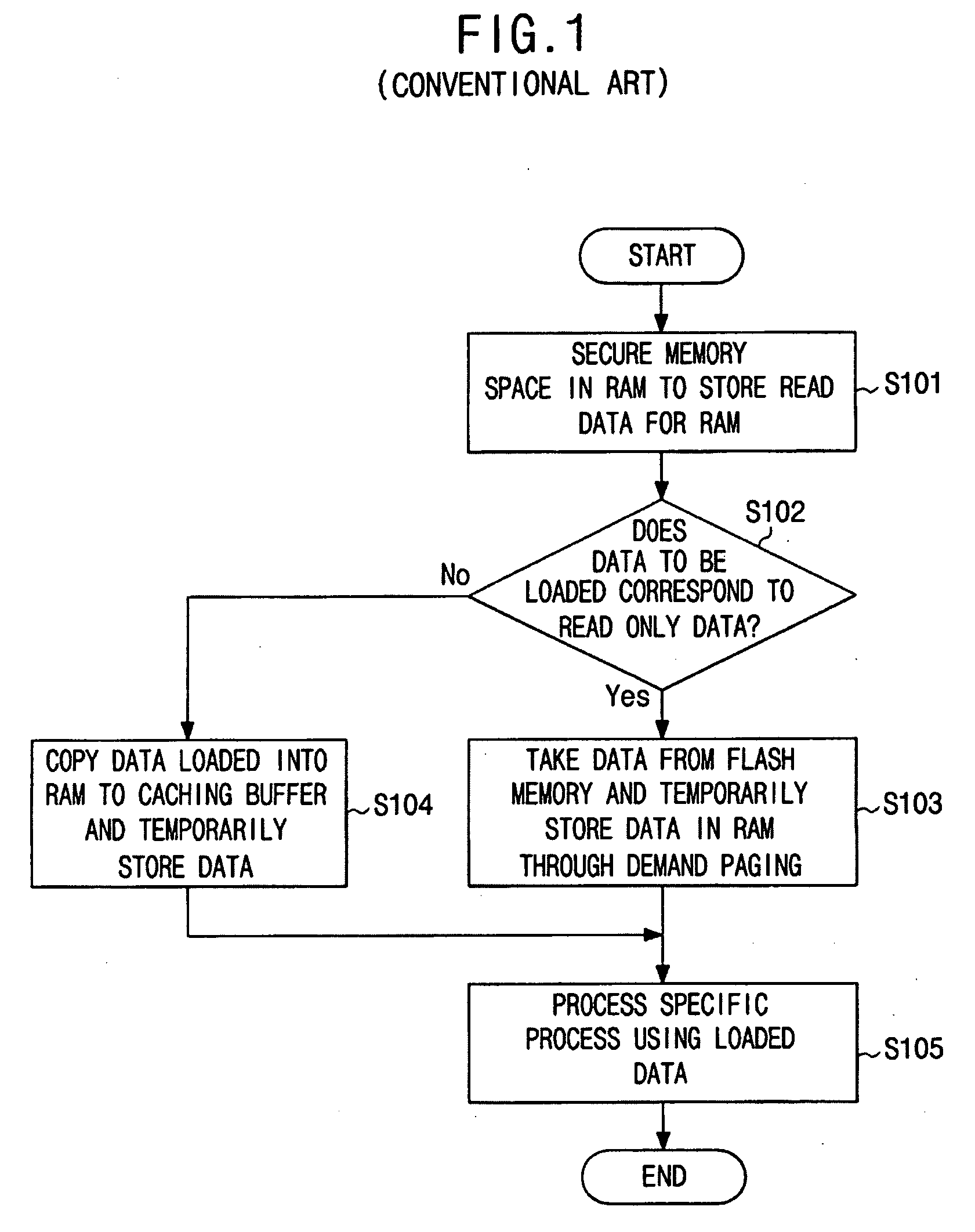

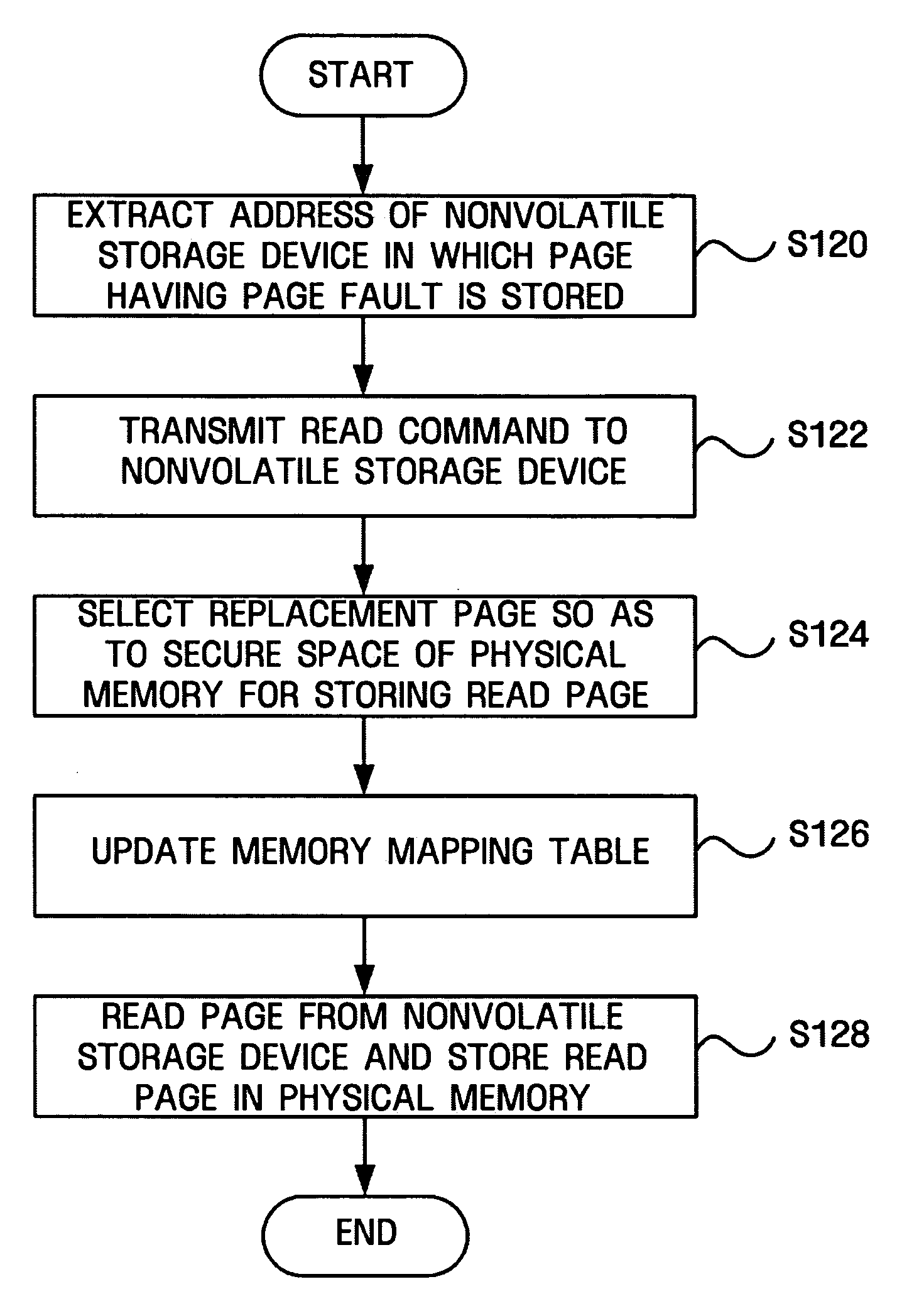

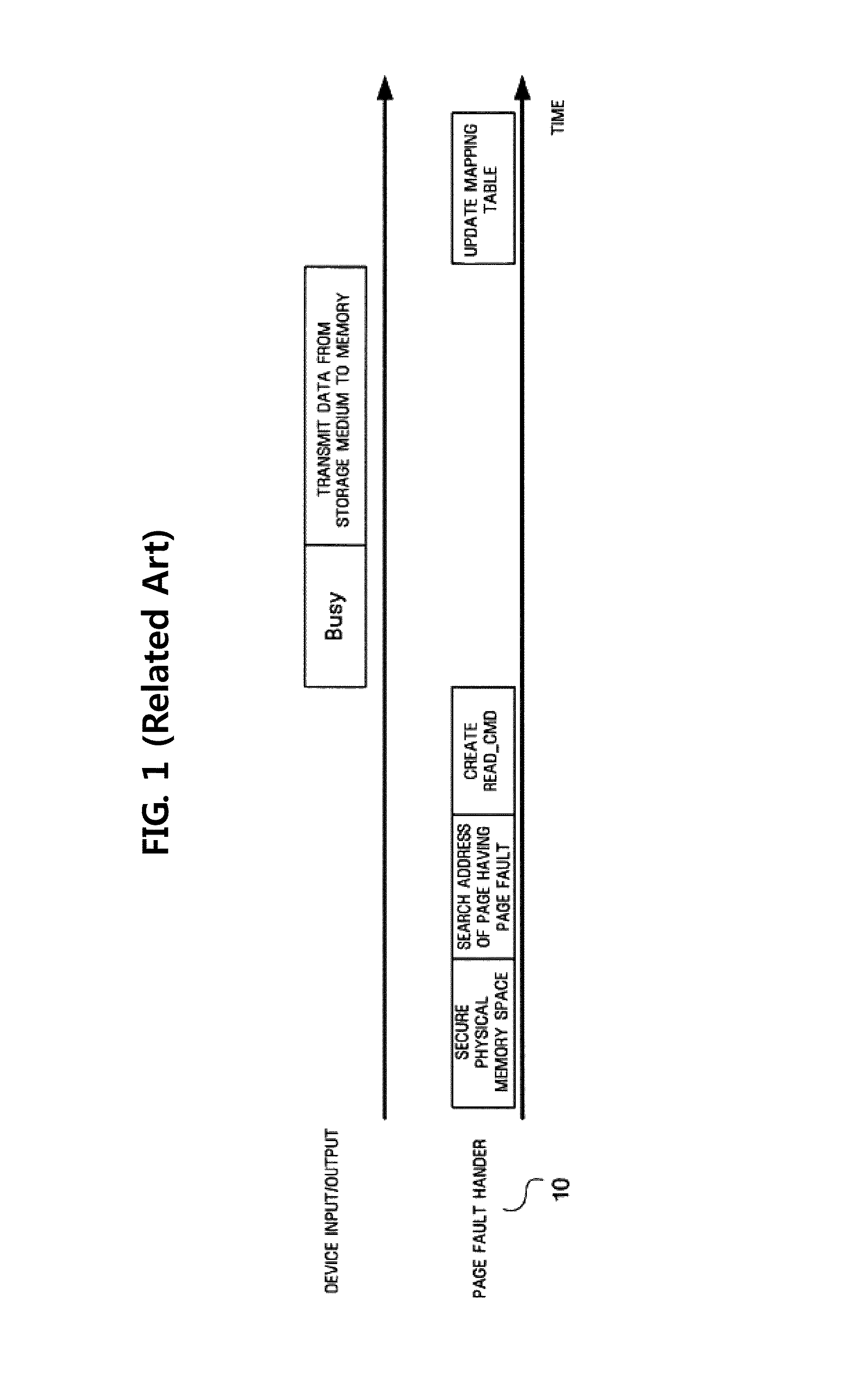

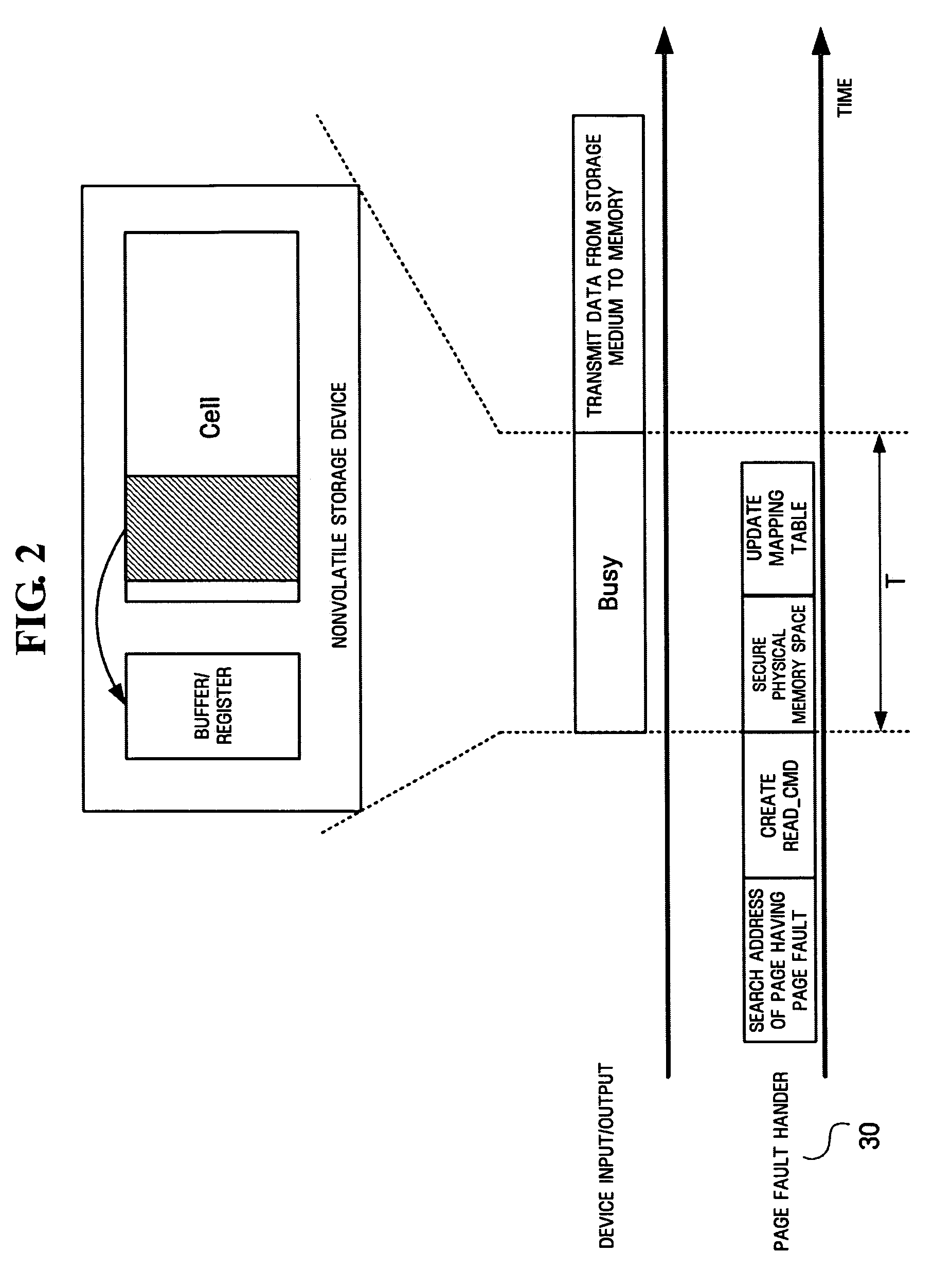

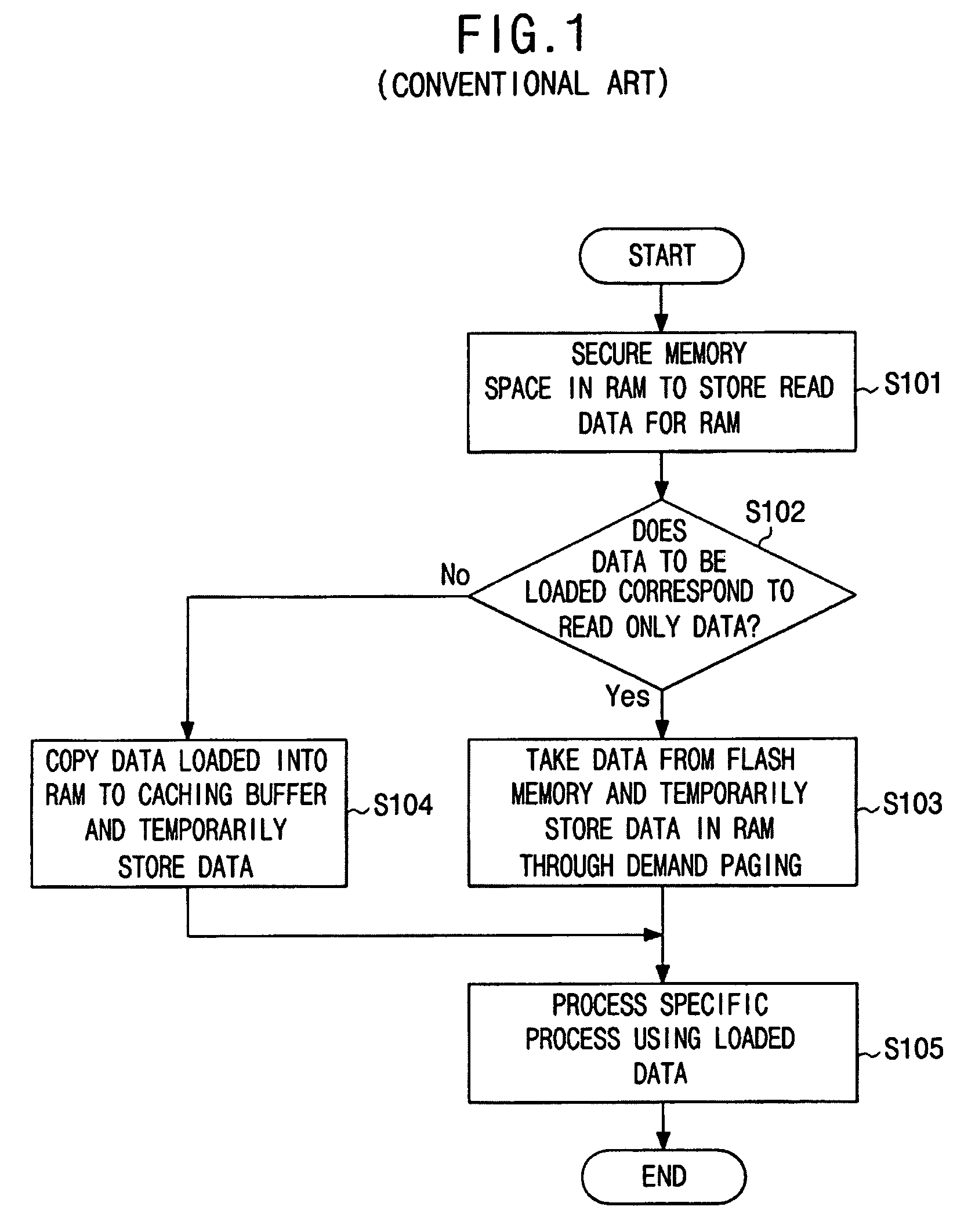

Method and apparatus for reducing page replacement time in system using demand paging technique

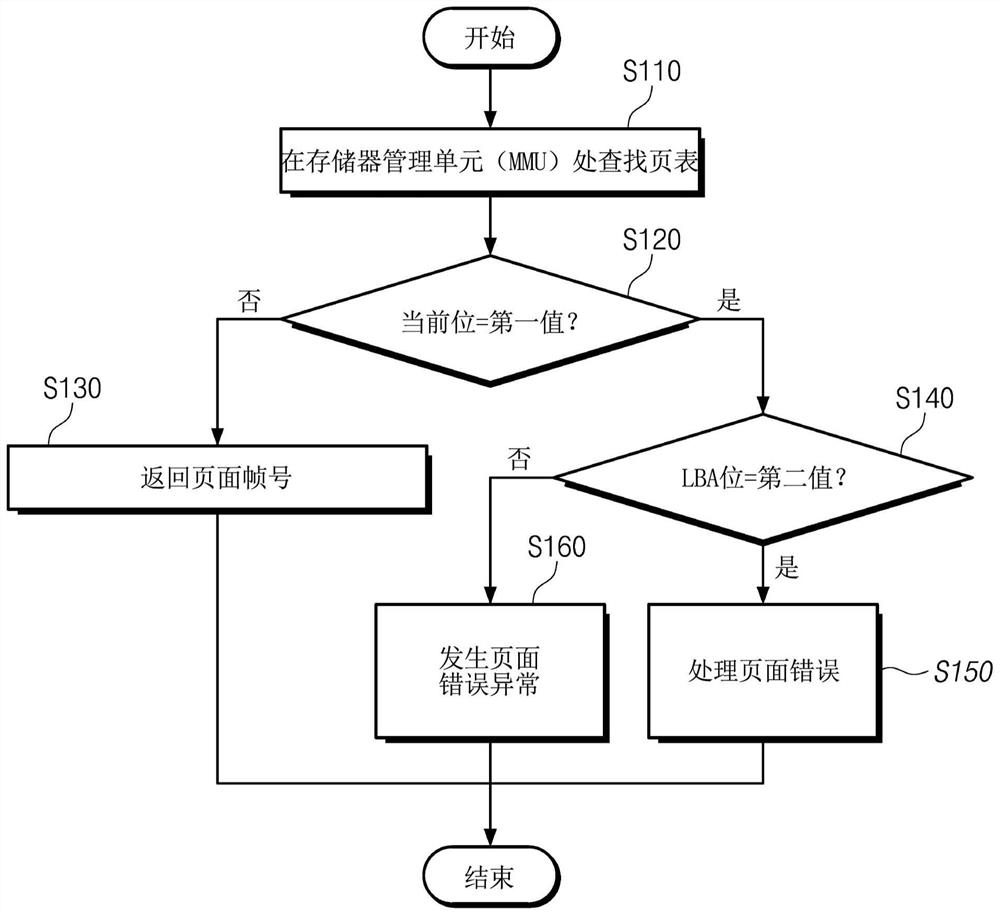

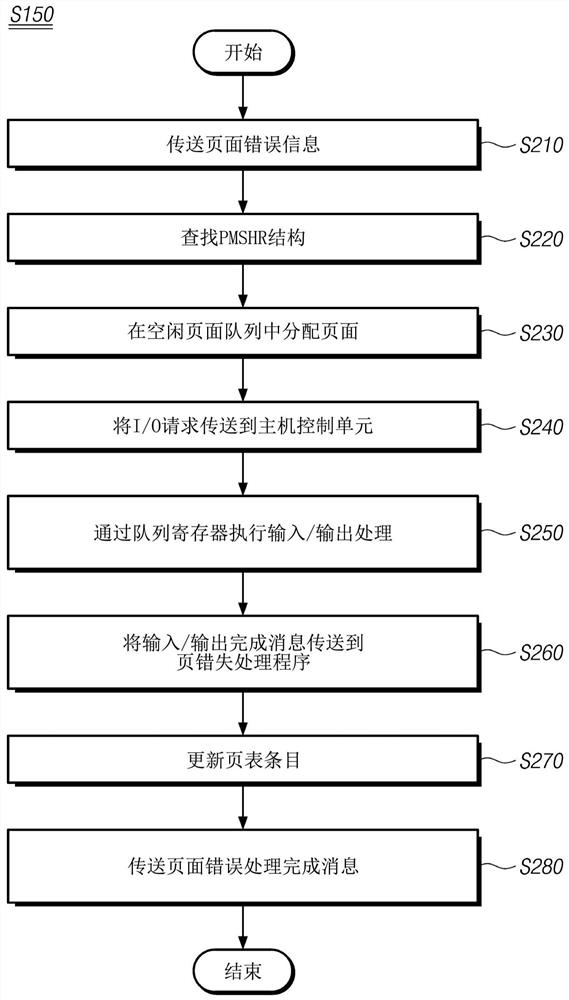

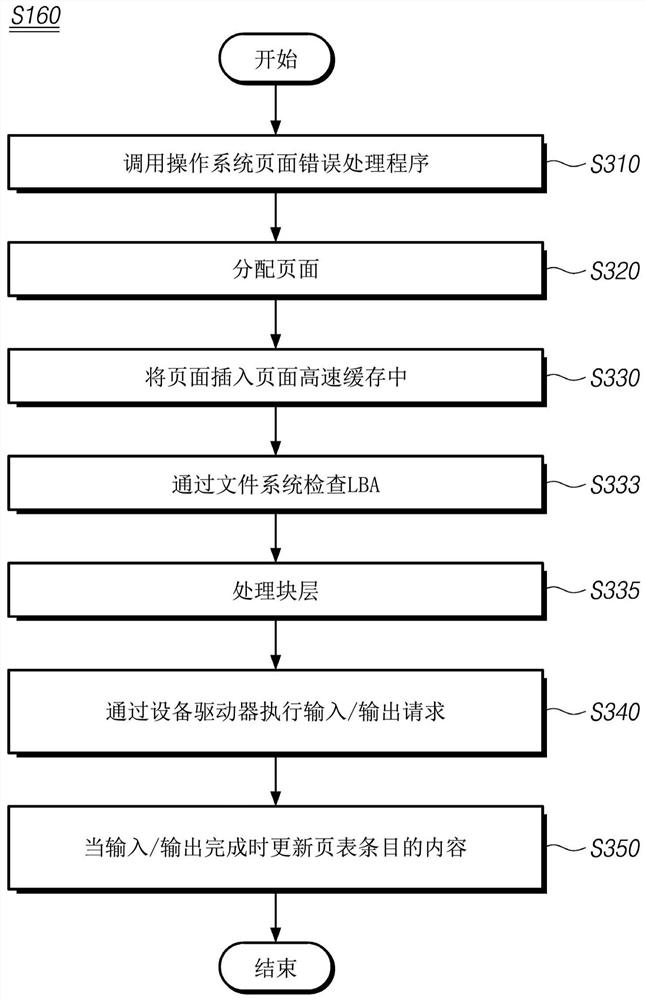

ActiveUS20070168627A1Reducing page replacement timeImprove system performanceMemory adressing/allocation/relocationMicro-instruction address formationTerm memoryMemory management unit

A method and apparatus for reducing a page replacement time in a system using a demand paging technique are provided. The apparatus includes a memory management unit which transmits a signal indicating that a page fault occurs, a device driver which reads a page having the page fault from a nonvolatile memory, and a page fault handler that searches and secures a space for storing the page having the page fault in a memory. The searching and securing of the space in the memory is performed within a limited time calculated beforehand and a part of data to be loaded to the memory of the system is stored in the nonvolatile memory.

Owner:SAMSUNG ELECTRONICS CO LTD

Data compression method for supporting virtual memory management in a demand paging system

InactiveUS7512767B2Avoid indexImprove data performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationData compressionManagement unit

A virtual memory management unit (306) includes a redundancy insertion module (307) which is used for inserting redundancy into an encoded data stream to be compressed, such that after being compressed each logical data block fits into a different one from a set of equal-sized physical data blocks of a given size. For example, said redundancy may be given by no-operation (NOP) instructions represented by a number of dummy sequences of a given length (L) into an encoded data stream to be compressed, each dummy sequence being composed of a number of identical binary or hexadecimal fill-in values.

Owner:SONY ERICSSON MOBILE COMM AB

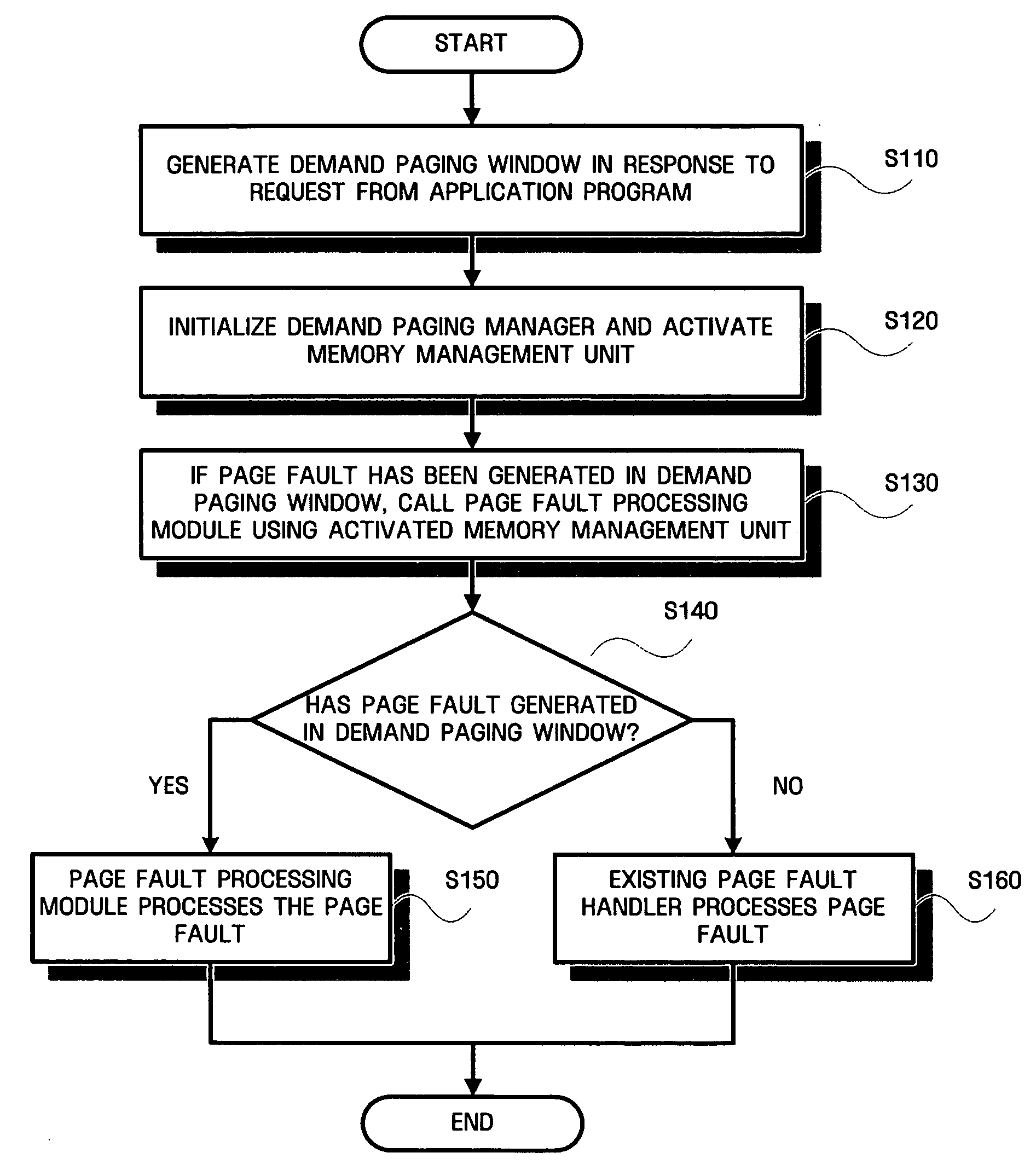

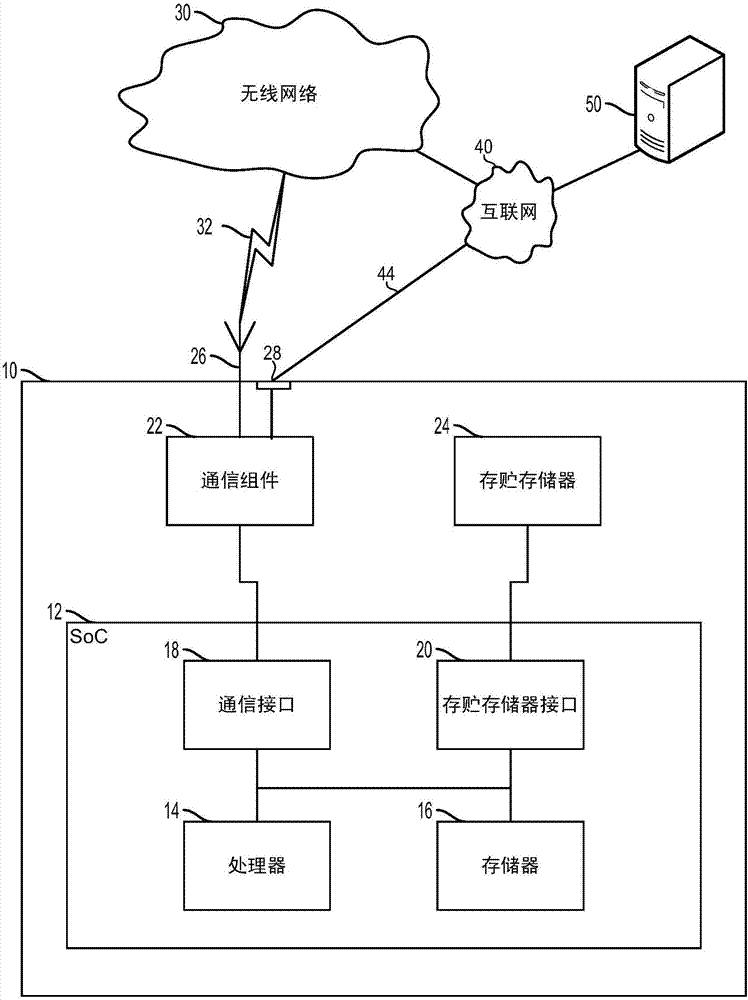

Demand paging apparatus and method for embedded system

ActiveUS7617381B2Memory adressing/allocation/relocationMicro-instruction address formationMemory processPaging

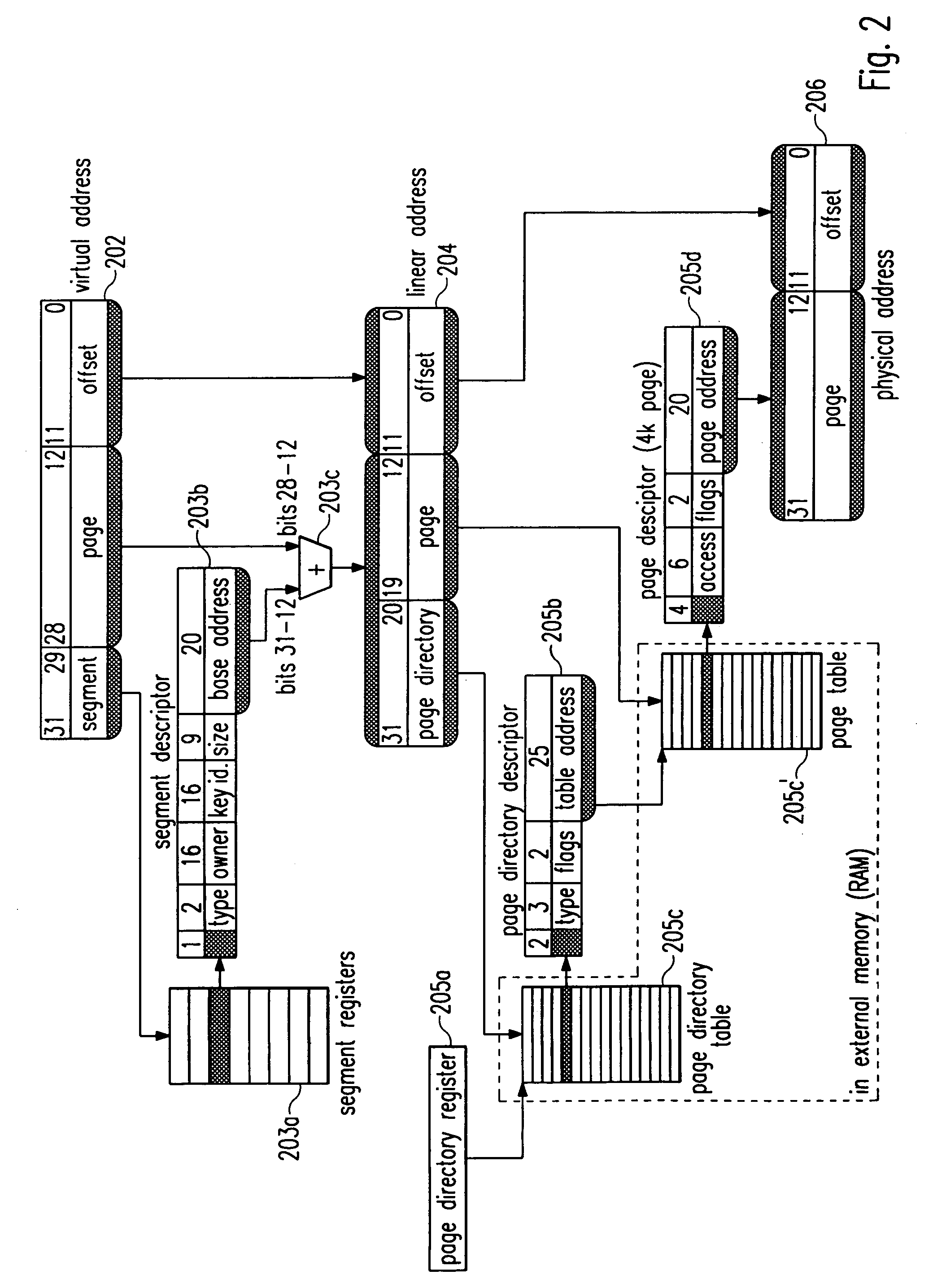

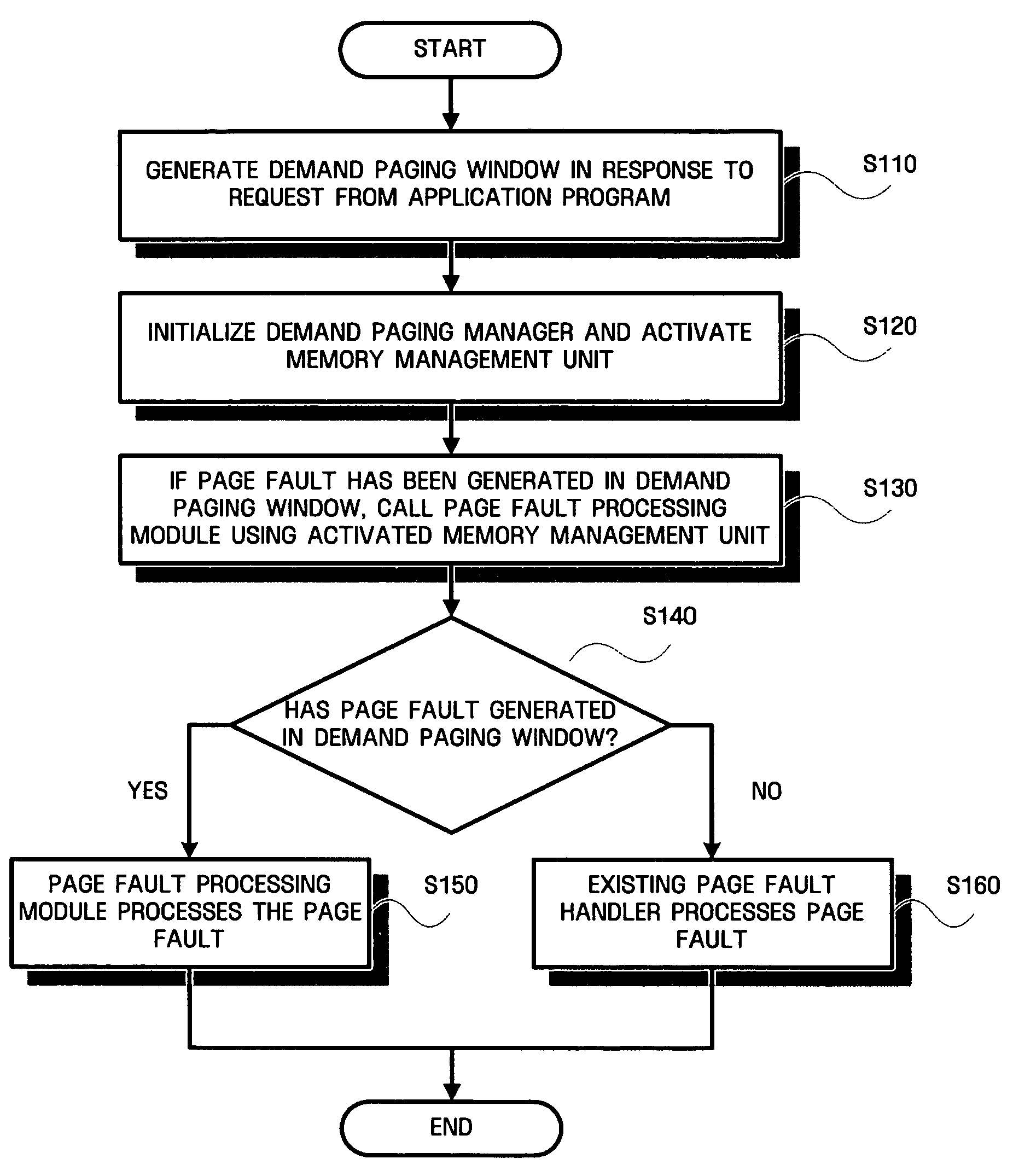

A demand paging apparatus and a method for an embedded system are provided. The demand paging apparatus includes a nonvolatile storage device, a physical memory, a demand paging window, and a demand paging manager. The nonvolatile storage device stores code and data which are handled by demand paging. The physical memory processes information about a requested page that is read from the nonvolatile storage device. The demand paging window generates a fault for the page and, thus, causes demand paging to occur. The demand paging window is part of an address space to which an application program stored in the nonvolatile storage device refers. The demand paging manager processes the page fault generated in the demand paging window.

Owner:SAMSUNG ELECTRONICS CO LTD

Demand paging apparatus and method for embedded system

ActiveUS20070150695A1Memory adressing/allocation/relocationMicro-instruction address formationMemory processPaging

A demand paging apparatus and a method for an embedded system are provided. The demand paging apparatus includes a nonvolatile storage device, a physical memory, a demand paging window, and a demand paging manager. The nonvolatile storage device stores code and data which are handled by demand paging. The physical memory processes information about a requested page that is read from the nonvolatile storage device. The demand paging window generates a fault for the page and, thus, causes demand paging to occur. The demand paging window is part of an address space to which an application program stored in the nonvolatile storage device refers. The demand paging manager processes the page fault generated in the demand paging window.

Owner:SAMSUNG ELECTRONICS CO LTD

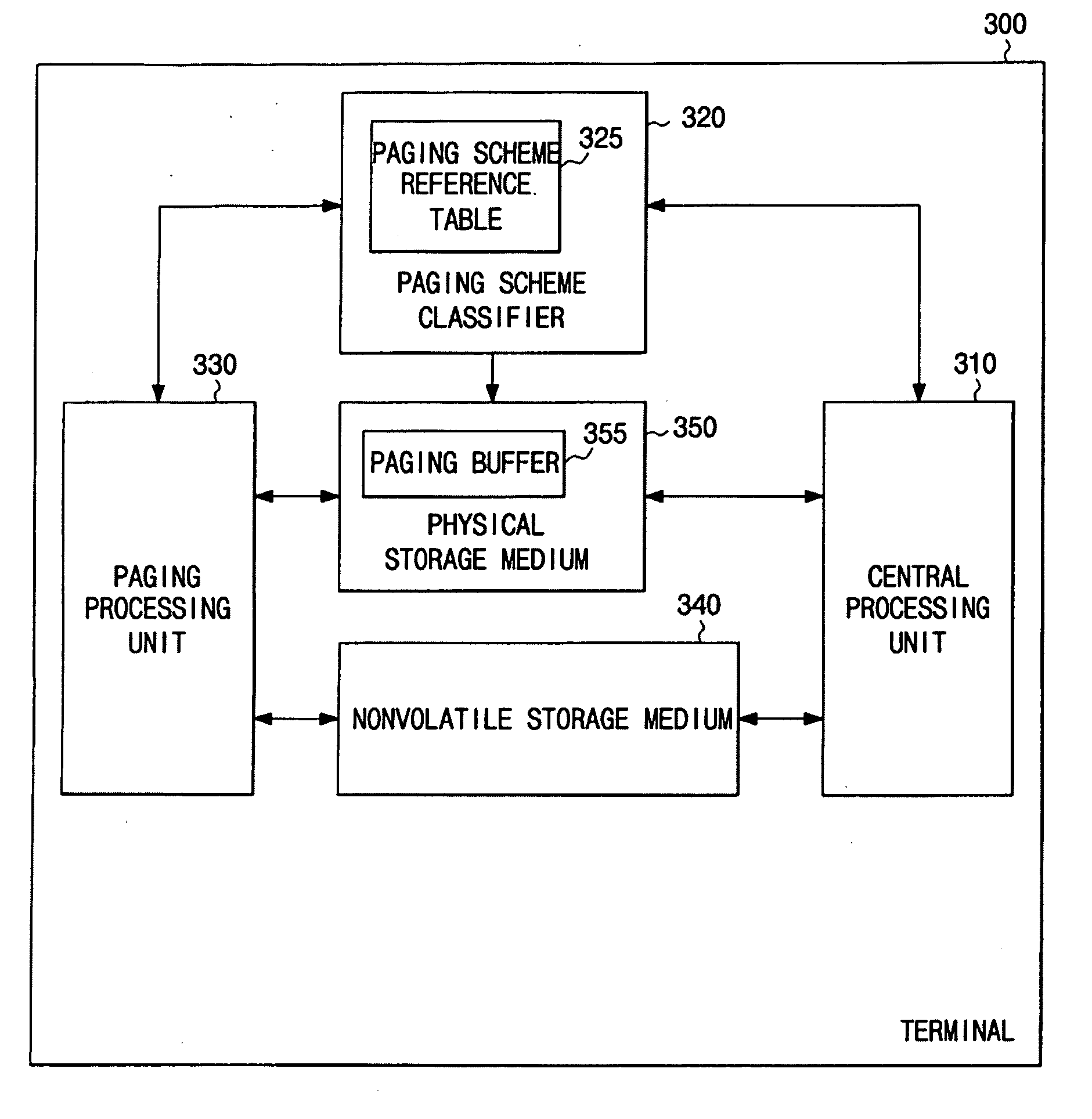

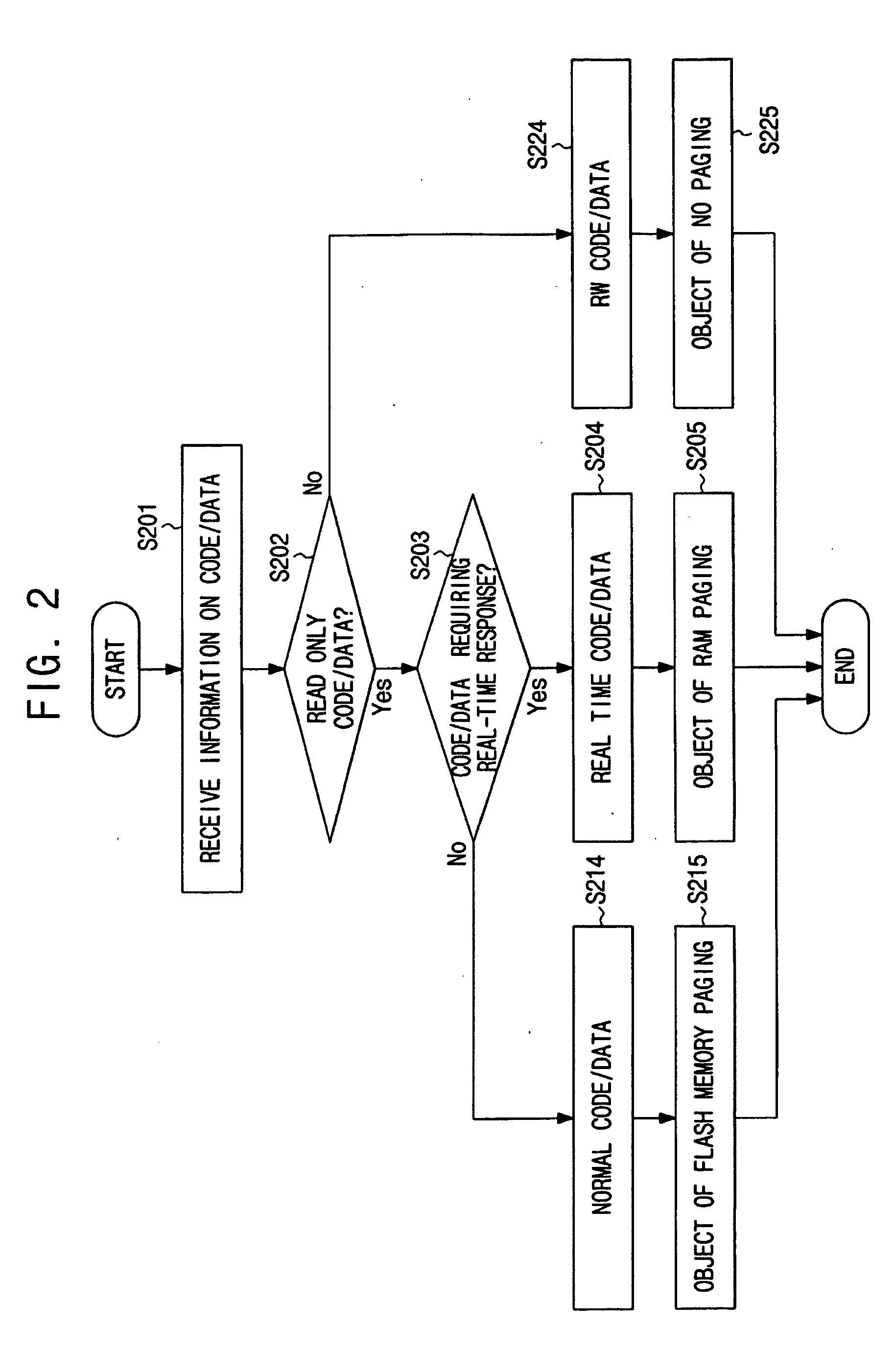

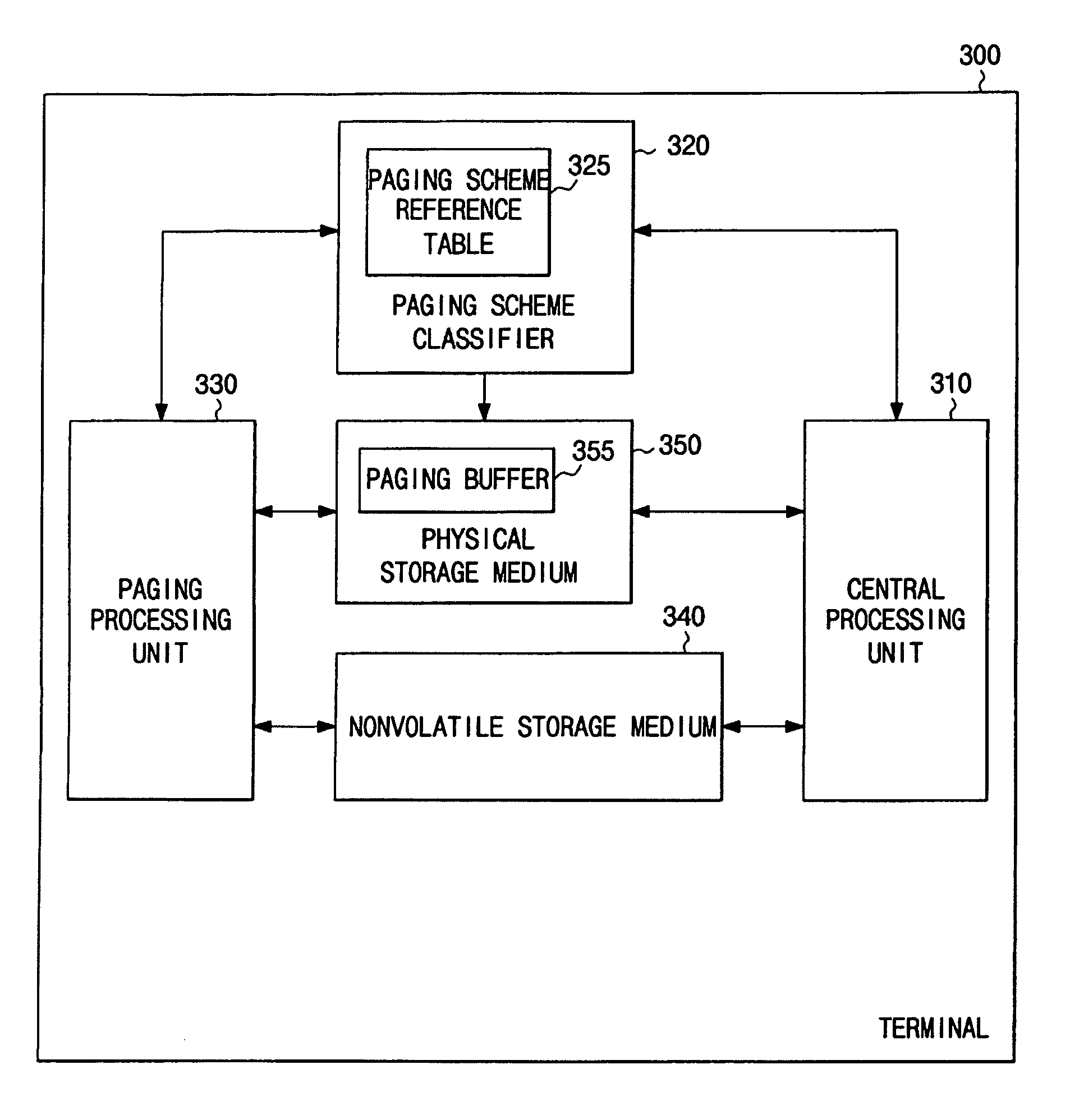

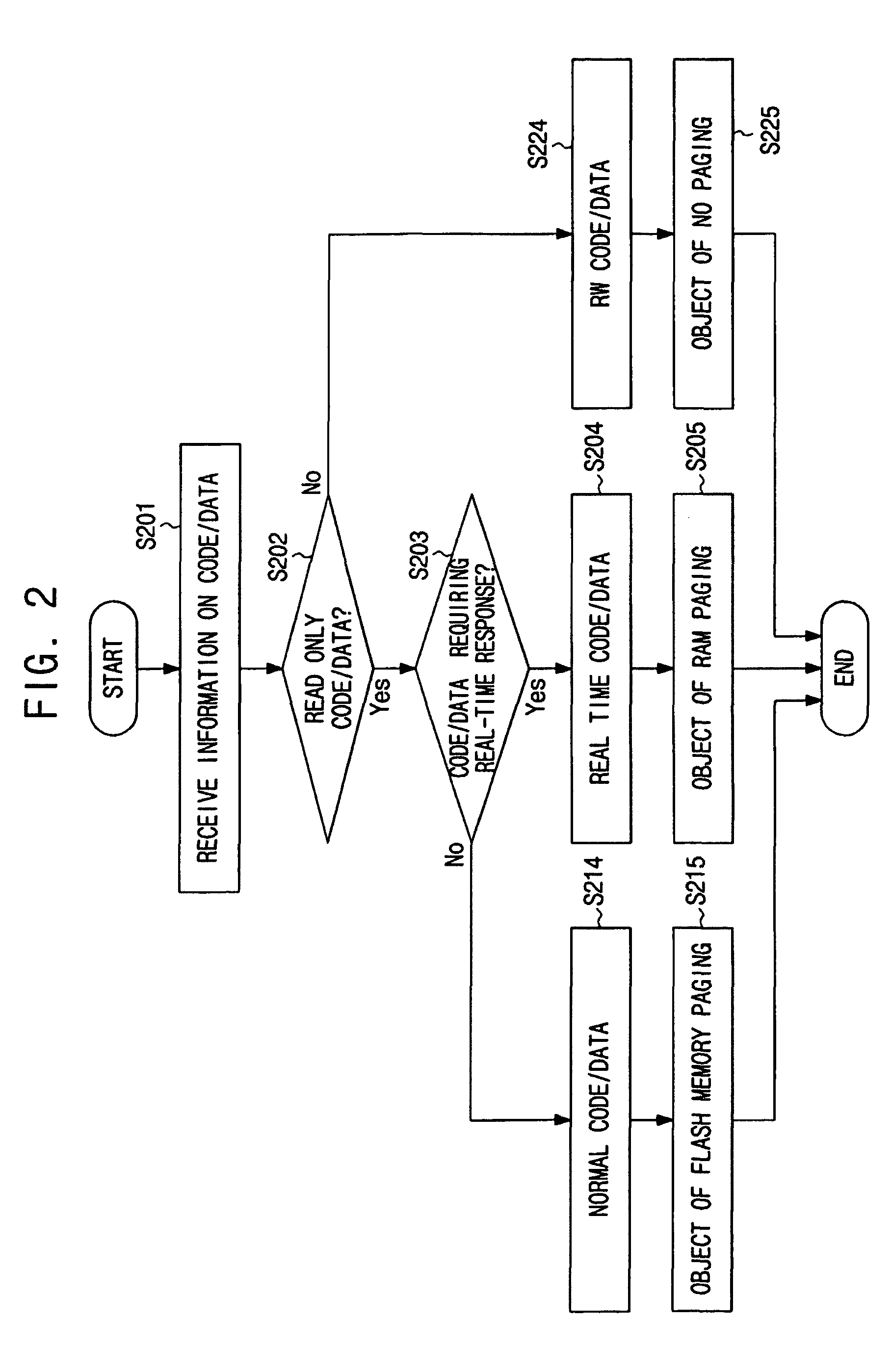

Method and terminal for demand paging at least one of code and data requiring real-time response

InactiveUS20090138655A1Reduce usageEasy to useMemory architecture accessing/allocationMemory adressing/allocation/relocationTime responseRandom access memory

A method and terminal for demand paging at least one of code and data requiring a real-time response is provided. The method includes splitting and compressing at least one of code and data requiring a real-time response to a size of a paging buffer and storing the compressed at least one of code and data in a physical storage medium, if there is a request for demand paging for the at least one of code and data requiring the real-time response, classifying the at least one of code and data requiring the real-time response as an object of Random Access Memory (RAM) paging that pages from the physical storage medium to a paging buffer, and loading the classified at least one of code and data into the paging buffer.

Owner:SAMSUNG ELECTRONICS CO LTD

Data compression method for supporting virtual memory management in a demand paging system

InactiveCN101356509AMemory architecture accessing/allocationMemory adressing/allocation/relocationData compressionIntel HEX

A virtual memory management unit ( 306 ) includes a redundancy insertion module ( 307 ) which is used for inserting redundancy into an encoded data stream to be compressed, such that after being compressed each logical data block fits into a different one from a set of equal-sized physical data blocks of a given size. For example, said redundancy may be given by no-operation (NOP) instructions represented by a number of dummy sequences of a given length (L) into an encoded data stream to be compressed, each dummy sequence being composed of a number of identical binary or hexadecimal fill-in values.

Owner:SONY ERICSSON MOBILE COMM AB

On-demand paging-in of pages with read-only file system

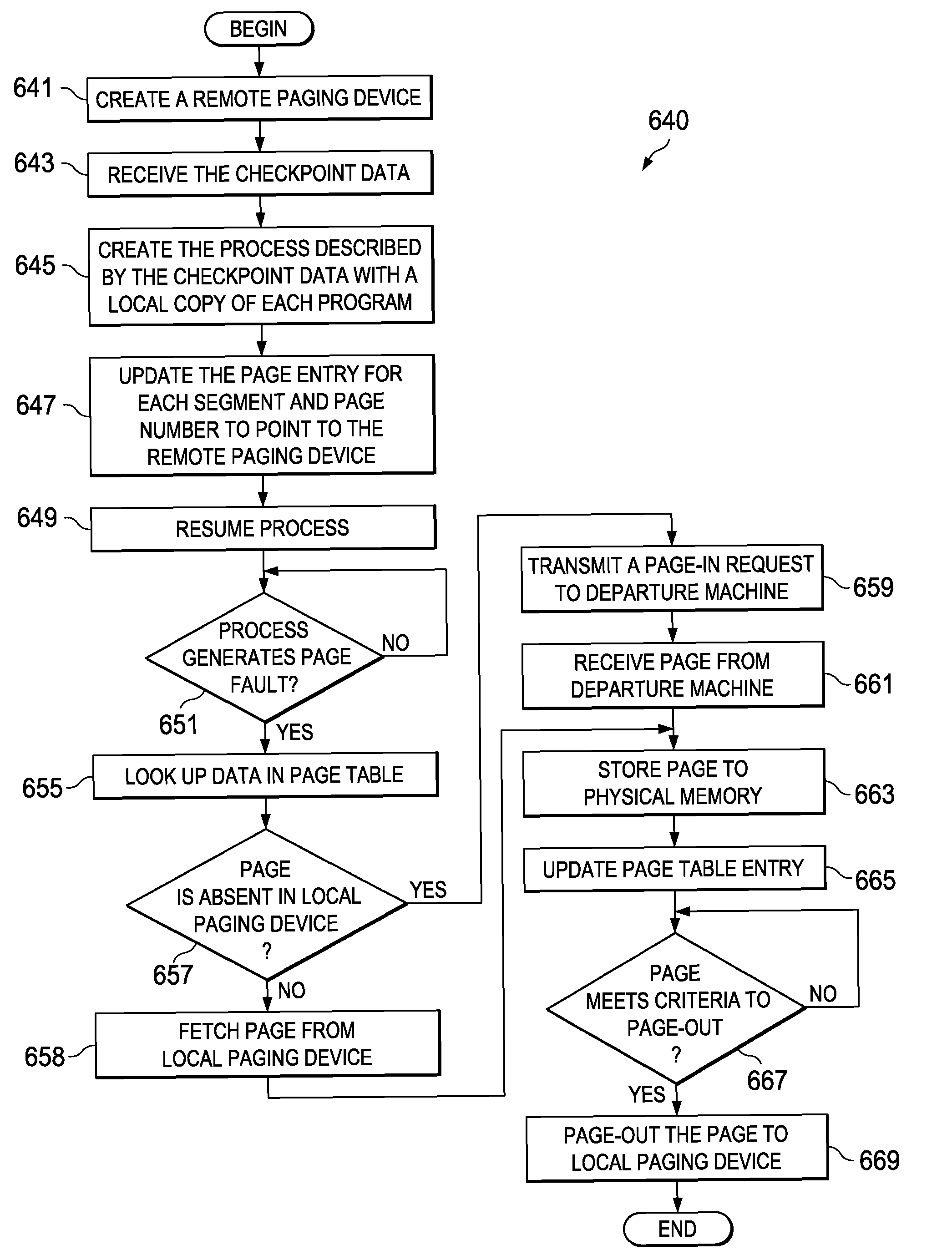

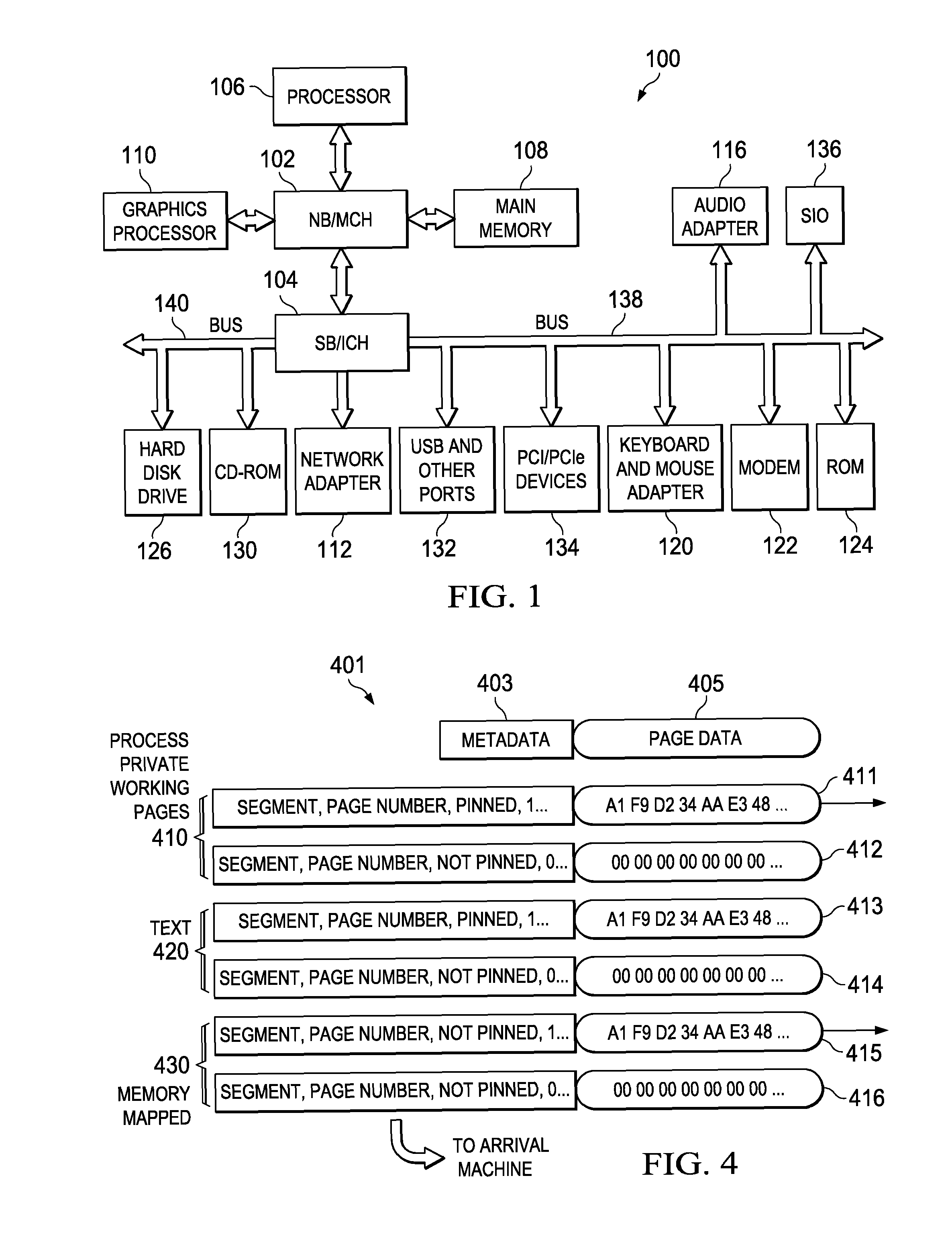

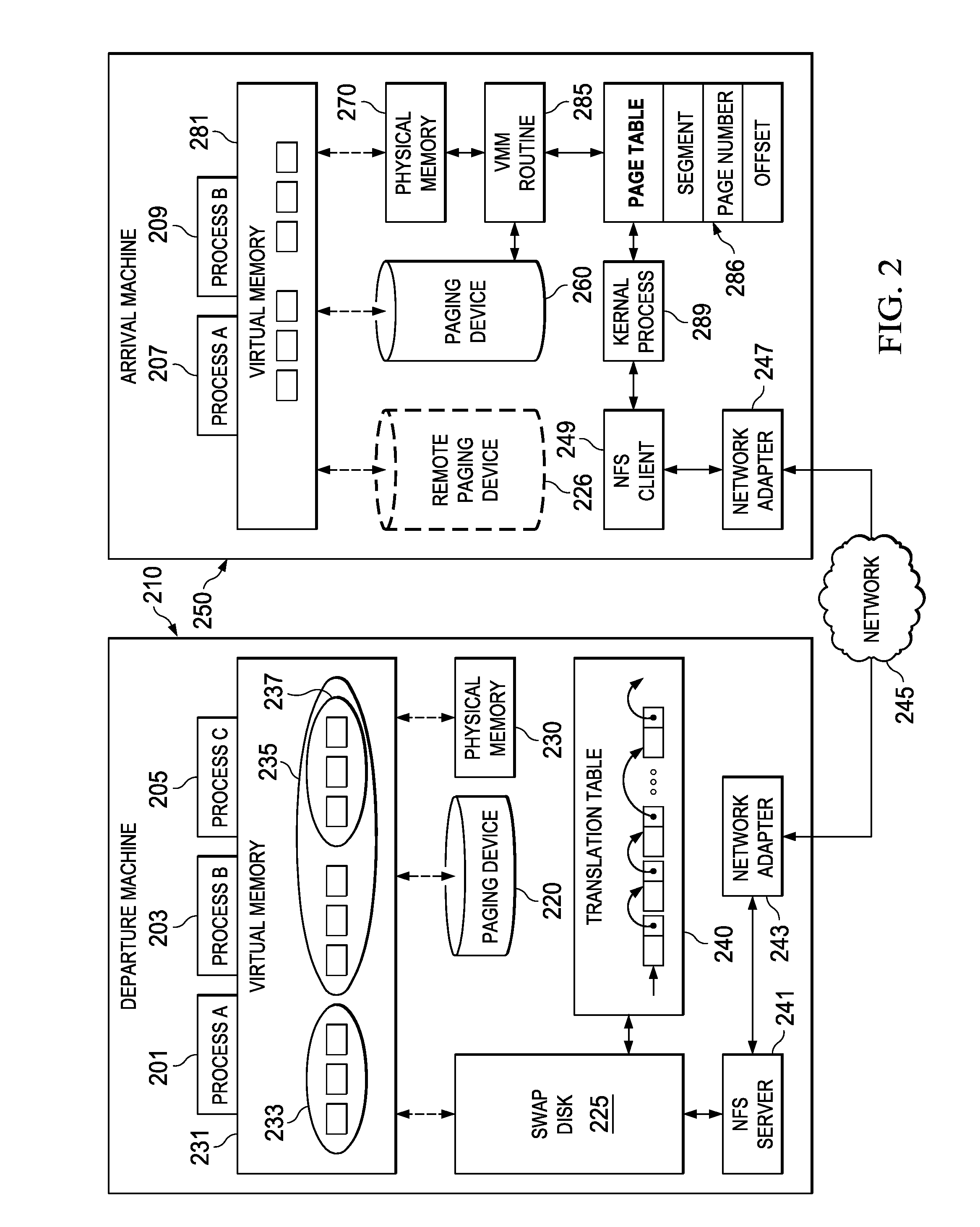

Disclosed is a computer implemented method and computer program product to resume a process at an arrival machine that is in an identical state to a frozen process on a departure machine. The arrival machine receives checkpoint data for the process from the departure machine. The arrival machine creates the process. The arrival machine updates a page table, wherein the page table comprises a segment, page number, and offset corresponding to a page of the process available from a remote paging device, wherein the remote paging device is remote from the arrival machine. The arrival machine resumes the process. The arrival machine generates a page fault for the page, responsive to resuming the process. The arrival machine looks up the page in the page table, responsive to the page fault. The arrival machine determines whether the page is absent in the arrival machine. The arrival machine transmits a page-in request to the departure machine, responsive to a determination that the page is absent. The arrival machine receives the page from the departure machine.

Owner:IBM CORP

System and method for implementing a demand paging jitter buffer algorithm

InactiveUS7370126B2Reduce disadvantagesReduce problemsMemory systemsInput/output processes for data processingData segmentParallel computing

An apparatus for providing storage is provided that includes a jitter buffer element. The jitter buffer element includes a primary jitter buffer storage that includes a primary low water mark and a primary high water mark. The jitter buffer element also includes a secondary jitter buffer storage that includes a secondary low water mark and a secondary high water mark. A first data segment within the primary jitter buffer storage is held for a processor. A playout point may advance from a bottom of the primary jitter buffer storage to the primary low water mark. When the playout point reaches the primary low water mark, the processor communicates a message for the secondary jitter buffer storage to request a second data segment up to the secondary high water mark associated with the secondary jitter buffer storage.

Owner:CISCO TECH INC

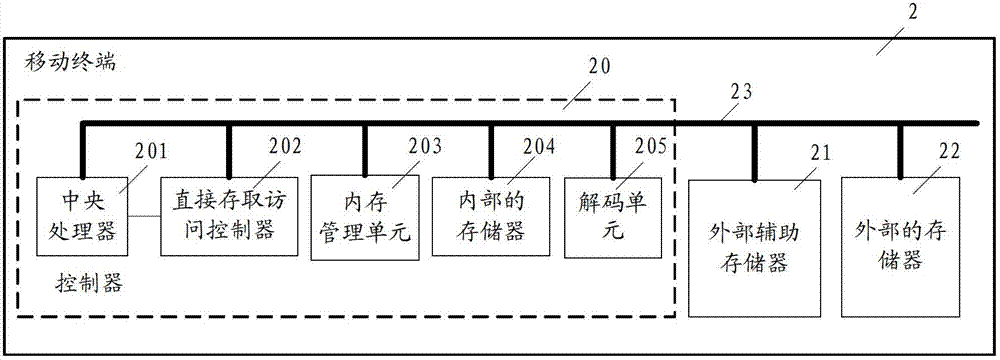

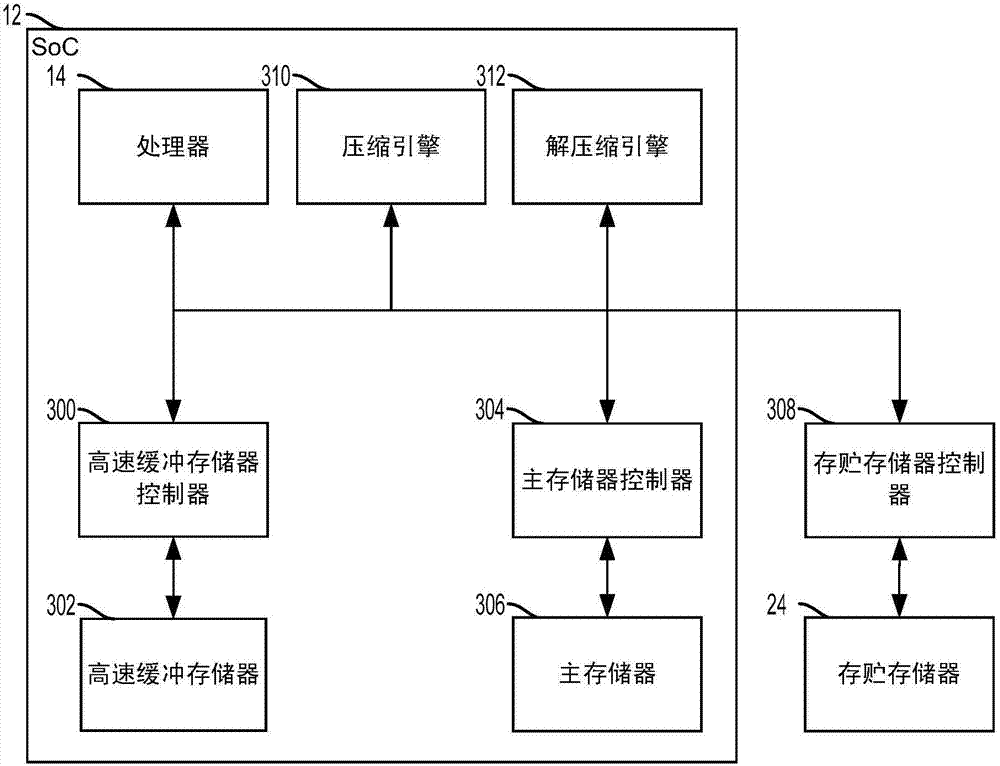

Demand paging method for mobile terminal, controller and mobile terminal

ActiveCN102792296AIncrease the rate of decoding compressed filesImprove processing efficiencyMemory architecture accessing/allocationMemory adressing/allocation/relocationComputer terminalDemand paging

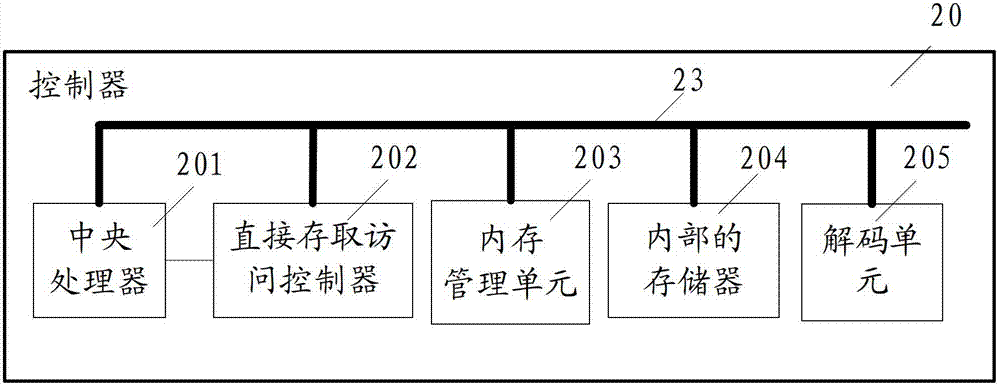

A demand paging method for a mobile terminal, a controller and a mobile terminal, wherein the demand paging method determines, when a mobile terminal in operation needs to compress a file, a storage location of the compressed file in an external part of the controller of the mobile terminal; a decoding unit of the internal part of the controller of the mobile terminal decompresses the compressed file in the storage location; the mobile terminal saves the decompressed file to a designated part of the memory, wherein the designated part of the memory comprises the memory in the internal part of the controller of the mobile terminal and / or the memory in the external part of the controller of the mobile terminal; the mobile terminal continues to operate on the basis of the decompressed file. The technical solution increases file decompression of the mobile terminal, takes advantage of storage resources of the memory of the internal part of the controller of the mobile terminal, and saves decompressed files in the internal part of the controller, increasing the processing efficiency of demand paging of the mobile terminal.

Owner:SPREADTRUM COMM (SHANGHAI) CO LTD

Method and apparatus for reducing page replacement time in system using demand paging technique

ActiveUS7953953B2Improve system performanceReduce replacement timeMemory adressing/allocation/relocationMicro-instruction address formationTerm memoryComputer science

A method and apparatus for reducing a page replacement time in a system using a demand paging technique are provided. The apparatus includes a memory management unit which transmits a signal indicating that a page fault occurs, a device driver which reads a page having the page fault from a nonvolatile memory, and a page fault handler that searches and secures a space for storing the page having the page fault in a memory. The searching and securing of the space in the memory is performed within a limited time calculated beforehand and a part of data to be loaded to the memory of the system is stored in the nonvolatile memory.

Owner:SAMSUNG ELECTRONICS CO LTD

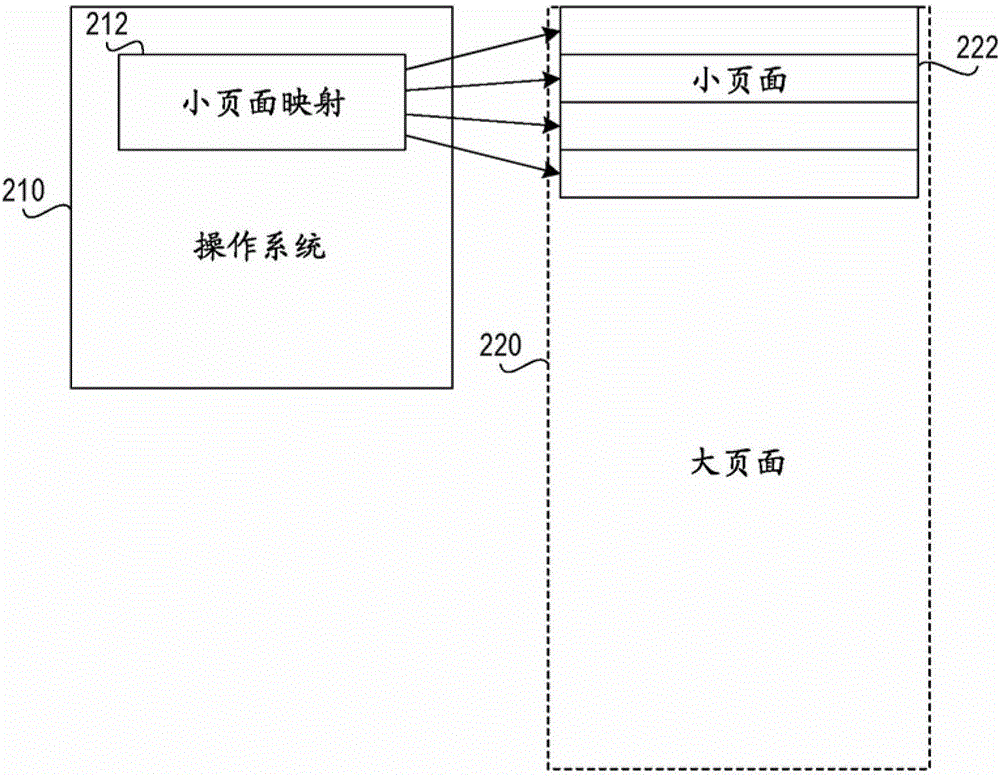

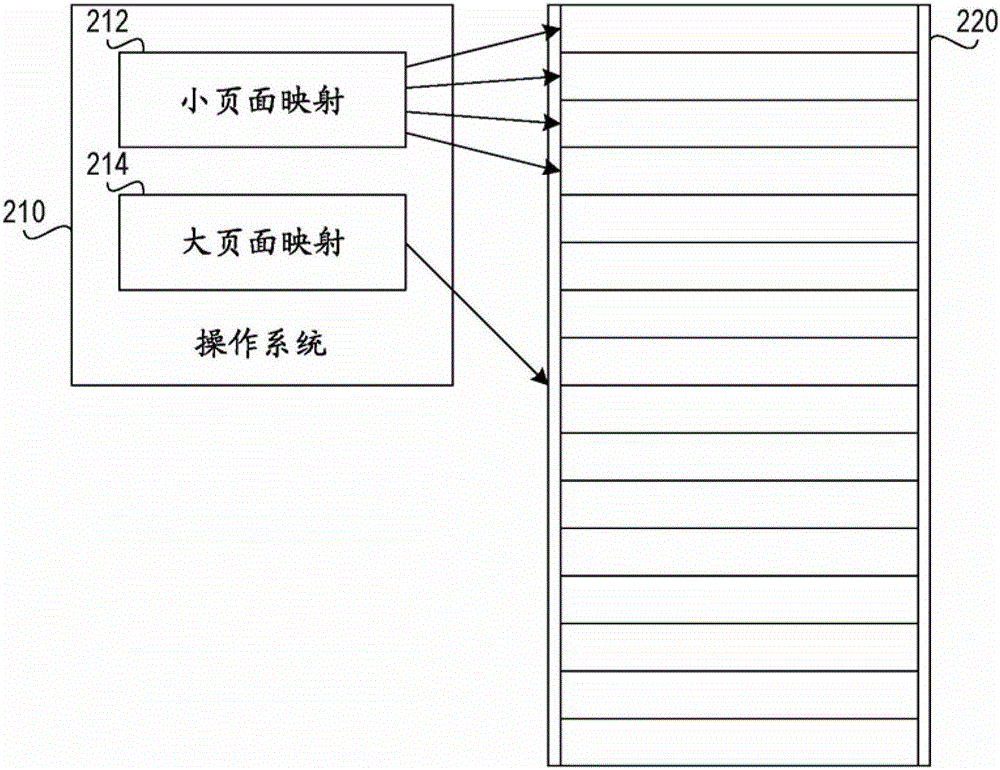

Method and device for automatic use of large pages

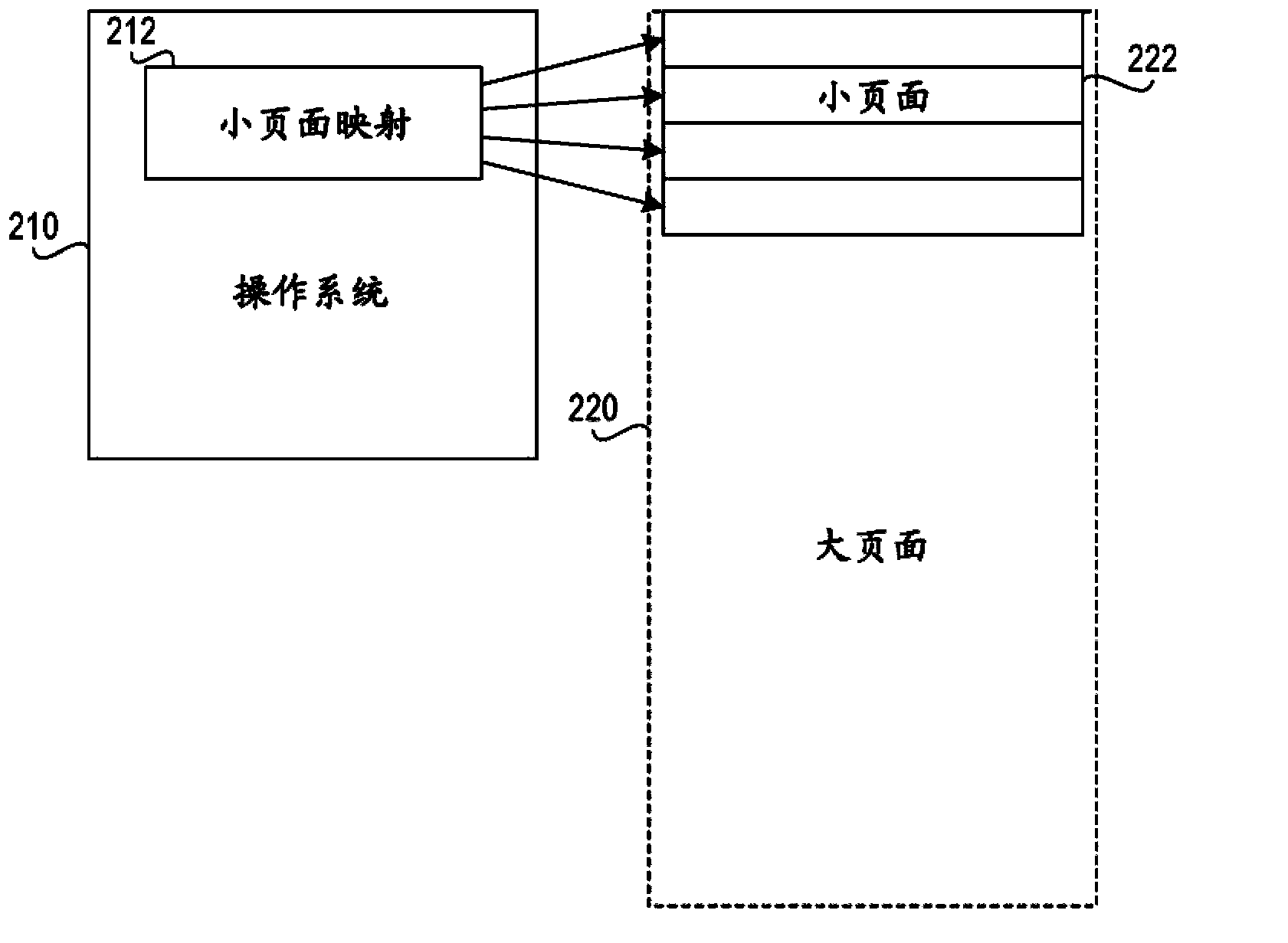

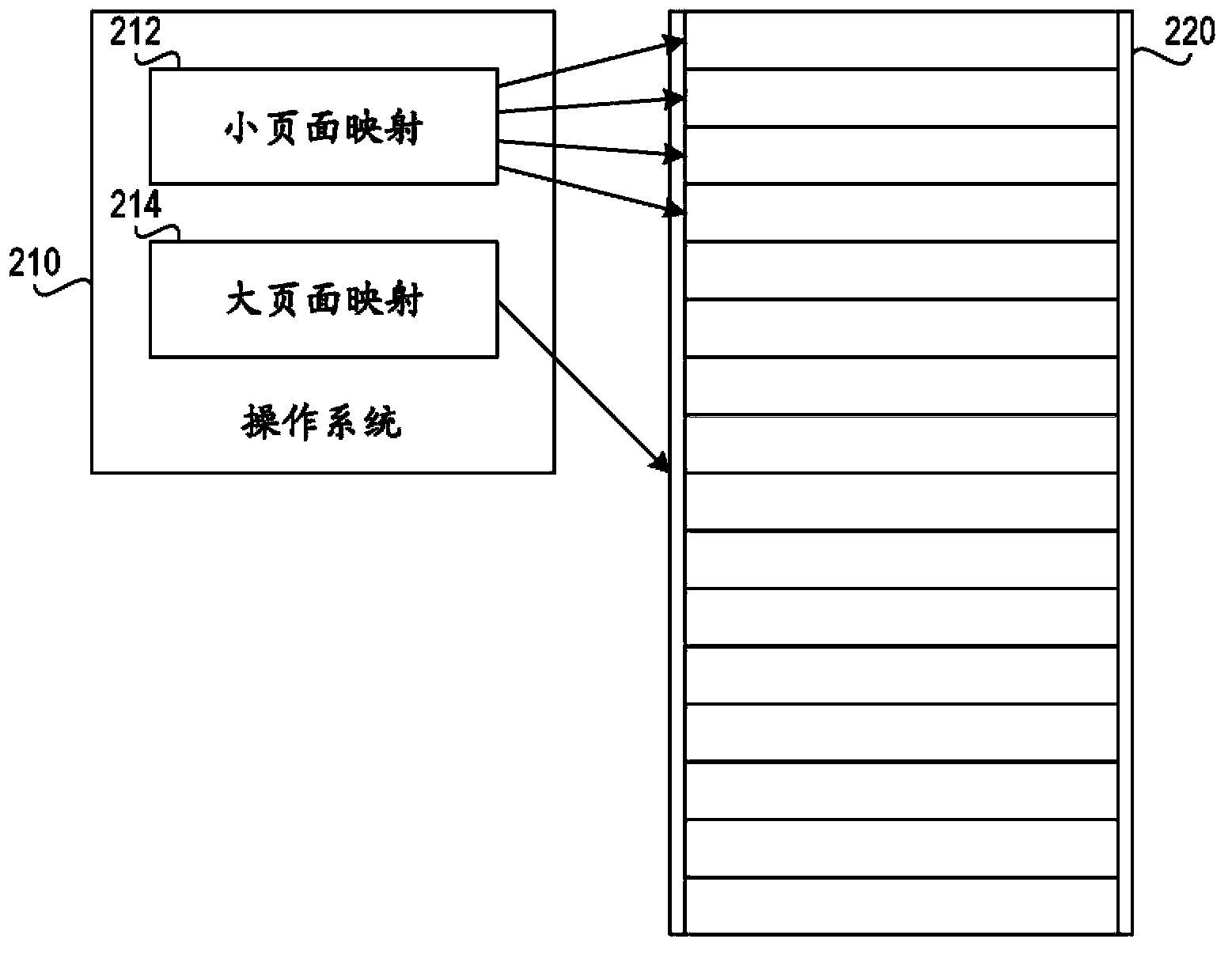

A mechanism is provided for automatic use of large pages. An operating system loader performs aggressive contiguous allocation followed by demand paging of small pages into a best-effort contiguous and naturally aligned physical address range sized for a large page. The operating system detects when the large page is fully populated and switches the mapping to use large pages. If the operating system runs low on memory, the operating system can free portions and degrade gracefully.

Owner:IBM CORP

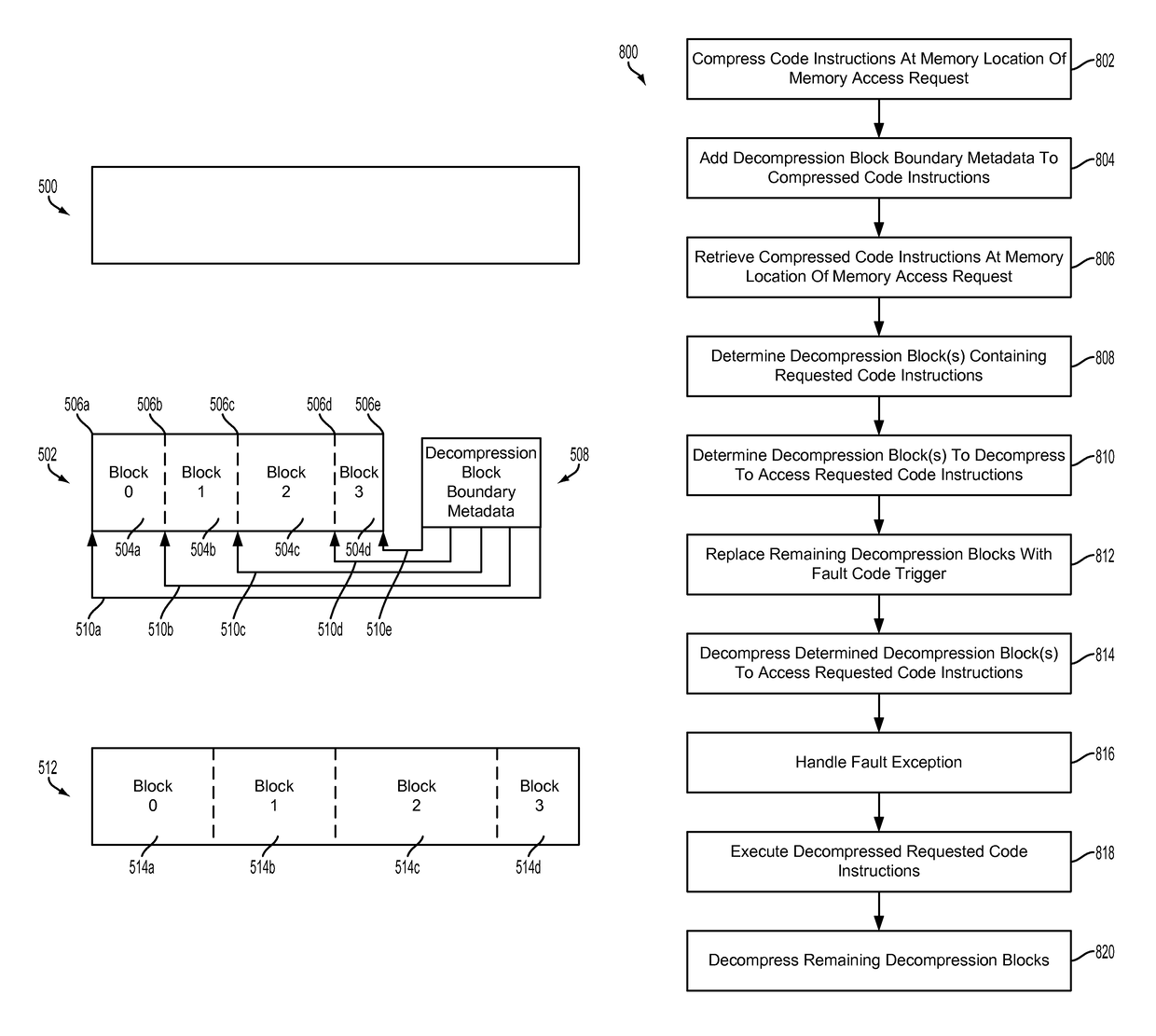

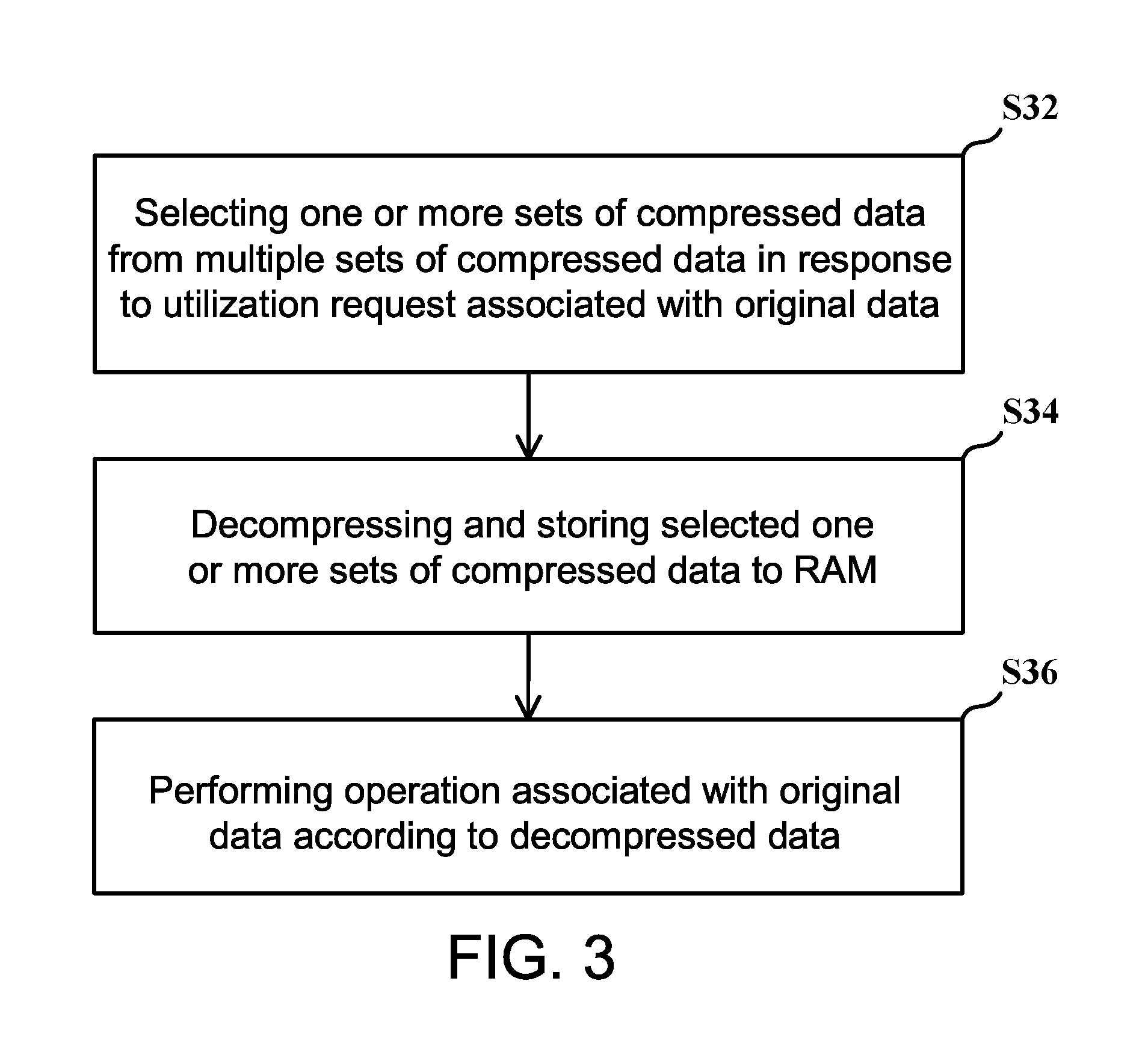

Efficient decompression locality system for demand paging

ActiveUS9652152B2Memory architecture accessing/allocationInput/output to record carriersParallel computingDemand paging

Owner:QUALCOMM INC

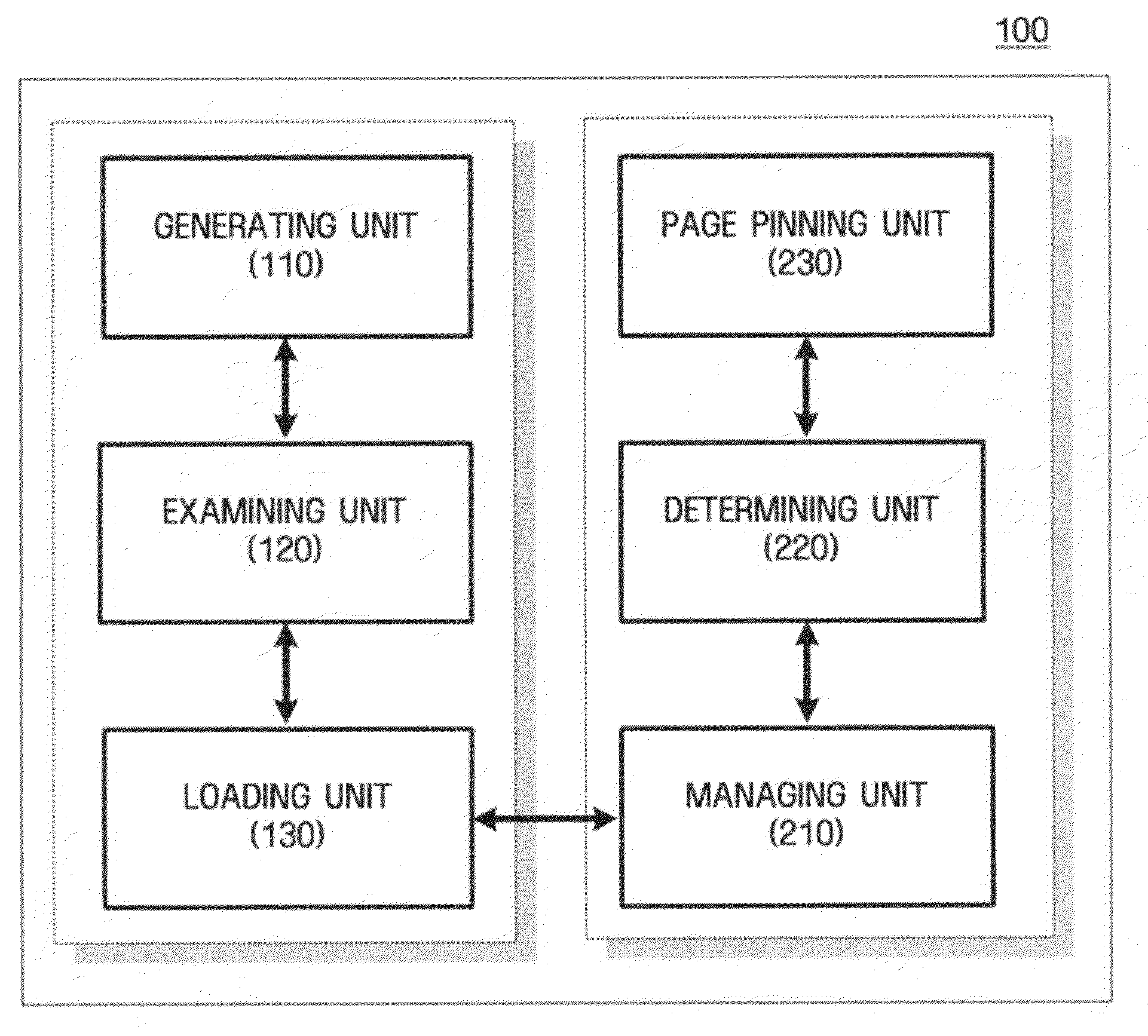

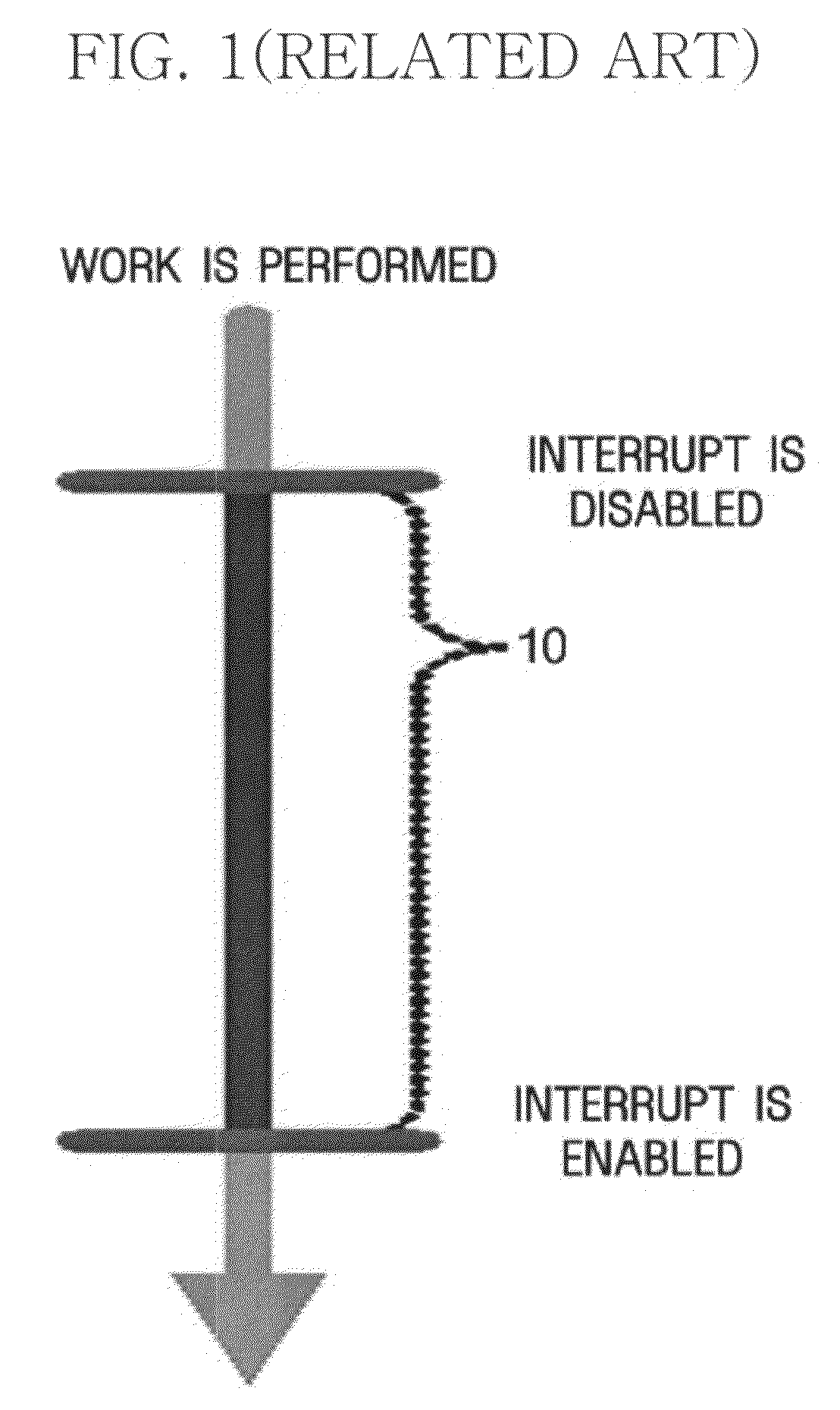

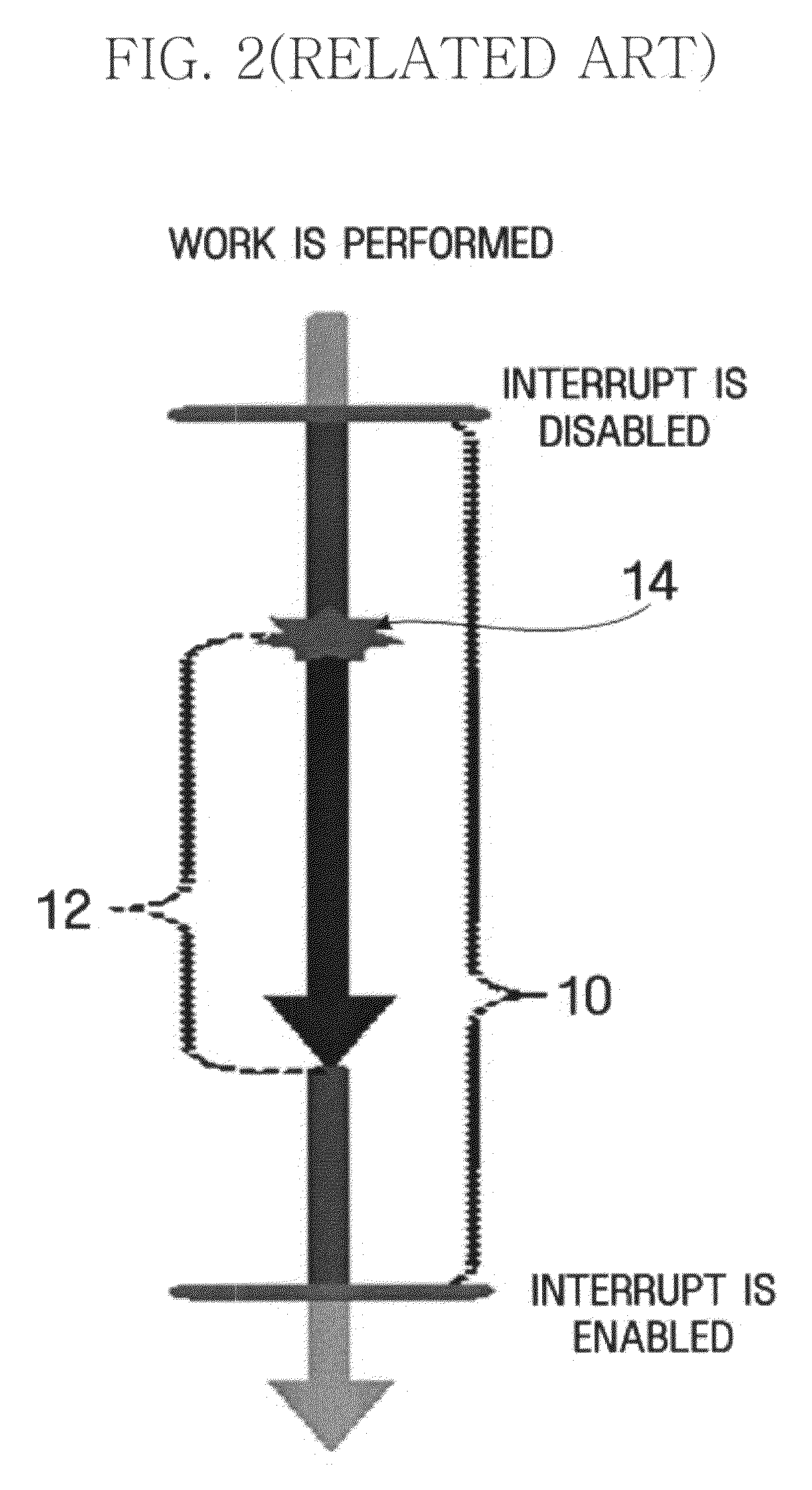

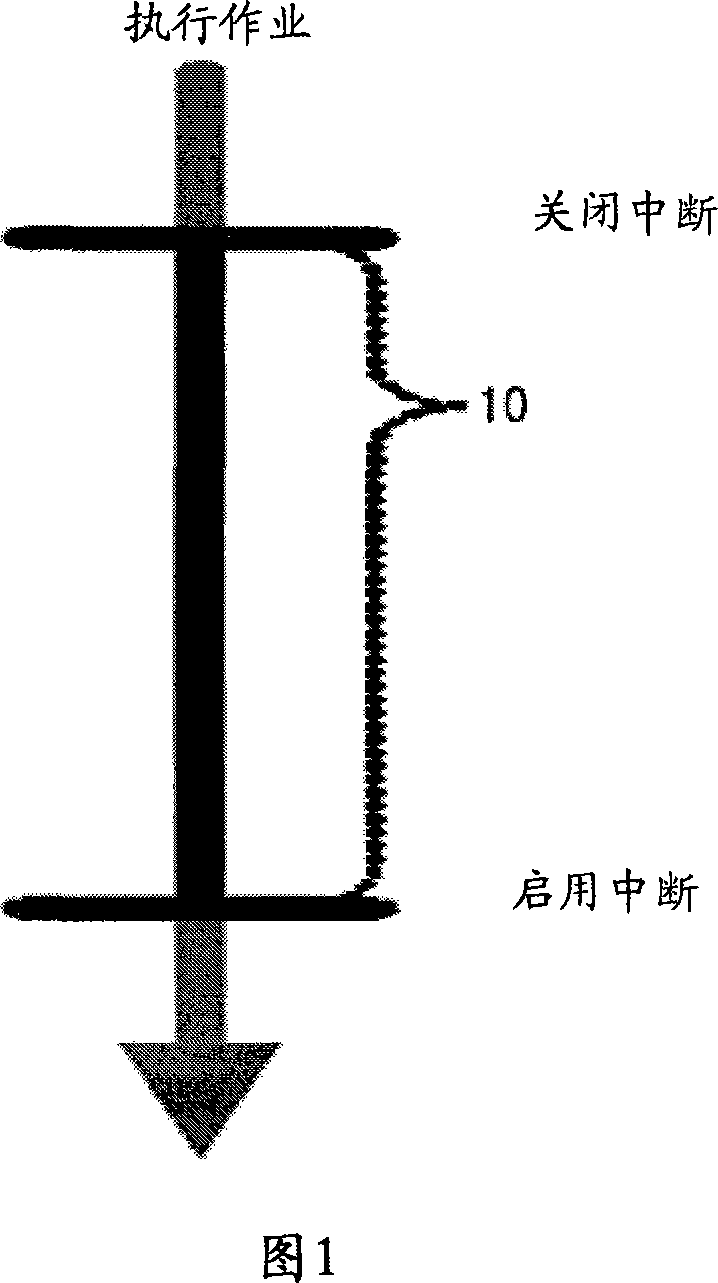

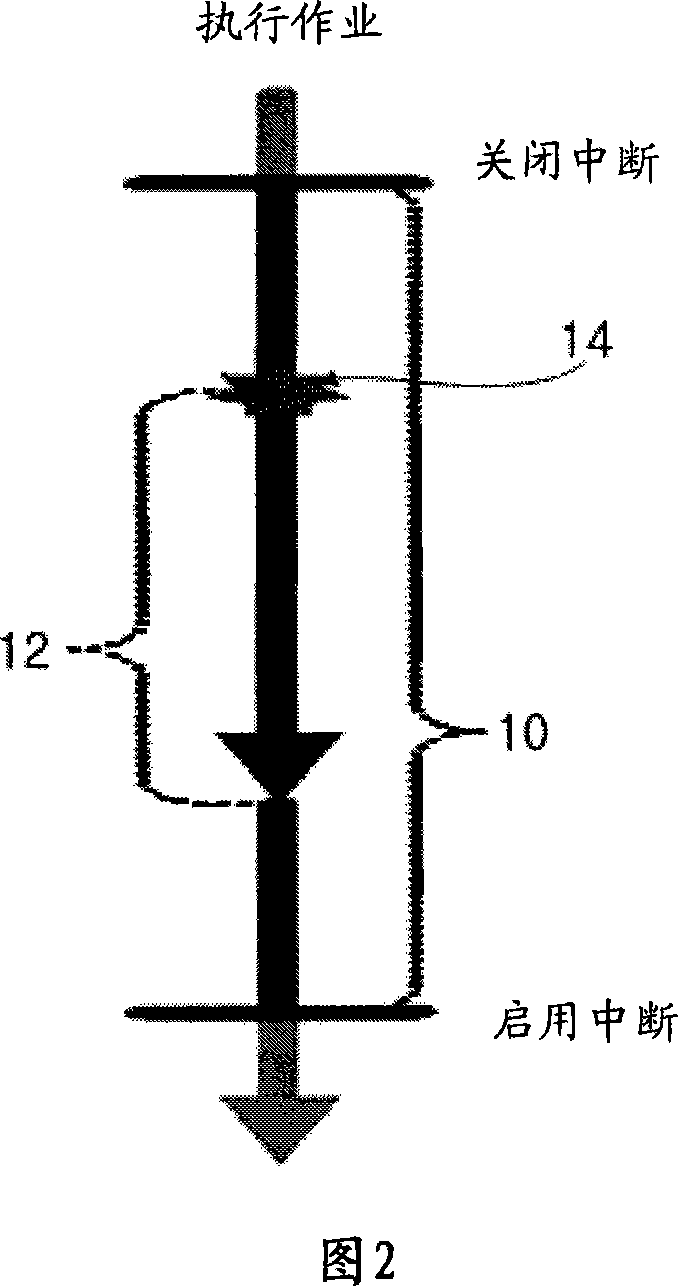

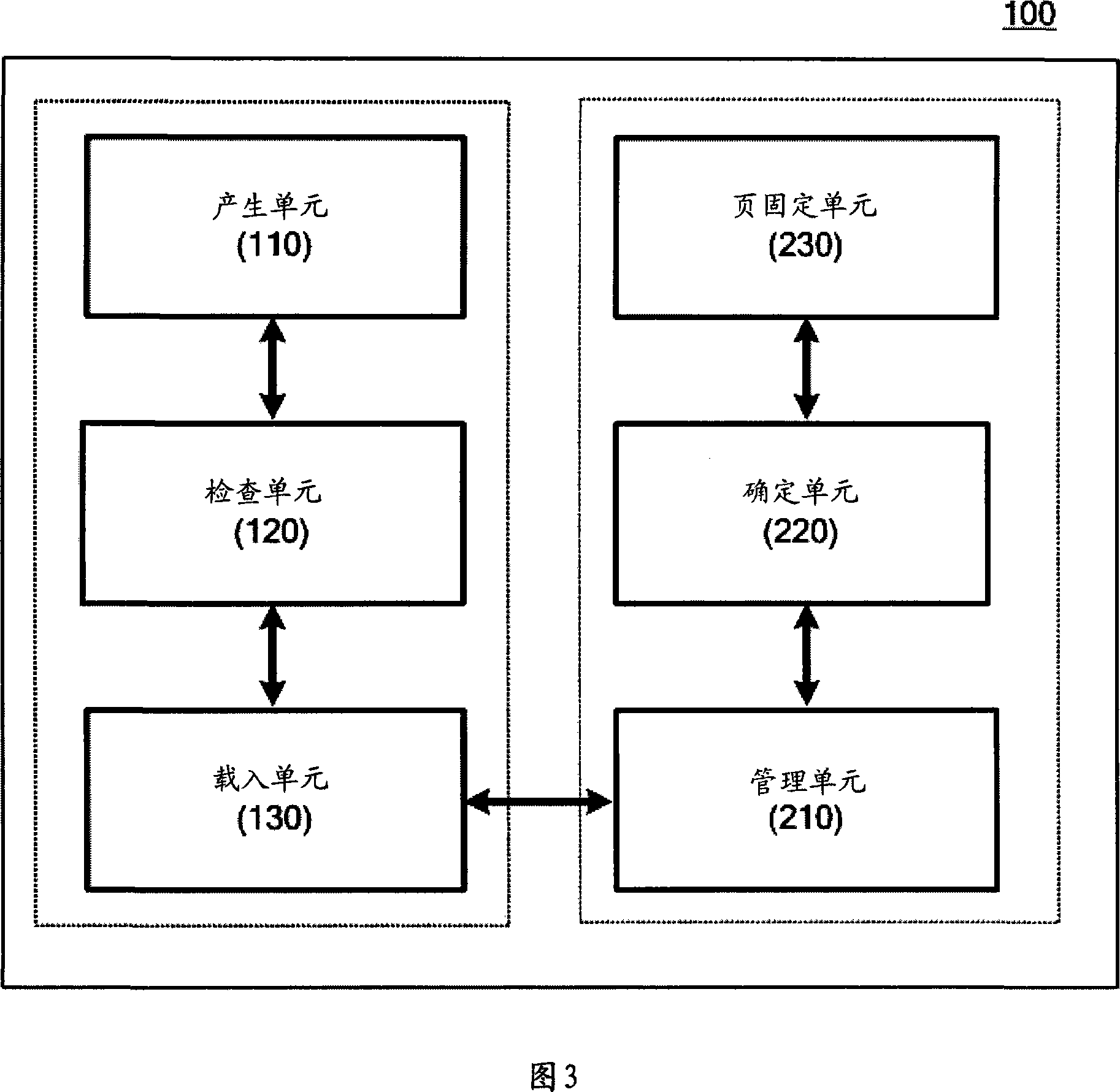

Apparatus and method for handling interrupt disabled section and page pinning apparatus and method

InactiveUS20080072009A1Effective applicationMemory systemsMicro-instruction address formationComputer scienceDemand paging

An apparatus and method for handling an interrupt disabled section and page pinning apparatus and method are provided. An apparatus for handling an interrupt disabled section includes: a generating unit which generates a list of interrupt disabled sections, in which demand paging can occur, in order to execute a program; an examining unit which searches the generated list when the program demands to disable an interrupt and examines whether information corresponding to the interrupt disabling demand is included in the list; and a loading unit which reads out a page required to execute the program from an auxiliary storage device when the information corresponding to the interrupt disabling demand is included in the list, and loads the page into a physical memory.

Owner:SAMSUNG ELECTRONICS CO LTD

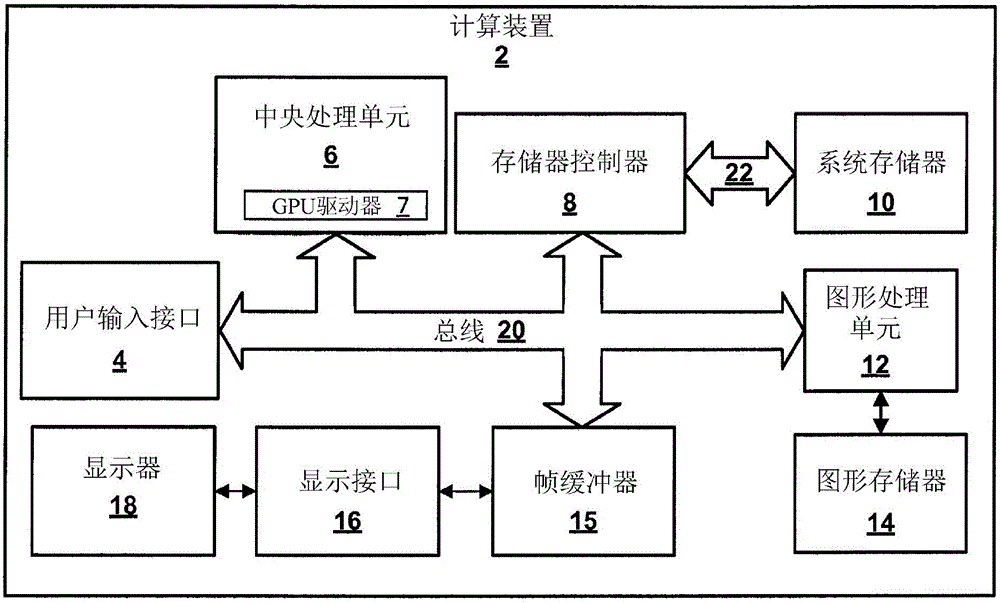

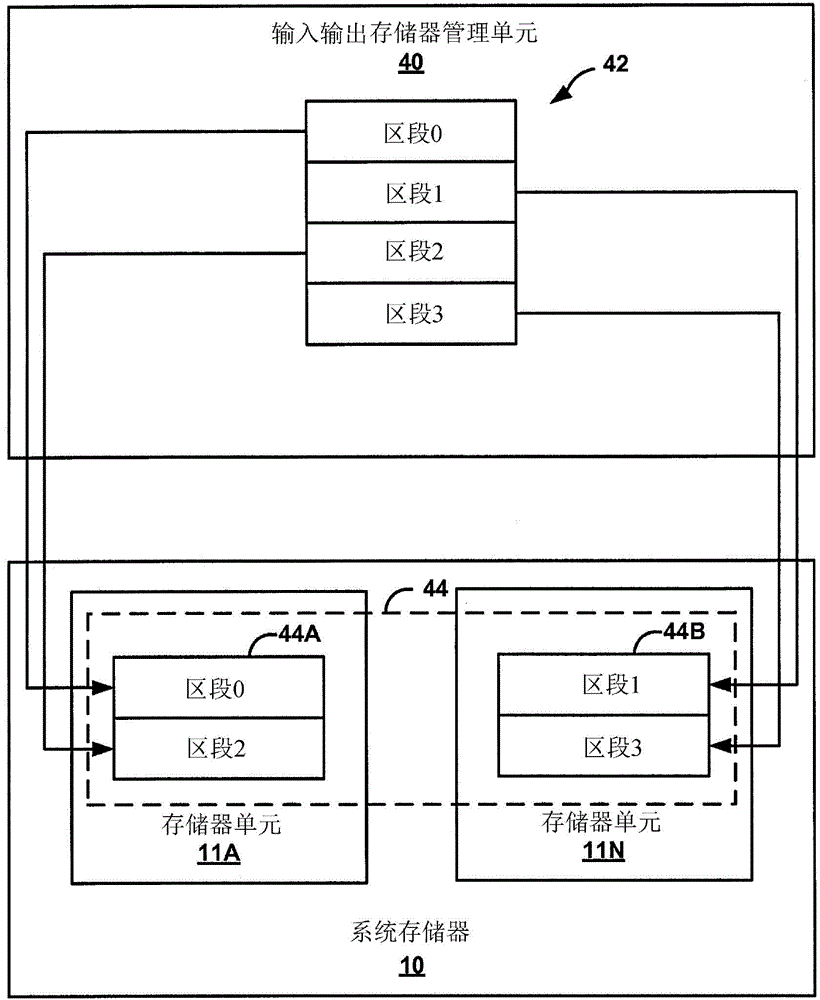

Gpu memory buffer pre-fetch and pre-back signaling to avoid page-fault

This disclosure proposes techniques for demand paging for an IO device (e.g., a GPU) that utilize pre-fetch and pre-back notification event signaling to reduce latency associated with demand paging. Page faults are limited by performing the demand paging operations prior to the IO device actually requesting unbacked memory.

Owner:QUALCOMM INC

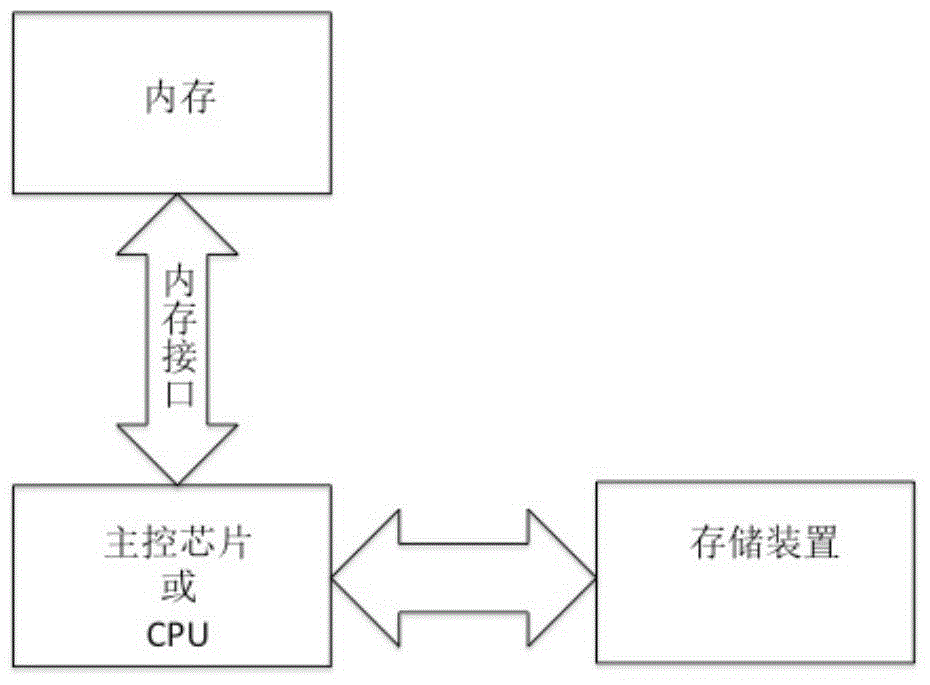

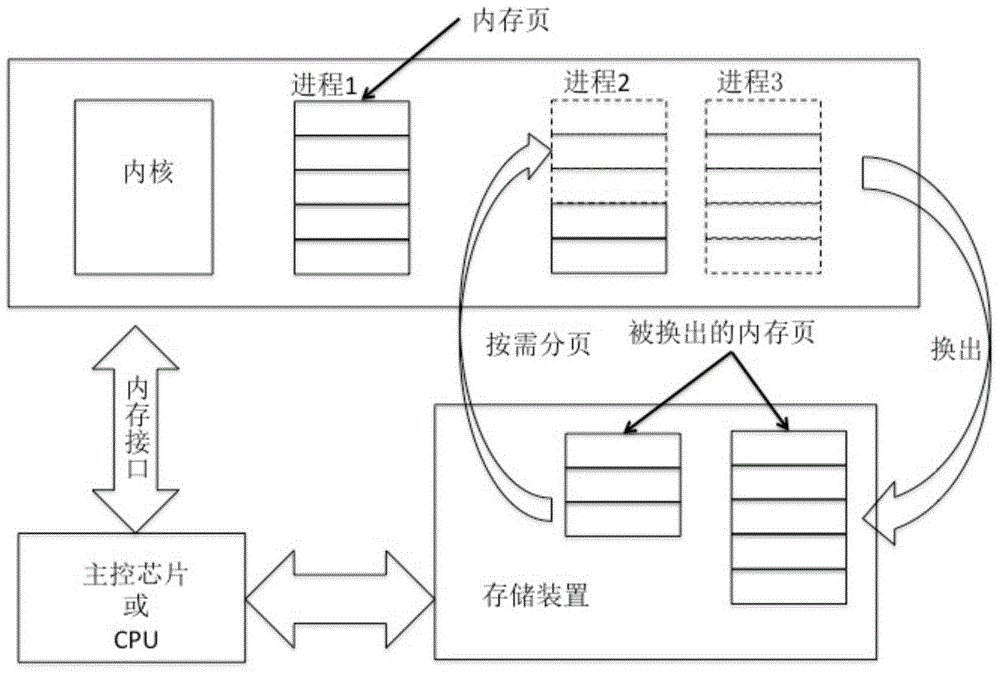

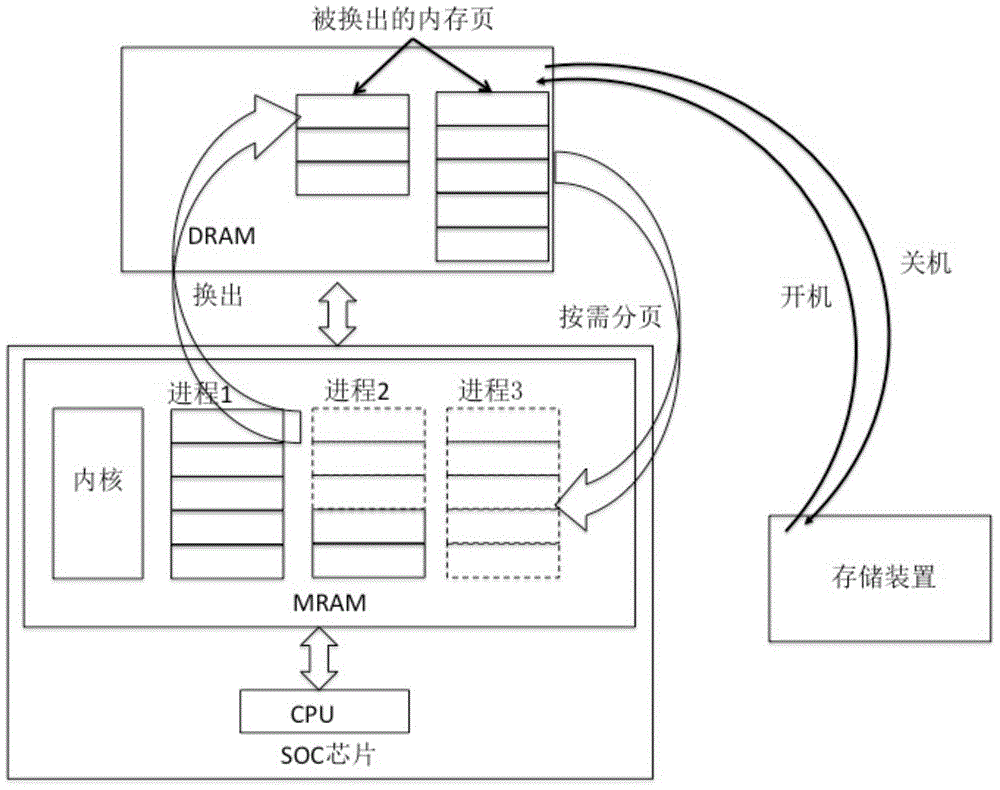

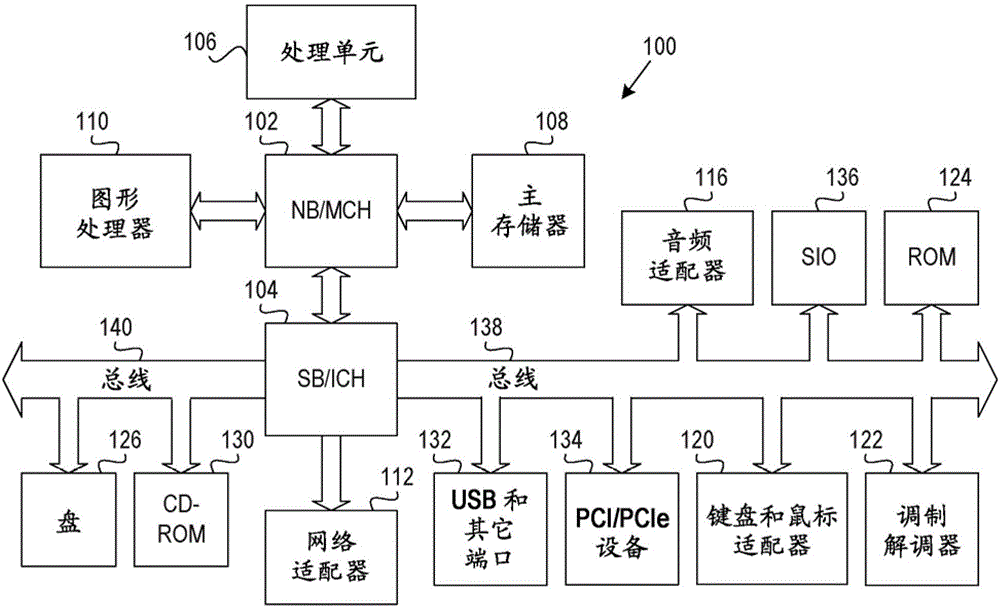

Computer system, memory scheduling method of operation system and system starting method

InactiveCN105630407AInput/output to record carriersProgram loading/initiatingStatic random-access memoryOperational system

The invention discloses a computer system. The system comprises a CPU, a random access memory and a storage device. The CPU transmits instructions and data with the random access memory through a bus and a random access memory interface; the CPU transmits instructions and data with the storage device through a bus and an input / output interface; the computer system also comprises a nonvolatile random access memory; the nonvolatile random access memory can exchange instructions and data with the CPU and the random access memory interface; and the nonvolatile random access memory and the CPU are arranged integrally. The invention also discloses a memory scheduling method of an operation system. A process in the operation system continuously applies new memory pages; therefore, the space of the nonvolatile random access memory is insufficient; a swap out / demand paging mechanism generally adopted in an open operation system is used for swapping out temporarily needless memory pages to the peripheral random access memory instead of storing in the storage device.

Owner:SHANGHAI CIYU INFORMATION TECH CO LTD

Apparatus and method for handling interrupt disabled section and page pinning apparatus and method

InactiveCN101145134AProgram control using stored programsMemory adressing/allocation/relocationDemand pagingComputer science

Owner:SAMSUNG ELECTRONICS CO LTD

Method and terminal for demand paging at least one of code and data requiring real-time response

InactiveUS8131918B2Memory architecture accessing/allocationMemory adressing/allocation/relocationTime responseRandom access memory

A method and terminal for demand paging at least one of code and data requiring a real-time response is provided. The method includes splitting and compressing at least one of code and data requiring a real-time response to a size of a paging buffer and storing the compressed at least one of code and data in a physical storage medium, if there is a request for demand paging for the at least one of code and data requiring the real-time response, classifying the at least one of code and data requiring the real-time response as an object of Random Access Memory (RAM) paging that pages from the physical storage medium to a paging buffer, and loading the classified at least one of code and data into the paging buffer.

Owner:SAMSUNG ELECTRONICS CO LTD

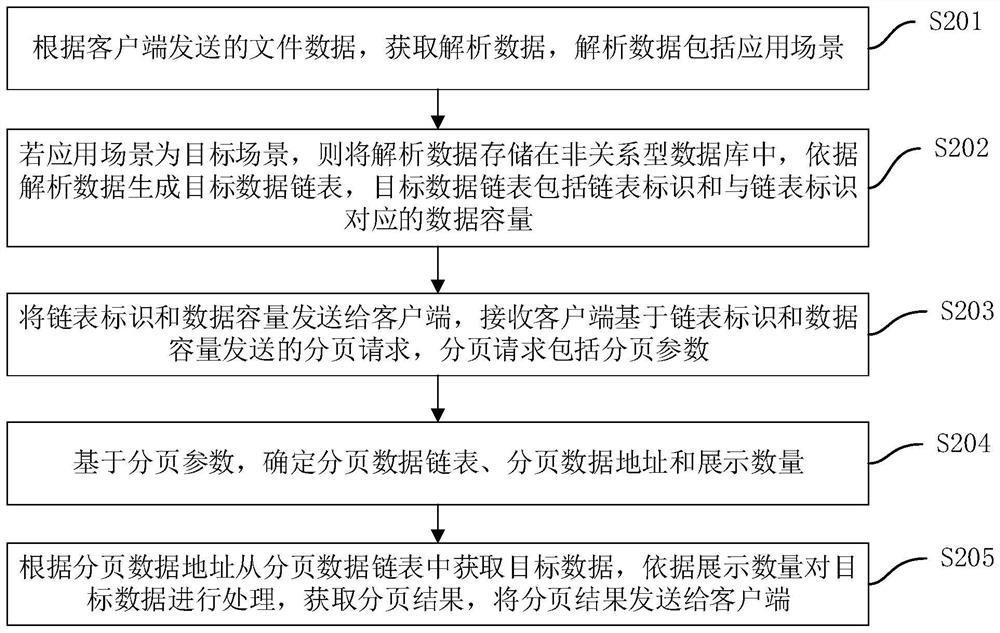

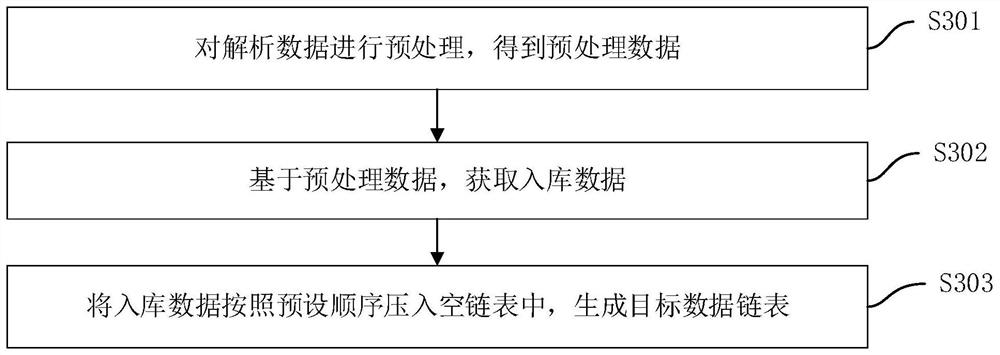

Data paging method and device, computer equipment and storage medium

PendingCN112613271AQuick showEasy to viewNatural language data processingOther databases indexingData displayData pack

The invention relates to the technical field of data display, and discloses a data paging method and device, computer equipment and a storage medium. The data paging method comprises the steps: obtaining analysis data according to file data transmitted by a client, and the analysis data comprises an application scene; if the application scene is a target scene, storing the analysis data in a non-relational database, generating a target data link table according to the analysis data, wherein the target data link table comprises a link table identifier and data capacity corresponding to the link table identifier; sending the linked list identifier and the data capacity to a client, and receiving a paging request sent by the client based on the linked list identifier and the data capacity, the paging request comprising paging parameters; based on the paging parameters, determining a paging data chain table, paging data addresses and a display quantity; and obtaining target data from the paging data link table according to the paging data address, processing the target data according to the display quantity, obtaining a paging result, and sending the paging result to the client, so as to realize quick paging of the data in the target scene.

Owner:深圳壹账通创配科技有限公司

Demand paging method and method for inputting related page information into page

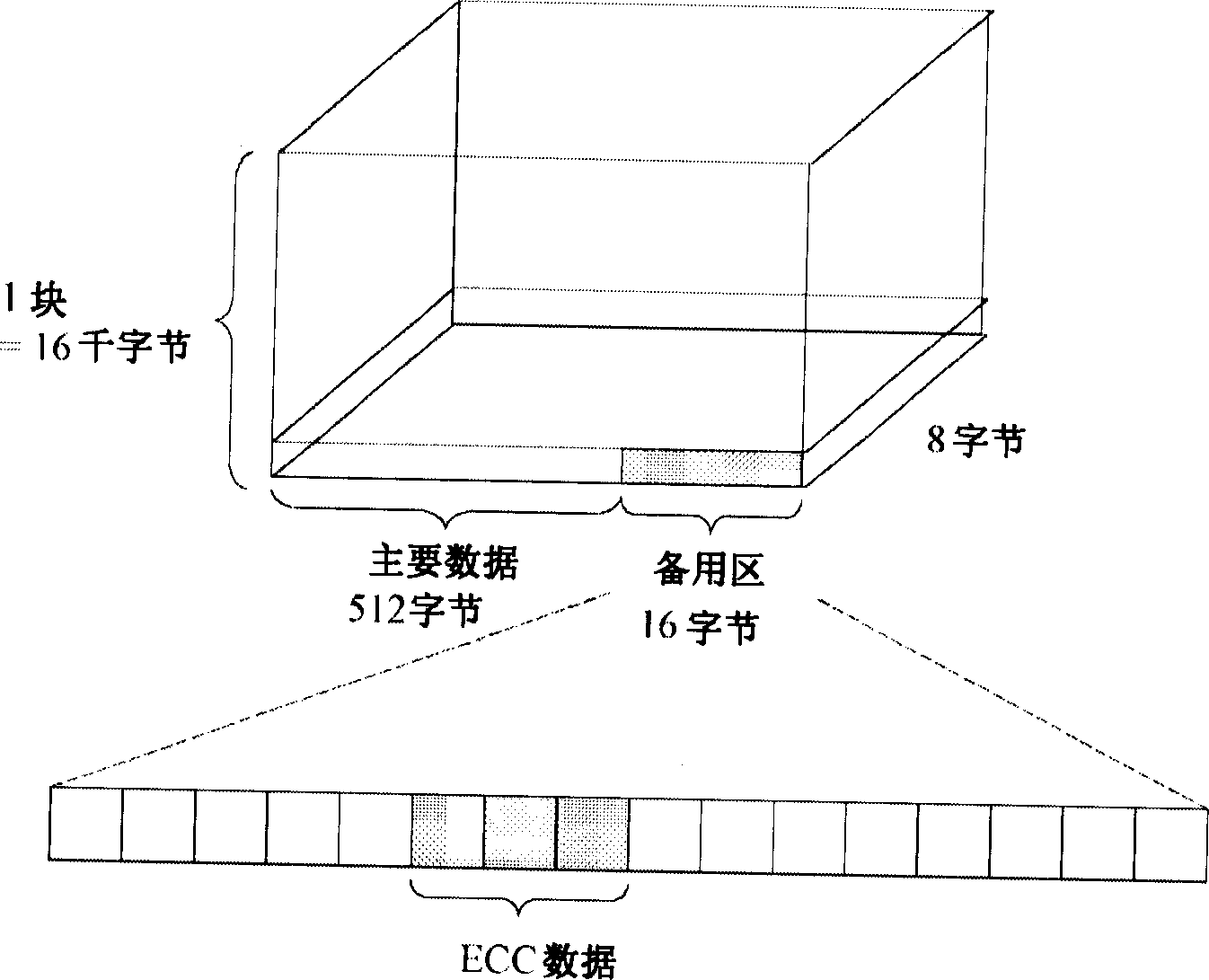

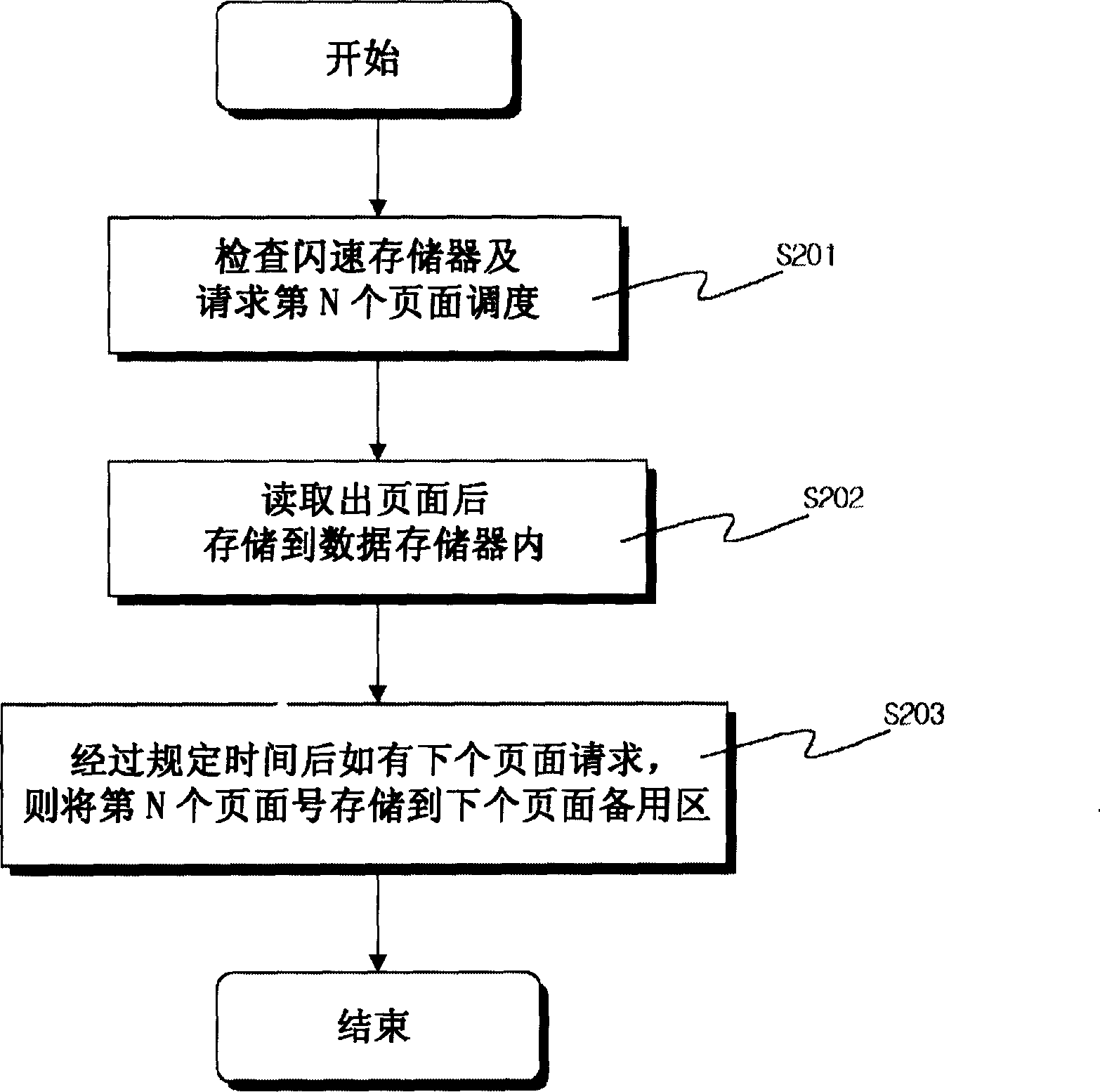

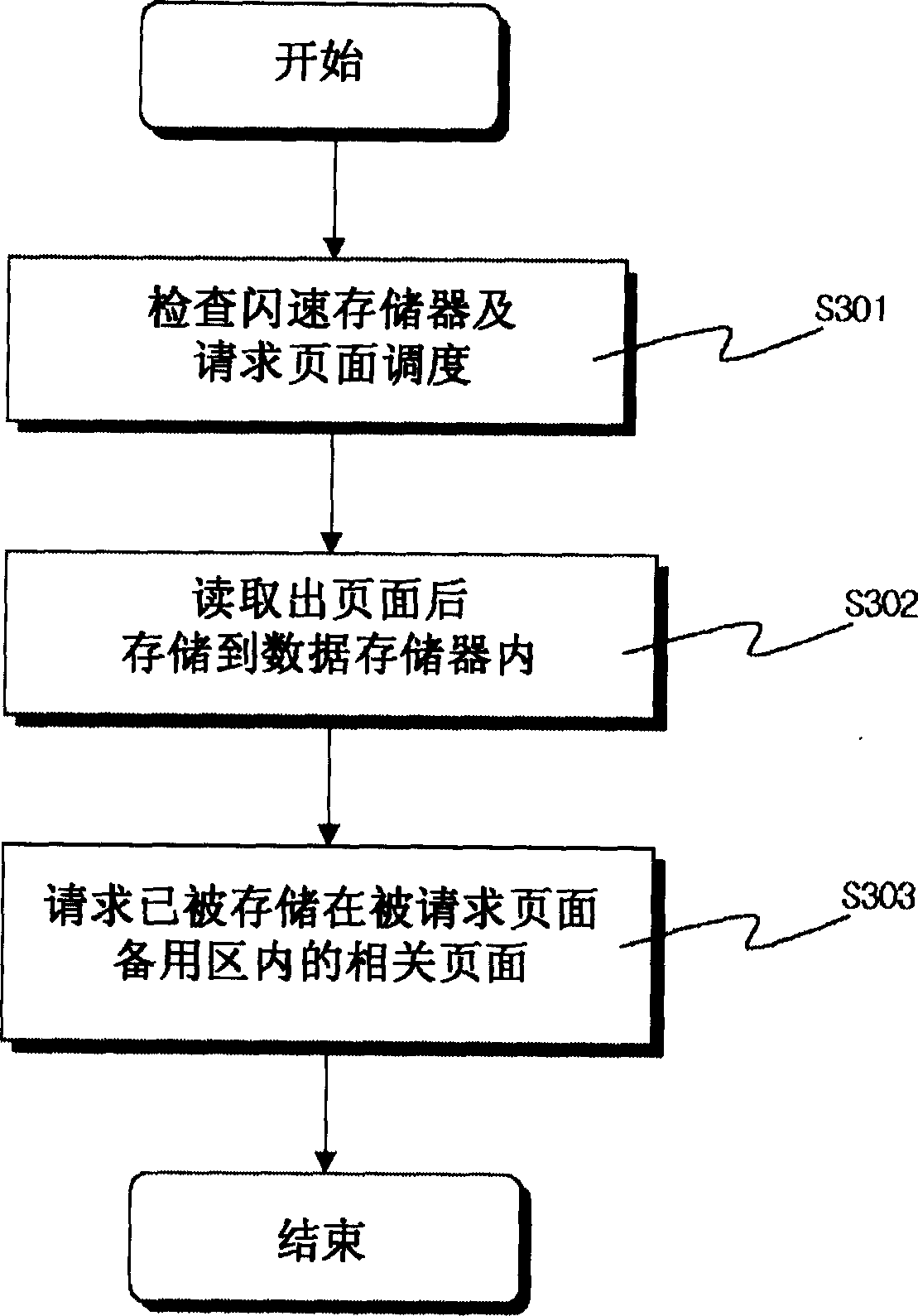

InactiveCN1881188ASave associated informationMemory adressing/allocation/relocationData memoryDemand paging

The invention relates to a method that personal mobile terminal demands paging, which comprises: (a), the central processor checks the data of flash memory and demands paging; (b), storing the relative page from the flash memory to the data memory; (c), checking the page number stored in the relative page and storing the page in the data memory.

Owner:LG ELECTRONIC (HUIZHOU) CO LTD

Efficient decompression locality system for demand paging

ActiveCN107077423AMemory architecture accessing/allocationInput/output to record carriersParallel computingDemand paging

Owner:QUALCOMM INC

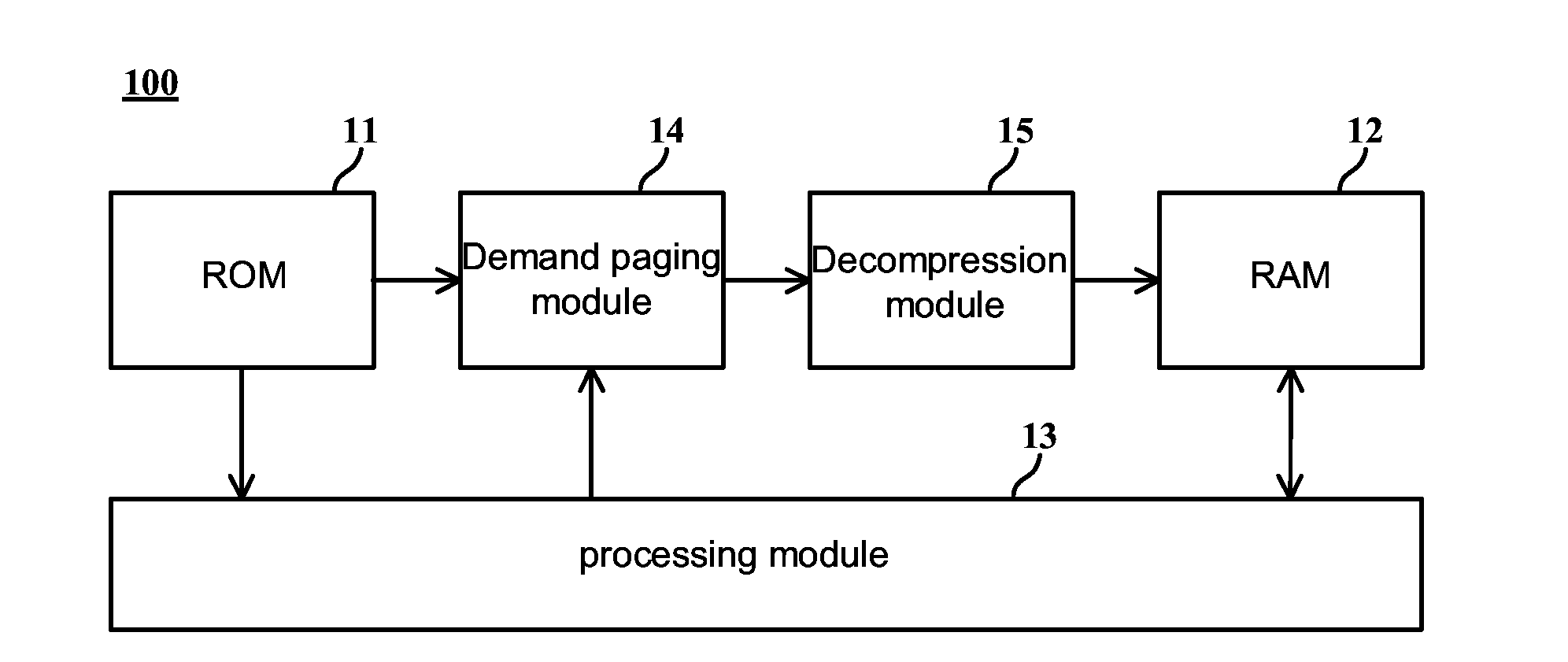

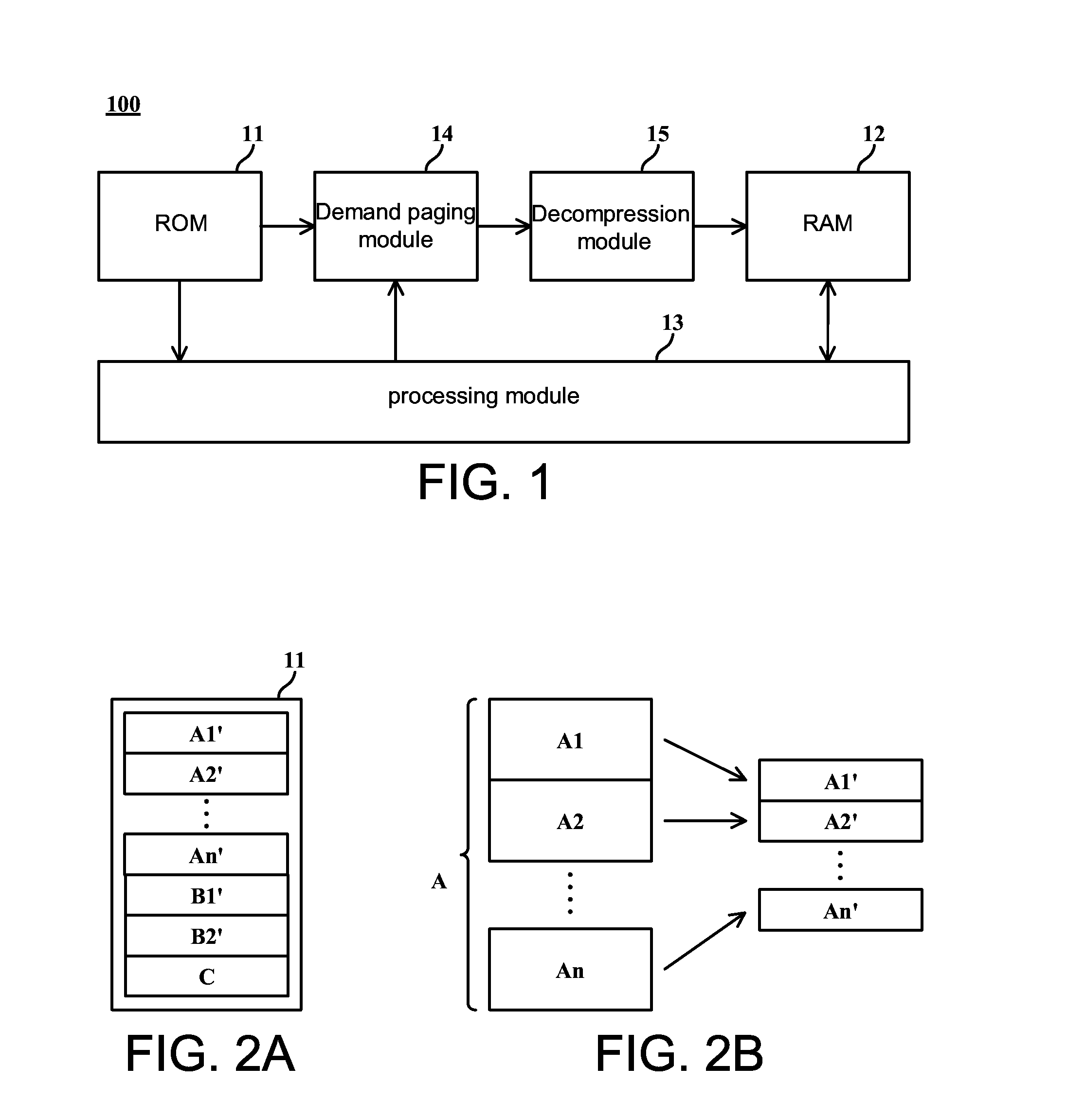

Electronic apparatus and control method thereof

ActiveUS20140068141A1Reduce memory spaceLow hardware costMemory architecture accessing/allocationMemory adressing/allocation/relocationRandom access memoryOriginal data

An electronic includes a read-only memory (ROM), a random access memory (RAM), a processing module, a demand paging module and a decompression module. The ROM stores multiple sets of compressed data corresponding to a plurality of sets of uncompressed data. The plurality of sets of uncompressed data are divided from one same set of original data. According to a request associated with the set of original data and from the processing module, the demand paging module selects one or more sets from the multiple sets of compressed data. The decompression module decompresses and stores the selected one or more sets of compressed data to the RAM for use of the processing module.

Owner:MEDIATEK INC

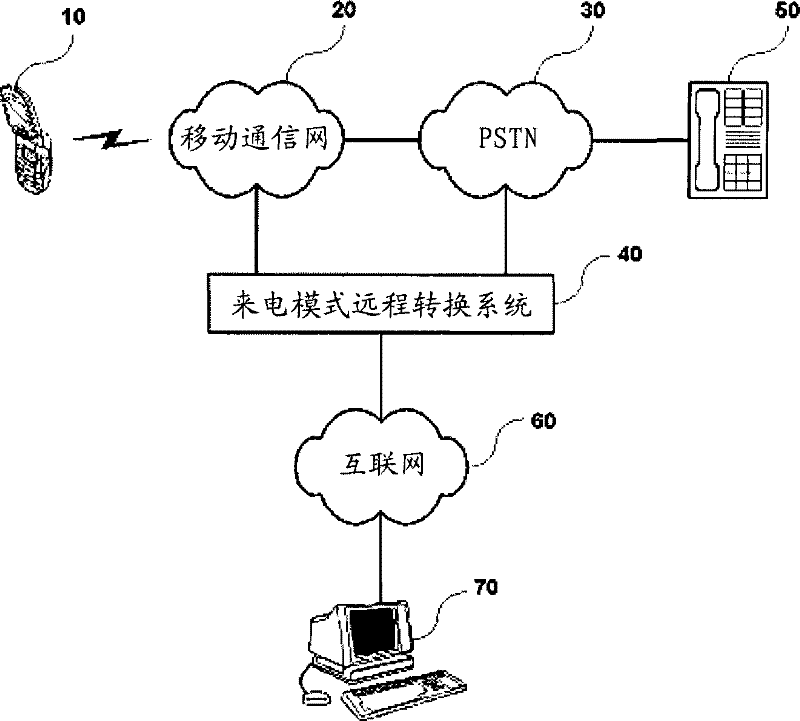

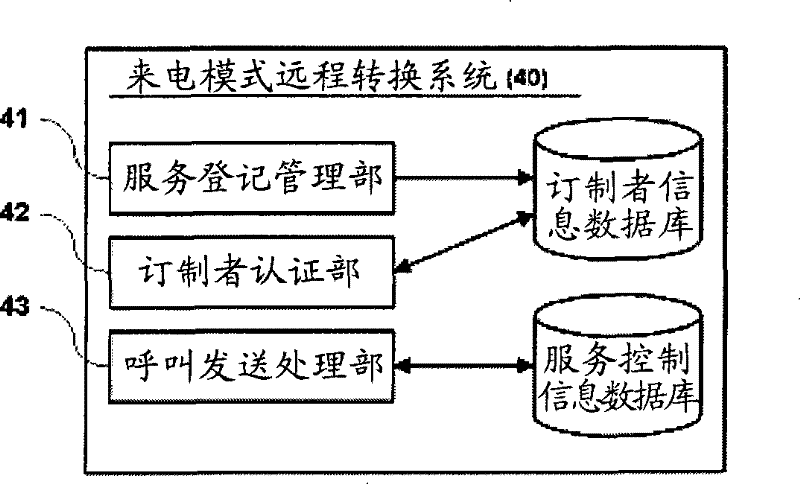

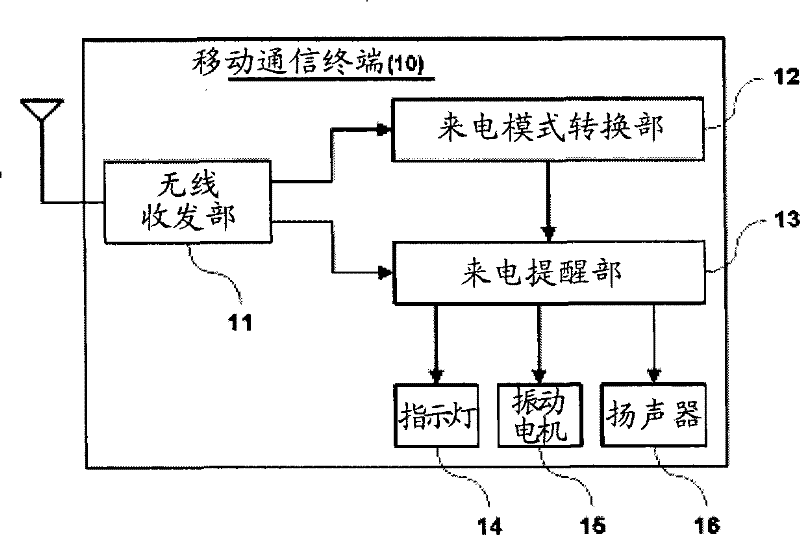

System and method for remote converting call alerting mode of mobile communication terminal, mobile communication terminal for the same

InactiveCN101312562BUser identity/authority verificationSpecial service for subscribersTelecommunicationsUser authentication

Owner:DONG A UNIV RES FOUND FOR IND ACAD COOP

Method for processing page fault by processor

PendingCN113742115AMemory architecture accessing/allocationNon-redundant fault processingComputer architectureOperational system

Disclosed is a method for processing a page fault by a processor. The method includes performing demand paging depending on an application operation in a system including a processor and an operating system, and loading, at the processor, data on a memory in response to the demand paging.

Owner:RES & BUSINESS FOUND SUNGKYUNKWAN UNIV +1

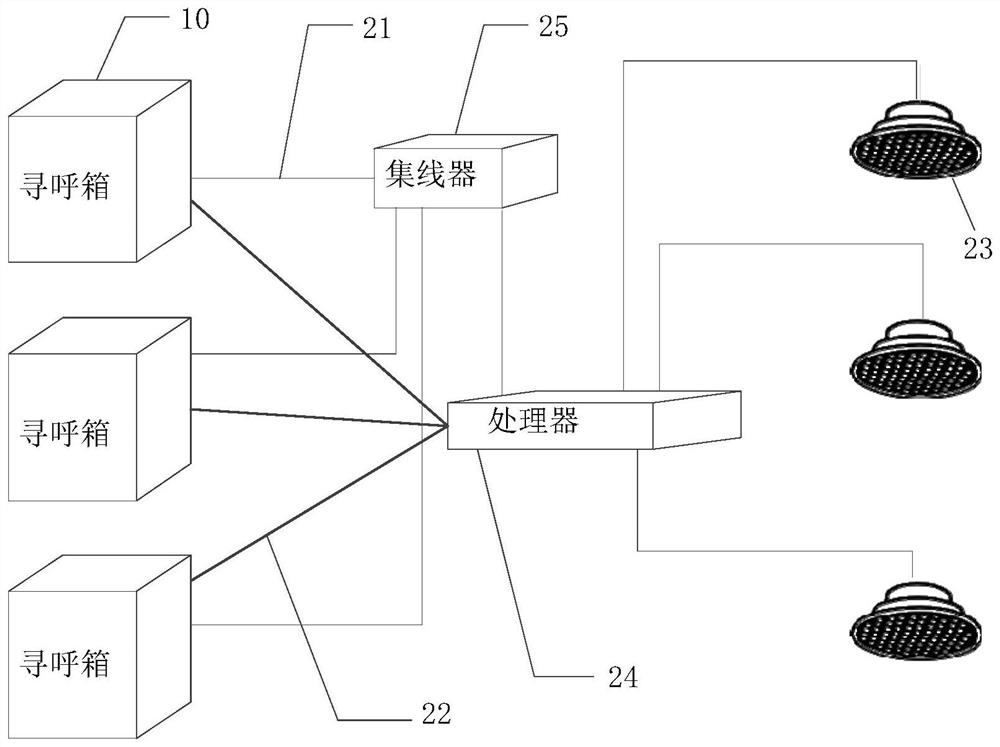

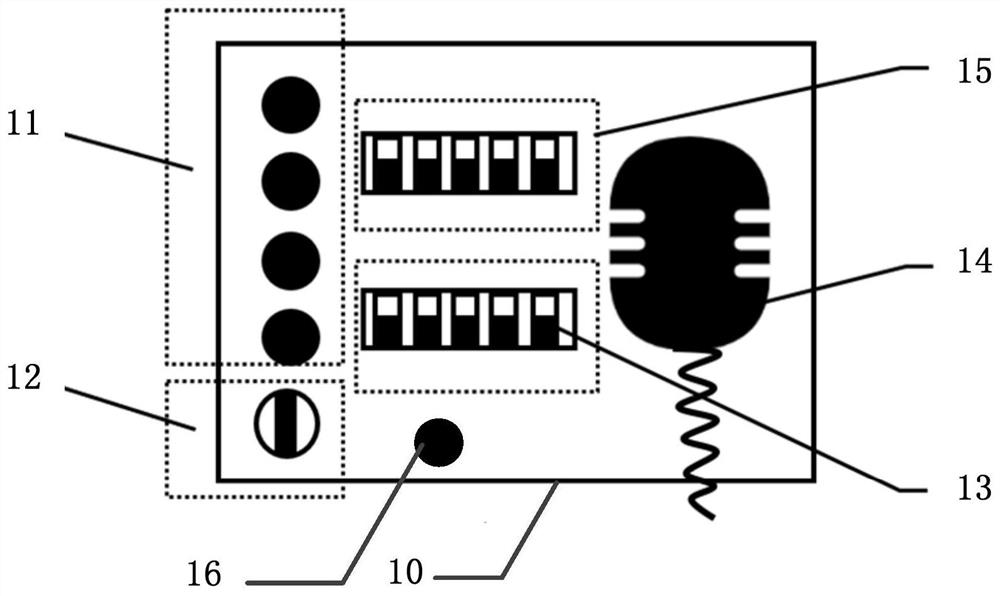

A multi-area on-demand paging system and its application method

ActiveCN111555828BImprove efficiencyEasy to controlBroadcast specific applicationsBroadcast circuit arrangementsEngineeringBroadcasting

The invention discloses a multi-area on-demand paging system and its application method. The system includes at least one paging box, the paging box is equipped with a control box and a plurality of on-demand buttons, an area selection knob, a first dial switch and a microphone, each on-demand button corresponds to a pre-recorded prompt word, and each of the area selection knobs Each stall corresponds to a playing area, and the value of the first dial switch constitutes the identity mark of the paging box, and the control box is connected with the processor for controlling the loudspeaker system in the broadcasting area; the control box is used to transmit the identity signal of the paging box , the corresponding playback area selection signal, the signal containing the corresponding prompt word, and / or, the real-time audio stream is output to the processor, so that the processor controls the broadcast area speaker system to play the prompt word or real-time sound in the corresponding playback area. The invention is used for multi-area on-demand and broadcast control, and easily realizes the function of free switching between on-demand prompt sound and multi-area broadcast.

Owner:华强方特(深圳)科技有限公司

Method and apparatus for automatically using huge pages

Owner:INT BUSINESS MASCH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com