Patents

Literature

57 results about "Shared memory multiprocessor" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

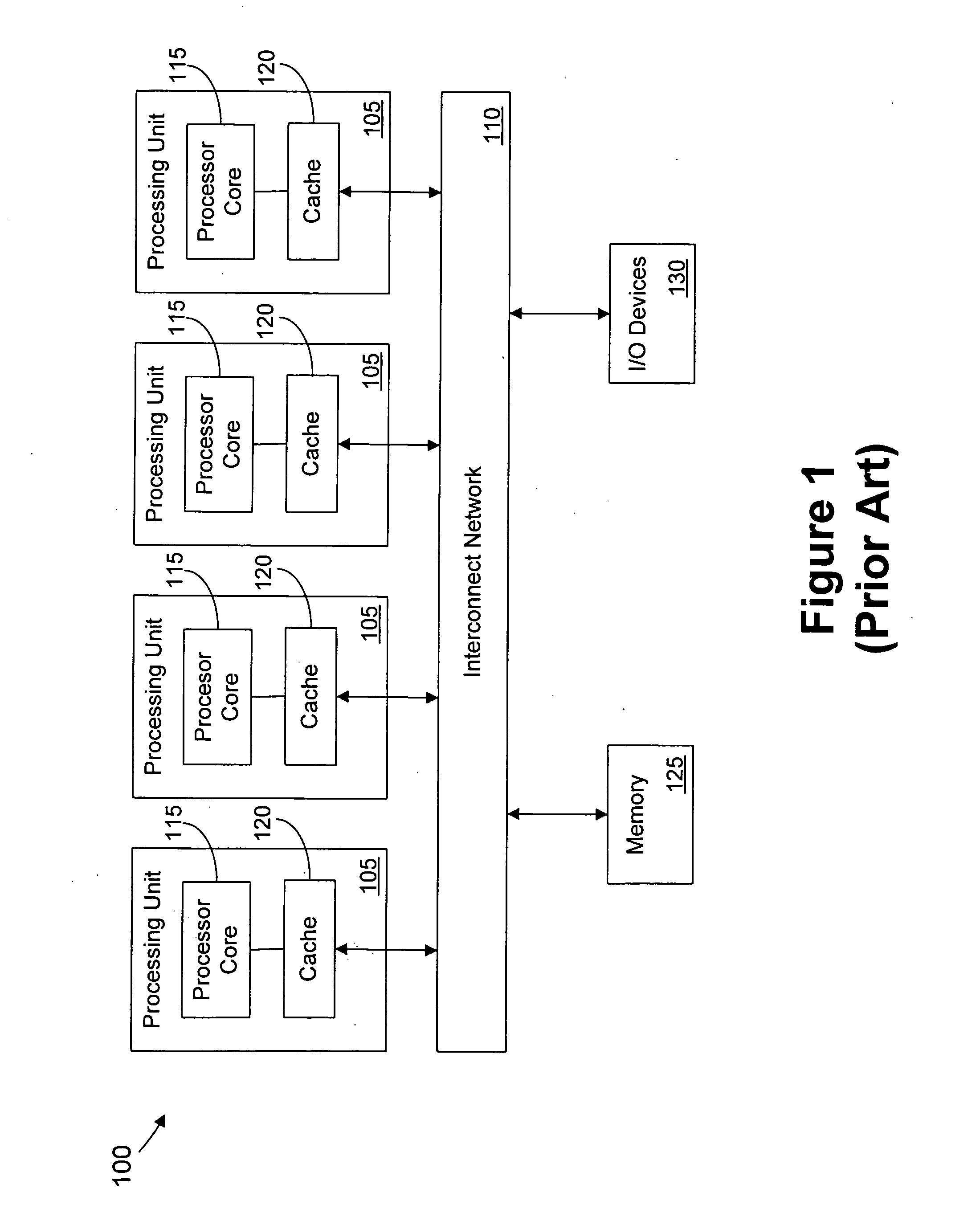

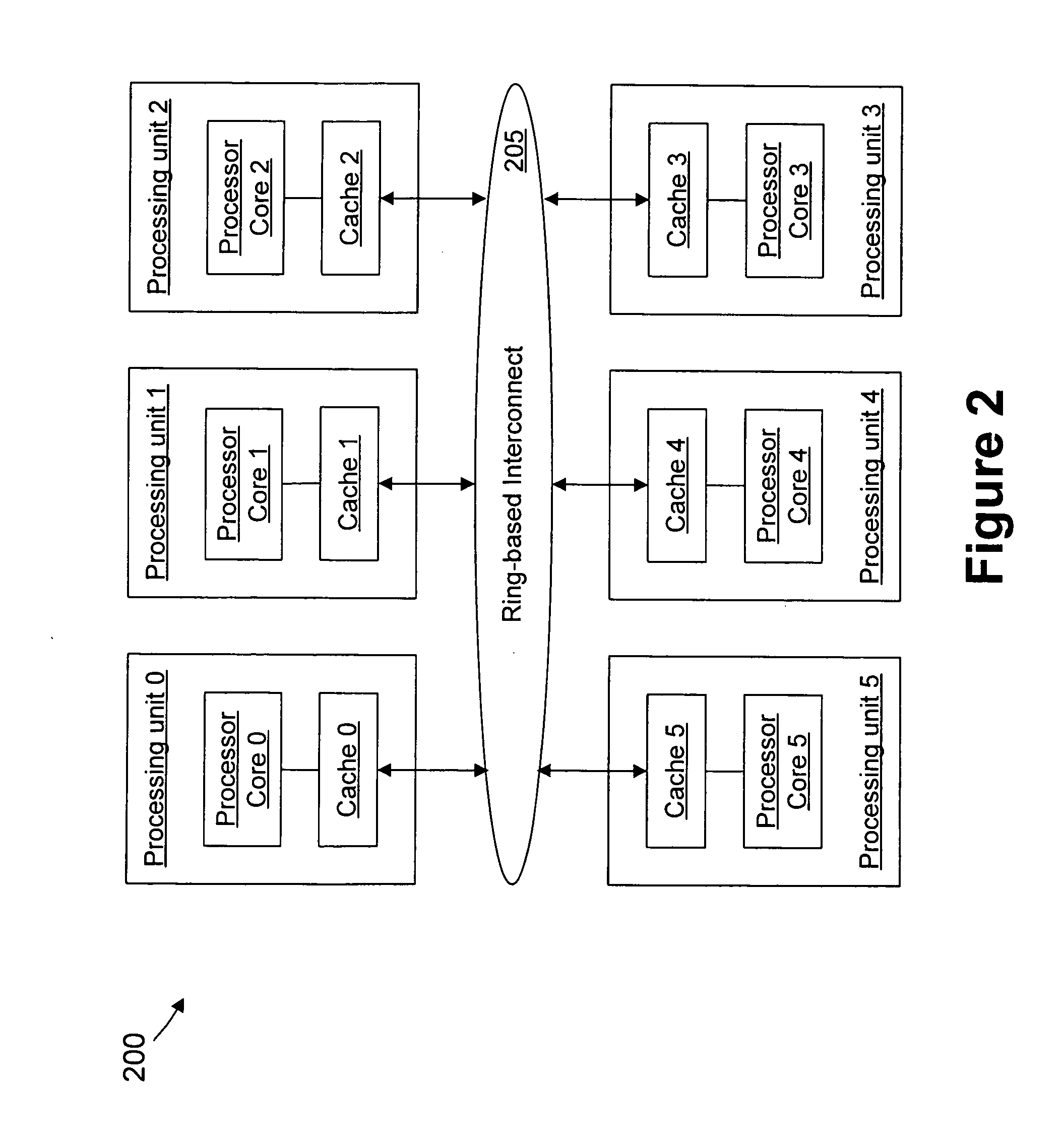

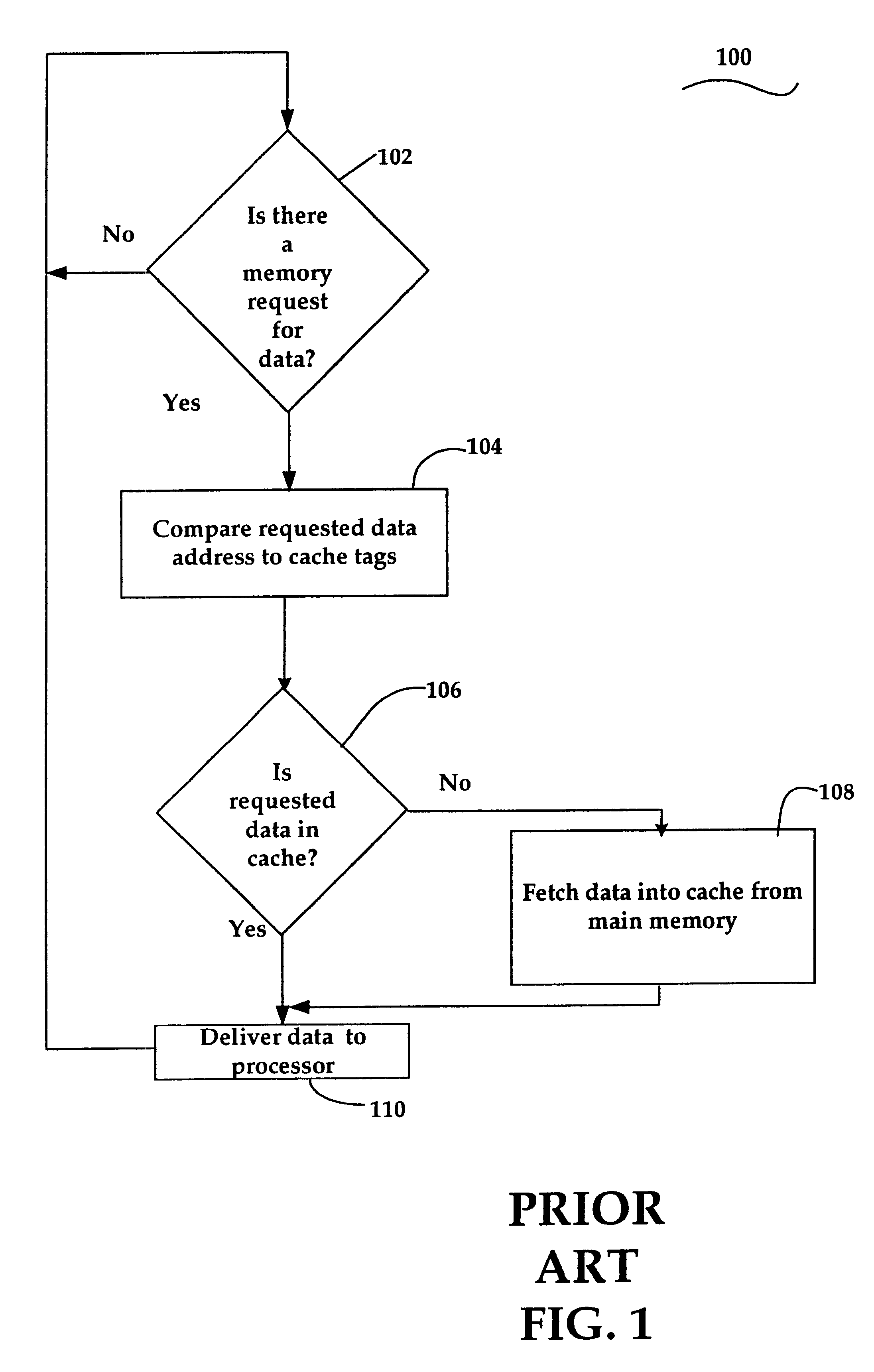

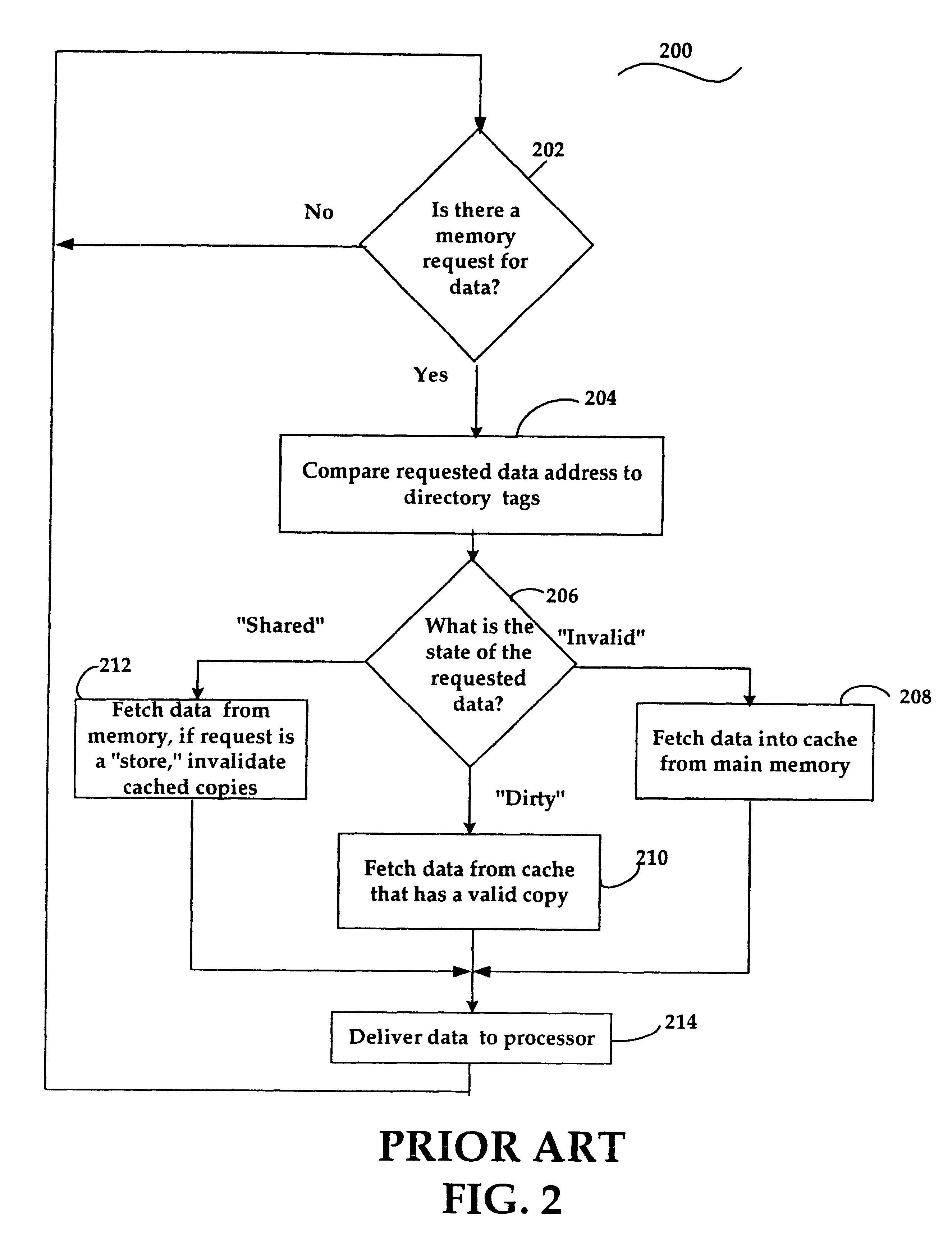

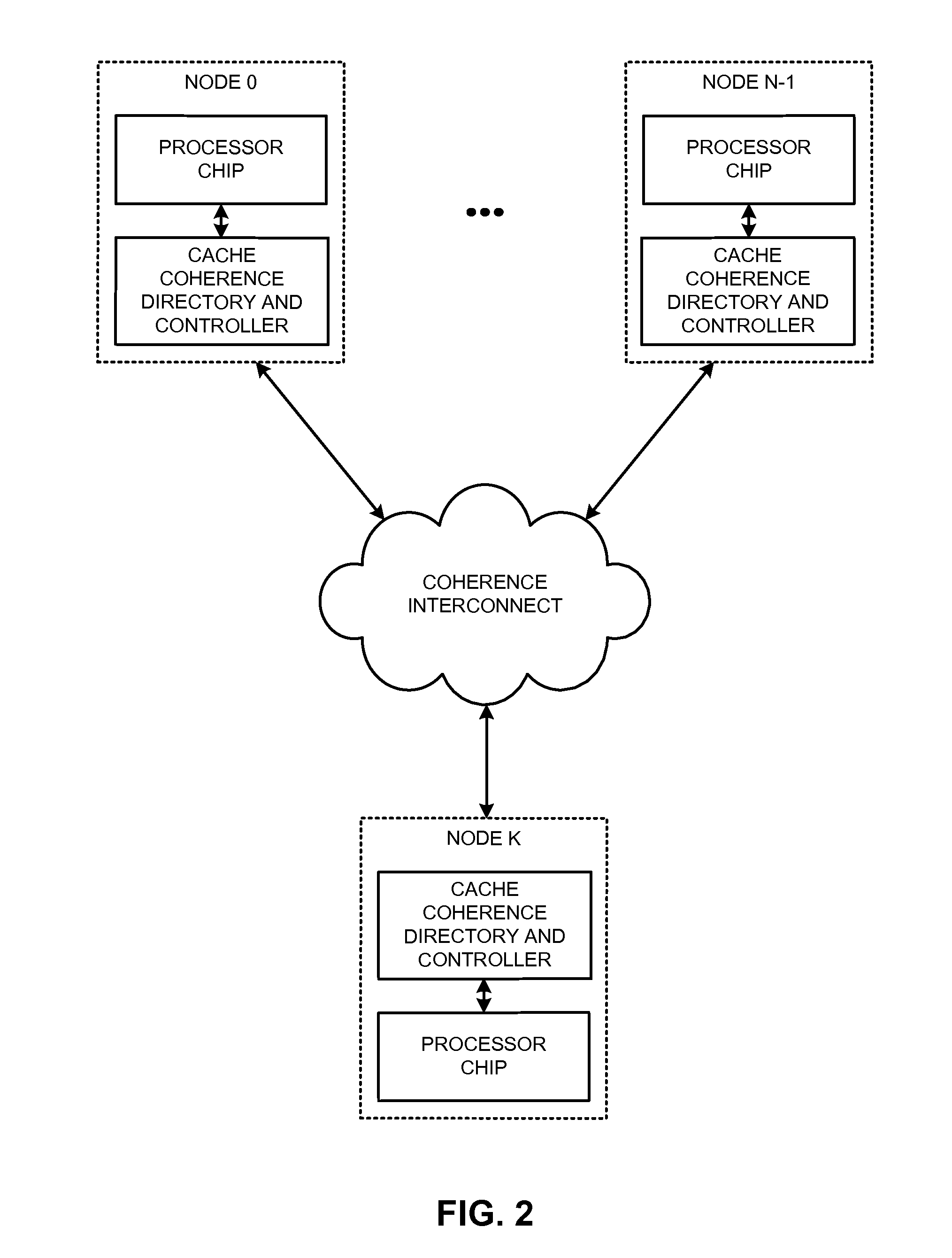

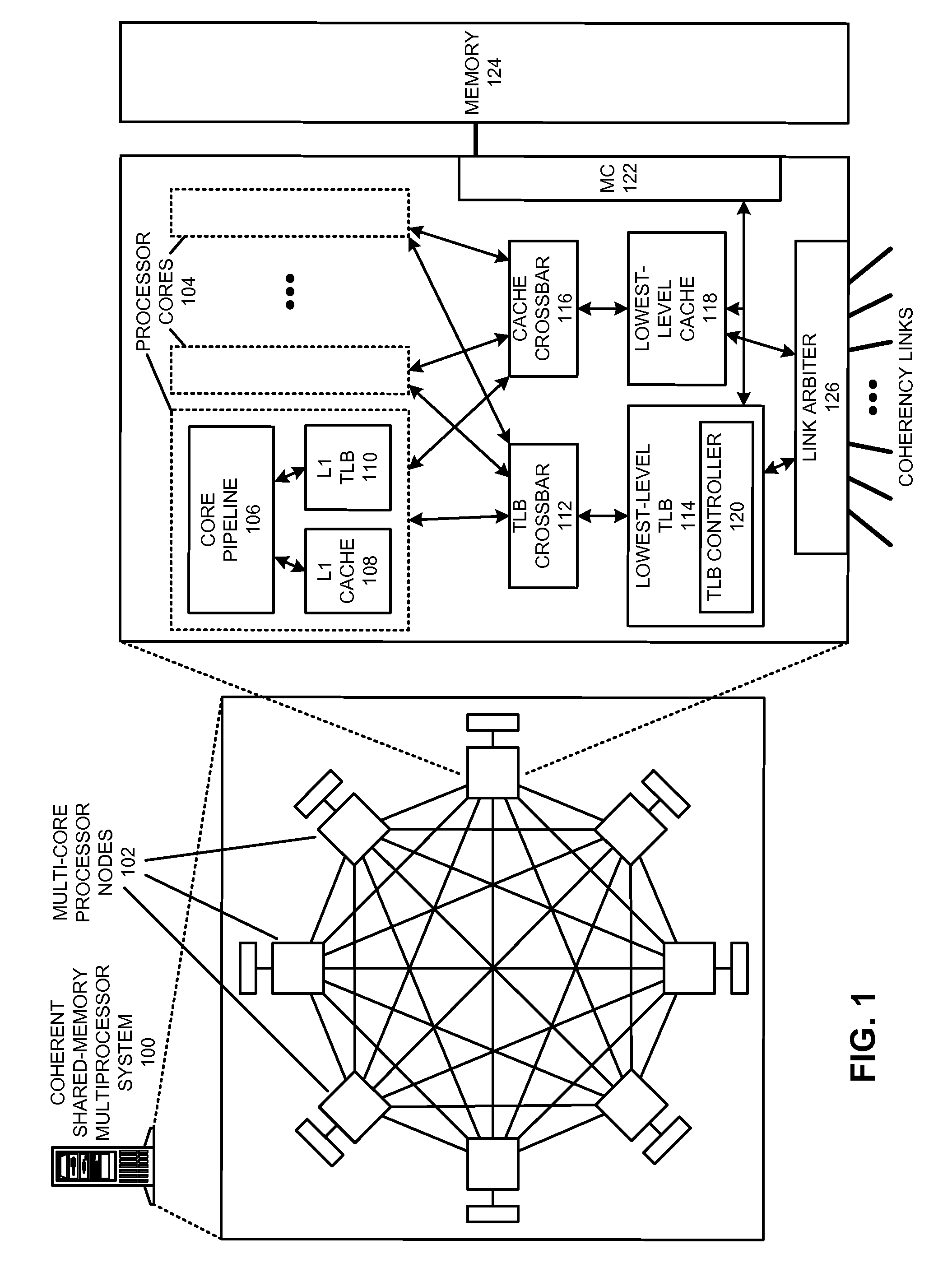

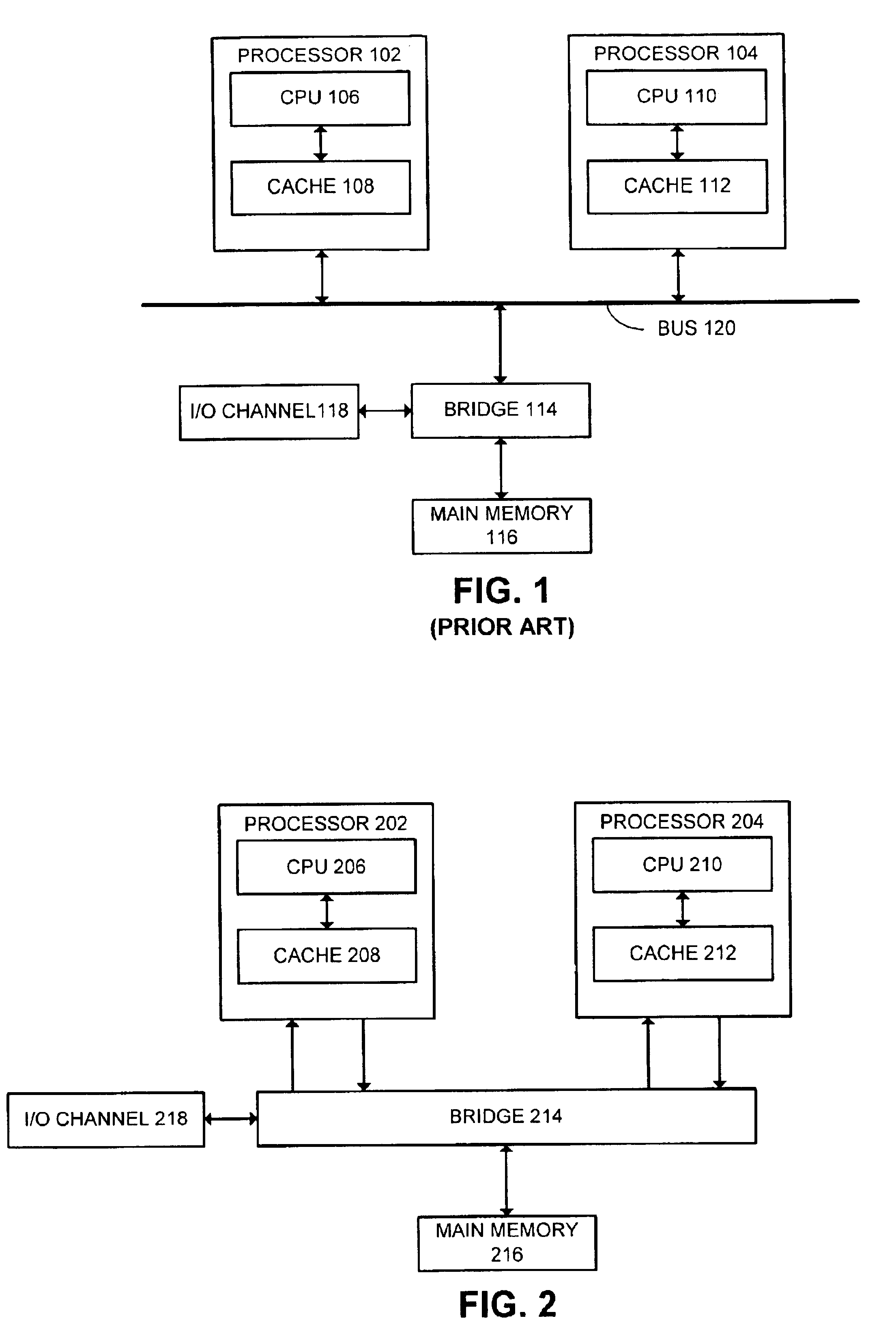

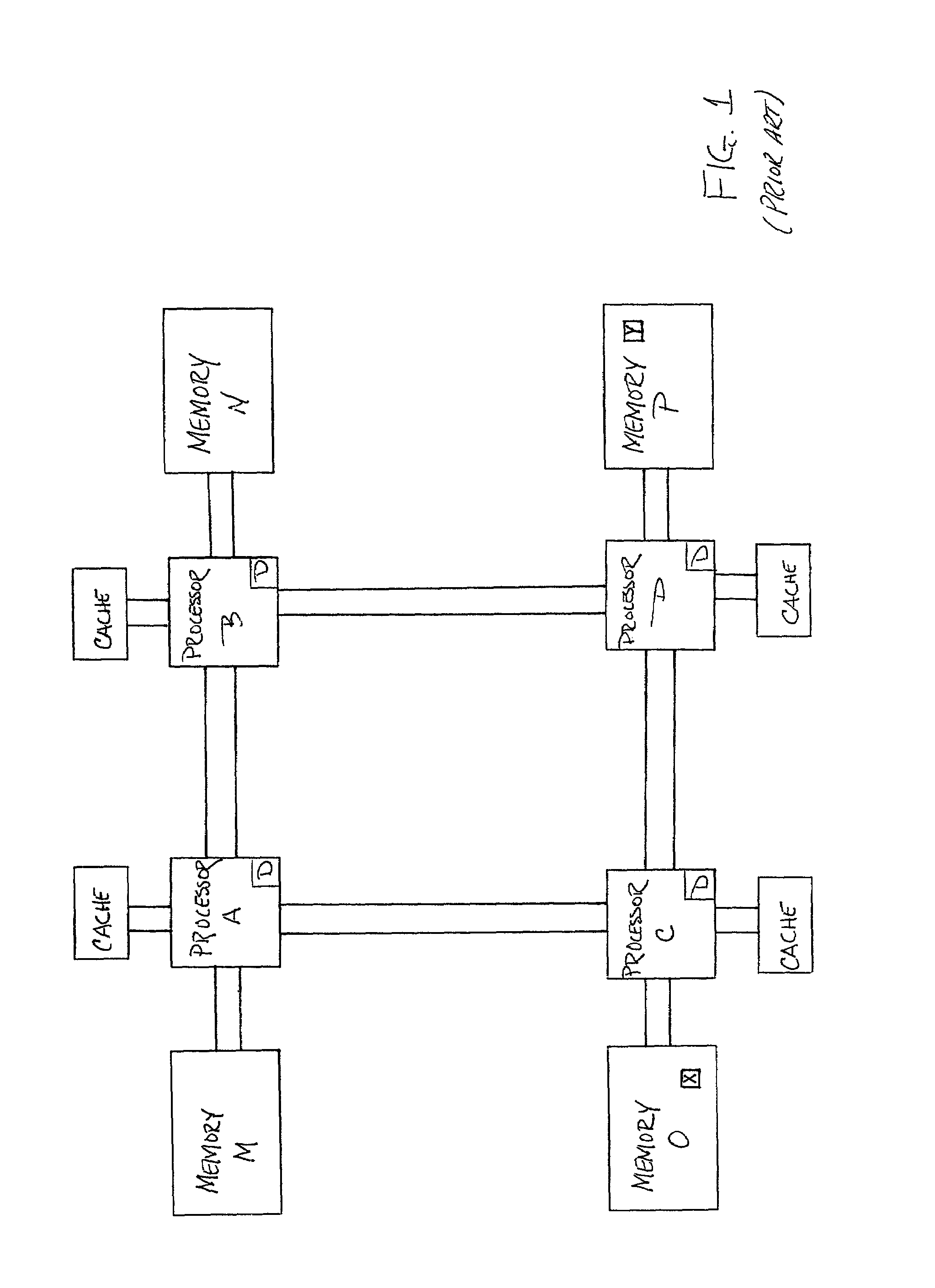

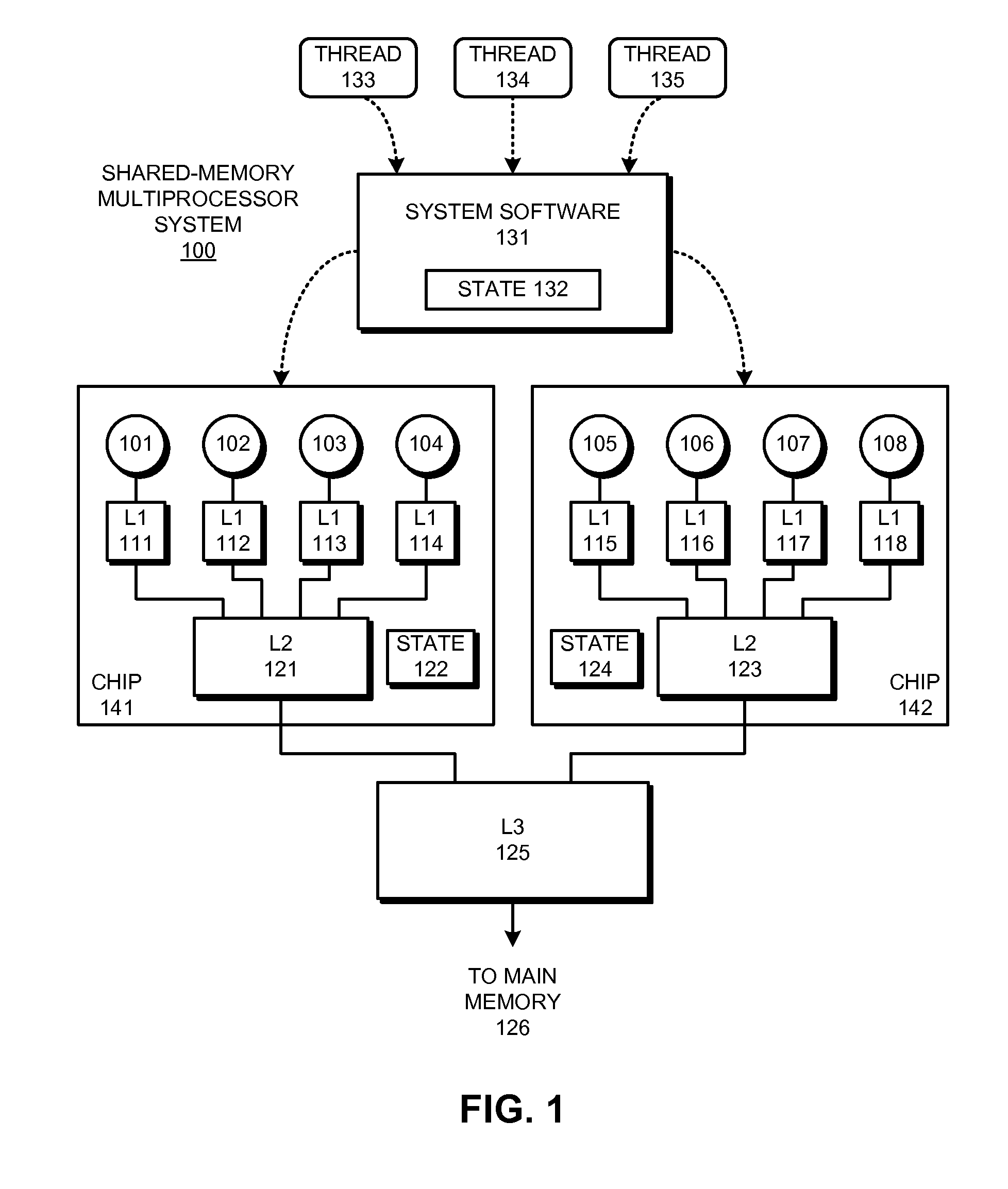

Shared-Memory Multiprocessors. [§5] Symmetric multiprocessors (SMPs) are the most common multiprocessors. They provide a shared address space, and each processor has its own cache. All processors and memories attach to the same interconnect, usually a shared bus. SMPs dominate the server market, and are the building blocks for larger systems.

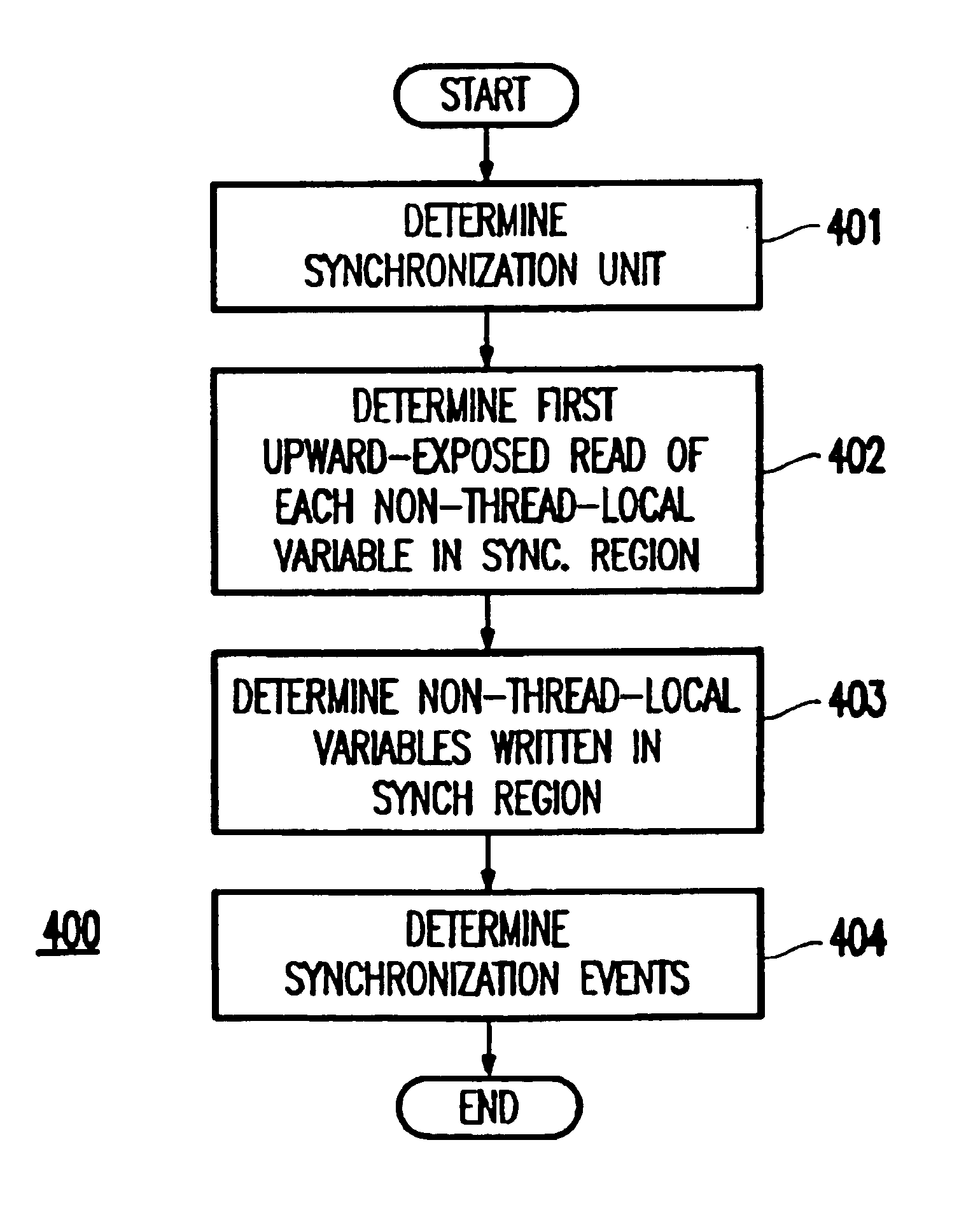

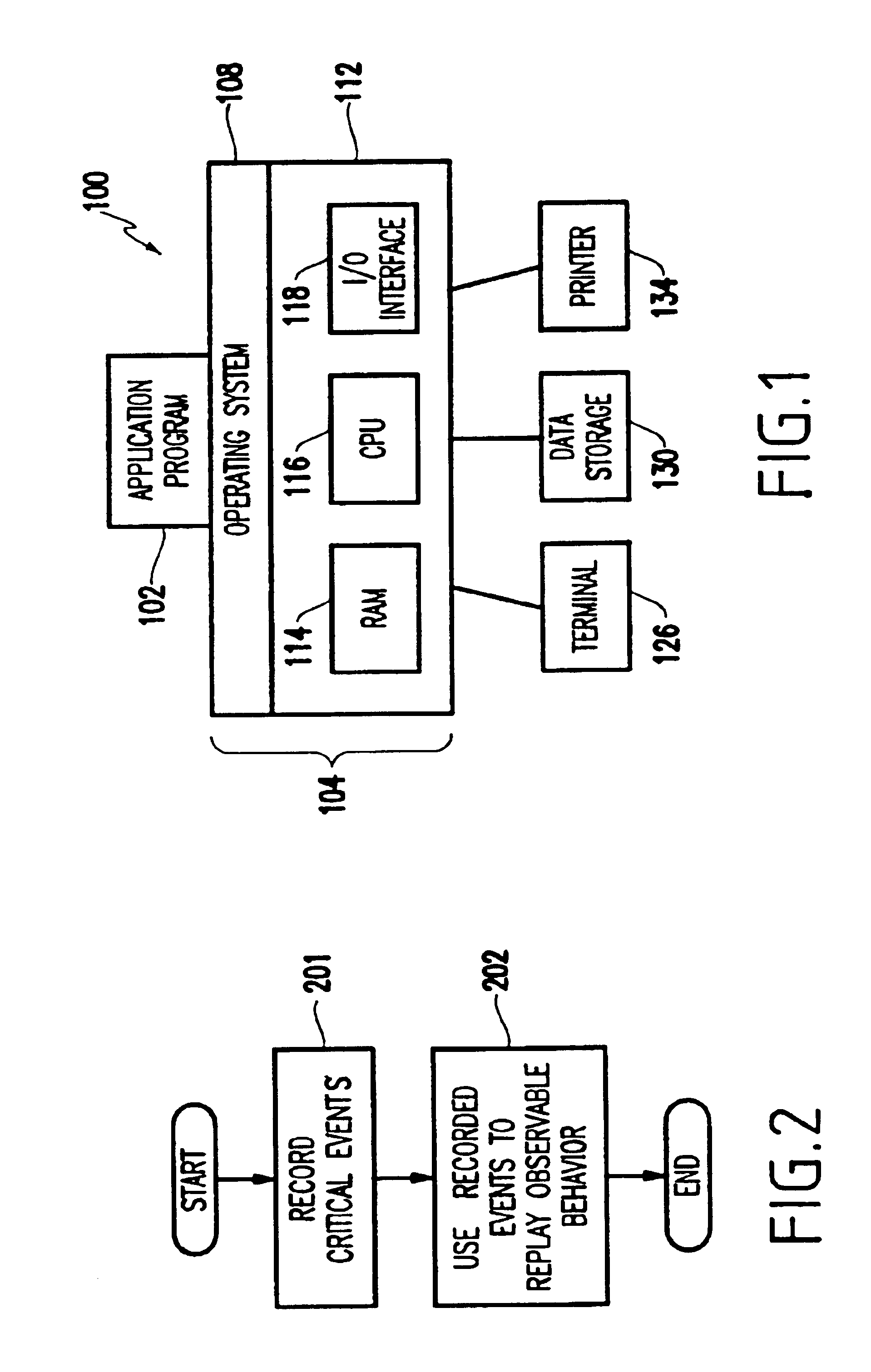

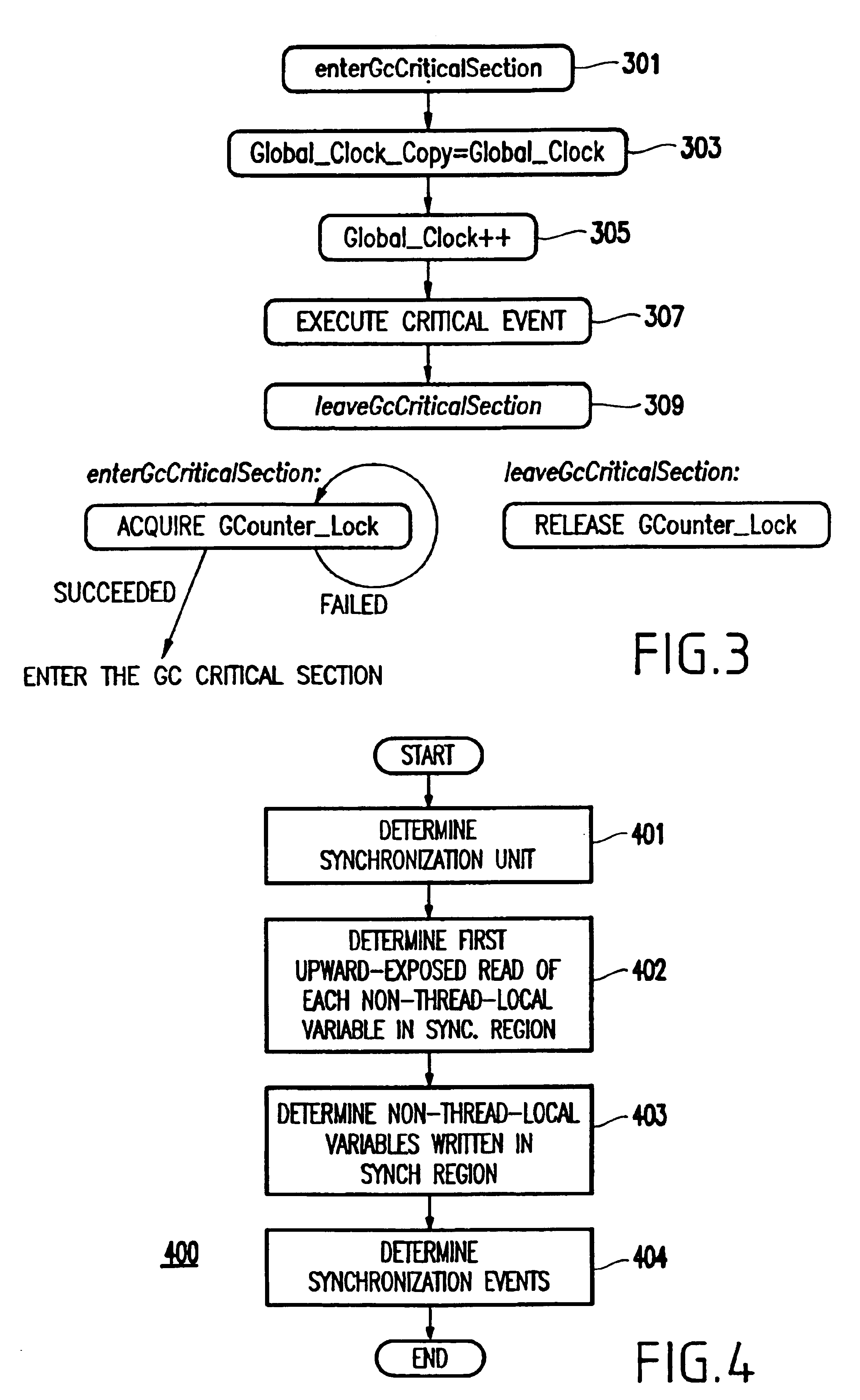

Method and apparatus for deterministic replay of java multithreaded programs on multiprocessors

InactiveUS6854108B1Reduce in quantityGood runtime performanceSoftware testing/debuggingSpecific program execution arrangementsMulti processorThread scheduling

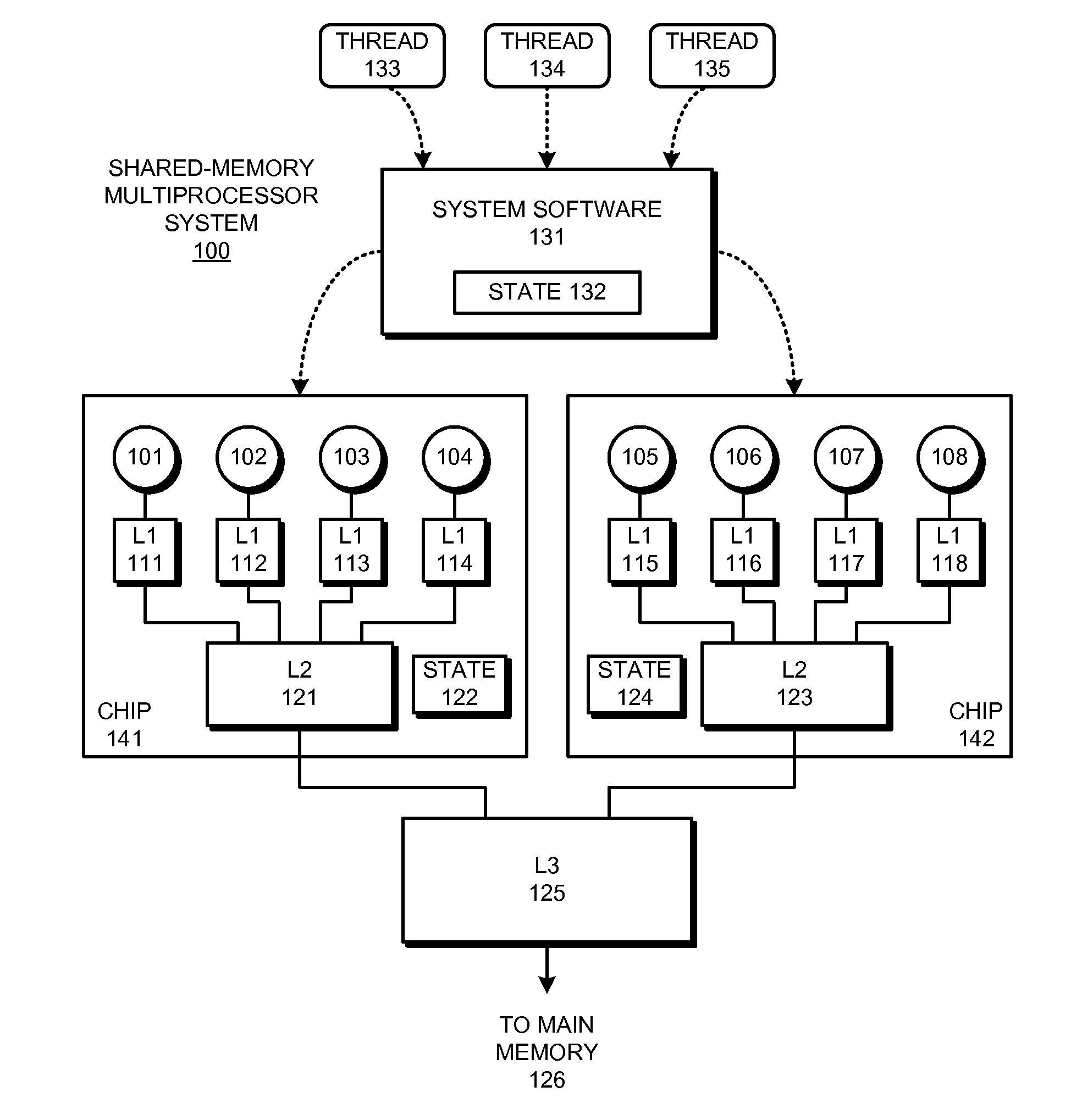

A method (and apparatus) of determinstically replaying an observable run-time behavior of distributed multi-threaded programs on multiprocessors in a shared-memory multiprocessor environment, wherein a run-time behavior of the programs includes sequences of events, each sequence being associated with one of a plurality of execution threads, includes identifying an execution order of critical events of the program, wherein the program includes critical events and non-critical events, generating groups of critical events of the program, generating, for each given execution thread, a logical thread schedule that identifies a sequence of the groups associated with the given execution thread, and storing the logical thread schedule for subsequent reuse.

Owner:IBM CORP

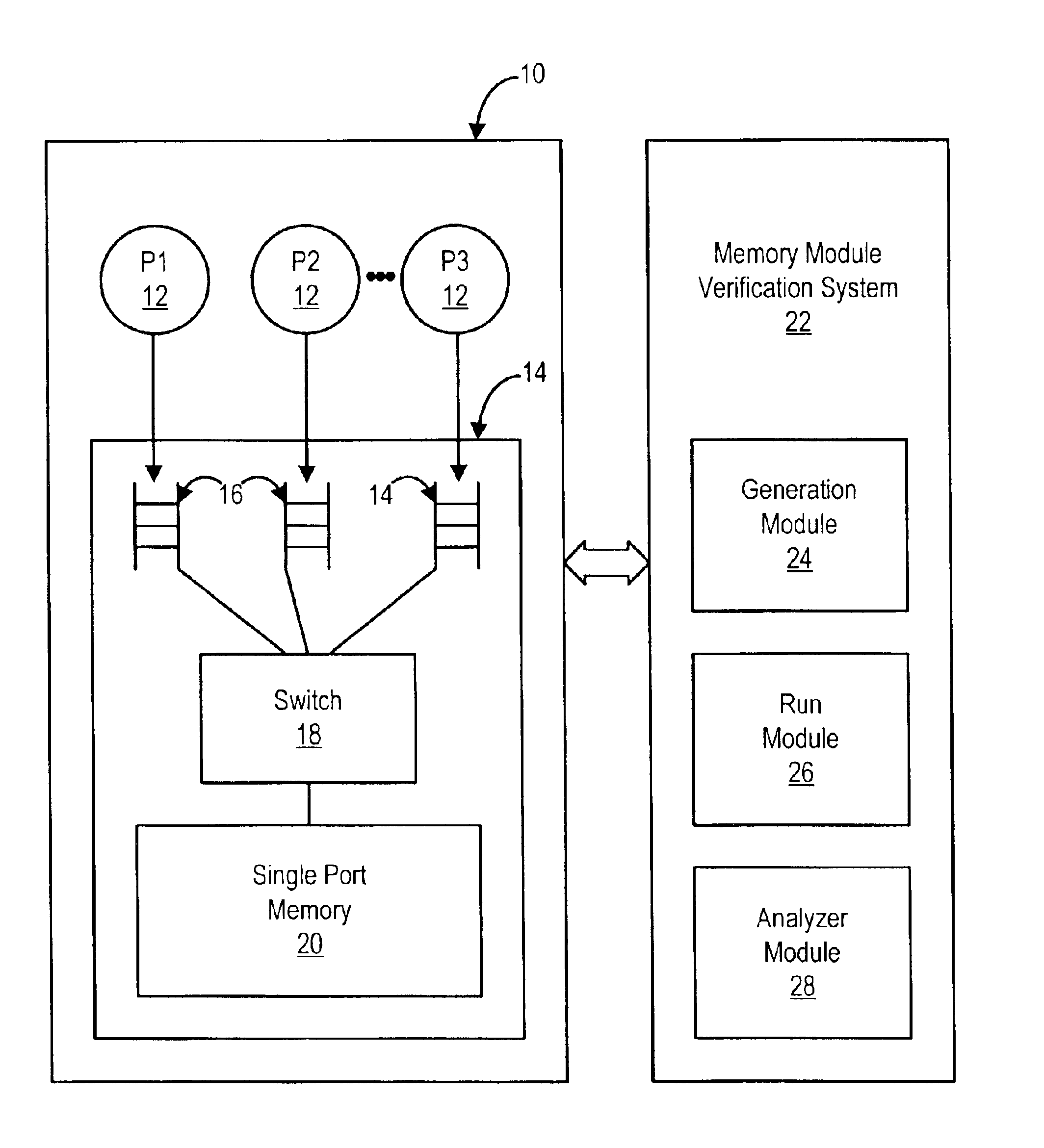

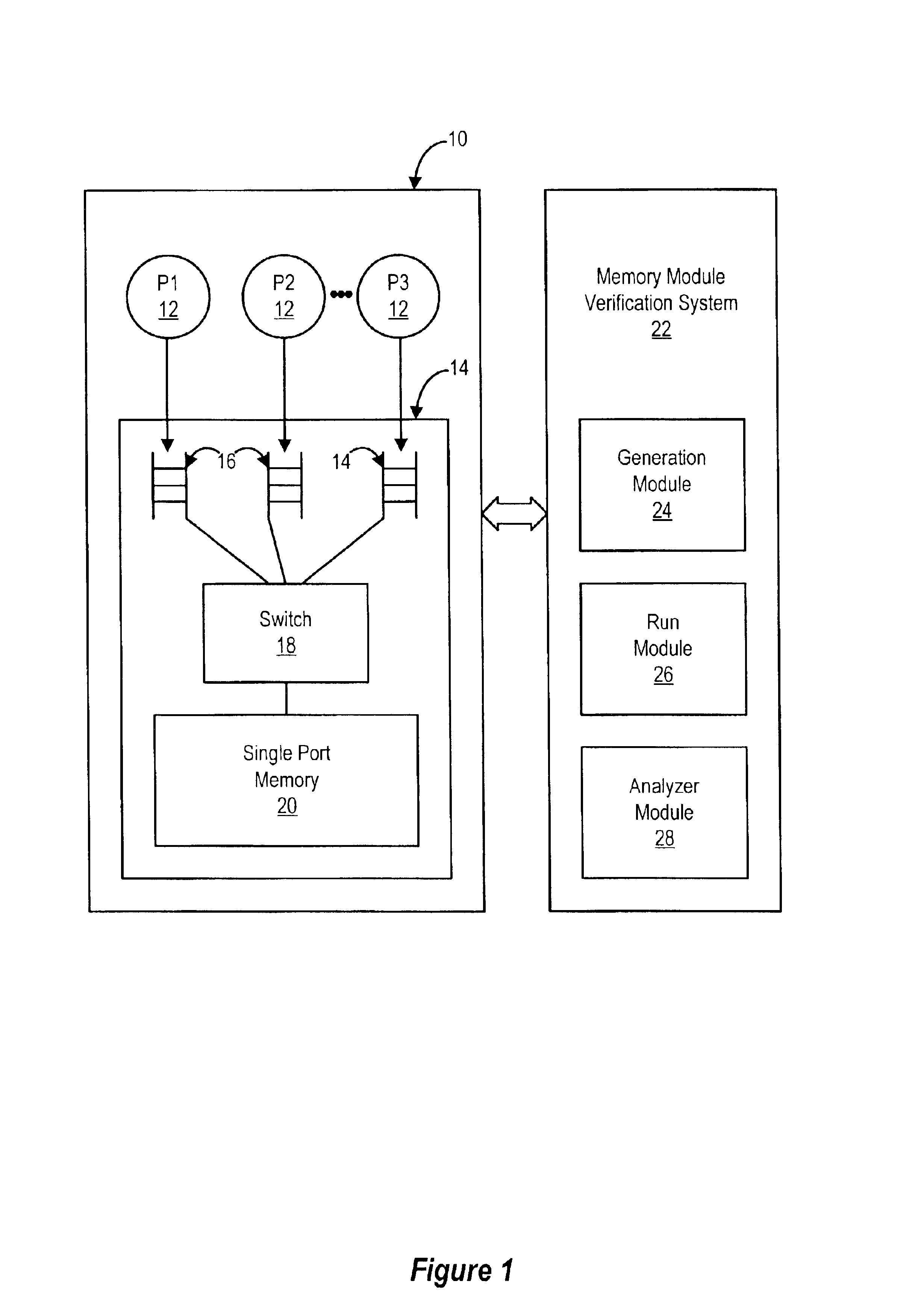

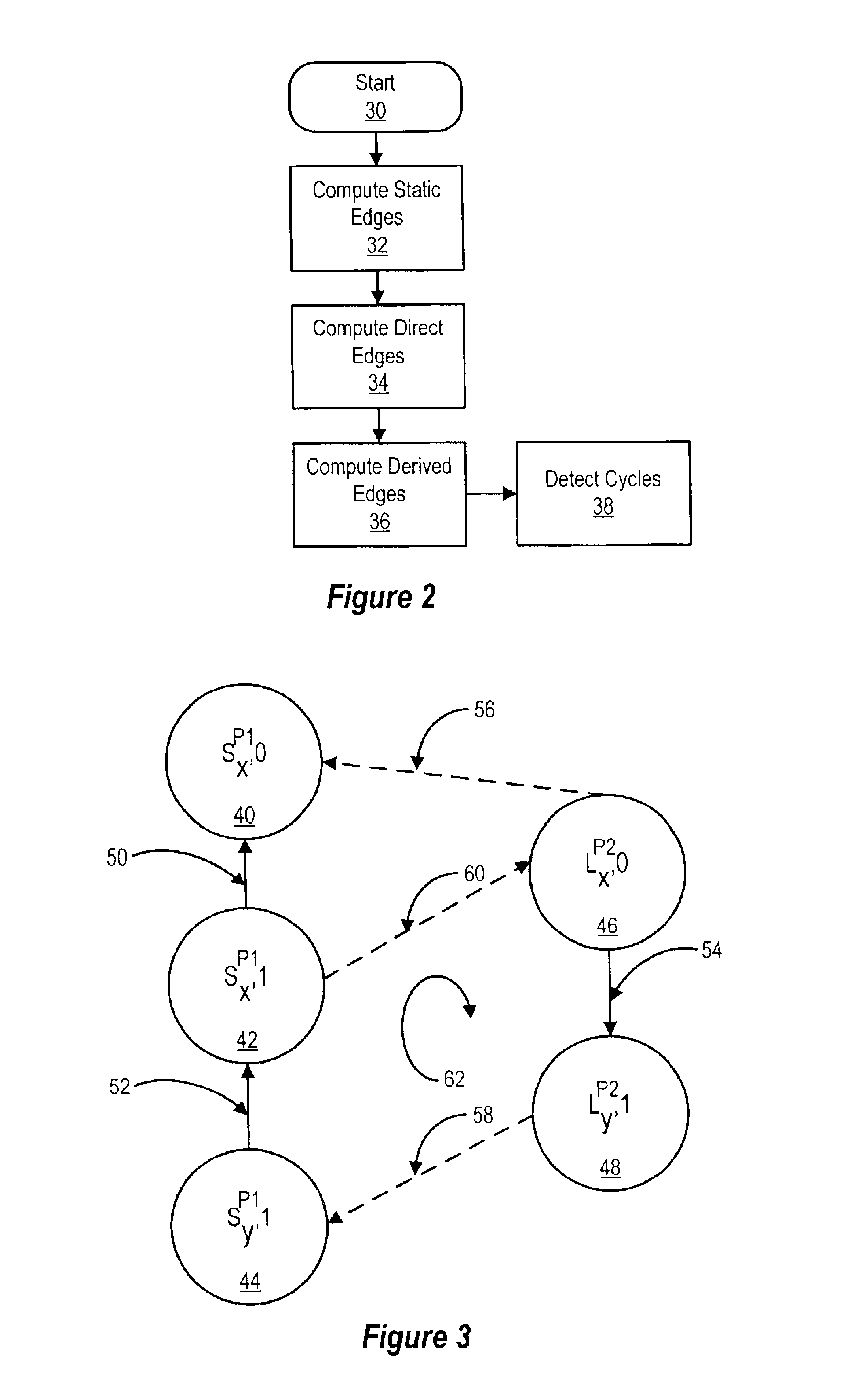

Shared memory multiprocessor memory model verification system and method

InactiveUS6892286B2Memory architecture accessing/allocationMemory adressing/allocation/relocationPrecedence graphMulti processor

A system and method for verifying a memory consistency model for a shared memory multiprocessor computer systems generates random instructions to run on the processors, saves the results of the running of the instructions, and analyzes the results to detect a memory subsystem error if the results fall outside of the space of possible outcomes consistent with the memory consistency model. A precedence relationship of the results is determined by uniquely identifying results of a store location with each result distinct to allow association of a read result value to the instruction that created the read result value. A precedence graph with static, direct and derived edges identifies errors when a cycle is detected that indicates results that are inconsistent with memory consistency model rules.

Owner:ORACLE INT CORP

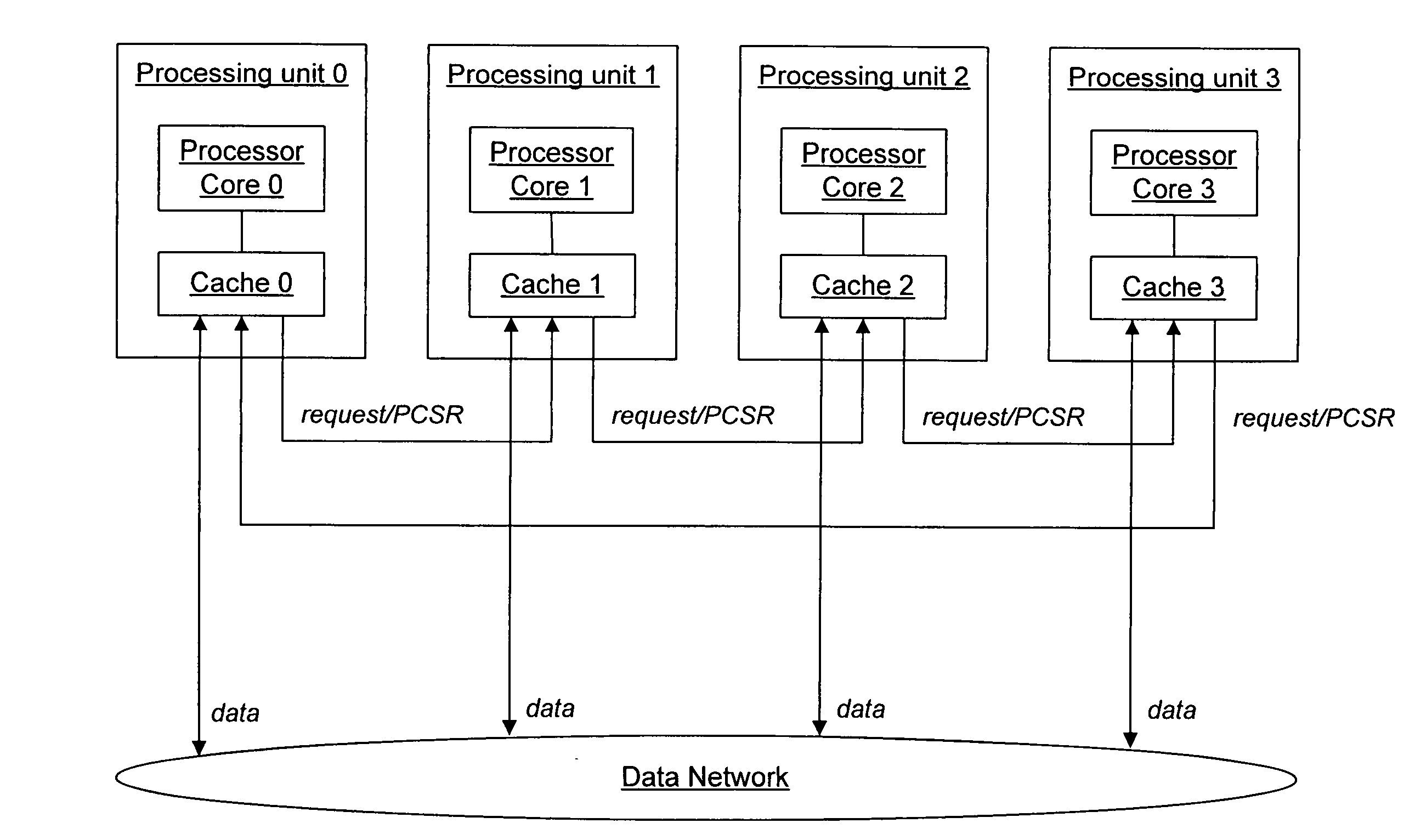

Location-aware cache-to-cache transfers

InactiveUS20050240735A1Low cache intervention costLow costEnergy efficient ICTMemory adressing/allocation/relocationParallel computingCache coherence

In shared-memory multiprocessor systems, cache interventions from different sourcing caches can result in different cache intervention costs. With location-aware cache coherence, when a cache receives a data request, the cache can determine whether sourcing the data from the cache will result in less cache intervention cost than sourcing the data from another cache. The decision can be made based on appropriate information maintained in the cache or collected from snoop responses from other caches. If the requested data is found in more than one cache, the cache that has or likely has the lowest cache intervention cost is generally responsible for supplying the data. The intervention cost can be measured by performance metrics that include, but are not limited to, communication latency, bandwidth consumption, load balance, and power consumption.

Owner:IBM CORP

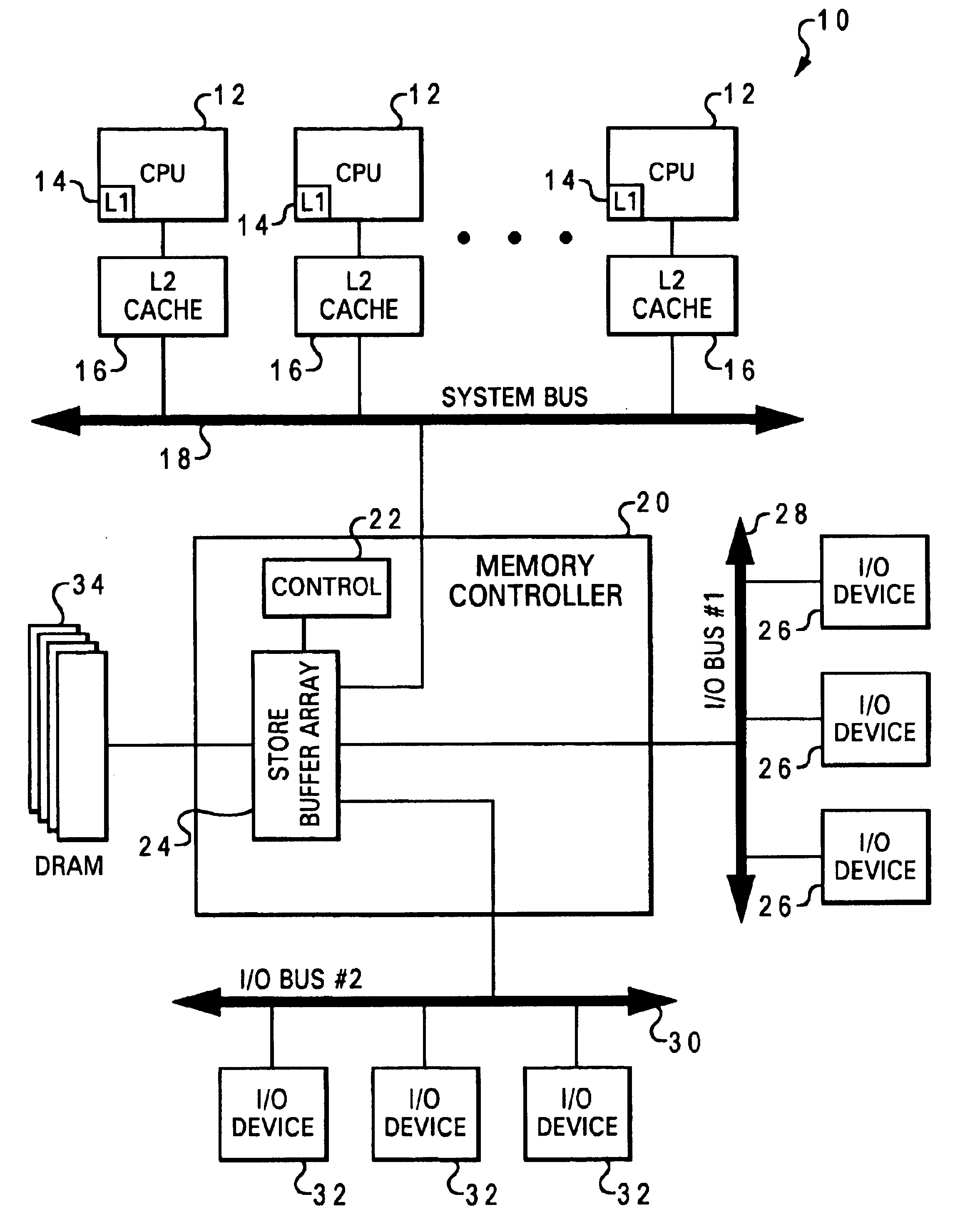

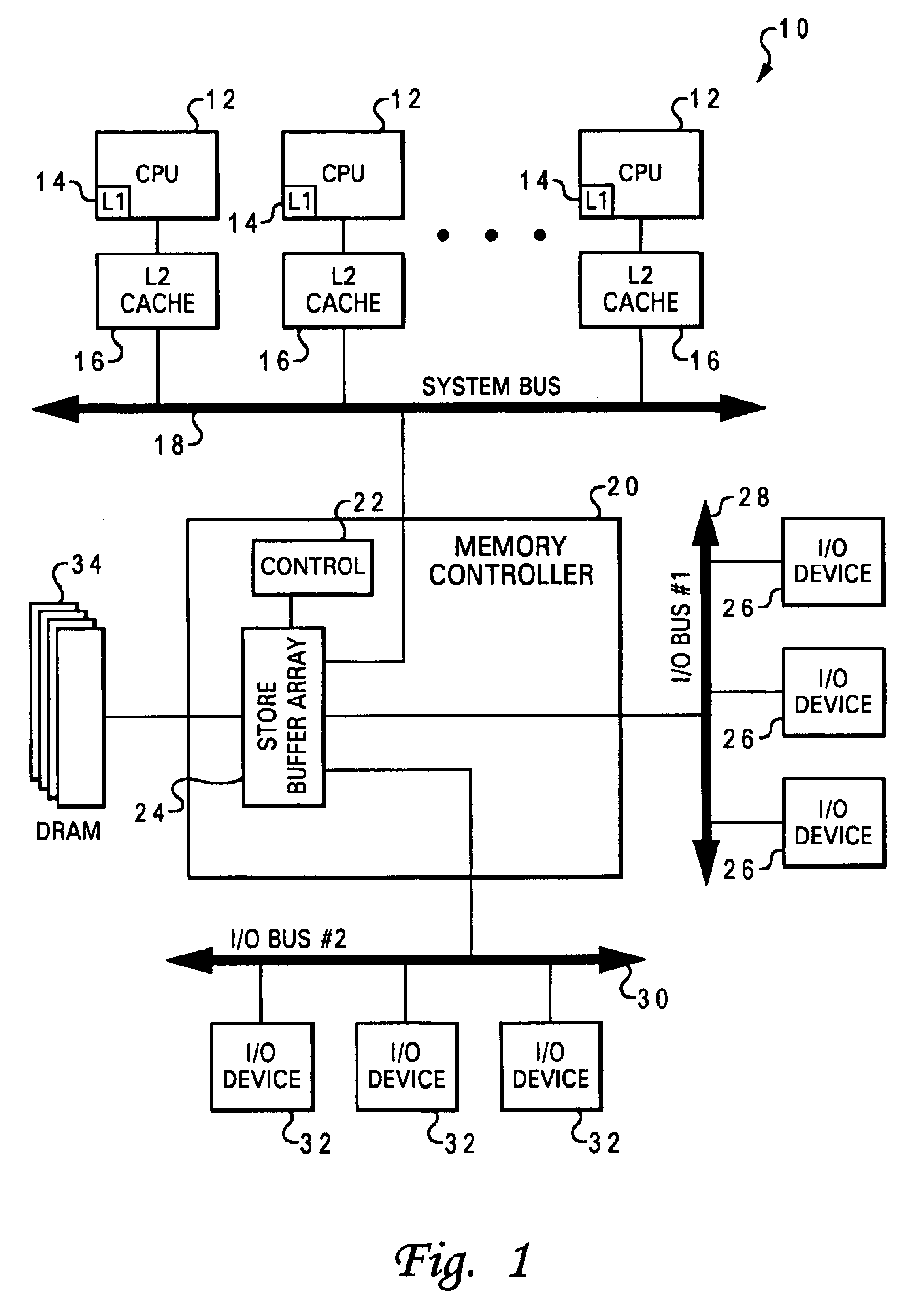

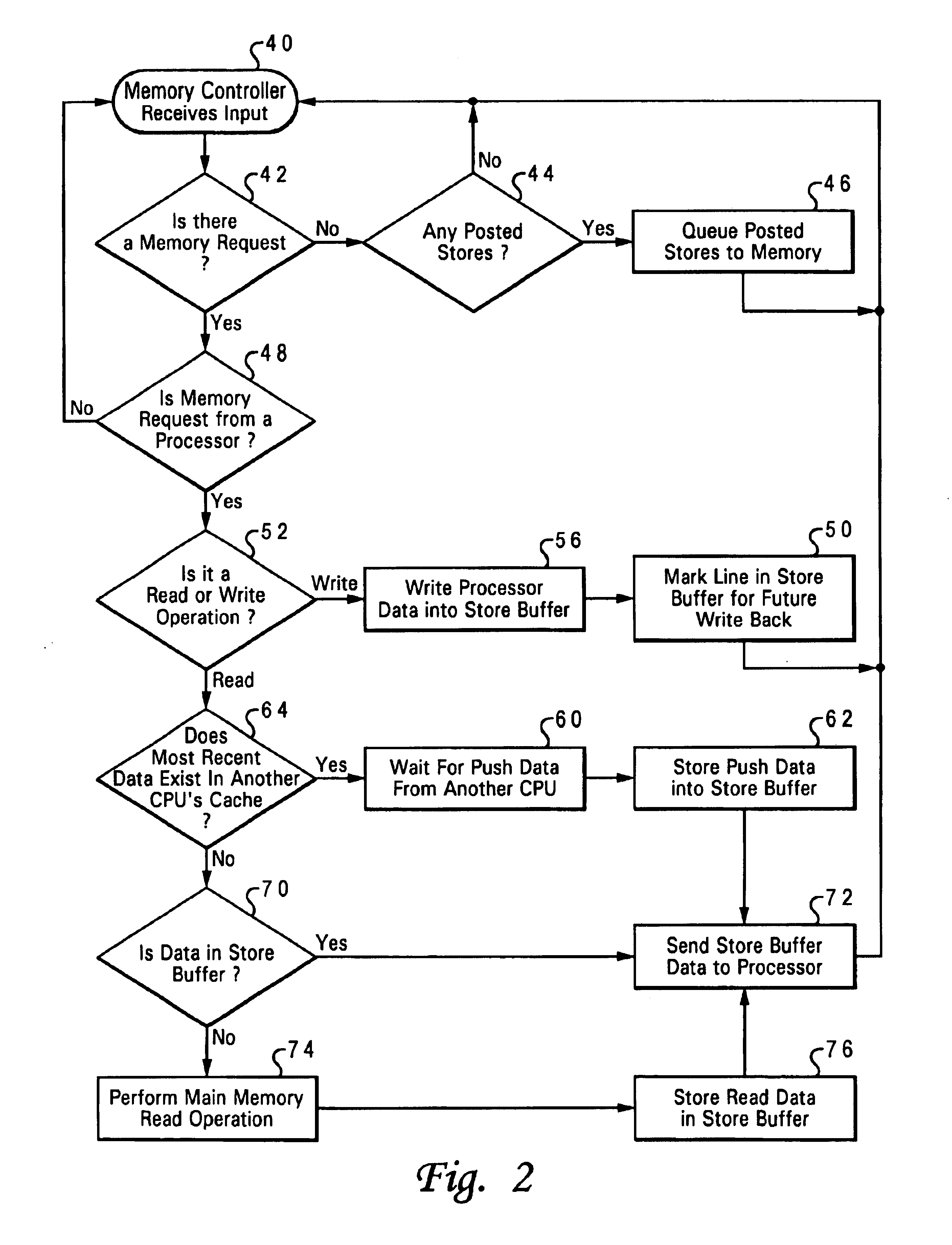

Method and apparatus for managing memory operations in a data processing system using a store buffer

InactiveUS6748493B1Minimize impactLower latencyMemory adressing/allocation/relocationData processing systemMulti processor

A shared memory multiprocessor (SMP) data processing system includes a store buffer implemented in a memory controller for temporarily storing recently accessed memory data within the data processing system. The memory controller includes control logic for maintaining coherency between the memory controller's store buffer and memory. The memory controller's store buffer is configured into one or more arrays sufficiently mapped to handle I / O and CPU bandwidth requirements. The combination of the store buffer and the control logic operates as a front end within the memory controller in that all memory requests are first processed by the control logic / store buffer combination for reducing memory latency and increasing effective memory bandwidth by eliminating certain memory read and write operations.

Owner:GOOGLE LLC

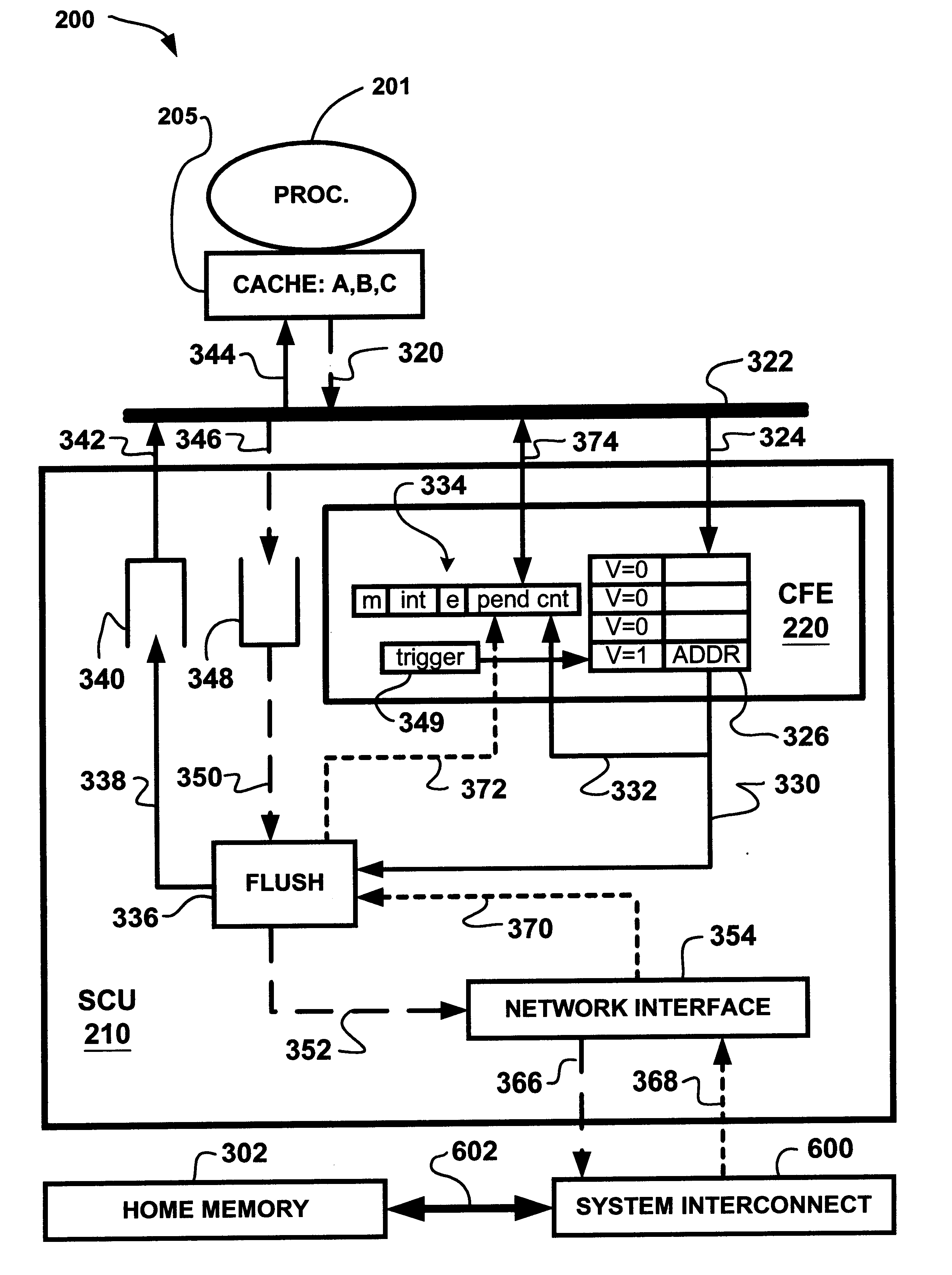

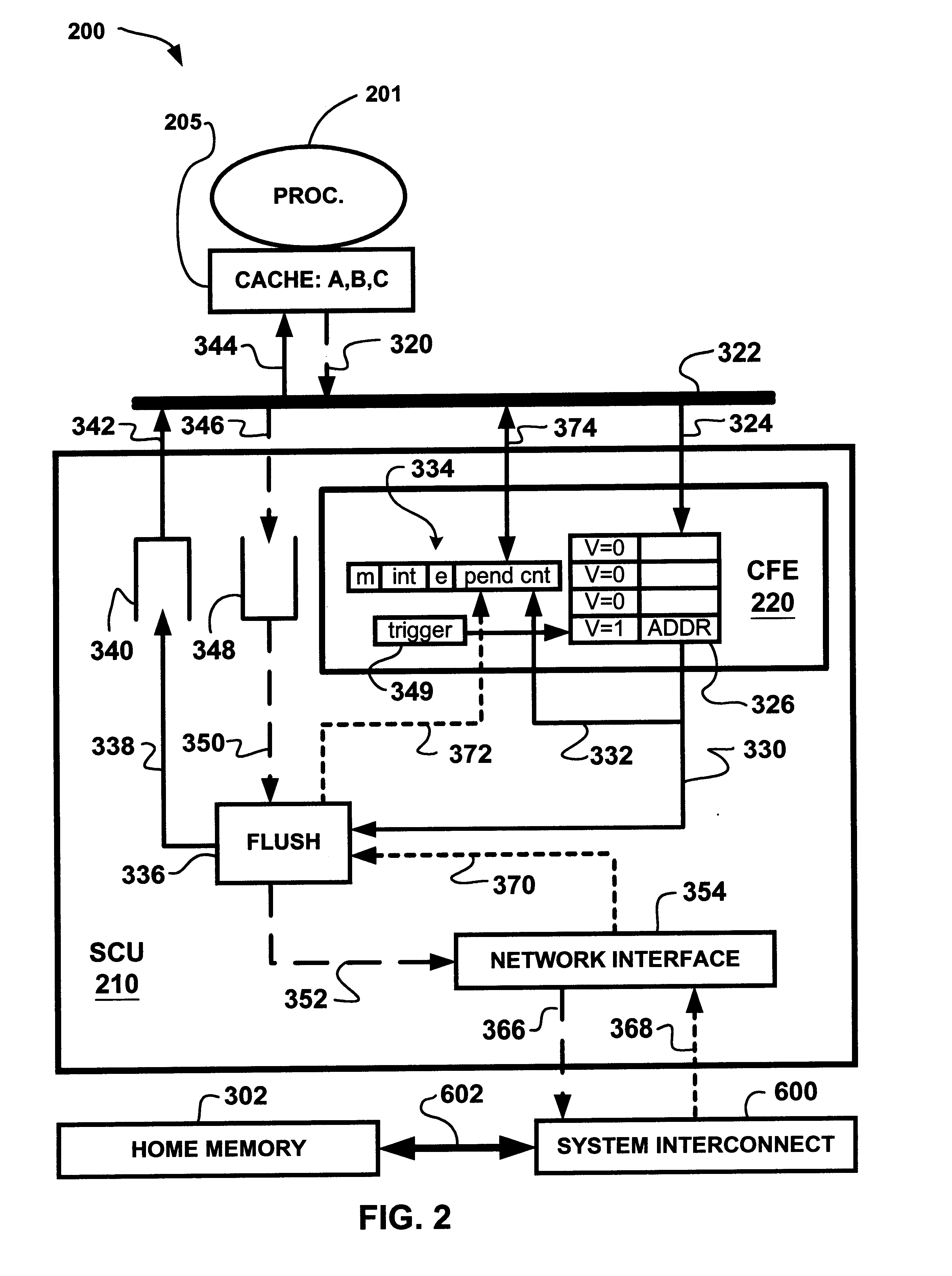

Cache-flushing engine for distributed shared memory multi-processor computer systems

InactiveUS6874065B1Memory architecture accessing/allocationMemory adressing/allocation/relocationMulti processorParallel computing

A cache coherent distributed shared memory multi-processor computer system is provided with a cache-flushing engine which allows selective forced write-backs of dirty cache lines to the home memory. After a request is posted in the cache-flushing engine, a “flush” command is issued which forces the owner cache to write-back the dirty cache line to be flushed. Subsequently, a “flush request” is issued to the home memory of the memory block. The home node will acknowledge when the home memory is successfully updated. The cache-flushing engine operation will be interrupted when all flush requests are complete.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

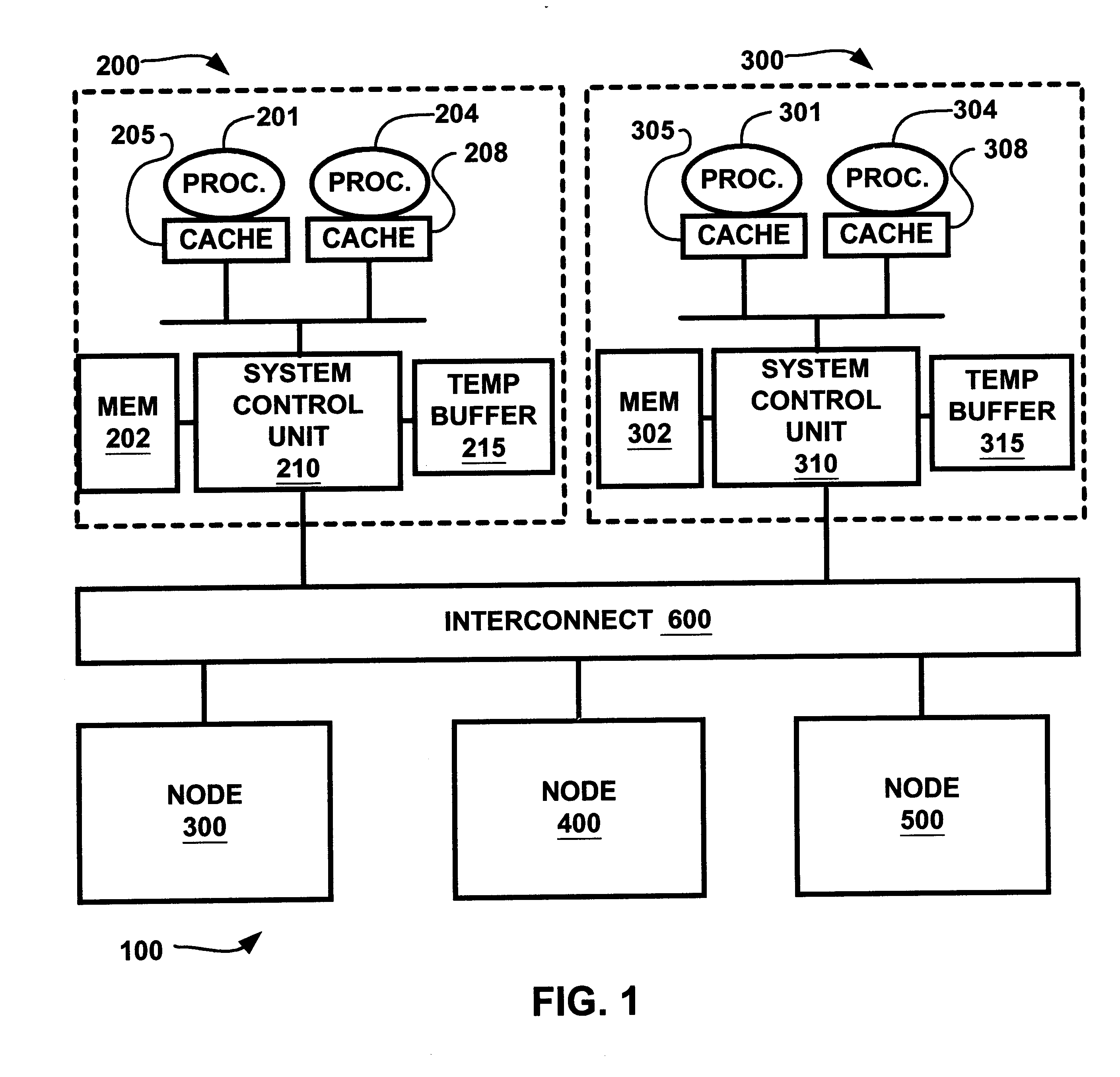

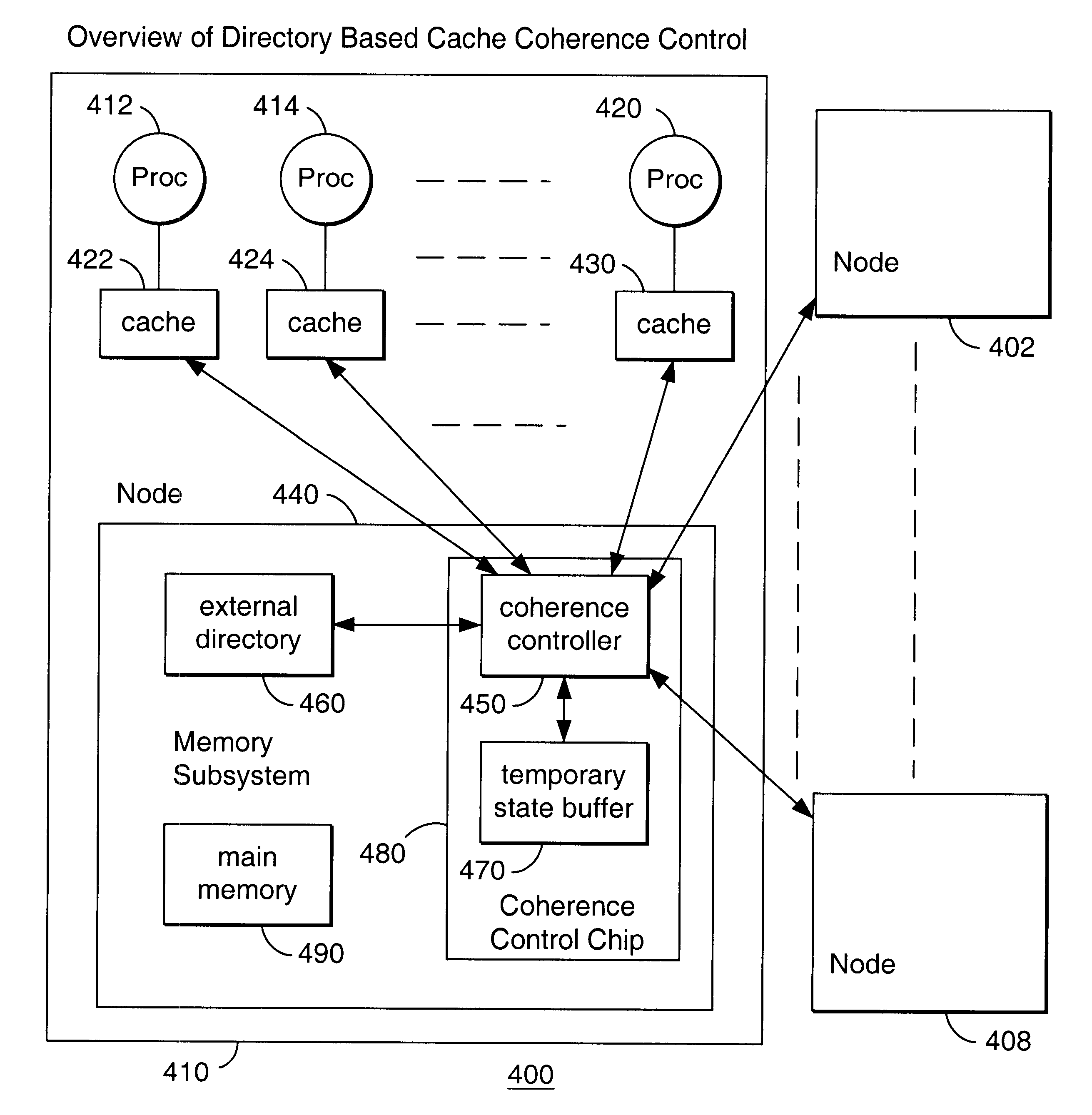

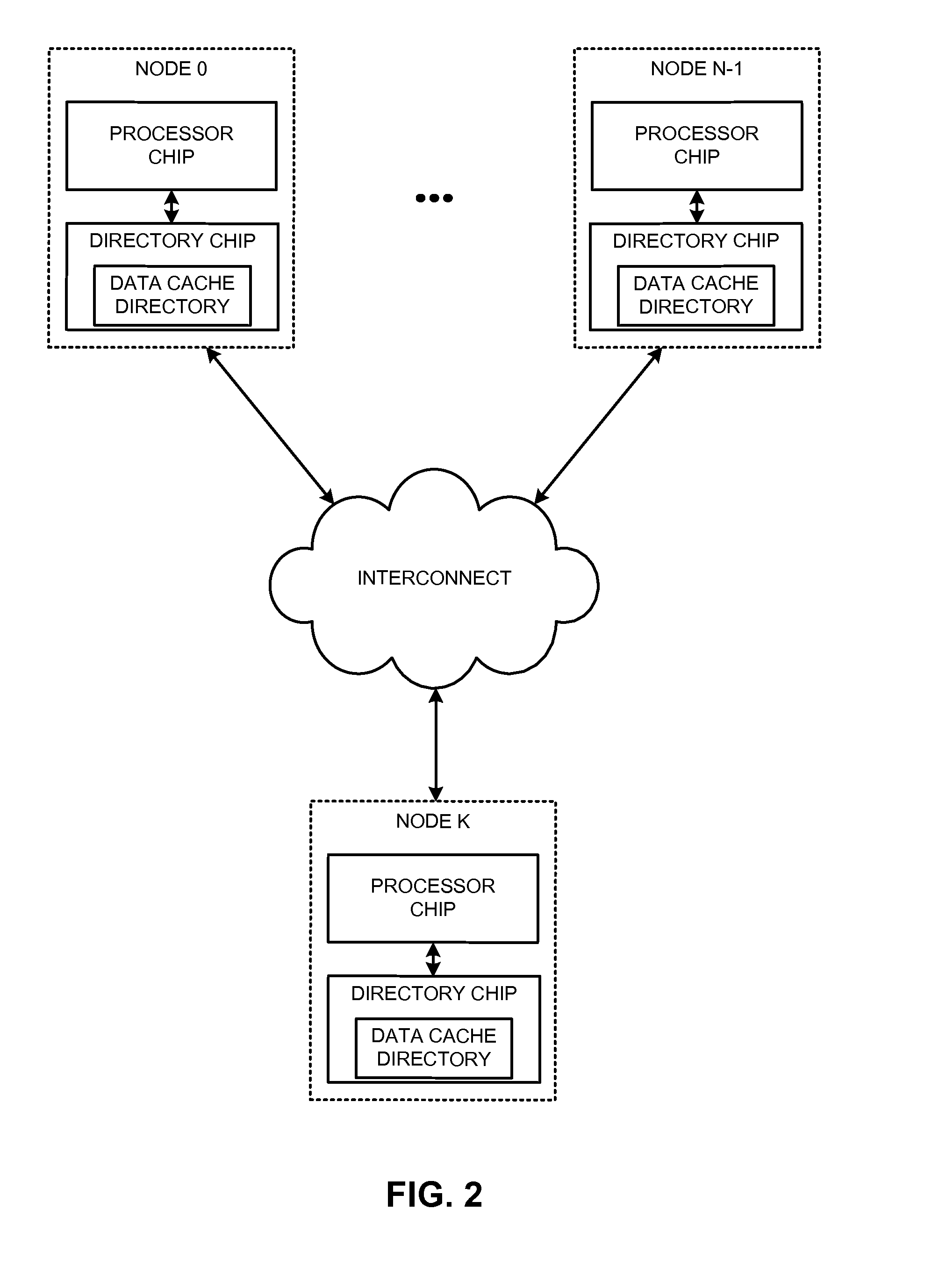

Split sparse directory for a distributed shared memory multiprocessor system

InactiveUS6560681B1Reduce the amount requiredSmall sizeMemory adressing/allocation/relocationMultiple digital computer combinationsMulti processorParallel computing

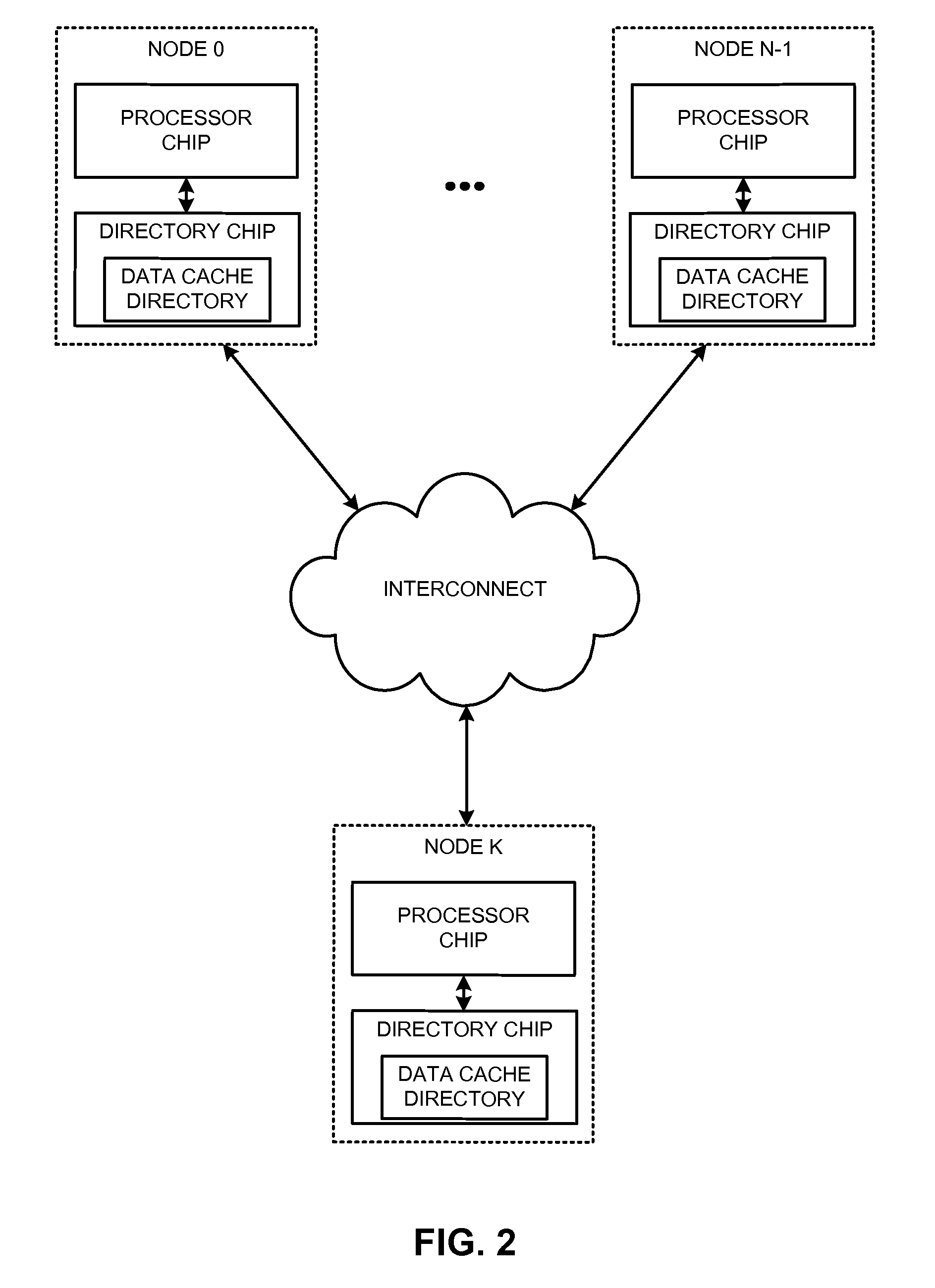

A split sparse directory for a distributed shared memory multiprocessor system with multiple nodes, each node including a plurality of processors, each processor having an associated cache. The split sparse directory is in a memory subsystem which includes a coherence controller, a temporary state buffer and an external directory. The split sparse directory stores information concerning the cache lines in the node, with the temporary state buffer holding state information about transient cache lines and the external directory holding state information about non-transient cache lines.

Owner:FUJITSU LTD

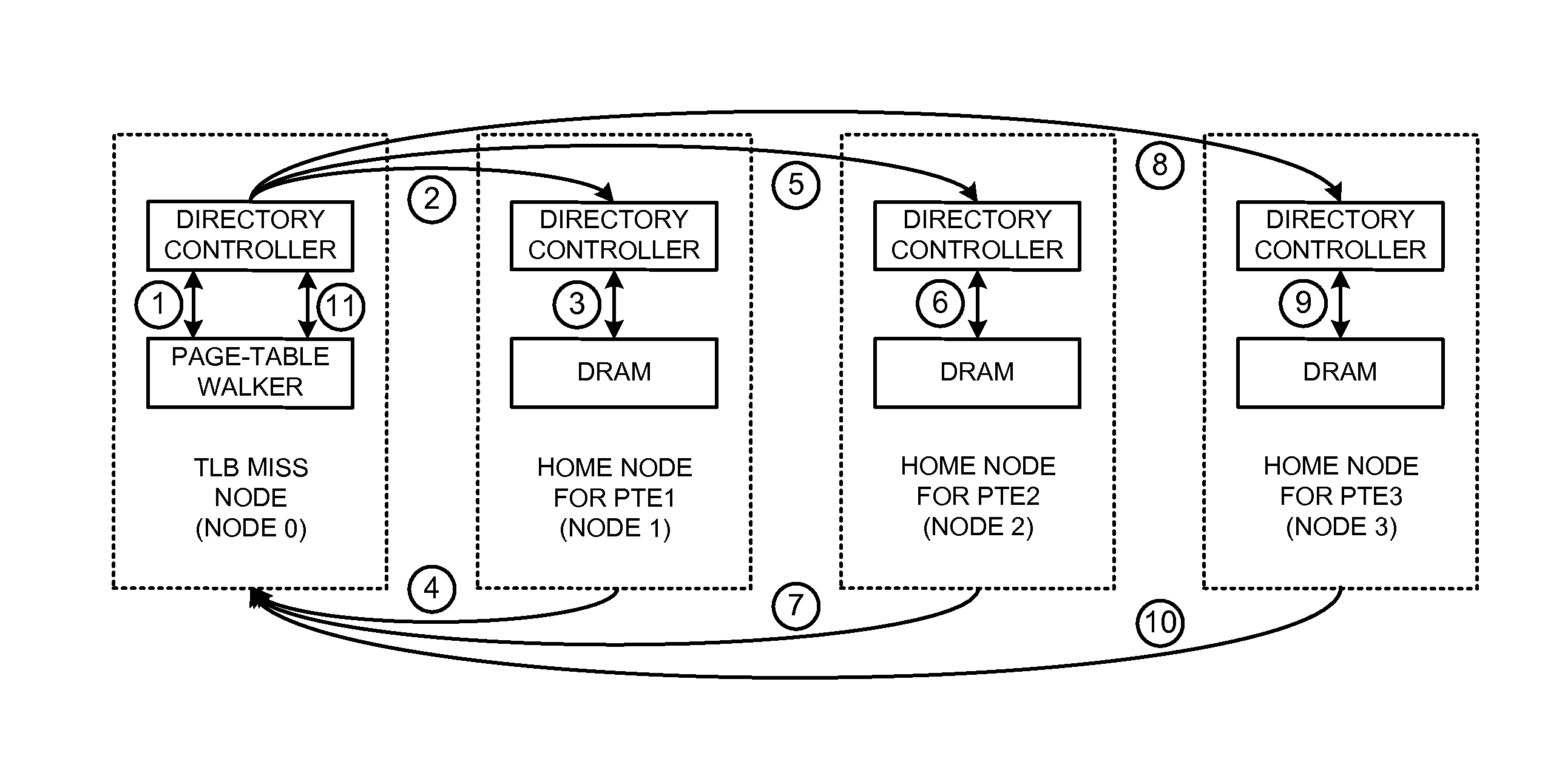

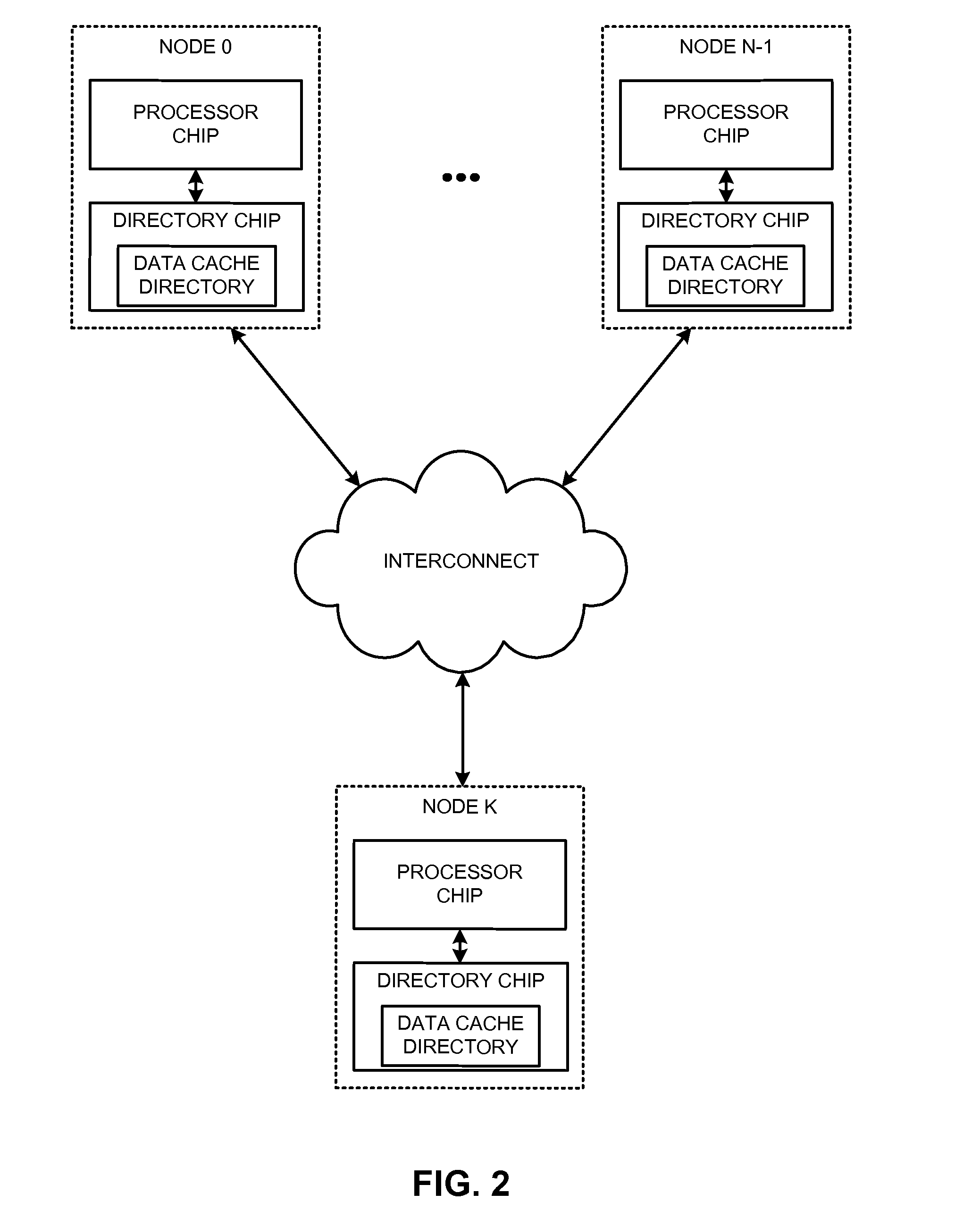

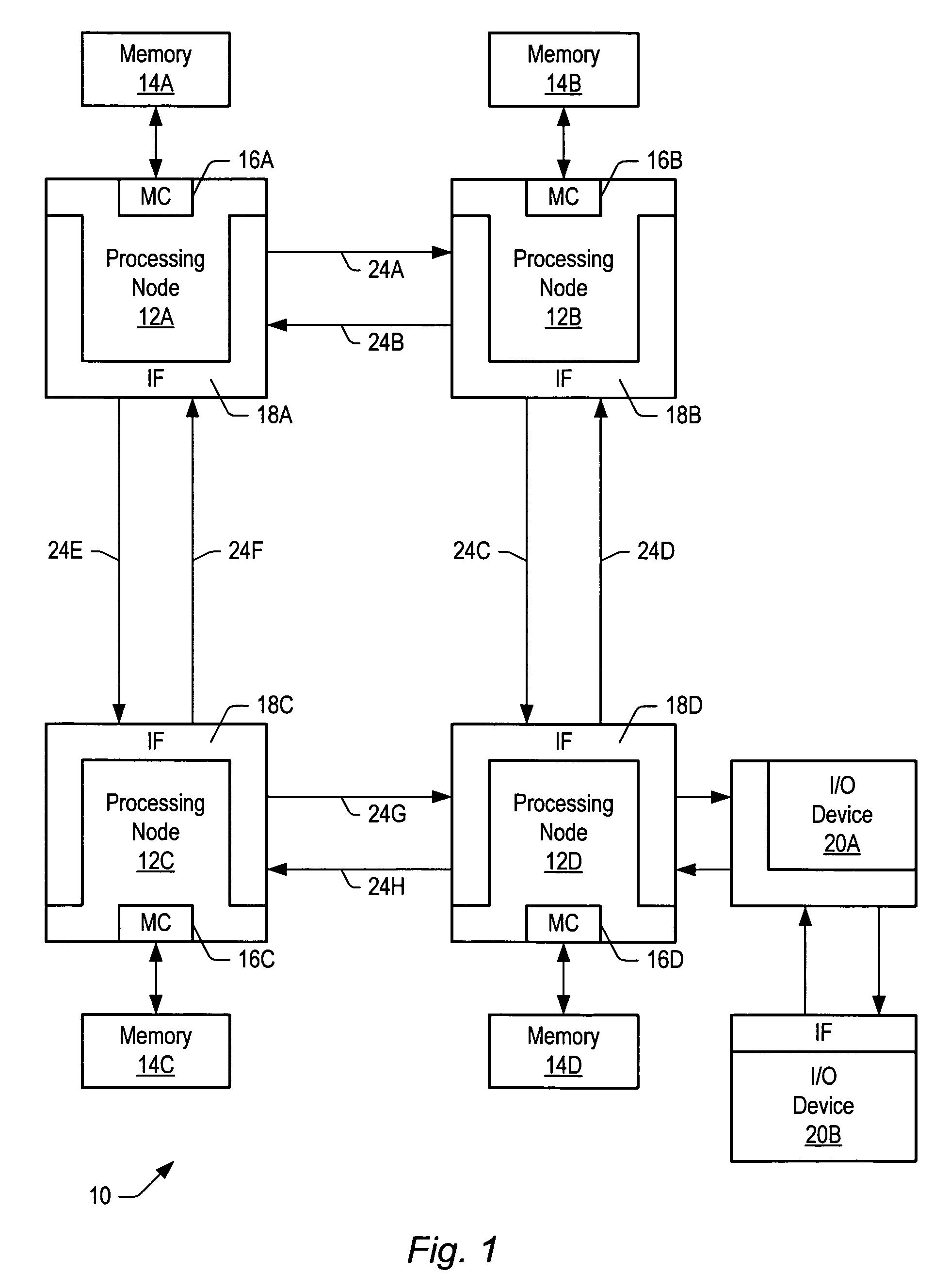

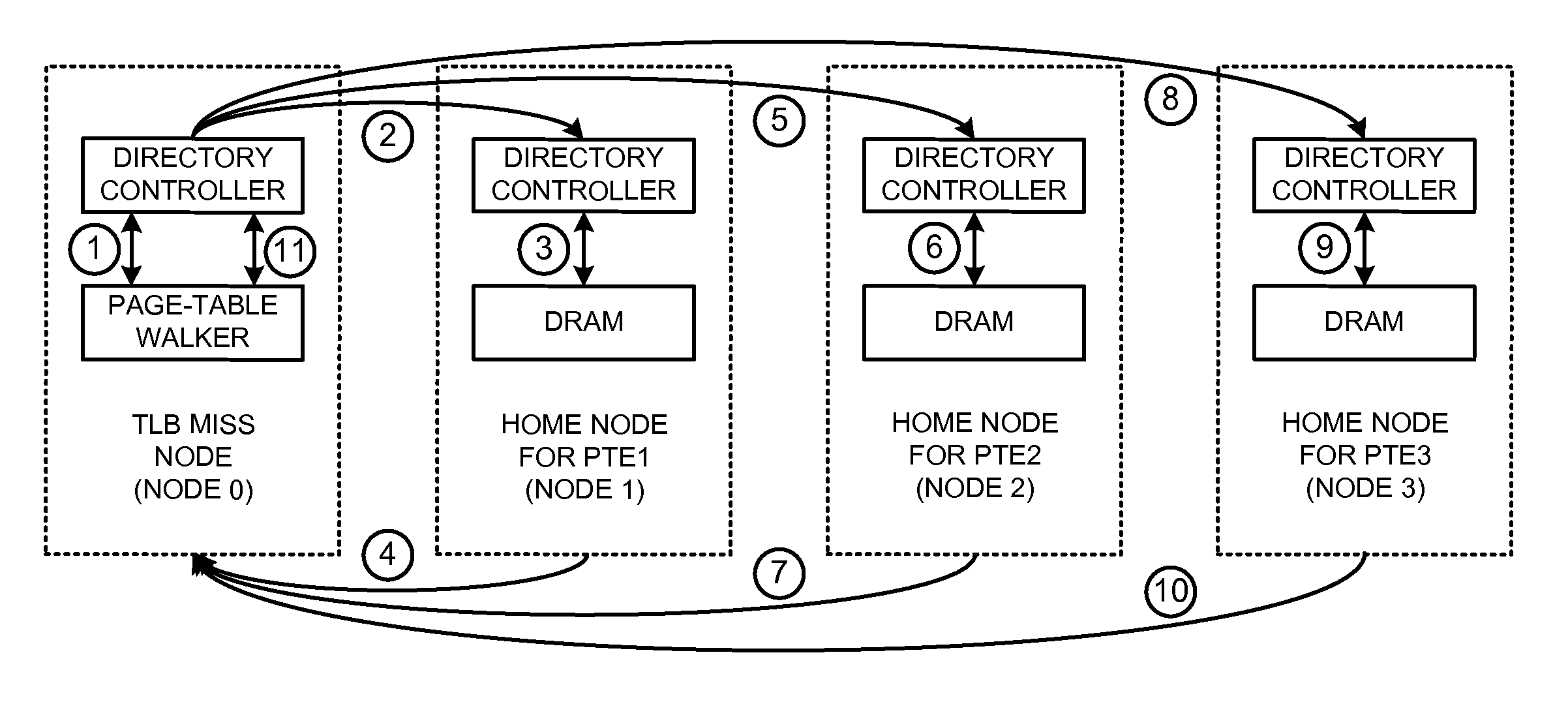

Distributed page-table lookups in a shared-memory system

ActiveUS20140089572A1Reduce address-translation latencyReduce communication overheadMemory architecture accessing/allocationMemory adressing/allocation/relocationPhysical addressPage table

The disclosed embodiments provide a system that performs distributed page-table lookups in a shared-memory multiprocessor system with two or more nodes, where each of these nodes includes a directory controller that manages a distinct portion of the system's address space. During operation, a first node receives a request for a page-table entry that is located at a physical address that is managed by the first node. The first node accesses its directory controller to retrieve the page-table entry, and then uses the page-table entry to calculate the physical address for a subsequent page-table entry. The first node determines the home node (e.g., the managing node) for this calculated physical address, and sends a request for the subsequent page-table entry to that home node.

Owner:ORACLE INT CORP

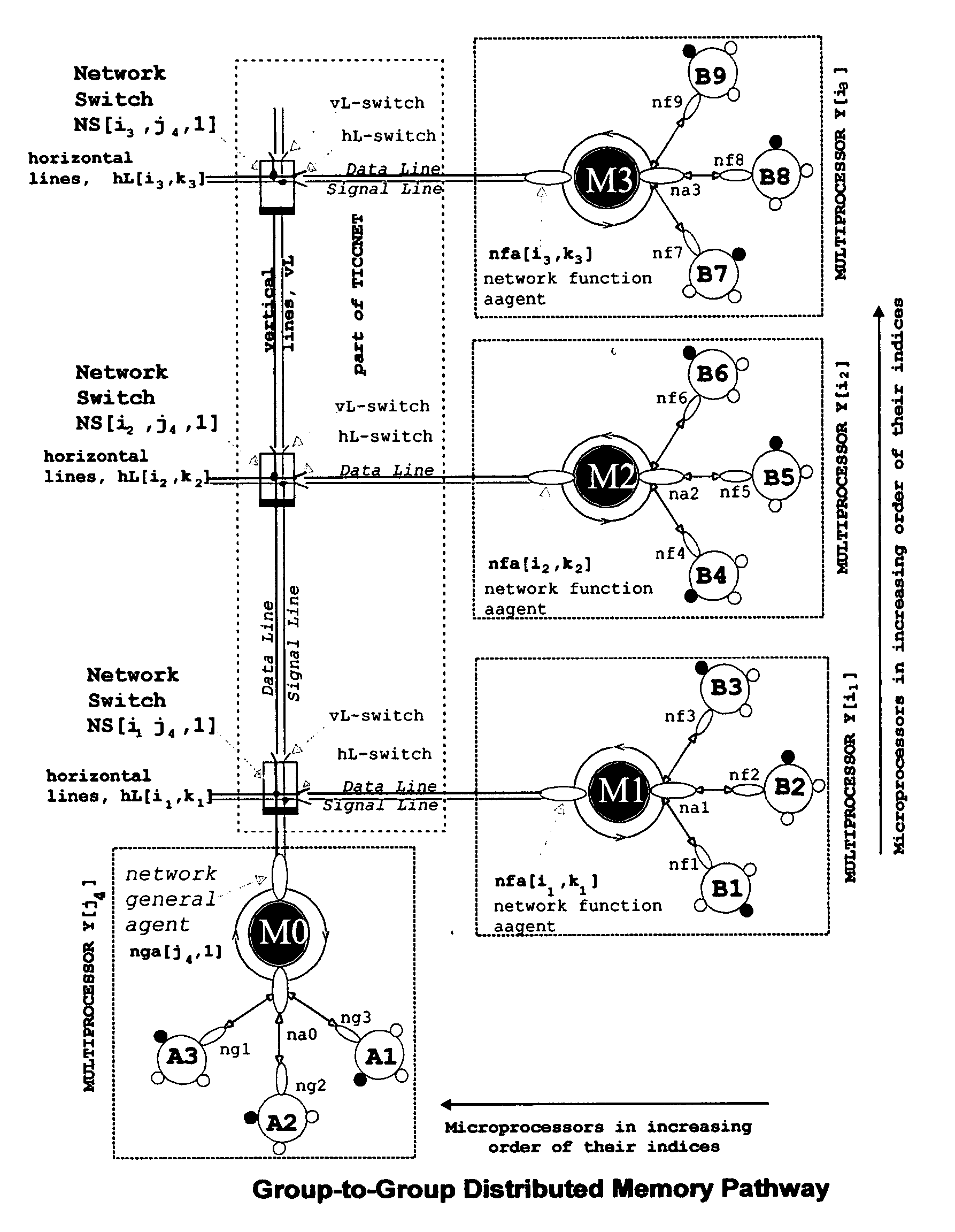

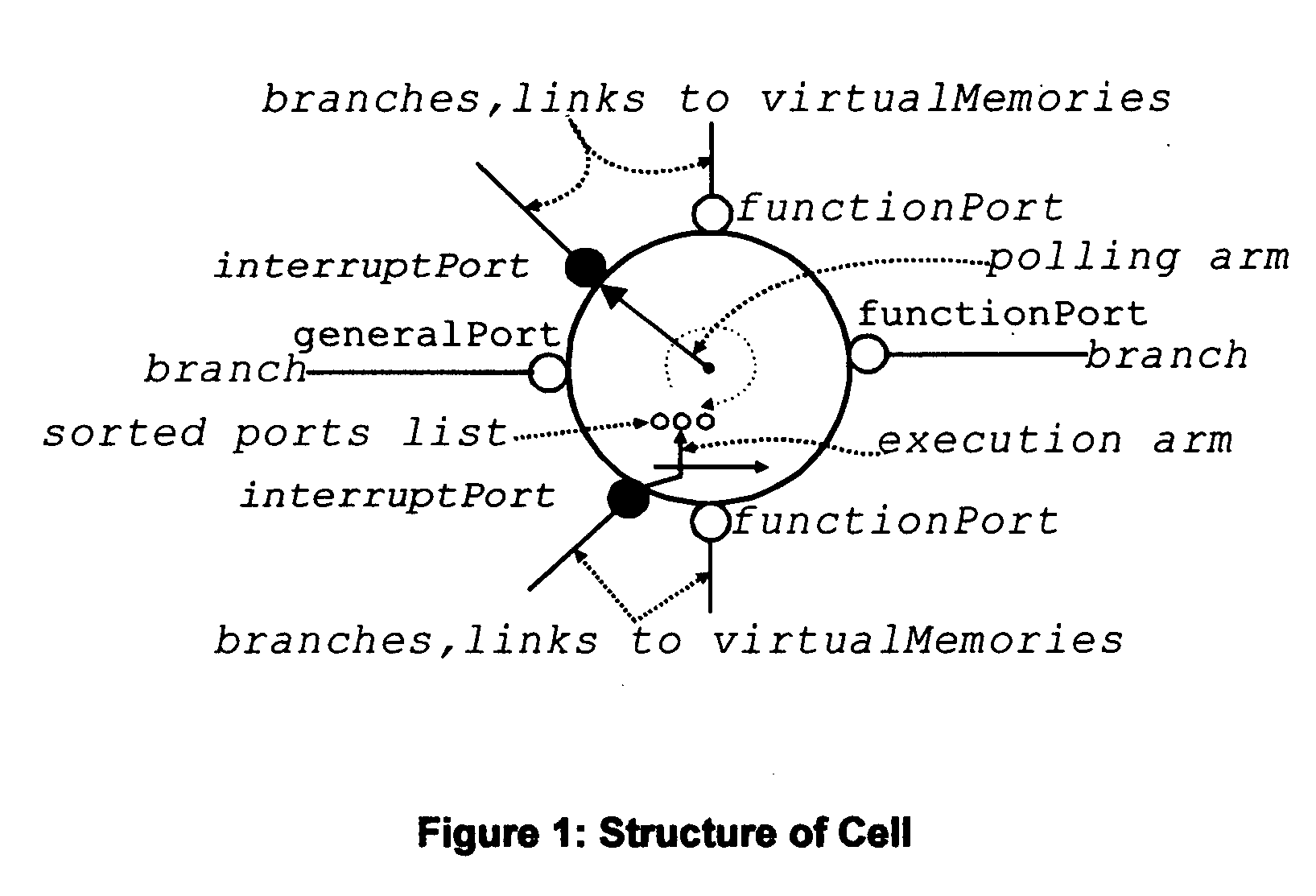

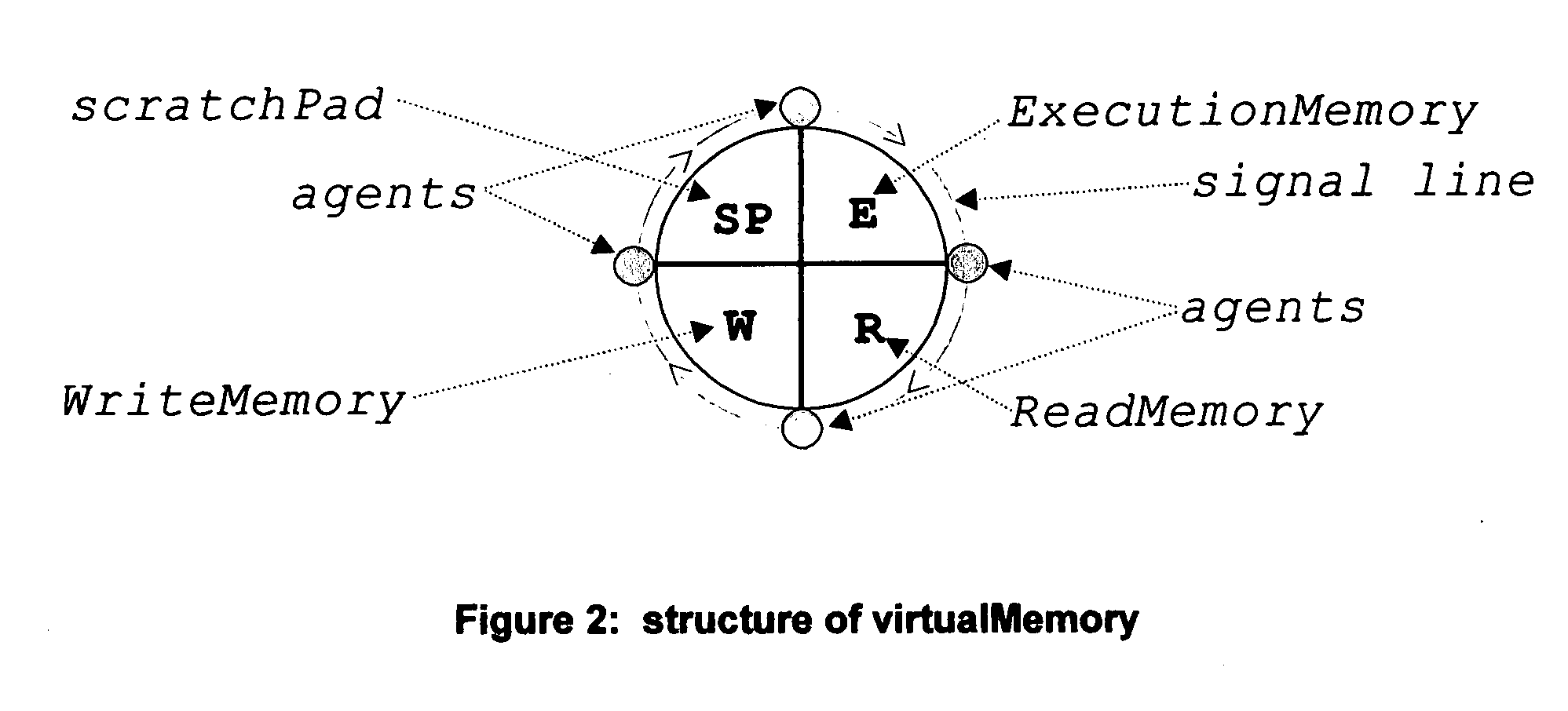

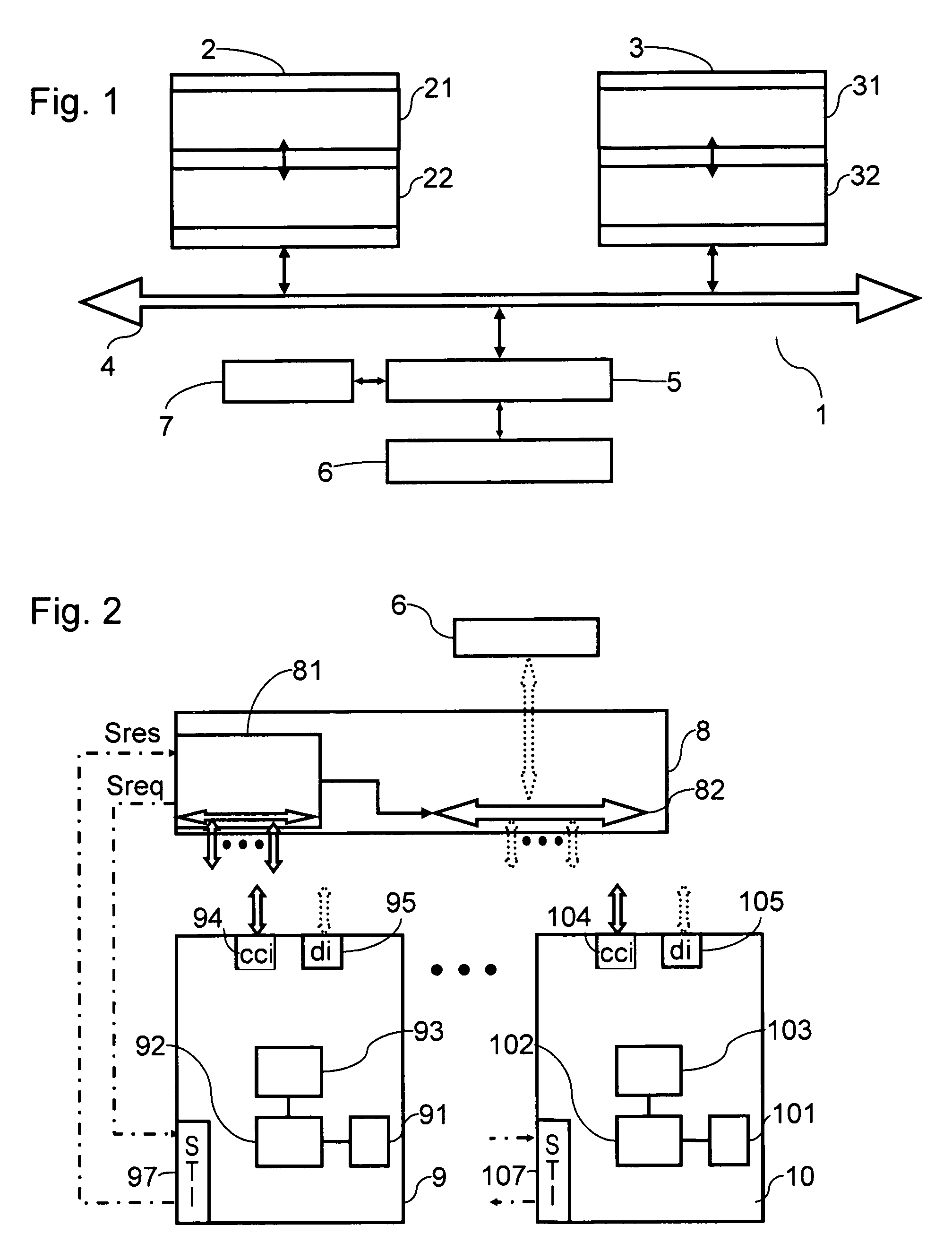

Self-scheduled real time software using real time asynchronous messaging

TICC™ (Technology for Integrated Computation and Communication), a patented technology [1], provides a high-speed message-passing interface for parallel processes. TICC™ does high-speed asynchronous message passing with latencies in the nanoseconds scale in shared-memory multiprocessors and latencies in microseconds scale over distributed-memory local area TICCNET™ (Patent Pending, [2]. Ticc-Ppde (Ticc-based Parallel Program Development and Execution platform, Patent Pending, [3]) provides a component based. parallel program development environment, and provides the infrastructure for dynamic debugging and updating of Ticc-based parallel programs, self-monitoring, self-diagnosis and self-repair. Ticc-Rtas (Ticc-based Real Time Application System) provides the system architecture for developing self-scheduled real time distributed parallel processing software with real-time asynchronous messaging, using Ticc-Ppde. Implementation of a Ticc-Rtas real time application using Ticc-Ppde will automatically generate the self-monitoring system for the Rtas. This self-monitoring. system may be used to monitor the Rtas during its operation, in parallel with its operation, to recognize and report a priori specified observable events that may occur in the application or recognize and report system malfunctions, without interfering with the timing requirements of the Ticc-Rtas. The structure, innovations underlying their operations, details on developing Rtas using Ticc-Ppde and TICCNET™ are presented here together with three illustrative examples: one on sensor fusion, the other on image fusion and the third on. power transmission control in a fuel cell powered automobile.

Owner:EDSS INC

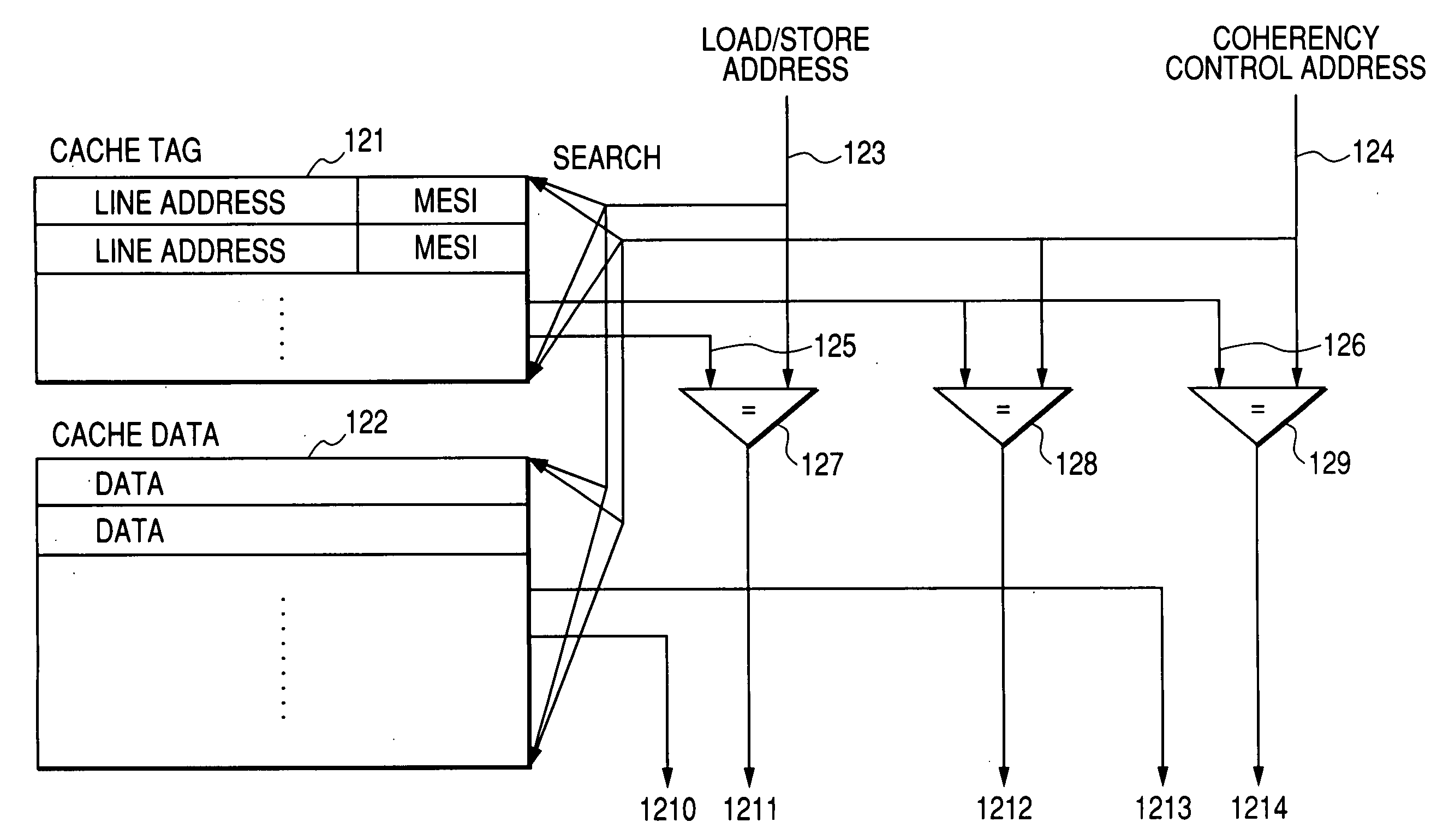

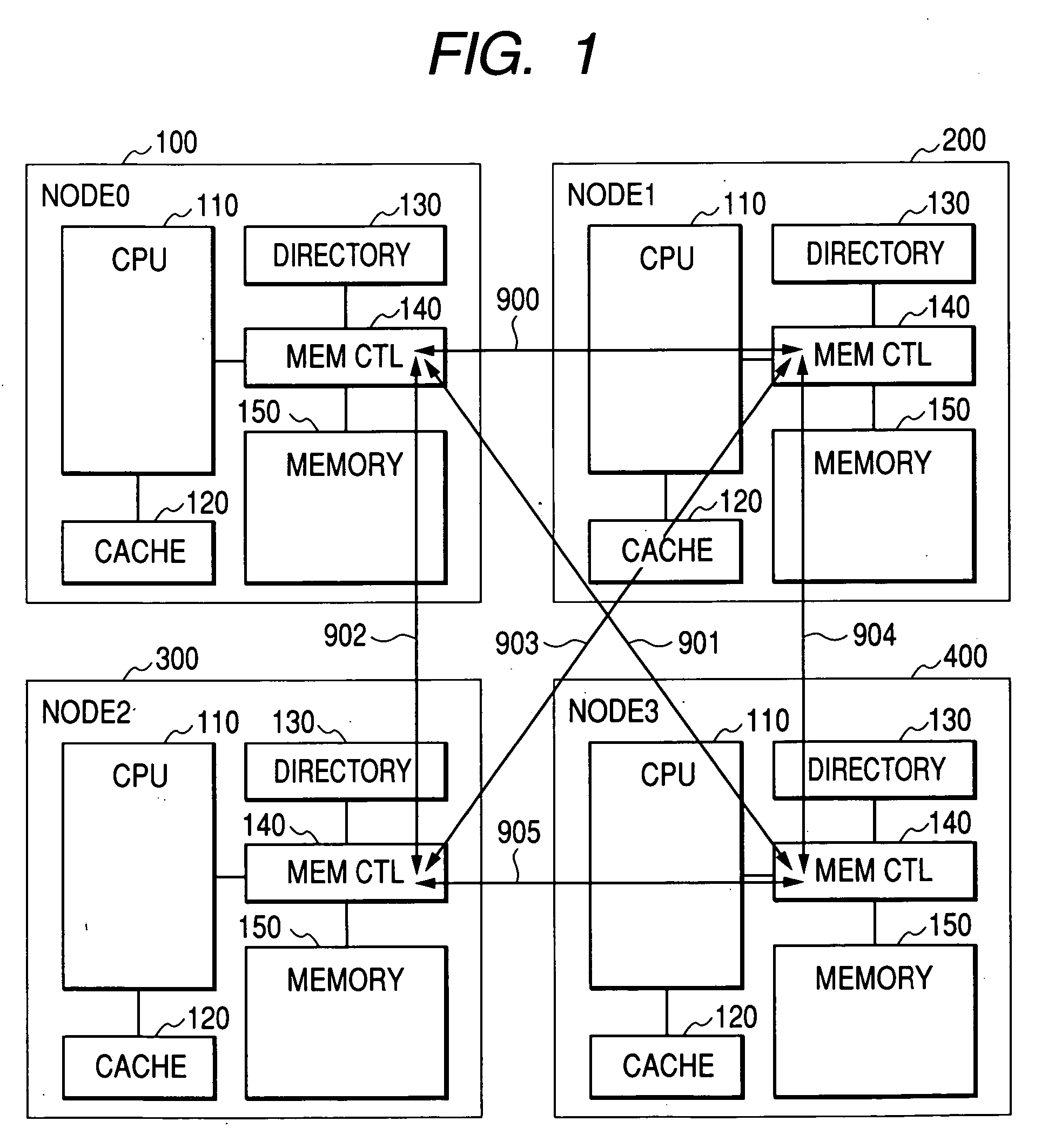

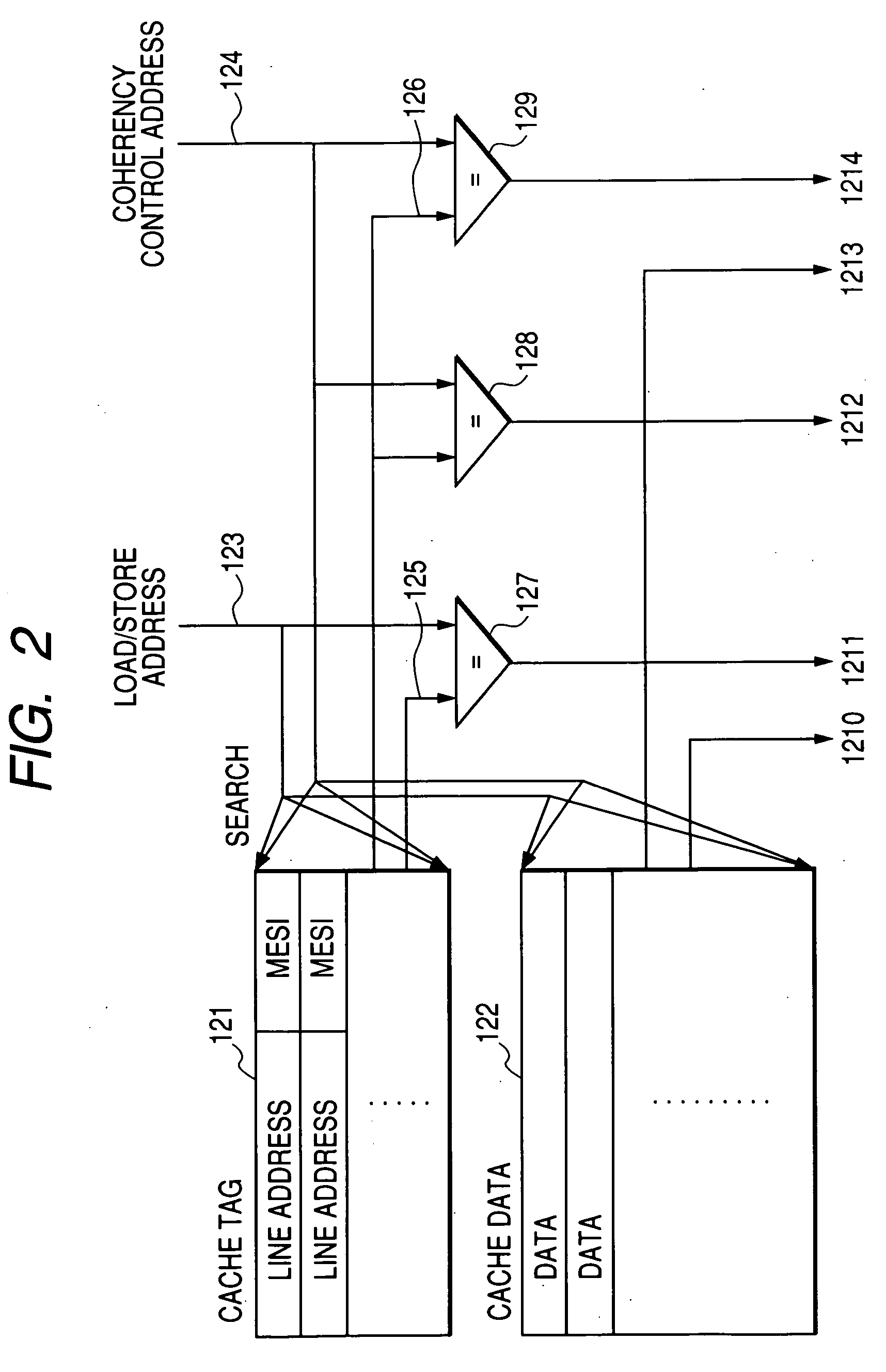

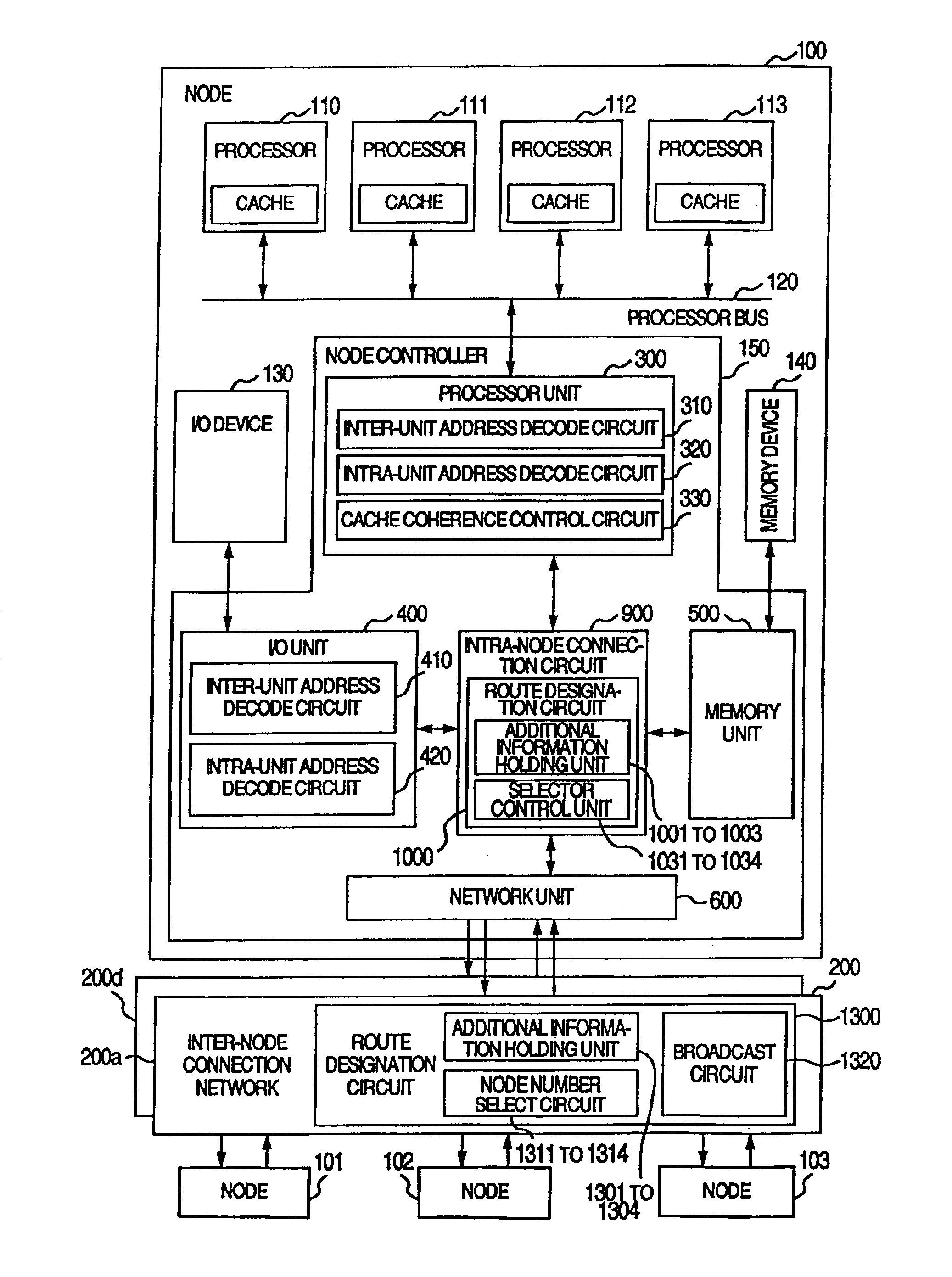

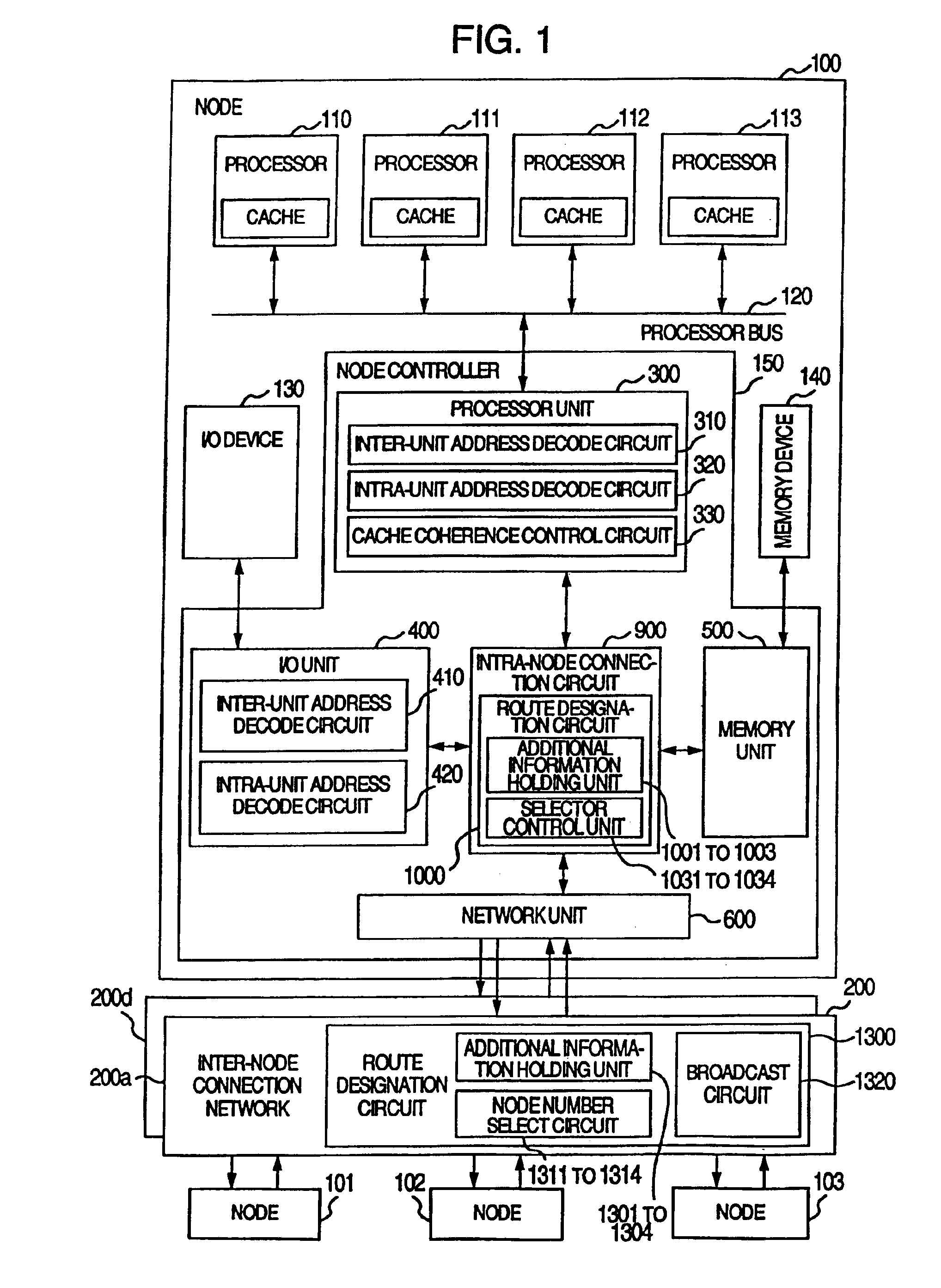

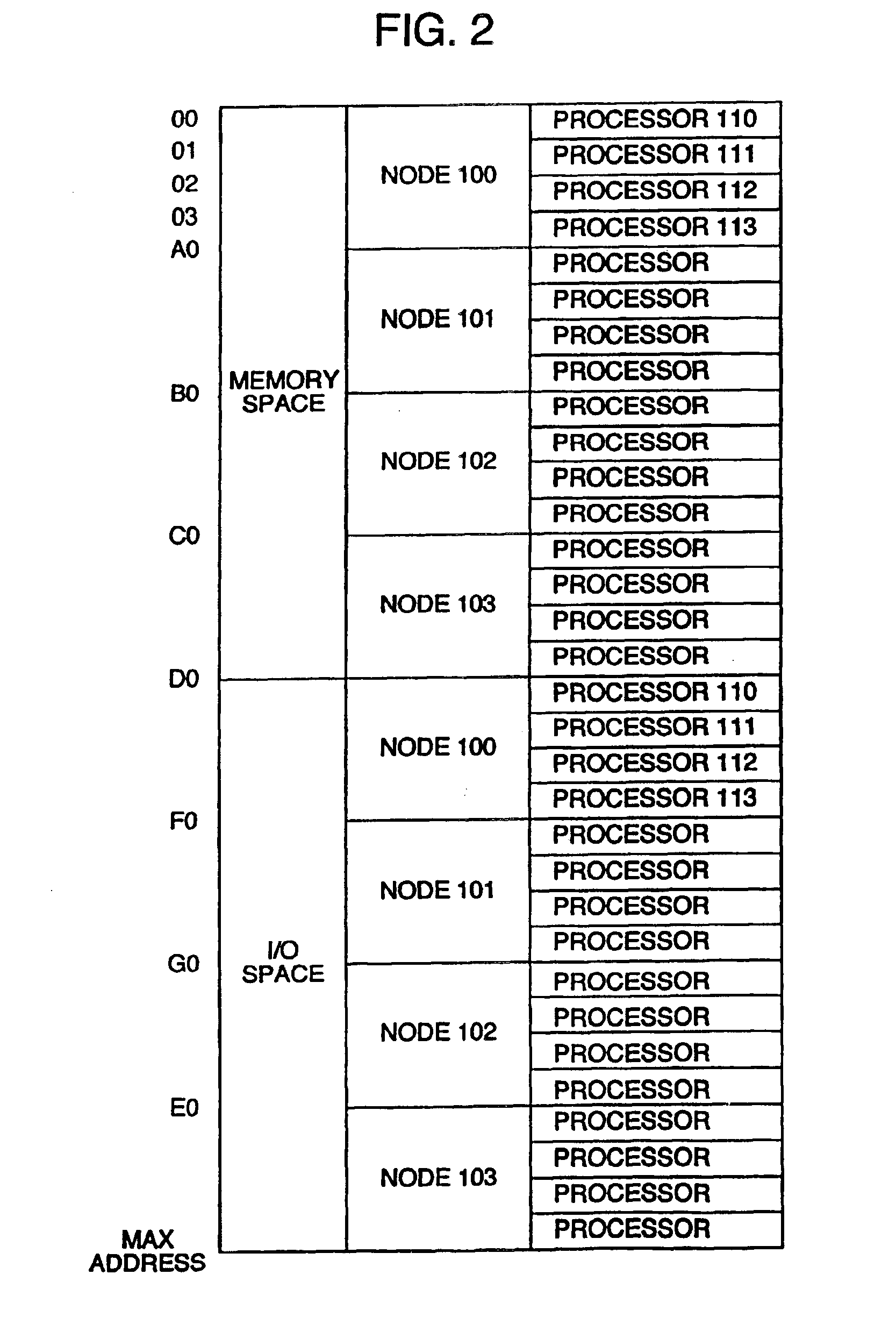

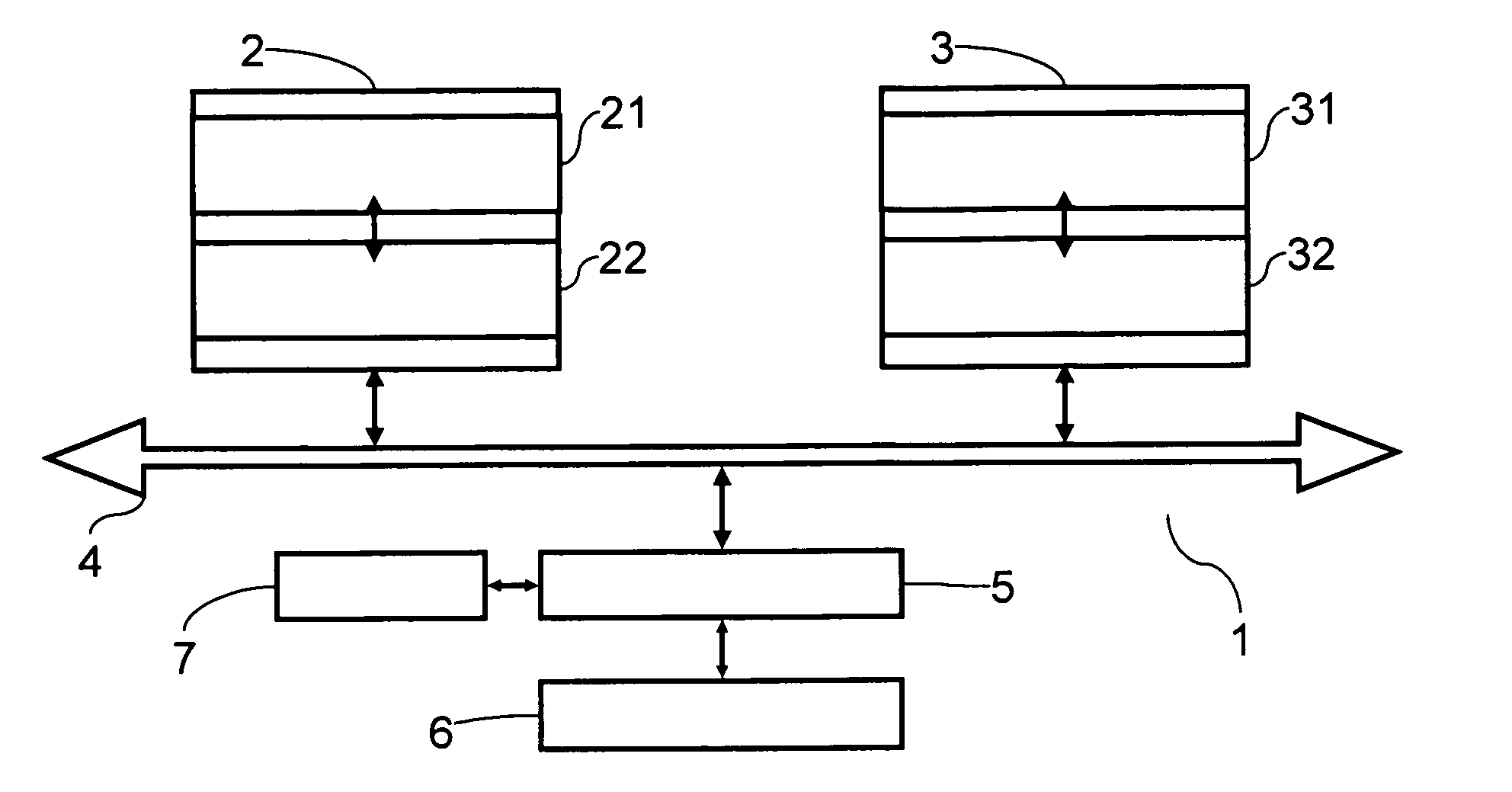

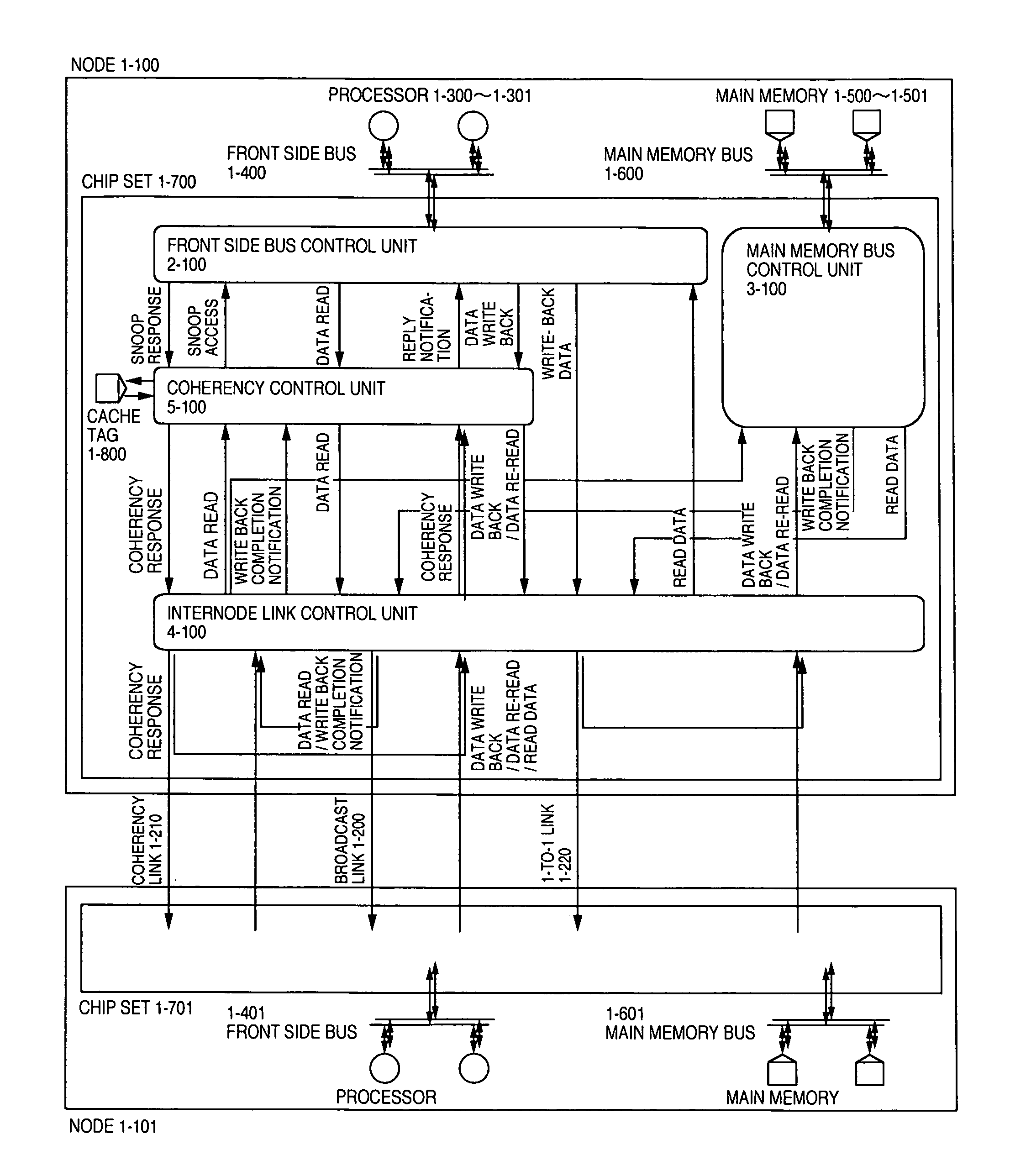

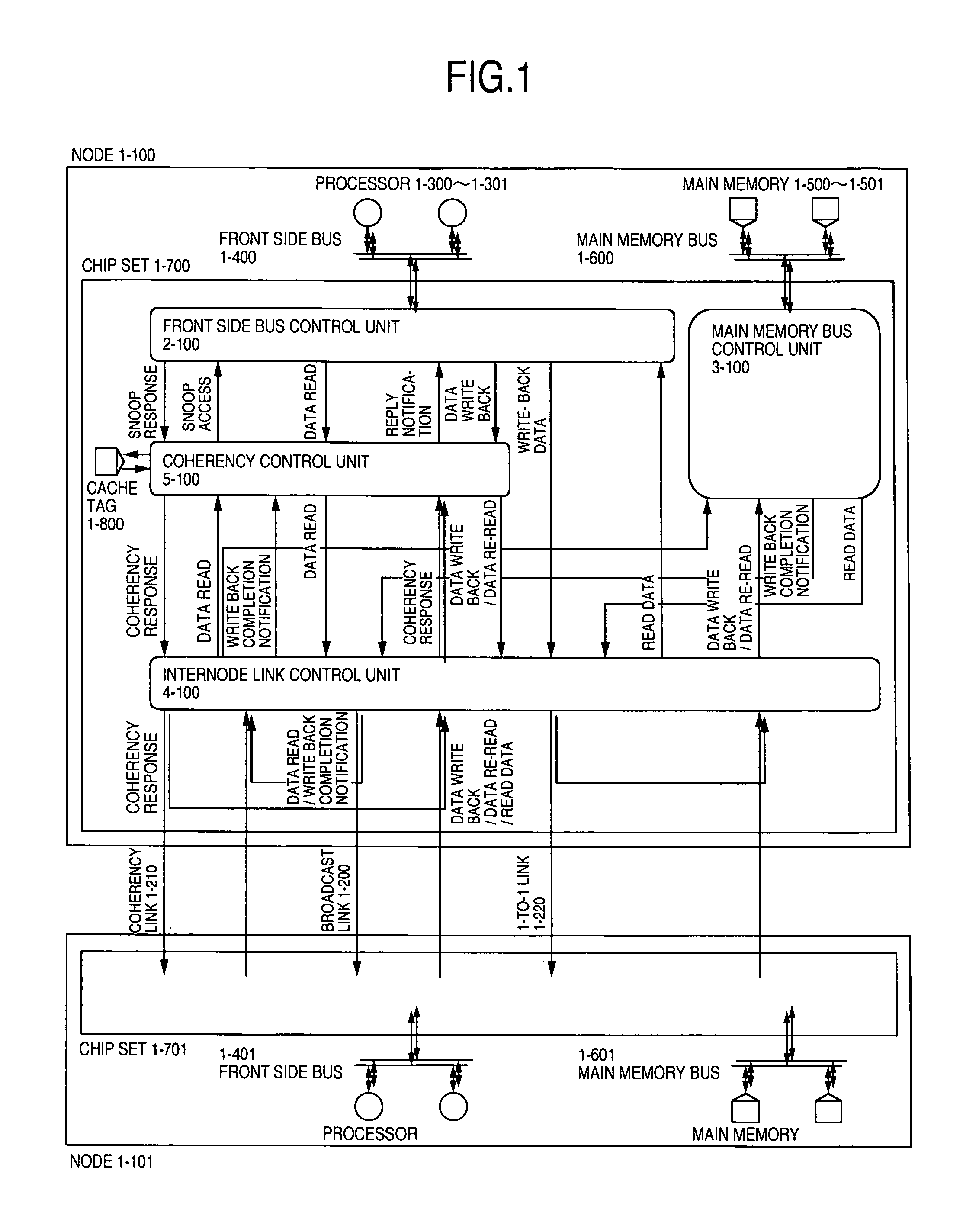

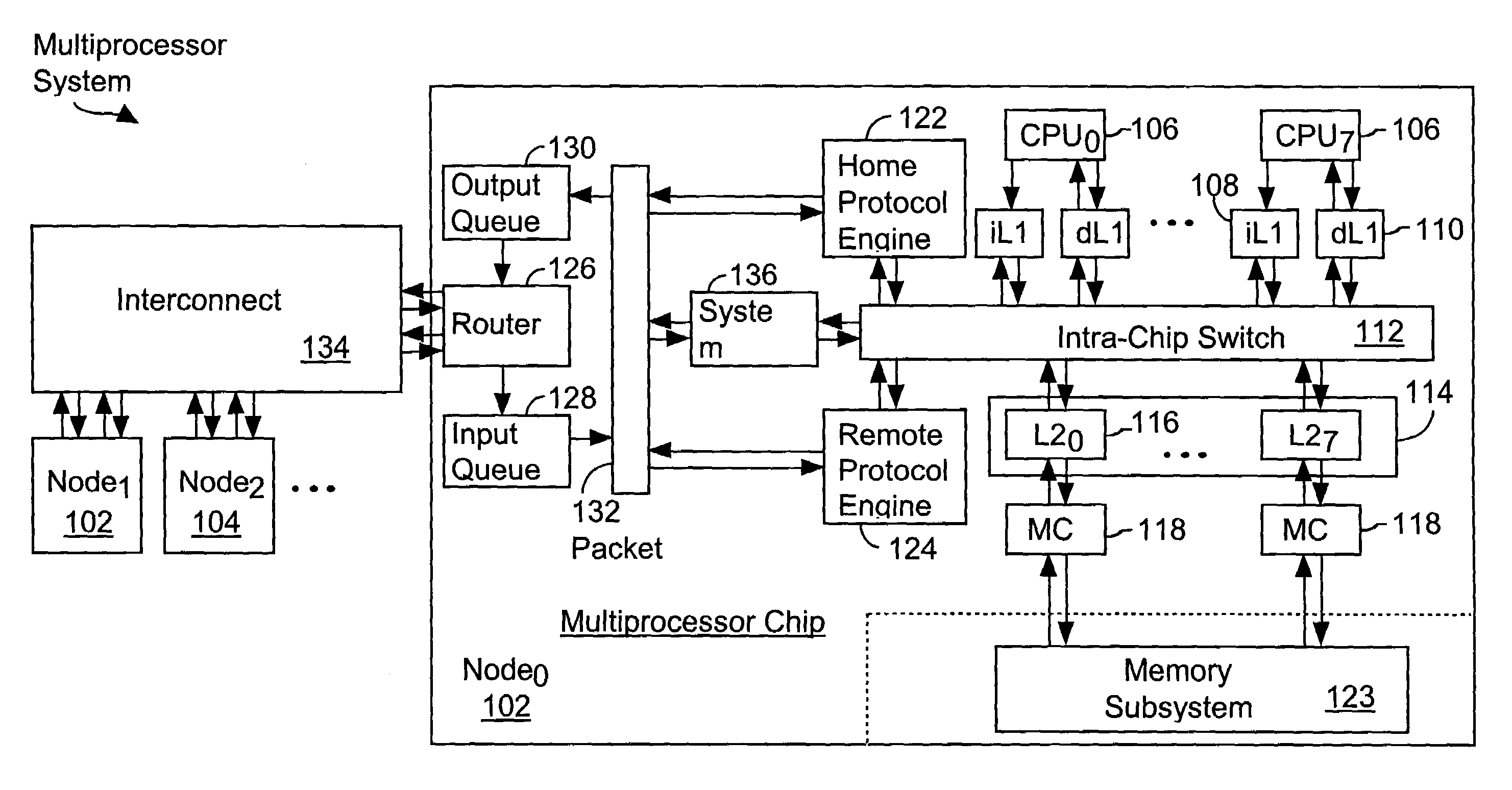

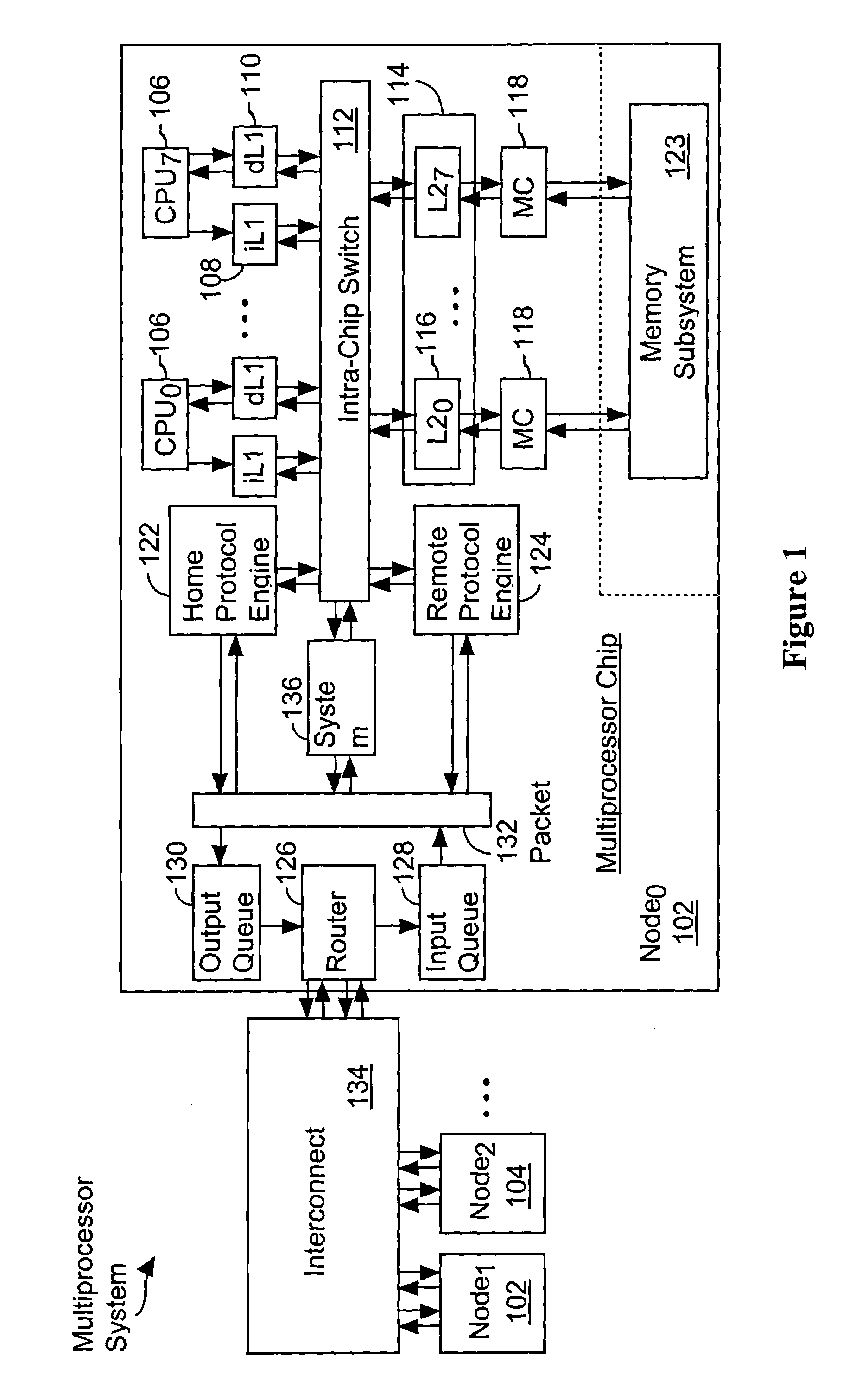

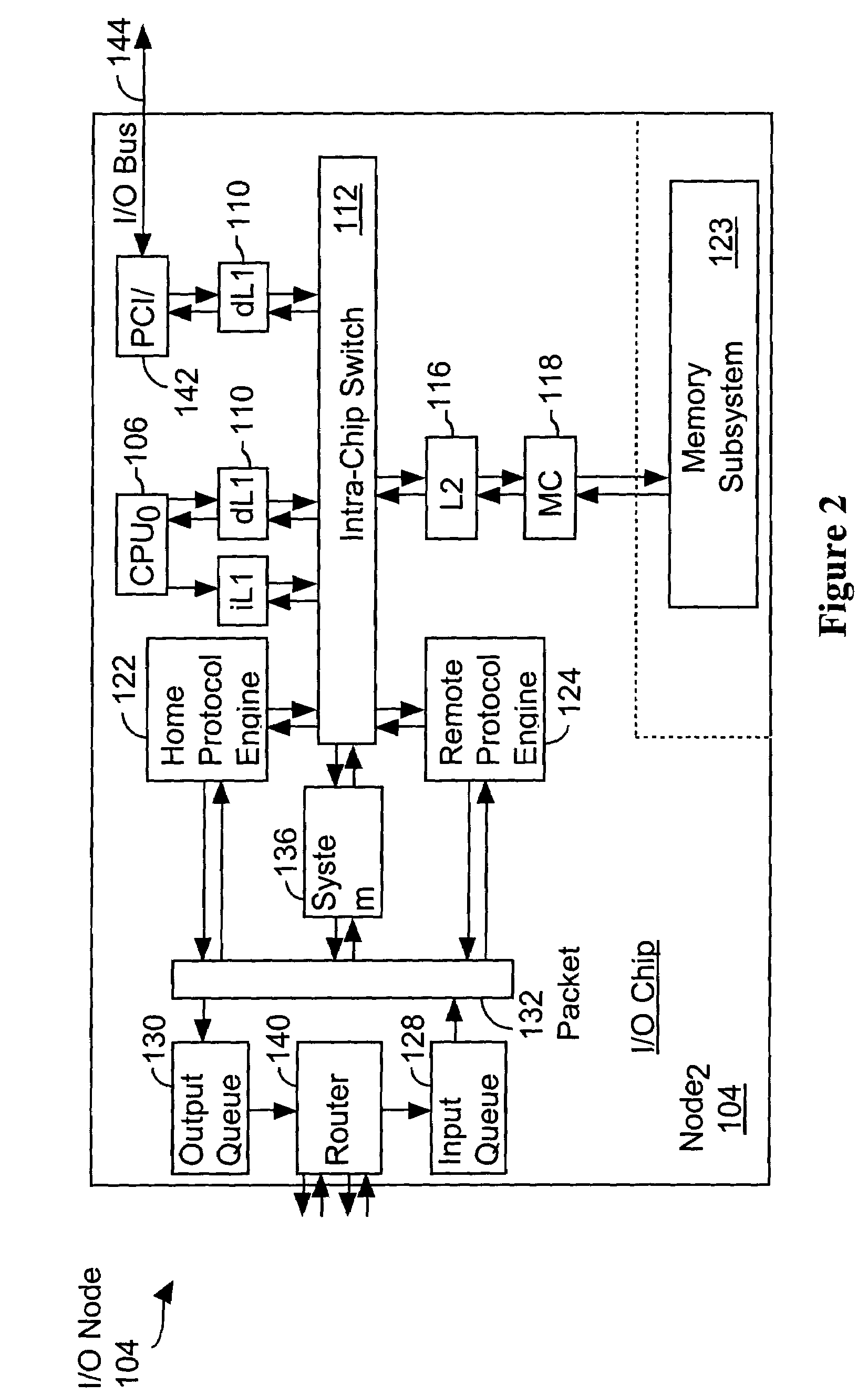

Multiprocessor system

InactiveUS20050198441A1Reduce the amount of hardwareReduce the amount requiredMemory adressing/allocation/relocationMemory addressMulti processor

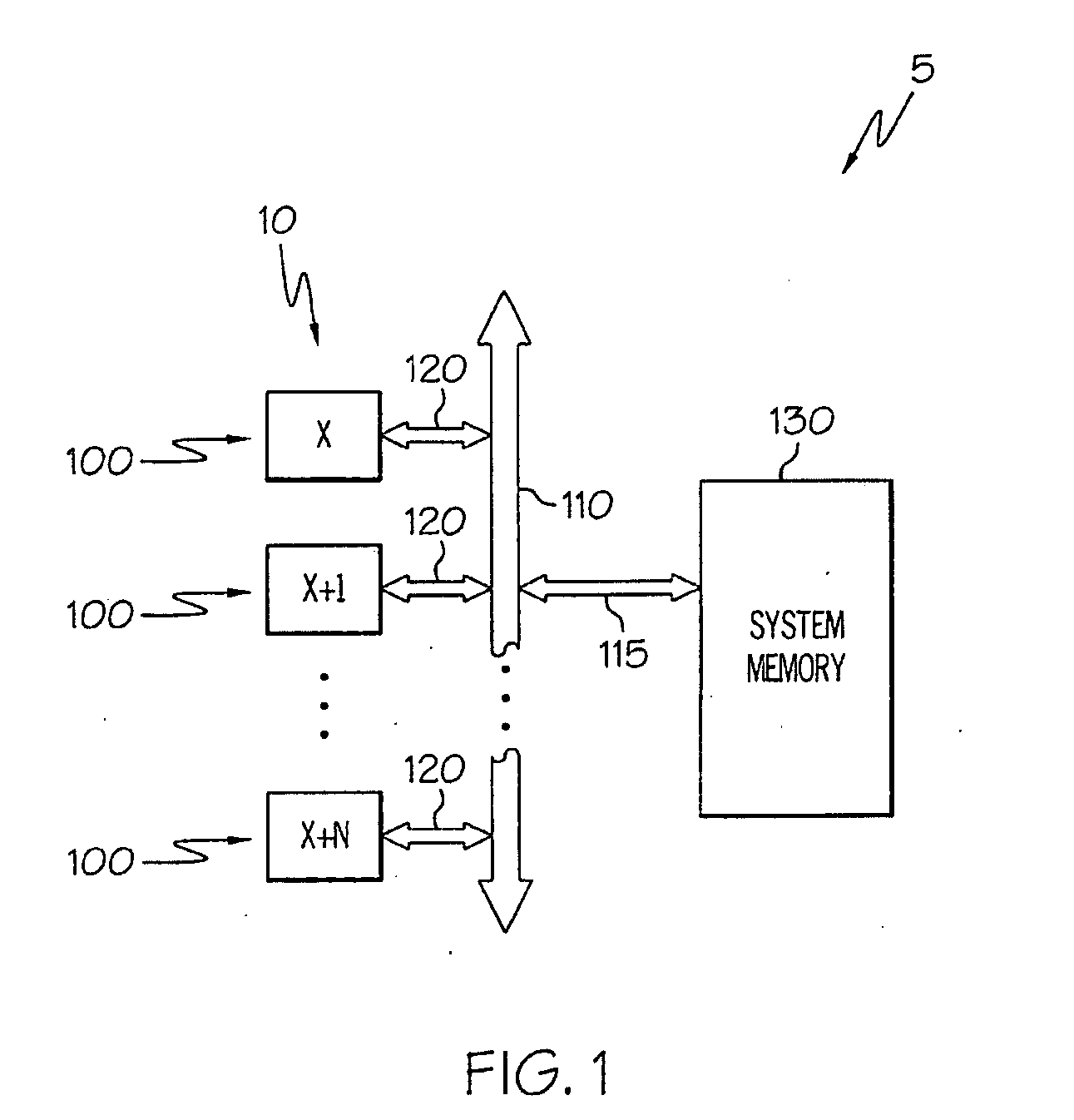

A shared memory multiprocessor is provided which includes a plurality of nodes connected to one another. Each node includes: a main memory for storing data; a cache memory for storing a copy of data obtained from the main memory; and a CPU for accessing the main memory and the cache memory and processing data. The node further includes a directory and a memory region group. The directory is made up of directory entries each including one or more directory bits which each indicate whether the cache memory of another node stores a copy of a part of a memory region group of the main memory of this node. The memory region group includes of memory regions having the same memory address portion including a cache index portion. Each node is assigned to one of the one or more directory bits.

Owner:HITACHI LTD

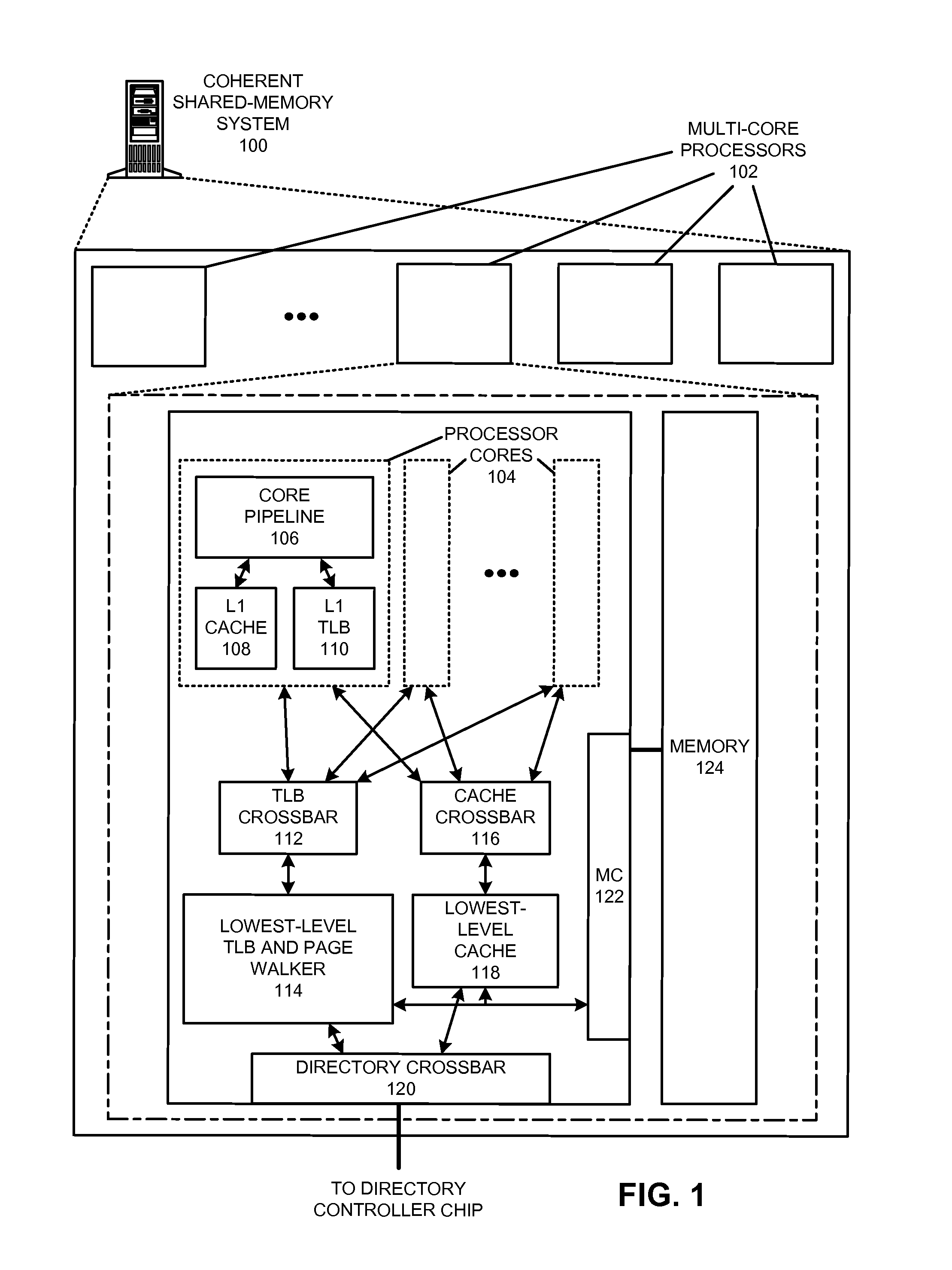

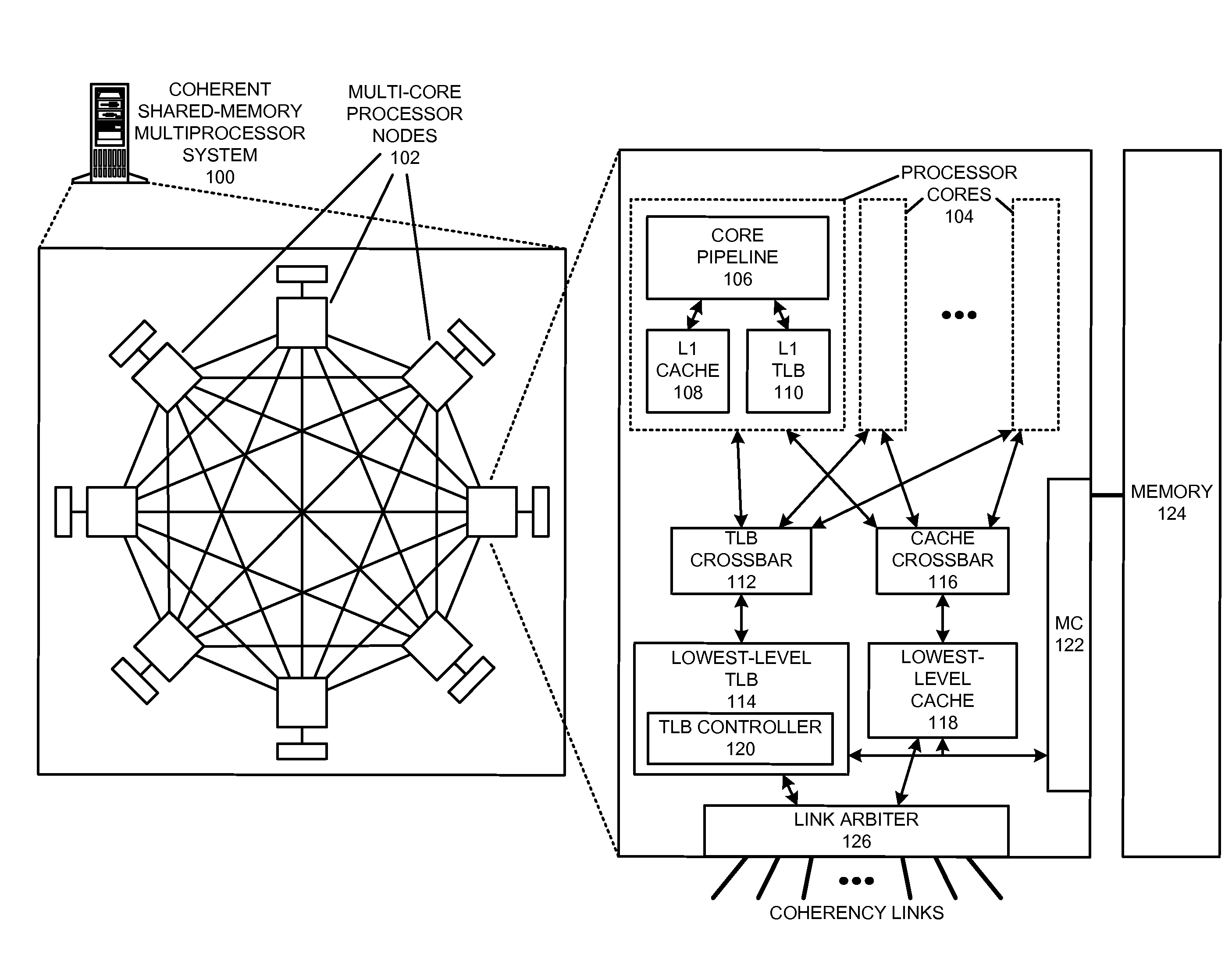

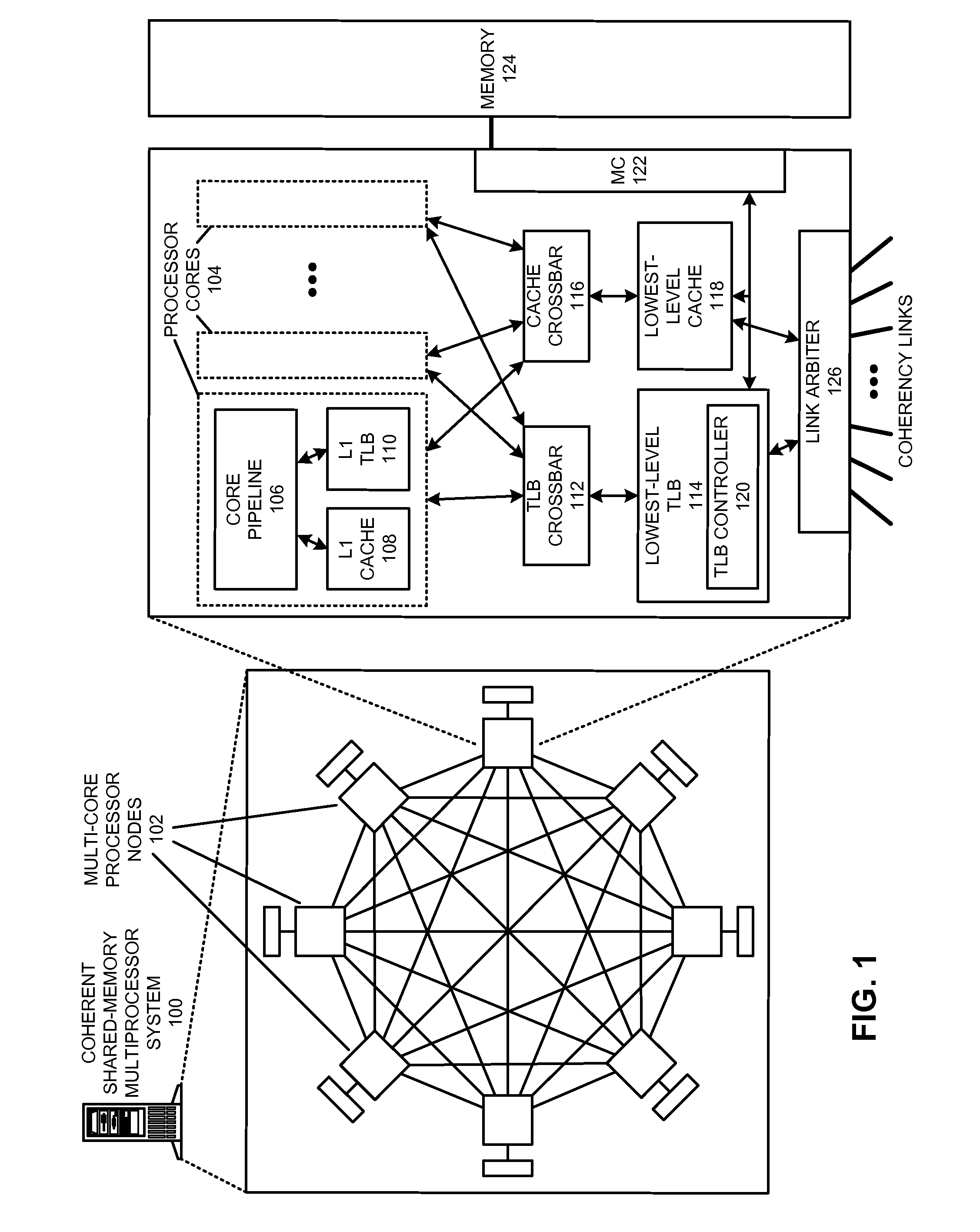

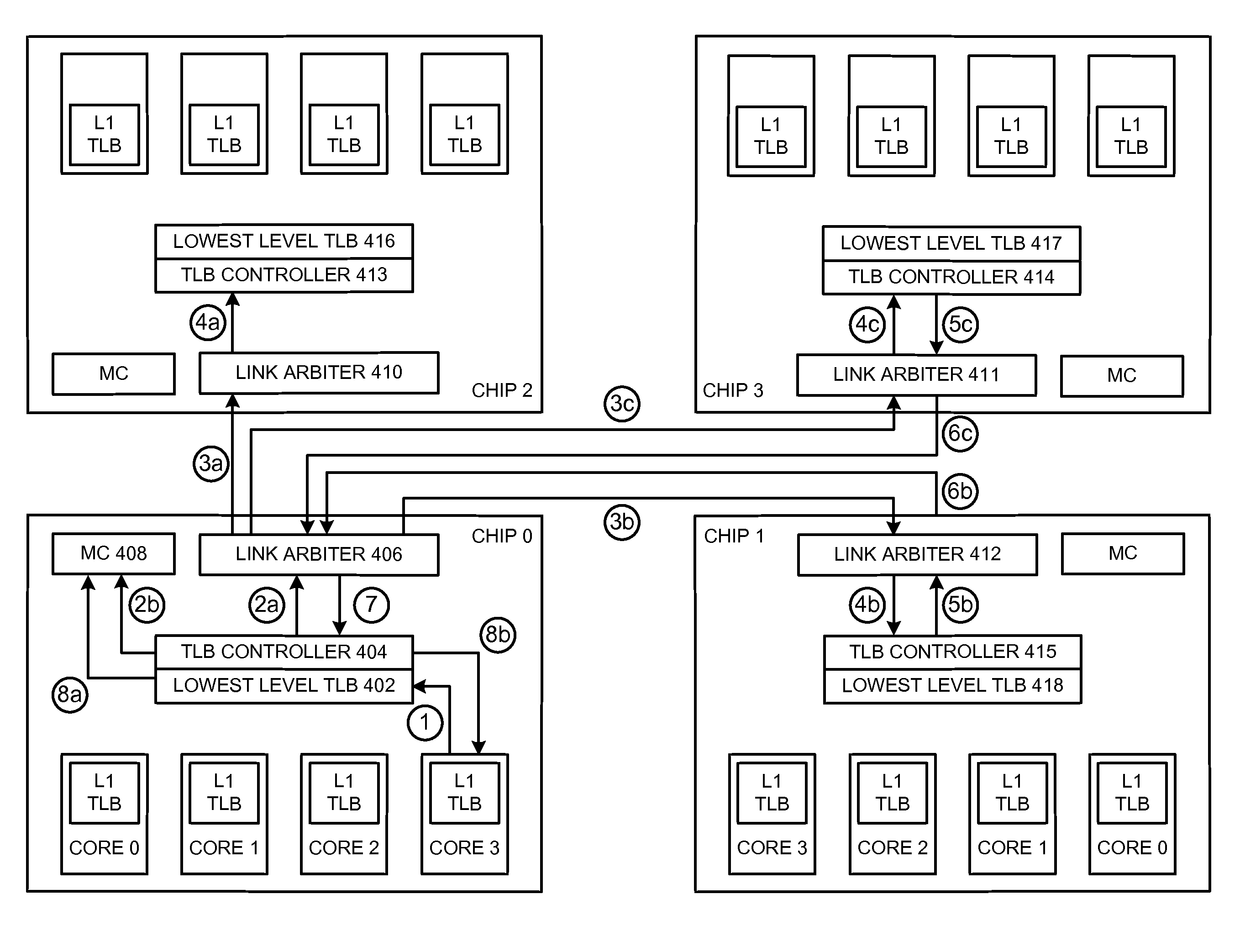

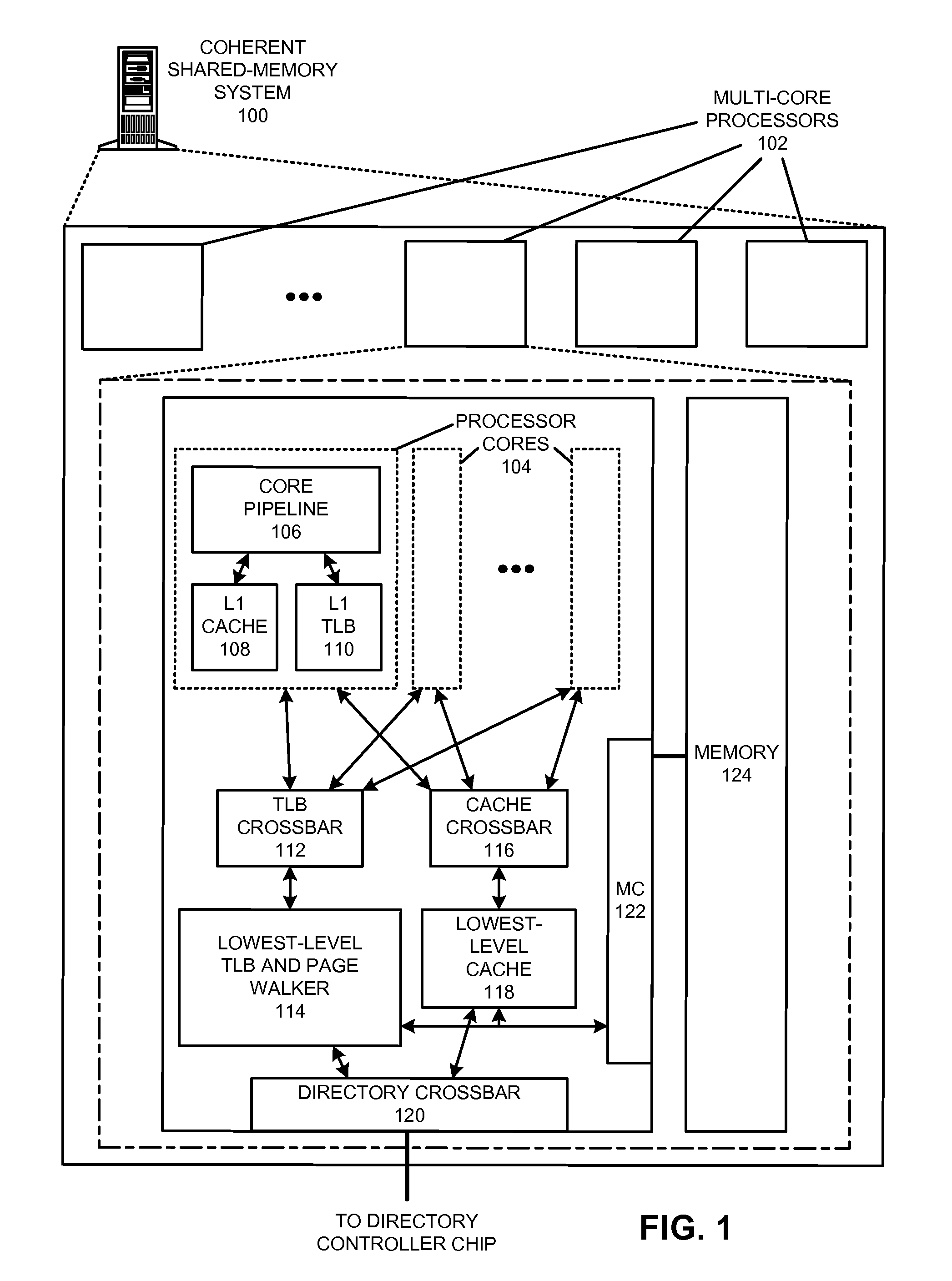

Using broadcast-based TLB sharing to reduce address-translation latency in a shared-memory system with optical interconnect

ActiveUS20150301949A1Reduce latencyFacilitate communicationMemory architecture accessing/allocationMultiplex system selection arrangementsMulti processorPage table

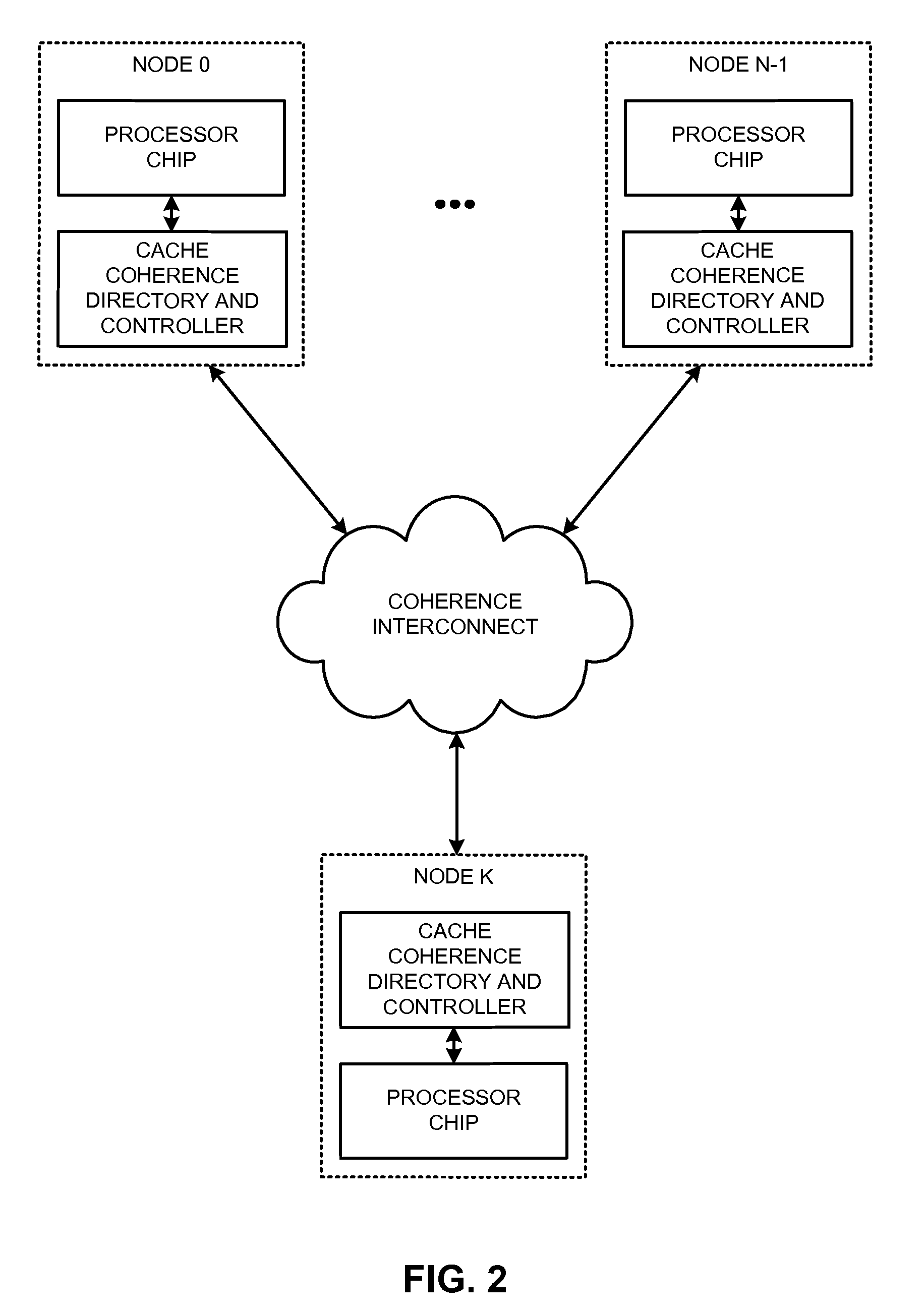

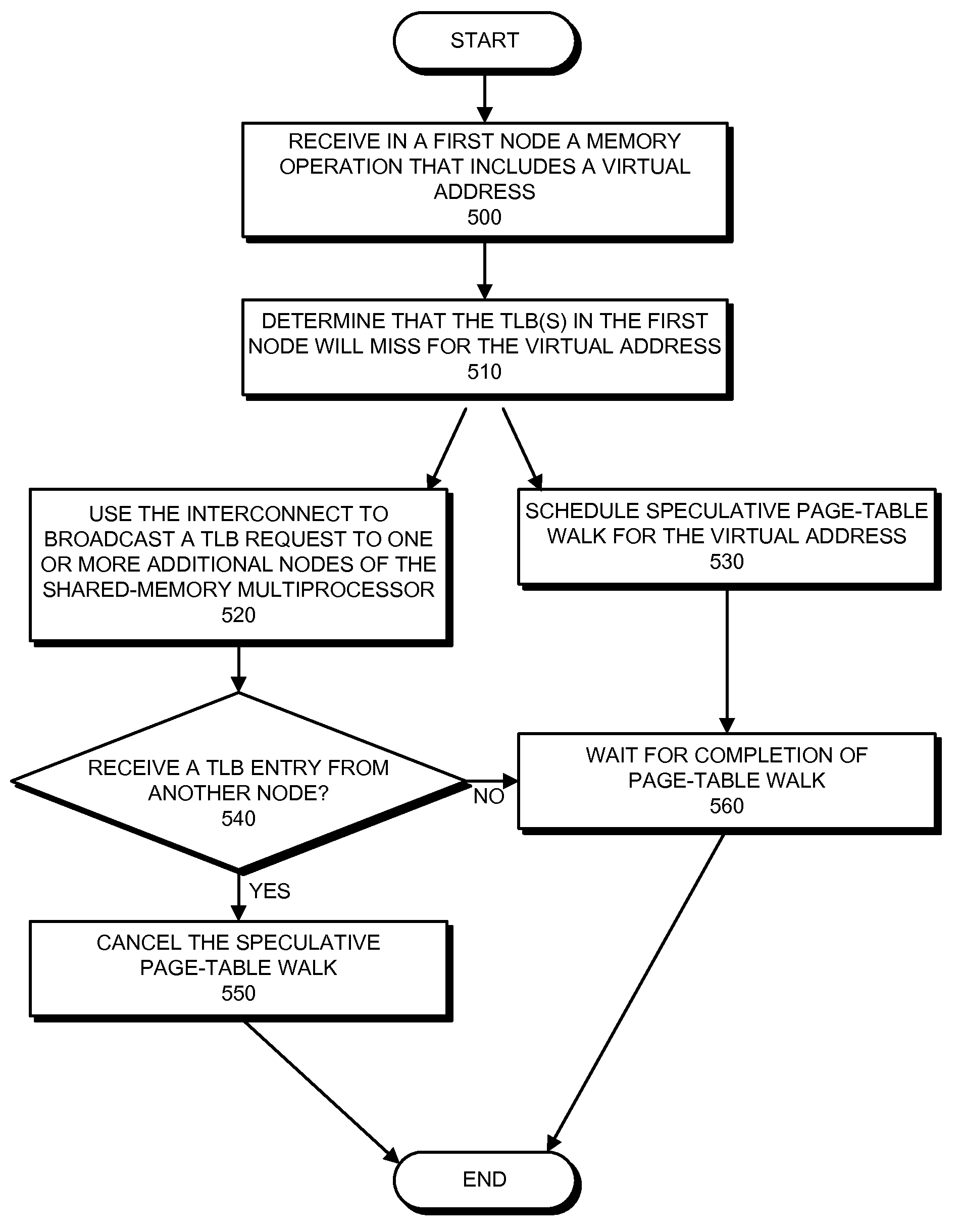

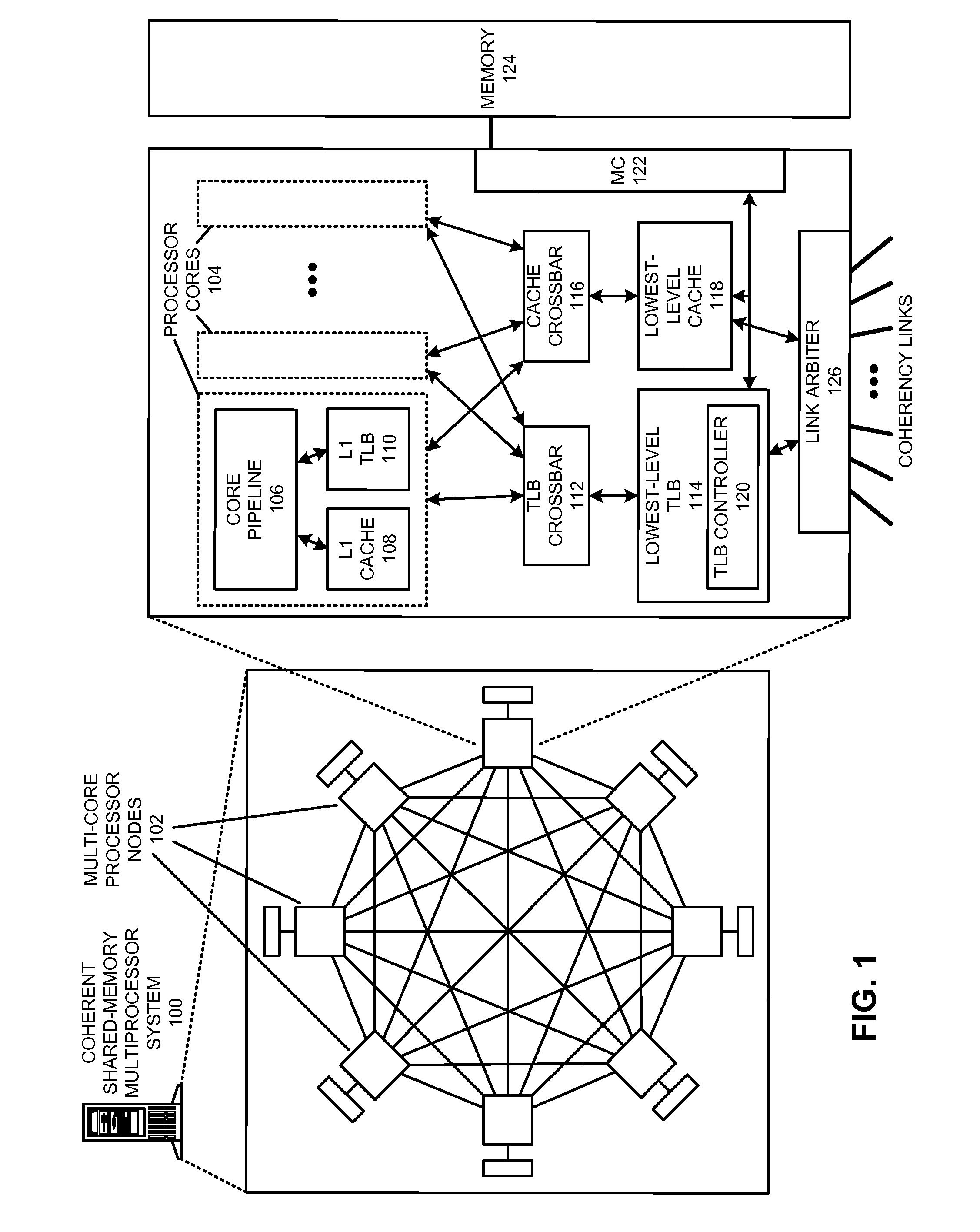

The disclosed embodiments provide a system that uses broadcast-based TLB sharing to reduce address-translation latency in a shared-memory multiprocessor system with two or more nodes that are connected by an optical interconnect. During operation, a first node receives a memory operation that includes a virtual address. Upon determining that one or more TLB levels of the first node will miss for the virtual address, the first node uses the optical interconnect to broadcast a TLB request to one or more additional nodes of the shared-memory multiprocessor in parallel with scheduling a speculative page-table walk for the virtual address. If the first node receives a TLB entry from another node of the shared-memory multiprocessor via the optical interconnect in response to the TLB request, the first node cancels the speculative page-table walk. Otherwise, if no response is received, the first node instead waits for the completion of the page-table walk.

Owner:ORACLE INT CORP

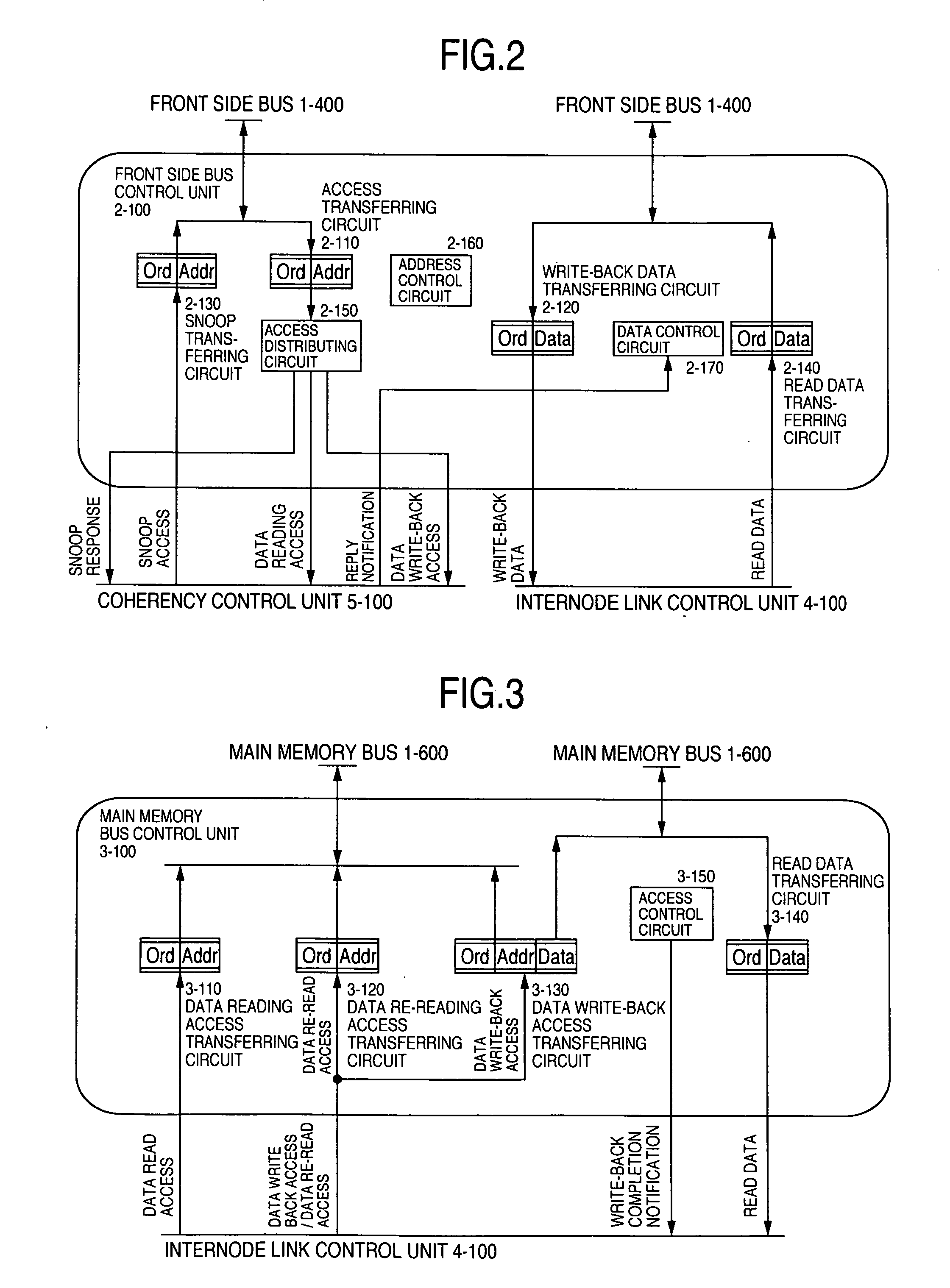

Shared memory multiprocessor performing cache coherence control and node controller therefor

InactiveUS6874053B2Efficient address snoop systemImprove effective throughputMemory architecture accessing/allocationComputer controlMulti processorParallel computing

Each node includes a node controller for decoding the control information and the address information for the access request issued by a processor or an I / O device, generating, based on the result of decoding, the cache coherence control information indicating whether the cache coherence control is required or not, the node information and the unit information for the transfer destination, and adding these information to the access request. An intra-node connection circuit for connecting the units in the node controller holds the cache coherence control information, the node information and the unit information added to the access request. When the cache coherence control information indicates that the cache coherence control is not required and the node information indicates the local node, then the intra-node connection circuit transfers the access request not to the inter-node connection circuit inter-connecting the node but directly to the unit designated by the unit information.

Owner:HITACHI LTD

Using broadcast-based tlb sharing to reduce address-translation latency in a shared-memory system with electrical interconnect

ActiveUS20140040562A1Reduce address-translation latencyReduce latencyMemory architecture accessing/allocationMemory adressing/allocation/relocationMulti processorPage table

The disclosed embodiments provide a system that uses broadcast-based TLB-sharing techniques to reduce address-translation latency in a shared-memory multiprocessor system with two or more nodes that are connected by an electrical interconnect. During operation, a first node receives a memory operation that includes a virtual address. Upon determining that one or more TLB levels of the first node will miss for the virtual address, the first node uses the electrical interconnect to broadcast a TLB request to one or more additional nodes of the shared-memory multiprocessor in parallel with scheduling a speculative page-table walk for the virtual address. If the first node receives a TLB entry from another node of the shared-memory multiprocessor via the electrical interconnect in response to the TLB request, the first node cancels the speculative page-table walk. Otherwise, if no response is received, the first node instead waits for the completion of the page-table walk.

Owner:ORACLE INT CORP

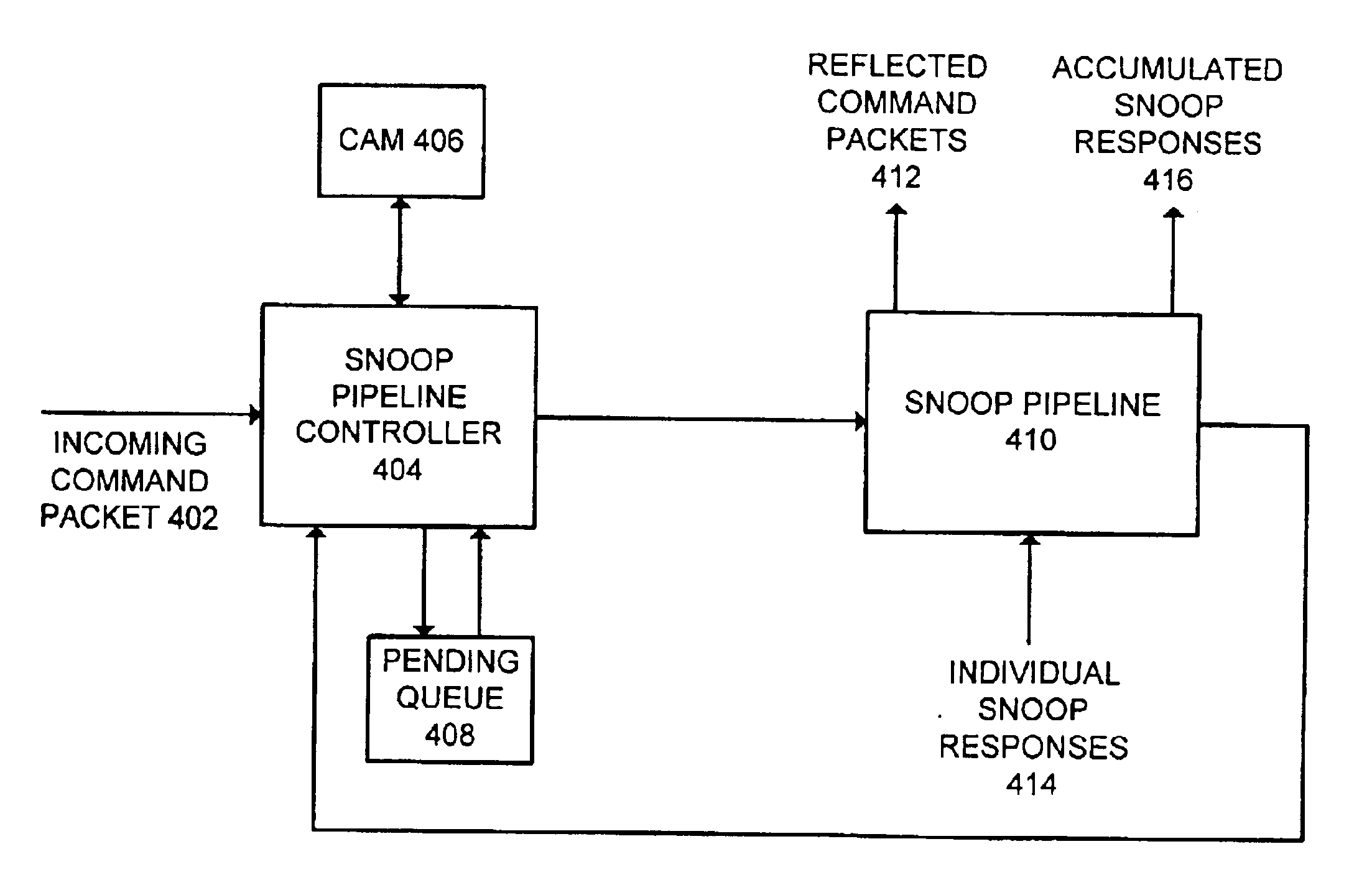

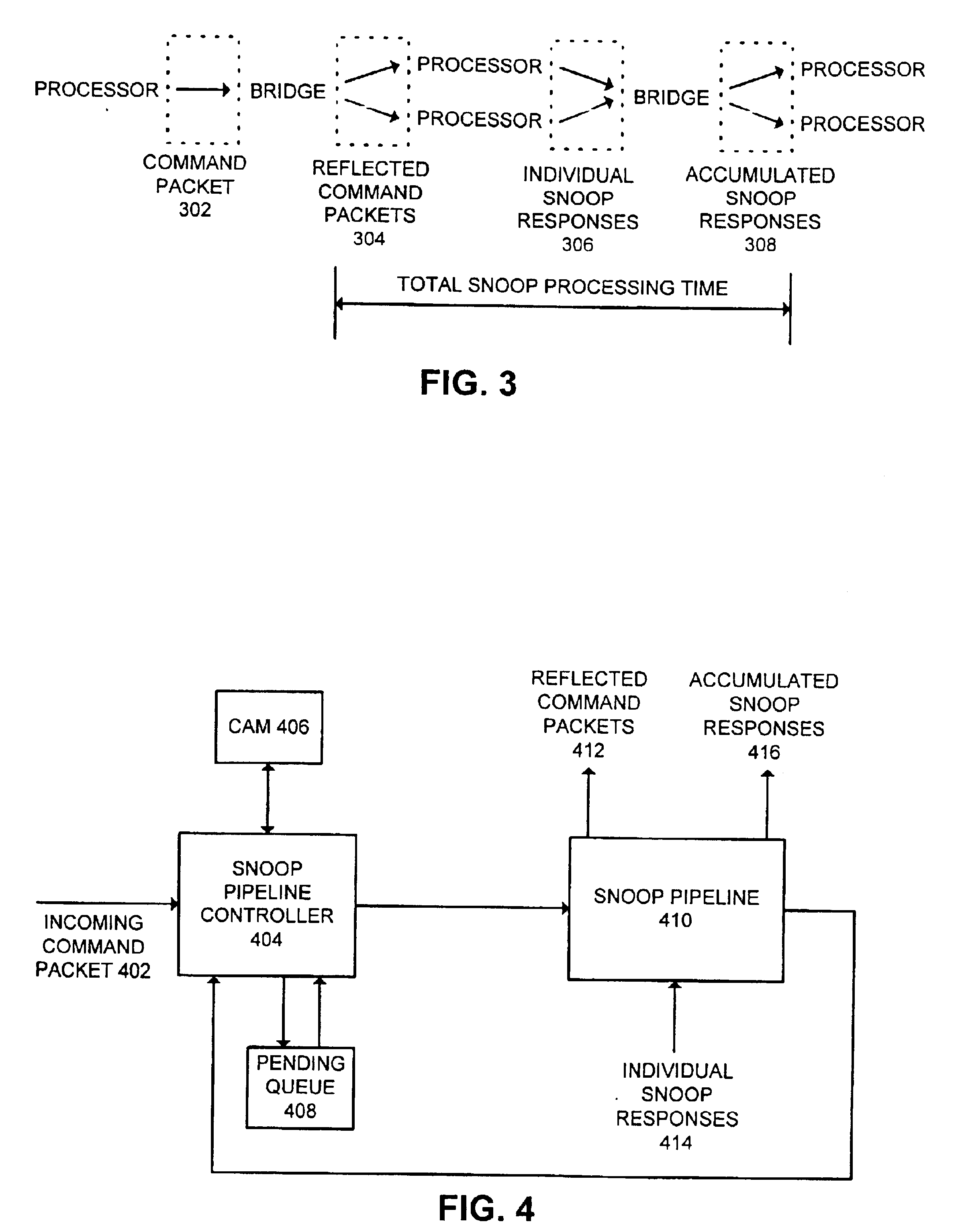

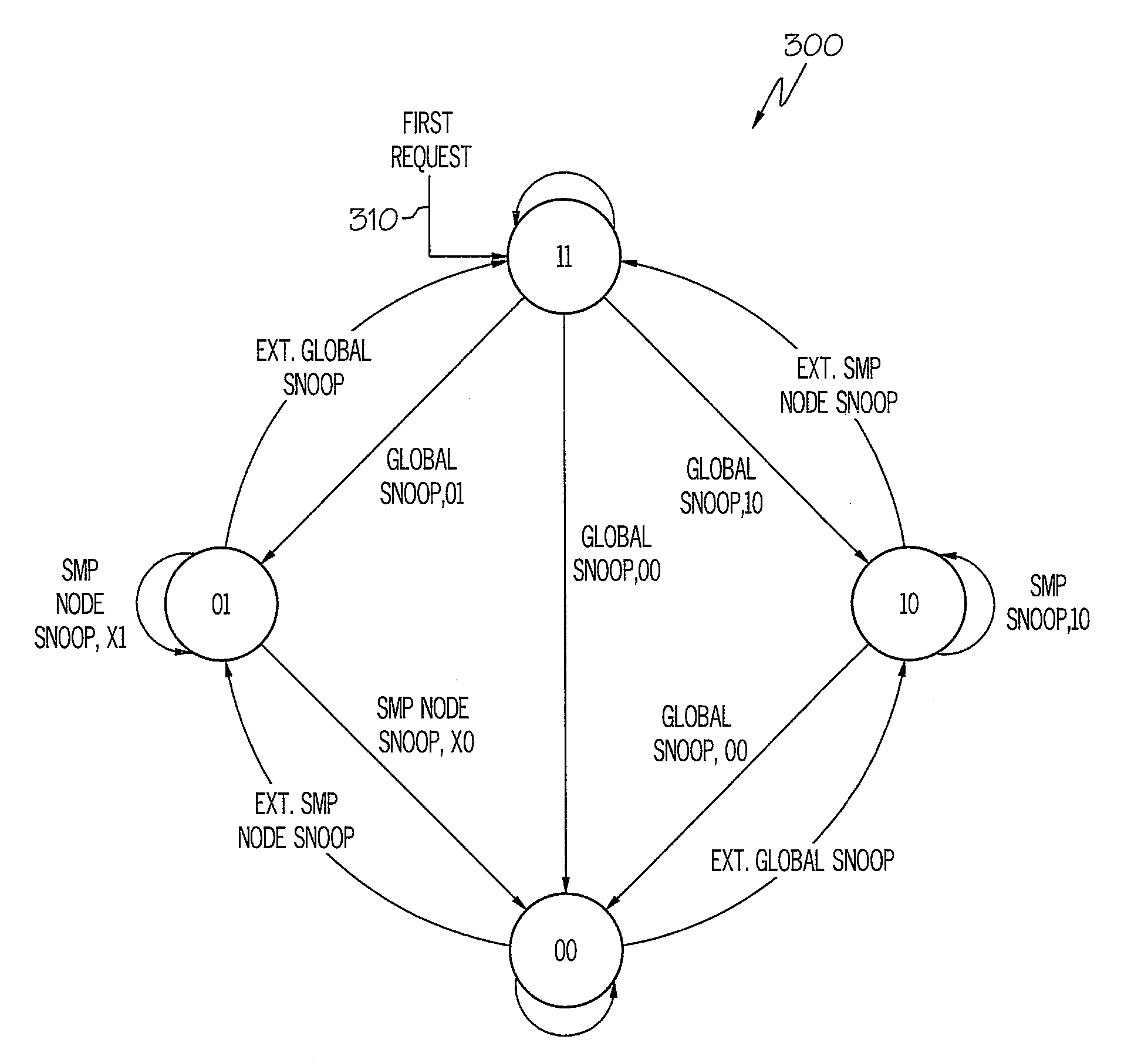

Pipelining cache-coherence operations in a shared-memory multiprocessing system

InactiveUS6848032B2Facilitates pipelining cache coherence operationMemory adressing/allocation/relocationMultiple digital computer combinationsMulti processorCache coherence

One embodiment of the present invention provides a system that facilitates pipelining cache coherence operations in a shared memory multiprocessor system. During operation, the system receives a command to perform a memory operation from a processor in the shared memory multiprocessor system. This command is received at a bridge that is coupled to the local caches of the processors in the shared memory multiprocessor system. If the command is directed to a cache line that is subject to an in-progress pipelined cache coherency operation, the system delays the command until the in-progress pipelined cache coherency operation completes. Otherwise, the system reflects the command to local caches of other processors in the shared memory multiprocessor system. The system then accumulates snoop responses from the local caches of the other processor and sends the accumulated snoop response to the local caches of other processors in the shared memory multiprocessor system.

Owner:APPLE INC

Cache coherency in a shared-memory multiprocessor system

ActiveUS20060259705A1Reduce disadvantagesEnergy efficient ICTEnergy efficient computingExternal storageTerm memory

A method of making cache memories of a plurality of processors coherent with a shared memory includes one of the processors determining whether an external memory operation is needed for data that is to be maintained coherent. If so, the processor transmits a cache coherency request to a traffic-monitoring device. The traffic-monitoring device transmits memory operation information to the plurality of processors, which includes an address of the data. Each of the processors determines whether the data is in its cache memory and whether a memory operation is needed to make the data coherent. Each processor also transmits to the traffic-monitoring device a message that indicates a state of the data and the memory operation that it will perform on the data. The processors then perform the memory operations on the data. The traffic-monitoring device performs the transmitted memory operations in a fixed order that is based on the states of the data in the processors' cache memories.

Owner:SK HYNIX INC

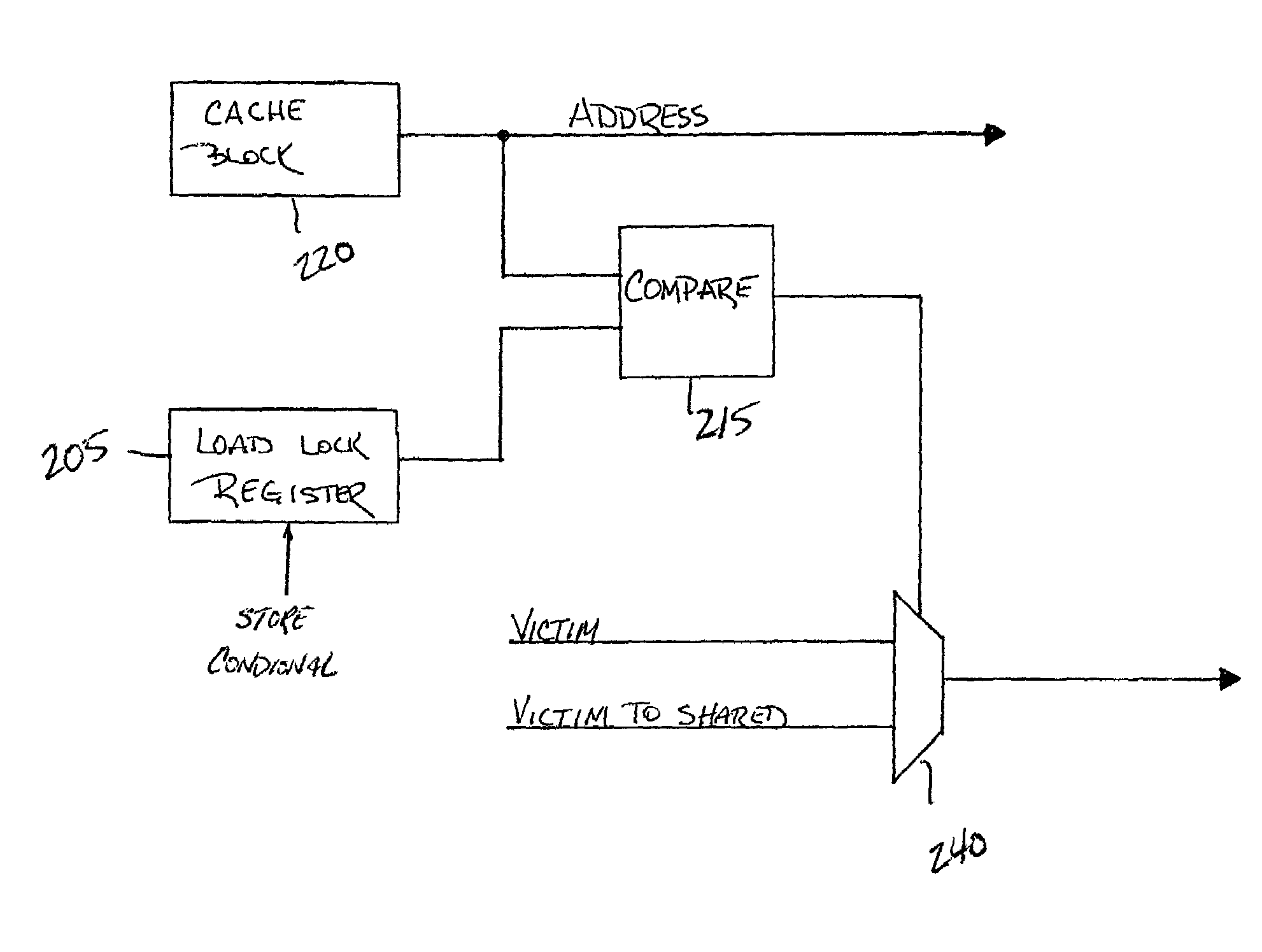

Mechanism for handling load lock/store conditional primitives in directory-based distributed shared memory multiprocessors

InactiveUS7620954B2Memory adressing/allocation/relocationGeneral purpose stored program computerParallel computingControl memory

Each processor in a distributed shared memory system has an associated memory and a coherence directory. The processor that controls a memory is the Home processor. Under certain conditions, another processor may obtain exclusive control of a data block by issuing a Load Lock instruction, and obtaining a writeable copy of the data block that is stored in the cache of the Owner processor. If the Owner processor does not complete operations on the writeable copy of the data prior to the time that the data block is displaced from the cache, it issues a Victim To Shared message, thereby indicating to the Home processor that it should remain a sharer of the data block. In the event that another processor seeks exclusive rights to the same data block, the Home processor issues an Invalidate message to the Owner processor. When the Owner processor is ready to resume operation on the data block, the Owner processor again obtains exclusive control of the data block by issuing a Read-with Modify Intent Store Conditional instruction to the Home processor. If the Owner processor is still a sharer, a writeable copy of the data block is sent to the Owner processor, who completes modification of the data block and returns it to the Home processor with a Store Conditional instruction.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

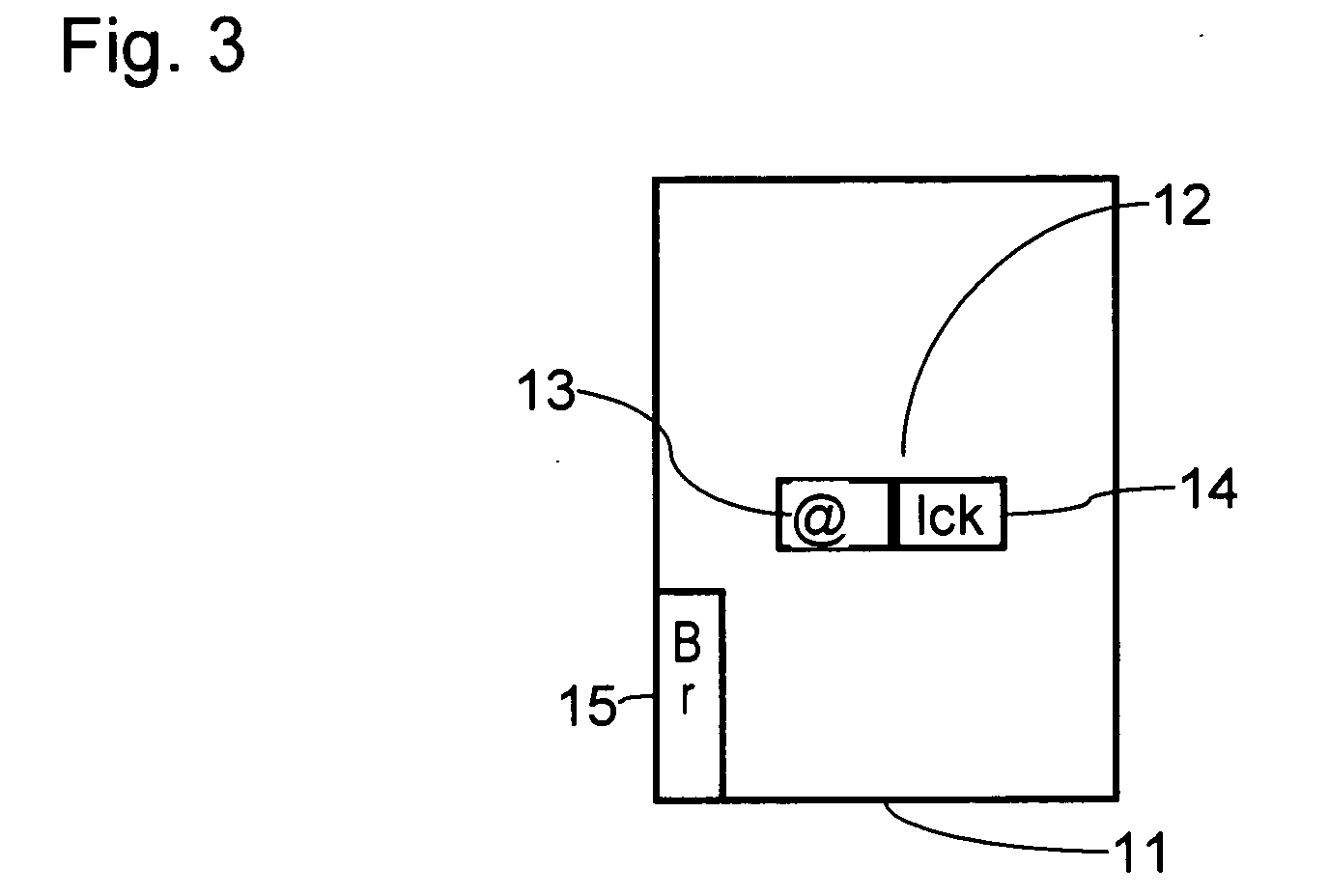

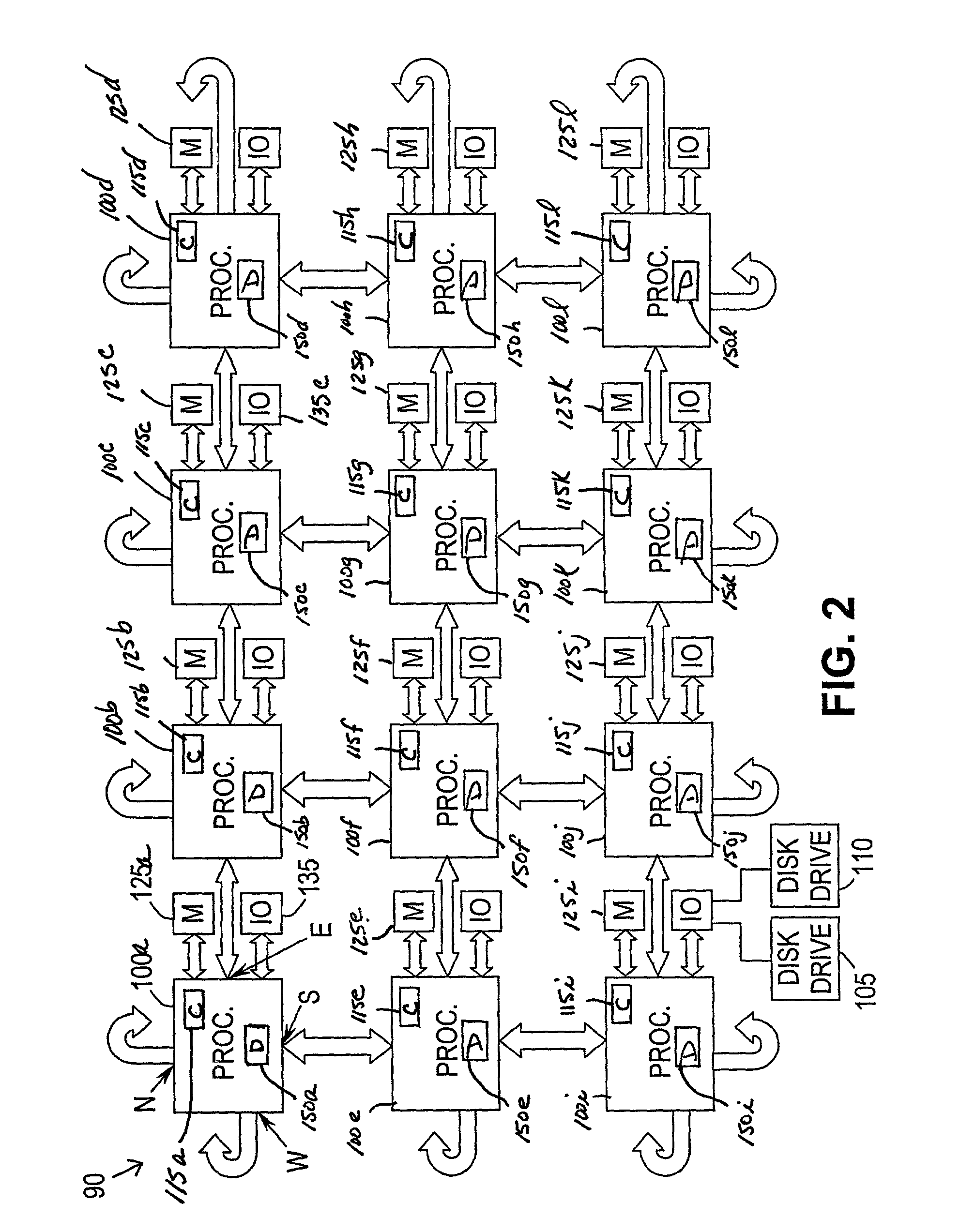

Shared memory multiprocessor system

InactiveUS20060265466A1Improve system performanceAchieve effectMultiple digital computer combinationsMemory systemsTerm memorySynchronism

In a shared memory multiprocessor system, data reading accesses and data write-back completion notifications are selected in synchronism with all of the nodes to order them. In each of the nodes, a subject address of ordered data reading access is compared with a subject address of ordered data write-back completion notification to detect a data reading operation of the same address which is passed by the completion of the data writing-back operation. Both a data reading sequence and a data writing-back sequence are determined. At this time, such a coherency response for prompting a re-reading operation of the data is transmitted to the node which transmitted the data reading access, so that coherency of the data is maintained.

Owner:HITACHI LTD

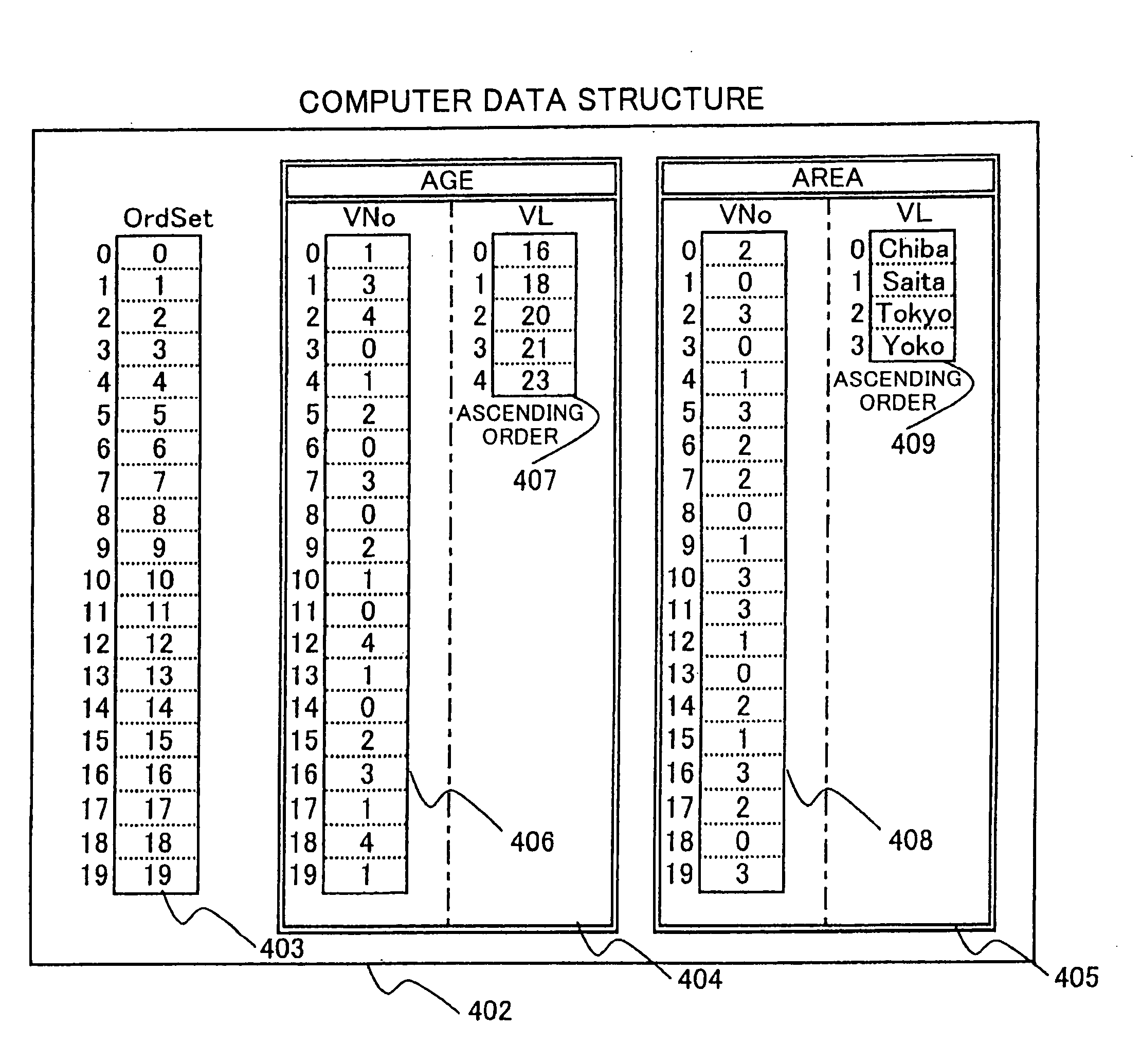

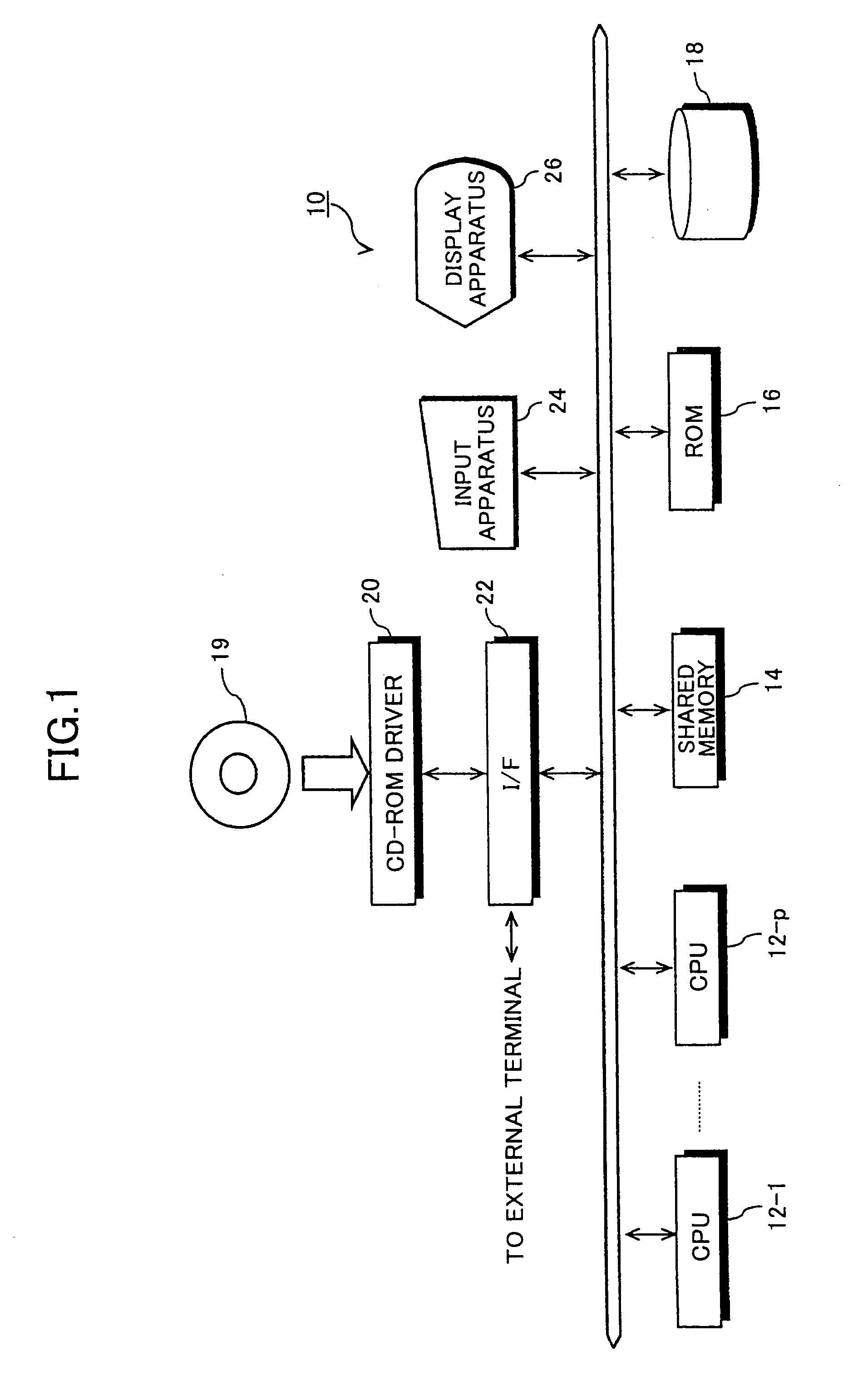

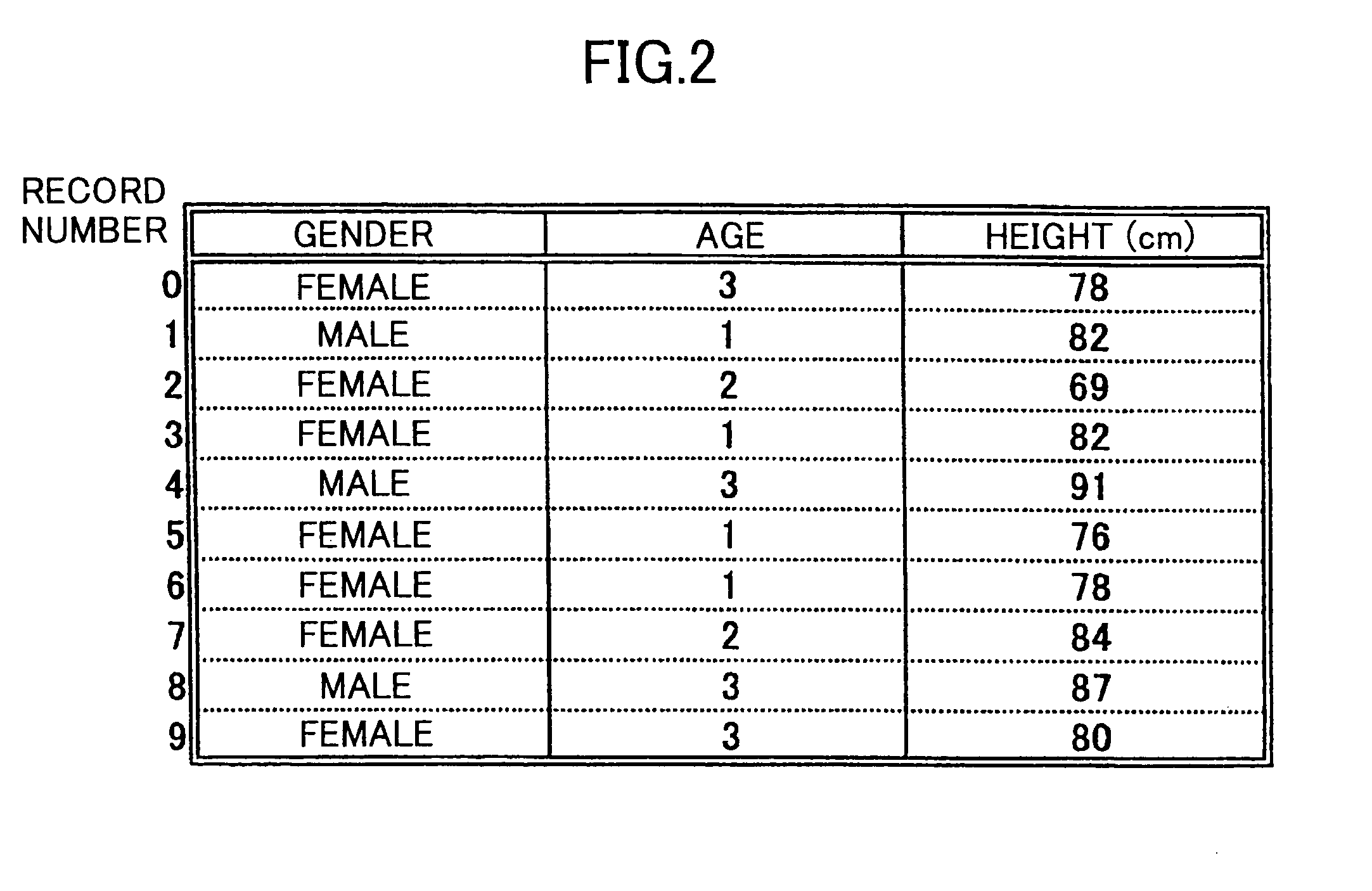

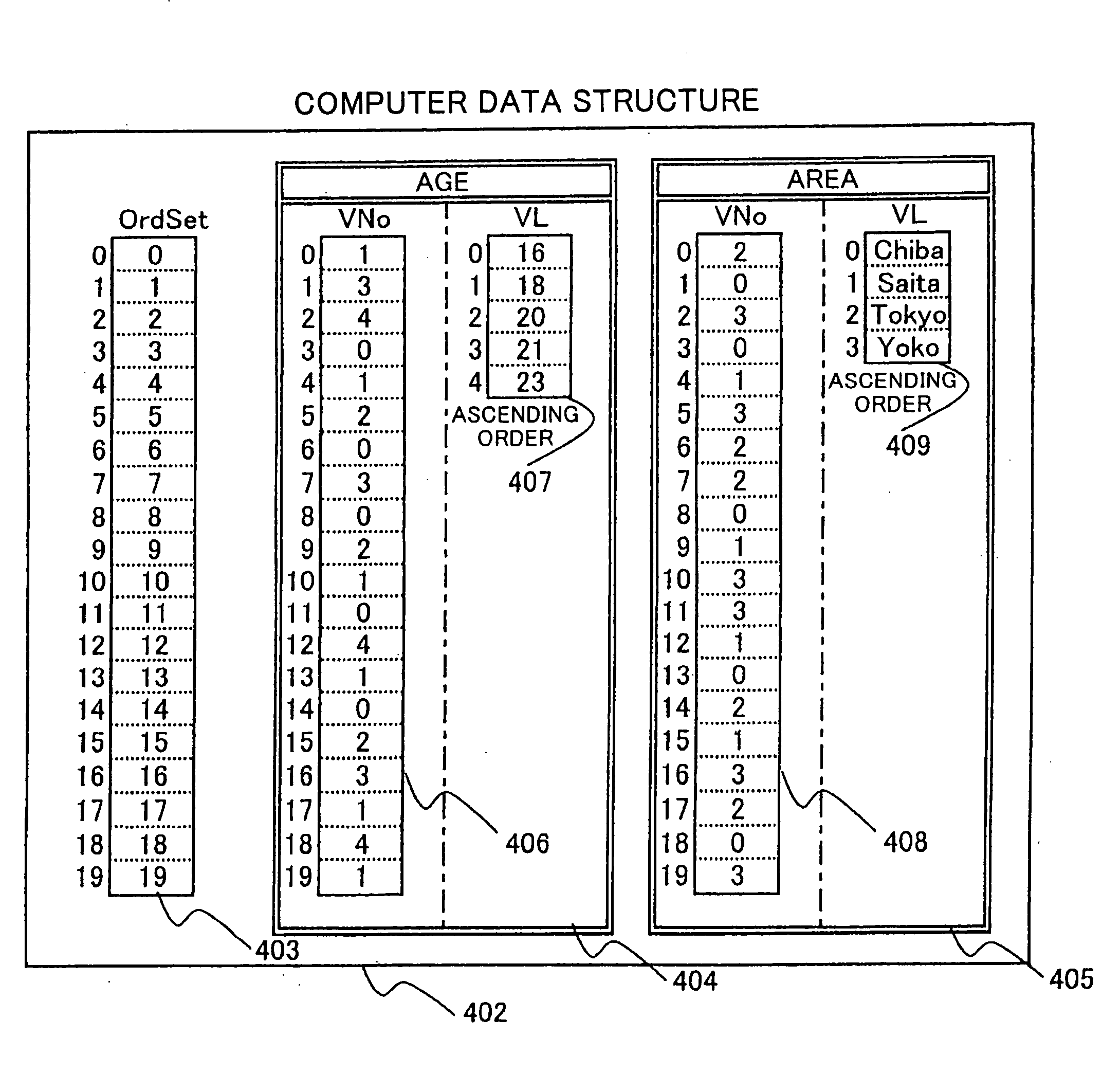

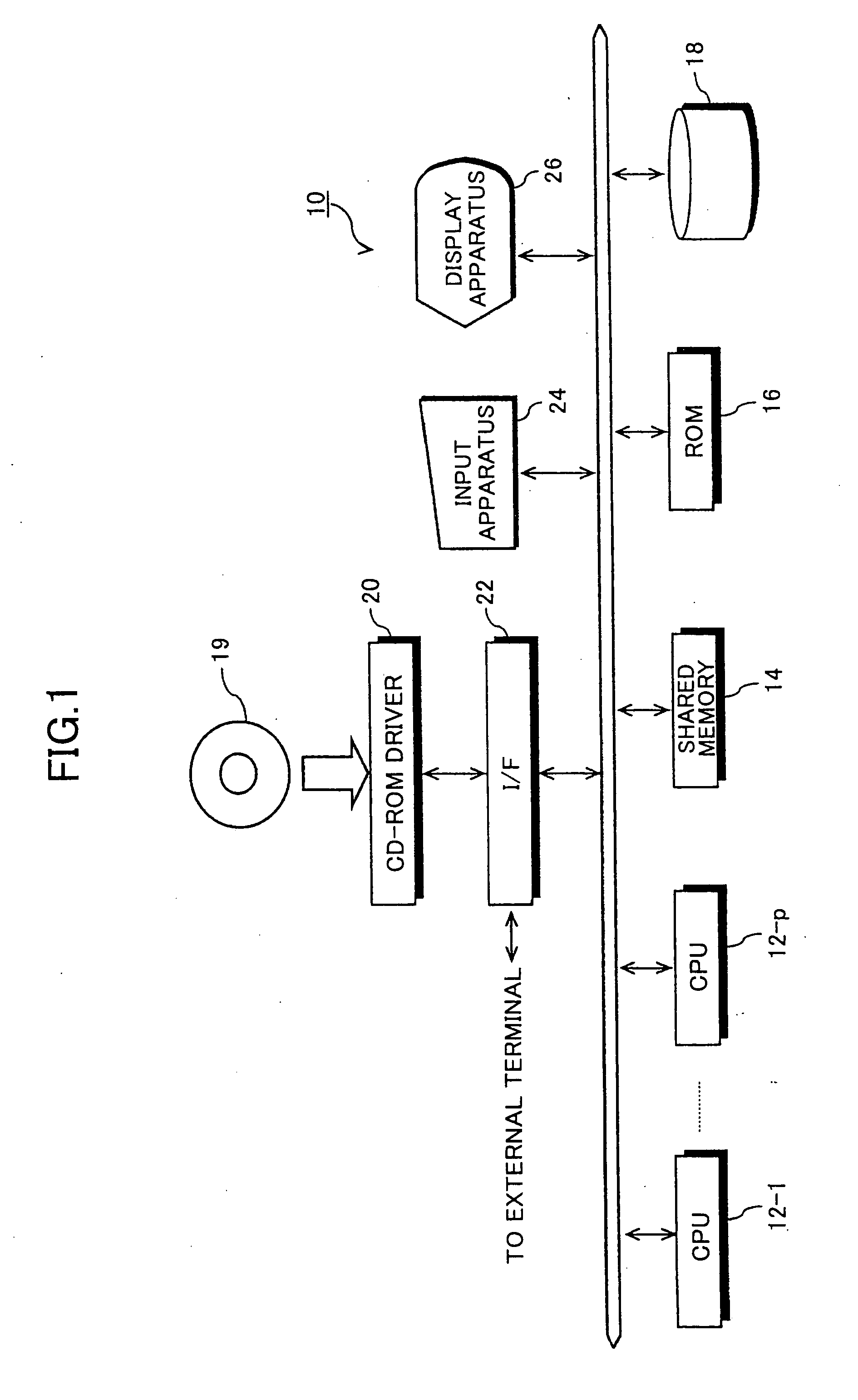

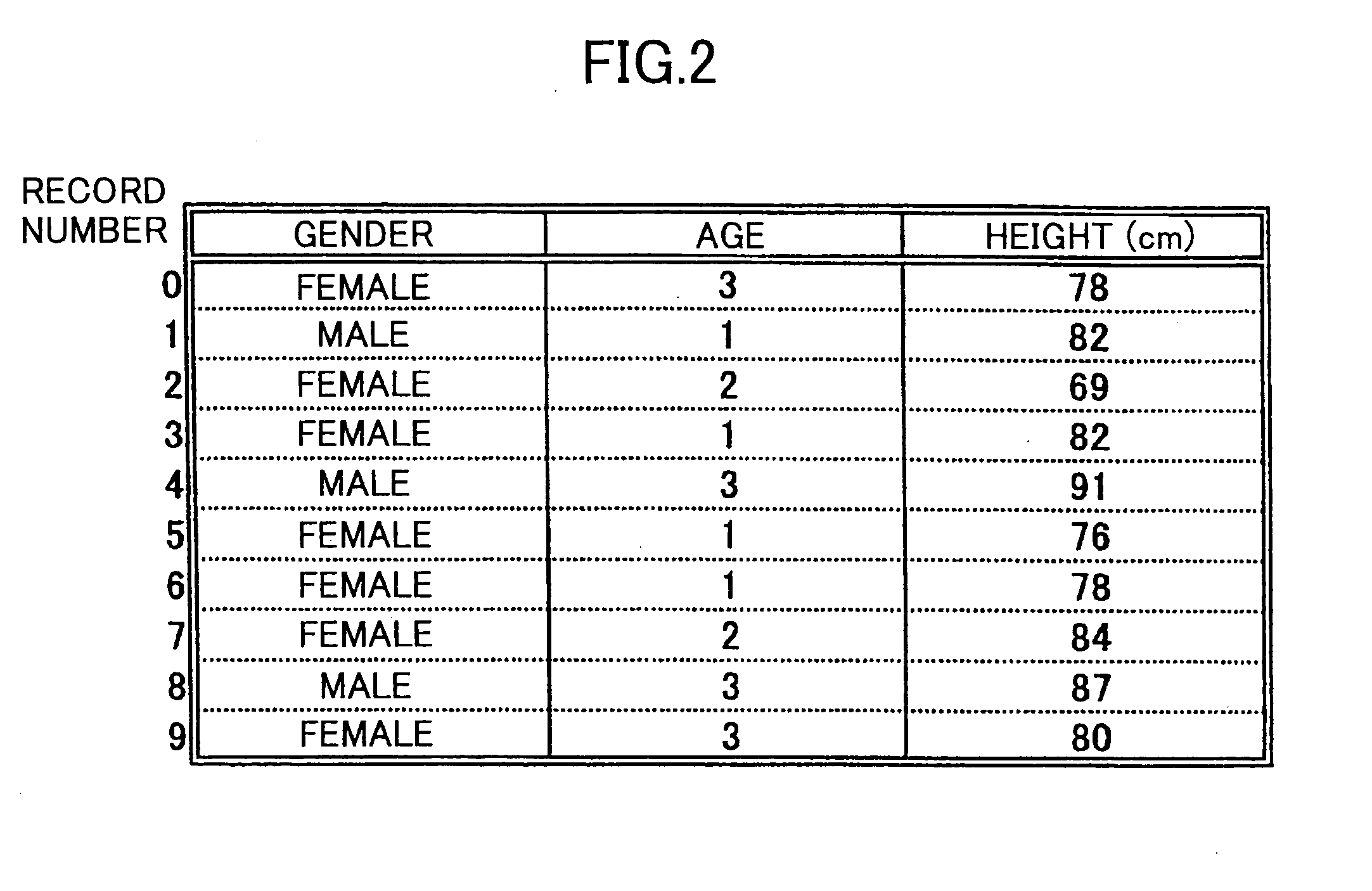

Shared-memory multiprocessor system and method for processing information

ActiveUS7801903B2Increase speedDigital data information retrievalDigital data processing detailsMulti processorData storing

Large-scale table data stored in a shared memory are sorted by a plurality of processors in parallel. According to the present invention, the records subjected to processing are first divided for allocation to the plurality of processors. Then, each processor counts the numbers of local occurrences of the field value sequence numbers associated with the records to be processed. The numbers of local occurrences of the field value sequence numbers counted by each processor is then converted into global cumulative numbers, i.e., the cumulative numbers used in common by the plurality of processors. Finally, each processor utilizes the global cumulative numbers as pointers to rearrange the order of the allocated records.

Owner:ESS HLDG CO LTD

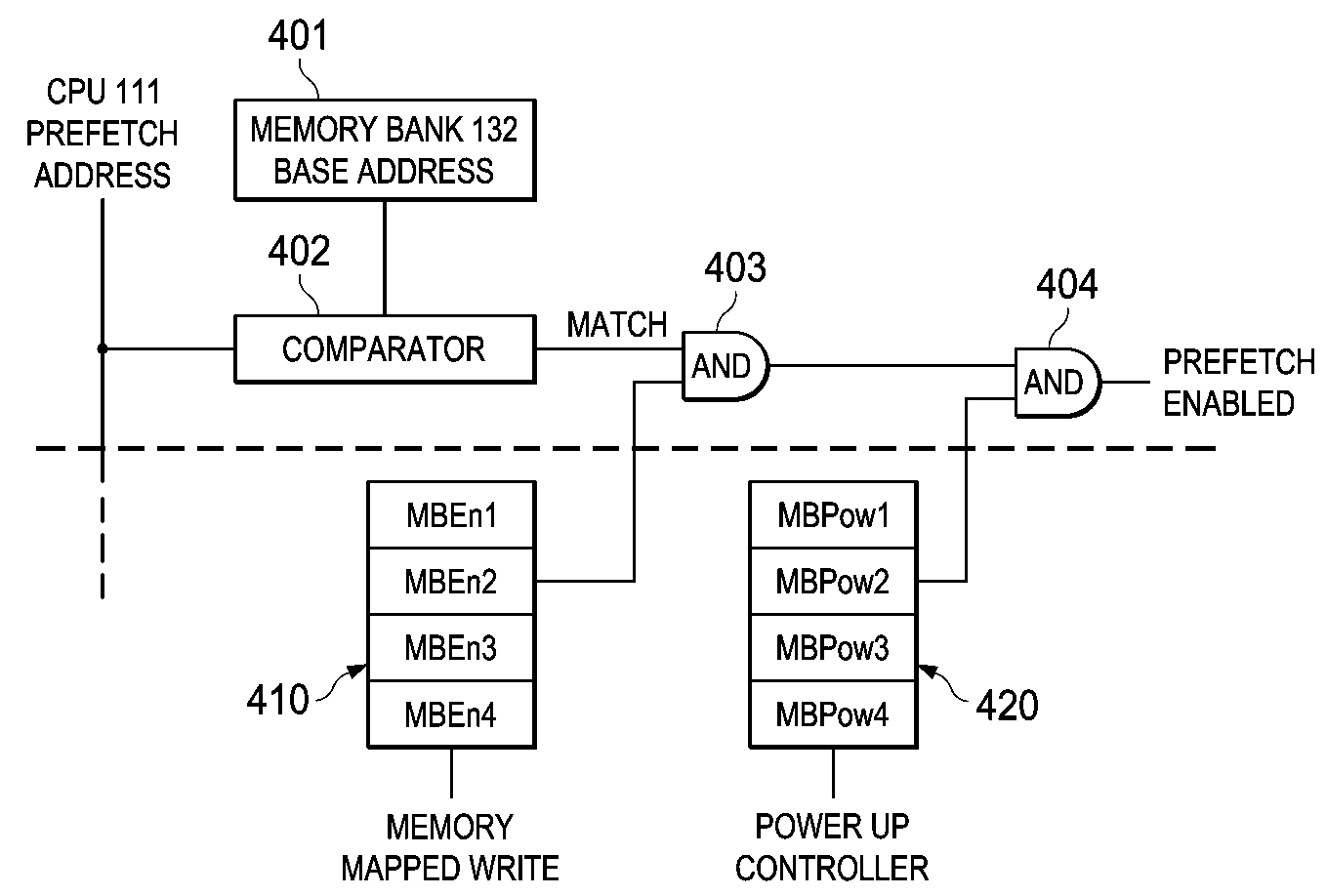

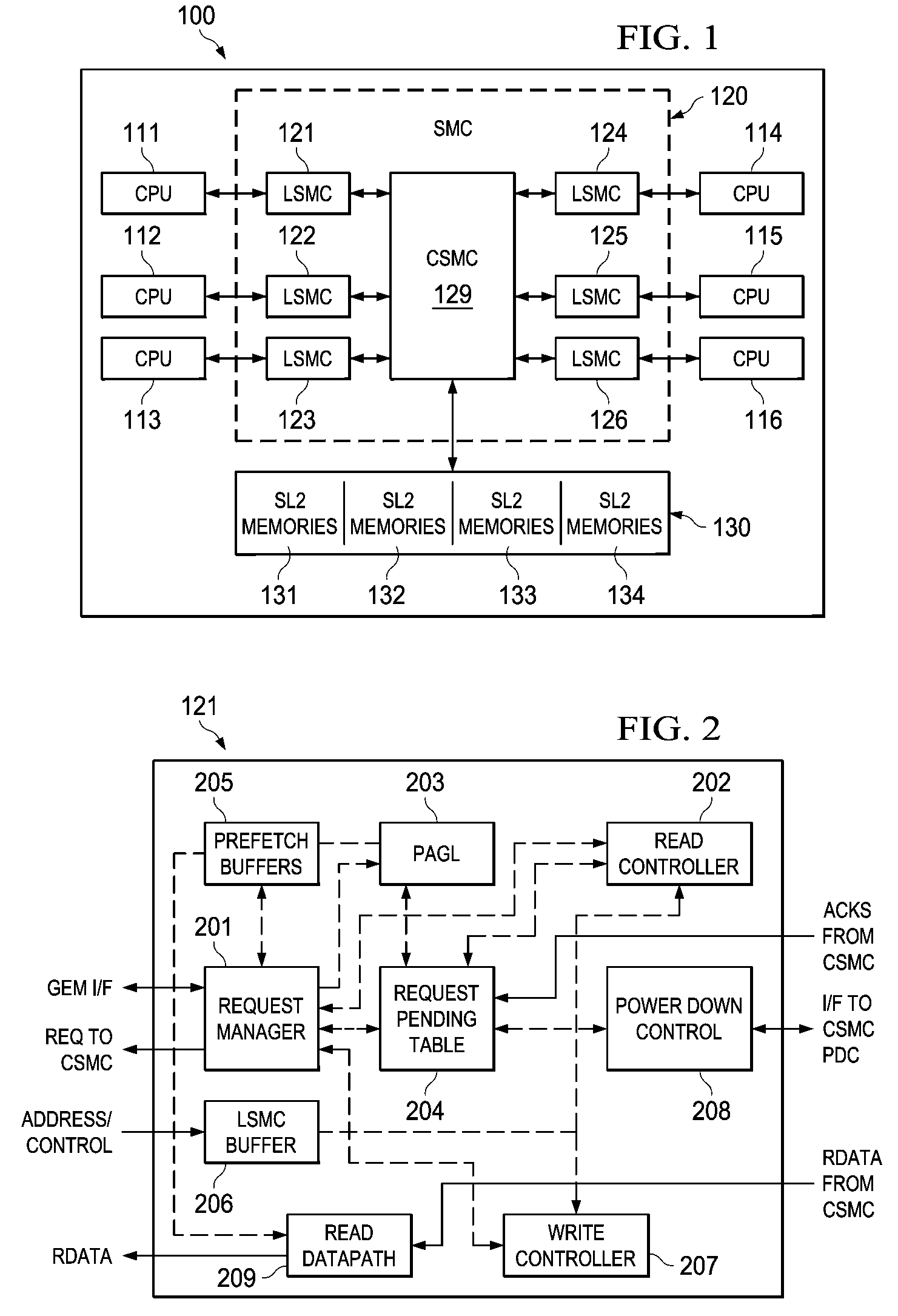

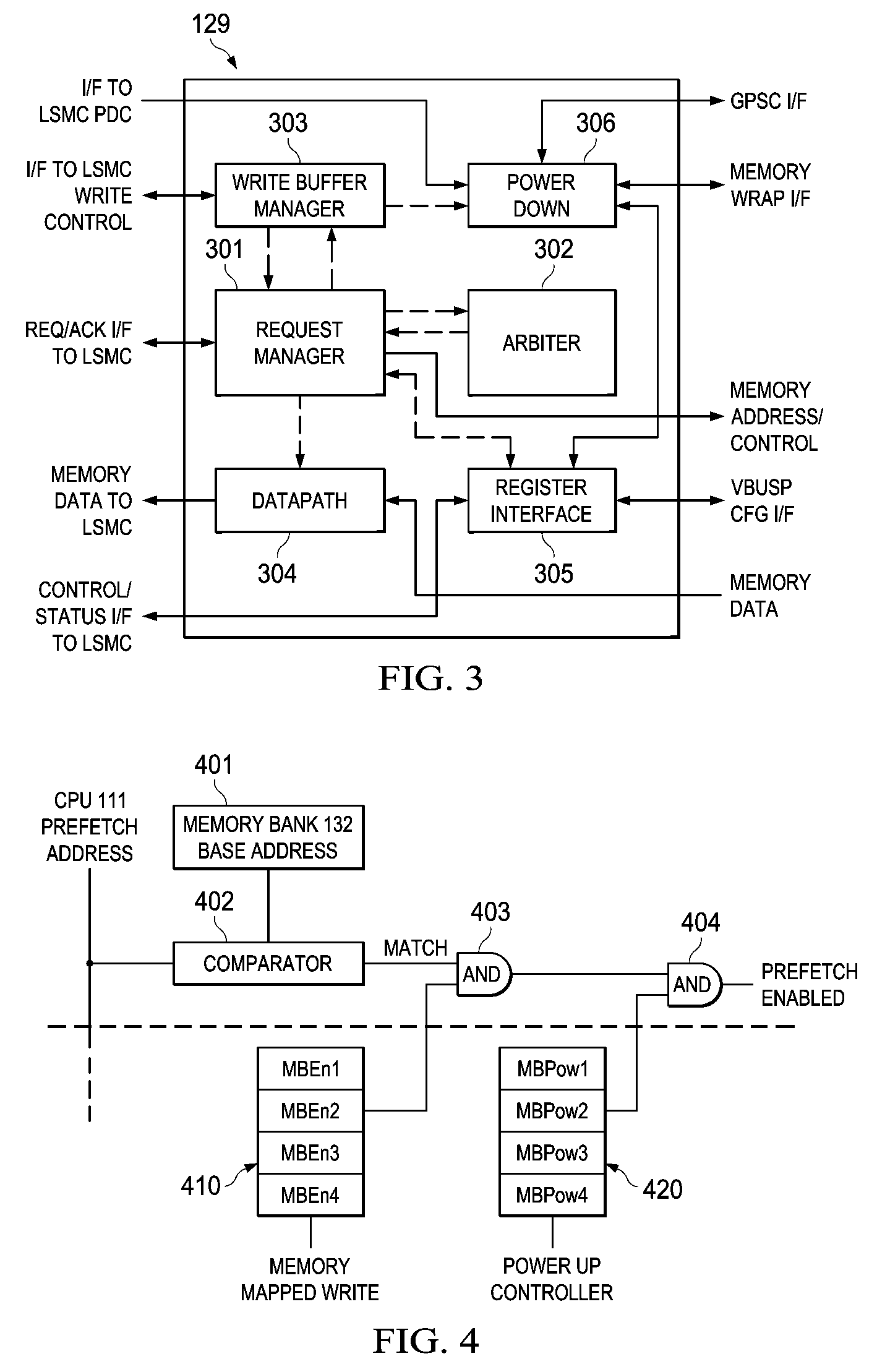

Prefetch Termination at Powered Down Memory Bank Boundary in Shared Memory Controller

ActiveUS20090187715A1Avoid interventionMemory architecture accessing/allocationEnergy efficient ICTMemory bankMulti processor

A prefetch scheme in a shared memory multiprocessor disables the prefetch when an address falls within a powered down memory bank. A register stores a bit corresponding to each independently powered memory bank to determine whether that memory bank is prefetchable. When a memory bank is powered down, all bits corresponding to the pages in this row are masked so that they appear as non-prefetchable pages to the prefetch access generation engine preventing an access to any page in this memory bank. A powered down status bit corresponding to the memory bank is used for masking the output of the prefetch enable register. The prefetch enable register is unmodified. This also seamlessly restores the prefetch property of the memory banks when the corresponding memory row is powered up.

Owner:TEXAS INSTR INC

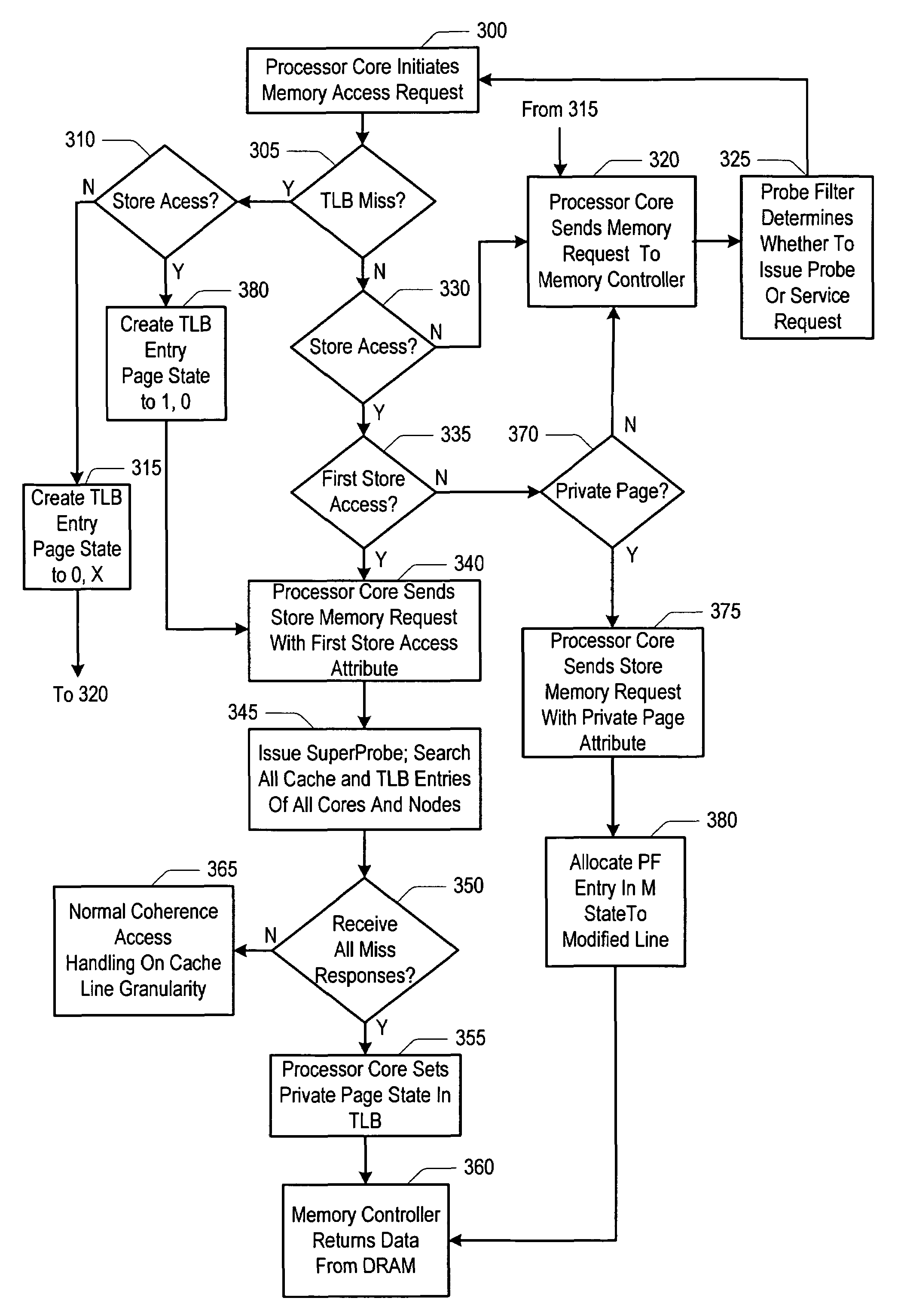

Method and apparatus for detecting and tracking private pages in a shared memory multiprocessor

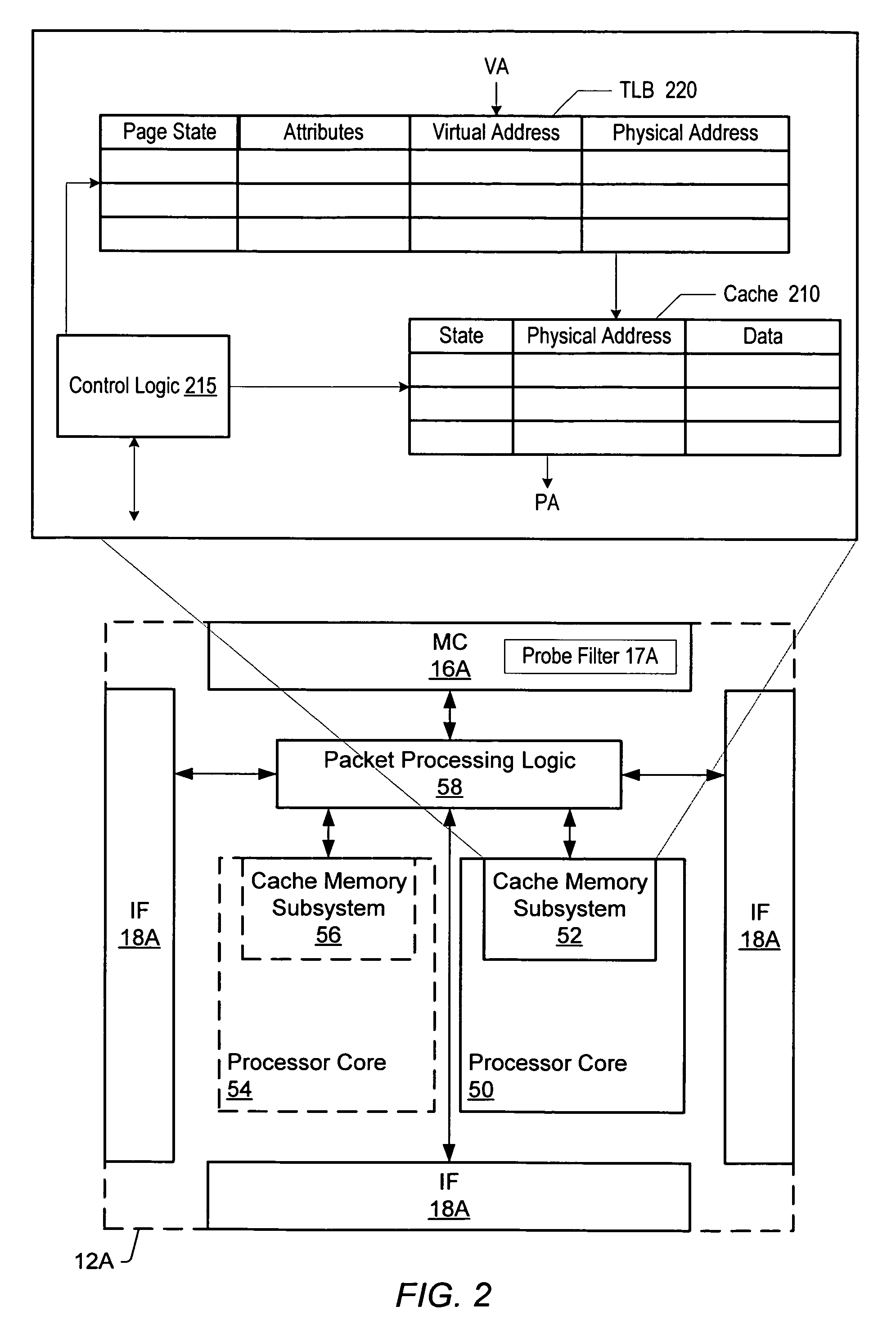

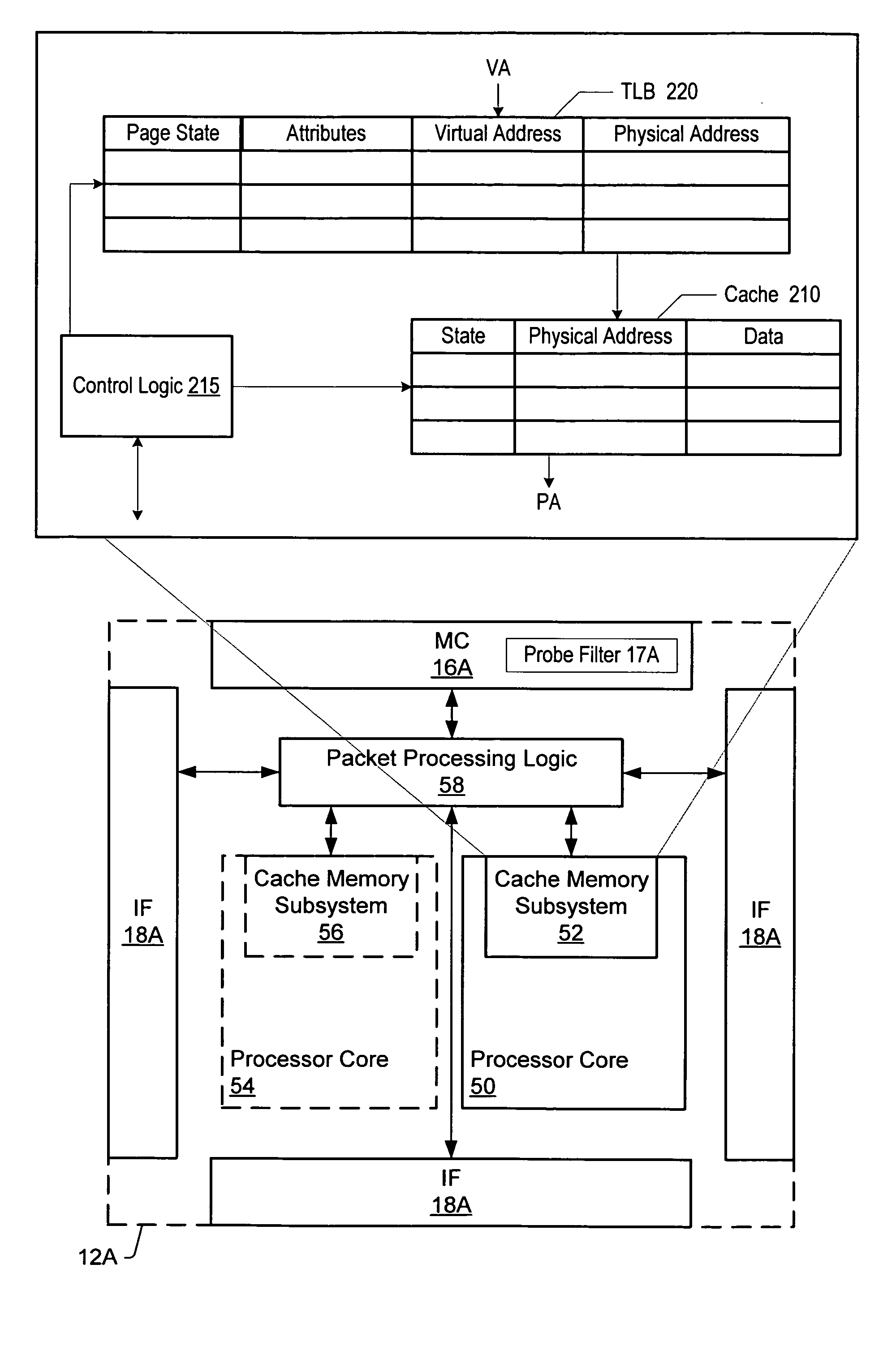

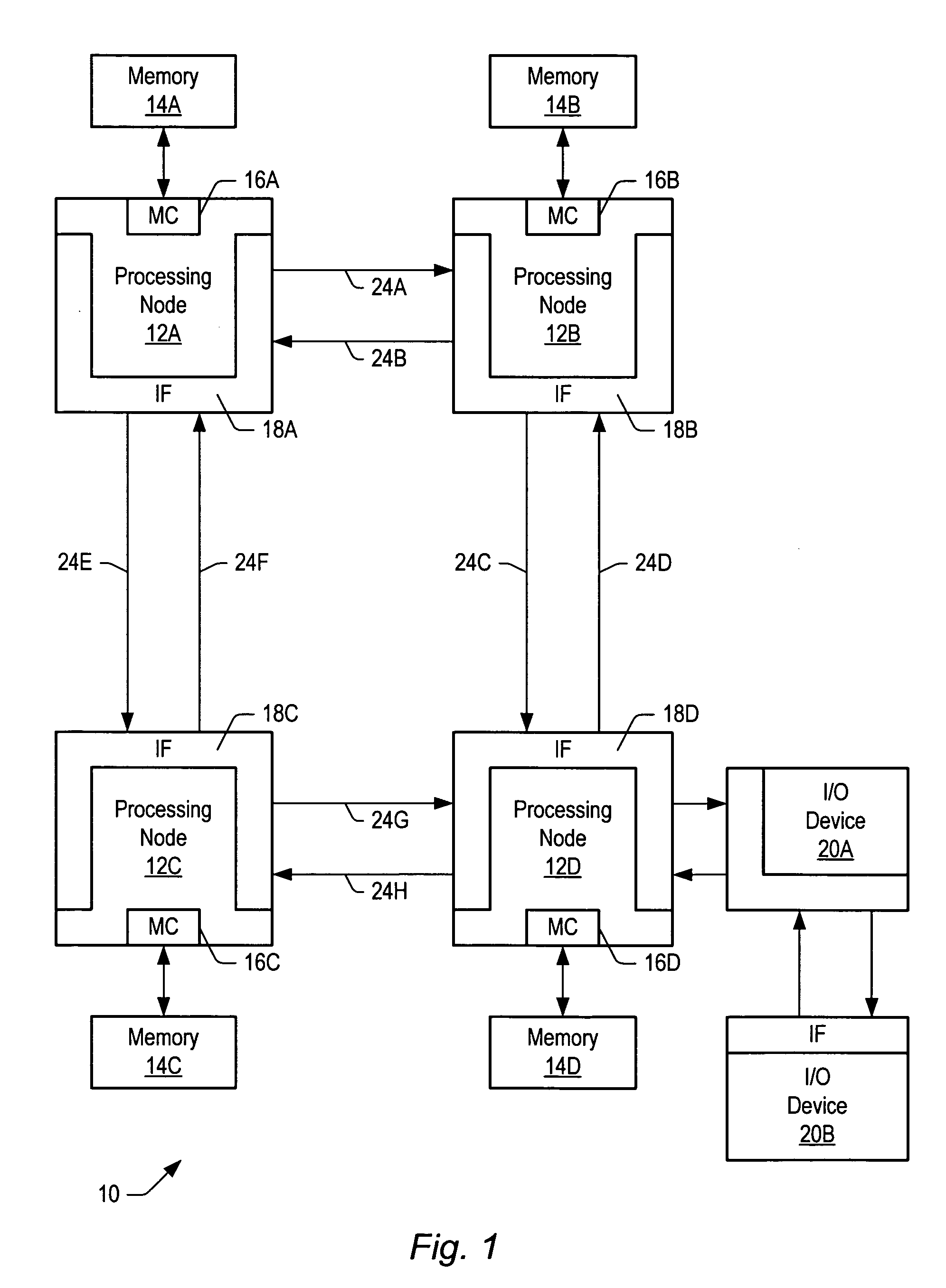

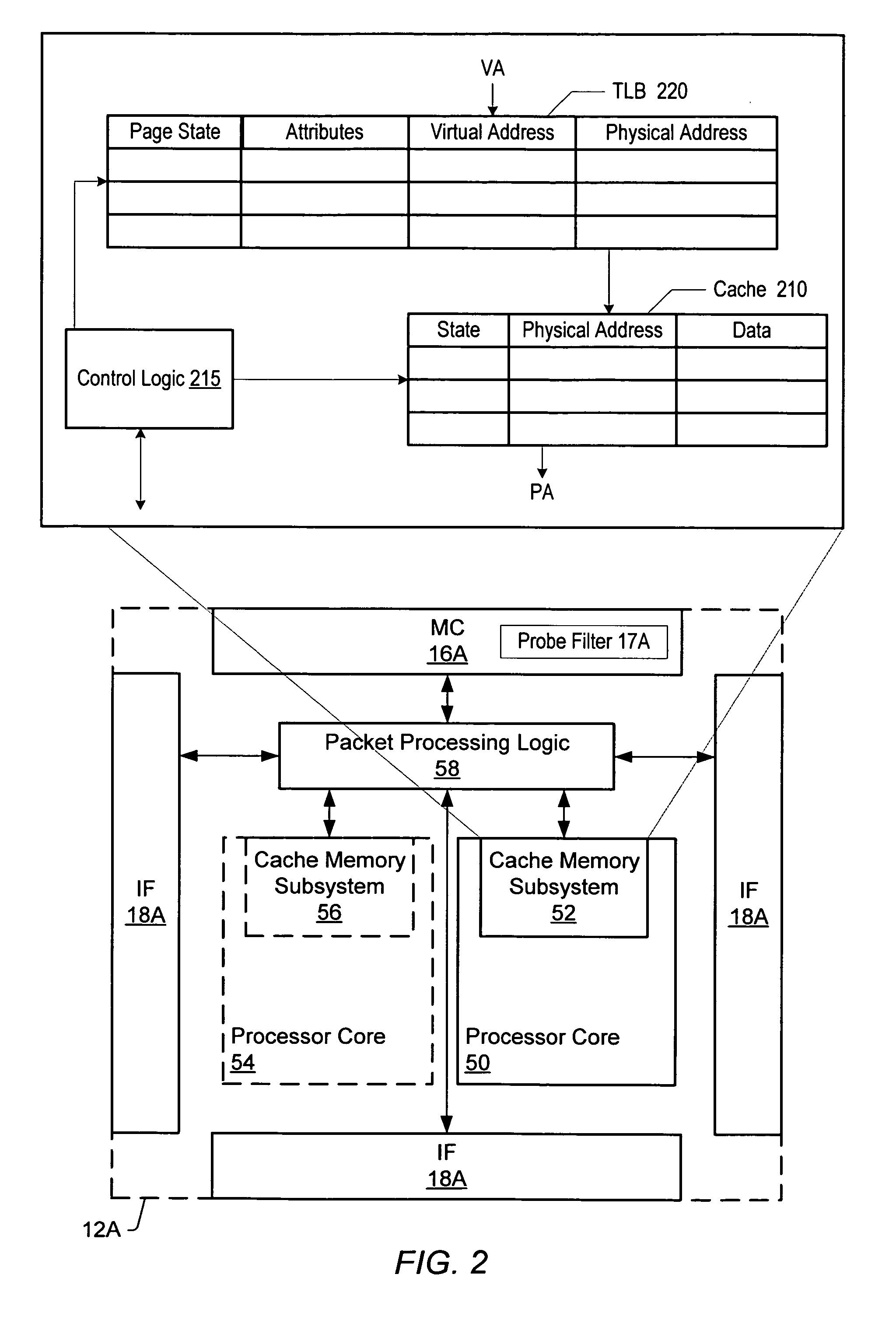

ActiveUS7669011B2Memory architecture accessing/allocationEnergy efficient ICTMulti processorPhysical address

A processor includes a processor core coupled to an address translation storage structure. The address translation storage structure includes a plurality of entries, each corresponding to a memory page. Each entry also includes a physical address of a memory page, and a private page indication that indicates whether any other processors have an entry, in either a respective address translation storage structure or a respective cache memory, that maps to the memory page. The processor also includes a memory controller that may inhibit issuance of a probe message to other processors in response to receiving a write memory request to a given memory page. The write request includes a private page attribute that is associated with the private page indication, and indicates that no other processor has an entry, in either the respective address translation storage structure or the respective cache memory, that maps to the memory page.

Owner:ADVANCED MICRO DEVICES INC

Method and apparatus for detecting and tracking private pages in a shared memory multiprocessor

ActiveUS20080155200A1Memory architecture accessing/allocationEnergy efficient ICTMulti processorPhysical address

A processor includes a processor core coupled to an address translation storage structure. The address translation storage structure includes a plurality of entries, each corresponding to a memory page. Each entry also includes a physical address of a memory page, and a private page indication that indicates whether any other processors have an entry, in either a respective address translation storage structure or a respective cache memory, that maps to the memory page. The processor also includes a memory controller that may inhibit issuance of a probe message to other processors in response to receiving a write memory request to a given memory page. The write request includes a private page attribute that is associated with the private page indication, and indicates that no other processor has an entry, in either the respective address translation storage structure or the respective cache memory, that maps to the memory page.

Owner:ADVANCED MICRO DEVICES INC

Shared-Memory Multiprocessor System and Method for Processing Information

ActiveUS20080215584A1Increase speedDigital data information retrievalData acquisition and loggingMulti processorData storing

Large-scale table data stored in a shared memory are sorted by a plurality of processors in parallel. According to the present invention, the records subjected to processing are first divided for allocation to the plurality of processors. Then, each processor counts the numbers of local occurrences of the field value sequence numbers associated with the records to be processed. The numbers of local occurrences of the field value sequence numbers counted by each processor is then converted into global cumulative numbers, i.e., the cumulative numbers used in common by the plurality of processors. Finally, each processor utilizes the global cumulative numbers as pointers to rearrange the order of the allocated records.

Owner:ESS HLDG CO LTD

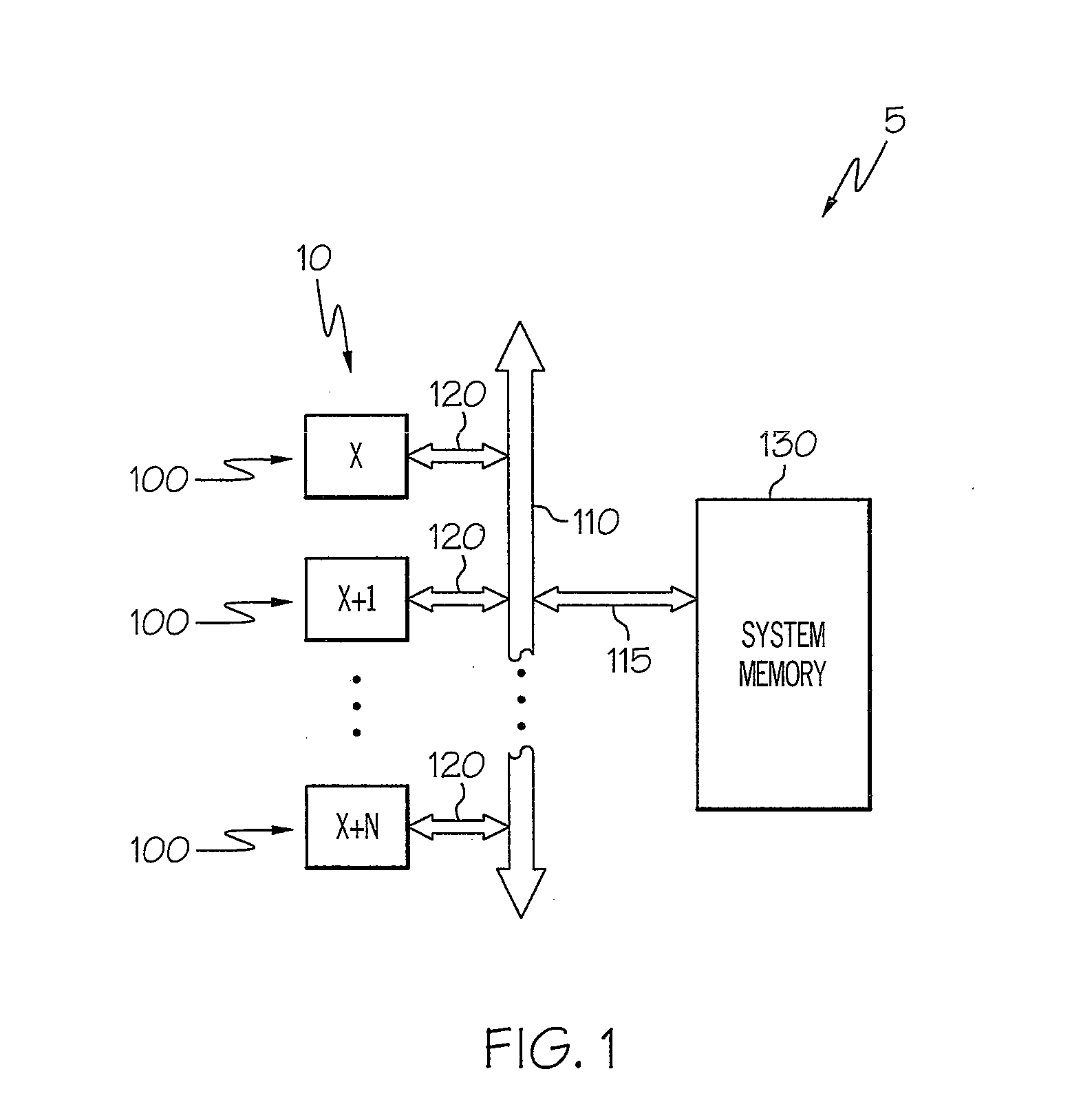

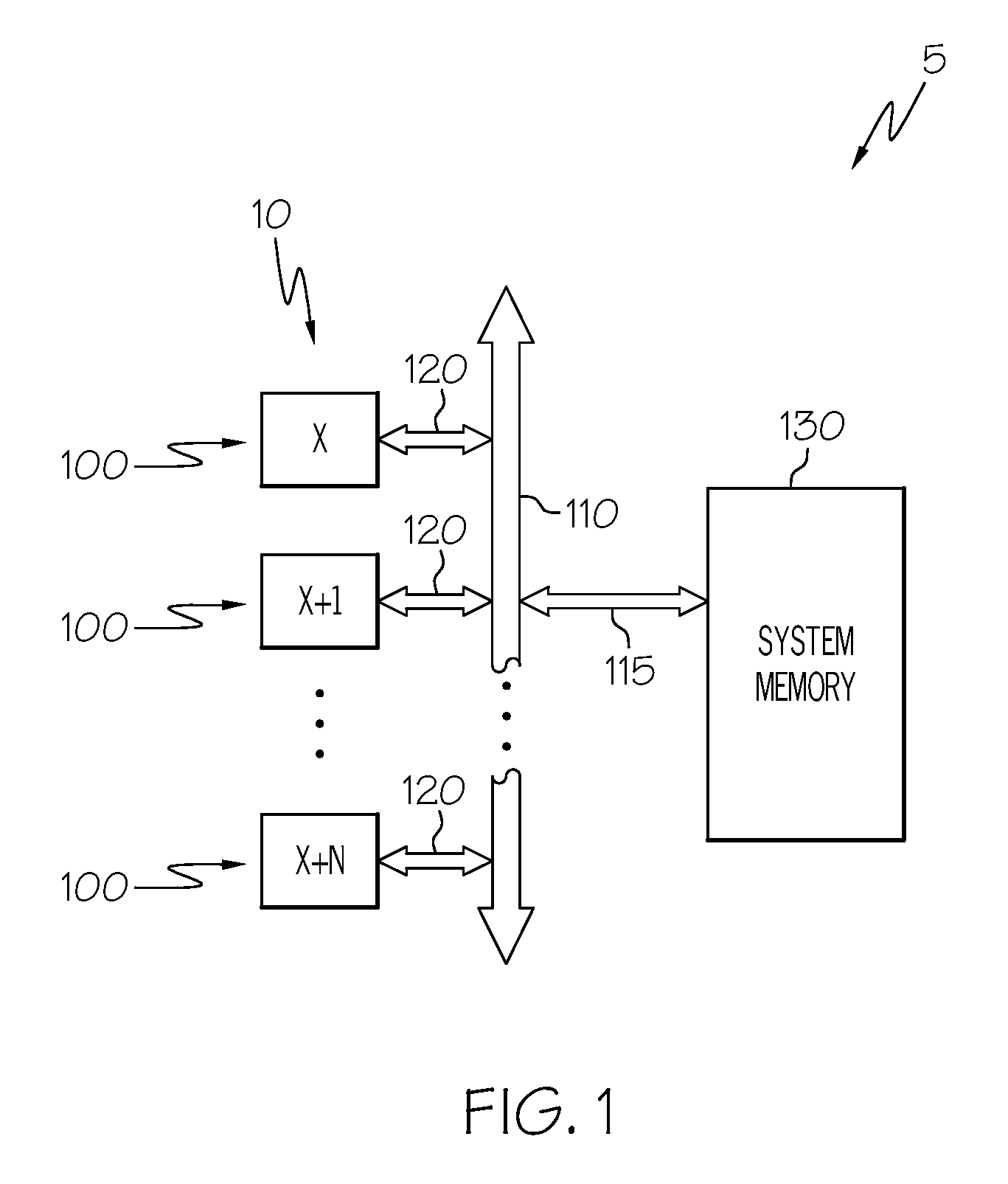

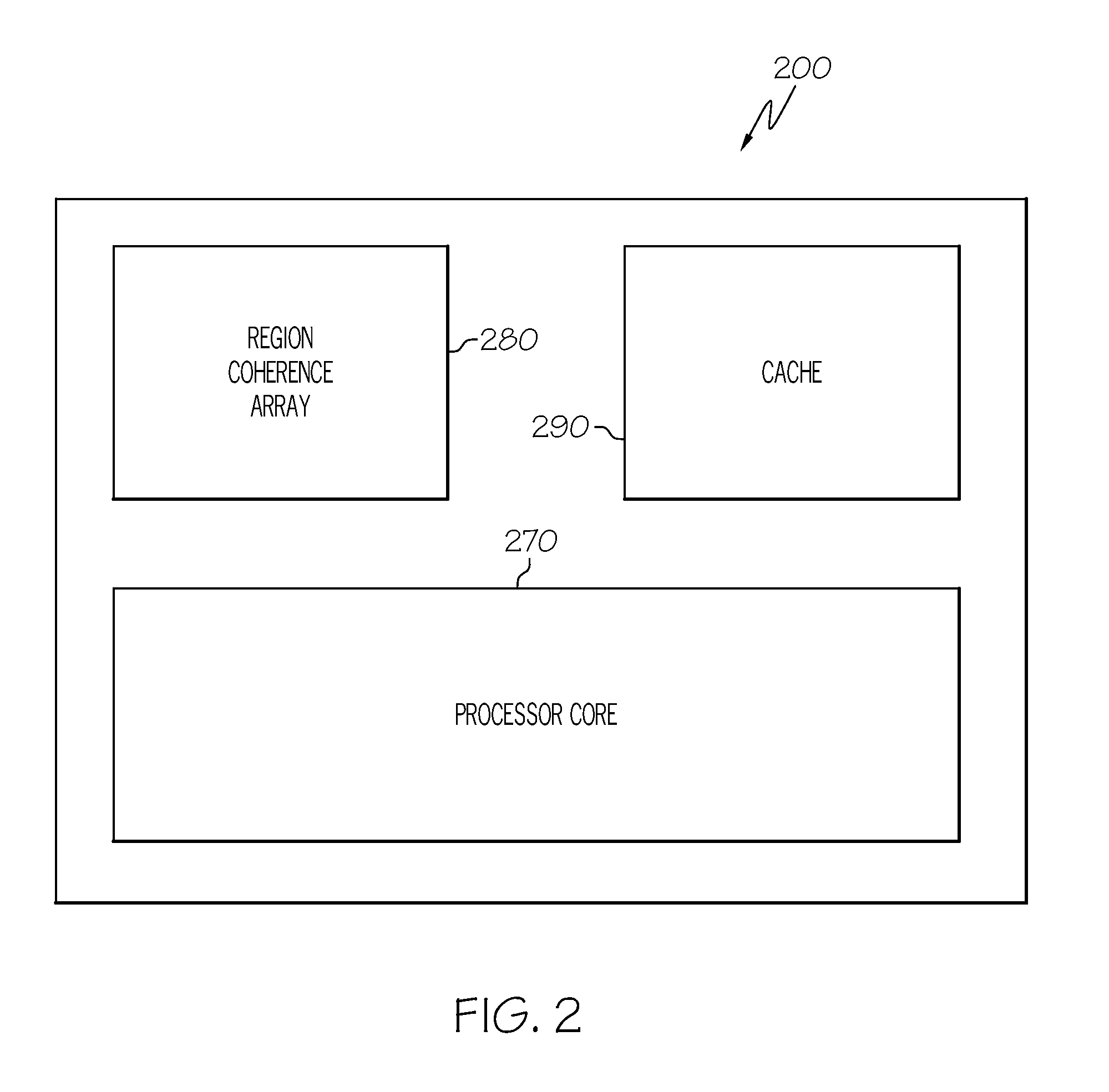

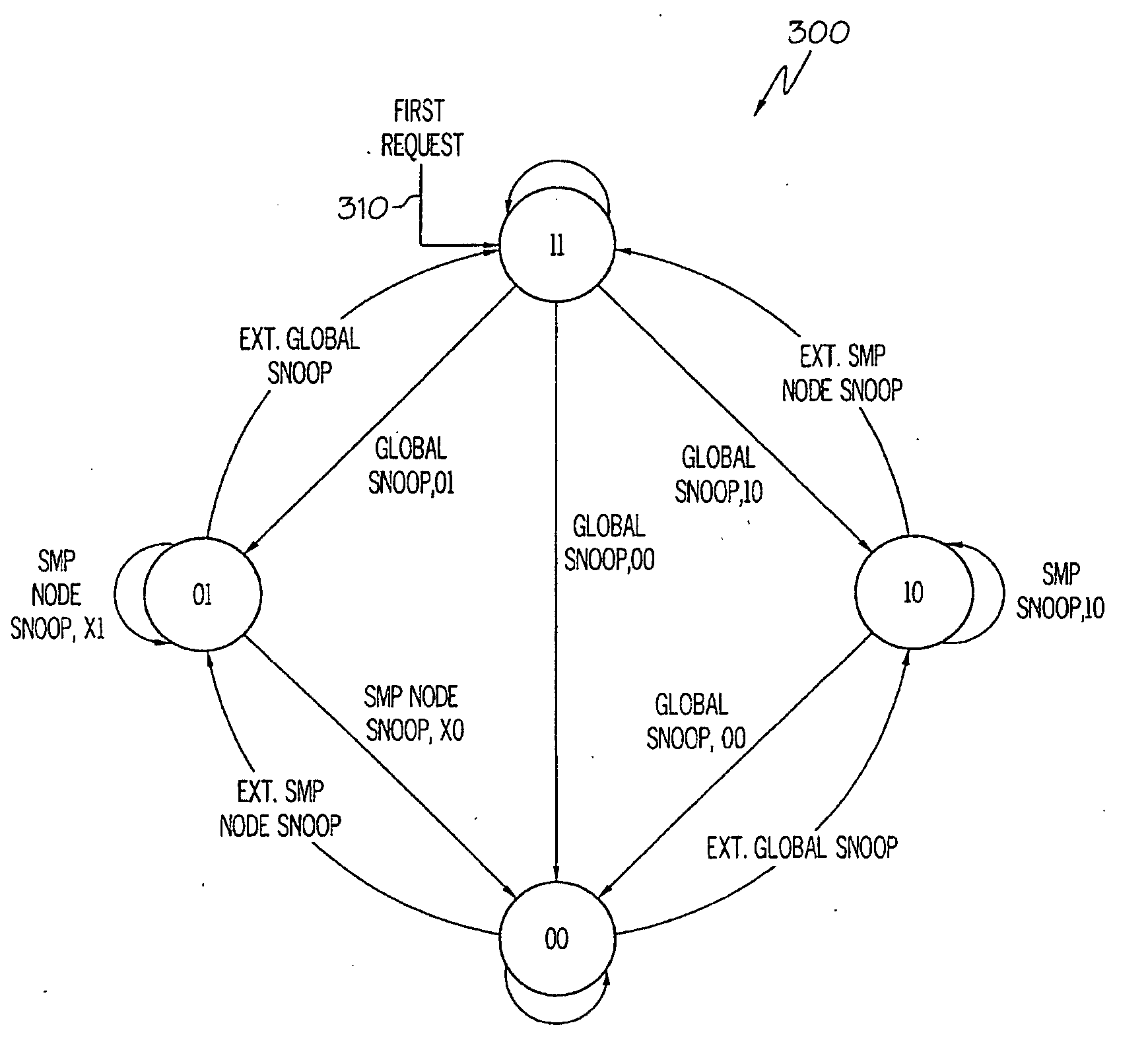

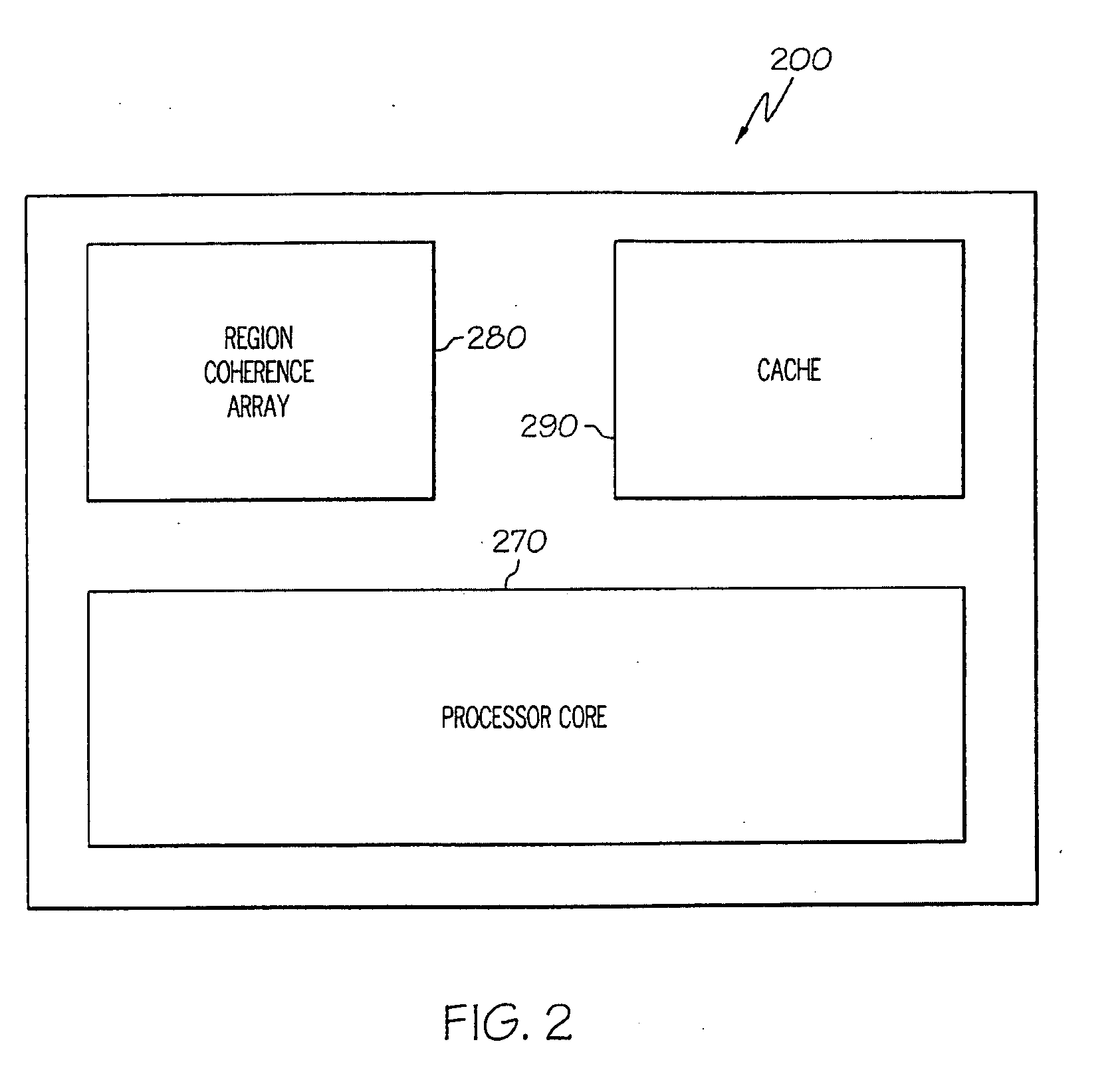

Efficient Region Coherence Protocol for Clustered Shared-Memory Multiprocessor Systems

ActiveUS20090319726A1Easy to optimizeEasy to implementMemory adressing/allocation/relocationMulti processorPrinted circuit board

A system and method of a region coherence protocol for use in Region Coherence Arrays (RCAs) deployed in clustered shared-memory multiprocessor systems which optimize cache-to-cache transfers by allowing broadcast memory requests to be provided to only a portion of a clustered shared-memory multiprocessor system. Interconnect hierarchy levels can be devised for logical groups of processors, processors on the same chip, processors on chips aggregated into a multichip module, multichip modules on the same printed circuit board, and for processors on other printed circuit boards or in other cabinets. The present region coherence protocol includes, for example, one bit per level of interconnect hierarchy, such that the one bit has a value of “1” to indicate that there may be processors caching copies of lines from the region at that level of the interconnect hierarchy, and the one bit has a value of “0” to indicate that there are no cached copies of any lines from the region at that respective level of the interconnect hierarchy.

Owner:IBM CORP

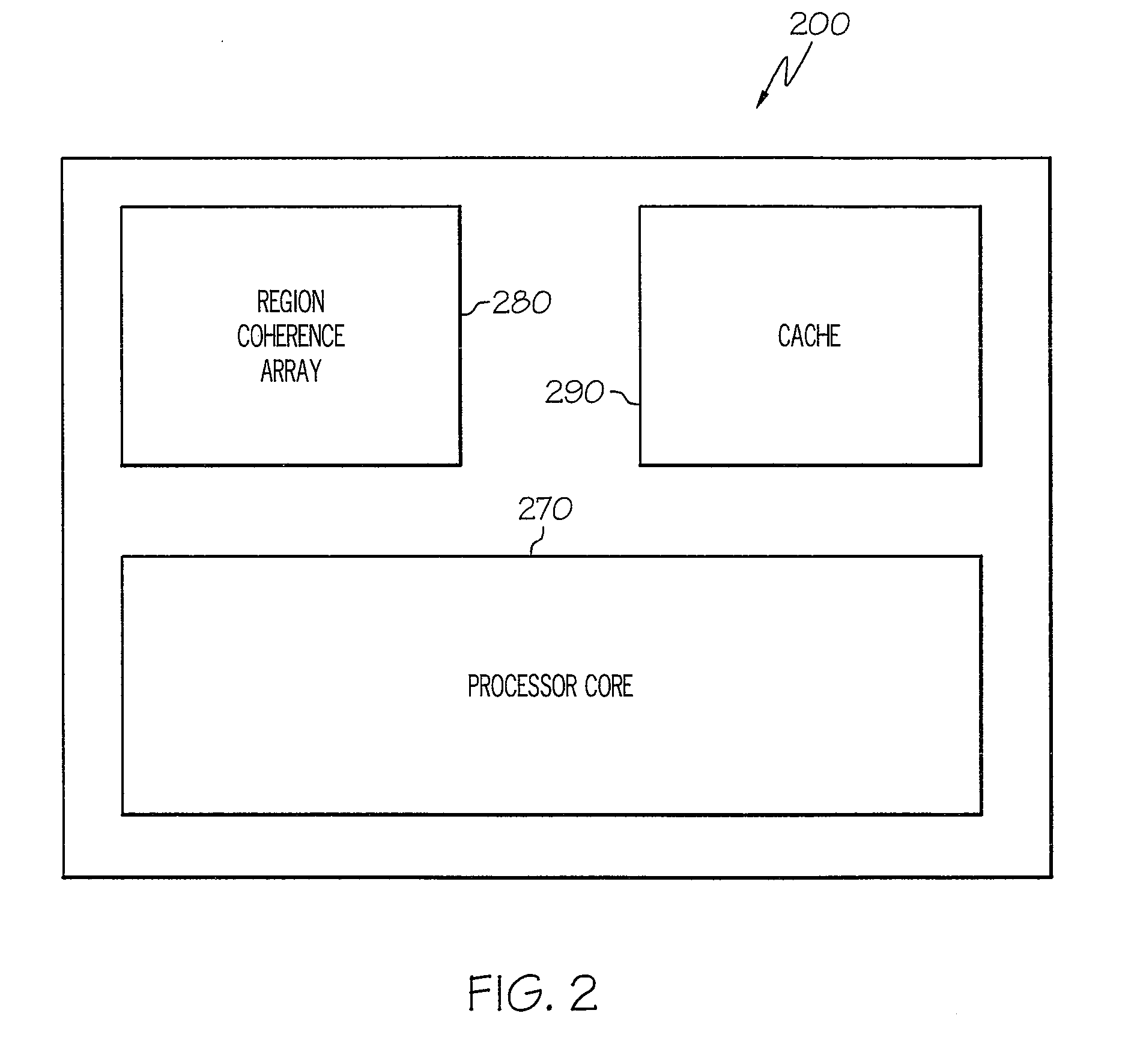

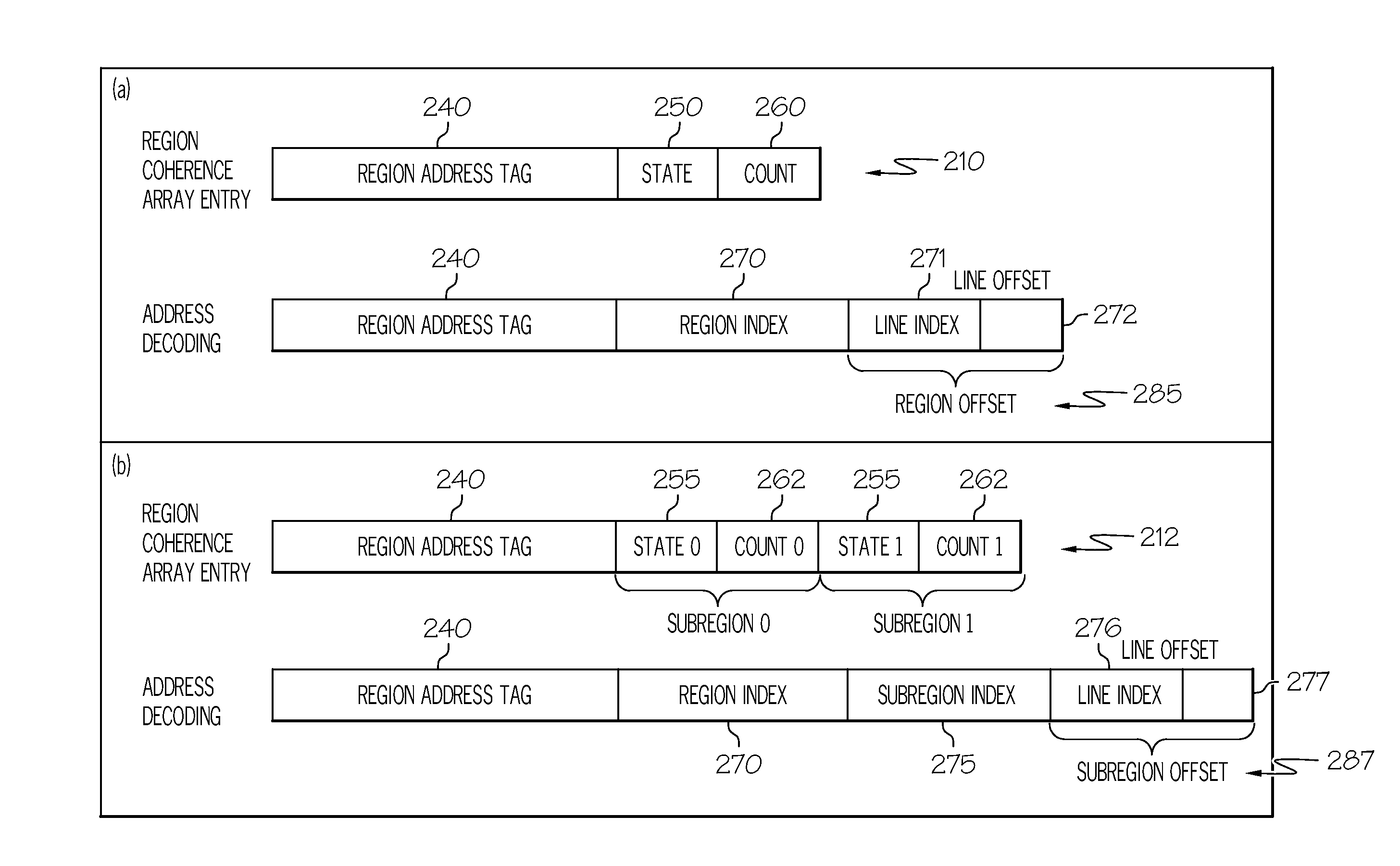

Region coherence array for a mult-processor system having subregions and subregion prefetching

InactiveUS20100191921A1TrackingAccurate trackingMemory architecture accessing/allocationMemory systemsMulti processorSingle level

Owner:IBM CORP

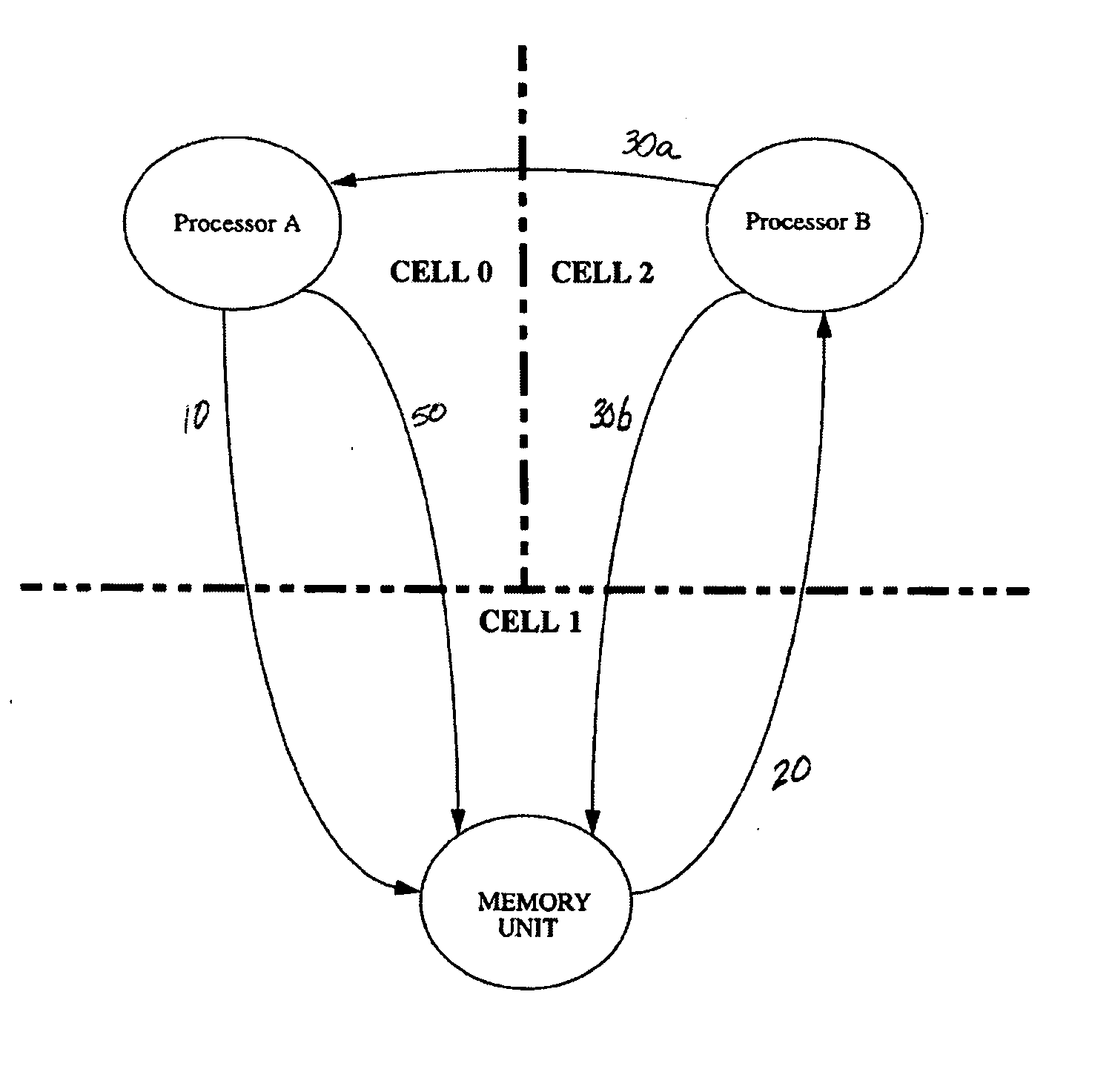

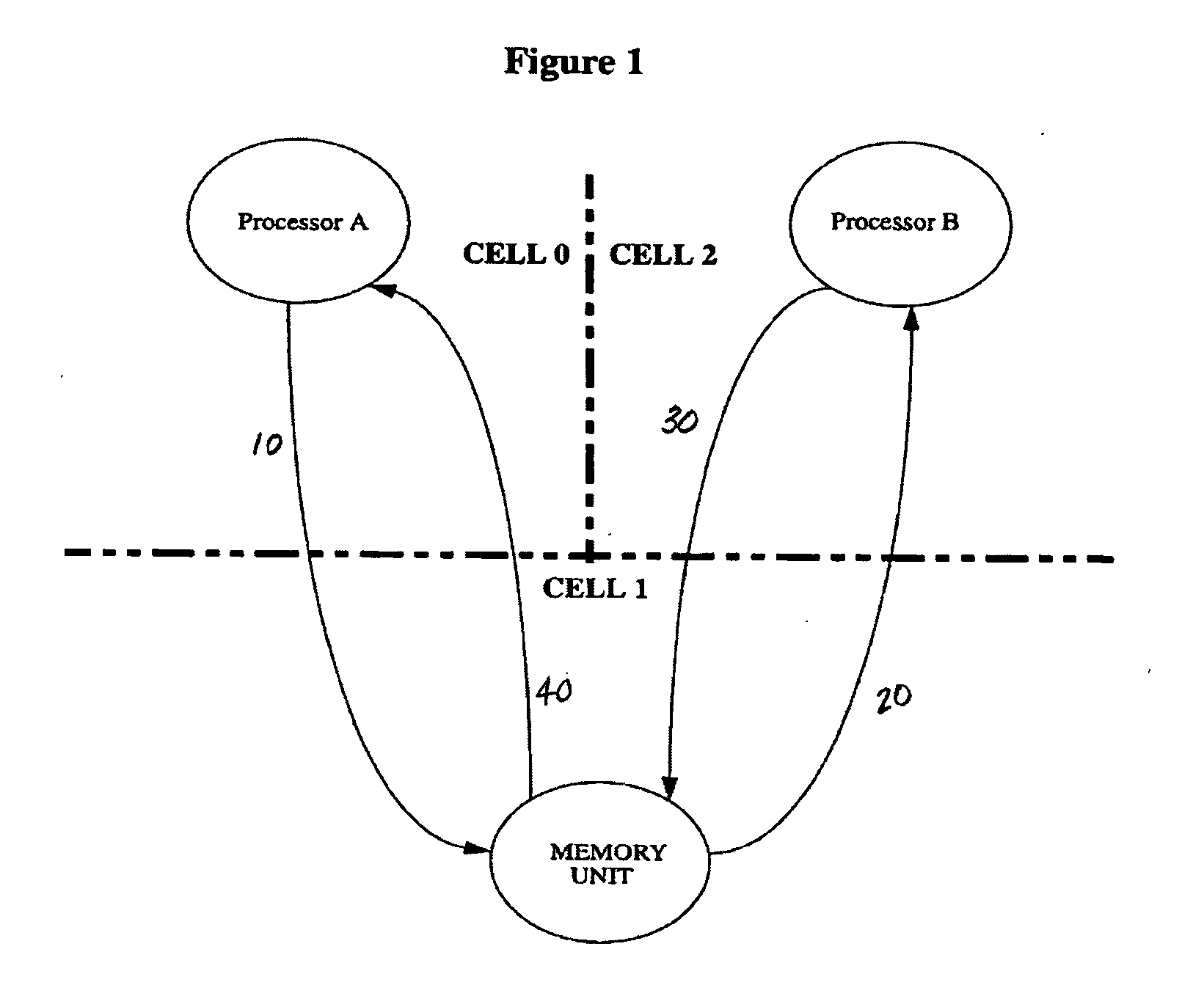

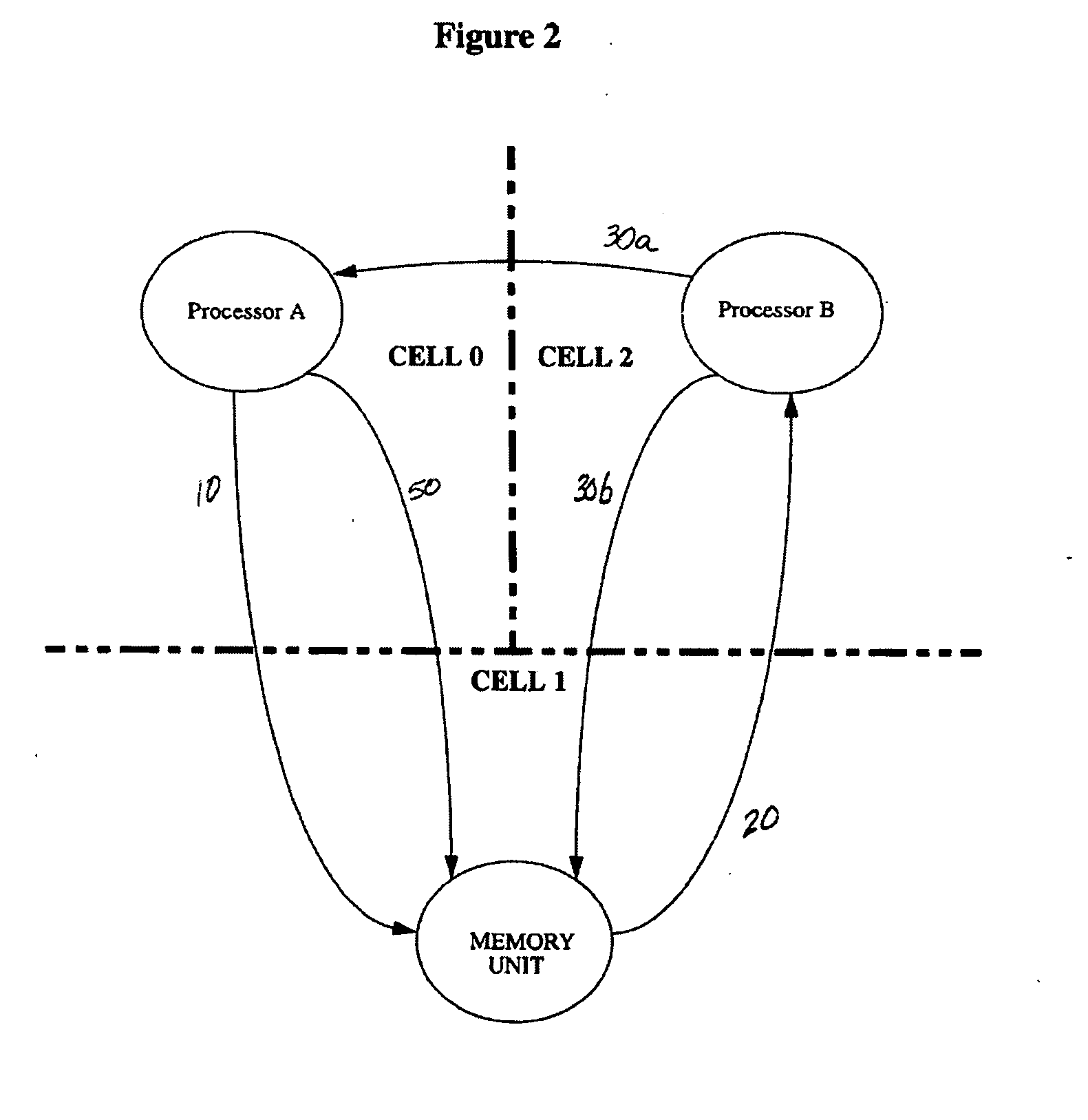

Cache line ownership transfer in multi-processor computer systems

InactiveUS20050154840A1Reduce cache miss latencyReduce the number of stepsData processing applicationsMemory adressing/allocation/relocationMulti processorReceipt

Transferring cache line ownership between processors in a shared memory multi-processor computer system. A request for ownership of a cache line is sent from a requesting processor to a memory unit. The memory unit receives the request and determines which one of a plurality of processors other than the requesting processor has ownership of the requested cache line. The memory sends an ownership recall to that processor. In response to the ownership recall, the other processor sends the requested cache line to the requesting processor, which may send a response to the memory unit to confirm receipt of the requested cache line. The other processor may optionally send a response to the memory unit to confirm that the other processor has sent the requested cache line to the requesting processor. A copy of the data for the requested cache line may, under some circumstances, also be sent to the memory unit by the other processor as part of the response.

Owner:HEWLETT PACKARD DEV CO LP

System and method for limited fanout daisy chaining of cache invalidation requests in a shared-memory multiprocessor system

InactiveUS7389389B2Memory architecture accessing/allocationDigital data processing detailsCache invalidationMulti processor

A protocol engine is for use in each node of a computer system having a plurality of nodes. Each node includes an interface to a local memory subsystem that stores memory lines of information, a directory, and a memory cache. The directory includes an entry associated with a memory line of information stored in the local memory subsystem. The directory entry includes an identification field for identifying sharer nodes that potentially cache the memory line of information. The identification field has a plurality of bits at associated positions within the identification field. Each respective bit of the identification field is associated with one or more nodes. The protocol engine furthermore sets each bit in the identification field for which the memory line is cached in at least one of the associated nodes. In response to a request for exclusive ownership of a memory line, the protocol engine sends an initial invalidation request to no more than a first predefined number of the nodes associated with set bits in the identification field of the directory entry associated with the memory line.

Owner:SK HYNIX INC

Region coherence array having hint bits for a clustered shared-memory multiprocessor system

A system and method for a multilevel region coherence protocol for use in Region Coherence Arrays (RCAs) deployed in clustered shared-memory multiprocessor systems which optimize cache-to-cache transfers (interventions) by using region hint bits in each RCA to allow memory requests for lines of a region of the memory to be optimally sent to only a determined portion of the clustered shared-memory multiprocessor system without broadcasting the requests to all processors in the system. A sufficient number of region hint bits are used to uniquely identify each level of the system's interconnect hierarchy to optimally predict which level of the system likely includes a processor that has cached copies of lines of data from the region.

Owner:IBM CORP

Distributed page-table lookups in a shared-memory system

ActiveUS9213649B2Reduce latencyReduce communication overheadMemory architecture accessing/allocationMemory adressing/allocation/relocationMulti processorPhysical address

Owner:ORACLE INT CORP

Using broadcast-based TLB sharing to reduce address-translation latency in a shared-memory system with optical interconnect

ActiveUS9235529B2Reduce latencyFacilitate communicationMemory architecture accessing/allocationMultiplex system selection arrangementsPage tableOptical interconnect

The disclosed embodiments provide a system that uses broadcast-based TLB sharing to reduce address-translation latency in a shared-memory multiprocessor system with two or more nodes that are connected by an optical interconnect. During operation, a first node receives a memory operation that includes a virtual address. Upon determining that one or more TLB levels of the first node will miss for the virtual address, the first node uses the optical interconnect to broadcast a TLB request to one or more additional nodes of the shared-memory multiprocessor in parallel with scheduling a speculative page-table walk for the virtual address. If the first node receives a TLB entry from another node of the shared-memory multiprocessor via the optical interconnect in response to the TLB request, the first node cancels the speculative page-table walk. Otherwise, if no response is received, the first node instead waits for the completion of the page-table walk.

Owner:ORACLE INT CORP

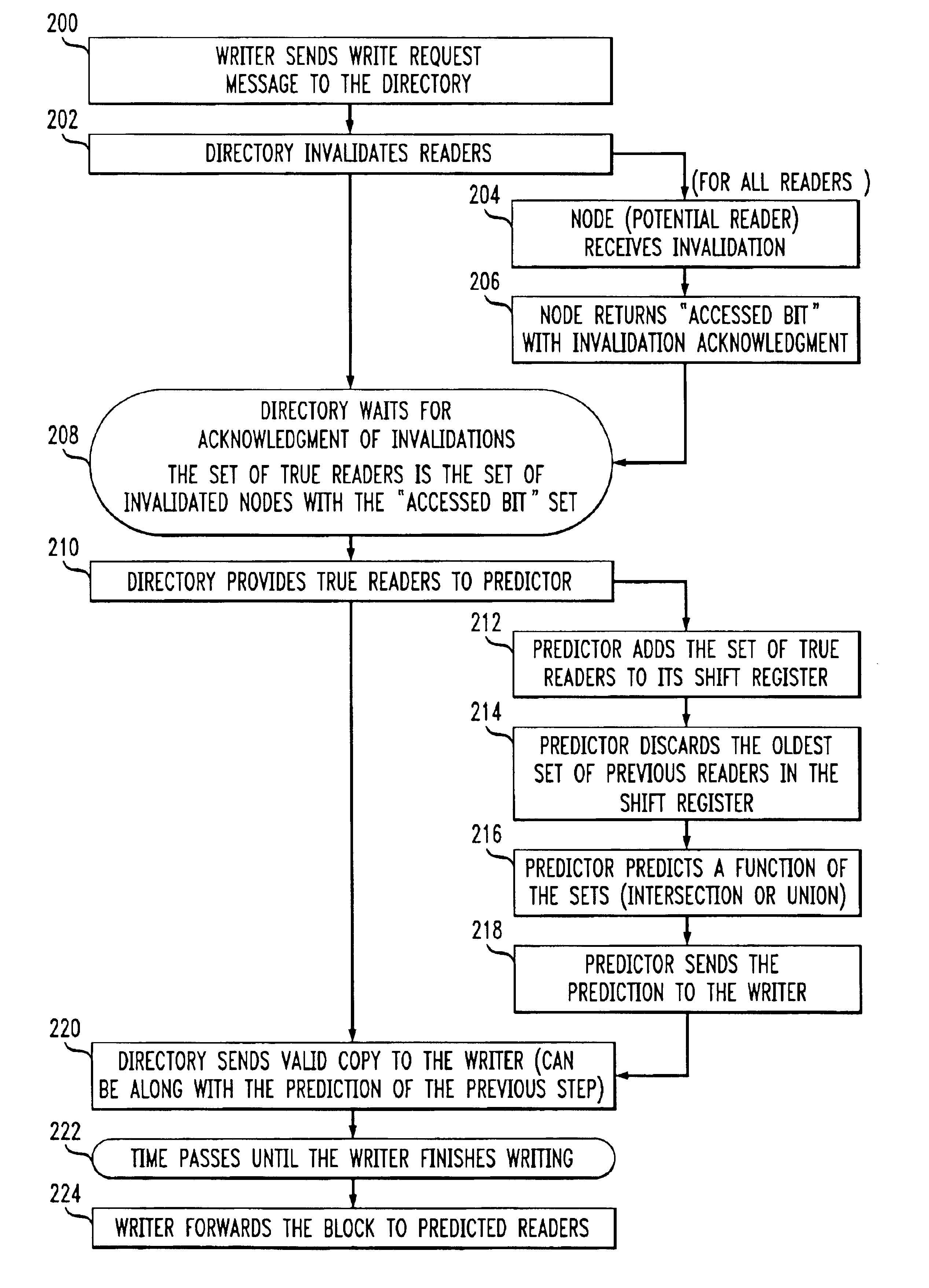

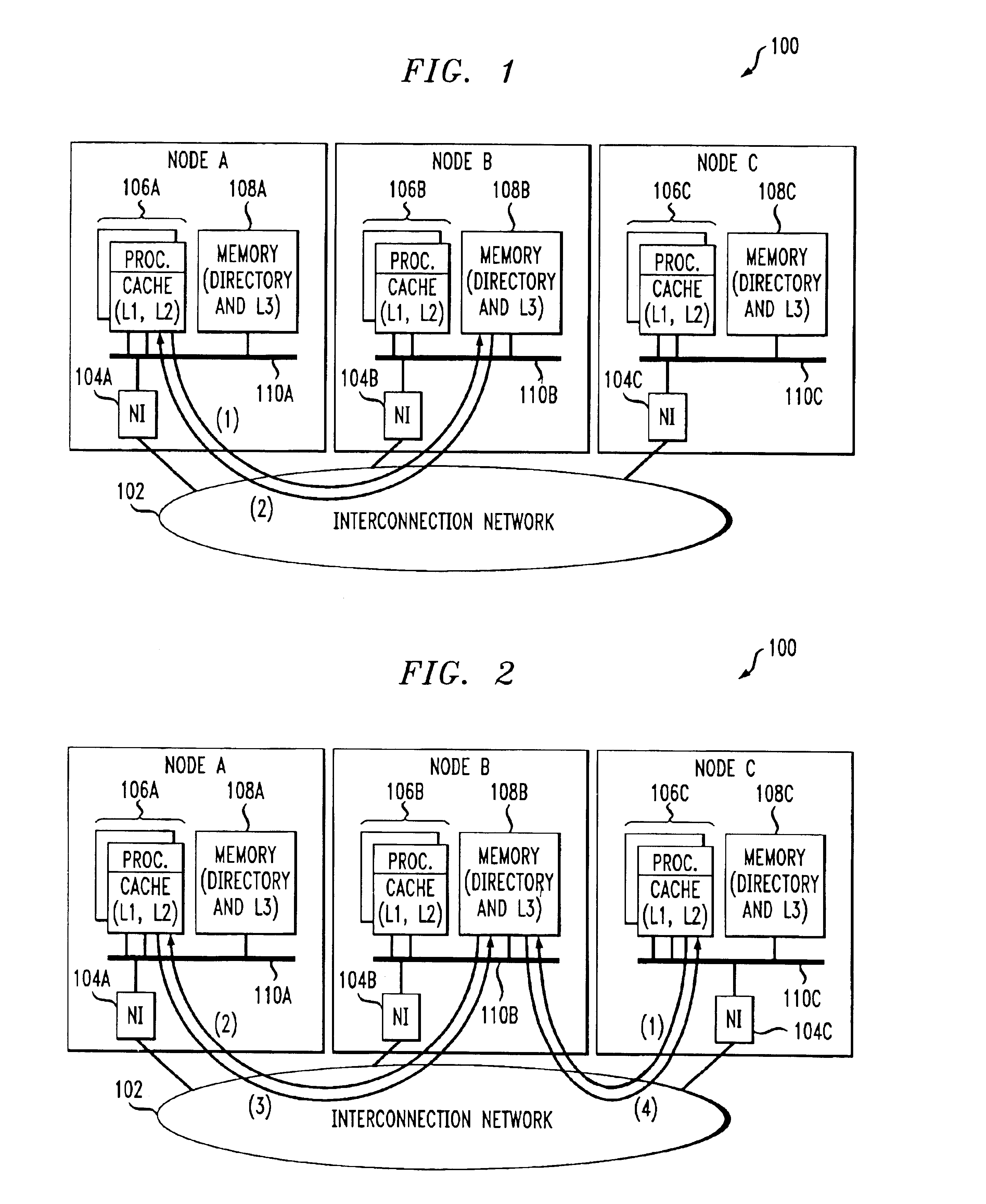

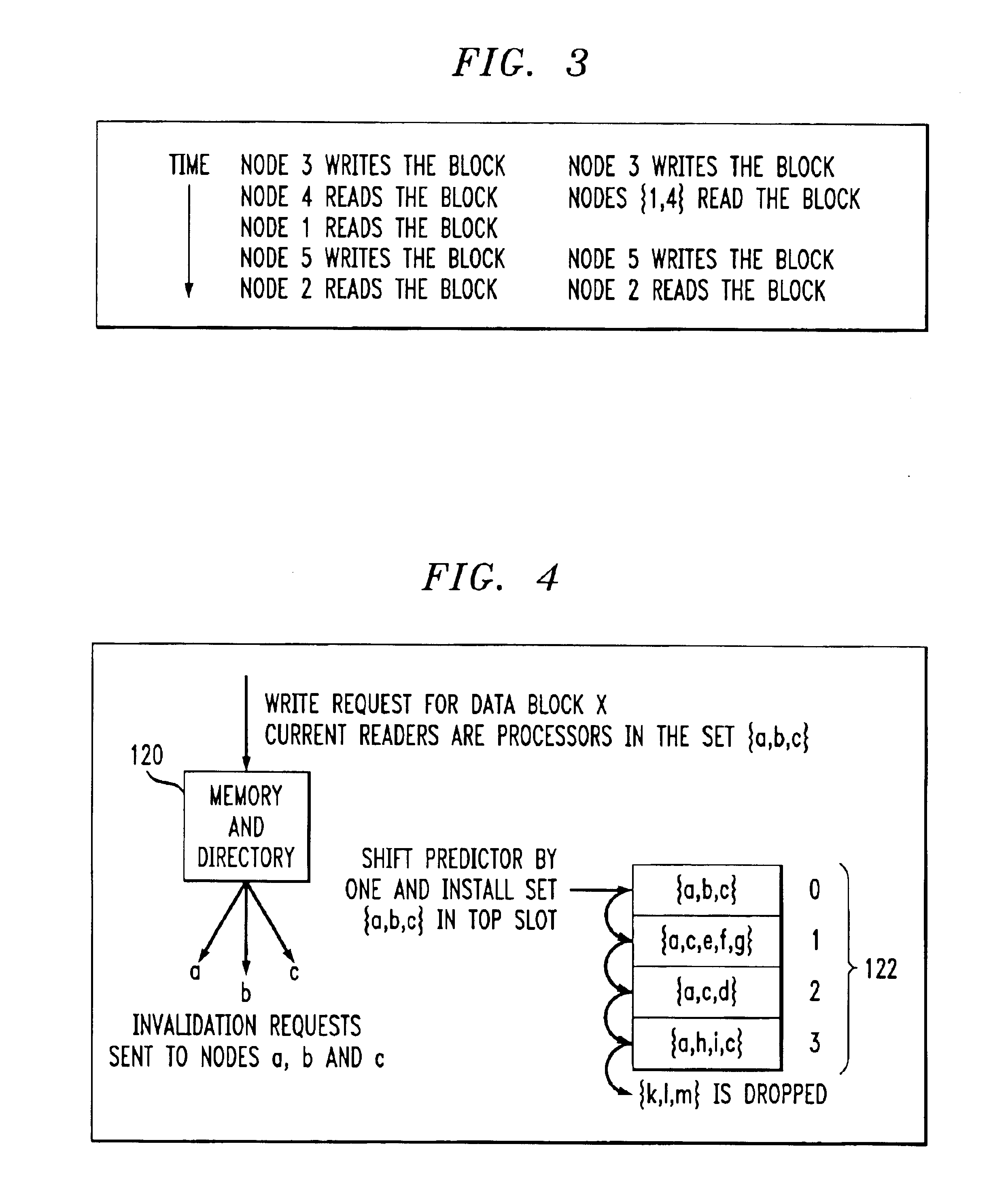

Directory-based prediction methods and apparatus for shared-memory multiprocessor systems

InactiveUS6889293B1Improve forecast accuracyMemory adressing/allocation/relocationDigital computer detailsAlgorithmMulti processor

A set of predicted readers are determined for a data block subject to a write request in a shared-memory multiprocessor system by first determining a current set of readers of the data block, and then generating the set of predicted readers based on the current set of readers and at least one additional set of readers representative of at least a portion of a global history of a directory associated with the data block. In one possible implementation, the set of predicted readers are generated by applying a function to the current set of readers and one or more additional sets of readers.

Owner:LUCENT TECH INC +1

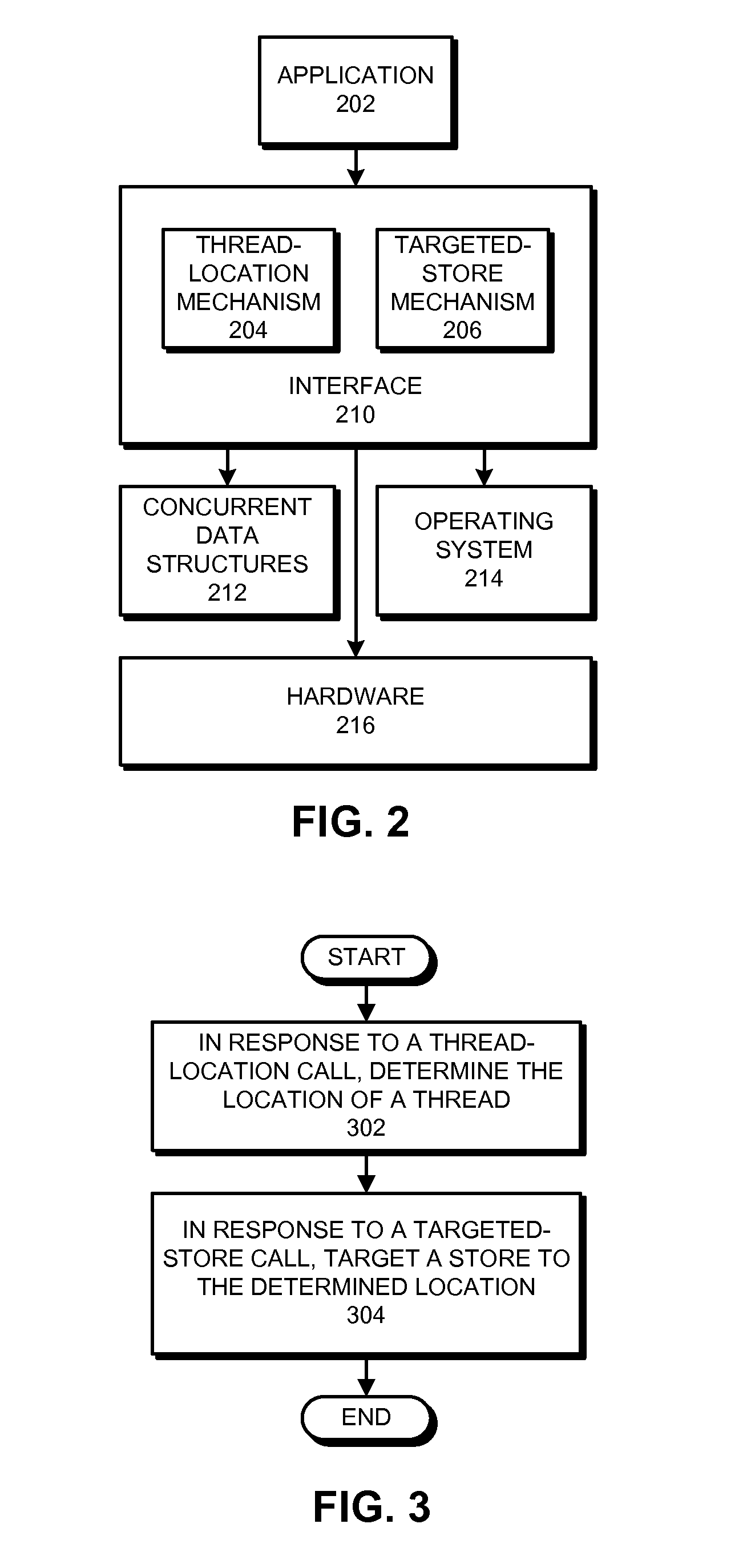

Monitoring multiple memory locations for targeted stores in a shared-memory multiprocessor

ActiveUS20140215157A1Eliminate needError detection/correctionMemory adressing/allocation/relocationMulti processorParallel computing

A system and method for supporting targeted stores in a shared-memory multiprocessor. A targeted store enables a first processor to push a cache line to be stored in a cache memory of a second processor. This eliminates the need for multiple cache-coherence operations to transfer the cache line from the first processor to the second processor. More specifically, the disclosed embodiments provide a system that notifies a waiting thread when a targeted store is directed to monitored memory locations. During operation, the system receives a targeted store which is directed to a specific cache in a shared-memory multiprocessor system. In response, the system examines a destination address for the targeted store to determine whether the targeted store is directed to a monitored memory location which is being monitored for a thread associated with the specific cache. If so, the system informs the thread about the targeted store.

Owner:ORACLE INT CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com