Patents

Literature

35 results about "Bigram" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A bigram or digram is a sequence of two adjacent elements from a string of tokens, which are typically letters, syllables, or words. A bigram is an n-gram for n=2. The frequency distribution of every bigram in a string is commonly used for simple statistical analysis of text in many applications, including in computational linguistics, cryptography, speech recognition, and so on. Gappy bigrams or skipping bigrams are word pairs which allow gaps (perhaps avoiding connecting words, or allowing some simulation of dependencies, as in a dependency grammar).

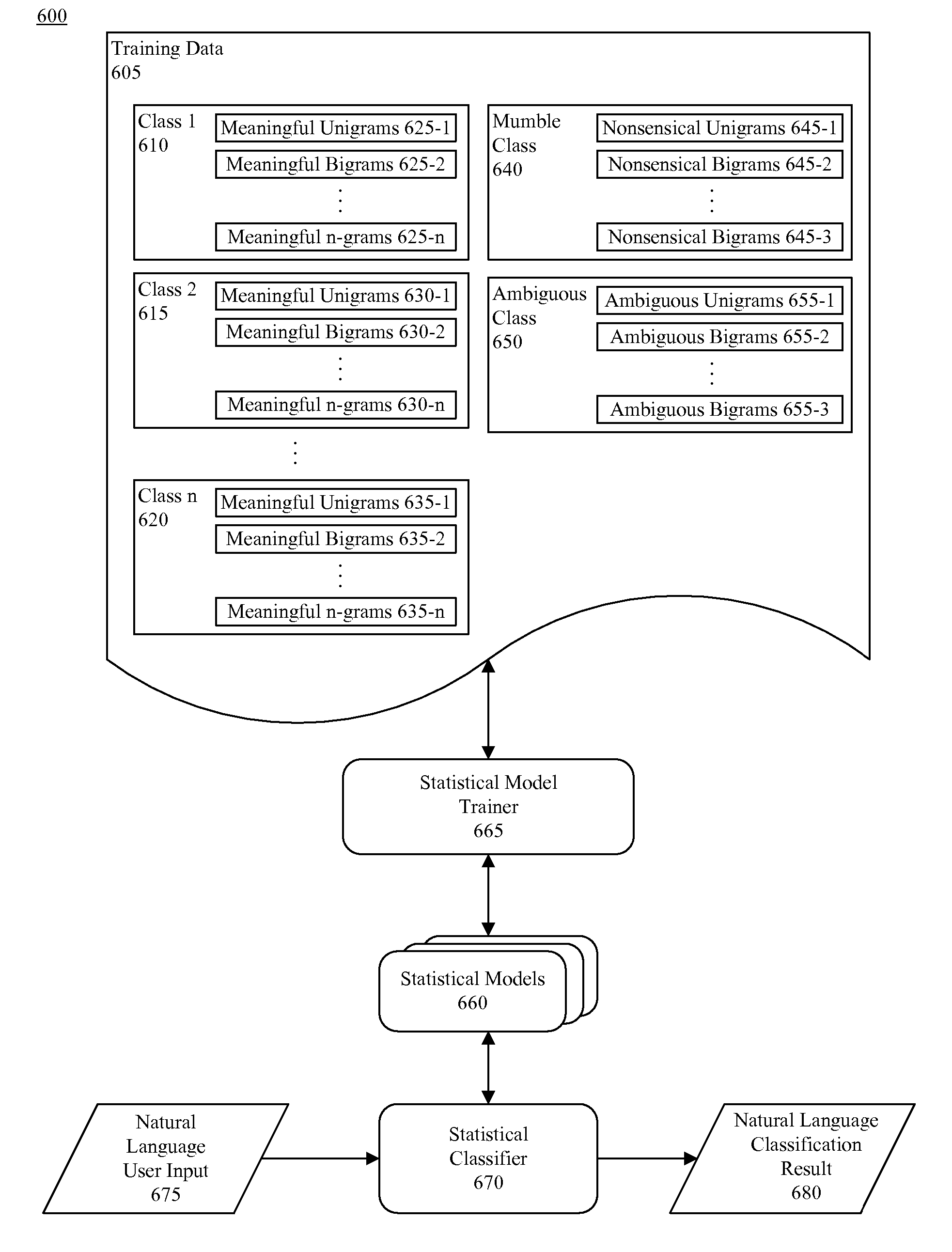

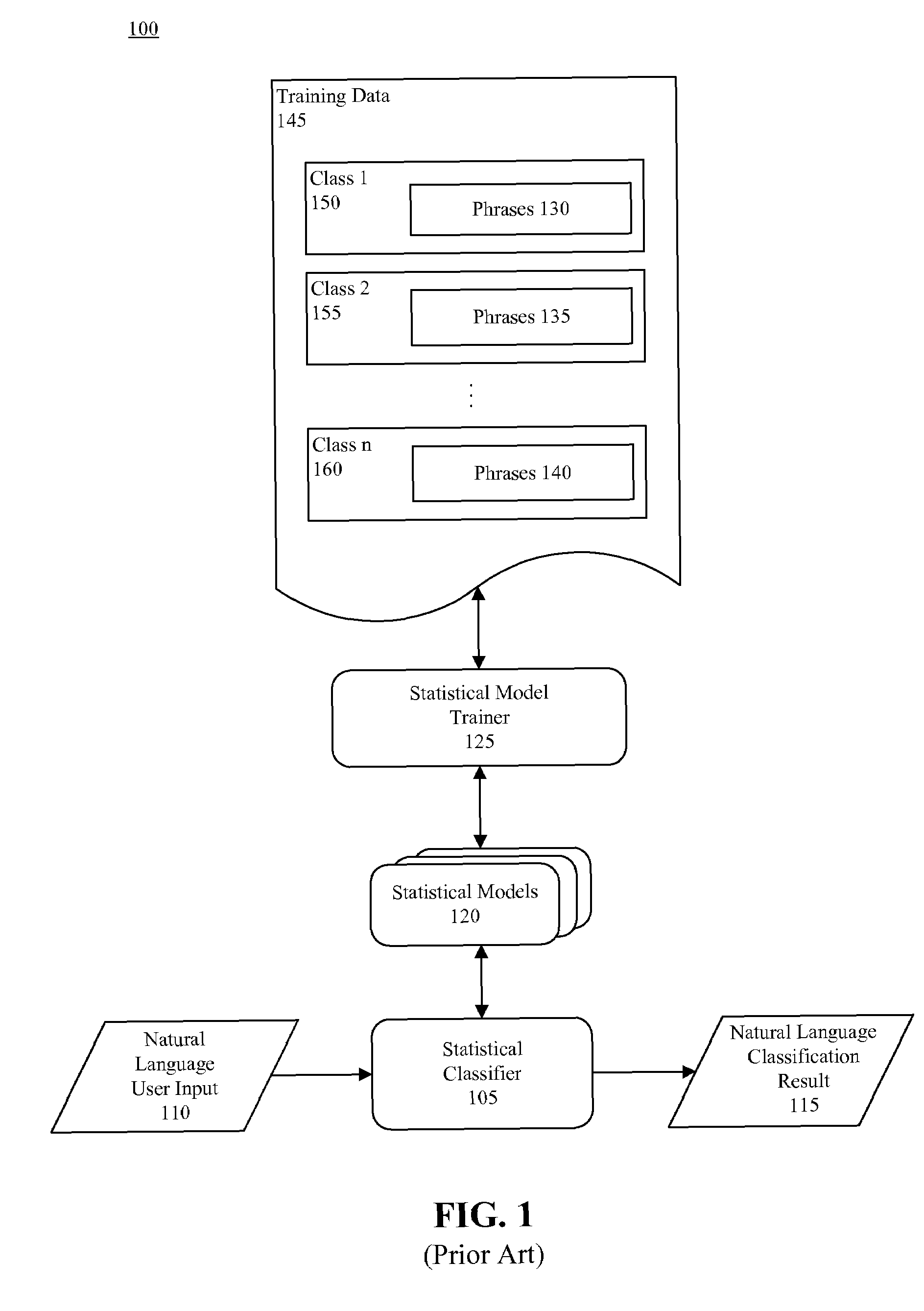

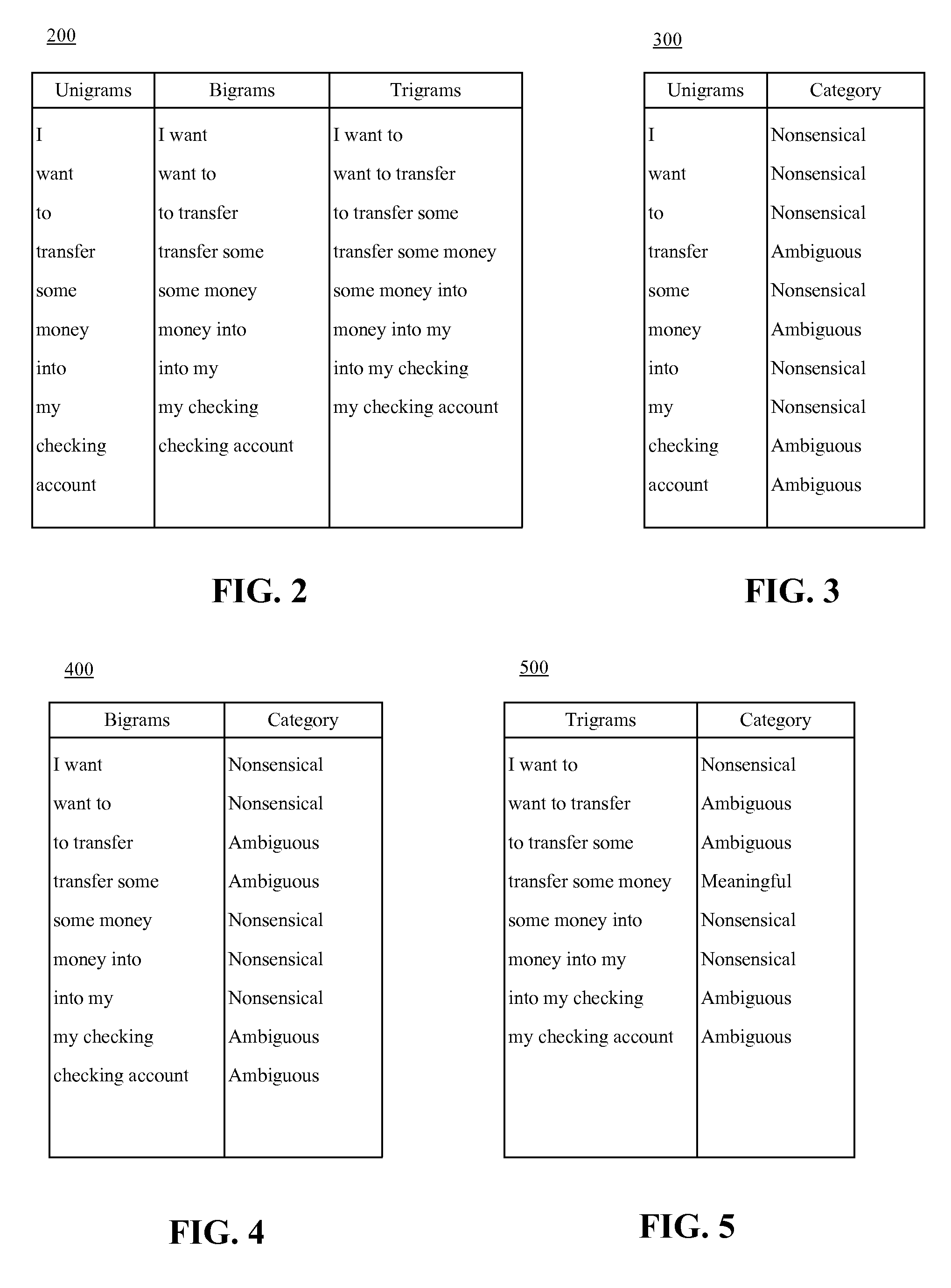

Identification and rejection of meaningless input during natural language classification

ActiveUS7707027B2Digital data information retrievalNatural language data processingAlgorithmComputer science

A method for identifying data that is meaningless and generating a natural language statistical model which can reject meaningless input. The method can include identifying unigrams that are individually meaningless from a set of training data. At least a portion of the unigrams identified as being meaningless can be assigned to a first n-gram class. The method also can include identifying bigrams that are entirely composed of meaningless unigrams and determining whether the identified bigrams are individually meaningless. At least a portion of the bigrams identified as being individually meaningless can be assigned to the first n-gram class.

Owner:MICROSOFT TECH LICENSING LLC

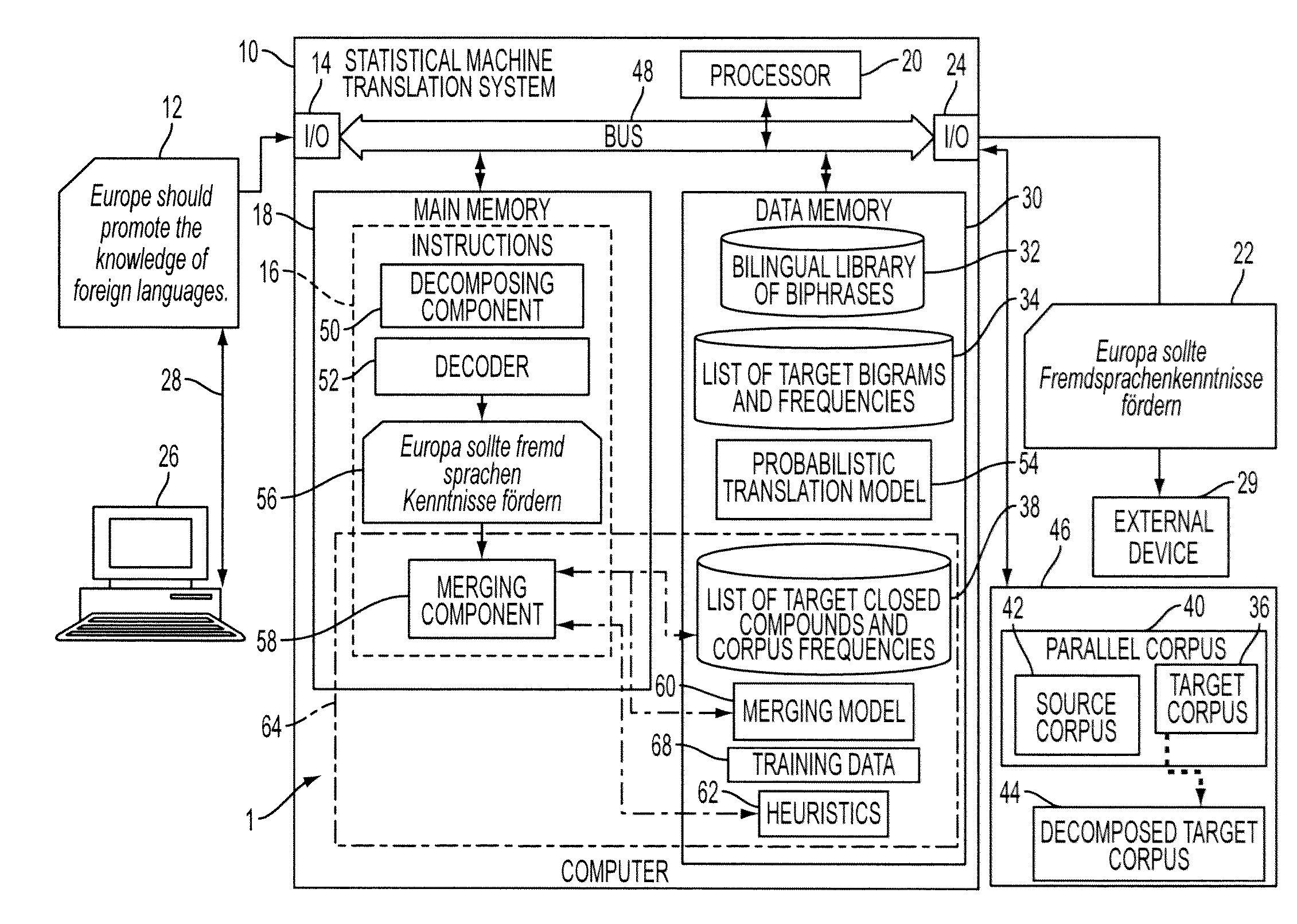

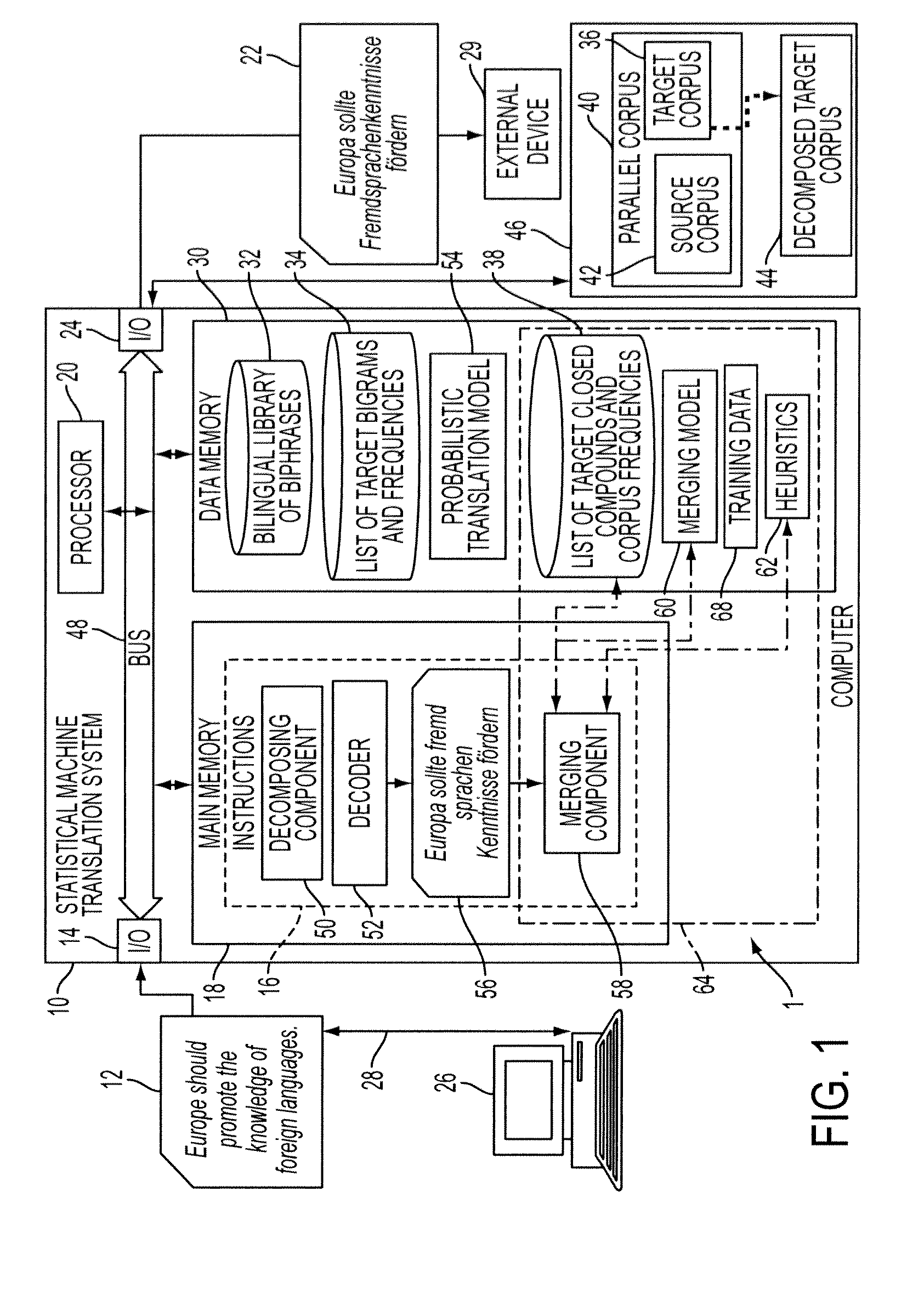

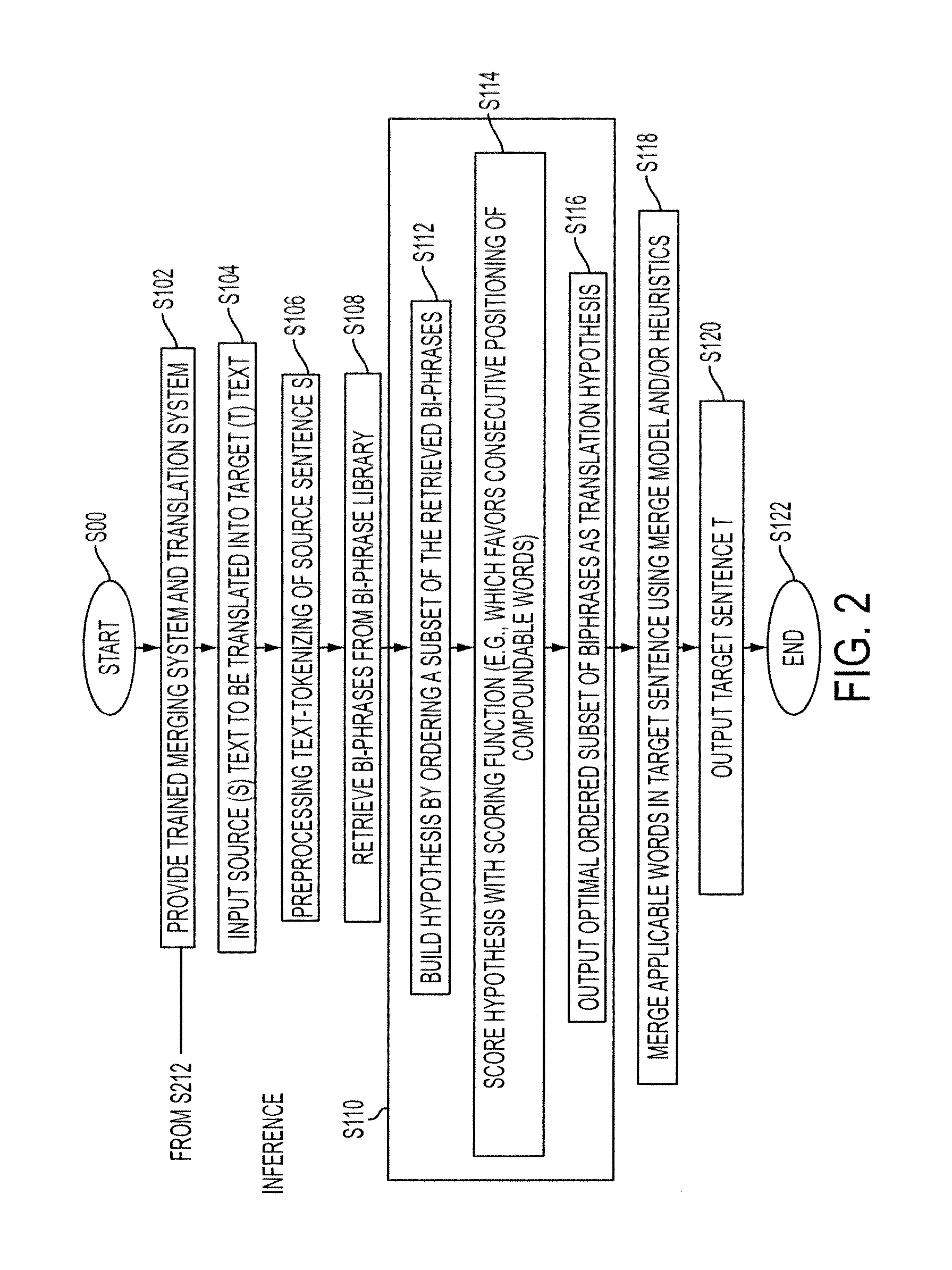

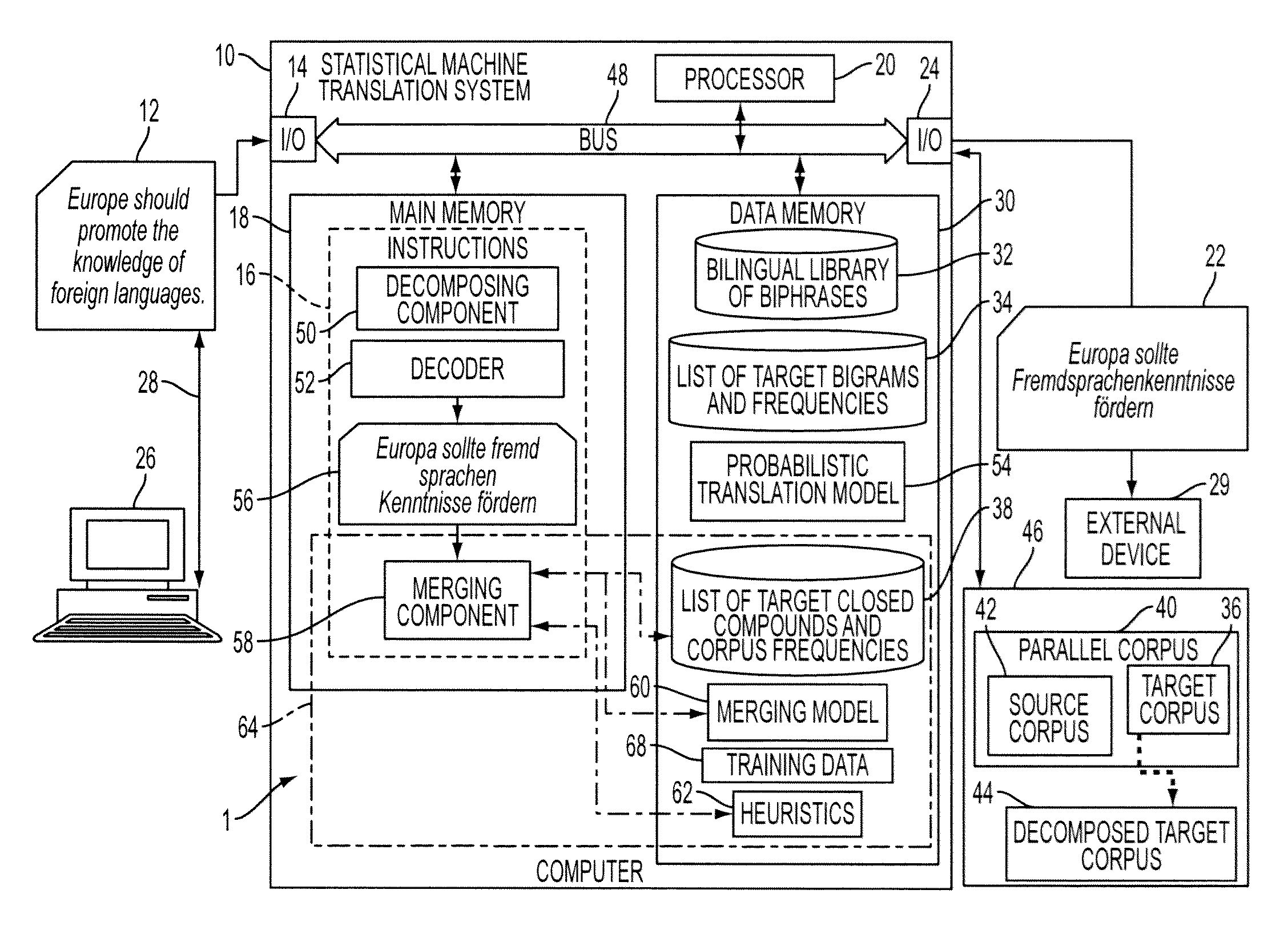

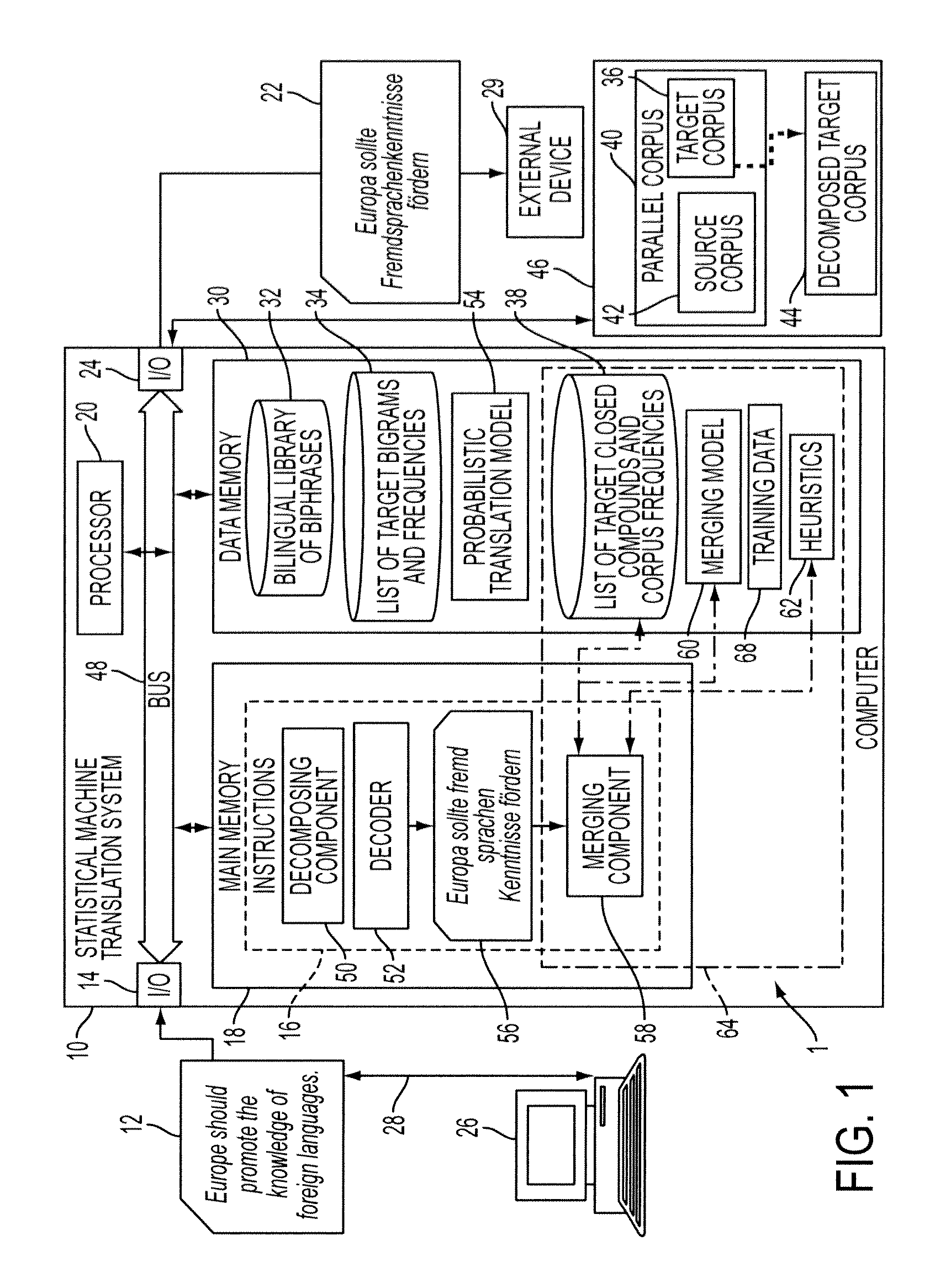

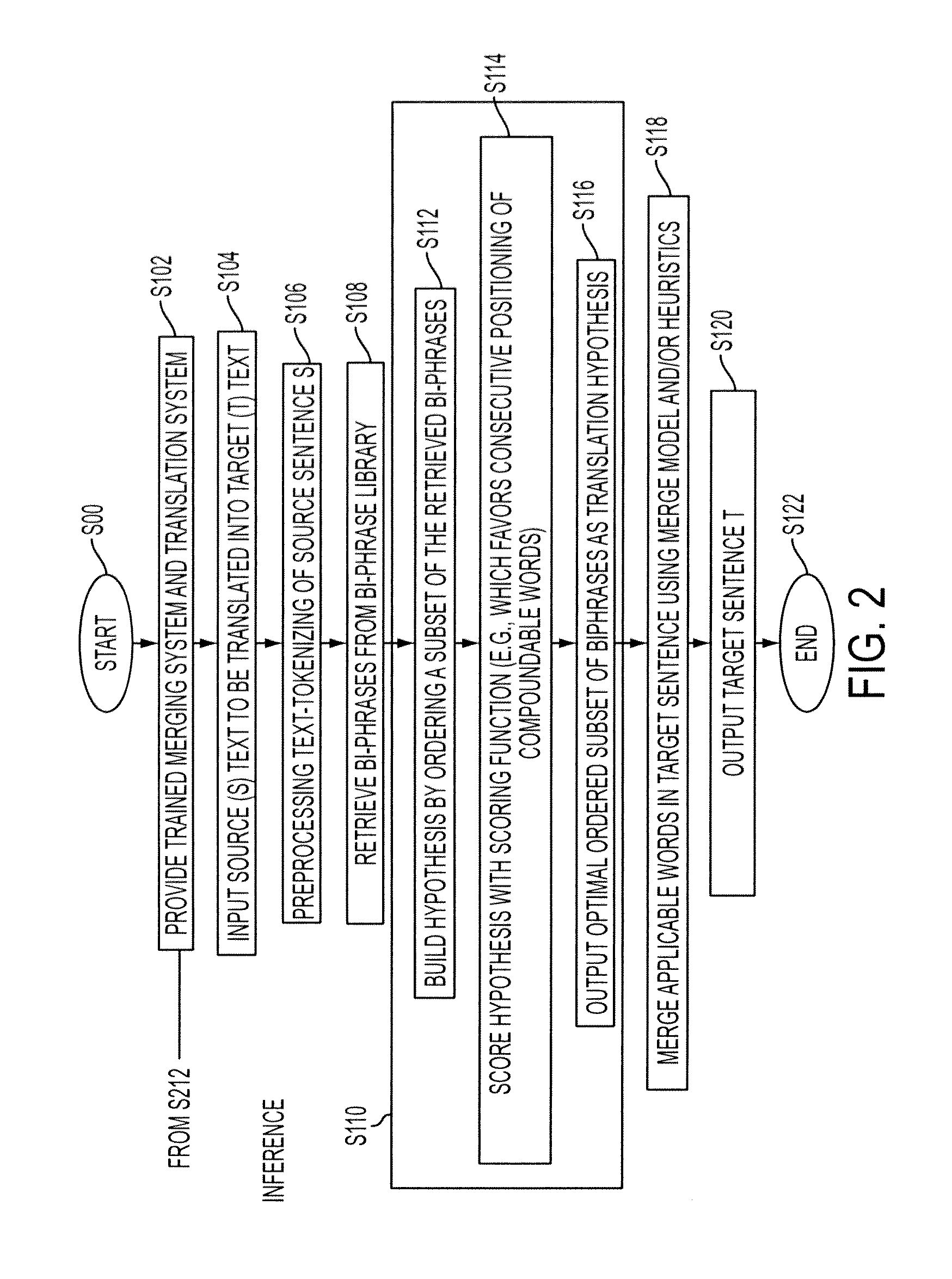

System and method for productive generation of compound words in statistical machine translation

InactiveUS20130030787A1Natural language translationSpecial data processing applicationsAlgorithmTheoretical computer science

A method and a system for making merging decisions for a translation are disclosed which are suited to use where the target language is a productive compounding one. The method includes outputting decisions on merging of pairs of words in a translated text string with a merging system. The merging system can include a set of stored heuristics and / or a merging model. In the case of heuristics, these can include a heuristic by which two consecutive words in the string are considered for merging if the first word of the two consecutive words is recognized as a compound modifier and their observed frequency f1 as a closed compound word is larger than an observed frequency f2 of the two consecutive words as a bigram. In the case of a merging model, it can be one that is trained on features associated with pairs of consecutive tokens of text strings in a training set and predetermined merging decisions for the pairs. A translation in the target language is output, based on the merging decisions for the translated text string.

Owner:XEROX CORP

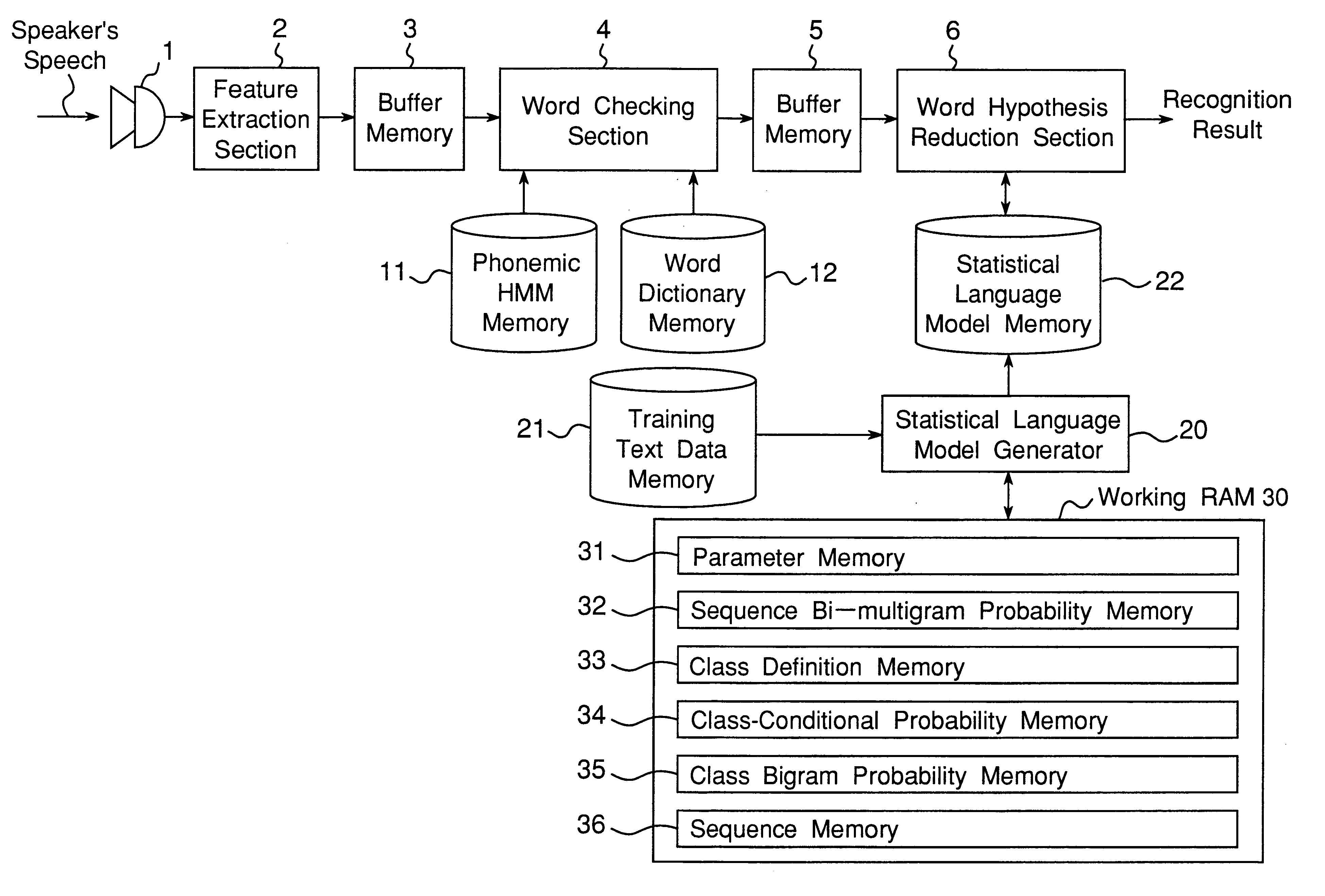

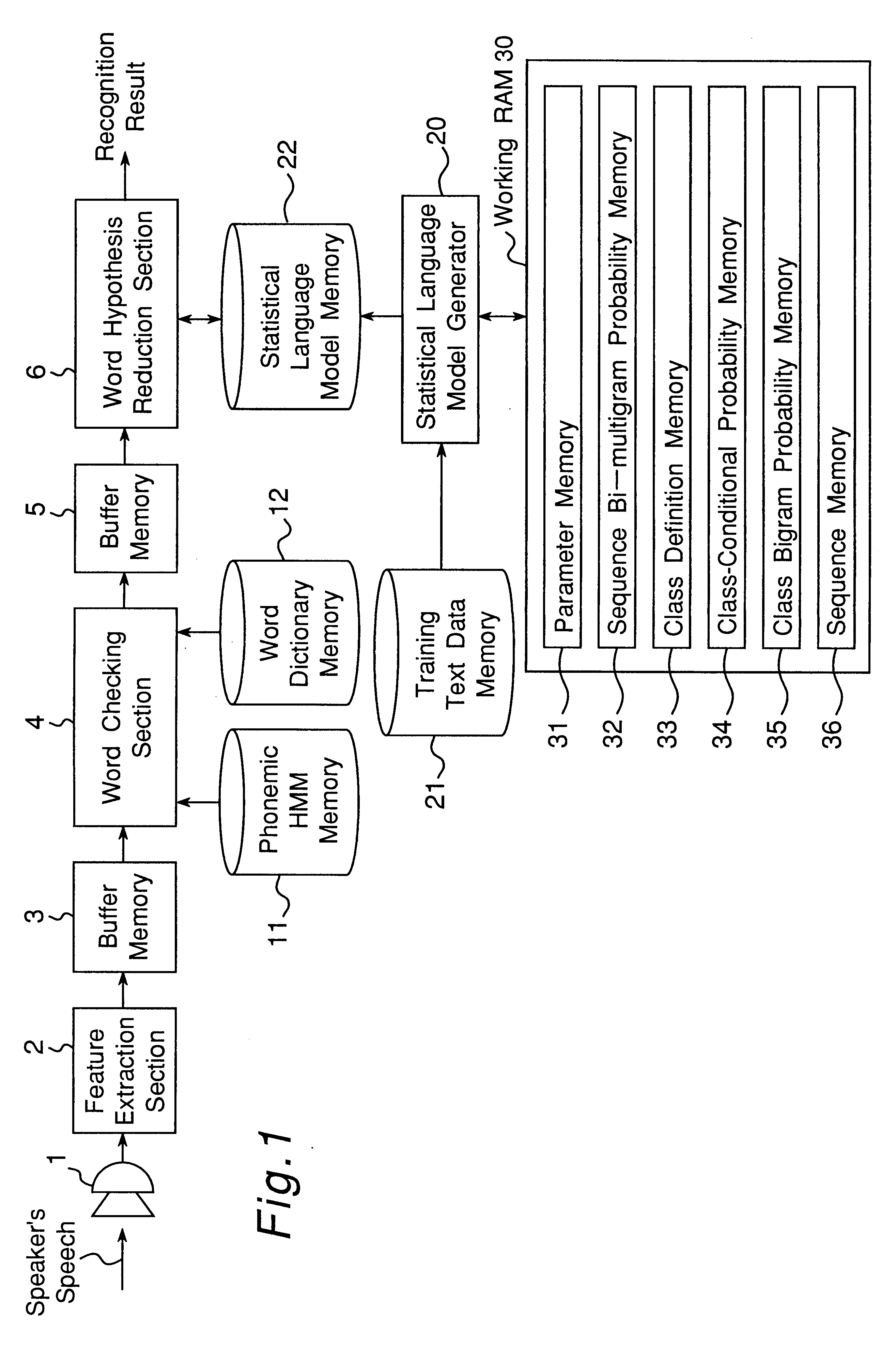

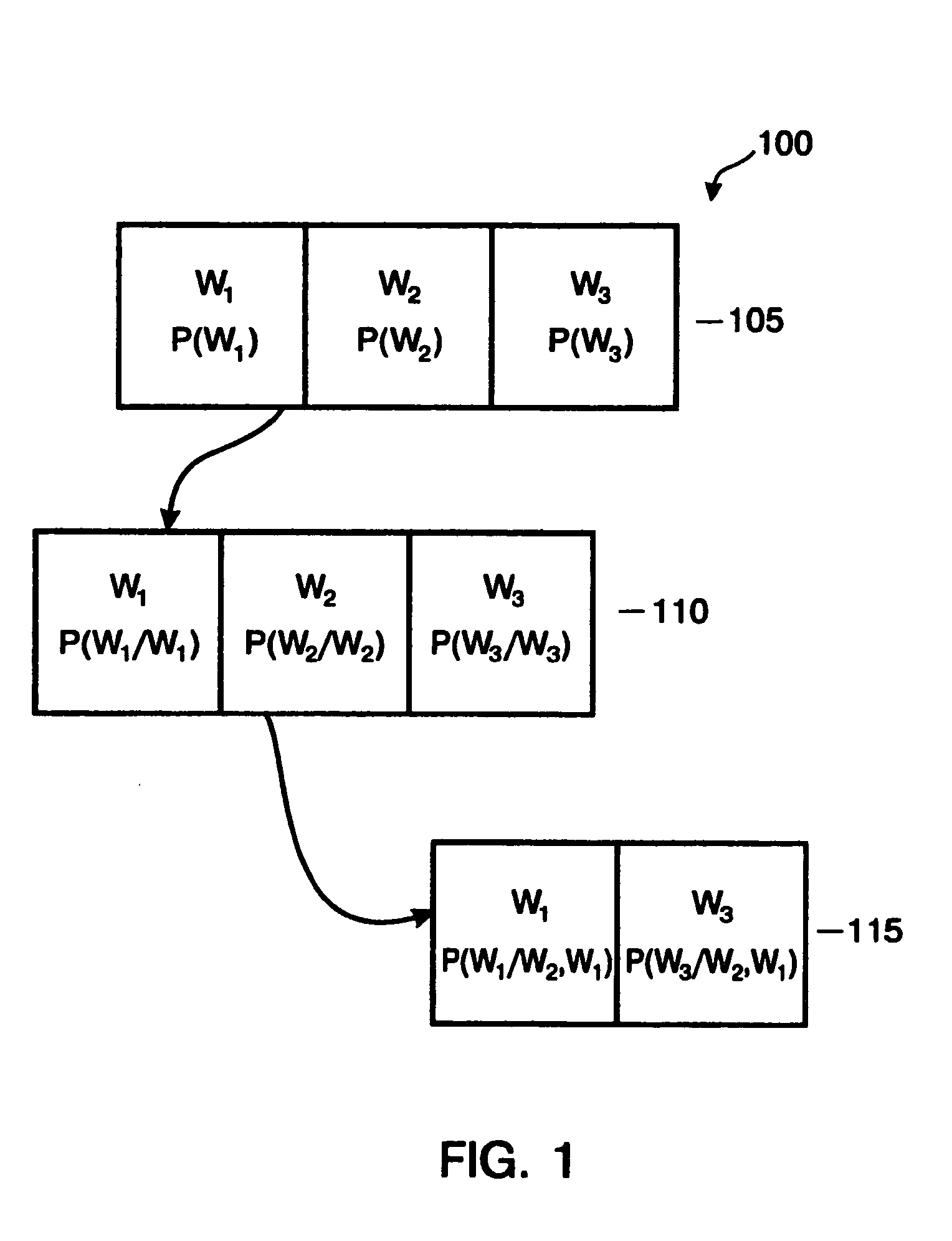

Apparatus for generating a statistical sequence model called class bi-multigram model with bigram dependencies assumed between adjacent sequences

InactiveUS6314399B1High speed machiningRecombinant DNA-technologySpeech recognitionSequence modelProbit

An apparatus generates a statistical class sequence model called A class bi-multigram model from input training strings of discrete-valued units, where bigram dependencies are assumed between adjacent variable length sequences of maximum length N units, and where class labels are assigned to the sequences. The number of times all sequences of units occur are counted, as well as the number of times all pairs of sequences of units co-occur in the input training strings. An initial bigram probability distribution of all the pairs of sequences is computed as the number of times the two sequences co-occur, divided by the number of times the first sequence occurs in the input training string. Then, the input sequences are classified into a pre-specified desired number of classes. Further, an estimate of the bigram probability distribution of the sequences is calculated by using an EM algorithm to maximize the likelihood of the input training string computed with the input probability distributions. The above processes are then iteratively performed to generate statistical class sequence model.

Owner:DENSO CORP

Systems and methods for improved spell checking

InactiveUS20070106937A1Raise checkQuality improvementDry-dockingDigital data information retrievalPersonalizationQuery string

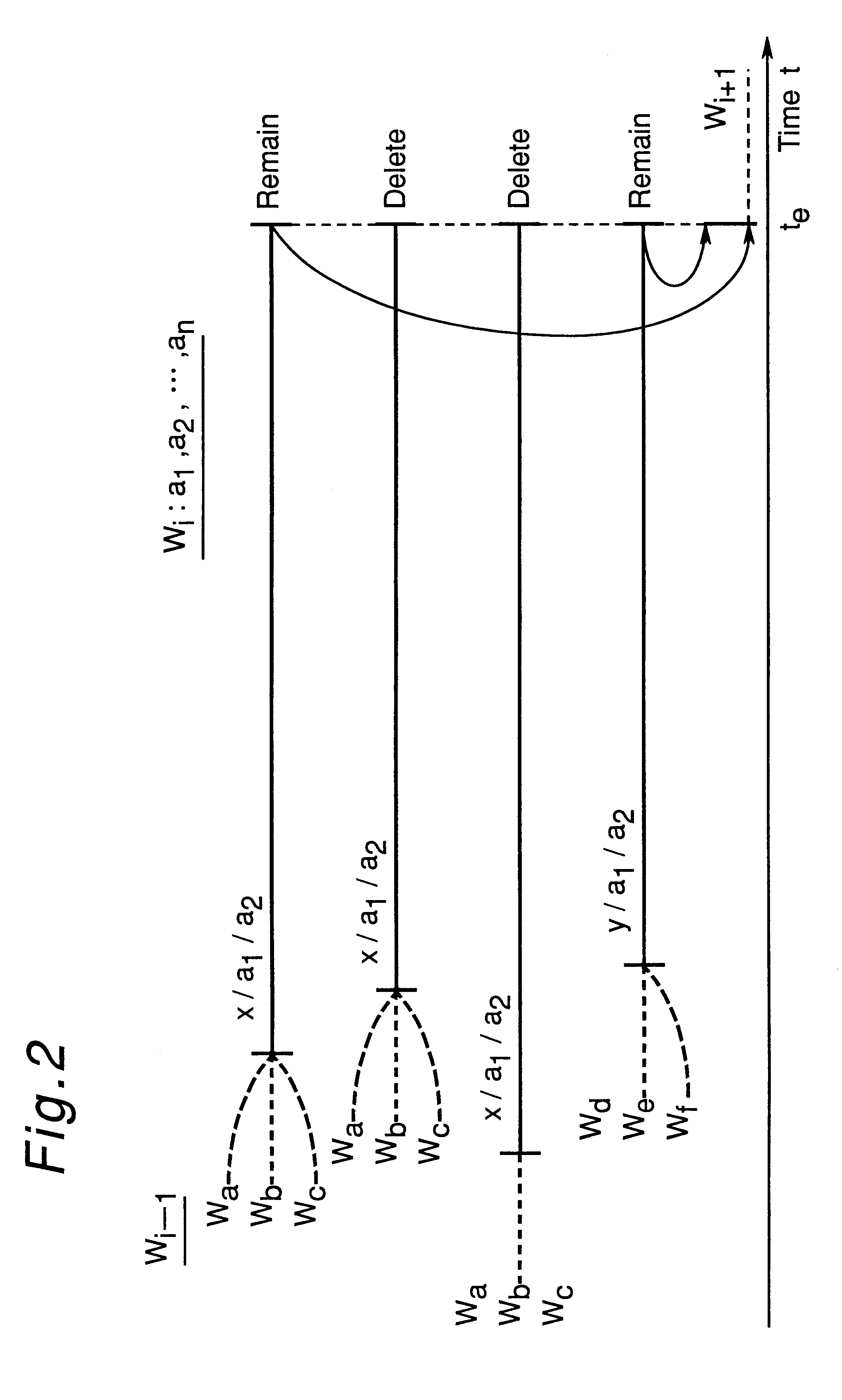

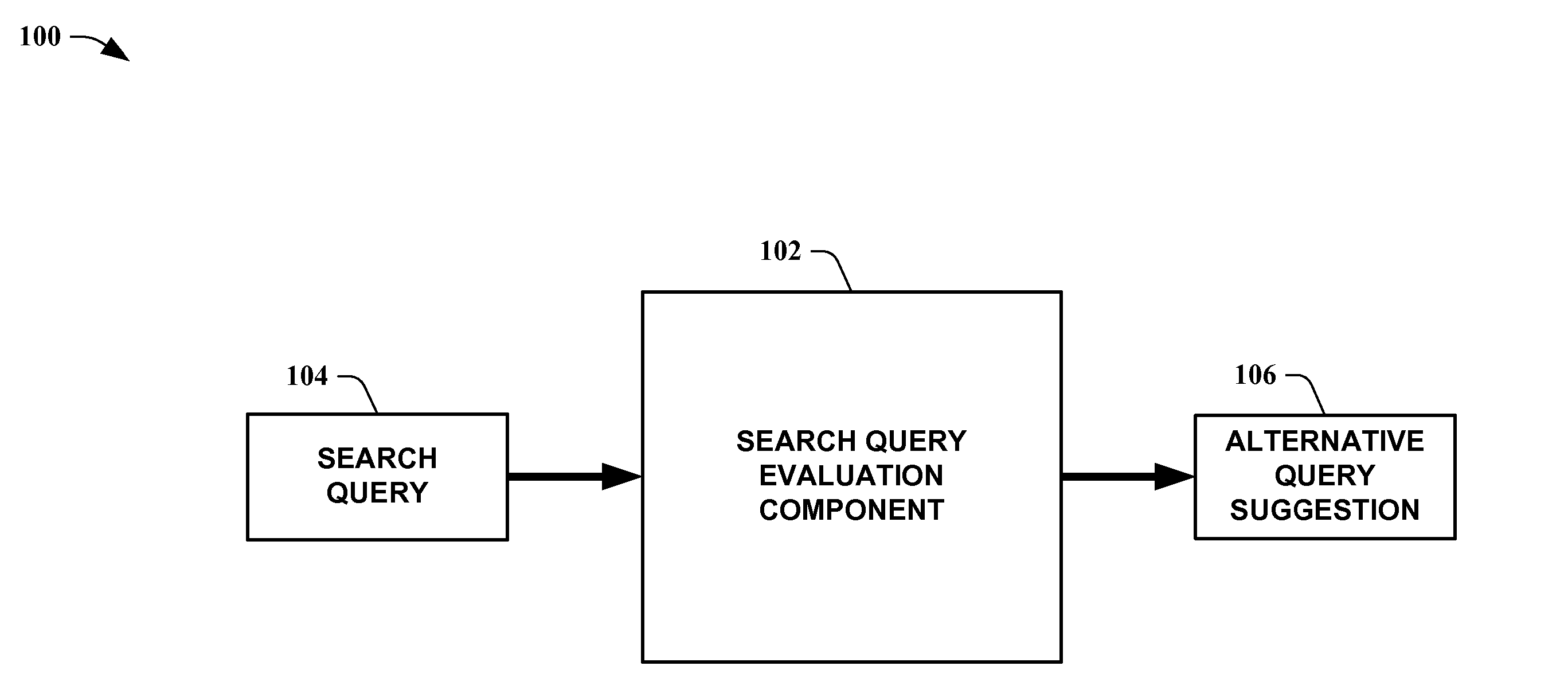

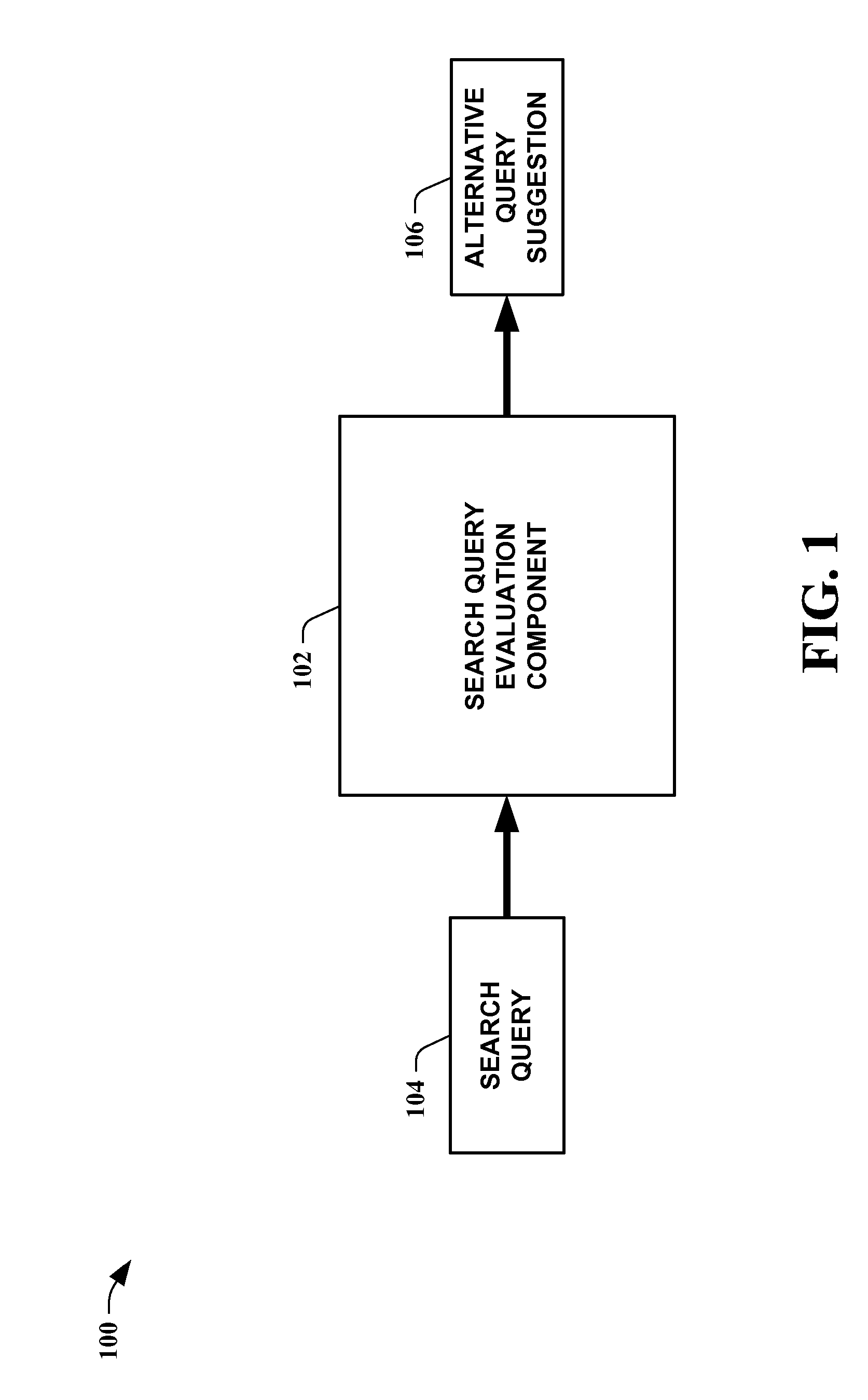

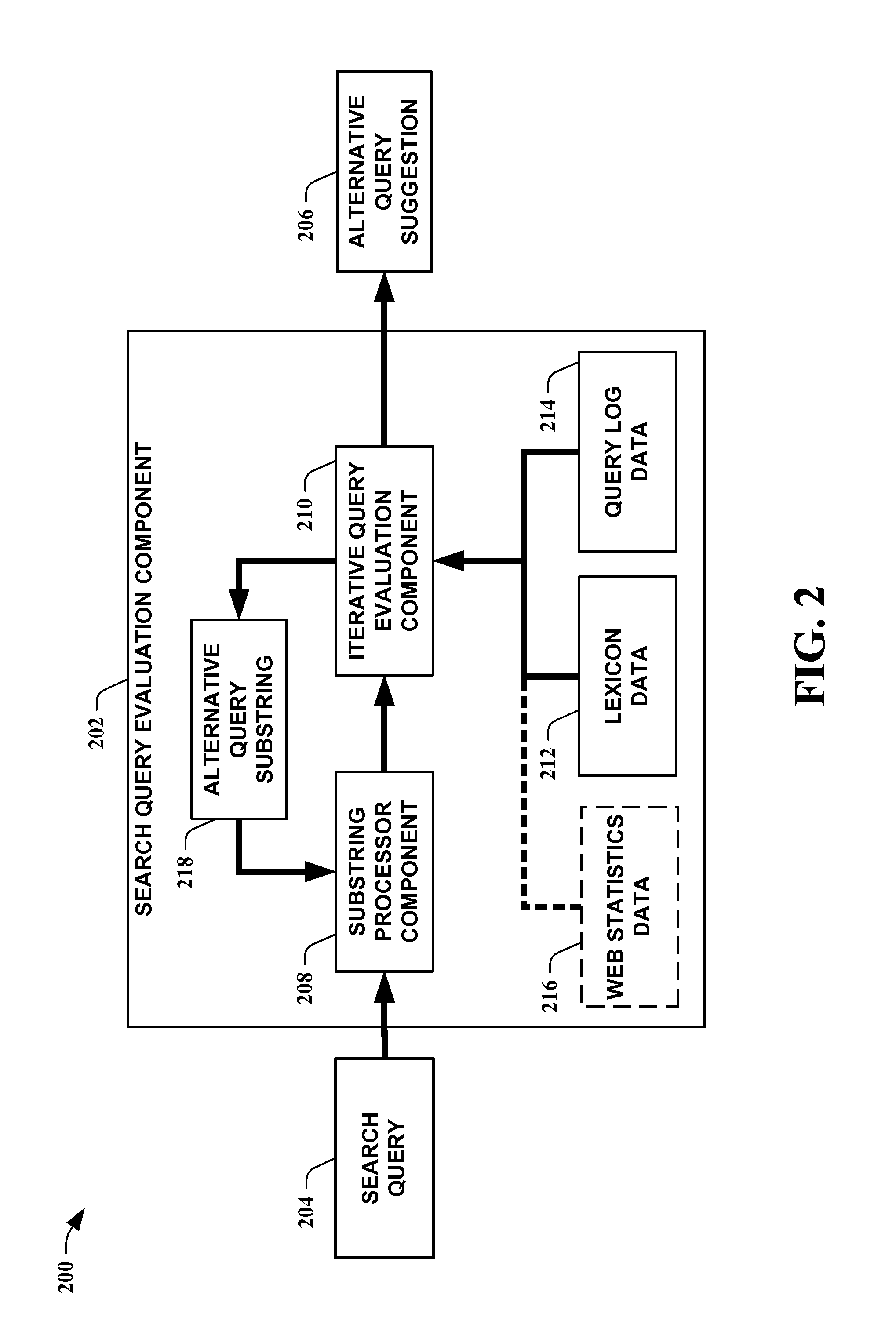

The present invention leverages iterative transformations of search query strings along with statistics extracted from search query logs and / or web data to provide possible alternative spellings for the search query strings. This provides a spell checking means that can be influenced to provide individualized suggestions for each user. By utilizing search query logs, the present invention can account for substrings not found in a lexicon but still acceptable as a search query of interest. This allows a means to provide a higher quality proposal for alternative spellings, beyond the content of the lexicon. One instance of the present invention operates at a substring level by utilizing word unigram and / or bigram statistics extracted from query logs combined with an iterative search. This provides substantially better spelling alternatives for a given query than employing only substring matching. Other instances can receive input data from sources other than a search query input.

Owner:MICROSOFT TECH LICENSING LLC

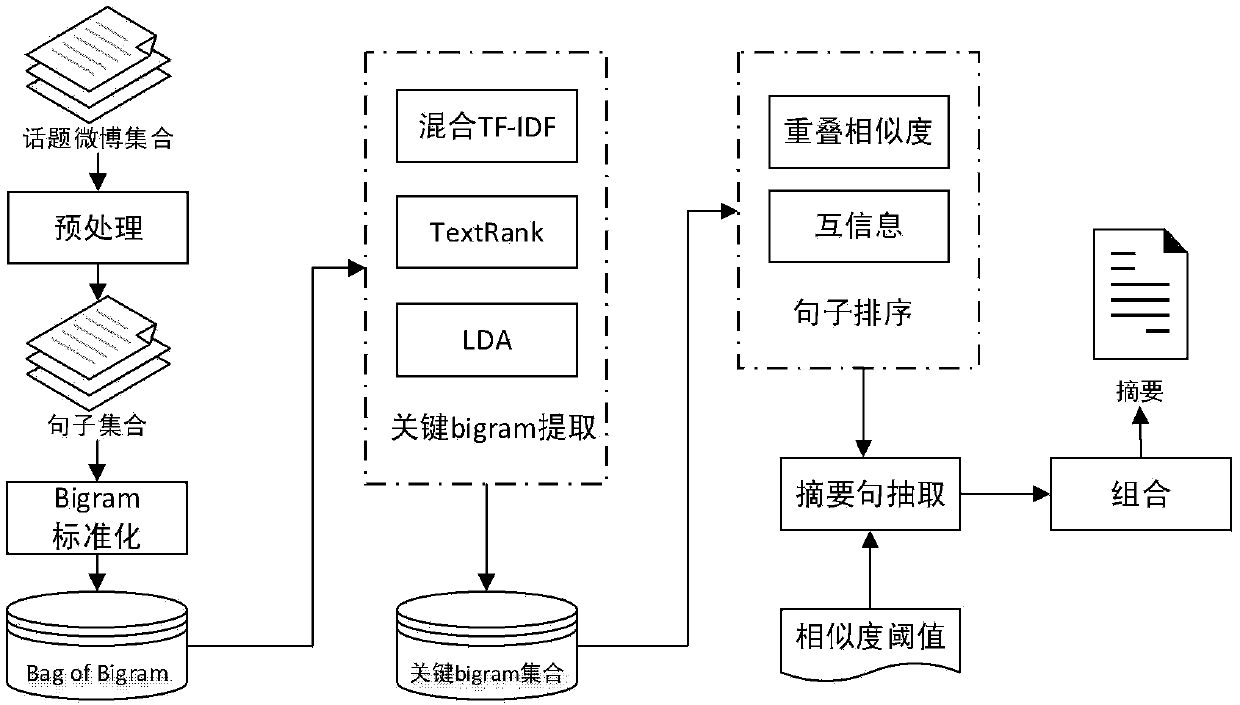

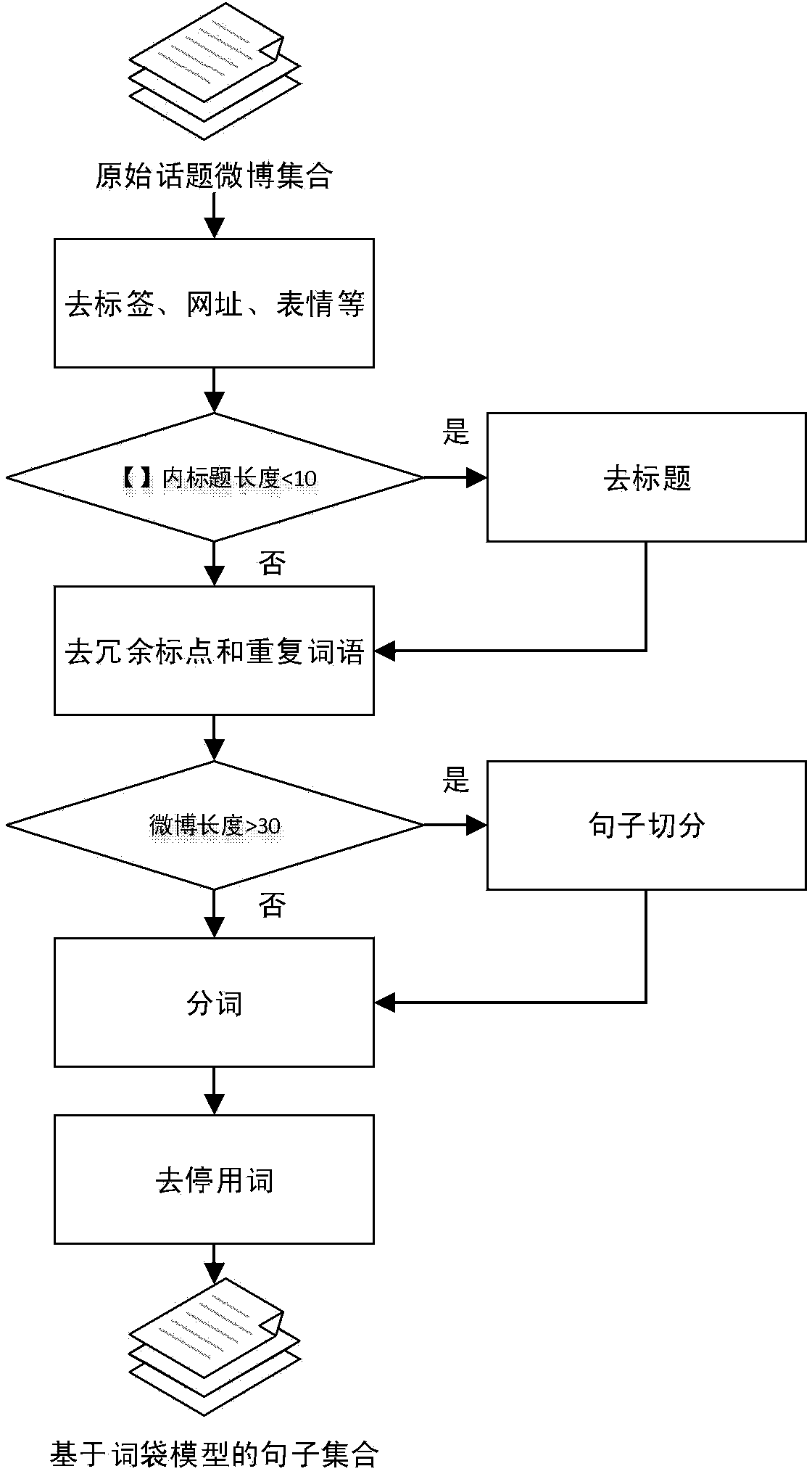

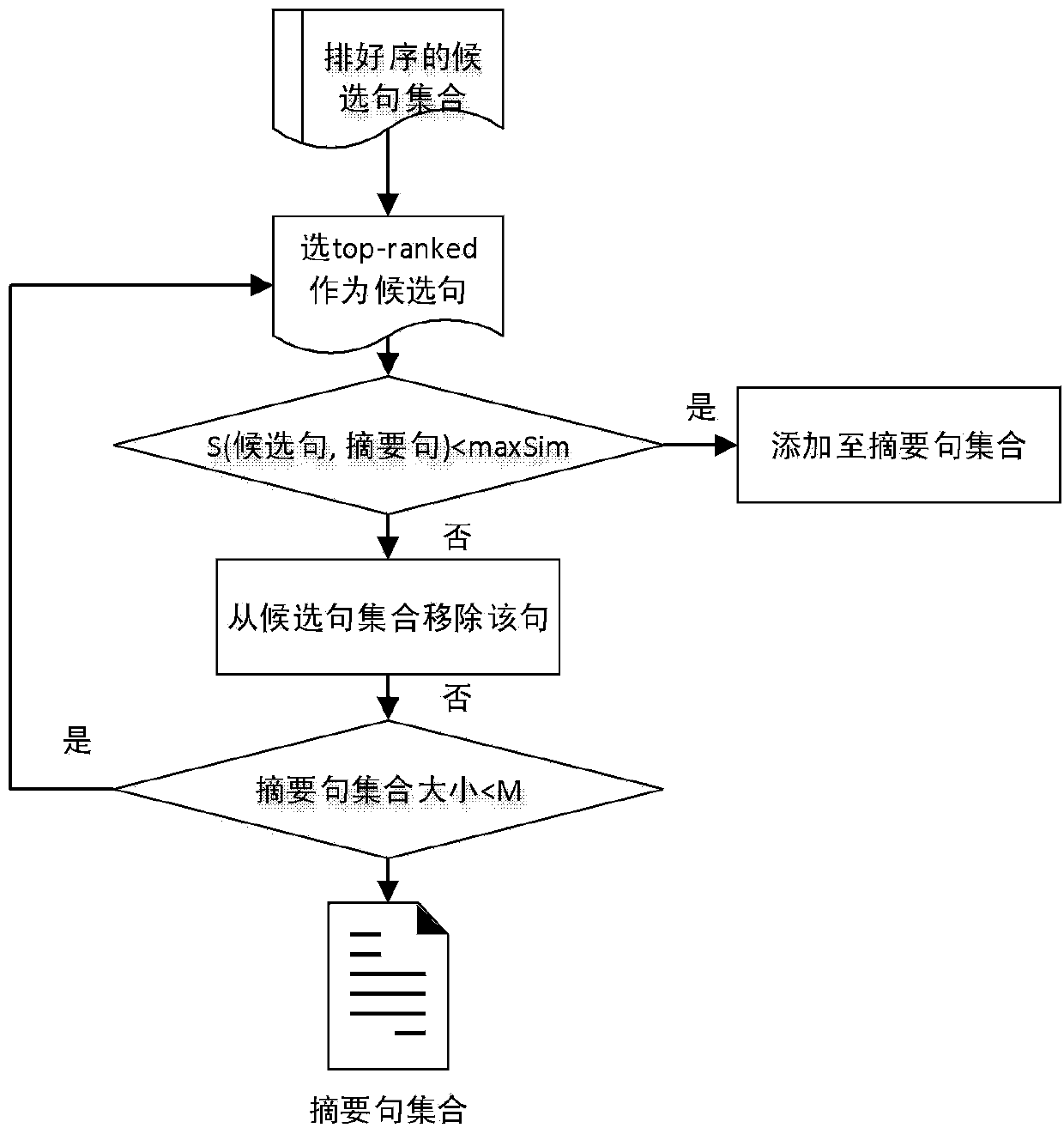

Automatic microblog text abstracting method based on unsupervised key bigram extraction

ActiveCN104216875AQuality improvementImprove efficiencySpecial data processing applicationsMicrobloggingMutual information

The invention discloses an automatic microblog text abstracting method based on unsupervised key binary word extraction. The automatic microblog text abstracting method comprises the steps of preprocessing a microblog; standardizing a binary word; extracting a key binary word based on a mixed TF-IDF (term frequency-inverse document frequency), TexRank and an LDA (local data area); sequencing sentences based on the intersection similarity and a mutual information strategy; extracting abstract sentences based on a similarity threshold value; generating abstract by reasonably combining the abstract sentences. According to the automatic microblog text abstracting method, the binary word is used as a minimum vocabulary unit, and the binary word has richer text information than words, so that the sentences based on the key binary word is higher in noise immunity and accuracy than the sentences based on key word extraction; meanwhile, when the abstract sentences are extracted, the similarity threshold value is introduced to control redundancy, so that the abstract is higher in recall rate. The abstract generated by the method is accurate, simple and comprehensive; the efficiency and the quality that a user acquires knowledge are obviously improved, and the time of the user is greatly saved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

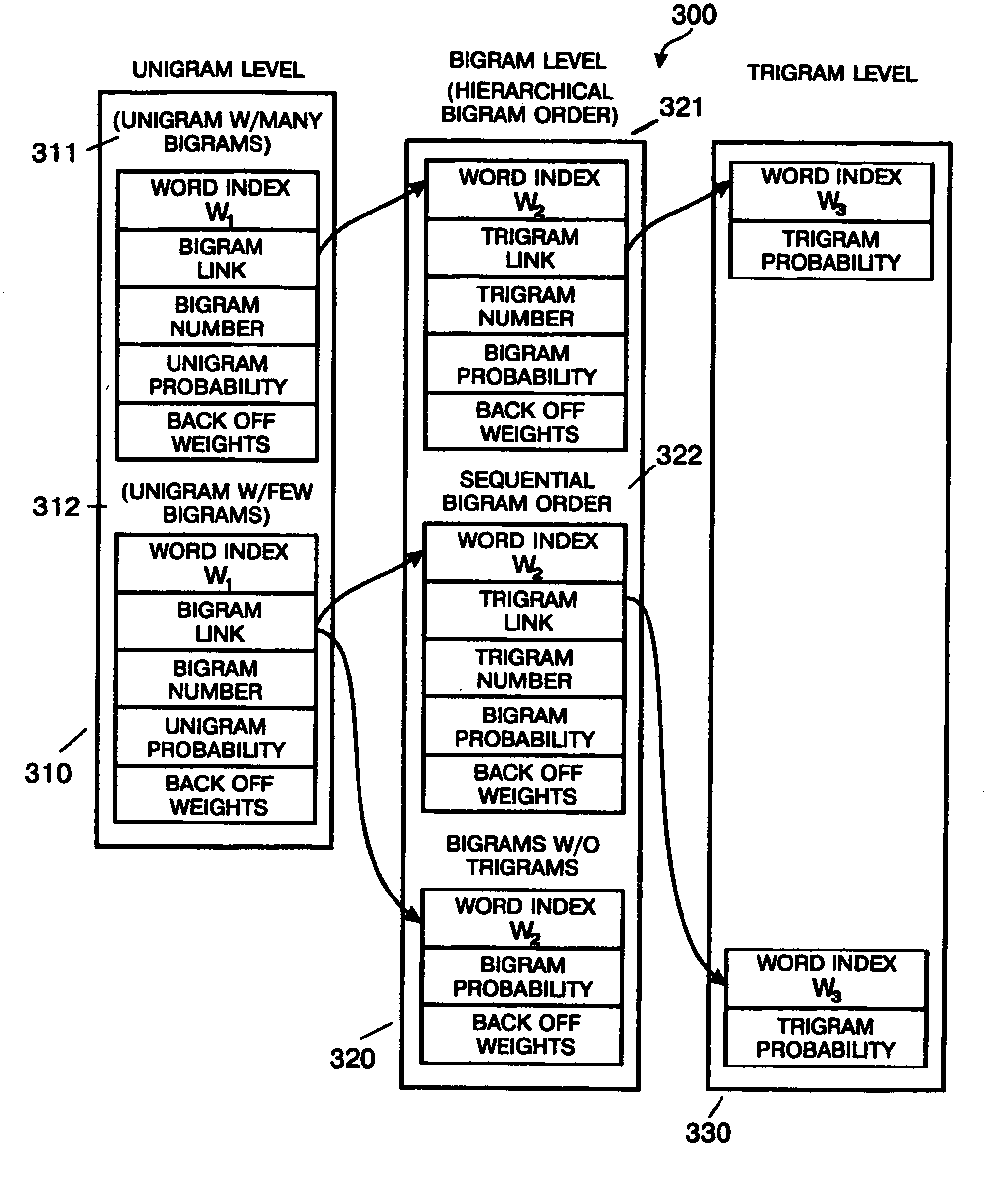

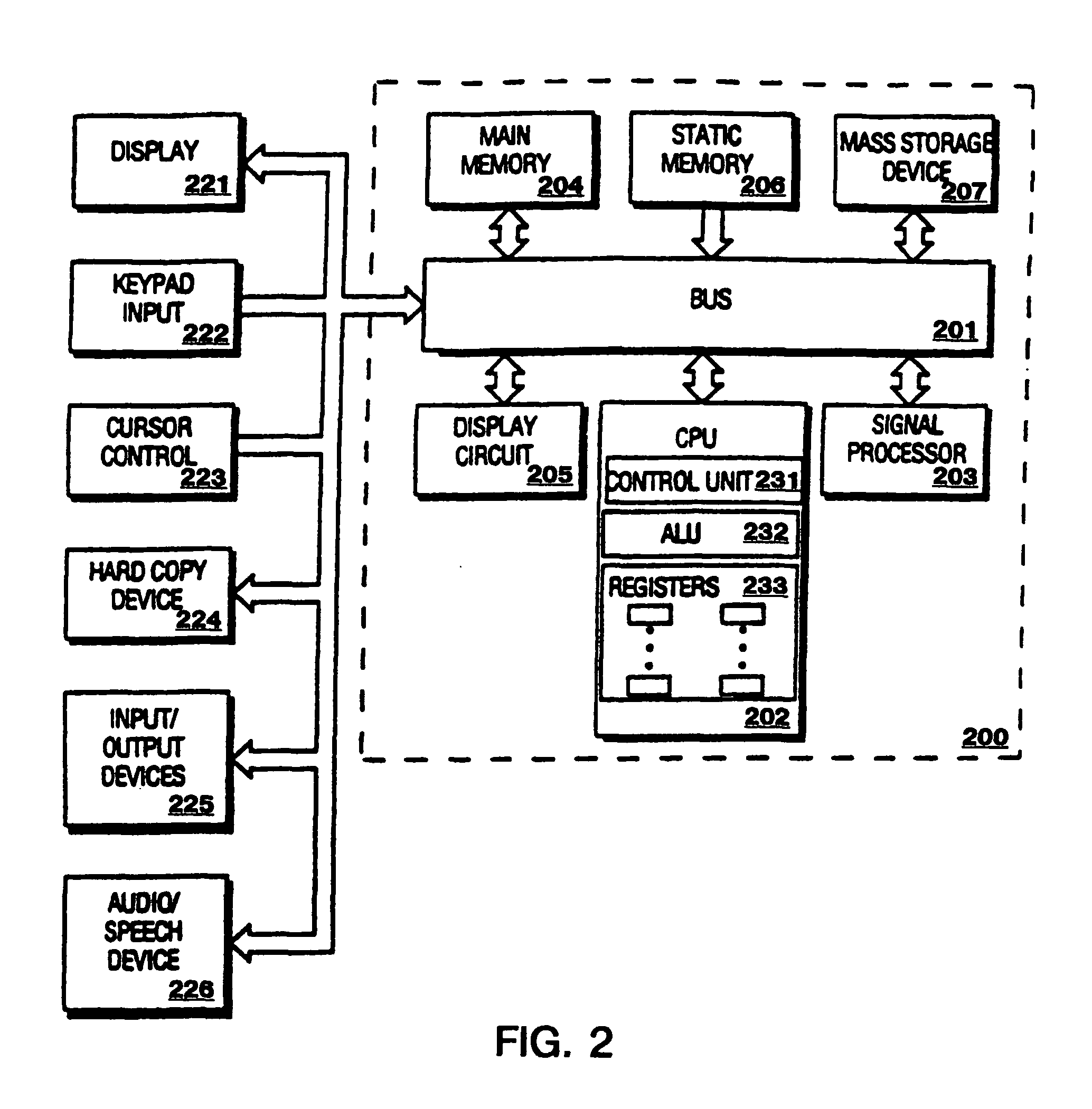

Method and apparatus to provide a hierarchical index for a language model data structure

InactiveUS20050055199A1Small sizeDigital data information retrievalSpeech recognitionAlgorithmTheoretical computer science

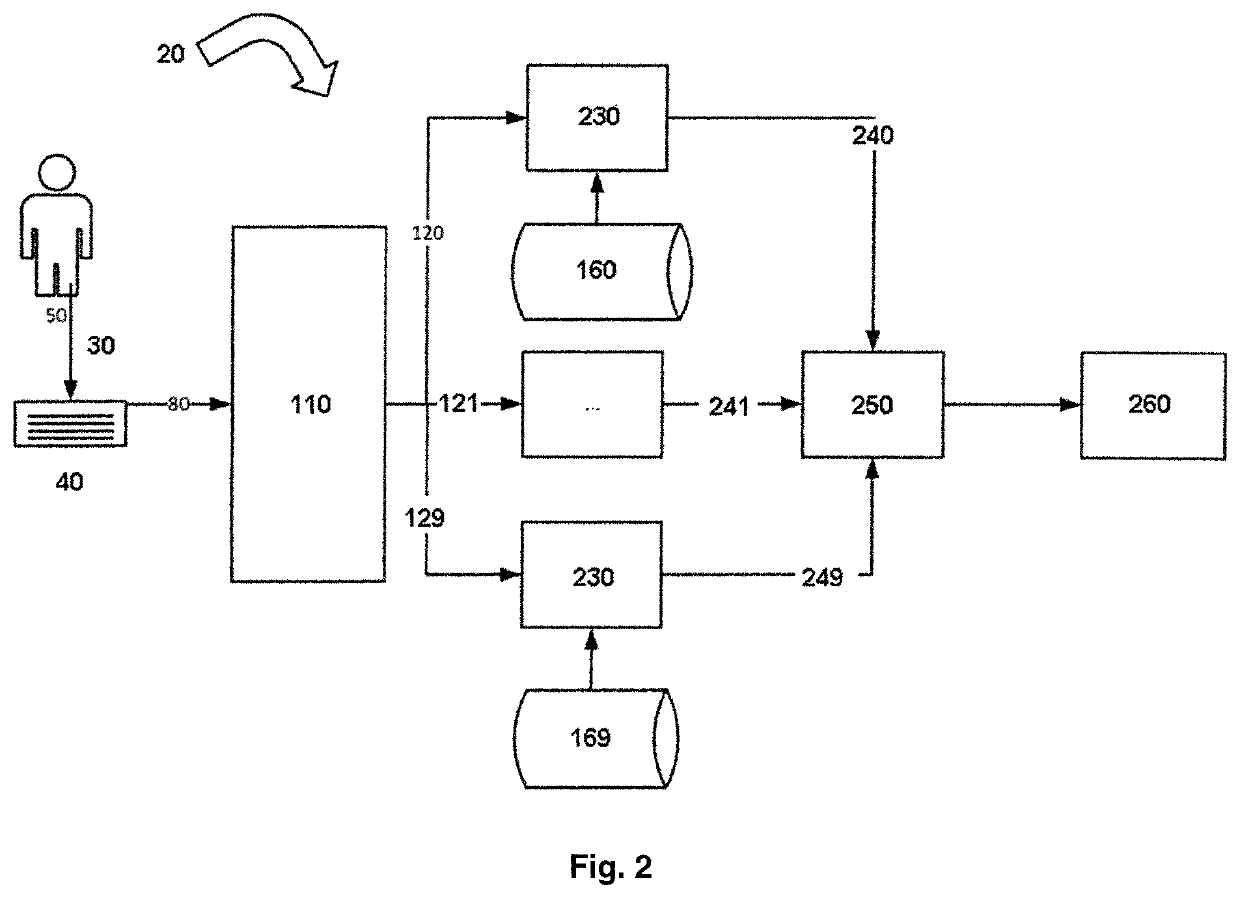

A method for storing bigram word indexes of a language model for a consecutive speech recognition system (200) is described. The bigram word indexes (321) are stored as a common two-byte base with a specific one-byte offset to significantly reduce storage requirements of the language model data file. In one embodiment the storage space required for storing the bigram word indexes (321) sequentially is compared to the storage space required to store the bigram word indexes as a common base with specific offset. The bigram word indexes (321) are then stored so as to minimize the size of the language model data file.

Owner:INTEL CORP

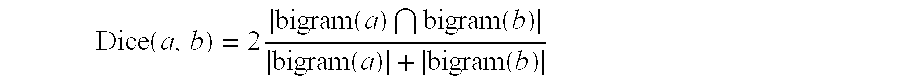

Apparatus for secure computation of string comparators

InactiveUS20090282039A1Rule out the possibilityDigital data information retrievalDigital data processing detailsConfidentialityTheoretical computer science

We present an apparatus which can be used so that one party learns the value of a string distance metric applied to a pair of strings, each of which is held by a different party, in such a way that none of the parties can learn anything else significant about the strings. This apparatus can be applied to the problem of linking records from different databases, where privacy and confidentiality concerns prohibit the sharing of records. The apparatus can compute two different string similarity metrics, including the bigram based Dice coefficient and the Jaro-Winkler string comparator. The apparatus can implement a three party protocol for the secure computation of the bigram based Dice coefficient and a two party protocols for the Jaro-Winkler string comparator which are secure against collusion and cheating. The apparatus implements a three party Jaro-Winkler string comparator computation which is secure in the case of semi-honest participants

Owner:TELECOMM RES LAB

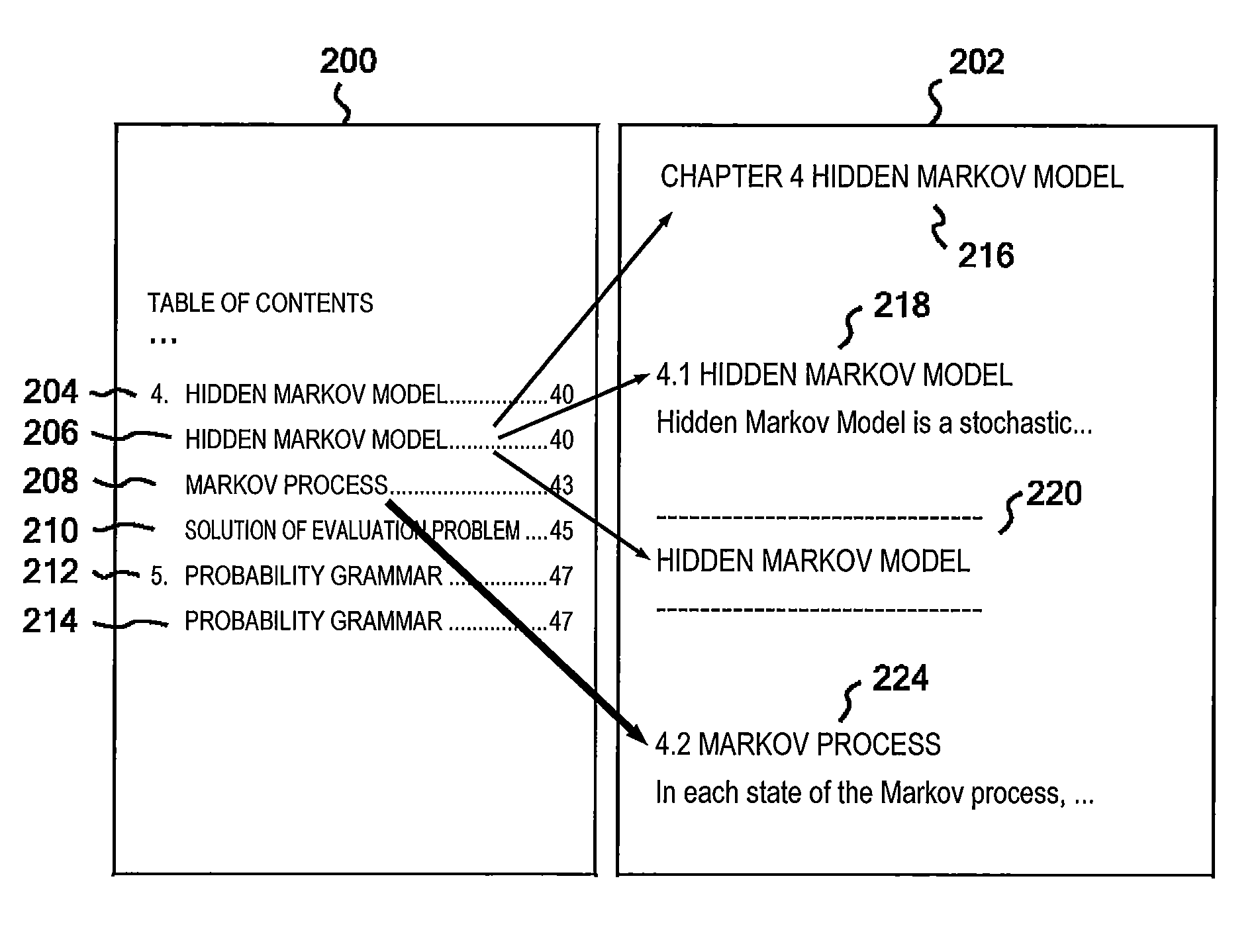

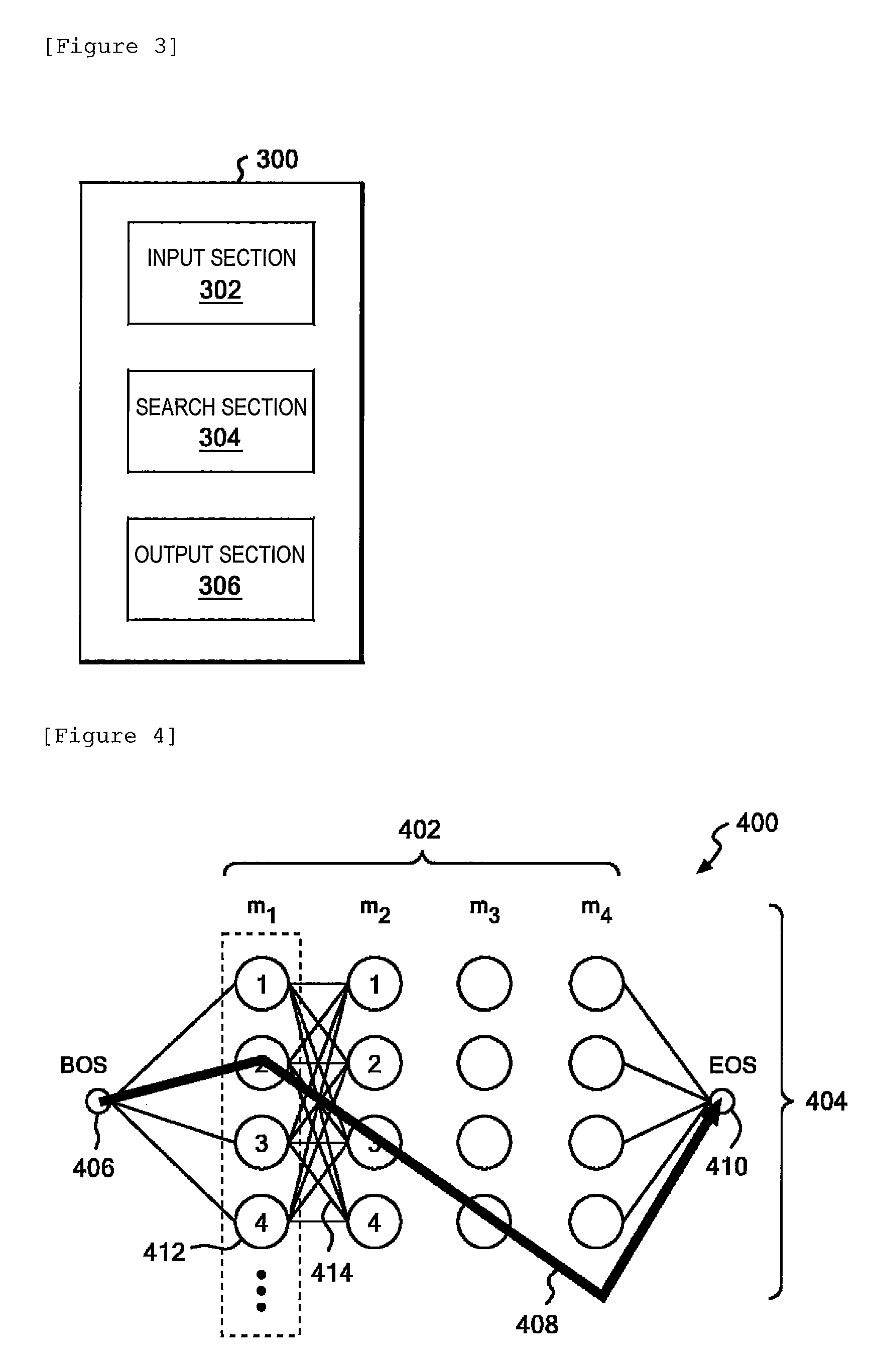

Method and apparatus for associating a table of contents and headings

InactiveUS20120197908A1Digital data processing detailsNatural language data processingData miningBigram

Apparatus to associate a table of contents (TOC) and headings. An input section inputs TOC data C and body data D. A search section seeks the maximum value of a score function S which indicates the likelihood of associations M between a TOC and headings. An output section outputs associations M which maximize the score function S. The score function S is the total of a first sum obtained by summing unigram scores u for all the TOC items, where the unigram score u evaluates the likelihood of association of TOC item with a heading candidate line independently, and a second sum obtained by summing bigram scores b for all pairs of TOC items, where the bigram score b evaluates the likelihood of associations of paired TOC items with heading candidate lines on the basis of a degree of commonality.

Owner:IBM CORP

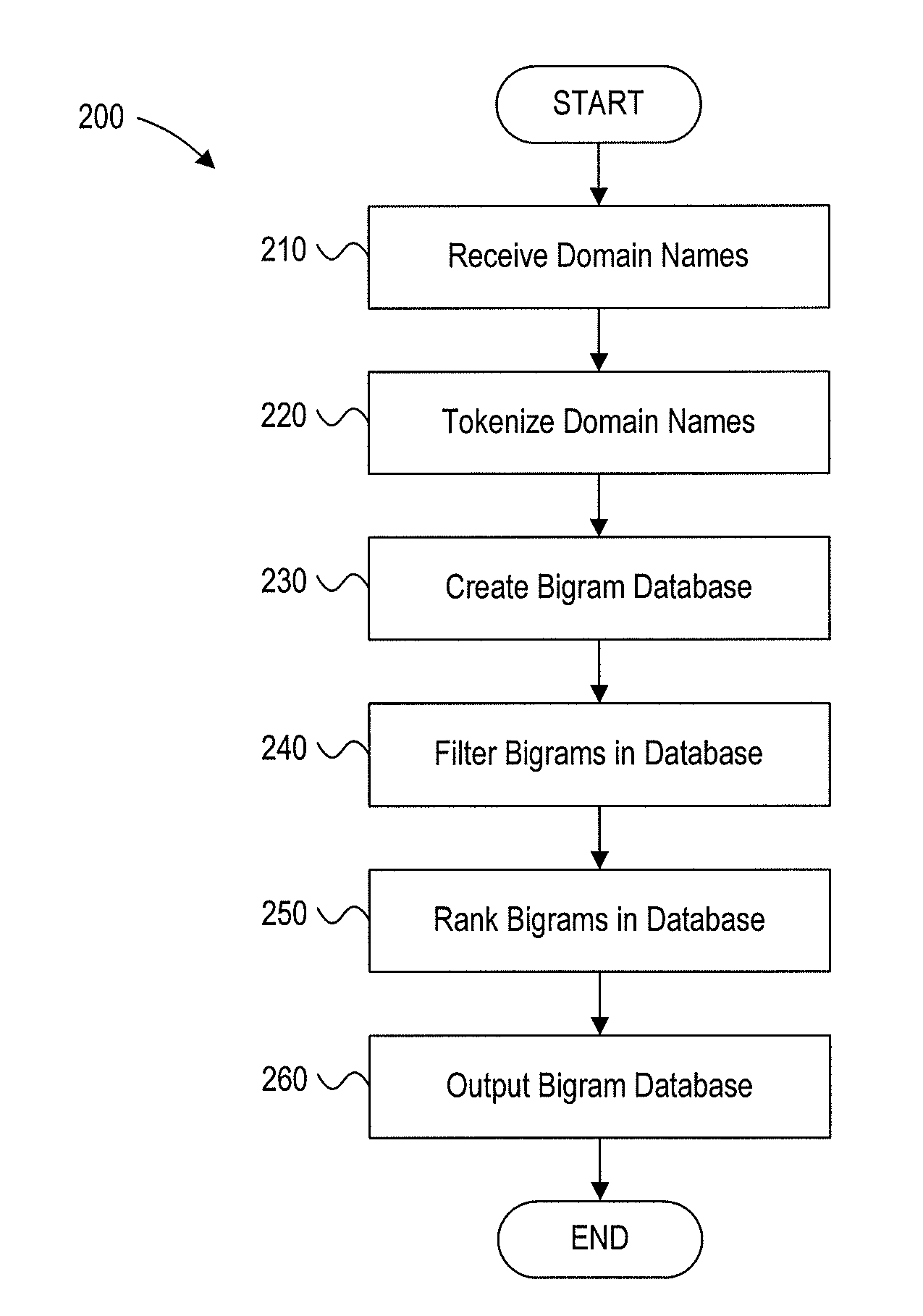

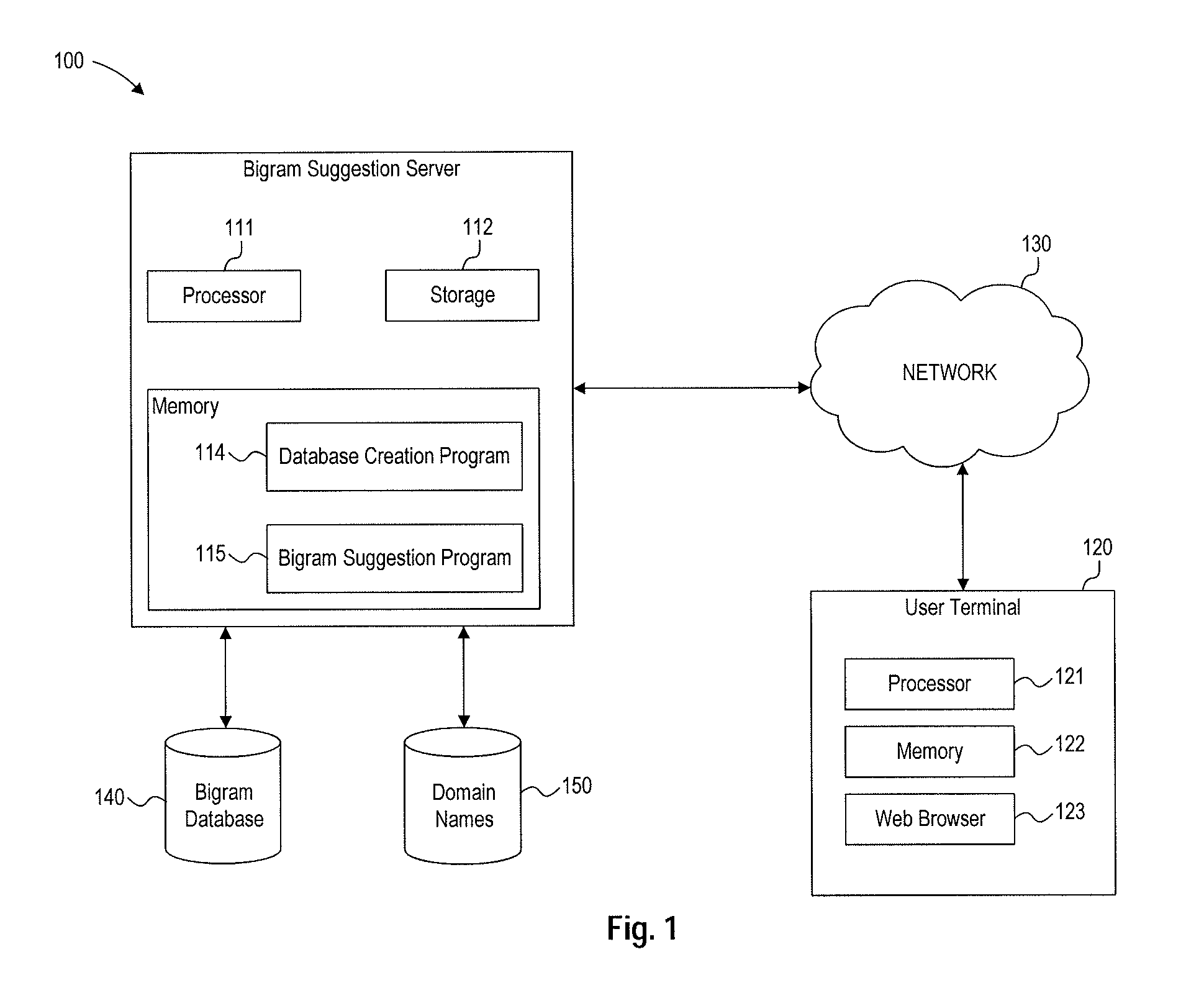

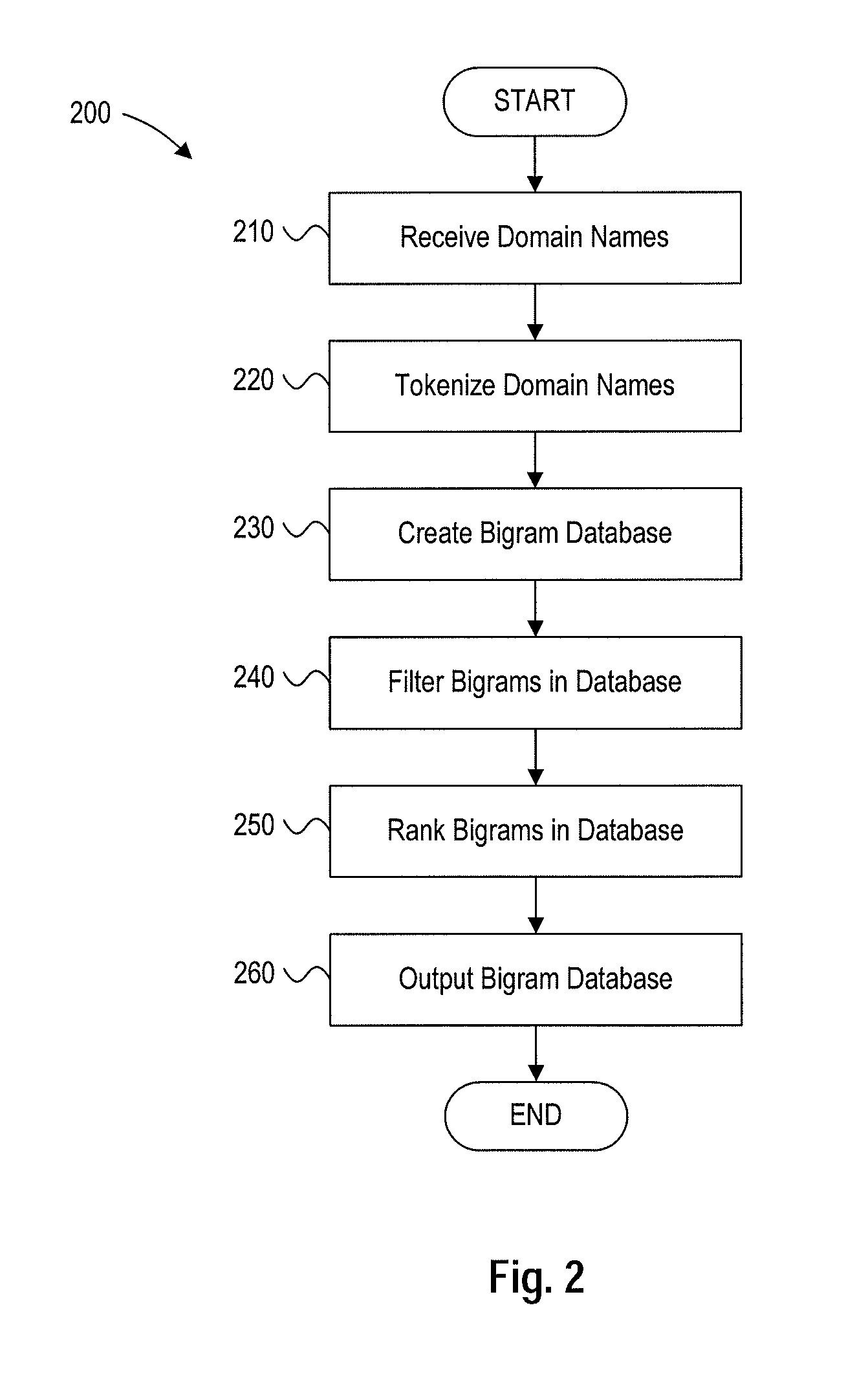

Bigram suggestions

ActiveUS20130091143A1Digital data processing detailsSpecial data processing applicationsDomain nameBigram

A method for generating a bigram database may include receiving domain names, tokenizing the domain names, generating token bigrams from the tokenized domain names, filtering the token bigrams, ranking the token bigrams, and storing the filtered and ranked token bigrams in a bigram database. A method for suggesting alternative domain names may include receiving a requested domain name, tokenizing the requested domain name to divide the requested domain name into a series of tokens, retrieving token bigrams for tokens of the requested domain name, generating alternative domain name suggestions based on the token bigrams and the requested domain name, ranking the alternative domain name suggestions, and outputting at least one of the alternative domain name suggestions.

Owner:VERISIGN

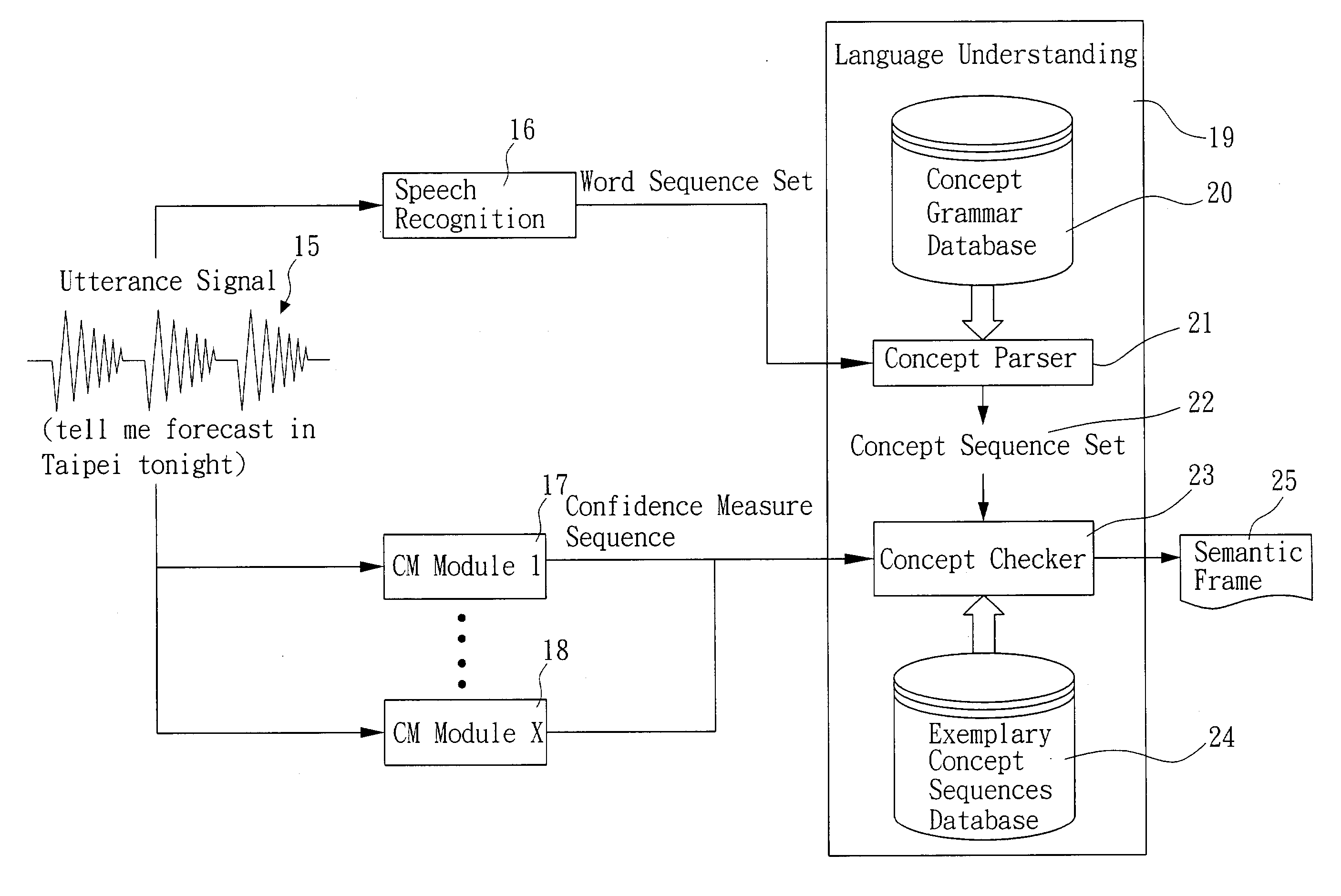

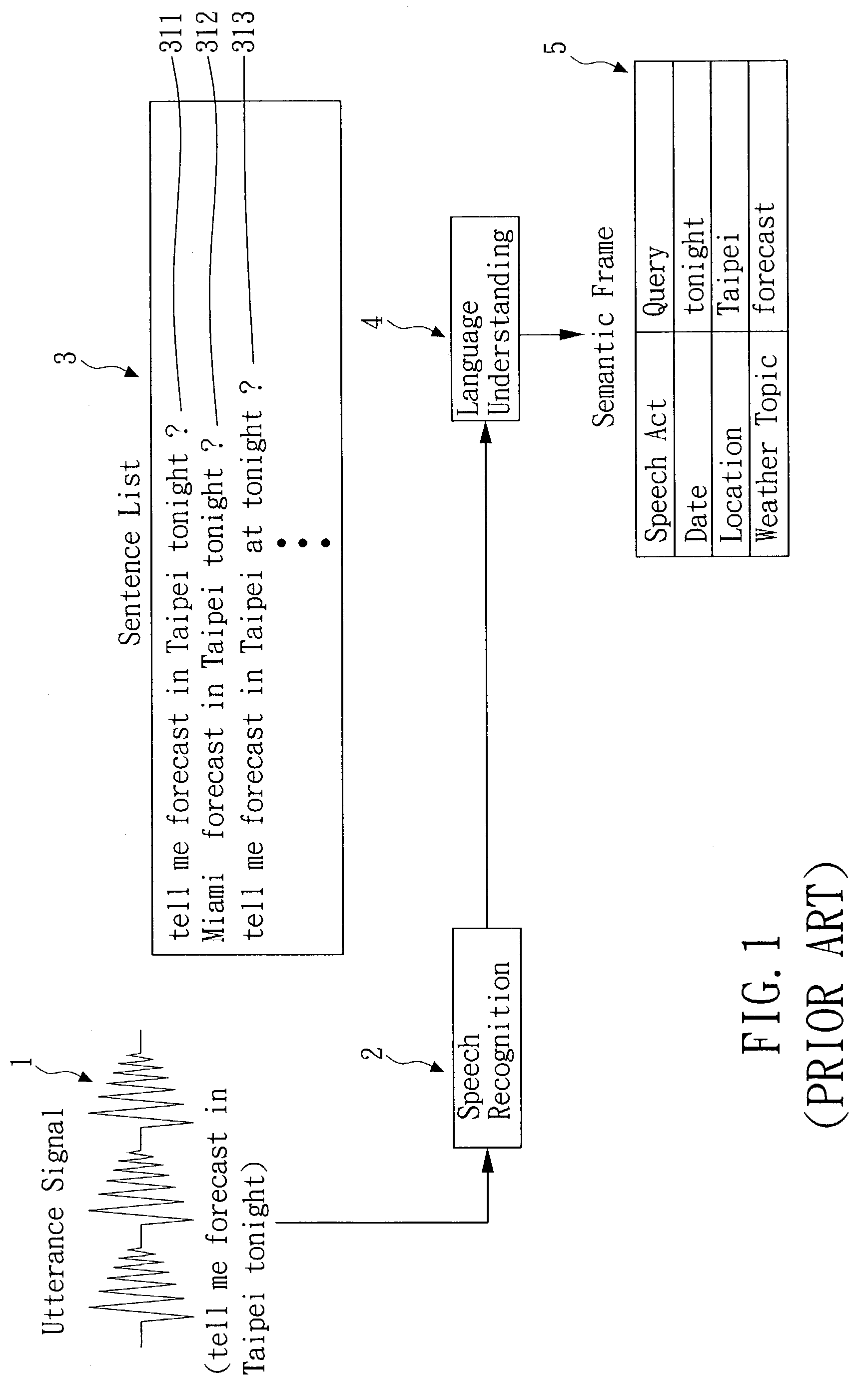

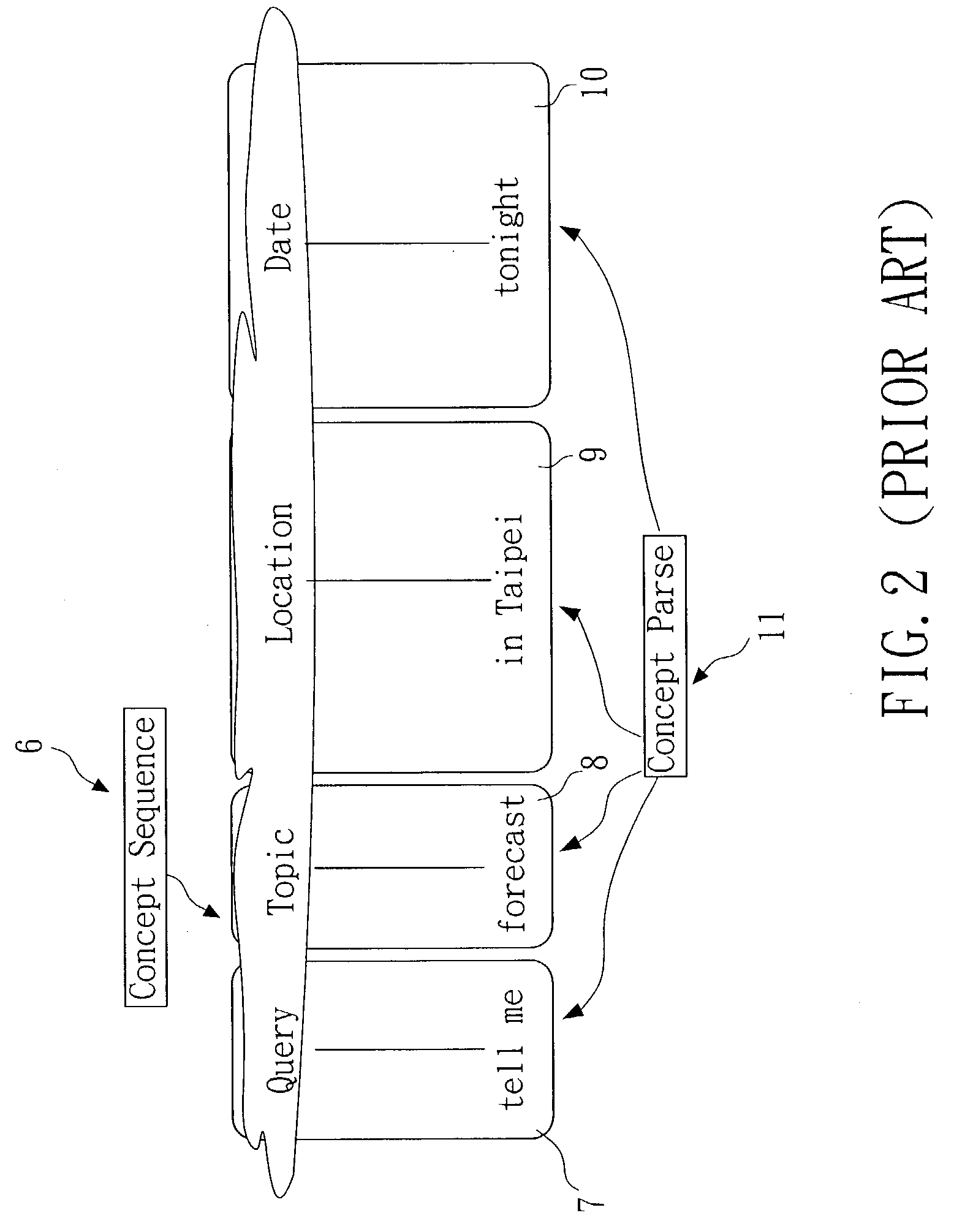

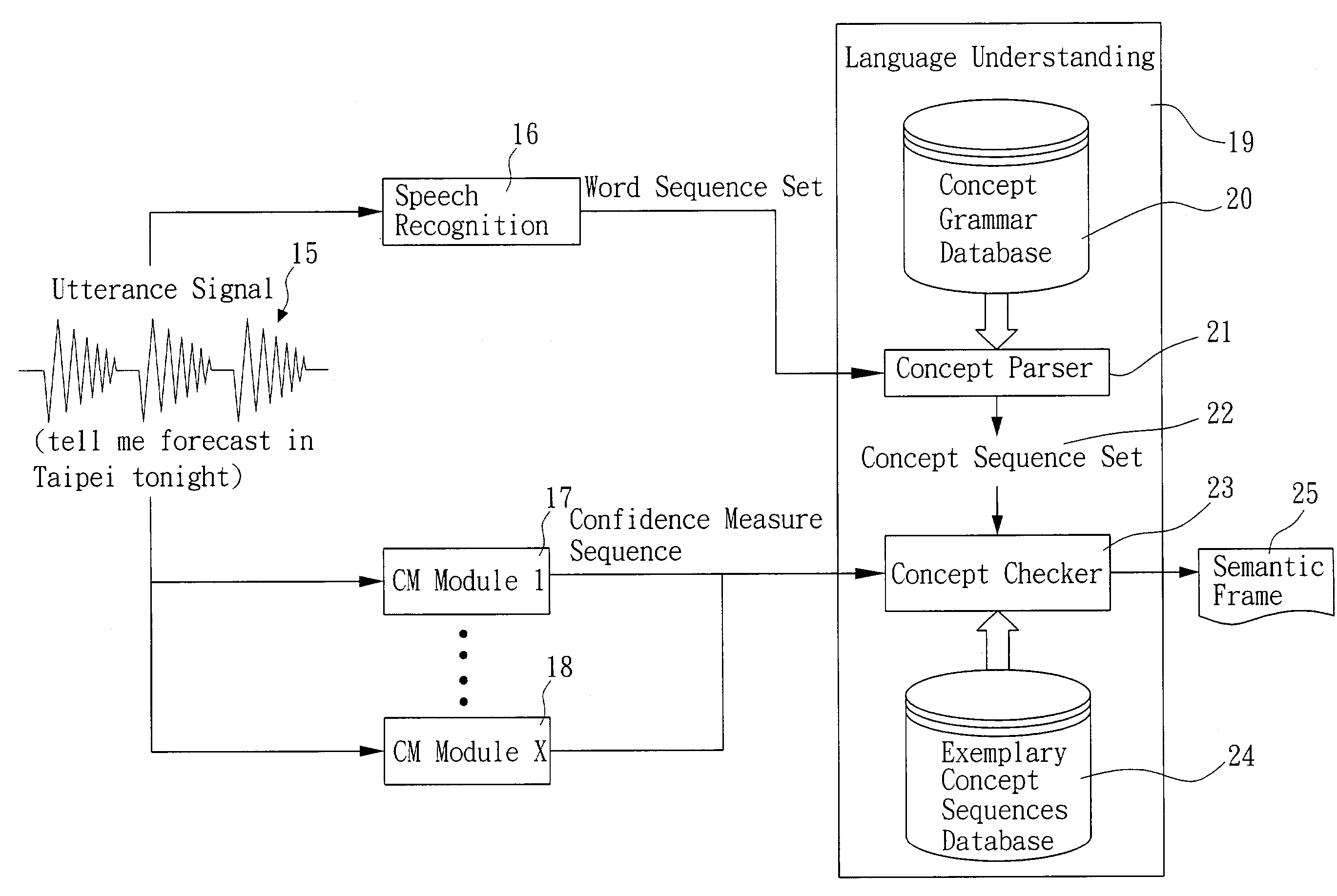

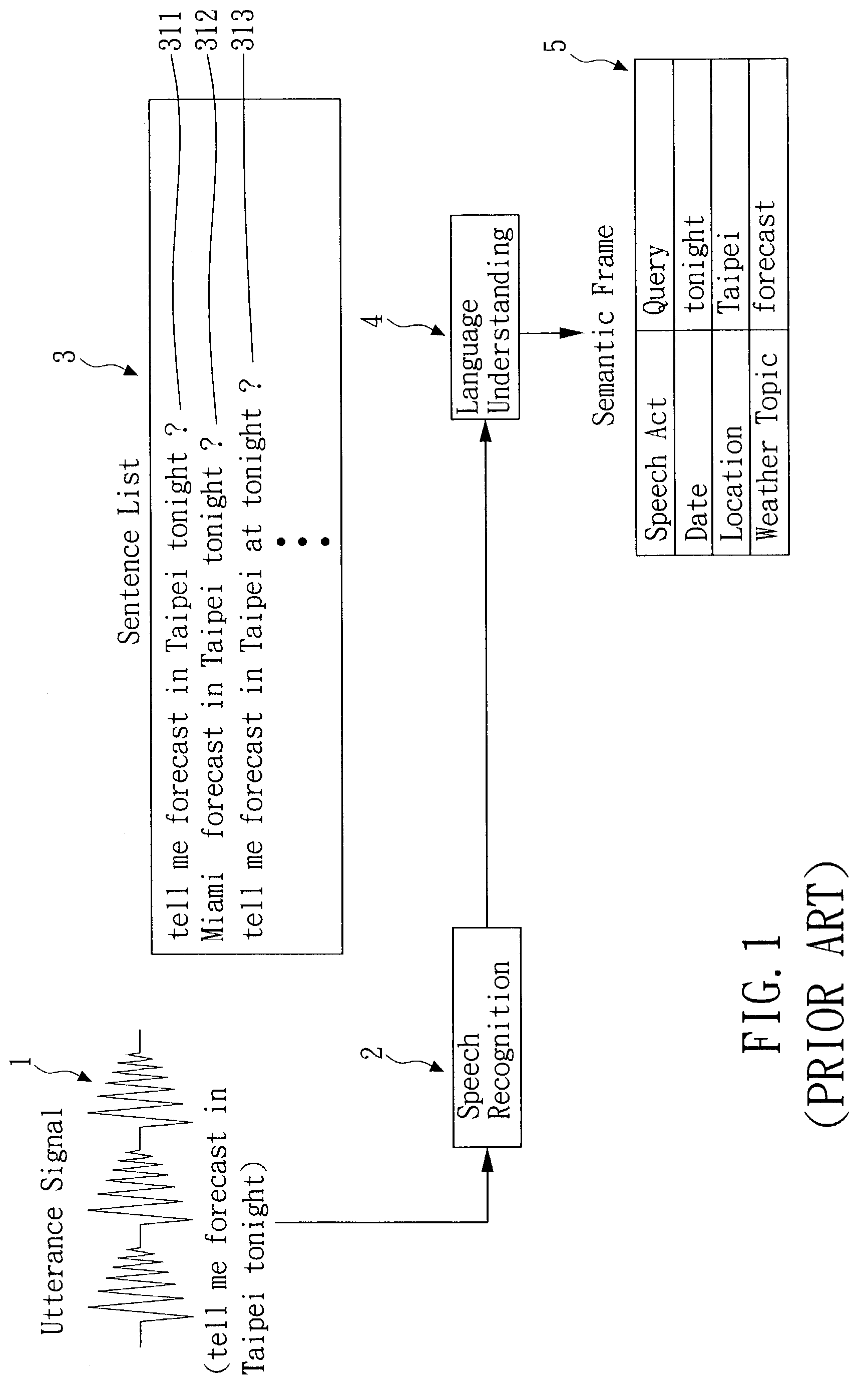

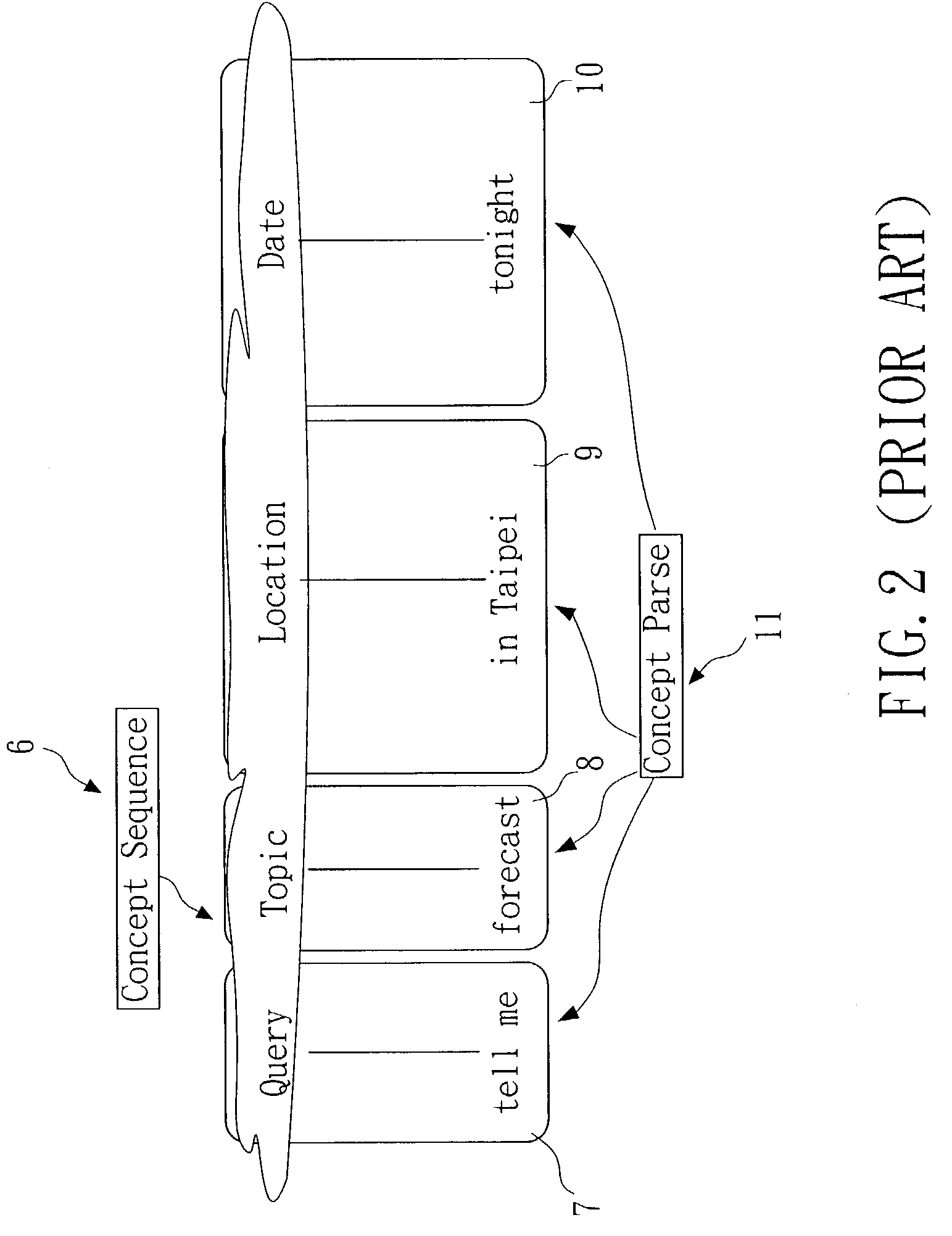

Error-tolerant language understanding system and method

InactiveUS20030225579A1Speech recognitionSpecial data processing applicationsLanguage understandingSpeech sound

The present invention relates to an error-tolerant language understanding, system and method. The system and the method is using example sentences to provide the clues for detecting and recovering errors. The procedure of detection and recovery is guided by a probabilistic scoring function which integrated the scores from the speech recognizer, concept parser, the scores of concept-bigram and edit operations, such as deleting, inserting and substituting concepts. Meanwhile, the score of edit operations refers the confidence measure achieving more precise detection and recovery of the speech recognition errors. That said, a concept with lower confidence measure tends to be deleted or substituted, while a concept with higher one tends to be retained.

Owner:IND TECH RES INST

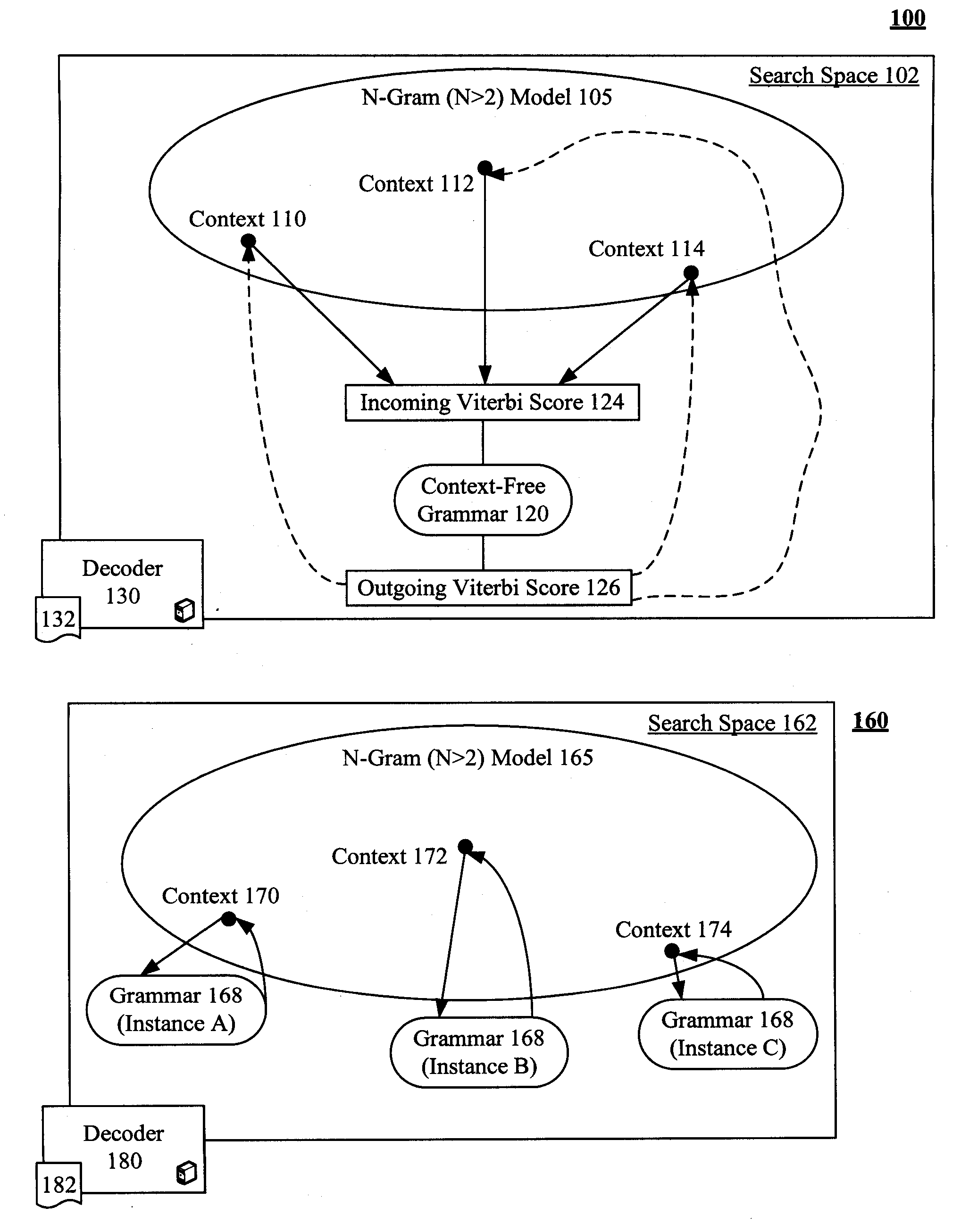

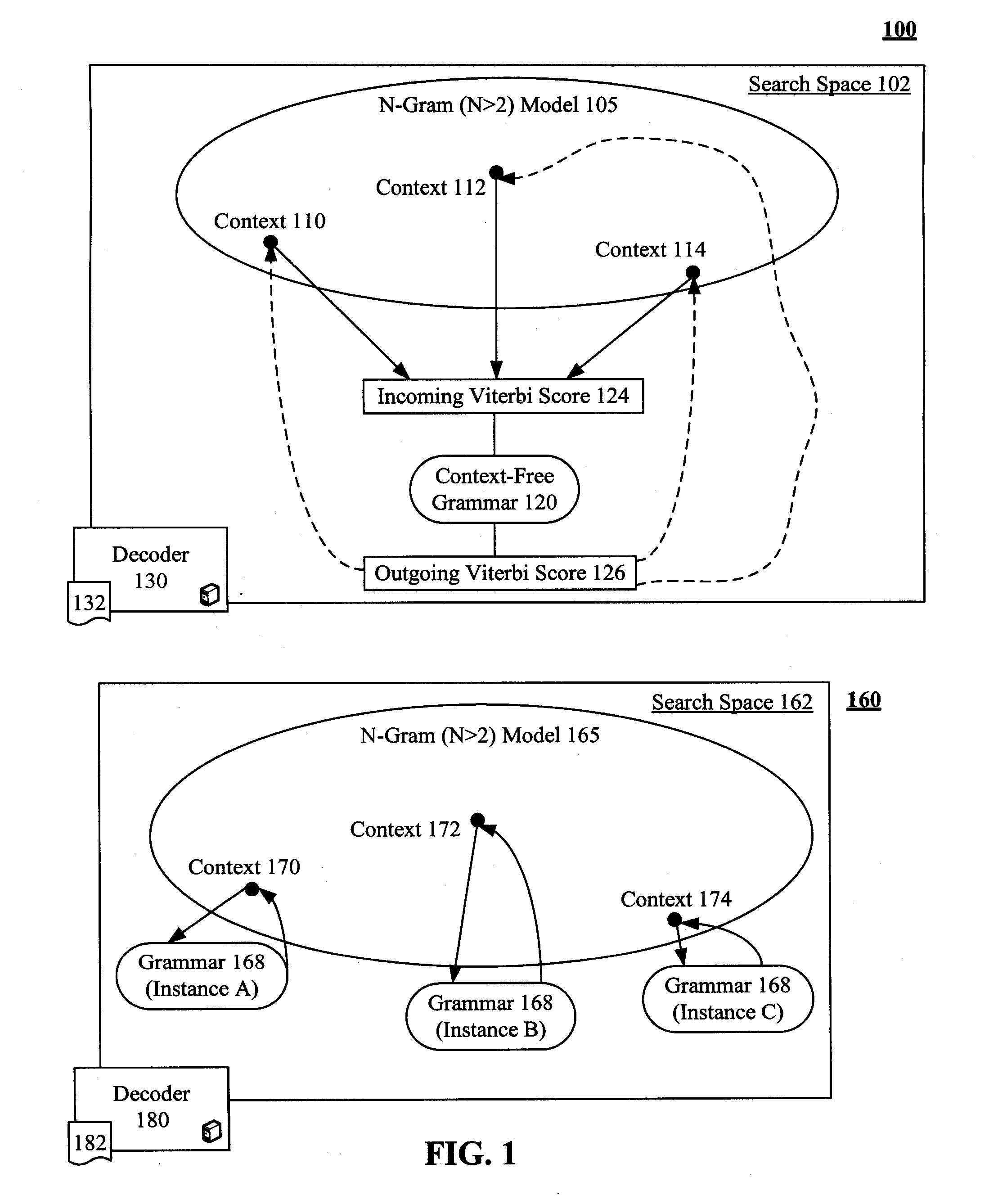

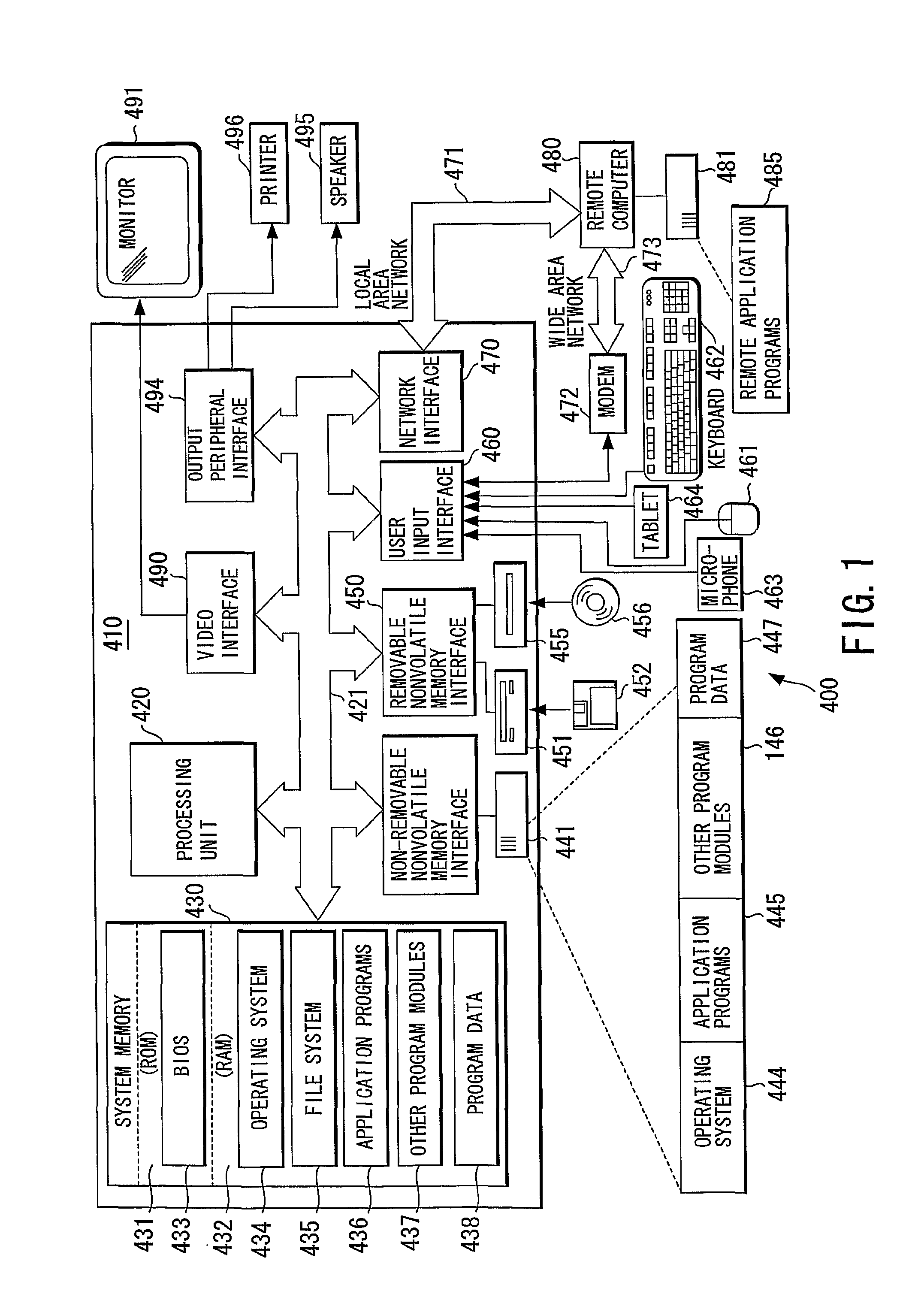

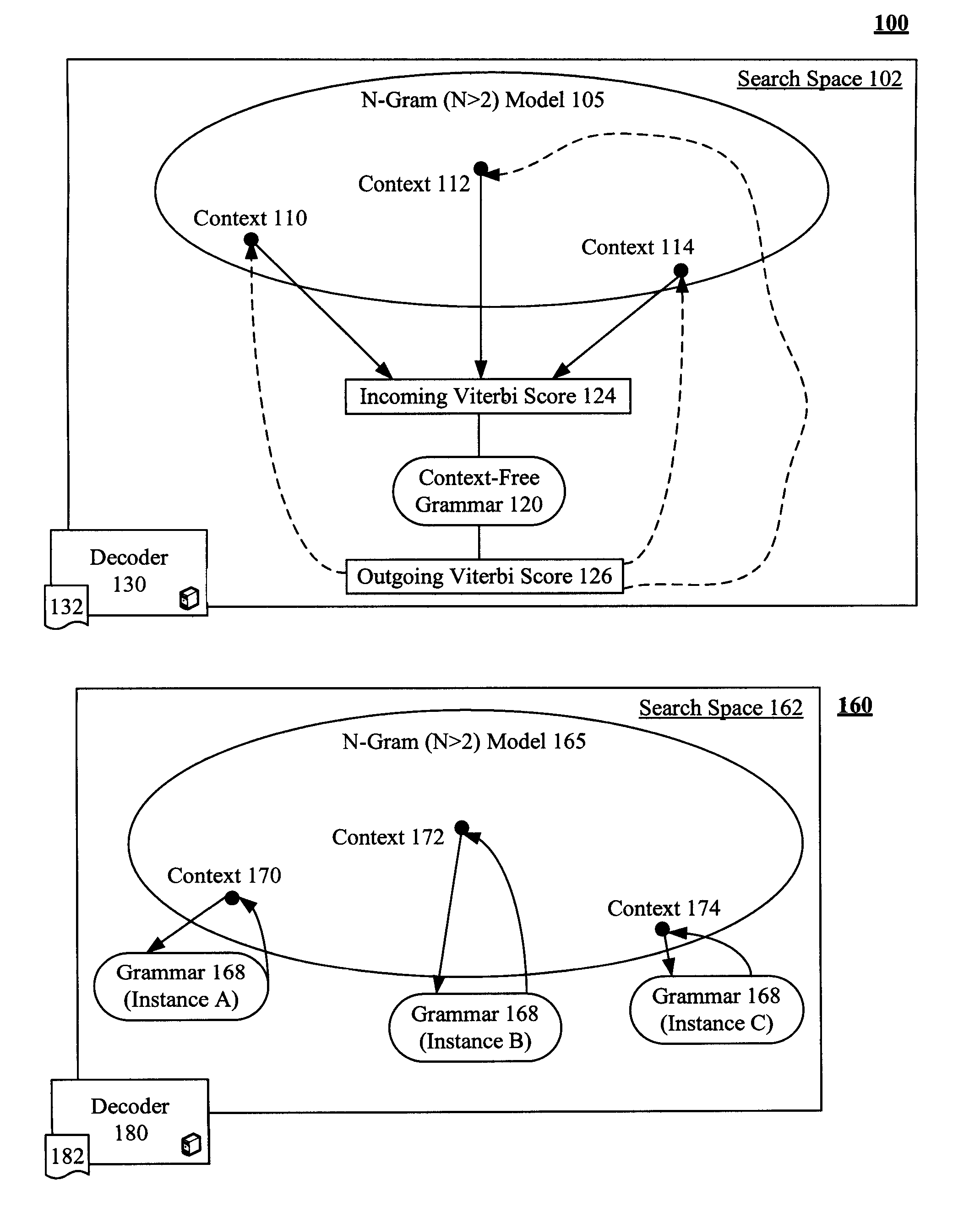

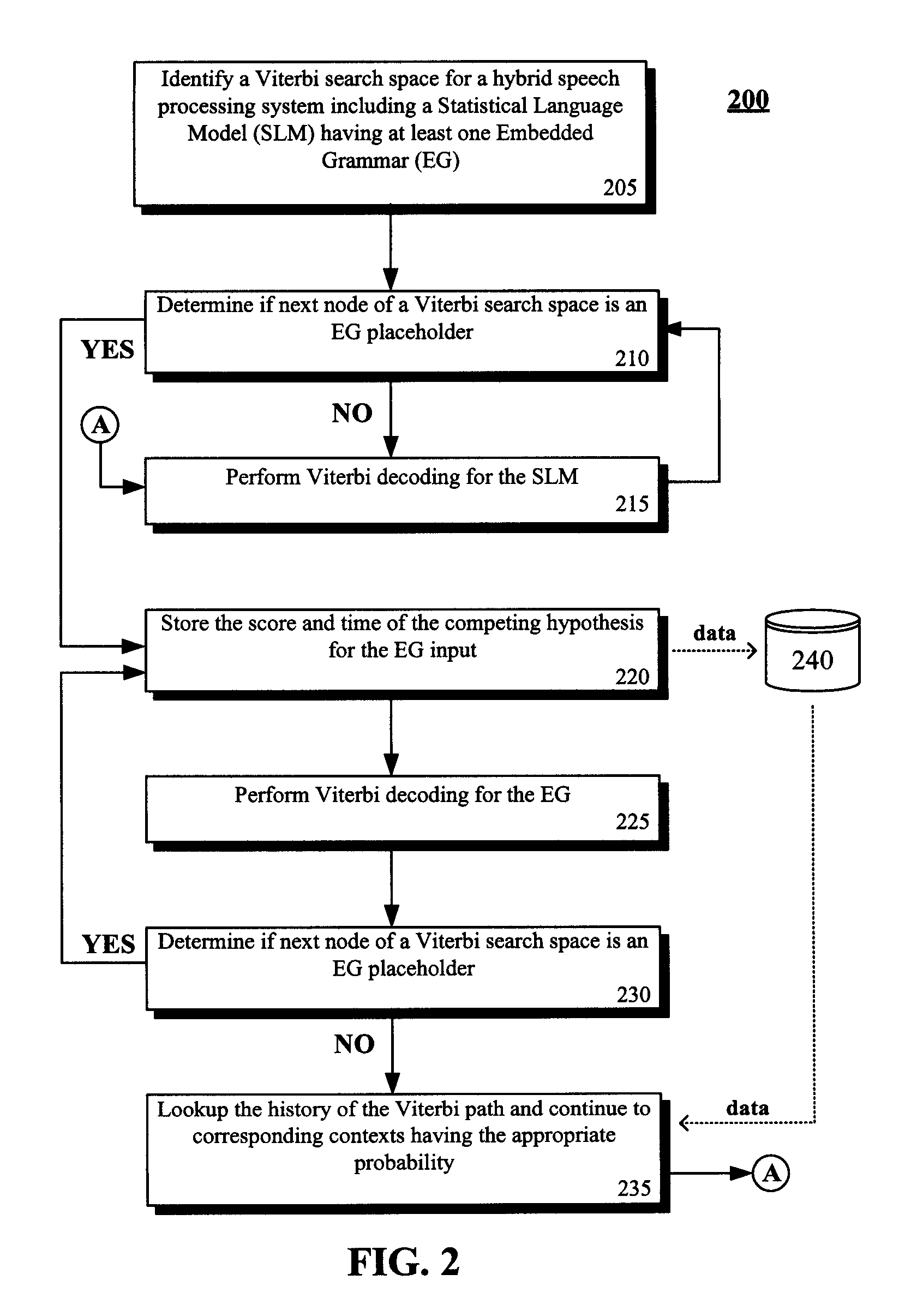

Enhancement to viterbi speech processing algorithm for hybrid speech models that conserves memory

InactiveUS20080091429A1Reduce complexityReduce decreaseSpeech recognitionSpecial data processing applicationsMultiple contextHide markov model

The present invention discloses a method for semantically processing speech for speech recognition purposes. The method can reduce an amount of memory required for a Viterbi search of an N-gram language model having a value of N greater than two and also having at least one embedded grammar that appears in a multiple contexts to a memory size of approximately a bigram model search space with respect to the embedded grammar. The method also reduces needed CPU requirements. Achieved reductions can be accomplished by representing the embedded grammar as a recursive transition network (RTN), where only one instance of the recursive transition network is used for the contexts. Other than the embedded grammars, a Hidden Markov Model (HMM) strategy can be used for the search space.

Owner:NUANCE COMM INC

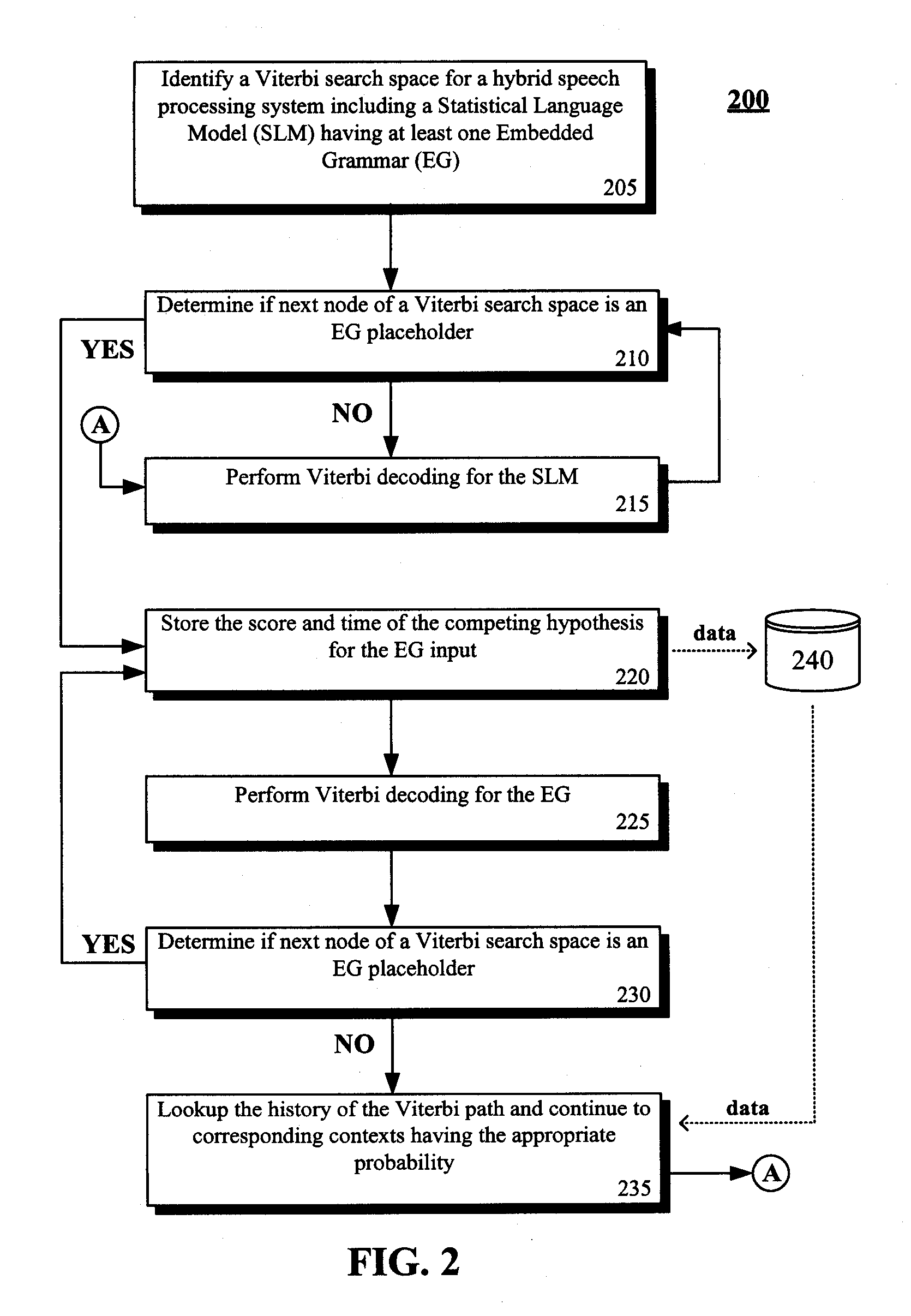

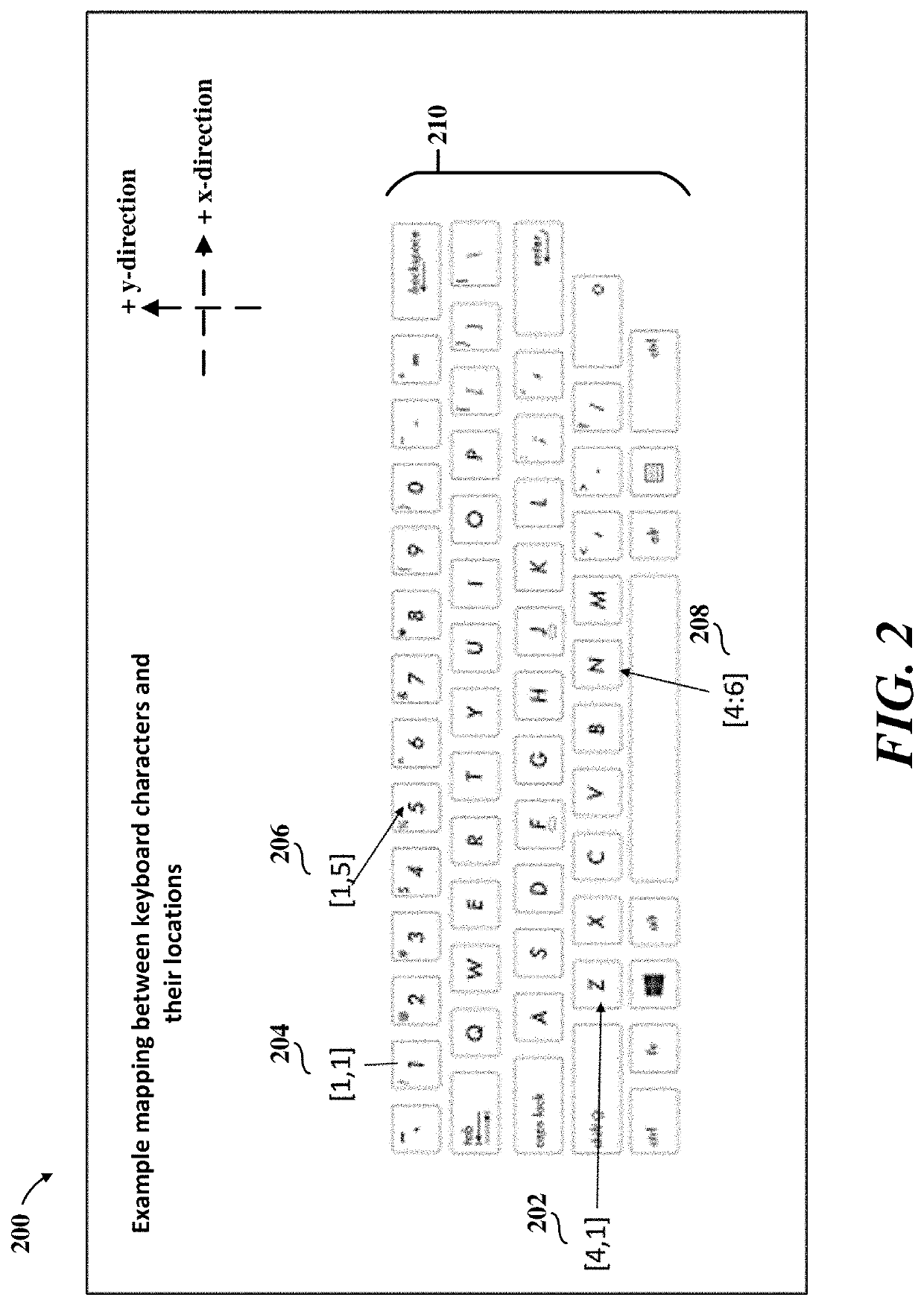

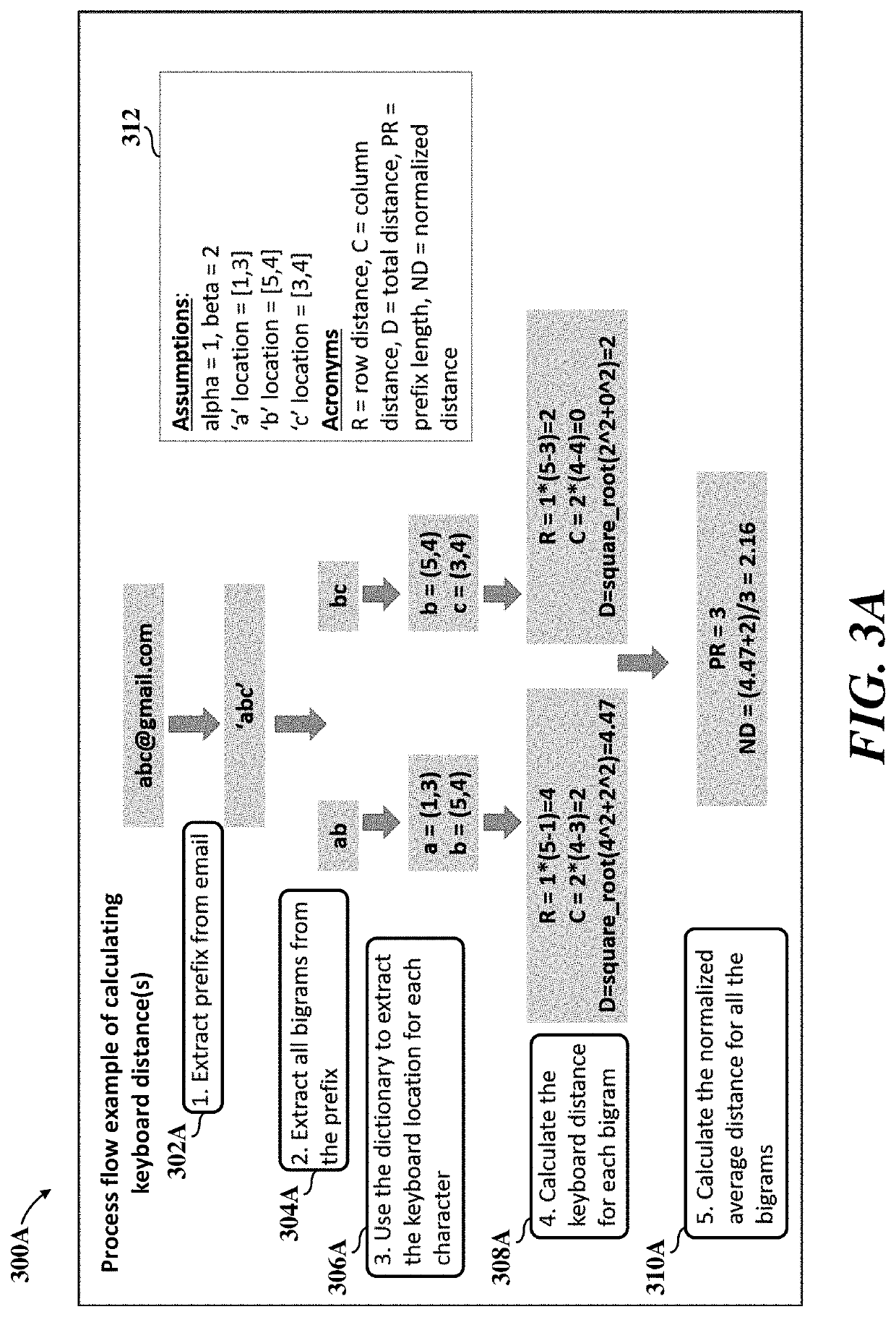

Detecting fraud by calculating email address prefix mean keyboard distances using machine learning optimization

Owner:INTUIT INC

Error-tolerant language understanding system and method

InactiveUS7333928B2Reduce negative impactImprove accuracySpeech recognitionSpecial data processing applicationsLanguage understandingSpeech identification

The present invention relates to an error-tolerant language understanding, system and method. The system and the method is using example sentences to provide the clues for detecting and recovering errors. The procedure of detection and recovery is guided by a probabilistic scoring function which integrated the scores from the speech recognizer, concept parser, the scores of concept-bigram and edit operations, such as deleting, inserting and substituting concepts. Meanwhile, the score of edit operations refers the confidence measure achieving more precise detection and recovery of the speech recognition errors. That said, a concept with lower confidence measure tends to be deleted or substituted, while a concept with higher one tends to be retained.

Owner:IND TECH RES INST

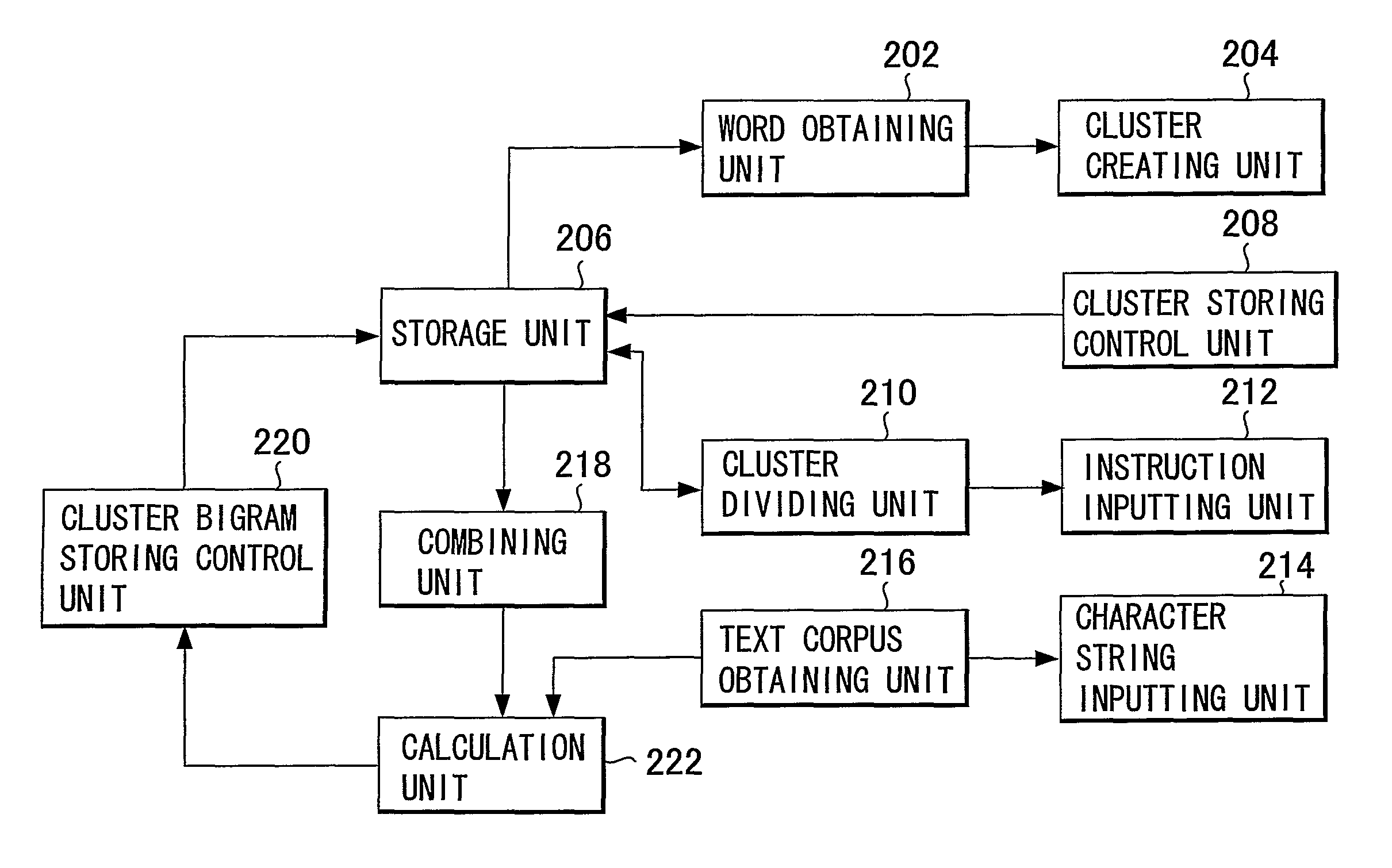

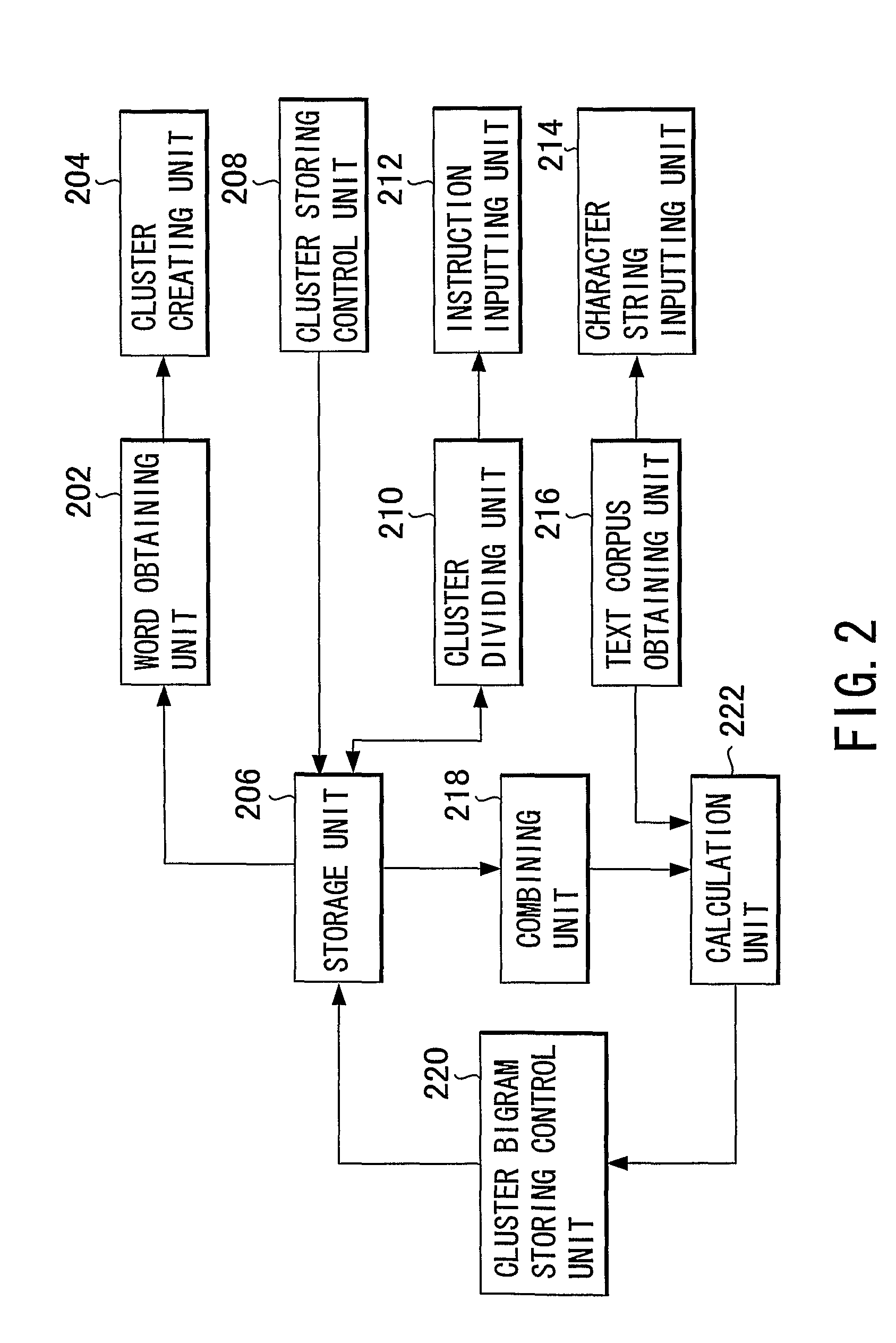

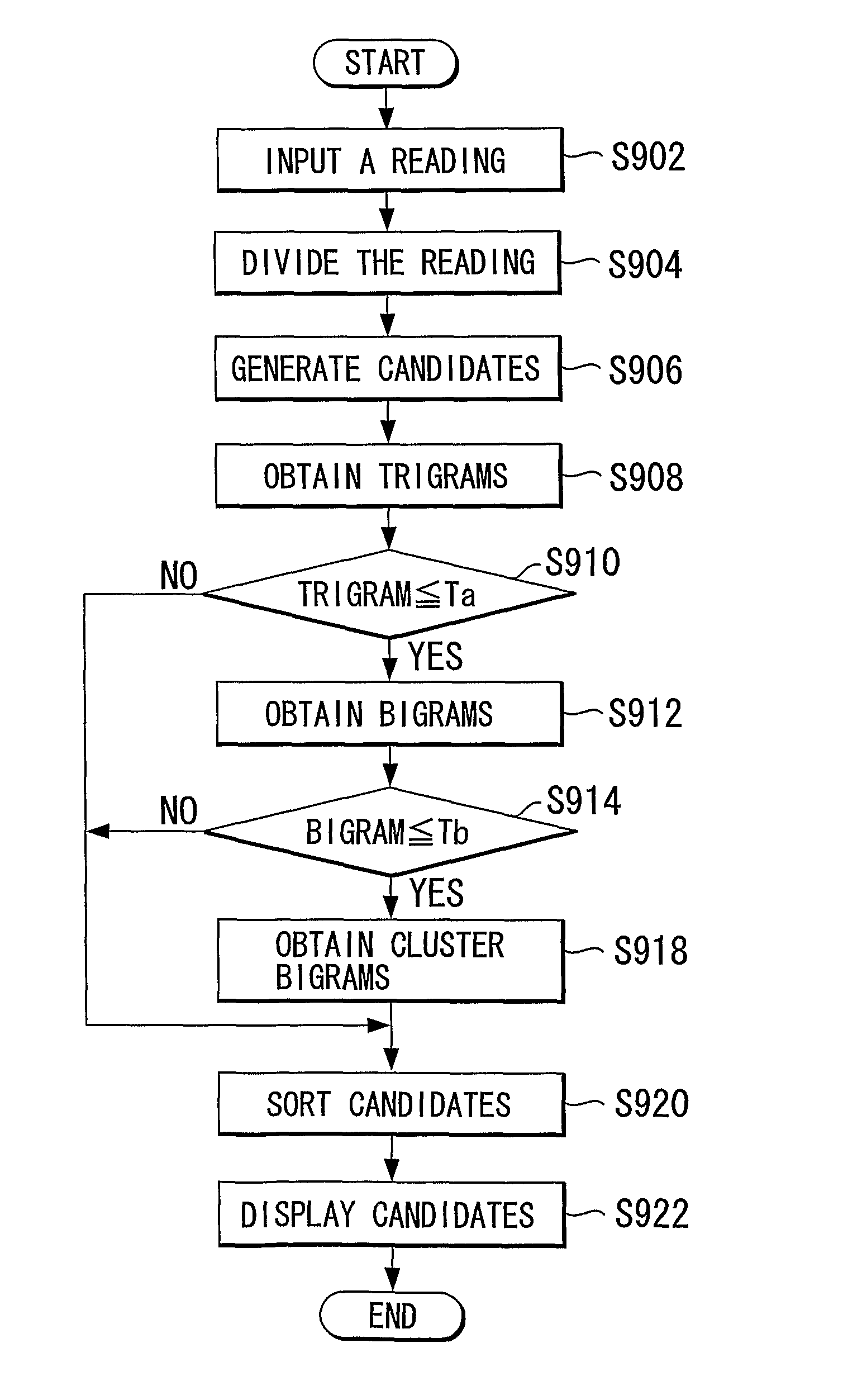

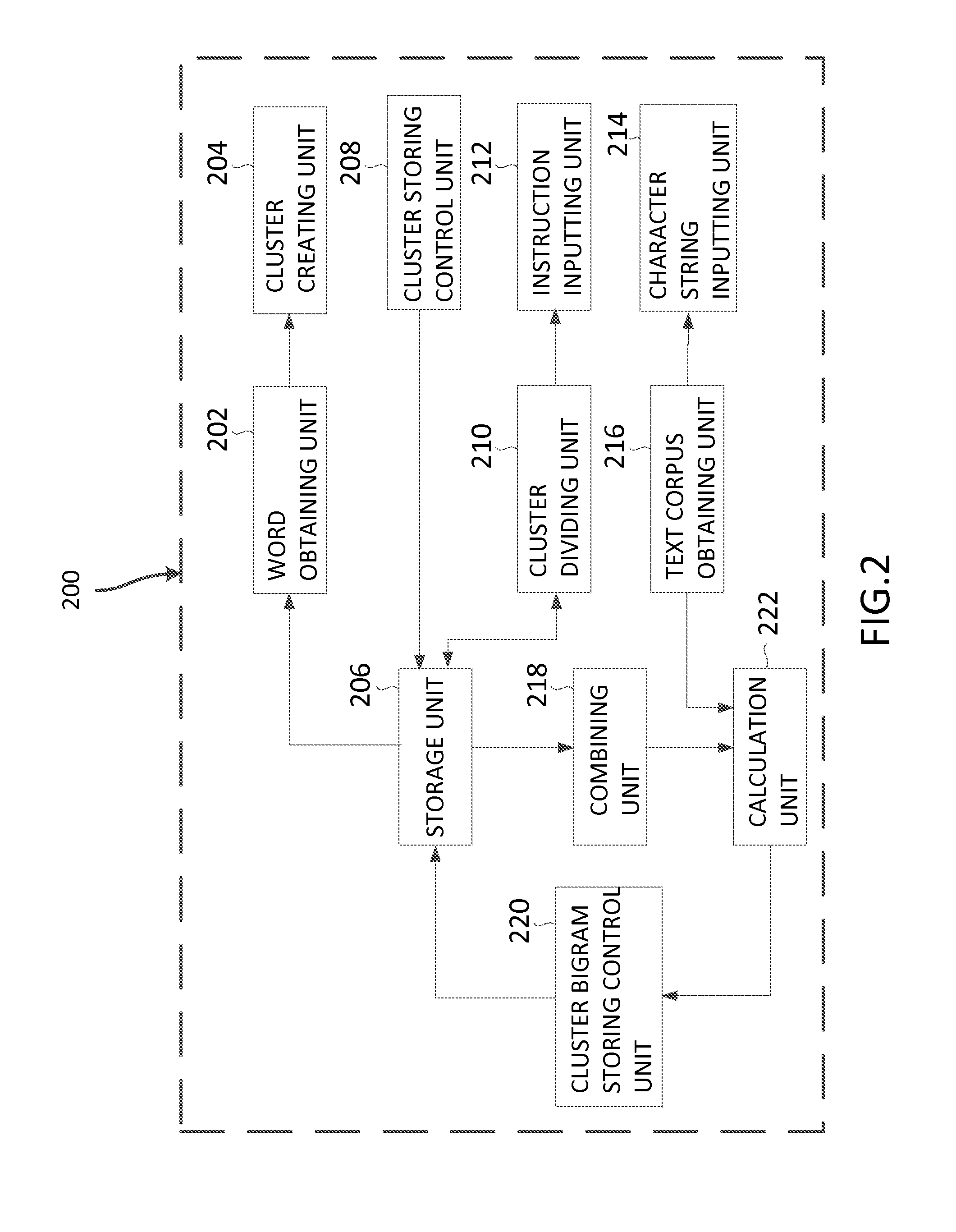

Method and Apparatus for Creating a Language Model and Kana-Kanji Conversion

InactiveUS20110106523A1Quality improvementImprove accuracyNatural language translationSpeech recognitionPart of speechChinese characters

Method for creating a language model capable of preventing deterioration of quality caused by the conventional back-off to unigram. Parts-of-speech with the same display and reading are obtained from a storage device (206). A cluster (204) is created by combining the obtained parts-of-speech. The created cluster (204) is stored in the storage device (206). In addition, when an instruction (214) for dividing the cluster is inputted, the cluster stored in the storage device (206) is divided (210) in accordance with to the inputted instruction (212). Two of the clusters stored in the storage device are combined (218), and a probability of occurrence of the combined clusters in the text corpus is calculated (222). The combined cluster is associated with the bigram indicating the calculated probability and stored into the storage device.

Owner:MICROSOFT TECH LICENSING LLC

System and method for productive generation of compound words in statistical machine translation

InactiveUS8781810B2Natural language translationSpecial data processing applicationsHeuristicDecision taking

A method and a system for making merging decisions for a translation are disclosed which are suited to use where the target language is a productive compounding one. The method includes outputting decisions on merging of pairs of words in a translated text string with a merging system. The merging system can include a set of stored heuristics and / or a merging model. In the case of heuristics, these can include a heuristic by which two consecutive words in the string are considered for merging if the first word of the two consecutive words is recognized as a compound modifier and their observed frequency f1 as a closed compound word is larger than an observed frequency f2 of the two consecutive words as a bigram. In the case of a merging model, it can be one that is trained on features associated with pairs of consecutive tokens of text strings in a training set and predetermined merging decisions for the pairs. A translation in the target language is output, based on the merging decisions for the translated text string.

Owner:XEROX CORP

Method and apparatus for creating a language model and kana-kanji conversion

InactiveUS8744833B2Avoid quality lossQuality improvementNatural language translationSpeech recognitionPart of speechEmbedded system

Method for creating a language model capable of preventing deterioration of quality caused by the conventional back-off to unigram. Parts-of-speech with the same display and reading are obtained from a storage device (206). A cluster (204) is created by combining the obtained parts-of-speech. The created cluster (204) is stored in the storage device (206). In addition, when an instruction (214) for dividing the cluster is inputted, the cluster stored in the storage device (206) is divided (210) in accordance with to the inputted instruction (212). Two of the clusters stored in the storage device are combined (218), and a probability of occurrence of the combined clusters in the text corpus is calculated (222). The combined cluster is associated with the bigram indicating the calculated probability and stored into the storage device.

Owner:MICROSOFT TECH LICENSING LLC

Enhancement to Viterbi speech processing algorithm for hybrid speech models that conserves memory

InactiveUS7805305B2Reduce complexityReducing the search space reduces an amount of memory neededSpeech recognitionSpecial data processing applicationsMultiple contextHide markov model

The present invention discloses a method for semantically processing speech for speech recognition purposes. The method can reduce an amount of memory required for a Viterbi search of an N-gram language model having a value of N greater than two and also having at least one embedded grammar that appears in a multiple contexts to a memory size of approximately a bigram model search space with respect to the embedded grammar. The method also reduces needed CPU requirements. Achieved reductions can be accomplished by representing the embedded grammar as a recursive transition network (RTN), where only one instance of the recursive transition network is used for the contexts. Other than the embedded grammars, a Hidden Markov Model (HMM) strategy can be used for the search space.

Owner:NUANCE COMM INC

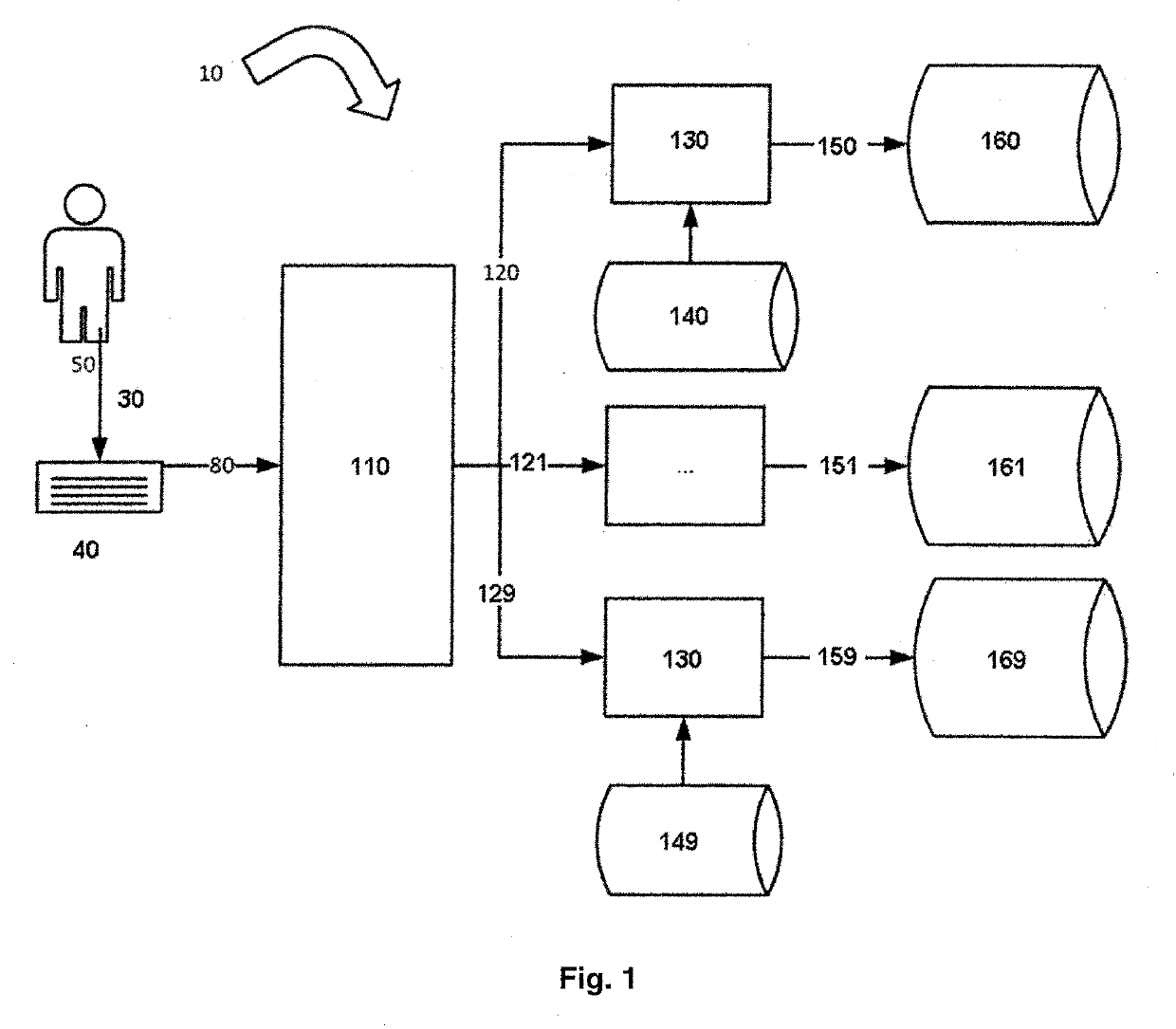

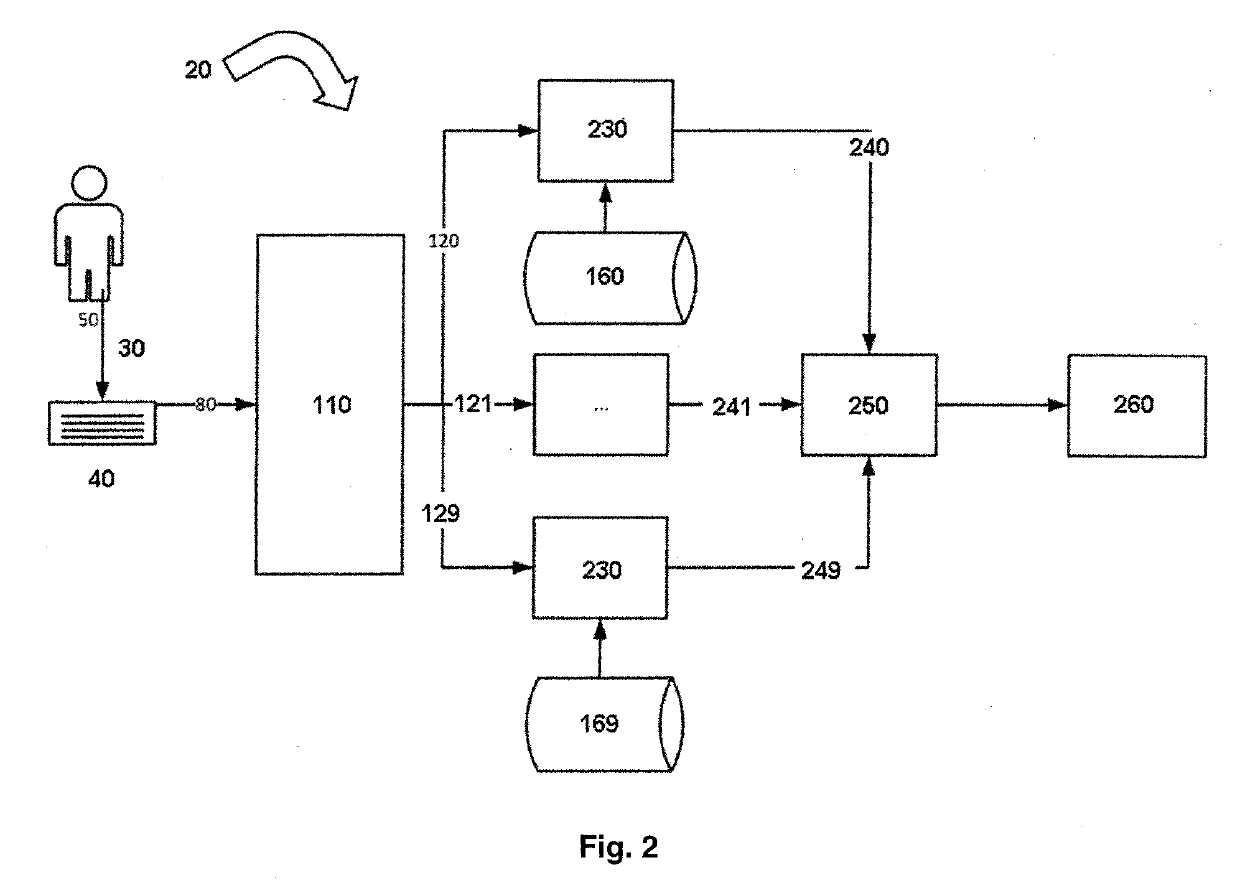

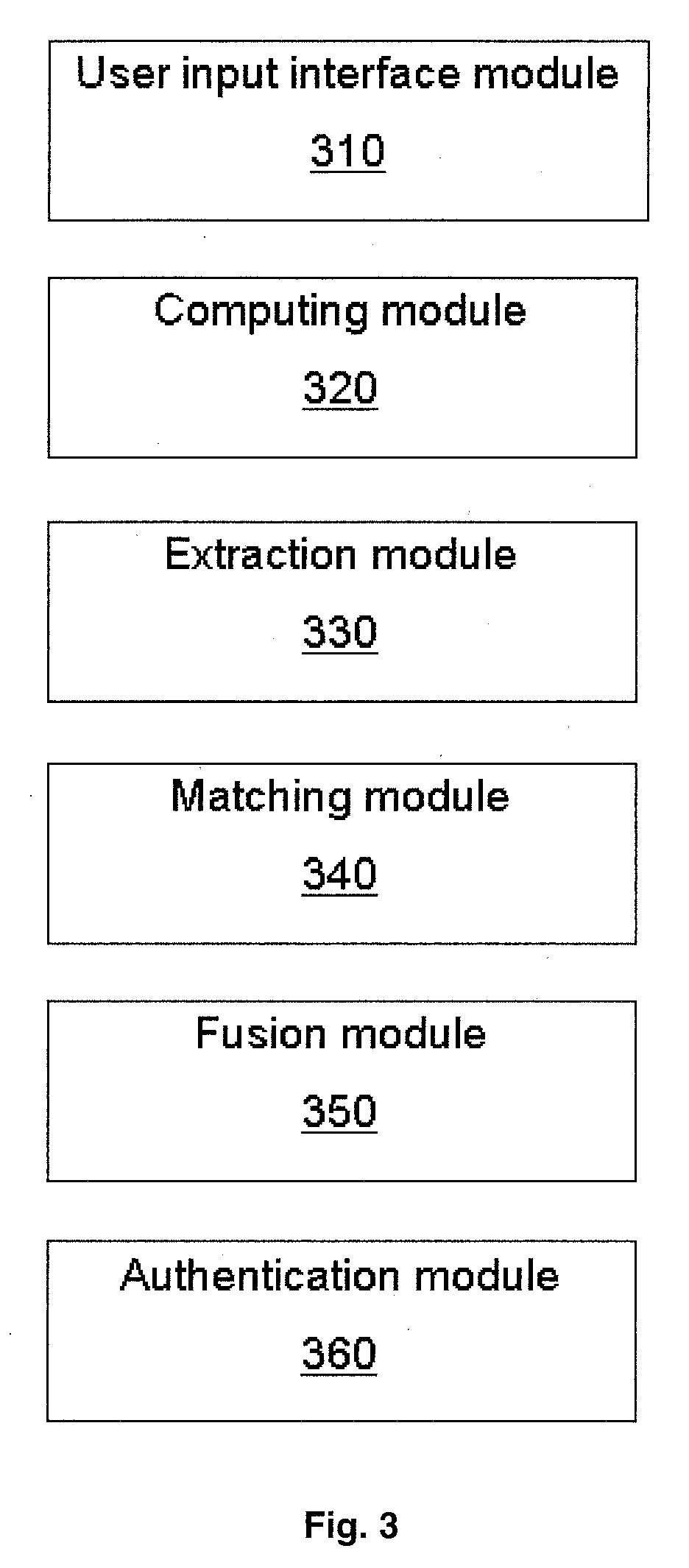

Method and system for free text keysroke biometric authentication

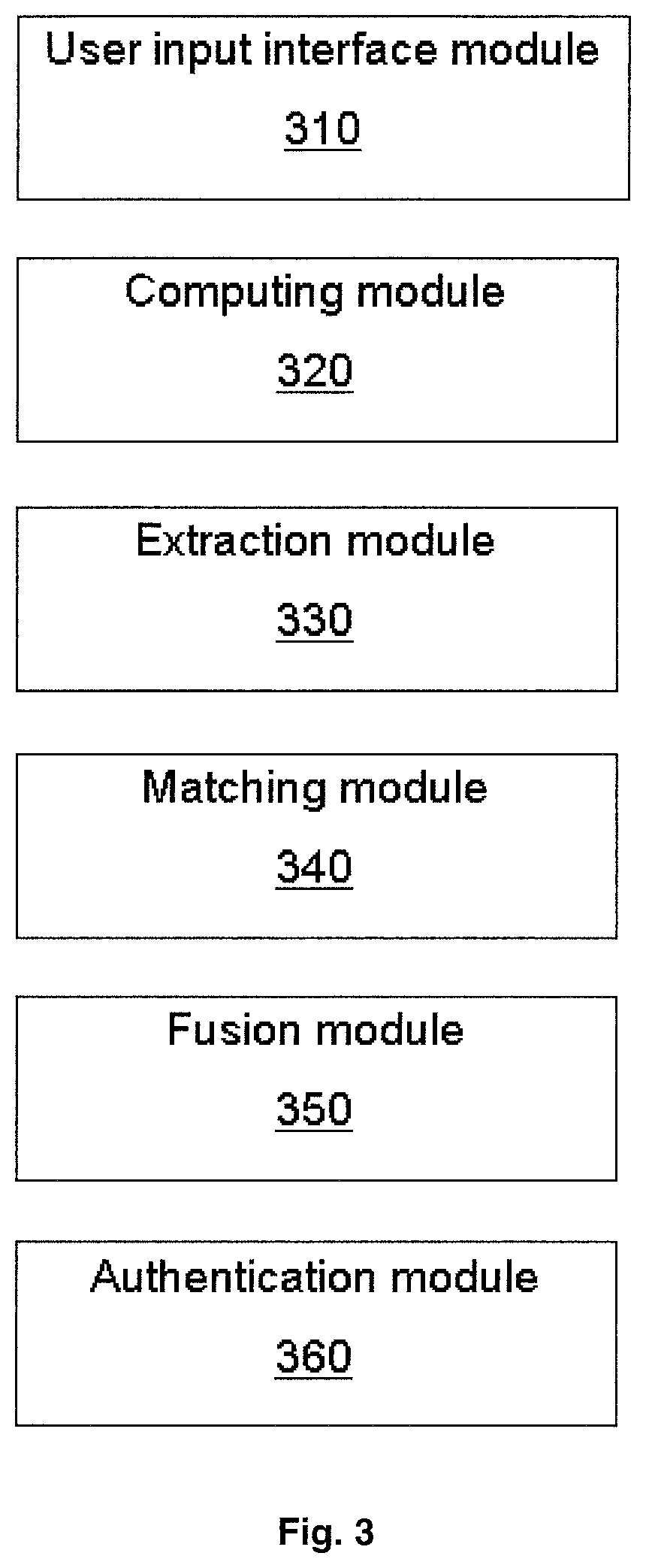

ActiveUS20190332876A1Accurate and fast and simple method for user authenticationQuality improvementDigital data authenticationBiometric pattern recognitionFeature vectorText entry

A method for user authentication based on keystroke dynamics is provided. The user authentication method includes receiving a keystroke input implemented by a user; separating a sequence of pressed keys into a sequence of bigrams having bigram names simultaneously with the user typing free text; collecting a timing information for each bigram of the sequence of bigrams; extracting a feature vector for each bigram based on the timing information; separating feature vectors into subsets according to the bigram names; estimating a GMM user model using subsets of feature vectors for each bigram; providing real time user authentication using the estimated GMM user model for each bigram and bigram features from current real time user keystroke input. The corresponding system is also provided. The GMM based analysis of the keystroke data separated by bigrams provides strong authentication using free text input, while user additional actions (to be verified) are kept at a minimum. The present invention allows to drastically improve accuracy of user authentication with low performance requirements that allows to implement authentication software for low-power mobile devices.

Owner:ID R&D INC

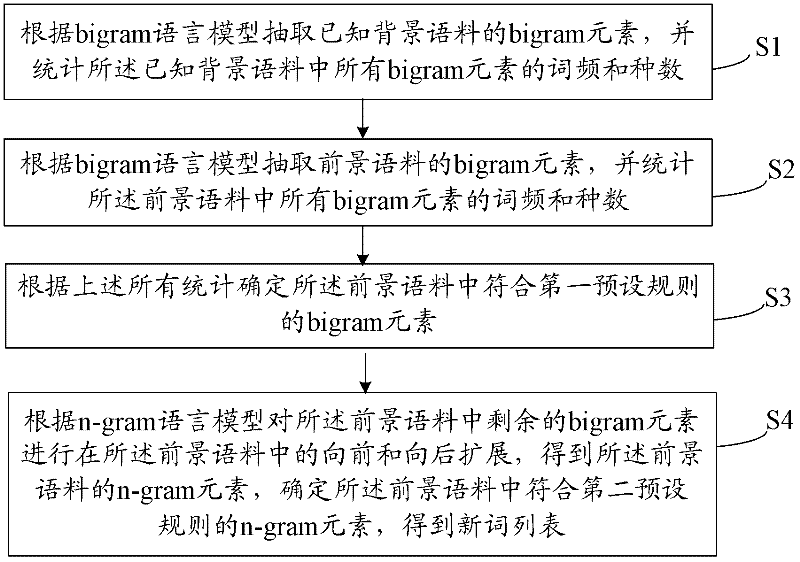

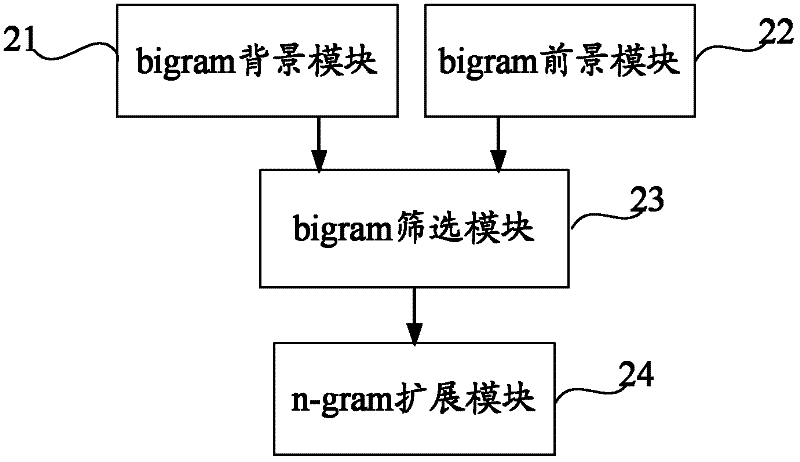

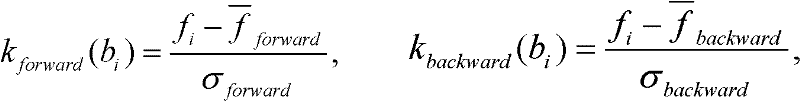

Method and system for finding out new words

The invention provides a method and system for finding out new words. The method comprises the following steps of: based on a bigram language model, respectively extracting bigram elements of a foreground corpus; respectively obtaining statistical information of the foreground corpus; filtering the bigram elements according to the statistical information and a first pre-set rule; expanding the remained bigram elements in the foreground corpus by using an n-gram language model and a second pre-set rule, wherein re-counting a background corpus is unnecessary during the updating of n-gram elements; preventing from re-finding out existing new words in the background corpus; judging boundaries of the new words according to the second pre-set rule; and removing garbage bigram elements and n-gram elements. The method is used simply and easily. The manual correction burden is reduced.

Owner:SHENGLE INFORMATION TECH SHANGHAI

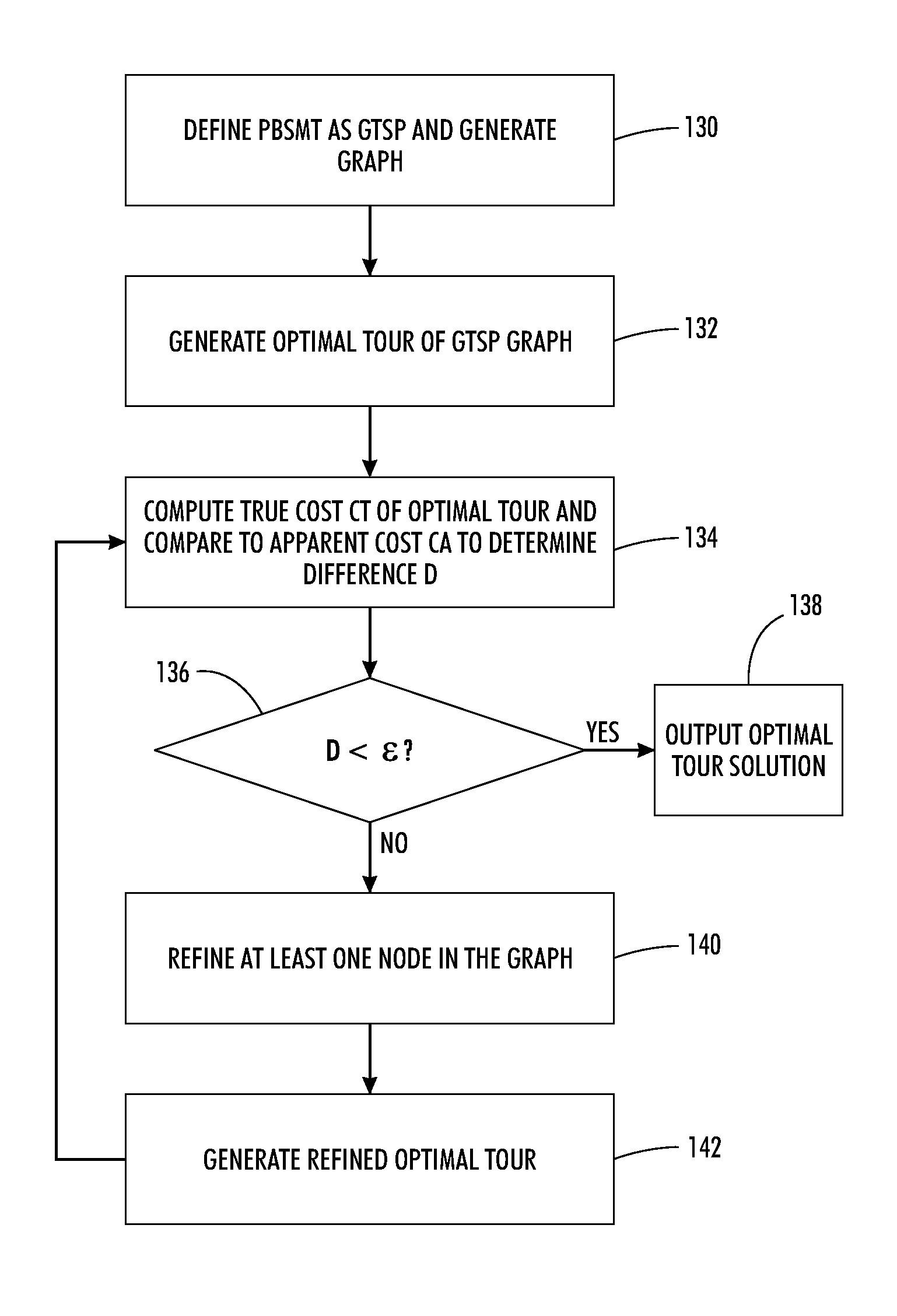

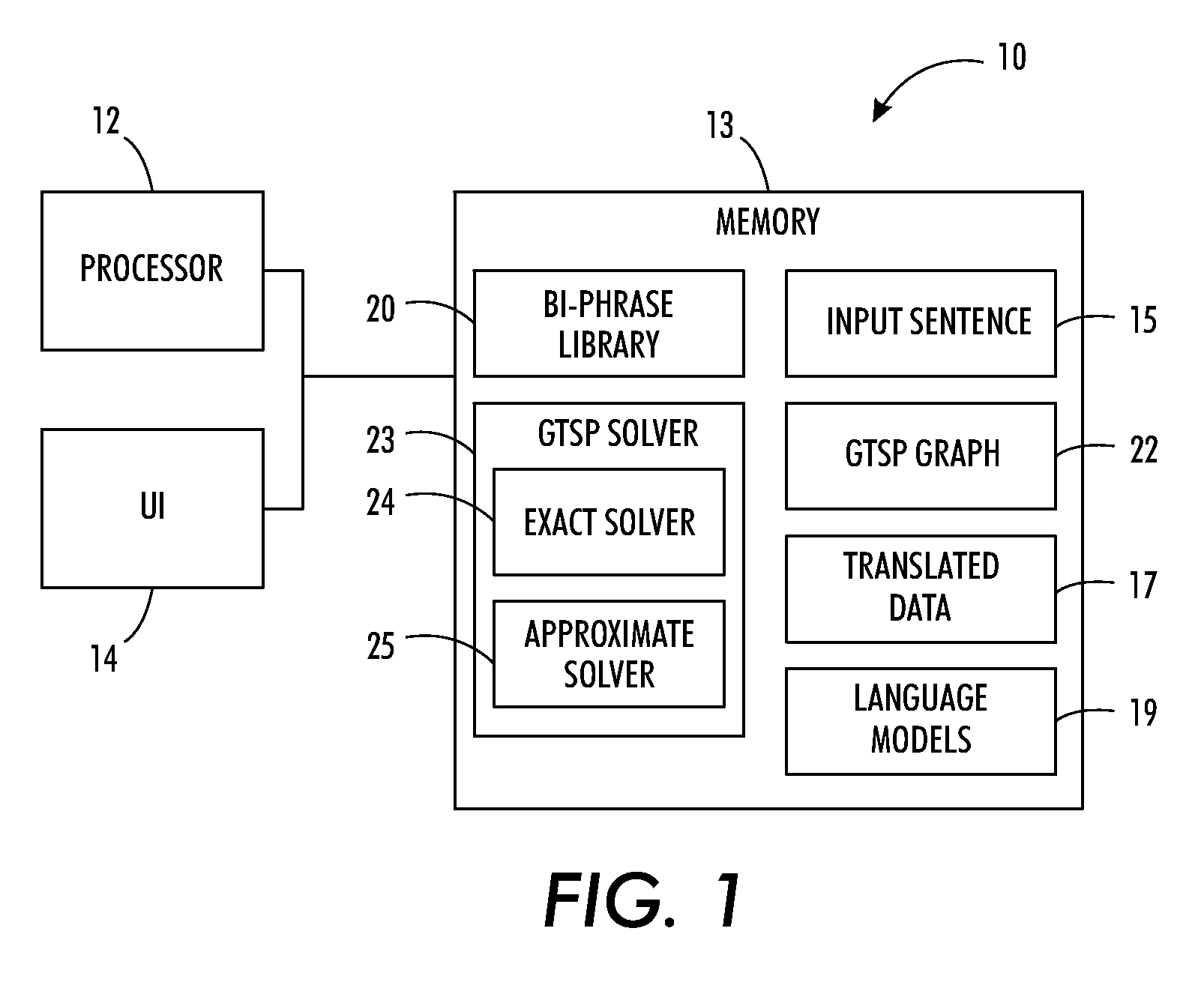

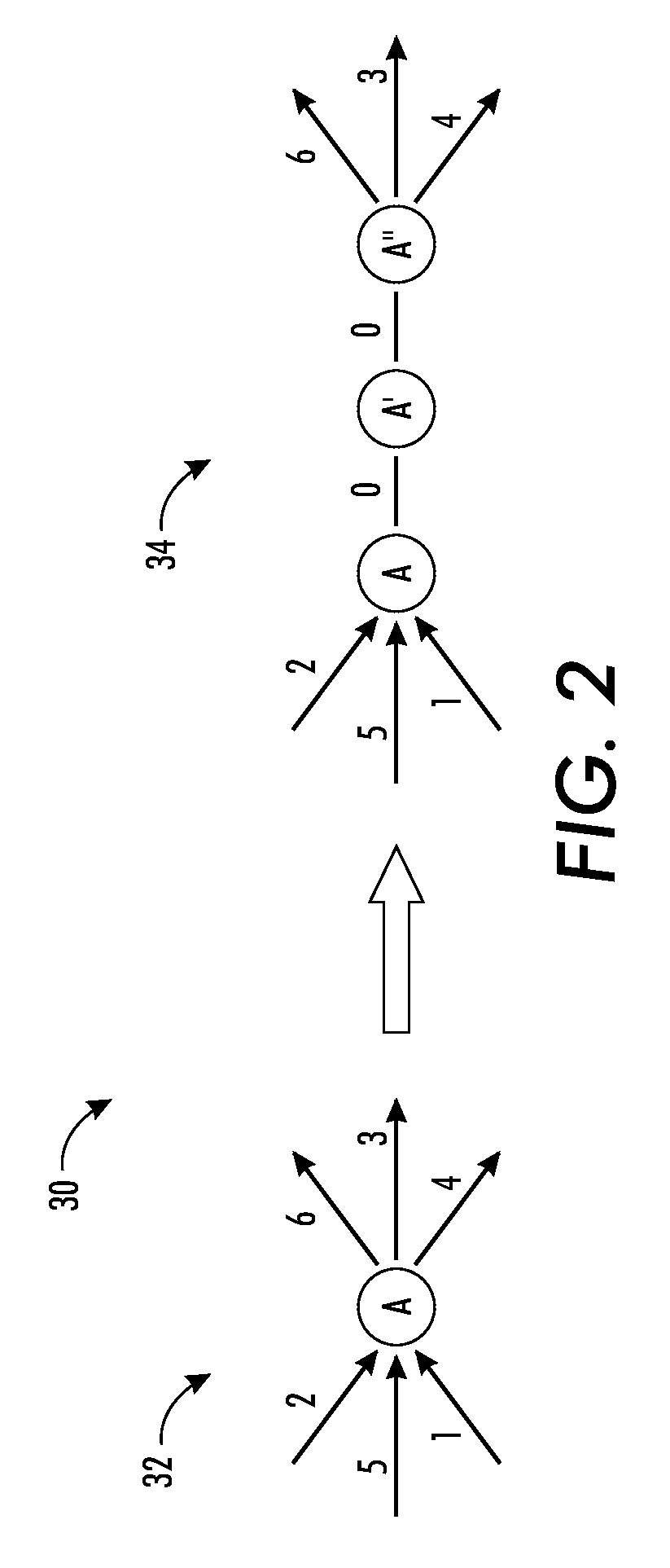

Phrase-based statistical machine translation as a generalized traveling salesman problem

InactiveUS8504353B2Improve translationNatural language translationSpecial data processing applicationsNODALGraphics

Owner:XEROX CORP

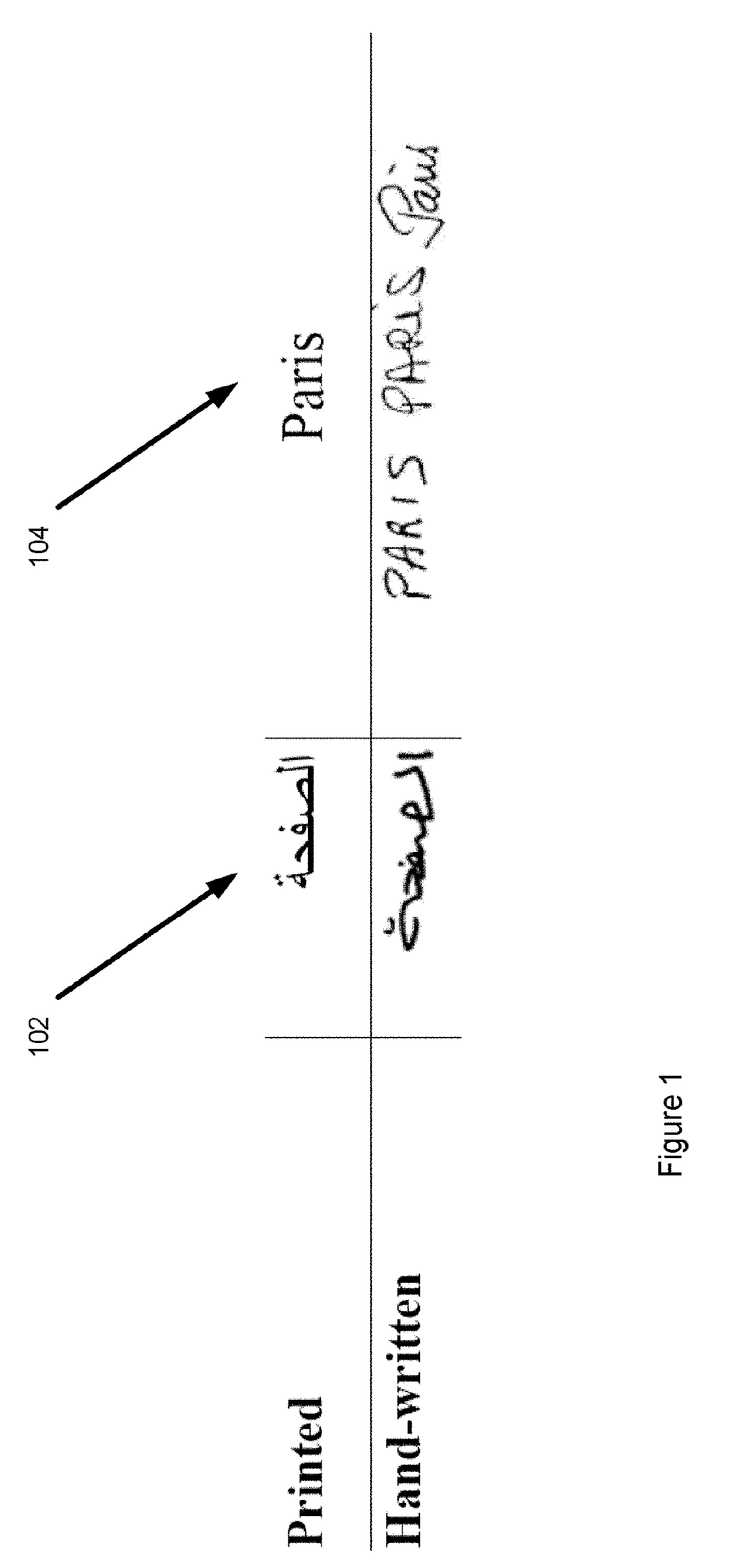

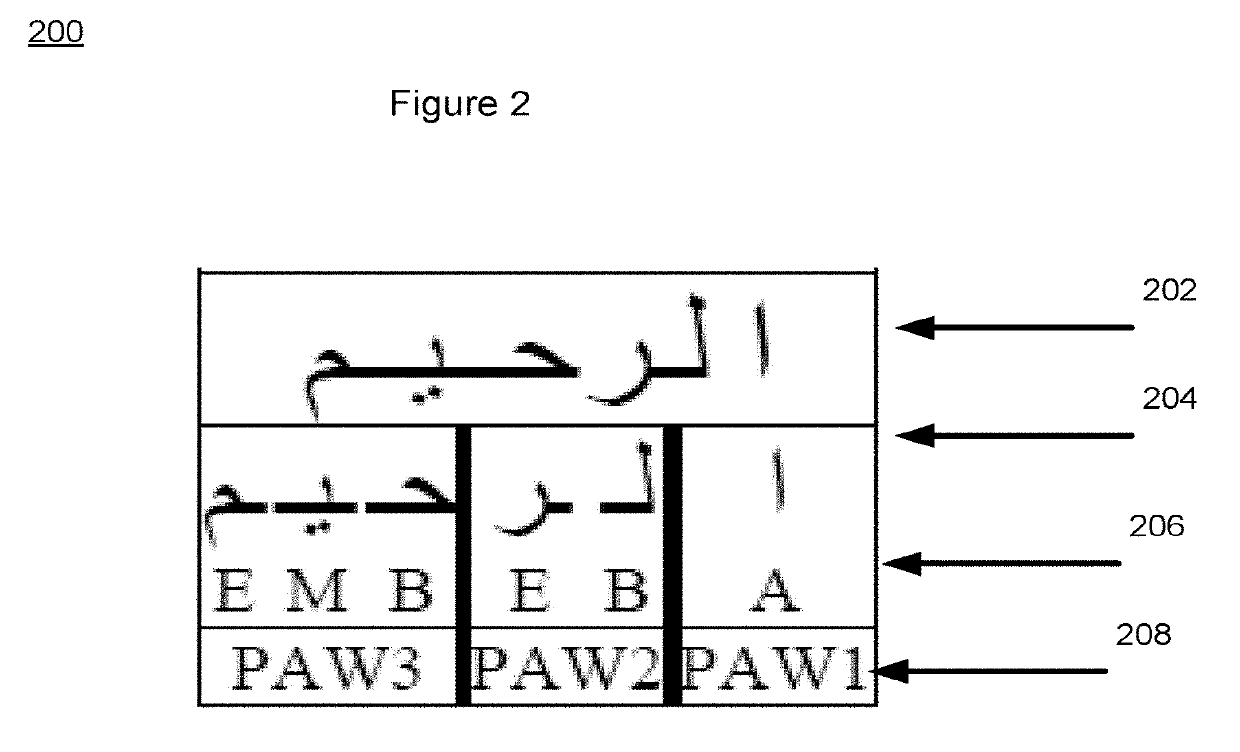

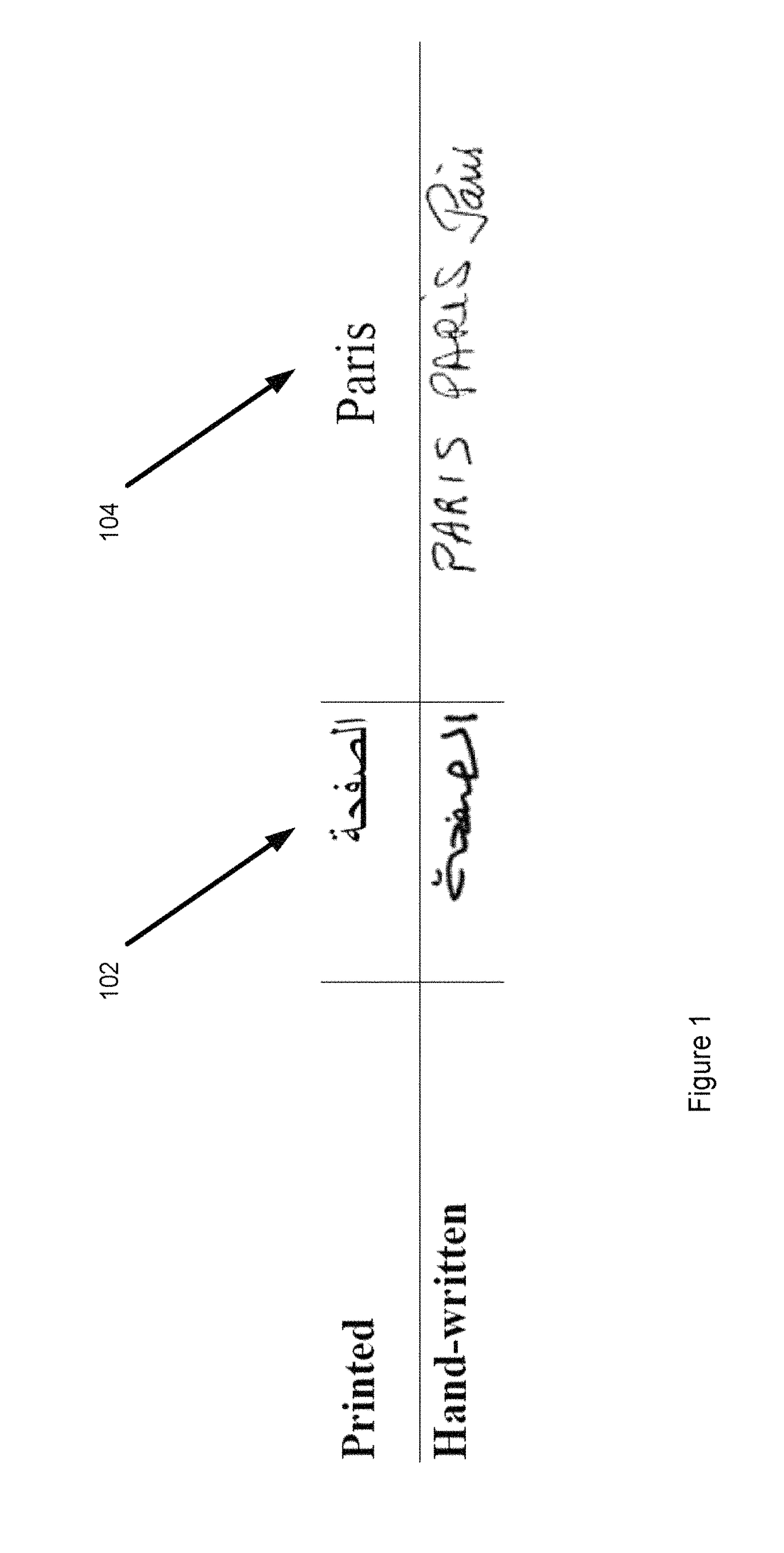

Method for analyzing and reproducing text data

InactiveUS20190114310A1Character and pattern recognitionNatural language data processingData setAlgorithm

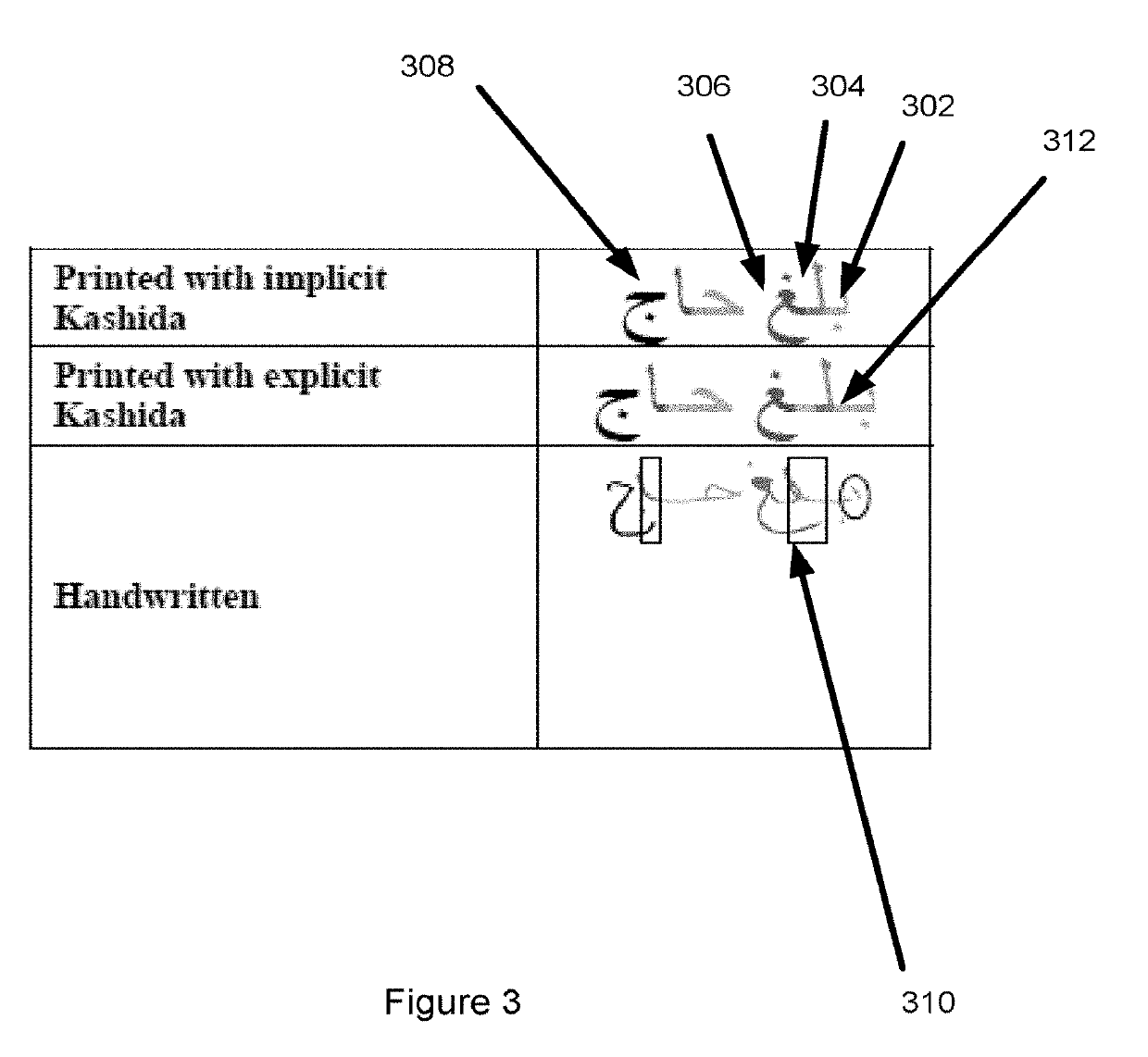

Systems and associated methodology are presented for Arabic handwriting synthesis including partitioning a dataset of sentences associated with the alphabet into a legative partition including isolated bigram representation and classified words that contain ligature representations of the collected dataset, an unlegative partion including single character shape representation of the collected data set, an isolated characters partition, and a passages and repeated phrases partition, generating a pangram, the pangram including the occurrence of every character shape in the collected dataset and further including a special lipogram condition set based on a desired digital output of the collected dataset, and outputting a digital representation of the pangram including synthesized text.

Owner:KING FAHD UNIVERSITY OF PETROLEUM AND MINERALS

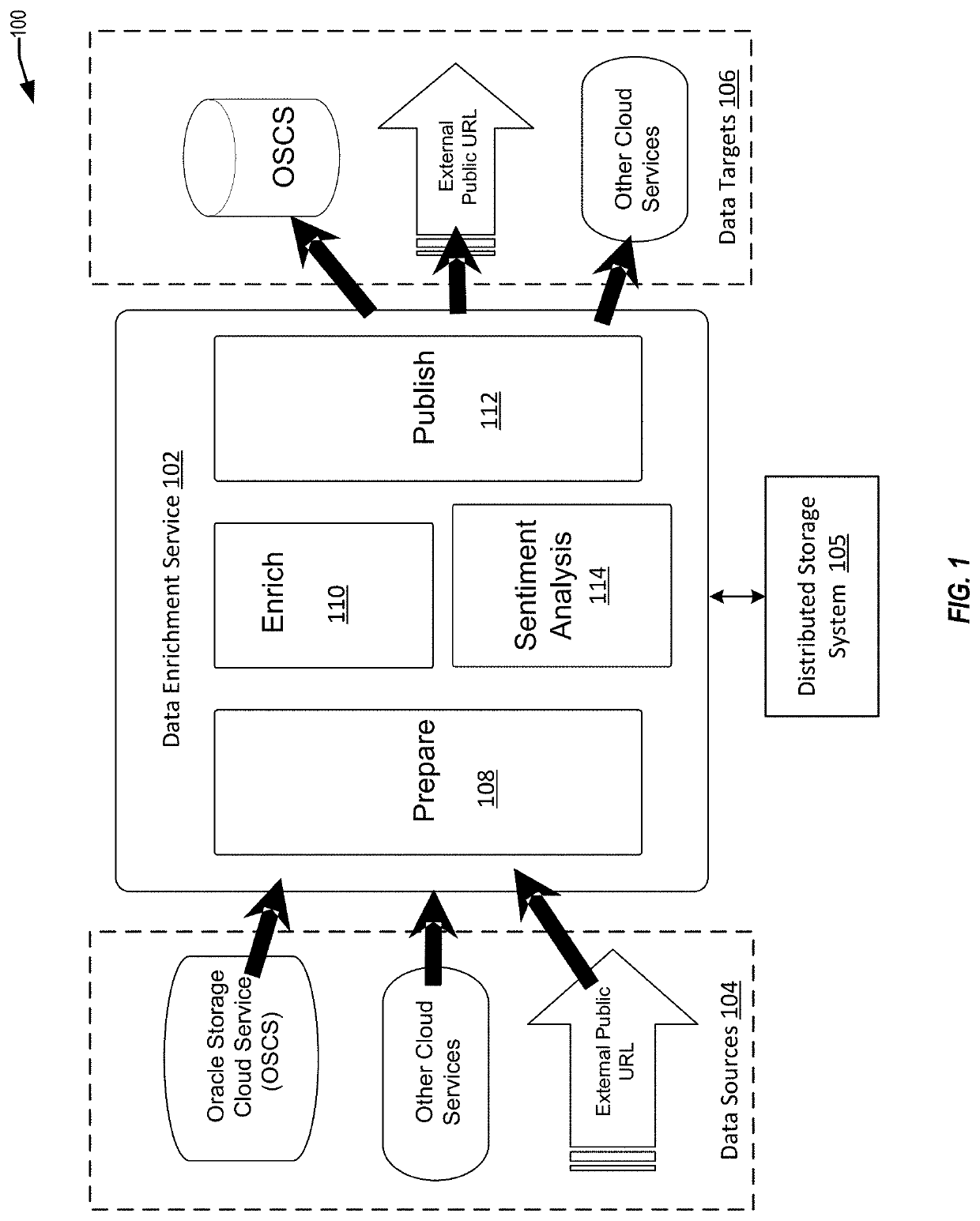

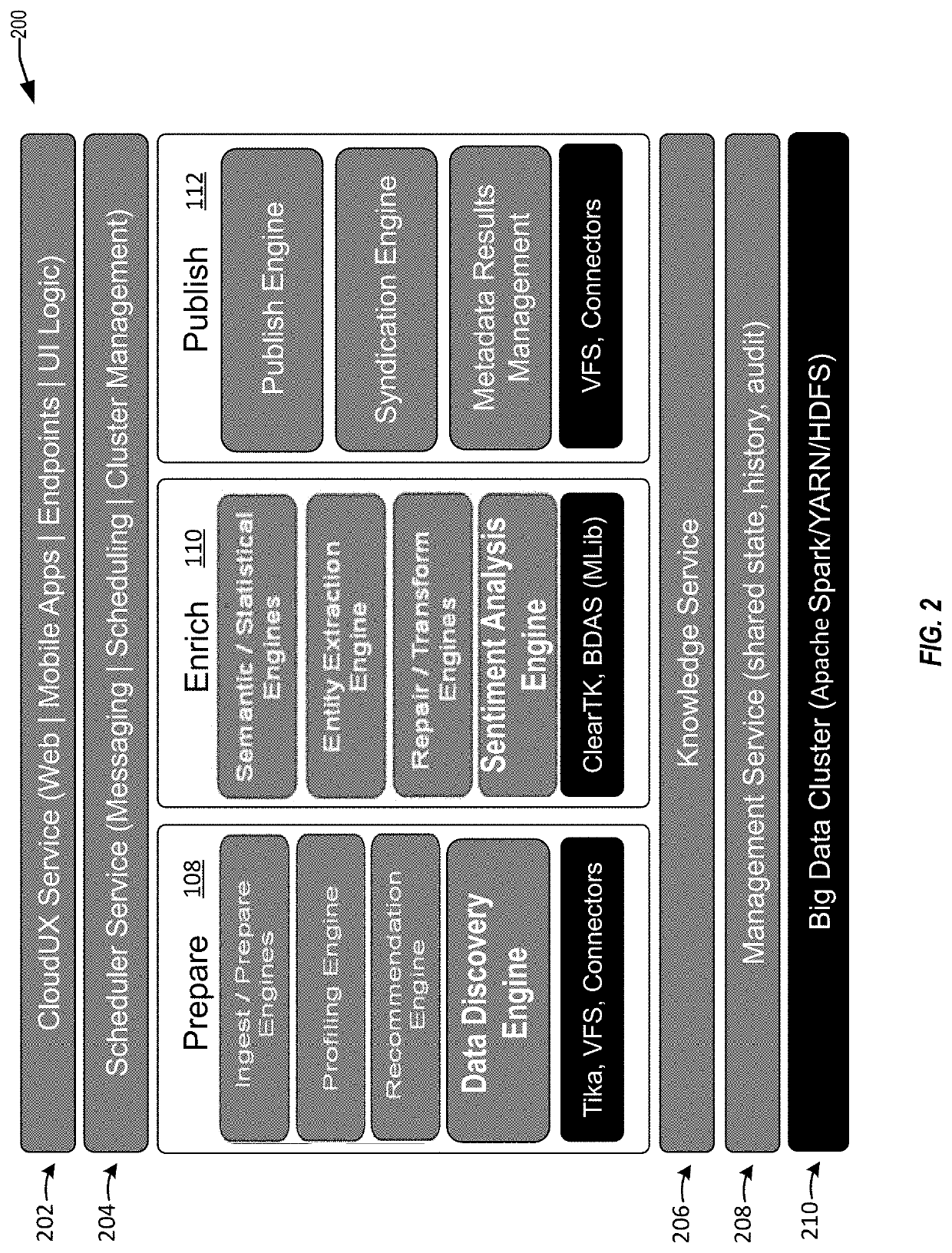

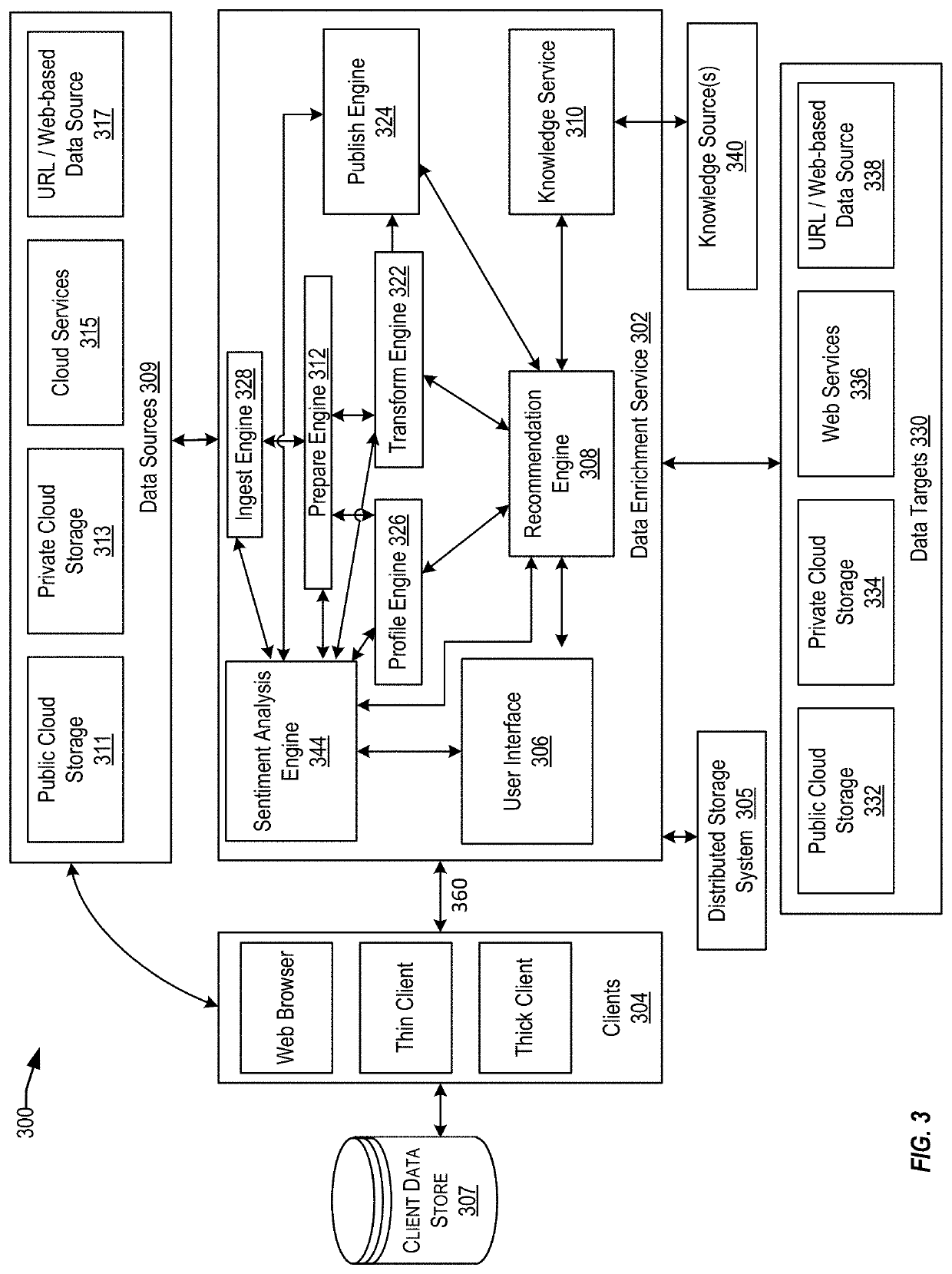

Techniques for sentiment analysis of data using a convolutional neural network and a co-occurrence network

ActiveUS10810472B2Improve accuracyFast waySemantic analysisCharacter and pattern recognitionData setGraph generation

Techniques are provided for performing sentiment analysis on words in a first data set. An example embodiment includes generating a word embedding model including a first plurality of features. A value indicating sentiment for the words in the first data set can be determined using a convolutional neural network (CNN). A second plurality of features are generated based on bigrams identified in the data set. The bigrams can be generated using a co-occurrence graph. The model is updated to include the second plurality of features, and sentiment analysis can be performed on a second data set using the updated model.

Owner:ORACLE INT CORP

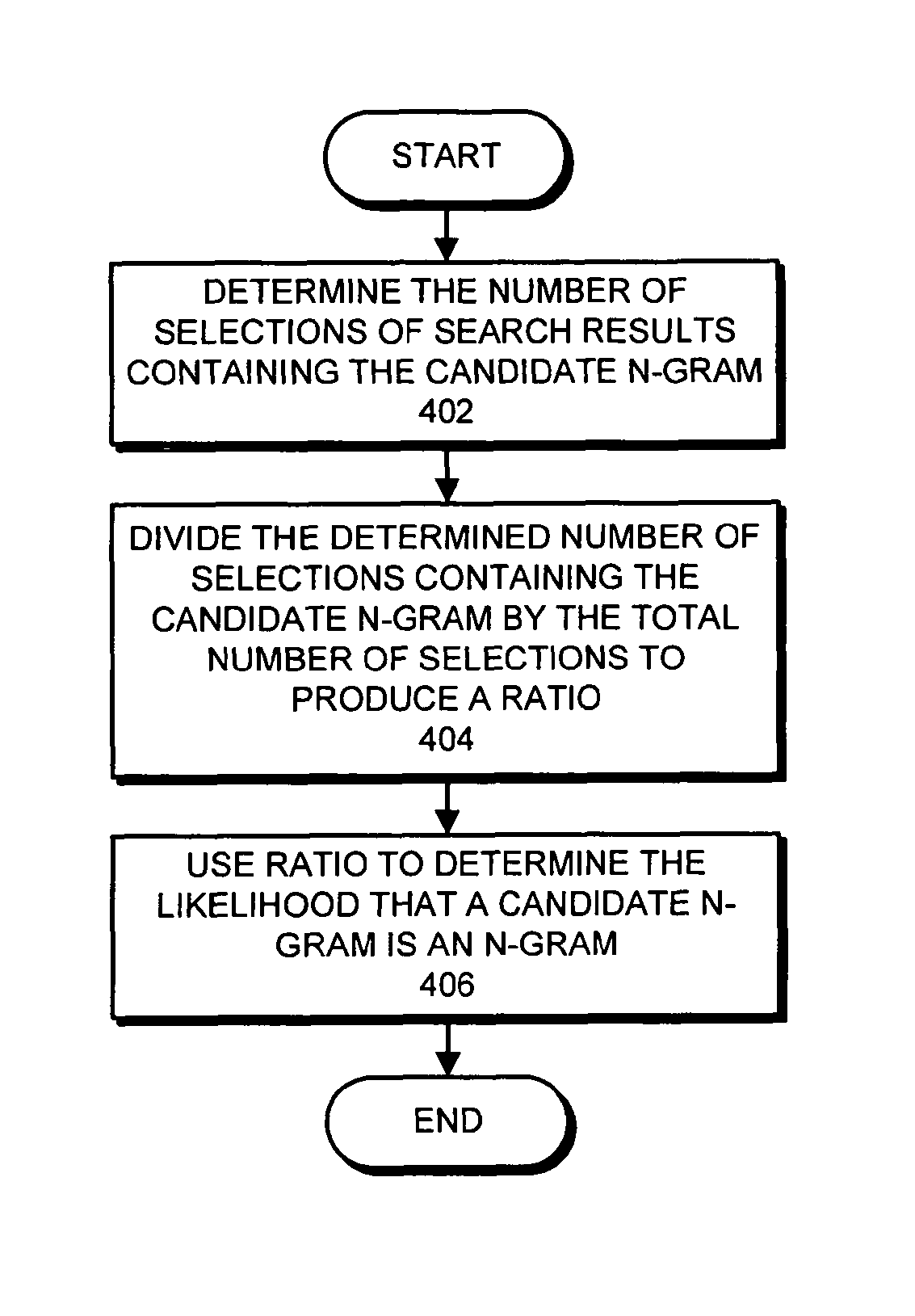

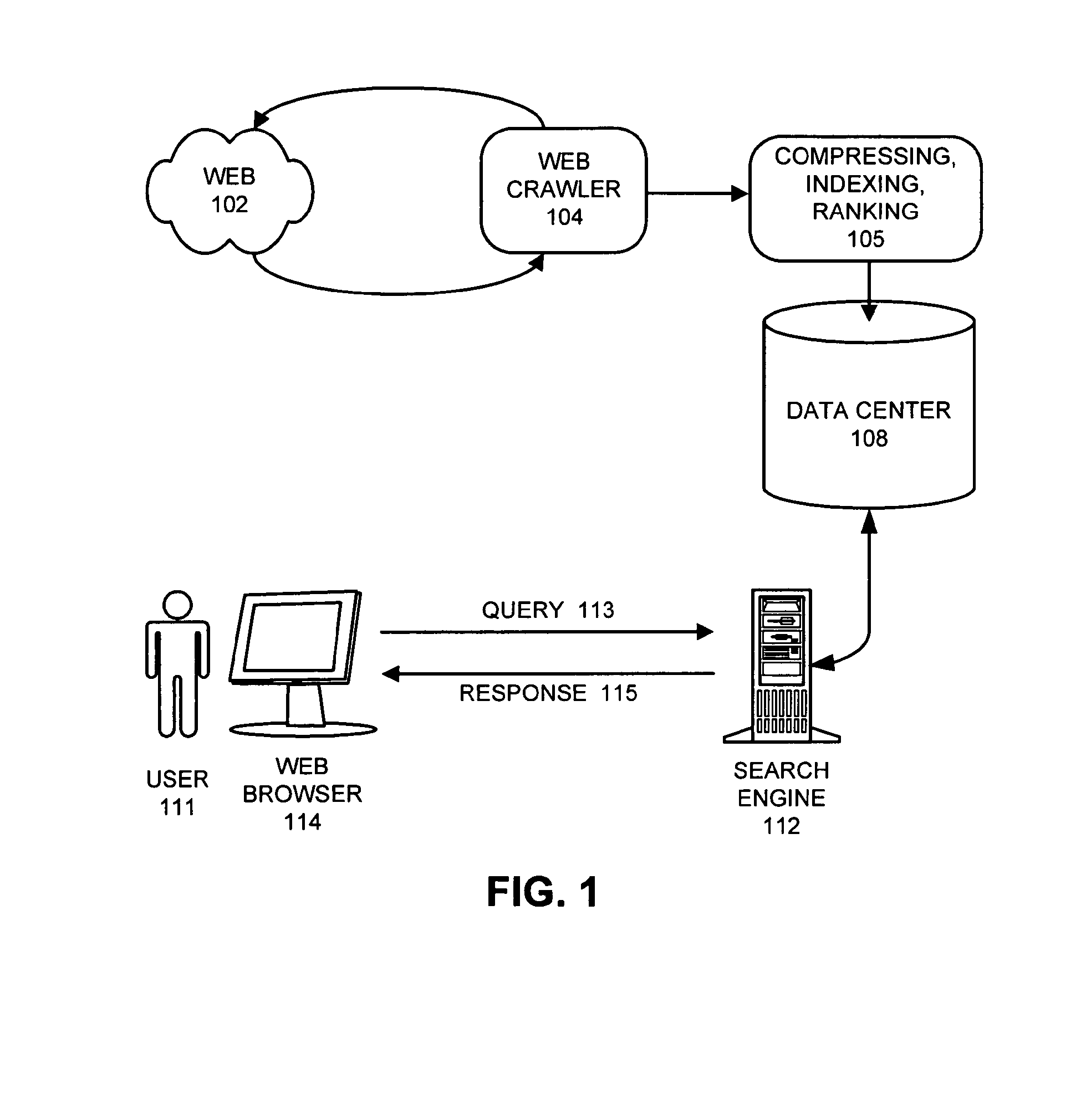

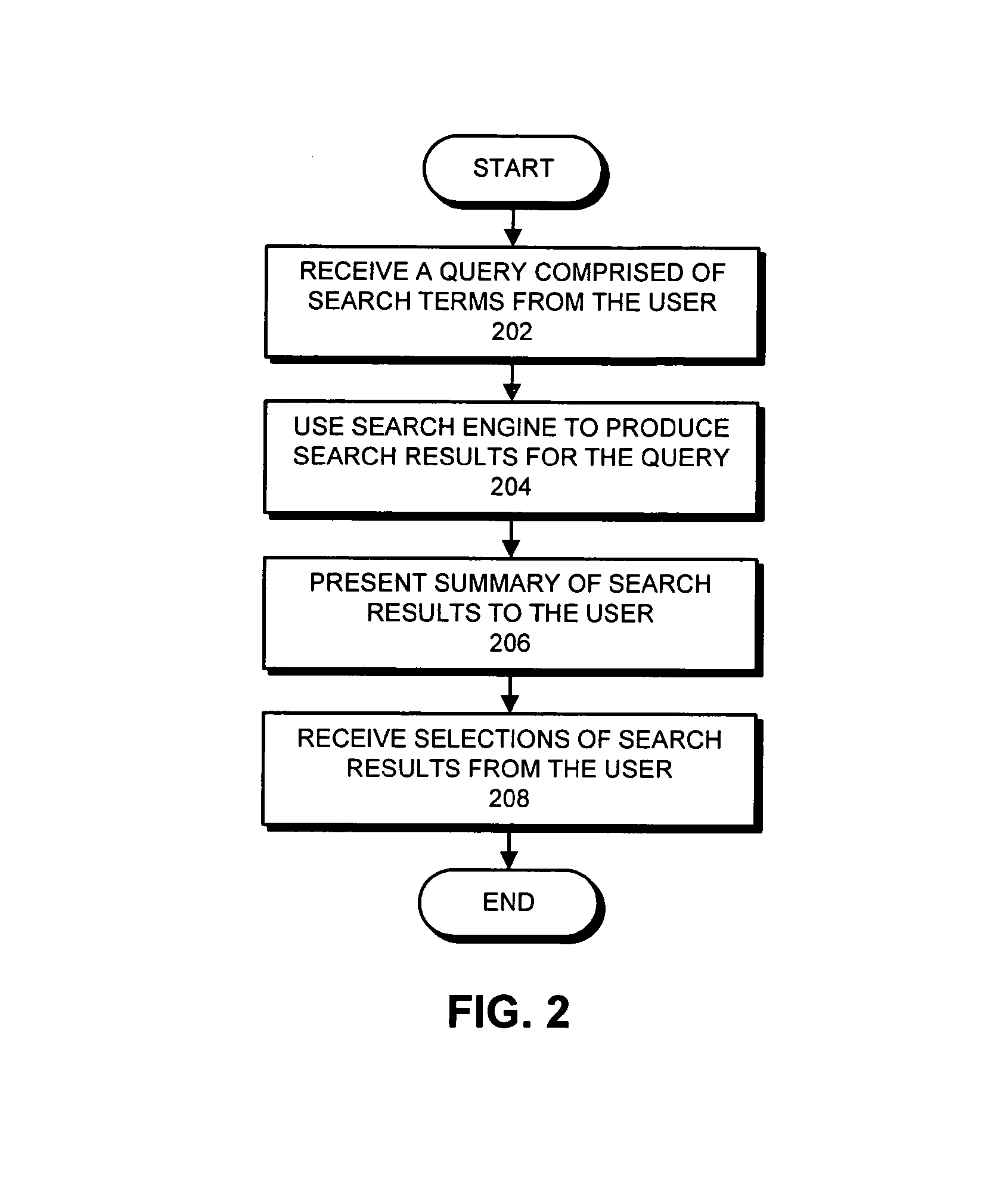

Method and apparatus for automatically identifying compounds

ActiveUS8332391B1Digital data information retrievalDigital data processing detailsSystems analysisSearch terms

One embodiment of the present invention provides a system that automatically identifies compounds, such as bigrams or n-grams. During operation, the system obtains selections of search results which were selected by one or more users, wherein the search results were previously generated by a search engine in response to queries containing search terms. Next, the system forms a set of candidate compounds from the queries, wherein each candidate compound comprises n consecutive terms from a query. Then, for each candidate compound in the set, the system analyzes the selections of search results to calculate a likelihood that the candidate compound is a compound.

Owner:GOOGLE LLC

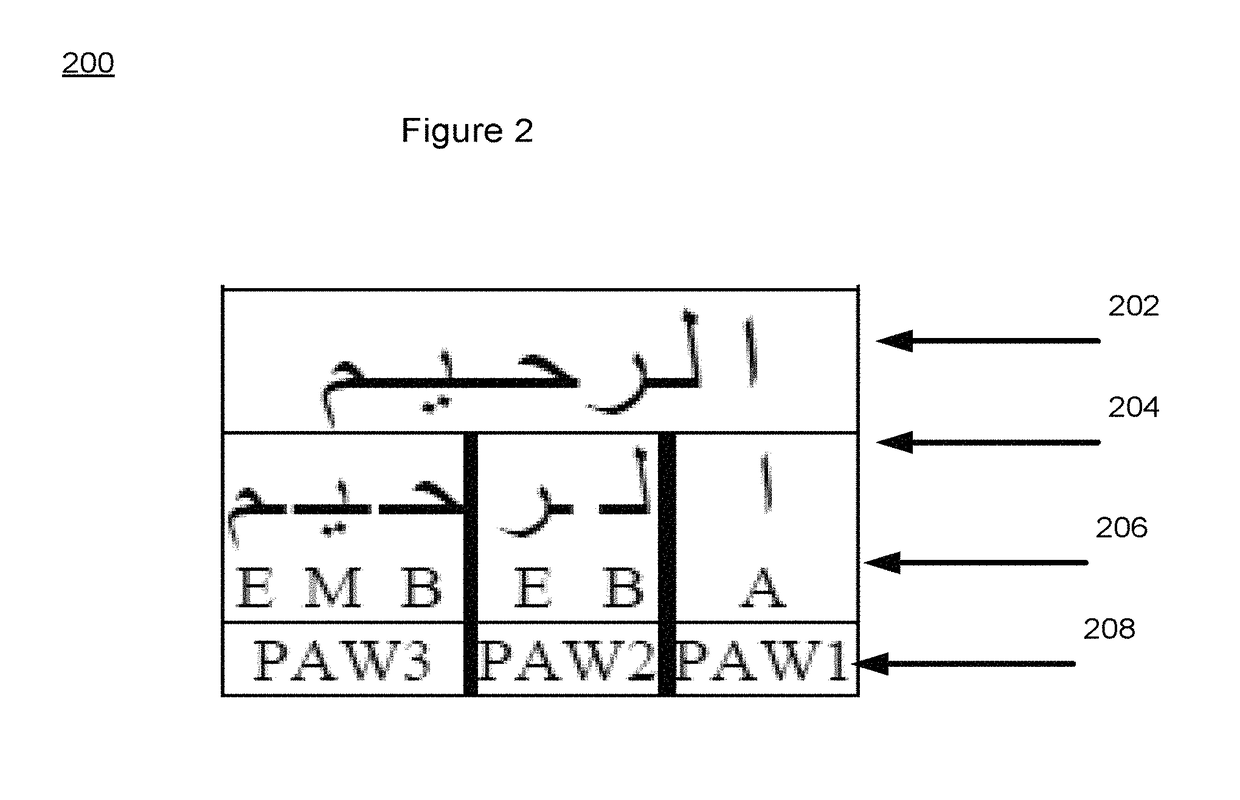

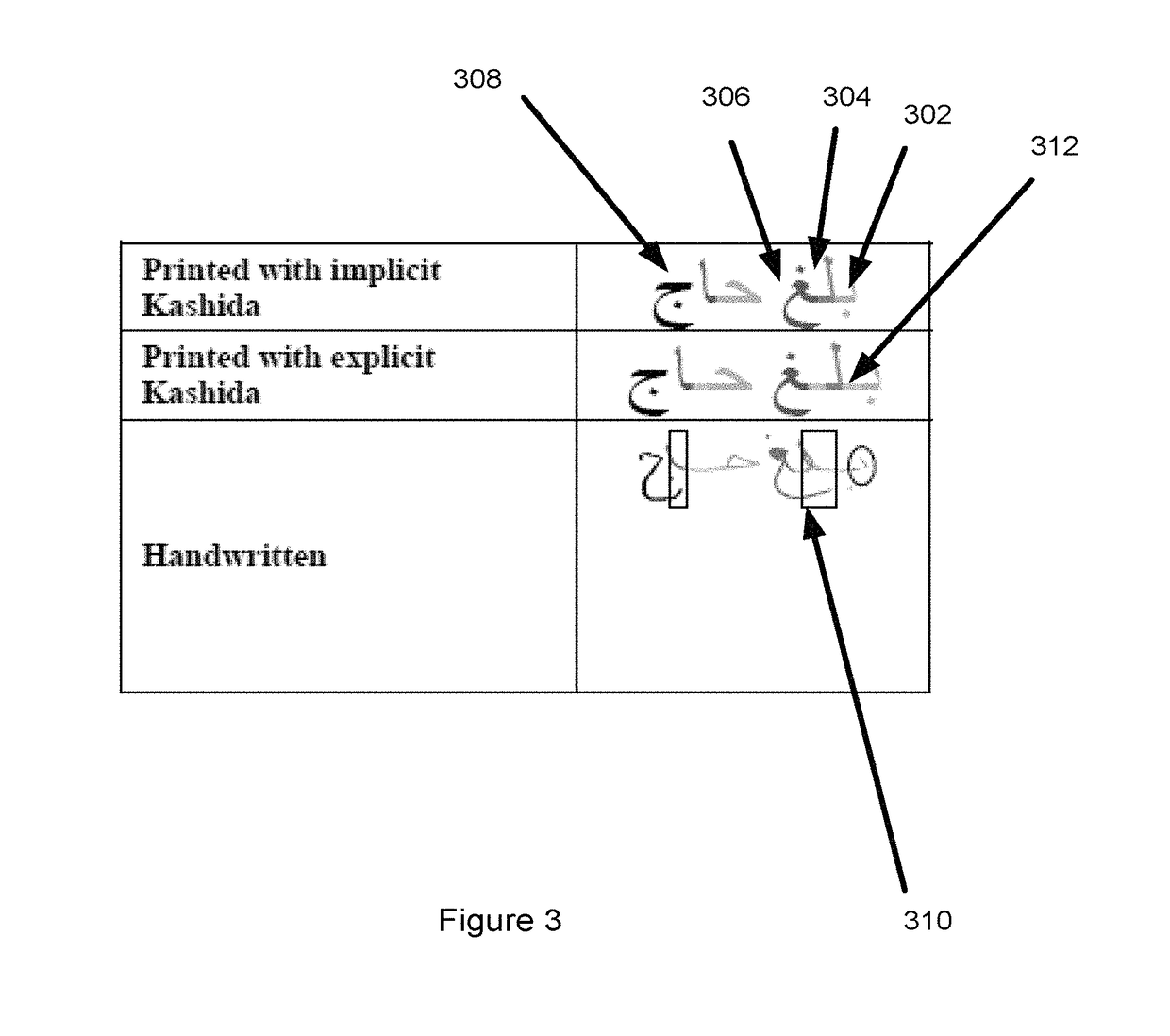

Systems and associated methods for Arabic handwriting synthesis and dataset design

InactiveUS10140262B2Character and pattern recognitionNatural language data processingPattern recognitionData set

Systems and associated methodology are presented for Arabic handwriting synthesis including partitioning a dataset of sentences associated with the alphabet into a legative partition including isolated bigram representation and classified words that contain ligature representations of the collected dataset, an unlegative partition including single character shape representation of the collected data set, an isolated characters partition, and a passages and repeated phrases partition, generating a pangram, the pangram including the occurrence of every character shape in the collected dataset and further including a special lipogram condition set based on a desired digital output of the collected dataset, and outputting a digital representation of the pangram including synthesized text.

Owner:KING FAHD UNIVERSITY OF PETROLEUM AND MINERALS

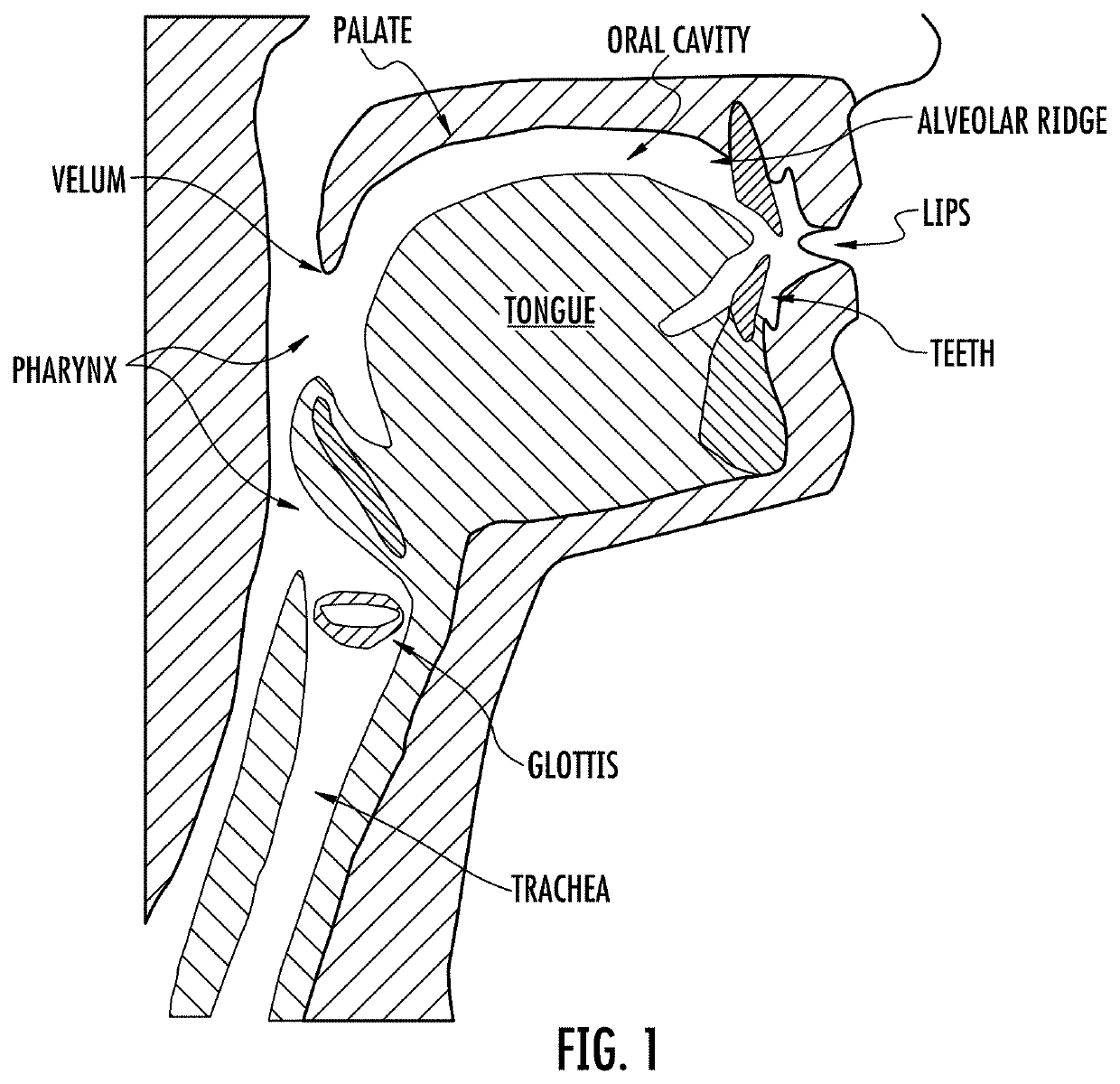

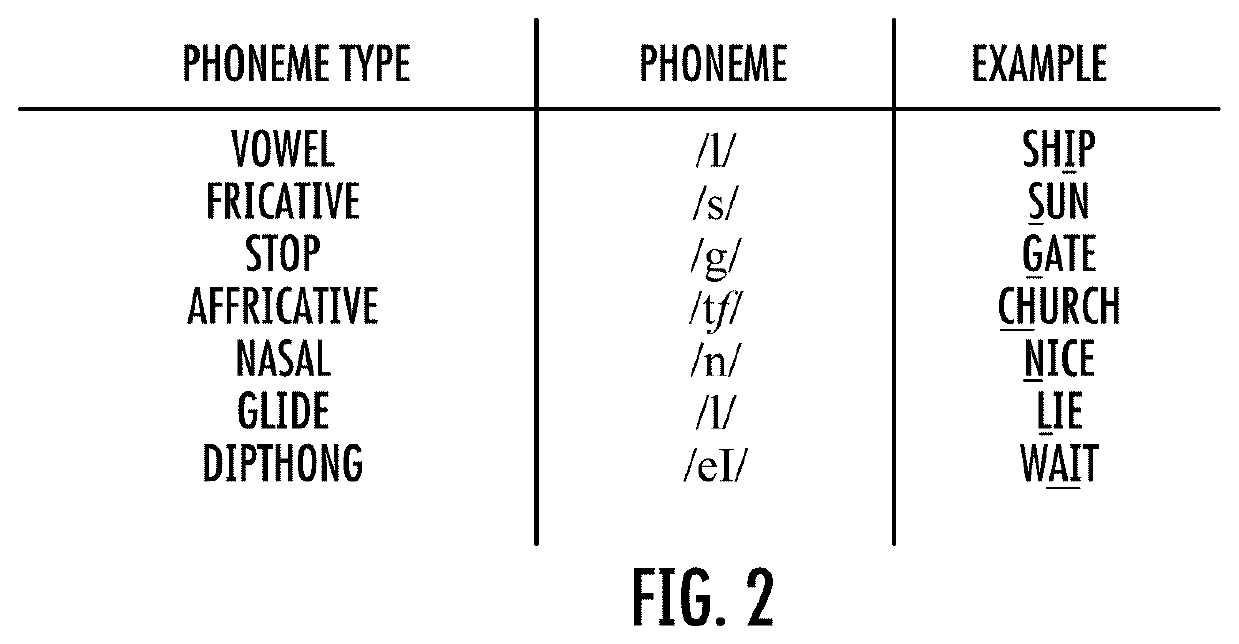

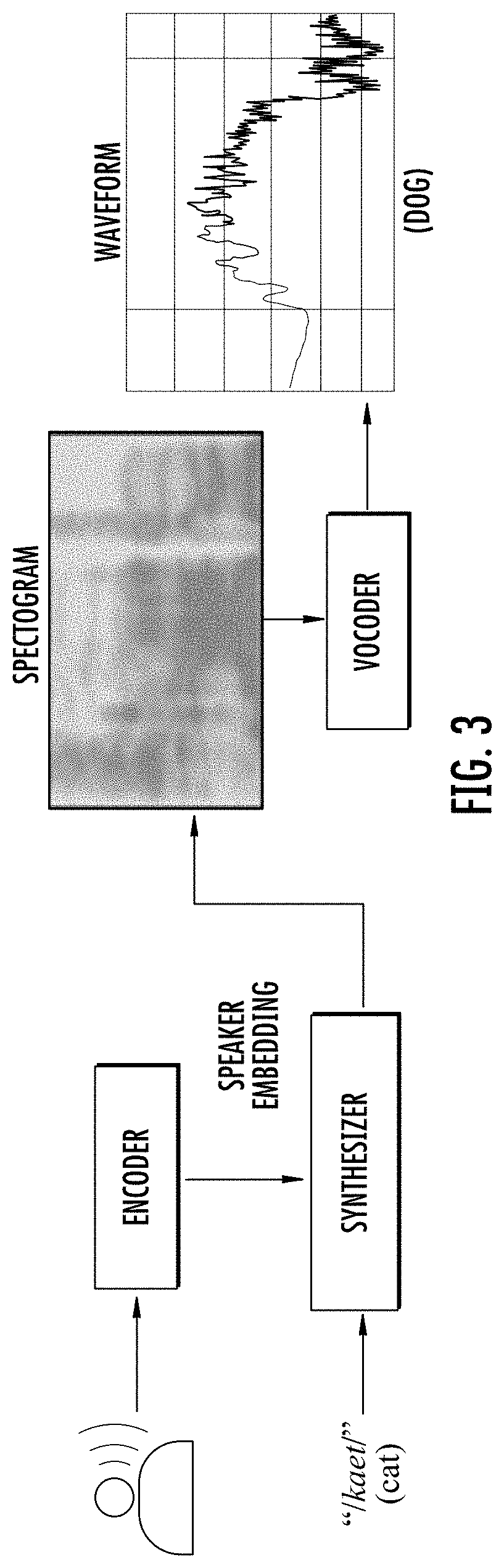

Detecting deep-fake audio through vocal tract reconstruction

A method is provided for identifying synthetic “deep-fake” audio samples versus organic audio samples. Methods may include: generating a model of a vocal tract using one or more organic audio samples from a user; identifying a set of bigram-feature pairs from the one or more audio samples; estimating the cross-sectional area of the vocal tract of the user when speaking the set of bigram-feature pairs; receiving a candidate audio sample; identifying bigram-feature pairs of the candidate audio sample that are in the set of bigram-feature pairs; calculating a cross-sectional area of a theoretical vocal tract of a user when speaking the identified bigram-feature pairs; and identifying the candidate audio sample as a deep-fake audio sample in response to the calculated cross-sectional area of the theoretical vocal tract of a user failing to correspond within a predetermined measure of the estimated cross sectional area of the vocal tract of the user.

Owner:UNIV OF FLORIDA RES FOUNDATION INC

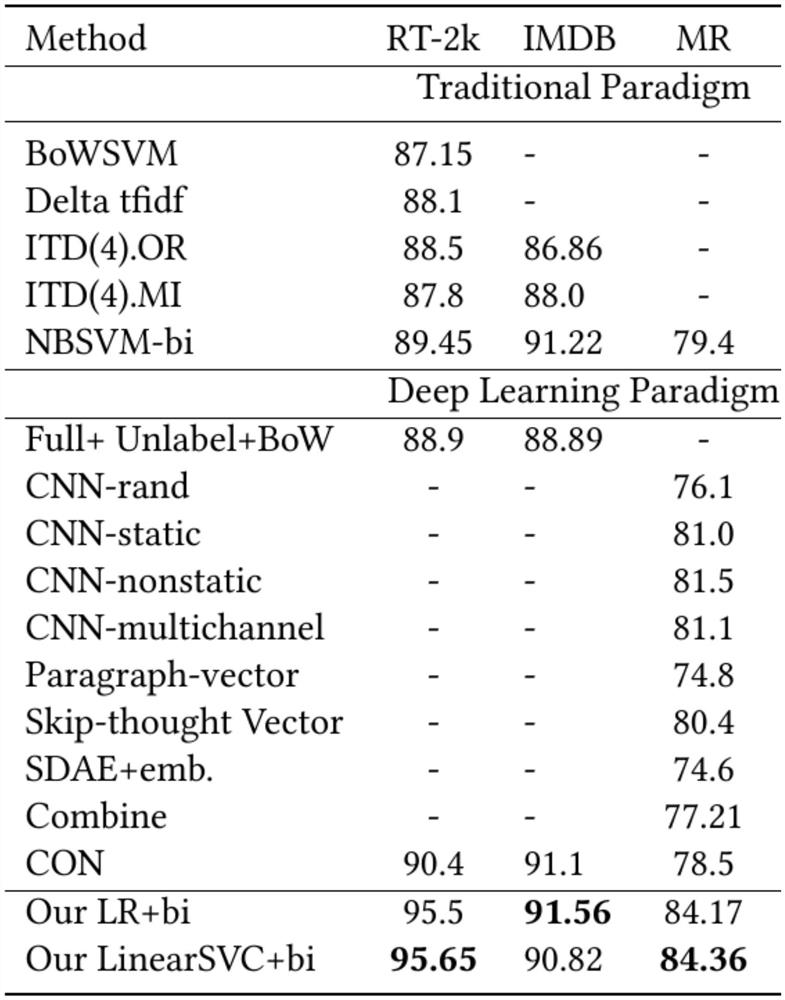

A Text Positive and Negative Sentiment Classification Method

InactiveCN107423371BImprove the efficiency of scientific computingMaximize class discriminationSemantic analysisSpecial data processing applicationsLexical itemClassification methods

The invention discloses a text positive and negative emotion classification method. The method comprises the steps that all texts in a text set are preprocessed to form a noiseless positive and negative text set; unigram word segmentation and bigram word segmentation are performed on positive and negative texts; after stop words are removed, a non-repeat multidimensional feature vector space is formed; inverse document frequency calculation is performed on variant word frequency of all-dimensional feature vectors in the multidimensional feature vector space; and finally after training is performed with a formed lexical item-document matrix being a supervised classifier support vector machine and an input factor of logic regression in combination with marked positive and negative emotion category tags, a final text linear classifier prediction model is obtained, that is, emotion classification can be performed on a new unknown text. Through the method, the characteristic that emotional words in a marked corpus have innate classification capability is effectively utilized, a new calculation method is proposed to maximize category discrimination of the emotional words, and therefore the precision of text emotion classification through a computer is improved.

Owner:HUBEI NORMAL UNIV

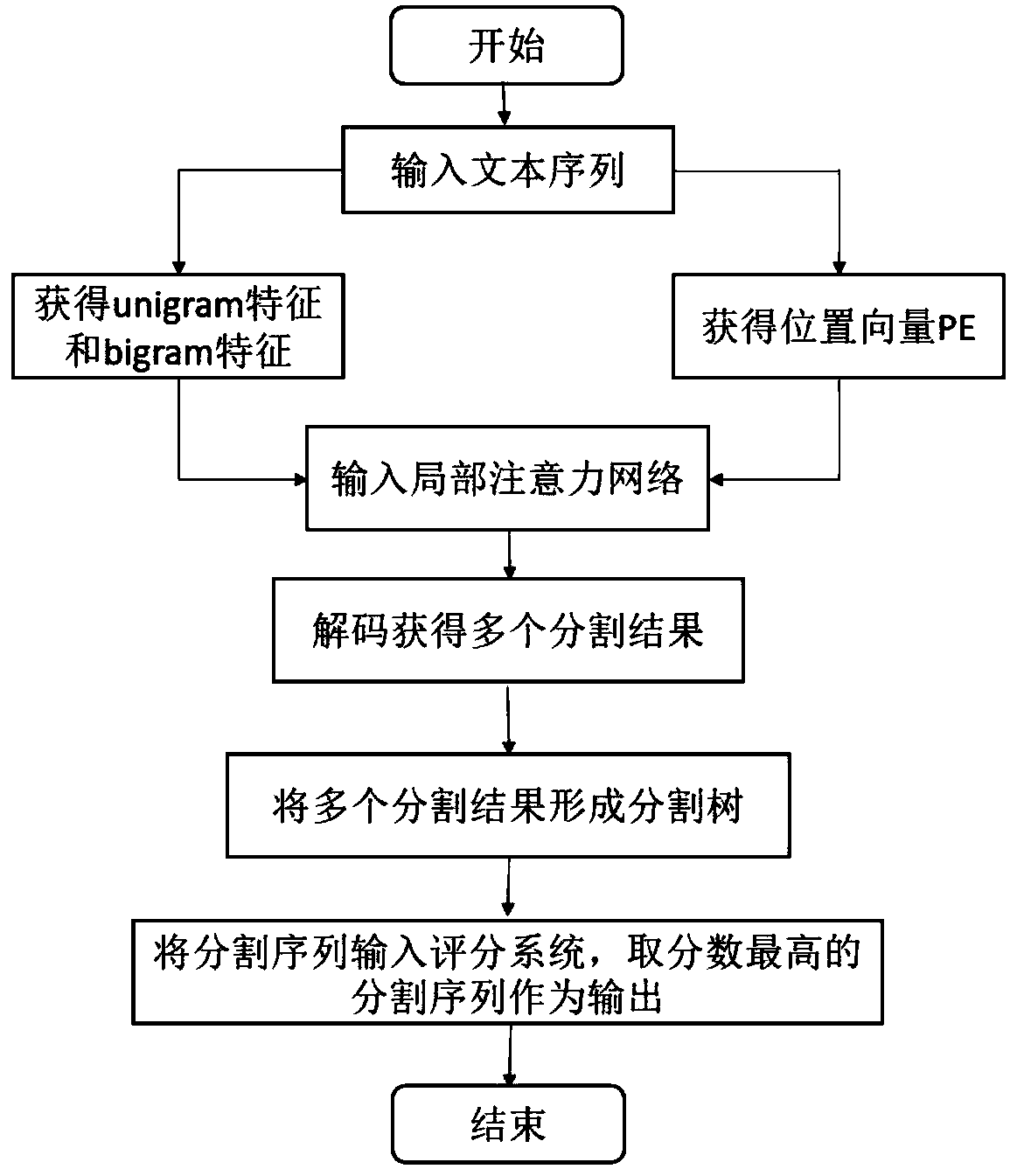

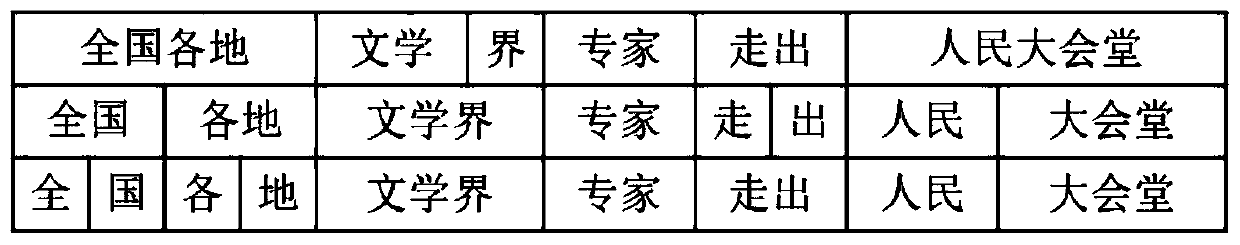

Multi-criterion Chinese word segmentation method based on local self-attention mechanism and segmentation tree

InactiveCN111507102AImprove accuracyReduce the impactNatural language data processingNeural architecturesPattern recognitionChinese word

The invention discloses a multi-criterion Chinese word segmentation method based on a local attention mechanism and a segmentation tree. According to the method, for a text sequence of a corpus, the method comprises the following implementation steps: inputting a text sequence, obtaining unigram features and Bigram features of each character through word2vec, combining the unigram features and theBigram features with a predefined position vector to serve as an embedded layer, transmitting the embedded layer to a self-attention network, and obtaining the output of the embedded layer; and labelingeach character through crf layer decoding, and obtaininga plurality of labeling results; combining the labeling results into a segmentation tree to form a plurality of segmentation sequences; inputting the plurality of segmentation sequences into a scoring system, and selecting the group of segmentation sequences with the highest score as output. According to the method, the accuracy of multi-criterion word segmentation is improved.

Owner:HANGZHOU DIANZI UNIV

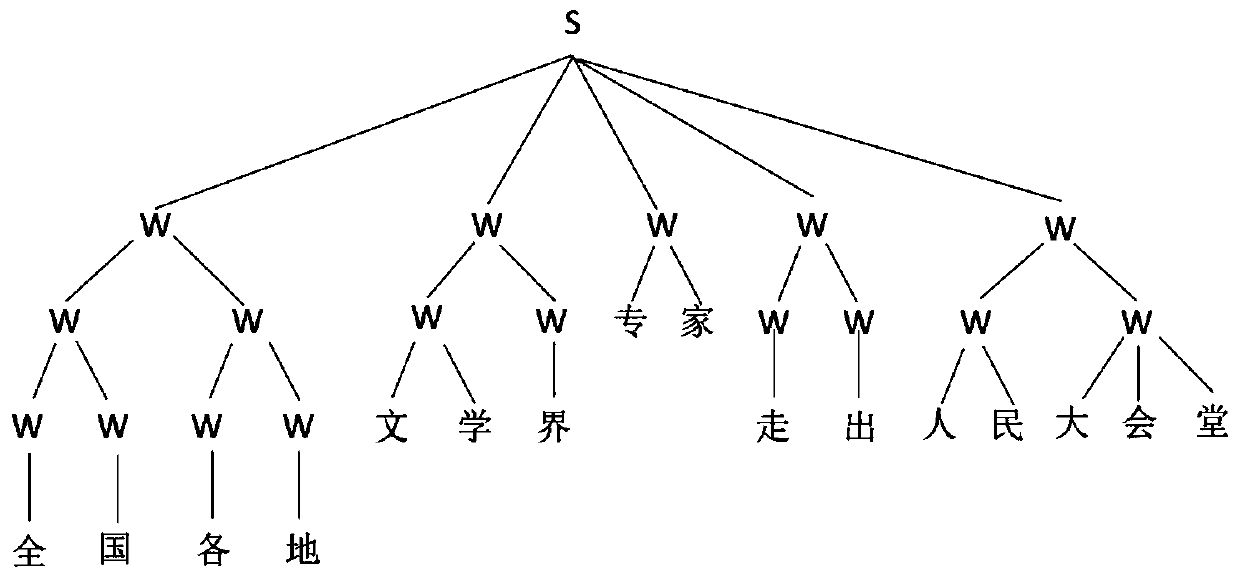

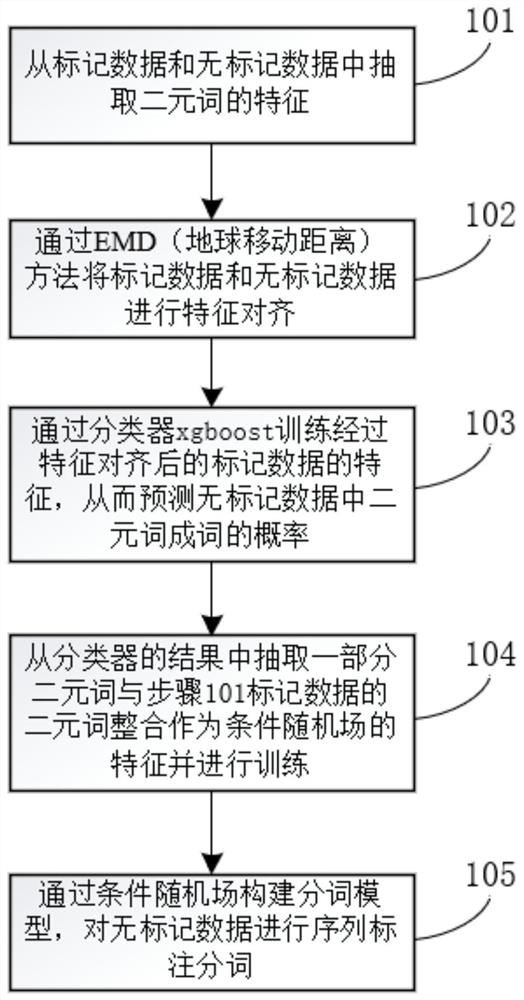

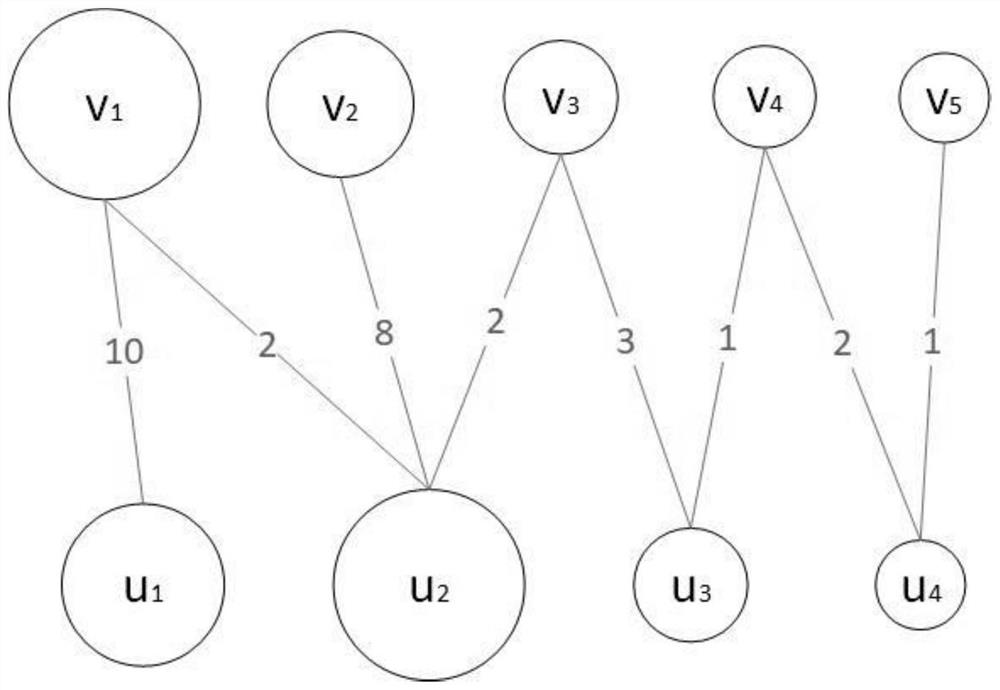

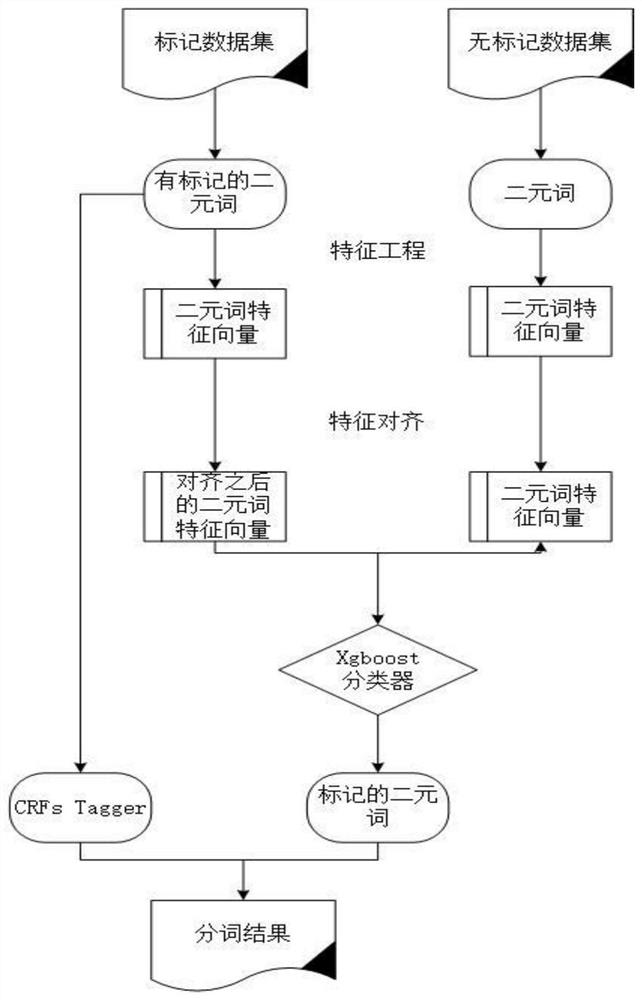

A feature-aligned Chinese word segmentation method

ActiveCN109472020BMitigating feature distribution differencesPrevent overfittingCharacter and pattern recognitionNatural language data processingConditional random fieldEarth mover's distance

The present invention claims to protect a feature-aligned Chinese word segmentation method, which includes: 101 extracting features of bigrams from marked data and unmarked data; 102 combining marked data with unmarked data by the Earth Mover's Distance (hereinafter referred to as EMD) method 103 Use the classifier xgboost to train the features of the labeled data after feature alignment, so as to predict the probability of big words in unlabeled data becoming words; 104 Extract some big words and steps from the results of the classifier 101 The bigram integration of labeled data is used as the feature of the conditional random field and trained; 105 The unlabeled data is sequence-labeled and segmented through the established model. The invention mainly performs feature alignment on marked data and unmarked data through EMD, predicts the word formation probability of binary words through classifier learning, and then integrates conditional random fields in a stacking manner to form a new tokenizer.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

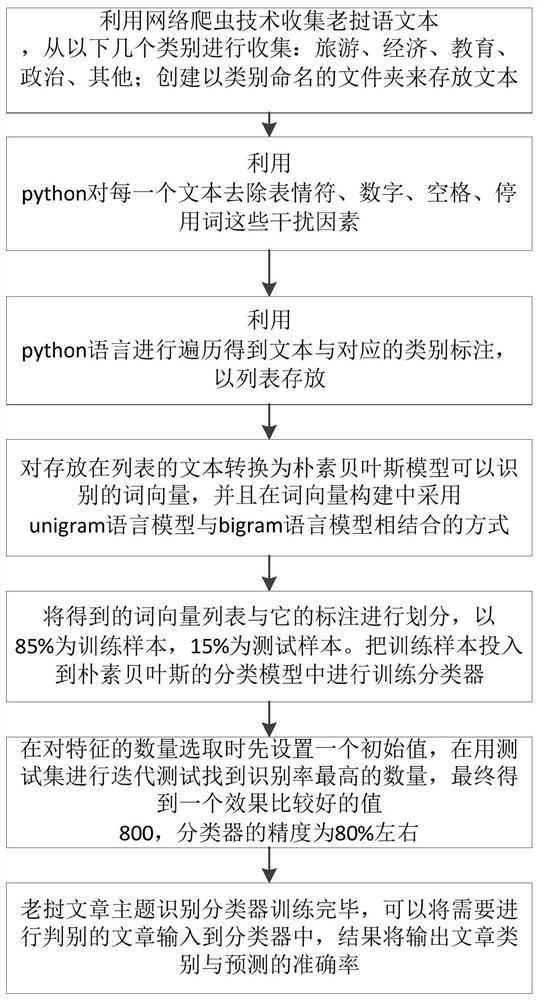

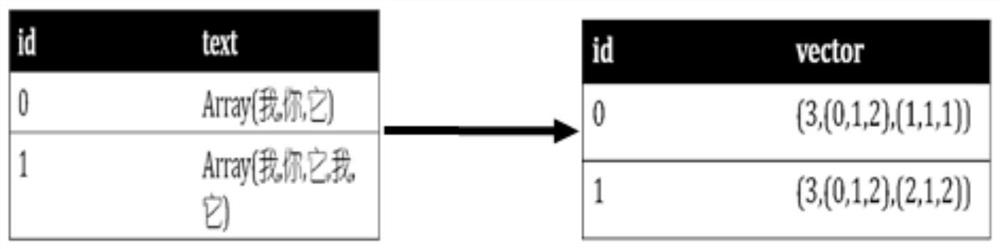

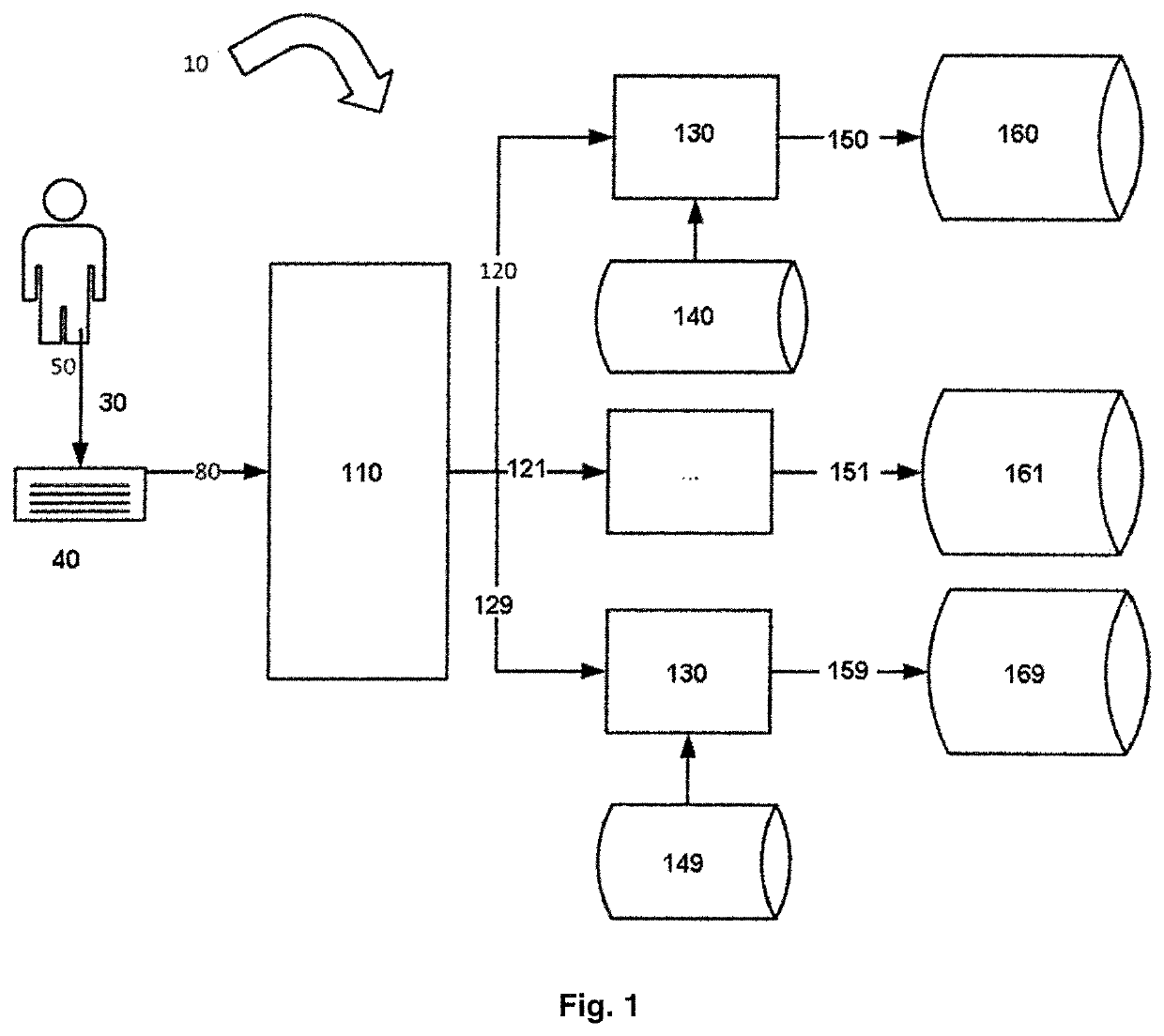

A Topic Classification Method for Lao Language Texts

ActiveCN109299357BEliminate limitationsImprove recognition rateDigital data information retrievalSpecial data processing applicationsFeature extractionMathematical model

The invention discloses a Lao language text topic classification method, which belongs to the technical fields of natural language processing and machine learning. The invention combines the N-gram language feature extraction model and the naive Bayesian mathematical model to realize topic recognition of Lao articles, and eliminates the limitation of naive Bayesian to a certain extent. It considers the conditional independence assumption, regards the text as a bag of words model, does not consider the order information between words, and uses the unigram and bigram feature models at the same time to improve the recognition rate of the text.

Owner:KUNMING UNIV OF SCI & TECH

Method and system for free text keystroke biometric authentication

ActiveUS10970573B2Accurate and fast and simple method for user authenticationQuality improvementDigital data authenticationBiometric pattern recognitionKey pressingFeature vector

A method for user authentication based on keystroke dynamics is provided. The user authentication method includes receiving a keystroke input implemented by a user; separating a sequence of pressed keys into a sequence of bigrams having bigram names simultaneously with the user typing free text; collecting a timing information for each bigram of the sequence of bigrams; extracting a feature vector for each bigram based on the timing information; separating feature vectors into subsets according to the bigram names; estimating a GMM user model using subsets of feature vectors for each bigram; providing real time user authentication using the estimated GMM user model for each bigram and bigram features from current real time user keystroke input. The corresponding system is also provided. The GMM based analysis of the keystroke data separated by bigrams provides strong authentication using free text input, while user additional actions (to be verified) are kept at a minimum. The present invention allows to drastically improve accuracy of user authentication with low performance requirements that allows to implement authentication software for low-power mobile devices.

Owner:ID R&D INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com