About 50% of those affected do not reach the hospital due to poor recognition of the

disease before a cataclysmic, often terminal event has occurred.

For this reason the use of visual analysis has been limited to academic research and it has not been possible to extend its benefit to

patient care in the

community.

The current methods are unable to reliably detect

ambulatory ischemia or risk for potentially lethal arrhythmia.

Such risks are not detectable in a cost-effective manner with prior art techniques.

These shortcomings of the prior art have a significant

impact on cardiovascular morbidity and mortality.

By failing to disclose evidence of risks for catastrophic events, current Holter analysis lulls clinicians into the falsehood of absence of evidence misrepresented as evidence of absence of potentially lethal risks.

Consecutive obsolete methodologic steps in current Holter analysis severely diminish the quantity and degrade the quality of the signal encoded in original Holter

recording media.

However, today, the only reliable form of Holter analysis is visual scanning of the

magnetic tape itself, not the “over reading” of the expunged and distorted

digital file which misrepresents the original signal.

Visual analysis by an expert electrocardiographer is a very

time consuming method used only by highly motivated experts in research programs.

Due to time and cost involved, visual analysis of the

analog signal cannot be applied to clinical practice or

mass screening of at risk

population with known methods.

Communications

engineering paradigms and techniques are best limited to the evaluation of non-biological signals where reproducibility and repetition of

waves and other phenomena are the norm.

A major drawback of

engineering autocorrelation is that it is sensitive to waveform changes in the

time domain (X-axes) and poorly sensitive to changes in the

voltage domain (Y-axes).

In current Holter analysis, autocorrelation is wrongly applied to a

small sample of degraded biological signal with poor dynamic

gain which magnifies the limitations of autocorrelation to recognize voltage changes.

Non-biological techniques used to analyze

biological data yield, at best, mediocre results, which become poor when analysis is done using a distorted, minuscule fraction of the original signal recorded.

Speed fluctuation in the 10% range is a

signal acquisition problem; the best research efforts have dropped it to 3%, which is still too high for accurate quantitative

ECG analysis.

The norm today is to digitize the

analog signal by playing back the cassette tapes at speeds as fast as 480 times real time; this is the beginning of major degradation of the analog ECG.

Tape stretching due to repeated stopping and starting of the tape is another source of signal degradation.

Current algorithms use elision and omission of vast amounts of the originally recorded

ECG signal to achieve extreme, unnecessary and deleterious

data compression.

However, extreme digital compression gravely decreases the integrity, fidelity, resolution and most importantly the

dynamic range of the stored electrocardiogram or any other signal.

Such creative approach is done after drastic

lossy compression has irretrievably discarded more than 90% of the original signal with great loss of integrity,

dynamic range, resolution and fidelity.

The continuing use of vastly outmoded computer and

signal processing technology impede the use of Dr.

Obsolete and unnecessary compression strategies reduce 24-hours worth of analog Holter data down to a little more than a single

megabyte digital file.

When the algorithms for Holter analysis were created, extreme limitations in available memory existed.

Thirty years ago, in the infancy of the computer industry, when

silicon chips were as expensive as they were limited in their RAM or ROM capacity,

data compression was a necessary evil.

Now that

computer memory is as cheap as it is truly vast in capacity, data compression is an undesirable tool mainly used by producers of entertainment and other non-essential computer applications, i.e. whenever loss of data is deemed acceptable for reasons of practicality and / or fast transmission over

consumer-level internet connections.

Like all biologic signals, ECG, as audio data are remarkably hard to compress effectively.

All compression routines are known to deteriorate

dynamic range, signal quantity and quality.

For 16-bit data, companies like Sony and Philips are spending millions of dollars to develop proprietary schemes that as yet are not fully successful.

Although great strides of innovation are now being made in techniques of data compression, a 350:1

data compression ratio keeping the integrity of the signal is as yet impossible, nor is it necessary.

The problem is that such pre-compression decision regarding

ambulatory ECG signal is not and can not be made without rendering compressed Holter files useless except for detection of gross arrhythmia.

Although the

algorithm used in MP3 compression is quite advanced, the process still degrades the quality of the original signal in an invariably noticeable (almost ‘

trademark’) fashion.

Such degradation, however, lies within an ‘acceptable’ window of loss for the

consumer-oriented purposes of the technology, i.e. exchanging recordings of popular songs over

the Internet.

One overriding fact remains clear: the application of any inherently omissive data compression strategies to a 24 hr ECG recording prior to any and all analysis of the totality of the signal is wrong.

Holter analysis remains a vastly under addressed technological

obsolescence which is an obstacle for detection of risk for lethal events and in doing so puts lives directly at risk.

In addition, the only limits containing further development and refinement of the CVAT process are those temporarily imposed by the ephemeral and upwardly spiraling limits of computer and signal analysis technology.

Correction for presence of Ta (atrial

ischemia) is unheard off in the current art, since it is unable to visualize this subtle but important change.

The analytic paradigm and totally obsolete limitations in

computer technology imposed this major source of false negative reports.

Current Holter algorithms can not detect ECG signs of abnormal

repolarization in a reliable and reproducible manner.

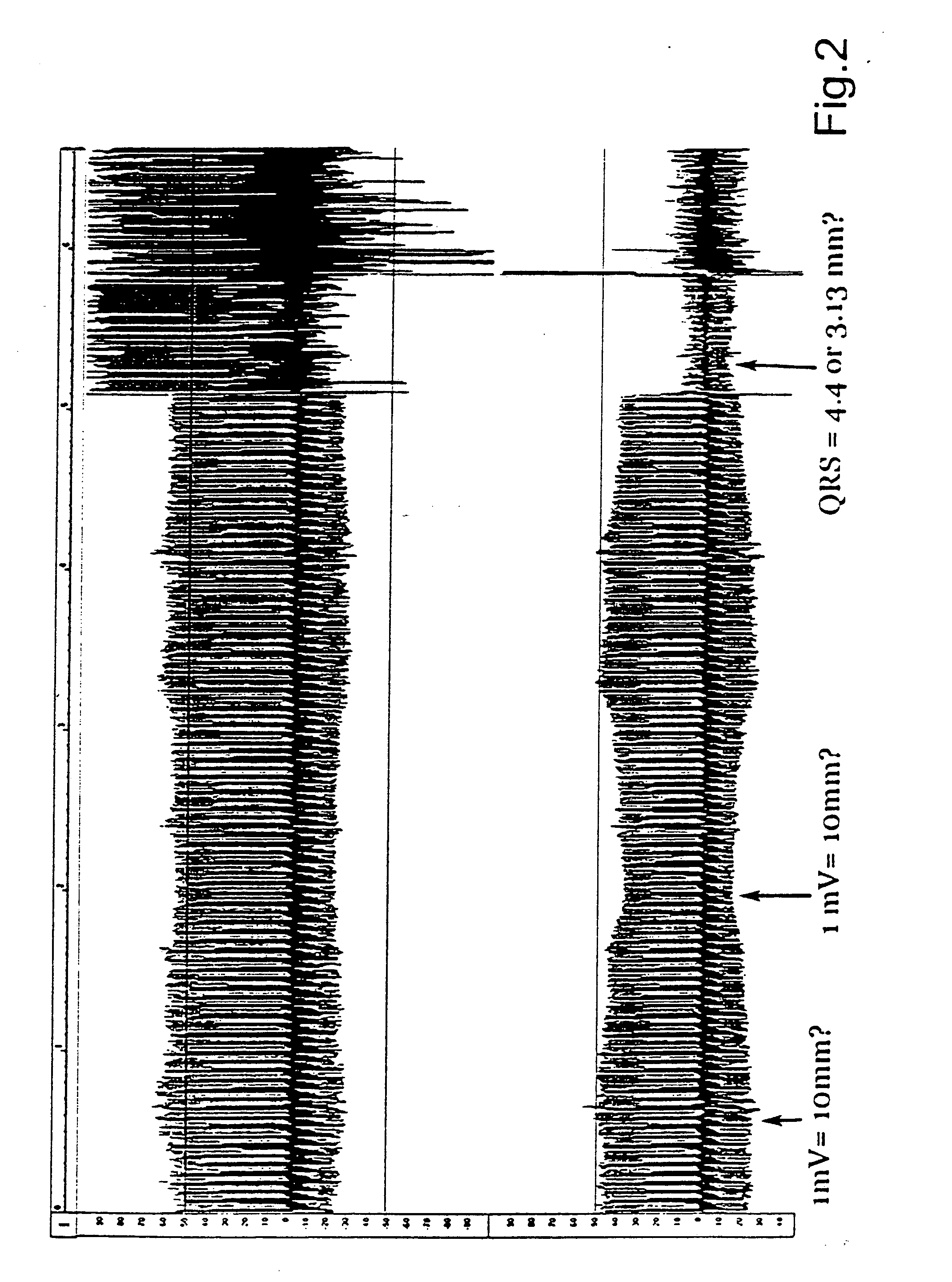

The prior art taught by conventional Holter monitoring systems cannot retrieve, store, display or analyze

high fidelity signals in the microvolt or

microsecond range.

Fast

magnetic tape play back done without optimizing the dynamic range, scanty sampling, poor quantization and extreme data compression deteriorate and diminish the signal.

All the above contribute to the poor diagnostic performance of current Holter technology for conditions other than gross arrhythmia.

Conventional Holter monitoring and ECG systems cannot detect, preserve or recover signals at or beyond the microvolt or

microsecond range.

Undue reliance is placed on a physician over reading of very small depictions of

low fidelity greatly deteriorated ECG tracings recovered from the

digital file.

This is a basic problem which has to be dealt with even when neural networks, used in research only, select beats to “

train” the computer to recognize “normal” beats.

Therefore, the method is used as a last resort, when setting the other parameters does not help, which can occur with patients who have peaked T

waves.

Whenever the

ST segment shifts up or down due to

myocardial ischemia, the T morphology is usually abnormal and not amenable to template classification.

While templates work well for arrhythmia, over reliance in abnormal beat classification using predetermined templates is a reason for the poor performance of computer automated Holter analysis in the diagnosis of conditions other than arrhythmia.

However

superimposition of fast played back, scantily sampled, mercilessly compressed, filtered, smoothed and / or Fast Fourier Transformed beats cannot be trusted, since it processes a signal different from that originally encoded in the

magnetic tape.

Template detection may be convenient, but applied to a digital file which lacks integrity, dynamic range, fidelity and resolution, it cannot be sensitive or specific nor can it detect abnormalities in microvolt regions such as the PQ, ST segments or the P and T waves.

The sophisticated

cardiology community is aware of the current Holter analysis shortcomings; hence, this method is not routinely used as an aid in the diagnosis of highly lethal cardiovascular risks.

After the “decimating compression” it is only benign to say that the

algorithm driven file will have poor resolution and fidelity.

This is a grave problem that needs immediate redress.

It is not surprising that the quality of the ECG recovered from current Holter analysis algorithms is too poor to identify anything but arrhythmia with some

degree of certainty.

With this algorithm, all the microvolt nuances will certainly be irretrievably lost.

The 12-lead electrocardiogram is not expected or designed to detect transient and unpredictable episodes of

myocardial ischemia or arrhythmia since it depicts only 3 of the 100,000 or more heart beats we have in 24 hours.

All methods available today, other than the Holter technique, are unable to detect

myocardial ischemia due to transient spastic and / or thrombotic causes of decreased coronary

blood flow.

Hence, this grave condition escapes detection unless Holter recordings are done under the fleeting and often difficult to identify forms of daily

life stress that induces the attacks in a given patient.

The

limiting factor is the current computerized Holter analysis that is unsuitable for detection of anything but gross arrhythmia.

The current art suffers from false negative findings which have dire consequences for patients considered healthy when they are not.

Such visual Holter analysis is

time consuming and hence, done only in few research efforts and not cost effective or applicable to daily clinical practice or

mass screening.

Ischemia-induced abnormalities are in the microvolt range and are unlikely to stand the decimating affects of current algorithms devoted to minimize

file size.

The minor changes introduced by computer algorithms are not sufficient for reliable detection of ischemia or risk for potentially lethal arrhythmia.

The magnitude and morphologic changes of the

T wave are additional indicators of ischemia which the current algorithms are unable to detect.

All these intensive computational niceties are done on a digital file known to be incomplete and with major fidelity, resolution and dynamic range deficiencies.

Fibrillation occurs when transient triggers impinge upon an electrically unstable heart causing normally organized electrical activity to become disorganized and

chaotic.

Microvolt signals are easily obliterated by poor dynamic range, “decimating” compression algorithms, creation of “imaginary” points, etc used by algorithms in the quest for

automation and trans-telephonic transmission of minimized Holter files.

Such computer programs have had only limited success in diagnosing

pathological conditions which compromise a patient's cardiovascular

system.

As a result, many patients have had

pathological conditions go undetected.

Login to View More

Login to View More  Login to View More

Login to View More