Patents

Literature

48 results about "Sound event detection" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

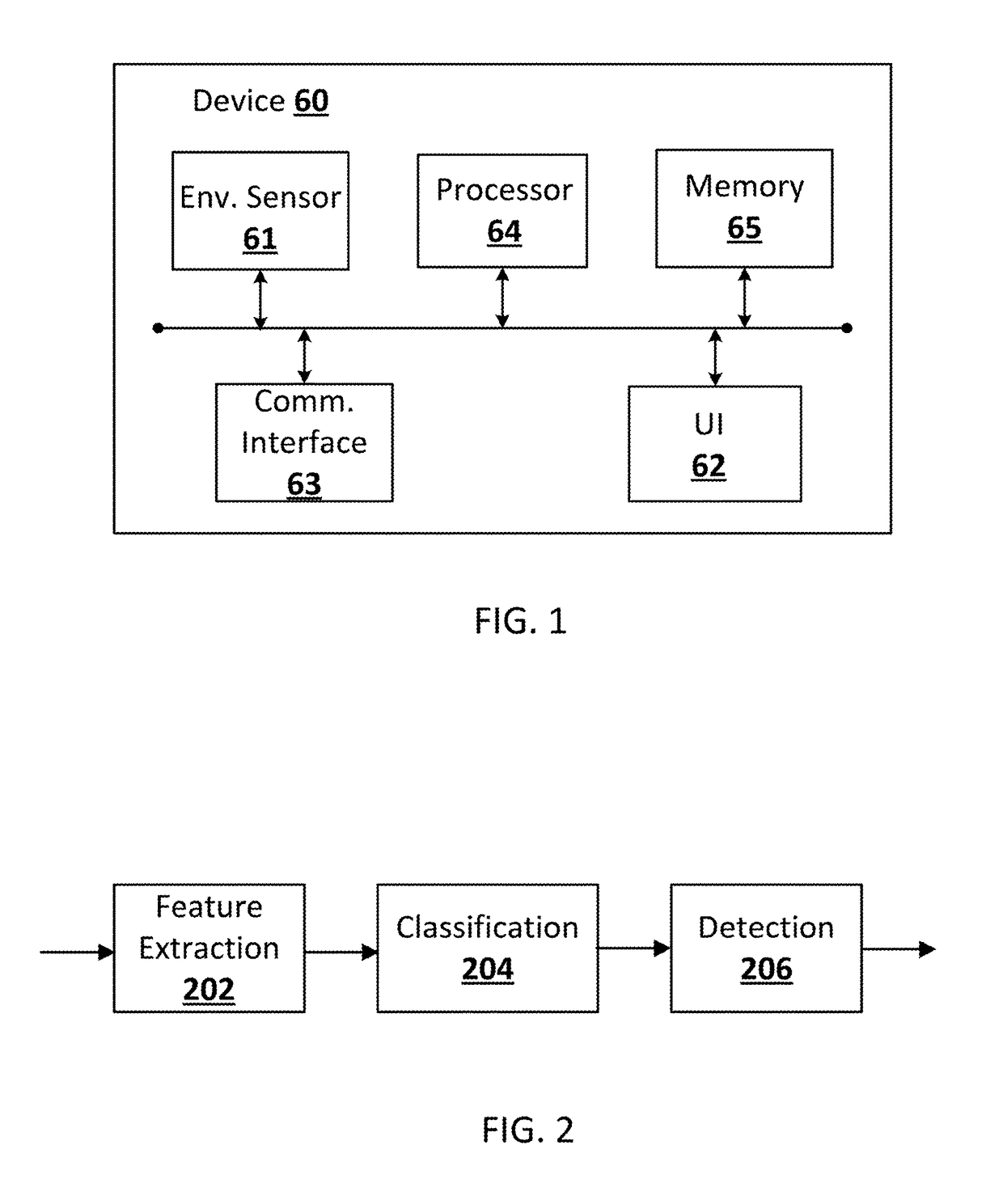

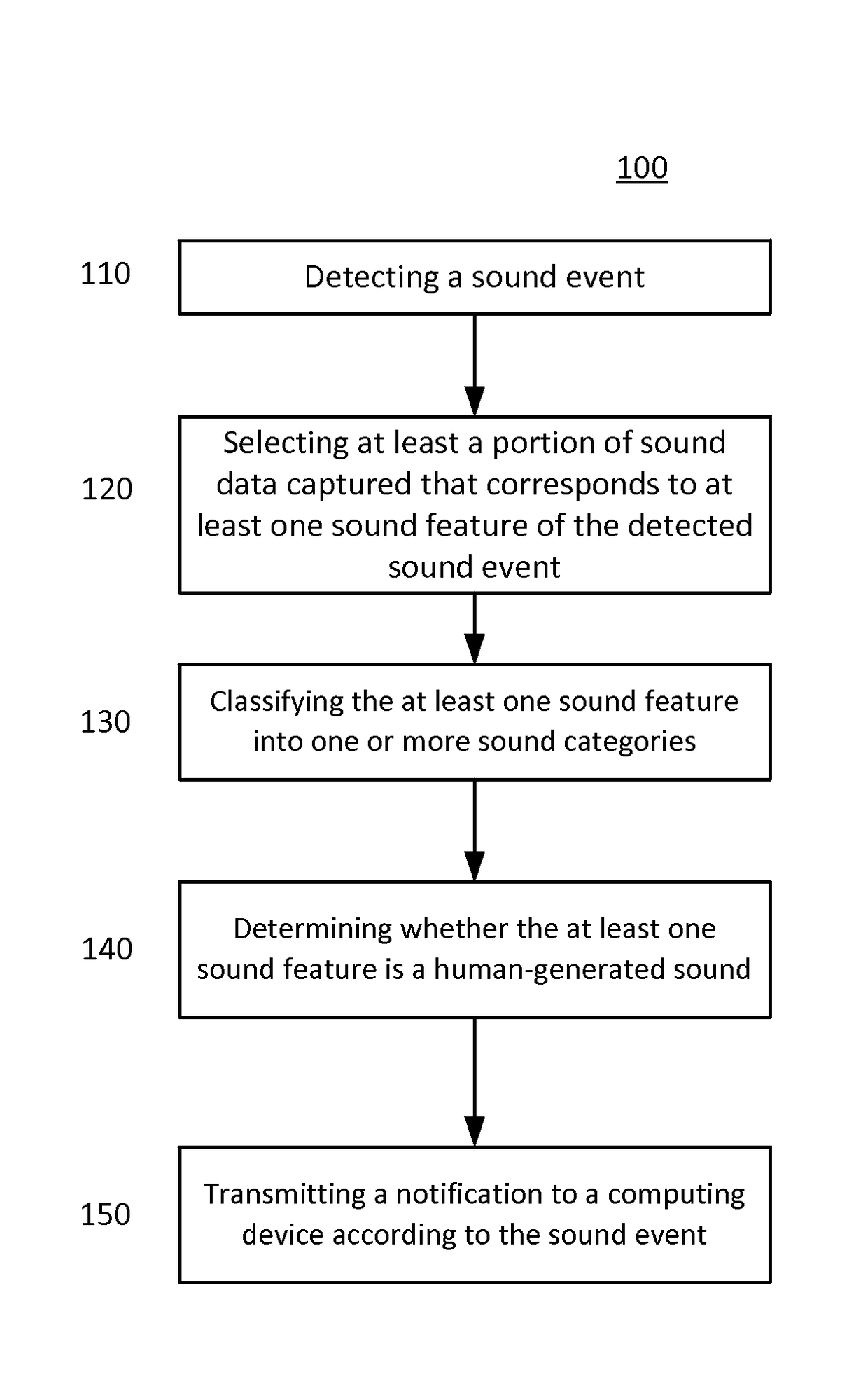

Systems and methods of home-specific sound event detection

ActiveUS20160379456A1ConfidenceAccurate identificationBurglar alarm mechanical vibrations actuationComputer scienceSound event detection

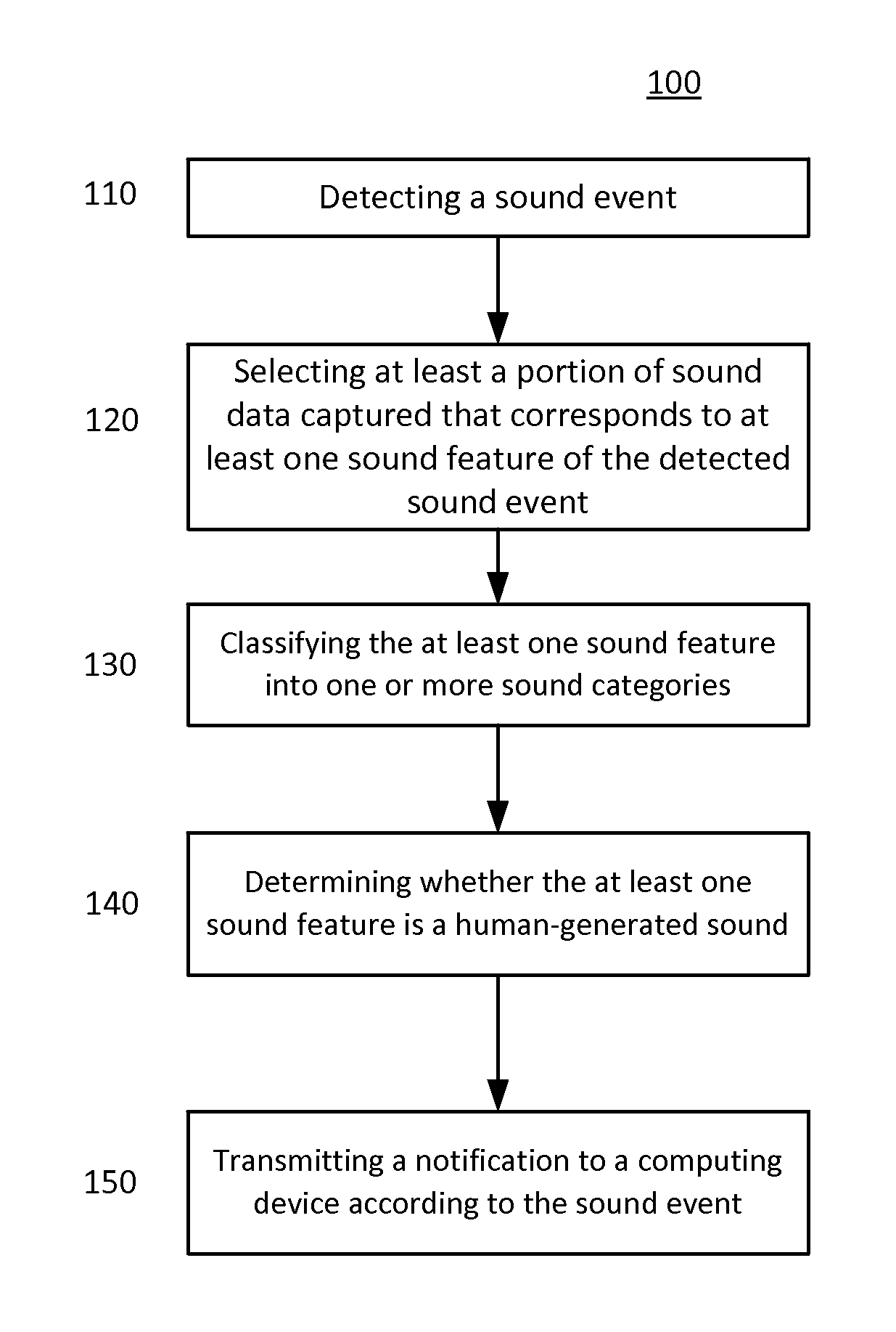

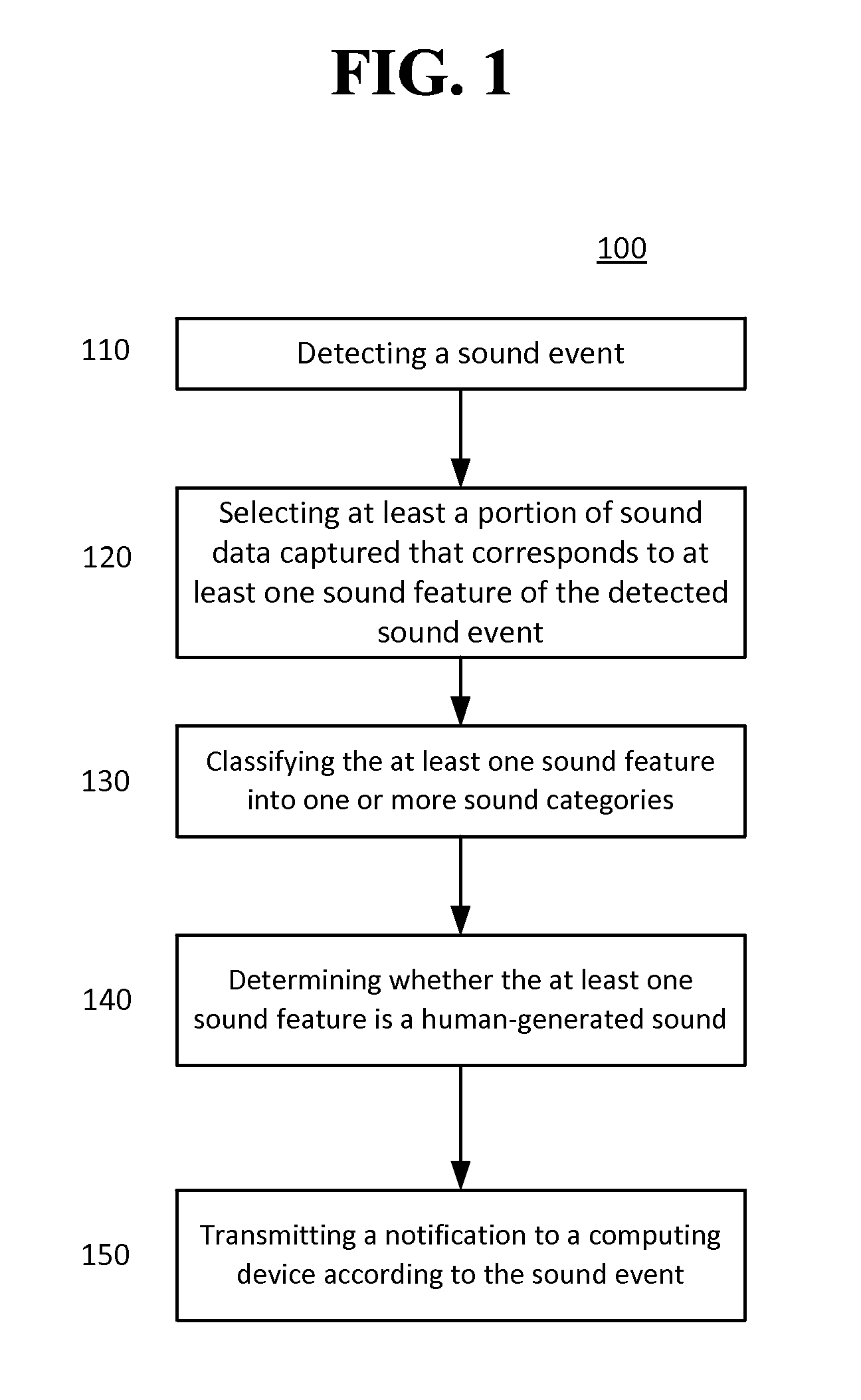

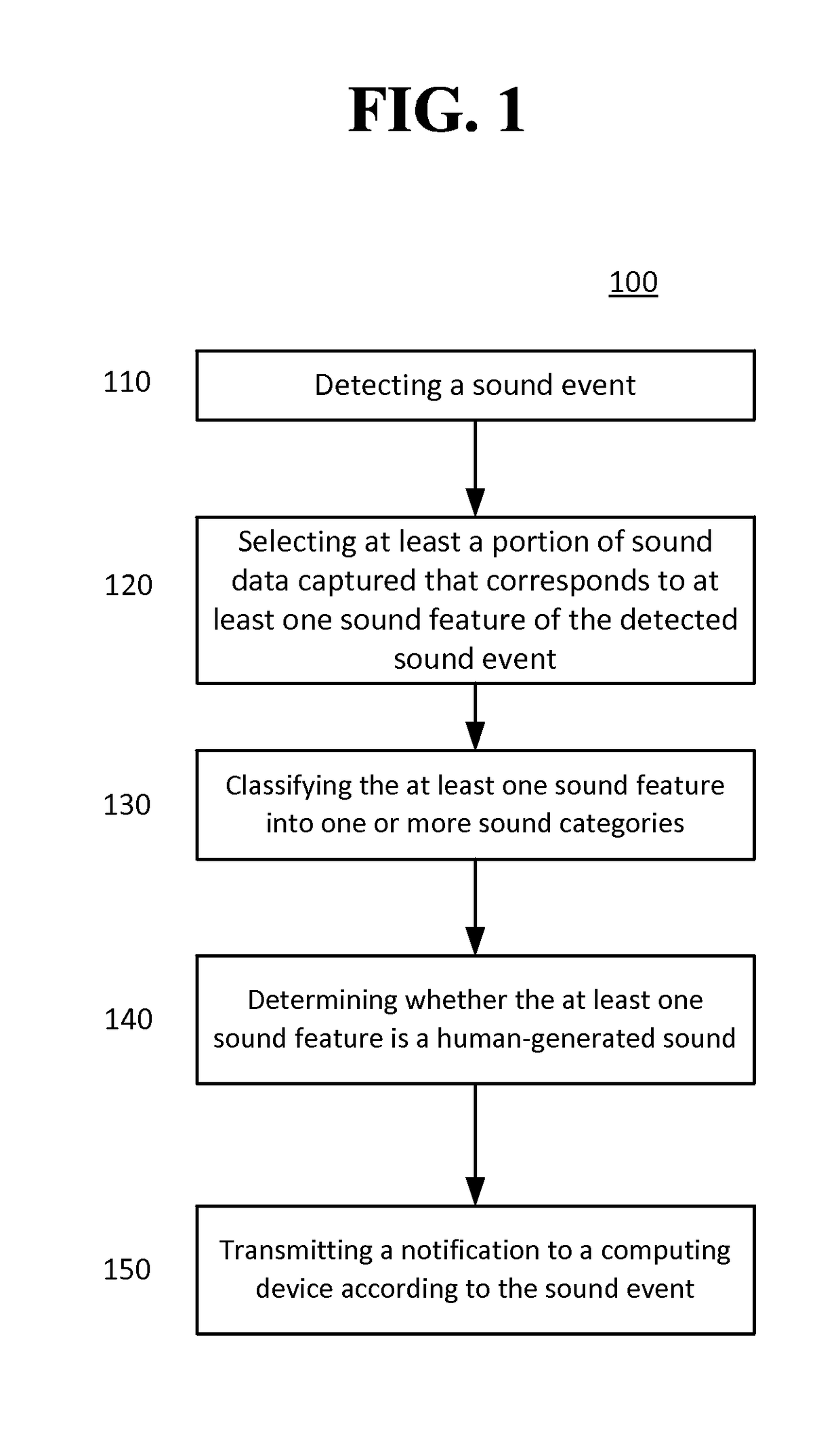

Systems and methods of a security system are provided, including detecting, by a sensor, a sound event, and selecting, by a processor coupled to the sensor, at least a portion of sound data captured by the sensor that corresponds to at least one sound feature of the detected sound event. The systems and methods include classifying the at least one sound feature into one or more sound categories, and determining, by a processor, based upon a database of home-specific sound data, whether the at least one sound feature is a human-generated sound. A notification can be transmitted to a computing device according to the sound event.

Owner:GOOGLE LLC

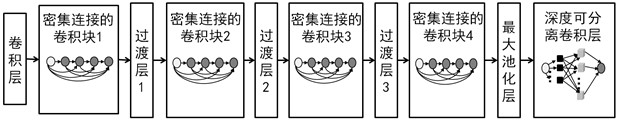

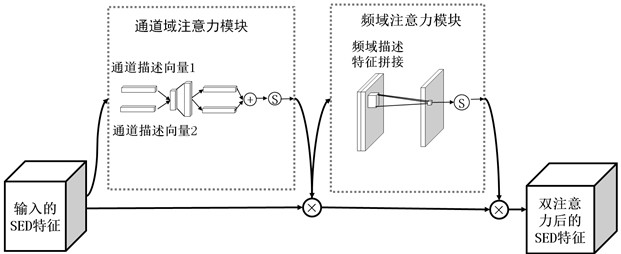

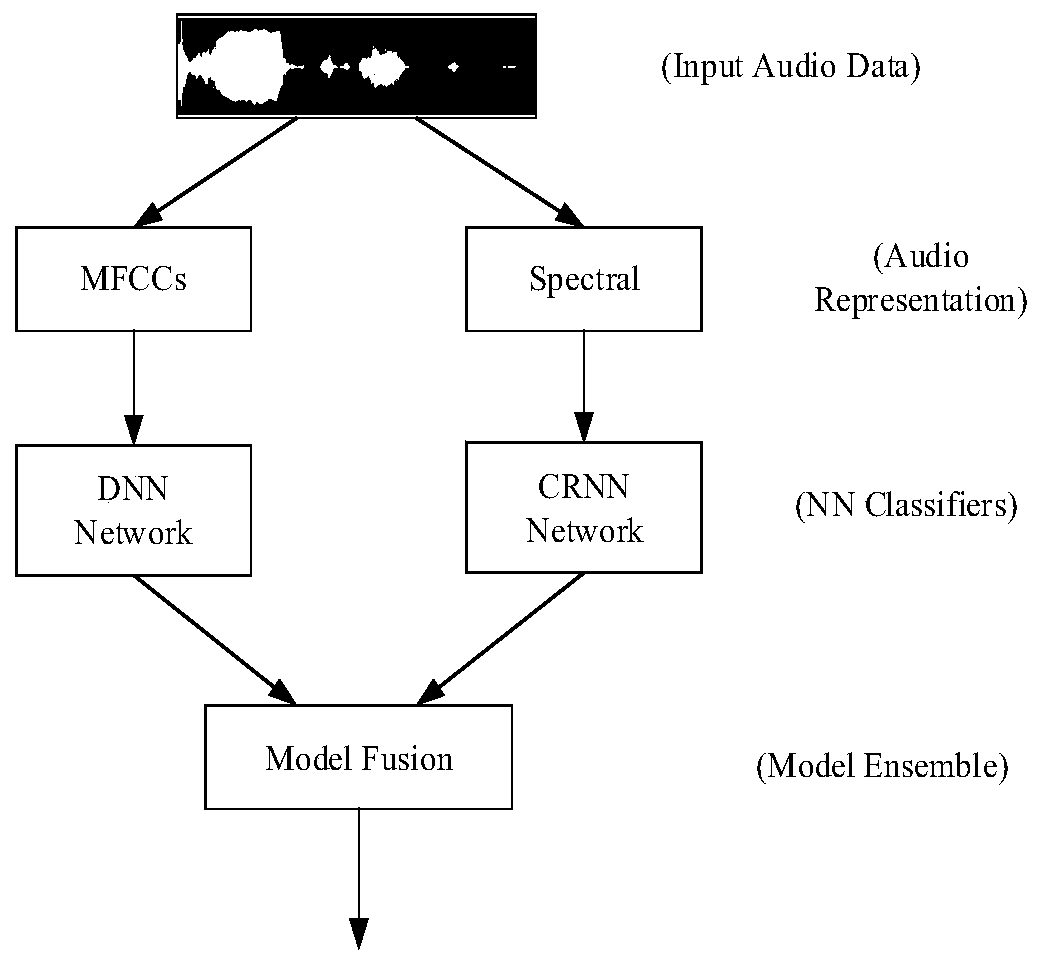

Sound event detection method based on convolutional neural network

ActiveCN111933188AReduce power consumptionReduce computational complexitySpeech recognitionNeural architecturesSound detectionComputation complexity

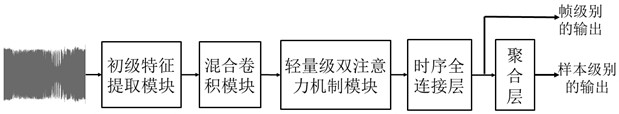

The invention discloses a sound event detection method based on a convolutional neural network, and belongs to the technical field of audio processing. The method comprises the steps: firstly, performing primary feature extraction on an audio stream; then sending the extracted primary feature to a neural network for feature extraction and classification of sound events, and finally obtaining prediction probabilities of various sound events; and if the prediction probability of the current type of sound event exceeds a preset classification threshold, considering that a corresponding sound event exists in the current audio stream. A sound event detection model is small in parameter quantity and low in calculation complexity, so that the power consumption and the calculation complexity of the related Internet of Things equipment during sound detection processing are greatly reduced; and the detection precision equivalent to that of an existing sound event detection model is maintained. Therefore, the sound event detection method provided by the invention can be effectively applied to embedded intelligent equipment and the like.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

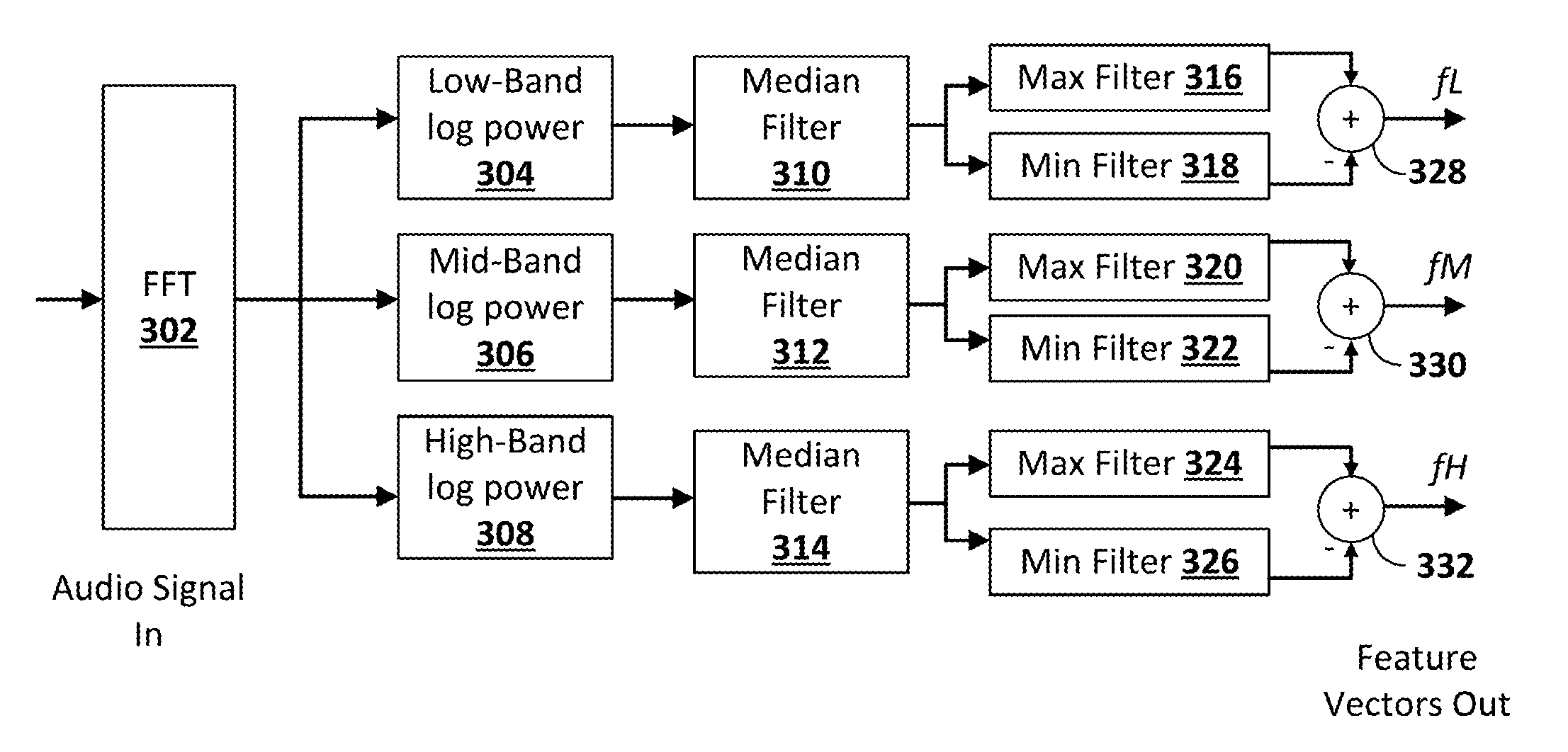

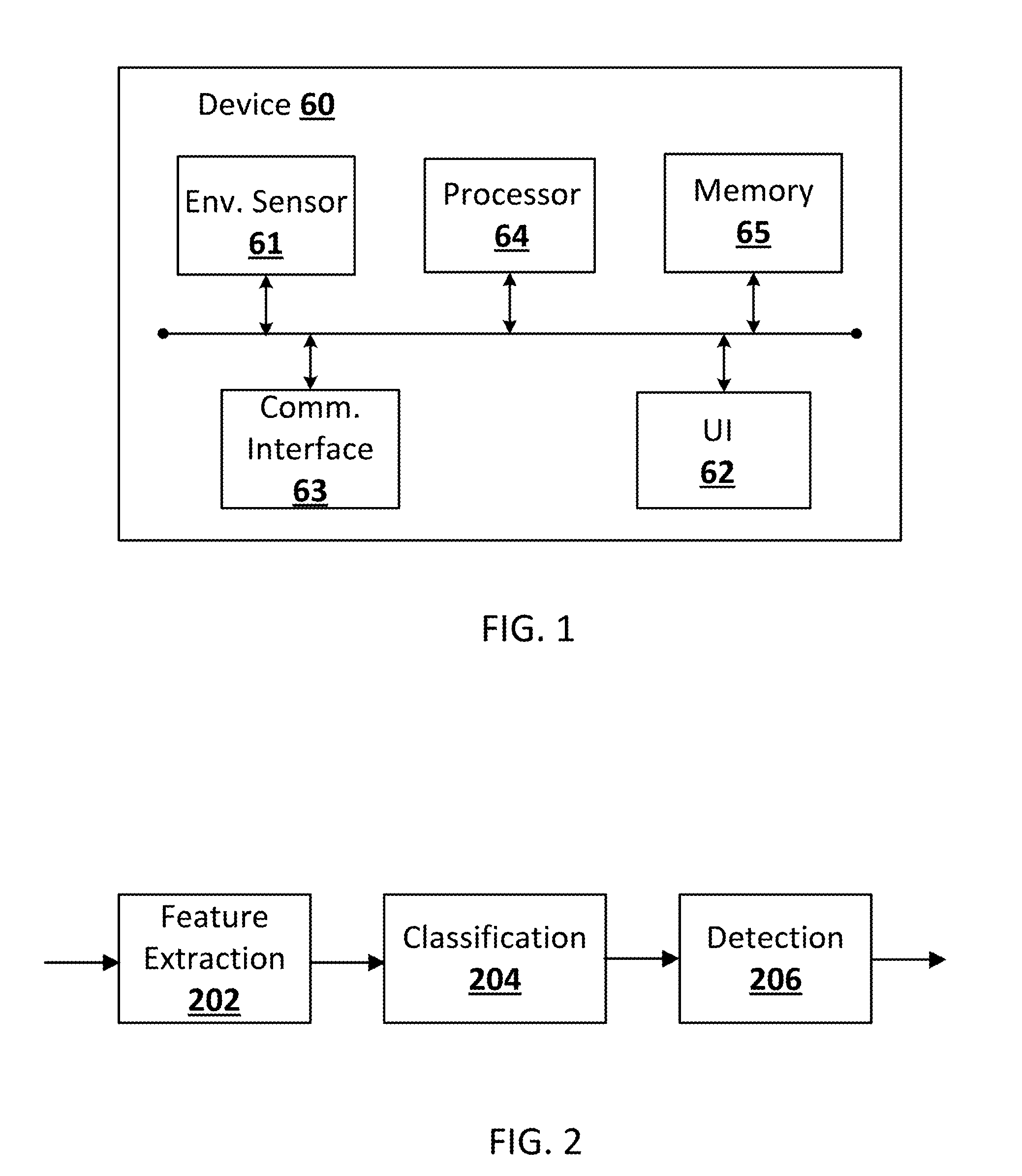

Sound event detection

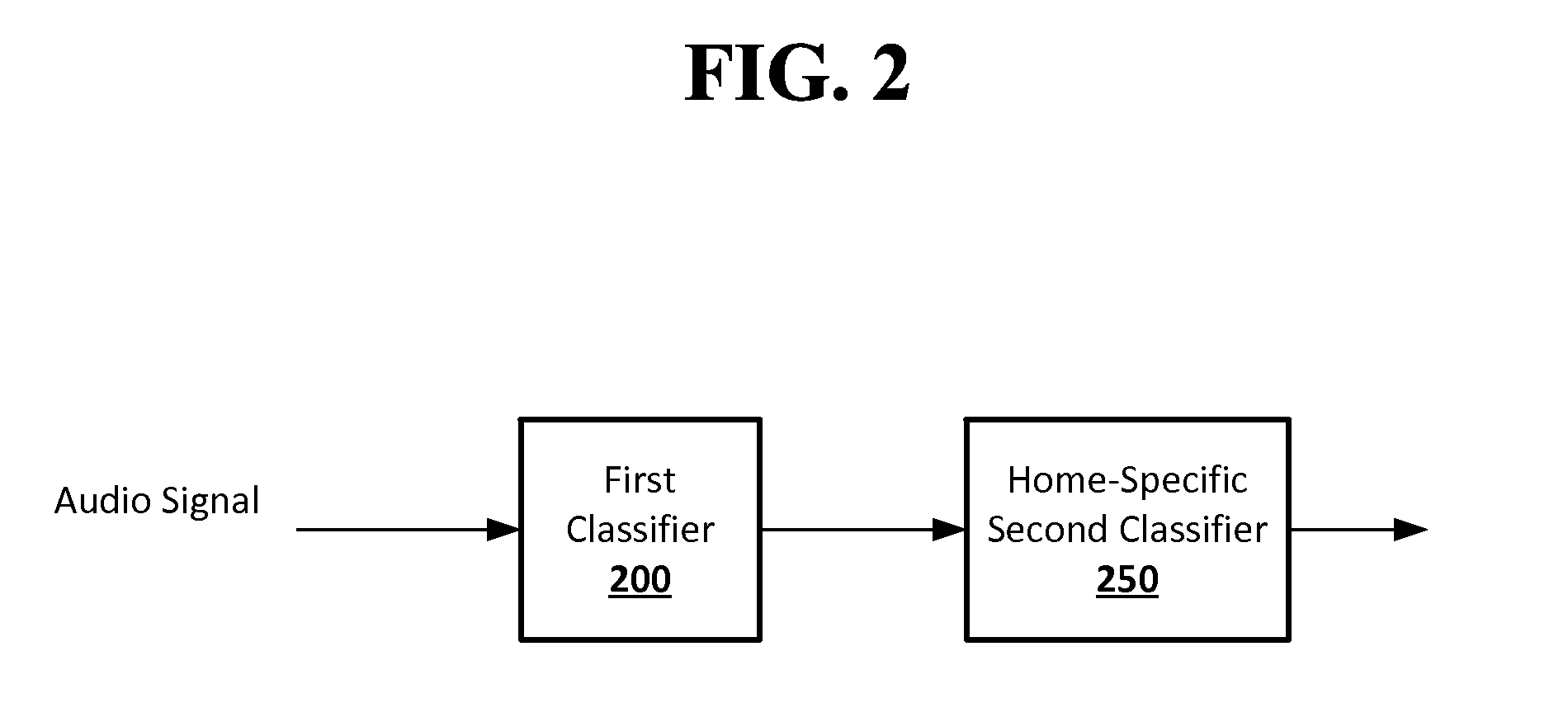

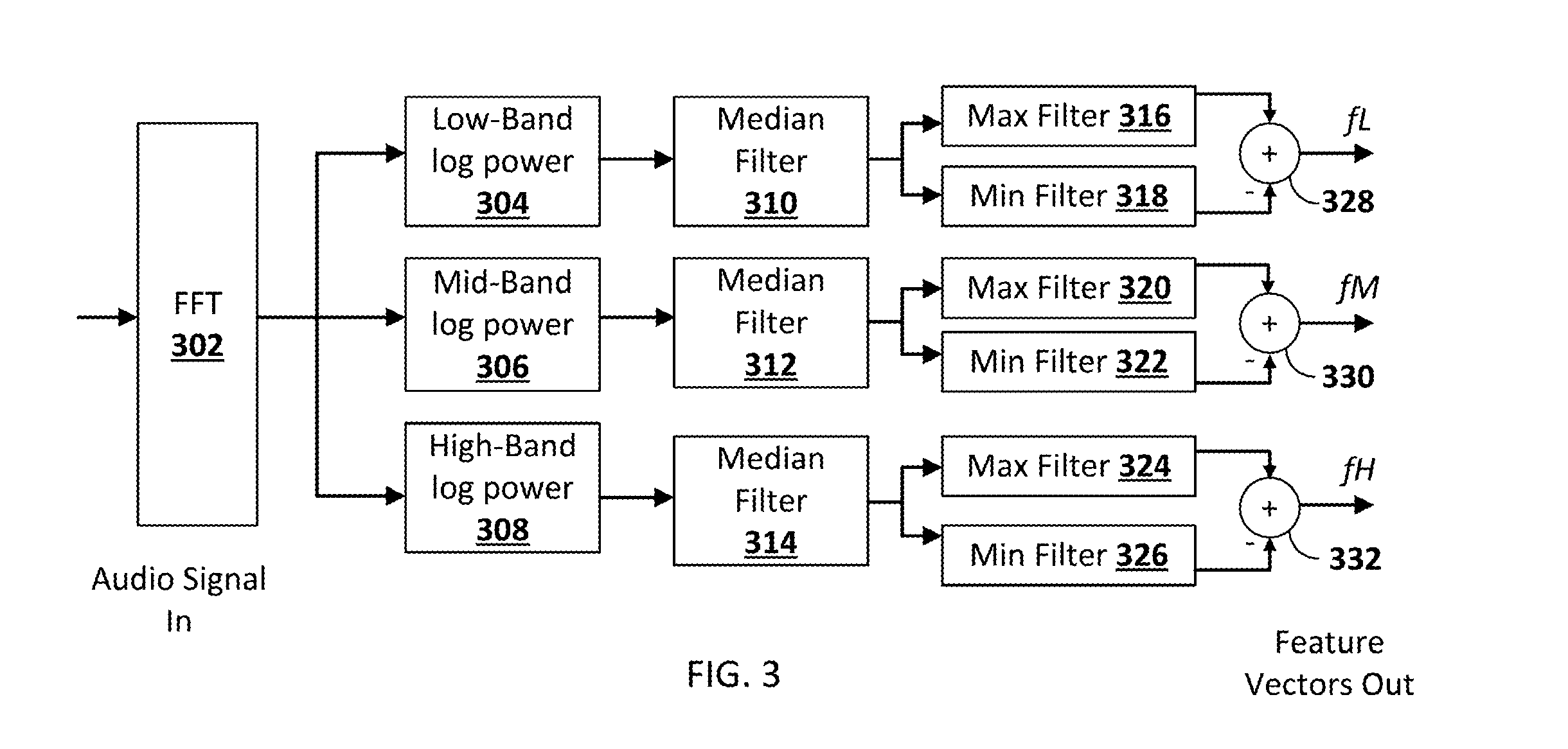

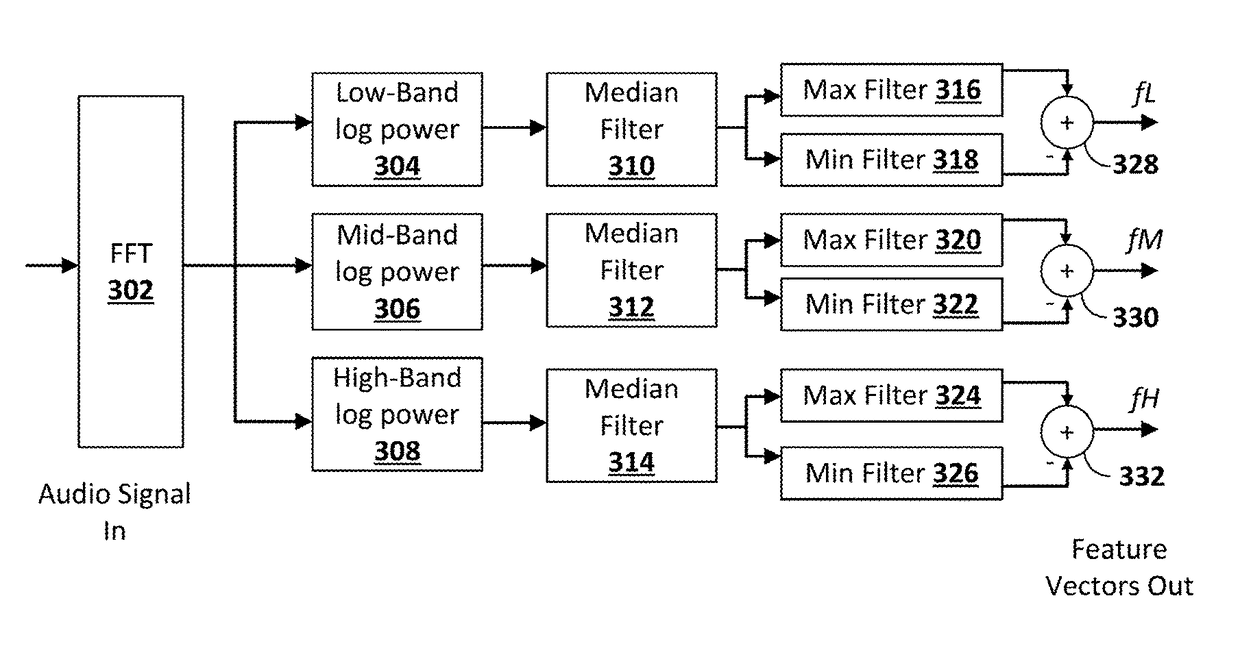

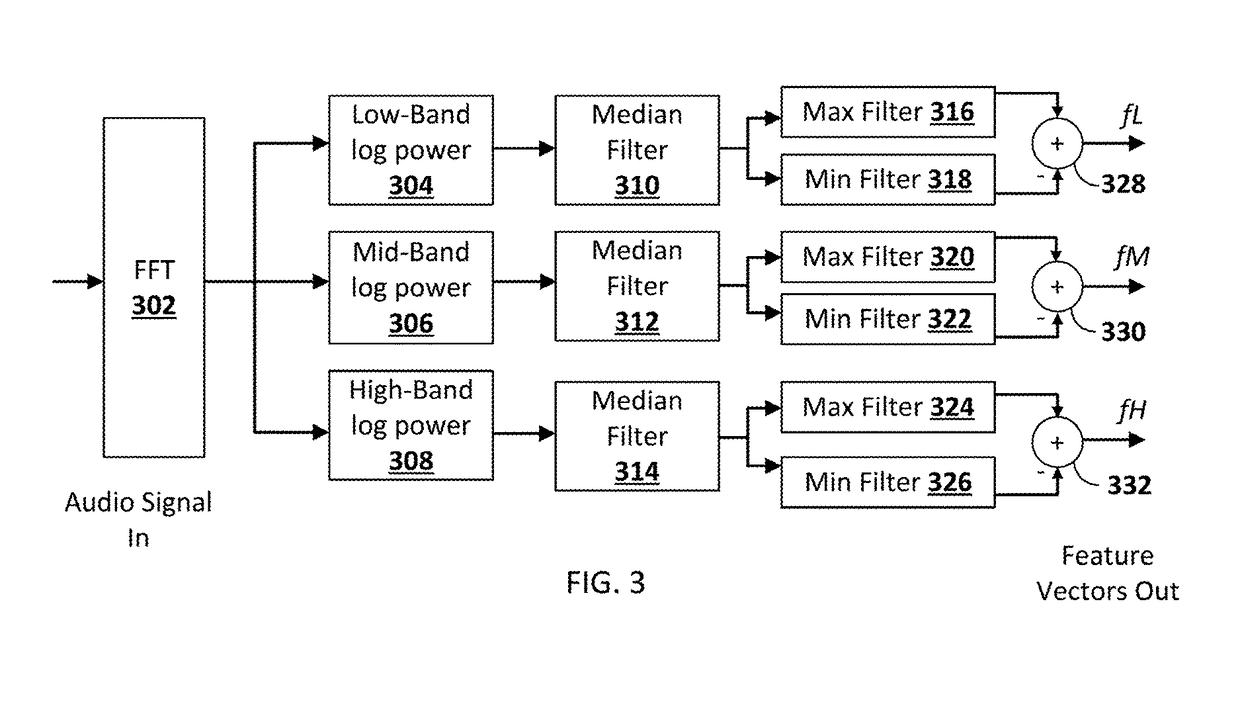

ActiveUS20160335488A1Easy to detectImprove classificationSpeech analysisCharacter and pattern recognitionComputation complexityRelevant feature

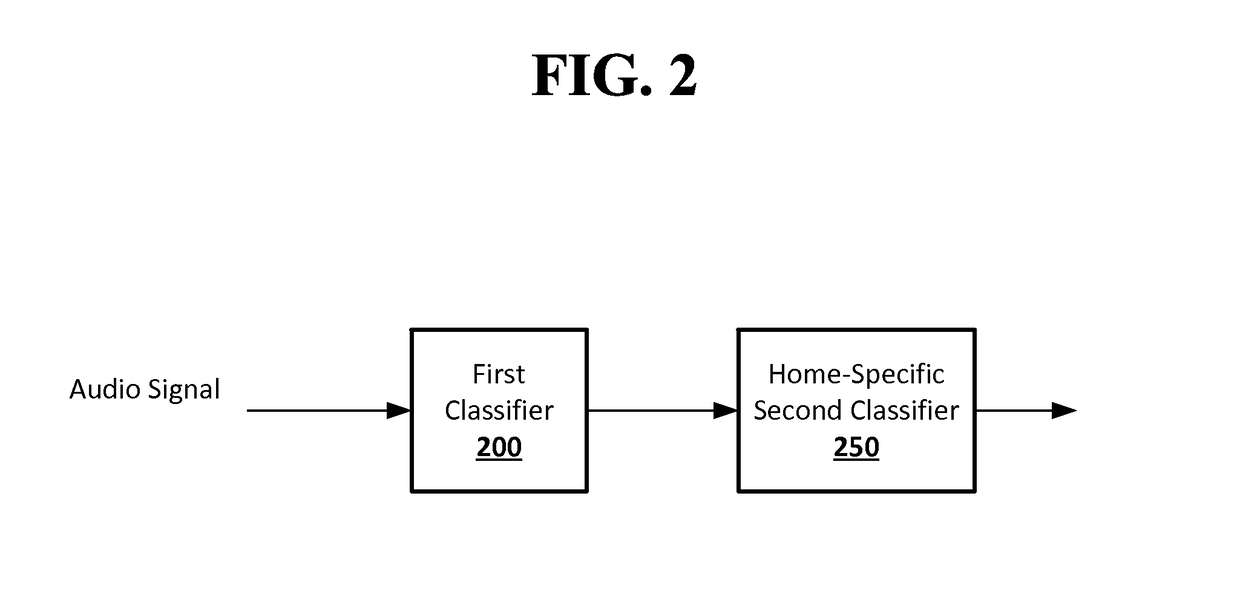

A system and method for the use of sensors and processors of existing, distributed systems, operating individually or in cooperation with other systems, networks or cloud-based services to enhance the detection and classification of sound events in an environment (e.g., a home), while having low computational complexity. The system and method provides functions where the most relevant features that help in discriminating sounds are extracted from an audio signal and then classified depending on whether the extracted features correspond to a sound event that should result in a communication to a user. Threshold values and other variables can be determined by training on audio signals of known sounds in defined environments, and implemented to distinguish human and pet sounds from other sounds, and compensate for variations in the magnitude of the audio signal, different sizes and reverberation characteristics of the environment, and variations in microphone responses.

Owner:GOOGLE LLC

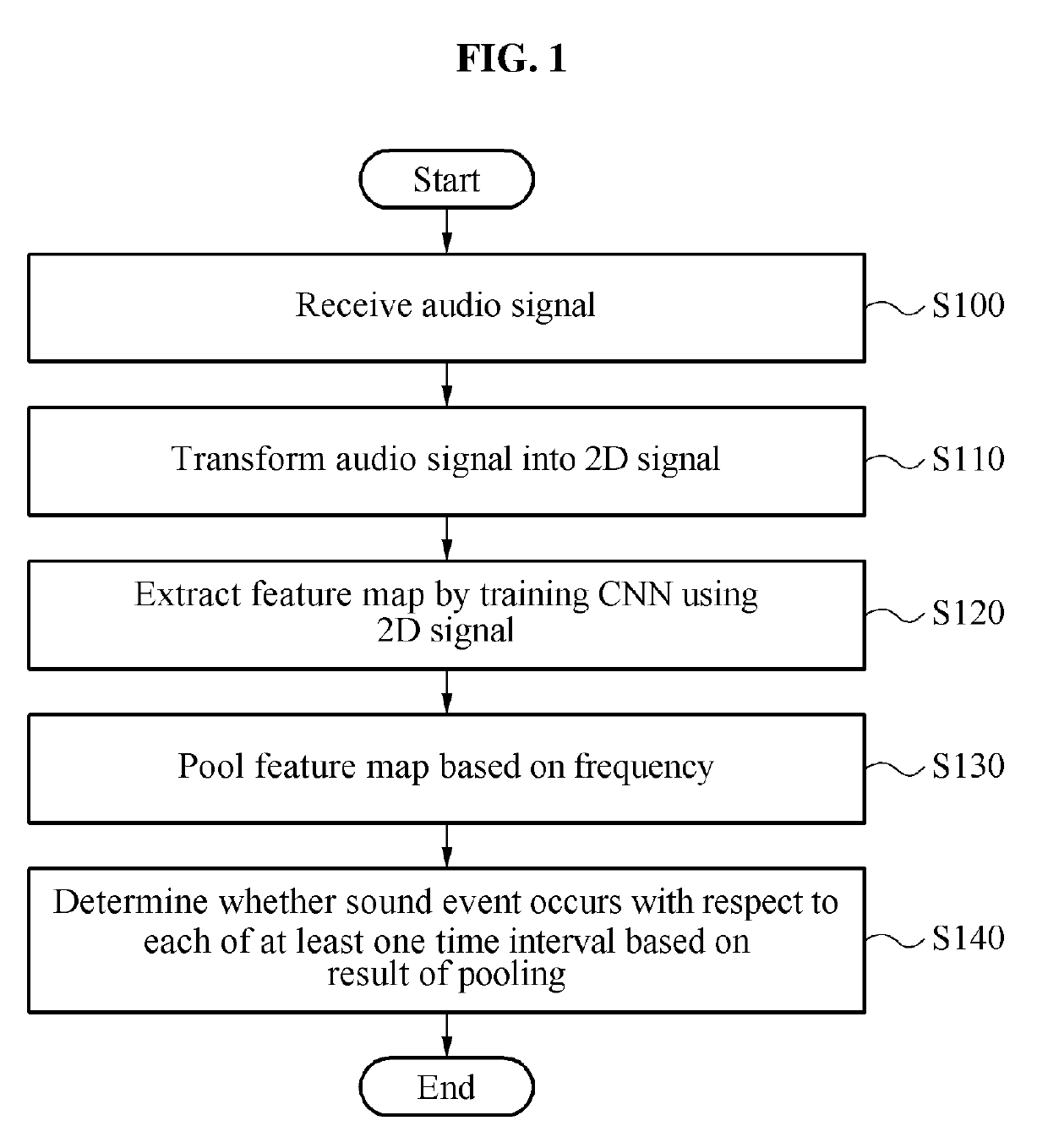

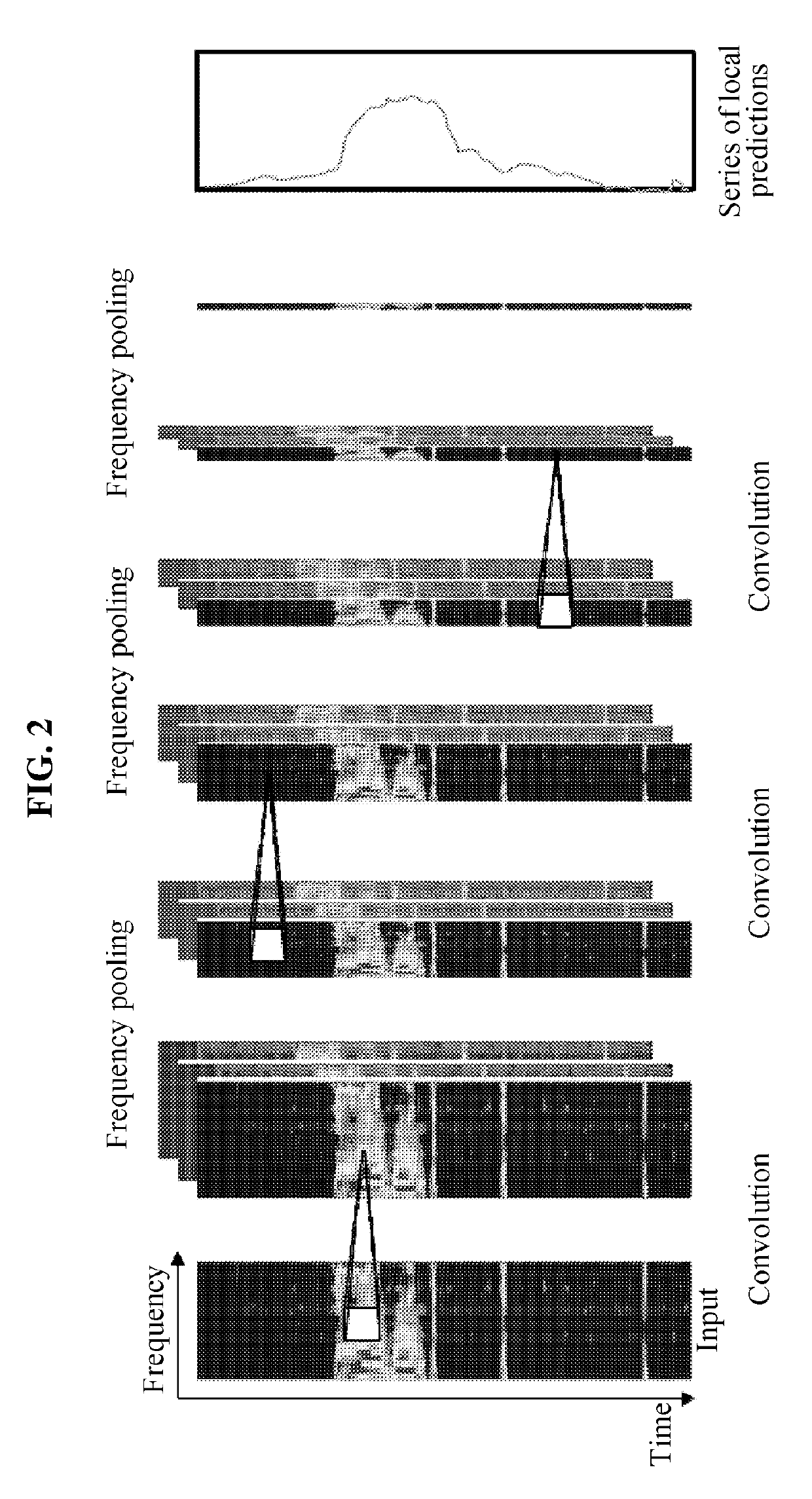

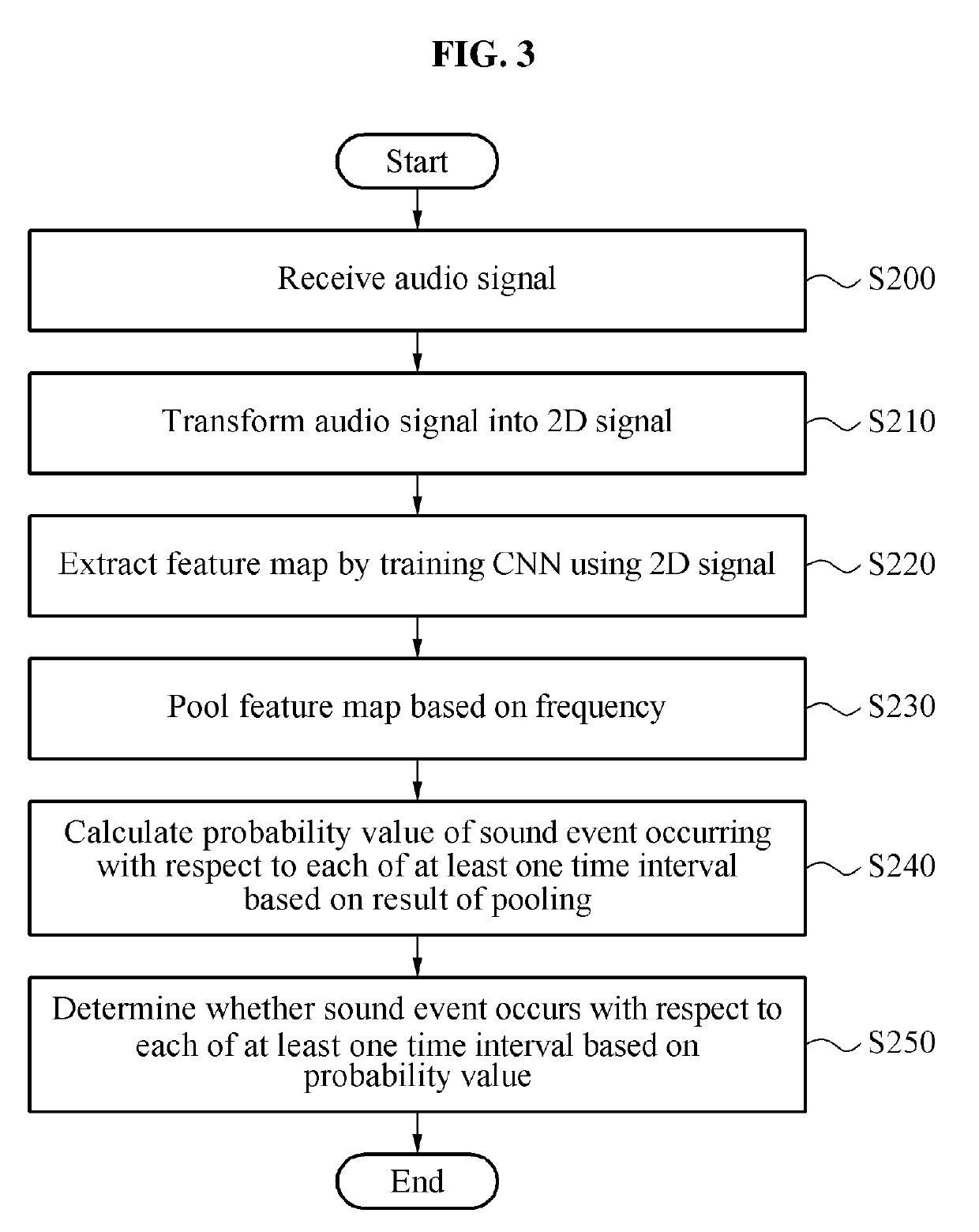

Method and apparatus for sound event detection robust to frequency change

Disclosed is a sound event detecting method including receiving an audio signal, transforming the audio signal into a two-dimensional (2D) signal, extracting a feature map by training a convolutional neural network (CNN) using the 2D signal, pooling the feature map based on a frequency, and determining whether a sound event occurs with respect to each of at least one time interval based on a result of the pooling.

Owner:ELECTRONICS & TELECOMM RES INST

Method for estimating at-home activities of elderly people living alone based on sound event detection

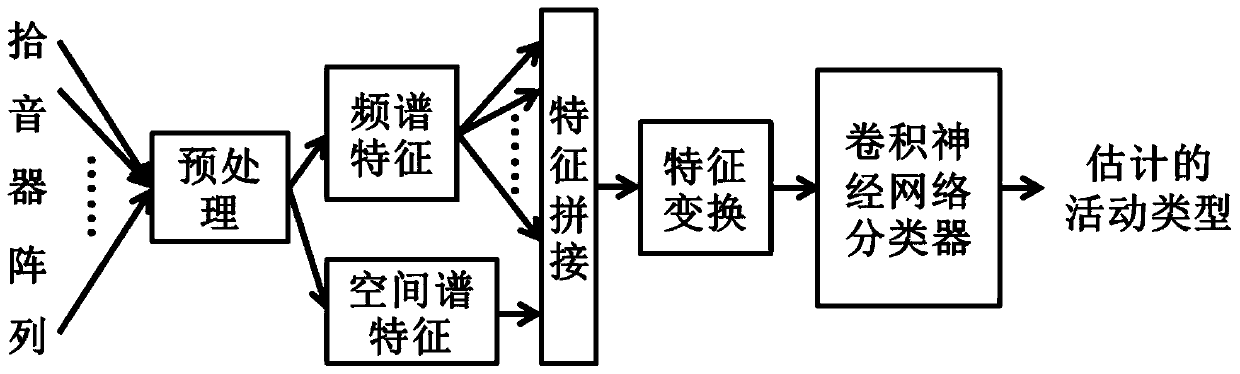

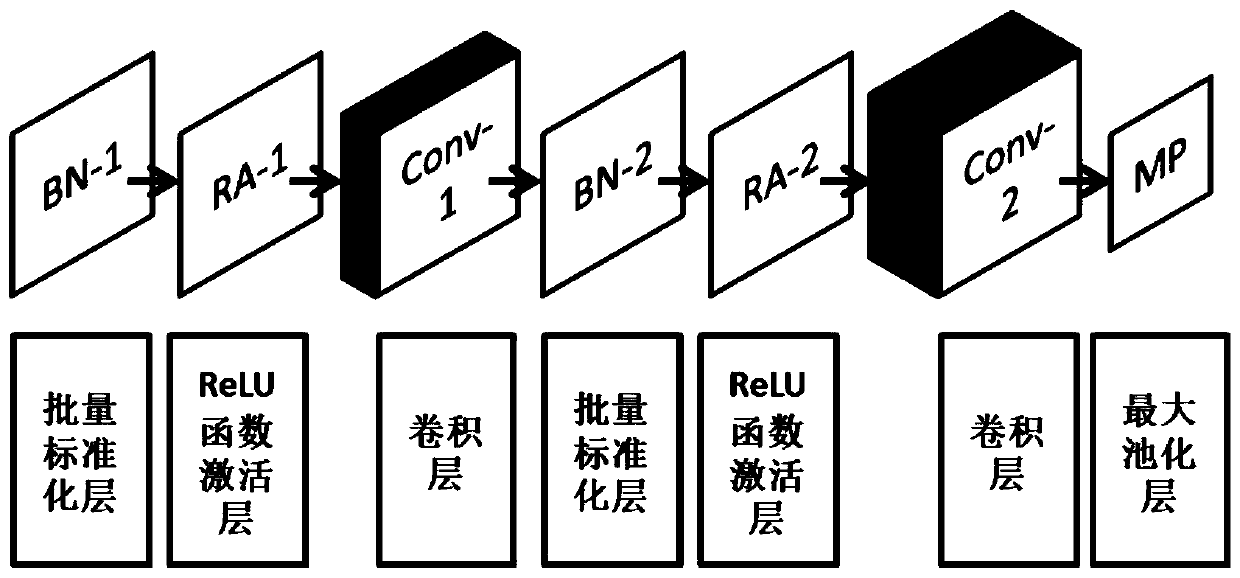

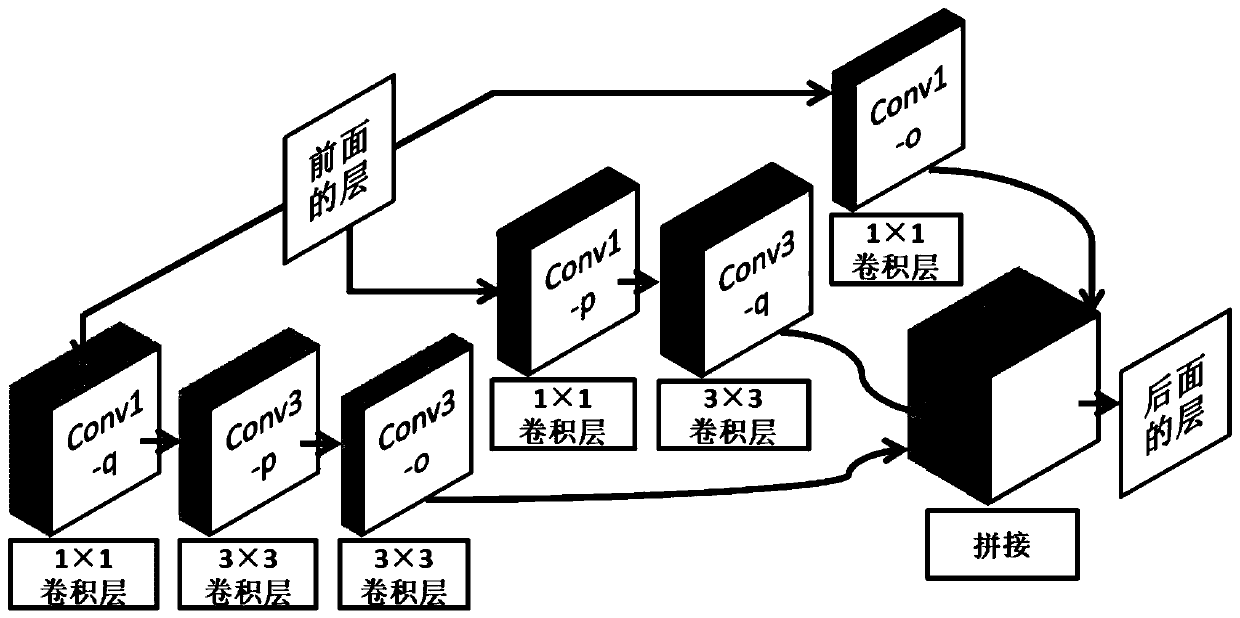

ActiveCN110223715AWide coverageDoes not involve privacy issuesSpeech analysisCharacter and pattern recognitionFrequency spectrumAudio frequency

The invention discloses a method for estimating at-home activities of elderly people living alone based on sound event detection. The method comprises the following steps that firstly, a pickup arrayis placed in the indoor space for collecting multi-channel audio data and pre-processing the audio data, wherein pre-processing comprises framing and windowing; then log Mel spectrum features are extracted from the audio data in each channel, direction of arrival (DOA) space spectrum features are extracted from the audio data in all the channels, and the log Mel spectrum features and the DOA spacespectrum features are spliced; then, the spliced features are input a convolutional neural network for feature transformation; and finally, the transformed features are input into a convolutional neural network classifier, and the type of the activities is estimated. According to the method, the spectrum features and transformed features are extracted from the multi-channel audio data, the diversity of training data can be improved, the generalization ability of the convolutional neural network classifier is effectively improved, and when the at-home activities of elderly people are estimated, the higher accuracy rate can be obtained.

Owner:SOUTH CHINA UNIV OF TECH

Method and apparatus for detecting sound event considering the characteristics of each sound event

InactiveUS20200312350A1Reduce errorsUsing detectable carrier informationSpeech recognitionLetter to soundAcoustics

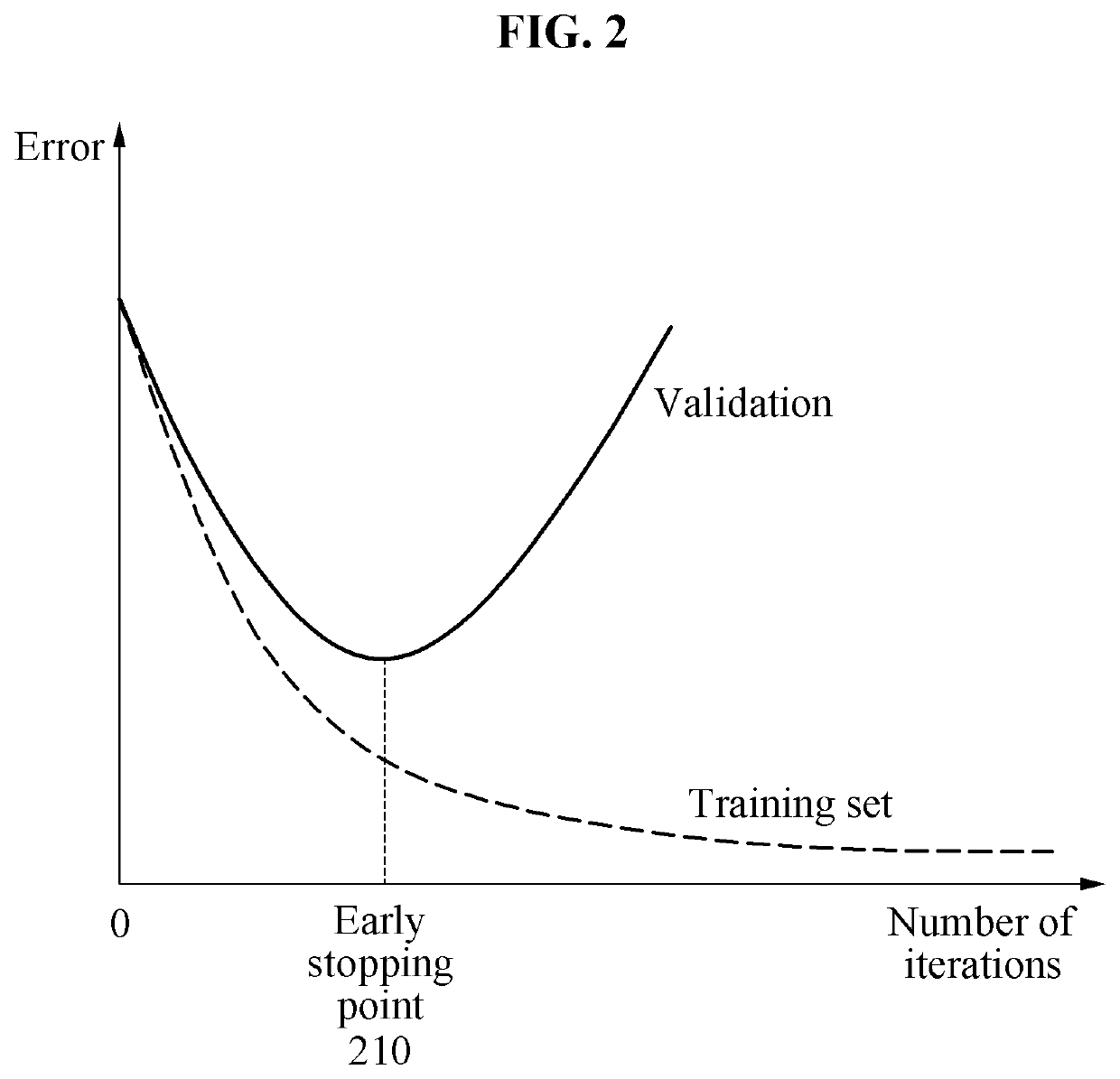

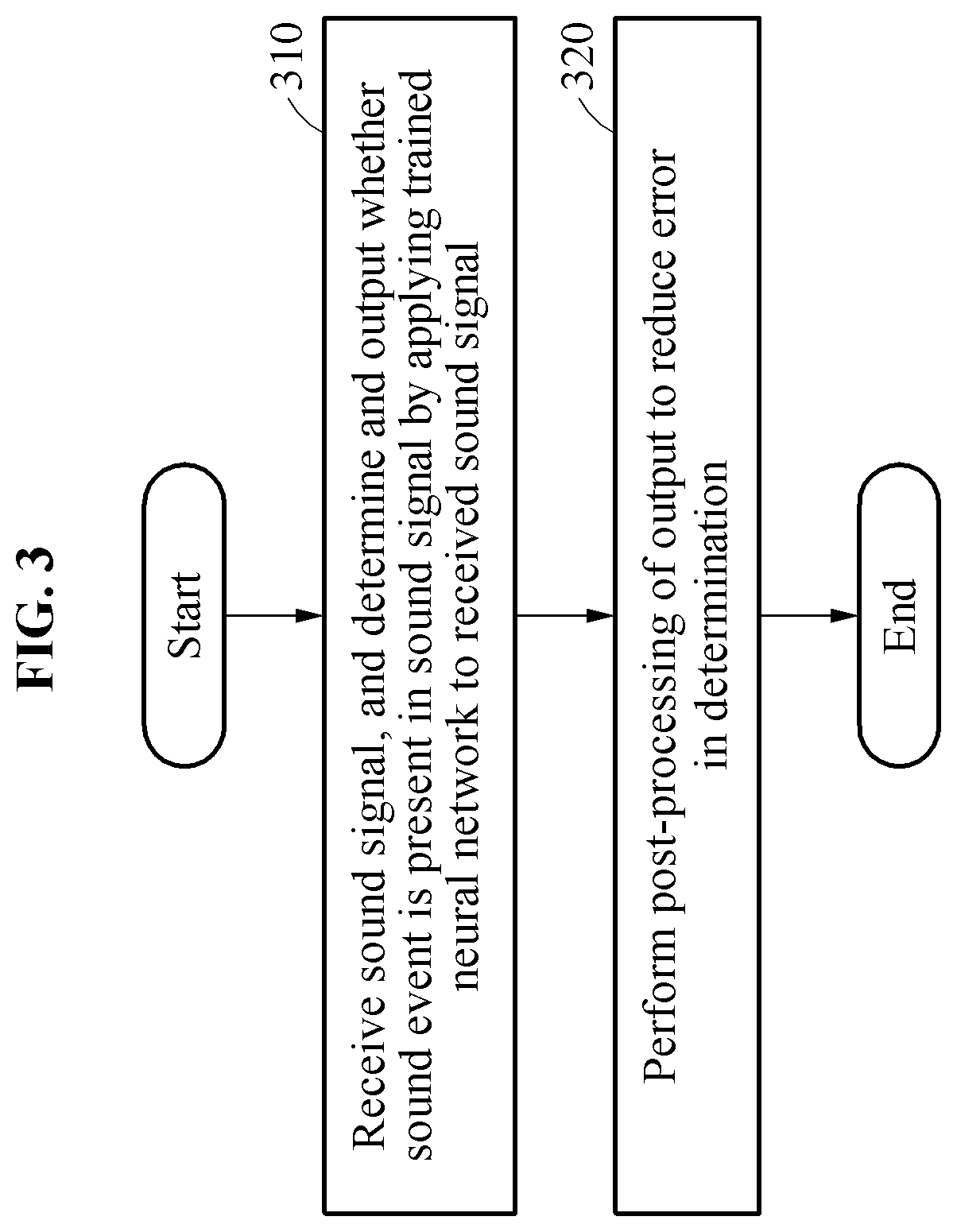

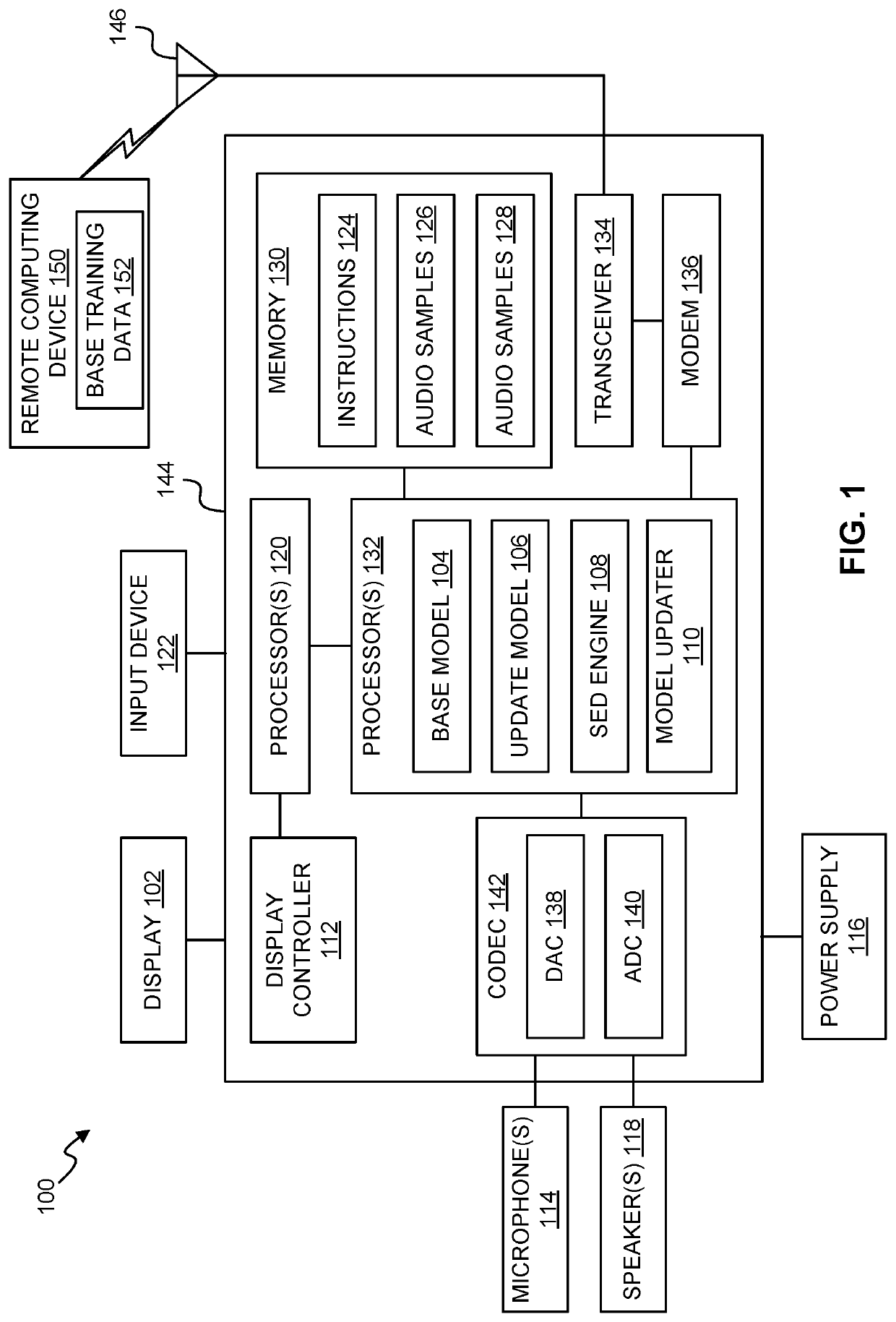

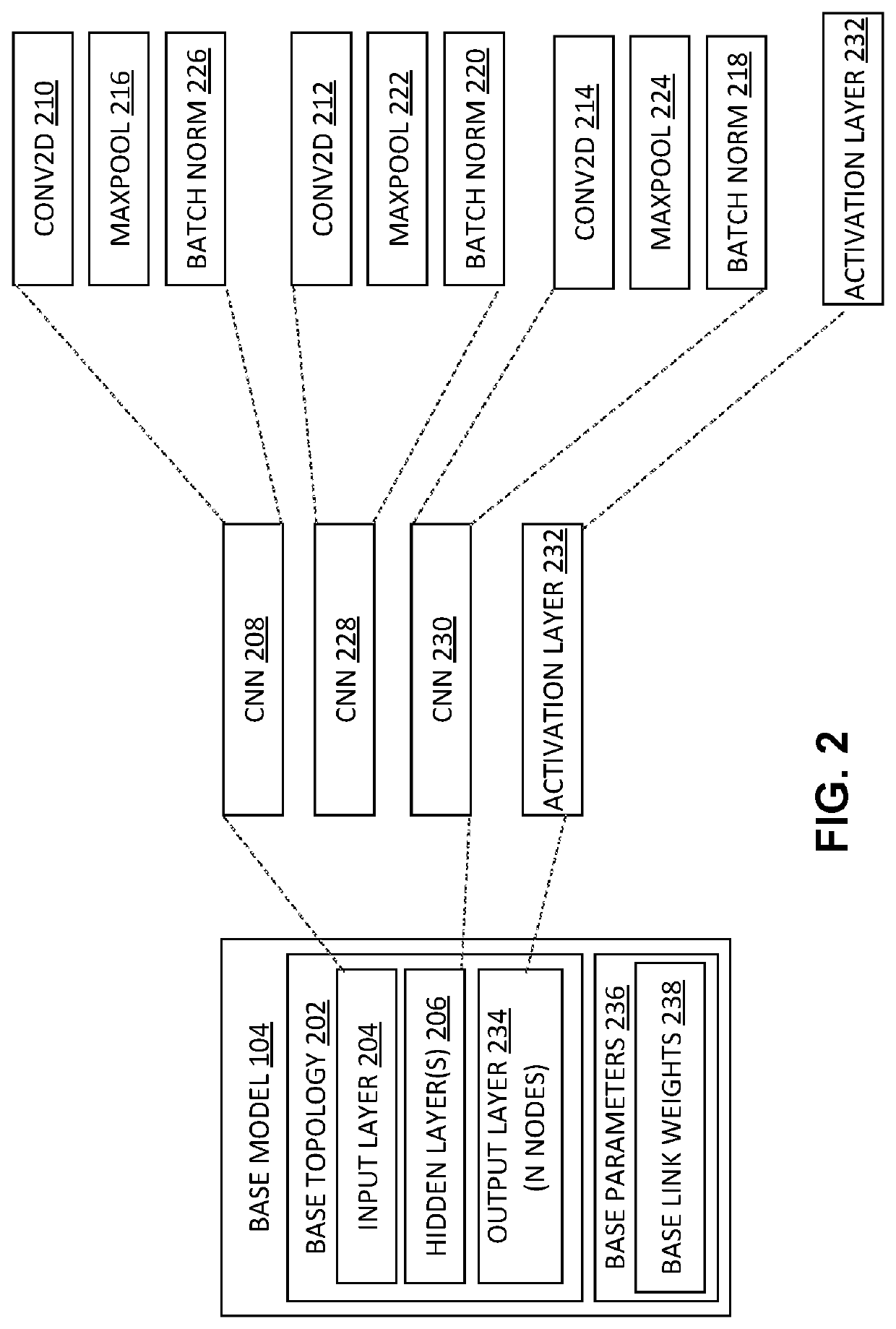

A sound event detection method includes receiving a sound signal and determining and outputting whether a sound event is present in the sound signal by applying a trained neural network to the received sound signal, and performing post-processing of the output to reduce an error in the determination, wherein the neural network is trained to early stop at an optimal epoch based on a different threshold for each of at least one sound event present in a pre-processed sound signal. That is, the sound event detection method may detect an optimal epoch to stop training by applying different characteristics for respective sound events and improve the sound event detection performance based on the optimal epoch.

Owner:ELECTRONICS & TELECOMM RES INST

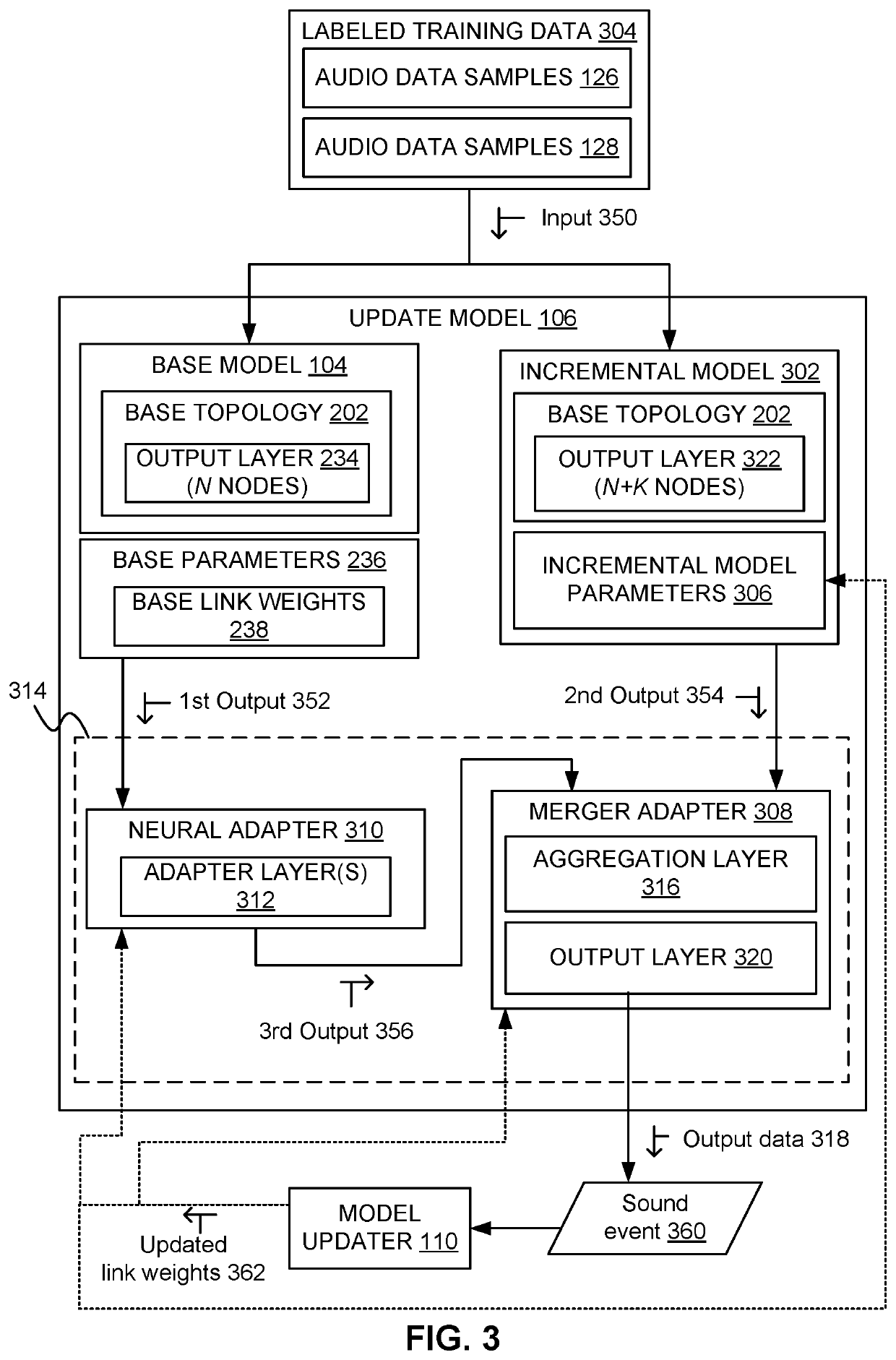

Sound event detection learning

ActiveUS20210158837A1Hearing device active noise cancellationHeadphones for stereophonic communicationEngineeringSound event detection

Owner:QUALCOMM INC

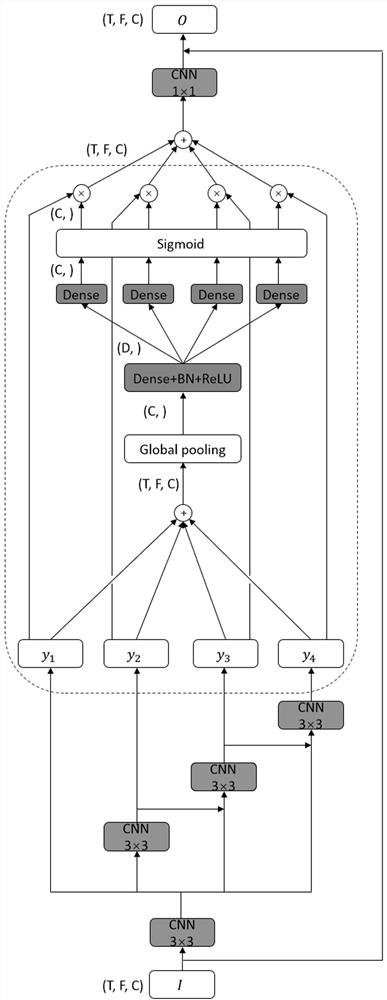

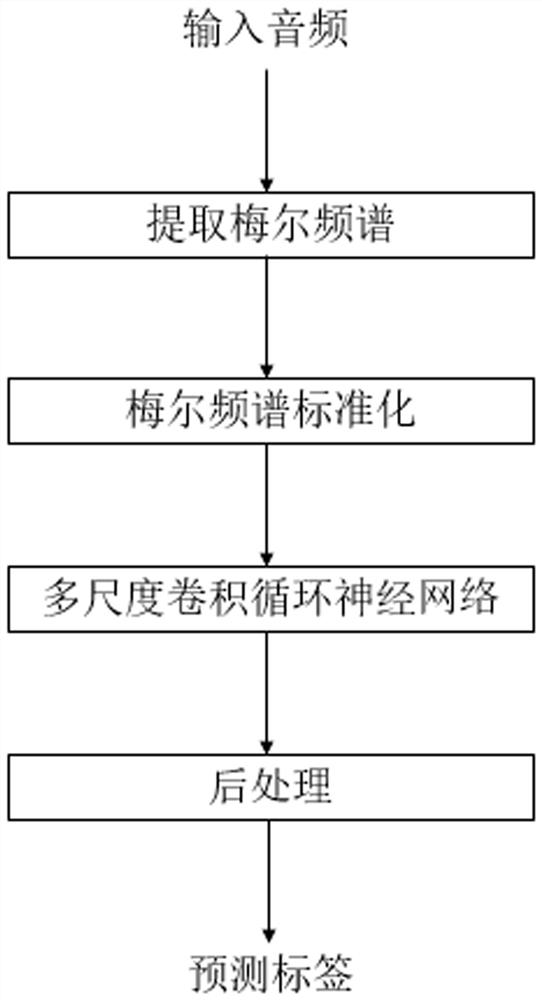

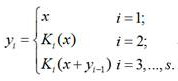

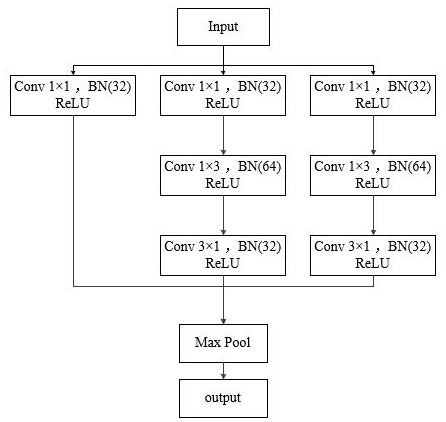

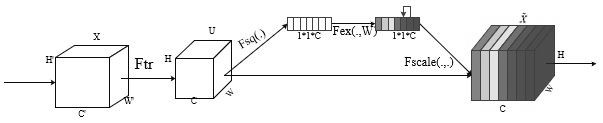

Panda sound event detection method and system under mixed audio

ActiveCN112802484AEasy to detectImprove recognition efficiencySpeech analysisSound event detectionNeutral network

The invention discloses a panda sound event detection method and system under mixed audio. The panda sound event detection method comprises the following steps: acquiring audio data of a detected environment; extracting a logarithmic Mel spectrum from the audio data, and standardizing the logarithmic Mel spectrum; dividing the processed audio data into development set data and test set data; building a multi-scale attention convolutional recurrent neural network; training the multi-scale attention convolutional recurrent neural network through the development set data; and predicting the test set data through the trained multi-scale attention convolutional recurrent neural network, and generating a prediction result; According to the method, the multi-scale attention convolution module is utilized to extract the multi-scale feature information, and the recurrent neural network is utilized to fully utilize the context information, so that the method has relatively high detection precision for the giant panda sound, and has a very good detection effect for the giant panda sound with different cry types and cry durations.

Owner:SICHUAN UNIV +1

Sound event detection

ActiveUS9805739B2Enhance detection and classificationReduce complexityElectrical apparatusSpeech analysisSound detectionComputation complexity

A system and method for the use of sensors and processors of existing, distributed systems, operating individually or in cooperation with other systems, networks or cloud-based services to enhance the detection and classification of sound events in an environment (e.g., a home), while having low computational complexity. The system and method provides functions where the most relevant features that help in discriminating sounds are extracted from an audio signal and then classified depending on whether the extracted features correspond to a sound event that should result in a communication to a user. Threshold values and other variables can be determined by training on audio signals of known sounds in defined environments, and implemented to distinguish human and pet sounds from other sounds, and compensate for variations in the magnitude of the audio signal, different sizes and reverberation characteristics of the environment, and variations in microphone responses.

Owner:GOOGLE LLC

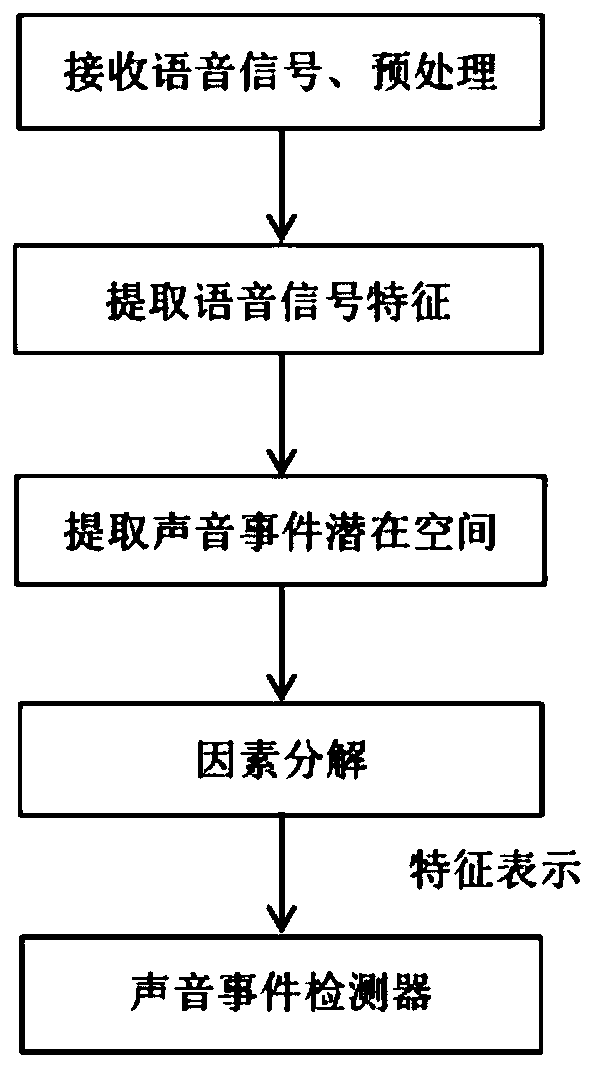

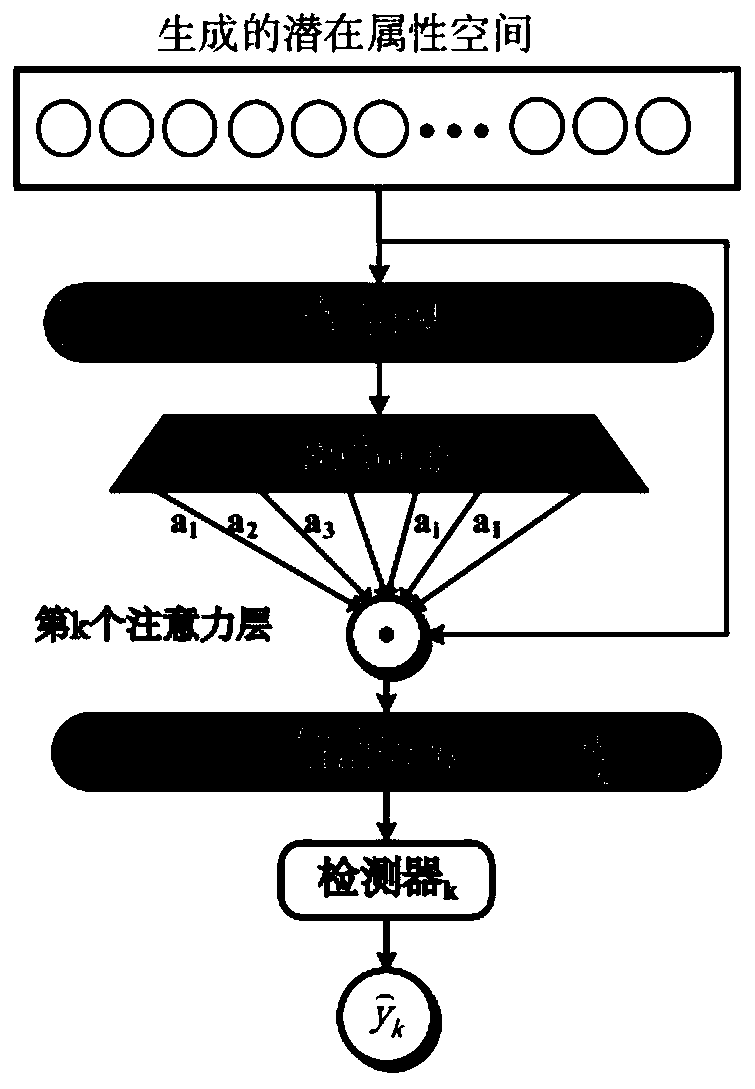

Hybrid sound event detection method based on factor decomposition of supervised variational encoder

The invention discloses a hybrid sound event detection method based on factor decomposition of a supervised variational encoder. The method includes the following steps that a voice signal is receivedand pretreated; the feature of the pretreated voice signal is extracted; a supervised variational automatic encoder is used for extracting potential attribute space of sound events; a factor decomposition method is used for decomposing various factors that make up a hybrid sound, and then the feature representation related to each specific sound event is obtained through studying; a correspondingsound event detector is used for detecting whether the specific sound events occur or not. A factor decomposition learning way is adopted for solving the problem that the detection accuracy of the sound events is not high when there are relatively many sound event categories in the hybrid sound, the accuracy of real-scene sound event detection is effectively improved, and the method can be used for speaker recognition and other tasks.

Owner:JIANGSU UNIV

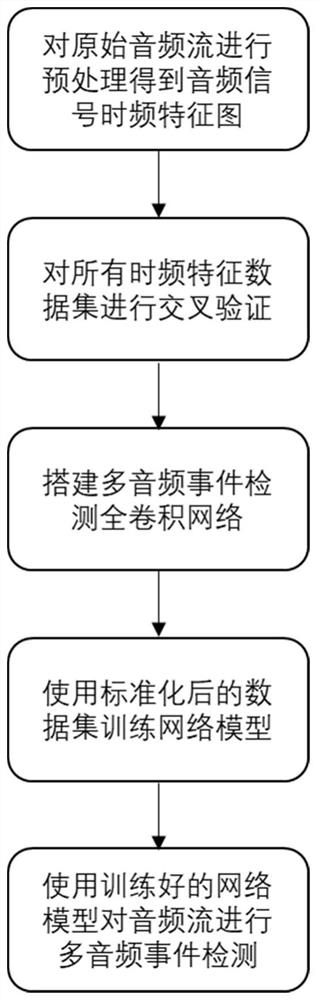

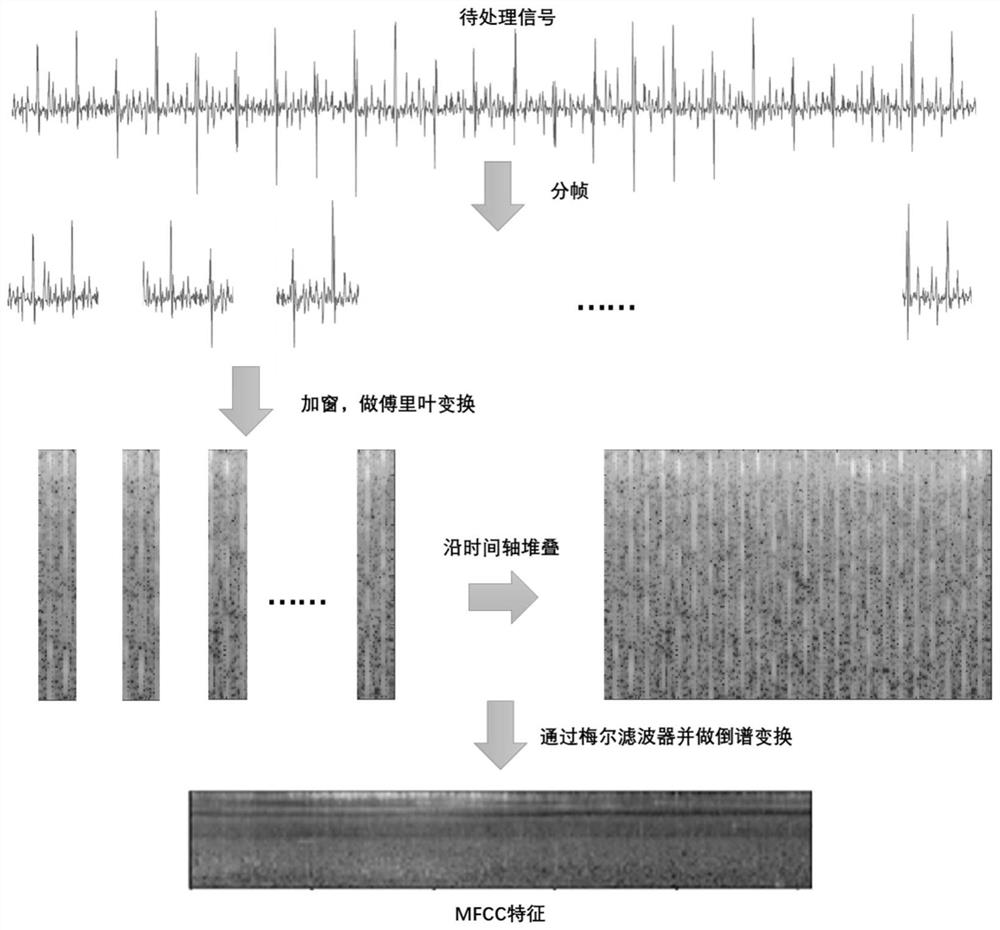

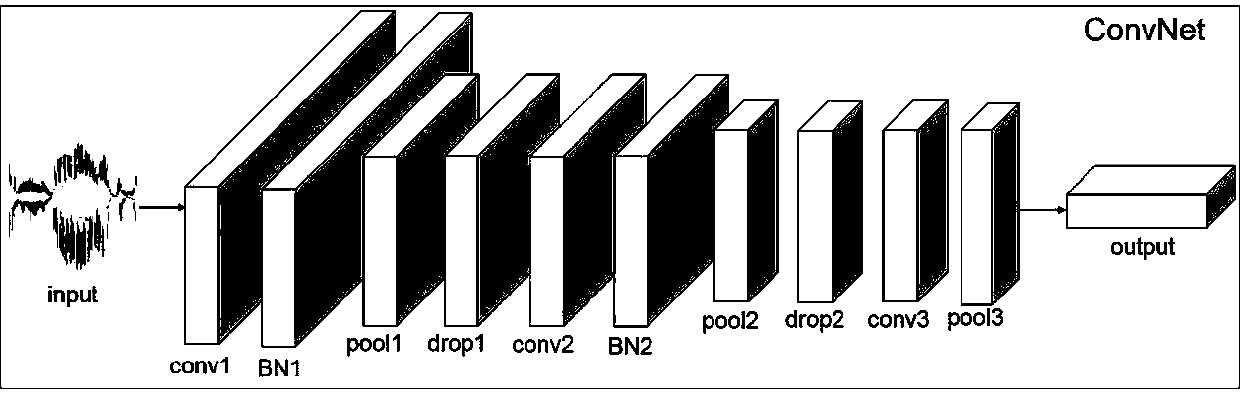

Sound event detection method based on full convolutional network

ActiveCN111986699AHigh precisionReduce time complexitySpeech analysisCharacter and pattern recognitionData setFeature extraction

The invention discloses a sound event detection method based on a full convolutional neural network, and mainly solves the problems of low multi-audio event detection precision and high time complexity of an existing network. According to the implementation scheme, the method comprises the following steps of 1) performing Mel cepstrum feature extraction on an audio stream to obtain time-frequencyfeature graphs of the audio stream, and forming a training data set by using the time-frequency feature graphs; 2) establishing a full convolution multi-audio event detection network composed of a frequency convolution network, a time convolution network and a decoding convolution network from top to bottom; 3) training the full convolution multi-audio event detection network by using the data set; and 4) inputting the audio stream to be detected into the trained full convolution multi-audio event detection network for multi-audio event detection to obtain the category of the audio event and the existing starting and ending time. Simulation results show that compared with an existing network 3D-CRNN with the highest precision, the precision of the method is improved by 2%, the operation speed is improved by about 5 times, and the method can be used for safety monitoring.

Owner:XIDIAN UNIV

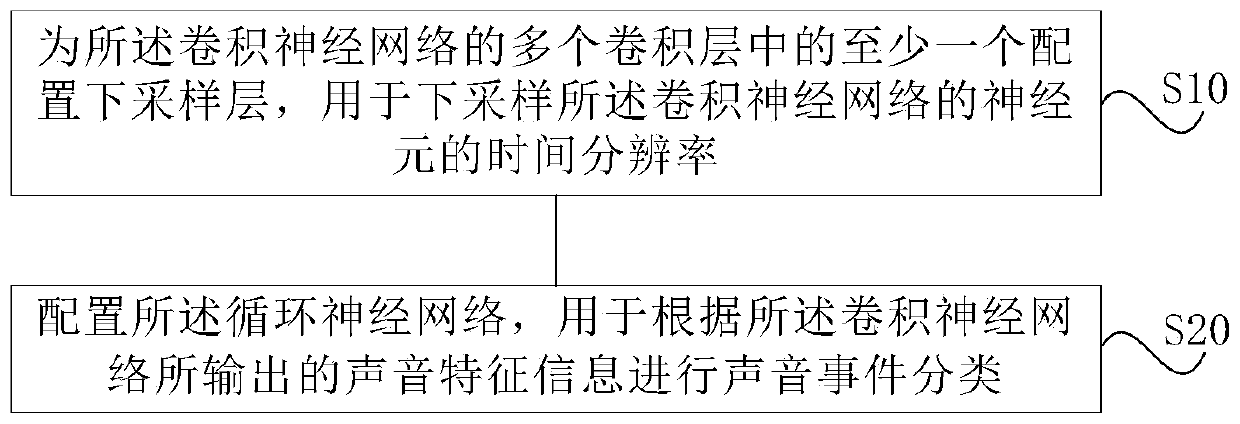

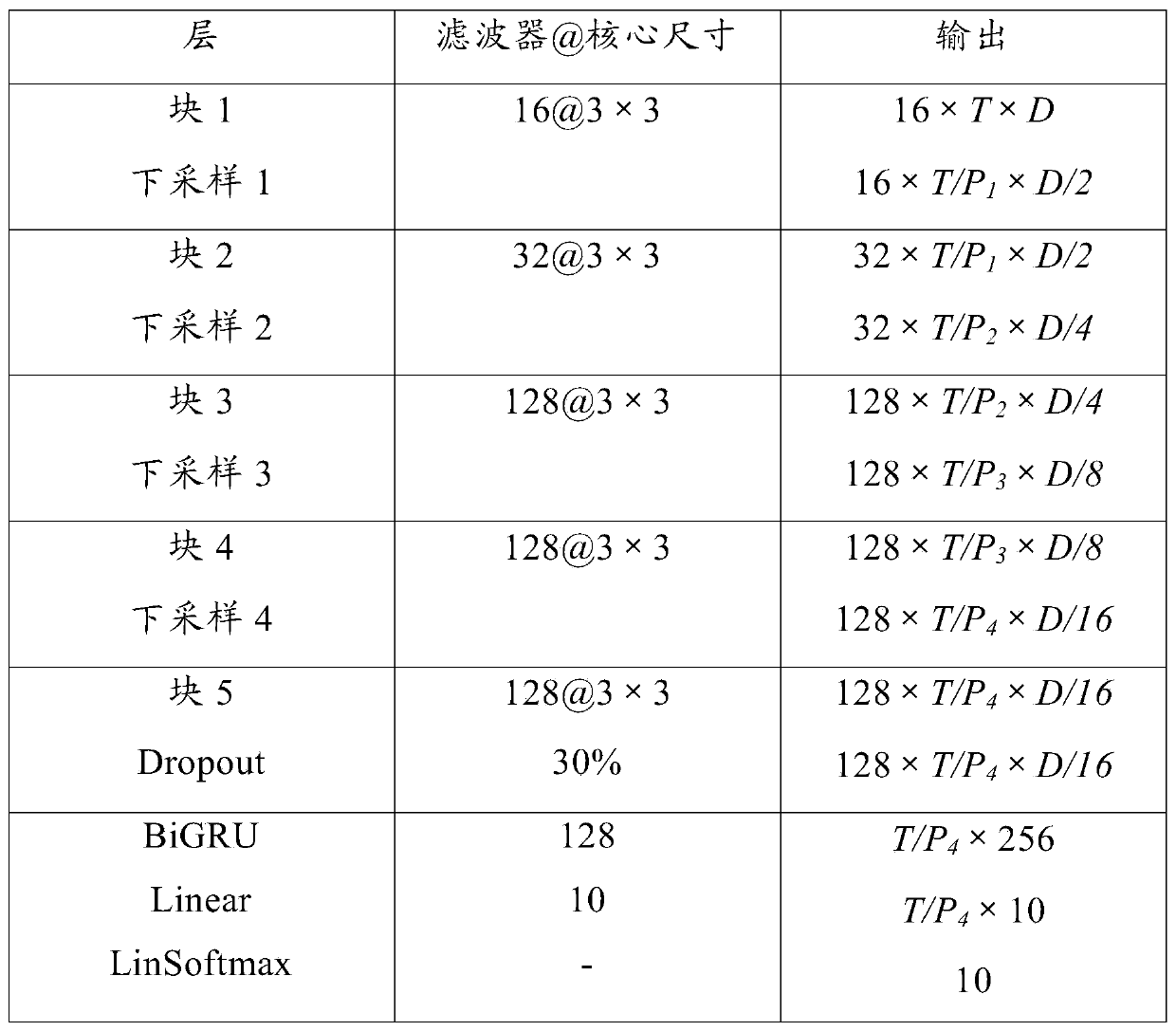

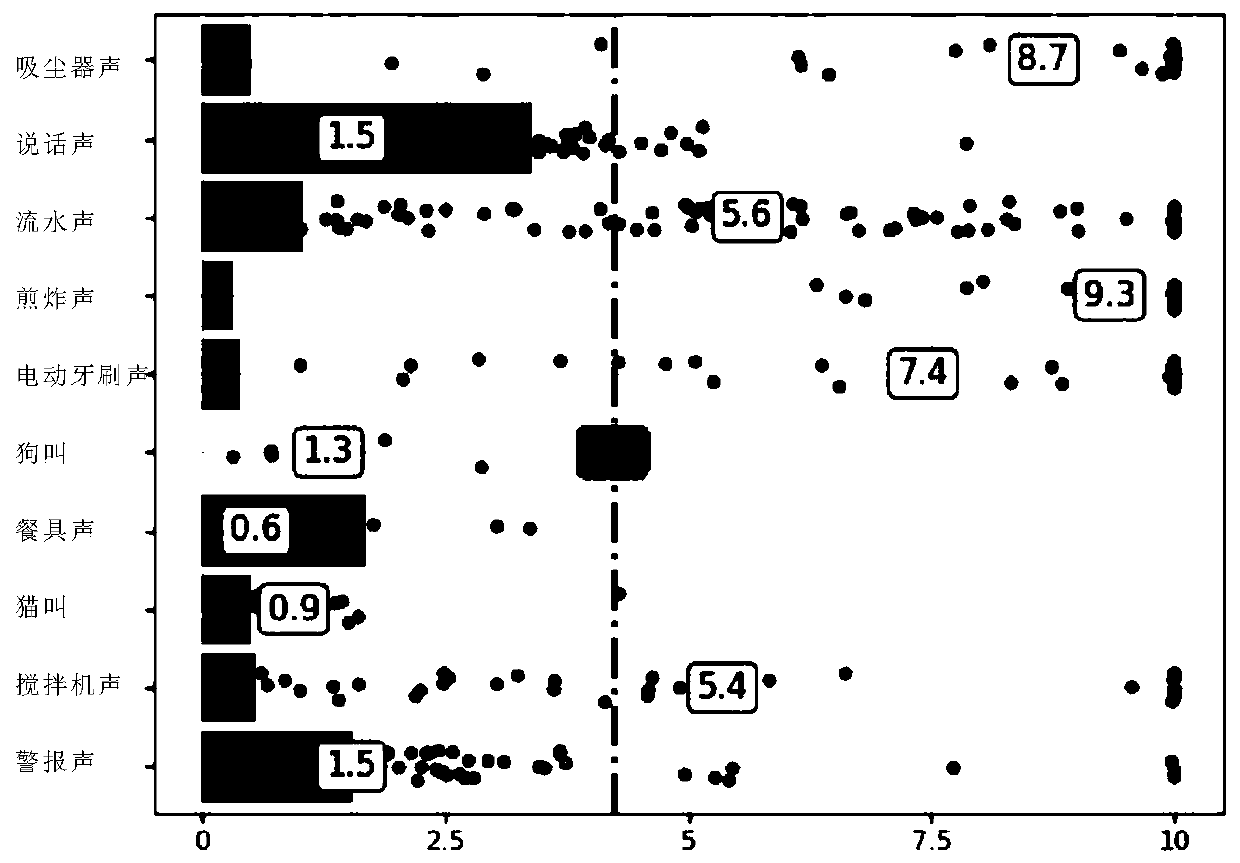

Sound event detection model training method and sound event detection method

InactiveCN110223713AClear boundariesEasy to classifySpeech analysisNeural architecturesImage resolutionAcoustics

The invention discloses a sound event detection model training method. A sound event detection model comprises a convolutional neural network and a circulatory neural network. The sound event detection model training method comprises the steps that a downsampling layer is arranged in at least one of a plurality of convolutional layers of the convolutional neural network, and the downsampling layeris used for downsampling time resolution of neurons of the convolutional neural network; and the circulatory neural network is arranged and used for classifying sound events according to sound feature information output by the convolutional neural network. According to the sound event detection model training method, through downsampling of the time resolution of neuron layer surfaces is carriedout when the sound feature information of the convolutional neural network is extracted, so that sound feature information with clearer an event boundary is obtained, the sound events are convenientlyclassified by the subsequent circulatory neural network, the accuracy of the sound event classification is improved, and the difficulty of sound event classification is reduced.

Owner:AISPEECH CO LTD

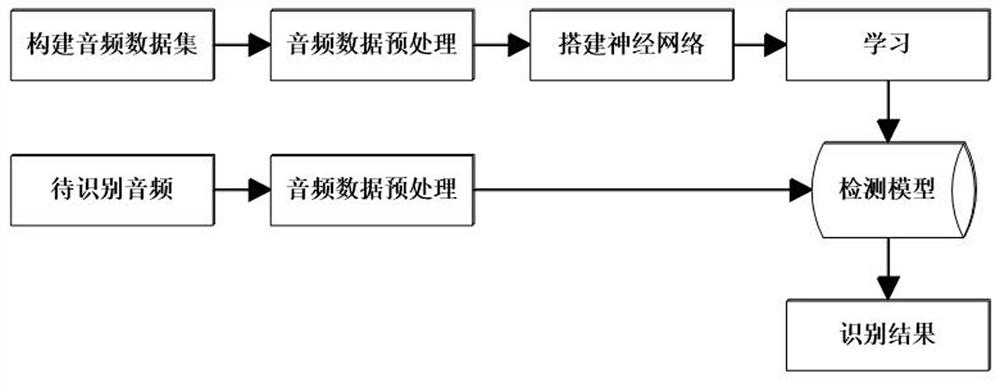

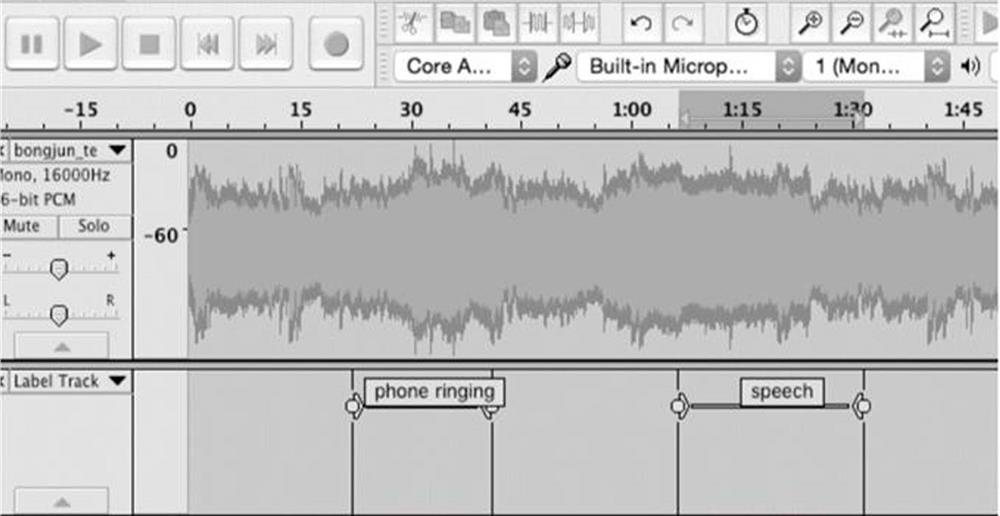

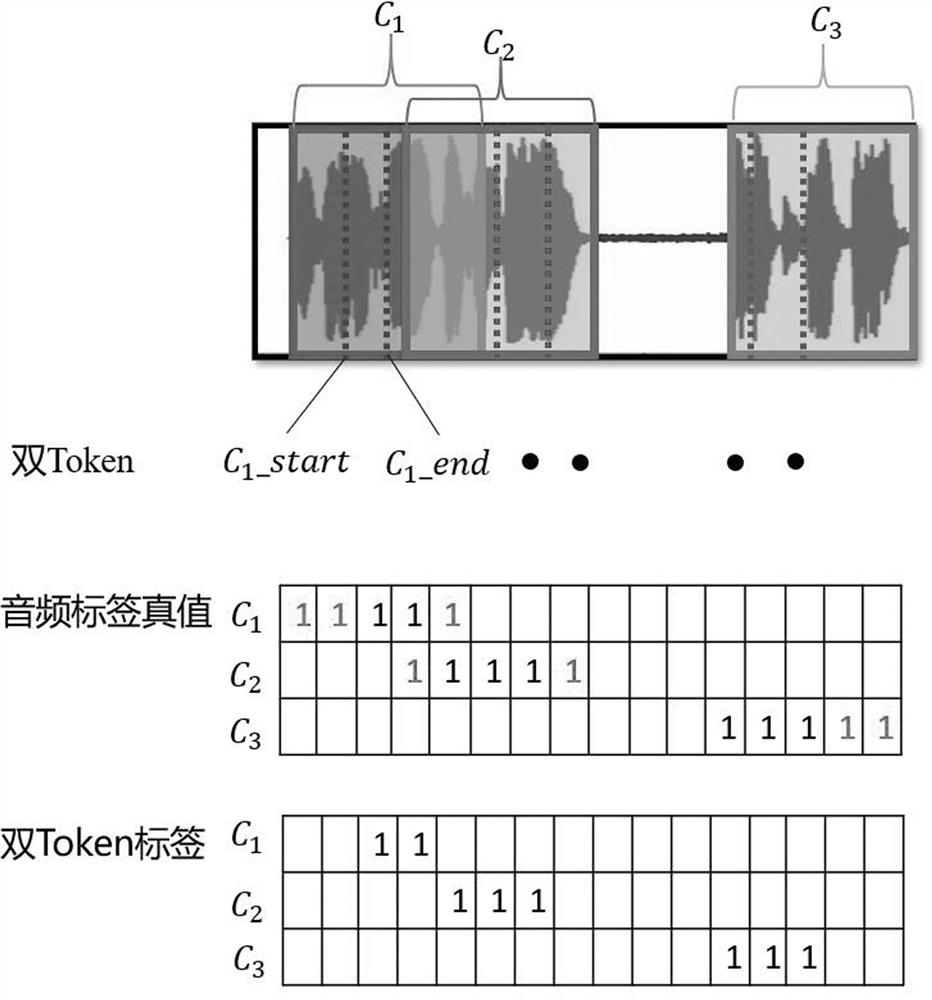

Sound event labeling and identification method adopting double Token tags

ActiveCN113140226AAccurate detectionAccurate monitoringSpeech analysisNeural architecturesData setEngineering

The invention discloses a sound event labeling and recognition method adopting double Token tags, which is characterized by comprising a sound event labeling process and a sound event recognition process, and the sound event labeling process is as follows: 1-1) realizing an audio tag form; 1-2) completing all audio annotations in the data set; the identification process comprises the following steps: 2-1) constructing an audio data set; 2-2) extracting audio data preprocessing and feature; 2-3) amplifying the audio data; 2-4) building a convolutional recurrent neural network; 2-5) training the convolutional recurrent neural network learning detection model, and 2-6) using the trained detection model to identify the audio to be detected. According to the method, the accuracy rate can be guaranteed, meanwhile, the sound event recognition range is widened at a low cost, accurate sound event detection and monitoring in the living environment of people can be achieved, and therefore the method better serves for smart city construction.

Owner:GUILIN UNIV OF ELECTRONIC TECH

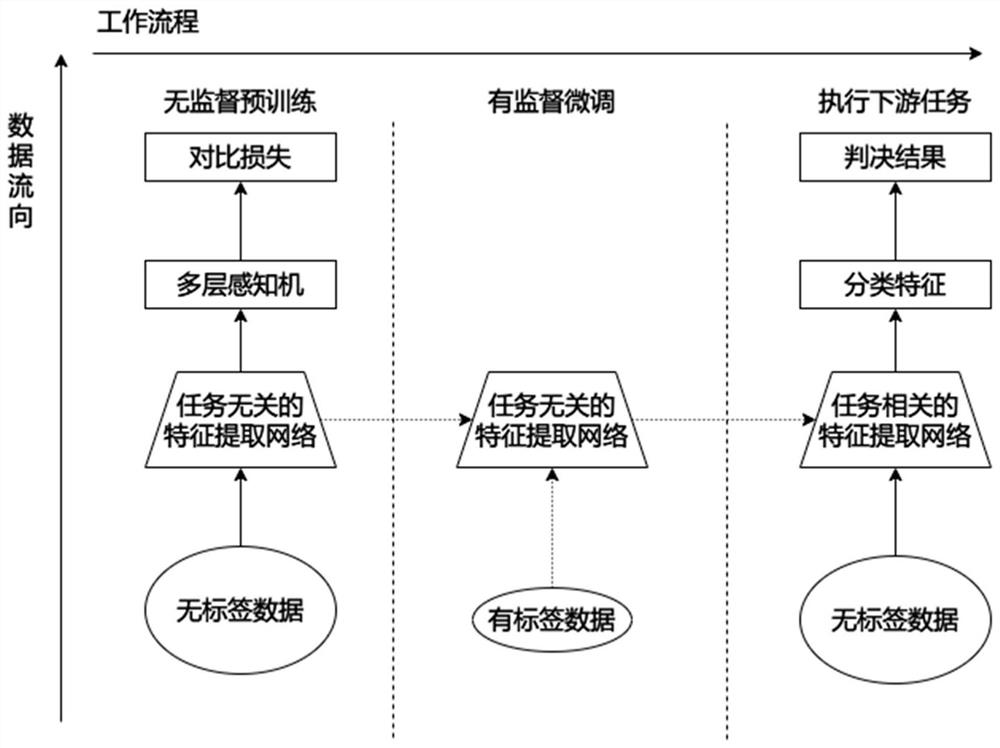

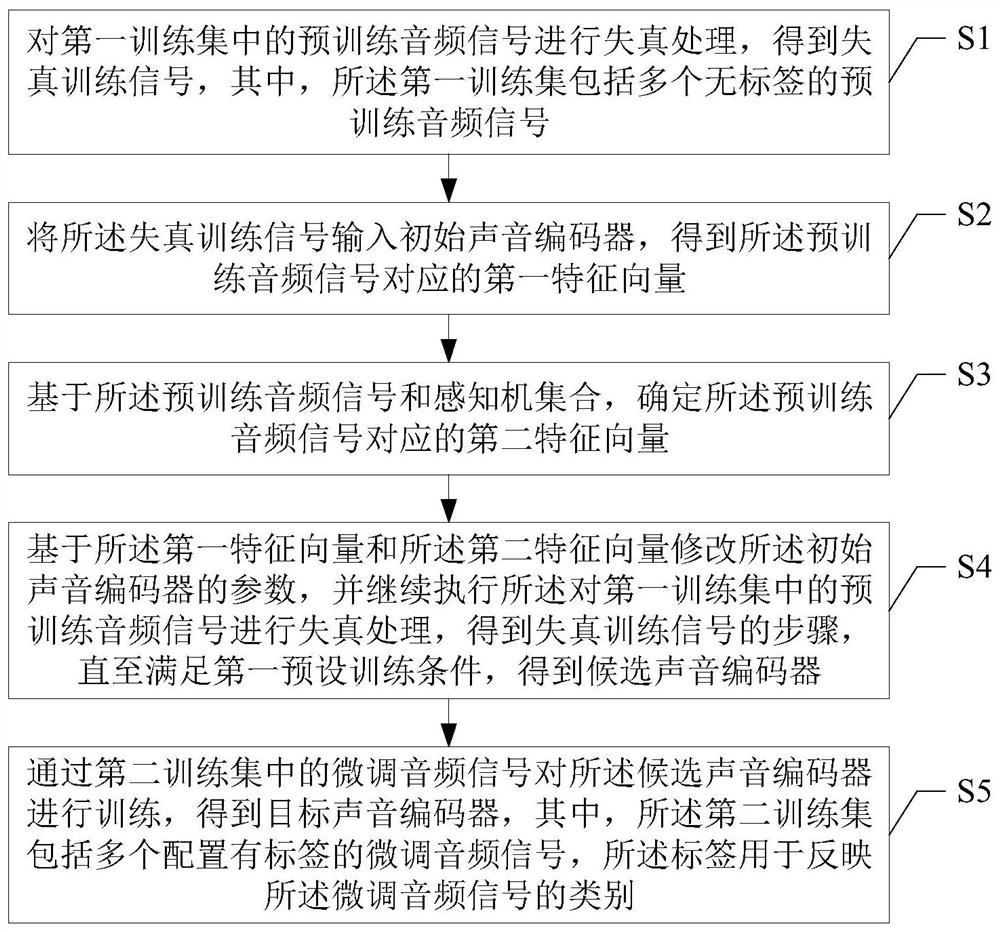

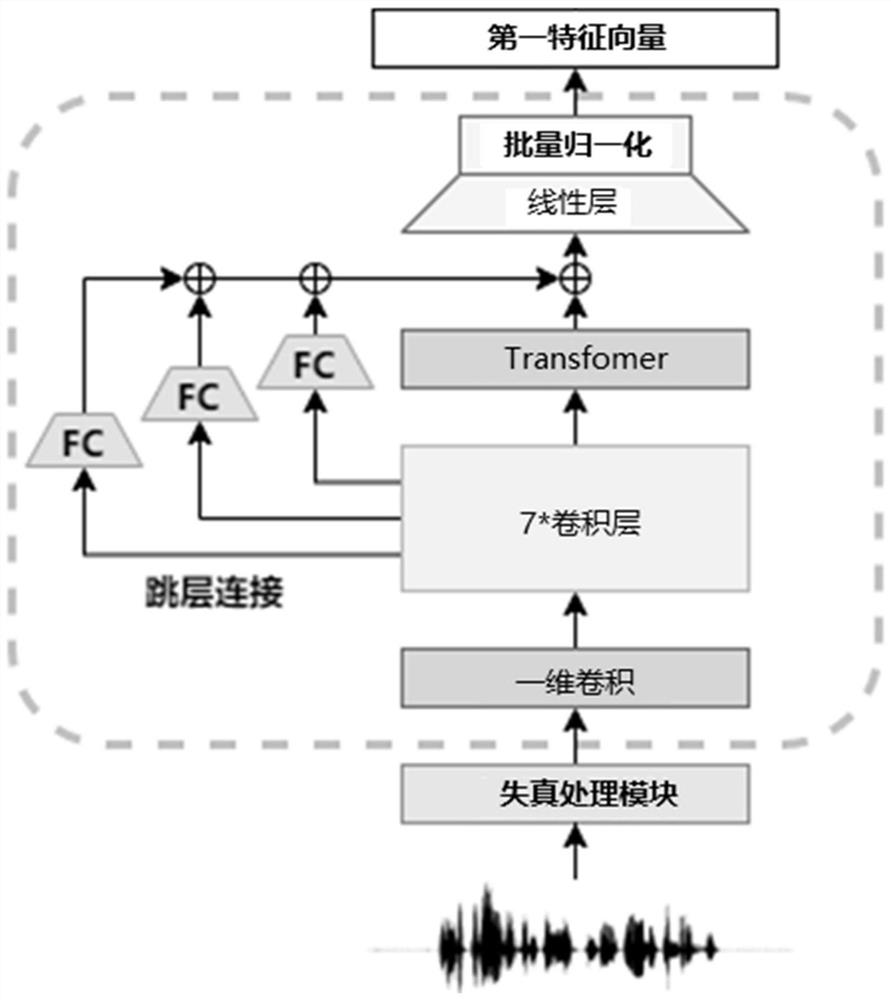

Method for generating sound encoder for sound event detection

ActiveCN113205820AReduce dependenceImprove robustnessSpeech analysisCharacter and pattern recognitionSound event detectionAudio frequency

The invention provides a method for generating a sound encoder for sound event detection, which comprises the following steps of: performing distortion processing on unlabeled pre-training audio signals in a first training set to obtain distortion training signals; inputting the distortion training signal into an initial sound encoder to obtain a first feature vector; determining a second feature vector based on the pre-training audio signal and the perceptron set; modifying parameters of the initial sound encoder based on the first feature vector and the second feature vector to obtain a candidate sound encoder; and training the candidate sound encoder through the fine tuning audio signal with the label in the second training set to obtain a target sound encoder. According to the invention, the initial sound encoder is pre-trained through the pre-training audio signal without the label to obtain the candidate sound encoder, and then the candidate sound encoder is fine-tuned through the fine-tuning audio signal with the label, so that the dependence on a strong label sample in the training process is reduced, and the robustness of the sound encoder is improved through distortion processing.

Owner:WUHAN UNIV

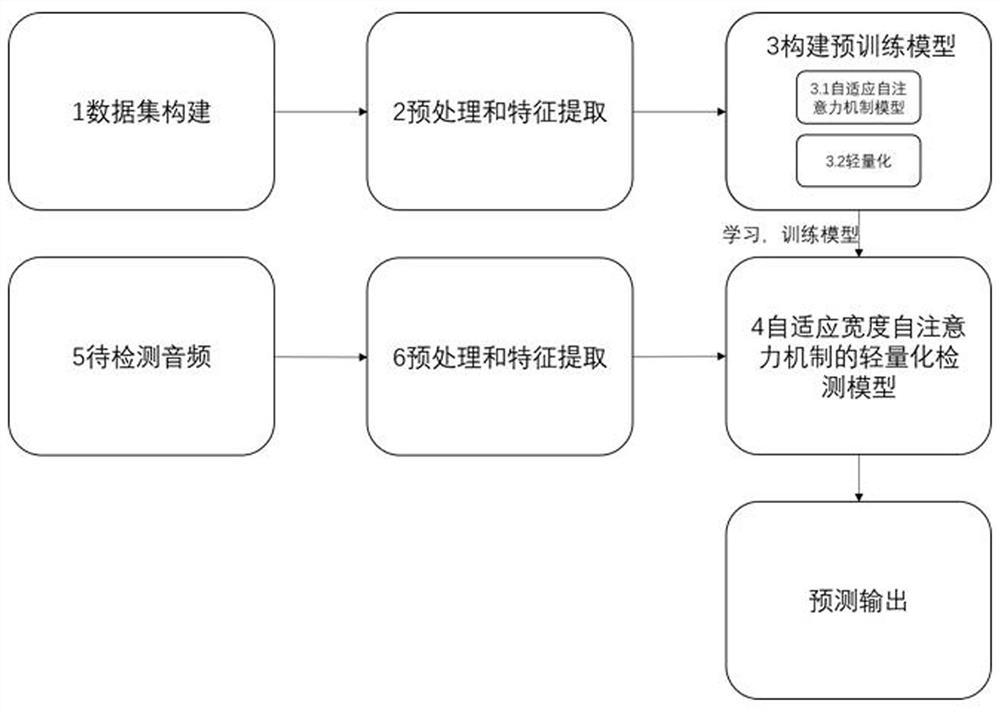

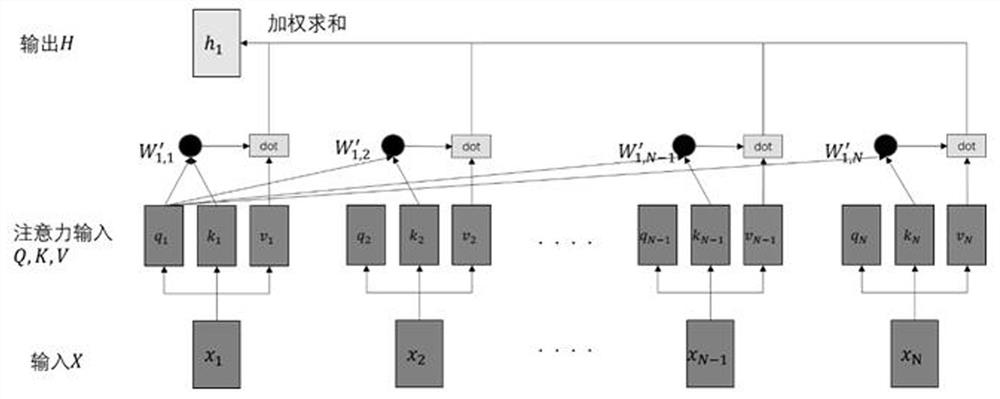

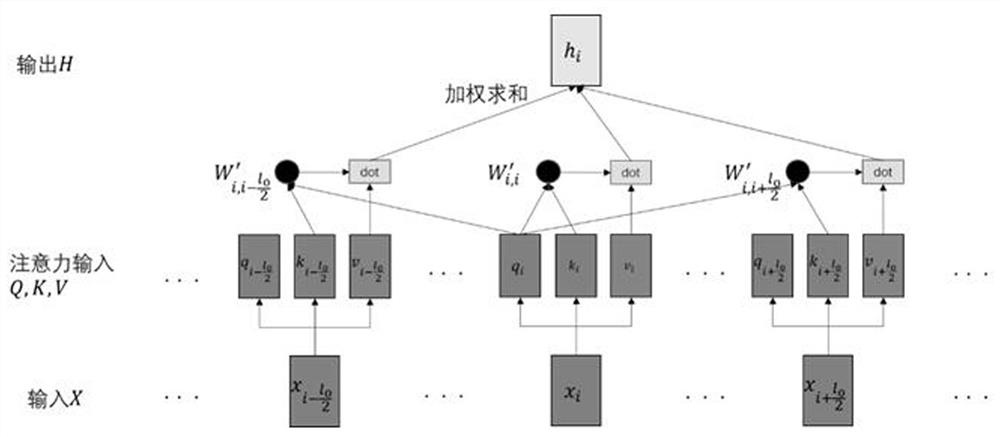

Lightweight abnormal sound event detection method based on adaptive width self-attention mechanism

PendingCN114386518AAvoid vanishing gradientsAvoid dependencyCharacter and pattern recognitionNeural architecturesAlgorithmEngineering

The invention discloses a lightweight abnormal sound event detection method based on a self-adaptive width self-attention mechanism. The method comprises the following steps: firstly, performing signal processing on an audio with a label to obtain a certain time-frequency characteristic representation of the audio; secondly, the feature representation (generally a vector or a matrix) with the label is taken as input to be given to the adaptive width self-attention mechanism model, then the adaptive width self-attention mechanism model has a defined loss function and a randomly initialized attention weight, the loss value of the label is calculated according to an adaptive self-attention mechanism algorithm, and the self-adaptive width self-attention mechanism model is used as a self-adaptive width self-attention mechanism model; next, the self-adaptive attention weight is updated by using a back propagation algorithm, and updating iteration is continuously performed on three input weights of attention until a loss function reaches a minimum or ideal state; and finally, storing the weight parameter at the moment by using a lightweight method, and predicting a section of unlabeled audio by taking the weight parameter as a model, so as to quickly and accurately detect the abnormal sound event.

Owner:GUILIN UNIV OF ELECTRONIC TECH

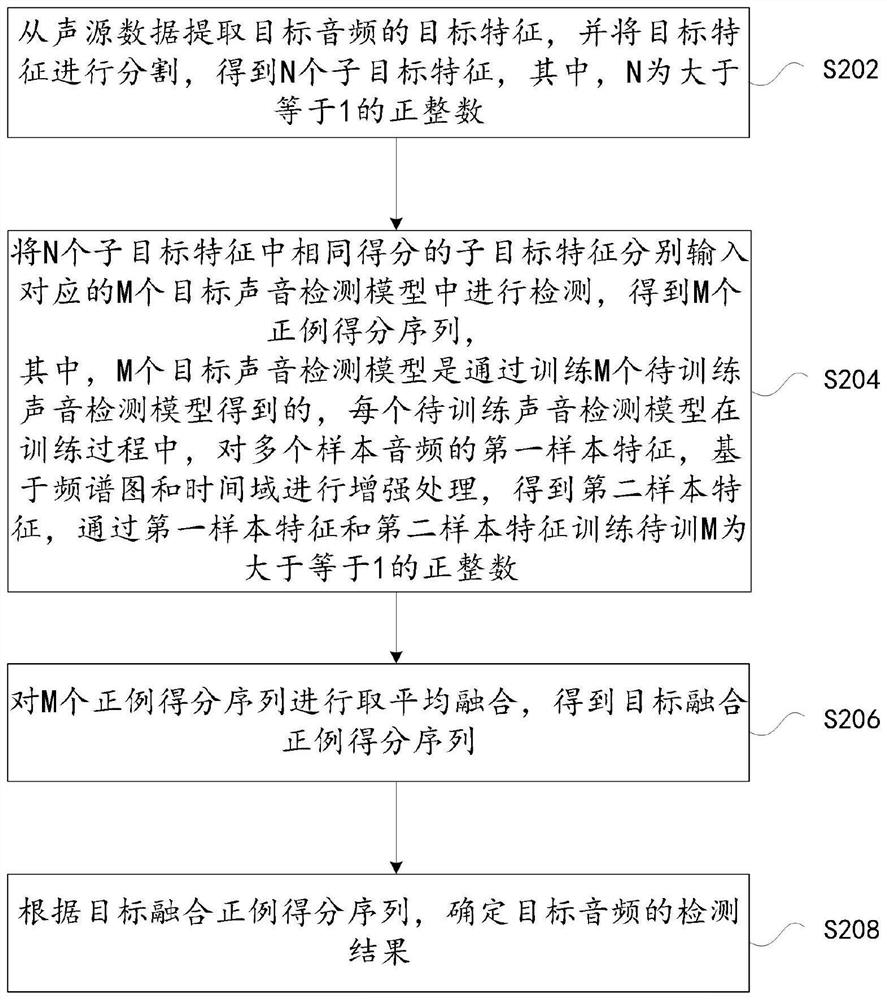

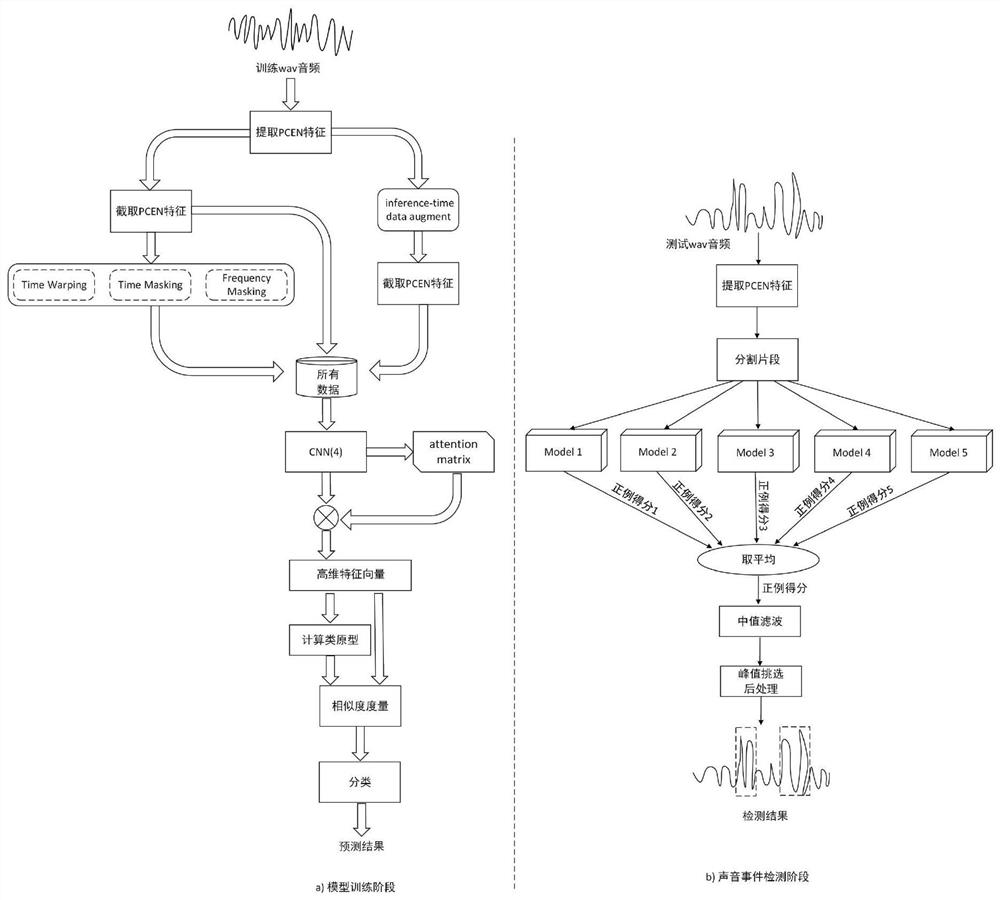

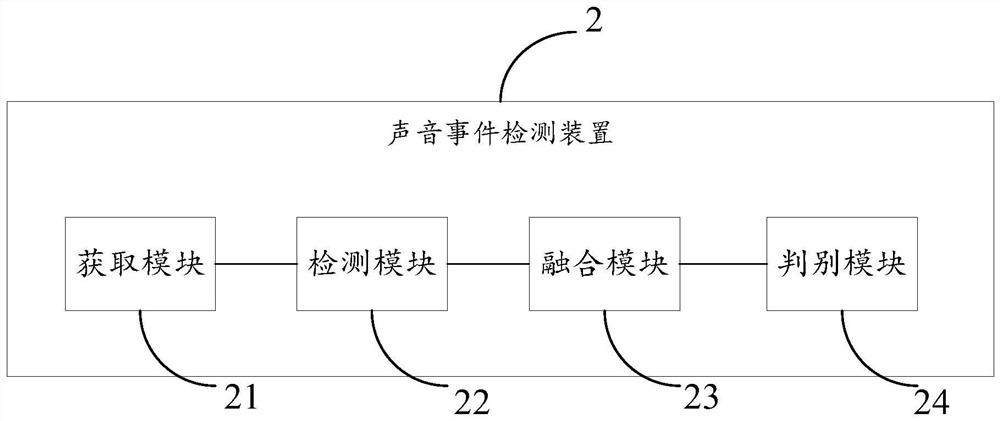

Sound event detection method and device, storage medium and electronic device

The invention discloses a sound event detection method and device, a storage medium and an electronic device. The sound event detection method comprises the following steps: acquiring target feature segmentation of a target audio so as to obtain N sub-target features; respectively inputting the sub-target features with the same score in the N sub-target features into corresponding M target sound detection models to obtain M positive example score sequences, performing enhancement processing on first sample features of multiple sample audios based on a spectrogram and a time domain in a training process of each target sound detection model to obtain second sample features, and training M sound detection models through the first sample features and the second sample features, wherein M is a positive integer greater than or equal to 1; averaging and fusing the M positive example score sequences; and determining the detection result of the target audio according to a target fusion positive example score sequence. Thus, the technical problem of low accuracy of a sound event detection result in the prior art is overcome.

Owner:SHANGHAI NORMAL UNIVERSITY +1

Systems and methods of home-specific sound event detection

ActiveUS10068445B2ConfidenceAccurate identificationBurglar alarm by openingSpeech analysisComputer scienceSound event detection

Systems and methods of a security system are provided, including detecting, by a sensor, a sound event, and selecting, by a processor coupled to the sensor, at least a portion of sound data captured by the sensor that corresponds to at least one sound feature of the detected sound event. The systems and methods include classifying the at least one sound feature into one or more sound categories, and determining, by a processor, based upon a database of home-specific sound data, whether the at least one sound feature is a human-generated sound. A notification can be transmitted to a computing device according to the sound event.

Owner:GOOGLE LLC

Sound event detection method based on hole convolution recurrent neural network

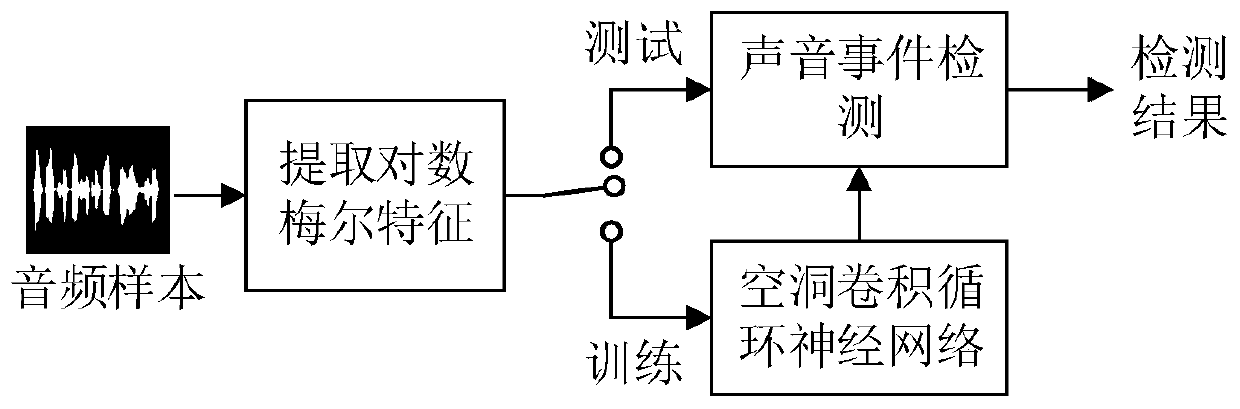

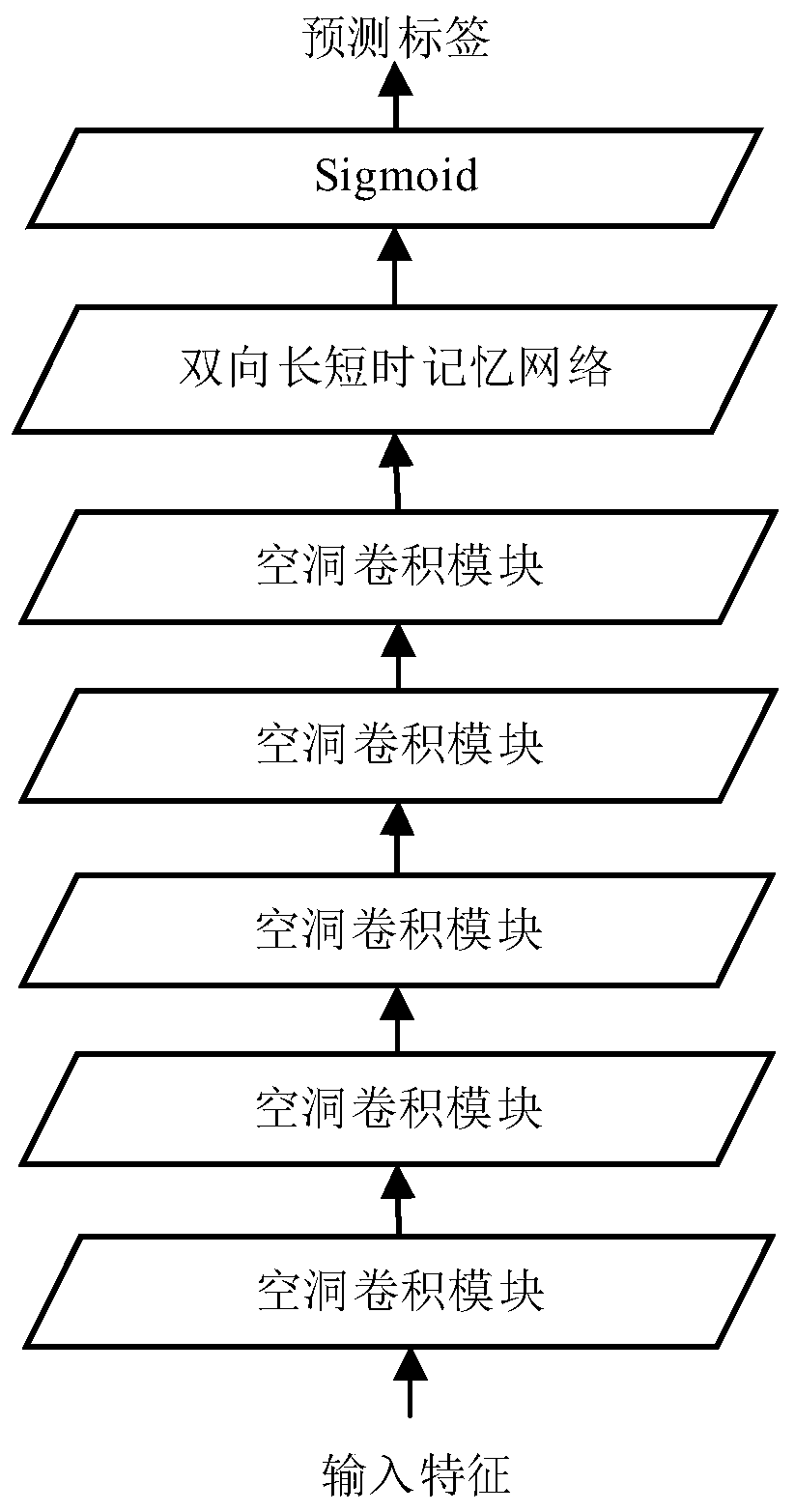

The invention discloses a sound event detection method based on a cavity convolution recurrent neural network. The method comprises the following steps: extracting logarithm Mel spectrum characteristics of each sample, constructing a cavity convolution recurrent neural network, wherein the cavity convolution recurrent neural network comprises a convolution neural network, a bidirectional long-short-term memory neural network and a Sigmoid output layer, using logarithm Mel spectrum features extracted from a training sample as input to train the cavity convolution recurrent neural network, and identifying a sound event in the test sample by adopting the trained cavity convolution recurrent neural network to obtain a sound event detection result. According to the method, cavity convolution isintroduced into a convolutional neural network, and the convolutional neural network and a recurrent neural network are optimized and combined to obtain a hole convolution recurrent neural network. Compared with a traditional convolutional neural network, the void convolutional recurrent neural network has a larger receptive field under the condition that the sizes of the network parameter sets are the same, contextual information of audio samples can be more effectively utilized, and a better sound event detection result is obtained.

Owner:SOUTH CHINA UNIV OF TECH

Sound event detection method, device and system and readable storage medium

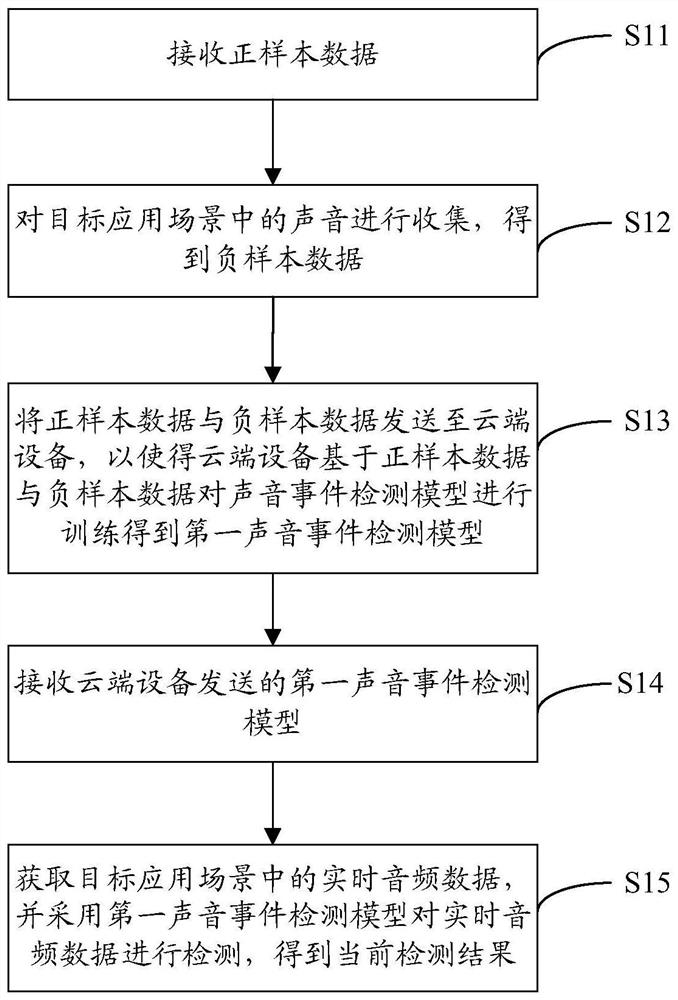

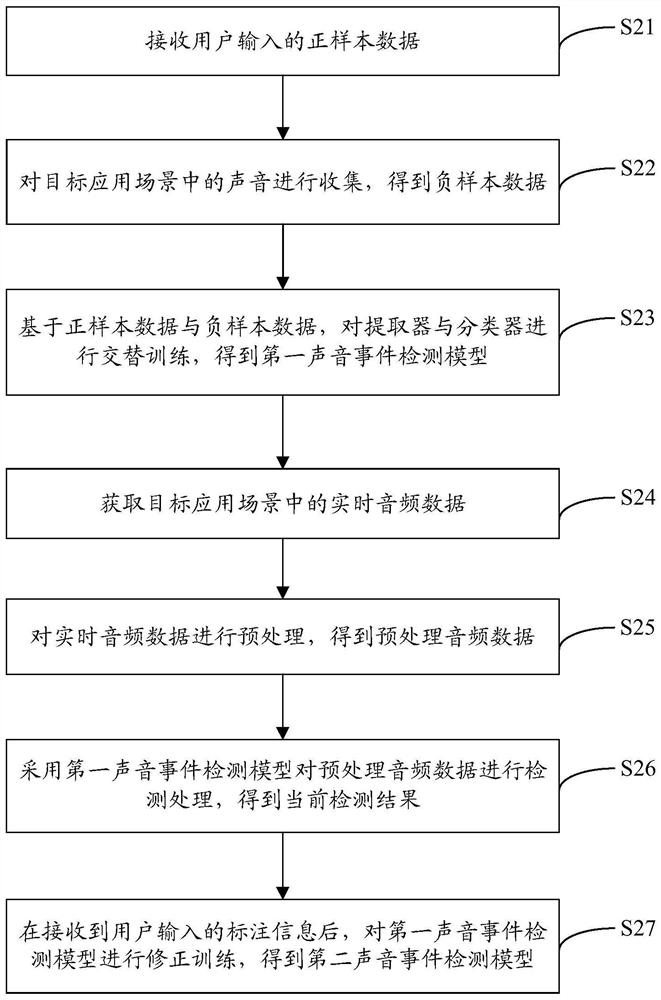

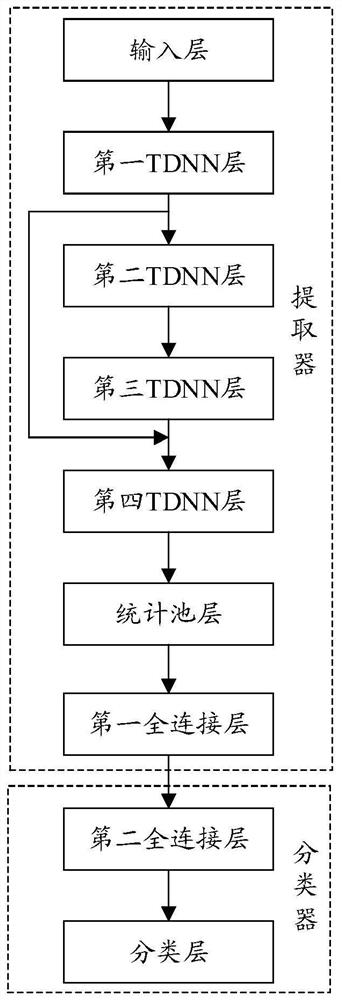

PendingCN114333898AThe cost of customization is not highPrivacy protectionSpeech analysisNeural architecturesPositive sampleSound event detection

The invention discloses a sound event detection method, device and system and a readable storage medium, the method is applied to a detection device, and the method comprises the following steps: receiving positive sample data, the positive sample data being audio data related to a target sound event occurring in a target application scene; collecting sound in the target application scene to obtain negative sample data; sending the positive sample data and the negative sample data to a cloud device, so that the cloud device trains a sound event detection model based on the positive sample data and the negative sample data to obtain a first sound event detection model; and obtaining real-time audio data in the target application scene, and detecting the real-time audio data by using the first sound event detection model to obtain a current detection result, the current detection result being a detection result of whether the target sound event occurs in the target application scene. In this way, the detection effect can be improved, and a user can customize the detection type of the sound event detection model.

Owner:IFLYTEK CO LTD

Sound event detection method and device and readable storage medium

ActiveCN114664290ALighten the computational burdenReduce occupancySpeech recognitionNeural architecturesFeature extractionEngineering

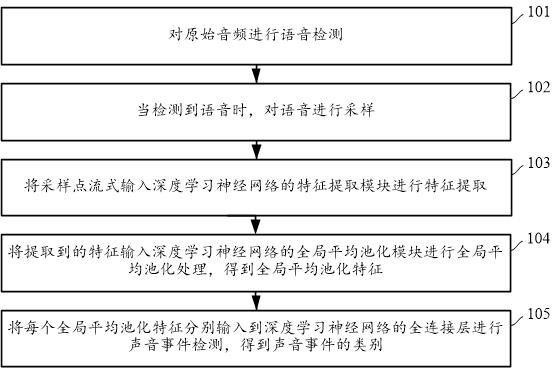

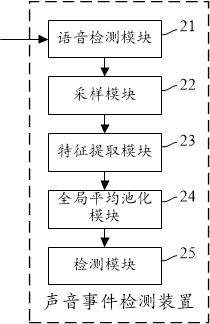

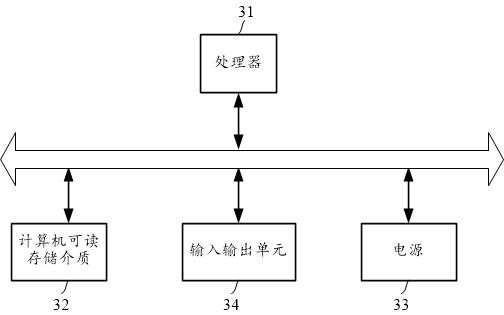

The embodiment of the invention provides a sound event detection method and device and a readable storage medium. The method comprises the following steps: performing voice detection on original audio; when the voice is detected, sampling the voice; inputting the sampling points into a feature extraction module of a deep learning neural network in a streaming manner for feature extraction; inputting the extracted features into a global average pooling module of a deep learning neural network for global average pooling processing to obtain global average pooling features; and respectively inputting each global average pooling feature into a full connection layer of a deep learning neural network to carry out sound event detection so as to obtain the category of the sound event. According to the embodiment of the invention, the calculation burden of the NPU is reduced, the calculation resources are saved, the occupation of the cache is reduced, and the real-time performance of sound event detection is improved.

Owner:SHENZHEN MICROBT ELECTRONICS TECH CO LTD

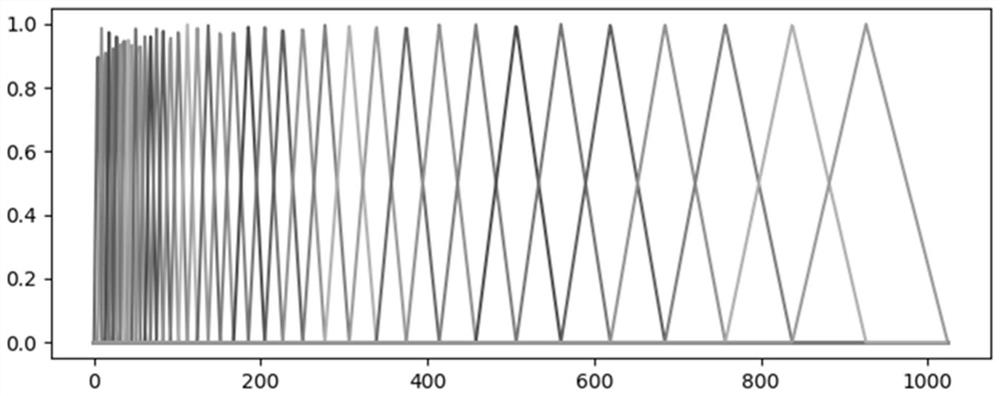

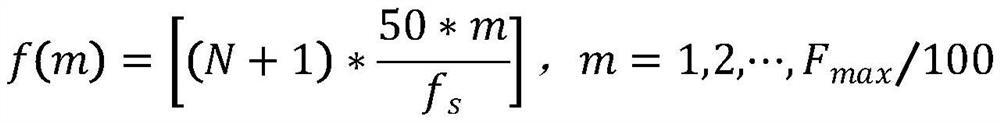

Transformer sound event detection method based on voiceprint recognition

InactiveCN112599134AResolve overlapImprove intelligenceSpeech analysisSound event detectionSpeech sound

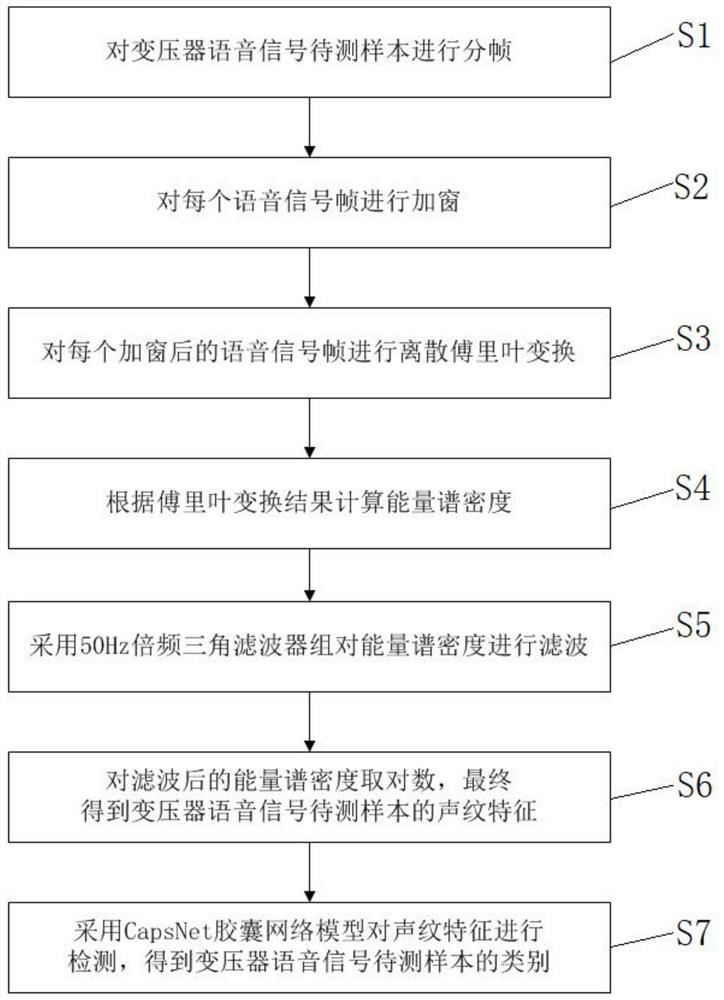

The invention provides a transformer sound event detection method based on voiceprint recognition. The method comprises the following steps of: framing a to-be-detected sample of a transformer voice signal; windowing each voice signal frame; carrying out discrete Fourier transform on each windowed voice signal frame; calculating an energy spectral density according to a Fourier transform result; filtering the energy spectral density by adopting a 50Hz frequency doubling triangular filter bank; taking a logarithm of the filtered energy spectrum density, and finally obtaining voiceprint featuresof the to-be-detected sample of the transformer voice signal; and detecting the voiceprint features by adopting a CapsNet capsule network model trained in advance to obtain the category of the to-be-detected sample of the transformer voice signal. According to the method, the problem of overlapping of transformer sound events can be solved, the recognition accuracy is high, and the intelligent level of online detection of the transformer sound events is improved.

Owner:STATE GRID ANHUI ELECTRIC POWER +4

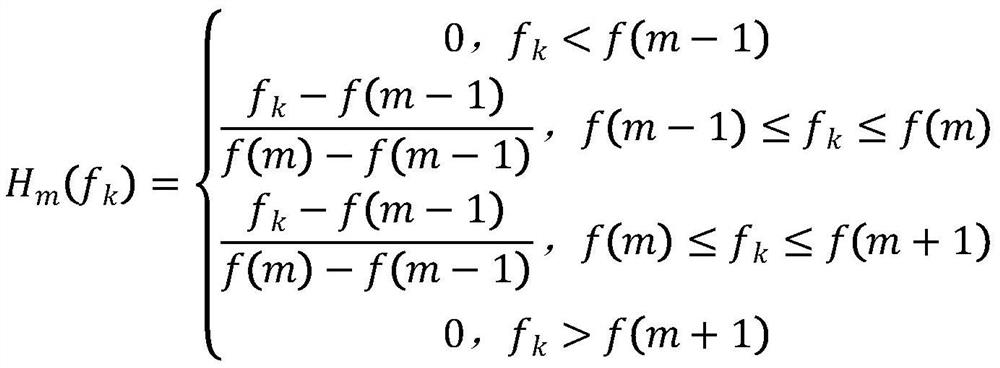

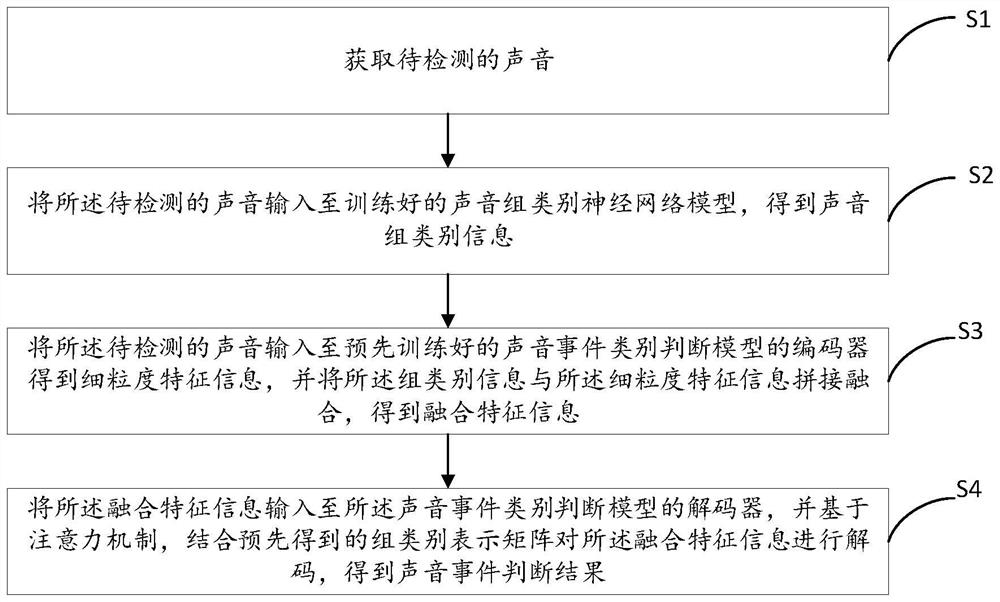

Sound event detection method and device, equipment and storage medium

The invention relates to the technical field of sound recognition, and provides a low-resource sound event detection method and device, equipment and a storage medium, and the method comprises the steps: obtaining a to-be-detected sound; inputting sound to be detected into the trained sound group category neural network model to obtain sound group category information; inputting the sound to be detected into an encoder of a pre-trained sound event category judgment model to obtain fine-grained feature information, and splicing and fusing the group category information and the fine-grained feature information to obtain fused feature information; and inputting the fused feature information into a decoder of a sound event category judgment model, and decoding the fused feature information by combining a pre-obtained group category representation matrix based on an attention mechanism to obtain a sound event judgment result. According to the invention, the to-be-detected sound forms the large-class group sound group class information containing rich information, and auxiliary discrimination based on large-class sound event results is realized through a self-attention mechanism.

Owner:PING AN TECH (SHENZHEN) CO LTD

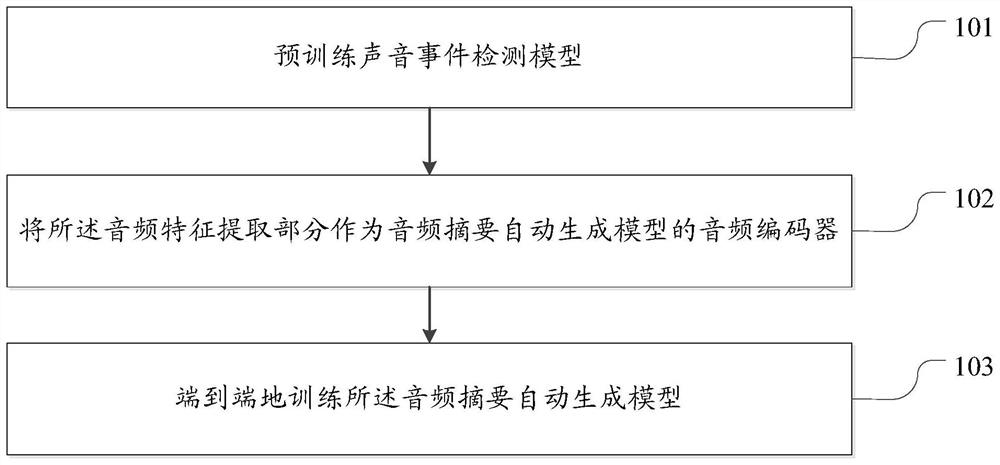

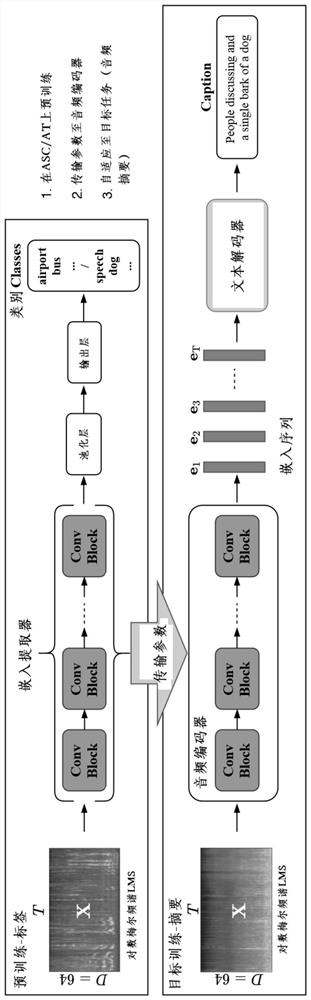

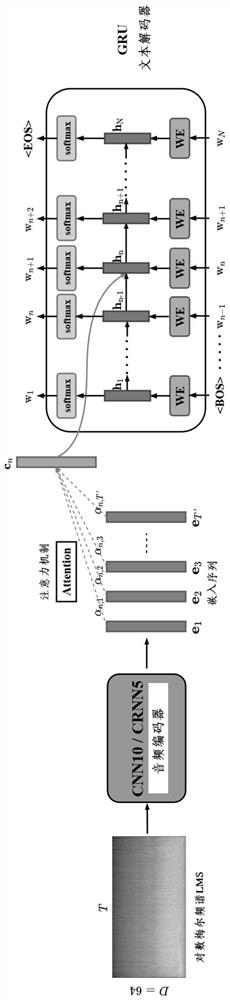

Automatic audio summary generation method and device

ActiveCN112784094ASupport for practical applicationsAccurate Audio Summary DescriptionCharacter and pattern recognitionSpecial data processing applicationsText databaseFeature extraction

The invention discloses an automatic audio abstract generation method and device, and the method comprises the steps: pre-training a sound event detection model which comprises an audio feature extraction part and an output part; enabling the audio encoder to take the audio feature extraction part as an audio abstract automatic generation model; and training the audio abstract automatic generation model in an end-to-end manner. According to the scheme provided by the embodiment of the invention, a better audio encoder is obtained through pre-training and transfer learning on the sound event detection task, so that more accurate audio abstract description is generated, corresponding text description can be generated for any new audio, the audio-text database is automatically established, and practical application of similar audio retrieval engines based on natural languages in unlimited forms can be supported.

Owner:AISPEECH CO LTD

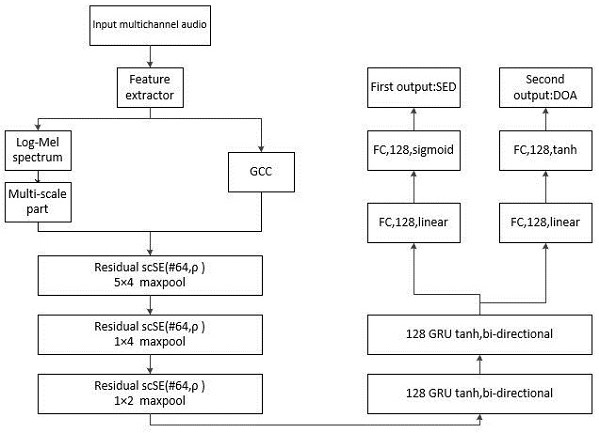

Sound event detection and positioning method based on deep learning

In an overlapped sound event detection task, sometimes, extracted global features cannot accurately detect and locate sound events of overlapped parts. In view of the fact that short-term and long-term sequence features of a sound event related to a context are obtained by utilizing a Gated Recurrent Unit (GRU) based on a multi-scale spatial channel squeeze excitation convolutional network and the GRU, the invention provides a sound event detection and positioning model based on multi-scale spatial channel squeeze excitation (MscSE). The model, a baseline model and a residual network model are subjected to a contrast experiment in a public data set DCASE2020Task3. According to the optimal results, the detection ER is 0.59, the F1 score is 50.7%, the positioning error DE score and the DE_F1 score are 15.8% and 70.3% respectively, the F1 score is 2%-5% higher than that of other models, and the ER is also lower than that of other models. Therefore, compared with a single-scale model, the squeeze excitation model based on multiple scales is improved in sound event detection and positioning performance.

Owner:HARBIN UNIV OF SCI & TECH

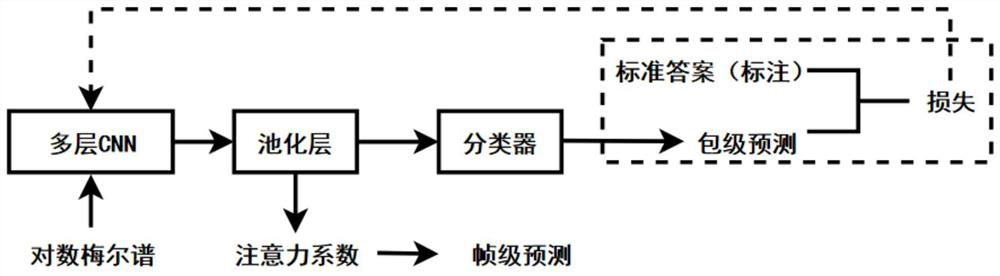

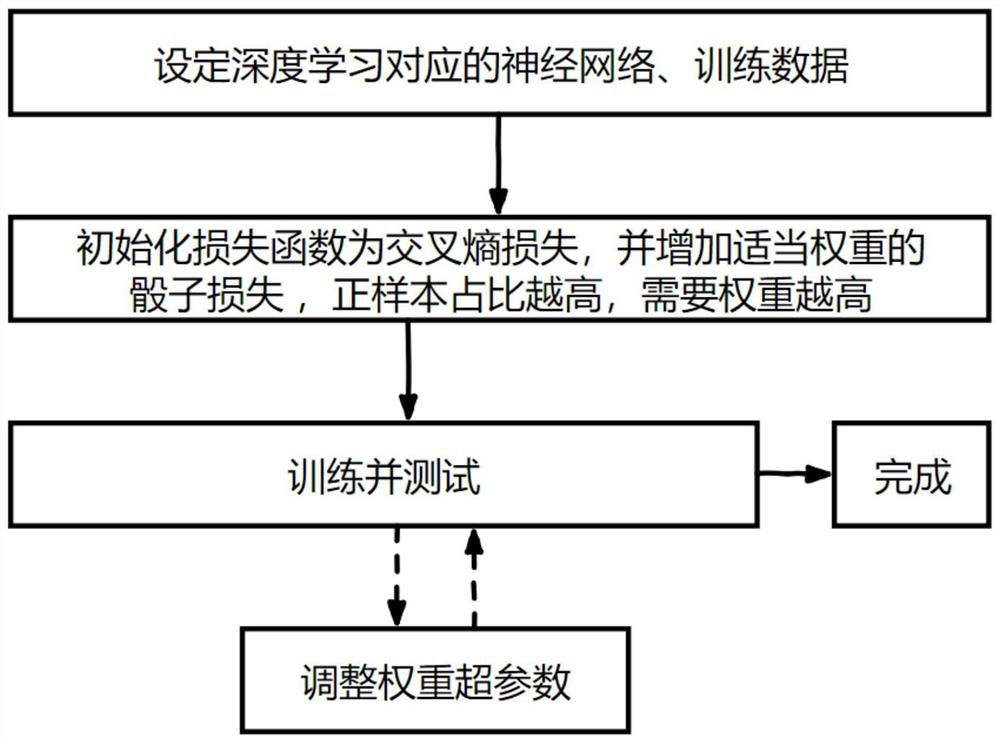

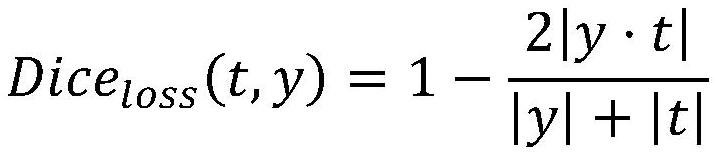

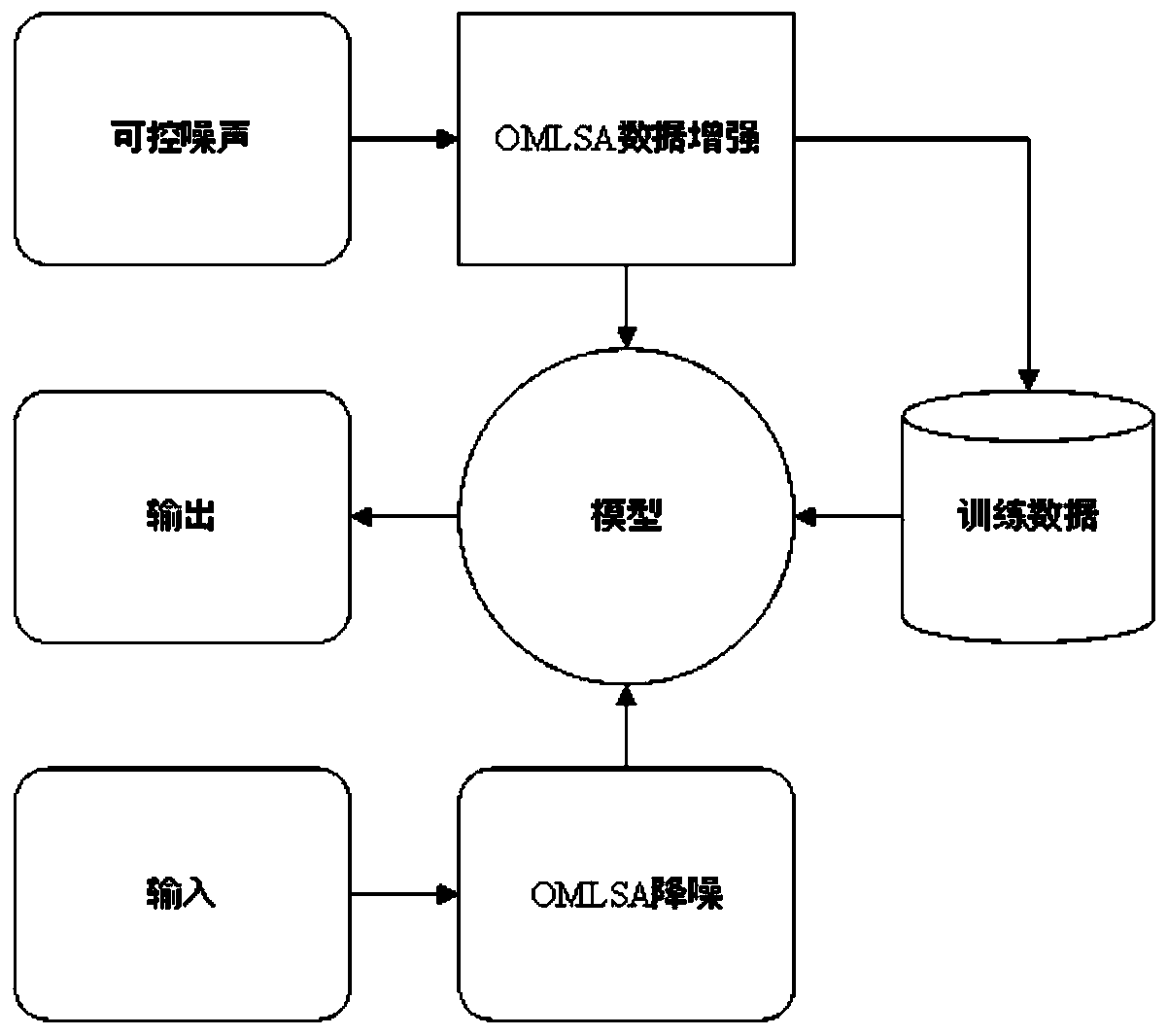

High-recall-rate weak-annotation sound event detection method

ActiveCN112036477ASolve the problem of uneven sample distributionCharacter and pattern recognitionNeural architecturesPattern recognitionPositive sample

The invention discloses a high-recall-rate weak-annotation sound event detection method, and the method comprises the steps: setting a neural network and training data corresponding to deep learning;initializing a loss function as cross entropy loss, and adding a plurality of groups of dice losses with different weights, wherein the higher the positive sample proportion is, the larger the required weight is; training, testing and observing experimental results of only using cross entropy loss and increasing a plurality of groups of dice loss with different weights; adjusting a weight hyper-parameter in the loss, and re-performing a plurality of groups of dice loss weight values; carrying out the loop iteration to find out the best effect to complete training, and obtaining a final loss function; applying the final loss function to a neural network detection model, applying the obtained model to a sound event detection system, and obtaining packet-level prediction and frame-level prediction of a sound event through a neural network classifier. According to the method, the problem of non-uniform sample distribution caused by one-to-many classification generally adopted in sound event detection can be solved, and the F2 score paying more attention to the recall rate is effectively improved.

Owner:TSINGHUA UNIV

Sound event detection system and method based on machine learning

The invention relates to a sound event detection system and method based on machine learning, which belong to the technical field of audio detection and fault detection. The system comprises a pickupmodule, an identification module and a background management module, wherein the pickup module is used for completing audio acquisition and is composed of a microphone and a corresponding peripheral circuit; the identification module is composed of an identification model based on a machine learning method and completes the real-time identification function of audio; and the background managementmodule is used for completing display of identification results. The system provided by the invention can detect the sound event more accurately and has better robustness under the condition of noiseinterference.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

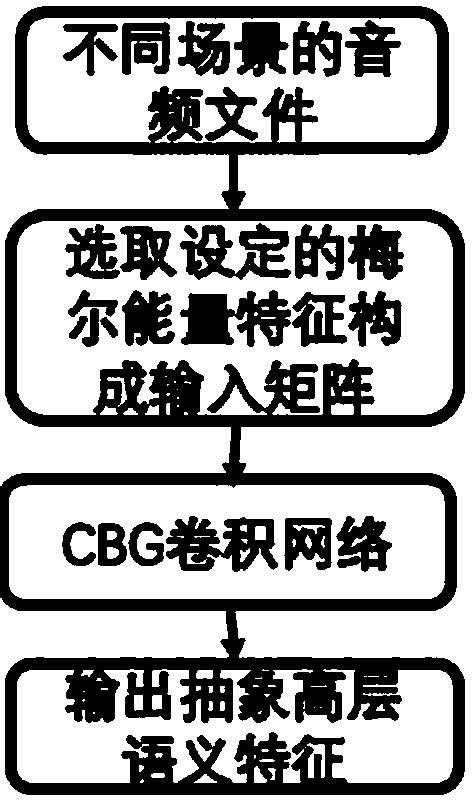

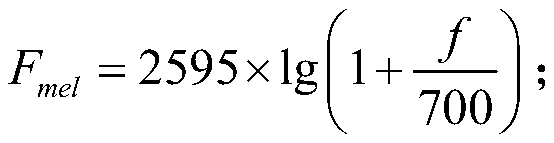

Audio high-level semantic feature extraction method and system for overlapped sound event detection

The invention relates to an audio high-level semantic feature extraction method and system for overlapped sound event detection, and the method comprises the steps: firstly constructing an audio filetraining data set, and selecting Mel energy features for audio files of different scenes in the training set to form an input matrix; secondly, constructing a CBG deep convolutional neural network, and inputting the input matrix obtained in the step S1 into the CBG deep convolutional neural network for training; and finally, for a given audio file, extracting Mel energy features of the given audiofile, and inputting the Mel energy features into the trained CBG deep convolutional neural network to obtain high-level semantic feature output. According to the method, traditional audio physical features are converted into high-level semantic features, so that the precision of subsequent detection can be improved.

Owner:FUZHOU UNIV

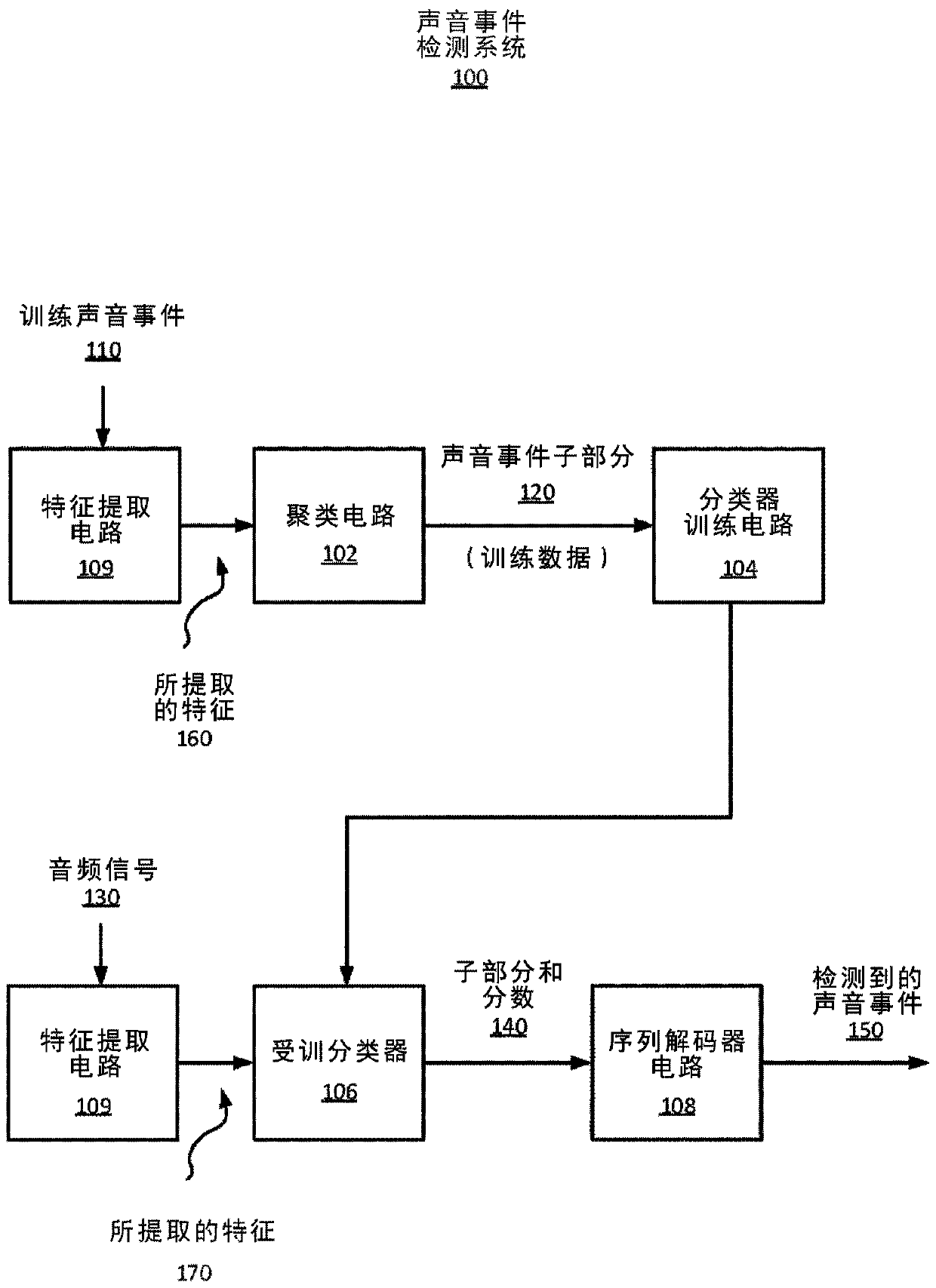

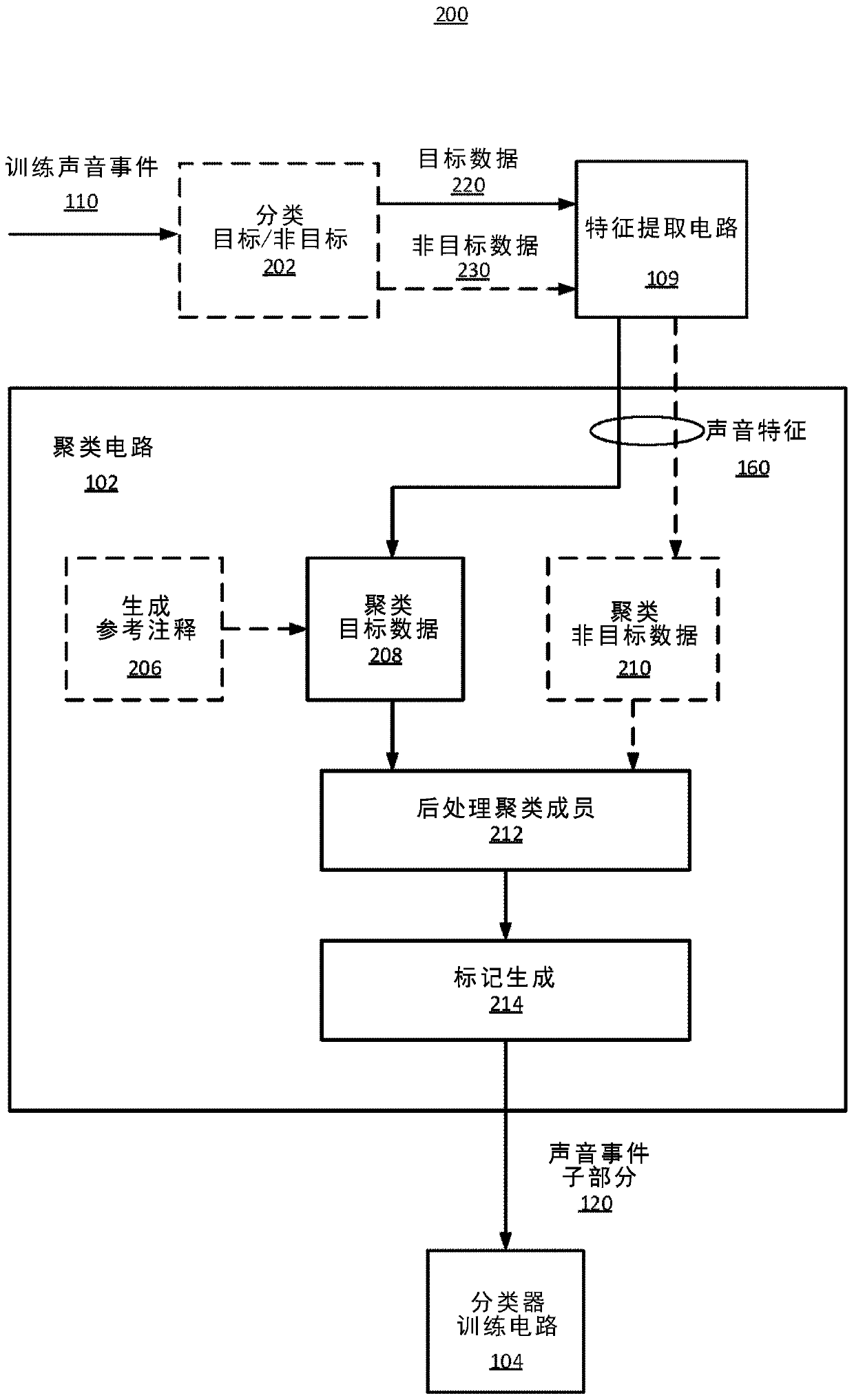

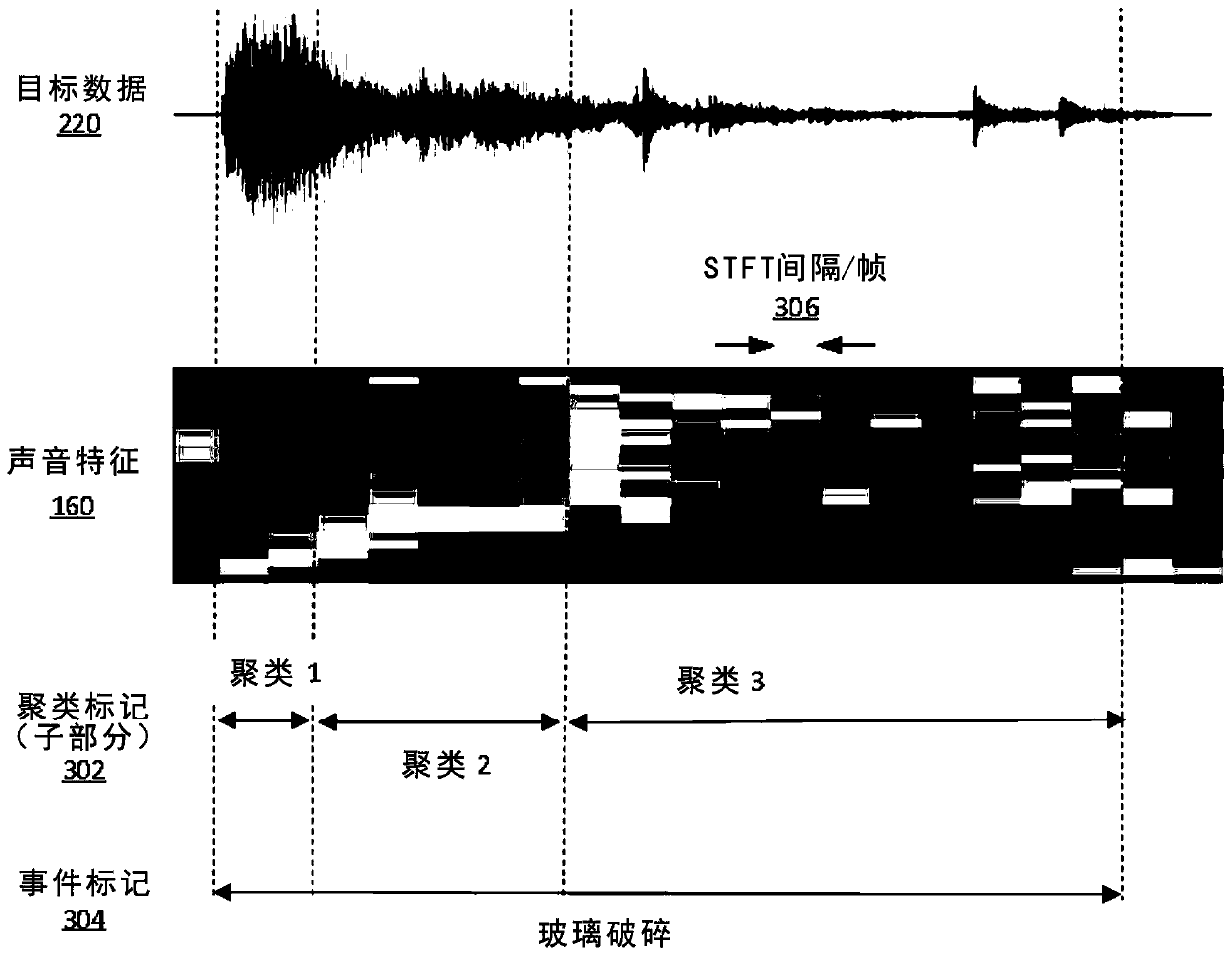

Acoustic event detection based on modelling of sequence of event subparts

The invention relates to acoustic event detection based on modeling of sequence of event subparts. Techniques are provided for acoustic event detection. A methodology implementing the techniques according to an embodiment includes extracting acoustic features from a received audio signal. The acoustic features may include, for example, one or more short-term Fourier transform frames, or other spectral energy characteristics, of the audio signal. The method also includes applying a trained classifier to the extracted acoustic features to identify and label acoustic event subparts of the audio signal and to generate scores associated with the subparts. The method further includes performing sequence decoding of the acoustic event subparts and associated scores to detect target acoustic events of interest based on the scores and temporal ordering sequence of the event subparts. The classifier is trained on acoustic event subparts that are generated through unsupervised subspace clusteringtechniques applied to training data that includes target acoustic events.

Owner:INTEL CORP

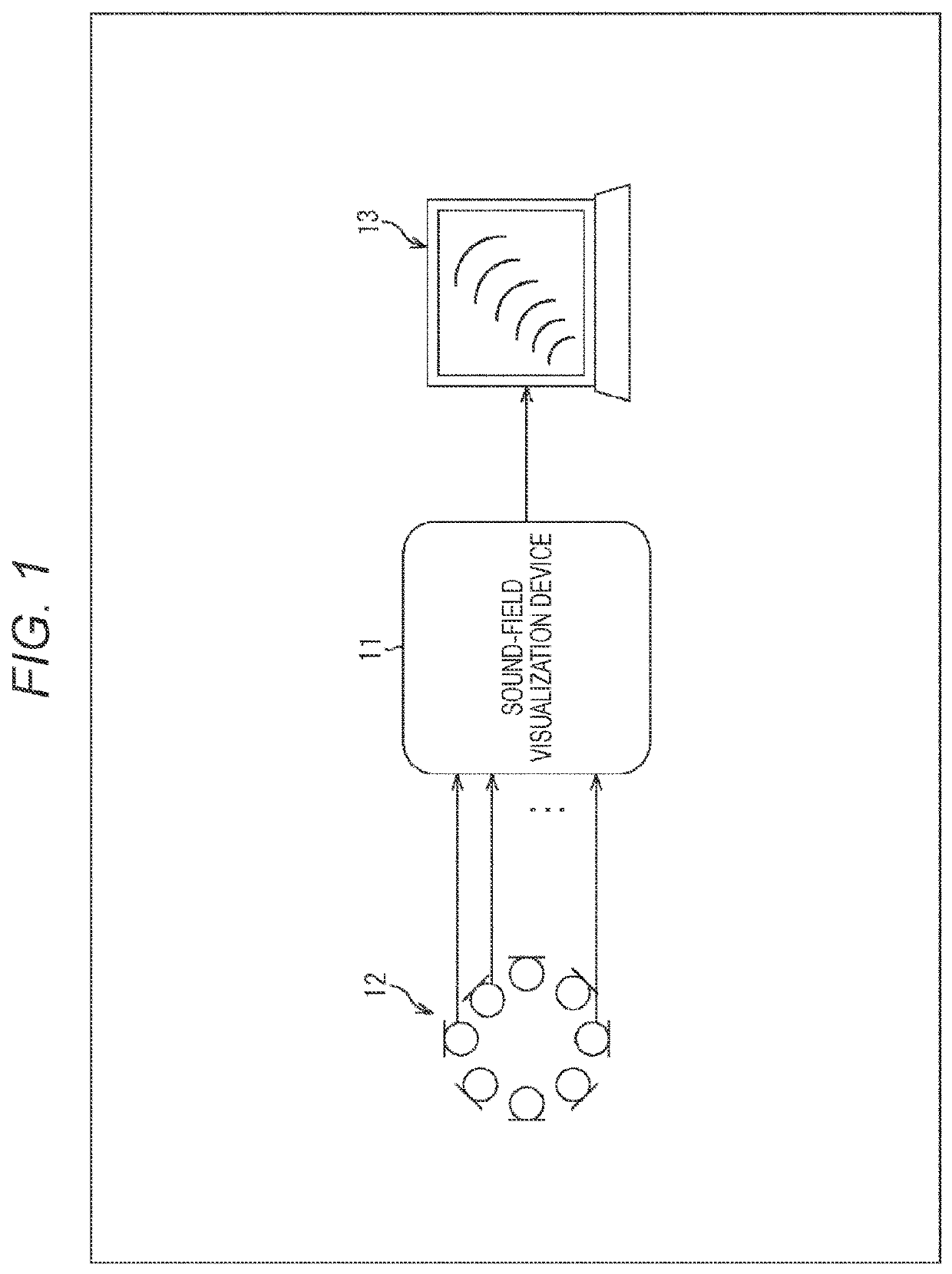

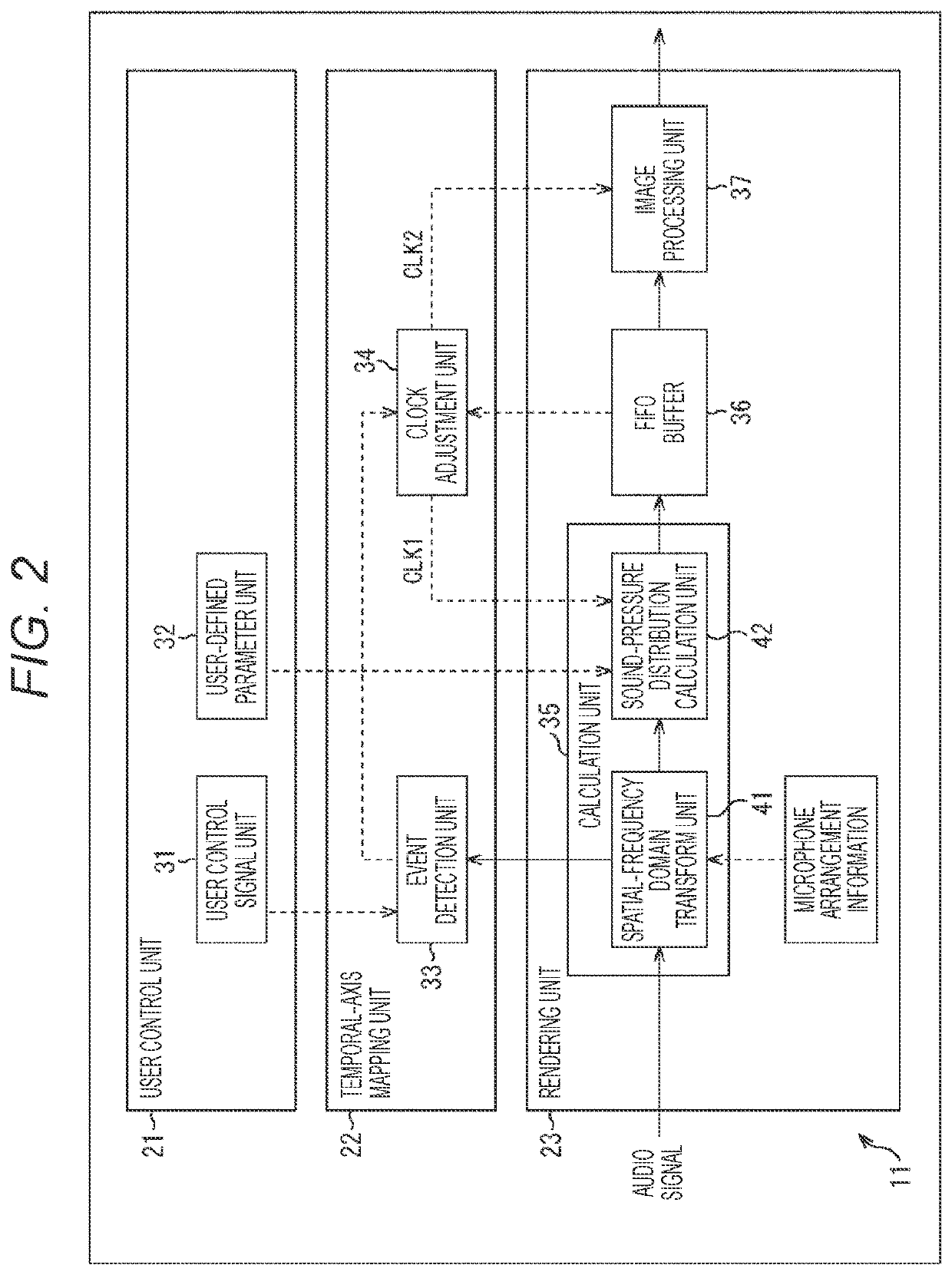

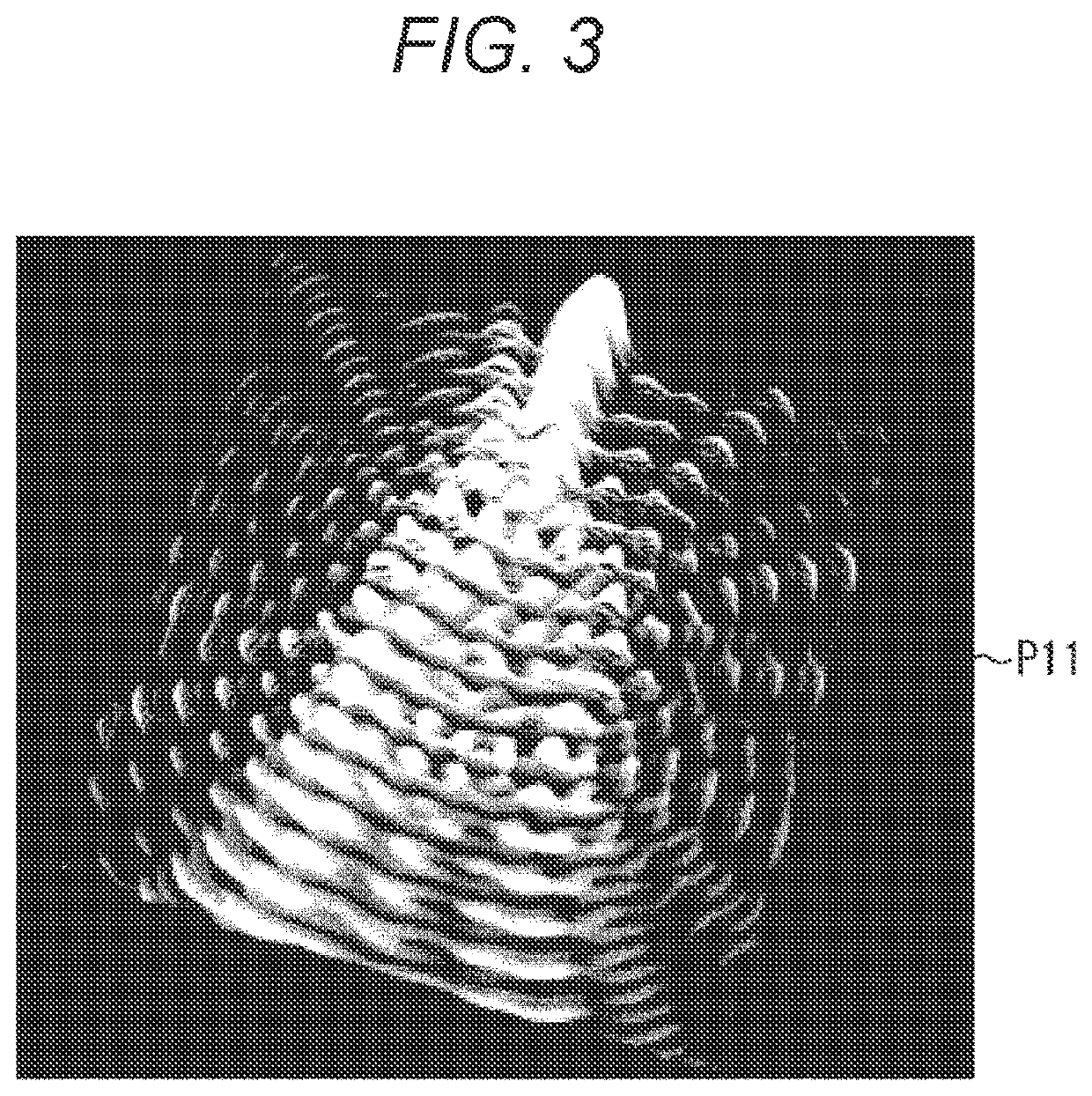

Information processing device, information processing method, and program

PendingUS20220208212A1Vibration measurement in fluidSpeech analysisInformation processingEngineering

The present technology relates to an information processing device, an information processing method, and a program that enable easier visualization of a sound field.The information processing device includes: a calculation unit configured to calculate, on the basis of positional information indicating a plurality of observation positions in a space and an audio signal of sound observed at each of the plurality of observation positions, an amplitude or phase of the sound at each of a plurality of positions in the space at a first time interval; an event detection unit configured to detect an event; and an adjustment unit configured to perform, in a case where the event is detected, control such that the amplitude or the phase is calculated at a second time interval shorter than the first time interval. The present technology can be applied to a sound-field visualization system.

Owner:SONY CORP

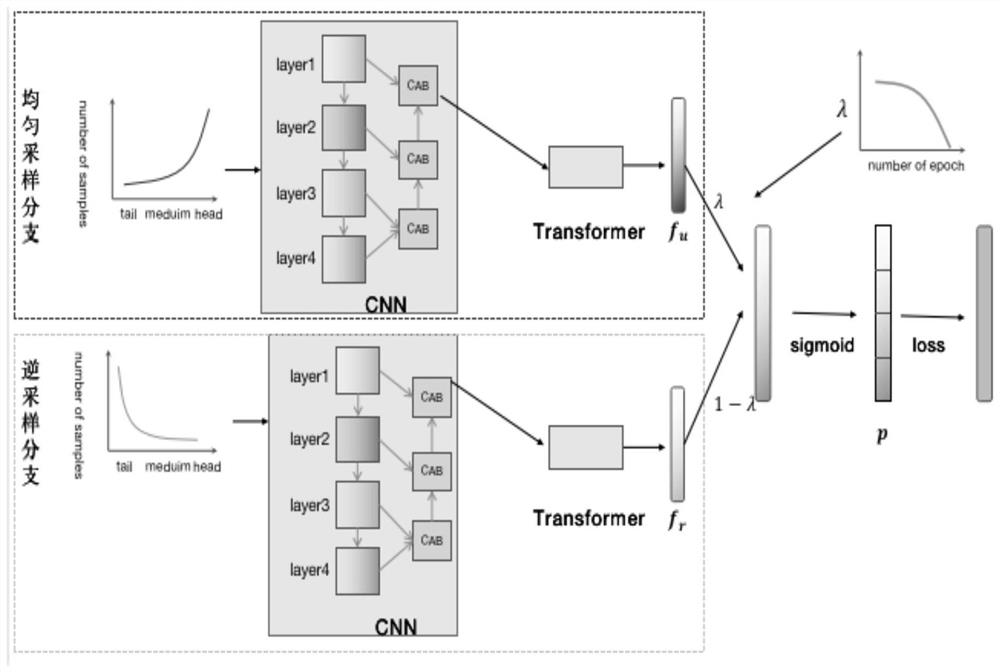

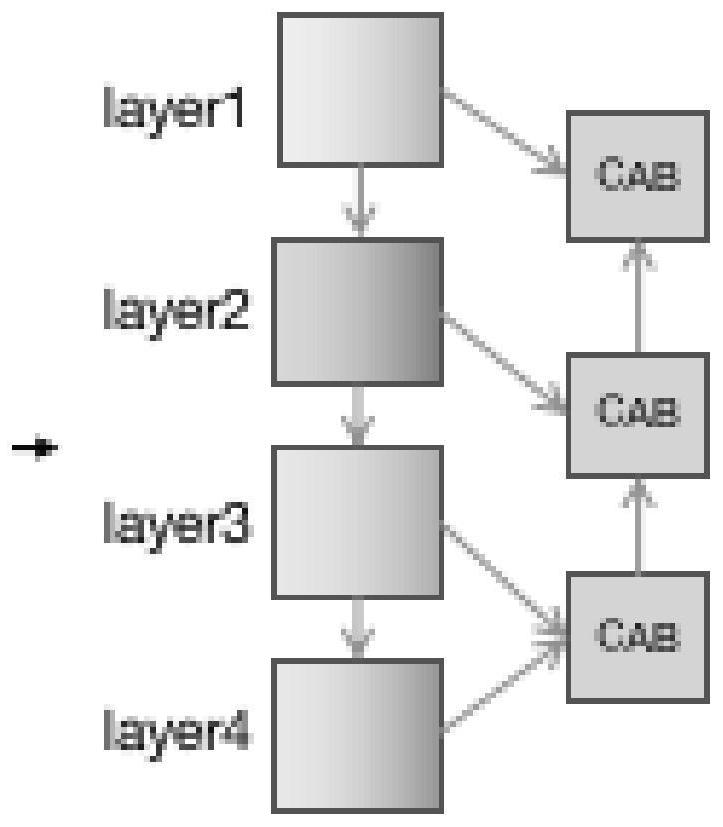

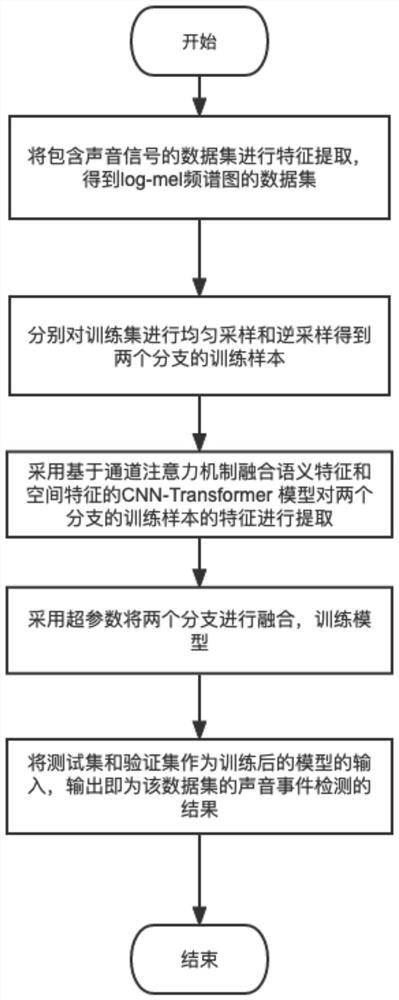

Sound event detection method based on double-branch discriminant feature neural network

PendingCN114881212AImprove generalizationGood overallSpeech analysisCharacter and pattern recognitionData setNetwork model

The invention discloses a sound event detection method based on a double-branch discriminant feature neural network, and the method comprises the steps: carrying out the feature extraction of a data set containing a sound signal, obtaining a log-mel spectrogram data set, and dividing the log-mel spectrogram data set into a training set, a test set and a verification set; and a double-branch discriminant feature network model is established, and the double-branch discriminant feature network model comprises double-branch sampling, feature extraction, double-branch feature fusion and loss fusion: the test set and the verification set are used as the input of the trained model, and the output of the model is the sound event detection result of the data set. Comprising a sound event type contained in the audio and starting and ending time of the event. According to the invention, the discriminative features of the tail class and the difficult-to-distinguish class are obtained in a double-branch discriminative feature fusion mode, the class weight of the classifier is balanced to a certain extent, and the sound event detection effect is improved.

Owner:TIANJIN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com