Patents

Literature

58 results about "Speech segmentation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Speech segmentation is the process of identifying the boundaries between words, syllables, or phonemes in spoken natural languages. The term applies both to the mental processes used by humans, and to artificial processes of natural language processing.

Voice print system and method

InactiveUS20030009333A1Increase in sizeImprove generalization abilitySpeech recognitionSpeech segmentationSpeaker verification

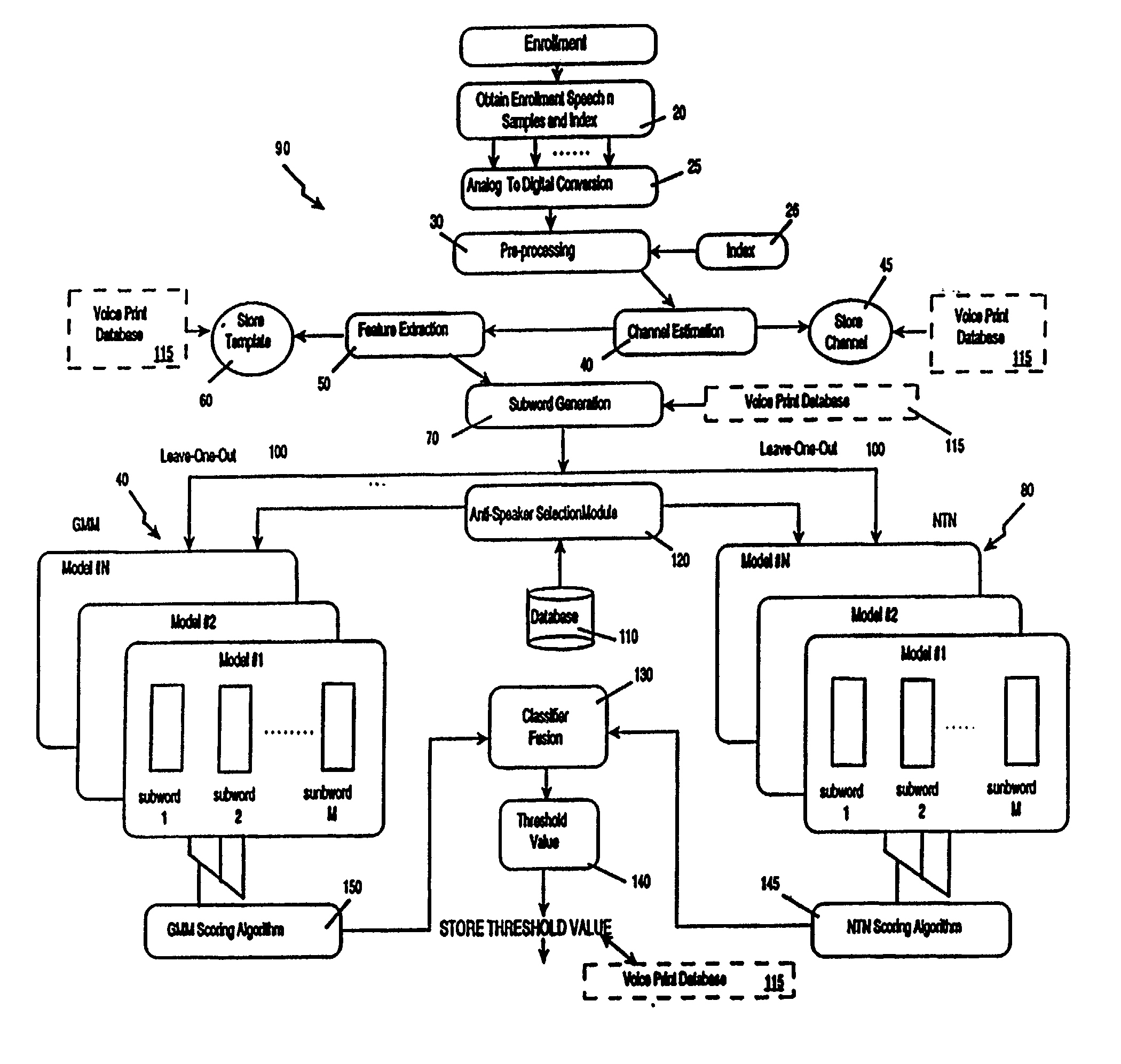

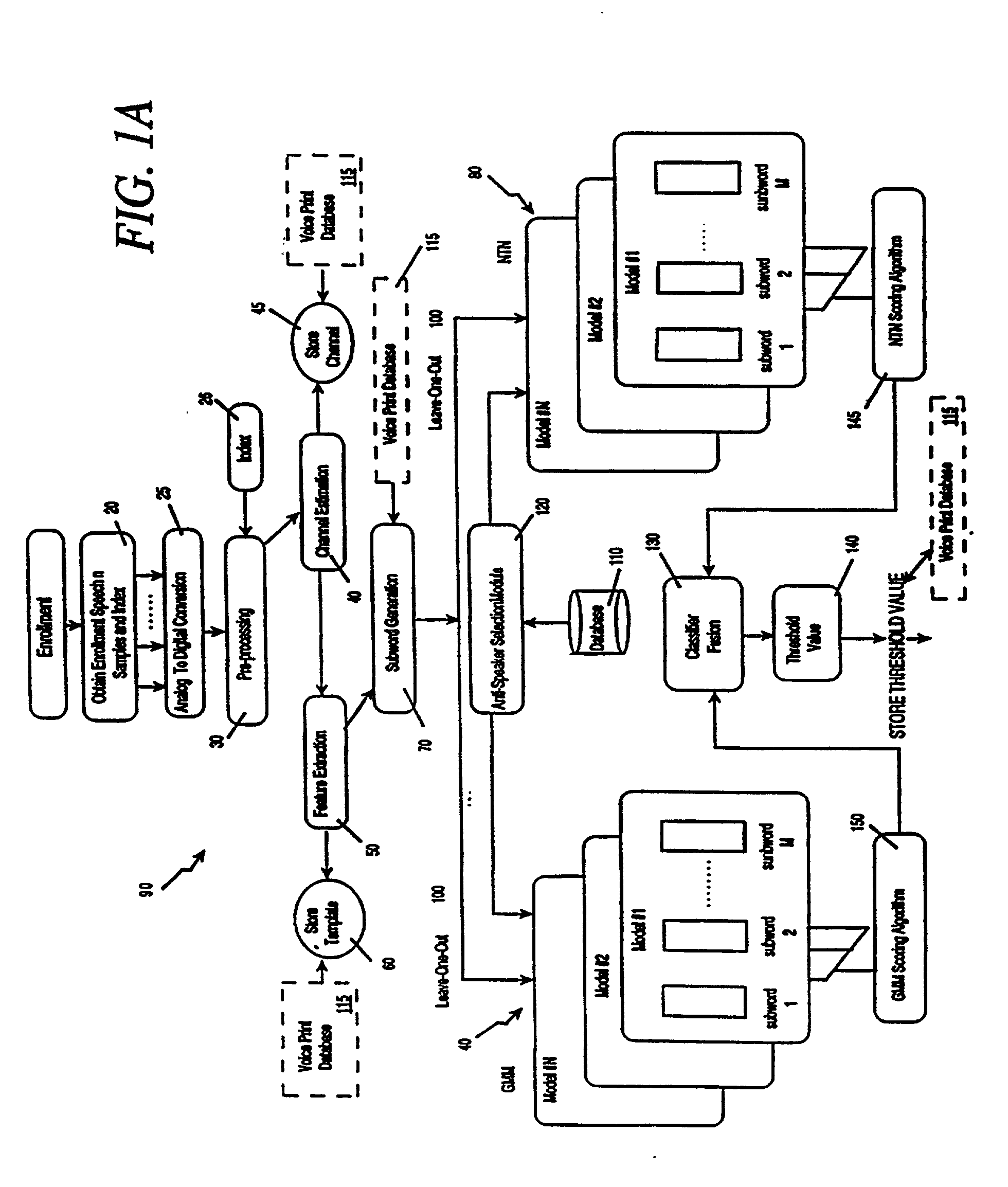

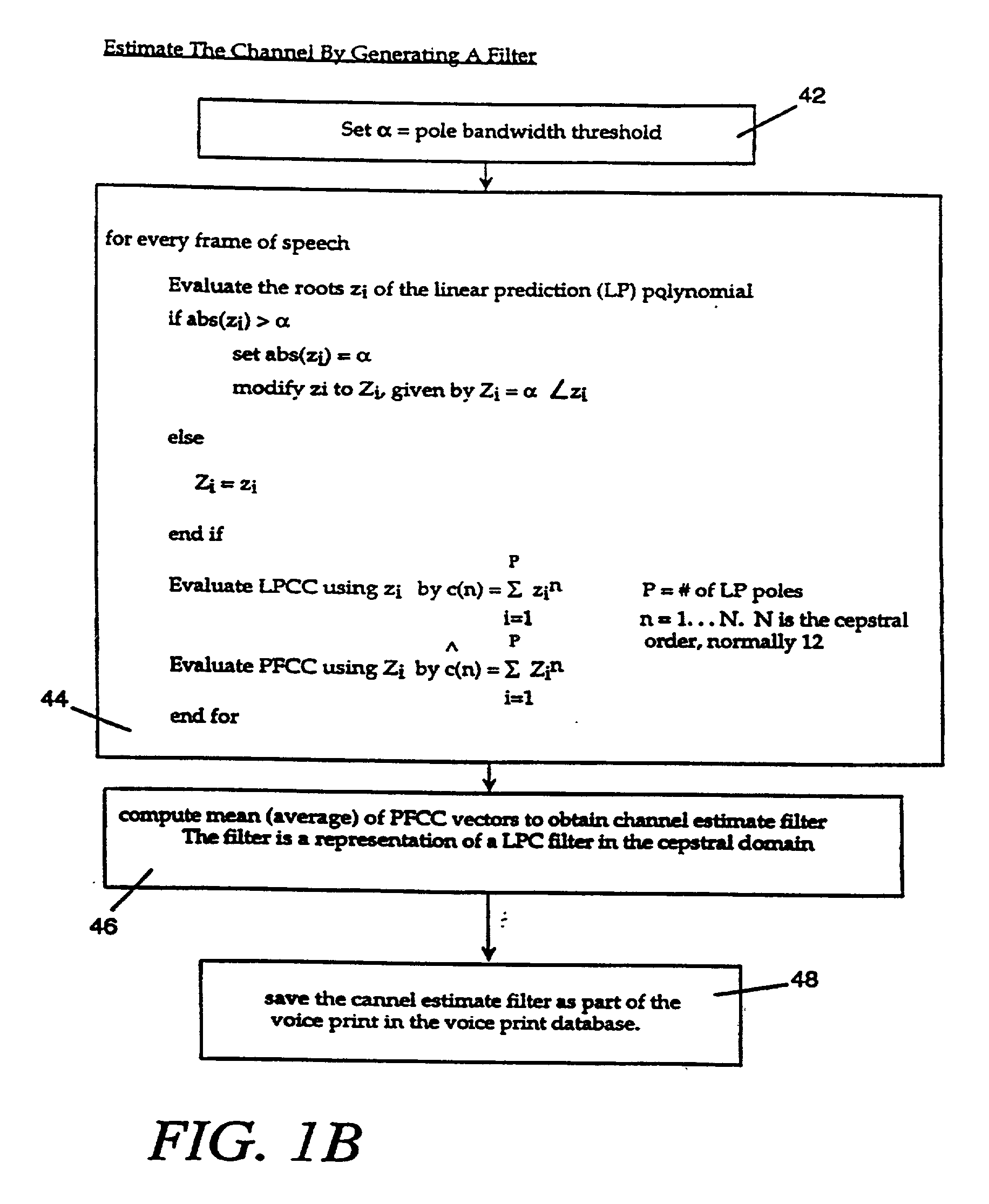

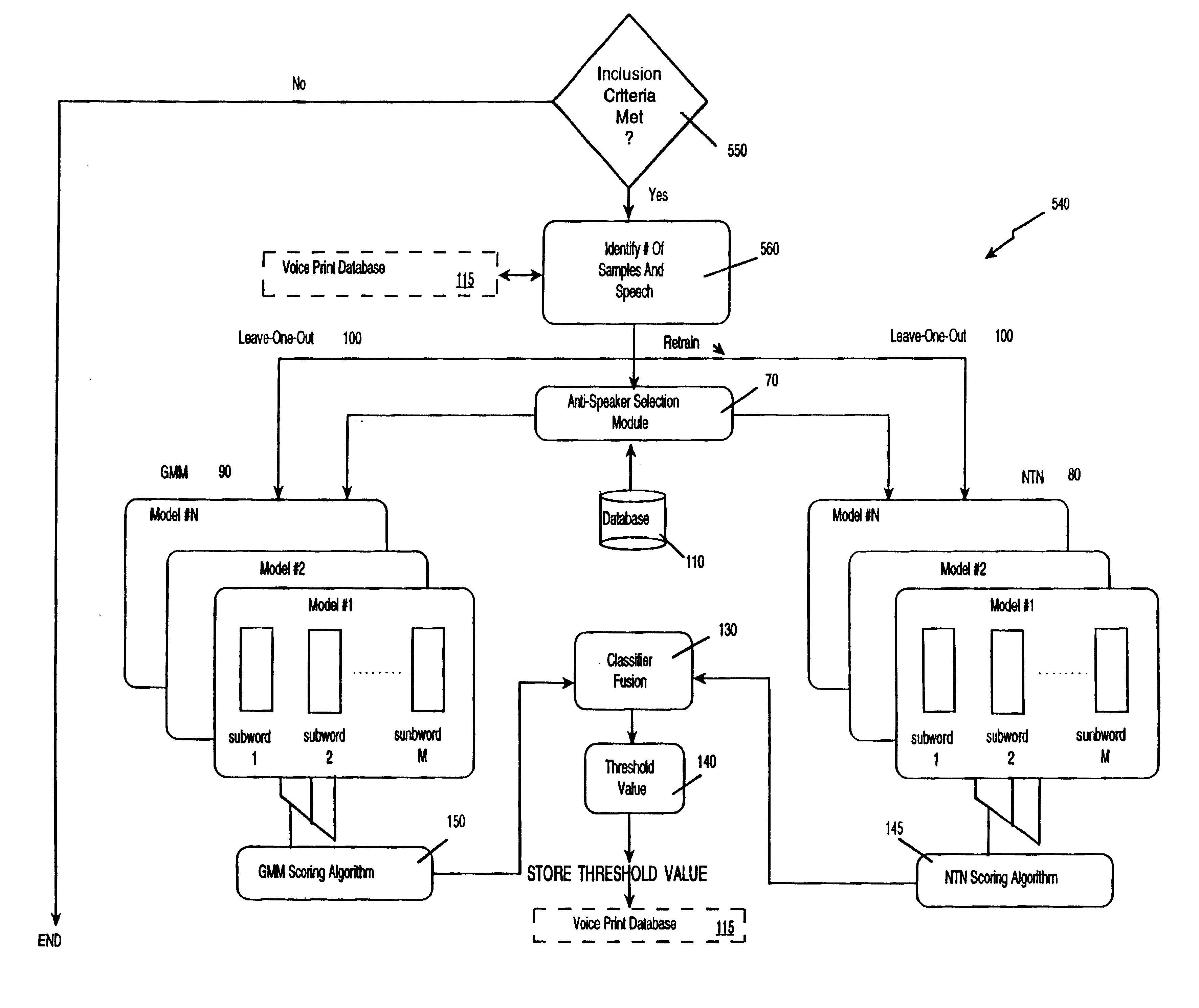

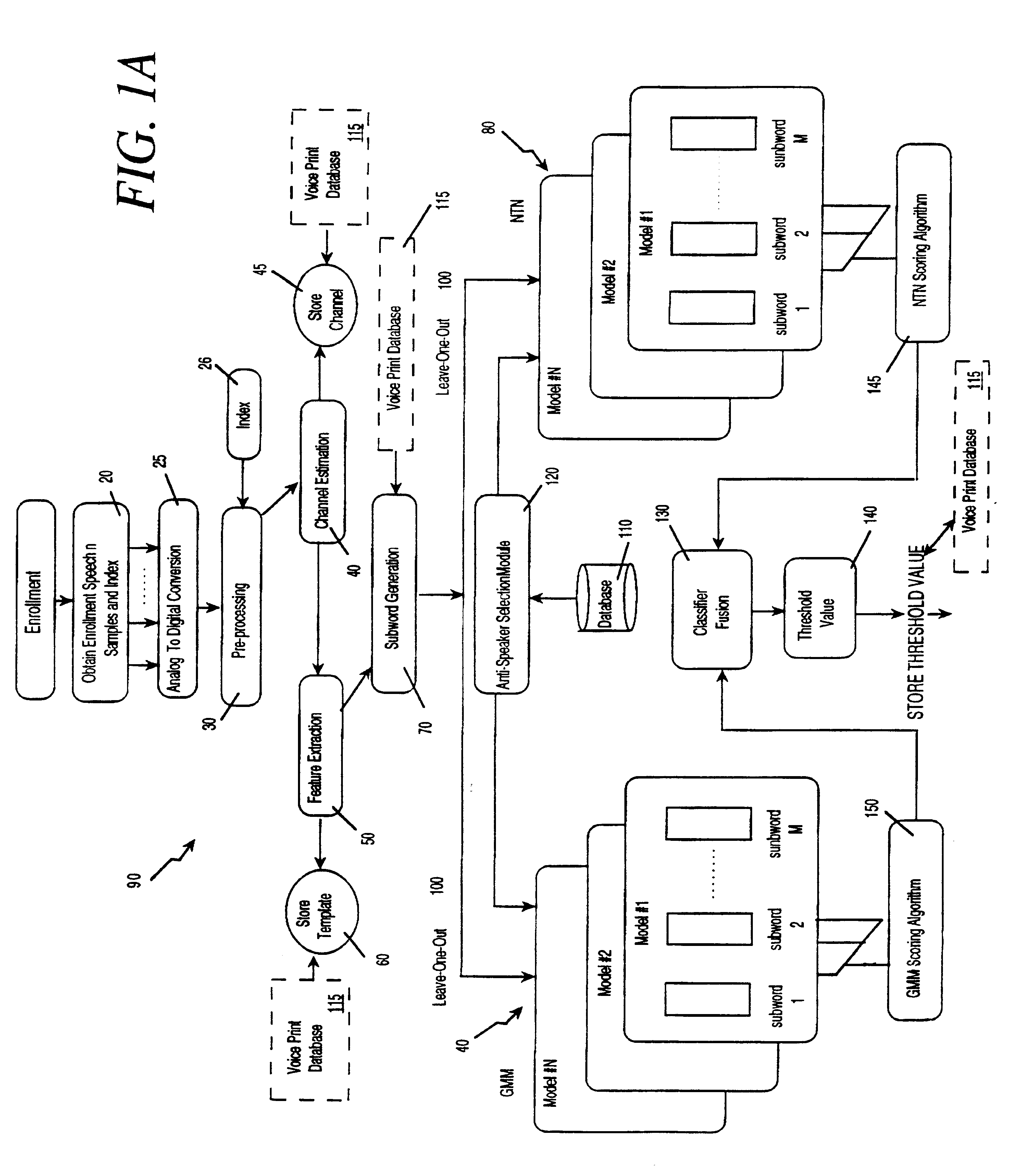

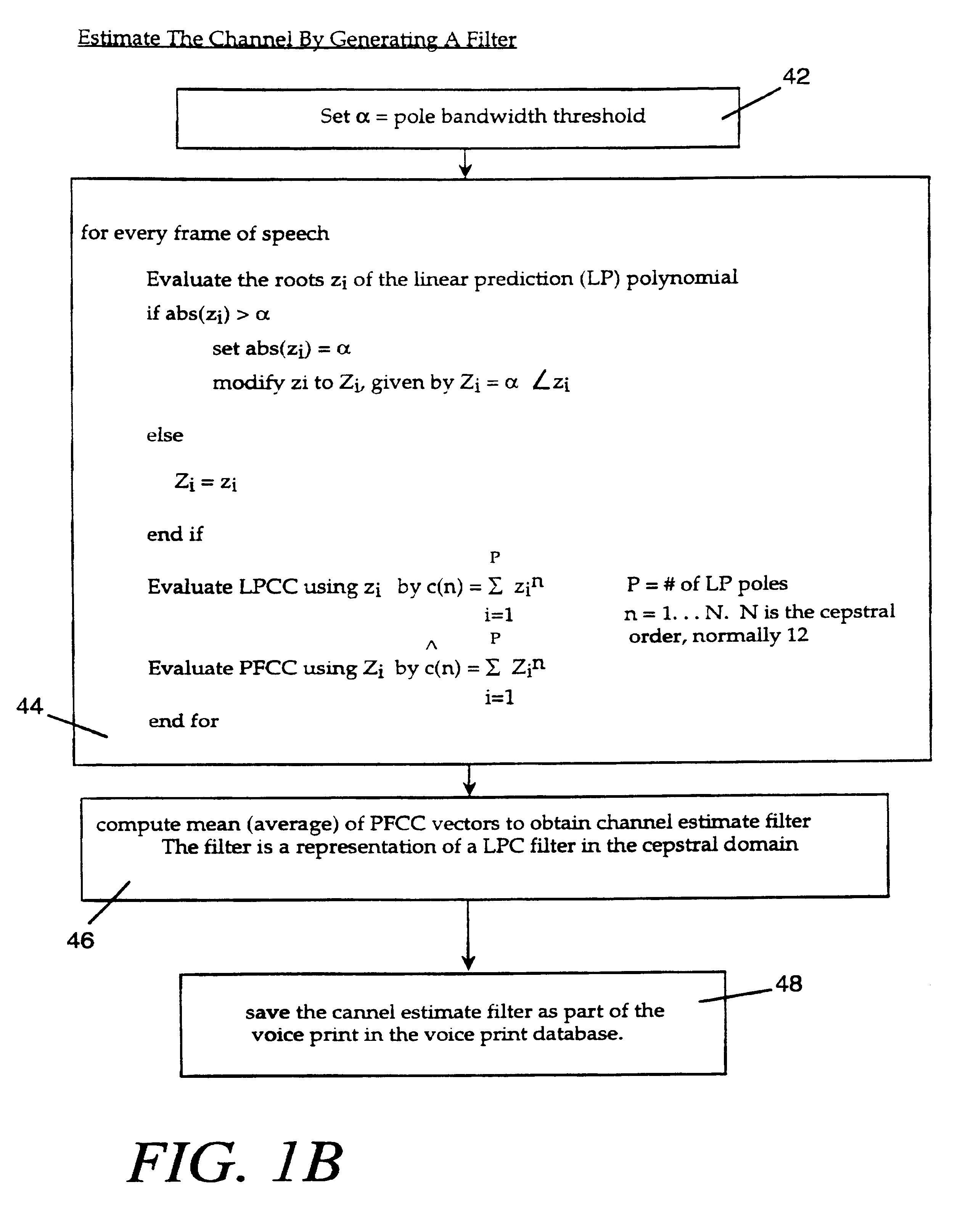

The voice print system of the present invention is a subword-based, text-dependent automatic speaker verification system that embodies the capability of user-selectable passwords with no constraints on the choice of vocabulary words or the language. Automatic blind speech segmentation allows speech to be segmented into subword units without any linguistic knowledge of the password. Subword modeling is performed using a multiple classifiers. The system also takes advantage of such concepts as multiple classifier fusion and data resampling to successfully boost the performance. Key word / key phrase spotting is used to optimally locate the password phrase. Numerous adaptation techniques increase the flexibility of the base system, and include: channel adaptation, fusion adaptation, model adaptation and threshold adaptation.

Owner:SPEECHWORKS INT

Subword-based speaker verification with multiple-classifier score fusion weight and threshold adaptation

InactiveUS6539352B1Increase in sizeImprove generalization abilitySpeech recognitionSpeech segmentationSpeaker verification

The voice print system of the present invention is a subword-based, text-dependent automatic speaker verification system that embodies the capability of user-selectable passwords with no constraints on the choice of vocabulary words or the language. Automatic blind speech segmentation allows speech to be segmented into subword units without any linguistic knowledge of the password. Subword modeling is performed using a multiple classifiers. The system also takes advantage of such concepts as multiple classifier fusion and data resampling to successfully boost the performance. Key word / key phrase spotting is used to optimally locate the password phrase. Numerous adaptation techniques increase the flexibility of the base system, and include: channel adaptation, fusion adaptation, model adaptation and threshold adaptation.

Owner:BANK ONE COLORADO NA AS AGENT +1

Bimodal man-man conversation sentiment analysis system and method thereof based on machine learning

ActiveCN106503805AFeatures are comprehensive and thoughtfulImprove accuracySemantic analysisMachine learningSpeech segmentationSingle sentence

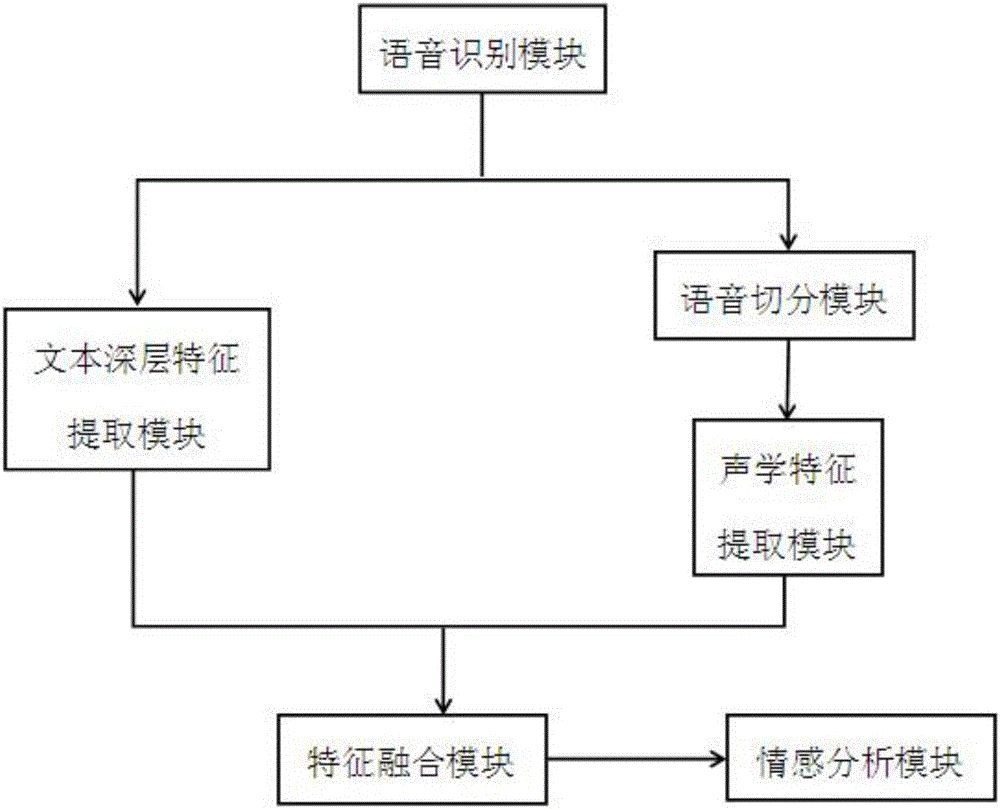

The invention comprises a bimodal man-man conversation sentiment analysis system and a bimodal man-man conversation sentiment analysis method based on machine learning. The bimodal man-man conversation sentiment analysis system is characterized by comprising a speech recognition module, a text deep-layer feature extraction module, a speech segmentation module, an acoustic feature extraction module, a feature fusion module and an sentiment analysis module, wherein the speech recognition module is used for recognizing speech content and a time label; the text deep-layer feature extraction module is used for completing the extraction of text deep-layer word level features and text deep-layer sentence level features; the speech segmentation module is used for segmenting single sentence speech from entire speech; the acoustic feature extraction module is used for completing the extraction of acoustic features of the speech; the feature fusion module is used for fusing the obtained text deep-layer features with the acoustic features; and the sentiment analysis module is used for acquiring sentiment polarities of the speech to be subjected to sentiment analysis. The bimodal man-man conversation sentiment analysis method can integrate the text and audio modals for recognizing conversation sentiment, and fully utilizes features of word vectors and sentence vectors, thereby improving the precision of recognition.

Owner:山东心法科技有限公司

Speech segment determination device, and storage medium

Owner:OKI ELECTRIC IND CO LTD

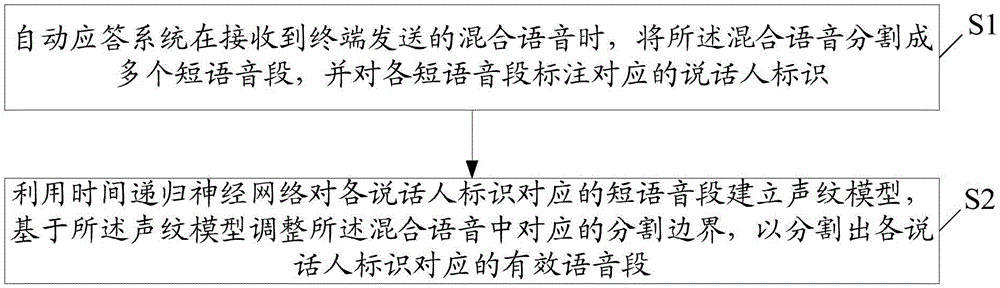

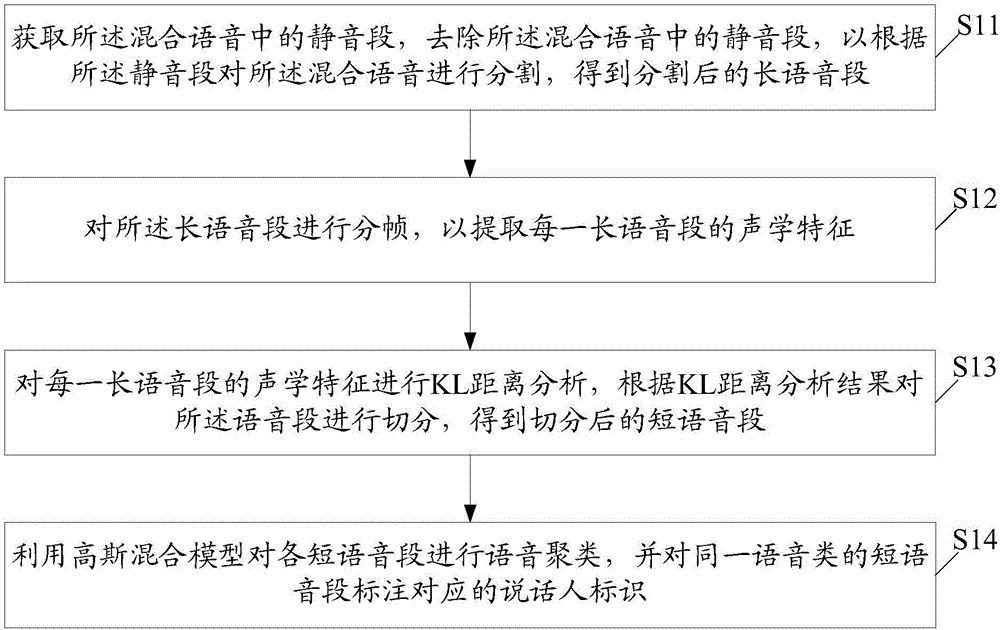

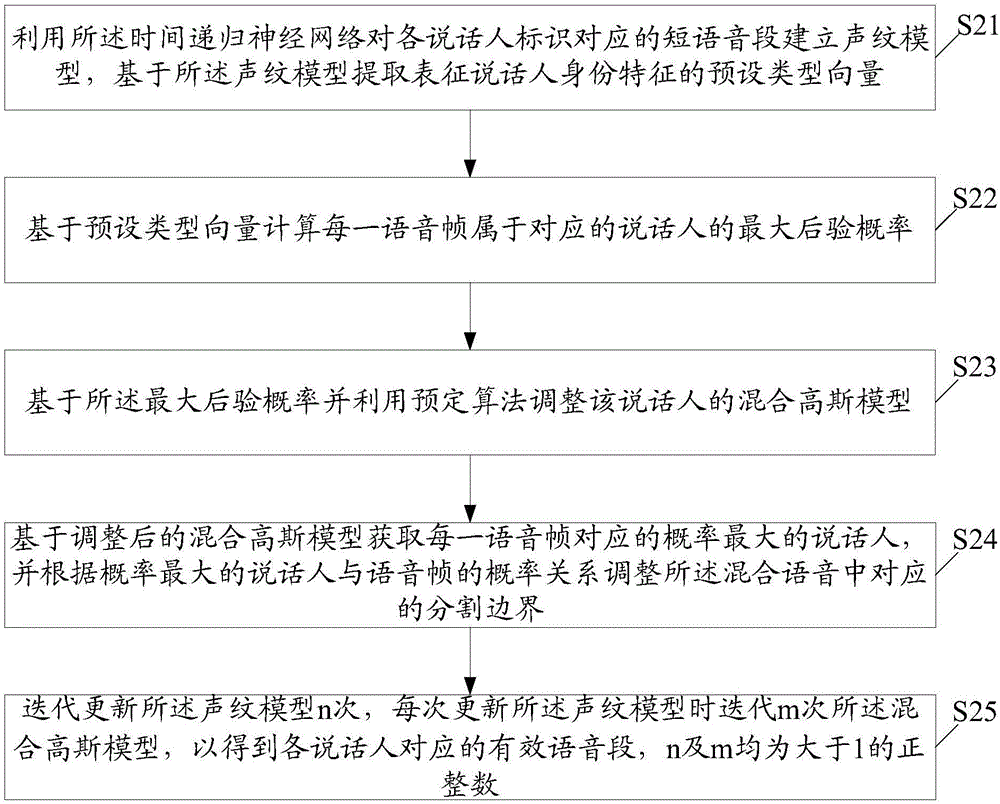

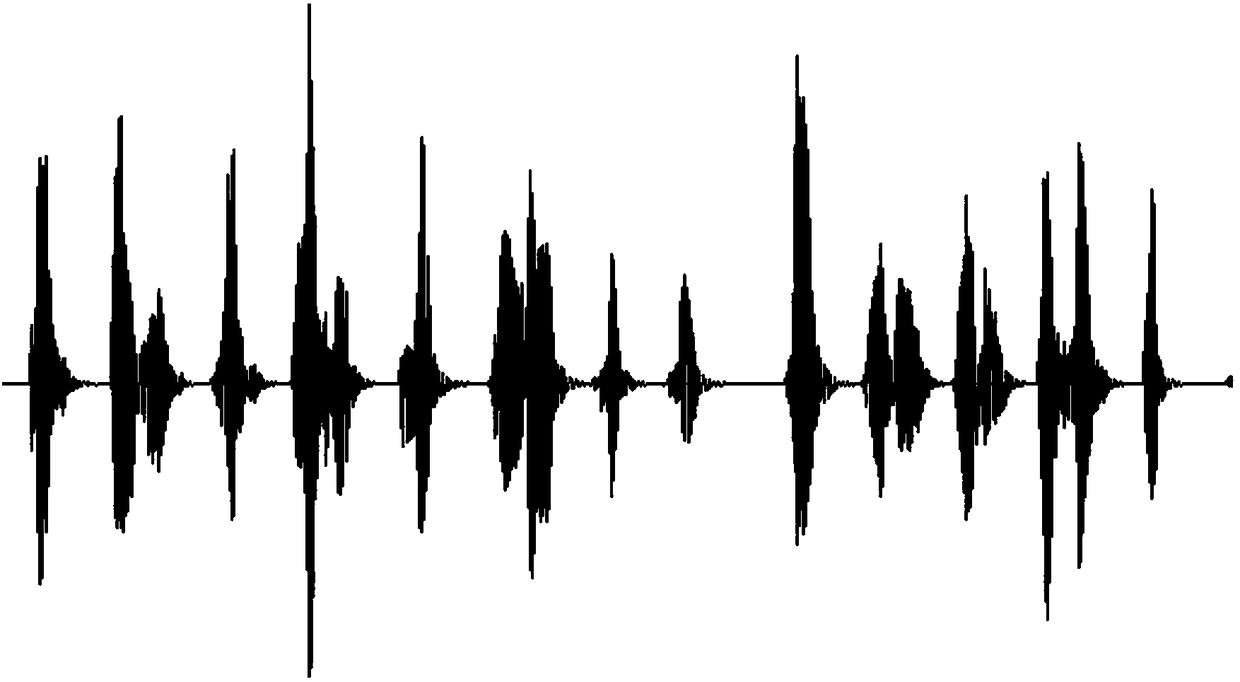

Speech segmentation method and device

ActiveCN106782507ASplit Boundary AdjustmentHigh precisionSpeech recognitionSpeech segmentationSpeech sound

The invention relates to a speech segmentation method and a speech segmentation device. The speech segmentation method comprises the following steps: segmenting mixed speech into a plurality of short speech segments when the mixed speech, which is transmitted from a terminal, is received by virtue of an automatic response system, and labeling the various short speech segments with corresponding speaker identifiers; and establishing a vocal print model for the short speech segments corresponding to the various speaker identifiers by virtue of a time recurrent neural network, and regulating corresponding segmentation boundaries in the mixed speech on the basis of the vocal print model, so as to segment out effective speech segments corresponding to the various speaker identifiers. With the application of the method and the device provided by the invention, precision of speech segmentation can be effectively enhanced; and especially for speeches that conversations are frequently alternated and are overlapped, a relatively good effect of speech segmentation is achieved.

Owner:PING AN TECH (SHENZHEN) CO LTD

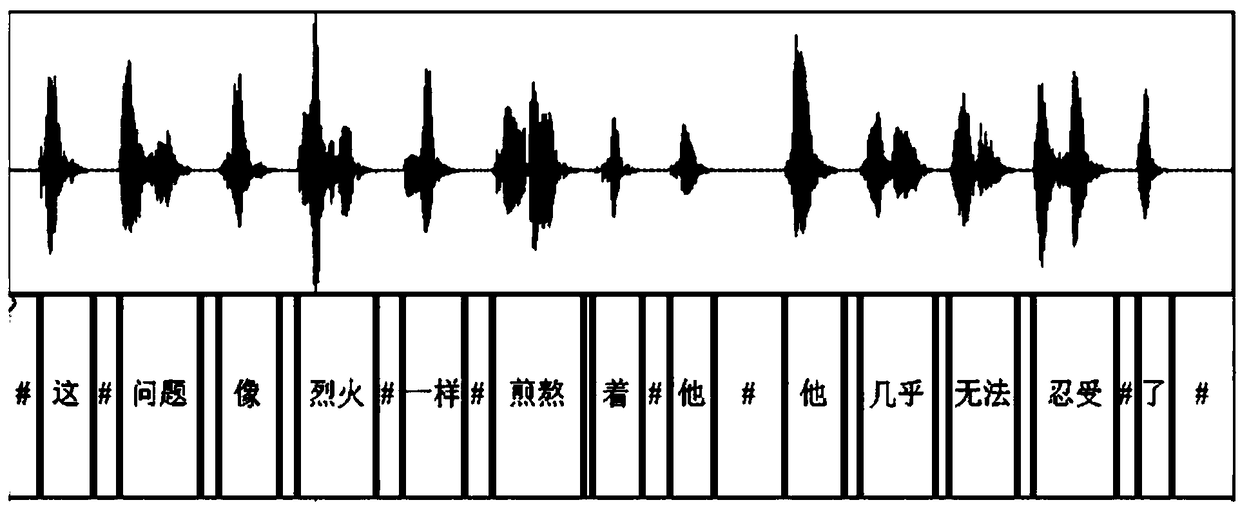

Emotional Chinese text human voice synthesis method

ActiveCN108364632AImprove performanceImprove the emotional rhythm effectNatural language data processingSpecial data processing applicationsPart of speechSpeech segmentation

The invention discloses an emotional Chinese text human voice synthesis method, which mainly comprises the steps of: (1) constructing an emotional corpus; (2) and performing emotional speech synthesisbased on waveform splicing. The emotional corpus establishment is mainly implemented by the steps of: (11) segmenting terms and acquiring parts of speech of the terms; (12) performing speech segmentation, and acquiring audio data corresponding to segmented terms based on speech data features and text corpora; (13) and performing emotion analysis, and acquiring emotional feature values of terms, clauses and whole sentences based on text term segmentation and audio features. The emotional speech synthesis based on waveform splicing is implemented by the steps of: (21) segmenting terms and performing emotion analysis on a text to be synthesized, and acquiring parts of speech of words, sentence patterns and emotional features in the text to be synthesized; (22) selecting the optimal corpus, and carrying out matching to obtain the optimal corpus set based on text eigenvalues; (23) and perfomring speech synthesis and waveform splicing, extracting a word audio sequence set from the corpus set, and synthesizing the audio to output a final speech. The emotional Chinese text human voice synthesis method is used for synthesizing and outputting a true human voice speech with emotional features.

Owner:SOUTHEAST UNIV

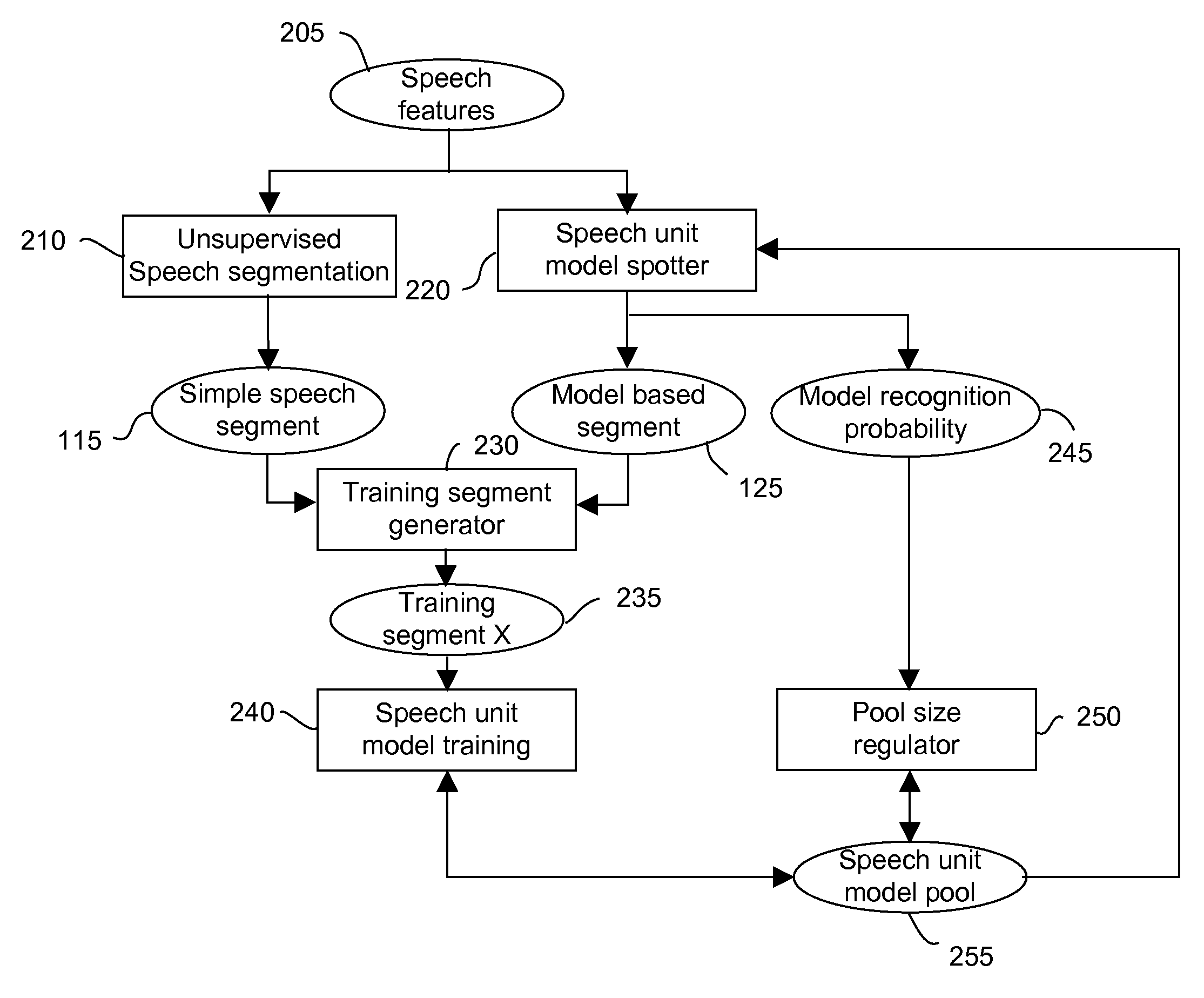

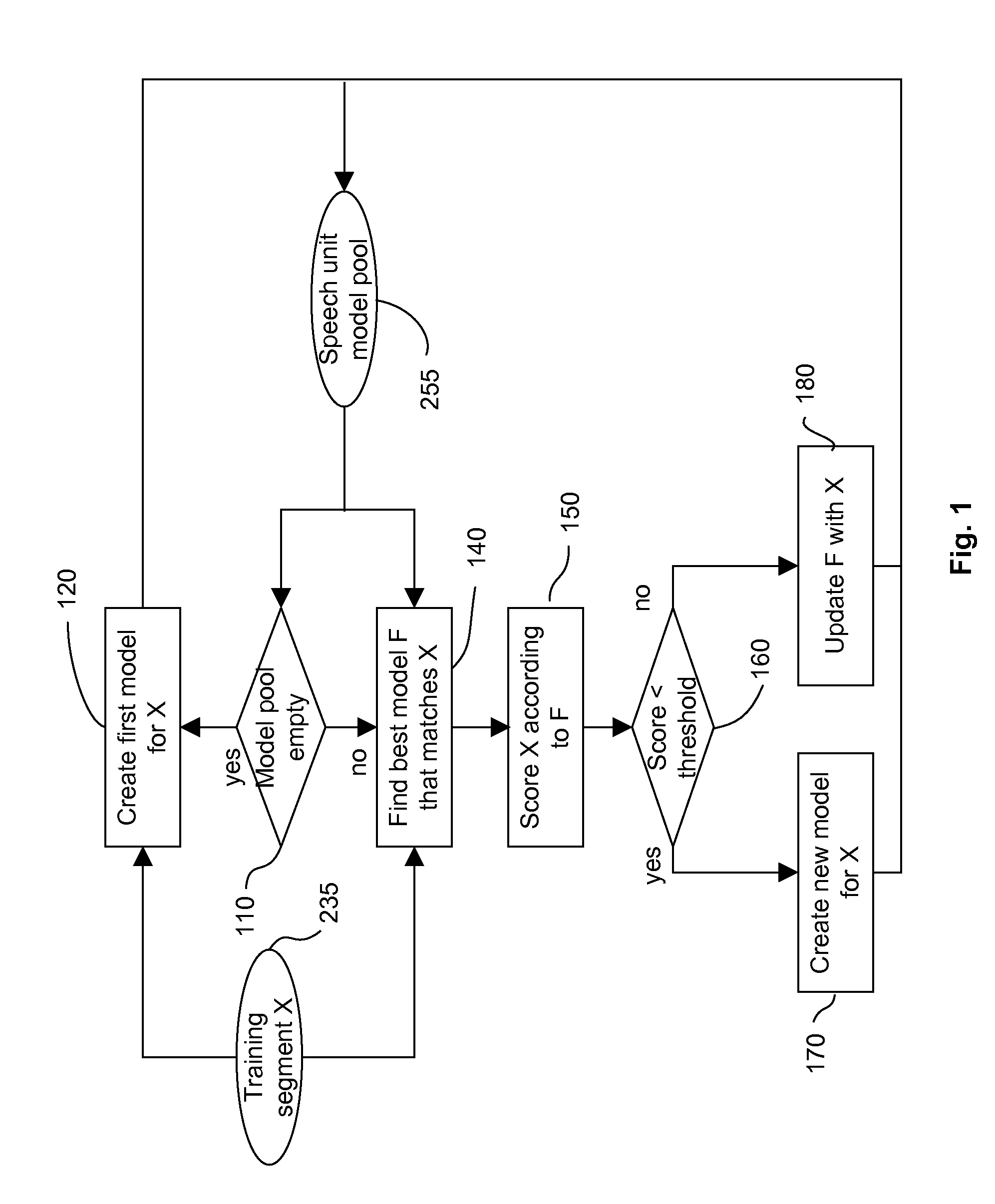

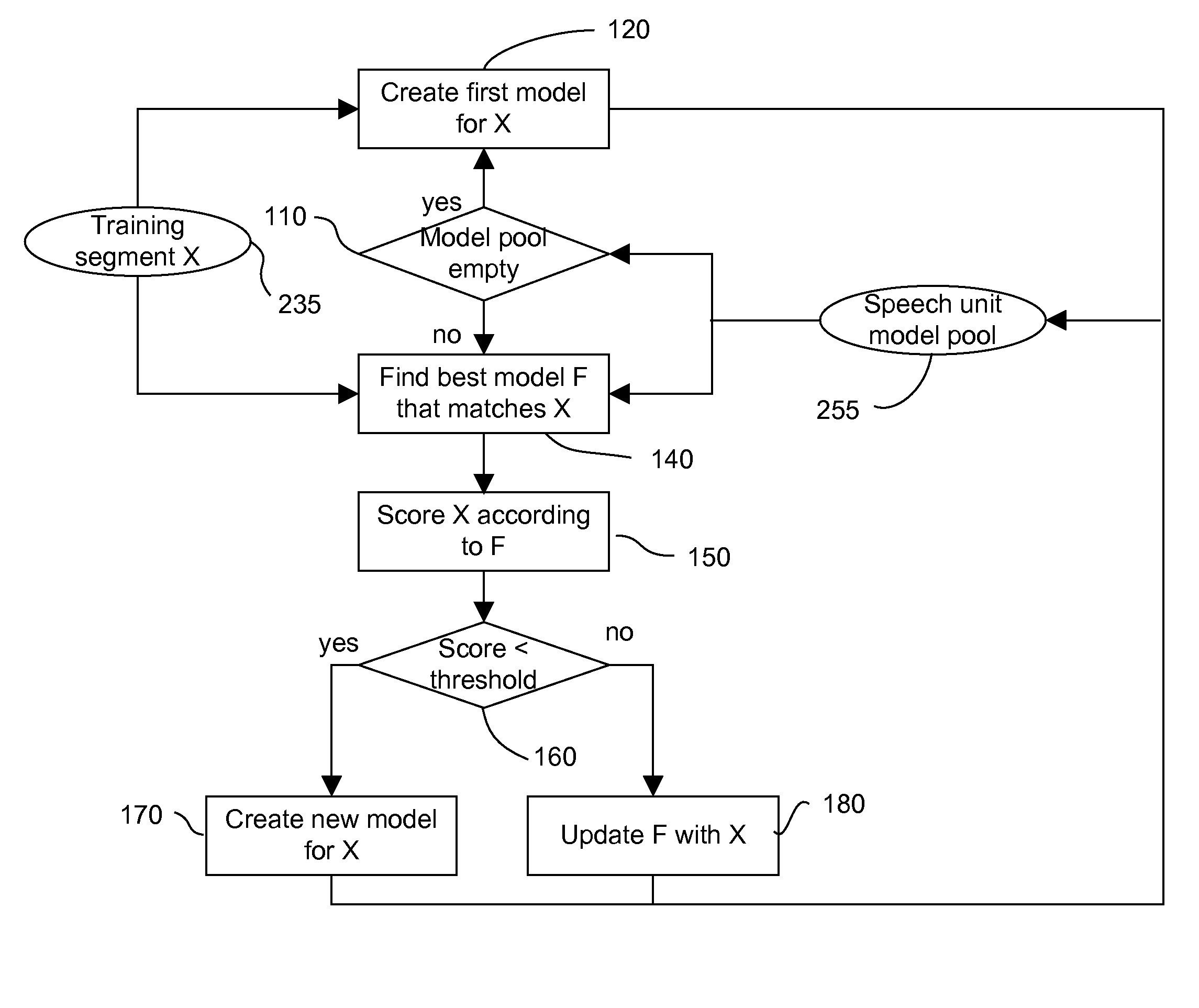

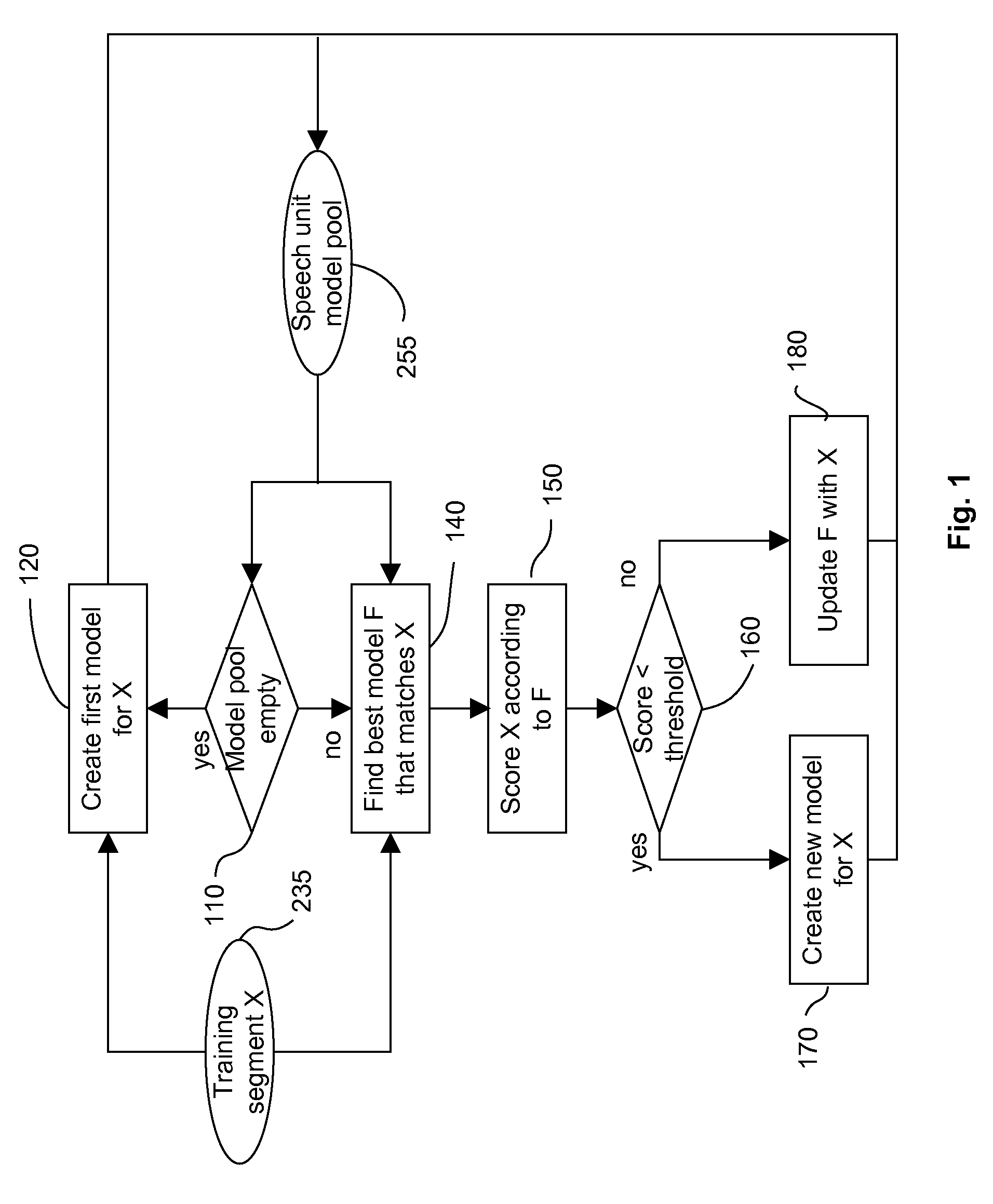

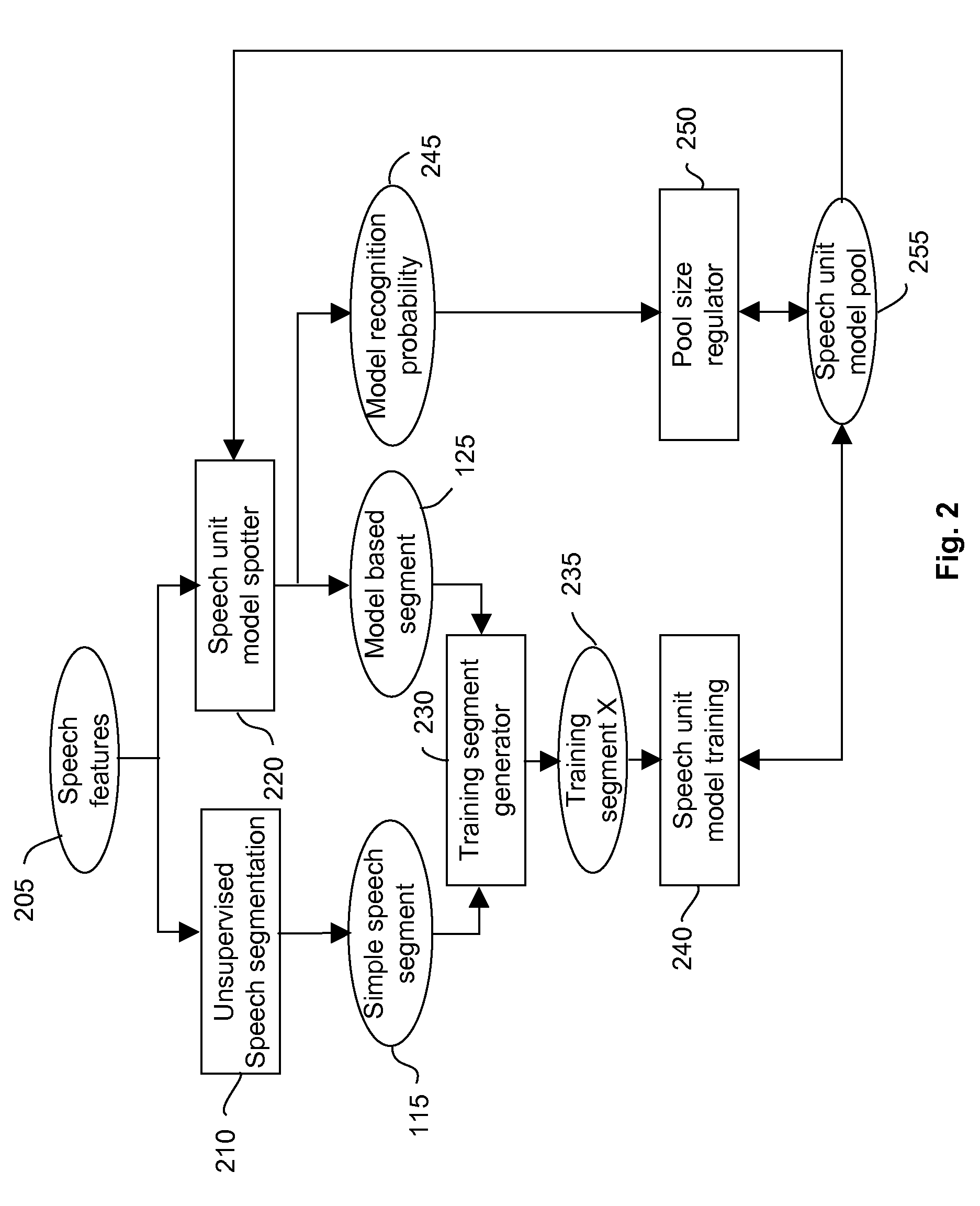

Using Child Directed Speech to Bootstrap a Model Based Speech Segmentation and Recognition System

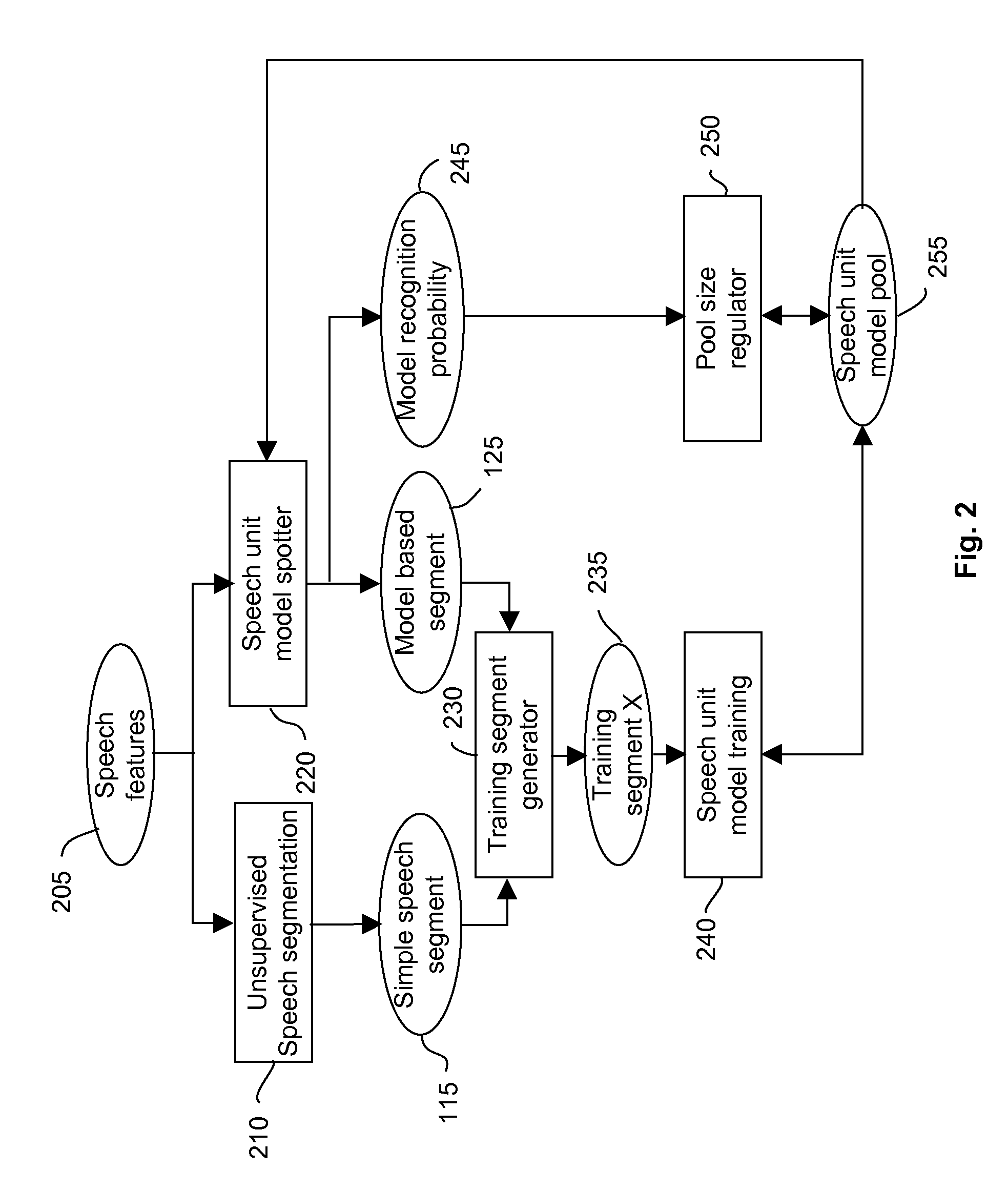

InactiveUS20080082337A1Simple methodImprove intelligibilitySpeech recognitionSpeech segmentationAlgorithm

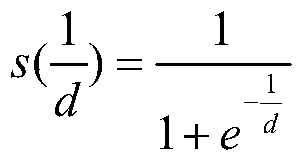

A method and system for obtaining a pool of speech syllable models. The model pool is generated by first detecting a training segment using unsupervised speech segmentation or speech unit spotting. If the model pool is empty, a first speech syllable model is trained and added to the model pool. If the model pool is not empty, an existing model is determined from the model pool that best matches the training segment. Then the existing module is scored for the training segment. If the score is less than a predefined threshold, a new model for the training segment is created and added to the pool. If the score equals the threshold or is larger than the threshold, the training segment is used to improve or to re-estimate the model.

Owner:HONDA RES INST EUROPE

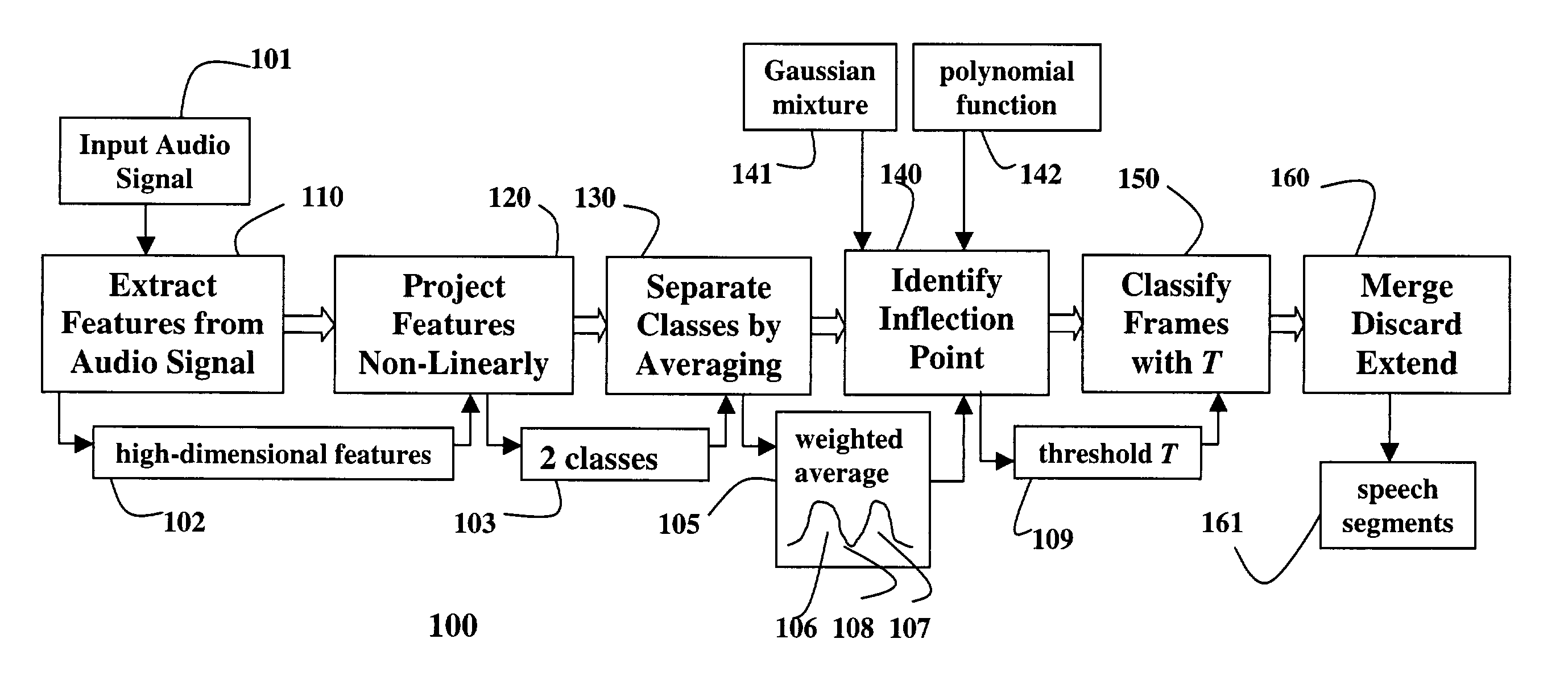

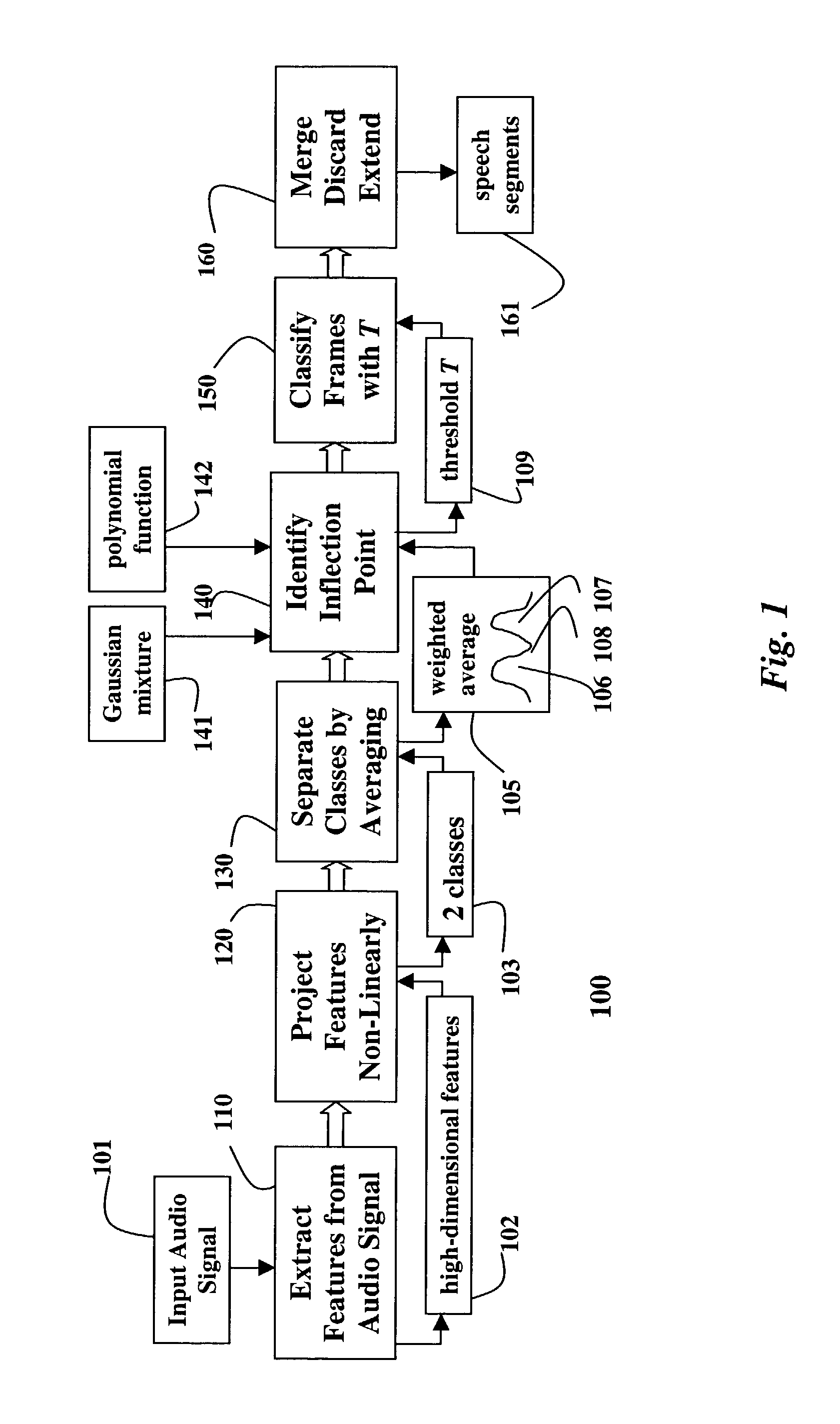

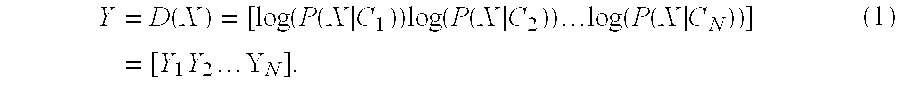

Classifier-based non-linear projection for continuous speech segmentation

A method segments an audio signal including frames into non-speech and speech segments. First, high-dimensional spectral features are extracted from the audio signal. The high-dimensional features are then projected non-linearly to low-dimensional features that are subsequently averaged using a sliding window and weighted averages. A linear discriminant is applied to the averaged low-dimensional features to determine a threshold separating the low-dimensional features. The linear discriminant can be determined from a Gaussian mixture or a polynomial applied to a bi-model histogram distribution of the low-dimensional features. Then, the threshold can be used to classify the frames into either non-speech or speech segments. Speech segments having a very short duration can be discarded, and the longer speech segments can be further extended. In batch-mode or real-time the threshold can be updated continuously.

Owner:MITSUBISHI ELECTRIC RES LAB INC

Single channel-based non-supervision target speaker speech extraction method

ActiveCN108962229AImprove adaptabilityImprove intelligenceCharacter and pattern recognitionSpeech recognitionSpeech segmentationSpeech sound

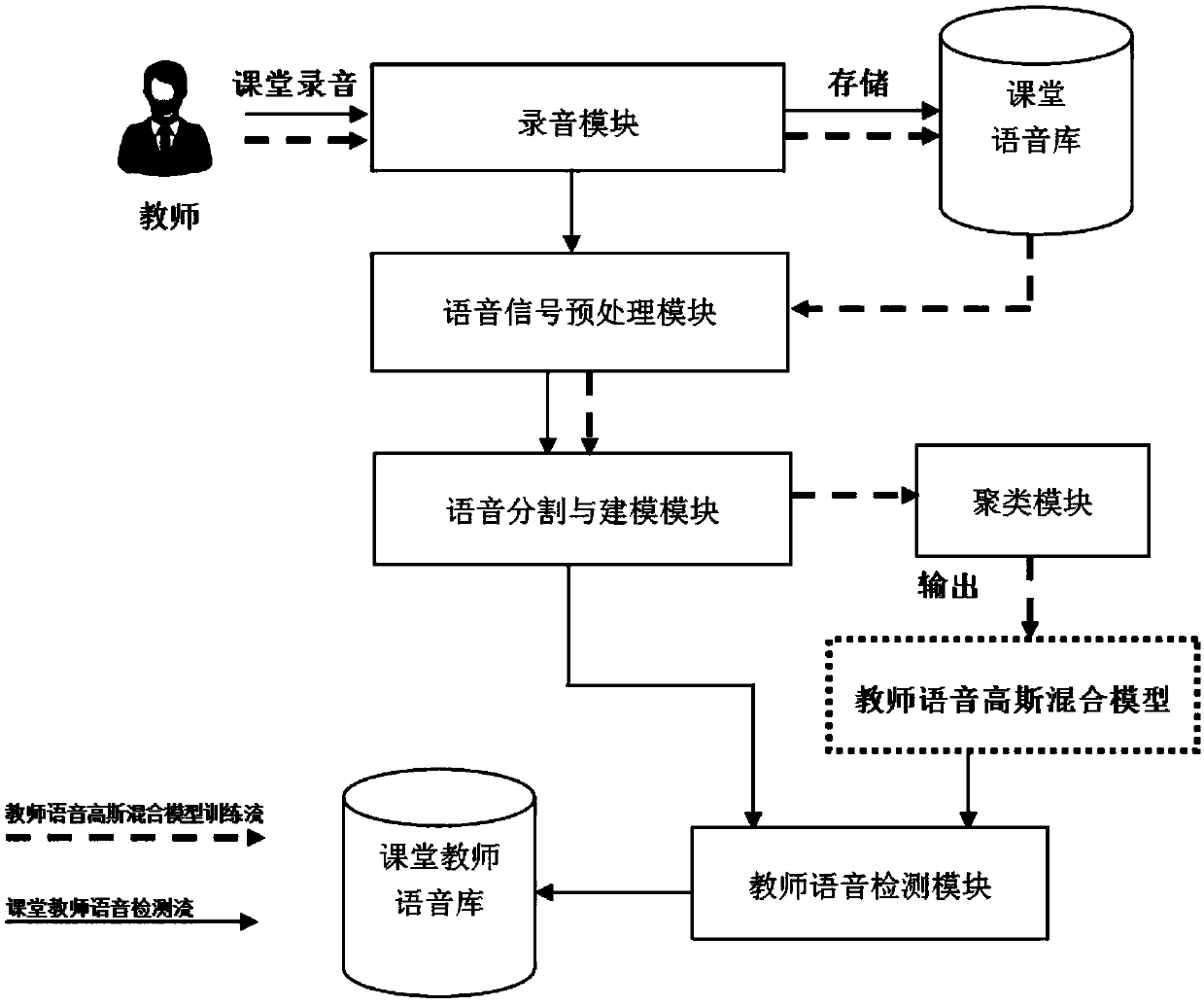

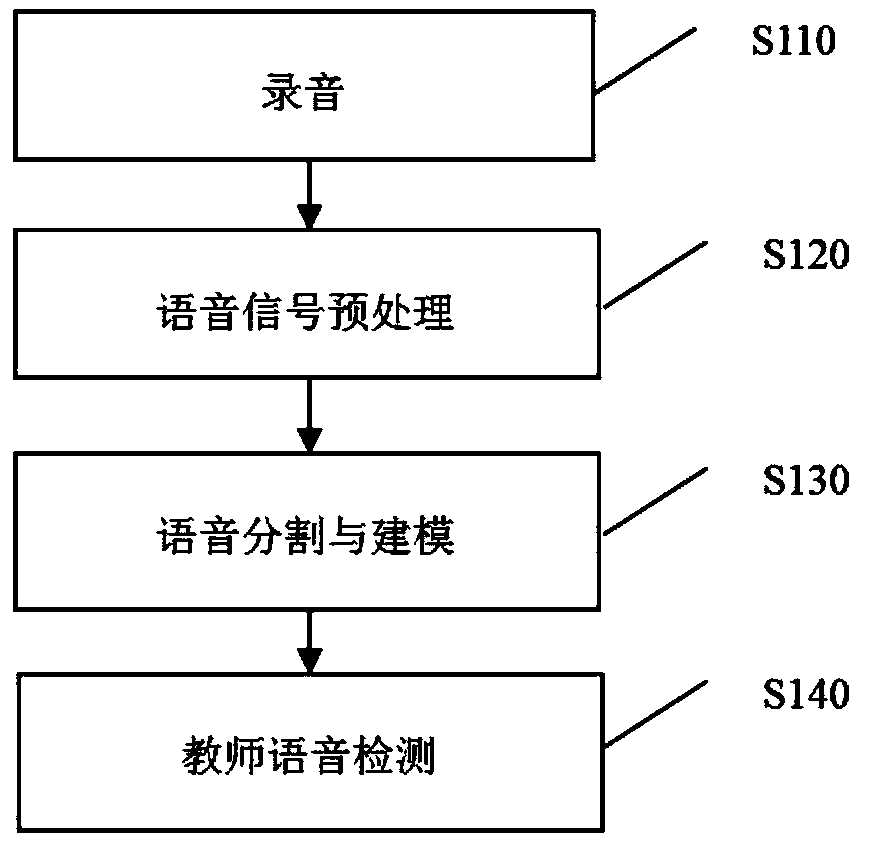

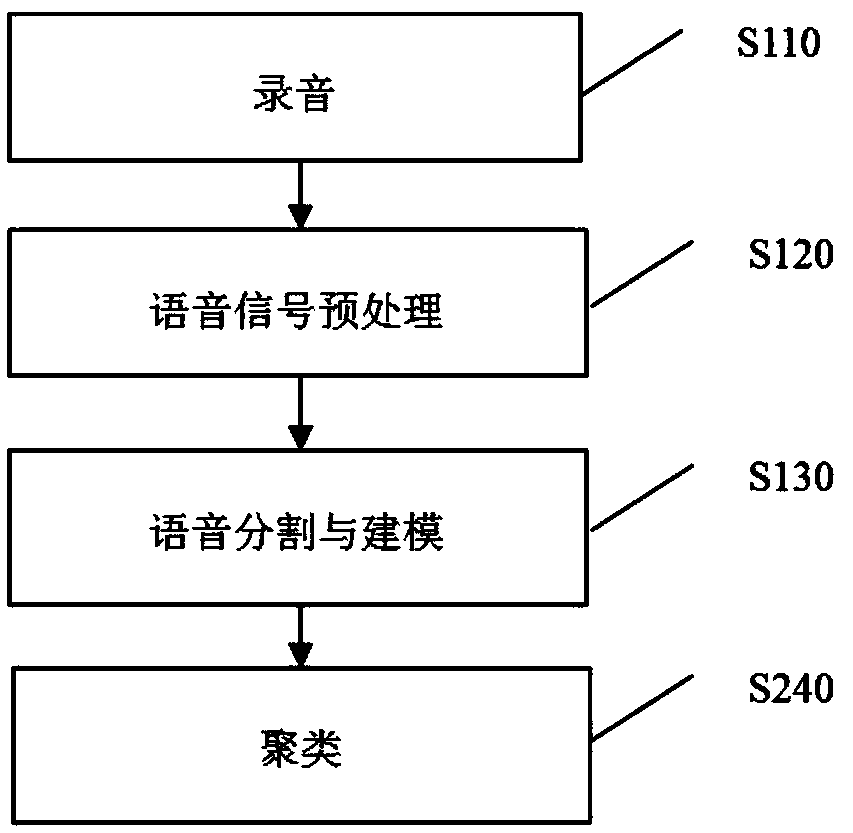

The embodiment of the invention discloses a single channel-based non-supervision target speaker speech extraction method comprising a teacher language detection step and a teacher language model training step; the teacher language detection step comprises the following parts: obtaining speech data from a classroom recording; processing speech signals; speech segmentation and modeling, the speech segmentation comprises steps of segmenting the classroom speech at equal length, aiming at each segment of speech and extracting corresponding MFCC features, and building each segment speech GMM modelaccording to the MFCC features; teacher speed detection, calculating the similarity between the GMM model of each segment speech except for teacher speech types and a GGMM, tagging the GMM models smaller than a set threshold as teacher speech types, thus obtaining the final teacher speech types; the teacher language GGMM model training step comprises the following parts: clustering the speech dataobtained in S3; obtaining an initial teacher speech type, and extracting the GGMM model according to the initial teacher speech type. The method can effectively improve the system adaptability and intelligence in real applications, thus laying foundation for following applications and researches.

Owner:SHANTOU UNIV

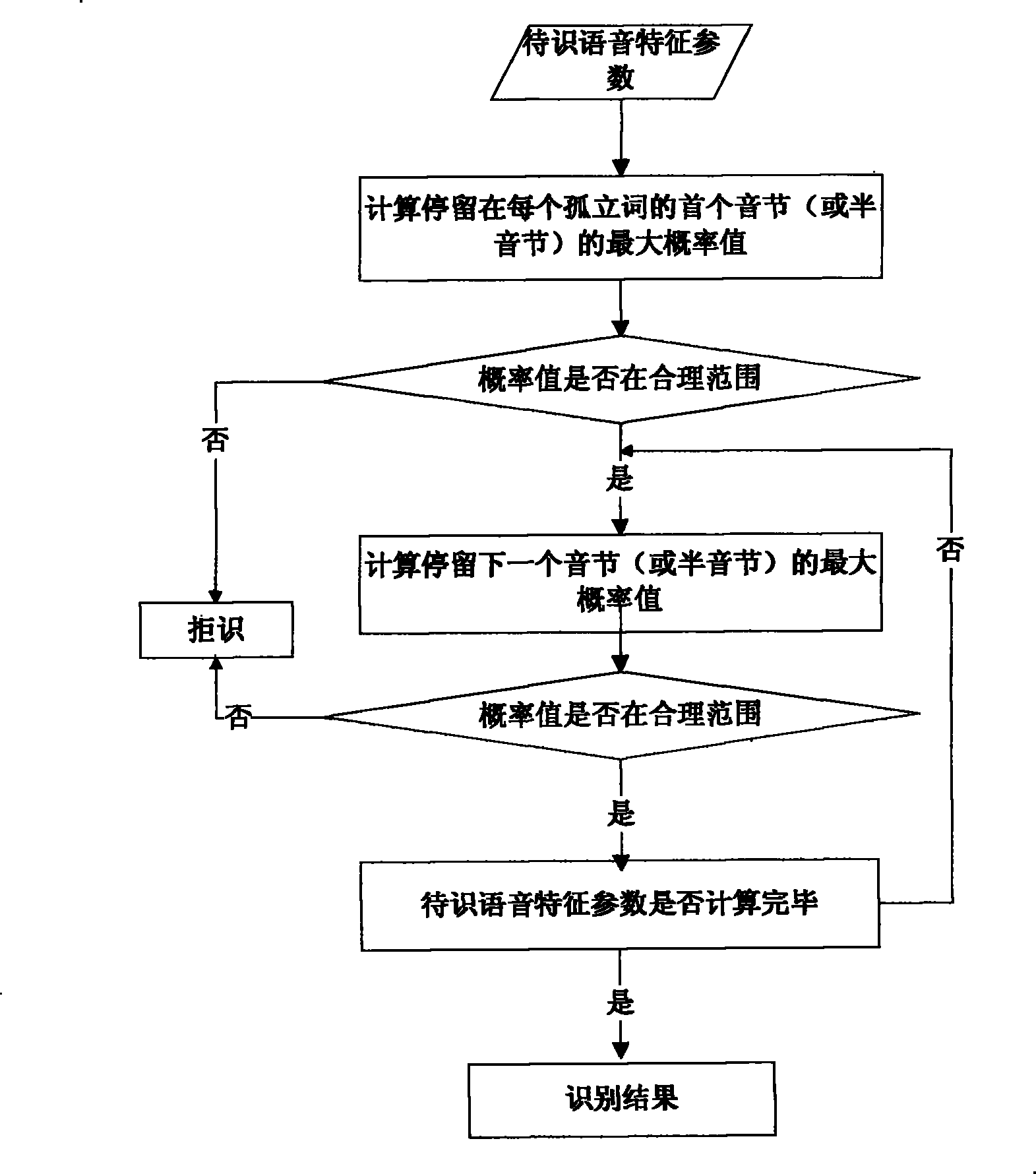

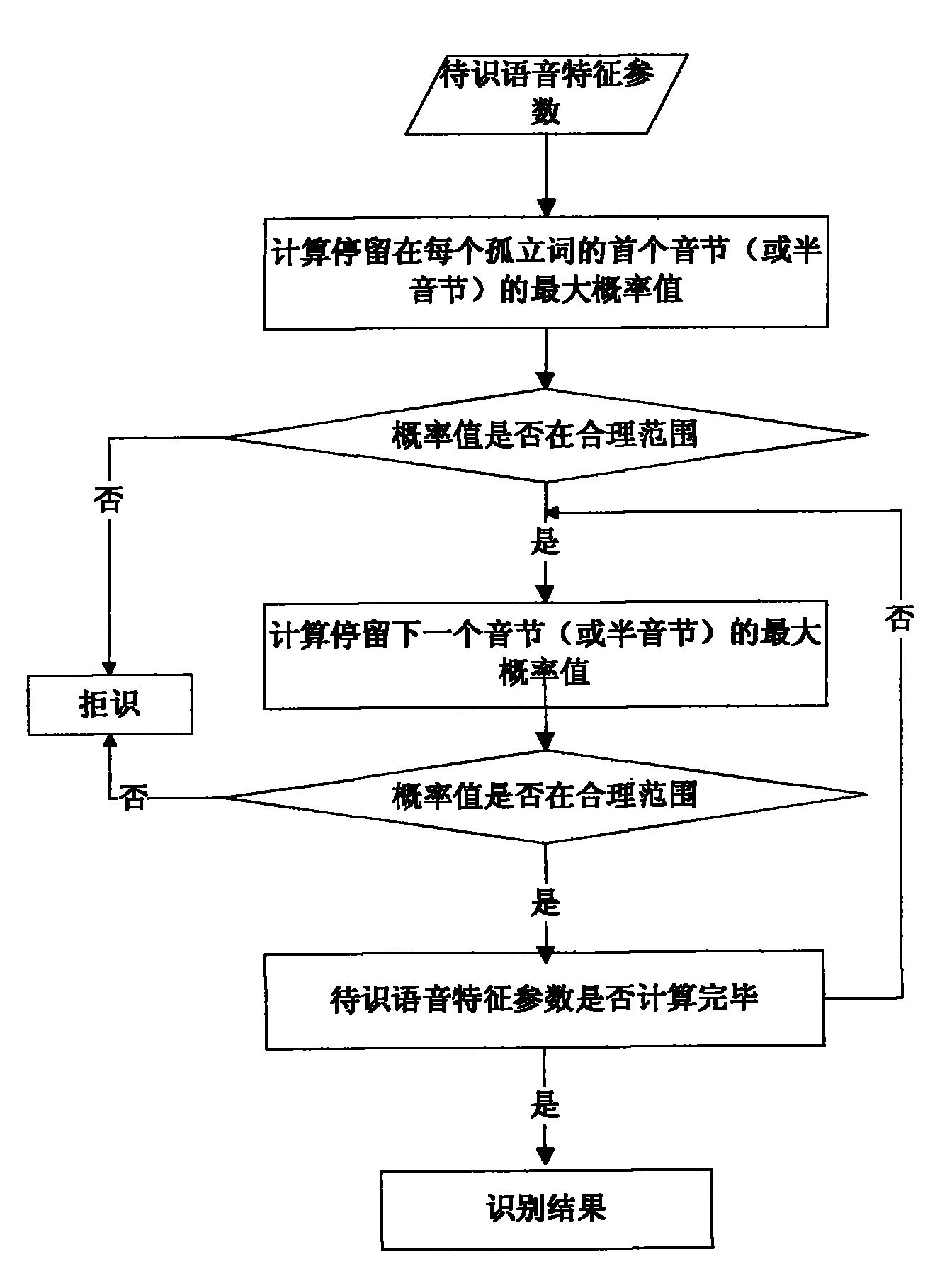

Phonetic segmentation-based isolate word recognition method

InactiveCN101819772AEasy to identifyImprove recognition response timeSpeech recognitionSyllableSmall probability

The invention discloses a phonetic segmentation-based isolate word recognition method. In the method, a continuous HMM model obtained by voice training takes syllable or semi-syllable as a unit set, trained unit set models are spliced into whole word models according to the syllables or semi-syllables of isolate words in a word list, and a Viterbi algorithm is adopted in recognition. The method has the advantages of improving recognition performance because each segment of HMM model in recognition results (accurately recognized isolate words) can be better matched with each segment of characteristic parameters of voices to be recognized, and shortening recognition response time because the recognition of the isolate words with relatively smaller probability values can be directly refused every time recognition operation is performed on the tail state of one syllable or semi-syllable.

Owner:NO 709 RES INST OF CHINA SHIPBUILDING IND CORP

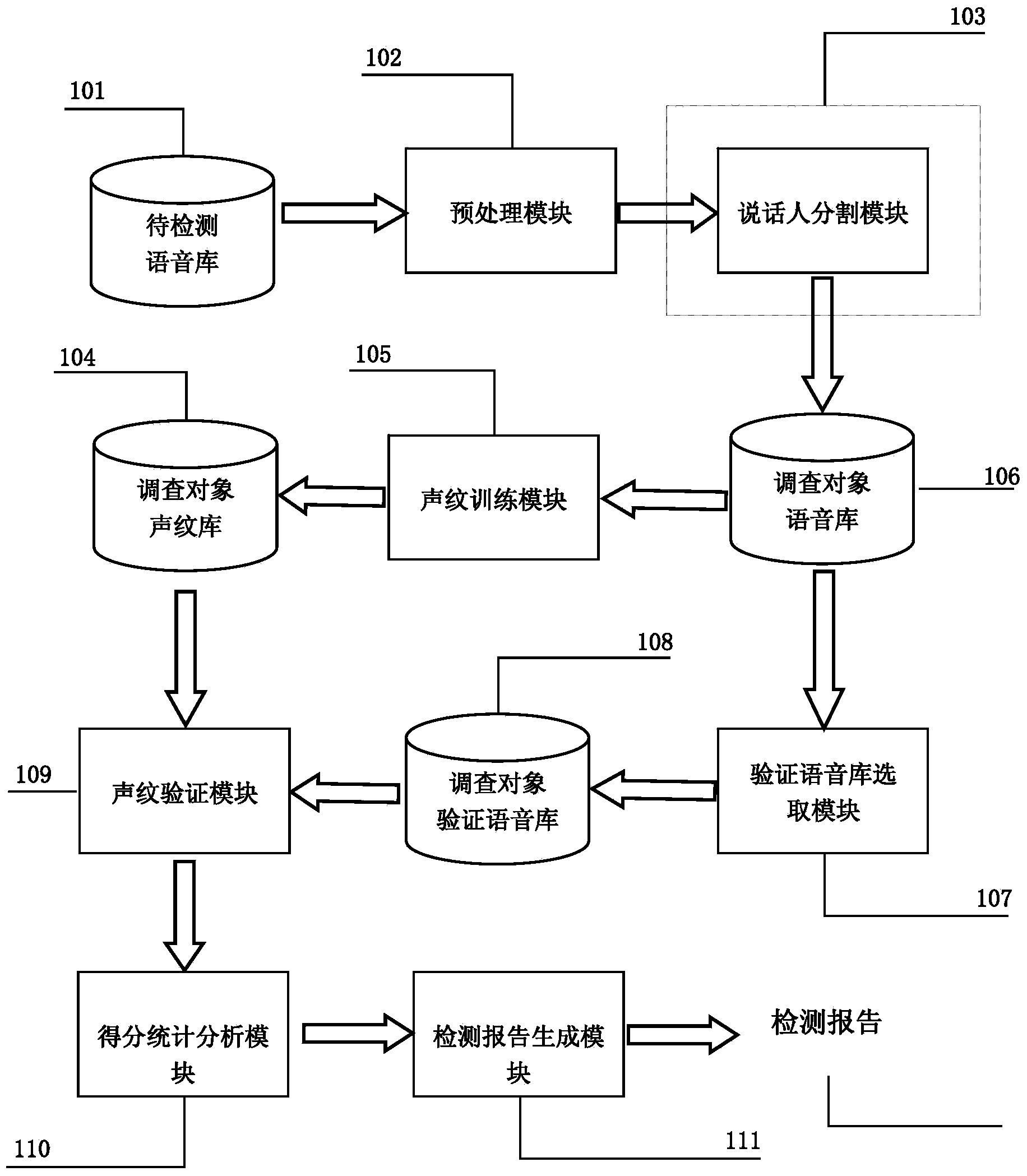

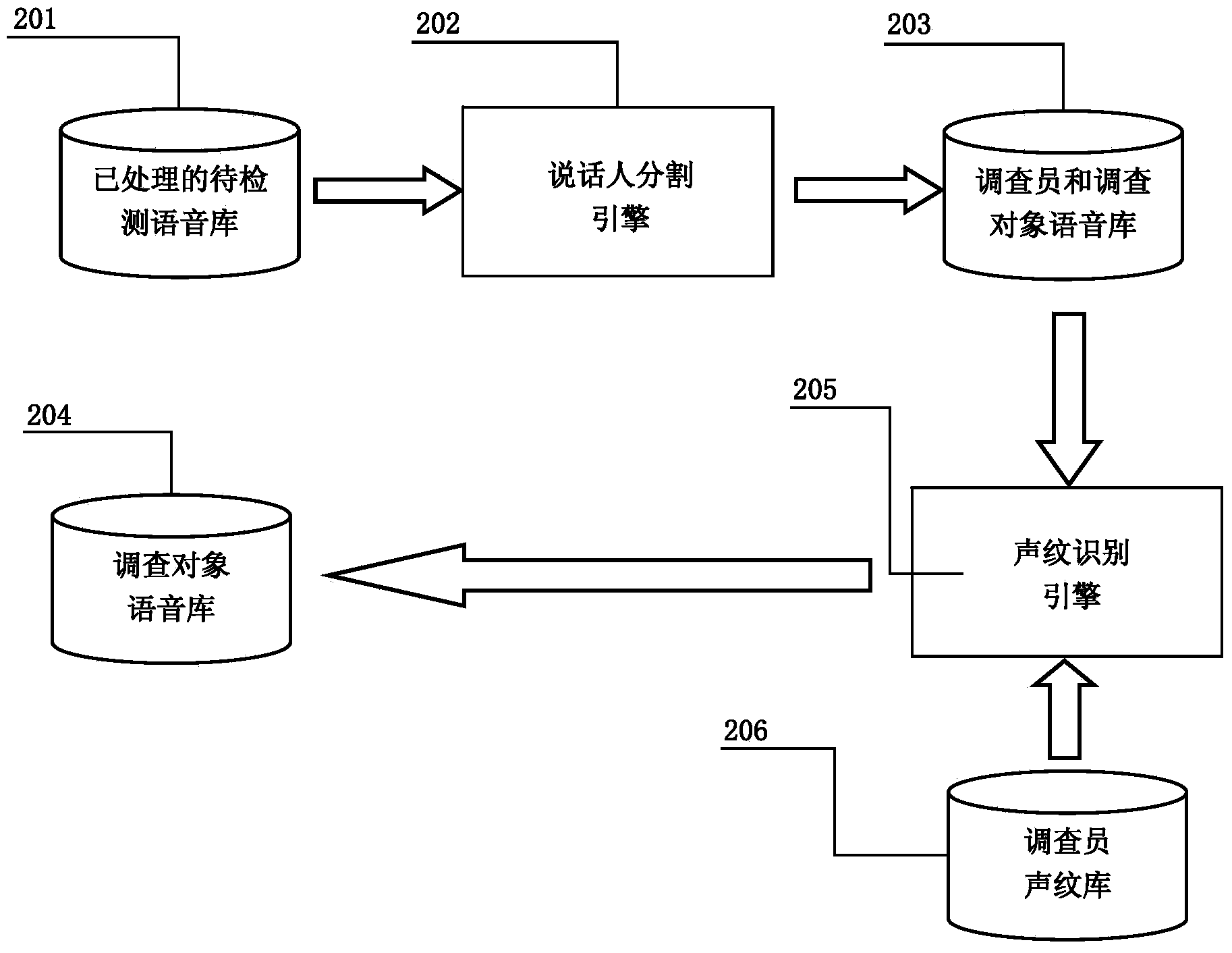

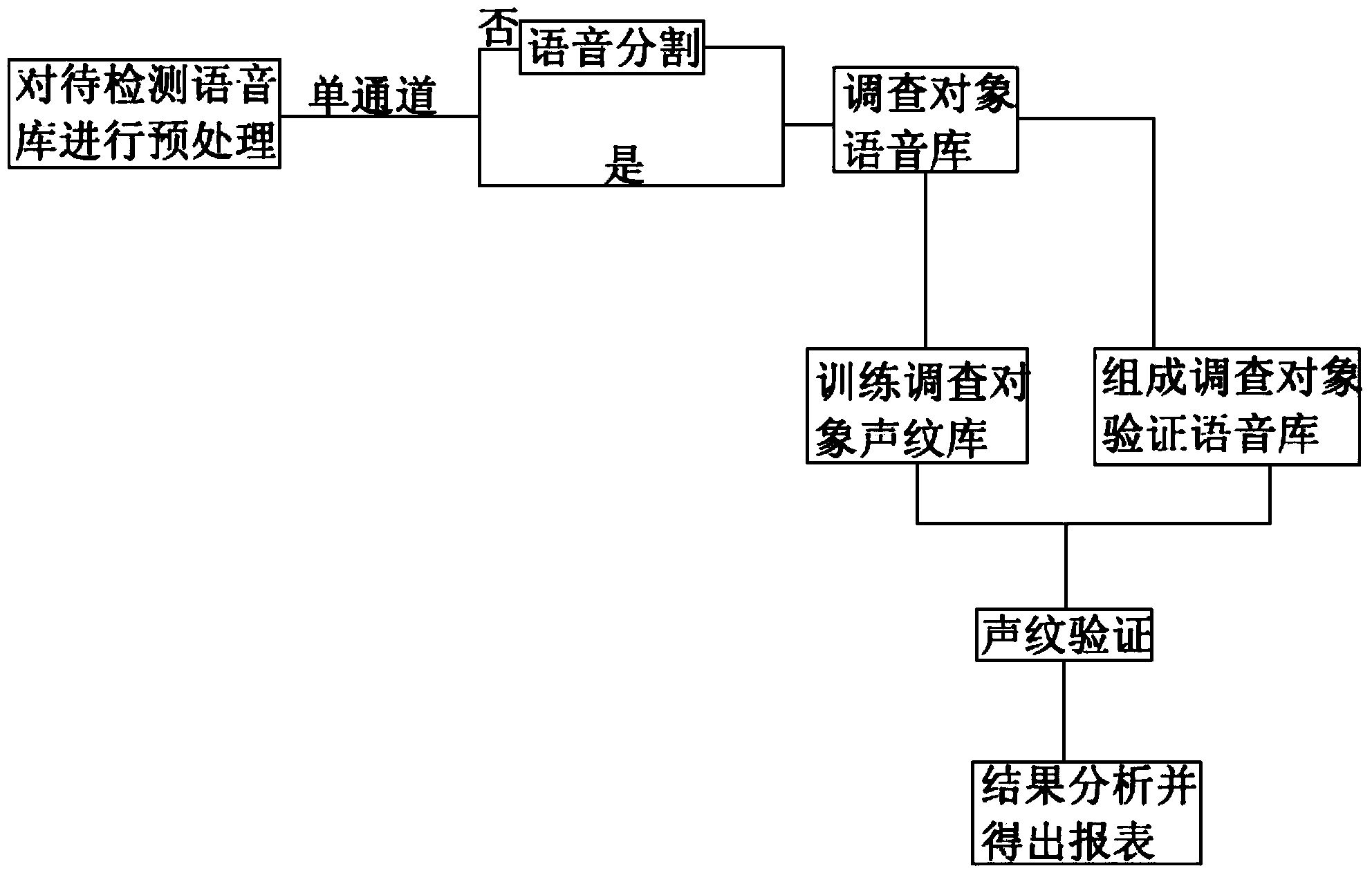

System and method for detecting identity impersonation in telephone satisfaction survey

ActiveCN103778917AImprove detection efficiencyReduce detection error rateSpeech analysisMarketingTelephone surveySpeech segmentation

The invention provides a system and a method for detecting identity impersonation in telephone satisfaction survey and provides a solution for the following problems: identity impersonation detection can be carried out only for single-channel telephone speech in previous telephone satisfaction surveys, the method for speech processing is rough, telephone survey speech contains a variety of non-effective speeches such as noise and ring-back tone, and the like. The system of the invention is composed of a to-be-detected speech library 101, a preprocessing module 102, a speaker speech segmentation module 103, a respondent voiceprint library 104, a voiceprint training module 105, a respondent speech database 106, a verification speech selection module 107, a respondent verification speech library 108, a voiceprint verification module 109, a score statistical analysis module 110 and a detection report generation module 111. Identity impersonation is detected by using the voiceprint recognition technology and the speaker speech segmentation technology, and a clear and readable identity impersonation detection report is given finally to be reflected on the authenticity of survey data in telephone satisfaction survey.

Owner:XIAMEN KUAISHANGTONG INFORMATION TECH CO LTD

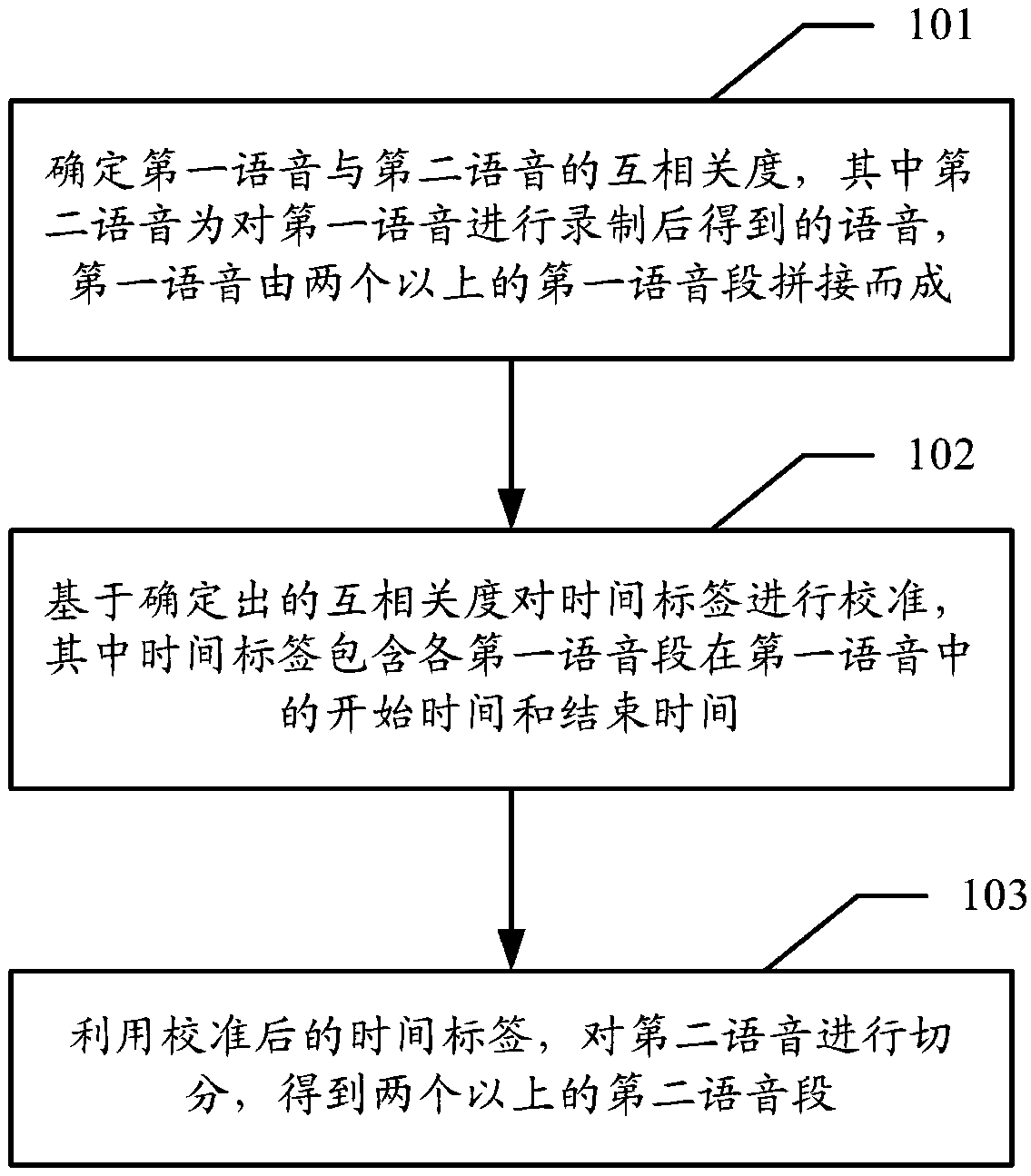

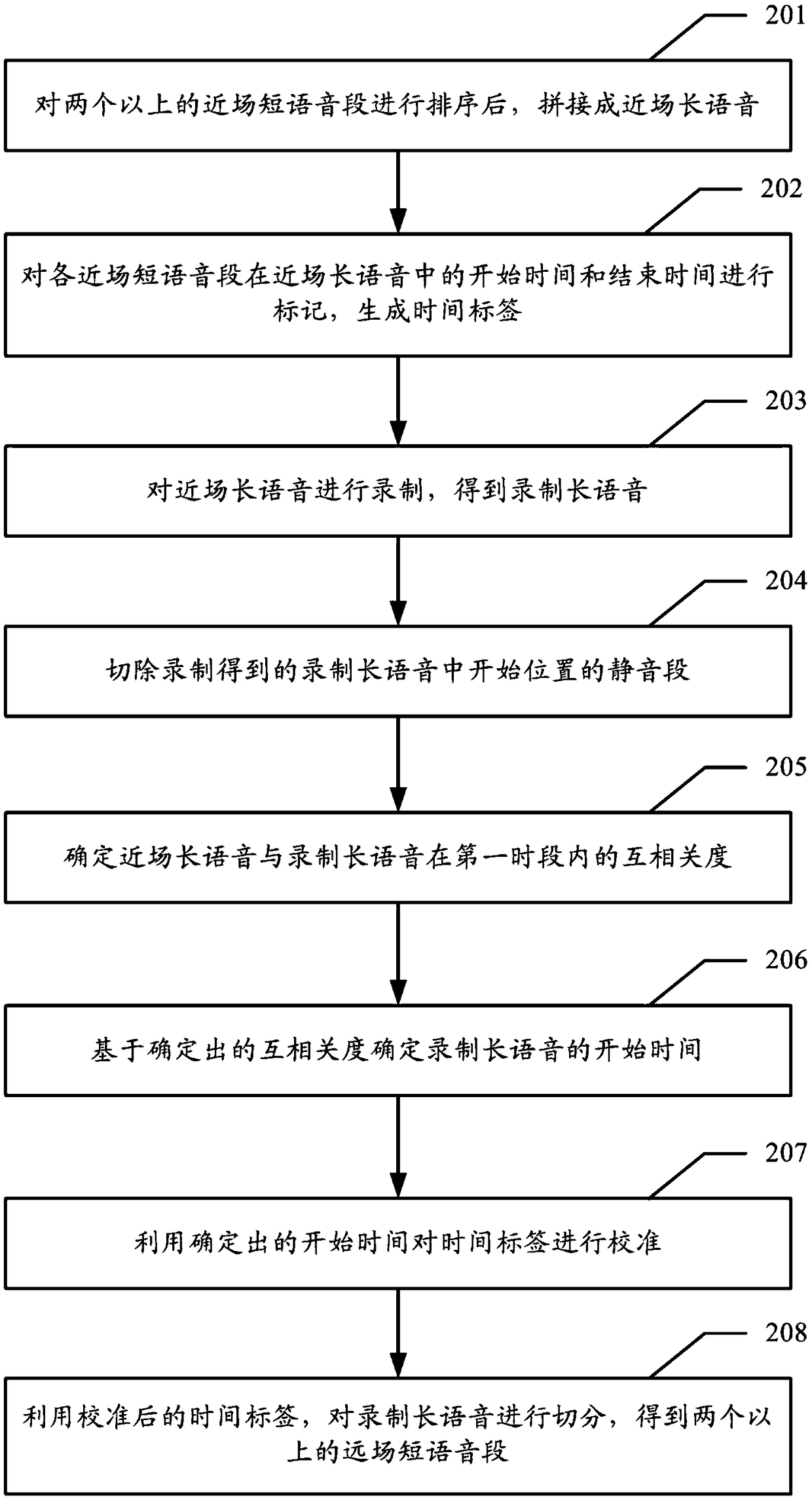

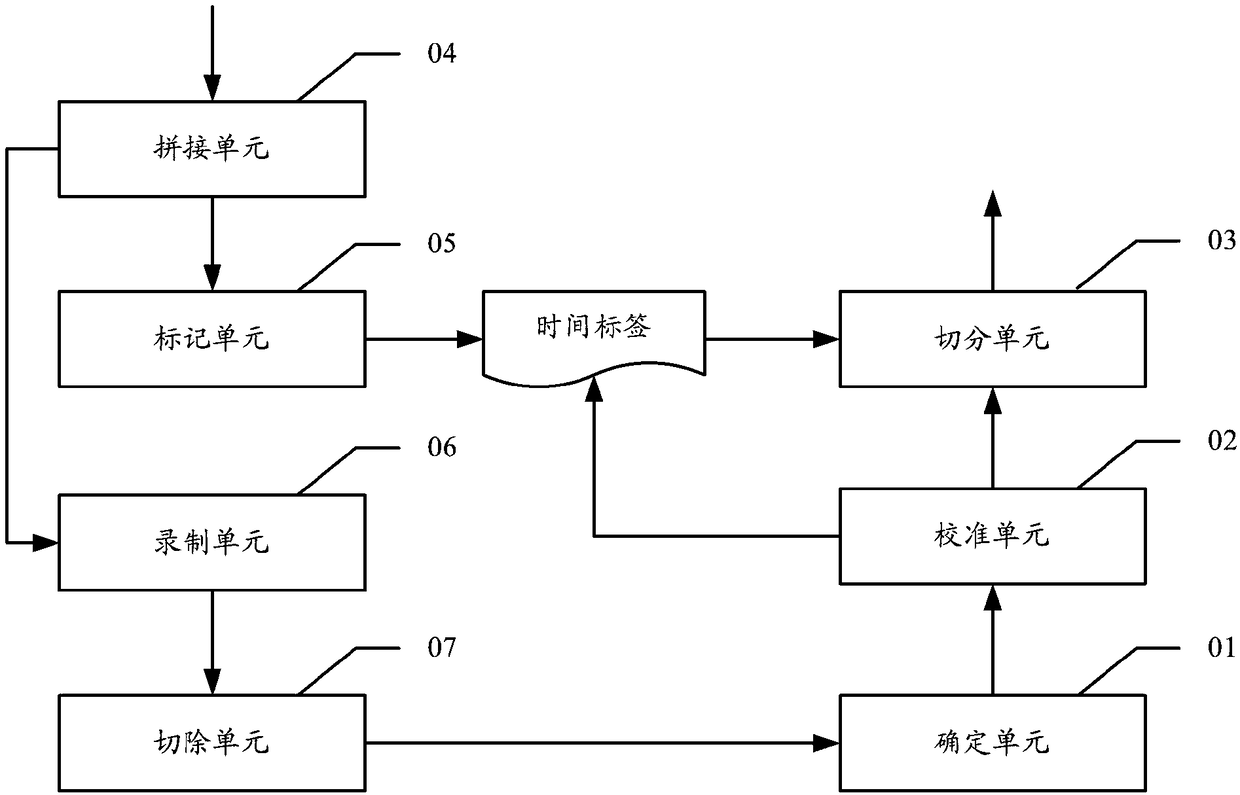

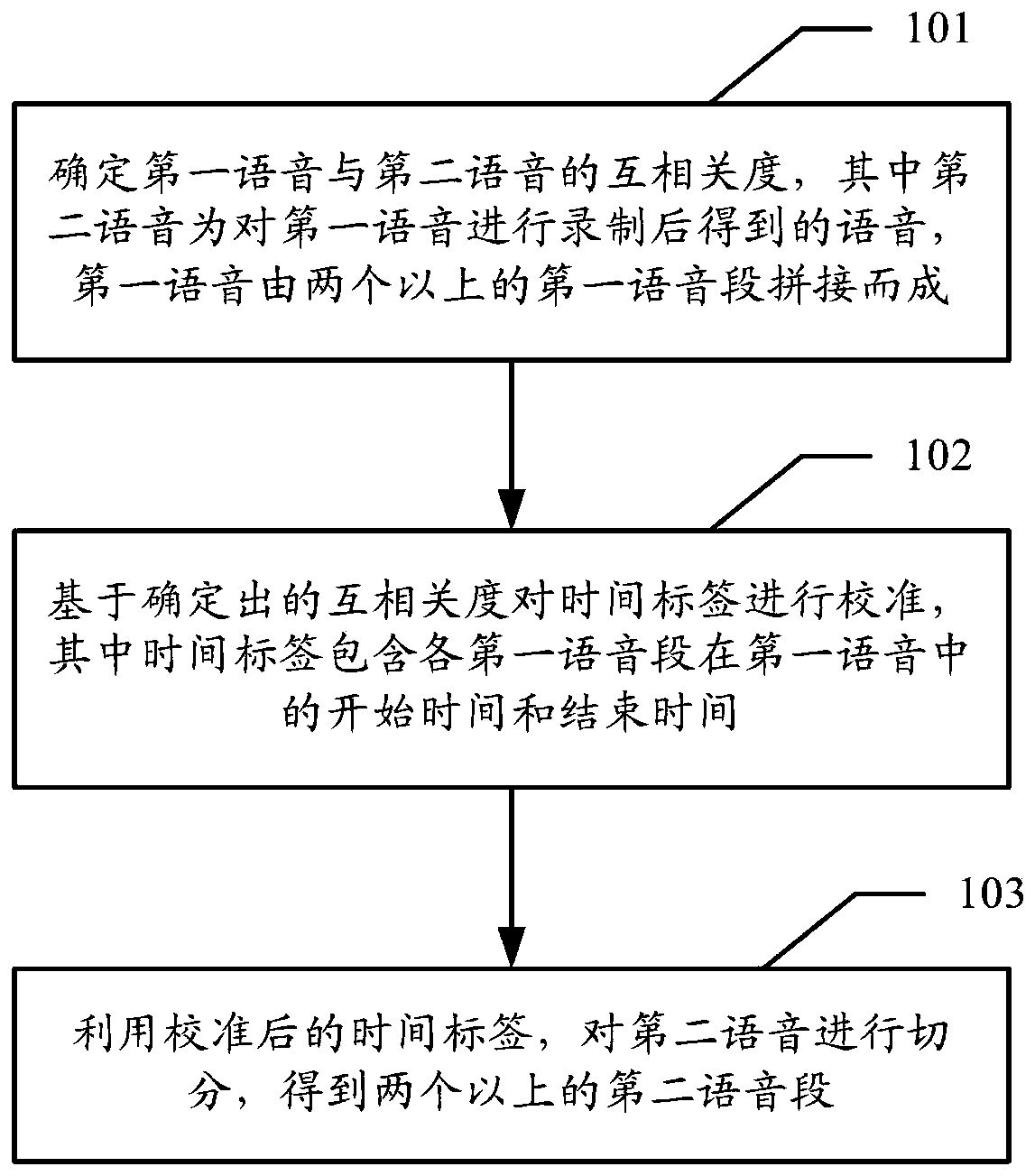

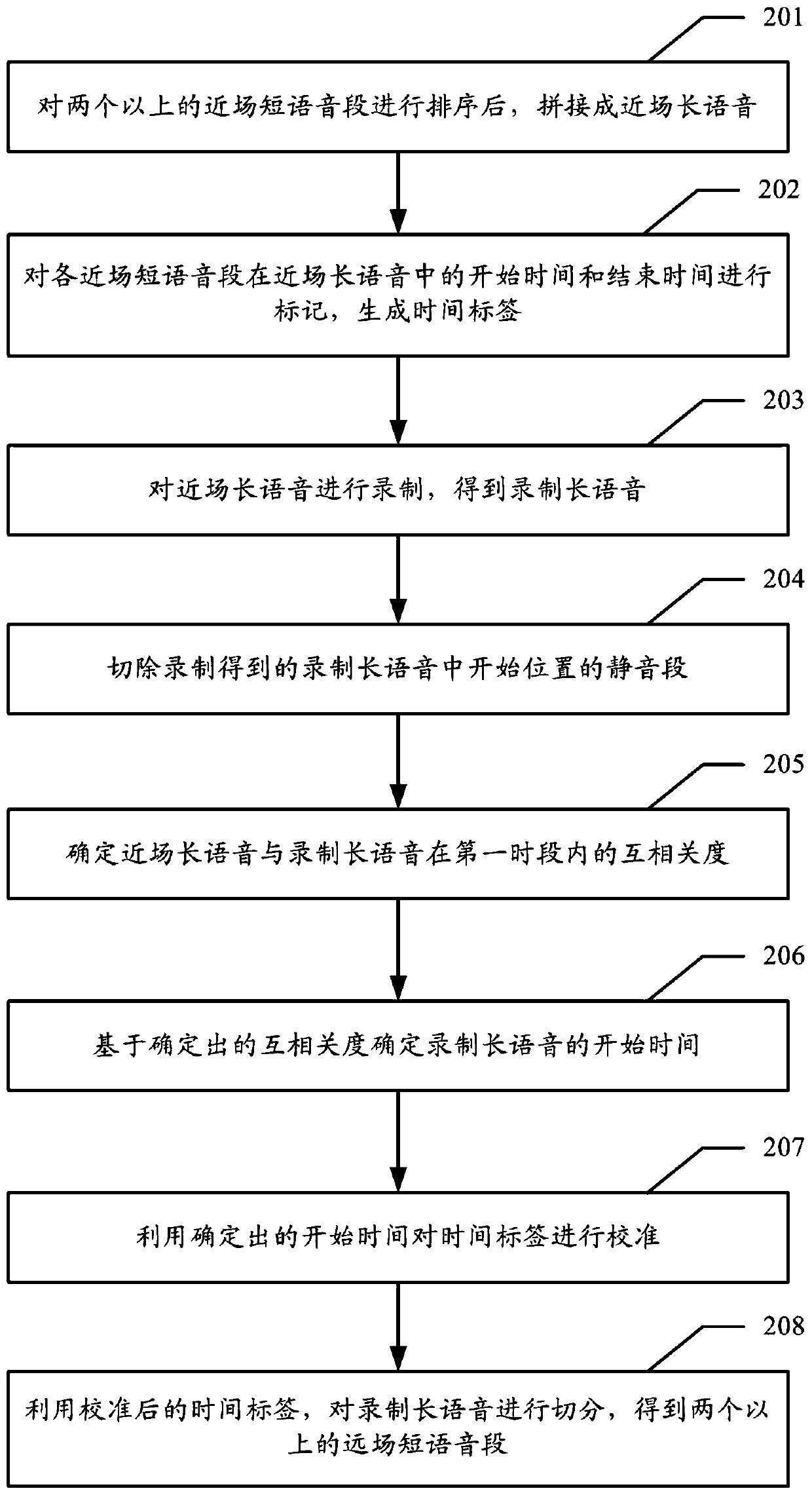

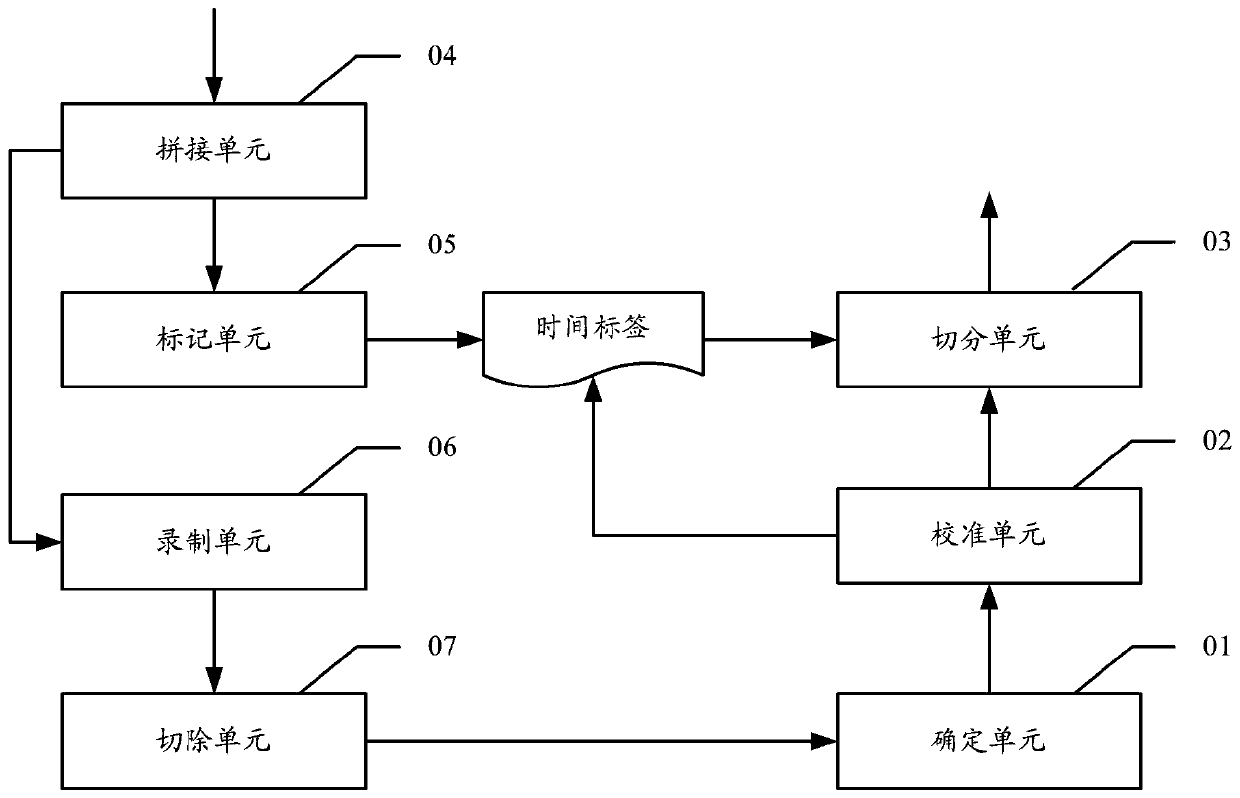

Speech segmentation method, apparatus, apparatus and computer storage medium

ActiveCN109166570AImprove Segmentation AccuracyEasy alignmentSpeech recognitionStart timeSpeech segmentation

The invention provides a speech segmentation method, a device, a device and a computer storage medium, wherein the method comprises the following steps: determining the cross correlation degree of a first speech and a second speech, wherein the second speech is the speech obtained after recording the first speech, and the first speech is formed by splicing two or more first speech segments; Calibrating a time tag based on the cross-correlation, the time tag comprising a start time and an end time of each first speech segment in a first speech; Using the calibrated time stamp, the second speechis segmented to obtain more than two second speech segments. The invention can better align the calibrated time label with the second speech, thereby improving the segmentation accuracy of the secondspeech.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Using child directed speech to bootstrap a model based speech segmentation and recognition system

A method and system for obtaining a pool of speech syllable models. The model pool is generated by first detecting a training segment using unsupervised speech segmentation or speech unit spotting. If the model pool is empty, a first speech syllable model is trained and added to the model pool. If the model pool is not empty, an existing model is determined from the model pool that best matches the training segment. Then the existing module is scored for the training segment. If the score is less than a predefined threshold, a new model for the training segment is created and added to the pool. If the score equals the threshold or is larger than the threshold, the training segment is used to improve or to re-estimate the model.

Owner:HONDA RES INST EUROPE

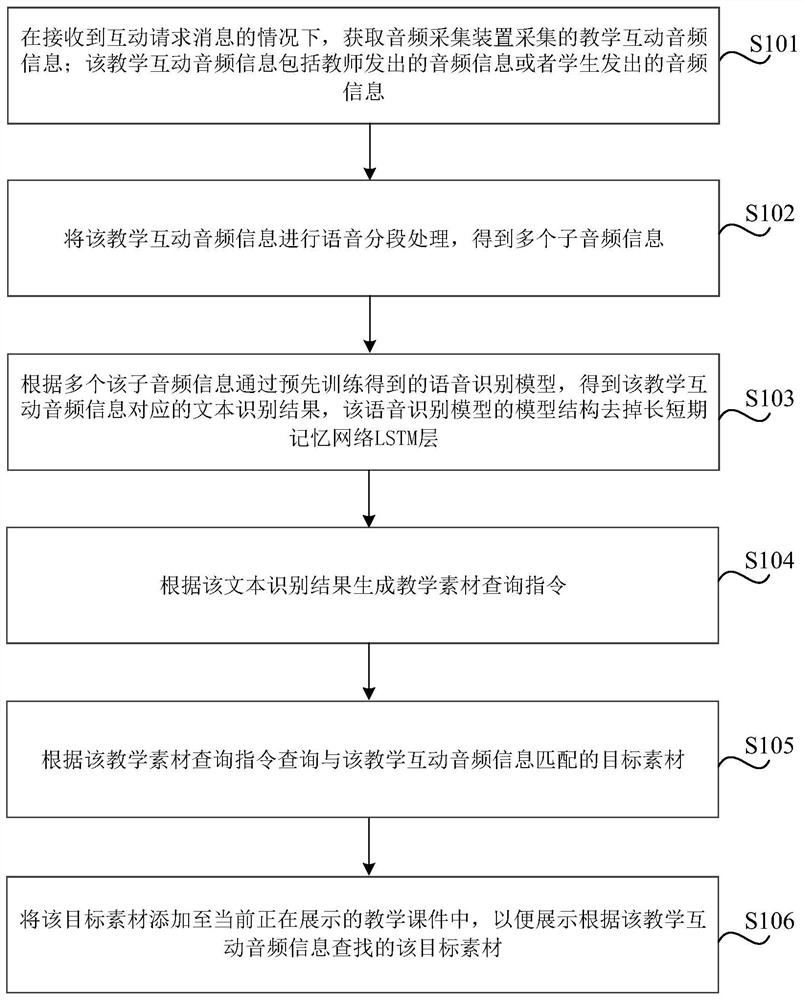

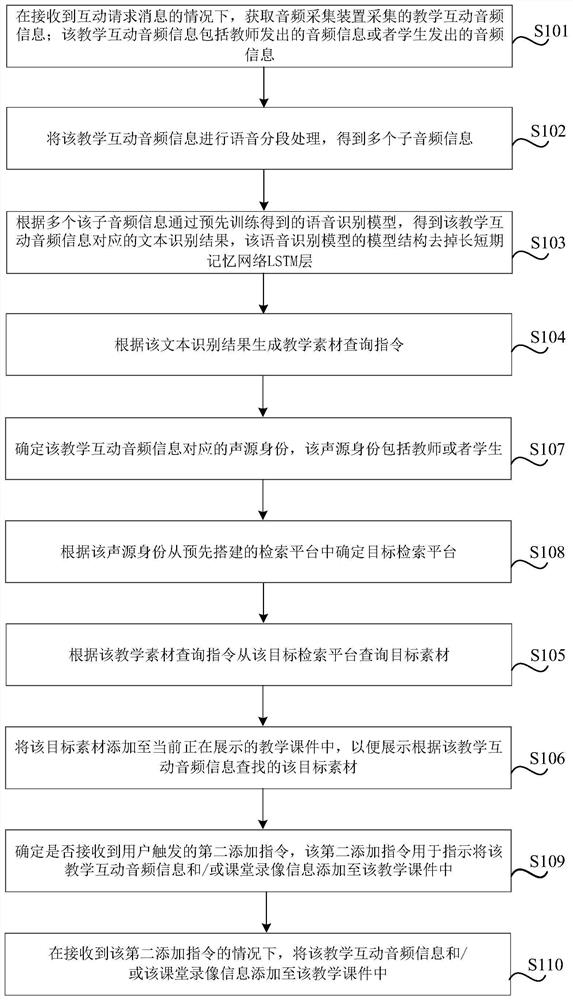

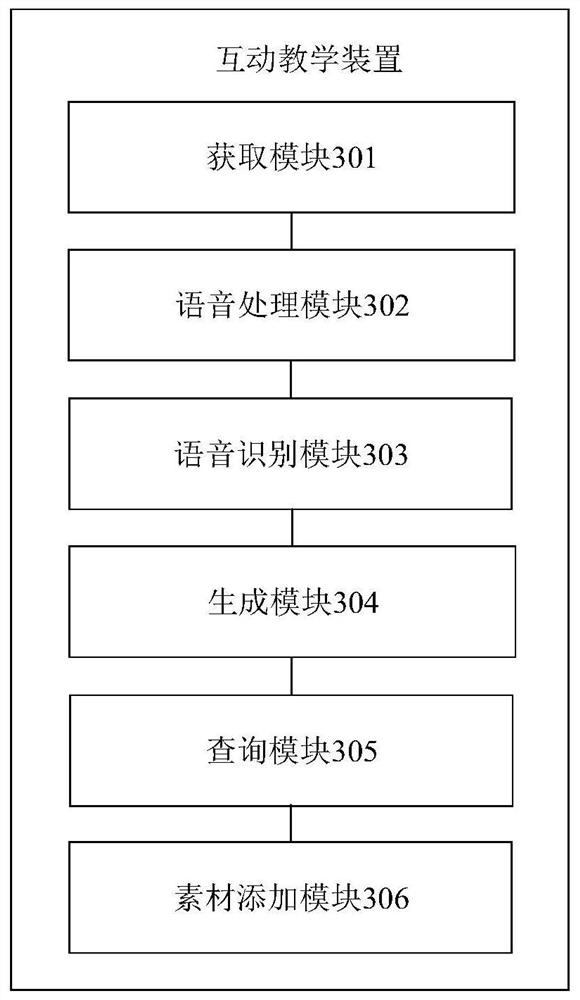

Interactive teaching method and device, storage medium and electronic equipment

InactiveCN113140138AImprove interactivityImprove intelligenceSpeech recognitionElectrical appliancesSpeech segmentationText recognition

The invention relates to an interactive teaching method and device, a storage medium and electronic equipment, and the method comprises the steps: obtaining teaching interaction audio information collected by an audio collection device under the condition that an interaction request message is received, wherein the teaching interaction audio information comprises audio information sent by teachers or audio information sent by students; performing voice segmentation processing on the teaching interaction audio information to obtain multiple pieces of sub-audio information; obtaining a text recognition result corresponding to the teaching interaction audio information according to a voice recognition model obtained through pre-training of the multiple pieces of sub-audio information, and removing a long short-term memory (LSTM) network layer from a model structure of the voice recognition model; generating a teaching material query instruction according to the text recognition result; querying a target material matched with the teaching interaction audio information according to the teaching material query instruction; and adding the target material to the currently displayed teaching courseware so as to display the target material searched according to the teaching interaction audio information.

Owner:NEW ORIENTAL EDUCATION & TECH GRP CO LTD

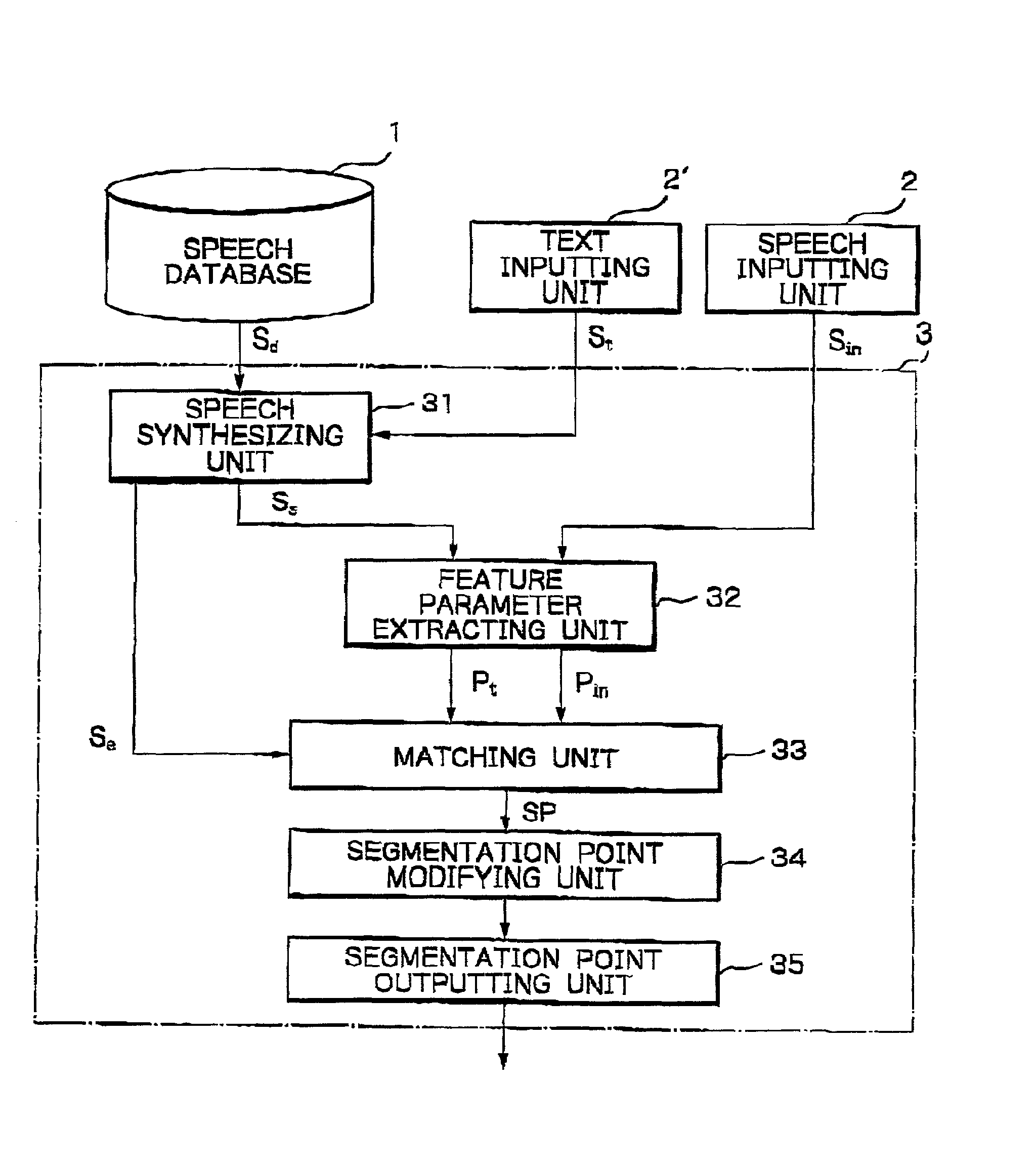

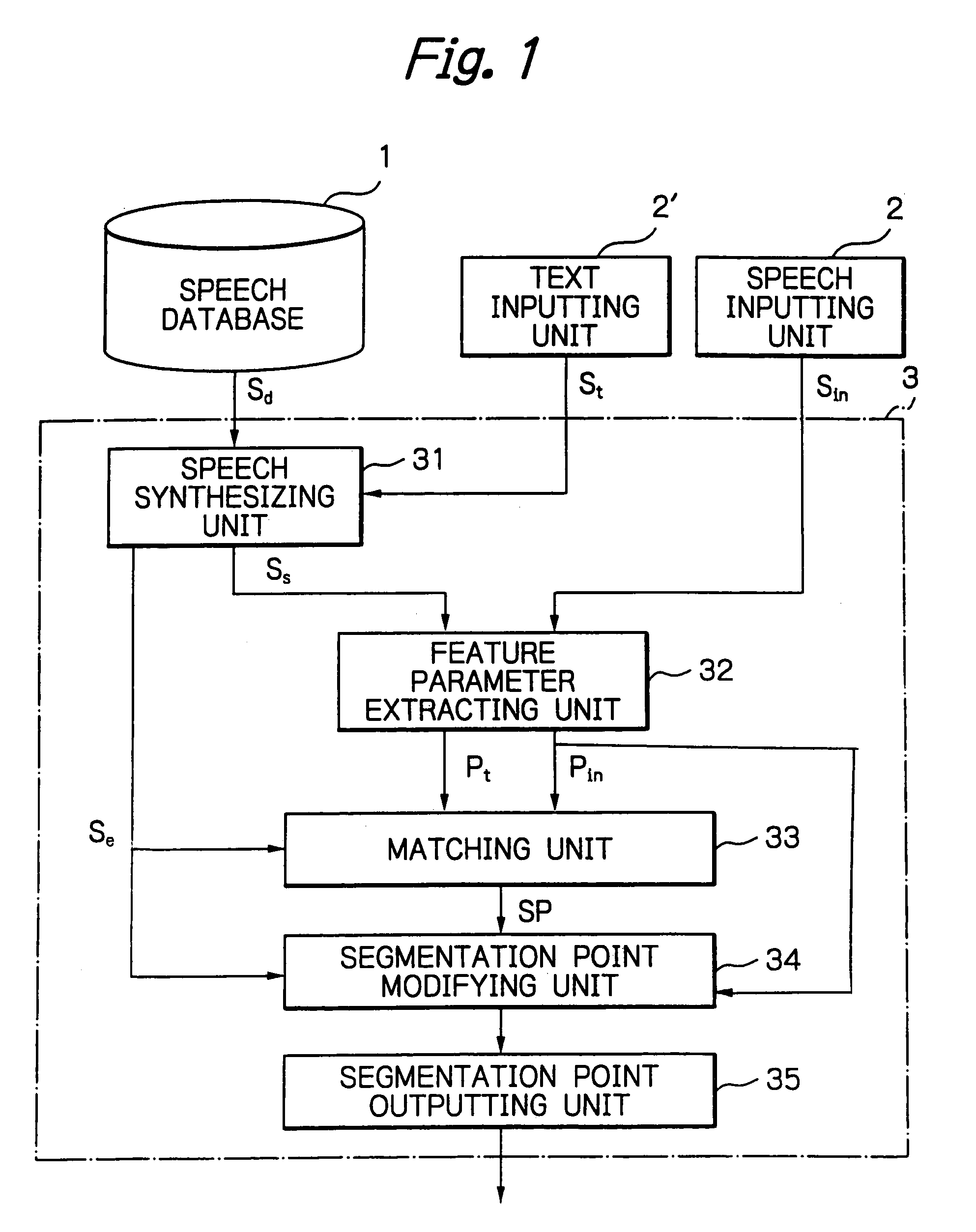

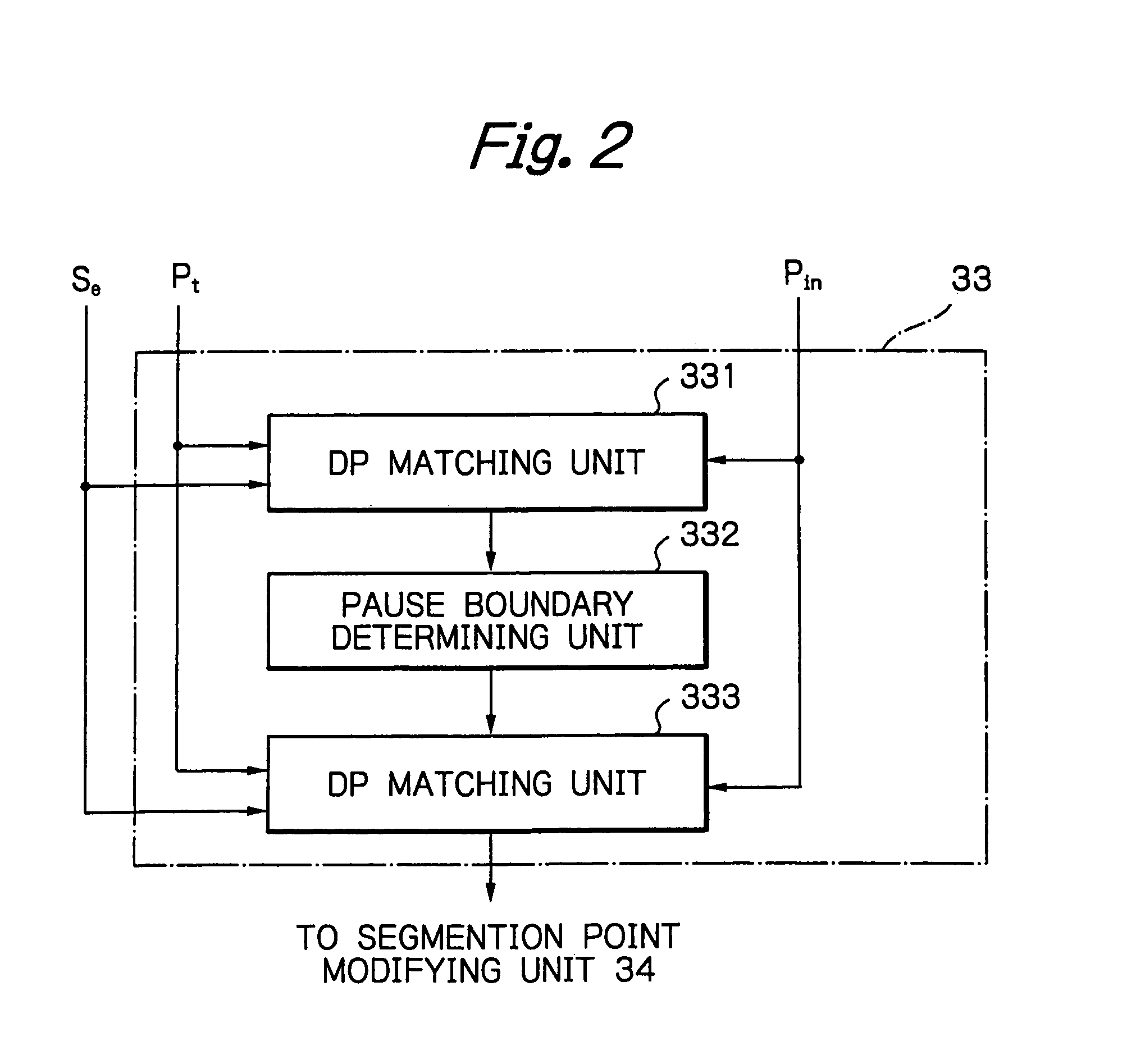

Method and apparatus for performing speech segmentation

InactiveUS7010481B2Improve performanceSpeech recognitionSpeech synthesisSpeech segmentationFeature parameter

In a method for performing a segmentation operation upon a synthesizing speech signal and an input speech signal, a synthesized speech signal and a speech element duration signal are generated from the synthesizing speech signal A first feature parameter is extracted from the synthesized speech signal, and a second feature parameter is extracted from the input speech signal. A dynamic programming matching operation is performed upon the second feature parameter with reference to the first feature parameter and the speech element duration signal to obtain segmentation points of the input speech signal.

Owner:NEC CORP +1

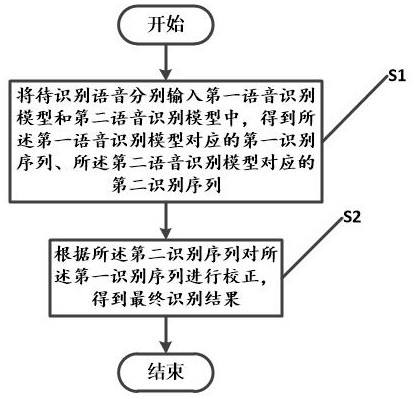

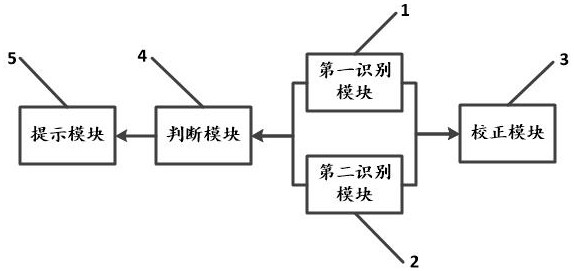

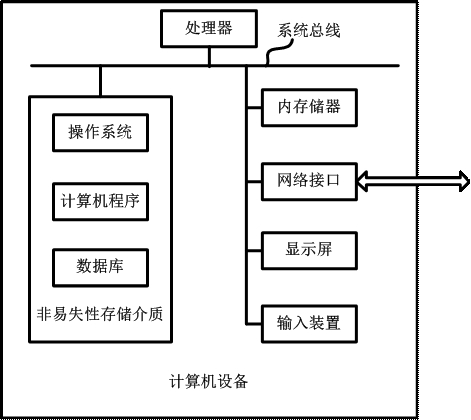

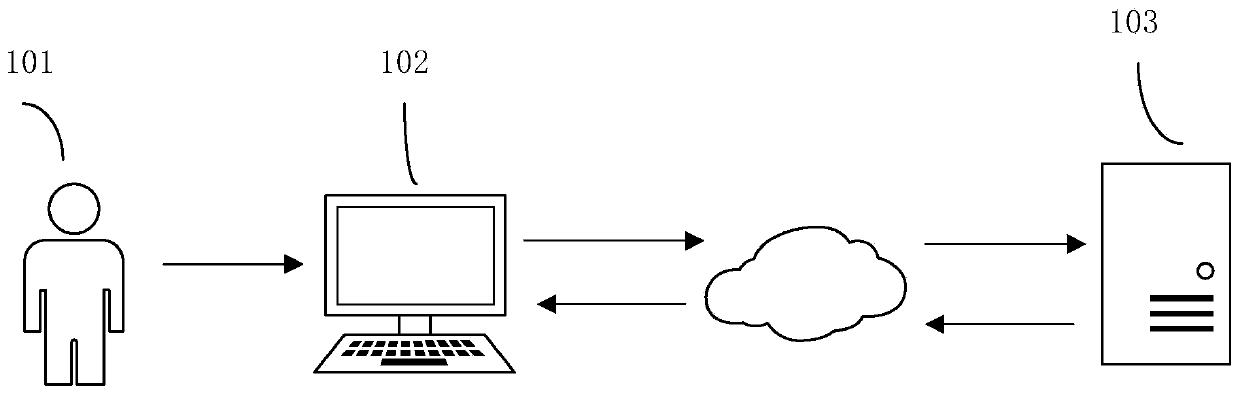

Speech recognition method and device of joint model, and computer equipment

The invention provides a speech recognition method and device of a joint model, and computer equipment. Firstly, to-be-recognized speech is input into a first speech recognition model and a second speech recognition model; a first recognition sequence corresponding to the first speech recognition model and a second recognition sequence corresponding to the second speech recognition model are obtained, wherein the first speech recognition model is a speech recognition model based on an HMM, and the second speech recognition model is an end-to-end speech recognition model. A system corrects thefirst recognition sequence according to the second recognition sequence to obtain a final recognition result. According to the invention, the recognition sequences of two different types of speech recognition models are combined with each other, and the first recognition sequence is corrected through the second recognition sequence, so that the speech segmentation accuracy is effectively improved.

Owner:深圳市友杰智新科技有限公司

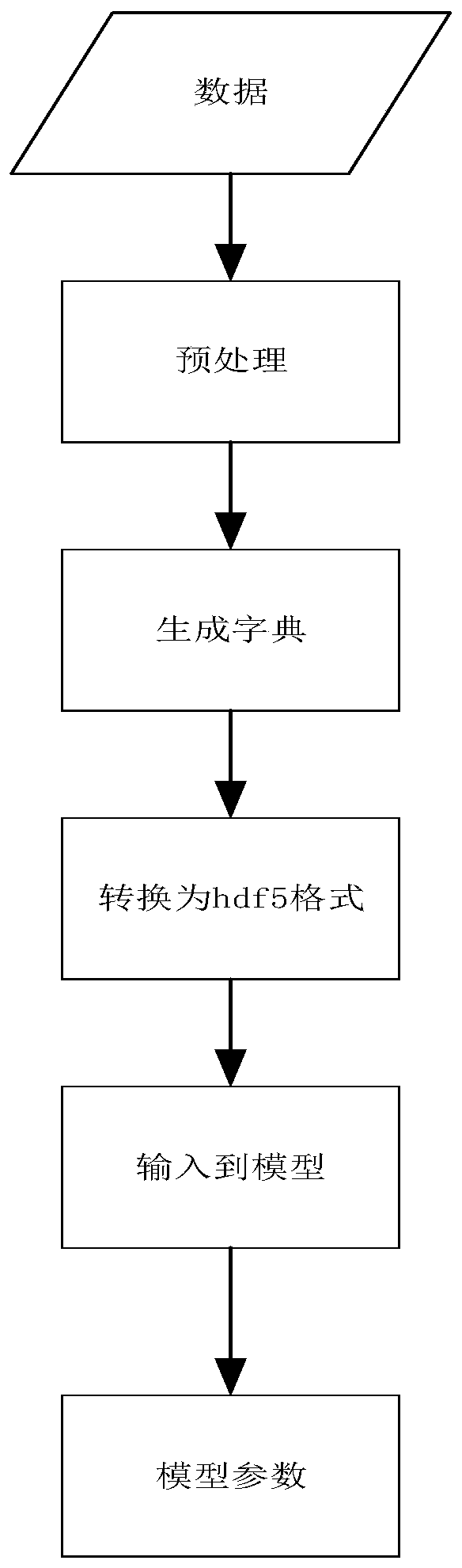

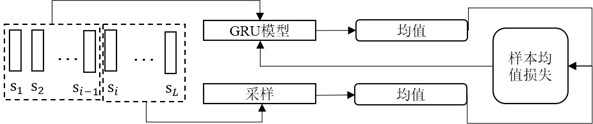

Voice segmentation model training method and device and electronic equipment

ActiveCN111312223ASolve the technical problem of inaccurate voice segmentationInternal combustion piston enginesSpeech recognitionPattern recognitionSpeech segmentation

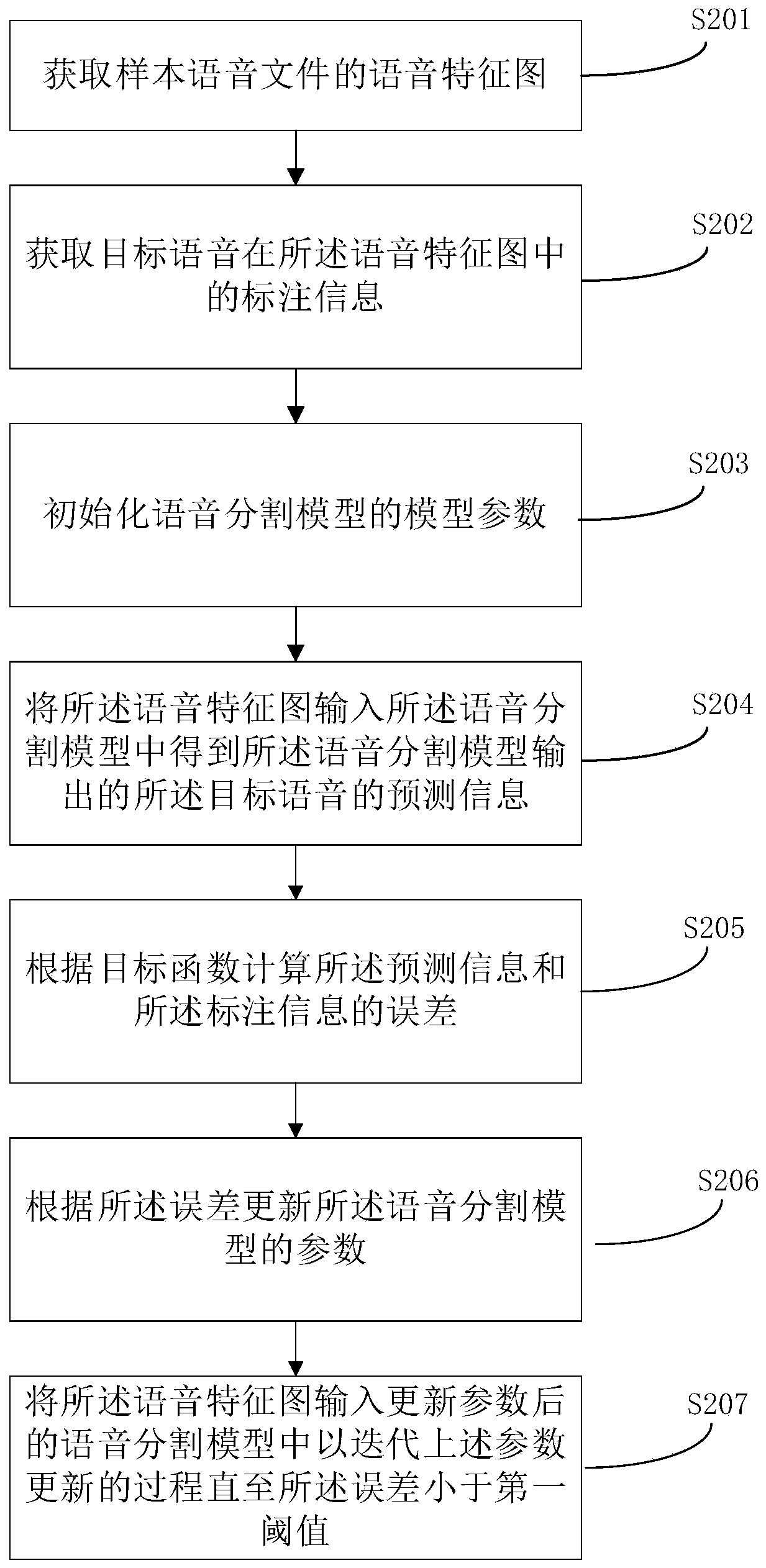

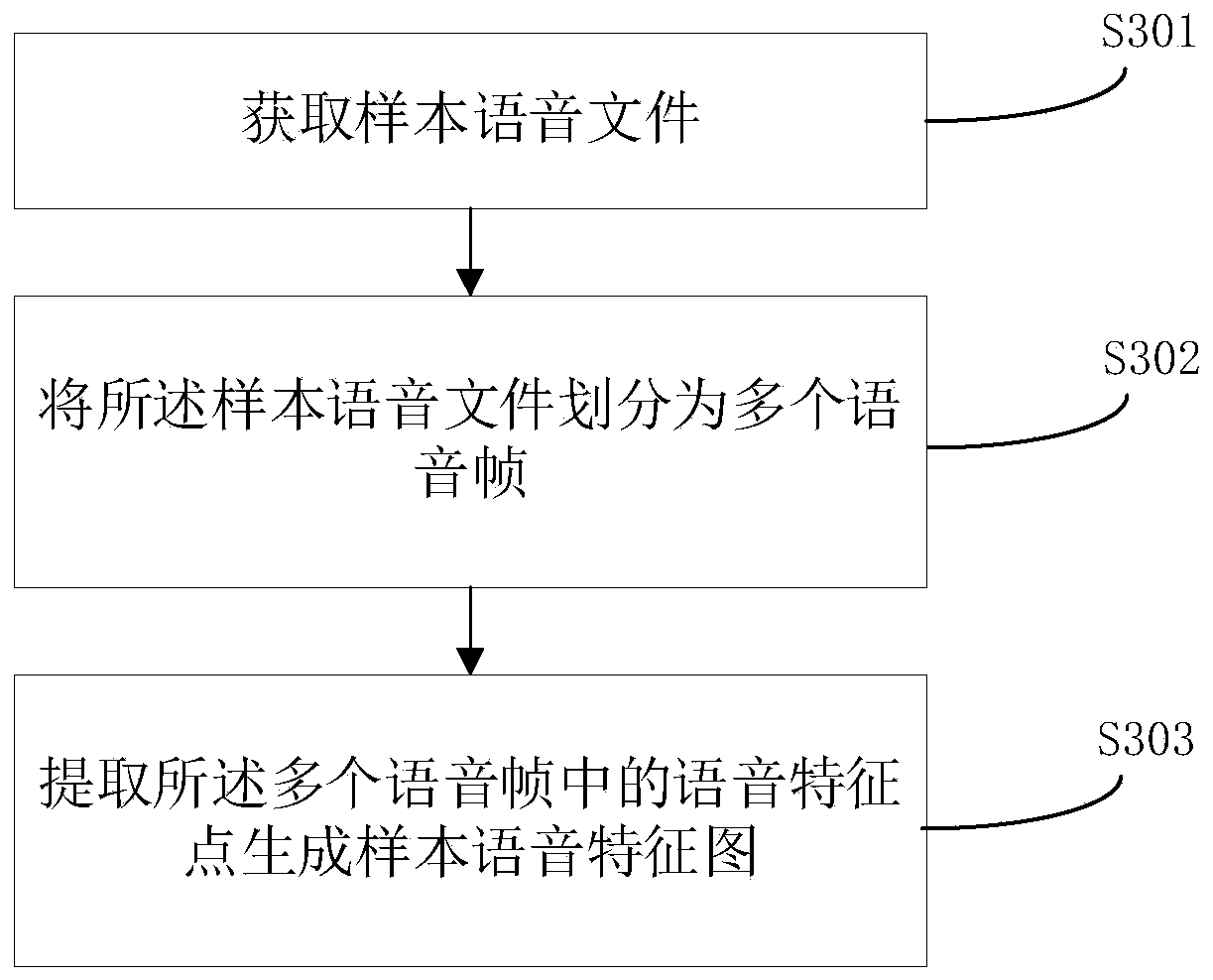

The embodiment of the disclosure discloses a voice segmentation model training method and device, electronic equipment and a computer readable storage medium. The training method of the voice segmentation model comprises the following steps: acquiring a voice feature map of a sample voice file; acquiring annotation information of a target voice in the voice feature map; initializing model parameters of a voice segmentation model; inputting the voice feature map into the voice segmentation model to obtain prediction information of a target voice output by the voice segmentation model; calculating an error between the prediction information and the annotation information according to a target function; updating parameters of the voice segmentation model according to the error; and inputtingthe voice feature map into the voice segmentation model after parameter updating to iterate the parameter updating process until the error is less than a first threshold. According to the method, thespeech segmentation model is trained through the speech feature image, and a technical problem of inaccurate speech segmentation caused by complex speech signals in the prior art is solved.

Owner:SOUNDAI TECH CO LTD

Speech segmentation, recombination and output method and device

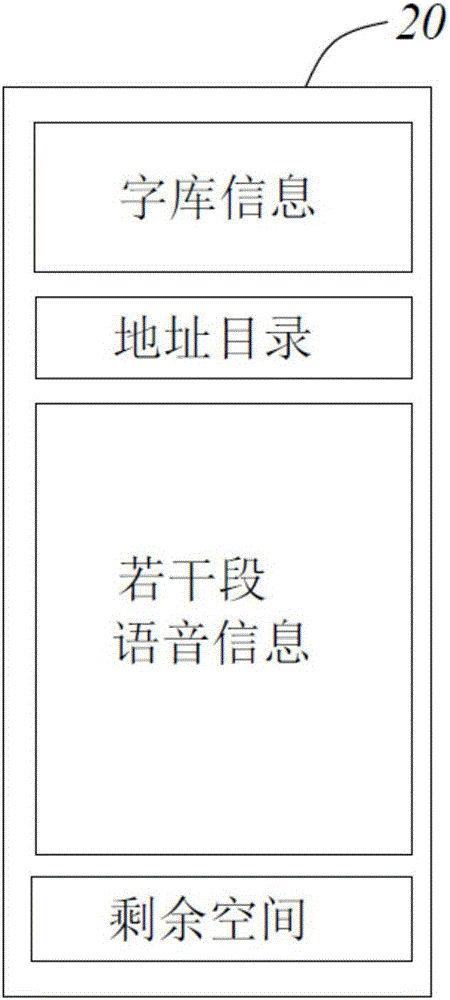

ActiveCN105810225AGuaranteed changeReduce resource costsUsing non-detectable carrier informationDigital recording/reproducingMicrocontrollerMicrocomputer

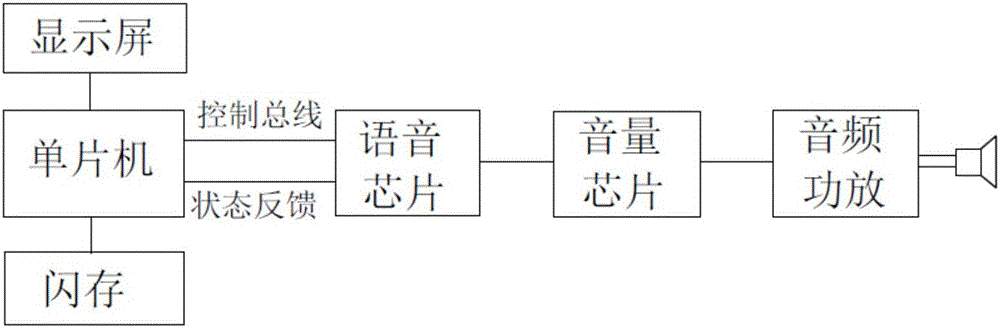

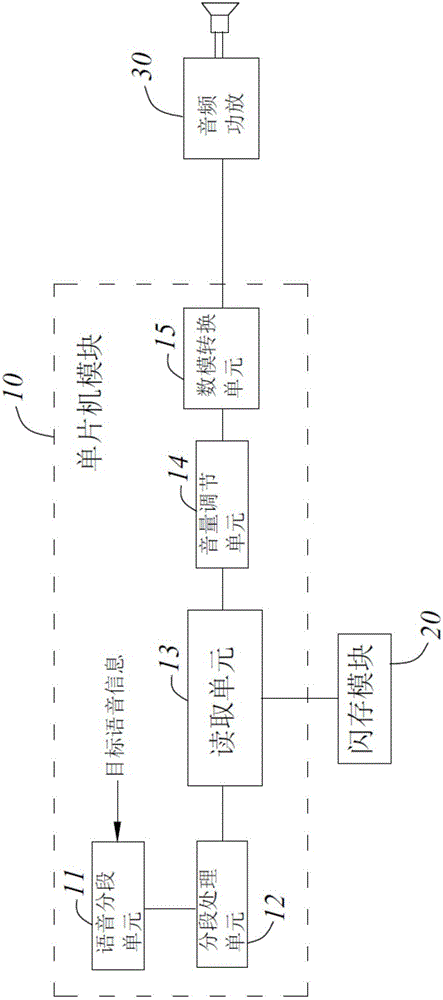

The invention discloses a speech segmentation, recombination and output method and device.The speech segmentation, recombination and output method and device are used for reducing occupied IO port resources and reducing production and manufacturing cost while providing a speech content alteration function.The speech segmentation, recombination and output device comprises a flash memory module and a single-chip microcomputer module.The flash memory module is used for storing several segments of speech broadcasting information and an address directory corresponding to the segments of speech broadcasting information.The single-chip microcomputer module comprises a speech segmentation unit, a segment processing unit and a reading unit, wherein the speech segmentation unit is used for decomposing target speech information into several ordered segments of speech information, the segment processing unit is used for converting the segments of speech information decomposed by the speech segmentation unit into address directory numbers in order, and the reading unit is used for reading and outputting the corresponding speech broadcasting information in the flash memory module in order according to the converted address directory numbers, so that recombination and output of segmented speech are realized.

Owner:苏州蓝博控制技术有限公司

Sound acquisition method and device based on microphone array

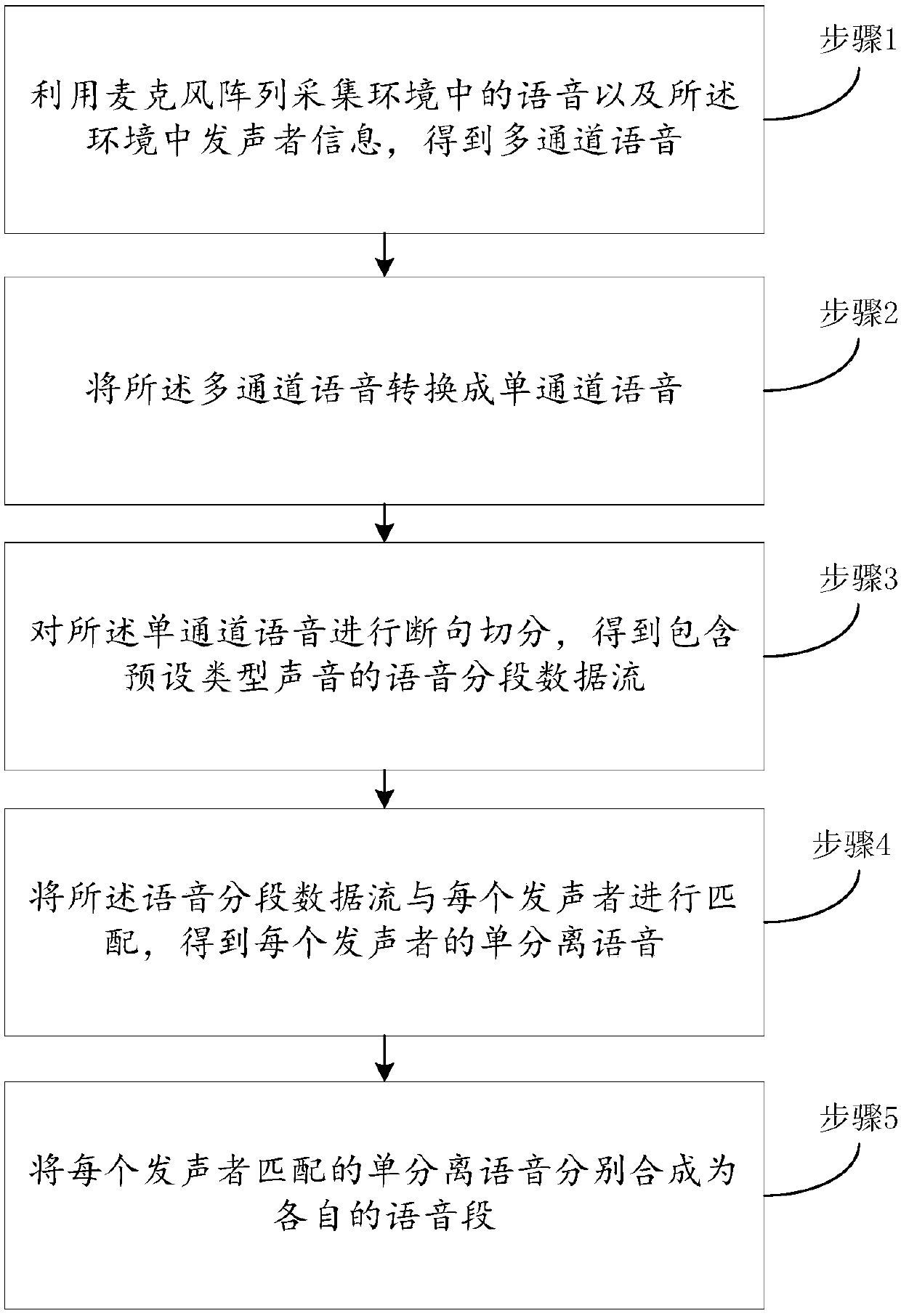

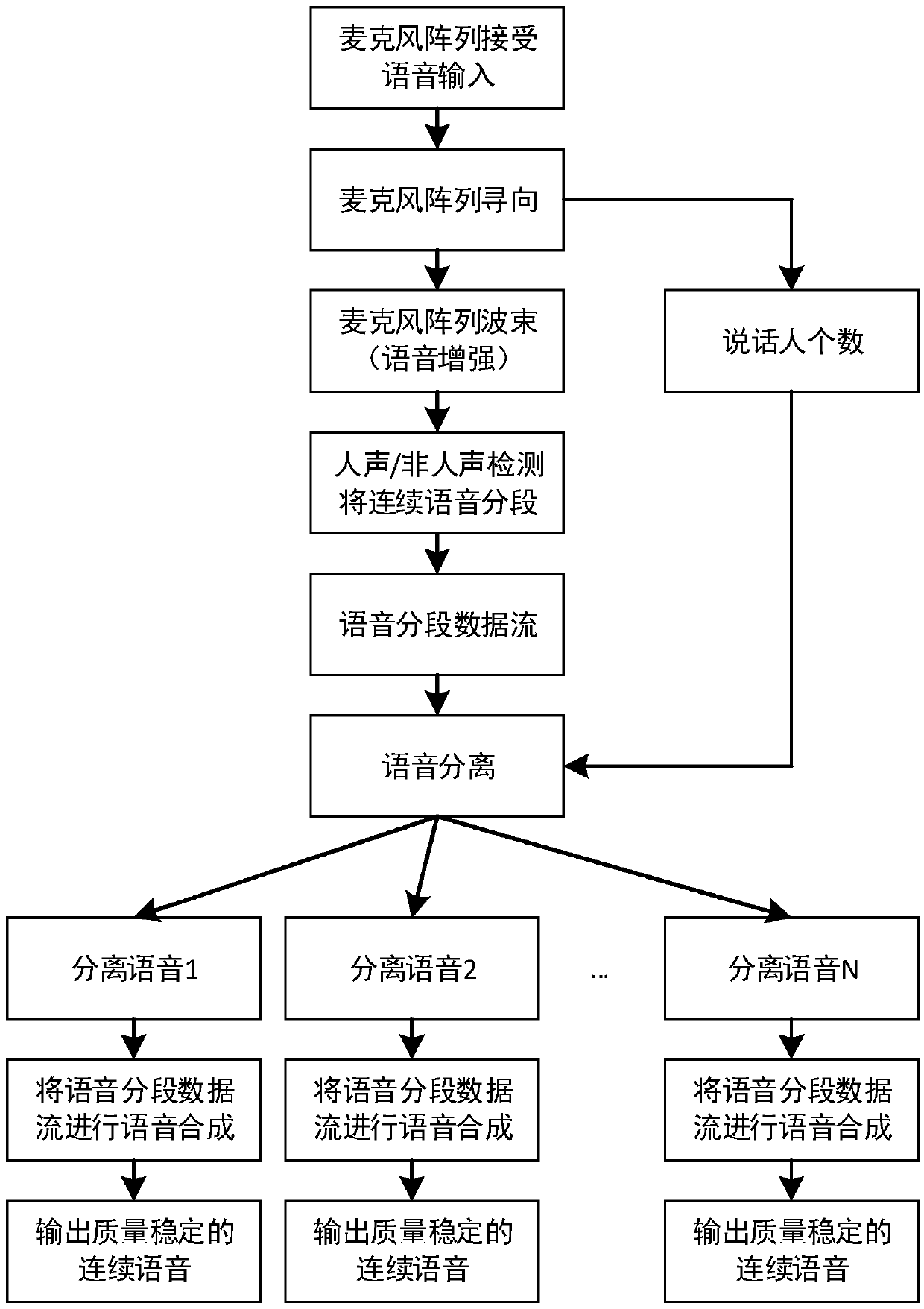

ActiveCN110858476AImprove accuracyEnhanced speech signalSpeech recognitionSpeech synthesisSound detectionSpeech segmentation

The invention provides a sound acquisition method and device based on a microphone array. The method comprises the following steps of acquiring voices in an environment and sounder information in theenvironment by utilizing the microphone array to obtain multi-channel voices, wherein the sounder information comprises sounding directions of sounders and the number of the sounders; converting the multi-channel voices into single-channel voices; carrying out sentence segmentation on the single-channel voices to obtain voice segment data streams containing a preset type of sound; matching the voice segment data streams with each sounder to obtain a single-separated voice of each sounder; and respectively synthesizing the single-separated voice matched with each sounder into a respective voicesegment. The technical scheme provided by the invention is high in applicability, can be suitable for near-field and far-field voice environments, has the characteristics of high sound detection accuracy, and can separate the voices of a plurality of sounders, so that each sounder corresponds to one voice separated voice segment.

Owner:BEIJING ZIDONG COGNITIVE TECH CO LTD

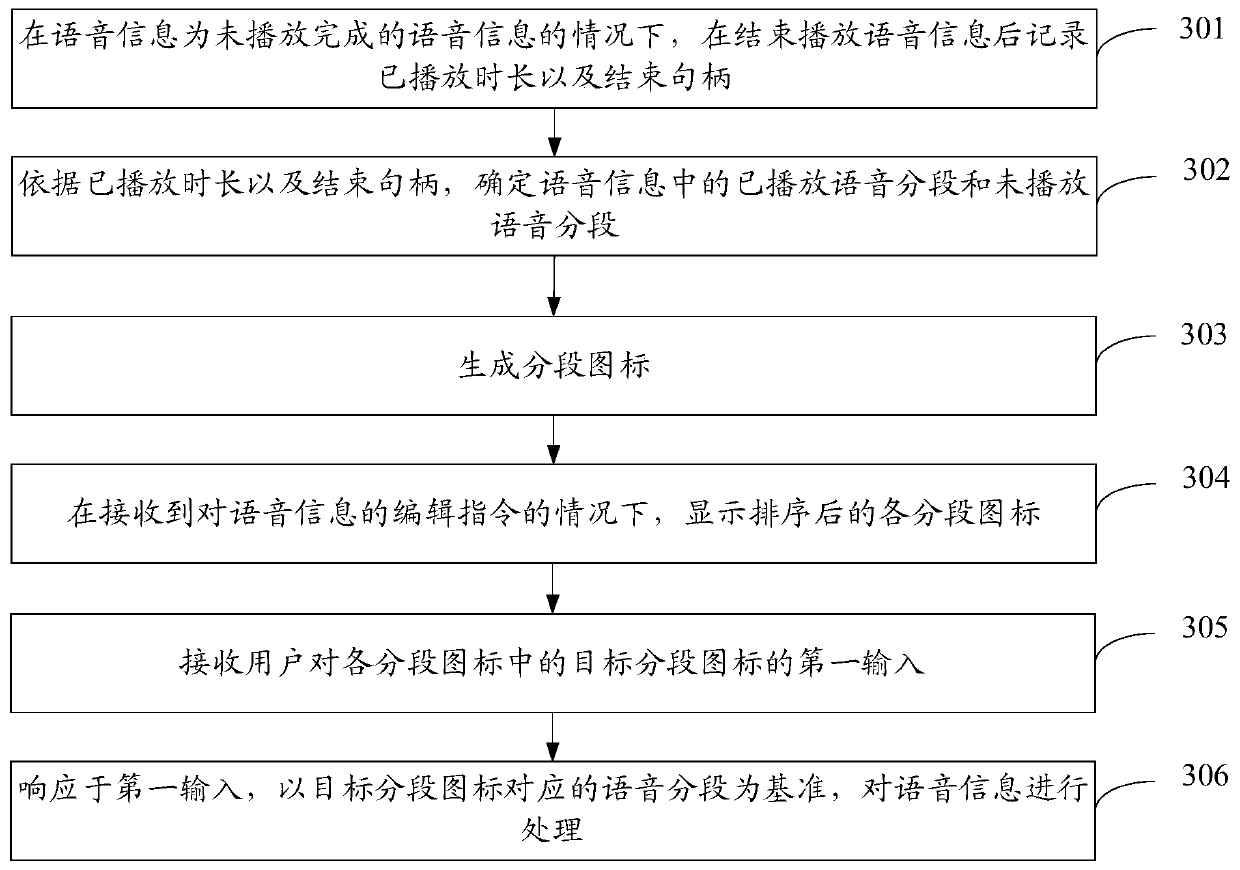

Voice information processing method and electronic equipment

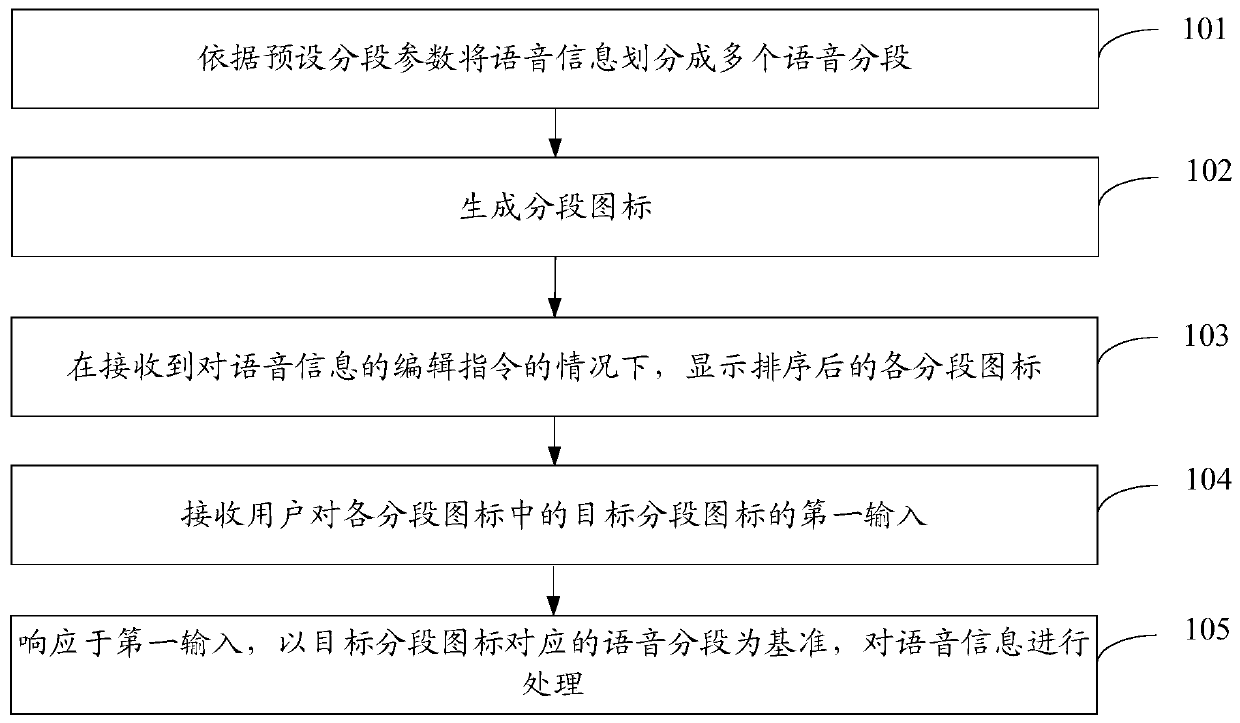

PendingCN111445929AImprove user experienceEasy to operateSpeech analysisSubstation equipmentInformation processingSpeech segmentation

The embodiment of the invention provides a voice information processing method and electronic equipment. The method comprises the following steps: dividing voice information into a plurality of voicesegments according to preset segment parameters; generating segmented icons; under the condition that an editing instruction for the voice information is received, displaying the sorted segmented icons; receiving a first input of a user to a target segmented icon in the segmented icons; and in response to the first input, processing the voice information by taking the voice segment corresponding to the target segment icon as a reference. According to the voice information processing method provided by the embodiment of the invention, a user can flexibly trigger the electronic equipment to insert the newly added voice content into the voice information, delete the voice segments in the voice information or continuously play the voice information, the operation is convenient, and the use experience of the user can be improved.

Owner:VIVO MOBILE COMM CO LTD

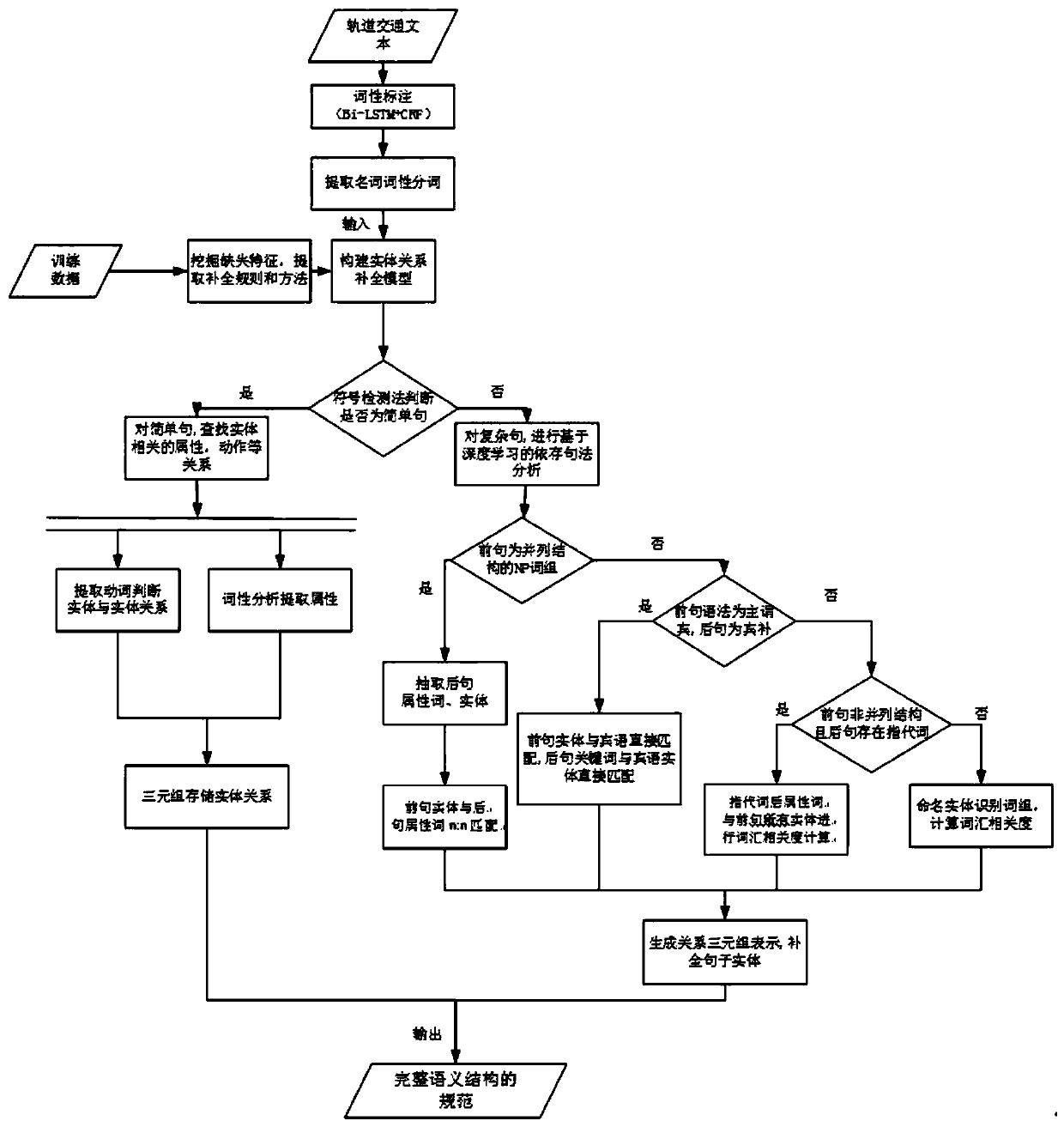

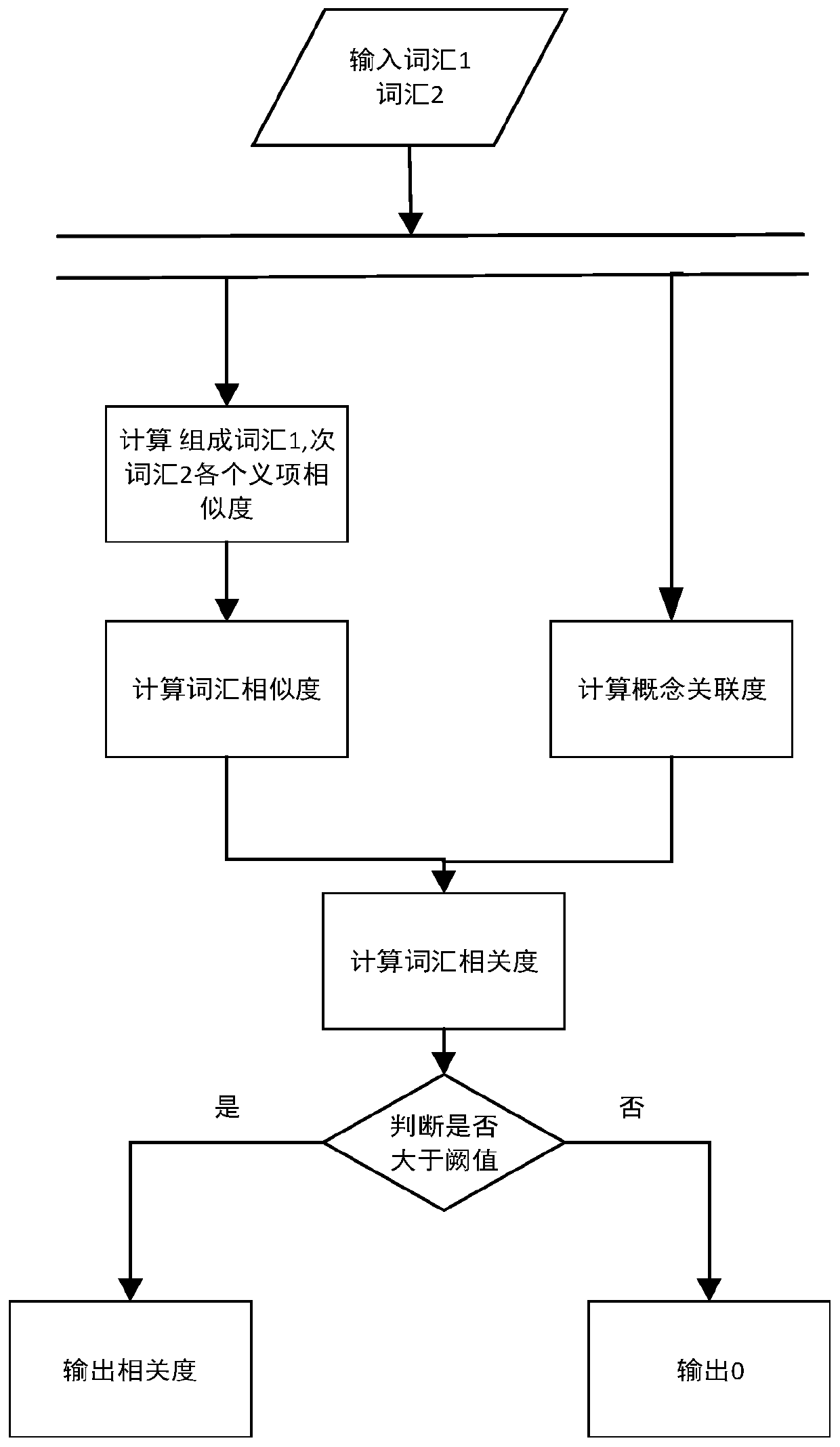

Rail transit standard entity relationship automatic completion method based on artificial intelligence

ActiveCN111597349AImprove accuracyReduce workloadNatural language data processingText database queryingPart of speechSpeech segmentation

The invention discloses a rail transit standard entity relationship automatic completion method based on artificial intelligence. The method comprises the steps of constructing an entity relationshipcompletion model, inputting the rail transit specification and part-of-speech segmentation of nouns into an entity relationship completion model; judging whether the input specification is a simple sentence or not; if yes, searching entity related attributes in the rail transit specification; generating an entity relationship triple; if not, extracting later sentence attribute words and entities of the rail transit specification, matching the former sentence entities and later sentence attribute words in a n:n manner, or judging whether the the former sentence grammar is subject-verb-object ornot and the latter sentence grammar is object complement; if yes, directly matching the former sentence entities with the objects and directly matching the later sentence keywords with the object entities to generate entity relationship triples, and if not, outputting the entities of which the vocabulary relevancy exceeds a threshold value and the entity relationships to generate the entity relationship triples to obtain complete semantic structure entity specifications, thereby finishing automatic completion of the rail transit specification entity relationships.

Owner:XIAN UNIV OF TECH

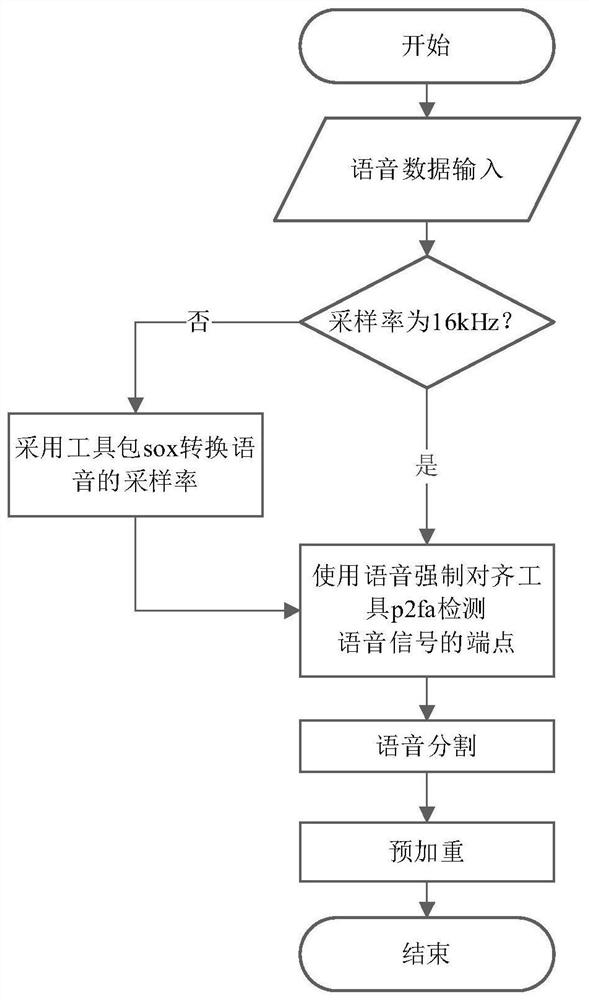

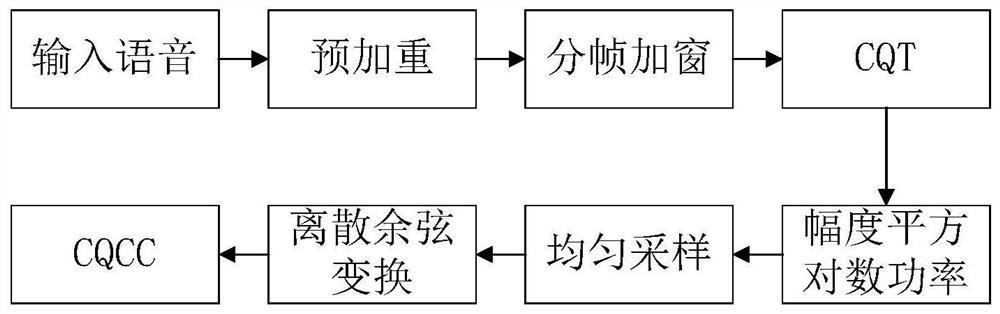

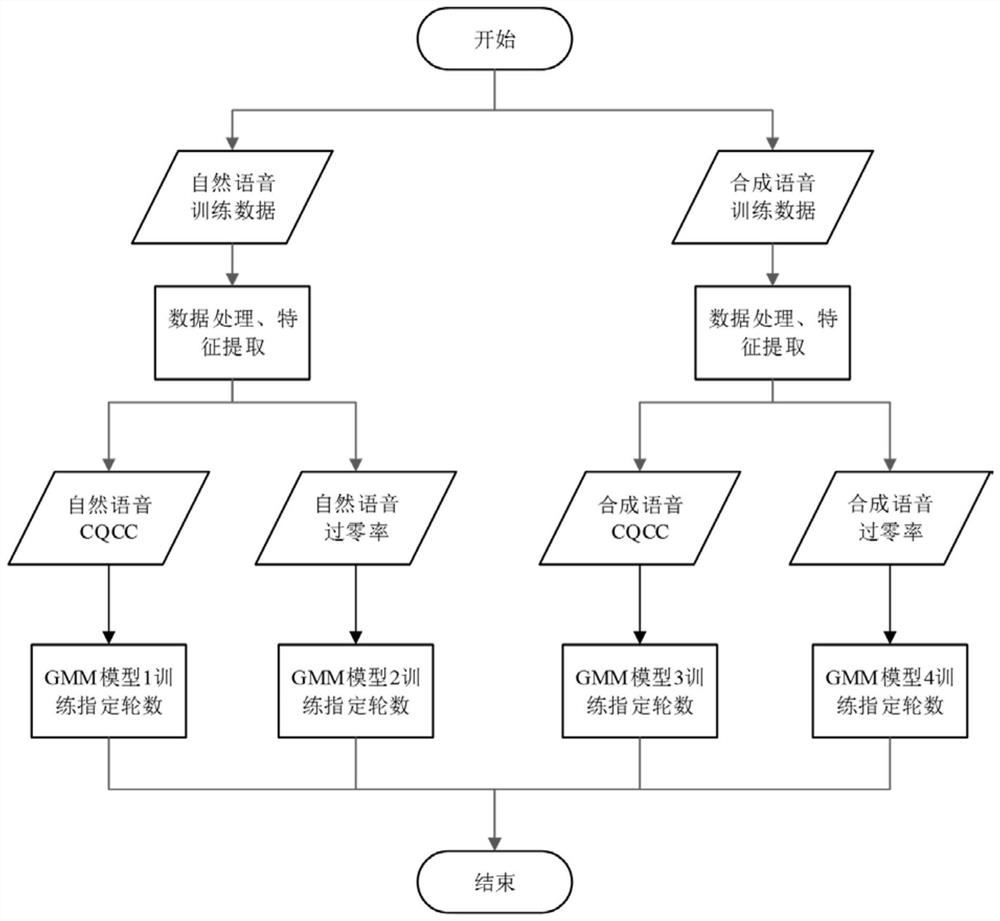

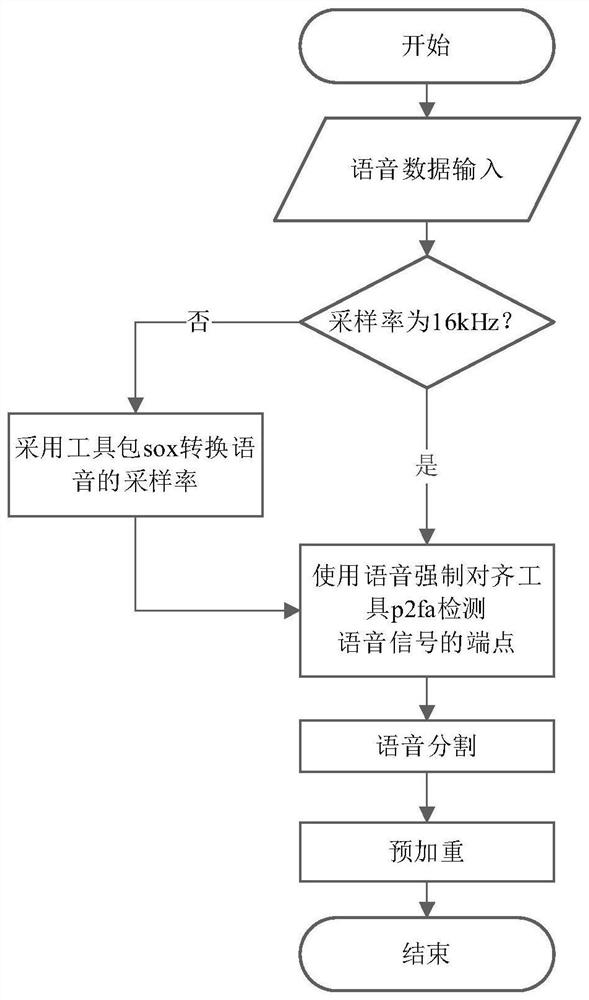

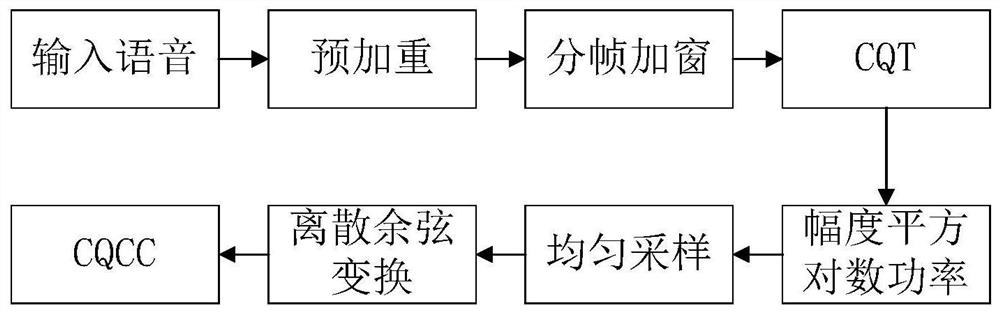

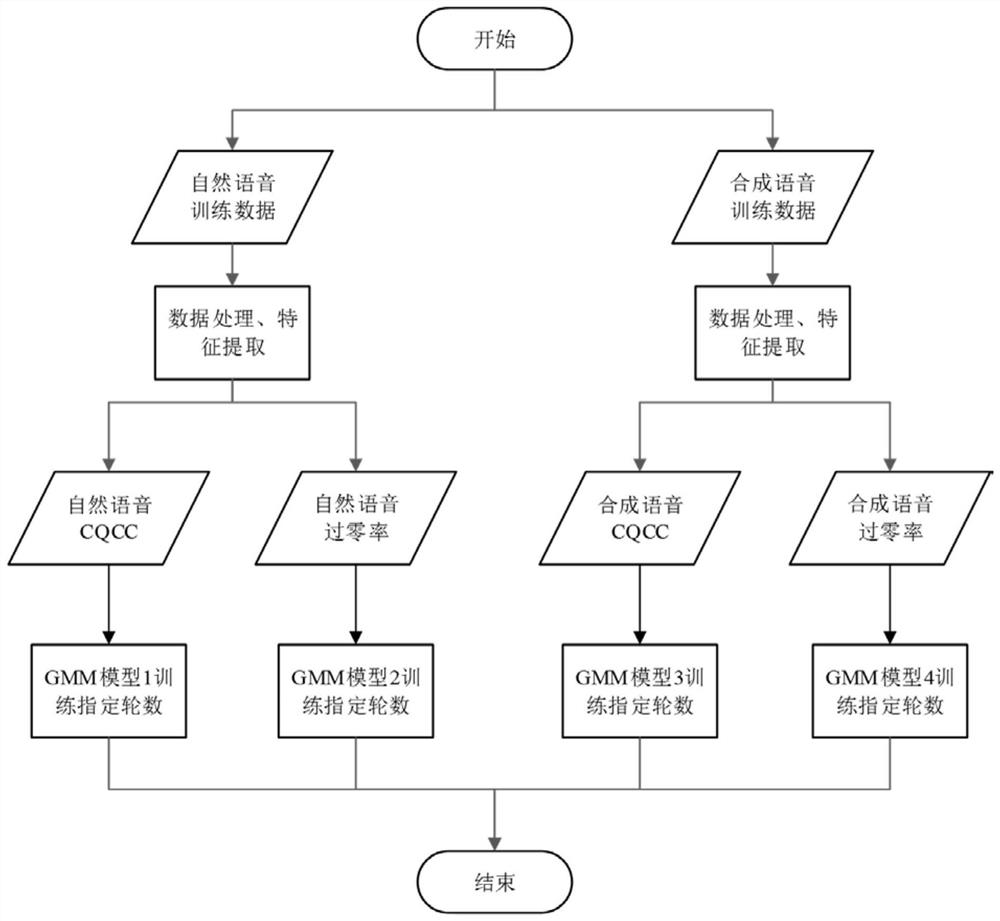

Synthetic speech detection method based on speech segmentation

ActiveCN113012684AImprove detection accuracyHigh precisionSpeech recognitionSpeech segmentationSpeech sound

The invention discloses a synthetic speech detection method based on speech segmentation, belongs to the field of speech detection, and aims to solve the problem of low detection precision in the prior art. The method comprises the following steps of: extracting two kinds of features in an audio, namely a CQCC feature of a voiced segment of the audio and an average zero-crossing rate feature of a silent (mute) segment of the audio; and adopting two GMM models to fit the two kinds of features respectively, giving different weights to the two GMM models, carrying out testing, and finding the most appropriate weight. The detection precision of synthetic speech is obviously improved.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

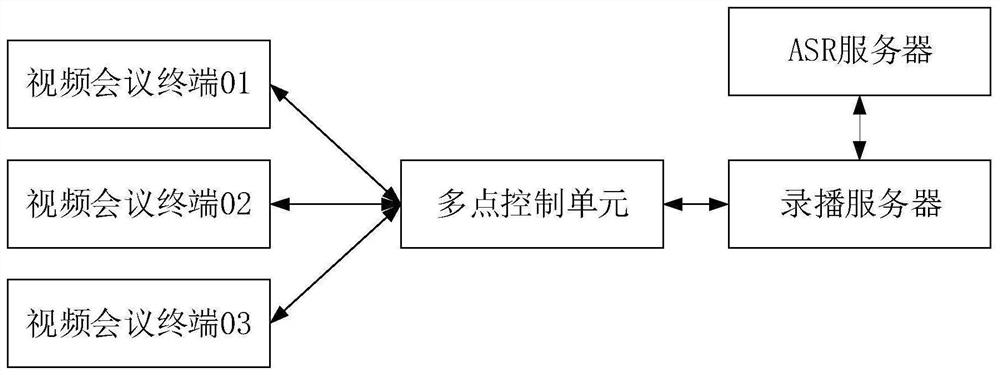

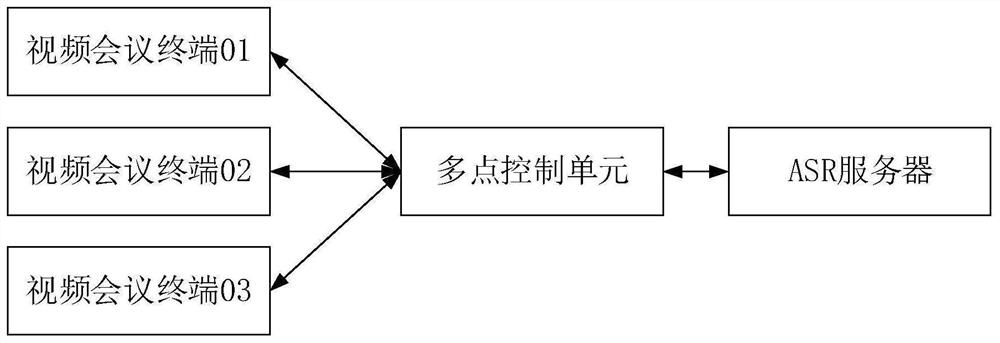

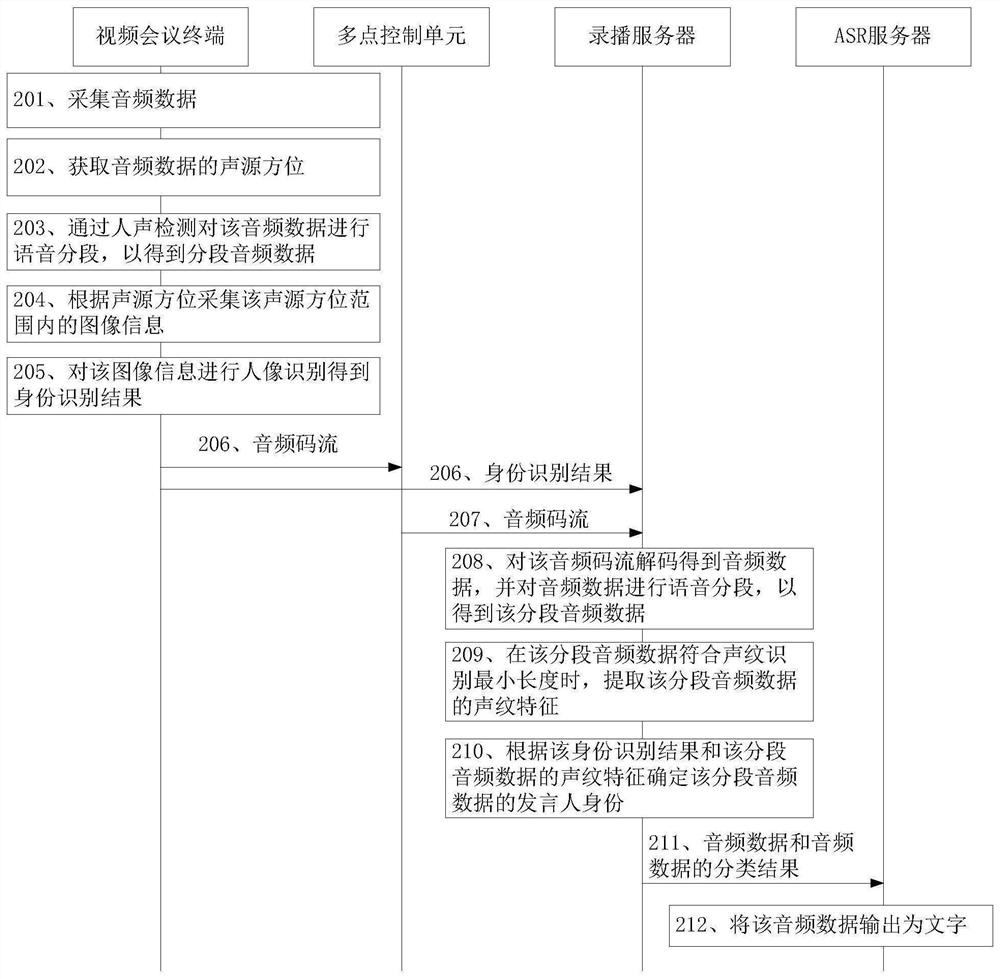

Audio data processing method, device and system

PendingCN114333853ATelevision conference systemsBiometric pattern recognitionTemporal informationSound sources

The embodiment of the invention provides an audio data processing method, equipment and system, which are used for classifying conference audio data according to identities of spokesmen. The conference record processing method specifically comprises the steps that the conference record processing device obtains audio data of a first conference place, sound source orientation information corresponding to the audio data and an identity recognition result, and additional domain information comprises the sound source orientation information corresponding to the audio data; the identity recognition result is used for indicating a corresponding relation between spokesman identity information obtained through a portrait recognition method and speaking time information of a spokesman; then the conference record processing device performs voice segmentation on the audio data to obtain first segmented audio data of the audio data; and finally, the conference record processing device determines a spokesman corresponding to the first segment of audio data according to the voiceprint feature of the first segment of audio data and the identity recognition result.

Owner:HUAWEI TECH CO LTD

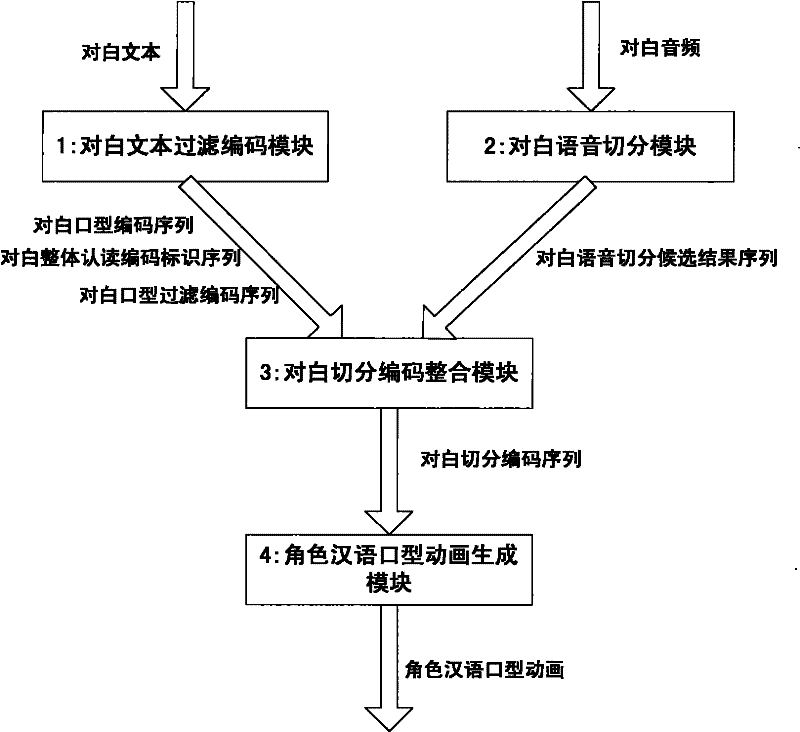

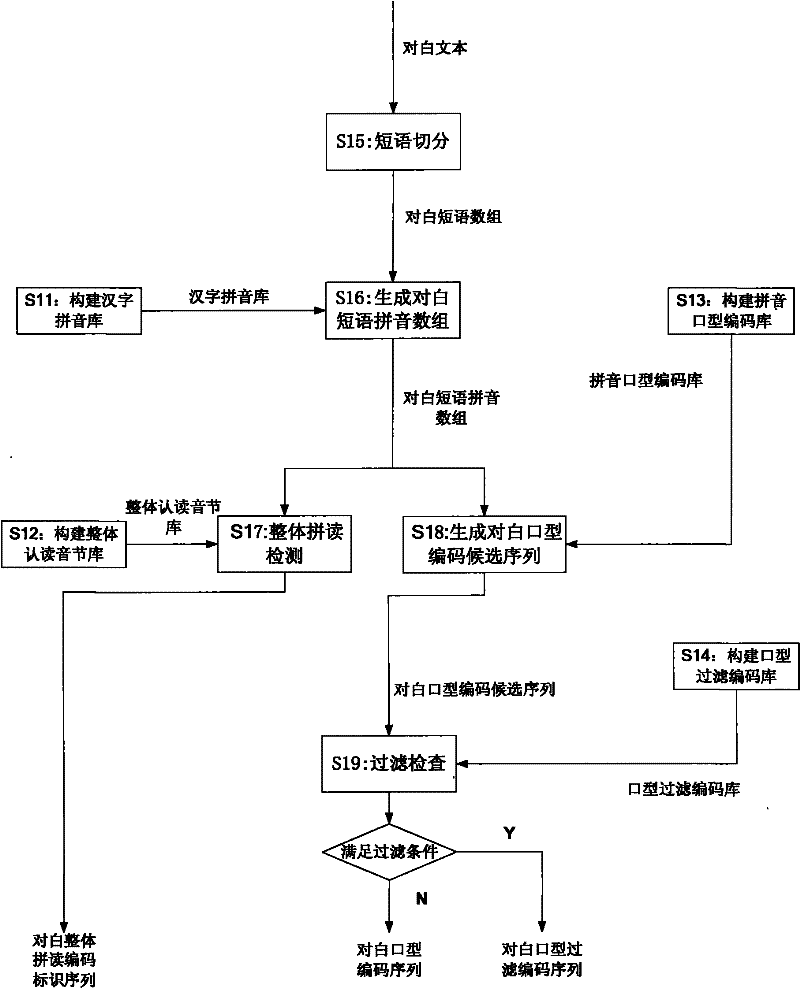

An automatic generation system for character Chinese lip animation

InactiveCN101826216BImprove production efficiencyImprove practicalityAnimationChinese charactersSpeech segmentation

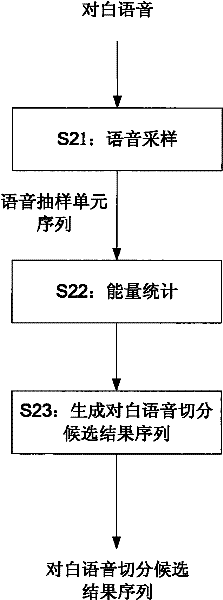

The invention discloses a system for automatically generating animations of characters' Chinese mouth shapes. The dialogue text filtering and coding module performs phrase segmentation, pinyin mouth shape coding, overall recognition and reading mark setting and coding filtering on the dialogue text, generates and outputs dialogue mouth shape codes, and dialogue overall Recognition and reading code identification and dialogue mouth shape filter coding sequence; dialogue speech segmentation module conducts speech sampling and speech energy statistics on dialogue audio, generates and outputs dialogue speech segmentation candidate result sequence; dialogue segmentation coding integration module connects dialogue text filter coding The module and the dialogue voice segmentation module integrate and correct the dialogue voice segmentation candidate result sequence, generate and output the dialogue segmentation code sequence; the character Chinese mouth animation generation module is connected with the dialogue segmentation coding integration module, The sequence generates and outputs the character's Chinese lip animation. In the processing process of the invention, the production of the Chinese mouth animation of the whole character can be automatically completed without loading the corresponding voice library.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Speech processing device, speech processing method, and non-transitory computer readable medium storing program

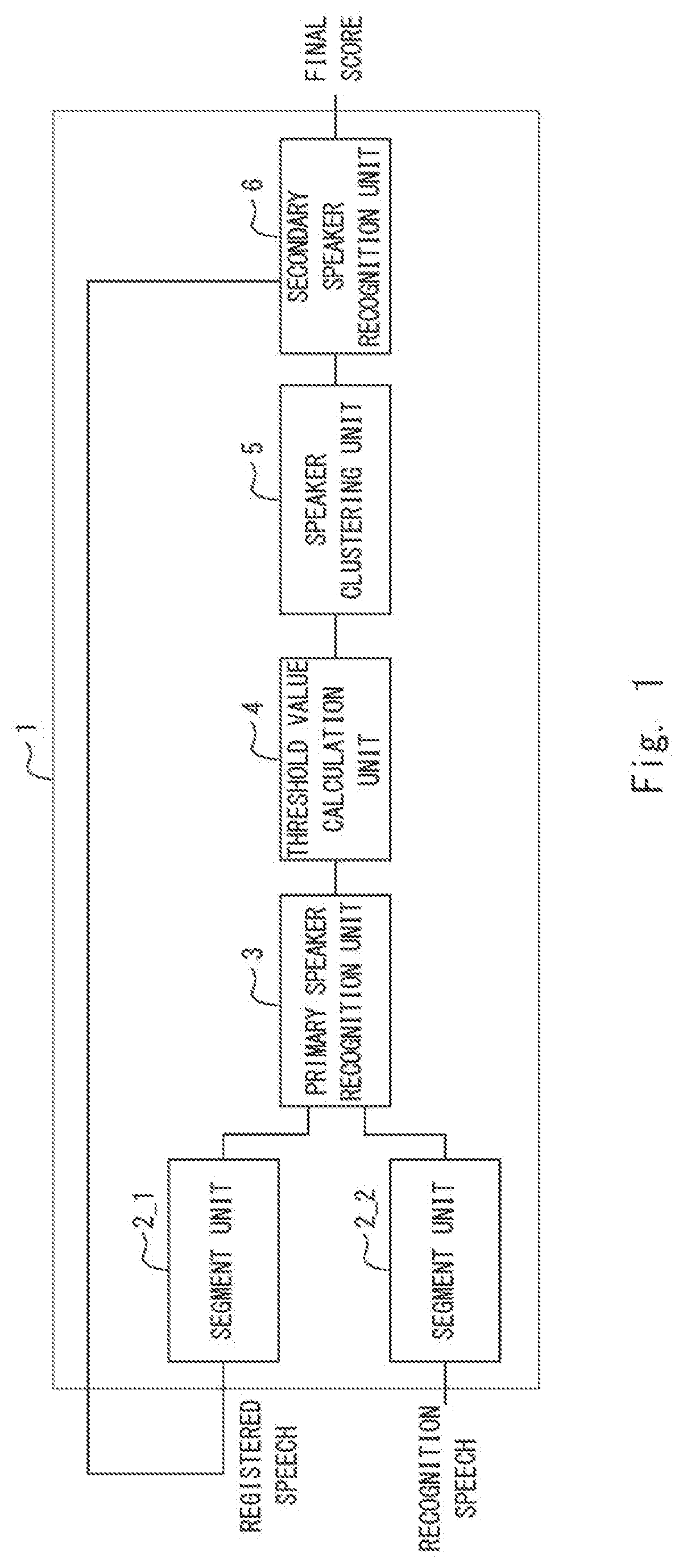

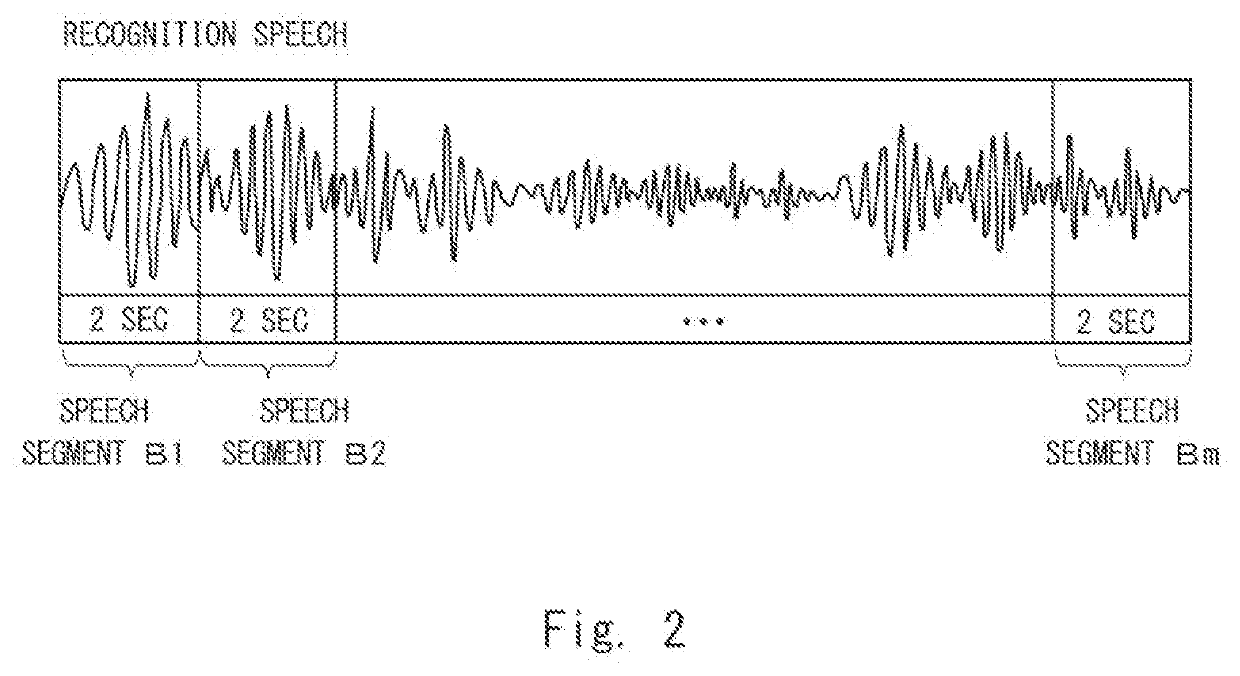

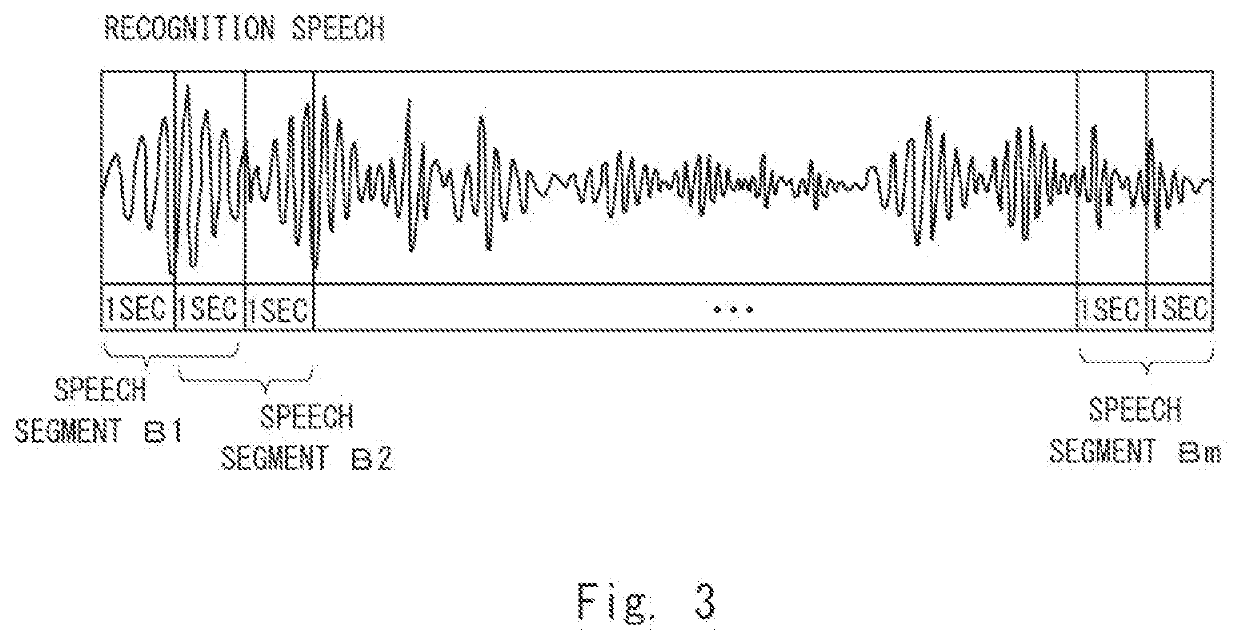

A speech processing device includes: first segment means for dividing first speech into a plurality of first speech segments; second segment means for dividing second speech into a plurality of second speech segments; primary speaker recognition means for calculating scores indicating similarities between the plurality of first and second speech segments; threshold value calculation means for calculating a threshold value based on scores indicating similarities between the plurality of first speech segments; speaker clustering means for classifying each of the plurality of second speech segments into one or more clusters having a similarity higher than the similarity indicated by the threshold value; and secondary speaker recognition means for calculating a similarity between each of the one or more clusters and the first speech and determining based on a result of the calculation whether speech corresponding to the first speech is contained in any of the one or more clusters.

Owner:NEC CORP

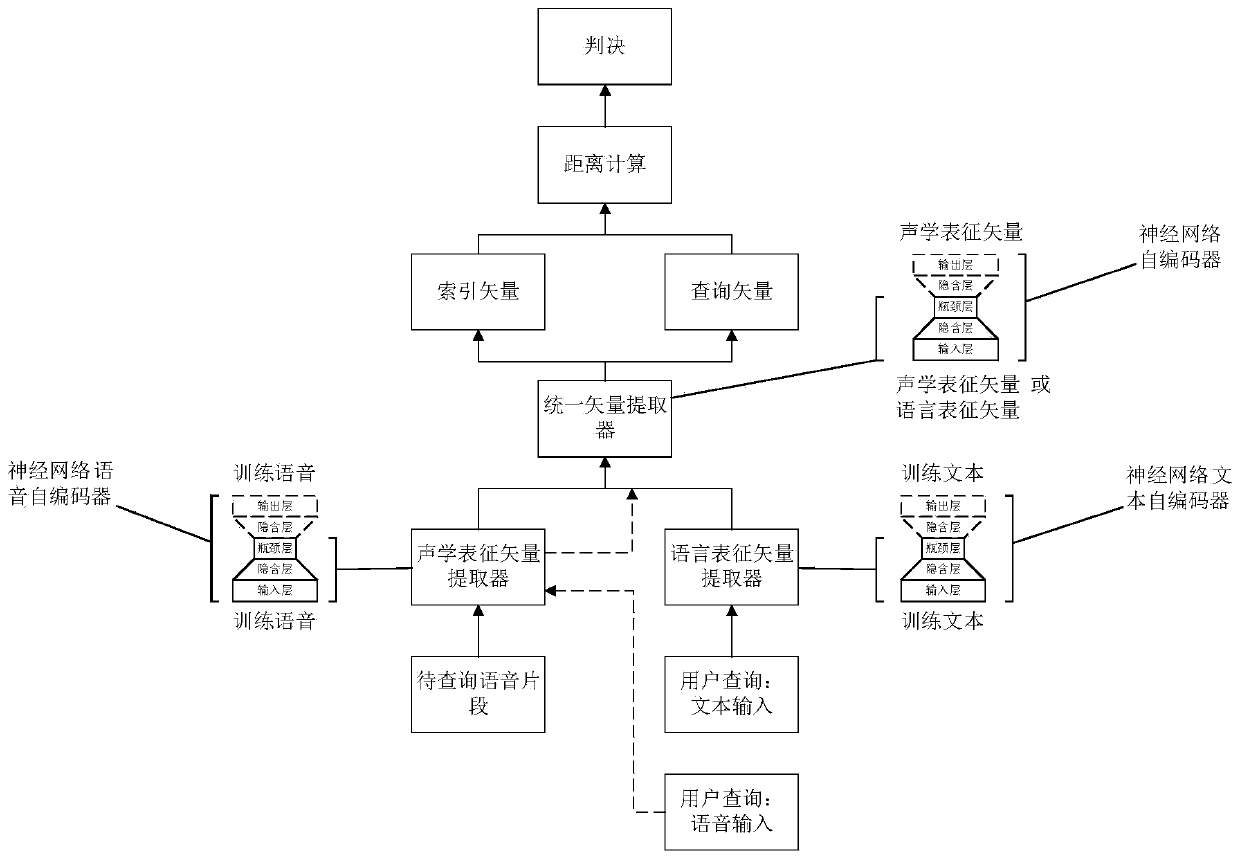

Keyword search method based on unified representation

ActiveCN110738987AGood modelingEasy to useMetadata audio data retrievalSpeech recognitionSpeech segmentationData segment

The invention belongs to the technical field of voice signal processing, and in particular relates to a keyword search method based on unified representation. The method comprises the following steps:training a neural network voice auto-encoder with a bottleneck layer by adopting abundant voice data, thus obtaining an acoustics representation vector extractor; training a neural network text auto-encoder with a bottleneck layer by adopting abundant text data, thus obtaining a language representation vector extractor; extracting corresponding acoustics representation vectors and language representation vectors respectively by adopting abundant voice data segments and corresponding text data segments for training a unified vector extractor; acquiring inquiring vectors of a text keyword through the language representation vector extractor and the unified vector extractor; acquiring inquiring vectors of a voice keyword through the acoustics representation vector extractor and the unified vector extractor; and for to-be-inquired voice, acquiring a plurality of index vectors in segments in the sequence through the acoustics representation vector extractor and the unified vector extractor, and calculating the distances among the inquiring vectors, wherein if the value is smaller than a preset threshold, considering that the keyword is hit.

Owner:TSINGHUA UNIV

A Synthetic Speech Detection Method Based on Speech Segmentation

ActiveCN113012684BImprove detection accuracyHigh precisionSpeech recognitionSpeech segmentationSpeech sound

The invention discloses a synthesized speech detection method based on speech segmentation, which is applied in the field of speech detection. Aiming at the problem of low detection accuracy in the prior art, the present invention extracts two features in the audio: the CQCC feature of the audio segment, and the extracted audio feature The average zero-crossing rate feature of a silent (silent) segment; then use two GMM models to fit the two features respectively, and assign different weights to the two GMMs and test to find the most suitable weight; significantly improved the synthesis Speech detection accuracy.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

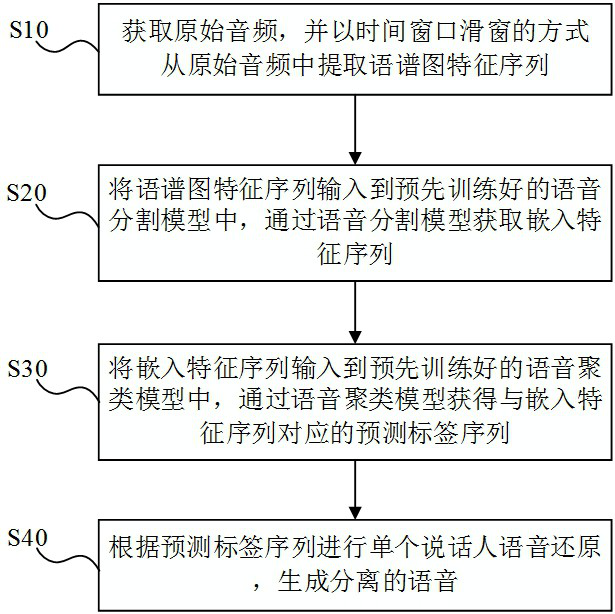

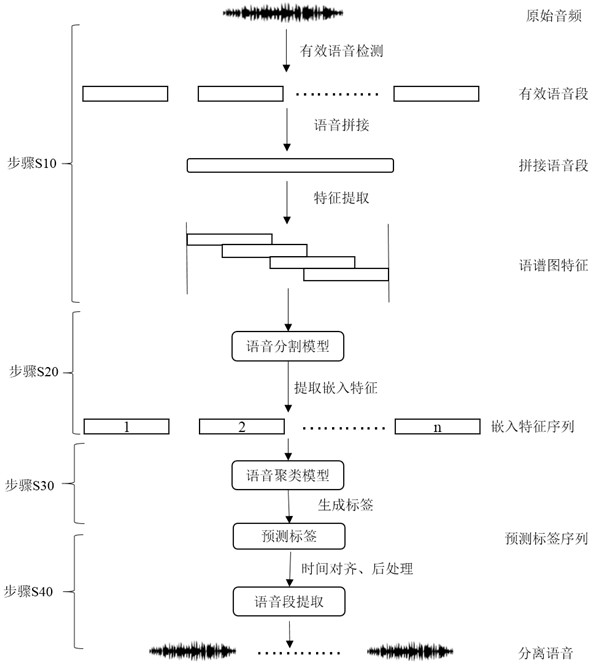

Voice separation method, voice separation device, electronic equipment and storage medium

ActiveCN112634875BAccurate estimateCharacter and pattern recognitionSpeech recognitionSpeech segmentationSpeech sound

The application provides a speech separation method, a speech separation device, electronic equipment, and a storage medium. The speech separation method includes: obtaining the original audio, and extracting the spectrogram feature sequence from the original audio in a time window sliding window; The graph feature sequence is input into the pre-trained speech segmentation model, and the embedded feature sequence is obtained through the speech segmentation model; the embedded feature sequence is input into the pre-trained speech clustering model, and the corresponding embedding feature sequence is obtained through the speech clustering model The predicted label sequence; perform single-speaker voice restoration based on the predicted label sequence to generate separated speech. According to the speech separation method, speech separation device, electronic equipment and storage medium of the present application, the problem of unsatisfactory speech separation effect can be solved, and the speech segment belonging to a single speaker can be separated from the short-term speech audio file in which multiple people speak alternately, And it can accurately estimate the number of speakers in conjunction with contextual information.

Owner:北京远鉴信息技术有限公司

Method, device, equipment and computer storage medium for voice segmentation

ActiveCN109166570BImprove Segmentation AccuracyEasy alignmentSpeech recognitionStart timeSpeech segmentation

The invention provides a speech segmentation method, a device, a device and a computer storage medium, wherein the method comprises the following steps: determining the cross correlation degree of a first speech and a second speech, wherein the second speech is the speech obtained after recording the first speech, and the first speech is formed by splicing two or more first speech segments; Calibrating a time tag based on the cross-correlation, the time tag comprising a start time and an end time of each first speech segment in a first speech; Using the calibrated time stamp, the second speechis segmented to obtain more than two second speech segments. The invention can better align the calibrated time label with the second speech, thereby improving the segmentation accuracy of the secondspeech.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

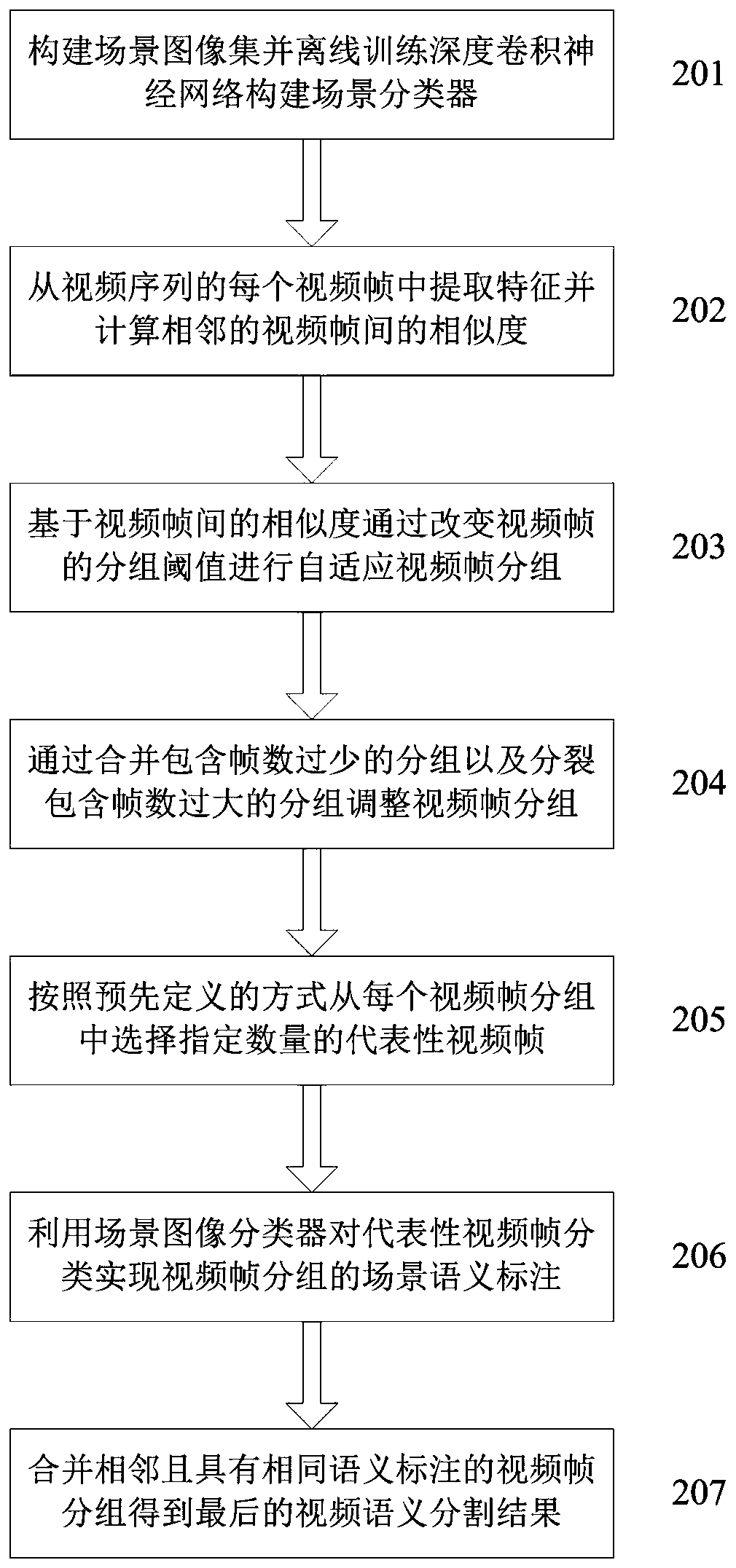

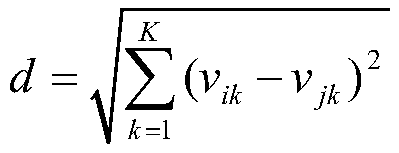

A Video Semantic Scene Segmentation and Labeling Method

InactiveCN108537134BImprove processing efficiencyImprove experienceCharacter and pattern recognitionVideo retrievalSpeech segmentation

Owner:BEIJING JIAOTONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com