Patents

Literature

82 results about "Word graph" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

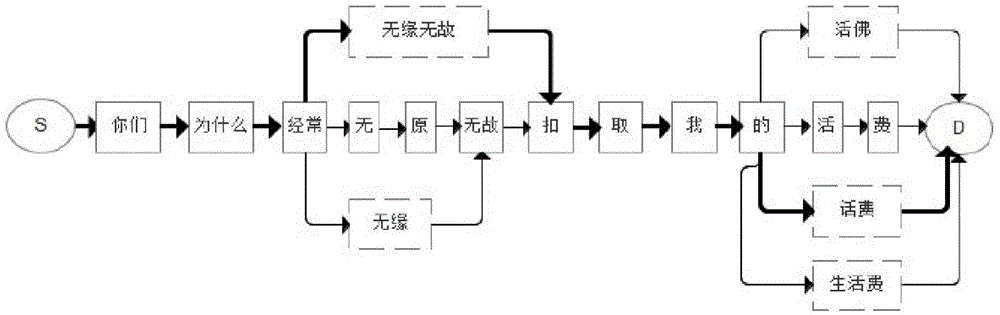

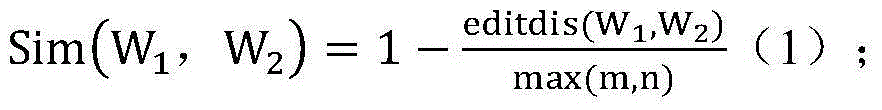

Fuzzy word segmentation based non-multi-character word error automatic proofreading method

ActiveCN104991889AQuick responseMeet the actual application needsSpecial data processing applicationsChinese wordWord graph

The invention discloses a fuzzy word segmentation based non-multi-character word error automatic proofreading method. According to the method, accurate segmentation is carried out based on a correct word dictionary and a wrong character word dictionary to generate a word graph; then the similarity of Chinese word strings is calculated by utilizing a fuzzy matching algorithm, accurately segmented disperse strings are subjected to fuzzy matching, and a fuzzy matching result is added into the word graph to form a fuzzy word graph; and finally a shortest path of the fuzzy word graph is calculated by utilizing a binary model of words in combination with similarity, so that automatic proofreading of Chinese non-multi-character word errors is realized. According to the fuzzy word segmentation based non-multi-character word error automatic proofreading method provided by the invention, the system response is quick, the precision meets actual application demands, and the effectiveness and the accuracy are high.

Owner:南方电网互联网服务有限公司

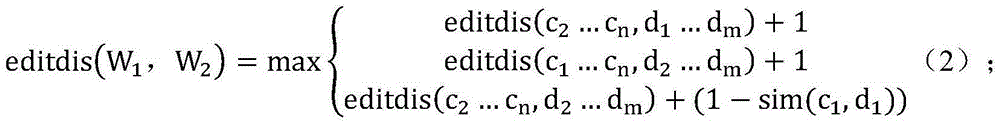

Speech-recognition text classification method and device

ActiveCN103514170AAccurate Speech Recognition ResultsIncrease coverageSpecial data processing applicationsNatural language processingText categorization

The invention discloses a speech-recognition text classification method and device. The method comprises the steps of respectively collecting training texts and training speeches identical with contents of the training texts for all service classes according to service class types, decoding the training speeches to obtain a work confusion network of the training speeches, extracting text characteristics of the training texts according to the training texts and the work confusion network, training a support vector machine classifier in a set according to the text characteristics, and using the trained support vector machine classifier to classify the texts. The speech-recognition text classification method and device converts a word graph network into the word confusion network suitable for text classification. After confusion words contained in the word confusion network are converted into text characteristics, a support vector machine algorithm is utilized to carry out text classification based on the confusion words. Thus, more accurate classification results can be obtained, and the accuracy of speech-recognition text classification is improved.

Owner:CHINA MOBILE GROUP ANHUI

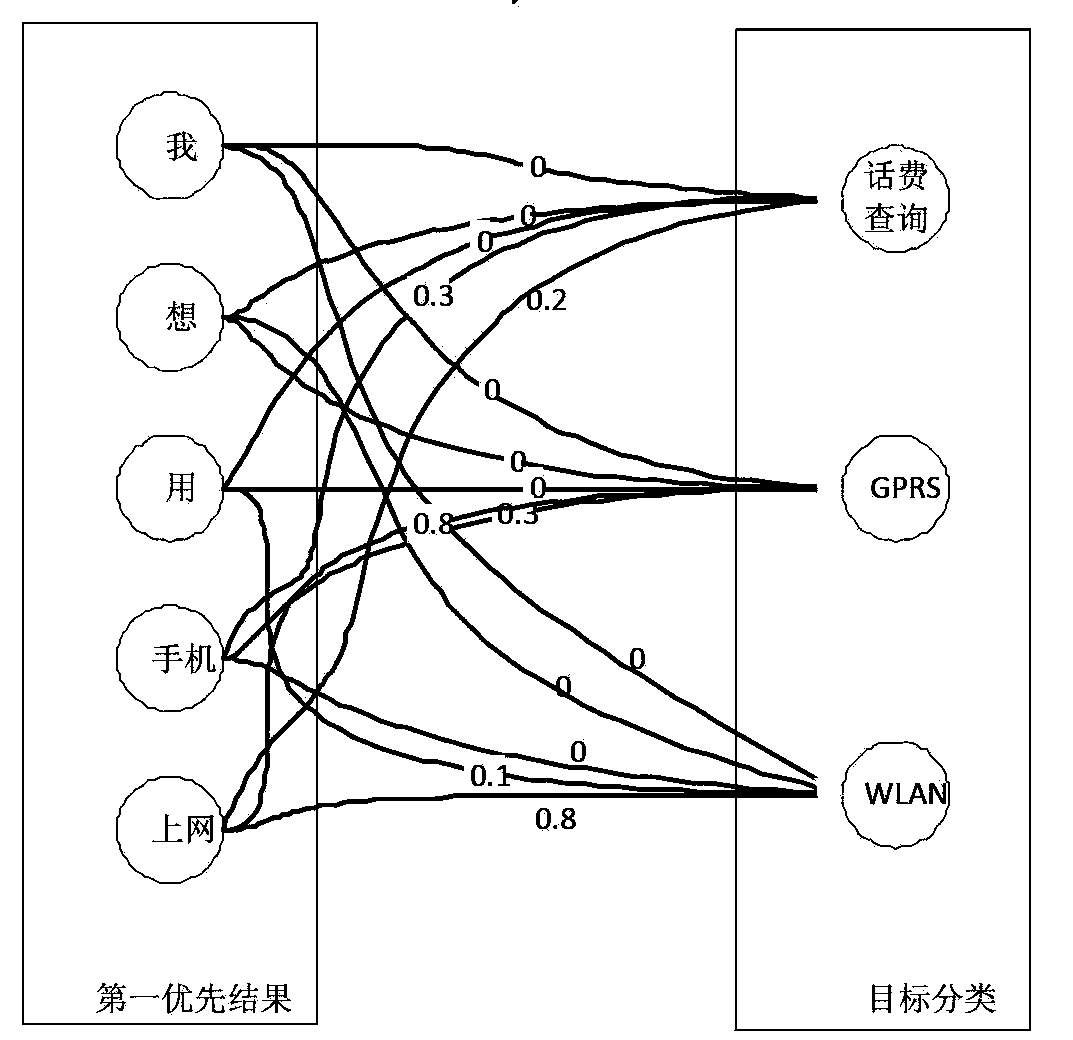

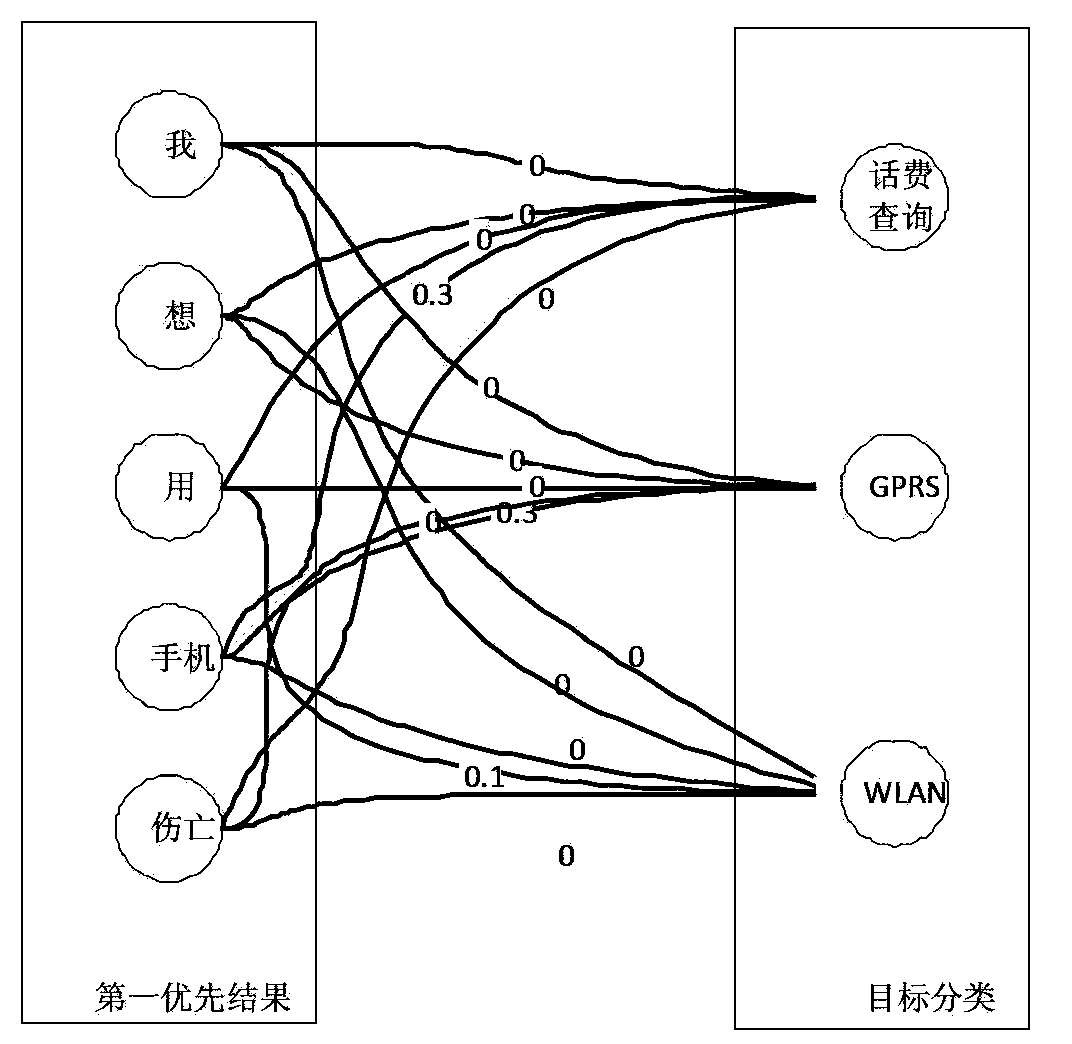

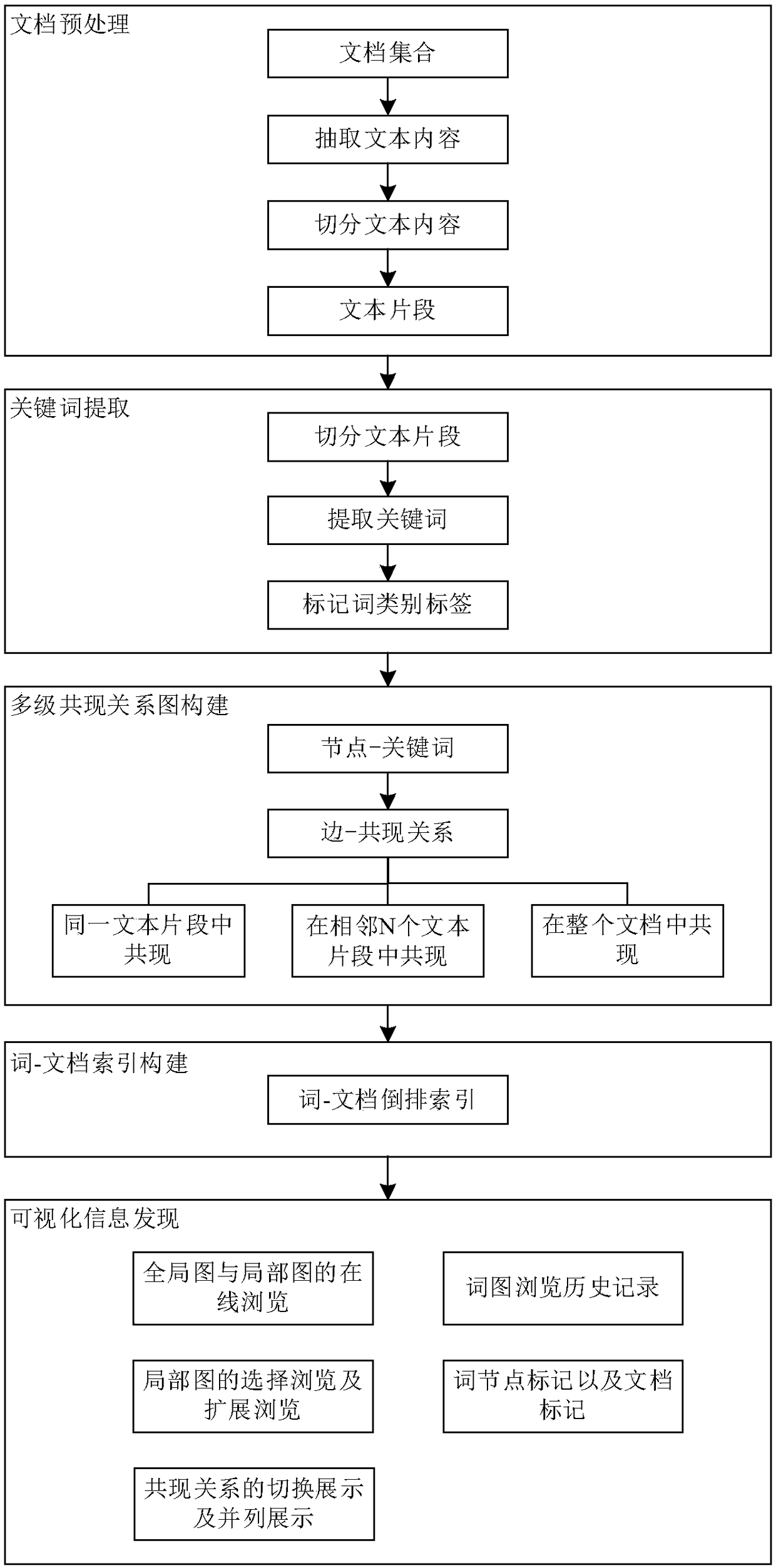

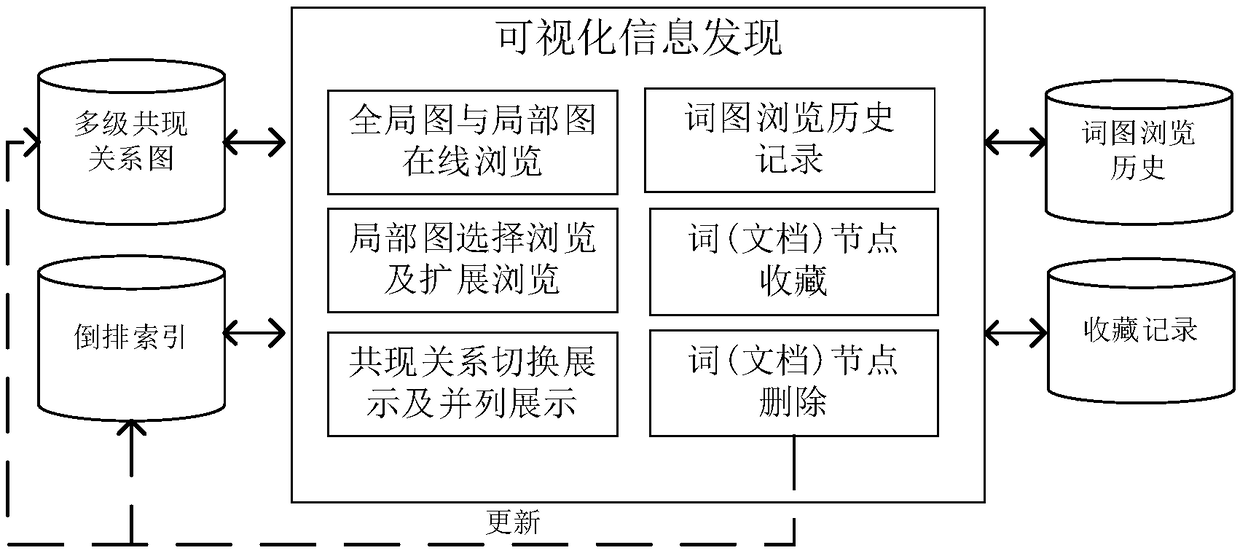

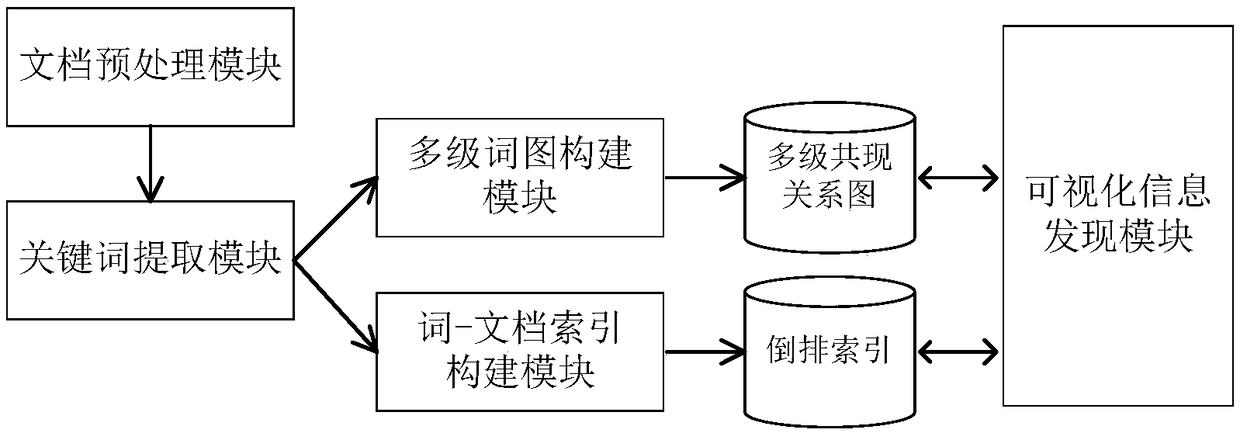

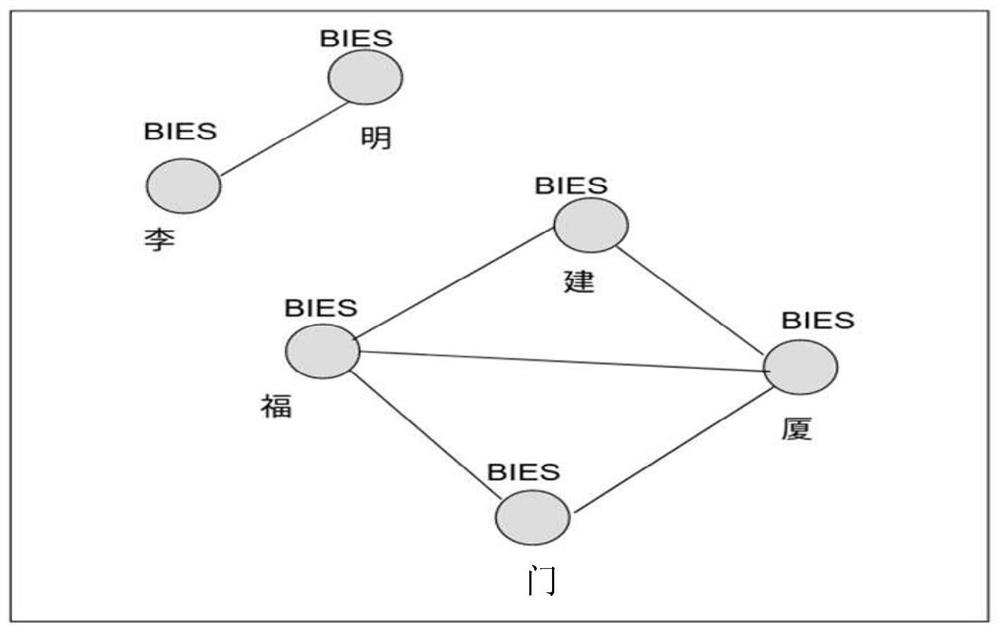

Visual text information discovery method and device based on multilevel cooccurrence relationship word graph

InactiveCN108415900AThe method is flexible and convenientSemantic analysisSpecial data processing applicationsCo-occurrenceDocument preparation

The invention provides a visual text information discovery method based on a multilevel cooccurrence relationship word graph. The method comprises the following steps that: extracting the text contents of a document, and segmenting the text contents to obtain text segments; segmenting the text segments, extracting a keyword, and labeling a word category tag; according to the cooccurrence relationship of the keyword in the text segments, constructing the multilevel cooccurrence relationship word graph, wherein nodes in the graph correspond to the keyword, and an edge in the graph corresponds tokeyword co-occurrence constructing a word-document inverted index for each keyword in the graph for retrieving a document which contains the keyword; and through the cooccurrence relationship word graph, obtaining the visual text information. The invention also provides a visual text information discovery system based on the multilevel cooccurrence relationship word graph. The system comprises adocument preprocessing module, a keyword extraction module, a multilevel word graph construction module, a word-document index construction module and a visual information discovery module.

Owner:INST OF INFORMATION ENG CHINESE ACAD OF SCI

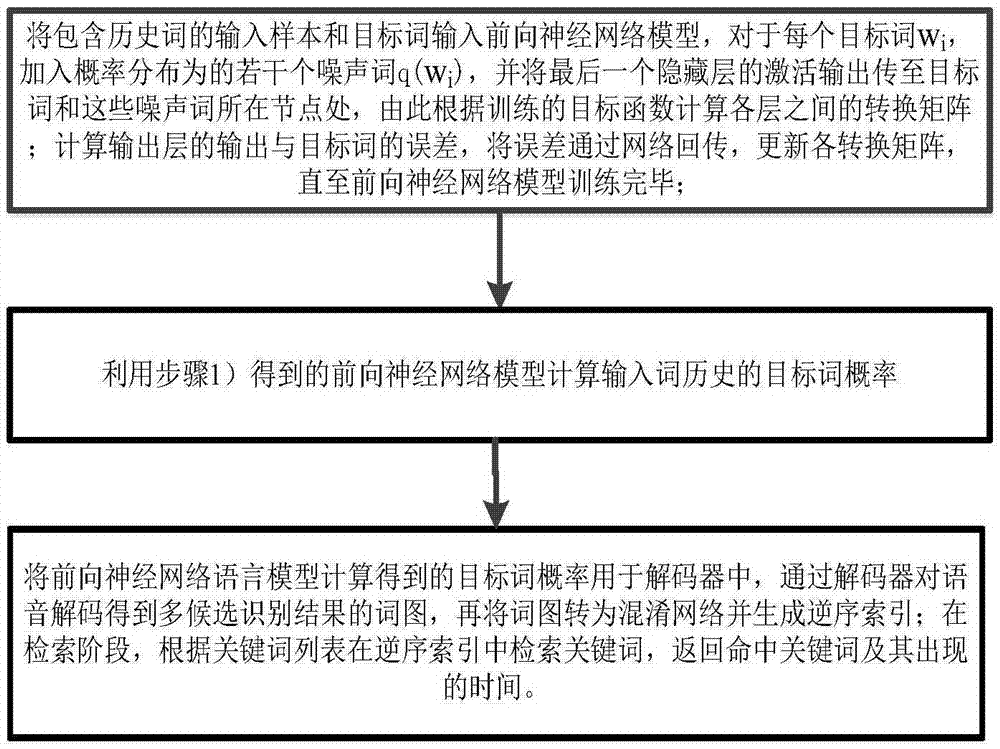

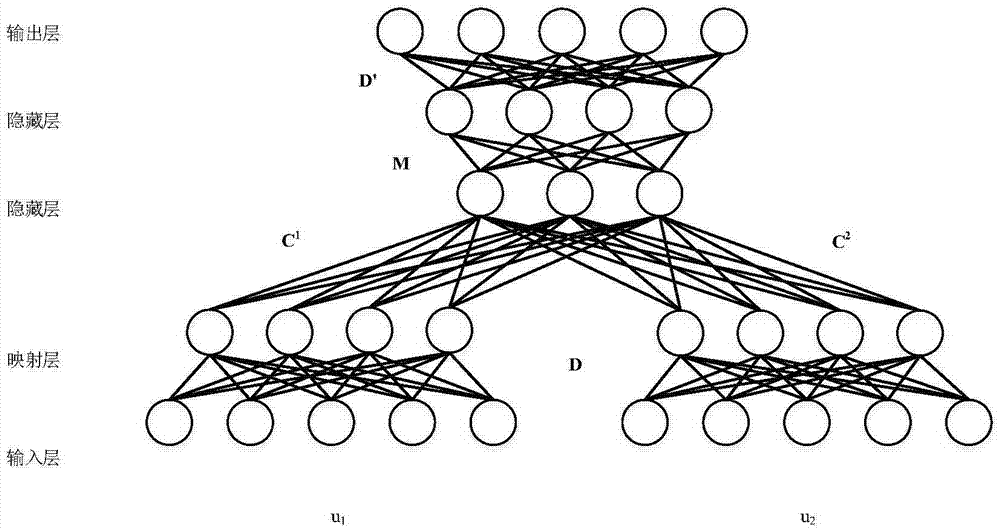

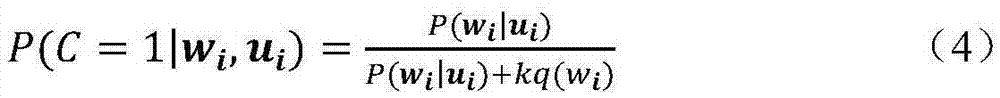

Chinese phonetic symbol keyword retrieving method based on feed forward neural network language model

ActiveCN106856092AReduce processingIncrease training speedSpeech recognitionPattern recognitionNODAL

The invention provides a Chinese phonetic symbol keyword retrieving method based on a feed forward neural network language model. The method comprises: (1), an input sample including historical words and target words are inputted into a feed forward neural network model; for each target word wi, a plurality of noise words with probability distribution q (wi) are added and an active output of a last hidden layer is transmitted to the target words and nodes where the noise words are located, and conversion matrixes between all layers are calculated based on an objective function; errors between an output of an output layer and the target words are calculated, all conversion matrixes are updated until the feed forward neural network model training is completed; (2), a target word probability of inputting a word history is calculated by using the feed forward neural network model; and (3), the target word probability is applied to a decoder and voice decoding is carried out by using the decoder to obtain word graphs of multiple candidate identification results, the word graphs are converted into a confusion network and an inverted index is generated; and a keyword is retrieved in the inverted index and a targeted key word and occurrence time are returned.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

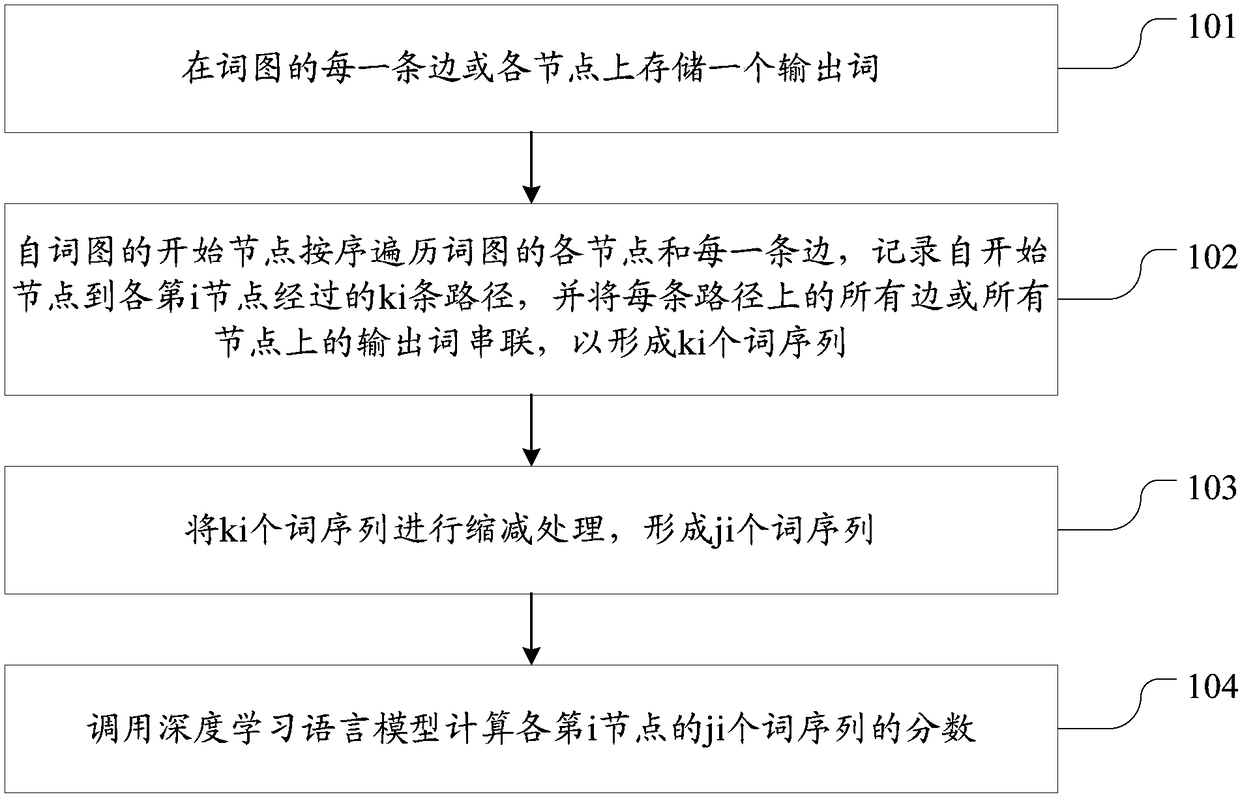

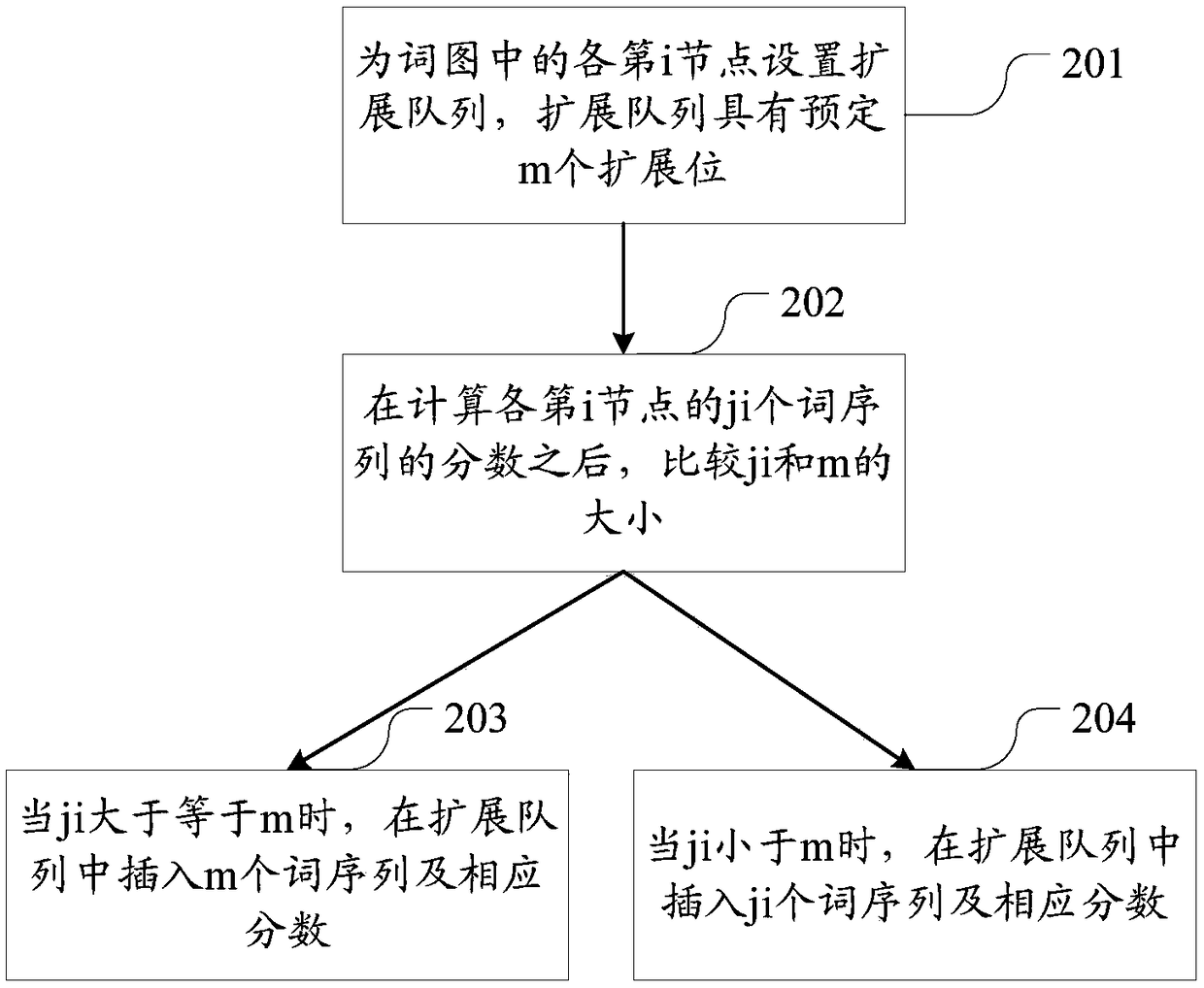

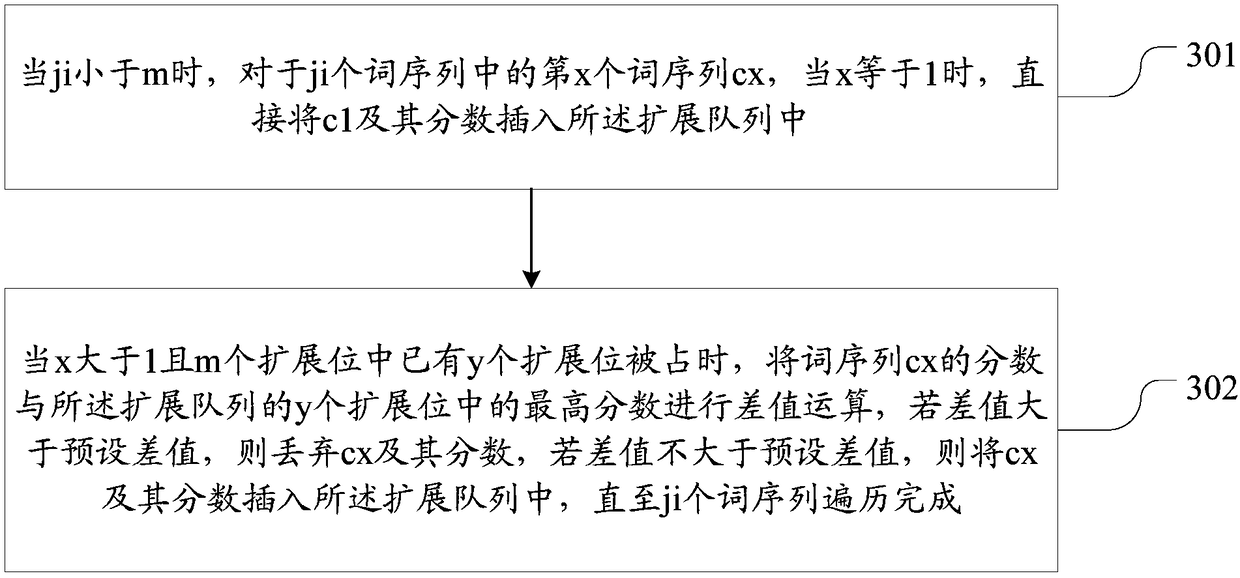

Word graph rescoring method and system for deep learning language models

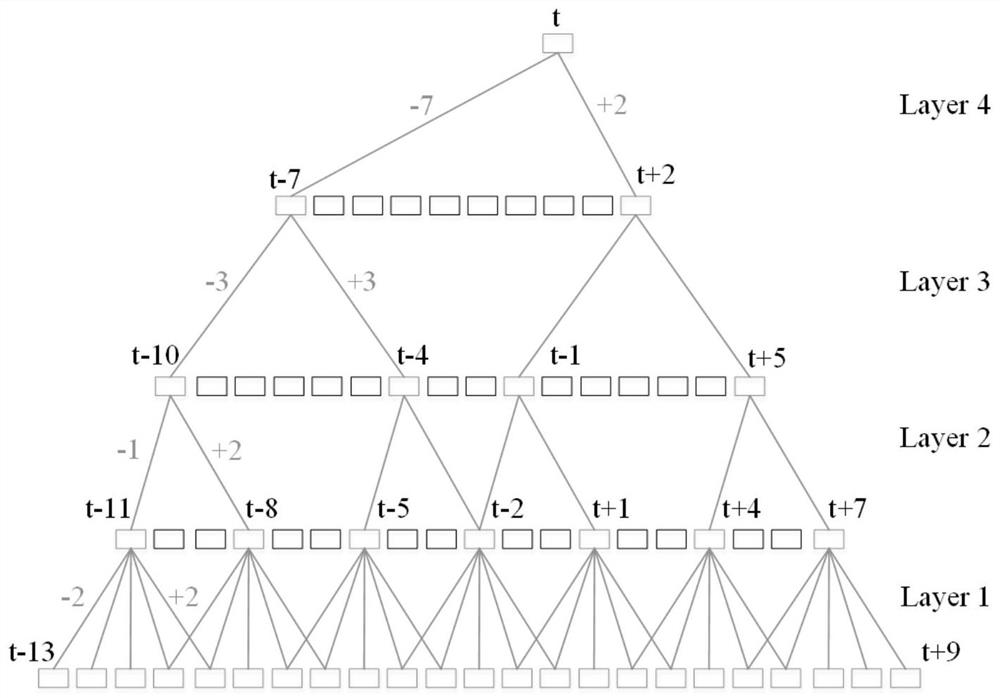

ActiveCN108415898ADelayed expansionReduce the number of extensionsNatural language data processingSpecial data processing applicationsAlgorithmConversational speech

The invention discloses a word graph rescoring method and system for deep learning language models for intelligent conversation voice platforms and electronic equipment. The method comprises the following steps of: storing an output word on each edge or each node of a word graph; traversing each node and each edge of the word graph in sequence from a starting node of the word graph, recording ki paths from the starting node to the ith node, and connecting the output words on all the edges or all the nodes on each path in series to form ki word sequences; carrying out reduction processing on the ki word sequences t form ji word sequences; and calculating scores of ji word sequences of each ith node by calling a deep learning language model. According to the method, the word graph is used asa rescoring target, so that the problem of small search space is solved; the problem of redundant repeated calculation is solved by using history cache; history clustering, token pruning and clusterpruning are used for decreasing extension of the word graph, accelerating the calculation and decreasing the memory consumption; and the word graph rescoring efficiency is improved by adoption of a node parallel calculation.

Owner:AISPEECH CO LTD

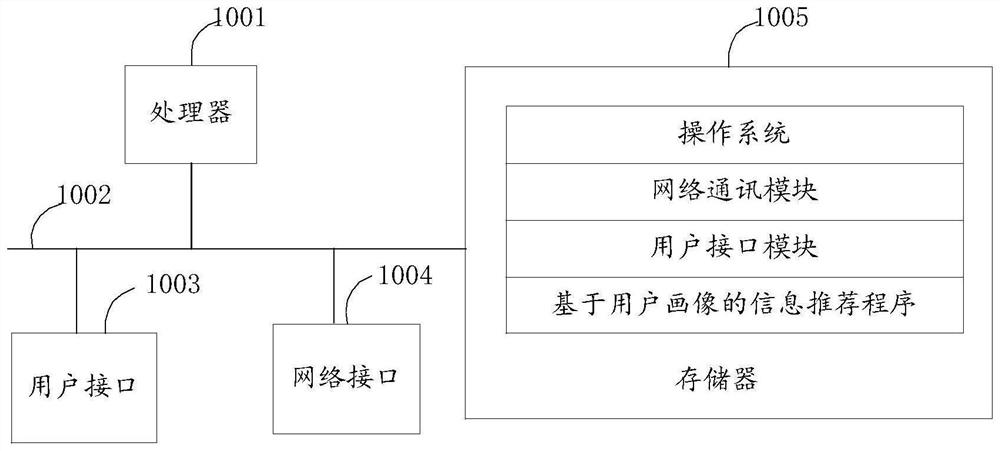

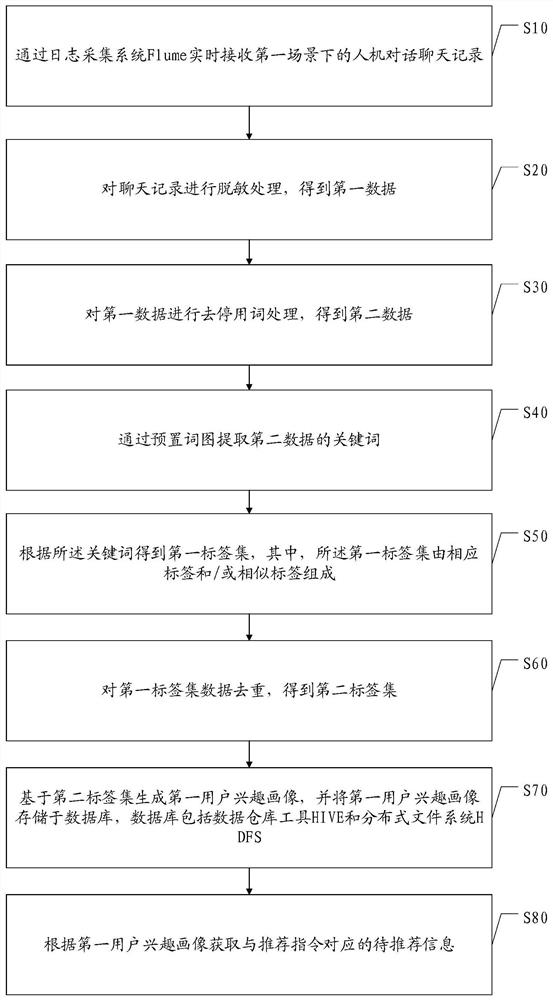

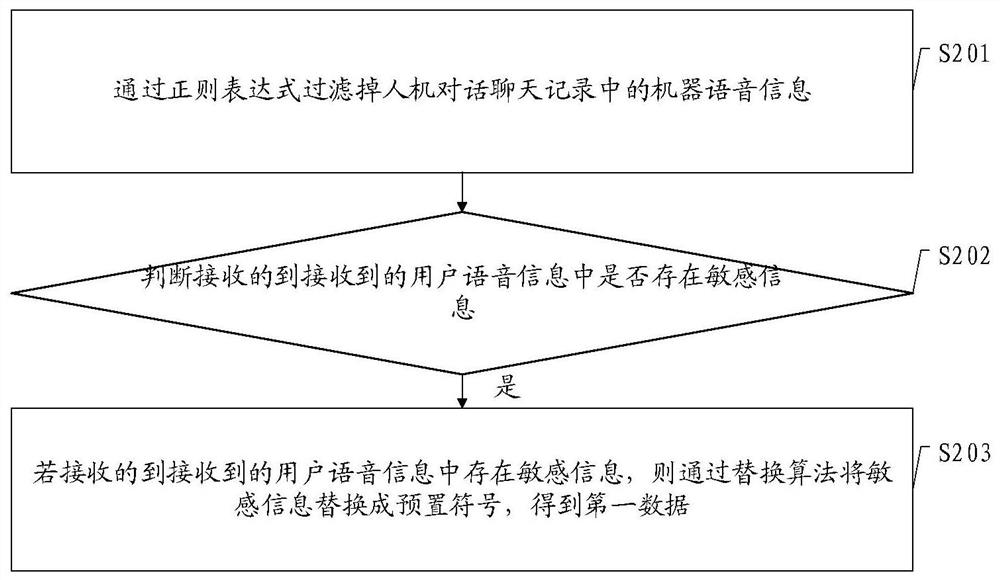

Information recommendation method and device based on user portrait, equipment and storage medium

PendingCN111797210AImprove accuracyMarket predictionsBuying/selling/leasing transactionsCollection systemEngineering

The invention relates to the technical field of big data, and discloses a user portrait-based information recommendation method. The method comprises the following steps: receiving a man-machine conversation chat record in a first scene in real time through a log collection system Flume; performing desensitization processing on the chat record to obtain first data; performing stop word removal processing on the first data to obtain second data; extracting a keyword of the second data through a preset word graph; obtaining a first label set according to the keyword; performing duplicate removalon the first label set data to obtain a second label set; generating a user interest portrait based on the second label set, and storing the user interest portrait in a database; receiving a recommendation instruction, and obtaining a user interest portrait according to the recommendation instruction; and obtaining to-be-recommended information corresponding to the recommendation instruction according to the user interest portrait. The invention also provides an information recommendation device and equipment based on the user portrait, and a storage medium. The information recommendation method based on the user portrait provided by the invention improves the accuracy of the user portrait.

Owner:CHINA PING AN LIFE INSURANCE CO LTD

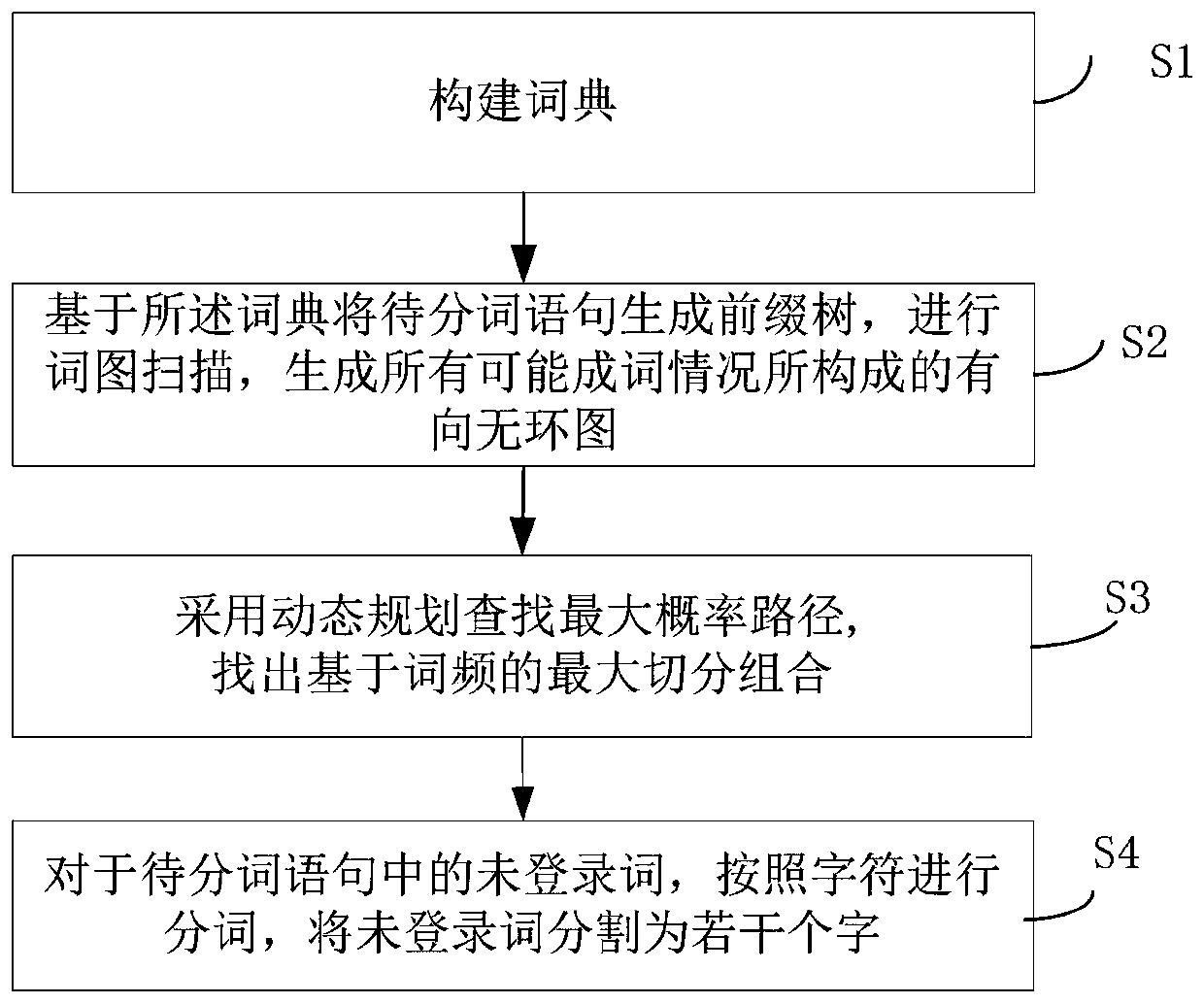

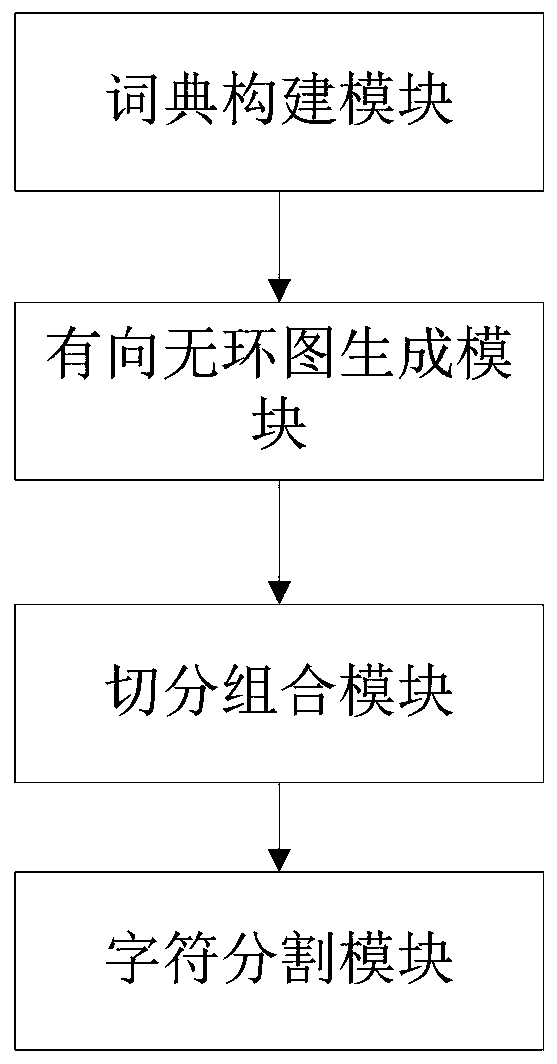

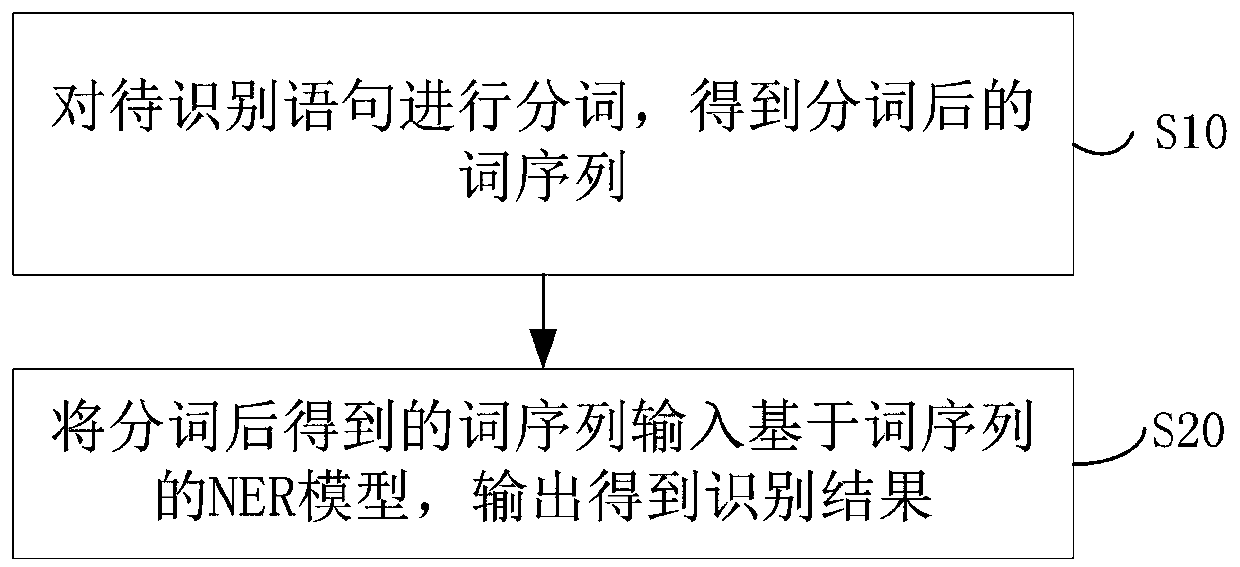

Word segmentation method, word segmentation device, named entity identification method and named entity identification system

PendingCN110750993AGuaranteed accuracyImprove accuracyNatural language data processingPattern recognitionNamed-entity recognition

The invention relates to a word segmentation method, a word segmentation device, a named entity identification method and a named entity identification system. The word segmentation method comprises the following steps: constructing a dictionary; generating a prefix tree for the sentence to be segmented based on the dictionary and performing word graph scanning to generate a directed acyclic graphformed by all possible word forming conditions; searching a maximum probability path by adopting dynamic planning, and finding out a maximum segmentation combination based on word frequency; and forthe unregistered words which do not exist in the dictionary in the sentence to be segmented, segmenting the unregistered words according to the characters, and segmenting the unregistered words into aplurality of characters. The unregistered words are independently processed and segmented into the single words rather than the segmented words, so that the unregistered names can be prevented from being recombined with the previous and later words after being segmented, and the identification accuracy of the unregistered names can be improved.

Owner:成都数联铭品科技有限公司

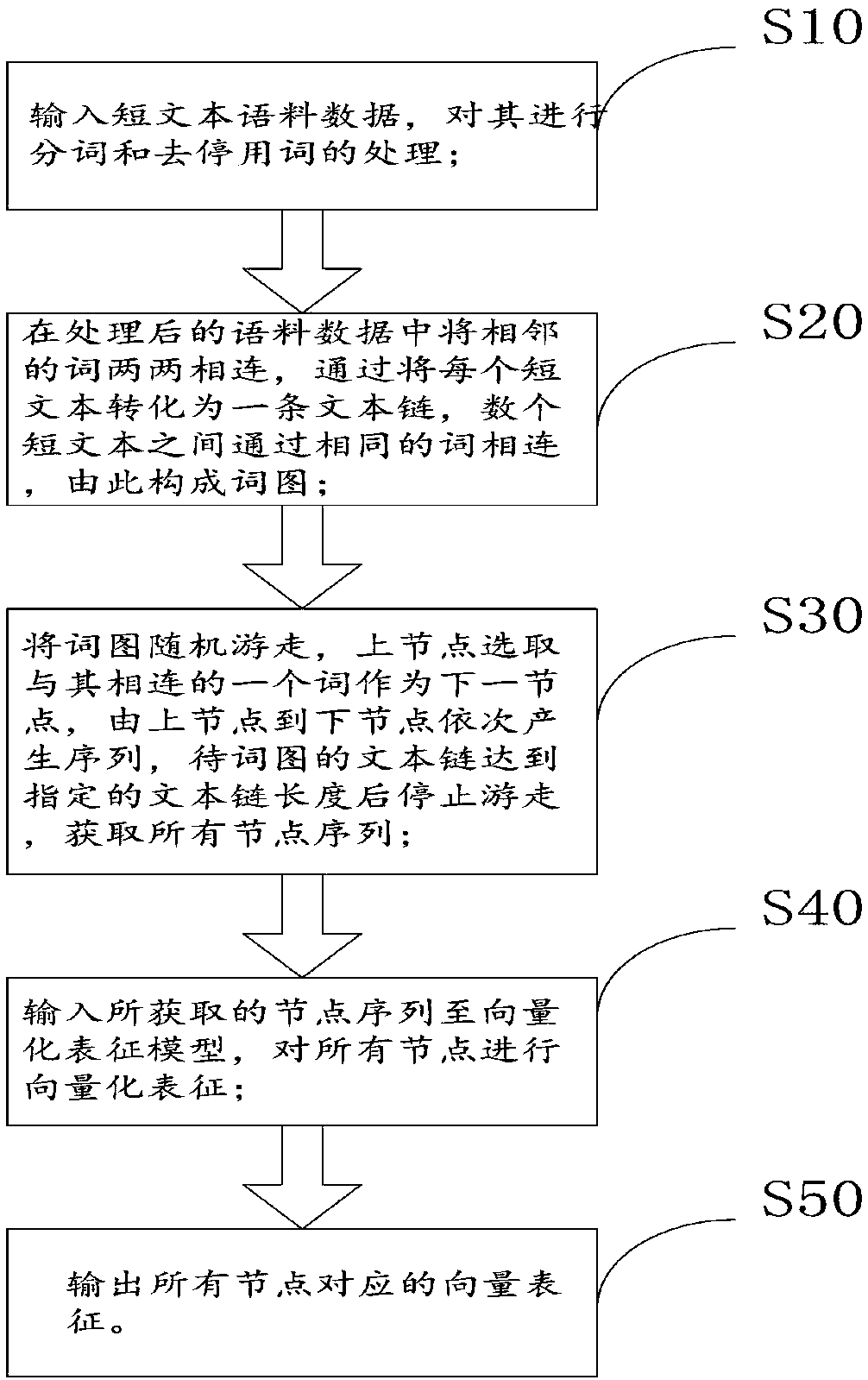

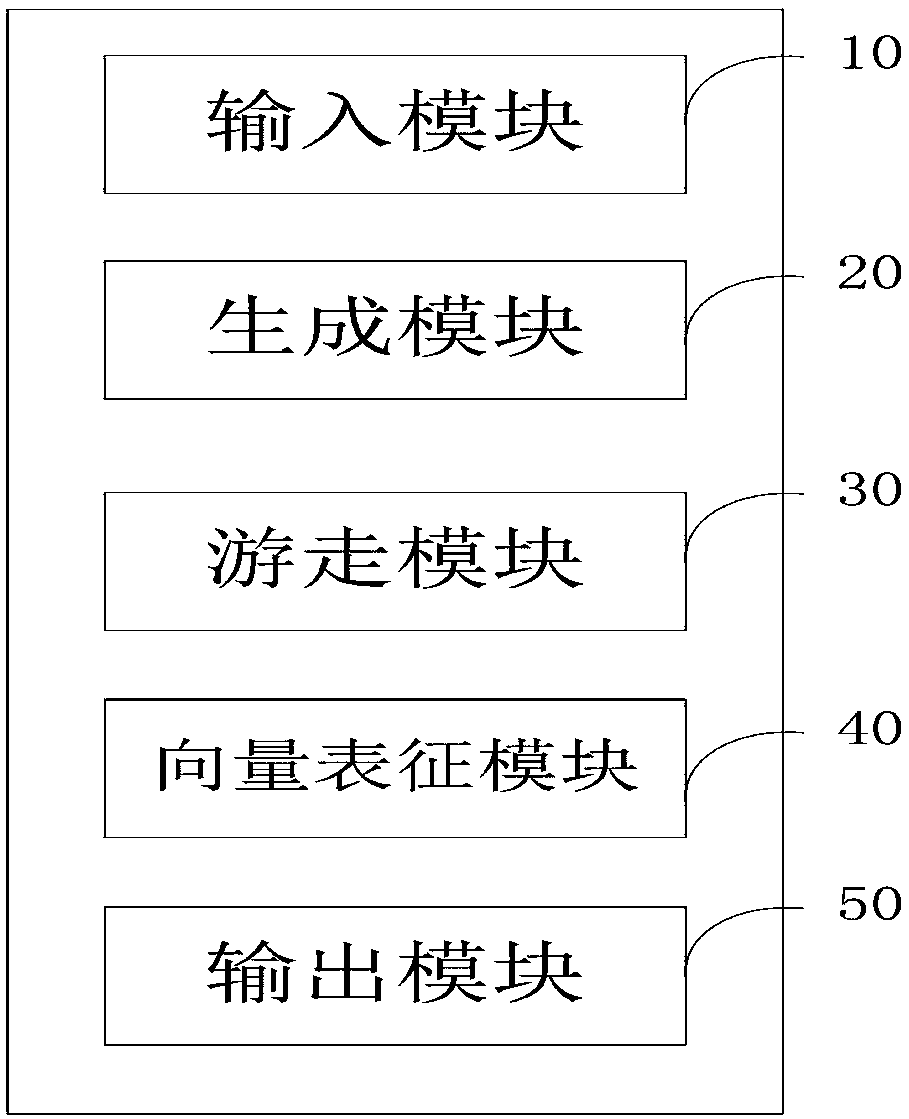

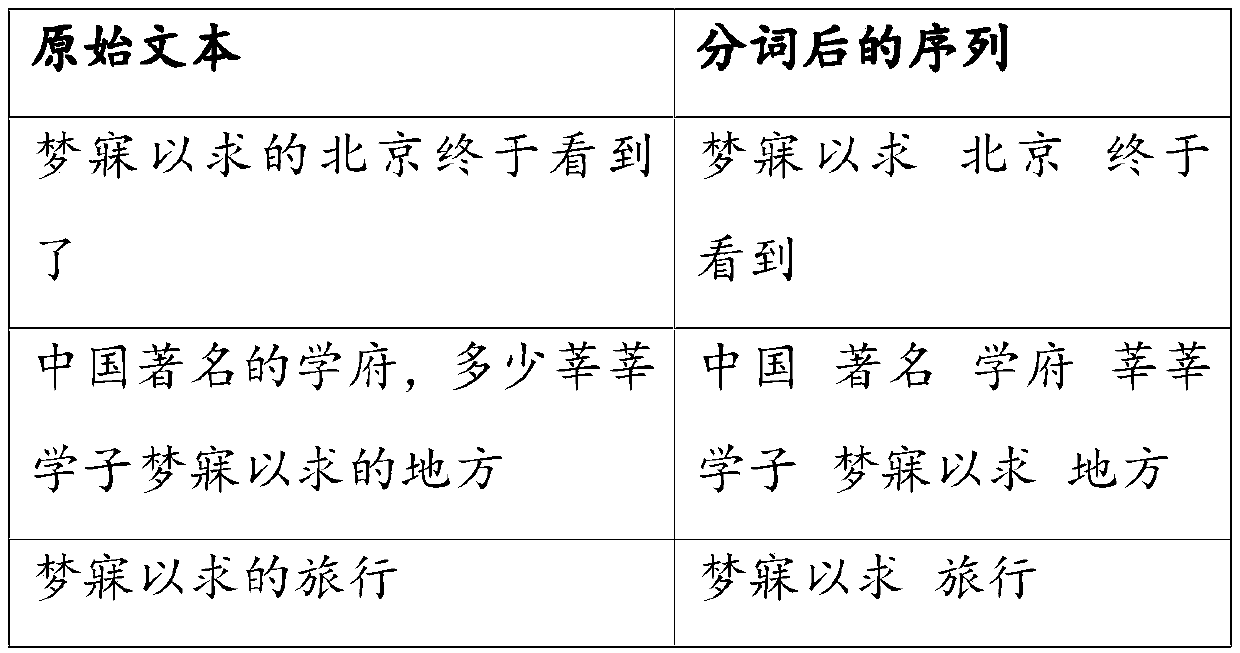

A method and apparatus for enriching short text semantics based on graph vector representation

ActiveCN109543176ASemantic analysisSpecial data processing applicationsAlgorithmTheoretical computer science

The invention discloses a method and apparatus for enriching short text semantics based on graph vector representation. The device is used for realizing the method. The method includes the processingof word segmentation and deactivation of short text corpus data. The processed corpus data is connected with adjacent words to form a word graph; The word graph is randomly walked, the sequence is sequentially generated from the upper node to the lower node, and when the text chain of the word graph reaches the specified text chain length, the word graph is stopped to walk, and the sequence of allnodes is obtained; the acquired node sequence is input to the vectorization representation model, and vectorization representation is performed on all nodes; and the vector representation corresponding to all nodes are output. The invention constructs a chain by connecting adjacent words in a short text, constructs a graph by using a keyword connection mode between chains formed by different short texts, and obtains vector representation of each node by using a graph vector representation algorithm for the constructed word graph, so as to be applied to a machine learning model.

Owner:SUN YAT SEN UNIV

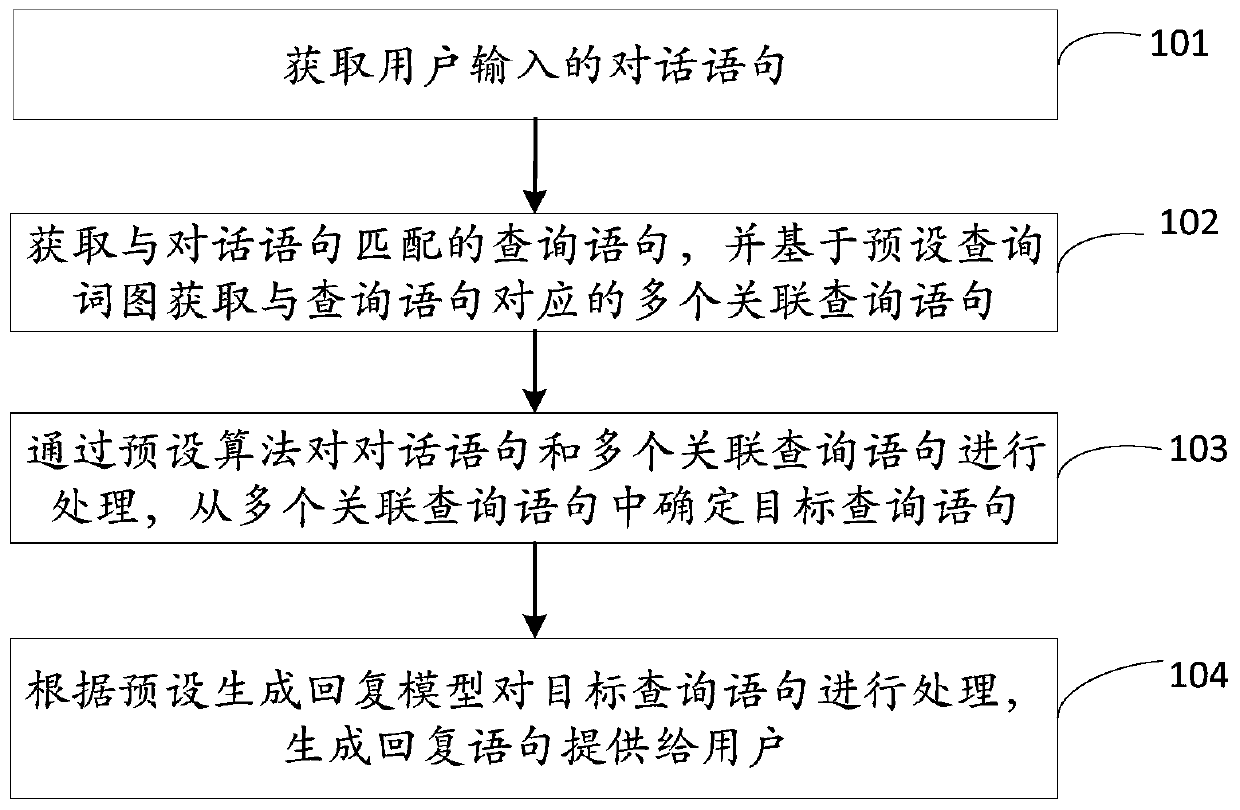

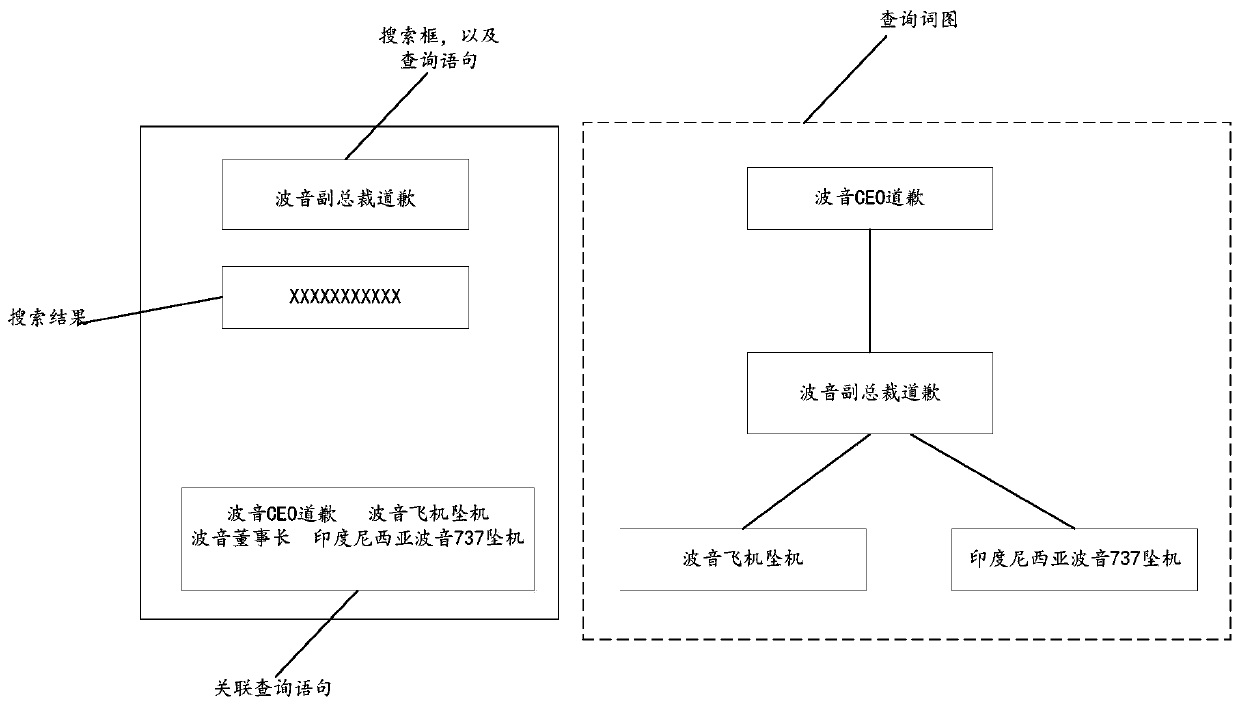

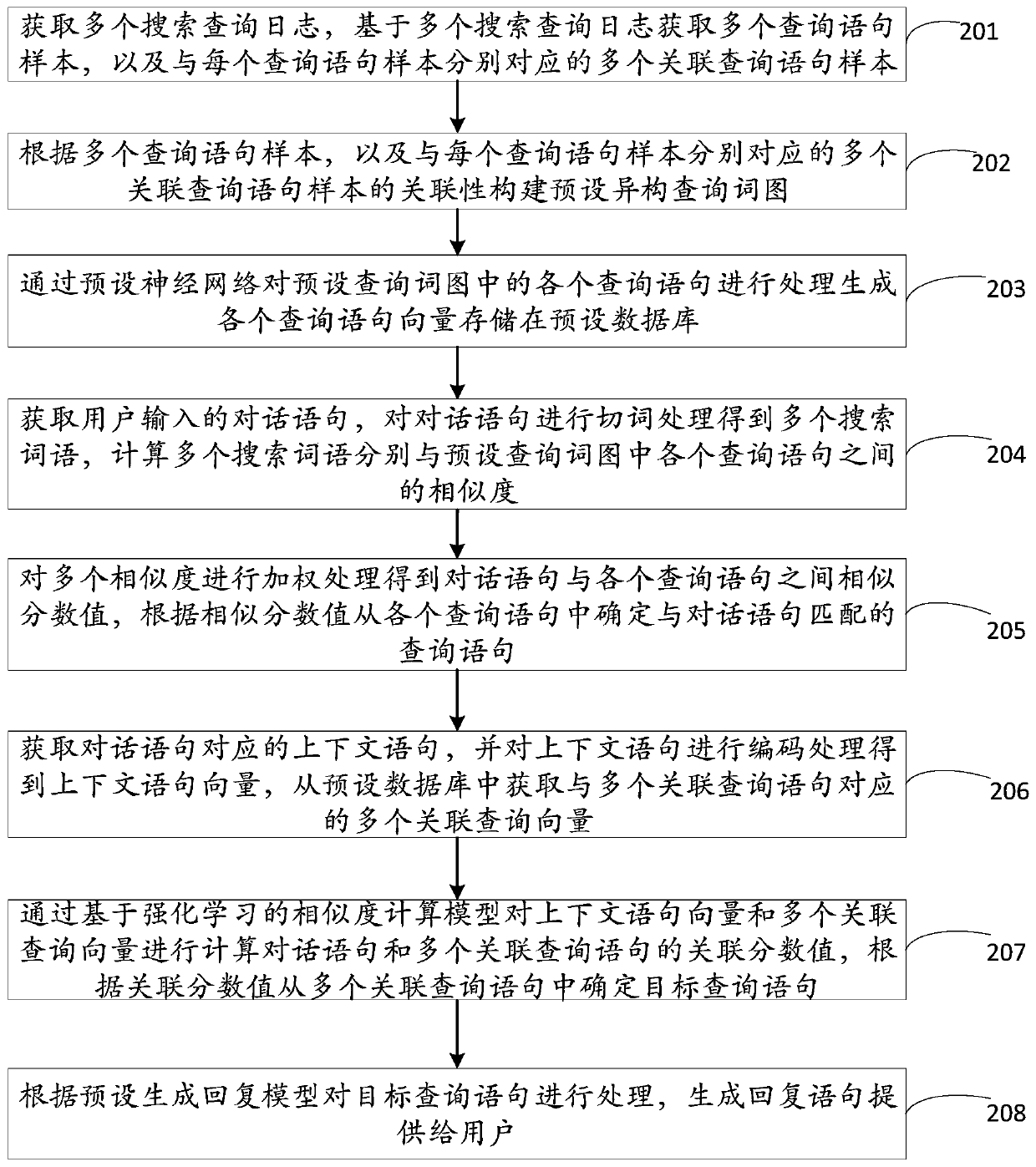

Man-machine conversation interaction method and device based on search data, and electronic equipment

ActiveCN111177355ADigital data information retrievalNatural language data processingEngineeringData mining

The invention discloses a man-machine conversation interaction method and a device based on search data, and electronic equipment, and relates to the technical field of artificial intelligence, and the method comprises the steps: obtaining a conversation statement inputted by a user; obtaining a query statement matched with the dialogue statement, and obtaining a plurality of associated query statements corresponding to the query statement based on a preset query word graph; processing the dialogue statement and the plurality of associated query statements through a preset algorithm, and determining a target query statement from the plurality of associated query statements; and processing the target query statement according to a preset generation reply model, and generating a reply statement to be provided for the user. Thus, the technical problems that reply content in man-machine conversation is not rich enough and the conversation effect is not good enough are solved, a high-quality reply content candidate list is provided by querying the relevance of query statements in the word graph, and therefore richer content reflecting user interests is provided.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

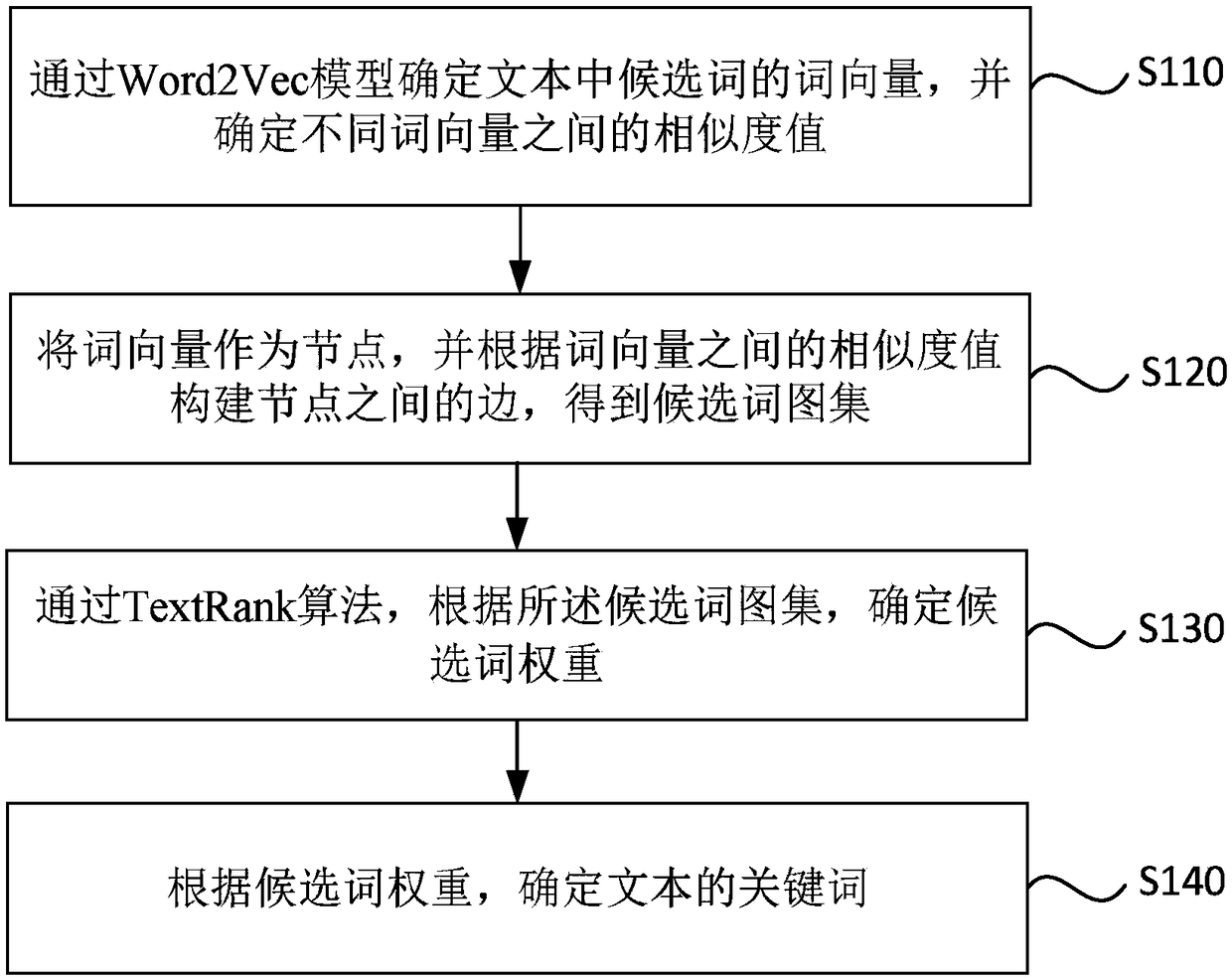

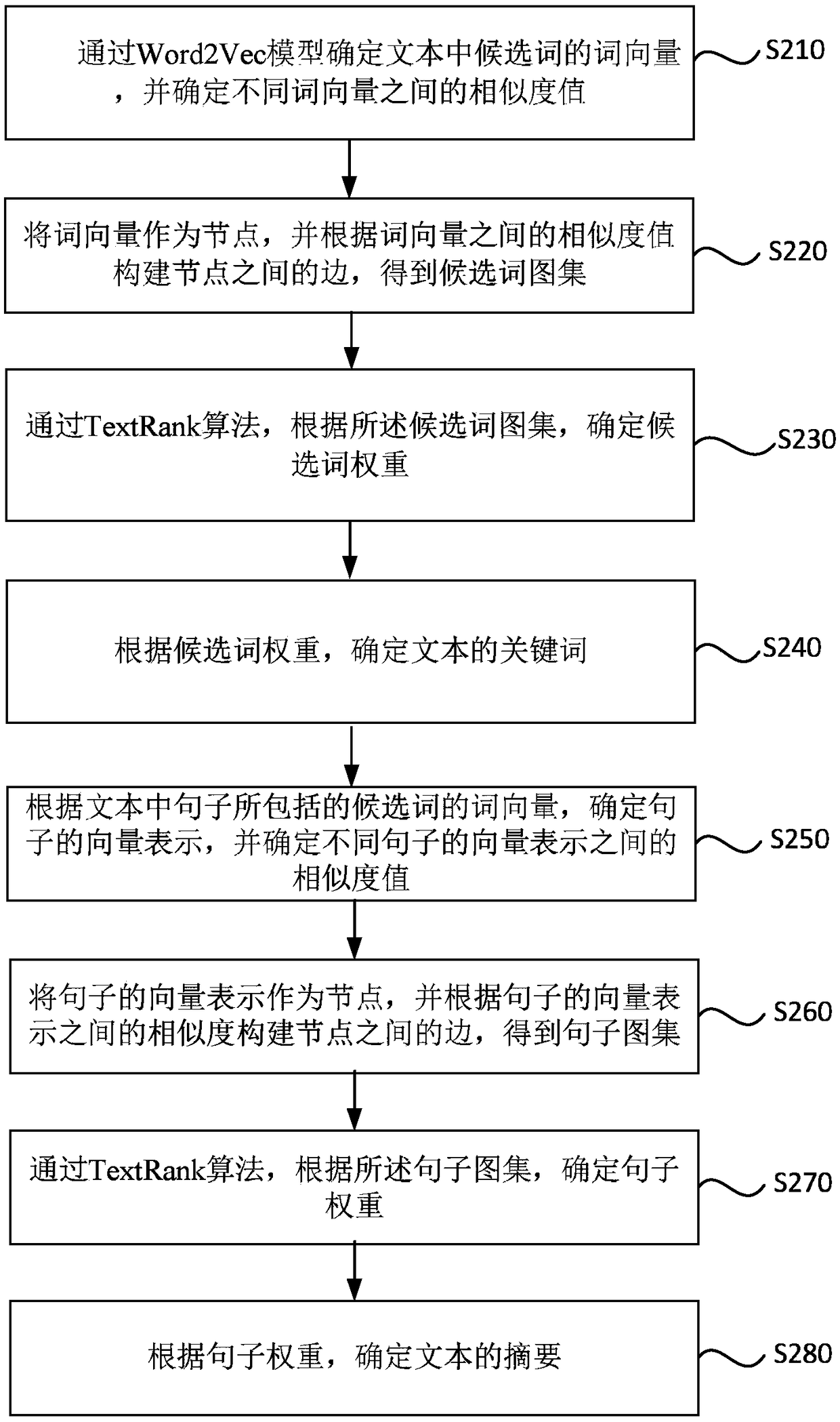

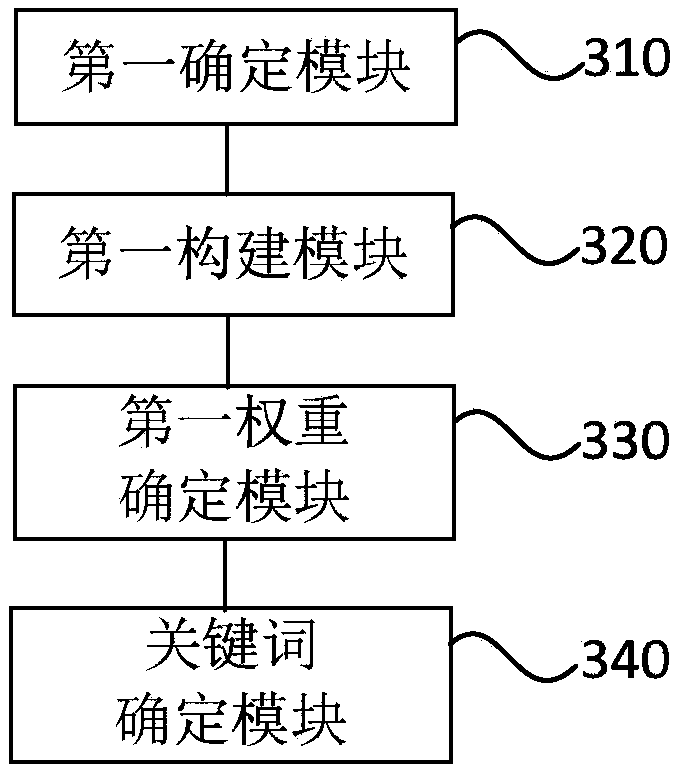

Text information extraction method and device, server and storage medium

PendingCN109408826AReflect the relationshipAccurately and fully determineNatural language data processingSpecial data processing applicationsAlgorithmTheoretical computer science

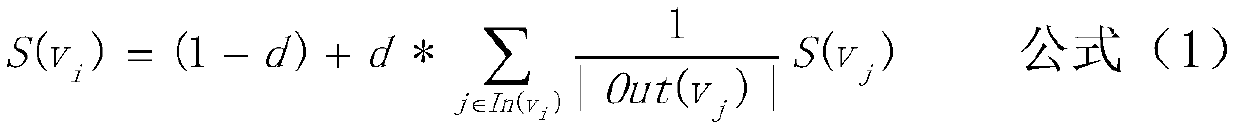

The embodiment of the invention provides a text information extraction method and device, a server and a storage medium. The method comprises the steps that word vectors of candidate words in a text are determined through a Word2Vec model, and similarity values between different word vectors are determined; taking the word vectors as nodes, and constructing edges between the nodes according to thesimilarity values between the word vectors to obtain a candidate word graph set; determining candidate word weights according to the candidate word atlas through a TextRank algorithm; and determiningkeywords of the text according to the weights of the candidate words. The method comprises the following steps of: converting candidate words into word vectors by adopting a Word2Vec model; Accordingto the method, the candidate words can be represented through the low-dimensional vectors, the processing efficiency is improved, the association relationship between the candidate words can be vividly reflected through similarity value calculation and image set construction, and finally the weight values of the candidate words are calculated through the TextRank algorithm, so that the keywords of the text are more accurately and comprehensively determined.

Owner:RUN TECH CO LTD BEIJING

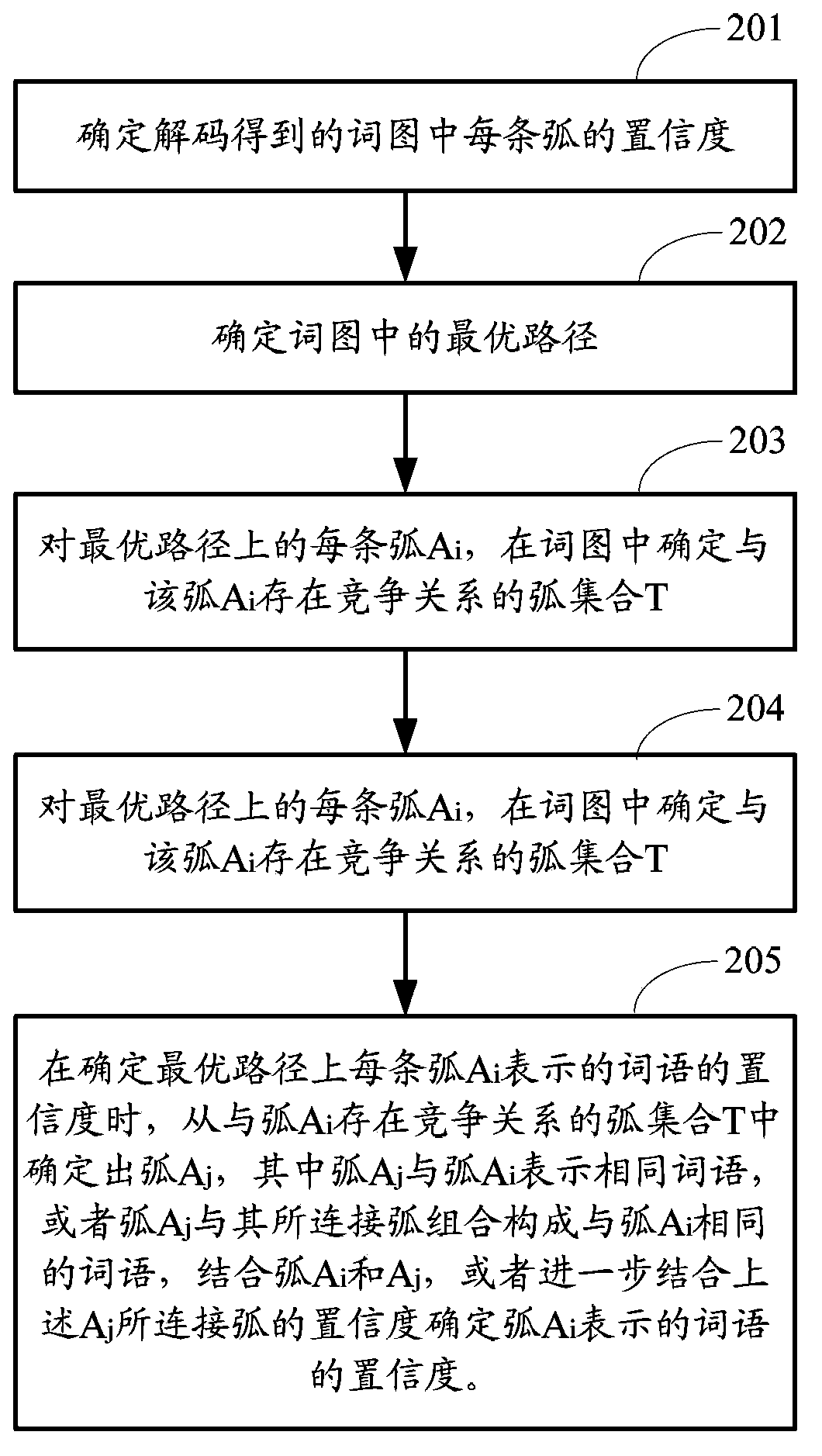

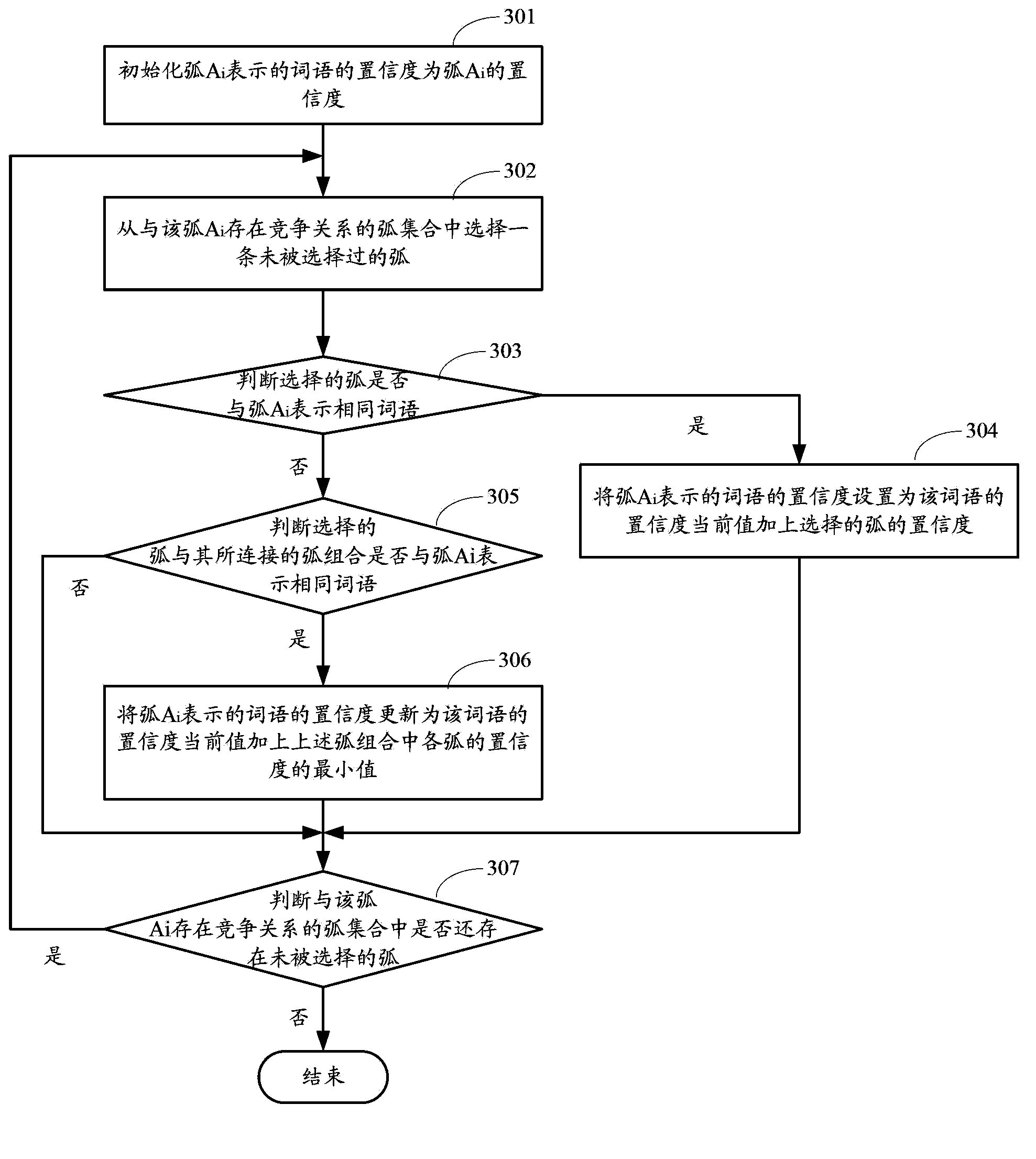

Method and device for determining confidence of voice recognition result

The invention provides a method and a device for determining the confidence of a voice recognition result, wherein the method comprises the following steps: determining the confidence of each arc in a word graph obtained by decoding, and determining an optimal path in the word graph; determining an arc assembly T competitive to an arc Ai in the word graph for each arc Ai on the optimal path; determining an arc Aj from the arc assembly T competitive to an arc Ai when the confidence of a word expressed by the arc Ai, wherein the arc Aj and the arc Ai represent the same words, or the arc Aj and the arc assembly connected with the arc Aj are formed into a word expressed as same as the arc Ai; determining the confidence of the word represented by the arc Ai according to the confidence of the arc Ai and the confidence of the arc Aj or further according to the confidence of the arc connected with the arc Aj. When the method and the device are used for determining the confidence of the voice recognition result, the component factors of a compound word are considered, so that the confidence can further reflect the real state accurately.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

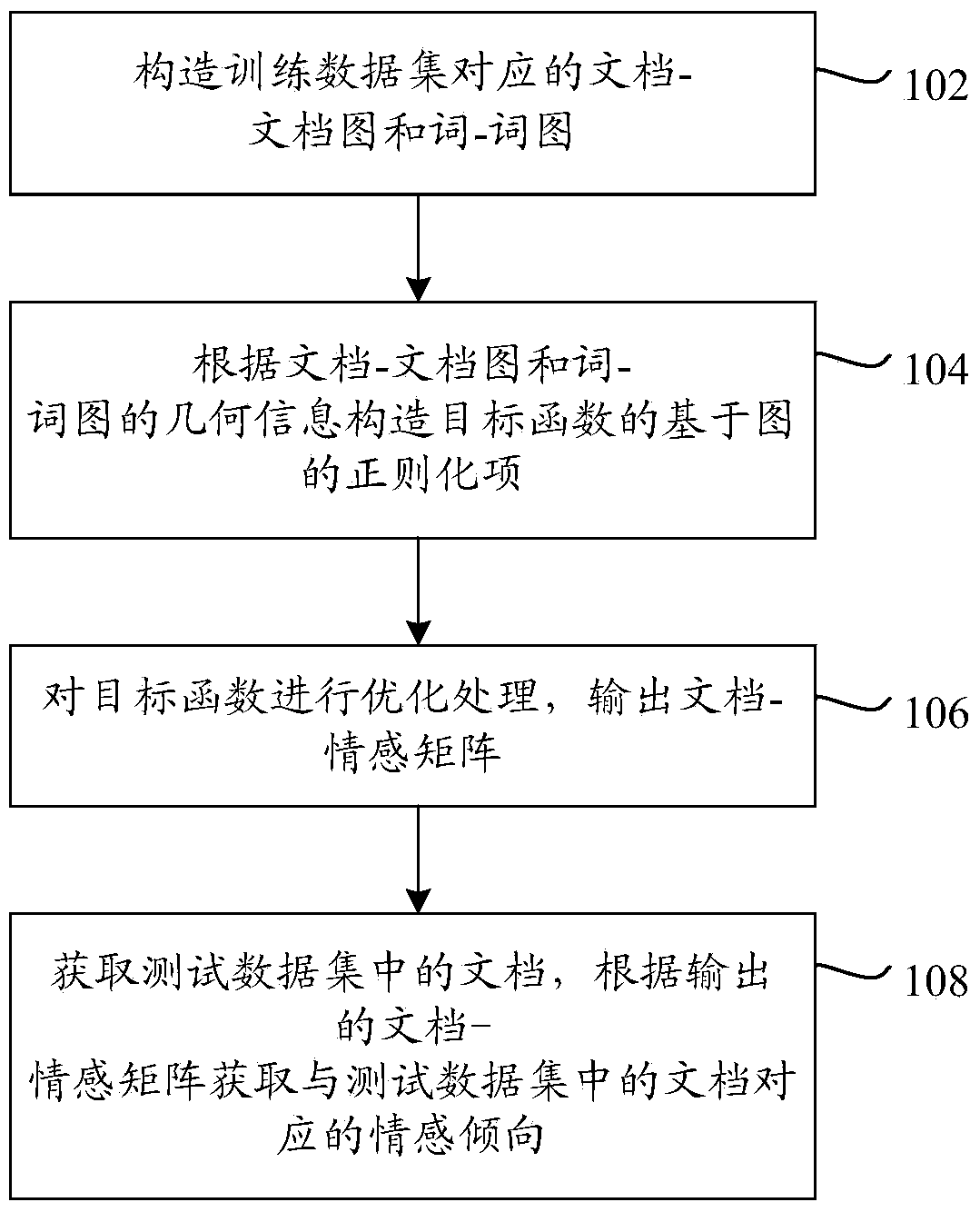

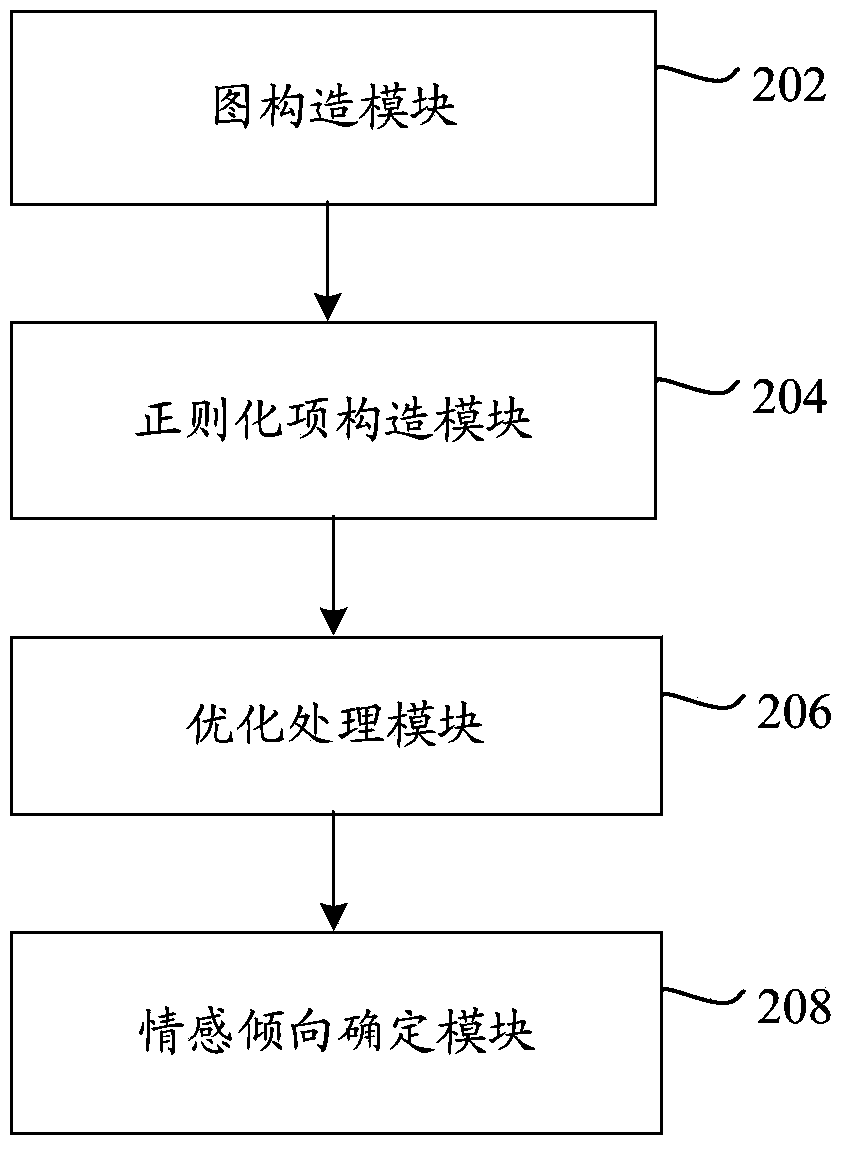

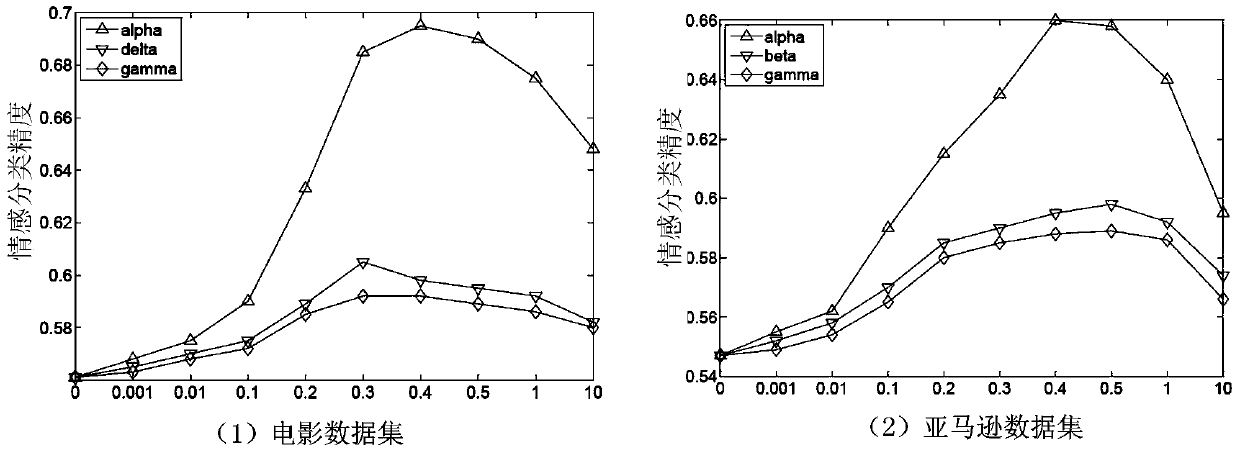

Emotion data classifying method and system

ActiveCN104199829AImprove classification accuracyAccurate Emotional TendencyWeb data indexingSpecial data processing applicationsData setAlgorithm

The invention provides an emotion data classifying method and system. The method comprises the steps that a document-document graph and a word-word graph corresponding to a training data set are established, in the document-document graph, nodes signify documents in the training data set, the geometrical information of edges signifies the relevance between the documents, in the word-word graph, nodes signify words in the training data set, and the geometrical information of edges signifies the relevance between the words; regularization items based on the graphs are established according to the geometrical information of the document-document graph and the word-word graph; an objective function is subjected to optimizing processing, and a document-emotion matrix is output; the documents in a testing data set are acquired, and the emotional tendency corresponding to the documents in the testing data set is acquired according to the document-emotion matrix. The emotion data classifying method and system are used, and the emotion classifying precision can be improved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

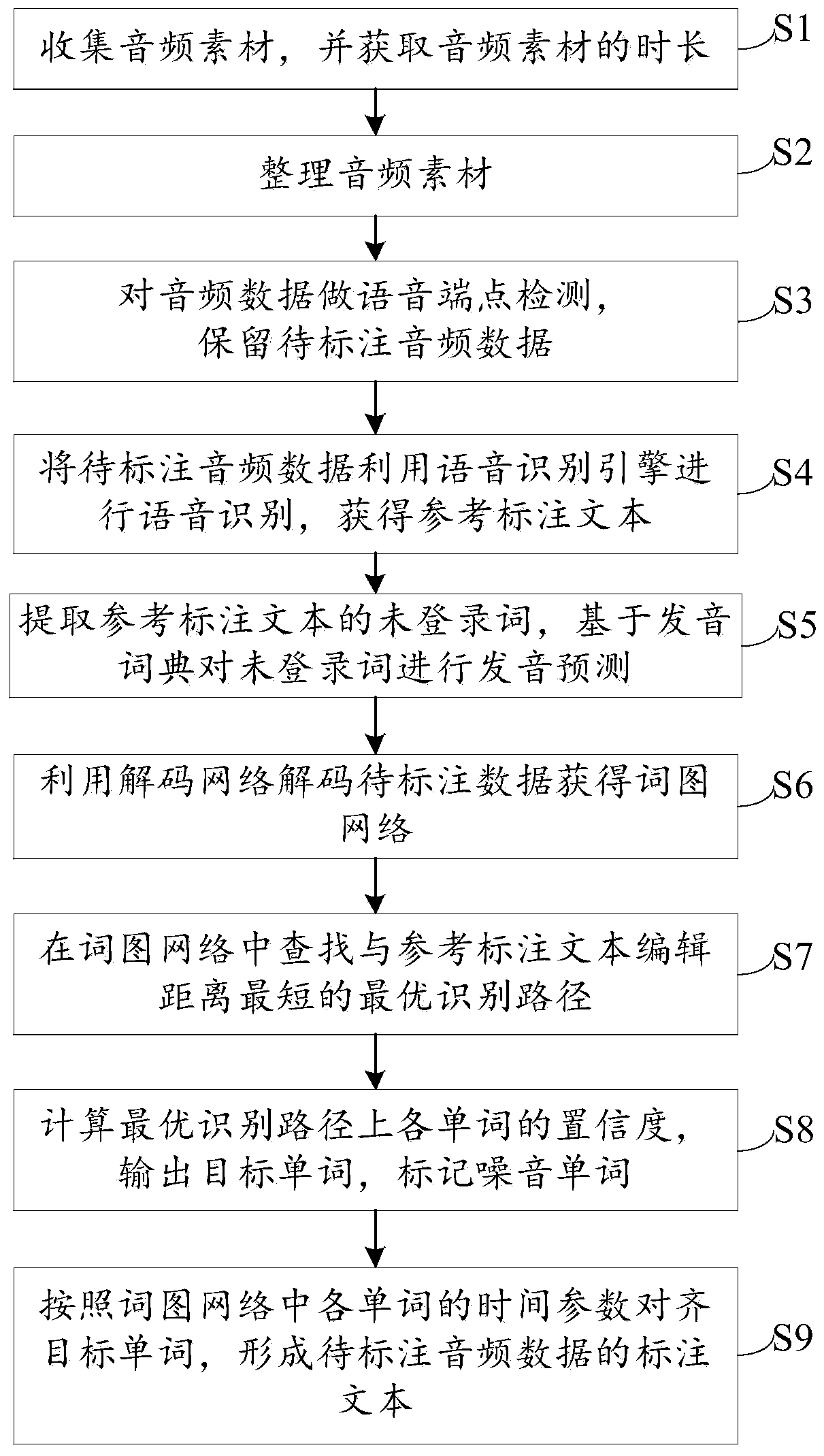

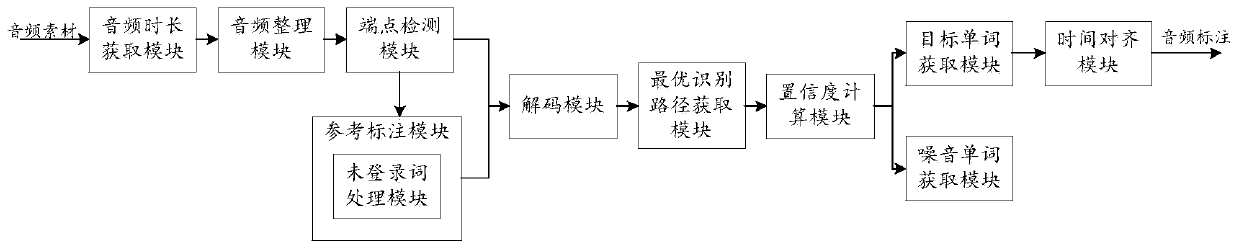

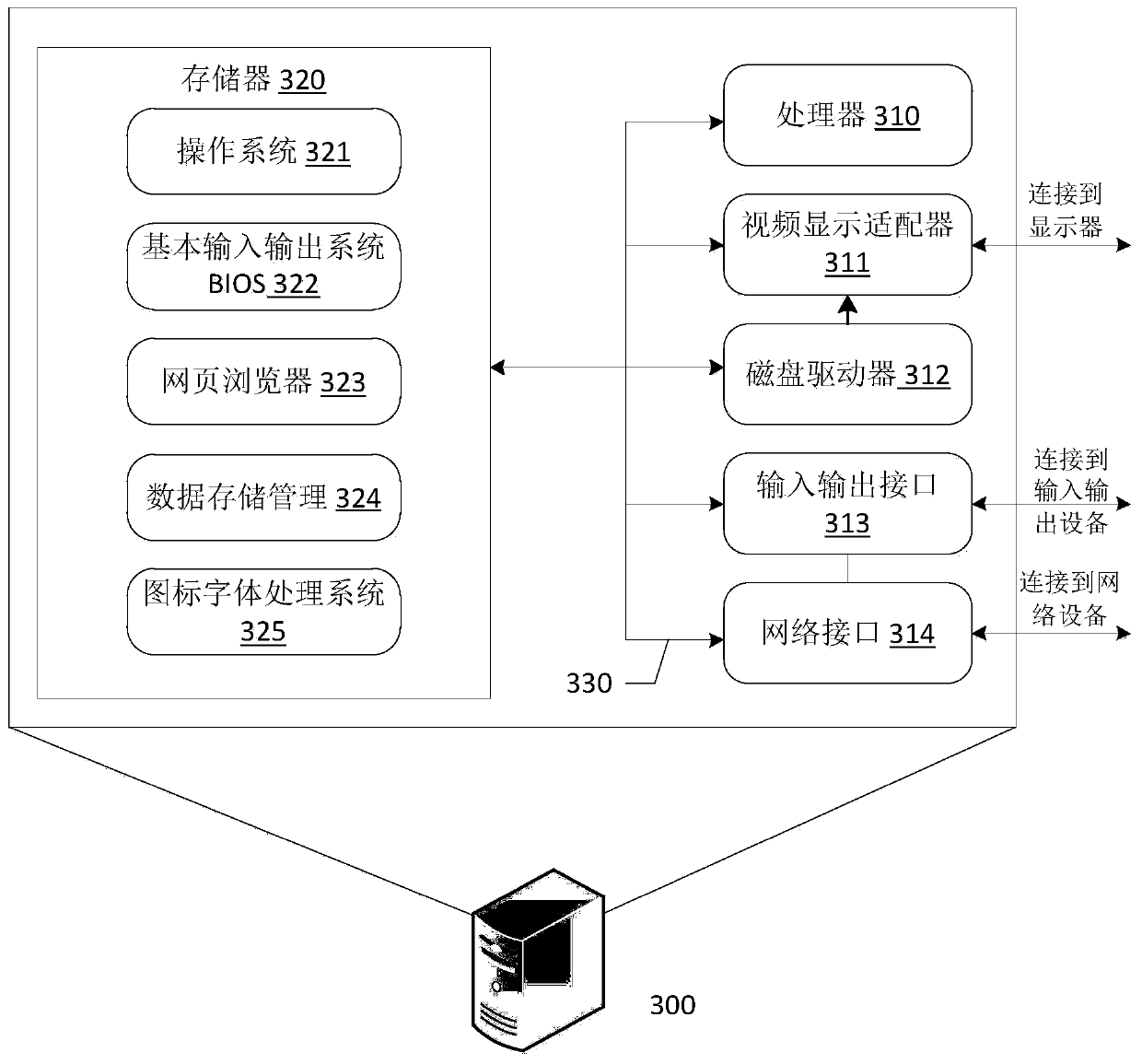

Audio data labeling method, device and system

PendingCN111341305AImprove labeling efficiencyImprove accuracySpeech recognitionText editingData Annotation

The invention discloses an audio data labeling method, device and system. The method comprises the following steps: performing voice recognition on to-be-labeled audio data by using a voice recognition engine to obtain a reference labeled text; searching an optimal identification path with the shortest editing distance from the reference labeled text in a word graph network obtained by decoding the to-be-labeled audio data; calculating the confidence coefficient of each word on the optimal recognition path, comparing the confidence coefficient of each word with a preset first confidence coefficient condition, and outputting a target word meeting the first confidence coefficient condition on the optimal recognition path; and aligning the target word according to the time parameter of each word in the word graph network to form an labeled text of the to-be-labeled audio data. The words in the word graph network of the to-be-labeled audio data are distinguished according to the confidencecoefficients, the words with high confidence coefficients are extracted to form the annotation text of the to-be-labeled audio data, the words with low confidence coefficients are annotated, audio data annotation is automatically completed, annotation efficiency is improved, and annotation accuracy is improved.

Owner:SUNING CLOUD COMPUTING CO LTD

Speech recognition system with huge vocabulary

ActiveCN101326572AExtended Speech Recognition SystemExtended Adaptive TechnologySpeech recognitionWord graphSpeech sound

The invention deals with speech recognition, such as a system for recognizing words in continuous speech. A speech recognition system is disclosed which is capable of recognizing a huge number of words, and in principle even an unlimited number of words. The speech recognition system comprises a word recognizer for deriving a best path through a word graph, and wherein words are assigned to the speech based on the best path. The word score being obtained from applying a phonemic language model to each word of the word graph. Moreover, the invention deals with an apparatus and a method for identifying words from a sound block and to computer readable code for implementing the method.

Owner:NUANCE COMM INC

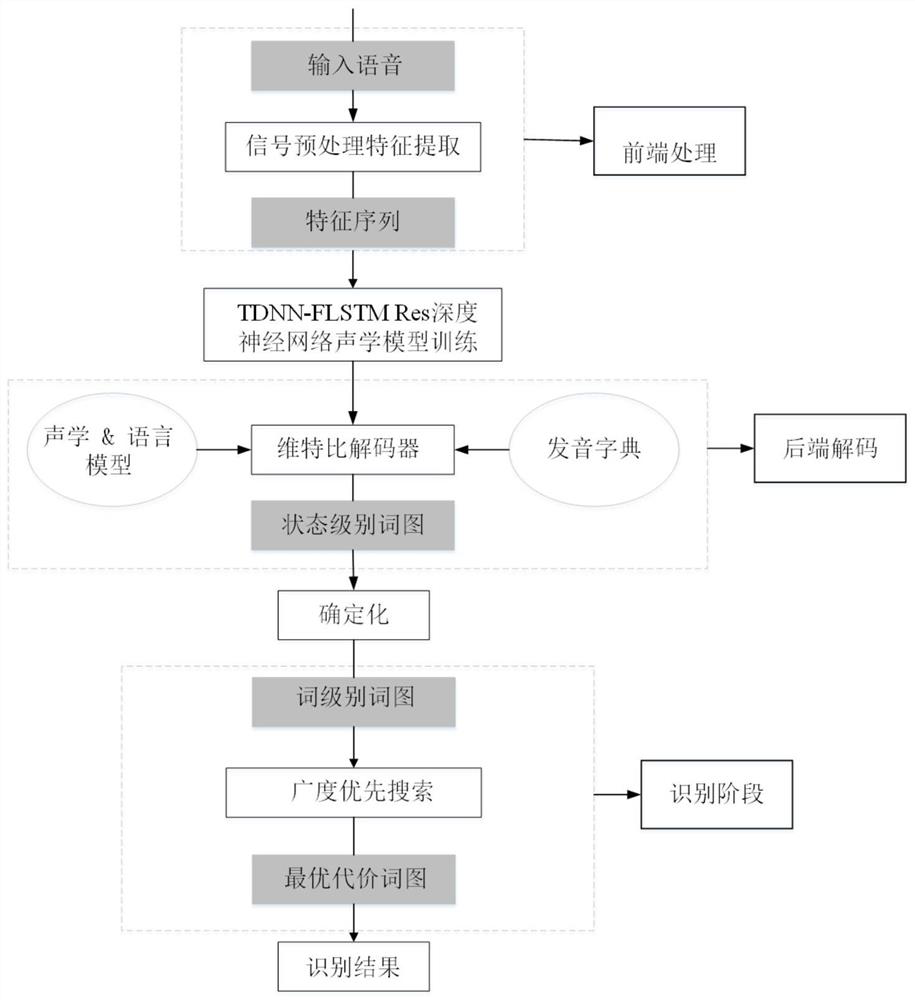

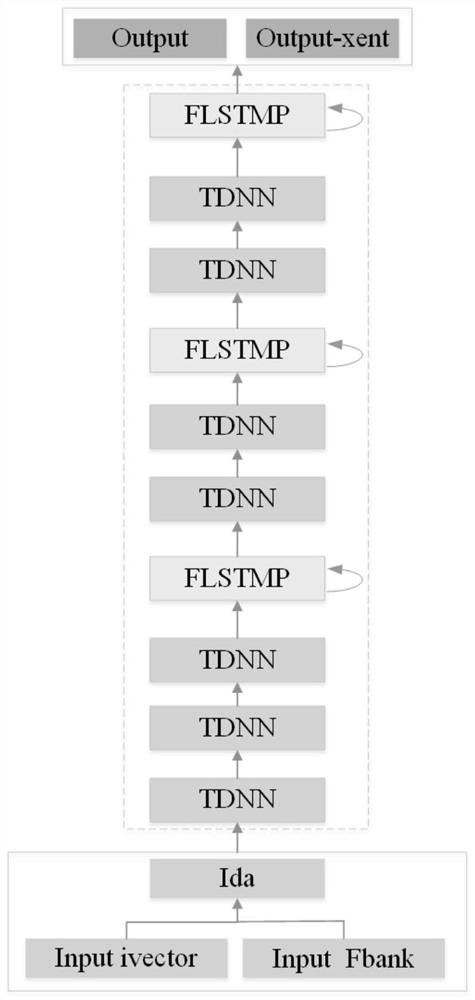

Voice recognition method and system based on deep neural network acoustic model

PendingCN112927682AIncrease training speedSmall amount of calculationSpeech recognitionPattern recognitionNetwork model

The invention discloses a voice recognition method and system based on a deep neural network acoustic model. The method comprises steps of carrying out the sliding windowing preprocessing operation of a to-be-recognized voice, and extracting acoustic features; constructing and training a deep neural network acoustic model; calculating a likelihood probability corresponding to the extracted acoustic features by using the deep neural network acoustic model; constructing a static decoding graph, a decoder constructing a directed acyclic graph containing all recognition results as a decoding network through the static decoding graph and the likelihood probability on the basis of a viterbi algorithm of dynamic programming, and a word graph of the state level being obtained from the decoding network and being determined to obtain the word graph of the word level; and obtaining an optimal cost path word graph of the word-level word graph, obtaining a word sequence corresponding to an optimal state sequence of the word graph, taking the word sequence as a final recognition result, and completing voice recognition. According to the method, gradient dispersion and gradient explosion caused by a complex structure network model can be solved, the word error rate is reduced while the decoding speed is ensured, and recognition accuracy is improved.

Owner:XI AN JIAOTONG UNIV

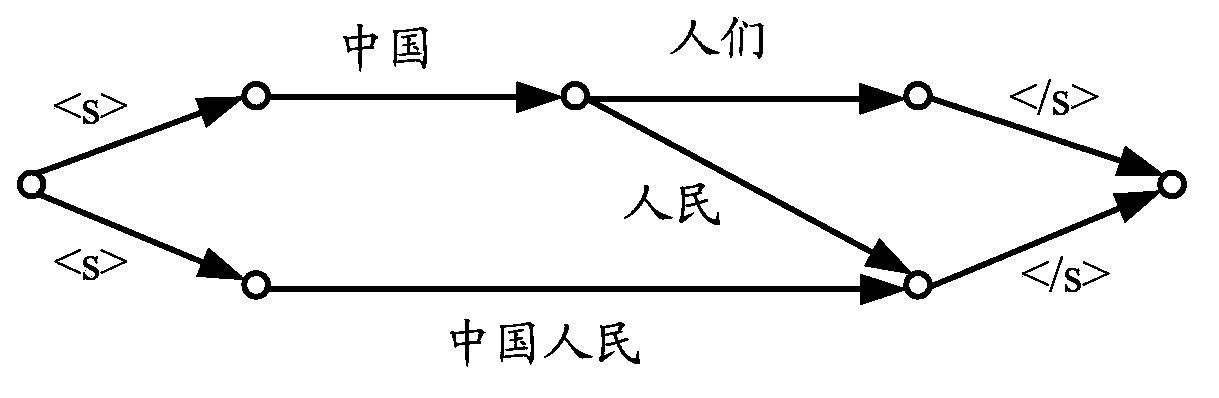

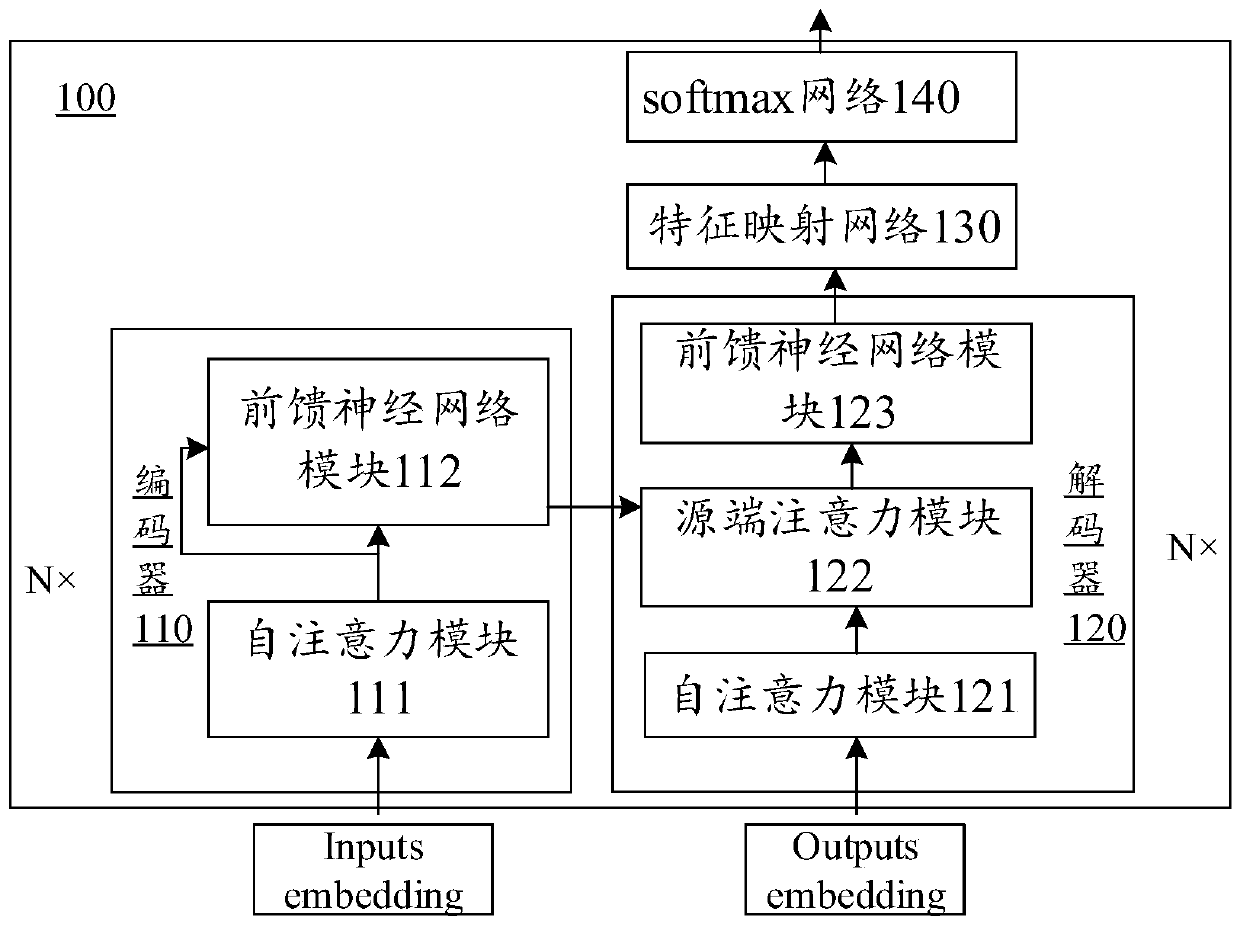

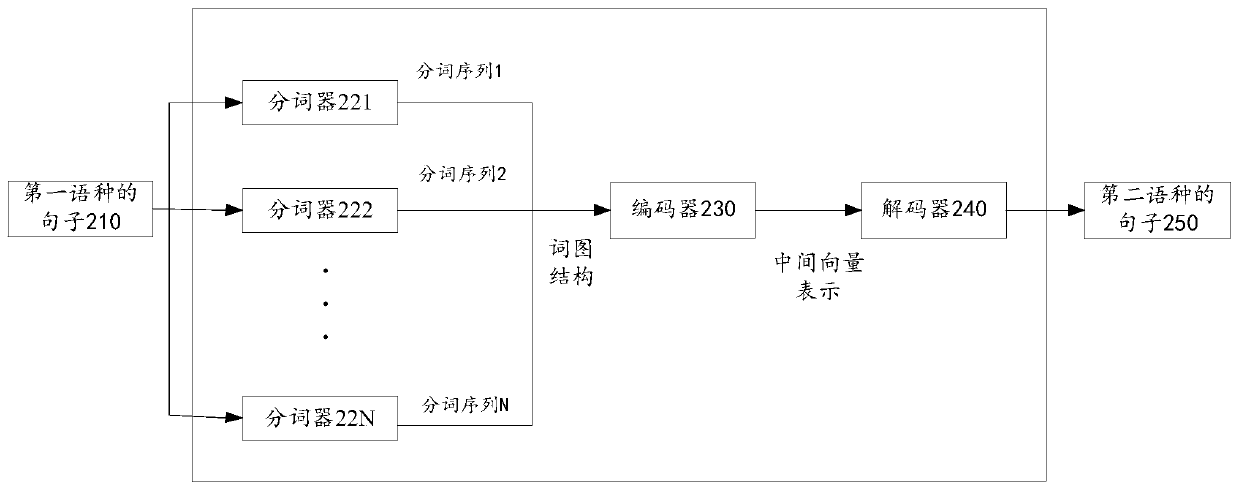

Translation method, device and equipment based on machine learning and storage medium

ActiveCN110807335AImprove translation accuracyNatural language translationSpecial data processing applicationsAlgorithmSemantics

The invention discloses a translation method, device and equipment based on machine learning, and a storage medium, and relates to the field of artificial intelligence, and the method comprises the steps: obtaining a sentence of a first language type; dividing the sentence into at least two word segmentation sequences by adopting different word segmentation devices; generating a word graph structure of the sentence according to the at least two word segmentation sequences; calling an encoder to convert the word graph structure into an intermediate vector representation of the sentence; and calling a decoder to convert the intermediate vector representation into a sentence of a second language type. Because the word graph represents the possibility of covering various word segmentation of the sentence, the problem that wrong segmentation is generated in word segmentation can be solved, the problem that wrong semantics or ambiguity is generated in the sentence due to the fact that wrongsegmentation is generated in word segmentation, and irreparable damage is caused to the semantics of the sentence is solved, and the translation accuracy of a machine translation model is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

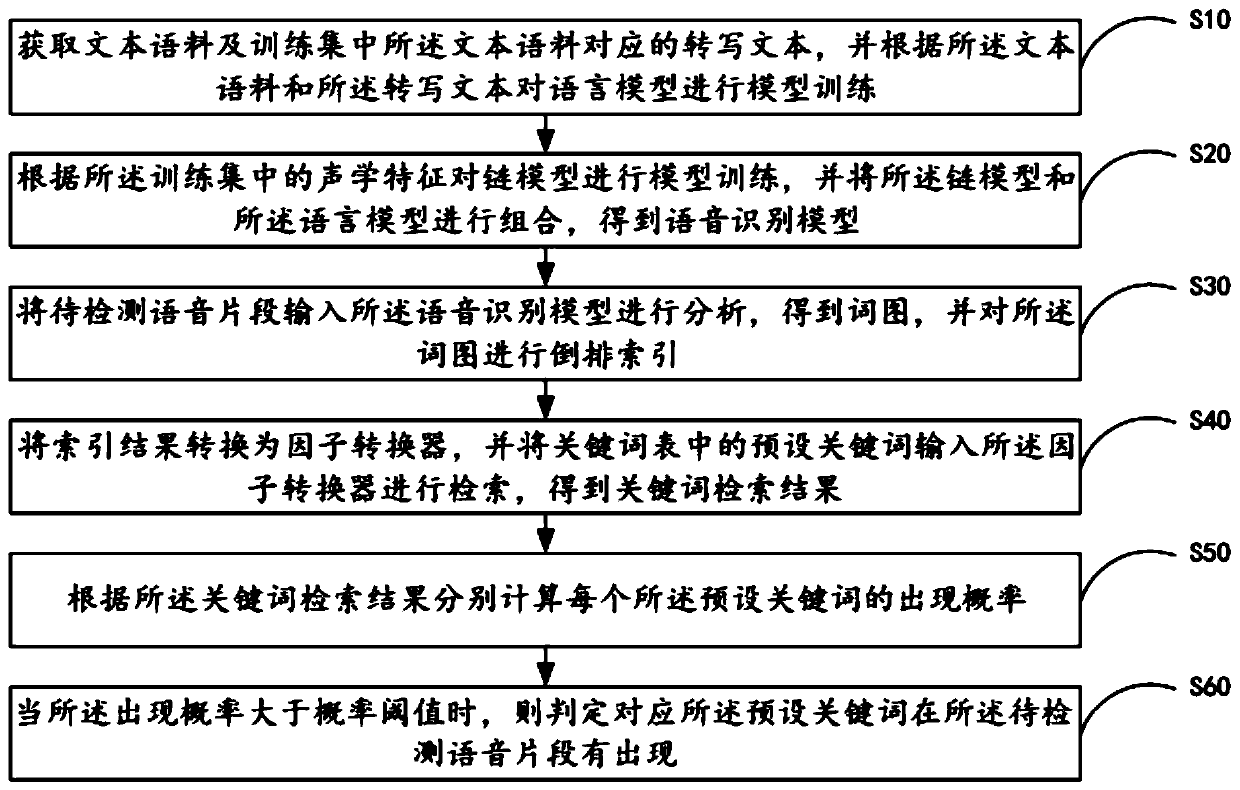

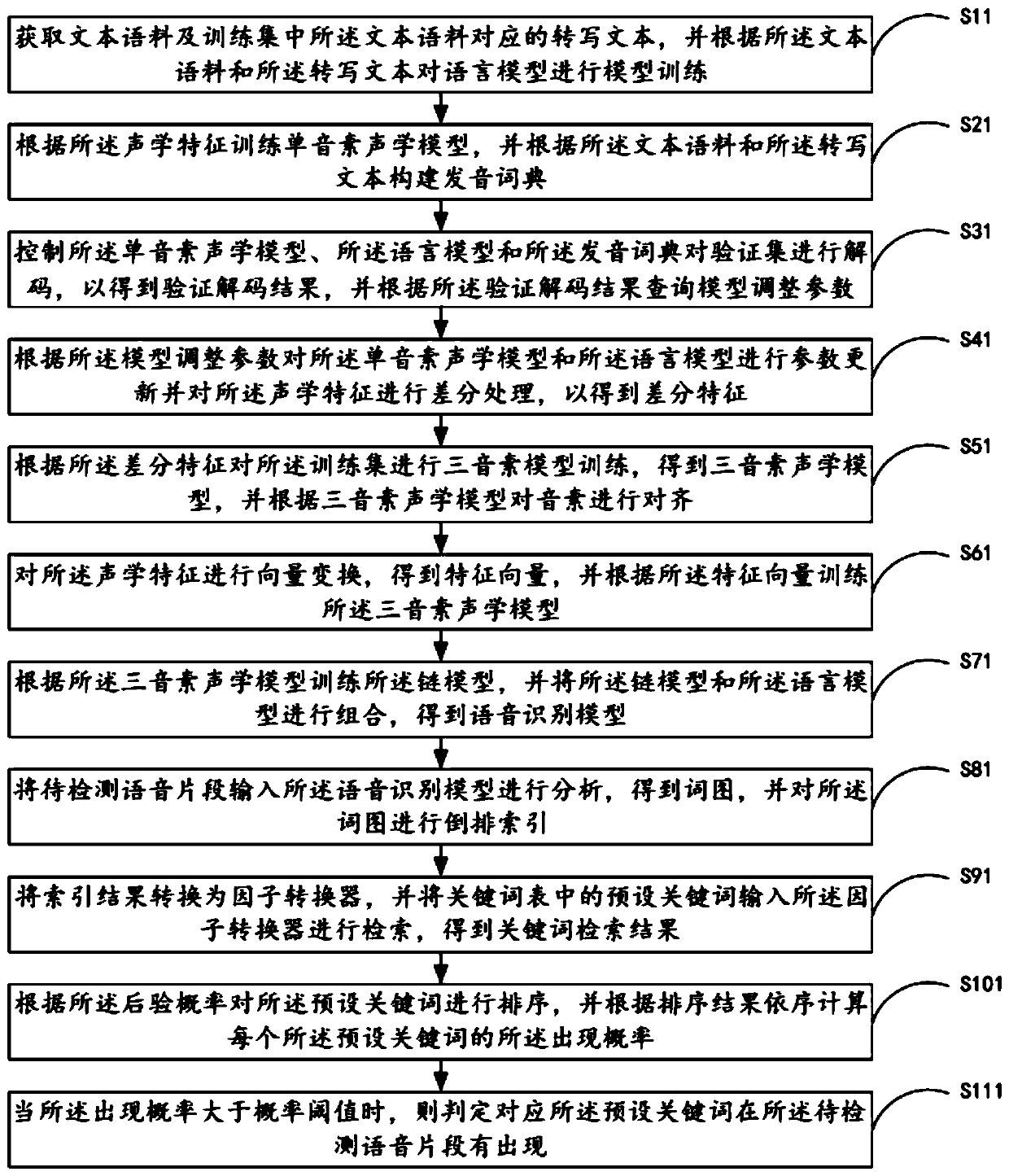

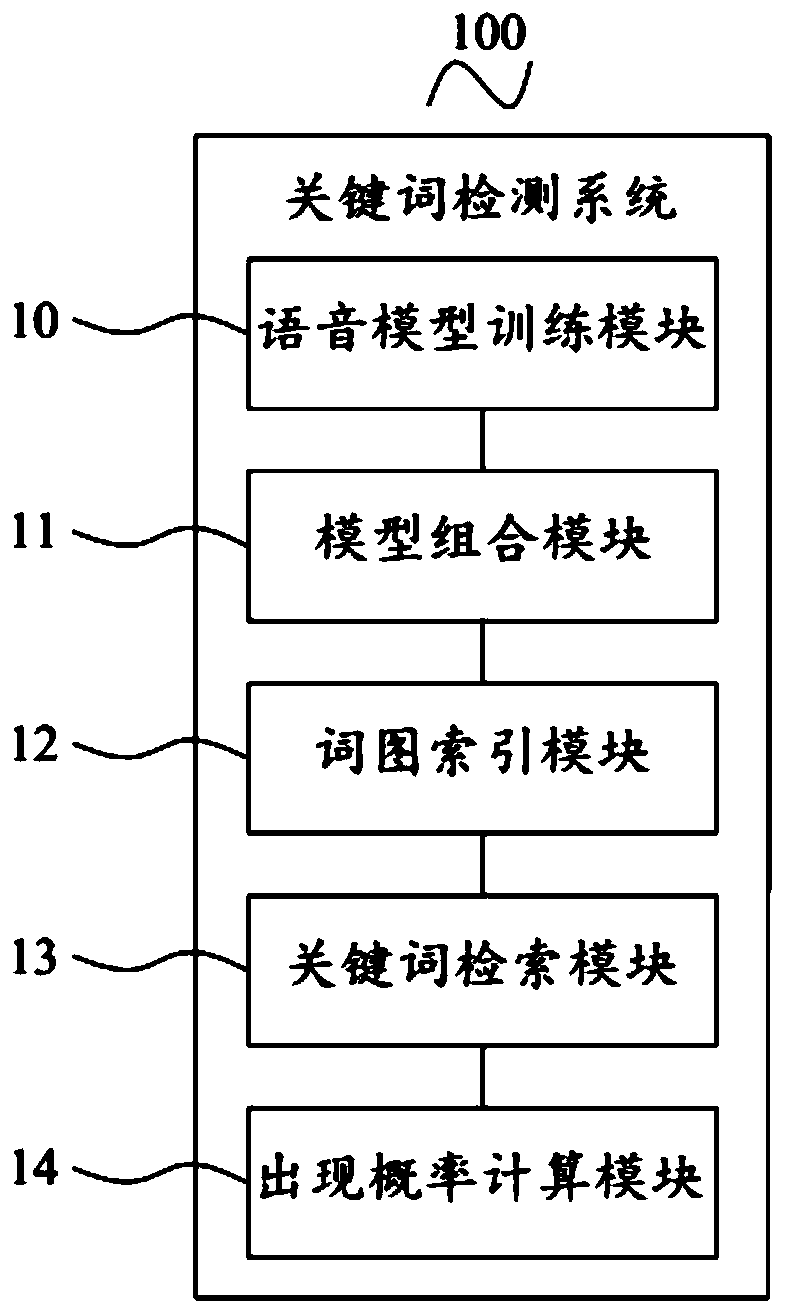

Keyword detection method and system, mobile terminal and storage medium

ActiveCN111429912AAvoid false detection casesImprove accuracySpeech recognitionMachine learningTransliterationWord graph

The invention provides a keyword detection method and system, a mobile terminal and a storage medium. The method comprises the steps of: acquiring text corpus and a transliteration text to perform model training on a language model; performing model training on a chain model according to acoustic features in a training set, and combining the chain model with the language model to obtain a speech recognition model; inputting a to-be-detected speech segment into the speech recognition model for analysis to obtain a word graph, and performing inverted indexing on the word graph; converting the index result into a factor converter, and inputting a preset keyword into the factor converter for retrieval to obtain a keyword retrieval result; and calculating the occurrence probability of the preset keyword according to the keyword retrieval result, and when the occurrence probability is greater than a probability threshold, judging that the preset keyword occurs in the to-be-detected speech segment. According to the invention, the speech recognition model is controlled to decode the to-be-detected speech segment to generate the word graph, so that the situation of keyword detection errorscaused by speech recognition errors is avoided, and the accuracy of keyword detection is improved.

Owner:XIAMEN KUAISHANGTONG TECH CORP LTD

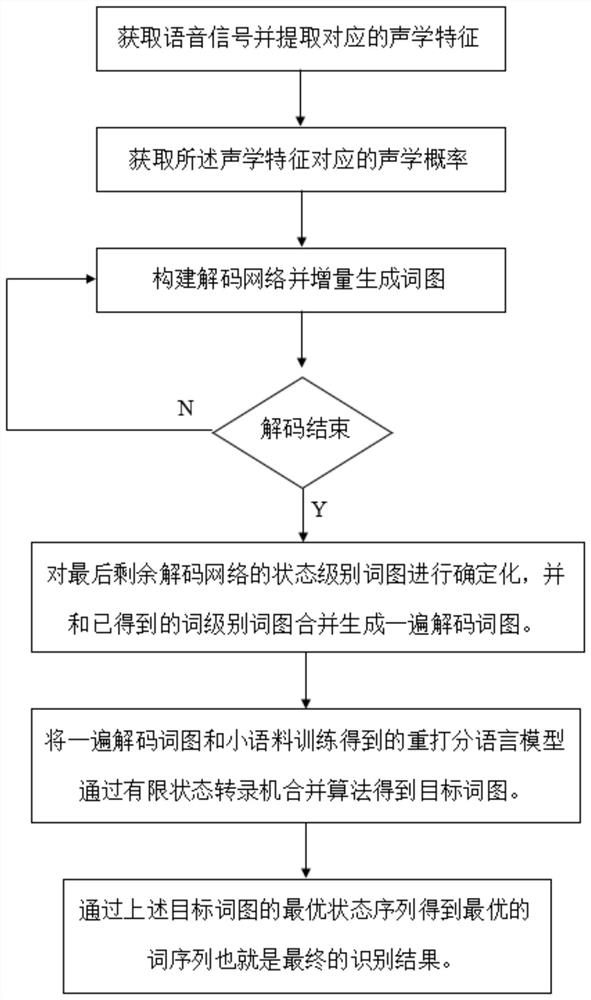

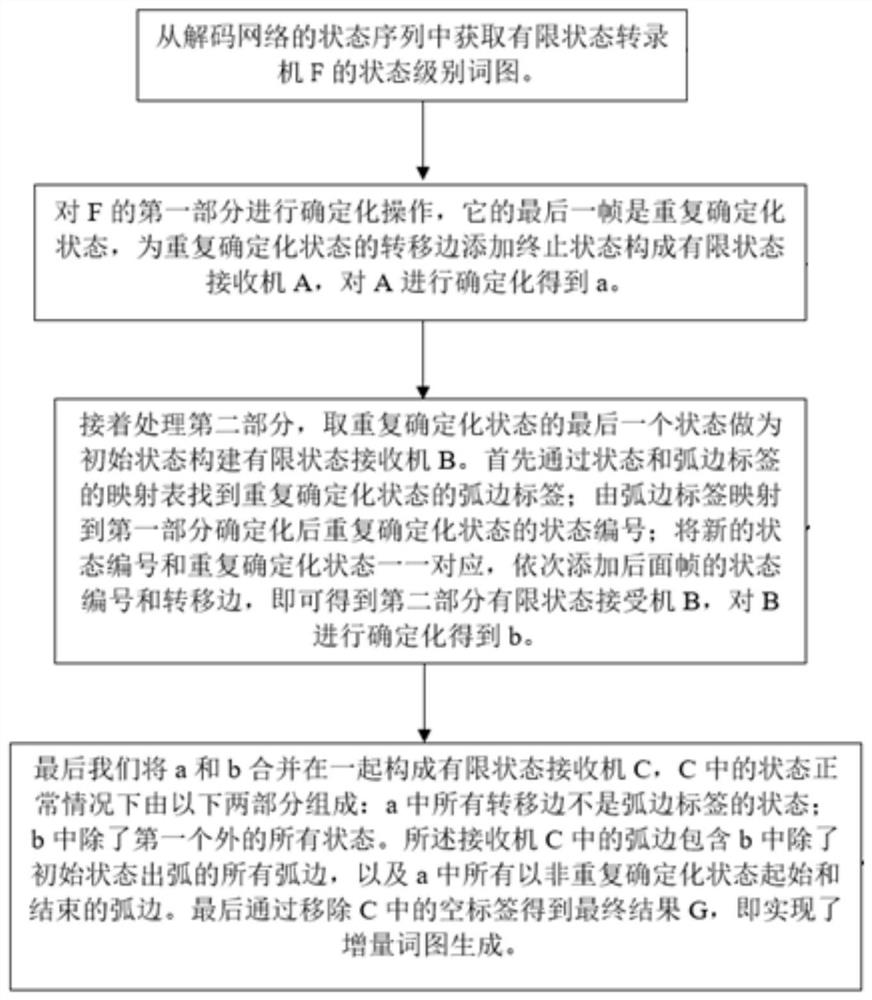

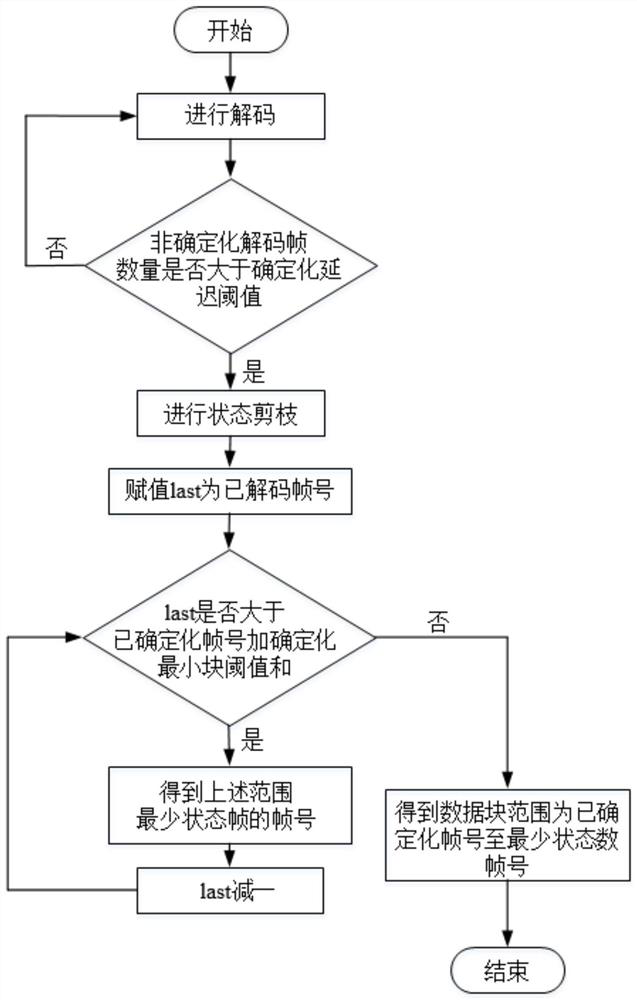

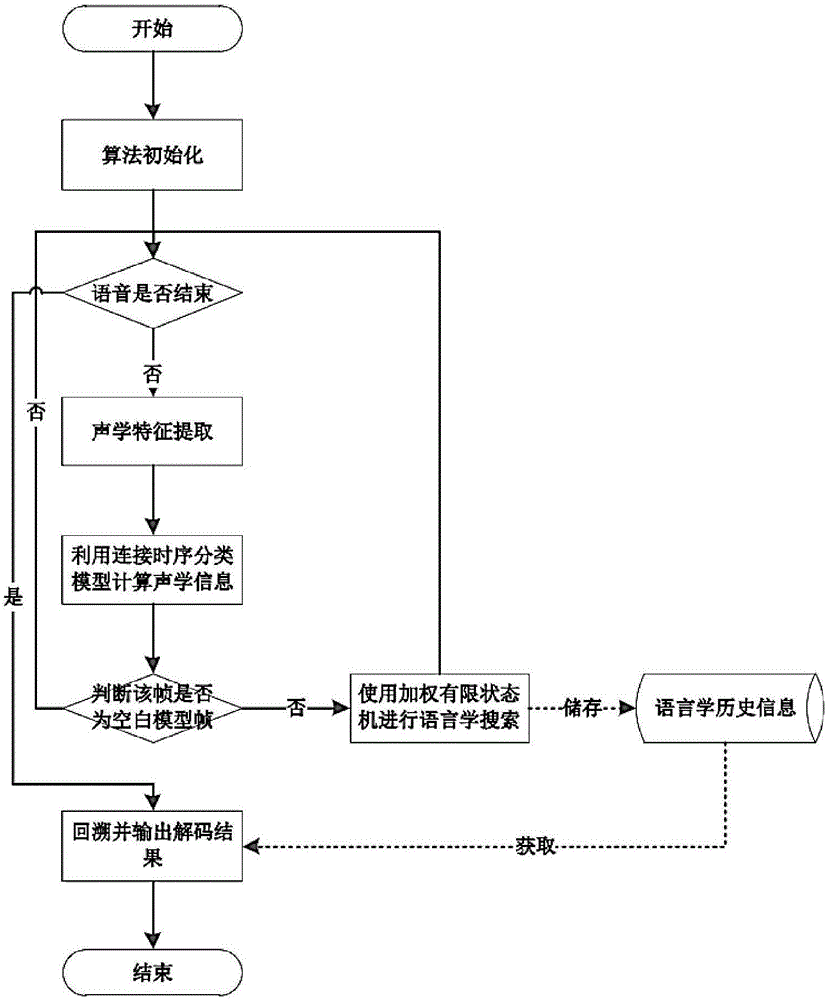

Voice recognition method and system based on incremental word graph re-scoring

The invention discloses a voice recognition method and system based on incremental word graph re-scoring. The method comprises the steps that: a to-be-recognized voice signal is obtained and acousticfeatures are extracted; a likelihood probability corresponding to the acoustic features is calculated by using a trained acoustic model; a decoder constructs a corresponding decoding network, obtainsa word graph of a state level from the decoding network and obtains a word graph of a word level by updating the word graph and determining the word graph; state-level word graphs of remaining decoding networks are determined, and the determined state-level word graphs are combined with the obtained word-level word graphs to generate a decoded word graph; a target word graph is obtained through afinite-state transcriber merging algorithm according to a re-scoring language model obtained through one-time decoded word graph and small corpus training; and an optimal cost path word graph of the target word graph is obtained, then a corresponding word sequence is obtained, and the word sequence is taken as a final recognition result. According to the invention, the calculation amount of determination after the decoding of a common decoder is finished is reduced, and the decoding speed is accelerated; the word error rate of speech recognition in a specific scene is reduced, and the accuracyis improved.

Owner:XI AN JIAOTONG UNIV

Finger knitting auxiliary method using computer drawing

InactiveCN1737809ARealize drawingHand lacing/braidingSpecial data processing applicationsTight frameAuxiliary memory

This invention discloses one computer assistant hand knitting method, which comprises the following steps: opening Word graph process on Windows platform and inputting fonts and graph formed pattern; pre-processing step by the amplifying, color filling and extending in tool box. The steps comprise form process, frame unit copy process and the finally graph printing step.

Owner:刘立宏

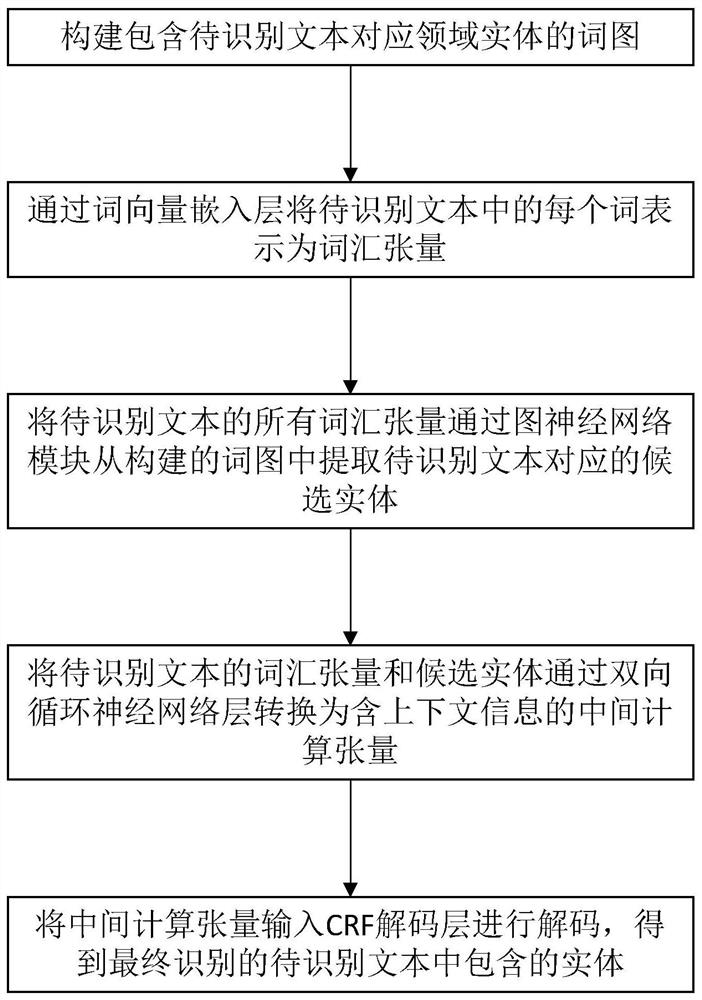

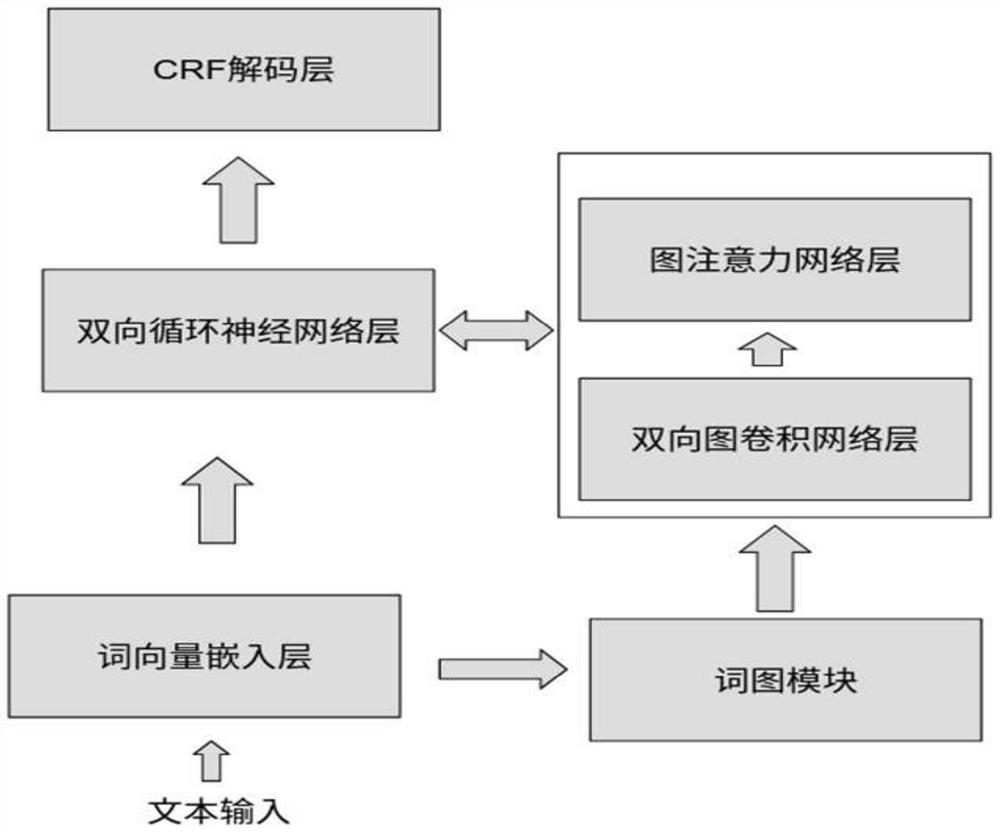

Entity identification method, terminal equipment and storage medium

ActiveCN112101031ARealize identificationReduce the impact of accuracyNatural language data processingOther databases indexingAlgorithmTheoretical computer science

The invention relates to an entity recognition method, terminal equipment and a storage medium. The method comprises the steps of S1, constructing a word graph containing domain entities correspondingto a to-be-recognized text; S2, expressing each word in the to-be-recognized text as a vocabulary tensor through a word vector embedding layer; S3, extracting candidate entities corresponding to theto-be-recognized text from the constructed word graph through a graph neural network module according to all vocabulary tensors of the to-be-recognized text, wherein the graph neural network module comprises a graph attention network layer and a bidirectional graph convolutional network layer; S4, converting a vocabulary tensor and a candidate entity of the text to be recognized into an intermediate calculation tensor containing context information through a bidirectional recurrent neural network layer; and S5, inputting the intermediate calculation tensor into a CRF decoding layer for decoding to obtain an entity contained in the finally recognized text to be recognized. According to the method, the secondary graph structure of the entity boundary is modeled, and the relationship betweenthe entity boundary and the graph neural network is analyzed, so that influence of insufficient judgment of the entity boundary on the result accuracy is reduced.

Owner:厦门渊亭信息科技有限公司

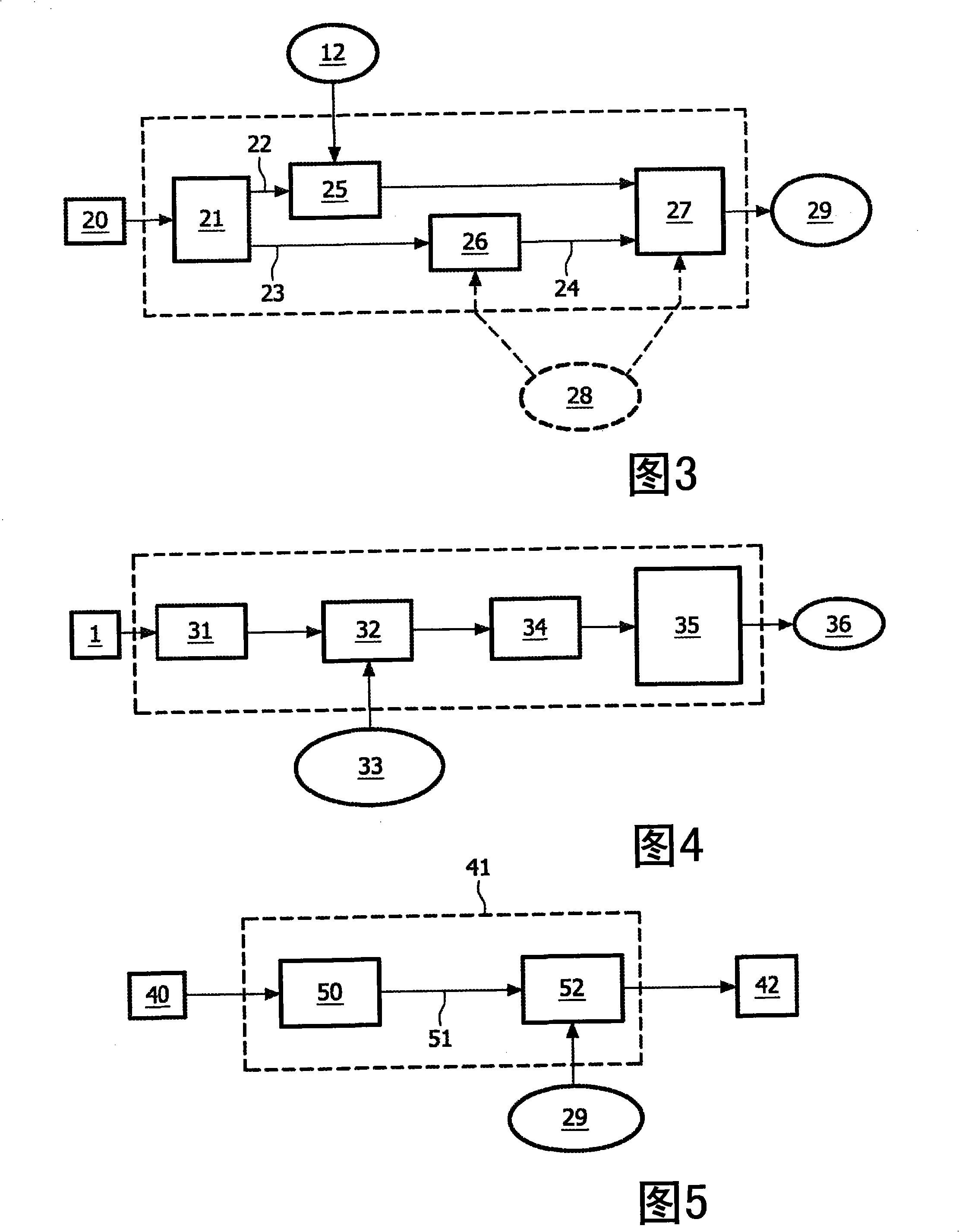

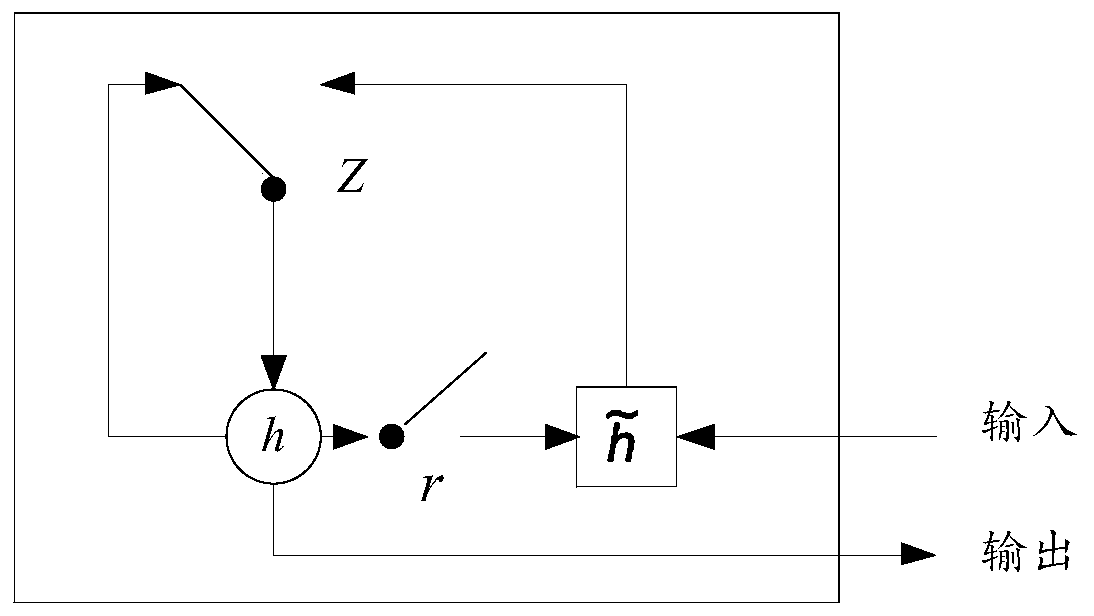

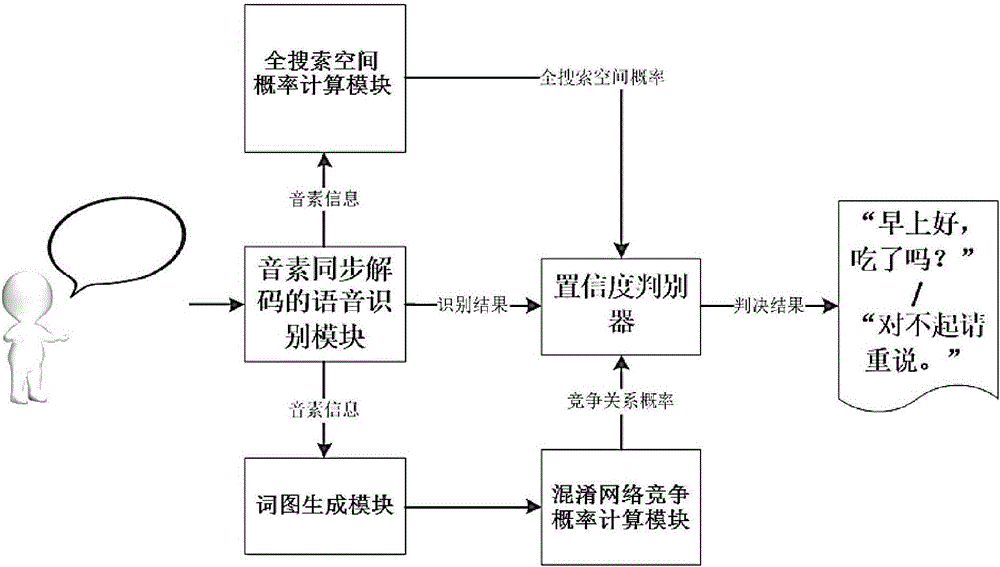

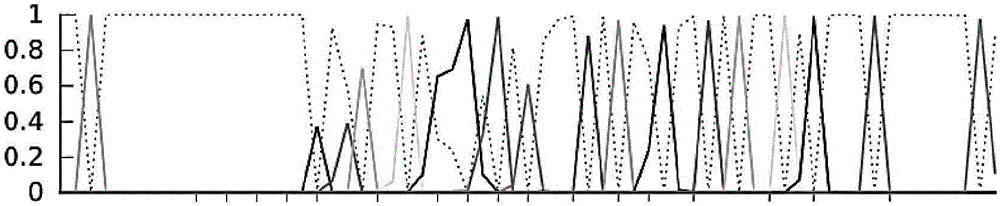

Voice recognition implementing method and system based on confidence coefficient

ActiveCN106782513APhoneme synchronization decoding is accurateProbability Confusion Network Competition Probability AccuracySpeech recognitionSpeech identificationLanguage model

The invention relates to a voice recognition implementing method and system based on a confidence coefficient. The method comprises the following steps: carrying out voice recognition of phoneme synchronous decoding from voice of a use to obtain a word graph acoustic information structure which is synchronous to decoding information generated phoneme, generating a confusion network on the basis of the word graph acoustic information structure so as to establish a competitive relation between voice recognition candidate results, namely a competition probability of the confusion network; meanwhile, establishing full search space of voice recognition by using an auxiliary search network based on a linguistic model; calculating to obtain complete lossless full search space probability; recording a search process of the generated full search space by voice recognition of phoneme synchronous decoding; carrying out path recalling by a whole search history so as to obtain the full search space probability; and finally, fusing the competition probability of the confusion network and the full search space probability to obtain a judgment result of voice recognition. On the one hand, correct confidence coefficient can be given for a voice recognition result so as to improve user experiences of voice recognition; and on the other hand, consumption of calculation and memory resources of voice recognition confidence coefficient algorithm can be reduced remarkably.

Owner:AISPEECH CO LTD

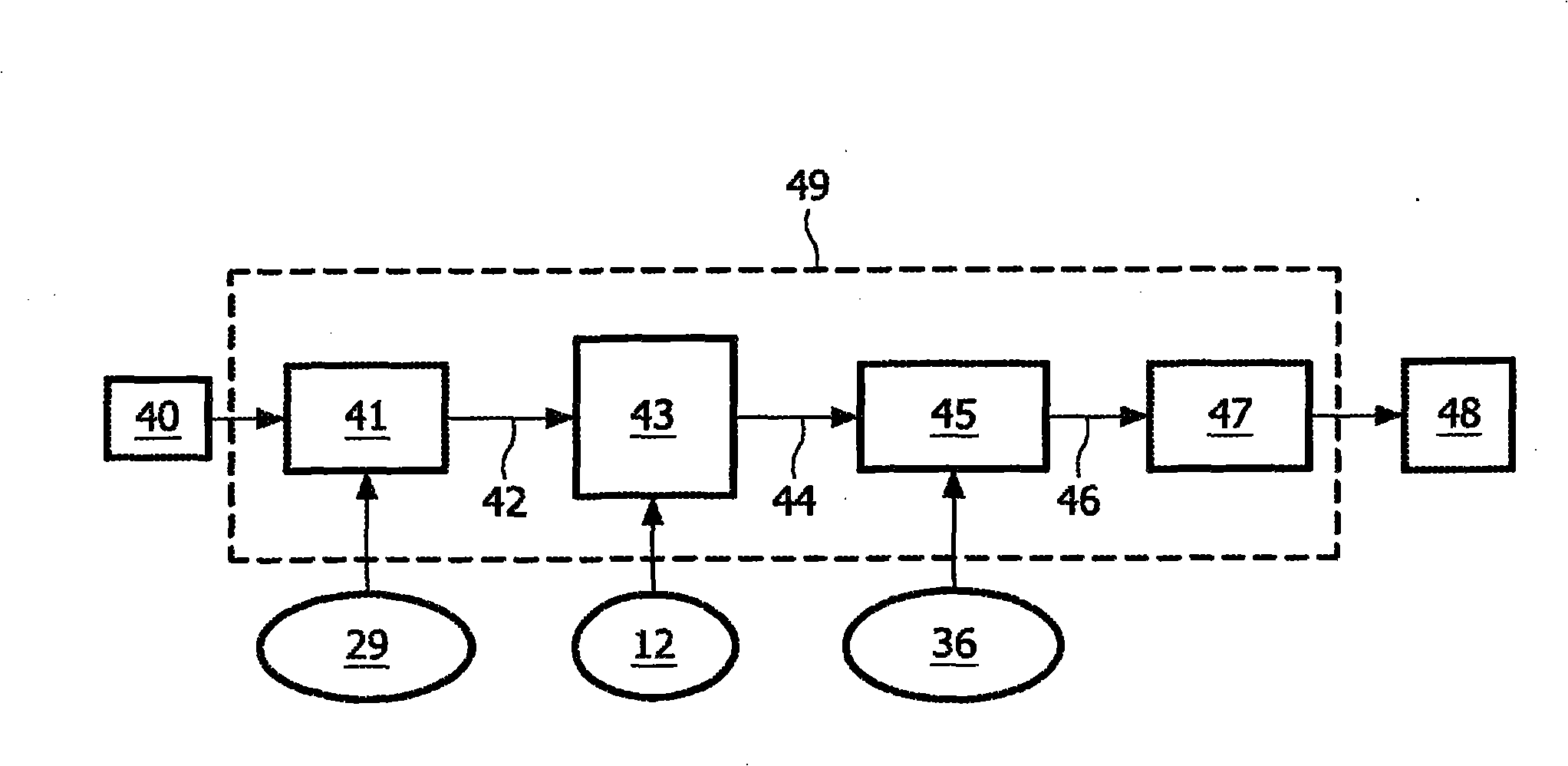

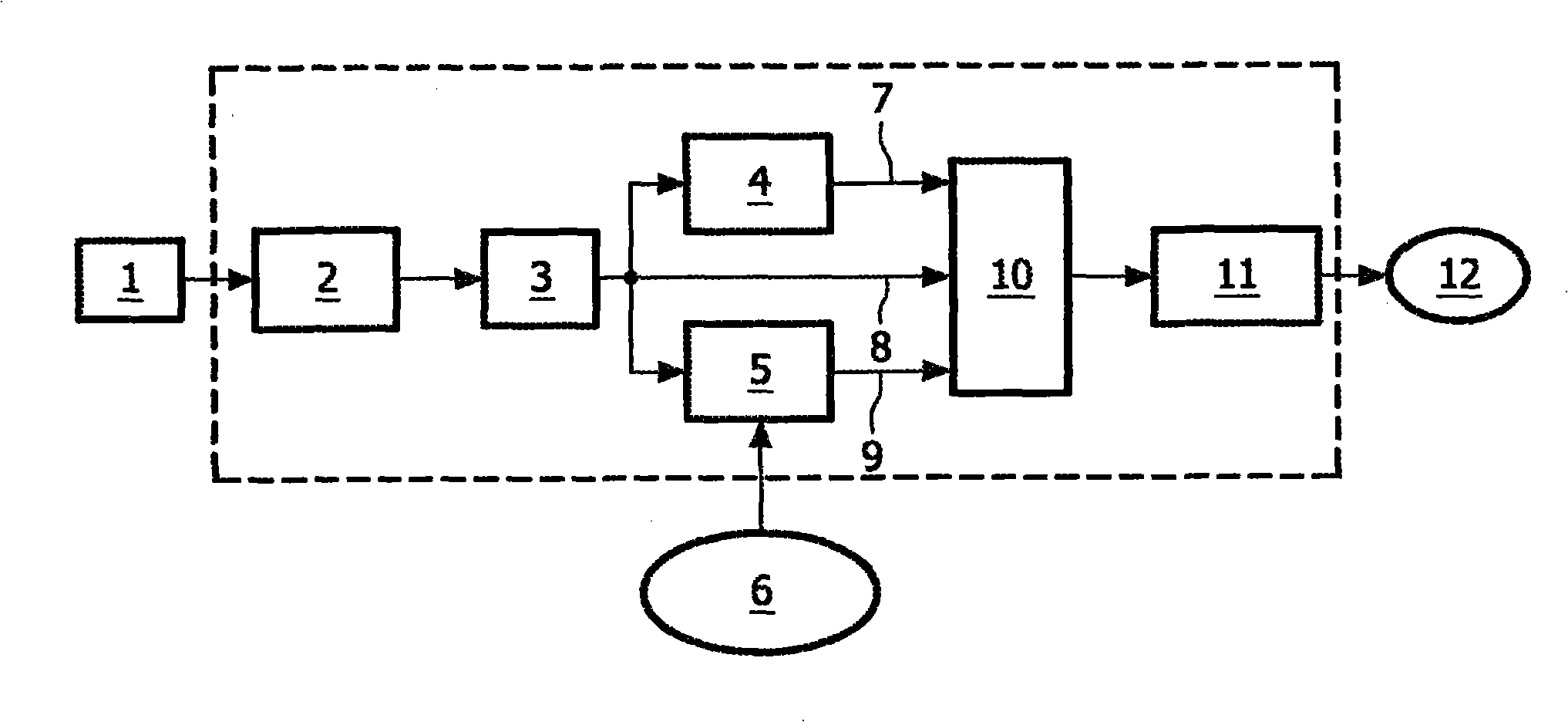

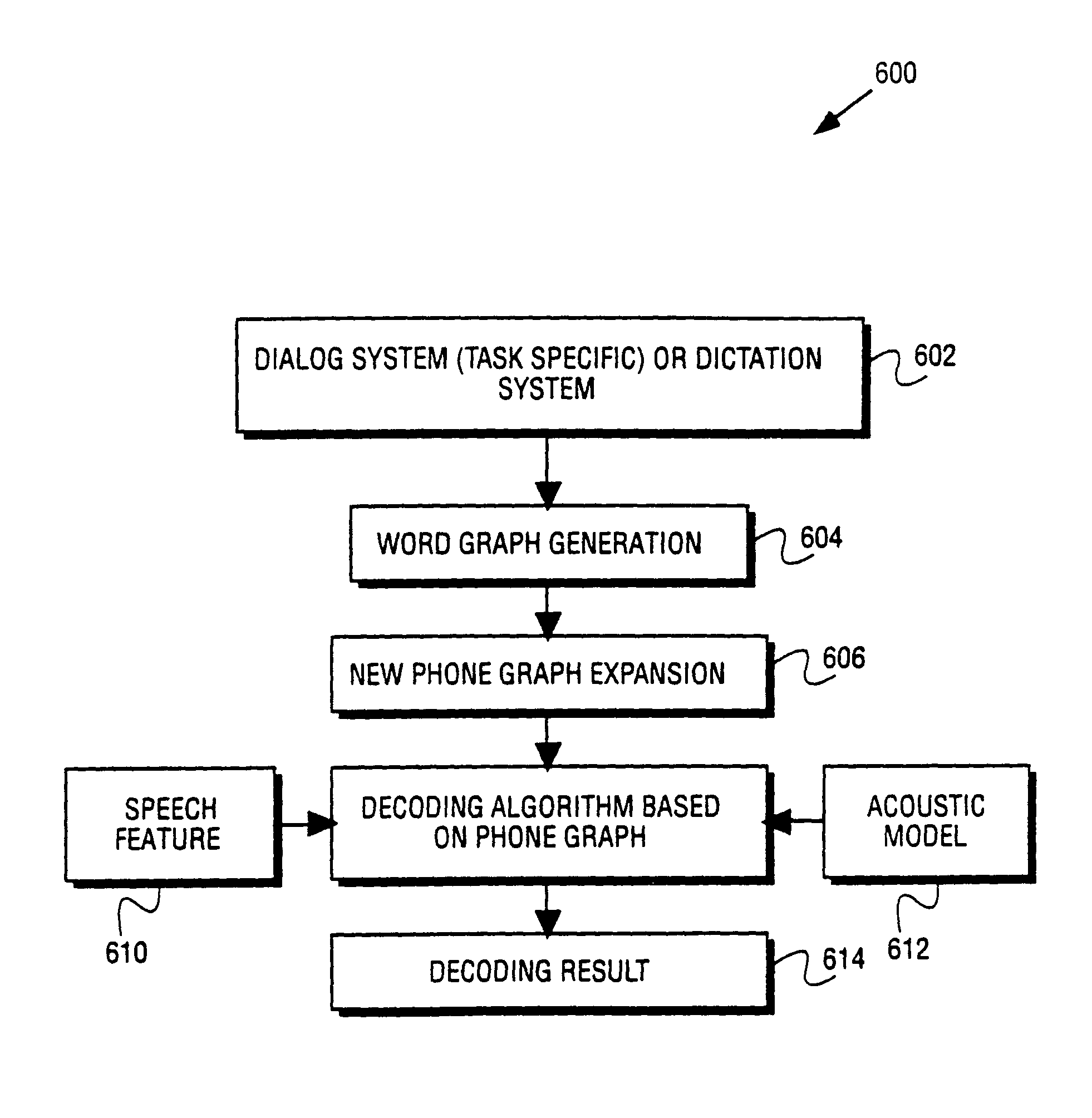

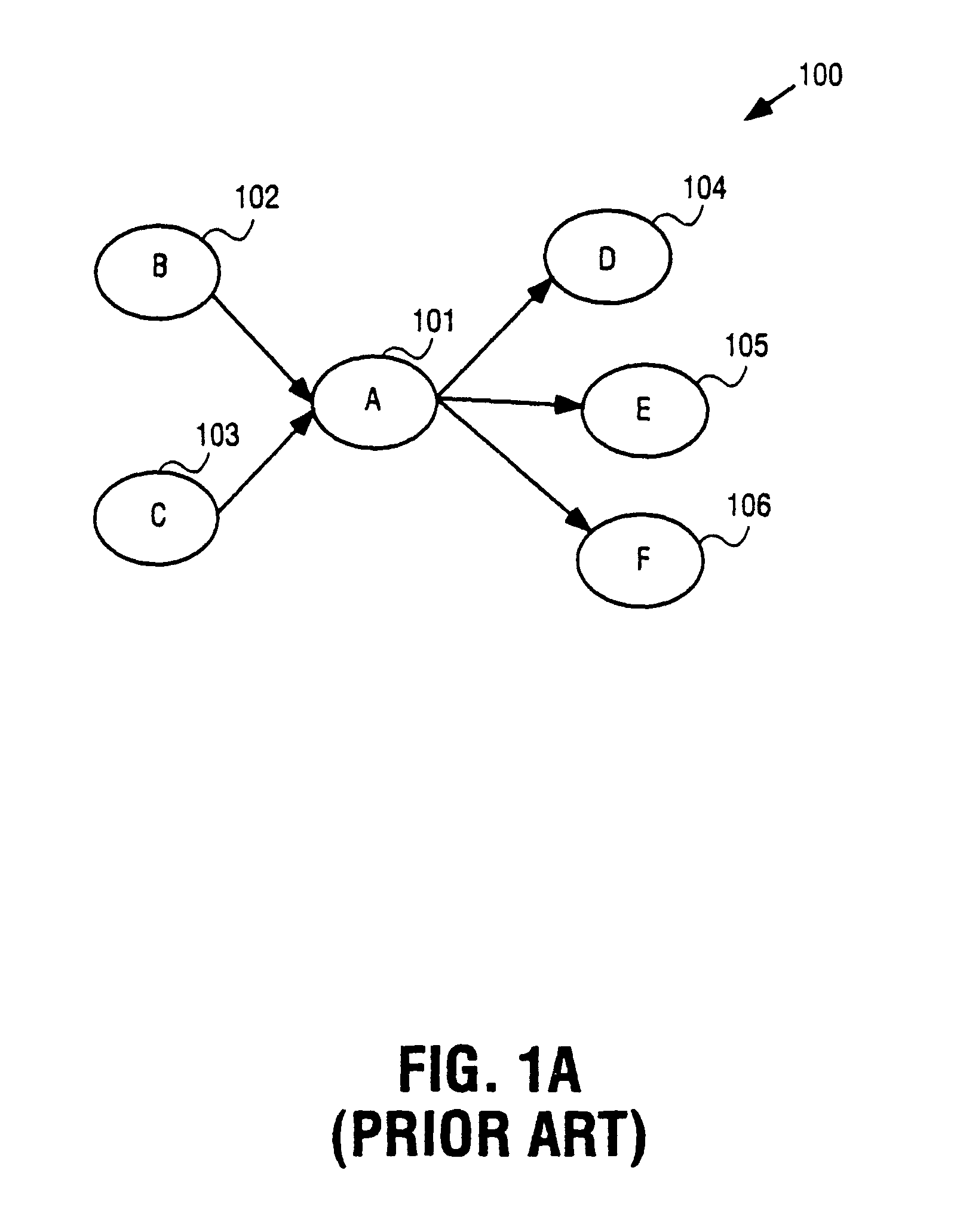

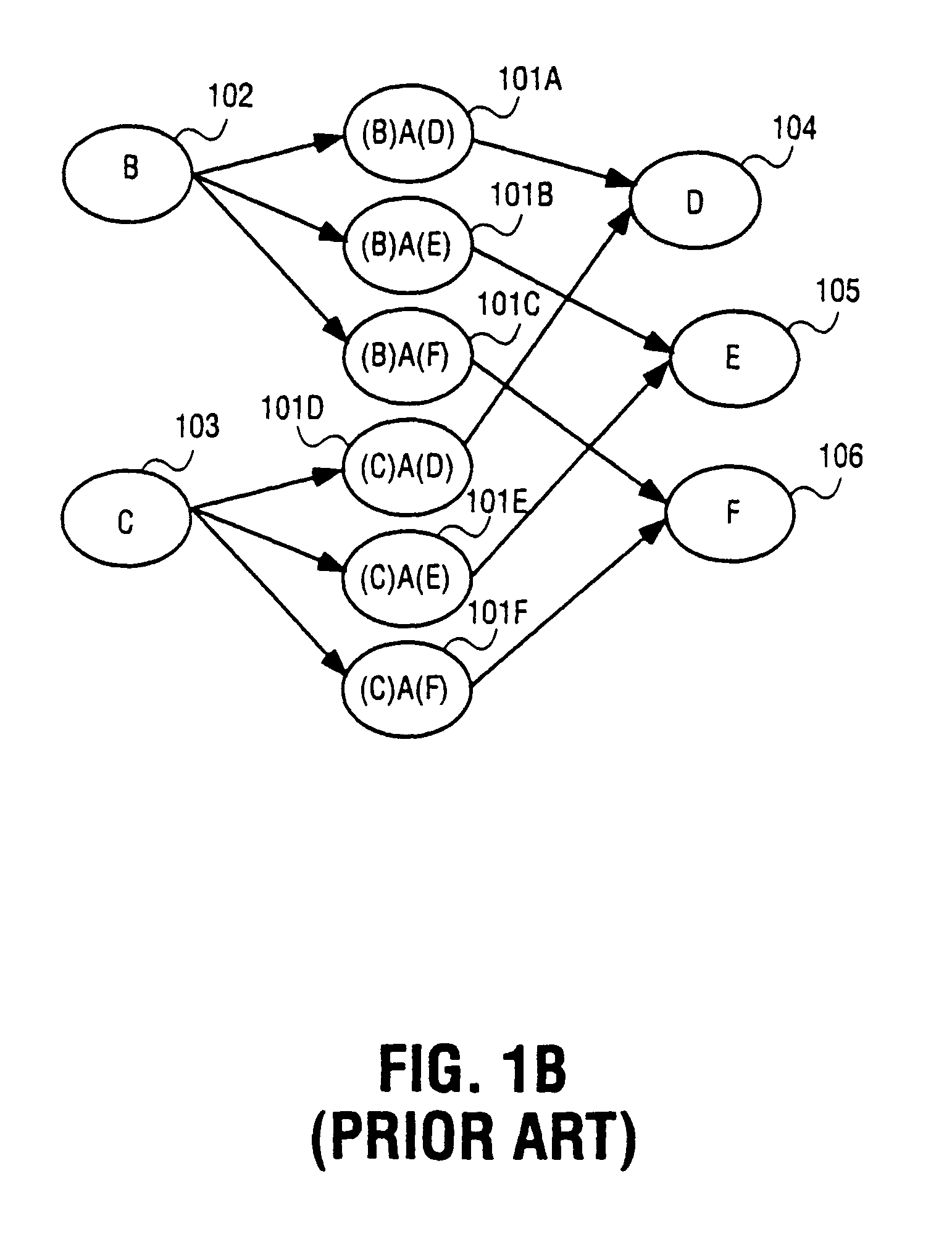

Method and system for expanding a word graph to a phone graph based on a cross-word acoustical model to improve continuous speech recognition

A method and system that expands a word graph to a phone graph. An unknown speech signal is received. A word graph is generated based on an application task or based on information extracted from the unknown speech signal. The word graph is expanded into a phone graph. The unknown speech signal is recognized using the phone graph. The phone graph can be based on a cross-word acoustical model to improve continuous speech recognition. By expanding a word graph into a phone graph, the phone graph can consume less memory than a word graph and can reduce greatly the computation cost in the decoding process than that of the word graph thus improving system performance. Furthermore, continuous speech recognition error rate can be reduced by using the phone graph, which provides a more accurate graph for continuous speech recognition.

Owner:INTEL CORP

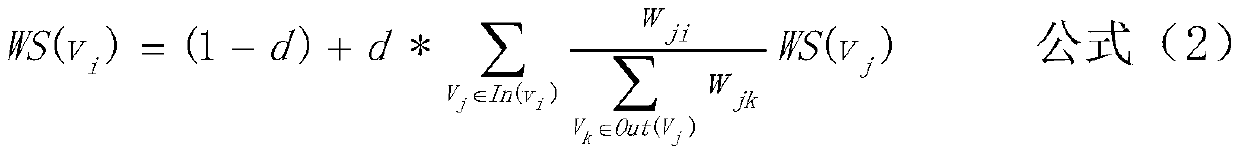

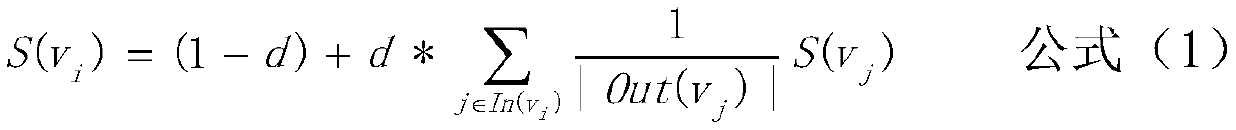

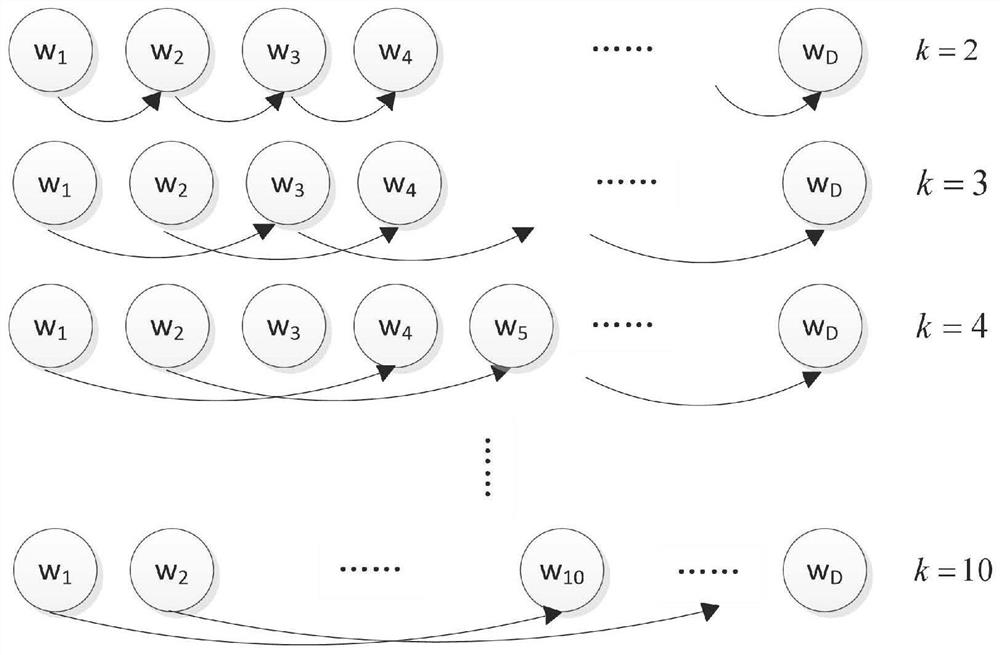

Multi-factor fused textrank keyword extraction algorithm

PendingCN110728136ASmall local feature influenceGlobal features have a significant impactNatural language data processingAlgorithmTheoretical computer science

The invention relates to the technical field of natural language processing, and especially relates to a multi-factor fused textrank keyword extraction algorithm. Influence factors of the keyword extraction algorithm TextRank include five factors including word coverage, word position, word frequency, word length, word span and the like. 1, global factors are greater than local factors in a keyword extraction process; 2, the word coverage, the word length, the word frequency, the word span and the word position influence weight are gradually increased; 3, the influence weights of the word coverage and the word length are basically equivalent, the word span and the word frequency influence weight are basically equivalent when the keyword of the text is extracted by using the TextRank algorithm, only two factors of word positions and word spans can be considered; wherein the ratio of the two factors is 7: 3; 3, because the text needs to be traversed again on the basis of establishing a word graph when the word span is calculated, a certain running time needs to be consumed, if the requirement on the running speed of the algorithm is strict, the word span can be replaced by the word frequency, and the extraction effect is slightly influenced, but is also good.

Owner:YANAN UNIV

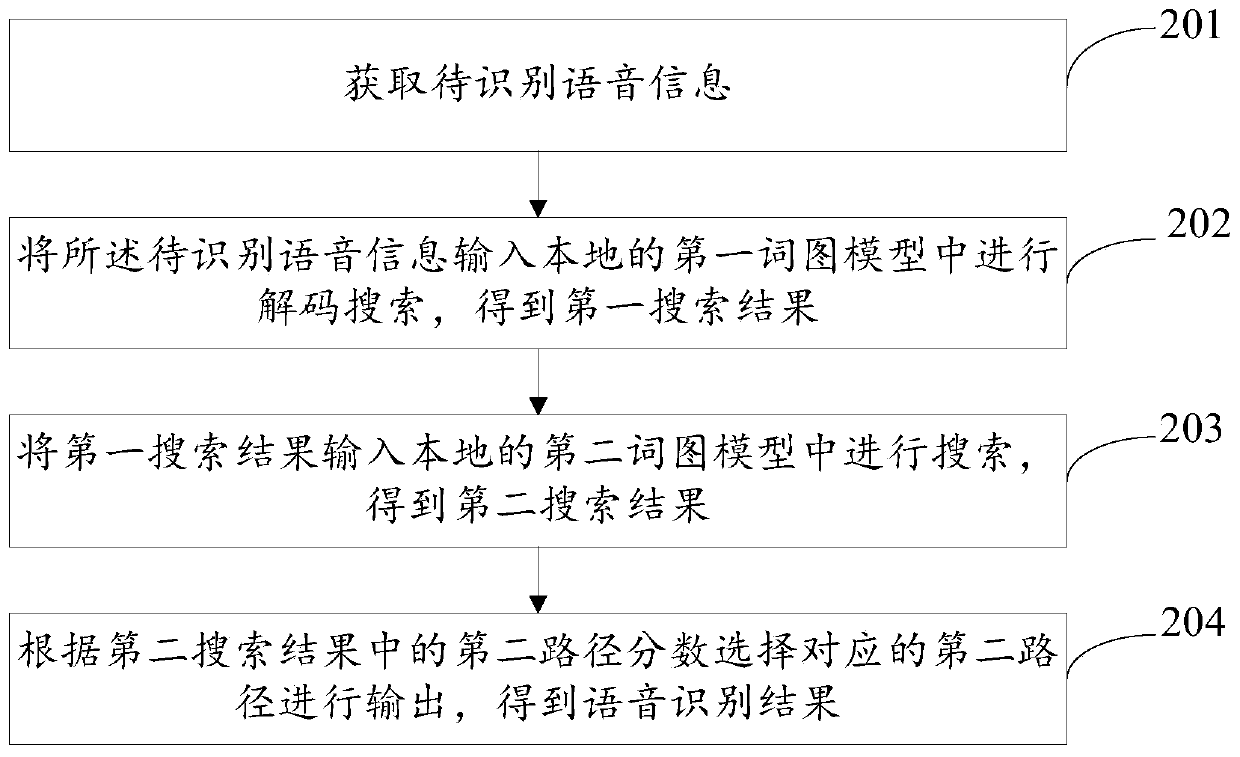

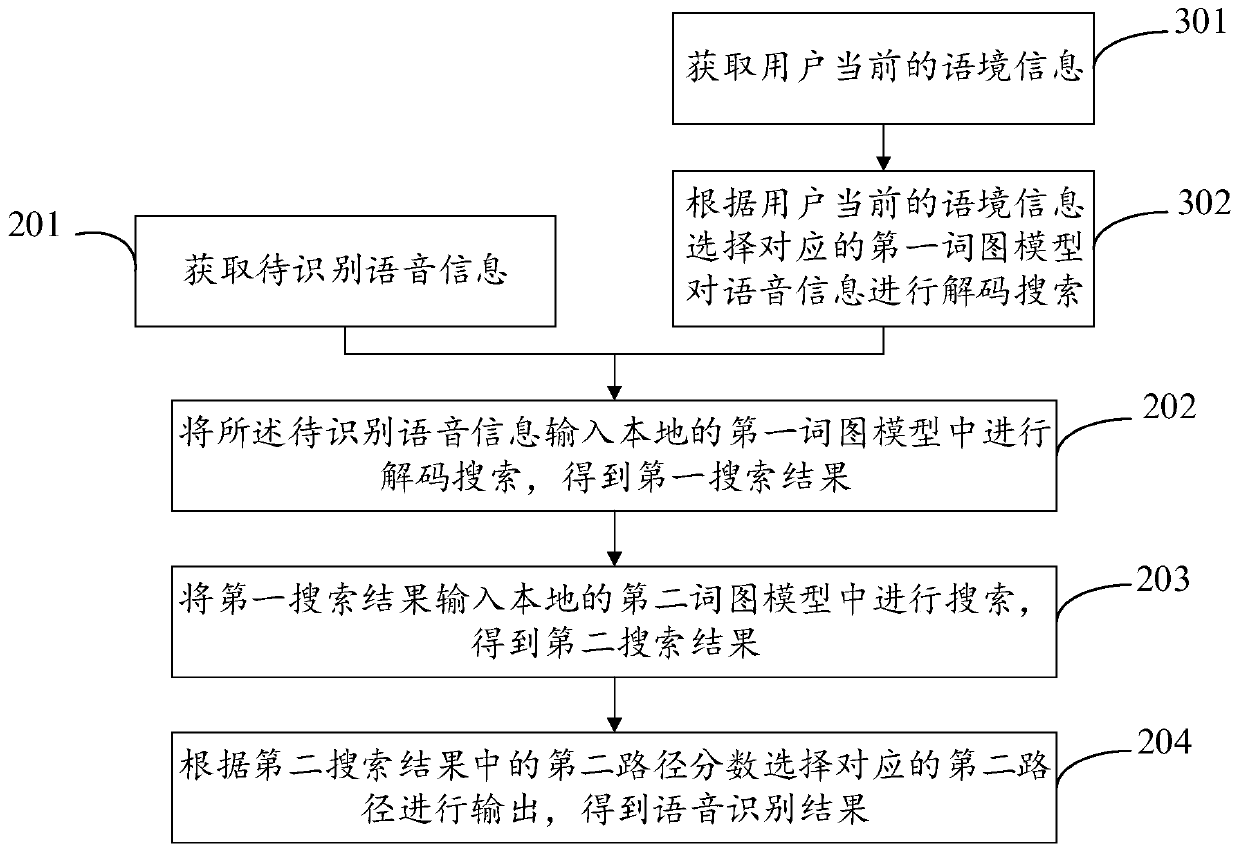

Speech identification method and device, computer equipment and storage medium

PendingCN110808032AReduce search volumeHigh speedSpeech recognitionTheoretical computer scienceEngineering

The application belongs to the technical field of the artificial intelligence, and relates to a speech identification method and device, computer equipment, and a storage medium. The method comprisesthe following steps of acquiring to-be-identified speech information; inputting the to-be-identified speech information into a local first word graph model to perform decoding search to obtain a firstsearch result, wherein the first research result comprises a first path and a corresponding first path score, the first word graph model comprises an acoustic model, a pronunciation dictionary and afirst word graph space; inputting the first search result into a local second word and graph model to search so as to obtain a second search result, wherein the second search result comprises a secondpath and a corresponding second path score, the second word and graph model comprises a second word and graph space, and the first word and graph space is the sub-word and graph space of the second word and graph space; and selecting the corresponding second path to output according to the second path score. The search dimension is lowered, the amount of the word and graph search is reduced, andthe search time is shortened, and the speech identification speed is improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

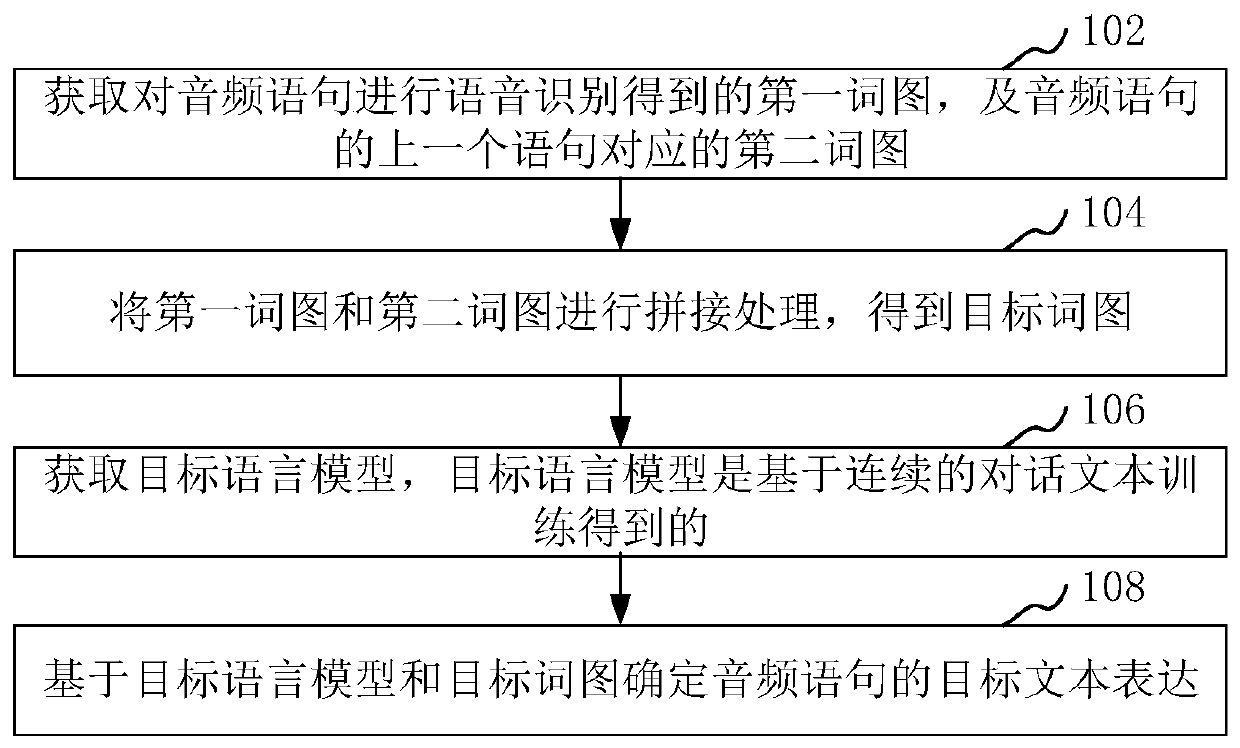

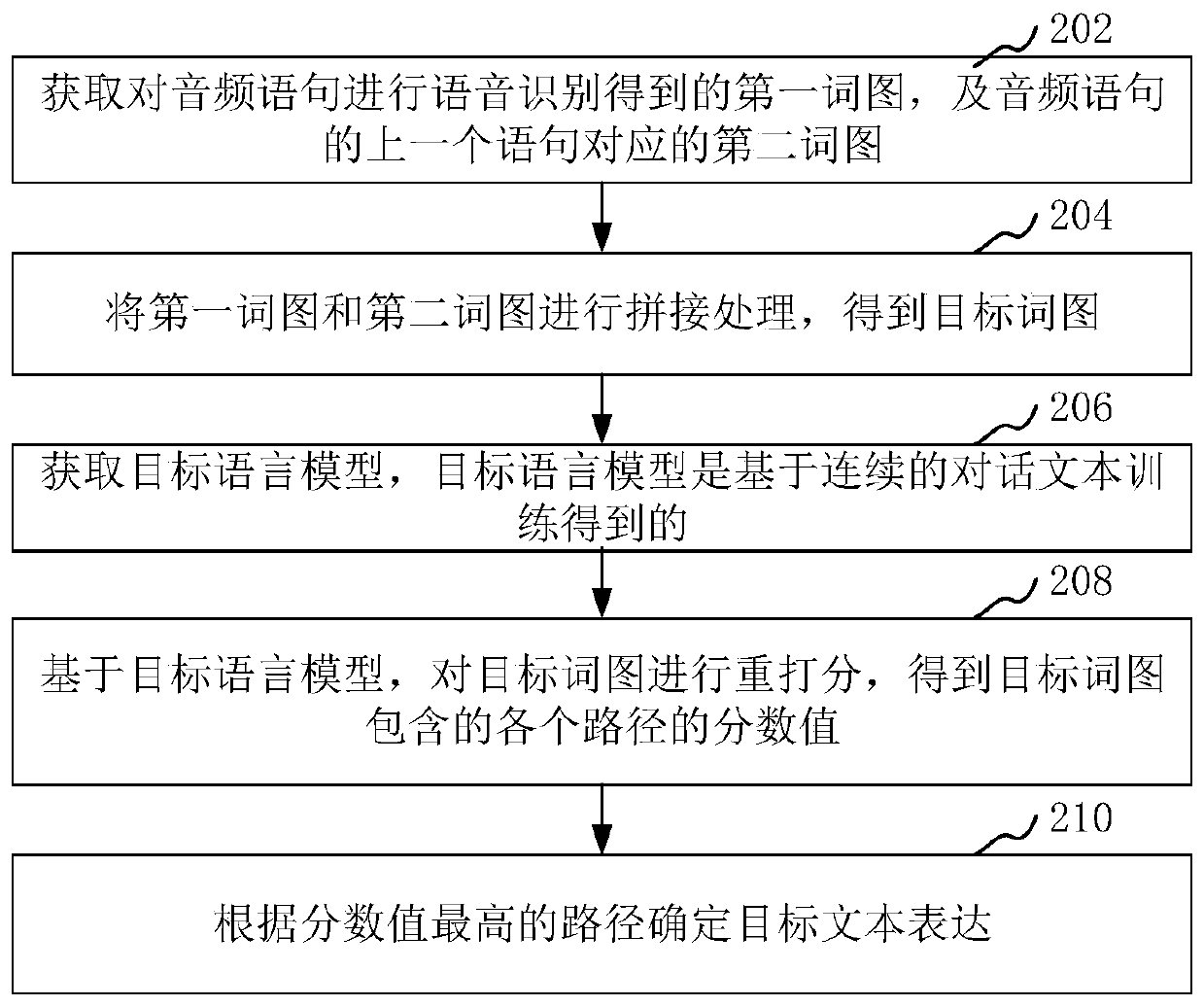

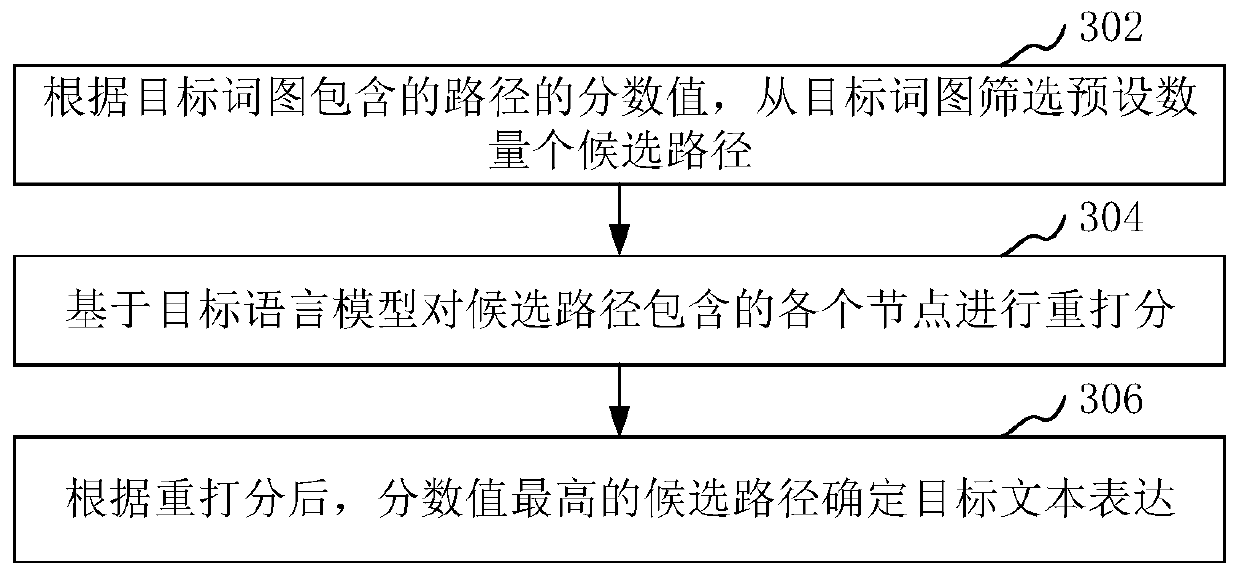

Voice recognition method, device, computer equipment, and computer readable storage medium

The invention relates to a voice recognition method, a device, computer equipment and a computer readable storage medium. The voice recognition method comprises the steps of obtaining a first word graph obtained by performing voice recognition on an audio statement and a second word graph corresponding to a previous statement of the audio statement; splicing the first word graph and the second word graph to obtain a target word graph; obtaining a target language model, wherein the target language model is obtained based on continuous dialogue text training; and determining a target text expression of the audio statement based on the target language model and the target word graph. By adopting the method, the accuracy of voice recognition can be improved.

Owner:SHENZHEN ZHUIYI TECH CO LTD

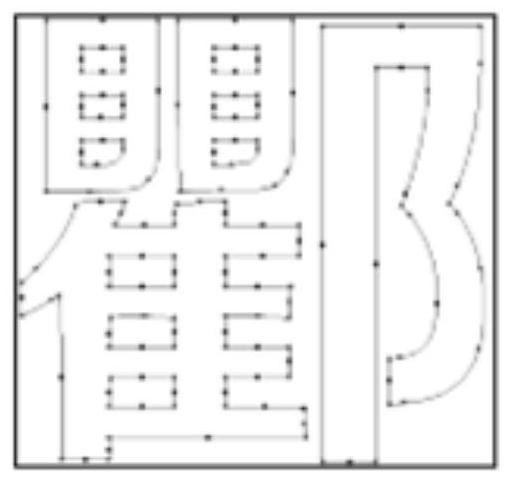

Word graph

InactiveUS20120065959A1Well formedNatural language data processingSpecial data processing applicationsWord graphSpeech recognition

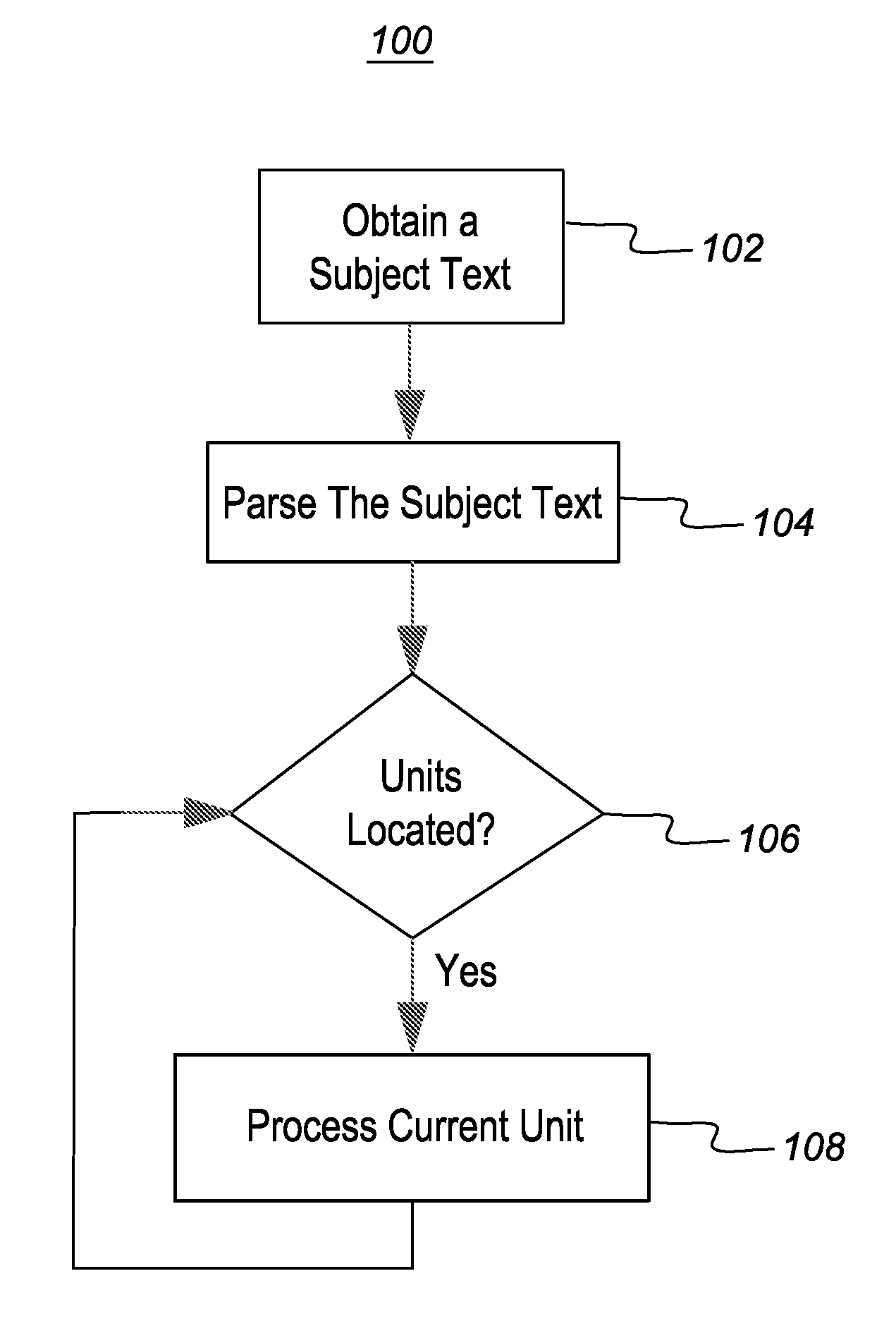

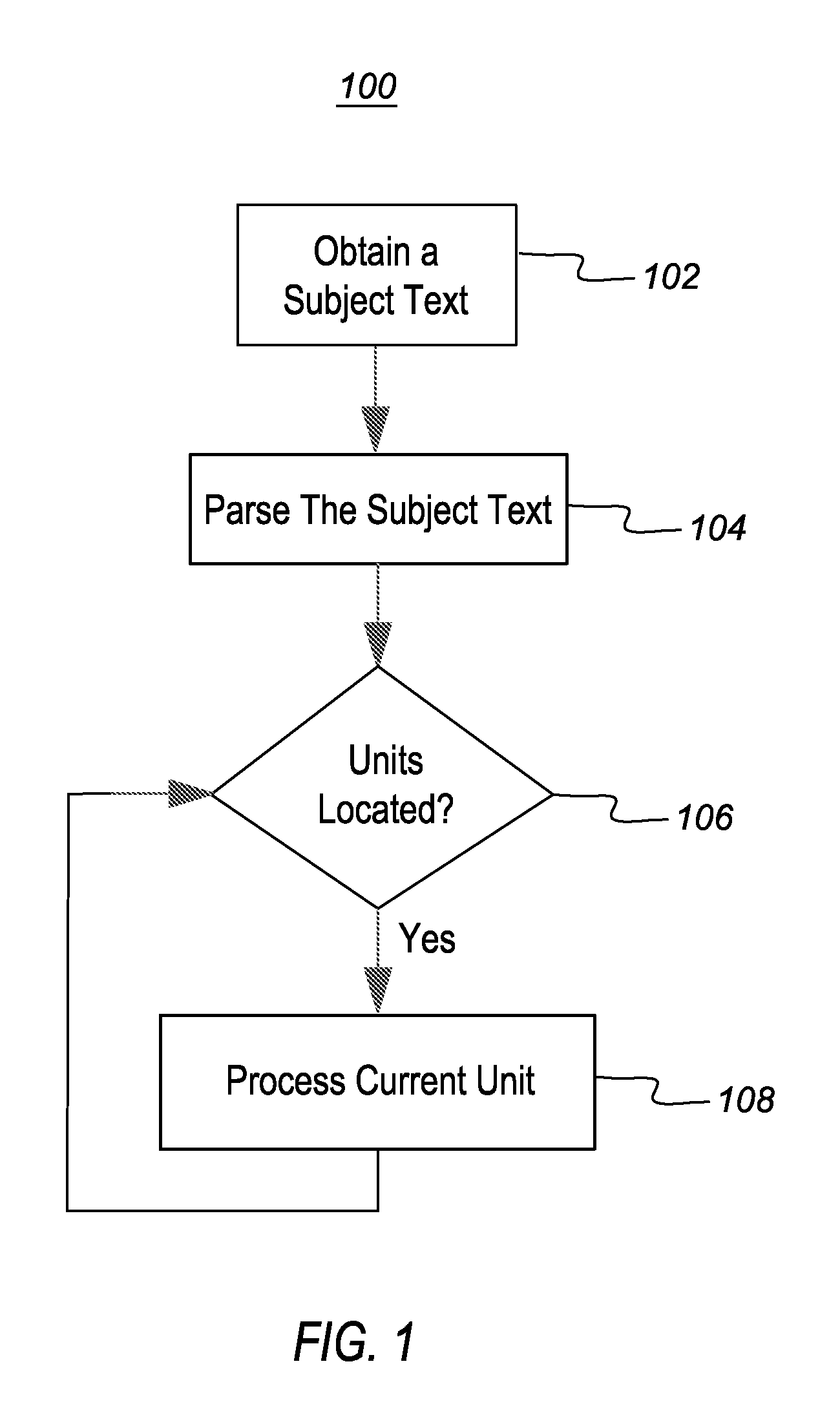

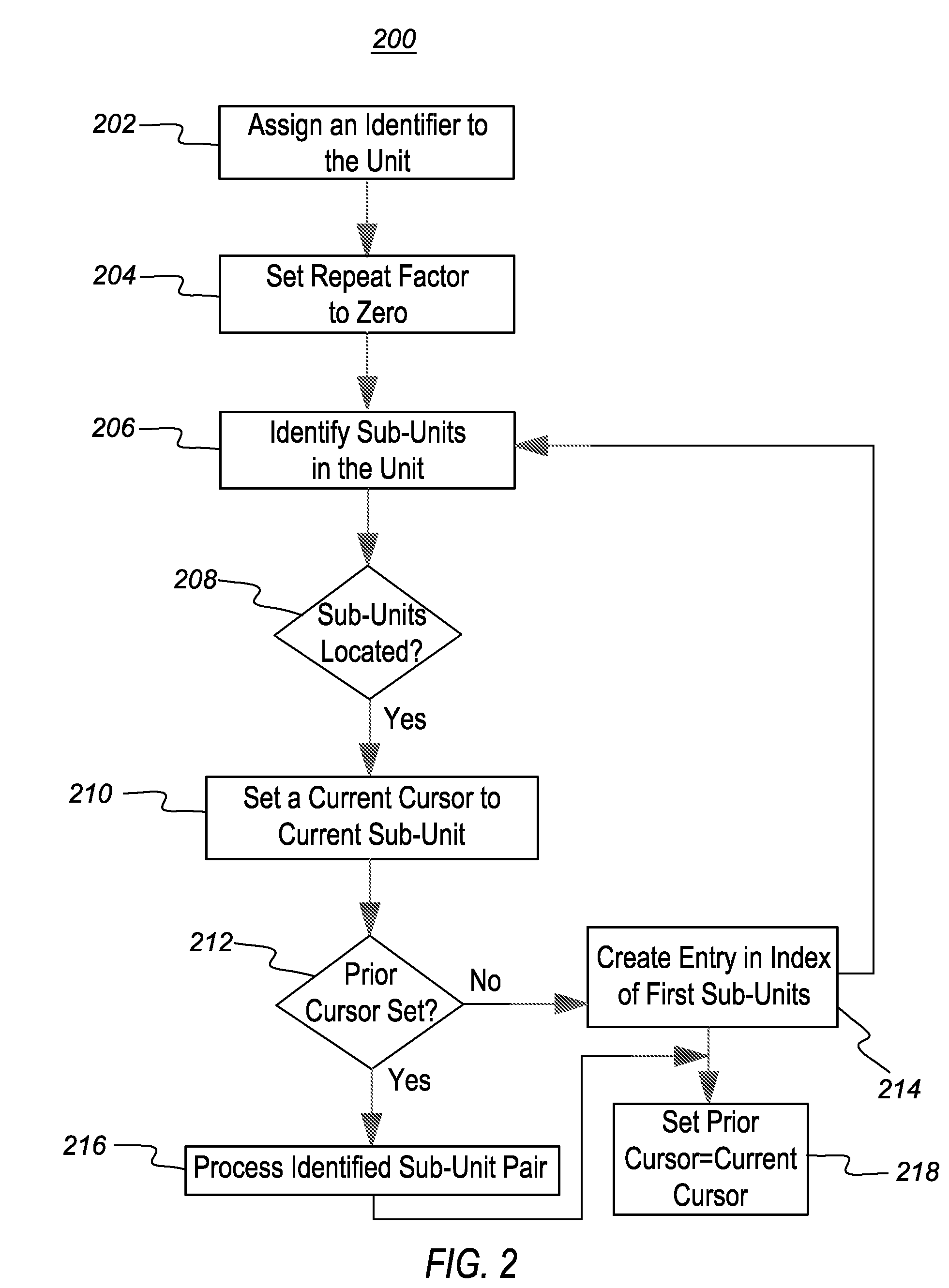

One example embodiment includes a method for constructing a word graph. The method includes obtaining a subject text and dividing the subject text into one or more units. The method also includes dividing the units into one or more sub-units and recording each of the one or more sub-units.

Owner:SALISBURY RICHARD

Vectorization method of word pattern

PendingCN112347288AImprove development efficiencyBoost Vector EffectsCharacter and pattern recognitionNatural language data processingEngineeringWord graph

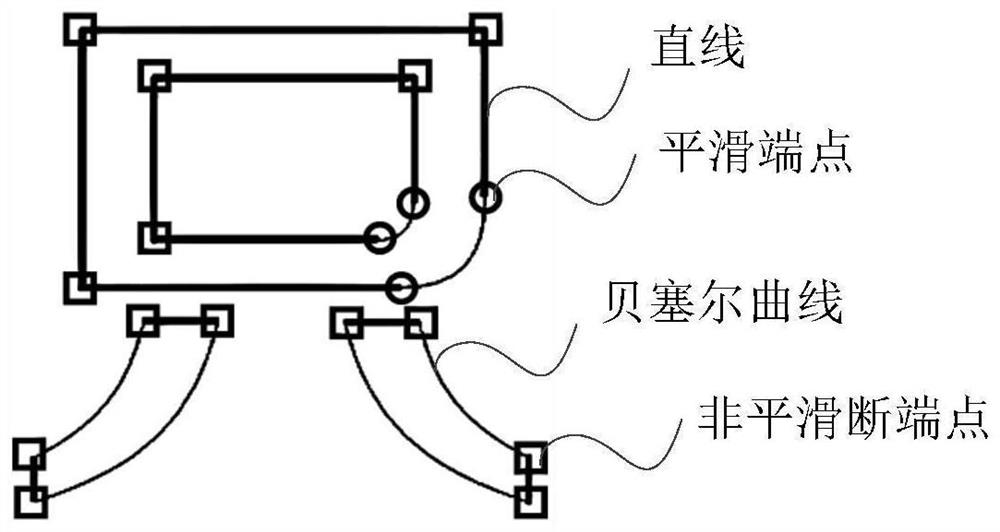

The invention provides a vectorization method and device for a word pattern. The method comprises the steps of extracting the contour of a to-be-vectorized word pattern; determining a straight line ofthe to-be-vectorized word pattern, a smooth endpoint of a curve of the to-be-vectorized word pattern and a non-smooth endpoint of the curve of the to-be-vectorized word pattern from the outline of the to-be-vectorized word pattern; segmenting the contour of the to-be-vectorized word pattern according to the smooth endpoint of the curve and the non-smooth endpoint of the curve to obtain at least one contour segment; vectorizing a curve contour section in the at least one contour section according to a vector curve library; and determining vectorization data of the word pattern according to thevectorized curve contour section and the straight line contour section in the at least one contour section. The embarrassing situation that the image generation technology is difficult to be used forfine font library development is effectively handled, the conversion effect from an image to the vector data is greatly enhanced, and the working efficiency of font library development and manufacturing is improved.

Owner:BEIJING FOUNDER ELECTRONICS CO LTD

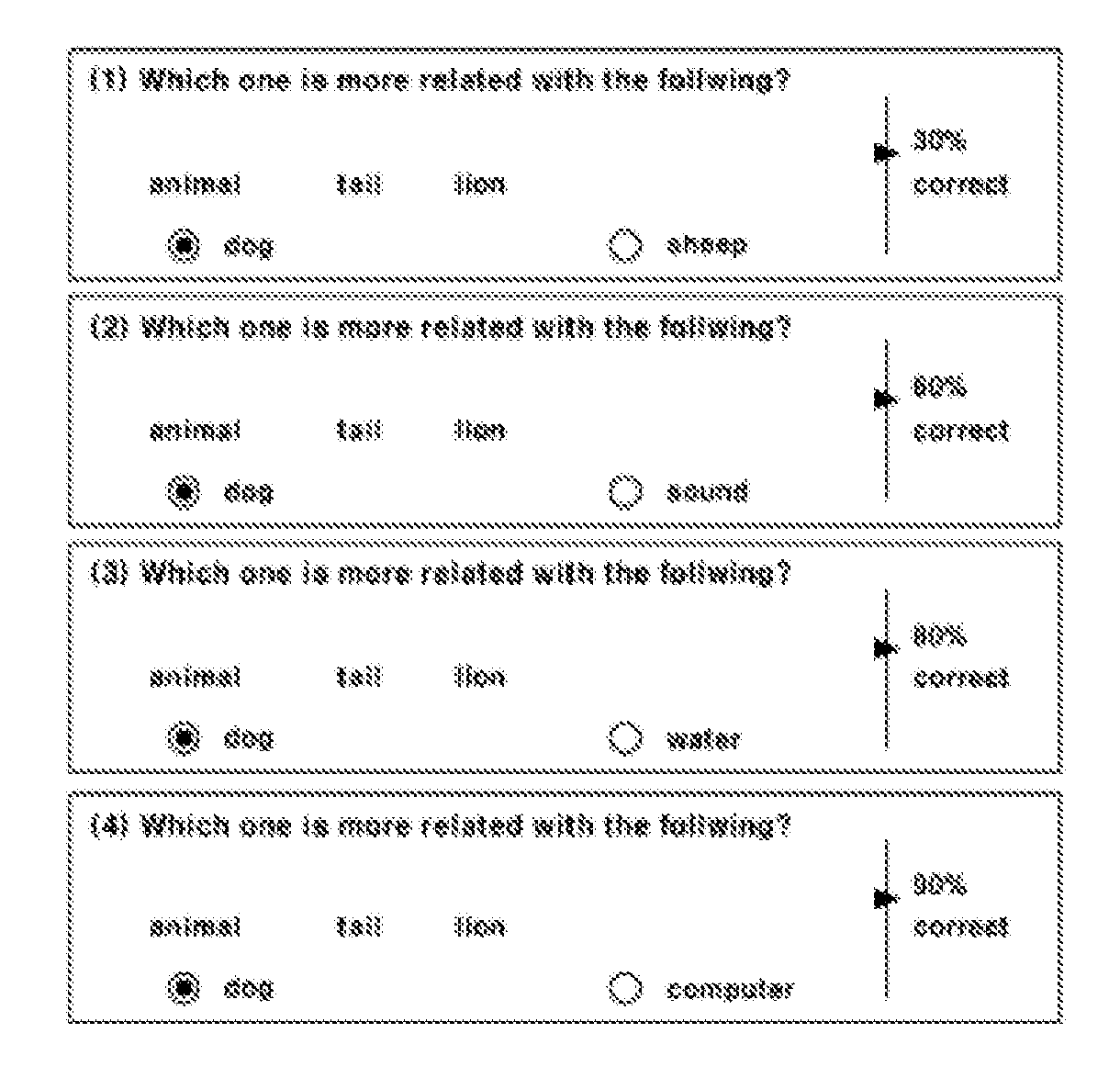

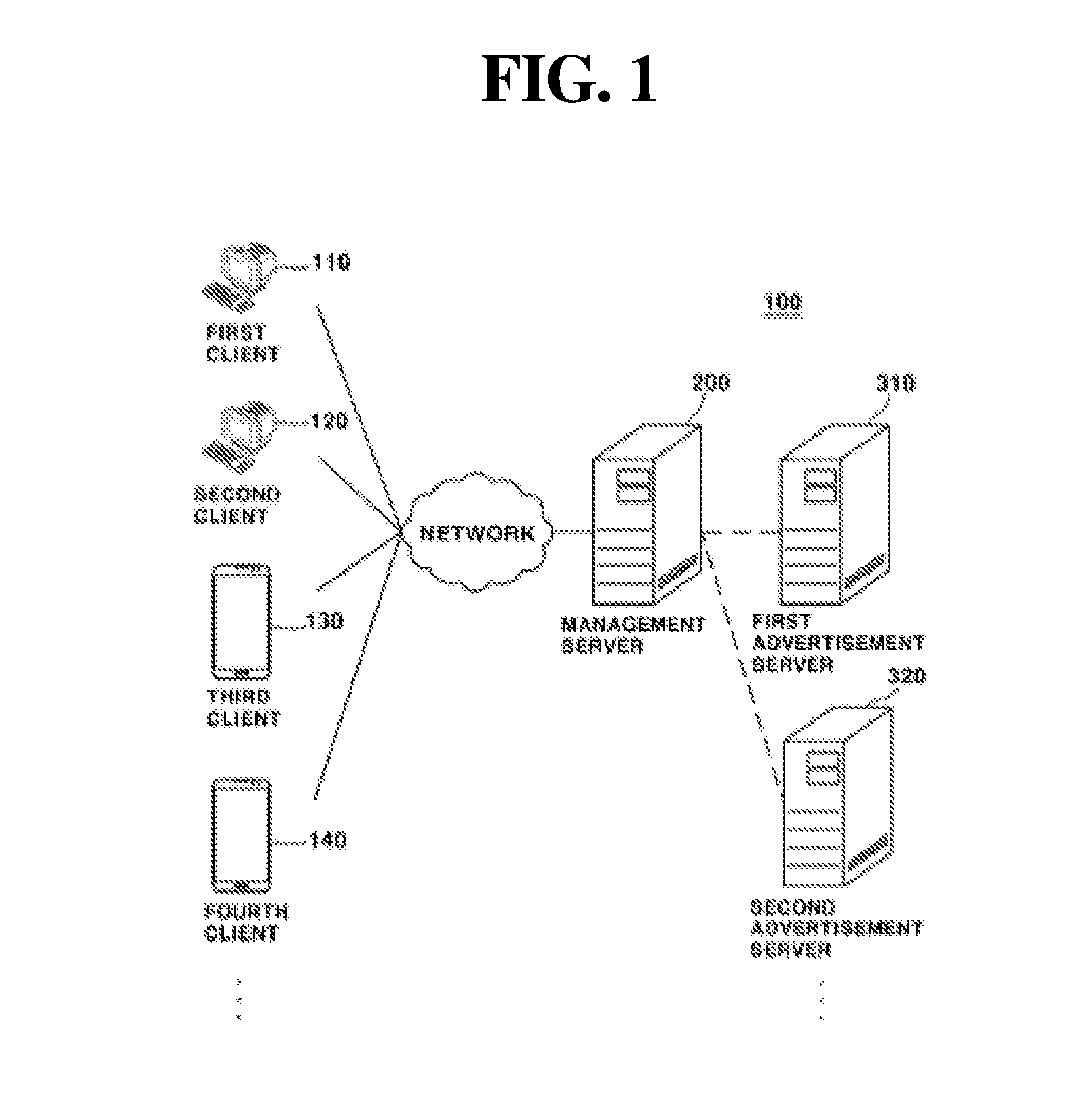

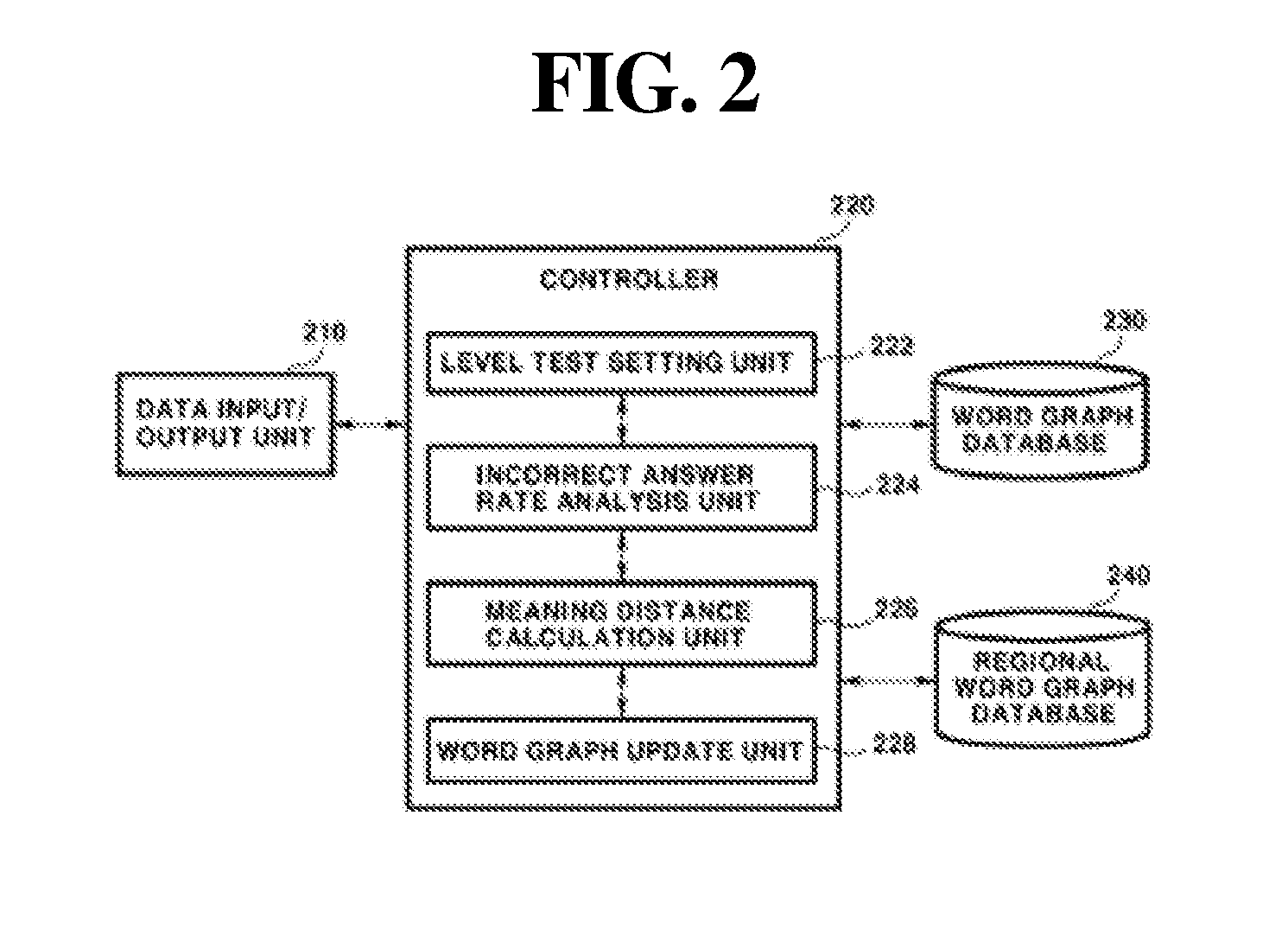

Method and system for managing a wordgraph

InactiveUS20160314701A1Reduce effortAutomatically setElectrical appliancesTeaching apparatusAlgorithmTheoretical computer science

Method and system for managing a wordgraph is provided. The present invention include a method and a system for managing a word graph, wherein the method includes receiving, by a management server, a result of vocabulary level test from a client device, extracting a correct answer rate or an incorrect answer rate for each word pair by analyzing the received result of vocabulary level test, calculating a meaning distance of word pair in response to the extracted correct answer rate or incorrect answer rate, and updating a pre-stored word graph, or generating a new word graph using the calculated meaning distance.

Owner:TWINWORD

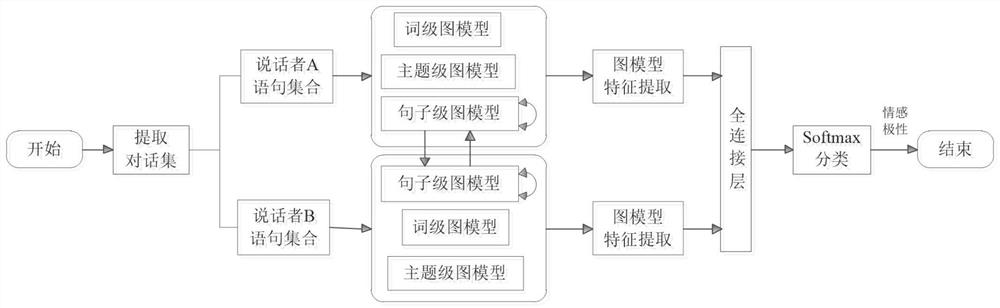

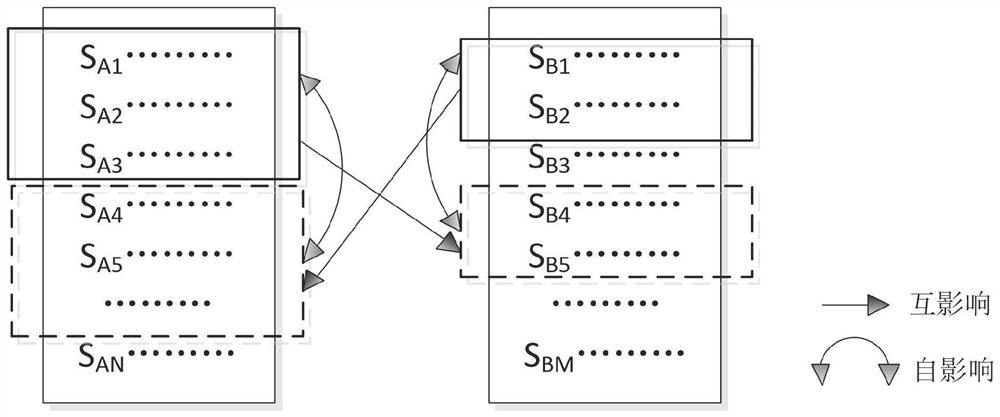

Power grid service conversation data emotion detection method based on graph neural network

PendingCN113656564AImprove accuracyCustomer relationshipSemantic analysisRelation graphUndirected graph

The invention relates to a power grid service conversation data emotion detection method based on a graph neural network. The method comprises the following steps: 1, extracting a conversation set, and constructing a statement-level self-influence and mutual influence relation graph and a feature extraction model; 2, constructing a word-level undirected graph and a feature extraction model; step 3, constructing a relation undirected graph and a feature extraction model between the topic vocabularies and the context words; and step 4, fusing the statement-level features, the word graph features and the relation features between the subject vocabularies and the context words in the step 1, the step 2 and the step 3, and calculating conversation emotion. According to the method, the accuracy of interactive sentiment analysis can be greatly improved, so that an important technical support is provided for constructing human-like interaction systems such as a question answering system, a chat robot and a public service robot.

Owner:STATE GRID TIANJIN ELECTRIC POWER +1

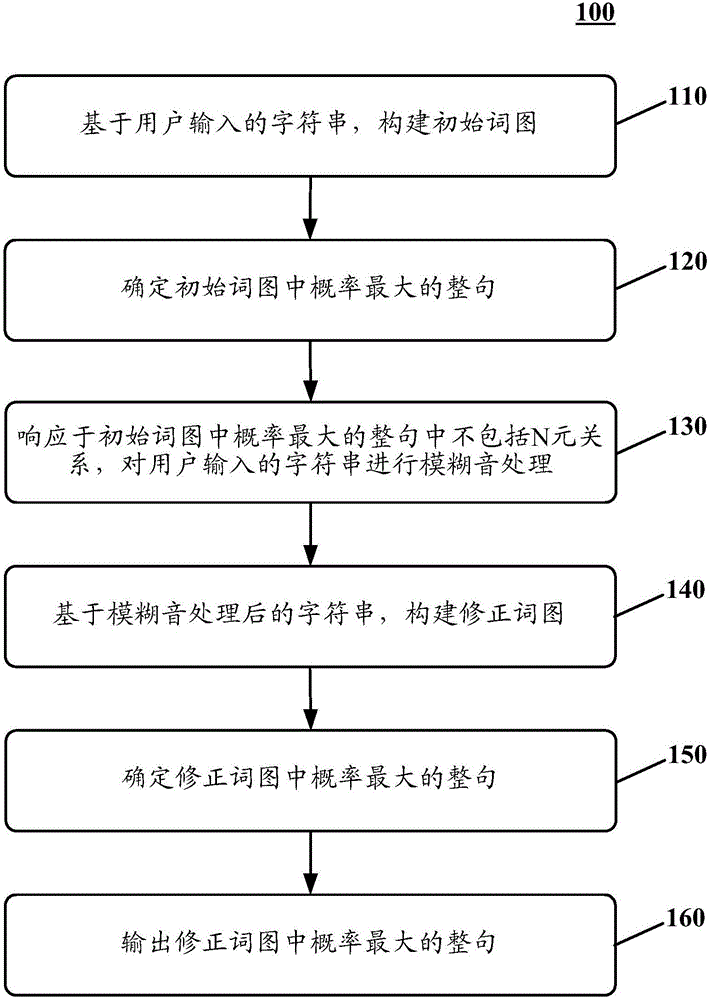

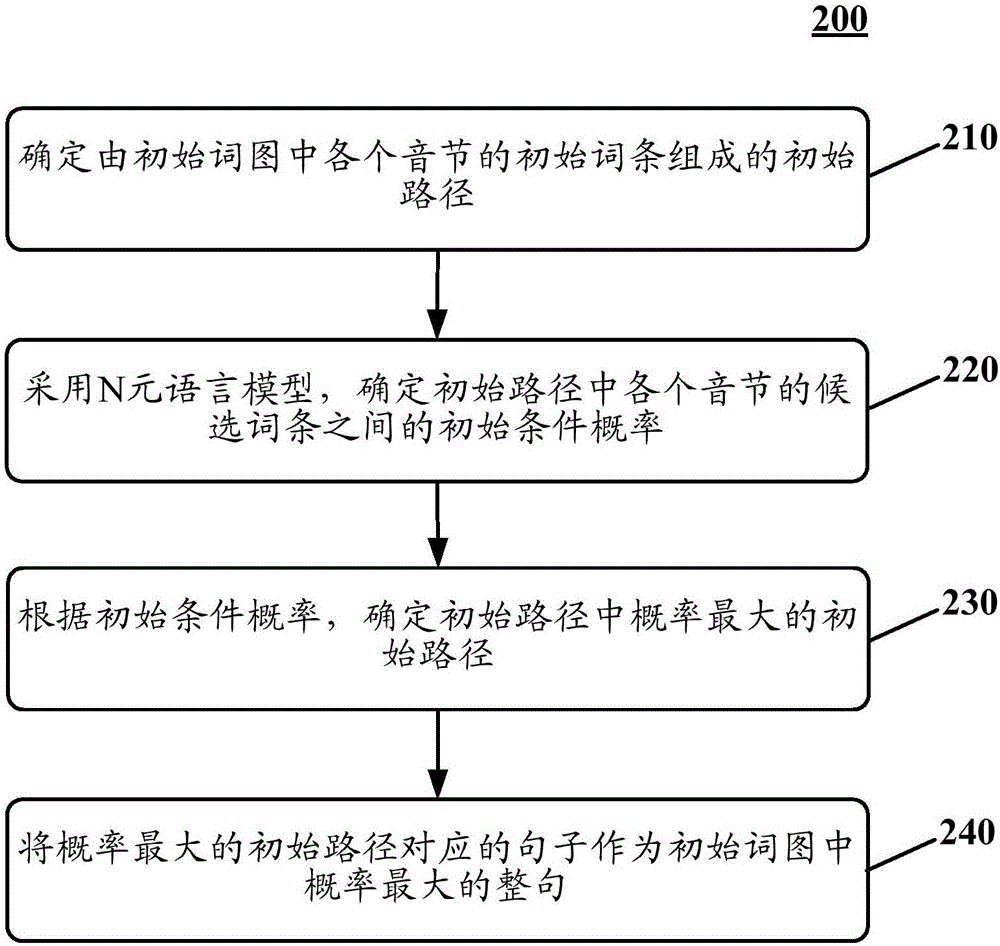

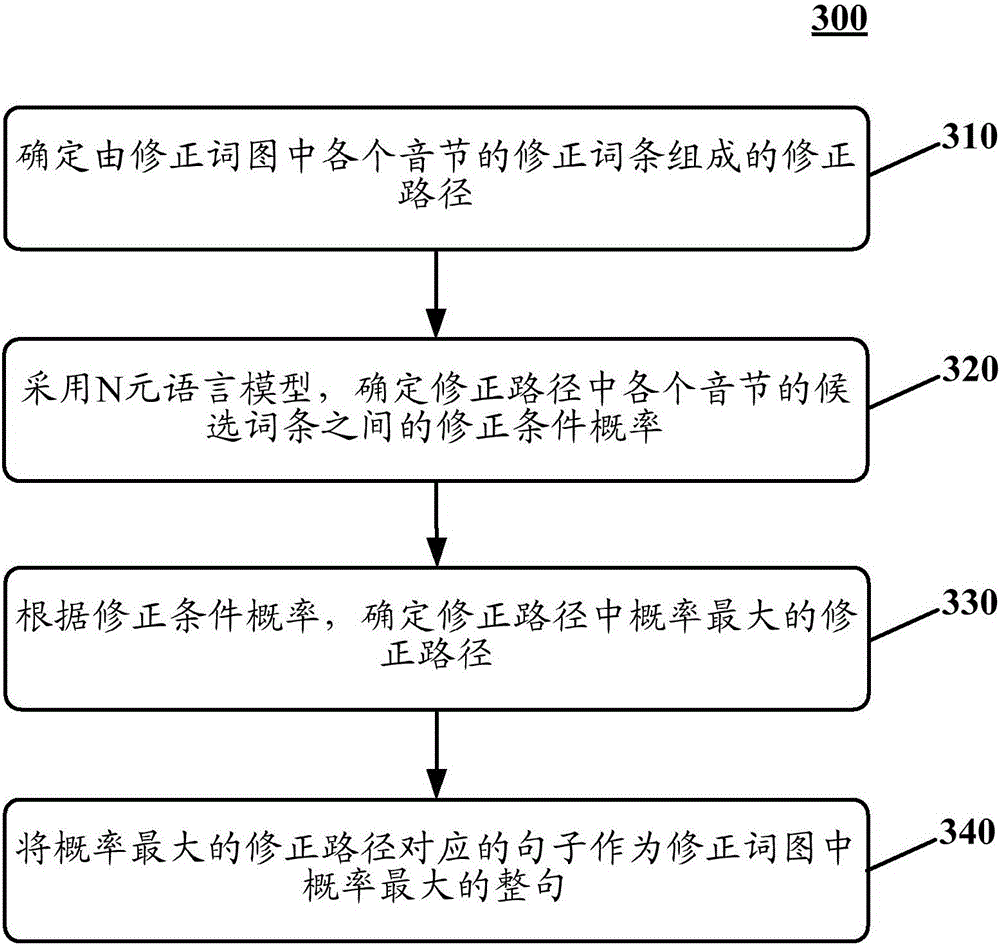

Method and device for outputting whole sentence

ActiveCN106843520ANo significant reduction in deterministic performanceImprove word qualityInput/output for user-computer interactionProgramming languageUser input

The invention discloses a method and a device for outputting a whole sentence. In one specific embodiment, the method comprises specific steps as follows: establishing an original word graph on the basis of a character string input by a user; determining a whole sentence which has the highest probability in the original word graph; performing fuzzy syllable treatment on the character string input by the user to respond to the fact that the whole sentence which has the highest probability in the original work graph does not contain an N-element relation; establishing a corrected word graph on the basis of the character string subjected to fuzzy syllable treatment; determining the sentence which has the highest probability in the corrected word graph; outputting the whole sentence which has the highest probability in the corrected word graph. With the adoption of the method, the word quality of the whole sentence on a client is greatly improved on the premise that the whole-sentence determining performance of the client is not remarkably reduced.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com