Patents

Literature

77 results about "Audiovisual technology" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The proliferation of audiovisual communications technologies, including sound, video, lighting, display and projection systems, is evident in every sector of society: in business, education, government, the military, healthcare, retail environments, worship, sports and entertainment, hospitality, restaurants, and museums.

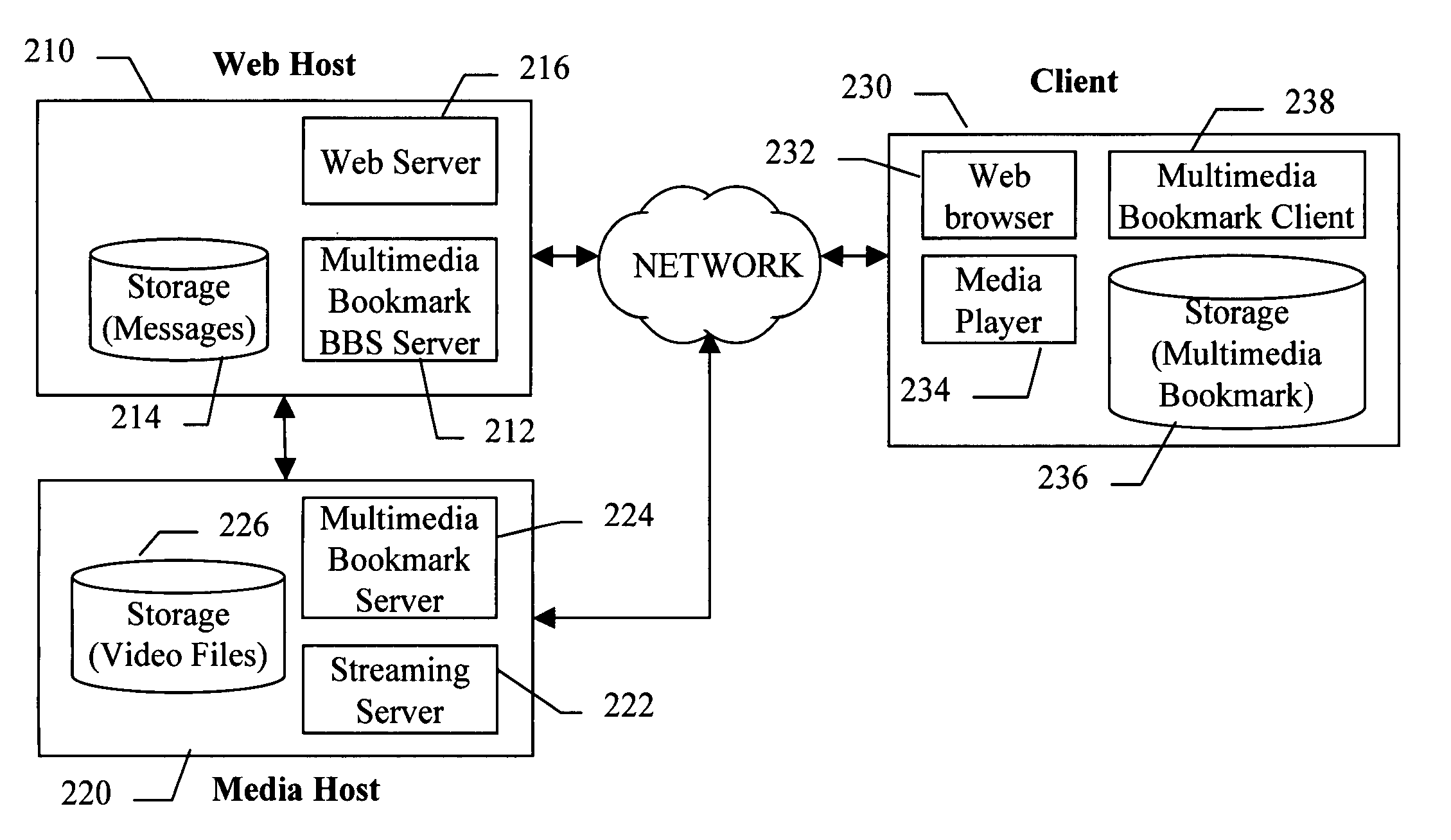

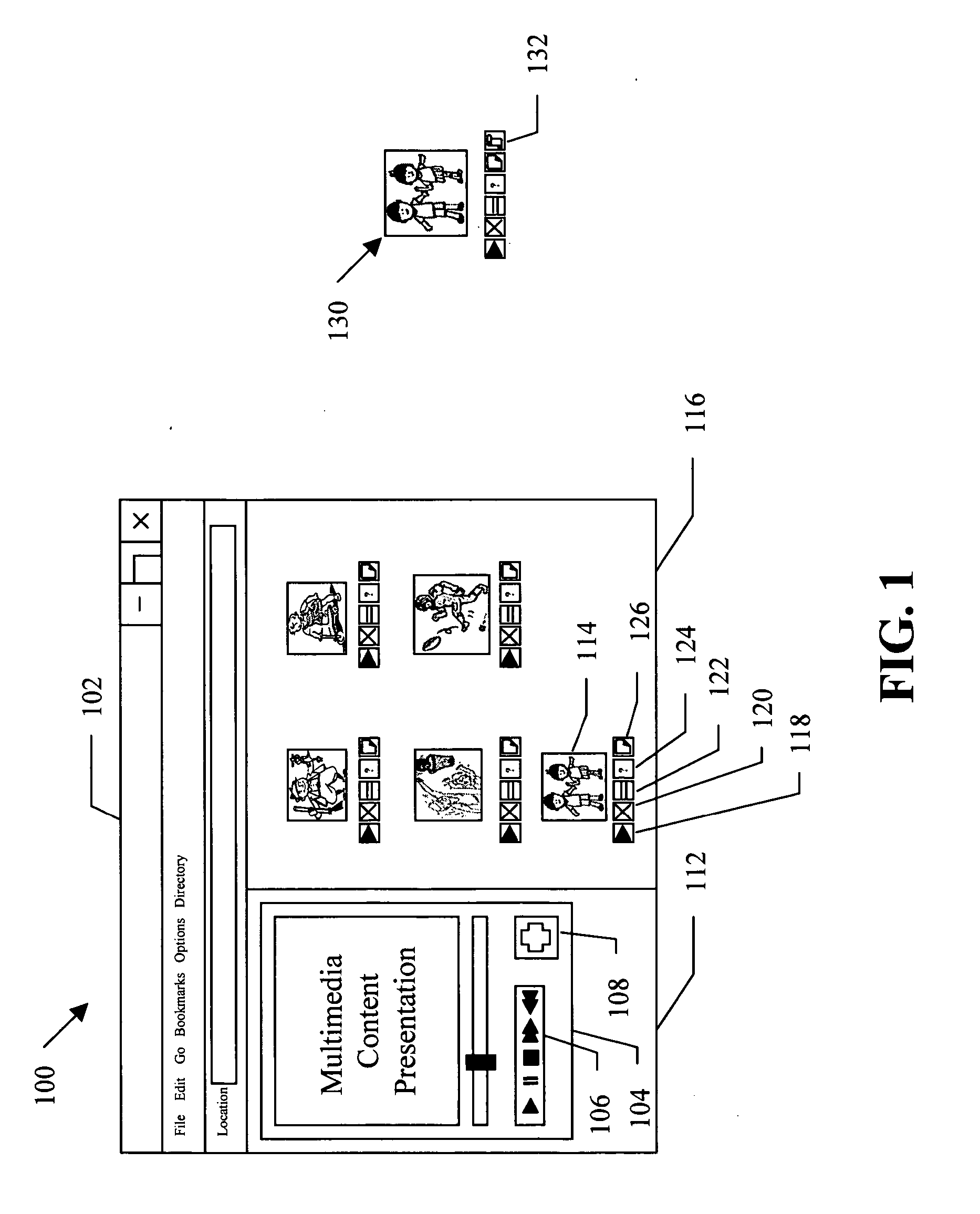

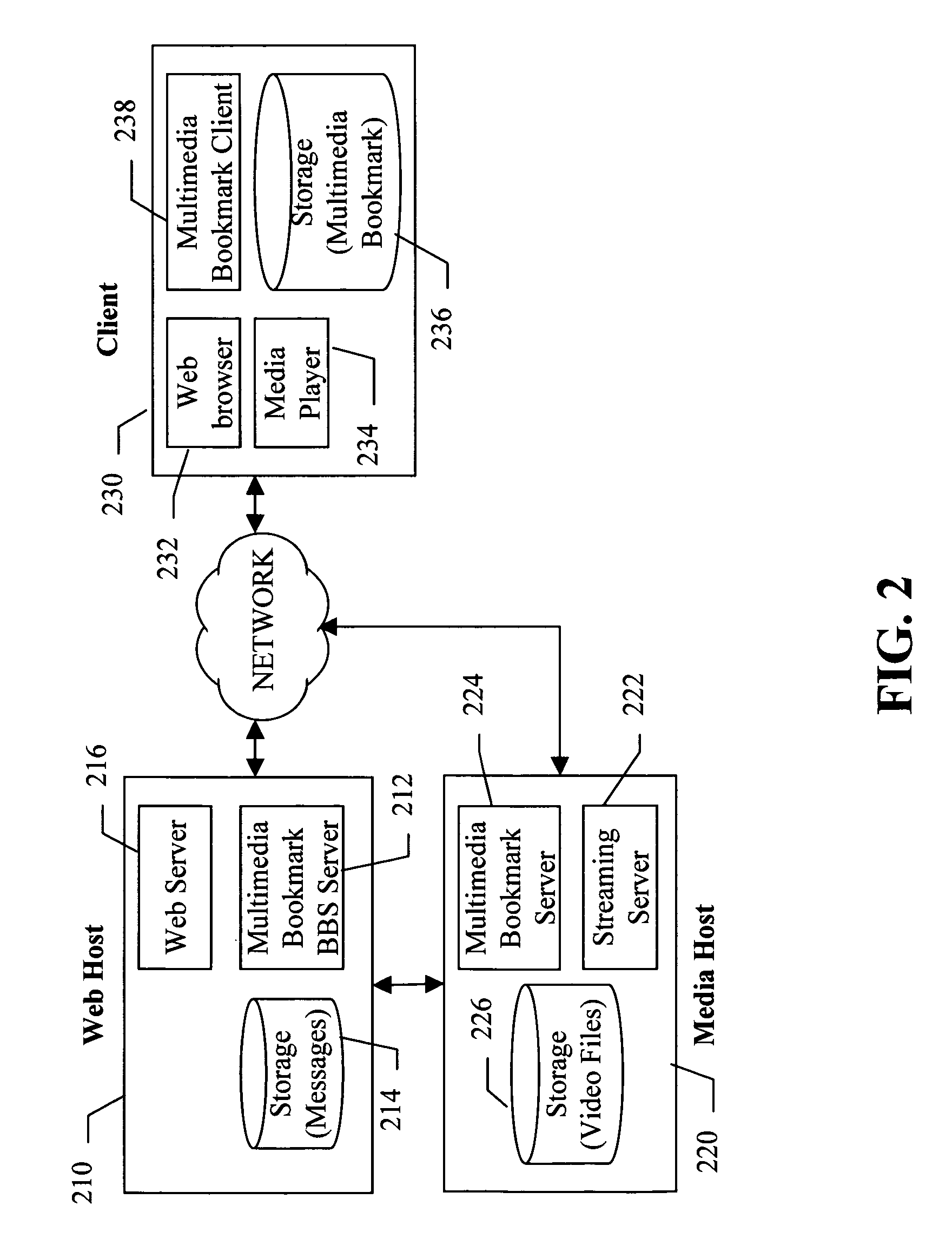

Delivering and processing multimedia bookmark

InactiveUS20050210145A1Easy to moveEasy detectionPulse modulation television signal transmissionDigital data information retrievalWeb browserMedia server

A multimedia bookmark (VMark) bulletin board service (BBS) system comprises: a web host comprising storage for messages, a web server, and a VMark BBS server; a media host comprising storage for audiovisual (AV) files, and a streaming server; a client comprising storage for VMark, a web browser, a media player and a VMark client; and a VMark server located at the media host or at the client; a communication network connecting the web host, the media host and the client. A method of performing a multimedia bookmark bulletin board service (BBS) comprises: creating a message including a multimedia bookmark for an AV file; and posting the message into the multimedia bookmark BBS. A method of sending multimedia bookmark (VMark) between clients comprises: at a first client, making a VMark indicative of a bookmarked position in an AV program; sending the VMark from the first client to a second client; and playing the program at the second client from the bookmarked position. A system for sharing multimedia content comprises: a multimedia bookmark bulletin board system (BBS); and means for posting a multimedia bookmark to the BBS.

Owner:VIVCOM INC

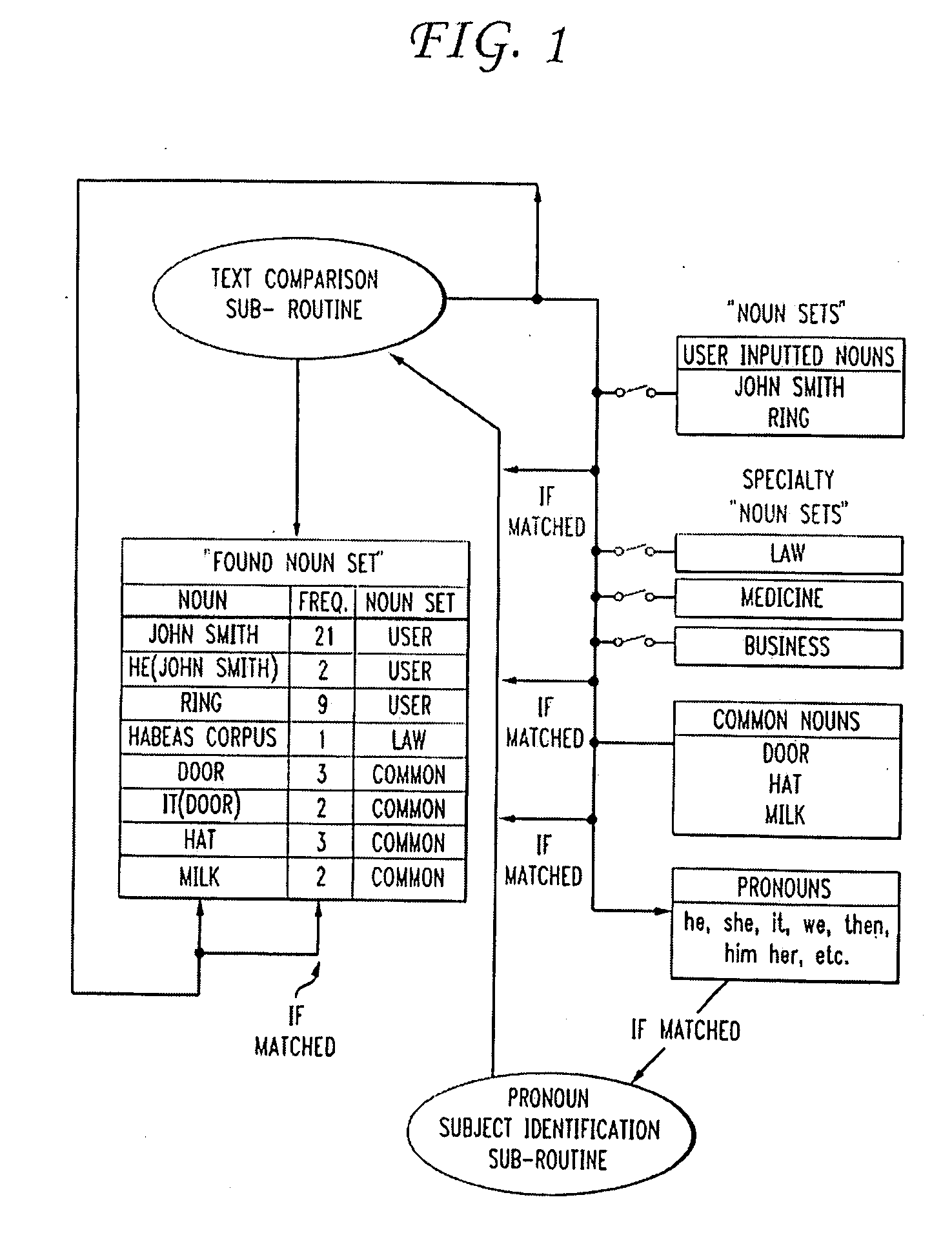

Character-based automated media summarization

ActiveUS20090100454A1Reduce and eliminate artifactStable outputInput/output for user-computer interactionDigital data processing detailsDigital videoComputer graphics (images)

Methods, devices, systems and tools are presented that allow the summarization of text, audio, and audiovisual presentations, such as movies, into less lengthy forms. High-content media files are shortened in a manner that preserves important details, by splitting the files into segments, rating the segments, and reassembling preferred segments into a final abridged piece. Summarization of media can be customized by user selection of criteria, and opens new possibilities for delivering entertainment, news, and information in the form of dense, information-rich content that can be viewed by means of broadcast or cable distribution, “on-demand” distribution, internet and cell phone digital video streaming, or can be downloaded onto an iPod™ and other portable video playback devices.

Owner:NUTSHELL MEDIA

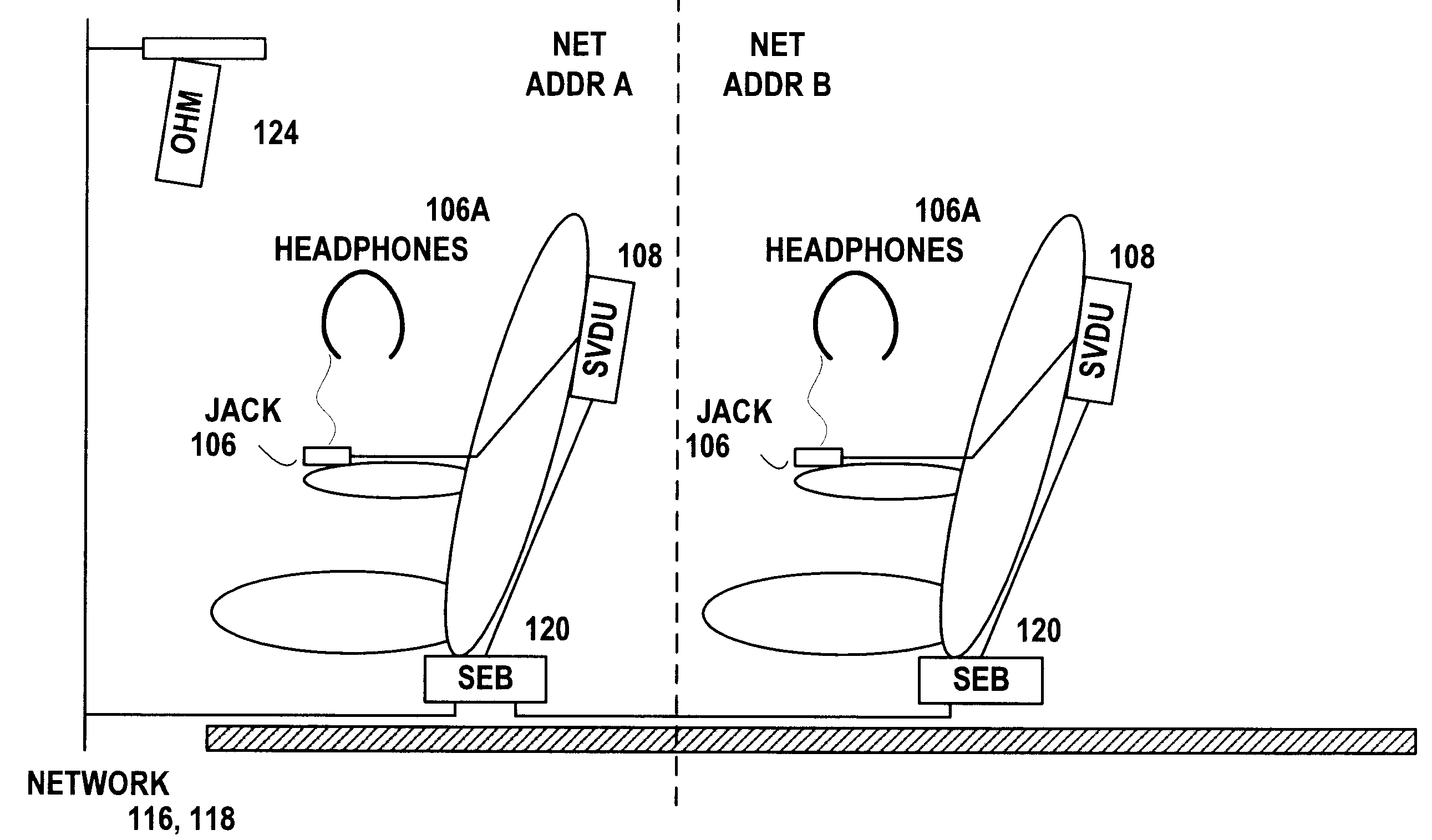

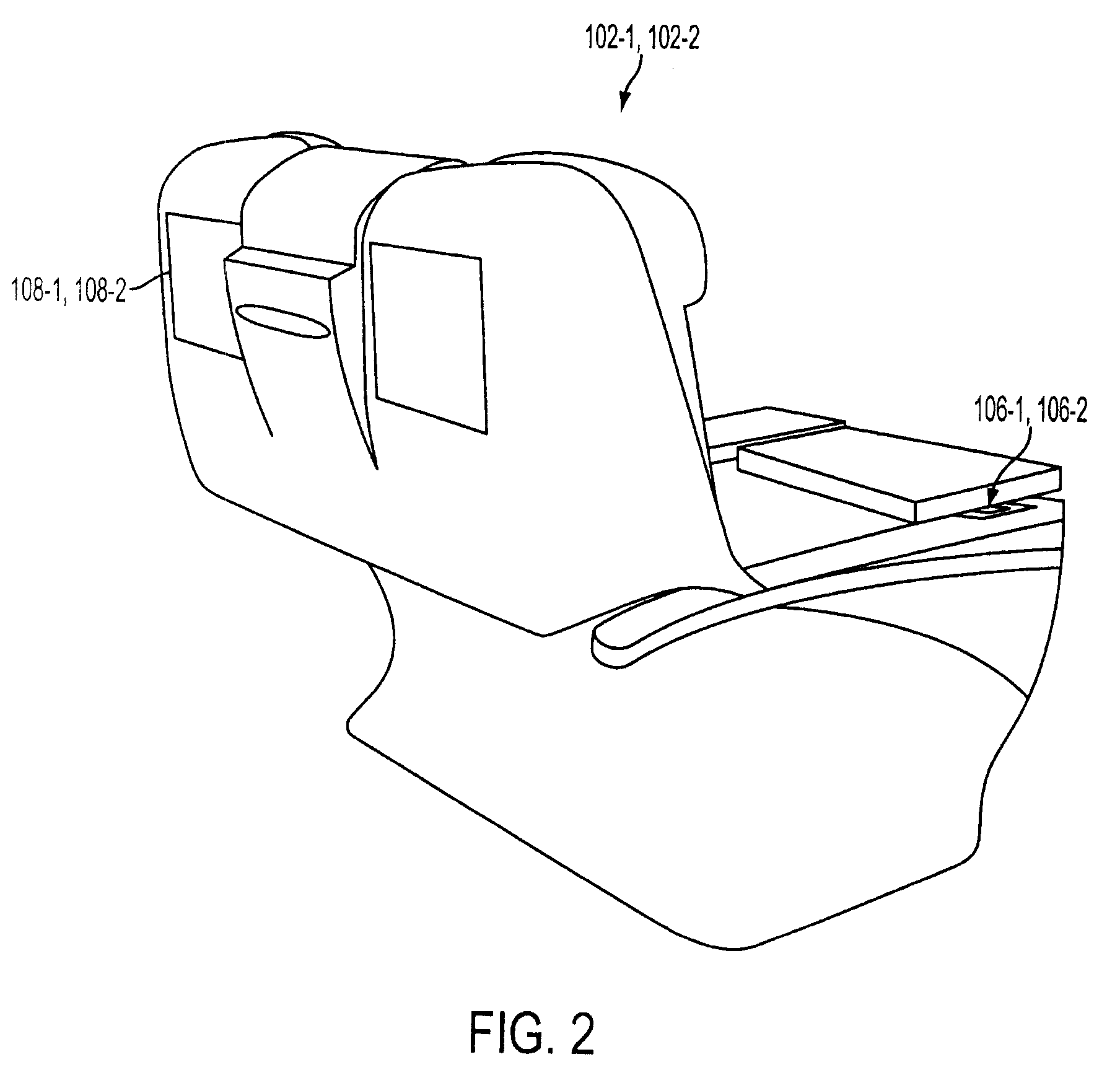

Remote recovery of in-flight entertainment video seat back display audio

InactiveUS20090007194A1Analogue secracy/subscription systemsClosed circuit television systemsComputer hardwareNetwork addressing

A system and method permit remote recovery of audio from audiovisual or multimedia content for a video display unit. Audio is recovered from the audiovisual content sent to a first network address and is packetized for transmission over a network that may utilized an existing wiring infrastructure that provides audio and video-on-demand content to a second network address. The audio packets are reassembled by hardware associated with the second network address and analog audio created from the audio packets is provided at an output to an audio device.

Owner:THALES AVIONICS INC

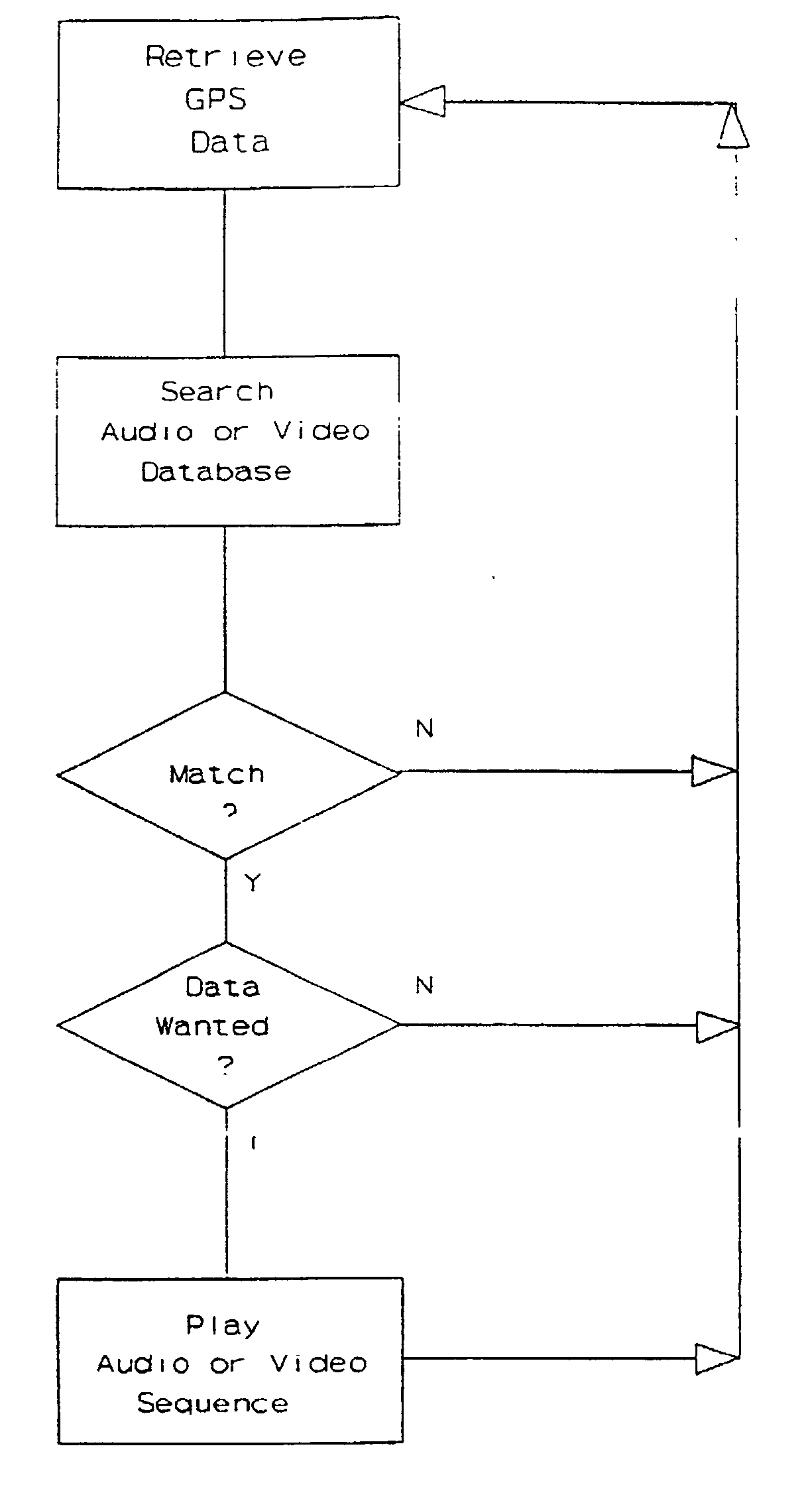

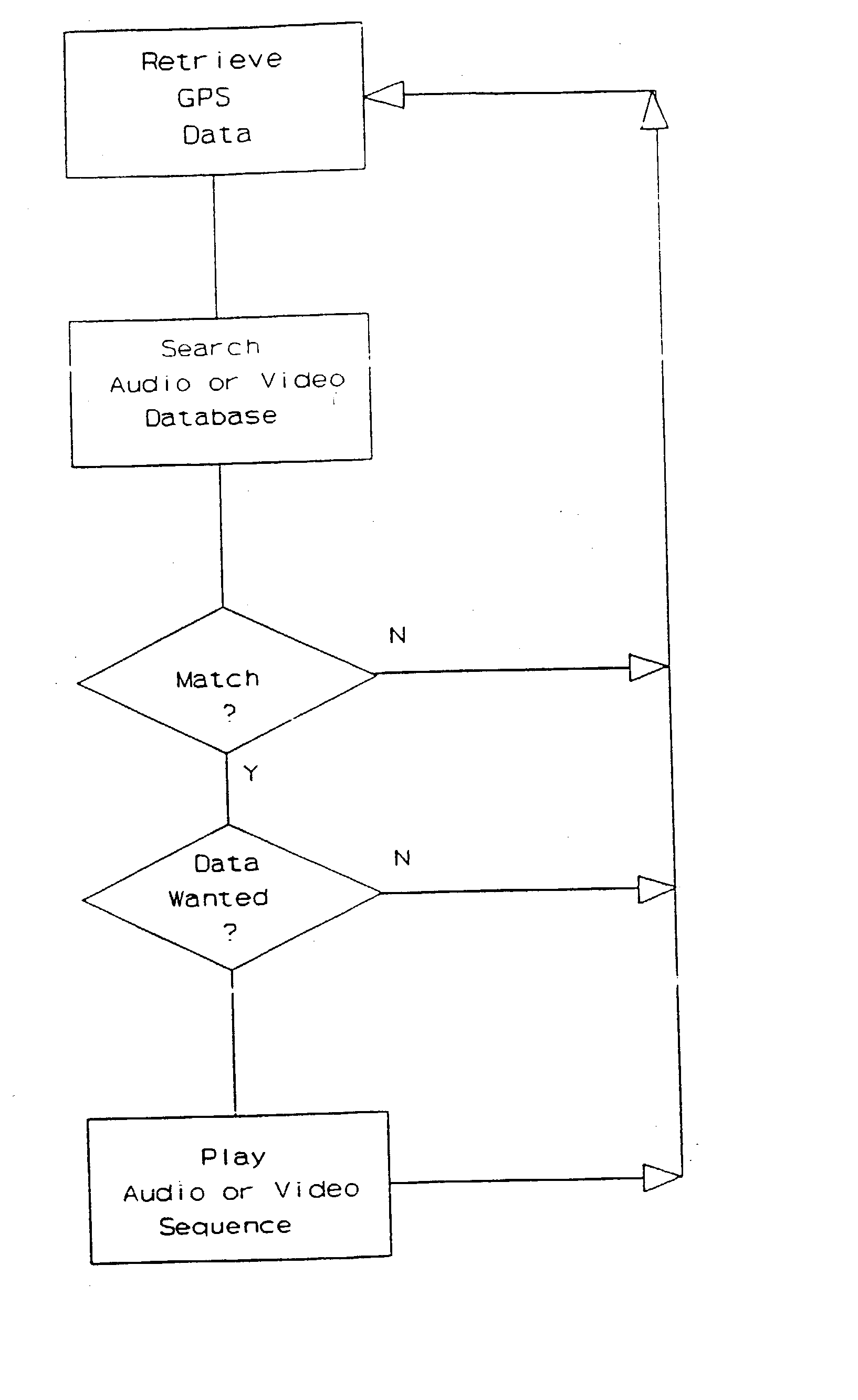

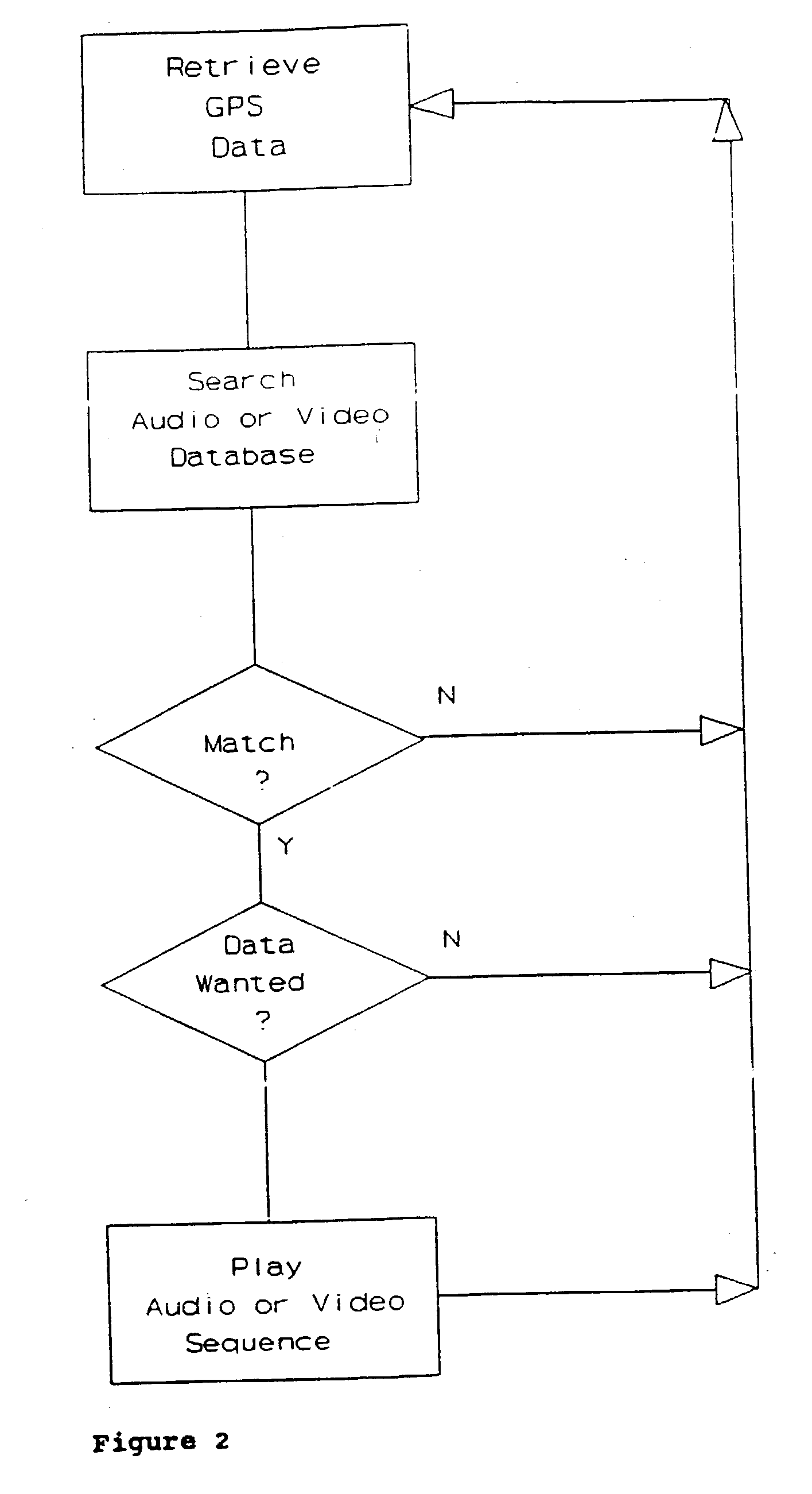

GPS explorer

InactiveUS20020167442A1Instruments for road network navigationData processing applicationsHands freeGlobal Positioning System

This is a portable information system which uses Global Positioning System (GPS) data as a key to automatically retrieve audiovisual data from a database. On a journey the system can automatically identify and describe places of specific interest to the user, landmarks and the history of nearby buildings, or locate hotels, hospitals, shops and products within a radius of the present position. Audible menus and voice command give hands-free and eyes-free control while driving, flying, sailing or walking.

Owner:TAYLOR WILLIAM MICHAEL FREDERICK

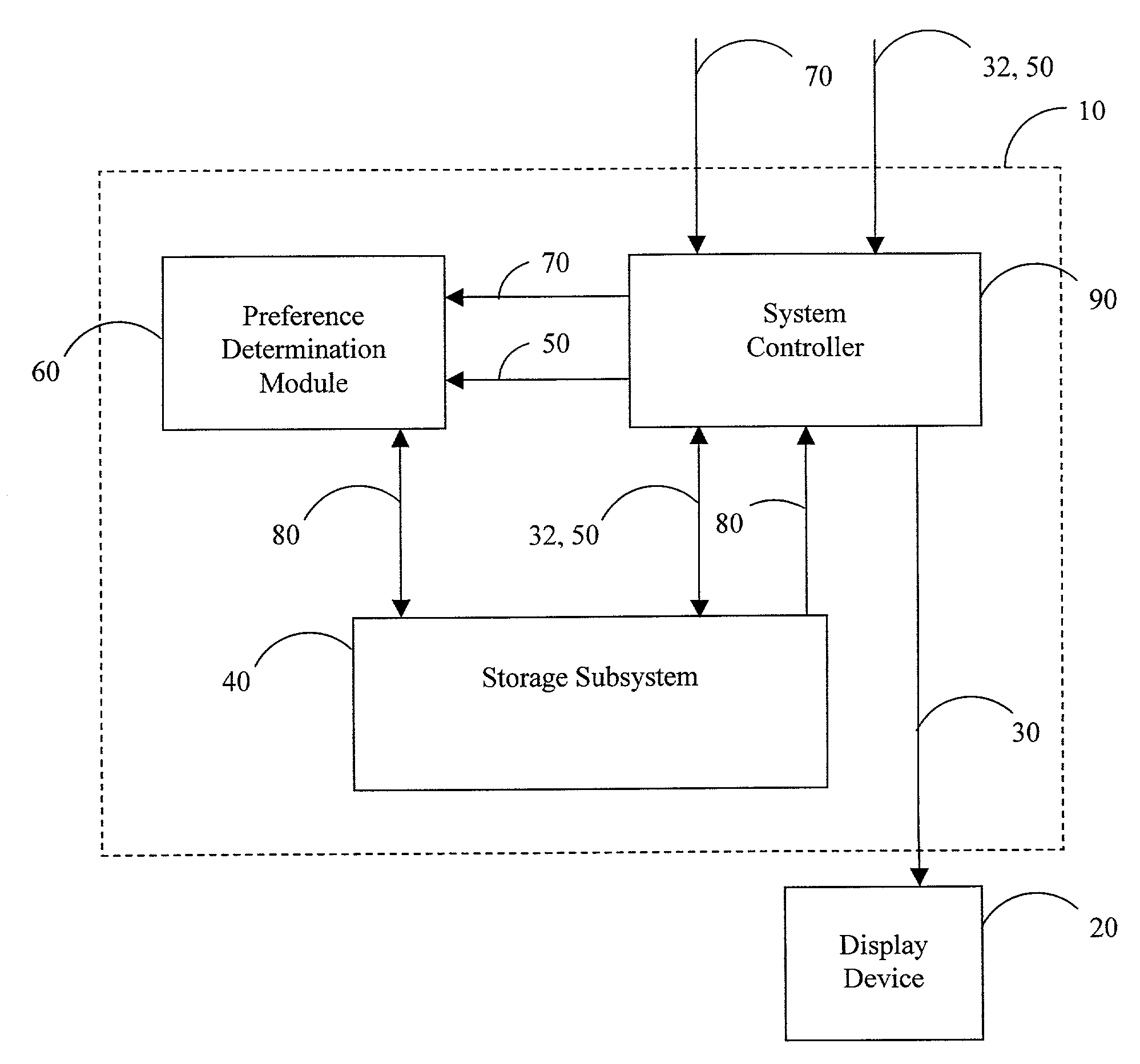

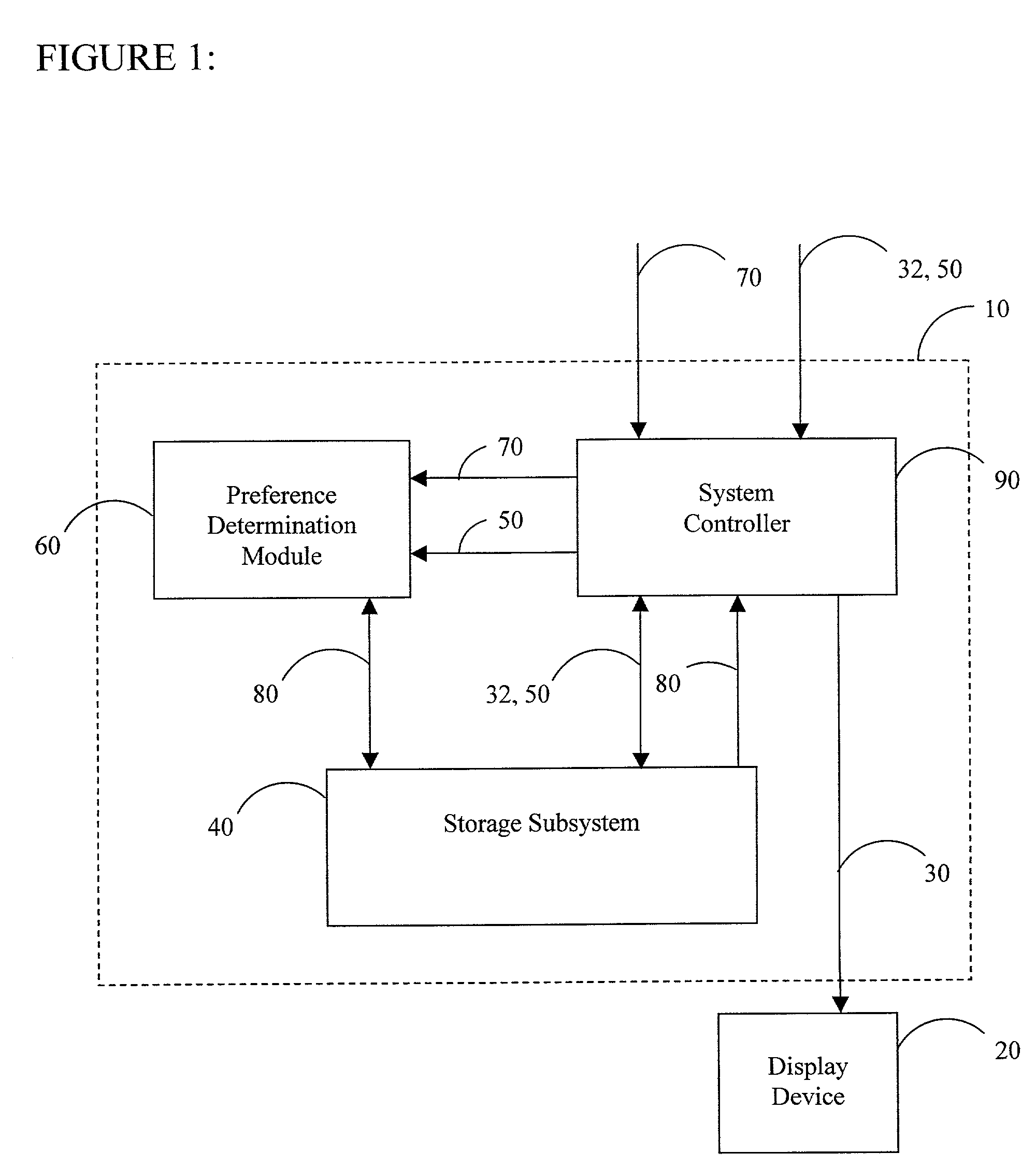

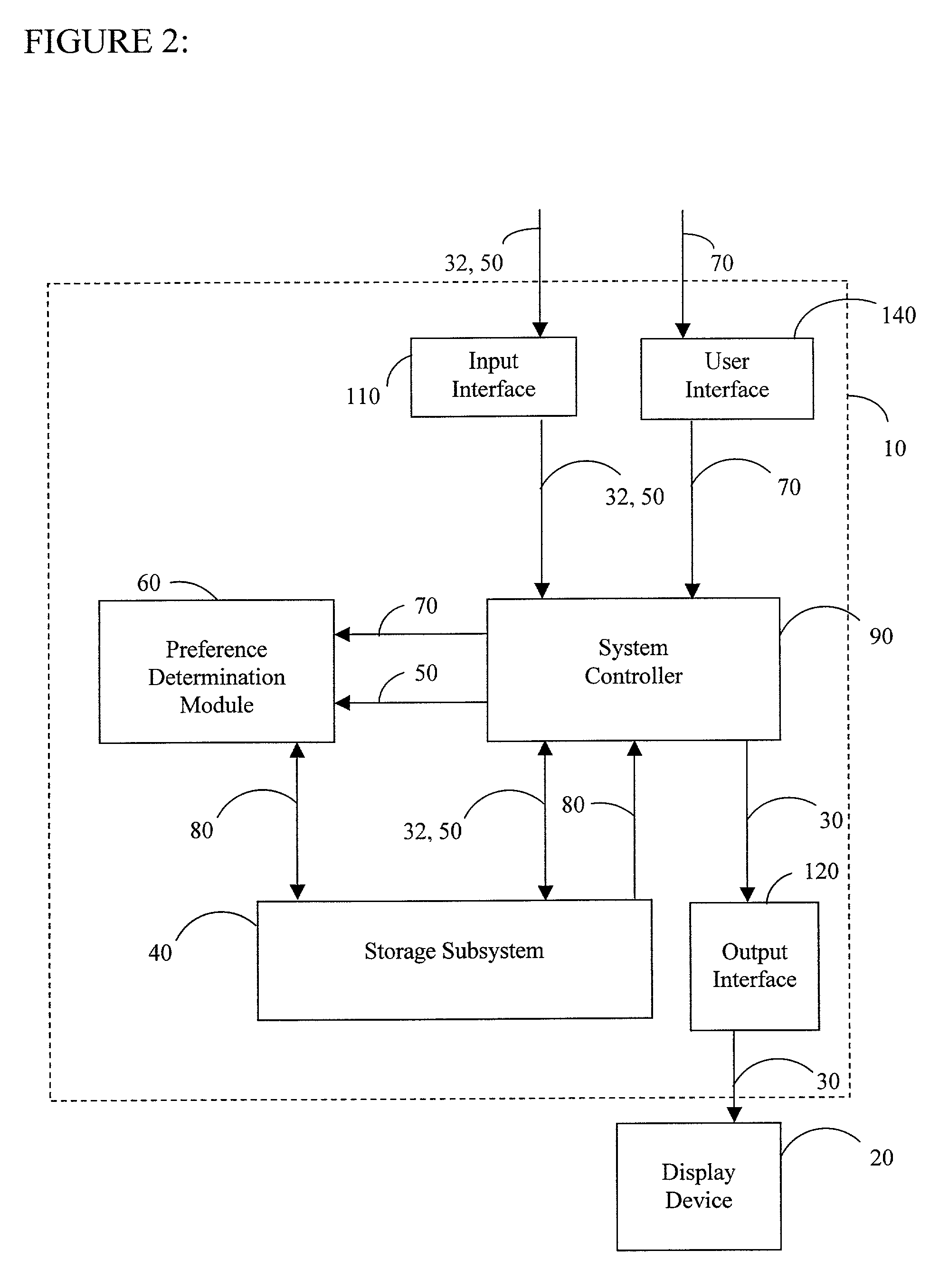

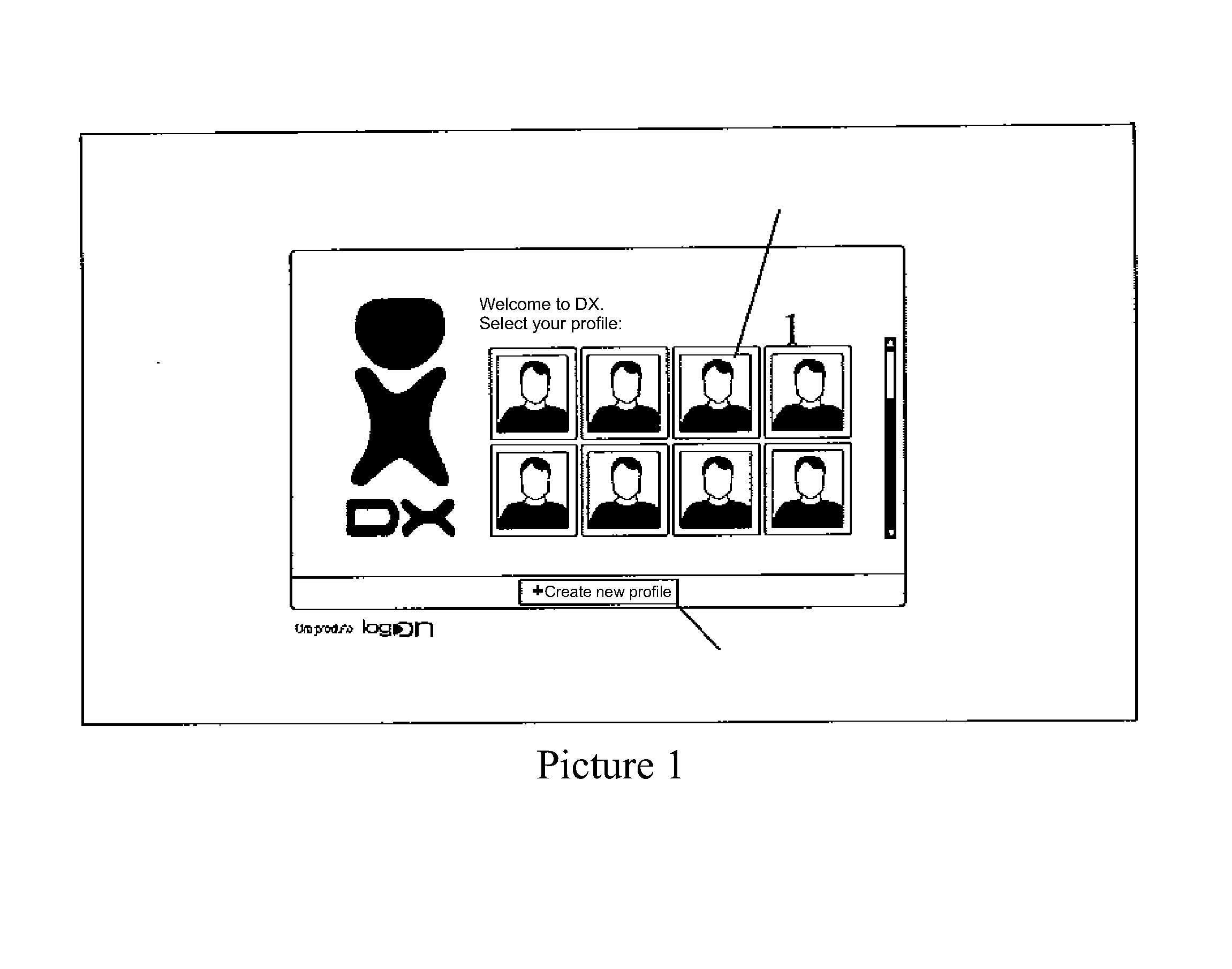

Audiovisual system and method for displaying segmented advertisements tailored to the characteristic viewing preferences of a user

An audiovisual system for displaying an audiovisual advertisement to a user includes a storage subsystem adapted to receive and store audiovisual advertising segments and to retrieve and transmit stored advertising segments. The audiovisual system further includes a preference determination module coupled to the storage subsystem. The preference determination module is responsive to user input and to metadata to generate one or more user profiles. Each user profile is indicative of characteristic viewing preferences of a corresponding user. The audiovisual system further includes a system controller coupled to the storage subsystem. The system controller is responsive to the metadata and to the user profile corresponding to the user to select and retrieve a plurality of stored advertising segments from the storage subsystem and to dynamically assemble the retrieved plurality of stored advertising segments to form the advertisement which is tailored to the characteristic viewing preferences of the user.

Owner:KEEN PERSONAL MEDIA

GPS explorer

InactiveUS20040036649A1Instruments for road network navigationData processing applicationsHands freeGlobal Positioning System

This is a portable information system which uses Global Positioning System (GPS) data as a key to automatically retrieve audiovisual data from a database. On a journey the system can automatically identify and describe places of specific interest to the user, landmarks and the history of nearby buildings, or locate hotels, hospitals, shops and products within a radius of the present position. Audible menus and voice command give hands-free and eyes-free control while driving, flying, sailing or walking.

Owner:TAYLOR WILLIAM MICHAEL FREDERICK

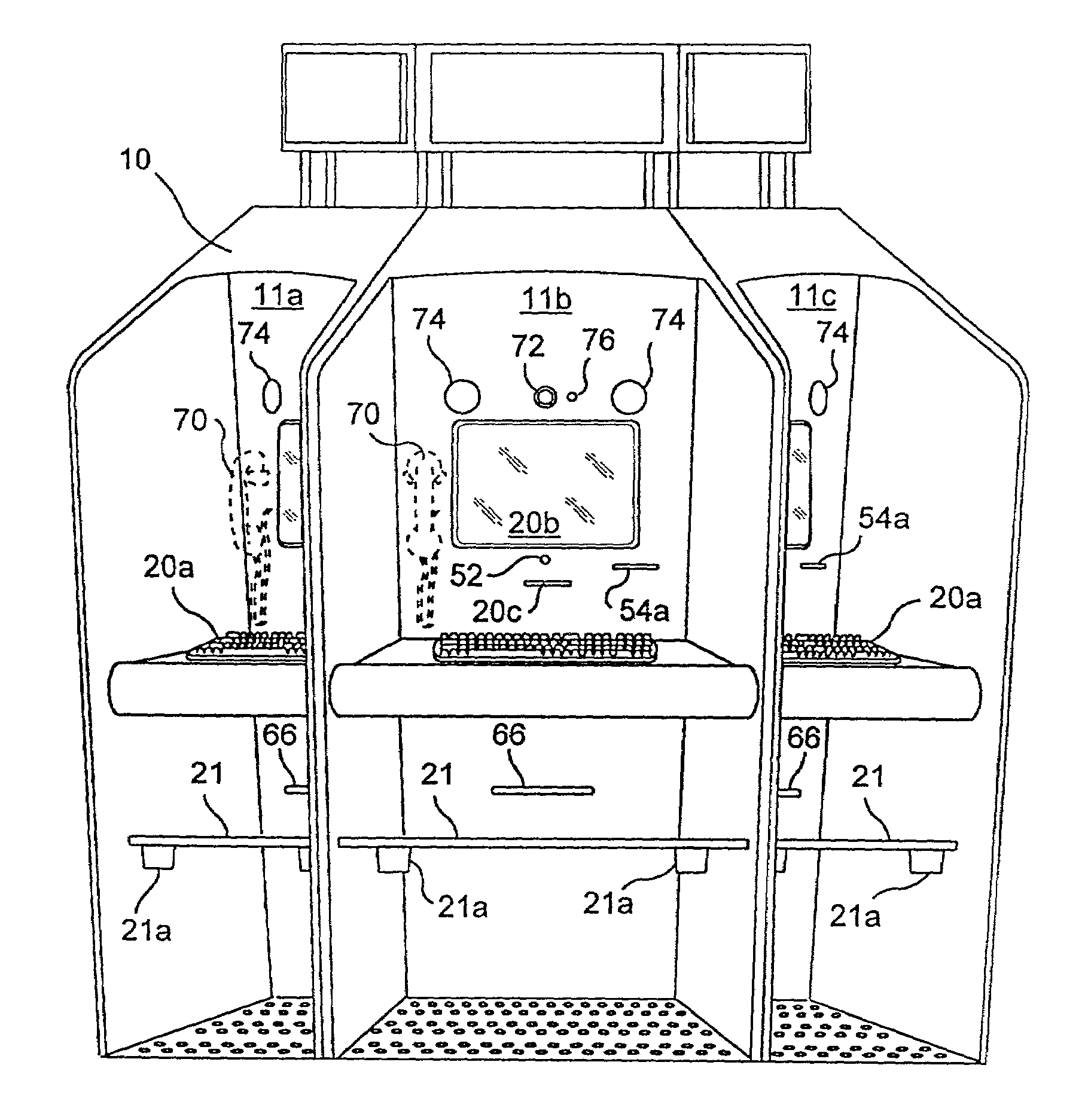

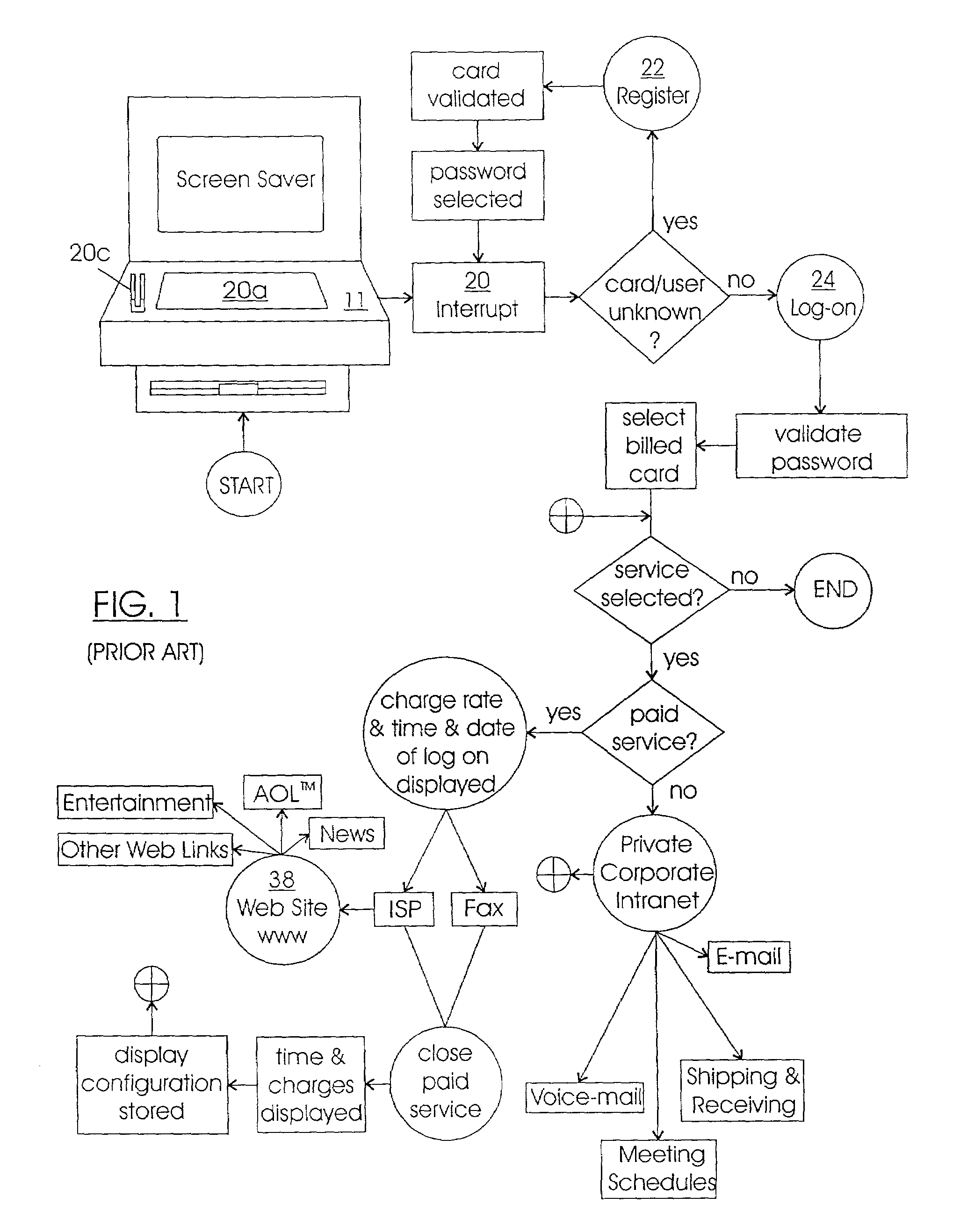

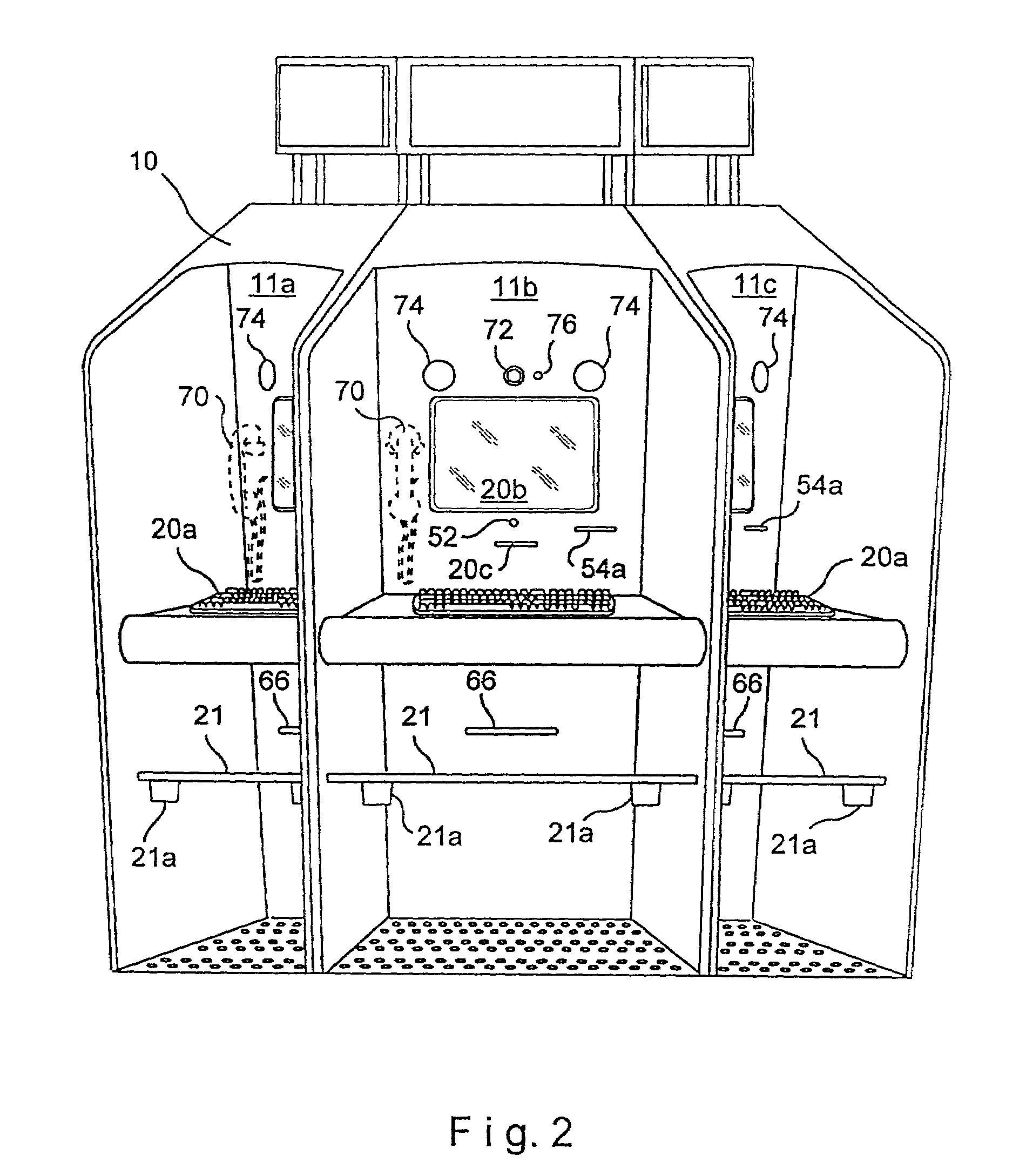

E-commerce development intranet portal

An intranet providing a multiple-carrel public-access kiosk is disclosed. The intranet provides free access to foreign and domestic informational e-commerce intranet sites as well as e-mail and public service educational and informational materials. The kiosk accepts anonymous pre-paid cards issued by a local franchisee of a network of c-commerce intranets that includes the local intranet. The franchisee owns or leases kiosks and also provides a walk-in e-commerce support center where e-commerce support services and goods, such as pre-paid accounts for access to paid services at a kiosk, can be purchased. The paid services provided by the carrel include video-conference and chat room time, playing and / or copying audio-visual materials such as computer games and music videos, and international e-commerce purchase support services such as customs and currency exchange. The third-party sponsored public service materials include audio-visual instructional materials in local dialects introducing the user to the use of the kiosks services and providing training for using standard business software programs. Sponsors include pop-up market research questions in the sponsored public service information and receive clickstream date correlated with the user's intranet ID and answers to the original demographic questions answered by the user.

Owner:DE FABREGA INGRID PERSCKY

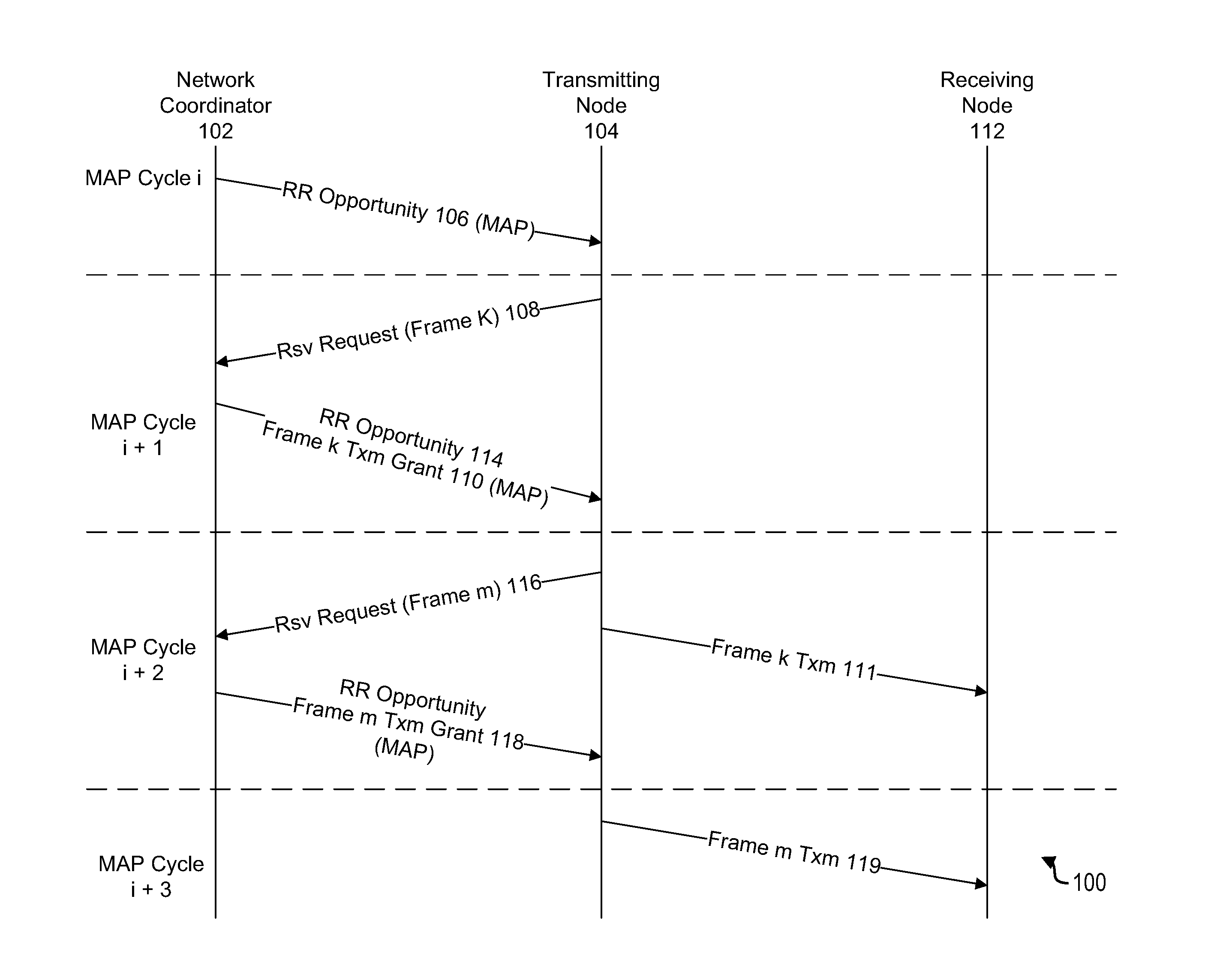

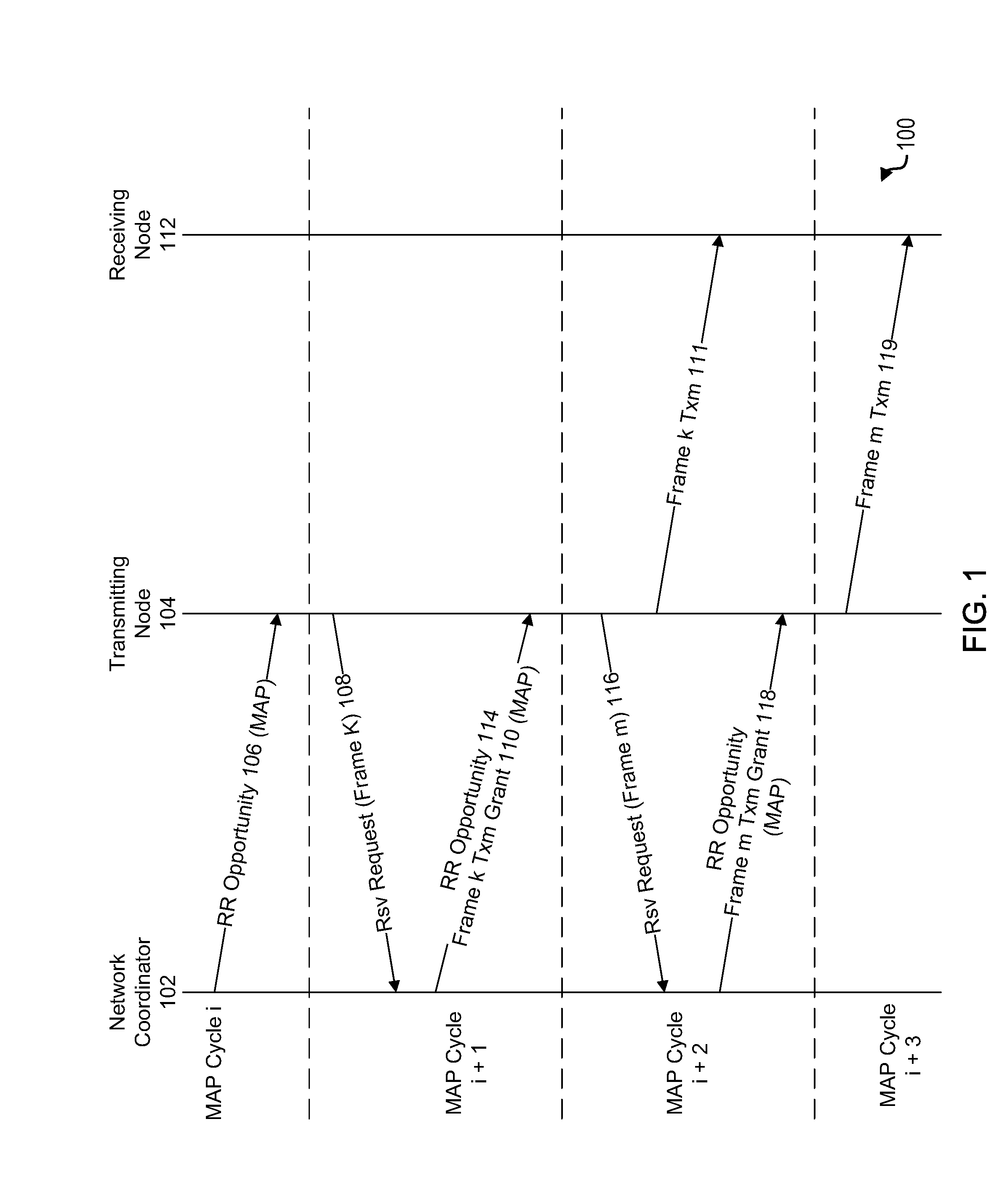

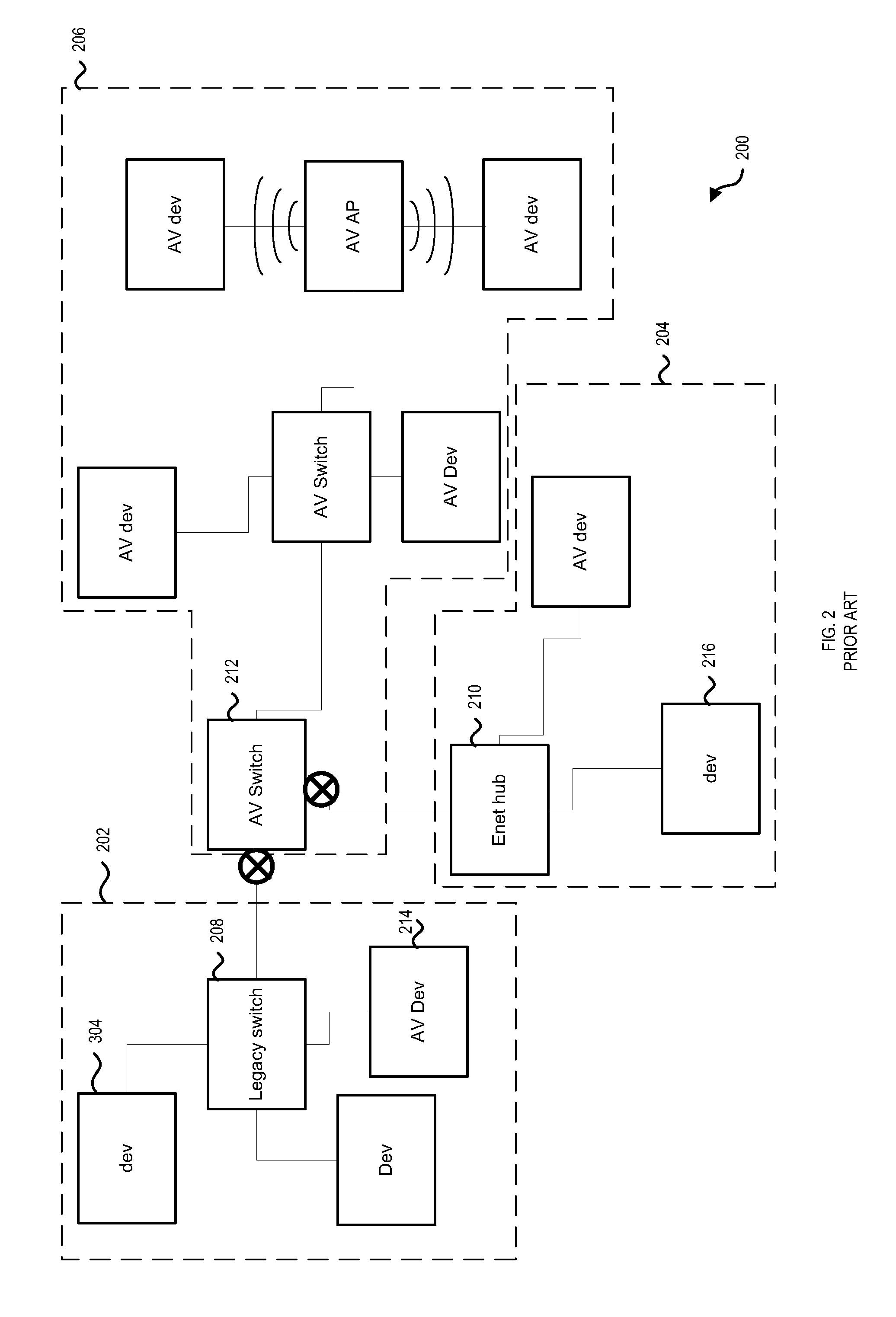

Apparatus and methods for reduction of transmission delay in a communication network

Apparatus and methods for reducing latency in coordinated networks. The apparatus and methods relate to a protocol that may be referred to as the Persistent Reservation Request (“p-RR”), which may be viewed as a type of RR (reservation request). p-RR's may reduce latency, on average, to one MAP cycle or less. A p-RR may be used to facilitate Ethernet audiovisual bridging. Apparatus and methods of the invention may be used in connection with coaxial cable based networks that serve as a backbone for a managed network, which may interface with a package switched network.

Owner:AVAGO TECH INT SALES PTE LTD

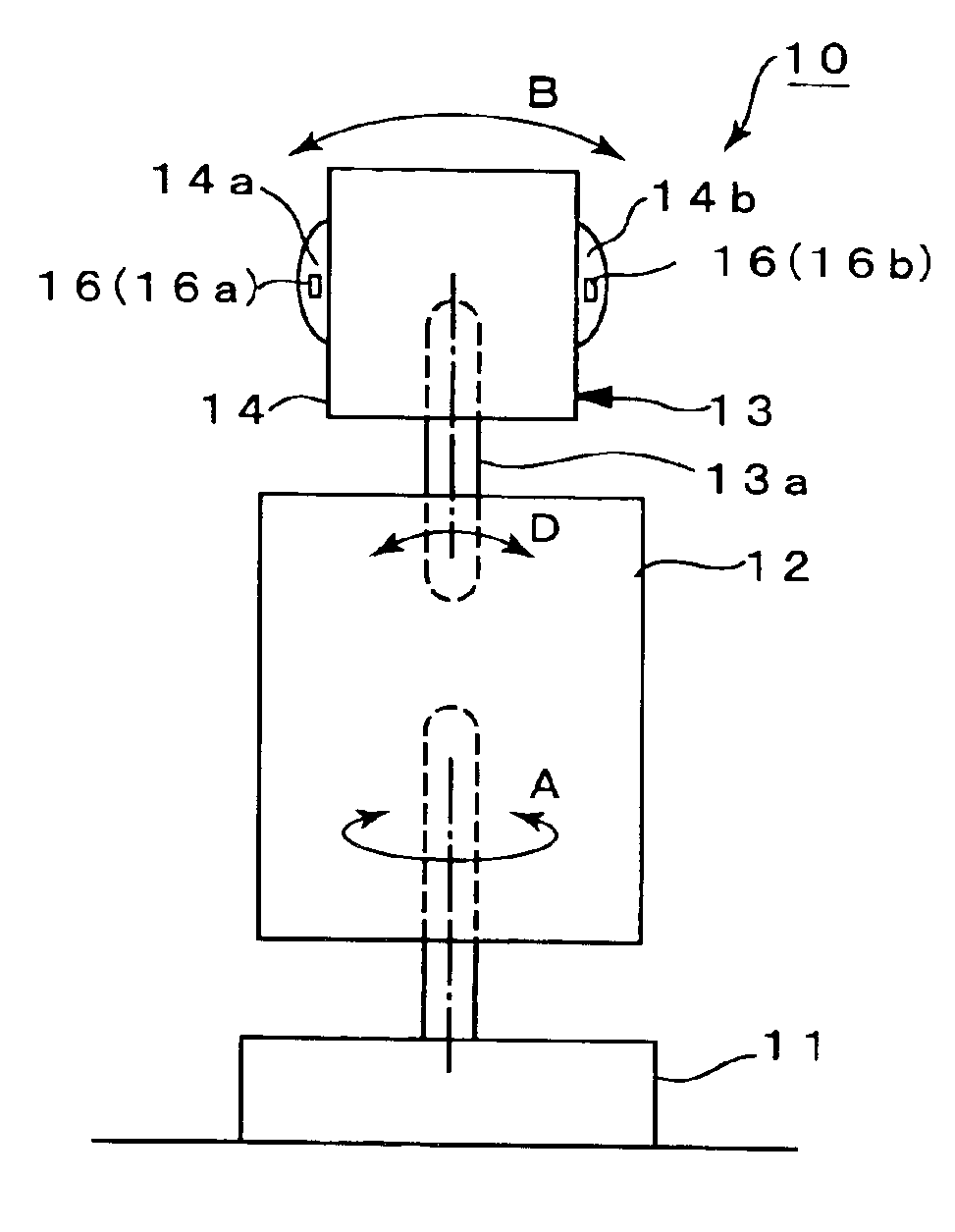

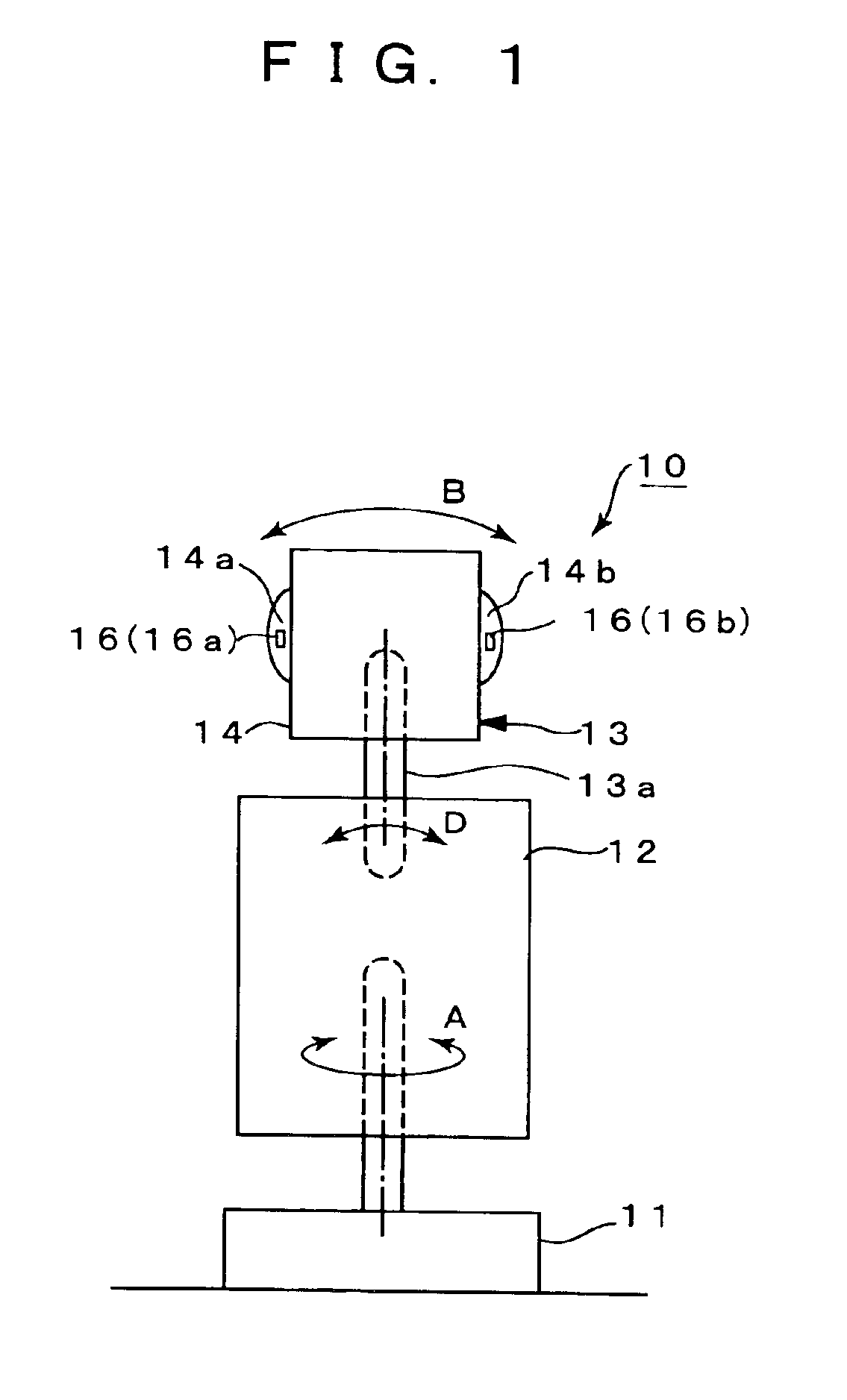

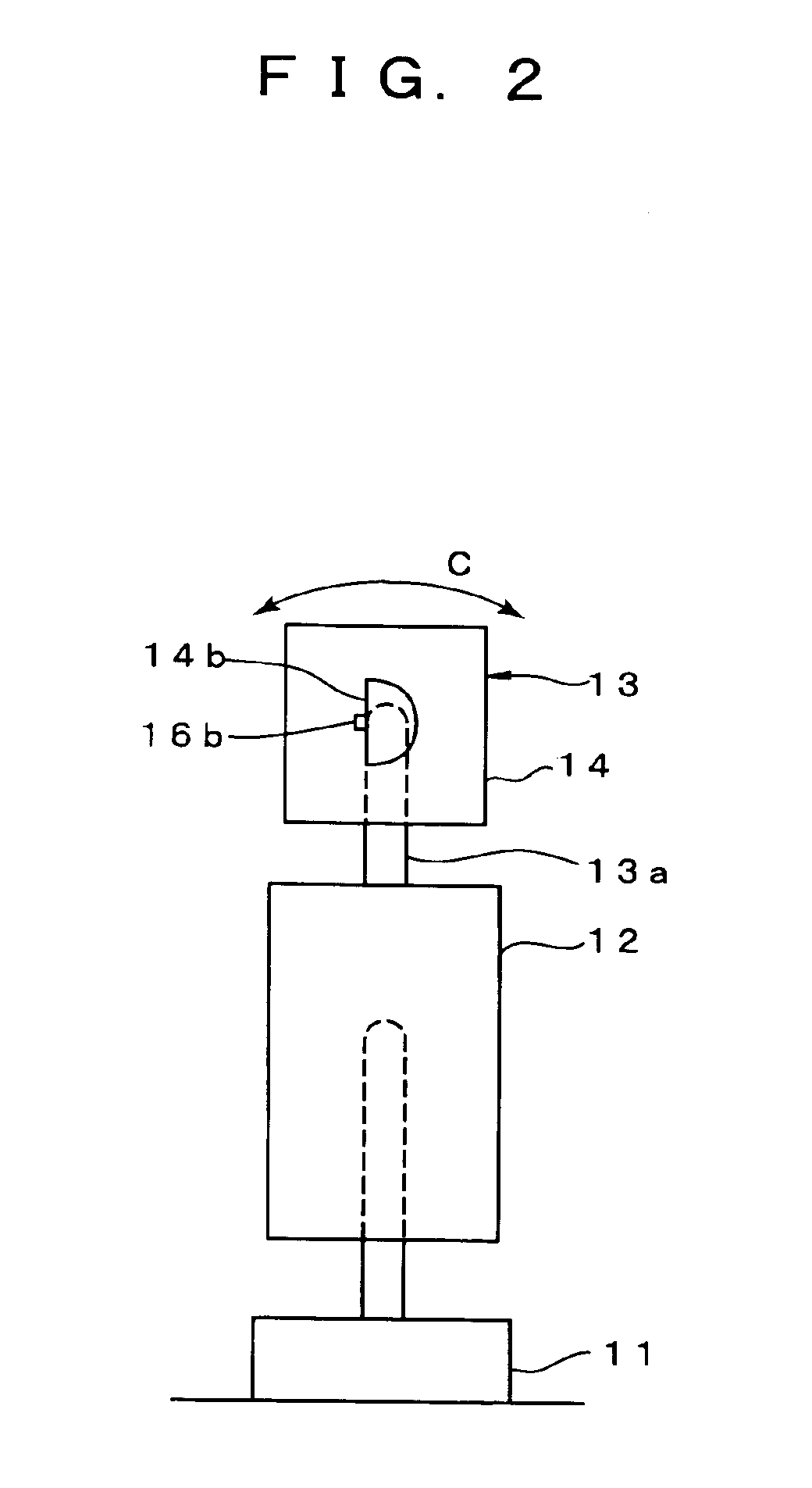

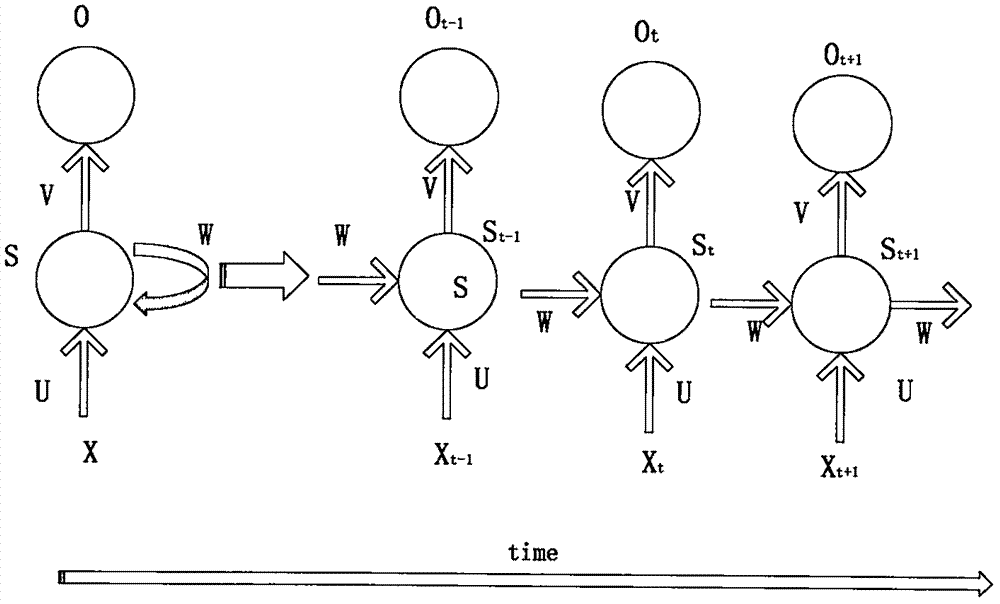

Robot audiovisual system

A robot visuoauditory system that makes it possible to process data in real time to track vision and audition for an object, that can integrate visual and auditory information on an object to permit the object to be kept tracked without fail and that makes it possible to process the information in real time to keep tracking the object both visually and auditorily and visualize the real-time processing is disclosed. In the system, the audition module (20) in response to sound signals from microphones extracts pitches therefrom, separate their sound sources from each other and locate sound sources such as to identify a sound source as at least one speaker, thereby extracting an auditory event (28) for each object speaker. The vision module (30) on the basis of an image taken by a camera identifies by face, and locate, each such speaker, thereby extracting a visual event (39) therefor. The motor control module (40) for turning the robot horizontally. extracts a motor event (49) from a rotary position of the motor. The association module (60) for controlling these modules forms from the auditory, visual and motor control events an auditory stream (65) and a visual stream (66) and then associates these streams with each other to form an association stream (67). The attention control module (6) effects attention control designed to make a plan of the course in which to control the drive motor, e.g., upon locating the sound source for the auditory event and locating the face for the visual event, thereby determining the direction in which each speaker lies. The system also includes a display (27, 37, 48, 68) for displaying at least a portion of auditory, visual and motor information. The attention control module (64) servo-controls the robot on the basis of the association stream or streams.

Owner:JAPAN SCI & TECH CORP

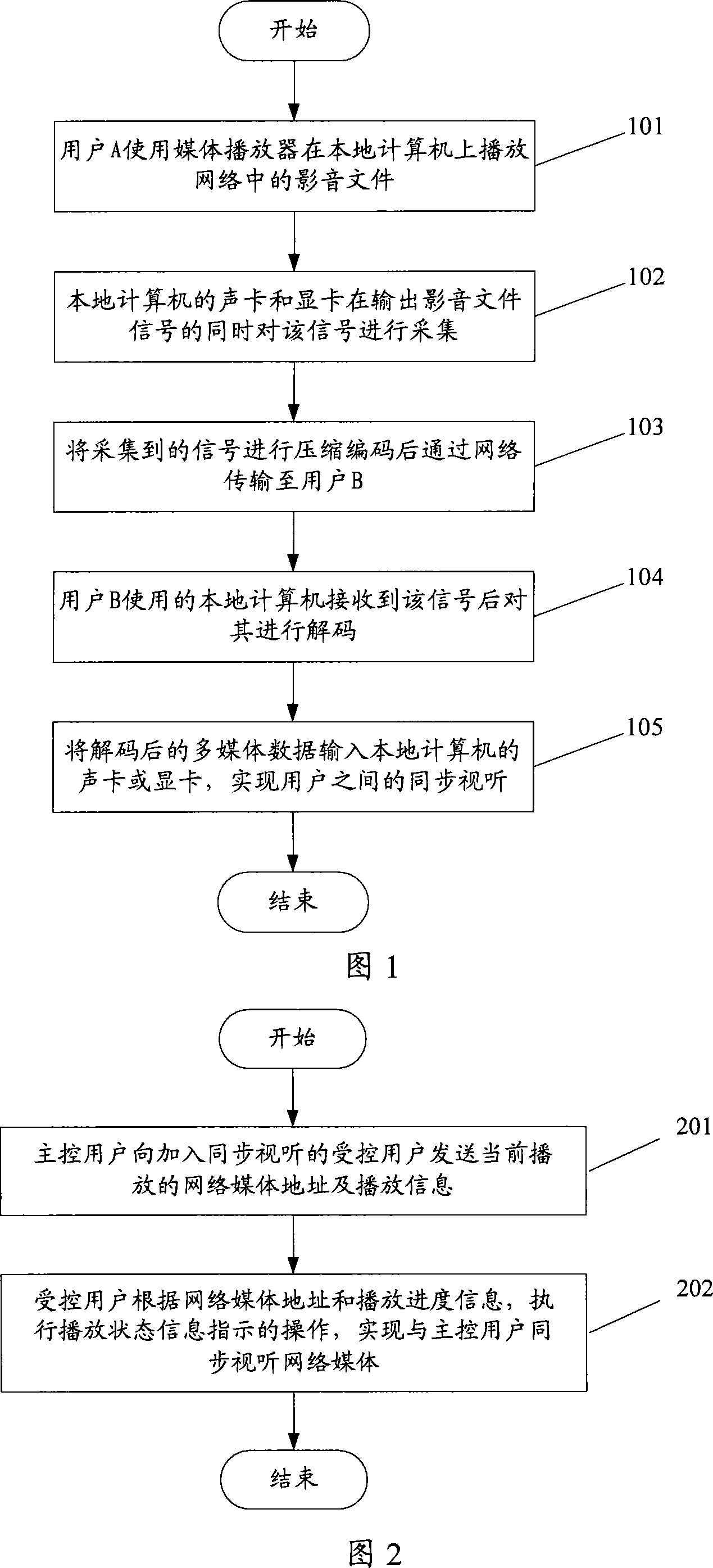

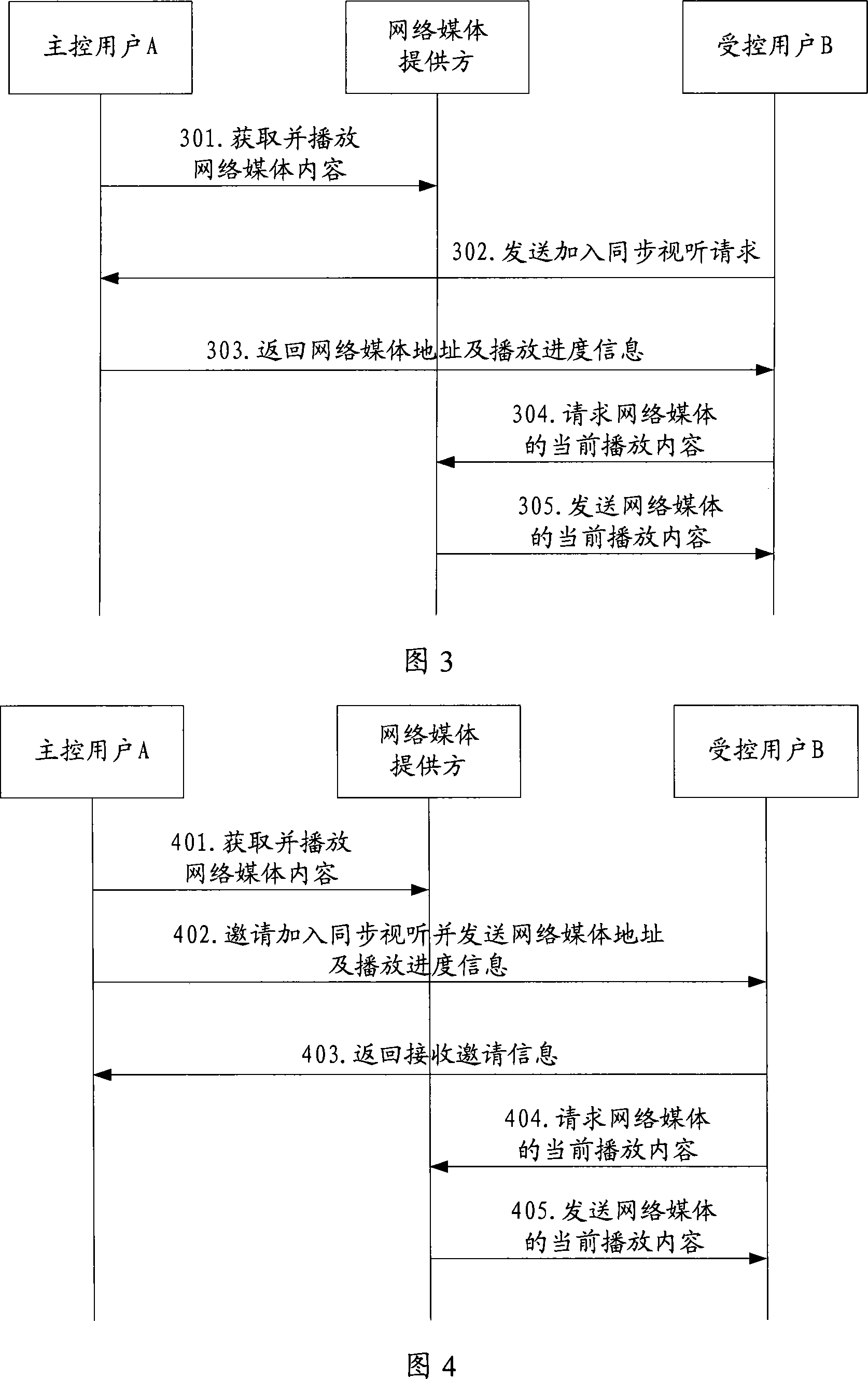

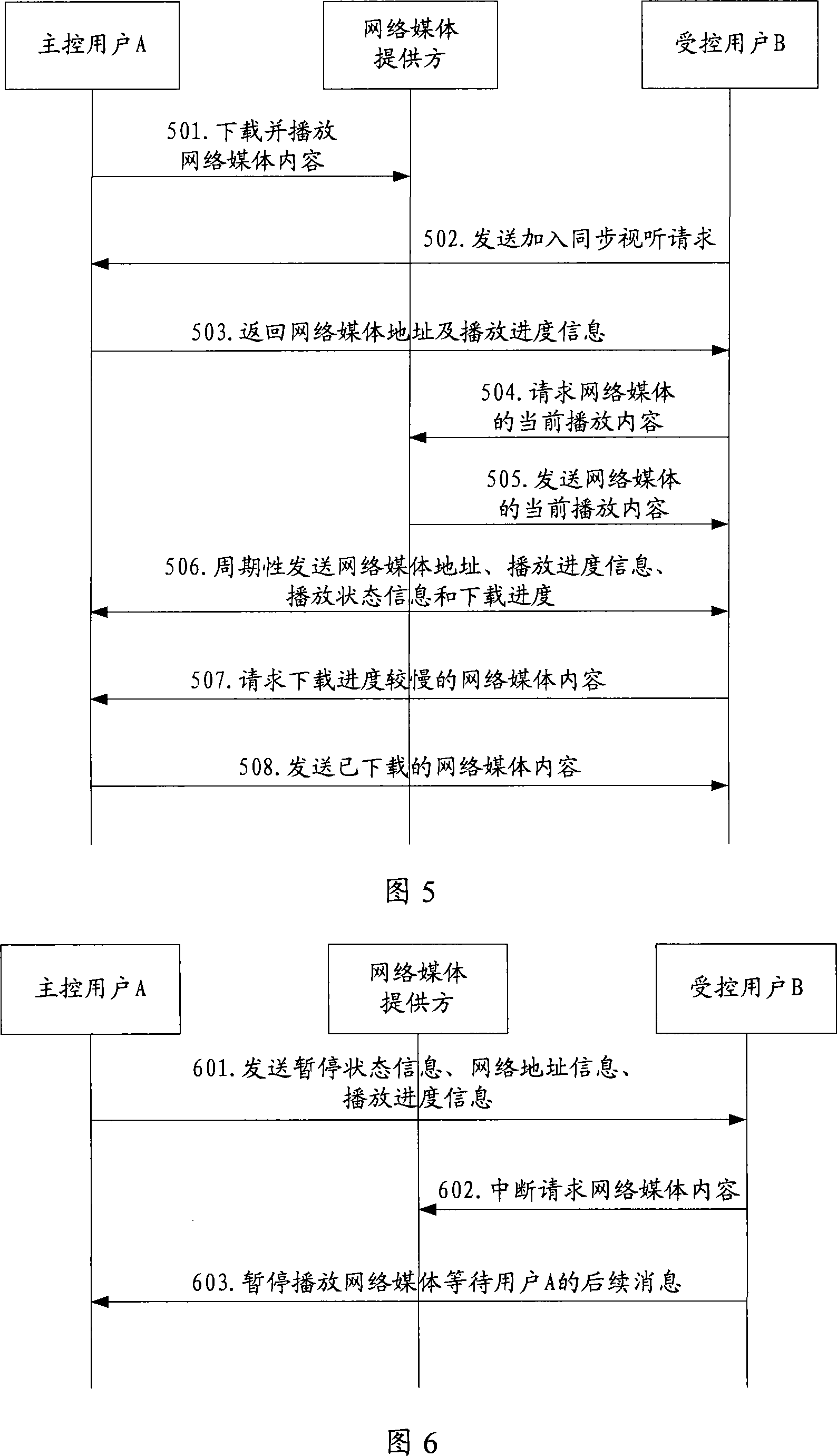

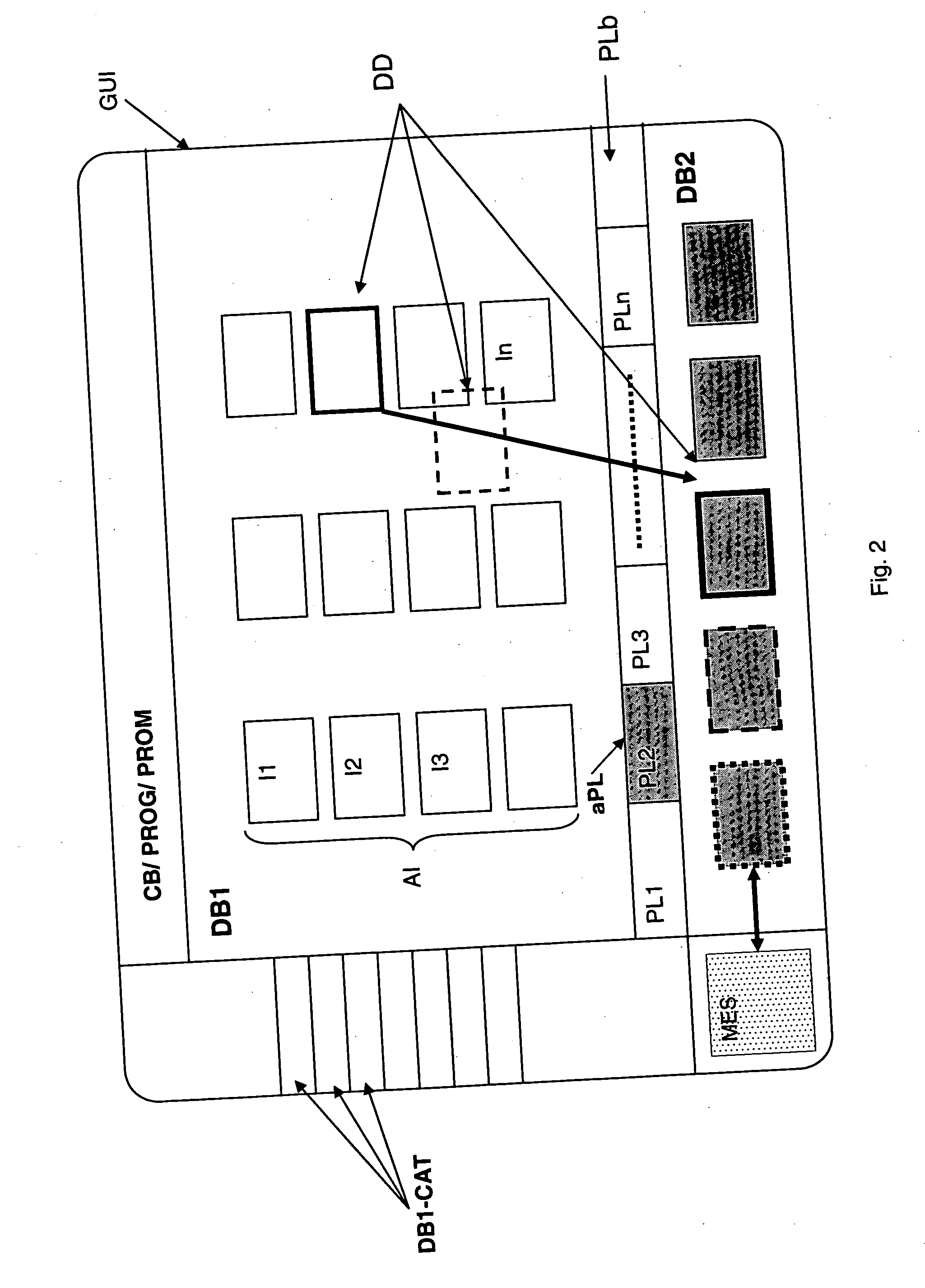

Method, system and user end for realizing network media audio-video synchronization

InactiveCN101072359ASynchronized audio-visual flexibilityGood synergistic entertainment effectPulse modulation television signal transmissionTransmissionNetwork mediaAudiovisual technology

The method includes steps: main controlling user sends address of current playing network media and playing info to the controlled users joined the synchronous audiovisual, and the playing information includes info of playing schedule, and info of playing state; based on address of network media, and info of playing schedule, the controlled user executes operations indicated by info of playing state to realize the synchronous audio visual with the main controlling user. The invention also discloses the system, and user end for realizing synchronous audiovisual from network media. In the method, main controlling user does not need to sample current playing content of network media and to send the sampled content to the controlled users, instead sends address of network media and playing information to the controlled users to let controlled users execute operations indicated by info of playing state. Thus, users can synchronize audiovisual network media smartly.

Owner:TENCENT TECH (SHENZHEN) CO LTD

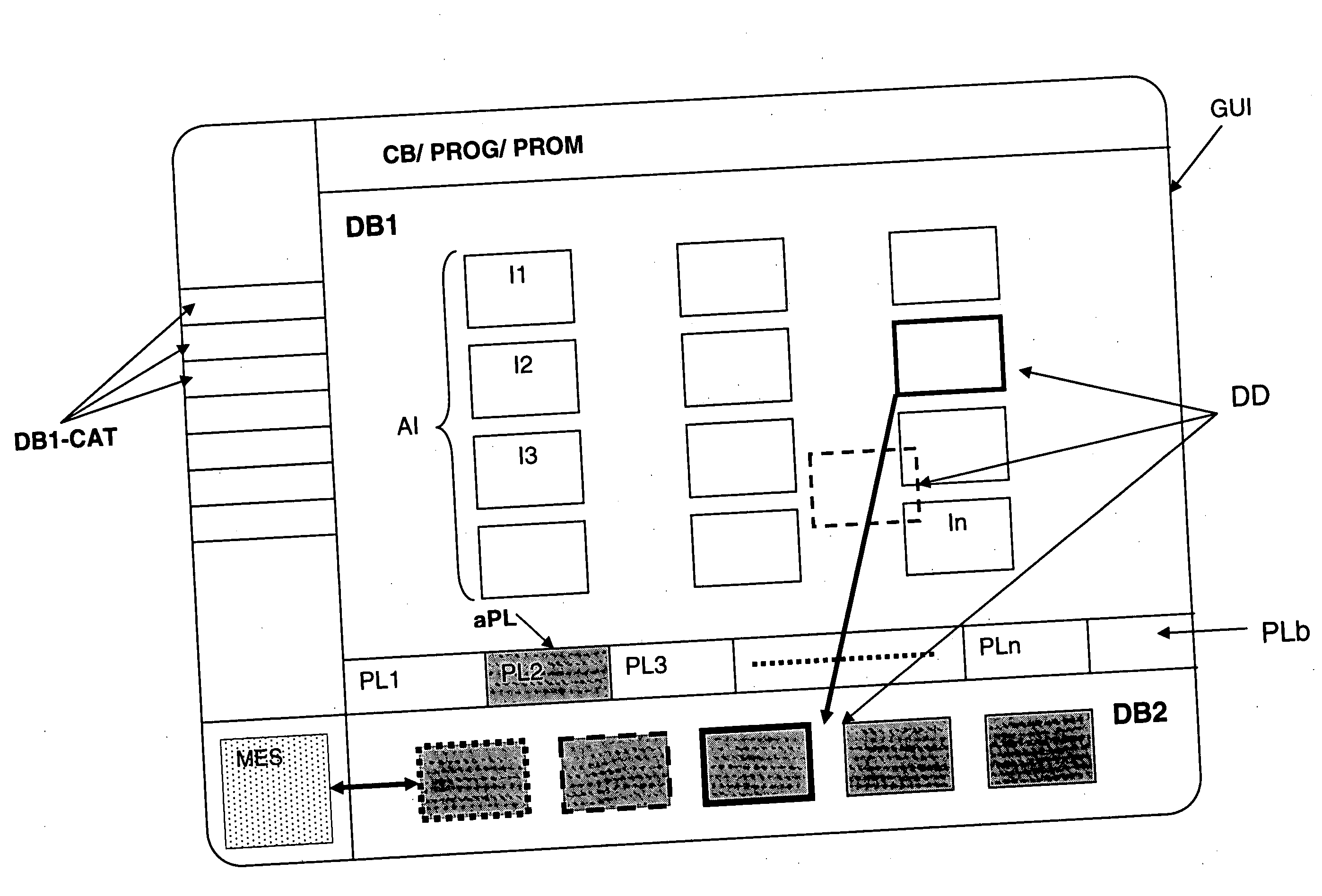

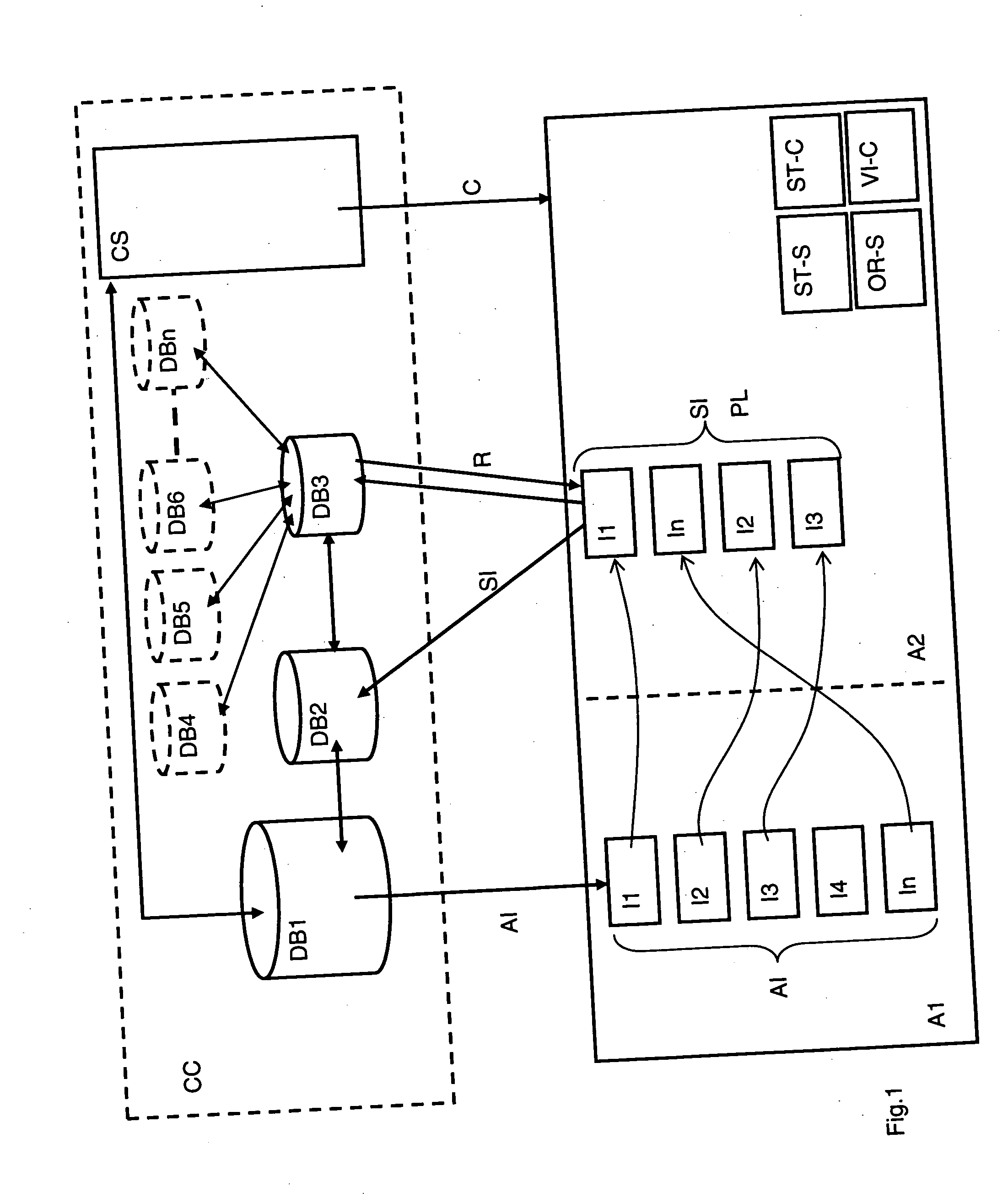

Method and system for dynamically organizing audio-visual items stored in a central database

InactiveUS20070156697A1Easy to changeLarge degree of independenceMultimedia data browsing/visualisationSpecial data processing applicationsPersonalizationGraphics

Embodiments of a method and a system are disclosed for remotely and dynamically managing, sequencing and retrieving audio-visual items from a central database in order to generate a customized digital audio-visual data stream. The content of this stream is associated to the selected items and can be visualized and / or stored in a storing device for a later use. An embodiment of this method is carried out via a central content database, central rights database and any number of rules databases (“Filters”) which can reflect changing parameters and rules of the system, which can pertain to evolving digital rights and permissions, marketing and other system goals and rules pertaining to information exchange between users. An example of a graphic and user-friendly interface is presented on a user terminal unit which can dynamically reflect both user and system generated changes, and dynamically present customized audio-visual streams (playlists) based on user selection and personalized preferences, system objectives and eventual play-list sharing between users.

Owner:TRANSMEDIA COMM

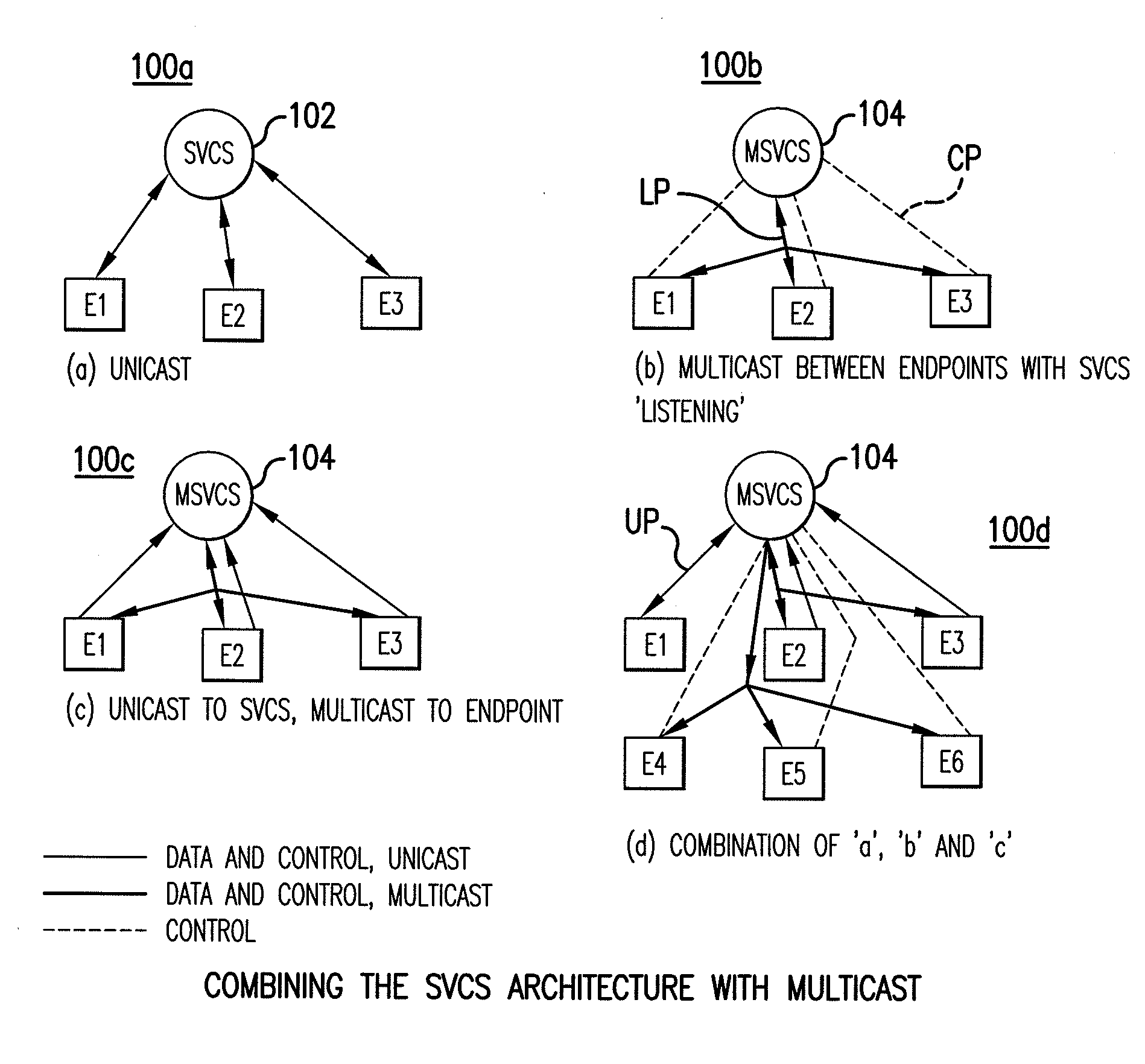

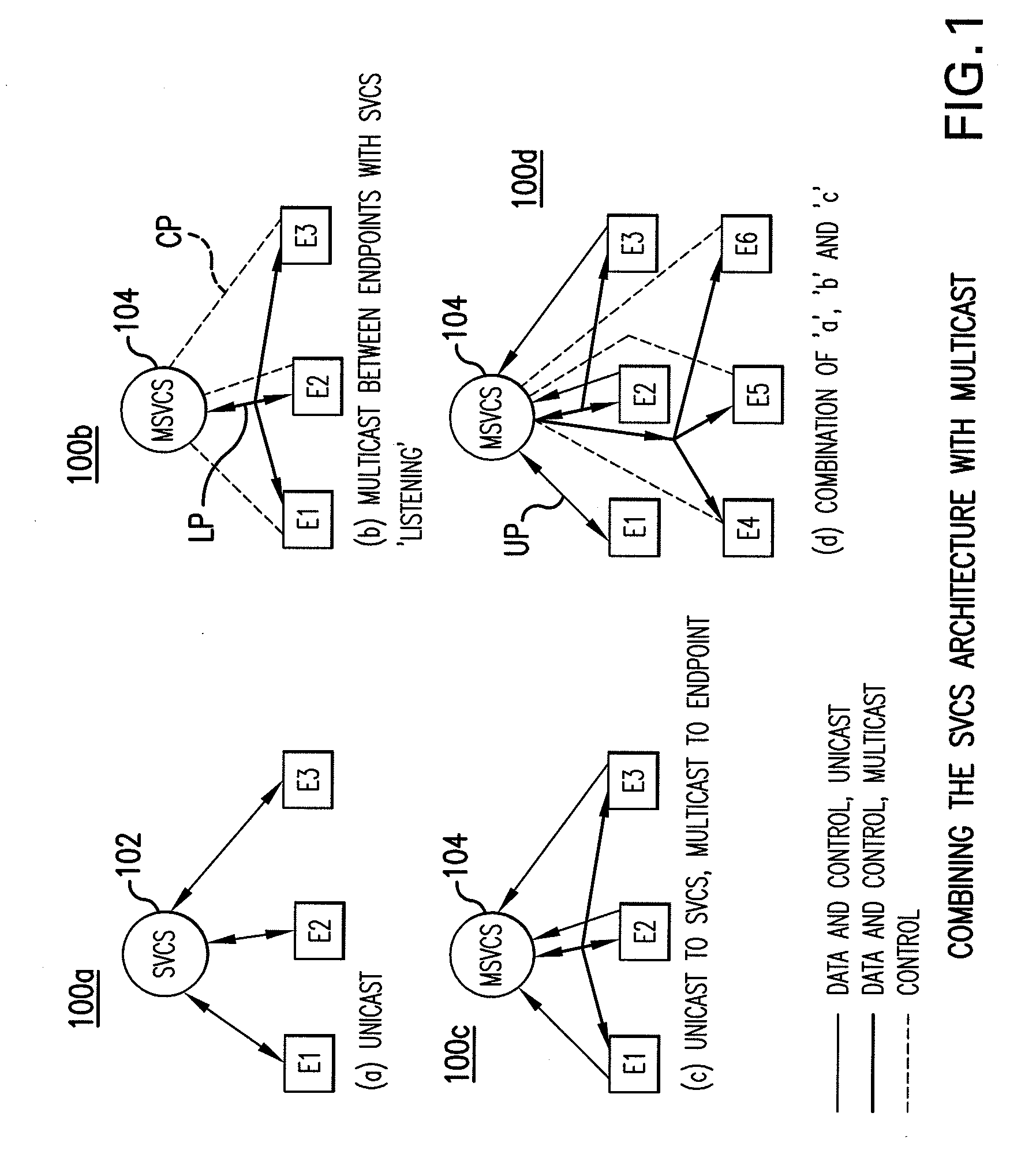

System and method for multipoint conferencing with scalable video coding servers and multicast

ActiveUS20080239062A1Easy to useMinimize bandwidth overheadTwo-way working systemsDigital video signal modificationMulticast communicationCommunications server

A multicast scalable video communication server (MSVCS) is disposed in a multi-endpoint video conferencing system having multicast capabilities and in which audiovisual signals are scalably coded. The MVCVS additionally has unicast links to endpoints. The MSVCS caches audiovisual signal data received from endpoints over multicast communication channels, and retransmits the data over either unicast or multicast communication channels to an endpoint that requests the cached data.

Owner:VIDYO

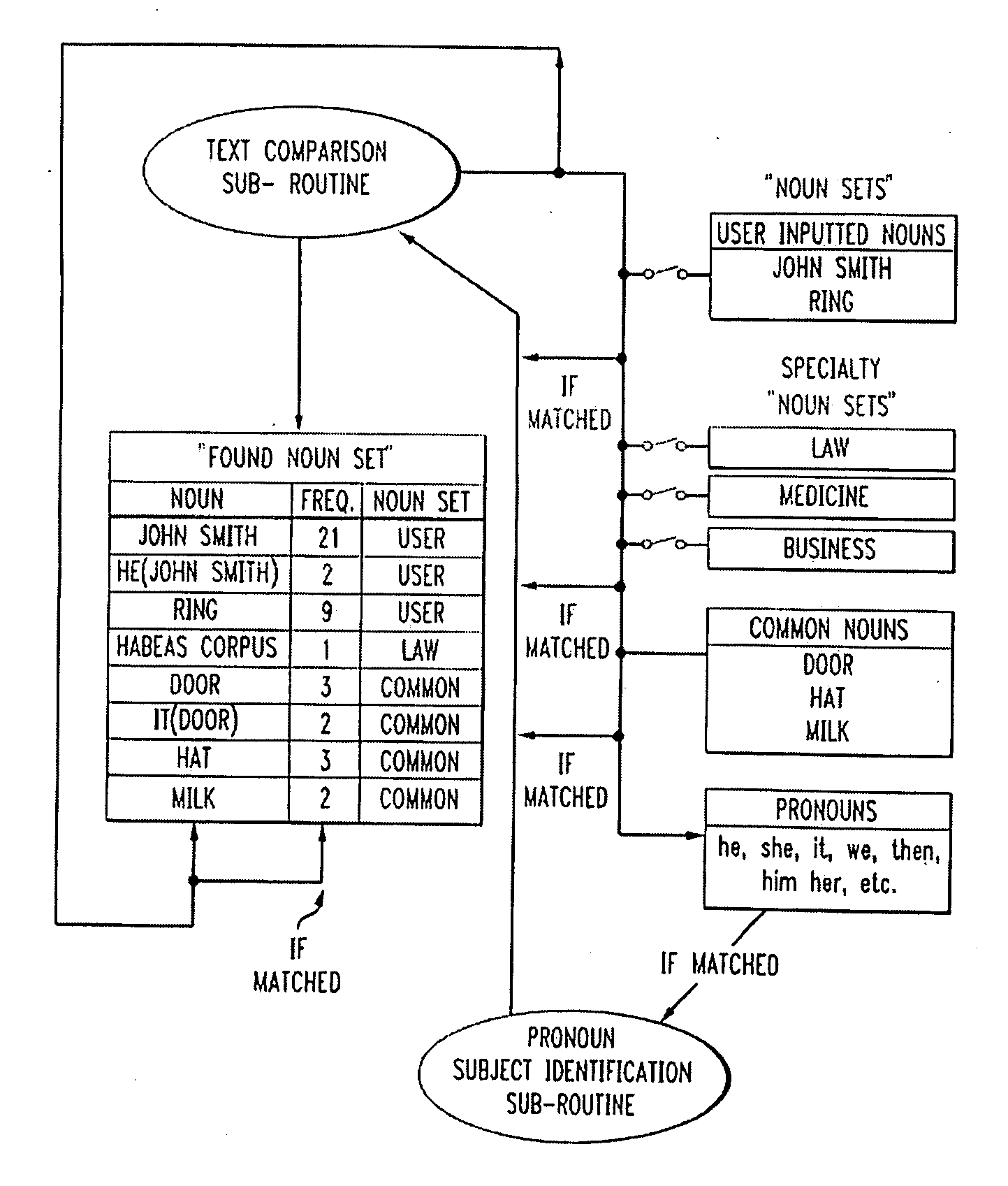

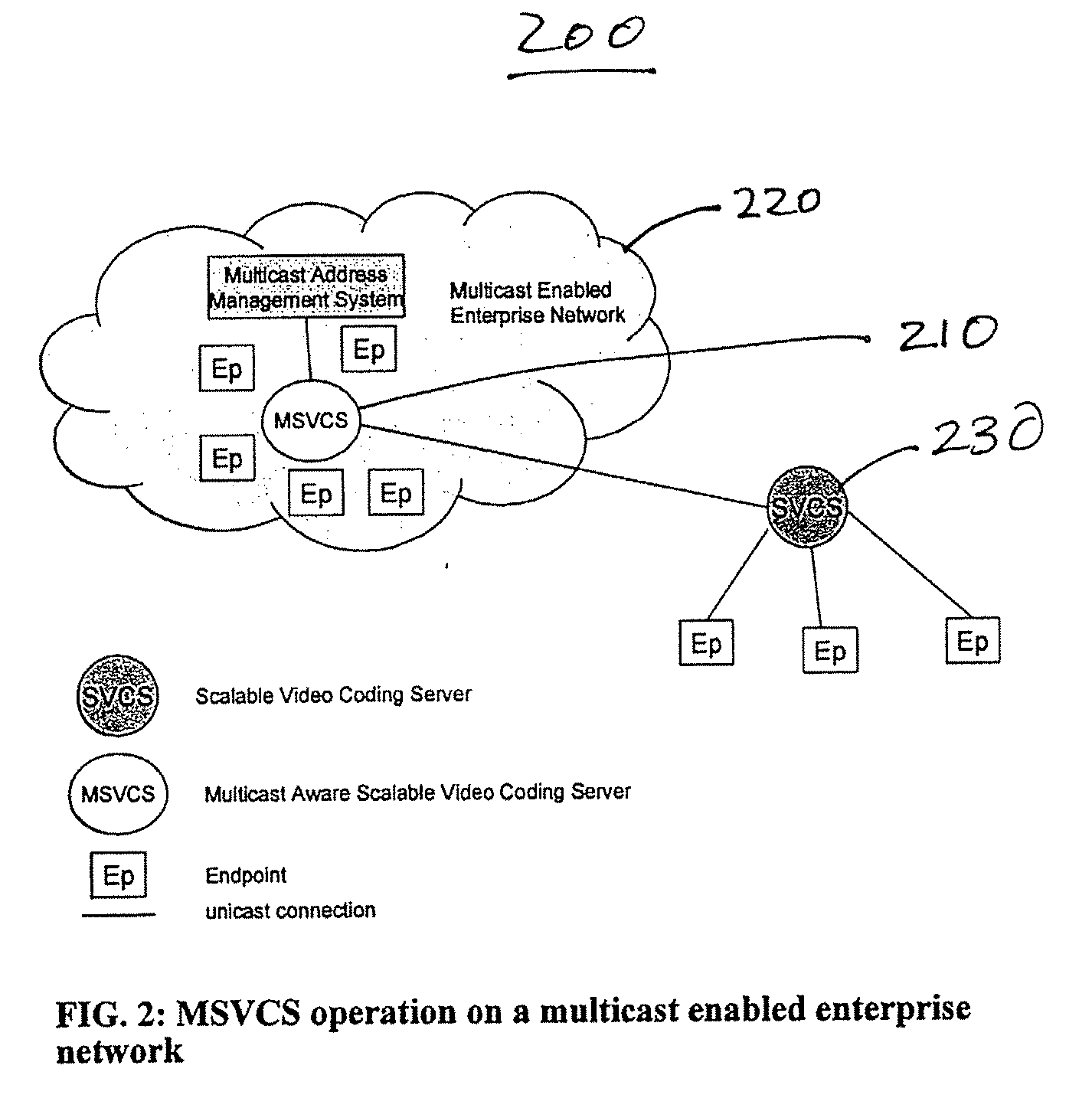

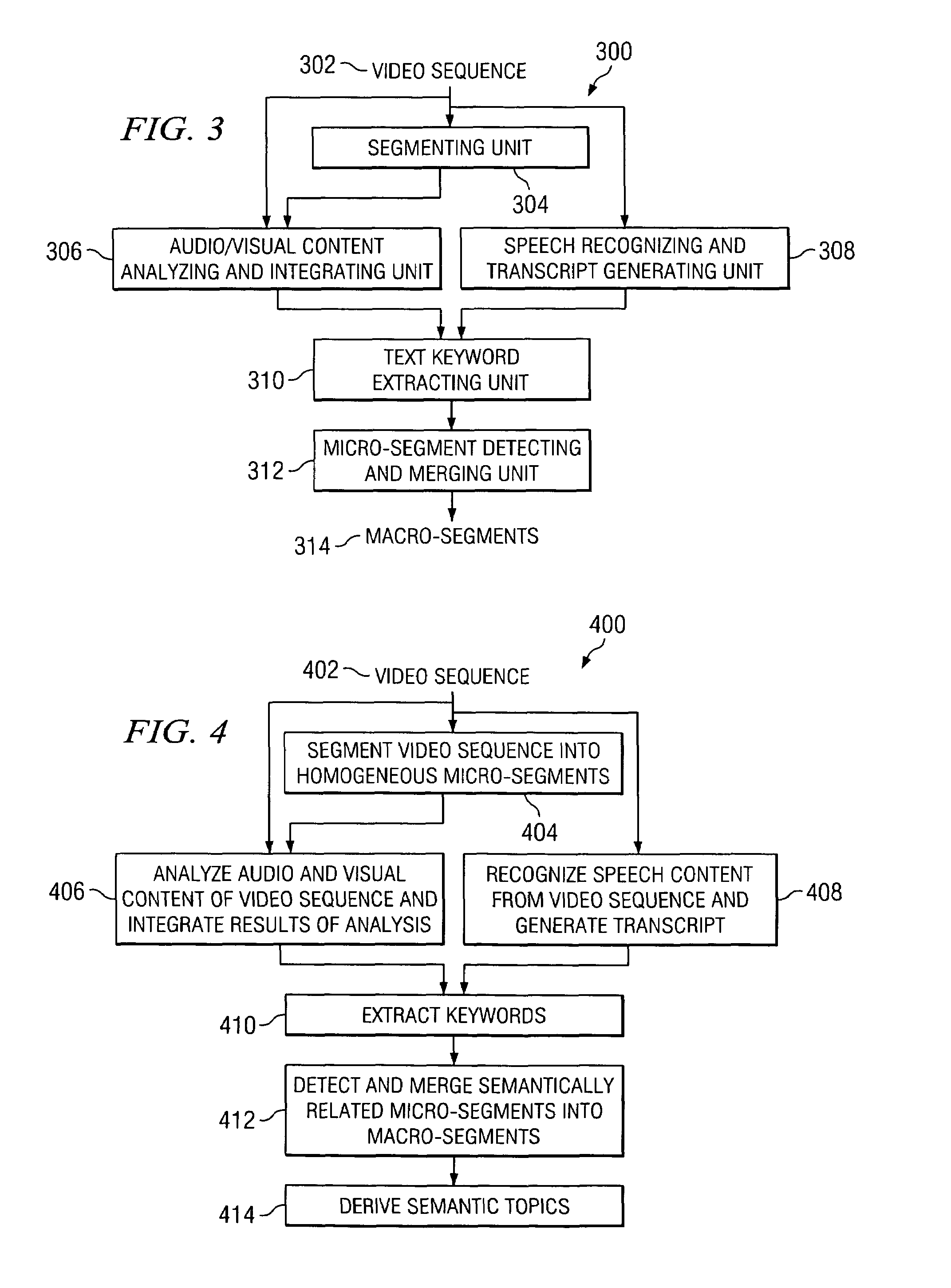

System and method for semantic video segmentation based on joint audiovisual and text analysis

InactiveUS7382933B2Digital data information retrievalCharacter and pattern recognitionVisual perceptionComputer science

System and method for partitioning a video into a series of semantic units where each semantic unit relates to a generally complete thematic topic. A computer implemented method for partitioning a video into a series of semantic units wherein each semantic unit relates to a theme or a topic, comprises dividing a video into a plurality of homogeneous segments, analyzing audio and visual content of the video, extracting a plurality of keywords from the speech content of each of the plurality of homogeneous segments of the video, and detecting and merging a plurality of groups of semantically related and temporally adjacent homogeneous segments into a series of semantic units in accordance with the results of both the audio and visual analysis and the keyword extraction. The present invention can be applied to generate important table-of-contents as well as index tables for videos to facilitate efficient video topic searching and browsing.

Owner:IBM CORP

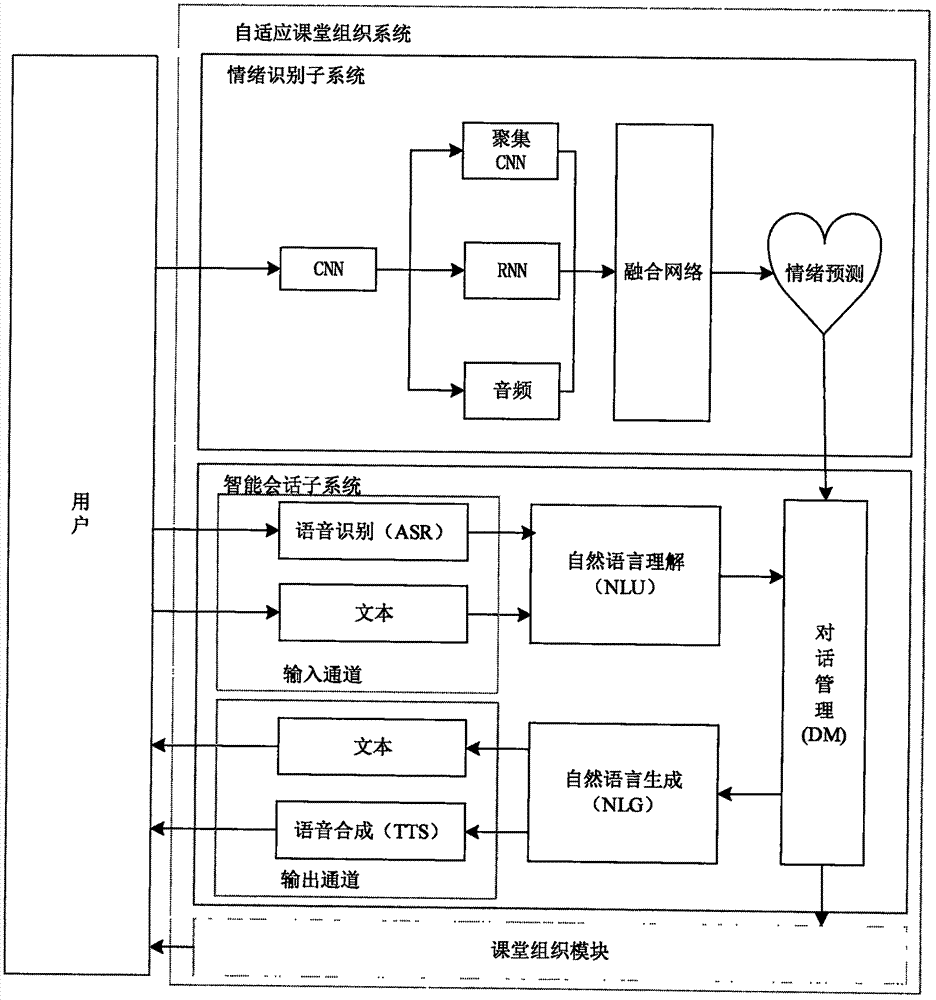

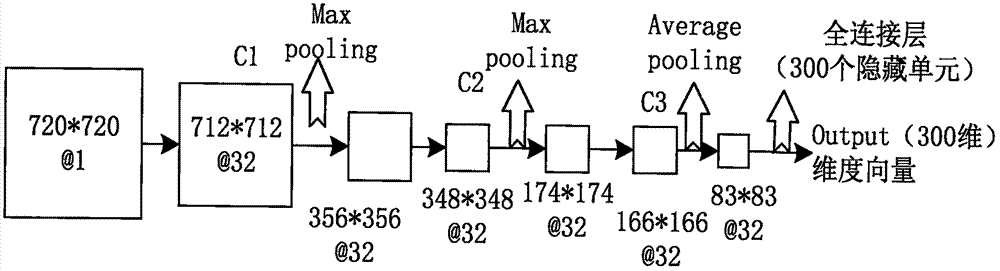

Man-machine interaction method and system for online education based on artificial intelligence

PendingCN107958433ASolve the problem of poor learning effectData processing applicationsSpeech recognitionPersonalizationOnline learning

The invention discloses a man-machine interaction method and system for online education based on artificial intelligence, and relates to the digitalized visual and acoustic technology in the field ofelectronic information. The system comprises a subsystem which can recognize the emotion of an audience and an intelligent session subsystem. Particularly, the two subsystems are combined with an online education system, thereby achieving the better presentation of the personalized teaching contents for the audience. The system starts from the improvement of the man-machine interaction vividnessof the online education. The emotion recognition subsystem judges the learning state of a user through the expression of the user when the user watches a video, and then the intelligent session subsystem carries out the machine Q&A interaction. The emotion recognition subsystem finally classifies the emotions of the audiences into seven types: angry, aversion, fear, sadness, surprise, neutrality,and happiness. The intelligent session subsystem will adjust the corresponding course content according to different emotions, and carry out the machine Q&A interaction, thereby achieving a purpose ofenabling the teacher-student interaction and feedback in the conventional class to be presented in an online mode, and enabling the online class to be more personalized.

Owner:JILIN UNIV

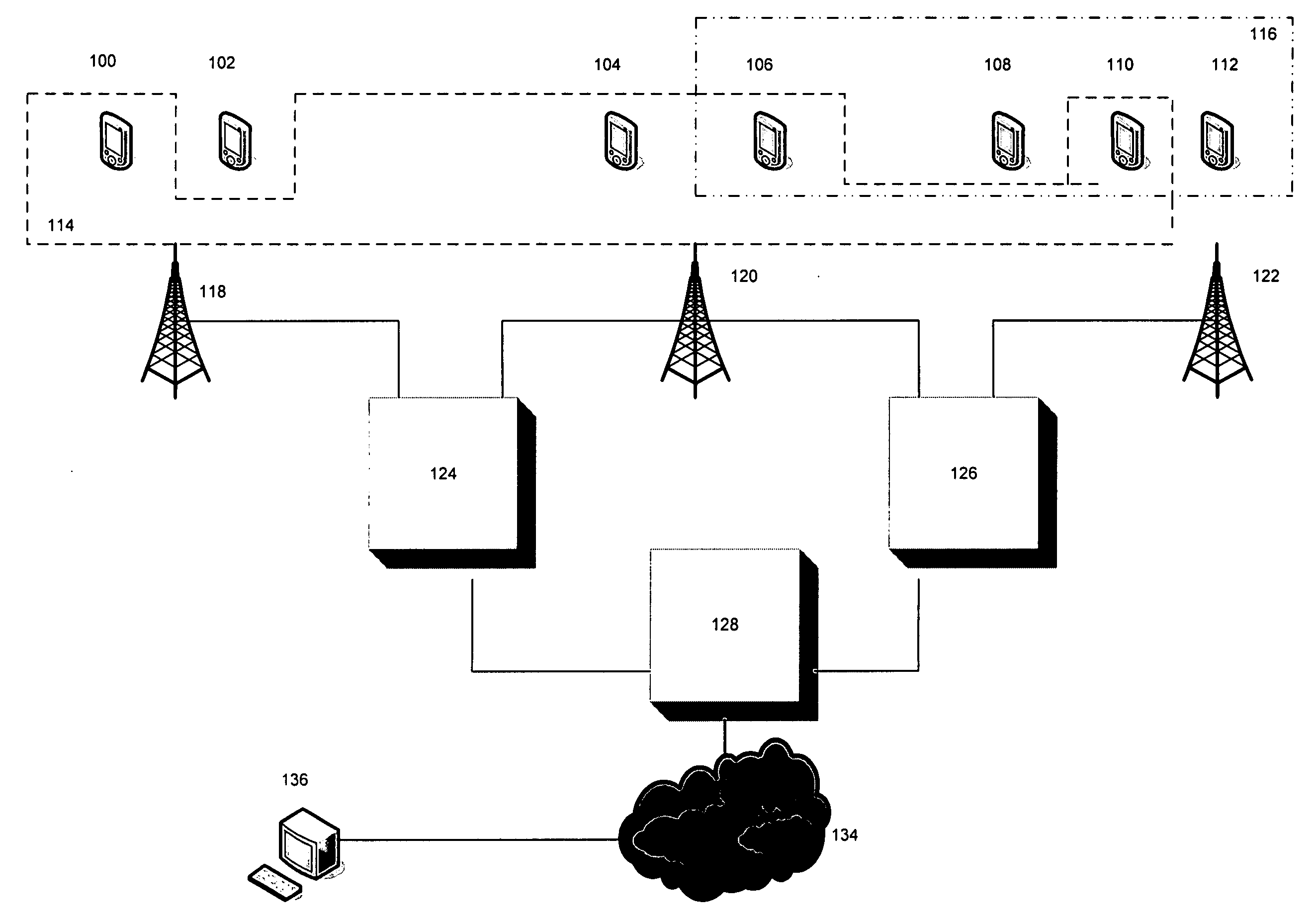

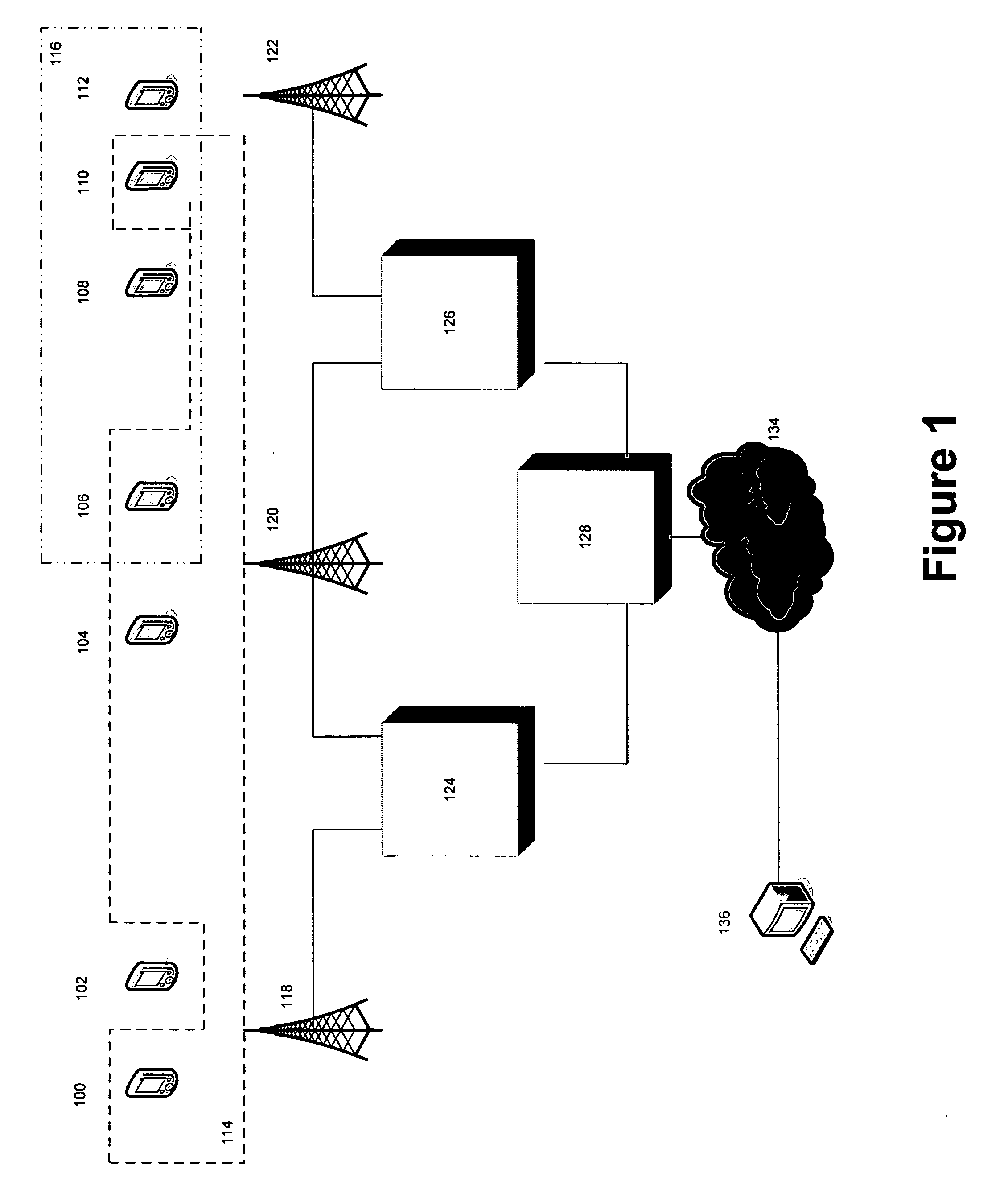

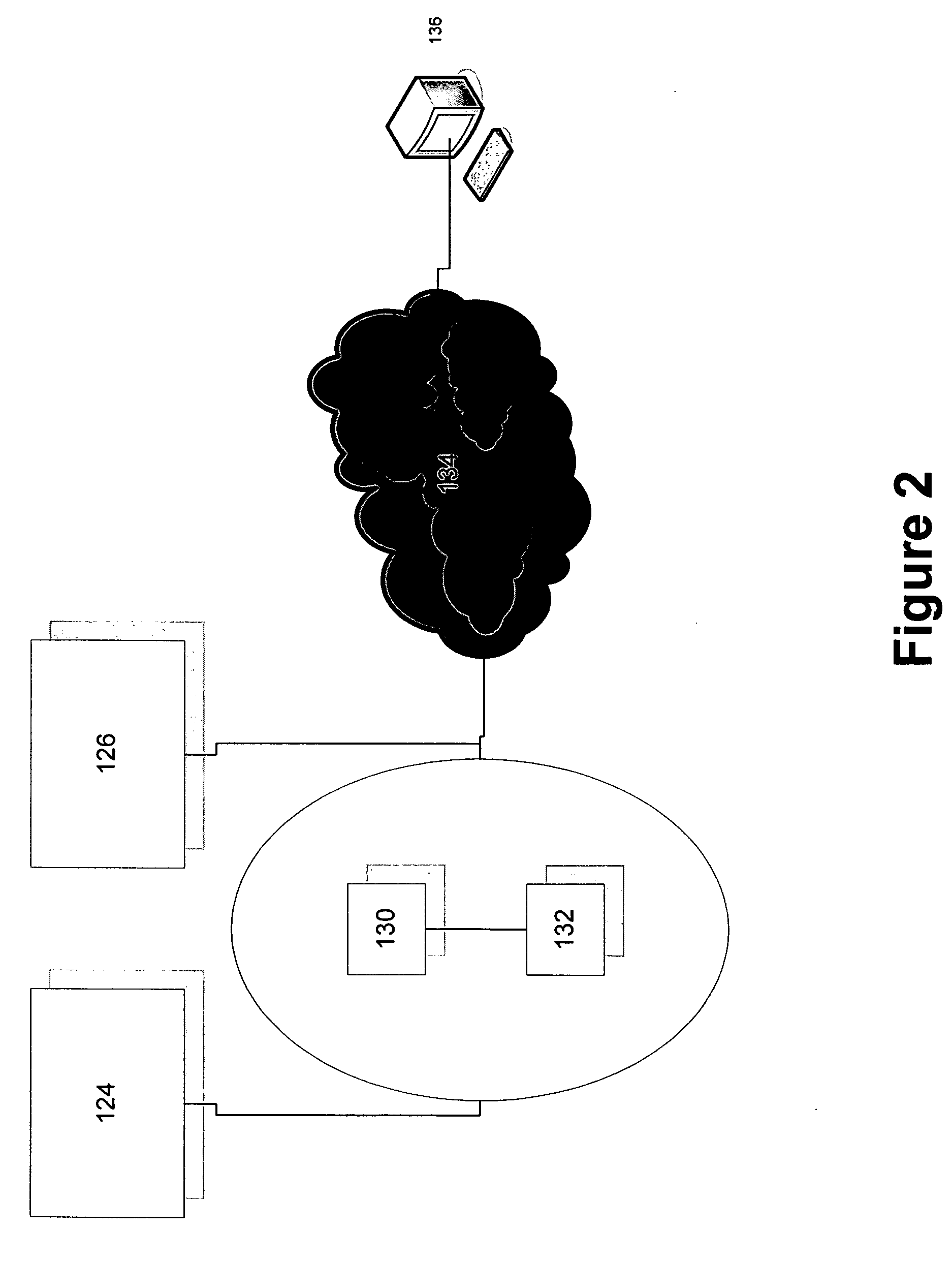

Systems and methods for creating and participating in ad-hoc virtual communities

InactiveUS20080126113A1Data processing applicationsSpecial service for subscribersInternet usersMobile device

Systems and methods are described for creating and expiring ad hoc communities of mobile and fixed-line phone and Internet users for the purpose of facilitating one-to-many communication amongst the users in response to live events. Embodiments of the invention allow one-to-many communication by any individual participant in a community to all other participants in a community via standard messaging protocols, and further allow dynamically generated content to be distributed amongst the participants in the community. Such dynamically generated content may include interactive contests amongst participants, surveys of the participants, or the distribution of audio visual content generated from or transmitted via mobile devices used by the participants. Embodiments of the invention include systems and methods for preserving state data regarding the communities, participants, and events in order to facilitate concurrent participation in multiple communities.

Owner:FRENGO CORP

Obscuring data in an audiovisual product

ActiveUS20050019017A1Simple but effectiveTelevision system detailsElectronic editing digitised analogue information signalsComputer graphics (images)Audiovisual technology

Owner:ZOO DIGITAL

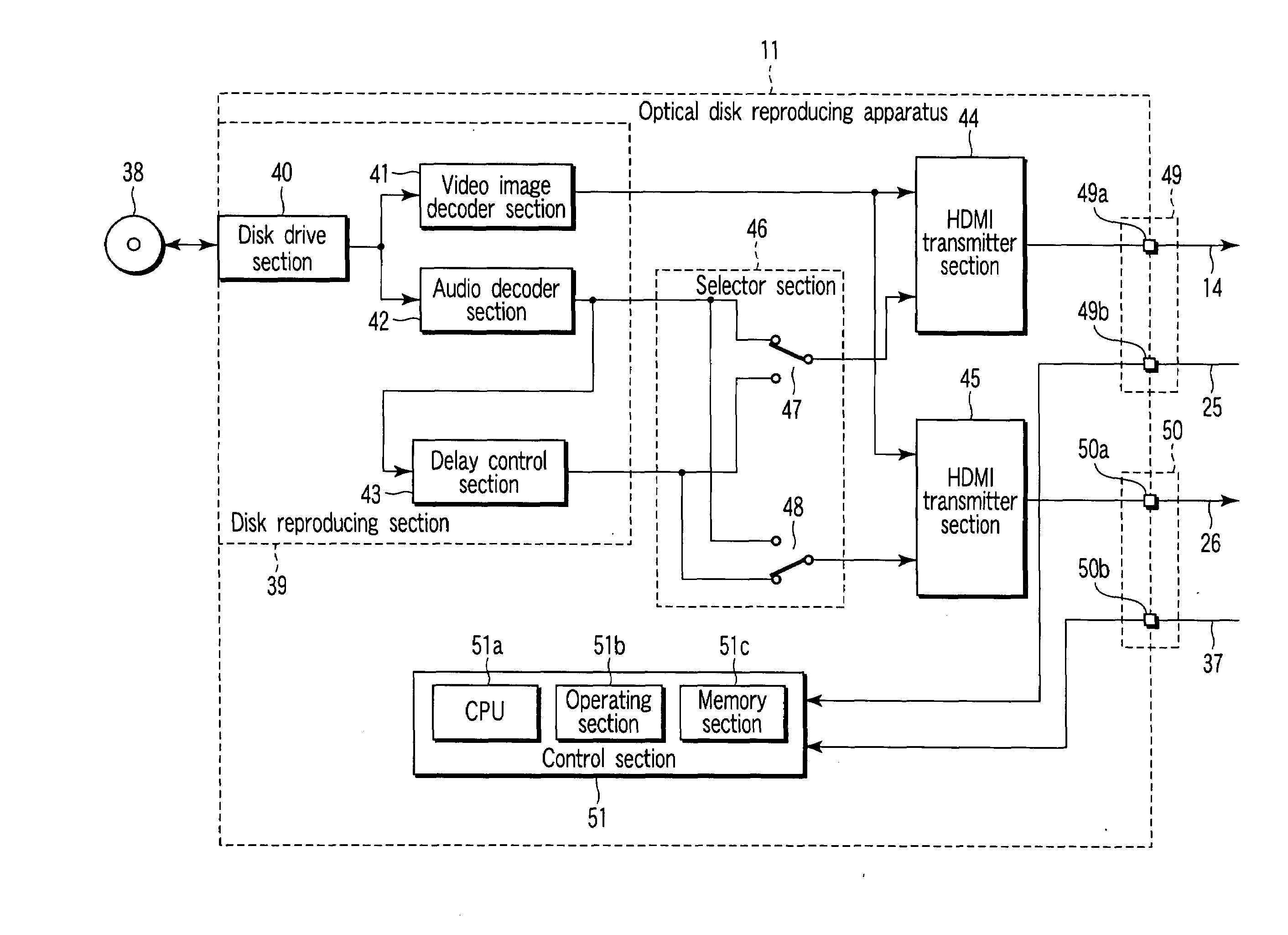

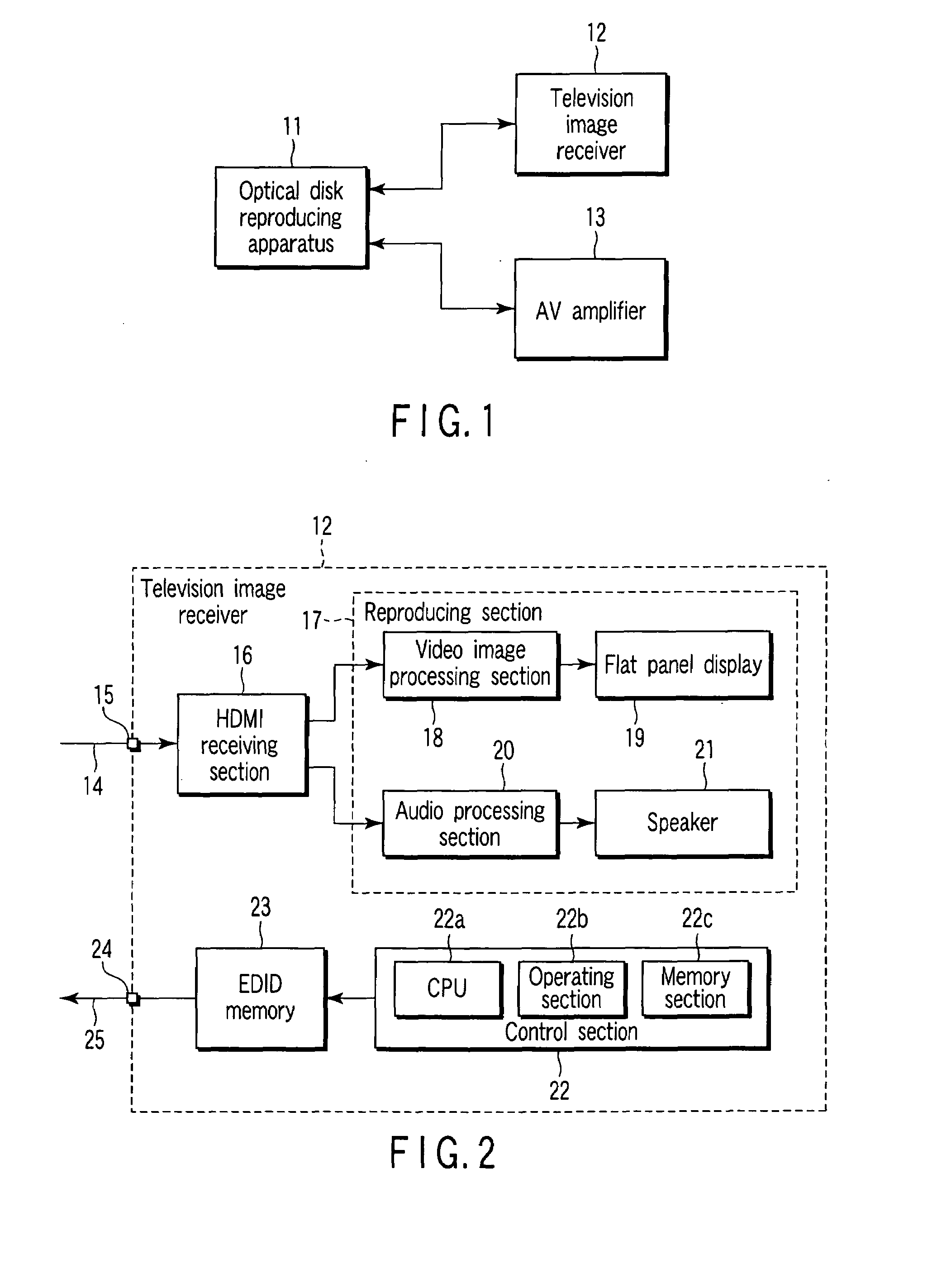

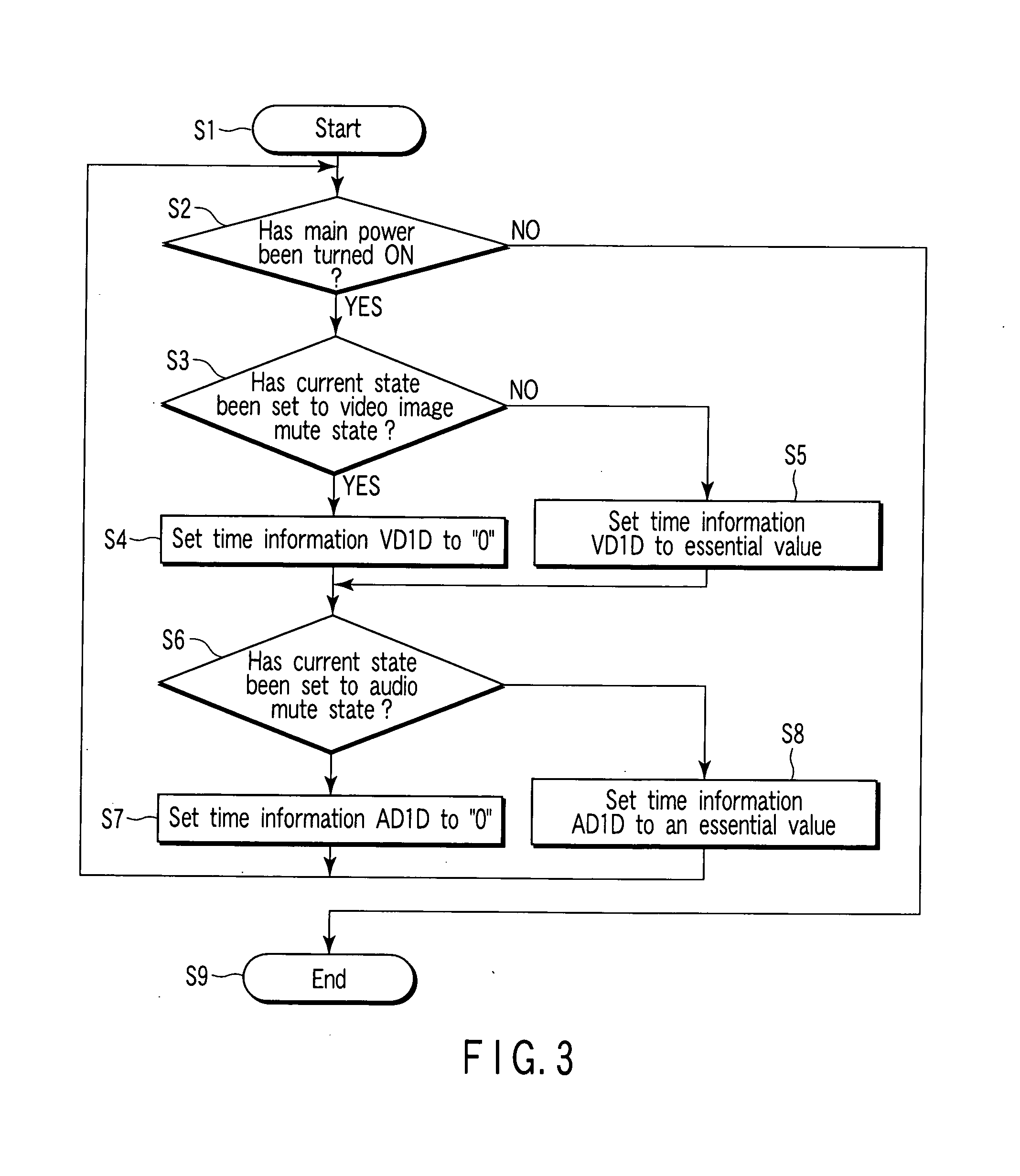

Audiovisual (AV) device and control method thereof

ActiveUS20070230909A1Television system detailsAnalogue recording/reproducingComputer graphics (images)Video image

According to one embodiment, video image and audio signals are transmitted to a plurality of electronic devices each having at least one of a video image display function and an audio reproducing function. Information indicating the fact that at least one of a video image and audio has been set in a mute state is acquired from each of the electronic devices. Based on the information, a processing operation is applied to the video image and audio signals supplied to each of the electronic devices.

Owner:KK TOSHIBA

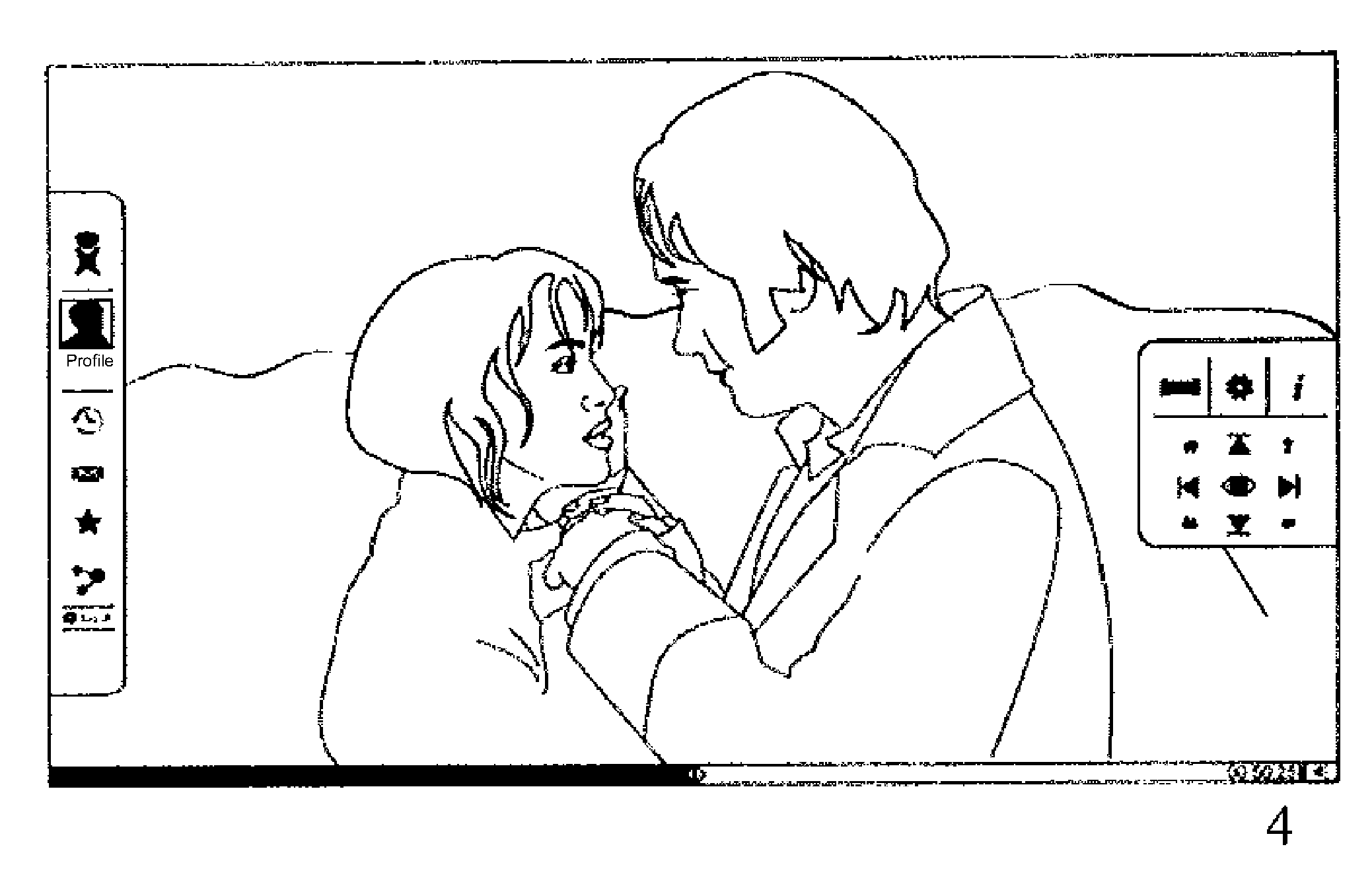

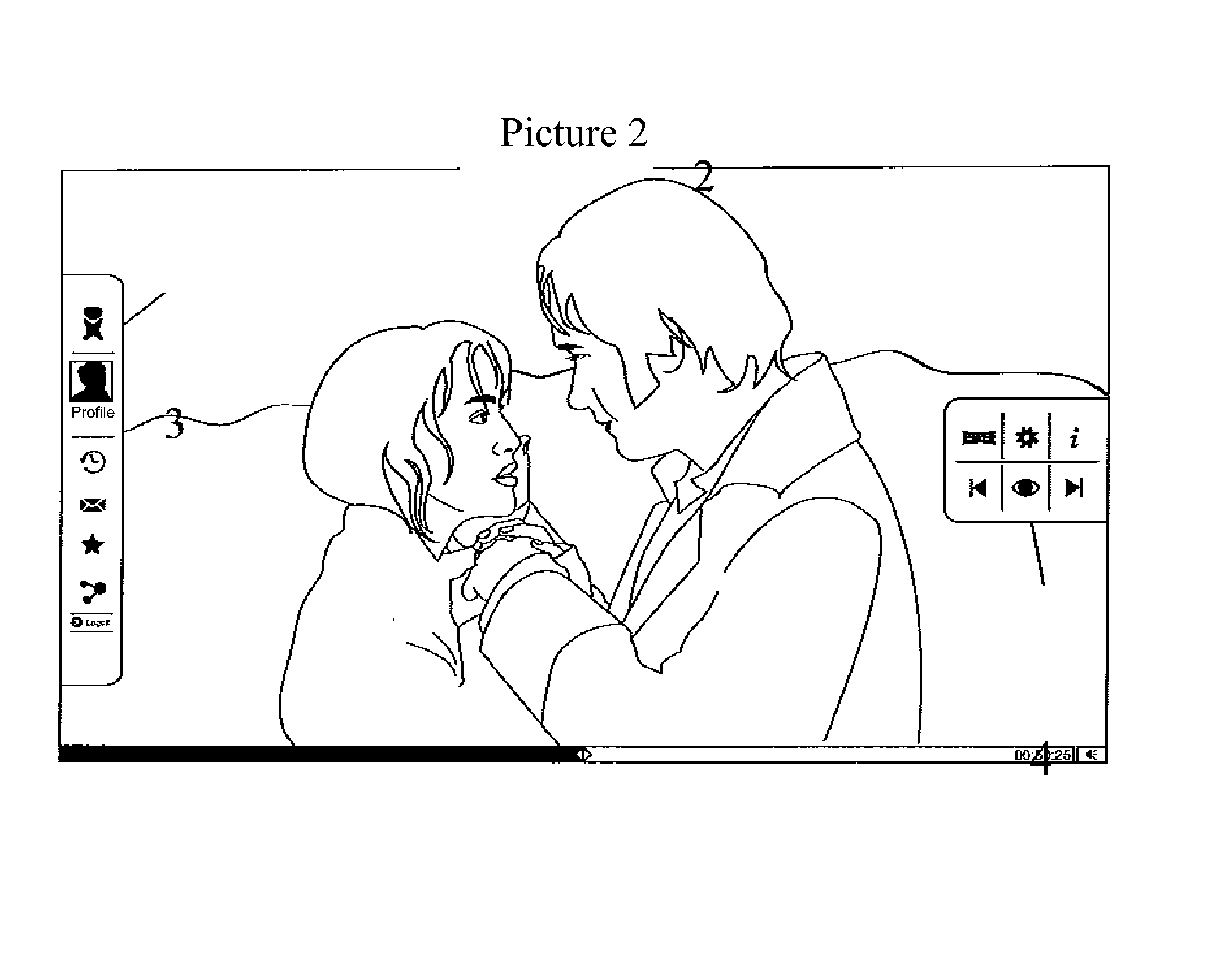

Dynamic audiovisual browser and method

InactiveUS20130332835A1Facilitates and enhances transmission surveillanceMove quicklyInput/output for user-computer interactionSelective content distributionPersonalizationUser interface

This is an invention of computing and its applications, more specifically information systems, in the areas of user interface and interactive systems for distribution of videos and games, with applications in various segments of networking systems and tele-radio digital diffusion. Particularly, it is about to a dynamic visual browser and its method of operation, which allows users to interact with video playlists while videos are being reproduced, ensuring an individualized, intuitive, and continuous experience simulating the behavior of consumption of television content, which can be shared with other users.

Owner:LOG ON MULTIMIDIA

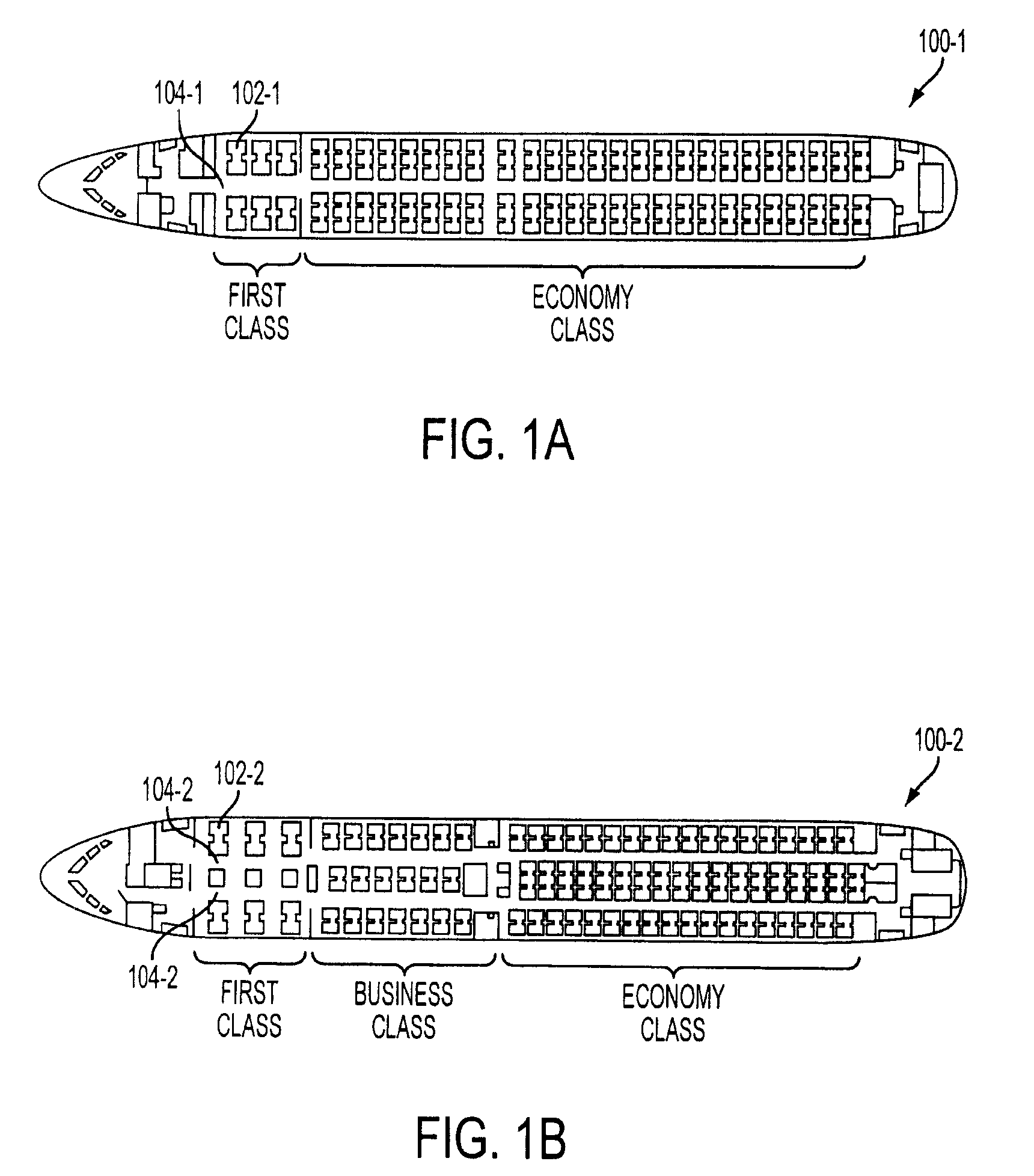

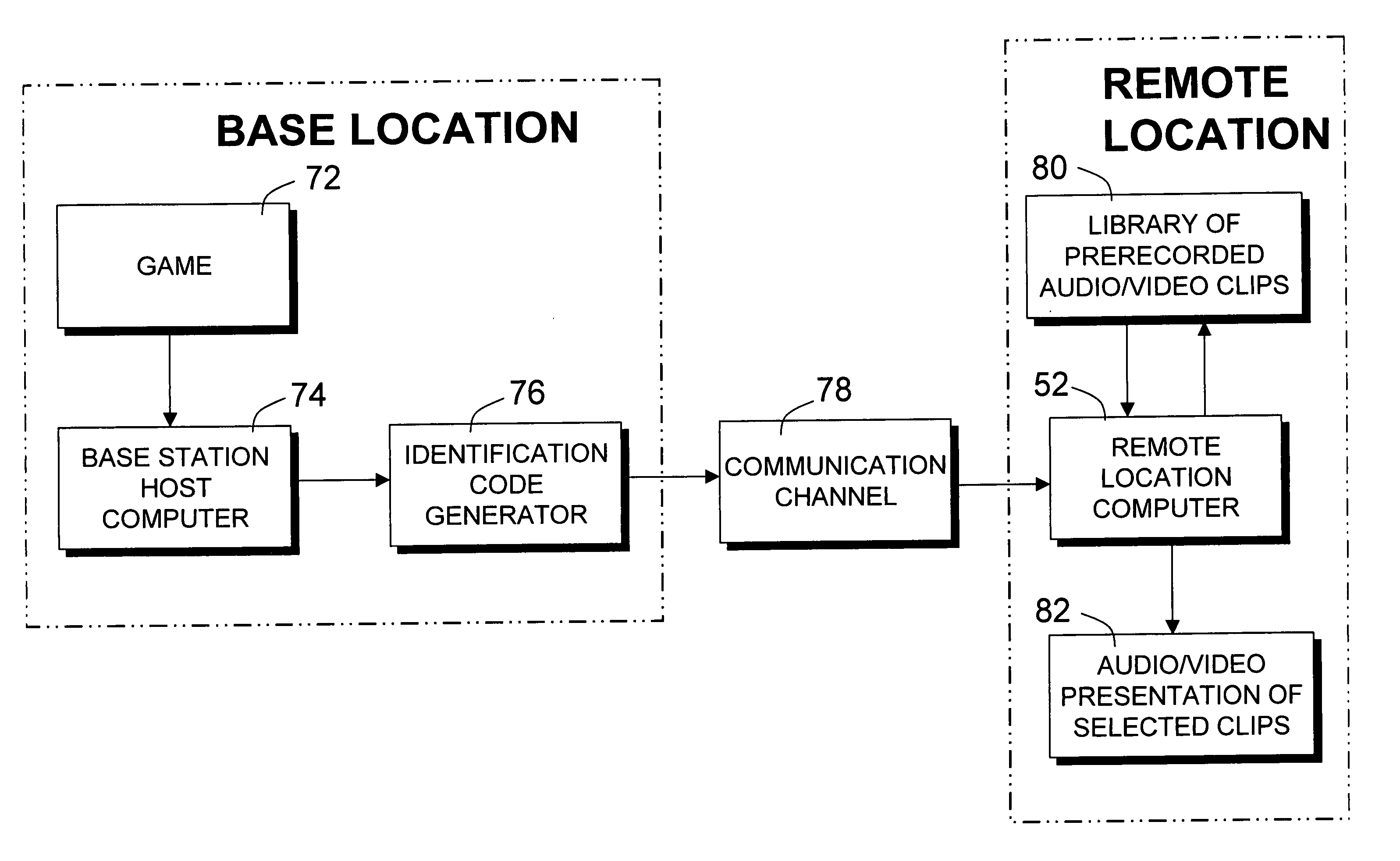

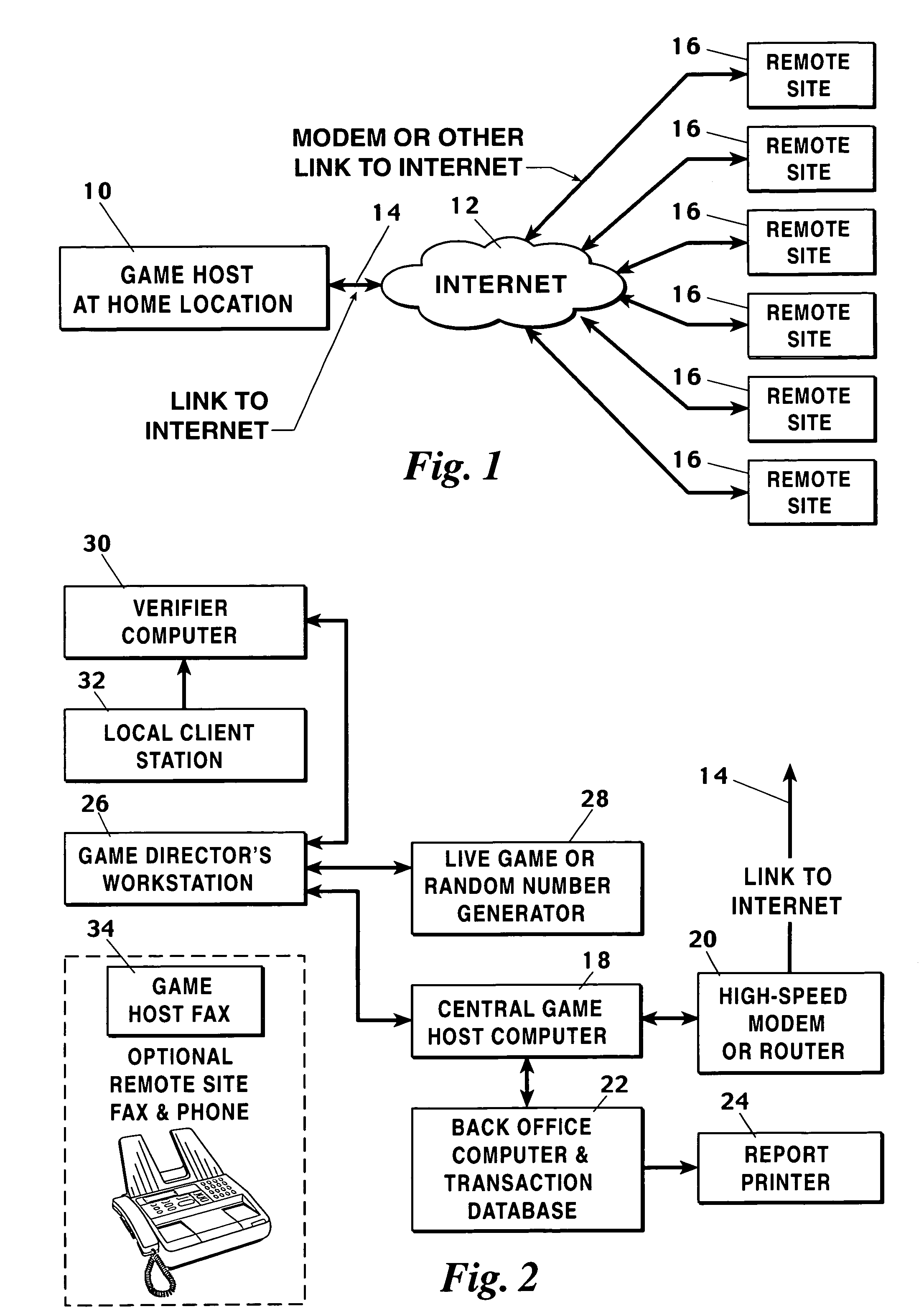

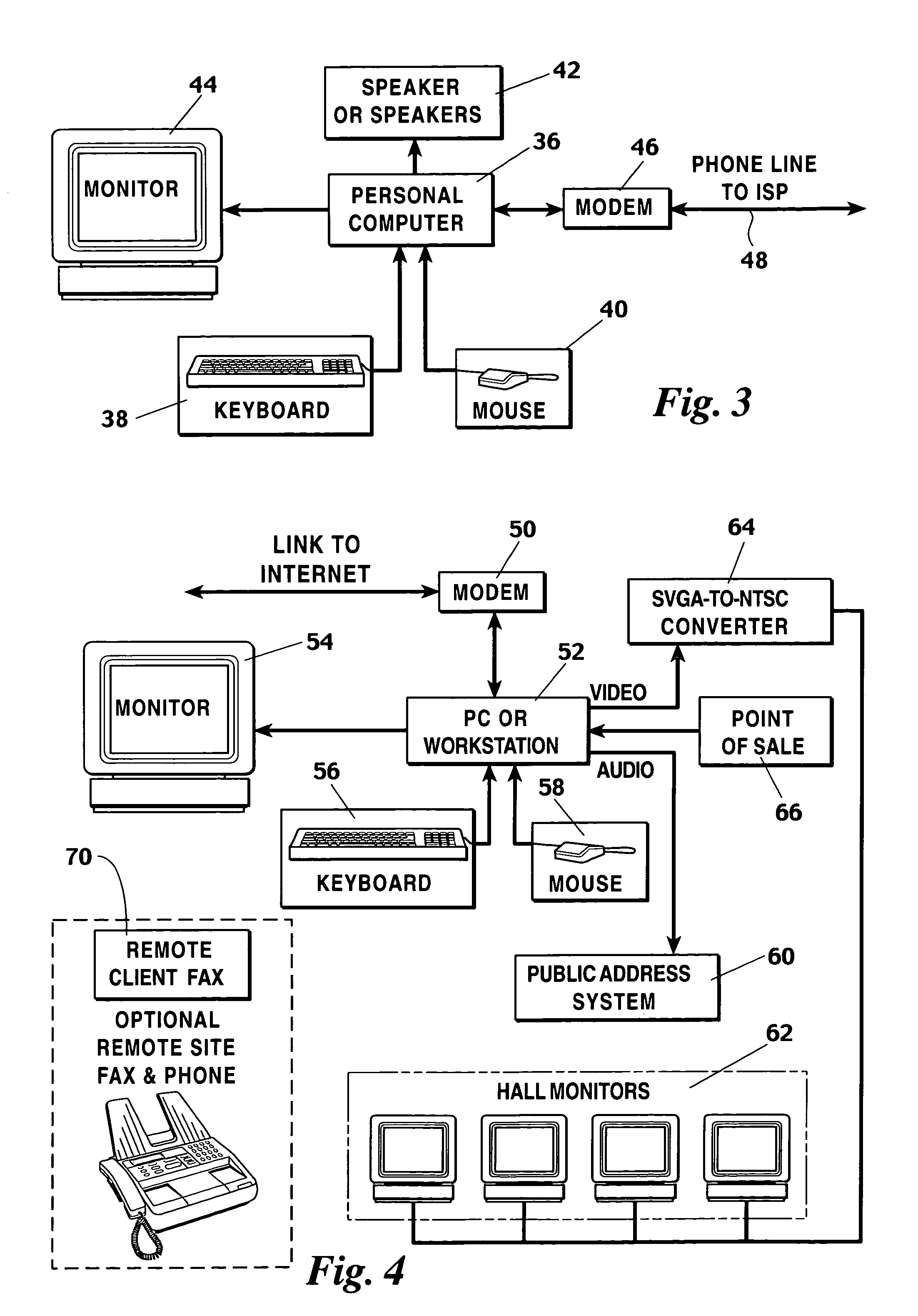

System and method for providing a realistic audiovisual representation of a game among widely separated participants

InactiveUS6955604B1Low costRun at high speedApparatus for meter-controlled dispensingVideo gamesComputer graphics (images)Audiovisual technology

A method of and system of providing a realistic audiovisual representation at a remote location of a game occurring at a base location in which the base location and remote location are linked by a communications channel, including the steps of preparing a library of prerecorded video clips depicting events typically encountered in conducting a game, storing the library of the remote location, transmitting information as to the progress of a game from the base location to the remote location over the communication channel at the base location using the information to select appropriate video clips from the library that replicate the game, and presenting the selected video clips at the remote location to provide a realistic audiovisual representation.

Owner:EVERI GAMES

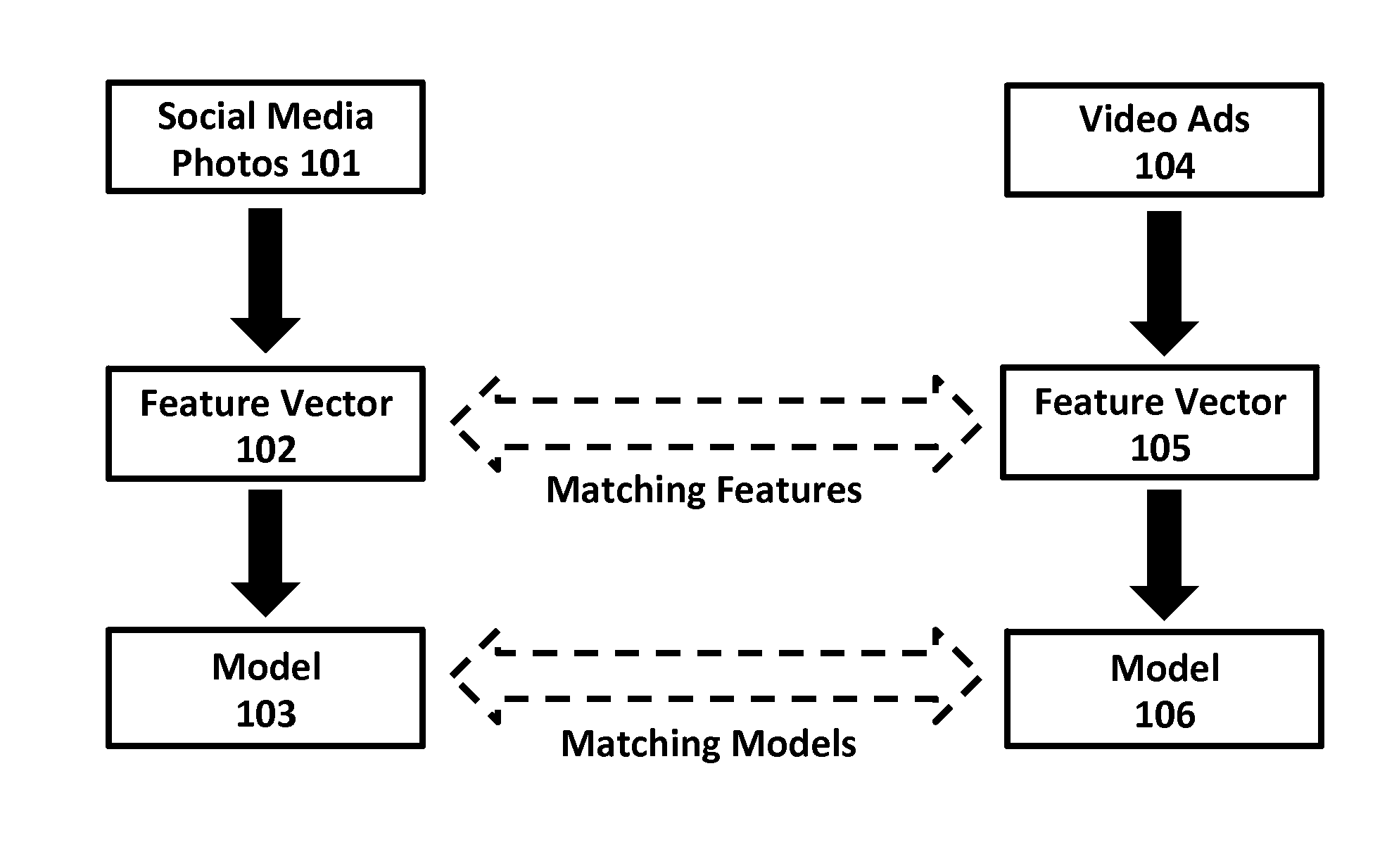

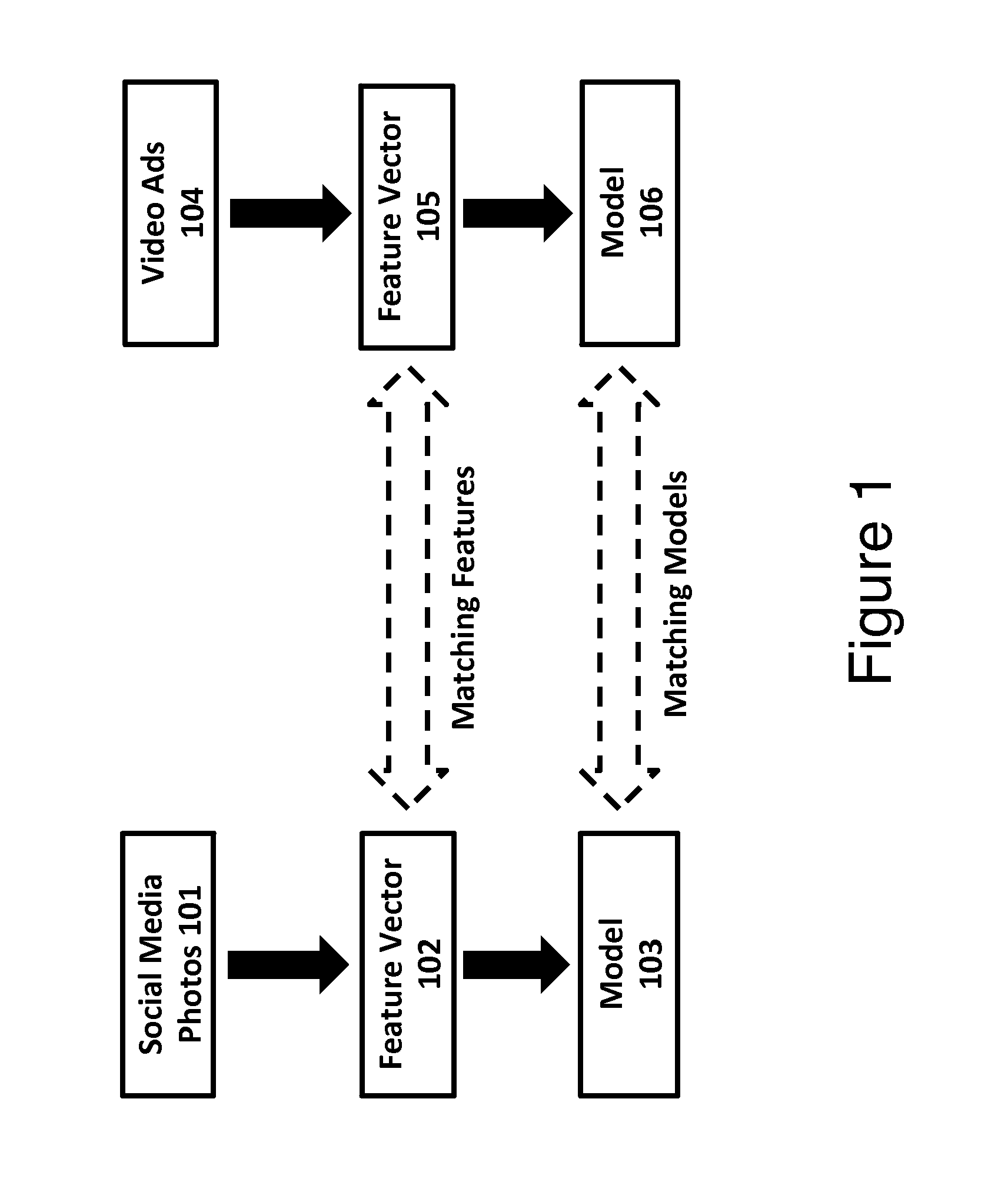

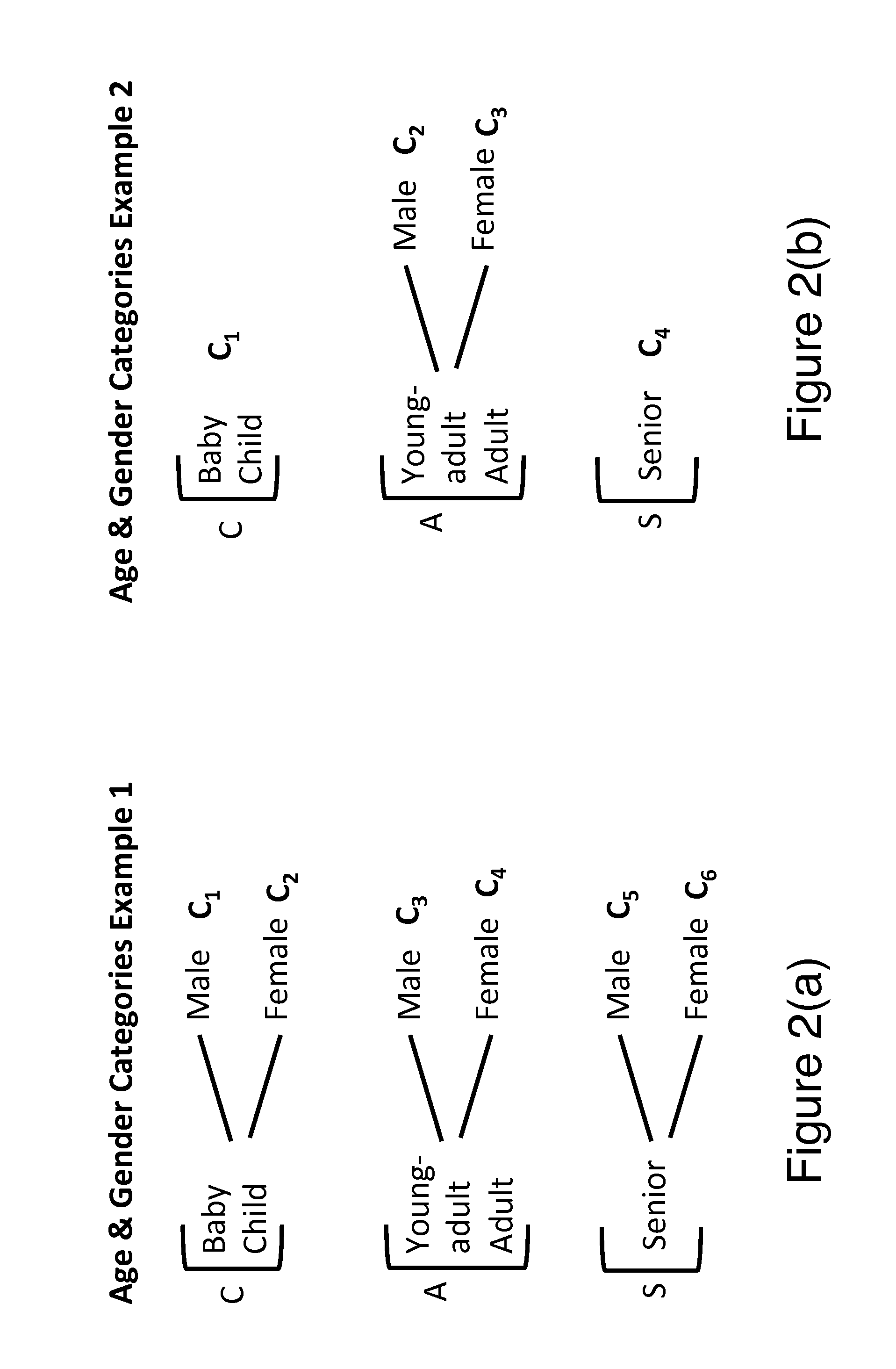

Systems and methods for gaining knowledge about aspects of social life of a person using visual content associated with that person

ActiveUS20160048887A1Eliminate the problemAdvertisementsCharacter and pattern recognitionPersonalizationSocial media

Systems and methods to analyze a person's social media photos or videos, such as those posted on Twitter, Facebook, Instagram, etc. and determine properties of their social life. Using information on the number of people appearing in the photos or videos, their ages, and genders, this method can predict whether the person is in a romantic relationship, has a close family, is a group person, or is single. This information is valuable for generating audiovisual content recommendations as well as for advertisers, because it allows targeting personalized advertisements to the person posting the photos. The described methods may be performed (and the advertisements or other content may be selected for recommendation) substantially in real-time as the user accesses a specific online resource.

Owner:FUJIFILM BUSINESS INNOVATION CORP

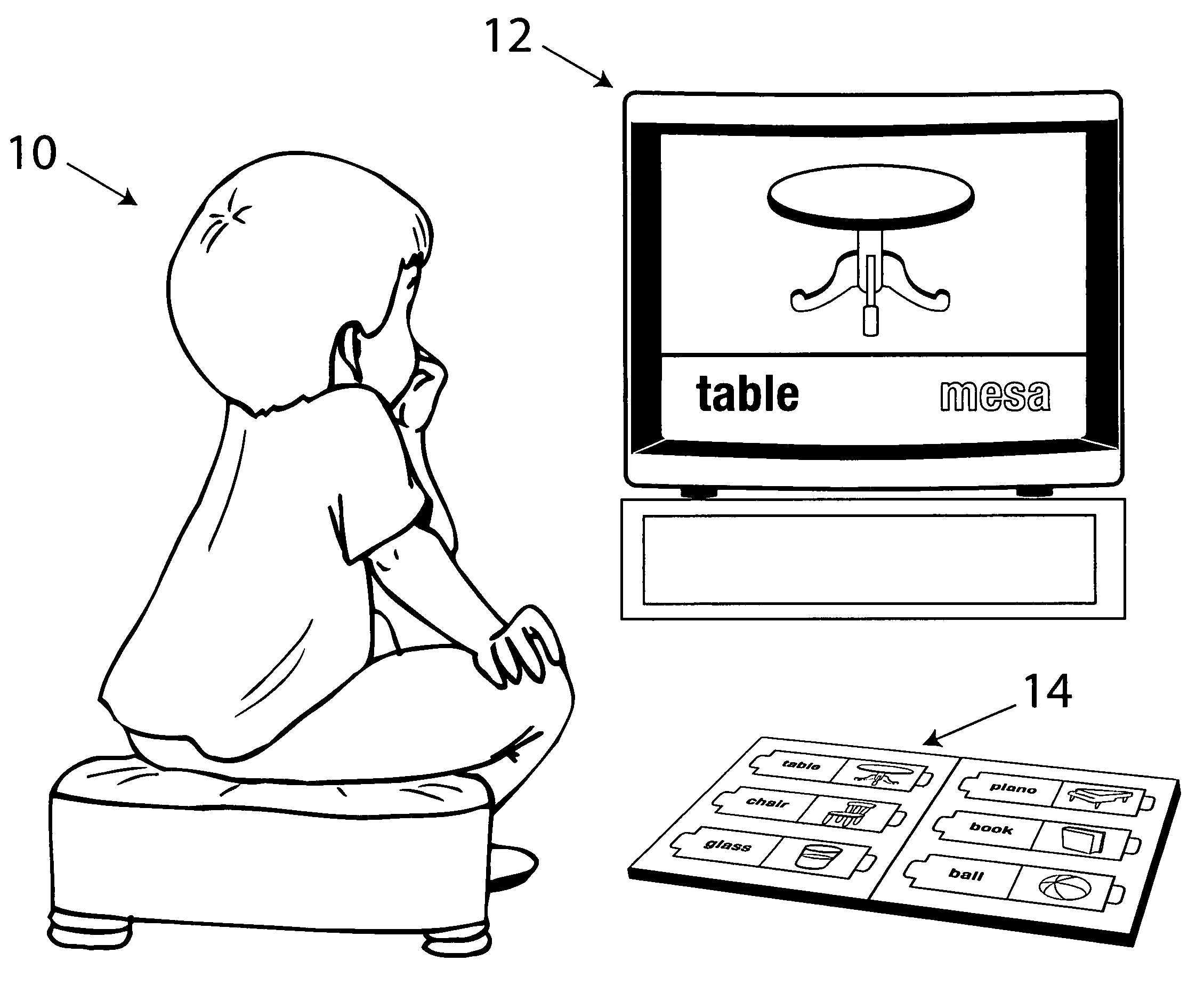

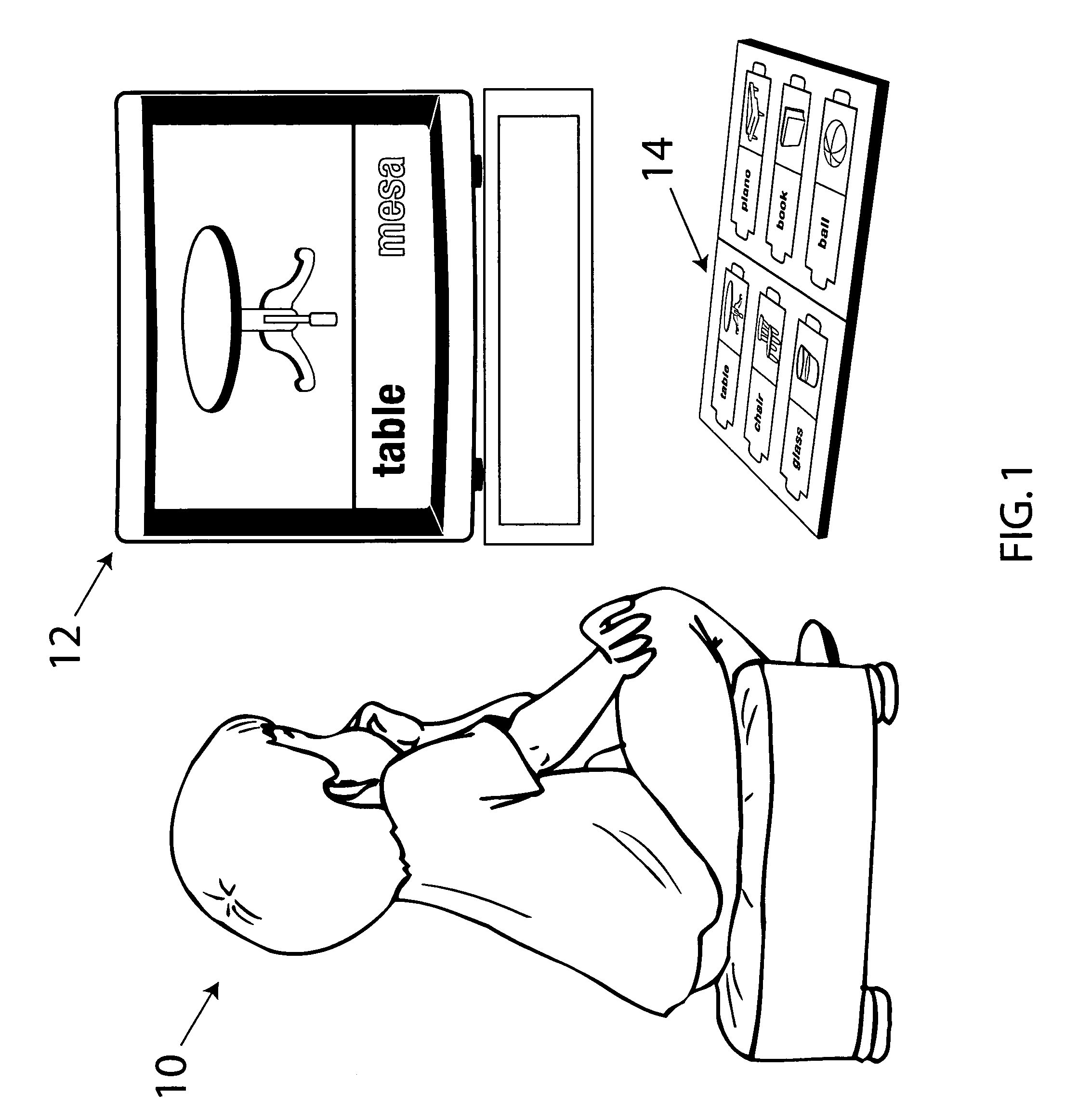

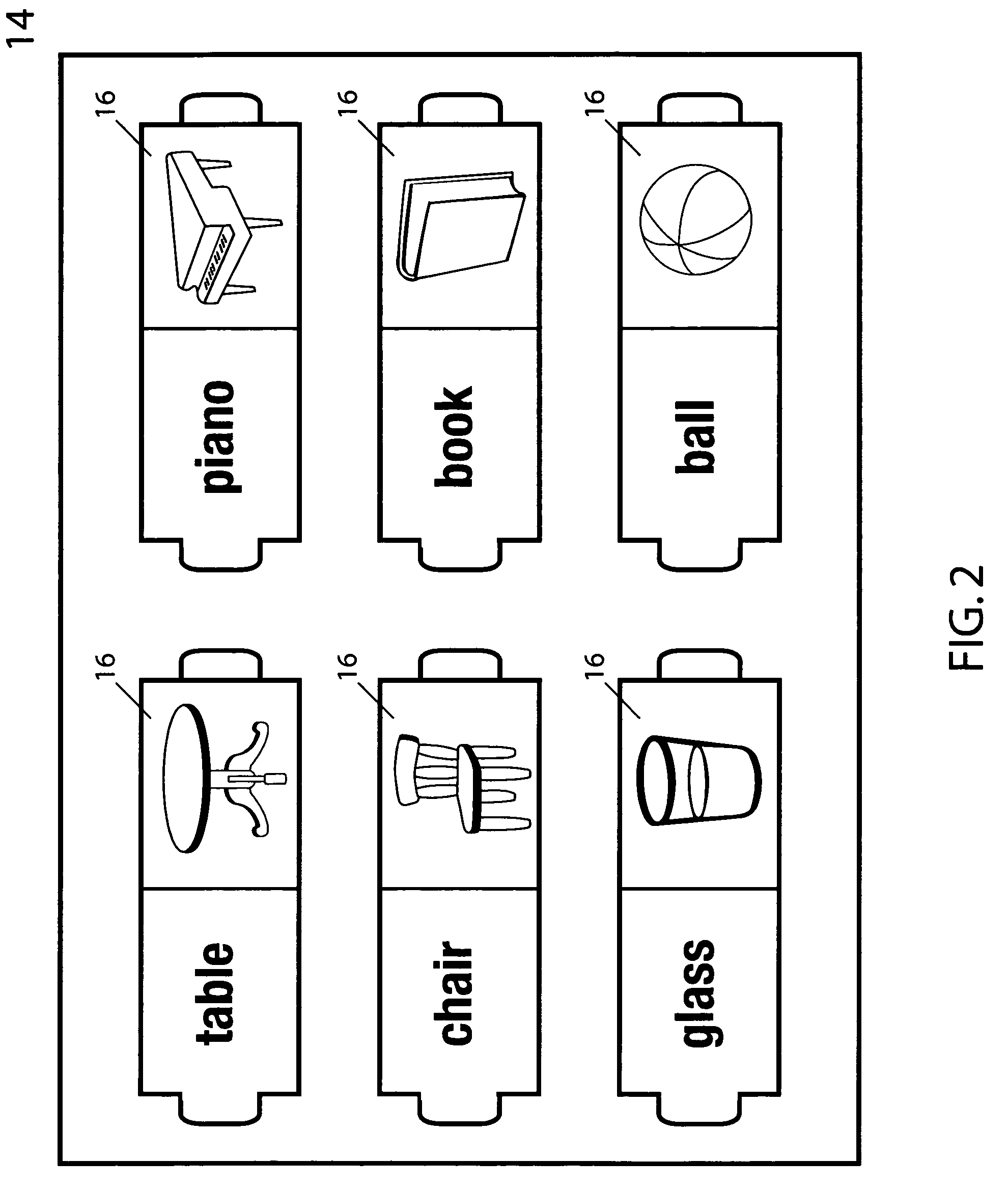

System and method for teaching a new language

InactiveUS20050112531A1Easy to distinguishStay interestedElectrical appliancesTeaching apparatusComputer graphics (images)Electronic book

Owner:OSMOSIS

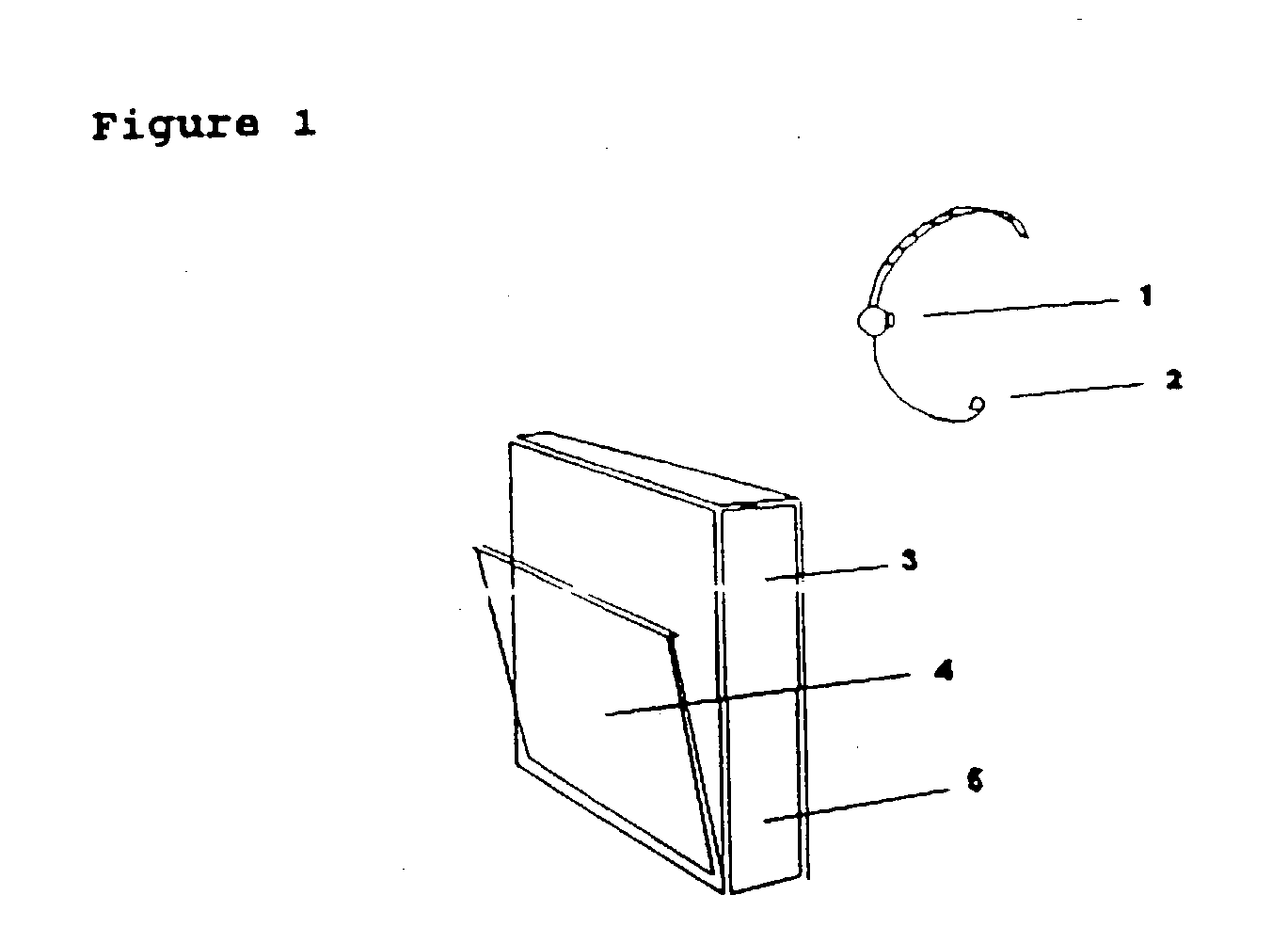

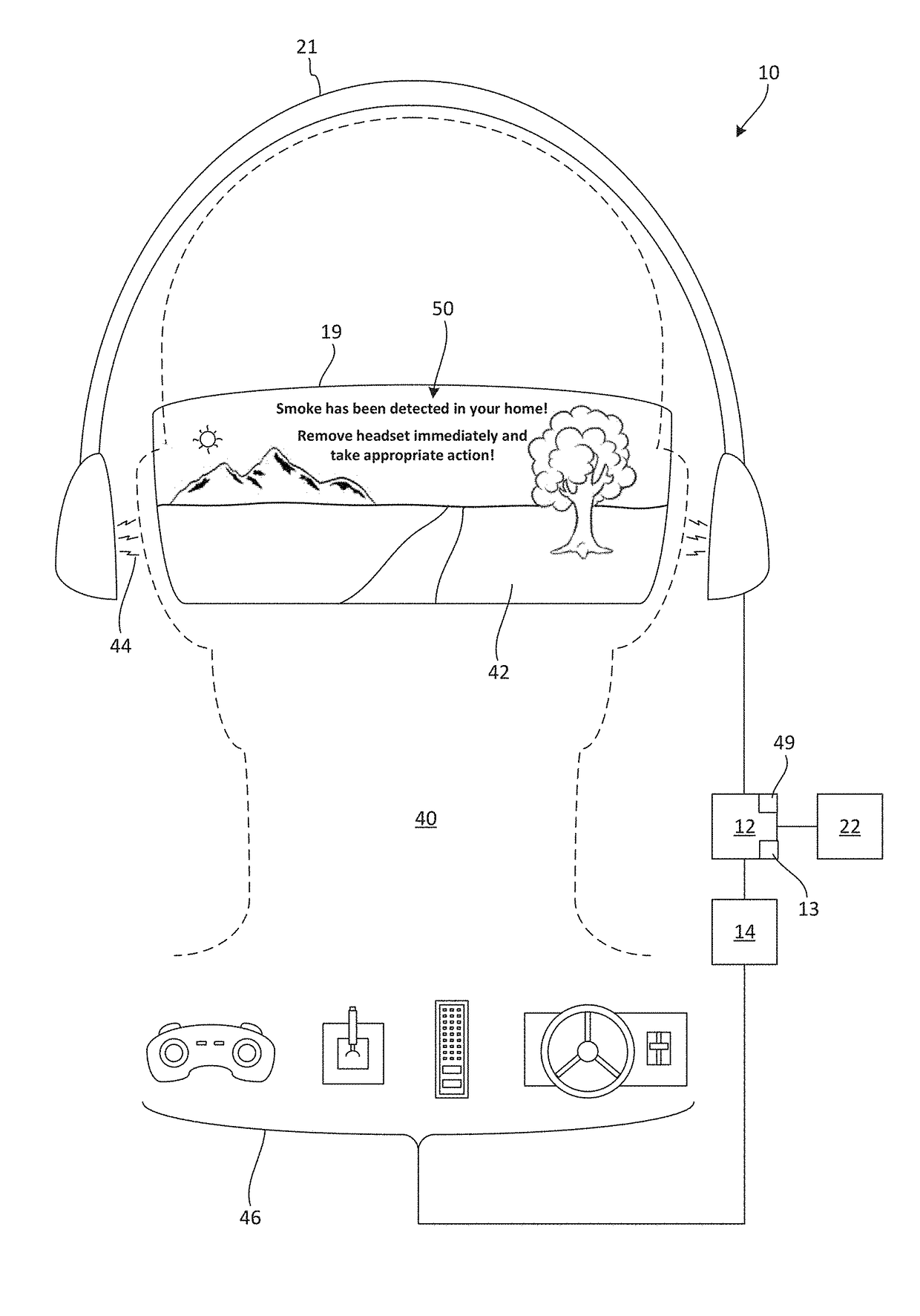

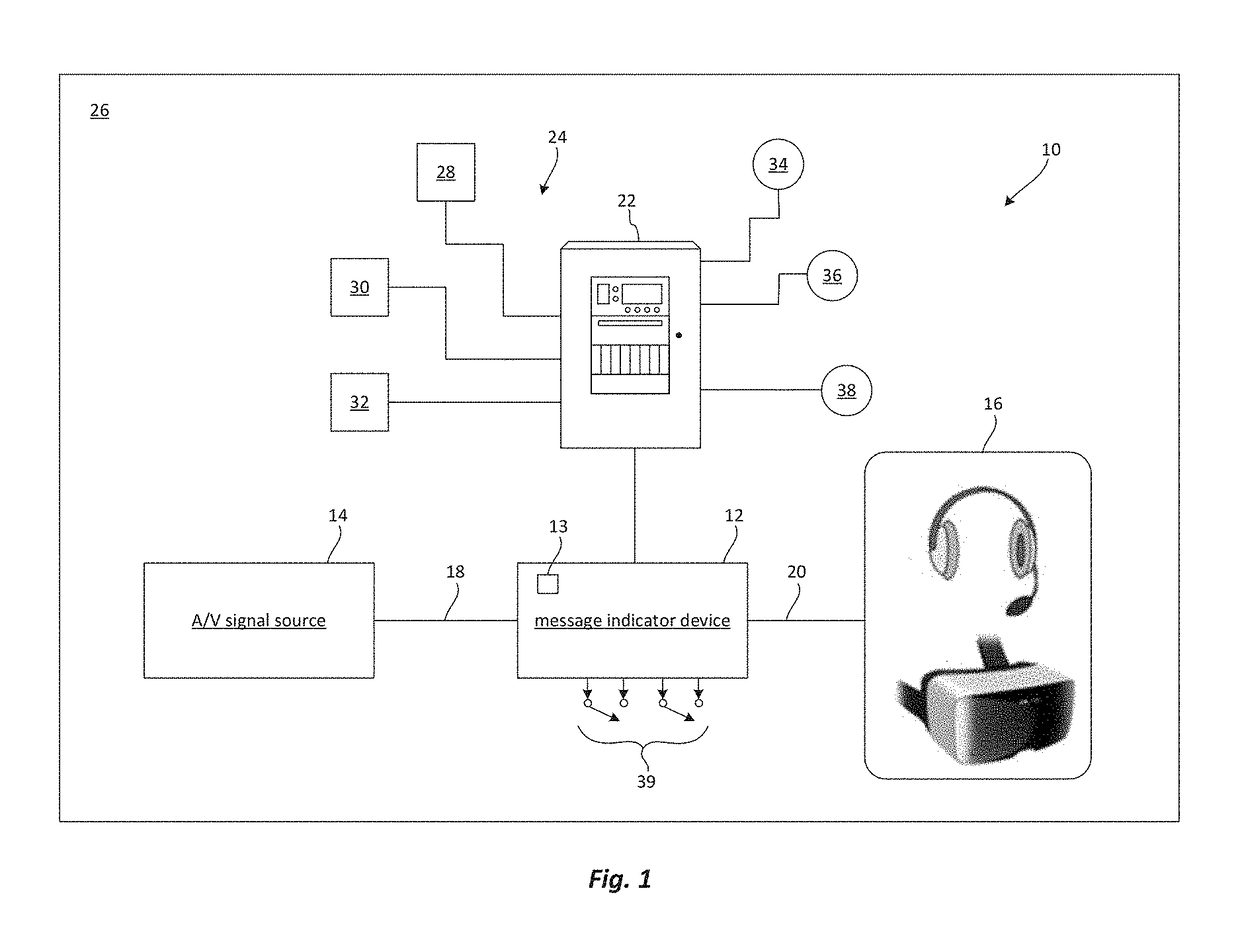

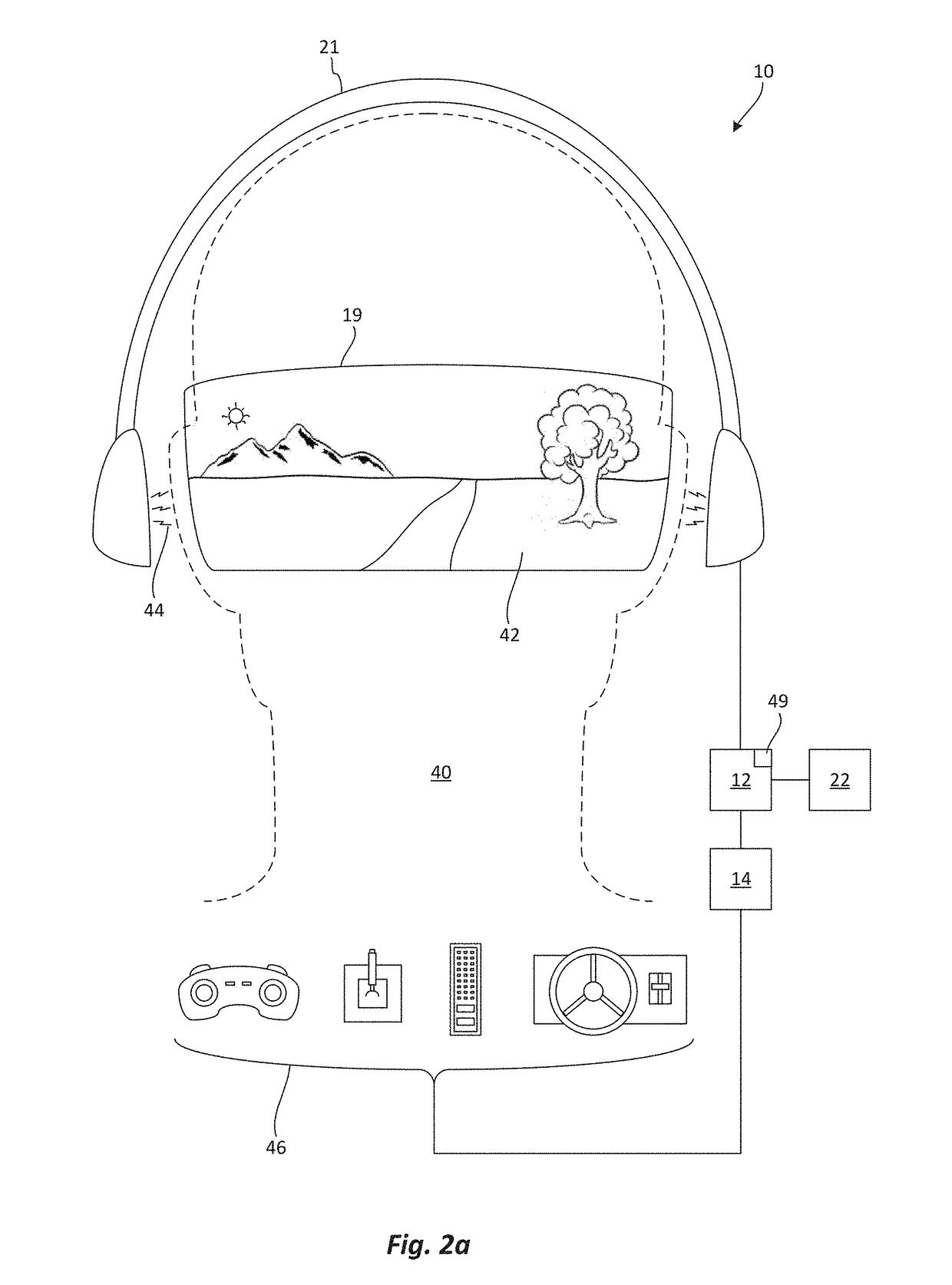

Notification system for virtual reality devices

ActiveUS20180047212A1Cathode-ray tube indicatorsImage data processingComputer hardwareMonitoring system

A notification system for virtual reality devices that includes a message indicator device, an audiovisual (A / V) signal source connected to the message indicator device and adapted provide an A / V feed containing at least one of video information and audio information, a monitoring system connected to the message indicator device and configured to detect notification events at a monitored premises, and a virtual reality (VR) headset connected to the message indicator device and adapted to receive and present the A / V feed, wherein the message indicator device is adapted to communicate the A / V feed to the VR headset during a normal operating state, and wherein the message indicator device is adapted to communicate an alert signal to the VR headset upon detection of a notification event by the monitoring system.

Owner:JOHNSON CONTROLS TYCO IP HLDG LLP

E-commerce development intranet portal

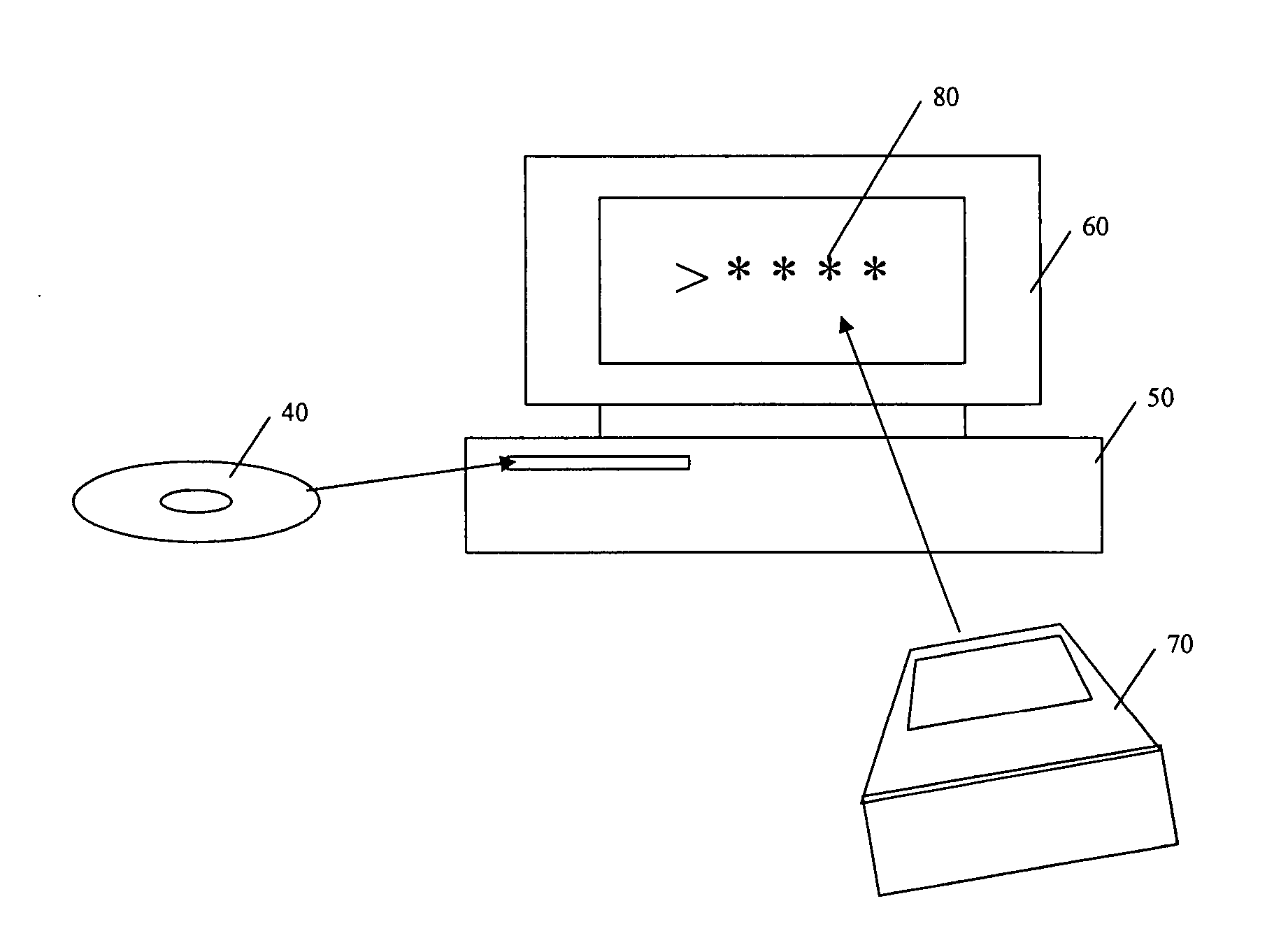

InactiveUS7647259B2Improve performanceSolve low usageCredit registering devices actuationFinanceFree accessSelf-service

An intranet providing a multiple-carrel public-access kiosk provides free access to foreign and domestic informational e-commerce intranet sites as well as e-mail and public service educational and informational materials. The kiosk accepts anonymous pre-paid cards issued by a local franchisee of a network of e-commerce intranets that includes the local intranet. The paid services provided by each carrel may include video-conference and chat room time, playing and / or copying audio-visual materials such as computer games and music videos, and international e-commerce purchase support services such as customs and currency exchange. Third-party sponsored public service materials may include audio-visual instructional materials in local dialects introducing the user to the use of the kiosk's services and providing training for using standard business software programs.

Owner:DE FABREGA INGRID PERSCKY

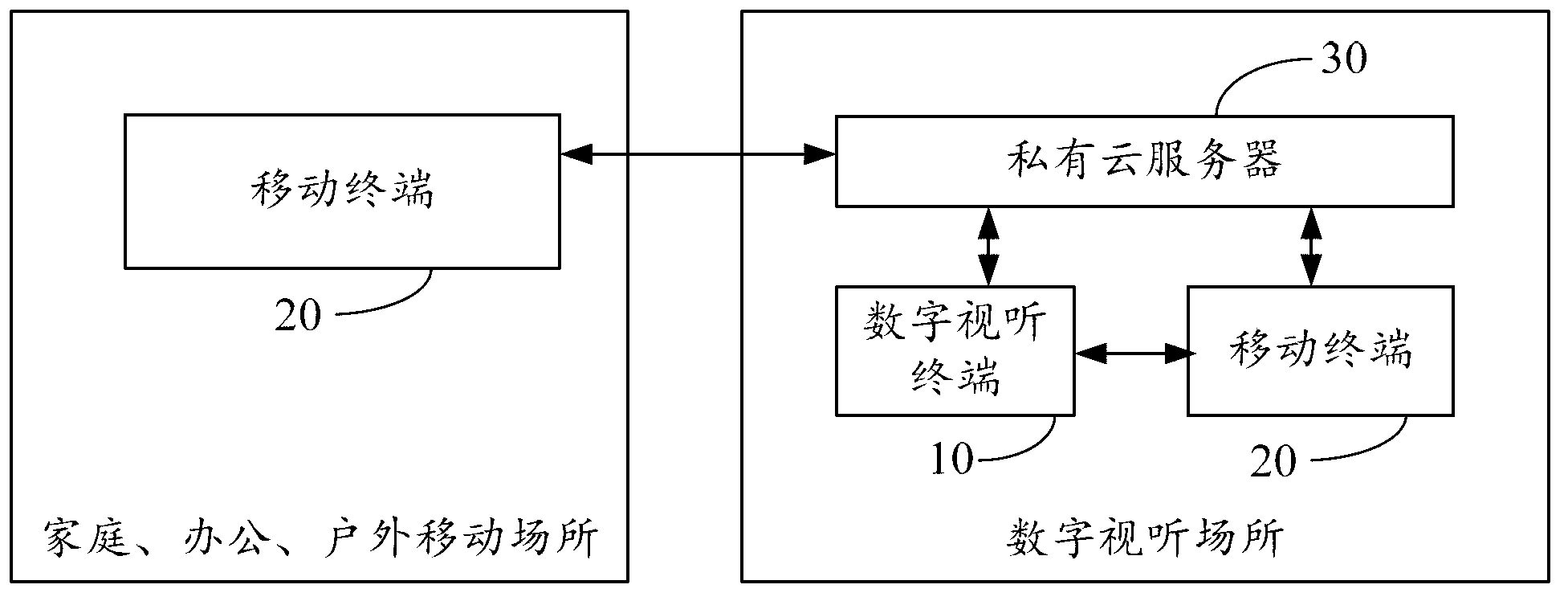

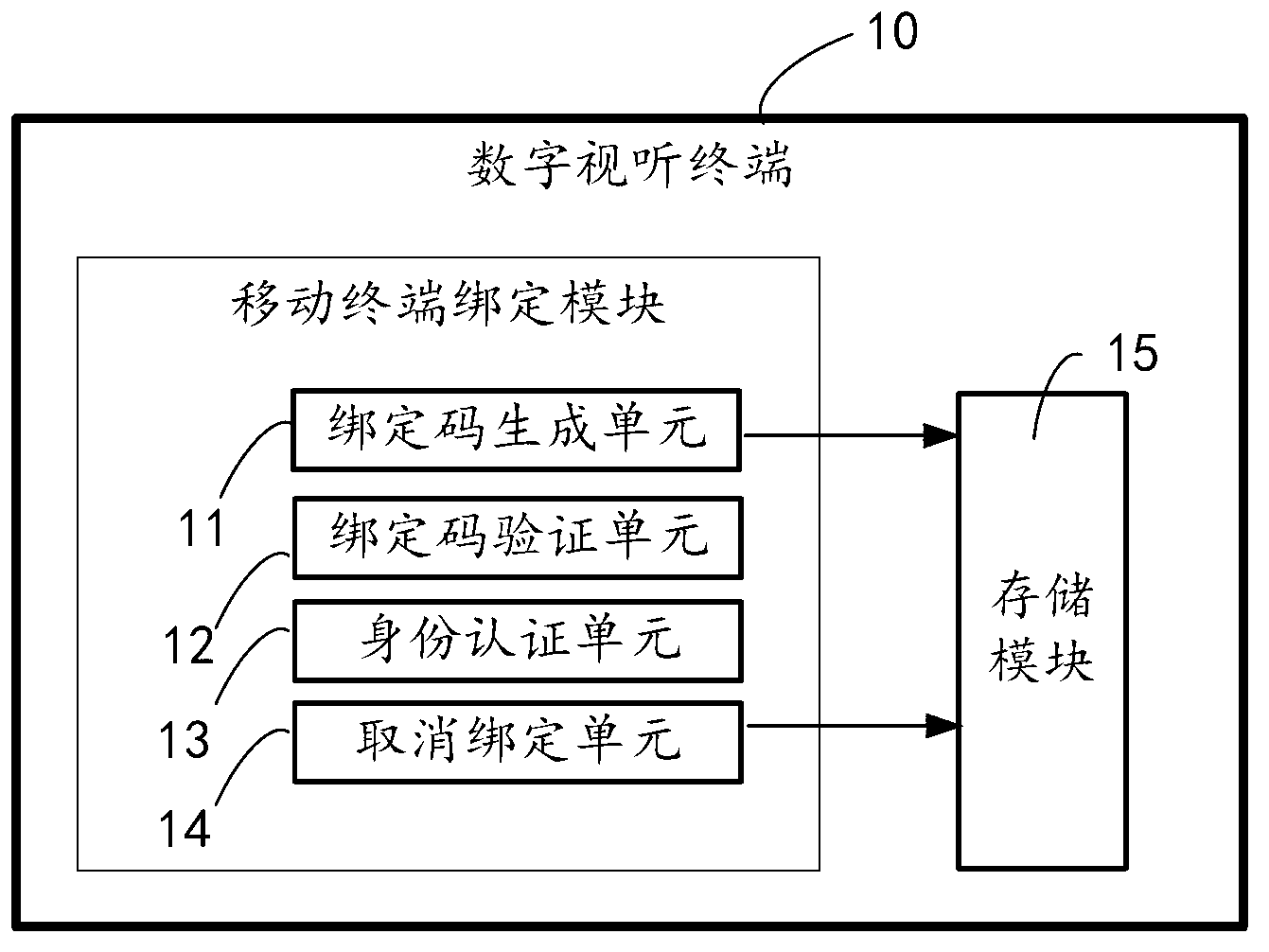

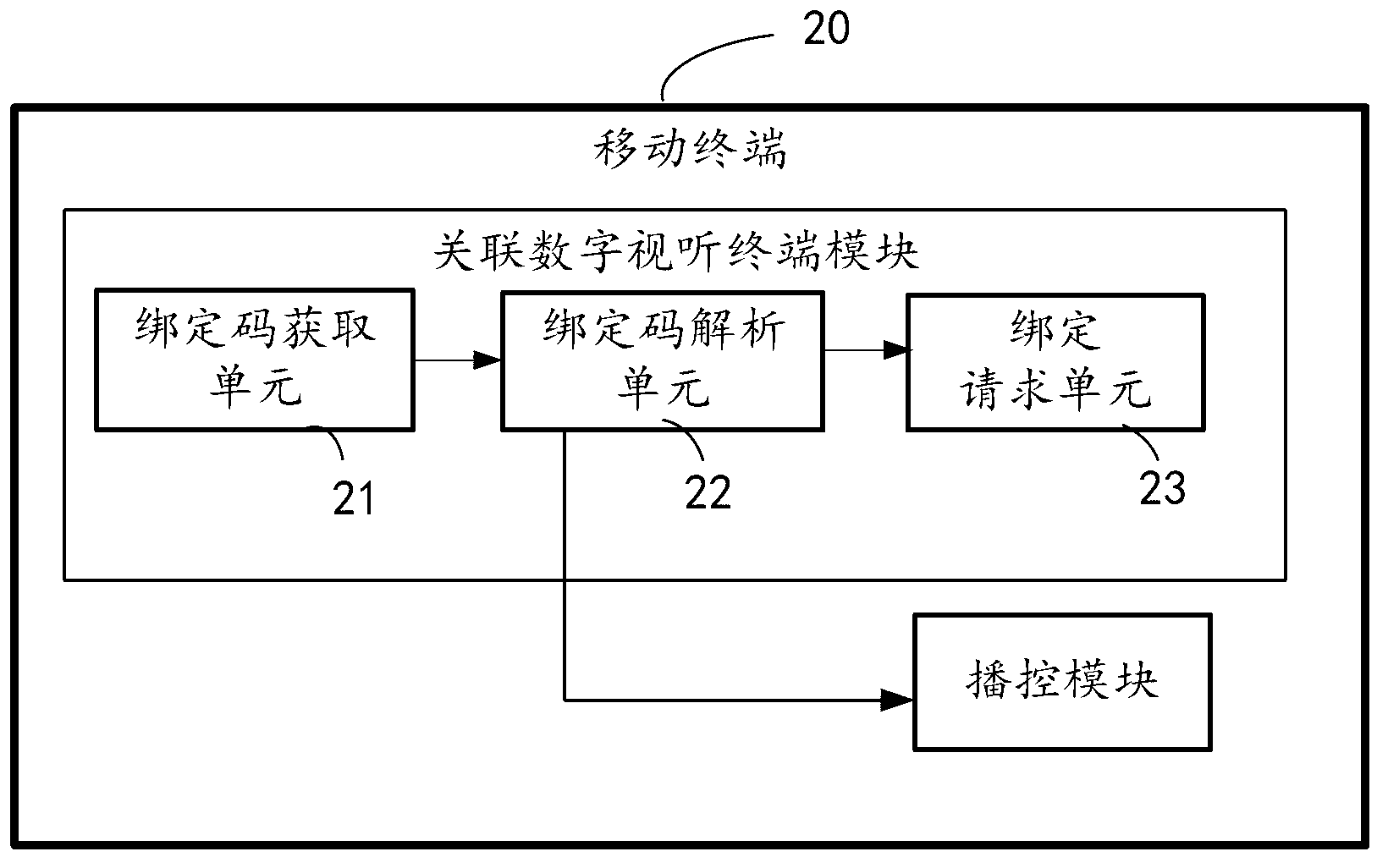

Method for binding mobile terminal to digital audiovisual terminal, bind controlling method and bind controlling system

The invention discloses a method for binding a mobile terminal to a digital audiovisual terminal. The method includes: acquiring an IP (internet protocol) of a private cloud server by the digital audiovisual terminal, generating a binding code from an IP of the digital audiovisual terminal and the IP of the private cloud server together with a random code, storing the binding code locally; acquiring the binding code from the digital audiovisual terminal by the mobile terminal, analyzing out the IP of the digital audiovisual terminal and the IP of the private cloud server from the binding code; transmitting the binding code to the private cloud server by the mobile terminal; analyzing the binding code by the private cloud server to acquire the IP of the audiovisual terminal, transmitting the binding code to the IP of the digital audiovisual terminal; comparing the received binding code with the locally stored binding code by the digital audiovisual terminal, judging whether the results are identical or not, and marking the binding code as a legal binding code by the digital audiovisual terminal when the results are identical.

Owner:福建凯米网络科技有限公司

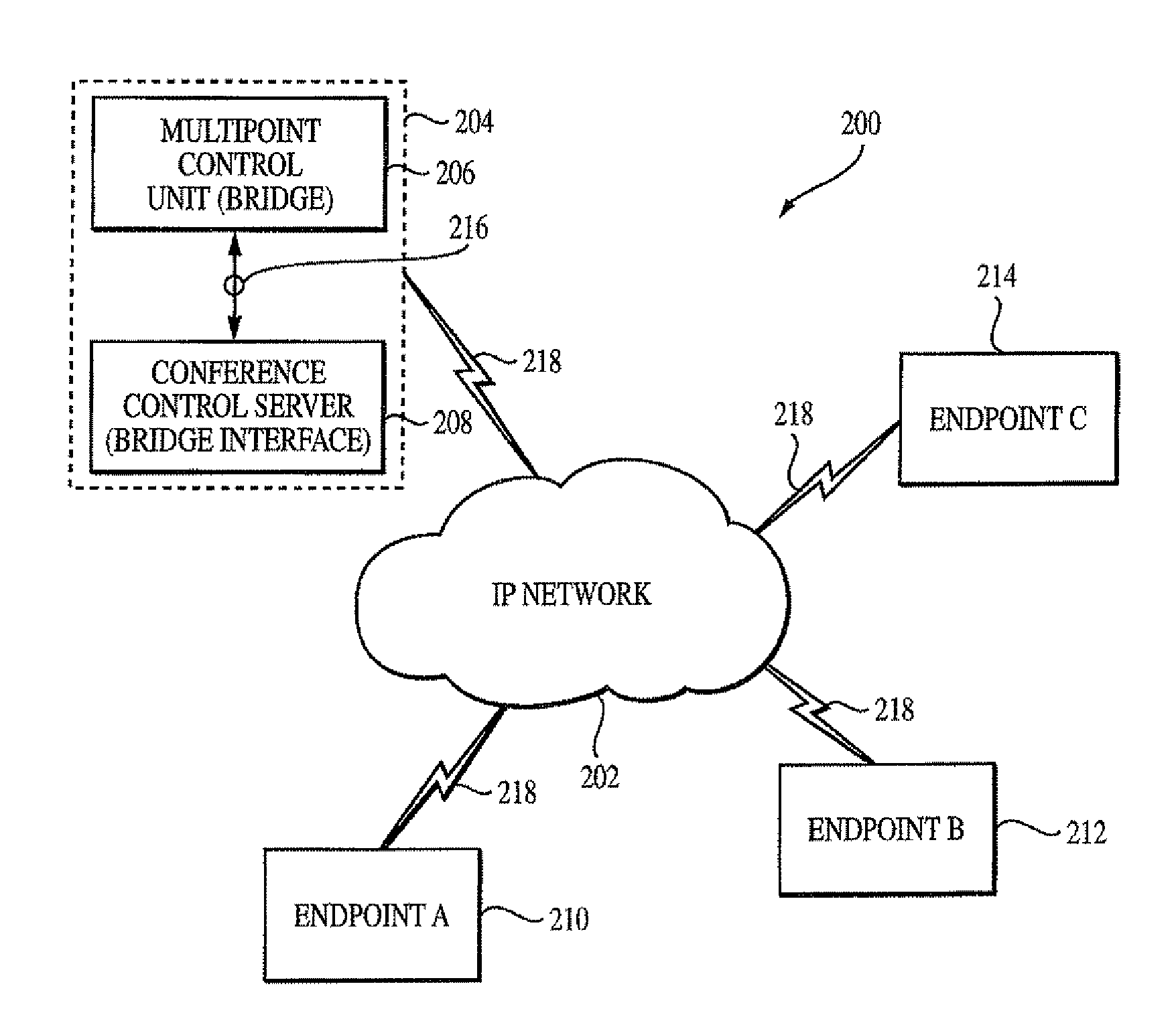

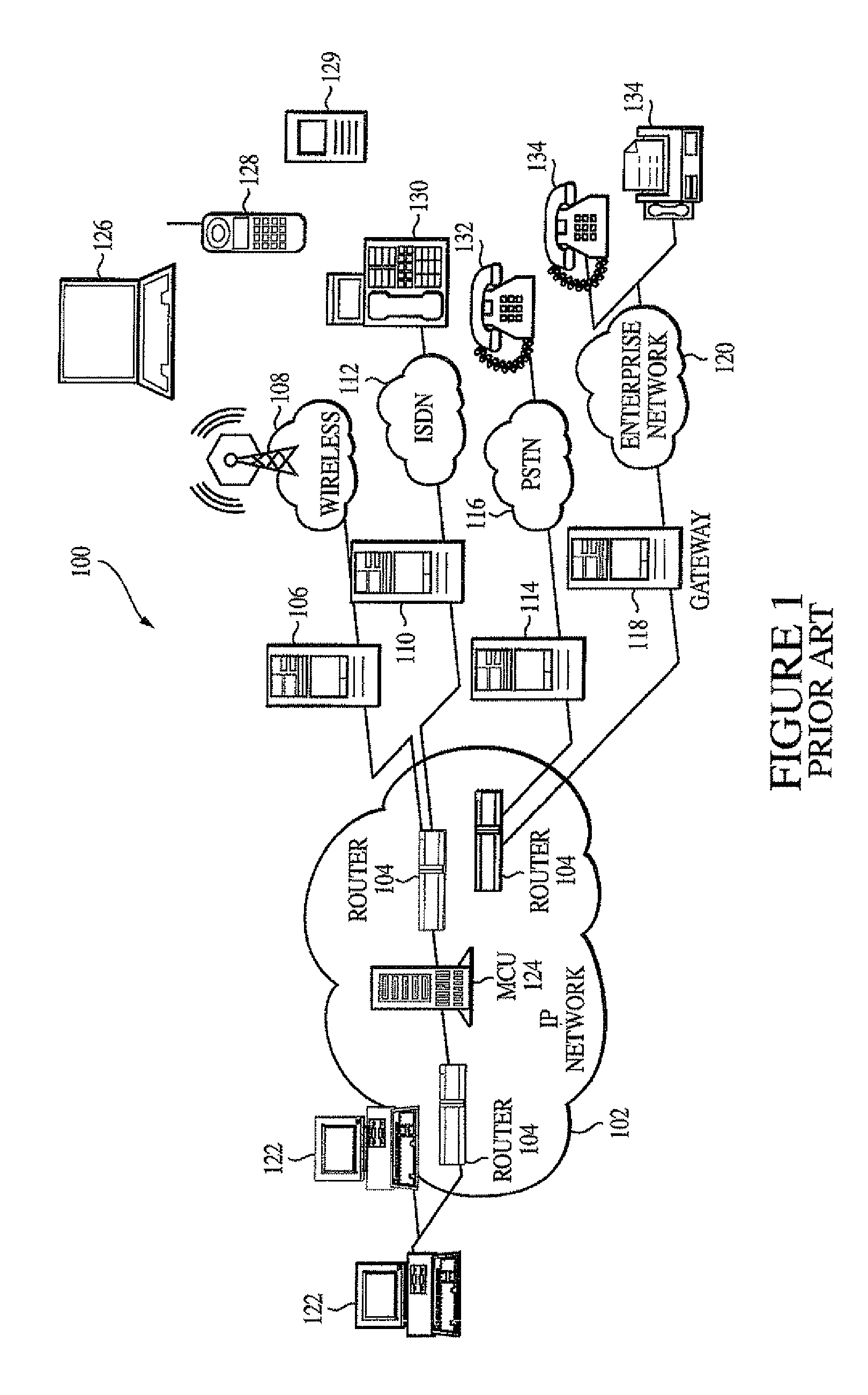

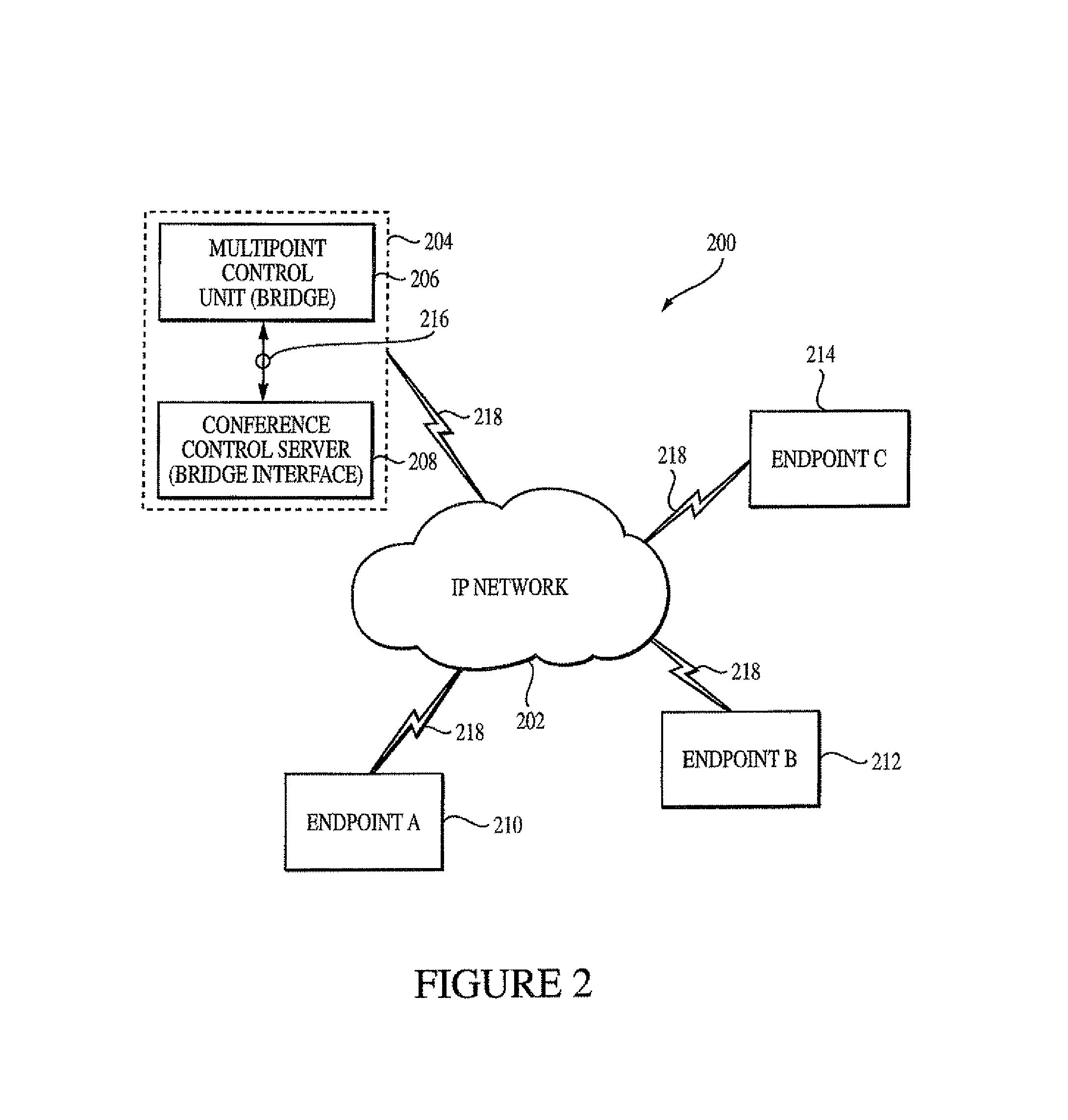

Multipoint audiovisual conferencing system

InactiveUS7292544B2Special service provision for substationTelevision conference systemsTelecommunicationsMultipoint control unit

Owner:RAMBUS INC

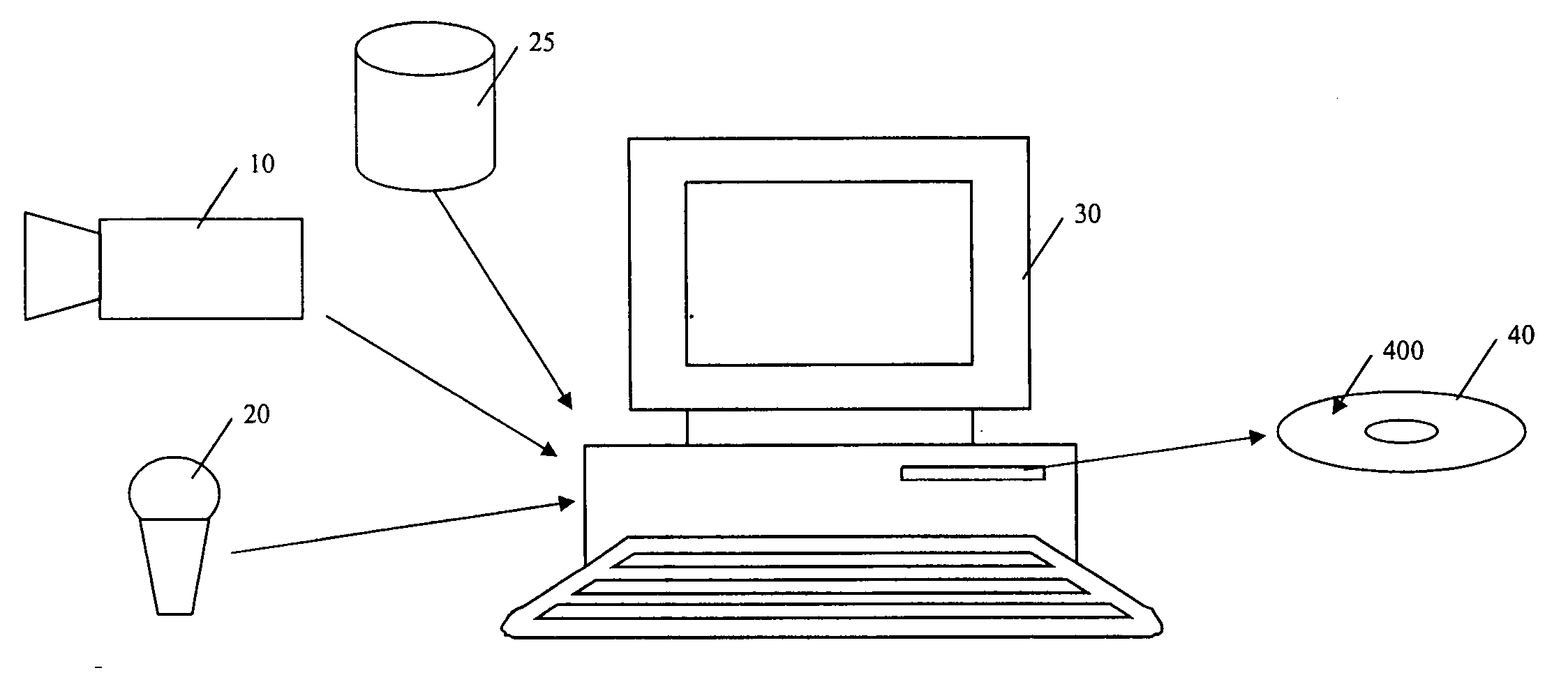

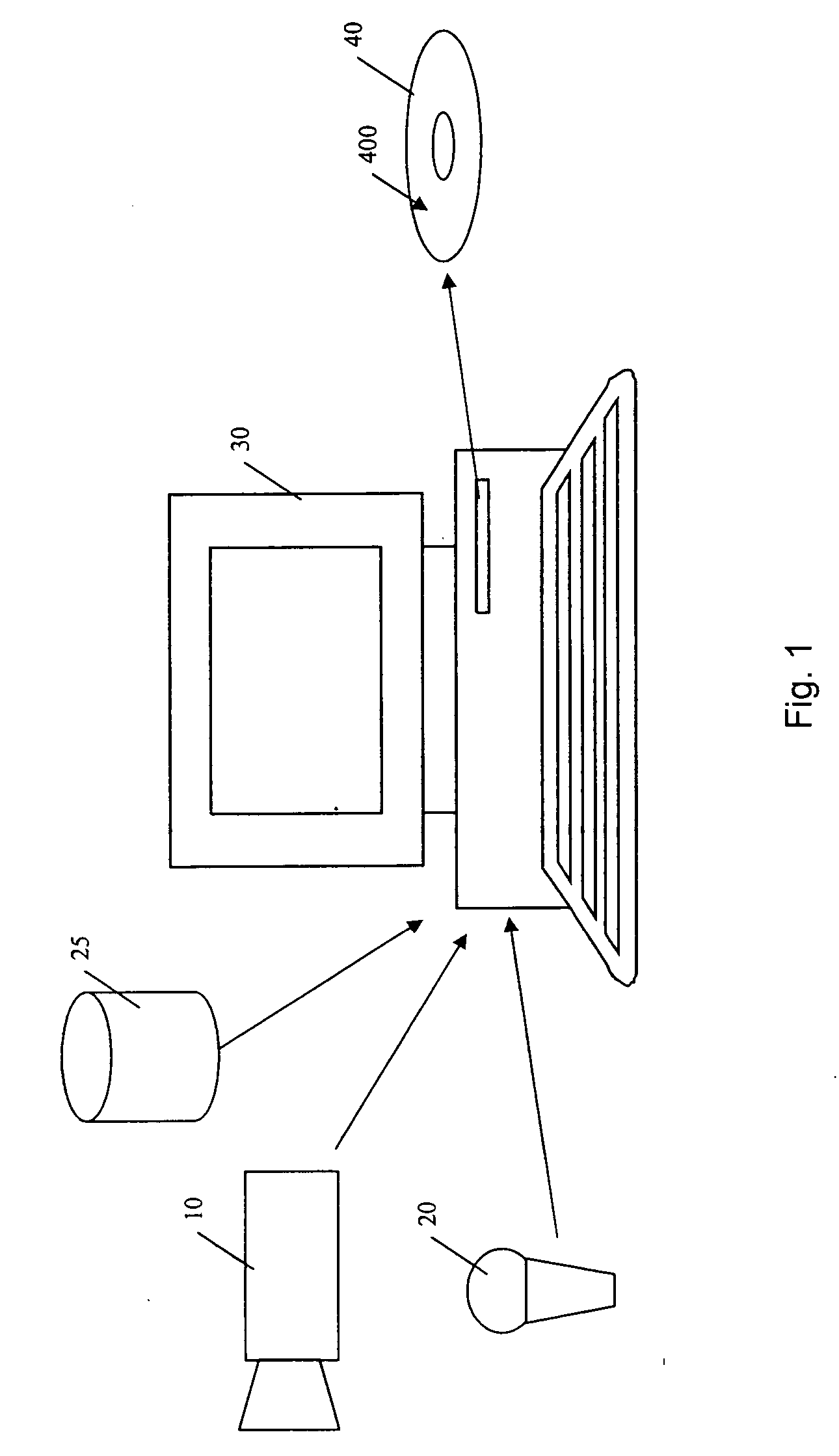

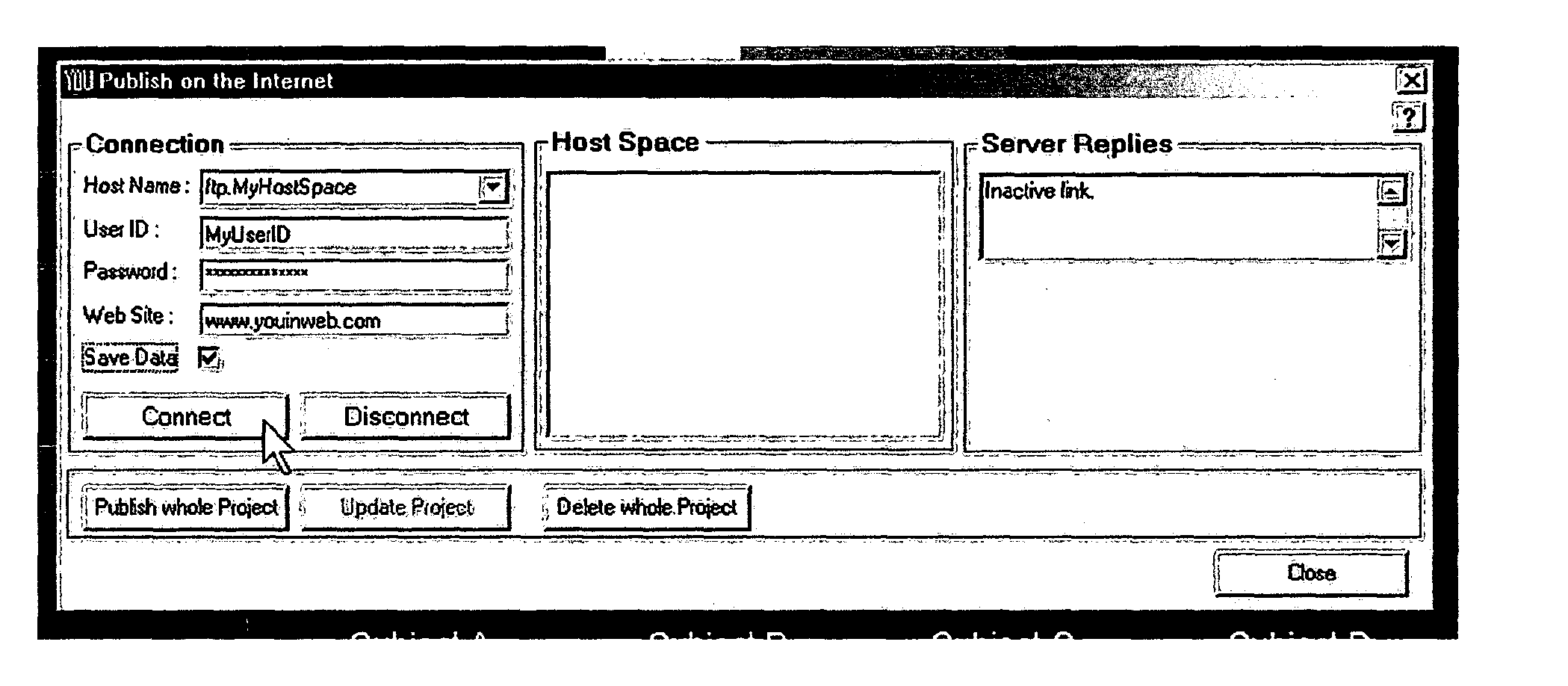

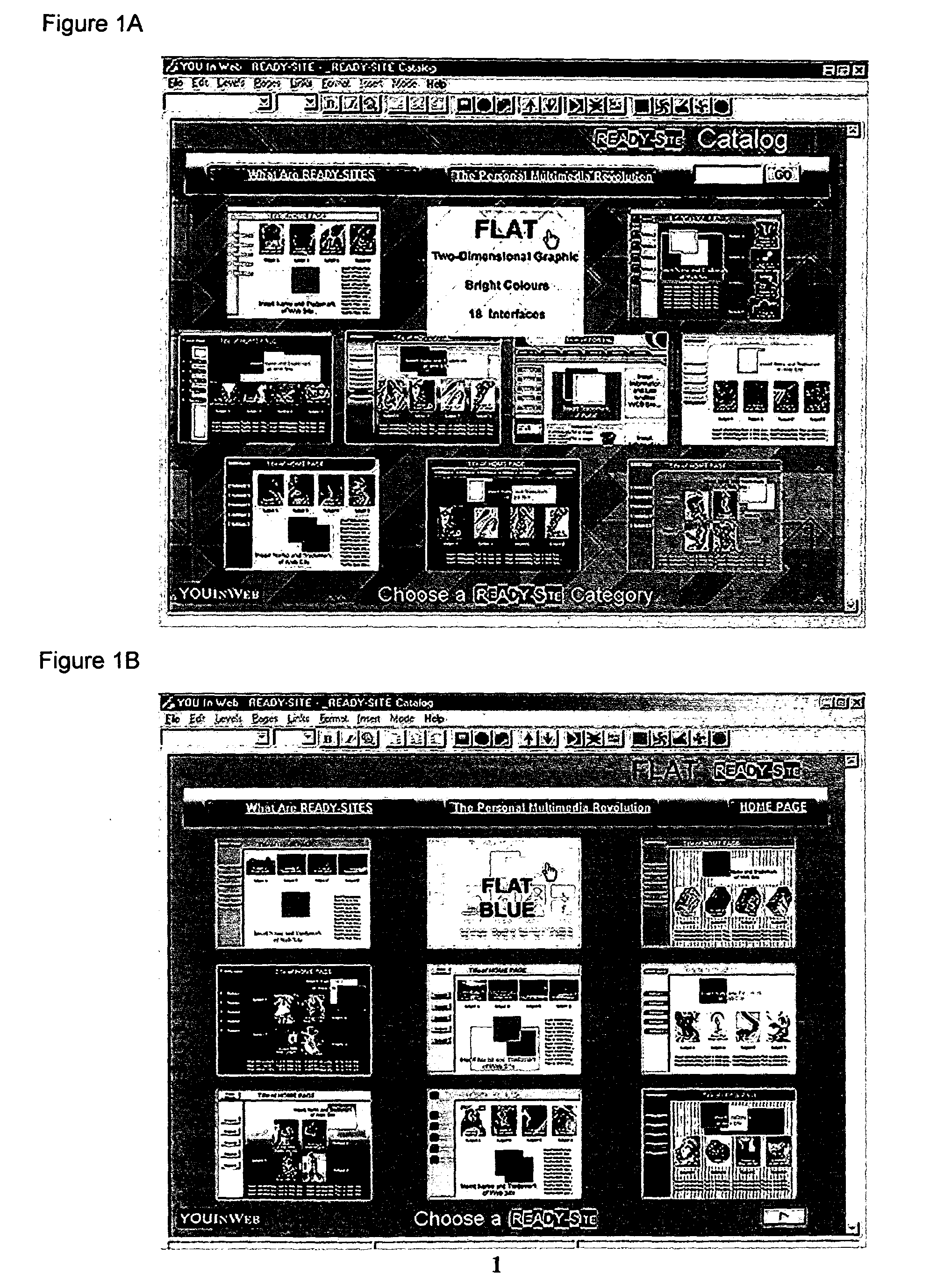

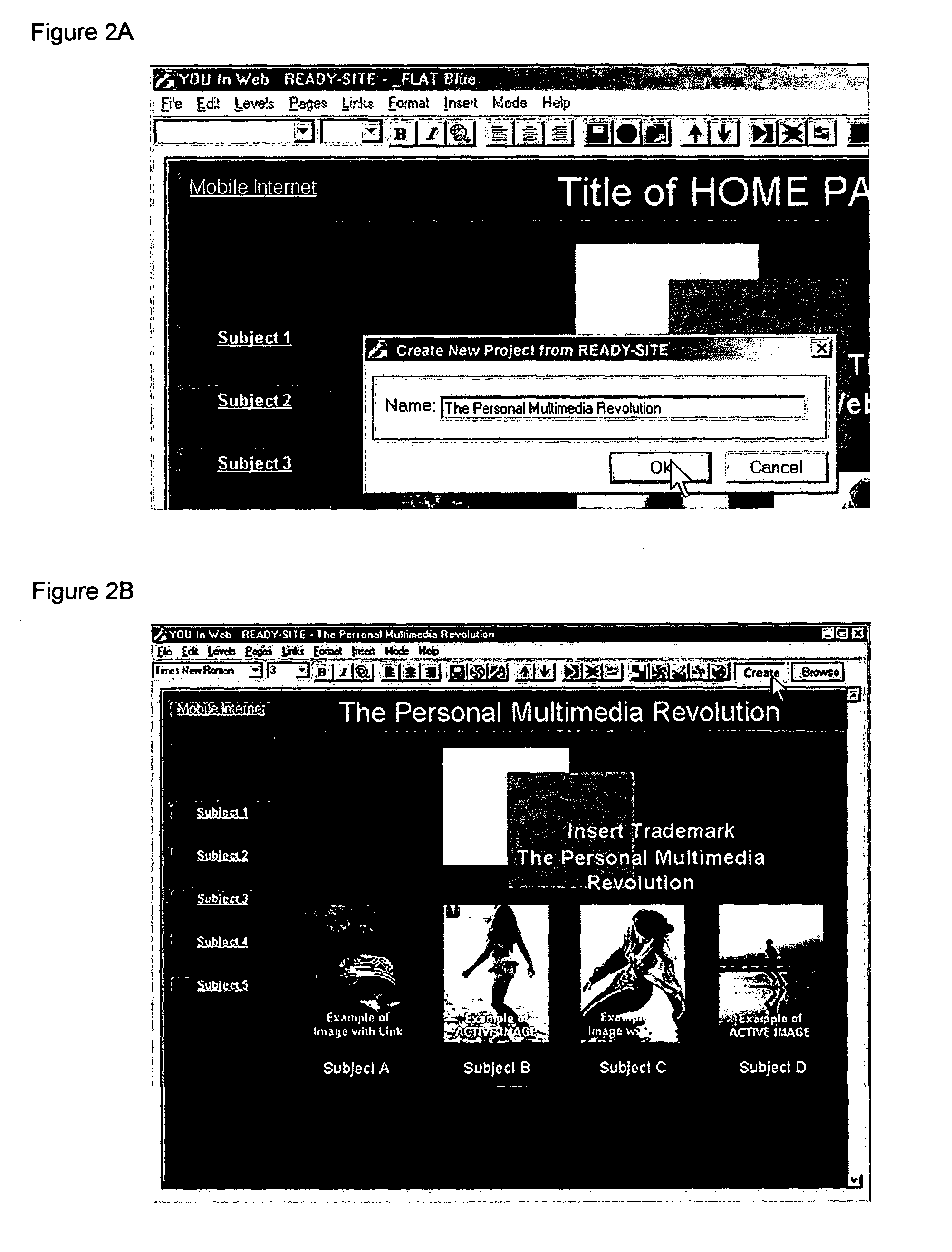

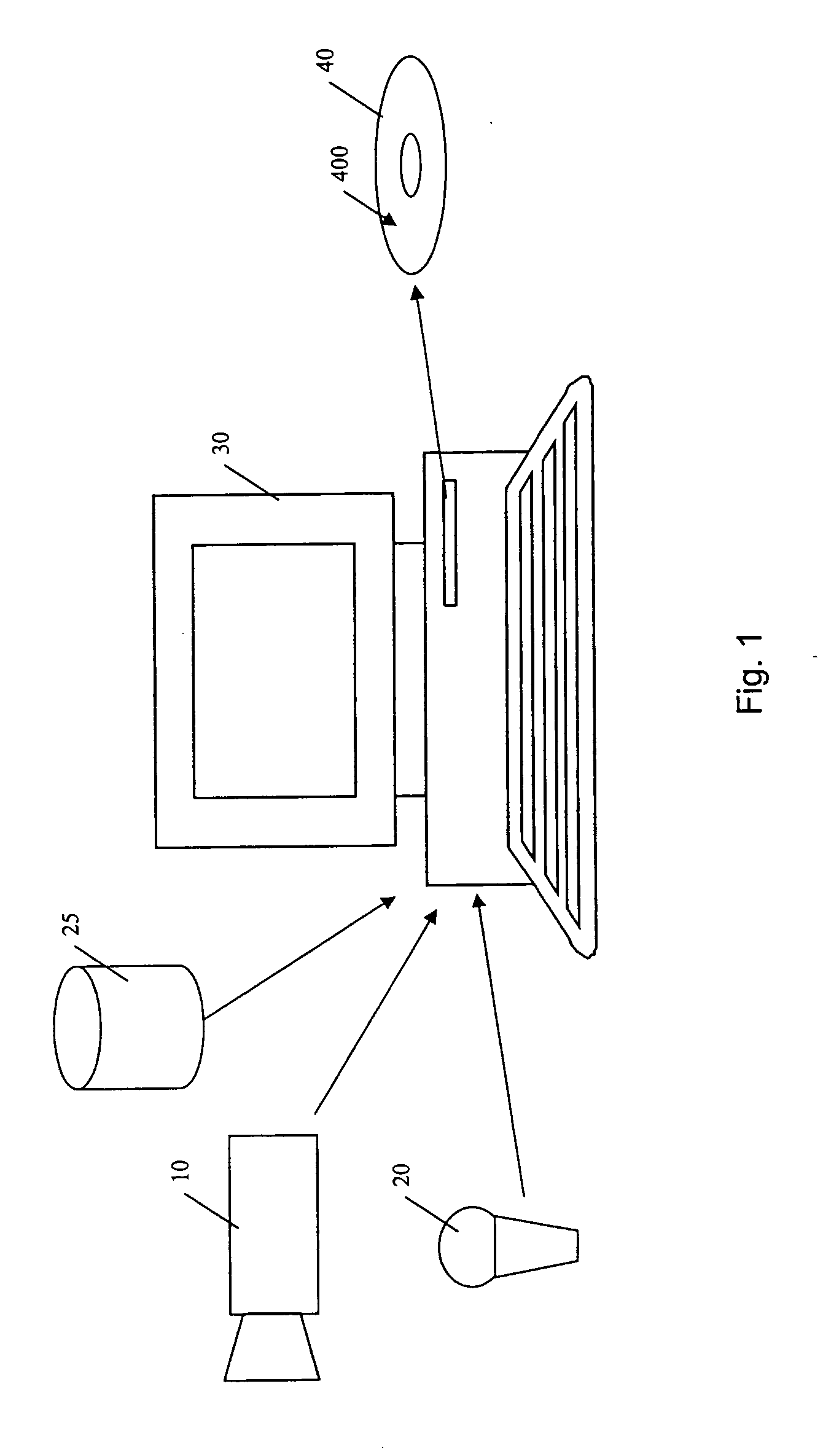

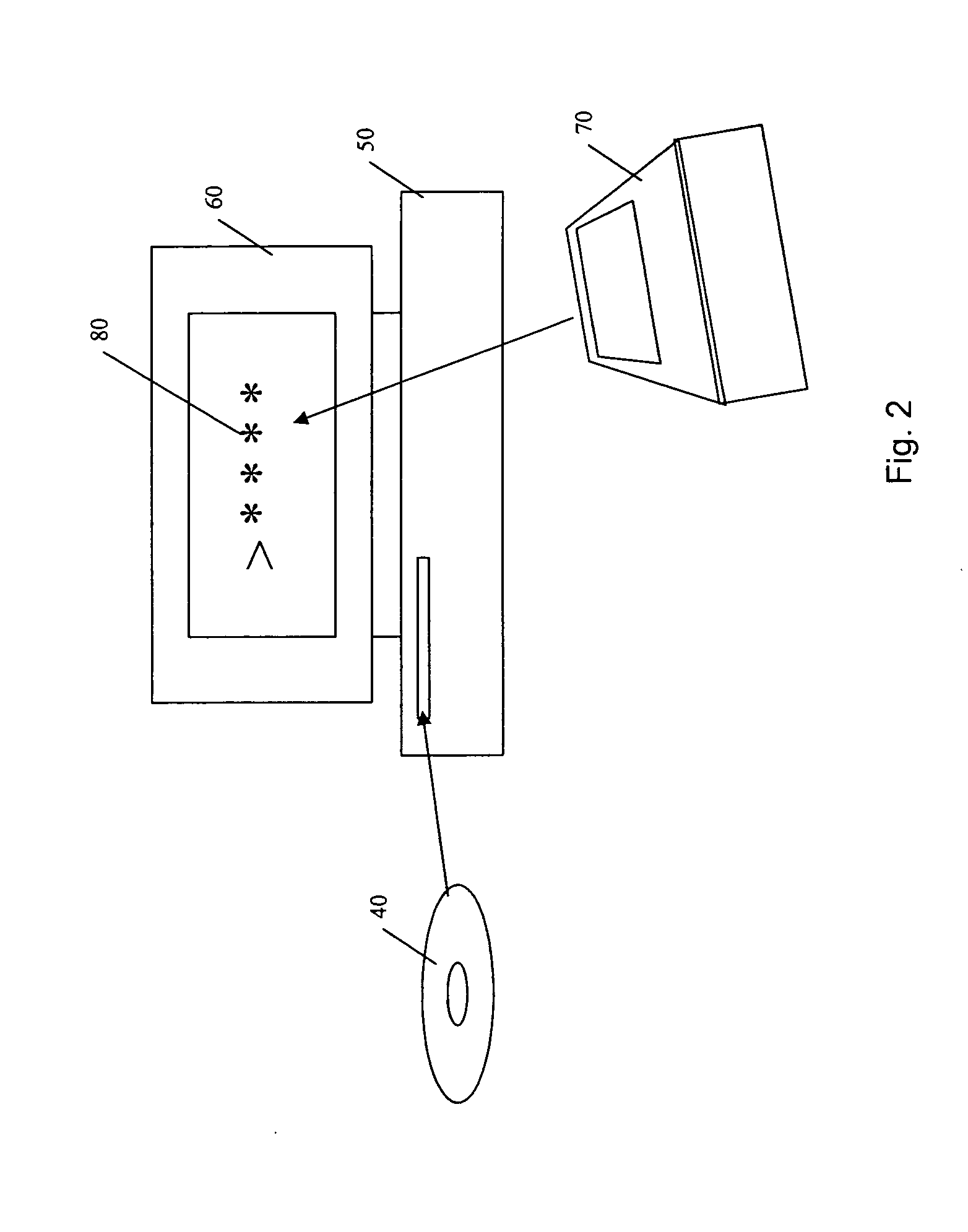

System, method and computer program product for the immediate creation and management of websites and multimedia audiovisuals for CD-ROM ready-to-use and already perfectly operating

System, method and computer program product are provided for the immediate creation and management of websites and multimedia audiovisuals for CD-ROM ready-to-use and already perfectly operating. This computer program is the extremely easy-to-use software wich enables every person who knows how to surf the web and to write an e-mail, to create at once professional level websites and multimedia audiovisuals, avoiding all technical problems related to the programming, the graphical layout, the connections, interactivity . . . etc. Apply the original software functions of this computer program with “Just One Click”: to create a search engine inside the website, to add already connected new pages, to create fading images slide-show presentations, to get the download of any file, to insert more videos on a single web page, to update or add data, to publish on the Internet, to create multimedia audiovisual for CD-ROM.

Owner:YOUNIVERSE

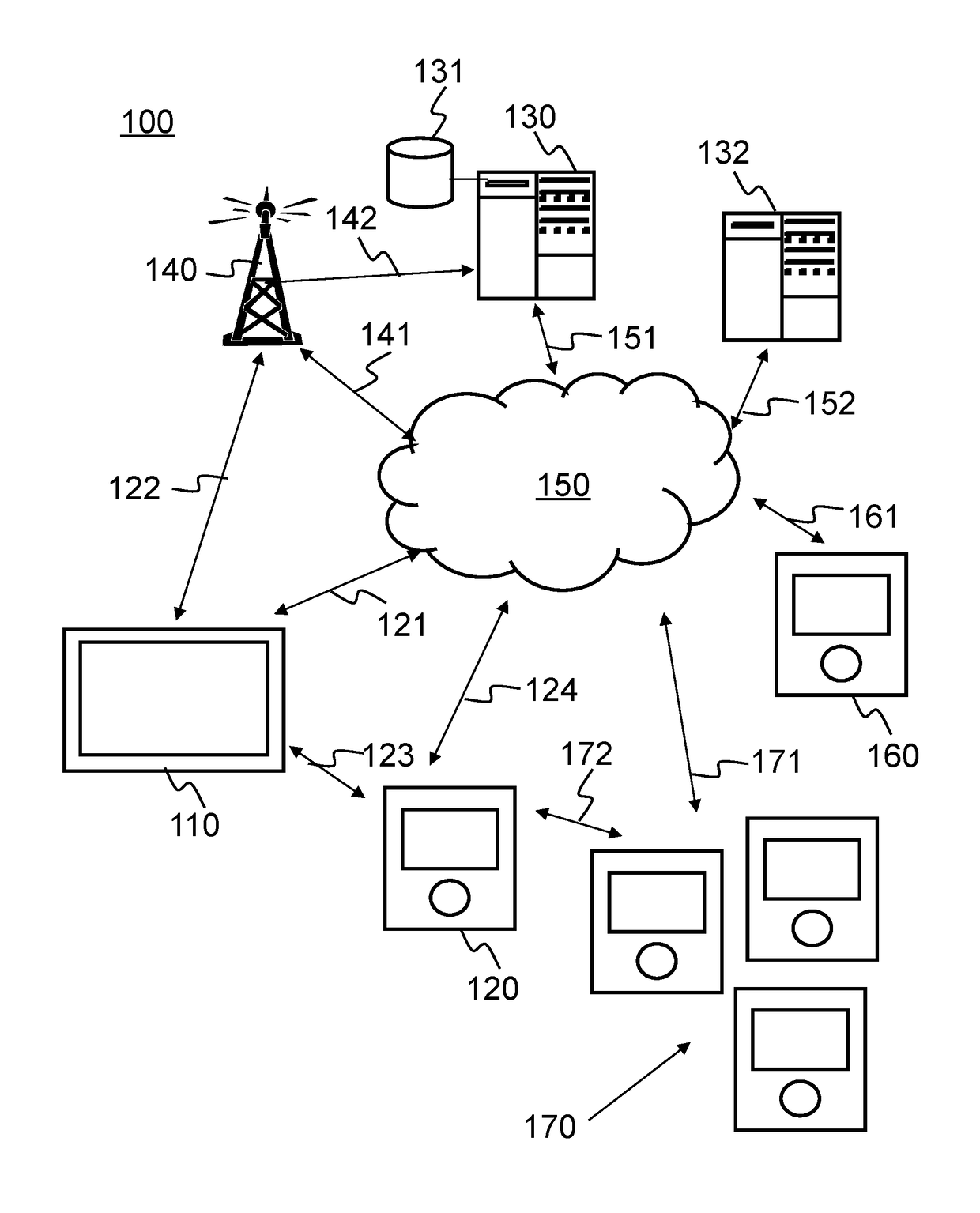

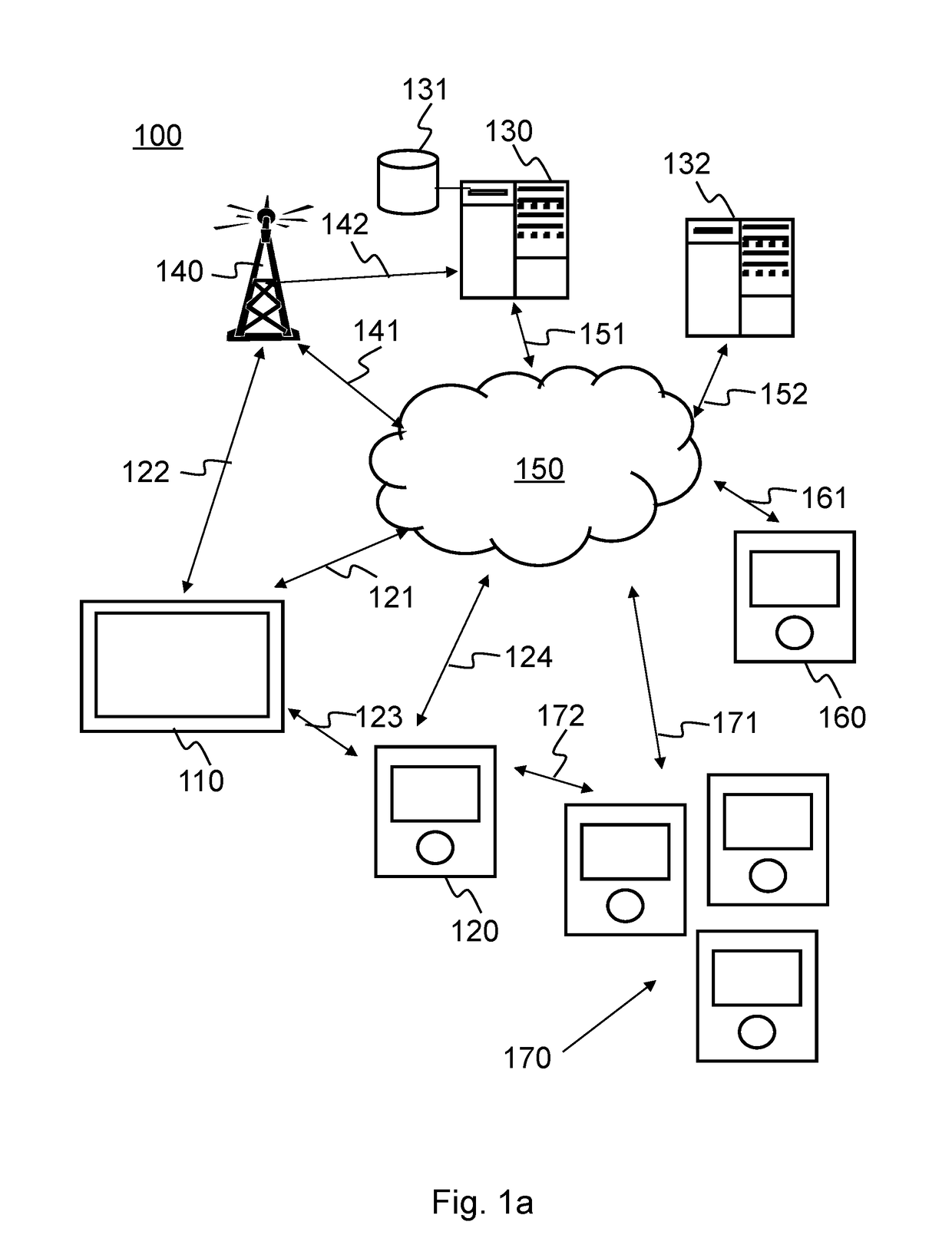

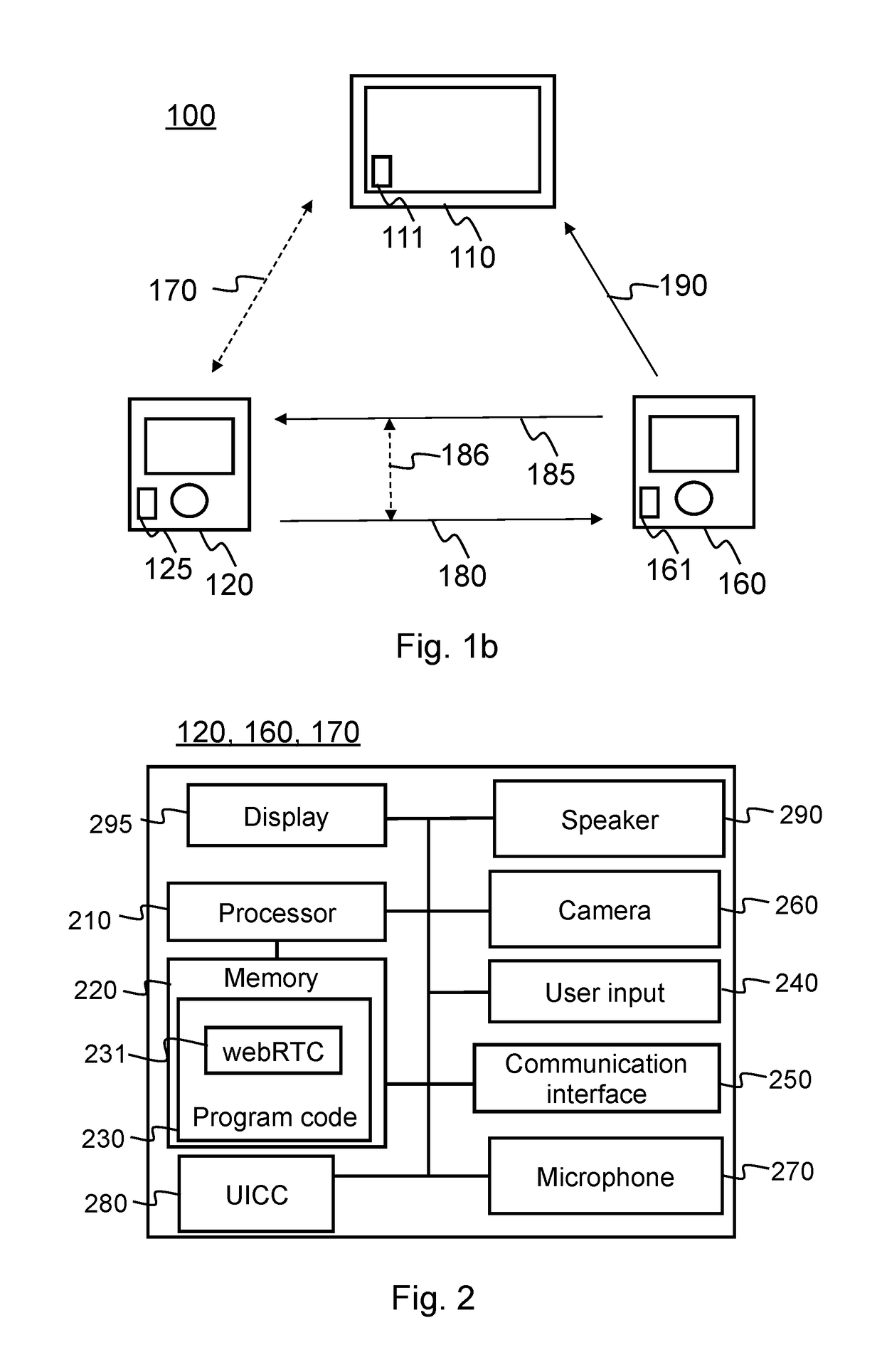

Computer implemented method for providing multi-camera live broadcasting service

A computer implemented method providing a multi-camera live video capturing and broadcasting service comprising connecting a master device via a local network or a public communication network to at least one wireless slave device using a peer connection; establishing a real time / live session associating the master device and the at least one wireless slave device; capturing real time content of audiovisual (AV) input signals using the at least one wireless slave device and transmitting the real time content to a mixer application of the master device; decoding and mixing the received real time content by the mixer application; encoding the mixed real time content by the mixer application; and broadcasting the encoded real time content by the mixer application to a streaming service of the public communication network.

Owner:TELLYBEAN

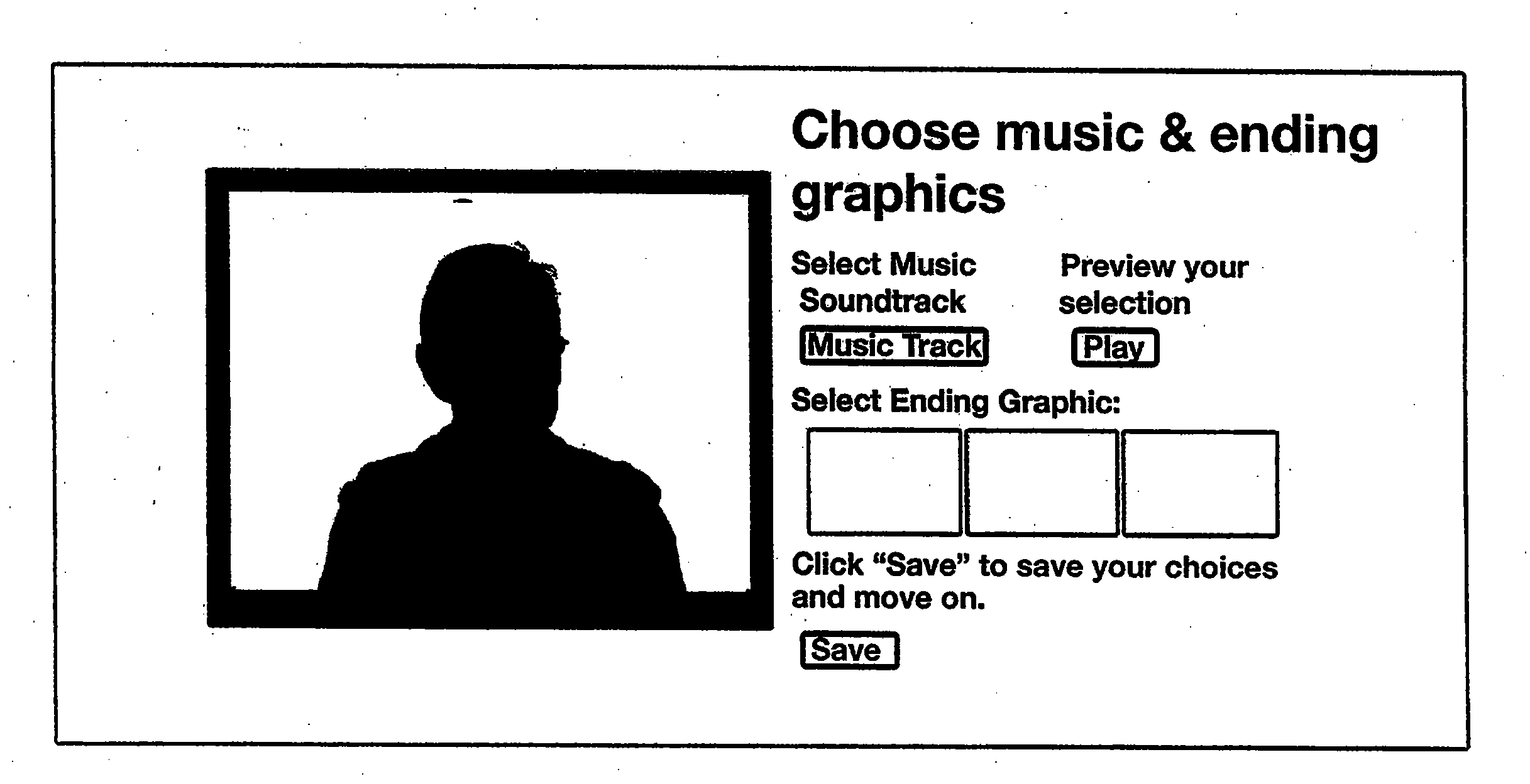

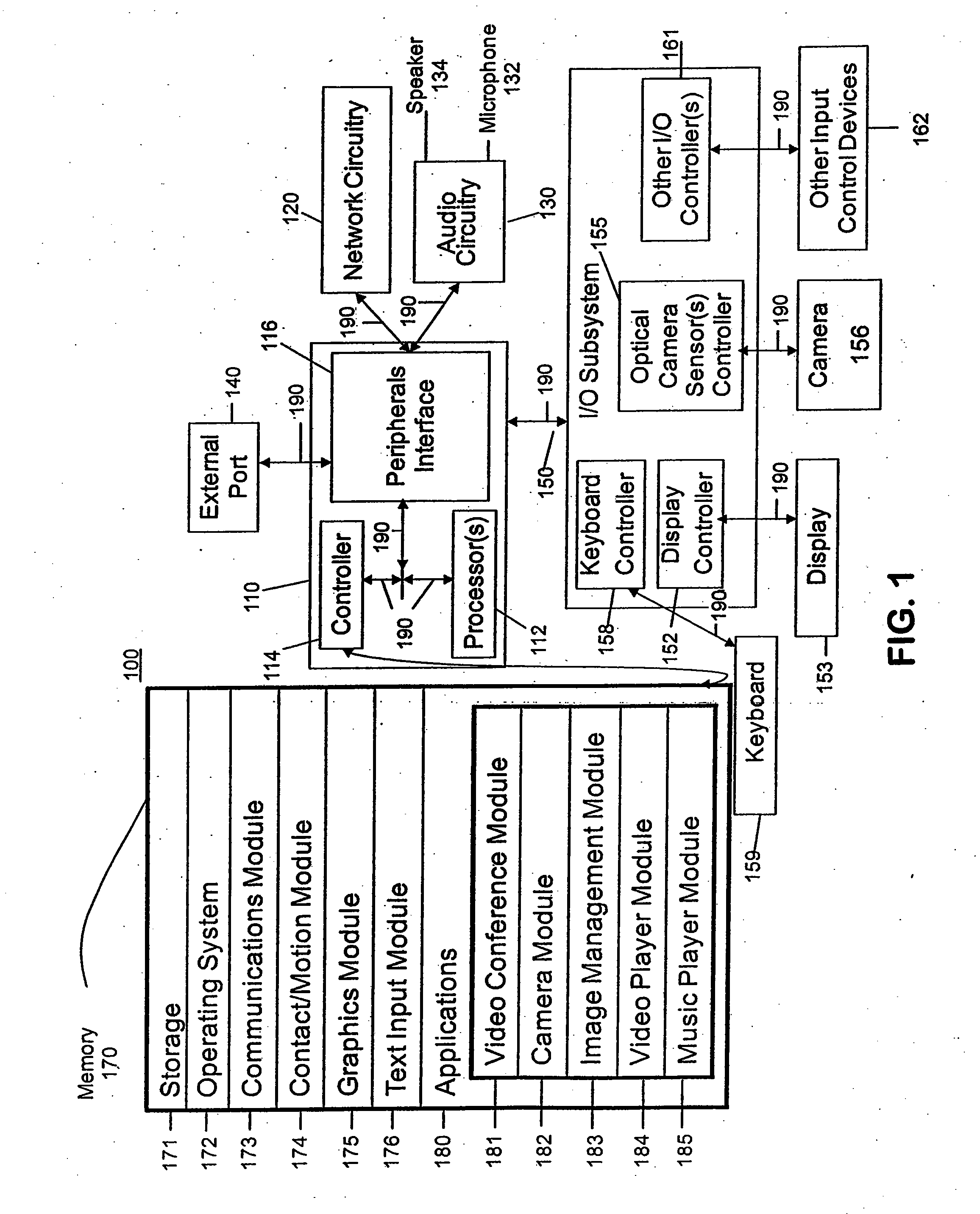

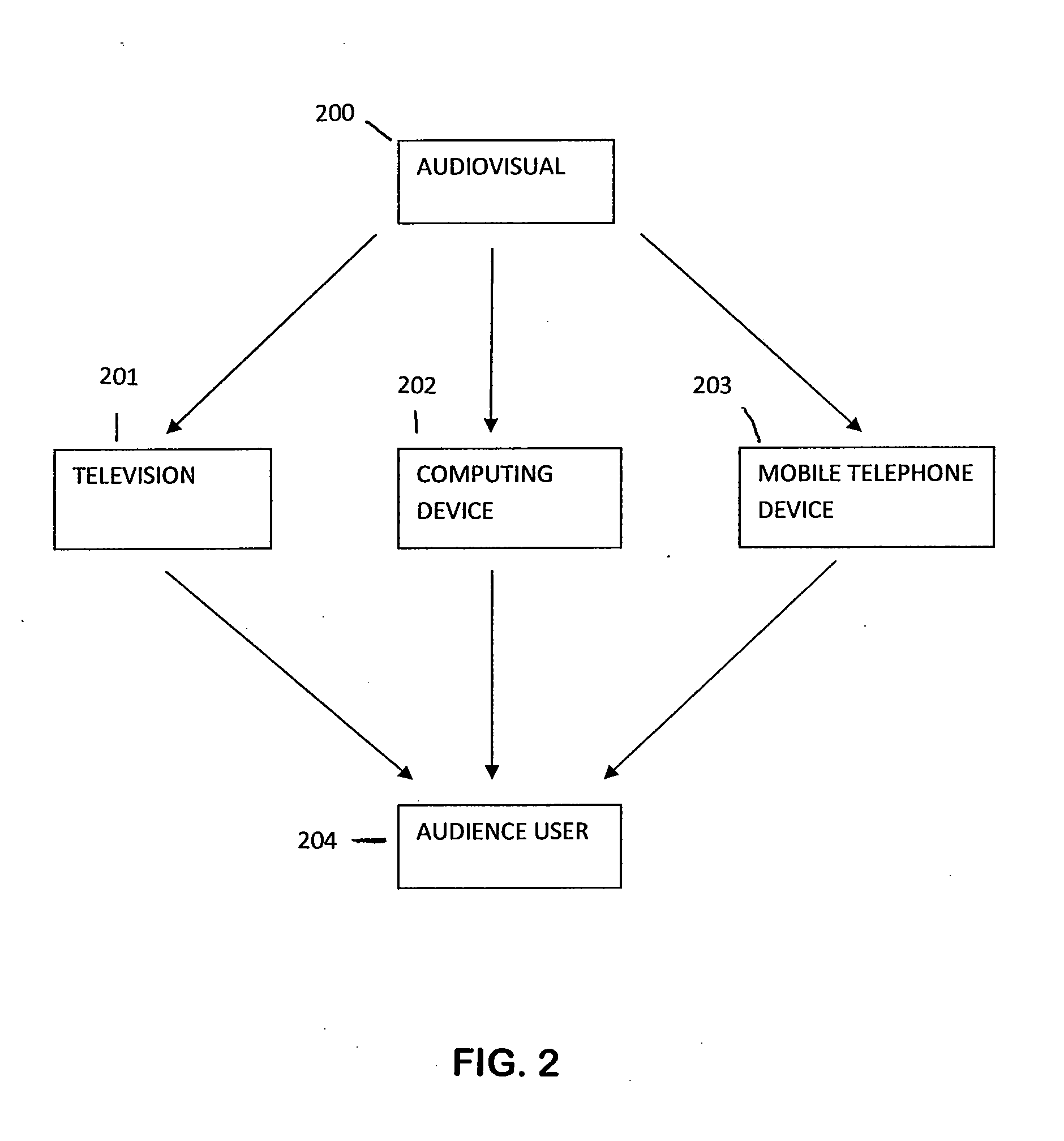

Computer device, method, and graphical user interface for automating the digital transformation, enhancement, and database cataloging of presentation videos

InactiveUS20100293061A1Save resourcesShorten the timeAdvertisementsMultimedia data retrievalGraphicsDigital transformation

A computer-implemented method is described for receiving at a computer identification of a product or service that is to be the subject of a video presentation, generating at the computer step-by-step guidance to a user specific to the subject of the video presentation on what to say in the video presentation, when to say it and how to record the subject of the video presentation, providing the step-by-step guidance from the computer to the user, receiving the video presentation at the computer and editing the video presentation at the computer. In some embodiments, the presentation is automatically processed to enhance it by combining it with additional audiovisual material, to compress it, to format it for Internet or broadcast delivery, and / or to route it for Internet or broadcast delivery. Computer apparatus for performing these steps is also described.

Owner:THE TALK MARKET

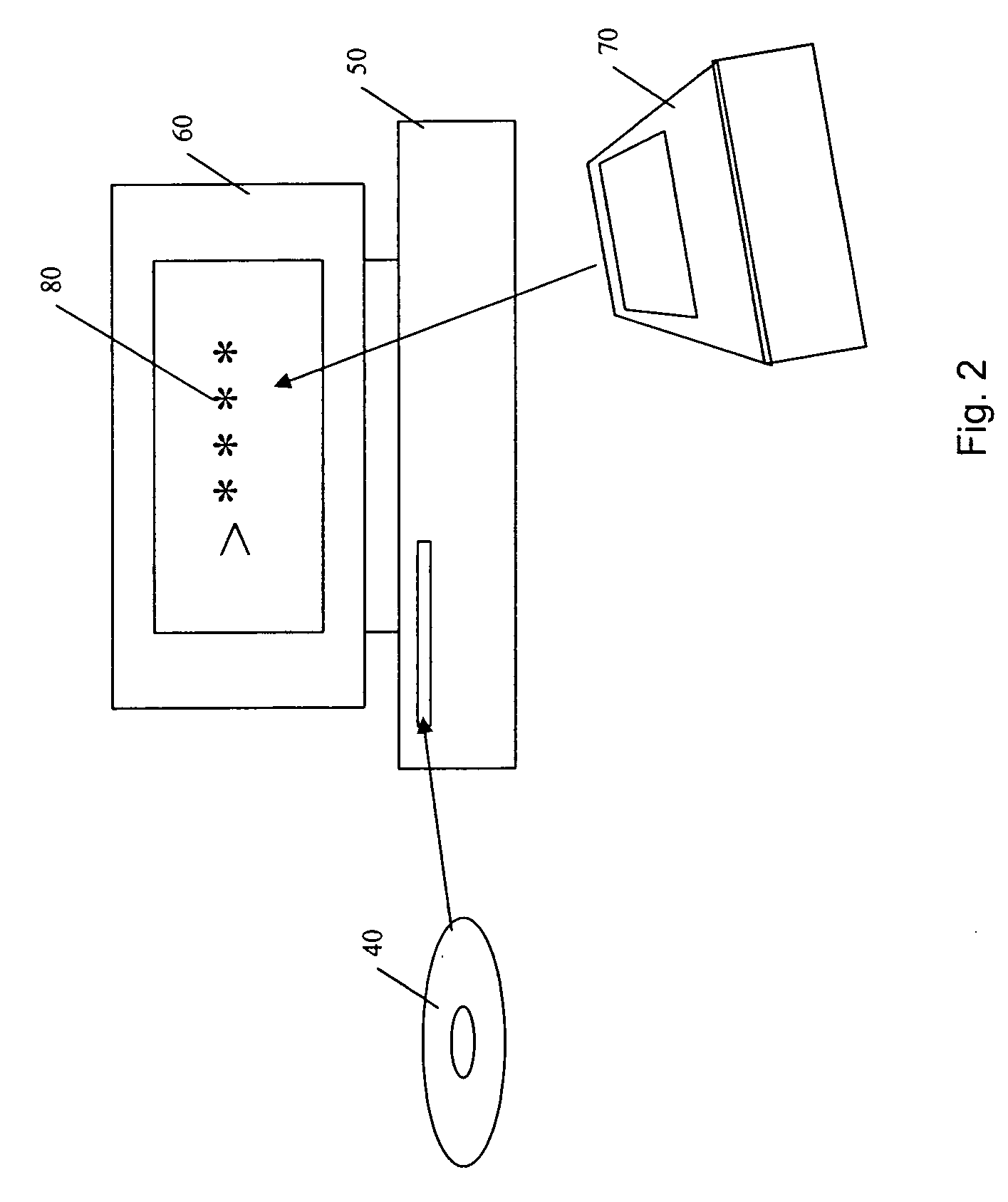

Scrambled video streams in an audiovisual product

ActiveUS20050022232A1Limited accessSimple but effectiveTelevision system detailsElectronic editing digitised analogue information signalsComputer graphics (images)Audiovisual technology

Access to an audiovisual product is controlled, through the use of scrambled video streams. Cells 420 of audiovisual data A,B,C1,D are scrambled into at least first and second video streams 1901, 1902. A video stream switch instruction 2011, 2021 automatically switches between at least first and second video streams 1901, 1902 during reproduction, so that cells are reproduced from an appropriate video stream 1901, 1902 at appropriate times. Multi-angle video objects 2020 hold the cells 420 in a selected video stream 1902, and hold nil or erroneous data C2 in other streams 1901.

Owner:ZOO DIGITAL

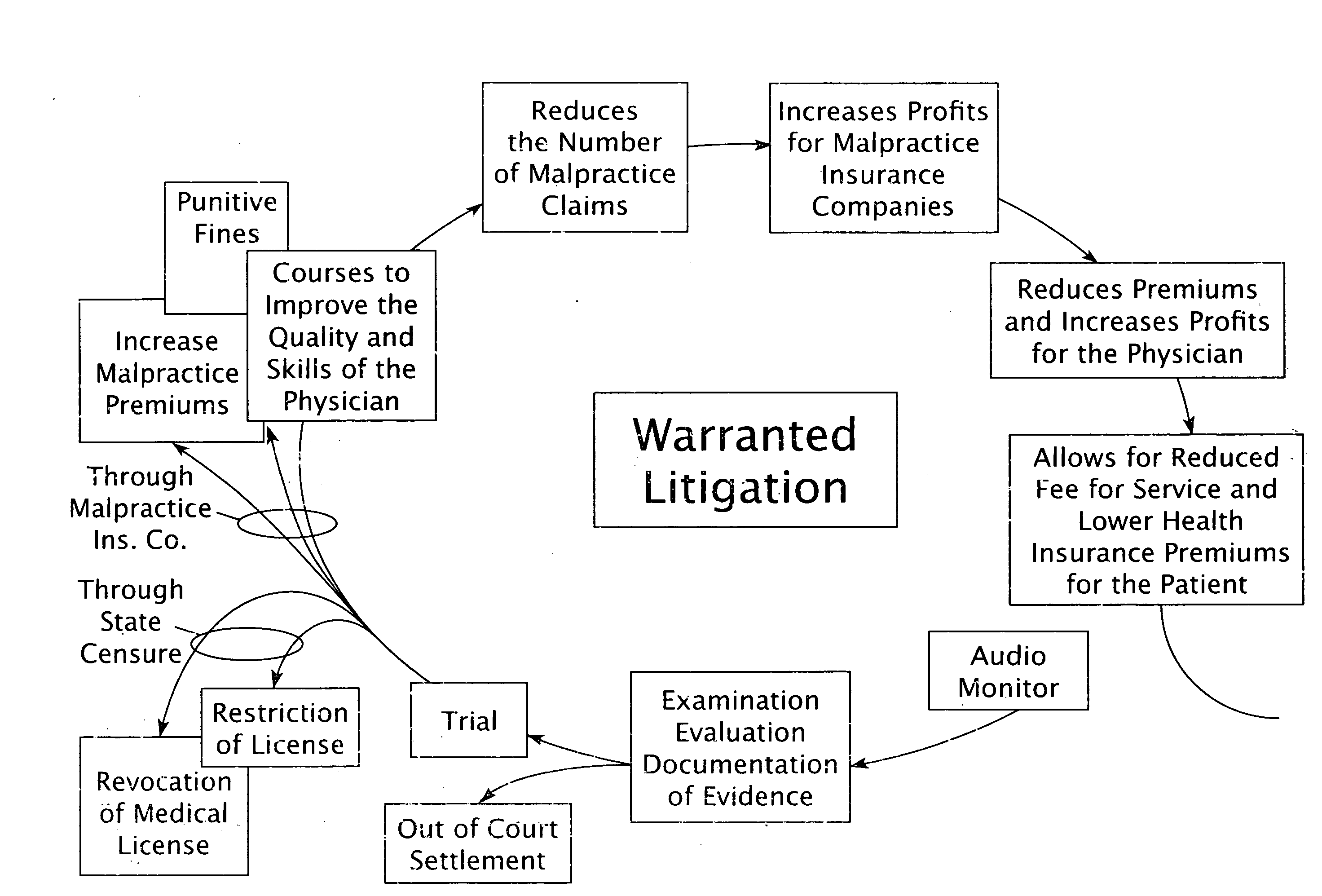

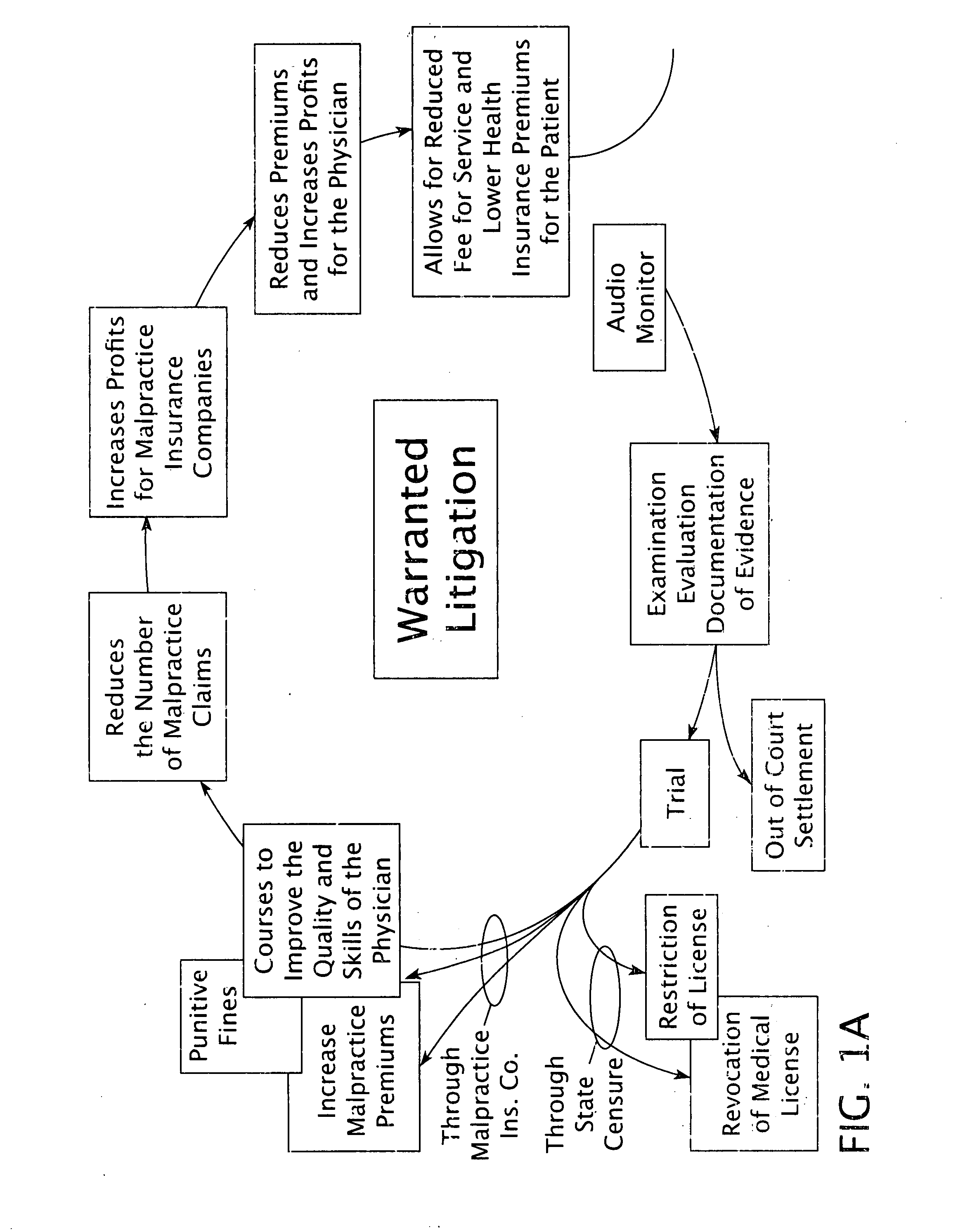

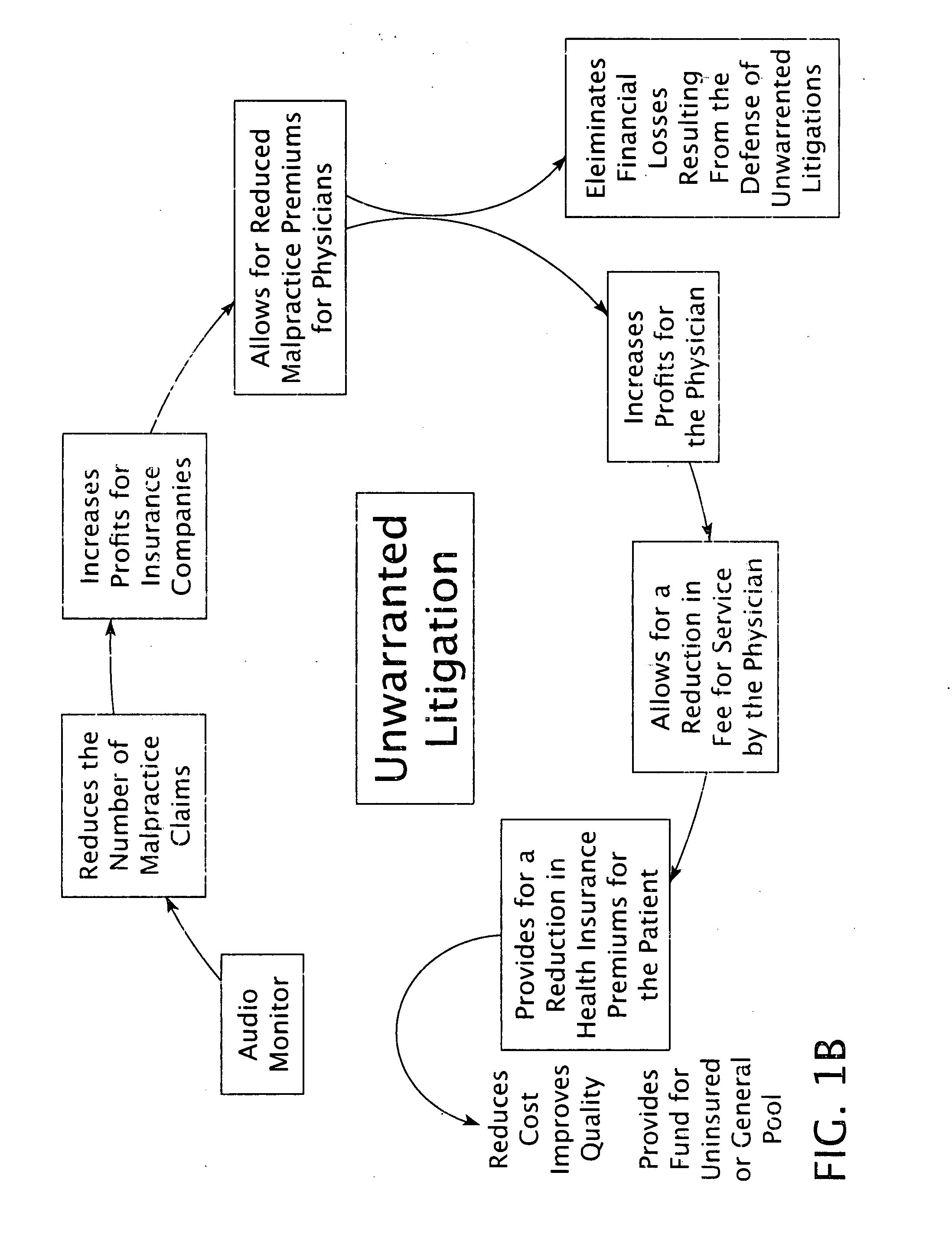

Healthcare management system

InactiveUS20070265888A1Reduced premiumIncrease painFinanceOffice automationManagement systemAudiovisual technology

Owner:CASTELLI DARIO DANTE

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com