Patents

Literature

406 results about "File caching" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

File Caching. By default, Windows caches file data that is read from disks and written to disks. This implies that read operations read file data from an area in system memory known as the system file cache, rather than from the physical disk.

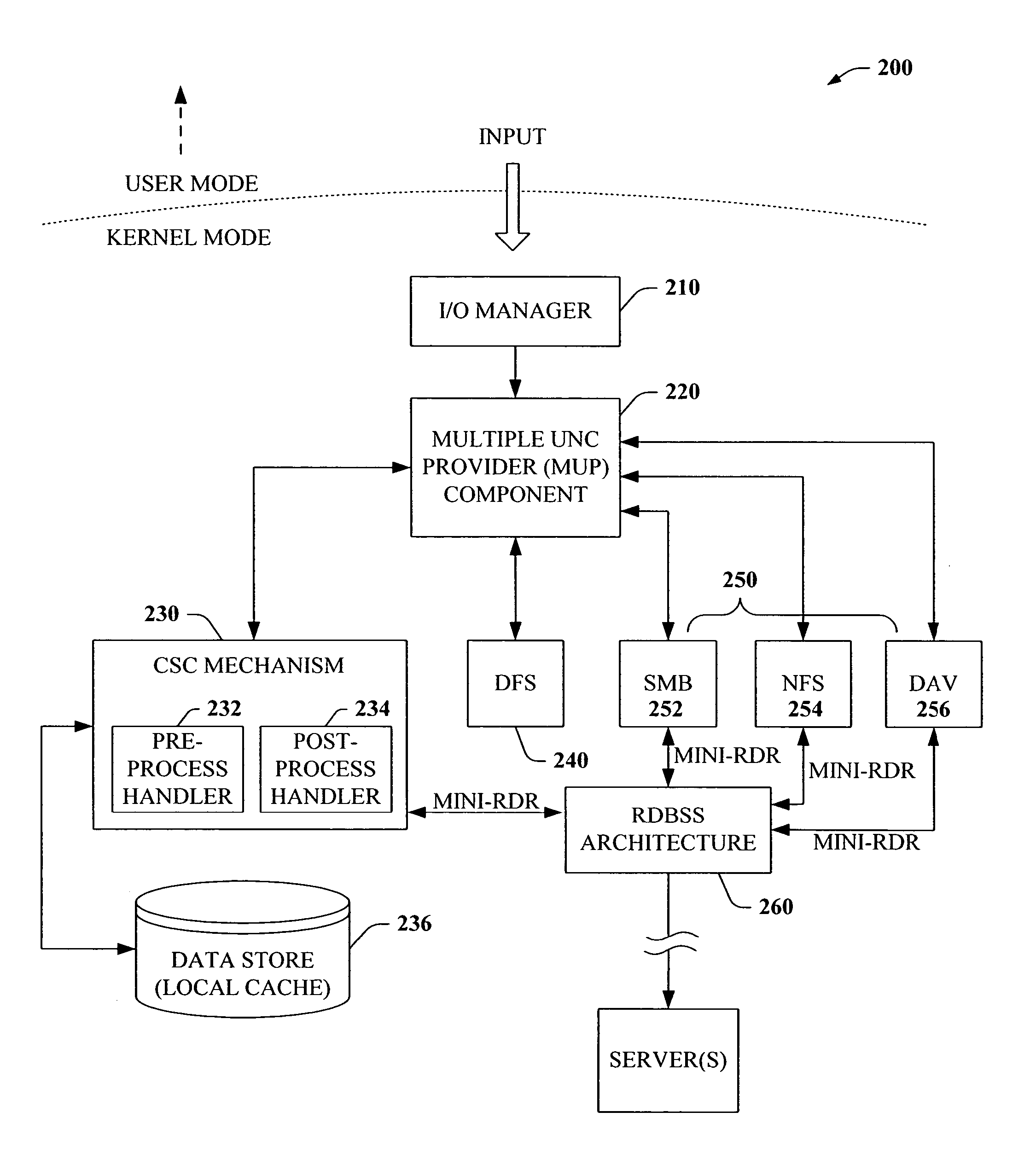

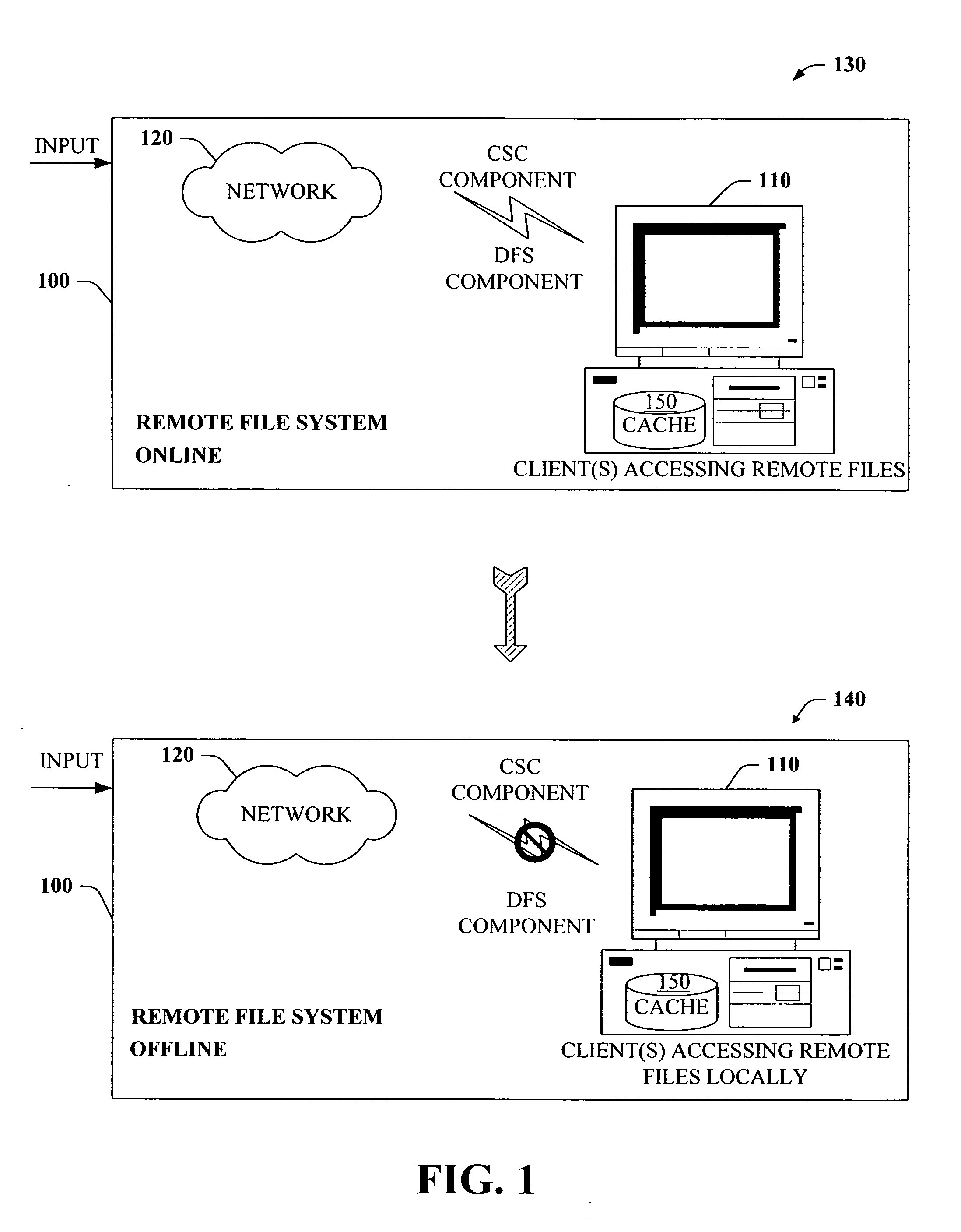

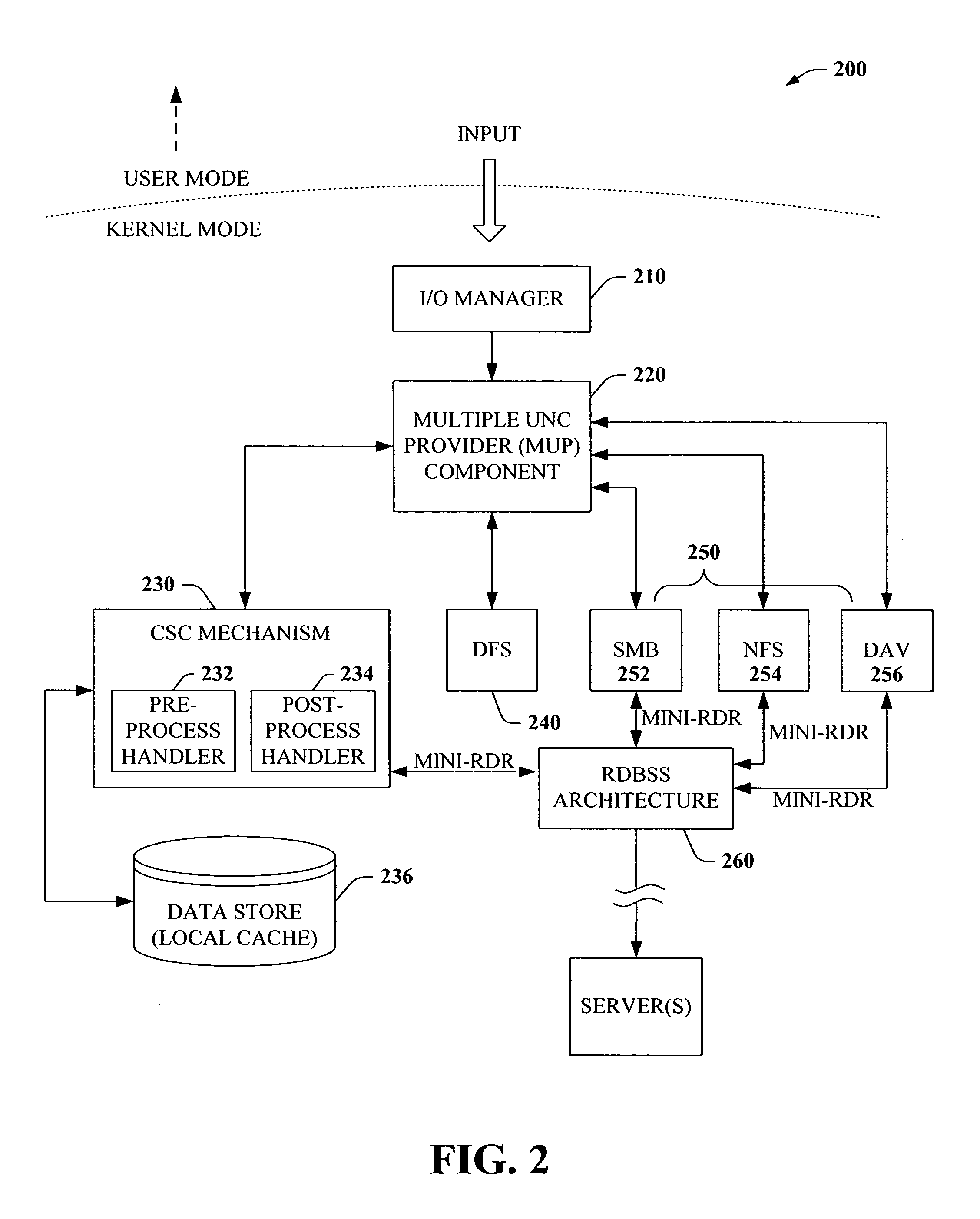

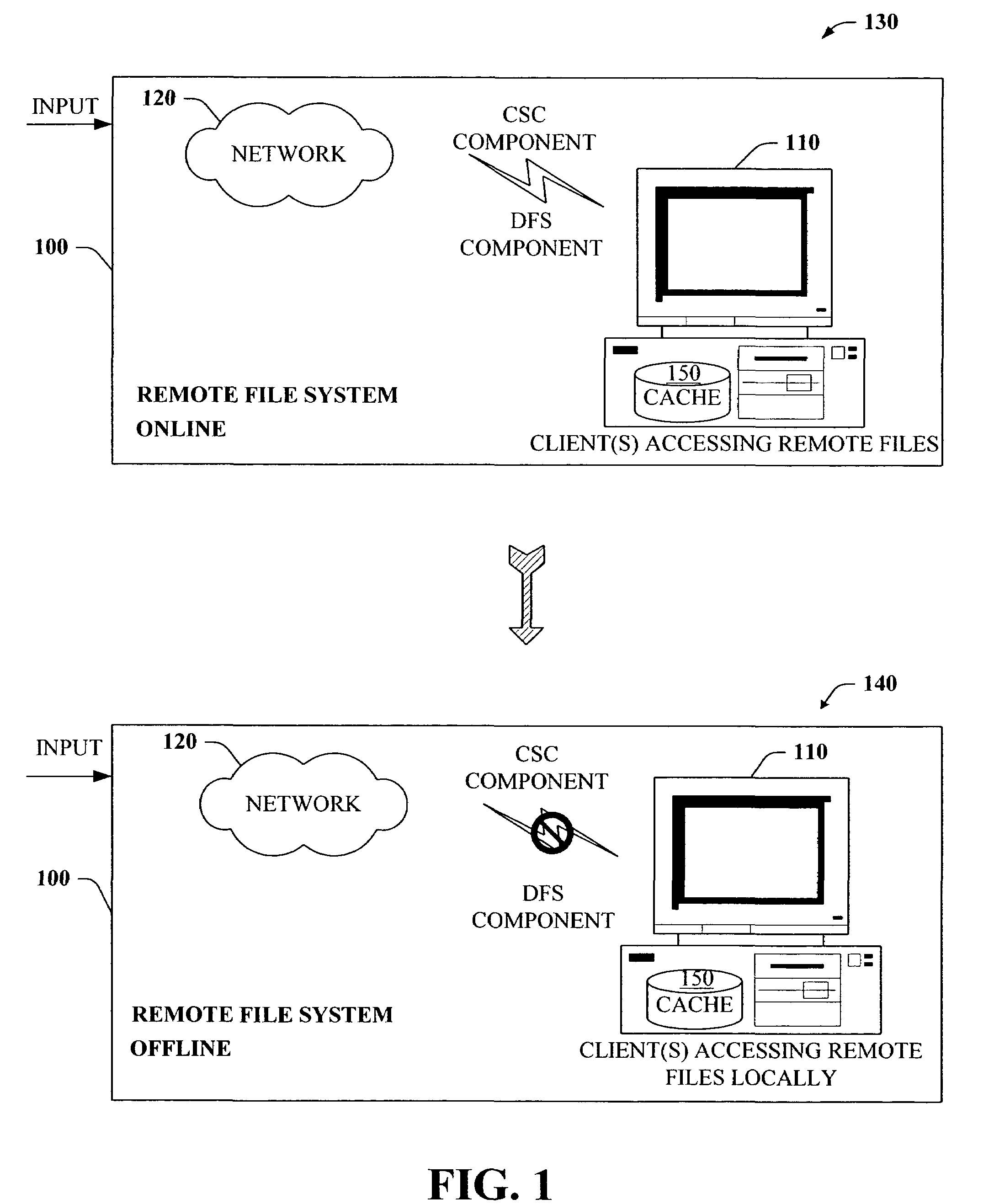

Truth on client persistent caching

ActiveUS20050102370A1Easy to operateMemory architecture accessing/allocationDigital data information retrievalClient dataClient-side

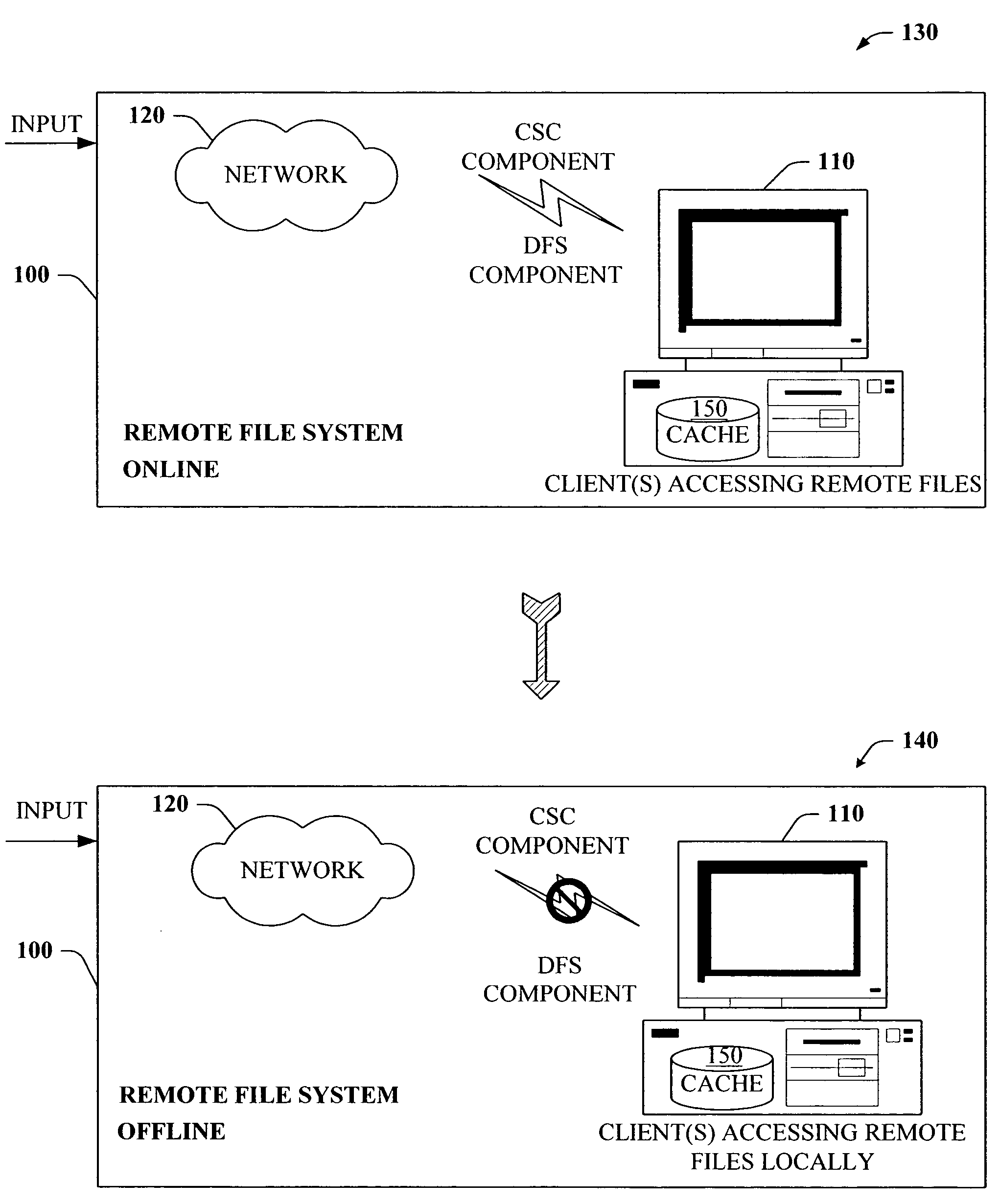

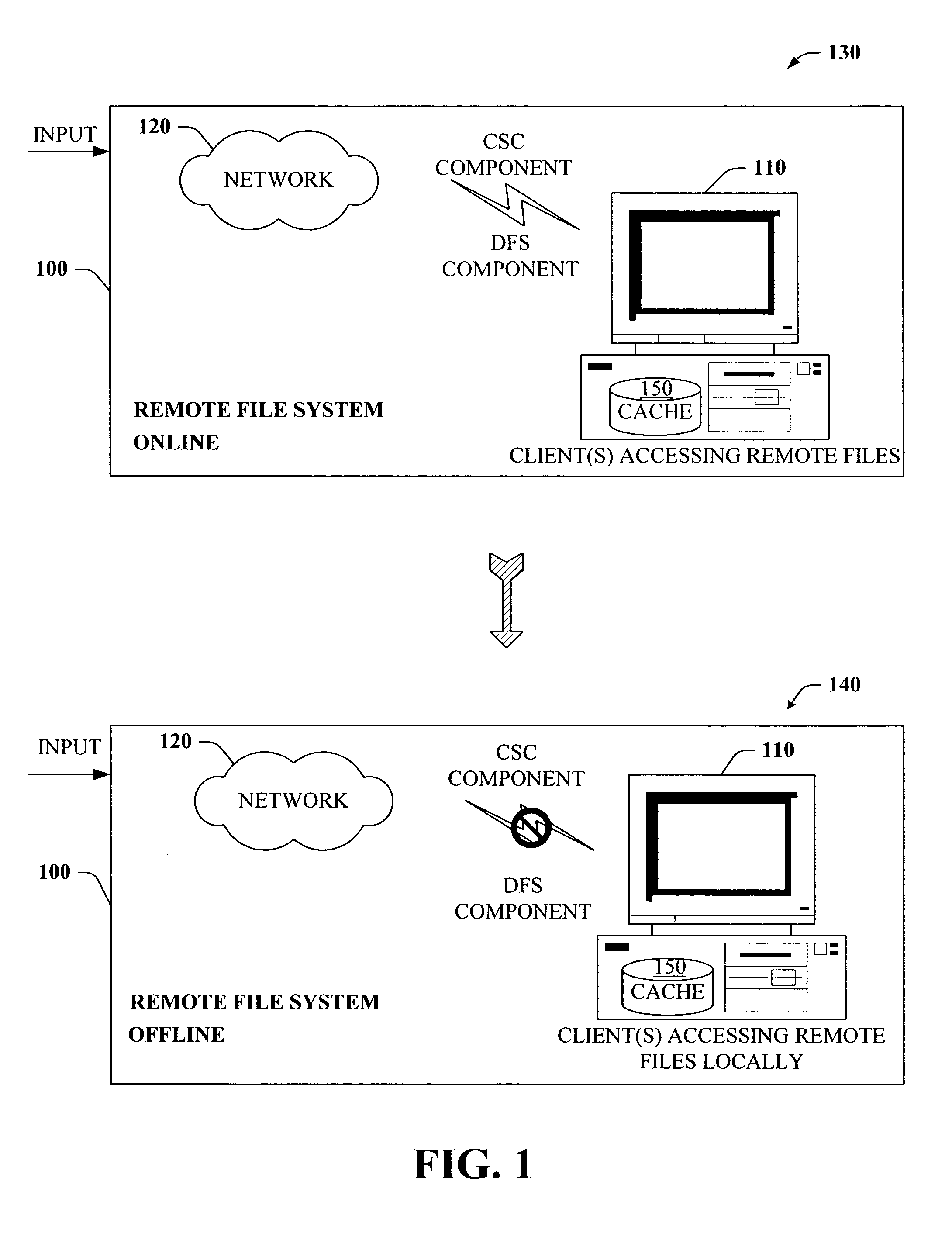

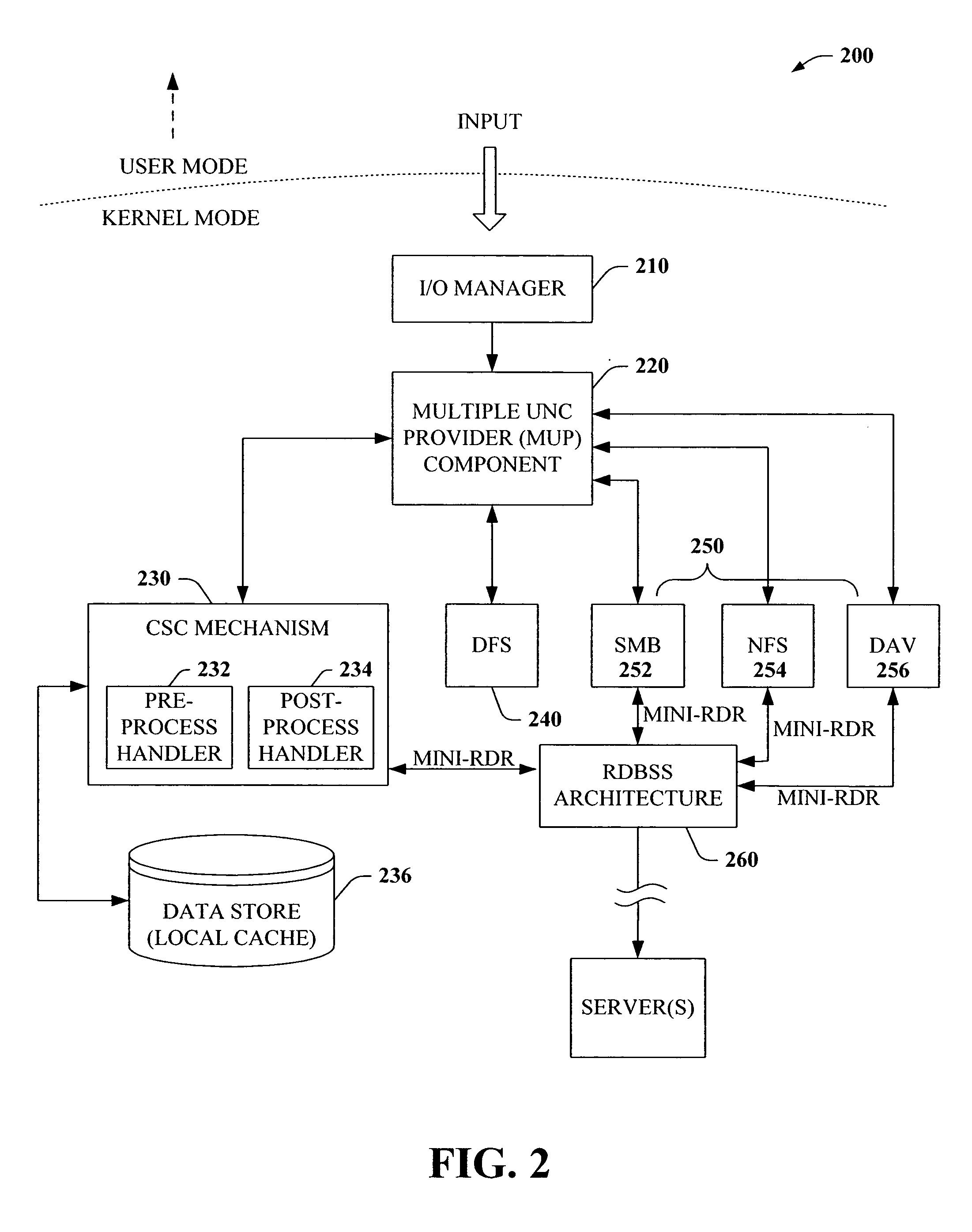

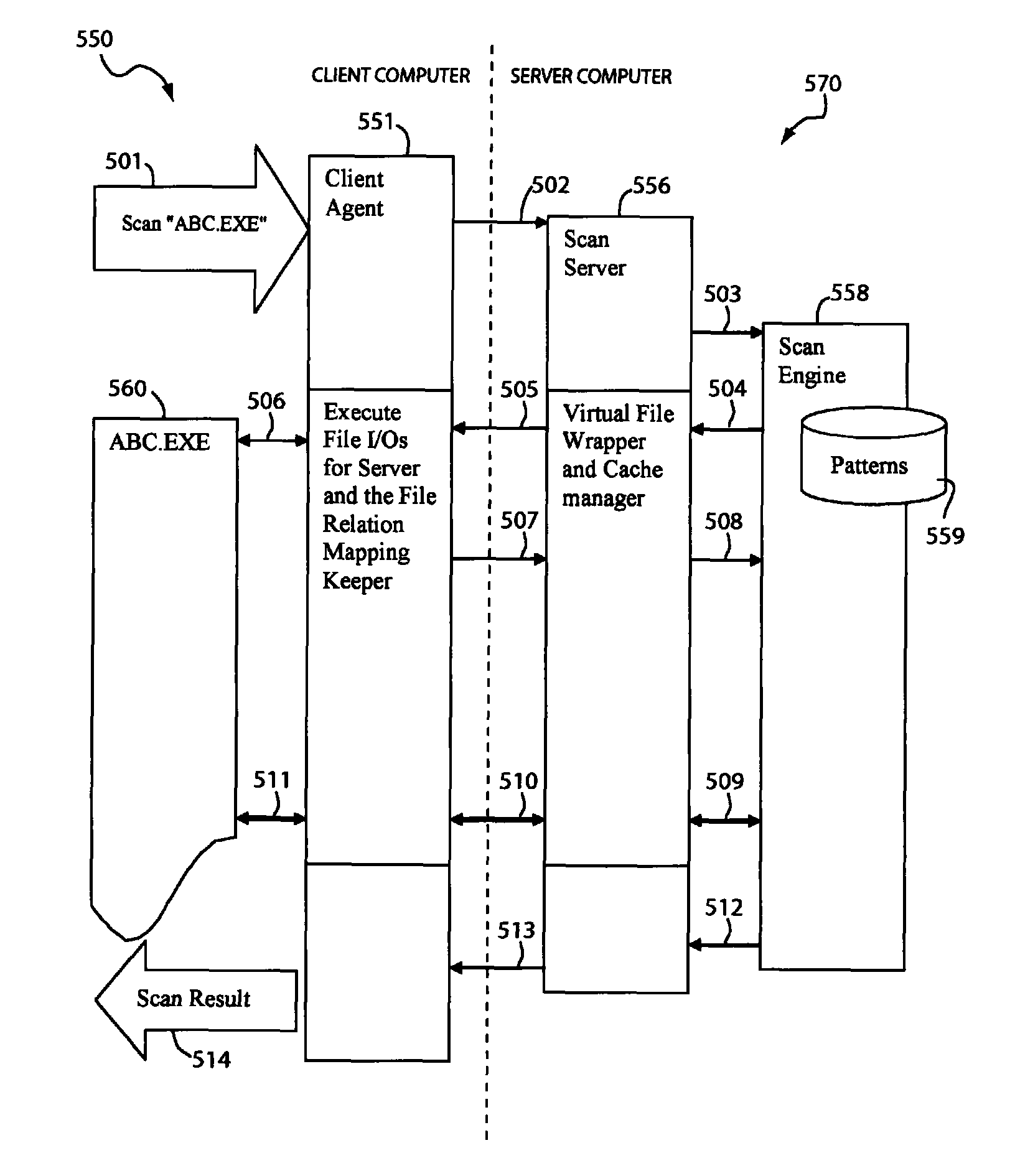

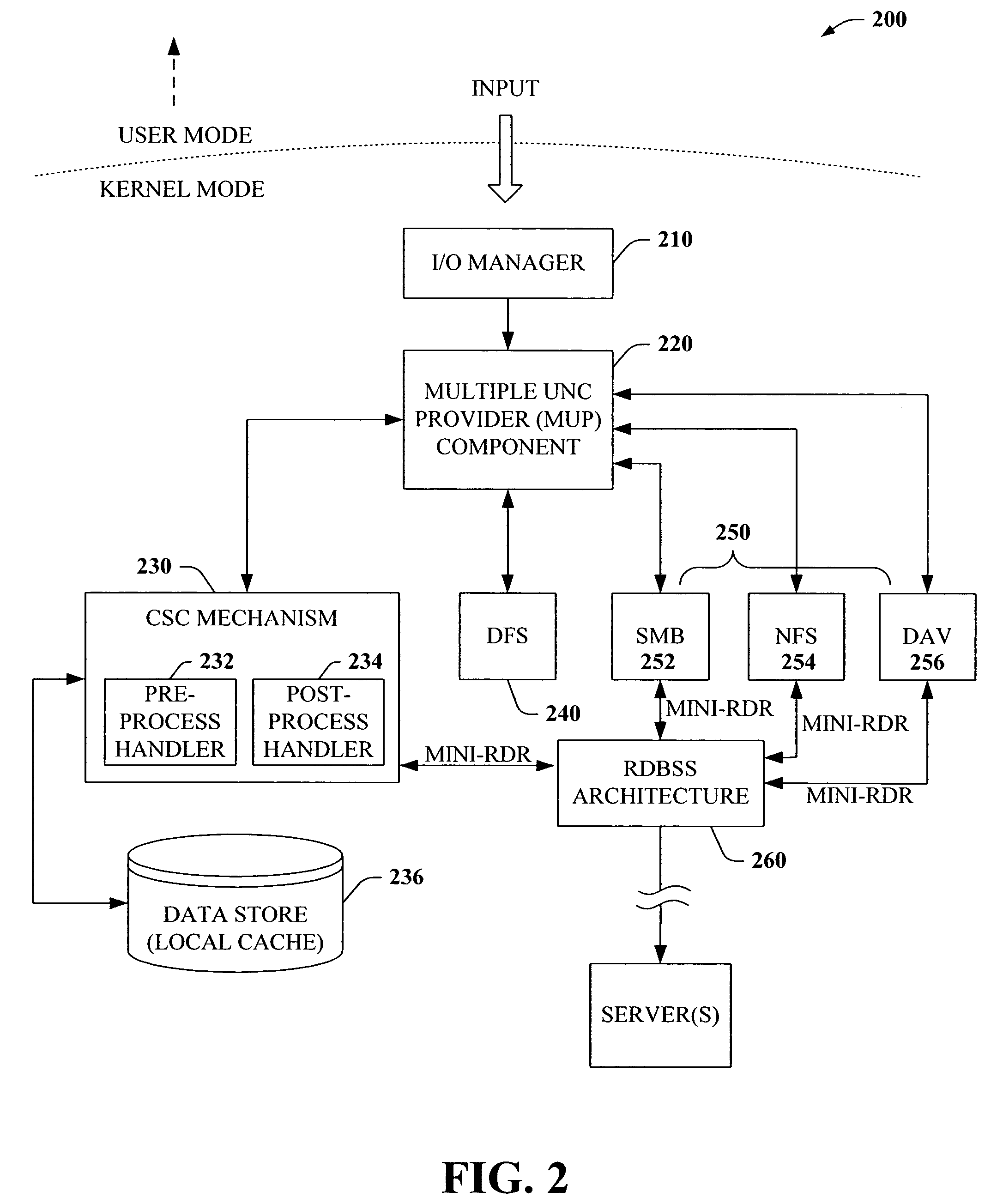

The present invention provides a novel client side caching (CSC) infrastructure that supports transition states at the directory level to facilitate a seamless operation across connectivity states between client and remote server. More specifically, persistent caching is performed to safeguard the user (e.g., client) and / or the client applications across connectivity interruptions and / or bandwidth changes. This is accomplished in part by caching to a client data store the desirable file(s) together with the appropriate file access parameters. Moreover, the client maintains access to cached files during periods of disconnect. Furthermore, portions of a path can be offline while other portions upstream can remain online. CSC operates on the logical path which cooperates with DFS which operates on the physical path to keep track of files cached, accessed and changes in the directories. In addition, truth on the client is facilitated whether or not a conflict of file copies exists.

Owner:MICROSOFT TECH LICENSING LLC

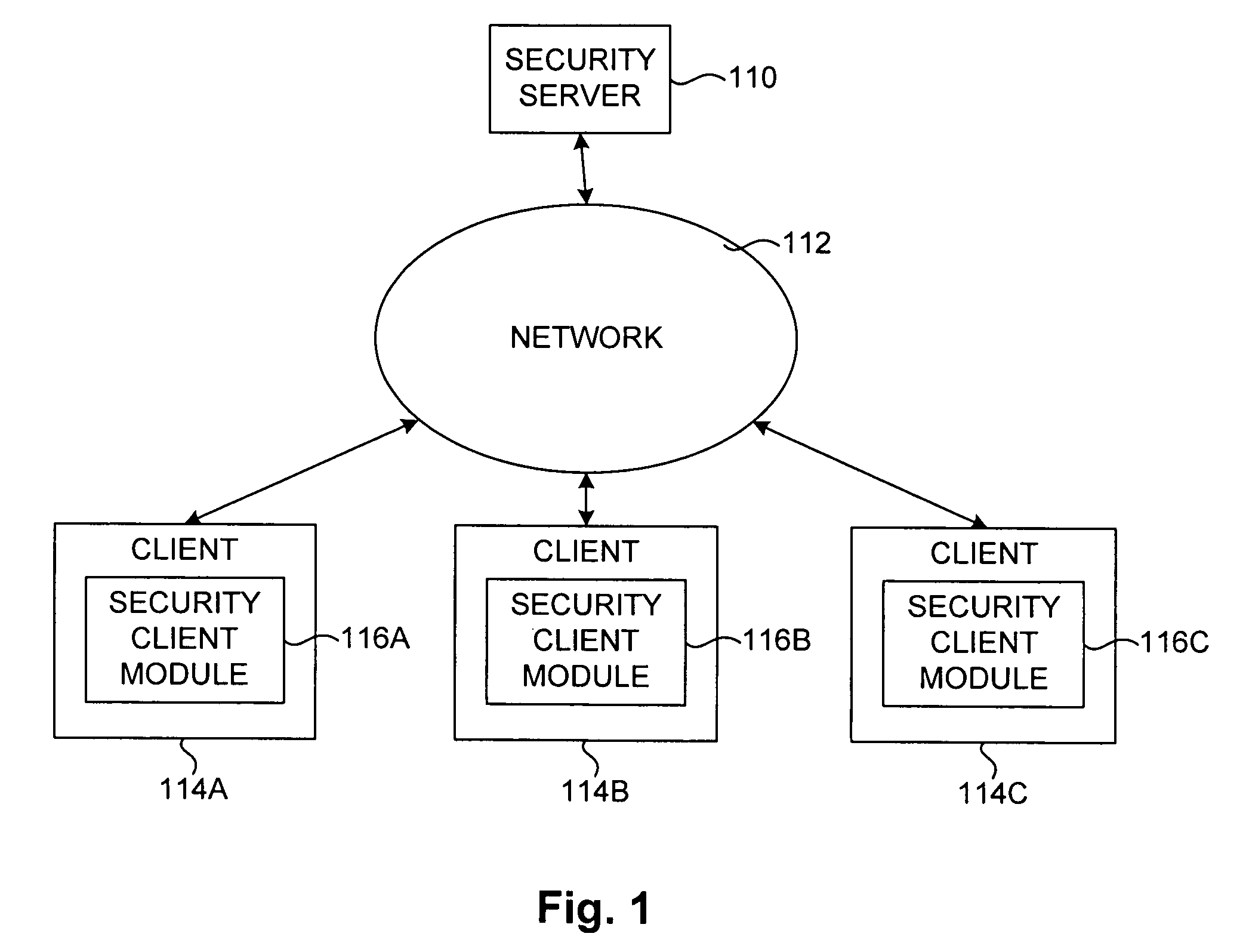

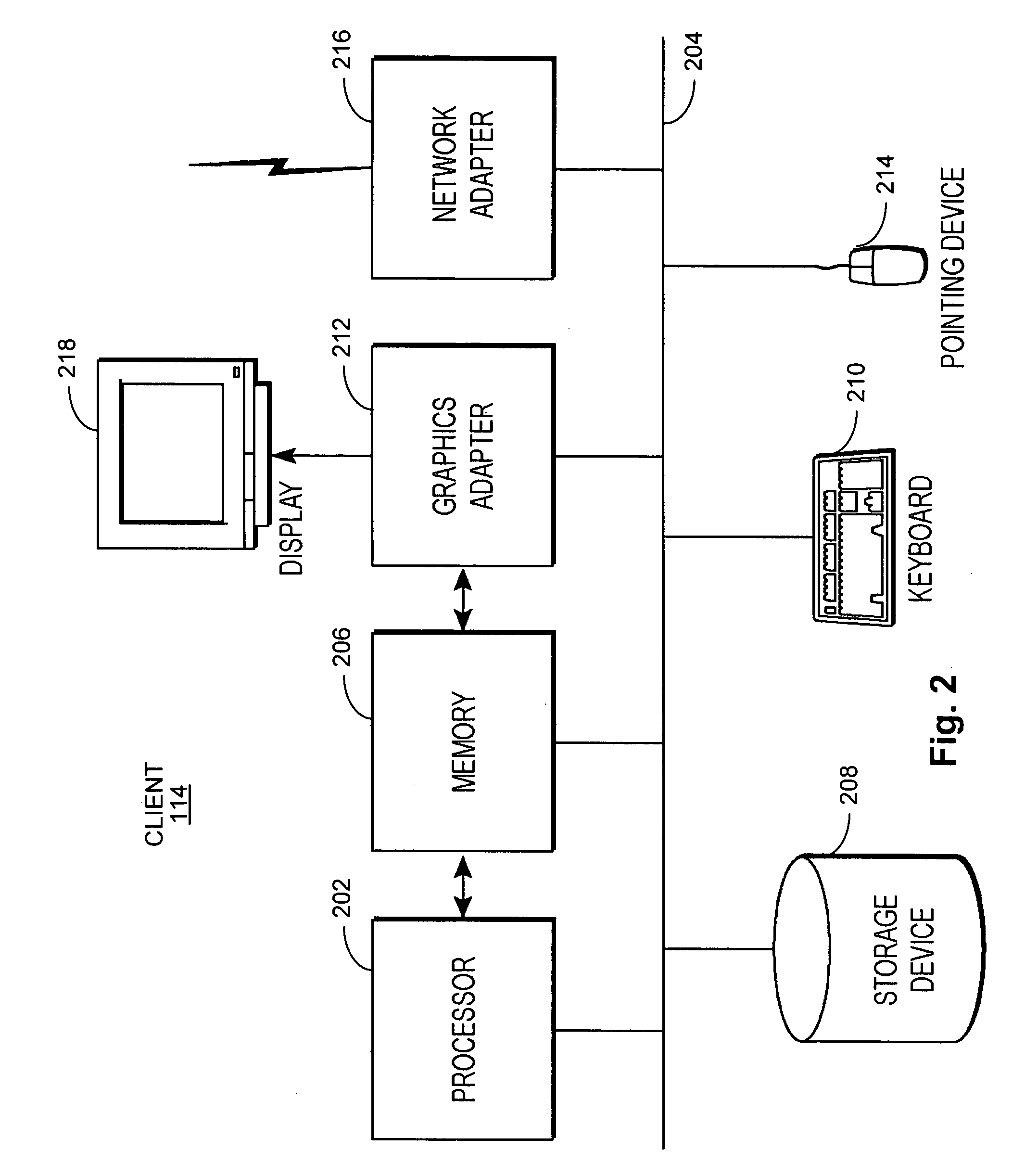

Thin client for computer security applications

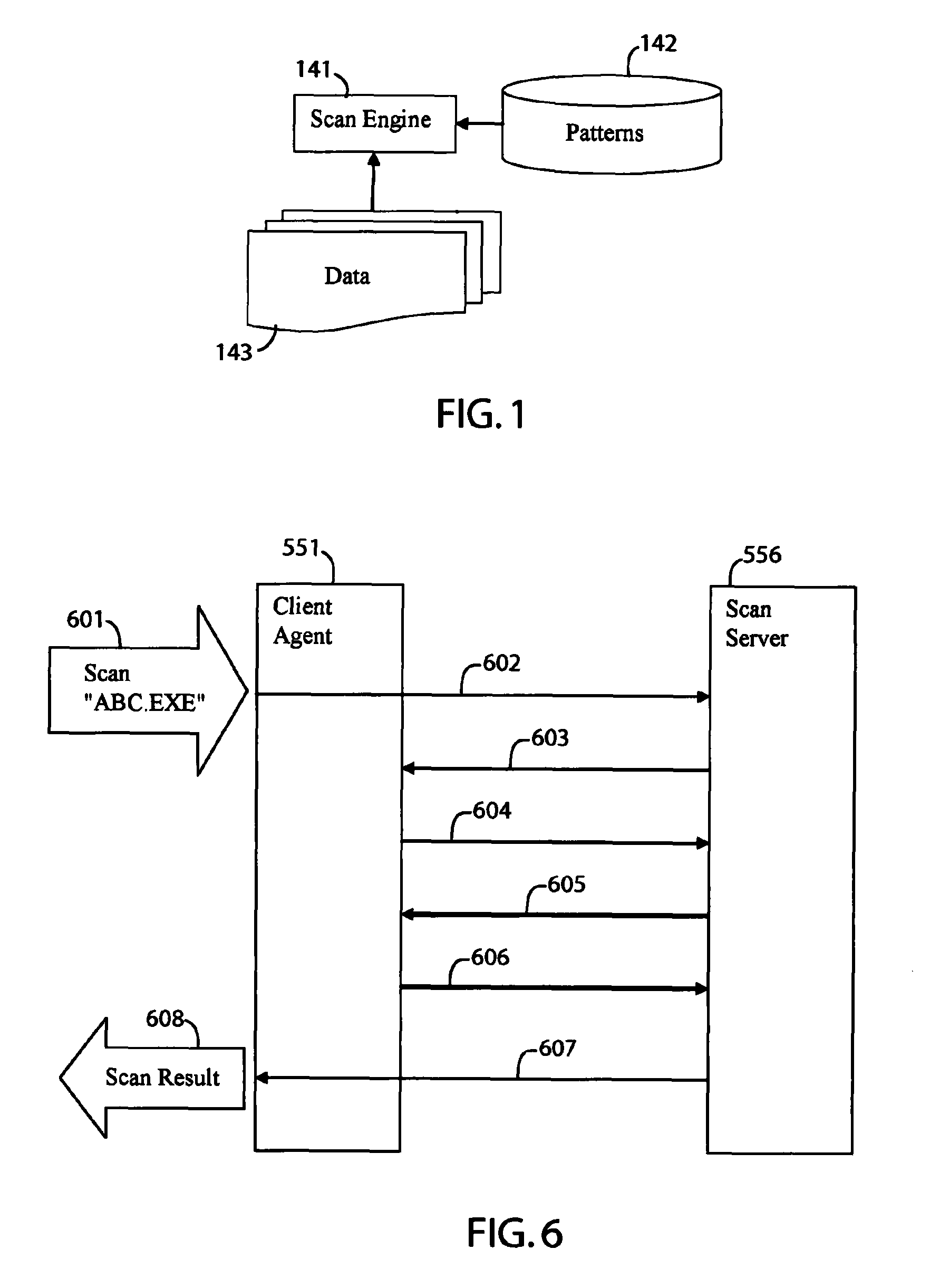

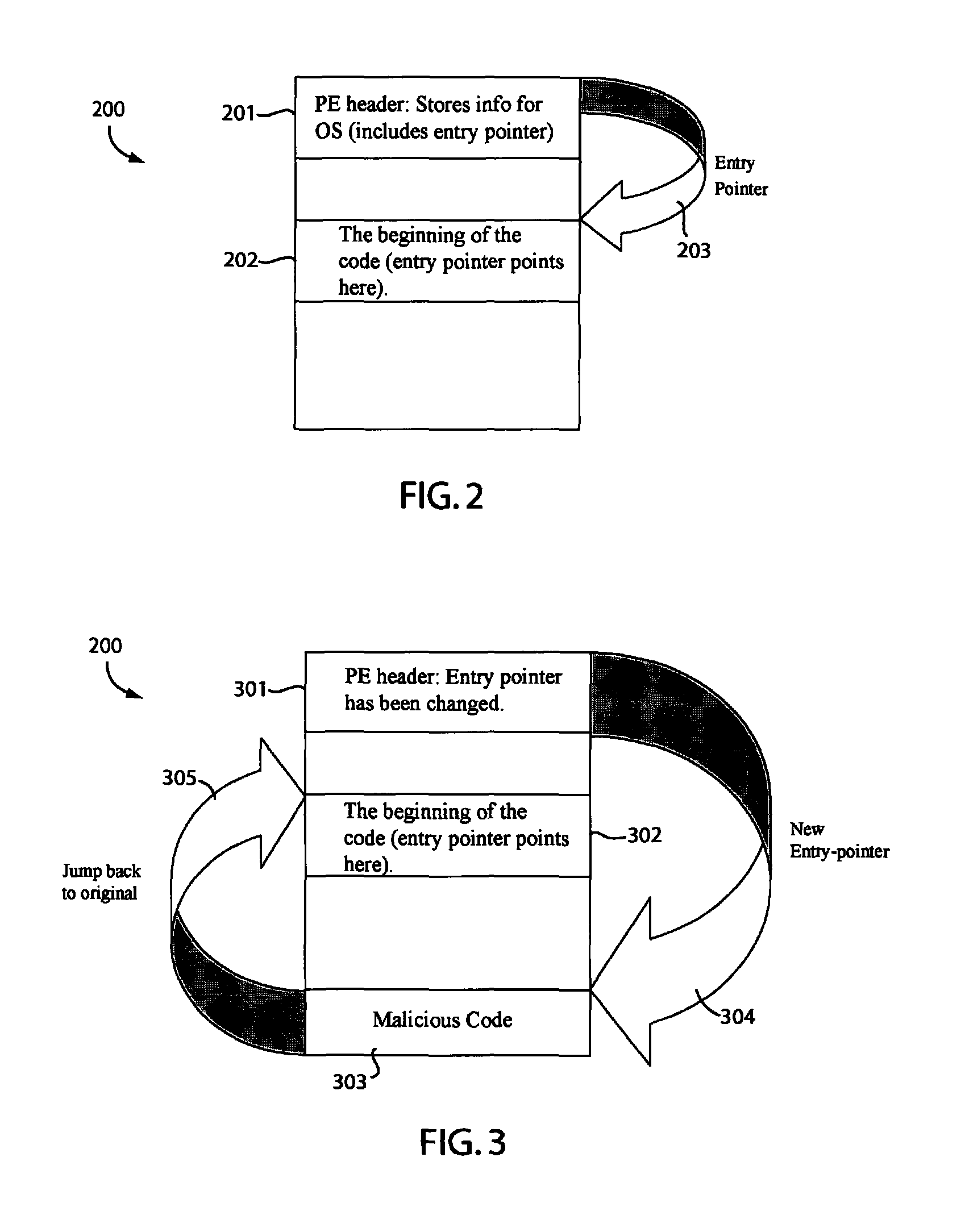

ActiveUS8127358B1Minimize network trafficTraffic minimizationMemory loss protectionError detection/correctionComputer hardwareClient agent

A system for scanning a file for malicious codes may include a client agent running in a client computer and a scan server running in a server computer, the client computer and the server computer communicating over a computer network. The client agent may be configured to locally receive a scan request to scan a target file for malicious codes and to communicate with the scan server to scan the target file using a scan engine running in the server computer. The scan server in communication with the client agent allows the scan engine to scan the target file by issuing file I / O requests to access the target file located in the client computer. The client agent may be configured to check for digital signatures and to maintain a file cache of previously scanned files to minimize network traffic.

Owner:TREND MICRO INC

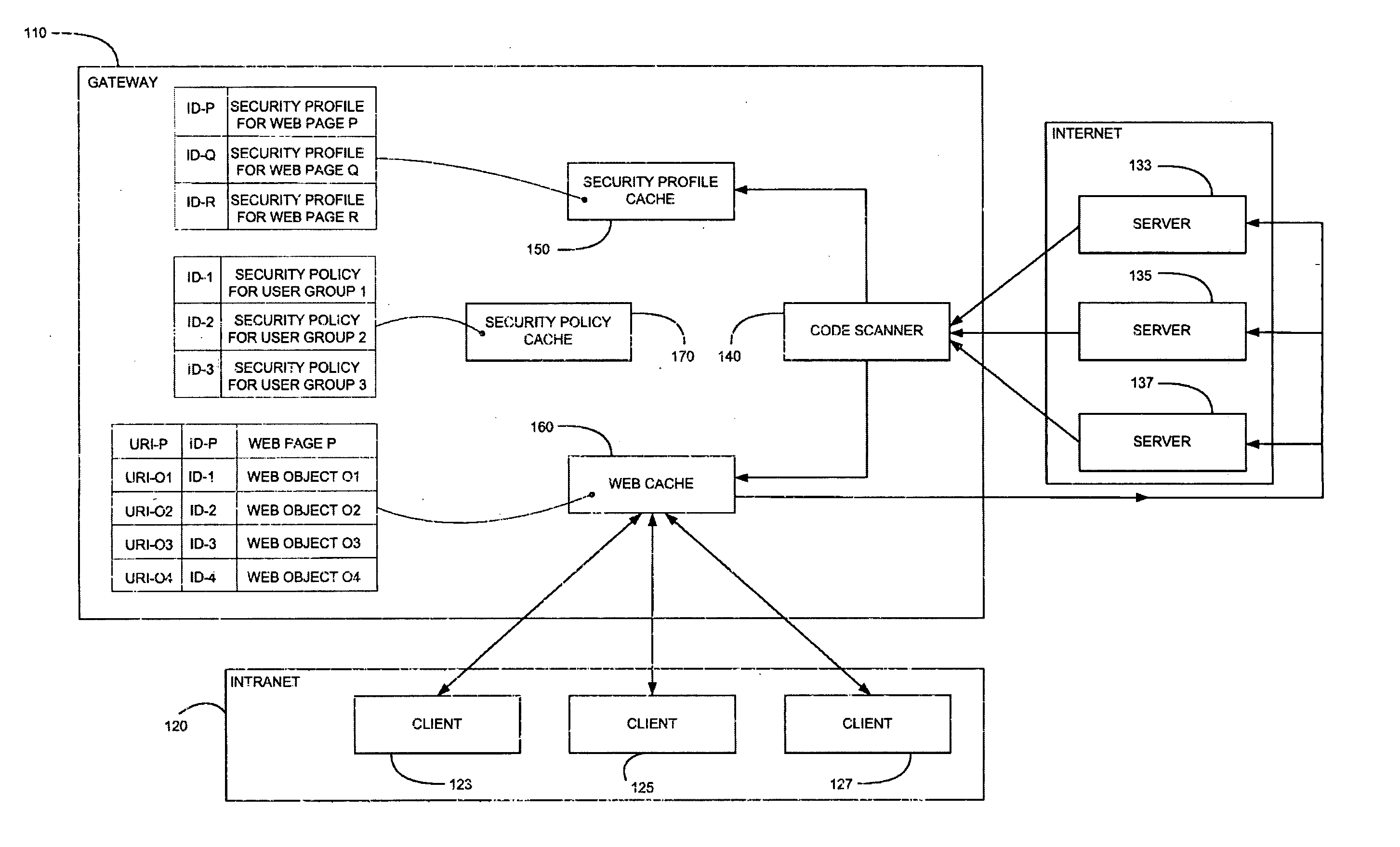

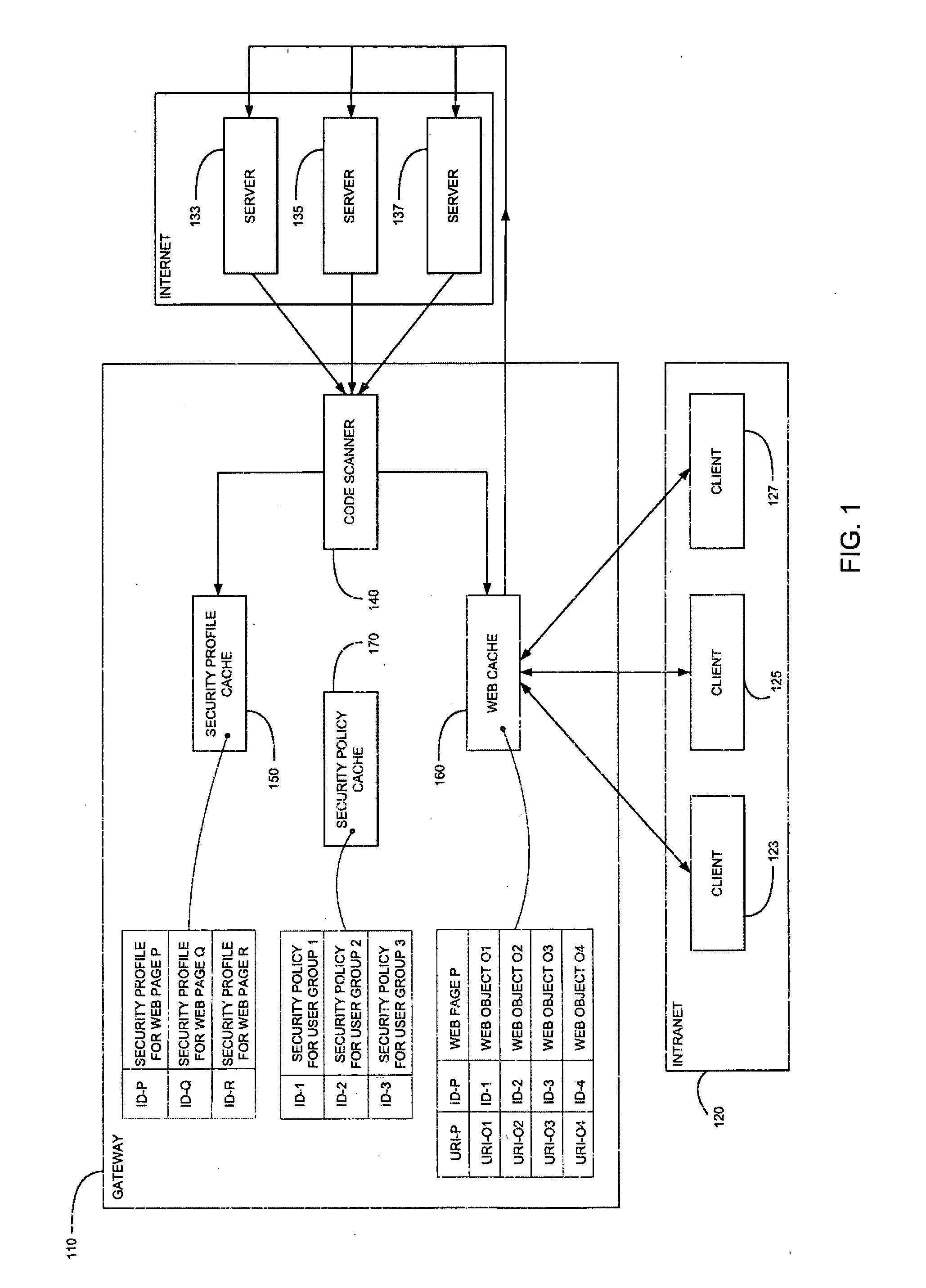

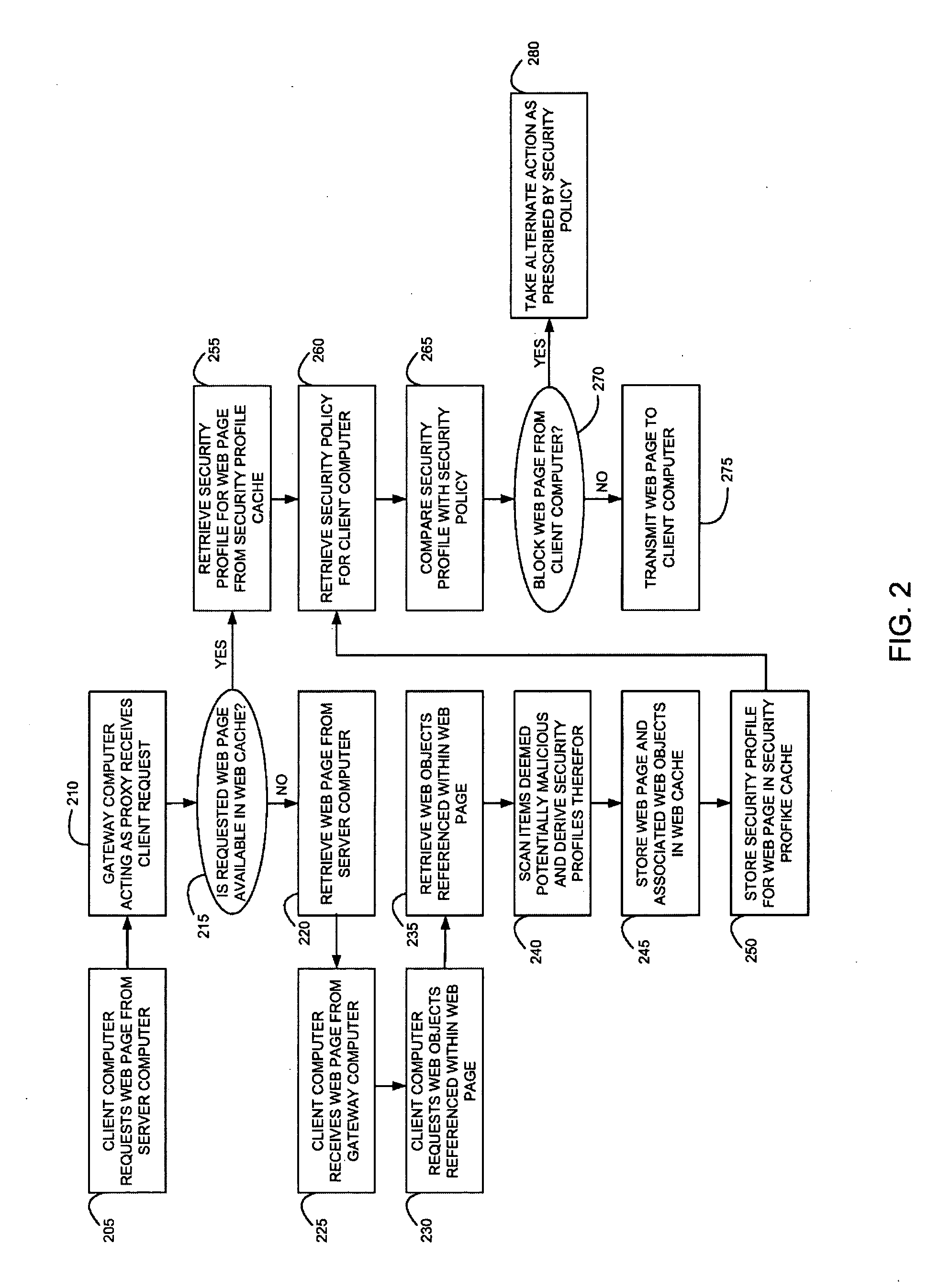

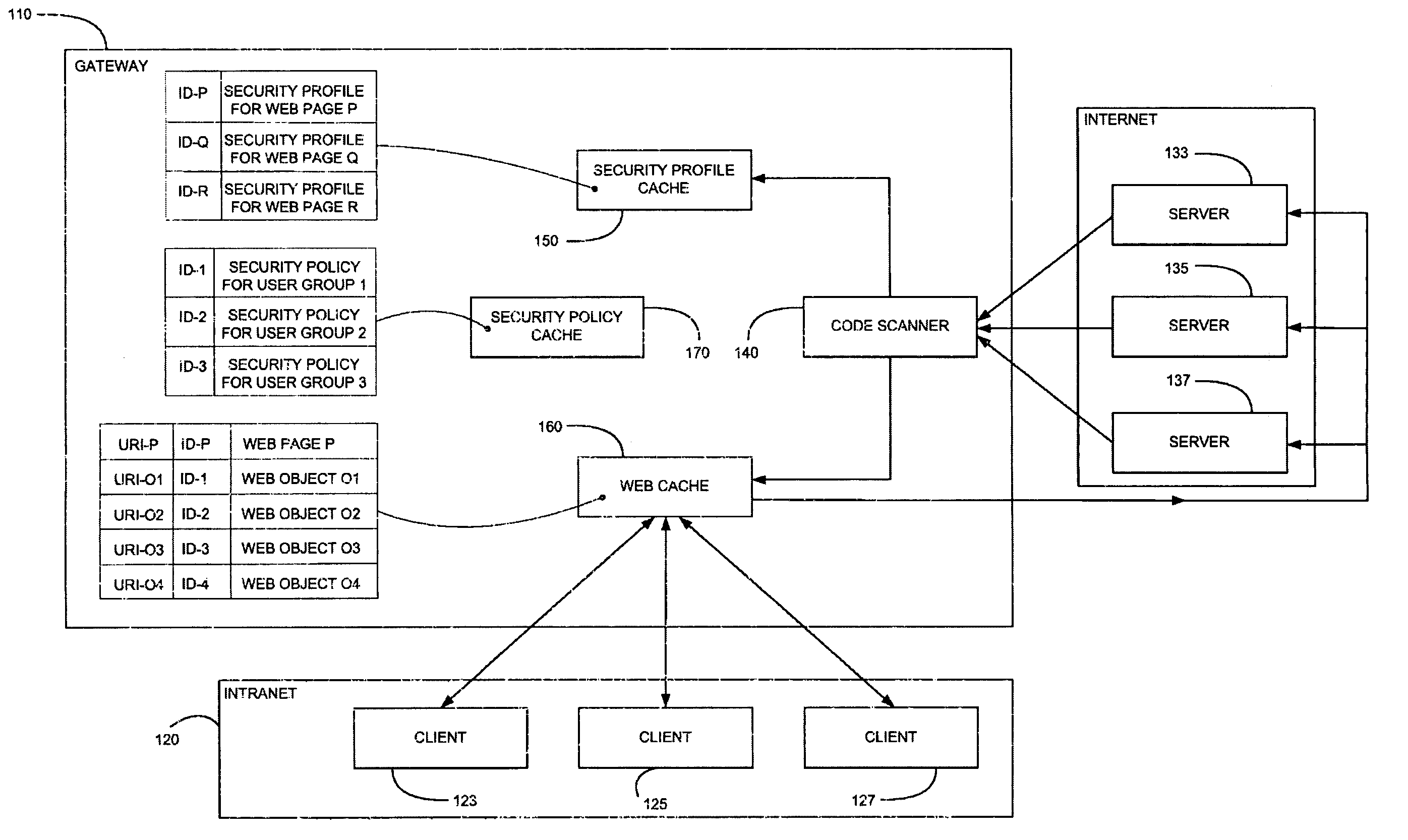

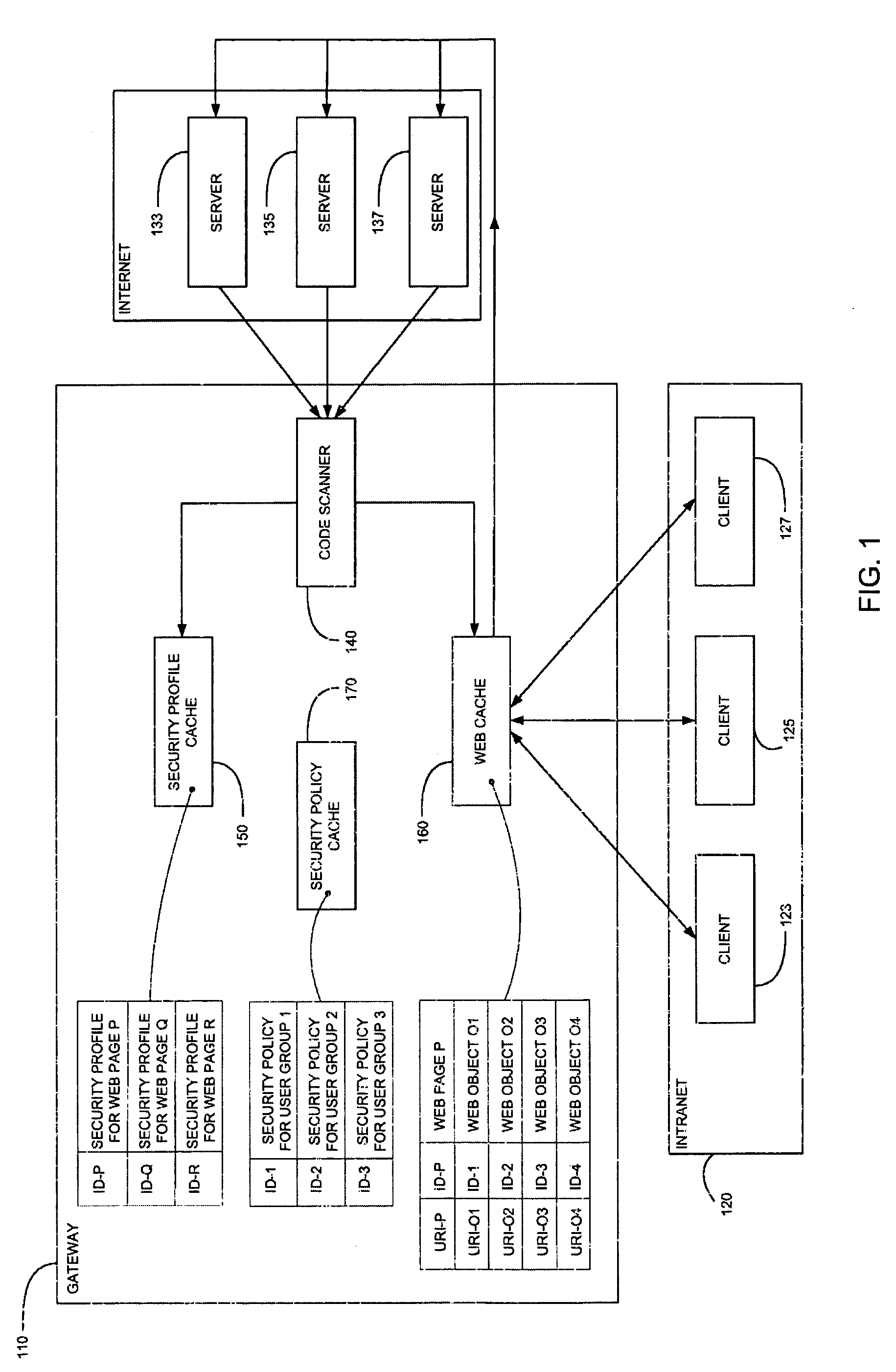

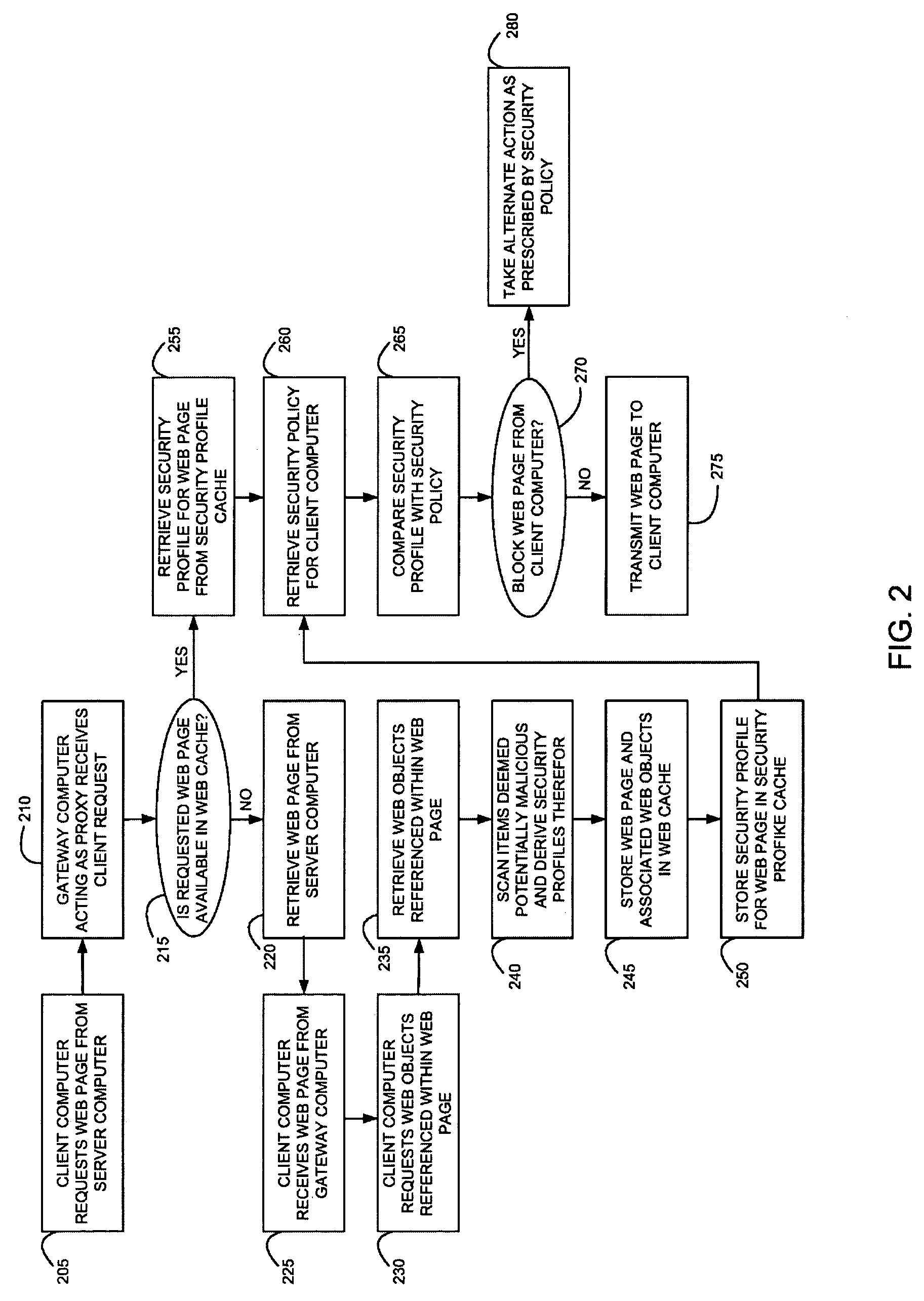

Method and system for caching at secure gateways

InactiveUS20050005107A1Improve performanceReduce network latencyMemory loss protectionError detection/correctionConfigfsClient-side

A computer gateway for an intranet of computers, including a scanner for scanning incoming files from the Internet and deriving security profiles therefor, the security profiles being lists of computer commands that the files are programmed to perform, a file cache for storing files, a security profile cache for storing security profiles for files, and a security policy cache for storing security policies for client computers within an intranet, the security policies including a list of restrictions for files that are transmitted to intranet computers. A method and a computer-readable storage medium are also described and claimed.

Owner:FINJAN LLC

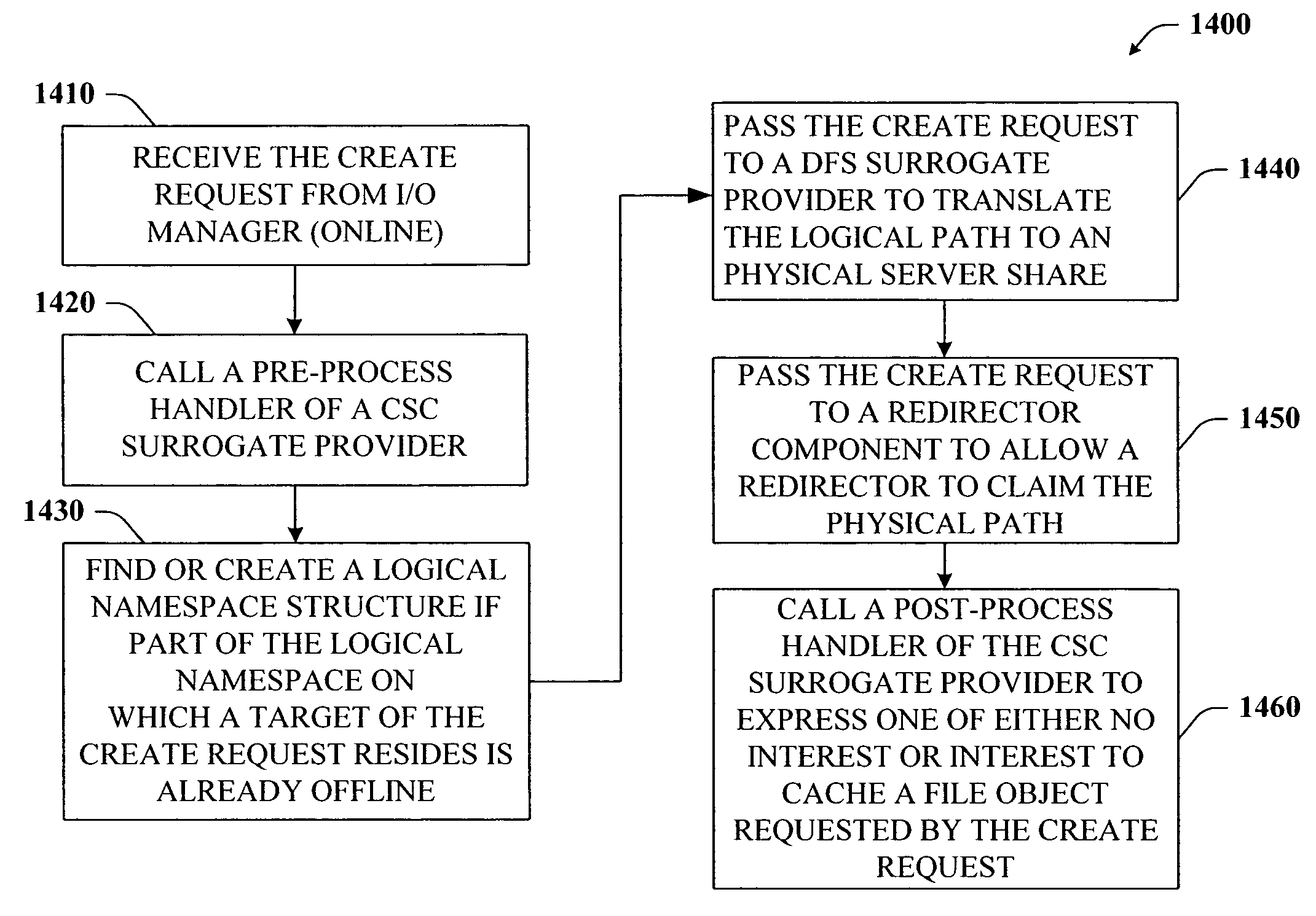

Persistent caching directory level support

InactiveUS20050091226A1Easy to operateDigital data information retrievalDigital data processing detailsPathPingClient data

The present invention provides a novel client side caching (CSC) infrastructure that supports transition states at the directory level to facilitate a seamless operation across connectivity states between client and remote server. More specifically, persistent caching is performed to safeguard the user (e.g., client) and / or the client applications across connectivity interruptions and / or bandwidth changes. This is accomplished in part by caching to a client data store the desirable file(s) together with the appropriate file access parameters. Moreover, the client maintains access to cached files during periods of disconnect. Furthermore, portions of a path can be offline while other portions upstream can remain online. CSC operates on the logical path which cooperates with DFS which operates on the physical path to keep track of files cached, accessed and changes in the directories. In addition, truth on the client is facilitated whether or not a conflict of file copies exists.

Owner:MICROSOFT TECH LICENSING LLC

Method and system for caching at secure gateways

InactiveUS7418731B2Improve performanceReduce network latencyMemory loss protectionError detection/correctionClient-sideSecurity policy

A computer gateway for an intranet of computers, including a scanner for scanning incoming files from the Internet and deriving security profiles therefor, the security profiles being lists of computer commands that the files are programmed to perform, a file cache for storing files, a security profile cache for storing security profiles for files, and a security policy cache for storing security policies for client computers within an intranet, the security policies including a list of restrictions for files that are transmitted to intranet computers. A method and a computer-readable storage medium are also described and claimed.

Owner:FINJAN LLC

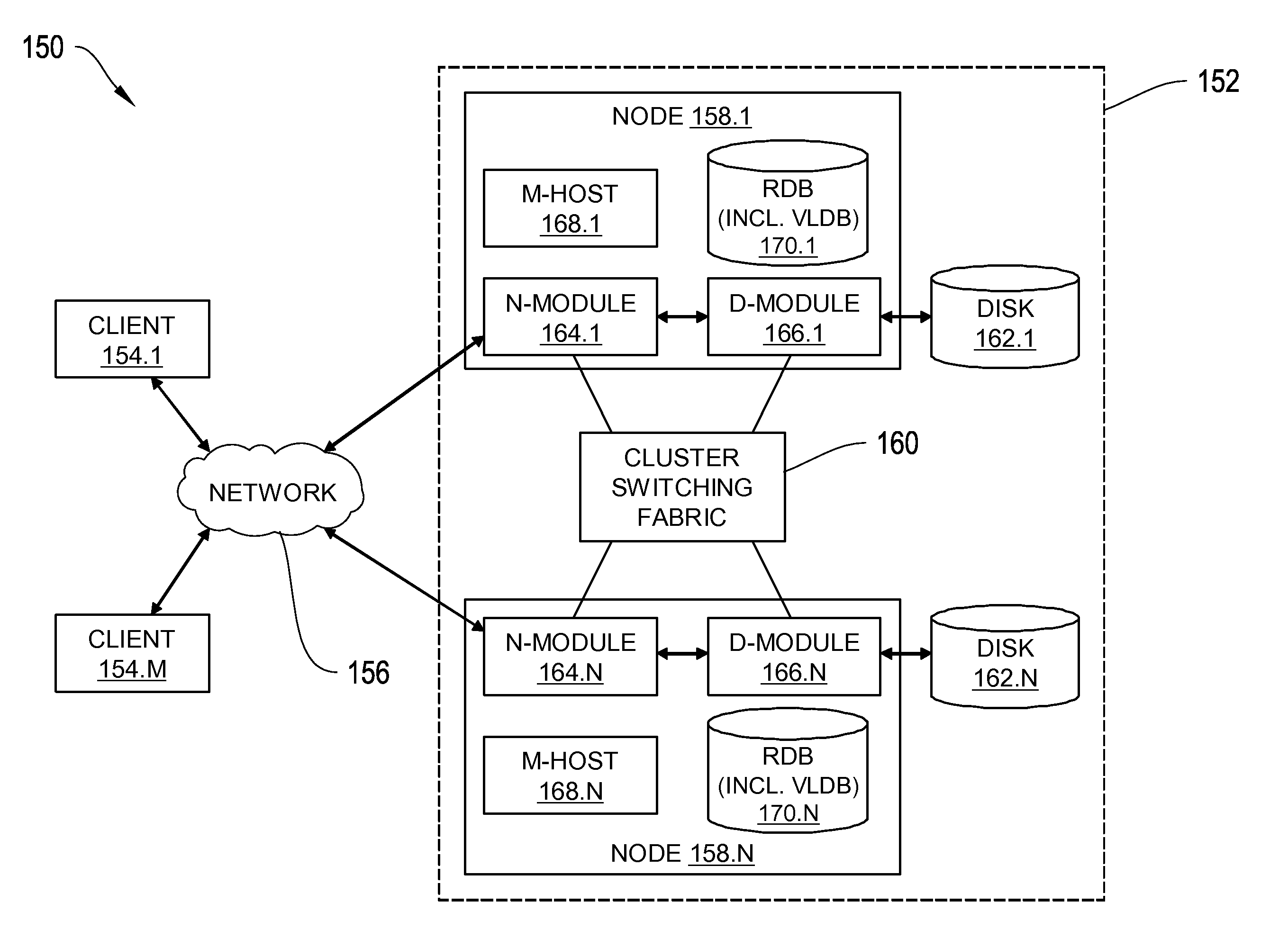

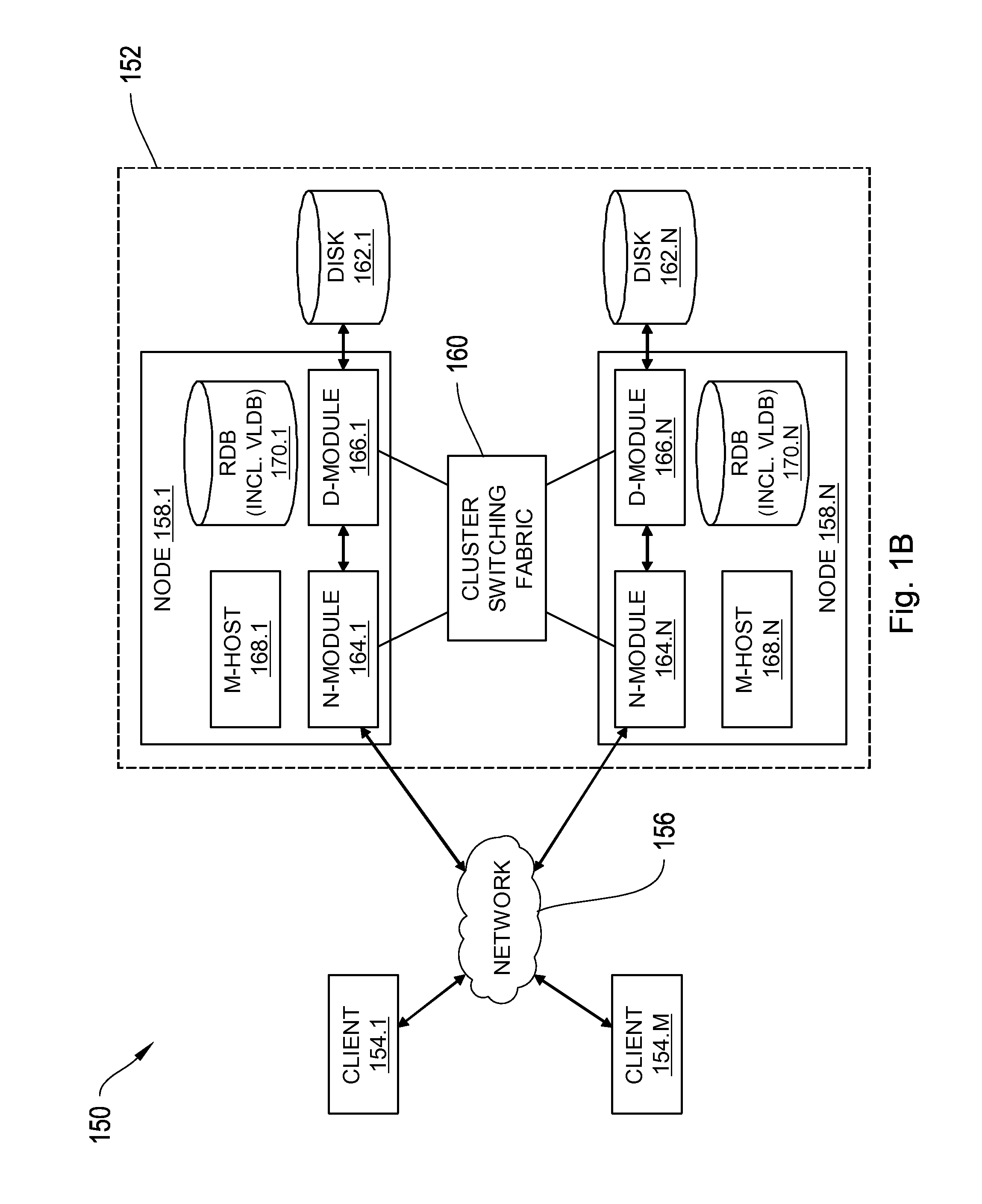

Systems and methods for caching data files

InactiveUS20130226888A1Digital data information retrievalDigital data processing detailsState variableData file

Systems and methods including storage systems that employ local file caching processes and that generate state variables to record, for subsequent use, intermediate states of a file hash process. In certain specific examples, there are systems that interrupt a hash process as it processes the data blocks of a file, and stores the current product of the interrupted hash process as a state variable that represents the hash value generated from the data blocks processed prior to the interruption. After interruption, the hash process continues processing the file data blocks. The stored state variables may be organized into a table that associates the state variables with the range of data blocks that were processed to generate the respective state variable. Such exemplary systems can be used with any type of storage system, including filers, database systems or other storage applications.

Owner:NETWORK APPLIANCE INC

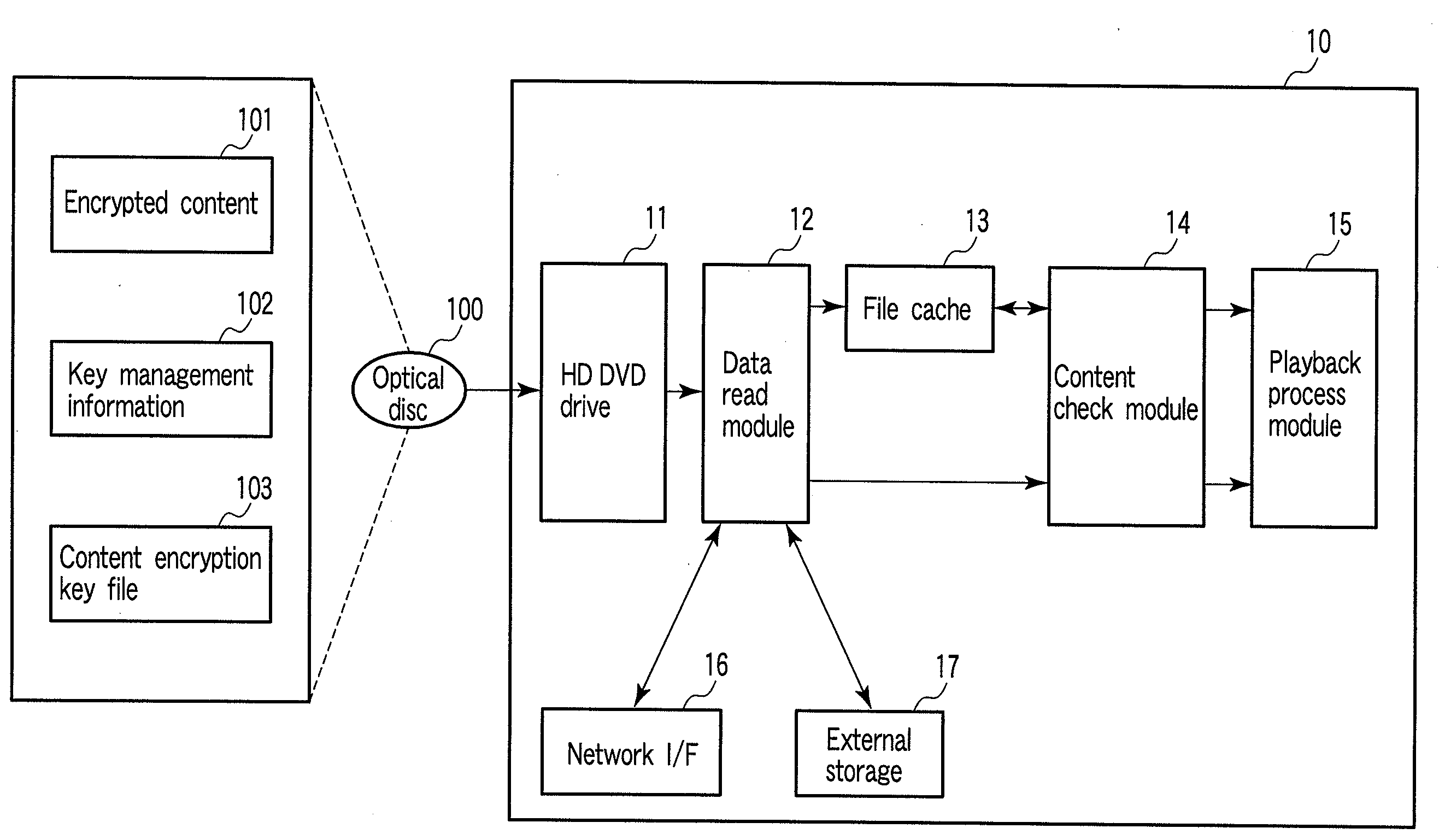

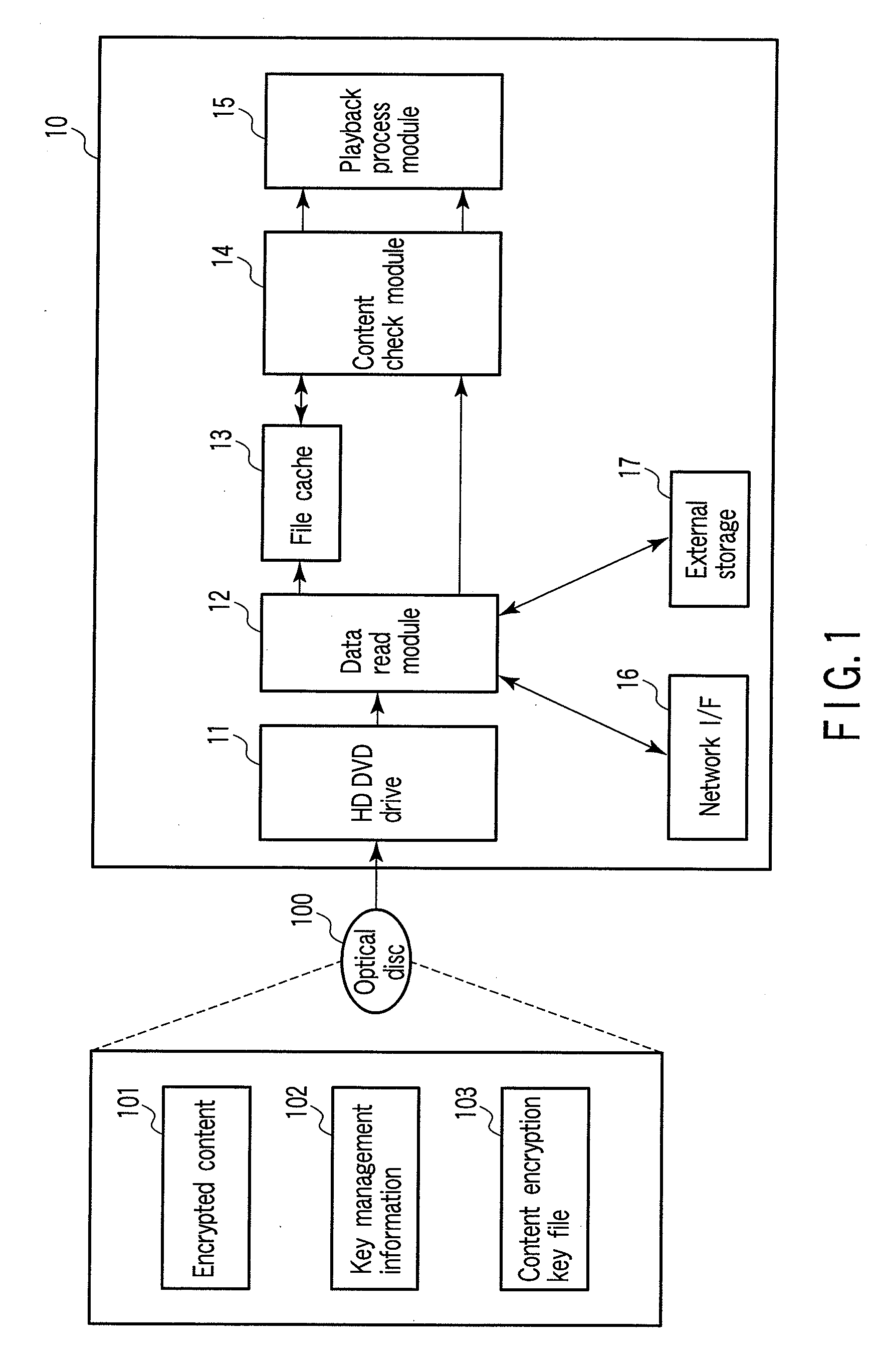

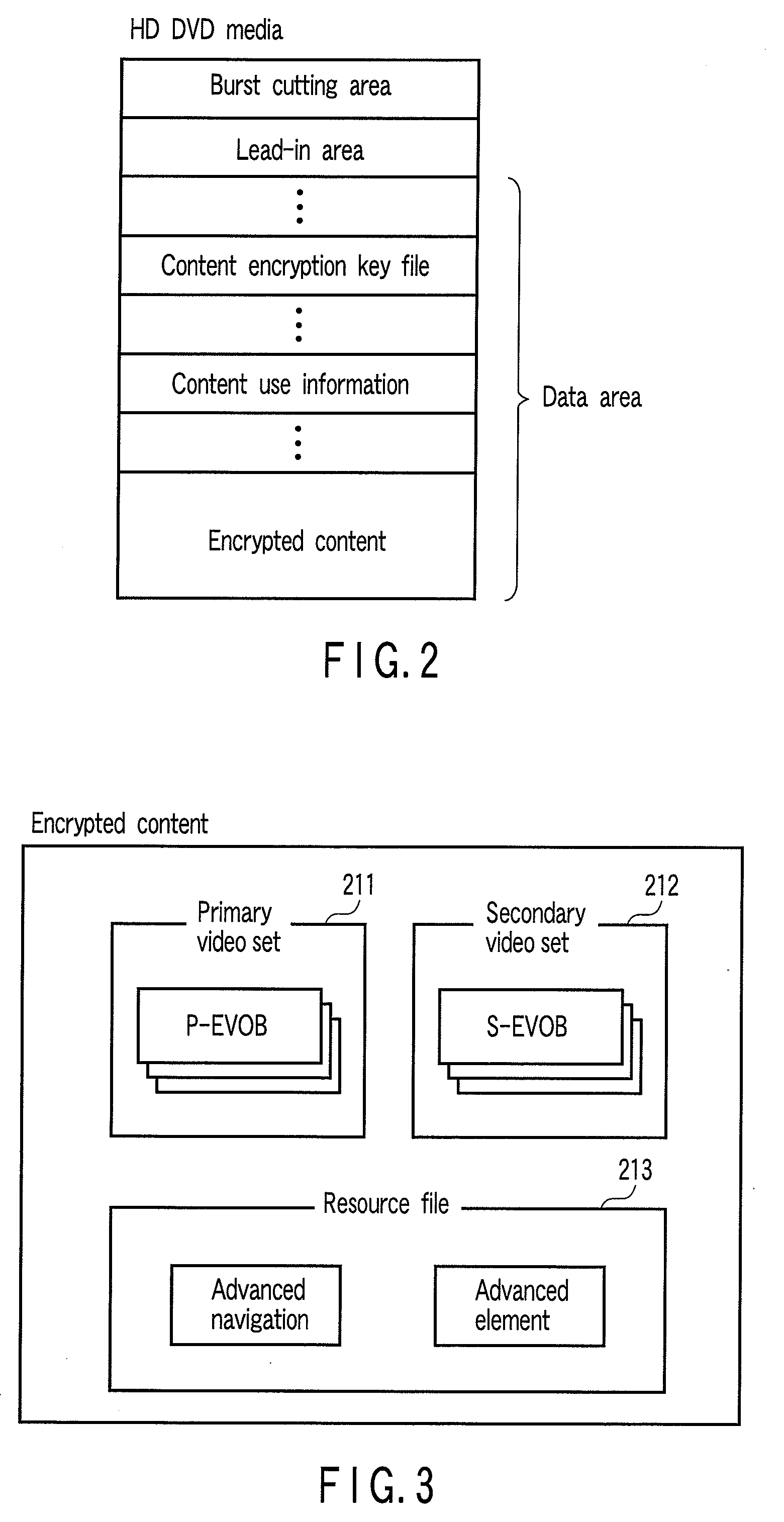

Playback apparatus and playback control method

InactiveUS20090097644A1Digital data processing detailsMemory adressing/allocation/relocationProcess moduleEncryption decryption

According to one embodiment, there is provided a playback apparatus which plays back content including an encrypted video object and an encrypted resource file, including a memory including a file cache area, a module configured to decrypt the video object, a playback process module configured to play back the decrypted video object and to output a resource file acquisition request, a module configured to determine whether the resource file is decrypted, to decrypt the resource file, to write the decrypted resource file over the encrypted resource file, to update the management information, and to send the decrypted resource file to the playback process module, and a module configured to determine whether the resource file is decrypted, to encrypt the decrypted resource file, to write the encrypted resource file over the decrypted resource file, to update the management information, and to send the encrypted resource file to the storage.

Owner:KK TOSHIBA

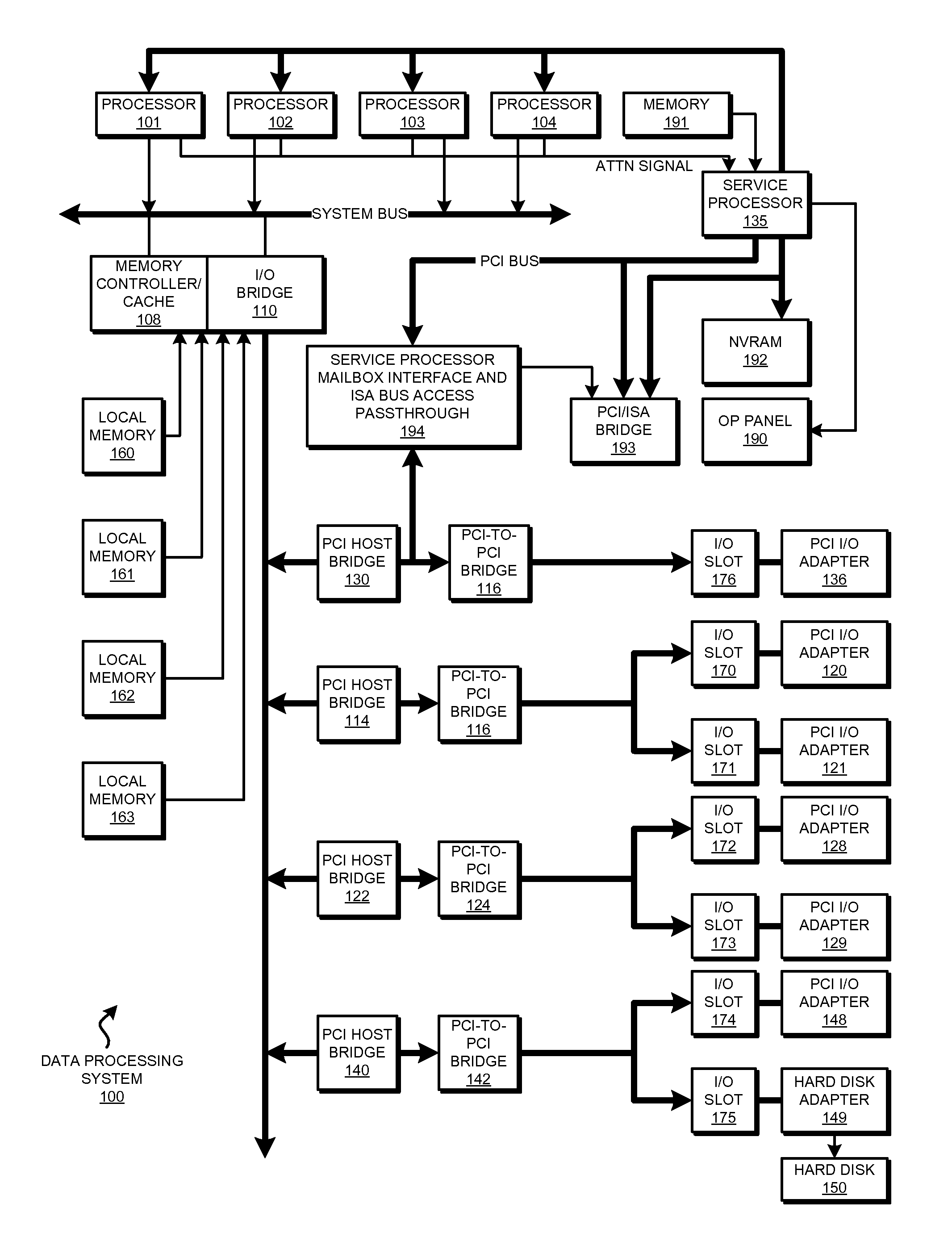

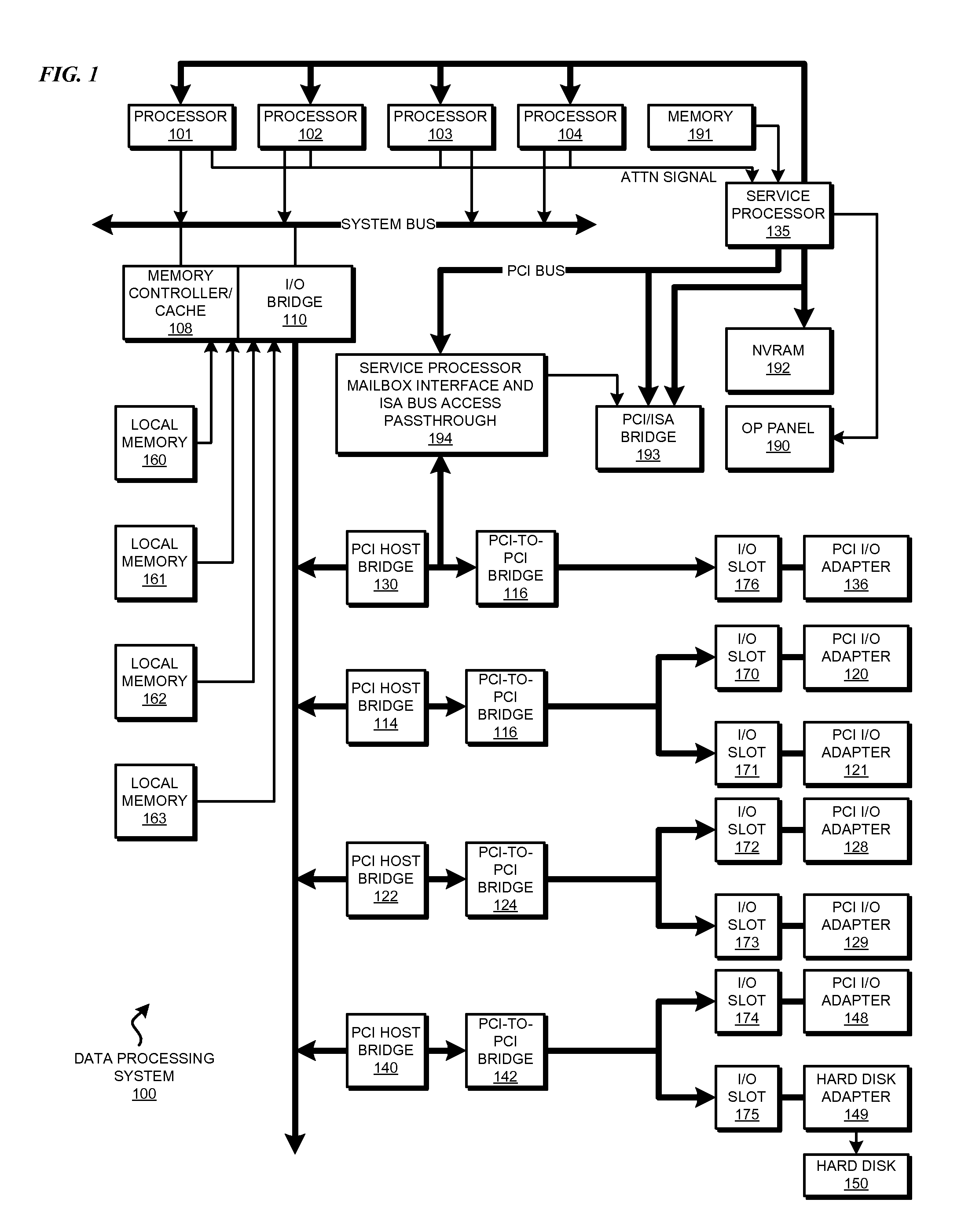

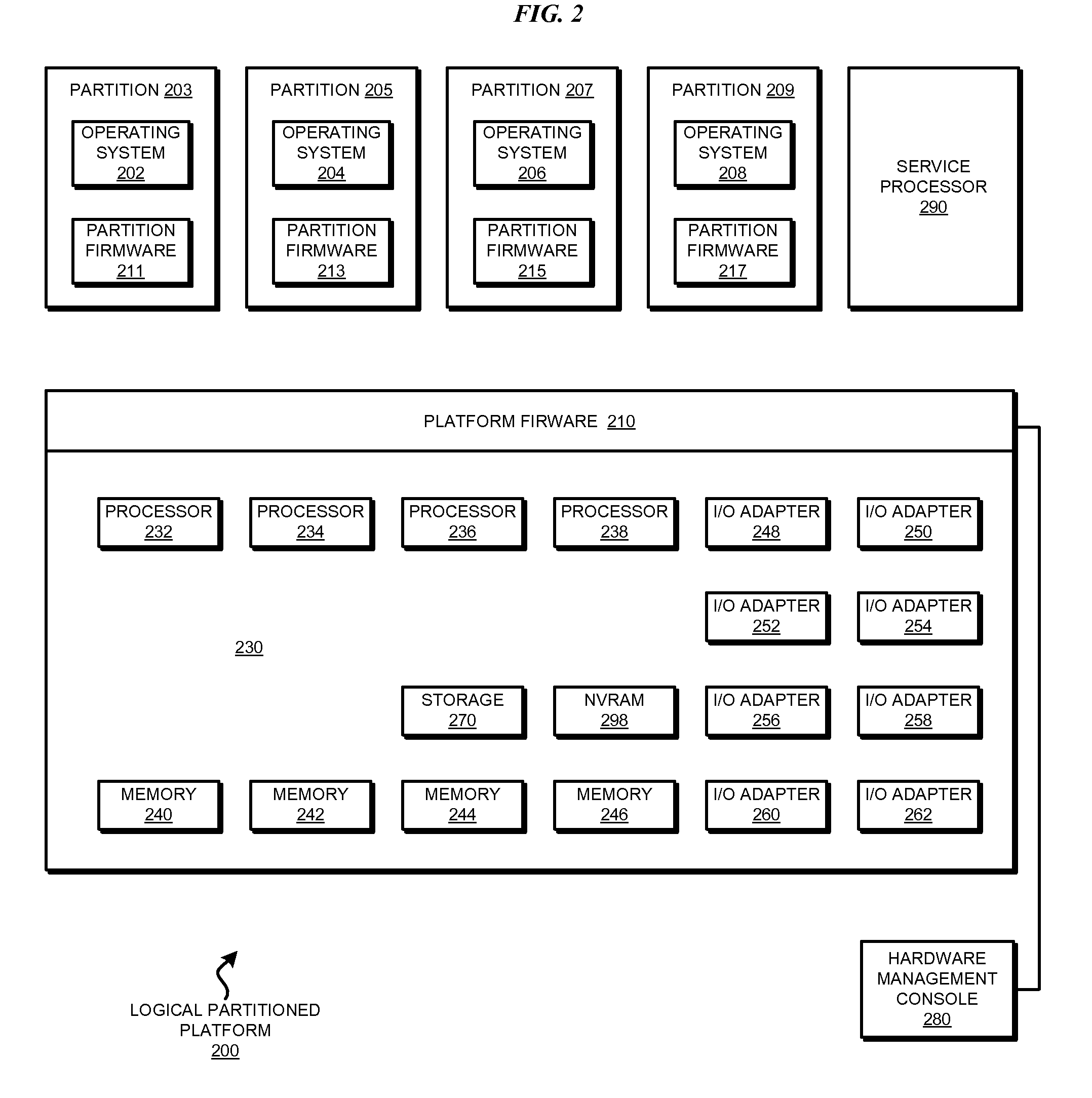

Selective memory donation in virtual real memory environment

InactiveUS20090307686A1Memory adressing/allocation/relocationSoftware simulation/interpretation/emulationTerm memoryVirtual machine

A method, system, and computer usable program product for selective memory donation in a virtual real memory environment are provided in the illustrative embodiments. A virtual machine receives a request for memory donation. A component of the virtual machine determines whether a portion of a memory space being used for file caching exceeds a threshold. The determining forms a threshold determination, and the portion of the memory space being used for file caching forms a file cache. If the threshold determination is false, the component ignores the request. If the threshold determination is true, a component of the virtual machine releases a part of the file cache that exceeds the threshold. The part of the file cache forms a released file cache. In response to the request, the virtual machine makes the released file cache available to a requester of the request.

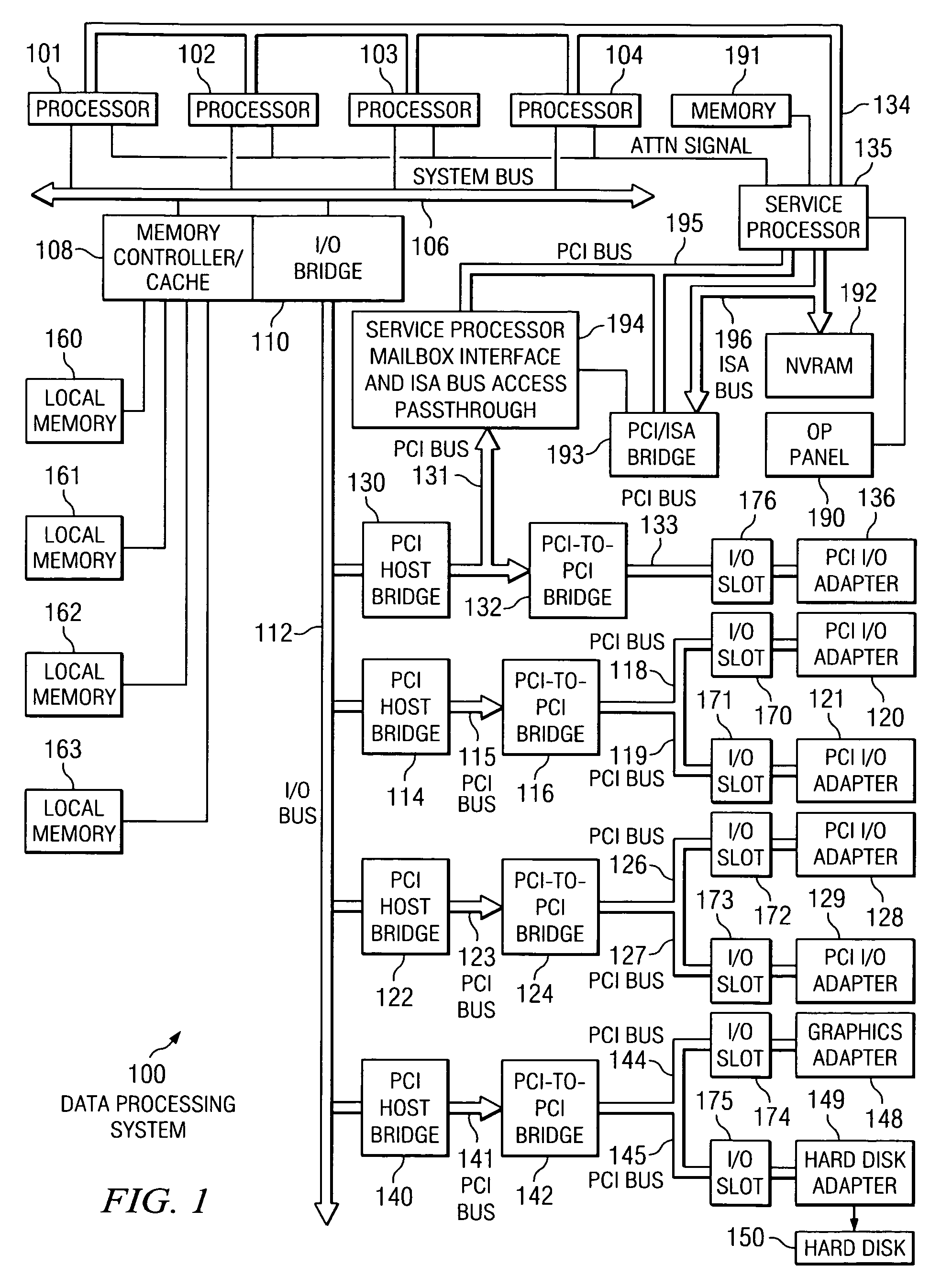

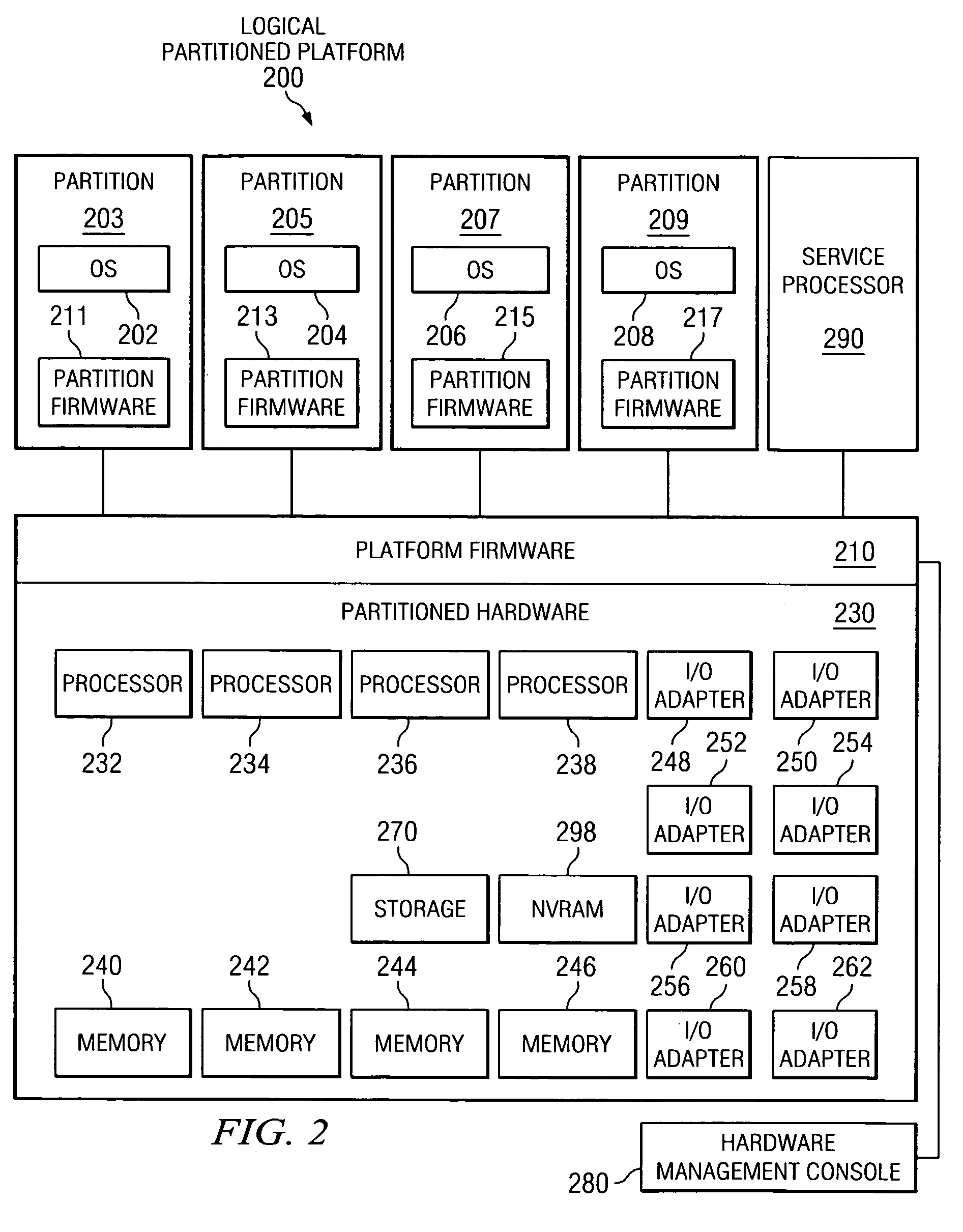

Owner:IBM CORP

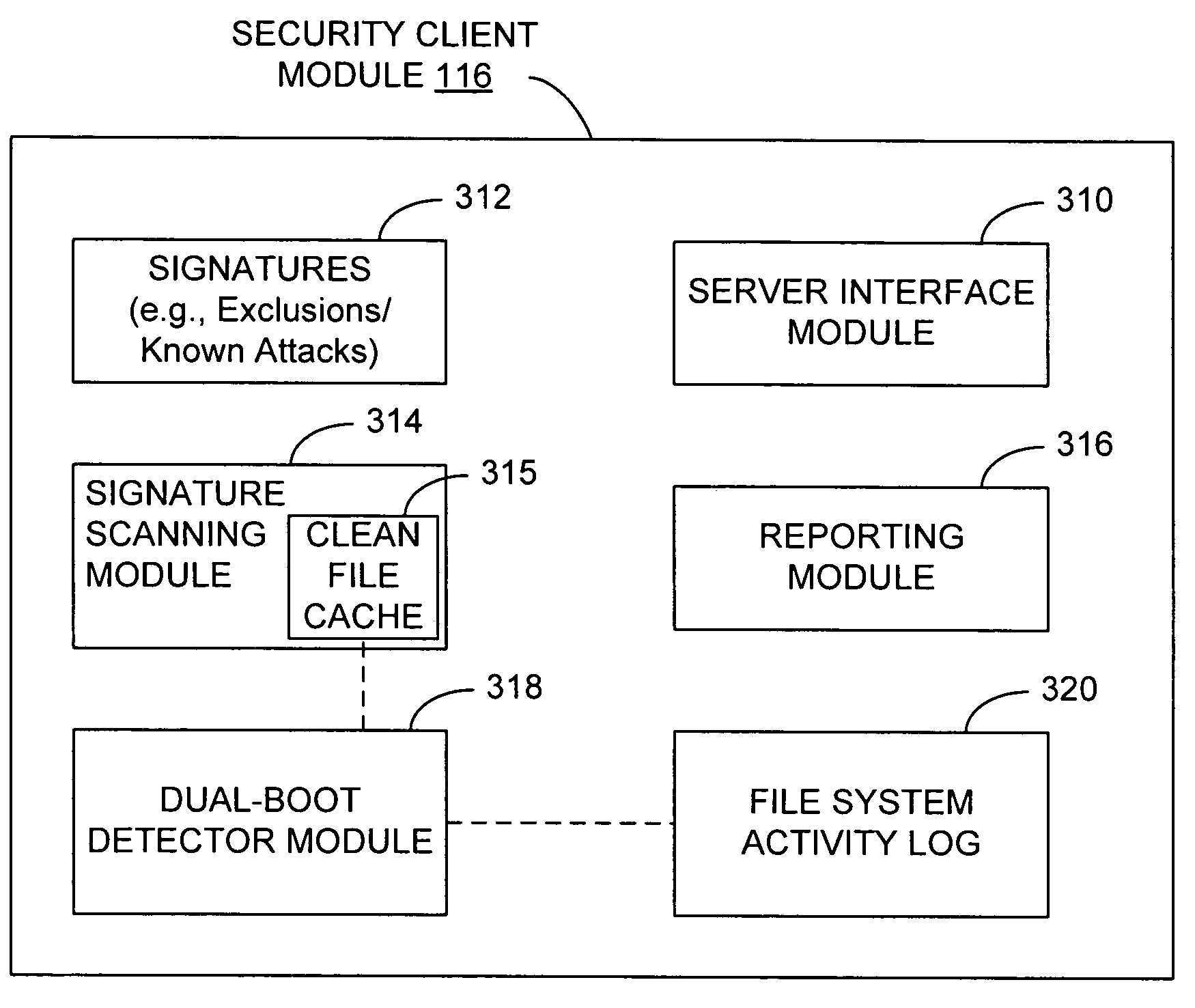

Enabling clean file cache persistence using dual-boot detection

A robust and reliable mechanism is disclosed for detecting whether a system has (or may have) been booted into a compromised or otherwise unprotected environment, so that a persisted clean file cache can be used across boots when appropriate. As such security scanning of files. A clean file cache can be maintained and used by a security application to avoid unnecessarily re-scanning a file that has not been modified since last being scanned and determined clean. Unnecessary scans are therefore avoided.

Owner:CA TECH INC

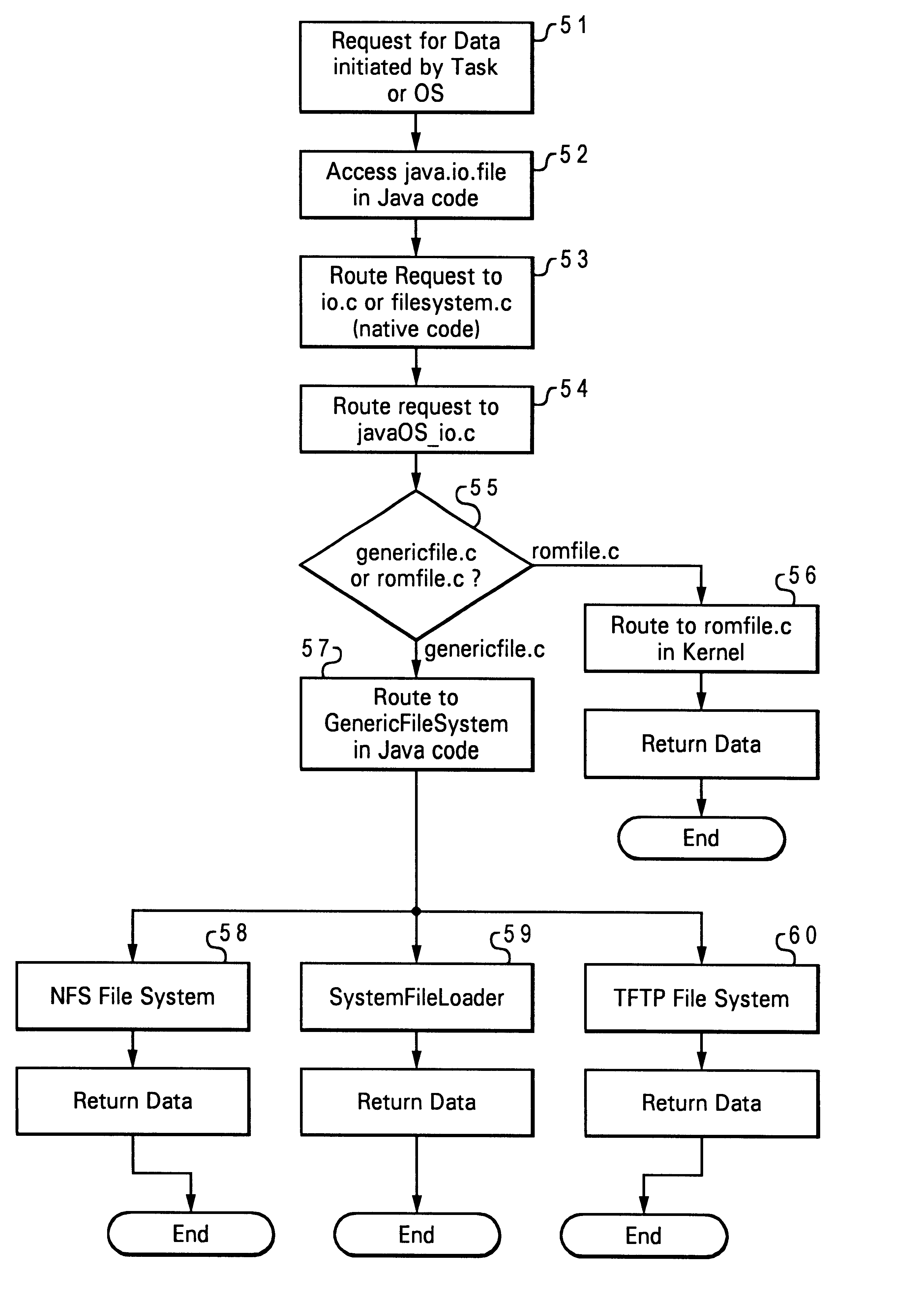

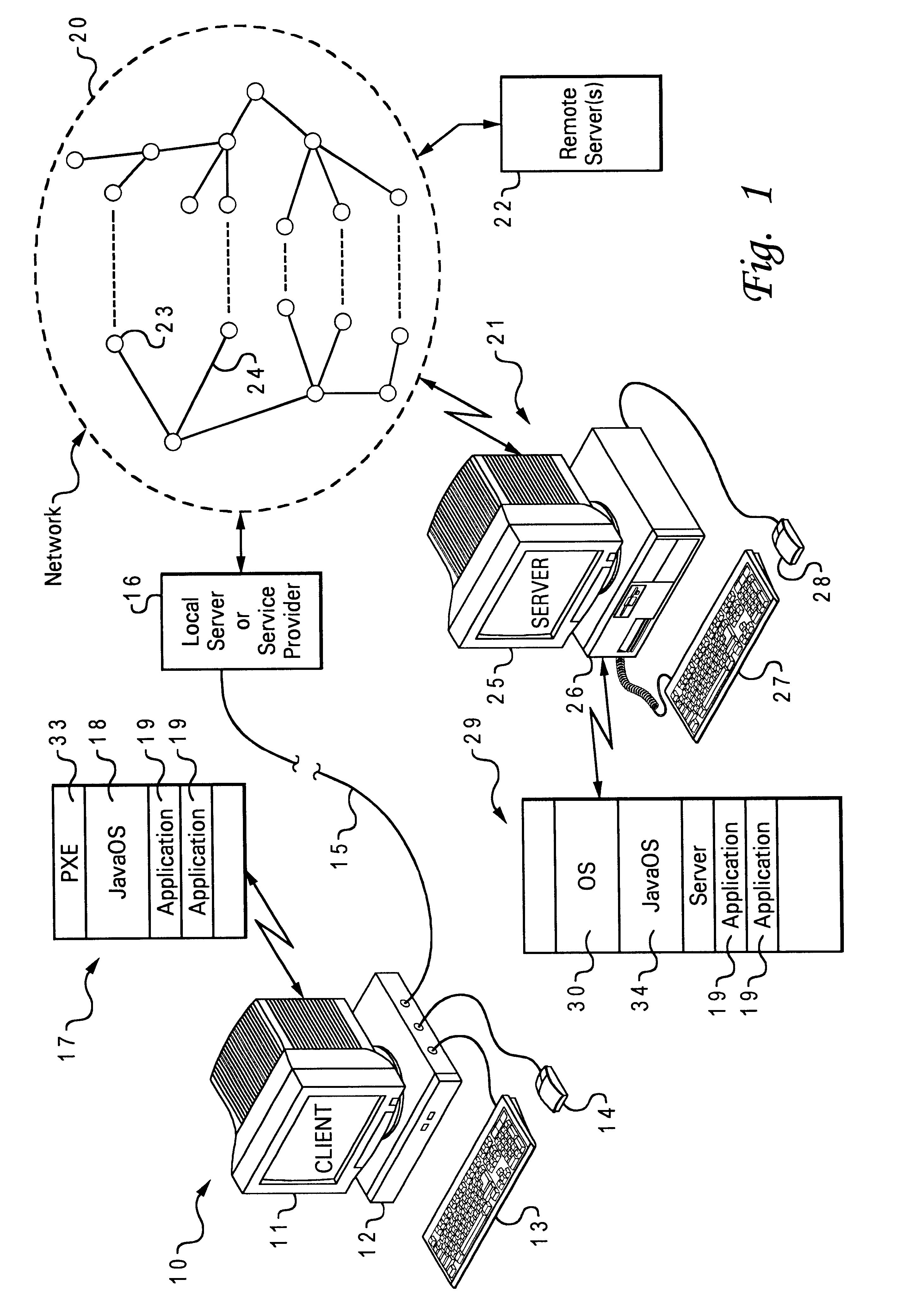

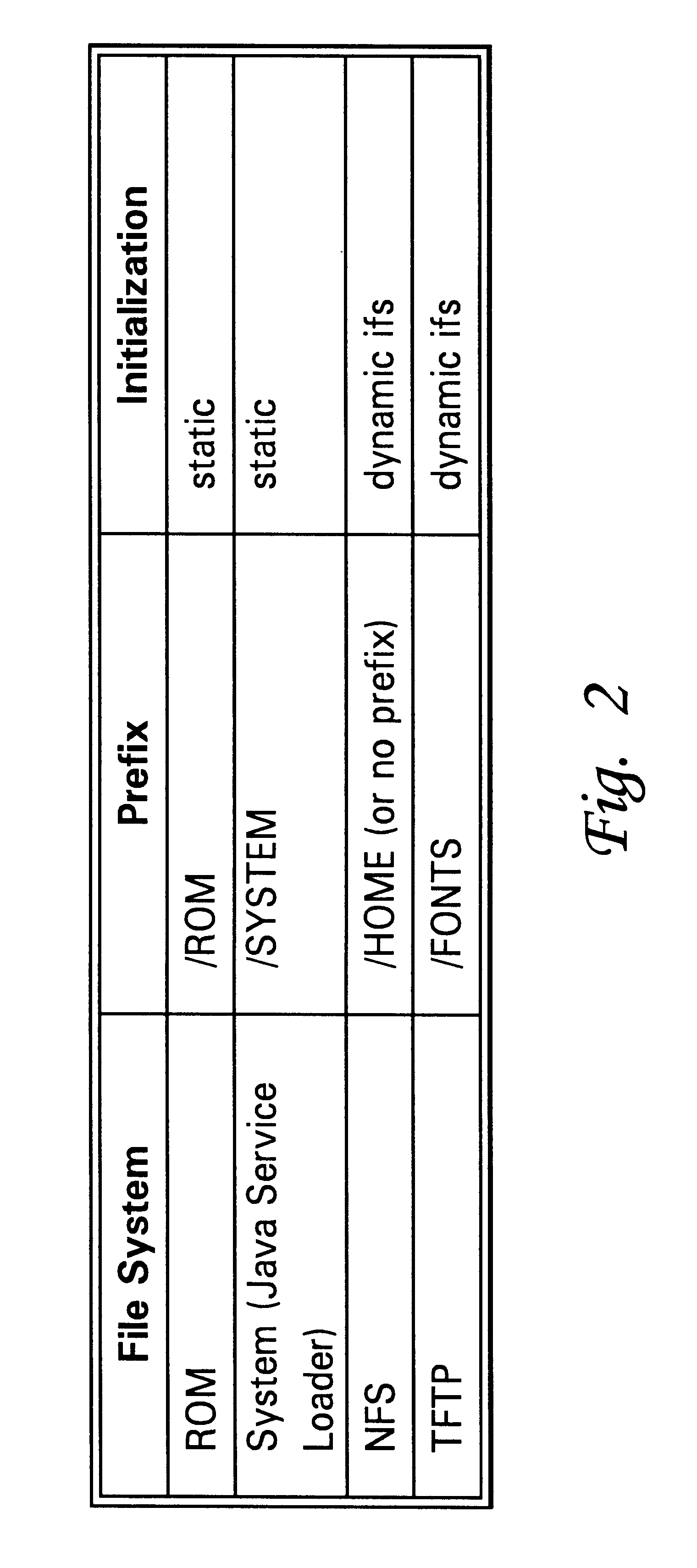

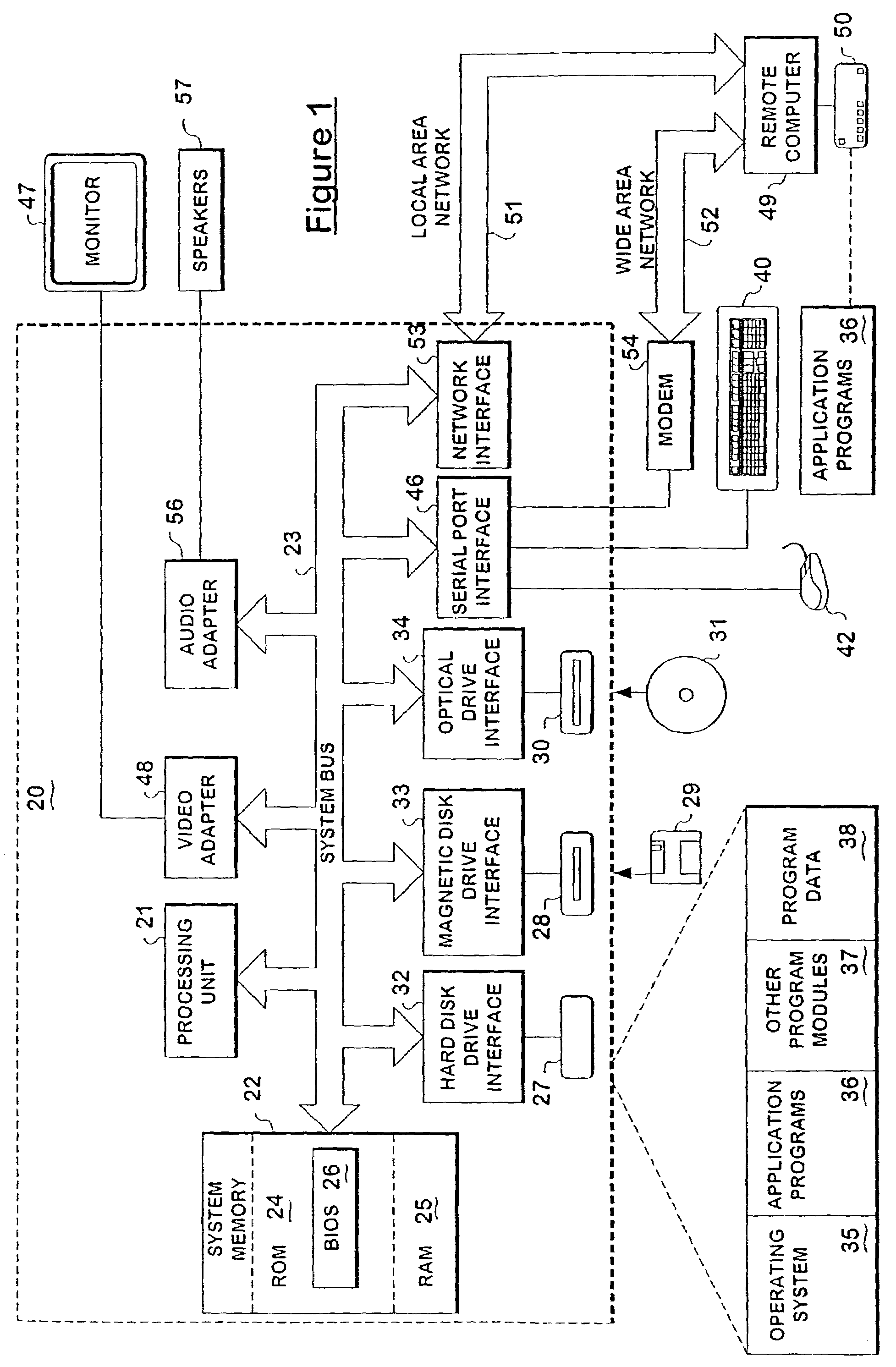

Selective loading of client operating system in a computer network

InactiveUS6611915B1Improved and efficient methodWay of increaseData processing applicationsMultiple digital computer combinationsOperational systemFile system

A client station on computer network uses an operating system such as JavaOS which is permanently stored at the server rather than on storage media at the client location. JavaOS is loaded and installed at the client upon bootup of the client. The JavaOS is loaded and installed at the client upon bootup of the client. Once the basic system is booted using local firmware, and the base file systems on the network are enabled, an application can begin running, and when it needs to use a particular class file a request will be made through the file system and will be routed over to a generic file system driver, on the client, which will then determine, using a set of configured information, where this class exists; it will utilize the particular file systems available on to that booted client, whether it be NFS, or TFTP, to determine where the server is and how to retrieve that particular class file. It will go ahead and force that operation to occur and the class file will be retrieved and cached locally on the client to be used by the application. In order to avoid loading unneeded or lesser-used parts of the JavaOS from the server to the client memory at boot time, groups of classes are broken out of the monolithic image of JavaOS, as part of the Java service loader model (JSL). JSL-provided packages allow an URL prefix to be provided as part of a package's configuration information. When a class method / data is requested by the loader via the filesystem, if it is not already present, the URL prefix is used to lazily retrieve and cache that file in memory. This allows classes and data files to be delivered as needed, and significantly reduces the amount of data to be retrieved by TFTP by the client at boot time.

Owner:IBM CORP

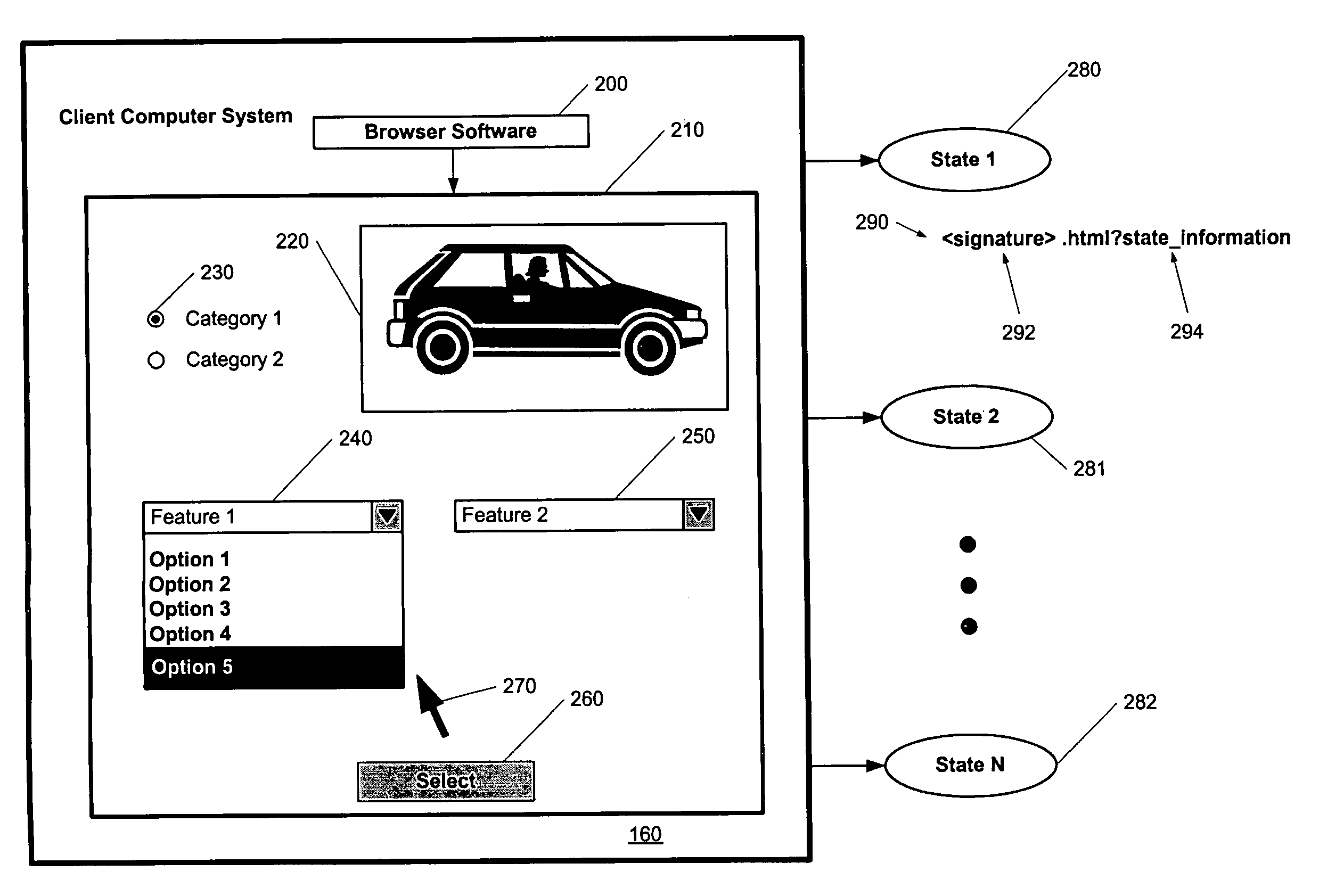

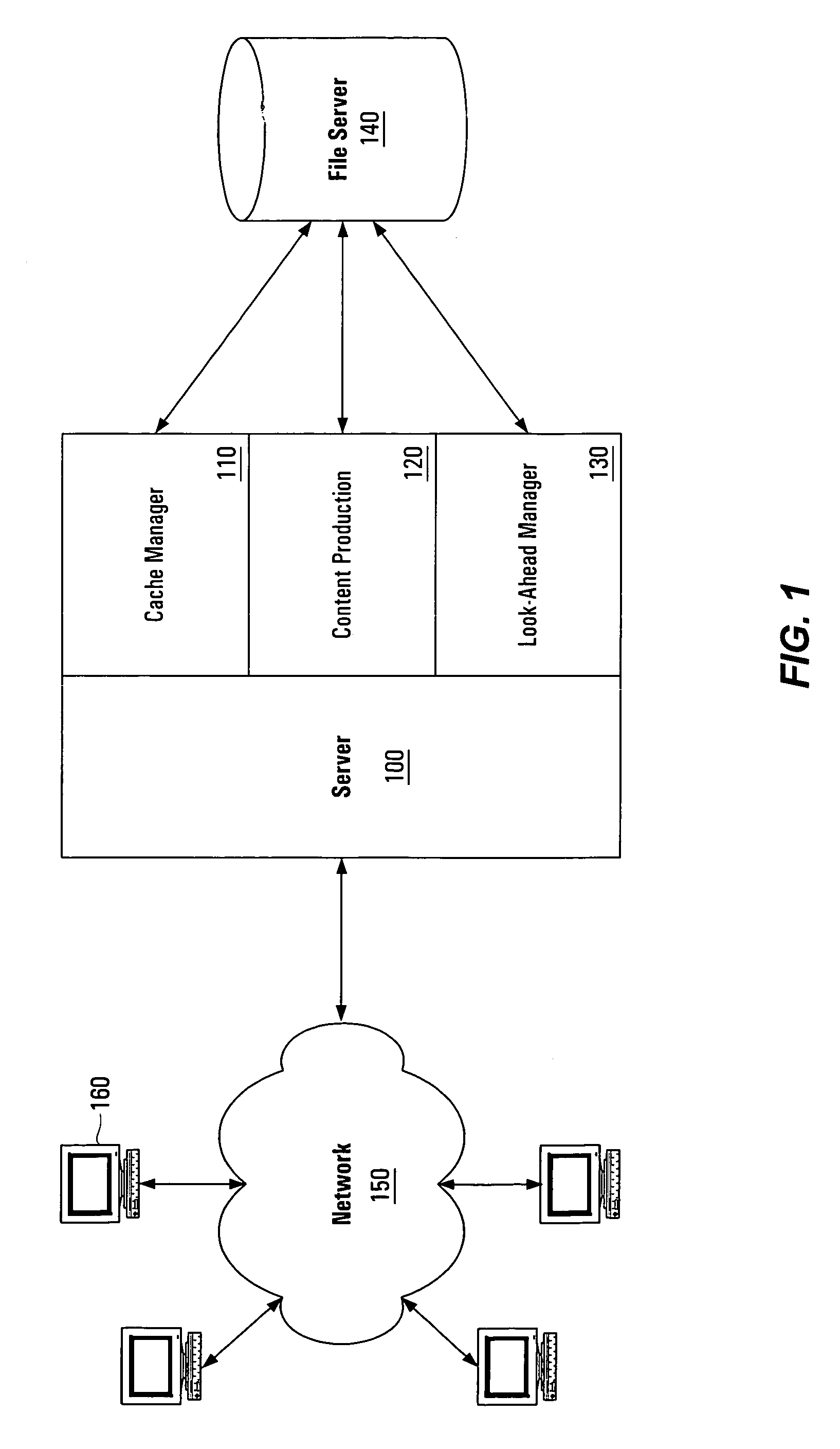

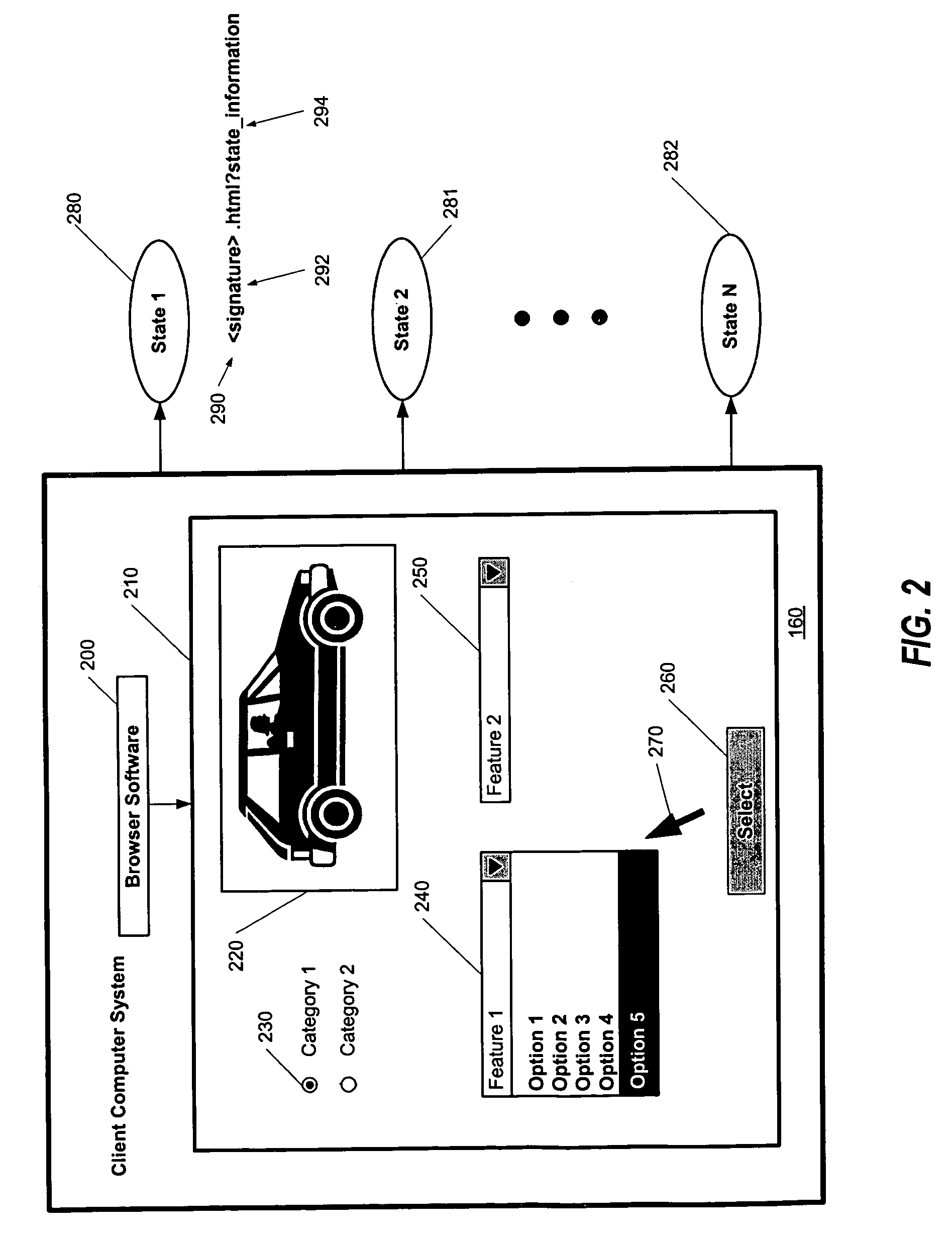

Dynamic content caching framework

InactiveUS7082454B1Reduce calculationQuick reuseDigital data information retrievalMultiple digital computer combinationsHash functionDocumentation procedure

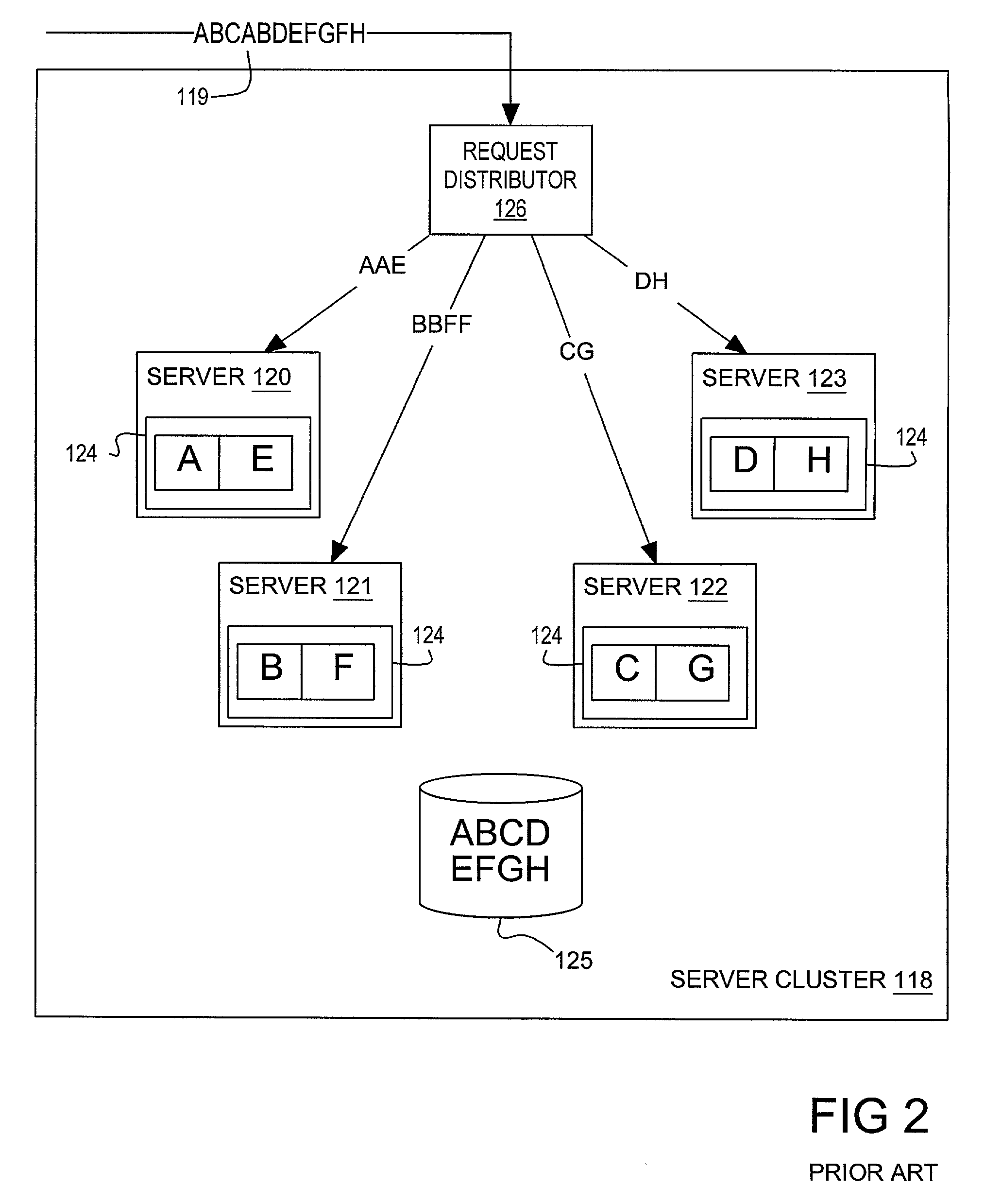

A dynamic content caching framework that encodes dynamically created documents with a filename that is derived from the state information describing the contents of the document, advantageously allows for the dynamically created documents to be cached and reused, thereby reducing server computation, and allowing more users to utilize a particular web site. A file cache management system manages files that can be provided by a web server computer system to a client computer system. Parameters selected by a user viewing a web page define a presentation state that describes, and is used to produce, a subsequent web page. The presentation state is processed using a one-way hashing function to form a hash value, or signature, for that presentation state which is then used to identify the file in which presentation information for the presentation state is stored. When another user chooses the same presentation state, the existing file having presentation information can be identified quickly and reused.

Owner:VERSATA DEV GROUP

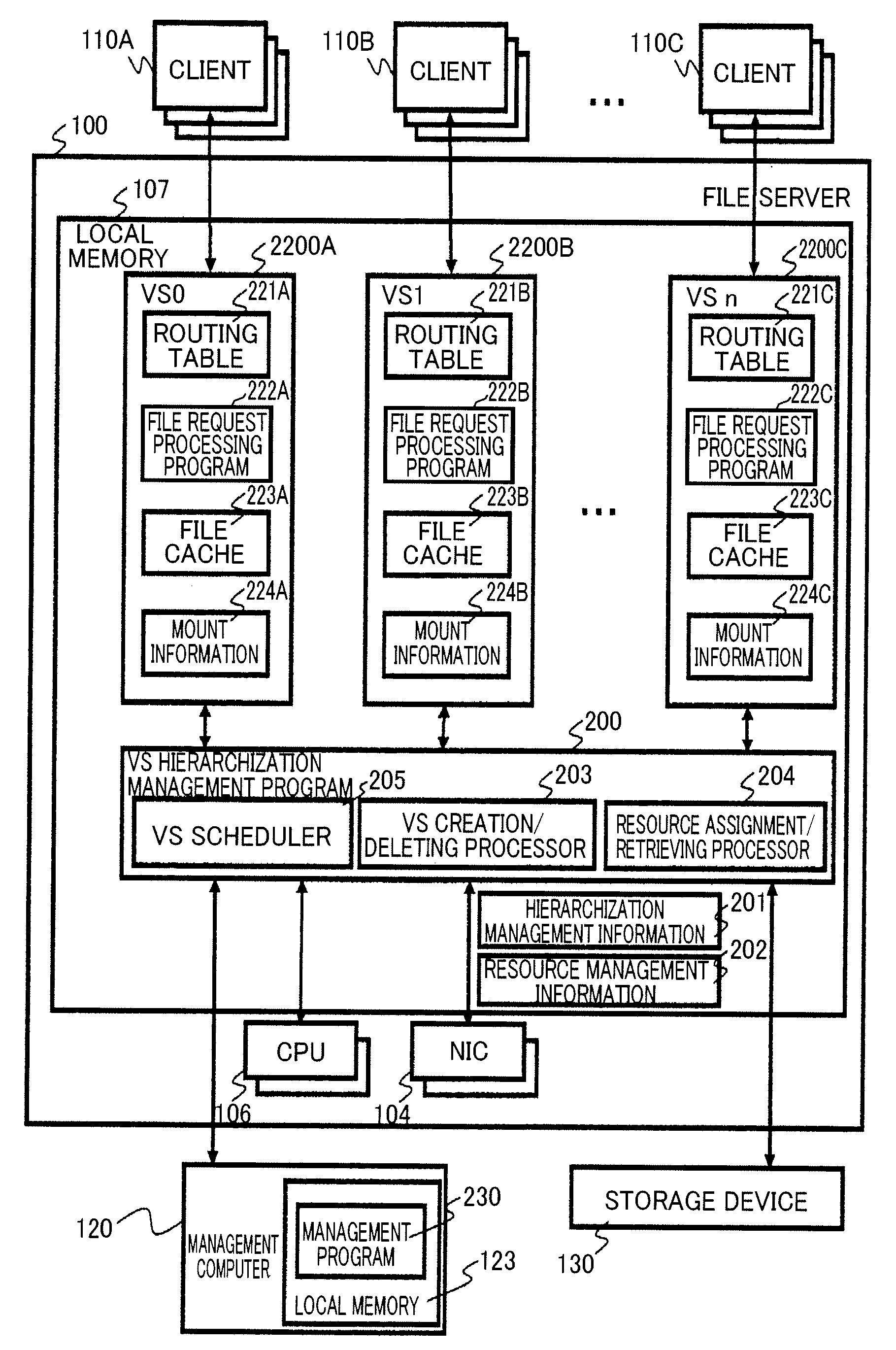

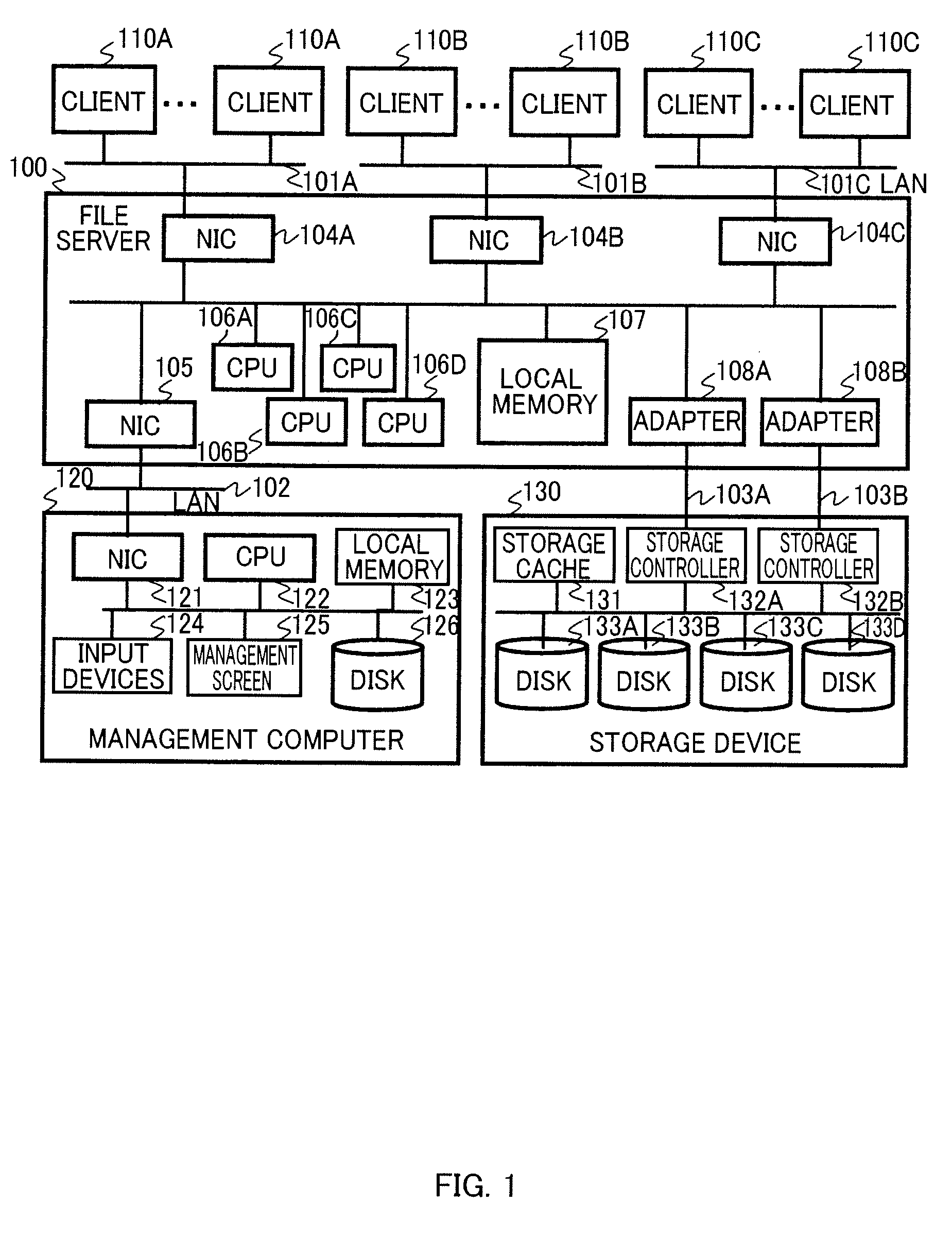

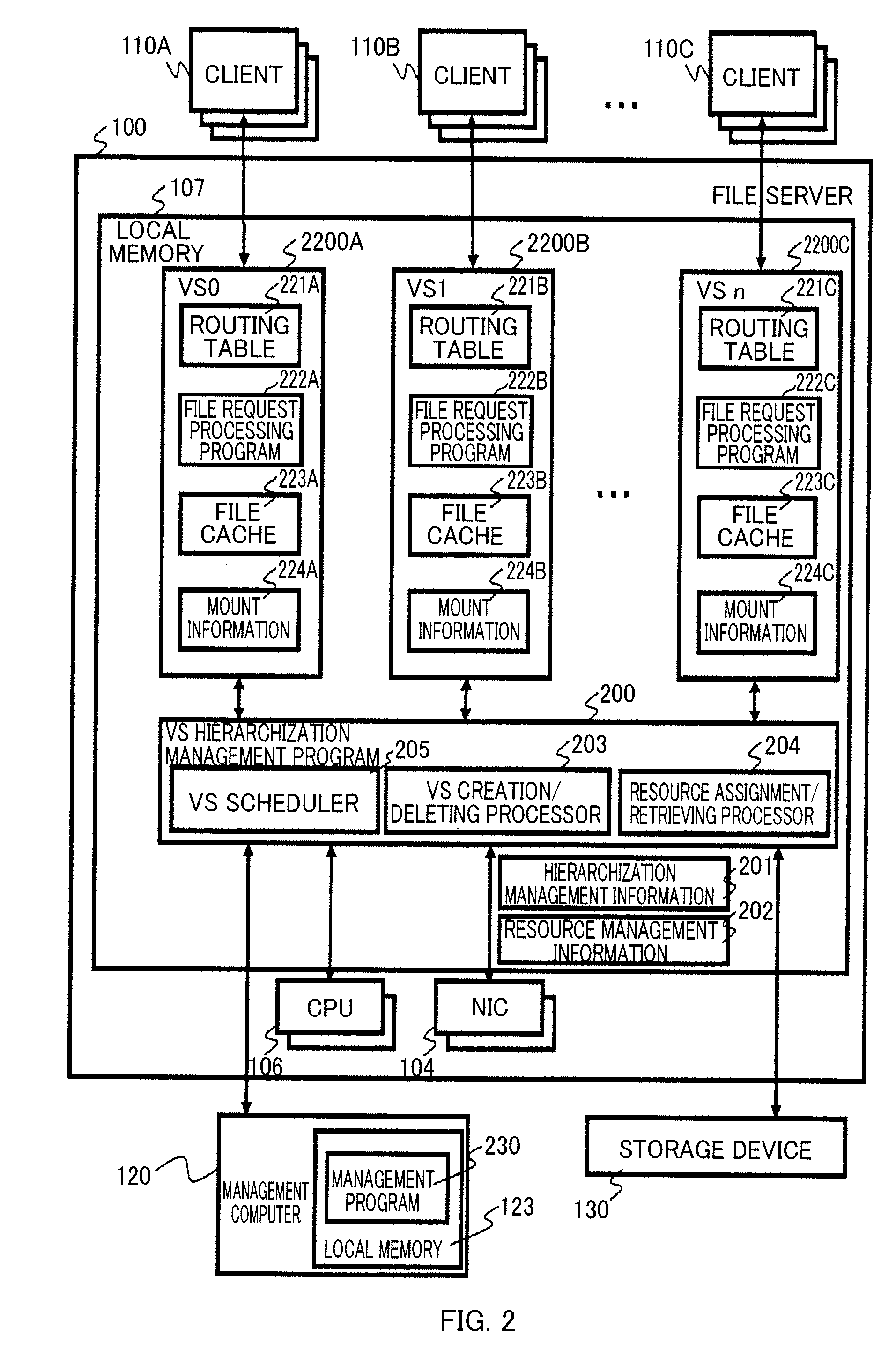

Method, system, and apparatus for file server resource division

InactiveUS20100082716A1Digital data processing detailsMultiple digital computer combinationsIp addressResource management

Provided is a method including the steps of: storing multiple IP addresses and resource management information including correspondence relationships among the multiple IP addresses, multiple file cache regions and multiple virtual file servers; processing multiple file requests transmitted from a client computer in accordance with the resource management information, by use of a lent file cache region being a part of the multiple file cache regions corresponding to the multiple virtual file servers, a lent IP address being a part of the multiple IP addresses, and a lent volume being a part of multiple volumes, the requests transmitted by designating the multiple virtual file servers, respectively; and creating a child virtual file server by receiving a child virtual file server creation request designating one of the multiple virtual file servers.

Owner:HITACHI LTD

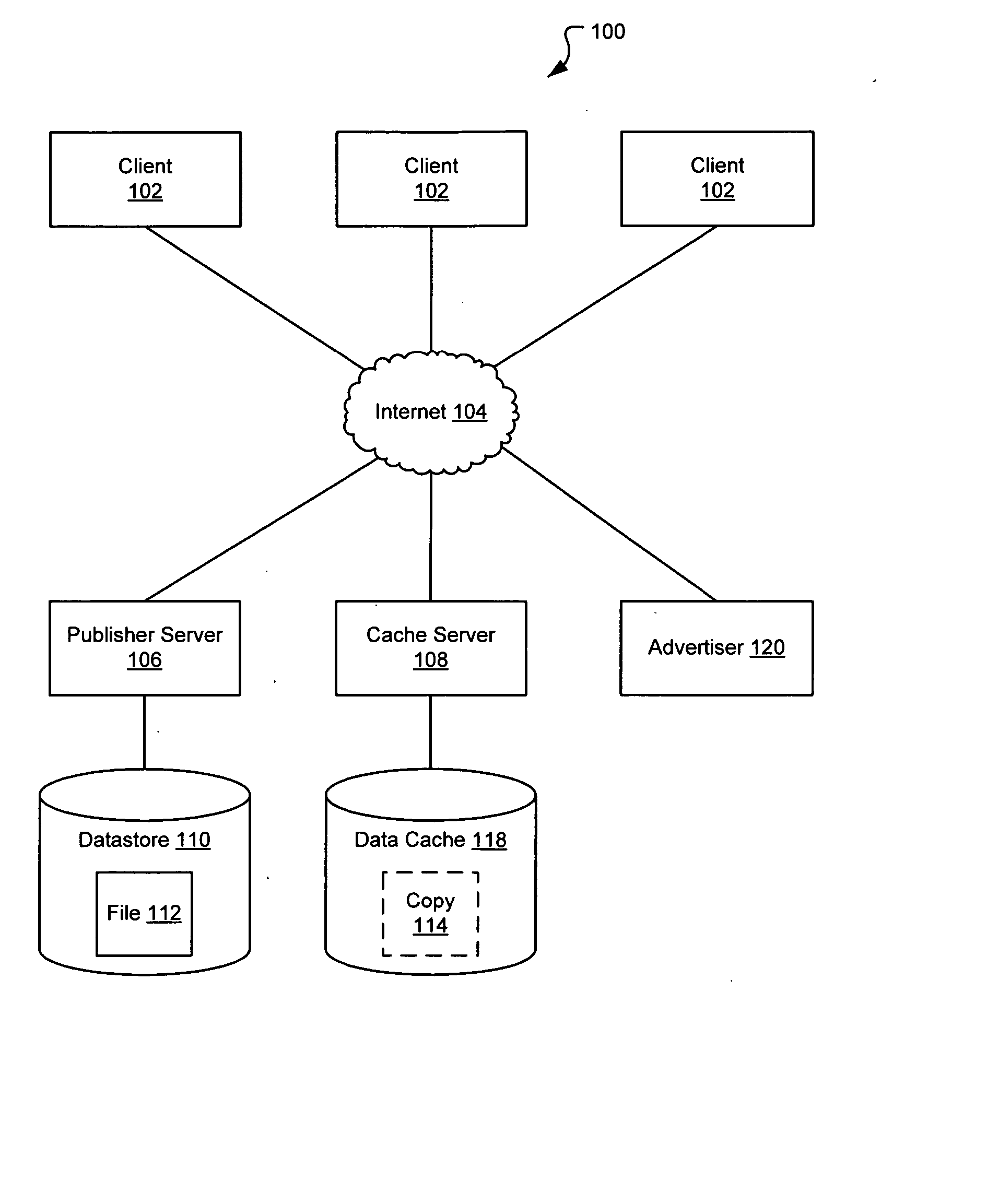

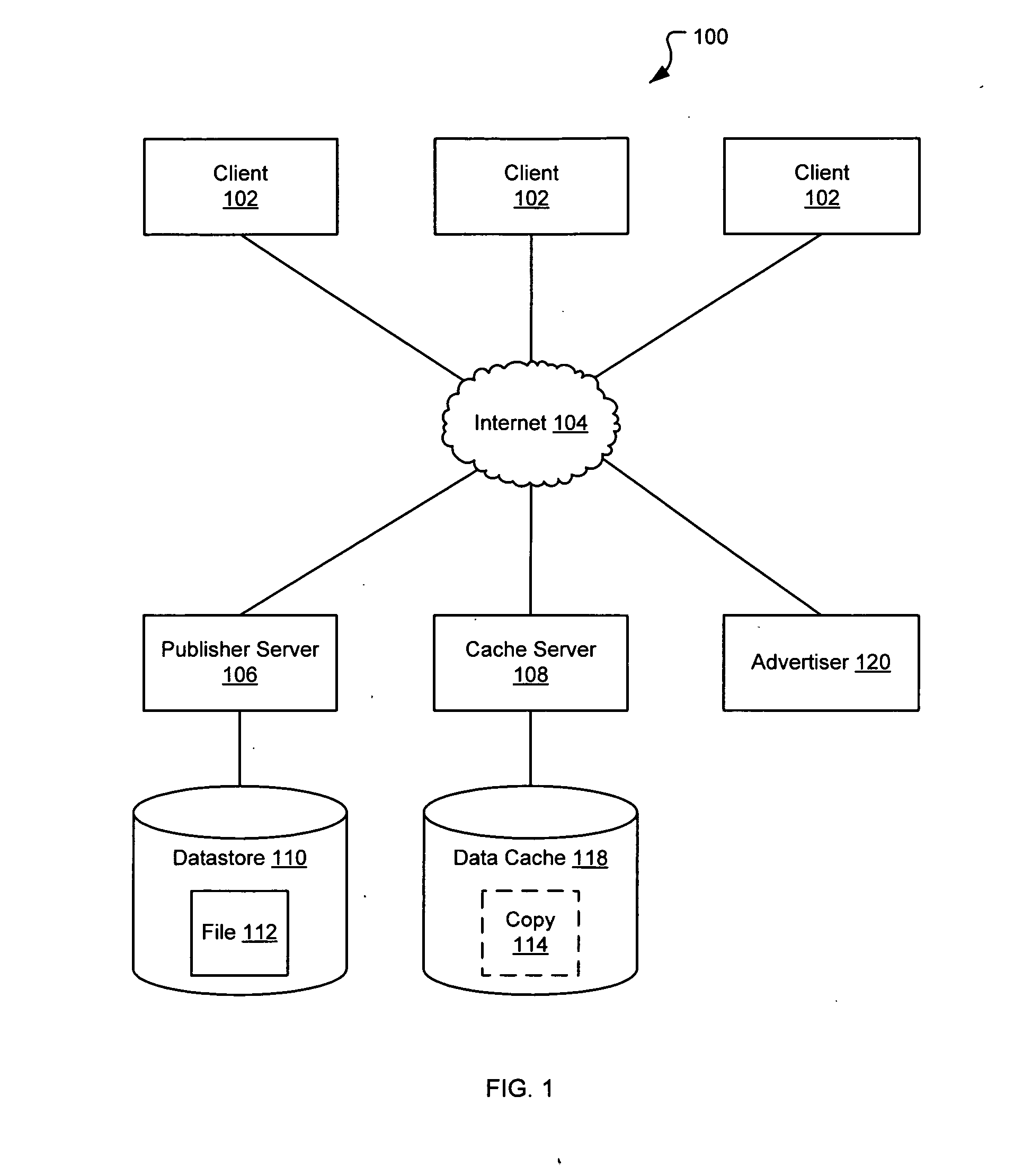

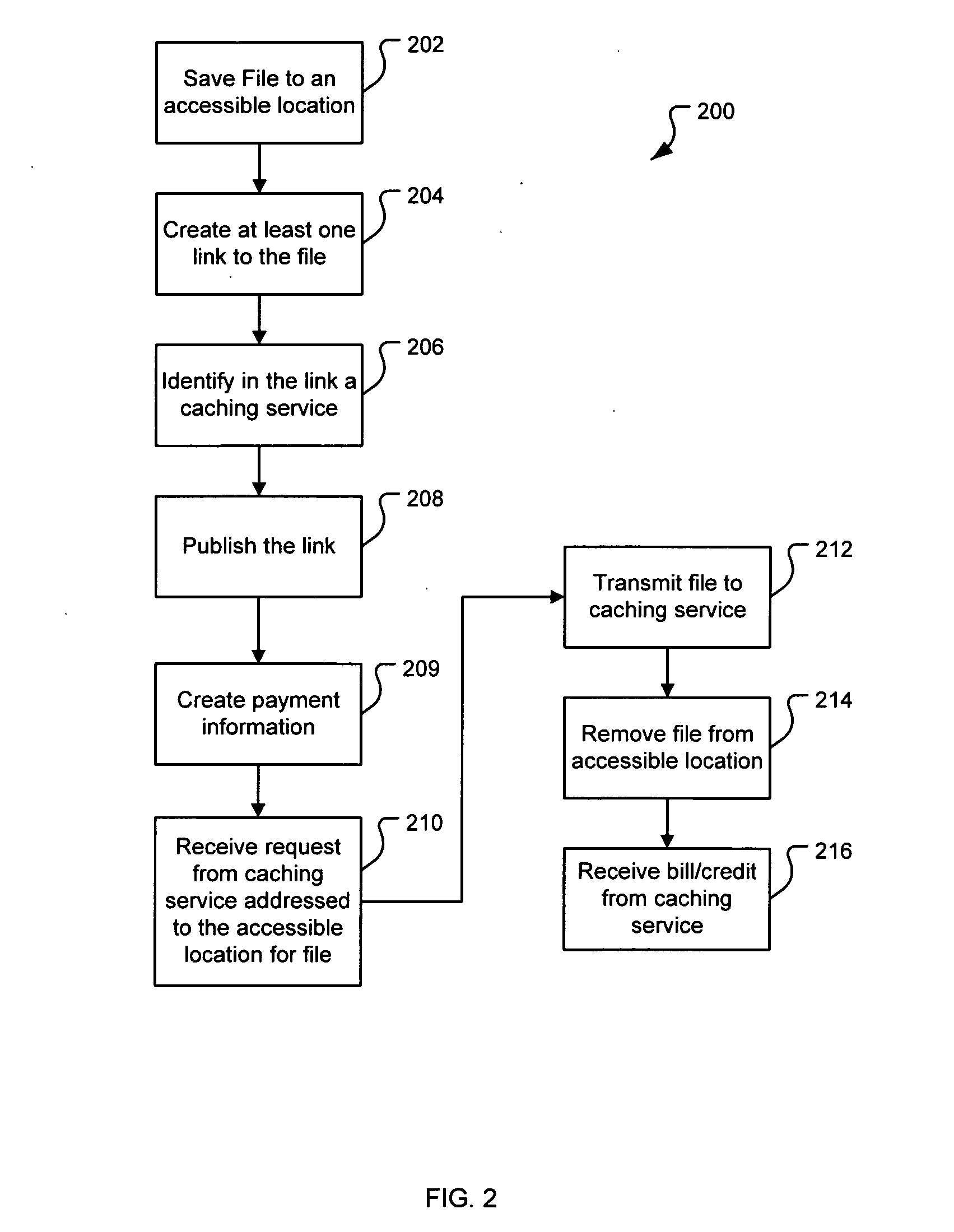

File caching

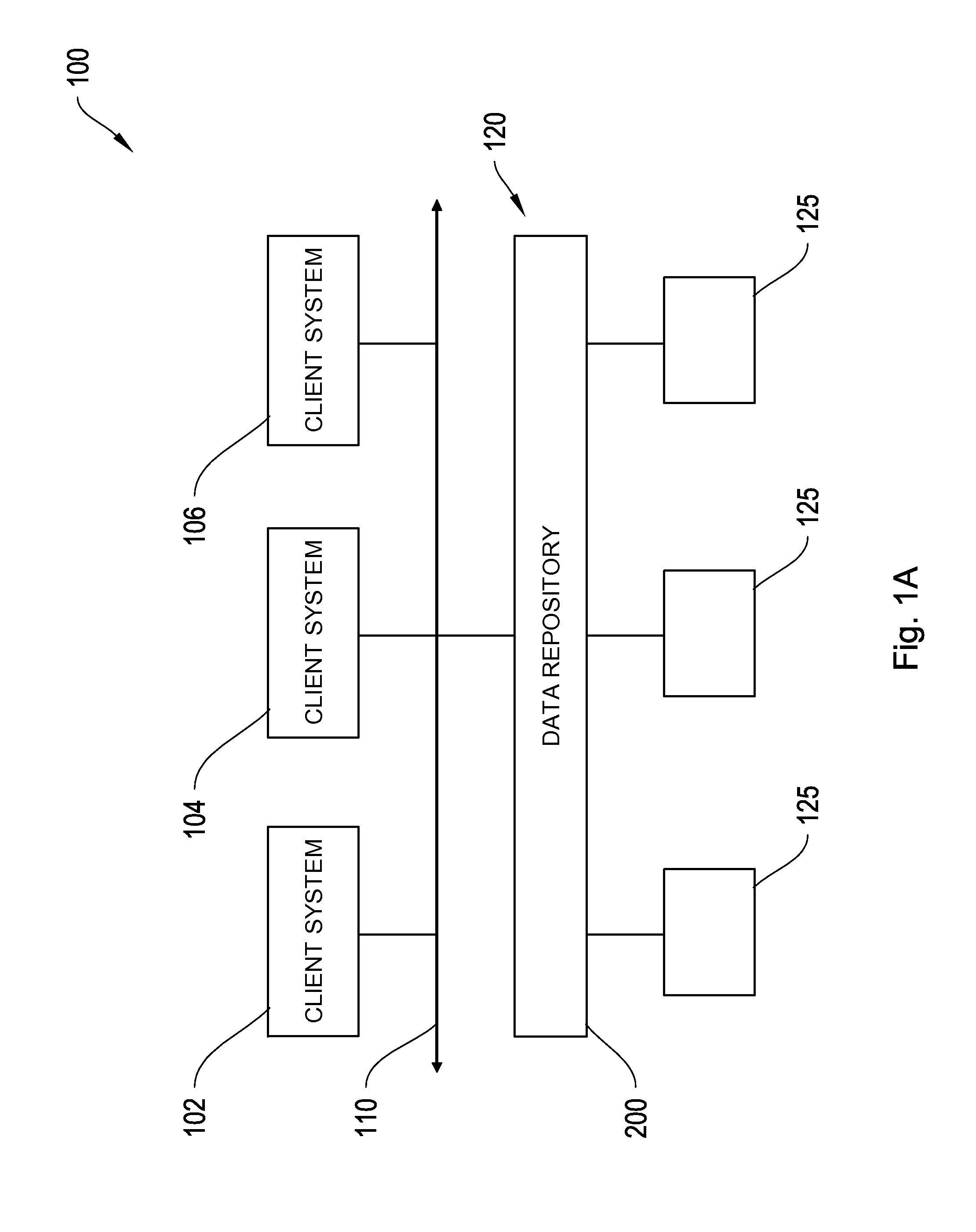

ActiveUS20070250513A1Reduce loadDigital data information retrievalDigital data processing detailsDatabaseFile caching

A system and method for publishing a file on a network is disclosed. A caching service is disclosed that reduces the load on the publisher's server by publishing that the file is at a fictitious network location. Requests directed to the fictitious location are received by the caching service and the fictitious network location is parsed to determine what file is being requested. If the caching service already has a copy of the file, then the copy is transmitted to the requestor without alerting to the requester that the address is fictitious. If the caching service does not have a copy of the file, then a copy is automatically obtained based on information contained in the fictitious network location.

Owner:R2 SOLUTIONS

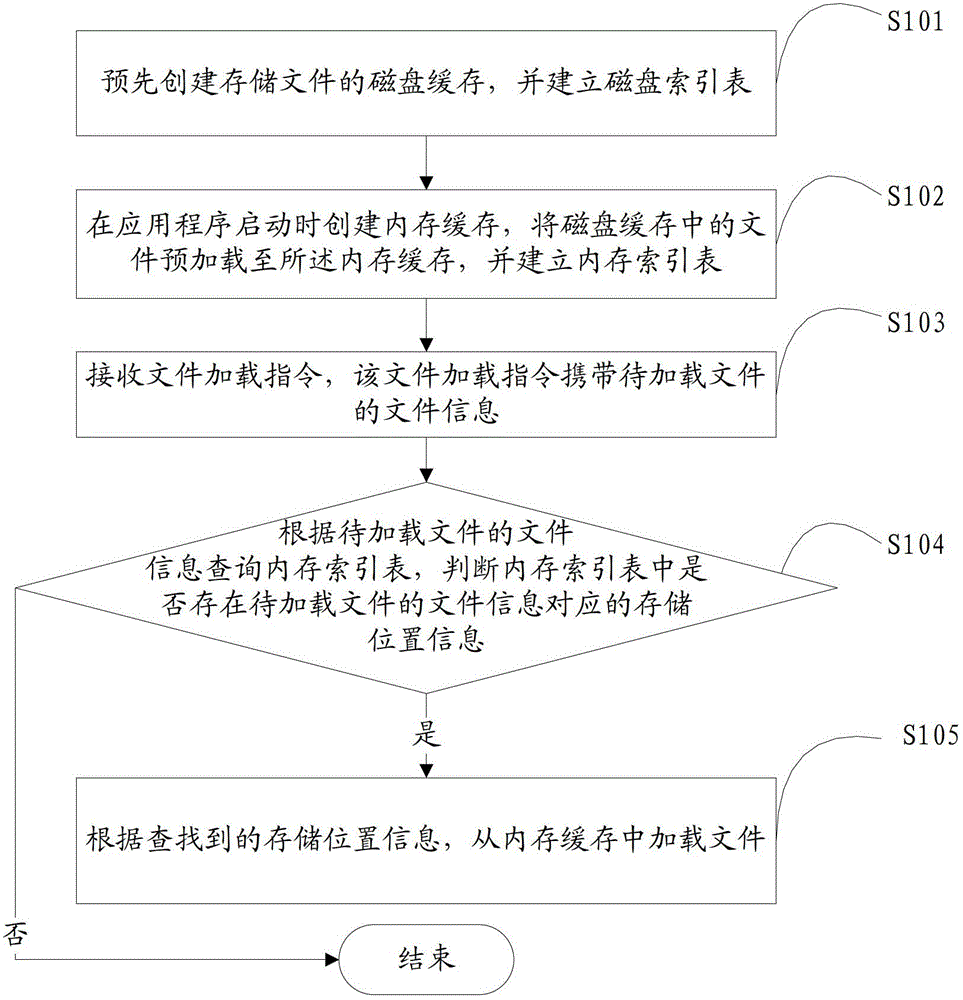

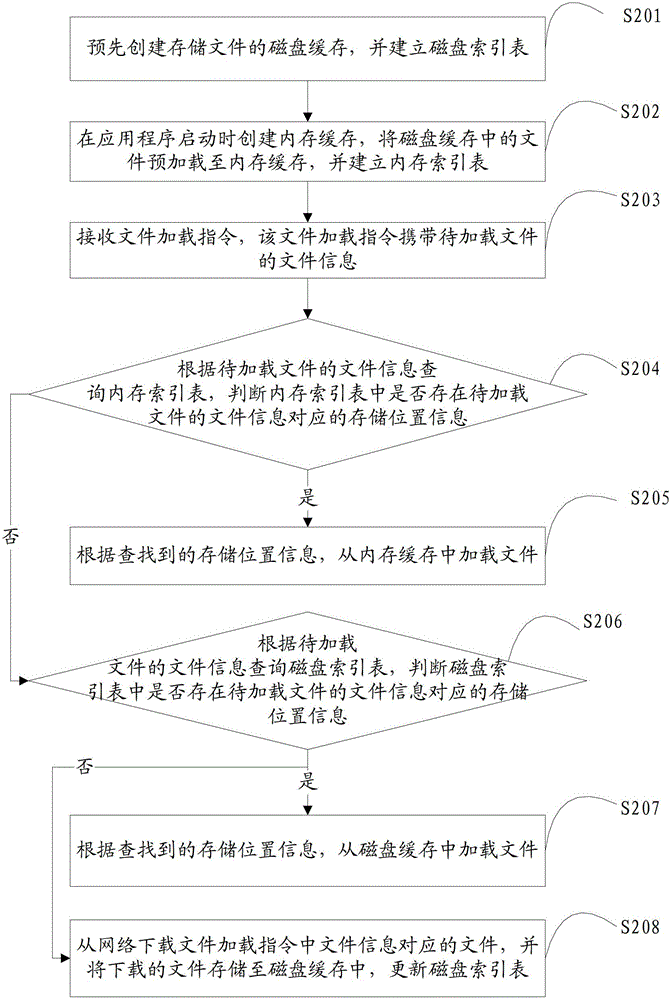

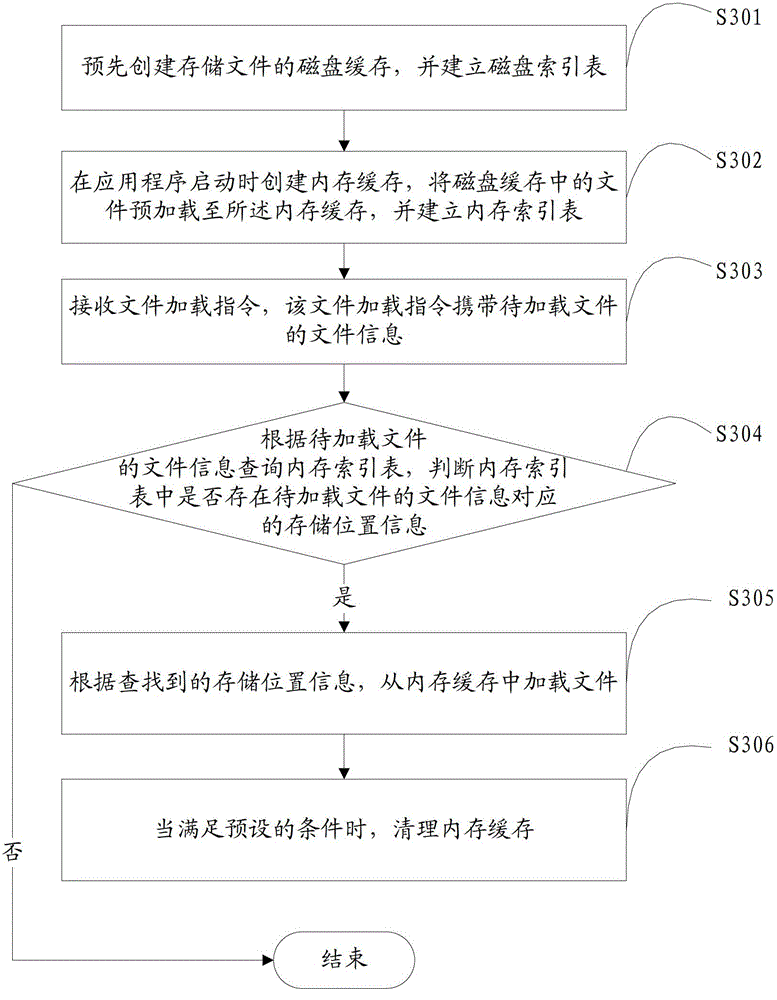

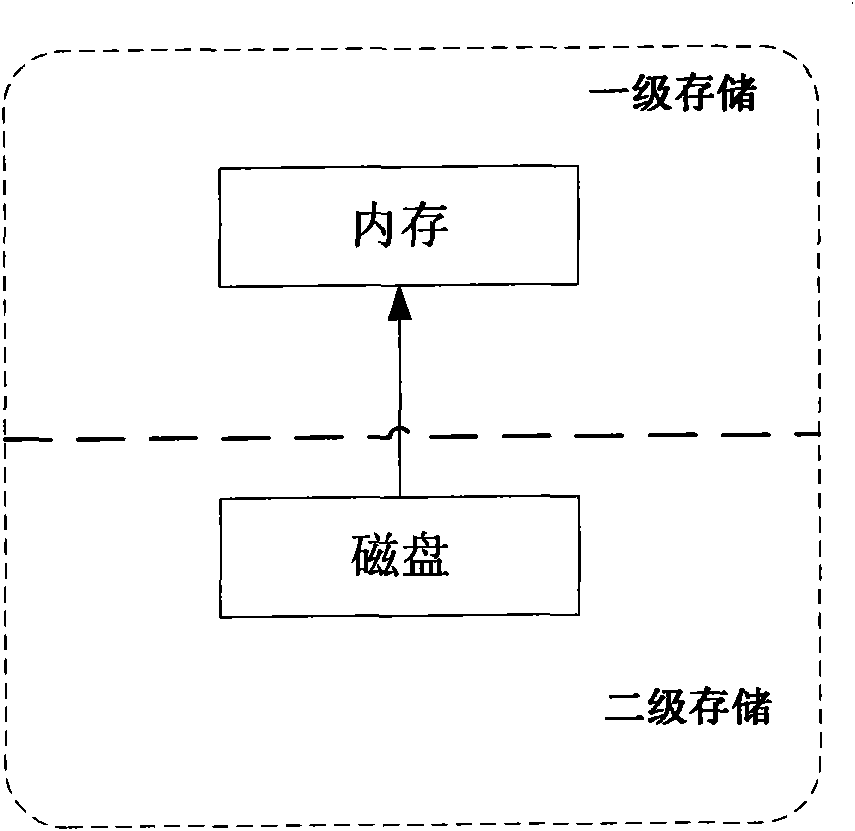

Method and device for loading file

InactiveCN102750174AImprove loading efficiencyHigh speedProgram loading/initiatingAccess frequencyComputer terminal

The invention is suitable for the field of multimedia applications and provides a method and a device for loading a file. The method comprises the following steps of: receiving a file loading instruction which carries file information of a to-be-loaded file; inquiring a memory index table according to the file information of the to-be-loaded file, wherein the memory index table contains storage location information and access frequency of a file preloaded from a disk cache to a memory cache; and if searching the storage location information corresponding to the file information in the memory index table, loading the file from the memory cache according to the searched storage location information and updating the access frequency of the file in the memory index table. The file downloaded from the network is stored at a terminal by a two-level file caching mode, and since the access delay of a memory is much less than that of the dick cache, compared with the file stored in the memory cache, a display file can be rapidly loaded, the file loading efficiency is high, and the file loading speed is quick.

Owner:TCL CORPORATION

Truth on client persistent caching

ActiveUS7441011B2Easy to operateMemory architecture accessing/allocationDigital data information retrievalClient dataClient-side

The present invention provides a novel client side caching (CSC) infrastructure that supports transition states at the directory level to facilitate a seamless operation across connectivity states between client and remote server. More specifically, persistent caching is performed to safeguard the user (e.g., client) and / or the client applications across connectivity interruptions and / or bandwidth changes. This is accomplished in part by caching to a client data store the desirable file(s) together with the appropriate file access parameters. Moreover, the client maintains access to cached files during periods of disconnect. Furthermore, portions of a path can be offline while other portions upstream can remain online. CSC operates on the logical path which cooperates with DFS which operates on the physical path to keep track of files cached, accessed and changes in the directories. In addition, truth on the client is facilitated whether or not a conflict of file copies exists.

Owner:MICROSOFT TECH LICENSING LLC

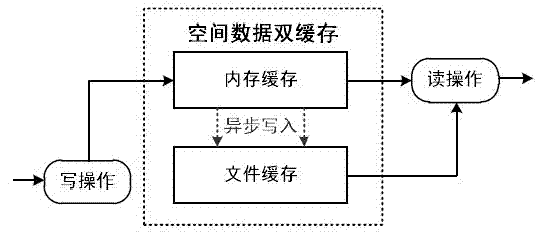

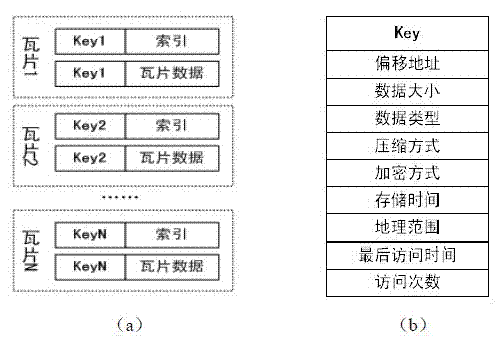

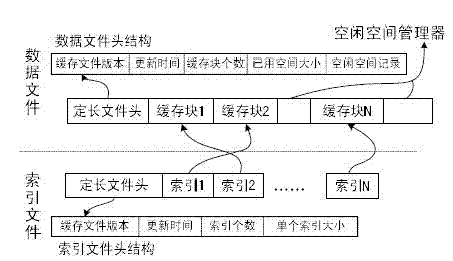

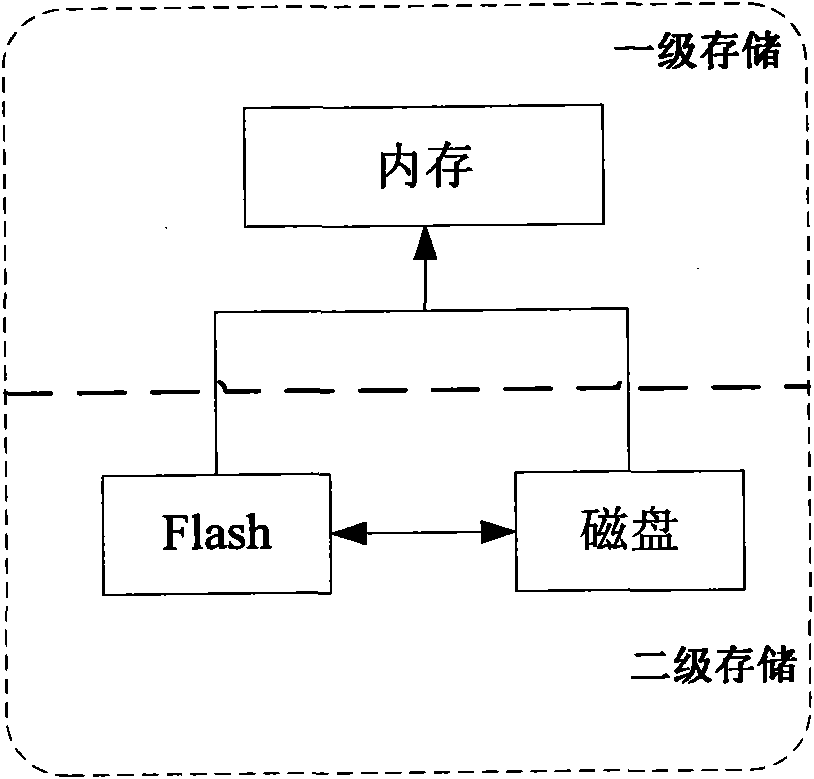

Spatial data double cache method and mechanism based on key value structure

ActiveCN103092775AImprove retrieval speedKey-value store model freedomMemory adressing/allocation/relocationSpecial data processing applicationsSpatial managementParallel computing

The invention discloses a spatial data double cache method and a mechanism based on a key value structure, and belongs to the technical field of spatial data storage and management. A double cache mechanism of memory caching and file caching is disclosed by the spatial data double cache method and the mechanism based on the key value structure, the memory caching is first level caching, uses B+tree organizing data, and is written in the file caching by adopting a caching write-back mechanism in an asynchronous mode; the file caching is second level caching, uses large files to be built, and builds caching index based on the B+tree so as to accelerate the speed of searching; and a free space of the file caching uses free space management based on the B+tree to manage. The spatial data double cache method and the mechanism based on the key value structure have the advantages of being free in key value storage mode, fast in searching speed, high in concurrency performance and the like. Storage and visiting efficiency of spatial data caching in network environment are improved, and the spatial data double cache method and the mechanism based on the key value structure can be used for caching of generic spatial data such as remote-sensing images, vector data and dynamic effect model (DEM) in a network geographic information system (GIS).

Owner:WUHAN UNIV

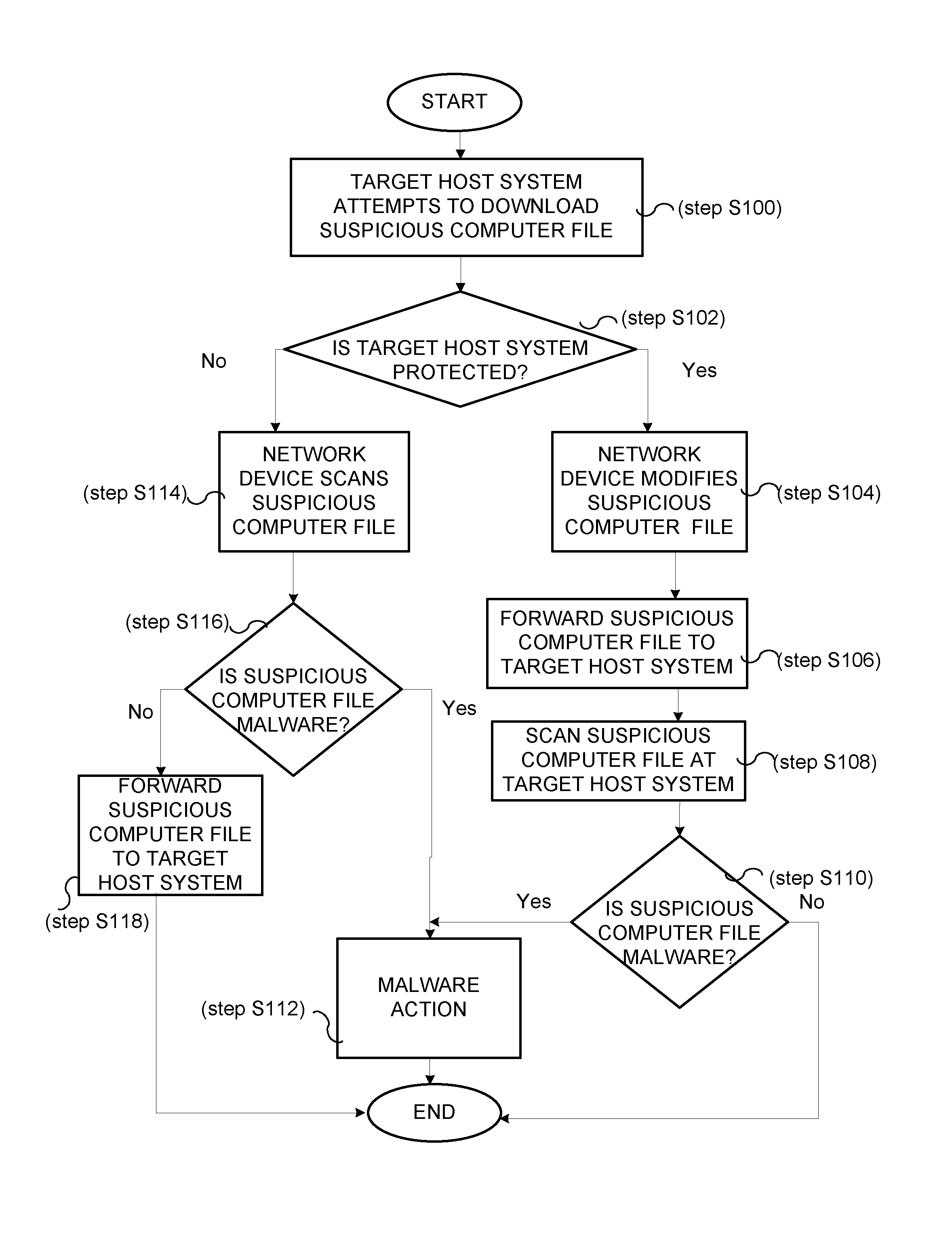

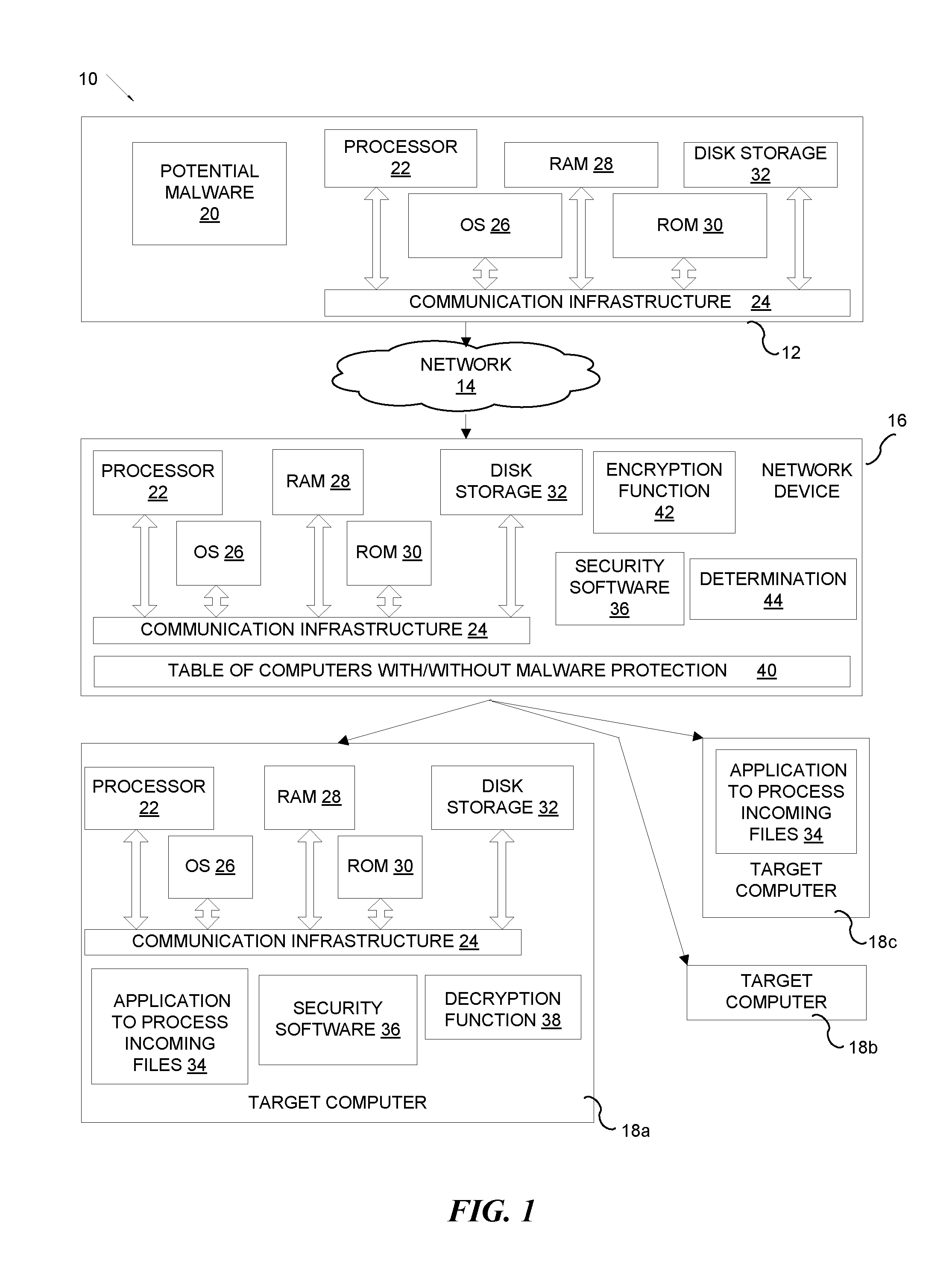

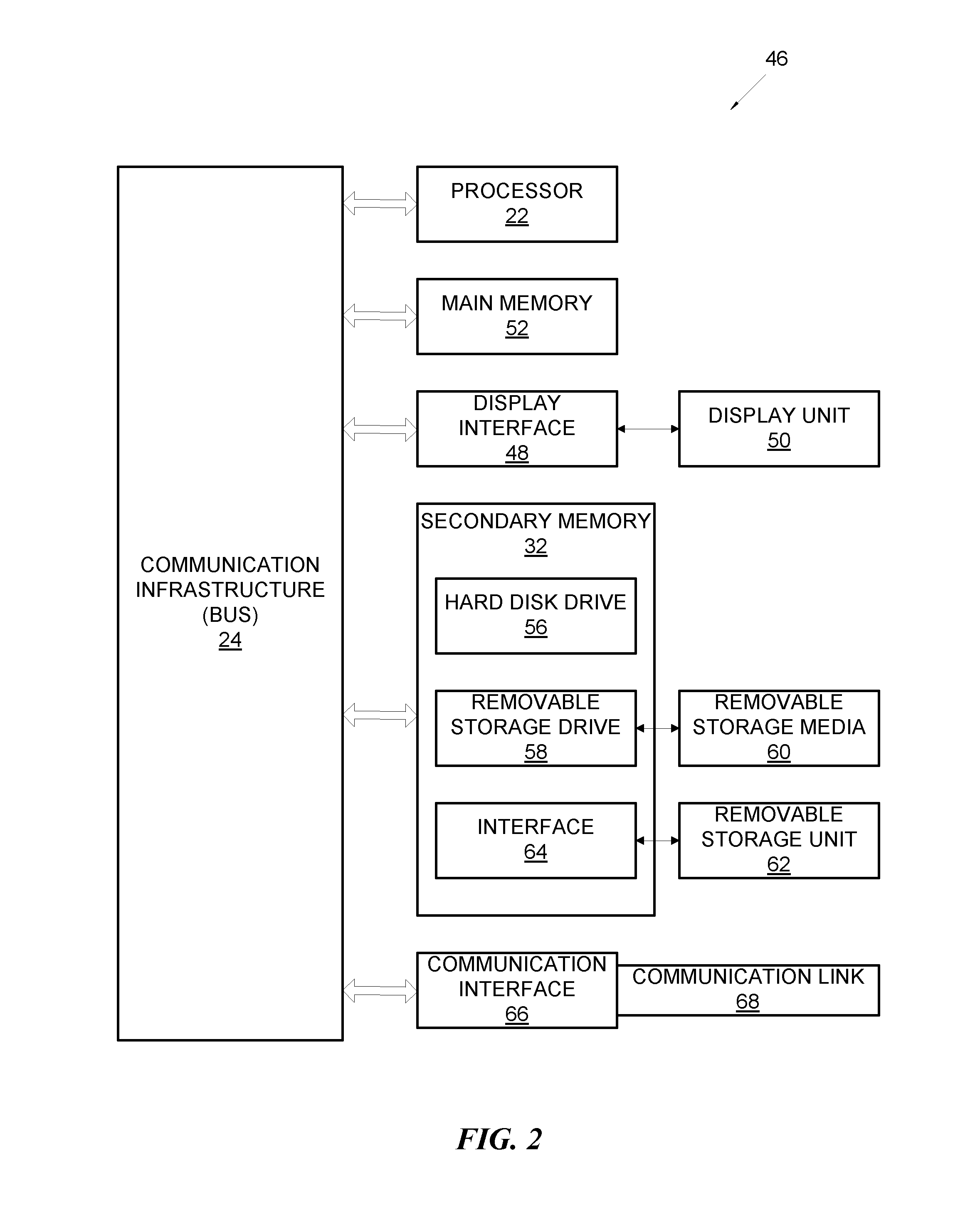

System and method for ensuring scanning of files without caching the files to network device

ActiveUS20110252474A1Memory loss protectionDigital data processing detailsSoftware engineeringMalware

A method and system for ensuring scanning of suspicious computer files without unnecessary caching of the files on a network device is provided. A network device receives a suspicious computer file and determines whether a target computer is protected by a malware protection program. If the network device determines that the target computer is protected by the malware protection program, the network device modifies the suspicious computer file to make the suspicious computer file unusable by the target computer and sends the modified suspicious computer file to the target computer.

Owner:KYNDRYL INC

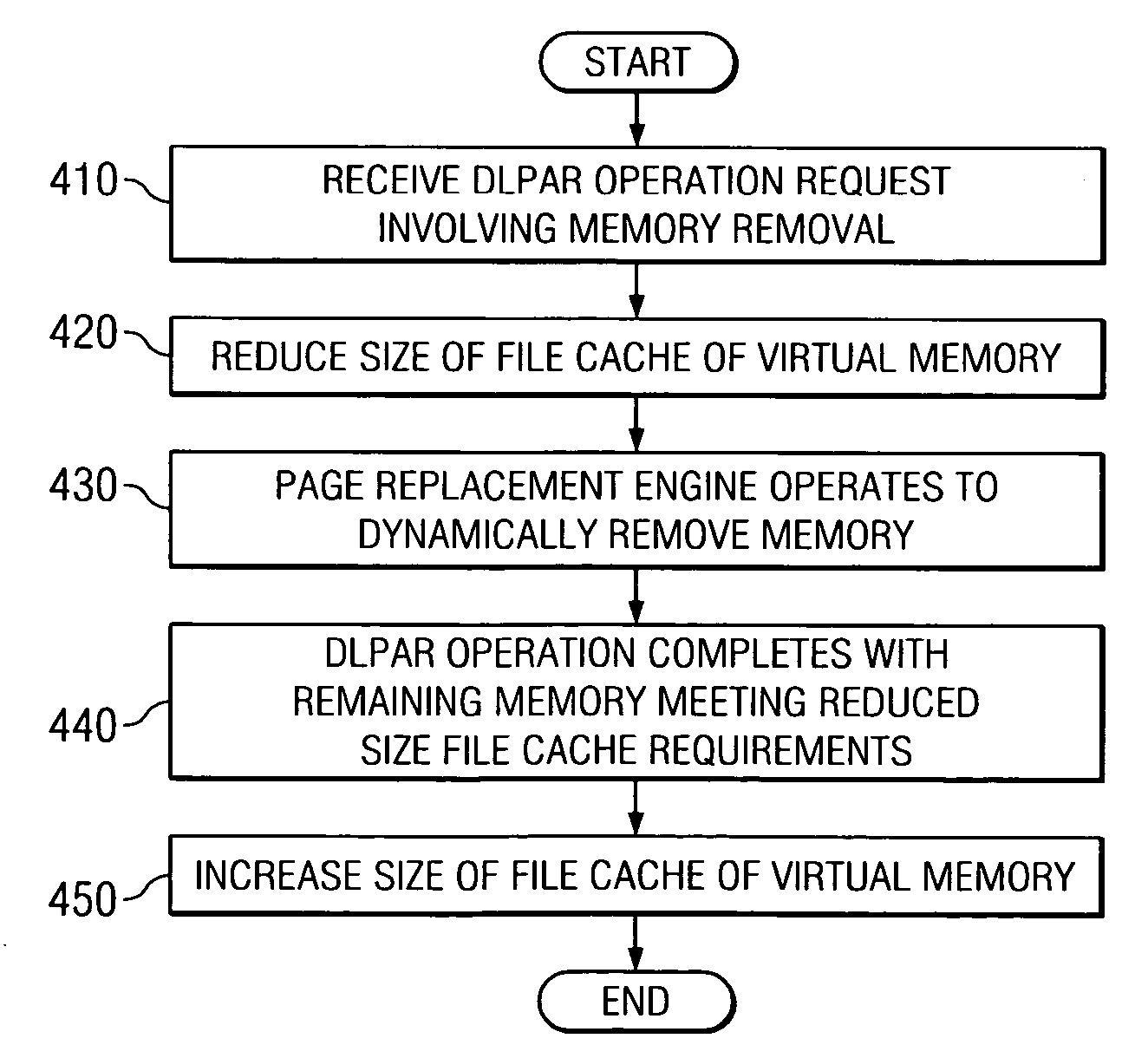

System and method for improving performance of dynamic memory removals by reducing file cache size

ActiveUS20050268052A1Improving dynamic memory removalReduce cache sizeMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingDynamic storage

A system and method for improving dynamic memory removals by reducing the file cache size prior to the dynamic memory removal operation initiating are provided. In one exemplary embodiment, the maximum amount of physical memory that can be used to cache files is reduced prior to performing a dynamic memory removal operation. Reducing the maximum amount of physical memory that can be used to cache files causes the page replacement algorithm to aggressively target file pages to bring the size of the file cache below the new maximum limit on the file cache size. This results in more file pages, rather than working storage pages, being paged-out.

Owner:GOOGLE LLC

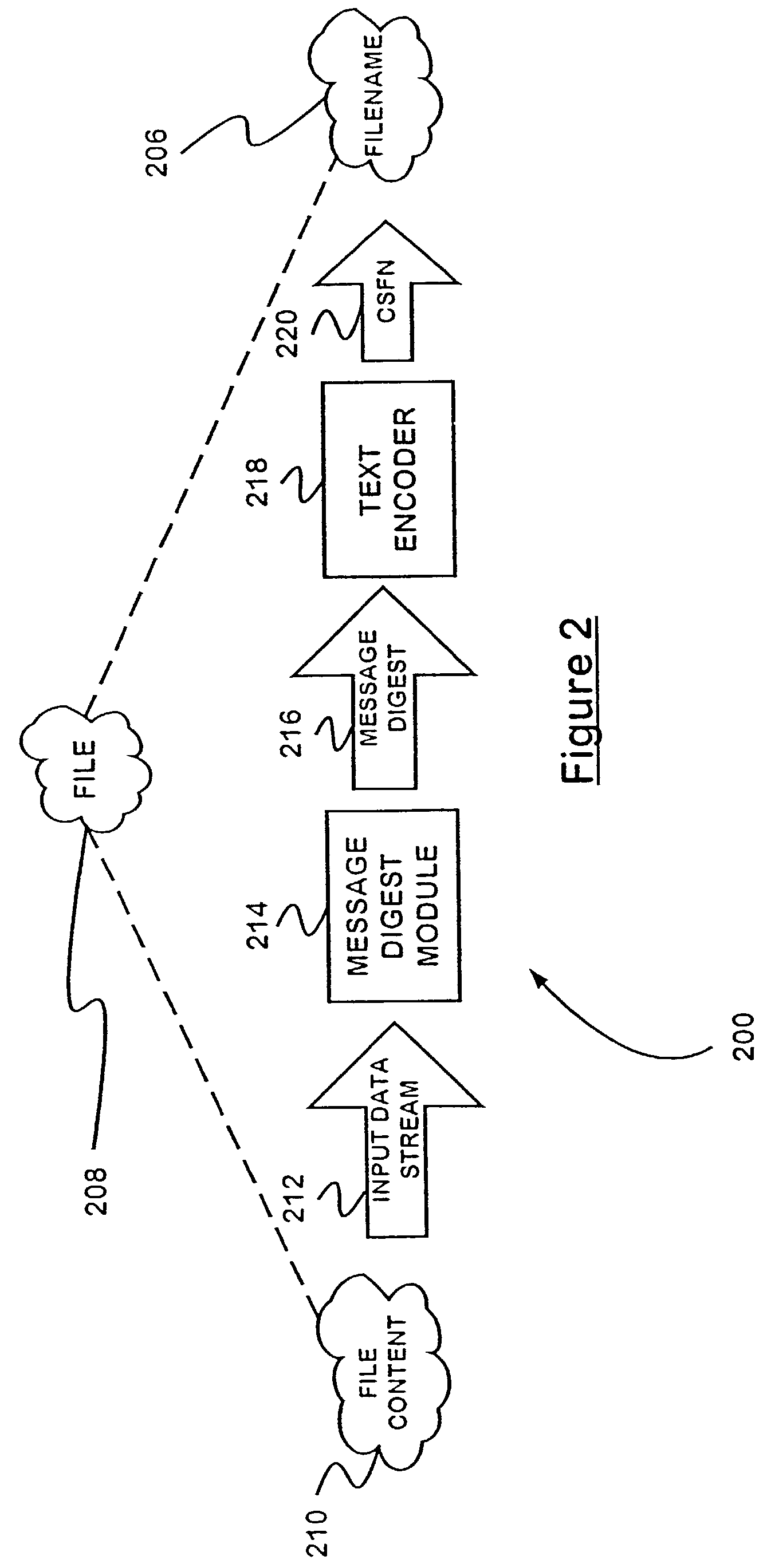

Installing content specific filename systems

InactiveUS7284243B2Eliminating potentialEliminate for referenceDigital data information retrievalProgram loading/initiatingWeb browserFile system

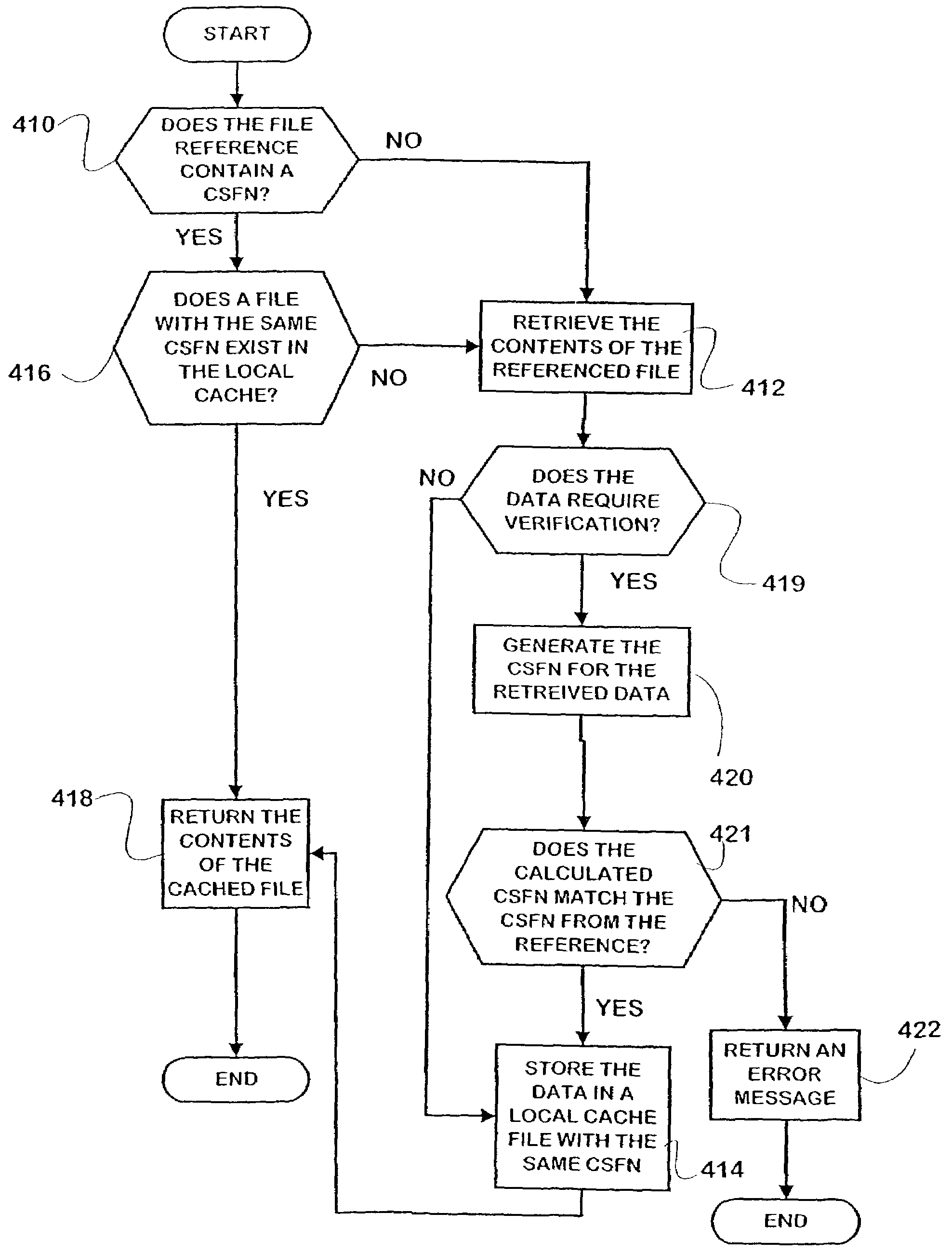

A computer file naming technique employs content-specific filenames (CSFN's) that represent globally-unique identifiers for the contents of a file. Since file references incorporating the CSFN's are not location-specific, they offer unique advantages in the areas of file caching and file installation. Particularly, web browsers enabled to recognize CSFN's inherently verify the content of files when they are retrieved from a local cache, eliminating the need for comparison of file data or time stamps of the cached file copy and the server copy. Thus, file verification occurs solely in the local context. The invention includes caching and software installation systems that incorporate the benefits of CSFN's.

Owner:MICROSOFT TECH LICENSING LLC

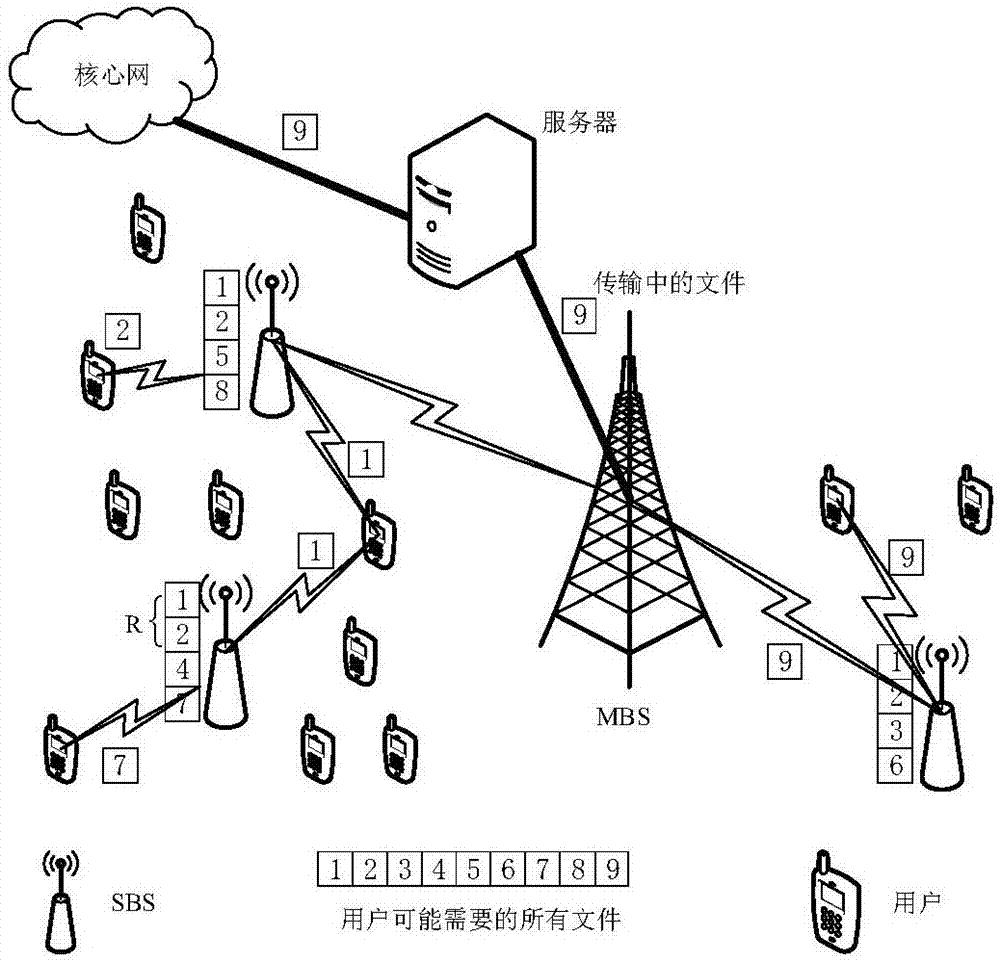

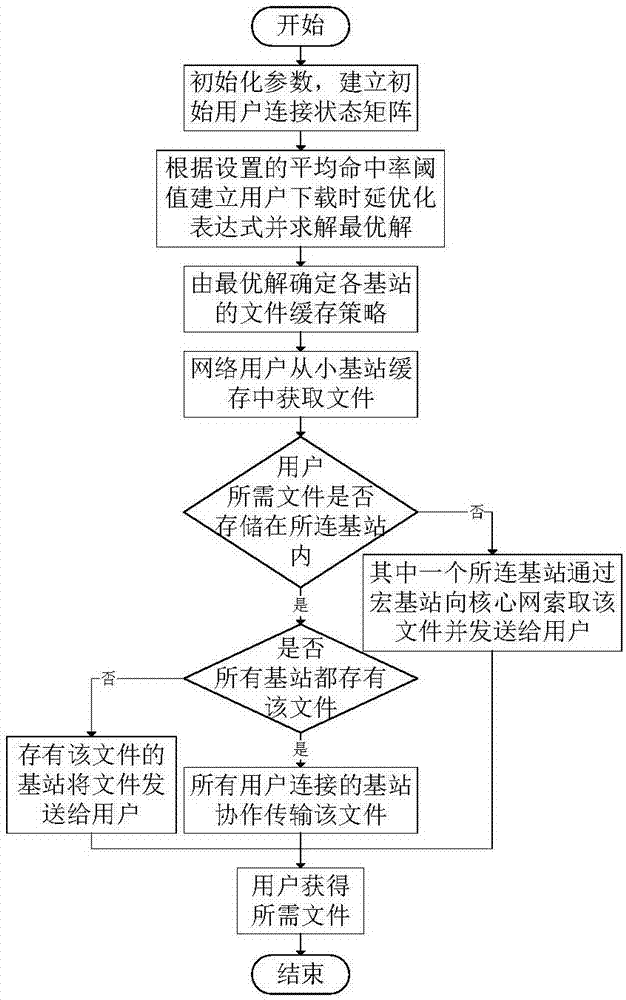

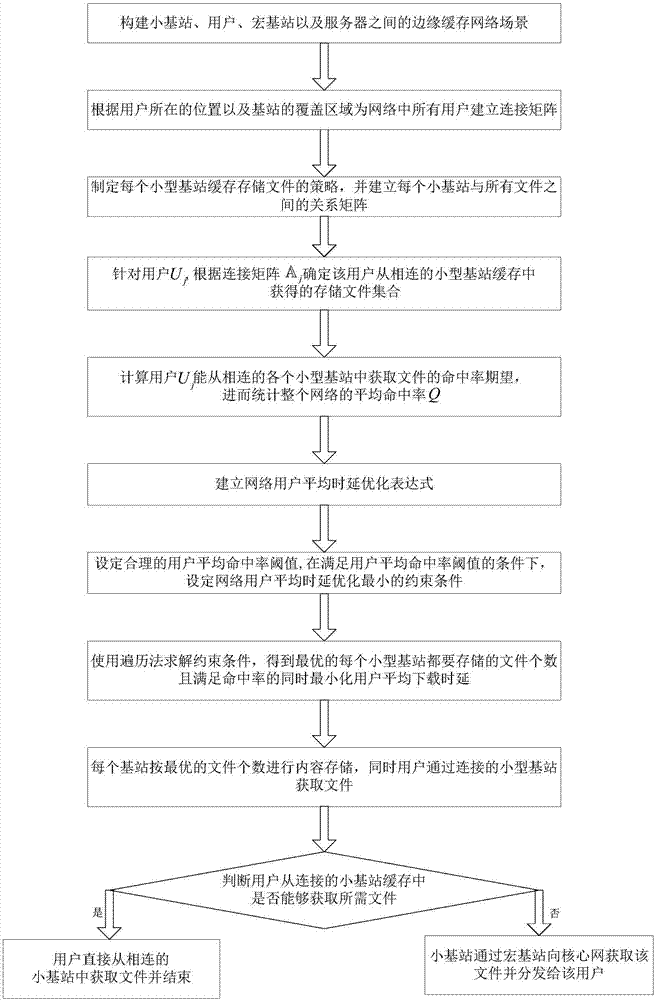

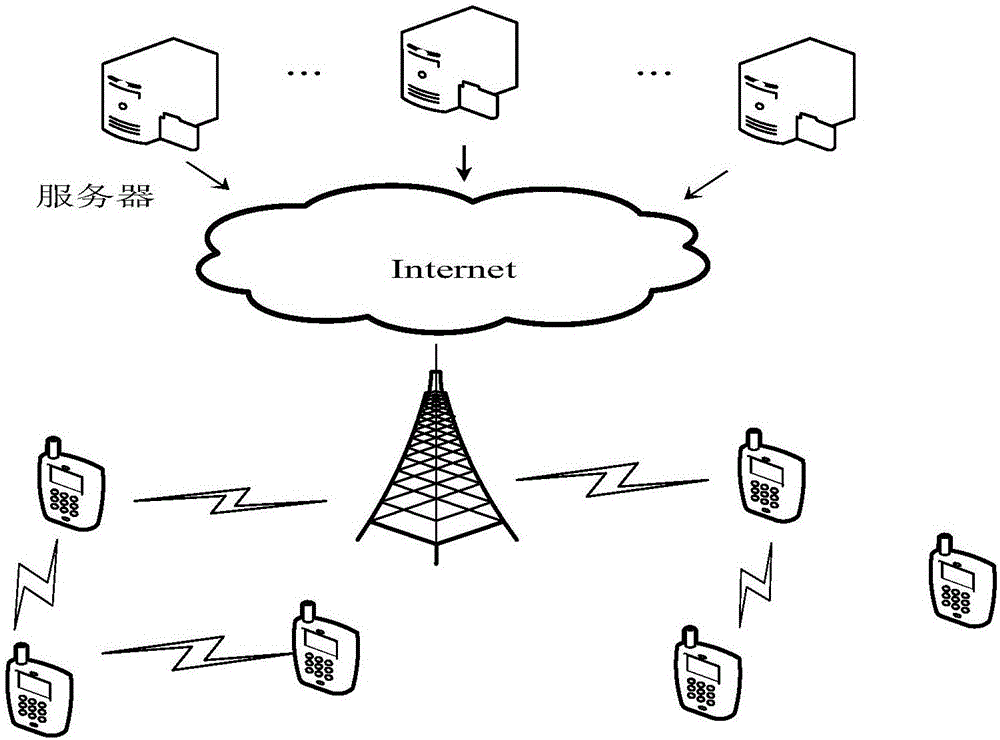

Base station caching method based on minimized user delay in edge caching network

ActiveCN107548102AImplementation LatencyHigh average hit rateNetwork traffic/resource managementBalance problemsHit rate

The invention discloses a base station caching method based on the minimized user delay in an edge caching network, and belongs to the field of wireless mobile communication. In an edge caching network scene, a connection matrix between a user and a base station is established at first; simultaneously, a strategy that the base station caches a file is generated; a relationship matrix between the base station and the file is established; then, the average hit rate that all users in the whole network obtain the file from the base station is counted; a constraint condition is set to achieve the optimal minimum network user average delay when satisfying a reasonable user average hit rate threshold value; a traversing method is further used for solving and finding an optimal base station content storage strategy; a small base station is deployed according to the strategy; and finally, a user is connected to the small base station to obtain the file. According to the base station caching method based on the minimized user delay in edge caching network disclosed by the invention, the balance problem of the average delay and the average hit rate in a process of designing the small base station file caching strategy of the edge caching network is sufficiently considered; and thus, the purpose of minimizing the user average downloading delay when a certain user average hit rate is satisfied is realized.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Transmission control method and player of online streaming media

ActiveCN102571894ASmooth transmissionSmooth playbackTransmissionContent distributionComputer terminal

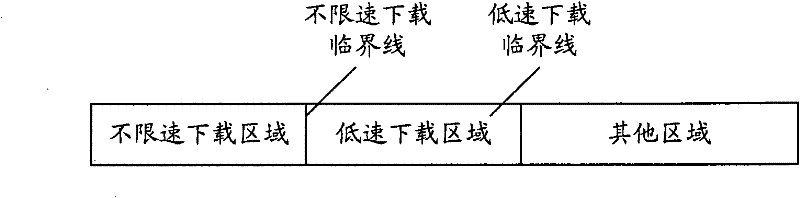

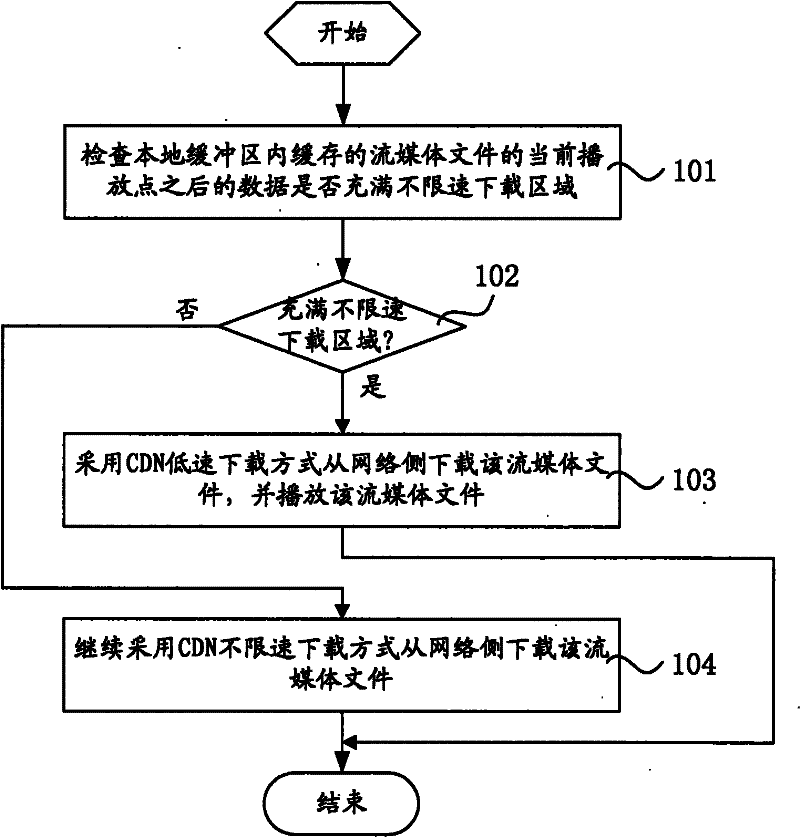

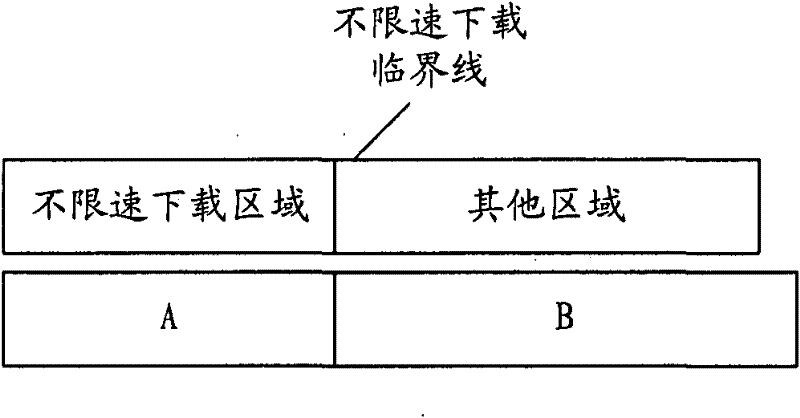

The invention relates to a transmission control method of online streaming media, comprising the following steps: checking whether data after a current playing point of a streaming media file cached in a local buffer area fully occupy a non-speed-limit downloading area or not, downloading the streaming media file from a network side through a CDN (content distribution network) low-speed downloading mode and playing the streaming media file if the data fully occupy the non-speed-limit downloading area; or continuously downloading the streaming media file from the network side via a CDN non-speed-limit downloading mode. The non-speed-limit downloading area is a data storage area between a starting storage position and a preset non-speed-limit downloading critical line, and the low-speed downloading mode is a downloading mode with a downloading speed not higher than a code rate of the streaming media file. The invention further relates to a player of the online streaming media. The transmission control method and the player of the online streaming media can switch a transmission speed and a transmission mode according to the streaming media data receiving situation of a terminal and save the consumption of system resources and network bandwidths at a terminal side and a server side on the premise of ensuring smooth transmission and play of the streaming media file.

Owner:CHINA TELECOM CORP LTD

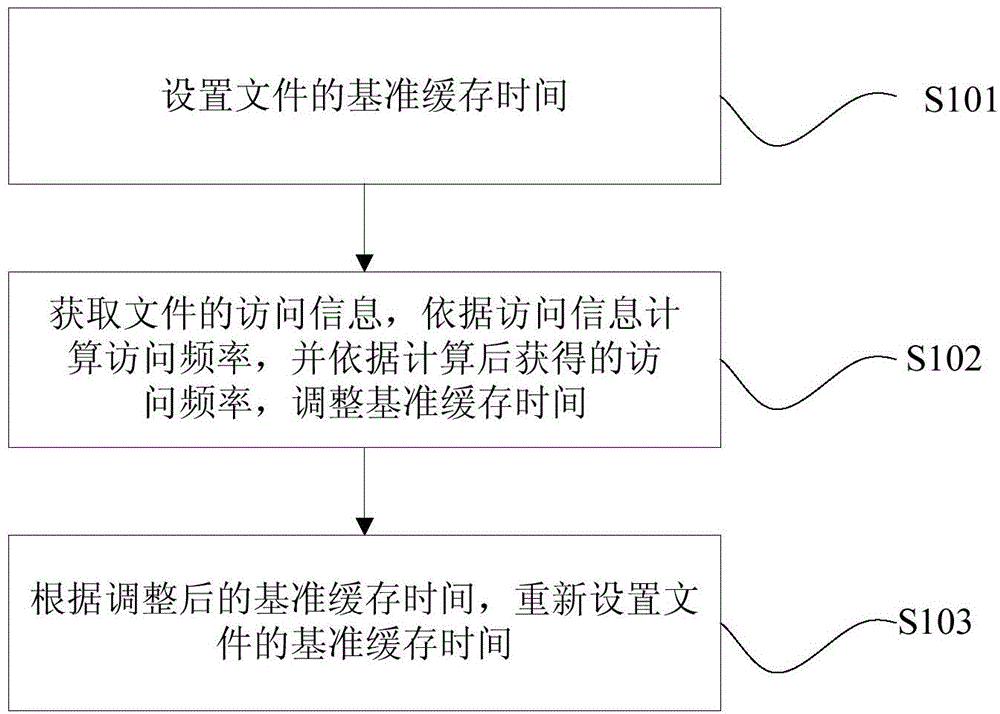

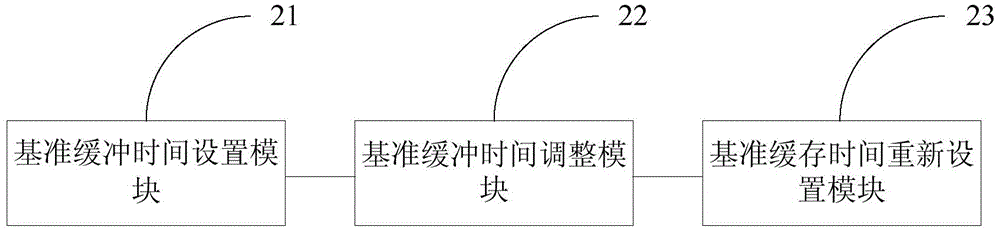

Method and device for setting file cache time

ActiveCN104133880AReduce the number of times to go back to the sourceMemory adressing/allocation/relocationTransmissionAccess frequencyServer-side

The embodiment of the invention discloses a method and a device for setting the file cache time. The method comprises the following steps that: the reference cache time of a file is set; access information of the file is obtained, the access frequency is calculated according to the access information, and the reference cache time is adjusted according to the access frequency obtained after the calculation; and the reference cache time of the file is reset according to the adjusted reference cache time. Through the method and the device for setting the file cache time disclosed by the embodiment of the invention, the dynamic adjustment on the file cache time is realized; cache resources are reasonably allocated; the excessive cache resource occupation caused by too long or too short file cache time is prevented; the number of times of file resource return is reduced; and the bandwidth and server end resources are saved.

Owner:GUANGDONG EFLYCLOUD COMPUTING CO LTD

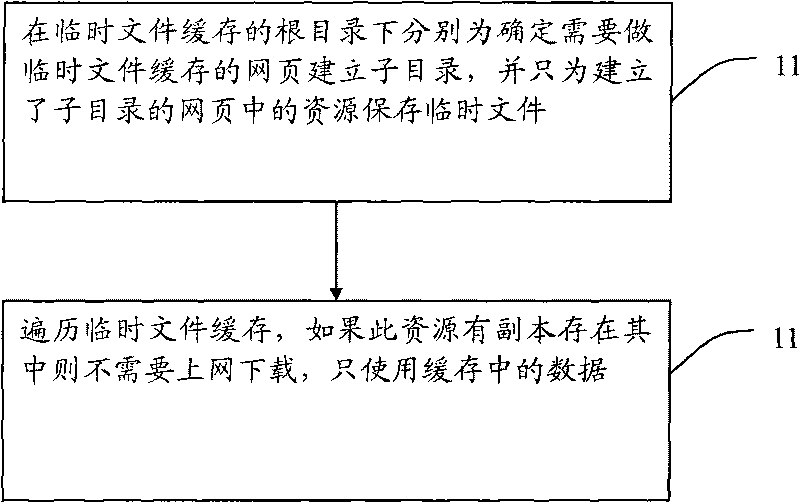

Method for caching local temporary files into embedded browser

InactiveCN101710327APlay complementarityImprove the efficiency of visiting web pagesSpecial data processing applicationsWeb pageDatabase

The invention discloses a method for caching local temporary files into embedded browser, which comprises the steps: establishing a subdirectory for each webpage which needs to perform temporary file cache in a root directory of the temporary file cache, and saving temporary files only for resources in the webpages in which the subdirectory is established; and traversing the temporary file cache, and if transcripts exist in the resources, only using data in the cache without downloading other data on line. Through the technical scheme of the invention, the method can improve the efficiency of accessing the webpages by the embedded browser.

Owner:SHENZHEN SKYWORTH DIGITAL TECH CO LTD

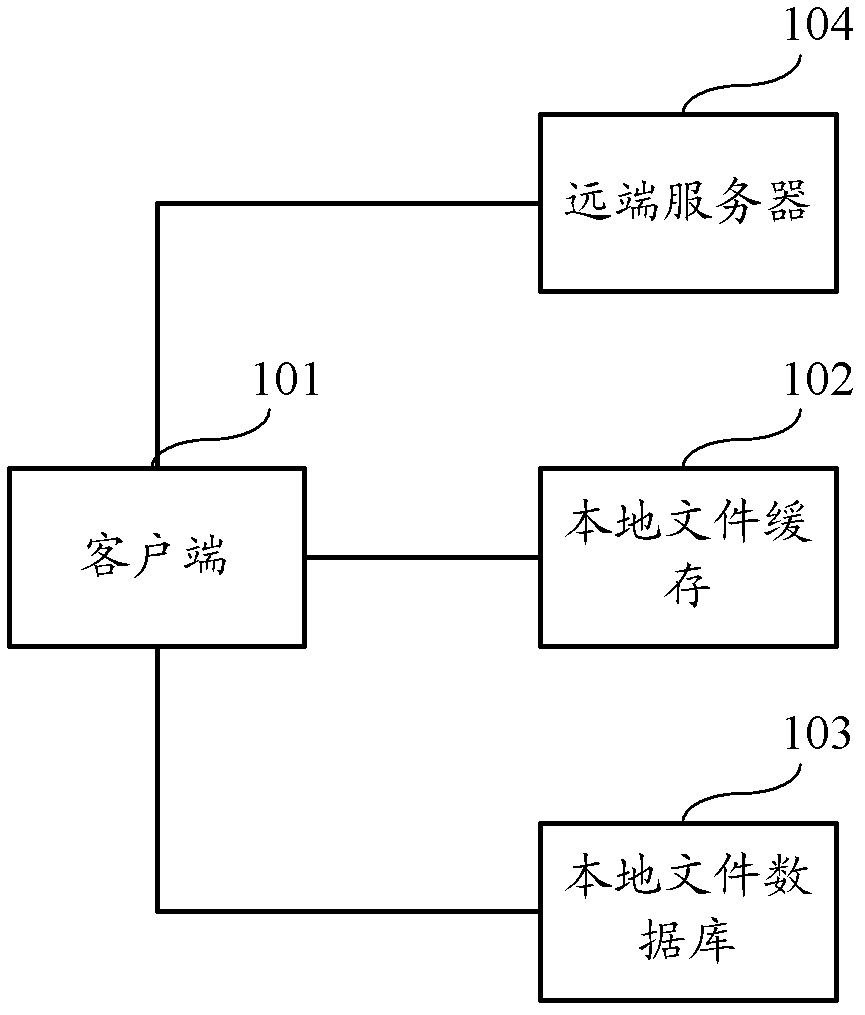

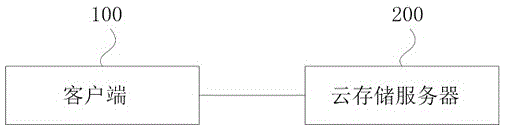

Network file system and method for accessing network file system

The invention discloses a network file system. The system comprises local file cache, a local file database, a remote server and a client. The local file cache is used for storing access targets which are accessed by users recently, the local file database is used for storing all access targets which are accessed by users and conducting synchronization through a preset synchronization strategy and original user file systems stored in the remote server, the remote server is used for storing original user file systems, when the client is used for determining locations of access targets in accordance with operation commands of users, the client searches in the local file cache, the client searches in the local file database if the client does not search in the local file cache, and the client searches in the remote server if the client does not search in the local file database. The invention also discloses a method for accessing the network file system. By the aid of the method, the interaction of the client and the remote server is reduced, and the file access stability and the access speed are improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

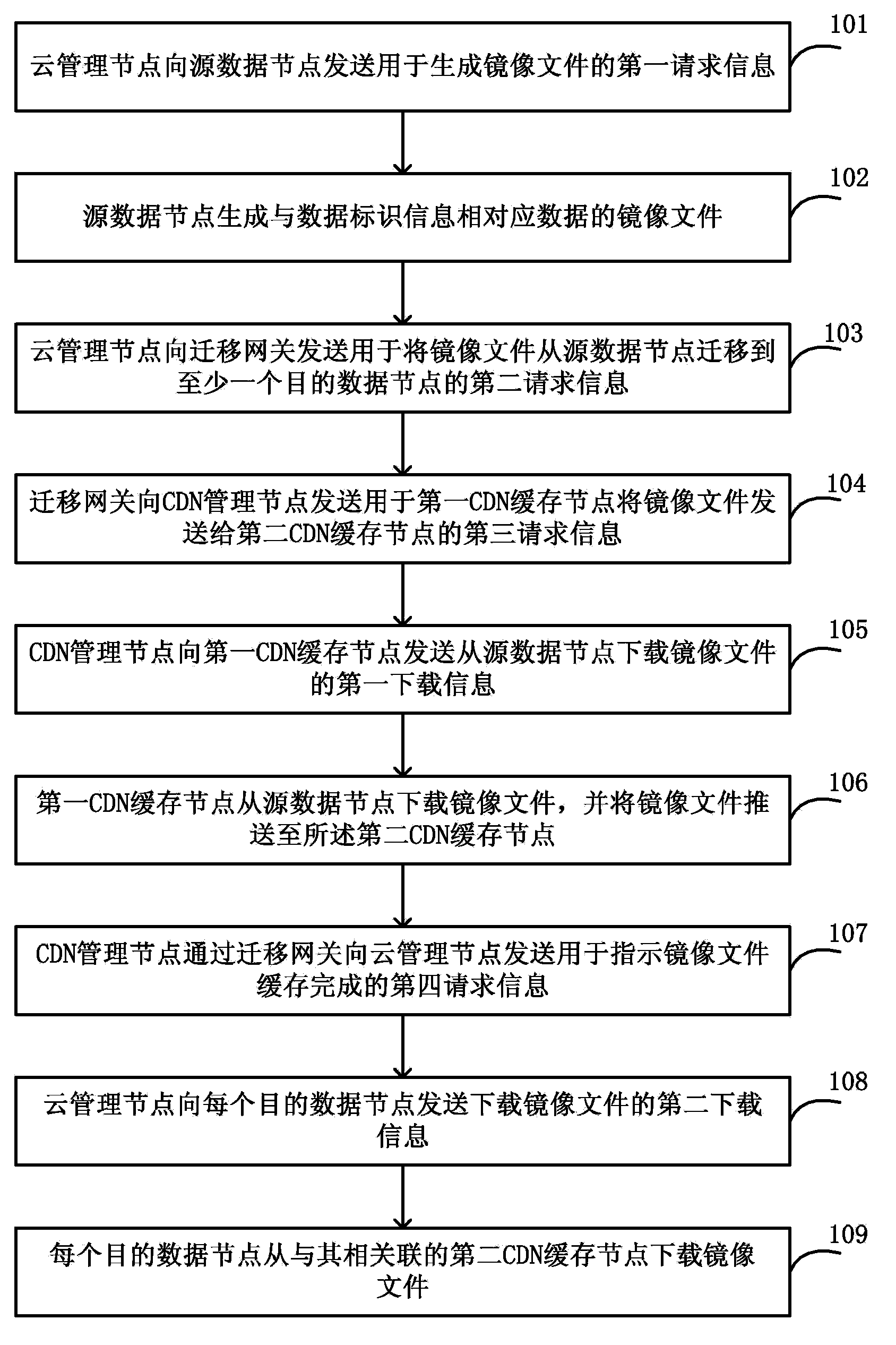

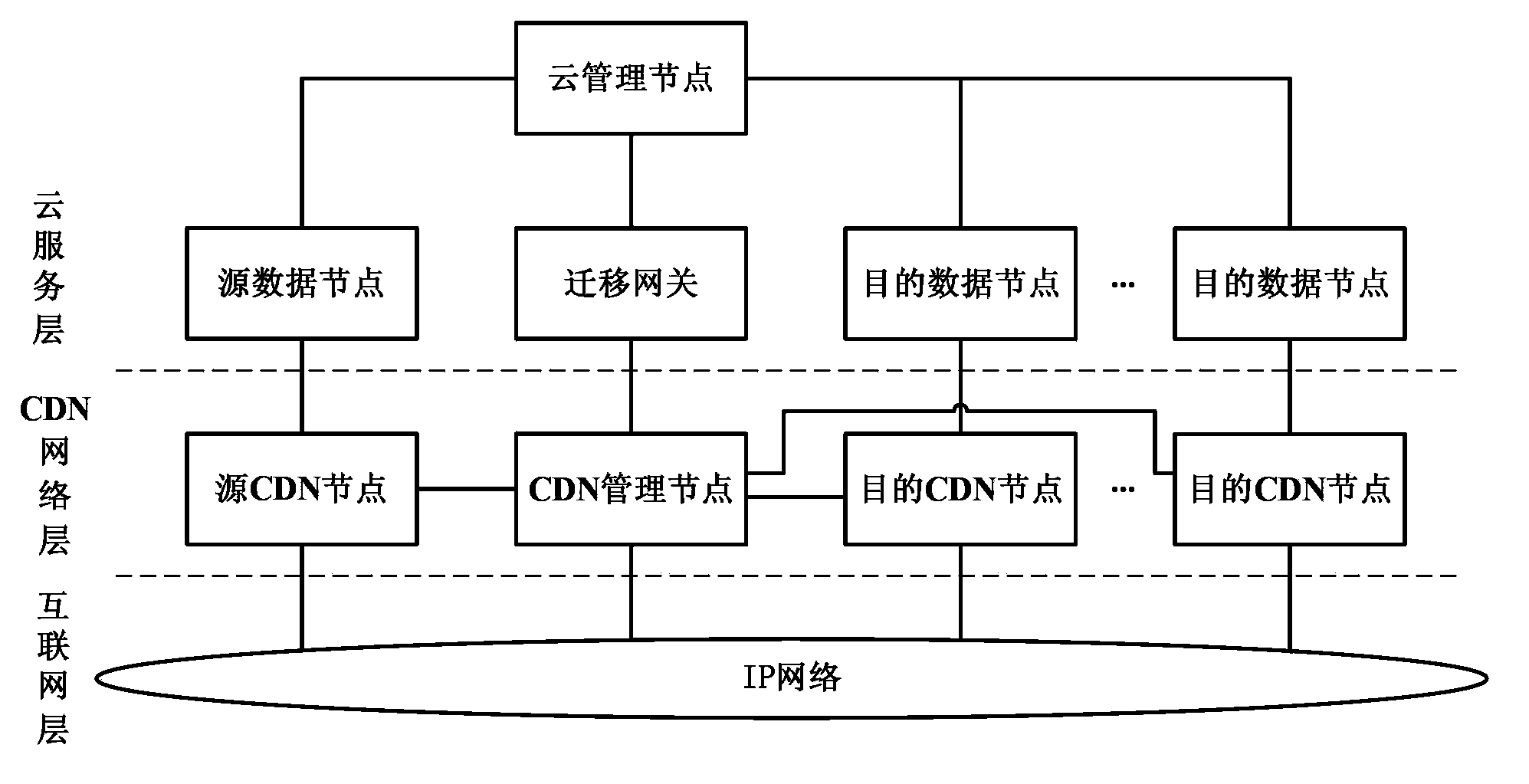

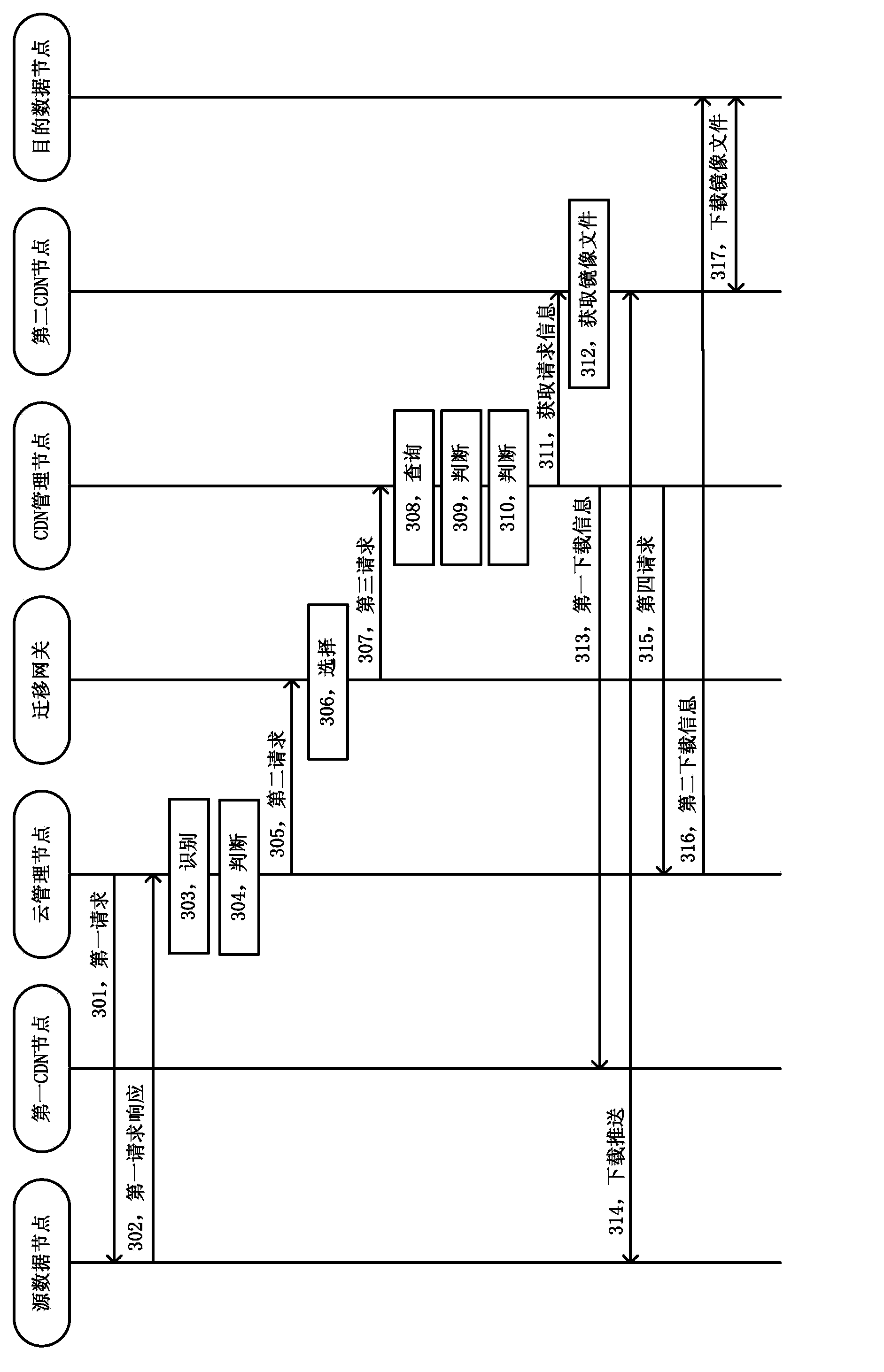

Method and system for migrating data

The invention discloses a method and system for migrating data. The method for migrating the data comprises the following steps: first CDN cache nodes related to source data nodes are selected by a migration gateway, and second CDN cache nodes related to each target data node are selected respectively; the first CDN cache nodes download mirror image files from the source data nodes and forward the mirror image files to the second CDN cache nodes; a CDN management node sends request information used for indicating that caching of the mirror image files is completed to a cloud management node through the migration gateway; the cloud management node sends download information of downloading the mirror image files to each target data node.; each target data node acquires the mirror image files from the second CDN cache nodes related to the target data nodes after receiving the download information. The transmission of the mirror image files is achieved by using a CDN network, so that the virtualization capacity of cloud calculation can be extended to all networked calculation resources on the premise that the conventional network is not required to be reconstructed.

Owner:CHINA TELECOM CORP LTD

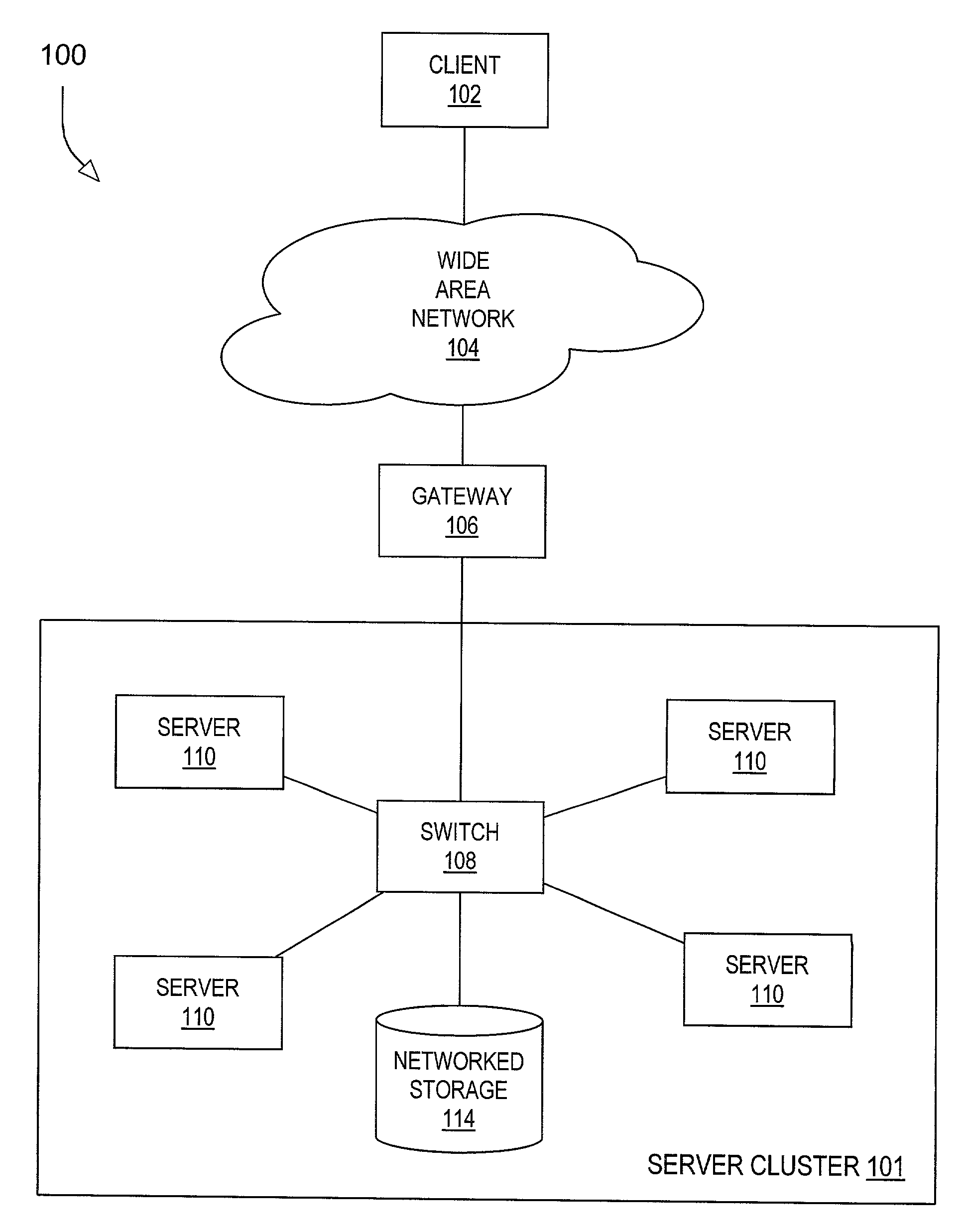

Power conservation in a server cluster

A system and method for operating a server cluster that includes a set of server devices connected to a local area network (LAN). Each server device maintains a directory of the contents of its file cache. When a decrease in server cluster traffic is detected, a server device on the server cluster is selected for powering down. Prior to powering down a server device, the device's file cache directory is broadcast over the LAN to each of the other server devices on the cluster. If a subsequent request for a file stored in the powered-down server's file cache is received by the cluster, the request is routed to one of the remaining active server devices. This server device then retrieves the requested file from the powered-down server's file cache over the LAN. Prior to broadcasting the file cache directory, pending client requests on the selected server device are completed. The powered-down server may continue to provide power to its NIC and system memory while the processor is deactivated. The server device NIC may include direct memory access capability enabling the NIC to retrieve files from the system memory while the processor is powered-down.

Owner:TWITTER INC

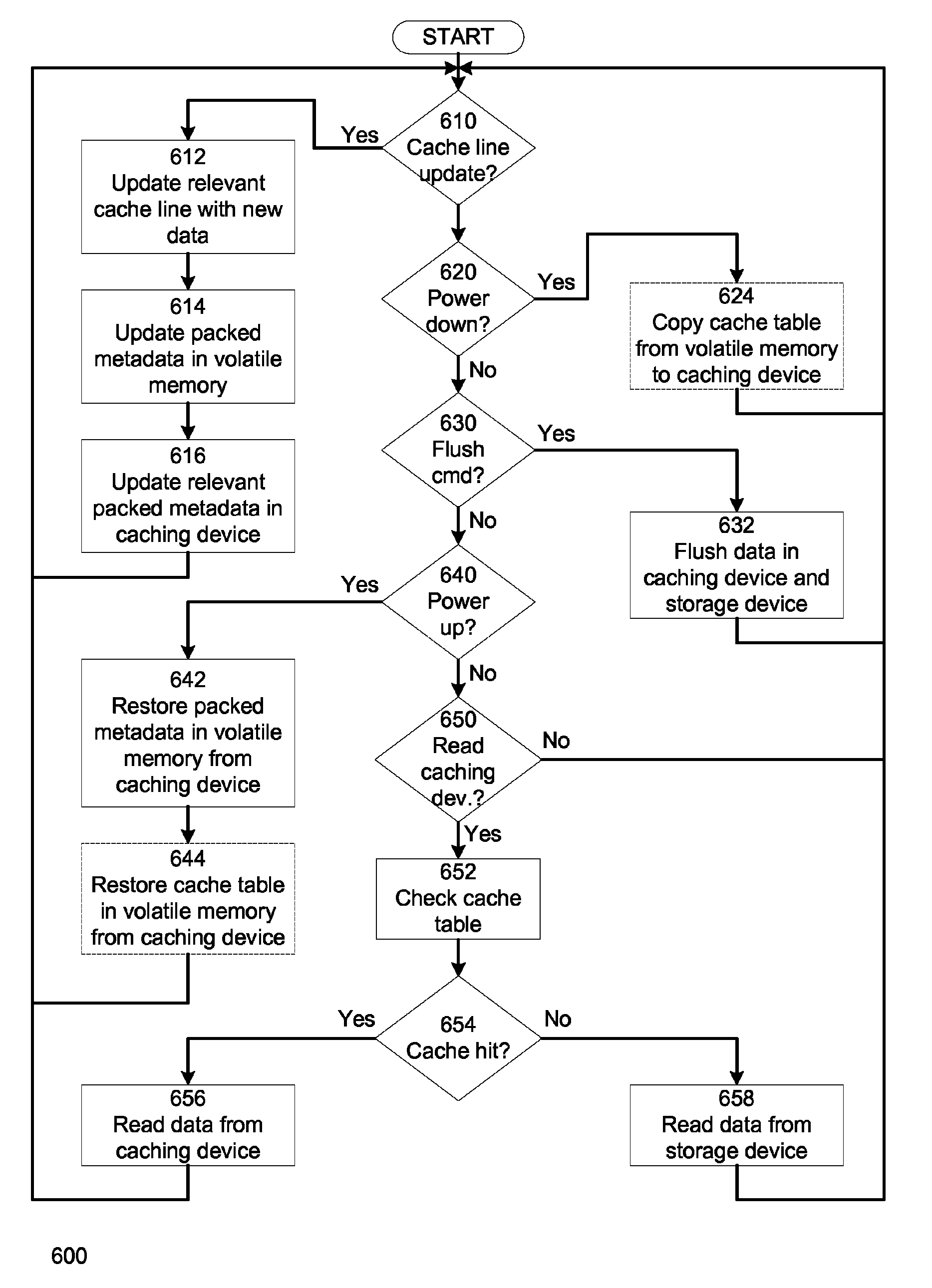

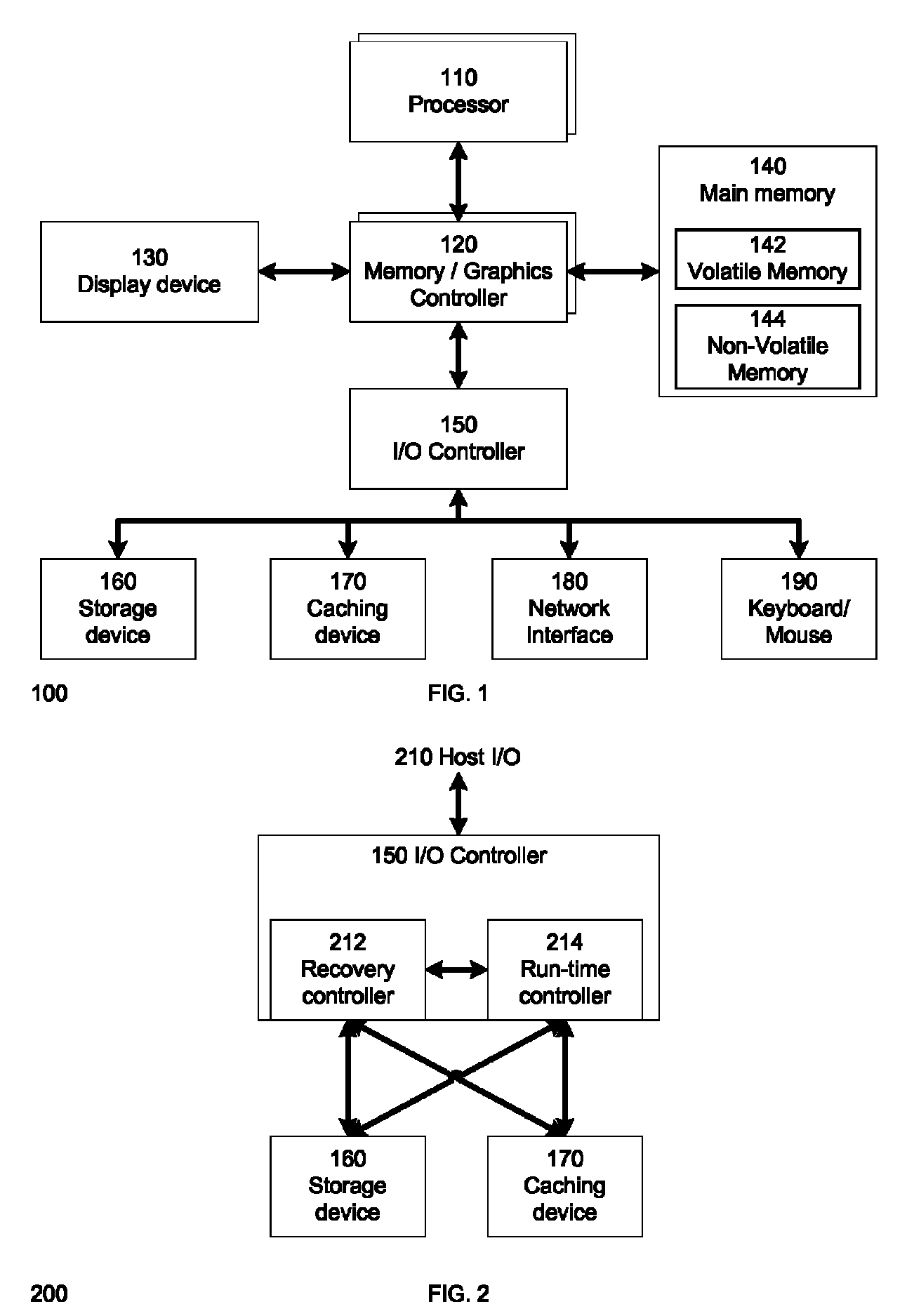

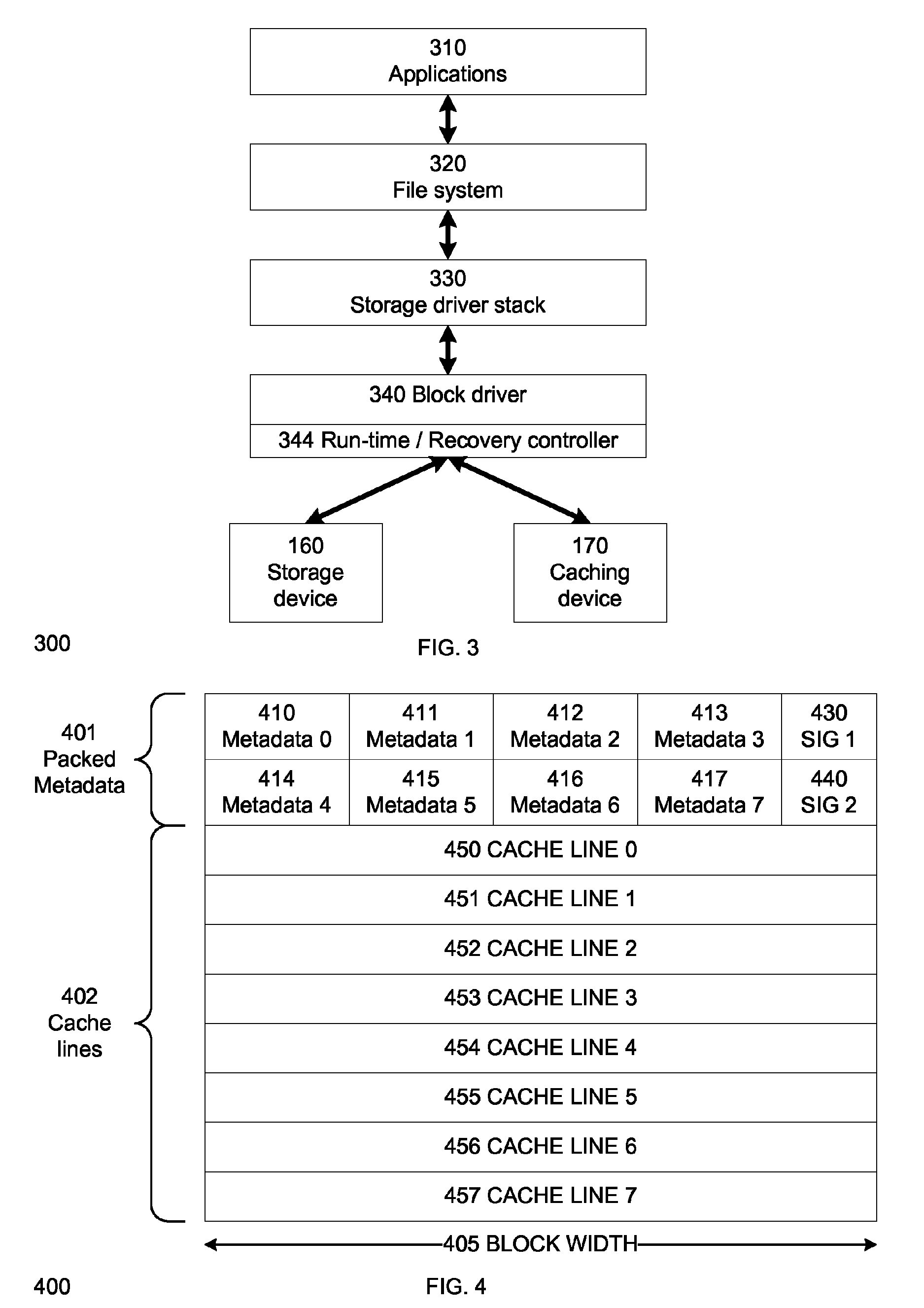

Techniques to perform power fail-safe caching without atomic metadata

ActiveUS8195891B2Memory architecture accessing/allocationError detection/correctionFail-safeDatabase

Owner:SK HYNIX NAND PROD SOLUTIONS CORP

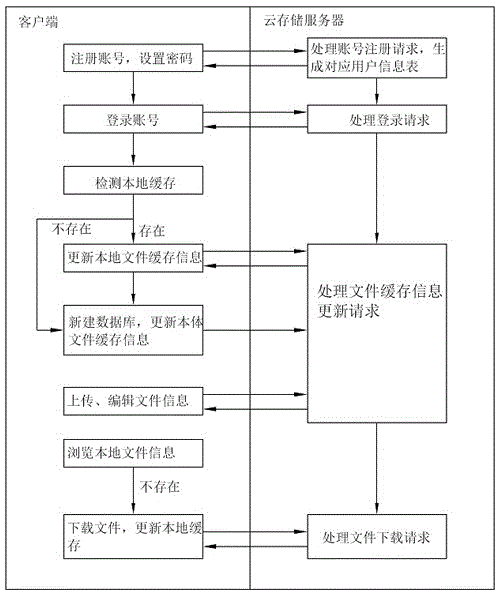

File cache method and device applied to client

The invention provides a file cache method and device applied to a client. The cache method comprises: step 1, a user logs in the client according to a registered account and a password; step 2, the client detects whether a local cache file exits in a local file information database, if not, establishes a new file information database and sends a file cache information update request to a cloud storage server, and if so, sends the file cache information update request to the cloud storage server; step 3, the cloud storage server processes the file cache information update request according to the file cache information update request sent by the client and updates the file information database; when the file cache information update request contains a file download request, the cloud storage server downloads a file according to the file download request and updates the file information database. The file cache method provided by the invention can be used for operating the client to update backup data on the cloud storage server and simultaneously update the local file cache information.

Owner:MEIBEIKE TECH CO LTD

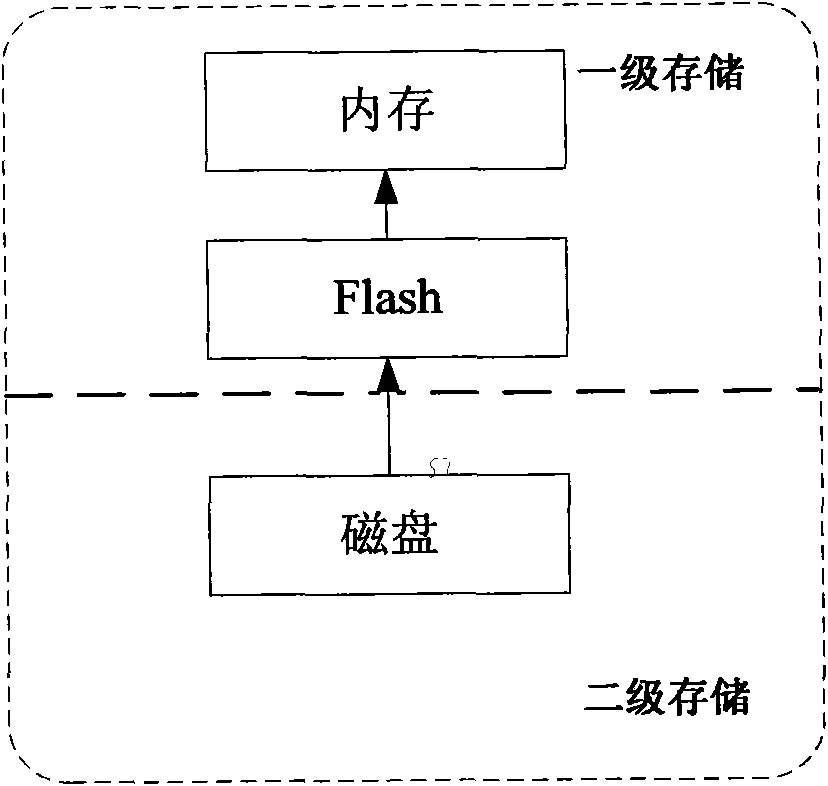

Realization method and device of mixed secondary storage system

InactiveCN101777028ALow costReduce power consumptionEnergy efficient ICTInput/output to record carriersHybrid typeAccess frequency

The invention relates to a realization method and a device of a mixed secondary storage system. The realization method comprises the following steps that: step 1: a file is stored in a disk by default; step 2: if the required file is not in a memory, a flash memory is accessed; if the required file is not in the flash memory, the disk is accessed, and the file is obtained; step 3: if the required file is stored in the disk and the access frequency reaches a preset value, and the required file is cached in the flash memory; and step 4: when the size of the file cached in the flash memory reaches a certain value, some files are selected to be written into the disk. Compared with the prior art, the invention can realize a large-capacity and low-power consumption secondary storage system with low cost.

Owner:北京北大众志微系统科技有限责任公司

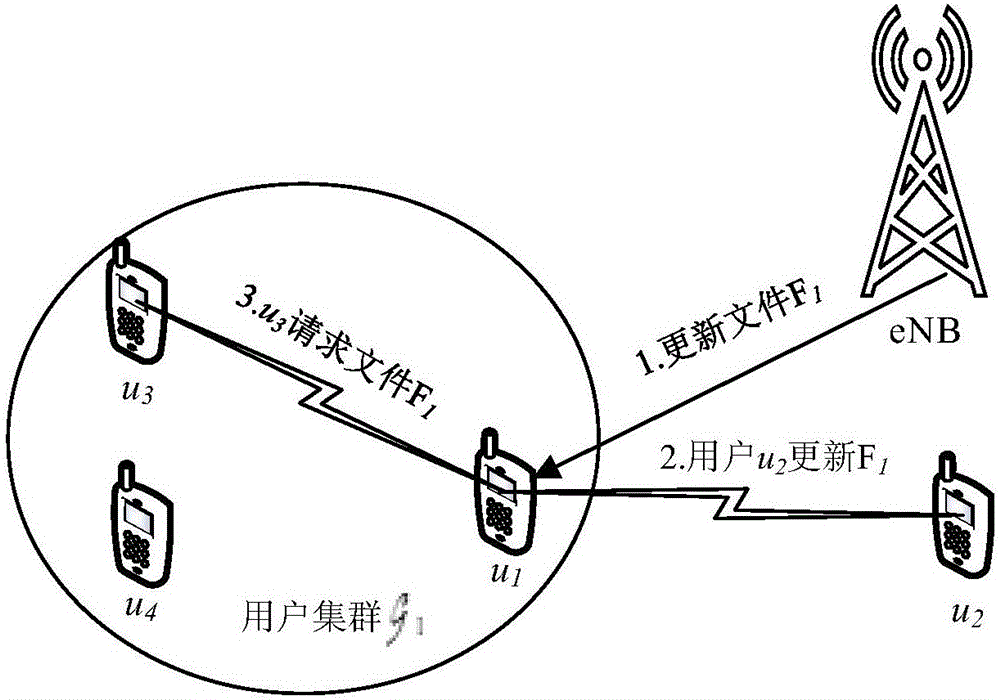

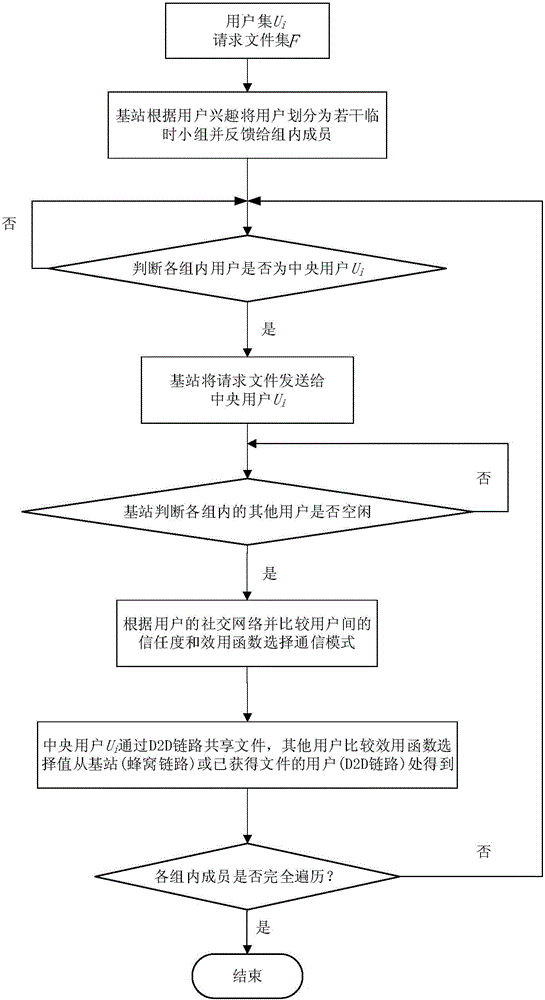

File sharing method based on social network

ActiveCN106230973AImprove sharing efficiencyReduce latencyData processing applicationsNetwork traffic/resource managementTelecommunications linkCommunications system

The invention discloses a file sharing method based on a social network. The invention provides a new method for file sharing and distribution among users based on a social network in a D2D communication environment controlled by a base station. In the method, the base station divides the users into multiple temporary groups according to the demands of the users, and judges a central user through the degrees of sociality of the users. In the case of no file cache demand in the system, the base station firstly sends a file to the central user through a wireless cellular communication link, and the central user shares the file to the users having social relations in the groups through D2D communication. The users having no social relations with the central user can acquire the file from the base station or other users who have acquired the file, and thus the file sharing is achieved. In addition, the problem that one D2D user can only process (receive or send) one request at the same time is solved. By adopting the file sharing method disclosed by the invention, the transmission delay is greatly reduced, the load of the base station is relieved, and the overall performance of the cellular communication system is optimized.

Owner:NANJING UNIV OF POSTS & TELECOMM

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com