Patents

Literature

81 results about "Residual frame" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

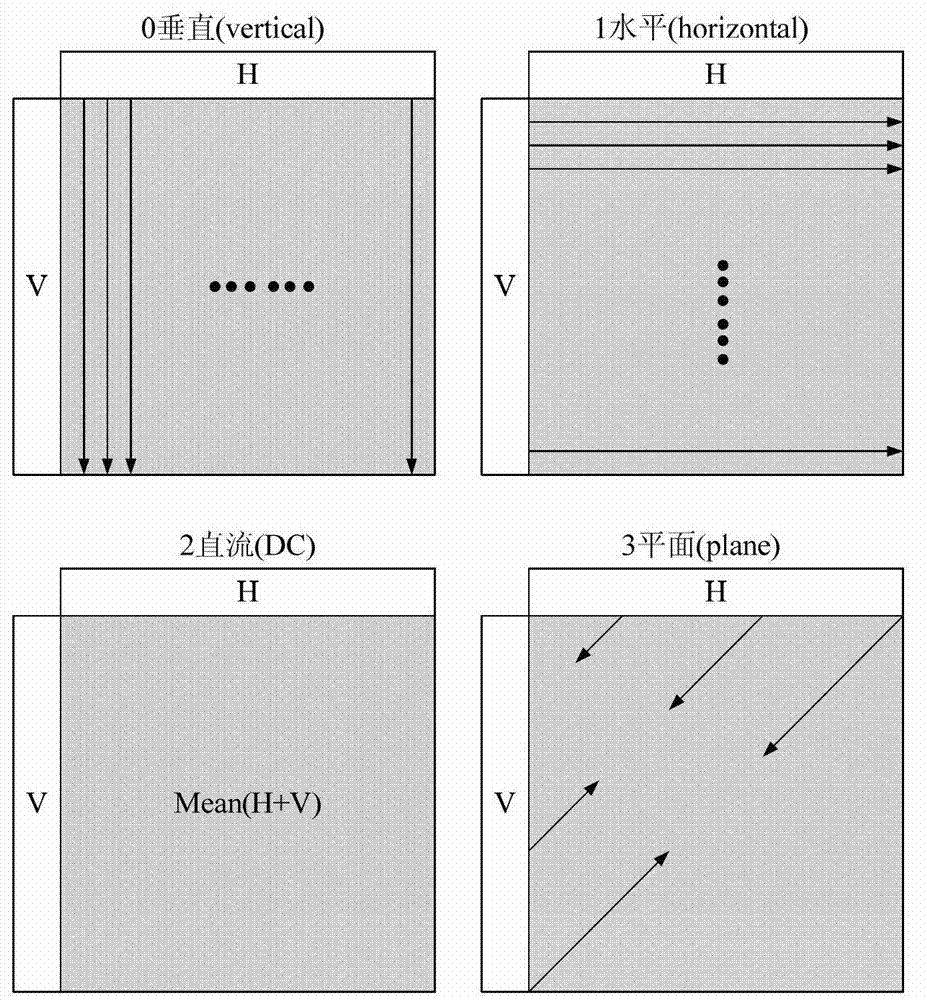

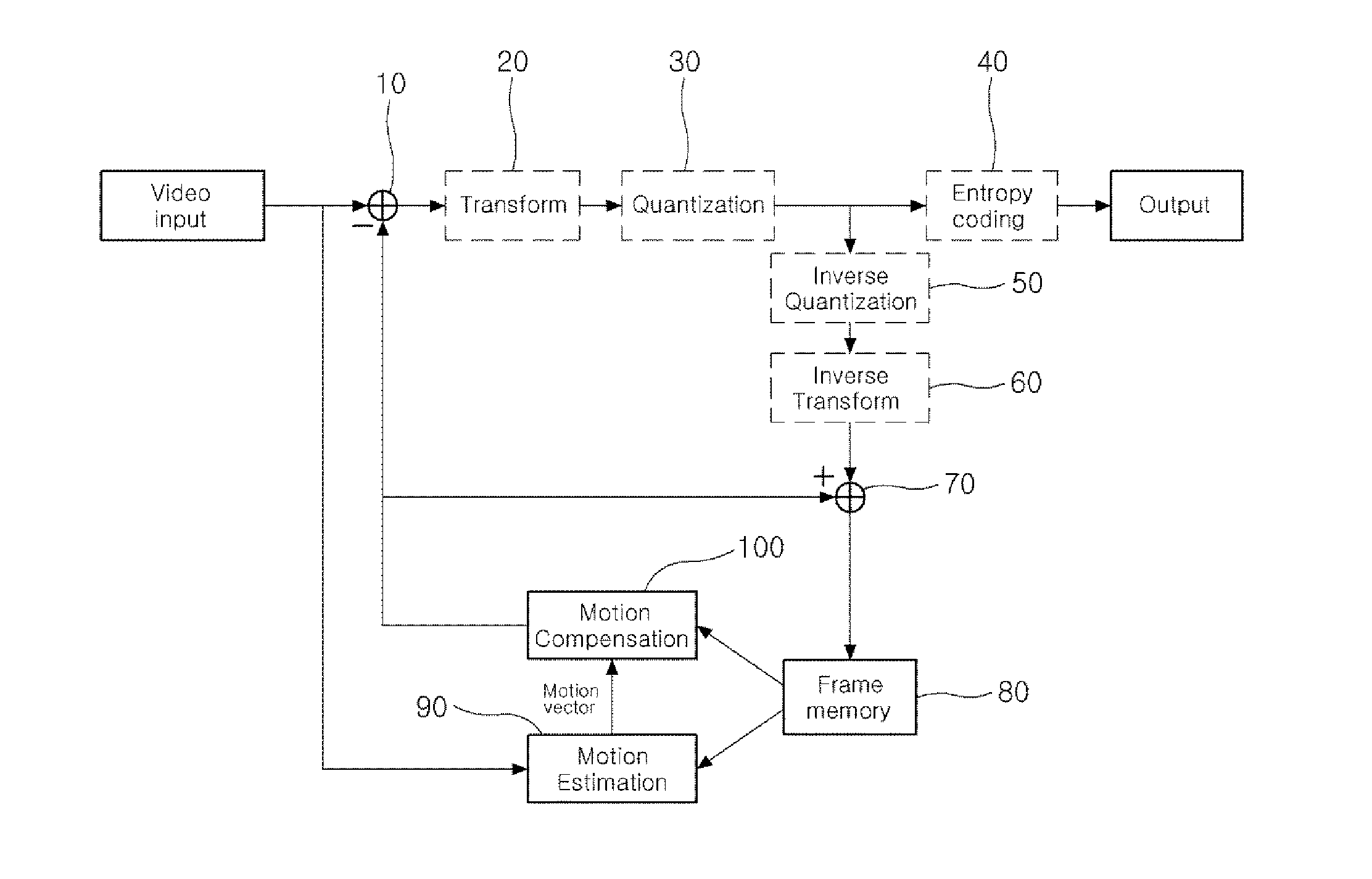

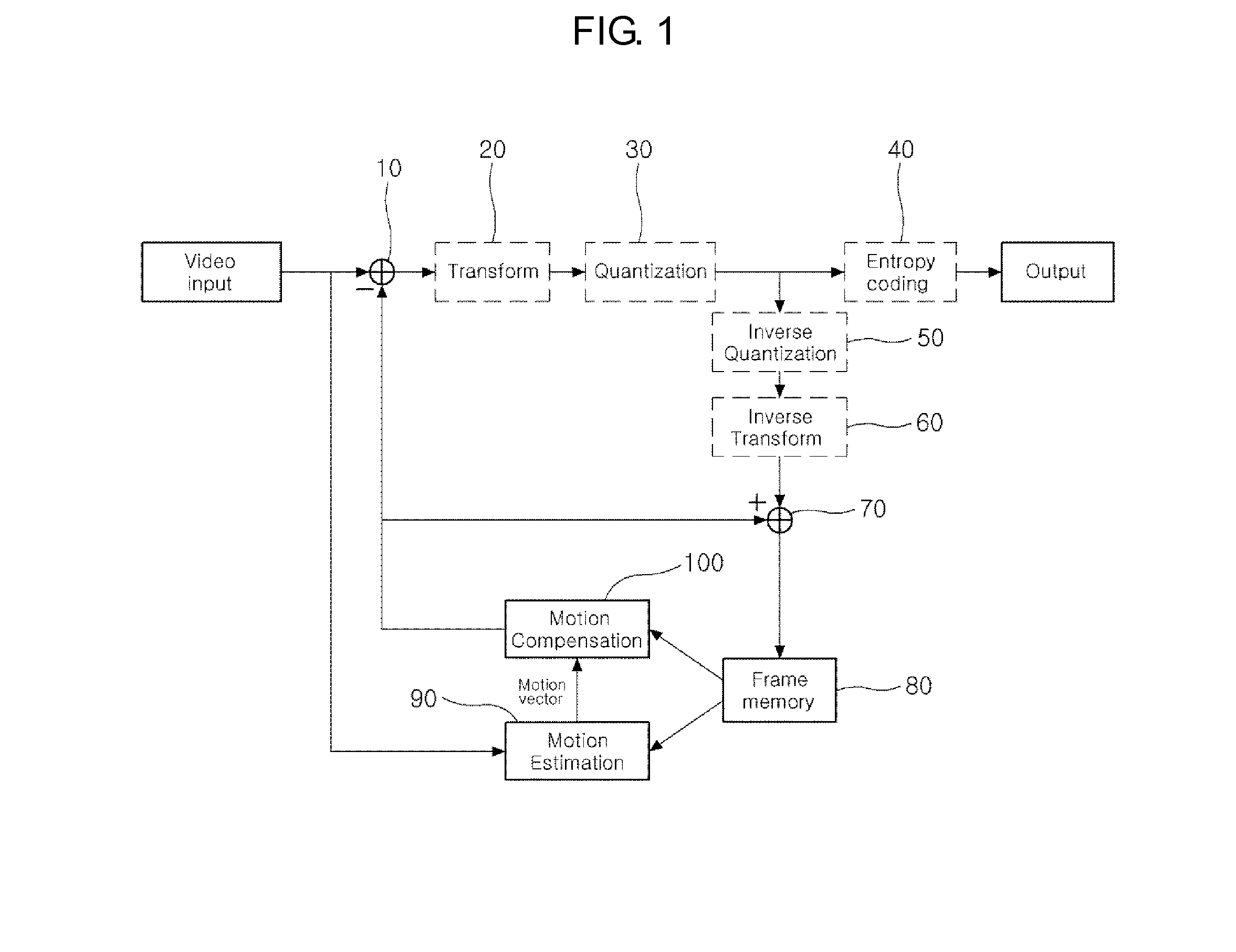

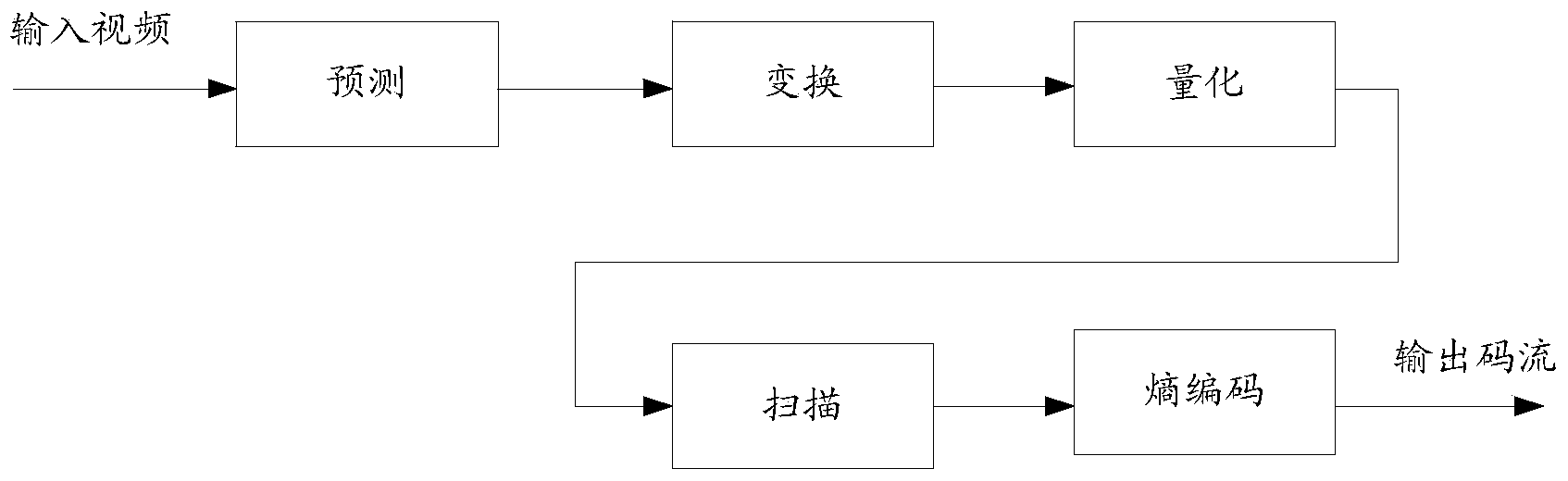

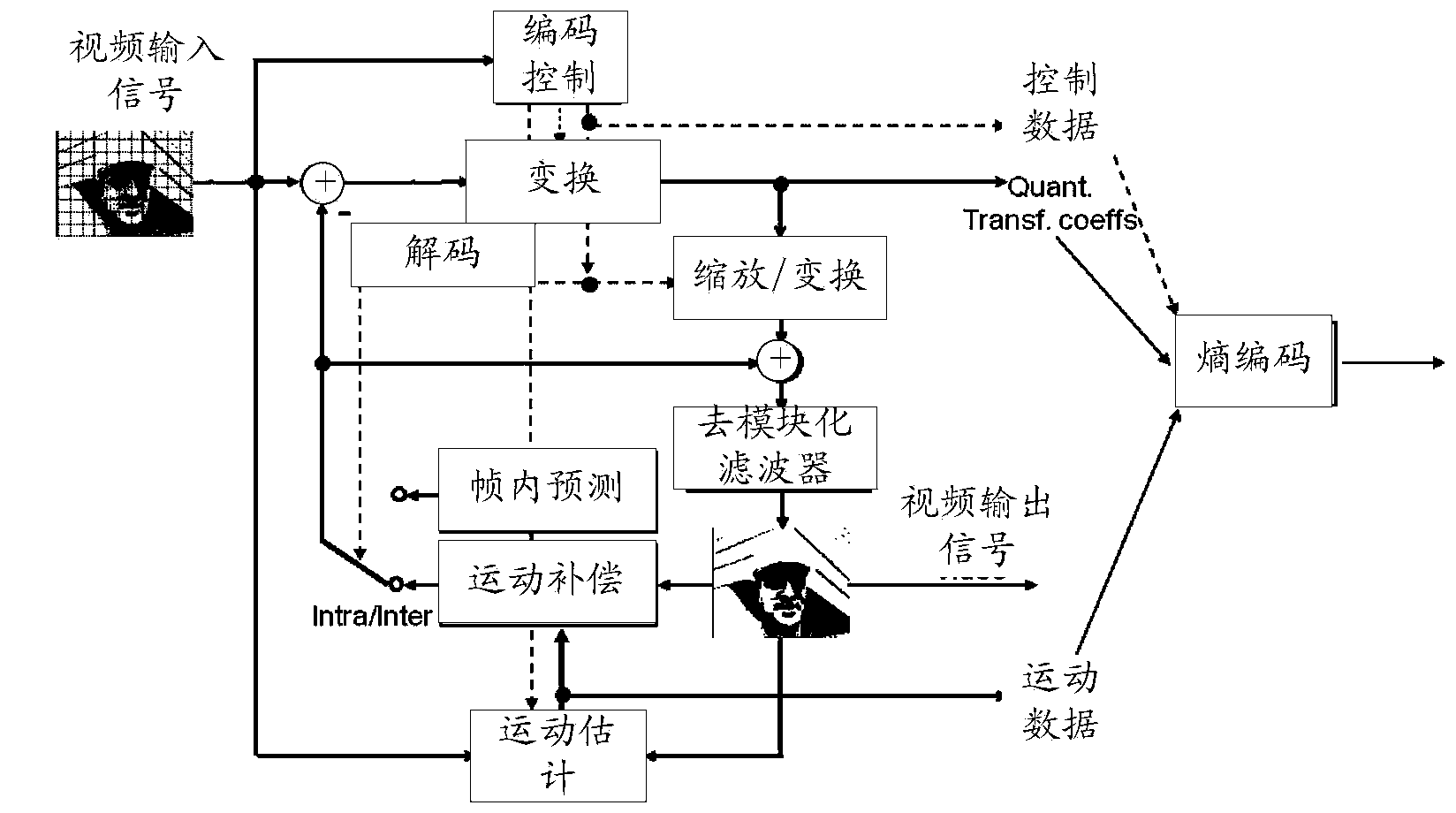

In video compression algorithms a residual frame is formed by subtracting the reference frame from the desired frame. This difference is known as the error or residual frame. The residual frame normally has less information entropy, due to nearby video frames having similarities, and therefore requires fewer bits to compress.

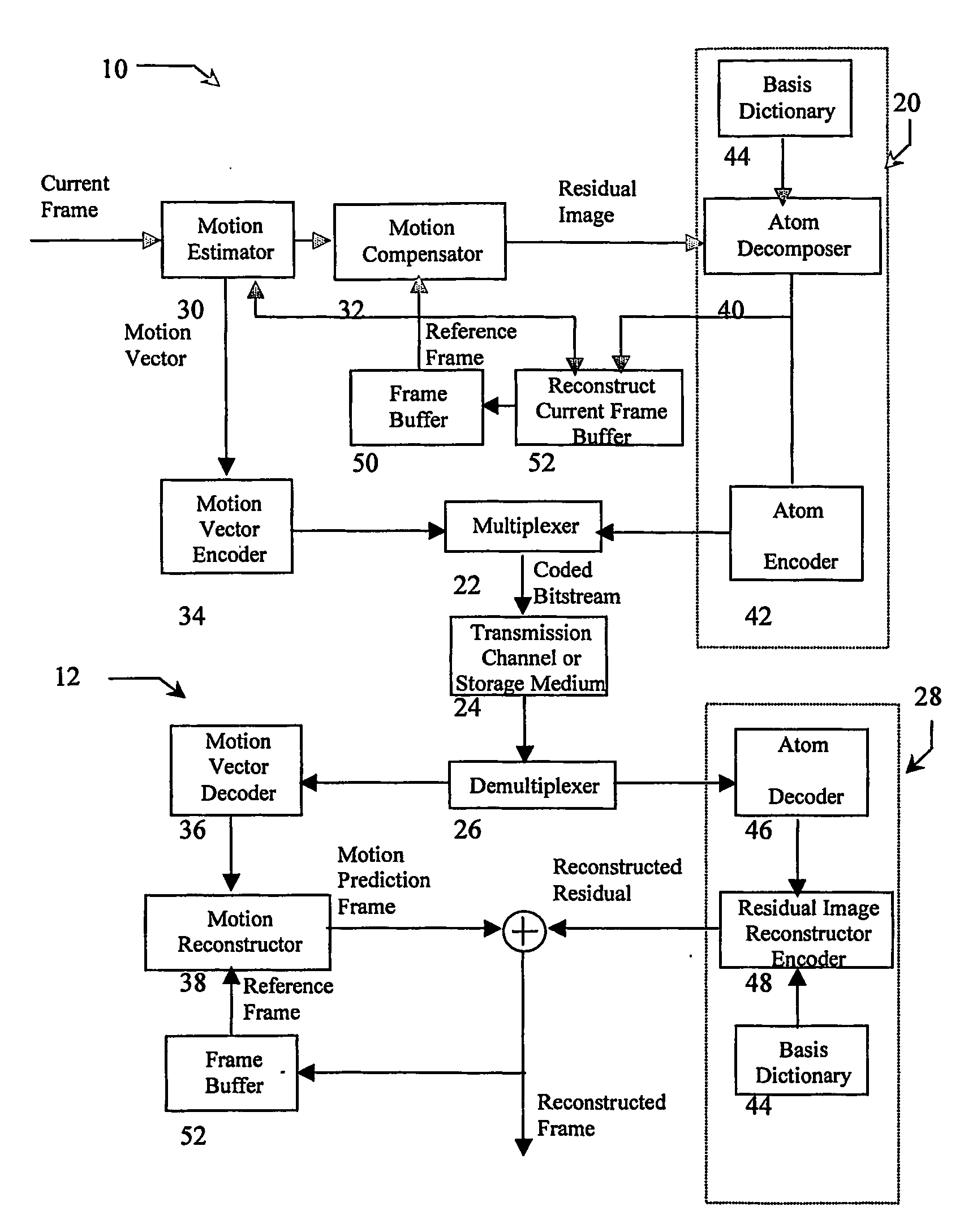

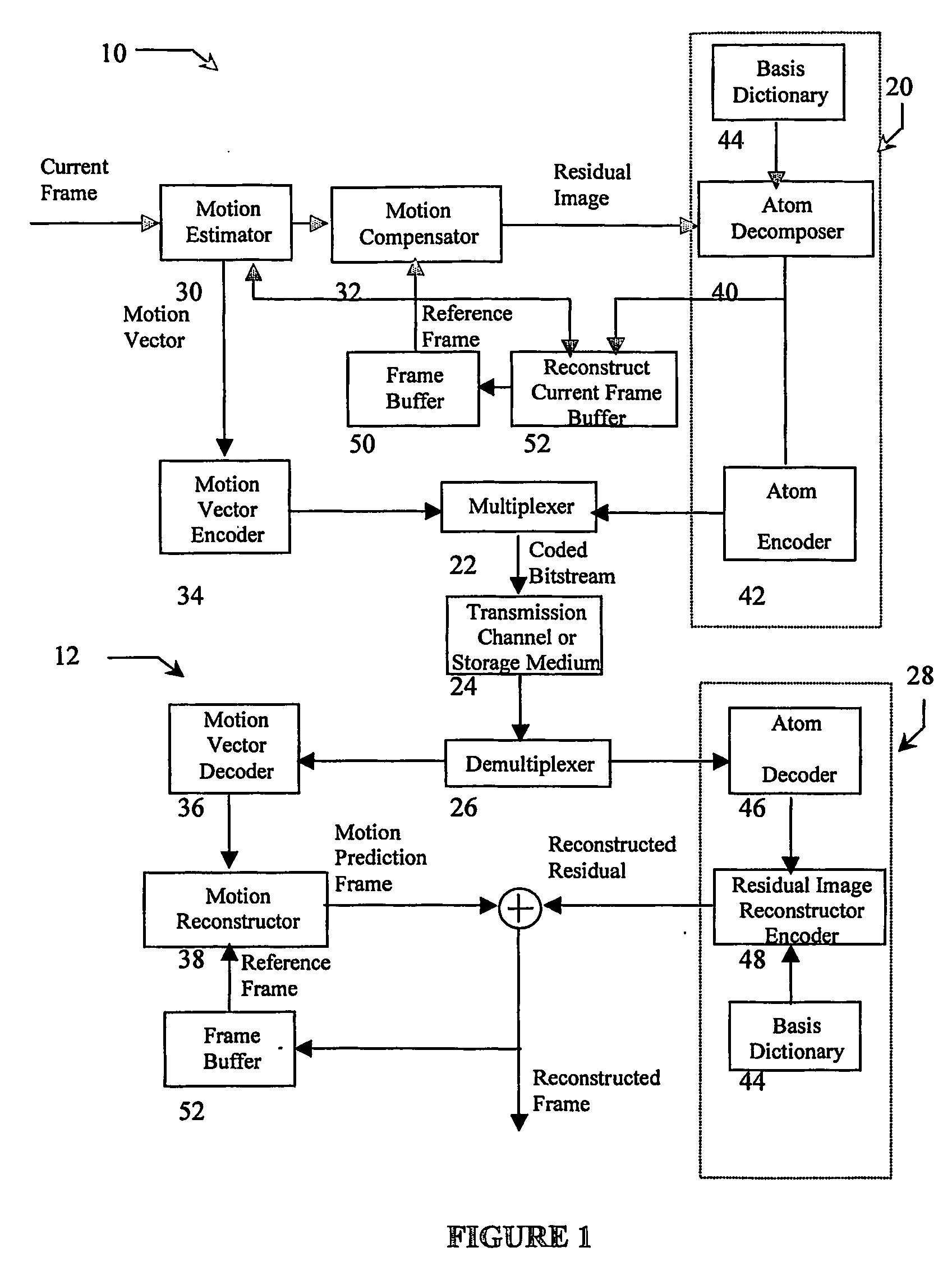

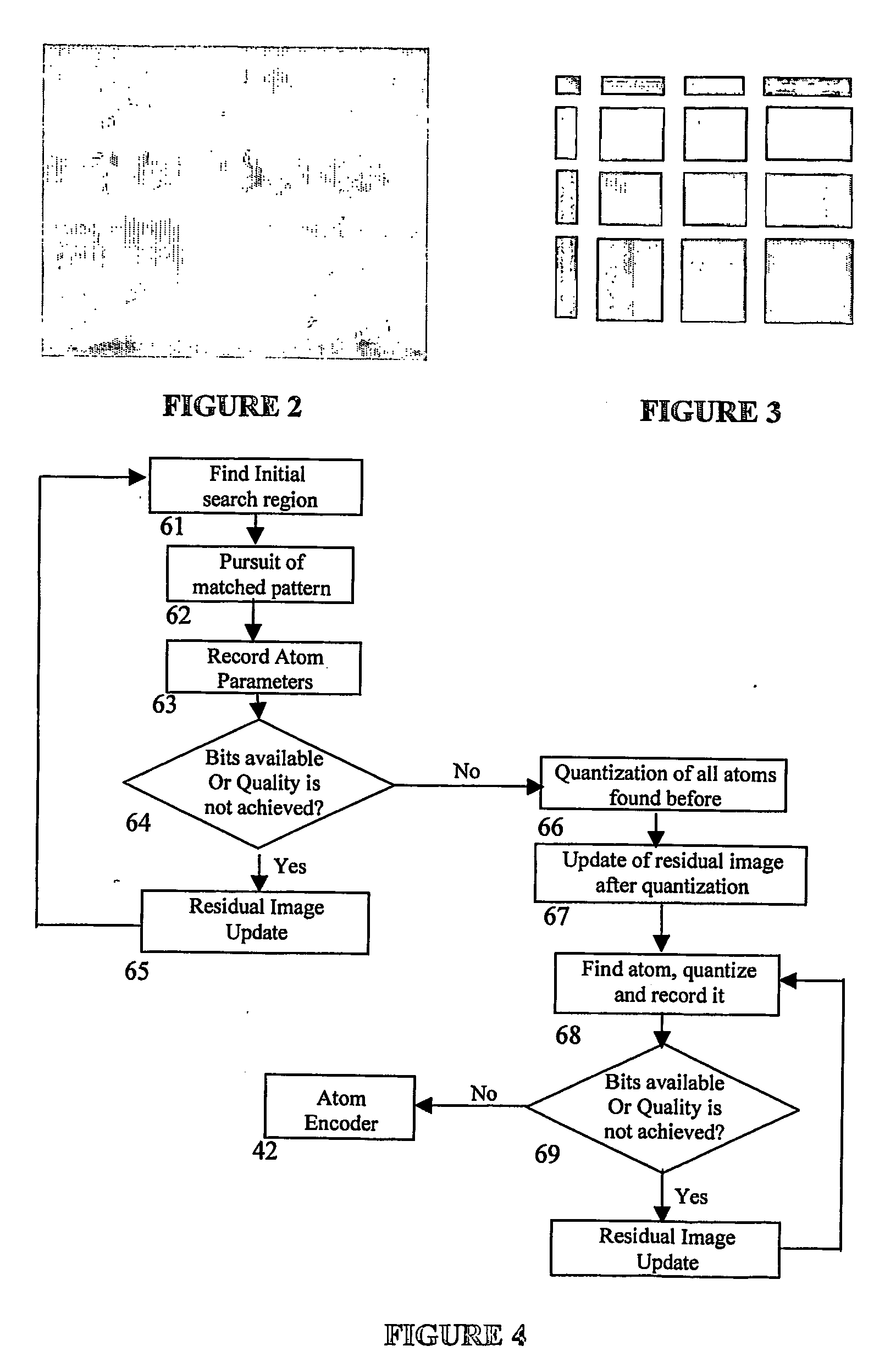

Overcomplete basis transform-based motion residual frame coding method and apparatus for video compression

ActiveUS20090103602A1Weaken energySmall sizeColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionHigh energy

The present invention provides a method to compress digital moving pictures or video signals based on an overcomplete basis transform using a modified Matching Pursuit algorithm. More particularly, this invention focuses on the efficient coding of the motion residual image, which is generated by the process of motion estimation and compensation. A residual energy segmentation algorithm (RESA) can be used to obtain an initial estimate of the shape and position of high-energy regions in the residual image. A progressive elimination algorithm (PEA) can be used to reduce the number of matching evaluations in the matching pursuits process. RESA and PEA can speed up the encoder by many times for finding the matched basis from the pre-specified overcomplete basis dictionary. Three parameters of the matched pattern form an atom, which defines the index into the dictionary and the position of the selected basis, as well as the inner product between the chosen basis pattern and the residual signal. The present invention provides a new atom position coding method using quad tree like techniques and a new atom modulus quantization scheme. A simple and efficient adaptive mechanism is provided for the quantization and position coding design to allow a system according to the present invention to operate properly in low, medium and high bit rate situations. These new algorithm components can result in a faster encoding process and improved compression performance over previous matching pursuit based video coders.

Owner:ETIIP HLDG

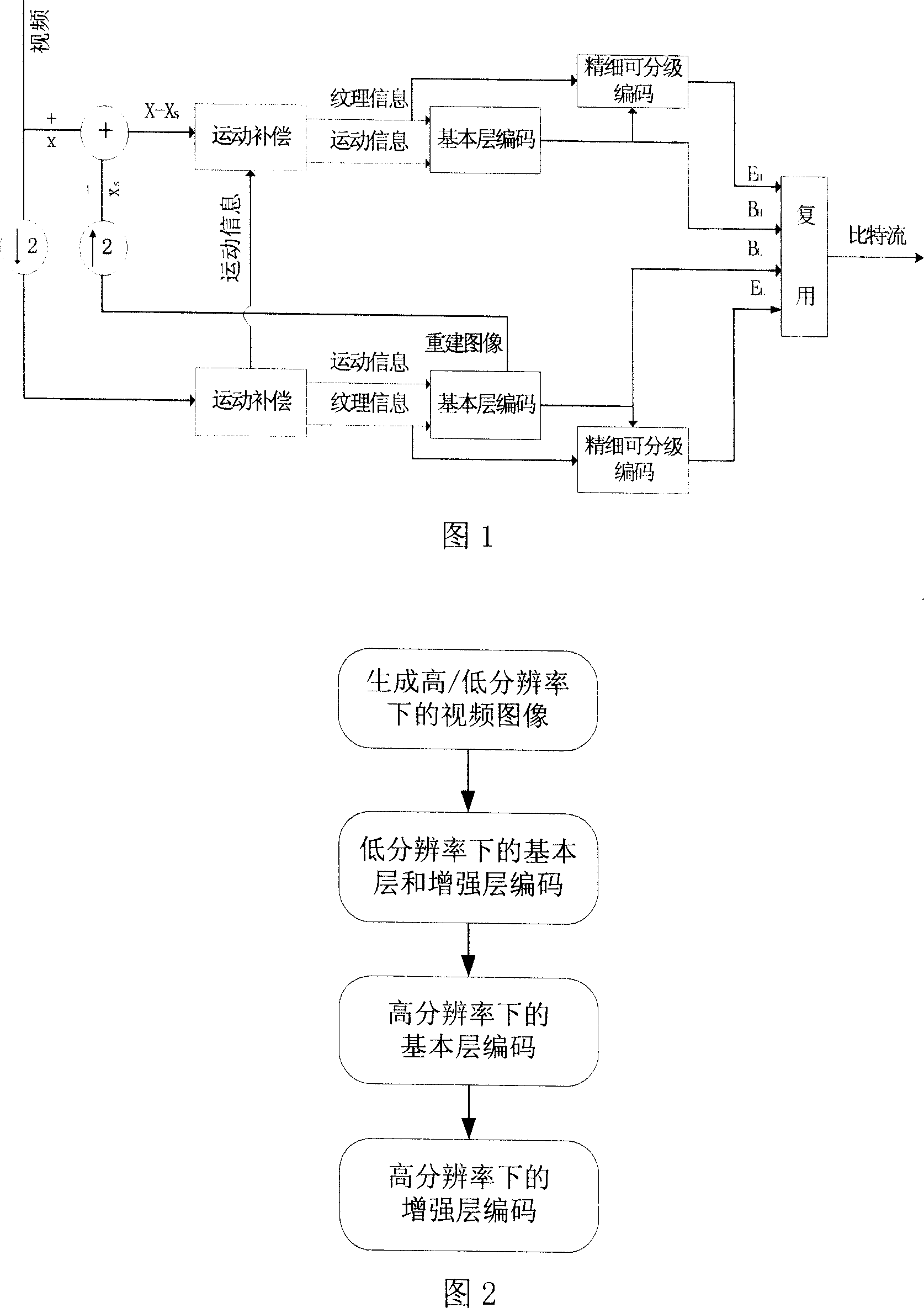

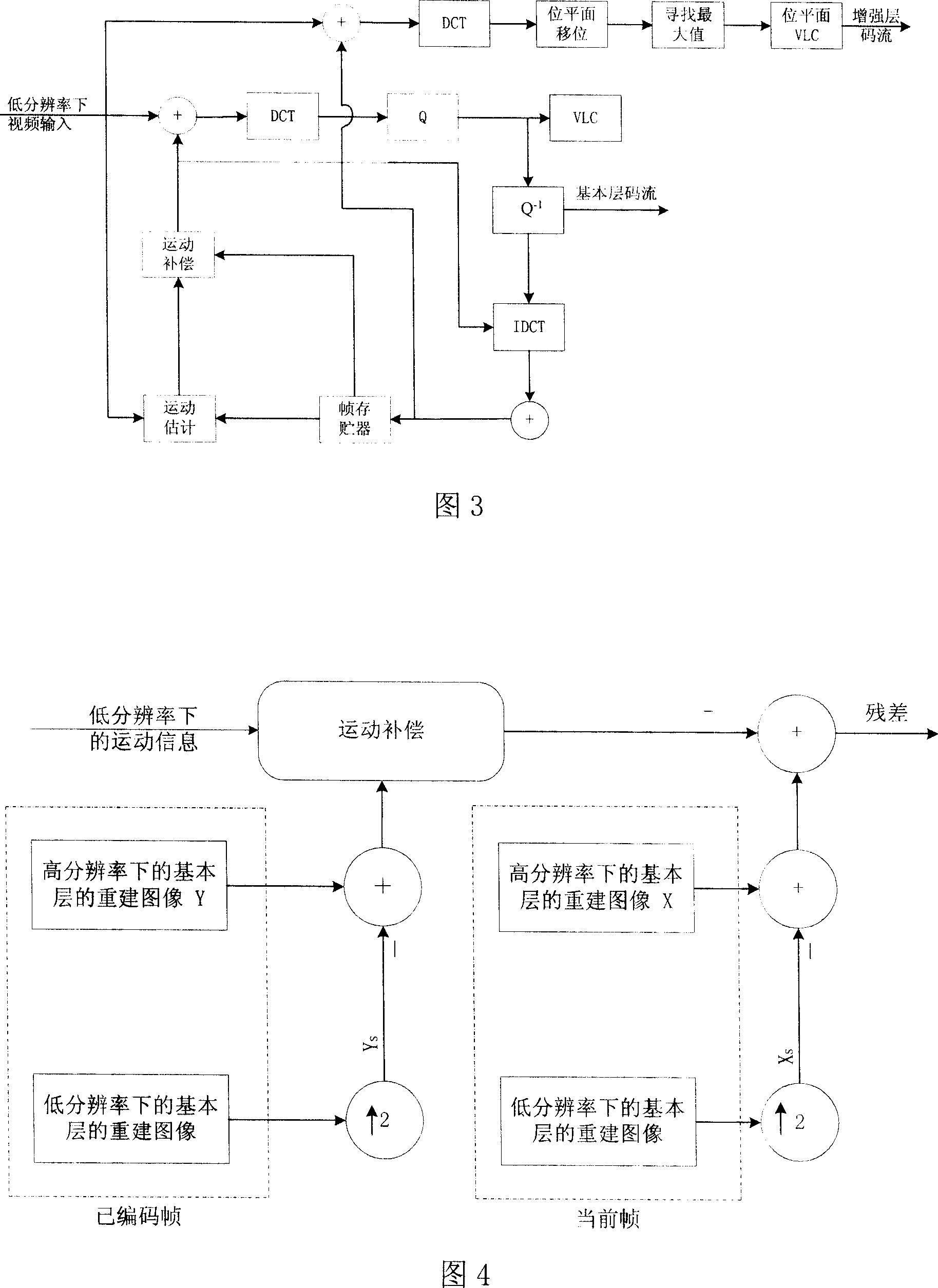

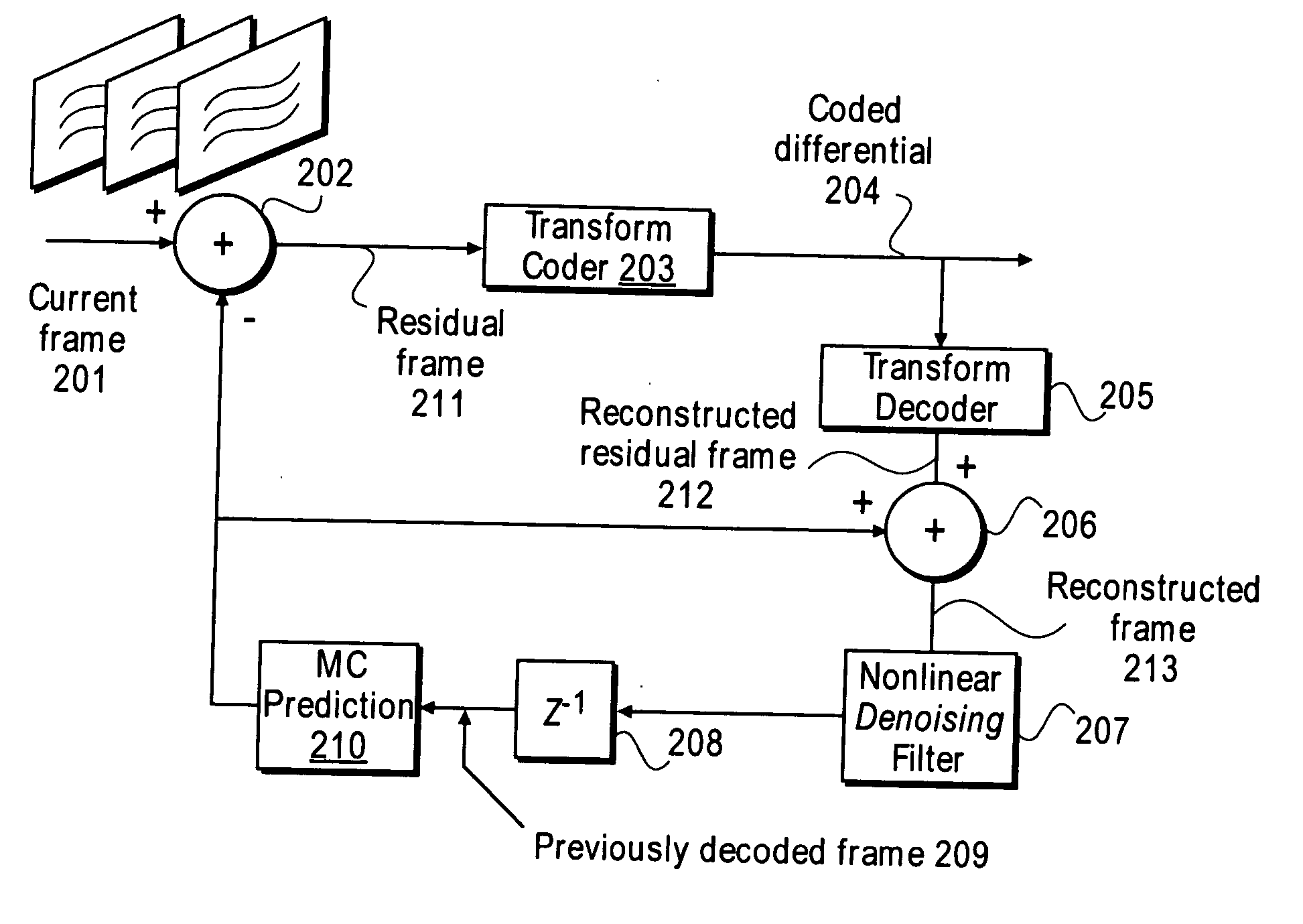

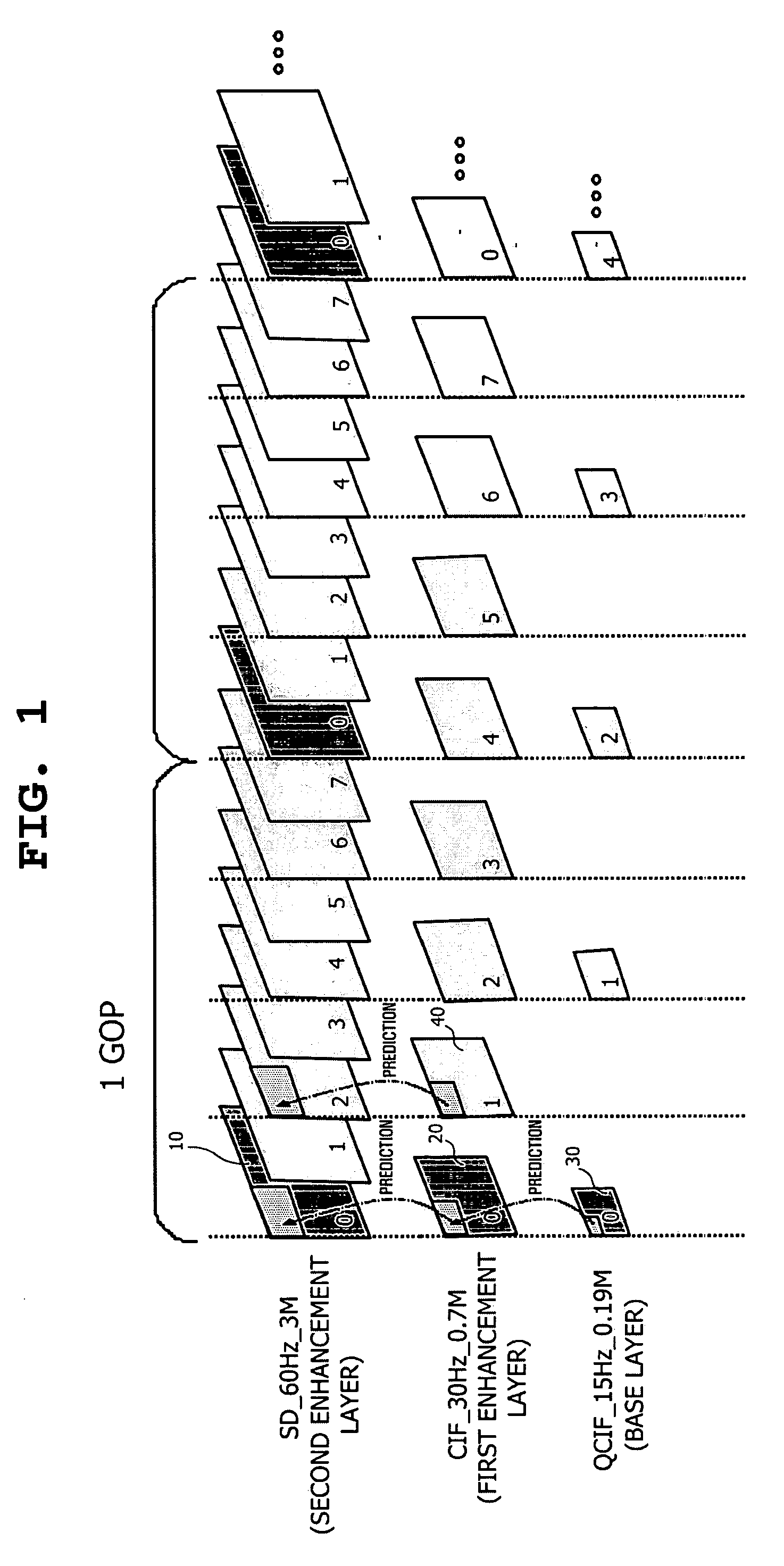

Coding method of fine and classified video of space domain classified noise/signal ratio

InactiveCN101018333AWith fine-grained adjustable featuresThe quality is fine and gradableTelevision systemsDigital video signal modificationSignal-to-noise ratio (imaging)Motion vector

The related space-staged SNR fine-staged video coding method comprises: combining the space-staged code and SNR fine-staged code to make both high and low resolution video have fine-staged reinforcing layers, providing the reference frame on low resolution to the high resolution video reference frame, and taking two times of the low resolution motion vector as the estimation of motion on high resolution; on high resolution, taking motion compensation directly on space estimated residual frame. Compared with current GFSS means in MPEG-4, for CIF format video, this invention adds 0.52dB on Y1-PSNR while reducing code rate 10.91%, and adds 0.20dB for Y0-PSNR.

Owner:SHANGHAI UNIV +1

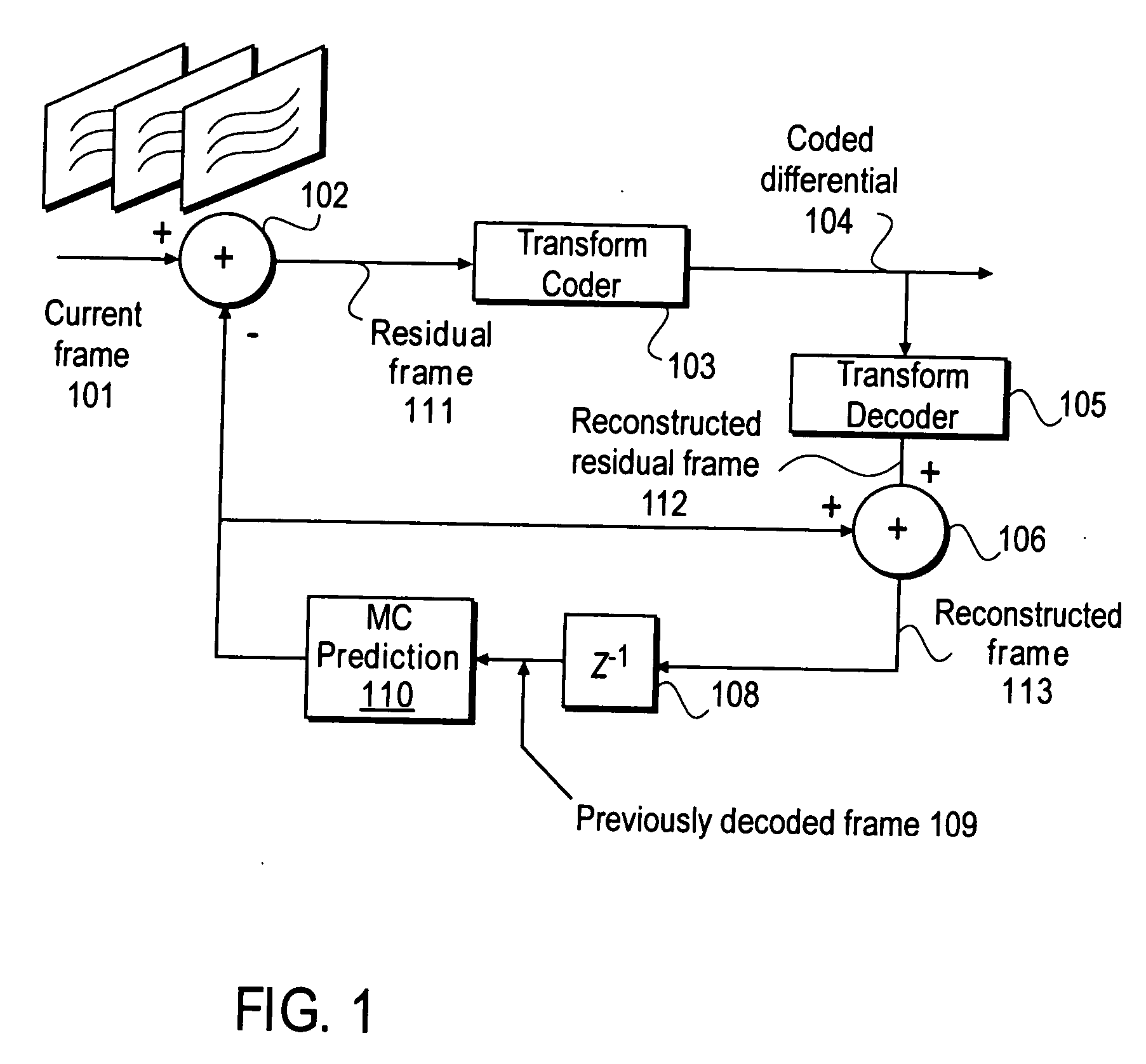

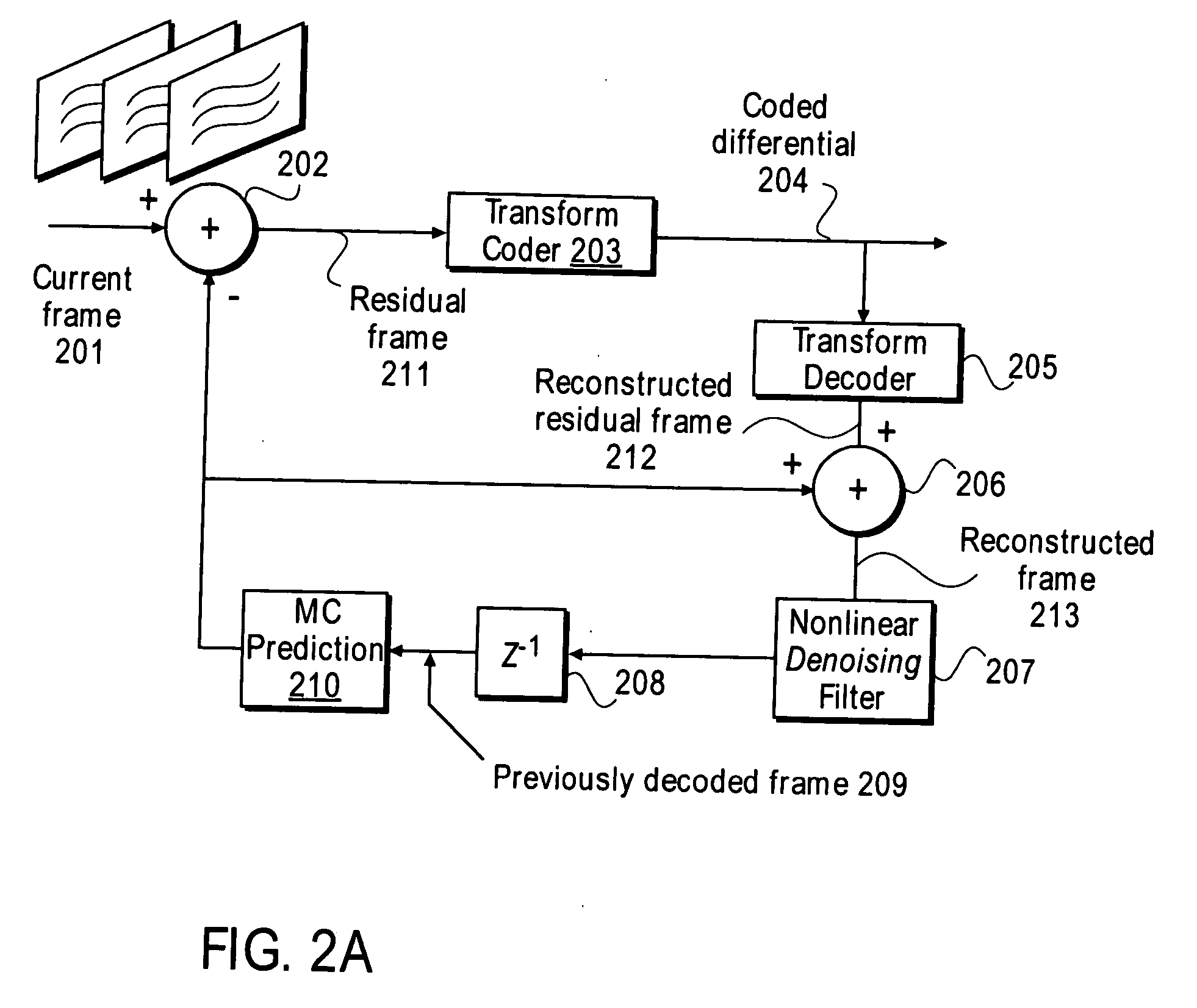

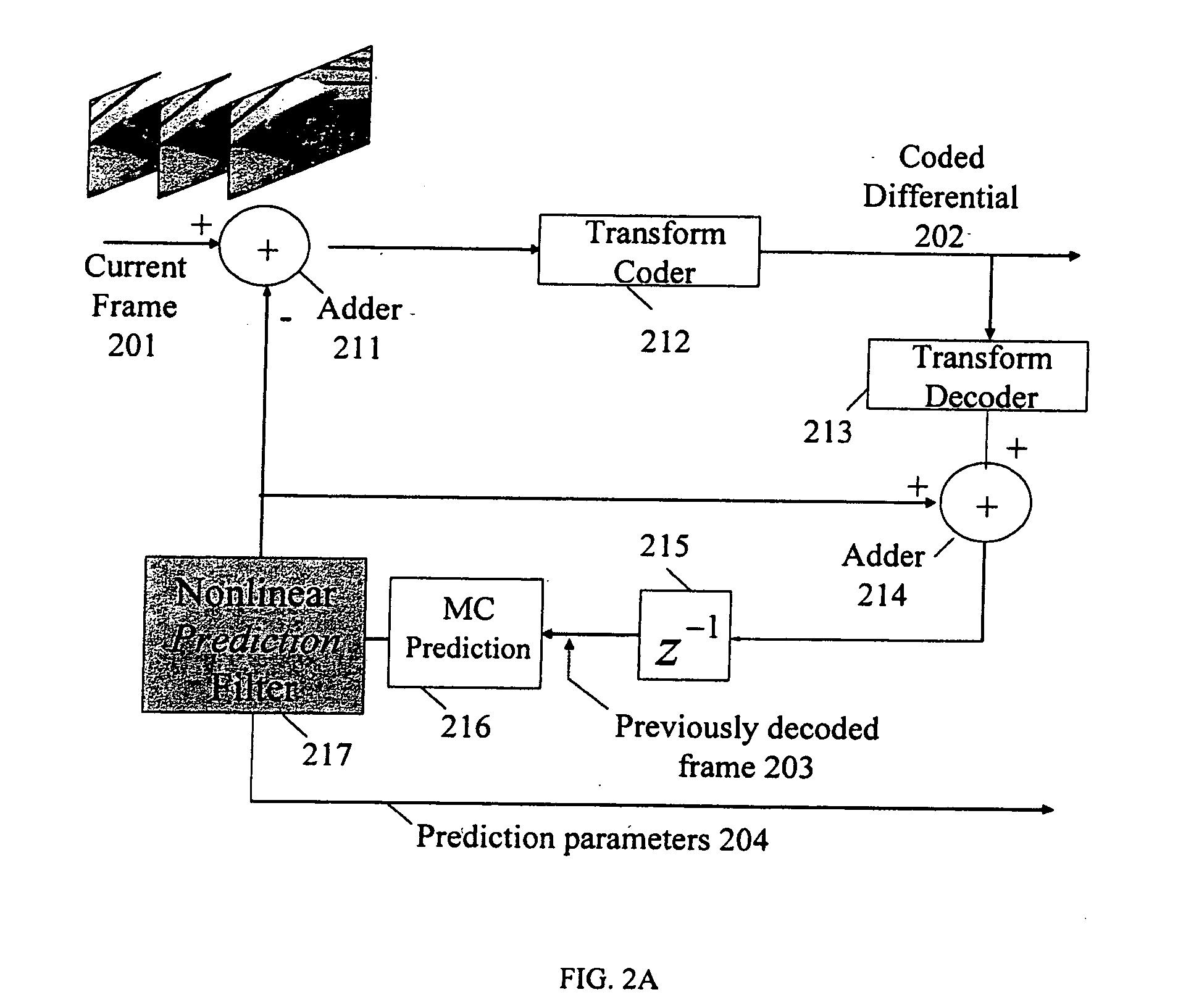

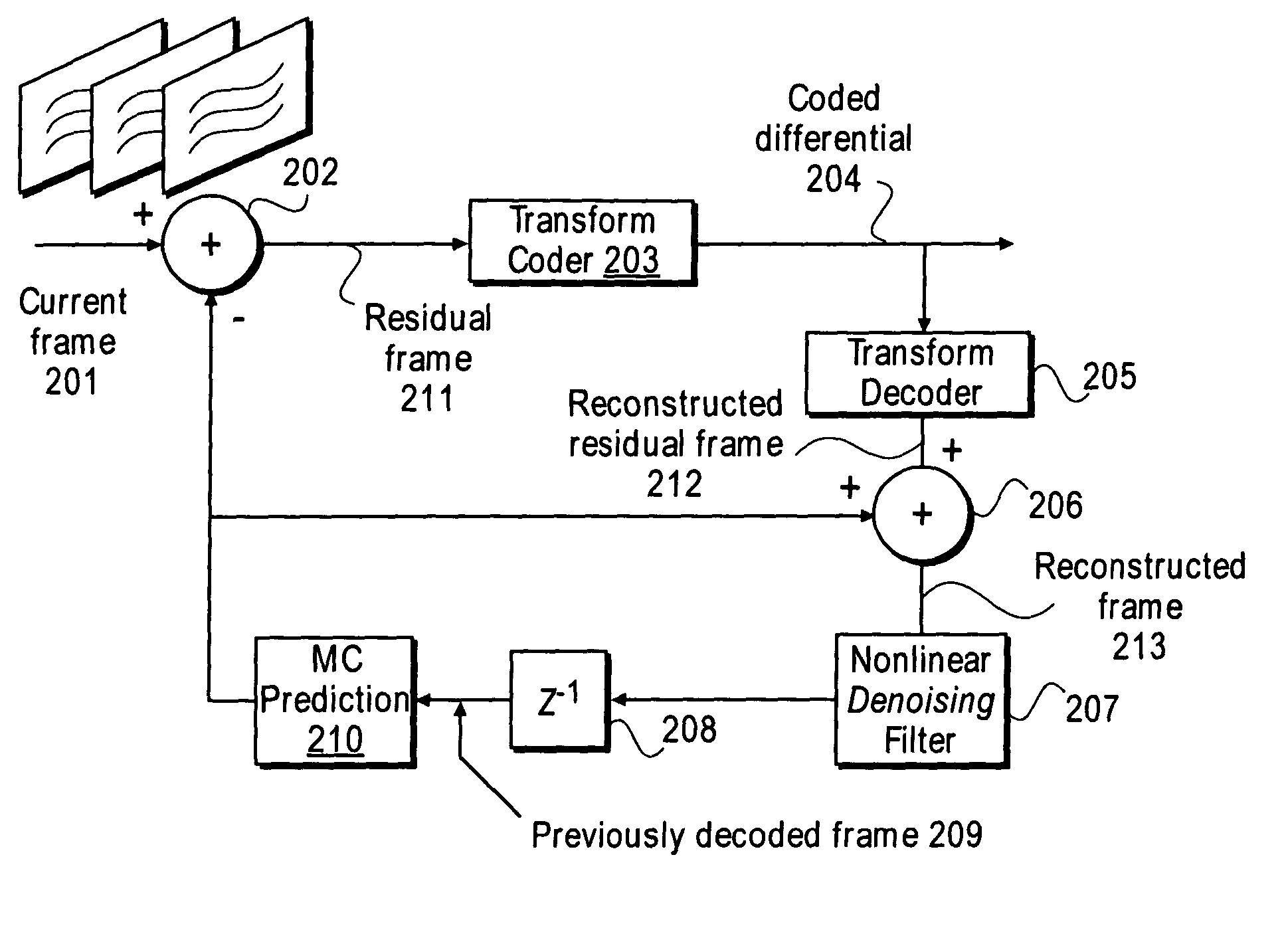

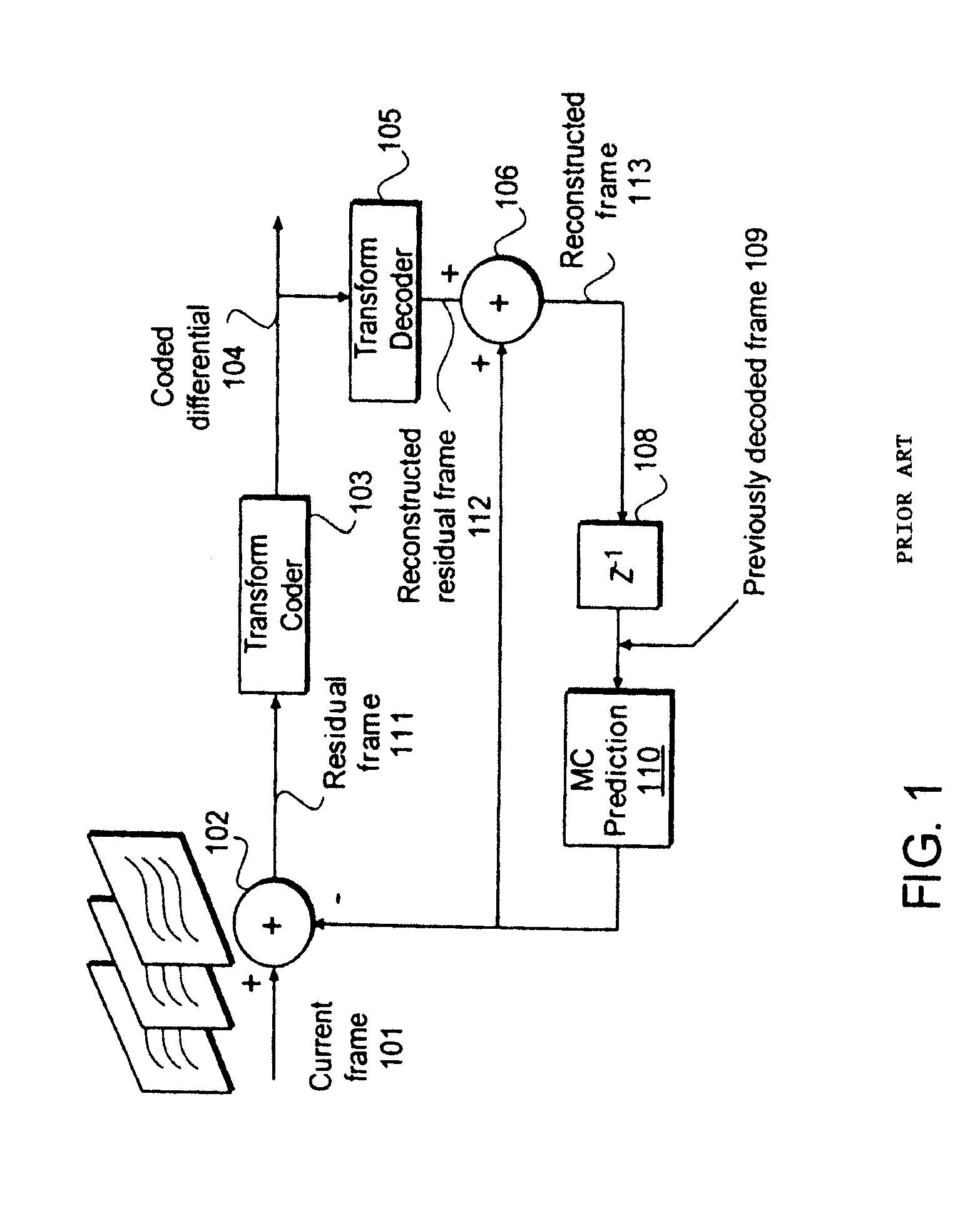

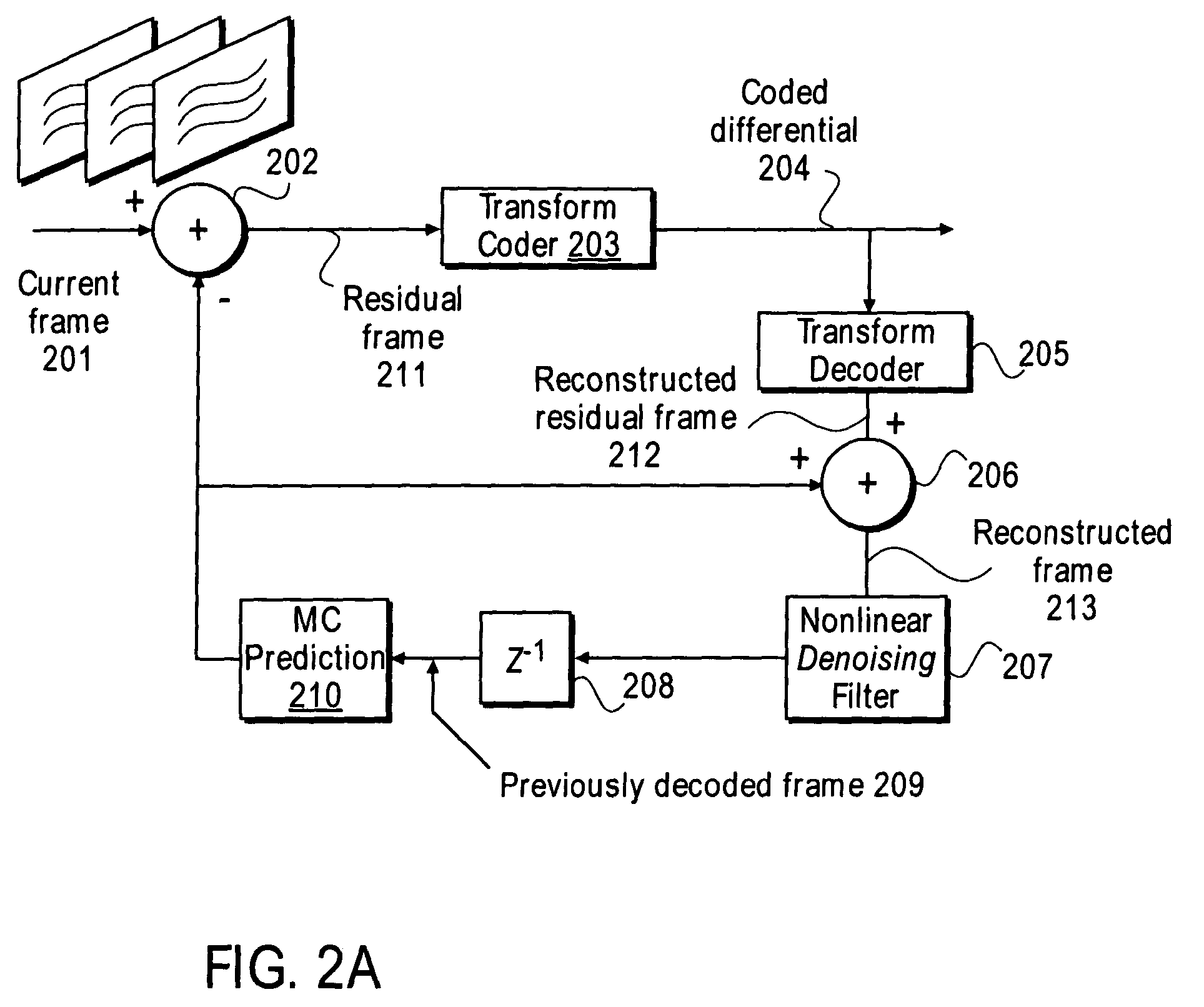

Nonlinear, in-the-loop, denoising filter for quantization noise removal for hybrid video compression

InactiveUS20060153301A1Color television with pulse code modulationColor television with bandwidth reductionNoise removalComputer science

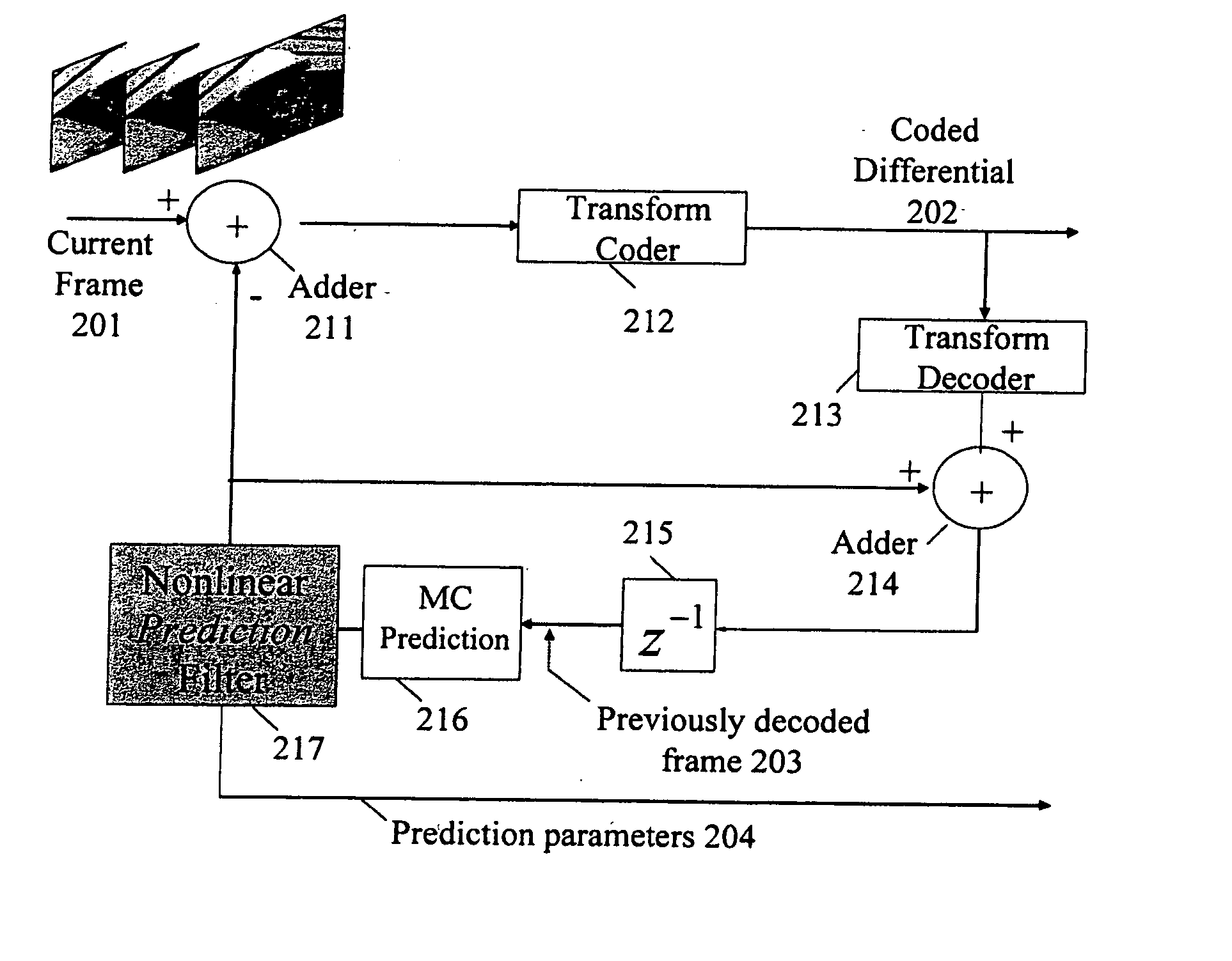

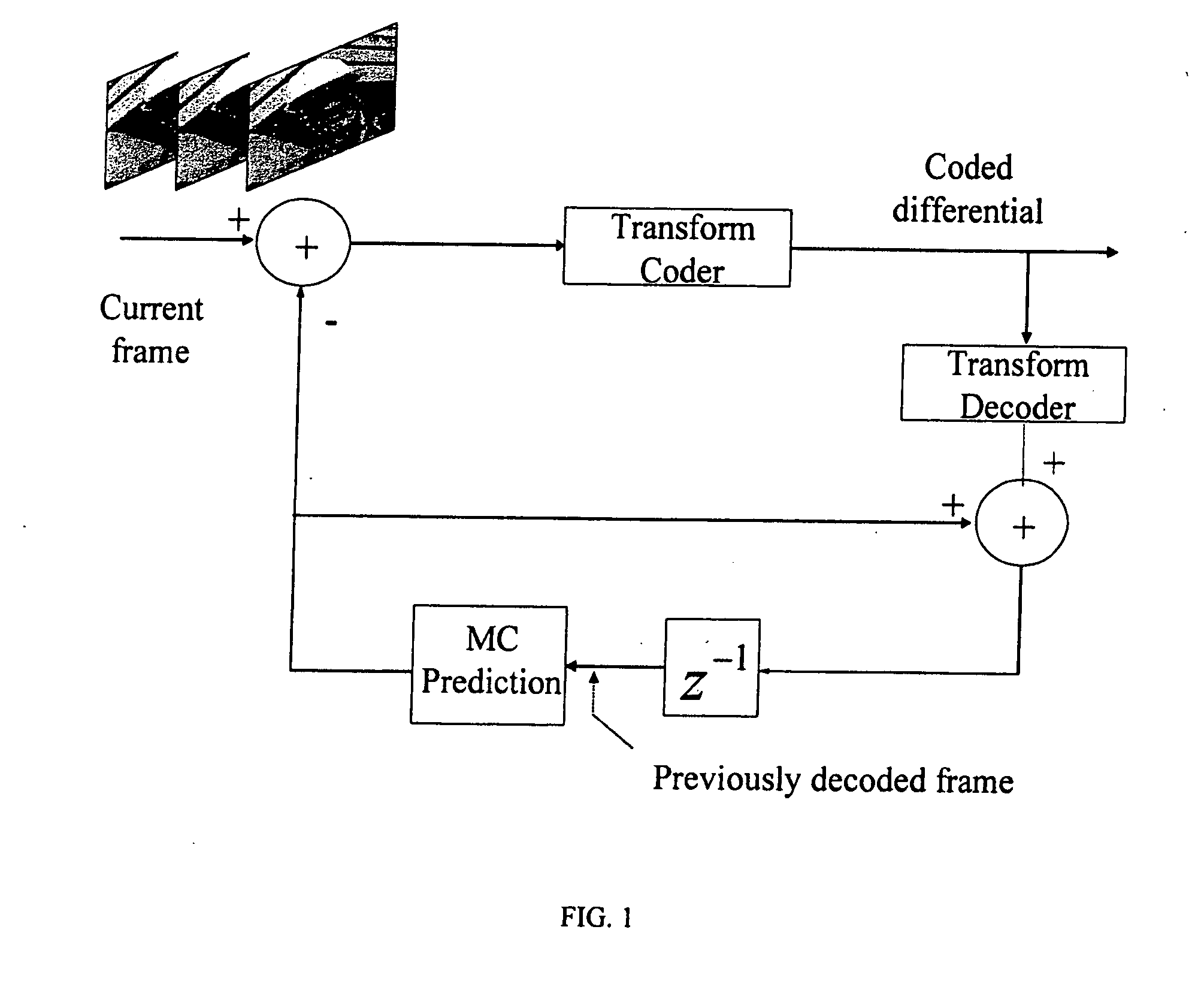

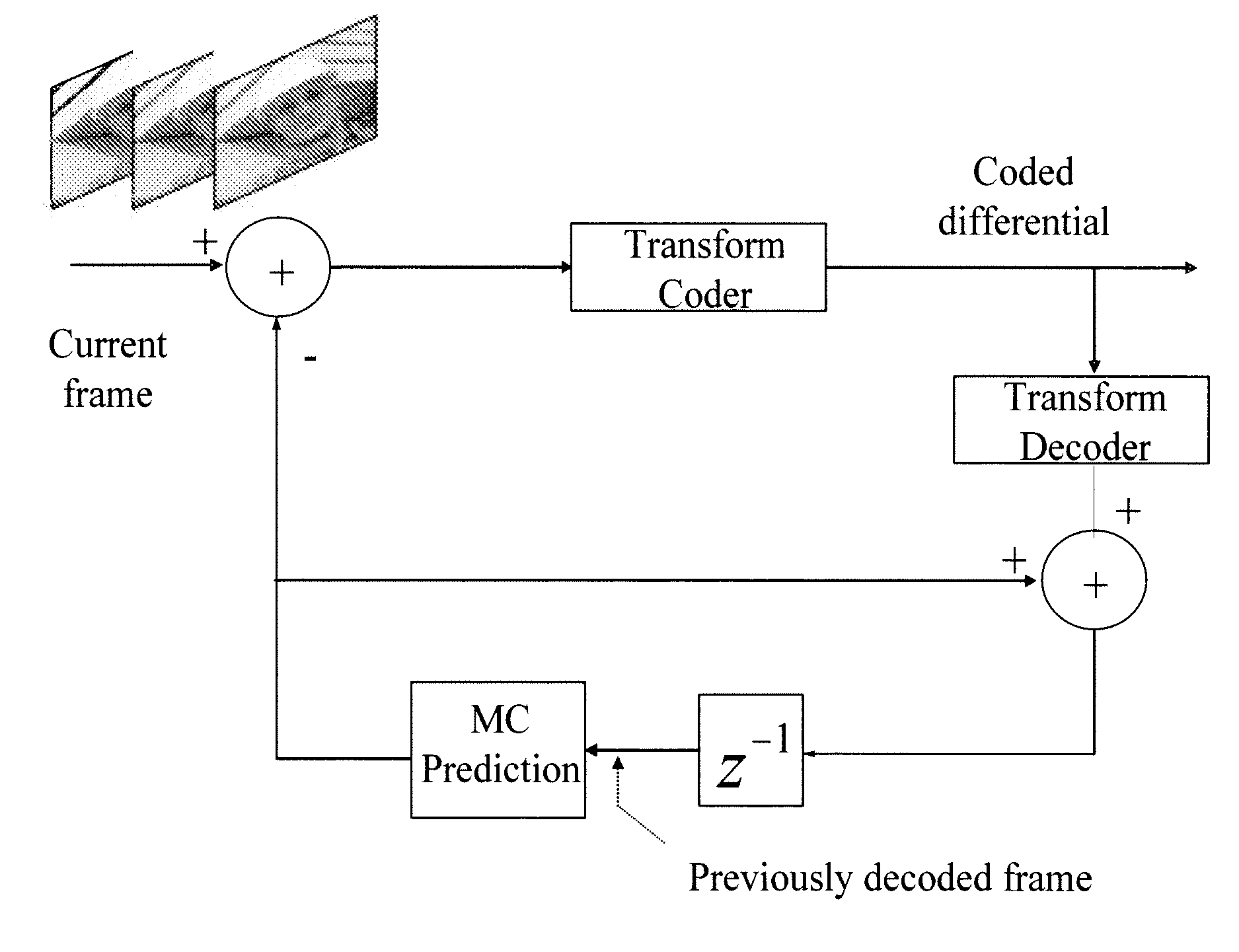

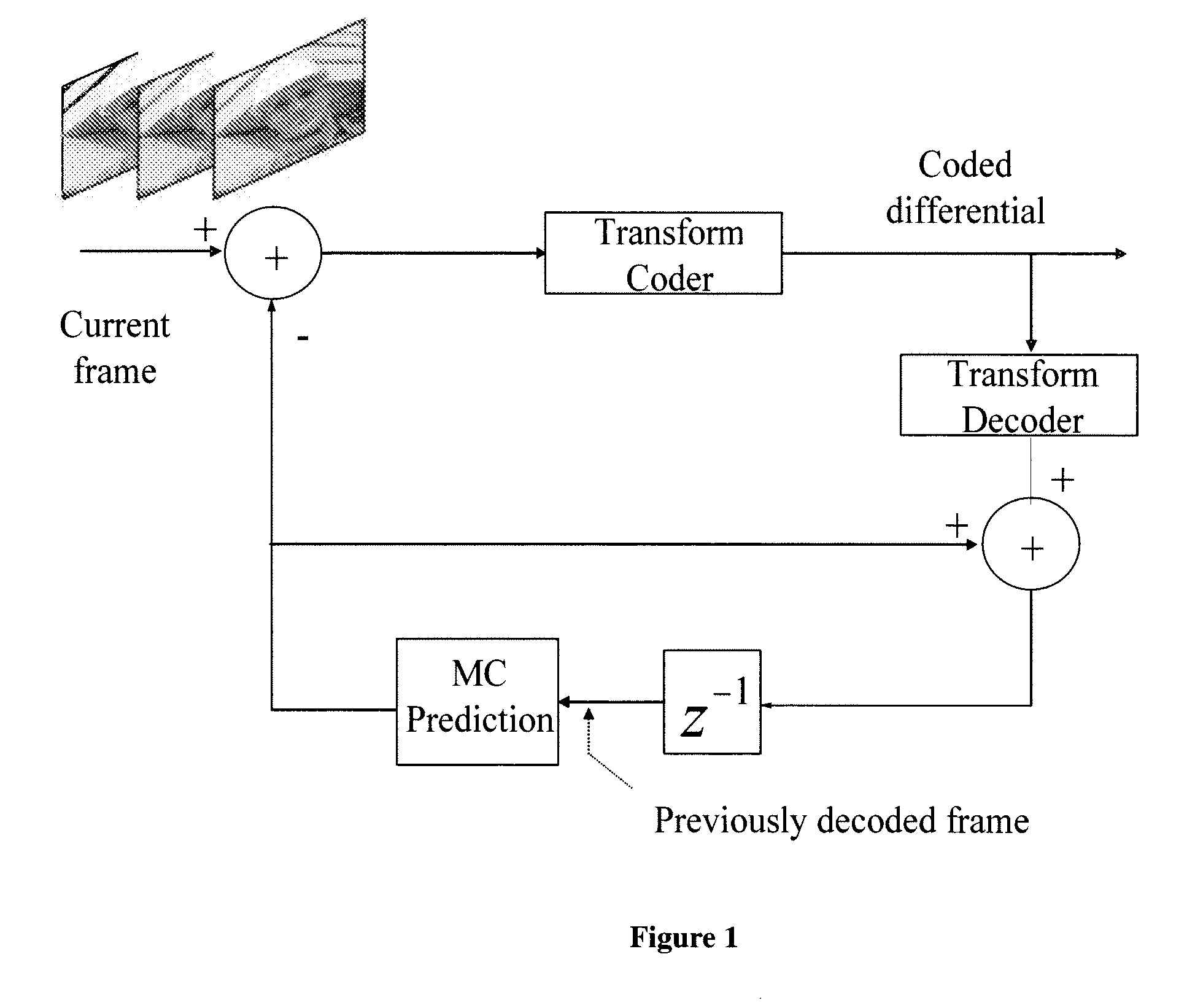

A method and apparatus is disclosed herein for using an in-the-loop denoising filter for quantization noise removal for video compression. In one embodiment, the video encoder comprises a transform coder to apply a transform to a residual frame representing a difference between a current frame and a first prediction, the transform coder outputting a coded differential frame as an output of the video encoder; a transform decoder to generate a reconstructed residual frame in response to the coded differential frame; a first adder to create a reconstructed frame by adding the reconstructed residual frame to the first prediction; a non-linear denoising filter to filter the reconstructed frame by deriving expectations and performing denoising operations based on the expectations; and a prediction module to generate predictions, including the first prediction, based on previously decoded frames.

Owner:NTT DOCOMO INC

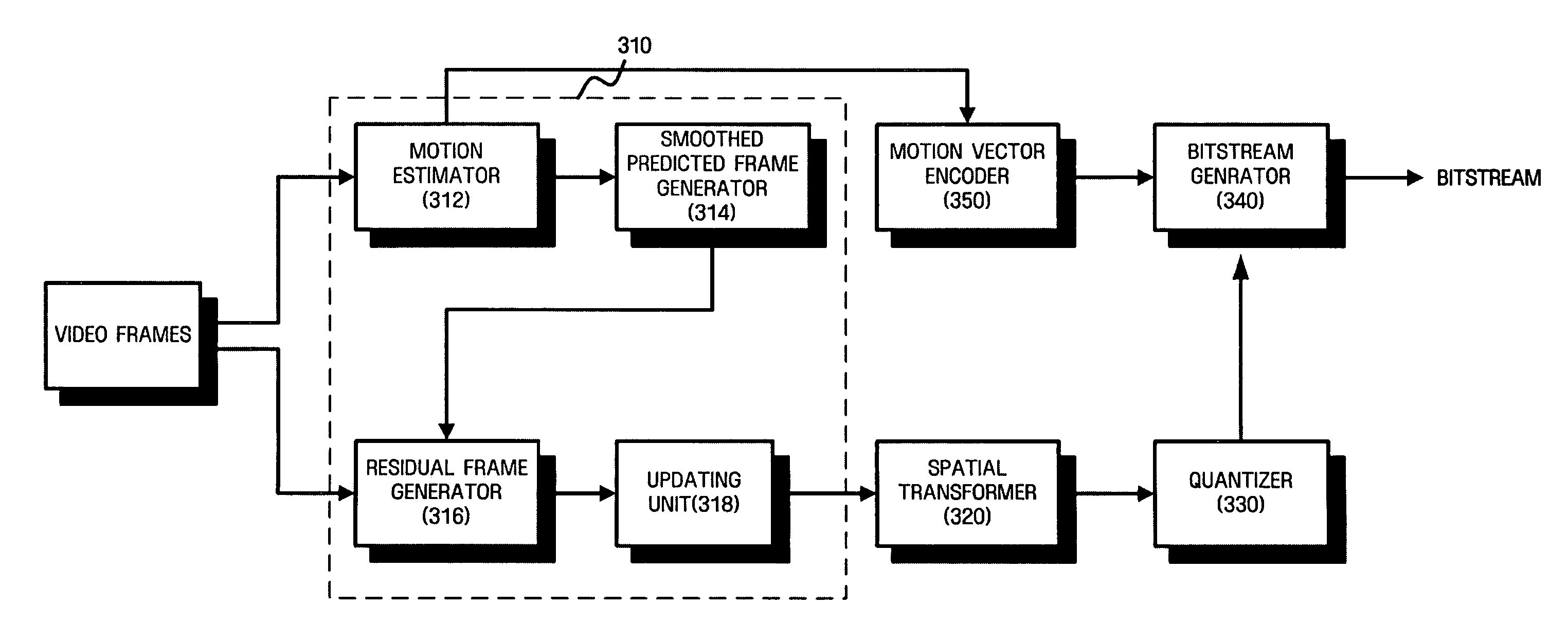

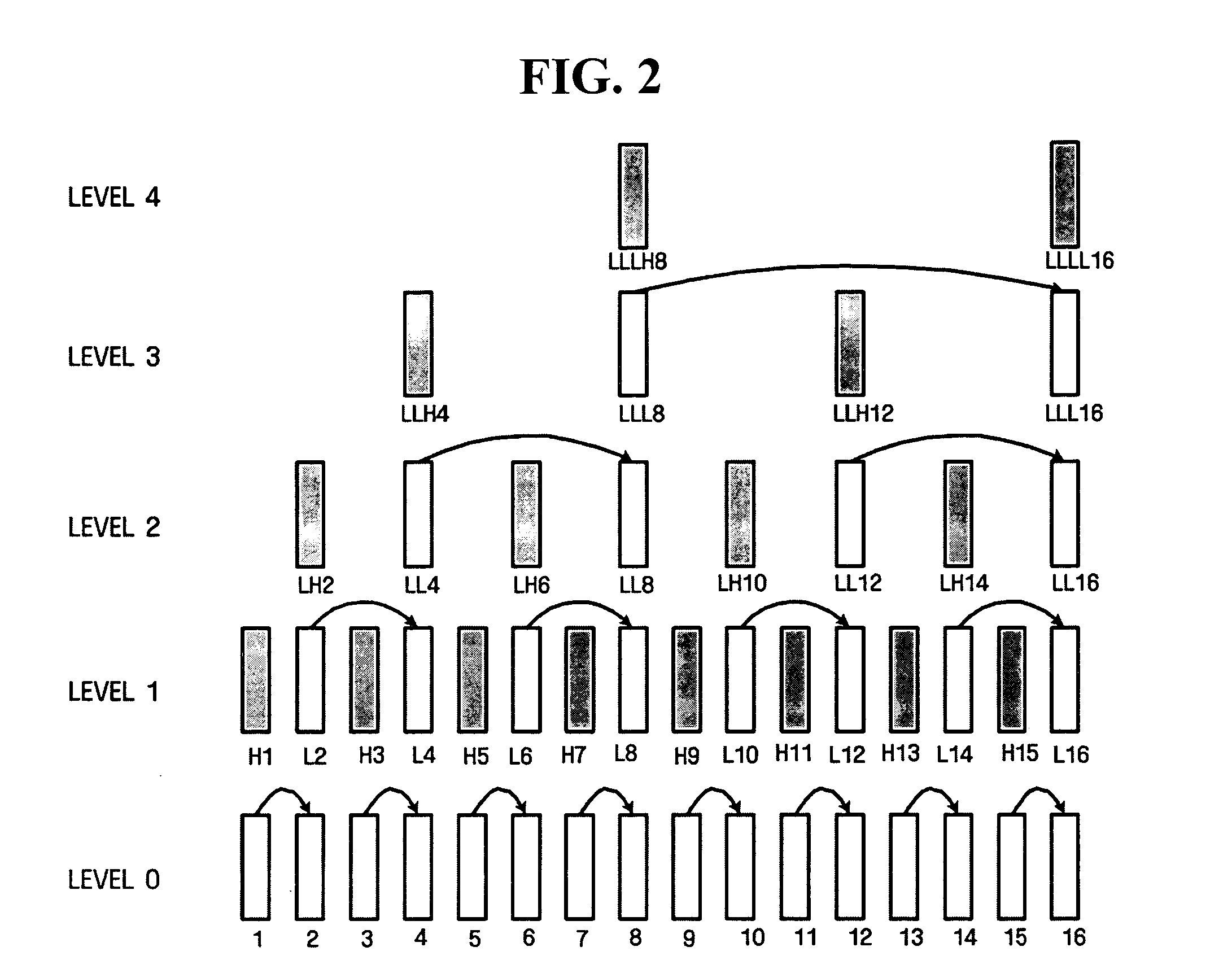

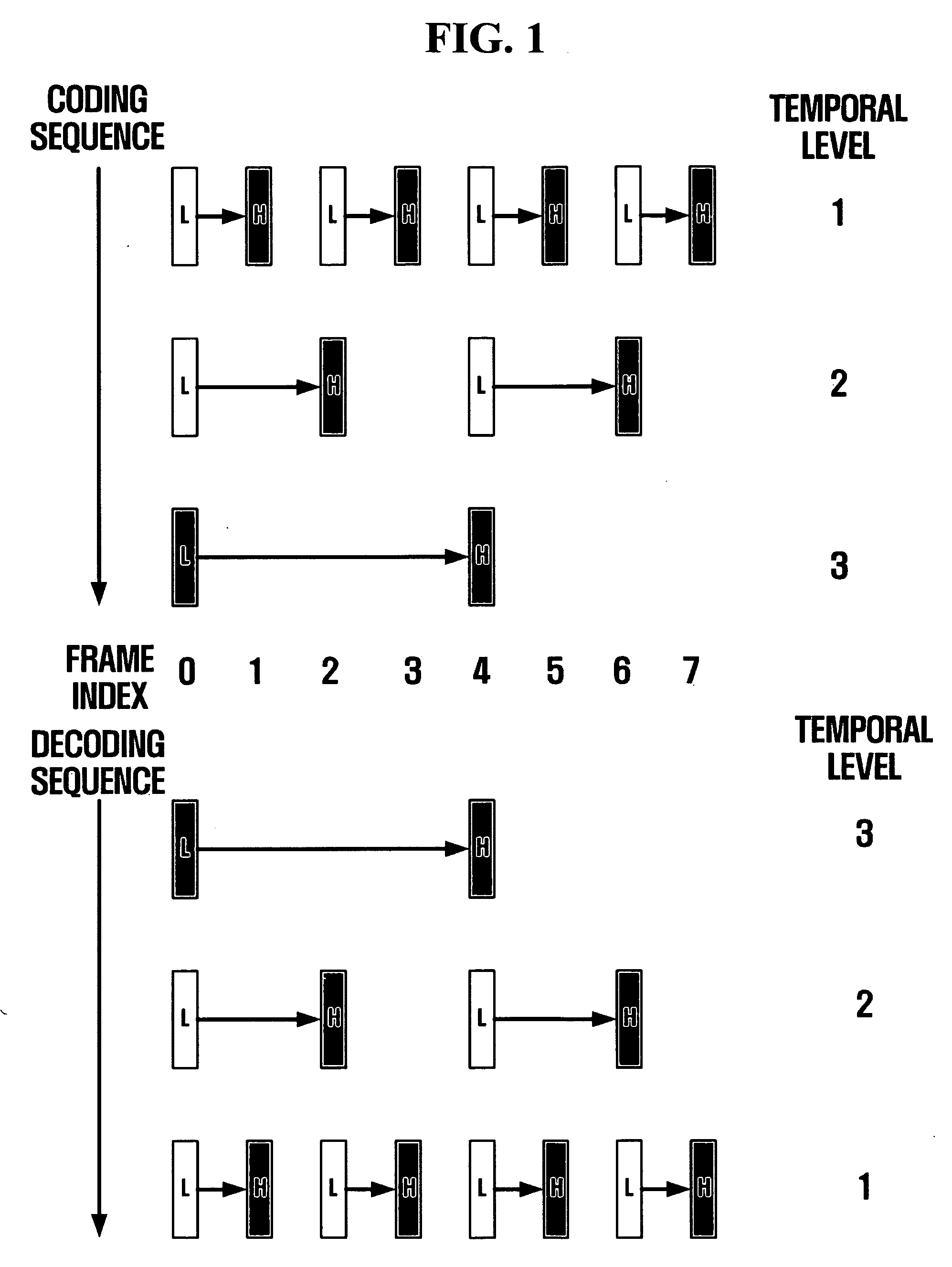

Temporal decomposition and inverse temporal decomposition methods for video encoding and decoding and video encoder and decoder

InactiveUS20060013310A1Color television with pulse code modulationColor television with bandwidth reductionPattern recognitionVideo encoding

Temporal decomposition and inverse temporal decomposition methods using smoothed predicted frames for video encoding and decoding and video encoder and decoder are provided. The temporal decomposition method for video encoding includes estimating the motion of a current frame using at least one frame as a reference and generating a predicted frame, smoothing the predicted frame and generating a smoothed predicted frame, and generating a residual frame by comparing the smoothed predicted frame with the current frame.

Owner:SAMSUNG ELECTRONICS CO LTD

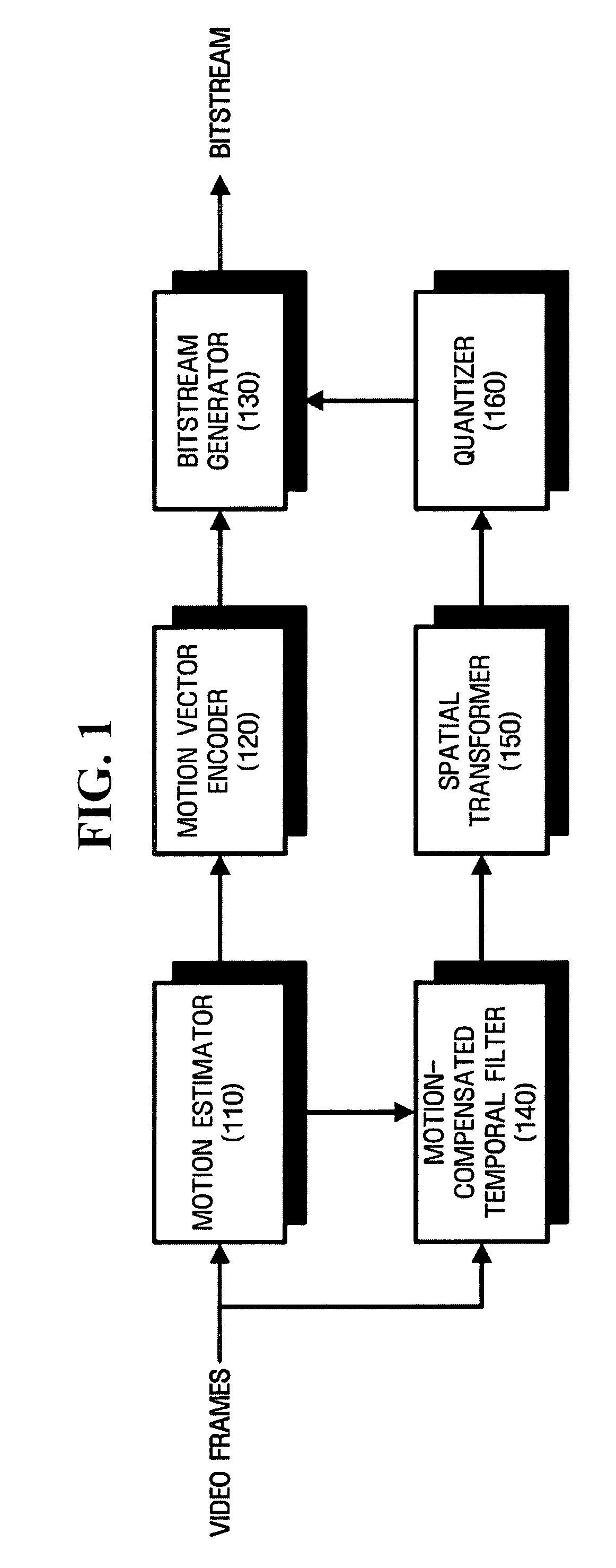

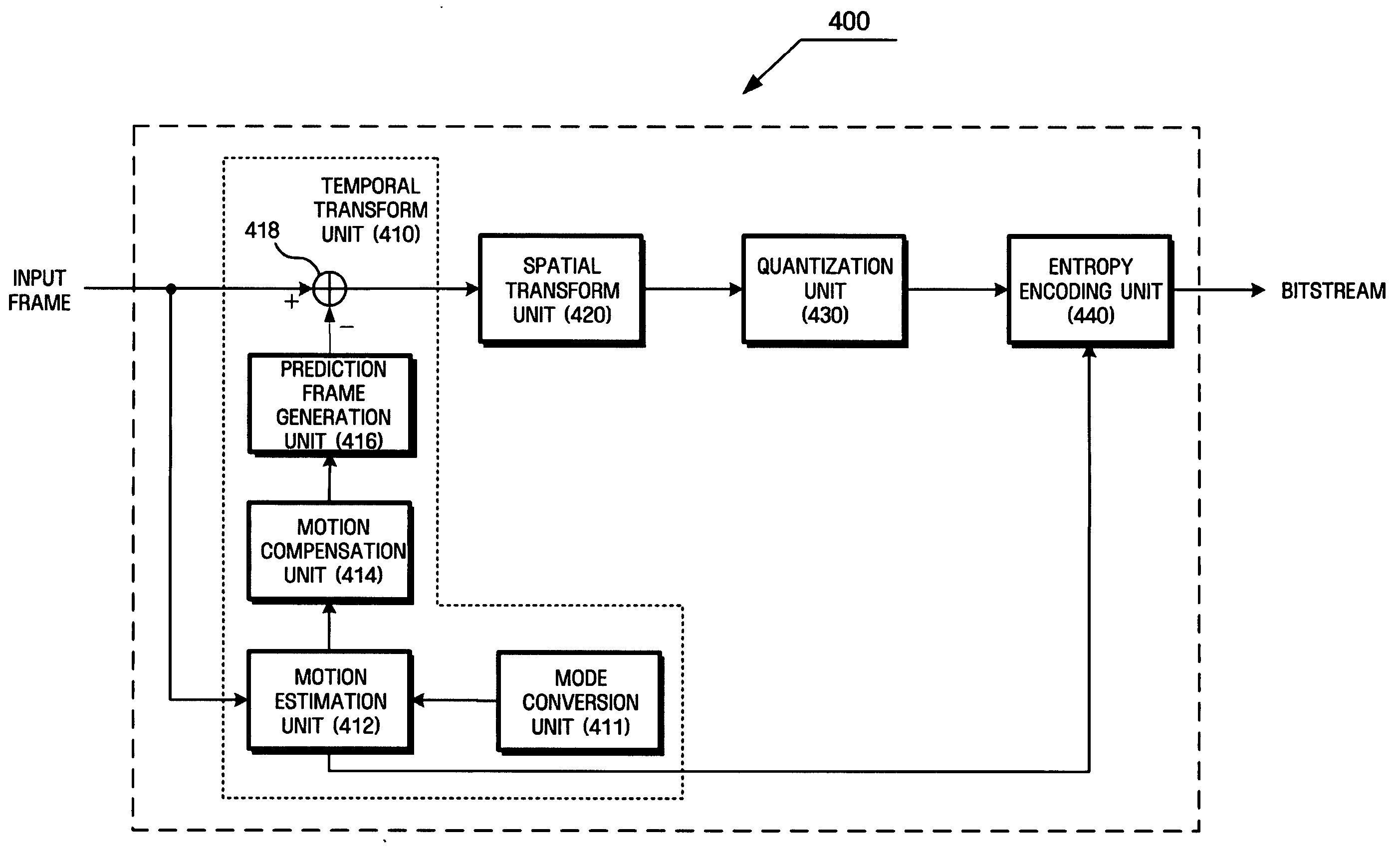

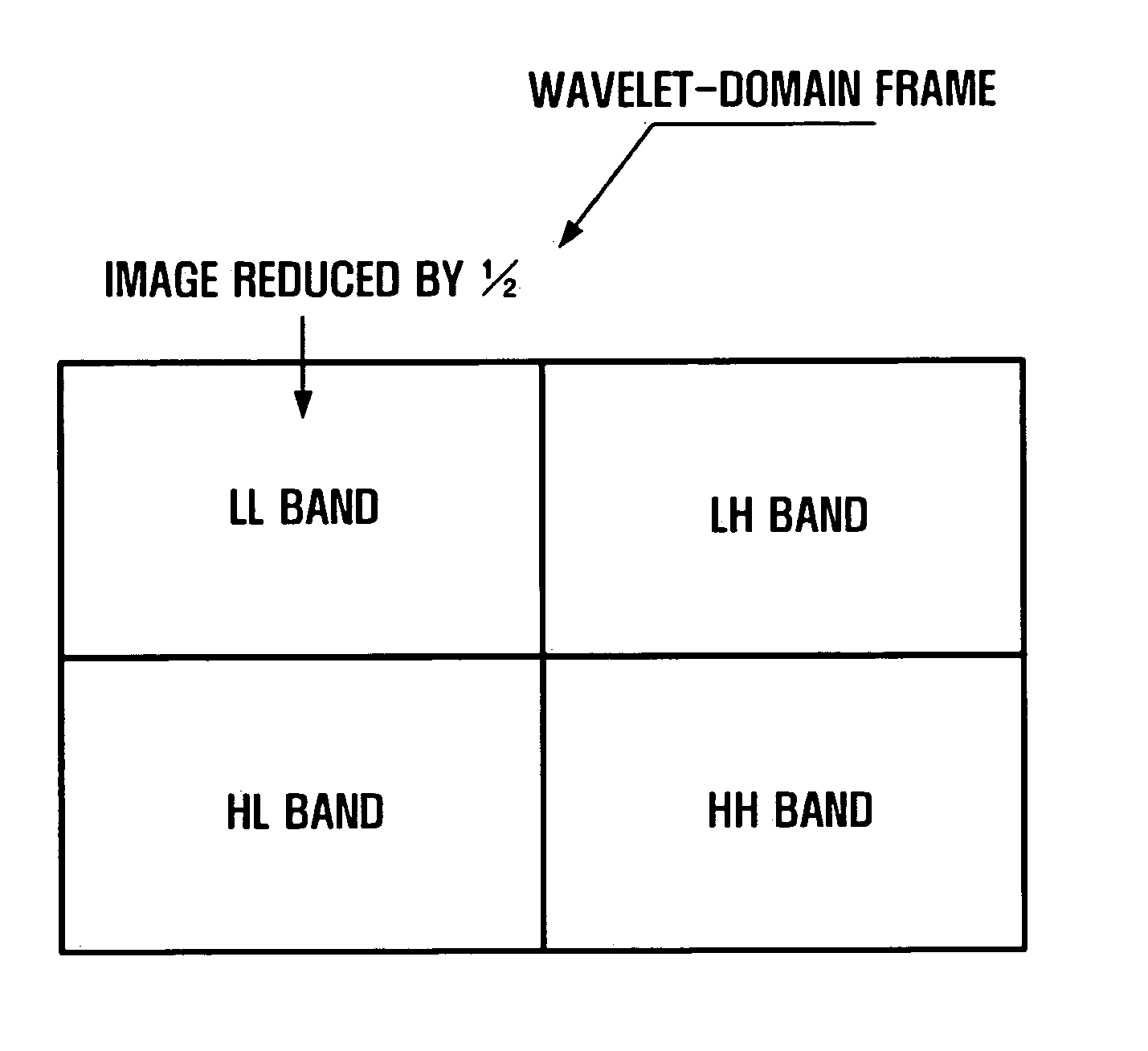

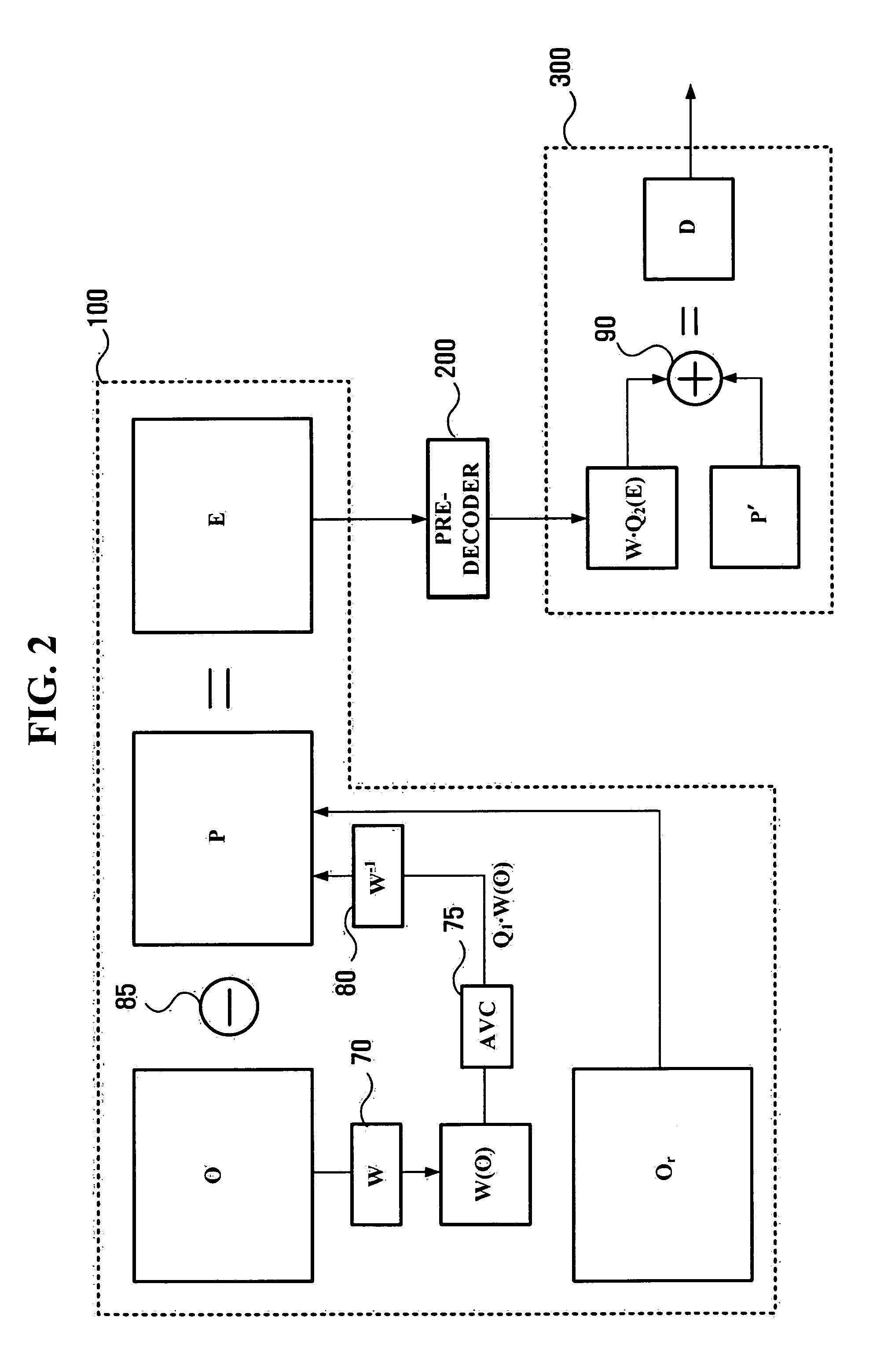

Video coding method and apparatus

InactiveUS20060088096A1Quality improvementColor television with pulse code modulationColor television with bandwidth reductionSpatial correlationVideo encoding

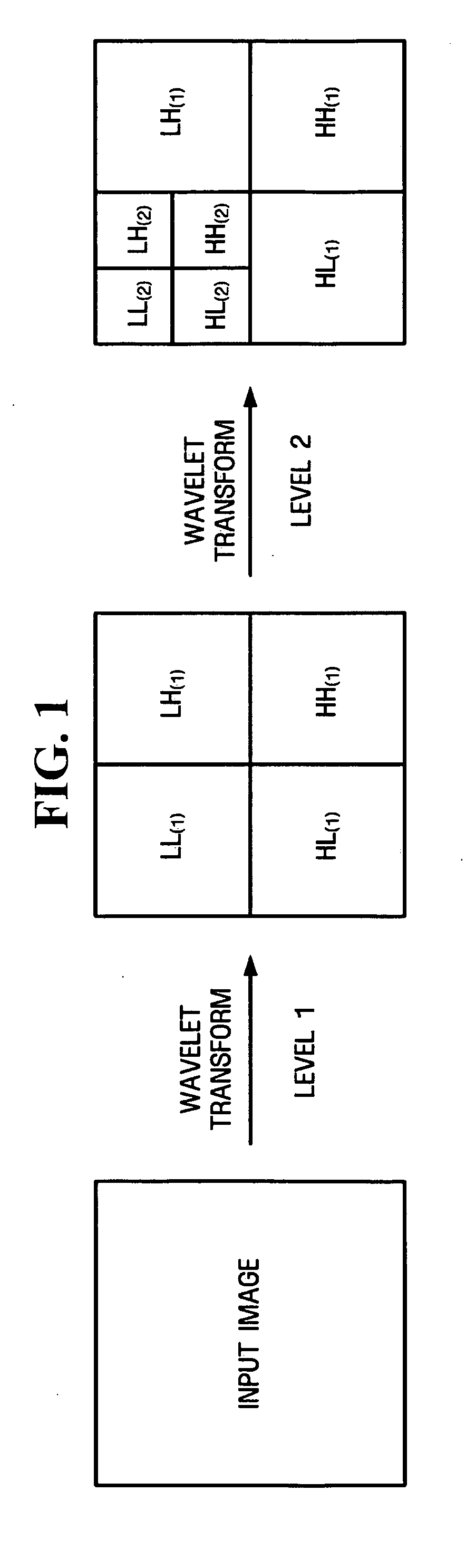

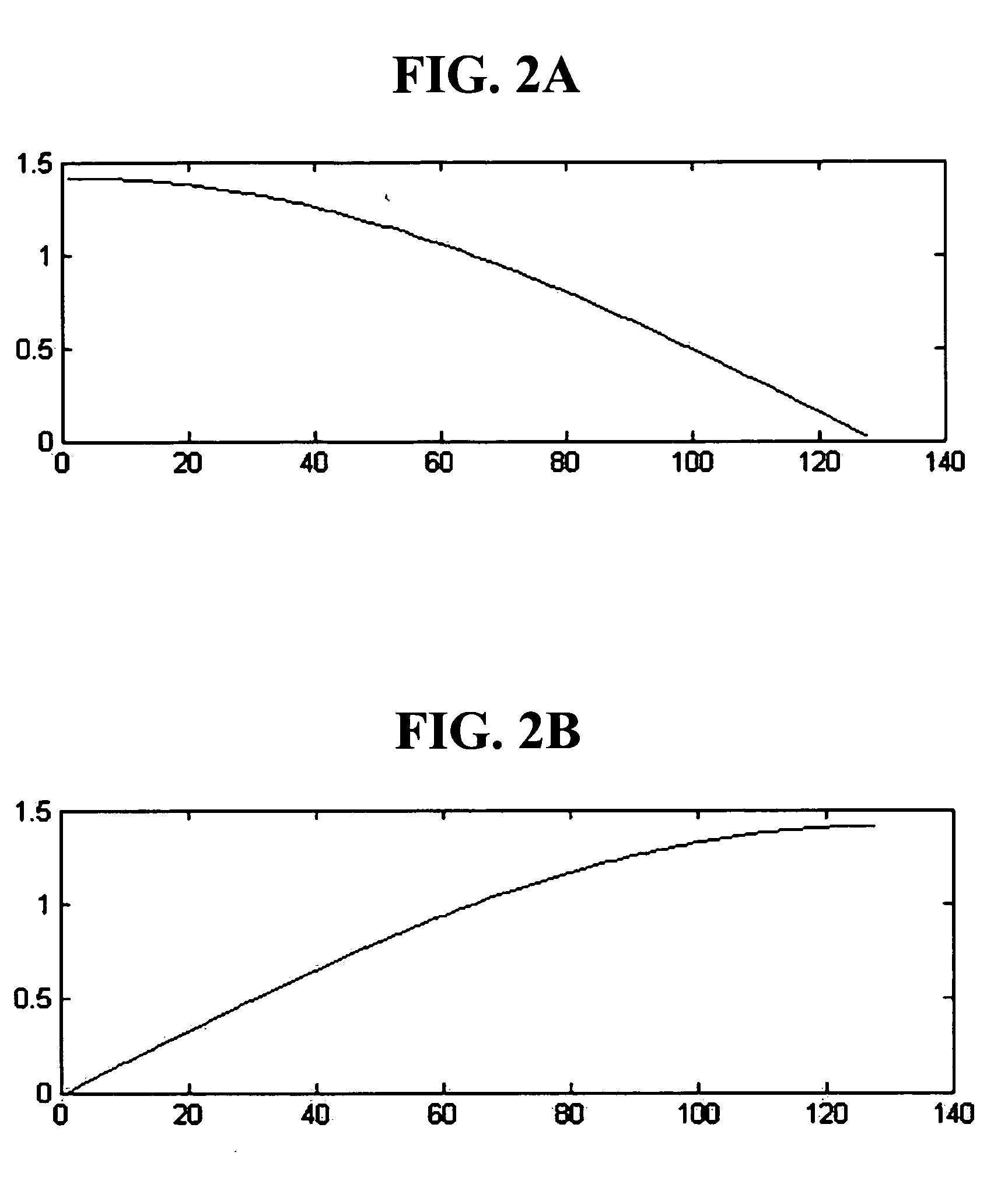

A method and apparatus are provided for improving compression efficiency or picture quality by selecting a wavelet transform technique suitable to input video / image scene characteristics in video / image compression. The video encoder includes a temporal transform module that removes temporal redundancy of an input frame and generates a residual frame, a selection module that selects an appropriate wavelet filter among a plurality of wavelet filters having different taps according to a spatial correlation of the residual frame, a wavelet transform module that generates wavelet coefficients by performing wavelet transform on the residual frame using the selected wavelet filter, and a quantization module that quantizes the wavelet coefficients.

Owner:SAMSUNG ELECTRONICS CO LTD

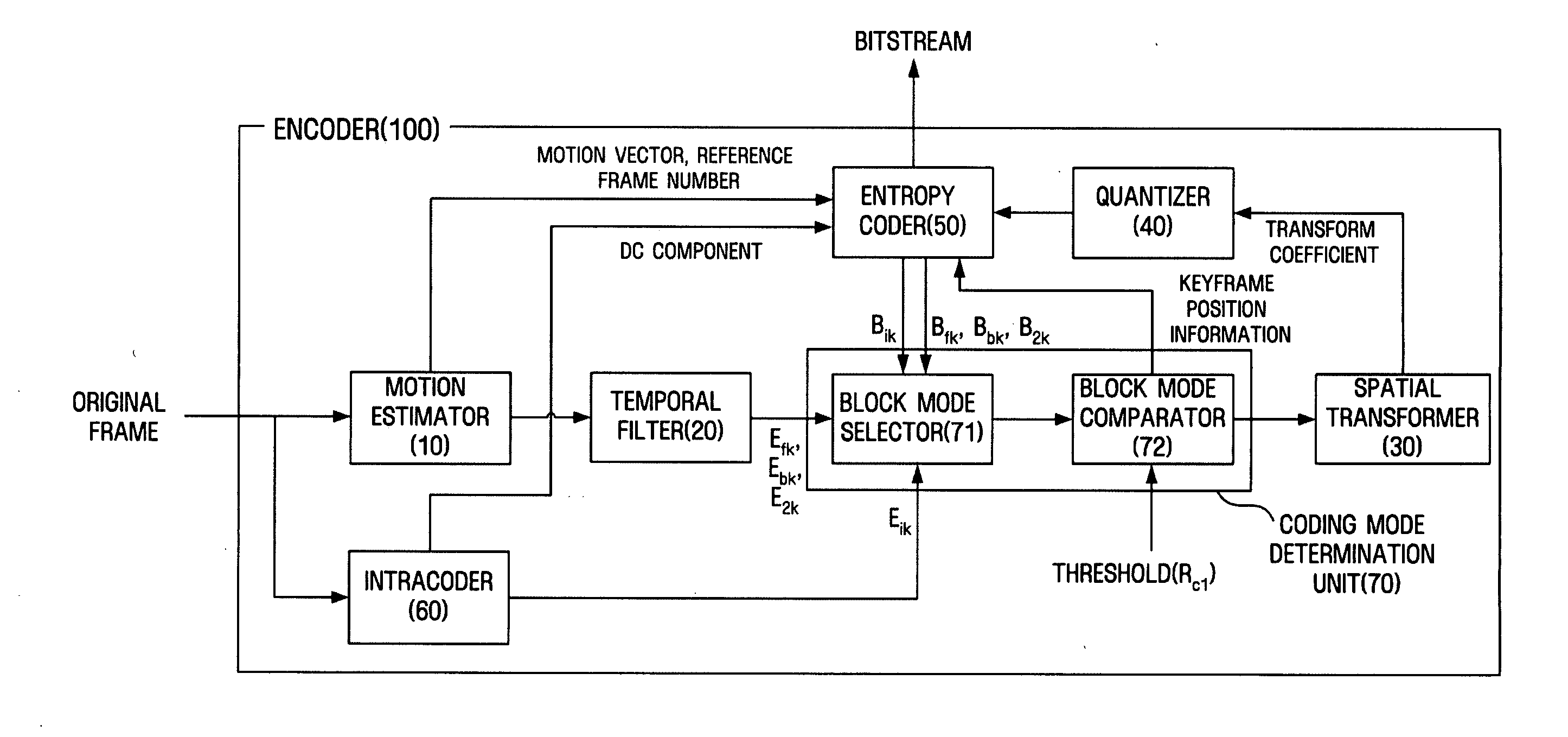

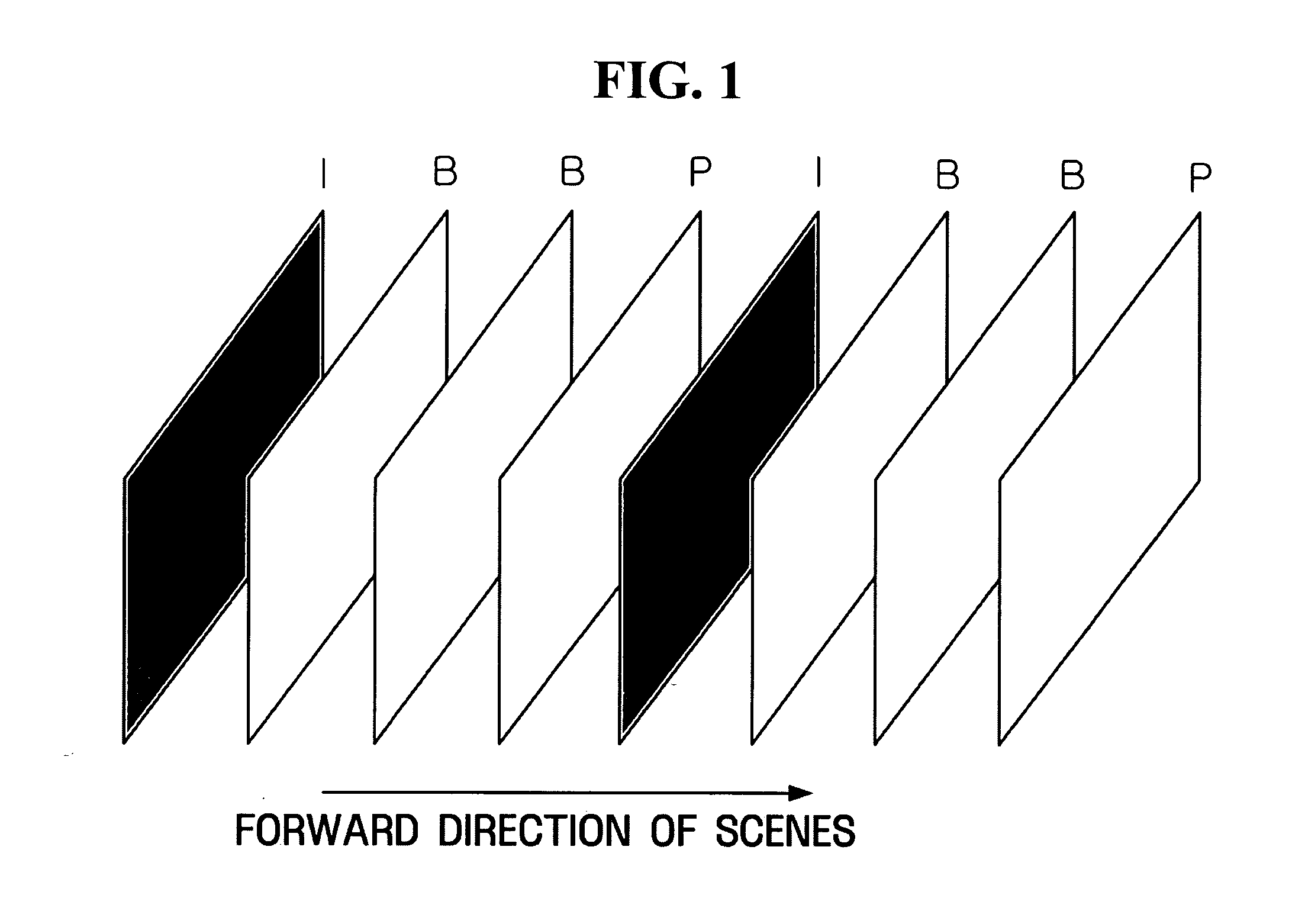

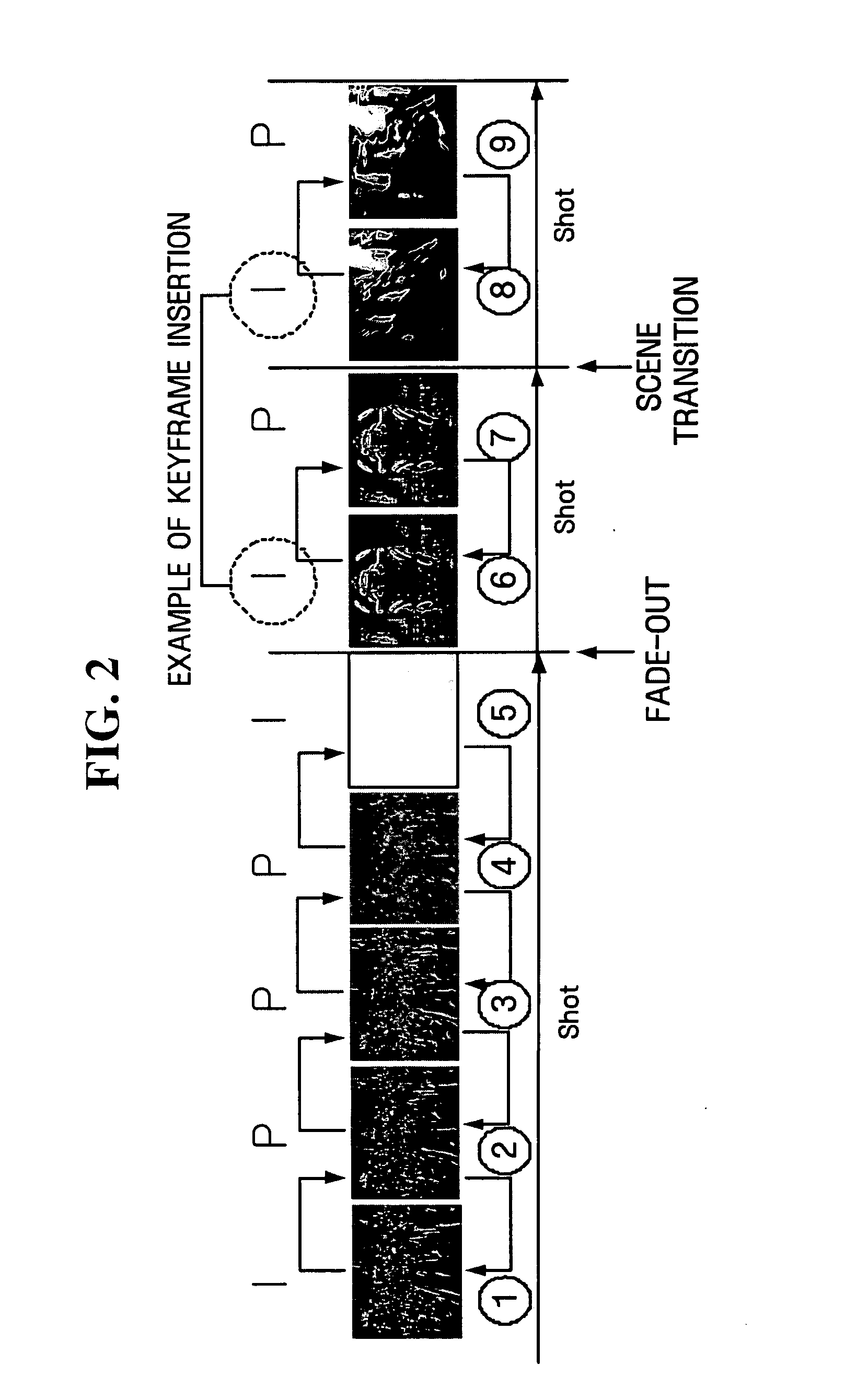

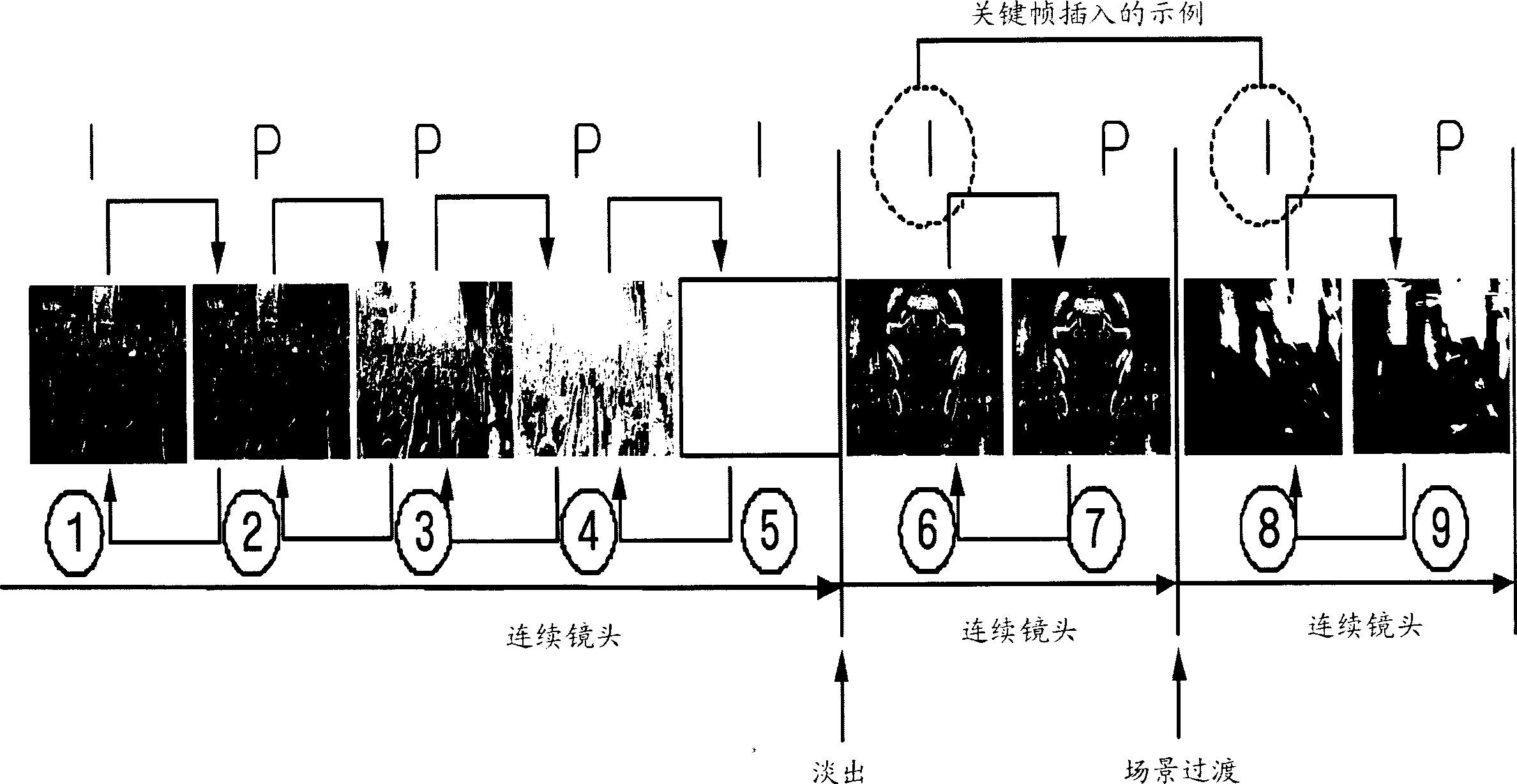

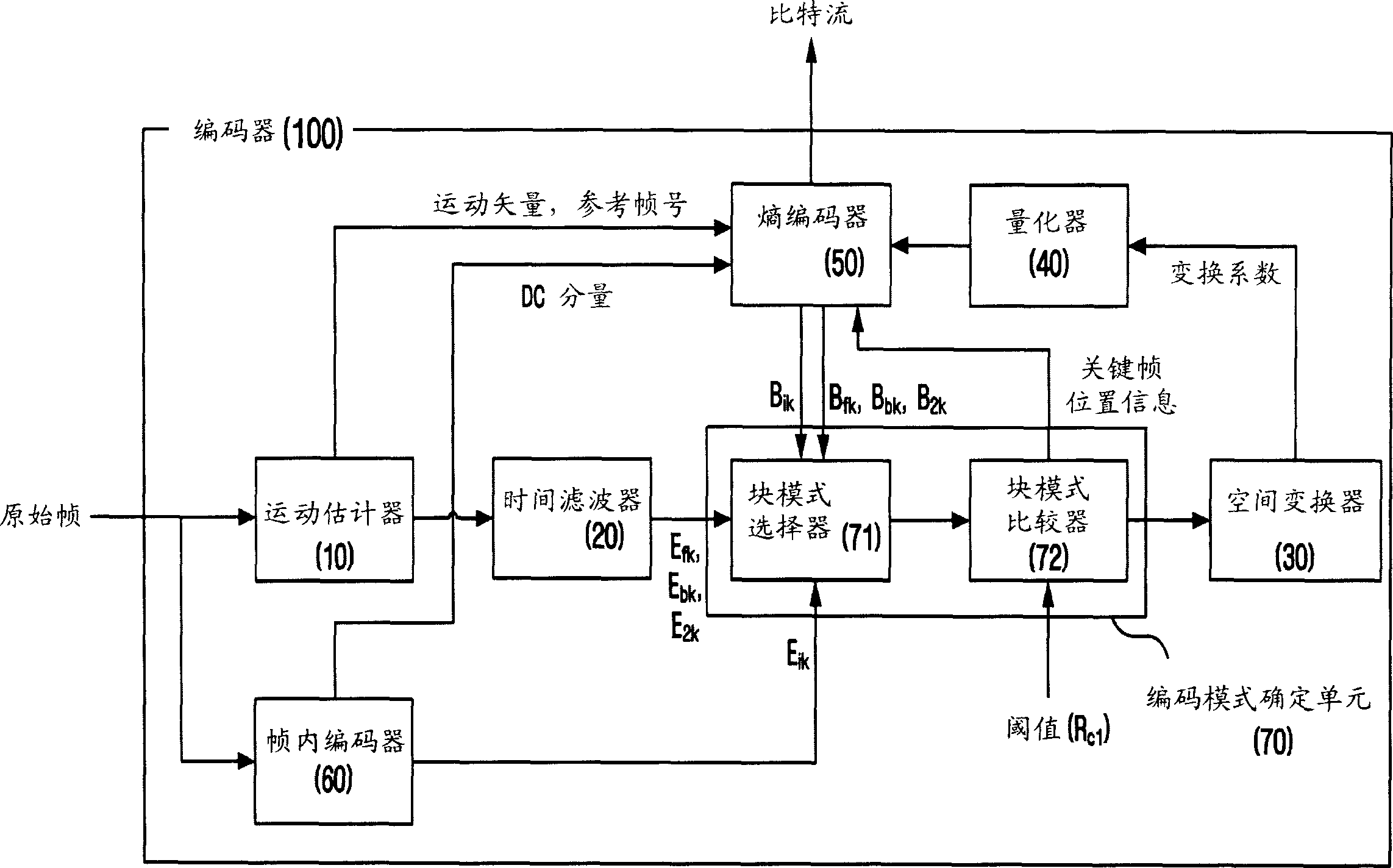

Video coding apparatus and method for inserting key frame adaptively

InactiveUS20050169371A1Minimal costTelevision system detailsPulse modulation television signal transmissionTransformerVideo encoding

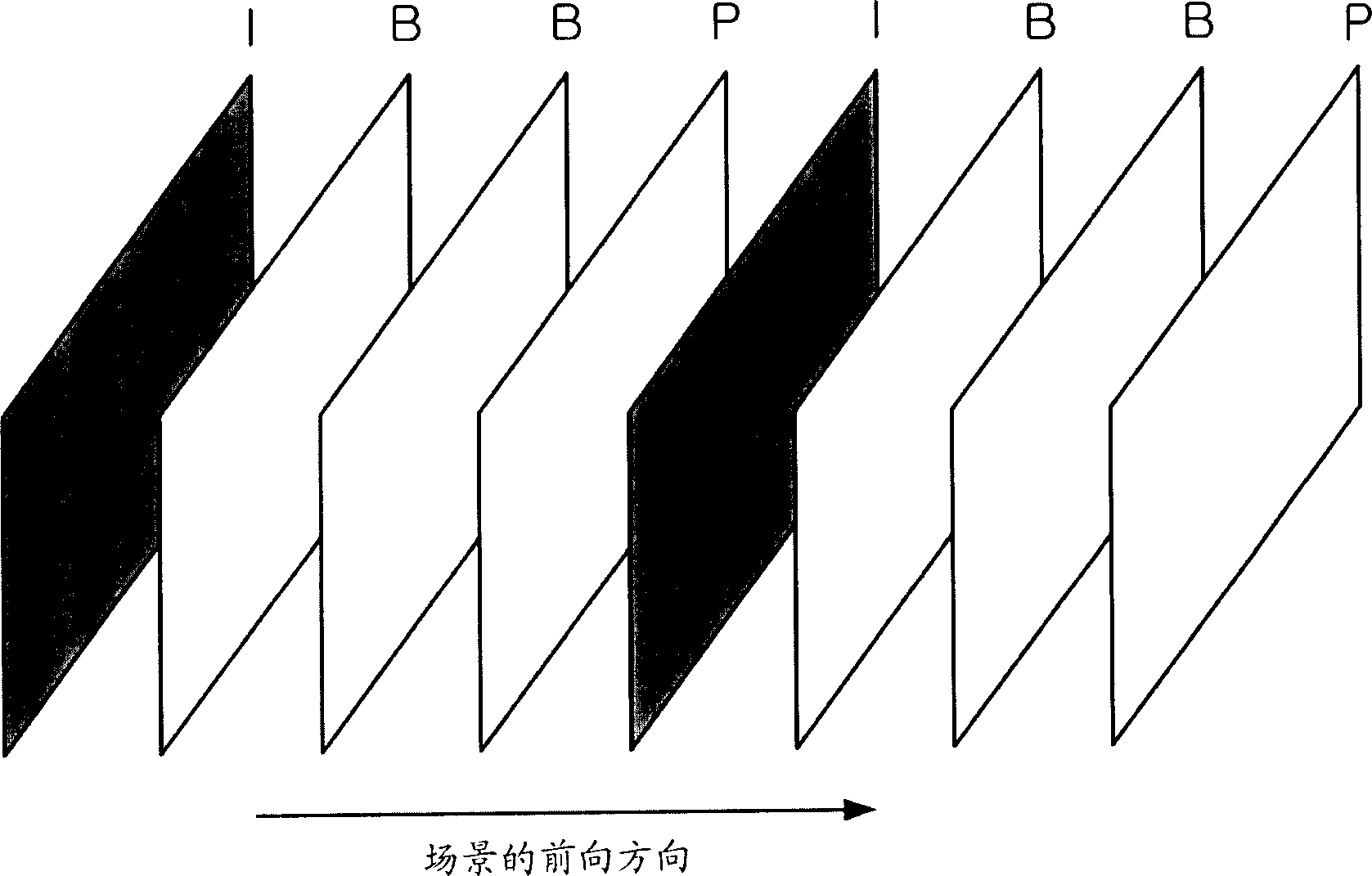

A method of adaptively inserting a key frame according to video content to allow a user to easily access a desired scene. A video encoder includes a coding mode determination unit receiving a temporal residual frame with respect to an original frame, determining whether the original frame has a scene change by comparing the temporal residual frame with a predetermined reference, determining to encode the temporal residual frame when it is determined that the original frame does not have the scene change, and determining to encode the original frame when it is determined that the original frame has the scene change, and a spatial transformer performing spatial transform on either of the temporal residual frame and the original frame according to the determination of the coding mode determination unit and obtaining a transform coefficient. A keyframe is inserted according to access to a scene based on the content of an image, so that usability of a function allowing access to a random image frame is increased.

Owner:SAMSUNG ELECTRONICS CO LTD

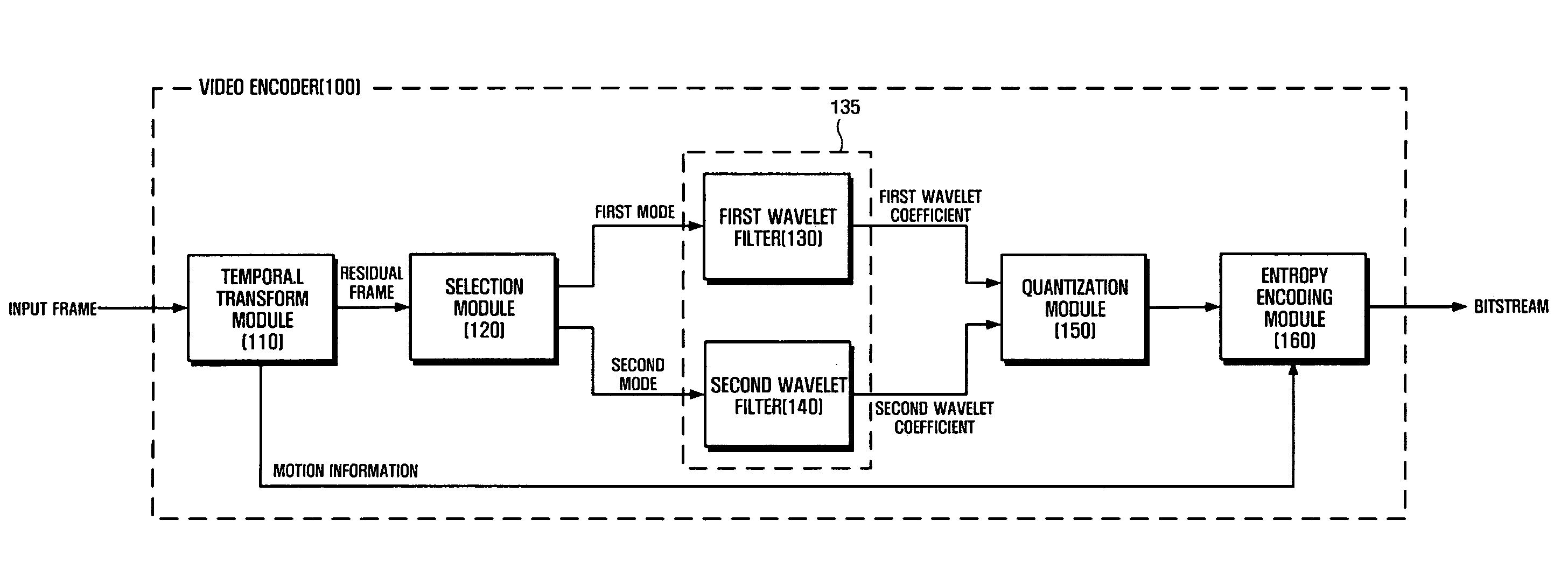

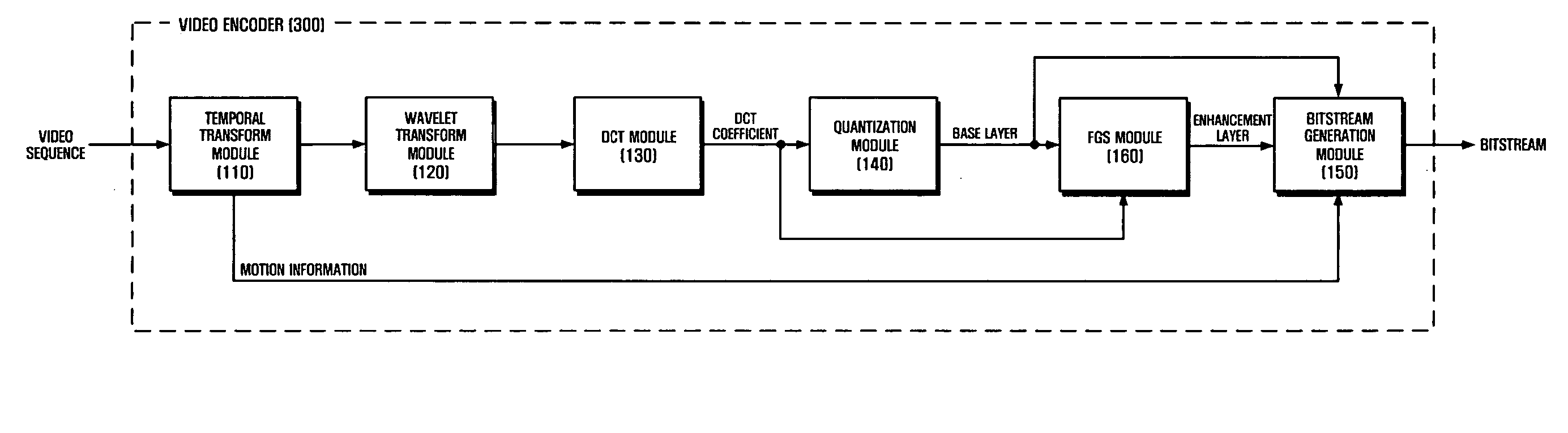

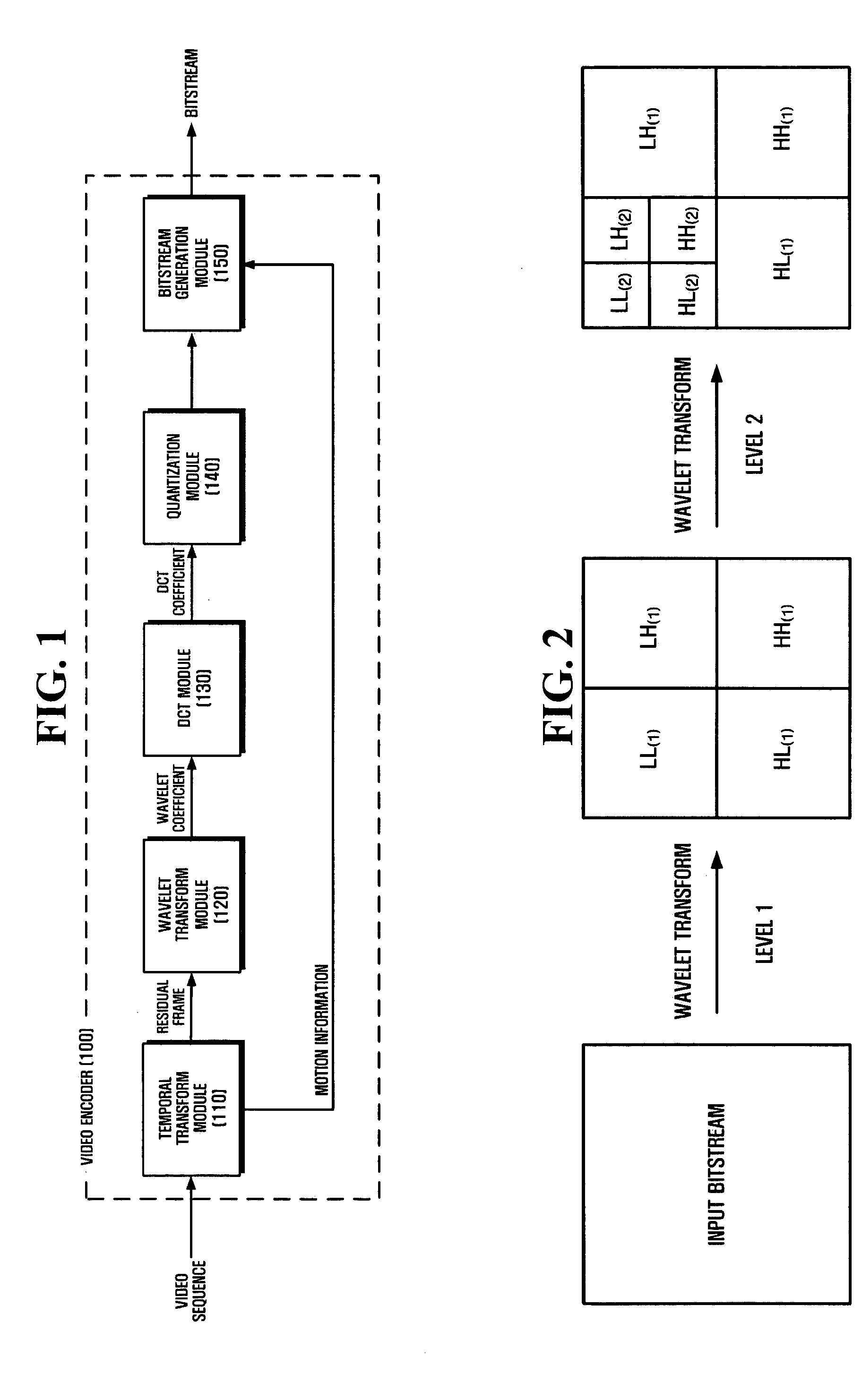

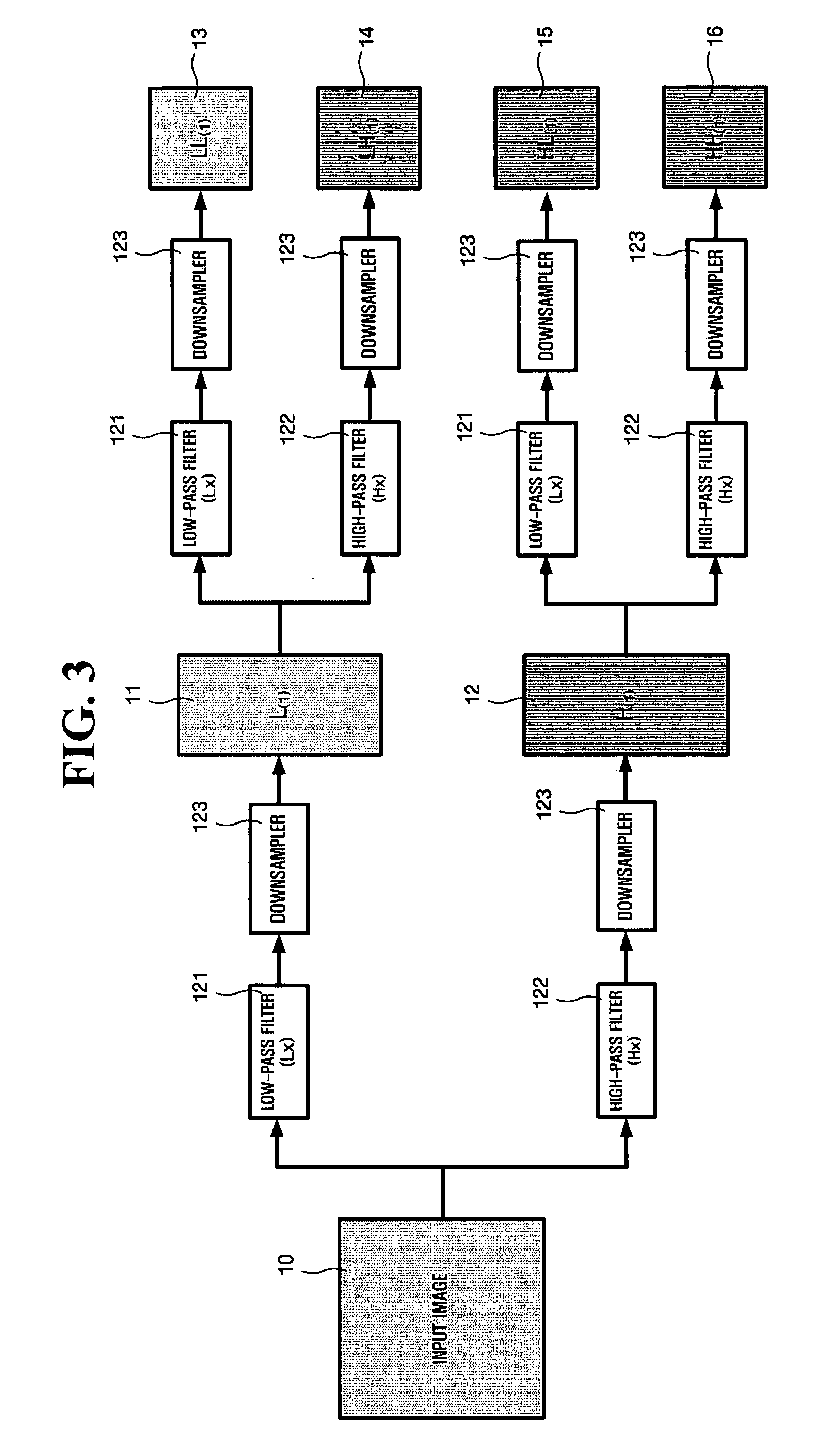

Video coding method and apparatus

InactiveUS20060088222A1Quality improvementCharacter and pattern recognitionDigital video signal modificationImaging qualityVideo encoding

A video coding method and apparatus are provided for improving compression efficiency or video / image quality by selecting a spatial transform method suitable for characteristics of an incoming video / image during video / image compression. The video coding apparatus includes a temporal transform module for removing temporal redundancy in an input frame to generate a residual frame, a wavelet transform module for performing wavelet transform on the residual frame to generate a wavelet coefficient, a Discrete Cosine Transform (DCT) module for performing DCT on the wavelet coefficient of each DCT block to create a DCT coefficient, and a quantization module for quantizing the DCT coefficient.

Owner:SAMSUNG ELECTRONICS CO LTD

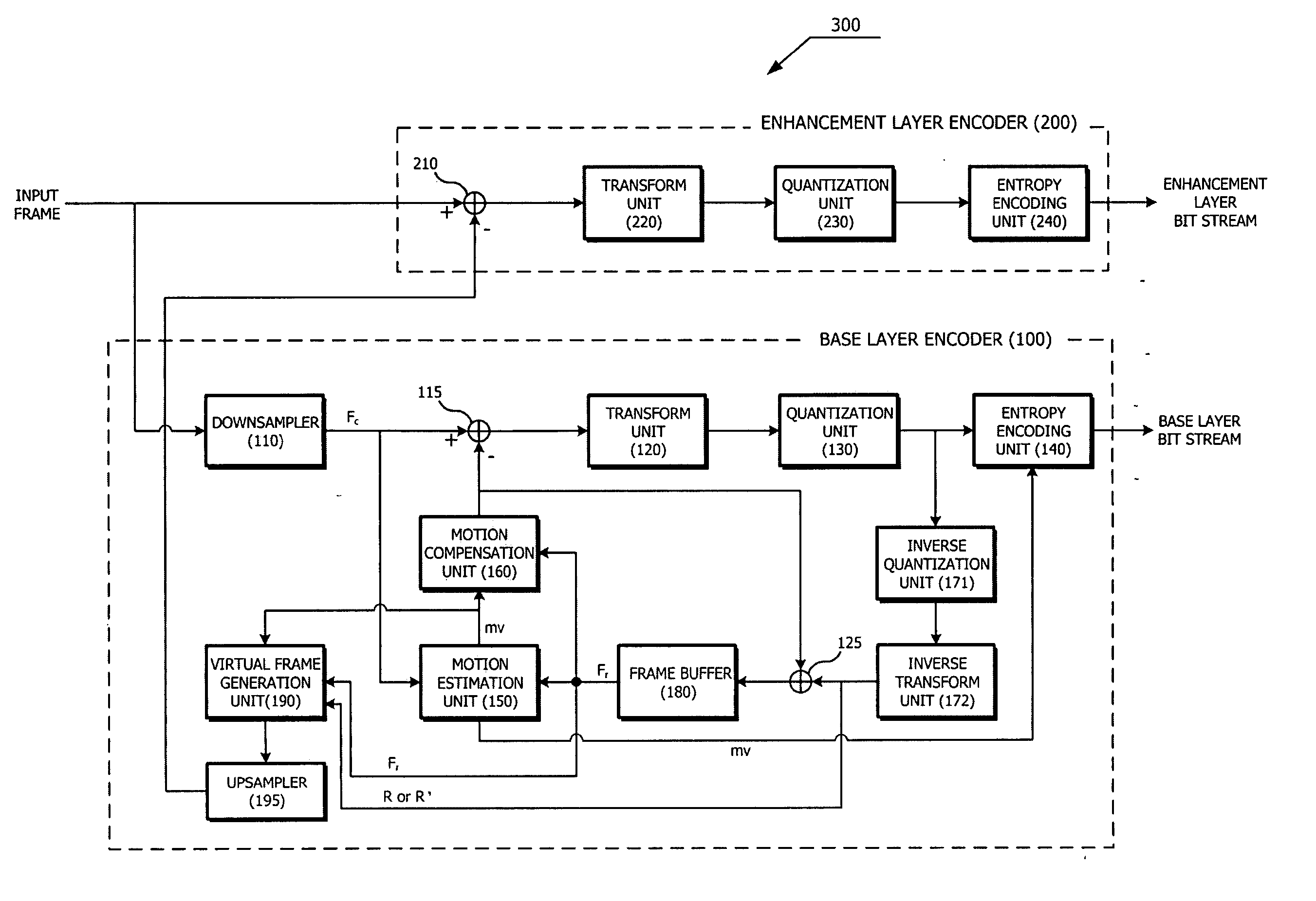

Video coding method and apparatus for efficiently predicting unsynchronized frame

InactiveUS20060165301A1Improve performanceCharacter and pattern recognitionDigital video signal modificationMotion vectorVideo encoding

A method of efficiently predicting a frame having no corresponding lower layer frame in video frames having a multi-layered structure, and a video coding apparatus using the prediction method is provided. In the video encoding method, motion estimation is performed by using a first frame of two frames of a lower layer temporally closest to an unsynchronized frame of a current layer as a reference frame. A residual frame between the reference frame and a second frame of the lower layer frames is obtained. A virtual base layer frame at the same temporal location as that of the unsynchronized frame is generated using a motion vector obtained as a result of the motion estimation, the reference frame, and the residual frame. The generated virtual base layer frame is subtracted from the unsynchronized frame to generate a difference, and the difference is encoded.

Owner:SAMSUNG ELECTRONICS CO LTD

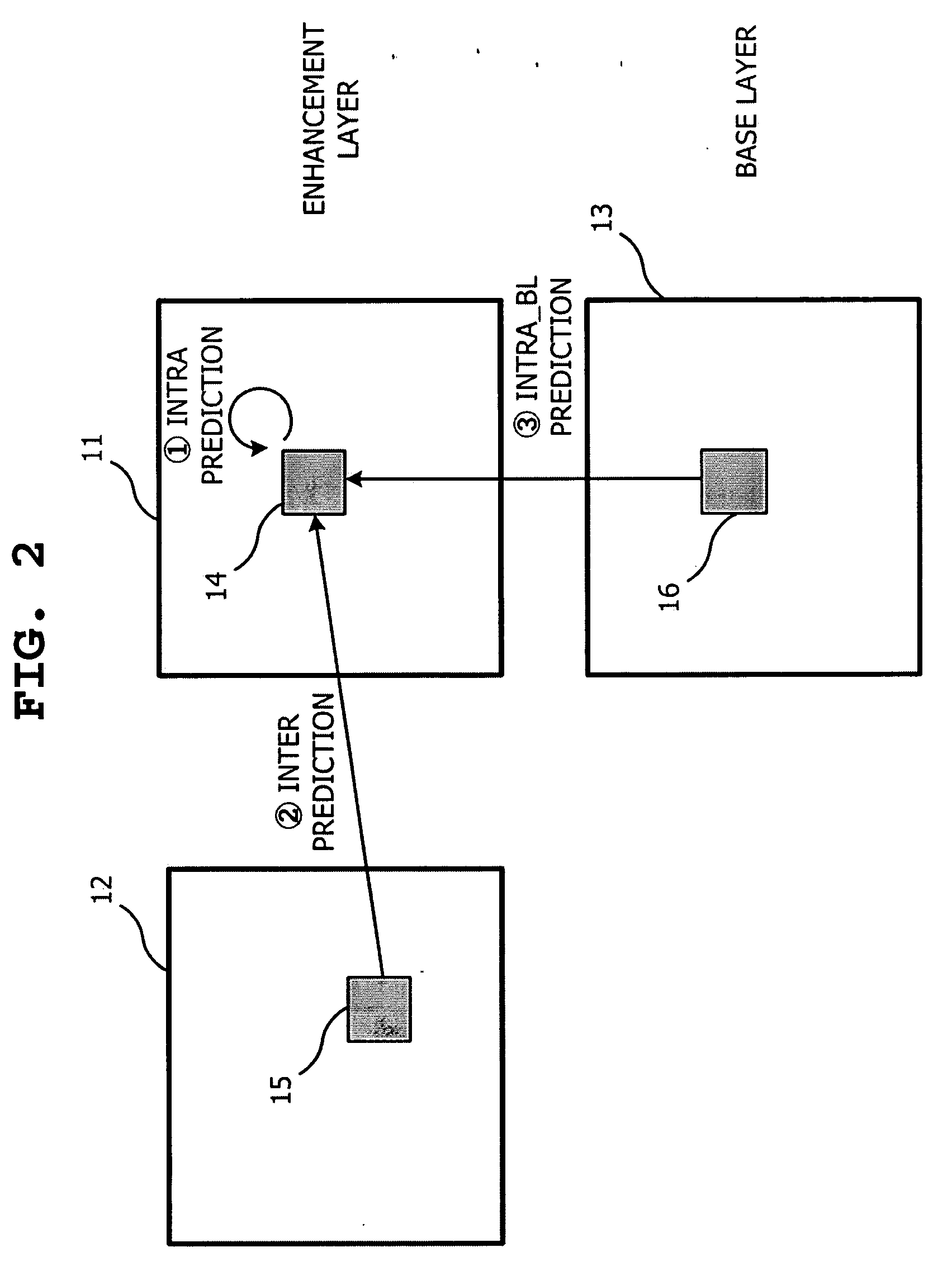

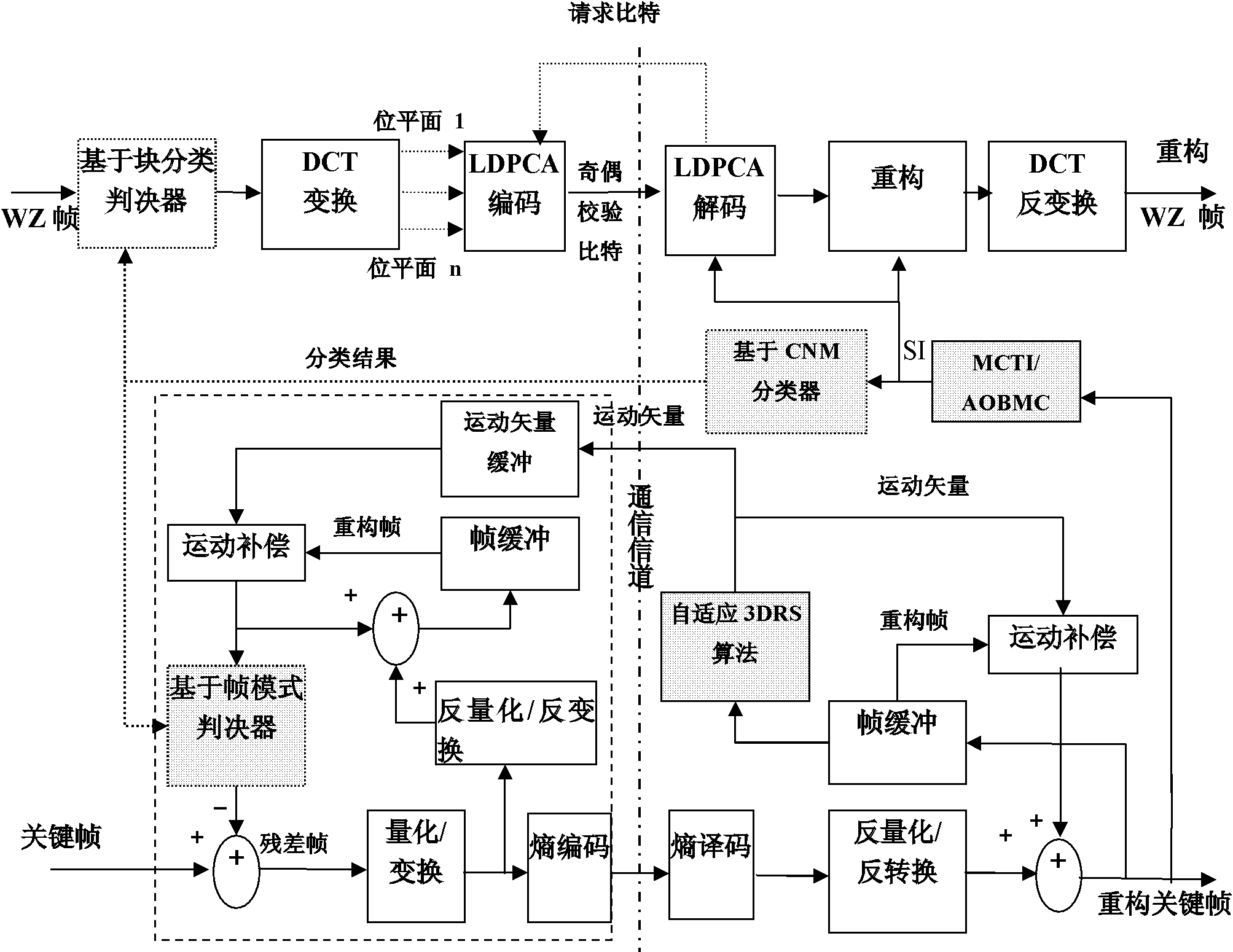

Distributed video coding and decoding methods based on classification of key frames of correlation noise model (CNM)

InactiveCN102137263AQuality improvementPrecise Motion VectorsTelevision systemsDigital video signal modificationPattern recognitionComputation complexity

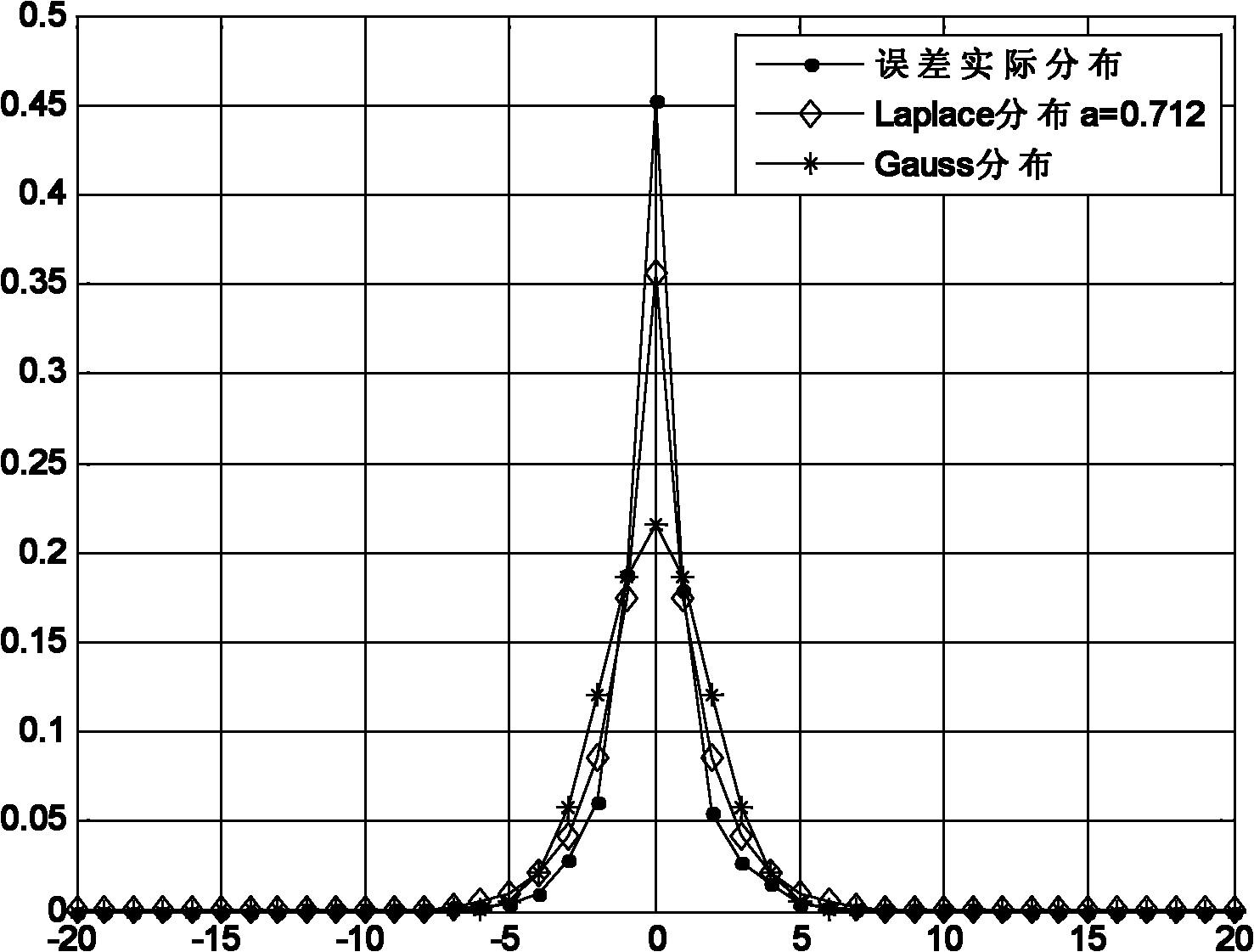

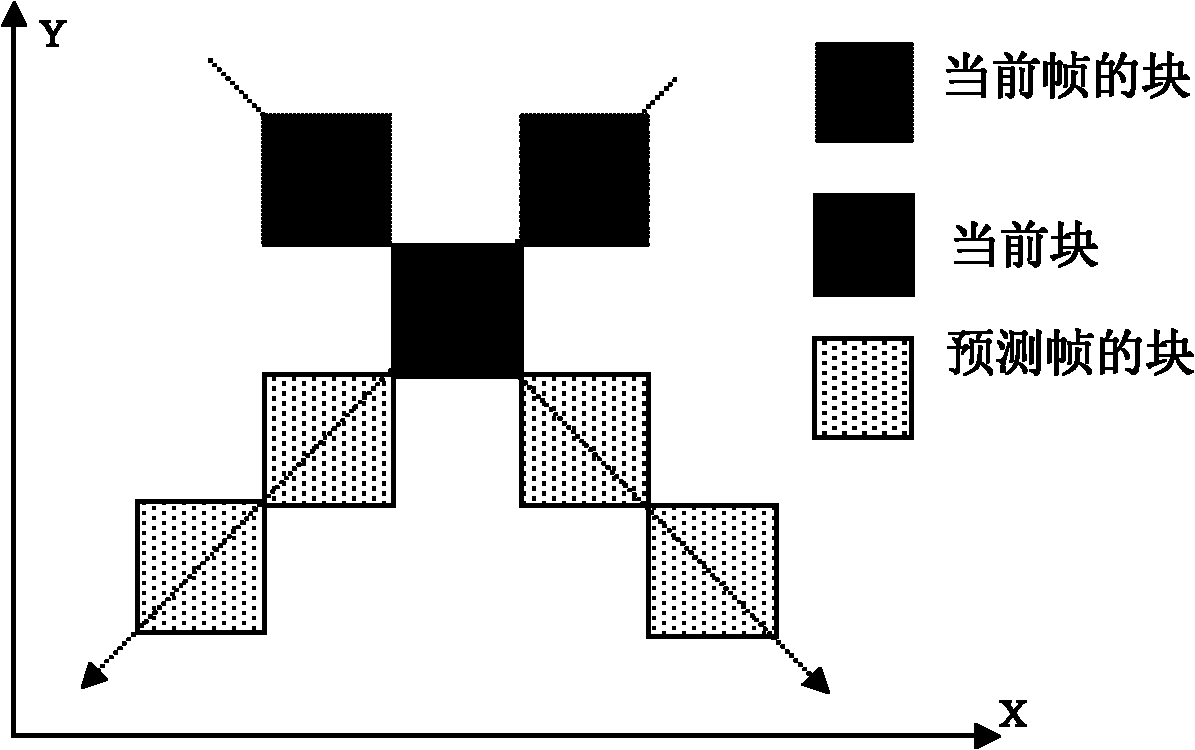

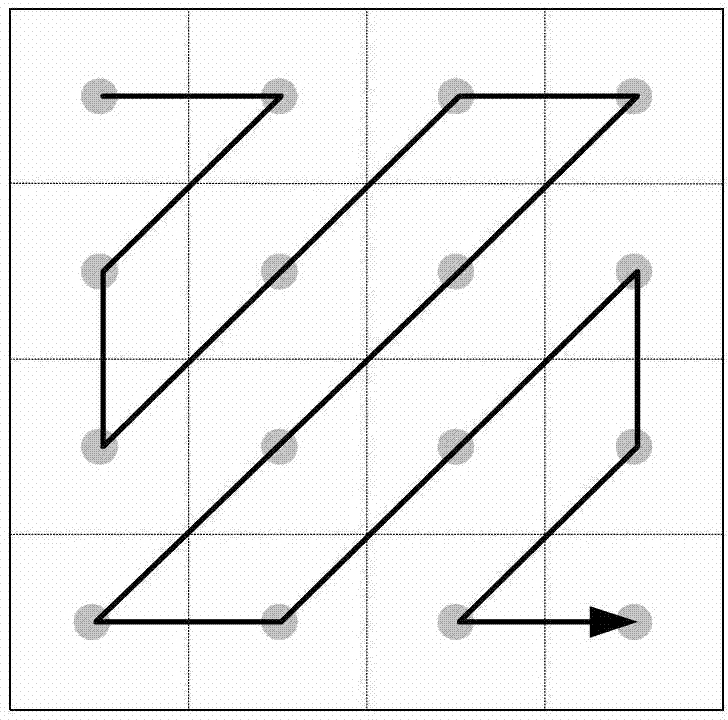

The invention discloses distributed video coding and decoding methods based on classification of key frames of a correlation noise model (CNM). The coding method comprises the following steps of: (1) computing a residual frame; (2) calculating Laplace parameter values of transformation coefficient grades of frames, blocks and frequency bands, and establishing CNM parameter tables of the differentfrequency bands according to the Laplace parameter value of the transformation coefficient grade of the frequency bands; and (3) according to the value of the residual frame and the CNM, dividing a coding sequence into a high-speed motion sequence block, a medium-speed motion sequence block and a low-speed motion sequence block which are coded by adopting an intra-frame mode, an inverse motion vector estimation mode and a frame skipping mode respectively. The decoding method comprises an adaptive three-dimensional recursive search method and an adaptive overlapped block motion compensation method based on the classification of the key frames of the CNM. By the methods provided by the invention, the quality of side information in distributed video coding can be improved effectively, the problem of incorrect estimation of motion vectors in the distributed video coding can be solved more effectively in a situation of no increase of the computational complexity of a coding terminal, and the more accurate motion vectors can be obtained simultaneously.

Owner:松日数码发展(深圳)有限公司 +1

Nonlinear, prediction filter for hybrid video compression

InactiveUS20060285590A1Color television with pulse code modulationColor television with bandwidth reductionNonlinear filterLinear filter

A method and apparatus for non-linear prediction filtering are disclosed. In one embodiment, the method comprises performing motion compensation to generate a motion compensated prediction using a block from a previously coded frame, performing non-linear filtering on the motion compensated prediction in the transform domain with a non-linear filter as part of a fractional interpolation process to generate a motion compensated non-linear prediction, subtracting the motion compensated non-linear prediction from a block in a current frame to produce a residual frame, and coding the residual frame.

Owner:NTT DOCOMO INC

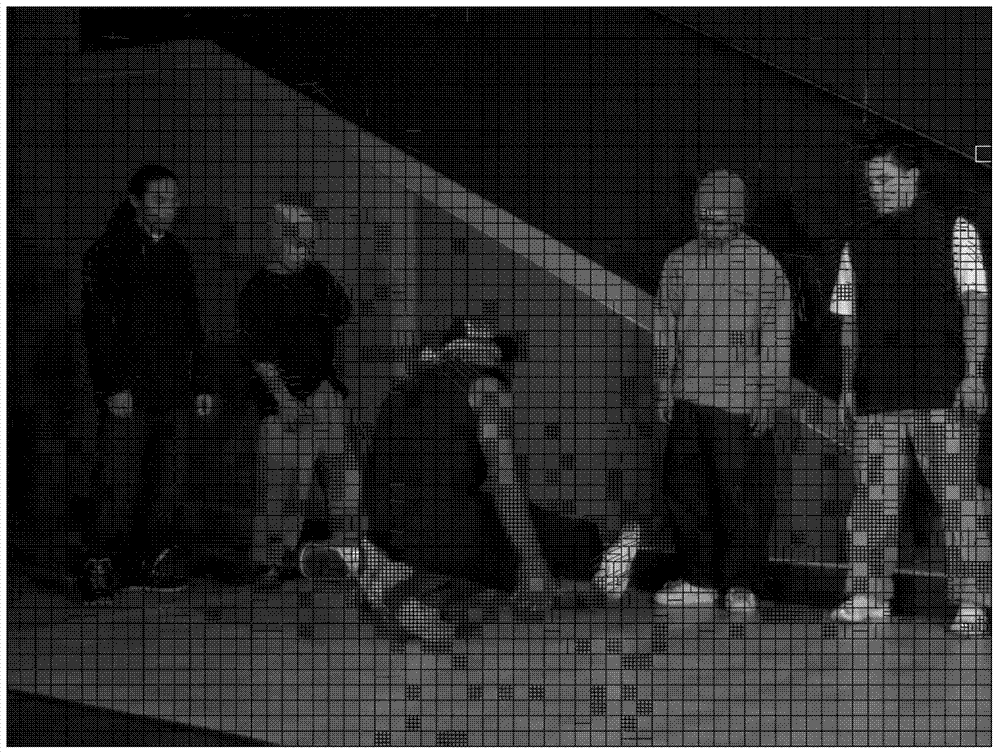

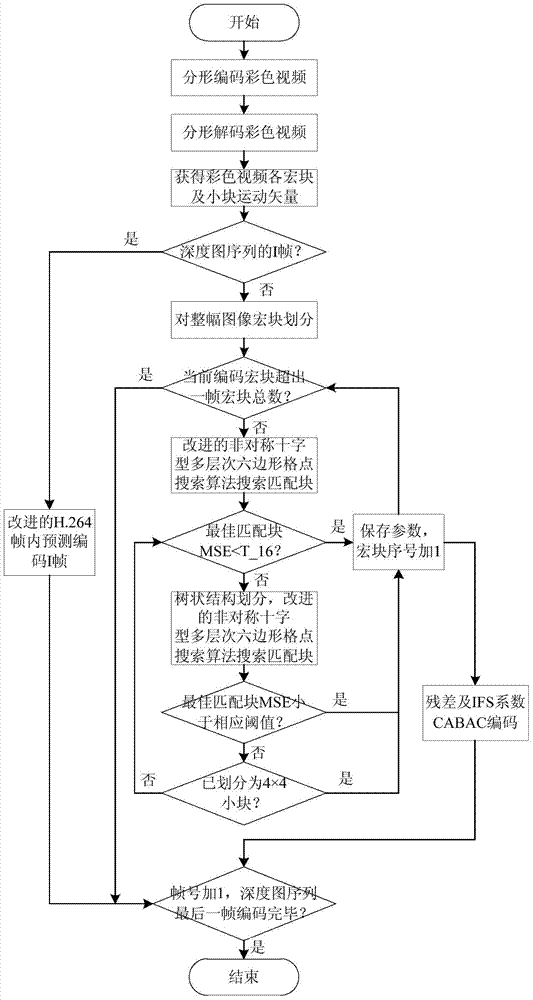

Depth map sequence fractal coding method based on motion vectors of color video

ActiveCN103581647AGuaranteed coding qualityImprove forecast accuracySteroscopic systemsMotion vectorIntra-frame

The invention provides a depth map sequence fractal coding method based on motion vectors of a color video. The method includes the steps that firstly, the color video is coded through a fractal video compression method; secondly, the color video is decoded through a fractal video decompression method so as to acquire the motion vectors of all macro blocks of the color video and the motion vectors of all small blocks of the color video; thirdly, for coding of frames I in a depth map sequence, a smooth block is defined based on the H.264 intra-frame prediction coding method, and for smooth blocks, the values of adjacent reference pixels are directly copied while various predication directions do not need traversing; fourthly, for coding of frames P in the depth map sequence, block motion estimation / compensation fractal coding is carried out, predication on motion vectors of macro blocks of frames of the depth map sequence is carried out according to the correlation between the motion vectors of the macro blocks of the frames of the depth map sequence and the corresponding motion vectors of the macro blocks of the color video, an enhanced non-uniform multilevel hexagon search template is designed to replace an original non-uniform cross multilevel hexagon search template in the UMHexagonS, the most similar matching blocks are searched for through the improved UMHexagonS, and fractal parameters are recoded; fifthly, residual frames of the frames I, residual frames of the frames P and the fractal parameters of the frames P are compressed through CABAC entropy coding.

Owner:江苏华普泰克石油装备有限公司

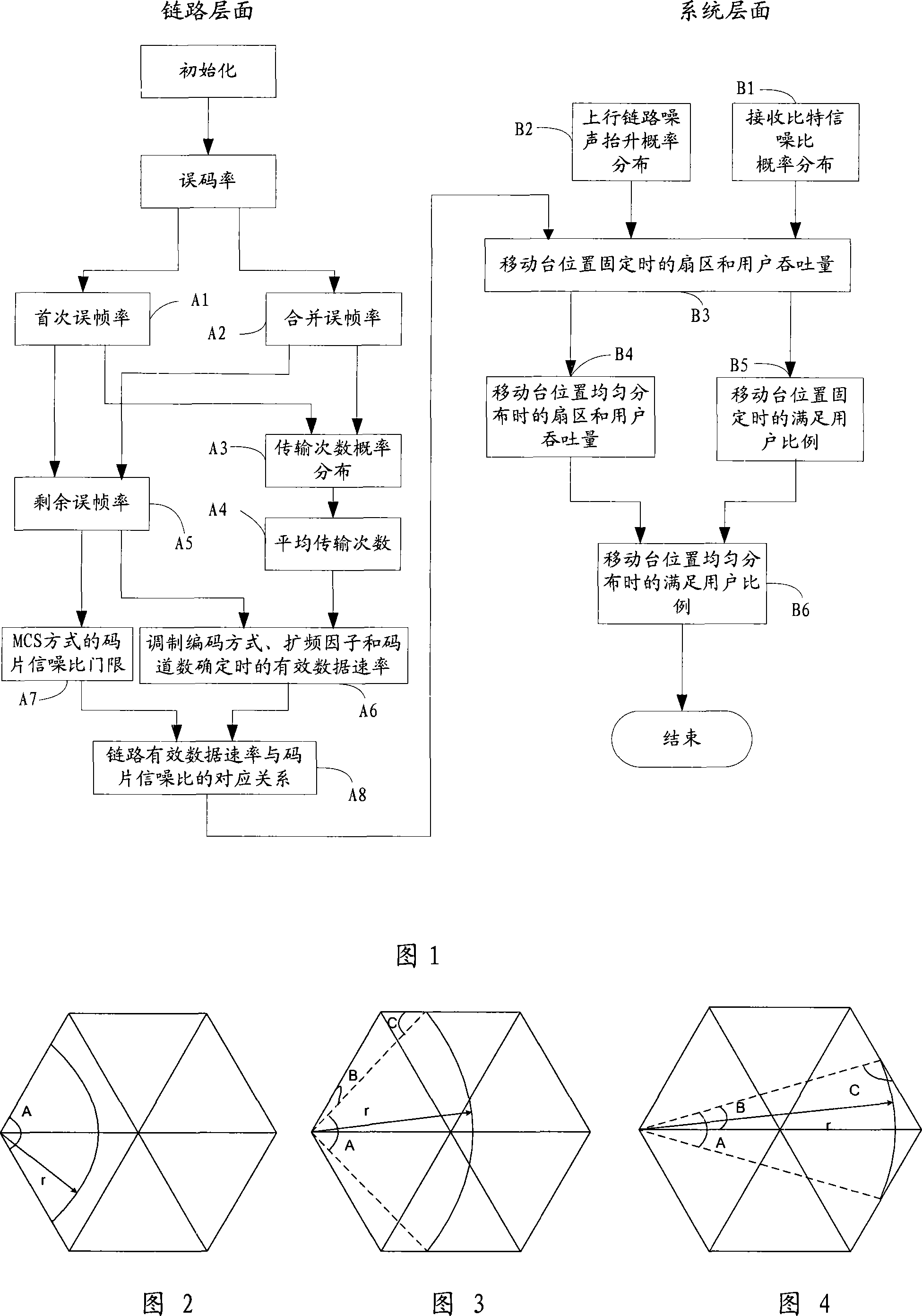

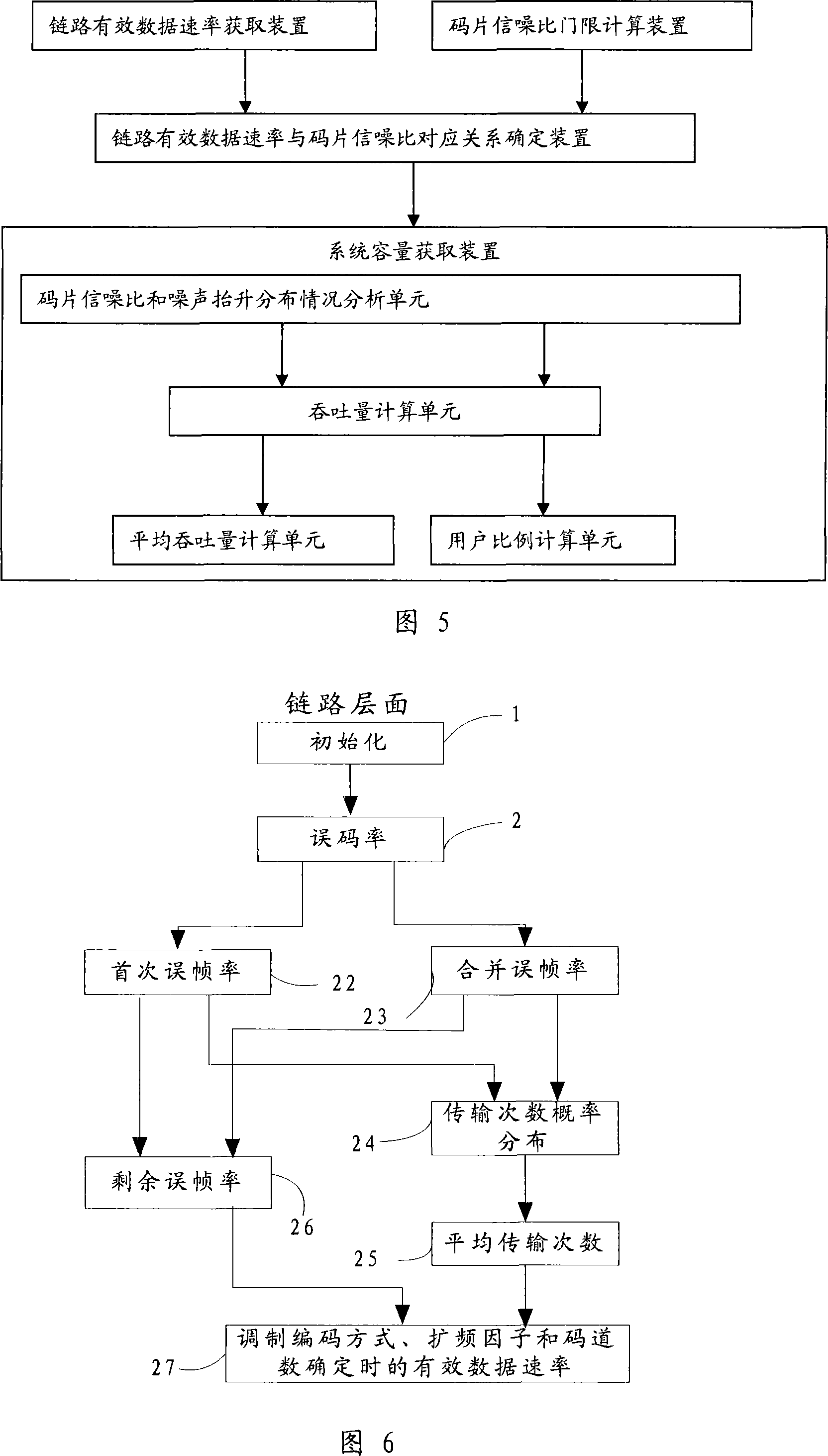

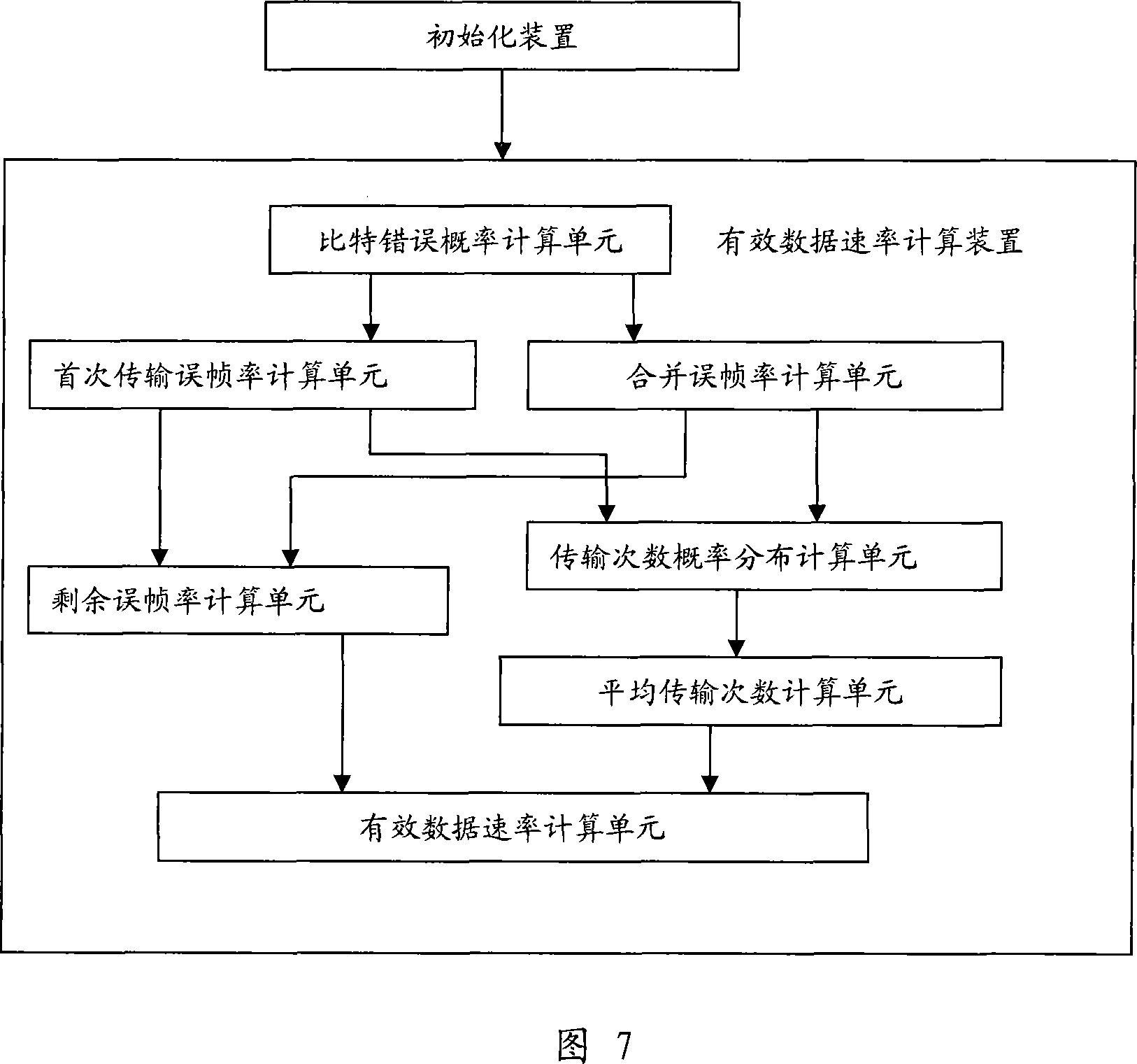

Method and system for obtaining high-speed uplink packet scheduling capacity, and method and device for obtaining link effective data rate

ActiveCN101072085AEasy to analyzeError prevention/detection by using return channelTransmission control/equalisingSystem capacityPacket scheduling

The invention discloses method and system for obtaining capacity of dispatch (CoD) for up going link packet in high speed (UGLPHS), as well as method and device for obtaining effective data rate (EDR) of link. The invention solves defect that the current technique gives out estimated system capacity not accorded with practical situation (PS) since considering system layer only. Based on code rate in physical layer, modulated encoding method, and spread spectrum factor, and number of channel, the method determines EDR of link under different fast mixed automatic retransmission (FMAR) modes. Based on residual frame error rate of system, the method determines SNR threshold of codes, and obtains coincidence relation between EDR of system and SNR of codes. Using EDR of link obtains capacity of system. Analyzing influence on CoD for UGLPHS caused by link adaptive, FMAR, and fast dispatch in system layer synthetically, the invention gives out estimated result accorded with PS relevantly.

Owner:XFUSION DIGITAL TECH CO LTD

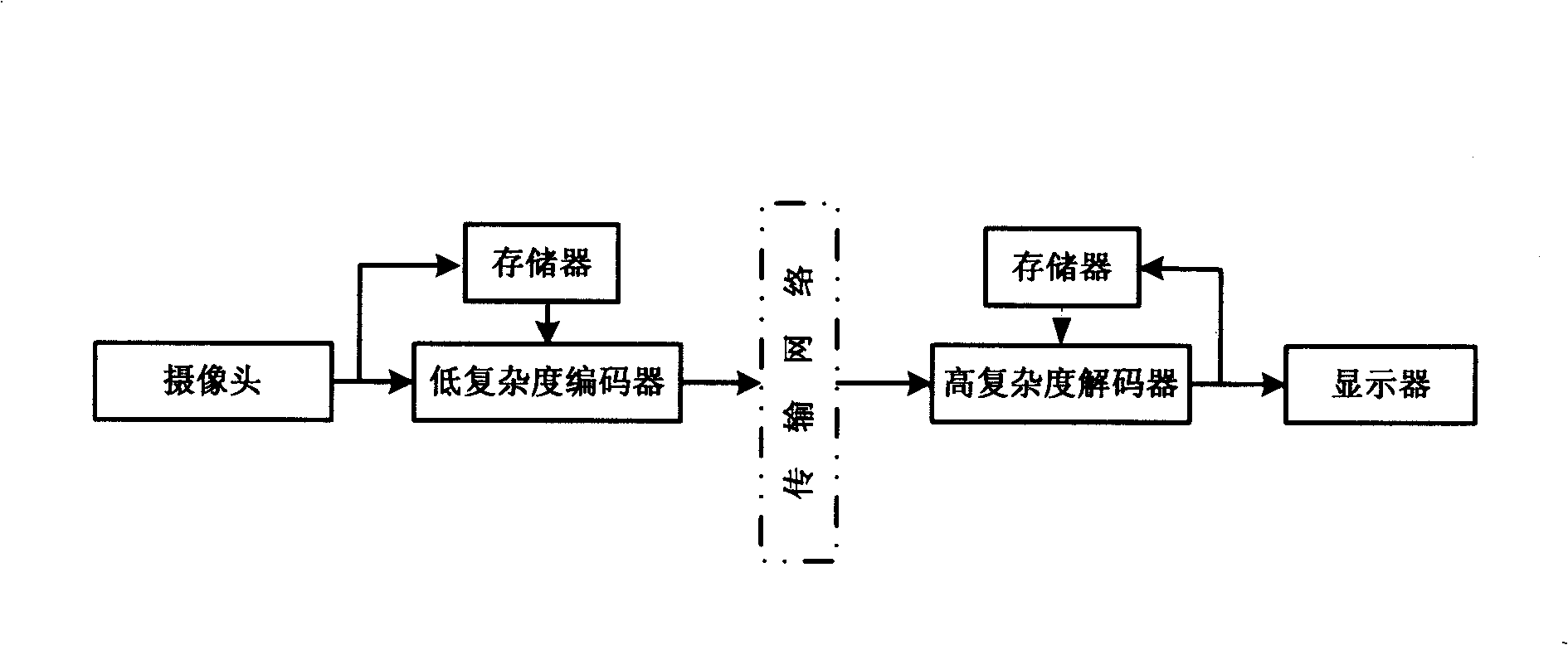

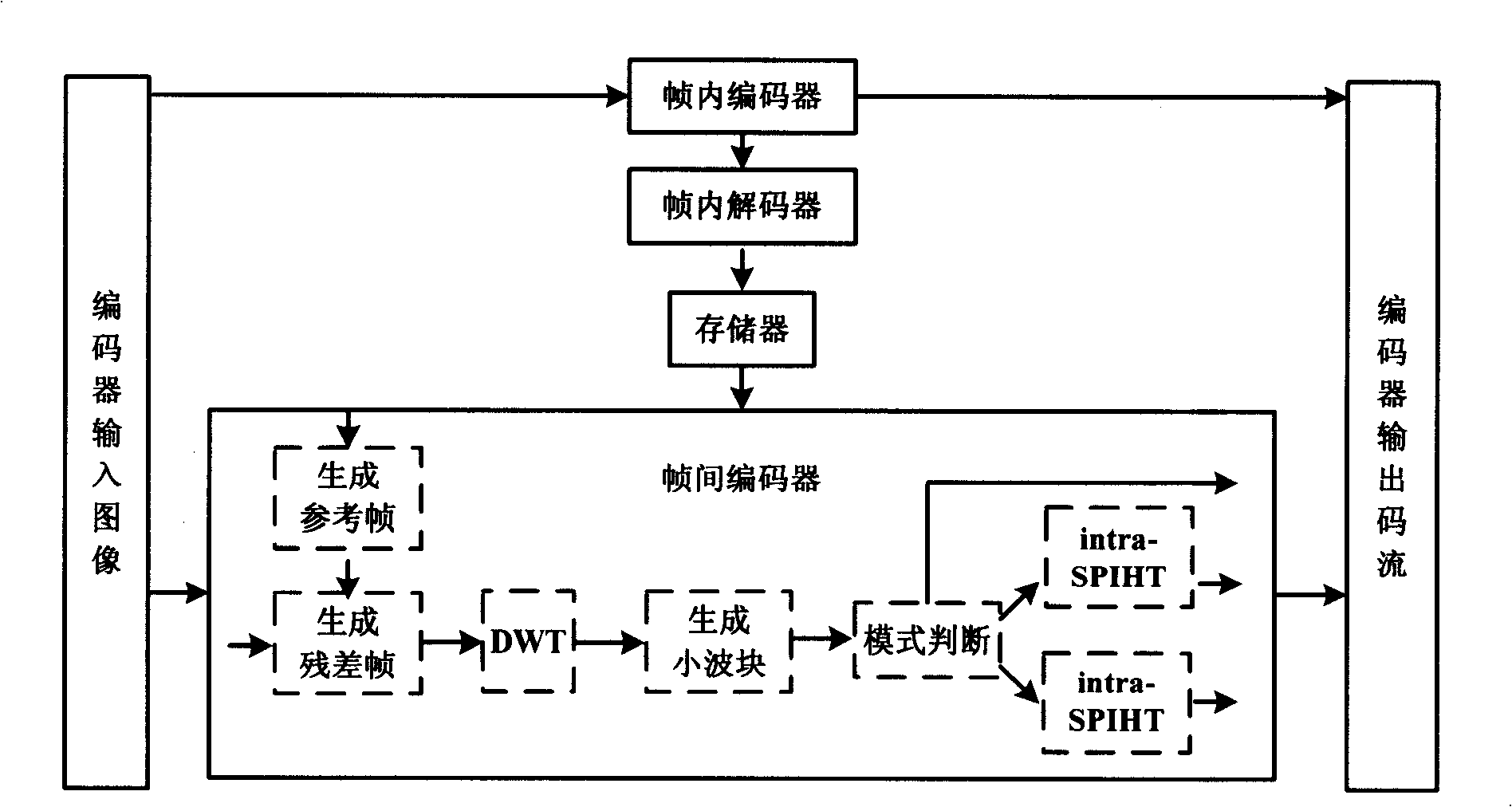

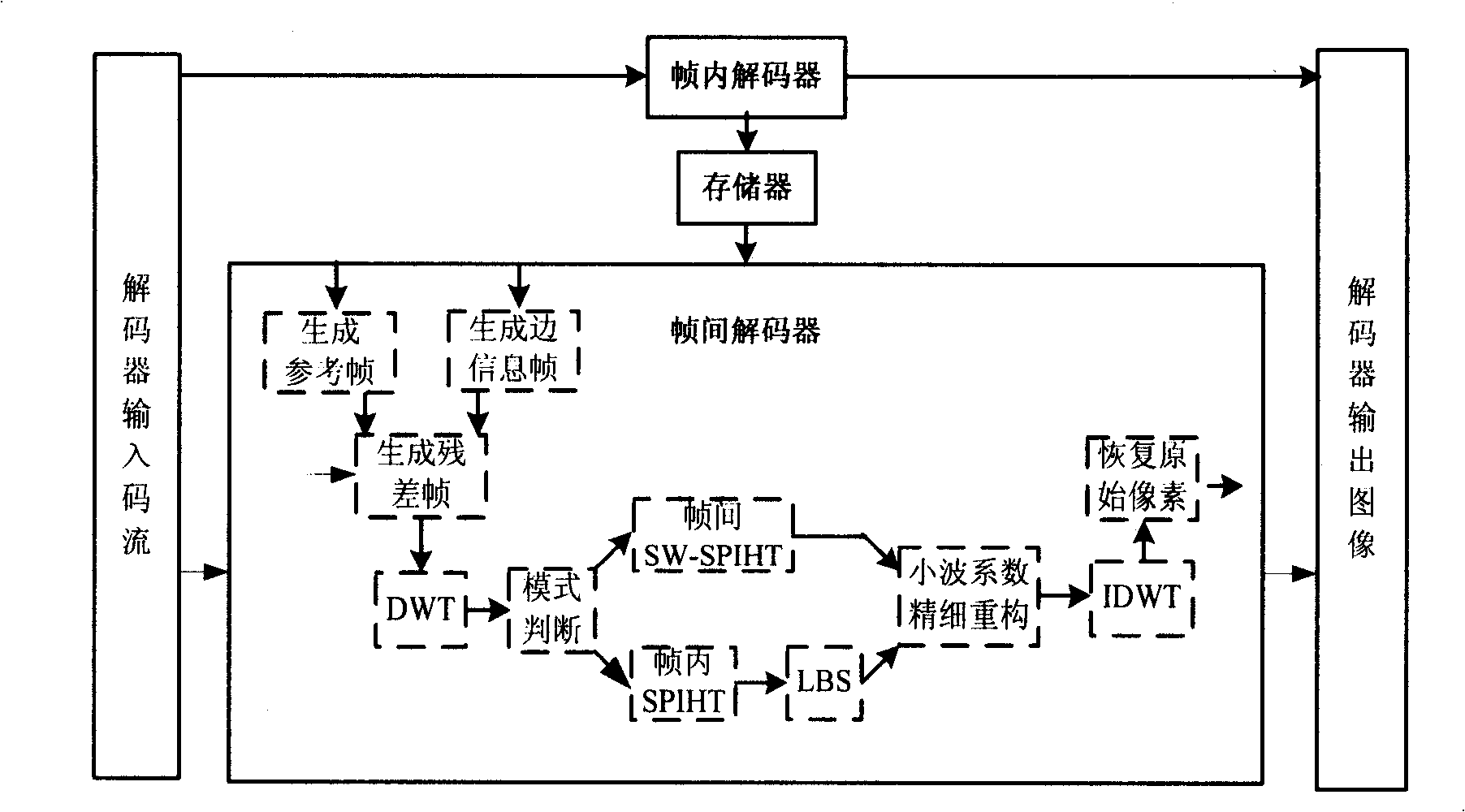

Hybrid distributed video encoding method based on intra-frame intra-frame mode decision

InactiveCN101335892AJoint Quantitative FacilityImprove rate-distortion performanceTelevision systemsDigital video signal modificationSide informationKey frame

A hybrid distributed video encoding method based on wavelet domain intra-frame mode decision capable of improving rate aberration performance, is characterized by the following steps: (1) a low complexity encoding, including the following steps: using traditional intra-frame encoder to code key frames, generating the reference frame of Wyner-Ziv frame by weighted average interpolation, generating a residual frame by a subtraction arithmetic, performing discrete wavelet switch DWT to the residual frame, generating a wavelet block, intra-frame mode decision of the wavelet block, entropy coding of the mode information, and inter-frame SW-SPIHT coding or intra-frame SPIHT coding of the wavelet block; (2) a high-complexity decoding, including the following: using traditional intra-frame decoding algorithm to decode key frames, adopting a motion estimation interpolation to produce side information frame of the Wyner-Ziv, generating the reference frame of the decoding terminal by weighting average interpolation, generating a residual frame of the decoding terminal by a subtraction arithmetic, performing DWT to the residual frame, entropy decoding of the mode information, adopting LBS to perform motion estimation to generate more accurate side information, fine reconstruction of wavelet coefficients, and recovering the original pixels by inverse discrete wavelet transform (IDWT) and addition operations.

Owner:TAIYUAN UNIVERSITY OF SCIENCE AND TECHNOLOGY

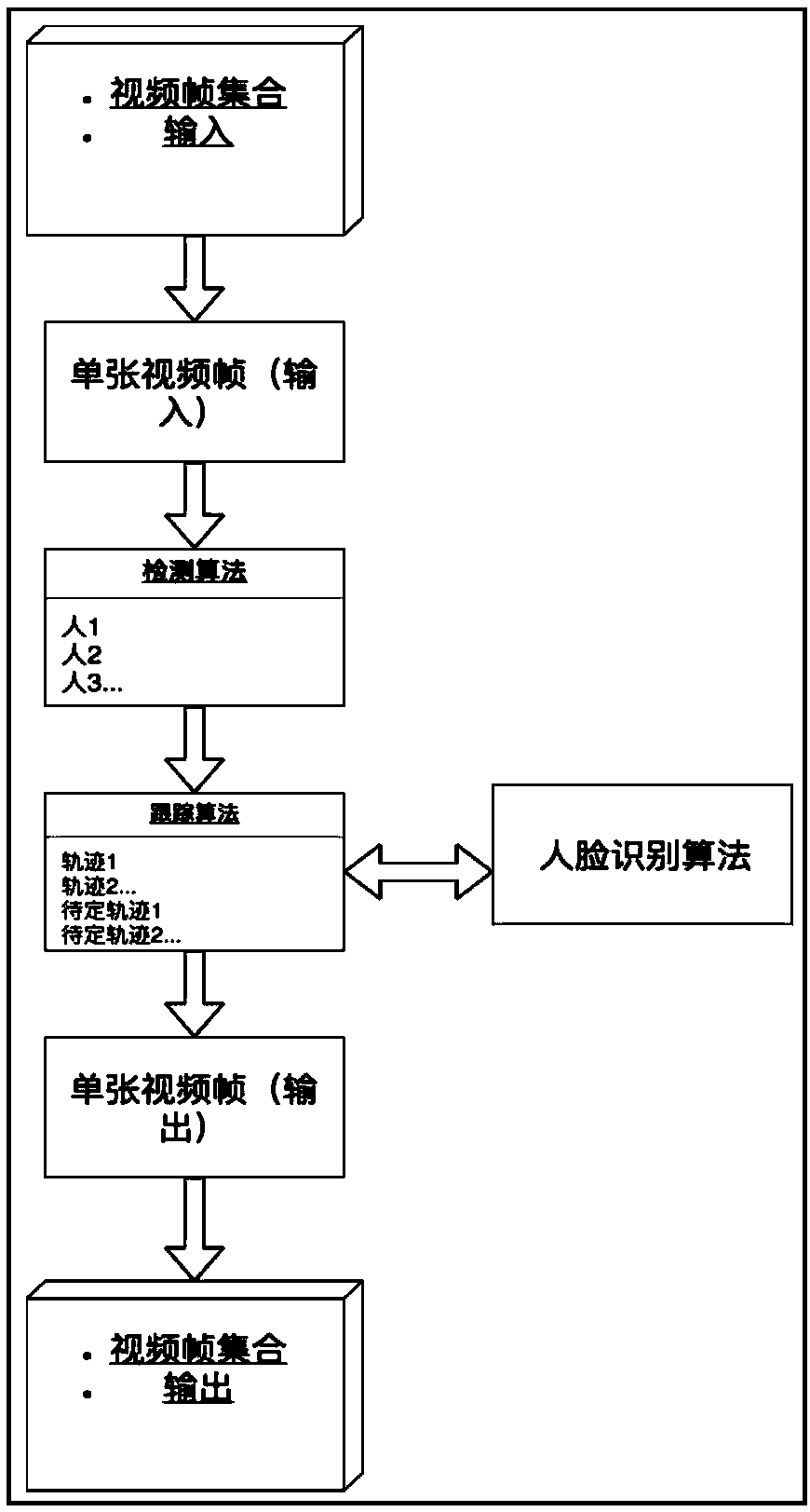

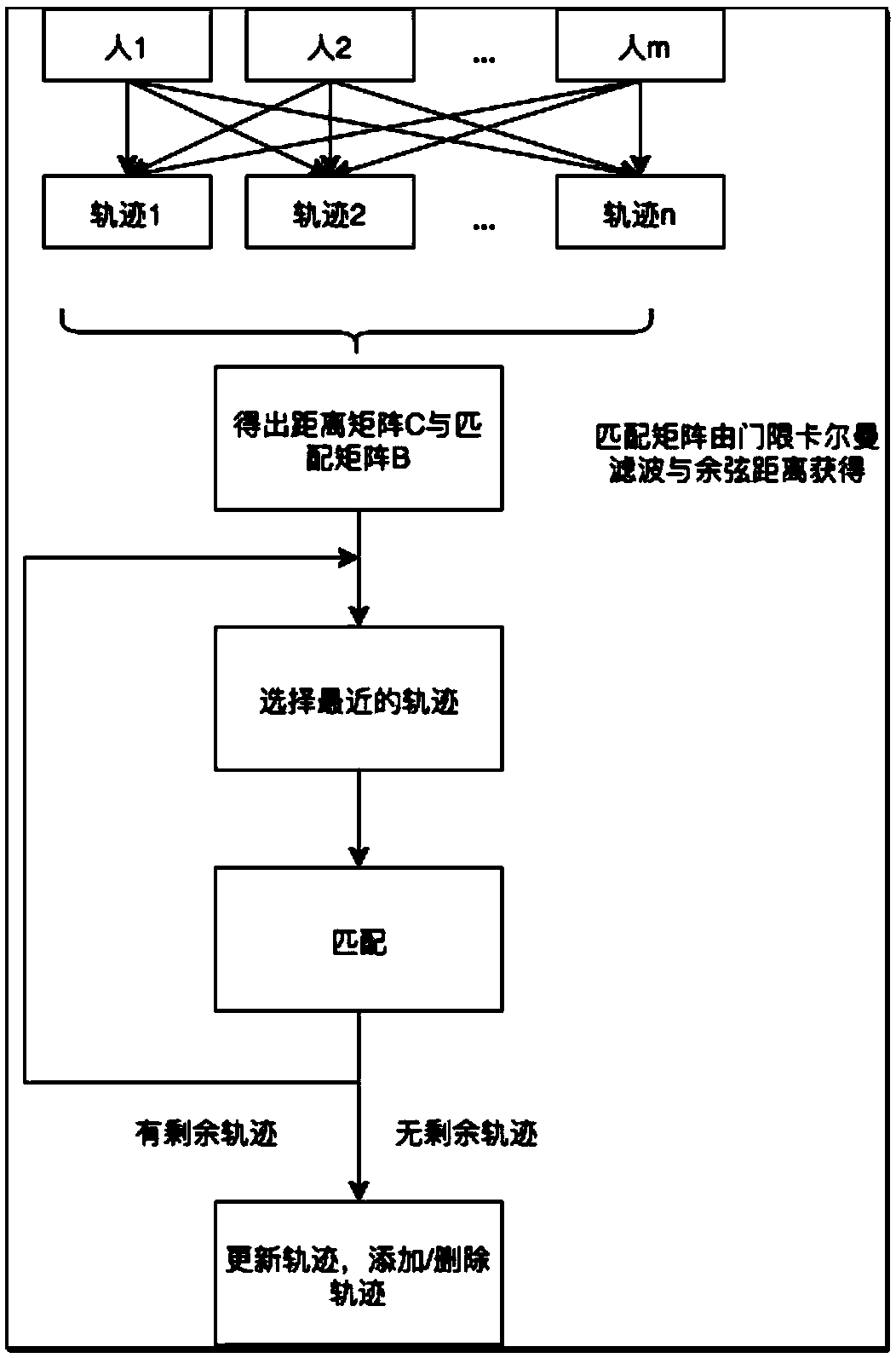

Real-time tracking method for certain person in video

InactiveCN108363997AImprove generalization abilityImprove forecast accuracyCharacter and pattern recognitionNeural architecturesTrack algorithmResidual frame

The invention discloses a real-time tracking method for a certain person in a video. The method is characterized by comprising the following steps that step one, an input video is cut into images, andeach image corresponds to a frame; step two, a detecting algorithm is performed on the images, and persons are detected from the images; step three, a detecting result is input to a tracking algorithm, the tracking algorithm constructs a new tracking path according to conditions, an existing tracking path is upgraded, and tracking paths are deleted; step four, the tracking algorithm calls a faceidentification algorithm to name the paths which do not acquire names, and the naming is determined by the paths which acquire names; step five, if the residual frames in the video are not processed,the process returns to the step two; otherwise, the processed frames are made into a video for output. The method can greatly reduce the calculated amount of a full connection neural network, improvethe calculating speed, reserve space information better, and improve the generalization capability and predicting accuracy of a model.

Owner:南京云思创智信息科技有限公司

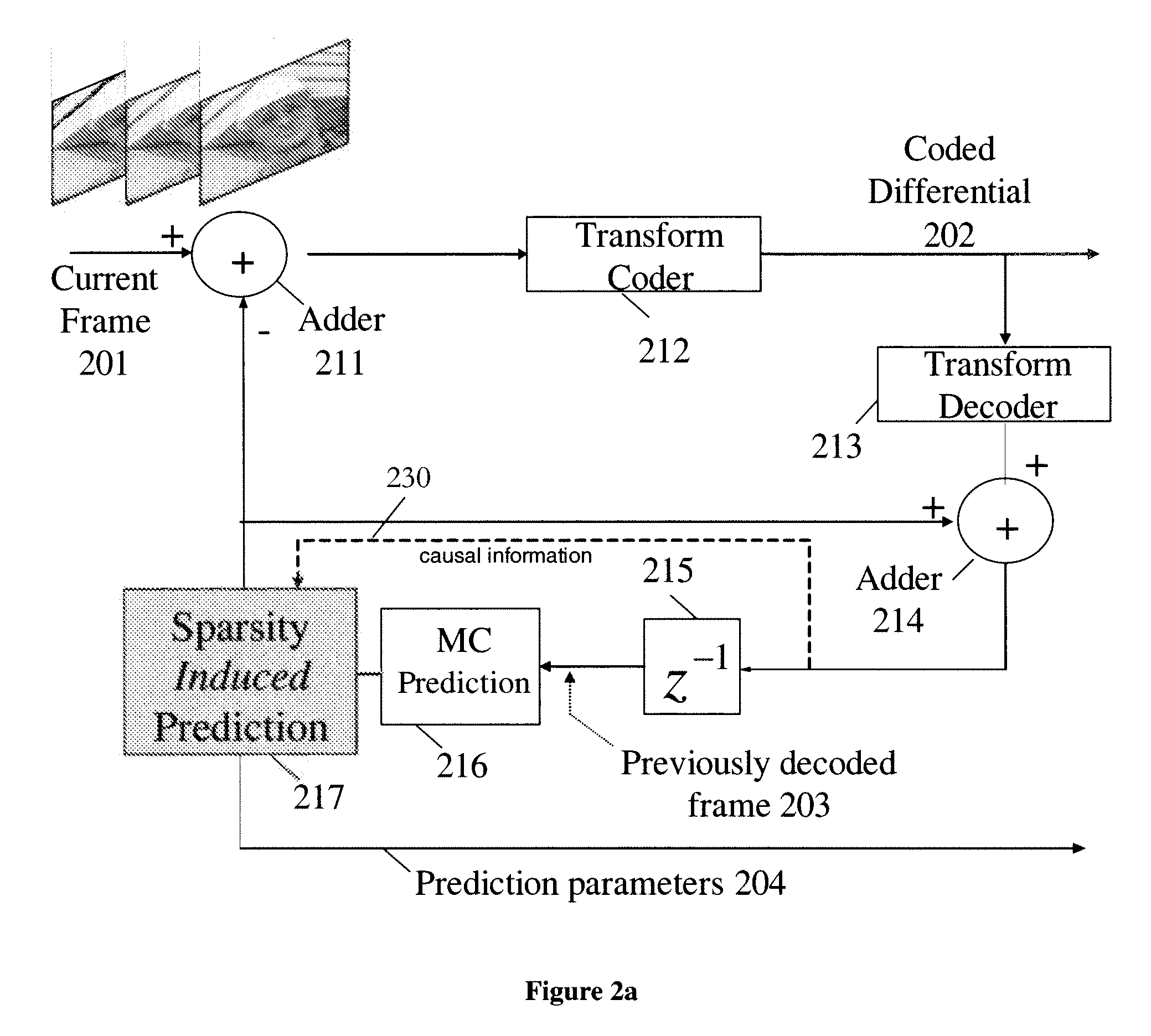

Spatial sparsity induced temporal prediction for video compression

A method and apparatus are disclosed herein for spatial sparsity induced temporal prediction. In one embodiment, the method comprises: performing motion compensation to generate a first motion compensated prediction using a first block from a previously coded frame; generating a second motion compensated prediction for a second block to be coded from the first motion compensated prediction using a plurality of predictions in the spatial domain, including generating each of the plurality of predictions by generating block transform coefficients for the first block using a transform, generating predicted transform coefficients of the second block to be coded using the block transform coefficients, and performing an inverse transform on the predicted transform coefficients to create the second motion compensated prediction in the pixel domain; subtracting the second motion compensated prediction from a block in a current frame to produce a residual frame; and coding the residual frame.

Owner:NTT DOCOMO INC

Video compression and decompression method based on fractal and H.264

ActiveCN103037219AImprove decoding qualityEasy to solveTelevision systemsDigital video signal modificationPattern recognitionEntropy encoding

The invention provides a video compression and decompression method based on fractal and H.264. Intra prediction of coding based on the H.264 is adopted for an I frame to obtain a prediction frame. A block motion estimation or complementary coding which are based on fractal is used for obtaining a prediction frame of a P frame. Difference between each initial frame and each corresponding prediction frame is a residual frame. After the residual frames are transformed through discrete cosine transformation (DCT) and are quantified, on one hand, the residual frames are written in a code rate, on the other hand, the residual frames are inversely quantified and reversely transformed though the DCT and then plus the prediction frames to get rebuilt frames (as reference frames). Fractal parameter is generated when the P frame is encoded in a predictive mode, the residual frames of the I frame and the P frame are compressed by the utilization entropy encoding CALCV, and the fractal parameter is compressed by utilization of an exponential-Golomb code with symbols, and a corresponding decompression process is that a prediction frame is obtained from an intra prediction of the I frame, and a prediction frame is obtained by a prediction of a frame in front of the P frame. Residual information in the code rate is reversely quantified and reversely transformed through the DCT to respectively obtain the residual frames of the I frame and the P frame.

Owner:海宁经开产业园区开发建设有限公司

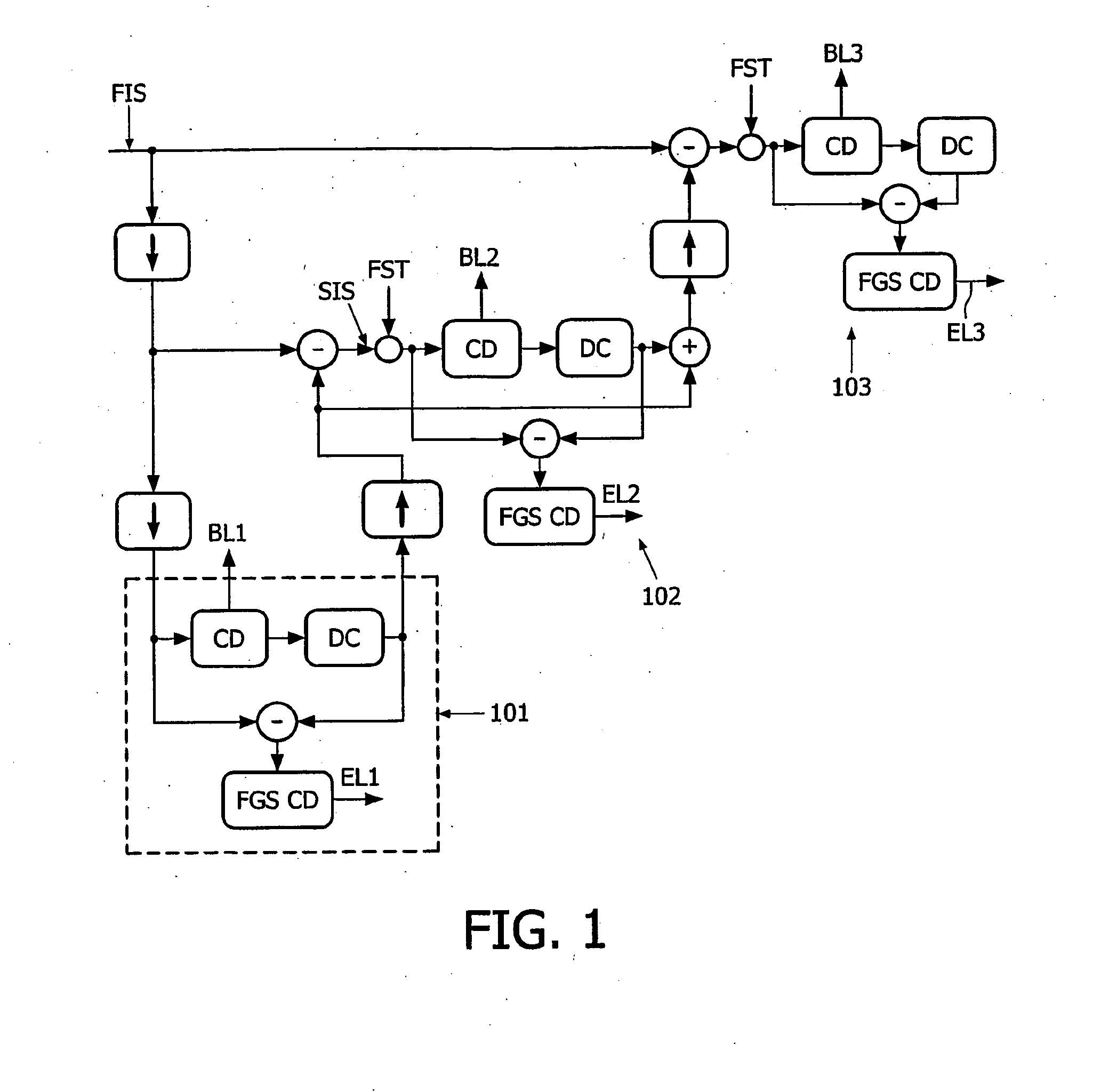

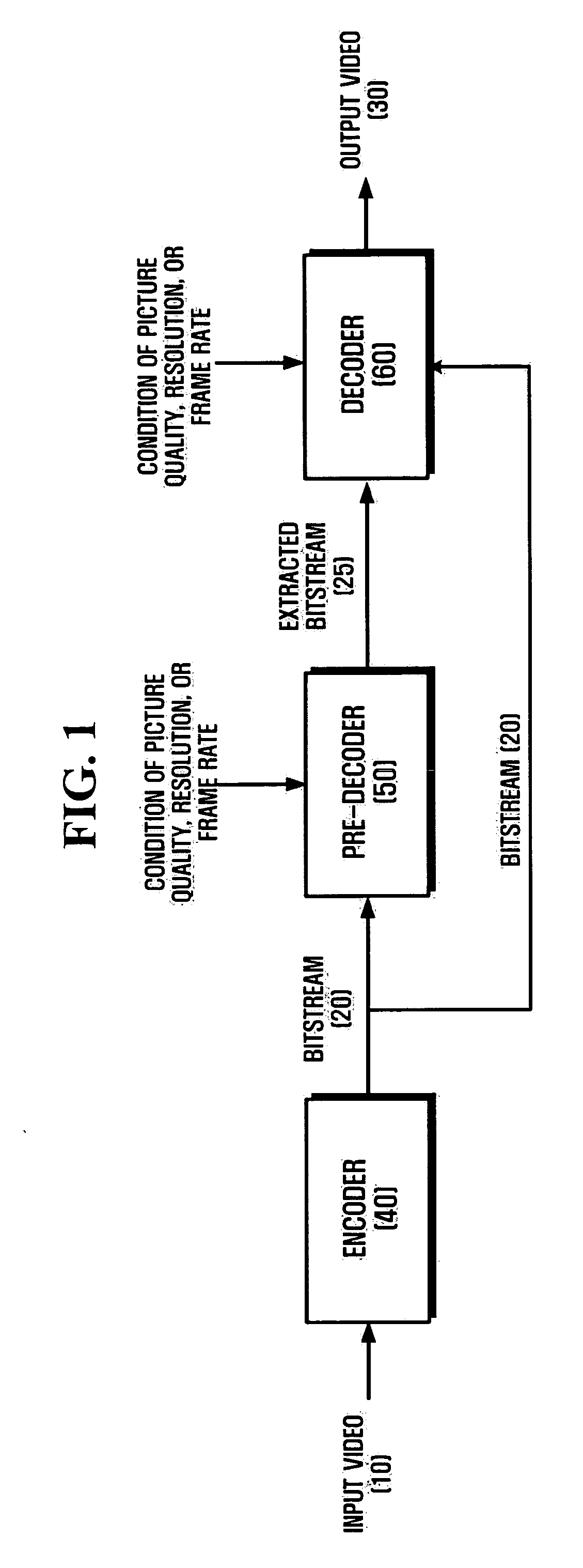

Method of spatial and snr fine granular scalable video encoding and transmission

InactiveUS20090022230A1Fine granularityReduce bitrateColor television with pulse code modulationColor television with bandwidth reductionGranularityVideo encoding

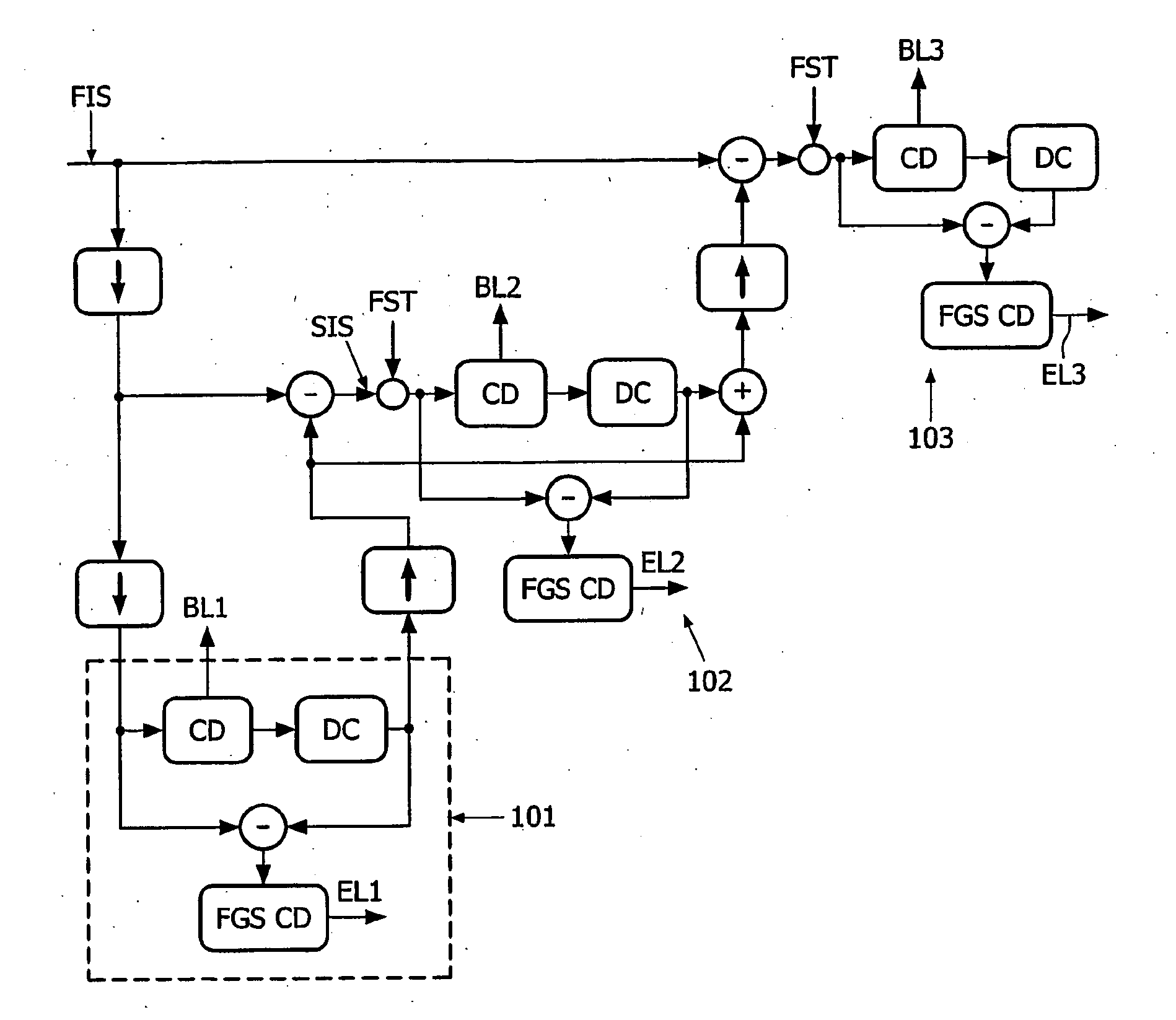

The invention relates to a method of coding video data available in the form of a first input stream of video frames, and to a corresponding coding device. This method, implemented for instance in three successives stages (101, 102, 103), comprises the steps of (a) encoding said first input stream to produce a first coded base layer stream (BL1) suitable for a transmission at a first base layer bitrate; (b) based on said first input stream and a decoded version of said encoded first base layer stream, generating a first set of residual frames in the form of a first enhancement layer stream and encoding said stream to produce a first coded enhancement layer stream (EL1); and (c) repeating at least once a similar process in order to produce further coded base layer streams (BL2, BL3, . . . ) and further coded enhancement layer streams (EL2, EL3, . . . ). The first input stream is thus, for obtaining a required spatial resolution, compressed by encoding the base layers up to said spatial resolution with a lower bitrate and allocating a higher bitrate to the last base layer and / or to the enhancement which corresponds to said required spatial resolution. A corresponding transmission method is also proposed.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

Multi-view stereoscopic video compression and decompression method based on fractal and H.264

ActiveCN103037218AReduce bit rateImprove decoding qualityTelevision systemsDigital video signal modificationStereoscopic videoFiltration

The invention provides a multi-view stereoscopic video compression and decompression method based on fractal and H.264. A middle view is used as a base layer and encoded on the principle of a motion compensated prediction (MCP) method in a compression mode, and other views are encoded on the principle of MCP + digitally controlled potentiometers (DCP) in a compression mode. Three views are taken for example, intra prediction coding of the H.264 is adopted by an I frame of the middle view, a rebuilt frame (as a reference frame) is obtained after filtration, a P frame of the middle view is encoded with block MCP by the utilization of the encoded rebuilt frame as the reference frame, the most matched fractal parameter of the block of each time is recorded, the fractal parameters are respectively substituted in a compression iterative function system to achieve a prediction frame of the P frame, and the rebuilt frame is obtained after filtration. The MCP + DCP is adopted for coding by a left view and a right view and a reference frame can be an encoded front frame of the respective view or an encoded frame of other views. Entropy encoding CALCV is used for compressing a residual frame of each frame and an exponential-Golomb code with symbols is used for compressing the fractal parameter.

Owner:海宁经开产业园区开发建设有限公司

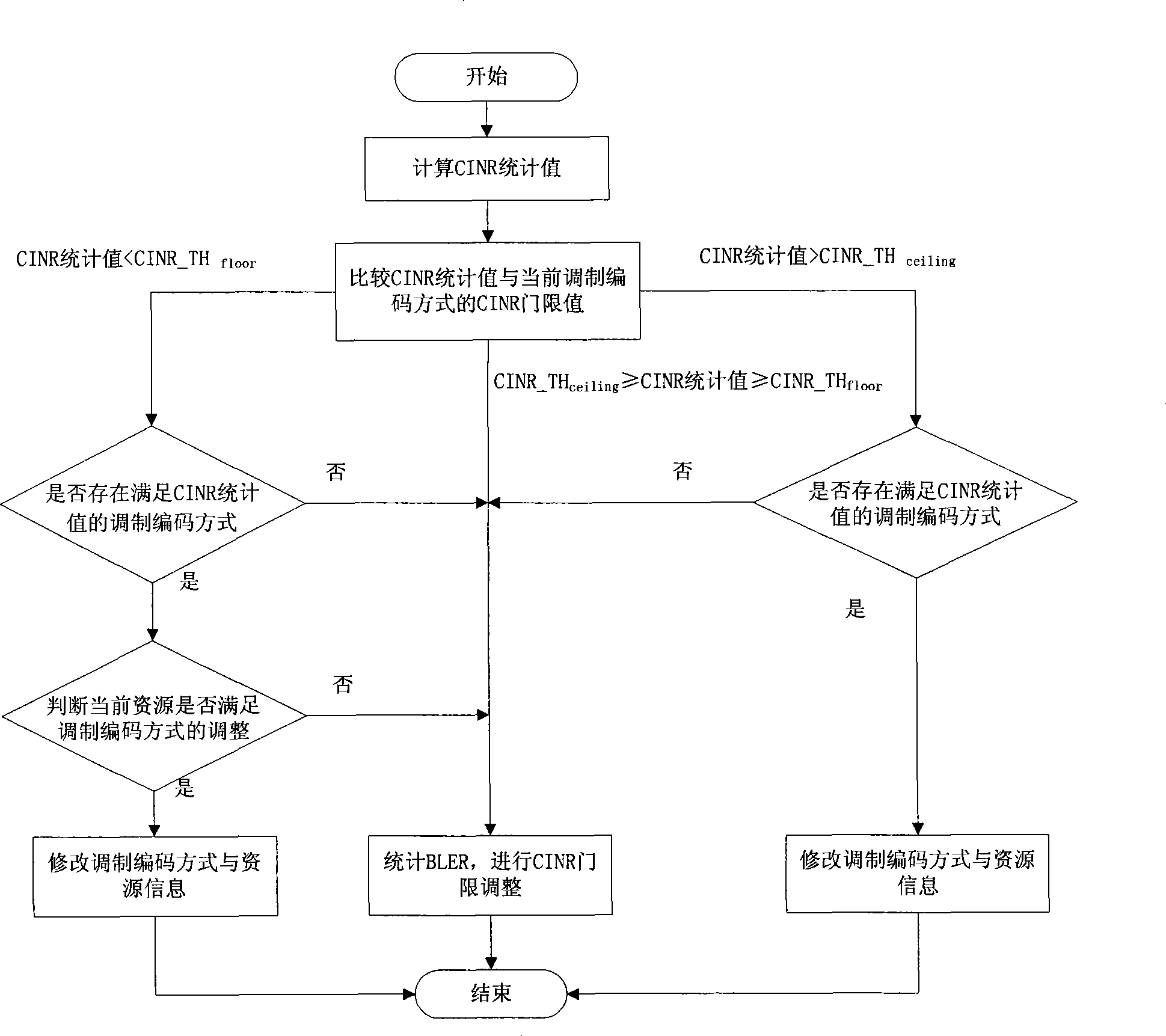

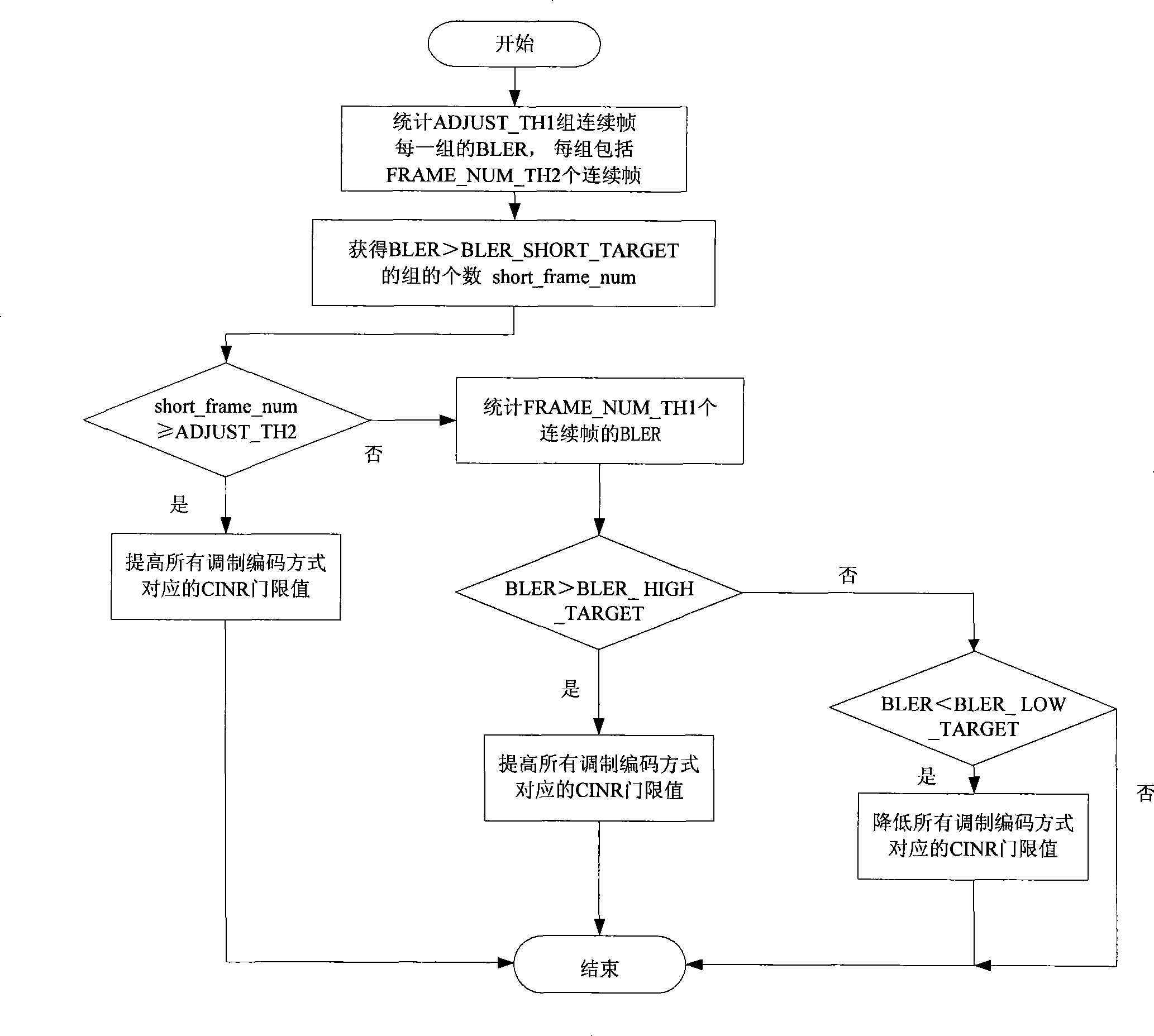

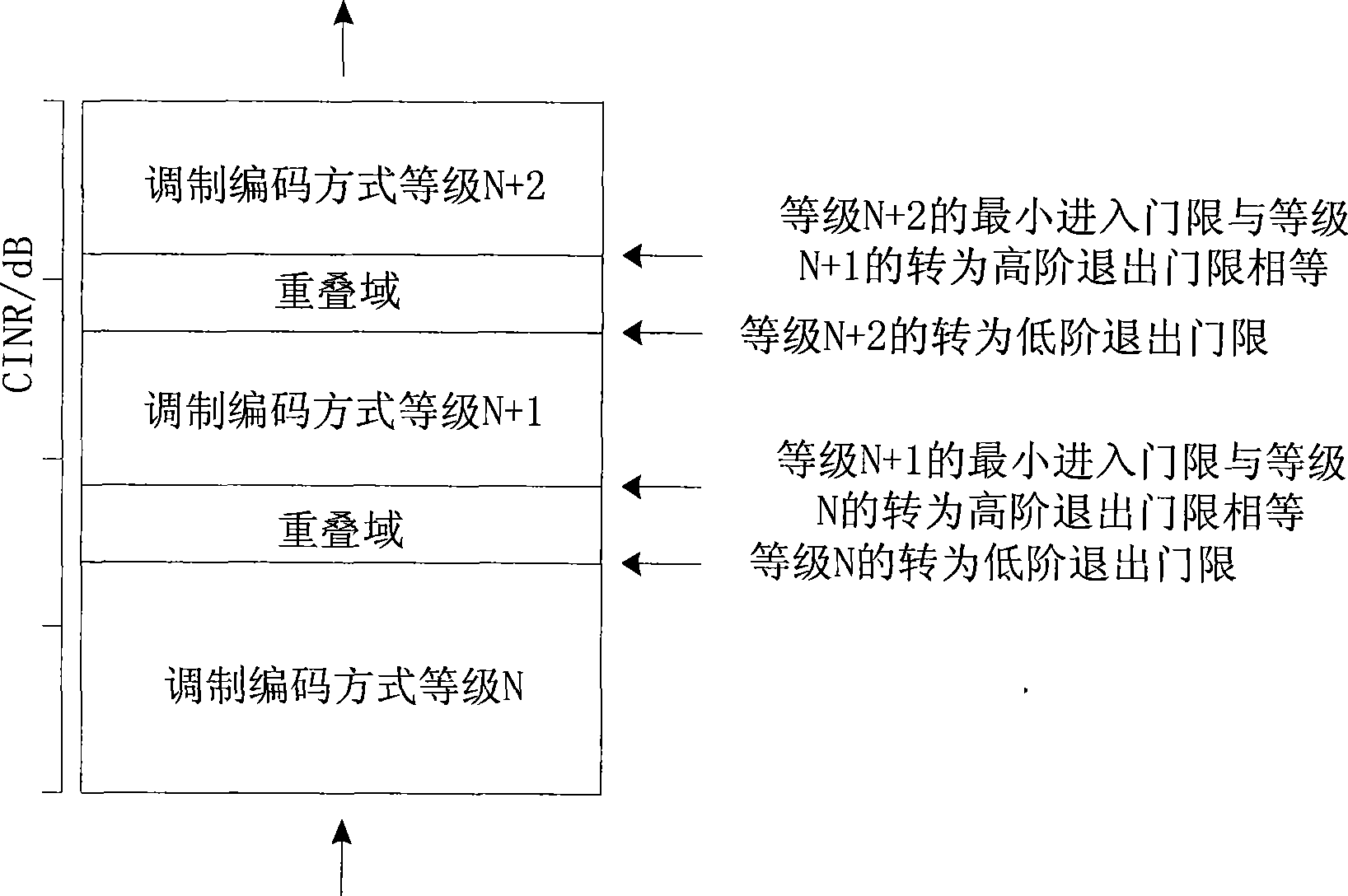

Method for adaptively regulating modulation encoding mode based on CINR

ActiveCN101483501AOvercoming Adjustment Speed EffectsMake the most of your bandwidthError preventionCommunication qualityFrequency spectrum

The present invention provides a method for adjusting modulating / coding mode based on CINR with a self-adapting mode. The method comprises the following steps: computing multi-frame average value of CINR measured value, eliminating the abnormal value of CINR measured value according to the multi-frame average value, and using the average value of residual frames which are eliminated with abnormal values as CINR statistic; preventing the fluctuation of chosen modulating / coding mode between the CINR threshold junctions corresponding to two adjacent grade modulating coding modes when the modulating / coding mode of each grade is respectively provided with an exit threshold CINR_THfloor which is switched to low stage, a least entering threshold CINR_THin and an exit threshold CINR_THceiling that is switched to high stage and the switching area between the modulating / coding modes of adjacent grades; and dynamically adjusting the CINR threshold corresponding with each modulating / coding mode according to the block-error rate BLER. Thereby the system stability and communication quality are ensured when the bandwidth is fully used and the spectrum efficiency is increased.

Owner:WUHAN HONGXIN TELECOMM TECH CO LTD

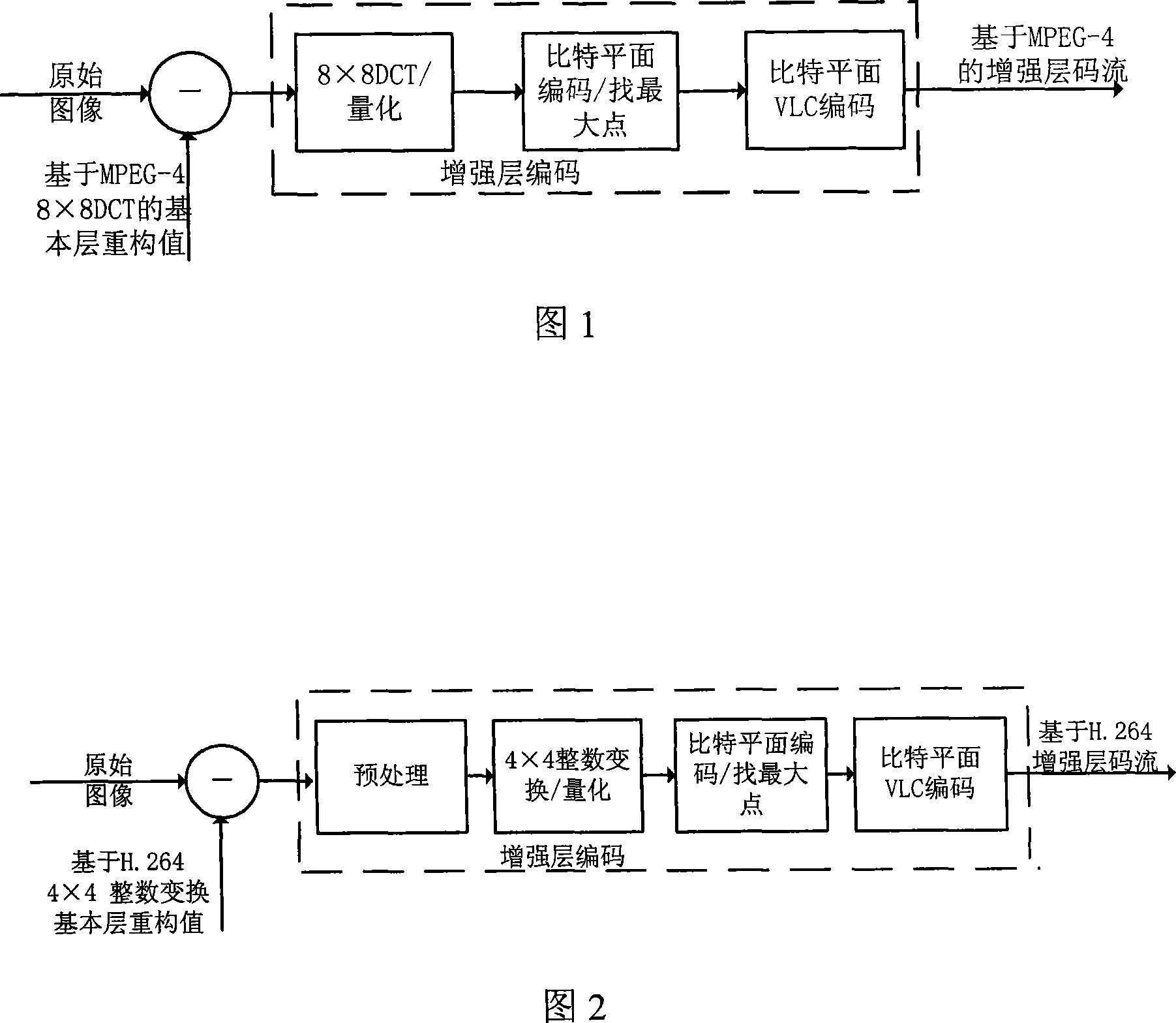

Real time fine flexible coding method based on H.264

InactiveCN101106695ASave coding timeSave code rateAnalogue secracy/subscription systemsDigital video signal modificationSignal-to-noise ratio (imaging)Granularity

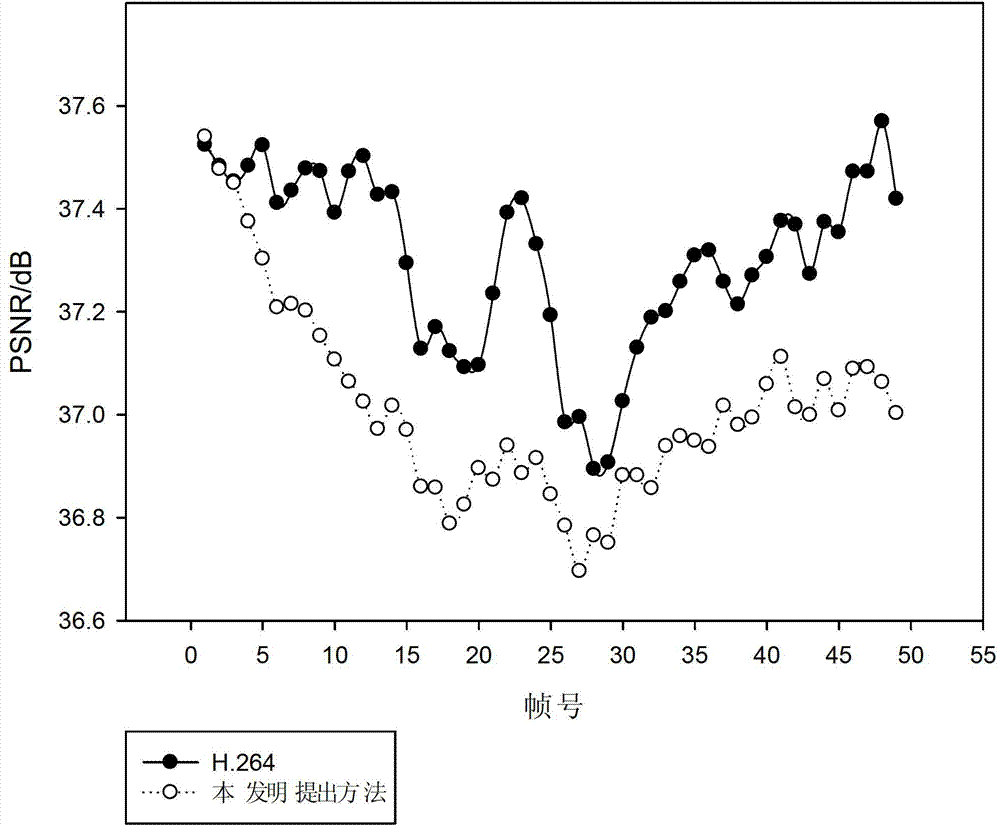

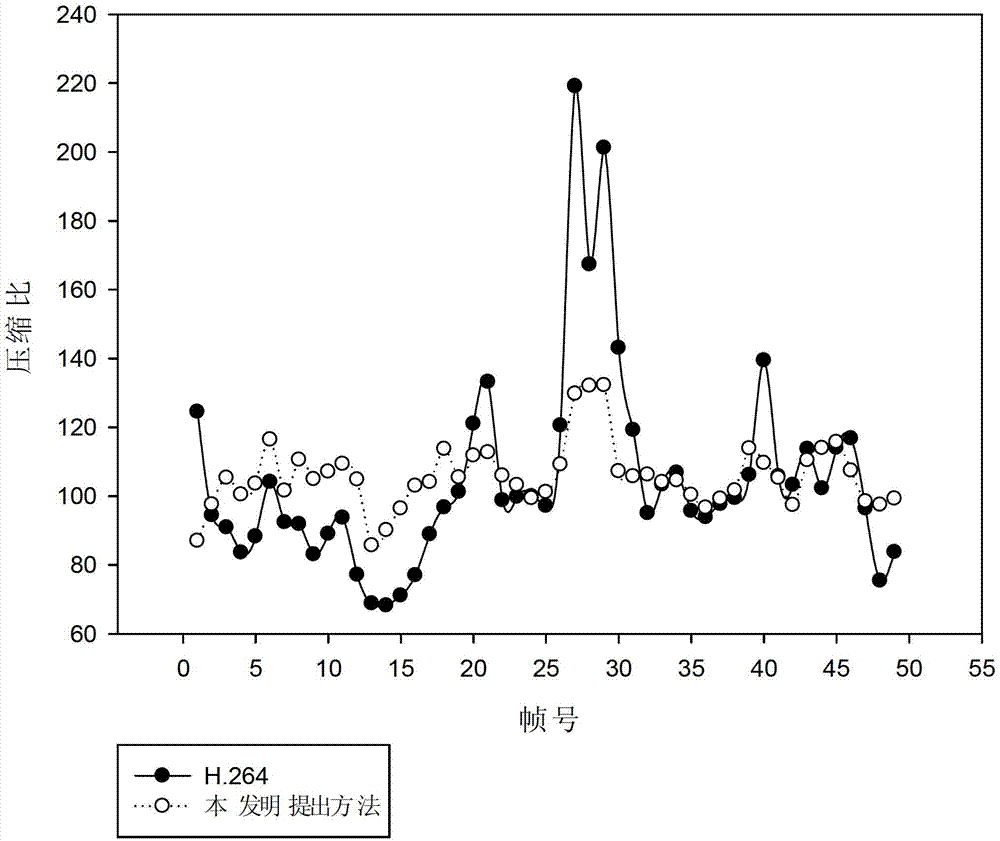

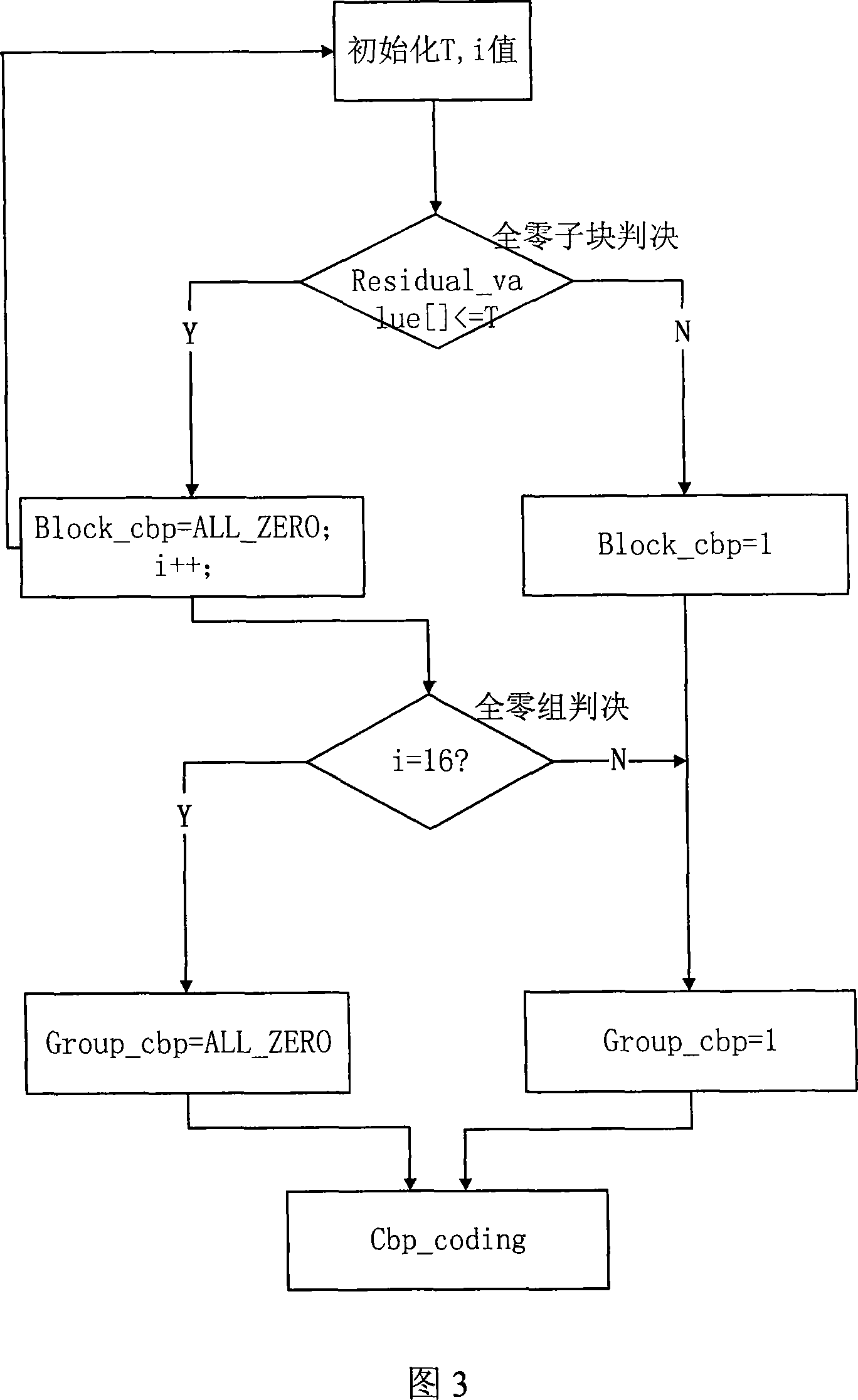

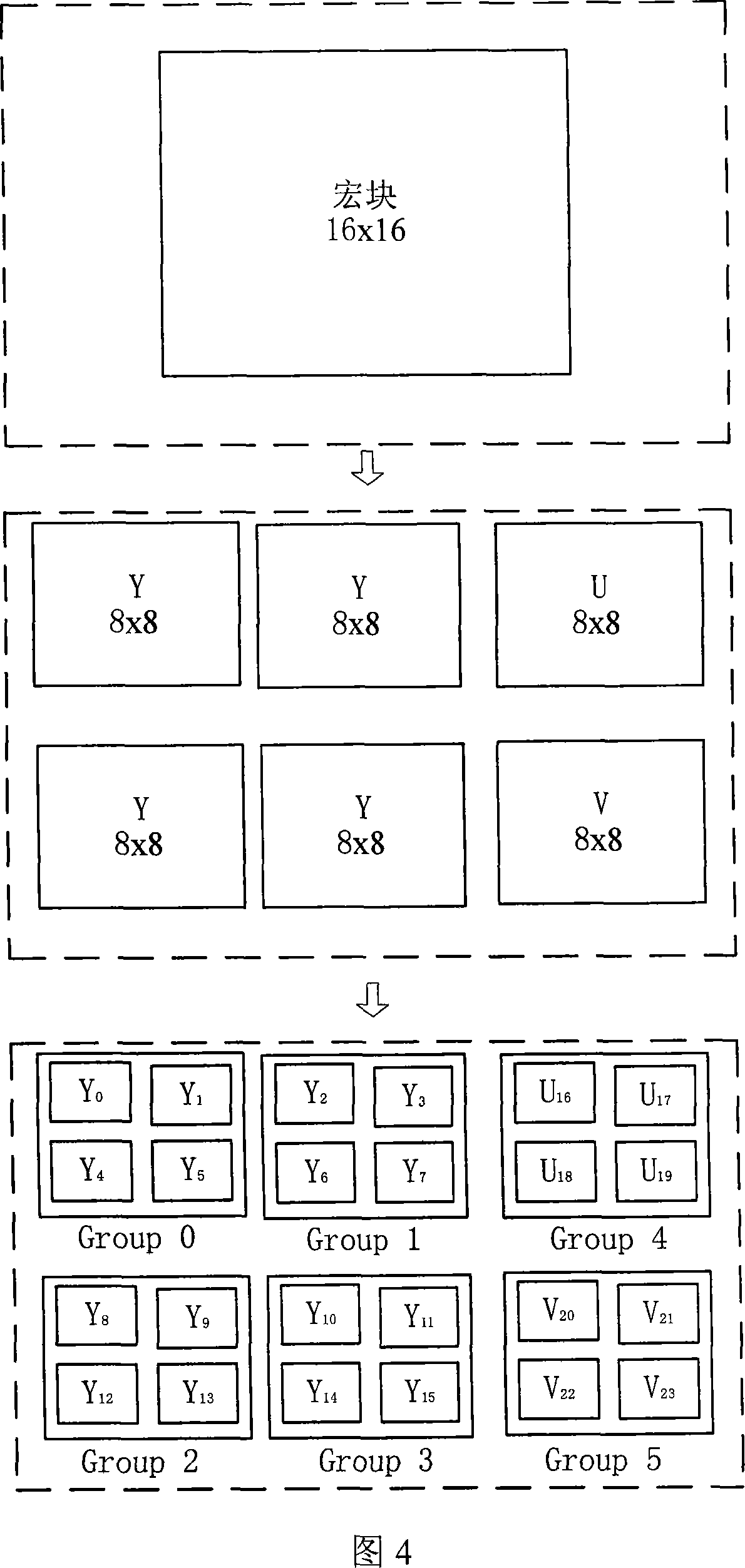

The invention relates to a real-time fine granularity scalable encoding method based on H.264. According to the overall situation of current residual frames and the local distribution of current residual blocks, the invention finds singular points from the global analysis, makes the bit plane number wholly equilibrated through downward shift of the singular values, and makes corresponding upper shift at the decoding end; the invention determines all-zero blocks from the local analysis. The invention will use a 4*4 whole number transformation in the reinforced layer conversion fraction during encoding. Compared with the general FGS method, the complexity in the invention is obviously reduced, the video quality is higher, and the overall PSNR (peak signal noise ratio) change is smoother. Evidenced by experiments, compared with the FGS method of MPEG-4, when keeping a close code rate, the invention can increase the average brightness PSNR by 0.37dB, and increase the average encoding speed by 13.86fps, namely by 97 percent.

Owner:SHANGHAI UNIV +1

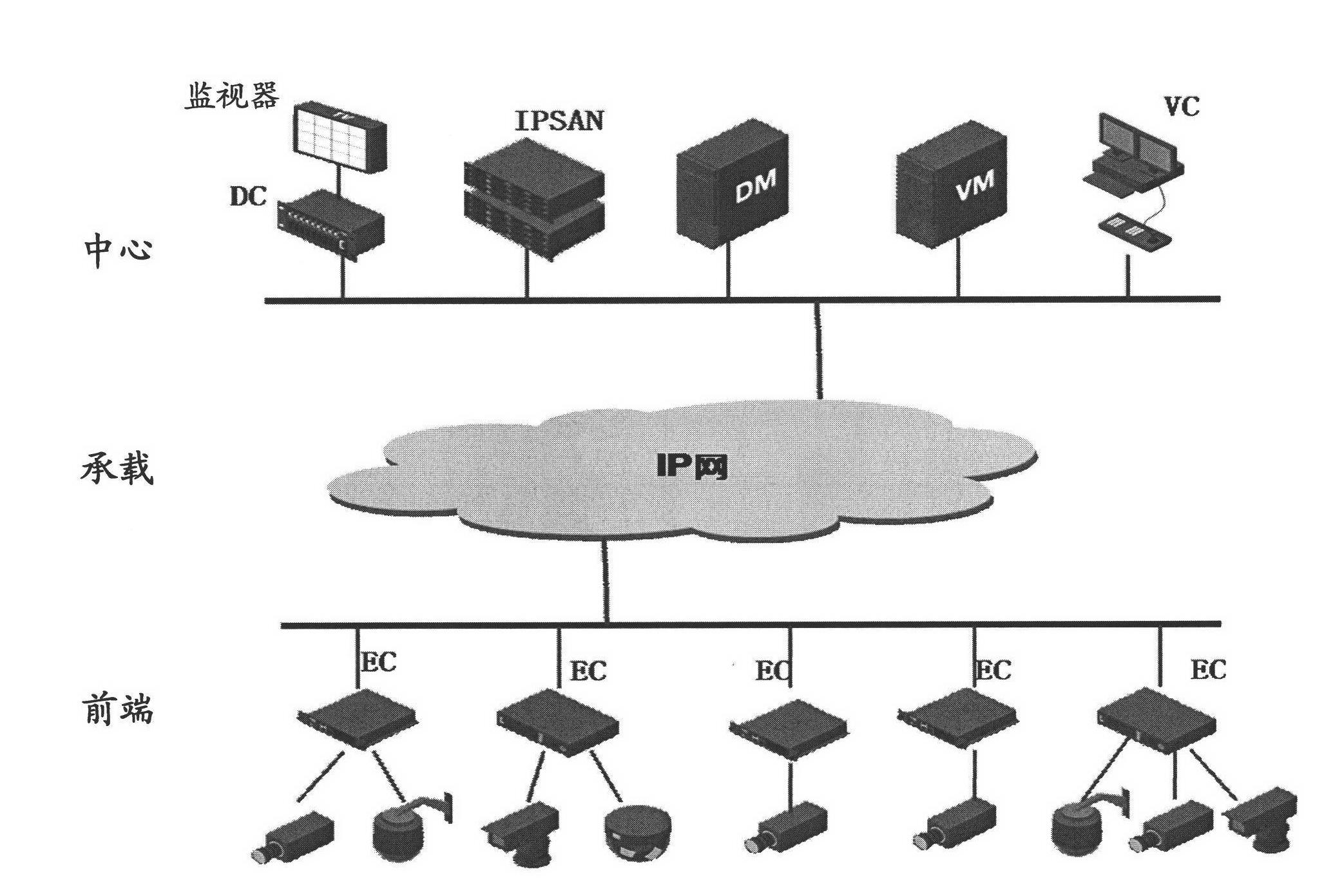

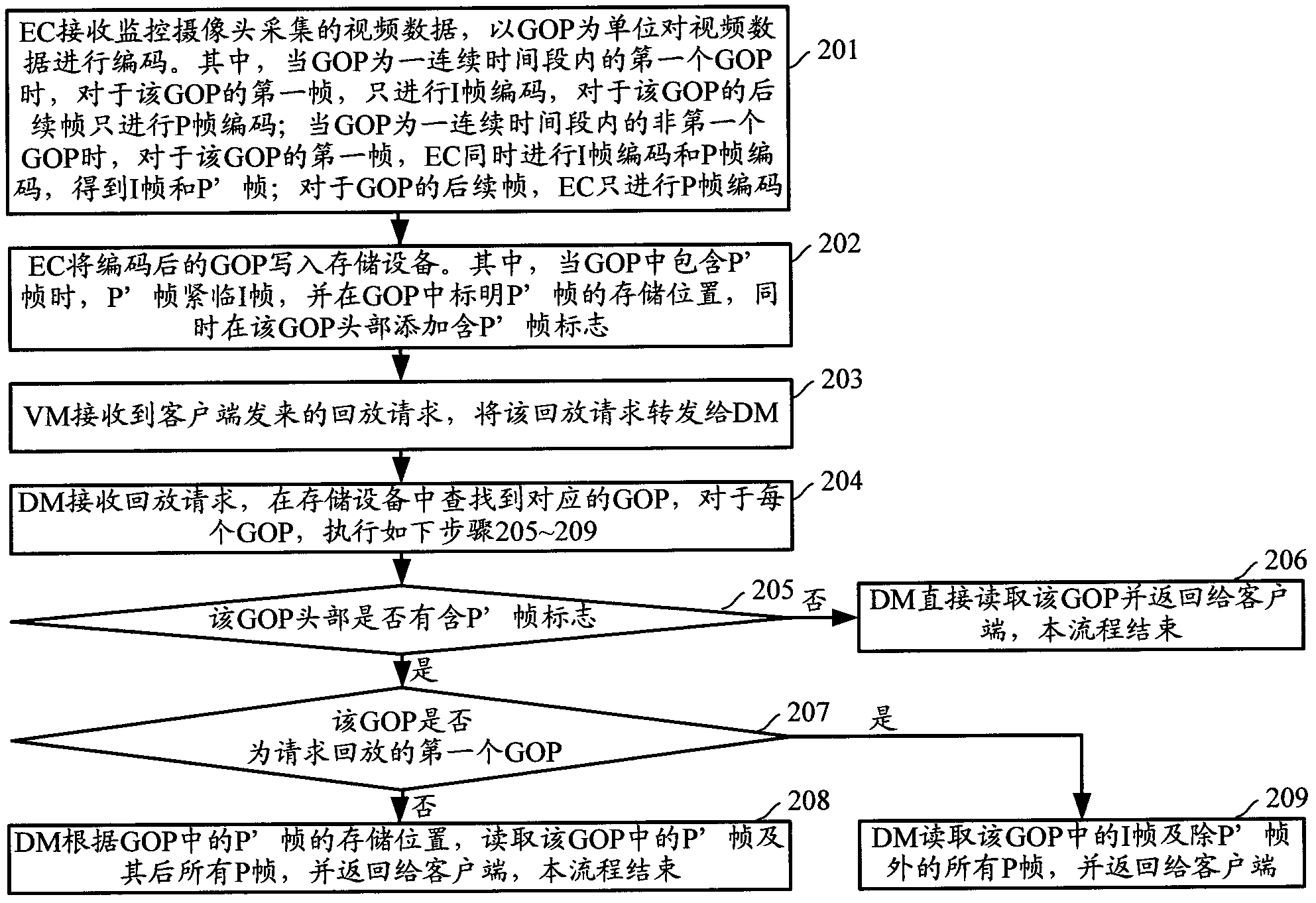

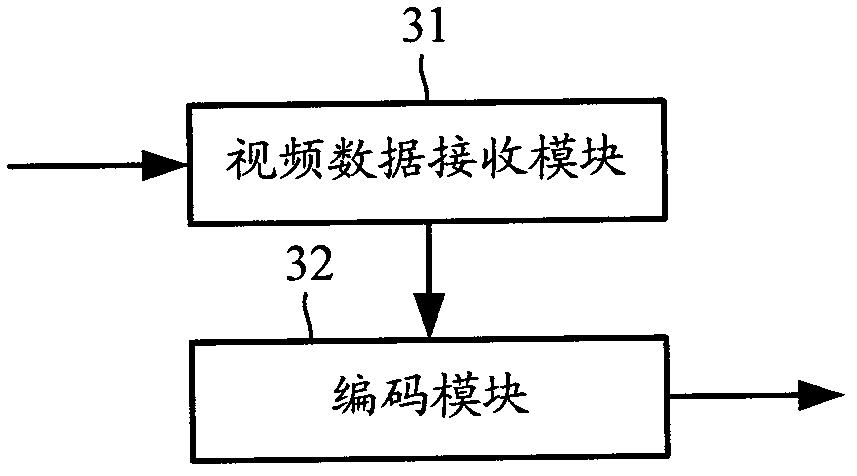

Monitoring data playback method, EC (Encoder) and video management server

ActiveCN102196249AReduce bandwidth consumptionGuaranteed continuityTelevision system detailsColor television detailsComputer hardwareGroup of pictures

The invention discloses a monitoring data playback method, an EC (Encoder) and a video management server. The monitoring data playback method comprises the following steps: the EC receives video data acquired by a monitor camera and encodes the video data; the EC encodes an I frame and a P frame simultaneously to the first frame of a GOP (Group of Pictures) when a non-first GOP in a continuous time period is encoded; the EC executes interframe coding to other frames except for the first frame of the GOP; the EC writes the encoded frame into a storage device; a management server receives a playback request transmitted by a client and finds out a corresponding GOP in the storage device, wherein the first frame of the read first GOP is taken as the I frame, residual frames of the first GOP are taken as frames obtained by the interframe coding, the first frame of the read other GOP is taken as the P frame, and the residual frames are taken as frames obtained by the interframe coding; and the management server returns the read frame to the client. Through the monitoring data playback method, the EC and the video management server, the bandwidth occupied by the playback of monitoring data is reduced.

Owner:ZHEJIANG UNIVIEW TECH CO LTD

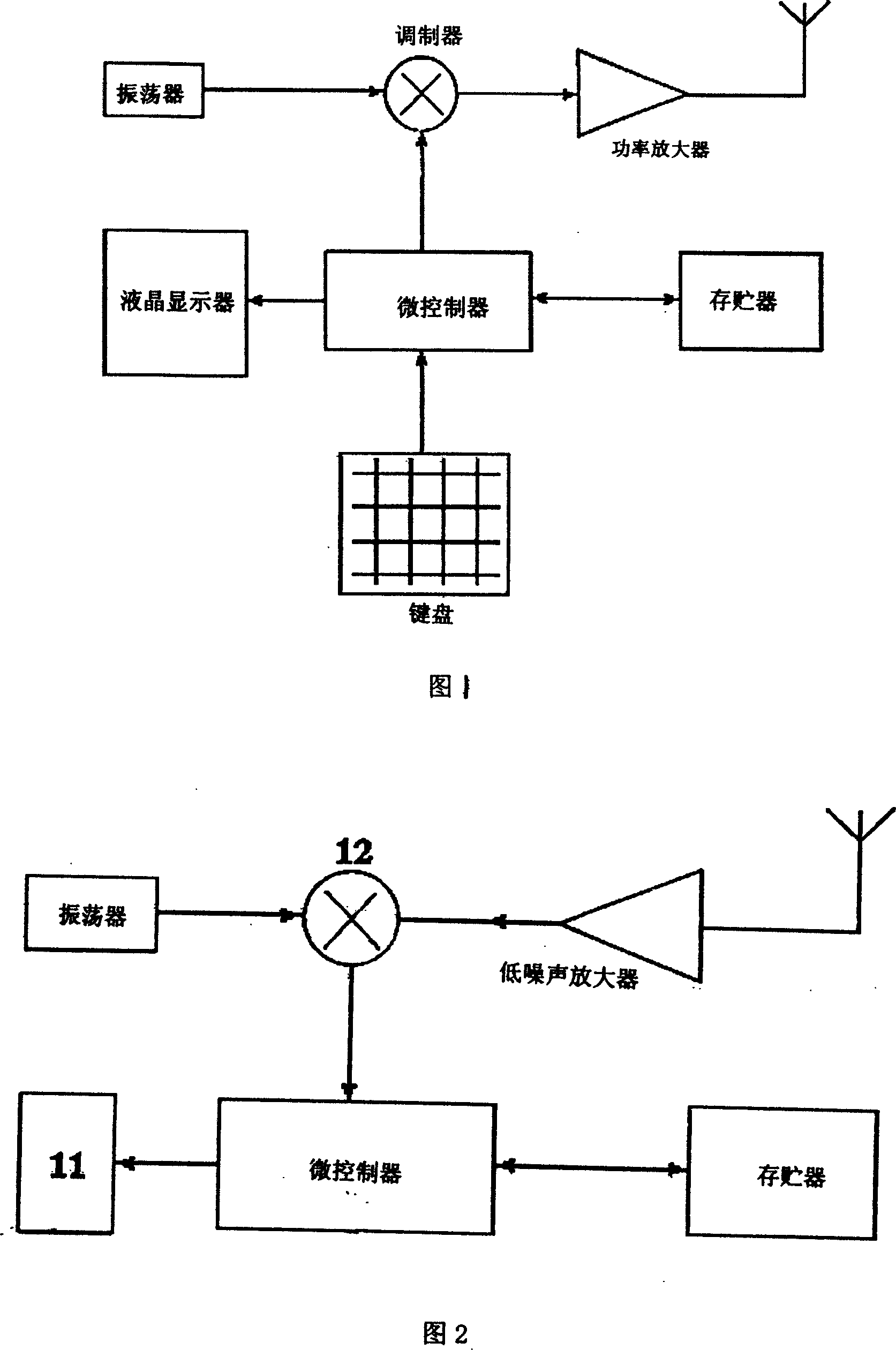

Remote-control detonation control method of industry electric cap

InactiveCN101135904ASolve complexitySolve the accuracy problemTransmission systemsTotal factory controlElectricityDetonator

The invention is used for ensure the synchronous exploration of all electric detonators. The method comprises: a radio detonating signal transmitted by the transmitter is composed of a series of information frames with same structures and amounts; after the signal processing device connected to the controlled electric detonator receives the radio detonating signal, it analyzes the frame series number information and calculates the amount of residual frames; according to the amount of residual frames, getting the delay time for transmitting the residual frames; the signal processing device of each controlled electric detonator sends the control signal for controlling the electric detonator.

Owner:HUNAN FUXIN TECH CO LTD

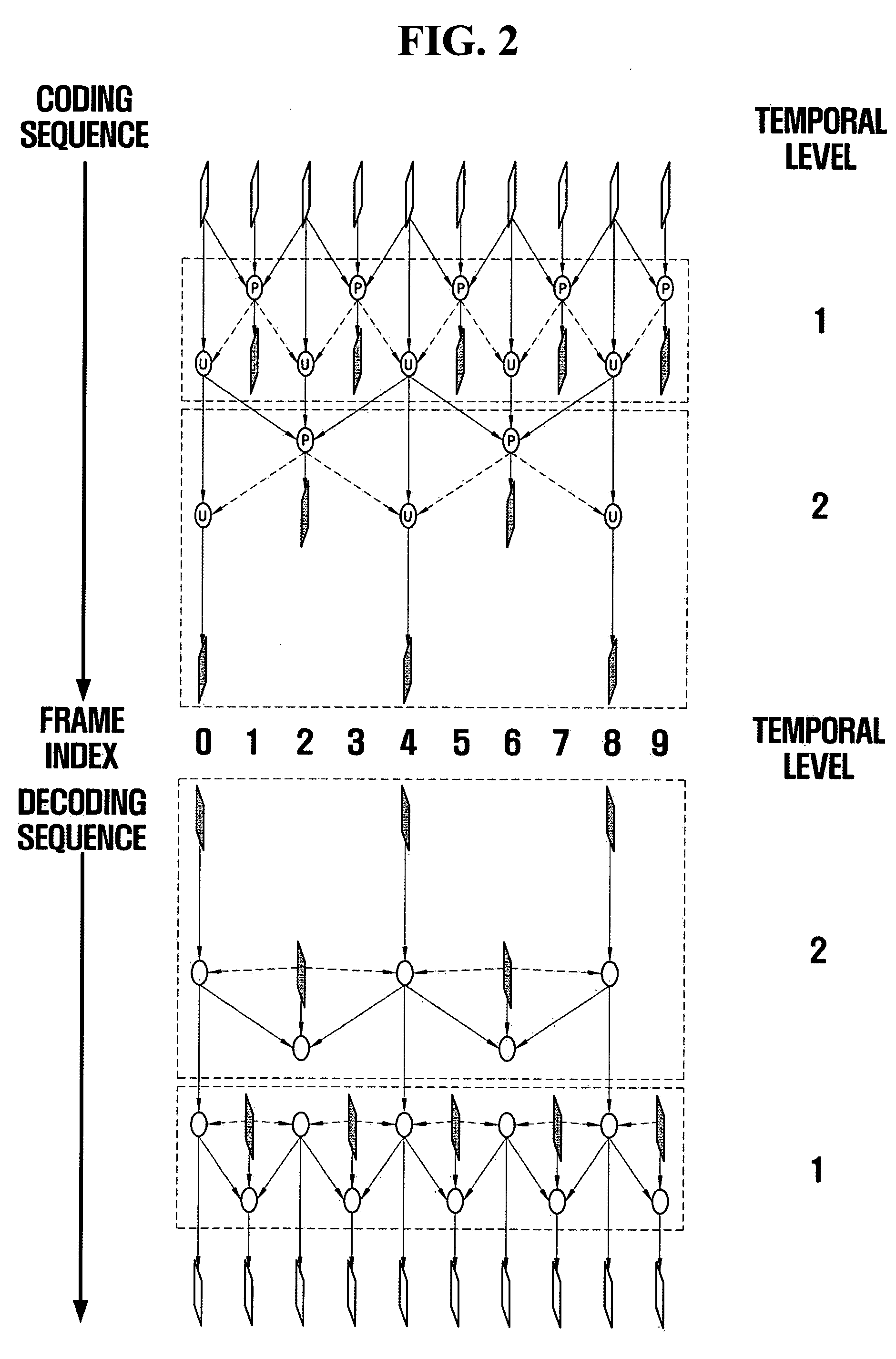

Video coding and decoding methods with hierarchical temporal filtering structure, and apparatus for the same

InactiveUS20060182179A1Reduce artifactsEliminate spaceDomestic stoves or rangesLighting and heating apparatusVideo encodingComputer vision

A method and apparatus for video coding and decoding with hierarchical temporal filtering structure are disclosed. A video encoding method in a temporal level having a hierarchical temporal filtering structure, includes generating prediction frames from two or more reference frames that temporally precede the current frame; generating a residual frame by subtracting the prediction frames from the current frame; and encoding and transmitting the residual frame.

Owner:SAMSUNG ELECTRONICS CO LTD

Video decoding method using smoothing filter and video decoder therefor

InactiveUS20060013311A1Soft qualityColor television with pulse code modulationColor television with bandwidth reductionVideo decodingVideo decoder

A method and apparatus for increasing output picture quality at a decoding terminal by using a smoothing filter are provided. The video decoding method includes generating a residual frame from an input bitstream, performing wavelet-based upsampling on the residual frame, performing non-wavelet-based downsampling on the upsampled frame, and performing inverse temporal filtering on the downsampled frame.

Owner:SAMSUNG ELECTRONICS CO LTD

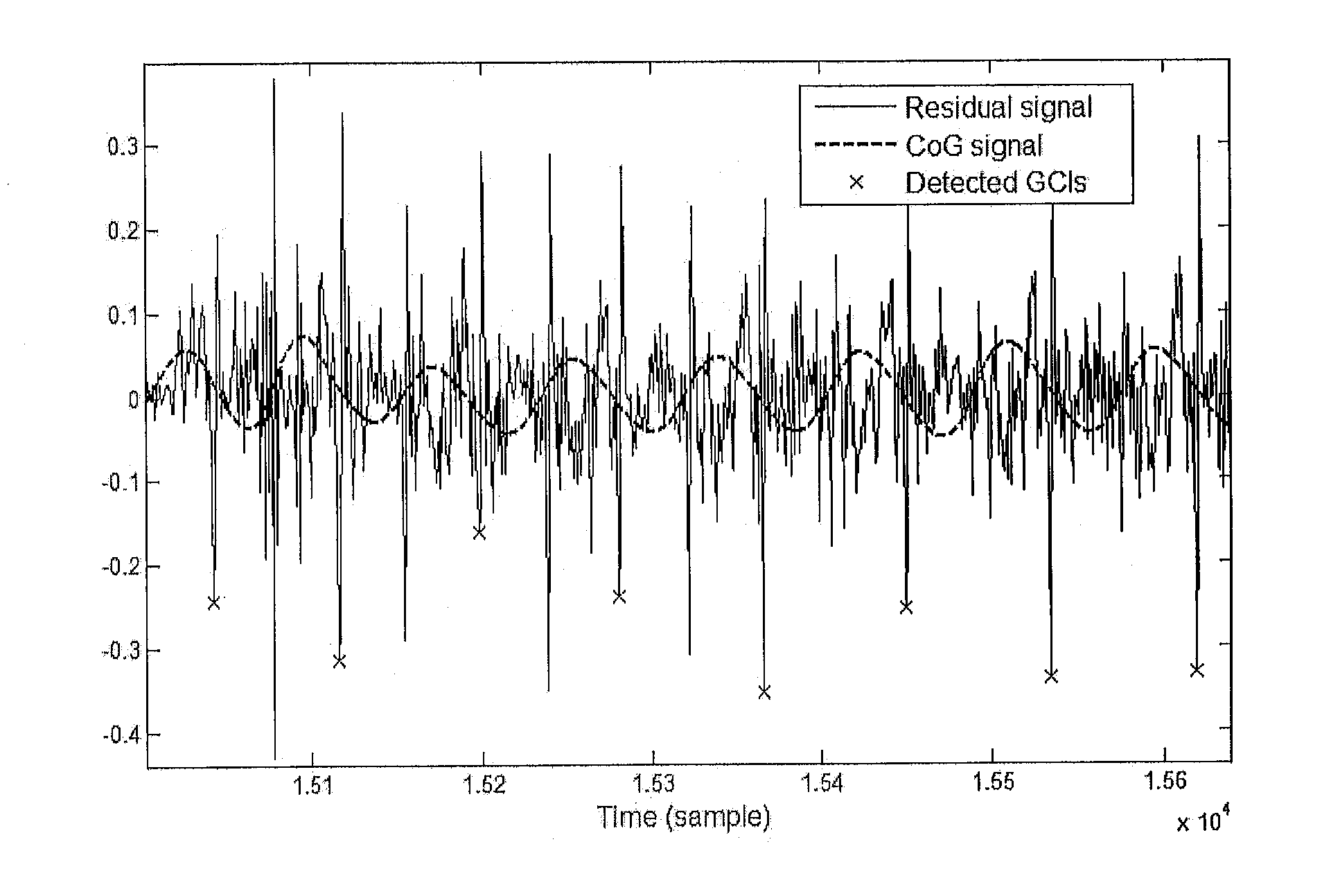

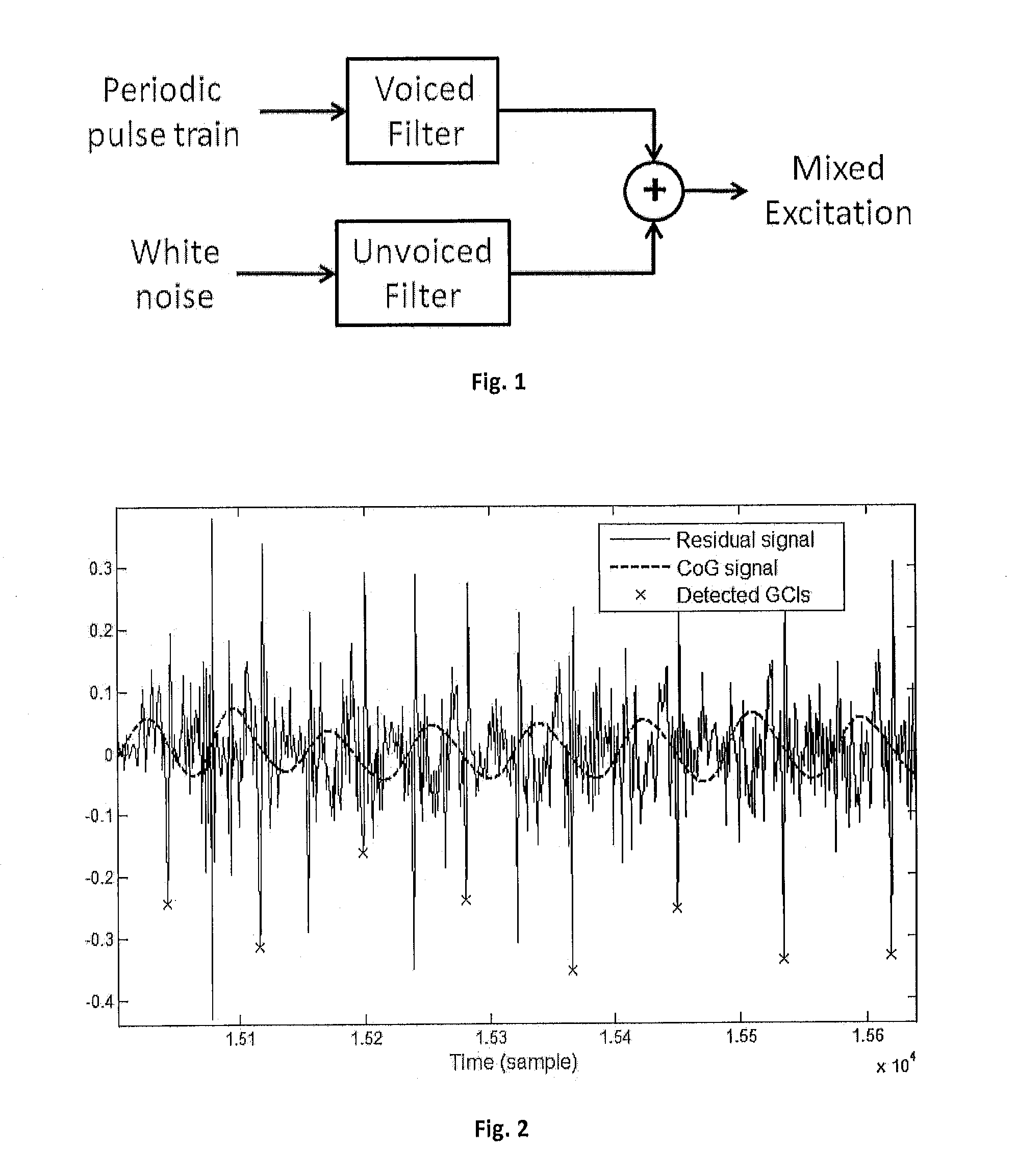

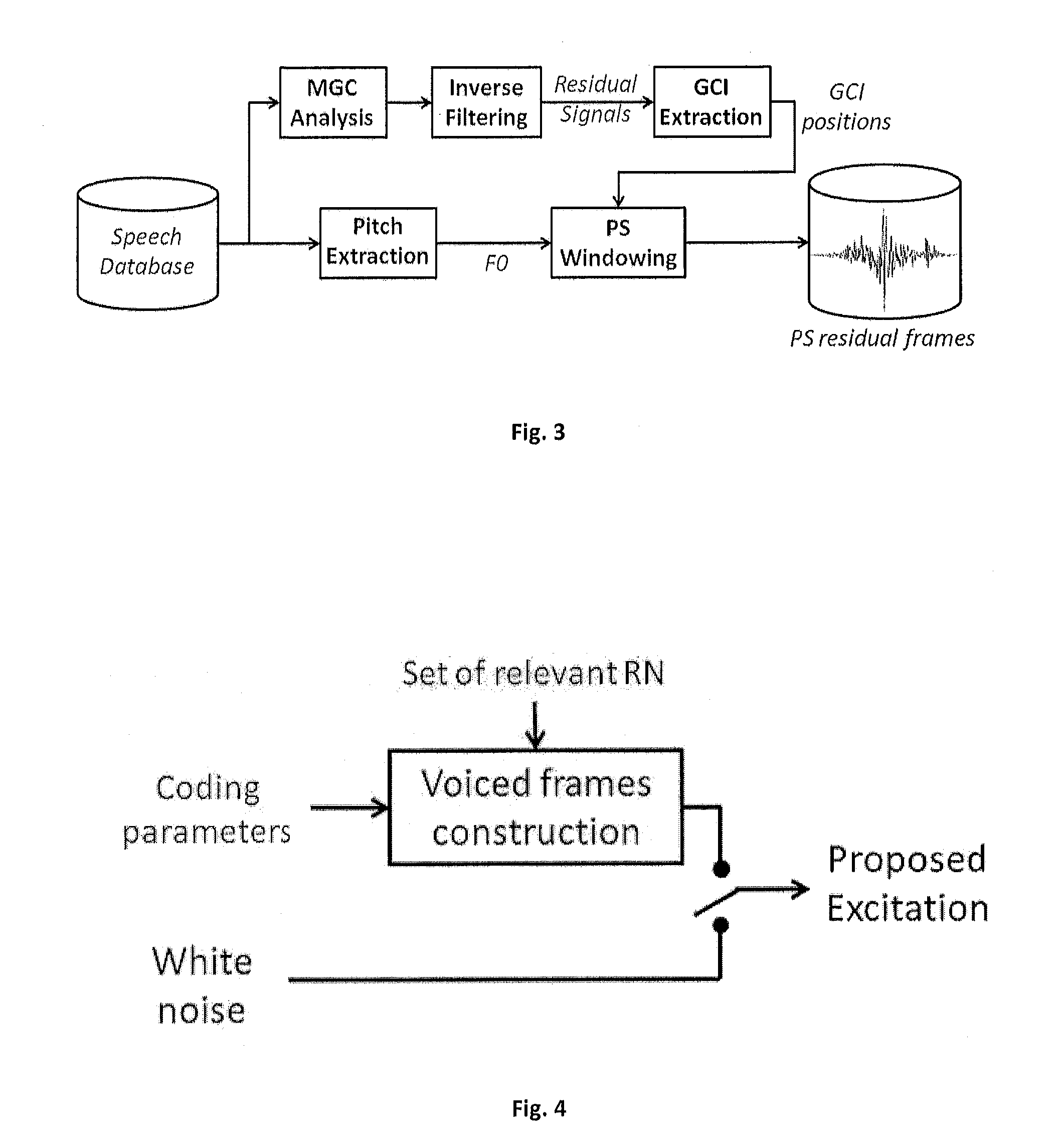

Speech synthesis and coding methods

The present invention is related to a method for coding excitation signal of a target speech comprising the steps of: extracting from a set of training normalised residual frames, a set of relevant normalised residual frames, said training residual frames being extracted from a training speech, synchronised on Glottal Closure Instant (GCI), pitch and energy normalised; determining the target excitation signal of the target speech; dividing said target excitation signal into GCI synchronised target frames; determining the local pitch and energy of the GCI synchronised target frames; normalising the GCI synchronised target frames in both energy and pitch, to obtain target normalised residual frames; determining coefficients of linear combination of said extracted set of relevant normalised residual frames to build synthetic normalised residual frames close to each target normalised residual frames; wherein the coding parameters for each target residual frames comprise the determined coefficients.

Owner:UNIVERSITY OF MONS +1

Fractal and H.264-based binocular three-dimensional video compression and decompression method

ActiveCN103051894AImprove encoding performanceHigh speedTelevision systemsDigital video signal modificationParallaxFiltration

The invention provides a fractal and H.264-based binocular three-dimensional video compression and decompression method, which comprises the following steps of: performing H.264-based intra-frame prediction coding on a starting frame (frame I) of a left eye, performing filtration to obtain a reconstructed frame (which can be used as a reference frame), performing block motion compensated prediction (MCP) coding on a frame P of the left eye, recording fractal parameters of blocks in each optimal matching process, substituting the fractal parameters into a compression iteration function system to obtain a predicted frame of the frame P respectively, and performing filtration to obtain a reconstructed frame; performing prediction on a starting frame of a right channel from the frame I of a base layer in a disparity compensated prediction (DCP) way; performing MCP and DCP on the frame P, and selecting a result with a minimum error as a prediction result for coding; and compressing residual frames of the frames I and P by utilizing entropy coding CALCV, and compressing the fractal parameters by utilizing signed index Columbus coding.

Owner:CHENGDU VISION ZENITH TECH DEV

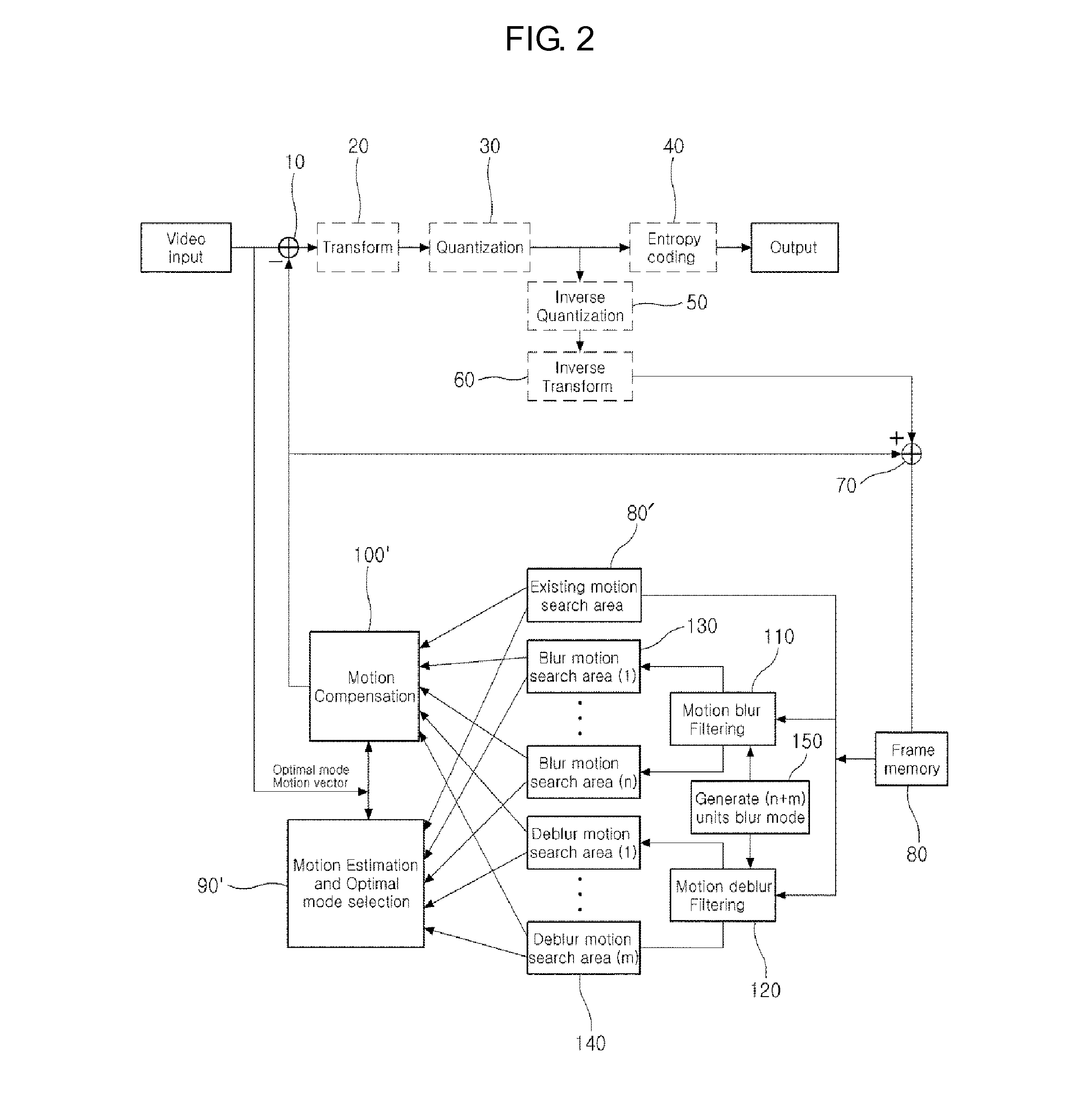

Video compression encoding device implementing an applied motion compensation technique using a selective motion search, and method for determining selective motion compensation

InactiveUS20130070862A1Reduce time redundancyImprove compression efficiencyColor television with pulse code modulationColor television with bandwidth reductionFuzzy filterResidual frame

Disclosed is a method for generating a motion search area of a video CODEC for implementing motion compensation through motion estimation as a unit of block. Compression efficiency can be further improved by additionally creating various reference frames by an intentional motion blur and a deblur filter, filtering a motion search area using a motion blur filter and a motion deblur filter as a unit of block which is a unit of video coding, and selecting reference frame having small residual frame data during execution of the motion estimation as final reference frames among original reference frames and various reference frames (motion search areas) additionally created.

Owner:GALAXIA COMM

Nonlinear, in-the-loop, denoising filter for quantization noise removal for hybrid video compression

InactiveUS8218634B2Color television with pulse code modulationColor television with bandwidth reductionNoise removalTransform coding

A method and apparatus is disclosed herein for using an in-the-loop denoising filter for quantization noise removal for video compression. In one embodiment, the video encoder comprises a transform coder to apply a transform to a residual frame representing a difference between a current frame and a first prediction, the transform coder outputting a coded differential frame as an output of the video encoder; a transform decoder to generate a reconstructed residual frame in response to the coded differential frame; a first adder to create a reconstructed frame by adding the reconstructed residual frame to the first prediction; a non-linear denoising filter to filter the reconstructed frame by deriving expectations and performing denoising operations based on the expectations; and a prediction module to generate predictions, including the first prediction, based on previously decoded frames.

Owner:NTT DOCOMO INC

Method and device for identifying video monitoring scenes

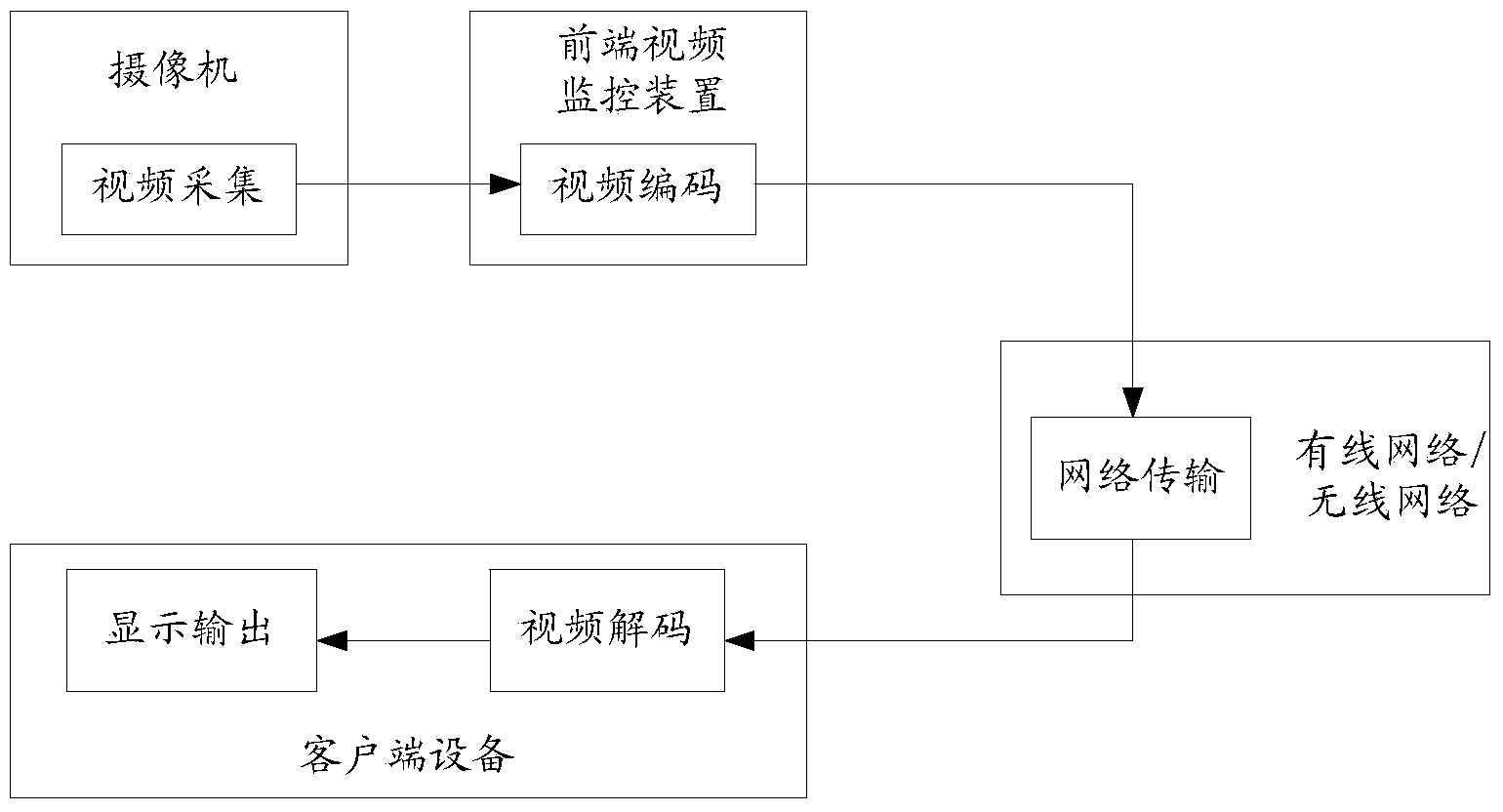

InactiveCN103561232AImprove surveillance image qualityClosed circuit television systemsDigital video signal modificationVideo monitoringVideo image

The invention discloses a method and device for identifying video monitoring scenes. The method for identifying the video monitoring scenes comprises the steps of (1) obtaining a front video image frame and a current video image frame obtained through monitoring of a front-end video monitoring device; (2) carrying out subtraction between the front video image frame and the obtained current video image frame to obtain an image residual frame; (3) determining brightness values of pixels of the obtained image residual frame; (4) determining the value of the ratio of the number of the pixels with the nonzero brightness values to the number of all the pixels of the image residual frame according to the determined brightness value of each pixel; (5) determining that the current monitoring scene where the front-end video monitoring device is located is a moving scene when the determined value of the ratio is larger than a set ratio threshold value; (6) determining that the current monitoring scene where the front-end video monitoring device is located is a static scene when the determined value of the ratio is smaller than or equal to the set ratio threshold value. The method and device for identifying the video monitoring scenes can achieve identification of the different monitoring scenes.

Owner:CHINA MOBILE COMM GRP CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com