Patents

Literature

49 results about "Text alignment" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Automatic document classification using lexical and physical features

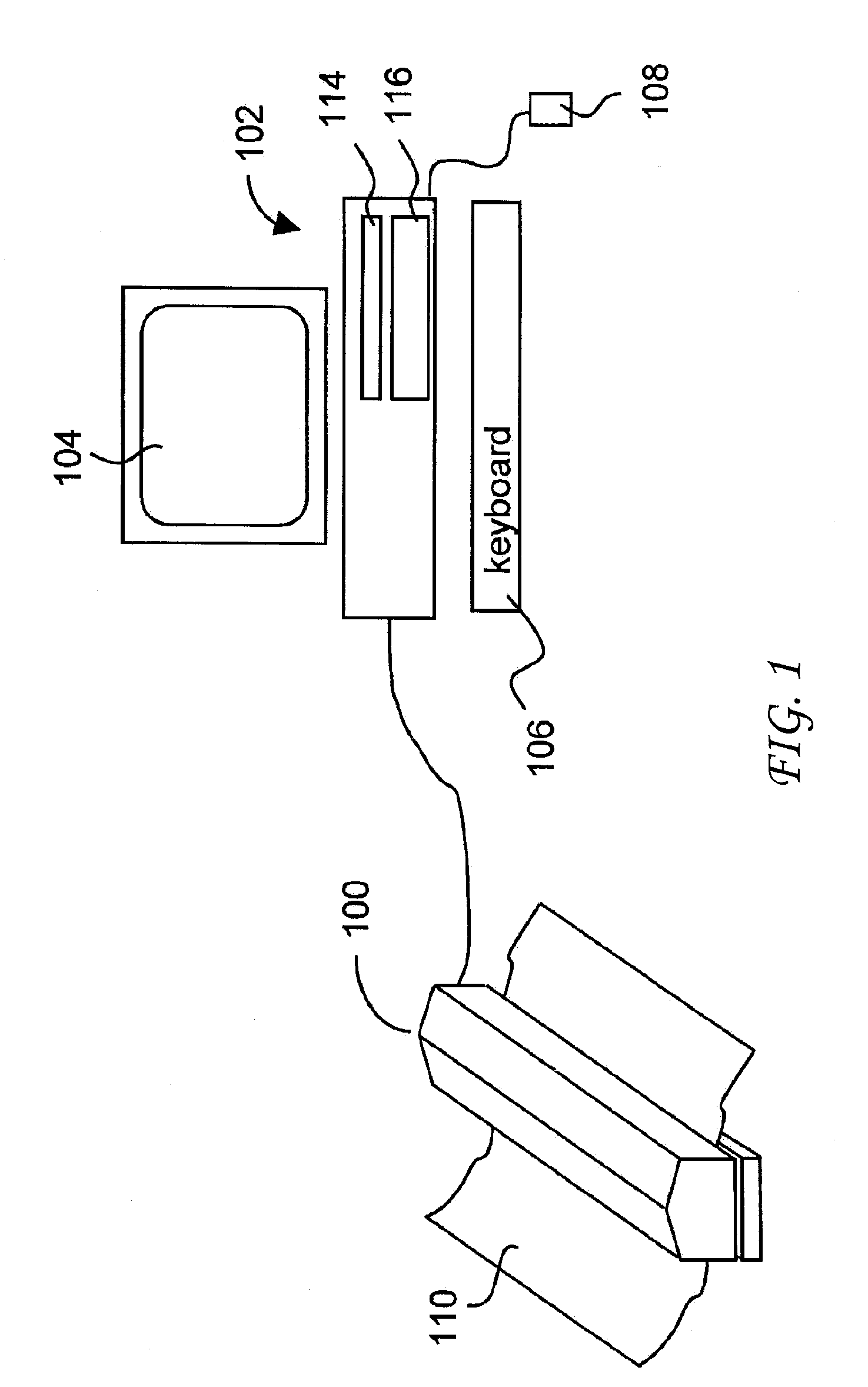

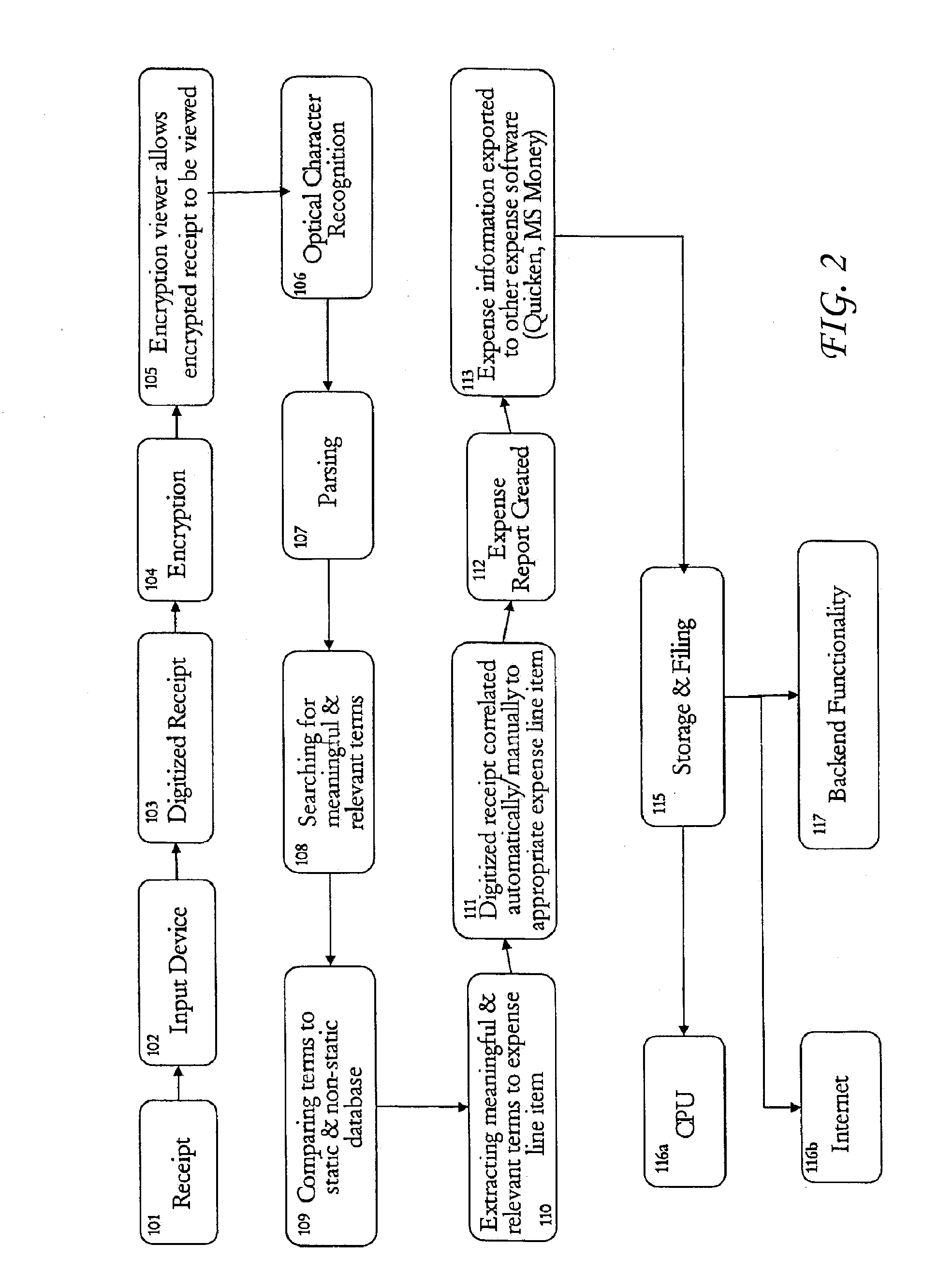

Owner:THE NEAT COMPANY INC DOING BUSINESS AS NEATRECEIPTS

Automatic document classification using lexical and physical features

ActiveUS20090067729A1Digital data information retrievalSpecial data processing applicationsText alignmentType frequency

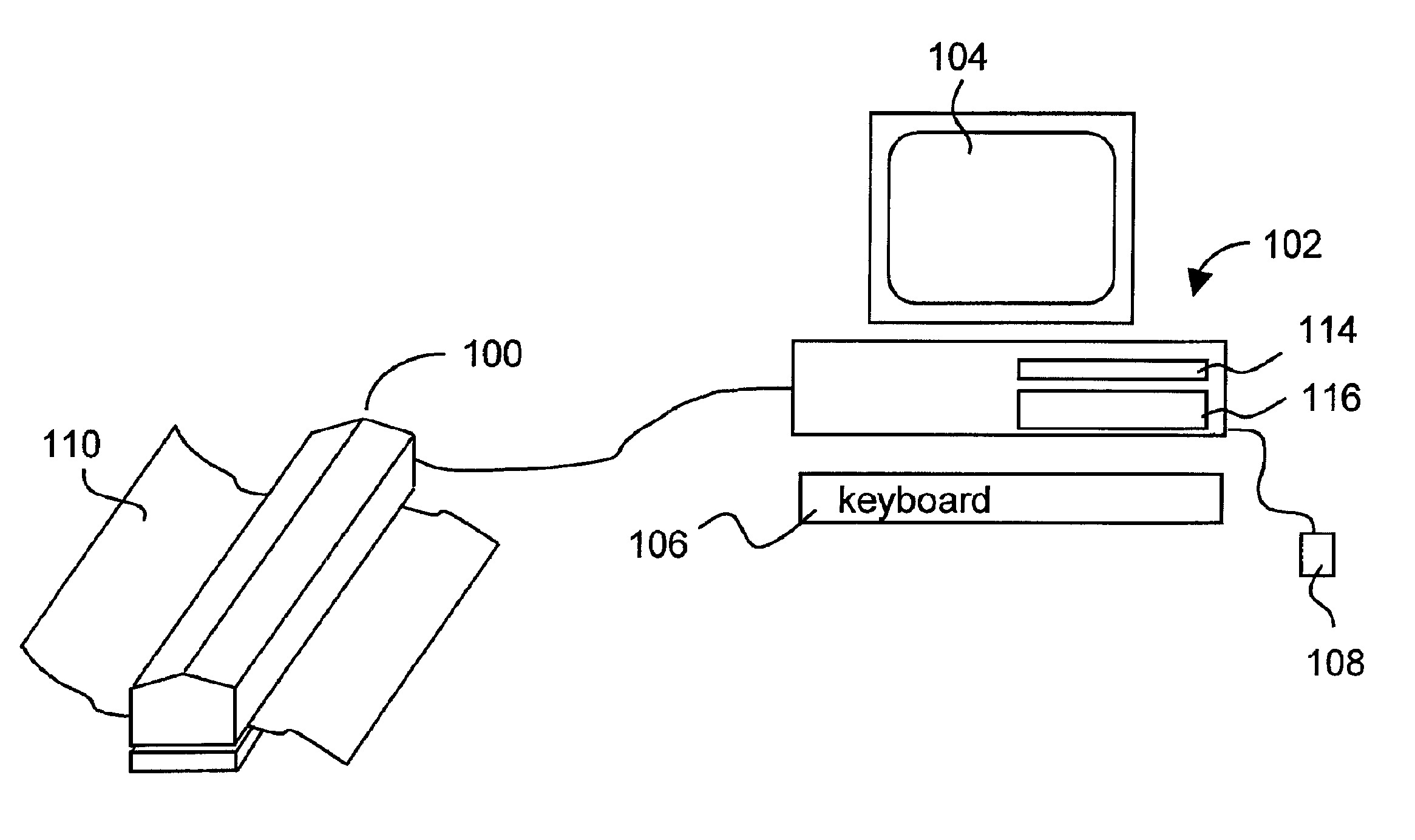

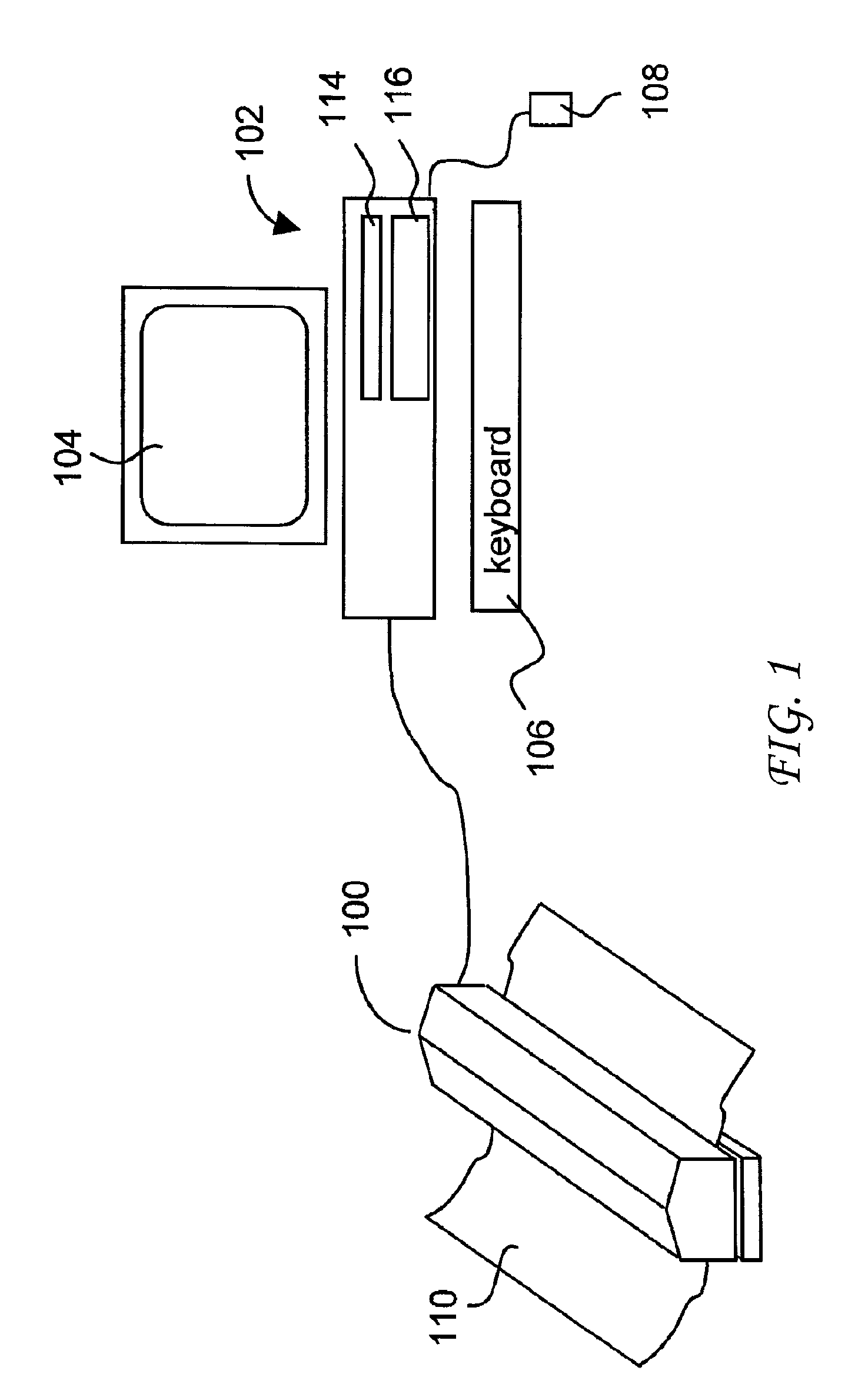

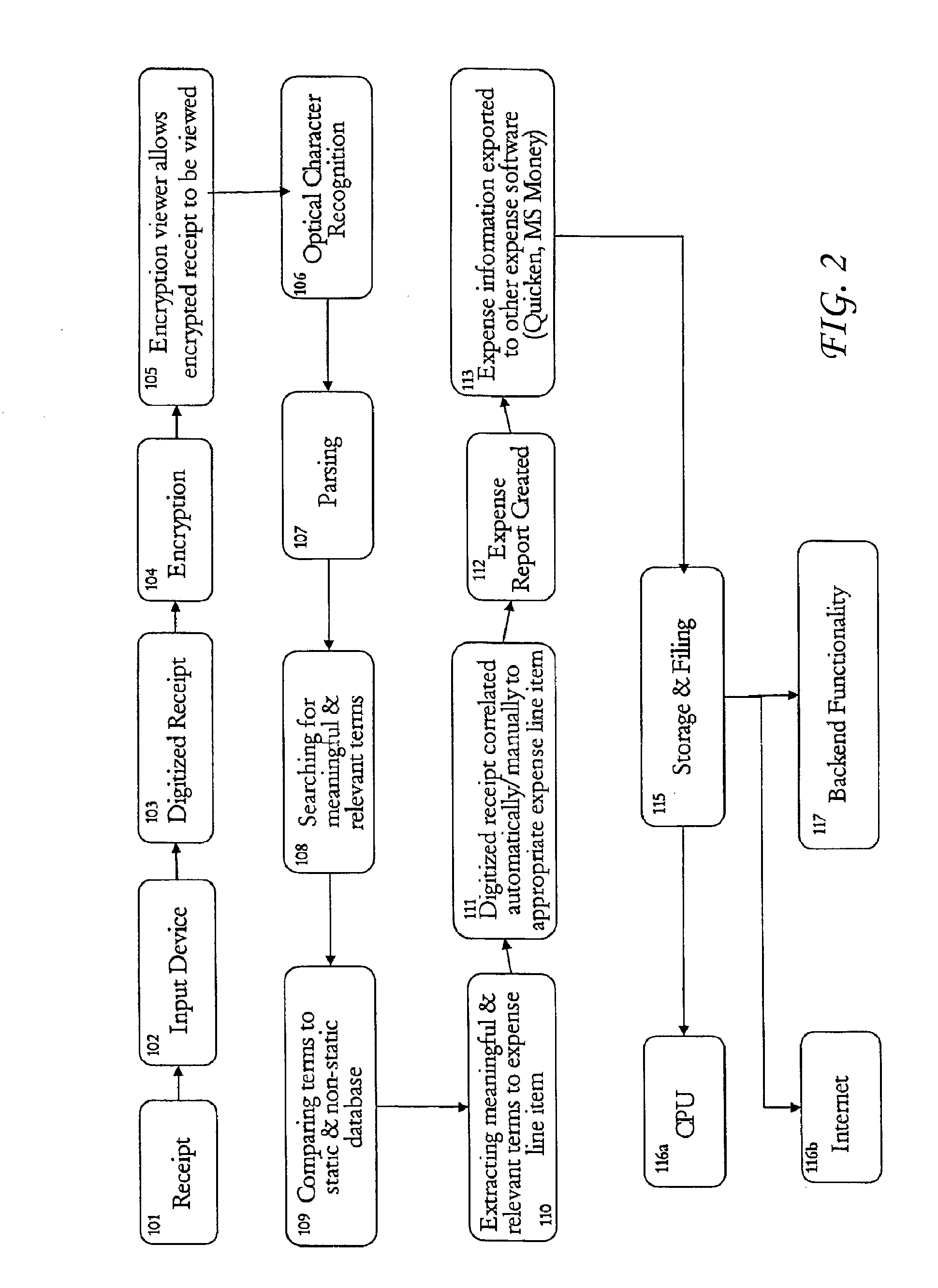

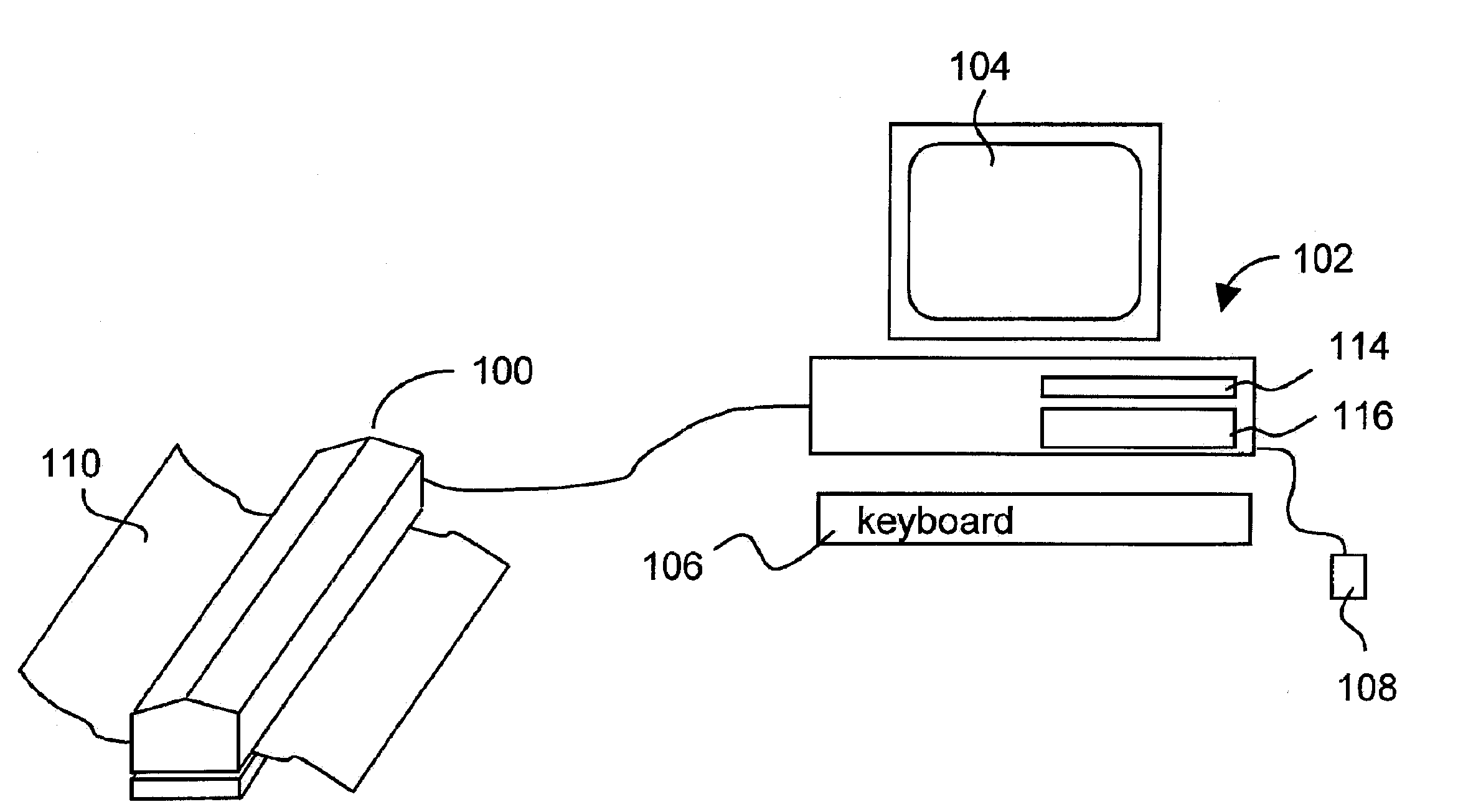

An automatic document classification system is described that uses lexical and physical features to assign a class ciεC{c1, c2, . . . , ci} to a document d. The primary lexical features are the result of a feature selection method known as Orthogonal Centroid Feature Selection (OCFS). Additional information may be gathered on character type frequencies (digits, letters, and symbols) within d. Physical information is assembled through image analysis to yield physical attributes such as document dimensionality, text alignment, and color distribution. The resulting lexical and physical information is combined into an input vector X and is used to train a supervised neural network to perform the classification.

Owner:THE NEAT COMPANY INC DOING BUSINESS AS NEATRECEIPTS

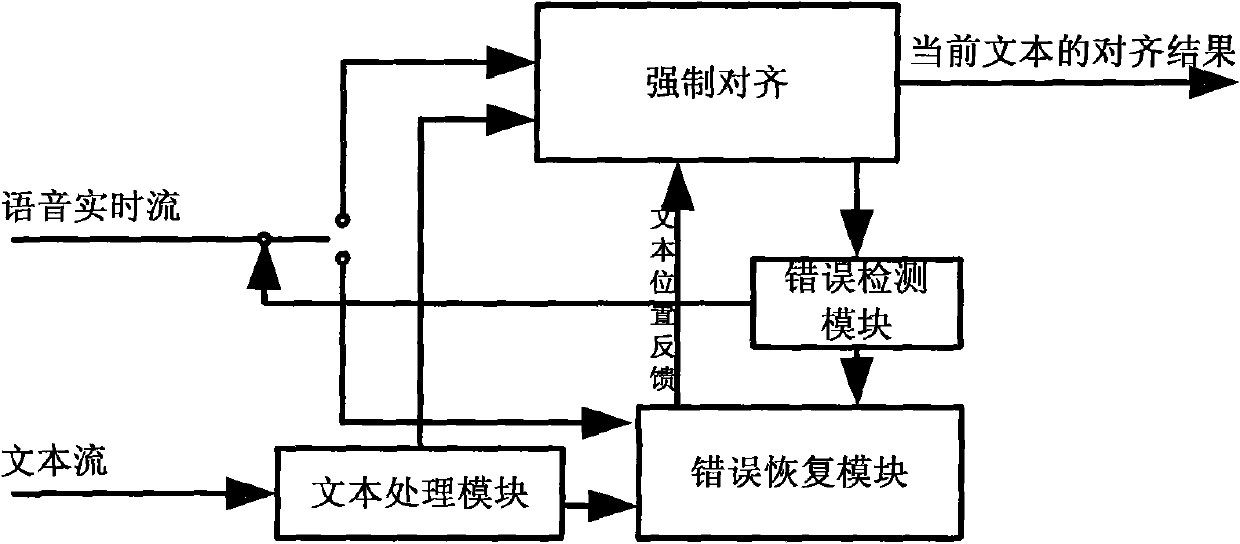

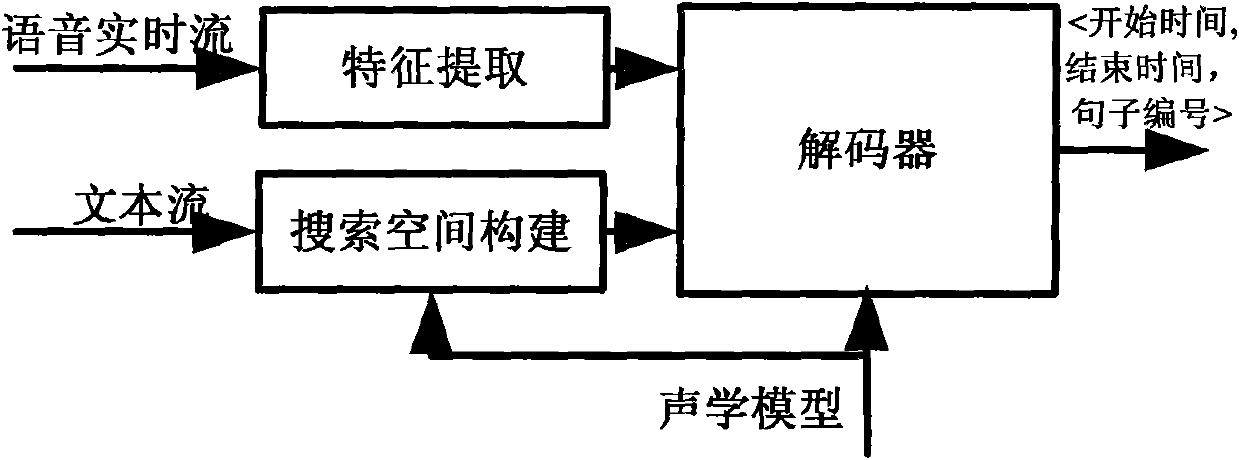

Alignment system of on-line speech text and method thereof

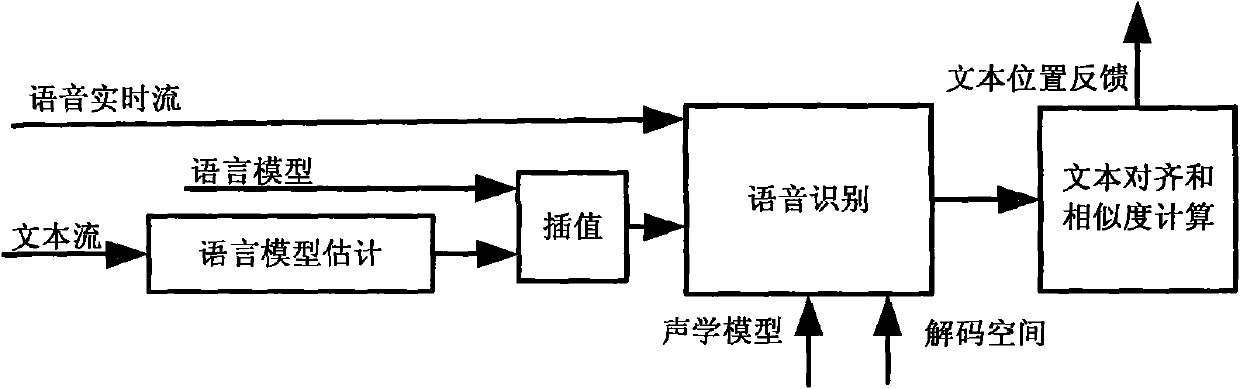

ActiveCN101651788AGenerate alignment results in real timeGenerate online input in real timeTelevision system detailsColor television detailsText alignmentFeature extraction

The invention relates to an alignment system of an on-line speech text and a method thereof. The system comprises a text processing module, an error detection module, an error recovery module and a compulsory alignment module. The compulsory alignment module comprises a characteristics extraction module, a search space construction module and an alignment decoding module. The error recovery modulecomprises a language model estimation module, a language model interpolation module, a speech recognition module and a text alignment and similarity calculation module. Method for the system and themethod to detect end of a sentence is an improvement of a conventional method based on Viterbi alignment, information of a search space by beam search is used for estimating activity degree A (t, somegae) of the search space at the end of the sentence, and estimating sentence end time in partial meaning *<*>. The system and the method have the function for automatically detecting and jumping overunmatched segment errors in a text and a speech; can generate online input speech current and corresponding text alignment result at real time and can process a long text with errors.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

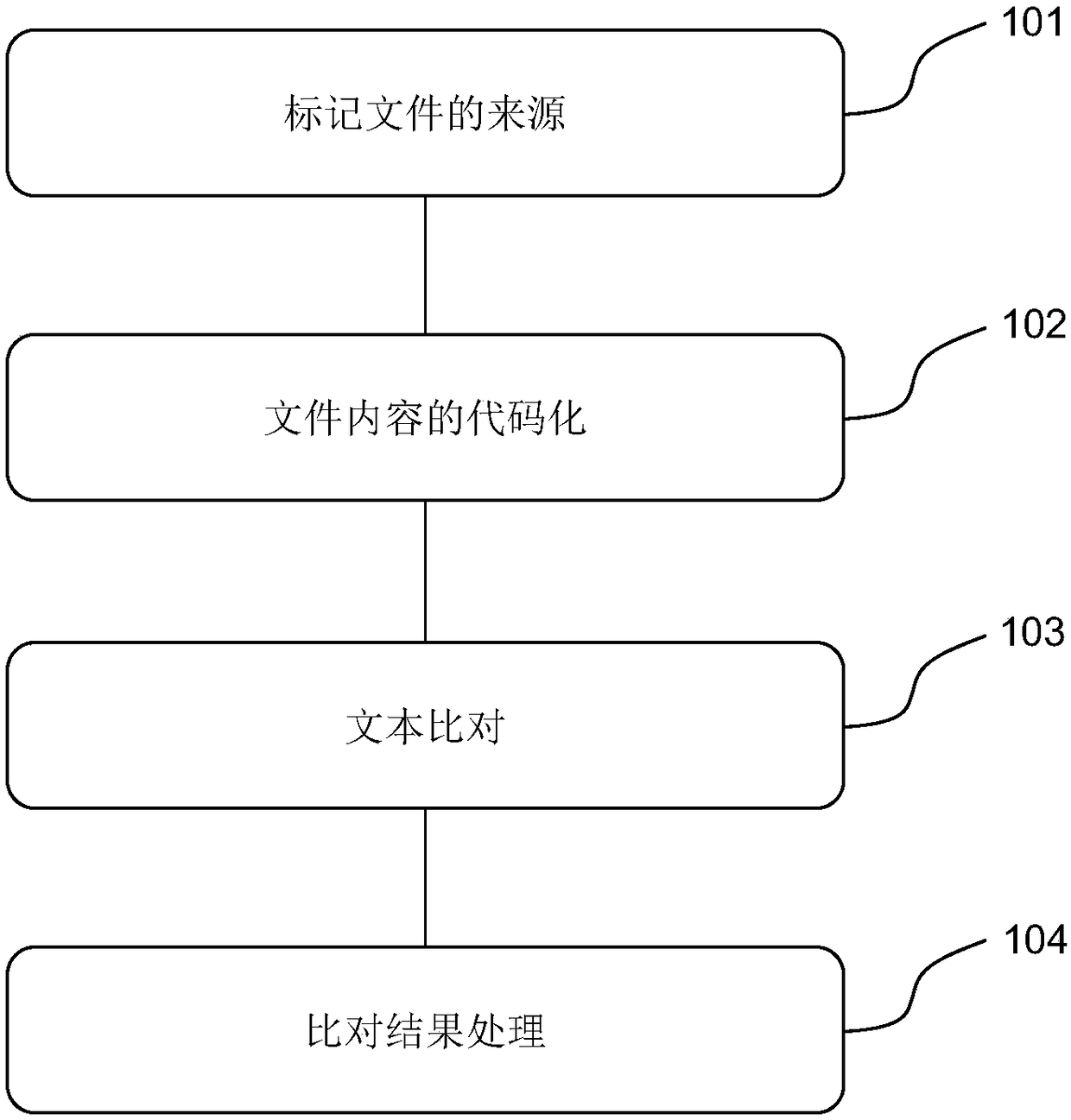

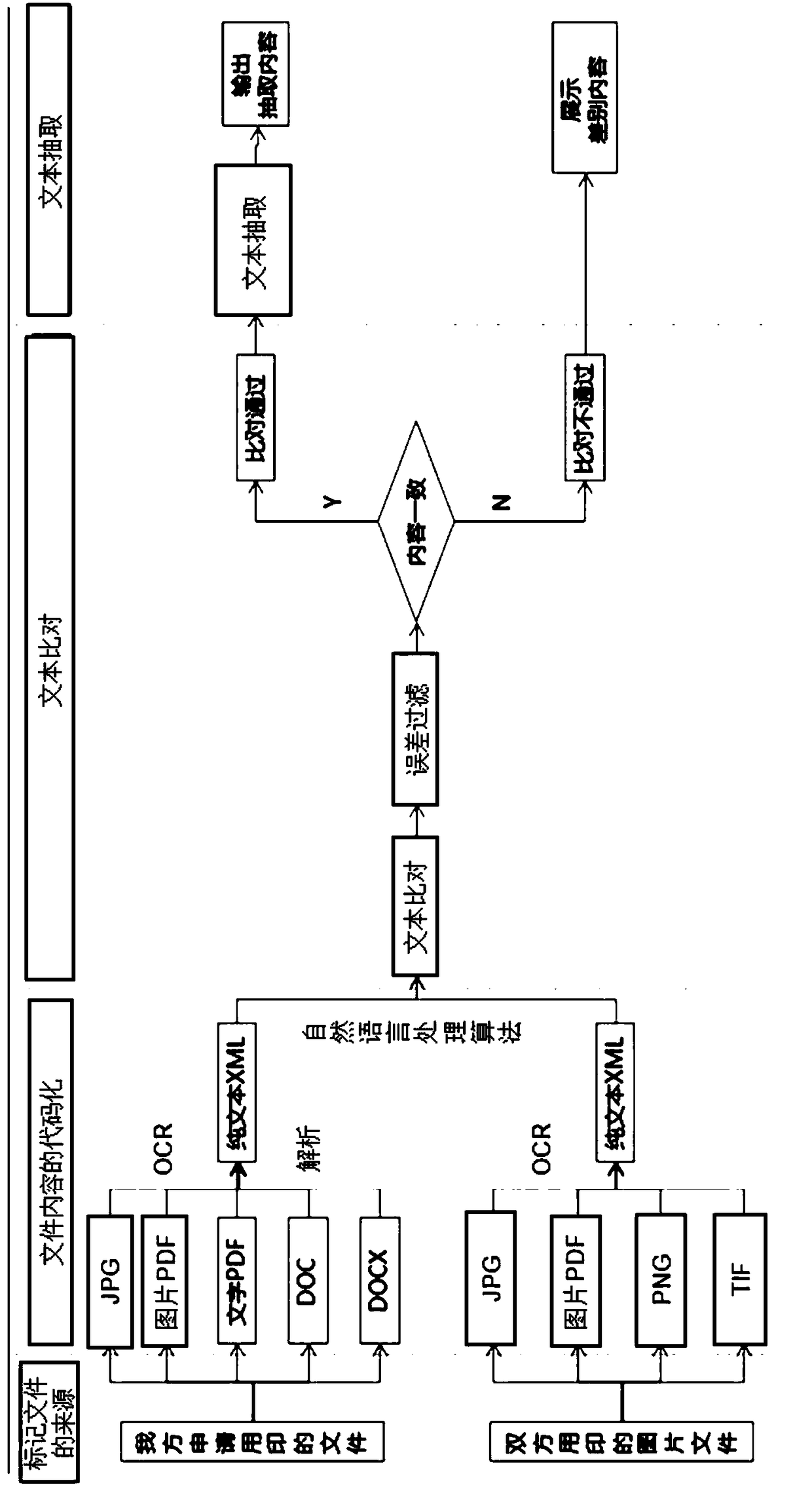

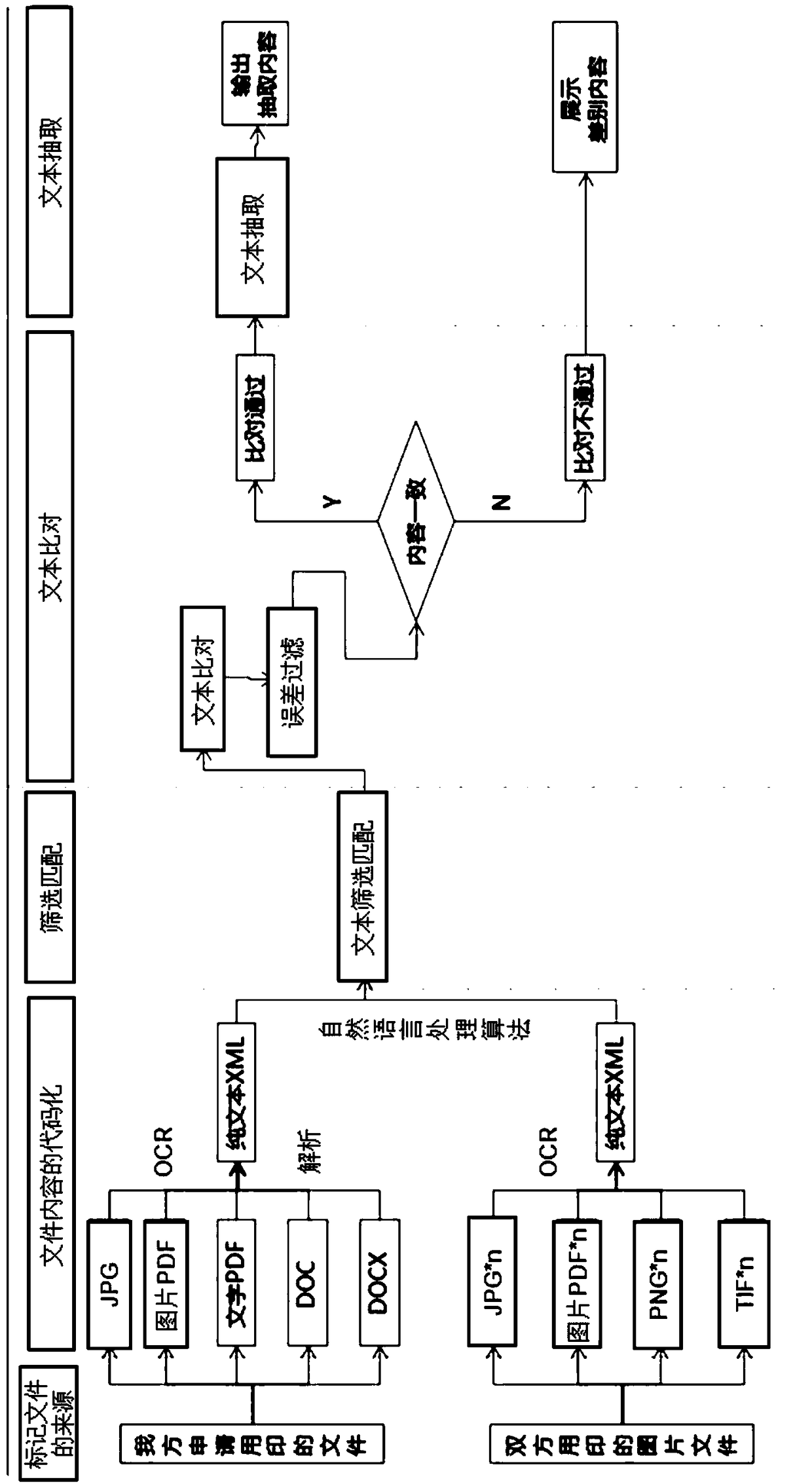

Consistency auditing method for different source files

InactiveCN109190092AReduce work intensityReduce financial riskFinanceSemantic analysisText alignmentText file

The invention discloses a consistency auditing method of different source files, which comprises the following steps: marking the source of the file, wherein two files to be checked are from differentsources respectively, one file is used as a reference part and the other file is used as a part to be checked and the other file is used as a part to be checked; coding the content of the files: thetwo files are coded separately to obtain the text file with uniform format; performing text alignment: text alignment is carried out on the basis of two text files, the contents added, deleted or modified are marked in the text of the part to be audited, the first alignment result is generated, the error filtering is carried out on the first alignment result, the second alignment result is generated, and the similarity value is generated according to the second alignment result; processing comparison results: if the similarity value is greater than the set threshold, the two text files are extracted from each other. If the similarity value is not greater than the set threshold, the difference between the two text files is displayed on the basis of the text of the part to be audited.

Owner:深圳平安综合金融服务有限公司

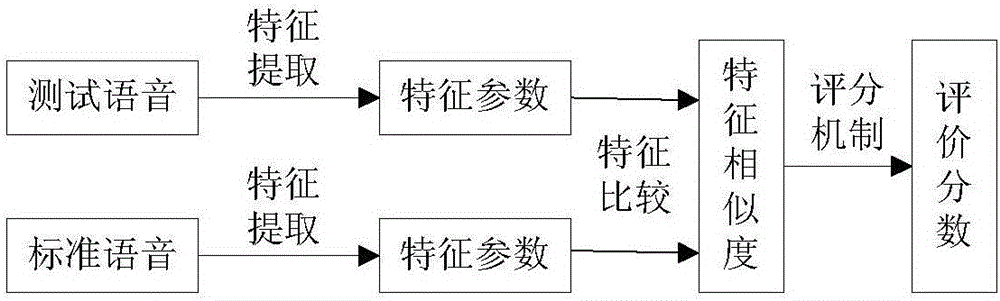

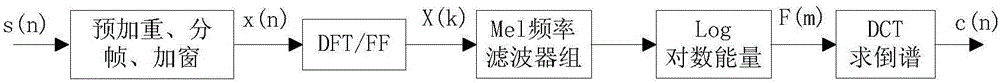

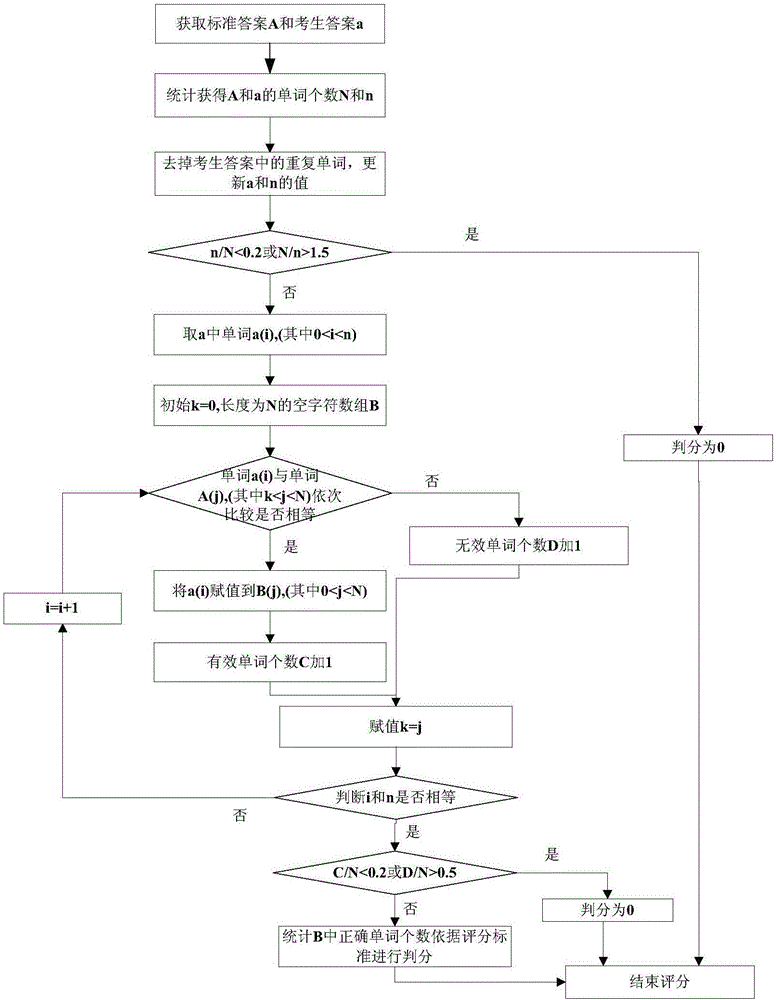

Automatic oral English marking method based on feature fusion

The invention provides an automatic oral English marking method based on feature fusion. A multi-feature fusion method is used for marking intonation tests in a test of oral English in large scale. A continuous speech signal and a speech recognition text are used as research objects, and the intonation tests in the test of oral English are analyzed from two different angles consisting of speech and text. On the one hand, input speech is analyzed, and a speech feature is extracted and matched and compared with a reference standard; on the other hand, the speech recognition text is compared with the reading text via a text alignment method, and a marking mechanism gives assessment and a mark according to a similarity level. Experimental results show that the method is relatively low in algorithm complexity, and a marking result accords with subjective sensation of people.

Owner:山东山大鸥玛软件股份有限公司

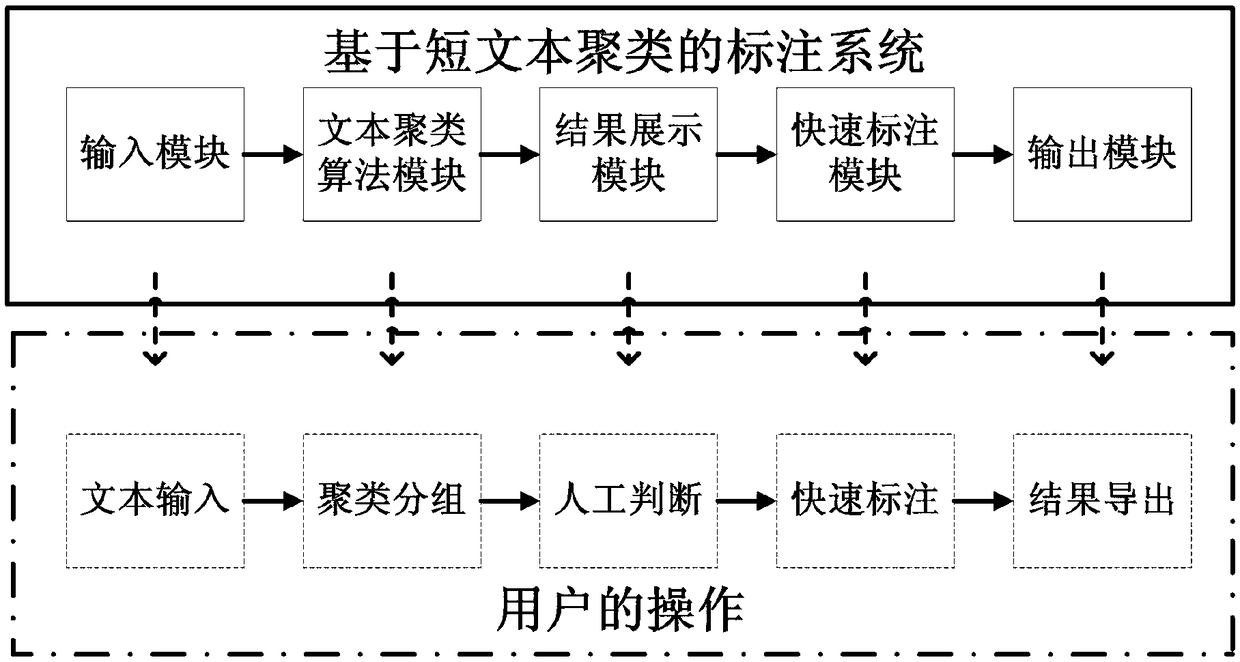

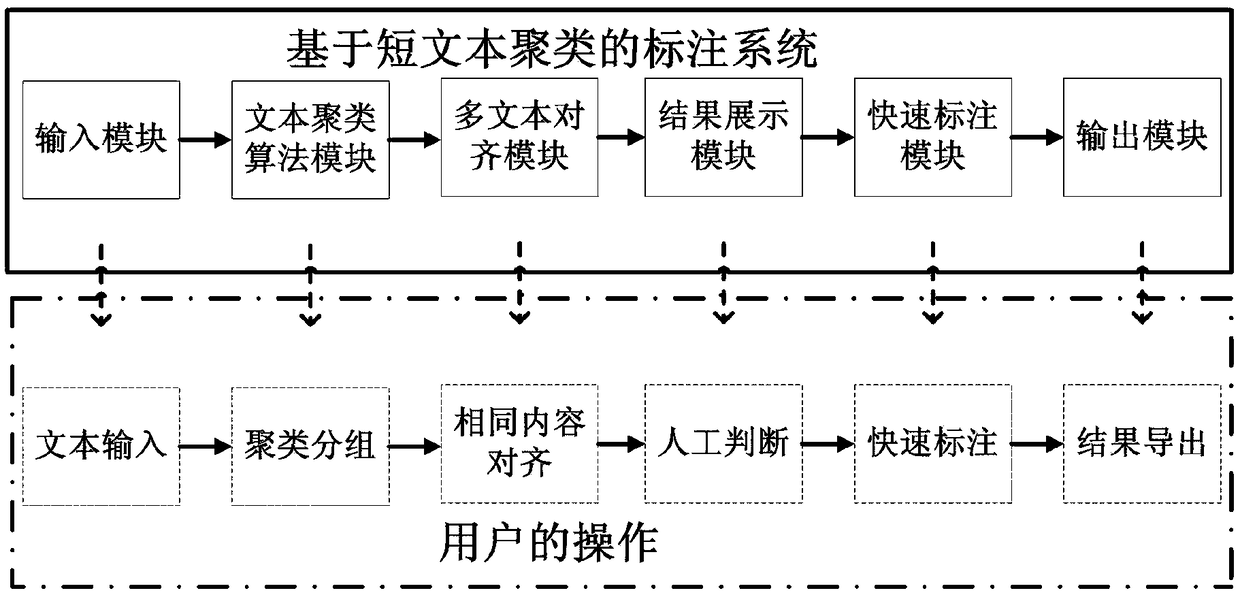

Short text clustering-based labeling system and method

ActiveCN108647319AImprove labeling efficiencyReduce reading workloadCharacter and pattern recognitionNatural language data processingText alignmentCluster algorithm

The invention relates to a short text clustering-based labeling system and method, belongs to the technical field of clinical medical labeling, and solves the problems of low labeling efficiency, difficult training, poor result accuracy and excessively high communication cost in the prior art. The short text clustering-based labeling system comprises an input module, a text clustering algorithm module, a multi-text alignment module, a result display module, a quick labeling module and an output module. Compared with the prior art, the labeling system and method has the advantages that a text clustering algorithm and a multi-text alignment algorithm are adopted, so that the reading quantity of similar sub-texts is greatly reduced and the reading speed is increased; longitudinal multi-text comparative browsing is adopted, so that the great convenience is provided for a user to perform manual comparison; and furthermore, algorithm training can be performed without any training set, and ITpersonnel do not need to perform algorithm modification for different medical texts, so that the communication cost is extremely low.

Owner:思派(北京)网络科技有限公司

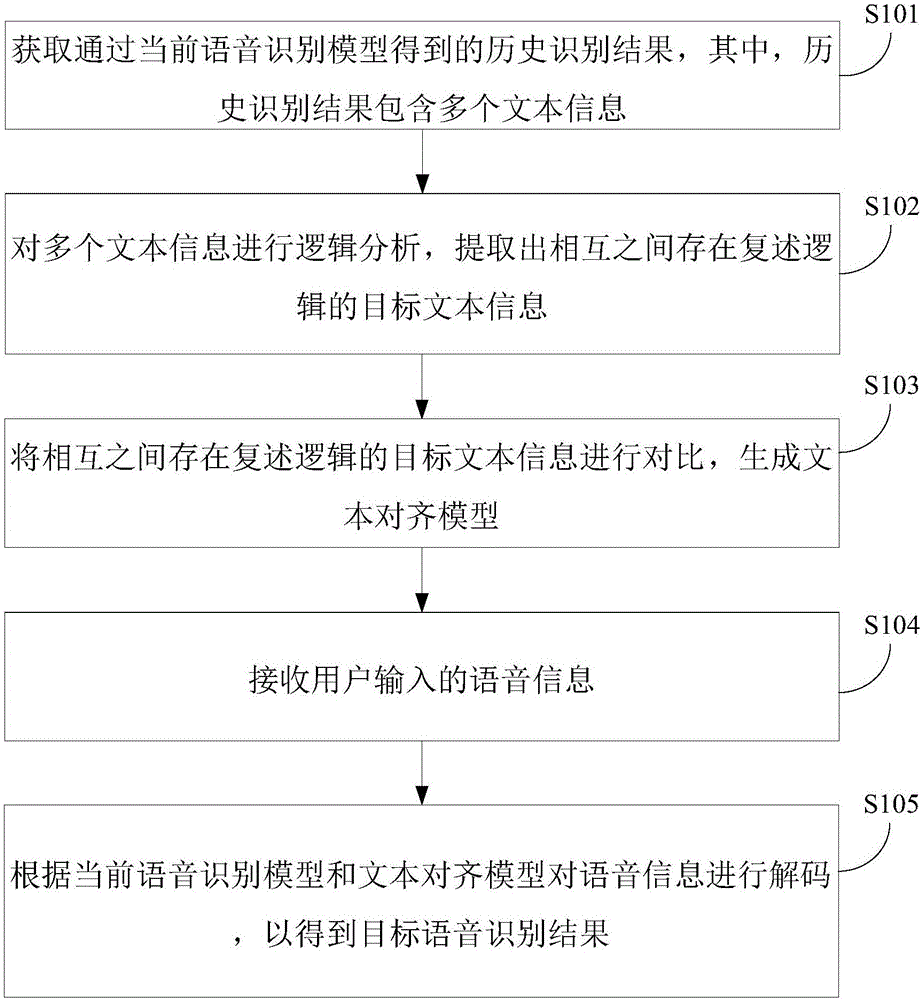

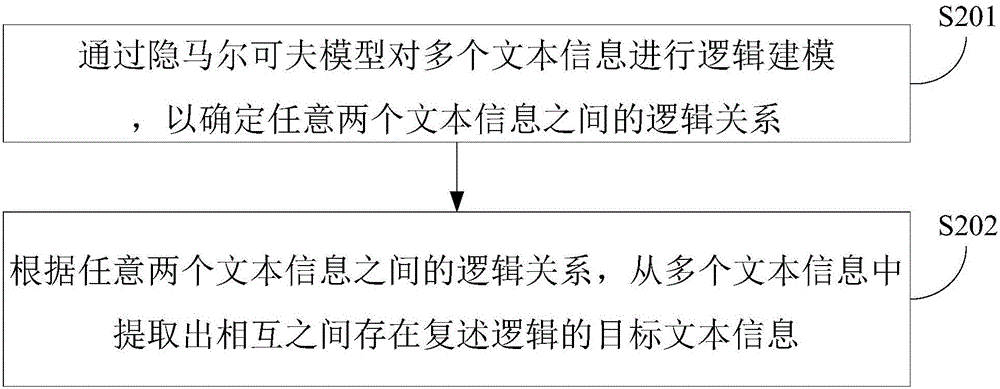

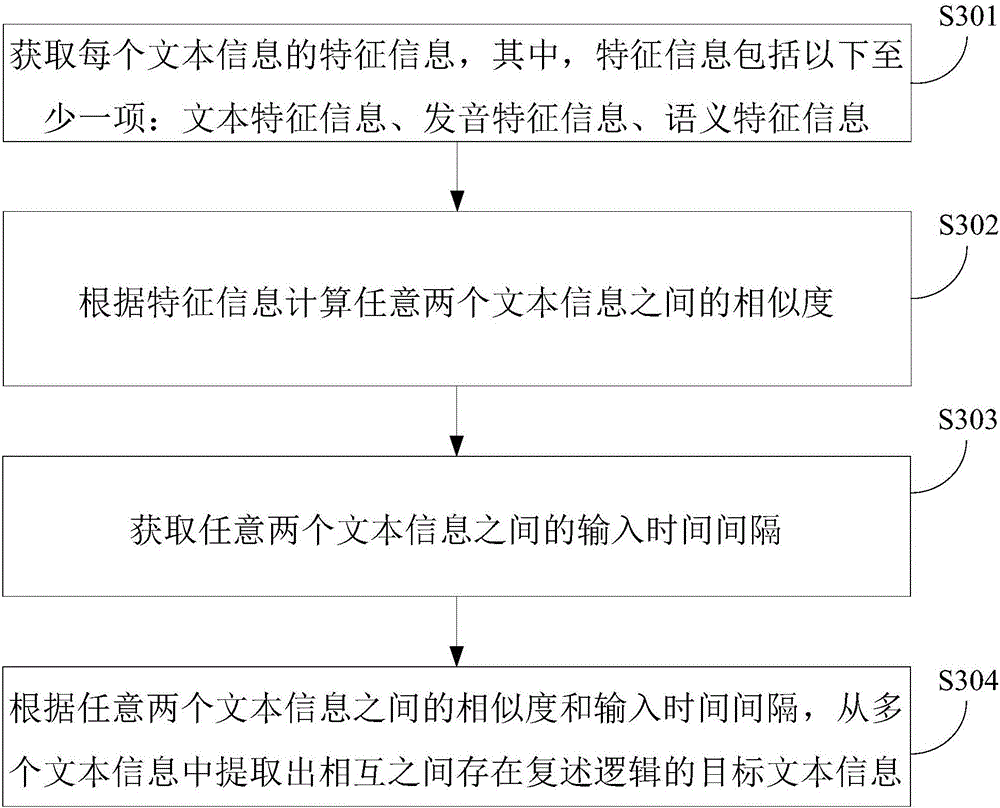

Speech recognition error correction method and device

The present invention relates to a speech recognition error correction method and device. The method includes the following steps that: historical recognition results obtained by a current speech recognition model are acquired, wherein the historical recognition results include a plurality of pieces of text information; logical analysis is performed on the plurality of pieces of text information, and target text information with repeat logic is extracted; the target text information with repeat logic is subjected to wrong channel statistics, so that a text alignment model can be generated; speech information inputted by a user is received; and the speech information is decoded according to the current speech recognition model and the text alignment model, so that a target speech recognition result is obtained. With the speech recognition error correction method and device provided by the technical schemes of the invention adopted, the obtained speech recognition result can be more accurate and better meet the requirements of the user, and therefore, the use experience of the user can be enhanced.

Owner:BEIJING UNISOUND INFORMATION TECH +1

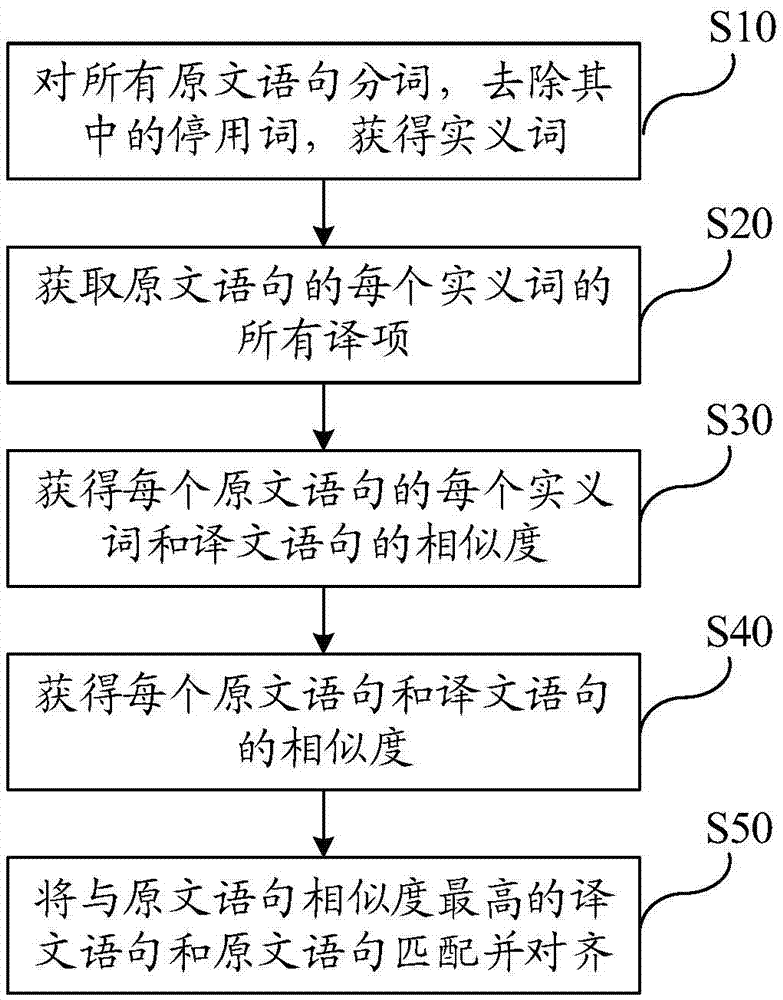

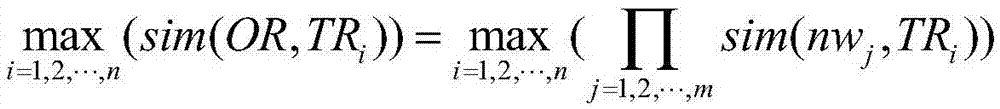

Original text and translated text alignment method and apparatus

ActiveCN105446962AFix alignment issuesShorten the timeNatural language translationSpecial data processing applicationsText alignmentTheoretical computer science

The invention discloses an original text and translated text alignment method. The method comprises: performing word segmentation on all original text statements to remove stop words and obtain content words; obtaining all translation items of the content words of the original text statements; matching all the translation items of the content words of the original text statements in all translated text statements to obtain the similarity between the content words of the original text statements and the translated text statements; according to the similarity between the content words of the original text statements and the translated text statements, matching the original text statements with the translated text statements to obtain the similarity between the original text statements and the translated text statements; and performing matching and alignment on a translated text statement with highest similarity with an original text statement and the original text statement. The invention discloses an original text and translated text alignment apparatus. According to the method and the apparatus, the problem in original text and translated text alignment is solved.

Owner:IOL WUHAN INFORMATION TECH CO LTD

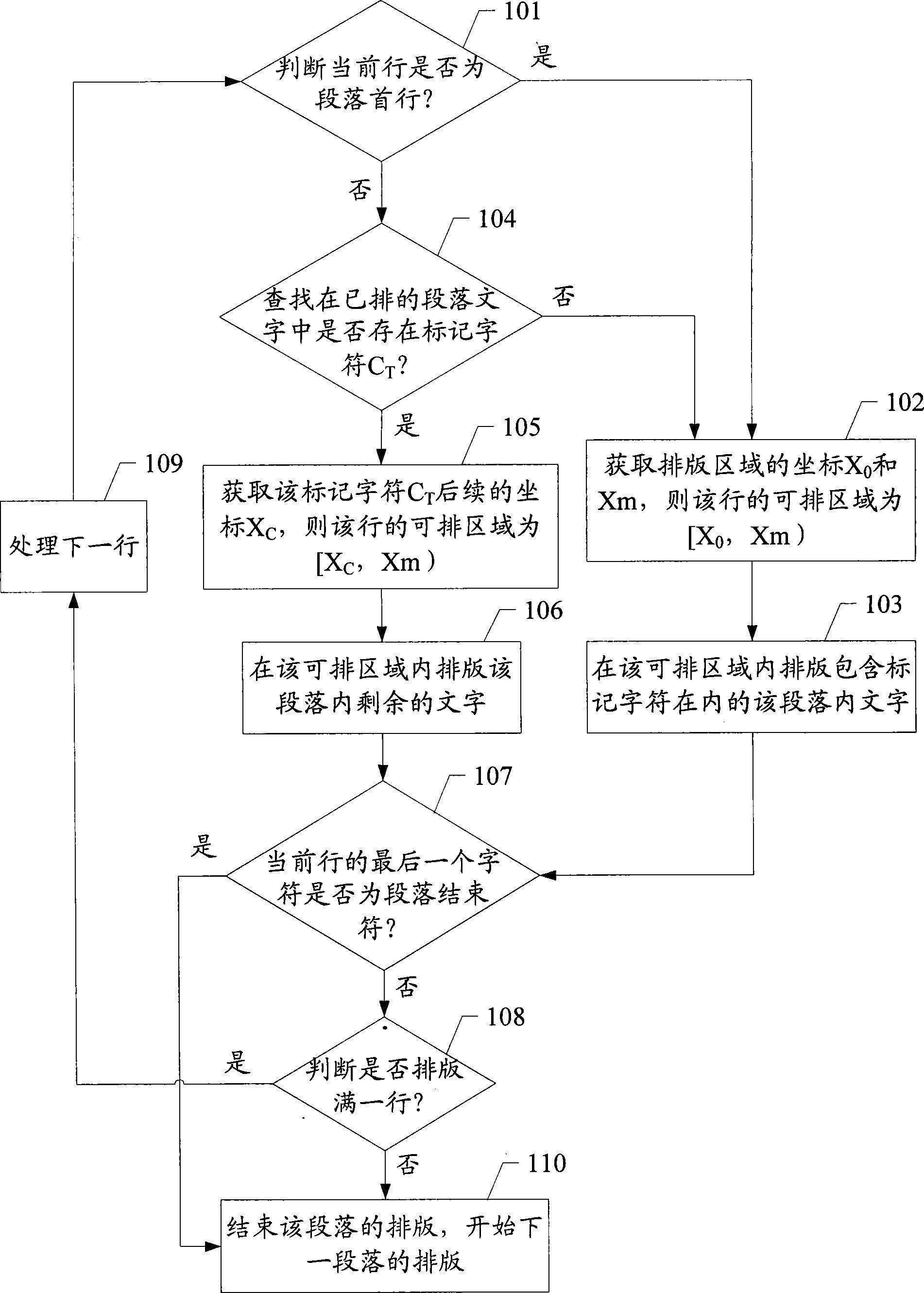

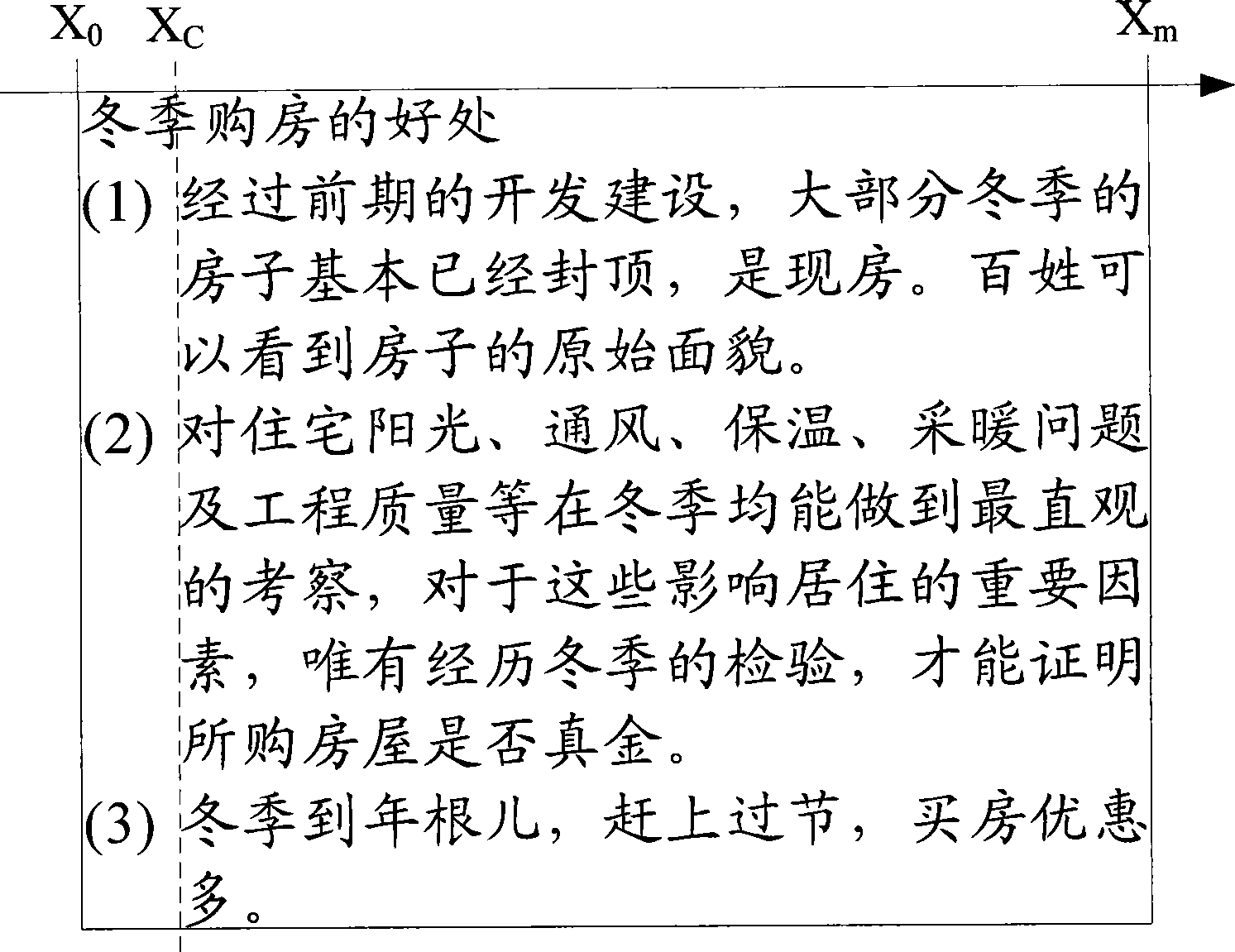

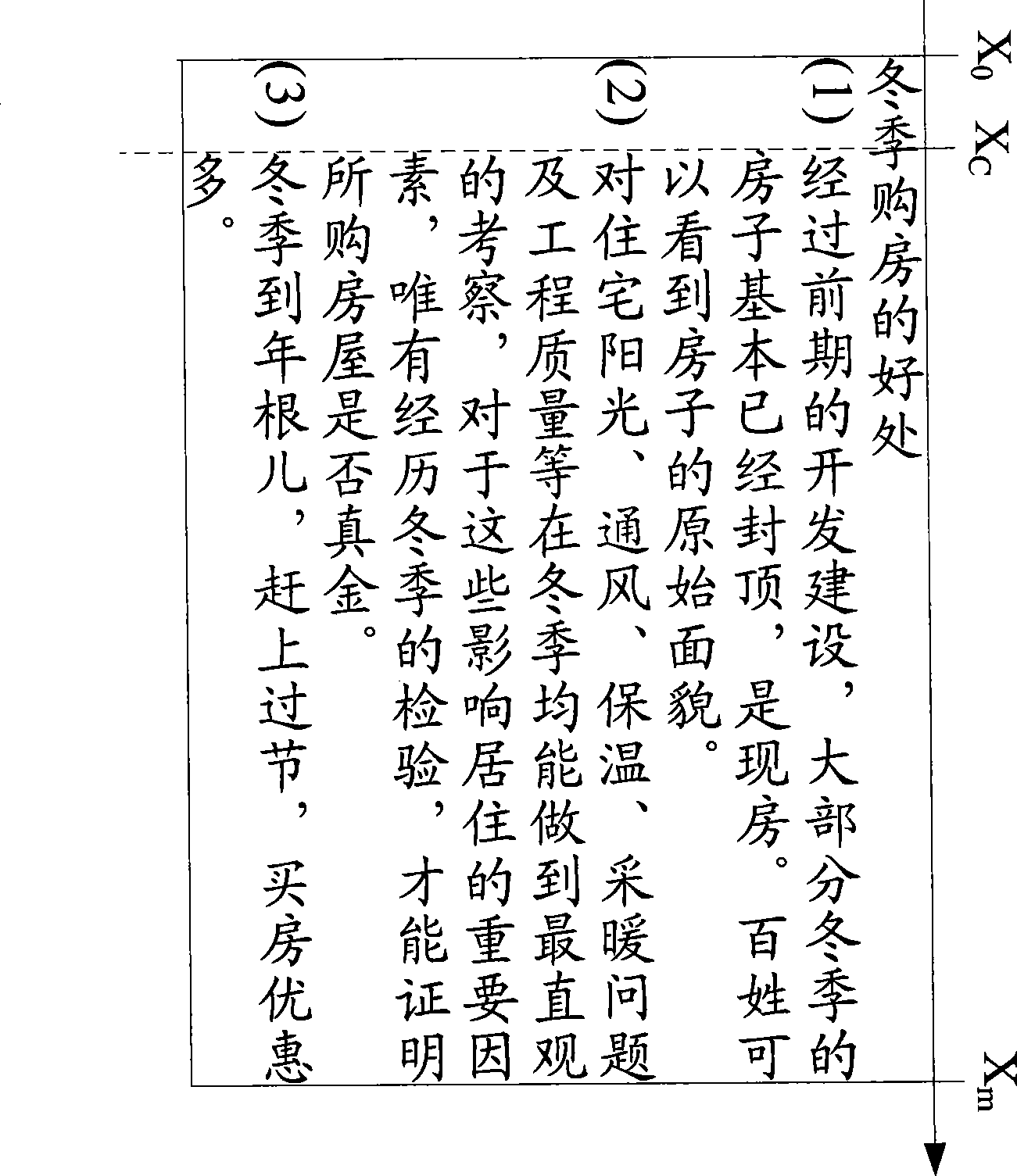

Typesetting method and device for text alignment in paragraph

InactiveCN101452445ATypesetting operation is simpleImprove typesetting efficiencyNatural language data processingSpecial data processing applicationsText alignmentParagraph

The invention discloses a typesetting method and a typesetting device for text alignment in a paragraph, which are used for solving the problems of low typesetting efficiency or nonideal adjustment effect in the prior art. The method determines a typesettable area of a row according to an entered tab character and the attribute of the current row in the paragraph, and then typesets characters in the paragraph in the typesettable area. The proposal provided by the invention can improve the efficiency of the typesetting and assure the adjustment effect.

Owner:PEKING UNIV FOUNDER GRP CO LTD +1

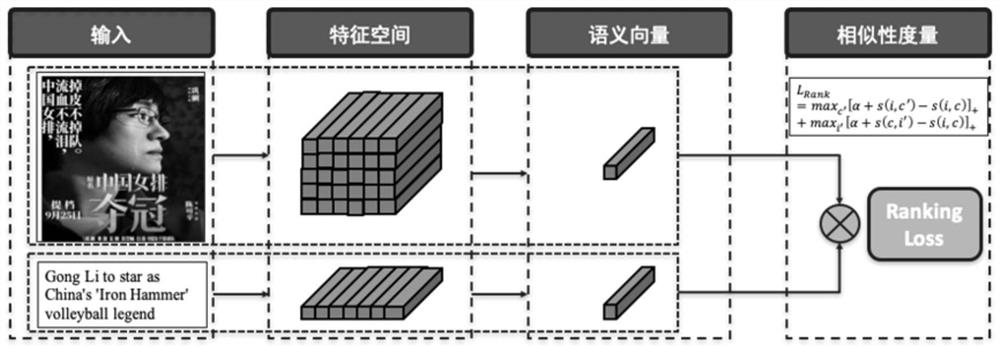

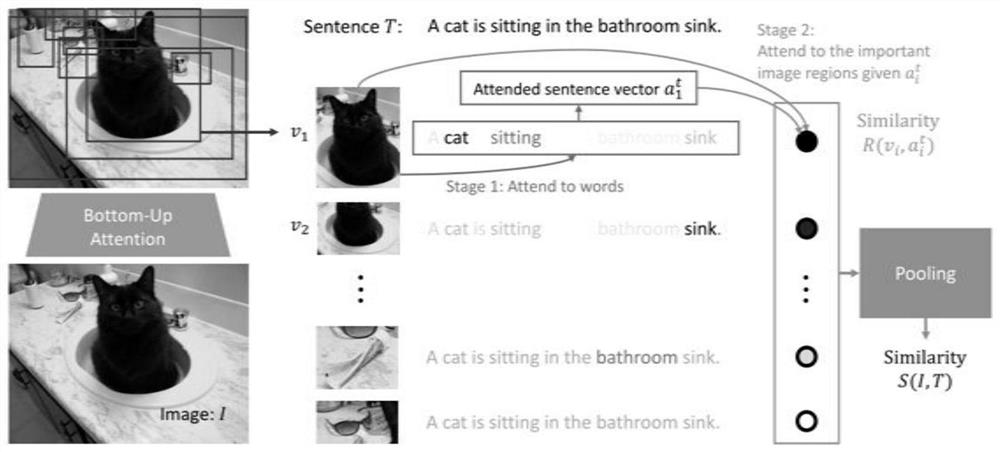

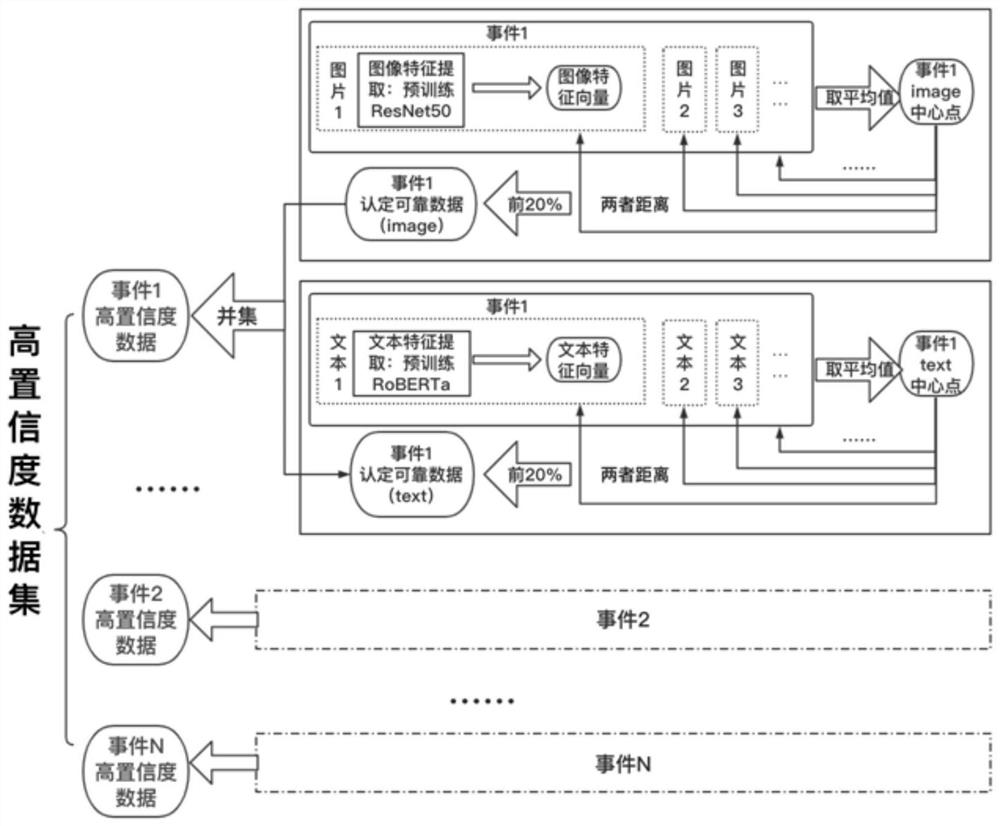

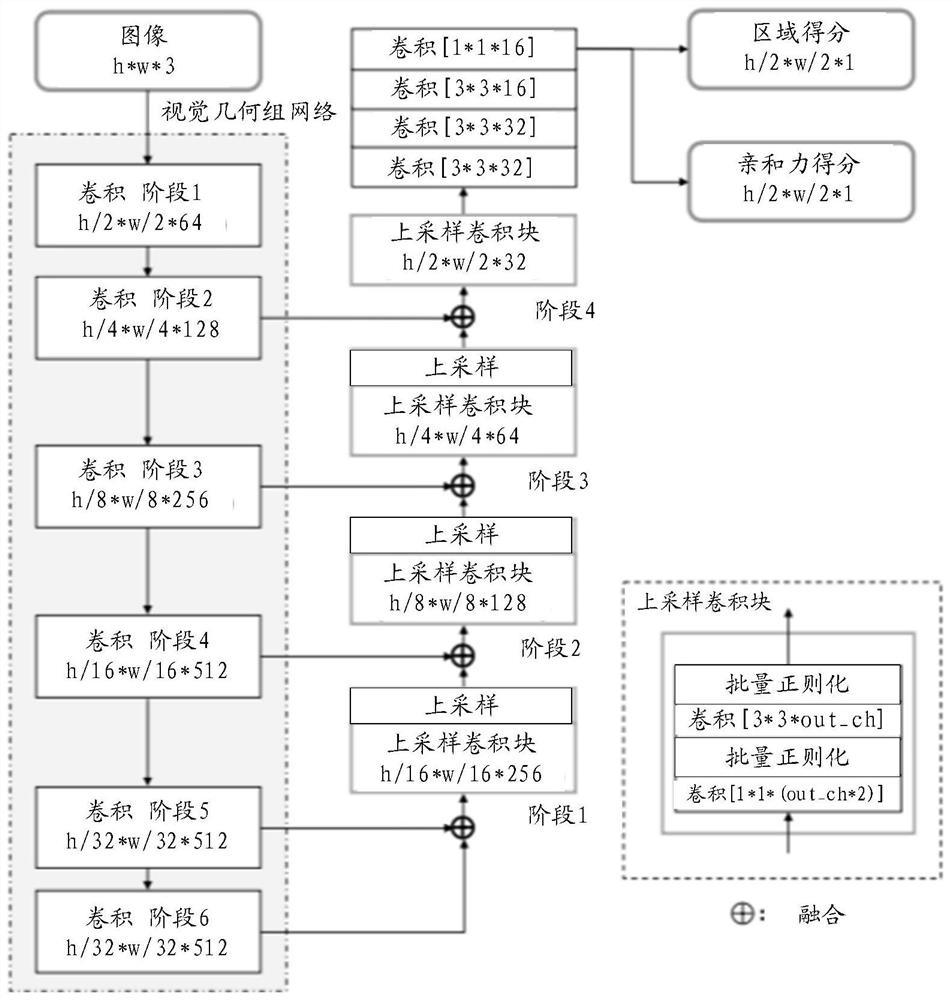

News event searching method and system based on multistage image-text semantic alignment model

PendingCN114297473ARich cognitionFill vacancyNeural architecturesOther databases clustering/classificationText alignmentSemantic alignment

The multi-level vision-text semantic alignment model MSAVT used for image-text matching is provided, the news event retrieval method based on the multi-level vision-text semantic alignment model MSAVT used for image-text matching is provided, news event cross-modal image-text search is achieved, and the current news retrieval requirement is met. The image-text alignment precision of the cross-modal retrieval model provided by the invention is higher, and when the cross-modal retrieval model is applied to news event cross-modal image-text retrieval, indexes such as recall rates of multiple levels, average accuracy and the like are remarkably improved. And meanwhile, a pre-trained BERT model is introduced to extract text features, so that the generalization performance of the algorithm is improved. The model adopts a public space feature learning method, vector representations of images and texts can be independently obtained, namely, vector representations of retrieval results can be stored in advance, retrieval time is short, and the method can be applied to actual scenes.

Owner:BEIJING UNIV OF POSTS & TELECOMM

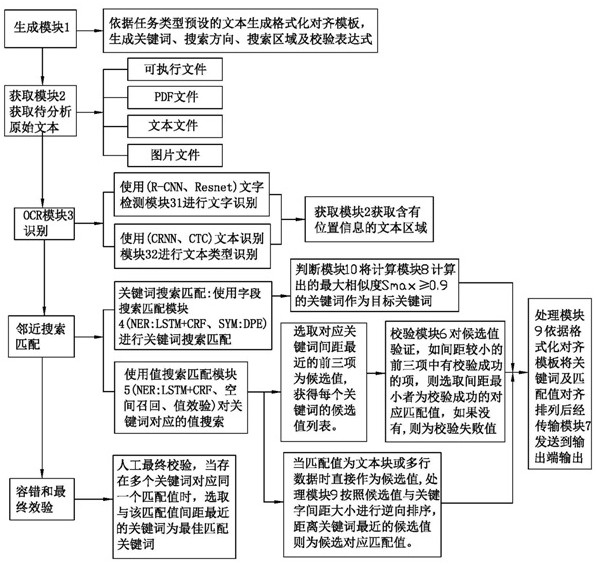

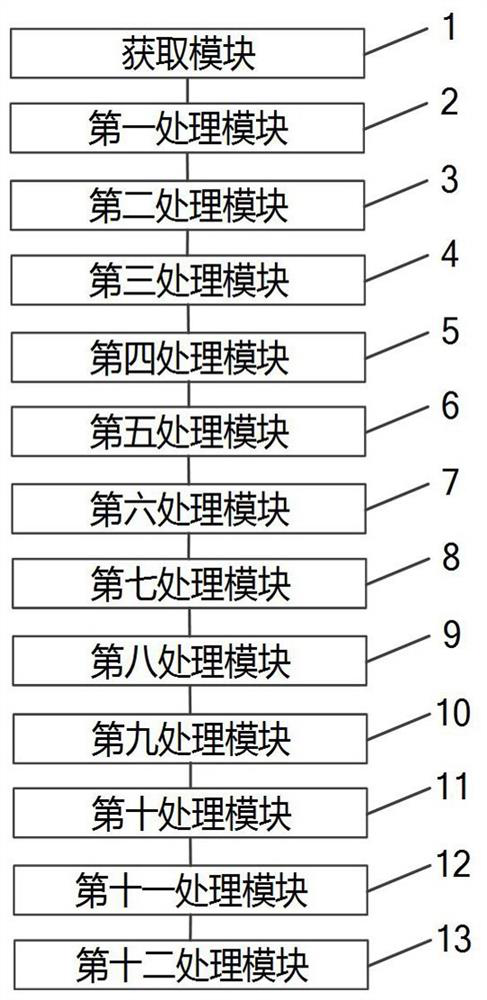

Text alignment method and device, electronic equipment and computer readable storage medium

ActiveCN112836484AGuaranteed accuracyCharacter and pattern recognitionNatural language data processingText alignmentFault tolerance

The invention discloses a text alignment method and device, electronic equipment and a computer readable storage medium, and the method comprises: presetting a formatting alignment template, a preset keyword, a search direction, a search region and a preset corresponding check expression of a text according to a task type, and carrying out the keyword recognition and text type recognition of the text, such as a picture, through an OCR module, obtaining a text region containing position information; then performing adjacent search matching, identifying each keyword and a matching value according to a preset search direction and a search area, performing verification according to a preset verification expression, performing qualification judgment according to a preset judgment rule, performing matching output after qualification, and finally performing fault tolerance and final verification processes. The accuracy of alignment of various text keywords and matching values is further ensured. The technical problems that in the prior art, typesetting is changeable, formatting with large noise cannot be aligned, manual operation is slow, and formatting efficiency is seriously affected are thoroughly solved.

Owner:BEIJING MORE HEALTH TECH GRP CO LTD

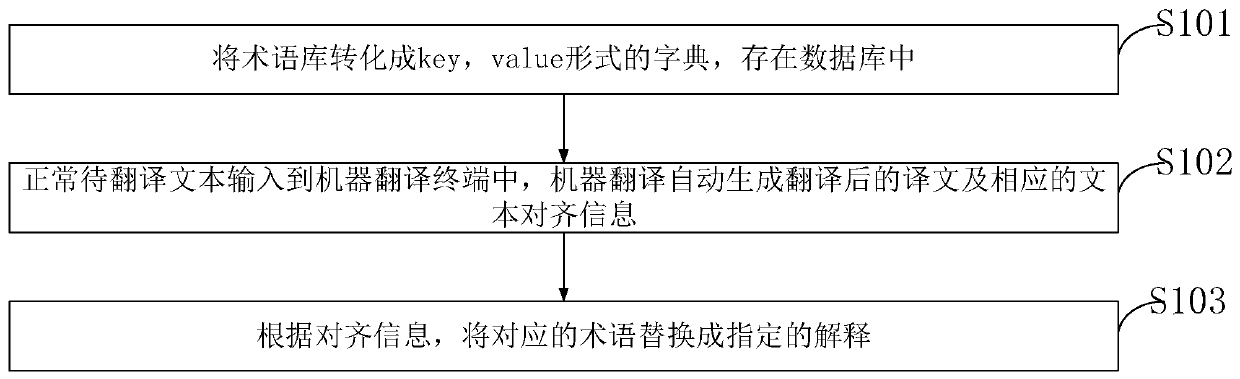

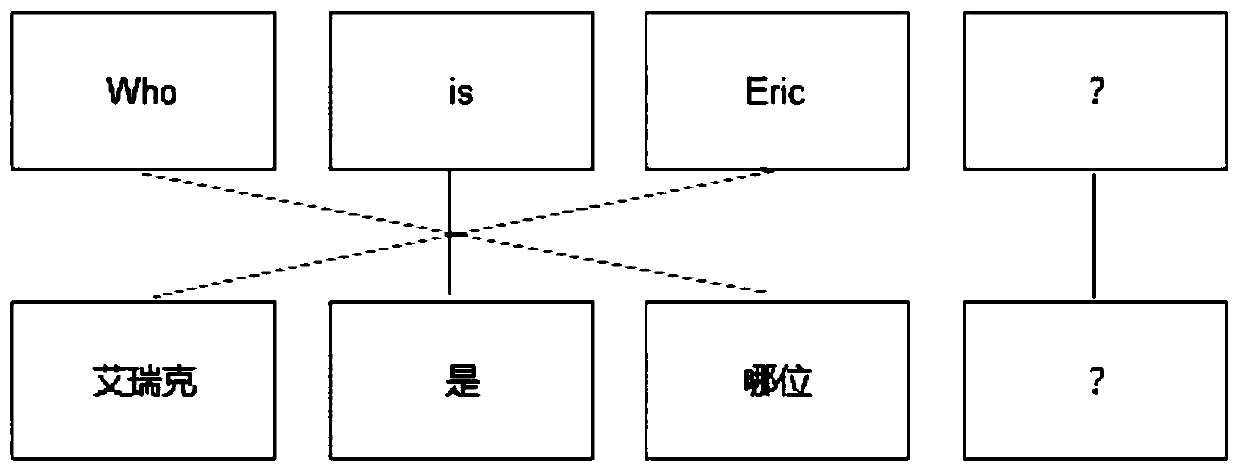

A term translation method and device

PendingCN109902314AEasy to expandMeet the expected effectSpecial data processing applicationsText alignmentHuman language

The invention belongs to the technical field of language translation, and discloses a term translation method and device, and the method comprises the steps: converting a term library into dictionaries in key and value forms, and storing the dictionaries in a database; Inputting the normal to-be-translated text into a machine translation terminal, and automatically generating a translated text andcorresponding text alignment information through machine translation; And according to the alignment information, replacing the corresponding term with a specified explanation. According to the method, machine translation and term translation in manual translation are combined, so that more results summarized by human experience are received by machine translation, the translation quality is further improved, and the expectation of translation workers is achieved; According to the method, the user can be allowed to use the pre-defined term library in the using process and apply the term library to machine translation, so that the term translation in the machine translation better conforms to the expected effect.

Owner:GLOBAL TONE COMM TECH

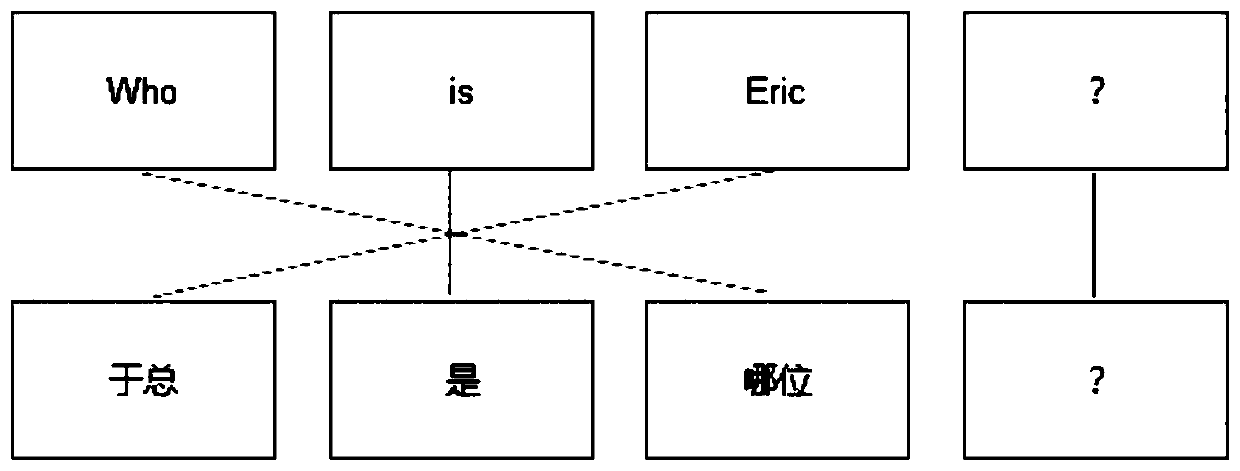

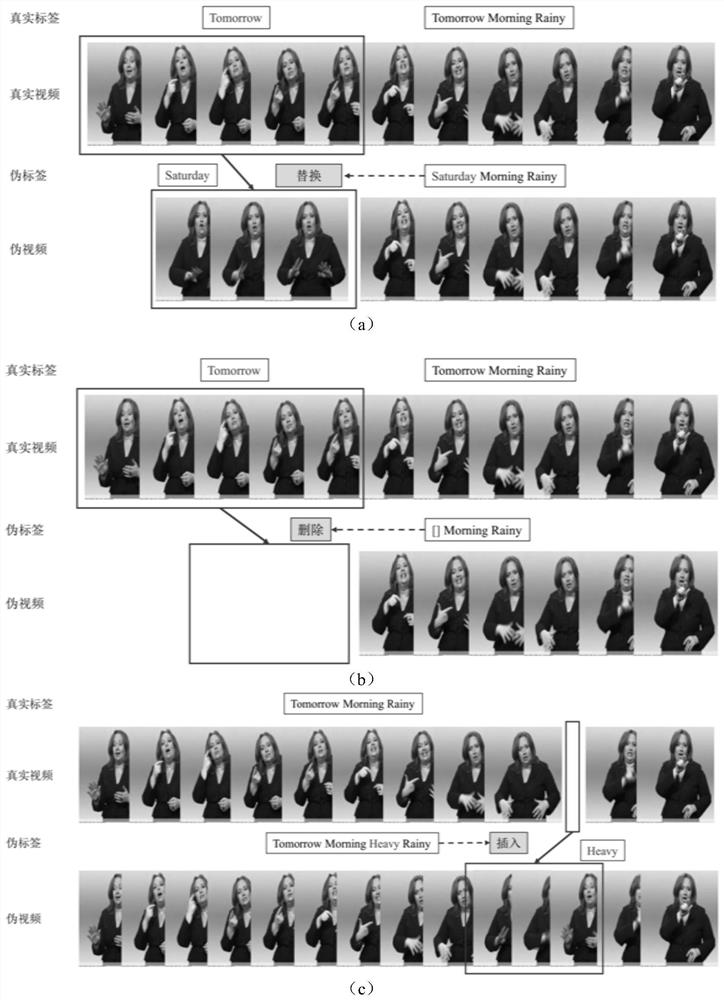

Continuous sign language recognition method based on cross-modal data augmentation

ActiveCN112149603AScale upReduce cross-modal distanceSemantic analysisCharacter and pattern recognitionPattern recognitionText alignment

The invention discloses a continuous sign language recognition method based on cross-modal data augmentation, and the method comprises the steps: carrying out random deletion, insertion and replacement of original video text data, generating a series of pseudo video text data with marks, amplifying a conventional data set, and achieving a purpose of enlarging the data scale. Based on original dataand augmented data, a brand-new multi-objective optimization function is designed, so that the cross-modal distance between a video and a corresponding text is reduced while weak supervision video text alignment learning is carried out, and meanwhile, a network can distinguish the difference between real data and augmented pseudo data. Through cross-modal data augmentation and multi-task learning, the continuous sign language recognition performance is improved.

Owner:UNIV OF SCI & TECH OF CHINA

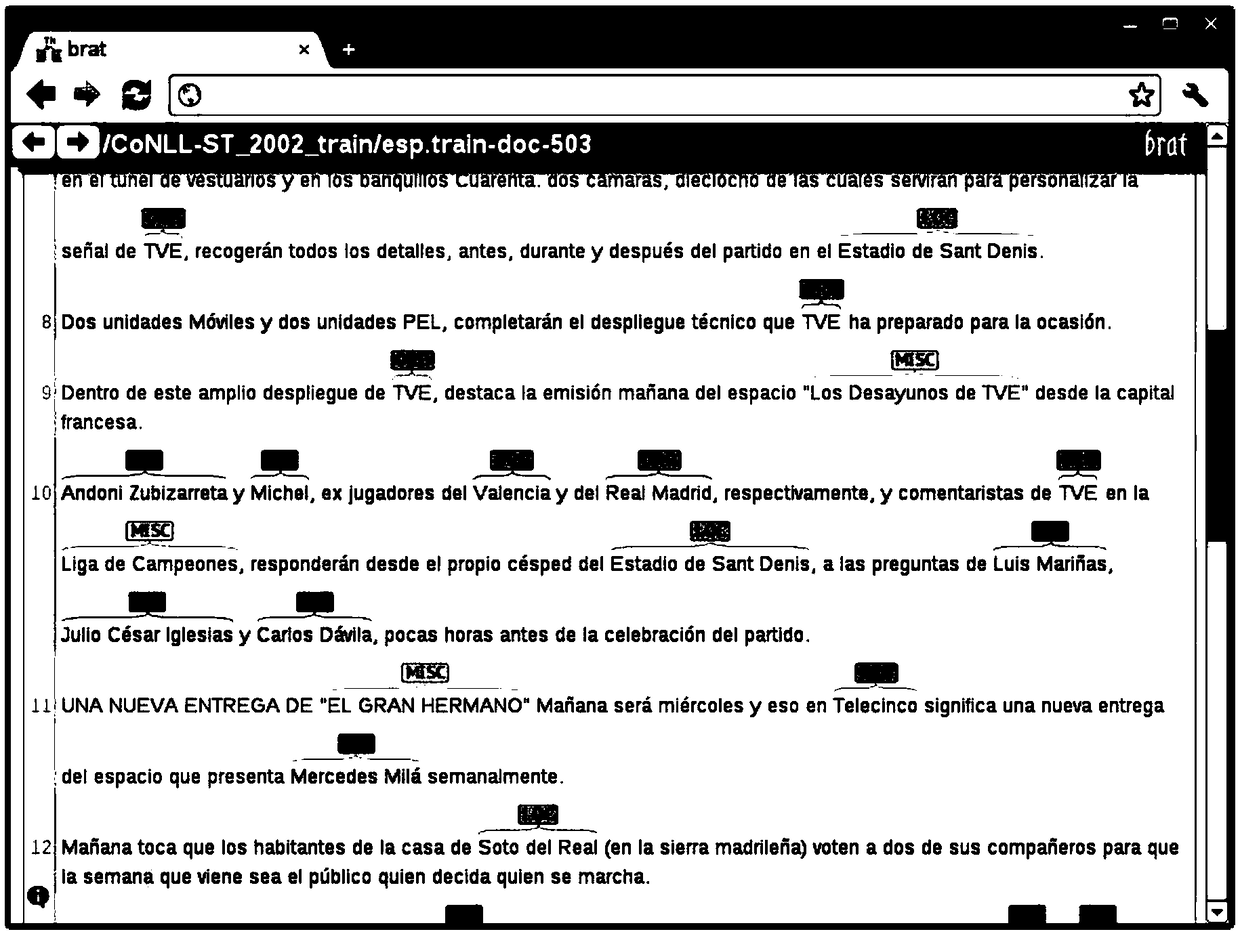

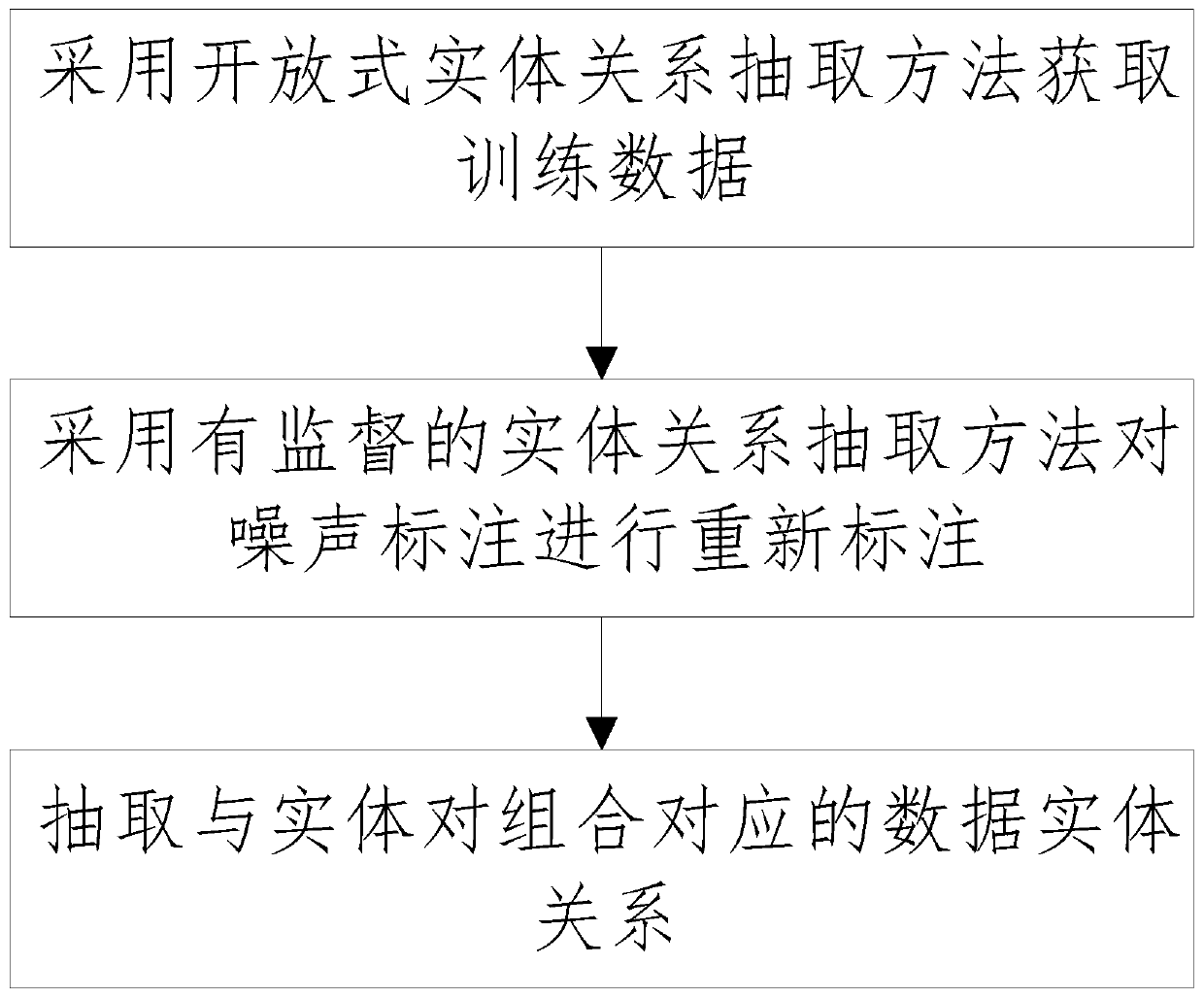

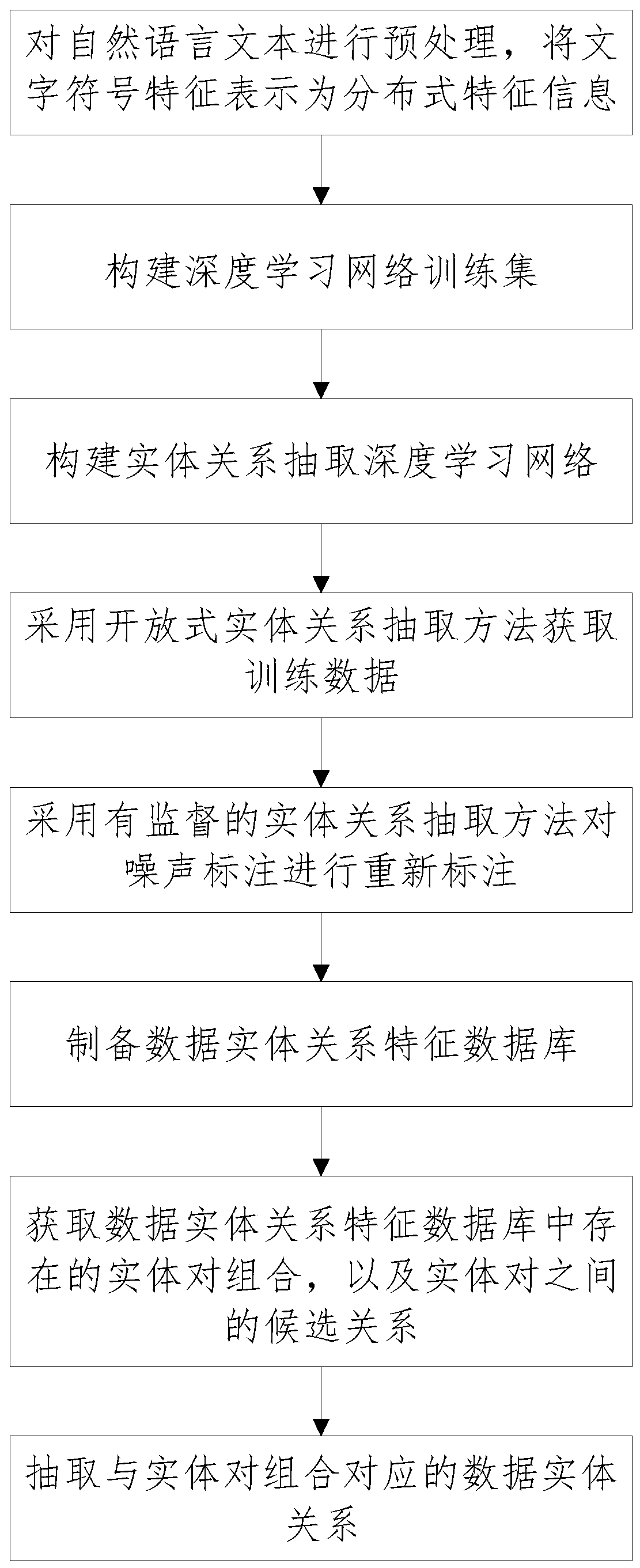

Data entity relationship extraction method based on deep learning

InactiveCN110399433AImprove extraction efficiencyHigh precisionData processing applicationsRelational databasesEntity–relationship modelText alignment

The invention discloses a data entity relationship extraction method based on deep learning. The method comprises the following steps: 1, obtaining training data by adopting an open entity relationship extraction method; mapping the data entity relationship instances to a large number of texts in an entity knowledge base by means of a DBPedia, OpenCyc, YAGO or FreeBase entity knowledge base, obtaining training data through a text alignment method, and obtaining training corpora with noise annotations; re-annotating the noise annotation by adopting a supervised entity relationship extraction method, and training a machine learning model on the basis of annotated training data; and extracting a data entity relationship corresponding to the entity pair combination. According to the method, the data entity relationship is extracted by combining the open entity relationship extraction method and the supervised entity relationship extraction method. The training data acquisition efficiency of the open entity relationship extraction method is high. The training data acquired by the supervised entity relationship extraction method is high in accuracy. The extraction efficiency and the accuracy of the entity relationship are improved.

Owner:福建奇点时空数字科技有限公司

Method for extracting translations from translated texts using punctuation-based sub-sentential alignment

ActiveUS7774192B2Natural language translationSpecial data processing applicationsText alignmentDocument preparation

A method for text alignment of a first document and a second document that is a translation version of the first document. The method first divides paragraphs of the first and second documents into sub-sentential segments according to the punctuations in the language of the first and second documents. Each of sub-sentential segments corresponds to a plurality of words. After the sub-sentential segmenting process, pairs of alignment units are summarized from the first and second documents. The alignment units in the first and second documents are then aligned and scored mainly based on the probability of corresponding punctuations. To increase the alignment accuracy, the pairs of alignment units can also be aligned and scored based on at least one of length corresponding probability, match type probability, and lexical information. The method allows for fast, reliable, and robust alignment of document and translated document in two disparate languages.

Owner:IND TECH RES INST

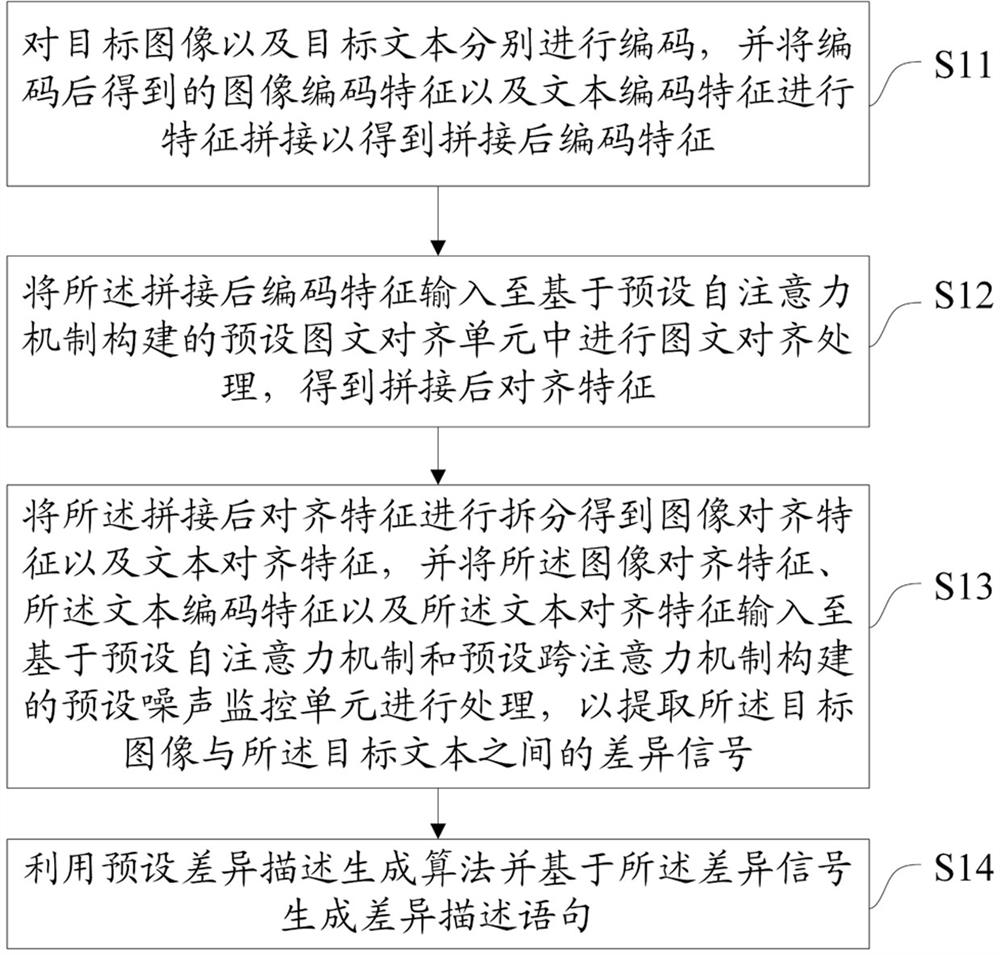

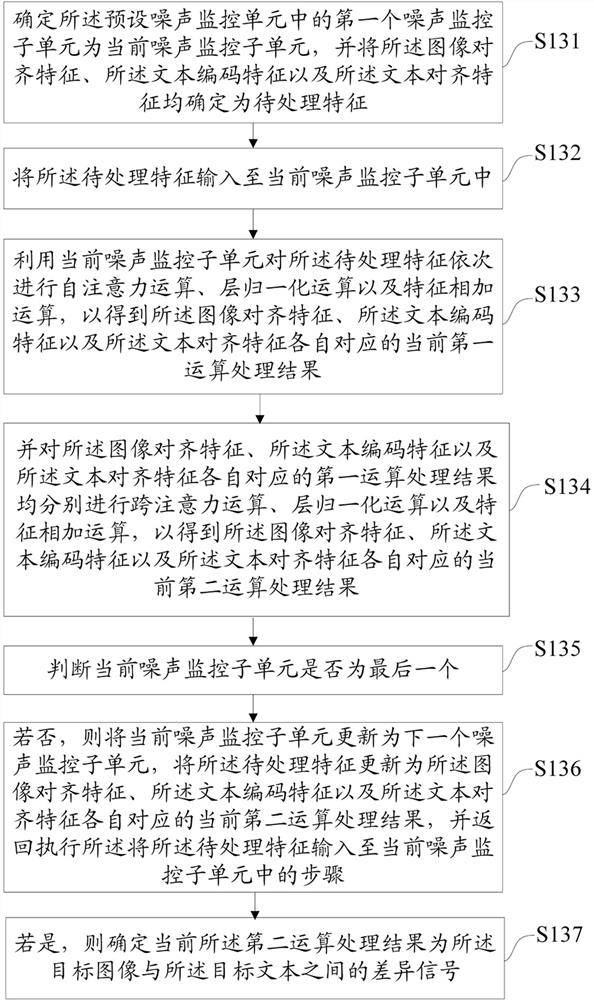

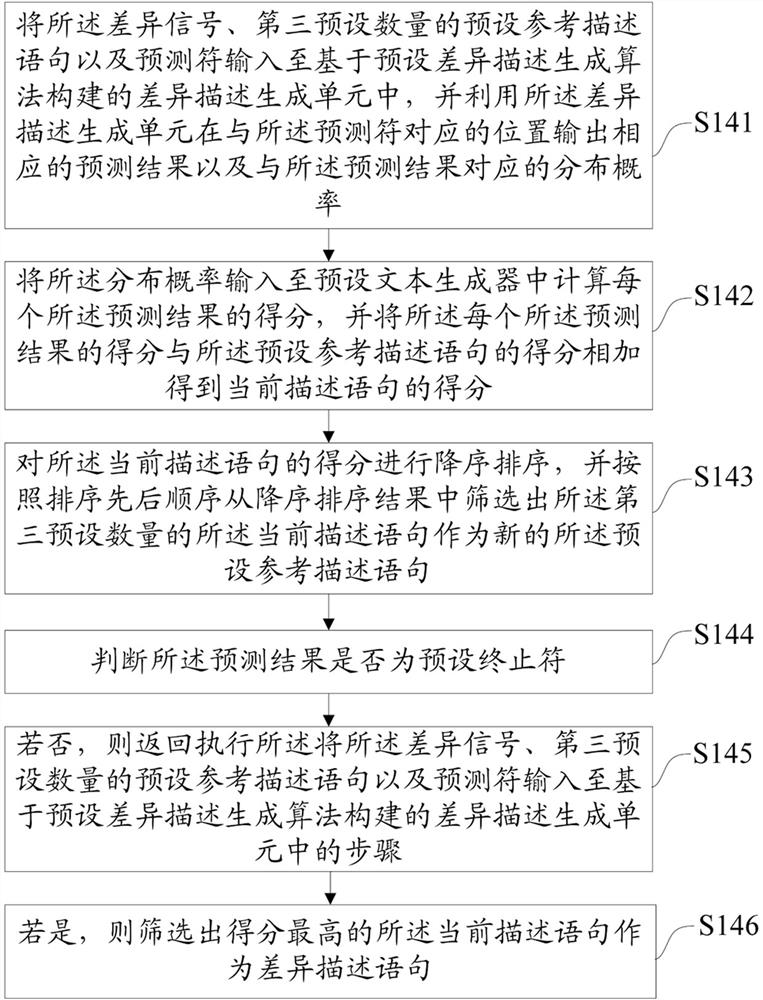

Differential description statement generation method and device, equipment and medium

ActiveCN114511860AImprove accuracyGuaranteed normal reasoning functionCharacter and pattern recognitionText alignmentNoise monitoring

The invention discloses a difference description statement generation method and device, equipment and a medium, and relates to the technical field of artificial intelligence, and the method comprises the steps: carrying out the feature splicing of image coding features and text coding features, inputting the spliced coding features into a preset image-text alignment unit constructed based on a preset self-attention mechanism to obtain spliced alignment features, using a preset noise monitoring unit constructed based on a preset self-attention mechanism and a preset cross-attention mechanism to process image alignment features and text alignment features obtained after splitting the text coding features and the spliced alignment features so as to extract difference signals; the difference description statement is generated by utilizing the preset difference description generation algorithm and based on the difference signal, and therefore, the part, which cannot be aligned with the image, in the human language text is positioned based on the preset cross-attention mechanism, and the corresponding interpretation description is given, so that the problem that a computer cannot perform normal reasoning due to human language errors is solved.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

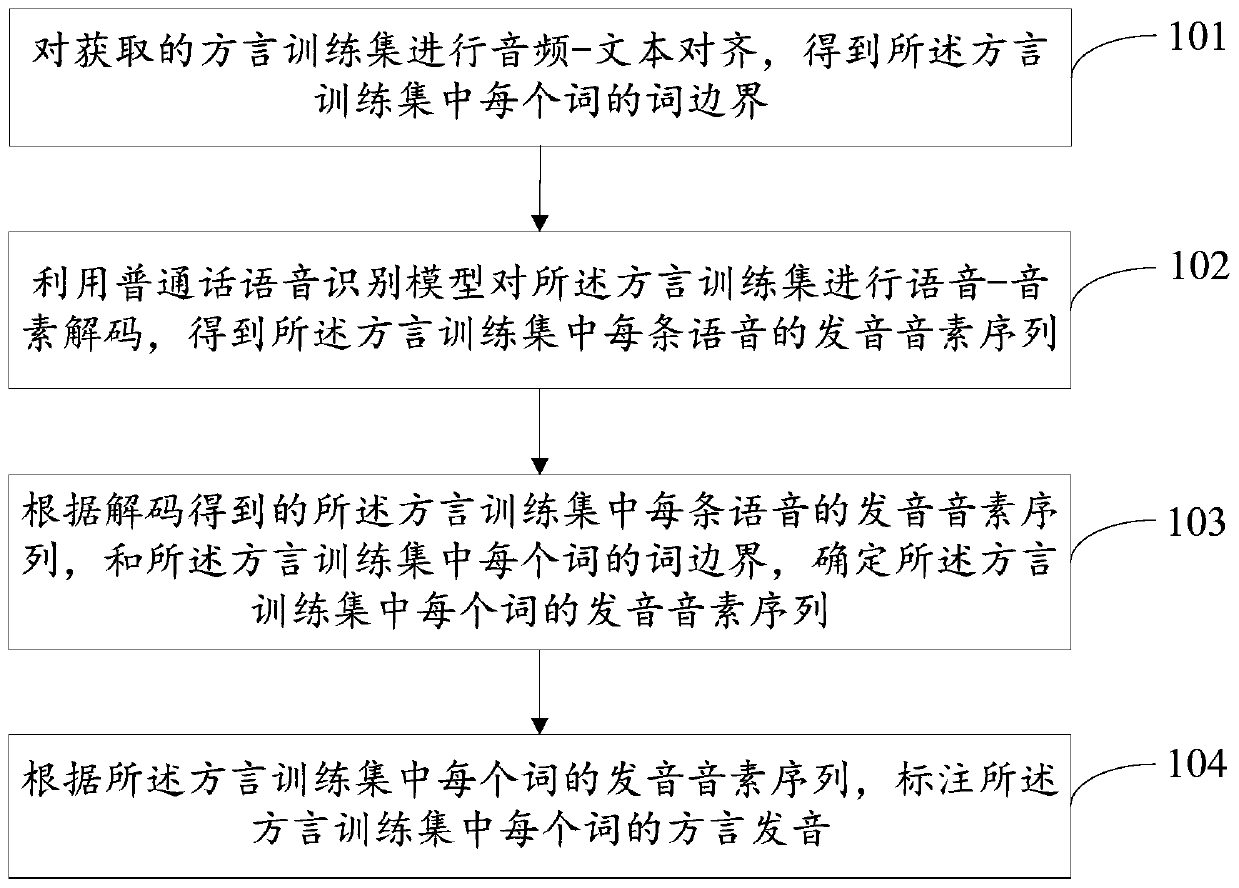

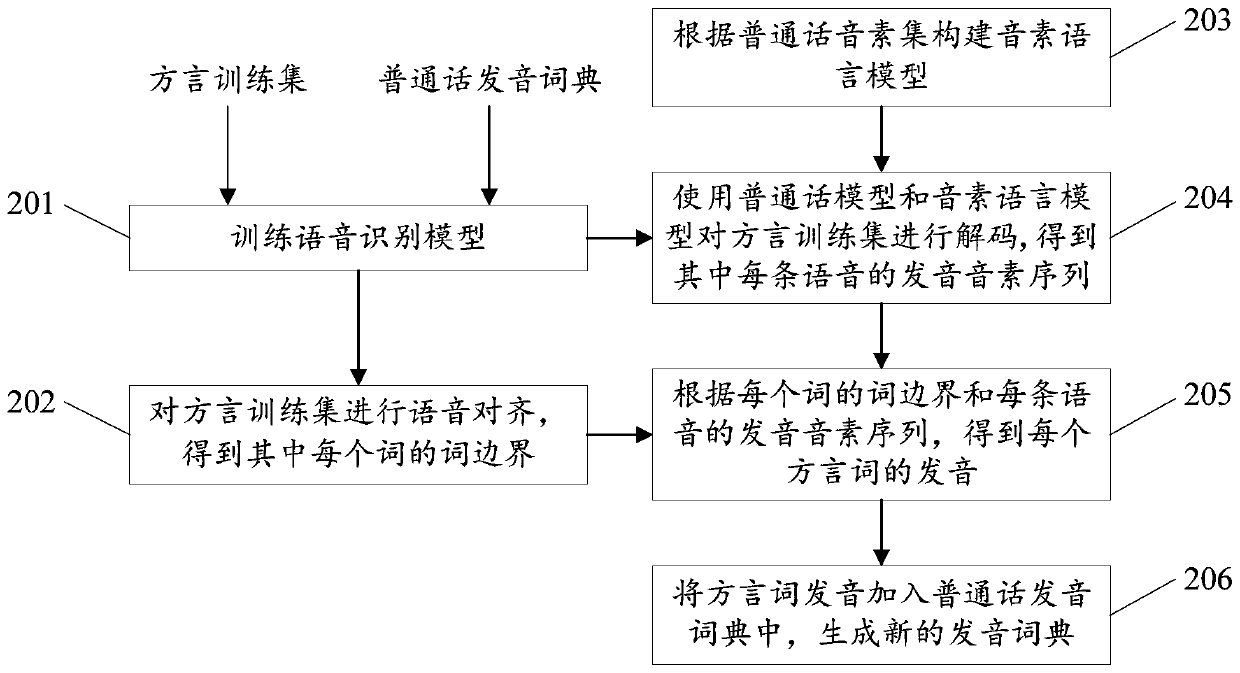

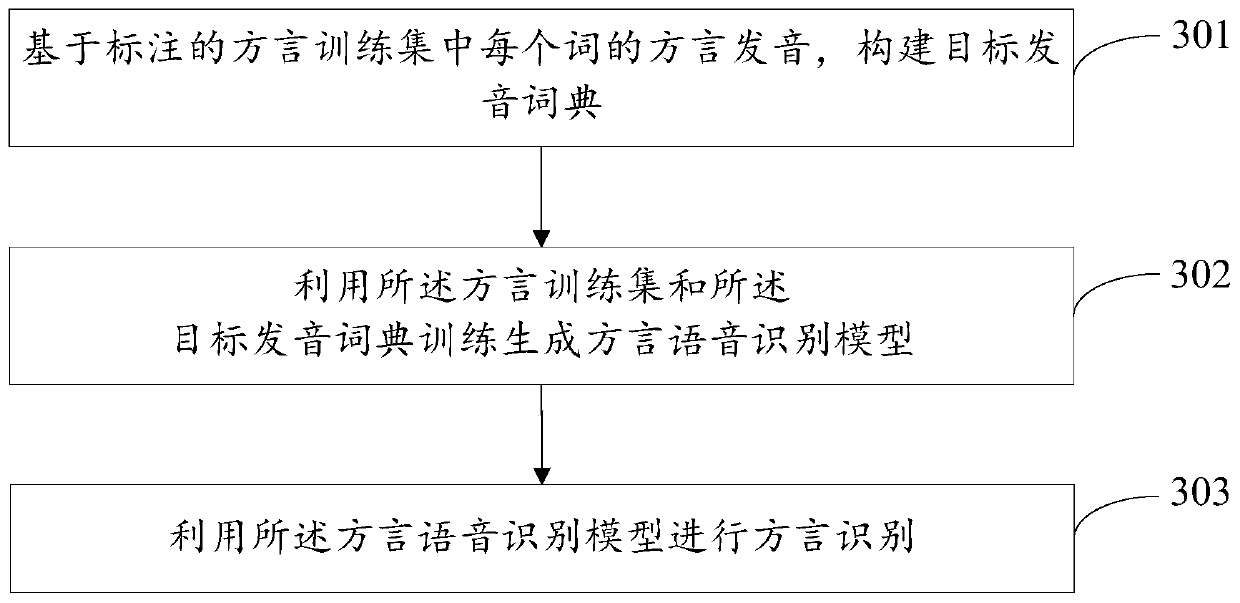

Dialect pronunciation labeling method, language recognition method and related devices

The invention provides a dialect pronunciation labeling method, a language recognition method and a related device, wherein the dialect pronunciation labeling method comprises the steps: carrying outaudio-text alignment of an obtained dialect training set, and obtaining a word boundary of each word in the dialect training set; carrying out voice-phoneme decoding on the dialect training set by using a mandarin voice recognition model to obtain a pronunciation phoneme sequence of each voice in the dialect training set; determining a pronunciation phoneme sequence of each word in the dialect training set according to the decoded pronunciation phoneme sequence of each voice in the dialect training set and the word boundary of each word in the dialect training set; determining a target word with a plurality of pronunciations according to the pronunciation phoneme sequence of each word in the dialect training set; and adding the target pronunciation of the target word into a mandarin pronunciation dictionary to obtain a target pronunciation dictionary. According to the embodiment of the invention, dialect pronunciation annotation can be automatically completed without depending on manpower, and manpower and time cost can be saved.

Owner:SOUNDAI TECH CO LTD

Text processing method and device, medium and computing equipment

PendingCN110807334ALower performance requirementsReduce labeling costsNatural language translationText alignmentAlgorithm

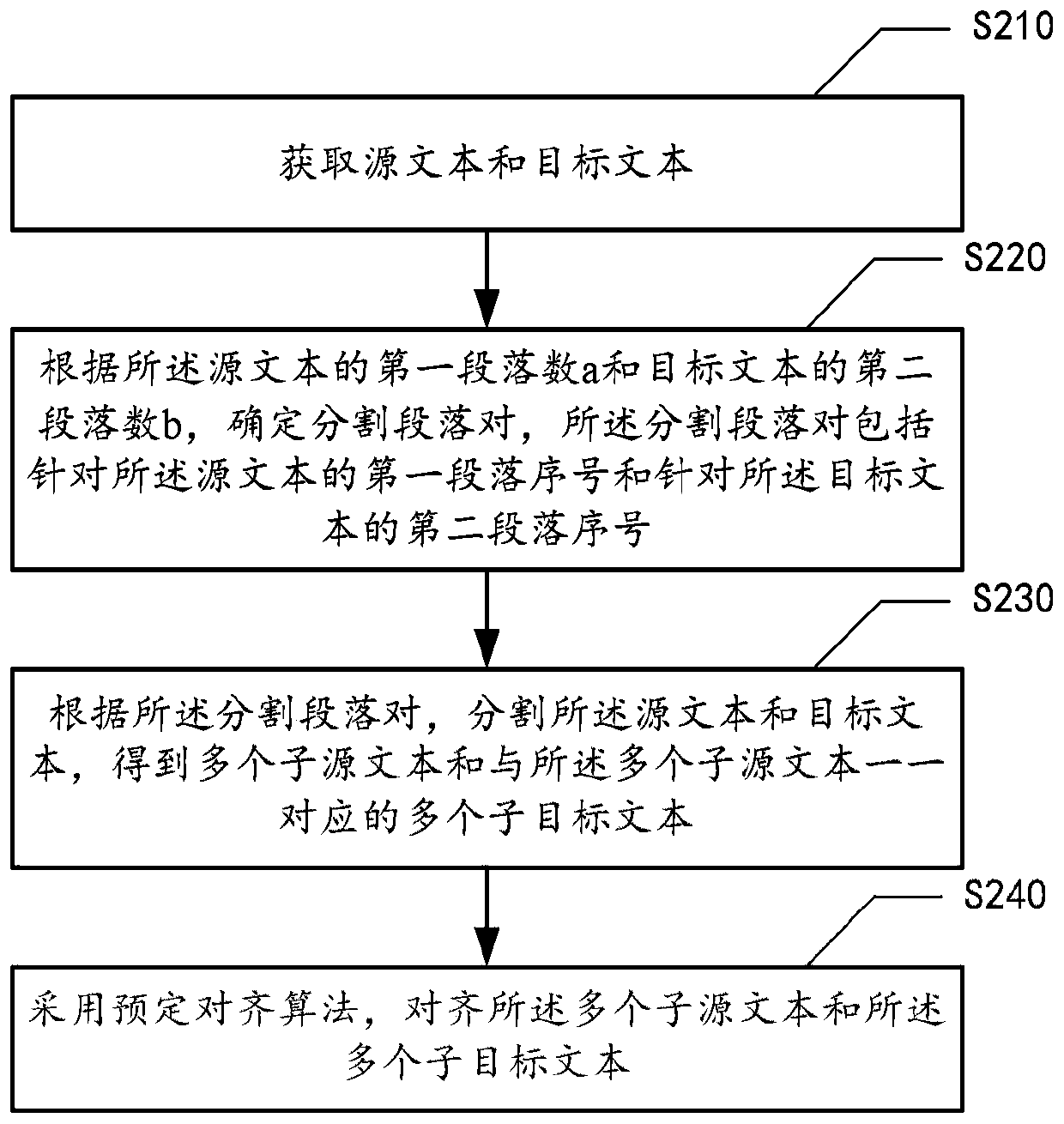

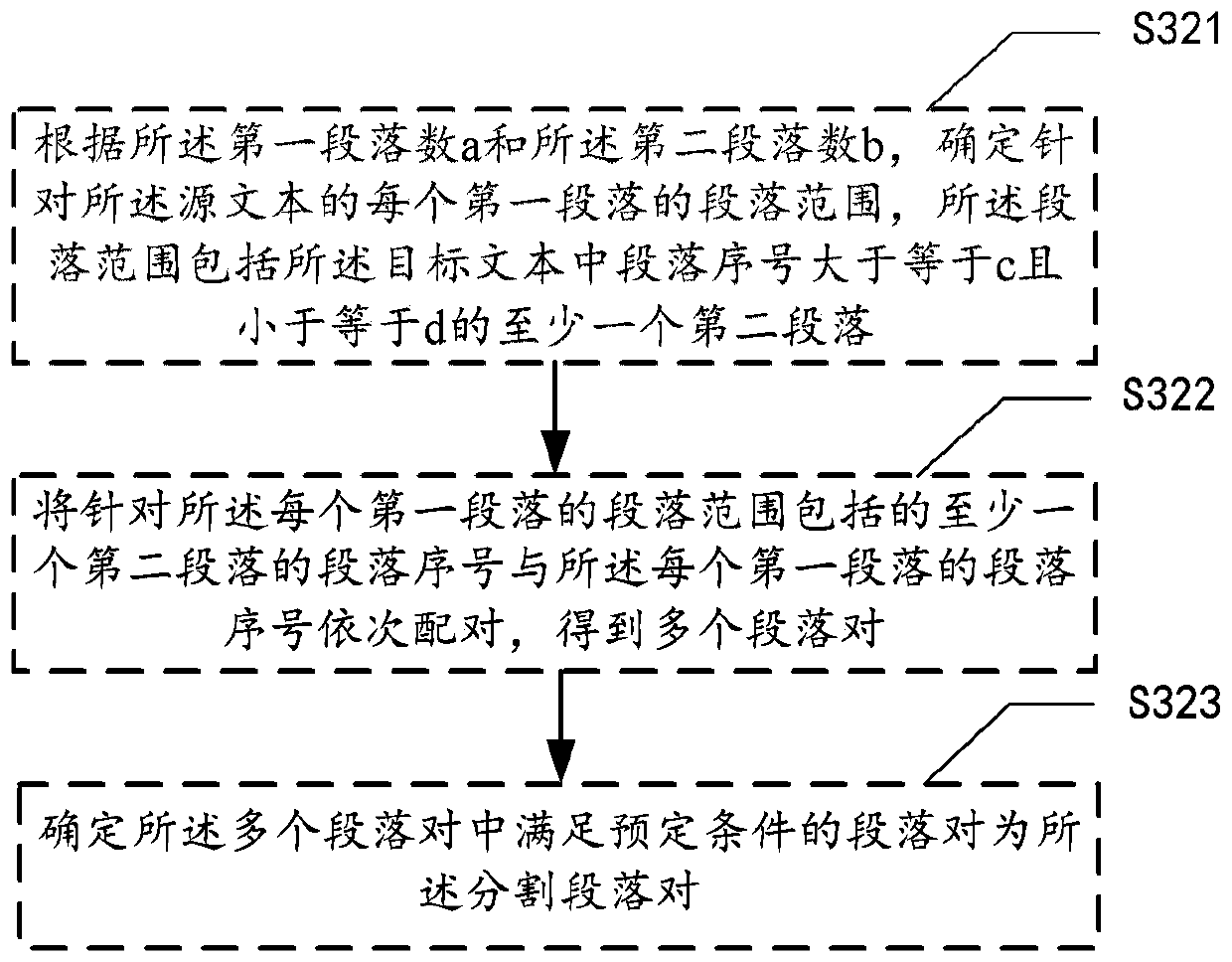

The embodiment of the invention provides a text processing method. The method comprises the steps of obtaining a source text and a target text; determining a segmented paragraph pair according to thefirst paragraph number a of the source text and the second paragraph number b of the target text, the segmented paragraph pair comprising a first paragraph serial number for the source text and a second paragraph serial number for the target text; segmenting the source text and the target text according to the segmentation paragraph pair to obtain a plurality of sub-source texts and a plurality ofsub-target texts in one-to-one correspondence with the plurality of sub-source texts; and aligning the plurality of sub-source texts and the plurality of sub-target texts by adopting a predeterminedalignment algorithm. According to the method, the device, the medium and the computing equipment, the two texts are divided into the plurality of sub-texts, and then the sub-texts are aligned, so thatcascading errors caused by non-standard texts during subsequent paragraph alignment and sentence alignment can be reduced, the text alignment quality is improved, and the quality requirement on the two texts is reduced.

Owner:网易有道信息技术(北京)有限公司

Voice data labeling method and system, electronic equipment and storage medium

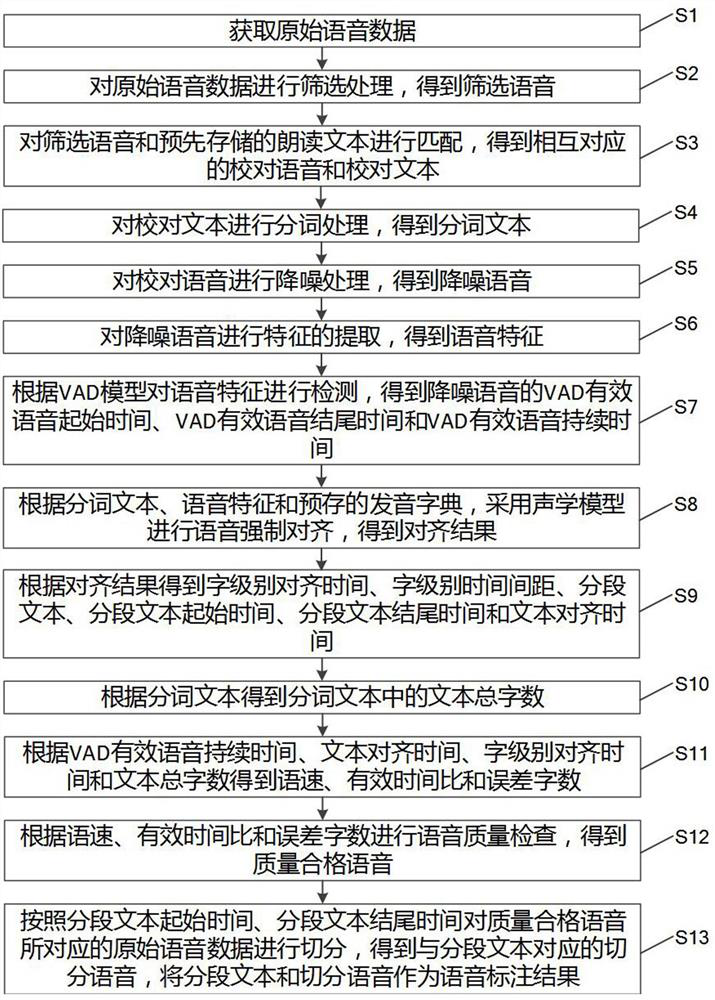

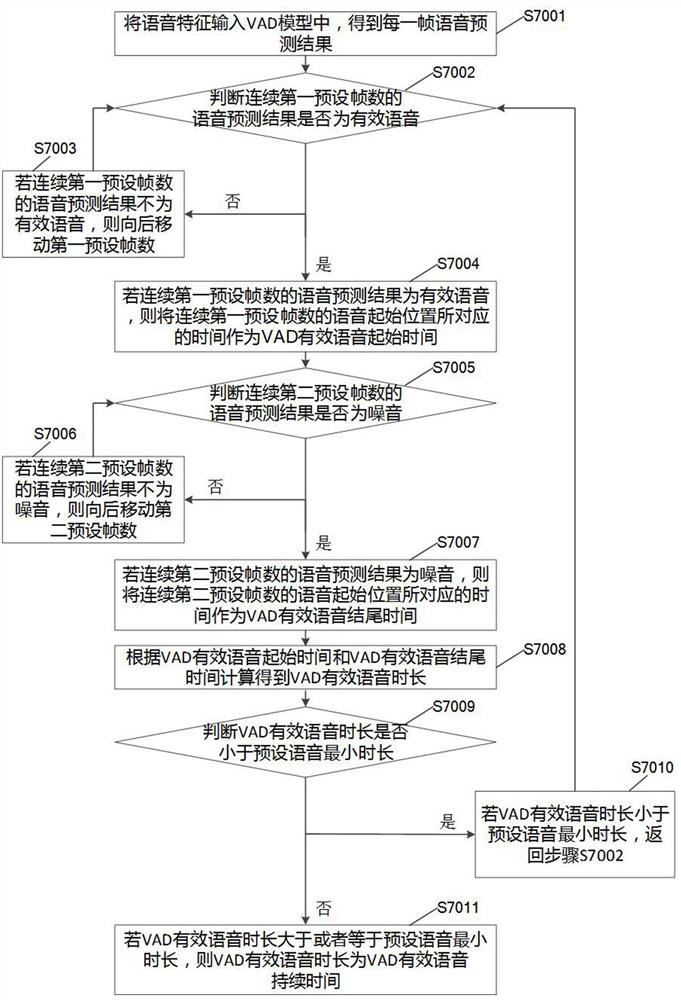

The invention discloses a voice data labeling method and system, electronic equipment and a storage medium, and the method comprises the steps: firstly screening original voice data, and carrying outthe reading text matching of screened voice, and obtaining proofreading voice and proofreading text; performing word segmentation on the proofreading text to obtain a word segmentation text; performing noise reduction on the proofreading voice to obtain noise reduction voice, and inputting the voice features after feature extraction into a VAD model to obtain VAD effective voice duration of the noise reduction voice; carrying out voice forced alignment on the word segmentation text by adopting an acoustic model to obtain the word-level alignment time, word-level time intervals, segmented texts, the segmented text starting time, the ending time and the text alignment time; determining a speech speed, an effective time ratio and an error word number according to the plurality of times, and performing speech quality inspection; and segmenting the original voice according to the starting time and the ending time of the segmented text, and taking the segmented text and the segmented voice as voice annotation results. The voice annotation text with qualified quality can be automatically acquired.

Owner:北京智慧星光信息技术有限公司

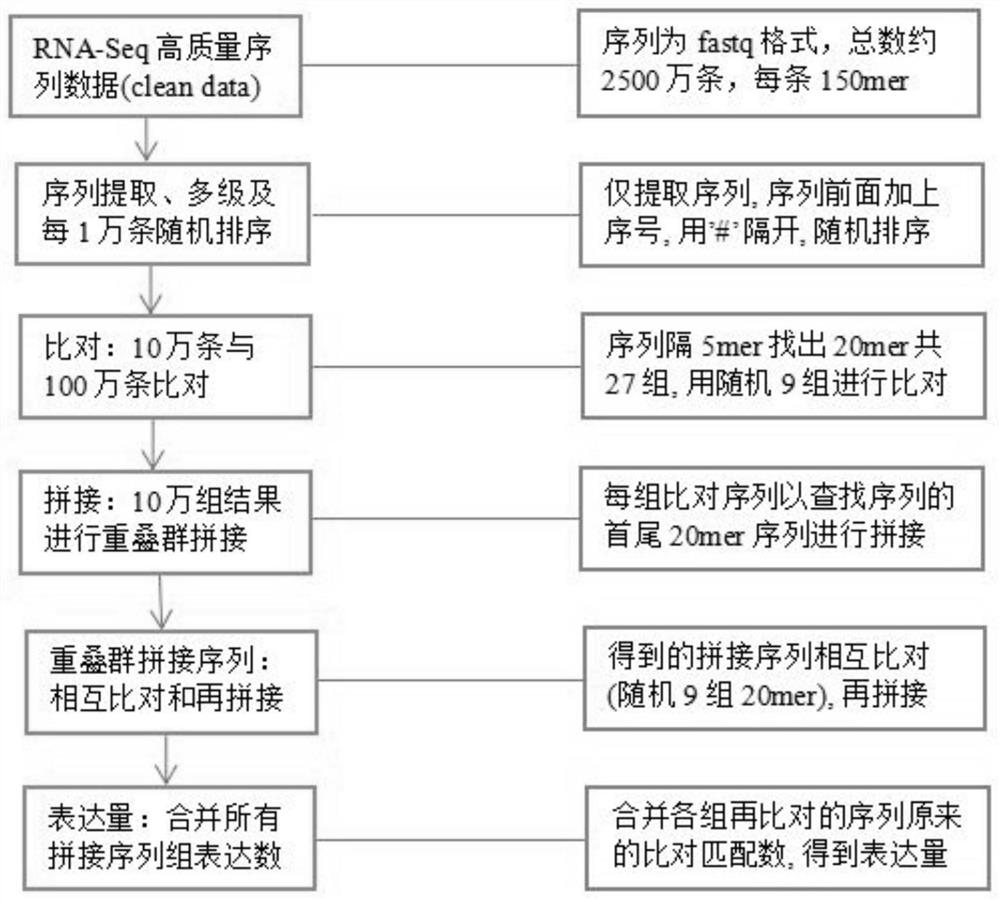

A Method for Analyzing High-Throughput Sequencing Gene Expression Levels Using Text Alignment

ActiveCN108388772BEasy to compareSimplify complexitySequence analysisInstrumentsNegative strandText alignment

The invention belongs to the field of bioinformatics and provides a method for analyzing the gene expression of high-throughput sequencing sequences. First, the sequencing sequences are coded, broken up, and randomly combined, and 100,000 sequences are selected as query sequences and respectively compared with 1 million sequences. The sequence was compared, and nine groups of 20mers were randomly selected from each query sequence, and the number of transcripts of the sequence was obtained after deduplication of 1 million sequences. The first and last 20mers of the query sequence were used to assemble from the matched aligned contigs. The expression amount of the spliced sequence is obtained by merging the expression amounts of all query sequence groups, which is equivalent to the expression amount of the negative strand obtained by alignment with the complementary strand. This method can be effectively used in the analysis of high-throughput sequencing gene expression and sequence de novo assembly.

Owner:FOSHAN UNIVERSITY

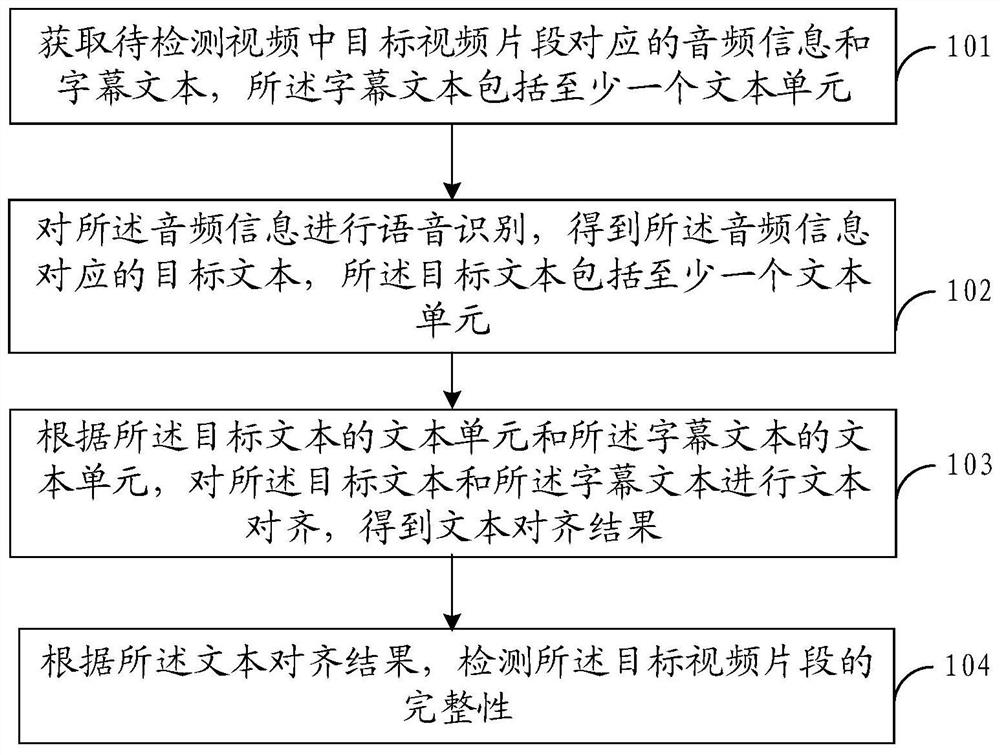

Video detection method and device, electronic equipment and storage medium

PendingCN113591530AImprove the efficiency of integrity detectionReduce testing costsDigital data information retrievalCharacter and pattern recognitionComputer hardwareText alignment

The invention discloses a video detection method and device, electronic equipment and a storage medium. The method comprises the following steps: acquiring audio information and a subtitle text corresponding to a target video clip in a to-be-detected video, wherein the subtitle text comprises at least one text unit; performing voice recognition on the audio information to obtain a target text corresponding to the audio information, the target text comprising at least one text unit; according to the text unit of the target text and the text unit of the subtitle text, performing text alignment on the target text and the subtitle text to obtain a text alignment result; and detecting the integrity of the target video clip according to the text alignment result. According to the embodiment of the invention, the video content integrity detection can be performed based on the audio and subtitles of the target video clip, the video integrity detection efficiency is improved, and the detection cost is reduced.

Owner:深圳市雅阅科技有限公司

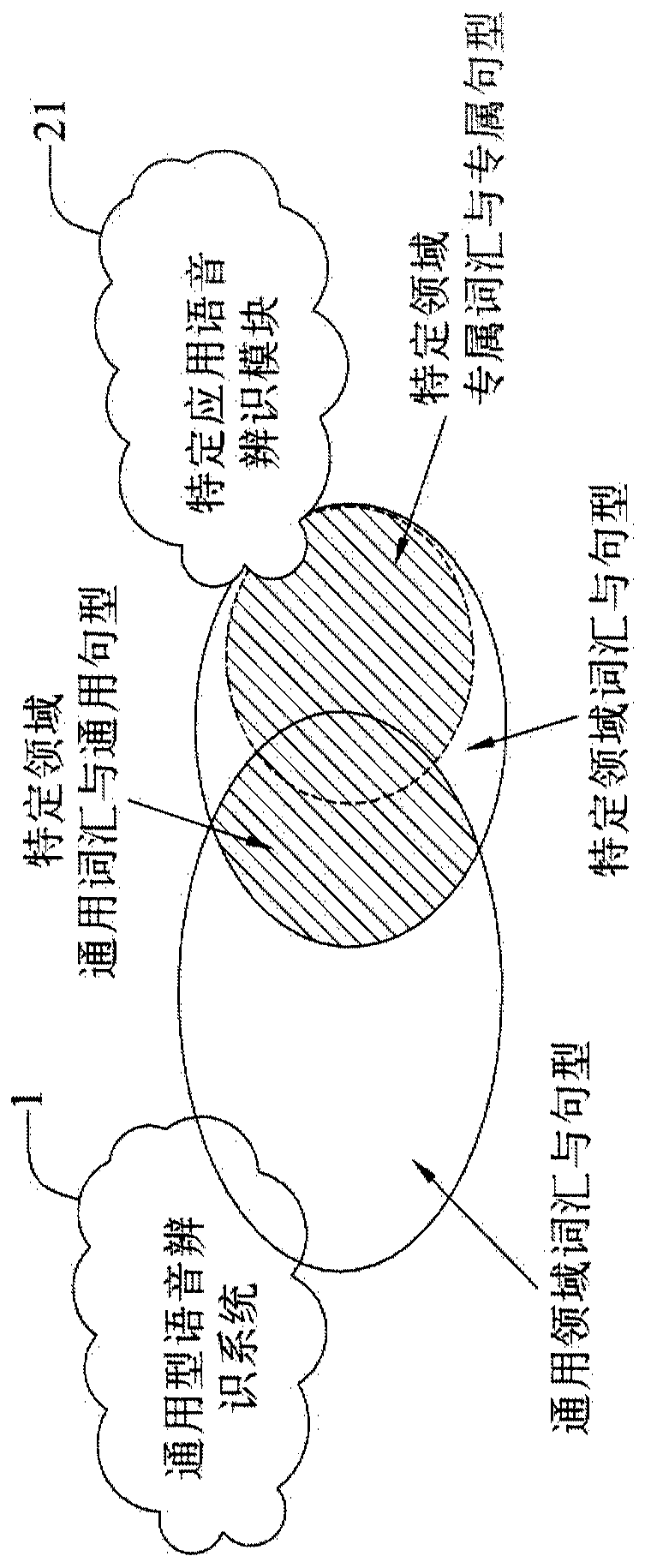

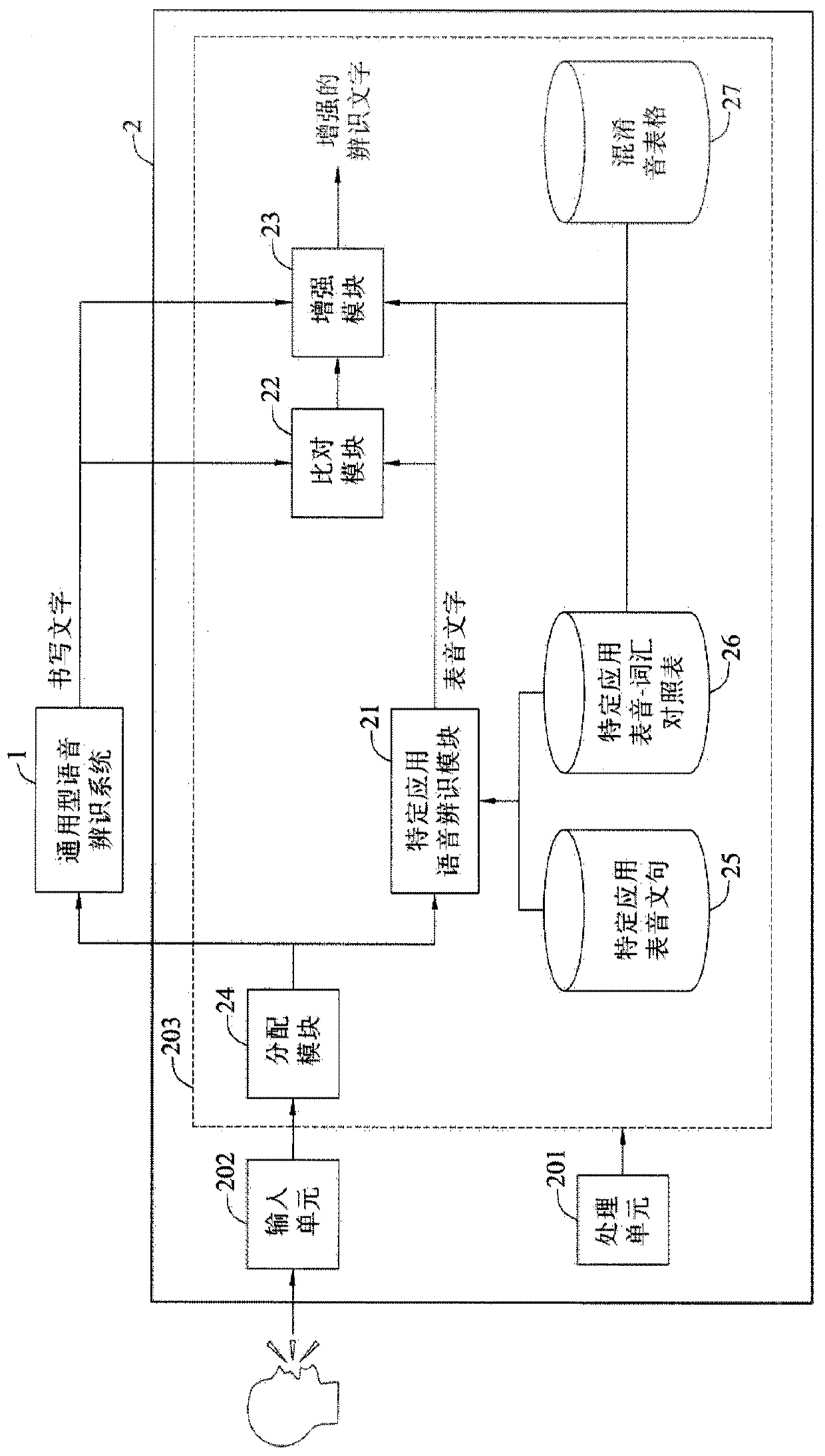

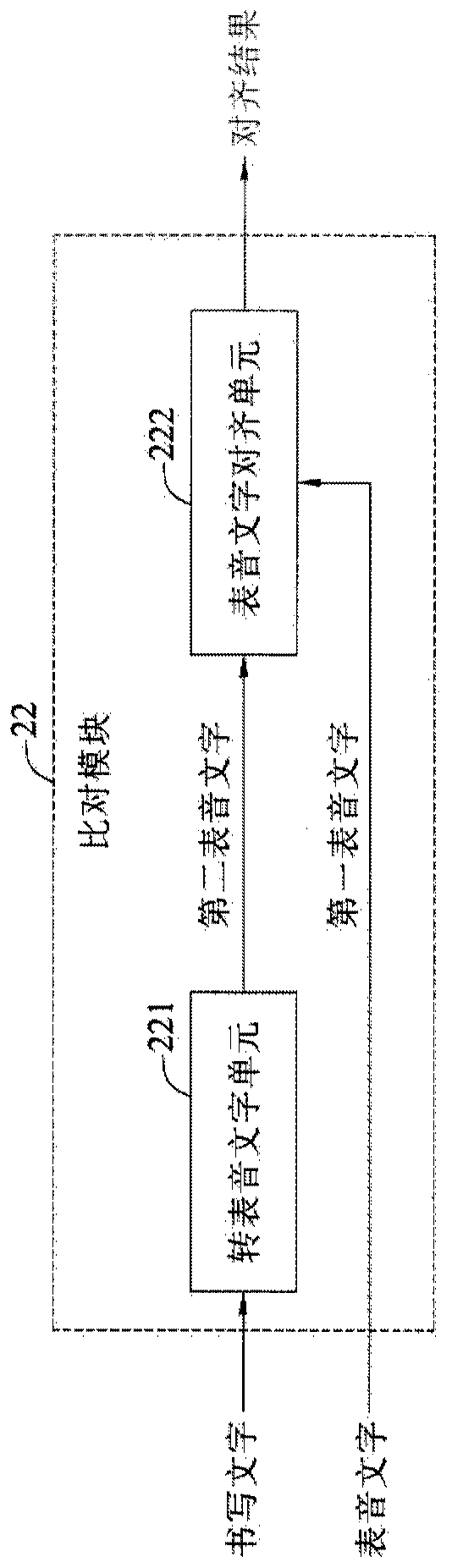

Speech recognition system, speech recognition method and computer program product

ActiveCN111292740ANatural language data processingSpeech recognitionText alignmentProcessing element

The invention discloses a speech recognition system and method thereof, and a computer program product. The speech recognition system is connected to an external general-purpose speech recognition system, and includes a storage unit and a processing unit. The storage unit stores a specific application speech recognition module, a comparison module and an enhancement module. The specific application speech recognition module converts an input speech signal into a first phonetic text. The general-purpose speech recognition system converts the speech signal into a written text. The comparison module receives the first phonetic text from the specific application speech recognition module and the written text from the general-purpose speech recognition system, converts the written text into a second phonetic text, and aligns the second phonetic text with the first phonetic text based on similarity of pronunciation to output a phonetic text alignment result. The enhancement module receives the phonetic text alignment result from the comparison module, and constructs the phonetic text alignment result after a path weighting with the written text and the first phonetic text to form an outputting recognized text.

Owner:IND TECH RES INST

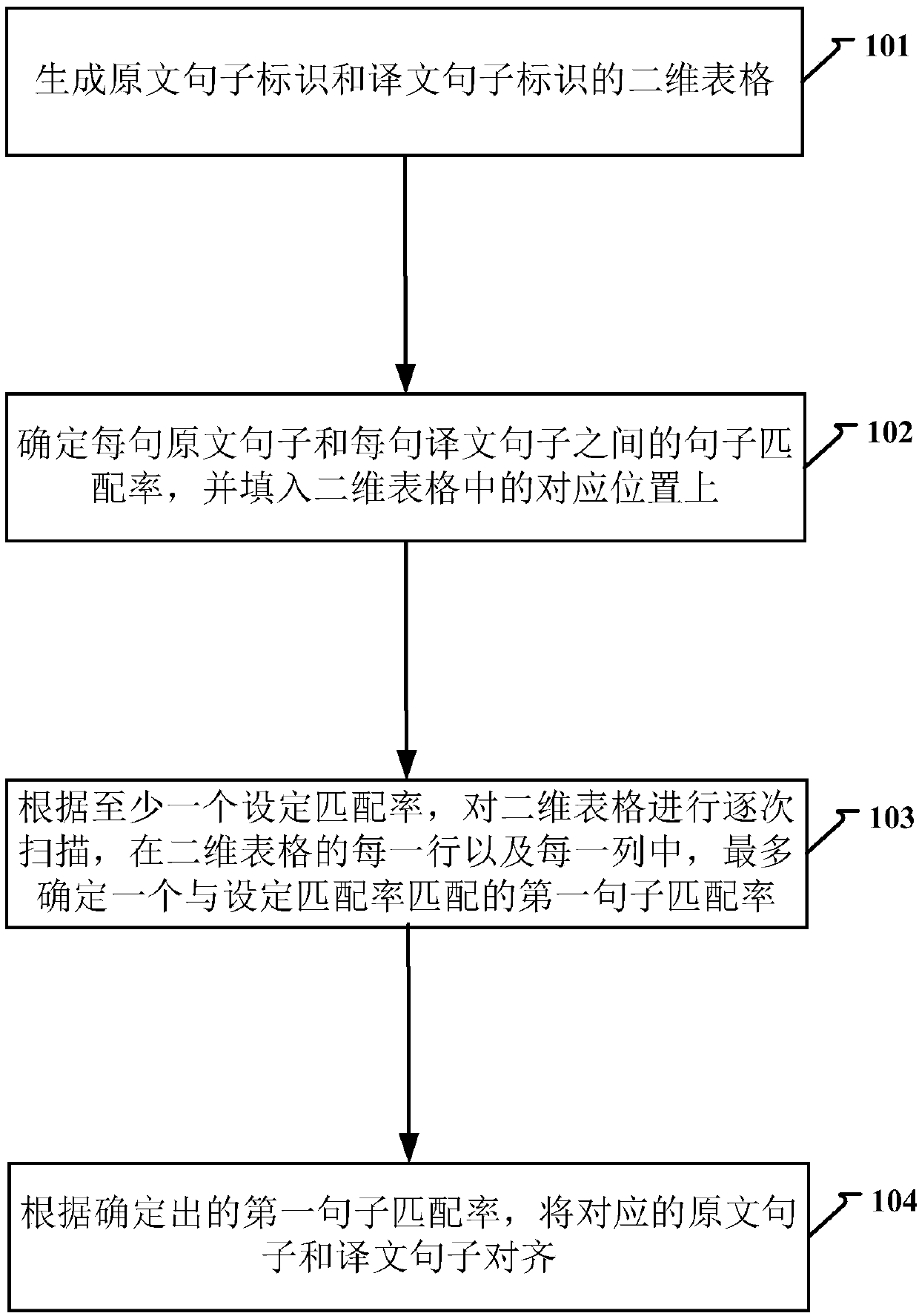

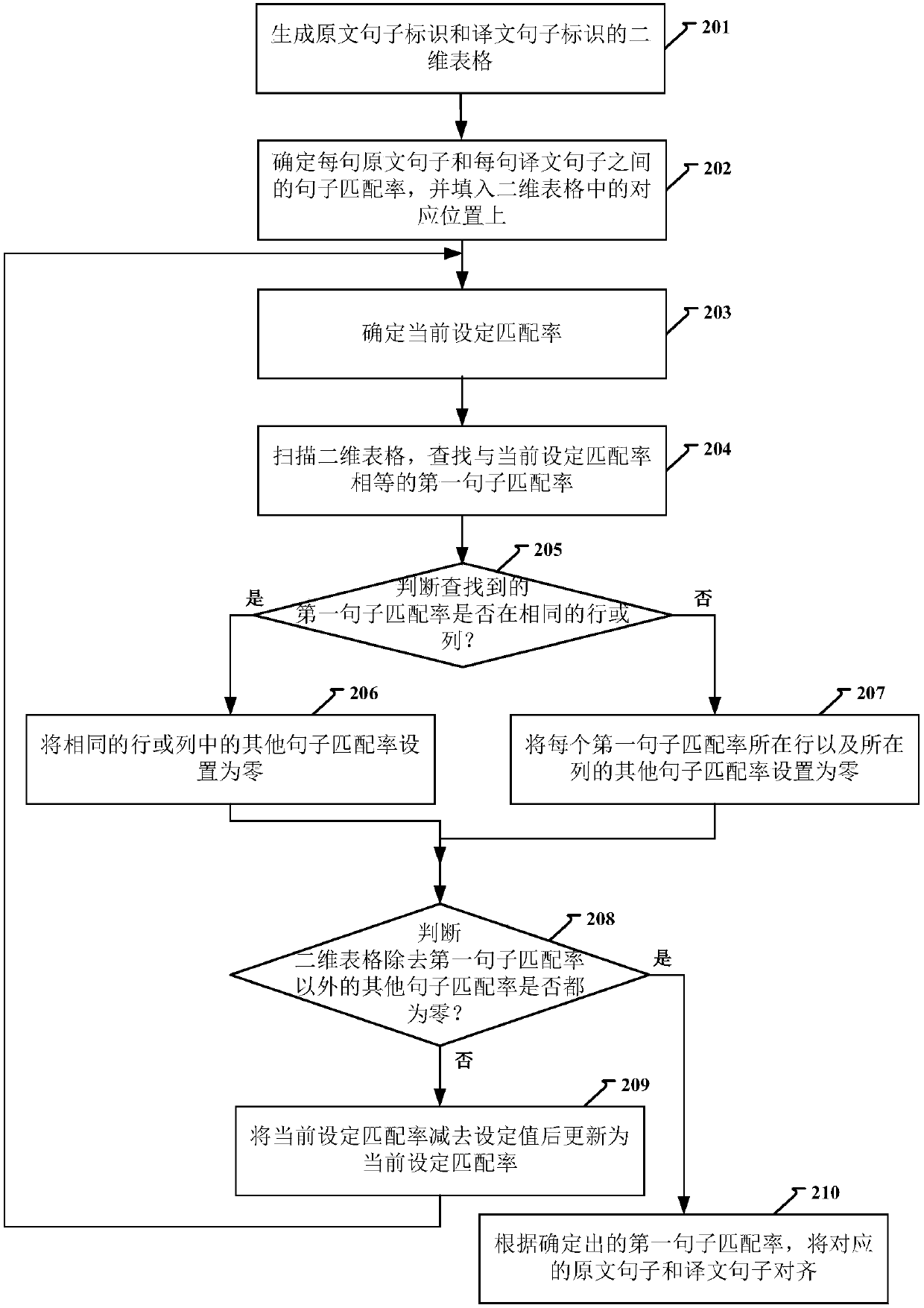

Original translated text alignment method and apparatus

InactiveCN107766339AAutomate alignmentFully automatedNatural language translationSpecial data processing applicationsText alignment

The invention discloses an original translated text alignment method and apparatus, and belongs to the technical field of translation. The method comprises the steps of generating a two-dimensional table of original text sentence identifiers and translated text sentence identifiers; determining a sentence matching rate between each original text sentence and each translated text sentence, and filling a corresponding position in the two-dimensional table with the sentence matching rate; according to at least one set matching rate, gradually scanning the two-dimensional table, and in each row and each column of the two-dimensional table, at most determining a first sentence matching rate matched with the set matching rate; and according to the determined first sentence matching rate, aligning the corresponding original text sentences and translated text sentences. Based on the sentence matching rate among all the sentences participating in alignment, original translated text alignment isperformed, so that the original translated text alignment accuracy is improved.

Owner:IOL WUHAN INFORMATION TECH CO LTD

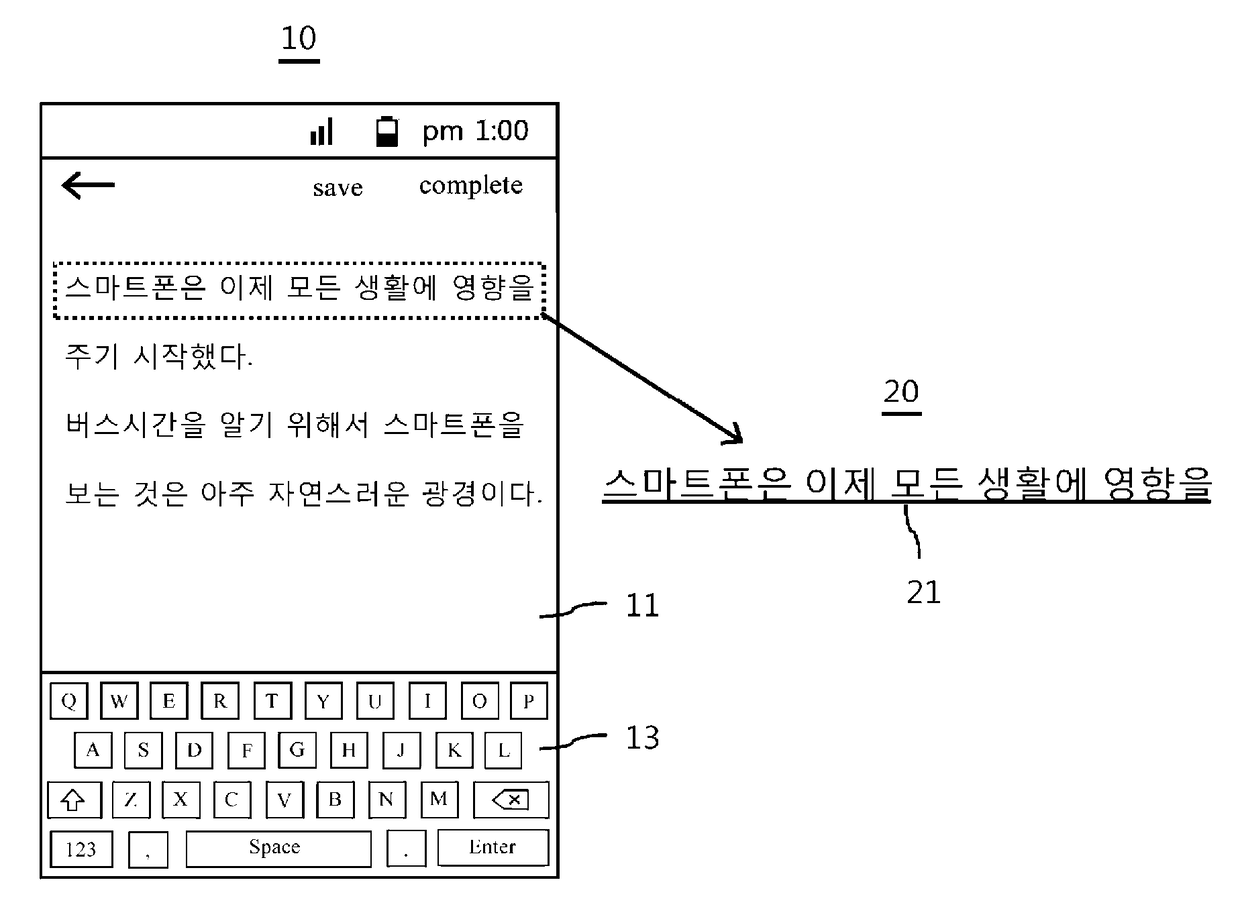

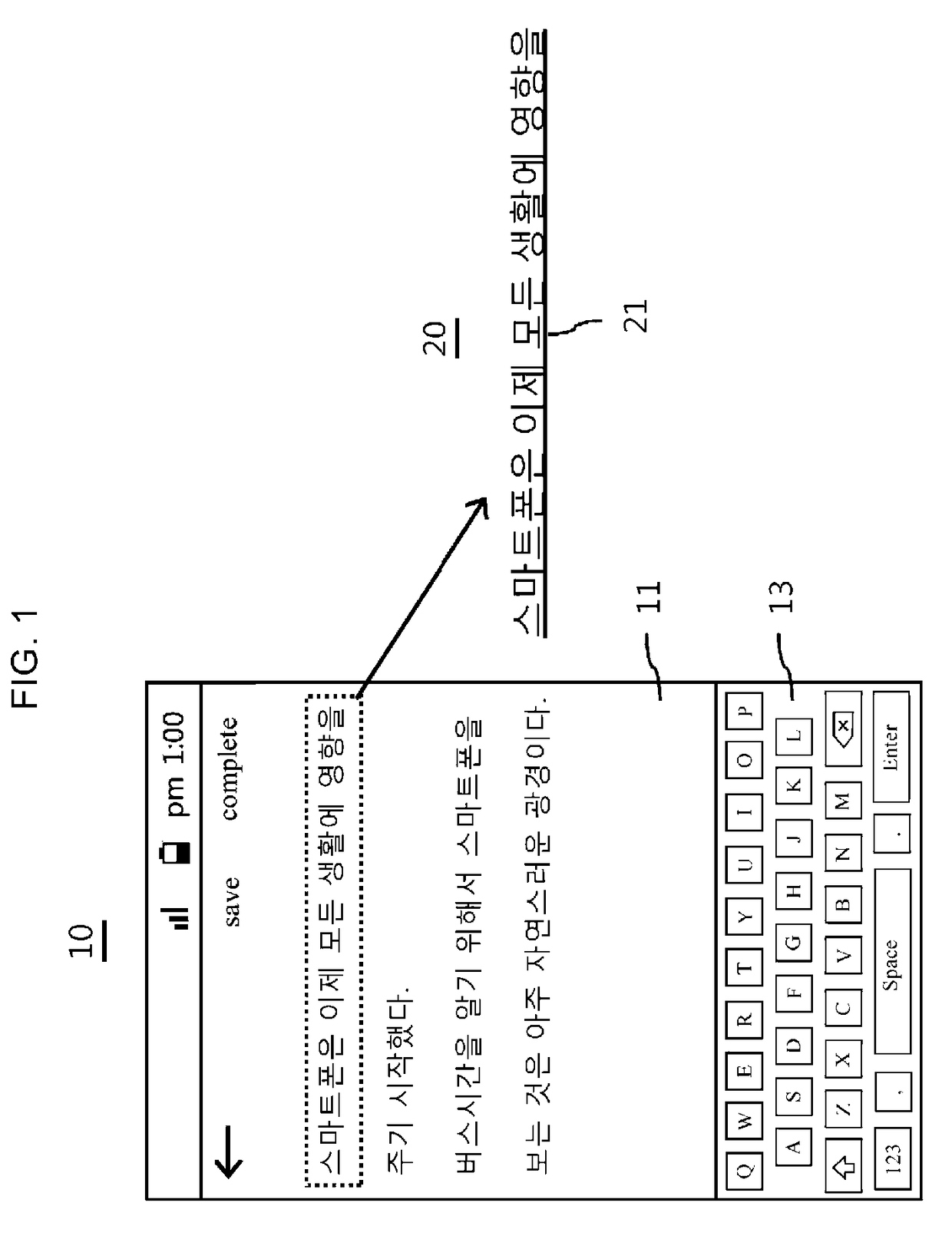

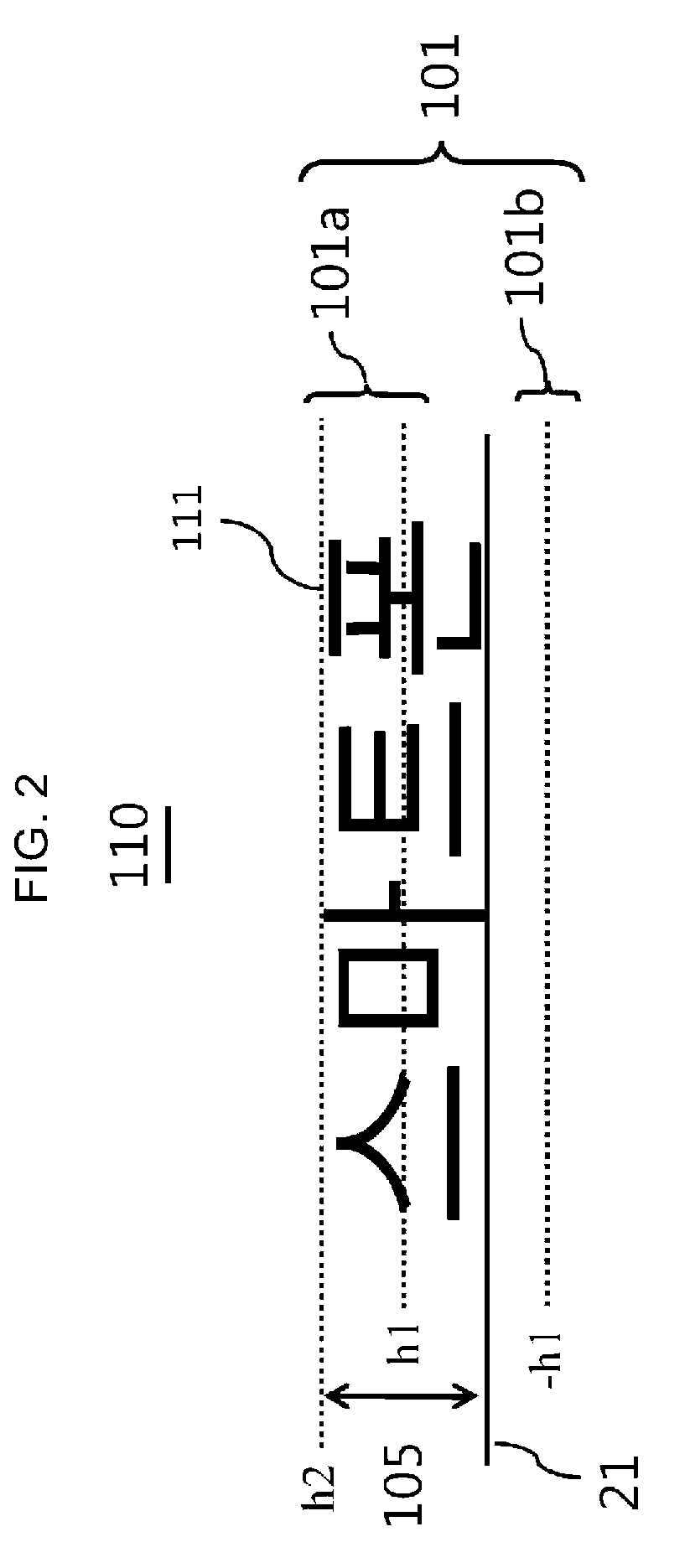

Method and storage medium for displaying text on screen of portable terminal

ActiveUS20180196786A1Alphabetical characters enteringNatural language data processingText alignmentComputer graphics (images)

A method for displaying a text on a screen of a portable terminal includes at least one special text alignment line for aligning and displaying a text in at least one of positions above and below a normal text alignment line along which a text is normally aligned and displayed, where a text is aligned and displayed along the special text alignment line such that the text positioned on the special text alignment line has a predetermined height which is 90% or greater than the height of a text positioned on the normal text alignment line just in front of the text on the special text alignment line.

Owner:KIM SUNG IL

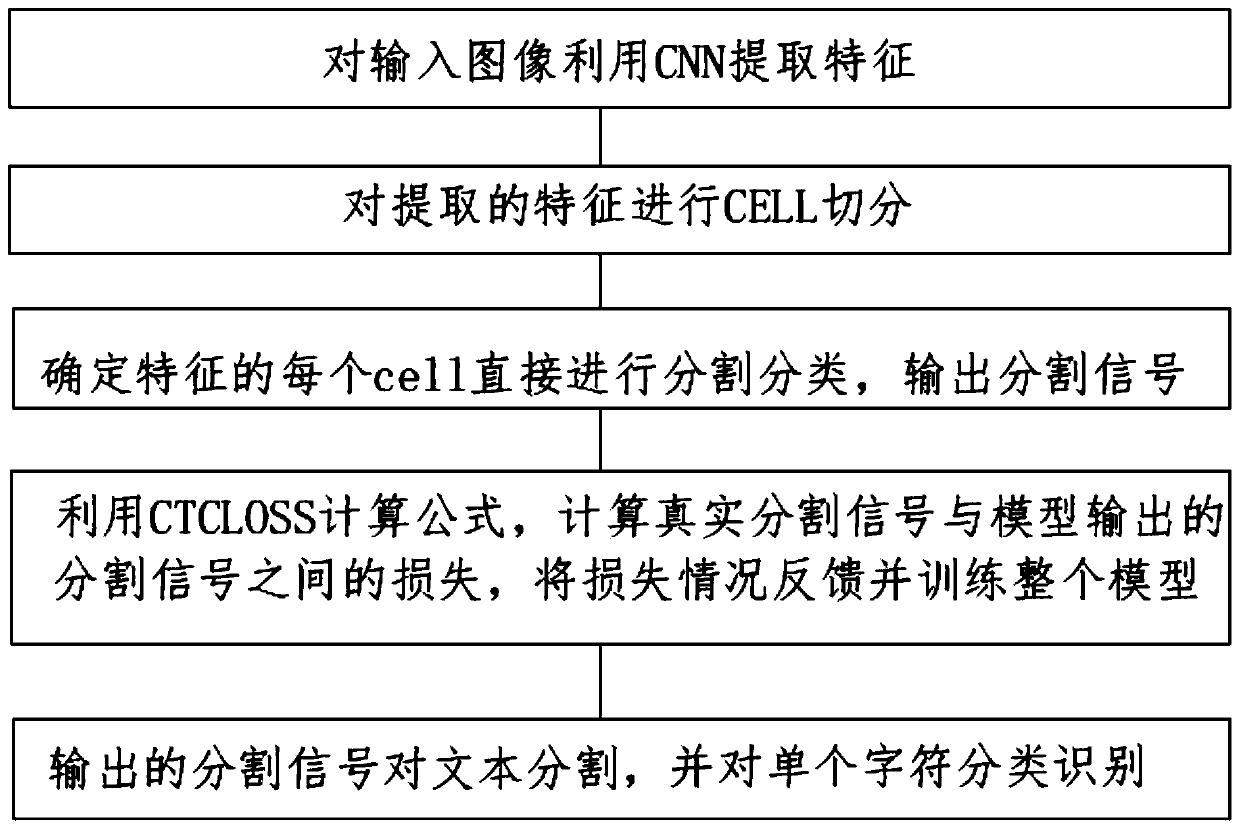

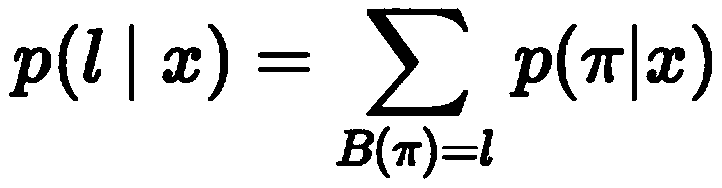

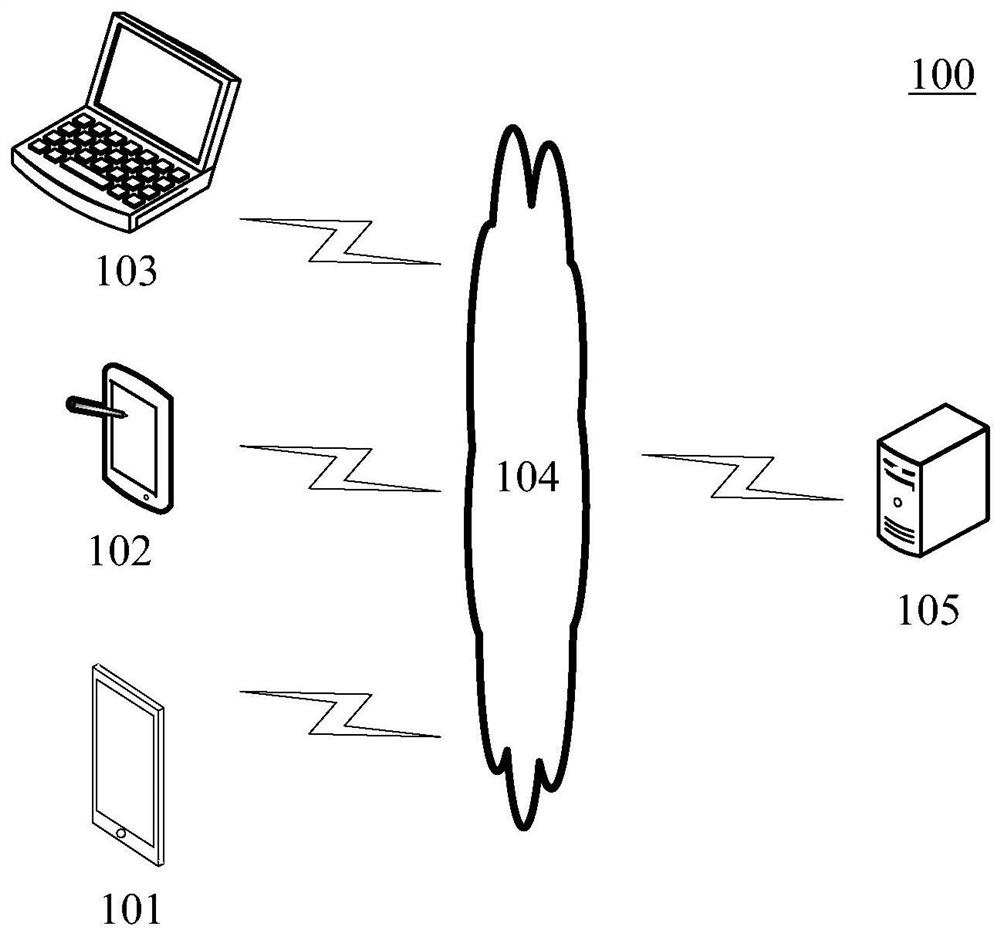

Character segmentation and recognition method based on CTC deep neural network

PendingCN111507348AFix alignment issuesFast recognitionNeural architecturesNeural learning methodsText alignmentCell segmentation

The invention provides a character segmentation and recognition method based on a CTC deep neural network. The method comprises the following steps: a1, extracting features of an input image by usinga CNN; a2, carrying out the CELL segmentation on the features extracted in a1, fixing the height and width of CELL, and determining the number of CELL by the length of the image; a3, directly segmenting and classifying each CELL of the determined features, and outputting segmentation signals; a4, calculating the loss between the real segmentation signal and the segmentation signal output by the model by using CTCLOSS, feeding back the loss condition and training the whole model; a5, segmenting the text by using the segmentation signal output in the step a3, carrying out CNN + softmax classification identification on a single character, mapping a real segmentation signal from the annotated text, and automatically solving the text alignment problem by using the CTCLOSS. According to the invention, the OCR recognition speed is improved, and the recognition optimization is targeted after the characters are cut into single characters, so the final precision is improved; a recognition framework is improved, and a recognition process is separated into character segmentation and single character recognition, so optimization can be separately carried out in a targeted manner.

Owner:北京深智恒际科技有限公司

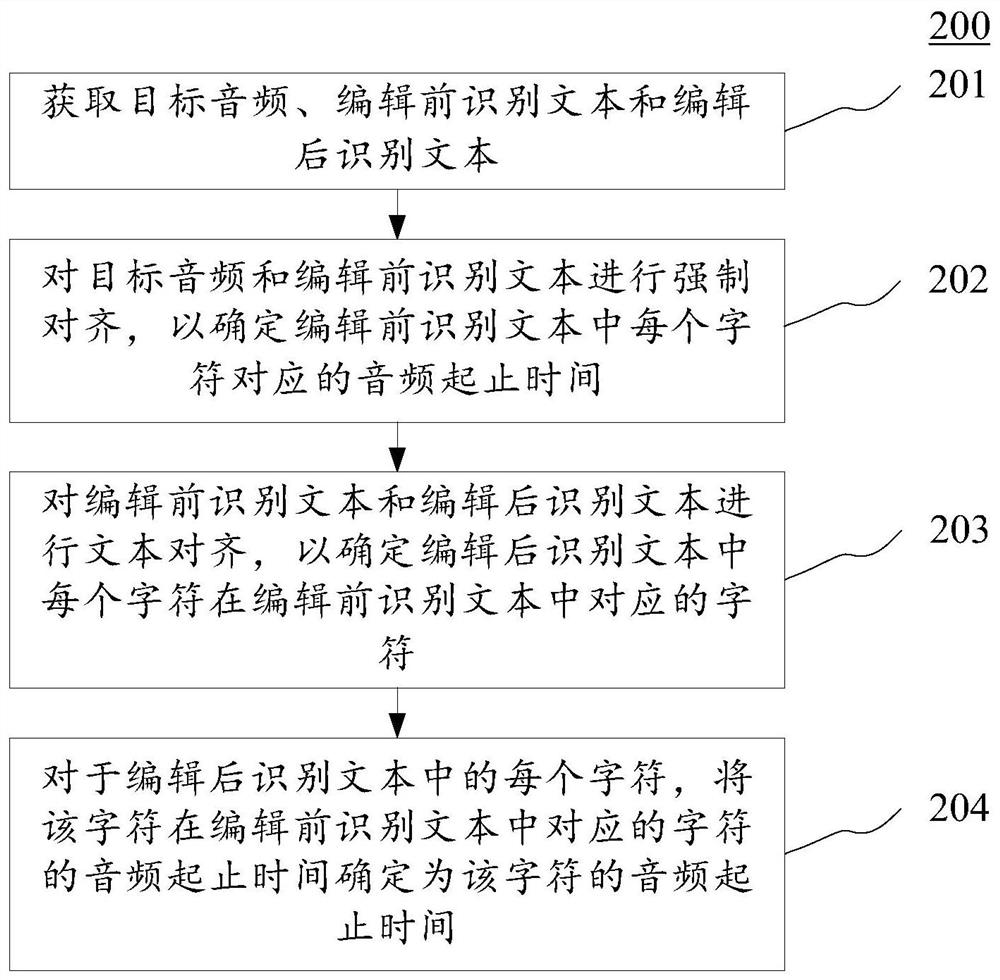

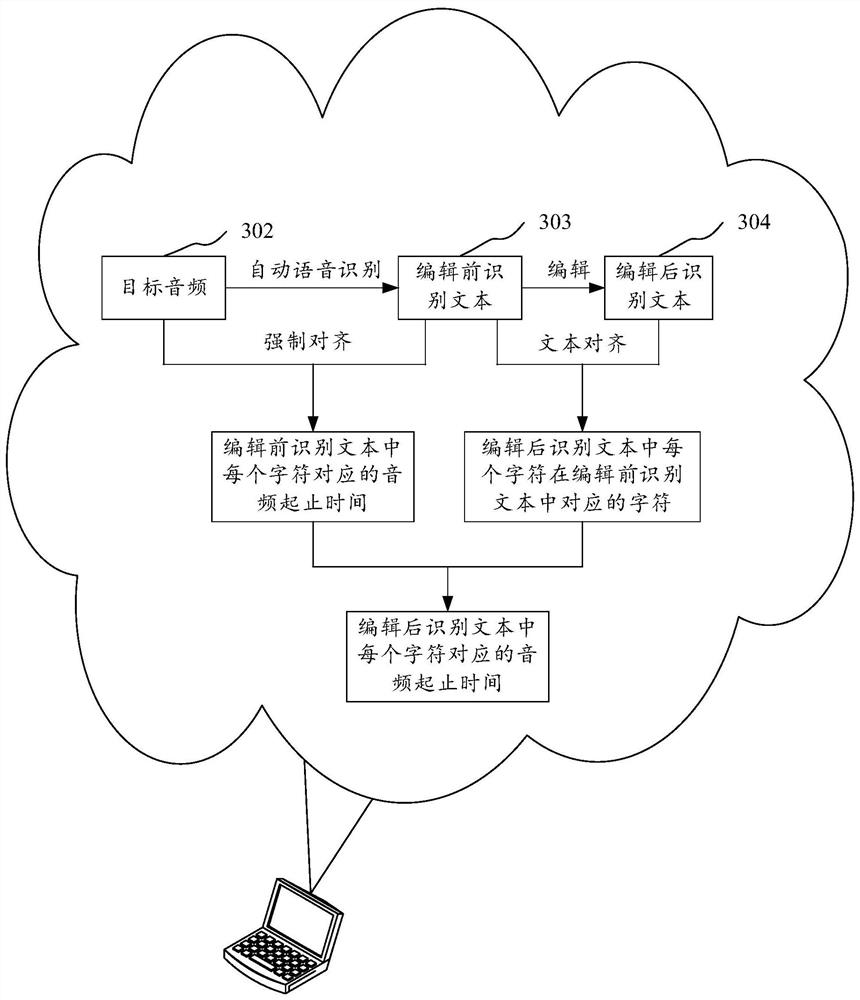

Sound and text realignment and information presentation method and device, electronic equipment and storage medium

PendingCN113761865ASmall granularityHigh precisionNatural language data processingSpeech recognitionText alignmentText editing

The invention provides a sound and text realignment and information presentation method and device, electronic equipment and a storage medium, and the method comprises the steps: obtaining a target audio, a pre-editing recognition text and a post-editing recognition text, wherein the pre-editing recognition text is a recognition text obtained through the automatic voice recognition of the target audio, and the edited text is a text obtained by editing the recognition text before editing; performing forced alignment on the target audio and the recognition text before editing to determine audio starting and ending time corresponding to each character in the recognition text before editing; performing text alignment on the pre-edited recognition text and the post-edited recognition text to determine a character corresponding to each character in the post-edited recognition text in the pre-edited recognition text; and for each character in the edited recognition text, determining the audio starting and ending time of the character corresponding to the character in the pre-edited recognition text as the audio starting and ending time of the character. According to the invention, high-precision sound and text re-alignment between the target audio and the edited recognition text is realized.

Owner:BEIJING ZITIAO NETWORK TECH CO LTD

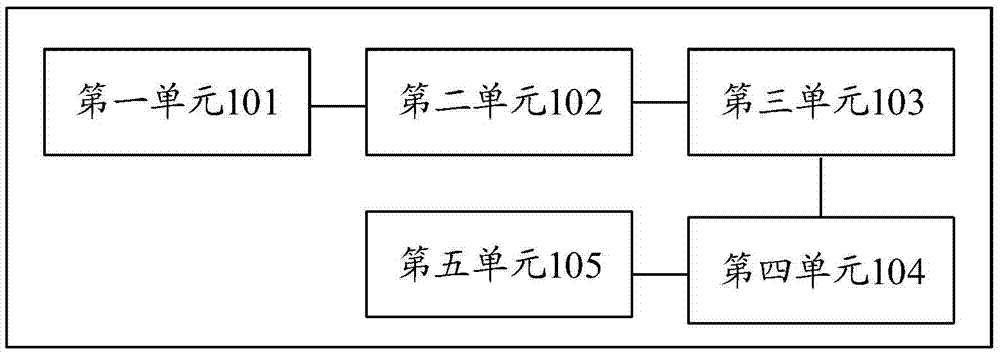

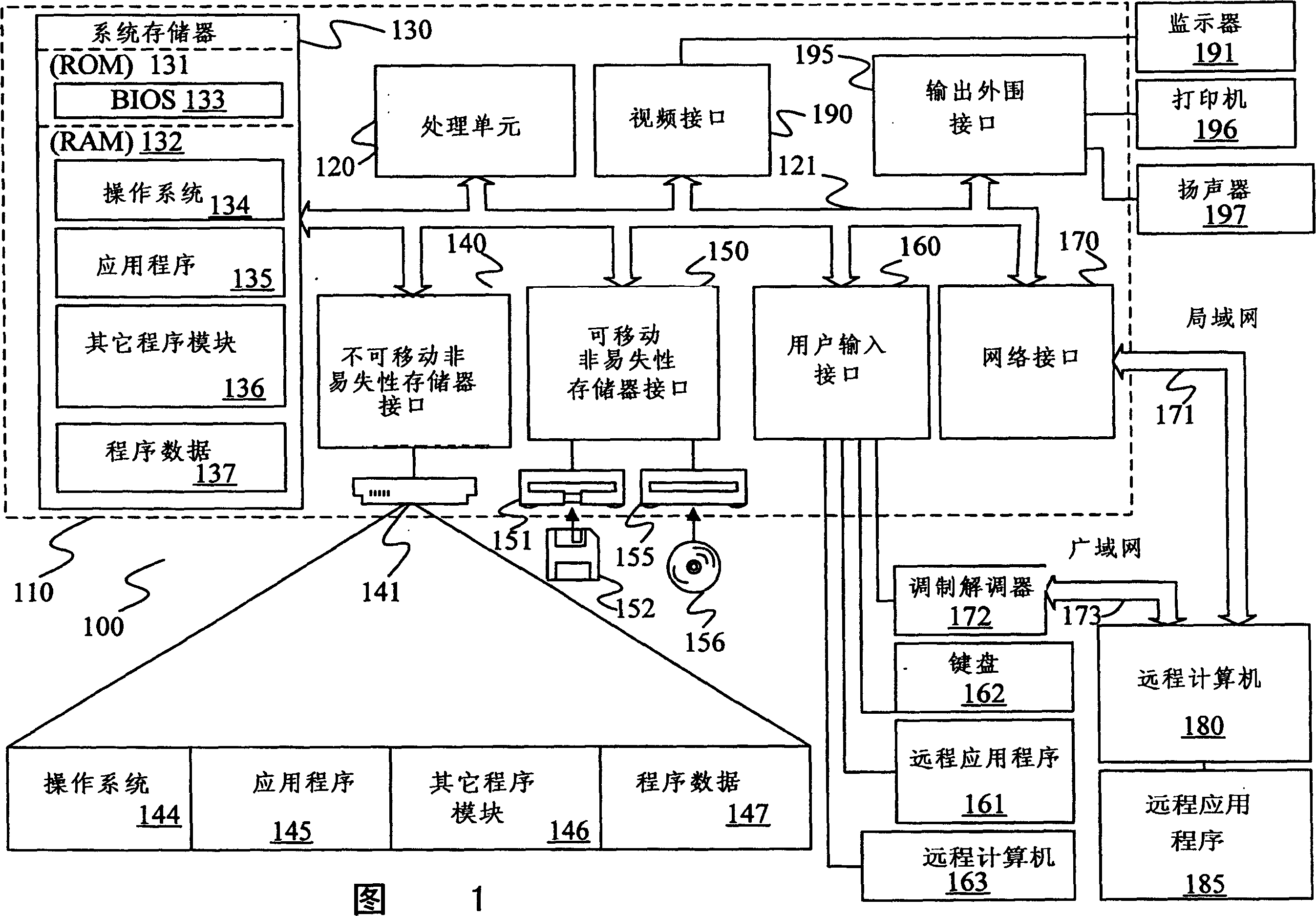

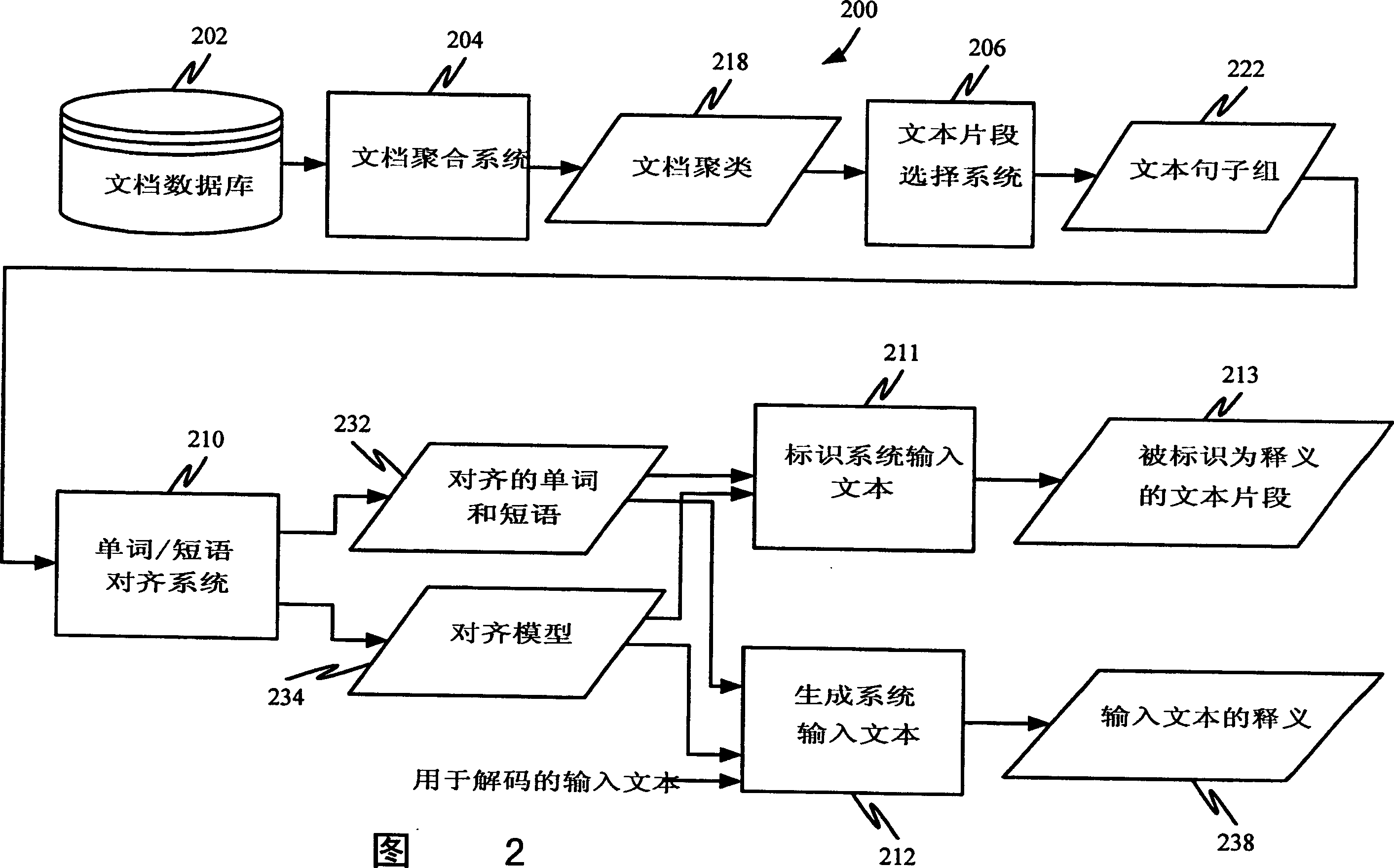

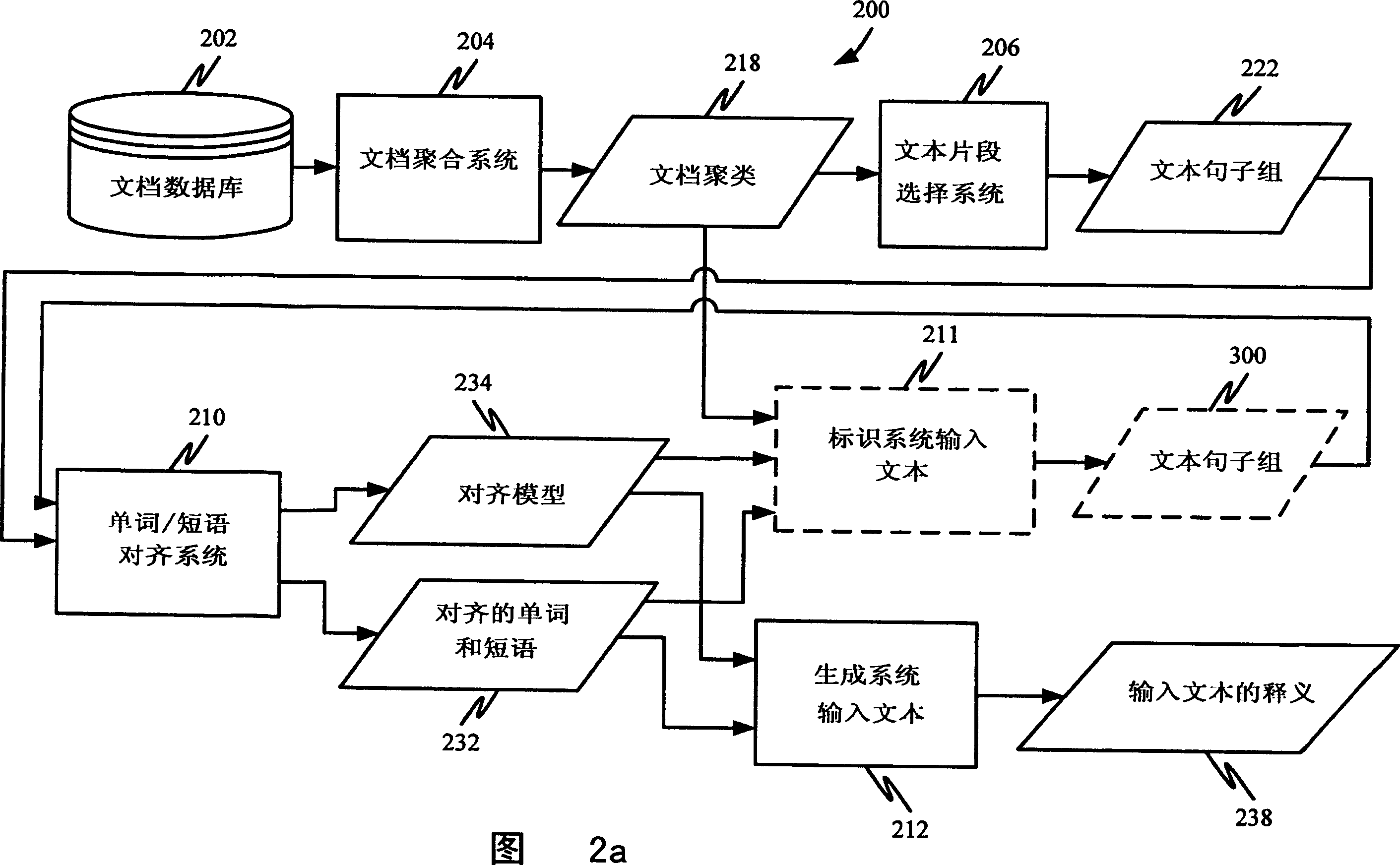

System for identifying paraphrases using machine translation techniques

The present invention obtains a set of text fragments from a cluster of different documents written about a common event. The set of text fragments is then subjected to text alignment techniques to identify paraphrases from text fragments in the text. The invention can also be used to generate paraphrases.

Owner:MICROSOFT TECH LICENSING LLC

Single-line text alignment method and translated file processing method of DWG file

InactiveCN106649248ASave lookingSave workNatural language translationSpecial data processing applicationsText alignmentTheoretical computer science

The invention discloses a single-line text alignment method and a translated file processing method of a DWG file. The single-line text alignment method comprises the following steps: A, the number of bytes of the original text and the translated text are respectively obtained according to the variable length character section; and B, according to the width and the number of bytes of the original text, and the number of bytes of the translated text, the width of the translated text is adjusted. The translated text is scaled relative to the original text, so that the width of the translated text is appropriate to avoid the too large width of the translated text to block other lines or other words in AutoCAD, and the cleanliness of the translated text is also improved.

Owner:成都优译信息技术股份有限公司

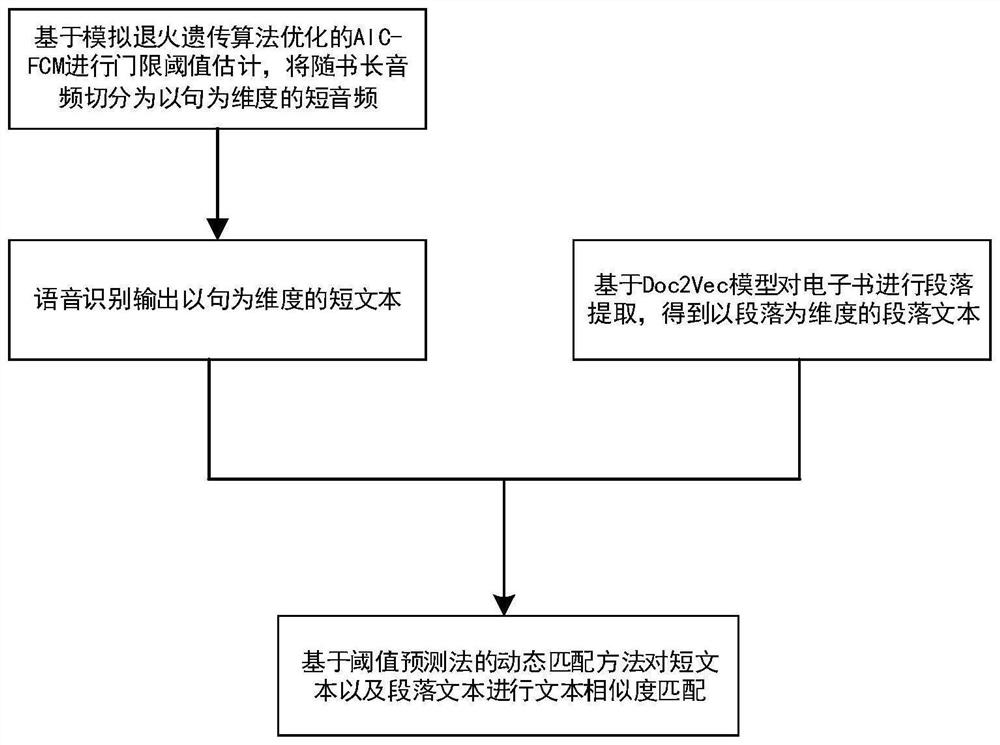

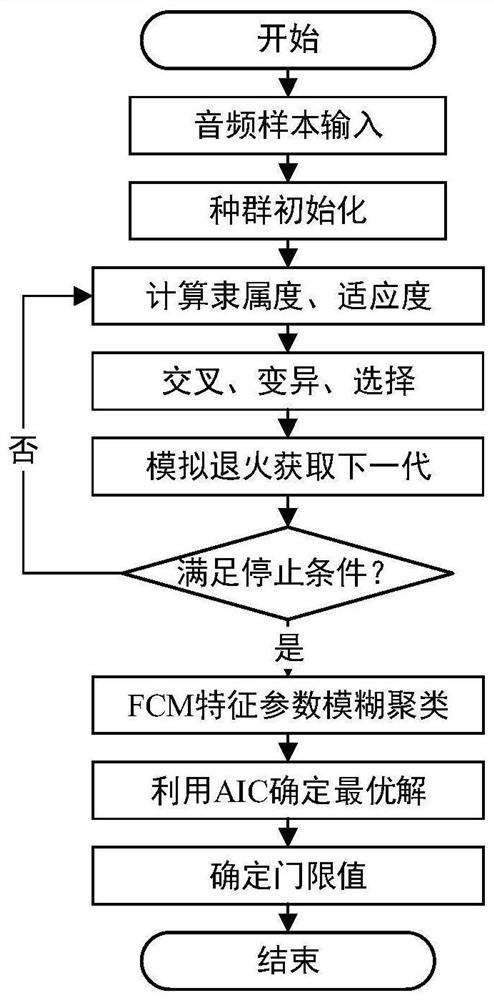

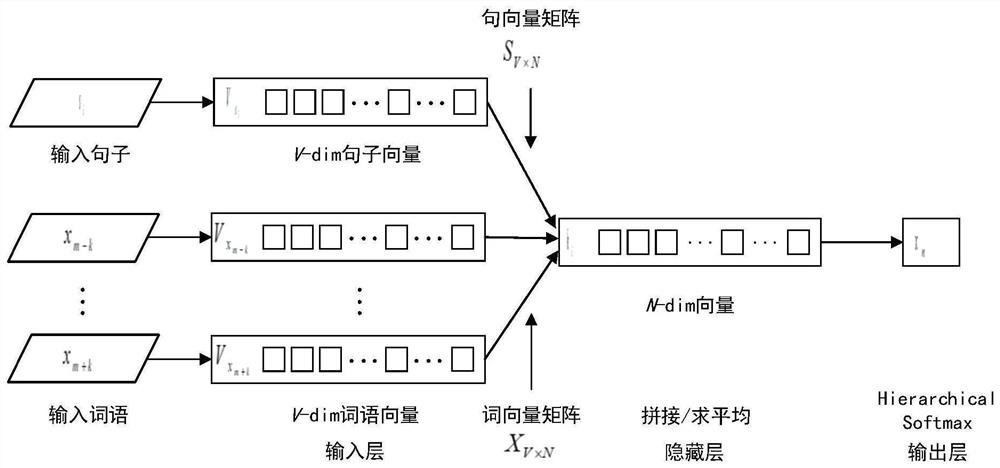

A doc2vec-based audio text alignment method and system

ActiveCN113191133BRealize the comparison relationshipReduce time complexityNatural language data processingSpecial data processing applicationsText alignmentAudio segmentation

The invention discloses a Doc2Vec-based audio-text alignment method and system. The method includes: performing threshold threshold estimation based on AIC-FCM optimized by simulated annealing genetic algorithm, and dividing the long audio with the book into short audio with sentence as the dimension , and conduct speech recognition on short audio to output short text with sentence as the dimension; extract paragraphs from e-books based on the Doc2Vec model, and obtain paragraph text with paragraph as the dimension; dynamic matching method based on threshold prediction method for short text and paragraph text Perform text similarity matching to complete text alignment. Compared with the traditional audio-text alignment algorithm, it is closer to the ideal segmentation result in long audio segmentation, and the alignment effect is basically the same as that of Doc2vec, and the time complexity is reduced by about 35%.

Owner:BEIJING UNIV OF POSTS & TELECOMM +1

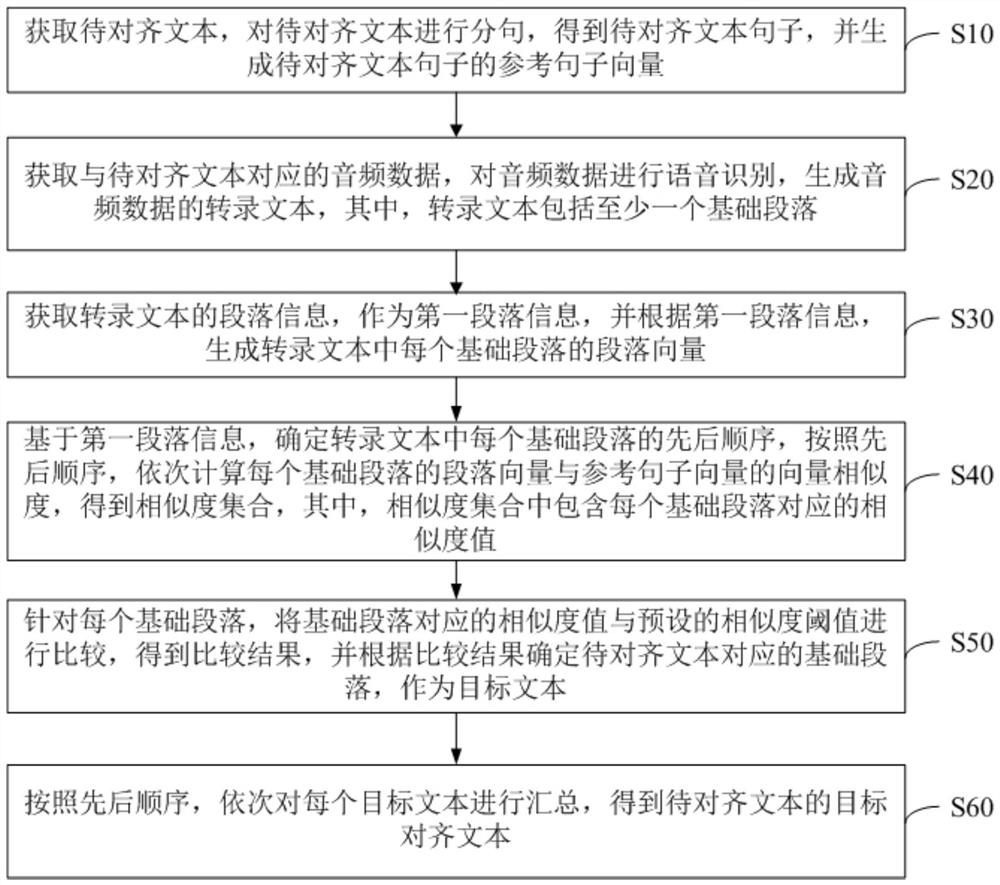

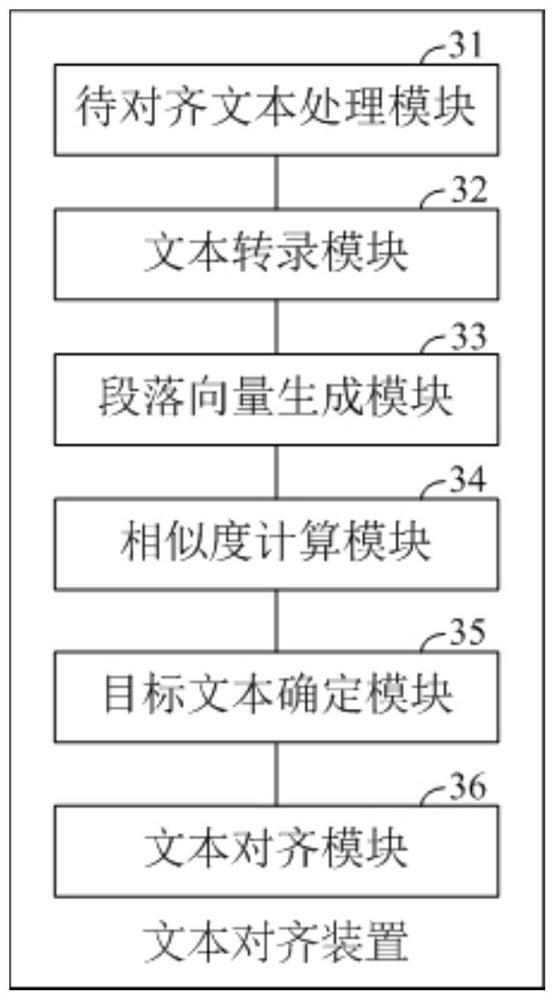

Text alignment method and device, computer equipment and storage medium

PendingCN114818646AImprove accuracyCharacter and pattern recognitionNatural language data processingText alignmentSentence segmentation

The invention discloses a text alignment method, which is applied to the technical field of text processing and is used for improving the accuracy of text alignment. The method comprises the steps of obtaining a to-be-aligned text, performing sentence segmentation on the to-be-aligned text, and generating reference sentence vectors of sentences of the to-be-aligned text; obtaining audio data corresponding to the to-be-aligned text, generating a transcriptional text of the audio data, and generating a paragraph vector of each basic paragraph in the transcriptional text; based on the first paragraph information, determining the sequence of each basic paragraph in the transcriptional text, and according to the sequence, sequentially calculating the vector similarity between the paragraph vector of each basic paragraph and the reference sentence vector to obtain a similarity set; and for each basic paragraph, comparing the similarity value corresponding to the basic paragraph with a preset similarity threshold to obtain a comparison result, and determining the basic paragraph corresponding to the to-be-aligned text according to the comparison result as a target text to obtain a target aligned text of the to-be-aligned text.

Owner:东莞点慧科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com