Patents

Literature

32 results about "Orchestration (computing)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Orchestration is the automated configuration, coordination, and management of computer systems and software. A number of tools exist for automation of server configuration and management, including Ansible, Puppet, Salt, Terraform, and AWS CloudFormation. For Container Orchestration there are different solutions such as Kubernetes software or managed services such as AWS EKS, AWS ECS or Amazon Fargate.

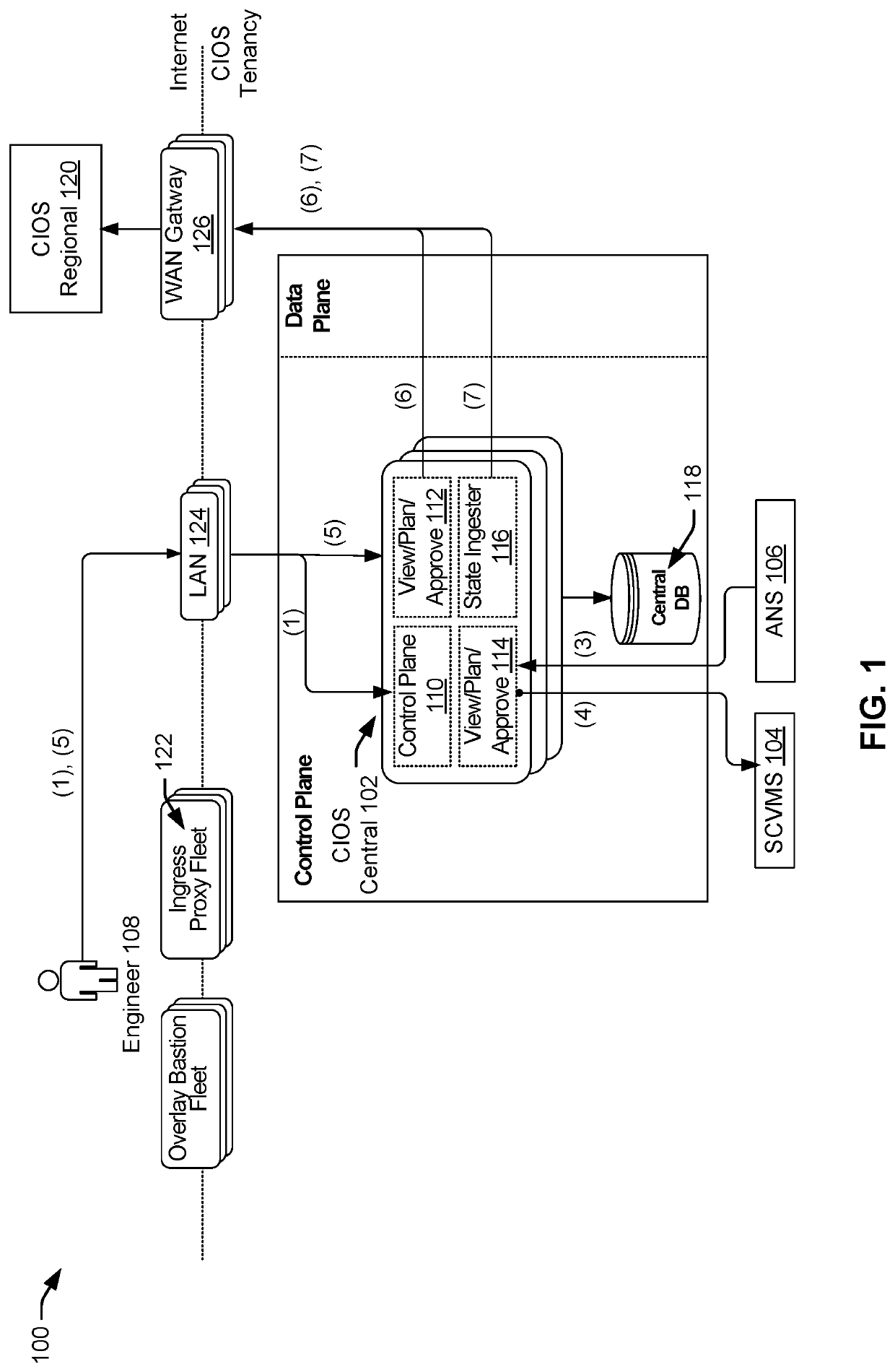

Apparatus, systems, and methods for distributed application orchestration and deployment

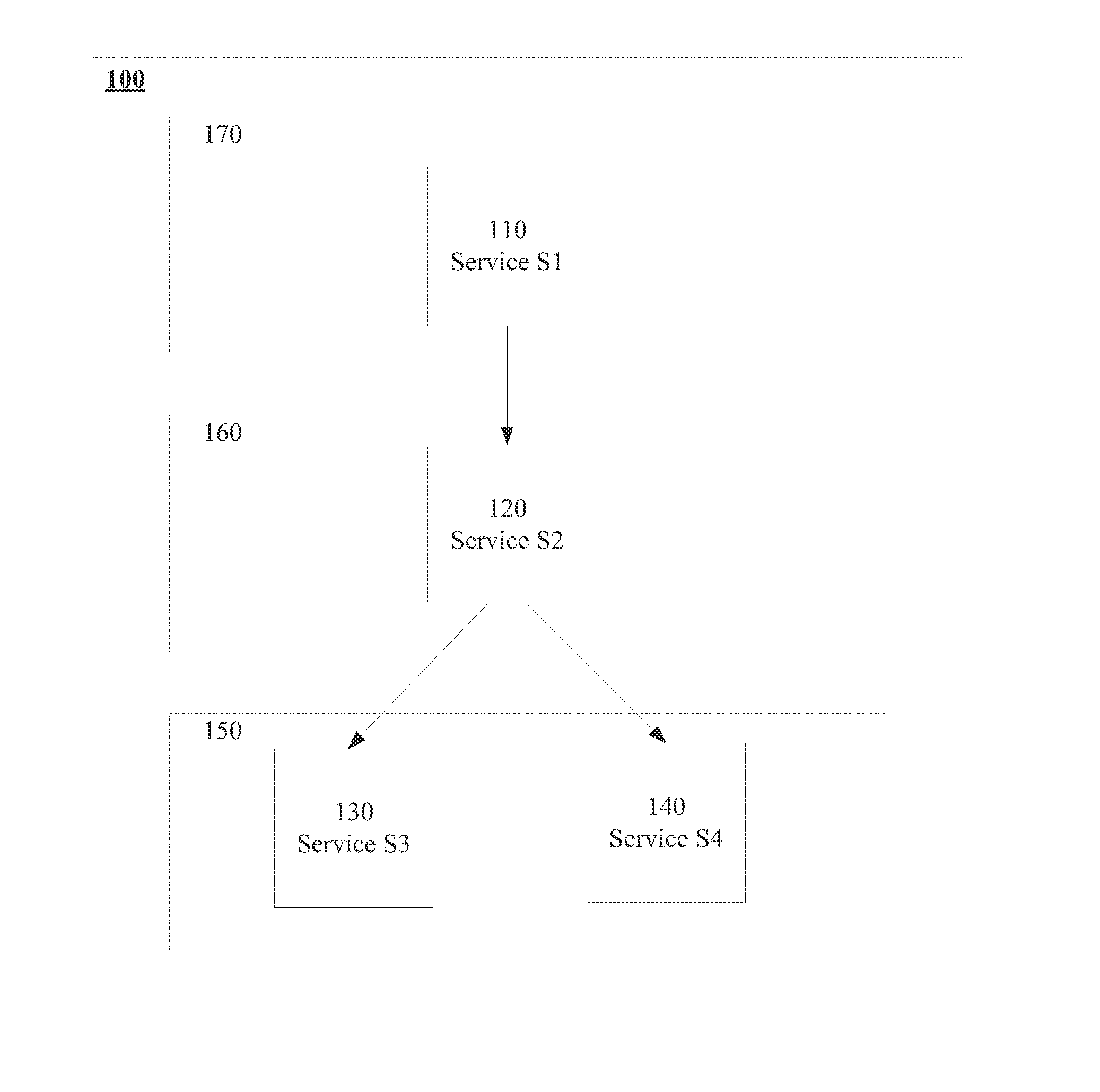

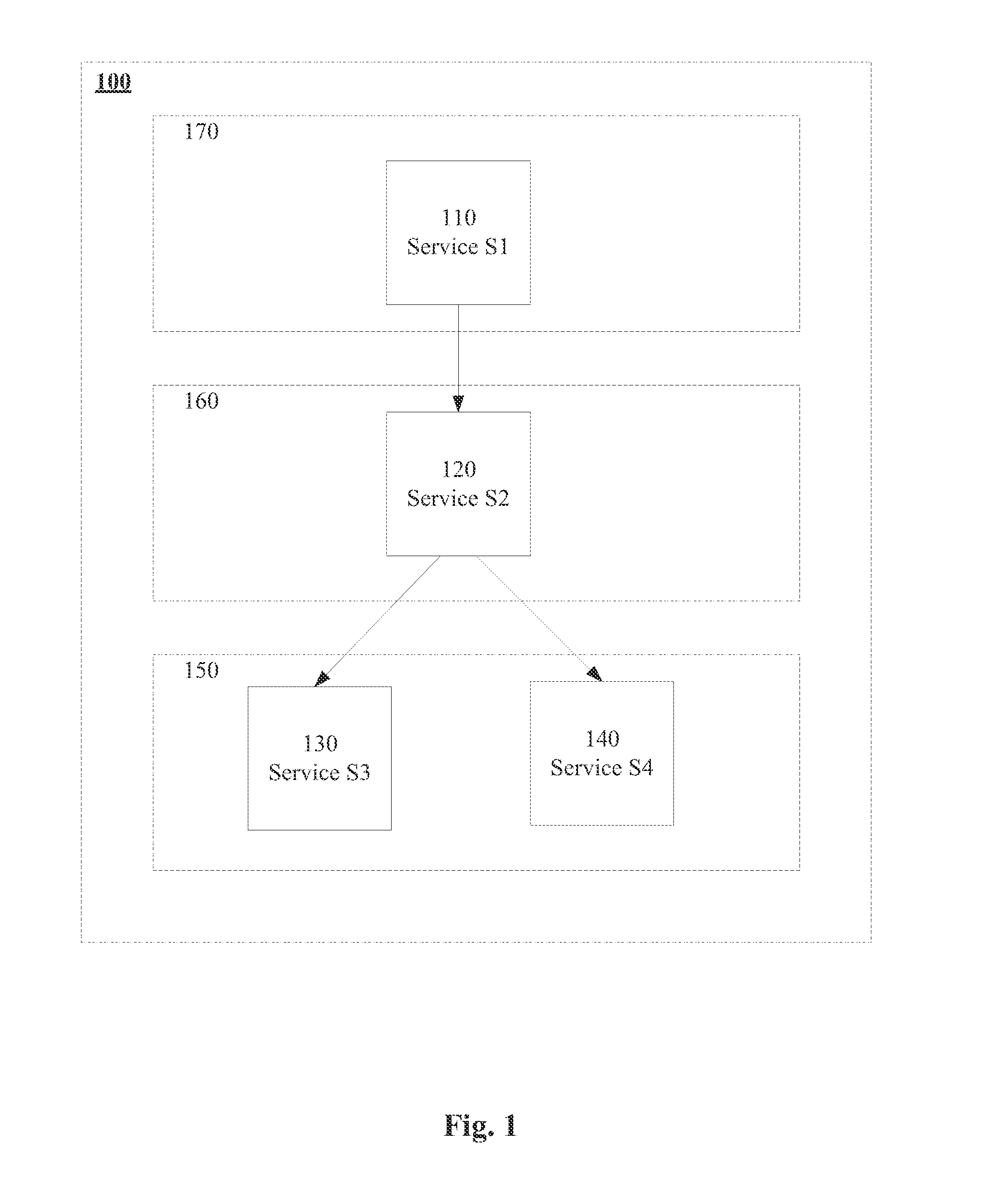

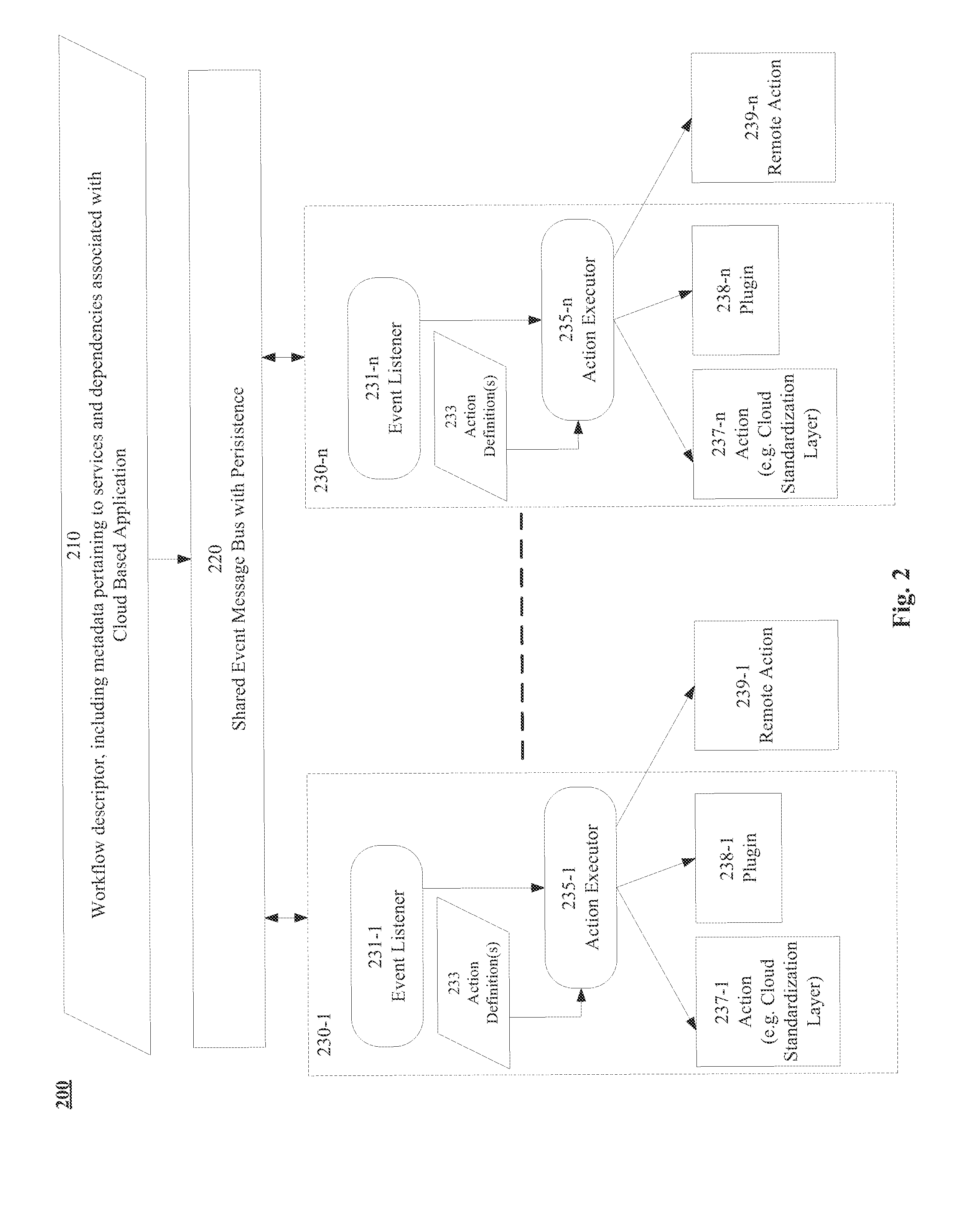

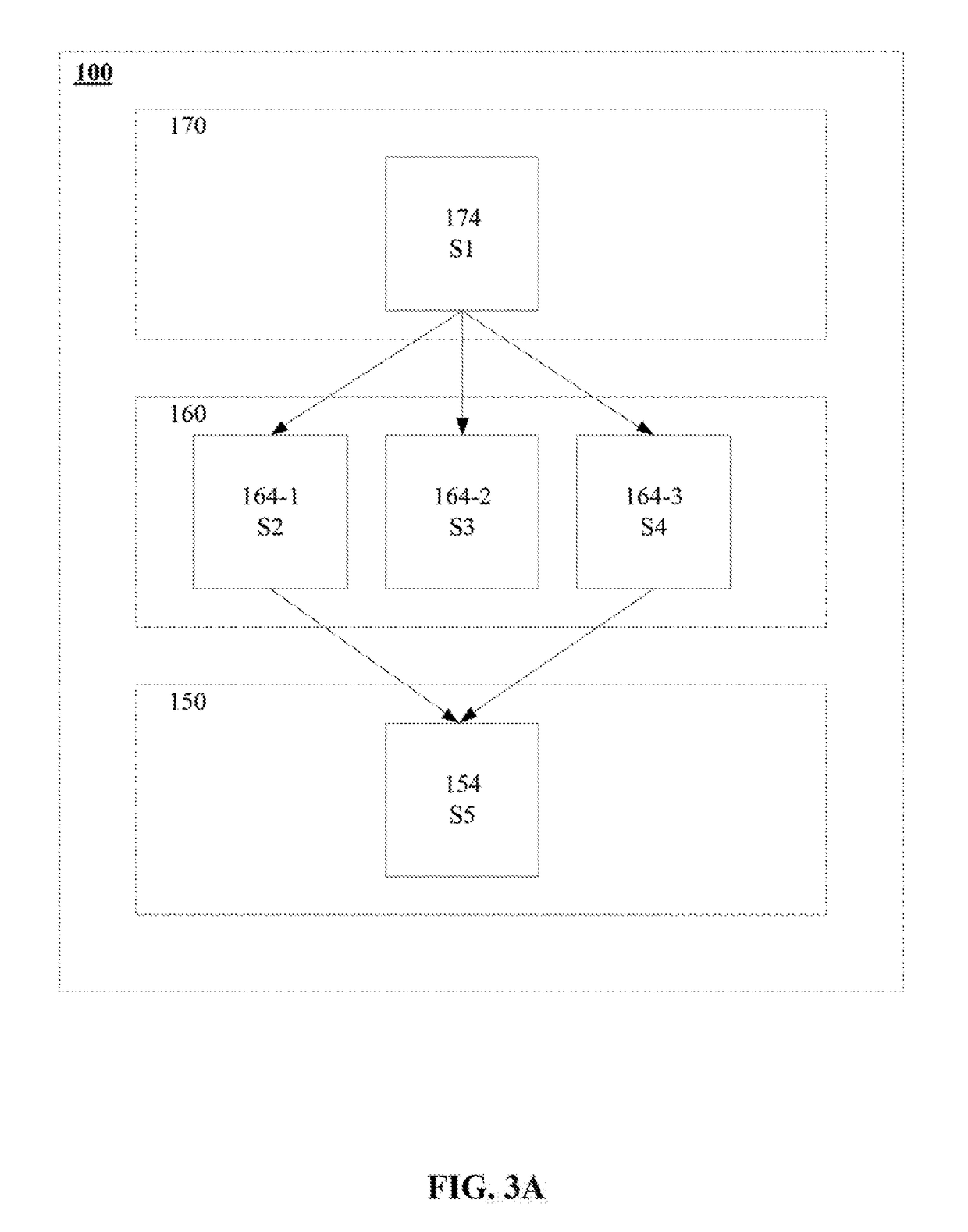

Embodiments disclosed facilitate distributed orchestration and deployment of a cloud based distributed computing application. In some embodiments, the distributed orchestration may be based on a cloud agnostic representation of the application. In some embodiments, an ordered set of events may be obtained based on a starting sequence for the plurality of components, where each event in the ordered set is associated with a corresponding set of prerequisites for initiating execution of the event. Event identifiers corresponding to the ordered set of events may be placed on an event message bus with persistence that is shared between a plurality of distributed nodes associated with a cloud. Upon receiving an indication of completion of prerequisites for one or more events, a plurality of nodes of a distributed orchestration engine may select the one or more events corresponding to the one or more selected event identifiers for execution on the cloud.

Owner:CISCO TECH INC

System and method for identification of discrepancies in actual and expected inventories in computing environment having multiple provisioning orchestration server pool boundaries

InactiveUS20060178953A1Performance impact is minimalBetter respondResourcesSoftware simulation/interpretation/emulationOrchestrationDistributed computing

A system for establishing and maintaining inventories of computing environment assets comprising one or more custom collector interfaces that detect movement of assets from one environment to another, and an inventory scanner which modifies inventories for each environment based on monitored asset movements. The present invention is of especial benefit to autonomic and on-demand computing architectures.

Owner:IBM CORP

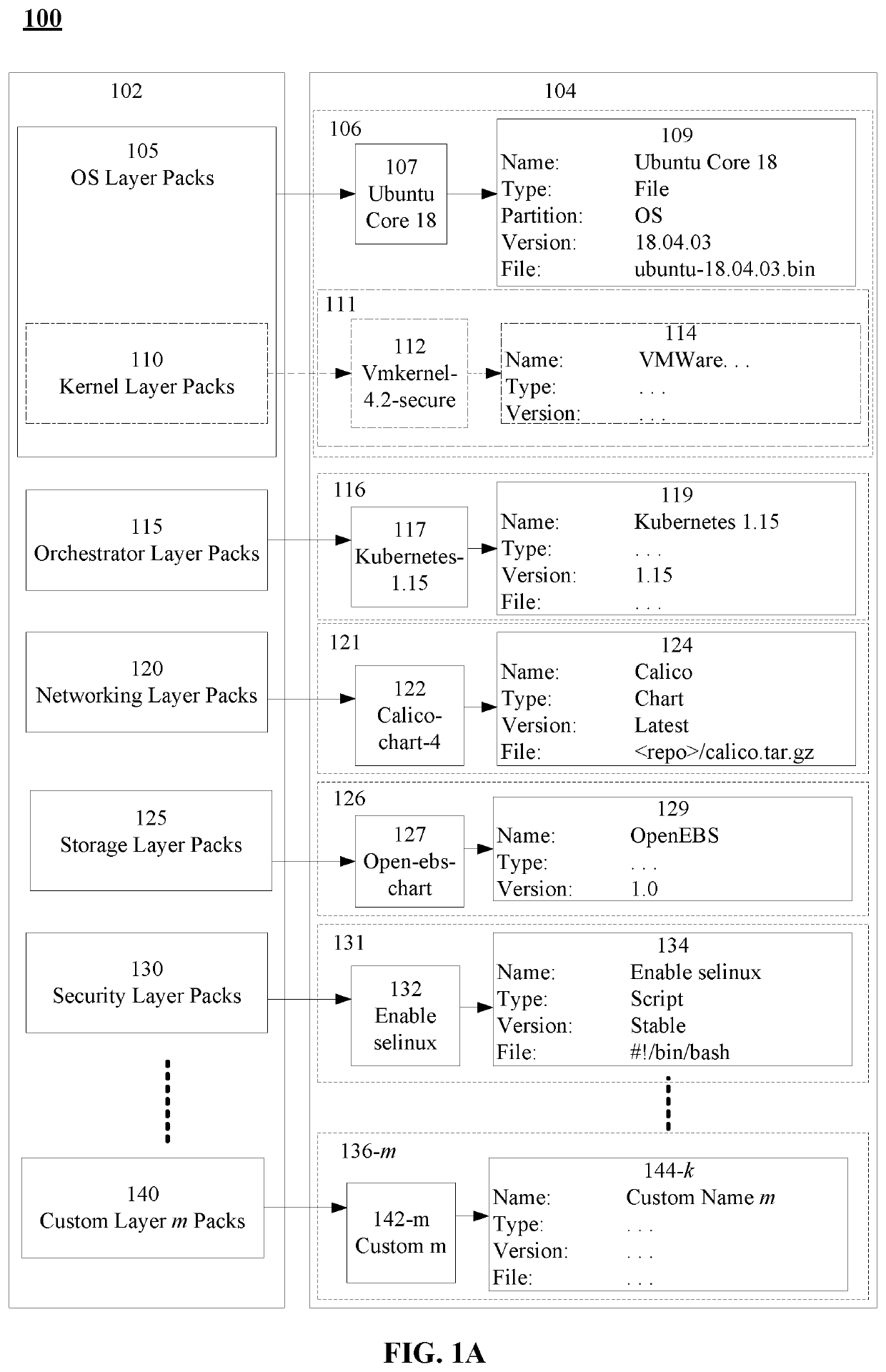

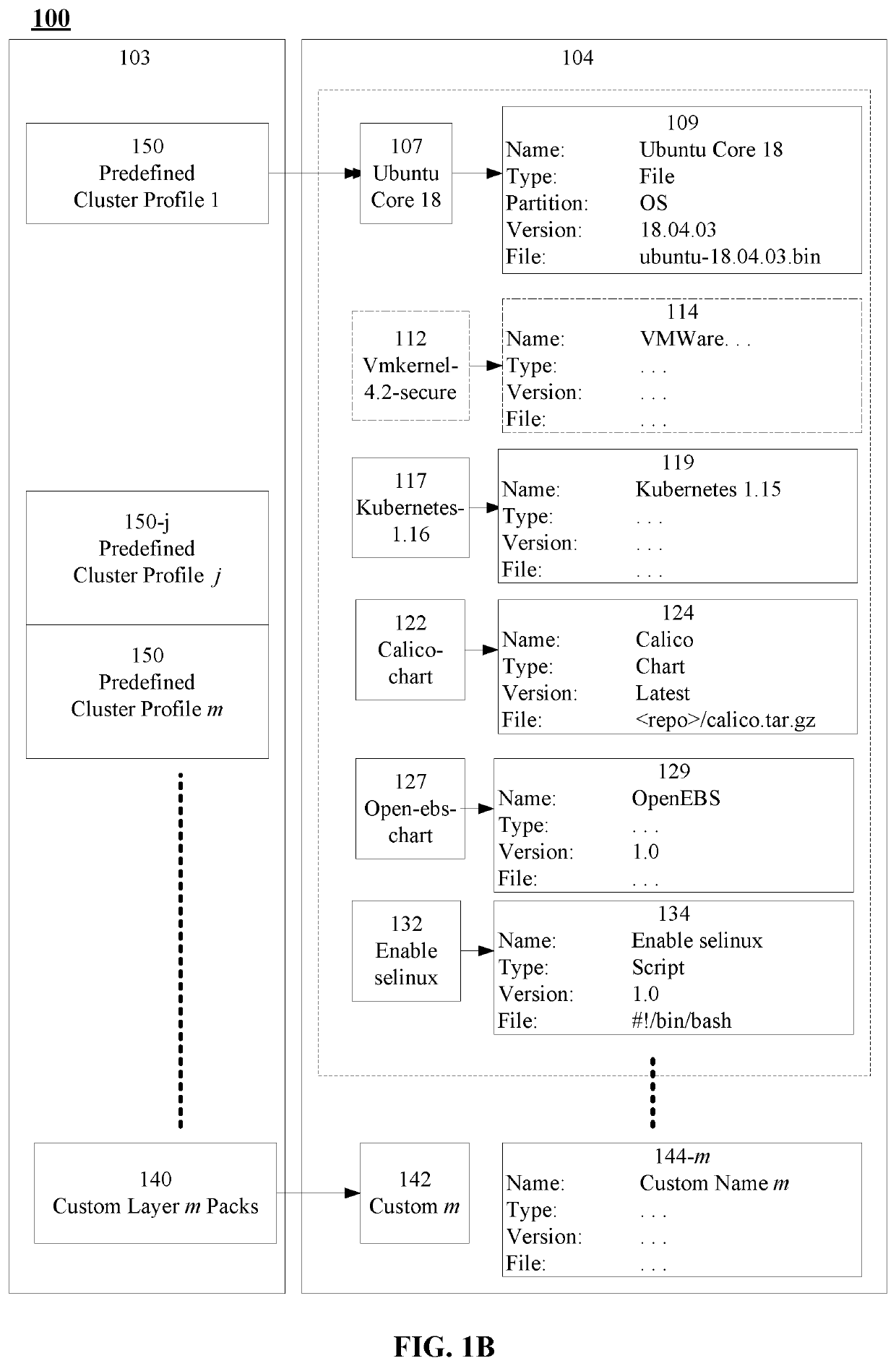

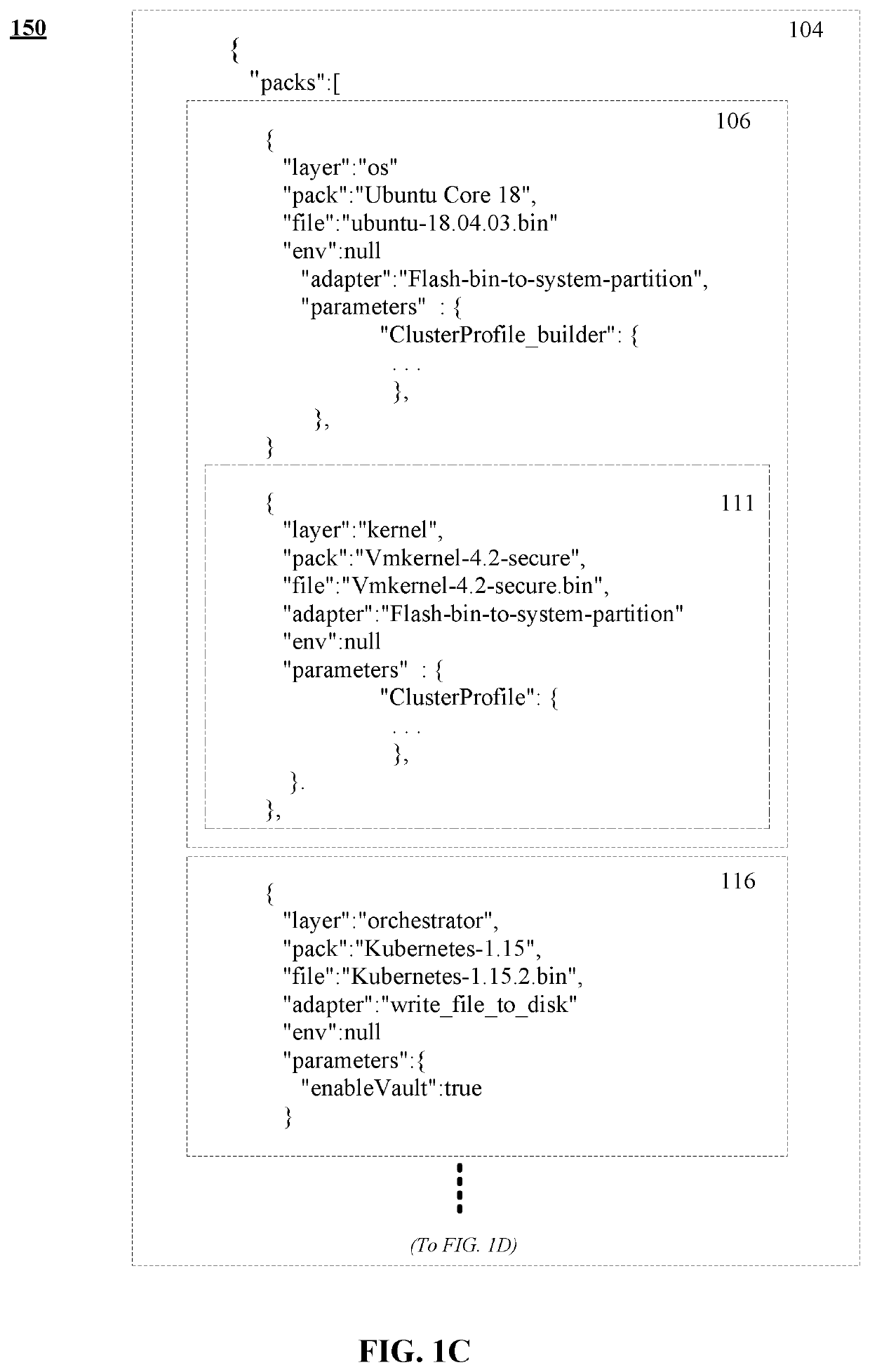

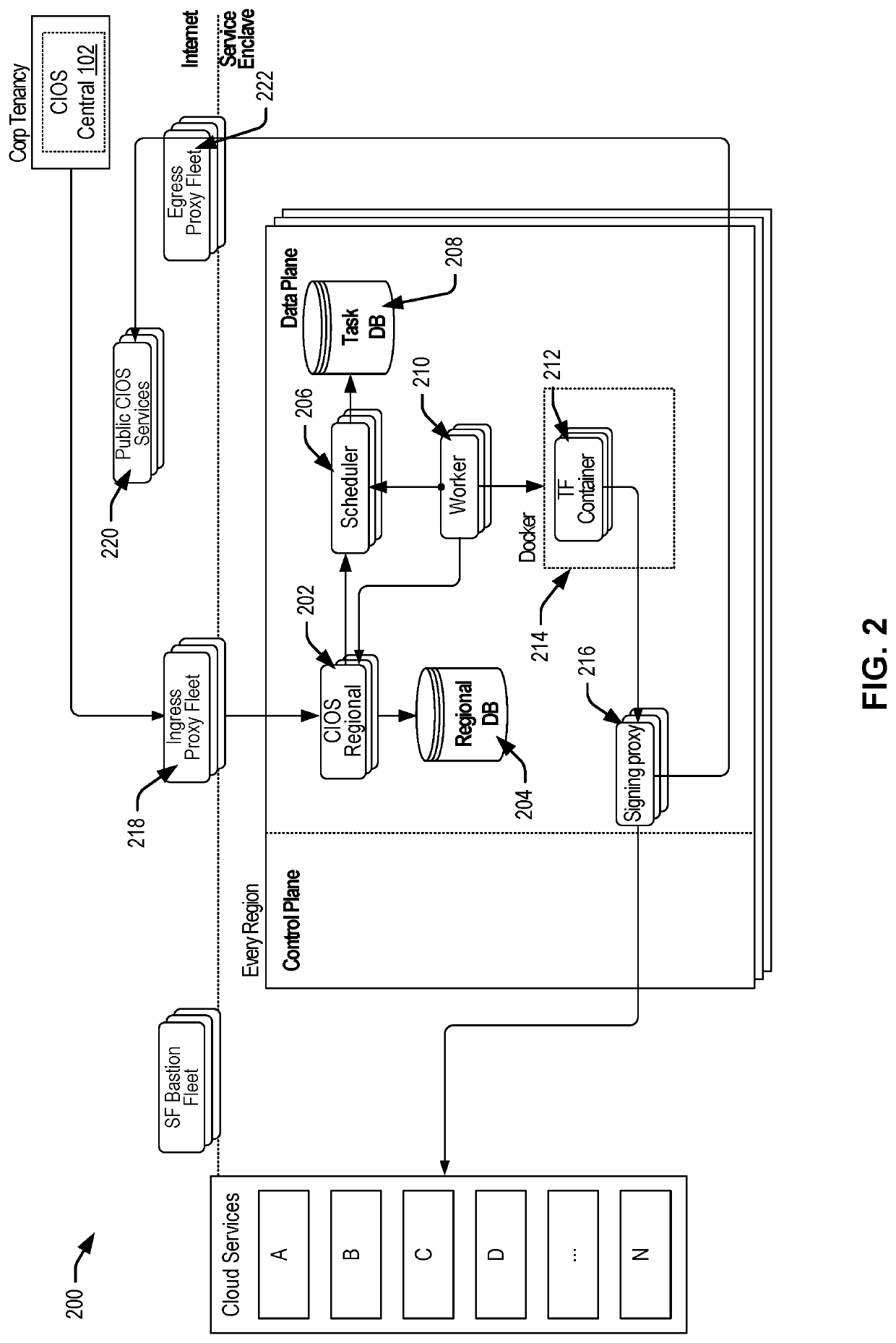

Apparatus, systems, and methods for composable distributed computing

ActiveUS20210224093A1BootstrappingSoftware simulation/interpretation/emulationOrchestration (computing)Distributed computing

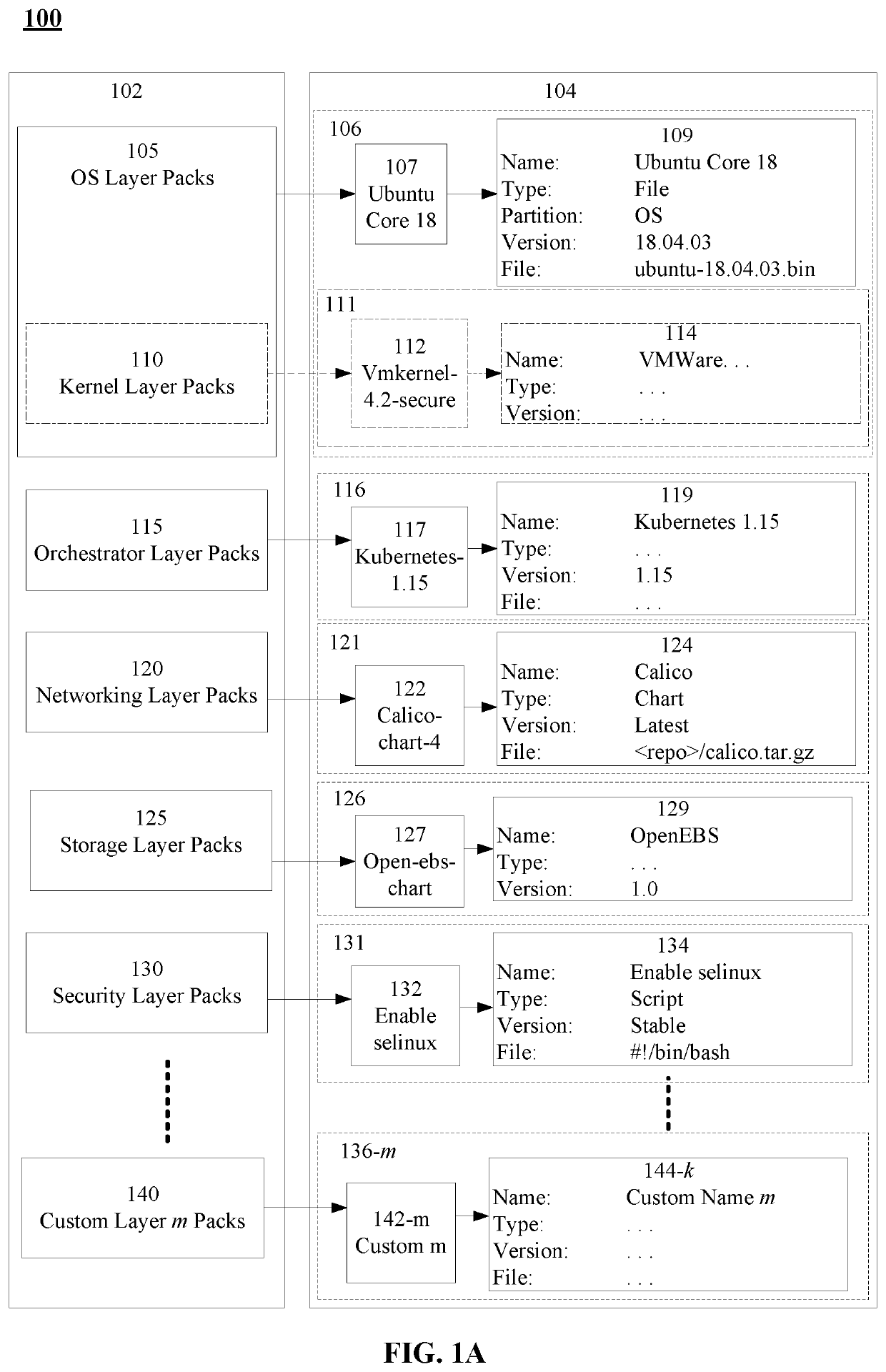

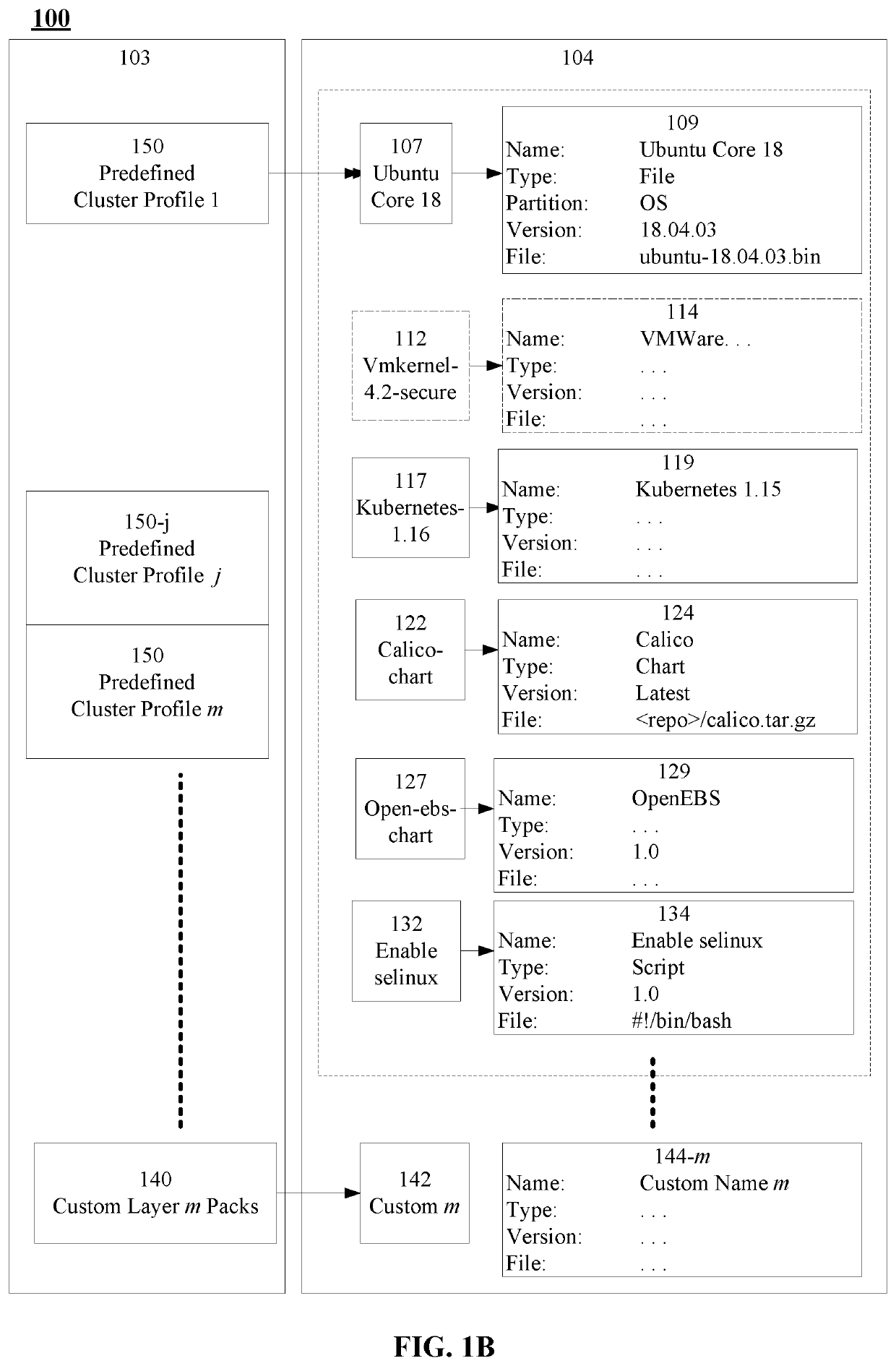

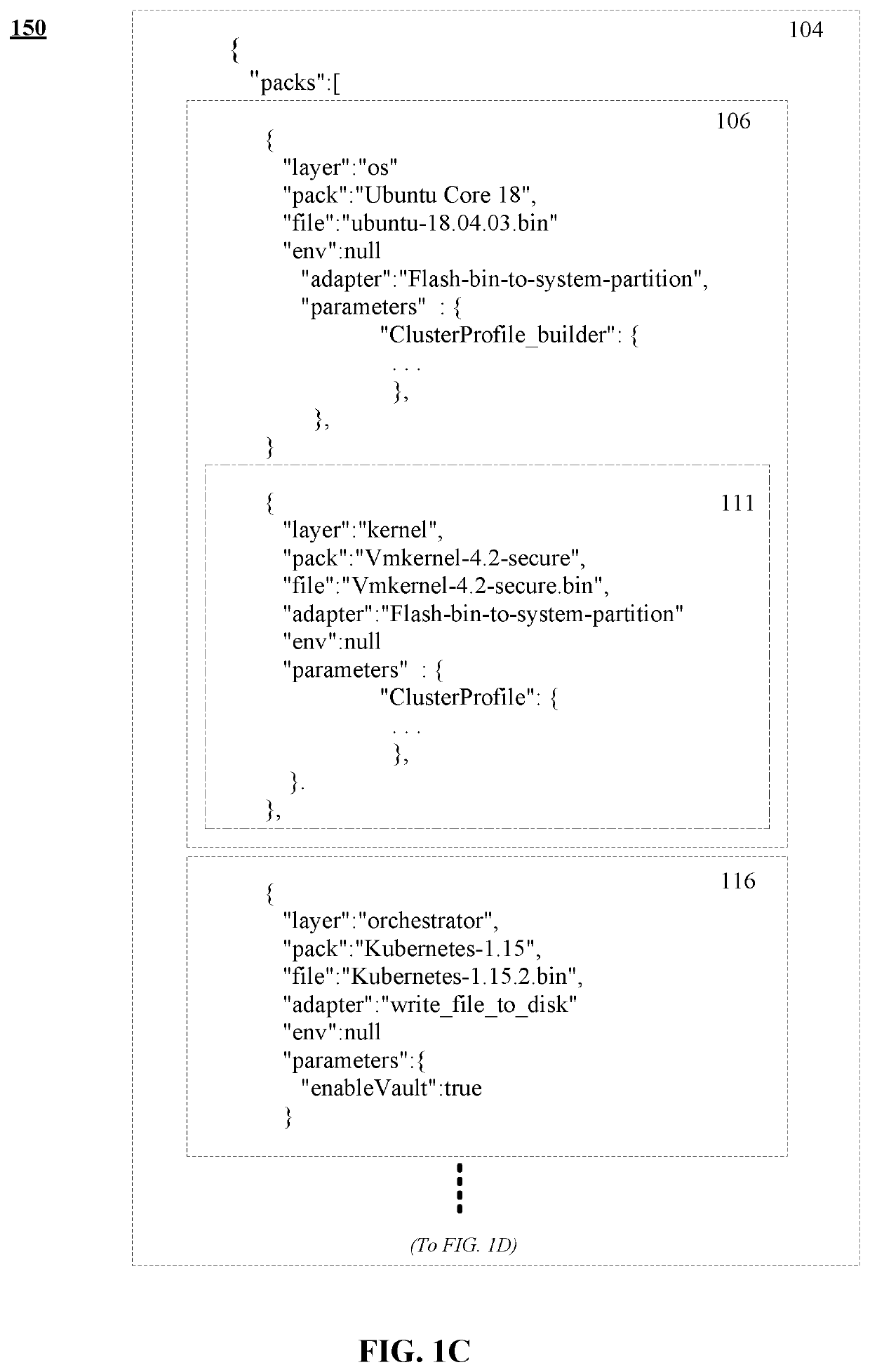

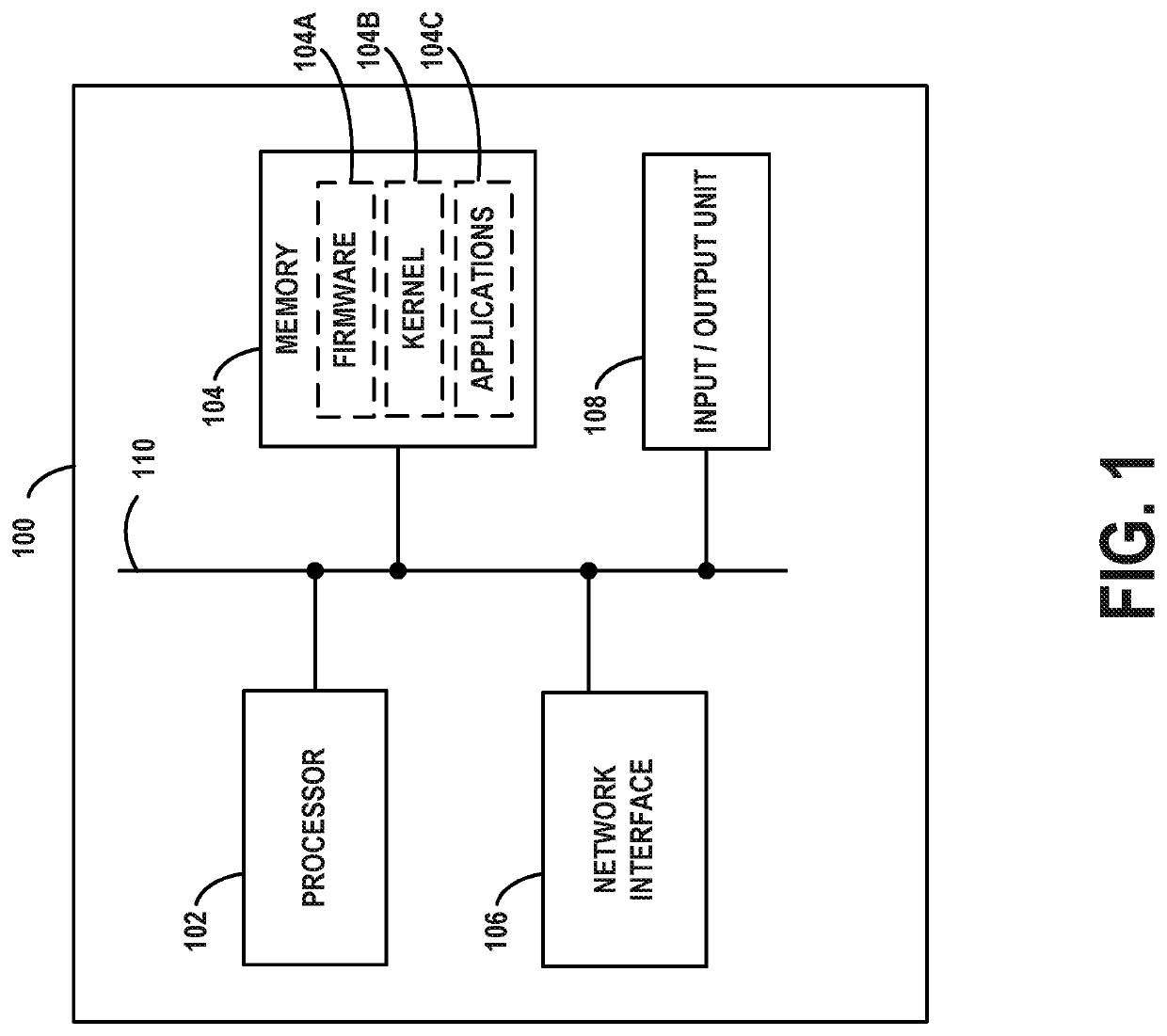

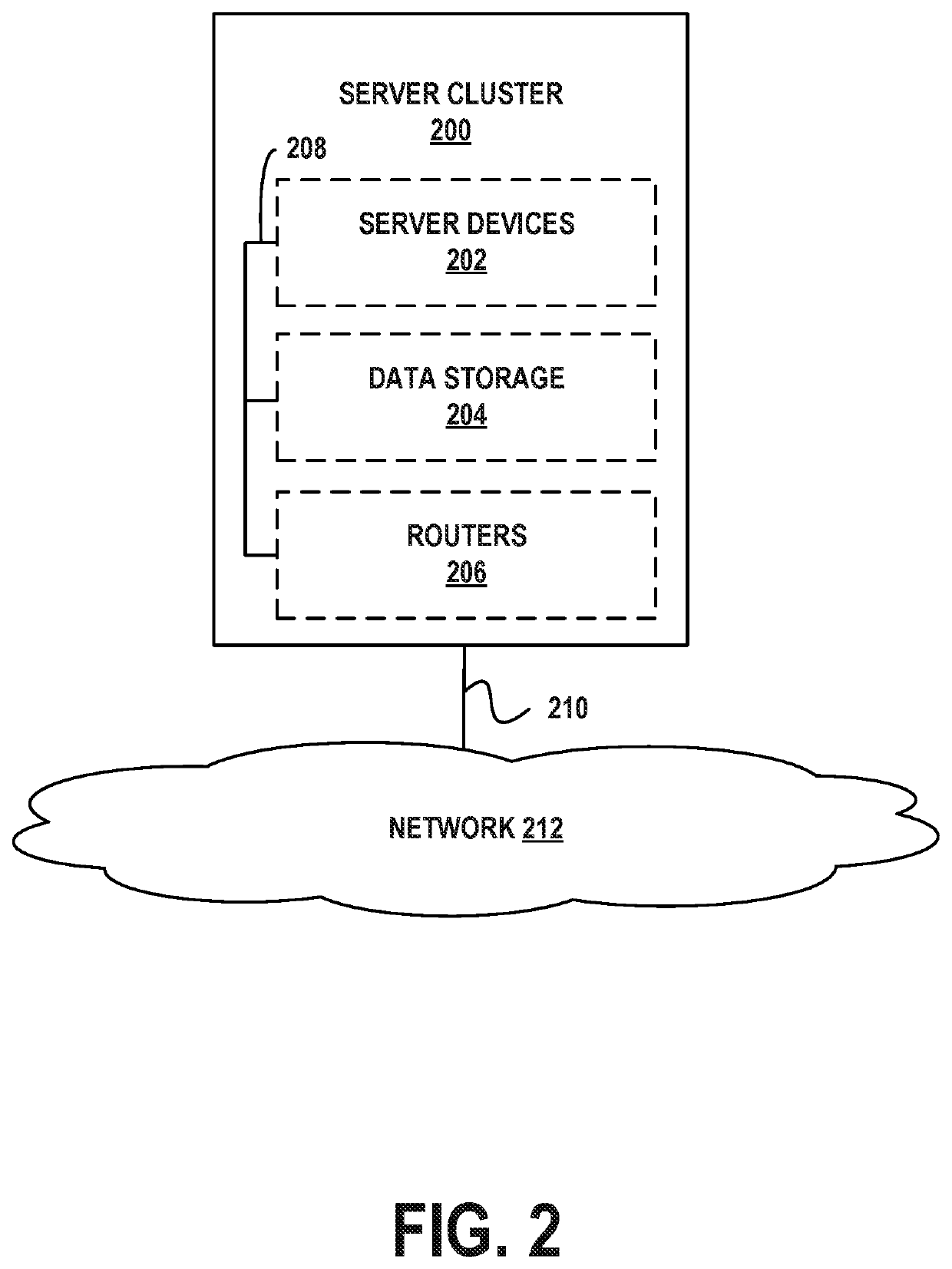

Embodiments disclosed facilitate specification, configuration, orchestration, deployment, and management of composable distributed systems. A method to realize a composable distributed system may comprise: determining, based on a system composition specification for the distributed system, one or more cluster configurations, wherein the system composition specification comprises, for each cluster, a corresponding cluster specification and a corresponding cluster profile, which may comprise a corresponding software stack specification. First software stack images applicable to a first plurality of nodes in a first cluster may be obtained based on a corresponding first software stack specification, which may be comprised in a first cluster profile associated with the first cluster. Deployment of the first cluster may be initiated by instantiating the first cluster in a first cluster configuration in accordance with a corresponding first cluster specification, wherein each of the first plurality of nodes is instantiated using the corresponding first software stack images.

Owner:SPECTRO CLOUD INC

Discovery of containerized platform and orchestration services

ActiveUS20210200814A1Easy to analyzeEasy to implementVersion controlStill image data queryingApplication programming interfaceServices computing

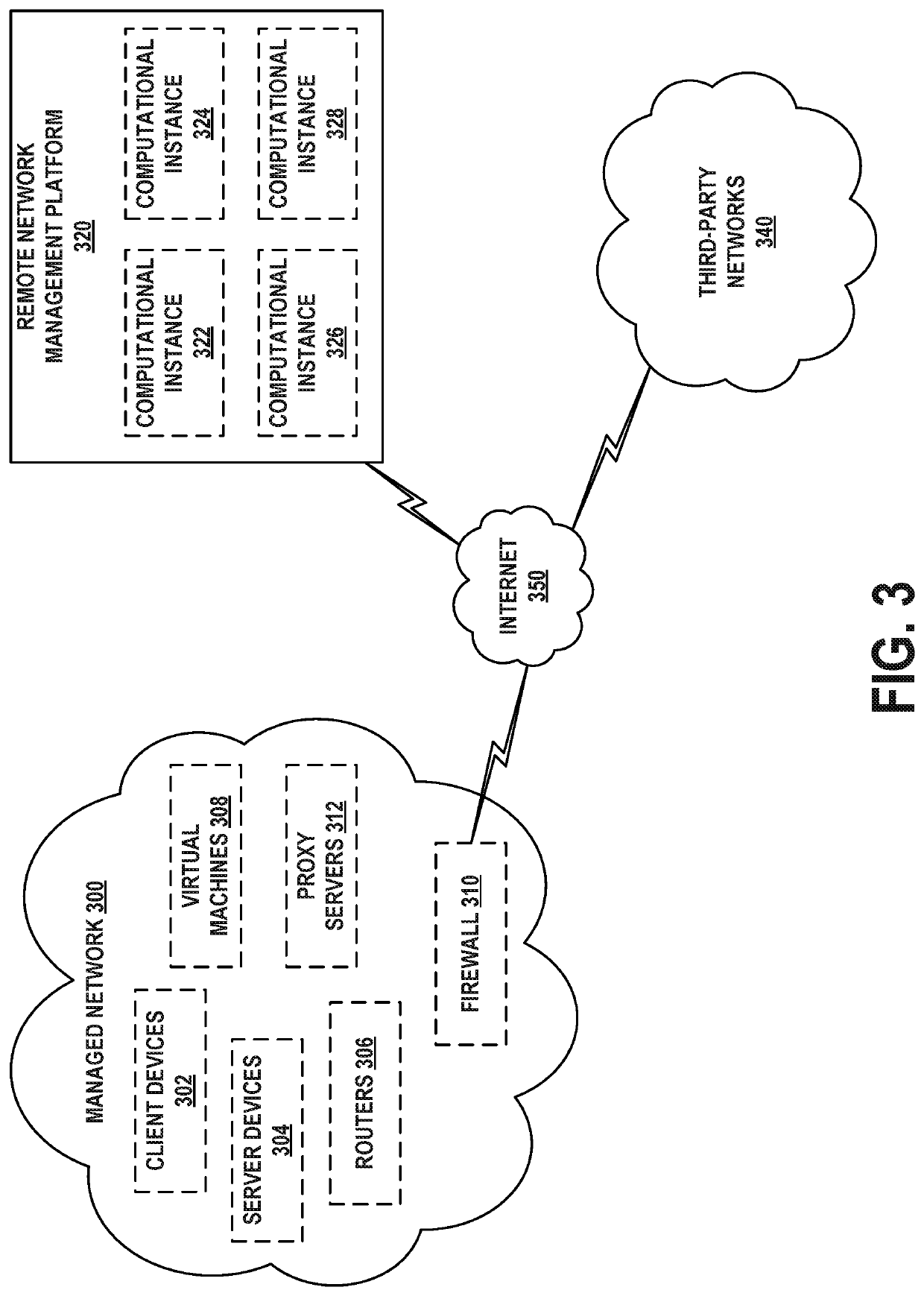

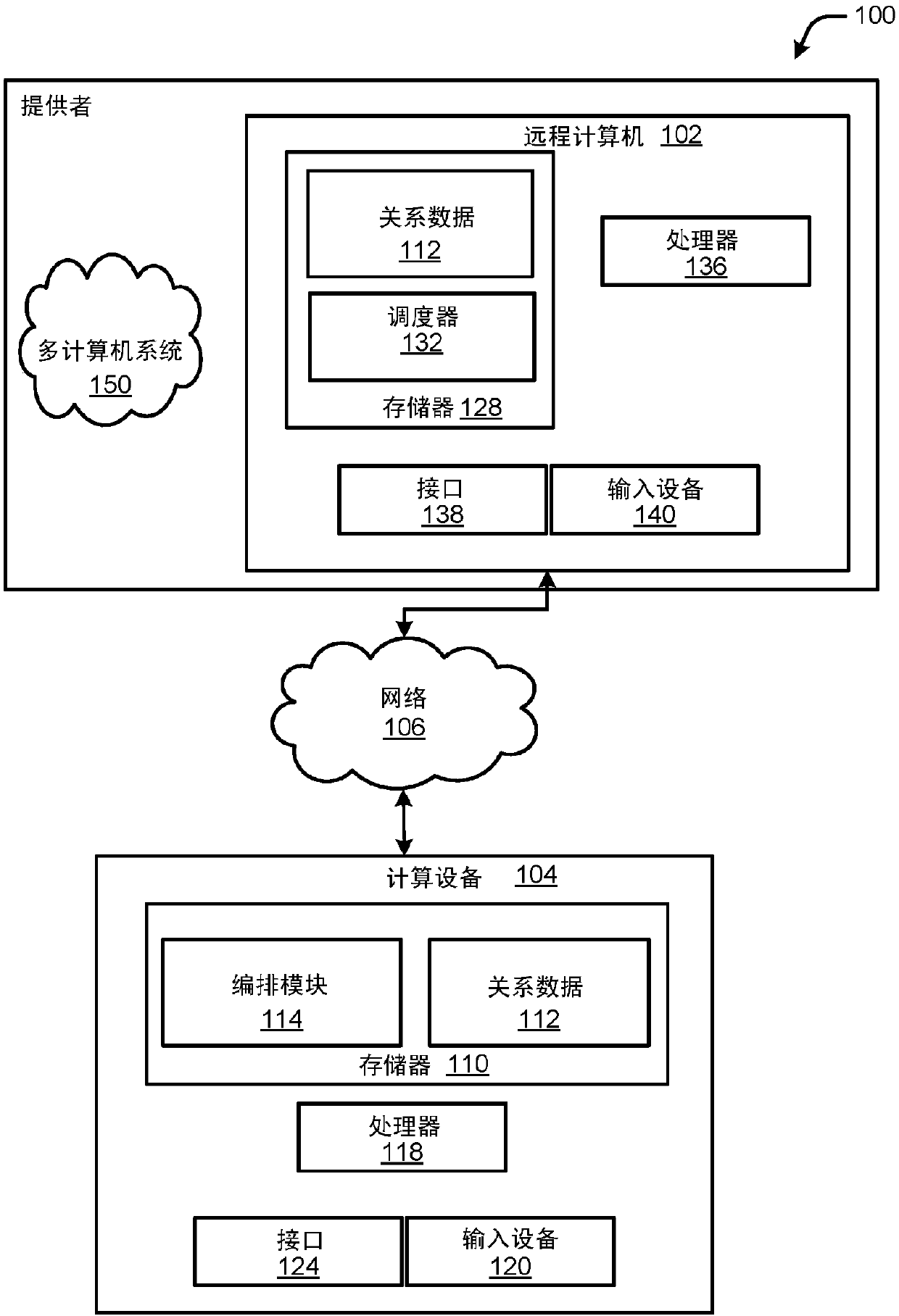

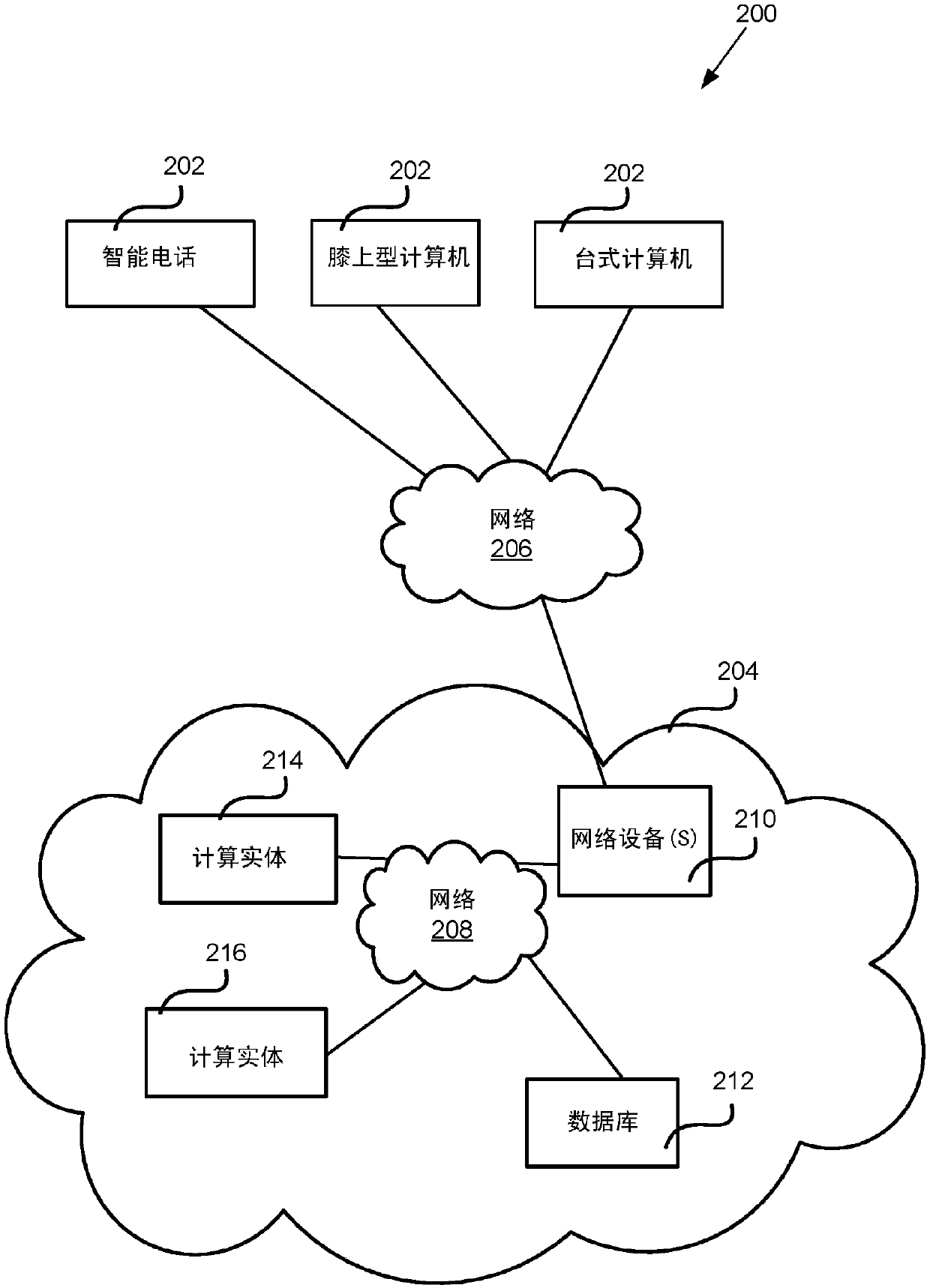

An example computing system includes a database disposed within a remote network management platform associated with a managed network that obtains service from a computing cluster that includes one or more worker nodes configured to execute containerized software applications using a containerized orchestration engine. The computing system also includes a computing device configured to identify a namespace associated with the containerized orchestration engine. The computing device is also configured to query a deployment configuration application programming interface (API) associated with a containerized application platform to obtain deployment configuration data. Further, the computing device is configured to query a build configuration API associated with the containerized application platform to obtain build configuration data. In addition, the computing device is configured to store, in the database, the deployment configuration data, the build configuration data, and one or more relationships between the deployment configuration data and the build configuration data.

Owner:SERVICENOW INC

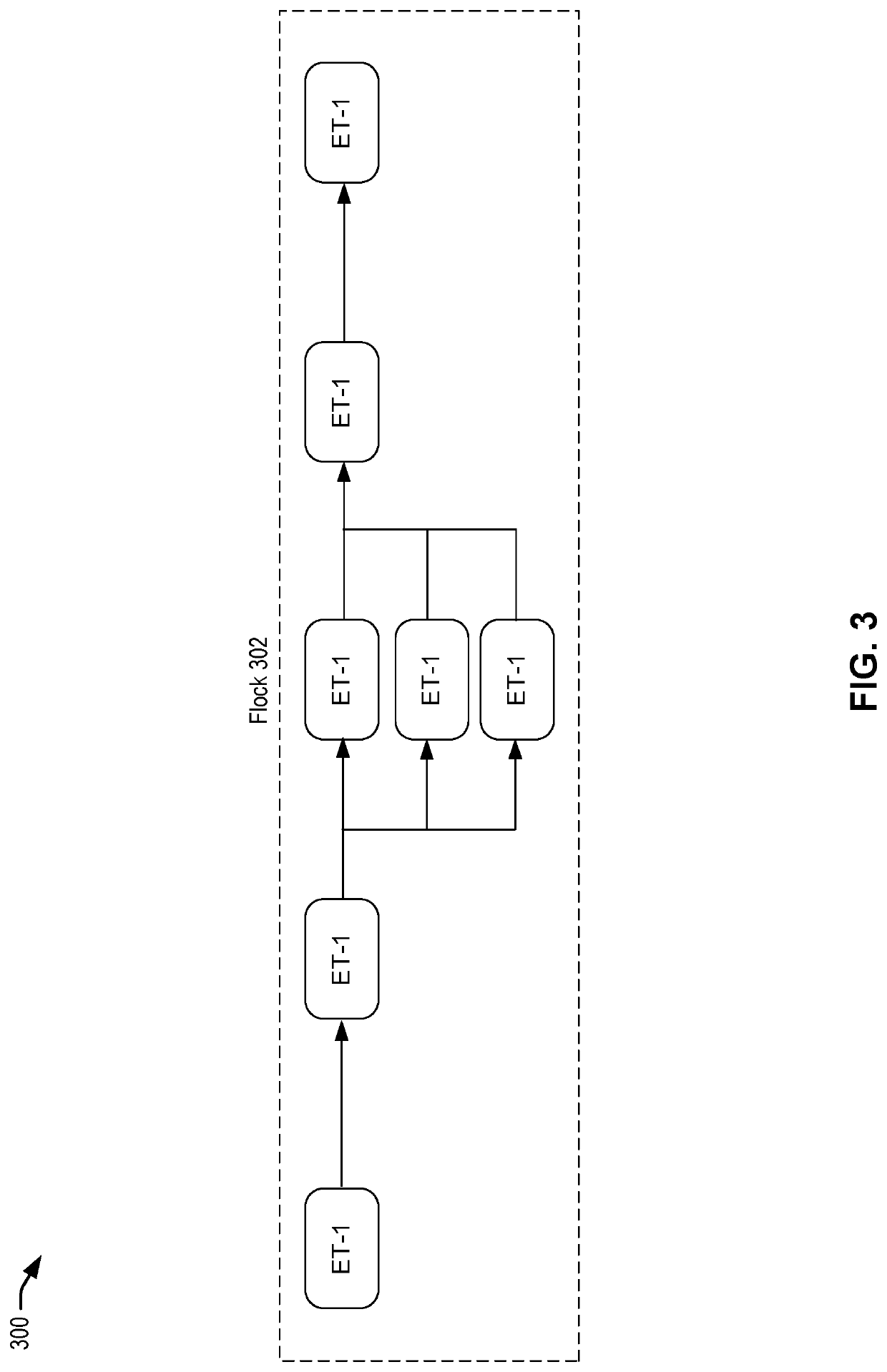

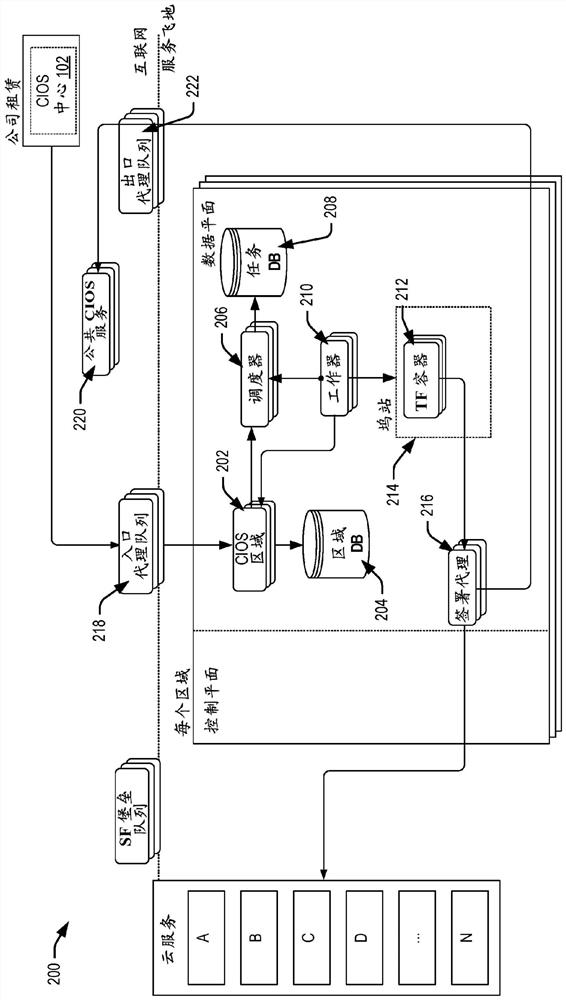

Apparatus, systems and methods for container based service deployment

ActiveUS10225335B2Resource allocationData switching by path configurationParallel computingEngineering

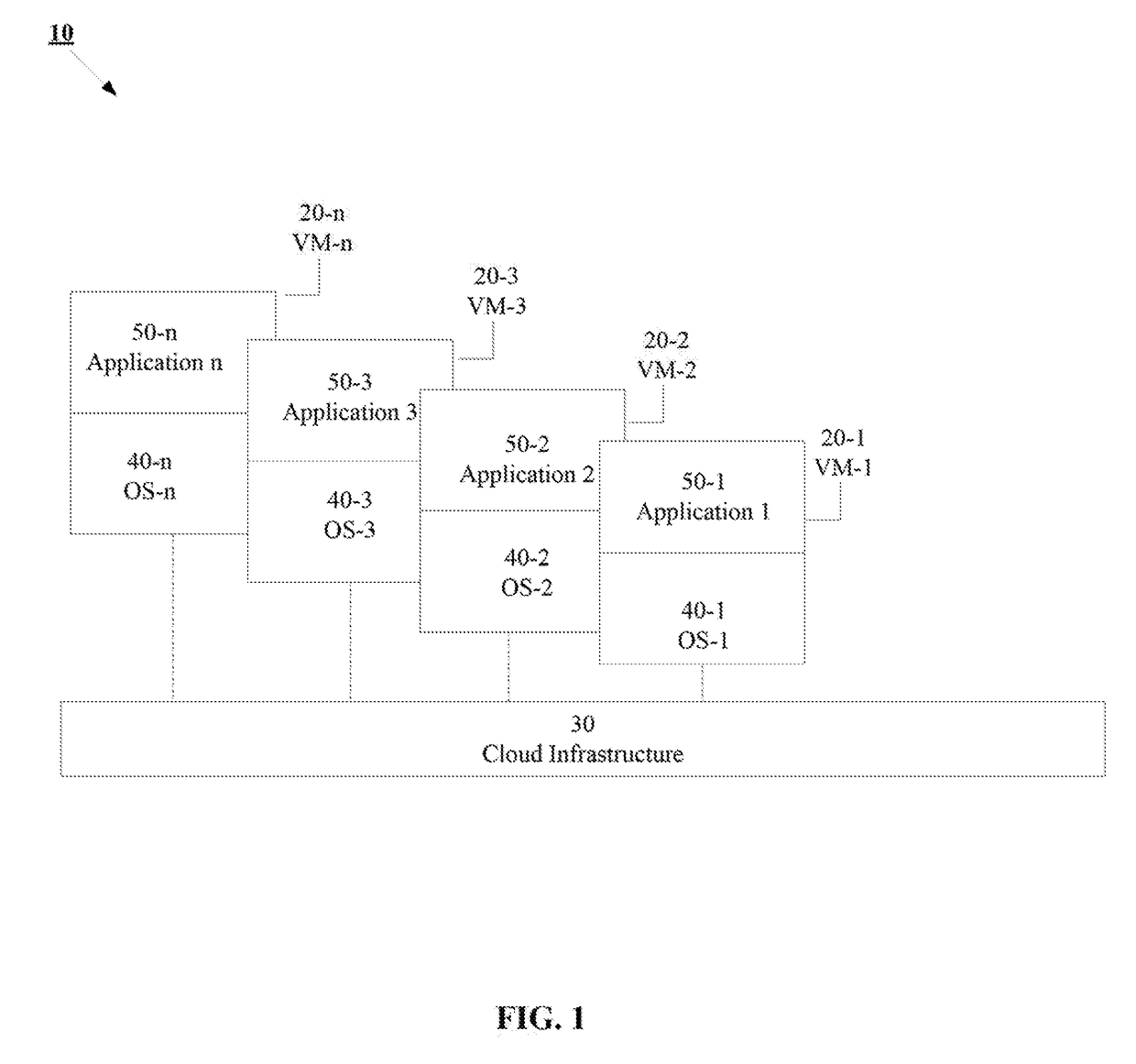

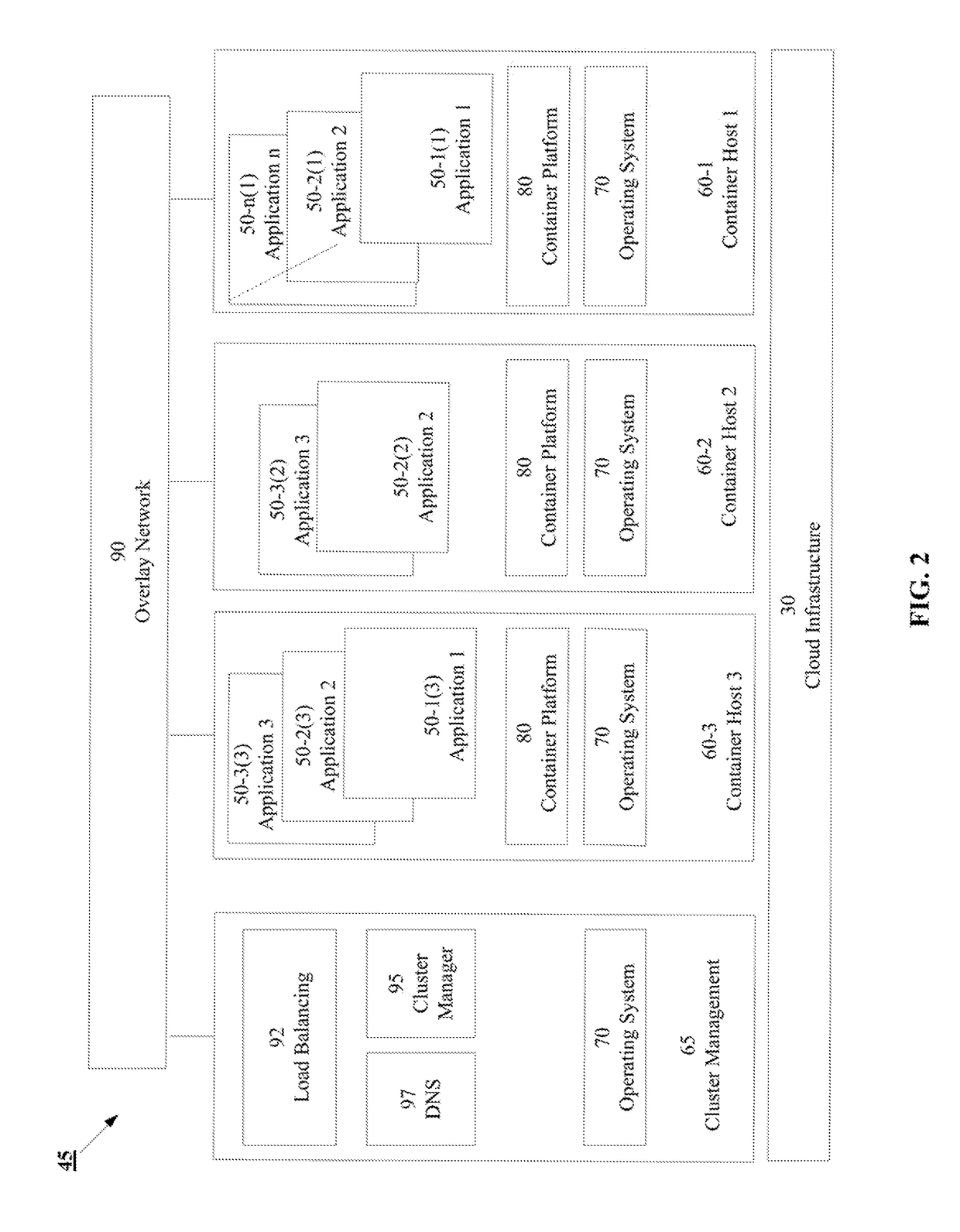

Embodiments disclosed facilitate distributed orchestration and deployment of a single instance of a distributed computing application over a plurality of clouds and container clusters, including container clusters provided through a Container as a Service (CaaS) offering. In some embodiments, system and pattern constructs associated with a hybrid distributed multi-tier application may be used to obtain an infrastructure independent representation of the distributed multi-tier application. The infrastructure independent representation may comprise a representation of an underlying pattern of resource utilization of the application. Further, the underlying pattern of resource utilization of the application may be neither cloud specific nor container cluster specific. In some embodiments, a single instance of the hybrid distributed multi-tier application may be deployed on a plurality of cloud infrastructures and on at least one container cluster, based, in part, on the cloud-infrastructure independent representation of the application.

Owner:CISCO TECH INC

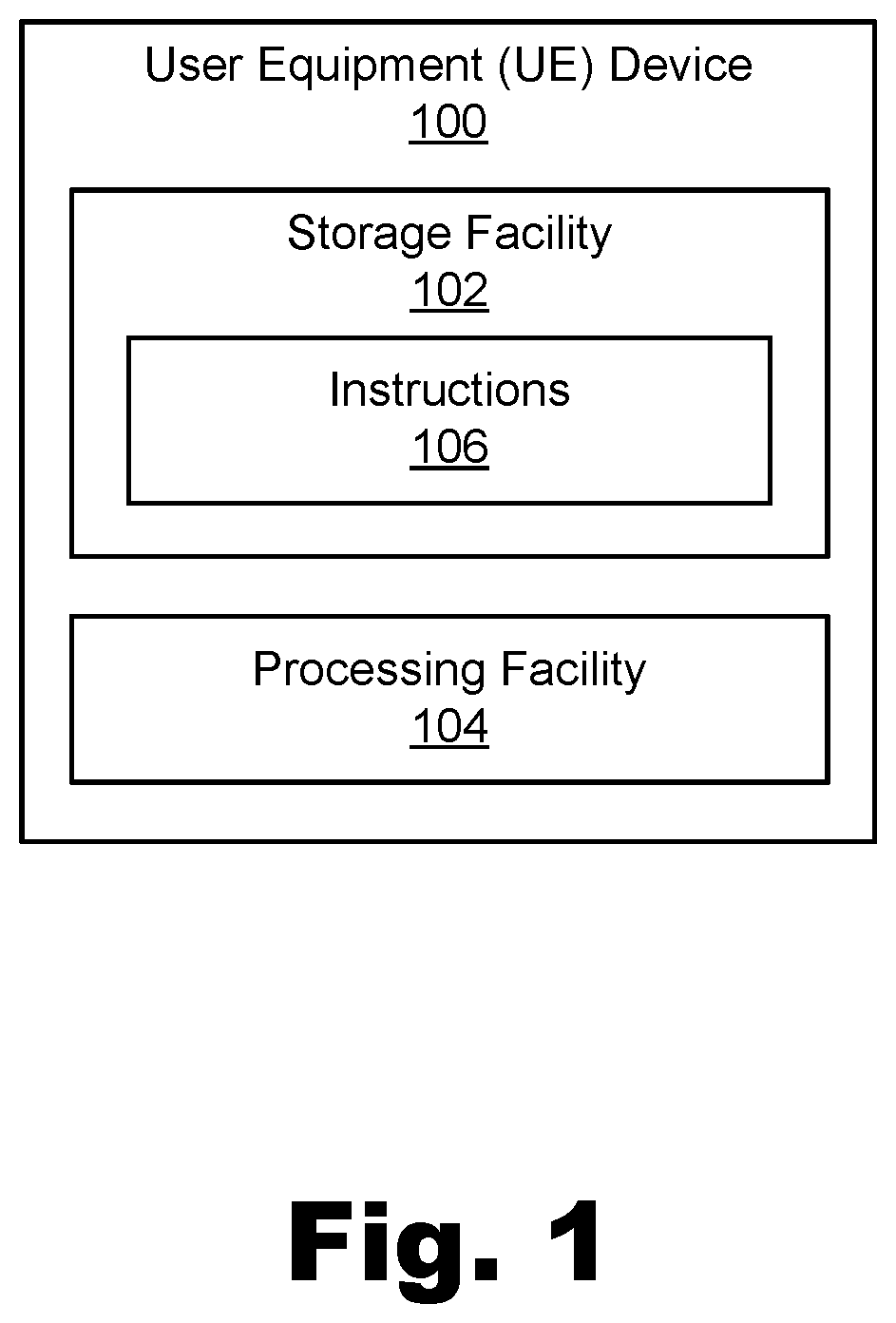

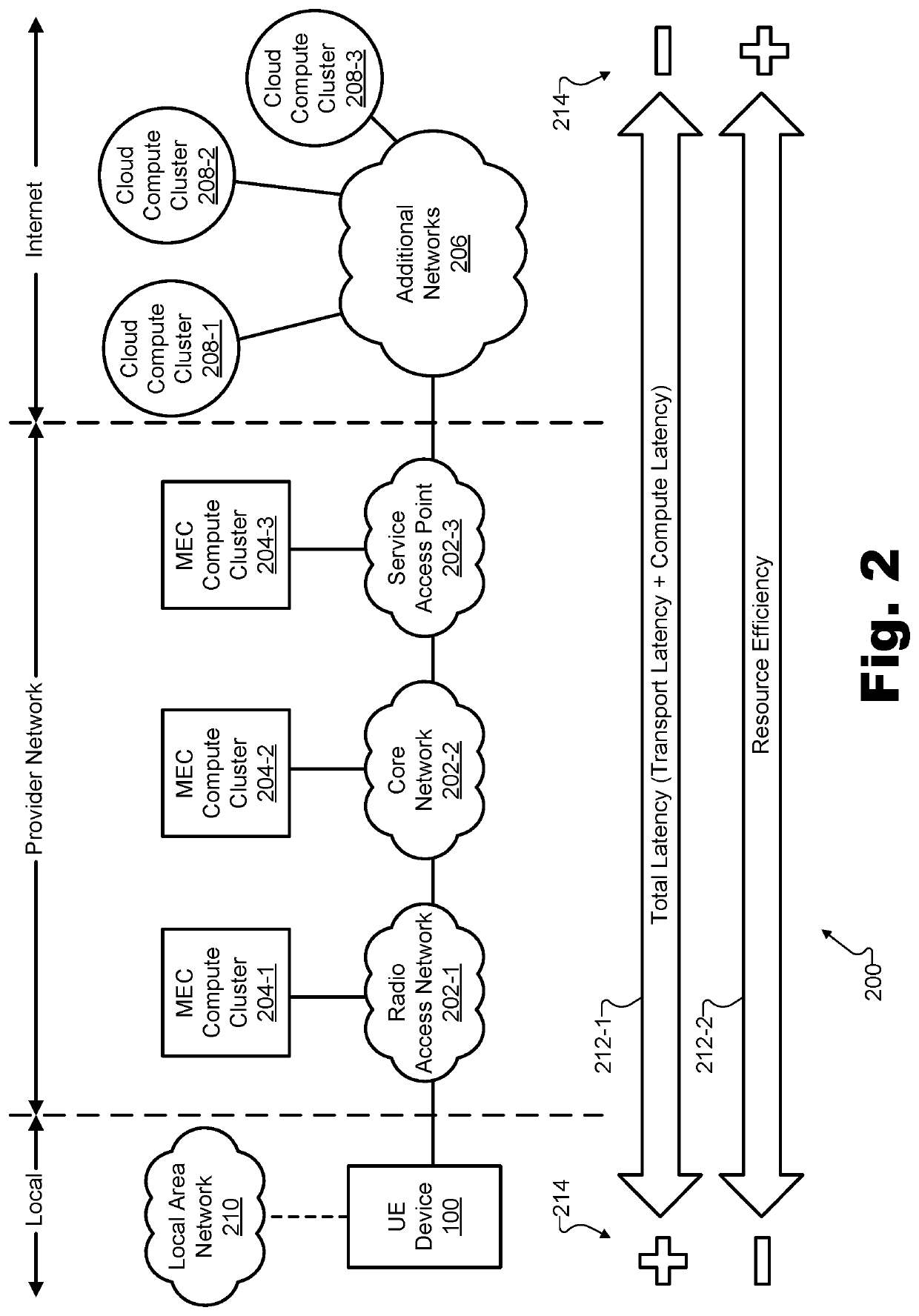

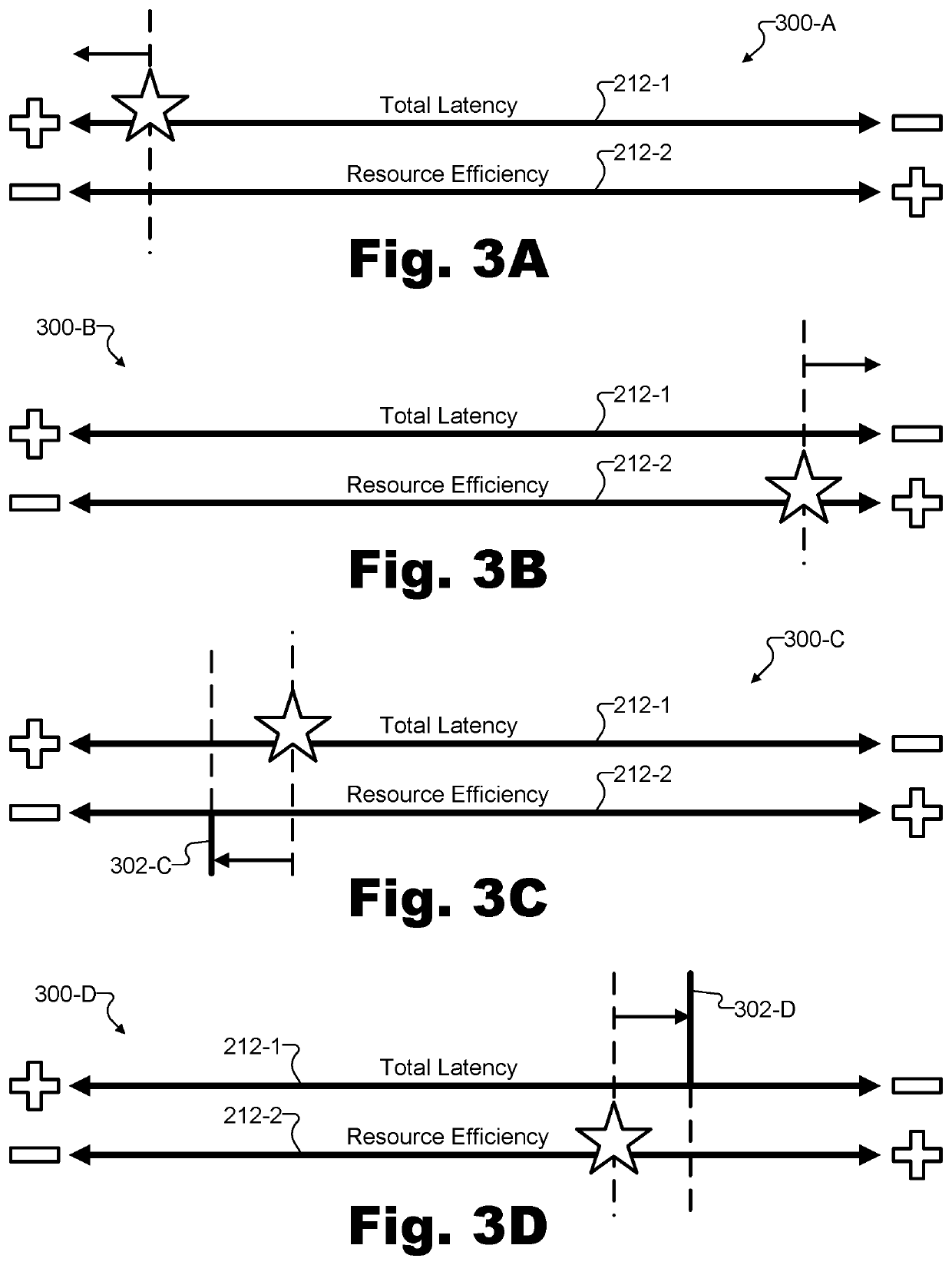

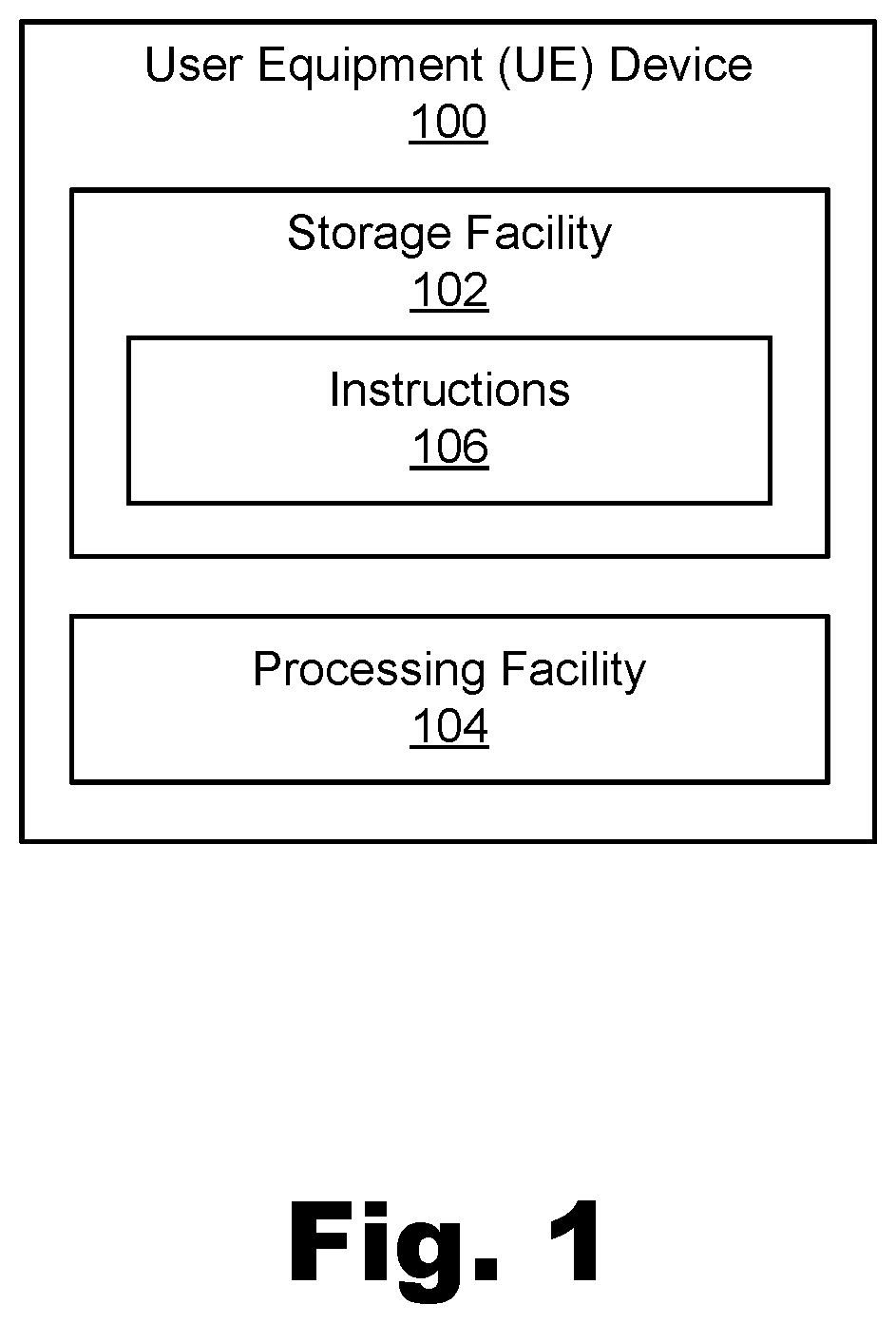

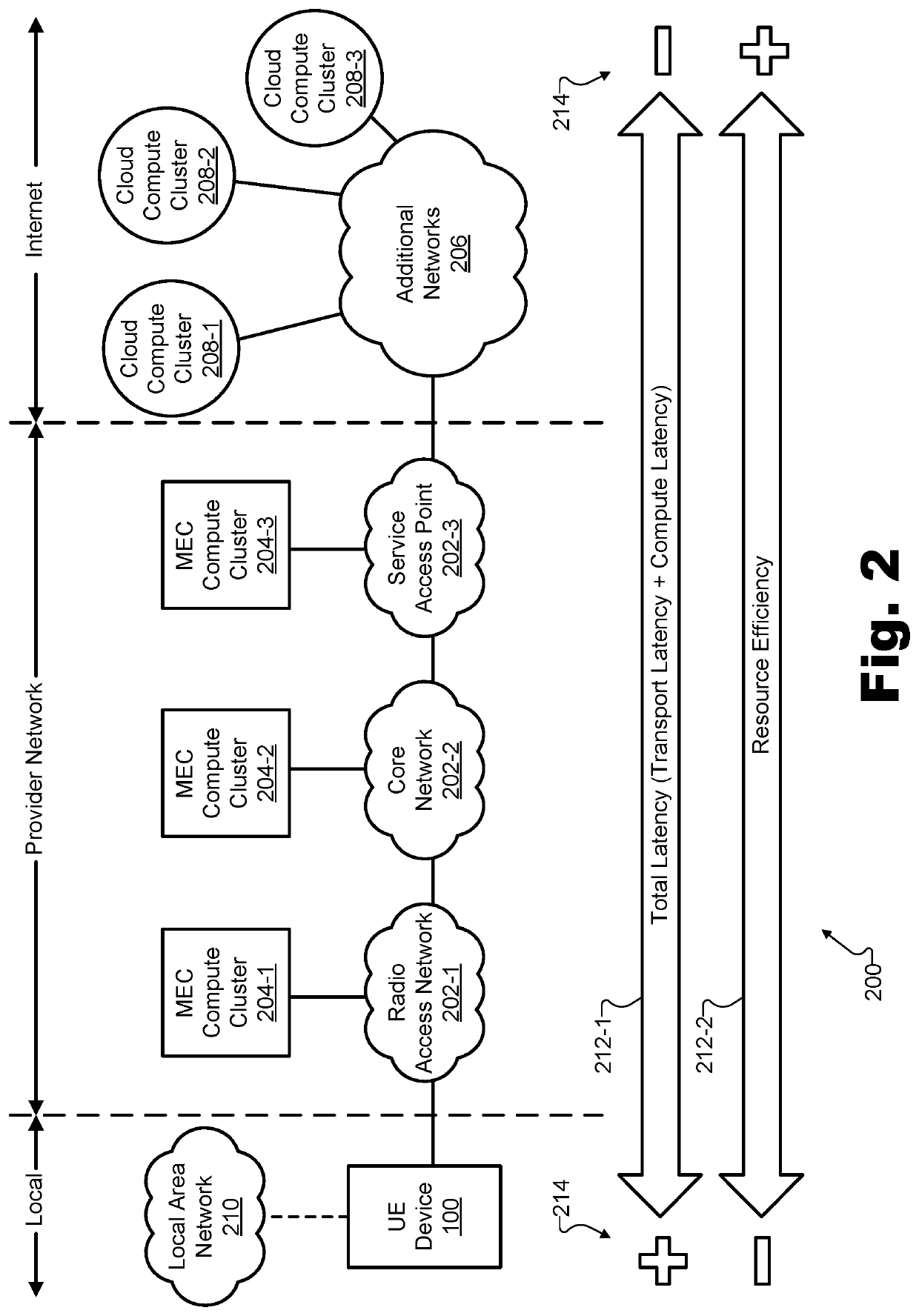

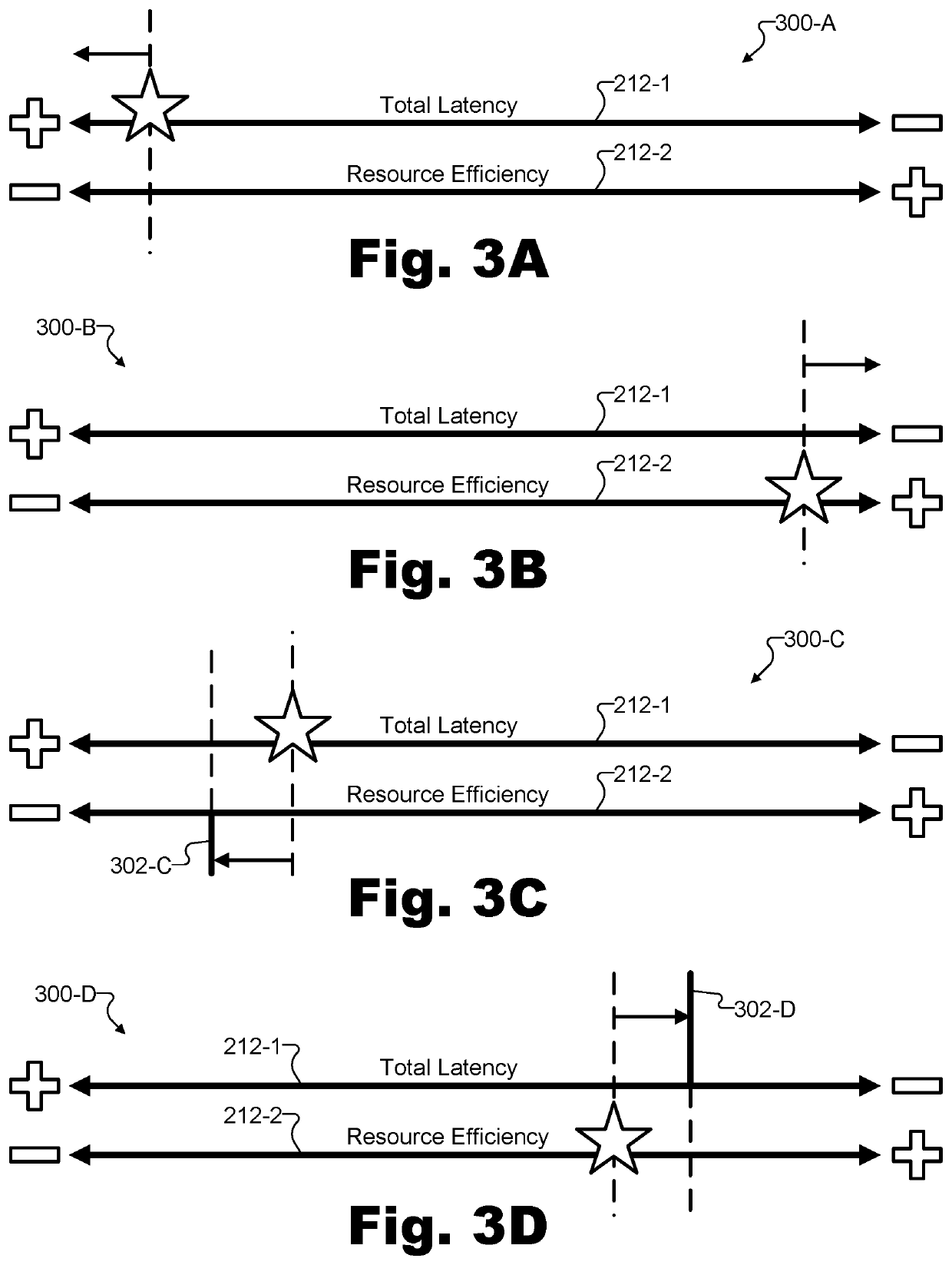

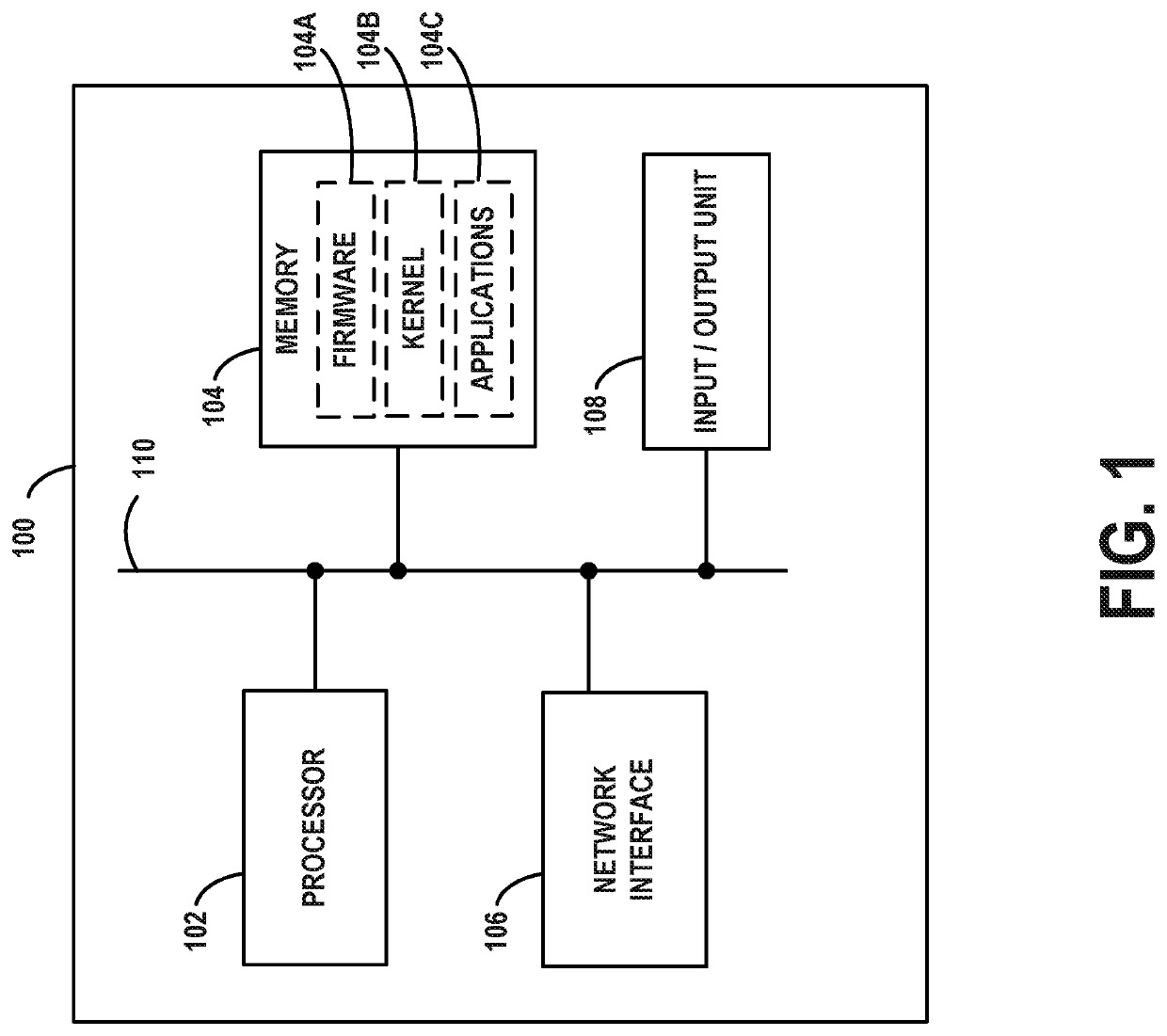

Methods and devices for discovering and employing distributed computing resources to balance performance priorities

An exemplary user equipment (“UE”) device provides a service request to an orchestration system associated with a federation of compute clusters available to fulfill the service request. The UE device also identifies a service optimization policy associated with a user preference for balancing performance priorities during fulfillment of the service request. In response to the service request, the UE device receives cluster selection data from the orchestration system. Based on the cluster selection data, the UE device characterizes compute clusters, within a subset of compute clusters represented in the cluster selection data, with respect to the performance priorities. Based on the service optimization policy and the characterization of compute clusters in the subset, the UE device selects a compute cluster from the subset to fulfill the service request. The UE device then provides an orchestration request indicative of the selected compute cluster to the orchestration system.

Owner:VERIZON PATENT & LICENSING INC

Methods and Devices for Discovering and Employing Distributed Computing Resources to Balance Performance Priorities

Owner:VERIZON PATENT & LICENSING INC

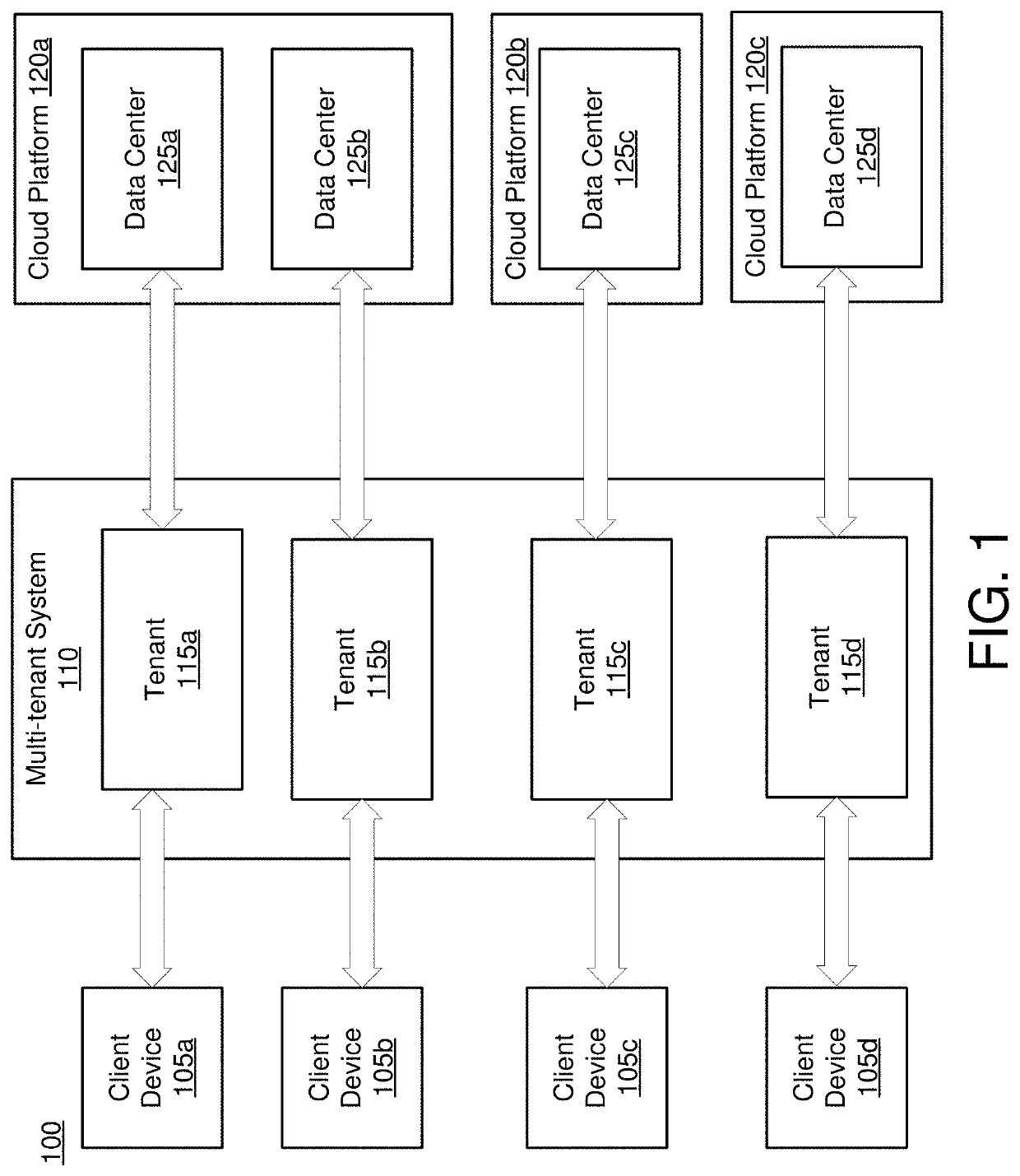

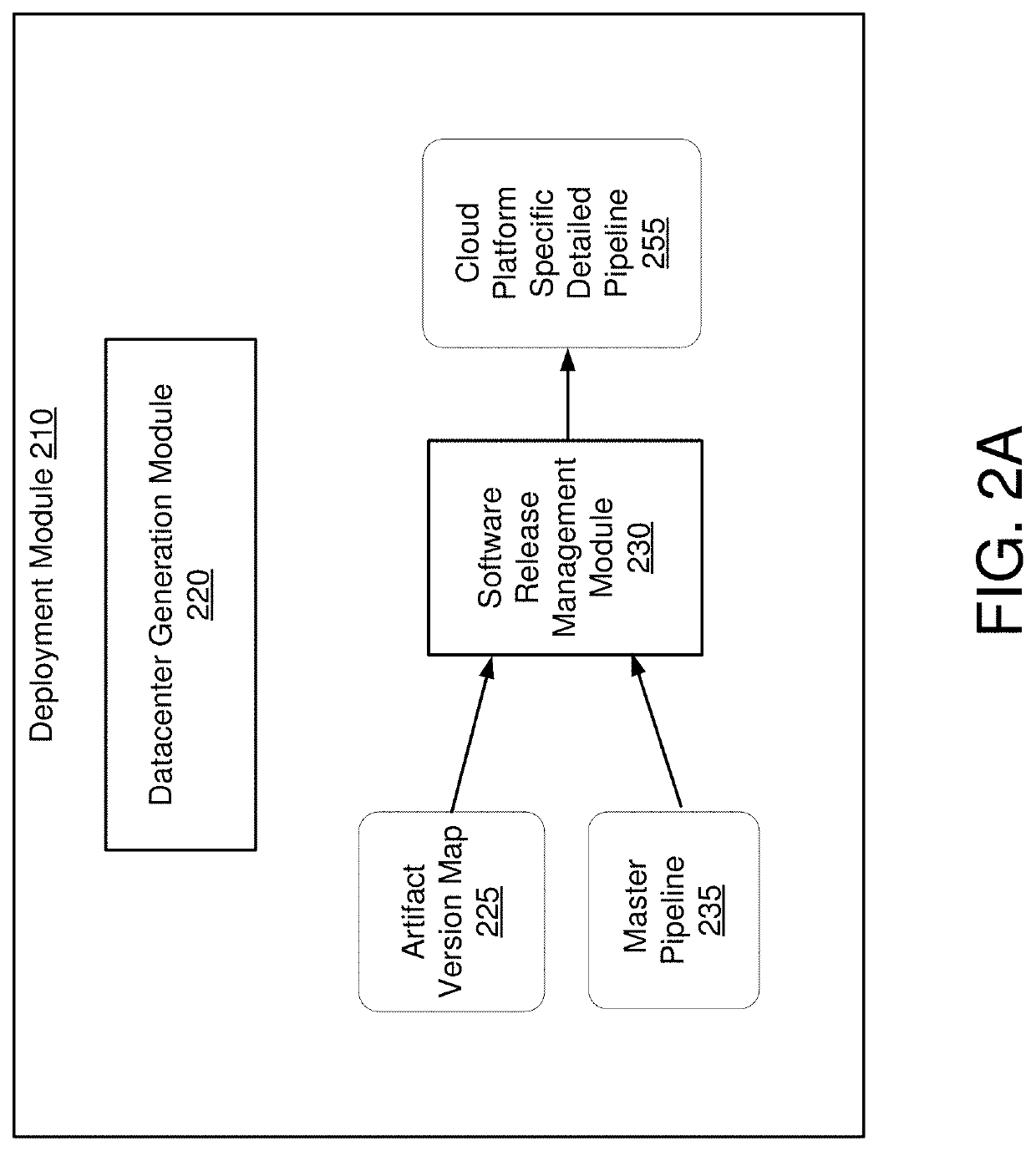

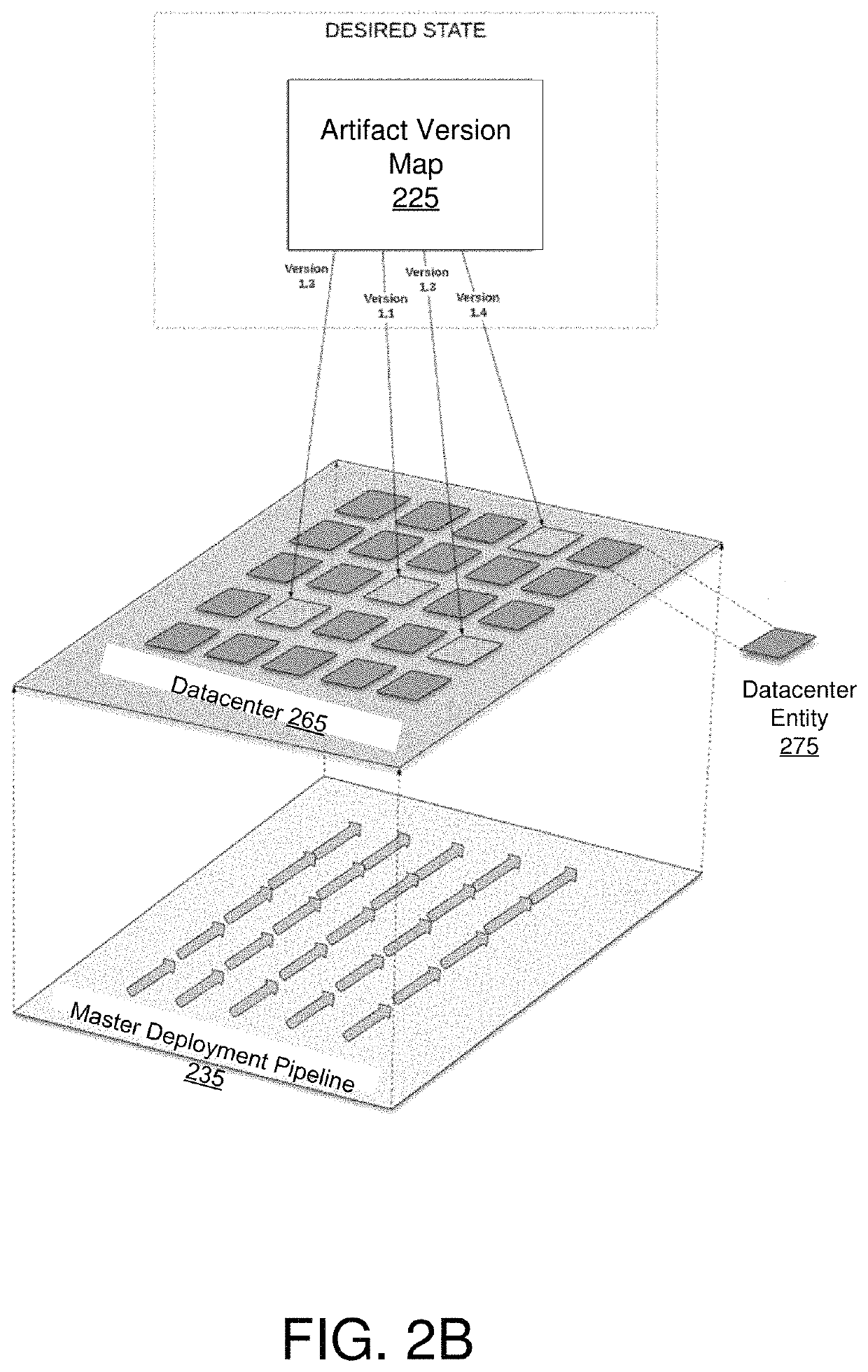

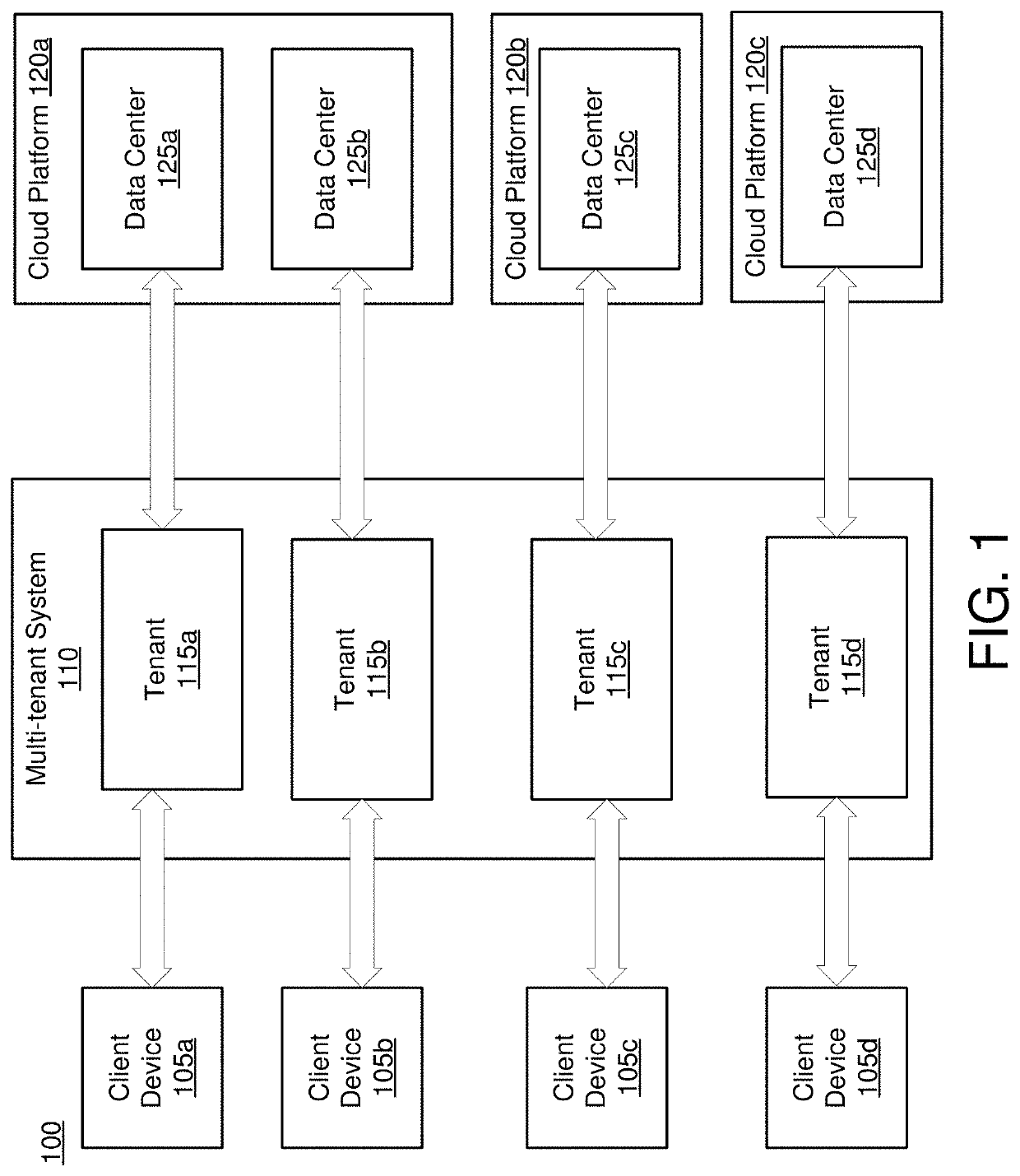

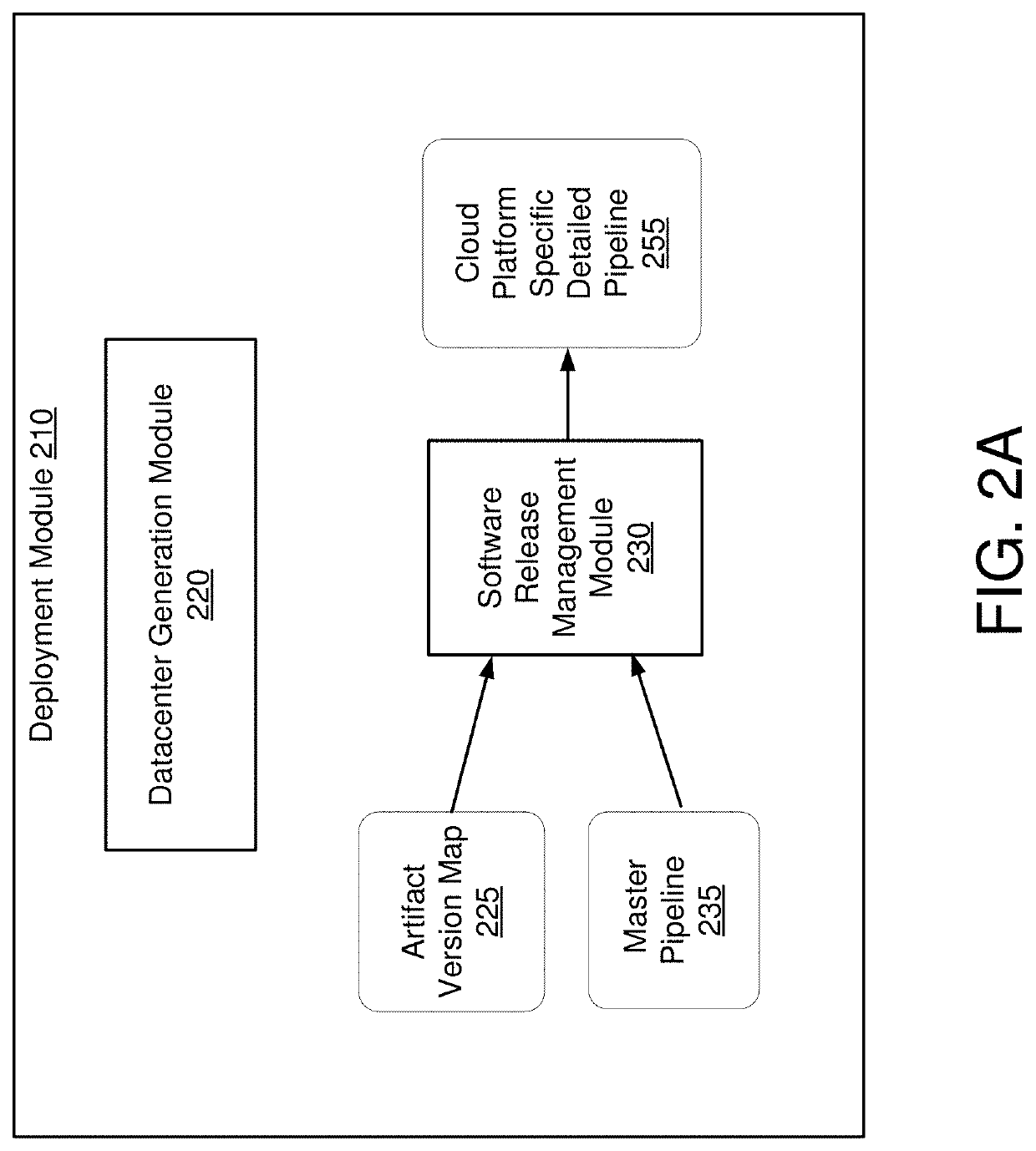

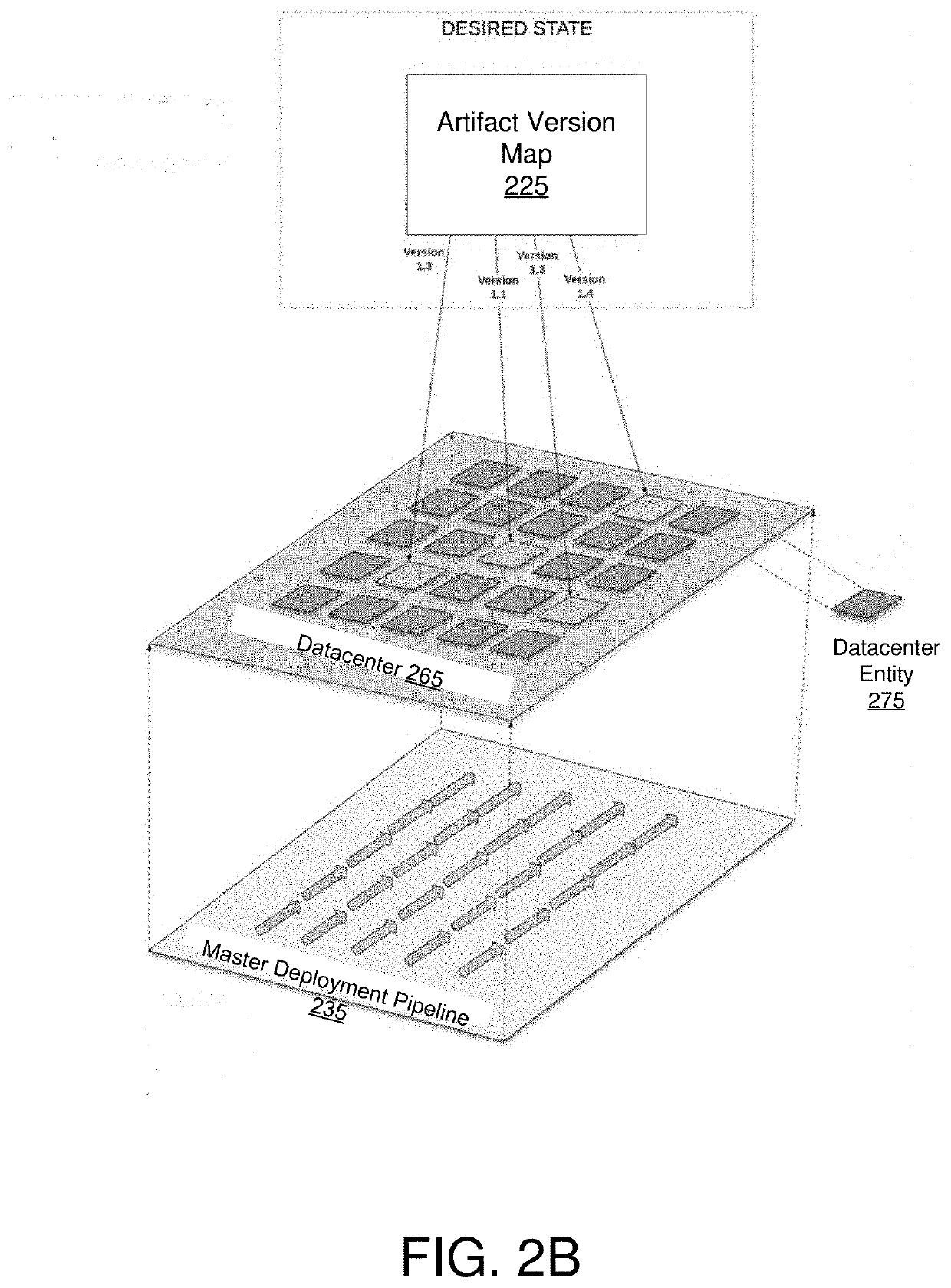

Software release orchestration for continuous delivery of features in a cloud platform based data center

ActiveUS11392361B2Version controlProgram loading/initiatingOrchestration (computing)Distributed computing

A system deploys software artifacts in data centers created in a cloud platform using a cloud platform infrastructure language that is cloud platform independent. The system receives an artifact version map that identifies versions of software artifacts for datacenter entities of the datacenter and a cloud platform independent master pipeline that includes instructions for performing operations related to services on the datacenter, including deploying software artifacts, provisioning computing resources. The system compiles the cloud platform independent master pipeline in conjunction with the artifact version map to generate cloud platform specific detailed pipeline that deploys the appropriate versions of deployment artifacts on the datacenter entities in accordance with the artifact version map. The system sends the cloud platform specific detailed pipeline to a target cloud platform for execution.

Owner:SALESFORCE COM INC

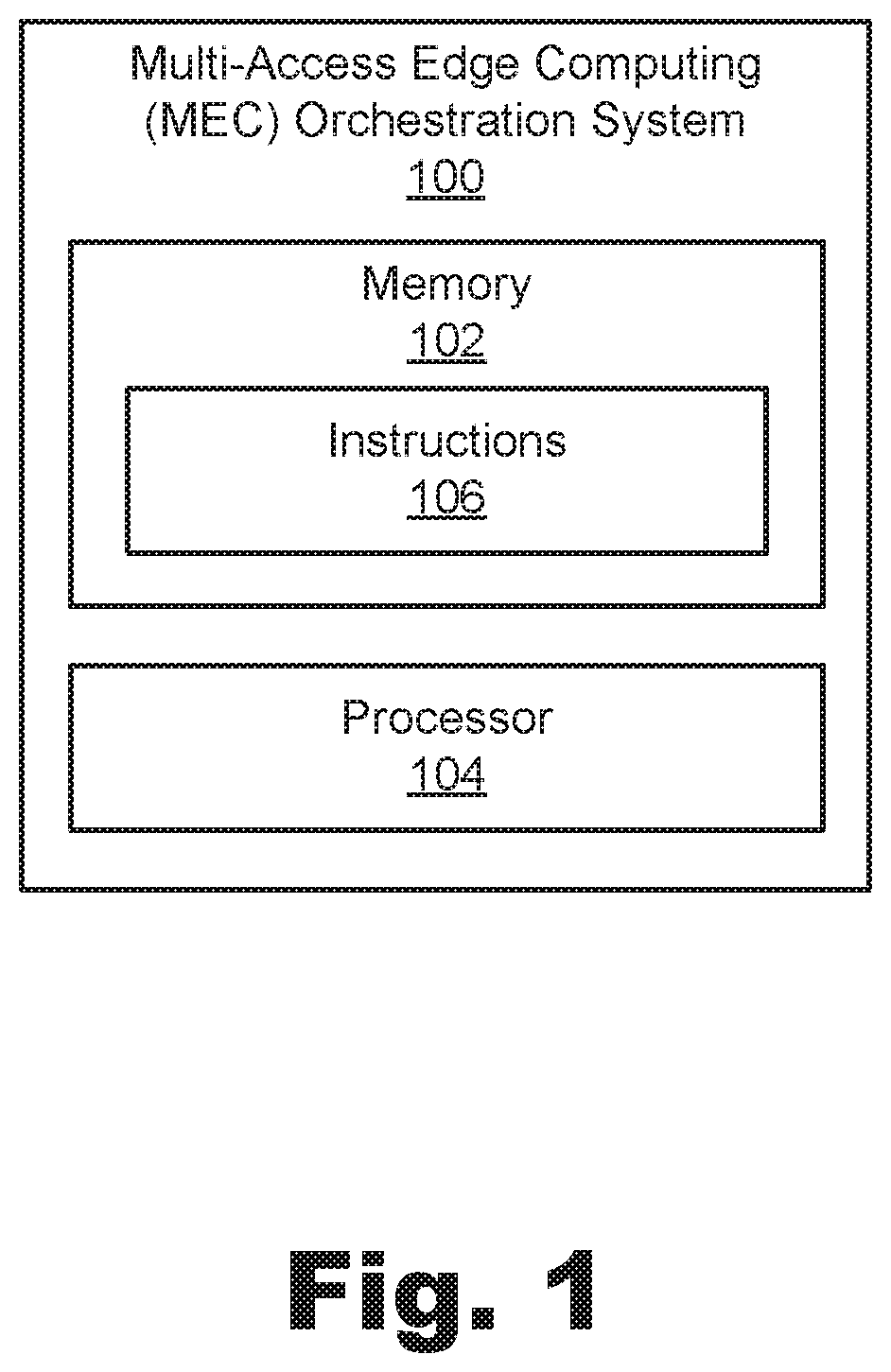

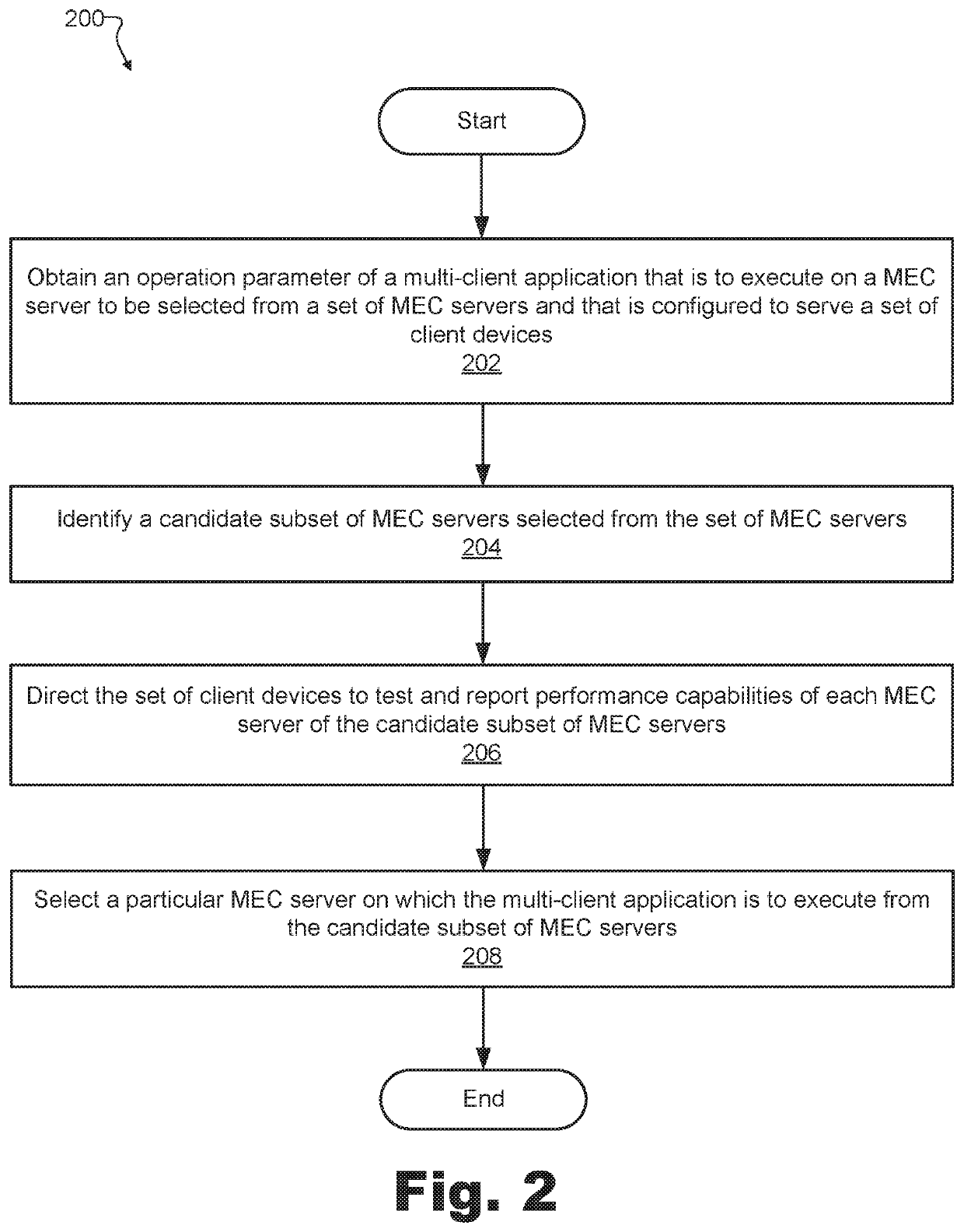

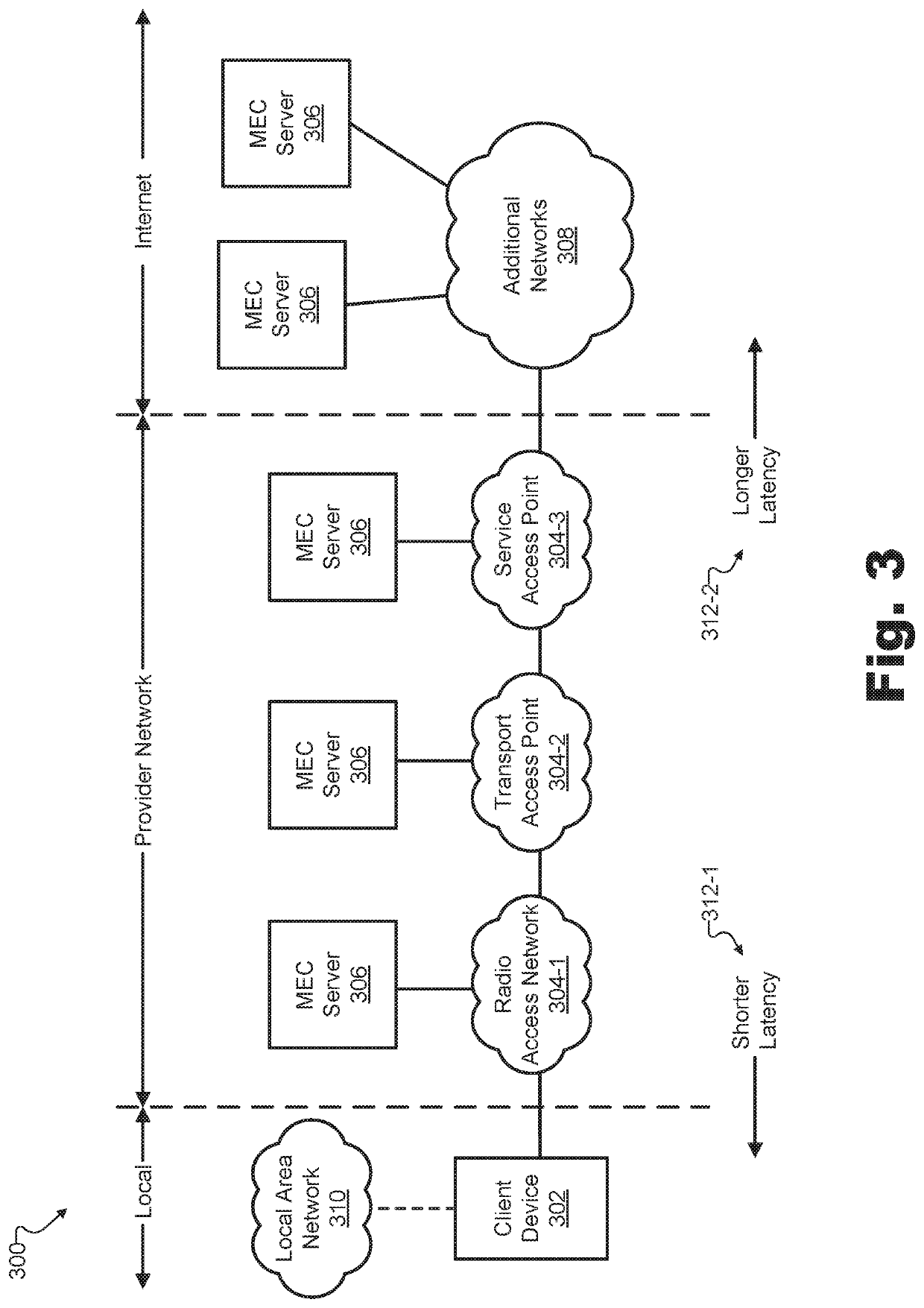

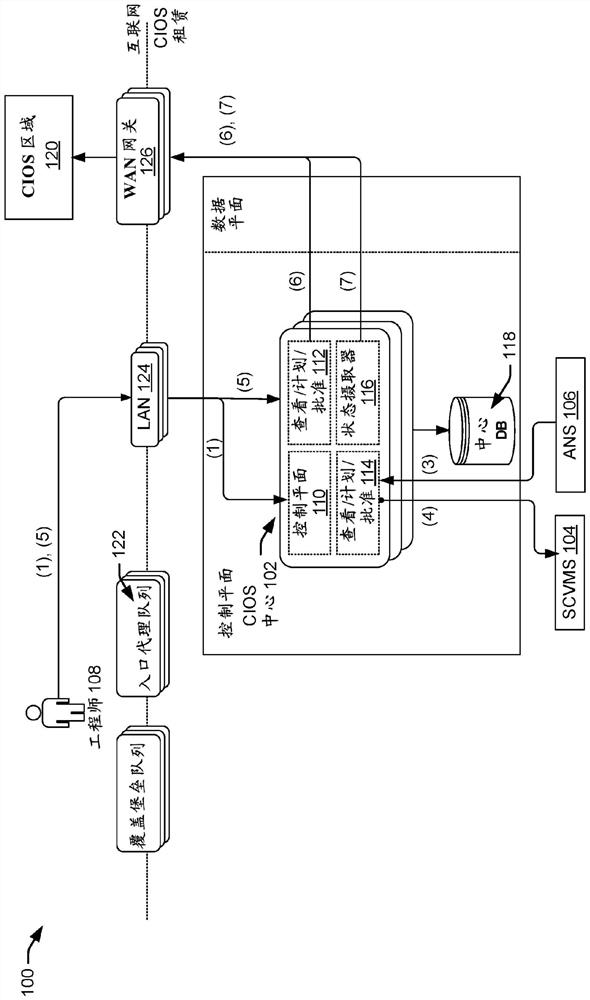

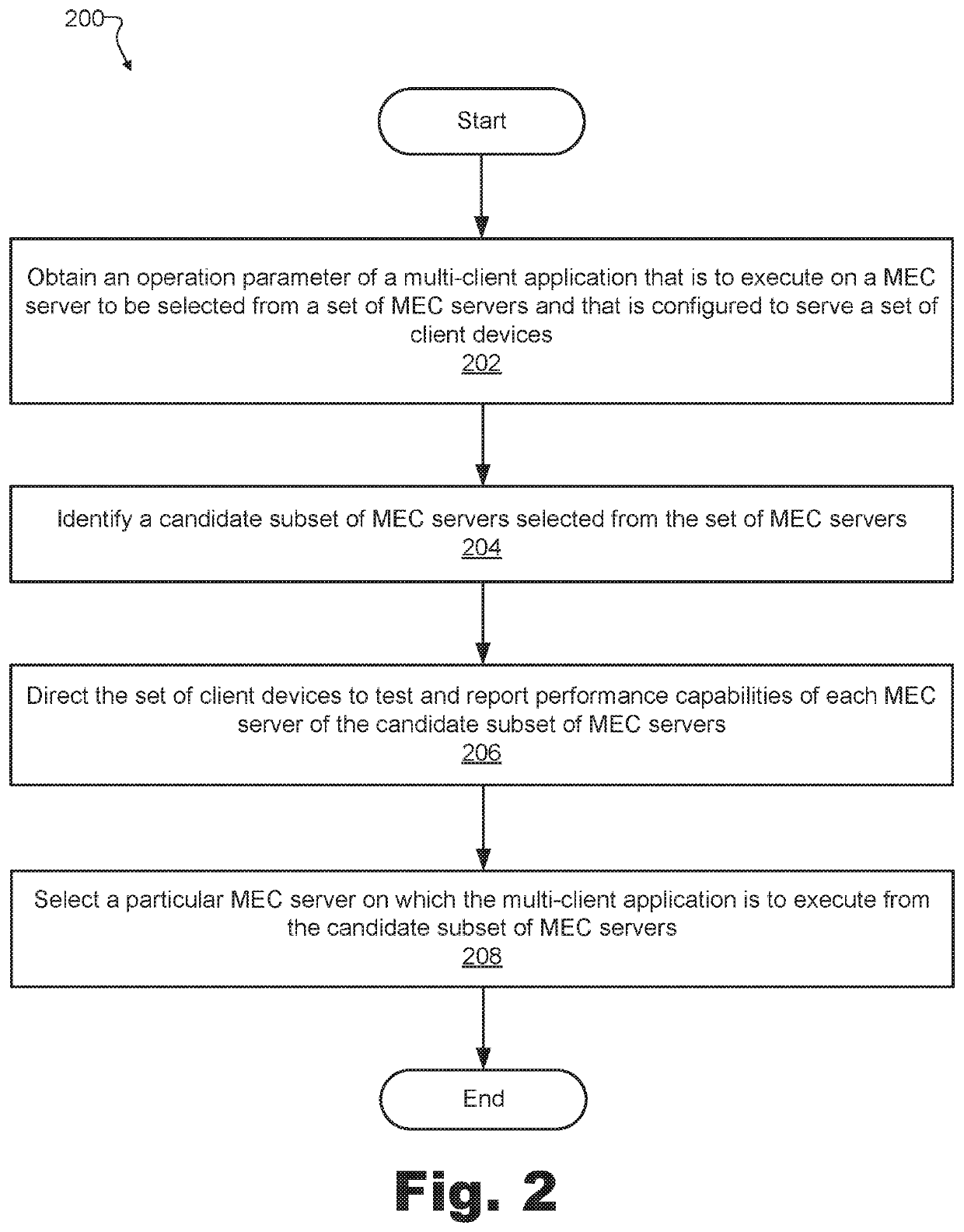

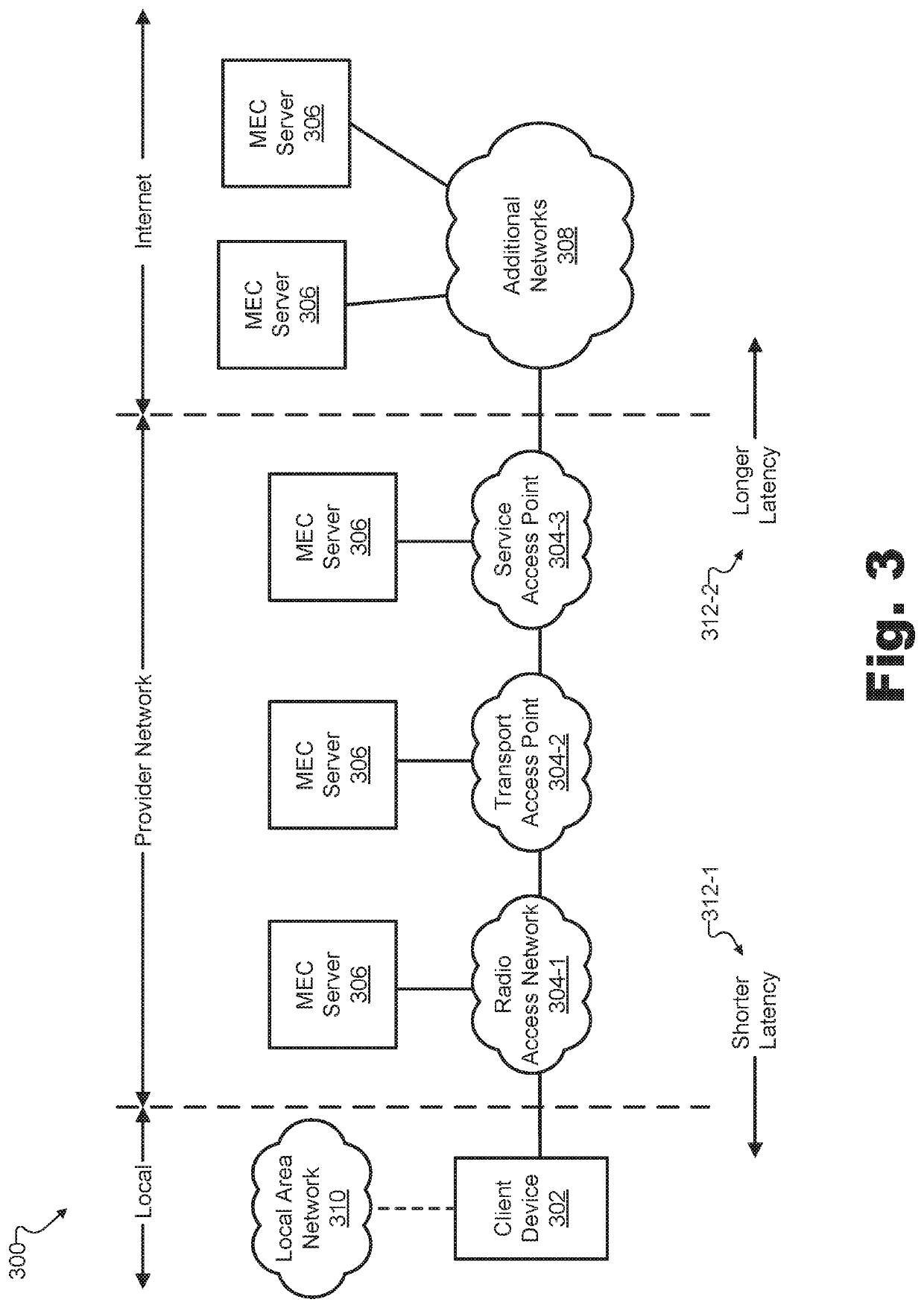

Methods and Devices for Orchestrating Selection of a Multi-Access Edge Computing Server for a Multi-Client Application

An exemplary multi-access edge computing (MEC) orchestration system obtains an operation parameter of a multi-client application that is to execute on a MEC server to be selected from a set of MEC servers located at a first set of locations within a coverage area of a provider network. When executing, the multi-client application serves respective client applications of a set of client devices located at a second set of locations within the coverage area. Based on the operation parameter, the MEC orchestration system identifies a candidate subset of MEC servers from the set of MEC servers and directs the set of client devices to test and report performance capabilities of the MEC servers in the candidate subset. Based on the reported performance capabilities, the MEC orchestration system selects, from the candidate subset of MEC servers, a particular MEC server on which the multi-client application is to execute.

Owner:VERIZON PATENT & LICENSING INC

Discovery of containerized platform and orchestration services

ActiveUS11347806B2Easy to analyzeEasy to implementVersion controlStill image data queryingApplication programming interfaceNetwork management

Owner:SERVICENOW INC

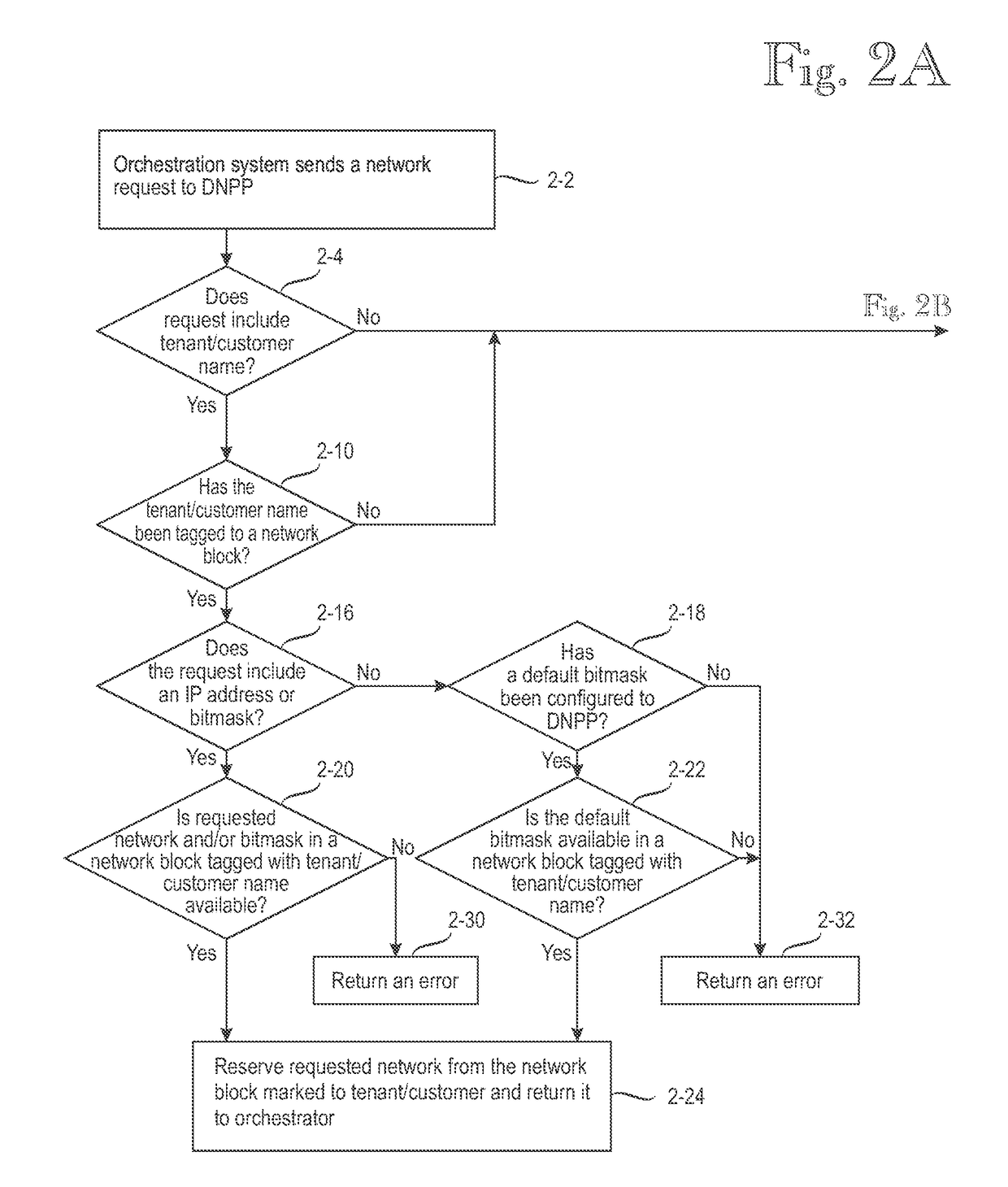

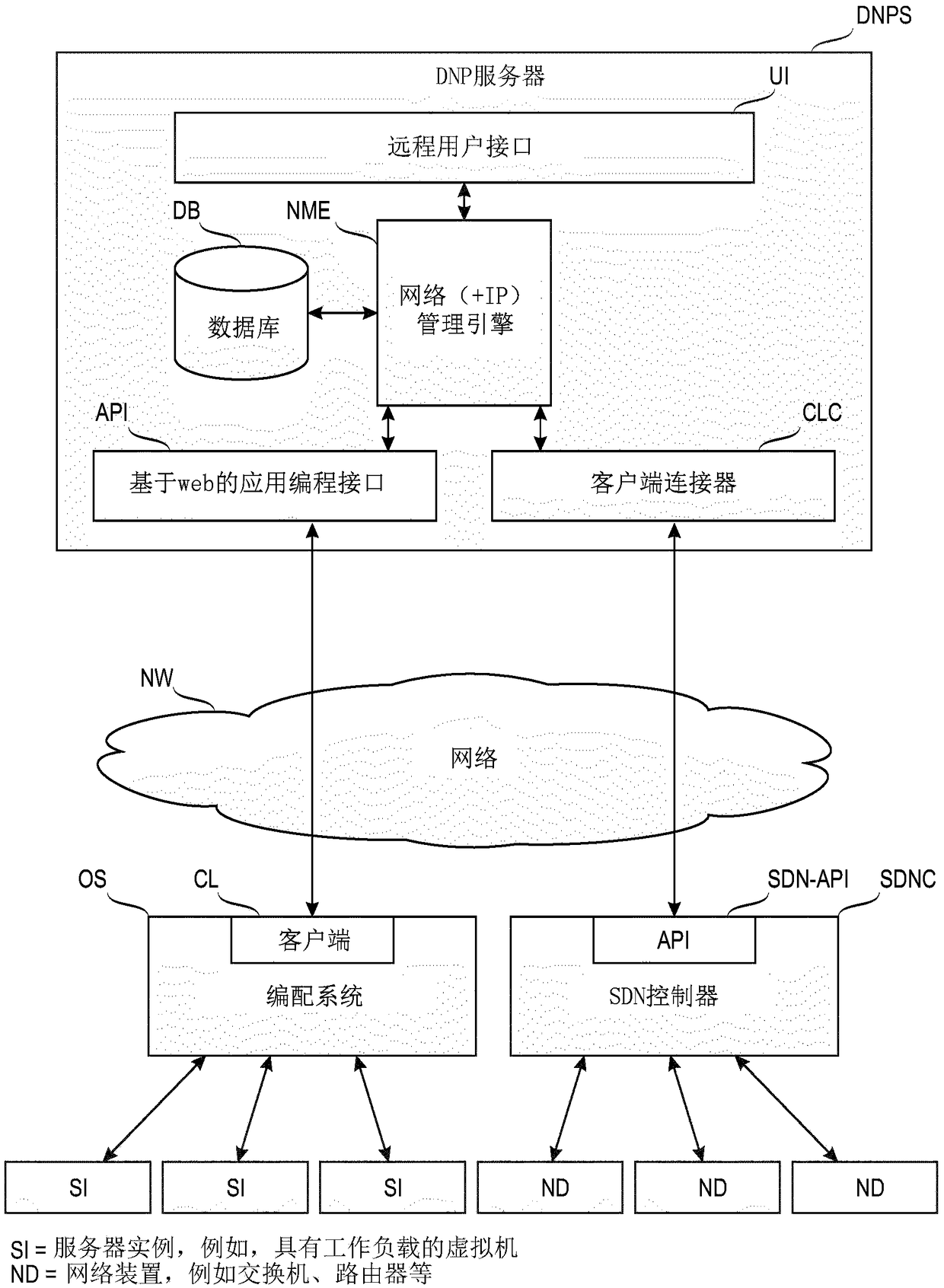

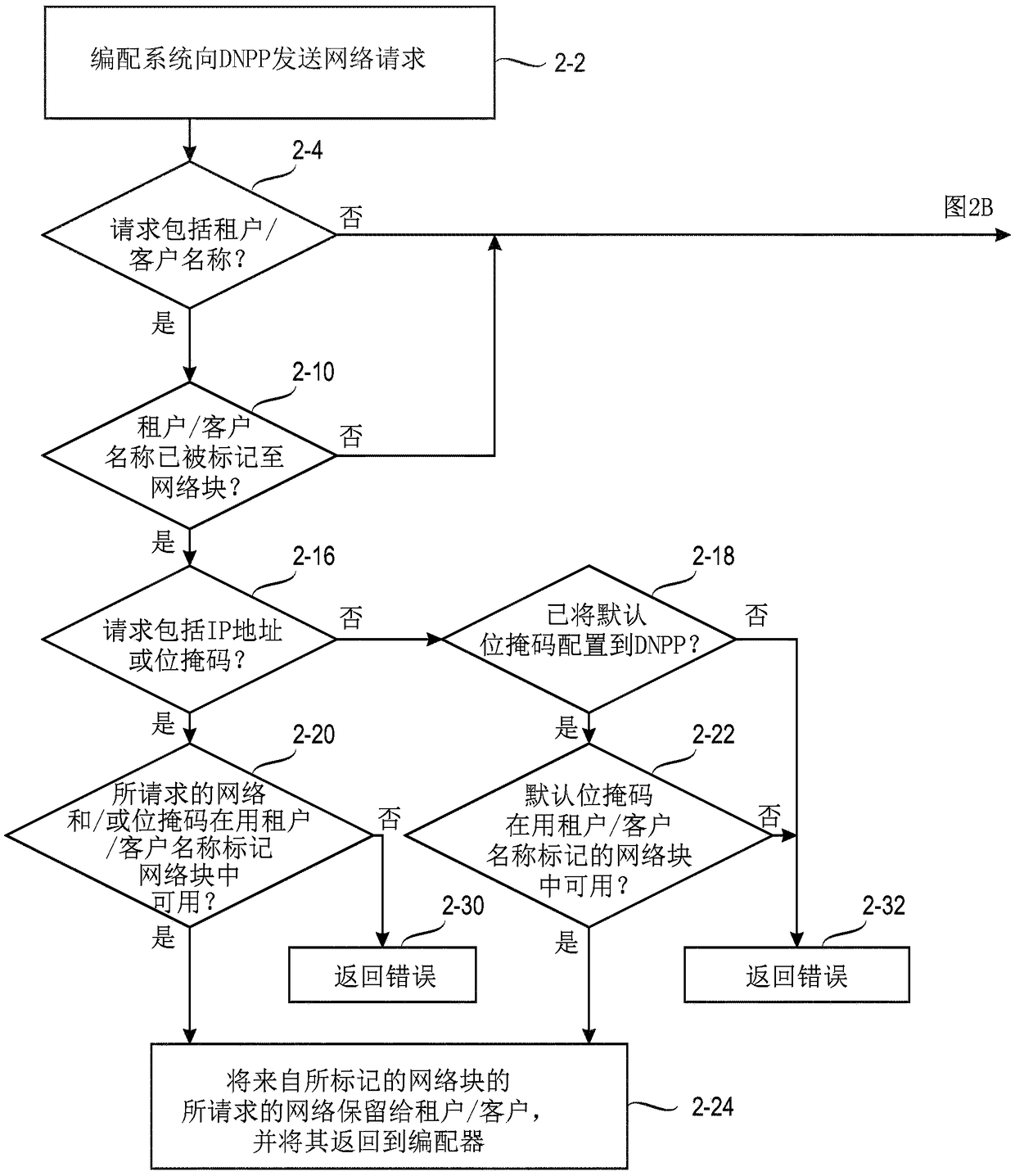

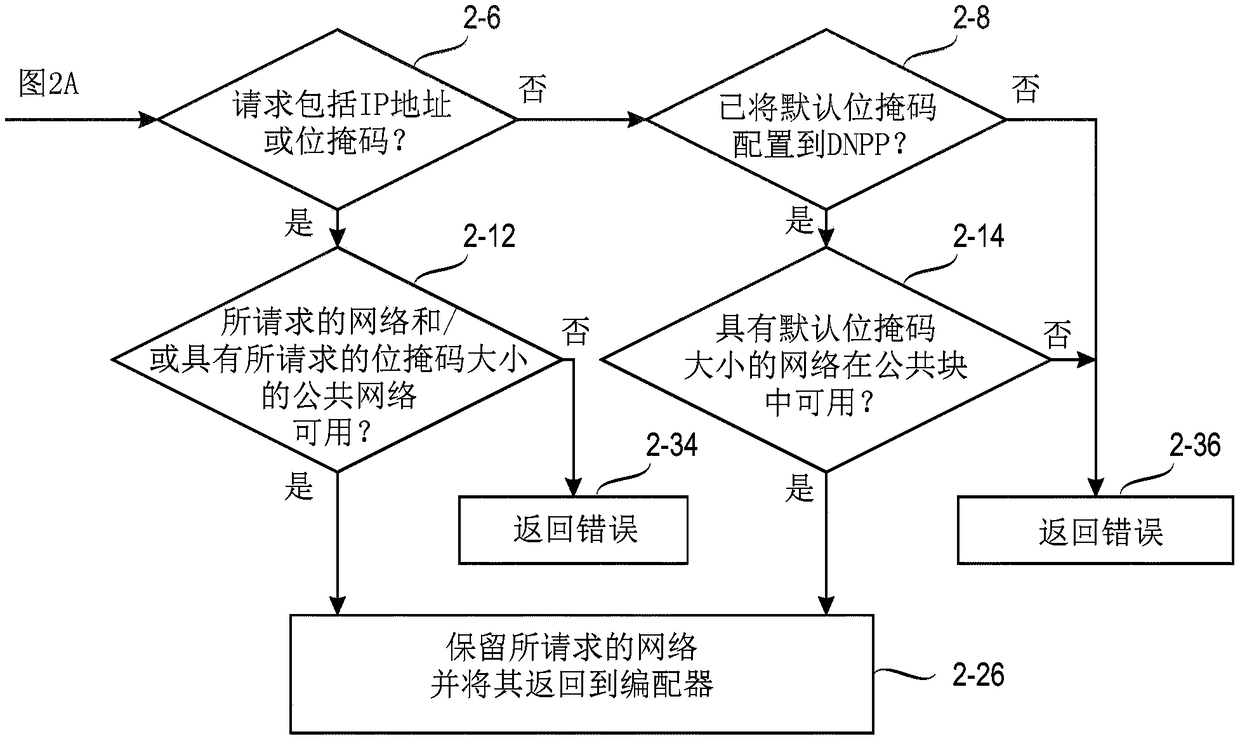

Commissioning/decommissioning networks in orchestrated or software-defined computing environments

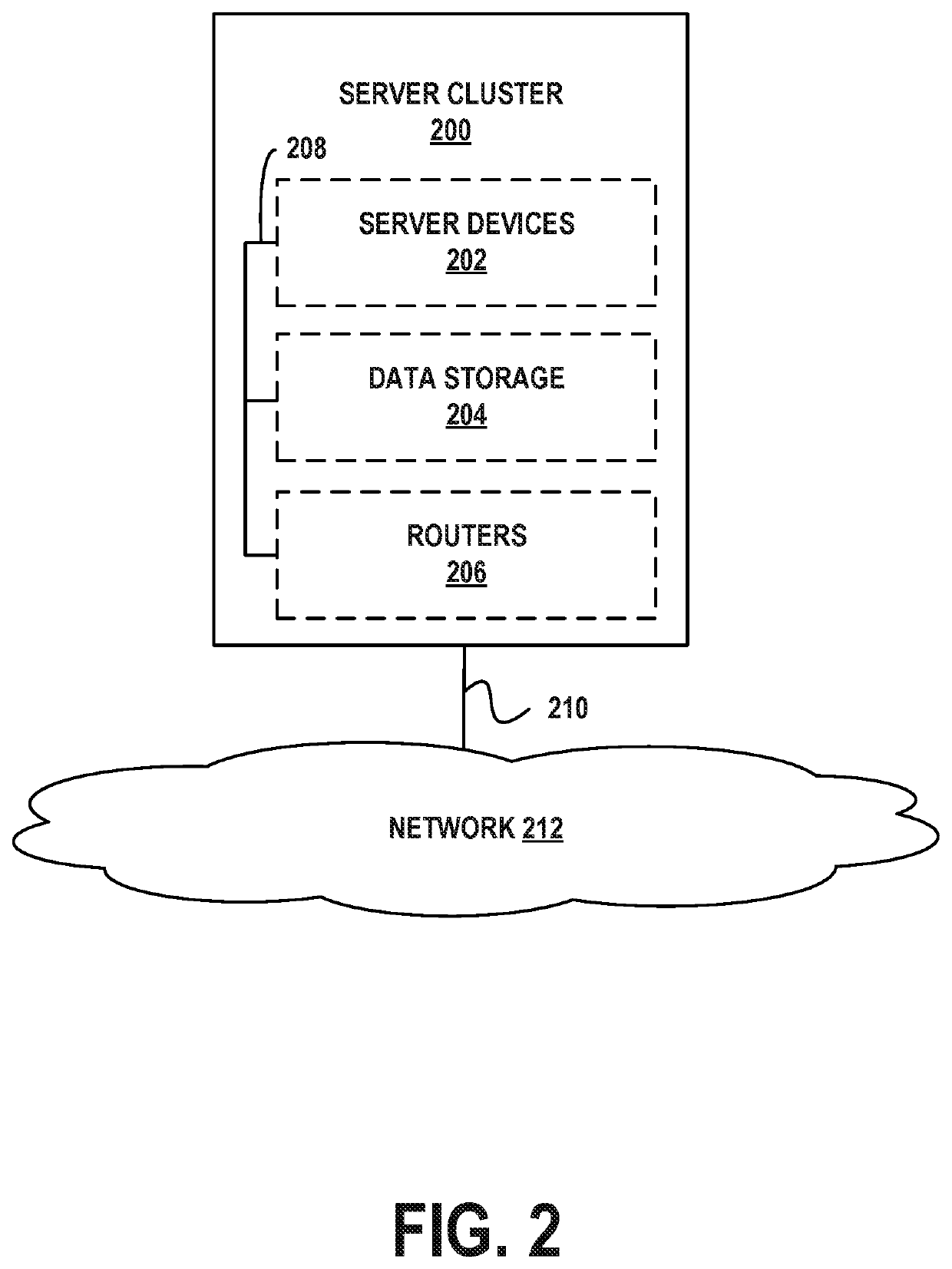

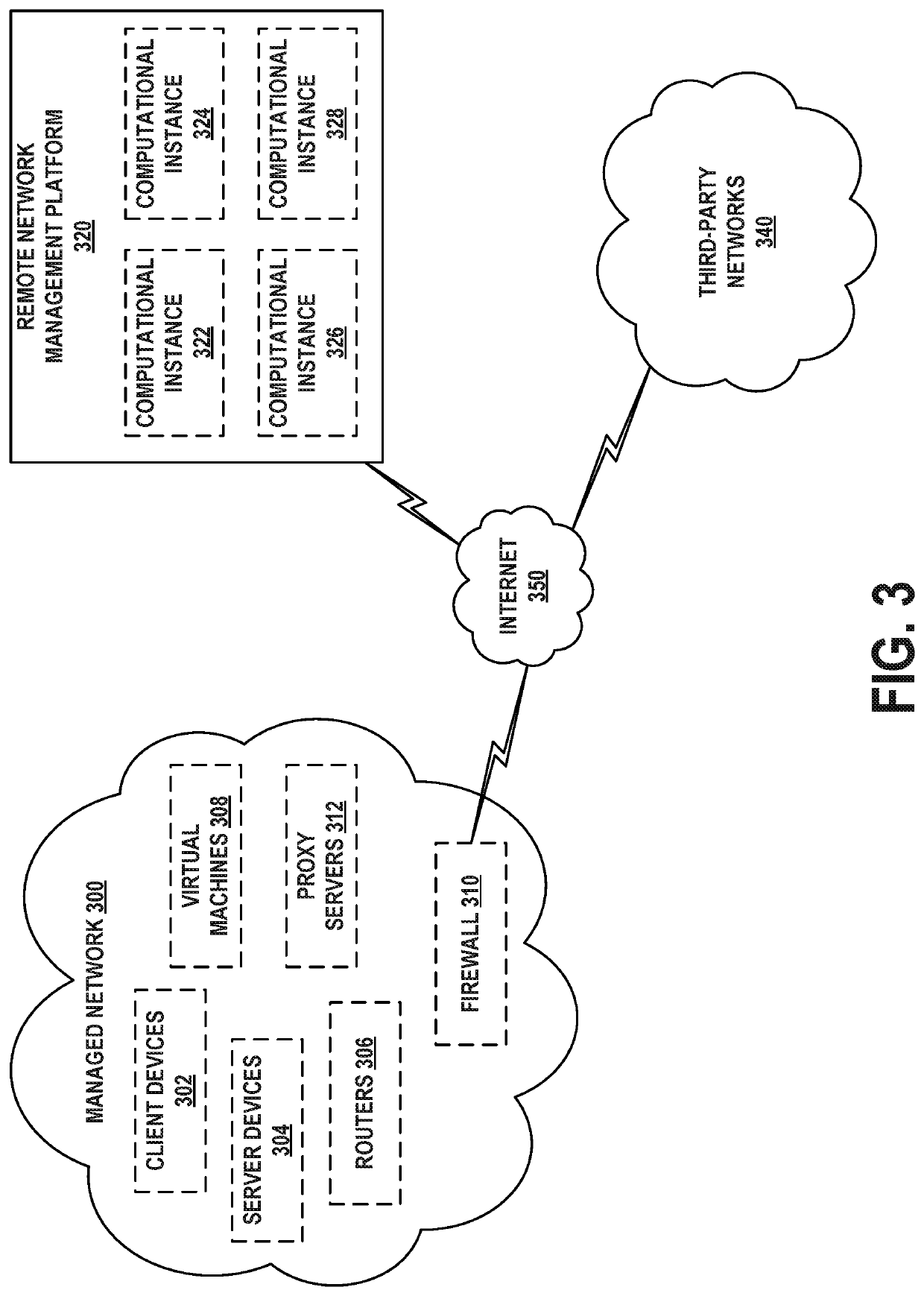

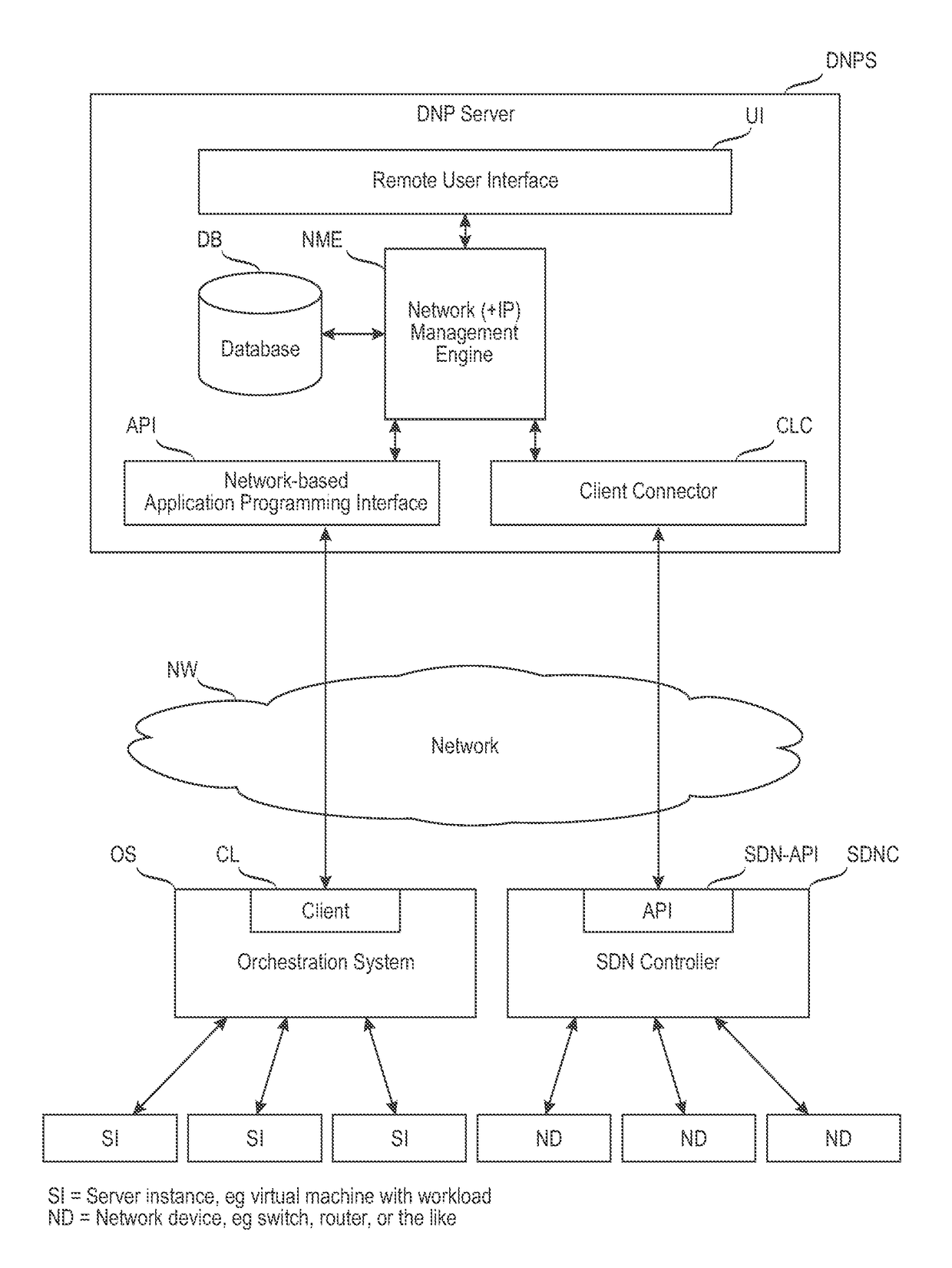

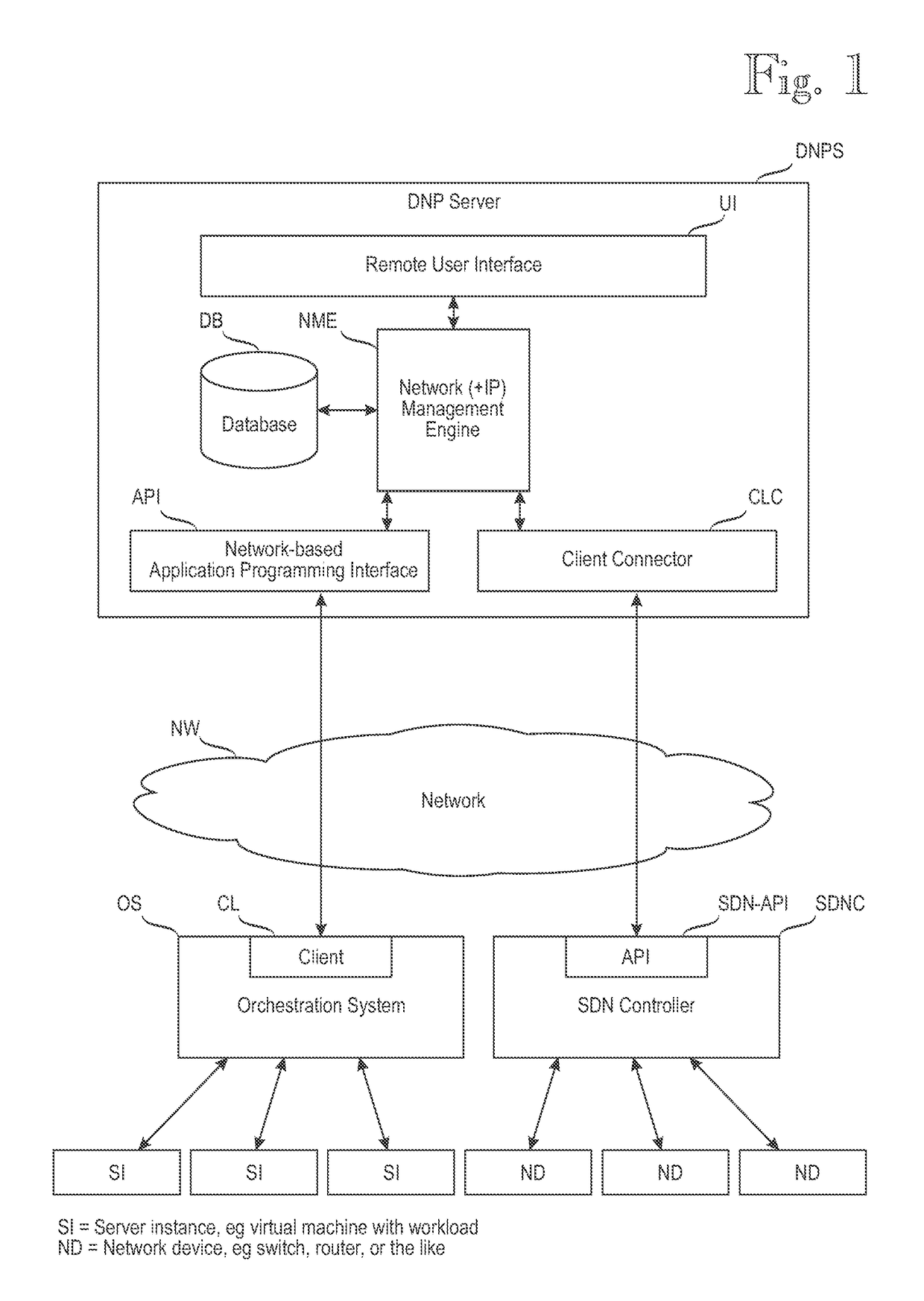

ActiveUS10129096B2Digital computer detailsNetworks interconnectionNetwork managementApplication software

A server computer (DNPS) commissions / decommissions networks provisioned using one or more orchestration solutions (OS) in a client-server architecture. Program code instructions instructing the server computer to implement a user interface (UI) for remote management of the server computer, wherein the user interface provides access to data managed by the server computer and a web-based application programming interface (API) that supports service oriented architecture [“SOA”], and a network management logic (NME) that dynamically assigns and releases networks via the one or more orchestration solutions (OS) and the web-based application programming interface (API). In an alternative implementation, the network management logic cooperates with Software-Defined Networking Controller(s) SDNC to commissions / decommission networks. A physical embodiment may implement either or both of the SOA-based and the SDN-based.

Owner:FUSIONLAYER

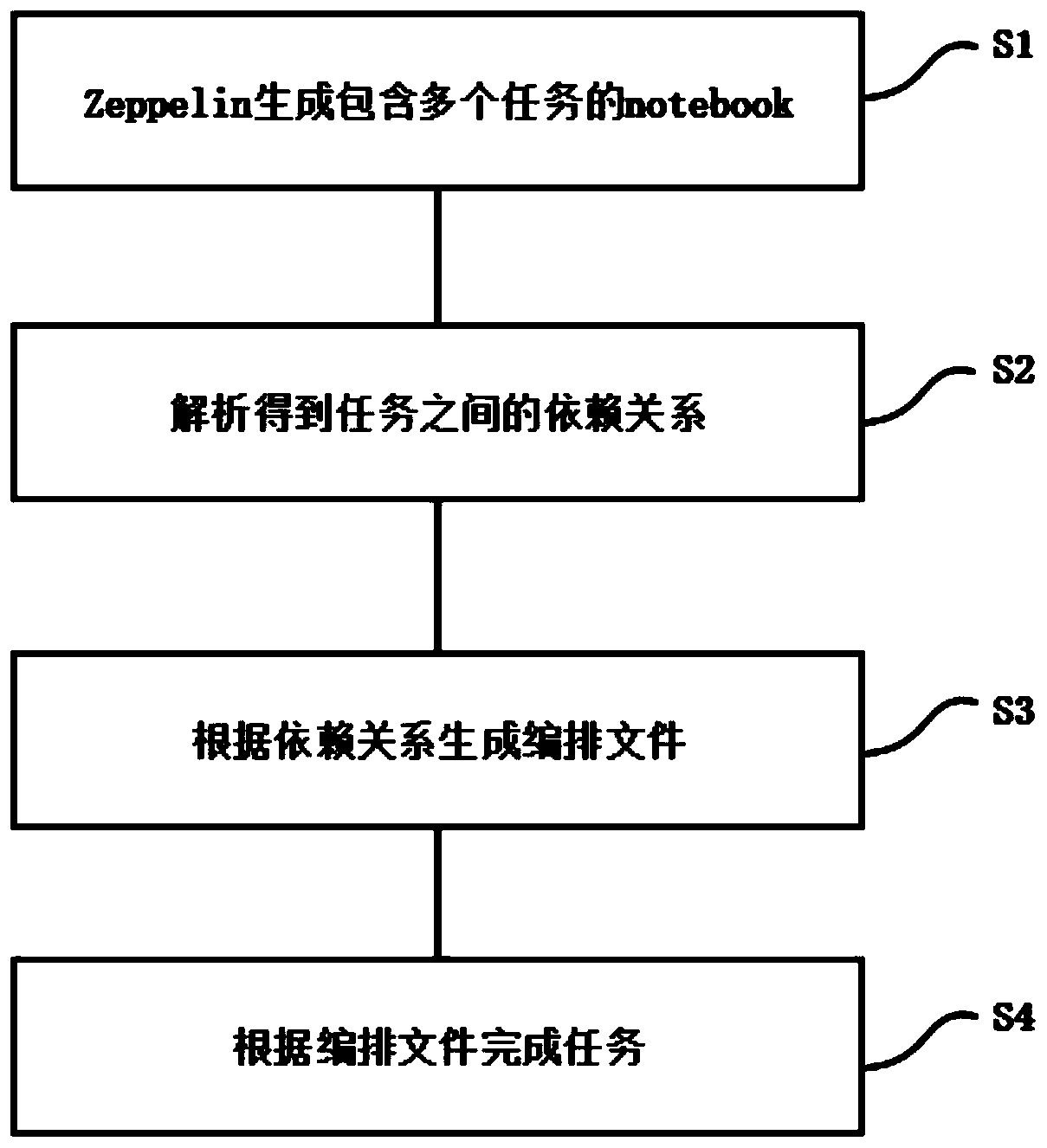

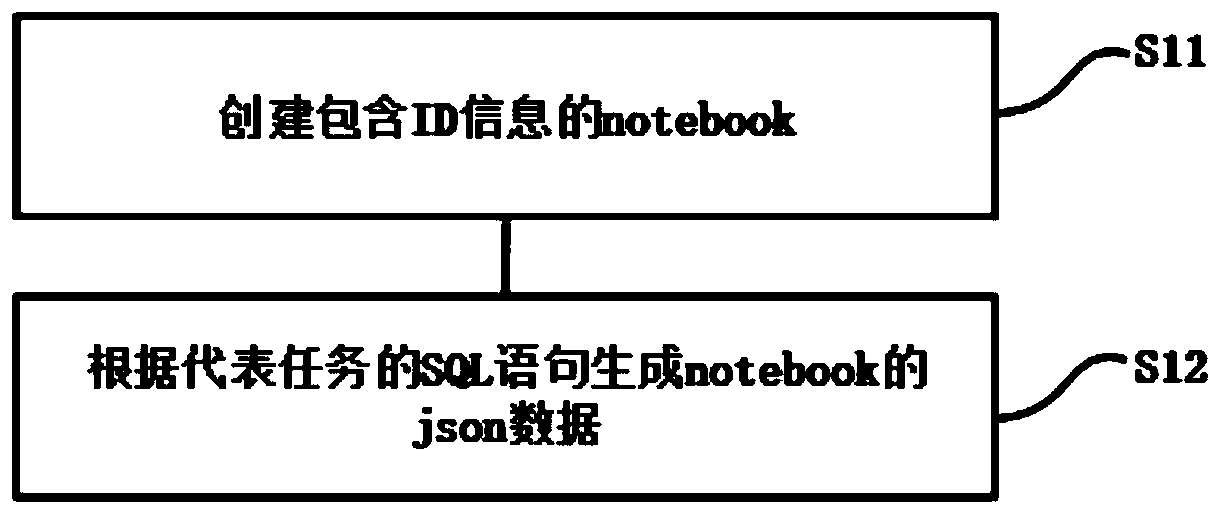

Method and system for scheduling Zeppelin tasks, computing device and storage medium

PendingCN111159270ARealize automatic schedulingProgram initiation/switchingDatabase management systemsParallel computingOrchestration (computing)

The invention discloses a method for scheduling Zeppelin tasks. The method comprises the following steps that Zeppelin generates notebook containing a plurality of tasks; analyzing to obtain a dependency relationship between the tasks; generating an arrangement file according to the dependency relationship; completing tasks from orchestration files. The execution sequence of the Zeppelin tasks with the dependency relationship does not need to be manually set, and the Zeppelin tasks with the dependency relationship can be automatically scheduled. The invention further provides a system for scheduling the Zeppelin tasks, computing equipment and a storage medium.

Owner:HANGZHOU YITU MEDIAL TECH CO LTD

Apparatus, systems, and methods for composable distributed computing

ActiveUS11449354B2BootstrappingSoftware simulation/interpretation/emulationOrchestration (computing)Distributed computing

Embodiments disclosed facilitate specification, configuration, orchestration, deployment, and management of composable distributed systems. A method to realize a composable distributed system may comprise: determining, based on a system composition specification for the distributed system, one or more cluster configurations, wherein the system composition specification comprises, for each cluster, a corresponding cluster specification and a corresponding cluster profile, which may comprise a corresponding software stack specification. First software stack images applicable to a first plurality of nodes in a first cluster may be obtained based on a corresponding first software stack specification, which may be comprised in a first cluster profile associated with the first cluster. Deployment of the first cluster may be initiated by instantiating the first cluster in a first cluster configuration in accordance with a corresponding first cluster specification, wherein each of the first plurality of nodes is instantiated using the corresponding first software stack images.

Owner:SPECTRO CLOUD INC

Software release orchestration for continuous delivery of features in a cloud platform based data center

Computing systems, for example, multi-tenant systems deploy software artifacts in data centers created in a cloud platform using a cloud platform infrastructure language that is cloud platform independent. The system receives an artifact version map that identifies versions of software artifacts for datacenter entities of the datacenter and a cloud platform independent master pipeline that includes instructions for performing operations related to services on the datacenter, for example, deploying software artifacts, provisioning computing resources, and so on. The system compiles the cloud platform independent master pipeline in conjunction with the artifact version map to generate cloud platform specific detailed pipeline that deploys the appropriate versions of deployment artifacts on the datacenter entities in accordance with the artifact version map. The system sends the cloud platform specific detailed pipeline to a target cloud platform for execution.

Owner:SALESFORCE COM INC

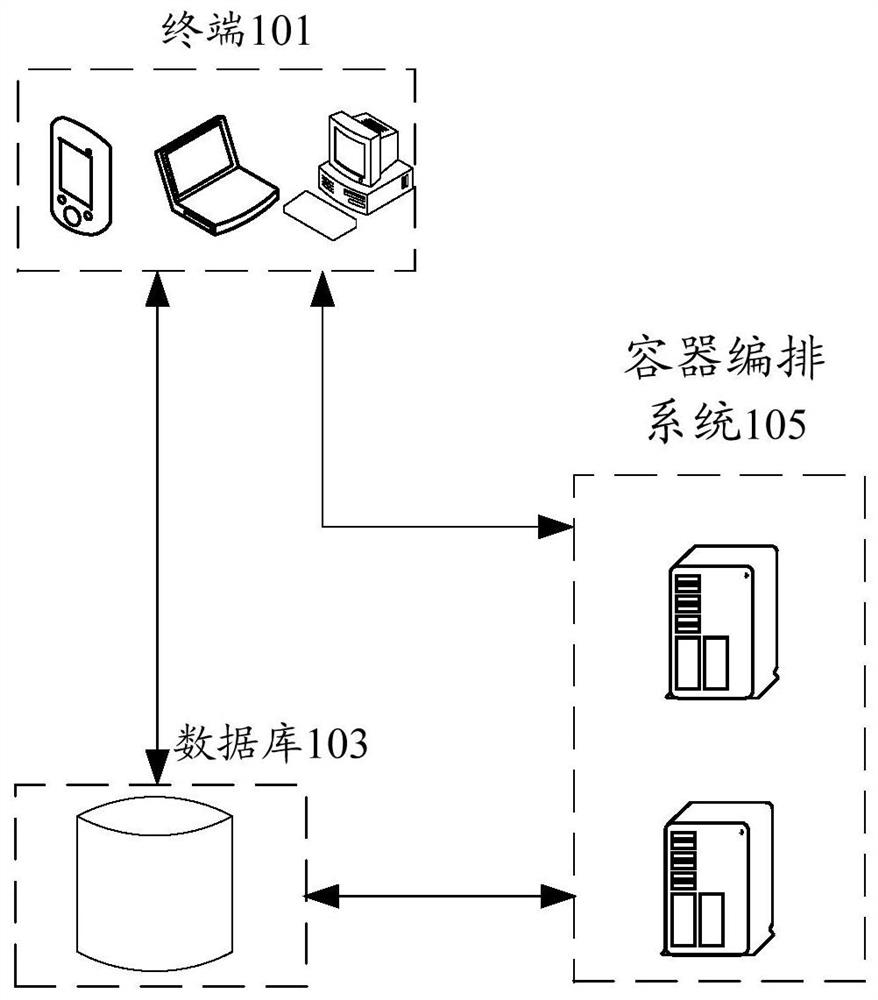

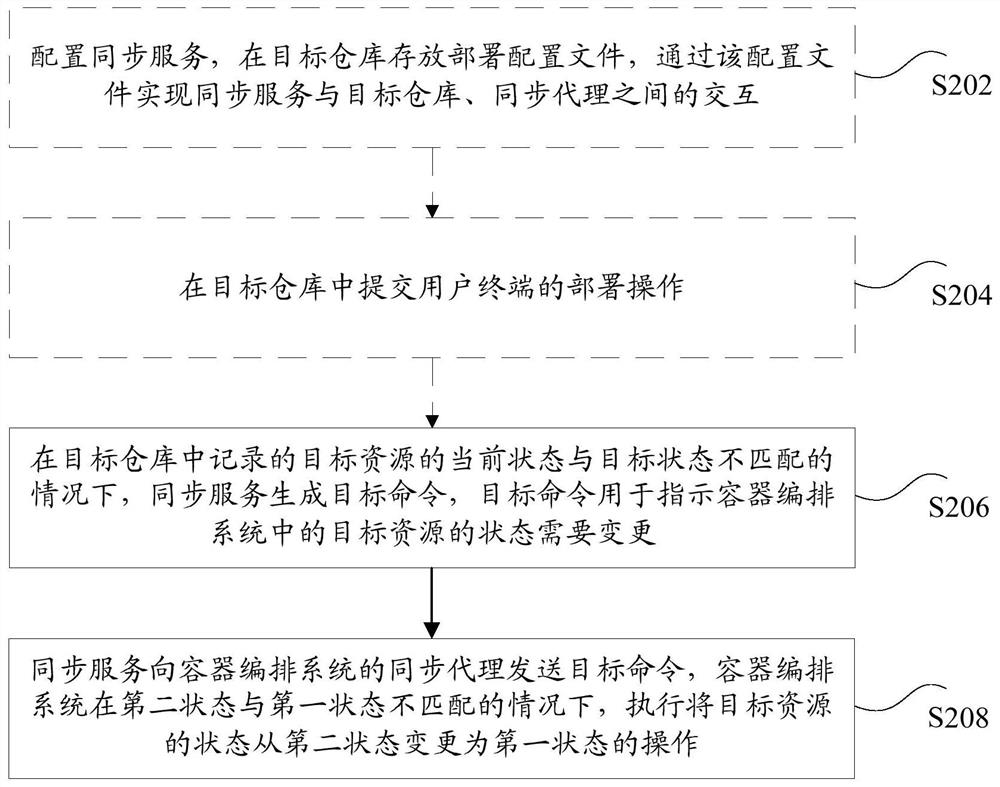

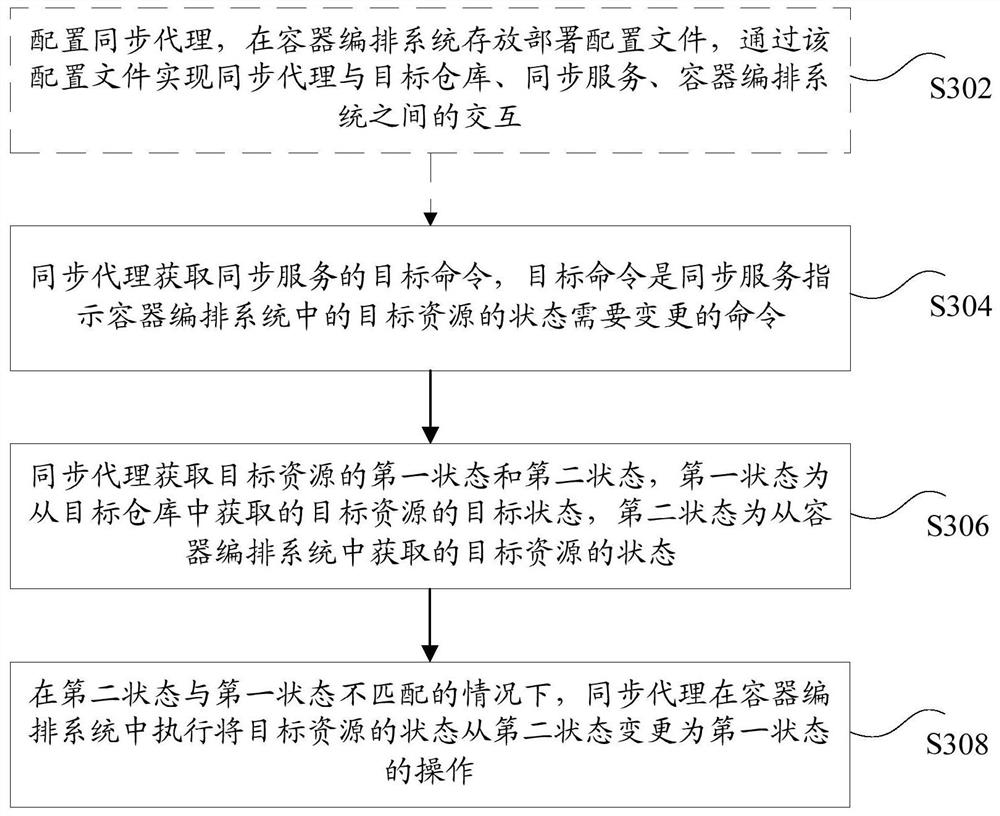

Resource management method and device, storage medium and electronic device

PendingCN112130889AImprove accuracyTroubleshoot inaccurate technical issuesVersion controlSoftware deploymentOrchestration (computing)Resource management

The invention discloses a resource management method and device, a storage medium and an electronic device in the field of cloud computing. The method comprises the steps of acquiring a target command, wherein the target command is used for indicating that the state of target resources in the container arrangement system needs to be changed; obtaining a first state and a second state of the targetresource, with the first state being a target state of the target resource recorded in the target warehouse, and the second state being a state of the target resource recorded in the container orchestration system; and if the second state is not matched with the first state, executing an operation of changing the state of the target resource from the second state to the first state in the container orchestration system. According to the invention, the technical problem of inaccurate operation results in related technologies is solved.

Owner:BEIJING KINGSOFT CLOUD NETWORK TECH CO LTD

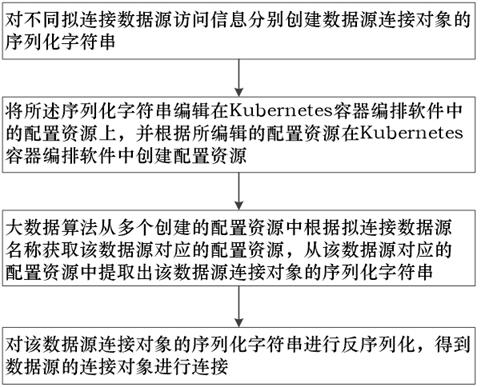

Method and device for replacing big data computing job data source based on kubernetes

ActiveCN114490834BShorten the timeImprove efficiencyDatabase updatingOther databases queryingSoftware engineeringData source

The present invention provides a method and device for replacing data sources of big data computing operations based on Kubernetes. By saving different data source connection objects in the form of character strings in configuration resources in Kubernetes container orchestration software, when the number of data sources increases , quickly find the data source to be connected through the query command provided by the Kubernetes container orchestration software. It saves the time of querying the data source to be connected in multiple data sources, and greatly improves the efficiency of querying the data source to be submitted.

Owner:梯度云科技(北京)有限公司

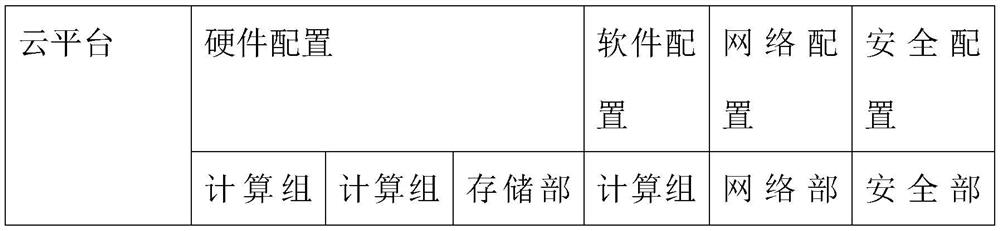

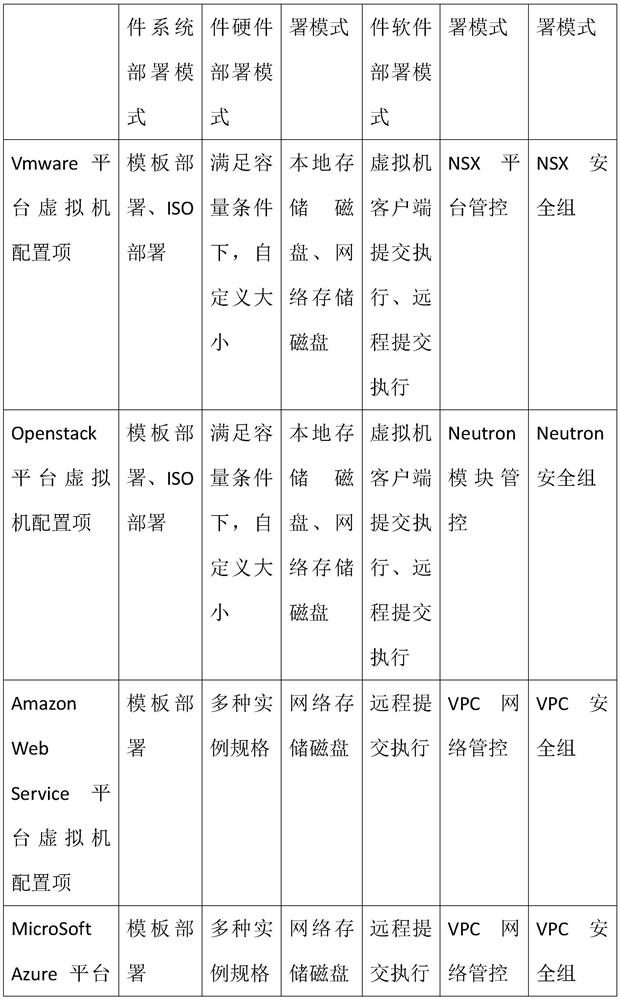

A cloud-based system framework automatic deployment time optimization method

ActiveCN109032757BMeet the needs of deploymentComplete deploymentSoftware simulation/interpretation/emulationSoftware deploymentDynamic resourceDeployment time

The invention discloses a method for optimizing the automatic deployment time of a system framework based on a cloud platform, which includes: step 1, building a virtual machine deployment stack warehouse; step 2, automatically escaping the virtual machine deployment stack; The deployment process is optimized and deployed; step 4, the version is archived after the deployment is successful; the cloud orchestration technology in the existing technology has been solved, and the life cycle management, dynamic adjustment of resources, and automatic deployment and configuration of cloud computing resources have been realized, which basically meets the needs of enterprises. Deployment needs, but there are problems such as long time to build the system architecture, high difficulty in architecture migration, and low management efficiency of the system architecture.

Owner:GUIZHOU POWER GRID CO LTD

Commissioning/decommissioning networks in orchestrated or software-defined computing environments

A server computer (DNPS) commissions / decommissions networks provisioned using one or more orchestration solutions (OS) in a client-server architecture. Program code instructions instructing the servercomputer to implement a user interface (UI) for remote management of the server computer, wherein the user interface provides access to data managed by the server computer and a web- based application programming interface (API) that supports service oriented architecture ['SOA'], and a network management logic (NME) that dynamically assigns and releases networks via the one or more orchestrationsolutions (OS) and the web-based application programming interface (API). In an alternative implementation, the network management logic cooperates with Software-Defined Networking Controller (s) SDNC to commissions / decommission networks. A physical embodiment may implement either or both of the SOA-based and the SDN-based.

Owner:FUSIONLAYER

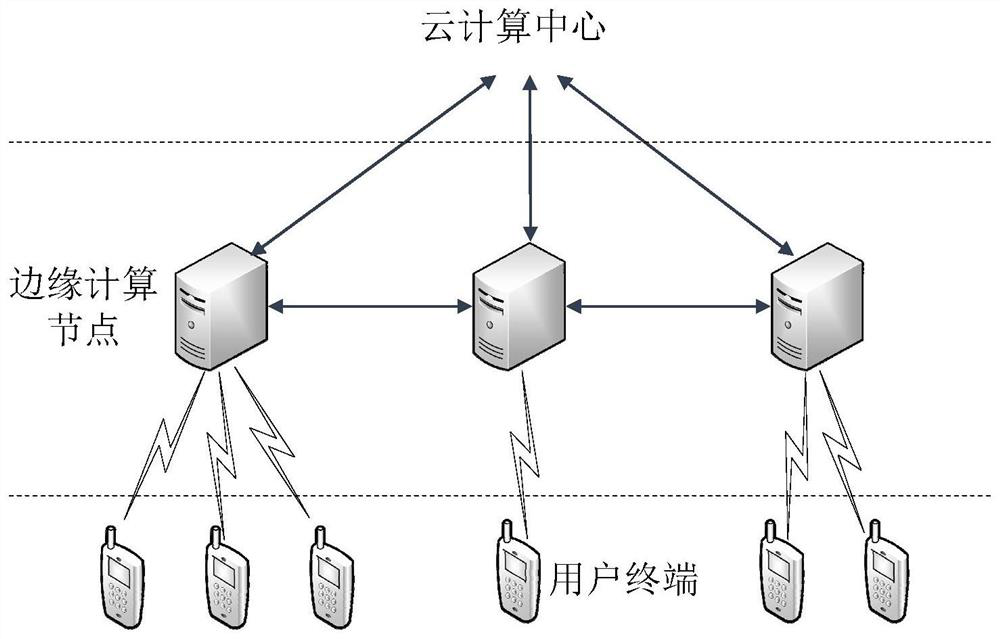

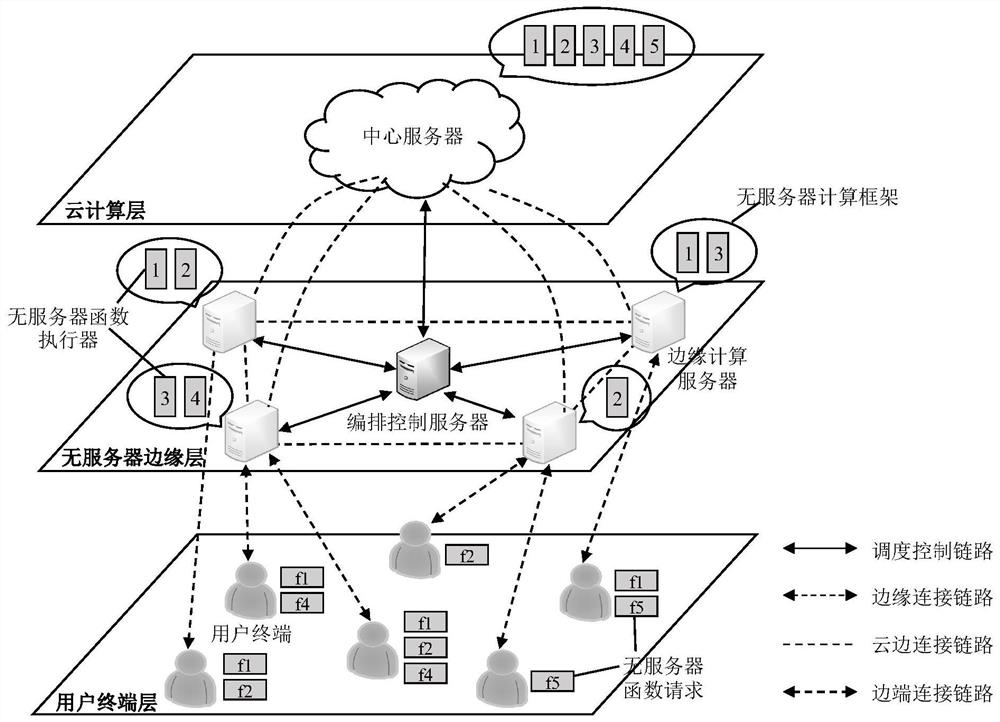

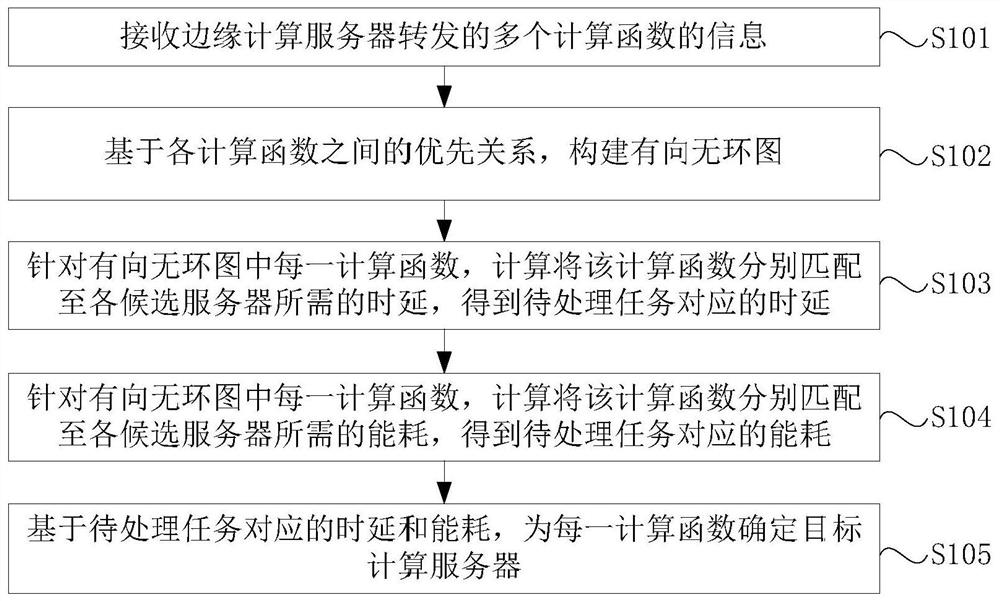

A task scheduling method and device for serverless edge computing

ActiveCN113114758BFlexible deploymentRealize unified managementTransmissionEdge computingOrchestration (computing)

The embodiment of the present invention provides a task scheduling method and device for serverless edge computing, wherein the method includes: the orchestration control server receives the information of multiple computing functions forwarded by the edge computing server, and based on the priority relationship between the computing functions , construct a directed acyclic graph; for each calculation function in the directed acyclic graph, calculate the delay and energy consumption required to match the calculation function to each candidate server, and obtain the delay and energy corresponding to the task to be processed Among them, the candidate servers include: each edge computing server and the central server in the serverless edge network where the orchestration control server is located; based on the delay and energy consumption corresponding to the tasks to be processed, the target computing server is determined for each computing function. The present invention realizes task scheduling oriented to serverless edge computing.

Owner:BEIJING UNIV OF POSTS & TELECOMM

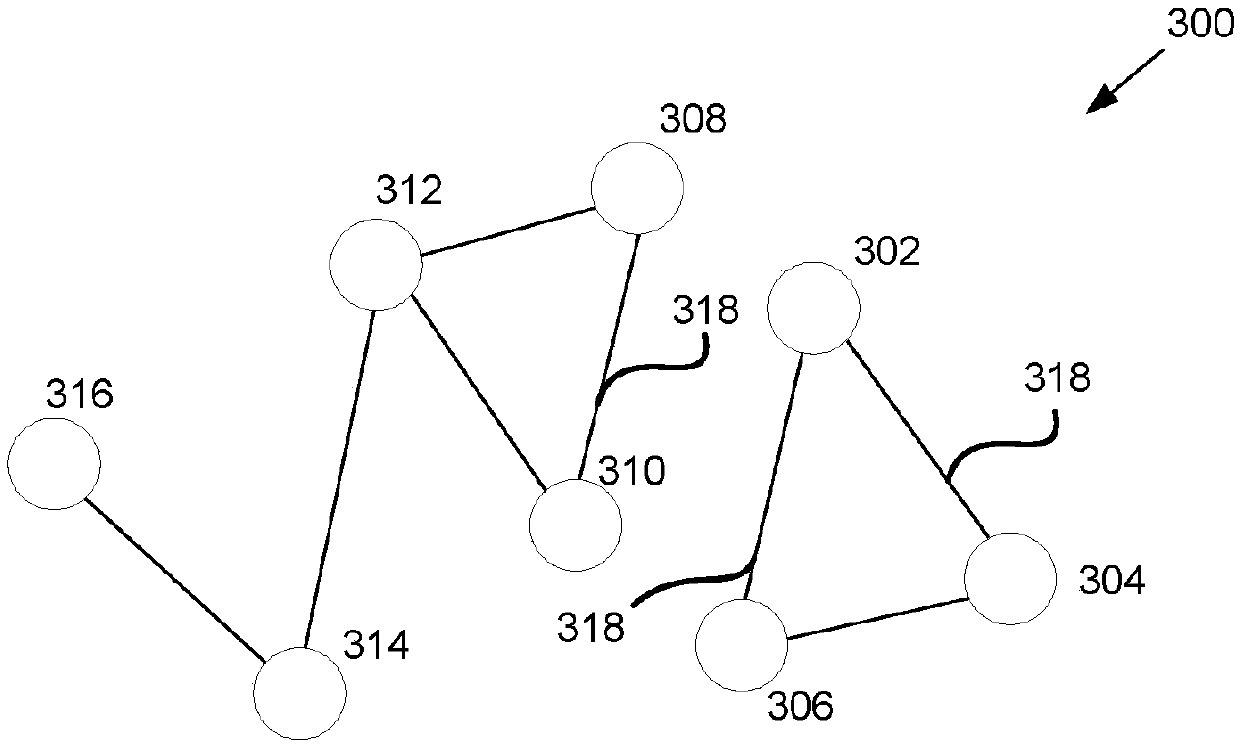

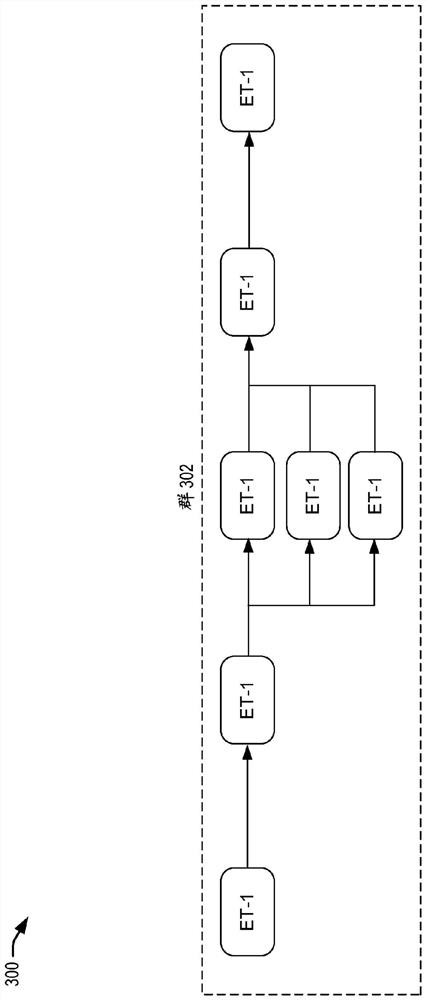

Action orchestration in fault domains

Concepts and technologies are described herein for providing an automated mechanism for grouping devices to allow safe and scalable actions to be taken in parallel. A computing device obtains data that defines service relationships between computing entities in a network of computing entities controlled by a service provider. The computing device determines two or more groups of computing entitieshaving one of a direct or indirect relationship with other computing entities within one of the two or more groups based on the obtained data. Then, the computing device determines one or more subgroups of one of the two or more groups based on the obtained data. Individual computing entities within a first subgroup of the one or more subgroups do not have a direct relationship with any of the other computing entities with the first subgroup. Output data identifying at least a portion of the subgroups is generated.

Owner:MICROSOFT TECH LICENSING LLC

User interface techniques for an infrastructure orchestration service

PendingUS20210223923A1Version controlNon-redundant fault processingOrchestration (computing)Human–computer interaction

Techniques are disclosed for providing a number of user interfaces. A computing system may execute a declarative infrastructure provisioner. The computing system may provide declarative instructions and instruct the declarative infrastructure provision to deploy a plurality of infrastructure resources and a plurality of artifacts. One example user interface may provide a global view of the plurality of infrastructure components and artifacts. Another example user interface may provide corresponding states and change activity of the plurality of infrastructure components and artifacts. Yet another user interface may be provided that presents similarities and / or differences between a locally-generated safety plan indicating first changes for a computing environment and a remotely-generated safety plan indicating second changes for the computing environment.

Owner:ORACLE INT CORP

User interface techniques for infrastructure orchestration services

PendingCN114730258AProgram controlSoftware deploymentOrchestration (computing)Multiple user interface

Techniques for providing multiple user interfaces are disclosed. A computing system may execute a declarative infrastructure provider. A computing system may provide declarative instructions and instruct declarative infrastructure provisioning to deploy a plurality of infrastructure resources and a plurality of workpieces. One example user interface may provide a global view of a plurality of infrastructure components and workpieces. Another example user interface may provide corresponding status and change activities for a plurality of infrastructure components and workpieces. Yet another user interface may be provided that presents similarities and / or differences between a locally generated security plan indicative of a first change to the computing environment and a remotely generated security plan indicative of a second change to the computing environment.

Owner:ORACLE INT CORP

Methods and devices for orchestrating selection of a multi-access edge computing server for a multi-client application

An exemplary multi-access edge computing (MEC) orchestration system obtains an operation parameter of a multi-client application that is to execute on a MEC server to be selected from a set of MEC servers located at a first set of locations within a coverage area of a provider network. When executing, the multi-client application serves respective client applications of a set of client devices located at a second set of locations within the coverage area. Based on the operation parameter, the MEC orchestration system identifies a candidate subset of MEC servers from the set of MEC servers and directs the set of client devices to test and report performance capabilities of the MEC servers in the candidate subset. Based on the reported performance capabilities, the MEC orchestration system selects, from the candidate subset of MEC servers, a particular MEC server on which the multi-client application is to execute.

Owner:VERIZON PATENT & LICENSING INC

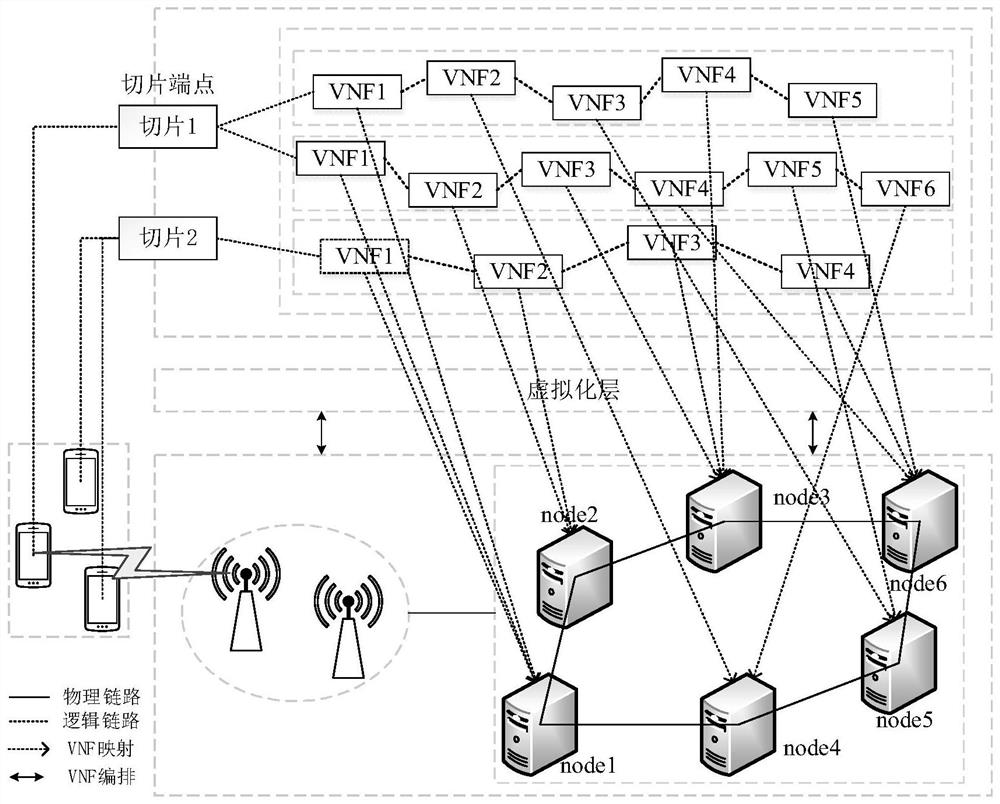

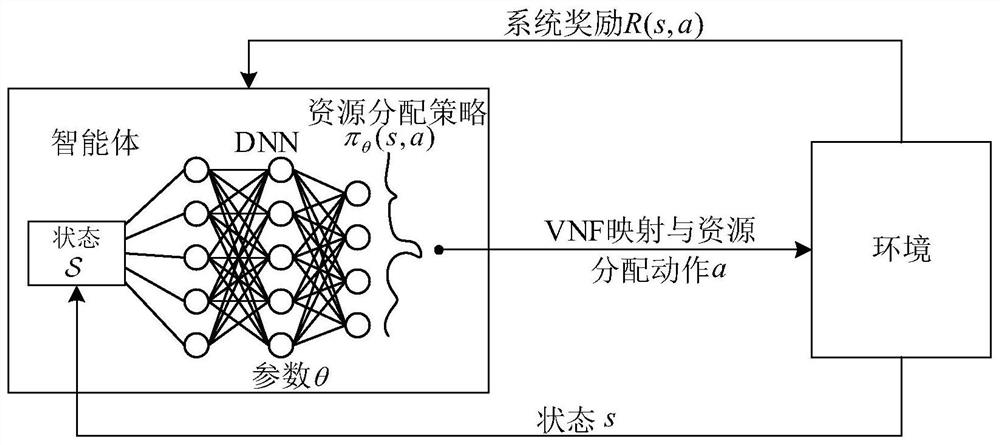

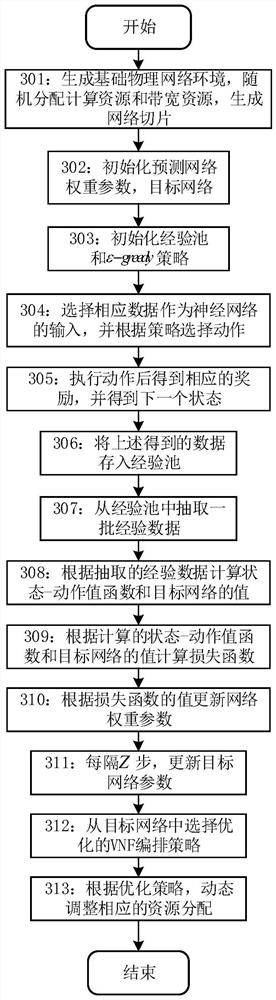

A Dynamic Virtual Network Function Orchestration Method Based on Deep Reinforcement Learning

ActiveCN112887156BOptimize orchestration costsGuaranteed performanceNeural architecturesTransmissionMathematical modelOrchestration (computing)

The invention relates to a dynamic virtual network function arrangement method based on deep reinforcement learning, which belongs to the field of wireless communication. The method includes: aiming at the high cost of VNF orchestration caused by dynamic changes in the physical network topology, establishing a mathematical model that minimizes the resource cost and operating cost of VNF orchestration under delay constraints; according to the dynamic changes in network topology and VNF dynamic changes, establish MDP model, and MDP is solved by deep Q network; Aiming at the problem of excessive state space and action space and dynamic change of network load in MDP model, a dynamic optimal VNF orchestration strategy is designed to solve the problem of high VNF orchestration cost. On the premise of ensuring the user delay performance, the present invention is limited by the computing resource capacity and link bandwidth resource capacity in the network, and dynamically adjusts the orchestration strategy of each network slice VNF to ensure user performance, optimize VNF orchestration costs, and improve resource utilization. utilization rate.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

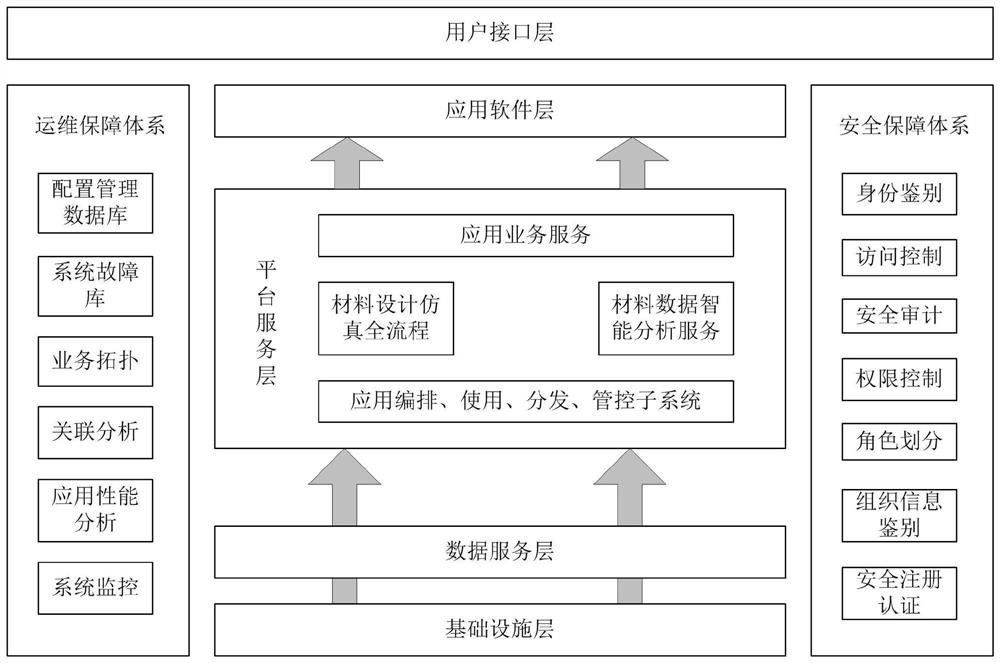

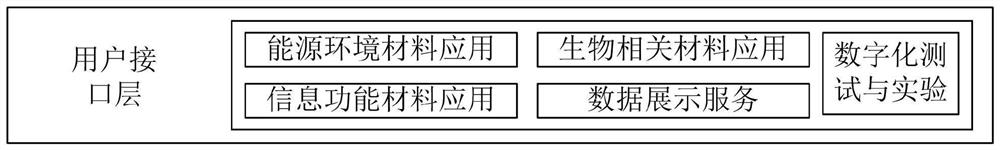

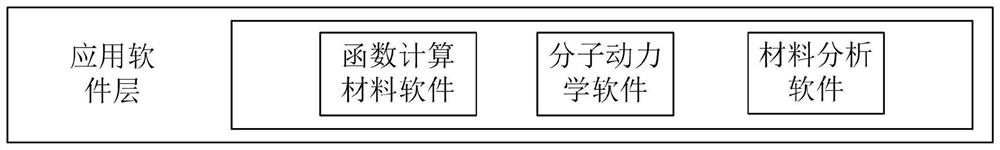

Hyper-converged hybrid architecture computing material platform

PendingCN112988695AEasy to useReduce difficultyGeneral purpose stored program computerMulti-dimensional databasesData packPerformance computing

A hyper-converged hybrid architecture computing material platform includes: an infrastructure module configured to implement a cloud host service, a cloud storage service, a high performance computing service, and a research and development environment support service with a resilient infrastructure architecture; the data service module that is configured to manage data by taking a data packet as granularity, improve data productivity by constructing an annotation system and standardizing a data annotation behavior, shield various heterogeneous storage and file systems, and realize operation of various data tasks by taking a shielding layer as a support so as to realize data management; the platform service module that is configured to realize an application service by integrating a plurality of HPC applications related to computing materials and combining access of a data warehouse, and realize a development service through an HPC application orchestration subsystem, a Monte Carlo algorithm and a general algorithm library; an application software module that is configured to implement compilation and operation of a computing job by assigning computing material-related software; and a user interface module.

Owner:BEIJING COMPUTING CENT

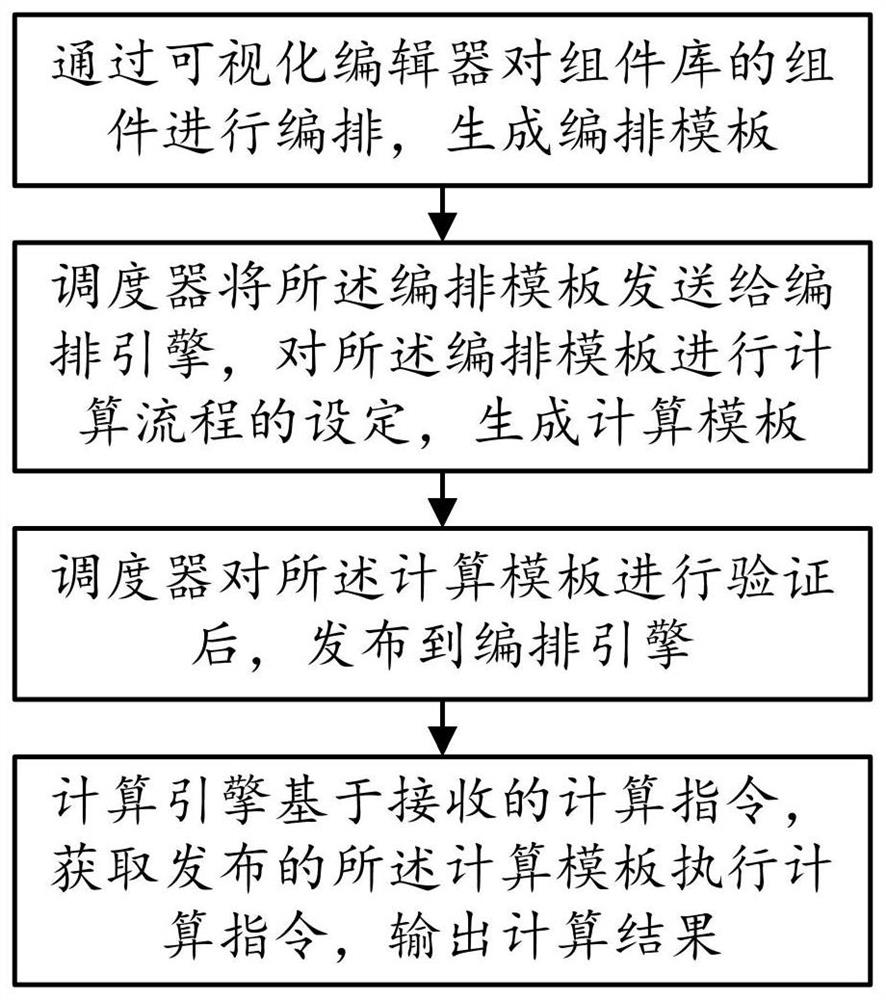

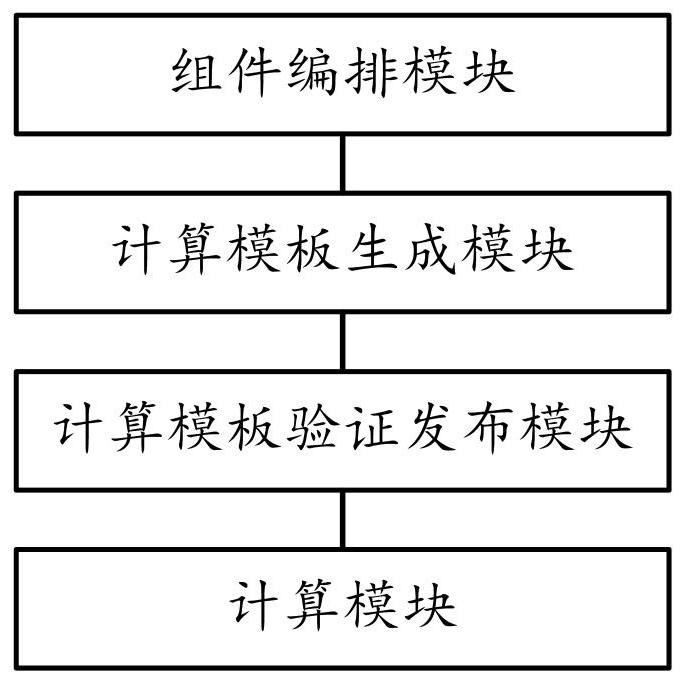

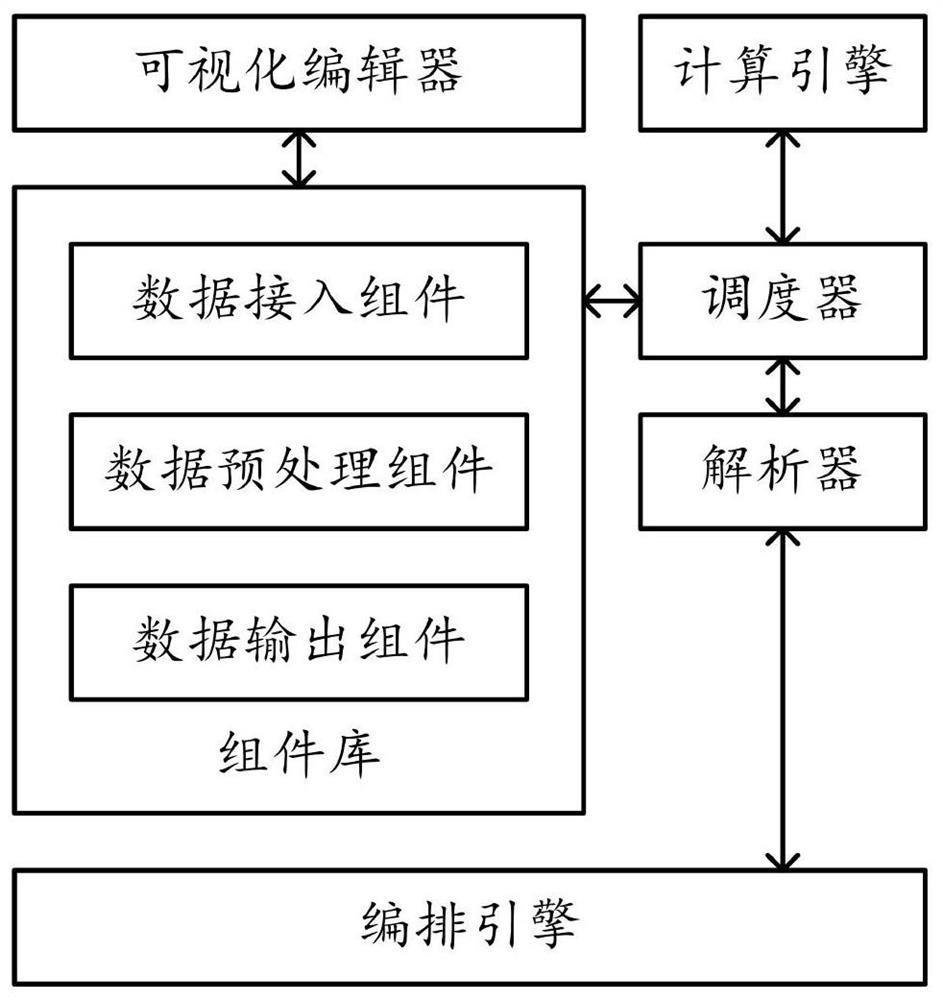

An online component orchestration computing method and system based on real-time stream computing

ActiveCN112685004BImprove orchestration efficiencyEasy to adjustDatabase management systemsSoftware designTheoretical computer scienceOrchestration (computing)

The present invention provides an online component arrangement calculation method and system based on real-time stream computing in the field of big data application technology. The method includes the following steps: Step S10: Arrange the components of the component library through a visual editor to generate an arrangement template; step S20, the scheduler sends the choreography template to the choreography engine, sets the calculation process for the choreography template, and generates a calculation template; Step S30, after the scheduler verifies the calculation template, publishes it to the choreography engine; step S40. Based on the received calculation instruction, the calculation engine obtains the published calculation template to execute the calculation instruction, and outputs the calculation result. The advantages of the present invention lie in that the efficiency of component arrangement is greatly improved.

Owner:FUJIAN NEWLAND SOFTWARE ENGINEERING CO LTD

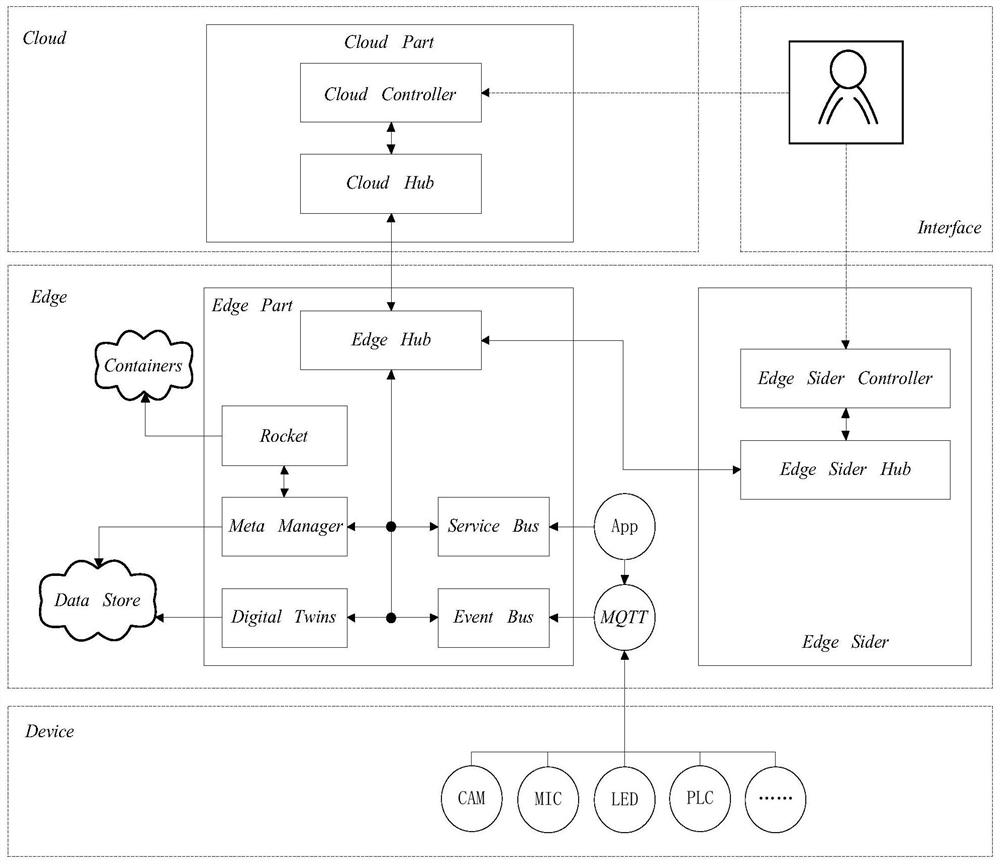

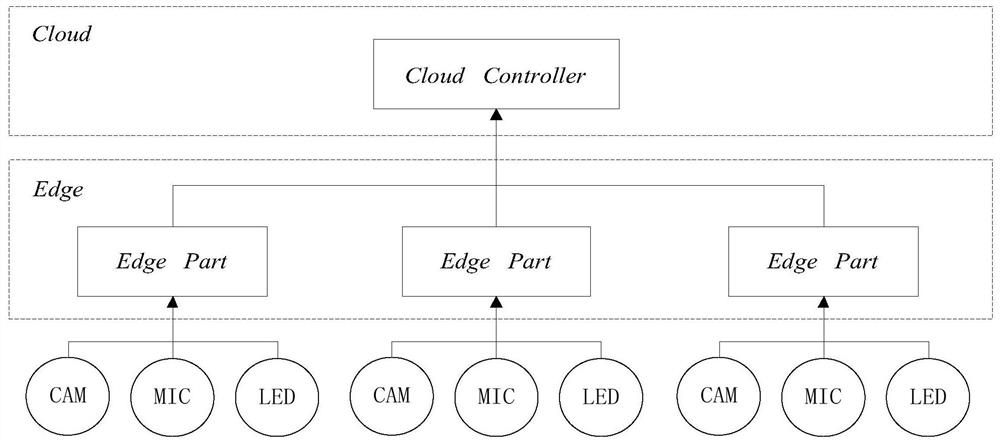

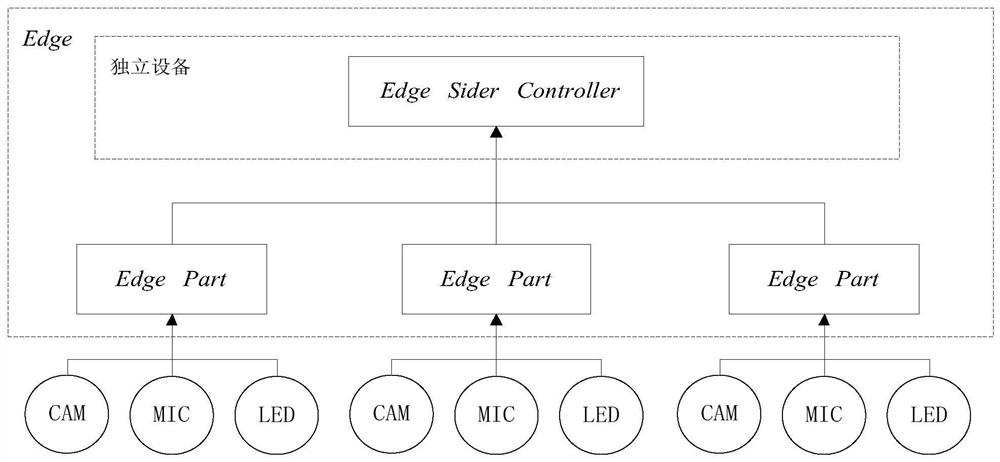

A novel architecture of edge computing system

ActiveCN112202900BReduce the difficulty of operation and maintenanceReduce interventionTransmissionComputer hardwareComputer architecture

The invention discloses an edge computing system with a new architecture, which relates to the field of edge computing. The system at least includes a cloud layer deployed in a cloud data center and an edge layer deployed in an edge node; the cloud layer includes a cloud control module, and the edge layer includes an edge layer Module, the edge module includes at least Rocket component, Meta Manager component and edge message channel; the edge module communicates with the cloud control module through the edge message channel; the system is based on lightweight containerization technology, providing a stable and reliable edge The software deployment and orchestration system, combined with the advantages of cloud-edge collaboration and edge autonomy, can effectively reduce personnel intervention in edge-side software orchestration and deployment, reduce the difficulty of edge-side software operation and maintenance, and solve the problems faced by edge computing, such as cloud-edge collaboration, Network, management, expansion, heterogeneity and other technical challenges.

Owner:WUXI XUELANG DIGITAL TECH CO LTD

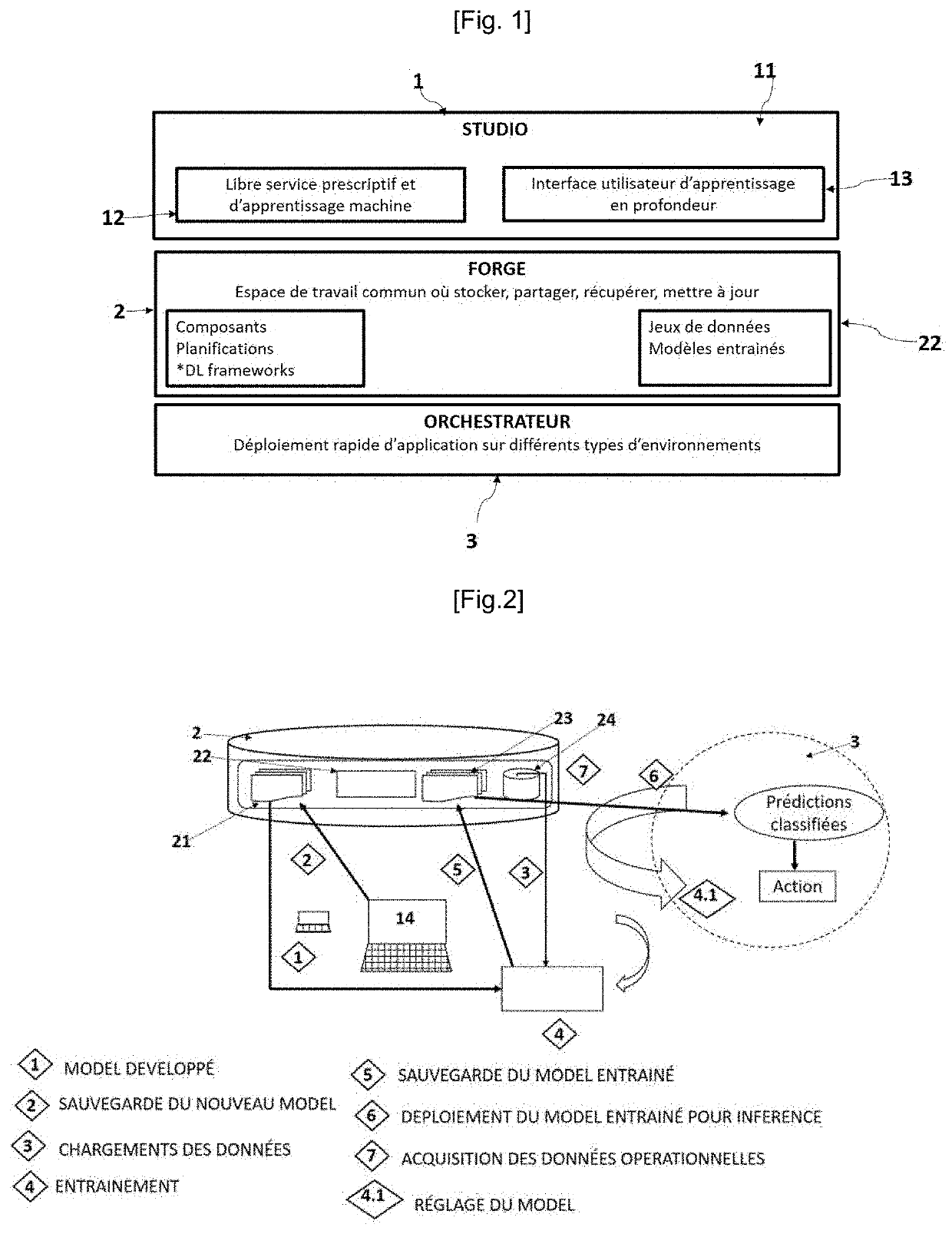

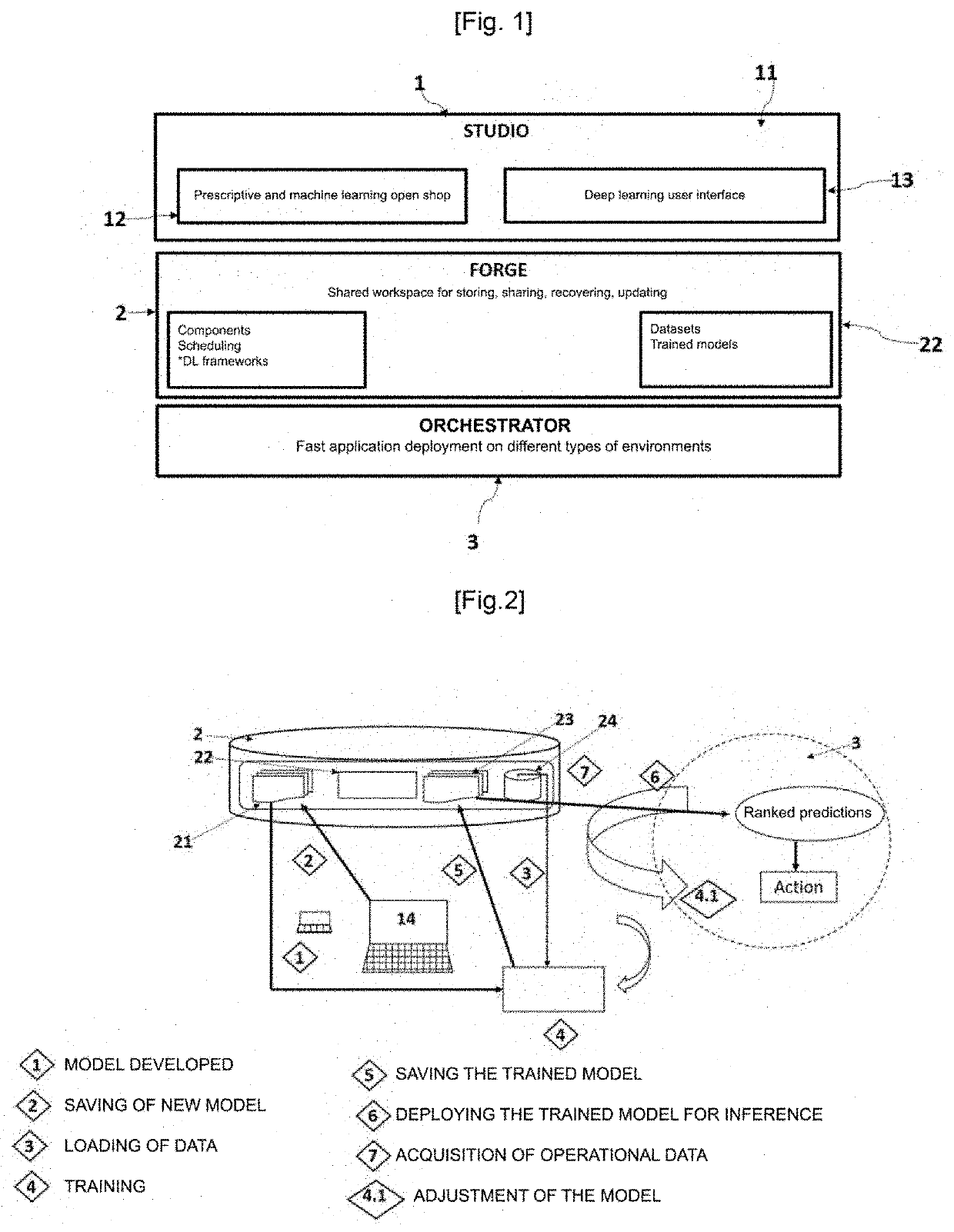

Support system for designing an artificial intelligence application, executable on distributed computing platforms

A system using a suite of modular and clearly structured Artificial Intelligence application design tools (SOACAIA), executable on distributed or undistributed computing platforms to browse, develop, make available and manage AI applications, this set of tools implementing three functions. A Studio function making it possible to establish a secure and private shared space for the company. A Forge function making it possible to industrialize AI instances and make analytical models and their associated datasets available to the development teams. An Orchestration function for managing the total implementation of the AI instances designed by the Studio function and industrialized by the Forge function and to perform permanent management on a hybrid cloud or HPC infrastructure.

Owner:BULL SA

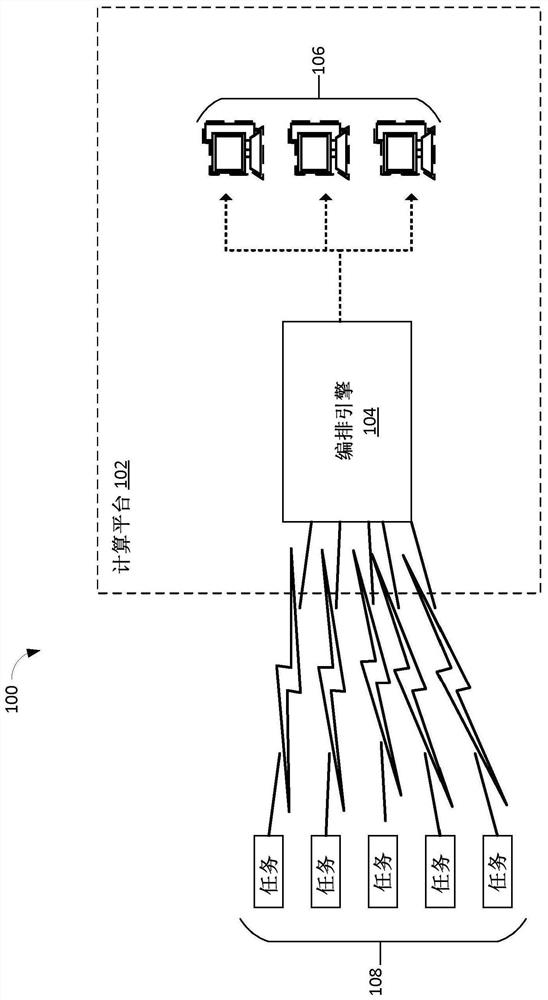

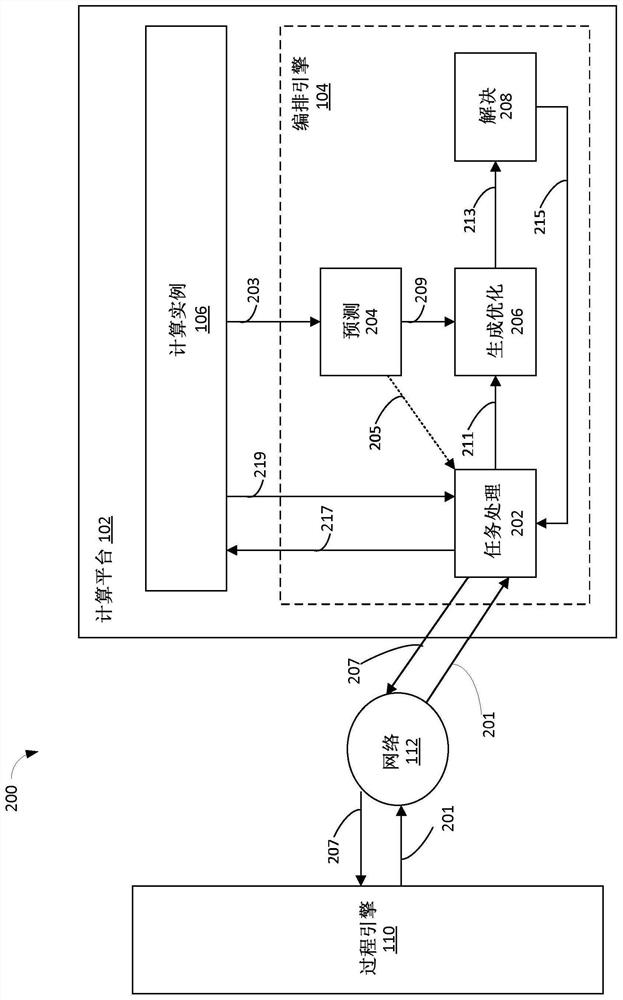

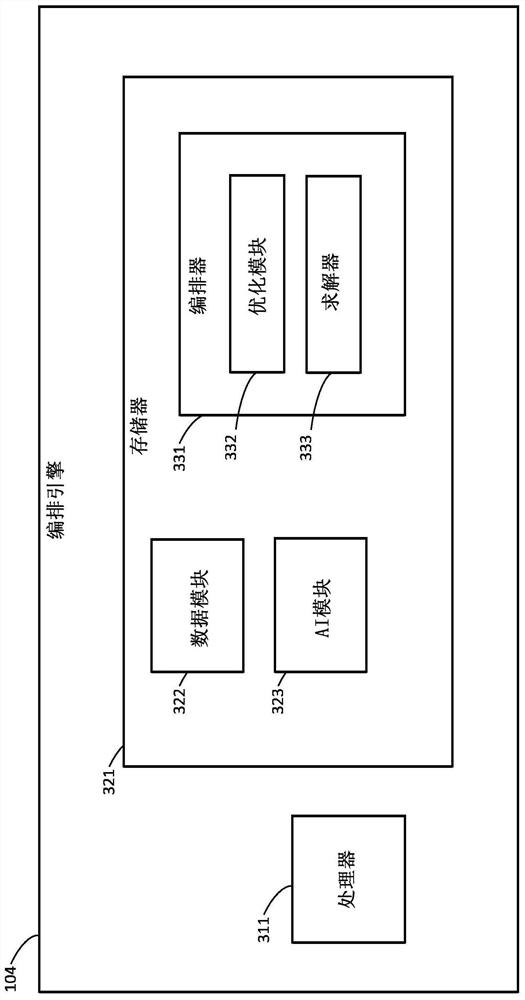

Orchestration of containerized applications

PendingCN113168347AResource allocationKnowledge representationNetwork conditionsOrchestration (computing)

A system and method are disclosed for orchestrating the execution of computing tasks. An orchestration engine can receive task requests over a network from a plurality of process engines. The process engines may correspond to respective edge or field devices that are remotely located as compared to the orchestration engine. Each task request may indicate at least one task requirement for executing a respective computing task. A plurality of computing instances that have available computing resources can be selected from a set of computing instances. A predicted runtime can be generated for each of the computing tasks. In an example, based on the predicted runtimes, task requirements, available computing resources, and associated network conditions, a schedule and allocation scheme are determined by the orchestration engine. The schedule and allocation scheme define when each of the plurality of computing tasks is performed, and which of the plurality of selected computing instances performs each of the plurality of computing tasks. The selected computing instances execute the plurality of computing tasks according to the schedule and allocation scheme.

Owner:SIEMENS AG

Cloud-based automatic control system network programming platform

PendingCN113220284AEasy to operateNot easy to make mistakesProgramming languages/paradigmsIntelligent editorsComputer architectureAutomatic control

The invention belongs to the technical field of programming computing platforms, and particularly relates to a cloud-based automatic control system network programming platform, which comprises a programmable processor coupled to a memory; a third-party orchestration module stored in the memory, and configured to be executed by the programmable processor to communicate with a cloud service provider orchestration system; a user login management module in which one side is connected with a programming platform, wherein one side of the programming platform is connected with an automatic control system; and the automatic control system which internally comprises a centralized network controller, a distributed database and a distributed file system, wherein one side of the automatic control system internally comprises a virtual computing system, a virtual network system, a virtual storage system and an operating system. When the automatic control system network programming platform based on the cloud is used, operation is easy, errors are not prone to occurring, and the automatic control system requirements of actual production are met.

Owner:陕西大唐高科机电科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com