Patents

Literature

265 results about "Reference counting" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, reference counting is a programming technique of storing the number of references, pointers, or handles to a resource, such as an object, a block of memory, disk space, and others.

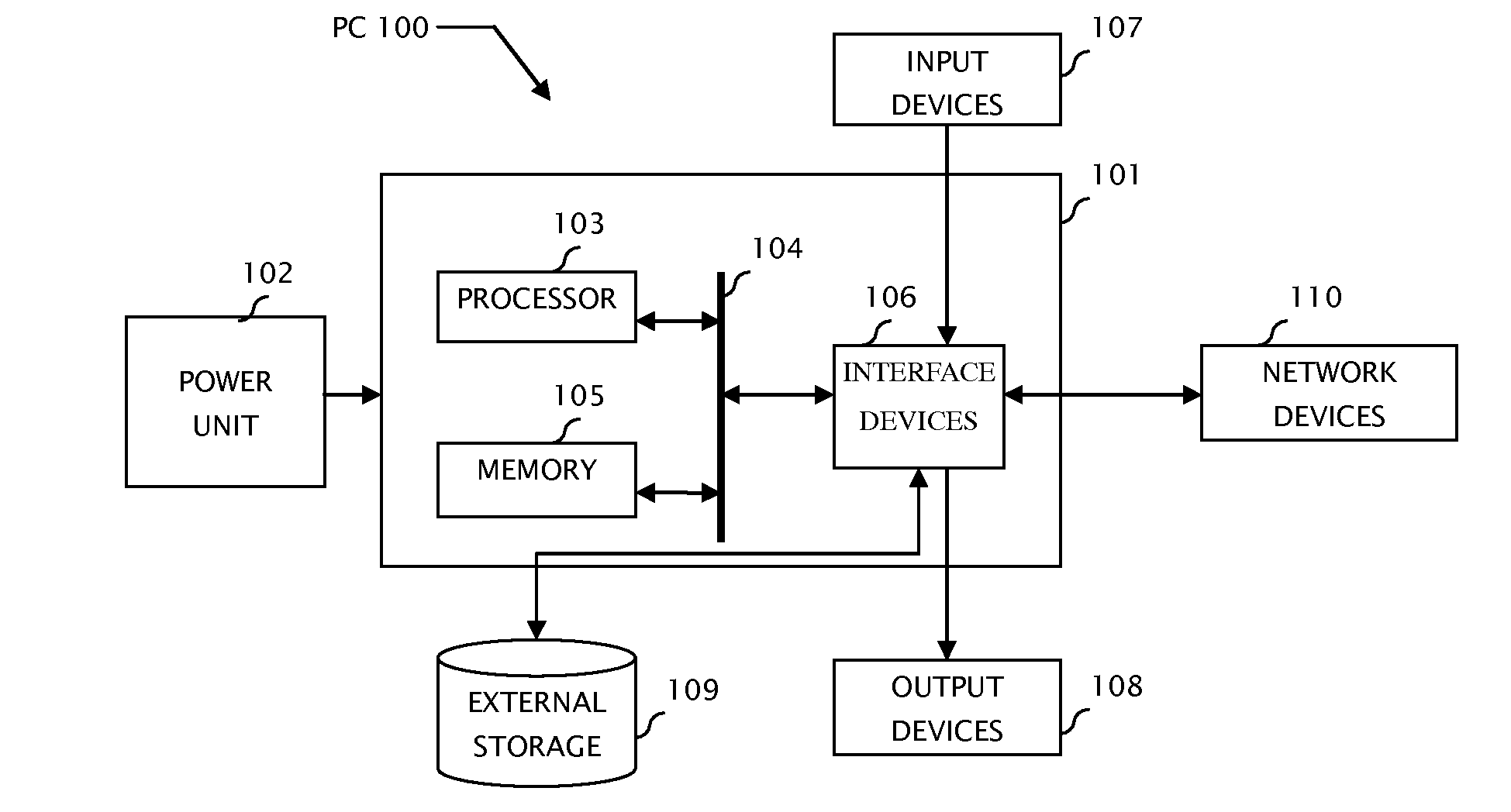

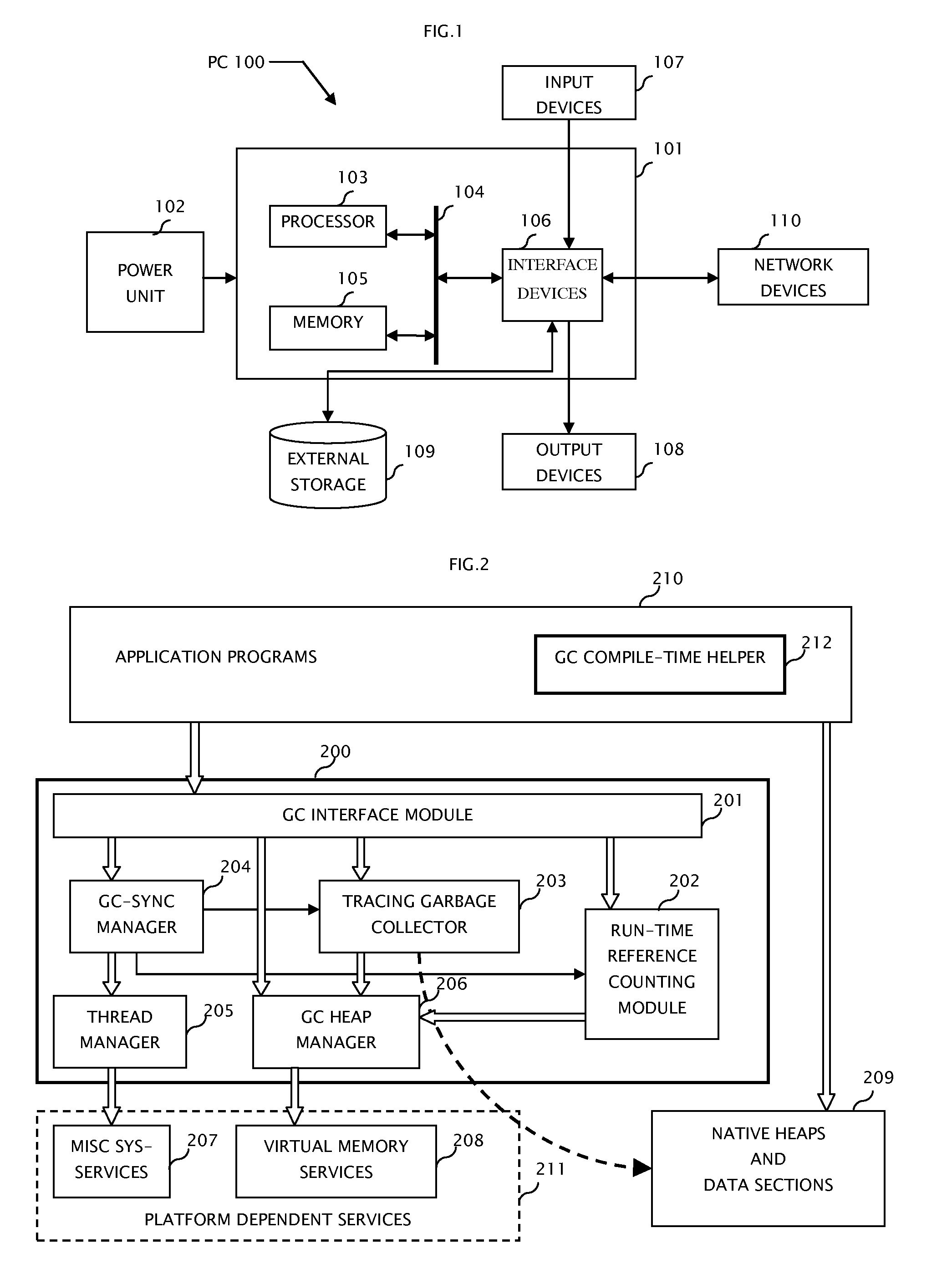

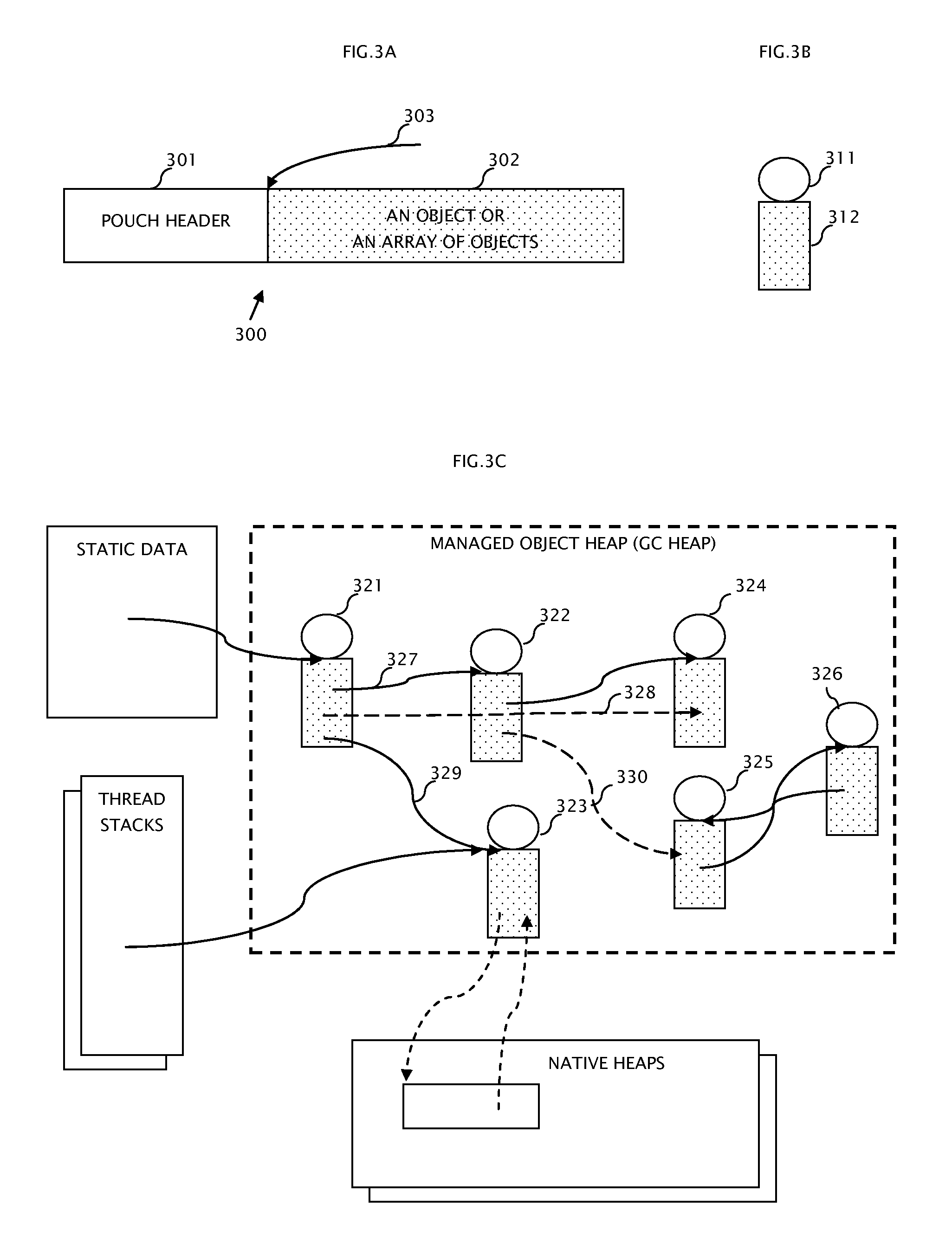

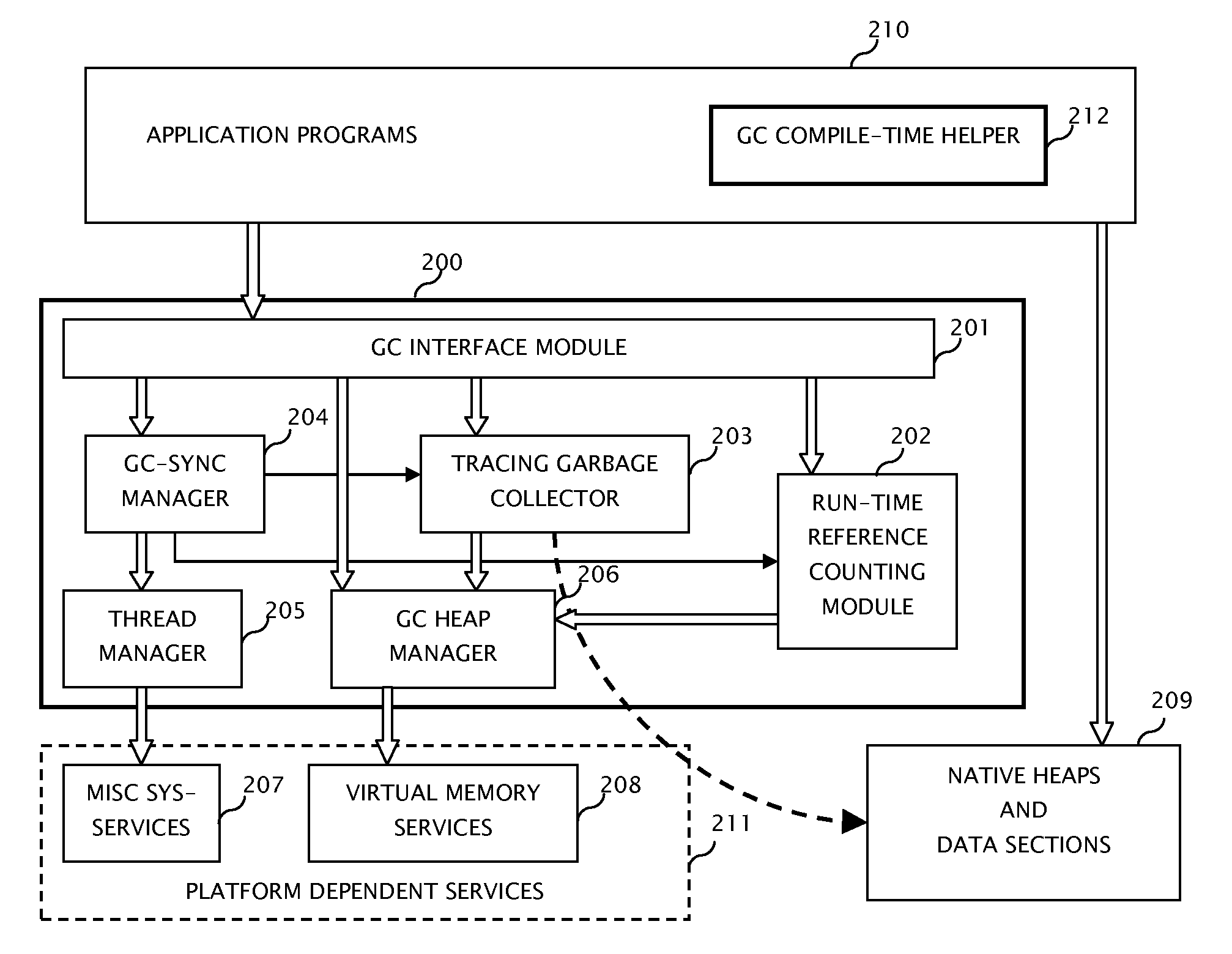

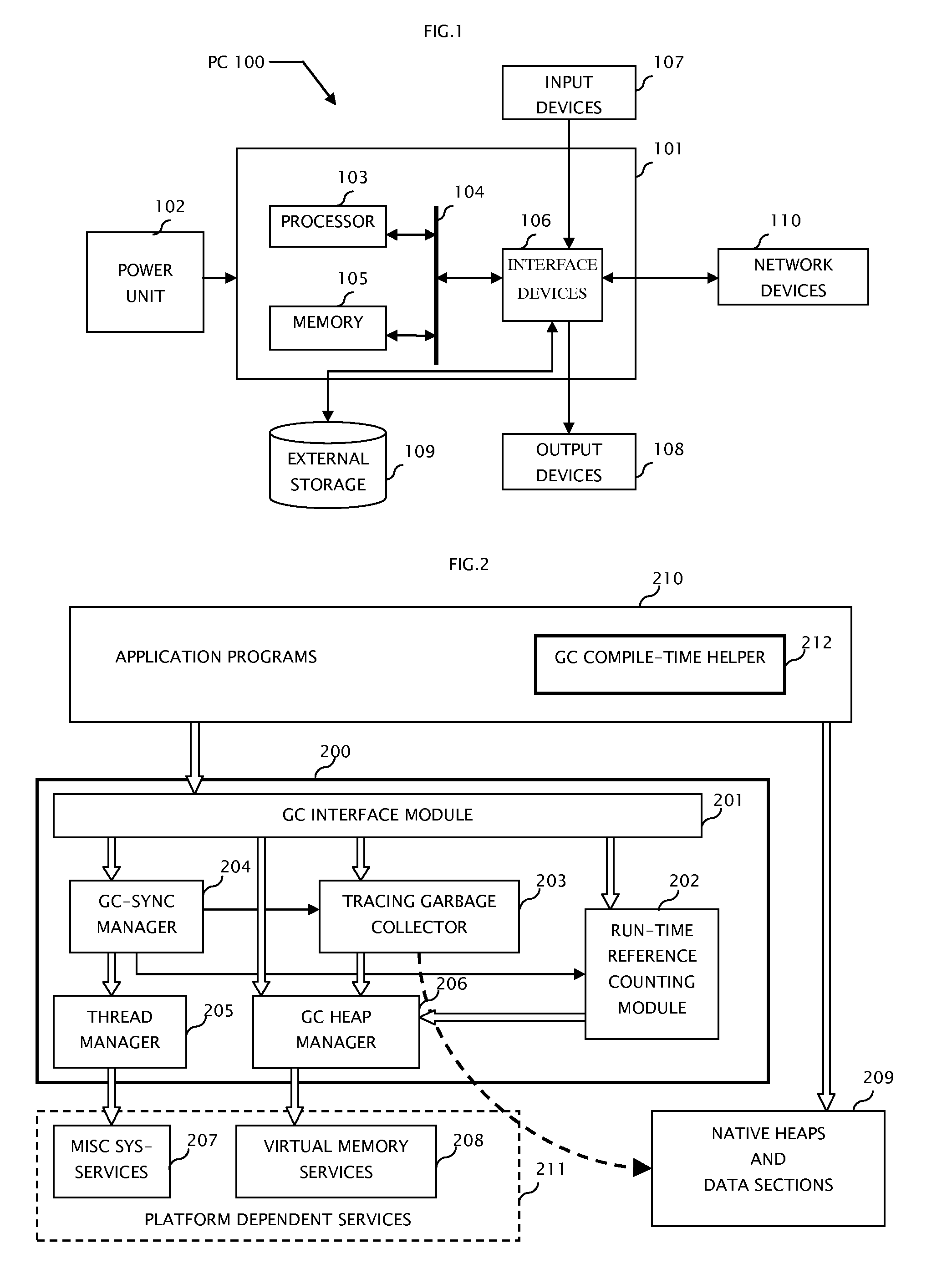

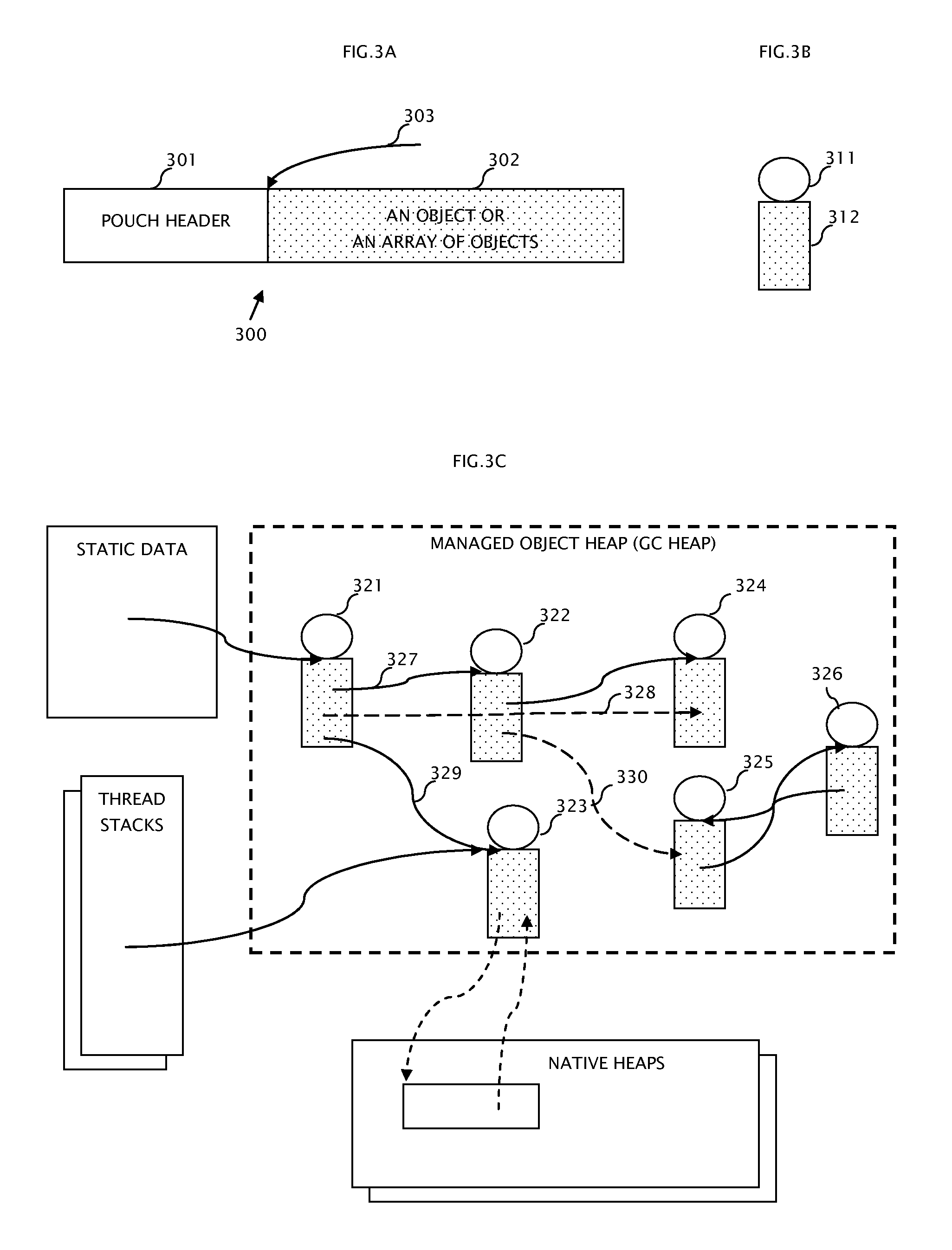

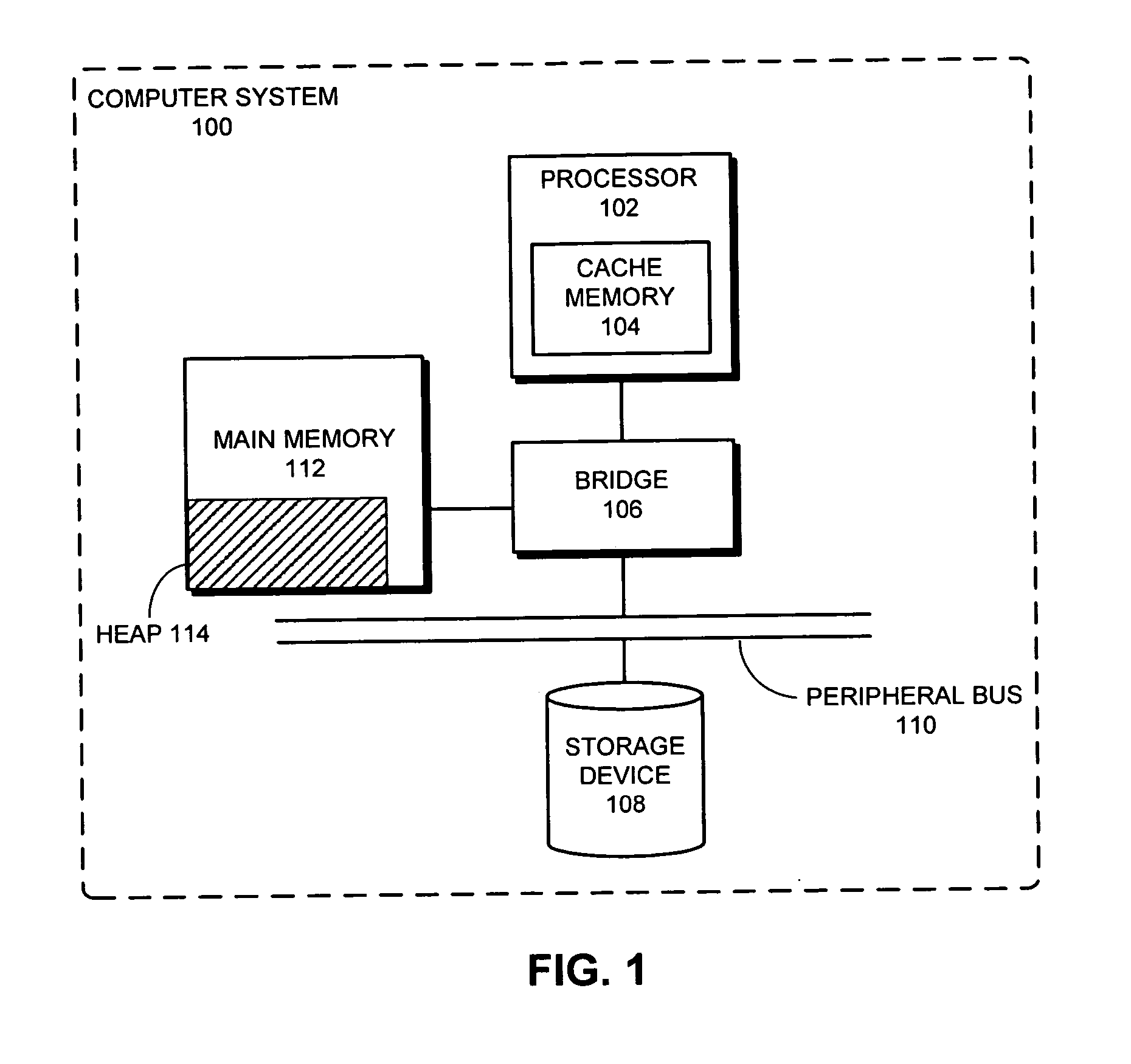

System and method for computer automatic memory management

ActiveUS20070203960A1Ensure correct executionEfficient memory usageData processing applicationsSpecial data processing applicationsImmediate releaseWaste collection

The present invention is a method and system of automatic memory management (garbage collection). An application automatically marks up objects referenced from the “extended root set”. At garbage collection, the system starts traversal from the marked-up objects. It can conduct accurate garbage collection in a non-GC language, such as C++. It provides a deterministic reclamation feature. An object and its resources are released immediately when the last reference is dropped. Application codes automatically become entirely GC-safe and interruptible. A concurrent collector can be pause-less and with predictable worst-case latency of micro-second level. Memory usage is efficient and the cost of reference counting is significantly reduced.

Owner:GUO MINGNAN

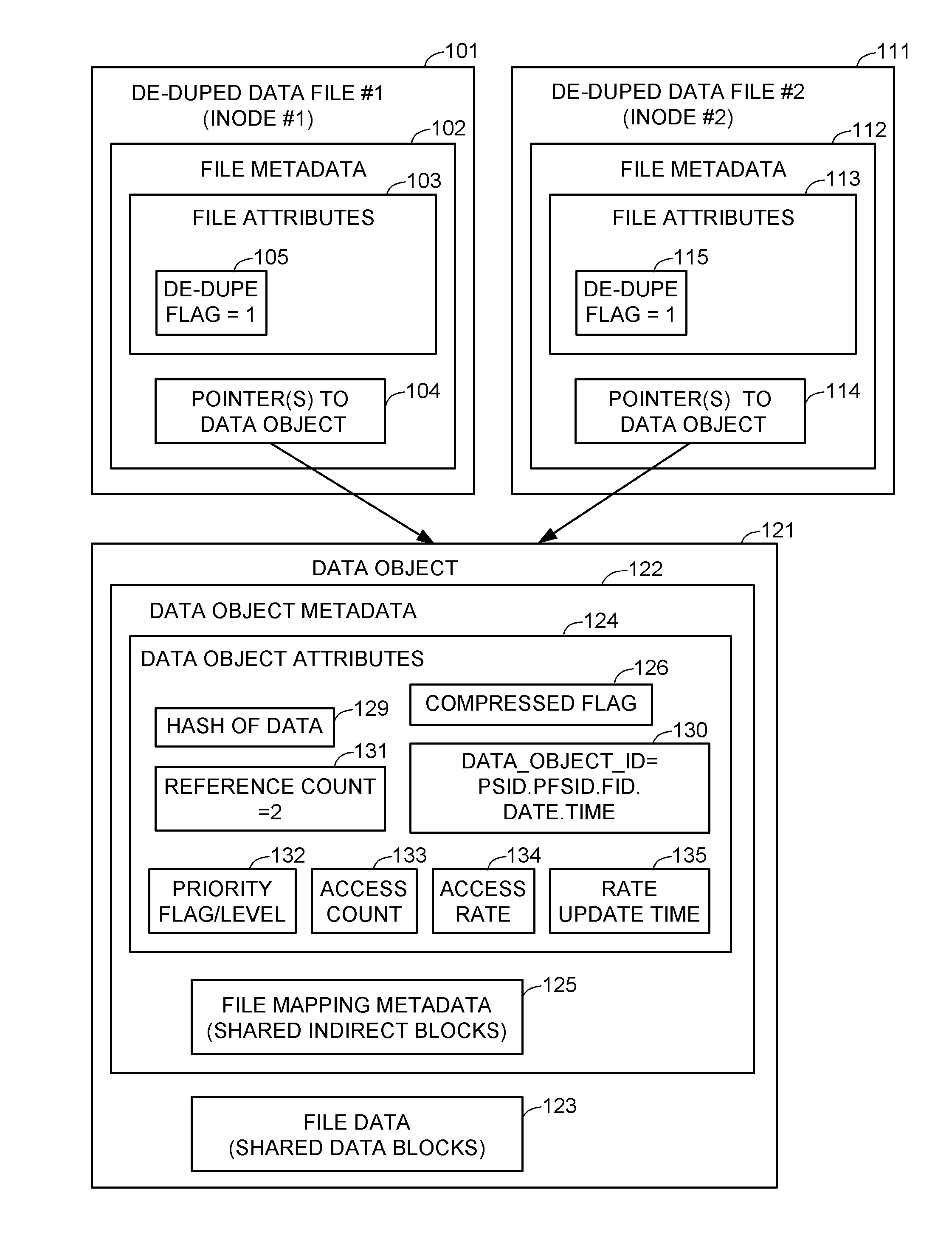

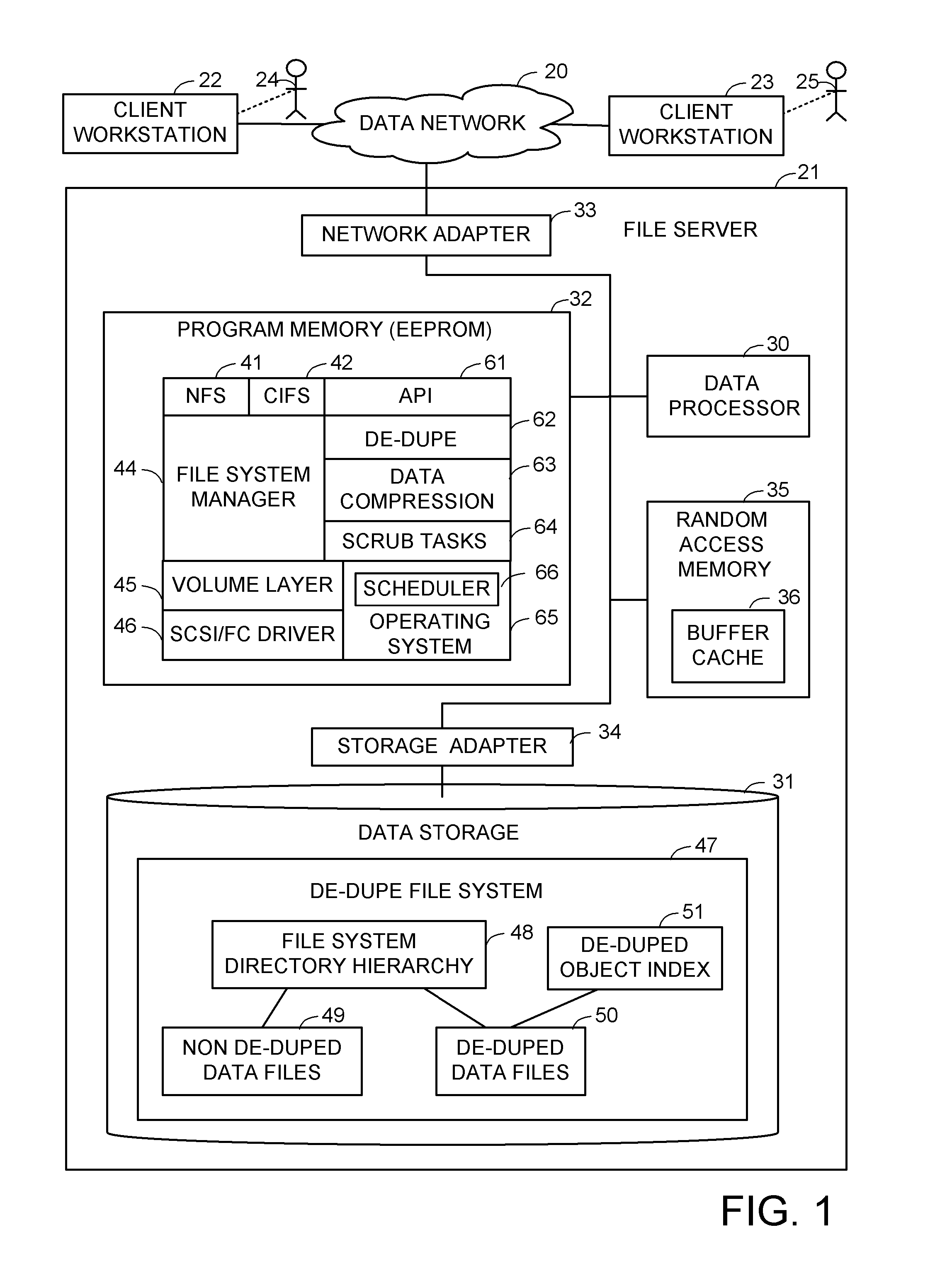

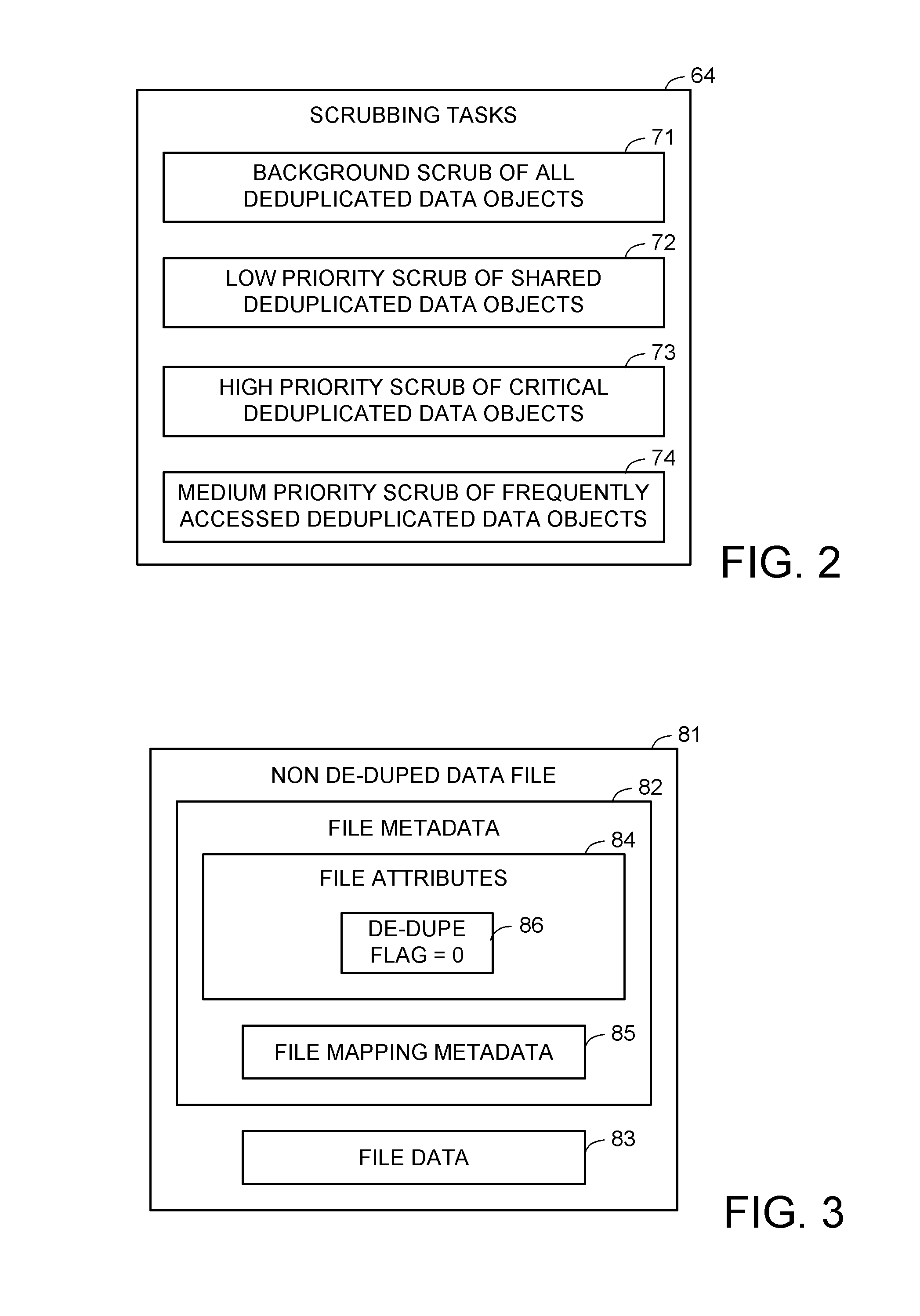

Priority based data scrubbing on a deduplicated data store

ActiveUS8407191B1Digital data information retrievalDigital data processing detailsAccess frequencyData store

Deduplicated data objects are scrubbed by a executing a priority scrubbing task that scans the deduplicated data objects and applies a condition that enables priority data scrubbing based on the value of at least one attribute of the de-duplicated data objects. For example, a low priority task scrubs a deduplicated data object when a reference count of the deduplicated object reaches a threshold. A high priority task scrubs a deduplicated data object when a priority attribute indicates that the deduplicated data object is used by a critical source data object. A medium priority task scrubs a deduplicated data object when the access frequency of the deduplicated data object reaches a threshold. The condition may encode a scrubbing policy or heuristic, and may trigger further action in addition to scrubbing, such as triggering an update of the access rate.

Owner:EMC IP HLDG CO LLC

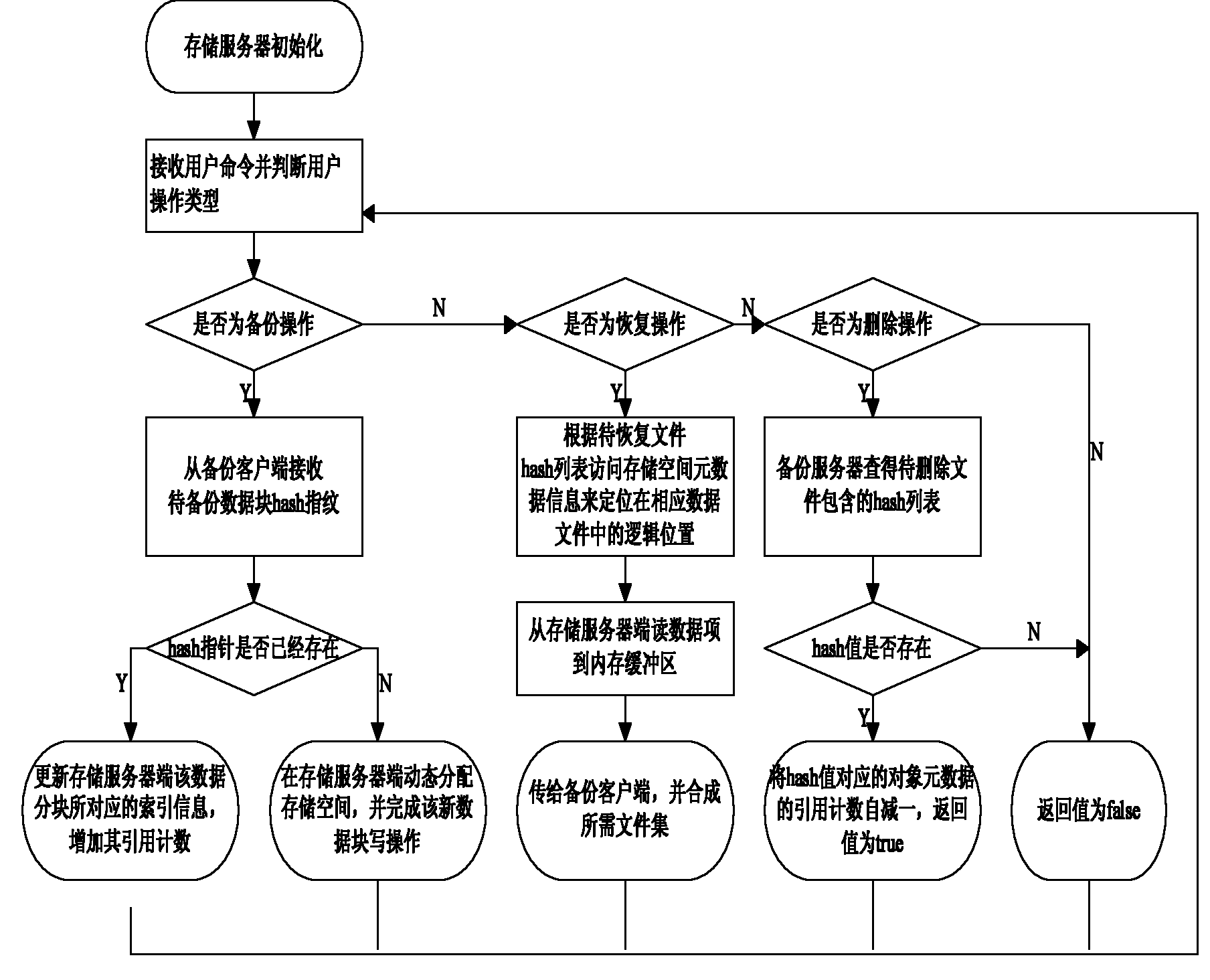

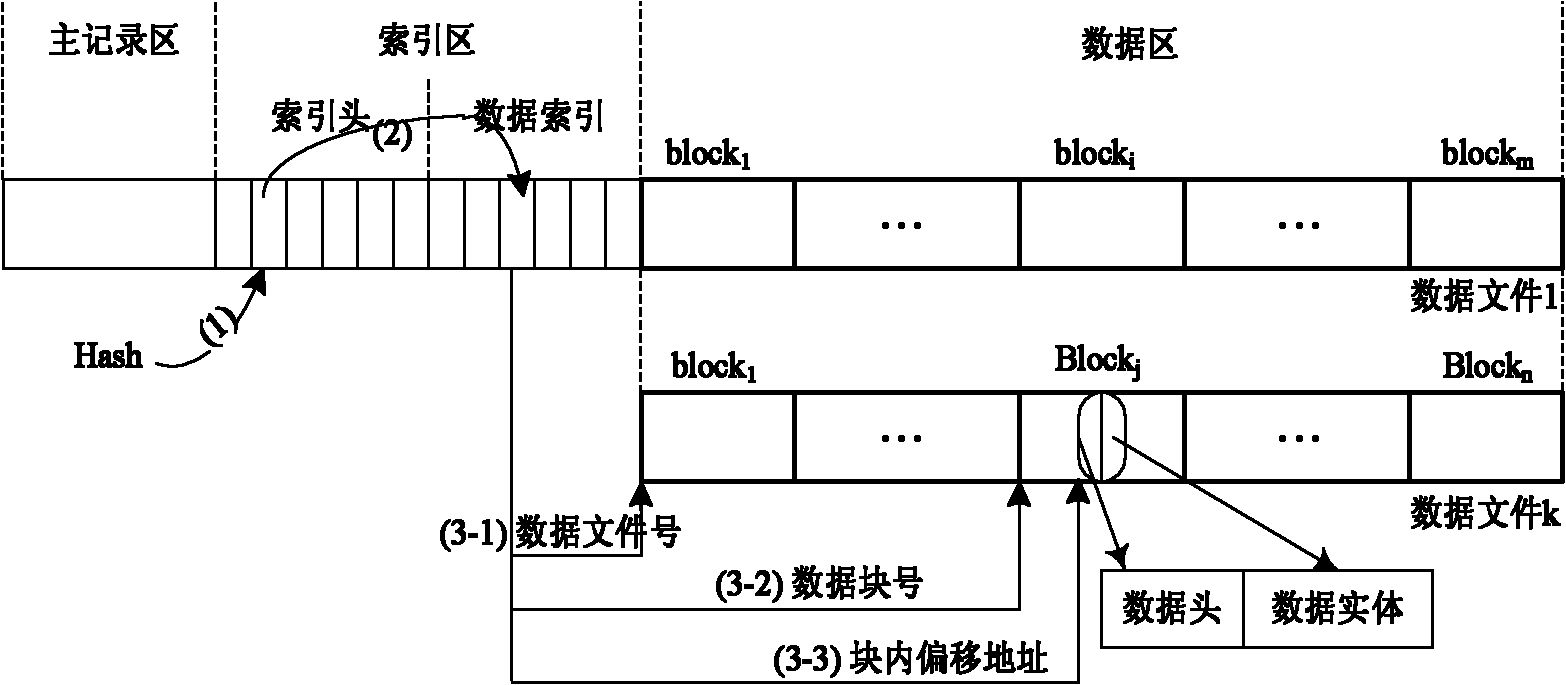

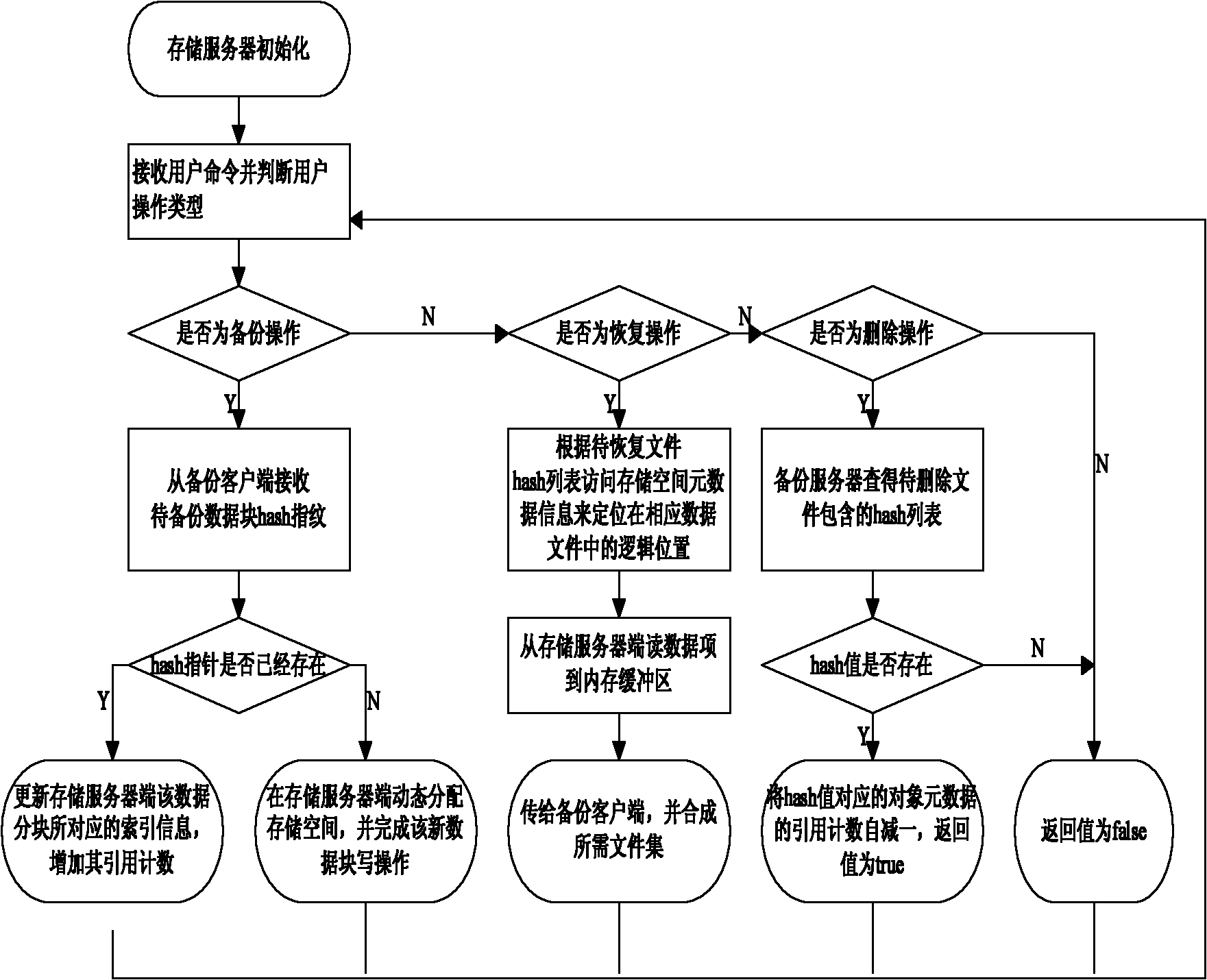

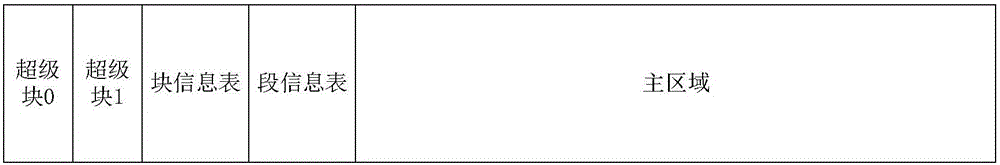

Data organization method for backup services

InactiveCN101814045ALow costEfficient execution of transfersMemory adressing/allocation/relocationRedundant operation error correctionExtensibilityData management

The invention discloses a data organization method for a backup service software storage server side for improving data organization and data management efficiency of the storage server side. The method comprises the following steps: (1) initializing a storage space of the storage server as a metadata area (comprising main record, index head and data index) and a data area; (2) receiving and judging a user operating command, sequentially performing a backup operation, recovering the operation and turning to step (4), and canceling the operation and turning to step (5); (3) processing a user backup operation, backing up user data to the data area of the storage server, meanwhile avoiding repeated data backup by using repeated data canceling technology, and turning to the step (2); (4) processing the recovery operation, positioning and searching a recovered data list specified by a user in the data area of the storage server, then transmitting the recovered data list to a user side, and turning to the step (2); and (5) processing the canceling operation, searching the canceled data specified by the user, introducing counting according to backup data blocks of the data in the data area of the storage server to perform corresponding processing, and turning to the step (2). The method improves the utilization rate and manageability of the storage server side and the expandability of the system, saves the network bandwidth, and improves the backup efficiency.

Owner:HUAZHONG UNIV OF SCI & TECH

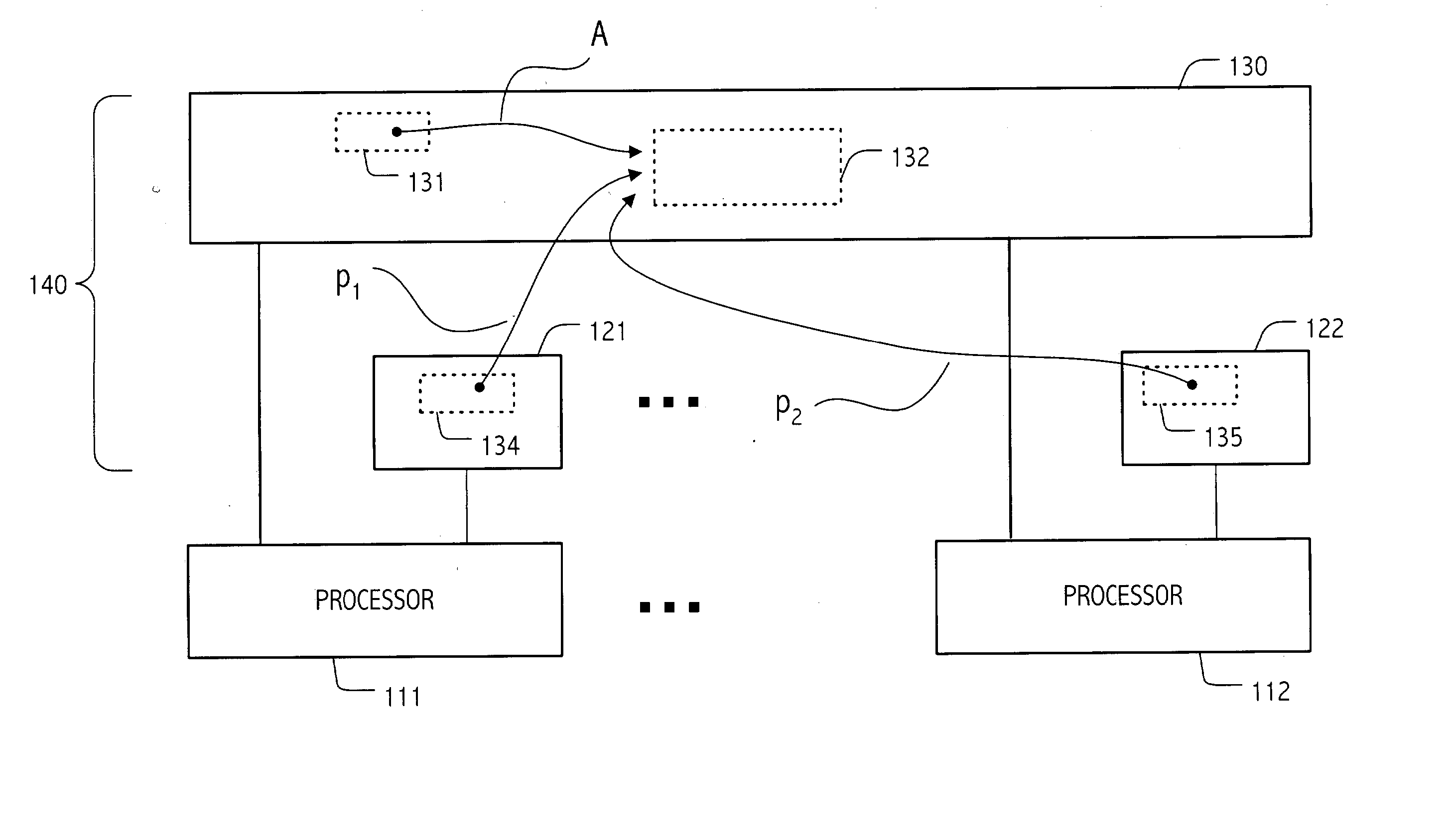

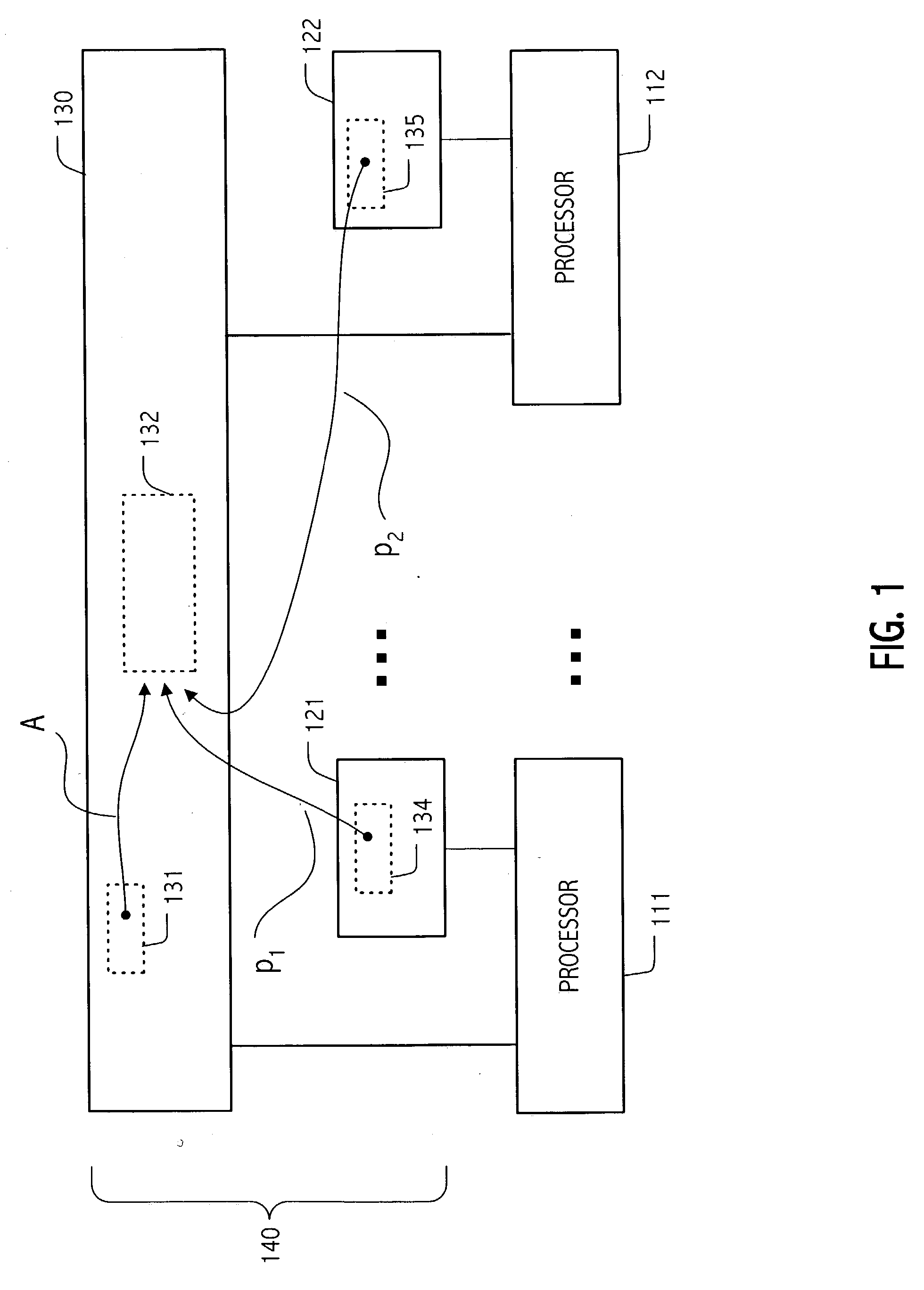

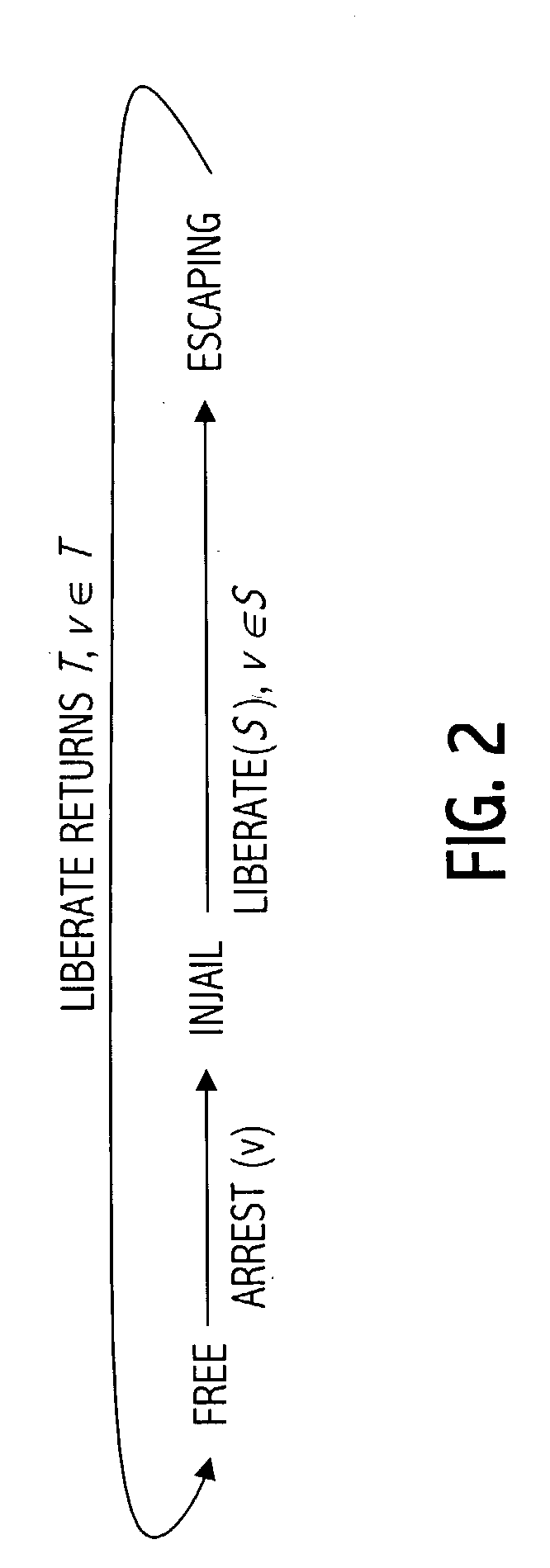

Single-word lock-free reference counting

ActiveUS20030140085A1Data processing applicationsProgram synchronisationWaste collectionTheoretical computer science

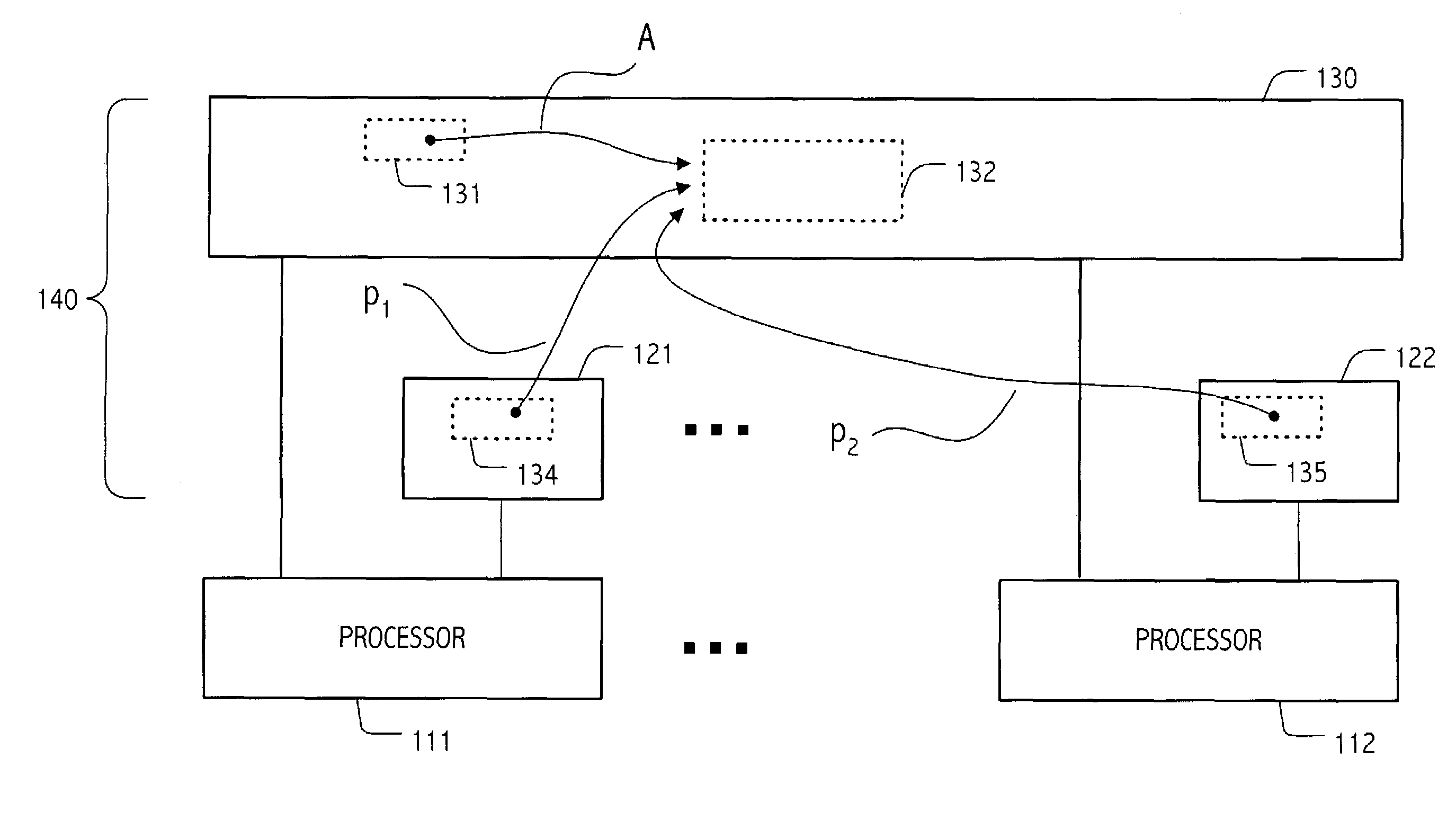

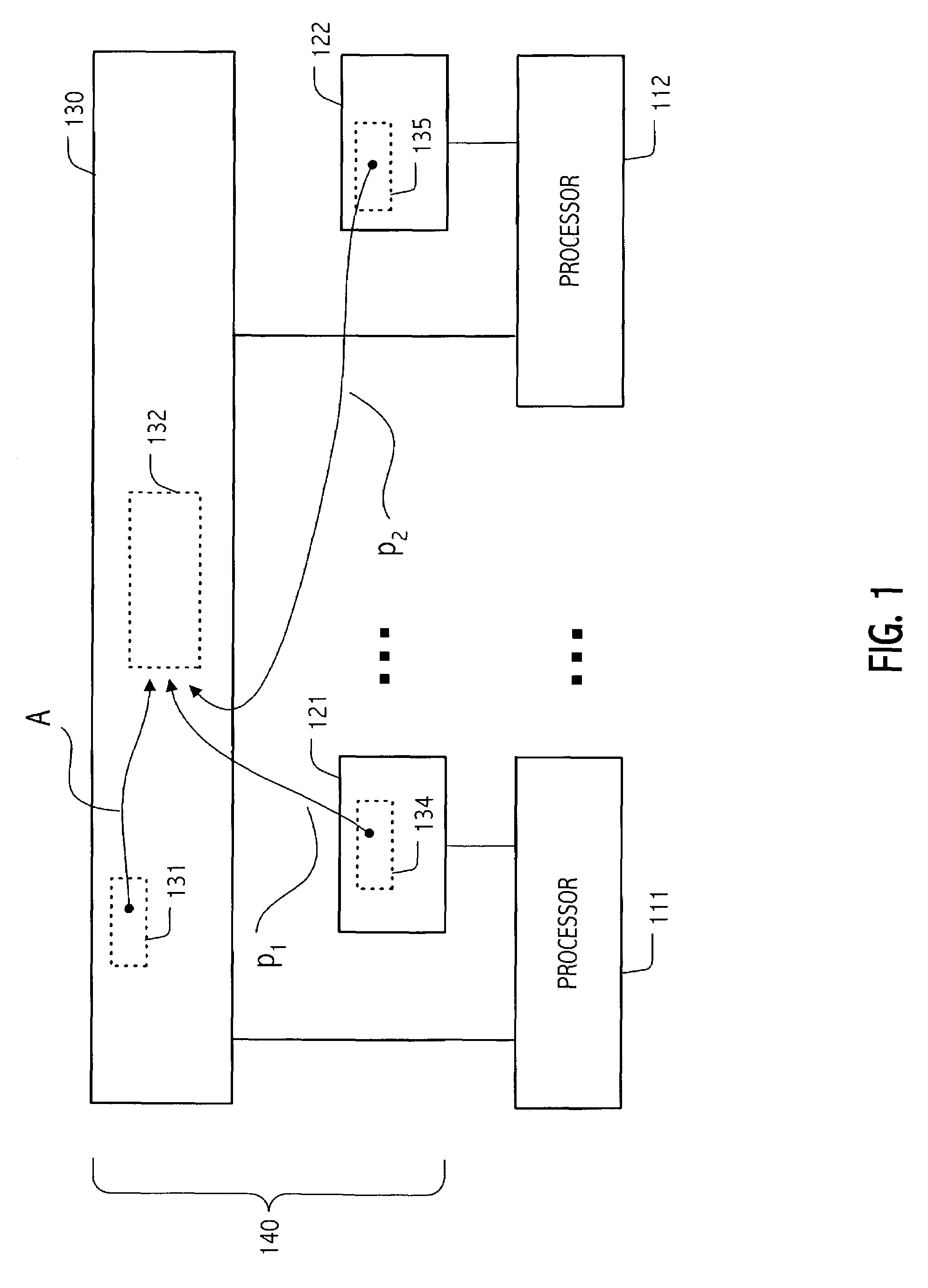

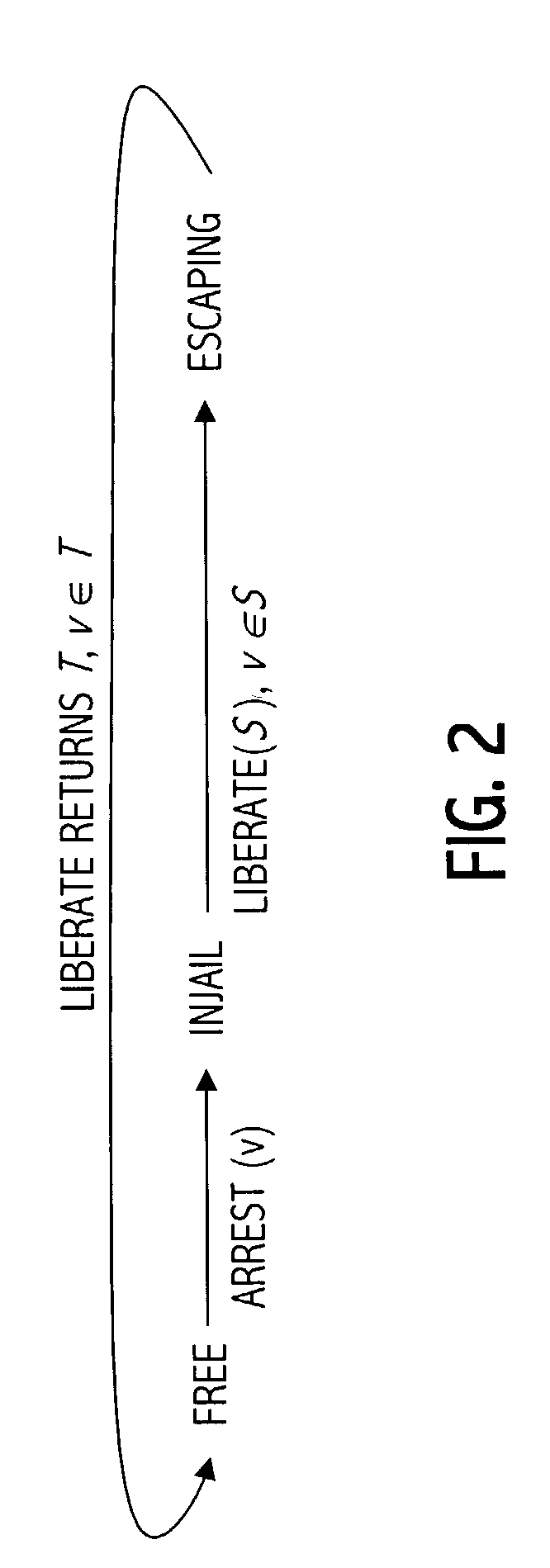

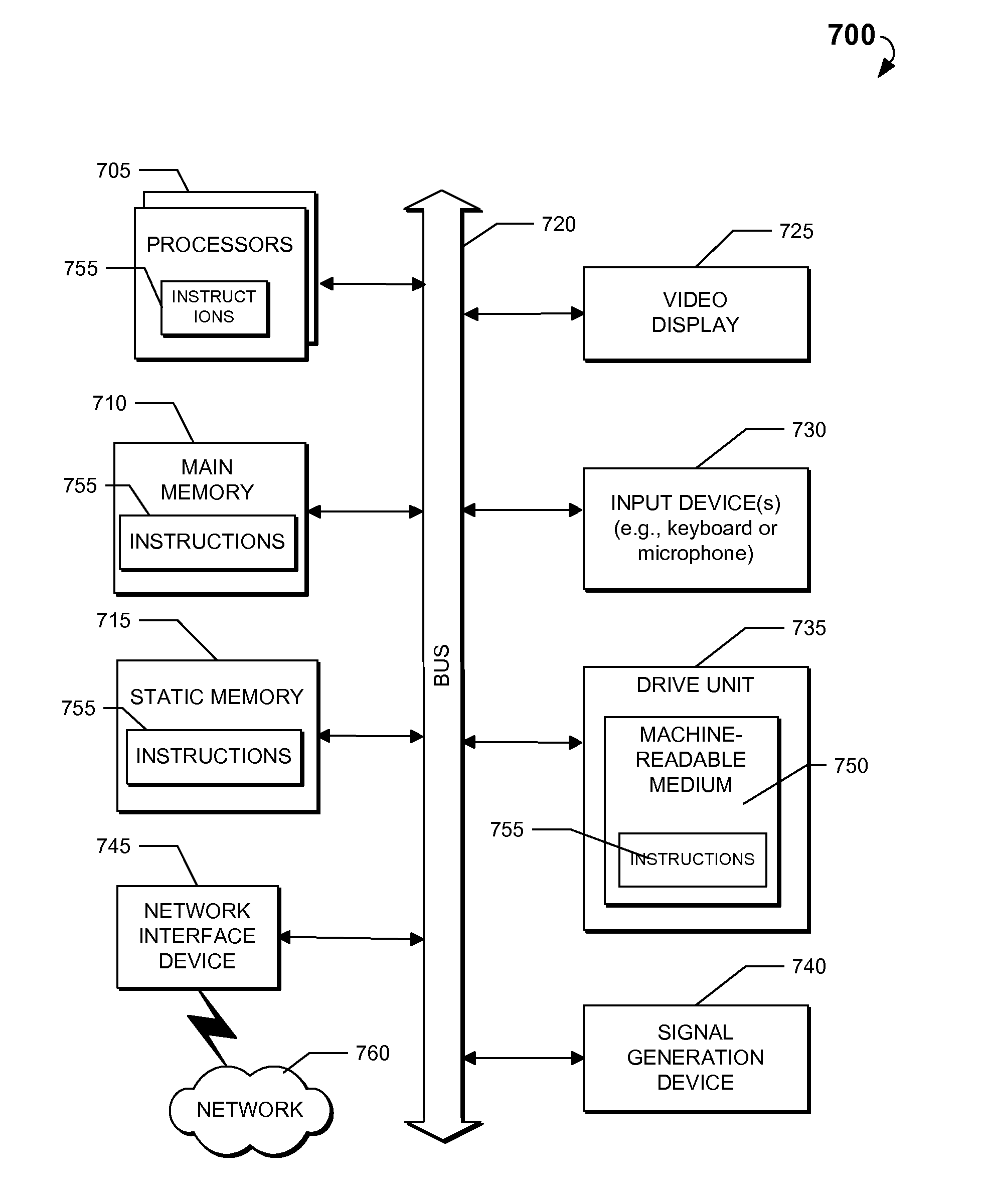

Solutions to a value recycling problem that we define herein facilitate implementations of computer programs that may execute as multithreaded computations in multiprocessor computers, as well as implementations of related shared data structures. Some exploitations of the techniques described herein allow non-blocking, shared data structures to be implemented using standard dynamic allocation mechanisms (such as malloc and free). A class of general solutions to value recycling is described in the context of an illustration we call the Repeat Offender Problem (ROP), including illustrative Application Program Interfaces (APIs) defined in terms of the ROP terminology. Furthermore, specific solutions, implementations and algorithm, including a Pass-The-Buck (PTB) implementation are also described. Solutions to the proposed value recycling problem have a variety of uses. For example, a single-word lock-free reference counting (SLFRC) technique may build on any of a variety of value recycling solutions to transform, in a straight-forward manner, many lock-free data structure implementations that assume garbage collection (i.e., which do not explicitly free memory) into dynamic-sized data structures.

Owner:ORACLE INT CORP

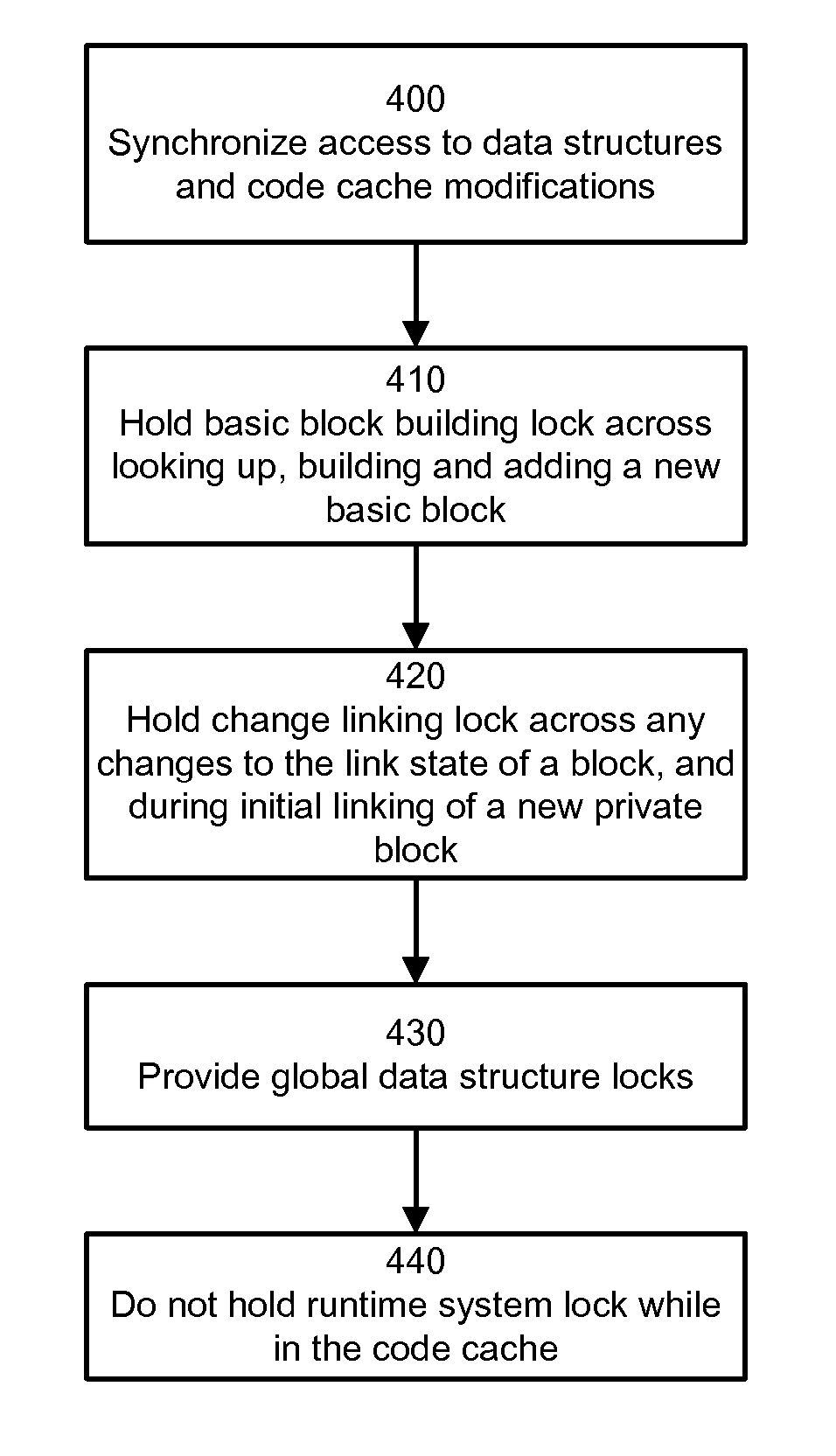

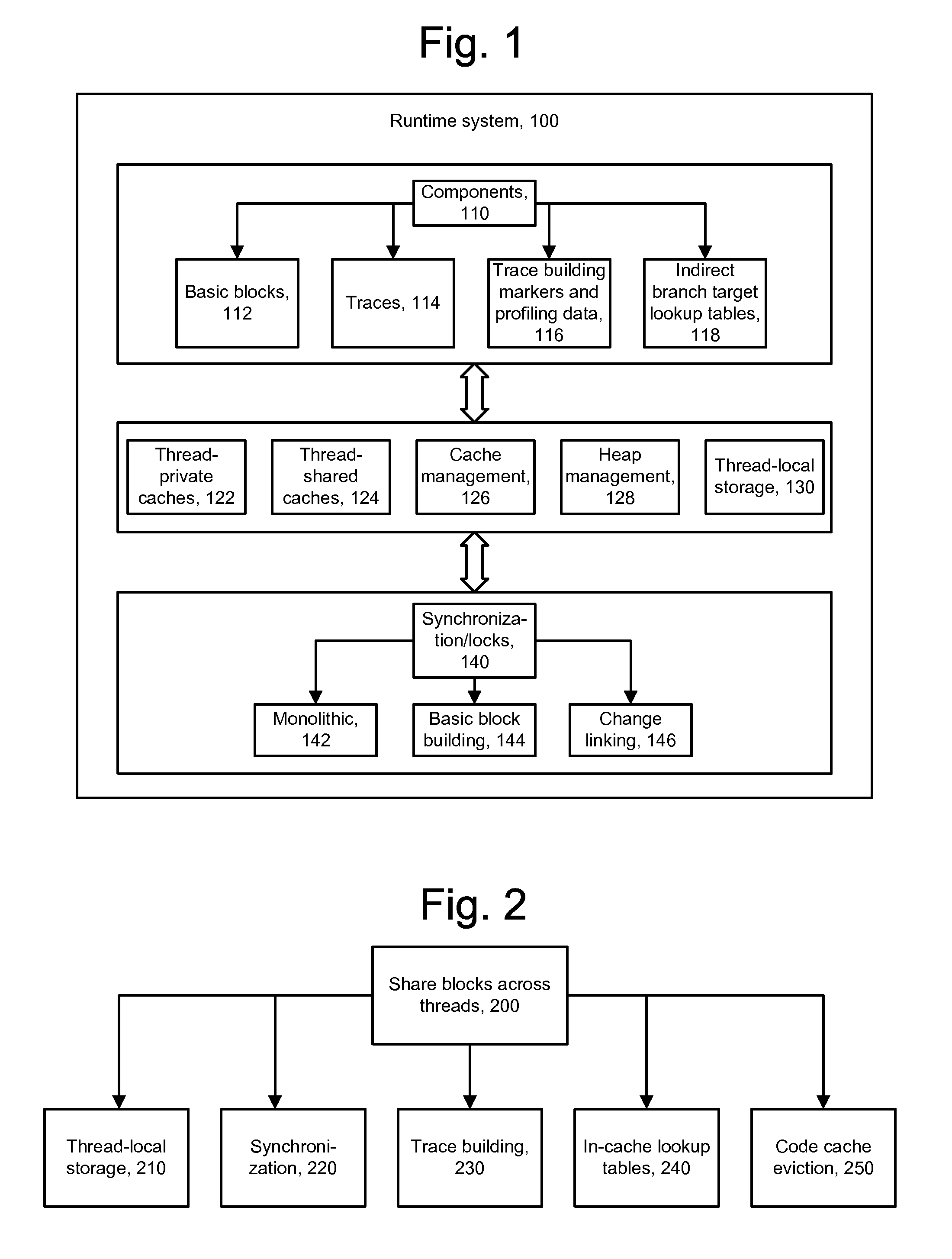

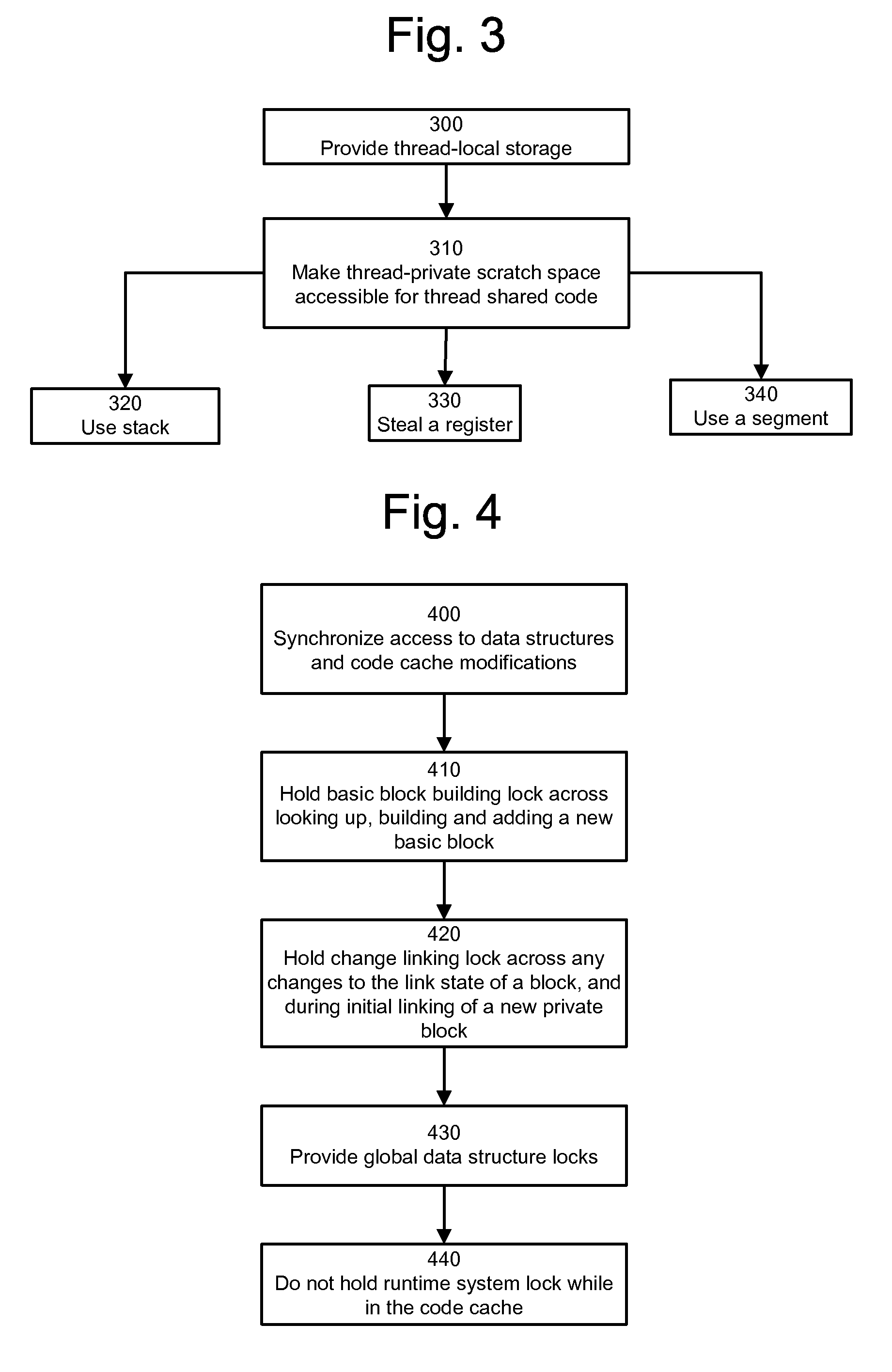

Thread-shared software code caches

ActiveUS20070067573A1Avoiding brute-force all-thread-suspensionAvoiding monolithic global locksMemory adressing/allocation/relocationMultiprogramming arrangementsTimestampBrute force

A runtime system using thread-shared code caches is provided which avoids brute-force all-thread-suspension and monolithic global locks. In one embodiment, medium-grained runtime system synchronization reduces lock contention. The system includes trace building that combines efficient private construction with shared results, in-cache lock-free lookup table access in the presence of entry invalidations, and a delayed deletion algorithm based on timestamps and reference counts. These enable reductions in memory usage and performance overhead.

Owner:VMWARE INC

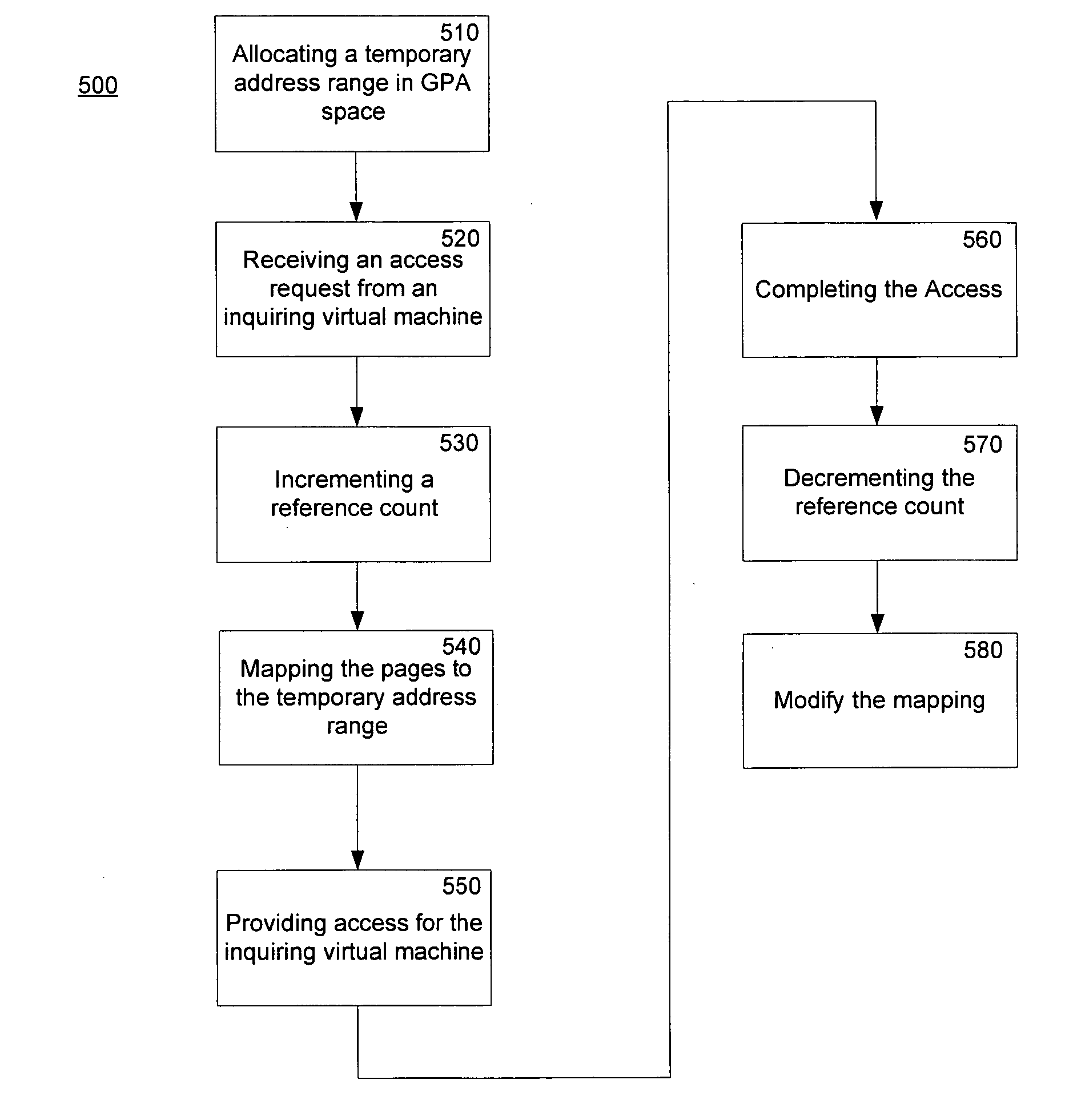

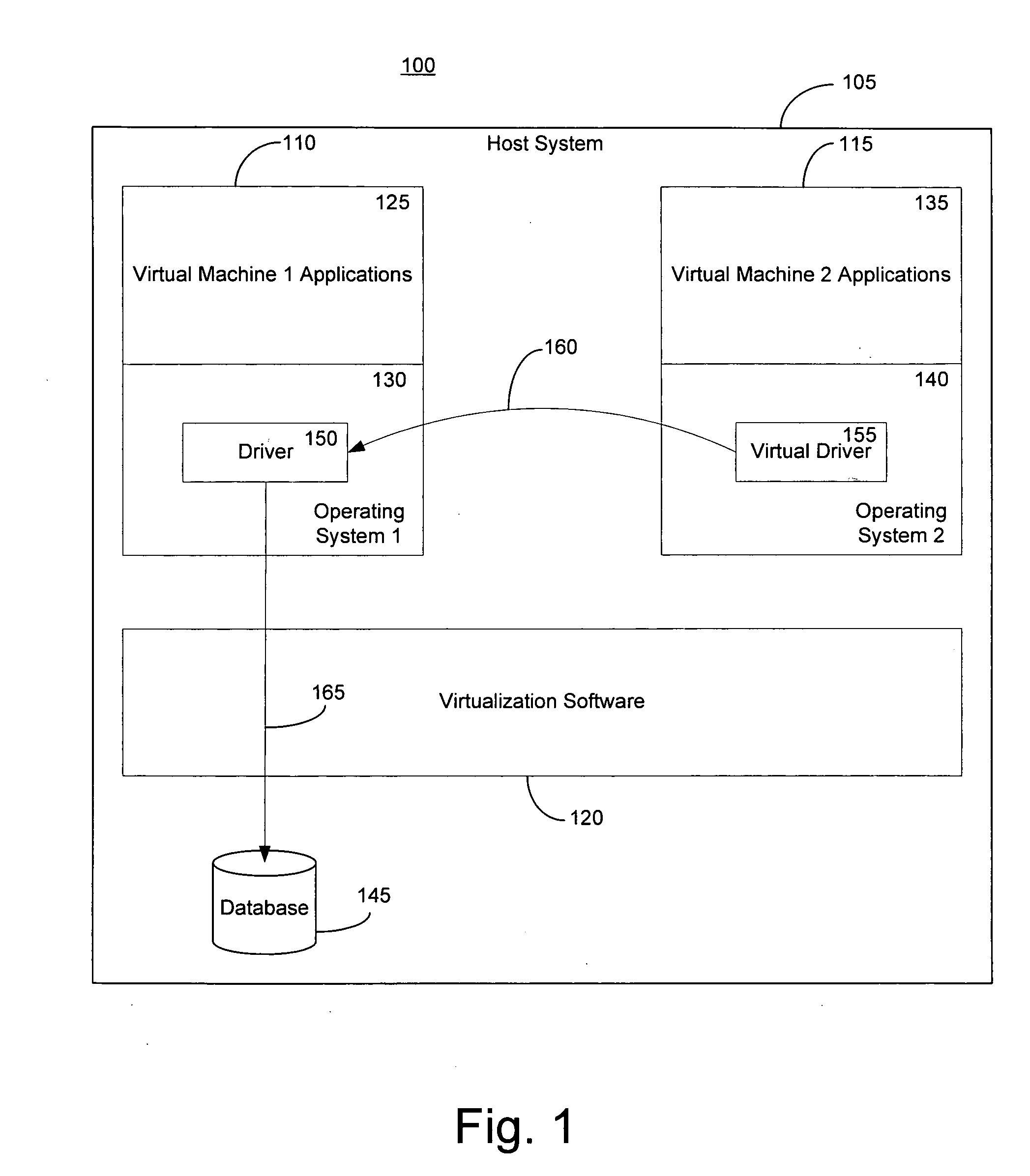

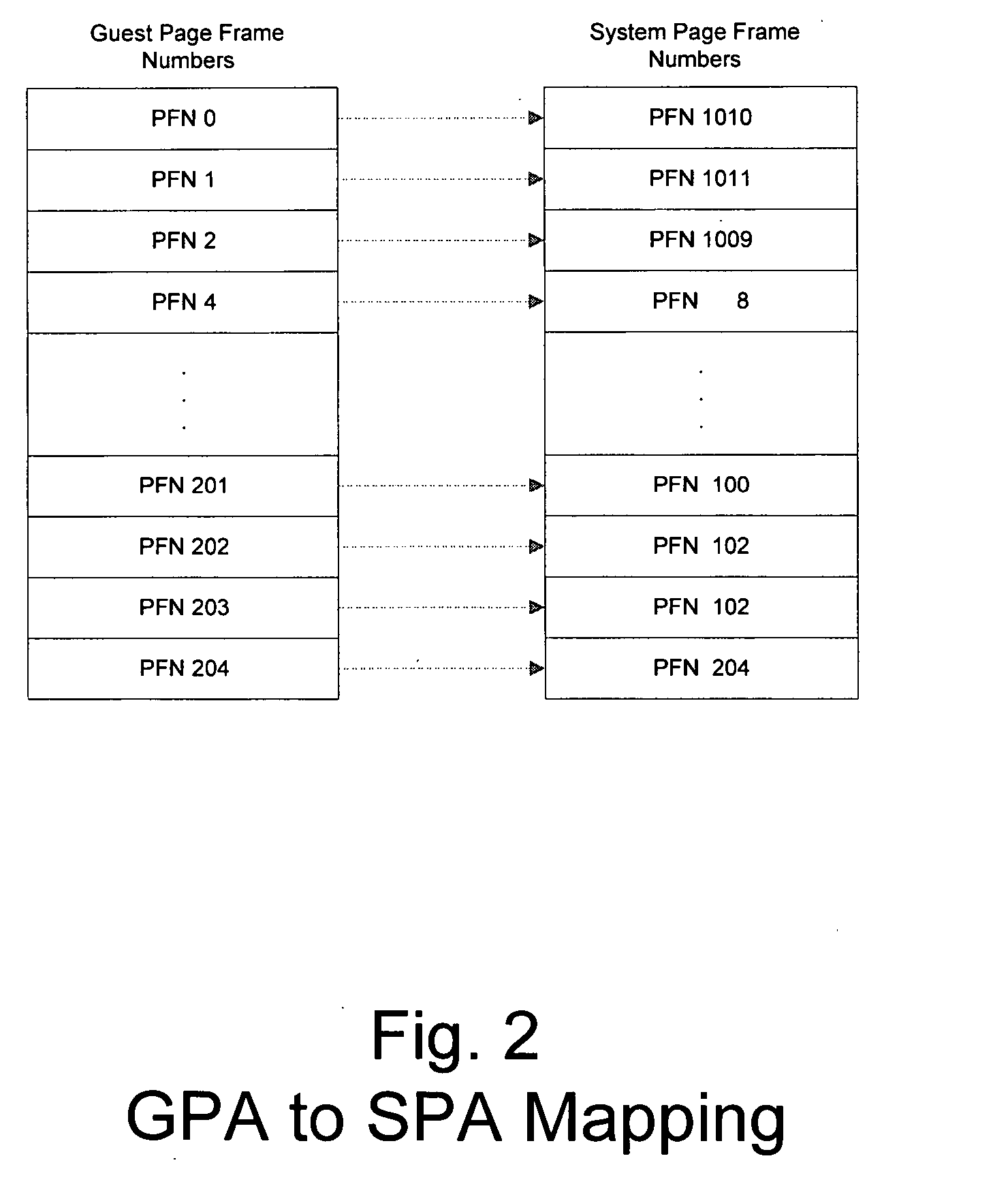

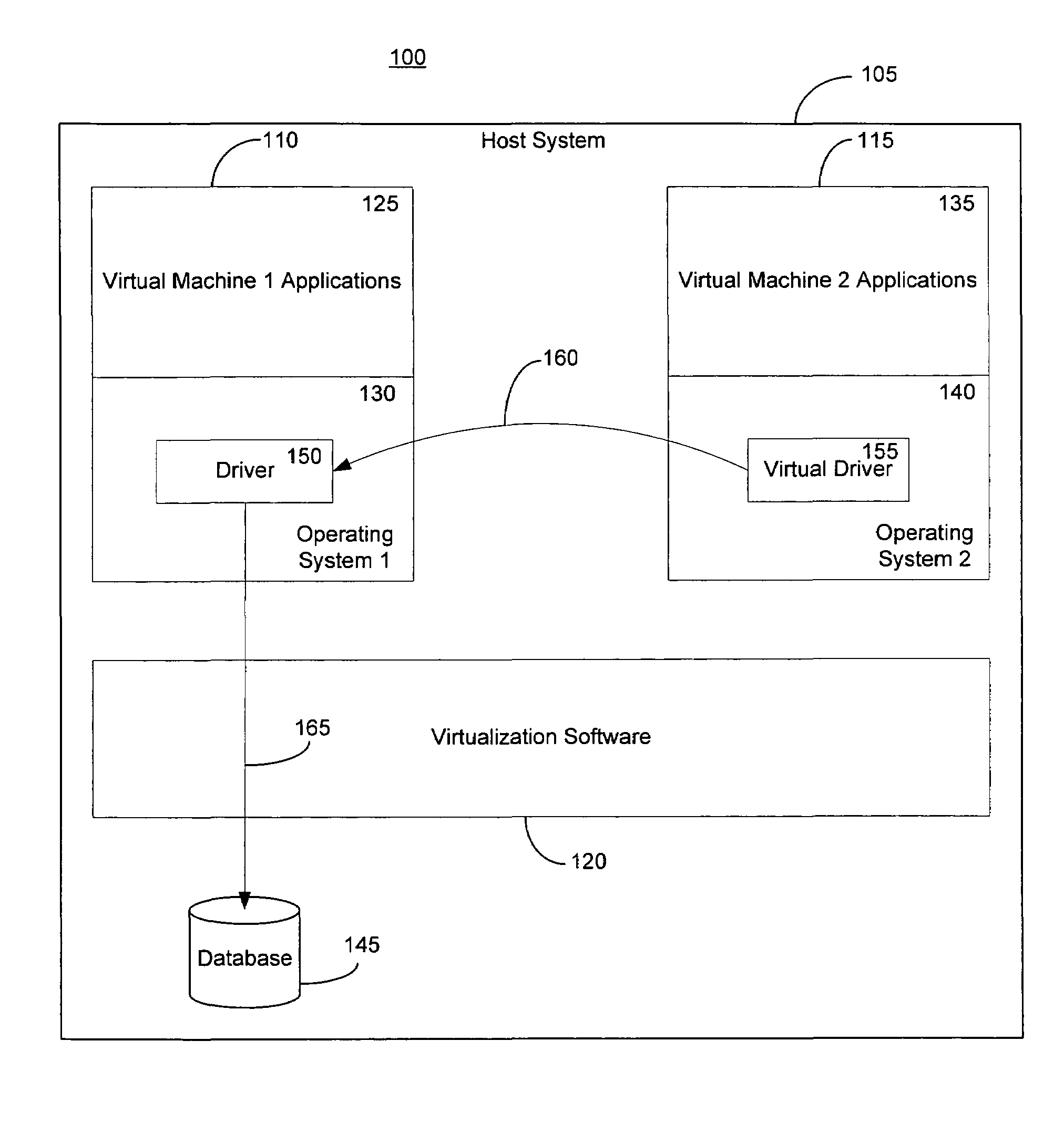

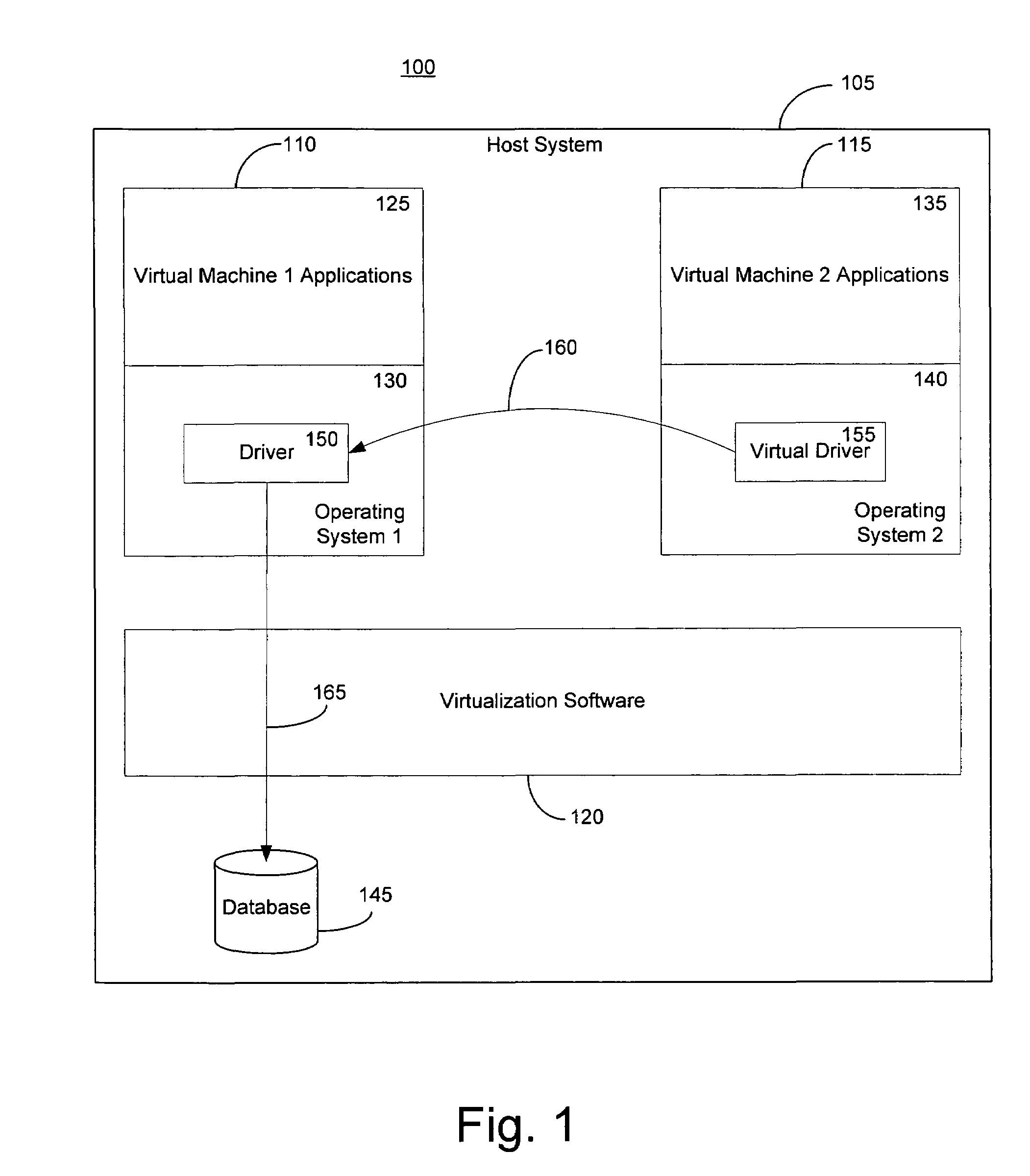

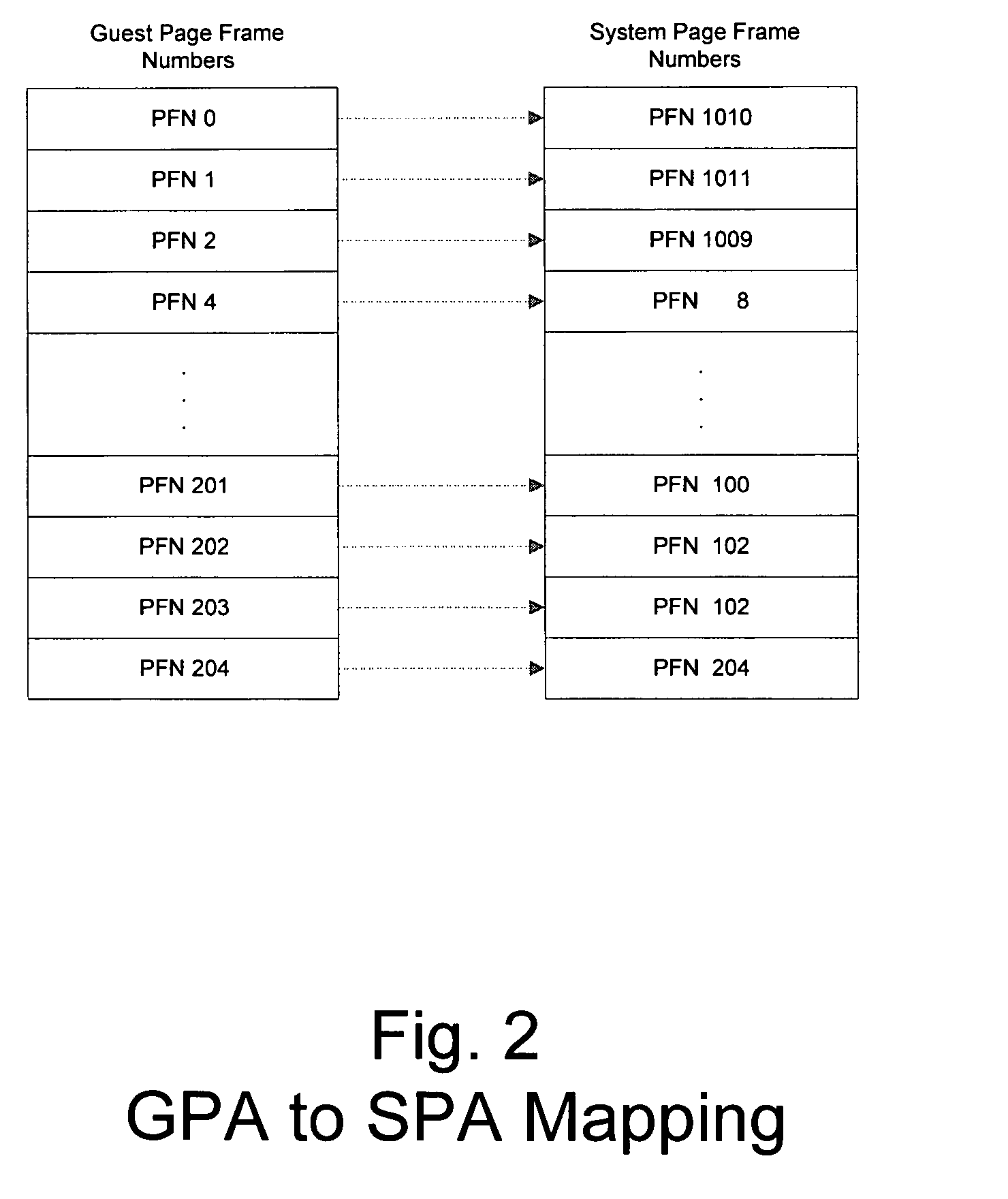

Method and system for a guest physical address virtualization in a virtual machine environment

InactiveUS20060206658A1Memory architecture accessing/allocationResource allocationVirtualizationPhysical address

A method of sharing pages between virtual machines in a multiple virtual machine environment includes initially allocating a temporary guest physical address range of a first virtual machine for sharing pages with a second virtual machine. The temporary range is within a guest physical address space of the first virtual machine. An access request, such as with a DMA request, from a second virtual machine for pages available to the first virtual machine is received. A reference count of pending accesses to the pages is incremented to indicate a pending access and the ages are mapped into the temporary guest physical address range. The pages are accessed and the reference count is decremented. The mapping in the temporary guest physical address range is then removed if the reference count is zero.

Owner:MICROSOFT TECH LICENSING LLC

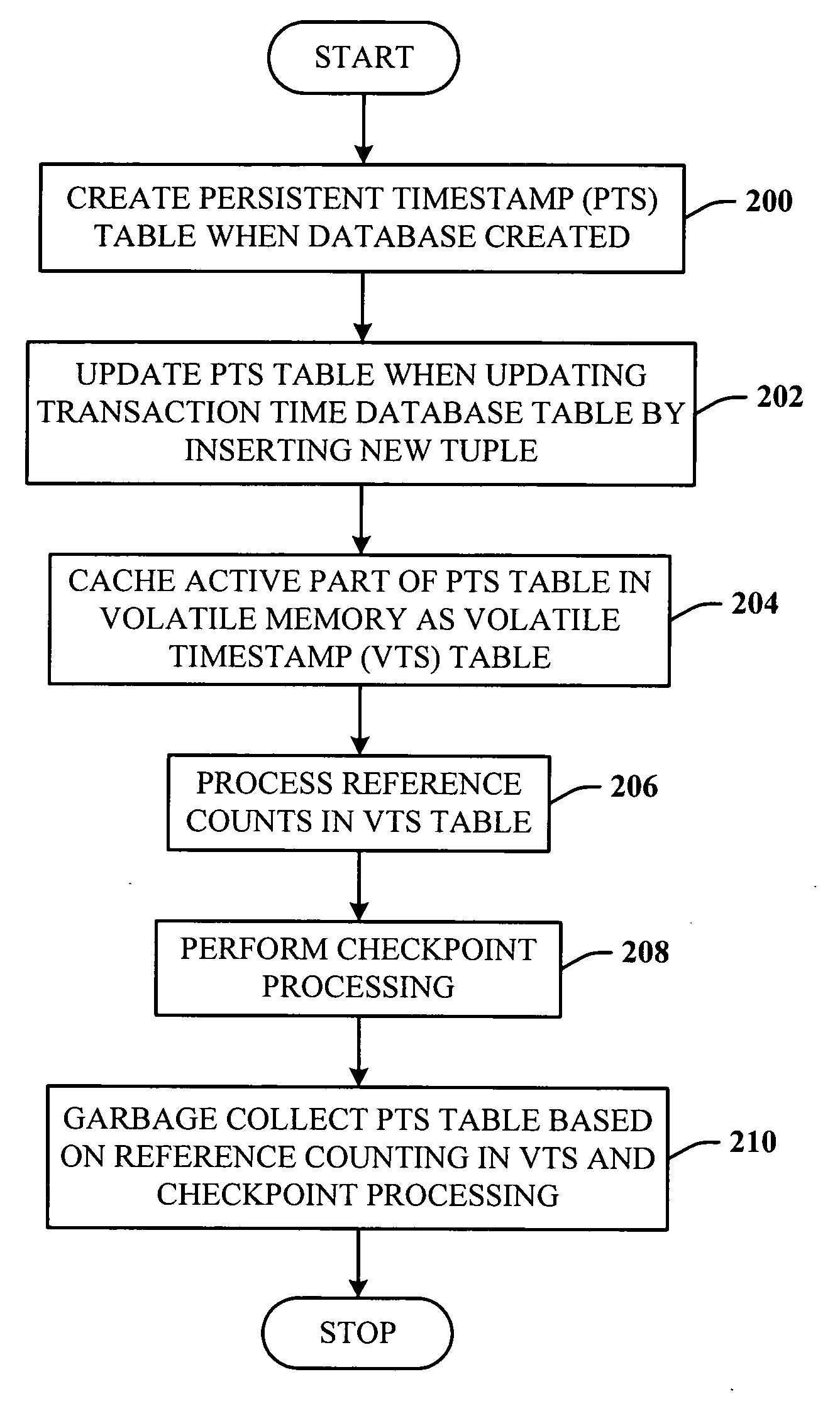

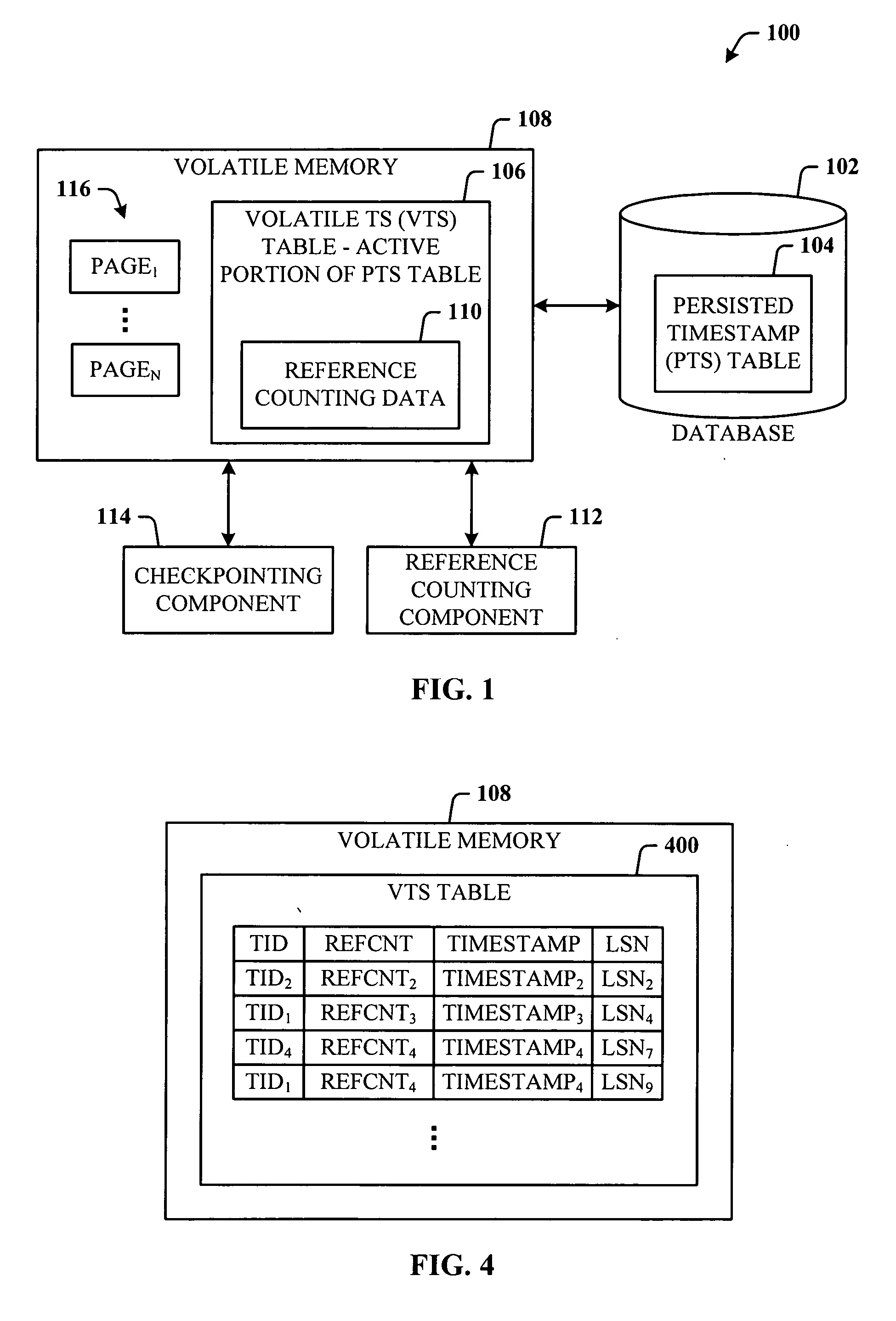

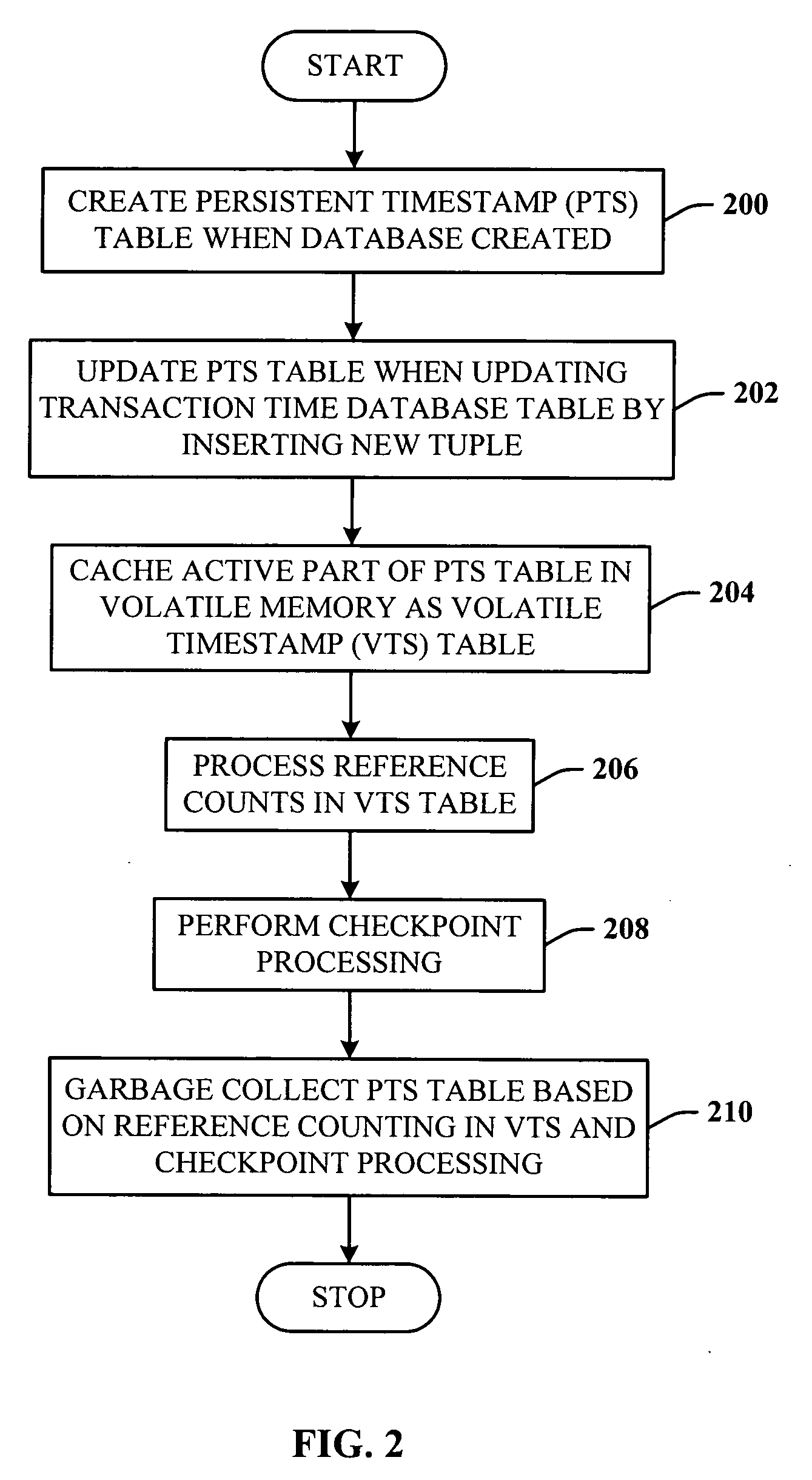

Lazy timestamping in transaction time database

InactiveUS20060167960A1Minimize accessEfficient record timestamping processData processing applicationsDigital data information retrievalTemporal databaseTimestamp

Lazy timestamping in a transaction time database is performed using volatile reference counting and checkpointing. Volatile reference counting is employed to provide a low cost way of garbage collecting persistent timestamp information about a transaction by identifying exactly when all record versions of a transaction are timestamped and the versions are persistent. A volatile timestamp (VTS) table is created in a volatile memory, and stores timestamp, reference count, transaction ID, and LSN information. Active portions of a persisted timestamp (PTS) table are stored in the VTS table to provide faster and more efficient timestamp processing via accesses to the VTS table information. The reference count information is stored only in the VTS table for faster access. When the reference count information decrements to zero, it is known that all record versions that were updates for a transaction were timestamped. A checkpointing component facilitates checkpoint processing for verifying that timestamped records have been written to the persistent database and that garbage collection of the PTS table can be performed for transaction entries with zero reference counts.

Owner:MICROSOFT TECH LICENSING LLC

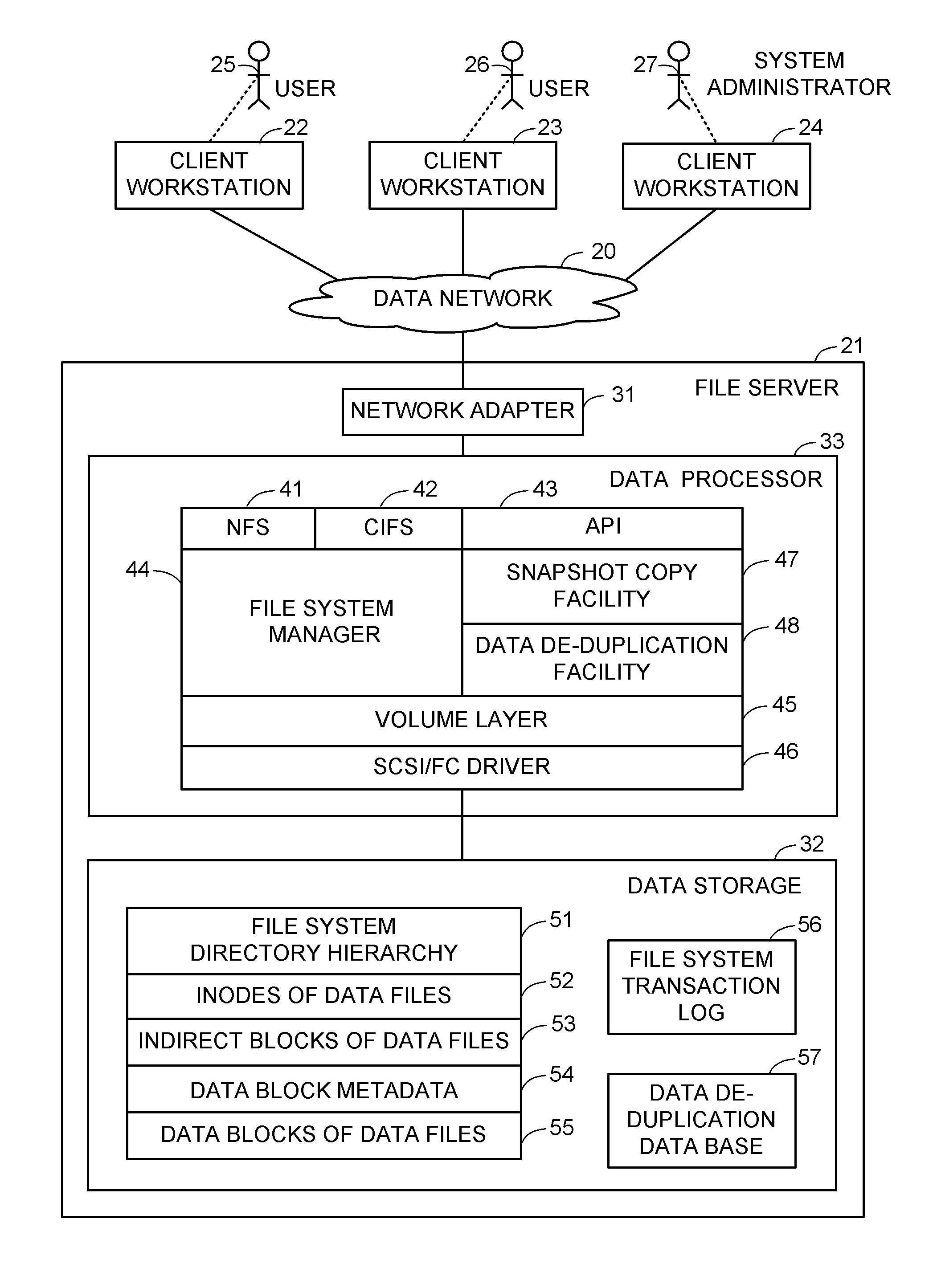

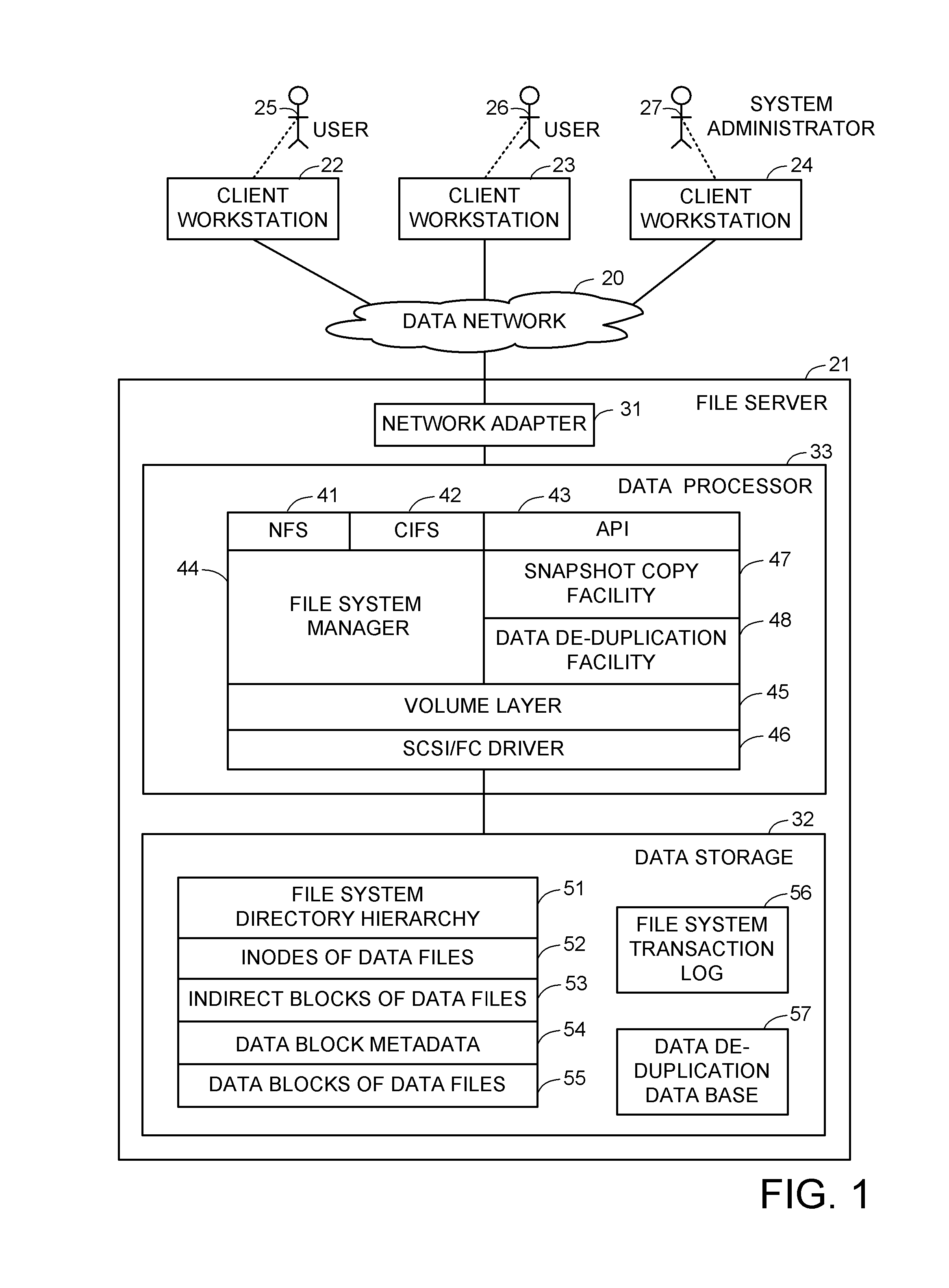

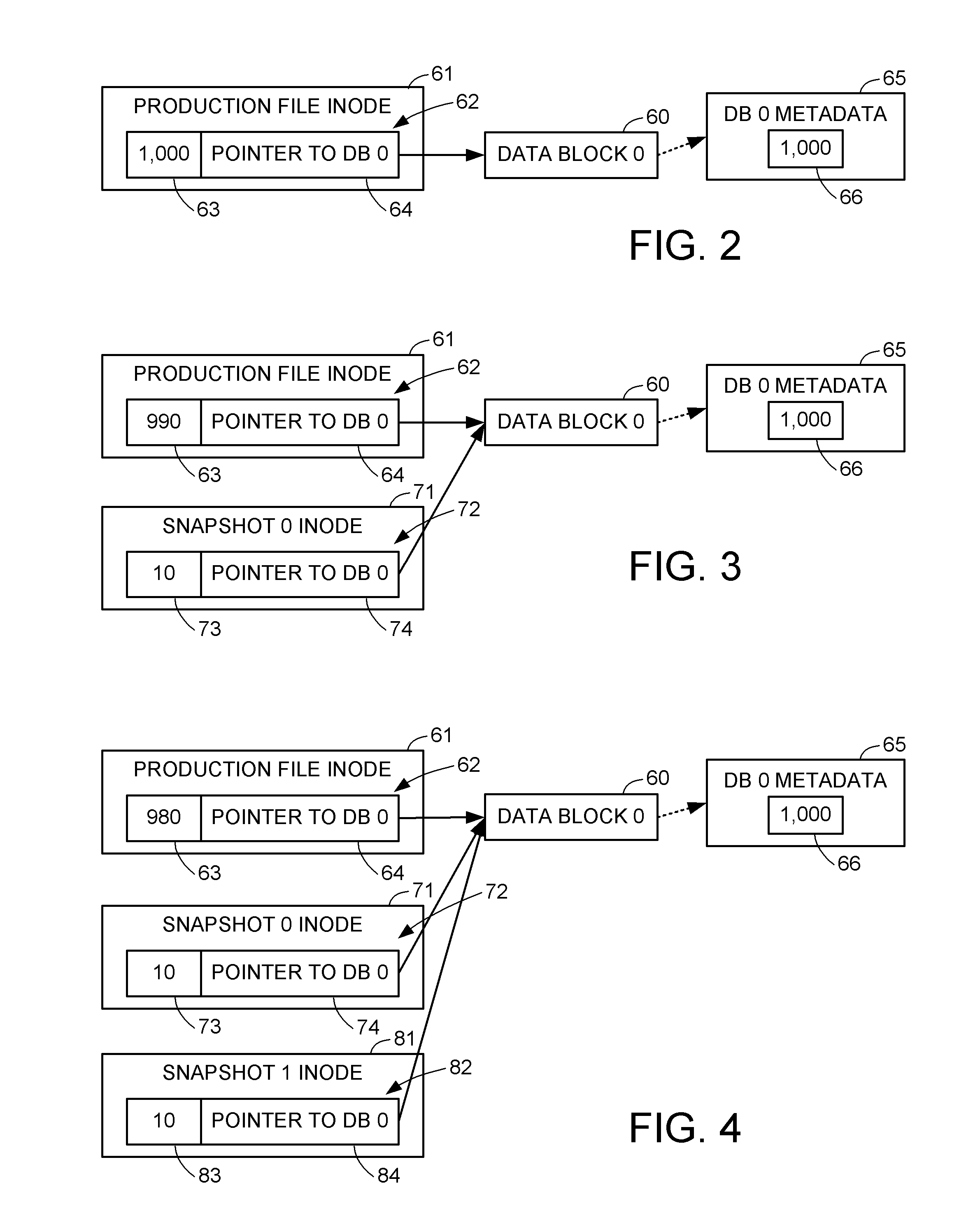

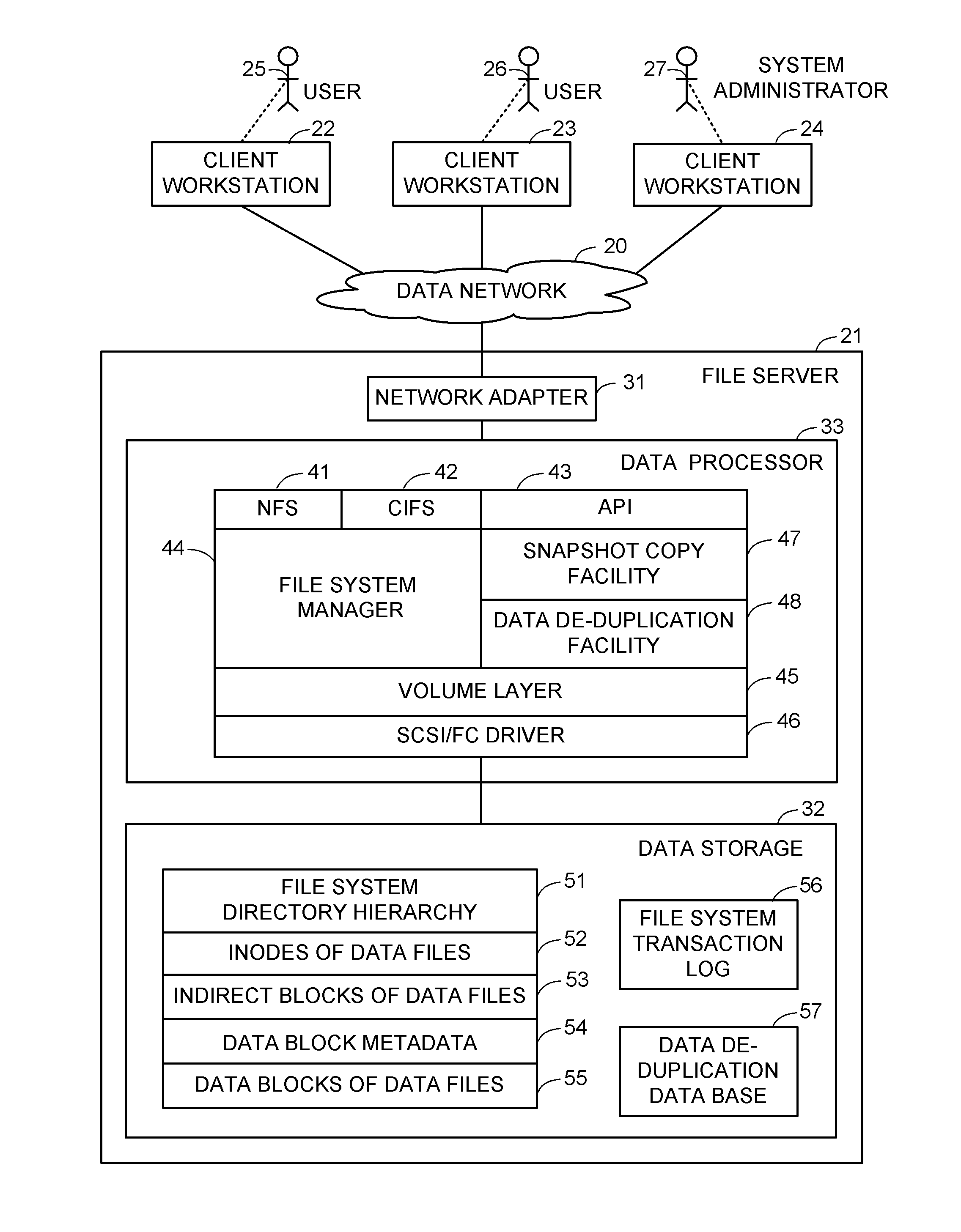

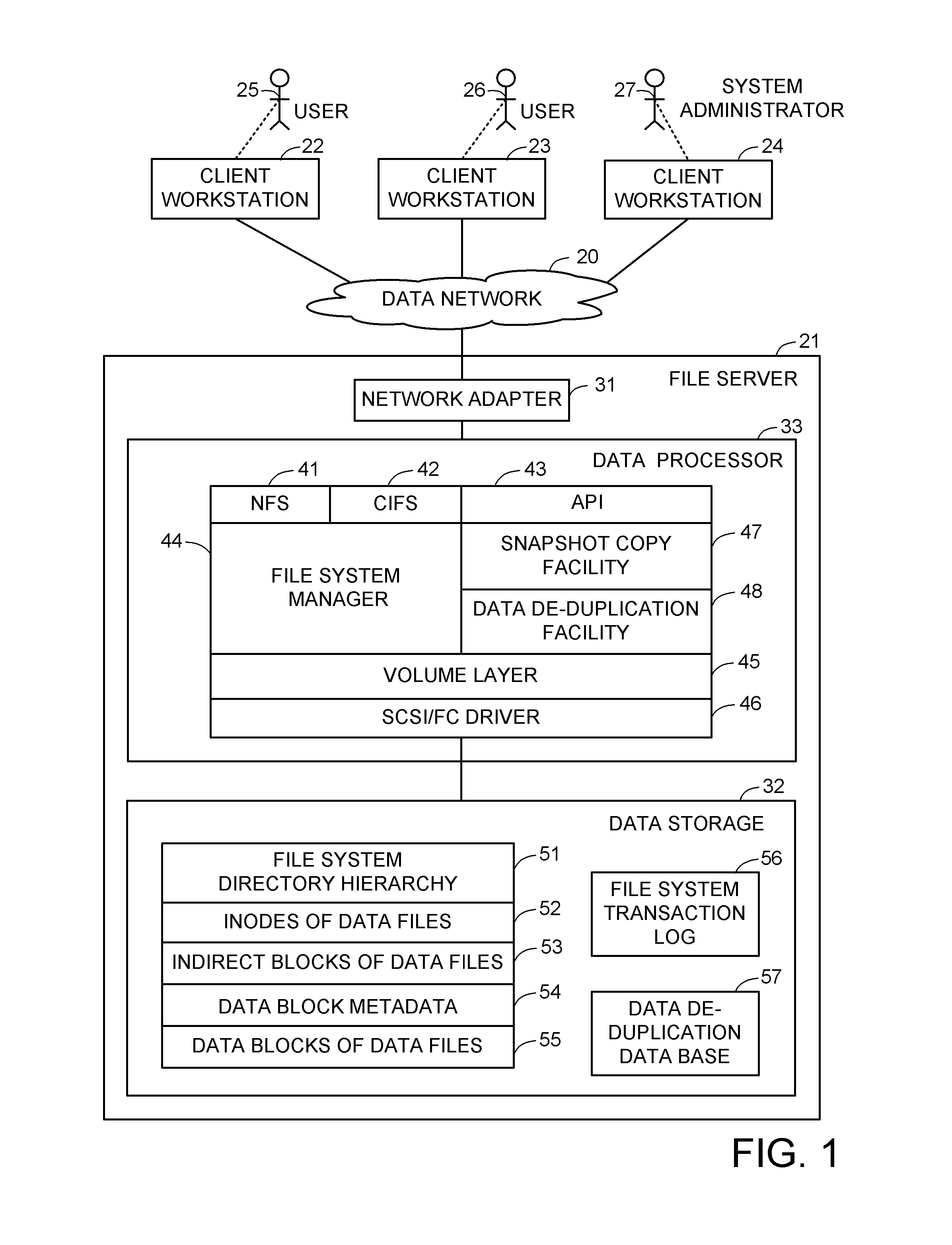

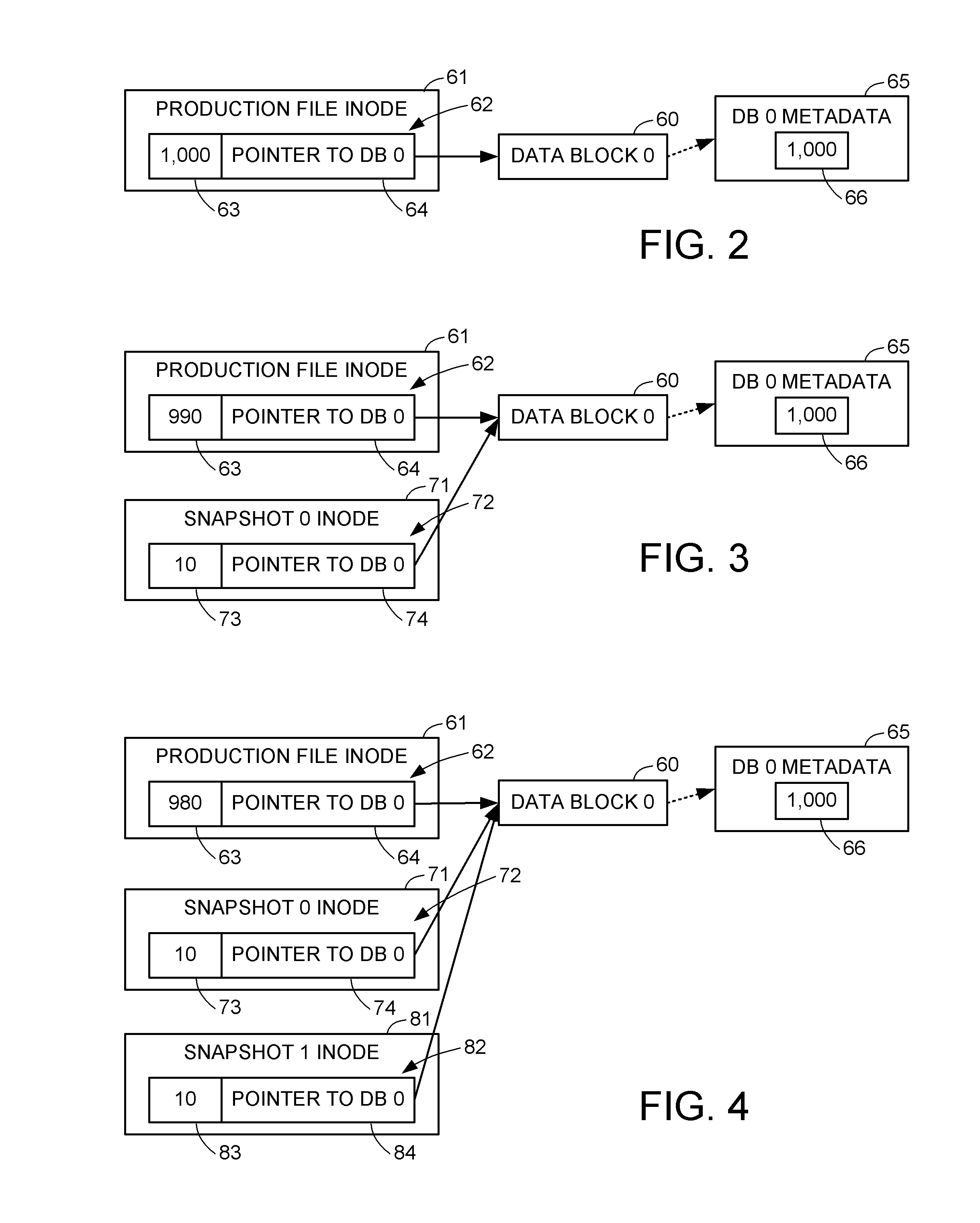

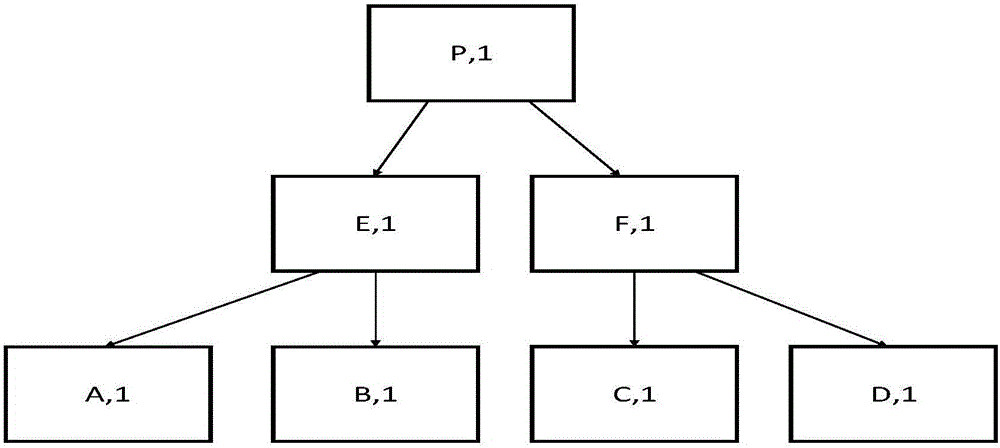

Delegated reference count base file versioning

ActiveUS8412688B1Improve performanceControl performanceDigital data information retrievalDigital data processing detailsMetadataData deduplication

A snapshot copy facility maintains information indicating ownership and sharing of child nodes in the hierarchy of a file between successive versions by delegating reference counts to the parent-child relationships between the nodes, as indicated by pointers in the parent nodes. When a child node becomes shared between a parent node of the production file and a parent node of a snapshot copy, the delegated reference count is split among the parent nodes. This method is compatible with a conventional data de-duplication facility, and avoids a need to update reference counts in metadata of child nodes of a shared intermediate node upon splitting the shared intermediate node when writing to a production file.

Owner:EMC IP HLDG CO LLC

System and method for computer automatic memory management

ActiveUS7584232B2Eliminates all suspensionEnsure correct executionData processing applicationsSpecial data processing applicationsImmediate releaseWaste collection

The present invention is a method and system of automatic memory management (garbage collection). An application automatically marks up objects referenced from the “extended root set”. At garbage collection, the system starts traversal from the marked-up objects. It can conduct accurate garbage collection in a non-GC language, such as C++. It provides a deterministic reclamation feature. An object and its resources are released immediately when the last reference is dropped. Application codes automatically become entirely GC-safe and interruptible. A concurrent collector can be pause-less and with predictable worst-case latency of micro-second level. Memory usage is efficient and the cost of reference counting is significantly reduced.

Owner:GUO MINGNAN

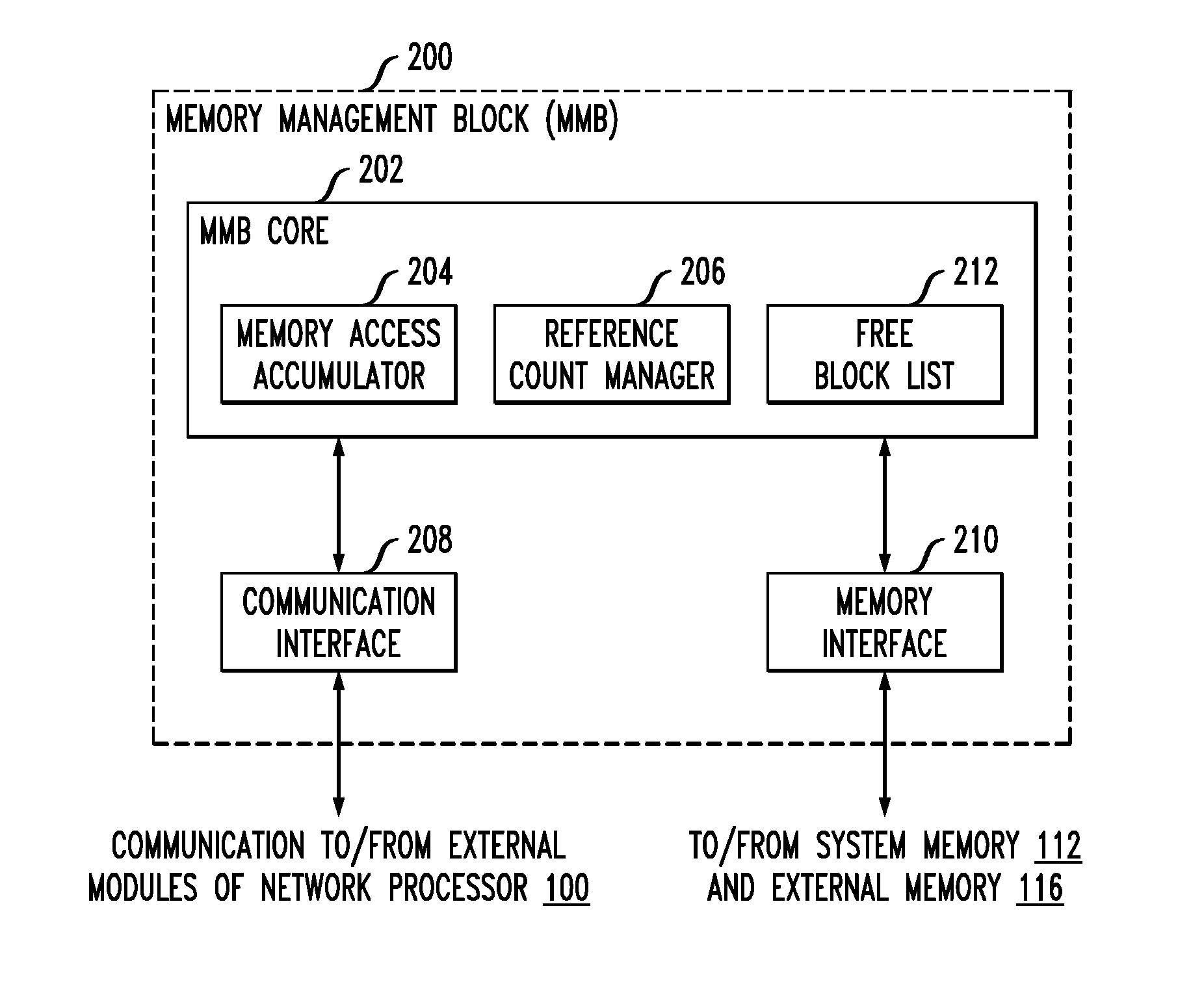

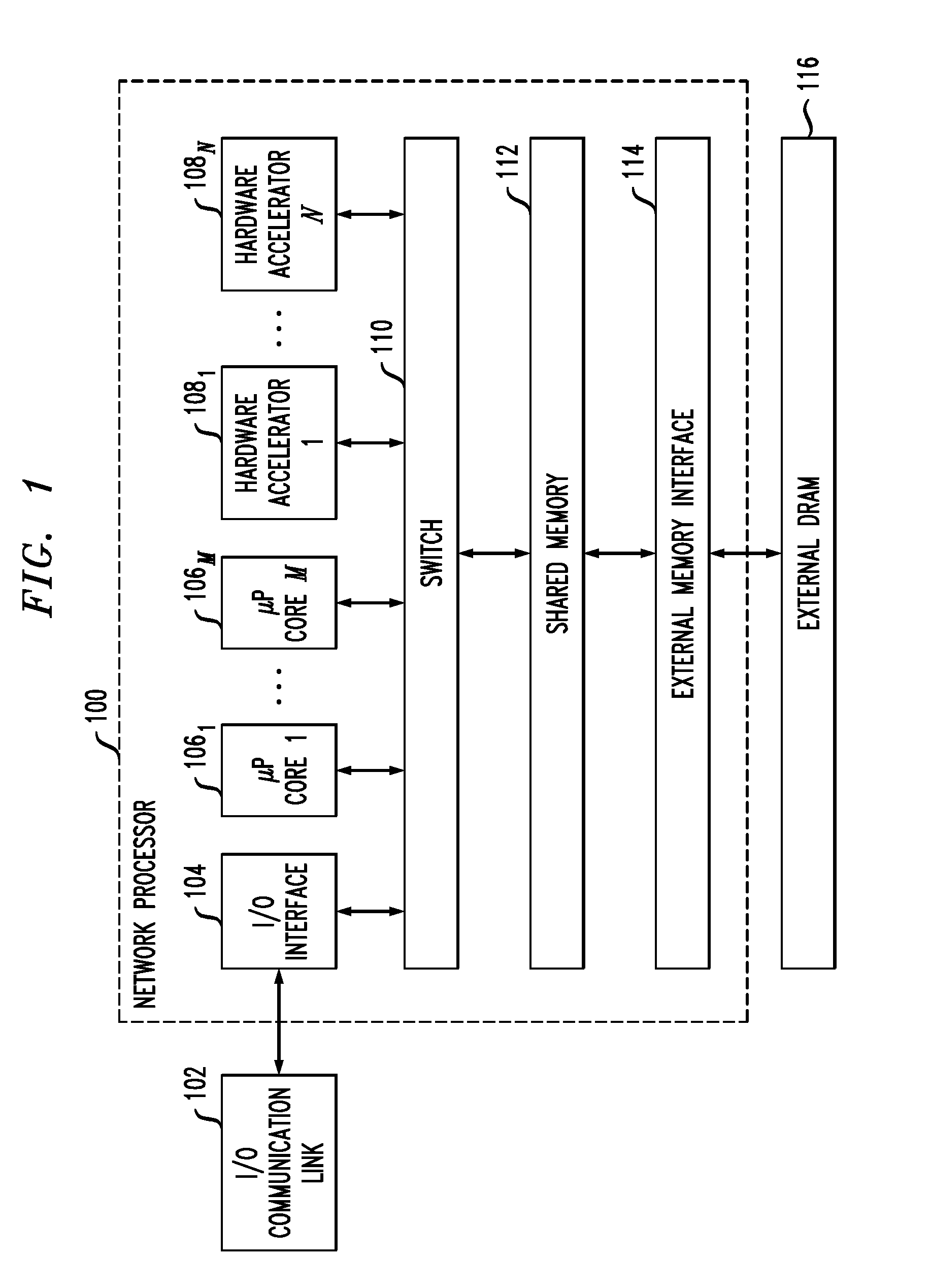

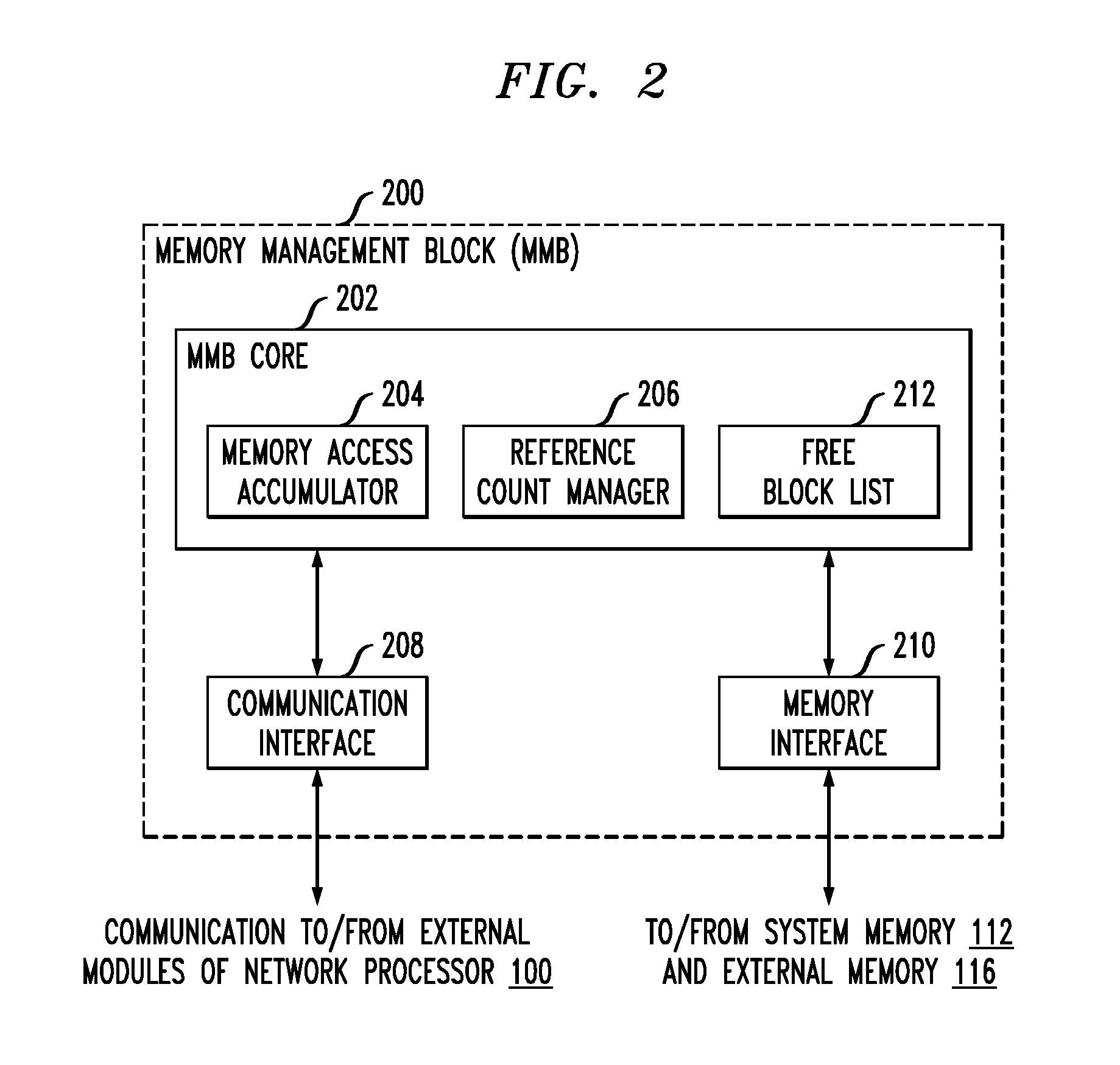

Memory manager for a network communications processor architecture

Described embodiments provide a memory manager for a network processor having a plurality of processing modules and a shared memory. The memory manager allocates blocks of the shared memory to requesting ones of the plurality of processing modules. A free block list tracks availability of memory blocks of the shared memory. A reference counter maintains, for each allocated memory block, a reference count indicating a number of access requests to the memory block by ones of the plurality of processing modules. The reference count is located with data at the allocated memory block. For subsequent access requests to a given memory block concurrent with processing of a prior access request to the memory block, a memory access accumulator (i) accumulates an incremental value corresponding to the subsequent access requests, (ii) updates the reference count associated with the memory block, and (iii) updates the memory block with the accumulated result.

Owner:INTEL CORP

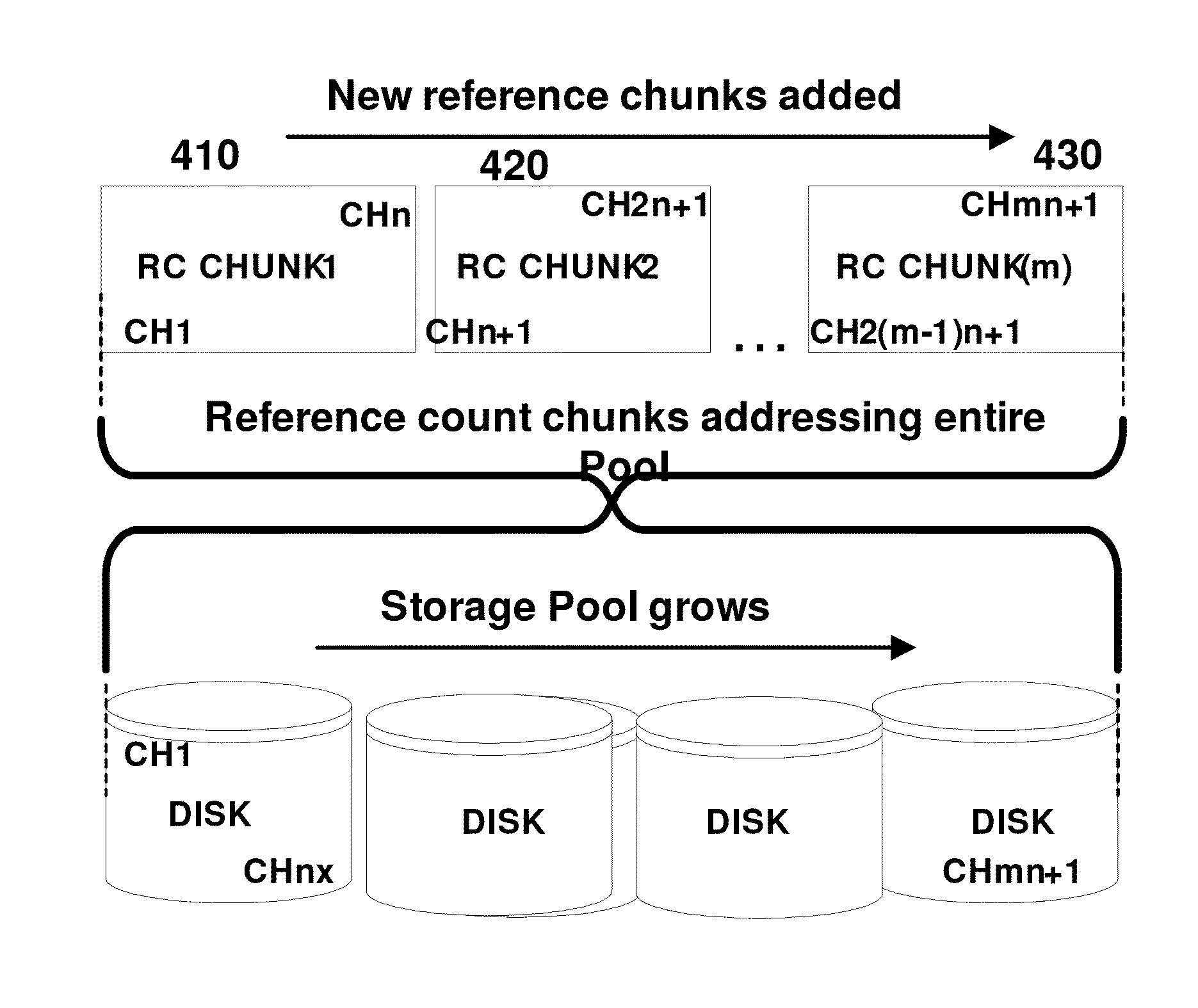

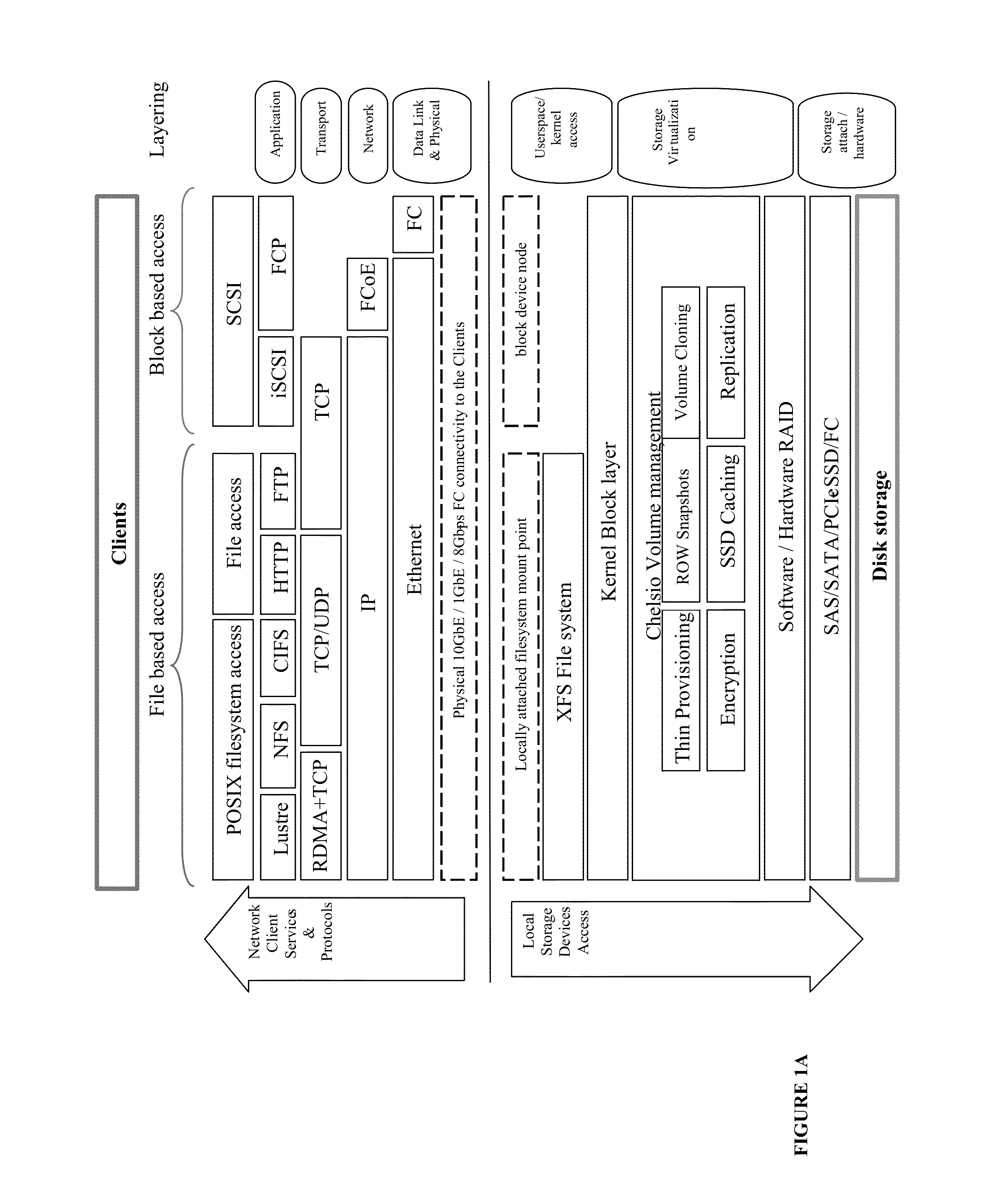

Thin provisioning row snapshot with reference count map

ActiveUS8806154B1Reduce redundant storageIncrease the number ofInput/output to record carriersError detection/correctionThin provisioningStorage pool

Owner:CHELSIO COMMUNICATIONS

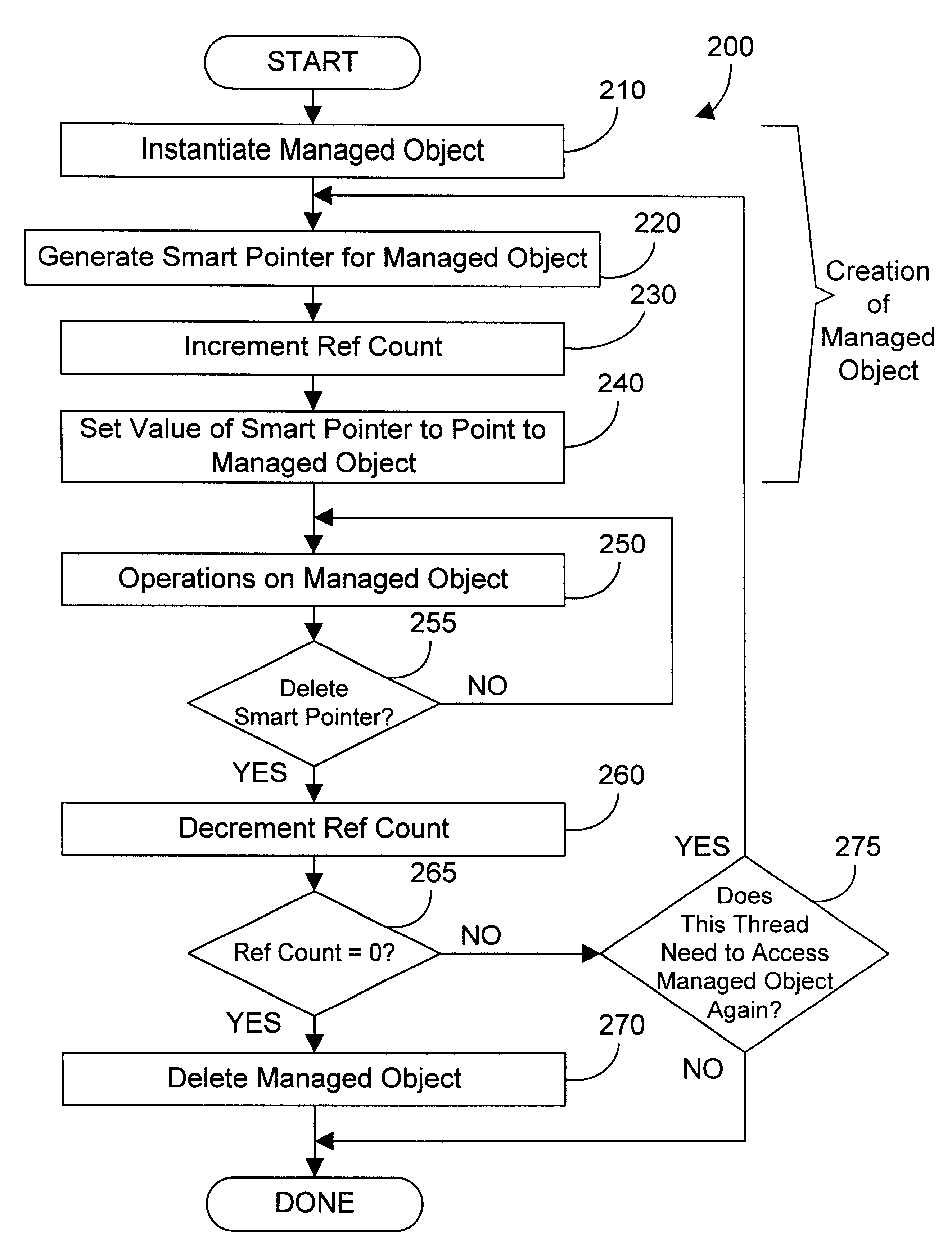

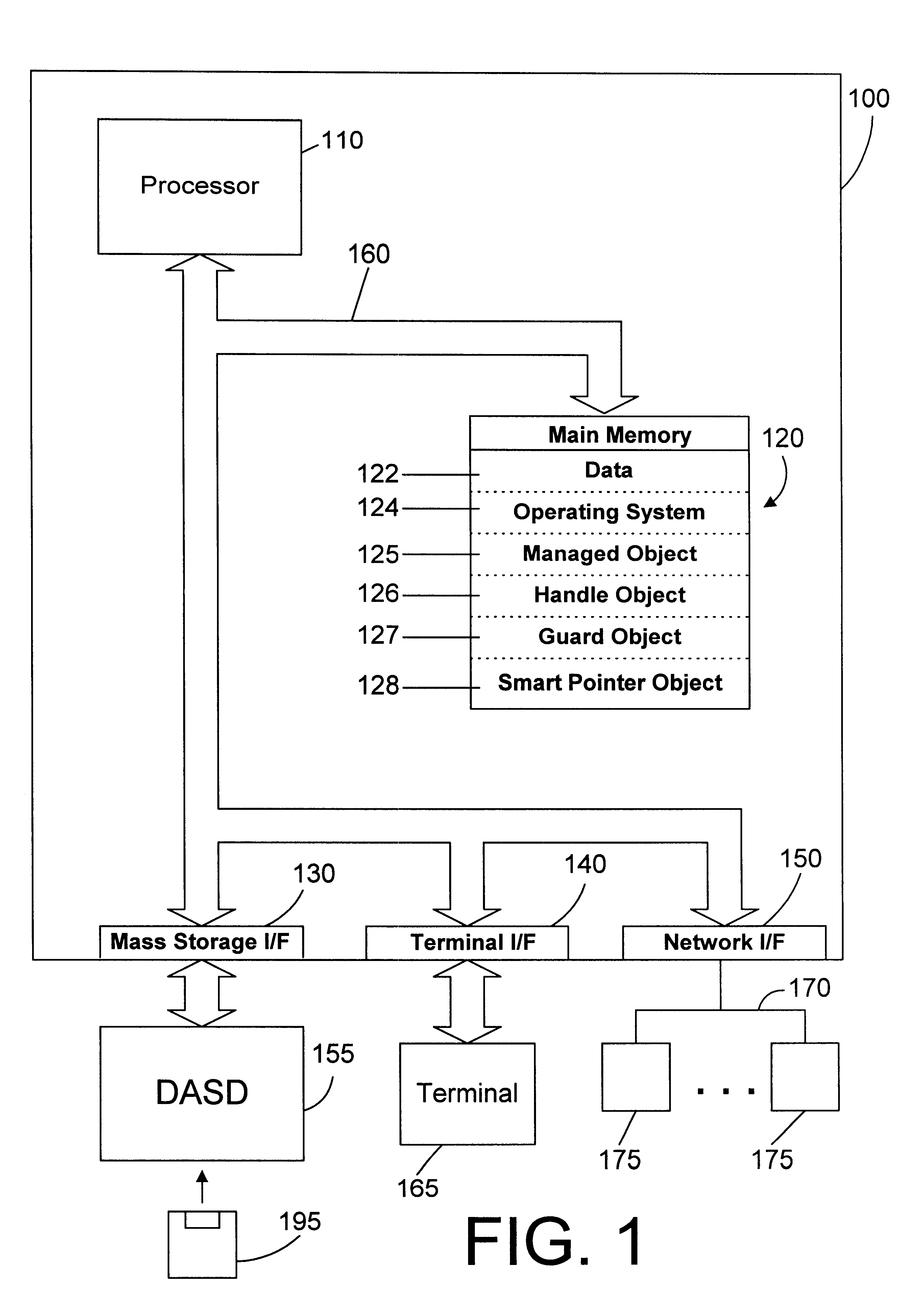

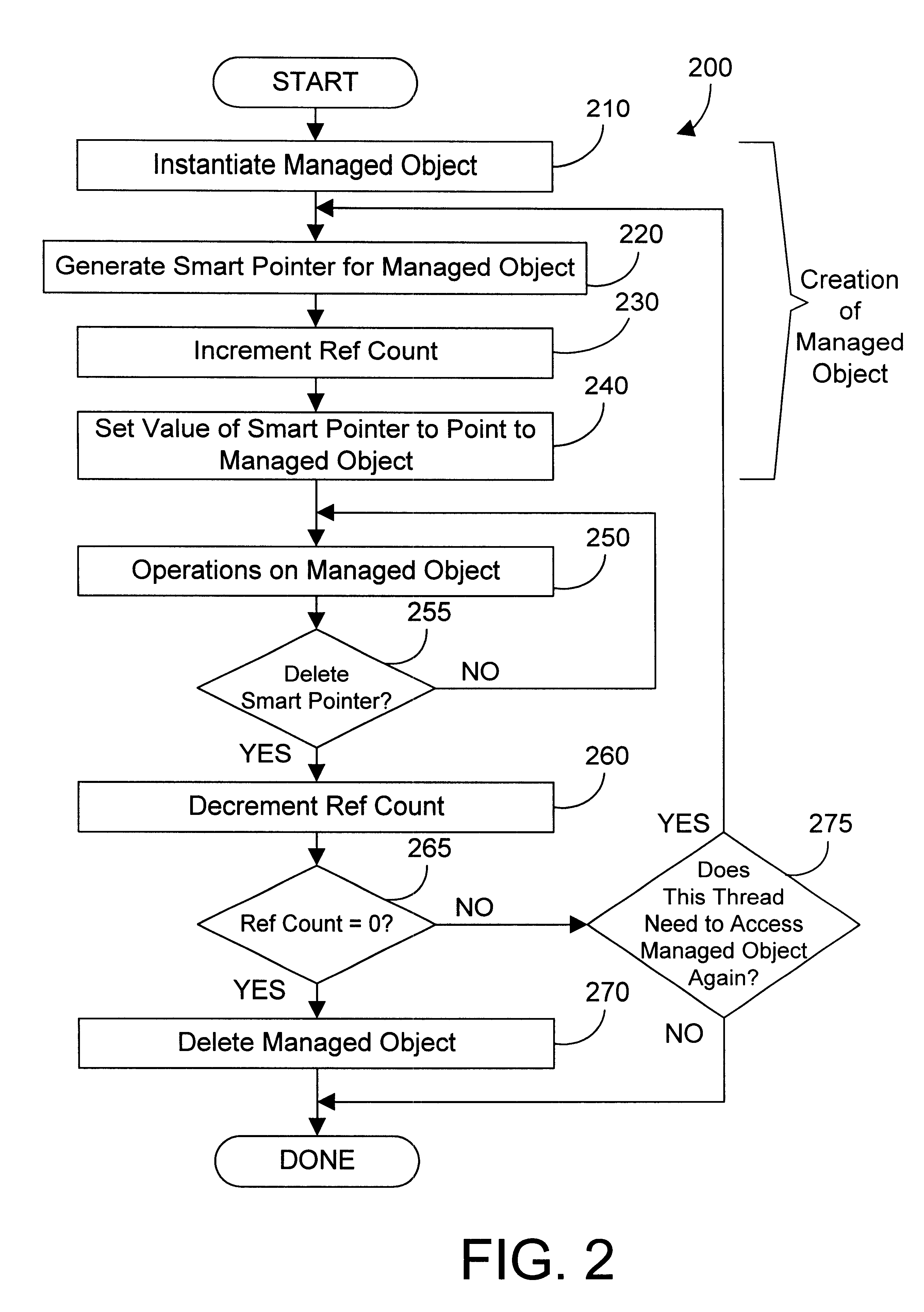

Apparatus and method for accessing an object oriented object using a smart passive reference

InactiveUS6366932B1Improve reusabilityEasy to useData processing applicationsMemory adressing/allocation/relocationComputer hardwareEngineering

A smart passive reference to an object oriented object provides control over creation and deletion of the object it references. A reference count is incremented when an active reference to an object is created, and is decremented when an active reference to the object is deleted. The smart passive reference allows suspending the activity of a thread until no threads have active references to the object. In addition, the smart passive reference can be used to invalidate the smart passive references in other threads, thereby allowing a thread to obtain exclusive access to an object. The smart passive reference also provides an interface to cause the managed object to be deleted when the reference count goes to zero.

Owner:IBM CORP

Method and system for a guest physical address virtualization in a virtual machine environment

InactiveUS7334076B2Memory architecture accessing/allocationResource allocationVirtualizationPhysical address

Owner:MICROSOFT TECH LICENSING LLC

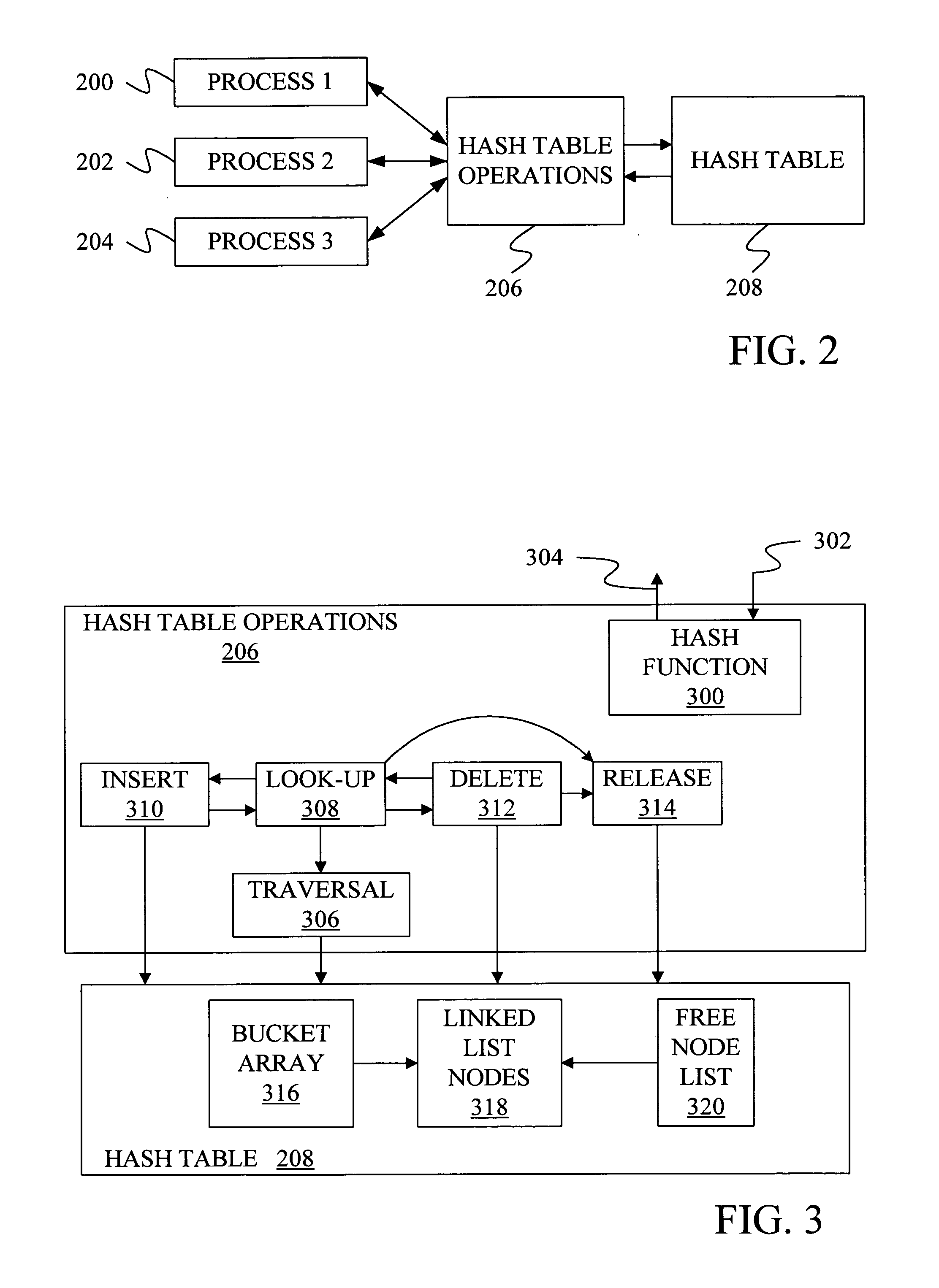

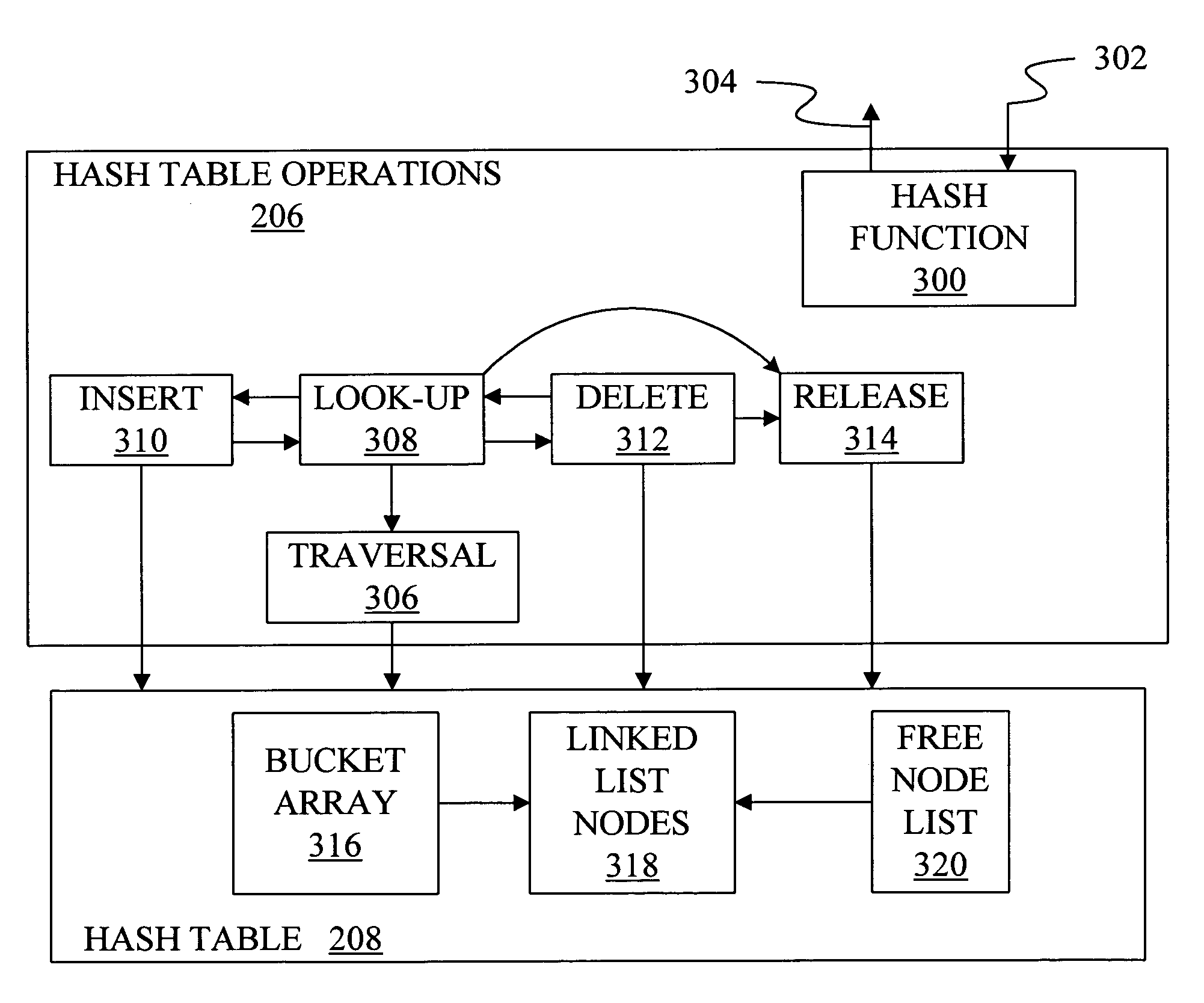

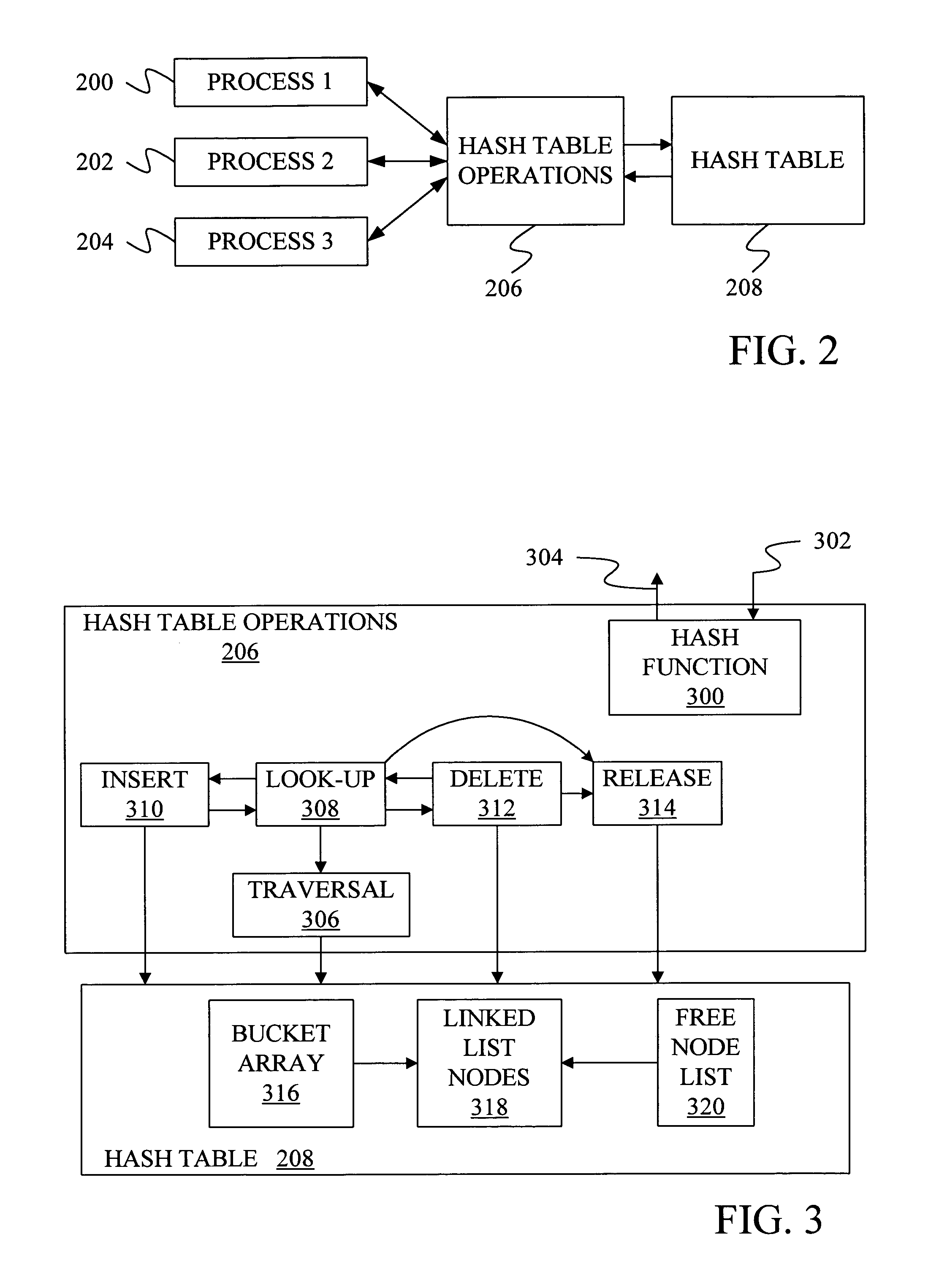

Method and apparatus for lock-free, non -blocking hash table

InactiveUS20050071335A1Speed up hash list traversalSpeedData processing applicationsDigital data information retrievalTheoretical computer scienceHash table

A method and apparatus are provided for an efficient lock-free, non-blocking hash table. Under one aspect, a linked list of nodes is formed in the hash table where each node includes a protected pointer to the next node in the list and a reference counter indicating the number of references currently made to the node. The reference counter of a node must be zero and none of the protected pointers in a linked list can be pointing at the node before the node can be destroyed. In another aspect of the invention, searching for a node in the hash table with a particular key involves examining the hash signatures of nodes along a linked list and only comparing the key of a node to a search key of the node if the hash signature of the node matches a search hash signature.

Owner:MICROSOFT TECH LICENSING LLC

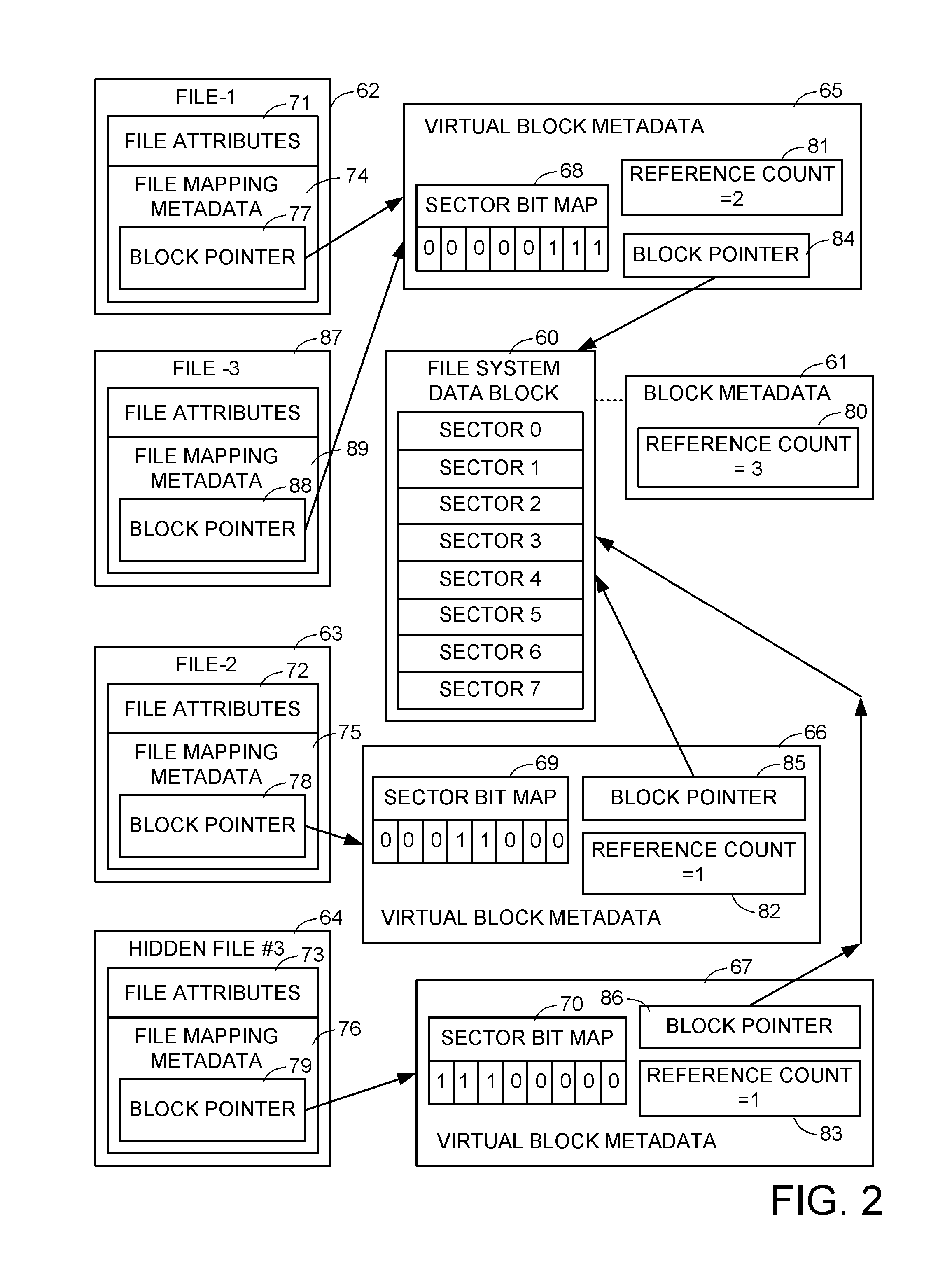

Delegated reference count base file versioning

ActiveUS8032498B1Improve performanceControl performanceDigital data information retrievalDigital data processing detailsParallel computingInode

A snapshot copy facility maintains information indicating block ownership and sharing between successive versions by delegating block reference counts to parent-child relationships between the file system blocks, as indicated by block pointers in inodes and indirect blocks. When a child block becomes shared between a parent block of the production file and a parent block of a snapshot copy, the delegated reference count is split among the parent blocks. This method is compatible with a conventional data de-duplication facility, and avoids a need to update block reference counts in block metadata of child blocks of a shared indirect block upon splitting the shared indirect block when writing to a production file.

Owner:EMC IP HLDG CO LLC

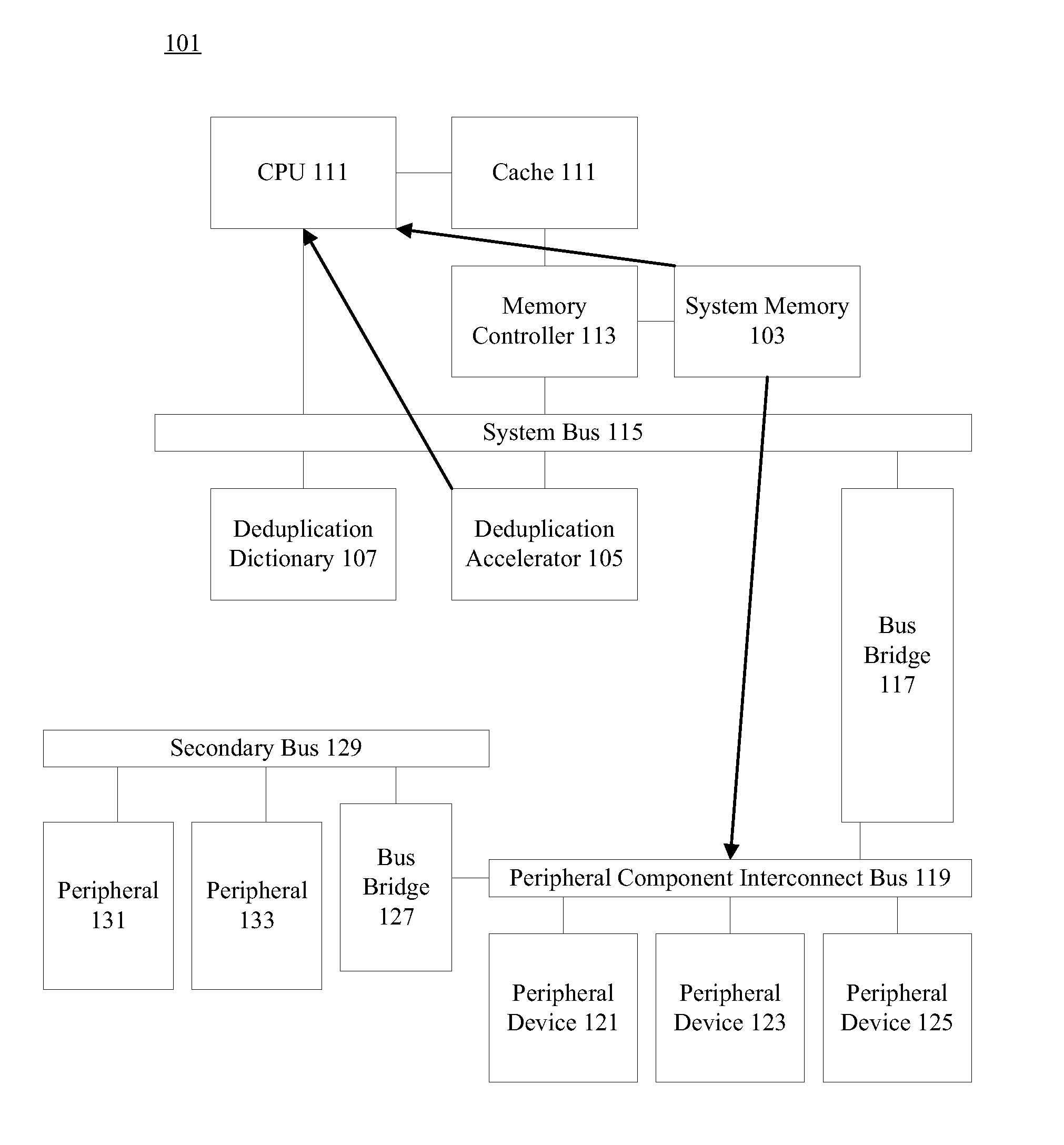

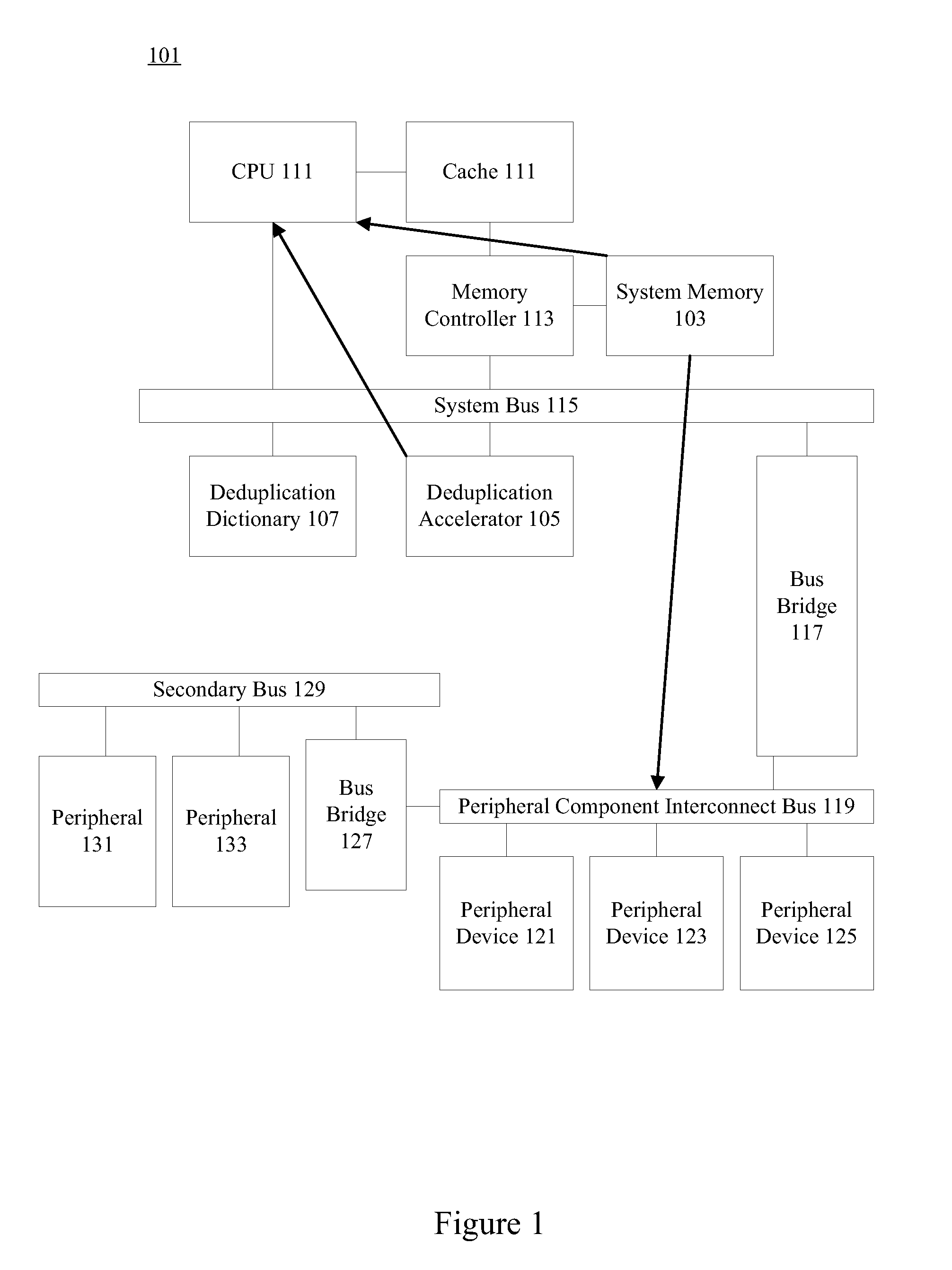

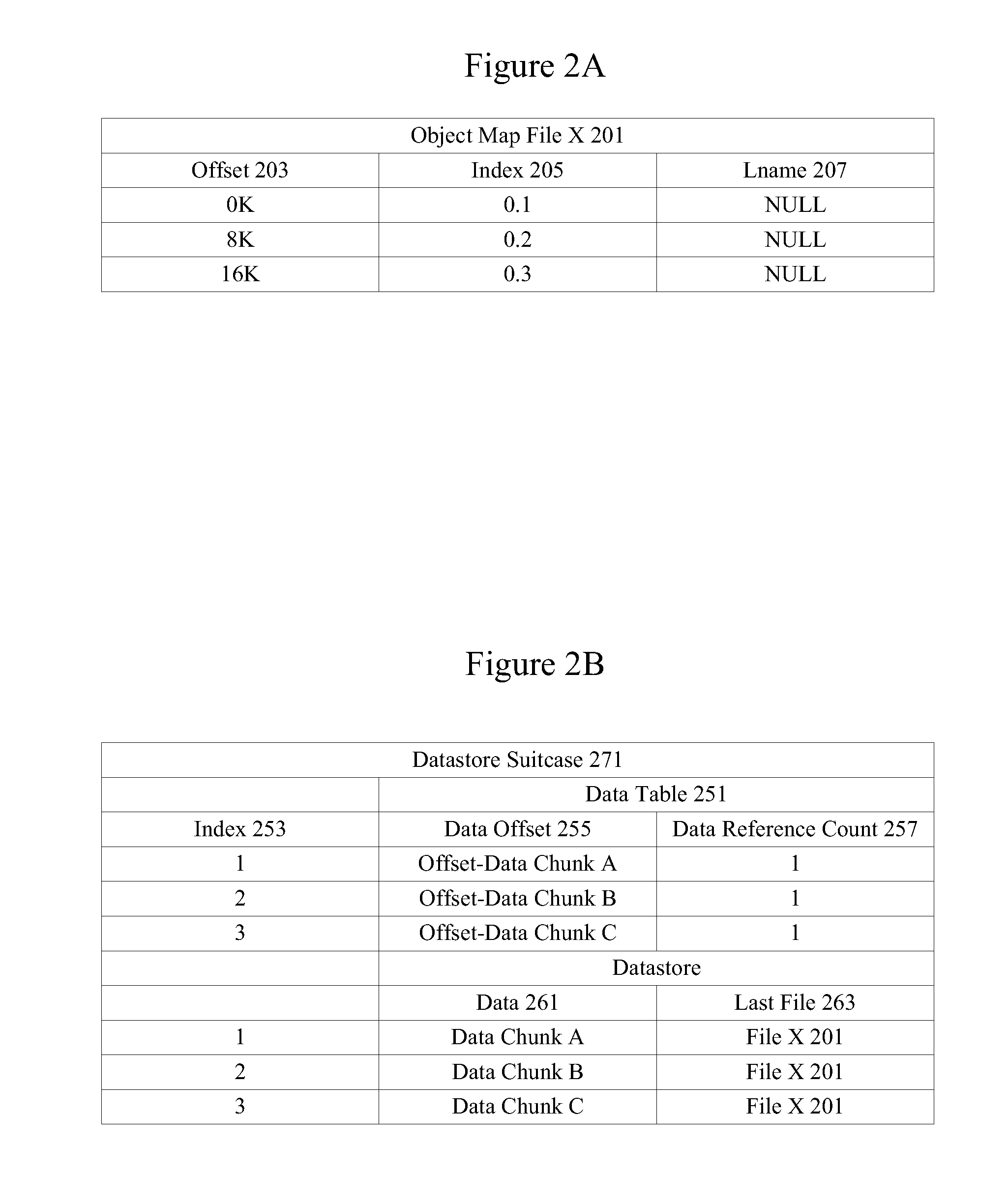

Accelerated deduplication

ActiveUS20130018853A1Digital data information retrievalDigital data processing detailsDirect memory accessData stream

Mechanisms are provided for accelerated data deduplication. A data stream is received an input interface and maintained in memory. Chunk boundaries are detected and chunk fingerprints are calculated using a deduplication accelerator while a processor maintains a state machine. A deduplication dictionary is accessed using a chunk fingerprint to determine if the associated data chunk has previously been written to persistent memory. If the data chunk has previously been written, reference counts may be updated but the data chunk need not be stored again. Otherwise, datastore suitcases, filemaps, and the deduplication dictionary may be updated to reflect storage of the data chunk. Direct memory access (DMA) addresses are provided to directly transfer a chunk to an output interface as needed.

Owner:QUEST SOFTWARE INC

Method and apparatus for lock-free, non-blocking hash table

InactiveUS6988180B2SpeedData processing applicationsDigital data information retrievalTheoretical computer scienceHash table

A method and apparatus are provided for an efficient lock-free, non-blocking hash table. Under one aspect, a linked list of nodes is formed in the hash table where each node includes a protected pointer to the next node in the list and a reference counter indicating the number of references currently made to the node. The reference counter of a node must be zero and none of the protected pointers in a linked list can be pointing at the node before the node can be destroyed. In another aspect of the invention, searching for a node in the hash table with a particular key involves examining the hash signatures of nodes along a linked list and only comparing the key of a node to a search key of the node if the hash signature of the node matches a search hash signature.

Owner:MICROSOFT TECH LICENSING LLC

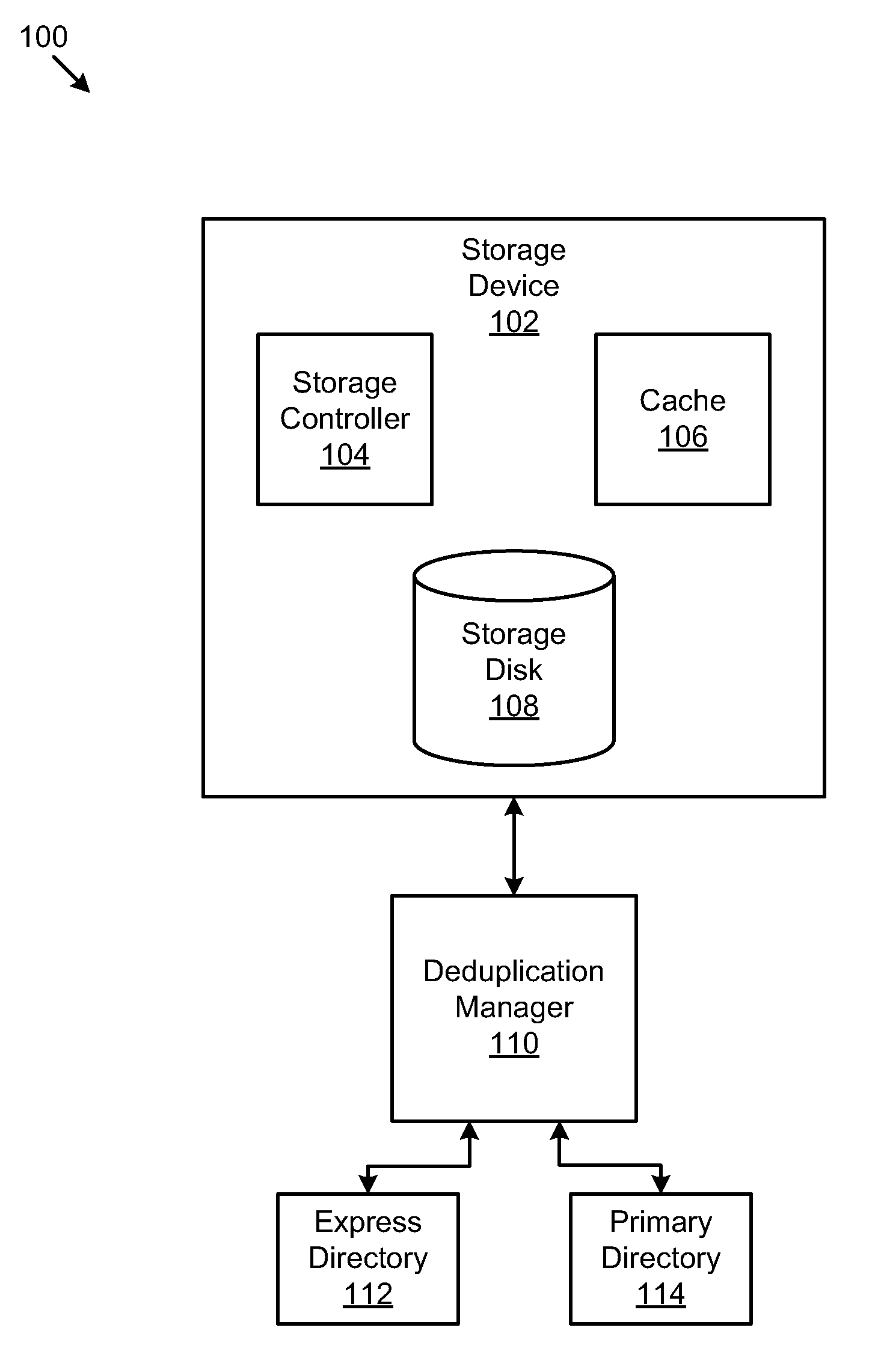

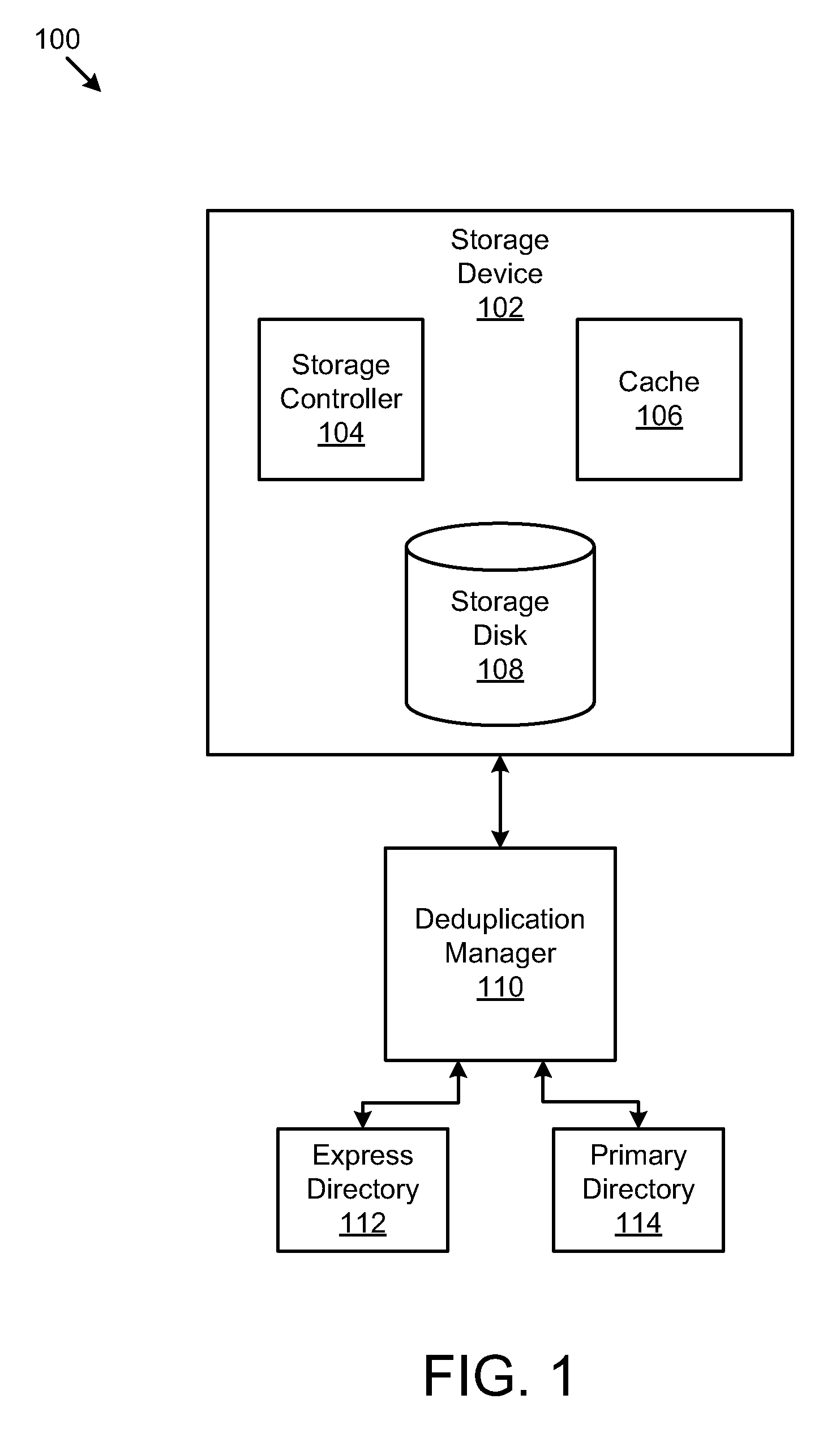

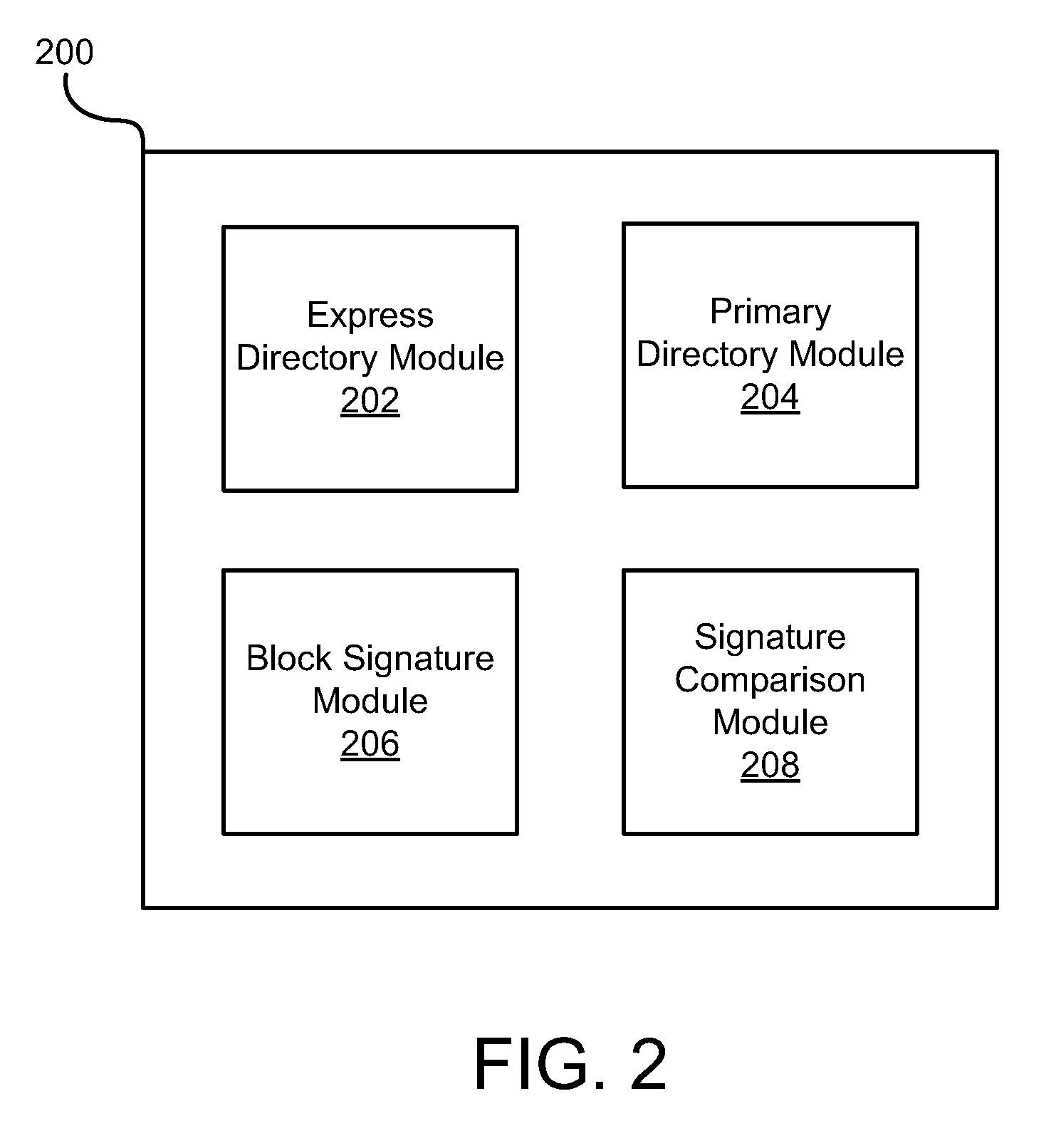

Apparatus, System, and Method for Enhanced Block-Level Deduplication

ActiveUS20110029497A1Special data processing applicationsInput/output processes for data processingParallel computingBlock match

An apparatus, system, and method are disclosed for enhanced block-level deduplication. A computer program product stores one or more express block signatures in an express directory that meet a reference count requirement. The computer program product also stores one or more primary block signatures and one or more reference counts for the primary block signatures in a primary directory. Each primary block signature has a corresponding reference count. The computer program product determines whether a block signature for a data block matches one of the one or more express block signatures stored in the express directory.

Owner:DAEDALUS BLUE LLC

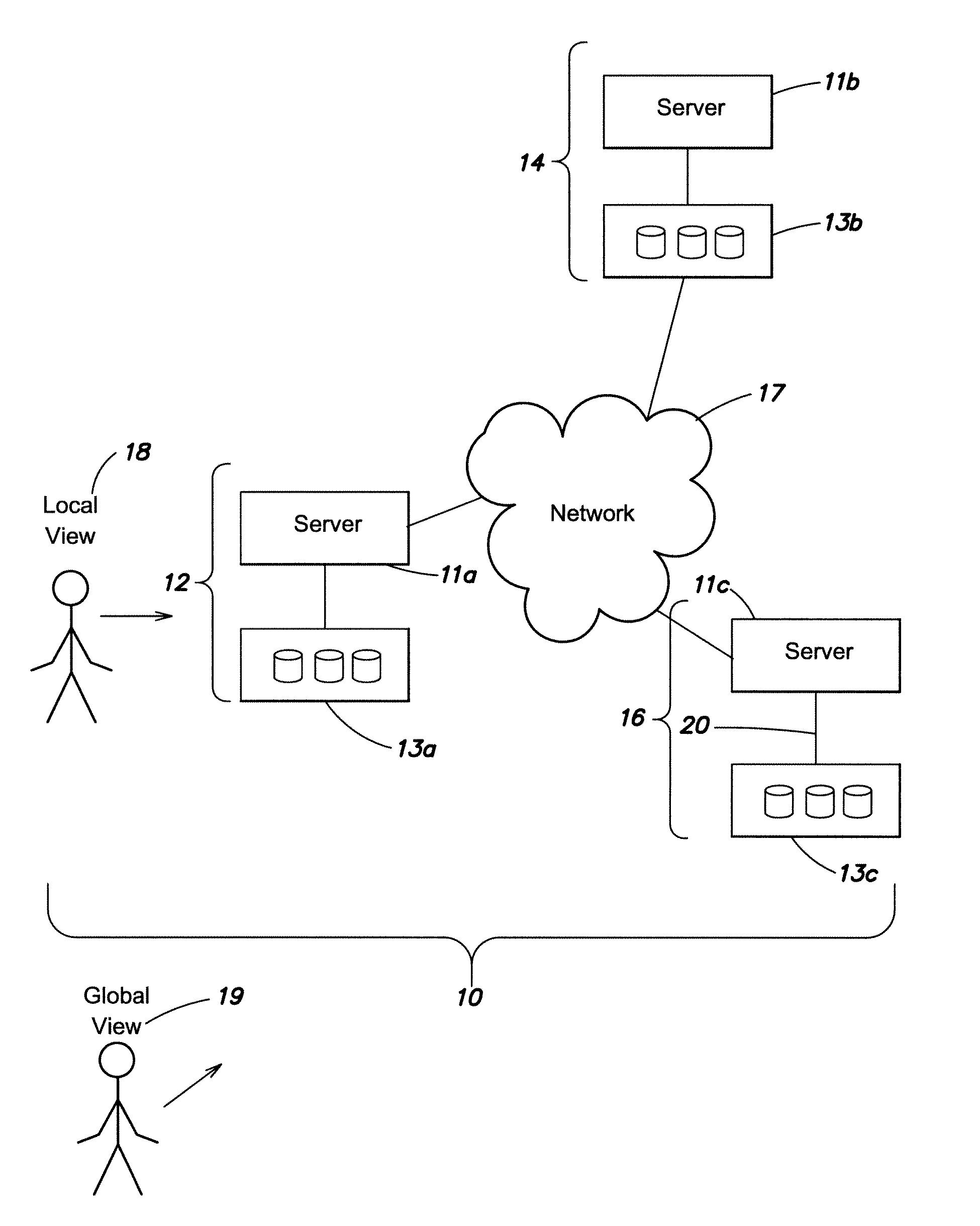

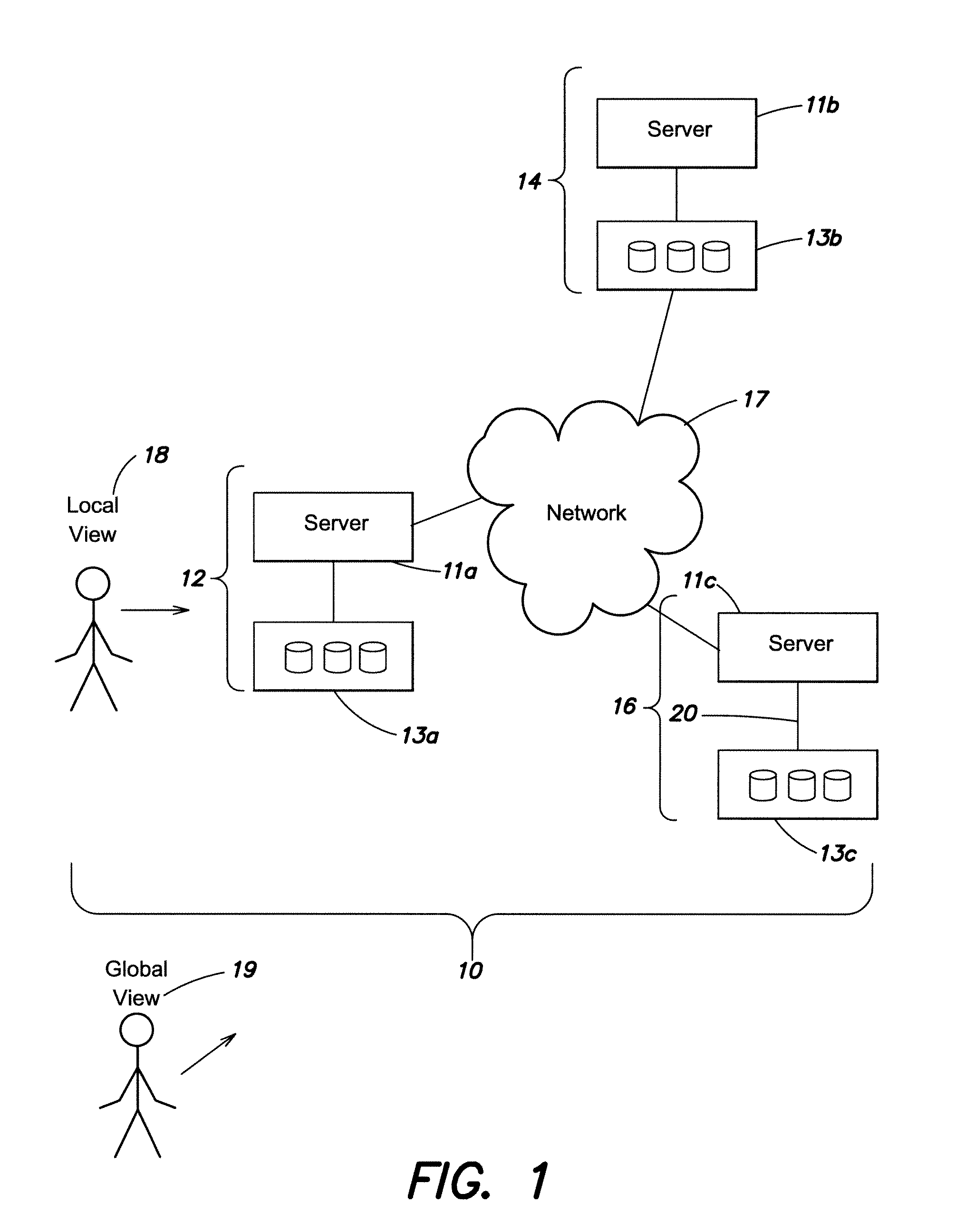

Reference count propagation

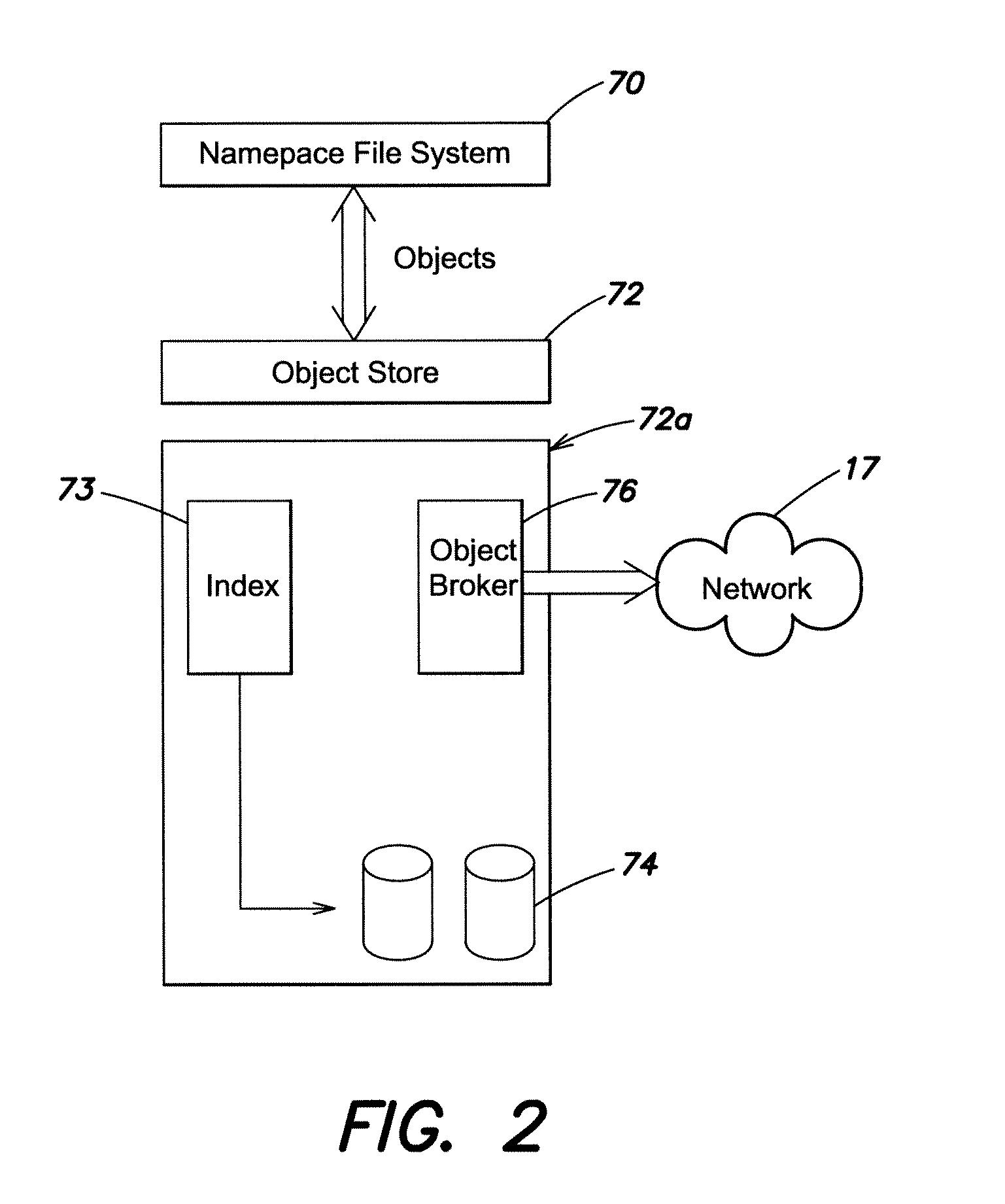

ActiveUS20120290629A1Safely de-allocatedEfficient and robustDigital data information retrievalDigital computer detailsFile systemObject store

Methods and systems are provided for tracking object instances stored on a plurality of network nodes, which tracking enables a global determination of when an object has no references across the networked nodes and can be safely de-allocated. According to one aspect of the invention, each node has a local object store for tracking and optionally storing objects on the node, and the local object stores collectively share the locally stored instances of the objects across the network. One or more applications, e.g., a file system and / or a storage system, use the local object stores for storing all persistent data of the application as objects.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

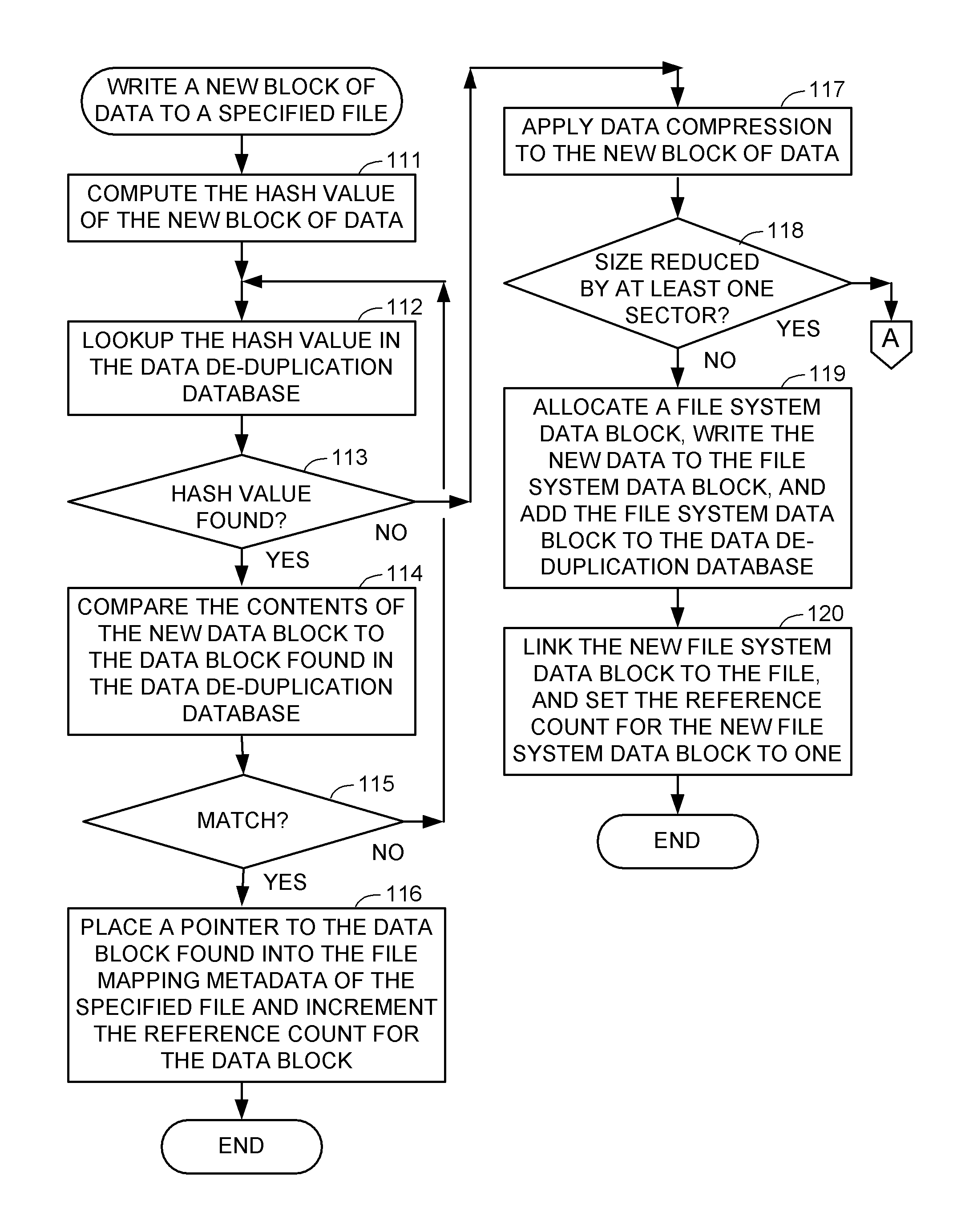

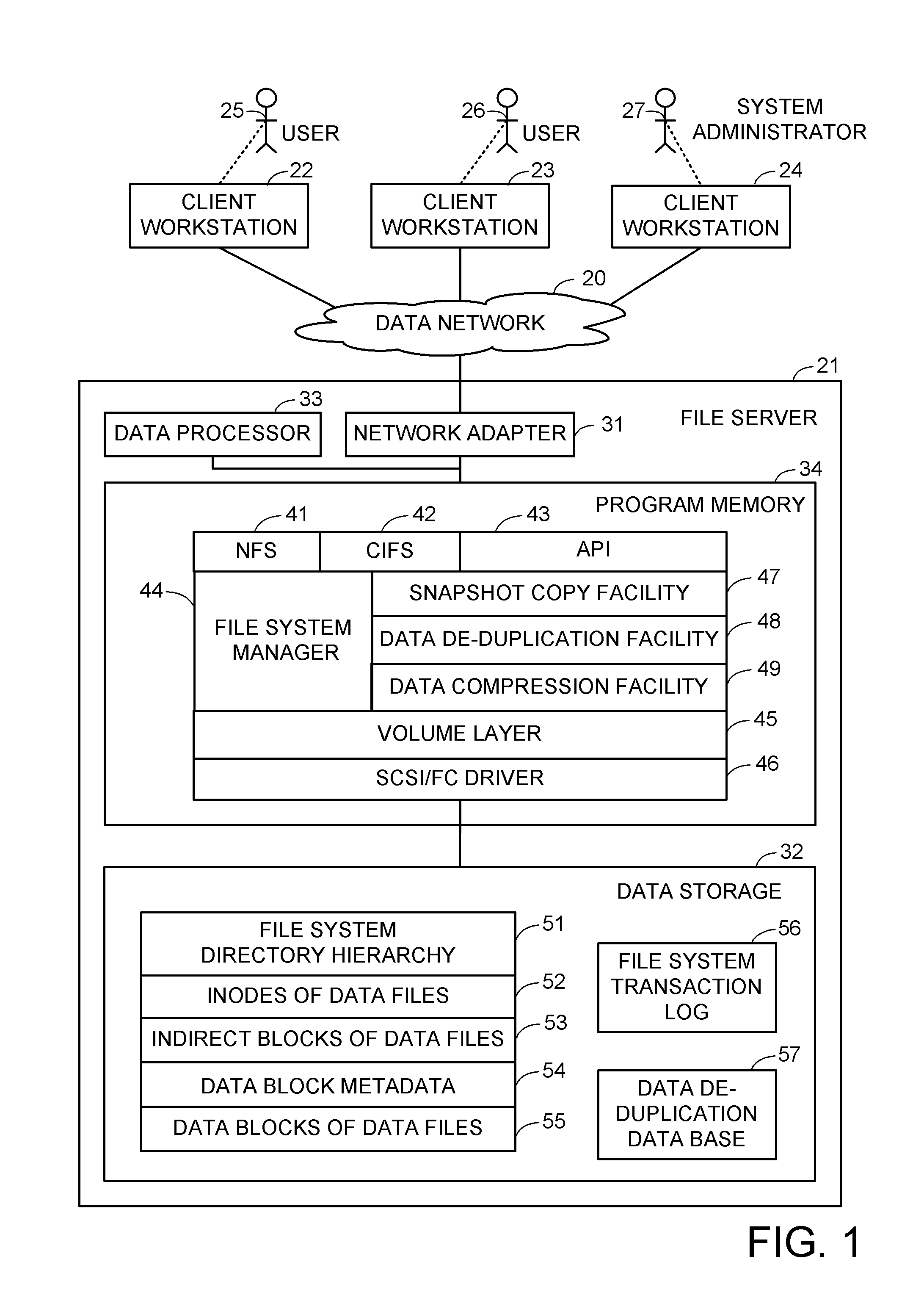

Partial block allocation for file system block compression using virtual block metadata

ActiveUS8615500B1Digital data information retrievalDigital data processing detailsData compressionFile system

A file server has a data compression facility and also a snapshot copy facility or a data de-duplication facility that shares data blocks among files. Compression of the file data on a file system block basis leads to a problem of partially used file system data blocks when the data blocks are shared among files. This problem is solved by partial block allocation so that file system data blocks are shared among files that do not share identical data. Block pointers in the file mapping metadata point to virtual blocks representing the compressed data blocks, and associated virtual block metadata identifies portions of file system data blocks that store the compressed data. For example, a portion of a file system data block is identified by a sector bitmap, and the virtual block metadata also includes a reference count to indicate sharing of a compressed data block among files.

Owner:EMC IP HLDG CO LLC

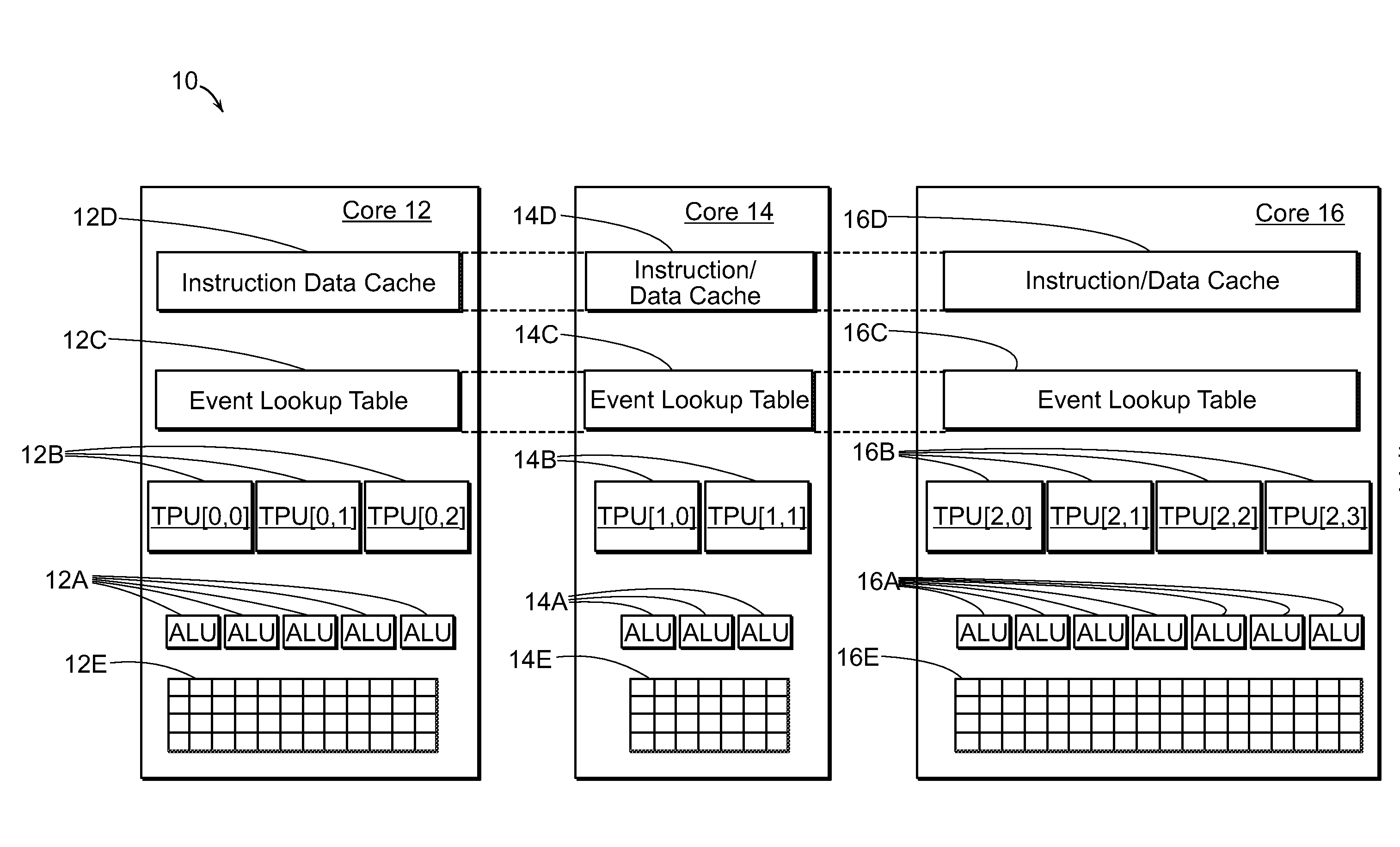

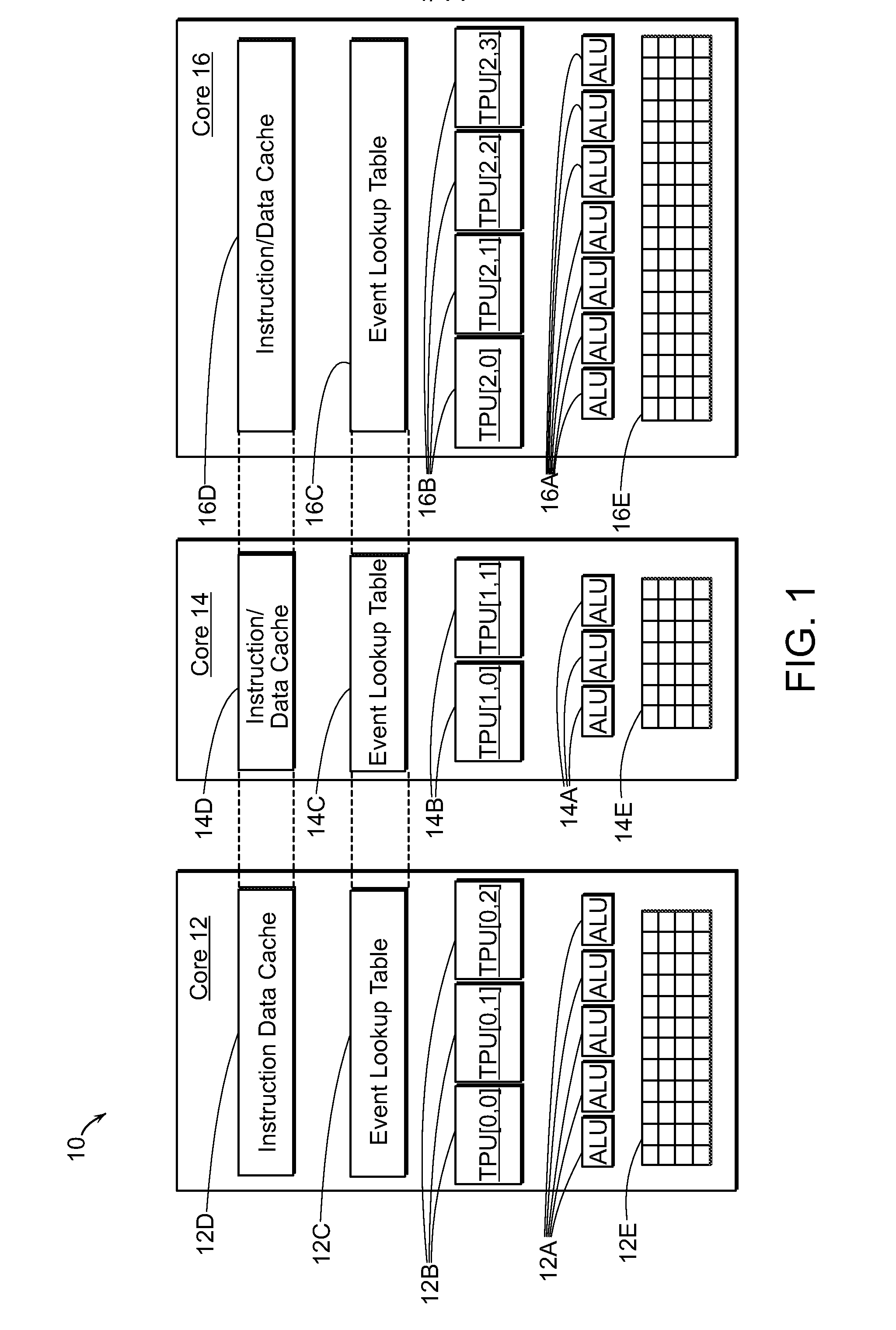

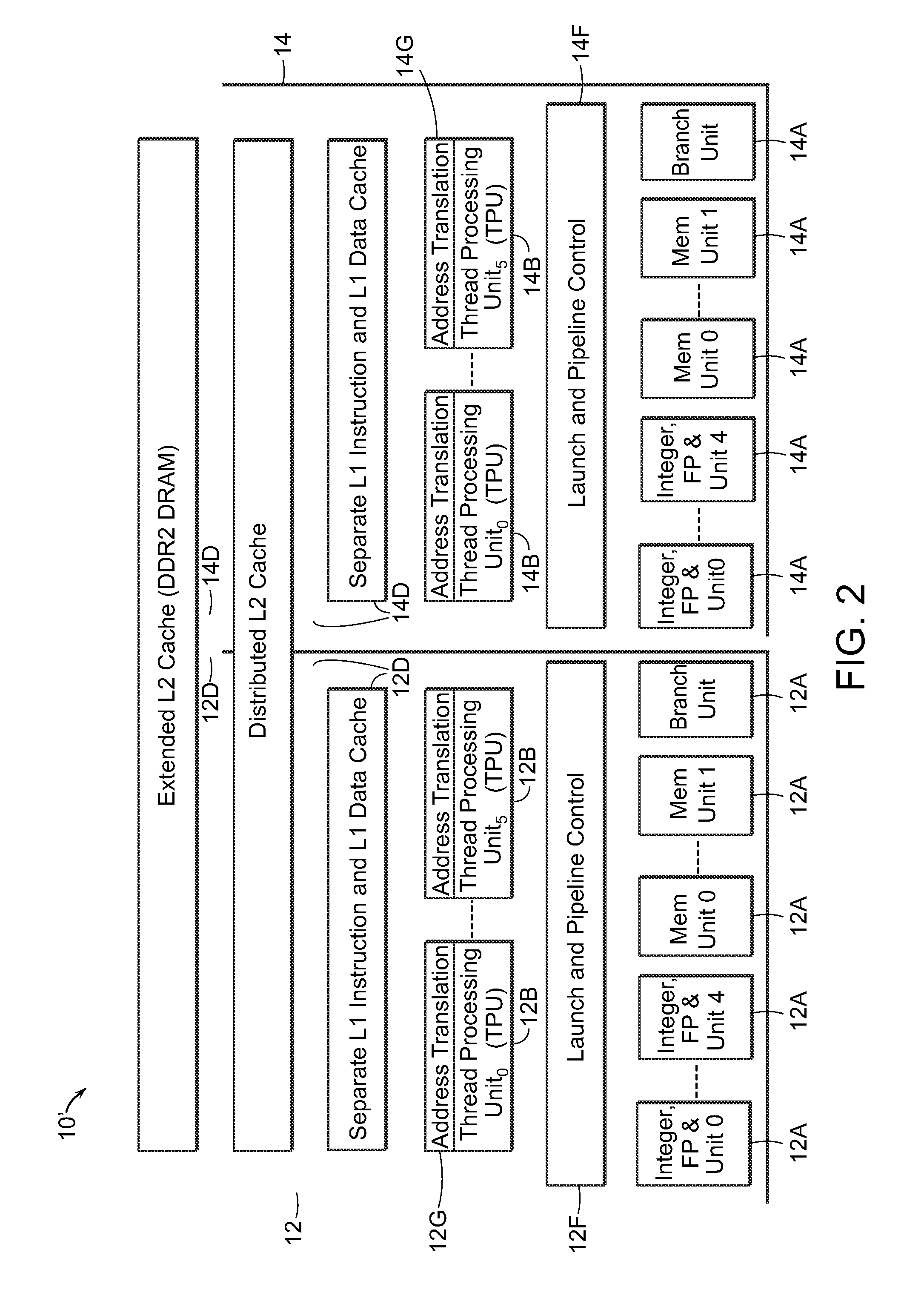

General Purpose Digital Data Processor, Systems and Methods

InactiveUS20130086328A1Efficient memory utilizationImprove performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationDigital dataGeneral purpose

The invention provides improved data processing apparatus, systems and methods that include one or more nodes, e.g., processor modules or otherwise, that include or are otherwise coupled to cache, physical or other memory (e.g., attached flash drives or other mounted storage devices) collectively, “system memory.” At least one of the nodes includes a cache memory system that stores data (and / or instructions) recently accessed (and / or expected to be accessed) by the respective node, along with tags specifying addresses and statuses (e.g., modified, reference count, etc.) for the respective data (and / or instructions). The tags facilitate translating system addresses to physical addresses, e.g., for purposes of moving data (and / or instructions) between system memory (and, specifically, for example, physical memory—such as attached drives or other mounted storage) and the cache memory system.

Owner:PANEVE

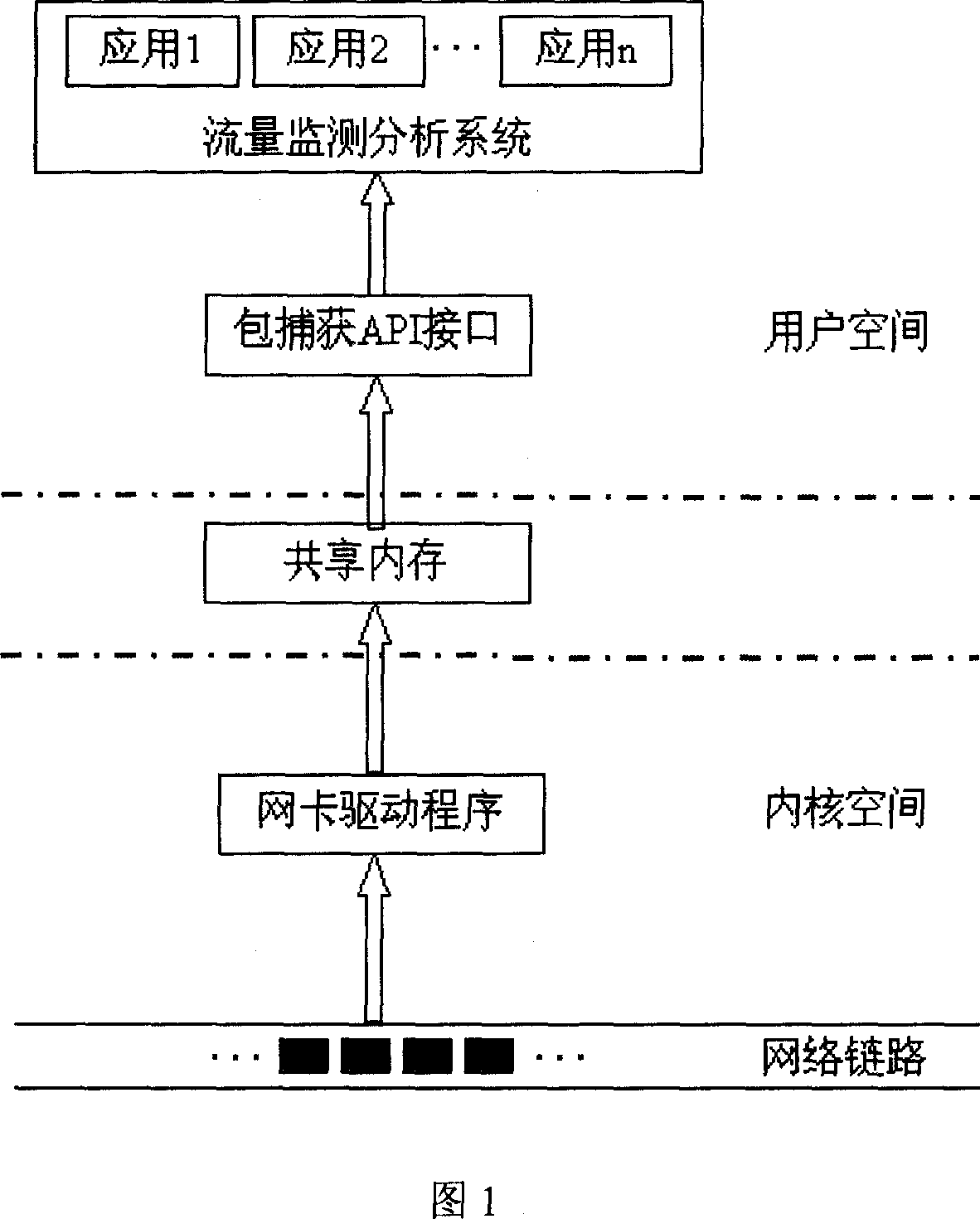

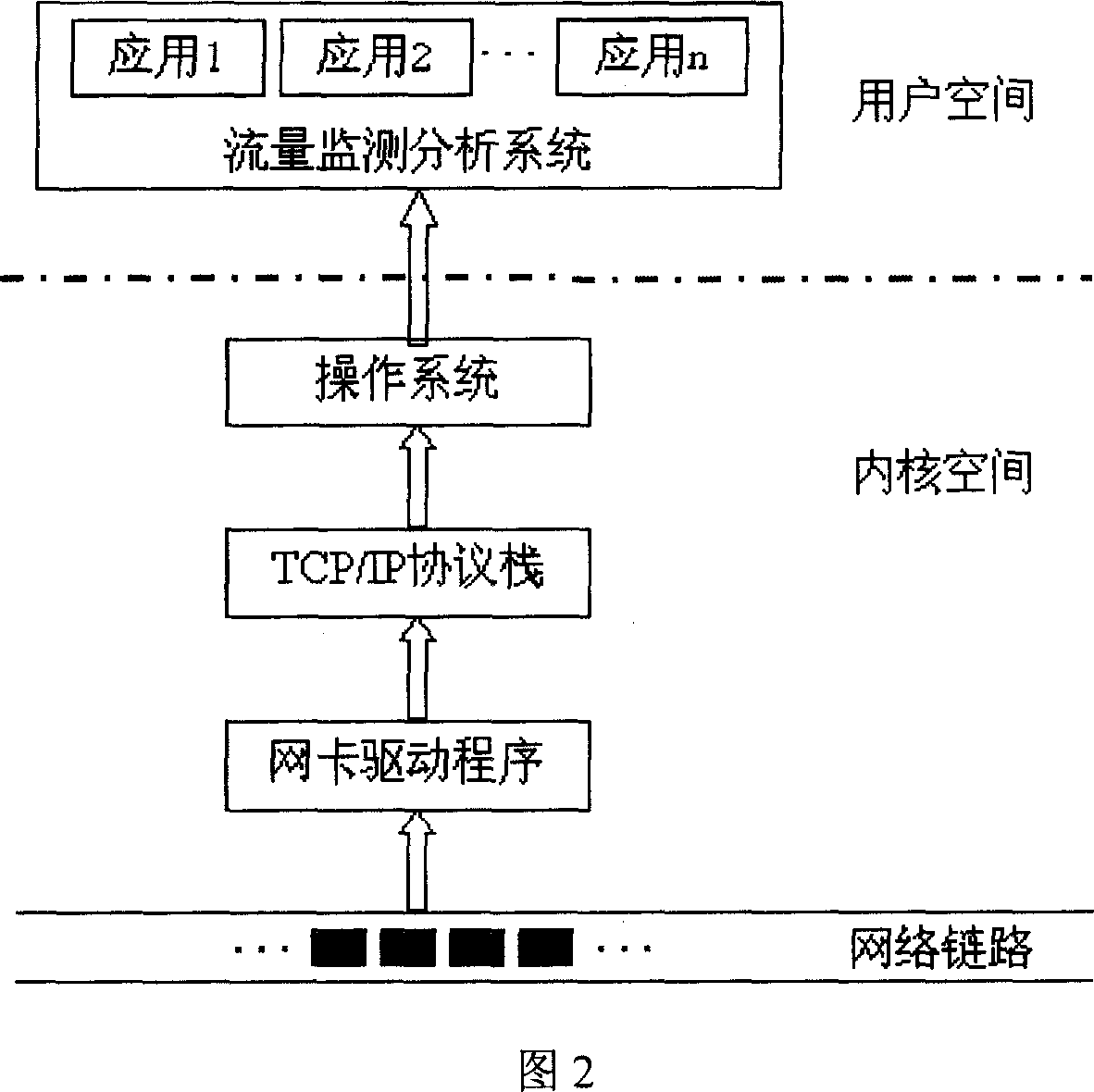

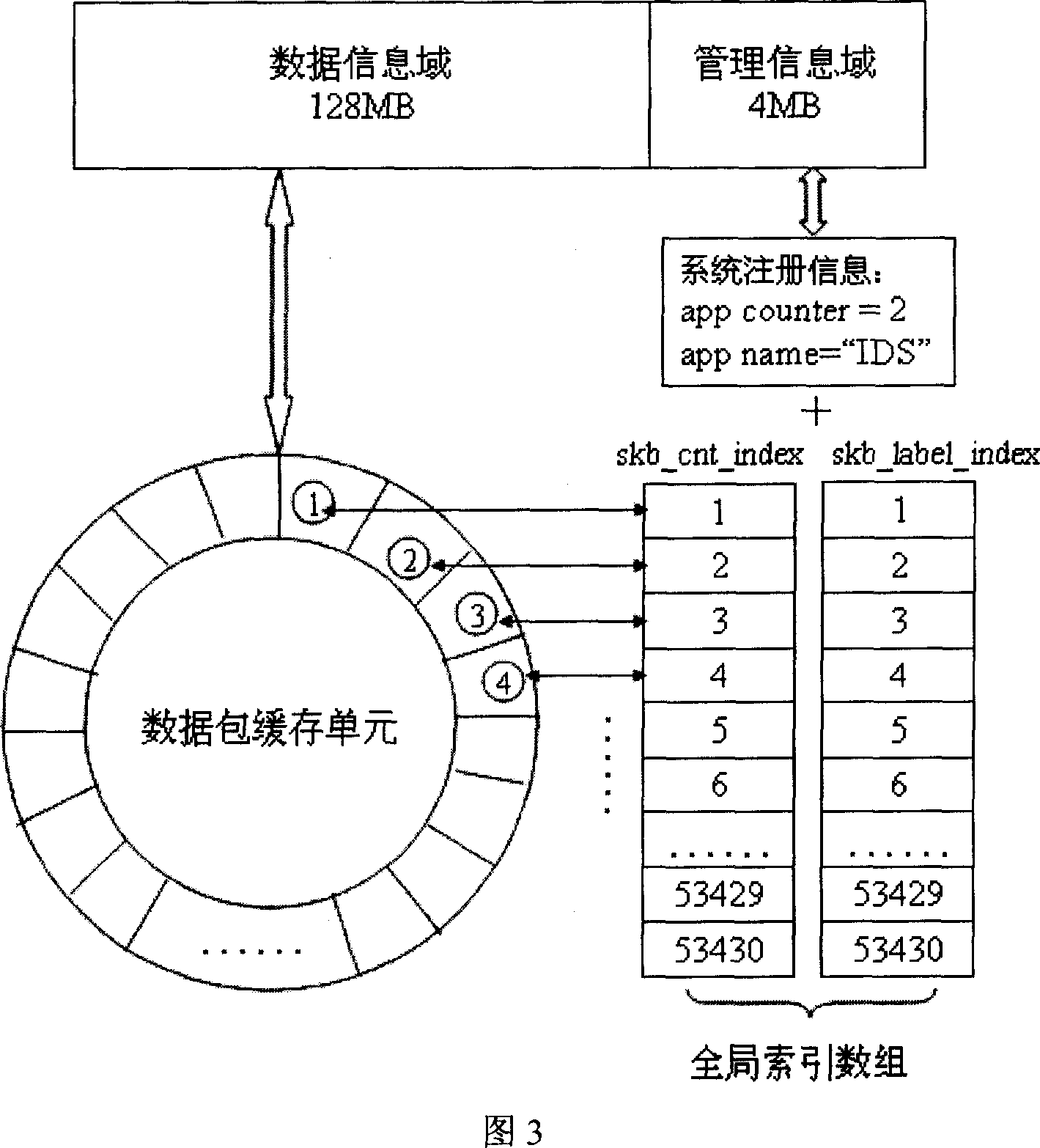

Method for realizing data packet catching based on sharing internal memory

InactiveCN1925465AImprove efficiencyImprove performanceData switching networksInternal memoryTraffic capacity

This invention discloses one data package capture method based on sharing memory, which comprises the following steps: net card loading and initiating drive program; establishing character device; setting monitor terminal card as mixture mode; receiving data package to judge receive terminal; judging data package into data information area; if not discarding data package; initiating management information unit; operating flow monitor analysis program; opening character device to get needle; extracting data package to introduce number label section then executing next step; executing monitor analysis program to change total index data to visit label section as processed data package.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

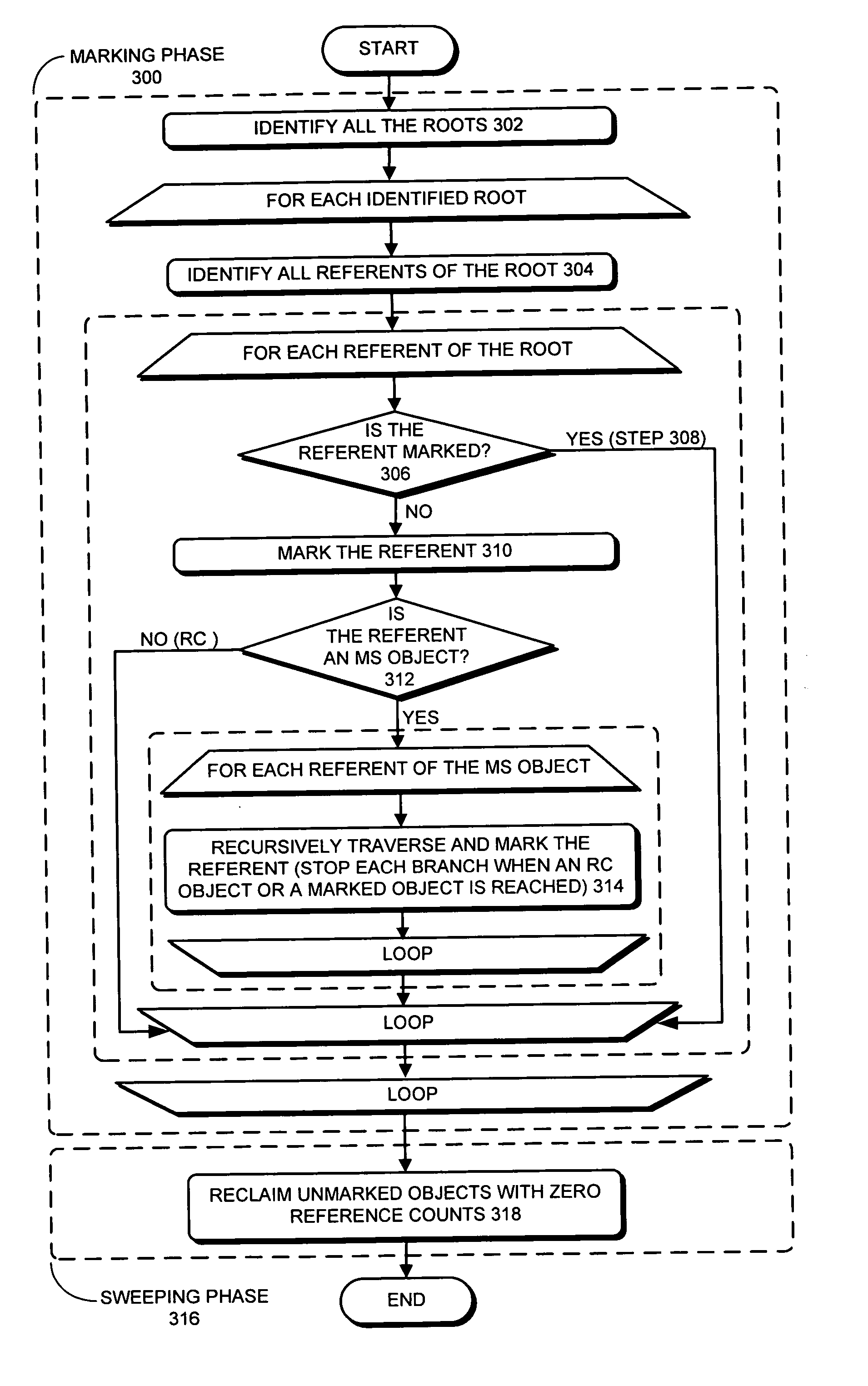

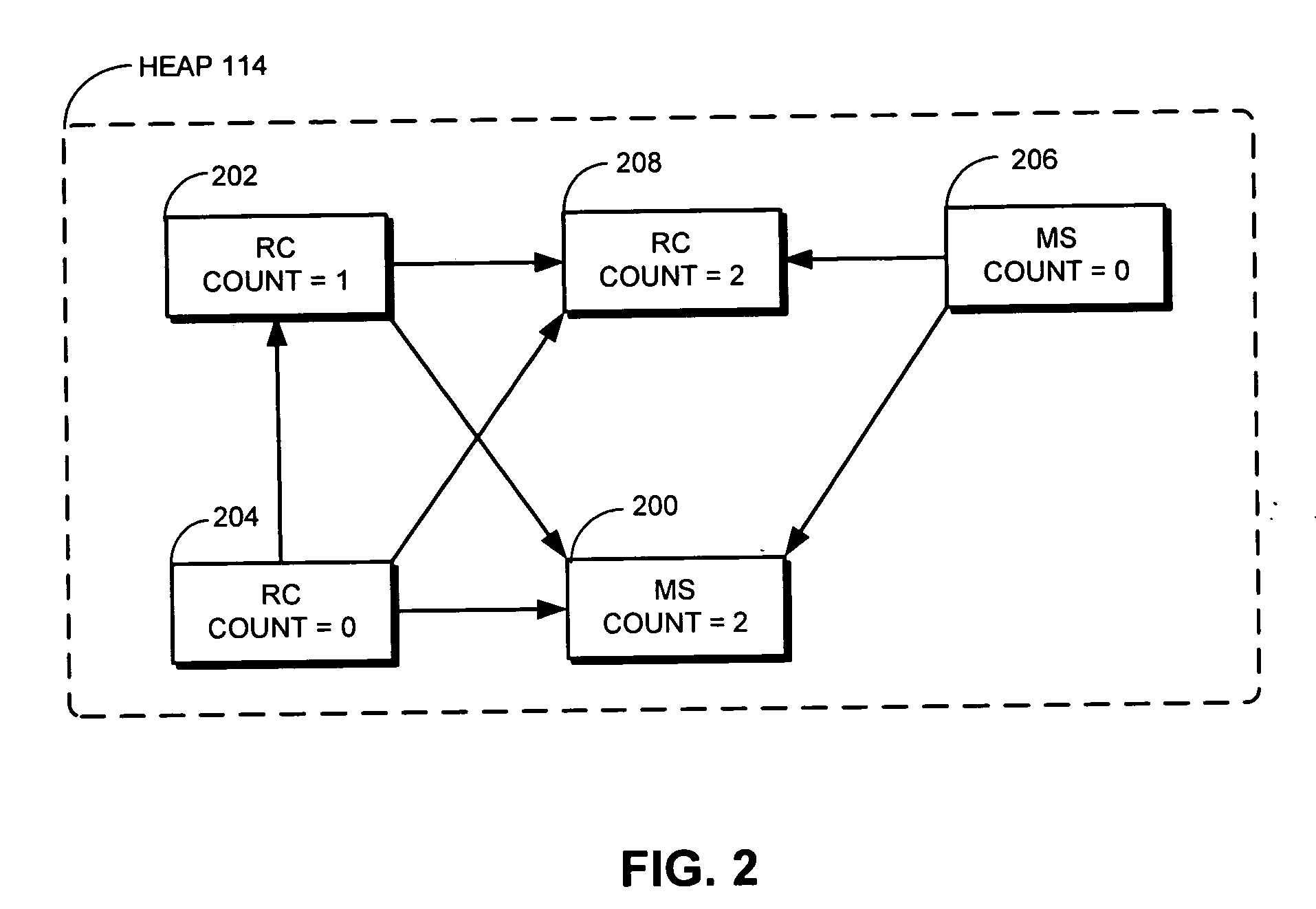

Method and apparatus for facilitating mark-sweep garbage collection with reference counting

ActiveUS20070162527A1Ease of garbage collectionSpecial data processing applicationsMemory systemsComputer scienceMemory management

One embodiment of the present invention provides a system that facilitates garbage collection (GC) in a memory-management system that supports both mark-sweep (MS) objects and reference-counted (RC) objects, wherein both MS objects and RC objects can be marked and have a reference count. During a marking phase of a GC operation, the system first identifies roots for the GC operation. Next, the system marks referents of the roots. The system then recursively traverses referents of the roots which are MS objects and while doing so, marks referents of the traversed MS objects. During a subsequent sweeping phase of the GC operation, the system reclaims objects that are unmarked and have a zero reference count.

Owner:ORACLE INT CORP

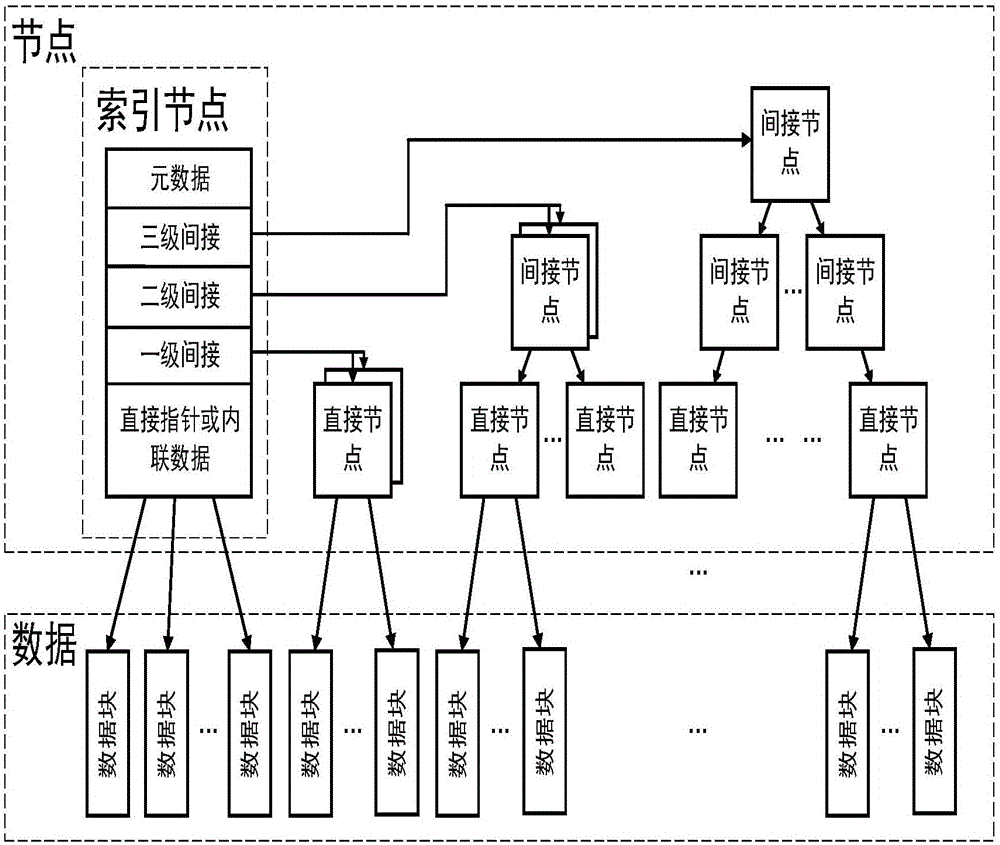

Multi-version control method of memory file system

InactiveCN105868396AReduce time and space overheadImprove performanceSpecial data processing applicationsNetwork File SystemJournaling file system

The invention provides a multi-version control method of a memory file system, based on an HMFS (hybrid memory file system). The method includes the following steps of: data modification and updating: performing multi-version backup on a node address tree of the memory file system via a copy-on-write mode, and multiplexing nodes in the address tree to perform data modification and updating; data sharing: adopting level reference counting to realize metadata sharing of the multi-version file system, wherein metadata refer to data for describing data and are recorded data for realizing multi-file system versions. Files not modified are utilized to restore the file system to the file system before the files are modified, file sharing among the multi-version file systems is realized by level reference counting, and all the files of the original file system do not need to be back-upped when the file system is snapshot, so that time-space cost needed for snapshooting of the file system is reduced, and performance of the file system is improved.

Owner:SHANGHAI JIAO TONG UNIV

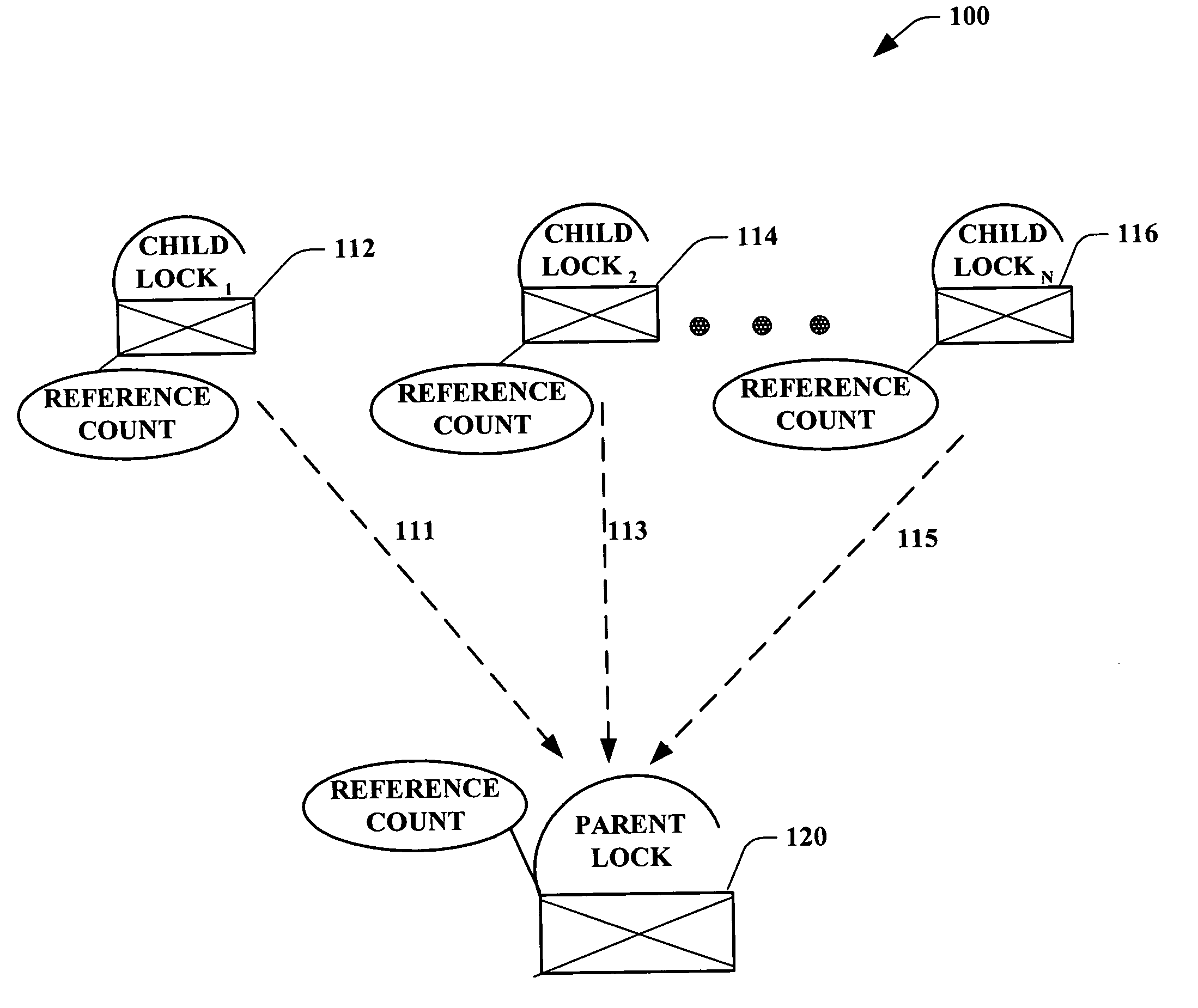

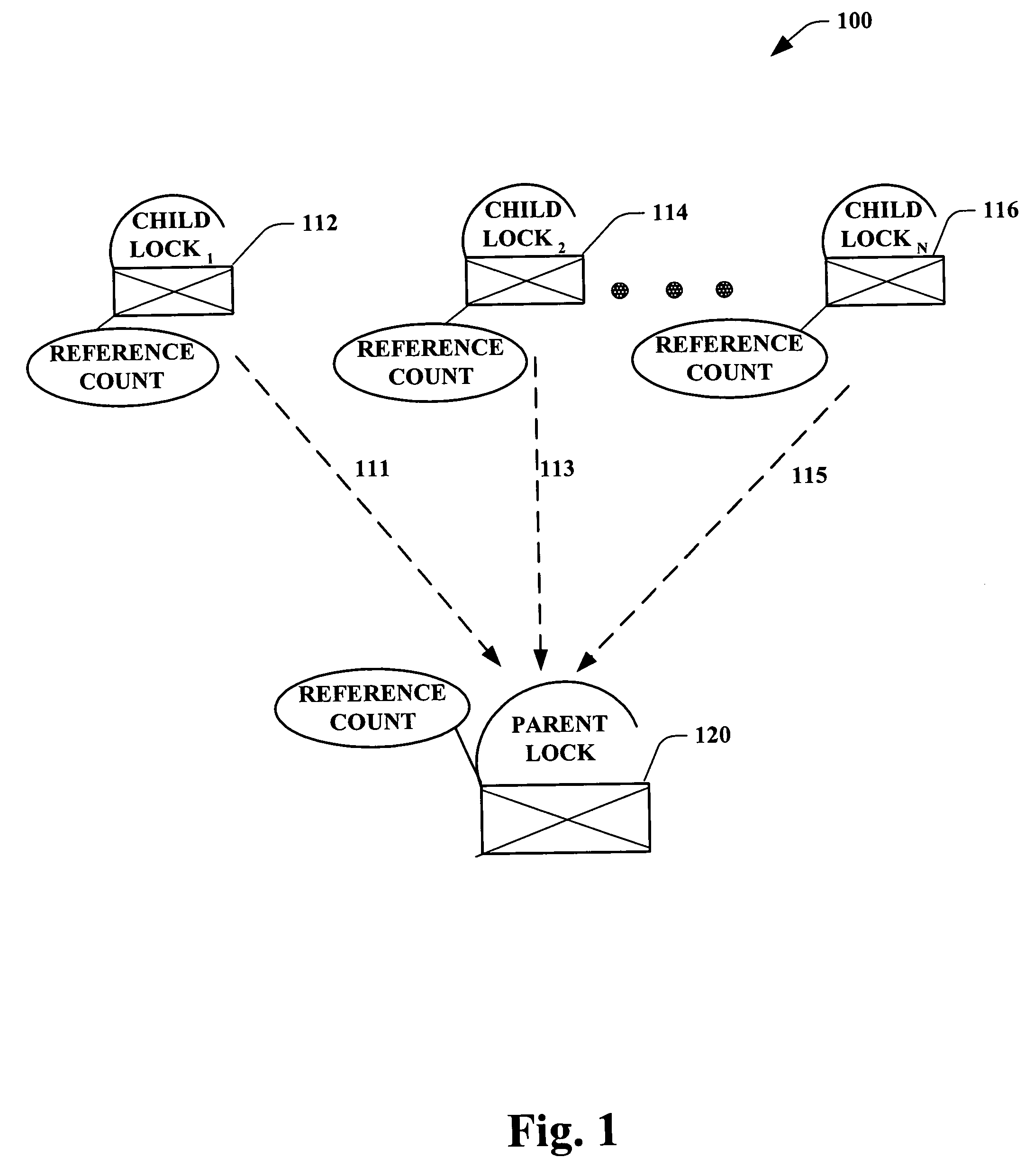

System and method for database lock with reference counting

ActiveUS20050234989A1High degreeMitigates an over locking thereofDigital data information retrievalDigital data processing detailsLife timeWorld Wide Web

Systems and methodologies are provided in a lock hierarchy arrangement wherein upon release of all child locks associated with a parent lock, then such parent lock is also released. The present invention supplies each lock with sufficient information to determine its own life time. Such framework enables a higher degree of transaction concurrency in a database, and mitigates an over locking thereof, thus conserving system resources.

Owner:MICROSOFT TECH LICENSING LLC

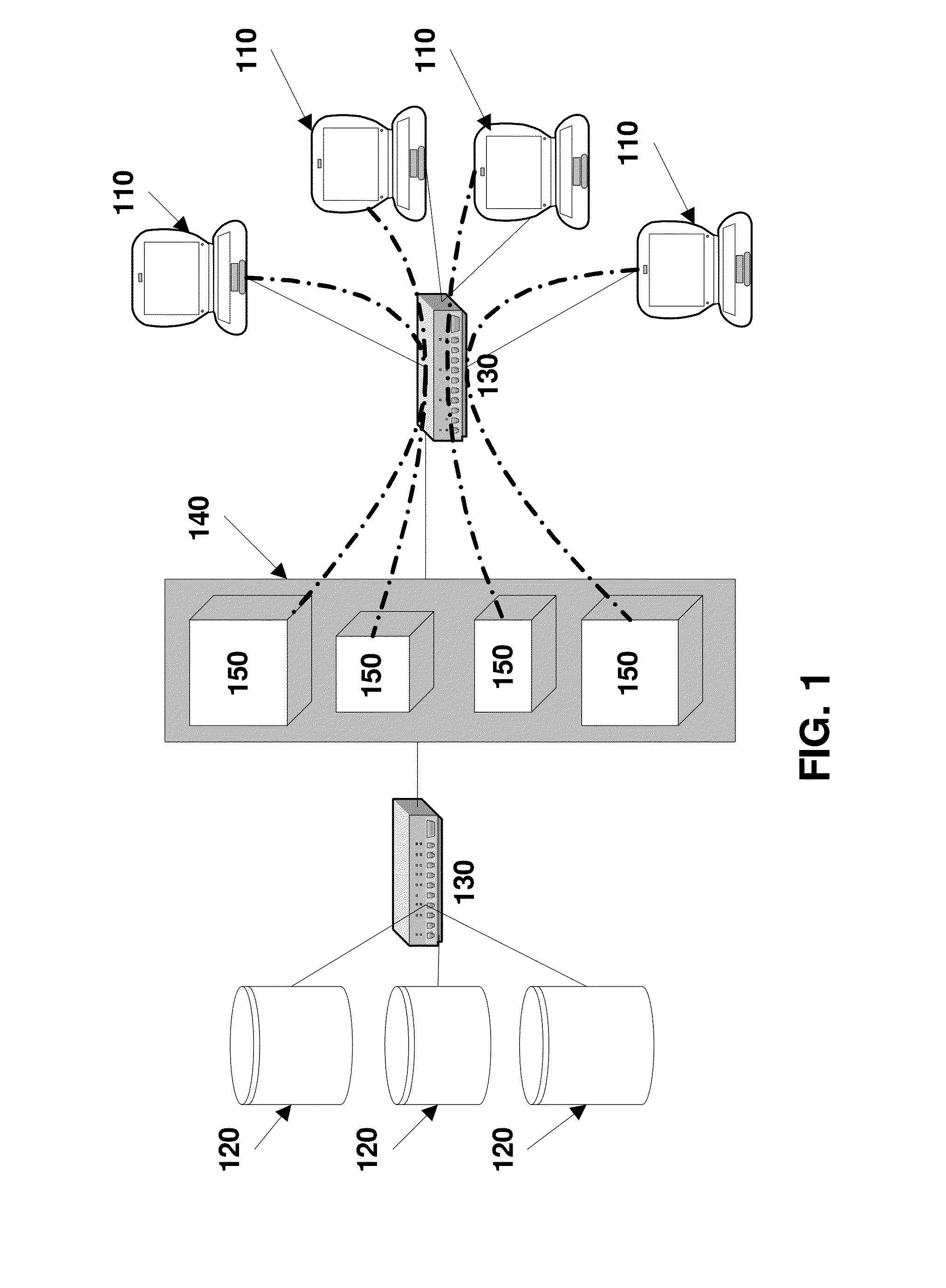

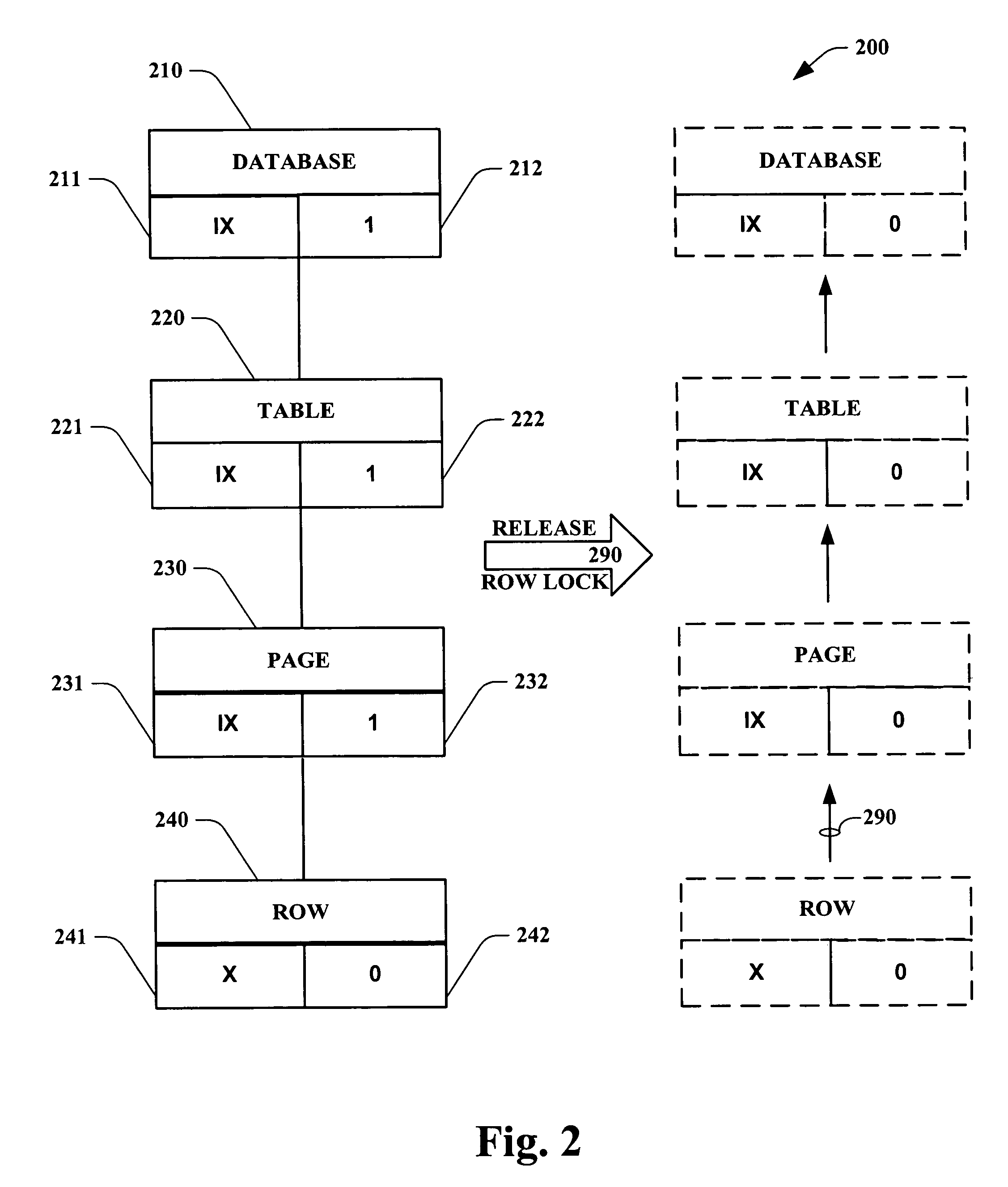

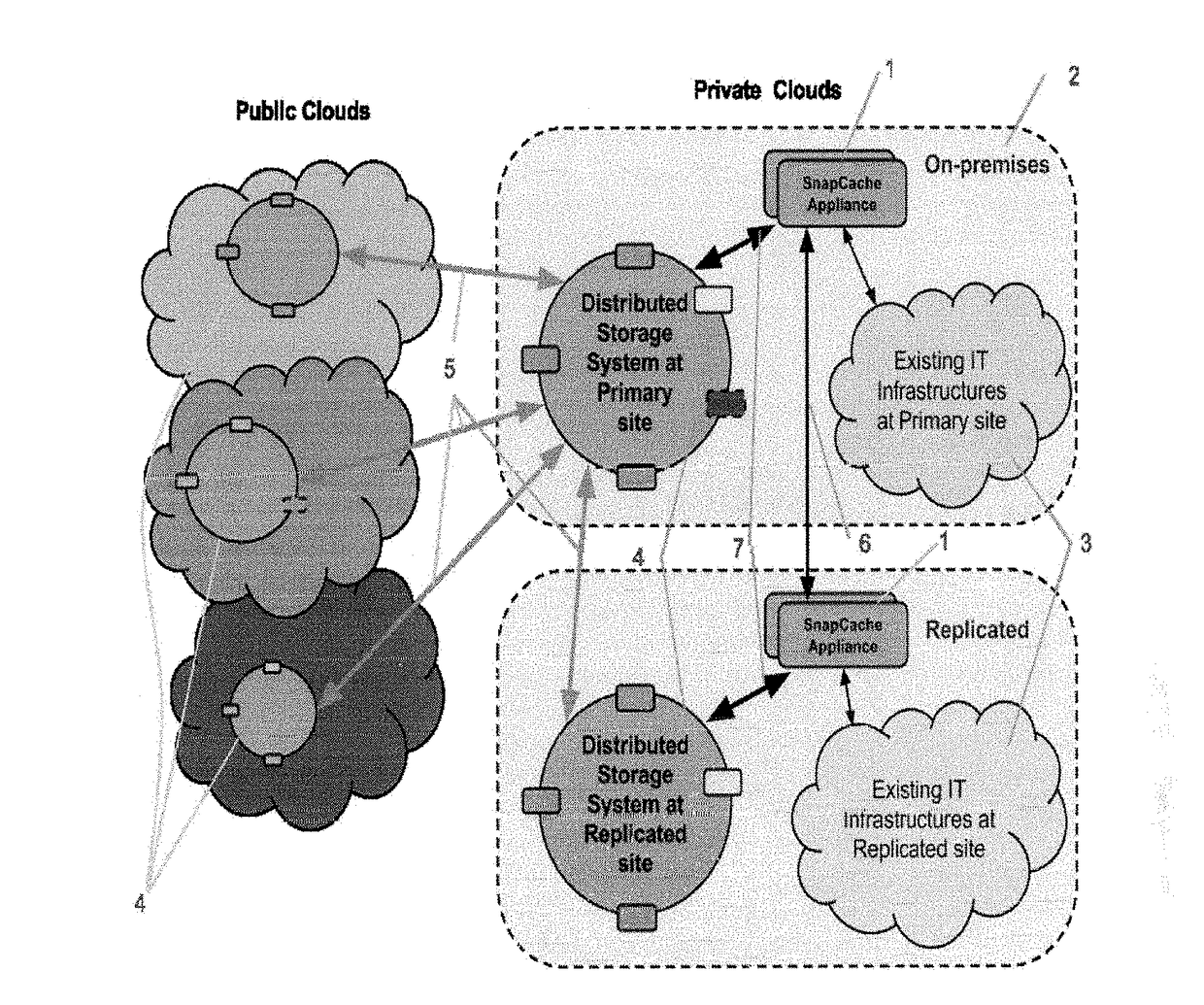

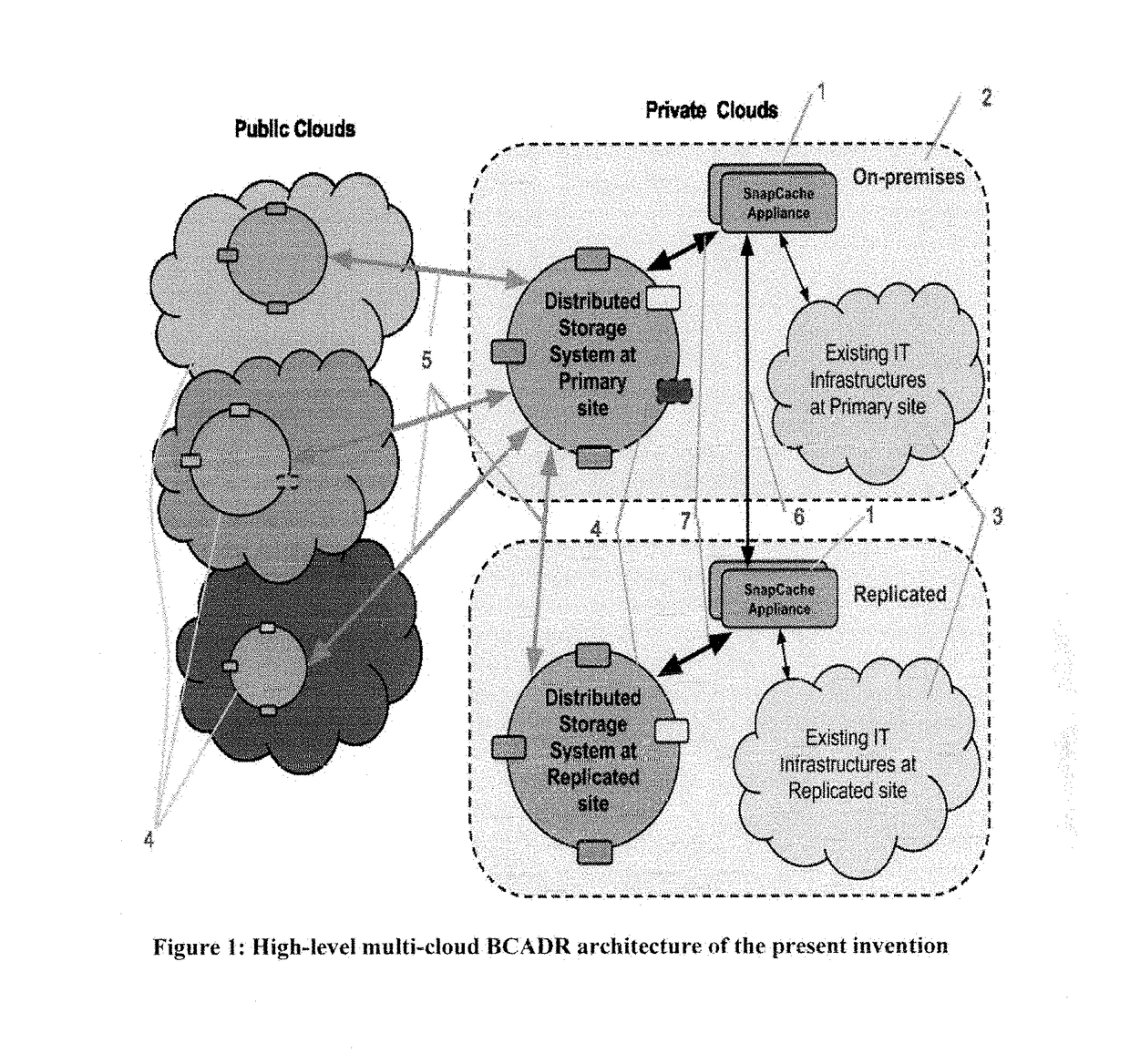

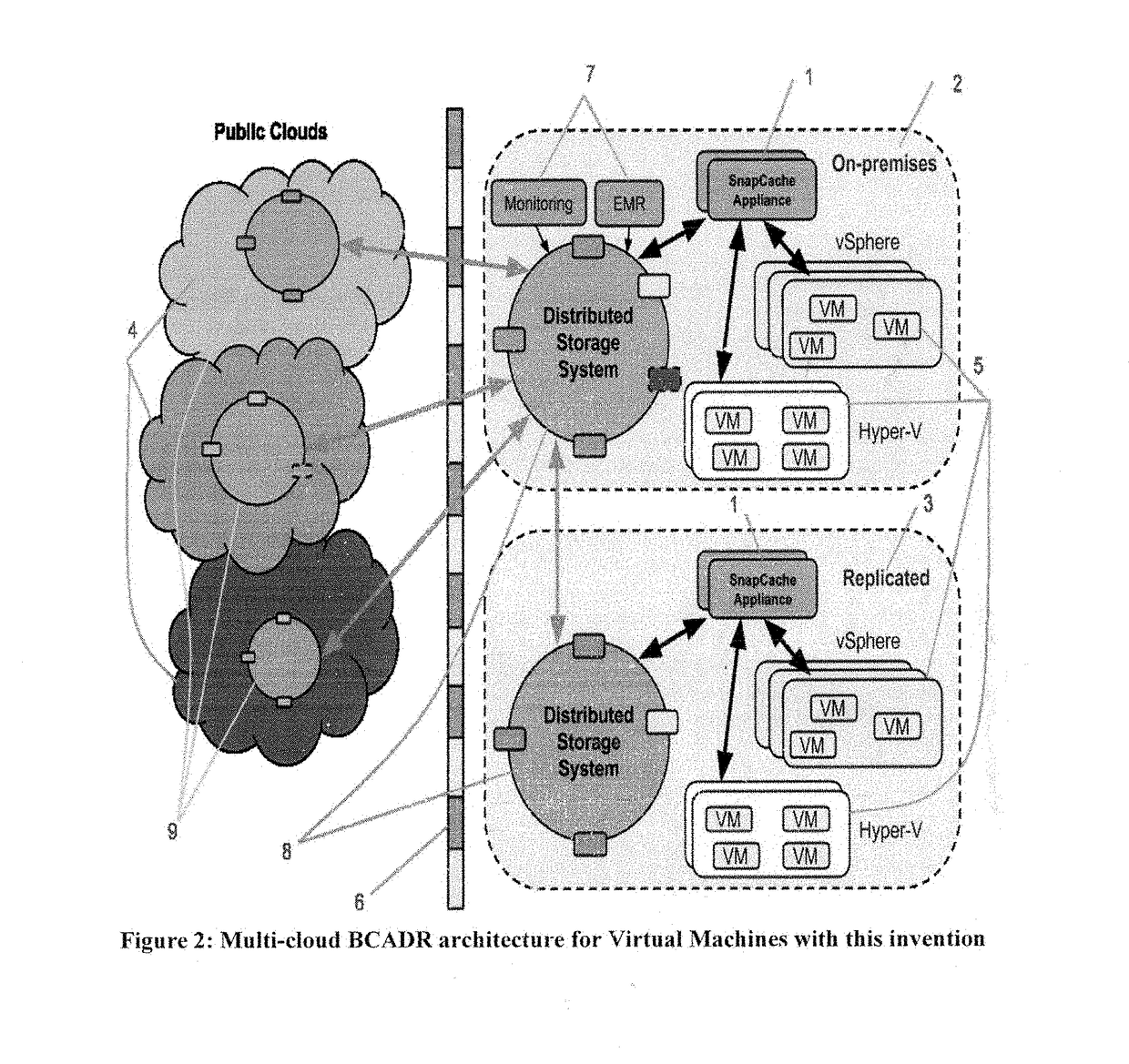

Backup, Archive and Disaster Recovery Solution with Distributed Storage over Multiple Clouds

InactiveUS20170262345A1Fast dataReduce riskTransmissionRedundant operation error correctionHigh availabilityApplication software

This invention is a software application utilizing distributed storage systems to provide backup, archive and disaster recovery (BCADR) functionality across multiple clouds. The multi-cloud aware BCADR application and distributed storage systems are utilized together to prevent data lost and to provide high availability in disastrous incidents. Data deduplication reduces the storage required to store many backups. Reference counting is utilized to assist in garbage collection of staled data chunks after removal of staled backups.

Owner:WANG JENLONG +1

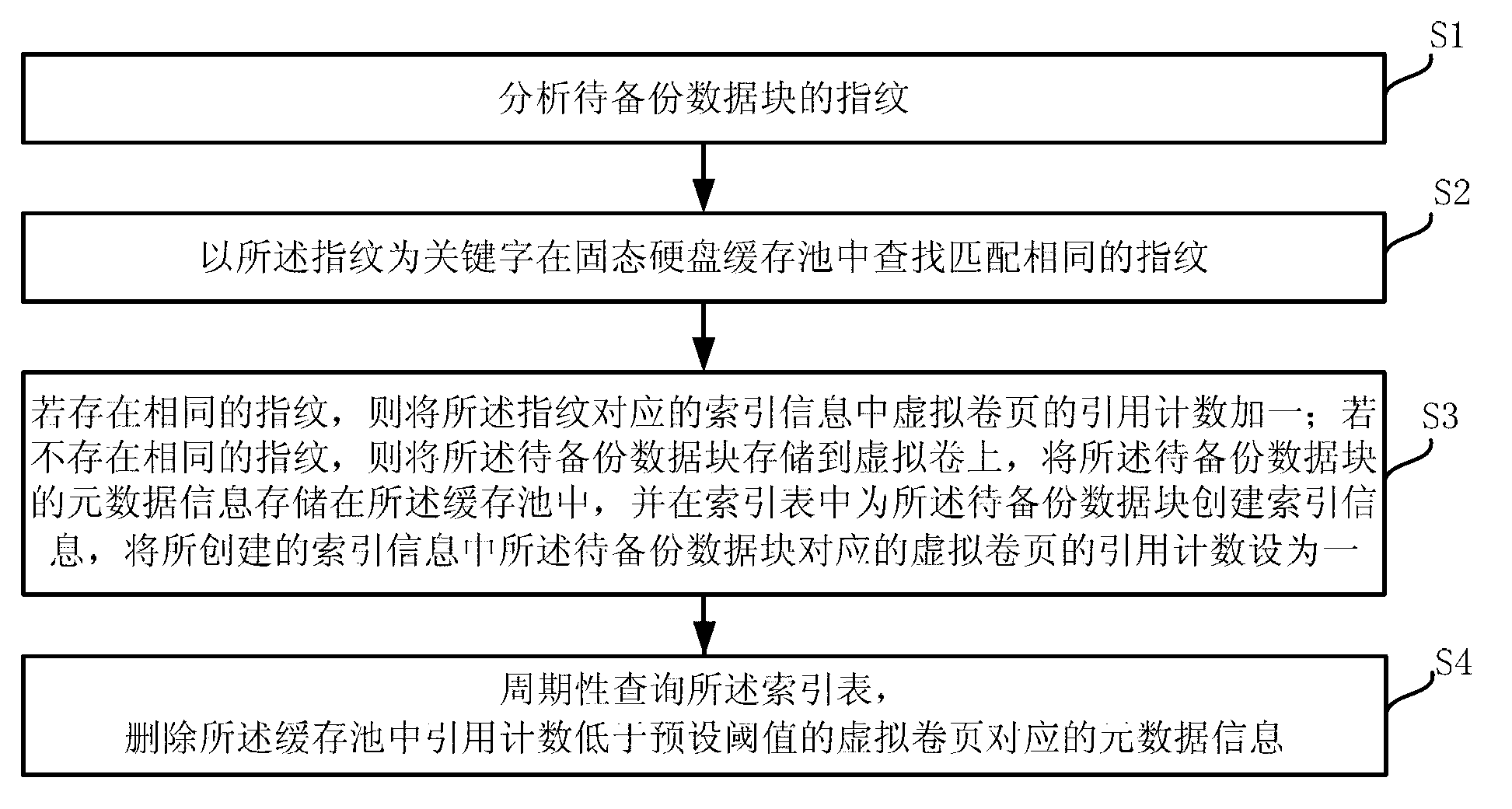

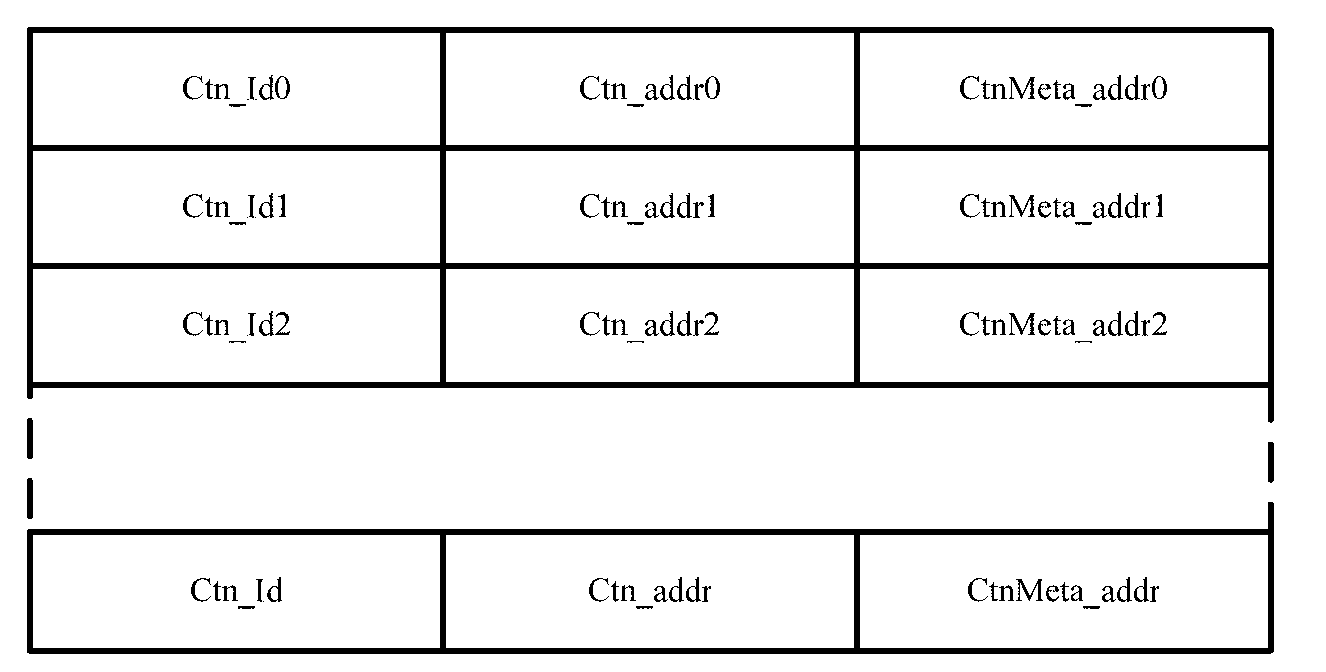

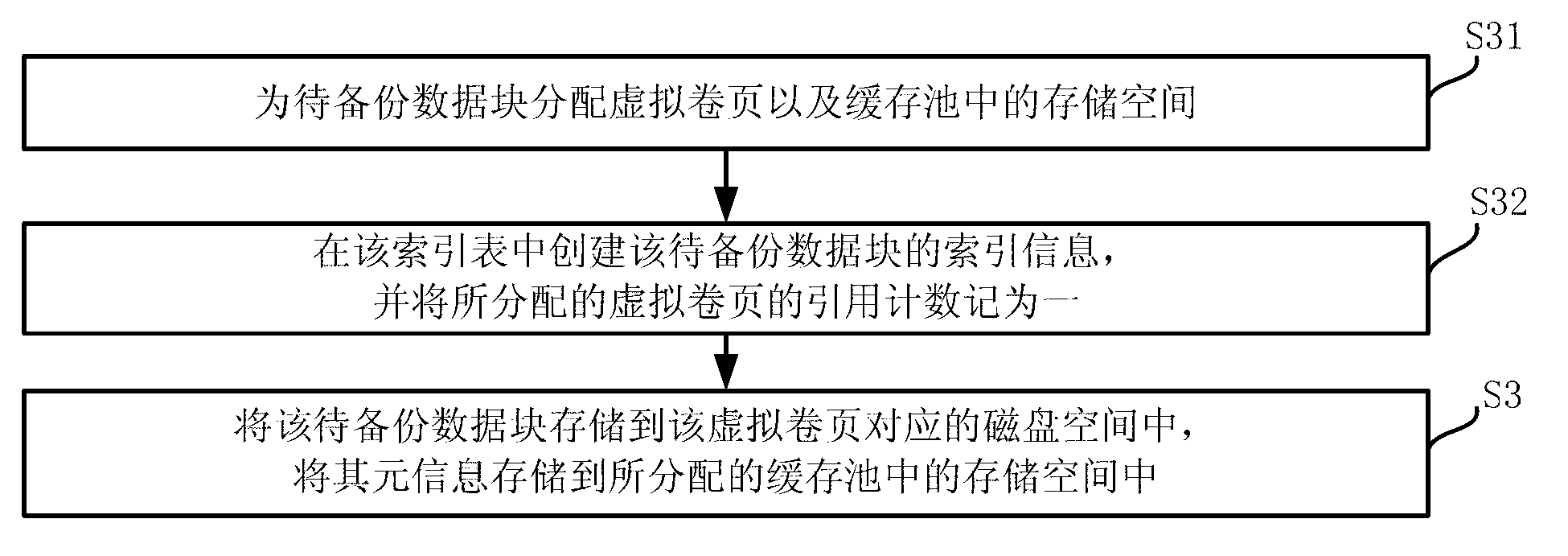

Data backup method and device

ActiveCN103019887AGuaranteed enoughPerformance is not affectedRedundant operation error correctionSpecial data processing applicationsComputer scienceMetadata

The invention discloses a data backup method and device, which belong to the technical field of data backup. The method comprises the steps of: analyzing a fingerprint of a data block to be backed up; searching for the same fingerprint in a buffer pool by using the fingerprint as a keyword; if the same fingerprint exists in the buffer pool, adding 1 to a reference count of a virtual volume page in index information corresponding to the fingerprint; if the same fingerprint does not exist in the buffer pool, storing the data block to be backed up to a virtual volume, storing metadata information of the data block to be backed up to the buffer pool and establishing index information for the data block to be backed up in an index table as well as adding 1 to the reference count of the virtual volume page corresponding to the data block to be backed up in the established index information; and periodically inquiring the index table, and deleting the metadata information corresponding to the virtual page reel with the reference count lower than a predetermined threshold value. According to the method and the device provided by the embodiment of the invention, the re-deleting performance can be improved without affecting the system performance.

Owner:HUAWEI TECH CO LTD

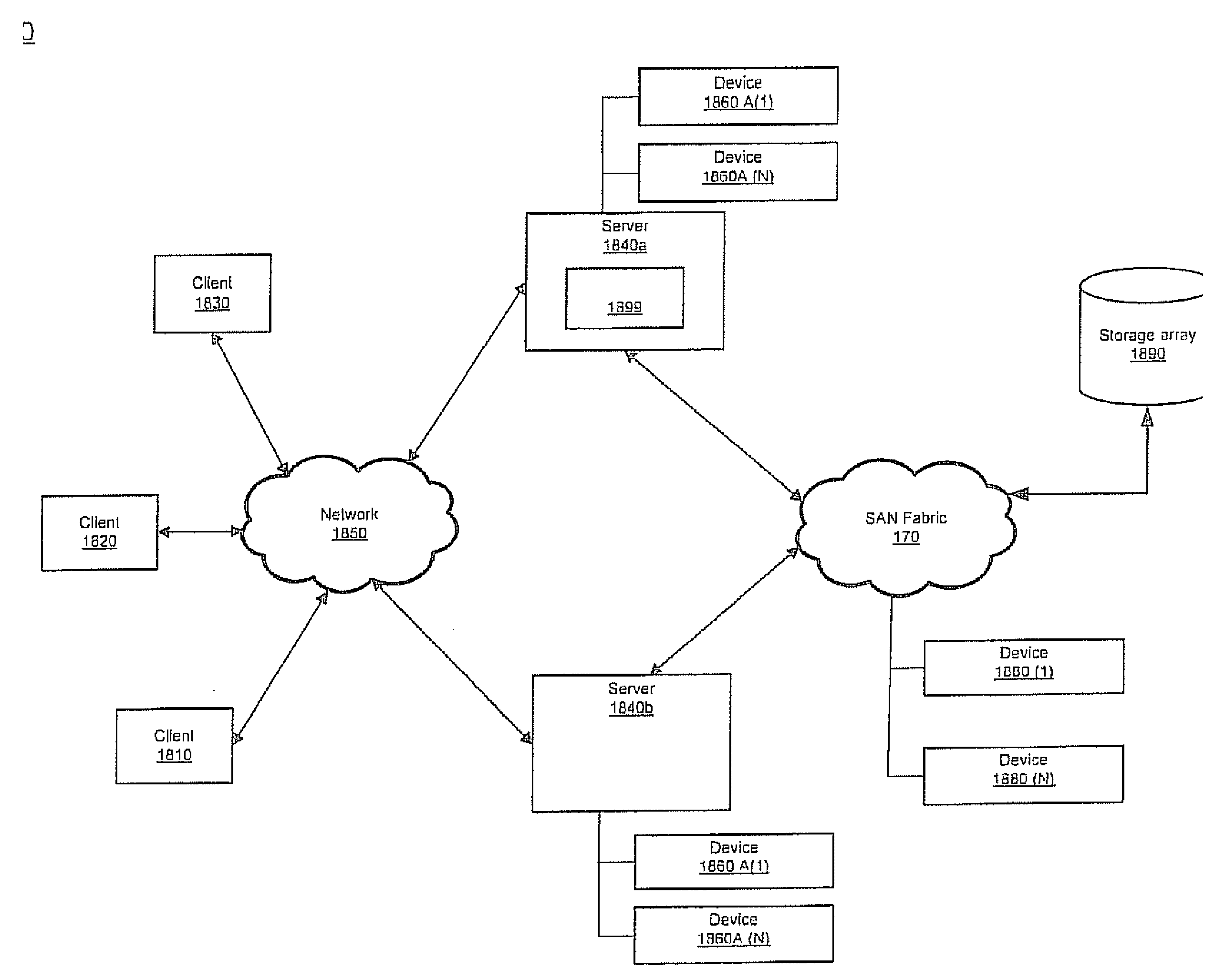

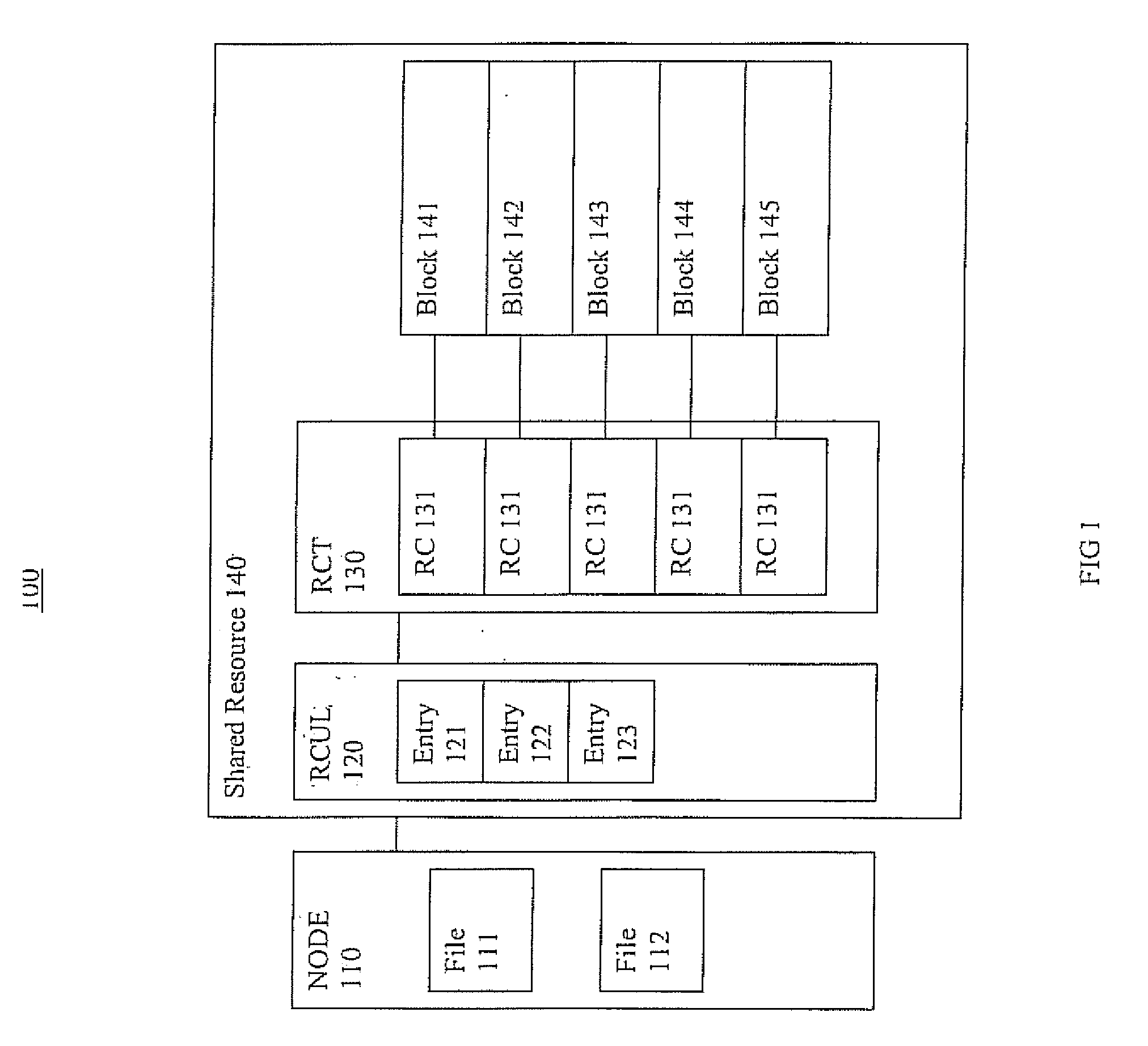

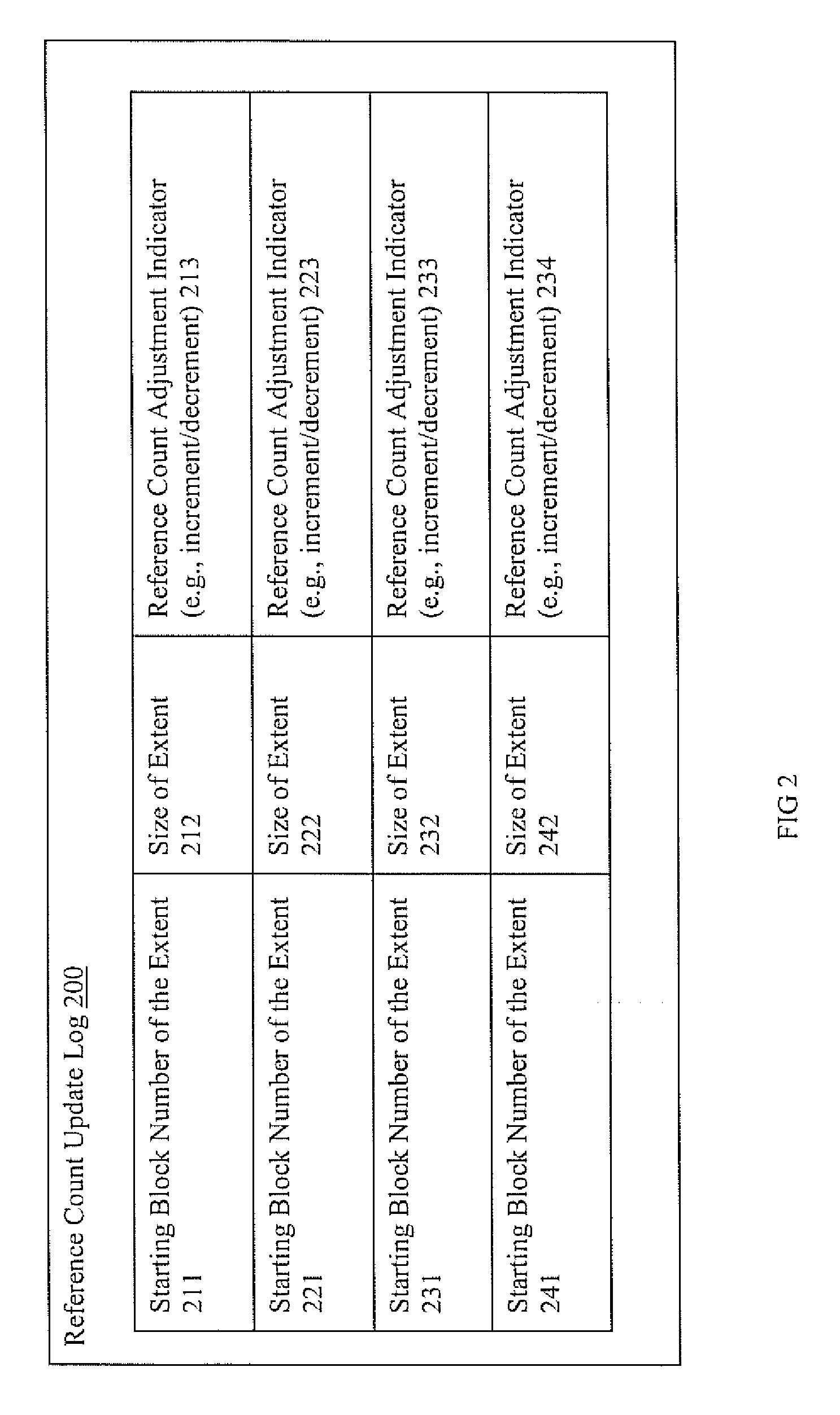

Extent reference count update system and method

ActiveUS20120047115A1Digital data information retrievalDigital data processing detailsBackground processData mining

Systems and methods for extent reference count updates are presented. In one embodiment; a reference count update method includes: receiving an indication of new reference association with an extent of a shared storage component; generating reference count update log information for a reference count update log to indicate the new reference association with an extent of a shared storage component, wherein the altering occurs inline; forwarding a successful data update indicator to initiator of activity triggering the new reference association with the extent of the shared storage component; and updating a reference count table in accordance with the information in the reference count update log, wherein the updating is included in a background process.

Owner:VERITAS TECH

Single-word lock-free reference counting

Solutions to a value recycling problem that we define herein facilitate implementations of computer programs that may execute as multithreaded computations in multiprocessor computers, as well as implementations of related shared data structures. Some exploitations of the techniques described herein allow non-blocking, shared data structures to be implemented using standard dynamic allocation mechanisms (such as malloc and free). A class of general solutions to value recycling is described in the context of an illustration we call the Repeat Offender Problem (ROP), including illustrative Application Program Interfaces (APIs) defined in terms of the ROP terminology. Furthermore, specific solutions, implementations and algorithm, including a Pass-The-Buck (PTB) implementation are also described. Solutions to the proposed value recycling problem have a variety of uses. For example, a single-word lock-free reference counting (SLFRC) technique may build on any of a variety of value recycling solutions to transform, in a straight-forward manner, many lock-free data structure implementations that assume garbage collection (i.e., which do not explicitly free memory) into dynamic-sized data structures.

Owner:ORACLE INT CORP

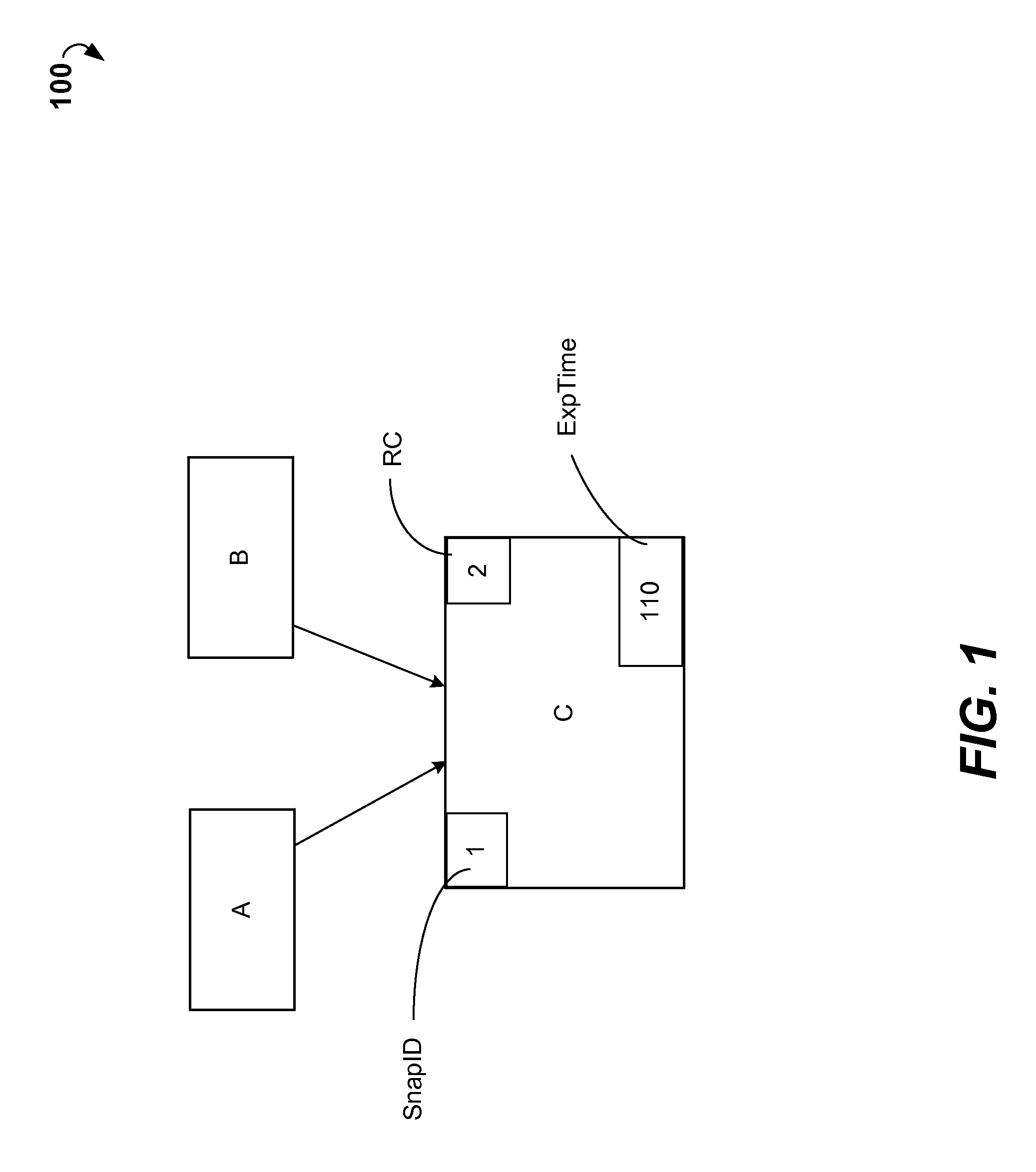

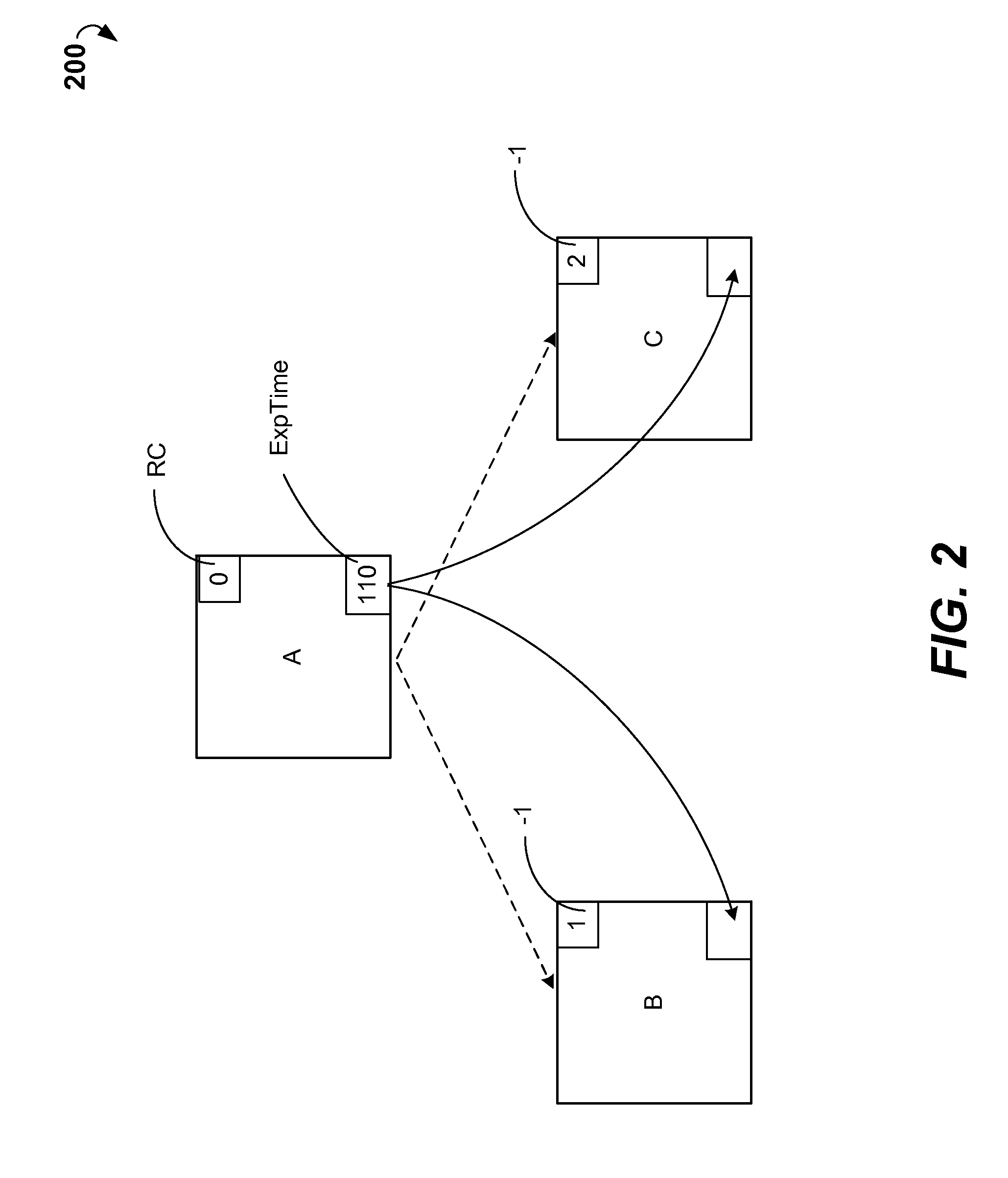

Hybrid garbage collection

ActiveUS20140372490A1Memory adressing/allocation/relocationObject oriented databasesExpiration TimeFile system

Disclosed is a method for hybrid garbage collection of objects in a file system. An example method includes associating, with each object in the file system, a reference counter, an expiration time, and a version identifier. The object is can be kept in the file system while the reference counter of the object is non-zero. After determining that the reference counter of the object is zero, the object can be kept in the file system up to the expiration time associated with the object. When a reference referring to the object is deleted, the expiration time of the object is updated to the latest of the expiration times of the object and the reference. Furthermore, the object can be kept in the file system while the version identifier of the object is larger than a predetermined version number.

Owner:EXABLOX

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com