Patents

Literature

223 results about "Manual memory management" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, manual memory management refers to the usage of manual instructions by the programmer to identify and deallocate unused objects, or garbage. Up until the mid-1990s, the majority of programming languages used in industry supported manual memory management, though garbage collection has existed since 1959, when it was introduced with Lisp. Today, however, languages with garbage collection such as Java are increasingly popular and the languages Objective-C and Swift provide similar functionality through Automatic Reference Counting. The main manually managed languages still in widespread use today are C and C++ – see C dynamic memory allocation.

Flash memory management system and method utilizing multiple block list windows

InactiveUS6895464B2Improve performanceImprove reliabilityInput/output to record carriersMemory loss protectionRapid accessBlock detection

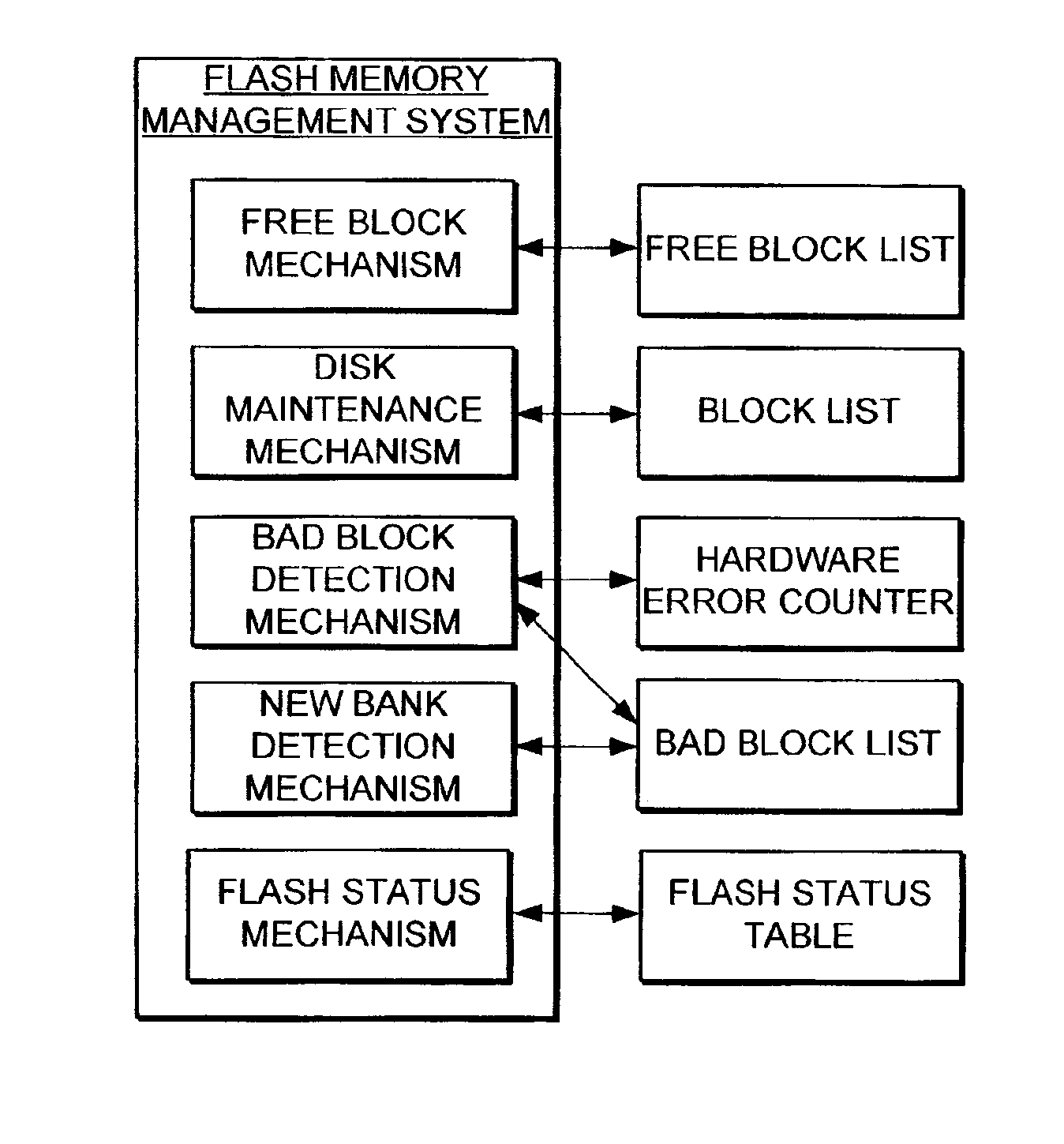

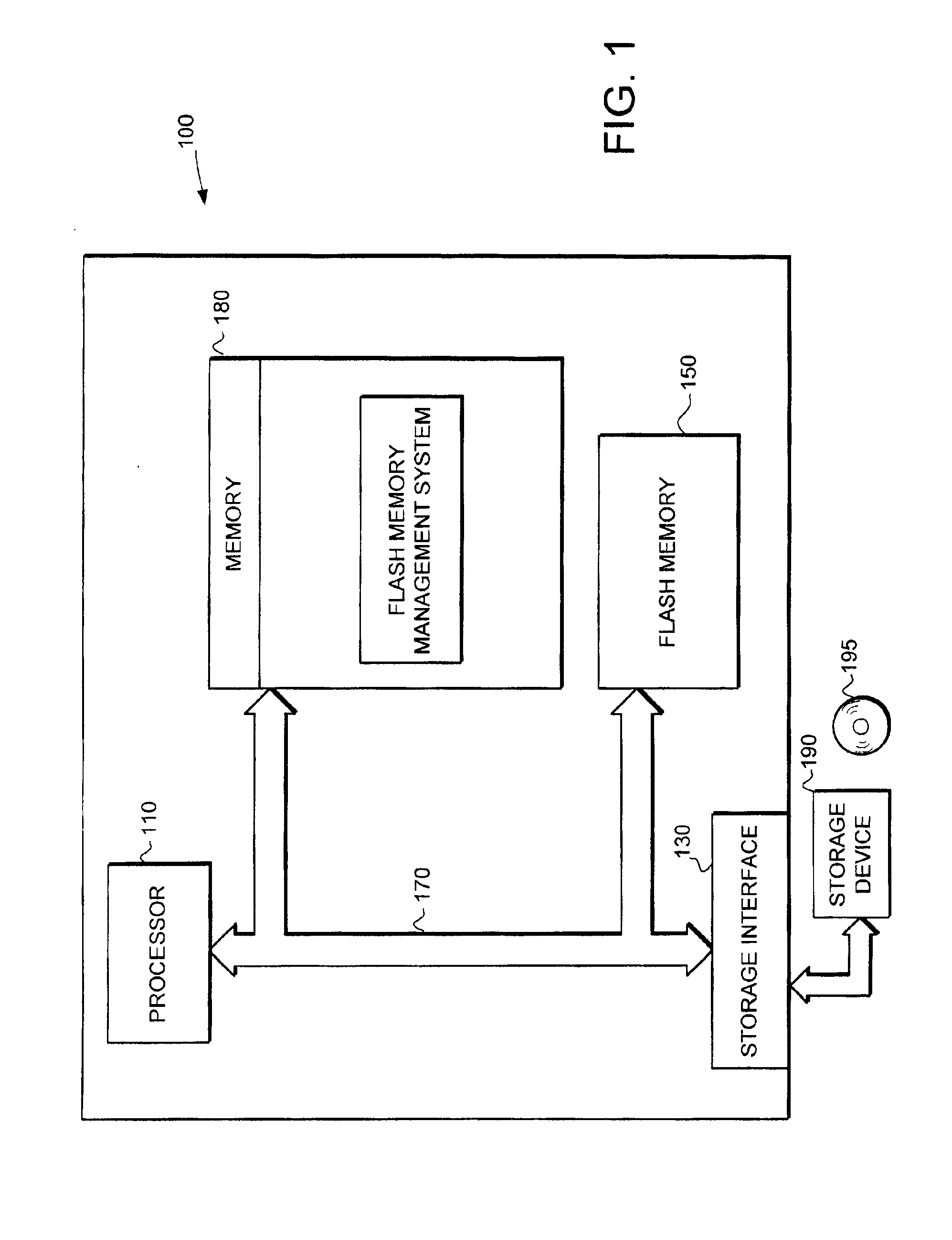

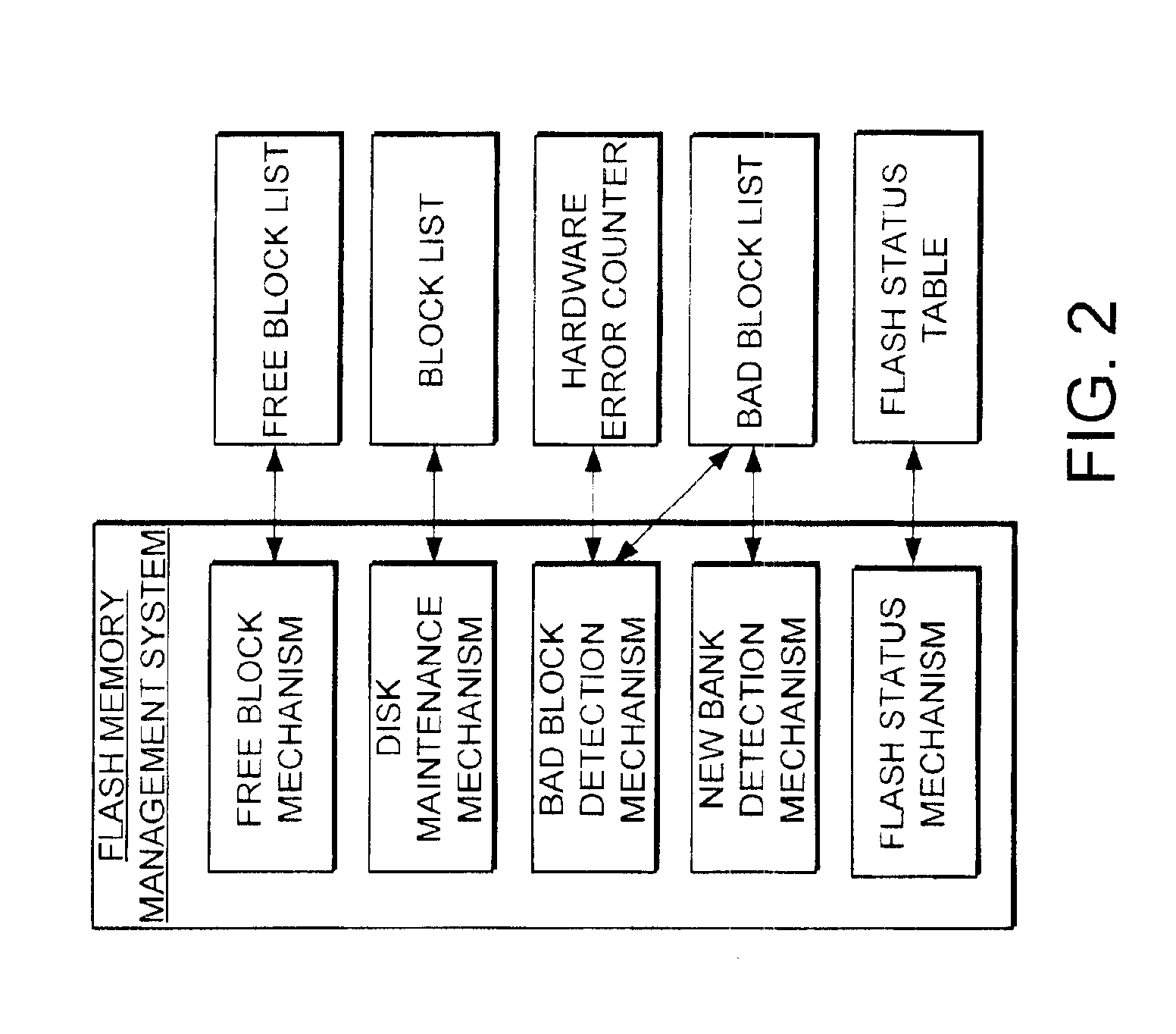

The present invention provides a flash memory management system and method with increased performance. The flash memory management system provides the ability to efficiently manage and allocate flash memory use in a way that improves reliability and longevity, while maintaining good performance levels. The flash memory management system includes a free block mechanism, a disk maintenance mechanism, and a bad block detection mechanism. The free block mechanism provides efficient sorting of free blocks to facilitate selecting low use blocks for writing. The disk maintenance mechanism provides for the ability to efficiently clean flash memory blocks during processor idle times. The bad block detection mechanism provides the ability to better detect when a block of flash memory is likely to go bad. The flash status mechanism stores information in fast access memory that describes the content and status of the data in the flash disk. The new bank detection mechanism provides the ability to automatically detect when new banks of flash memory are added to the system. Together, these mechanisms provide a flash memory management system that can improve the operational efficiency of systems that utilize flash memory.

Owner:III HLDG 12 LLC

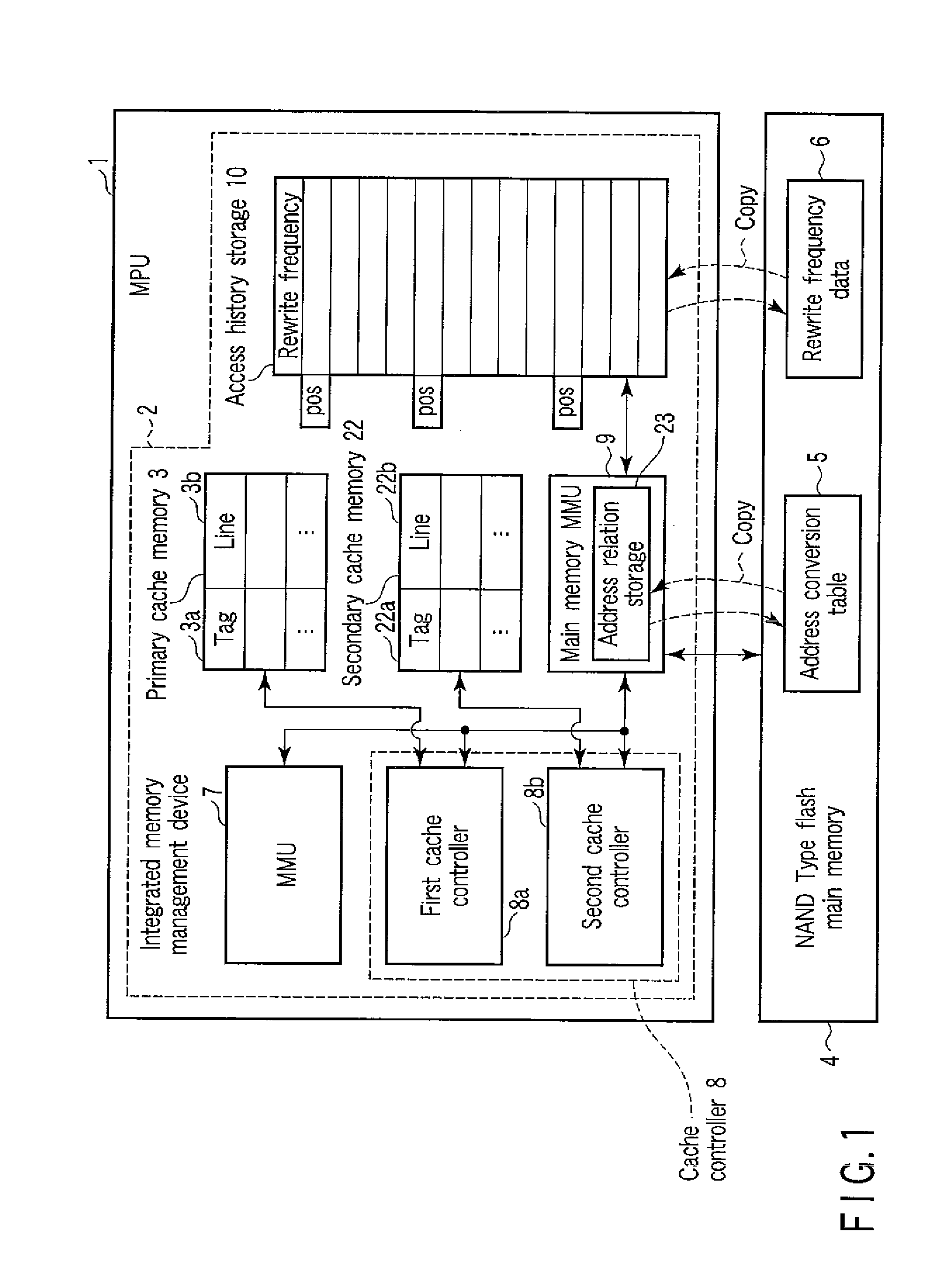

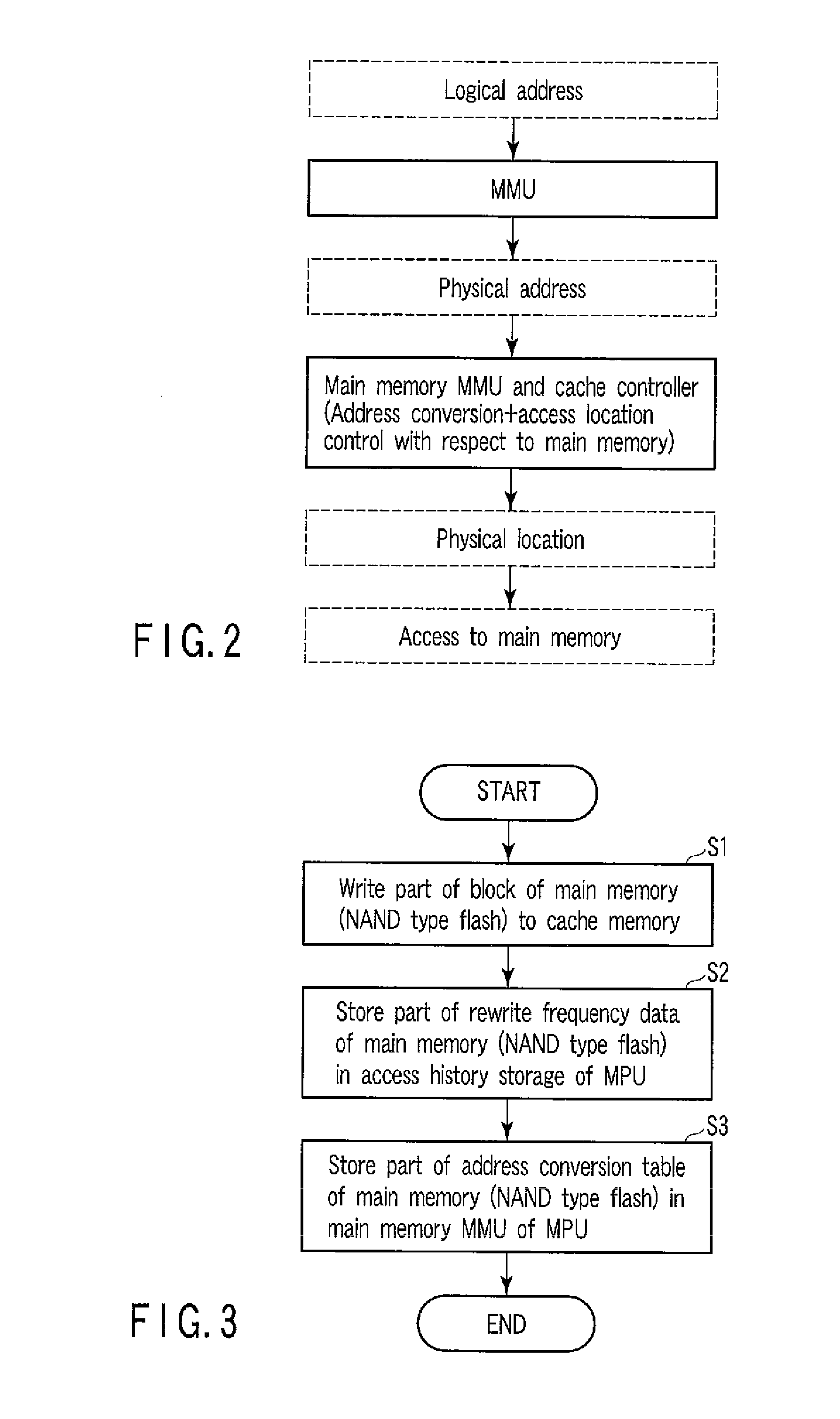

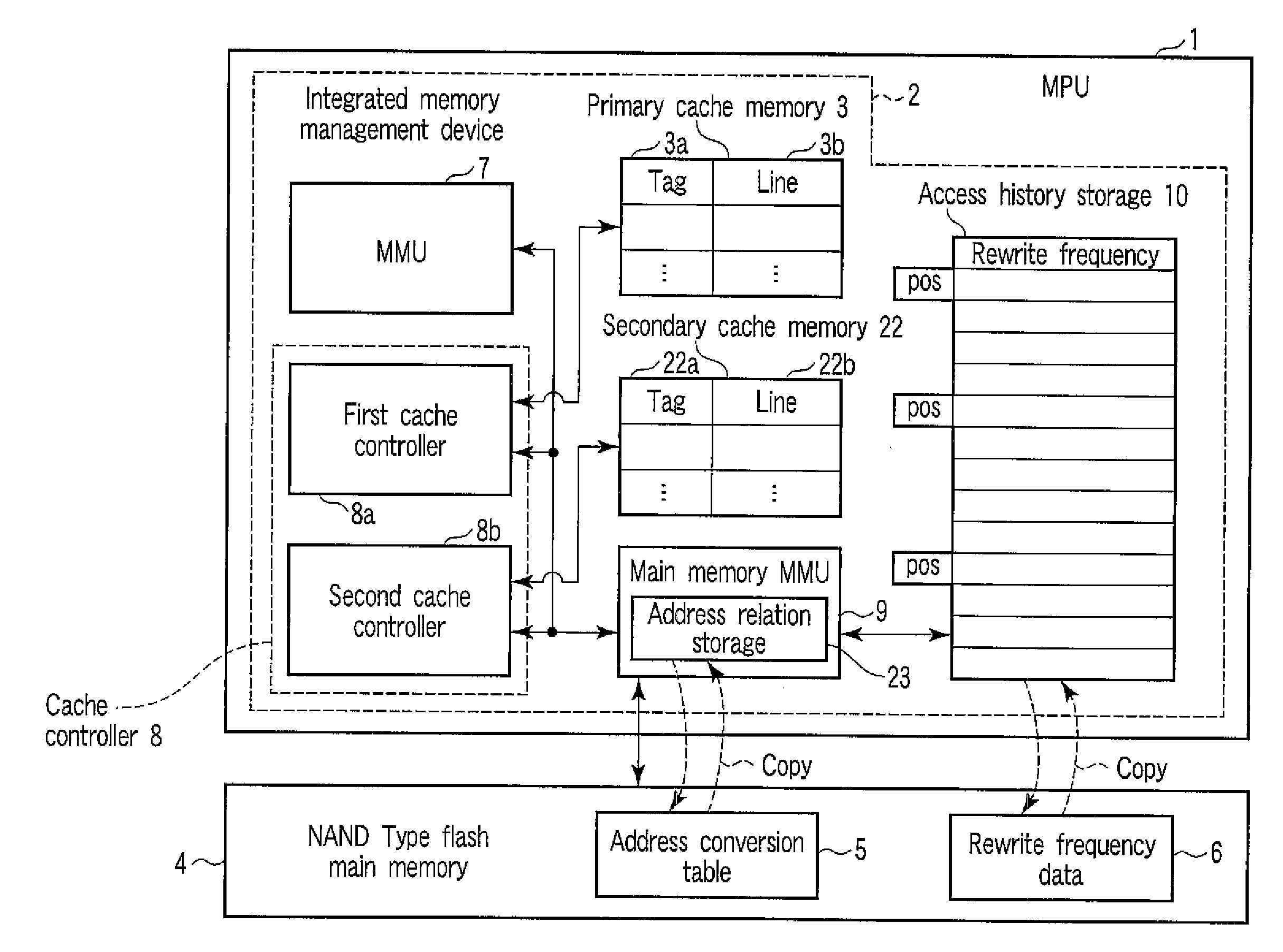

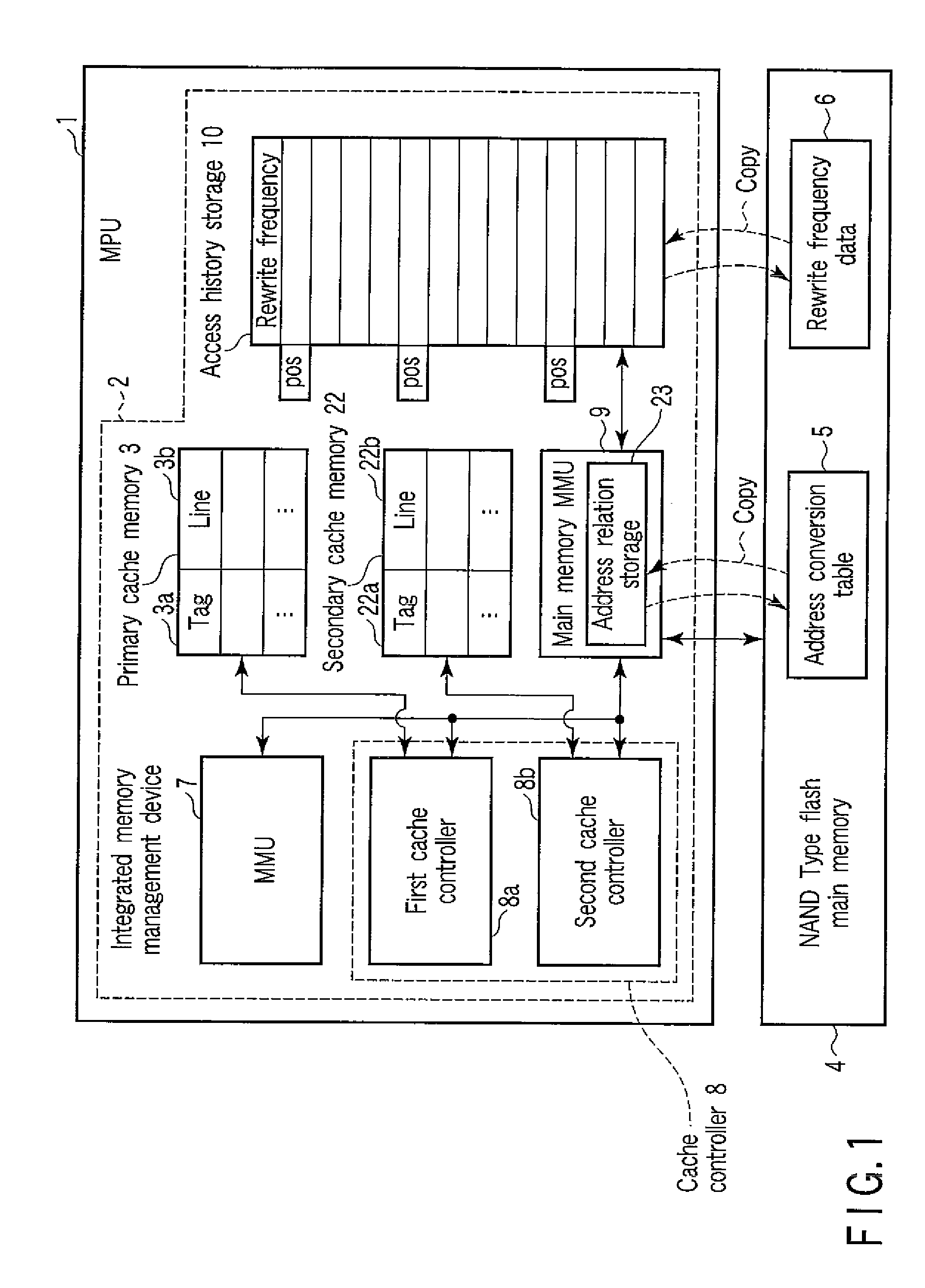

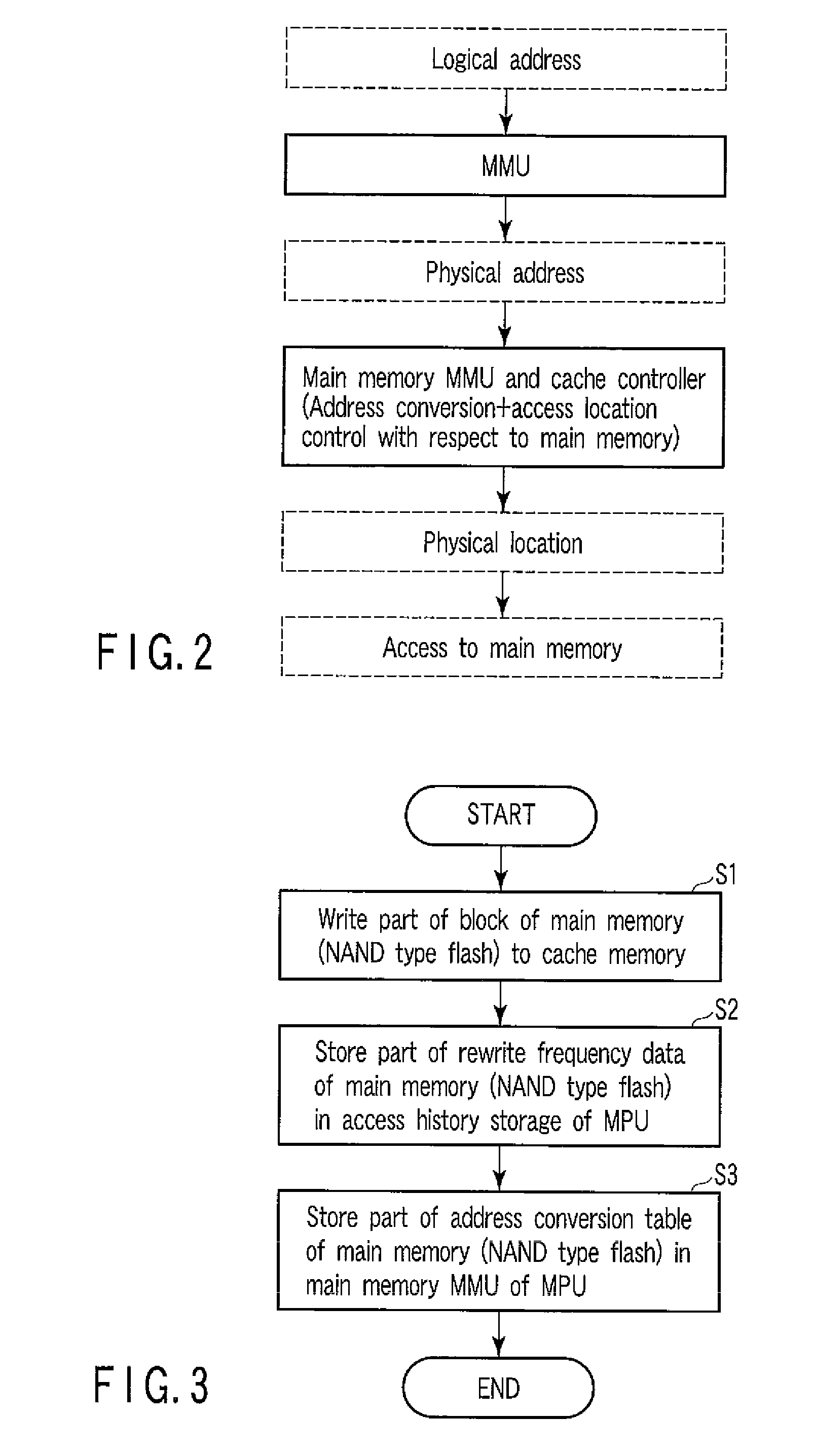

Integrated memory management and memory management method

ActiveUS20090083478A1Memory architecture accessing/allocationMemory adressing/allocation/relocationData transmissionMemory management unit

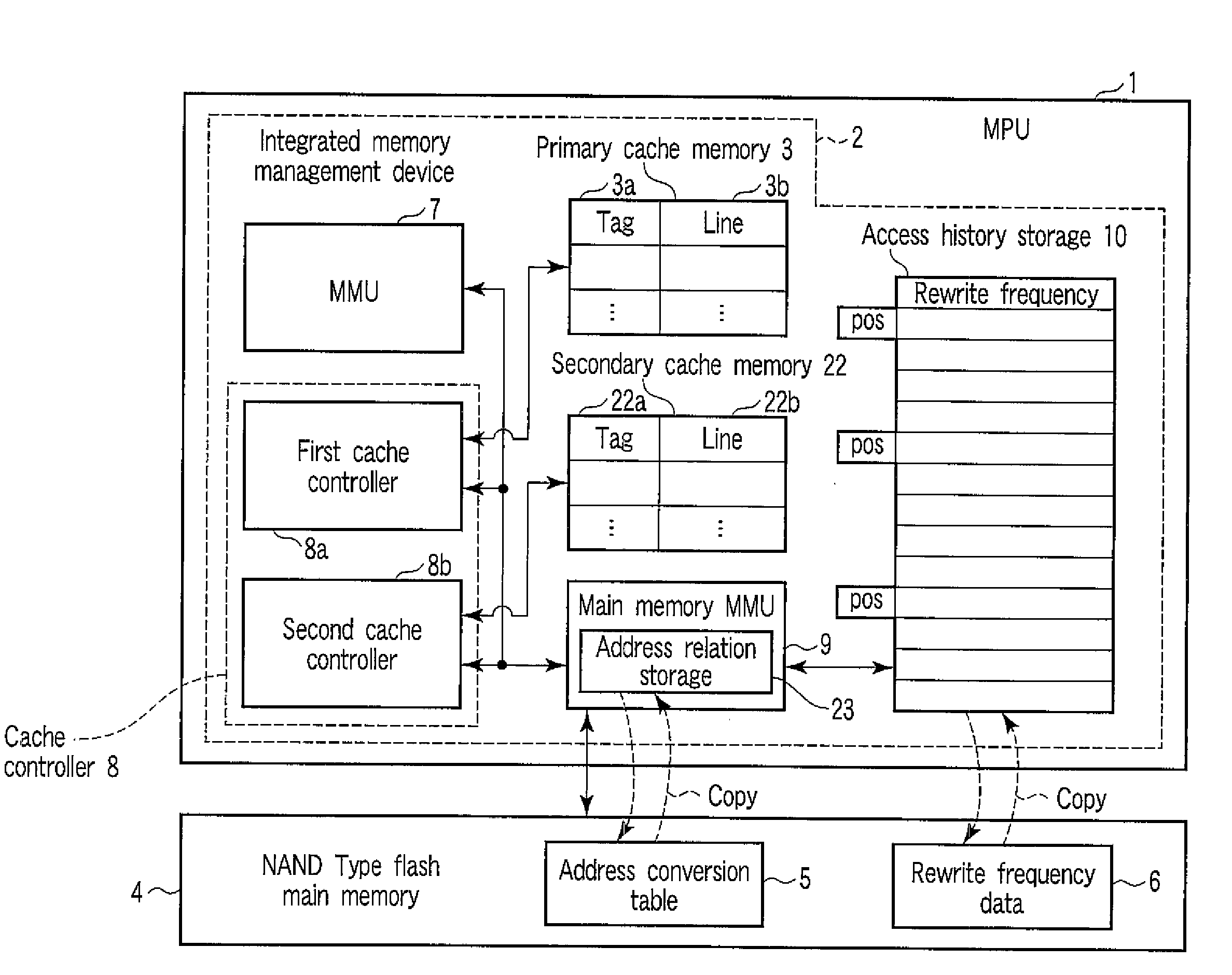

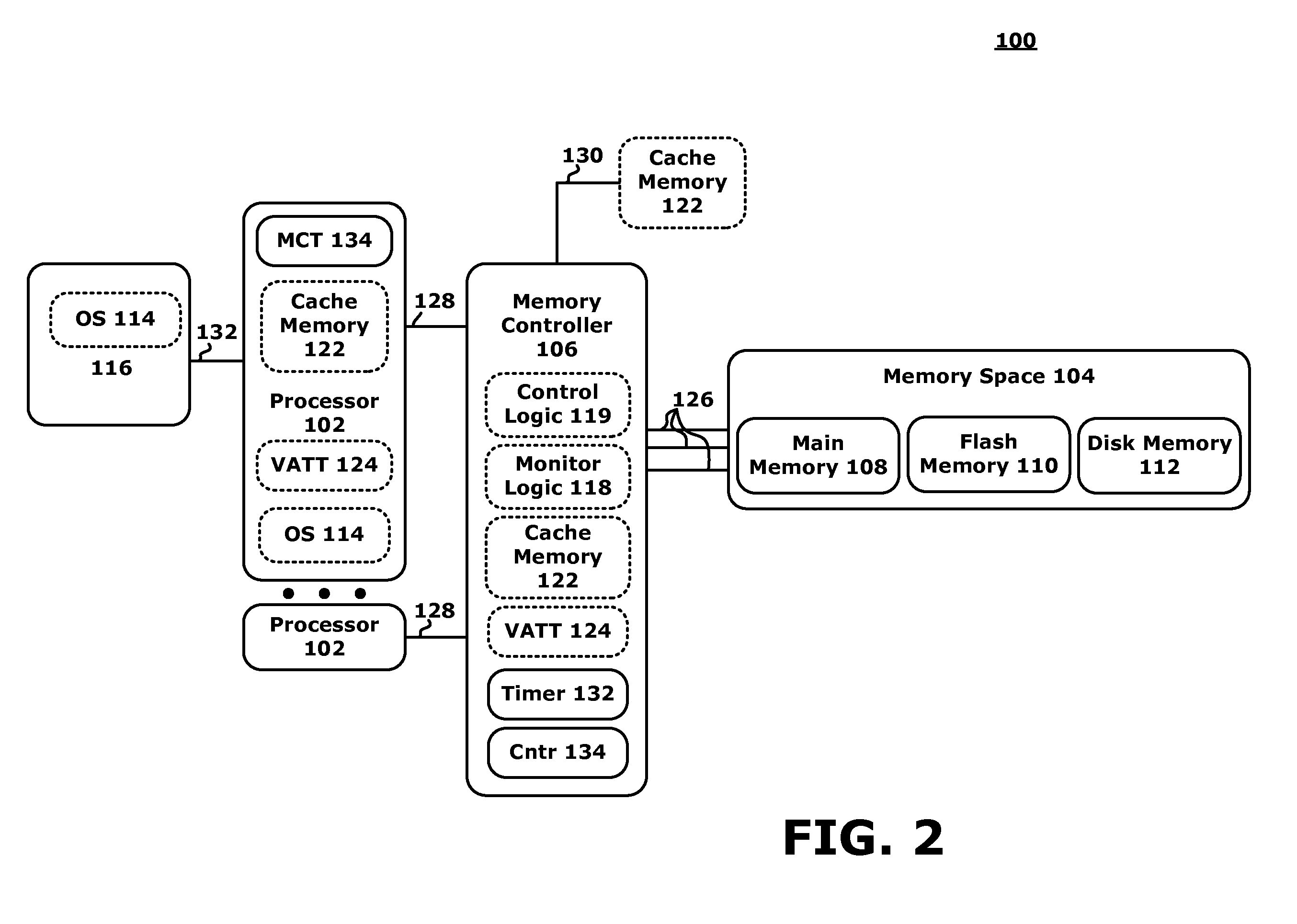

An integrated memory management device according to an example of the invention comprises an acquiring unit acquiring a read destination logical address from a processor, an address conversion unit converting the read destination logical address into a read destination physical address of a non-volatile main memory, an access unit reading, from the non-volatile main memory, data that corresponds to the read destination physical address and has a size that is equal to a block size or an integer multiple of the page size of the non-volatile main memory, and transmission unit transferring the read data to a cache memory of the processor having a cache size that depends on the block size or the integer multiple of the page size of the non-volatile main memory.

Owner:KIOXIA CORP

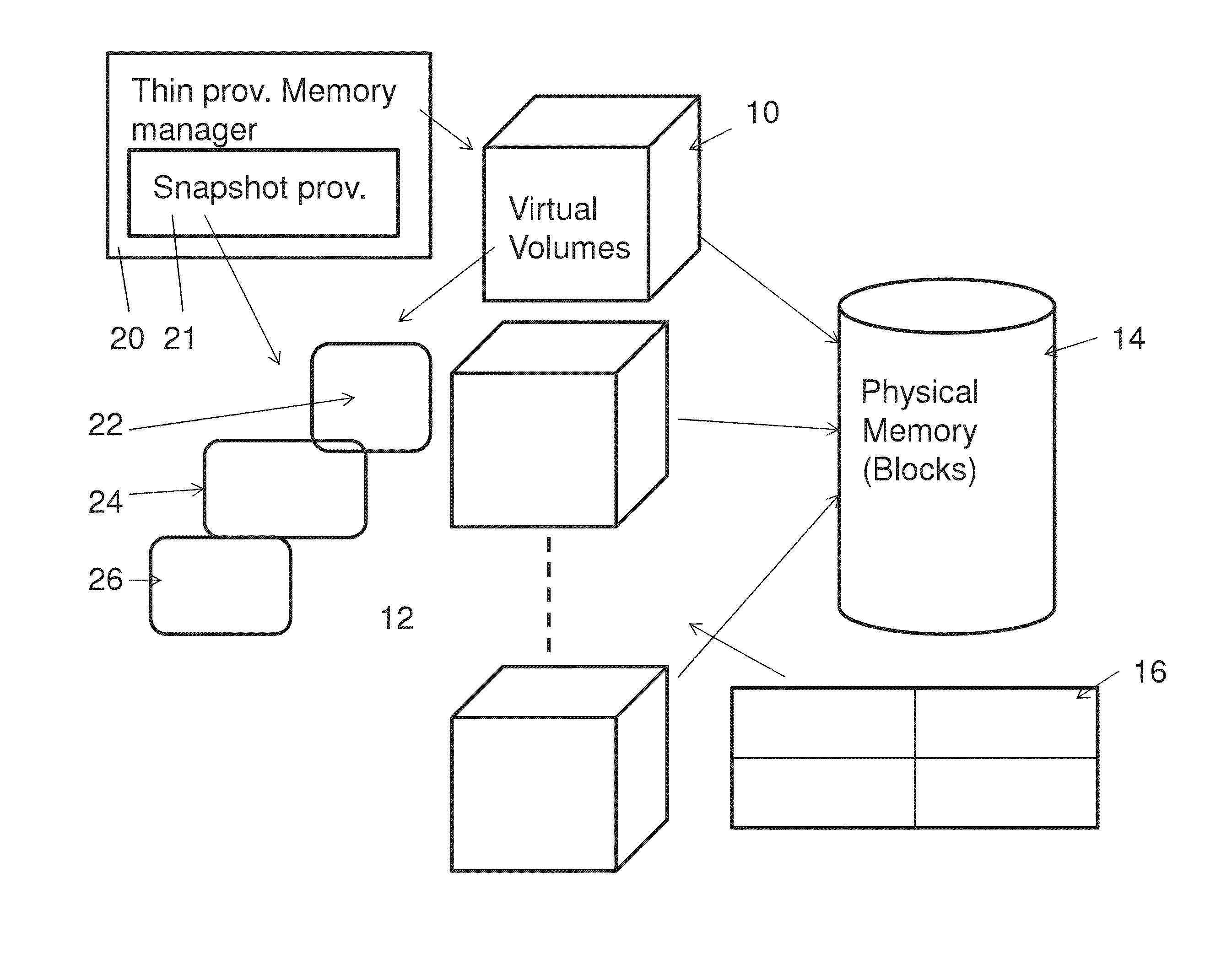

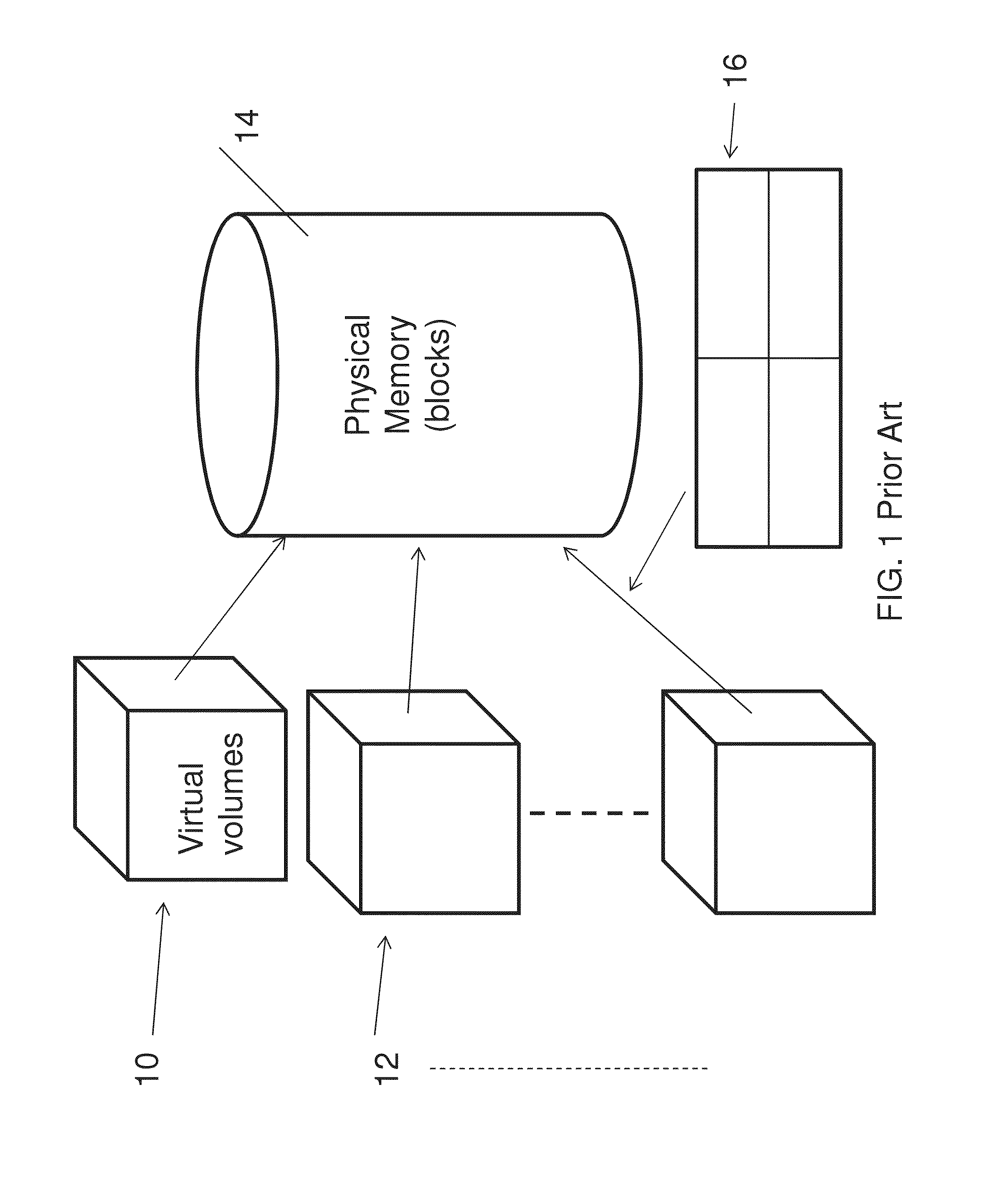

Snapshot mechanism

A memory management system for a thinly provisioned memory volume in which a relatively larger virtual address range of virtual address blocks is mapped to a relatively smaller physical memory comprising physical memory blocks via a mapping table containing entries only for addresses of the physical memory blocks containing data. The memory management system comprises a snapshot provision unit to take a given snapshot of the memory volume at a given time, the snapshot comprising a mapping table and memory values of the volume, the mapping table and memory values comprising entries only for addresses of the physical memory containing data. The snapshot is managed on the same thin provisioning basis as the volume itself, and the system is particularly suitable for RAM type memory disks.

Owner:EMC IP HLDG CO LLC

Systems and method for flash memory management

ActiveUS20100293321A1Memory architecture accessing/allocationMemory adressing/allocation/relocationComputer scienceNon-volatile memory

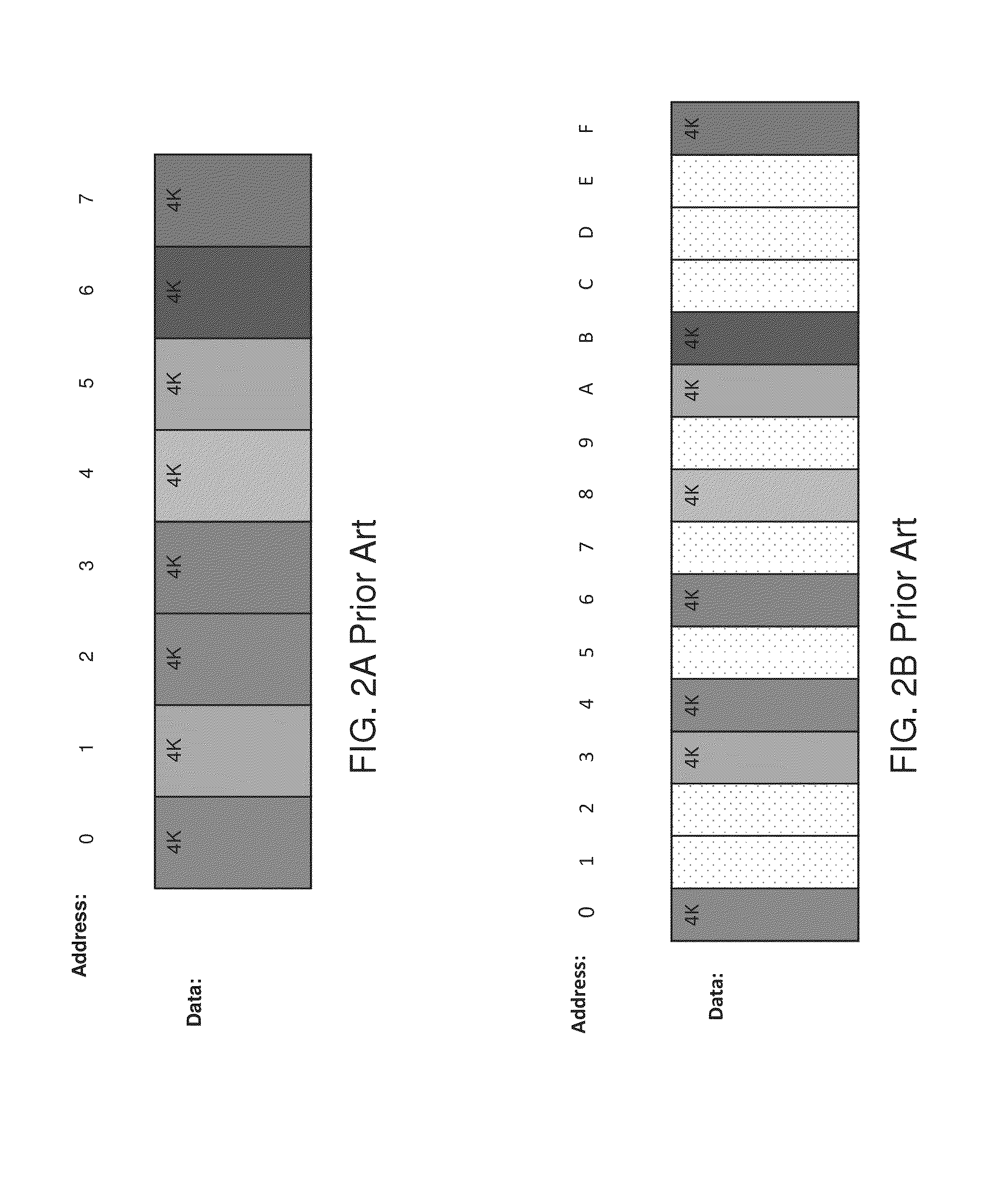

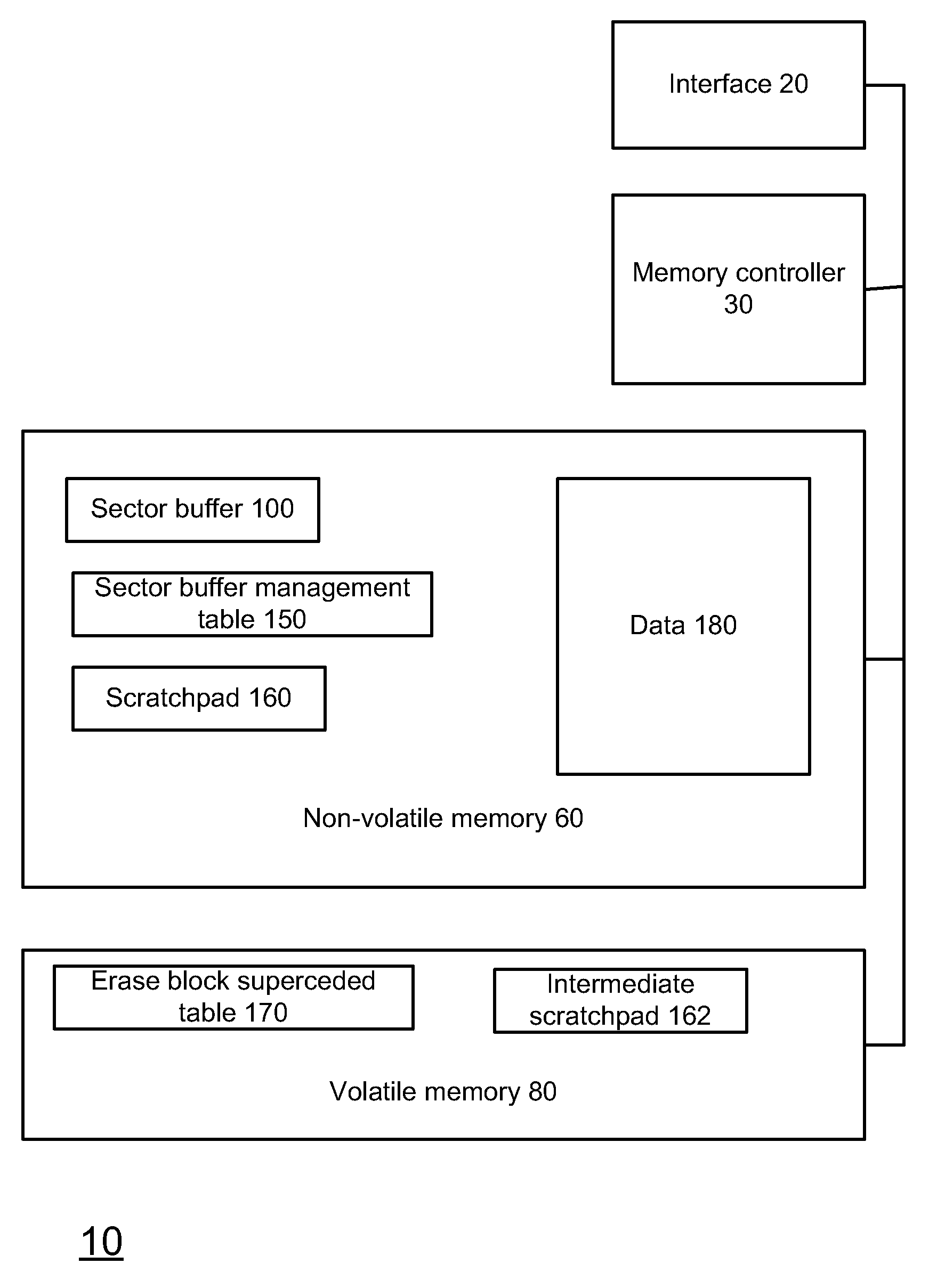

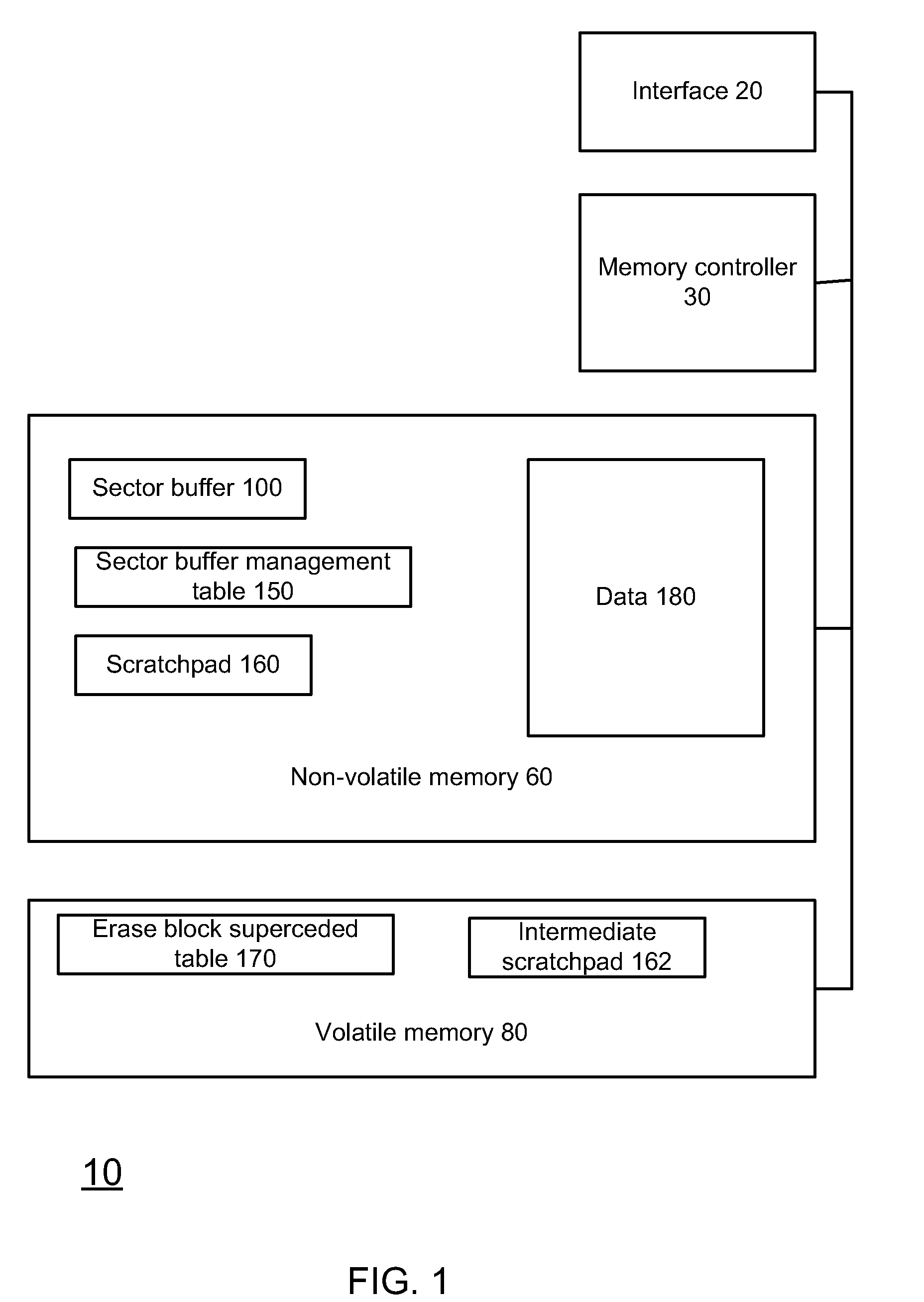

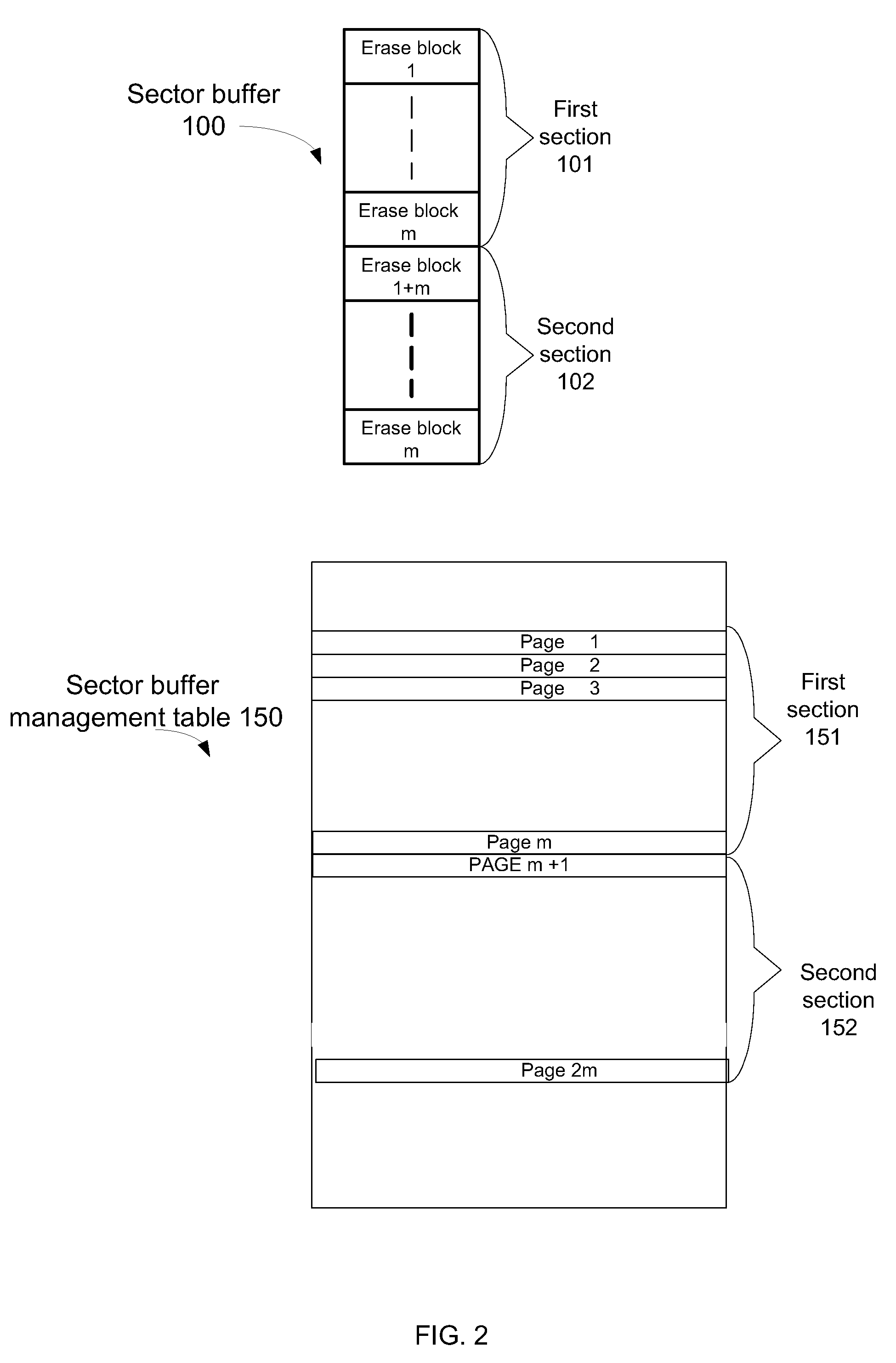

A system and method for merging sectors of a flash memory module, the method includes: receiving multiple sectors, each received sector is associated with a current erase block out of multiple (L) erase blocks; accumulating the received sectors in a sector buffer, the sector buffer is stored in a non-volatile memory module; maintaining a merged sector map indicative of a sectors of the sector buffer that have been merged and sectors of the sector buffer waiting to be merged; finding a first sector waiting to be merged according to the merged sector map; merging the first sector and other sectors that belong to a same erase block as the first sector; and updating the merged sector map to indicate that that the first second and the other sectors that belonged to the same erase block were merged.

Owner:AVAGO TECH INT SALES PTE LTD

Integrated memory management and memory management method

ActiveUS8135900B2Memory architecture accessing/allocationMemory adressing/allocation/relocationTerm memoryMemory management unit

An integrated memory management device according to an example of the invention comprises an acquiring unit acquiring a read destination logical address from a processor, an address conversion unit converting the read destination logical address into a read destination physical address of a non-volatile main memory, an access unit reading, from the non-volatile main memory, data that corresponds to the read destination physical address and has a size that is equal to a block size or an integer multiple of the page size of the non-volatile main memory, and transmission unit transferring the read data to a cache memory of the processor having a cache size that depends on the block size or the integer multiple of the page size of the non-volatile main memory.

Owner:KIOXIA CORP

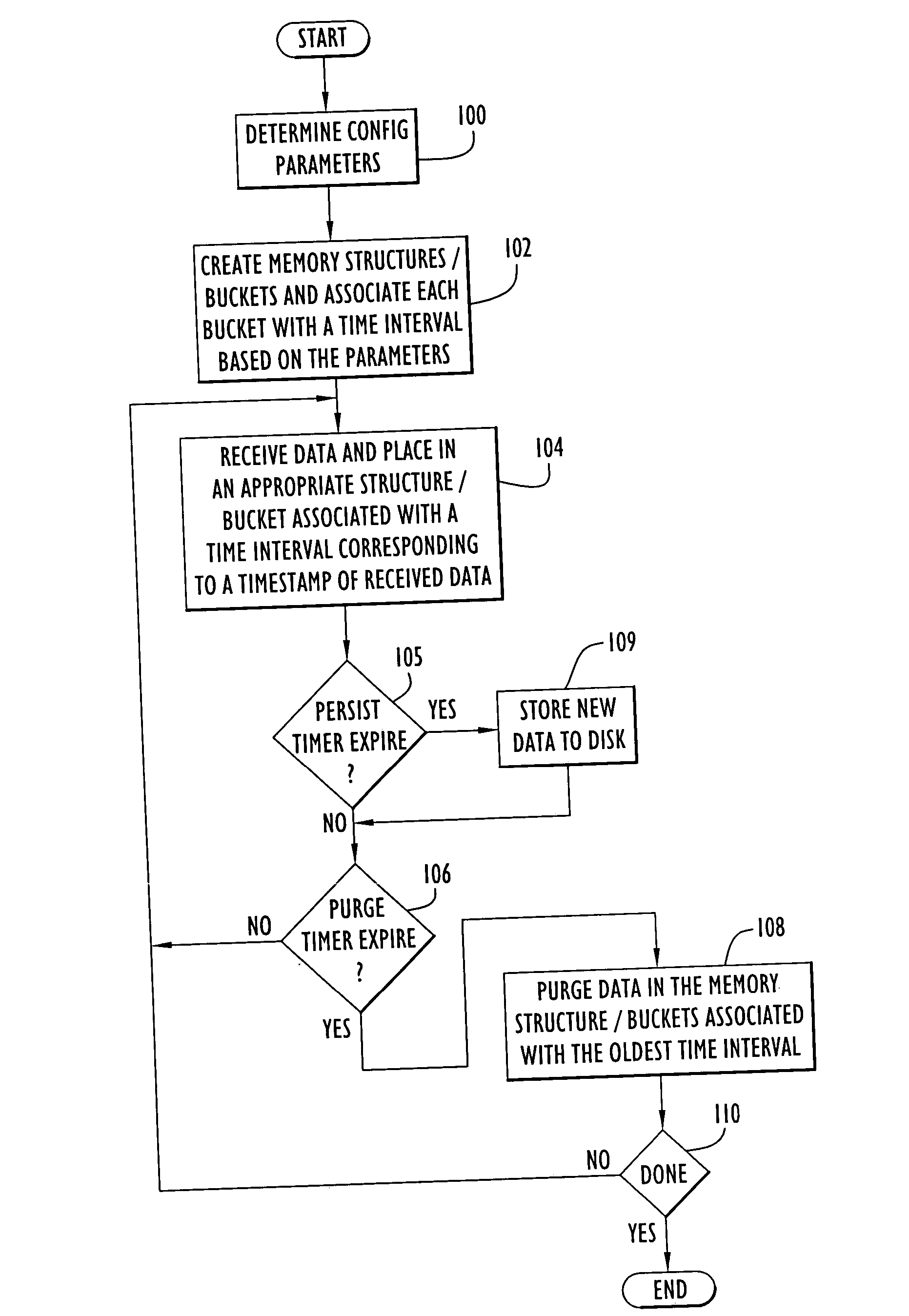

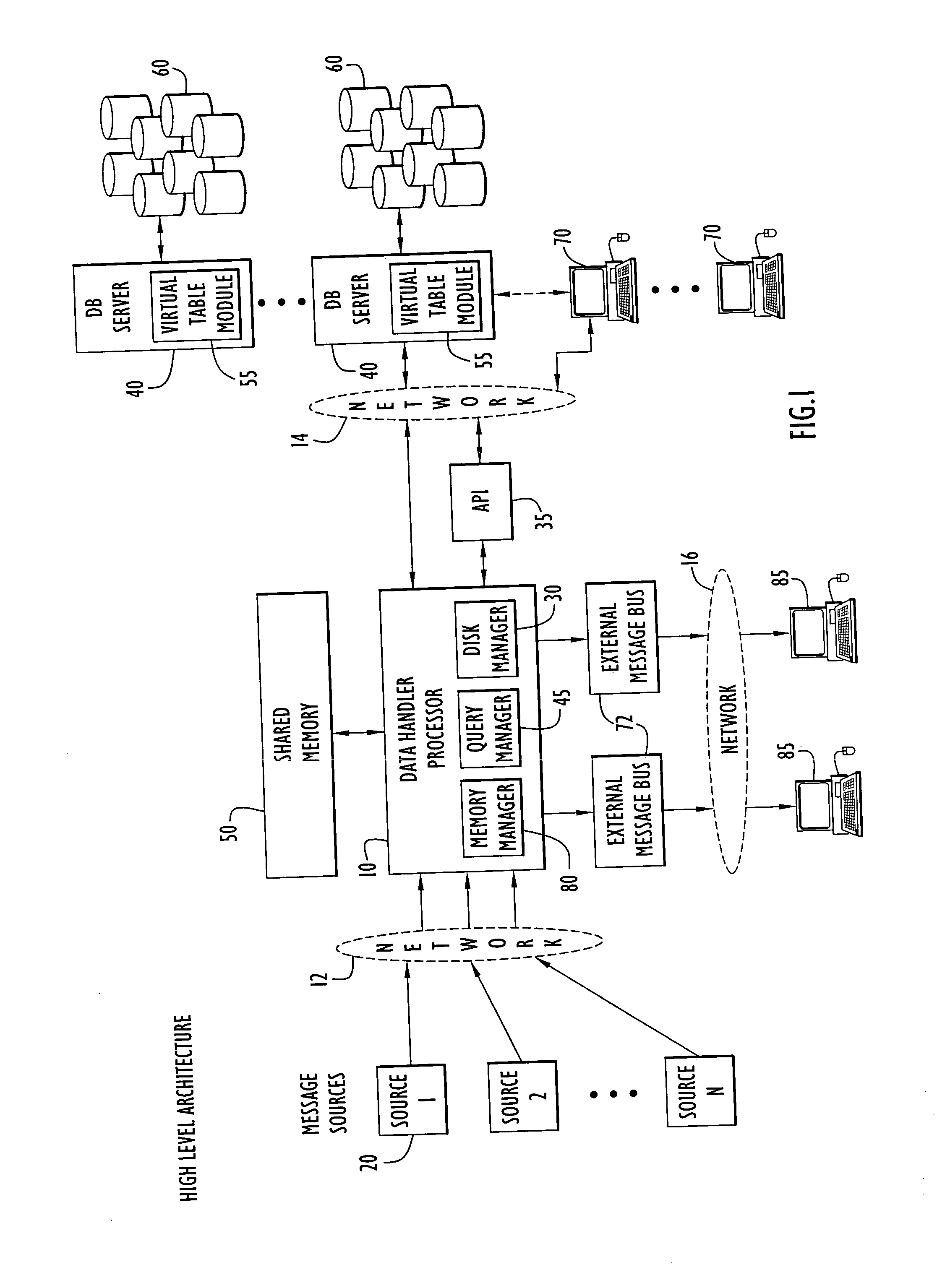

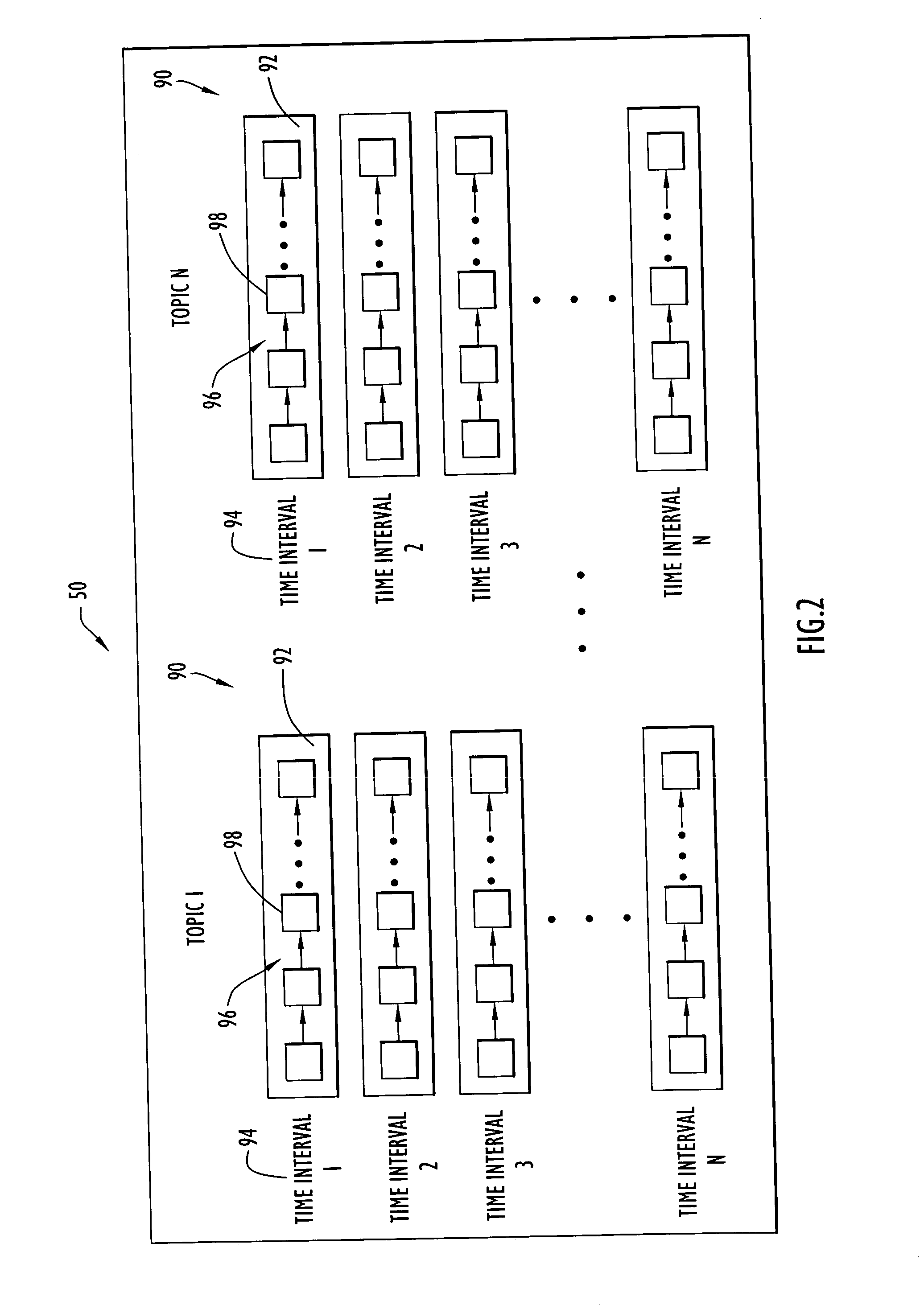

Memory management system and method for storing and retrieving messages

Embodiments of the present invention provide an efficient manner to systematically remove data from a memory that has been transferred or copied to disk storage, thereby facilitating faster querying of data residing in the memory. In particular, memory containing data received from data sources is partitioned into a fixed quantity of buckets each associated with a respective time interval. The buckets represent contiguous intervals of time, where each interval is preferably of the same duration. When data arrives, the data is associated with a timestamp and placed in the appropriate bucket associated with a time interval corresponding to that timestamp. If a timestamp falls outside the range of time intervals associated with the buckets, the data corresponding to that timestamp is placed in an additional bucket. Data within the oldest bucket in memory is periodically removed to provide storage capacity for new incoming information.

Owner:IBM CORP

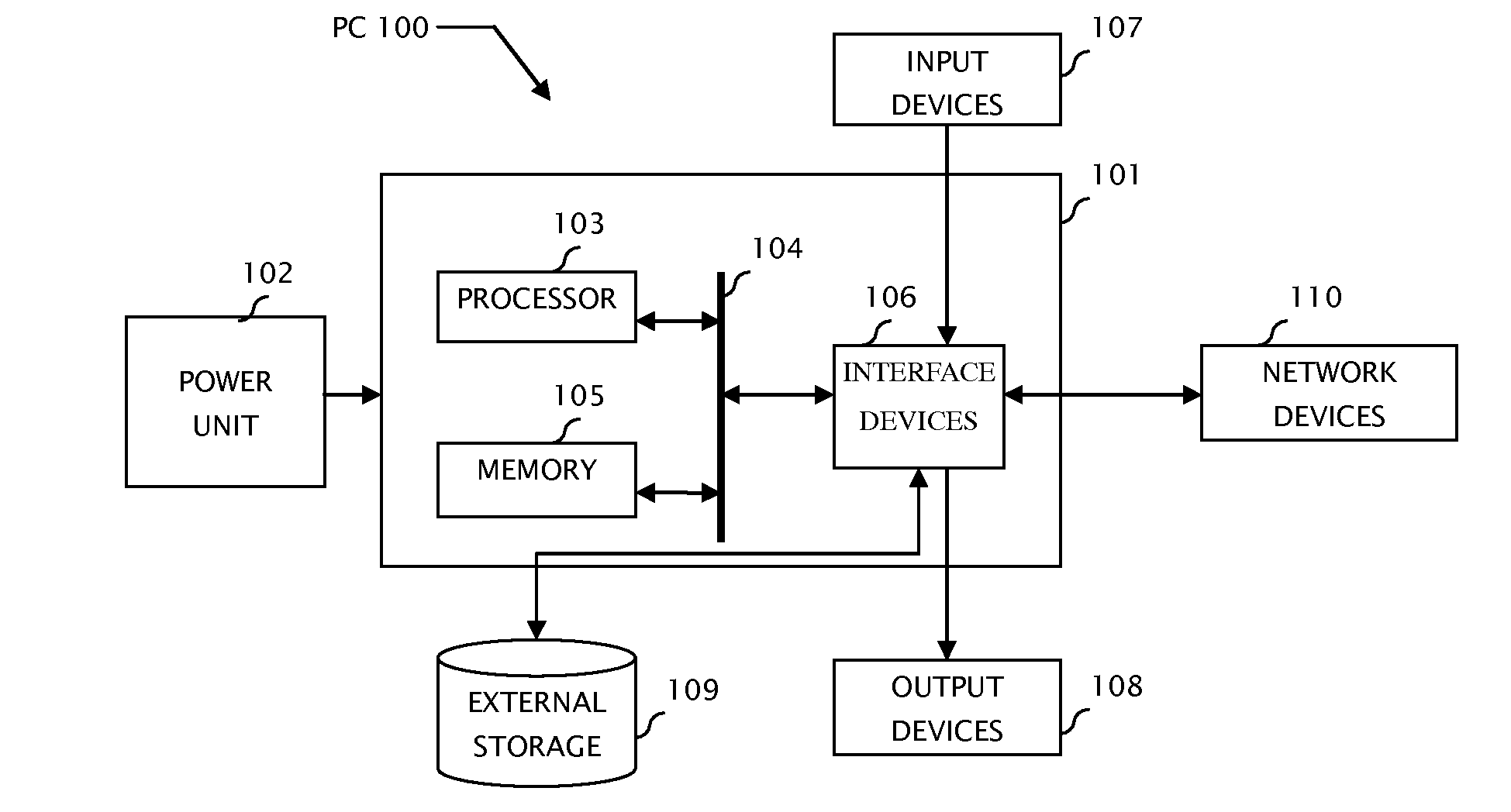

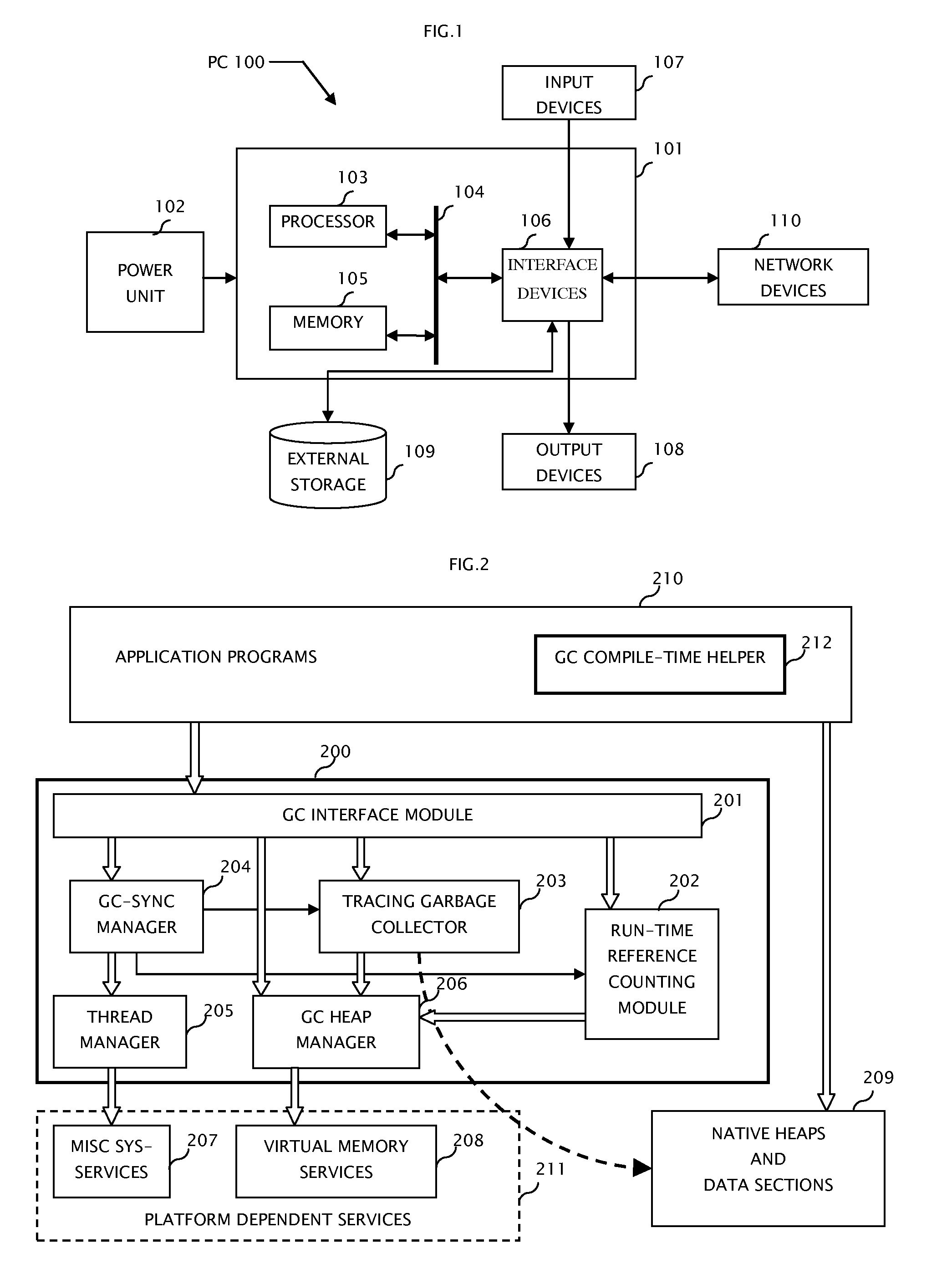

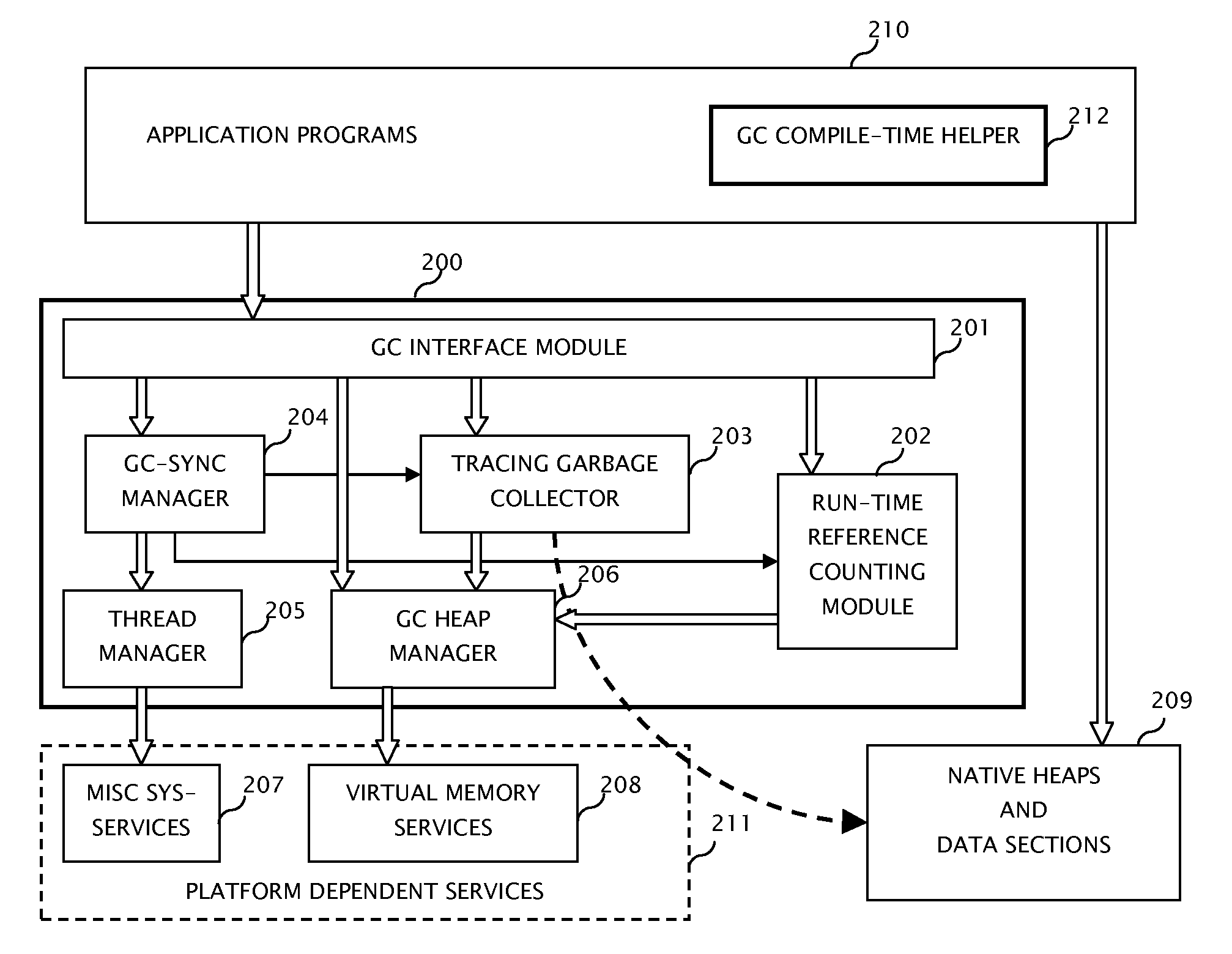

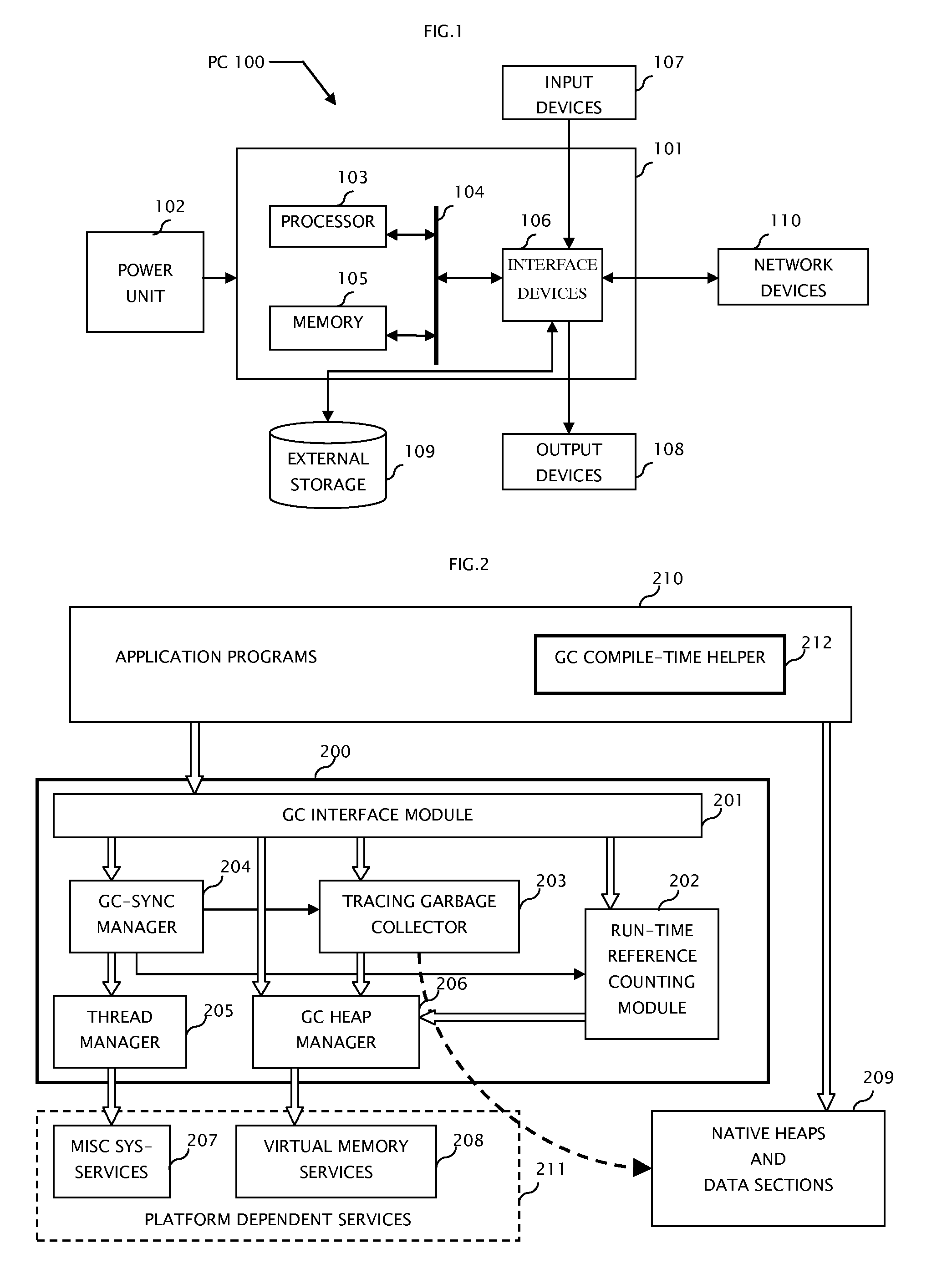

System and method for computer automatic memory management

ActiveUS20070203960A1Ensure correct executionEfficient memory usageData processing applicationsSpecial data processing applicationsImmediate releaseWaste collection

The present invention is a method and system of automatic memory management (garbage collection). An application automatically marks up objects referenced from the “extended root set”. At garbage collection, the system starts traversal from the marked-up objects. It can conduct accurate garbage collection in a non-GC language, such as C++. It provides a deterministic reclamation feature. An object and its resources are released immediately when the last reference is dropped. Application codes automatically become entirely GC-safe and interruptible. A concurrent collector can be pause-less and with predictable worst-case latency of micro-second level. Memory usage is efficient and the cost of reference counting is significantly reduced.

Owner:GUO MINGNAN

Automatic memory management (AMM)

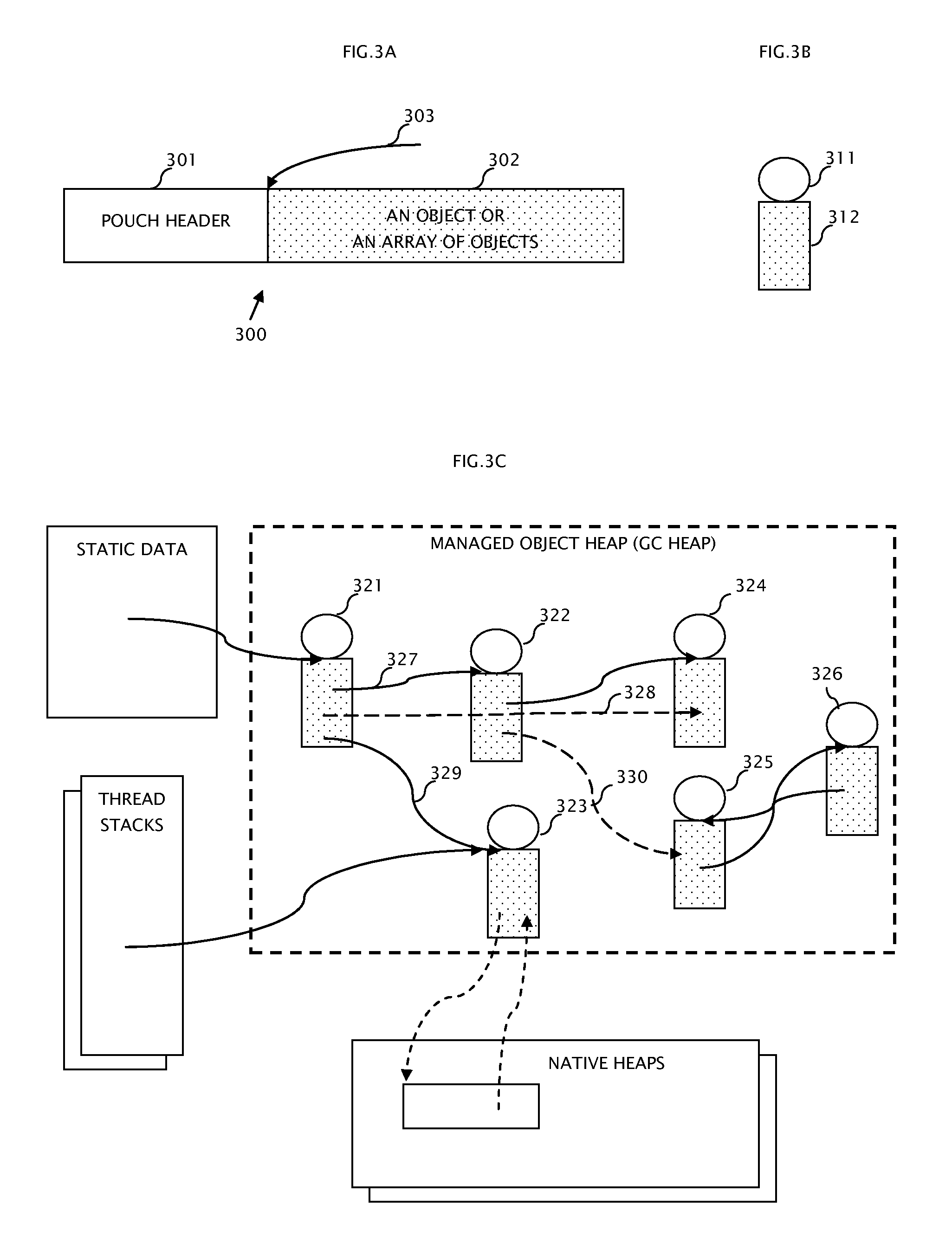

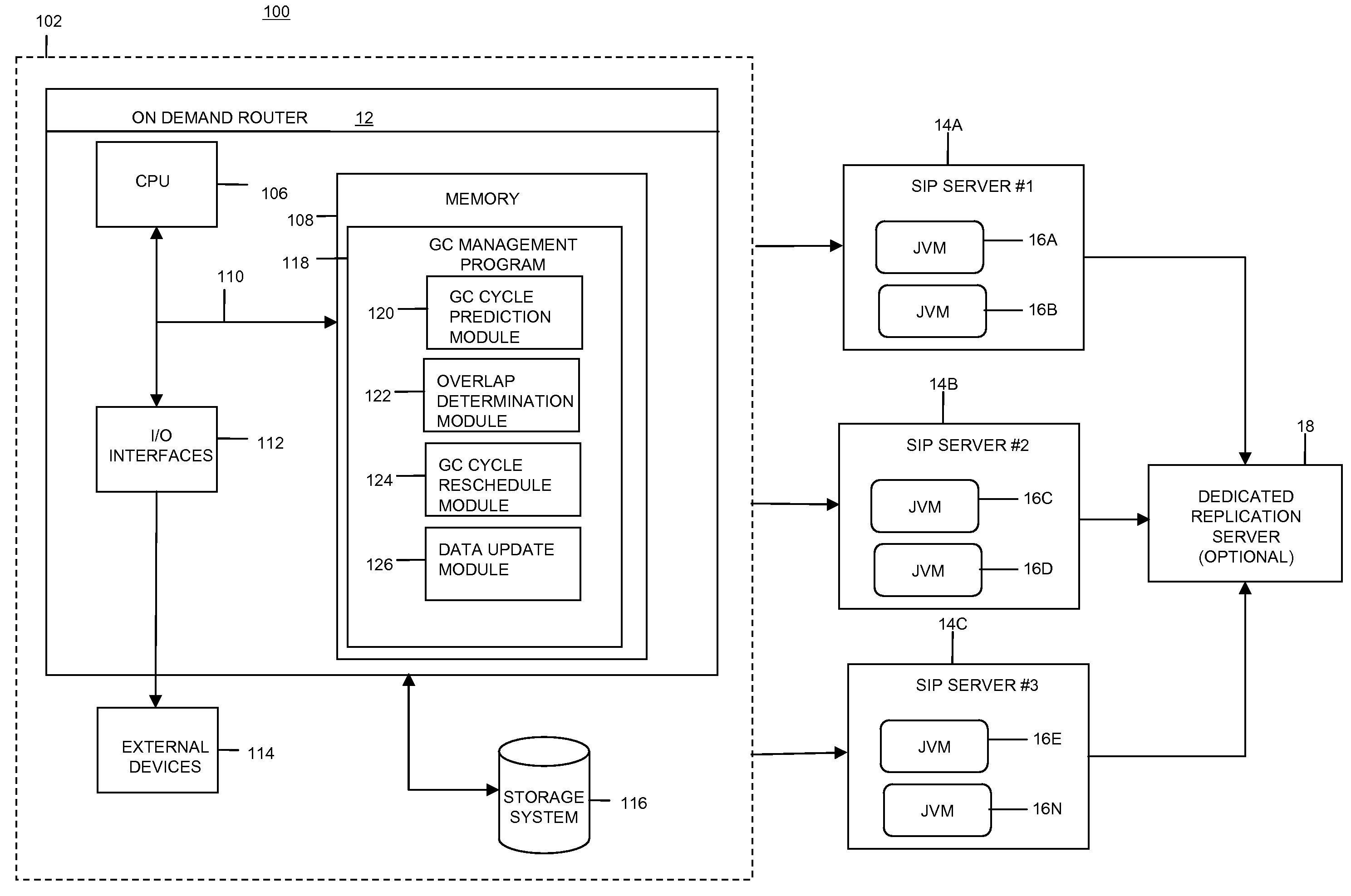

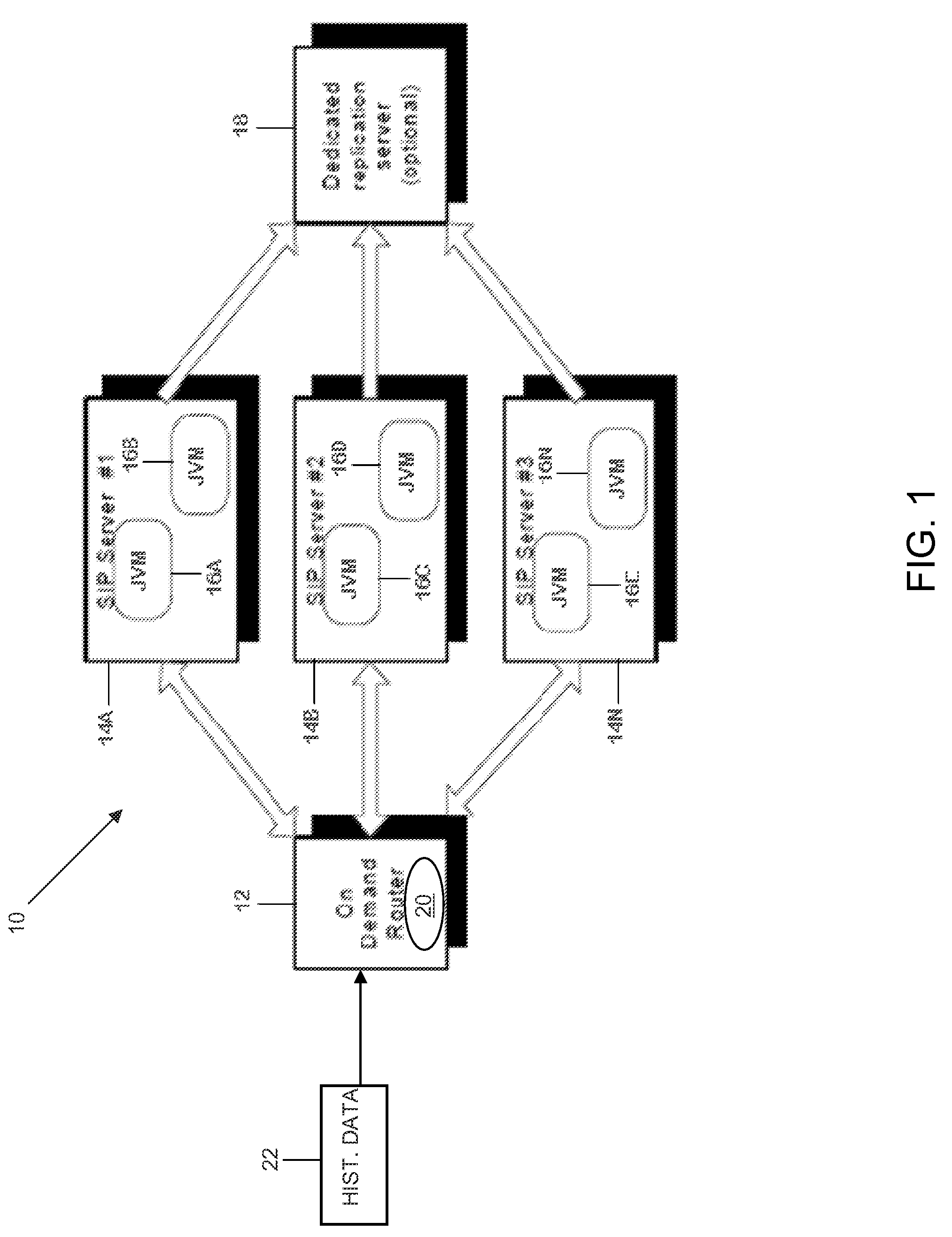

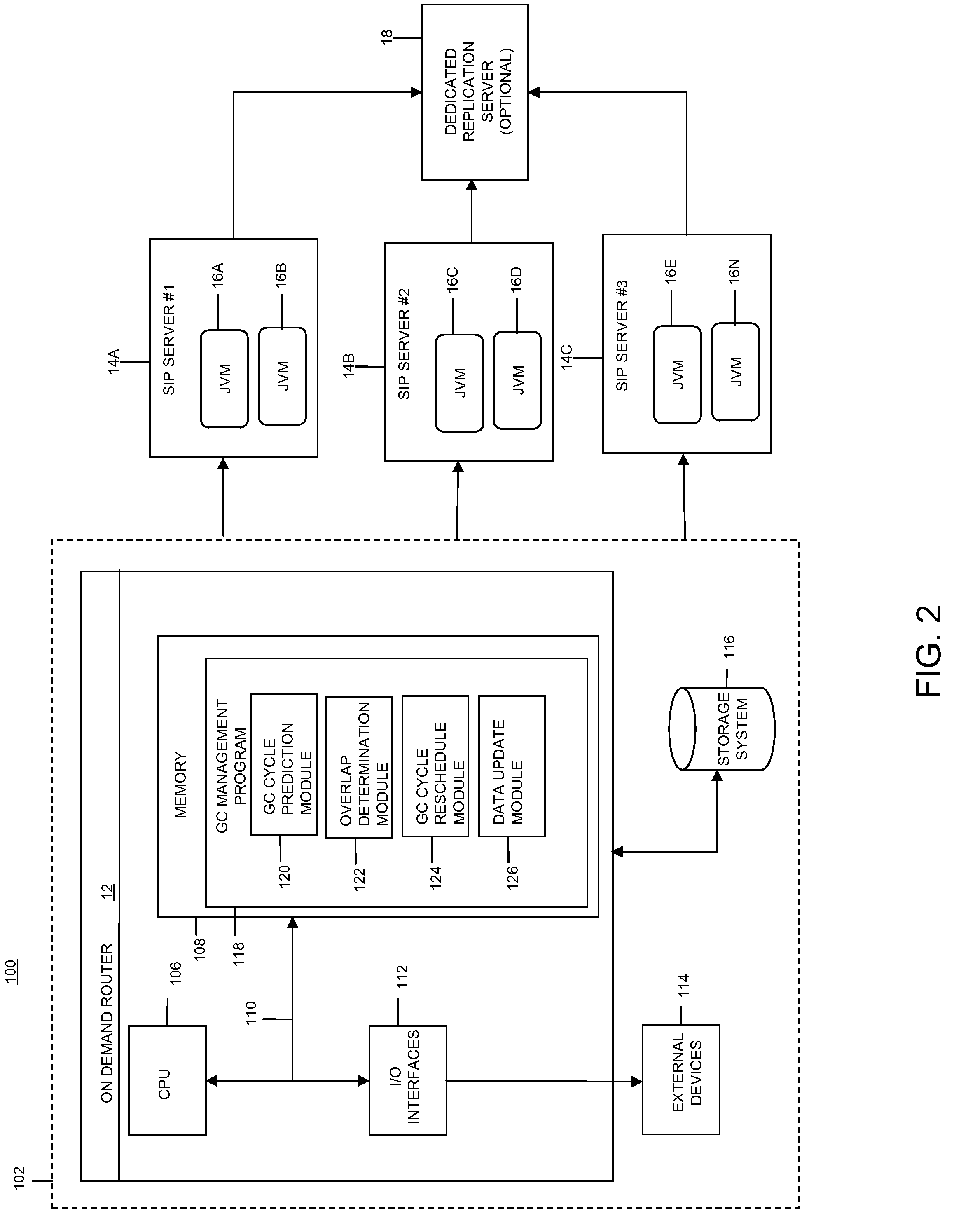

InactiveUS8051266B2Reduce and eliminate any overlapMaintaining latencySpecial data processing applicationsMemory systemsComputer scienceVirtual machine

Owner:INT BUSINESS MASCH CORP

Memory management system and method providing increased memory access security

Owner:GLOBALFOUNDRIES INC

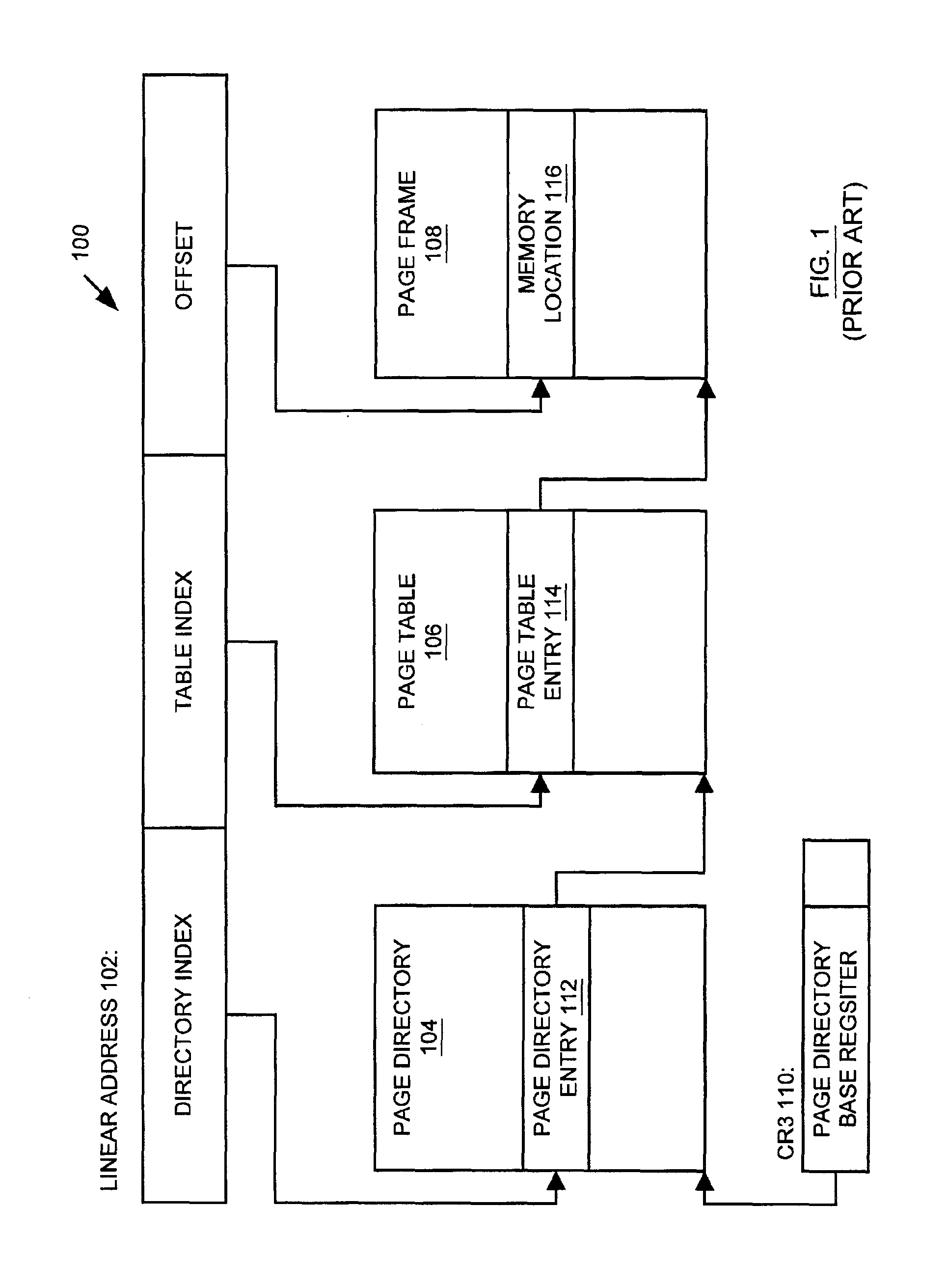

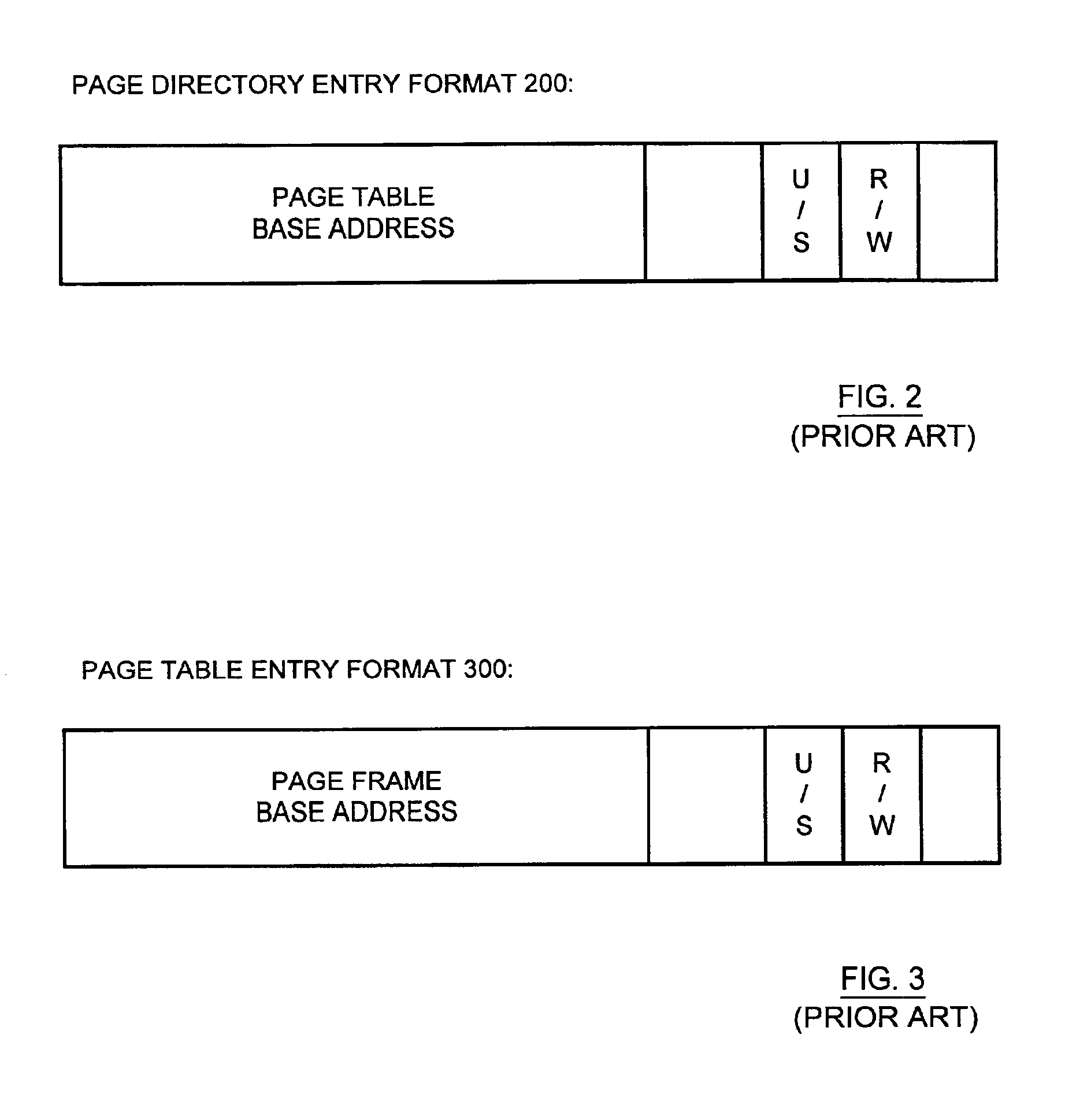

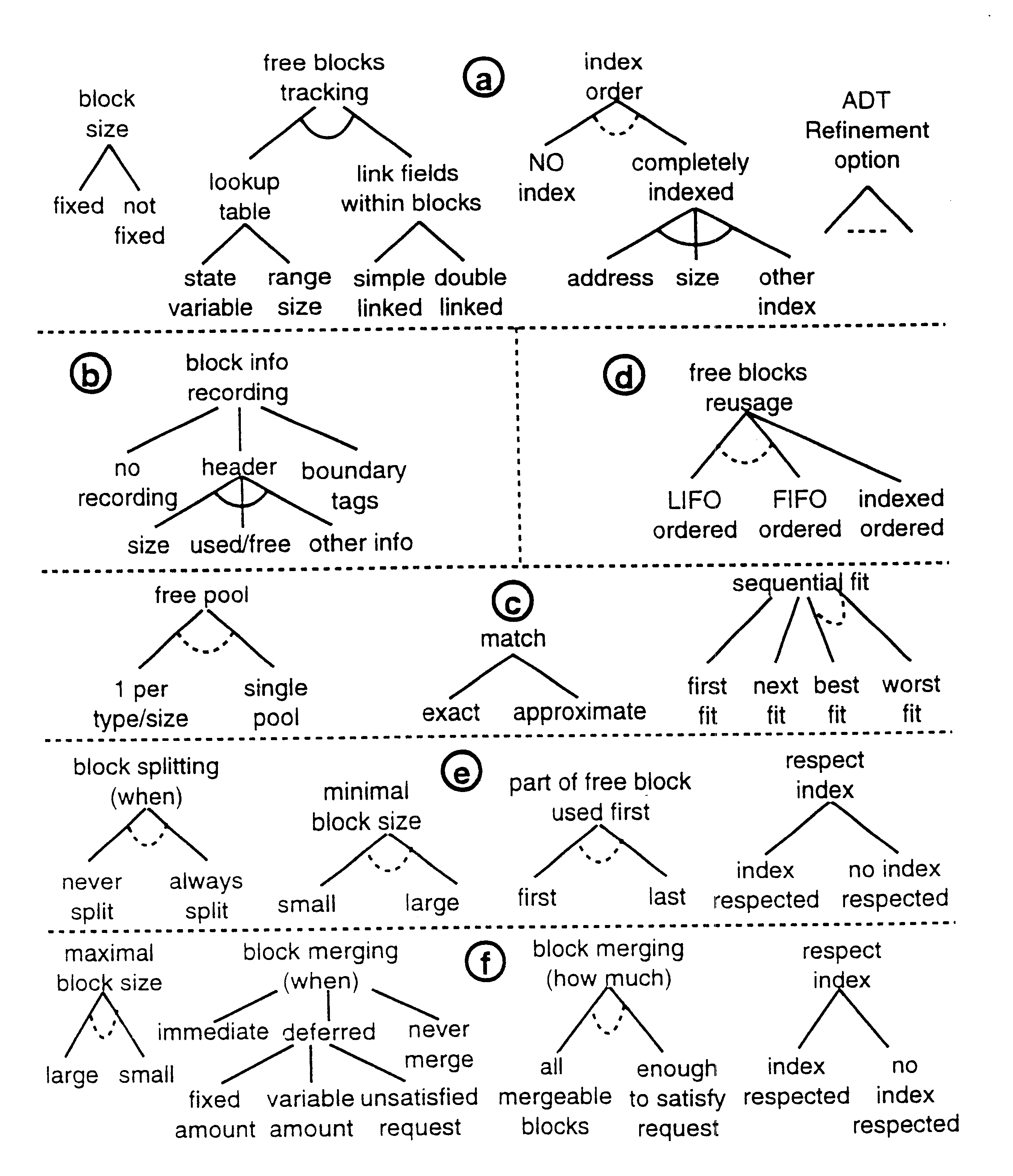

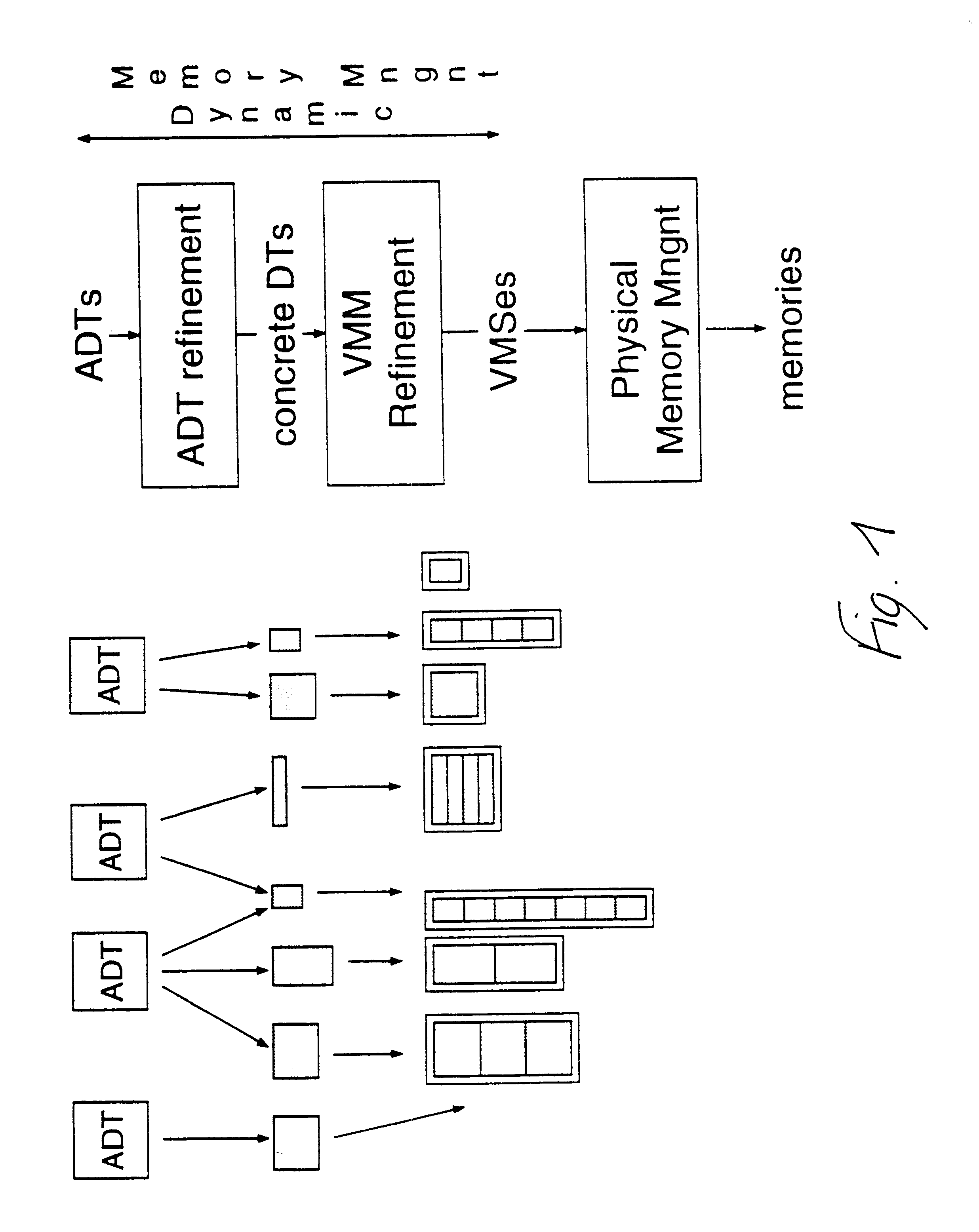

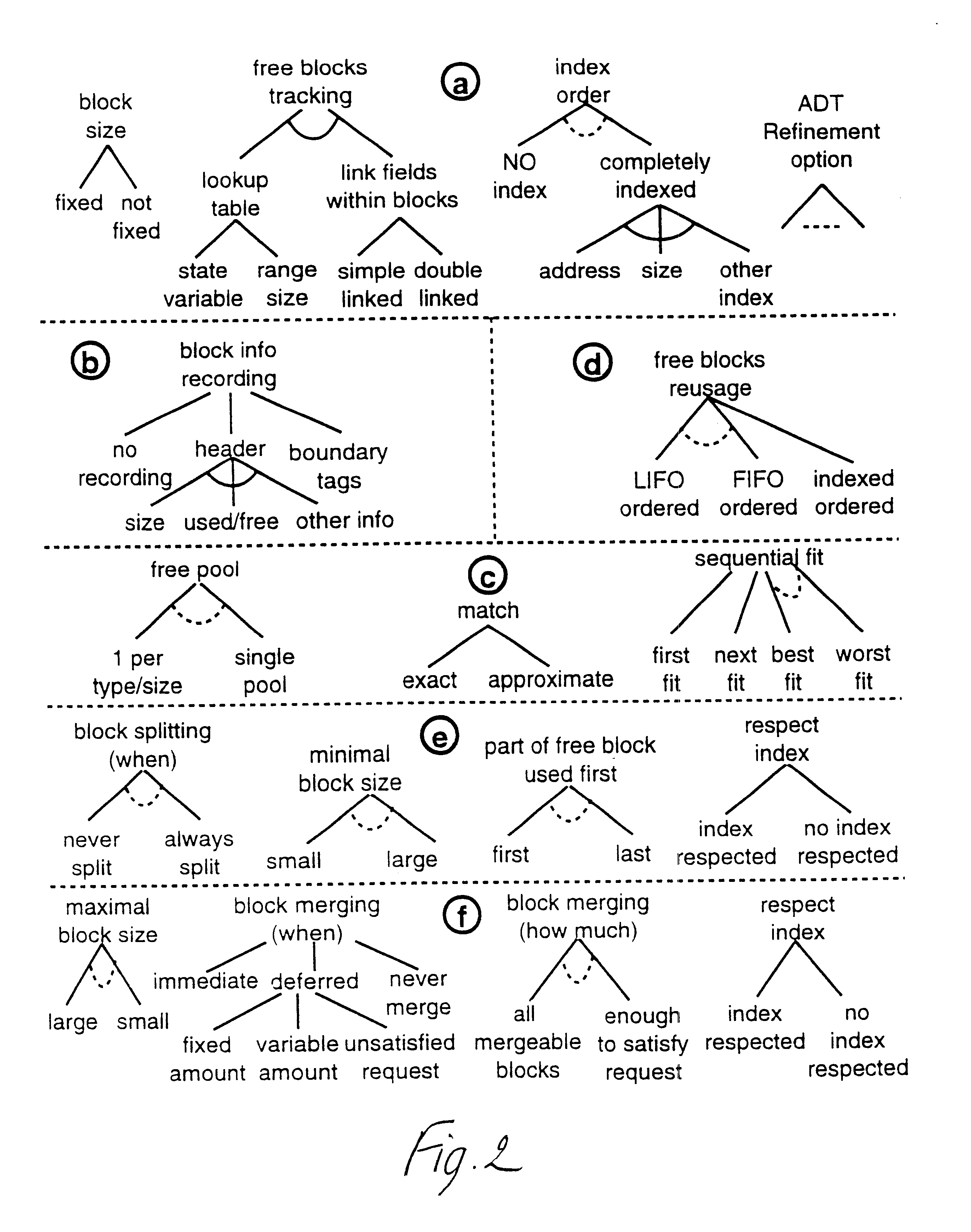

Optimized virtual memory management for dynamic data types

InactiveUS6578129B1Memory adressing/allocation/relocationCAD circuit designEffective solutionParallel computing

The present invention proposes effective solutions for the design of Virtual Memory Management for applications with dynamic data types in an embedded (HW or SW) processor context. A structured search space for VMM mechanisms with orthogonal decision trees is presented. Based on said representation a systematic power exploration methodology is proposed that takes into account characteristics of the applications to prune the search space and guide the choices of a VMM for data dominated applications. A parameterizable model, called Flexible Pools, is proposed. This model limits the exploration of the Virtual Memory organization considerably without limiting the optimization possibilities.

Owner:INTERUNIVERSITAIR MICRO ELECTRONICS CENT (IMEC VZW)

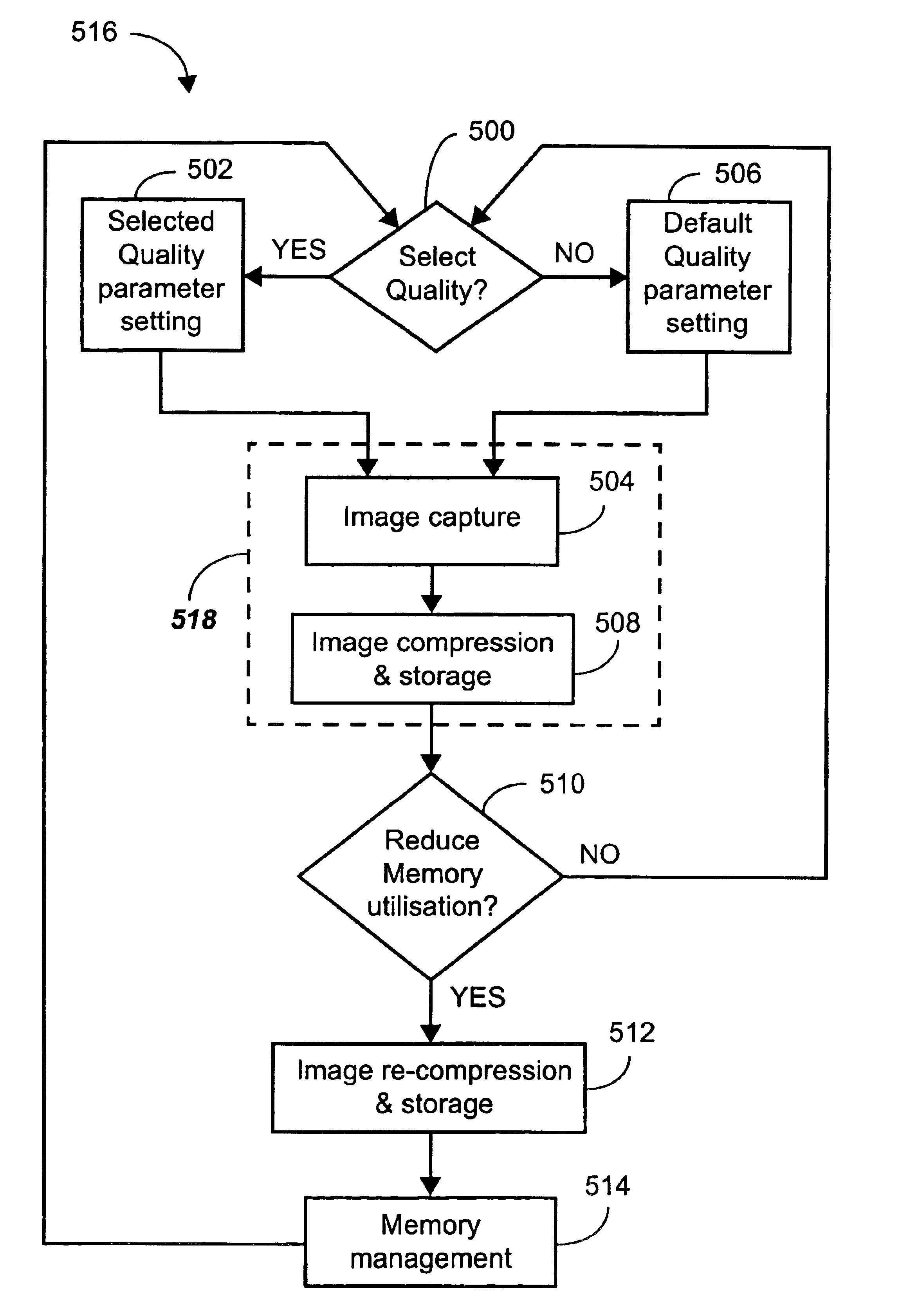

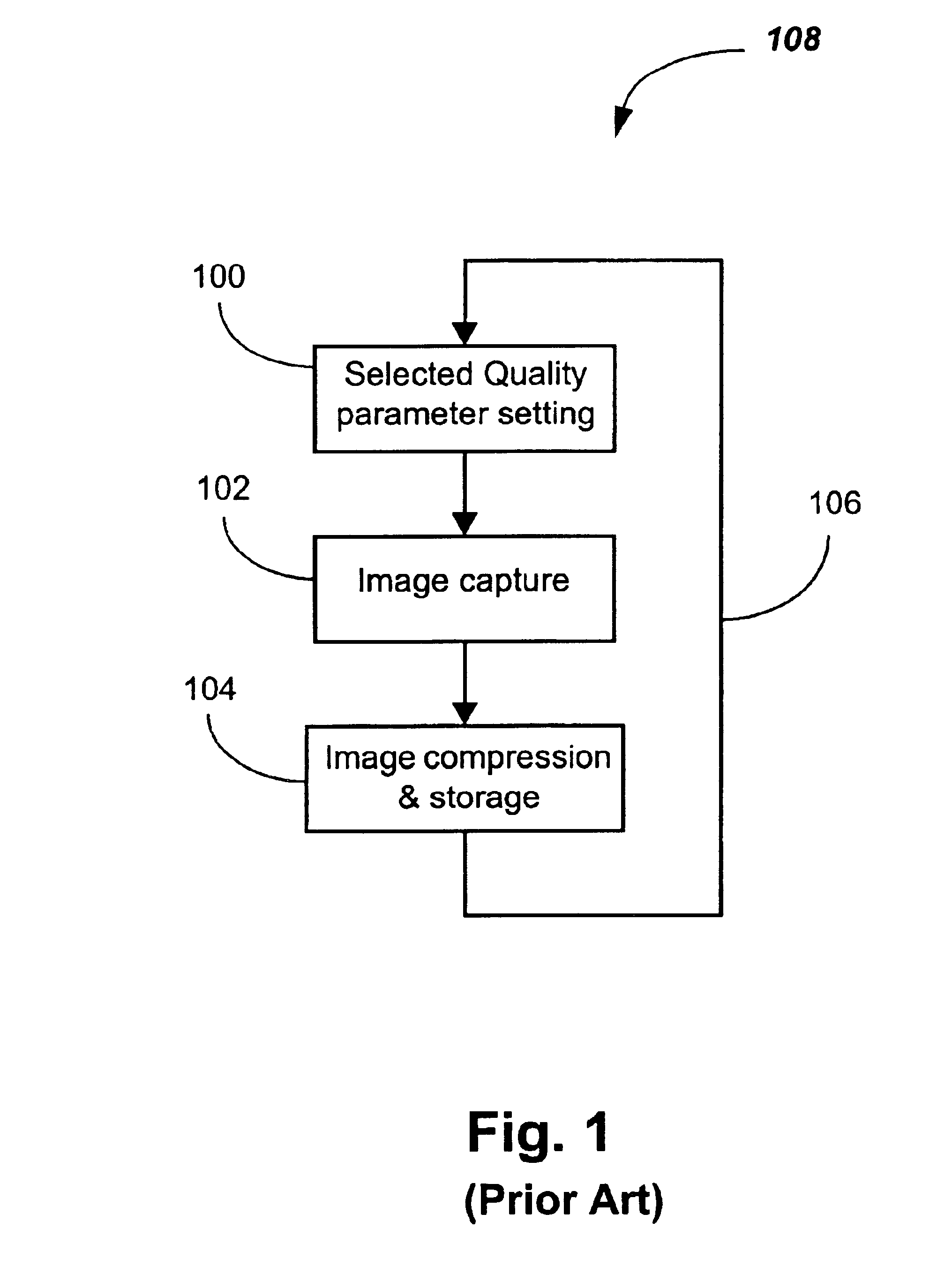

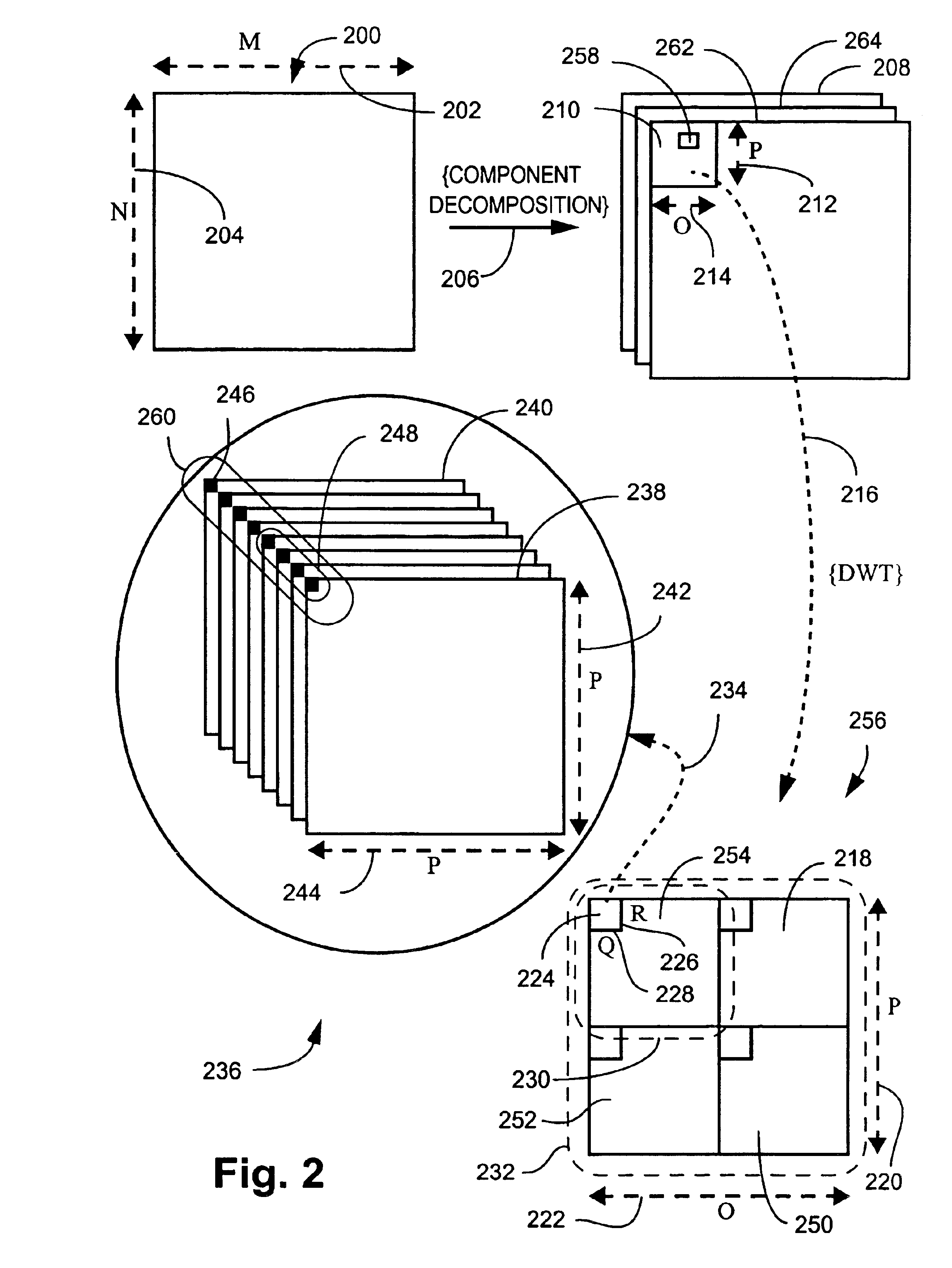

Memory management of compressed image data

InactiveUS6999626B2Recovering memory capacityDigital data processing detailsPicture reproducers using cathode ray tubesReduction factorComputer science

A method is disclosed for recovering image memory capacity, in relation to an image which has been encoded using a linear transform according to a layer progressive mode into L layers, L being an integer value greater than unity, the L layers being stored in an image memory having a limited capacity. The method comprises defining a Quality Reduction Factor (700), being a positive integer value, identifying at least one of the L layers corresponding to the Quality Reduction Factor, and discarding (702) said at least one of the L layers in progressive order in accordance with the Quality Reduction Factor, thereby recovering said memory capacity.

Owner:CANON KK

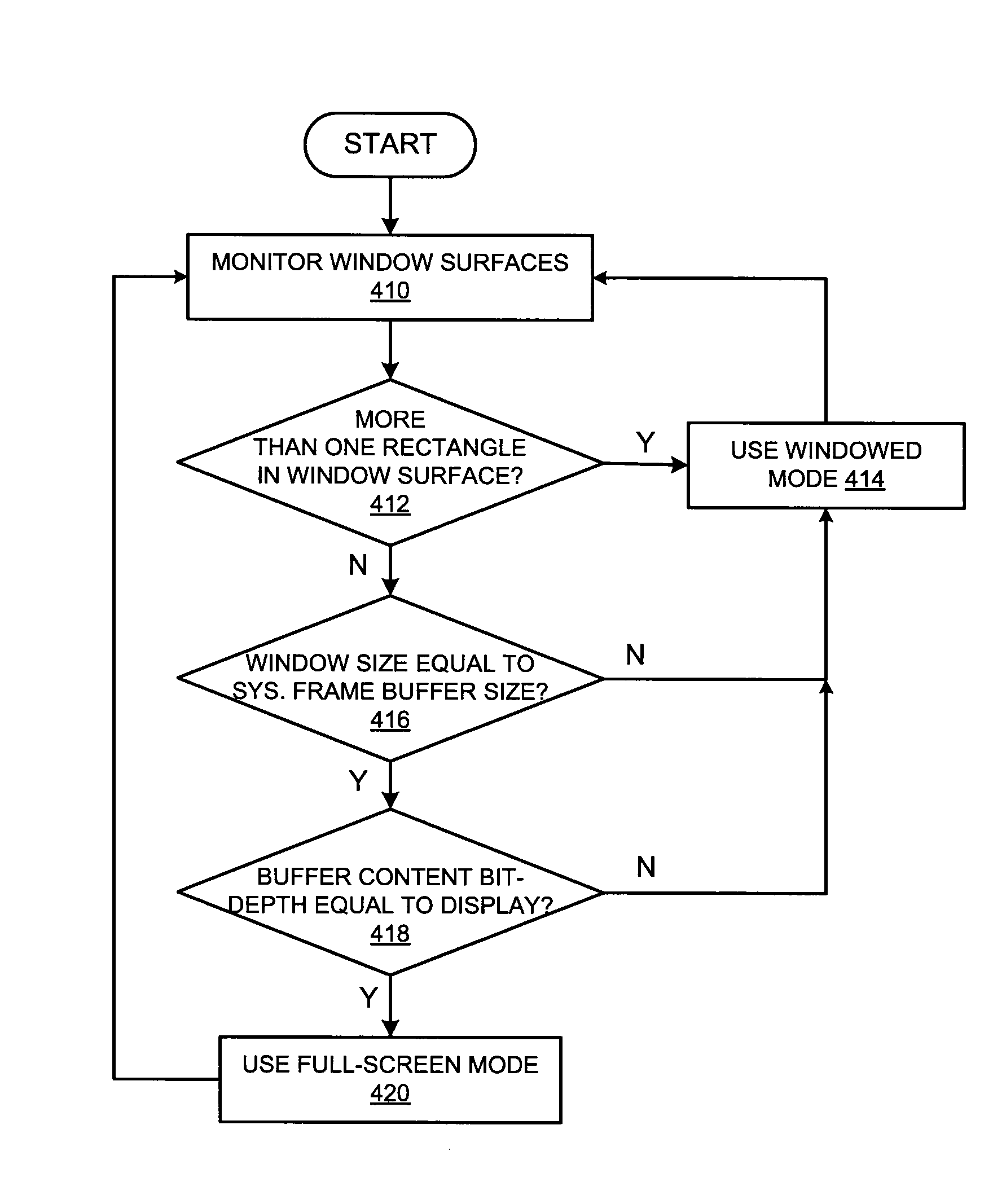

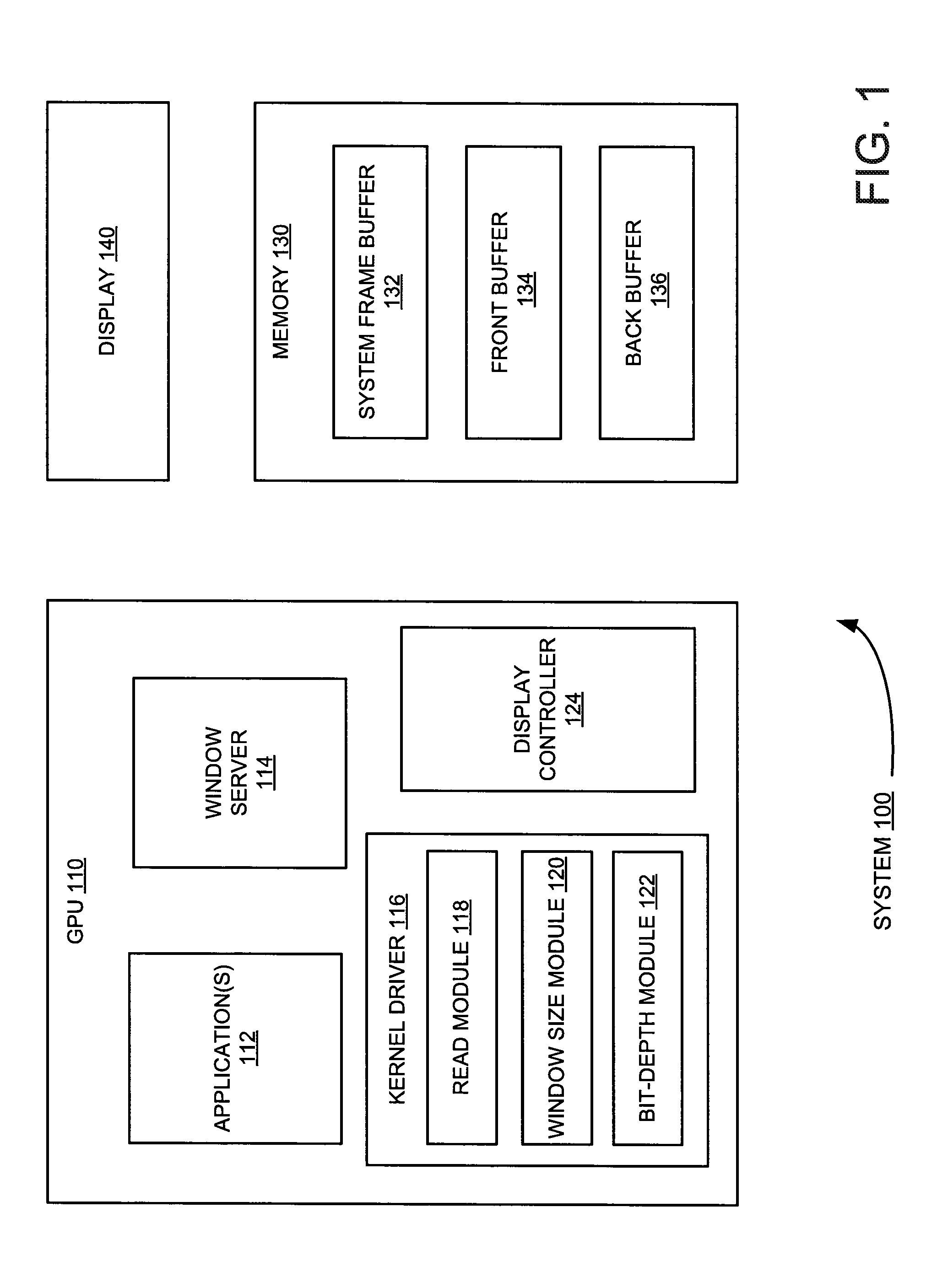

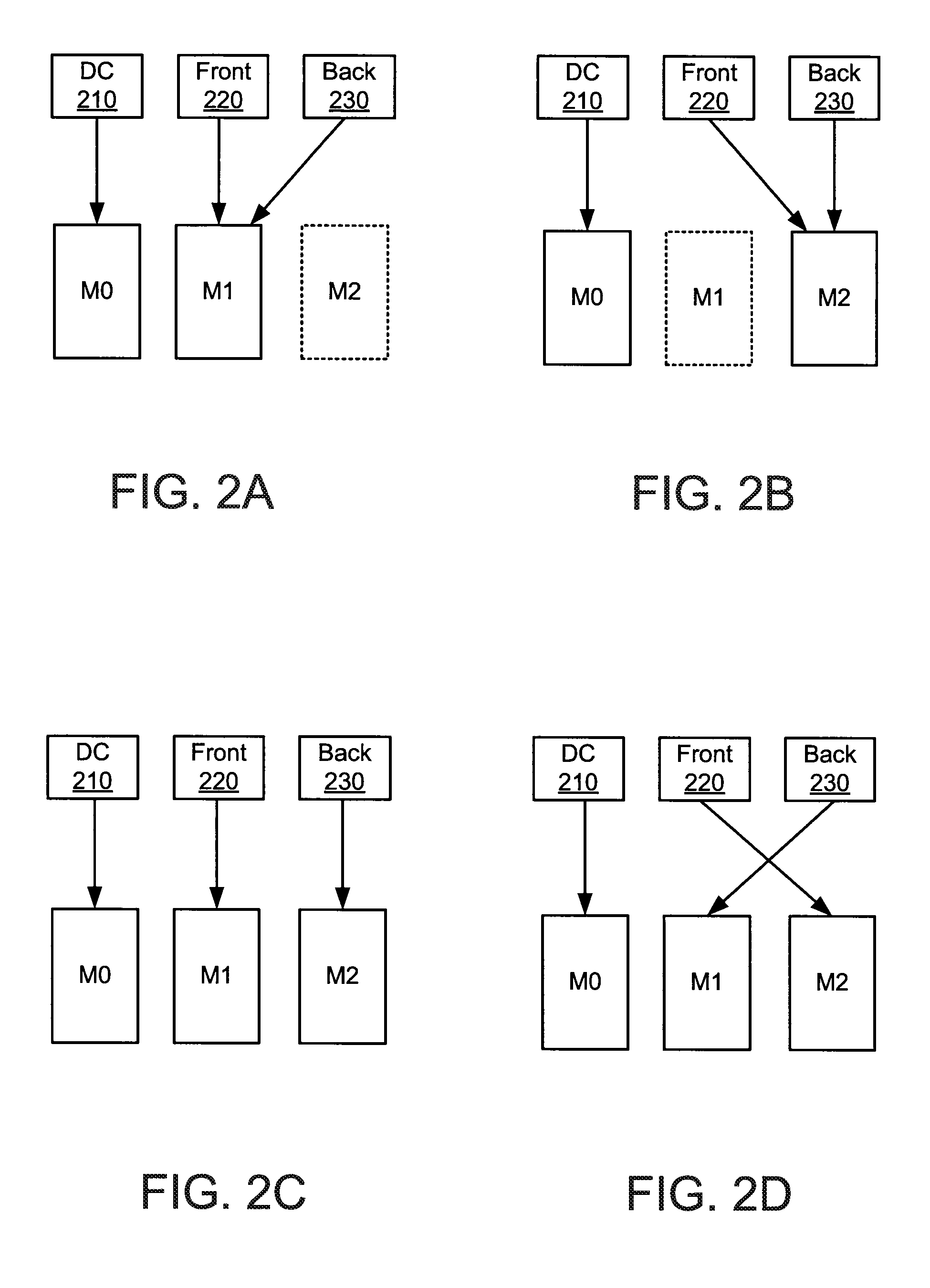

Memory management based on automatic full-screen detection

ActiveUS20100289806A1Cathode-ray tube indicatorsElectric digital data processingGraphicsDisplay device

A window surface associated with a first application is automatically detected as an exclusive window surface for a display. In response, the system automatically transitions to a full-screen mode in which a graphics processor flushes content to the display. The full-screen mode includes flipping between a front surface buffer and a back surface buffer associated with the first application. It is subsequently detected that the window surface associated with the first application is not an exclusive window surface for the display. In response, the system automatically transitions to a windowed mode in which the graphics processor flushes content to the display. In windowed mode, the system frame buffer is flushed to the display. The transition to windowed mode includes a minimum number of buffer content copy operations between the front surface buffer, the back surface buffer and the system frame buffer.

Owner:APPLE INC

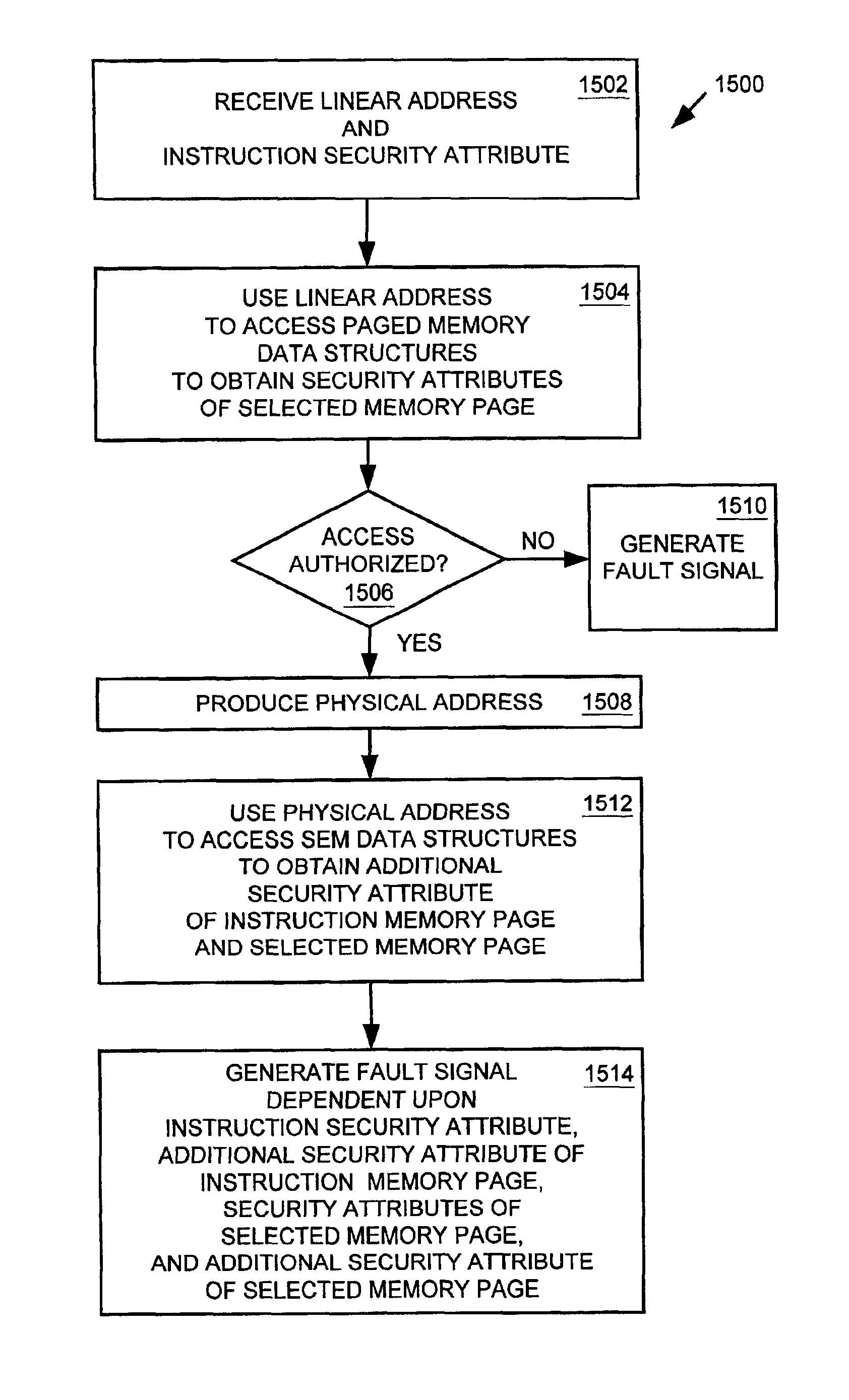

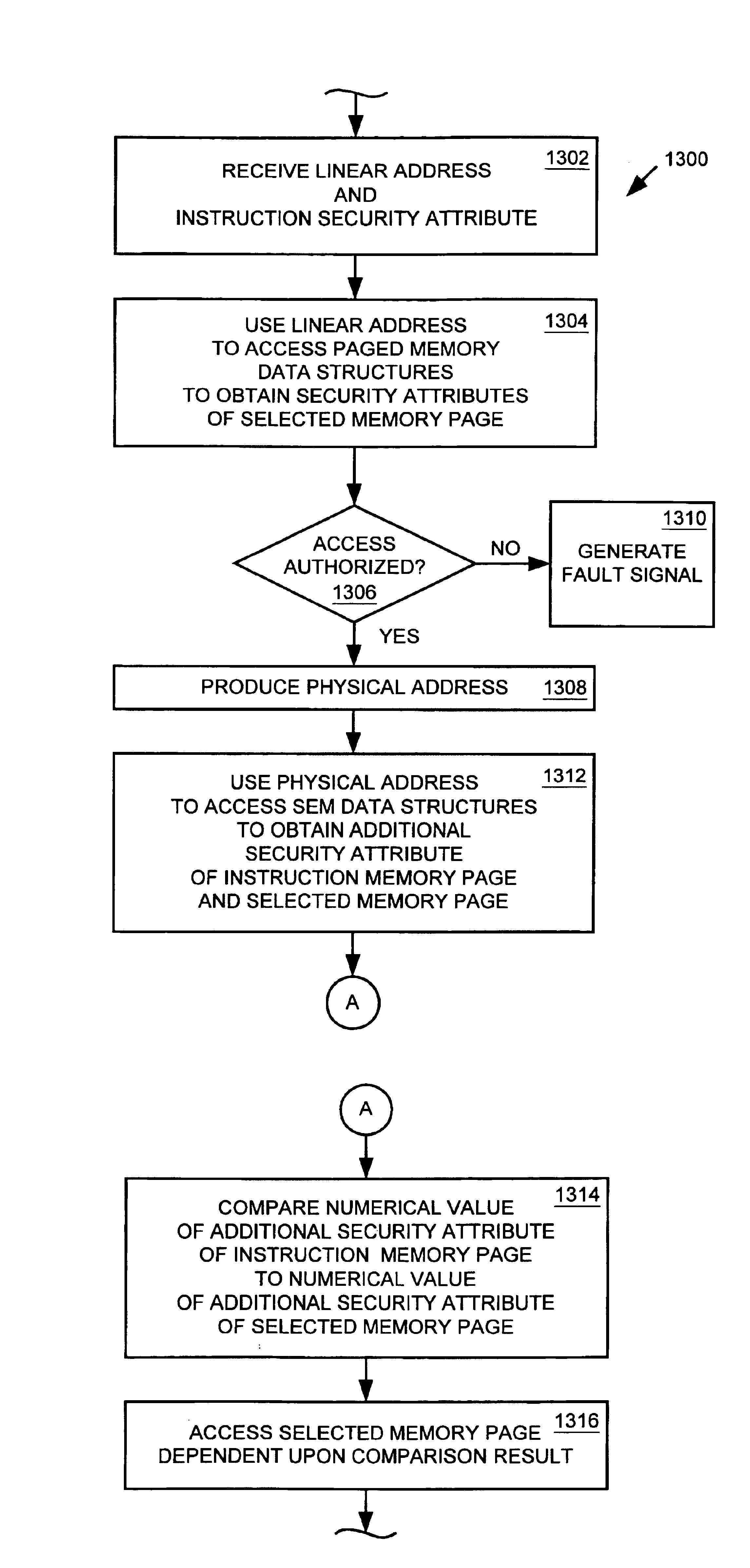

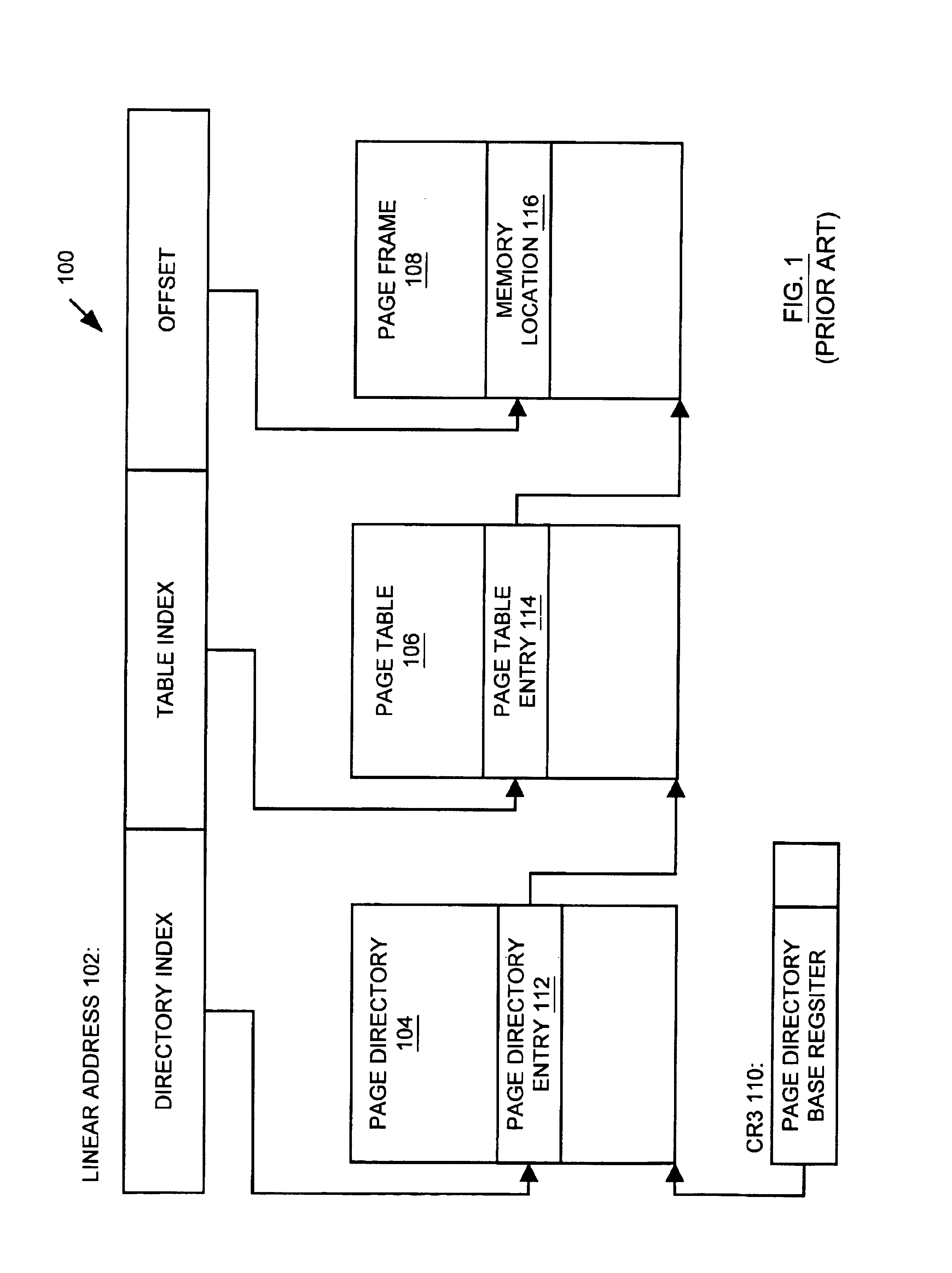

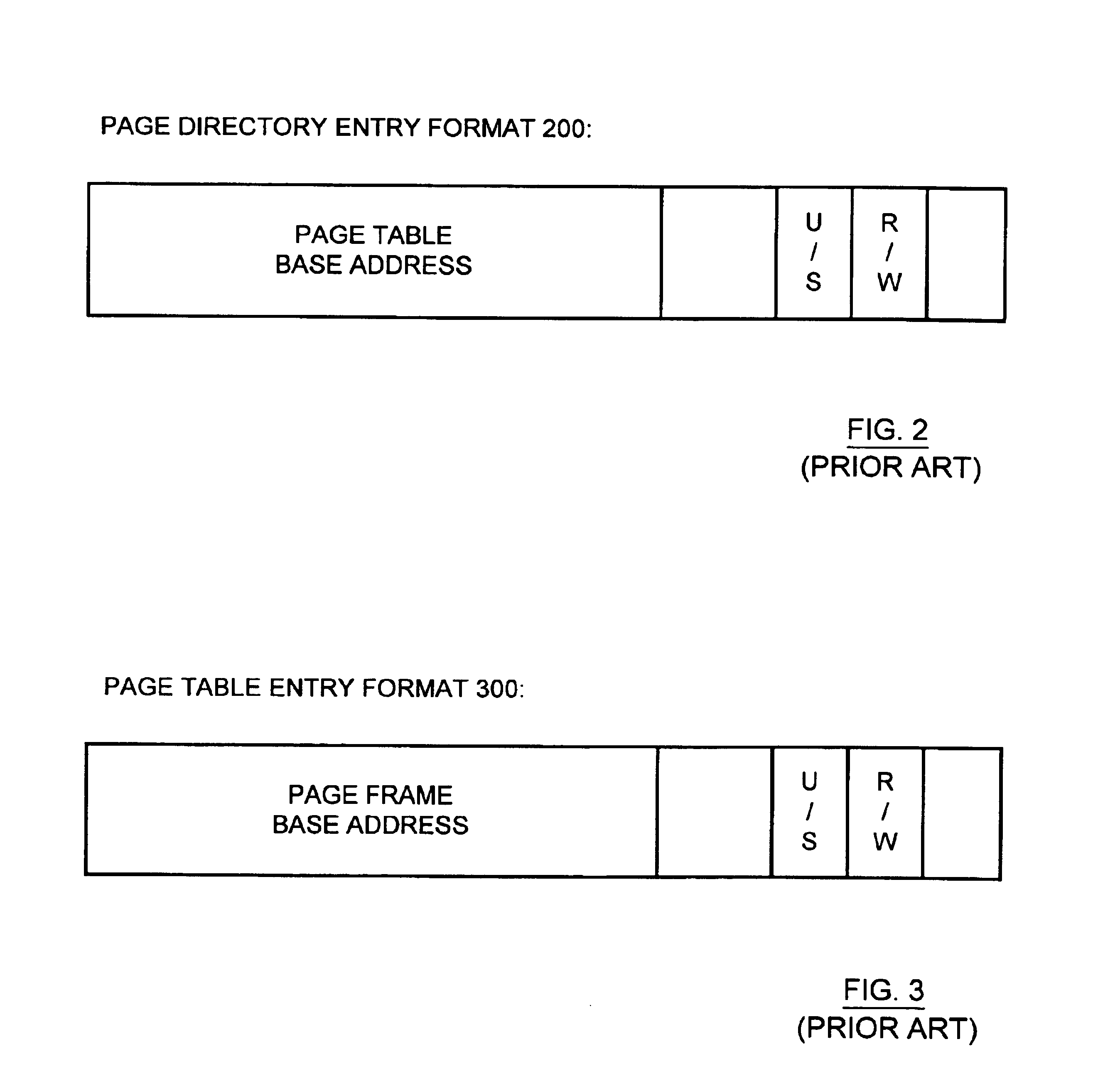

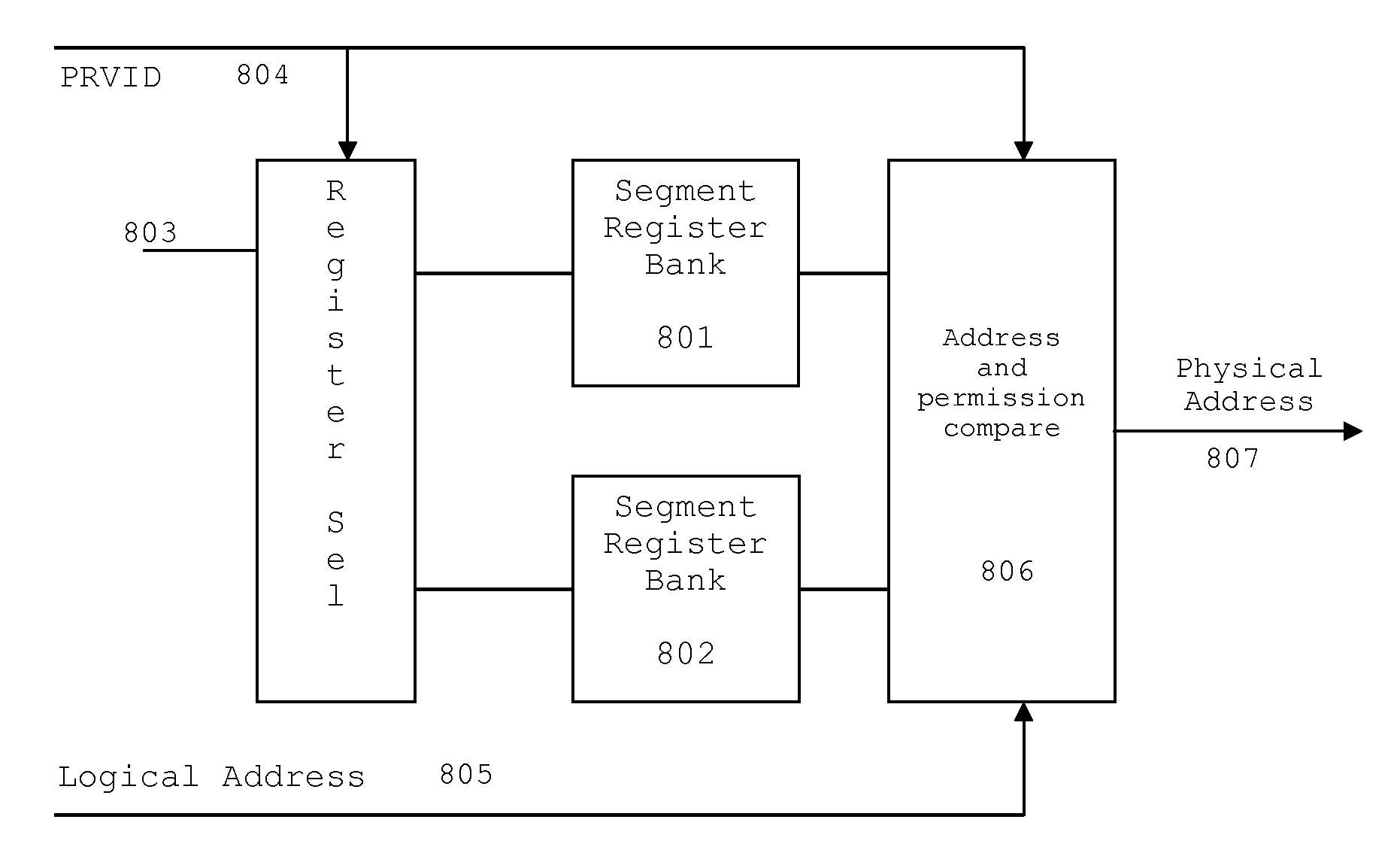

Memory management system and method for providing physical address based memory access security

InactiveUS6823433B1Memory adressing/allocation/relocationUnauthorized memory use protectionManagement unitComputerized system

A memory management unit (MMU) is disclosed for managing a memory storing data arranged within a plurality of memory pages. The MMU includes a security check unit (SCU) receiving a physical address generated during execution of a current instruction. The physical address resides within a selected memory page. The SCU uses the physical address to access one or more security attribute data structures located in the memory to obtain a security attribute of the selected memory page, compares a numerical value conveyed by a security attribute of the current instruction to a numerical value conveyed by the security attribute of the selected memory page, and produces an output signal dependent upon a result of the comparison. The MMU accesses the selected memory page dependent upon the output signal. The security attribute of the selected memory page may include a security context identification (SCID) value indicating a security context level of the selected memory page. The security attribute of the current instruction may include an SCID value indicating a security context level of a memory page containing the current instruction. A central processing unit (CPU) is described including an execution unit and the MMU. A computer system is described including the memory, the CPU, and the MMU. A method is described for providing access security for a memory used to store data arranged within a plurality of memory pages. The method may be embodied within the MMU.

Owner:GLOBALFOUNDRIES US INC +1

System and method for computer automatic memory management

ActiveUS7584232B2Eliminates all suspensionEnsure correct executionData processing applicationsSpecial data processing applicationsImmediate releaseWaste collection

The present invention is a method and system of automatic memory management (garbage collection). An application automatically marks up objects referenced from the “extended root set”. At garbage collection, the system starts traversal from the marked-up objects. It can conduct accurate garbage collection in a non-GC language, such as C++. It provides a deterministic reclamation feature. An object and its resources are released immediately when the last reference is dropped. Application codes automatically become entirely GC-safe and interruptible. A concurrent collector can be pause-less and with predictable worst-case latency of micro-second level. Memory usage is efficient and the cost of reference counting is significantly reduced.

Owner:GUO MINGNAN

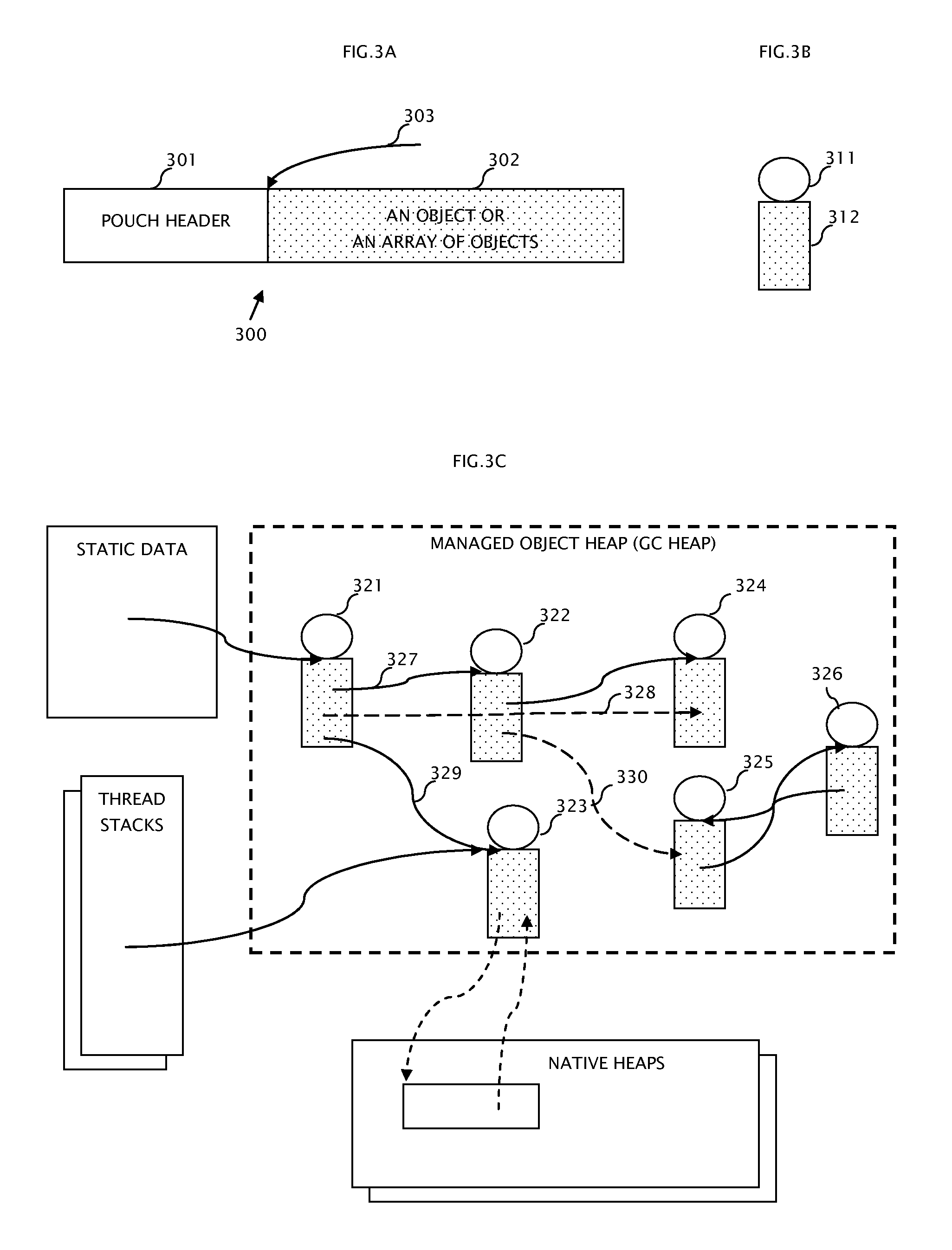

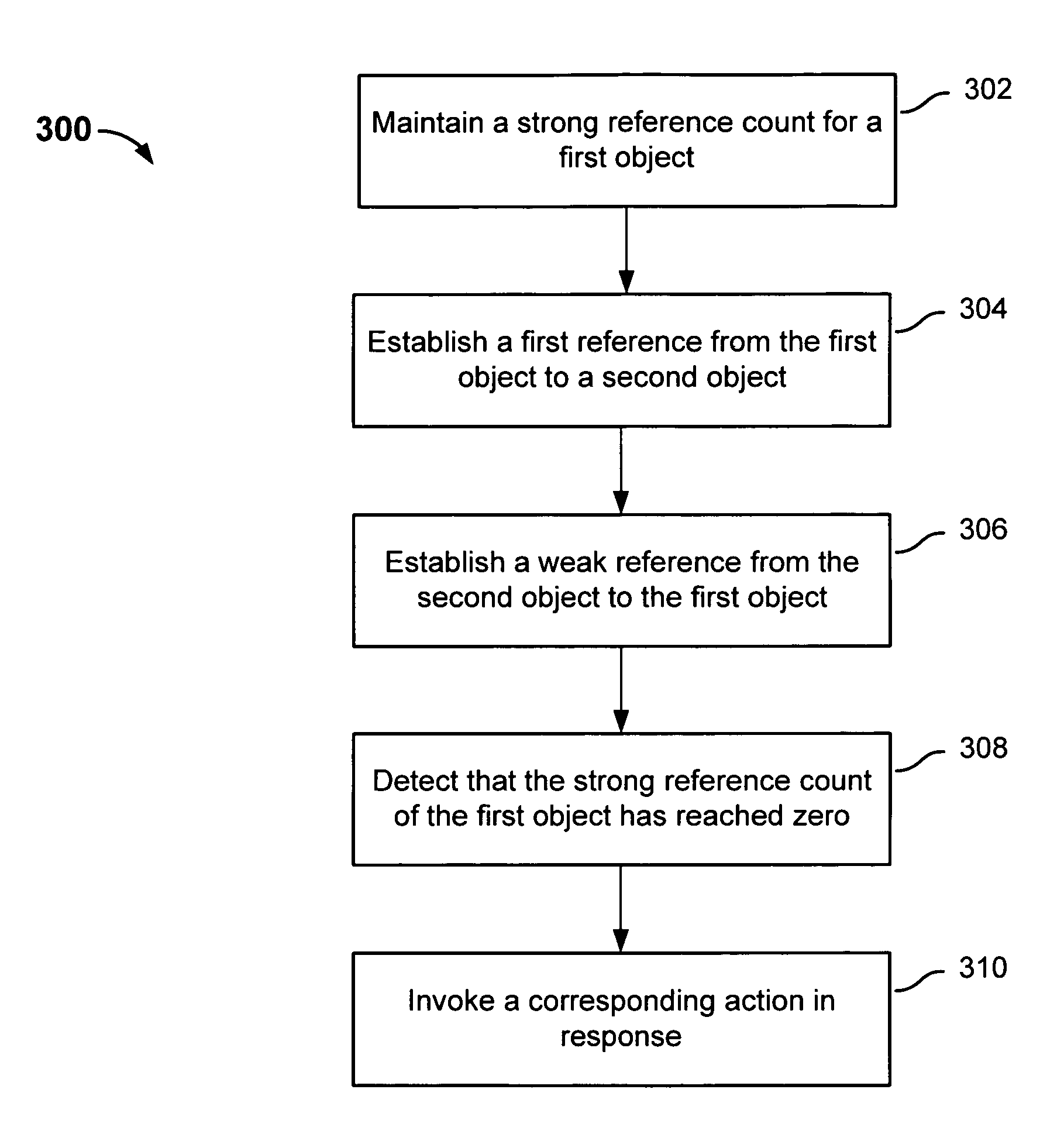

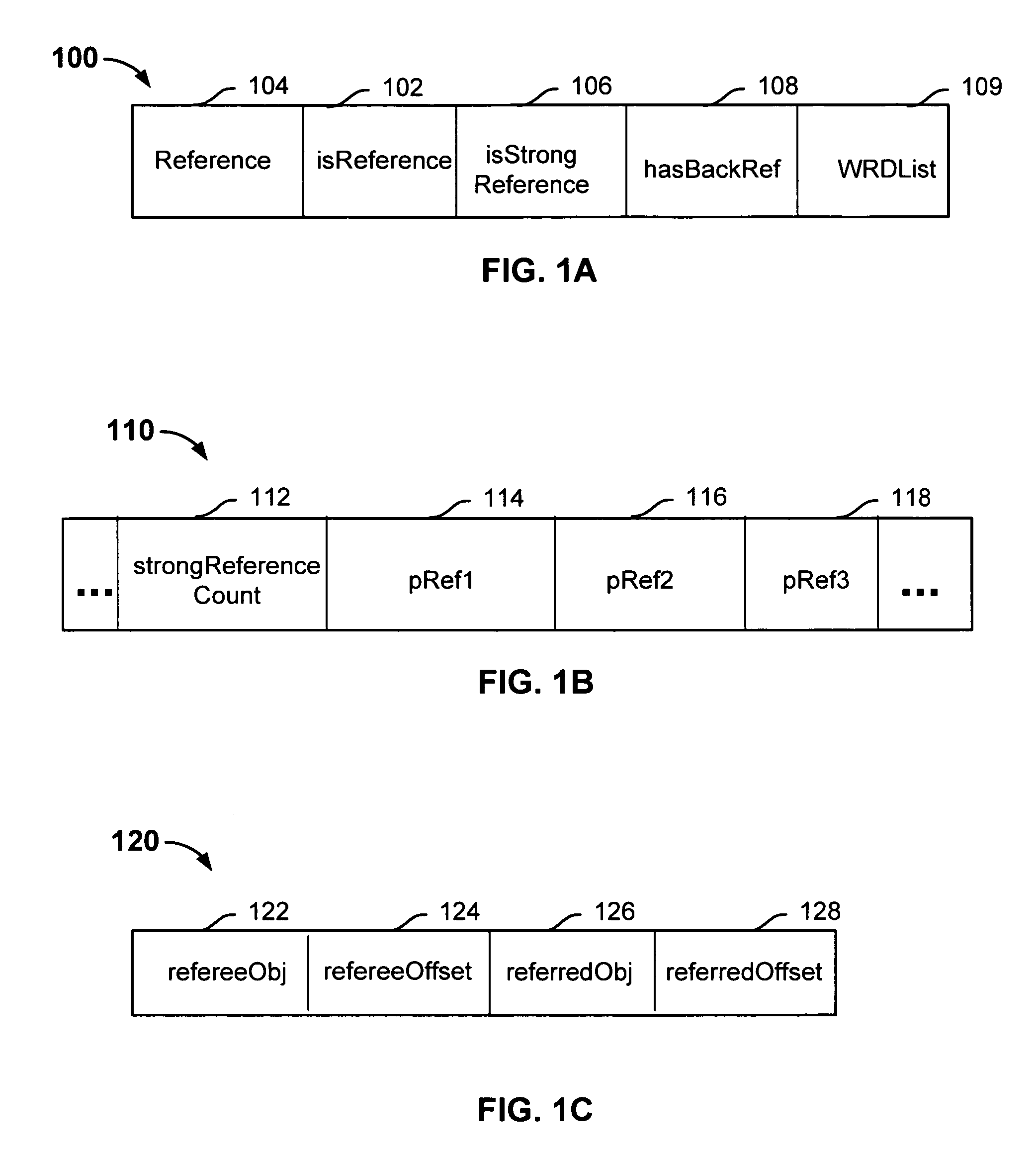

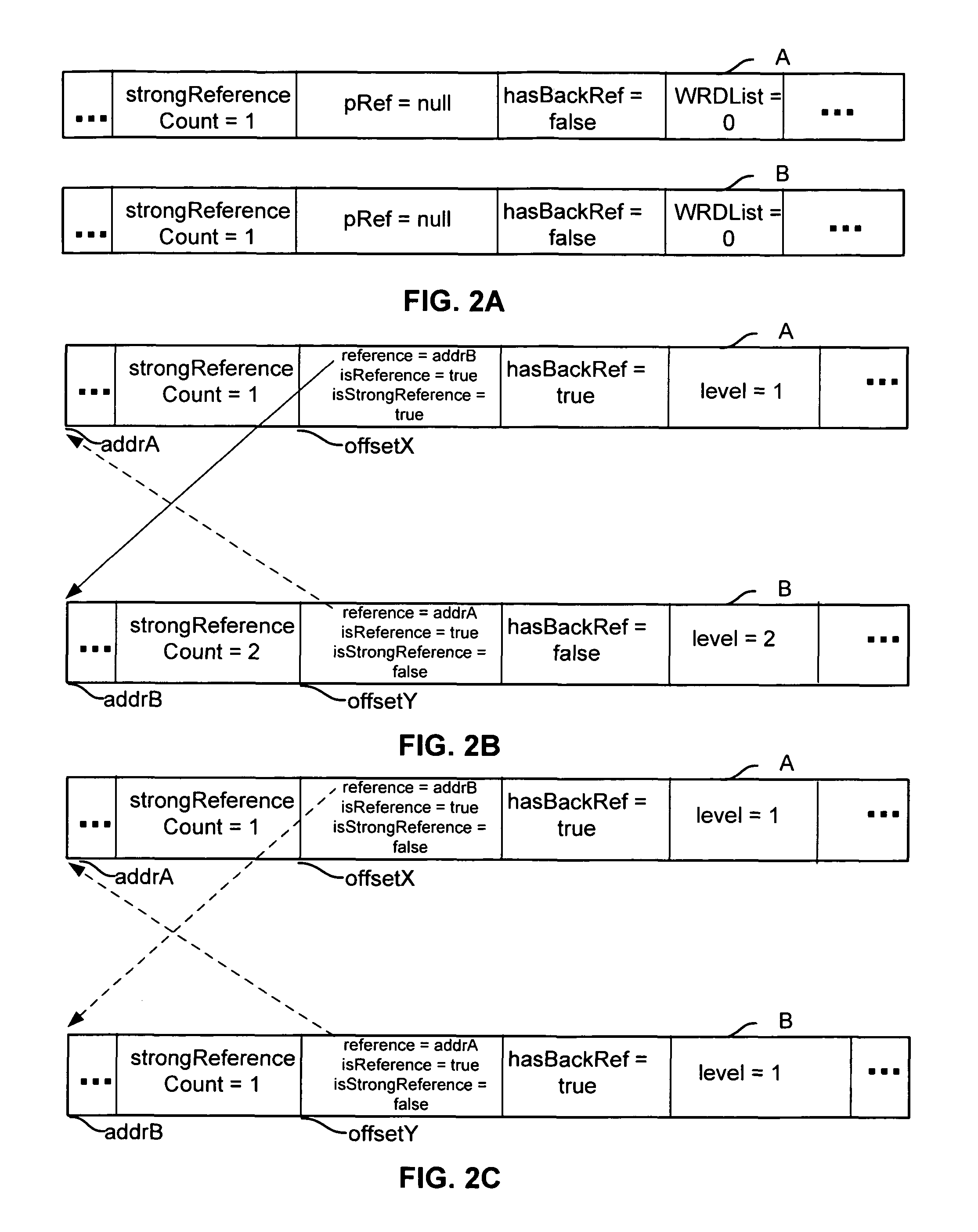

Hardware-protected reference count-based memory management using weak references

ActiveUS8838656B1Memory architecture accessing/allocationDigital data information retrievalStrong referenceMemory management unit

A method for managing memory, comprising: maintaining a strong reference count for a first object; establishing a first reference from the first object to a second object; establishing a second reference from the second object to the first object, wherein the second reference is a weak reference that does not increase the strong reference count of the first object; detecting that the strong reference count of the first object has reached zero; in response to detecting that the strong reference count has reached zero, invoking a corresponding action.

Owner:INTEL CORP

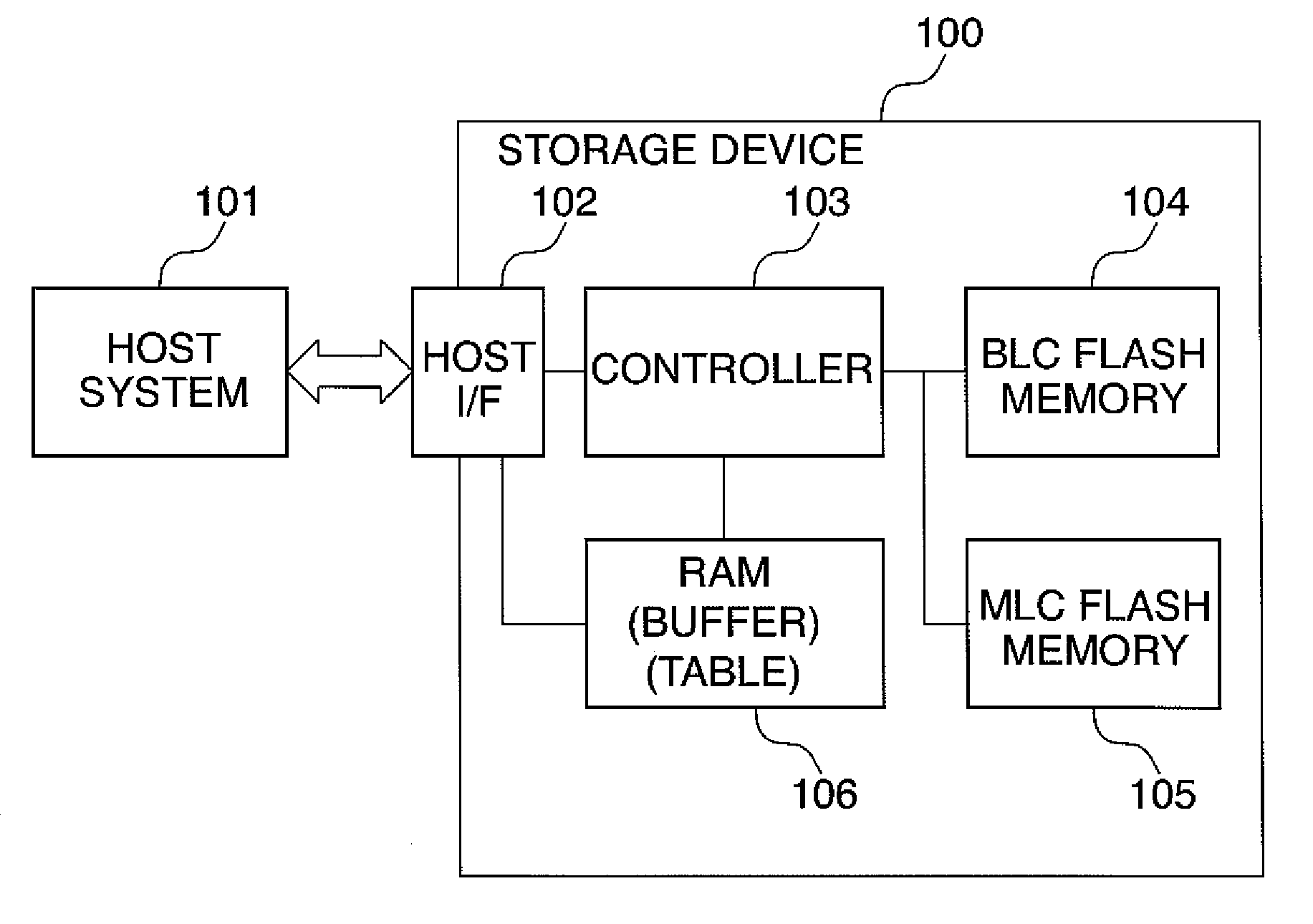

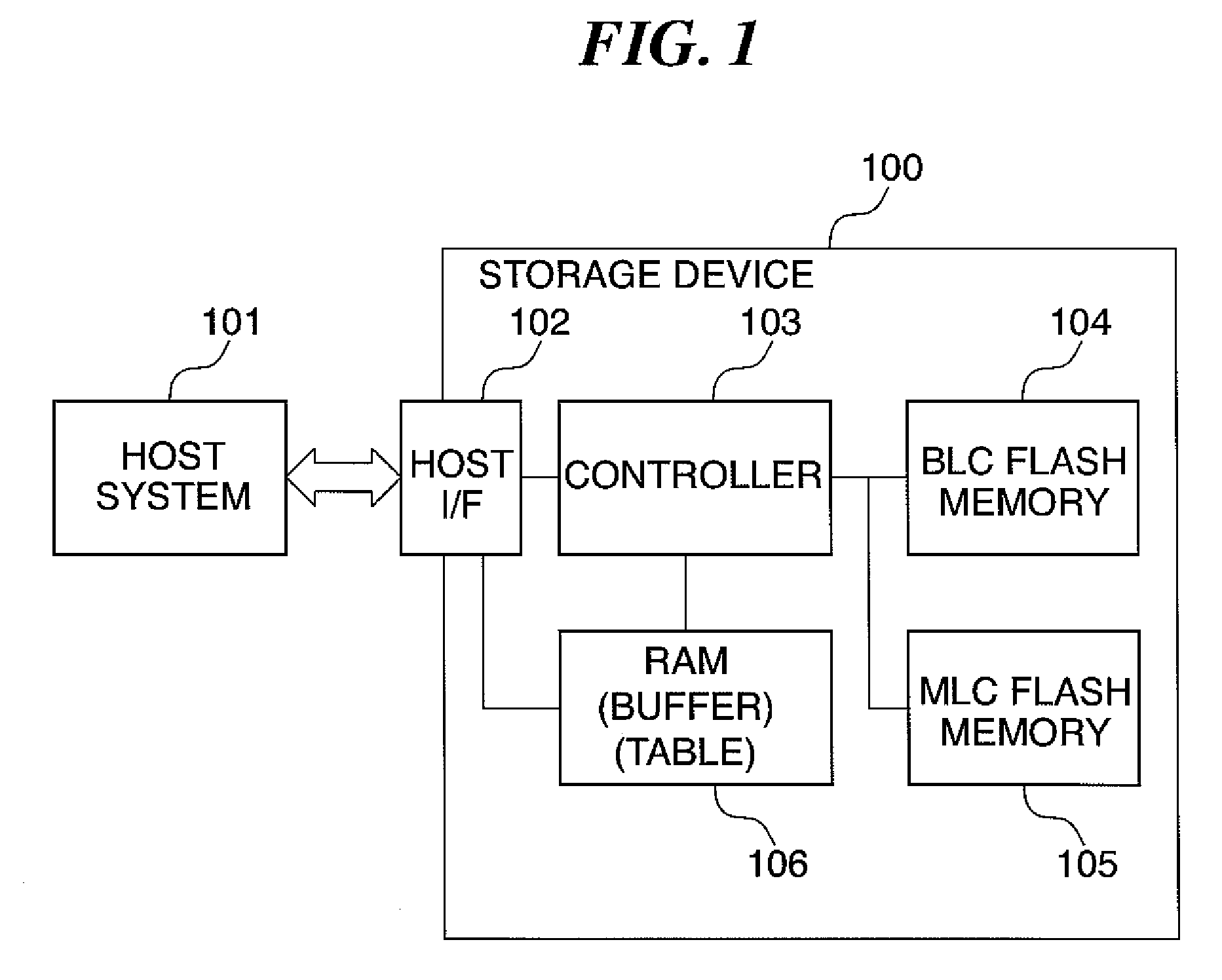

Memory management device and method, program, and memory management system

InactiveUS20080235467A1Increase speedMemory architecture accessing/allocationInput/output to record carriersMemory management unitManagement system

A memory management device which is capable of allocating a memory unit accessible at a higher speed to data which is stored in a storage device having memory units different in access speed, without being limited in an storage area. The storage device comprises a BLC flash memory accessible at a predetermined access speed, an MLC flash memory accessible at a lower access speed than the predetermined access speed, a controller, and a RAM. The controller manages the BLC flash memory and the BLC flash memory in units each formed by a plurality of physical pages, and writes data in the physical pages, and the RAM holds page allocation information in which logical pages designated when writing data and the physical pages are associated with each other.

Owner:CANON KK

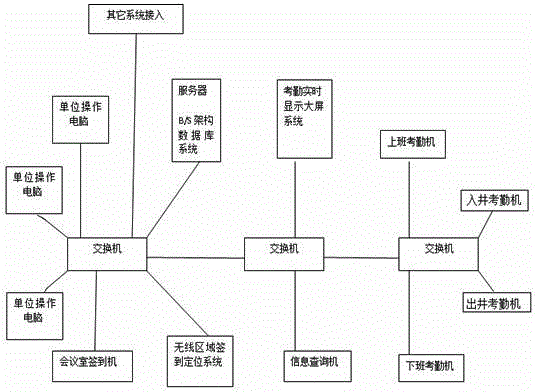

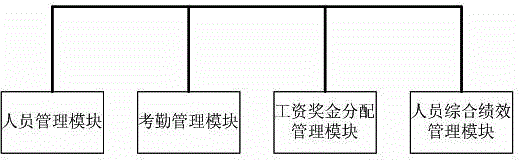

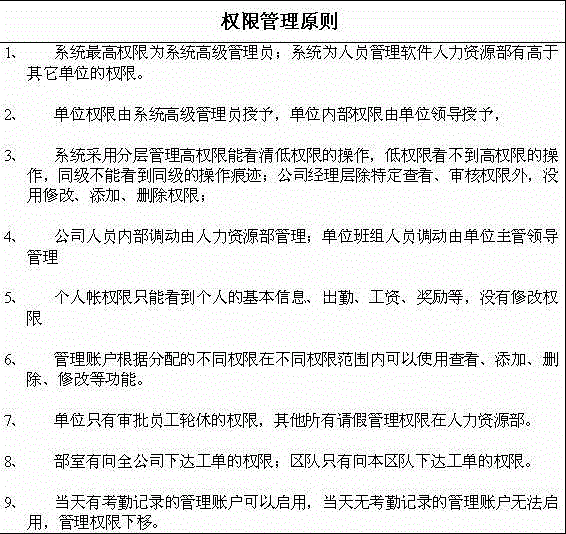

Personnel information comprehensive management system

InactiveCN104636889AGuarantee justiceEqual protectionResourcesTotal quality managementManagement environment

The invention discloses a personnel information comprehensive management system. The system comprises a personnel management module, an attendance management module, a salary and bonus distribution management module and a personnel comprehensive achievement management module. A management system is a basis of an enterprise, and execution power is guarantee for development of the enterprise. The enterprise is not in lack of the system but in lack of the execution of the system; the system is quantized by using an informationalized technical means and the execution is measured by using tracing supervision, the measuring result is related to personal interest; the system is a means for carrying out the system powerfully. Total factor management of personnel can be realized through the system; the enterprise management is both rigid and flexible and is performed well through the rigidness of computer management and the flexibility of manual management of the personnel information comprehensive management system, the national laws and regulations and the system of the enterprise are effectively implemented, the power of a manager is supervised, the personnel can work in a good management environment of the enterprise, finally healthy, harmonious and positive development of the enterprise is realized, employee benefit, the efficiency of the enterprise is increased, and the nation is stable.

Owner:刘升 +1

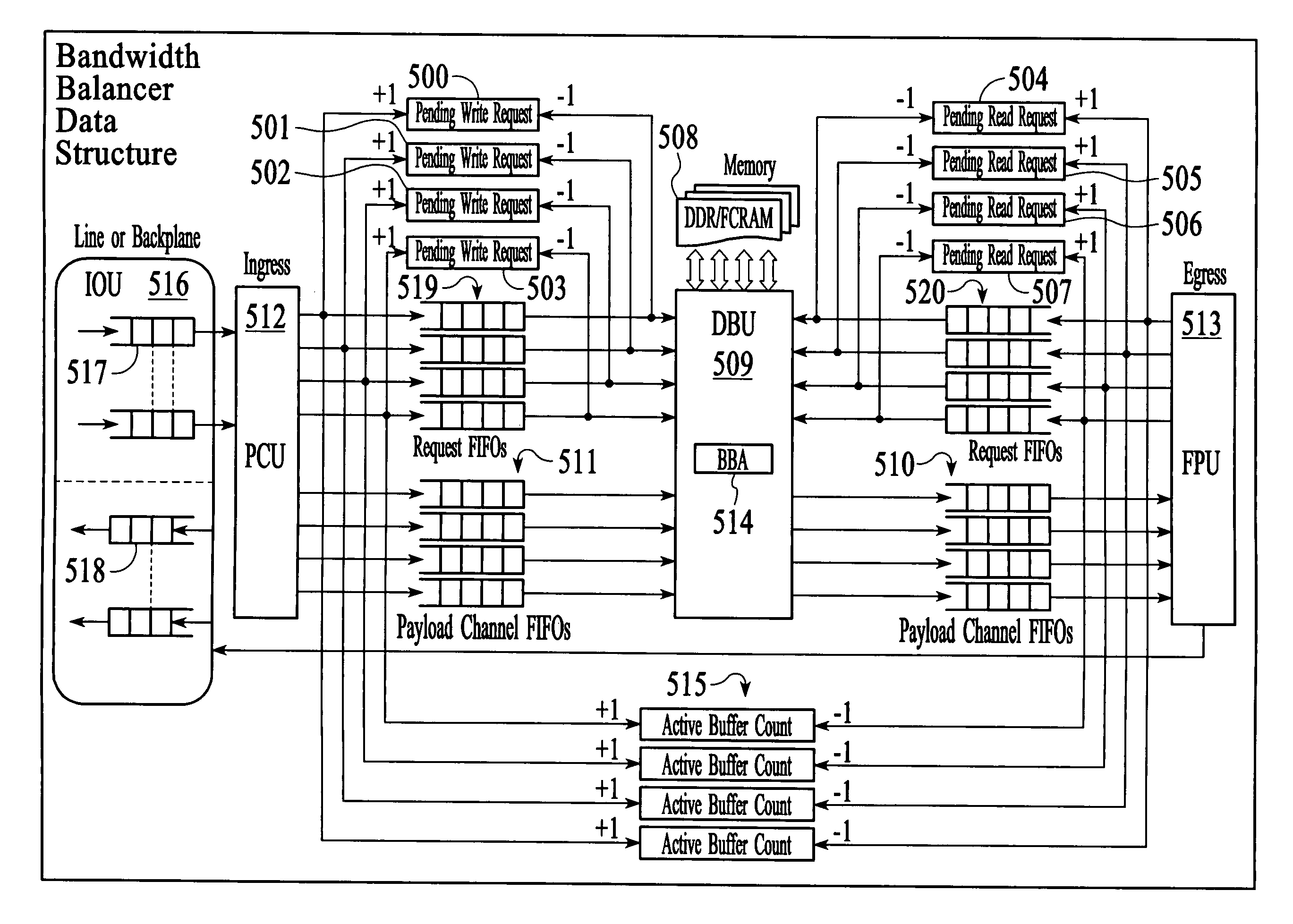

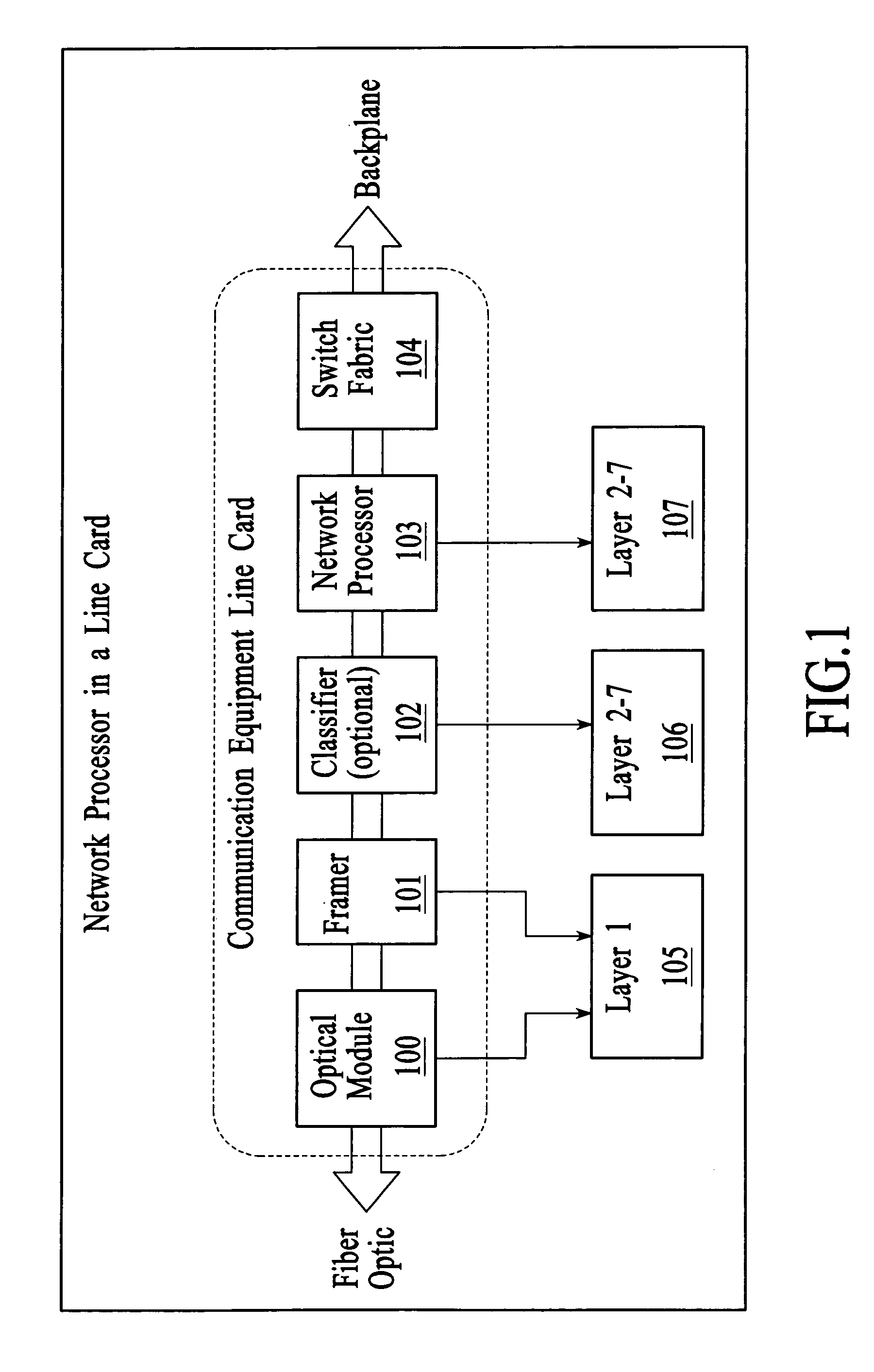

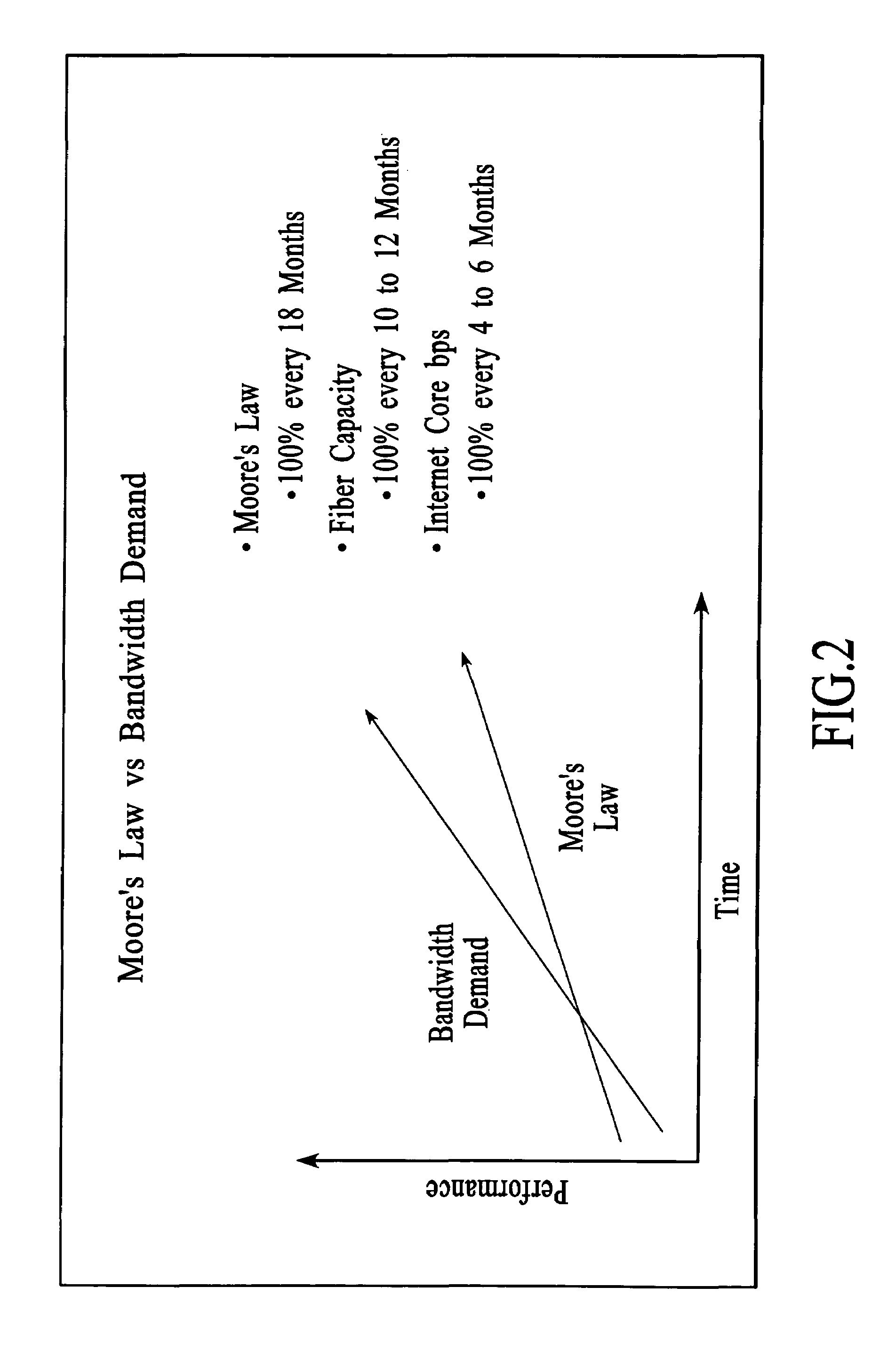

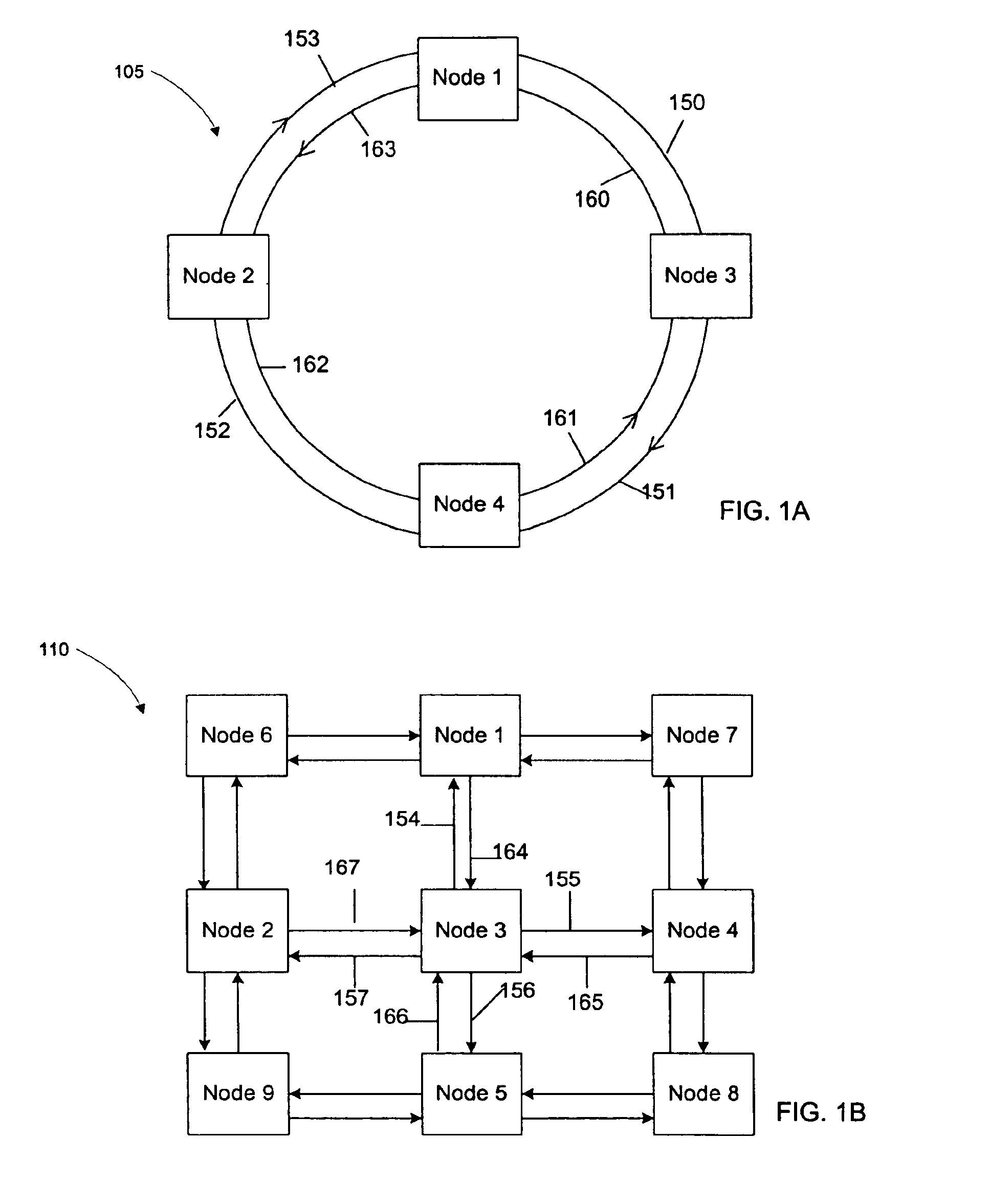

Memory management system and algorithm for network processor architecture

InactiveUS7006505B1Low costMaximize memory bandwidthData switching by path configurationMemory systemsLine rateParallel computing

An embodiment of this invention pertains to a system and method for balancing memory accesses to a low cost memory unit in order to sustain and guarantee a desired line rate regardless of the incoming traffic pattern. The memory unit may include, for example, a group of dynamic random access memory units. The memory unit is divided into memory channels and each of the memory channels is further divided into memory lines, each of the memory lines includes one or more buffers that correspond to the memory channels. The determination as to which of one or more buffers within a memory line an incoming information element is stored is based on factors such as the number of buffers pending to be read within each of the memory channels, the number of buffers pending to be written within each of the memory channels, and the number of buffers within each of the memory channels that has data written to it and is waiting to be read.

Owner:BAY MICROSYSTEMS INC

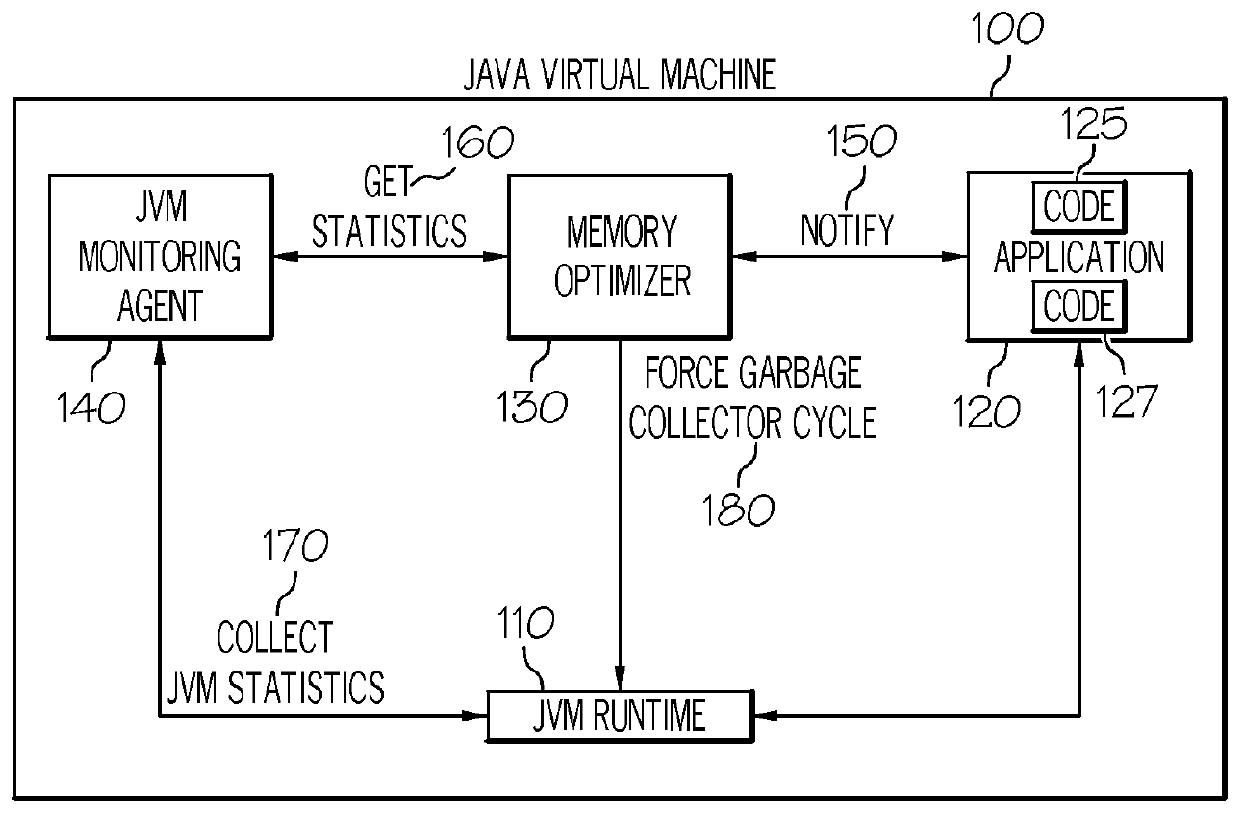

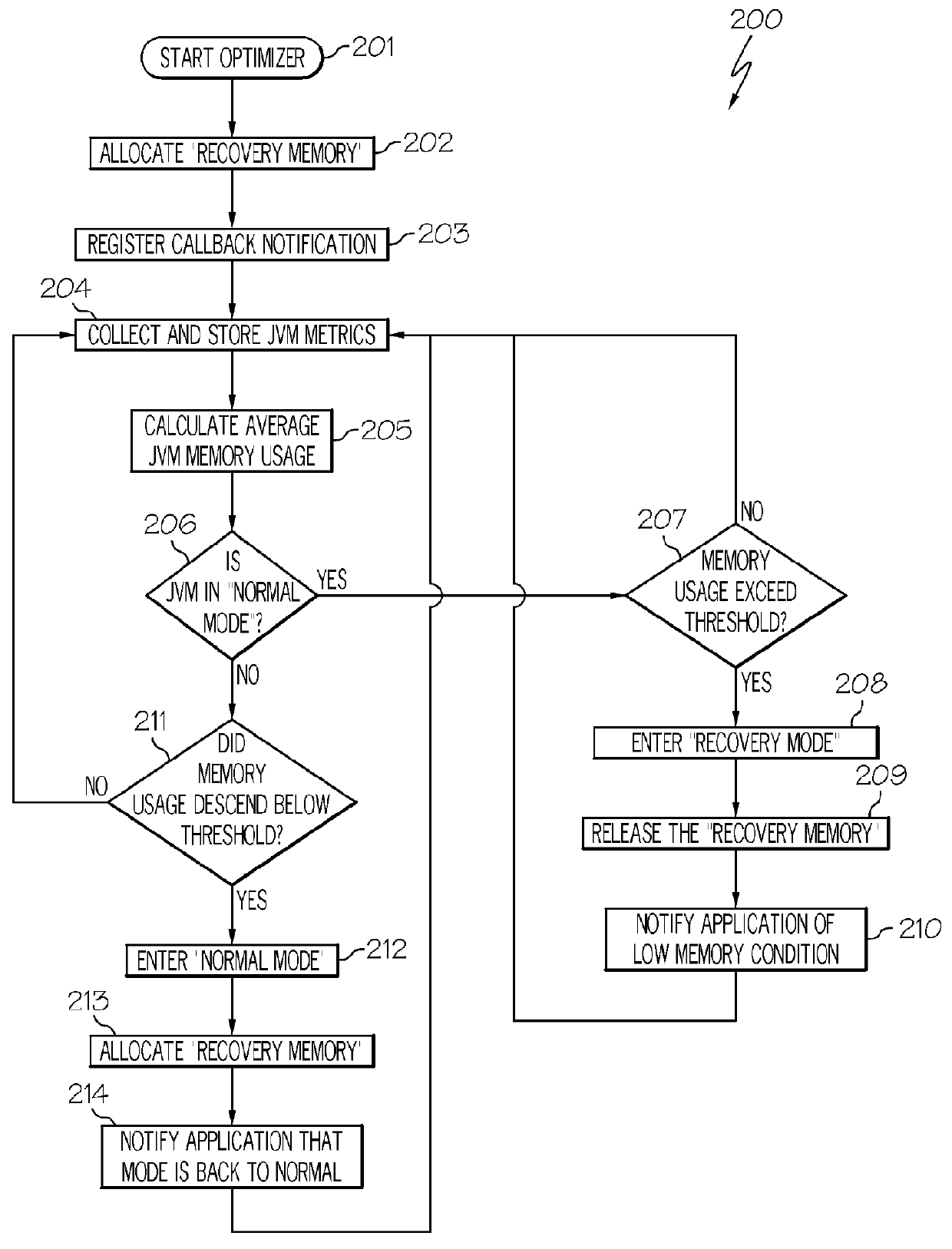

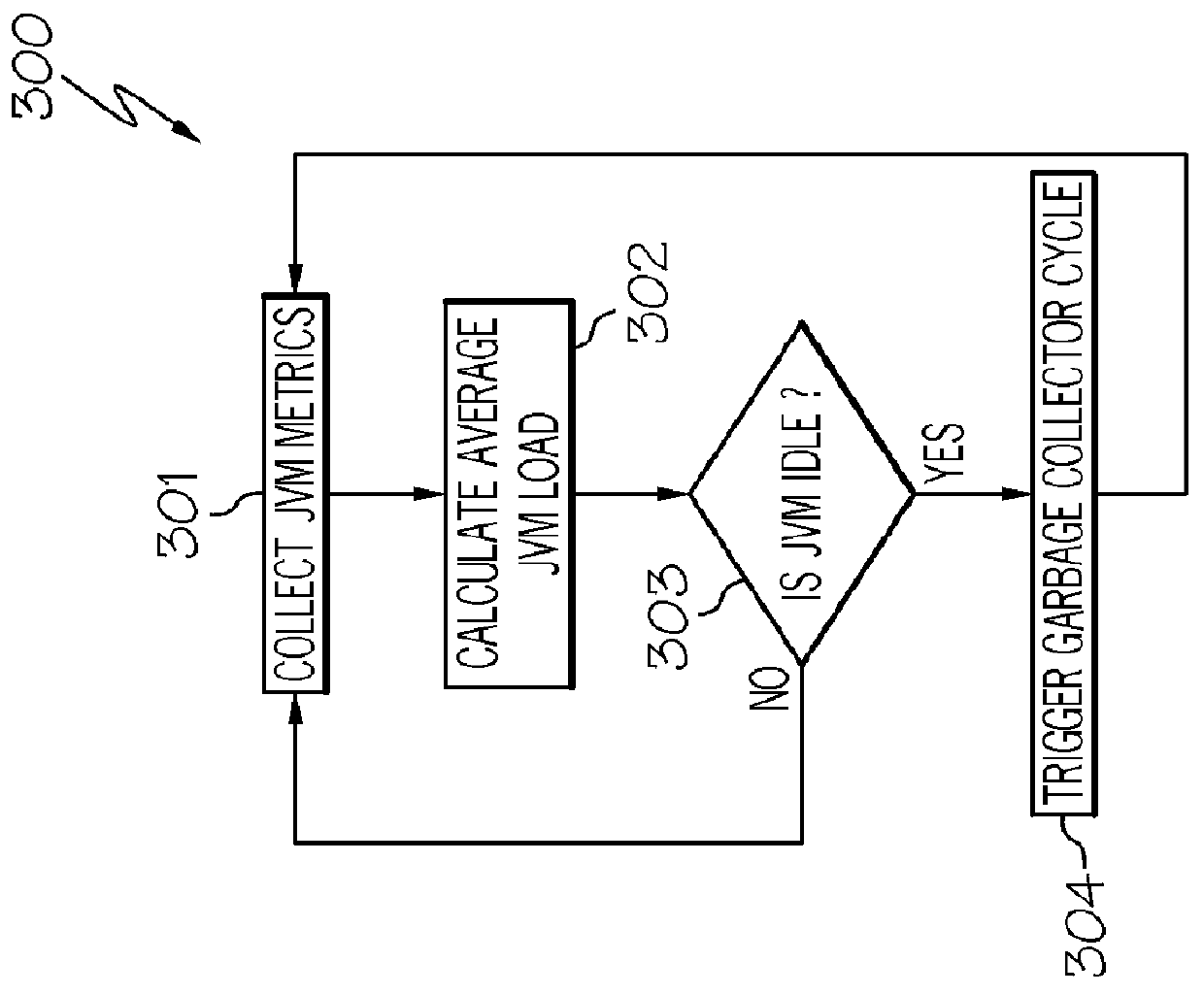

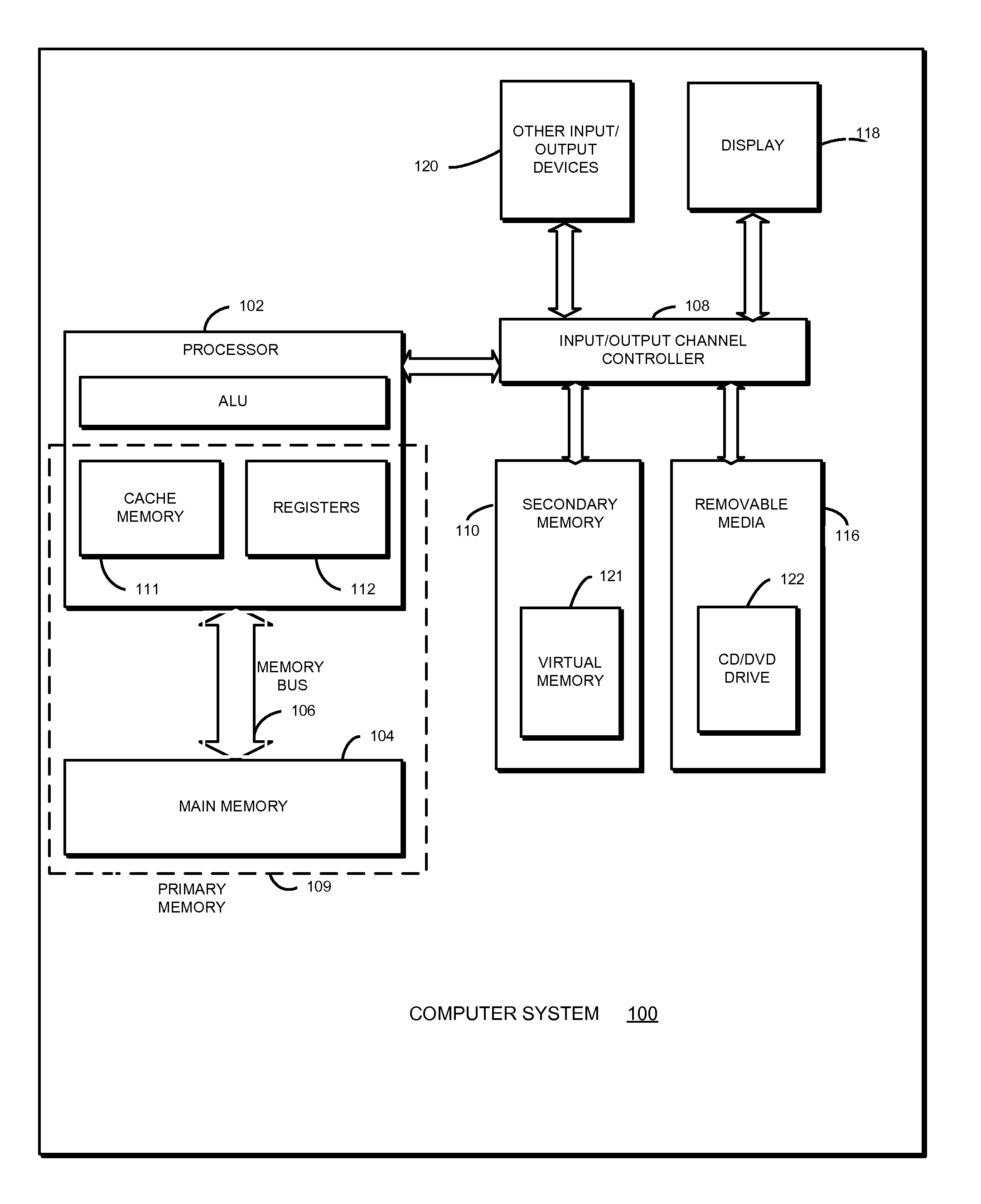

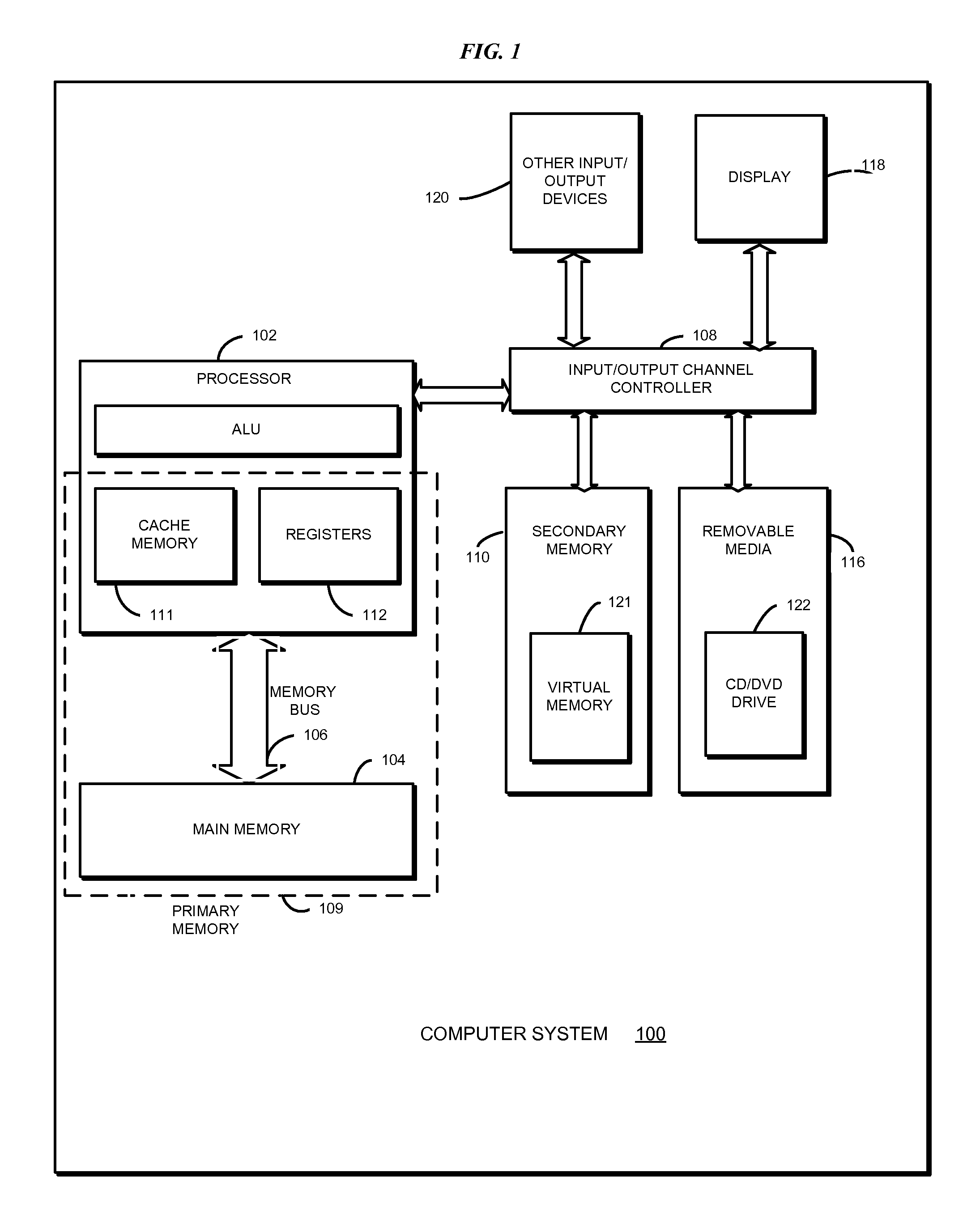

Optimizing memory management of an application running on a virtual machine

ActiveUS20120137101A1Optimize memory usageReduce disadvantagesMemory adressing/allocation/relocationProgram controlNormal modeApplication software

A method, system and computer program product for optimizing memory usage of an application running on a virtual machine. A virtual machine memory block is pre-allocated and the average memory usage of the virtual machine is periodically computed using statistics collected from the virtual machine through an API. If the memory usage average becomes higher than a maximum threshold, then a recovery mode is entered by releasing the virtual machine memory block and forcing the running application to reduce its processing activity; optionally, a garbage collector cycle can be forced. If the computed memory usage average becomes lower than a minimum threshold value, which is lower than the maximum threshold value, then a normal mode is entered by re-allocating the virtual machine memory block and forcing the running application to resumes its normal processing activity. Optionally, when the virtual machine is idle, a deep garbage collection is forced.

Owner:IBM CORP

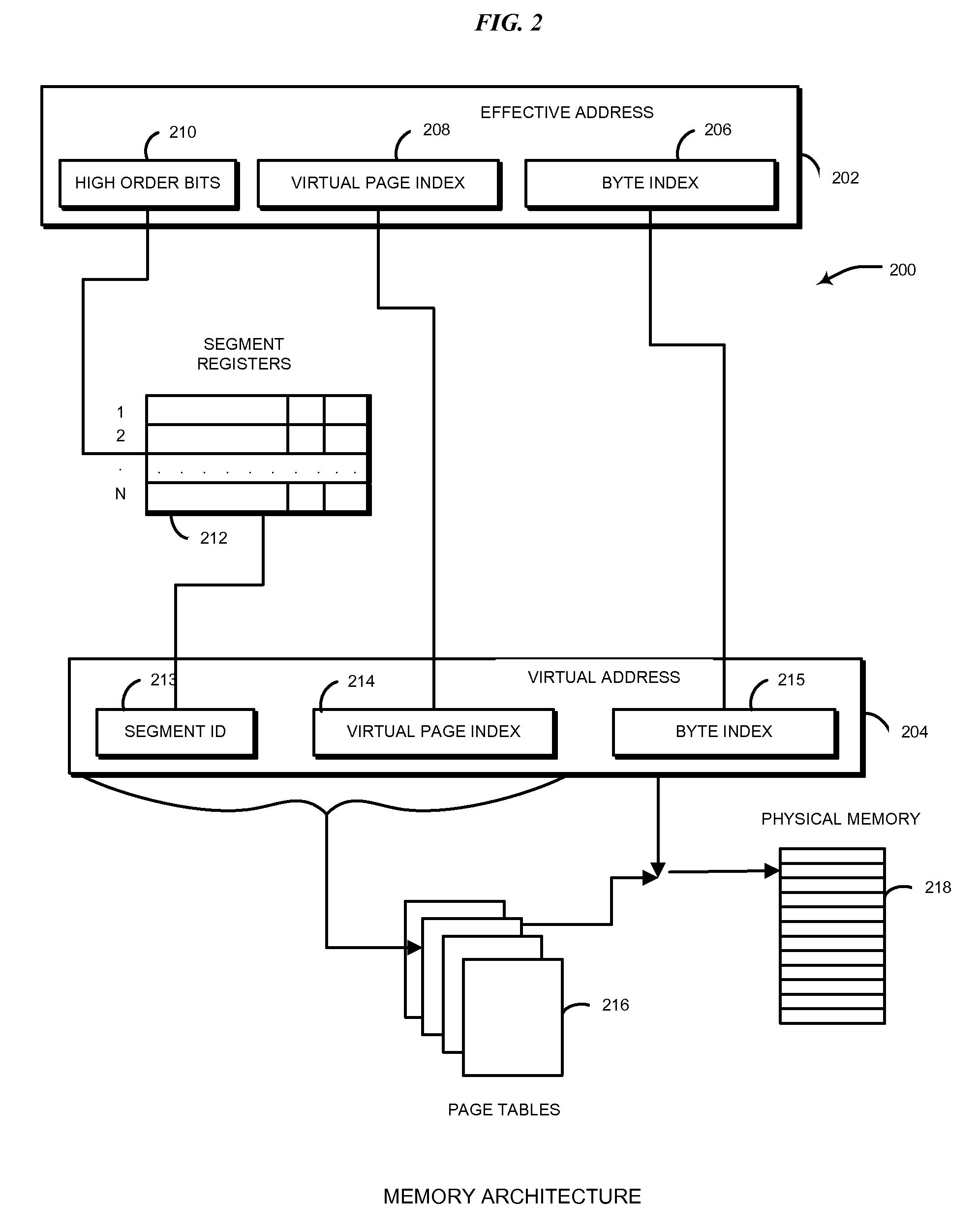

Virtual memory management

InactiveUS7930515B2Memory architecture accessing/allocationMemory systemsPhysical addressVirtual memory management

A method for managing a virtual memory system configured to allow multiple page sizes is described. Each page size has at least one table associated with it. The method involves maintaining entries in the tables to keep track of the page size for which the effective address is mapped. When a new effective address to physical address mapping needs to be made for a page size, the method accesses the appropriate tables to identify prior mappings for another page size in the same segment. If no such conflicting mapping exists, it creates a new mapping in the appropriate table. A formula is used to generate an index to access a mapping in a table.

Owner:INT BUSINESS MASCH CORP

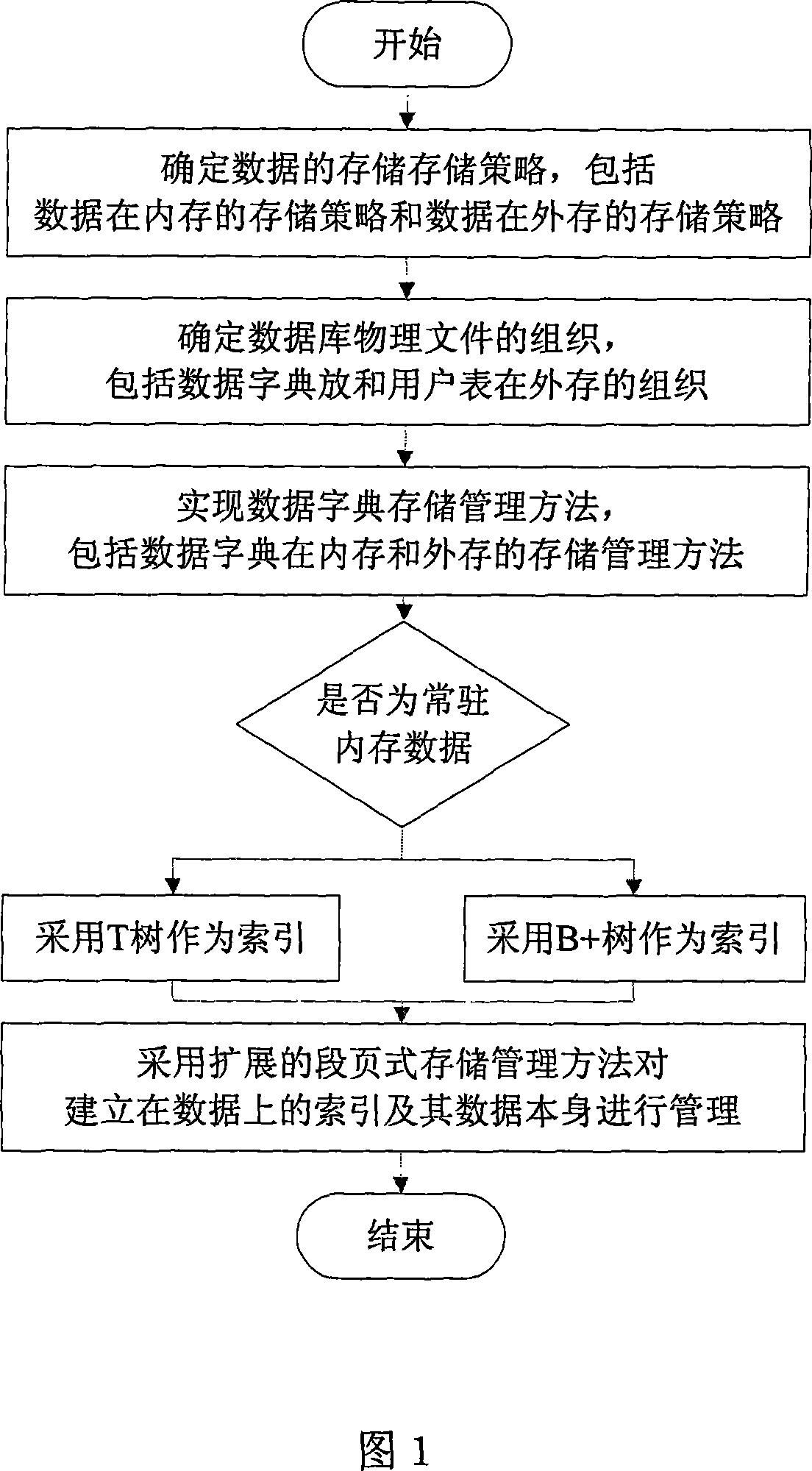

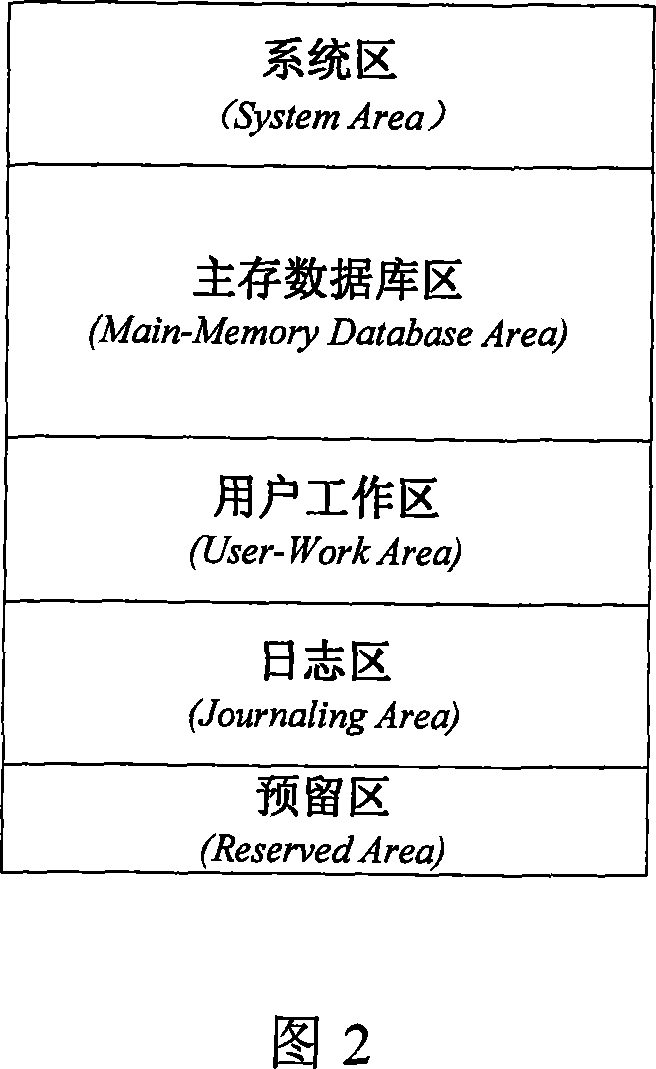

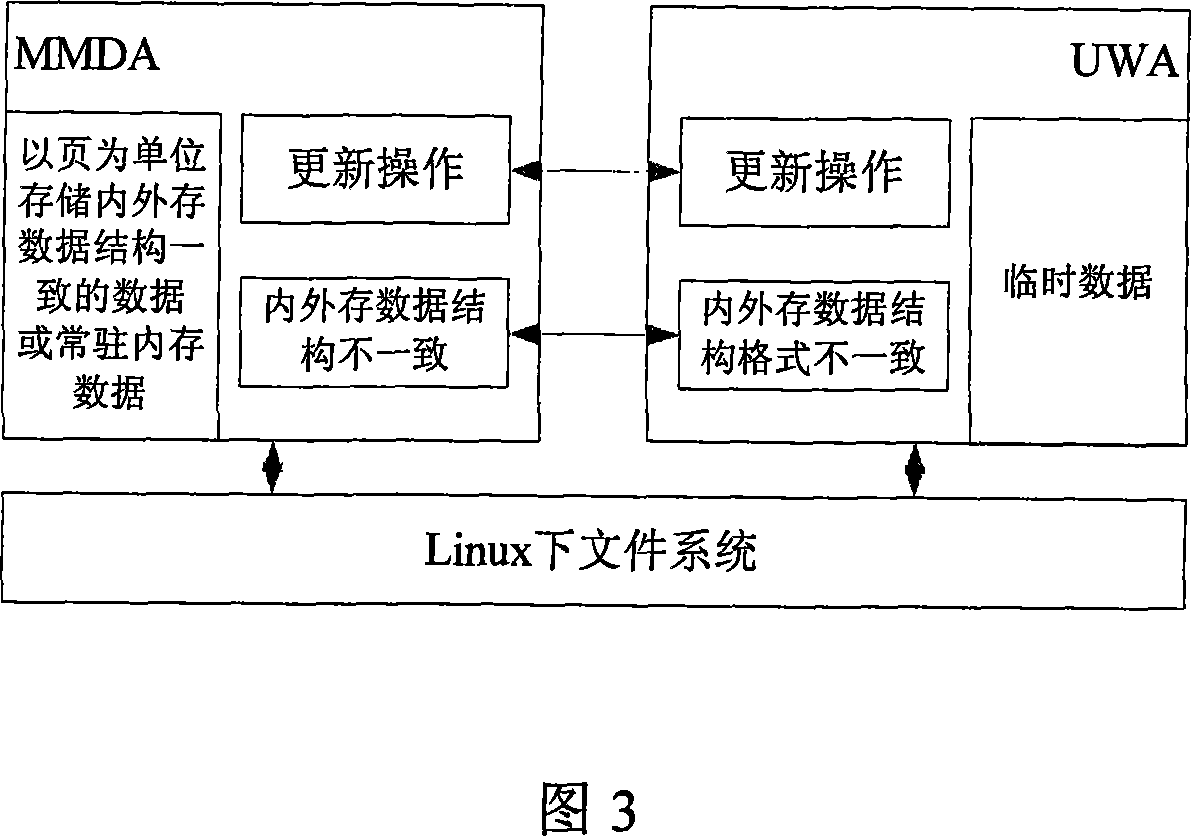

Embedded database storage management method

InactiveCN101055589AGuaranteed compactnessImprove loading speedSpecial data processing applicationsEmbedded databaseData access

A storage mamagement method of embedded database,includes that: (1) the storage strategy of data in the memory is that: if logic structure accords with physical structure, the structure is into a divided system area, a main storage database area, a user working area, a log area and a preformed area; the storage strategy of data in the external memory is that: logic structure is divided into a database table, a segment and a database block; physical structure includes a physical file and a physical block; (2) the physical file organization of database is that: a data dictionary is placed at the heading, a user table since then; (3) the memory management of data dictionaryin the external memory is that: the described information of physical file is stored in the file header, denotative definition kind and attribute definition kind adopt a three section type storage form; data dictionary adopt a page type memory management method in the memory; (4) a permanent memory data is stored using T tree and a a permanent external memory data is stored using B+ tree; data and its index are managed using a extended segment memory management method. The invention improves the utilization rate of memory space, and accelerate the speed of data to access.

Owner:BEIHANG UNIV

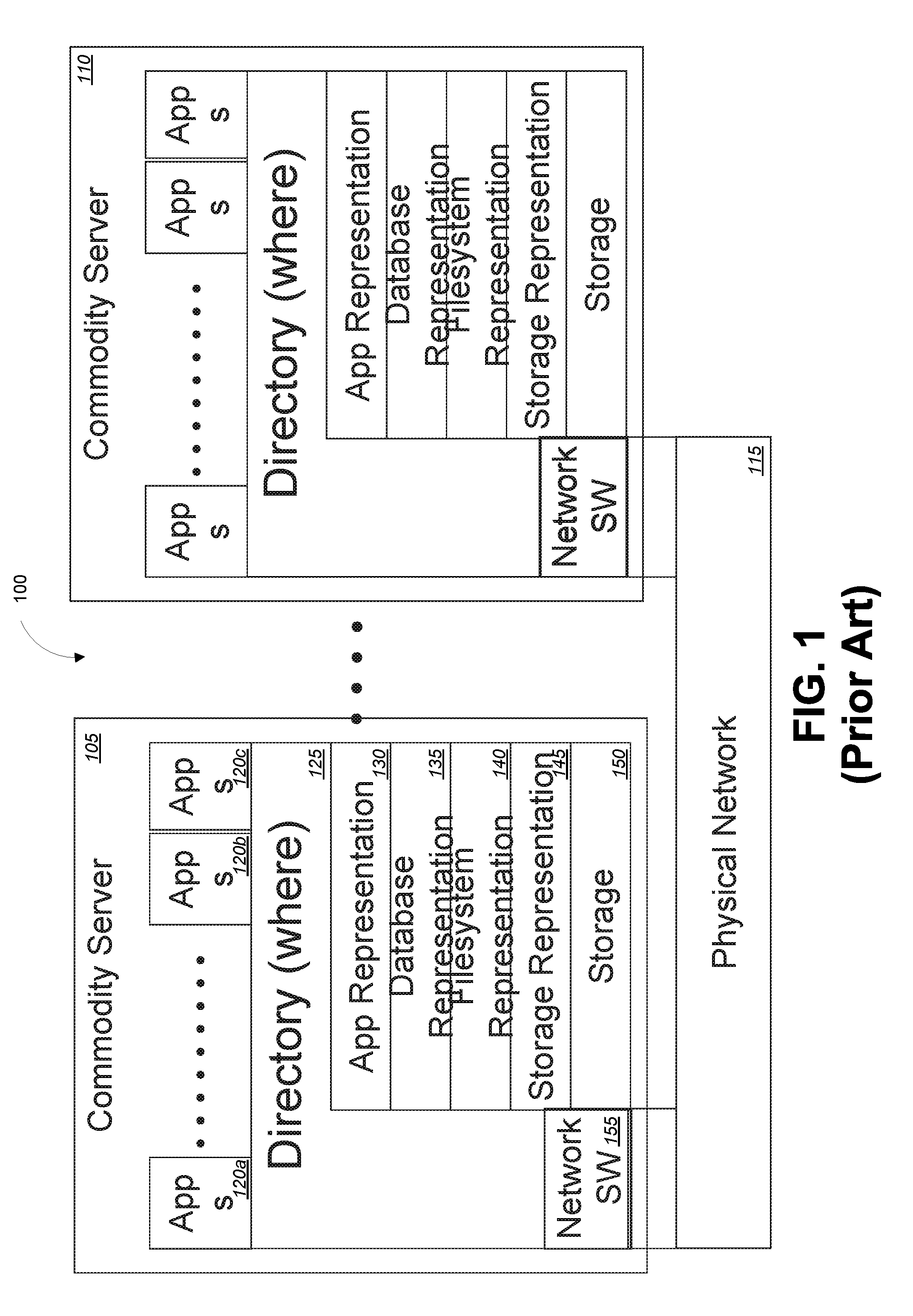

Object memory fabric performance acceleration

InactiveUS20160210079A1Improve efficiencyImprove performanceInput/output to record carriersMemory adressing/allocation/relocationParallel computingMemory object

Embodiments of the invention provide systems and methods for managing processing, memory, storage, network, and cloud computing to significantly improve the efficiency and performance of processing nodes. Embodiments described herein can provide transparent and dynamic performance acceleration, especially with big data or other memory intensive applications, by reducing or eliminating overhead typically associated with memory management, storage management, networking, and data directories. Rather, embodiments manage memory objects at the memory level which can significantly shorten the pathways between storage and memory and between memory and processing, thereby eliminating the associated overhead between each.

Owner:ULTRATA LLC

Apparatus and method for supporting memory management in an offload of network protocol processing

ActiveUS7930422B2Firmly connectedNumber of neededMultiple digital computer combinationsMemory systemsProtocol processingZero-copy

A number of improvements in network adapters that offload protocol processing from the host processor are provided. Specifically, mechanisms for handling memory management and optimization within a system utilizing an offload network adapter are provided. The memory management mechanism permits both buffered sending and receiving of data as well as zero-copy sending and receiving of data. In addition, the memory management mechanism permits grouping of DMA buffers that can be shared among specified connections based on any number of attributes. The memory management mechanism further permits partial send and receive buffer operation, delaying of DMA requests so that they may be communicated to the host system in bulk, and expedited transfer of data to the host system.

Owner:INT BUSINESS MASCH CORP

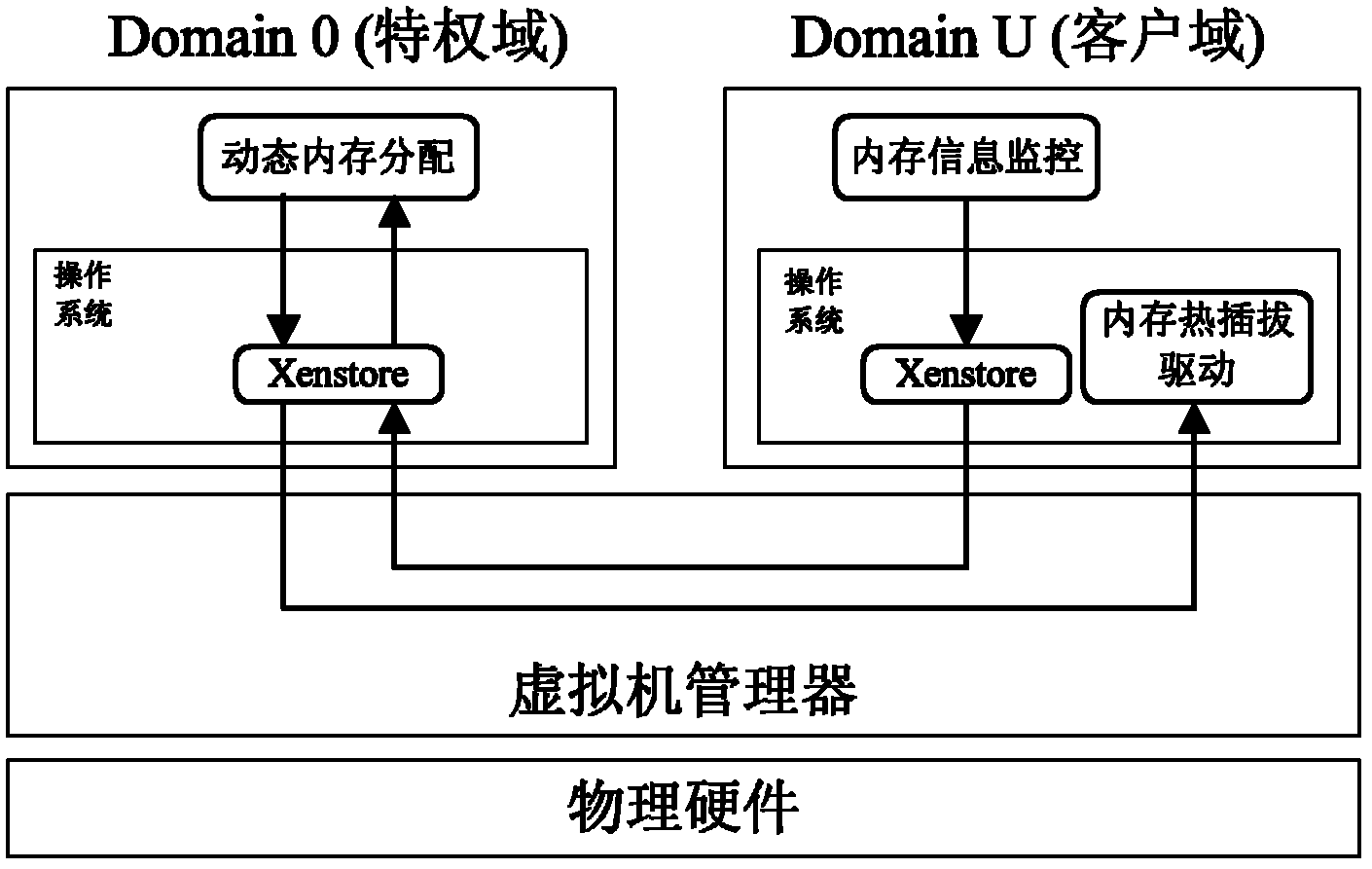

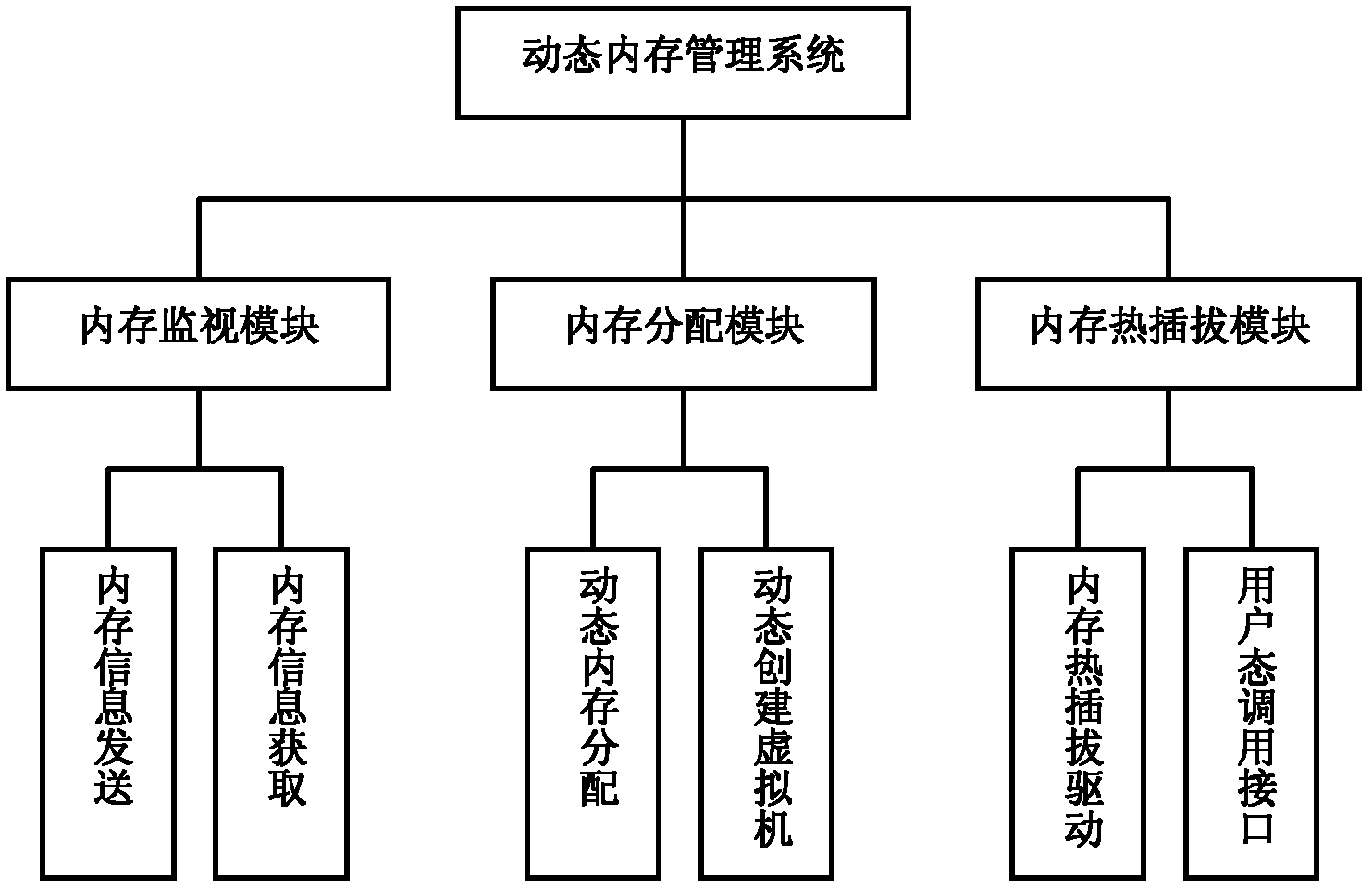

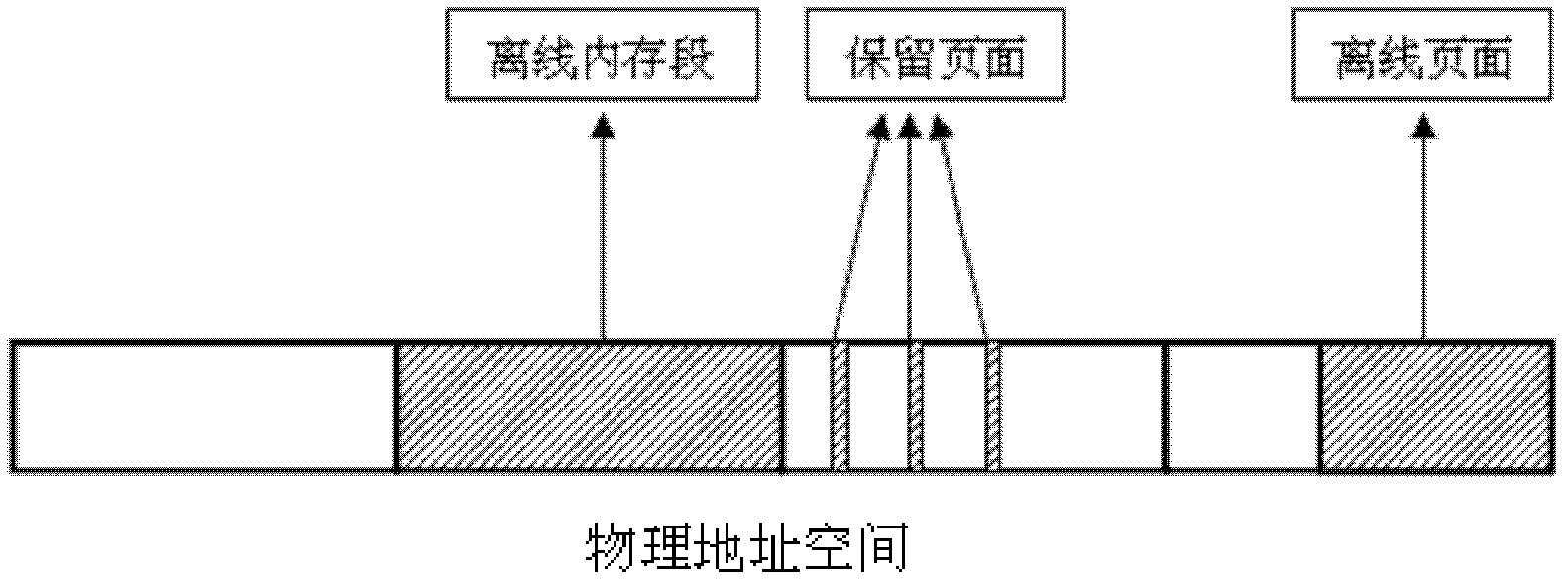

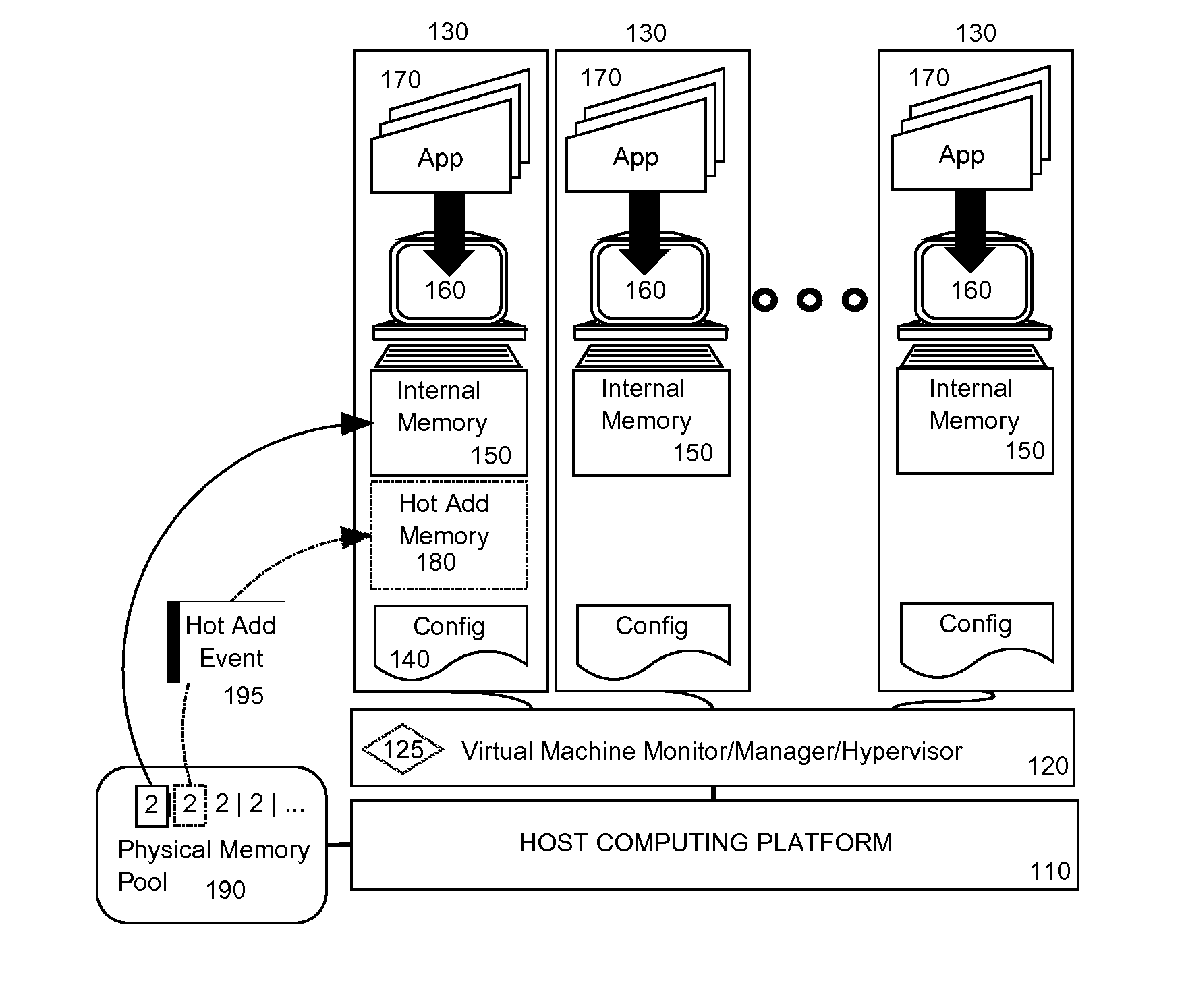

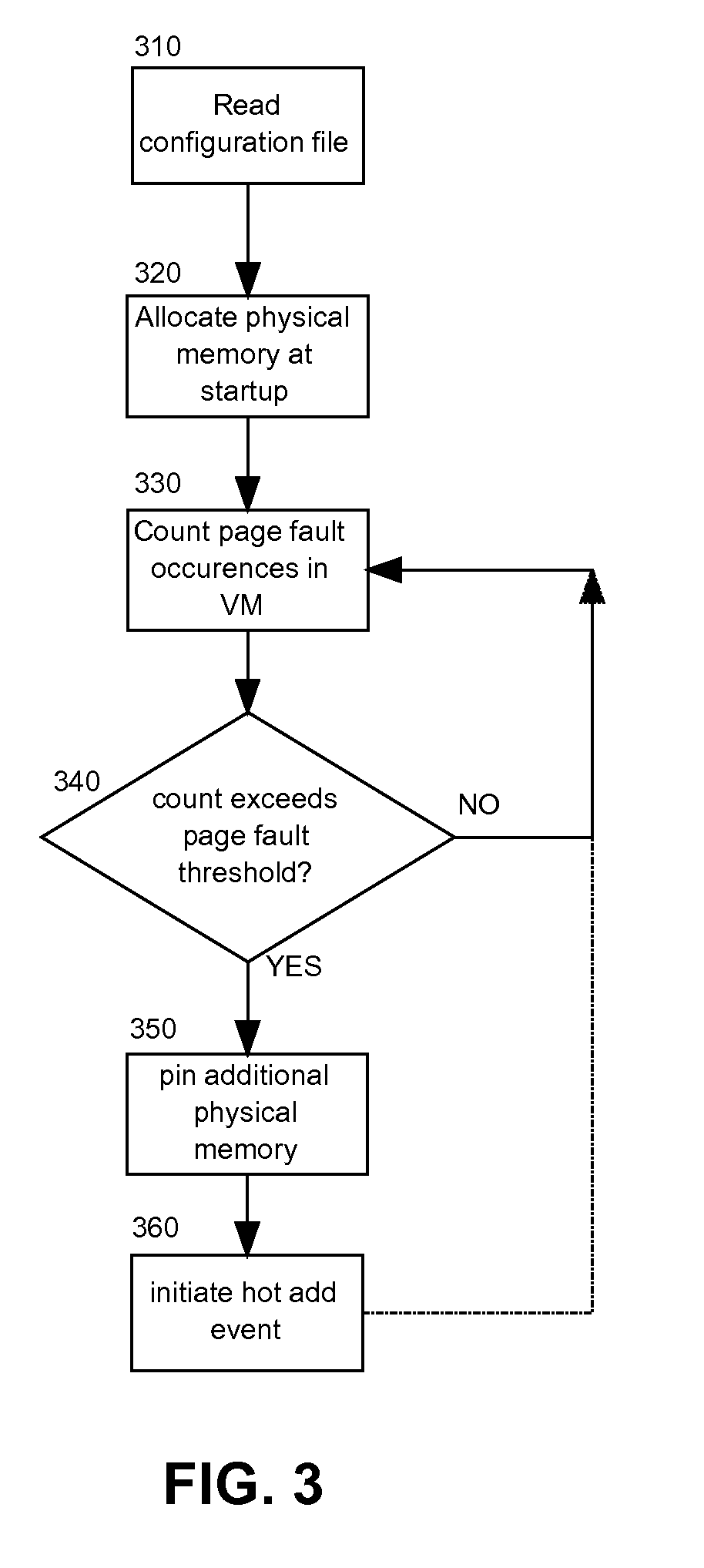

Dynamic memory management system based on memory hot plug for virtual machine

InactiveCN102222014AMemory Pressure PredictionMemory pressure balanceMemory adressing/allocation/relocationSoftware simulation/interpretation/emulationOut of memoryGNU/Linux

The invention discloses a dynamic memory management system based on memory hot plug for a virtual machine, comprising a memory monitoring module, a memory distributing module and a memory hot plug module. The memory hot plug module adopts Linux memory hot plug mechanism to realize memory hot plug on a semi-virtual Linux virtual machine, thereby breaking through the initial memory toplimit of the virtual machine and efficiently improving the memory expandability of the virtual machine by increasing and reducing the memory randomly. On one hand, the memory distributing module dynamically predicts the memory requirement of the virtual machine and balances the memory pressure of each virtual machine, thereby being capable of satisfying the memory requirement of the virtual machine and also improving the memory utilization ratio of a physical machine; on the other hand, the memory distributing module can establish a new virtual machine by reasonably reducing the memory of the existing virtual machine when the memory of the physical machine is not enough, thereby realizing memory overuse and improving the memory utilization ratio of the physical machine.

Owner:HUAZHONG UNIV OF SCI & TECH

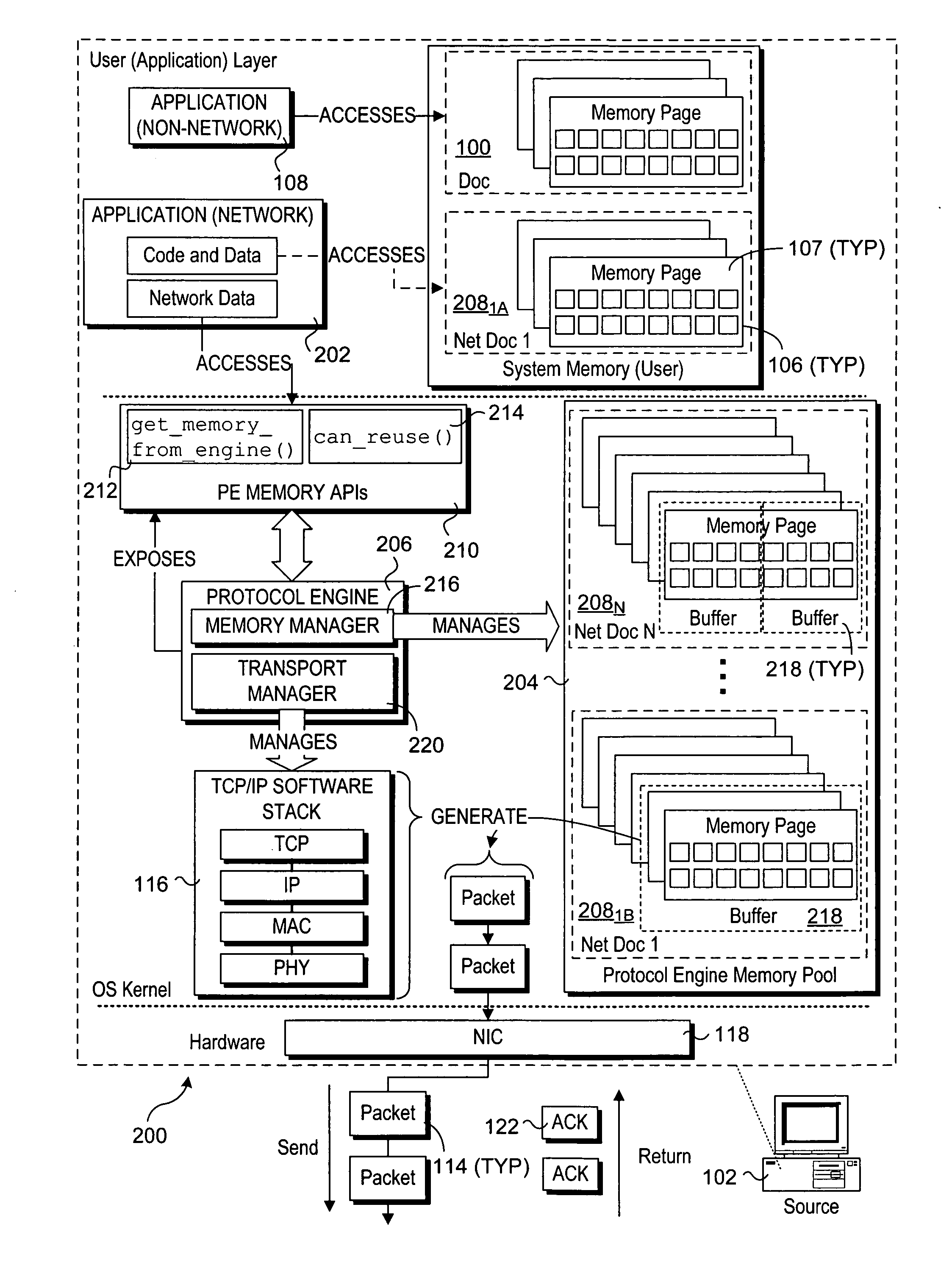

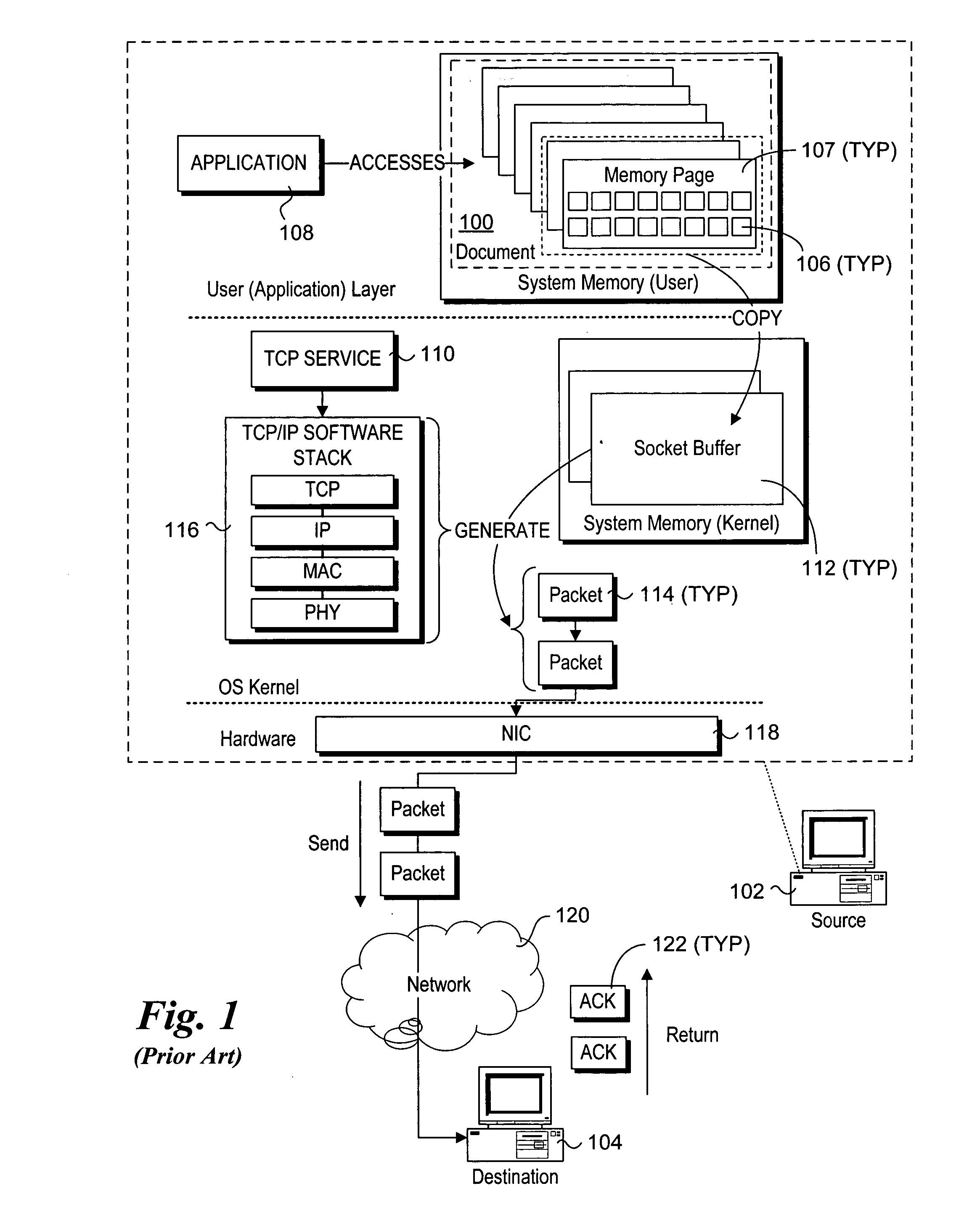

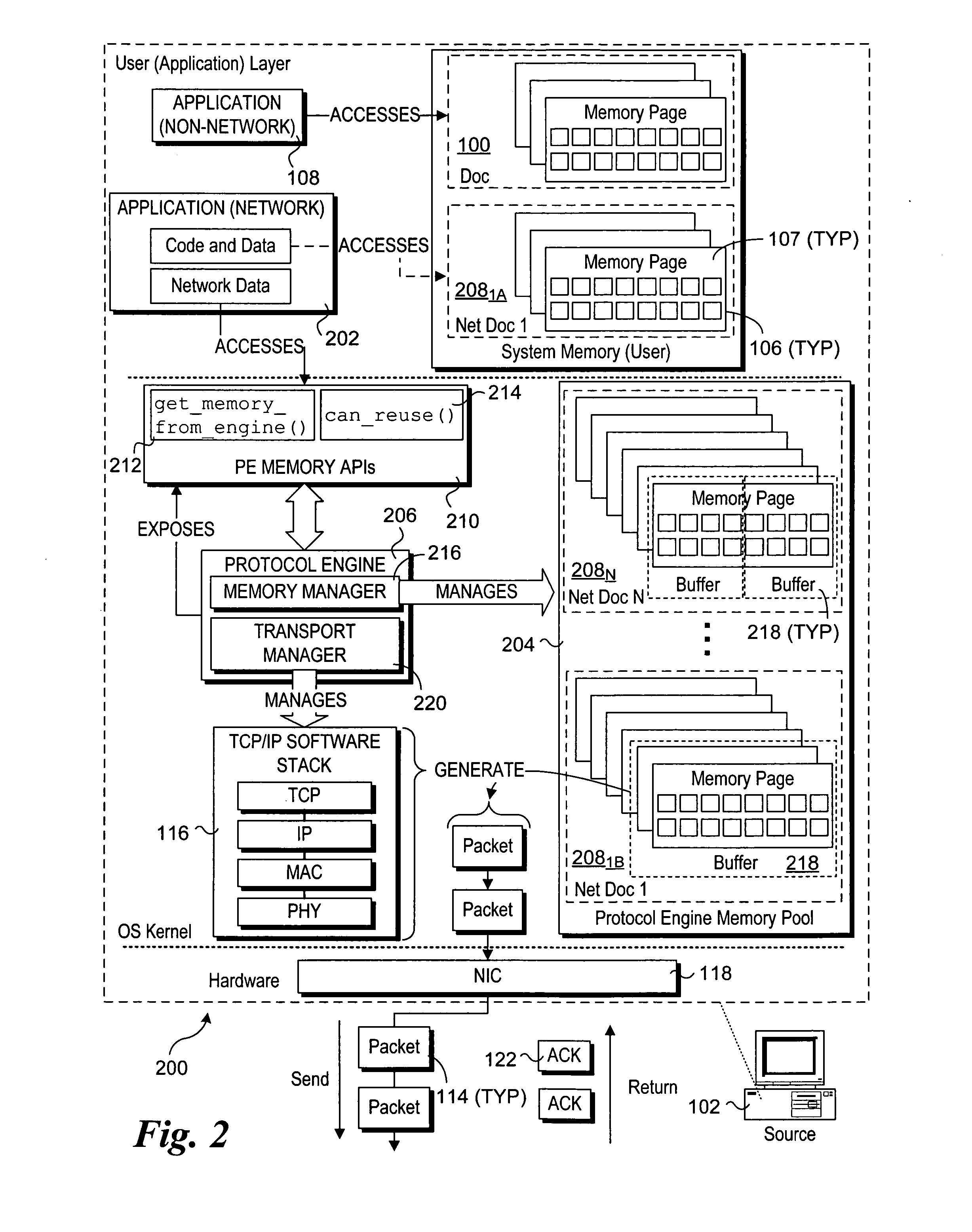

Mechanisms to implement memory management to enable protocol-aware asynchronous, zero-copy transmits

InactiveUS20070011358A1Multiple digital computer combinationsTransmissionZero-copyOperational system

Mechanisms to implement memory management to enable protocol-aware asynchronous, zero-copy transmits. A transport protocol engine exposes interfaces via which memory buffers from a memory pool in operating system (OS) kernel space may be allocated to applications running in an OS user layer. The memory buffers may be used to store data that is to be transferred to a network destination using a zero-copy transmit mechanism, wherein the data is directly transmitted from the memory buffers to the network via a network interface controller. The transport protocol engine also exposes a buffer reuse API to the user layer to enable applications to obtain buffer availability information maintained by the protocol engine. In view of the buffer availability information, the application may adjust its data transfer rate.

Owner:INTEL CORP

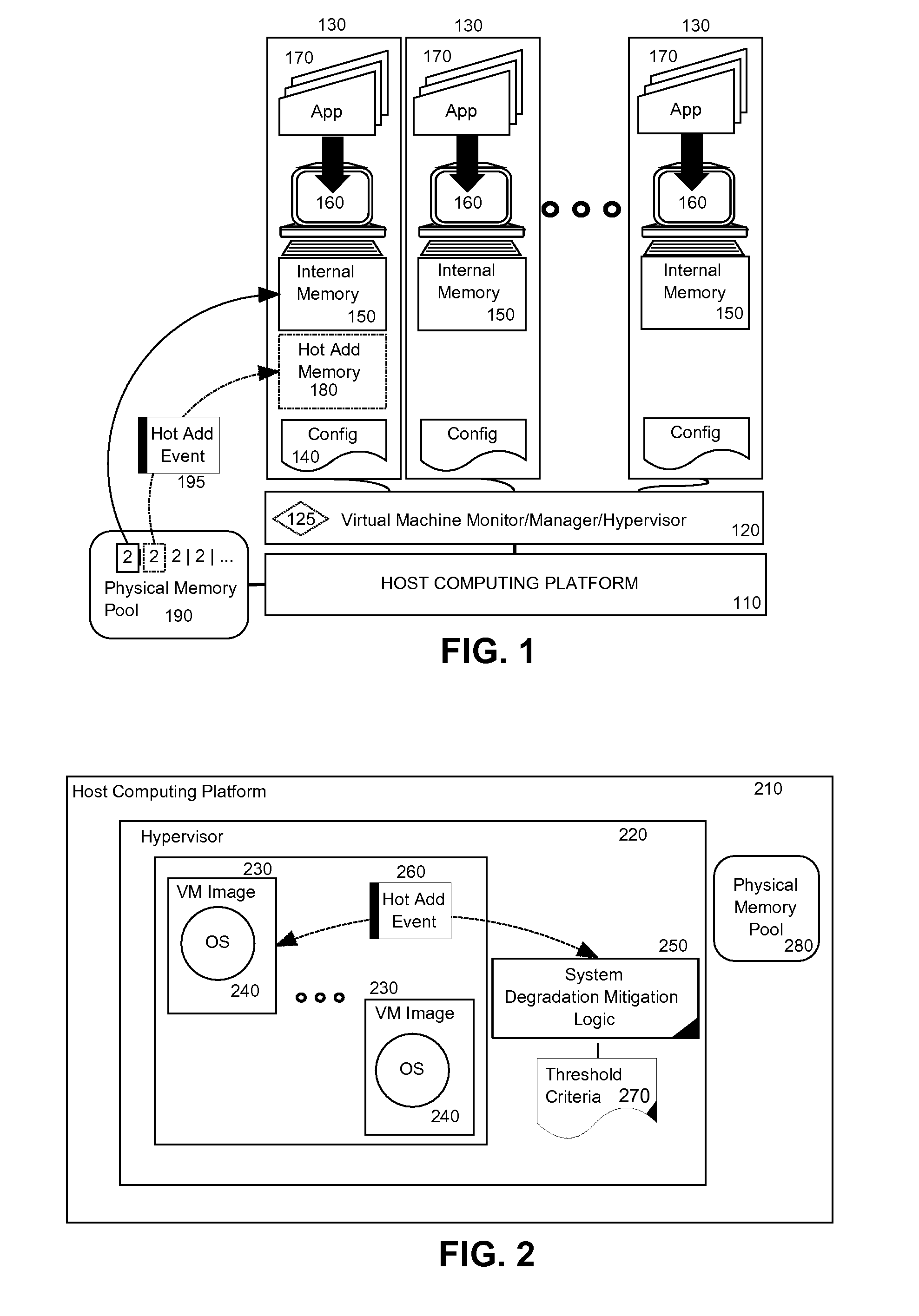

Memory management in a virtual machine based on page fault performance workload criteria

InactiveUS20100077128A1Memory adressing/allocation/relocationComputer security arrangementsVirtualizationOperational system

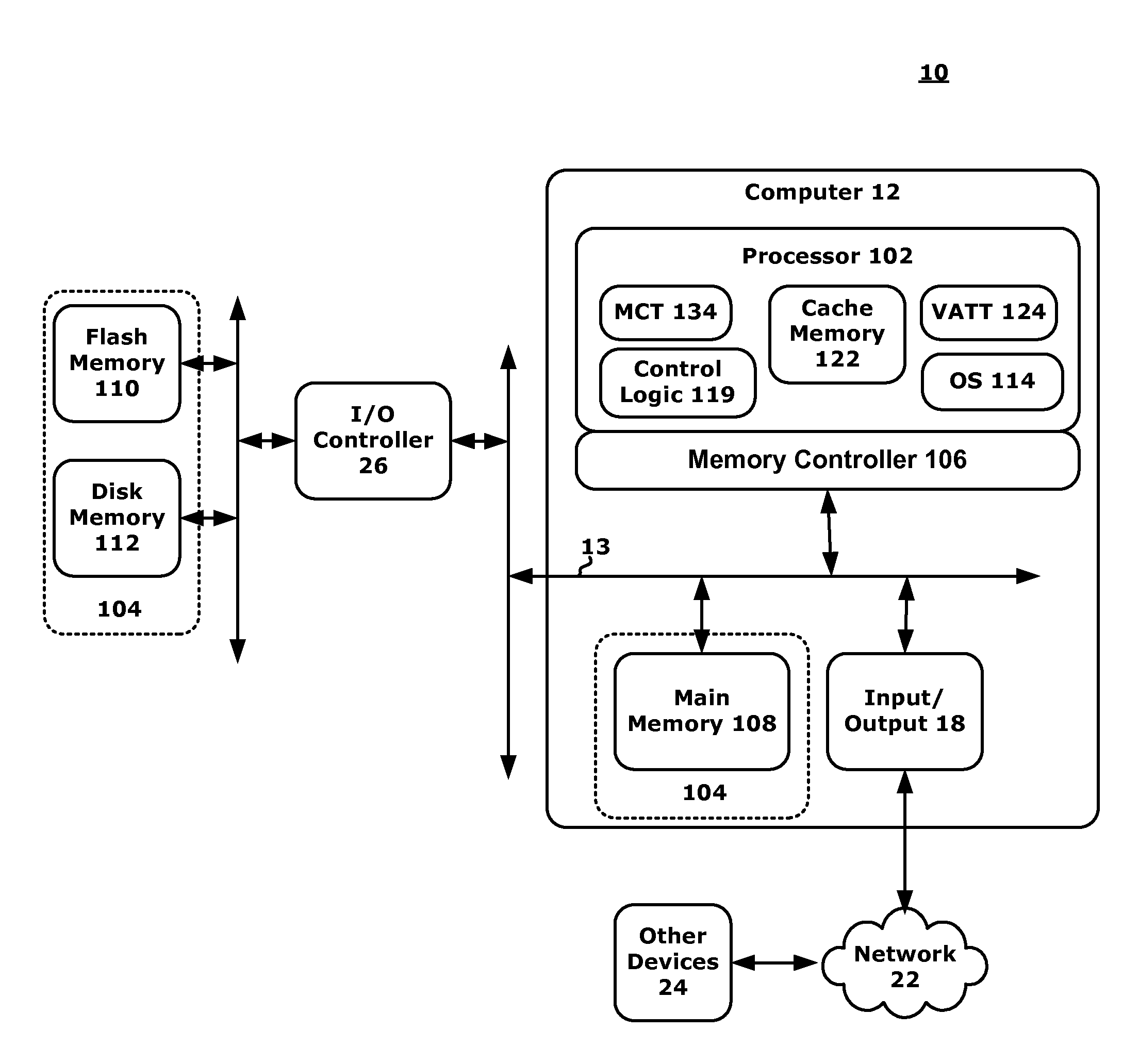

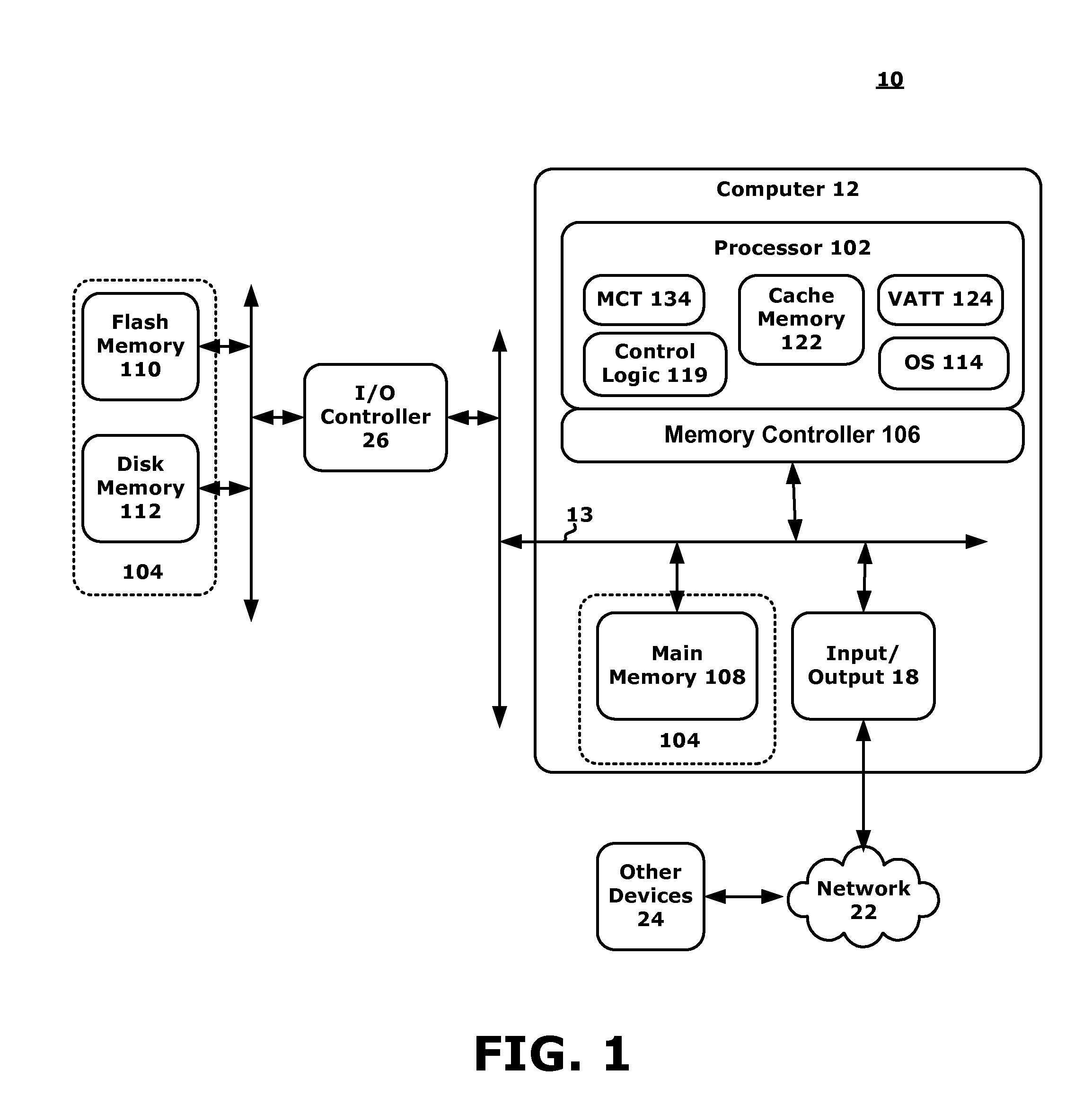

Embodiments of the present invention address deficiencies of the art in respect to virtualization and provide a novel and non-obvious method, system and computer program product for monitoring and managing memory used in a virtualized computing environment. In this regard, a method for monitoring and managing memory used by a virtual machine in a virtualized computing environment can include counting page fault occurrences in a guest operating system (OS) executing in the VM, pinning additional physical memory to the VM along with initiating a hot-add event to the guest OS executing in the VM, responsive to exceeding a page fault allowance threshold in order to mitigate system degradation in the VM based on page fault occurrences.

Owner:IBM CORP

Memory Architecture with Policy Based Data Storage

InactiveUS20120066473A1Efficient storageEnergy efficient ICTMemory adressing/allocation/relocationMemory typeMemory controller

A computing system and methods for memory management are presented. A memory or an I / O controller receives a write request where the data two be written is associated with an address. Hint information may be associated with the address and may relate to memory characteristics such as an historical, O / S direction, data priority, job priority, job importance, job category, memory type, I / O sender ID, latency, power, write cost, or read cost components. The memory controller may interrogate the hint information to determine where (e.g., what memory type or class) to store the associated data. Data is therefore efficiently stored within the system. The hint information may also be used to track post-write information and may be interrogated to determine if a data migration should occur and to which new memory type or class the data should be moved.

Owner:IBM CORP

Device Security Features Supporting a Distributed Shared Memory System

ActiveUS20120191933A1Memory architecture accessing/allocationEnergy efficient ICTChain of trustProtection system

A memory management and protection system that incorporates device security features that support a distributed, shared memory system. The concept of secure regions of memory and secure code execution is supported, and a mechanism is provided to extend a chain of trust from a known, fixed secure boot ROM to the actual secure code execution. Furthermore, the system keeps a secure address threshold that is only programmable by a secure supervisor, and will only allow secure access requests that are above this threshold.

Owner:TEXAS INSTR INC

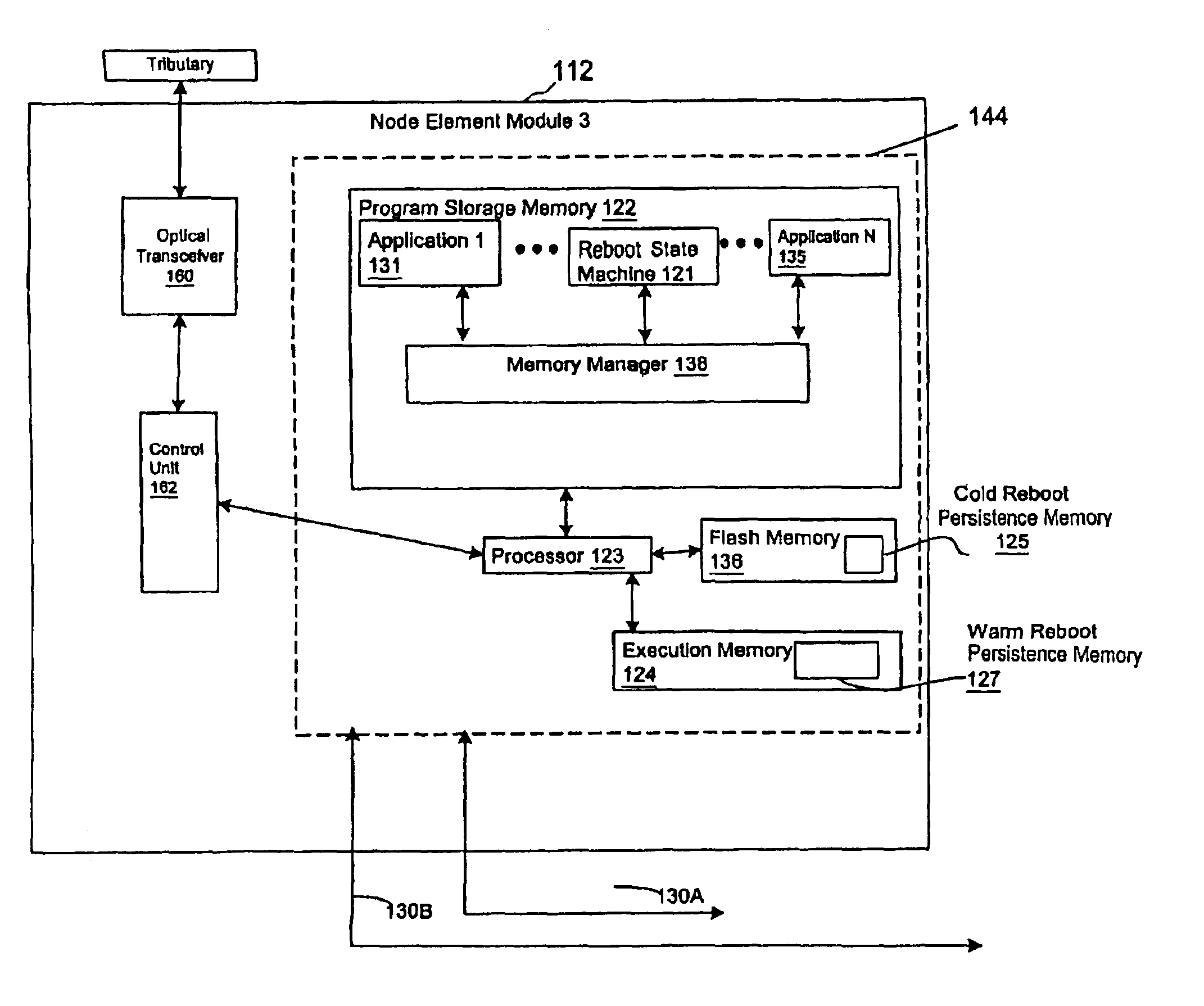

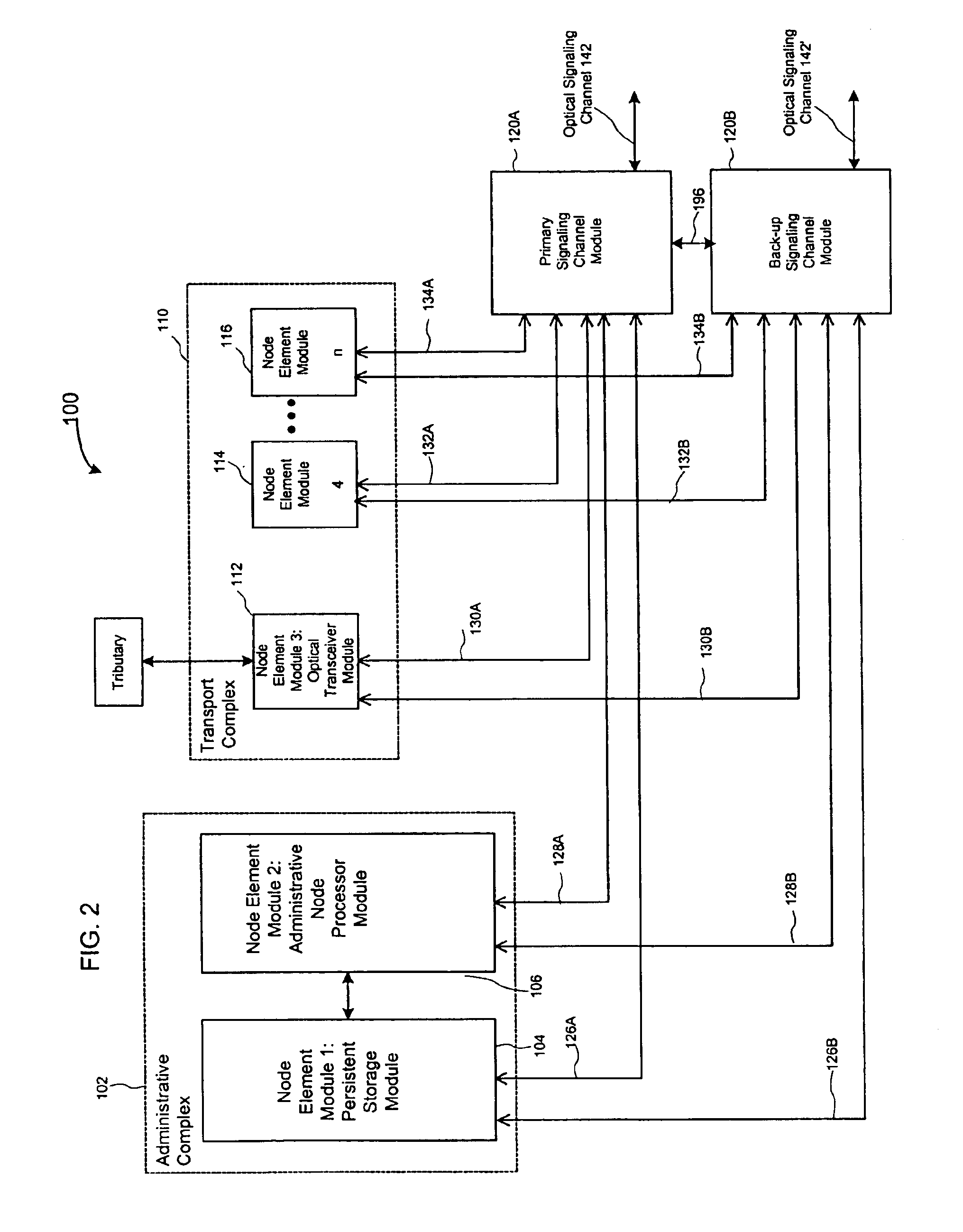

System and method of memory management for providing data storage across a reboot

InactiveUS6854054B1Digital computer detailsProgram loading/initiatingApplication softwareMemory management unit

A memory management system, method and computer readable medium for use in a circuit pack or circuit card such as in a node in an optical network are described. The memory management system includes memory that provides persistent storage over a reboot and a memory manager for directly controlling access to the reboot persistent memory. The memory manager processes requests received from one or more applications executing on the circuit card for storage of a set of data. The request indicates during which type of reboot, cold or warm, the data is to be received and may also indicate a particular state during a reboot in which the data is to be received. The manager responds to a reboot of the circuit card by providing a set of data from the reboot persistent memory to the application during the reboot.

Owner:CIENA

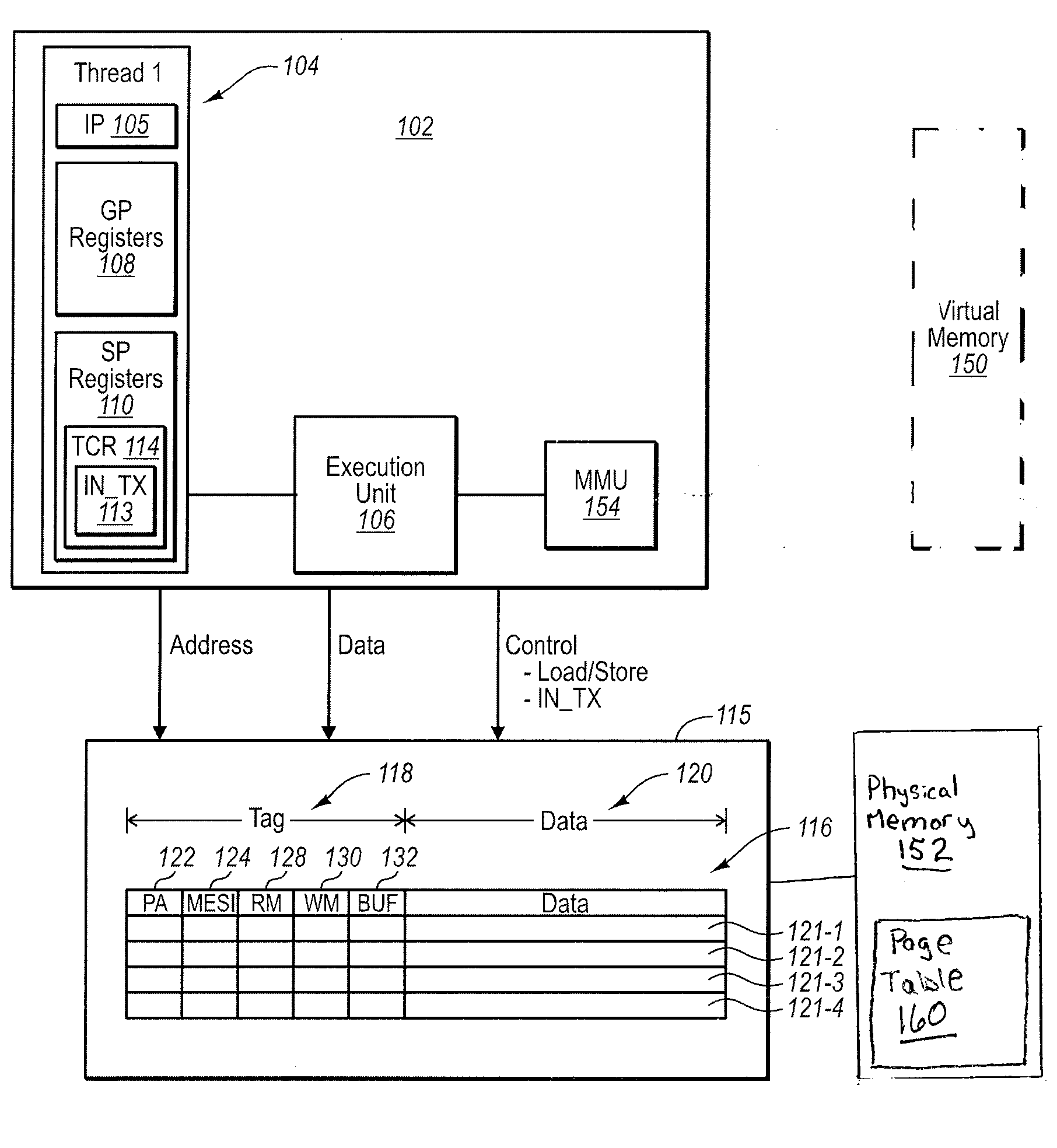

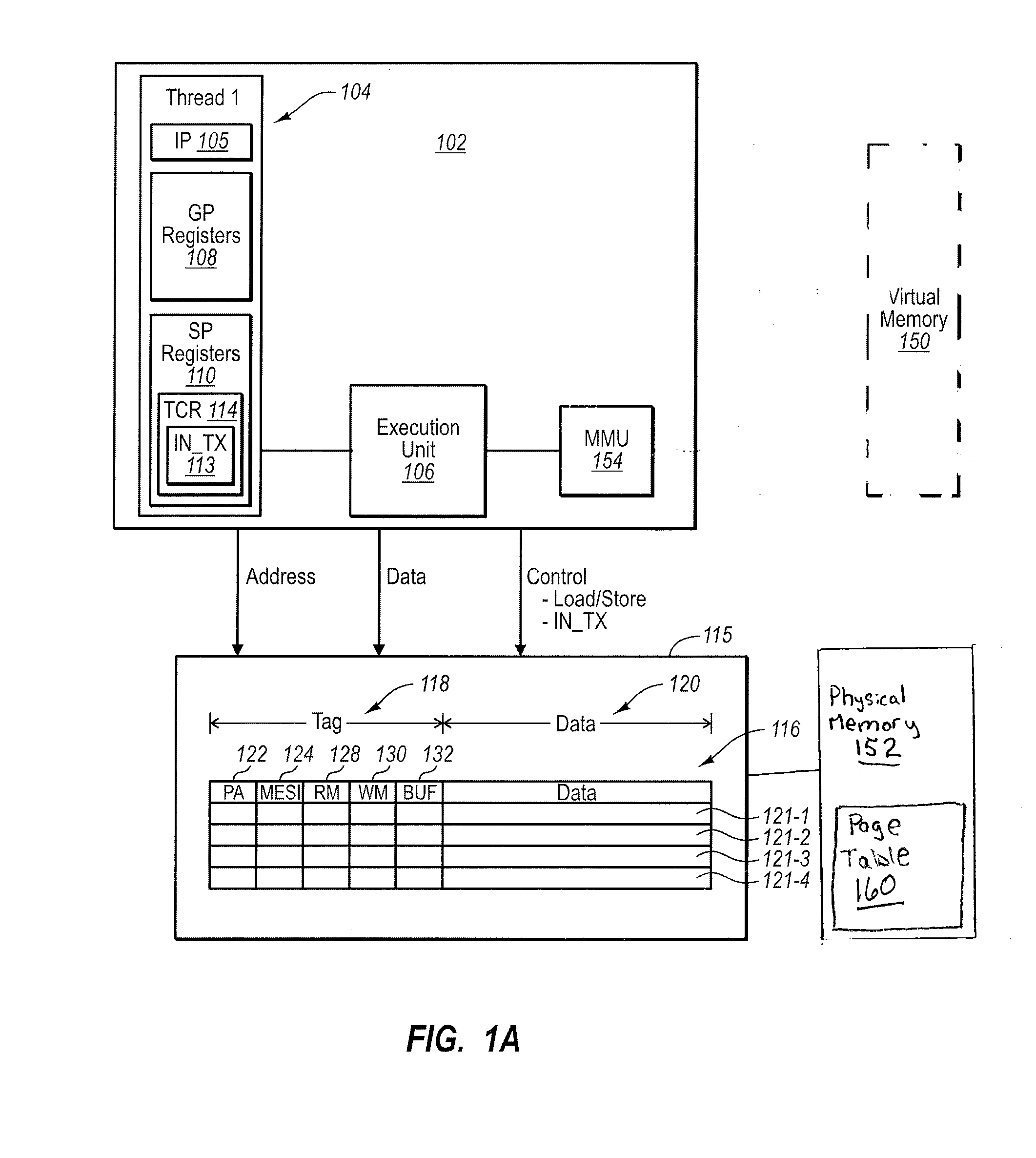

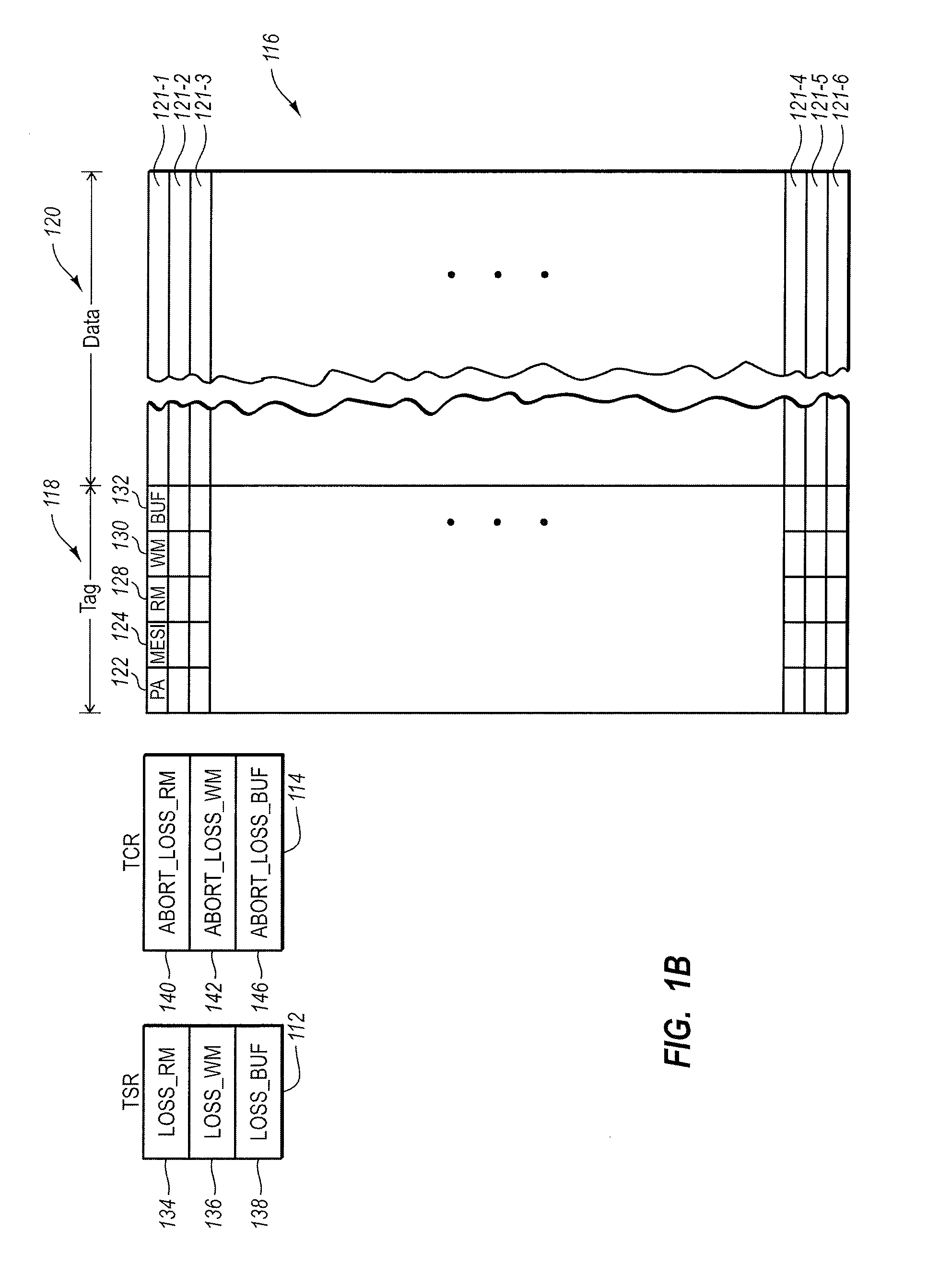

Operating system virtual memory management for hardware transactional memory

ActiveUS20100332721A1Memory adressing/allocation/relocationMultiprogramming arrangementsHardware threadOperational system

Operating system virtual memory management for hardware transactional memory. A method may be performed in a computing environment where an application running on a first hardware thread has been in a hardware transaction, with transactional memory hardware state in cache entries correlated by memory hardware when data is read from or written to data cache entries. The data cache entries are correlated to physical addresses in a first physical page mapped from a first virtual page in a virtual memory page table. The method includes an operating system deciding to unmap the first virtual page. As a result, the operating system removes the mapping of the first virtual page to the first physical page from the virtual memory page table. As a result, the operating system performs an action to discard transactional memory hardware state for at least the first physical page. Embodiments may further suspend hardware transactions in kernel mode. Embodiments may further perform soft page fault handling without aborting a hardware transaction, resuming the hardware transaction upon return to user mode, and even successfully committing the hardware transaction.

Owner:MICROSOFT TECH LICENSING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com