Patents

Literature

39 results about "Semantic analyzer" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

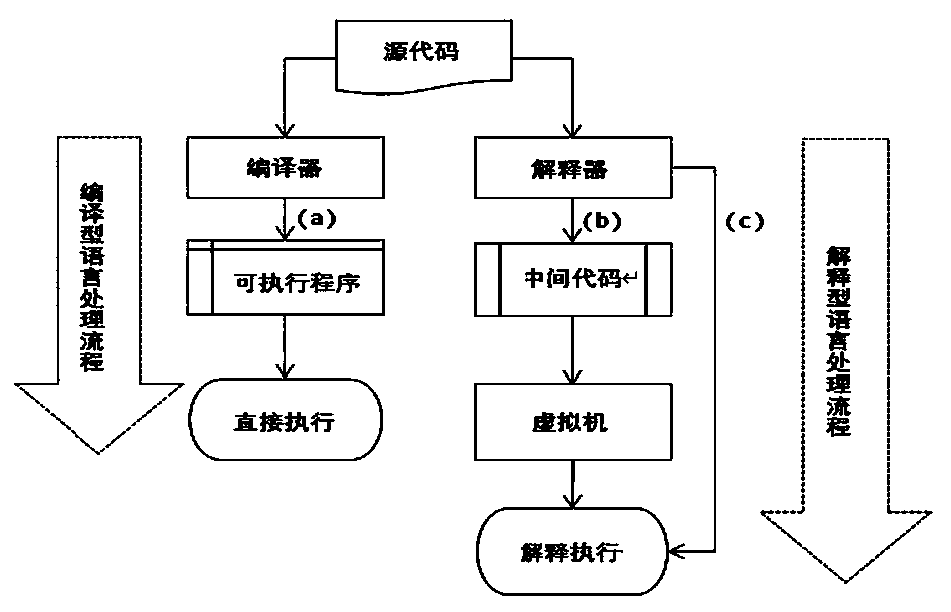

A semantic analyzer for a subset of the Java programming language. From Wikipedia: Semantic analysis, also context sensitive analysis, is a process in compiler construction, usually after parsing, to gather necessary semantic information from the source code.

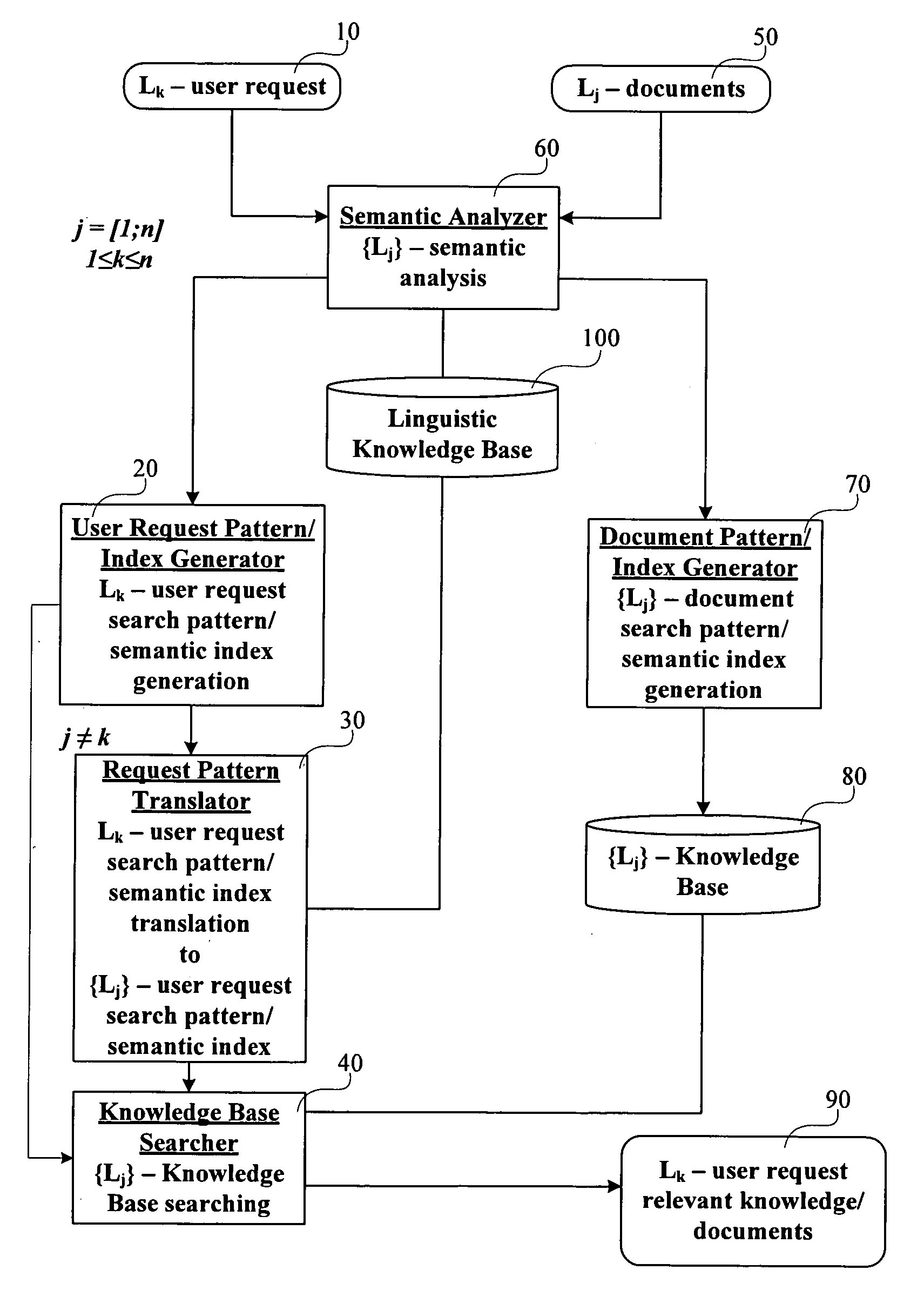

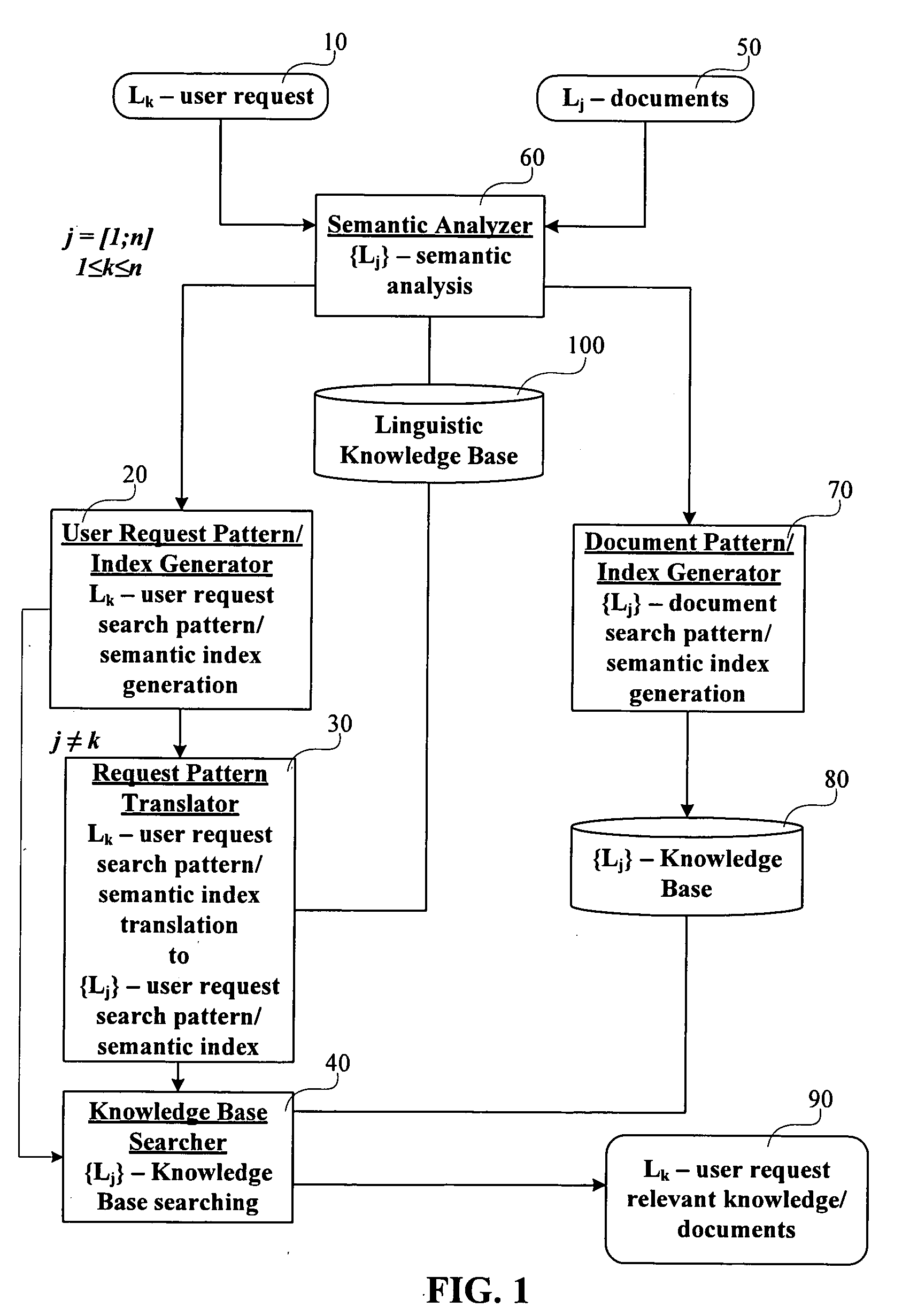

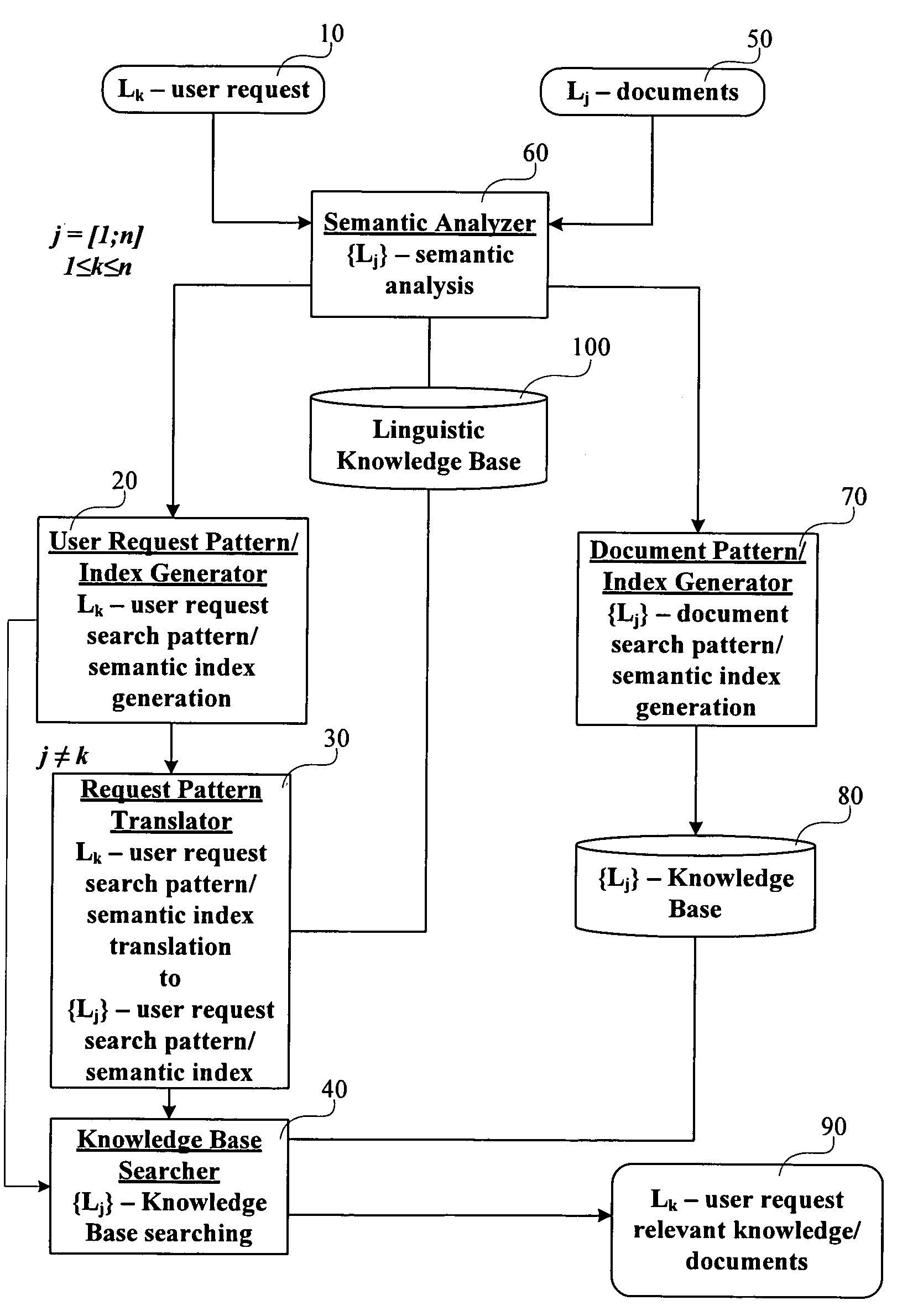

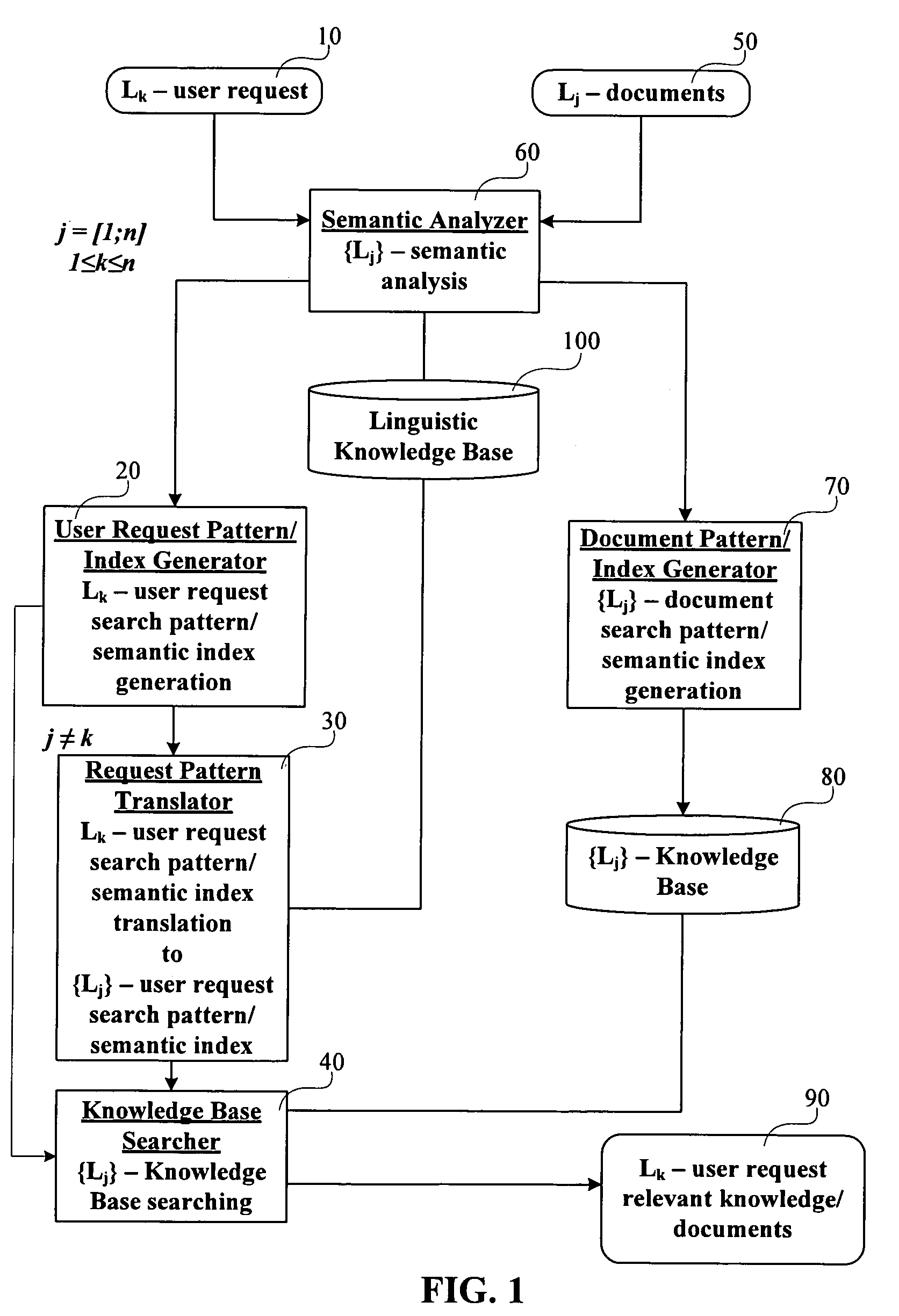

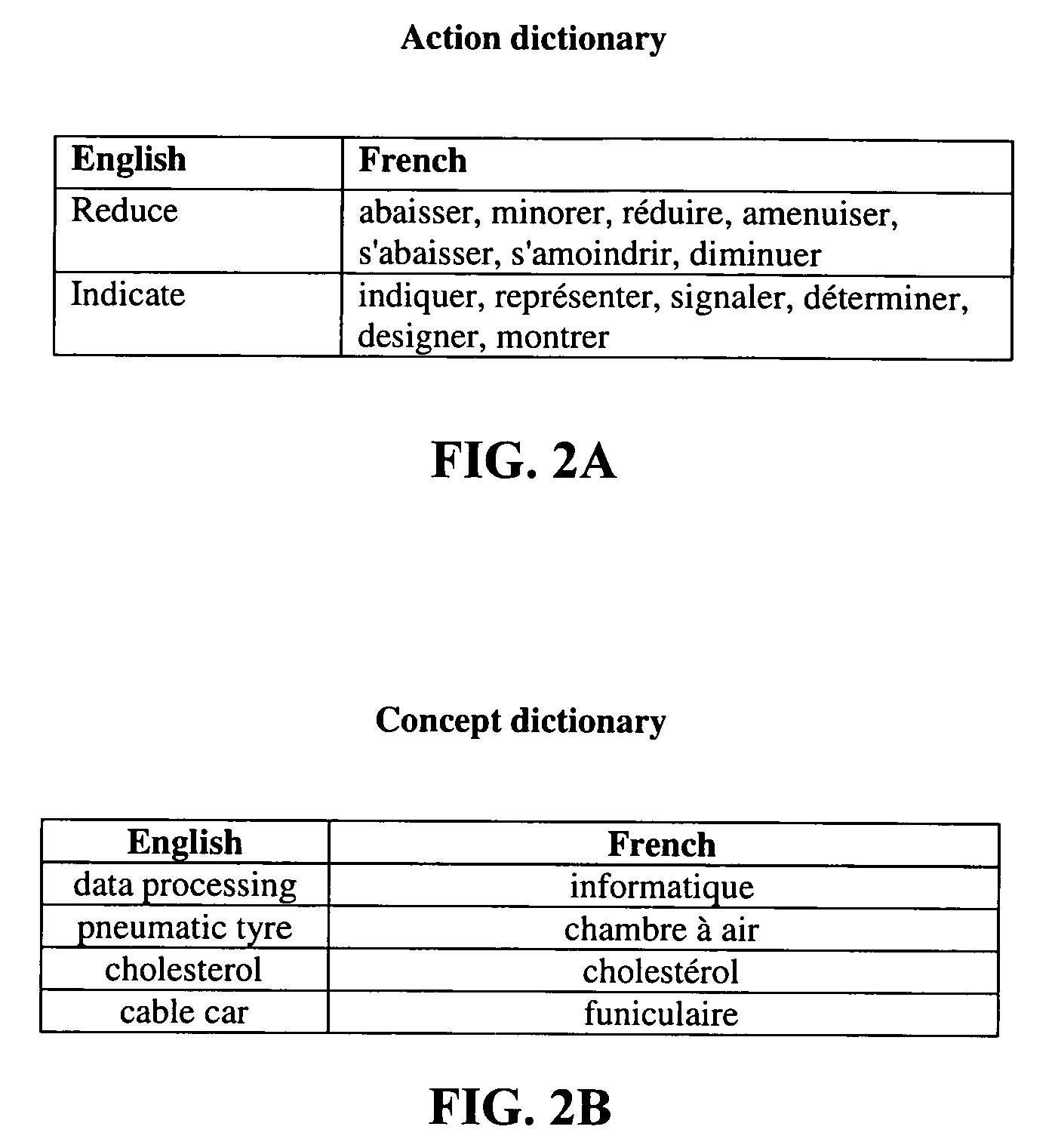

System and method for cross-language knowledge searching

ActiveUS20070094006A1Easy constructionNatural language translationDigital data information retrievalSemantic analyzerKnowledge extraction

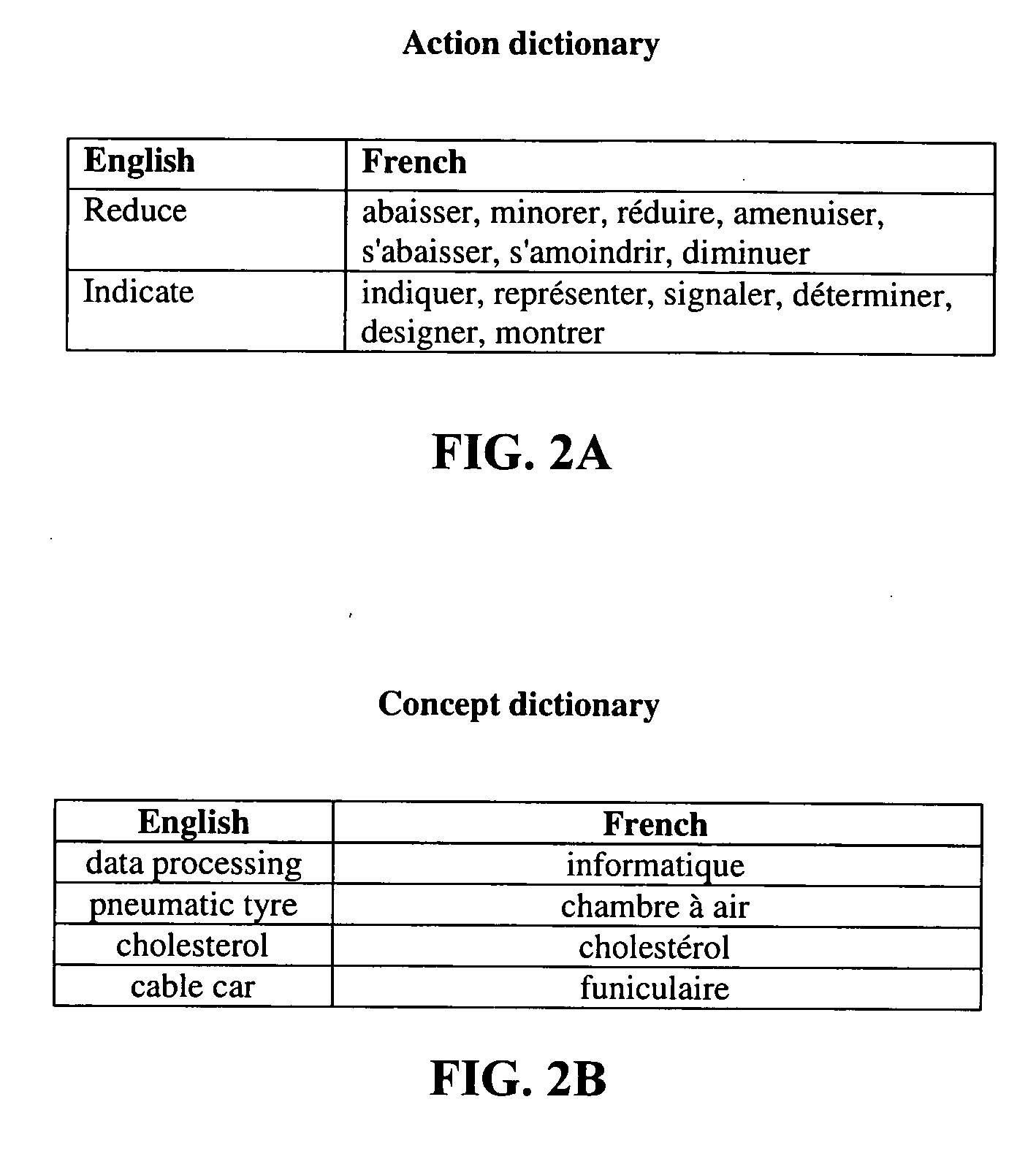

A system and method for cross-language knowledge searching. The system has a Semantic Analyzer, a natural language user request / document search pattern / semantic index Generator, a user request search pattern Translator and a Knowledge Base Searcher. The system also provides automatic semantic analysis and semantic indexing of natural language user requests / documents on knowledge recognition and cross-language relevant to user request knowledge extraction / searching. System functionality is ensured by Linguistic Knowledge Base as well as by a number of unique bilingual dictionaries of concepts / objects and actions.

Owner:ALLIUM US HLDG LLC

System and method for cross-language knowledge searching

ActiveUS7672831B2Easy constructionNatural language translationDigital data information retrievalKnowledge extractionBilingual dictionary

A system and method for cross-language knowledge searching. The system has a Semantic Analyzer, a natural language user request / document search pattern / semantic index Generator, a user request search pattern Translator and a Knowledge Base Searcher. The system also provides automatic semantic analysis and semantic indexing of natural language user requests / documents on knowledge recognition and cross-language relevant to user request knowledge extraction / searching. System functionality is ensured by Linguistic Knowledge Base as well as by a number of unique bilingual dictionaries of concepts / objects and actions.

Owner:ALLIUM US HLDG LLC

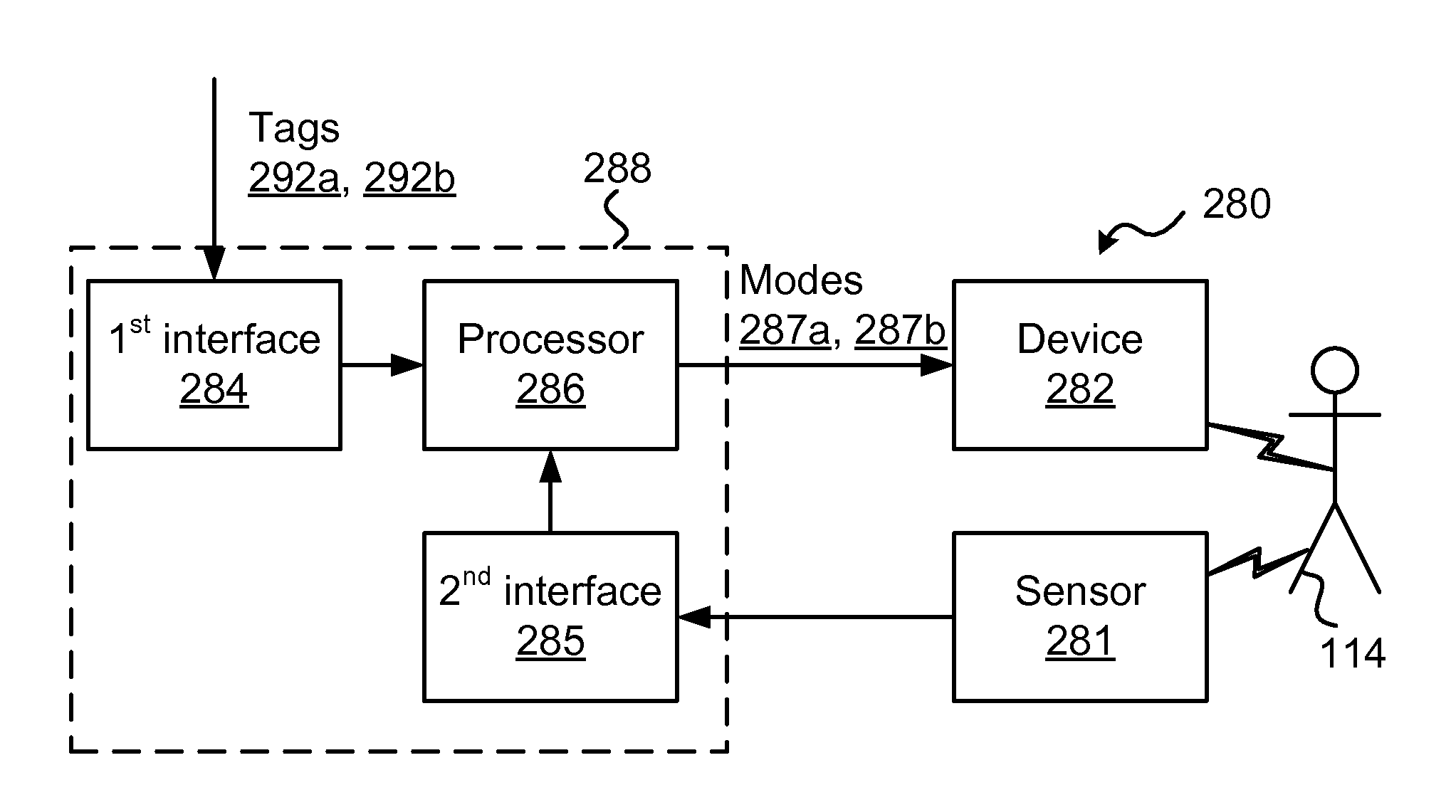

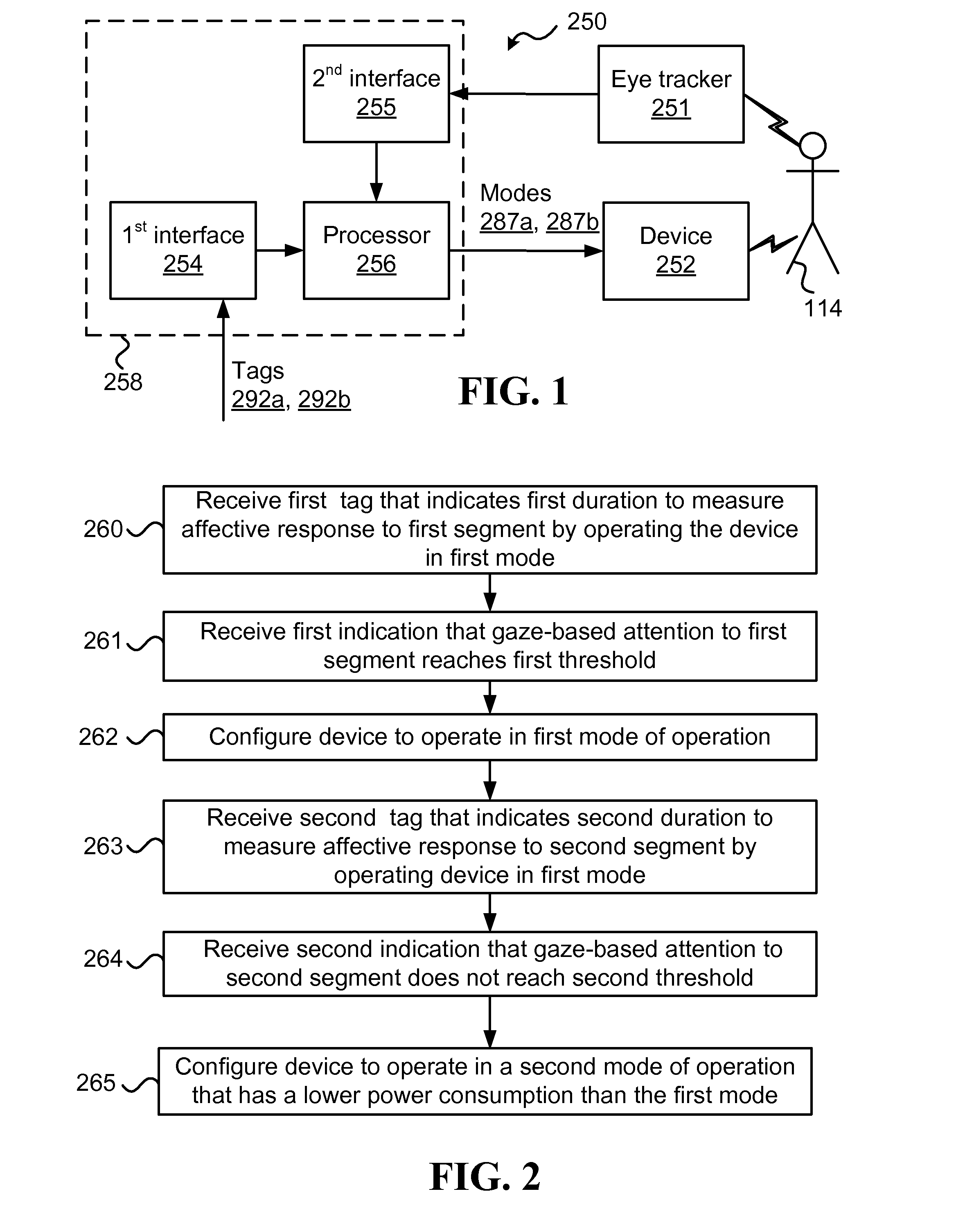

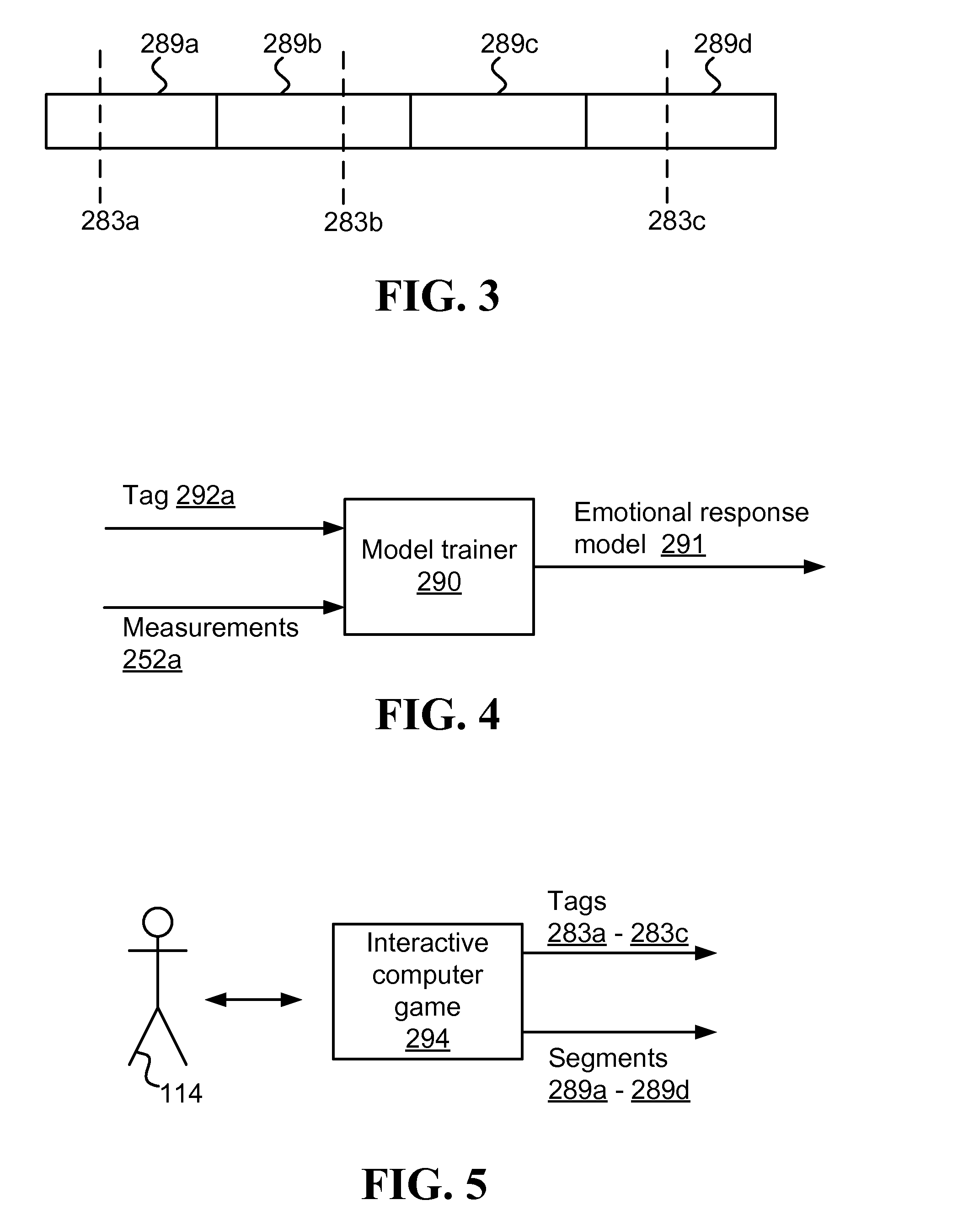

Utilizing semantic analysis to determine how to measure affective response

ActiveUS20140195221A1Improve user experienceAffecting responseNatural language translationMathematical modelsSemantic analyzerAlgorithm

A semantic analyzer receives a segment of content, analyzes it utilizing semantic analysis, and outputs an indication regarding whether a value related to a predicted emotional response to the segment reaches a predetermined threshold. Based on the indication, a controller selects a measuring rate, from amongst at least first and second measuring rates, at which a device is to take measurements of affective response of a user to the segment. The first rate may be selected when the value does not reach the predetermined threshold, while the second mode may be selected when the value does reach it. The device takes significantly fewer measurements while operating at the first measuring rate, compared to number of measurements it takes while operating at the second measuring rate.

Owner:AFFECTOMATICS

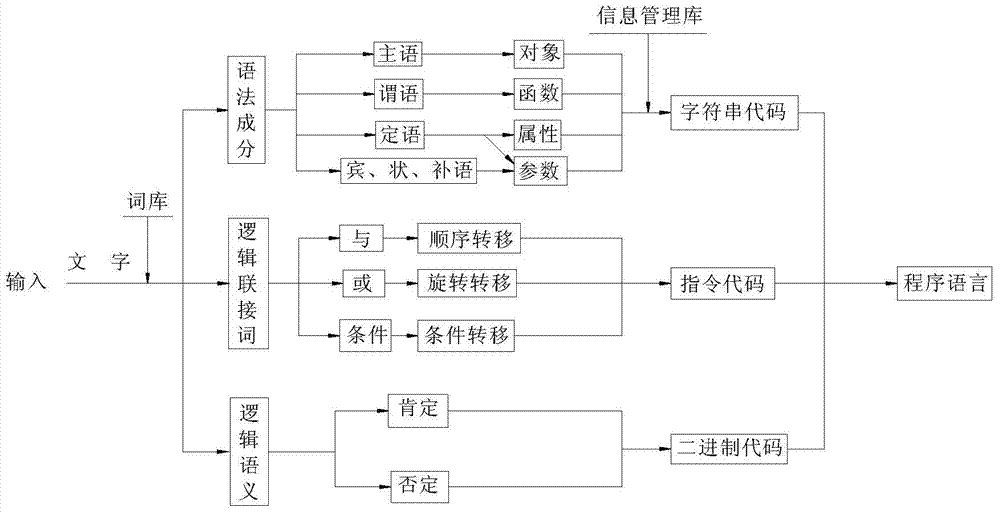

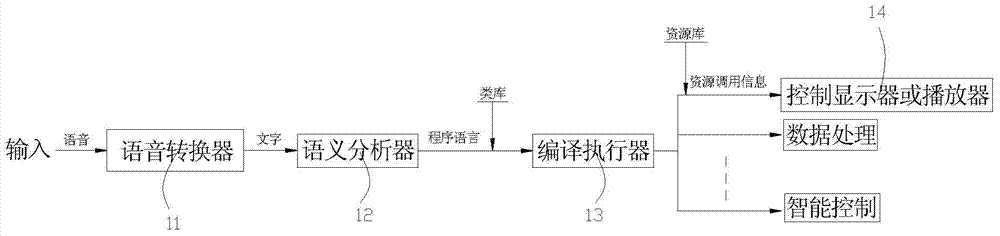

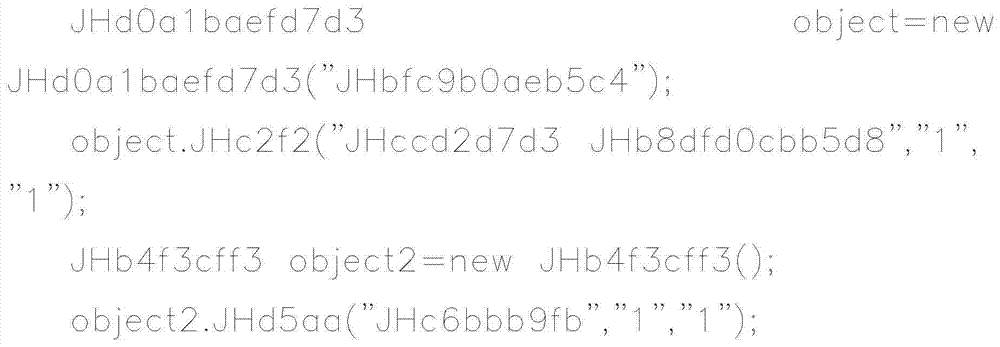

Method for translating natural languages into computer language, semantic analyzer and human-machine conversation system

ActiveCN103617159ASimplify computer hardware systemSimple hardware systemNatural language translationSpecial data processing applicationsSemantic analyzerNatural language understanding

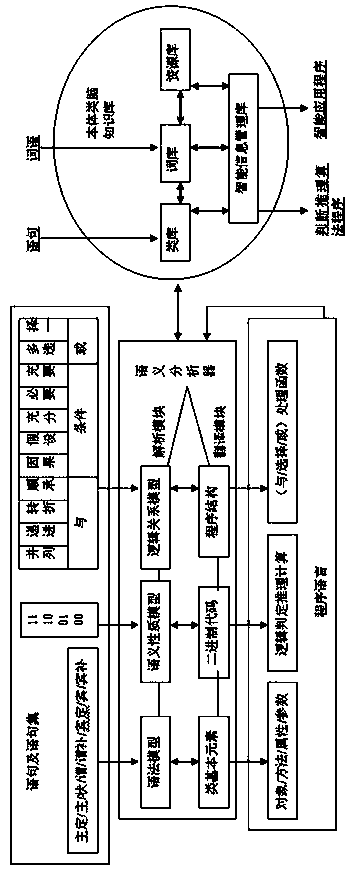

The invention relates to the computer field, in particular to a method for translating natural languages into a computer language, a semantic analyzer and a human-machine conversation system. The method comprises the steps of inputting the natural languages into a computer; extracting grammatical items, logic connection words and logical semantics through the computer; translating the grammatical items, the logic connection words and the logical semantics into character string codes, instruction codes and binary codes respectively; splicing the codes into a computer-recognizable programmable language. The technical process is performed on the grammatical items according to a mapping and splicing method of a computer program, therefore, any natural language can be translated into the computer-recognizable programmable language, understanding on the natural languages of the computer can be achieved, the technological base is established for developing deep intelligent electronic products, and dialogue and conversation between human and machine can be achieved.

Owner:万继华

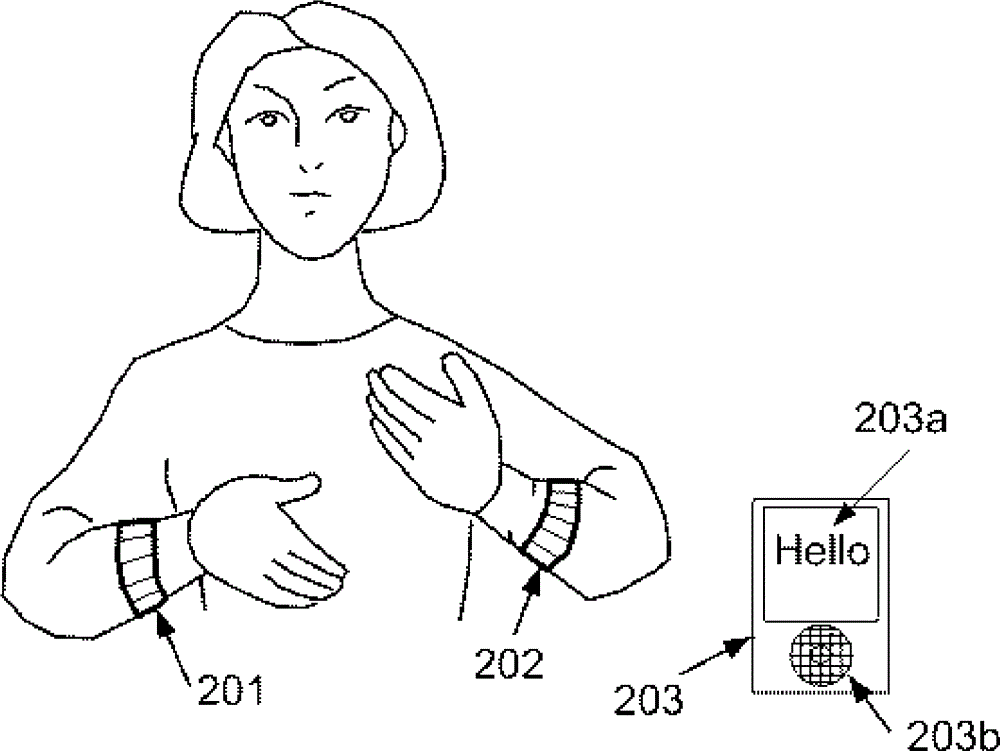

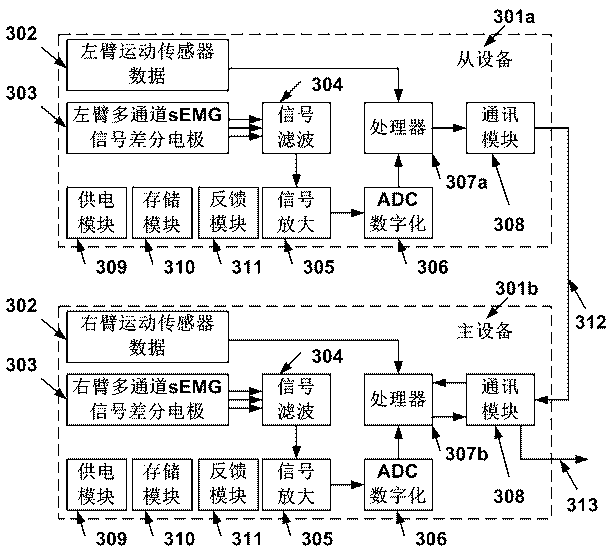

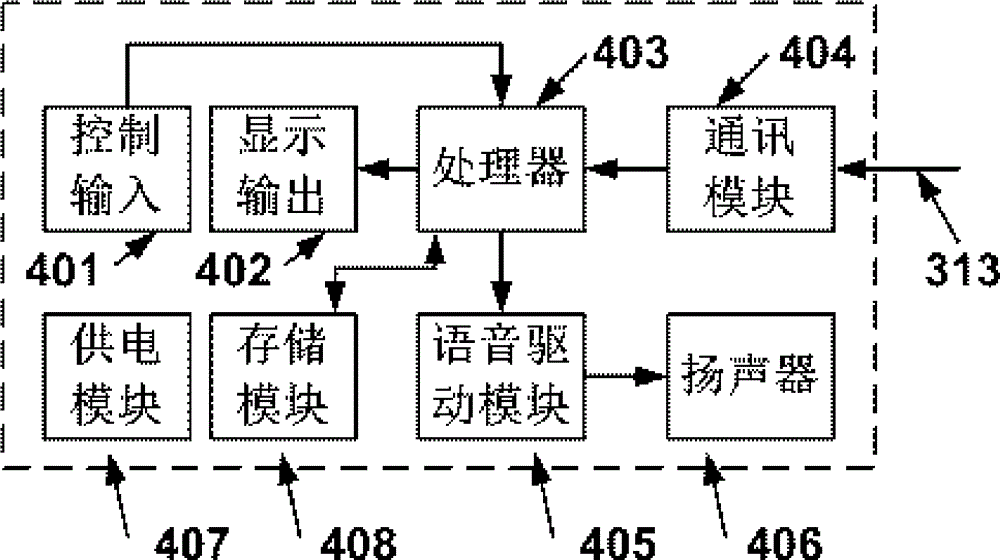

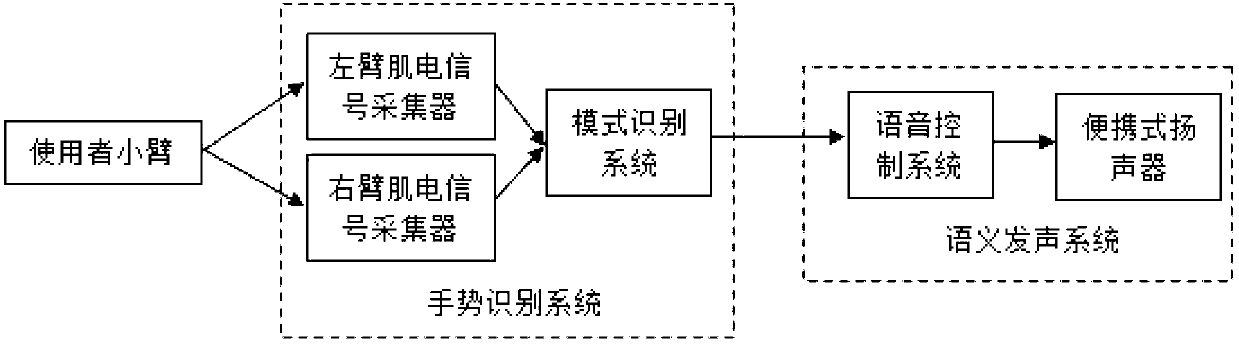

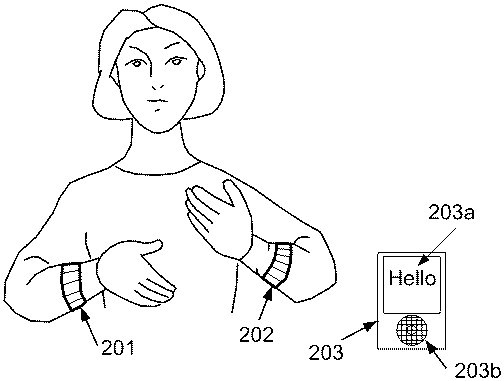

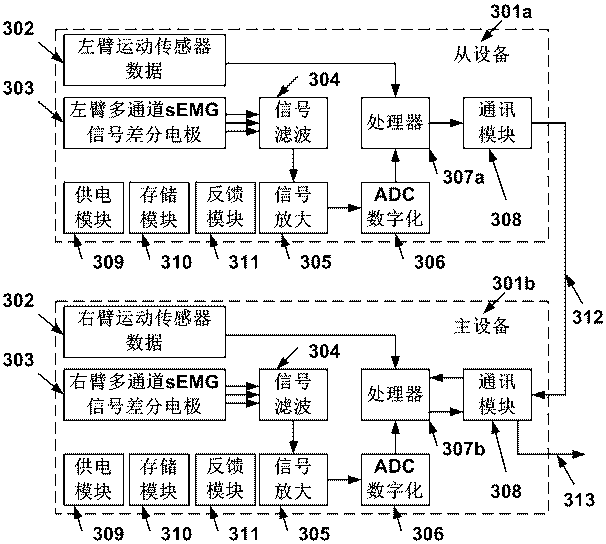

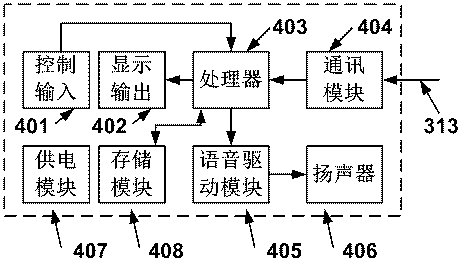

Sign language interpreting, displaying and sound producing system based on electromyographic signals and motion sensors

ActiveCN104134060AThe result of the exact distinctionImprove translationInput/output for user-computer interactionCharacter and pattern recognitionCrowdsDirect communication

The invention relates to a sign language interpreting, displaying and sound producing system based on electromyographic signals and motion sensors. The sign language interpreting, displaying and sound producing system comprises a gesture recognition subsystem and a semantic displaying and sound producing subsystem. The gesture recognition subsystem comprises the multi-axial motion sensors and a multi-channel muscle current acquisition and analysis module, the gesture recognition subsystem is put on the left arm and the right arm of a user, and the original surface electromyogram signals of the user and motion information of the arms of the user are obtained; gestures are differentiated by processing the electromyogram signals and data of the motion sensors. The displaying and sound producing subsystem comprises a semantic analyzer, a voice control module, a loudspeaker, a displaying module, a storage module, a communication module and the like. According to the sign language interpreting, displaying and sound producing system, by the adoption of the mode recognition technology based on the electromyographic signals of the double arms and the data of the motion sensors, the gesture recognition accuracy rate is increased; through the combination of the semantic displaying and sound producing subsystem, interpreting from commonly-used sign language to voice or text is achieved, and the efficiency of direct communication between people with language disorders and normal people is improved.

Owner:SHANGHAI OYMOTION INFORMATION TECH

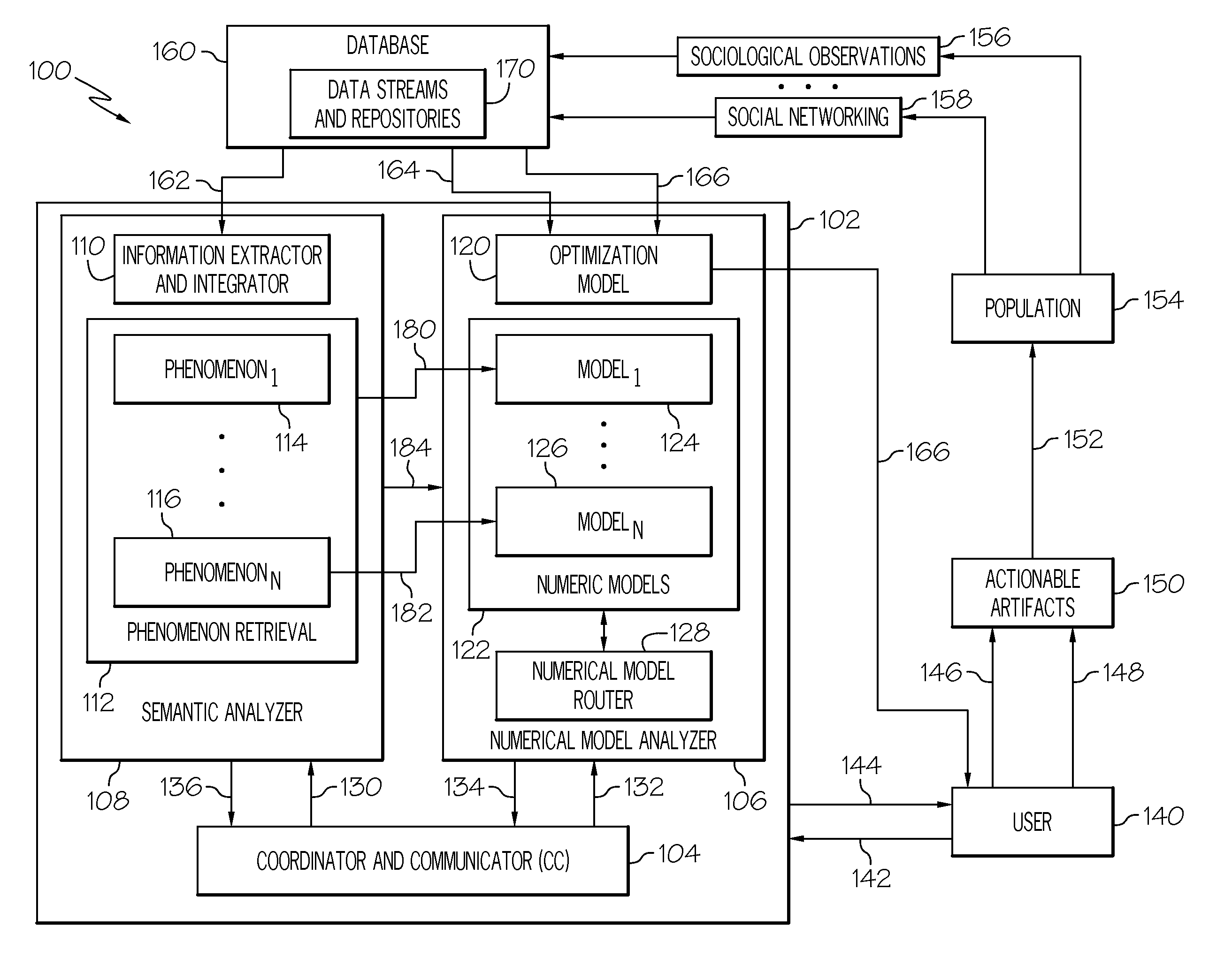

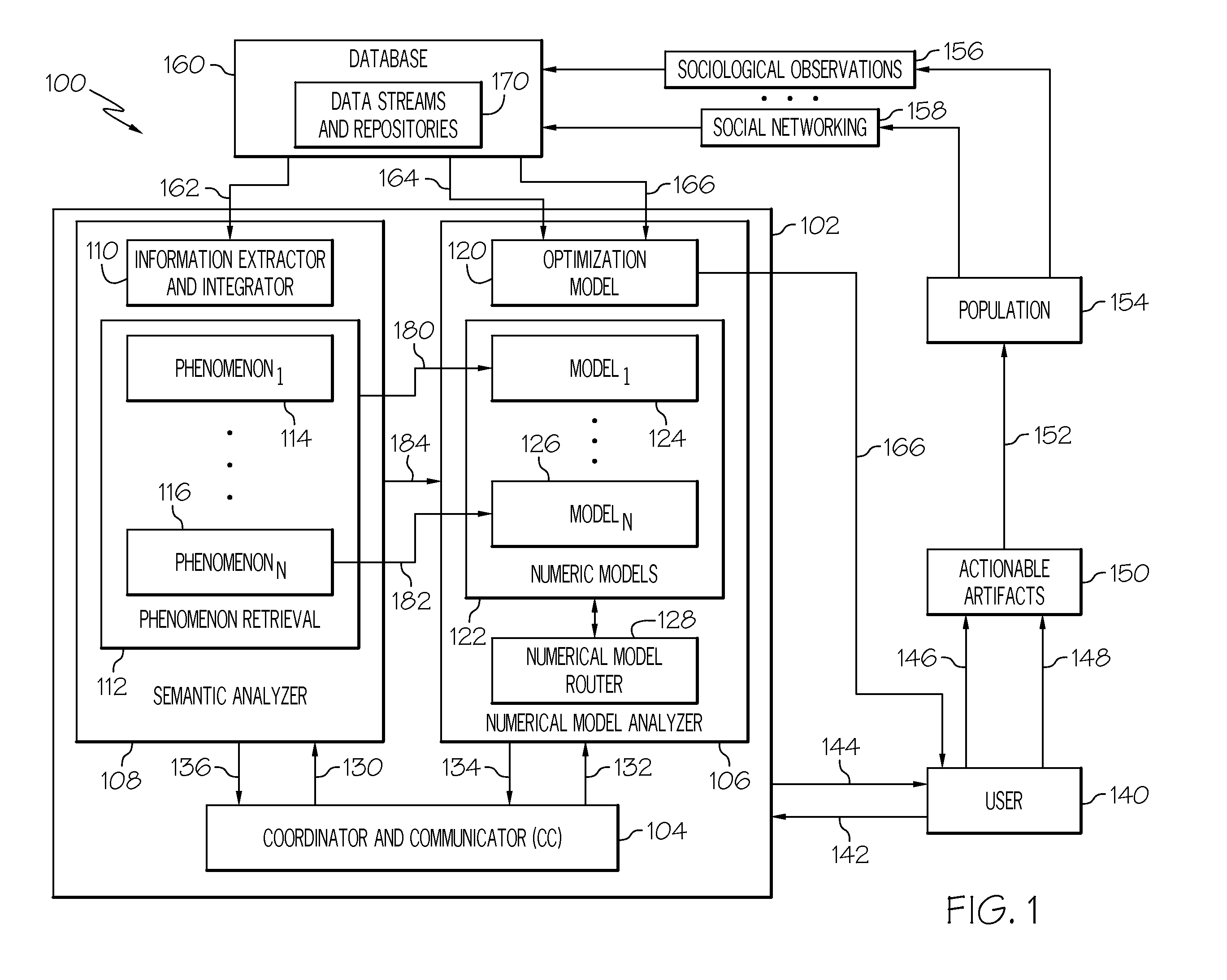

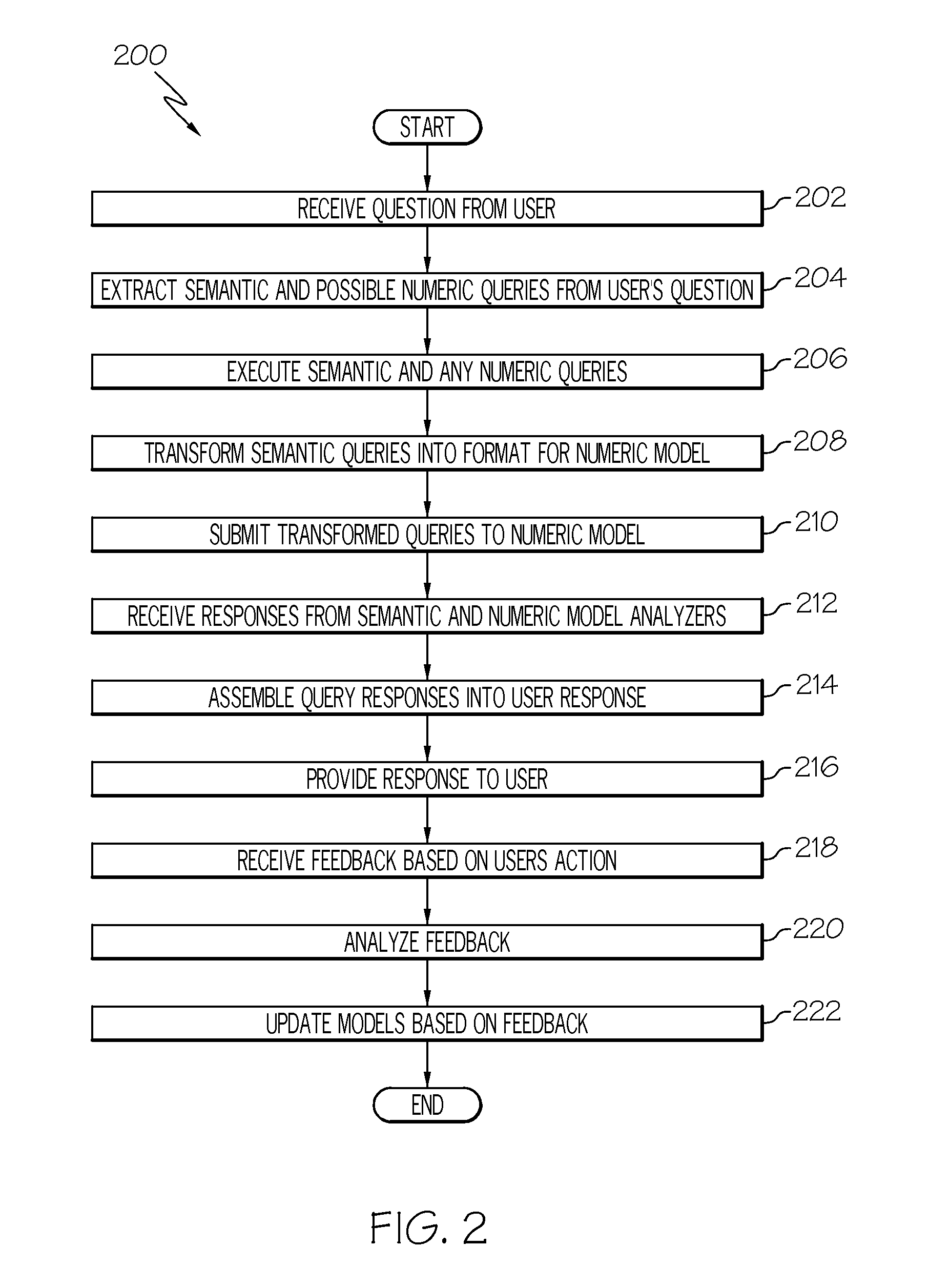

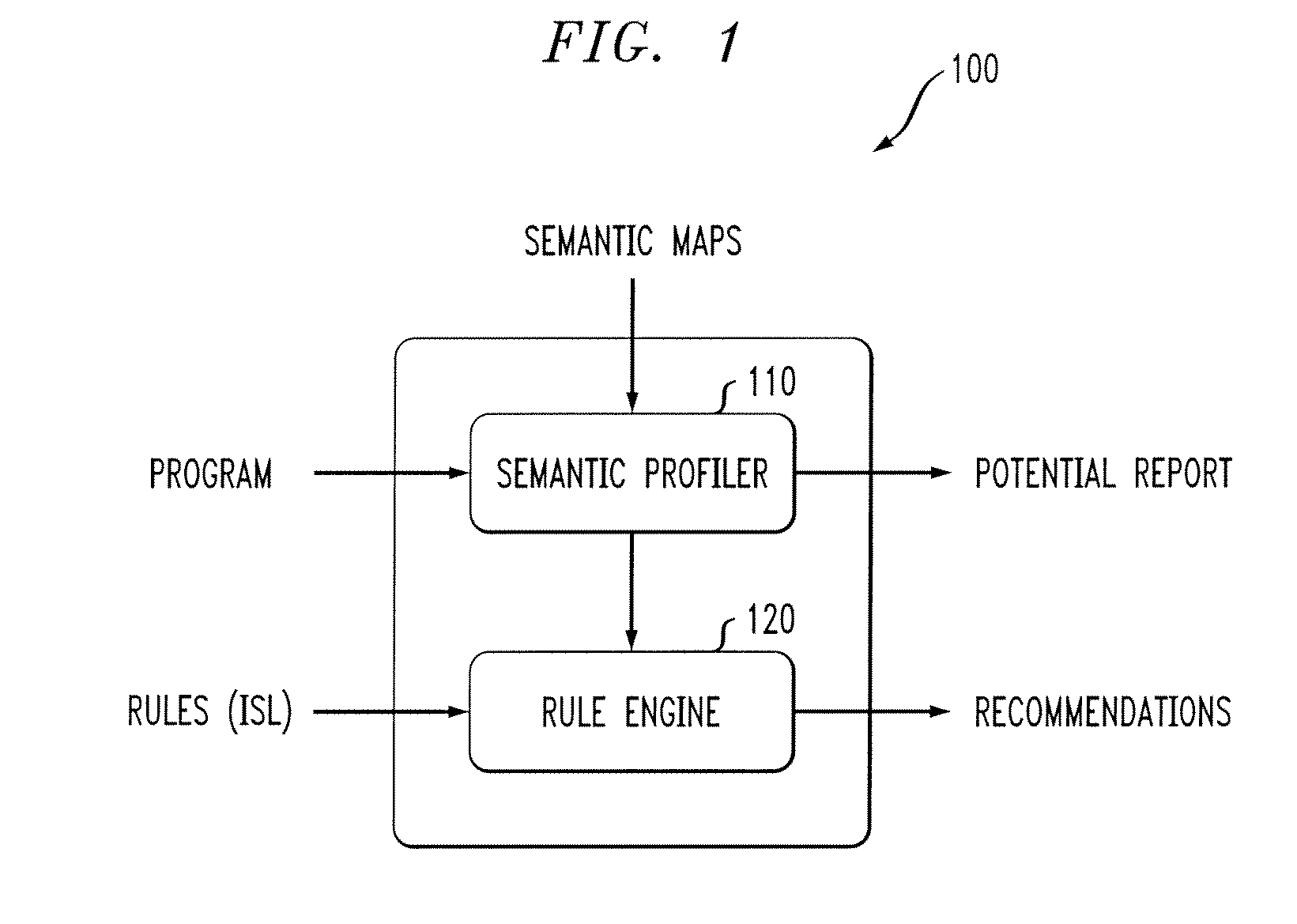

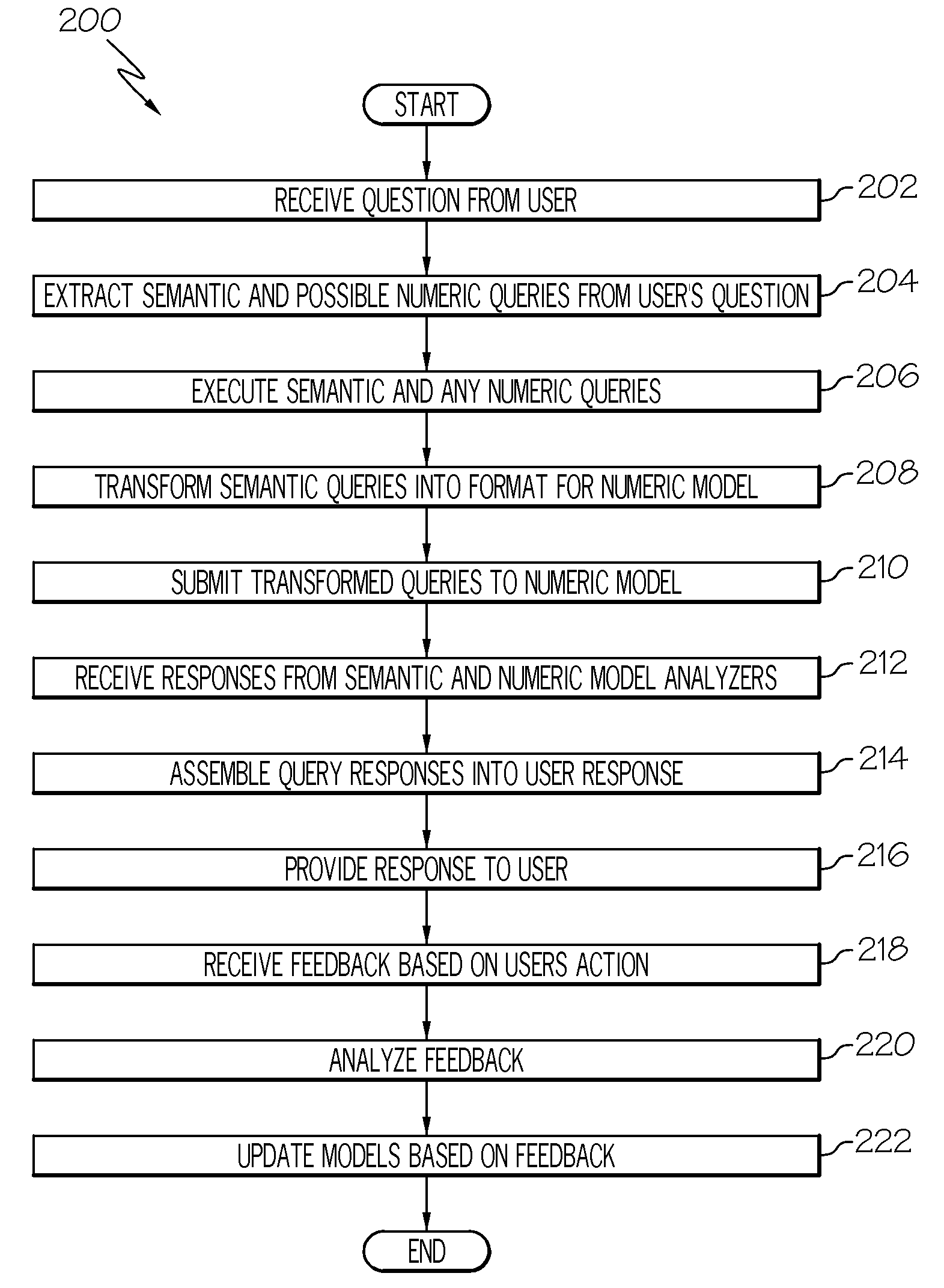

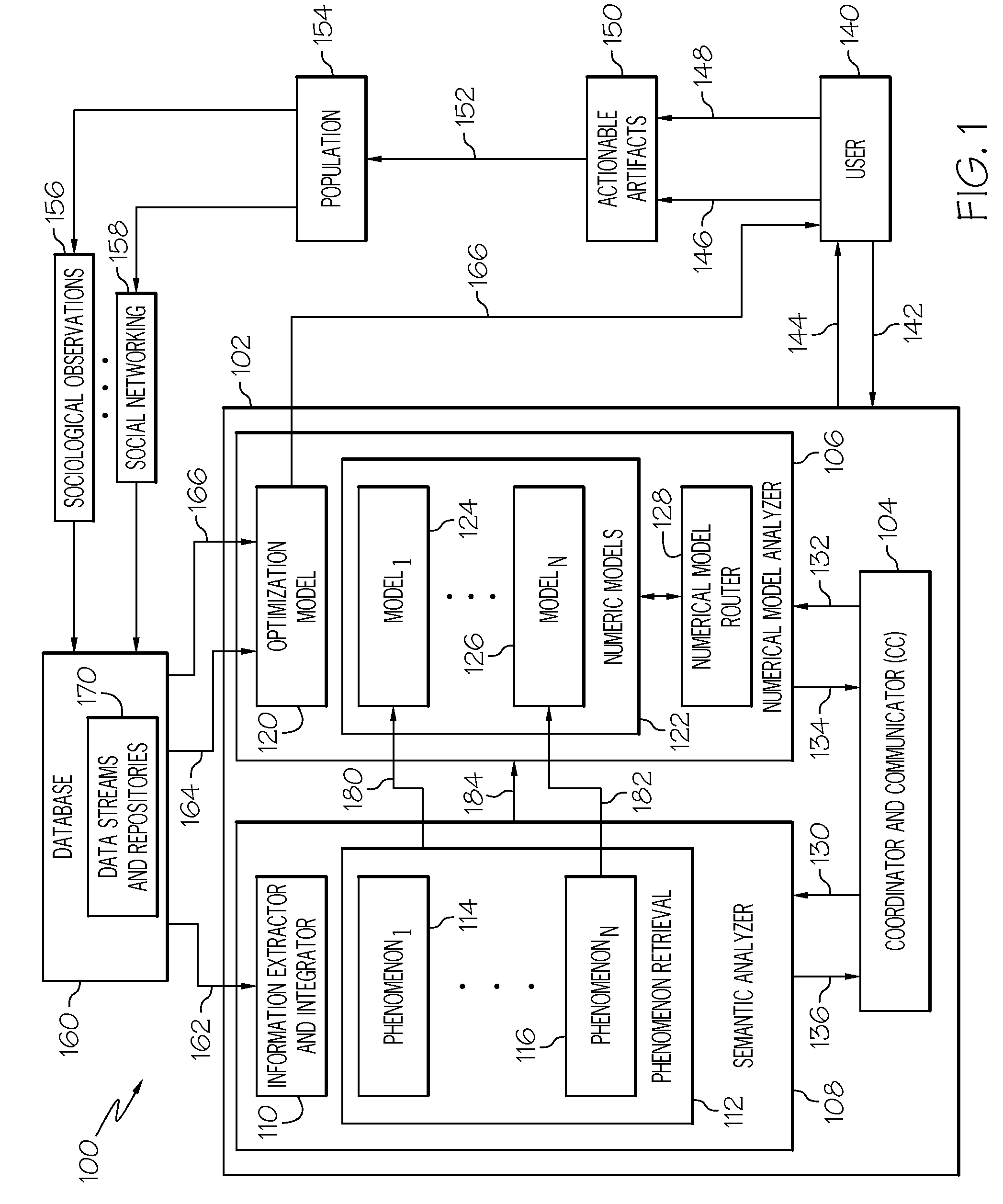

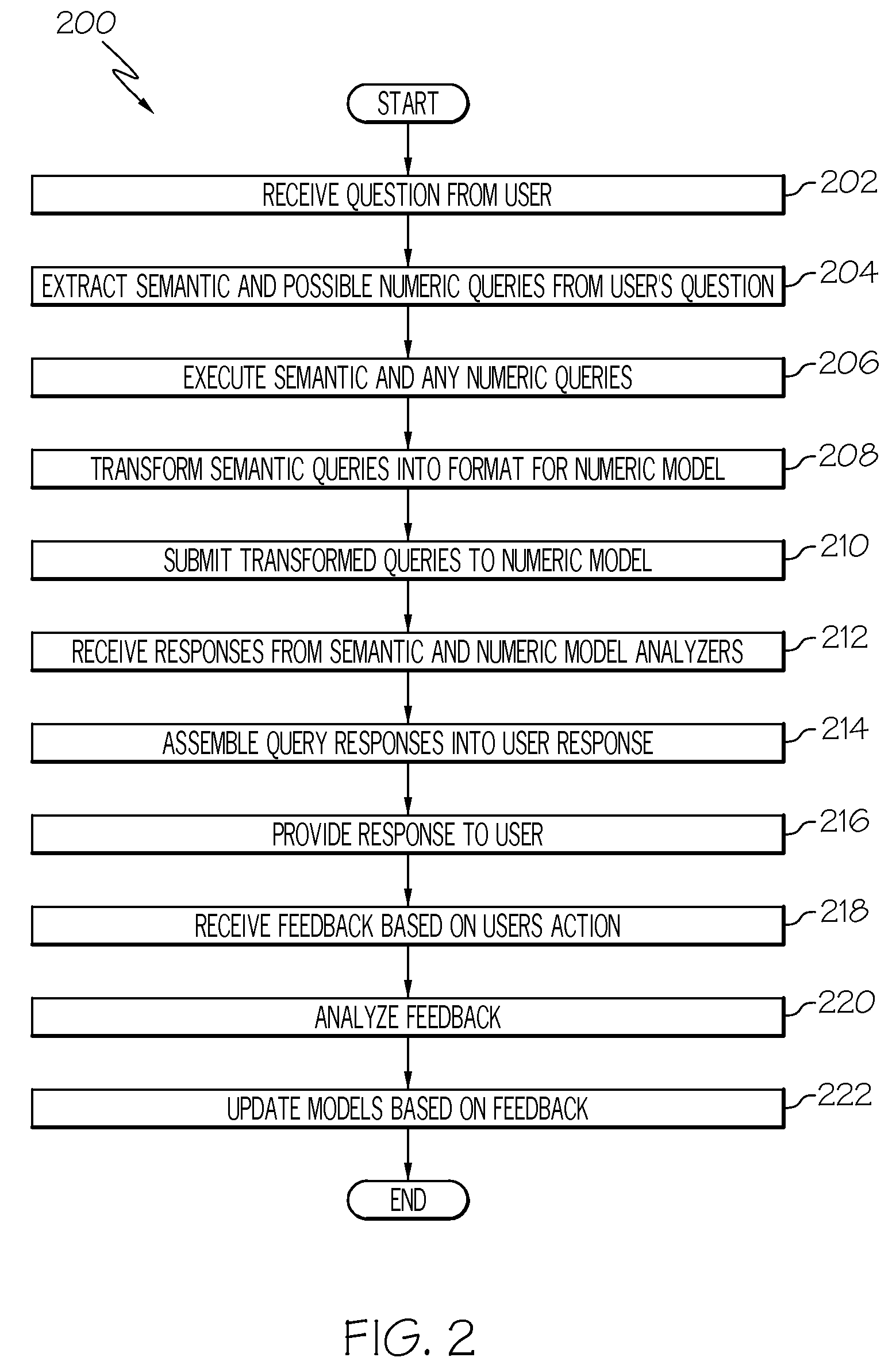

Unified numerical and semantic analytics system for decision support

ActiveUS20120011139A1Digital data information retrievalDigital data processing detailsSemantic analyzerSemantic query

A system and method for responding to a query. An original query is received. A first semantic query and a second semantic query are extracted from the information of the original query. The first semantic query is transformed, based upon semantic analysis, into a numeric model query. The second semantic query is submitted to a semantic analyzer and the numeric model query is submitted to a numeric model analyzer. A response for the second semantic query and a response for the numeric model query are integrated into an answer for the original query.

Owner:IBM CORP

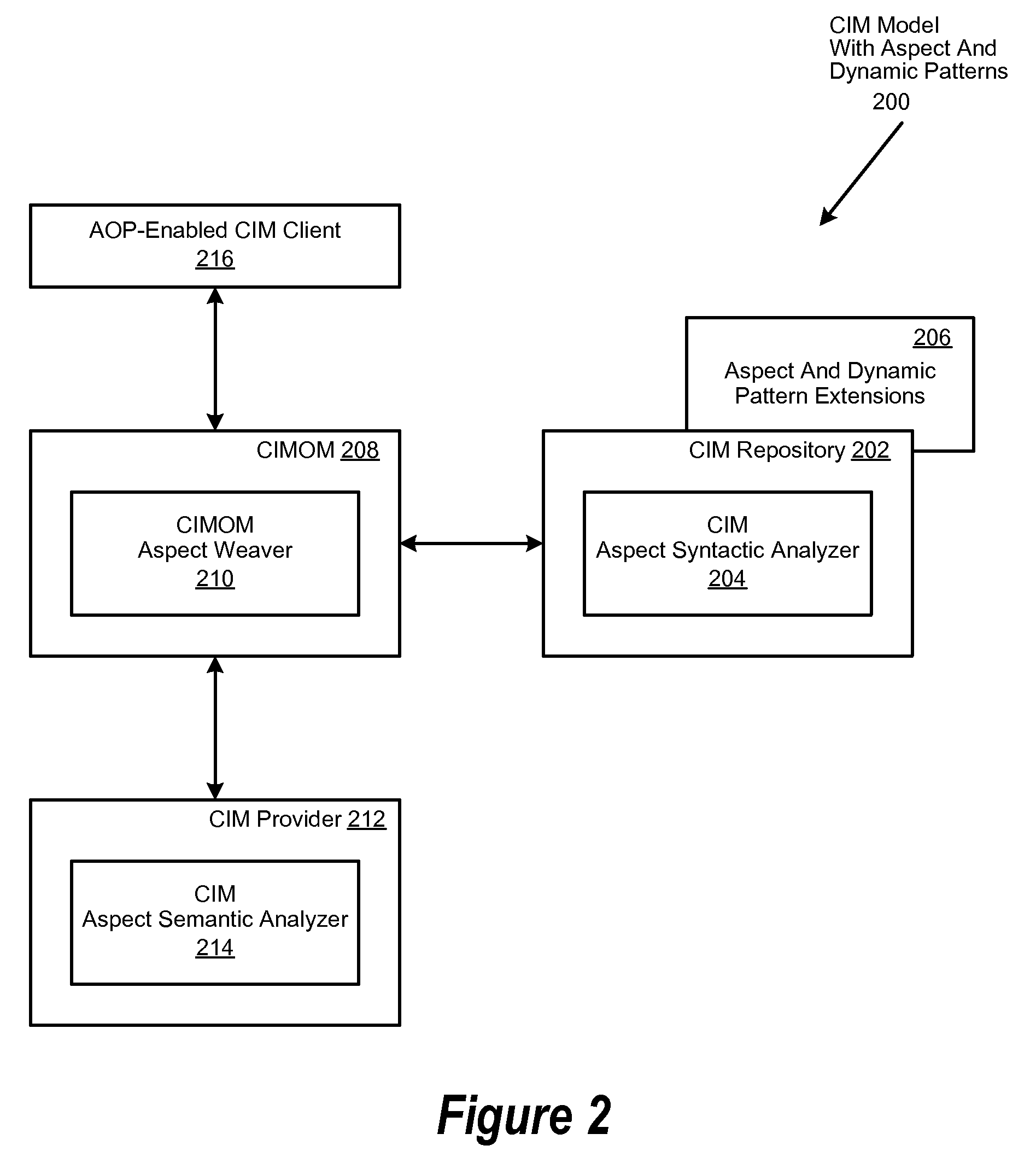

Common Information Model for Web Service for Management with Aspect and Dynamic Patterns for Real-Time System Management

InactiveUS20080033972A1Reduce the impactReduce impactDigital data processing detailsTransmissionQuality of serviceWeb service

A system and method is disclosed for reducing the effects of cross-profile crosscutting concerns to enable just-in-time configuration updates and real-time adaptation in the Common Information Model (CIM). The CIM object model is thereby allowed to adapt to dynamic role, resource, or service changes such as logging, debugging, security or quality of service (QOS). An aspect syntactic analyzer is implemented to extend a CIM Managed Object Format (MOF) to implement aspect and dynamic pattern extensions. CIM MOF extensions comprise an Aspect Oriented Programming (AOP) join point. The join point can be implemented as an association class referencing two classes or as a method call of a first class to a property of a second class. The two classes may reside in the same or different CIM profiles. A CIM repository is accessed by a CIM Object Manager (CIMOM) comprising an aspect weaver implemented to enable AOP operations between CIM clients and data providers. The CIM providers comprise an Aspect Semantic Analyzer to similarly enable AOP operations comprising CIM MOF aspect and dynamic pattern extensions. As a result, cross-profile crosscutting concerns are reduced, thereby allowing dynamic changes in the CIM model and enabling just-in-time configuration changes and real-time environment adaptation.

Owner:DELL PROD LP

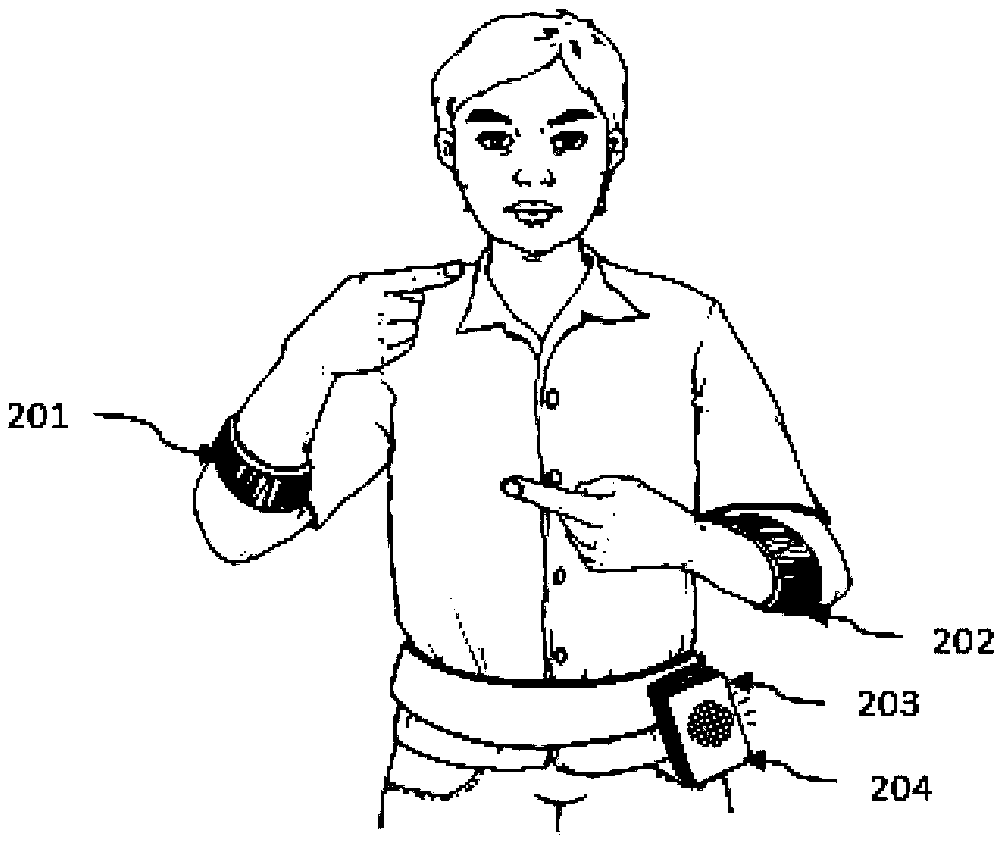

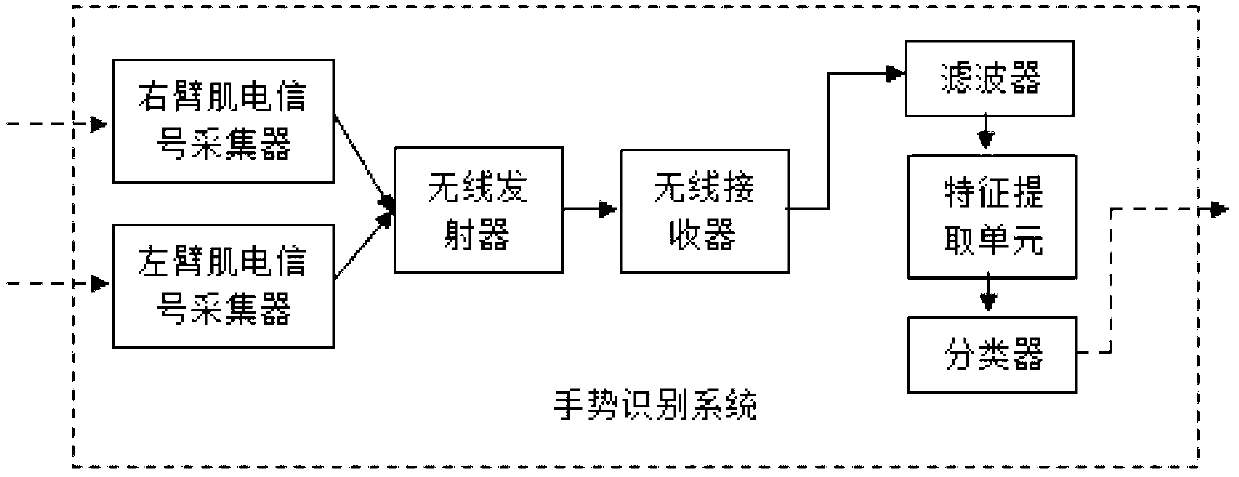

Novel intelligent sign language translation and man-machine interaction system and use method thereof

InactiveCN103279734ADoes not interfere with normal daily lifeAccurate judgmentInput/output for user-computer interactionCharacter and pattern recognitionBody shapeWireless transceiver

The invention provides a novel intelligent sign language translation and man-machine interaction system which comprises a gesture identifying system and a semantic vocalizing system. The gesture identifying system is connected with a user with language disorder to transmit an original electromyographic signal; the gesture identifying system is connected with the semantic vocalizing system to transmit basic semantic information; and the semantic vocalizing system transmits voice which is consistent with the semantics of sign language. The gesture identifying system comprises an electromyographic signal acquisition device, a wireless transceiver, a filter, a characteristic extraction unit and a classifier. The semantic vocalizing system comprises a semantic analyzer, a voice controller and a portable speaker. According to the novel intelligent sign language translation and man-machine interaction system and the use method thereof, a mode identifying technology based on the electromyographic signal is adopted, the gesture identification precision is improved and the efficiency in exchange between the user with language disorder and an healthy person is improved by combining a voice system. After being simply adjusted, the system and the method can be used by users with language disorder with different body shapes. The electromyographic signal acquisition device and the semantic vocalizing system are respectively worn through a wrist band and a waist band, so that the normal life of the user with language disorder is not affected.

Owner:SHANGHAI JIAO TONG UNIV

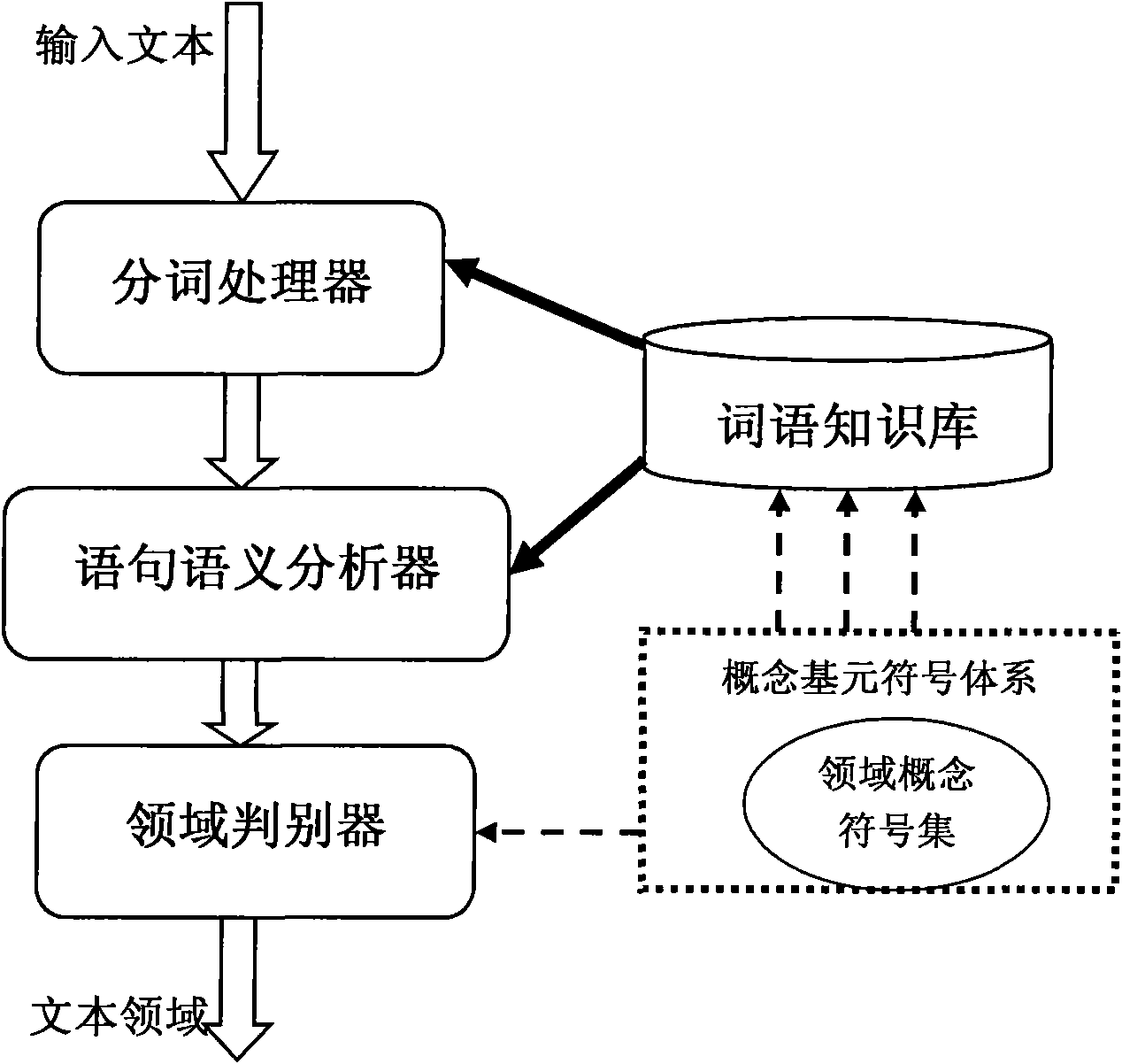

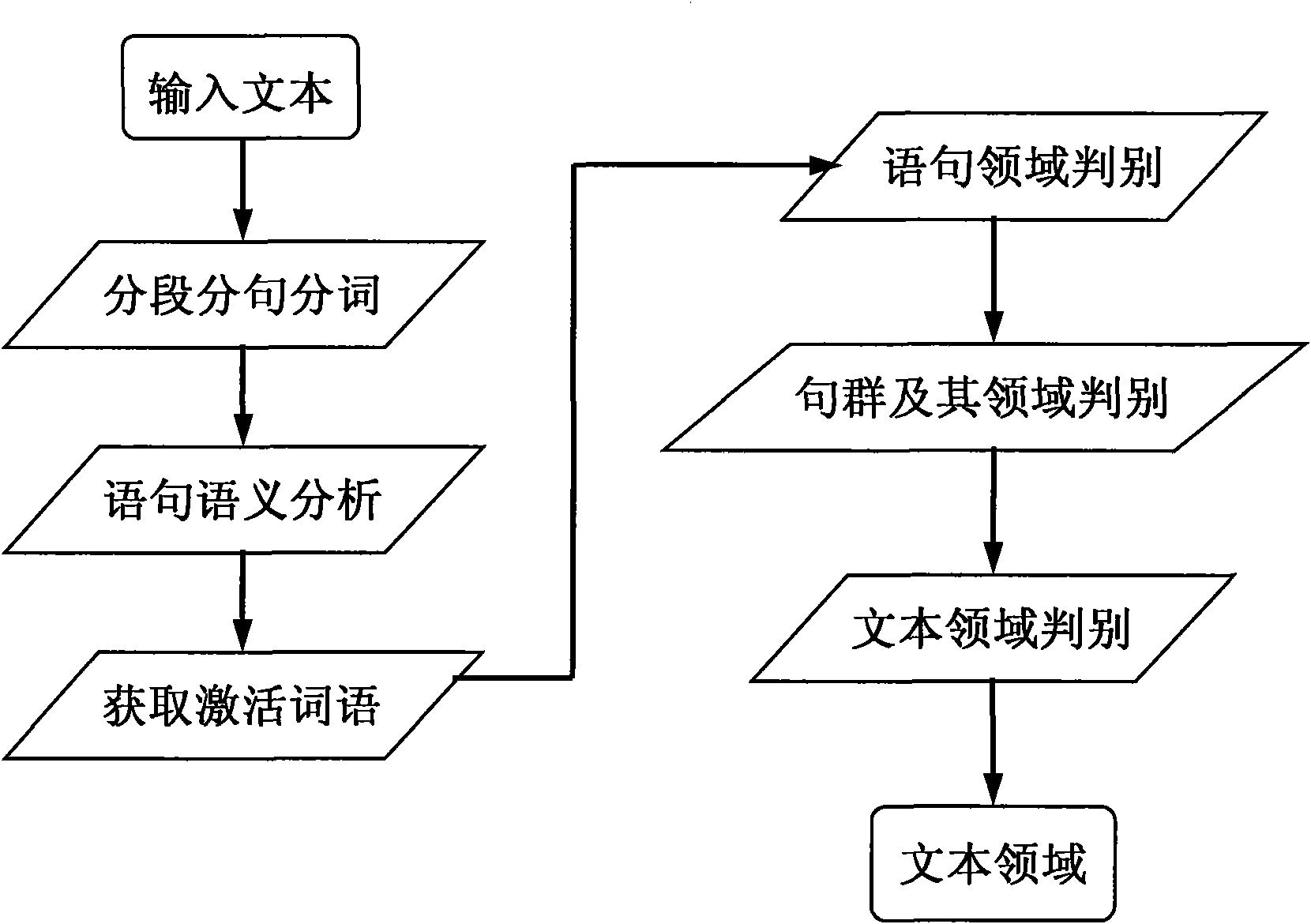

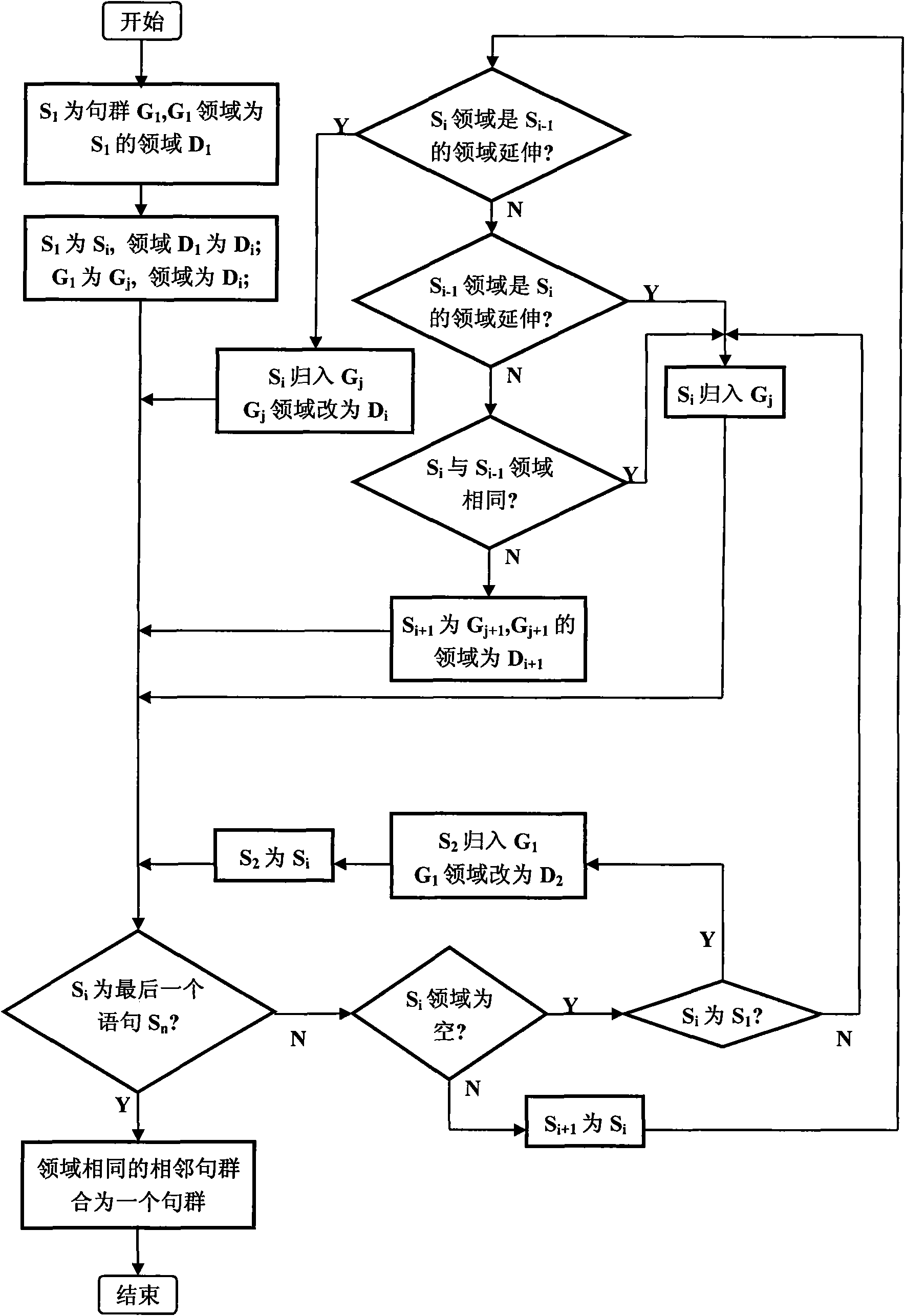

Acquisition system and method of text field based on concept symbols

InactiveCN101645083AHierarchicalSuitable for handlingSpecial data processing applicationsNatural language processingSemantic analyzer

The invention discloses an acquisition system and a method of the text field based on concept symbols. The system comprises a concept symbol set for expressing word concepts and field categories, a word knowledge base for storing word and concept symbols, a word segmentation processor, a statement semantic analyzer and a field arbiter. The method comprises the following steps: (1) segmenting an input text into paragraphs, statements and words; (2) carrying out semantic analysis on the statements for obtaining concept categories and semantic blocks of the statements; (3) obtaining activating words in the statements according to semantic concept symbols in the field concept symbol set and the word knowledge base; (4) carrying out comprehensive scoring on field concept symbols of the activating words and obtaining the field concept symbol with the highest score as the field of the statements; (5) merging the statements in the paragraphs according to the field concept symbols for obtaininga statement group and the field thereof; and (6) obtaining the field of the text according to a title of the text and the frequency of occurrence and the position of the statement group in the statement group.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

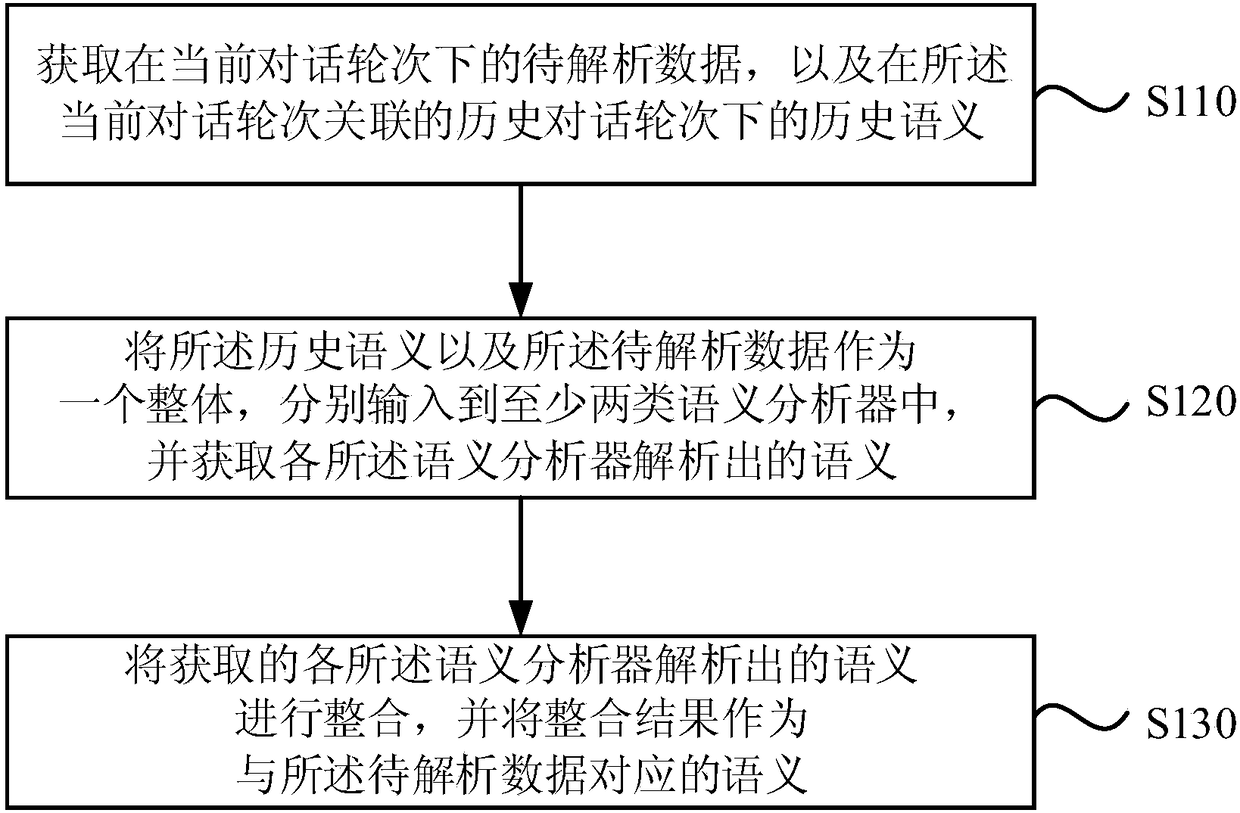

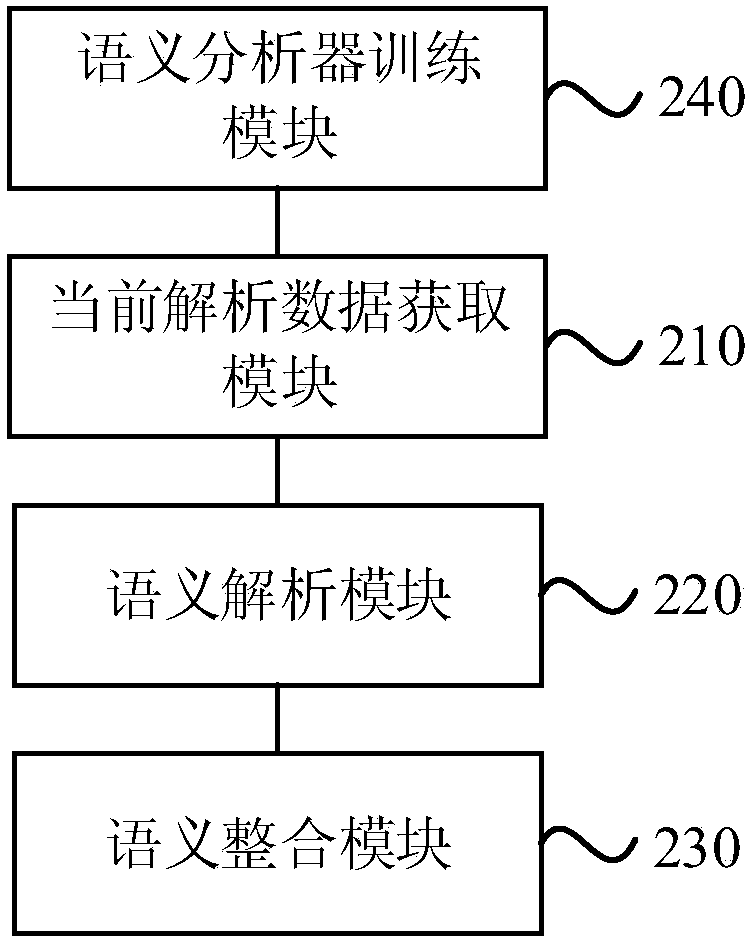

Method and device for parsing semantic meaning, apparatus and storage medium

ActiveCN108388638AIncrease diversityIncrease flexibilitySemantic analysisSpecial data processing applicationsNatural language processingSemantic analyzer

The invention discloses a method and a device for parsing a semantic meaning, an apparatus and a storage medium. The method comprises the following steps of obtaining to-be-parsed data in a current dialogue turn and a historical semantic meaning in a historical dialogue turn associated with the current dialogue turn; by using the historical semantic meaning and the to-be-parsed data as a whole, inputting into at least two semantic meaning analyzers respectively, and obtaining the semantic meaning parsed by each semantic meaning analyzer; integrating the obtained semantic meanings parsed out bythe semantic meaning analyzers, and using an integration result as the semantic meaning corresponding to the to-be-parsed data. According to the method provided by the embodiment of the invention, anexisting method for parsing the semantic meaning in a dialogue system is optimized; the diversity of the parsed out semantic meaning is increased; the interaction flexibility of a dialogue is improved, and the experience of a user is improved.

Owner:出门问问创新科技有限公司 +1

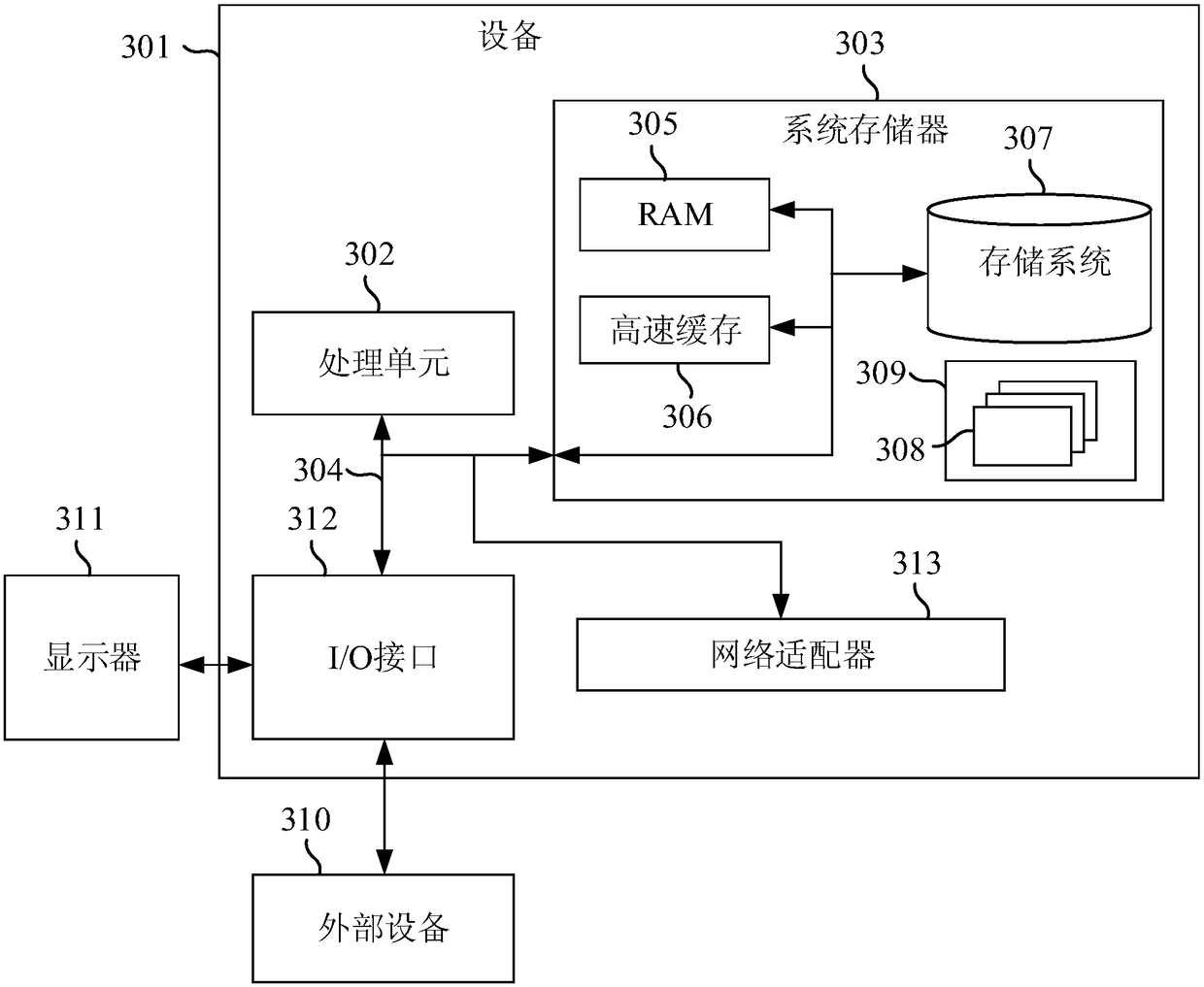

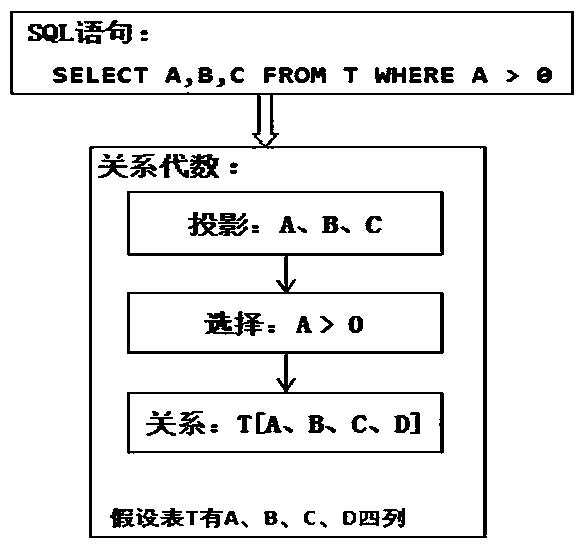

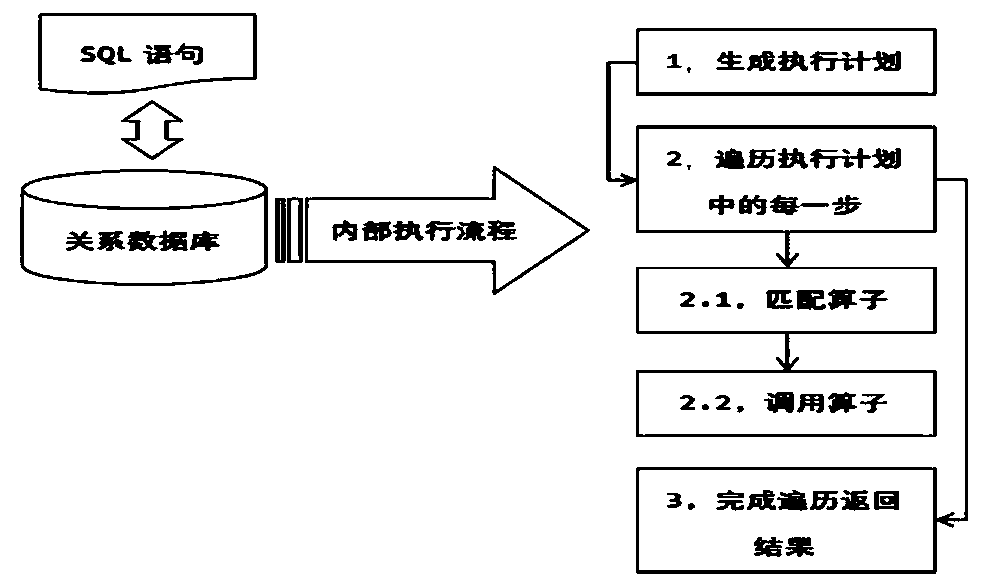

SQL interpreter of HBase and optimization method

ActiveCN111309757AImprove execution efficiencyImprove execution performanceDatabase distribution/replicationSpecial data processing applicationsSemantic analyzerLexical analysis

The invention discloses an SQL interpreter of HBase and an optimization method. A combination of a lexical analyzer, a syntactic analyzer, a semantic analyzer and an actuator is arranged; according tothe method, the logic operator in the SQL statement can be analyzed, the logic operator can be converted into the physical operator directly executed in the HBase database, and therefore the use difficulty of the HBase database is lowered for a large number of SQL language users.

Owner:深圳市赢时胜信息技术股份有限公司

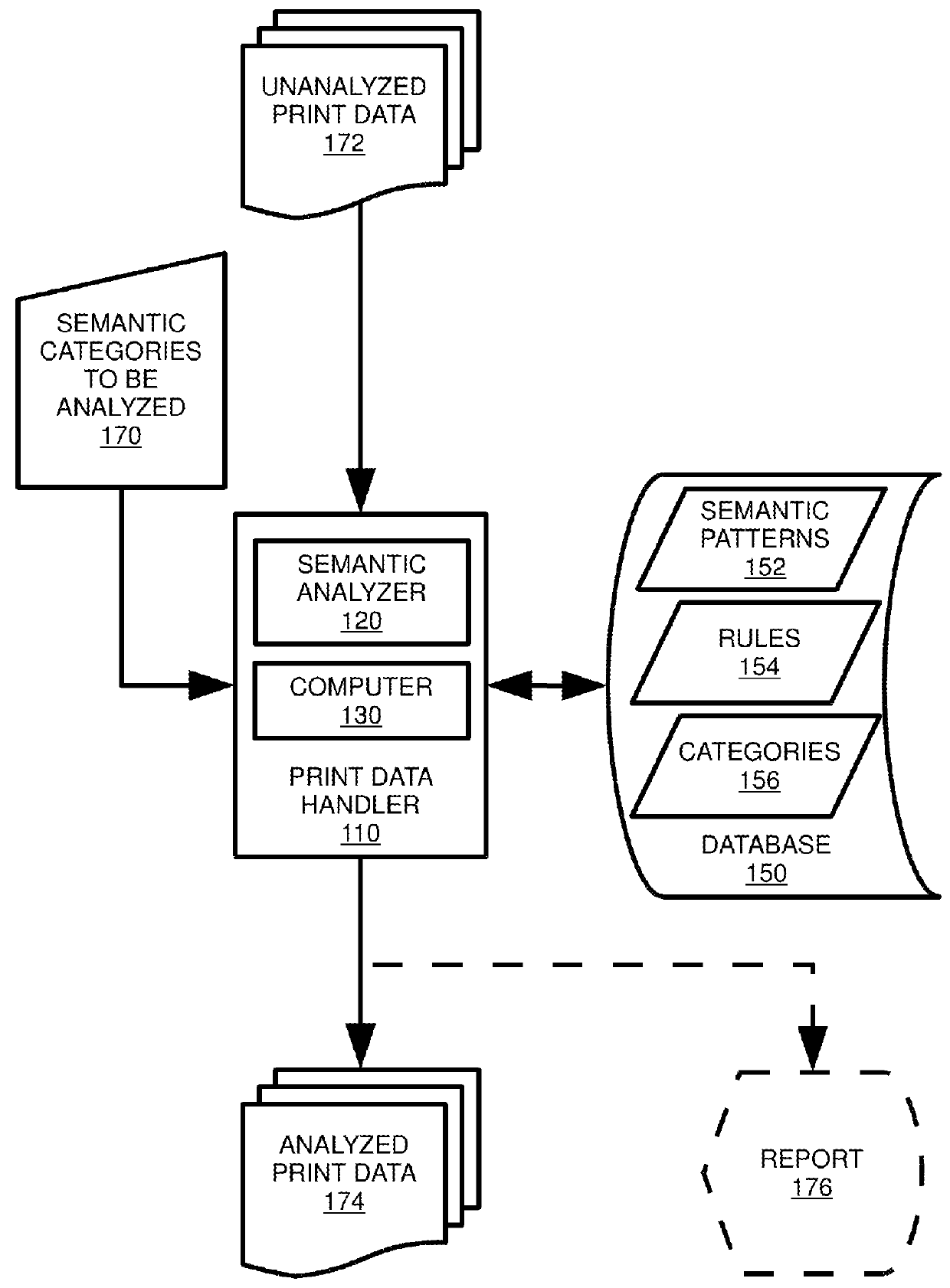

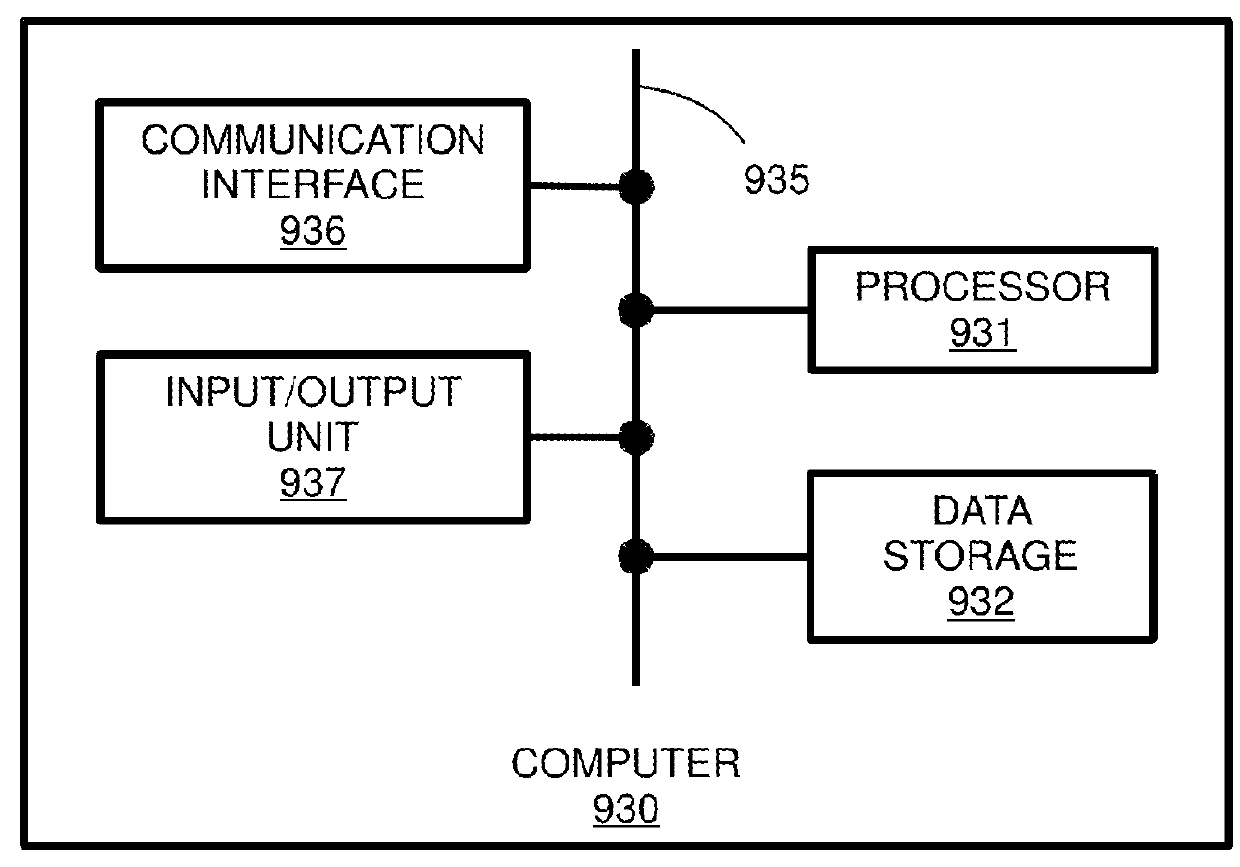

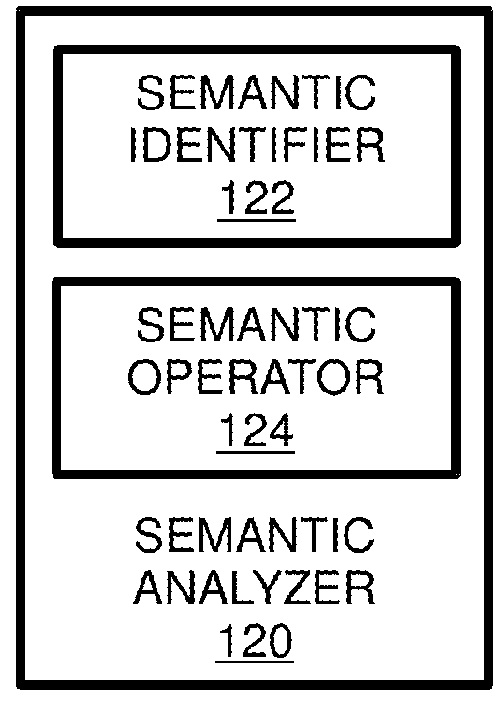

Print Data Semantic Analyzer

InactiveUS20180096201A1Conveniently and efficiently carry-outEfficient analysisCharacter and pattern recognitionPictoral communicationPage description languagePrint processor

A print data semantic analysis system may comprise a print data handler and a database. The print data handler may include a semantic analyzer and a computer. The semantic analyzer may include a semantic identifier and a semantic operator. Semantic pattern(s), rule(s), and / or semantic category or categories may be stored in mutually associated fashion at the database. The print data handler may be a printer driver having a page description language (PDL) generator. The print data handler may be a raster image processor (RIP), a print server, or a printer having a PDL interpreter. The database may take the form of source code, function(s), lookup or other such table(s), and / or any other suitable format(s). The database may be a function table containing function(s) incorporating the semantic pattern(s), rule(s), and / or semantic category or categories.

Owner:KYOCERA DOCUMENT SOLUTIONS INC

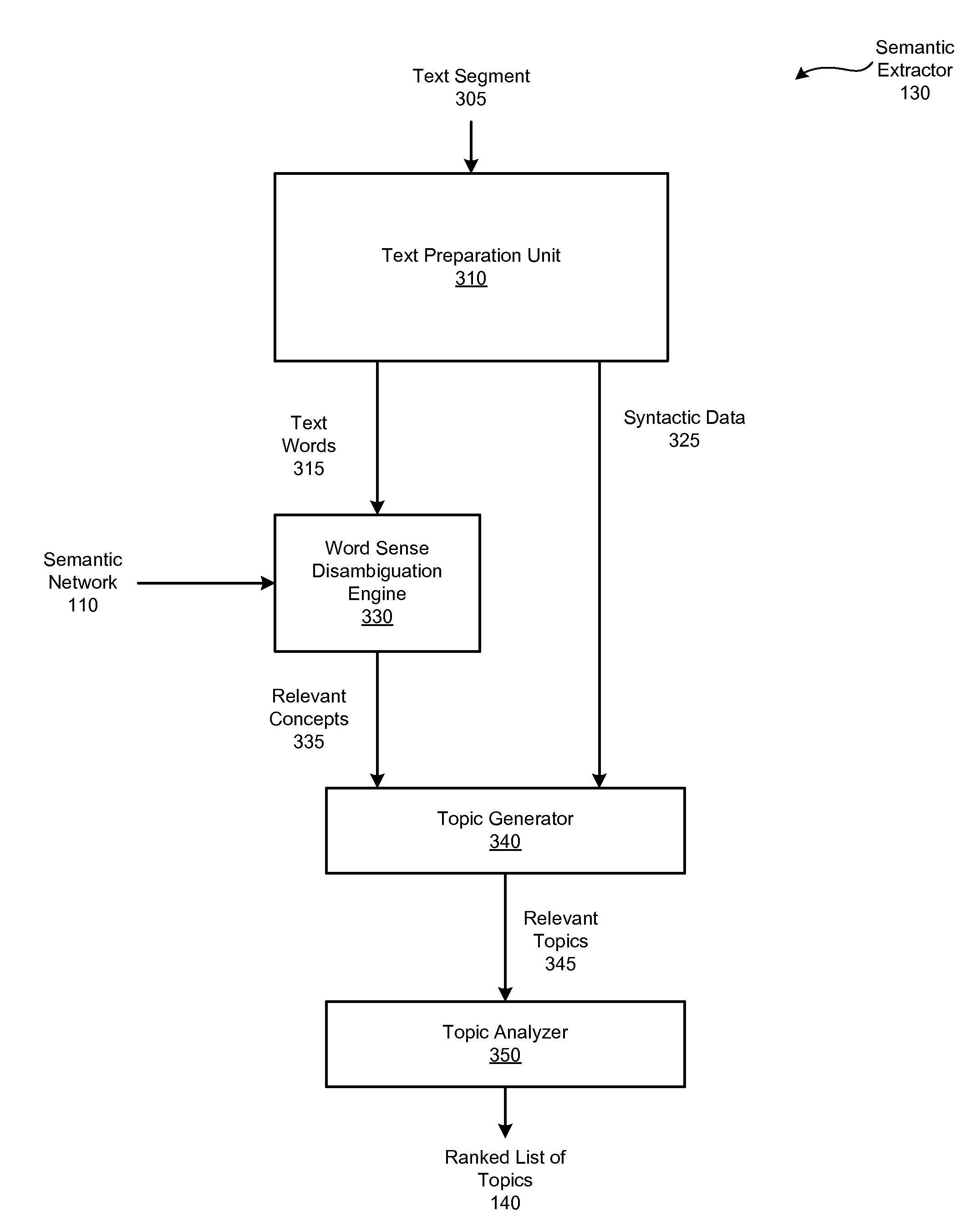

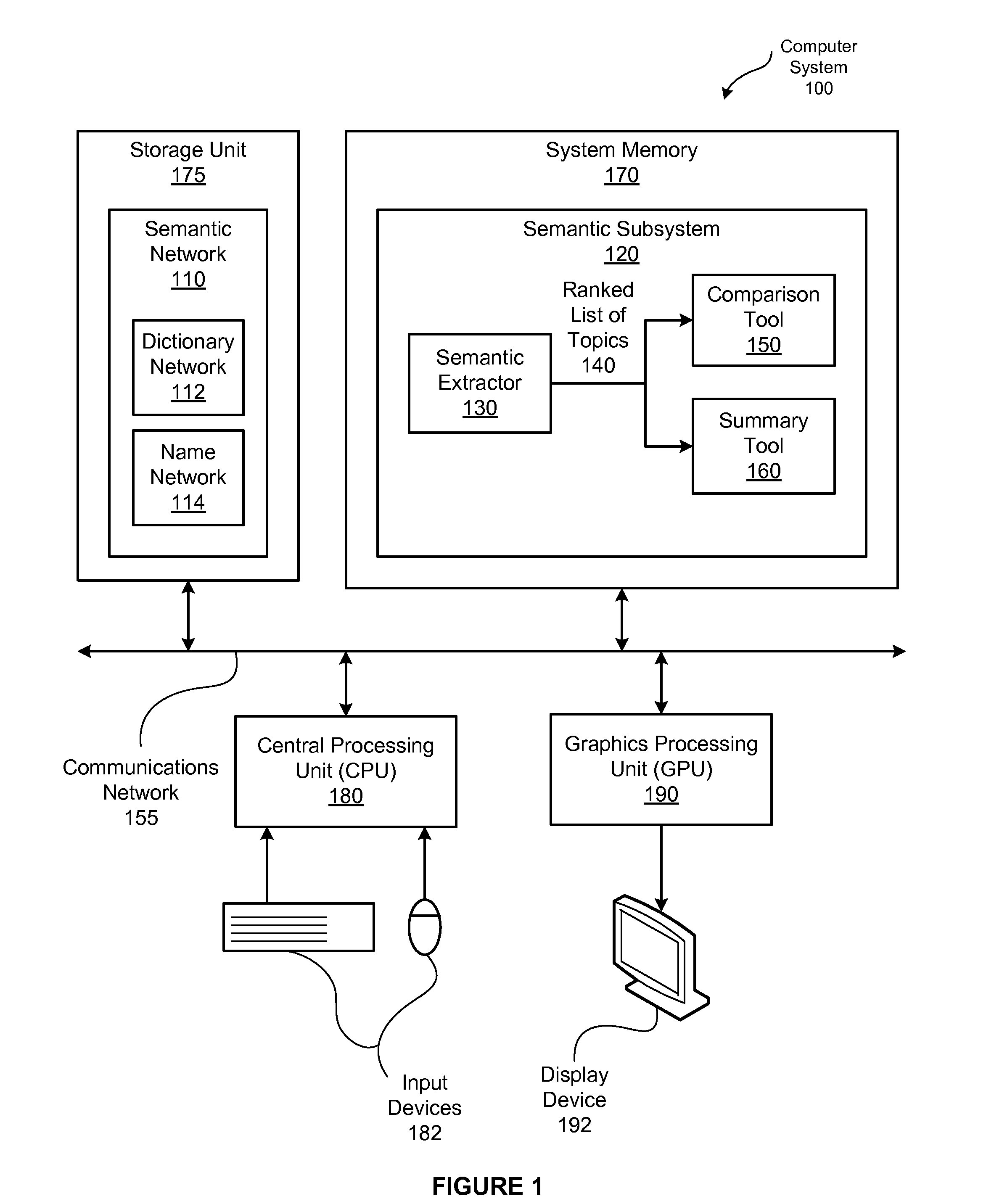

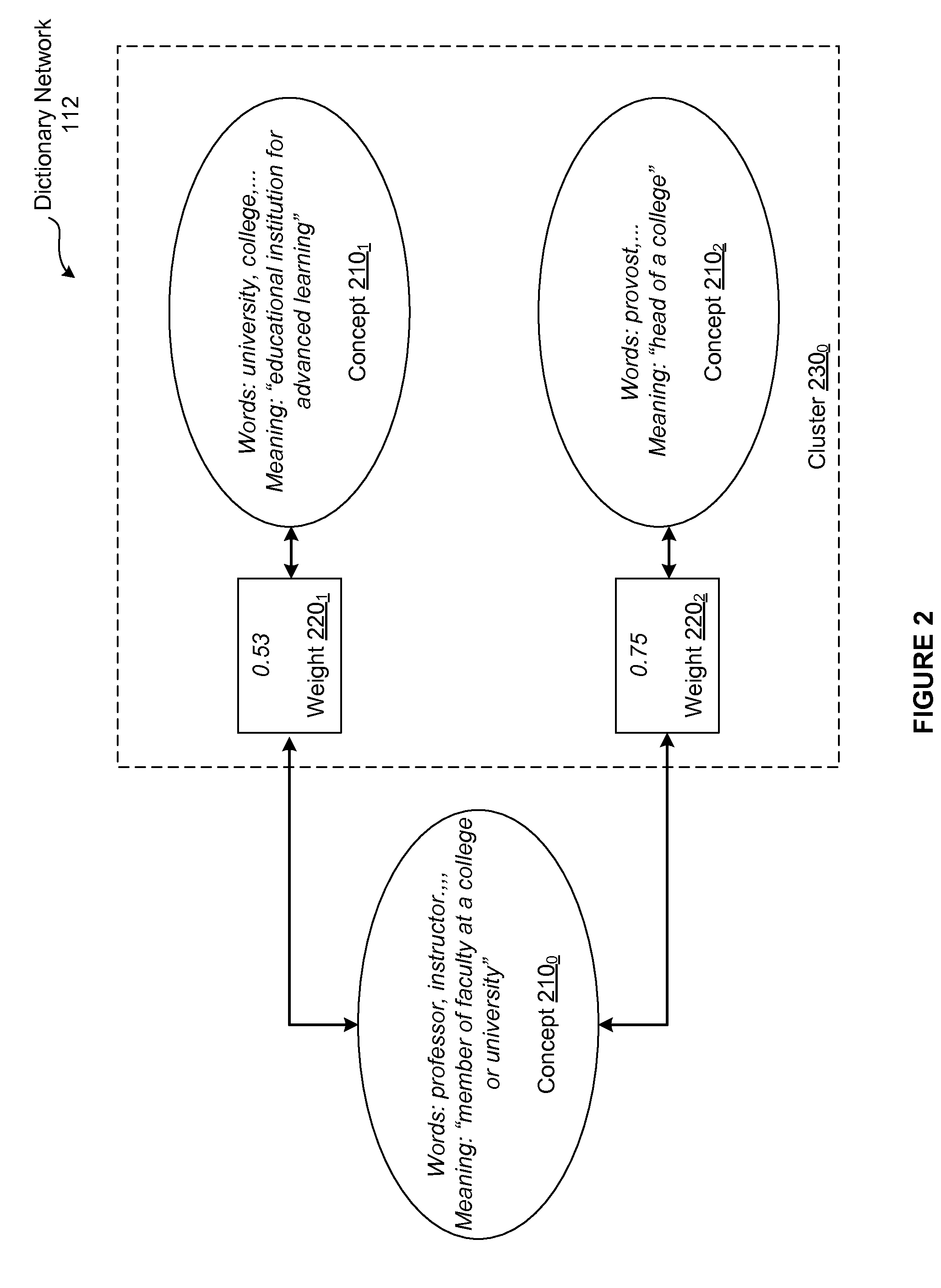

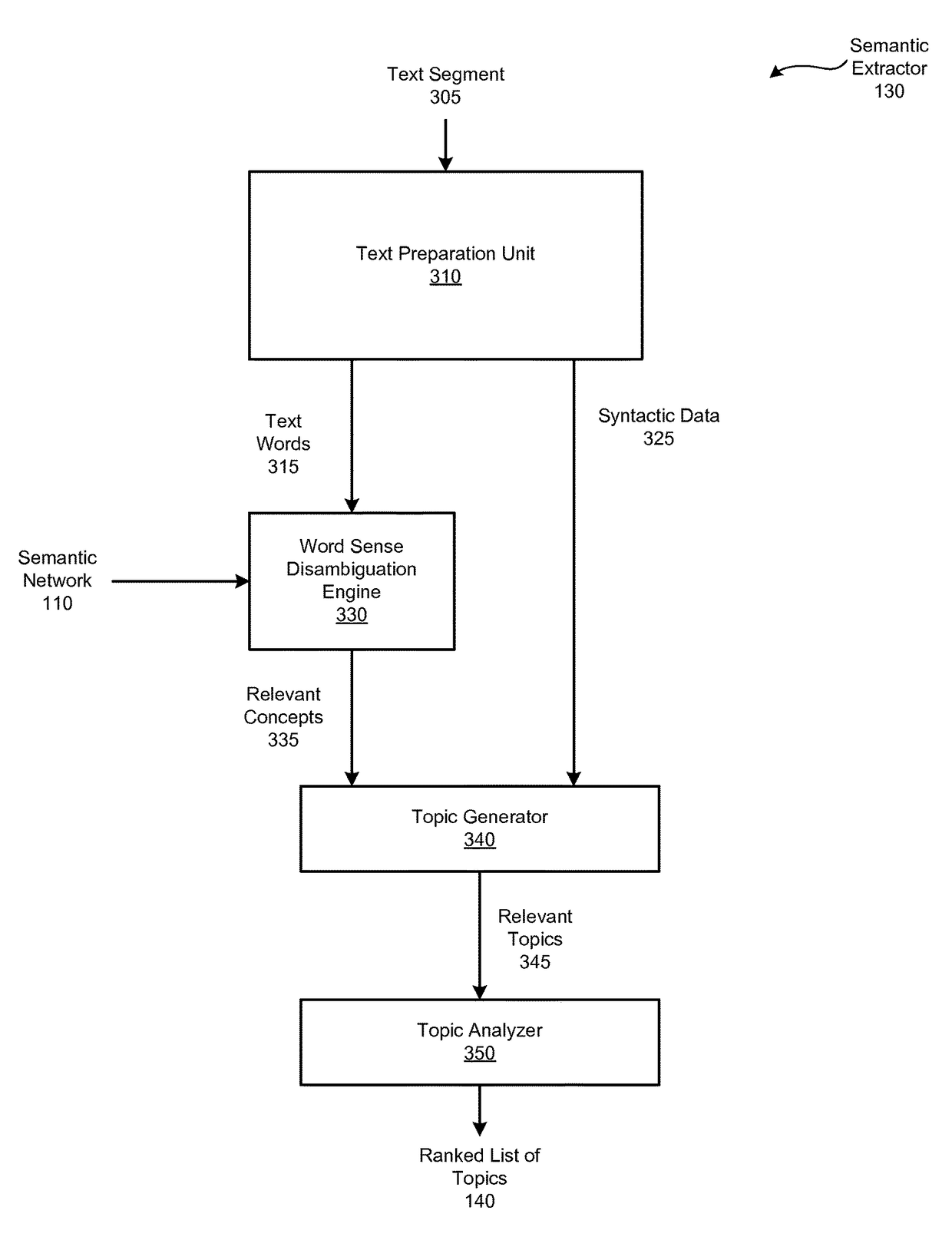

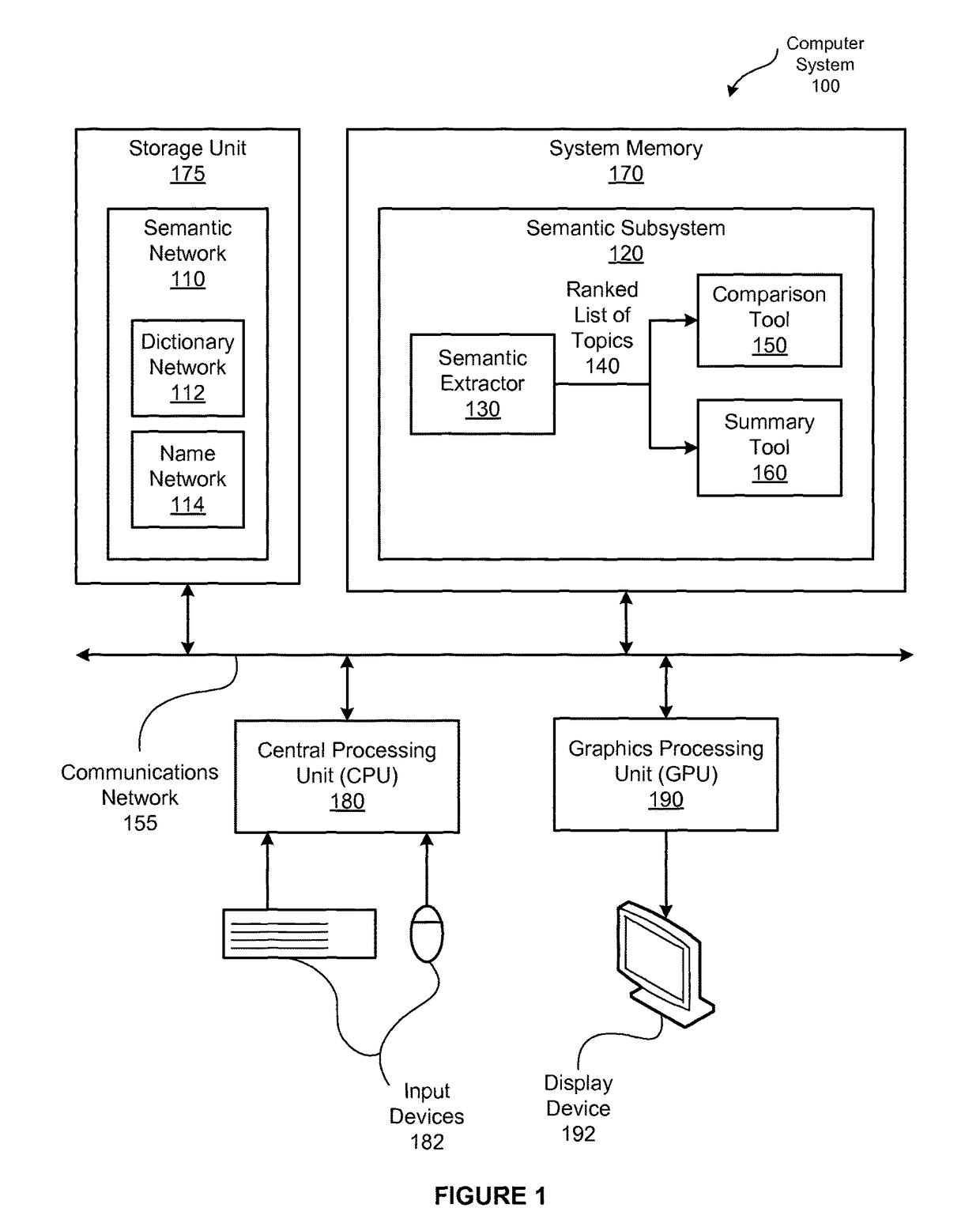

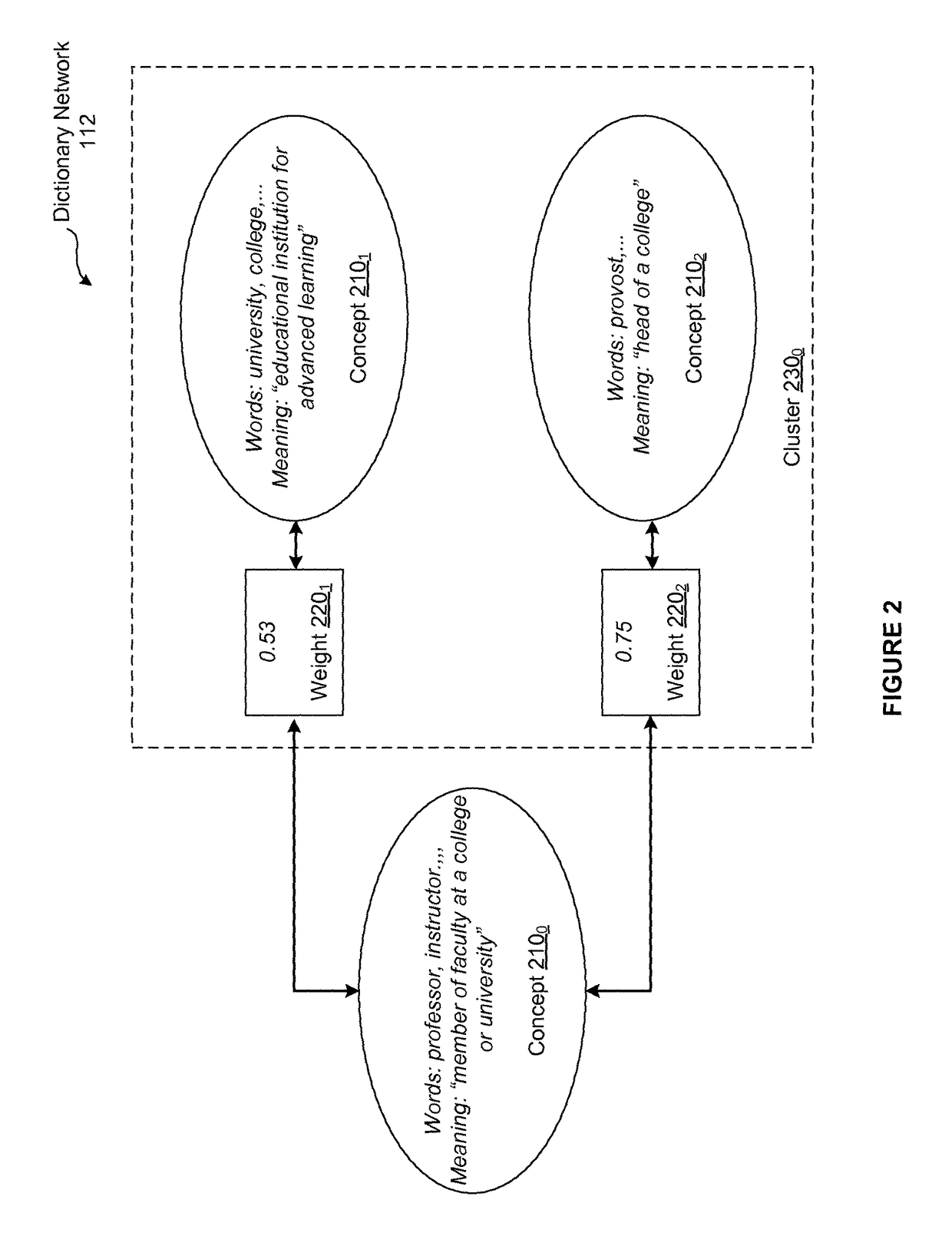

Techniques for understanding the aboutness of text based on semantic analysis

ActiveUS20160292145A1High resultFacilitate meaningful text interpretationSemantic analysisSpecial data processing applicationsSemantic analyzerTopic analysis

In one embodiment of the present invention, a semantic analyzer translates a text segment into a structured representation that conveys the meaning of the text segment. Notably, the semantic analyzer leverages a semantic network to perform word sense disambiguation operations that map text words included in the text segment into concepts—word senses with a single, specific meaning—that are interconnected with relevance ratings. A topic generator then creates topics on-the-fly that includes one or more mapped concepts that are related within the context of the text segment. In this fashion, the topic generator tailors the semantic network to the text segment. A topic analyzer processes this tailored semantic network, generating a relevance-ranked list of topics as a meaningful proxy for the text segment. Advantageously, operating at the level of concepts and topics reduces the misinterpretations attributable to key word and statistical analysis methods.

Owner:KLANGOO INC

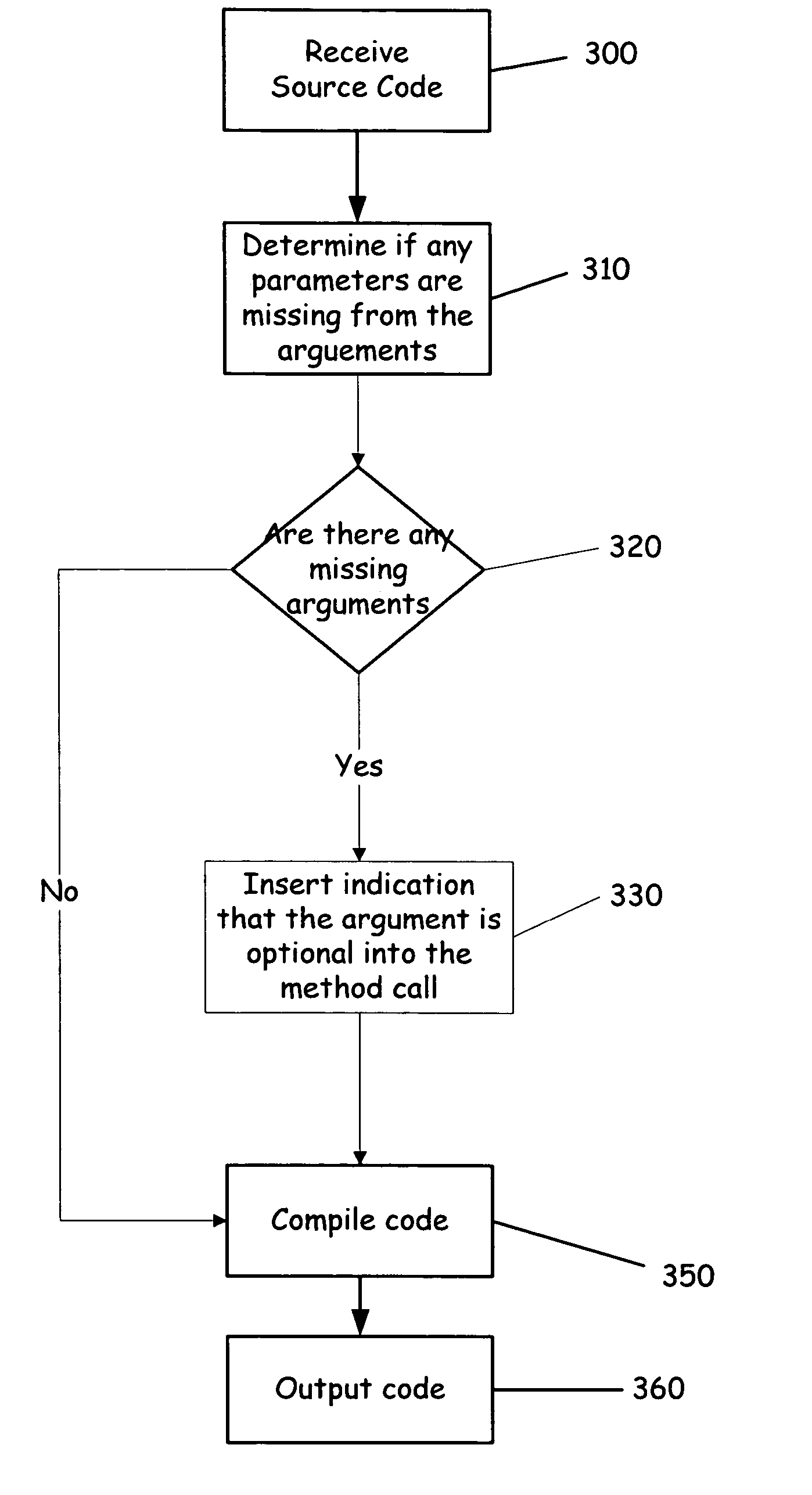

Specifying optional and default values for method parameters

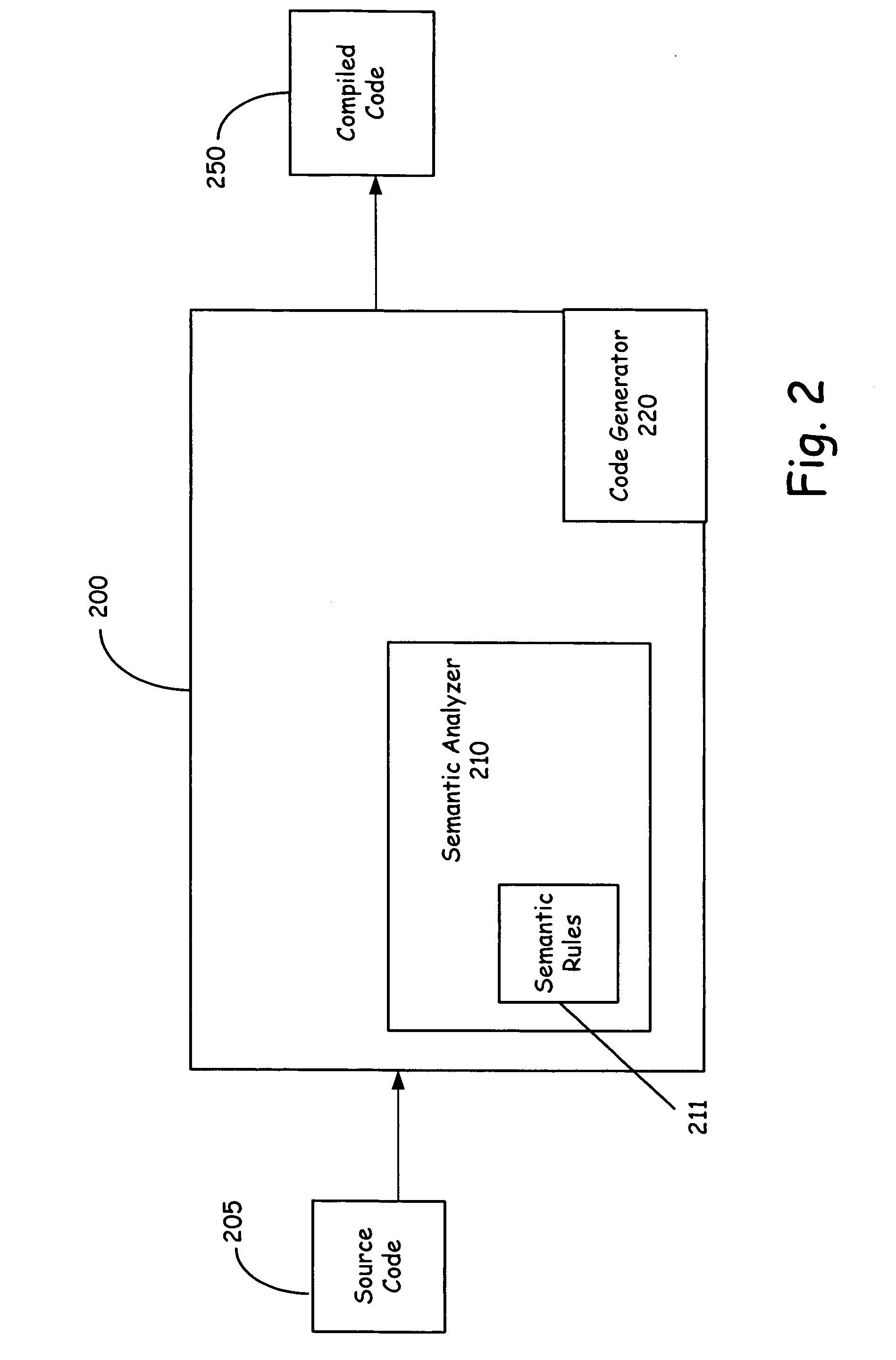

InactiveUS20070142929A1Well formedElectric controllersIgnition automatic controlSemantic analyzerSource code

An embodiment provides a way to handle default parameters in a method call that are not constant values. During the compiling process of a source code method a compiler generates code before the method body, for every optional parameter. The generated code checks if each optional parameter has a valid value. If a known tag is found then the code generated evaluates the default expression and assign the return value to the corresponding parameter. During the compiling process of a source code method call a compiler, or a semantic analyzer, identifies the defined arguments in the method call. If any arguments are missing, the process uses a known tag for the missing argument. Once all parameter have valid values, passed as arguments or returned from the default expression evaluation, then the original method body is executed.

Owner:MICROSOFT TECH LICENSING LLC

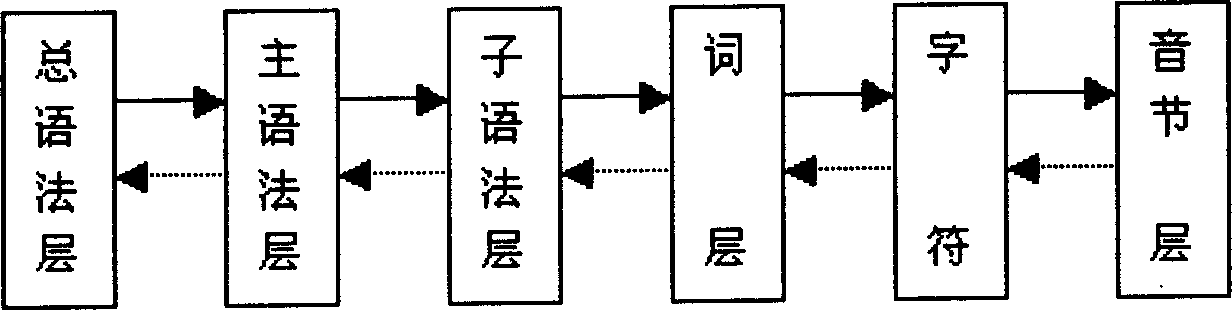

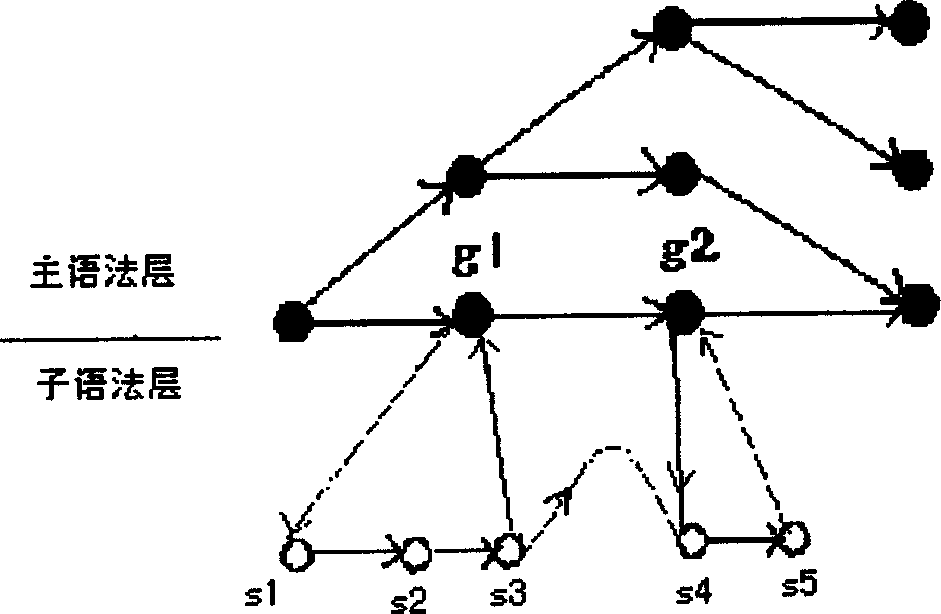

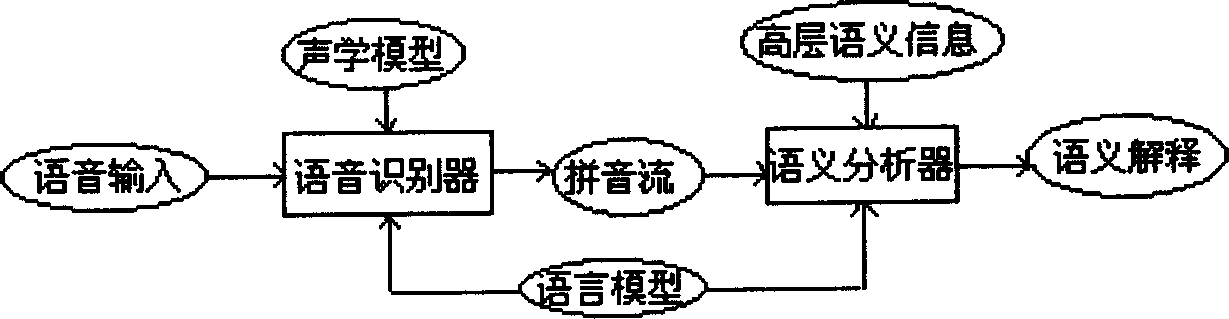

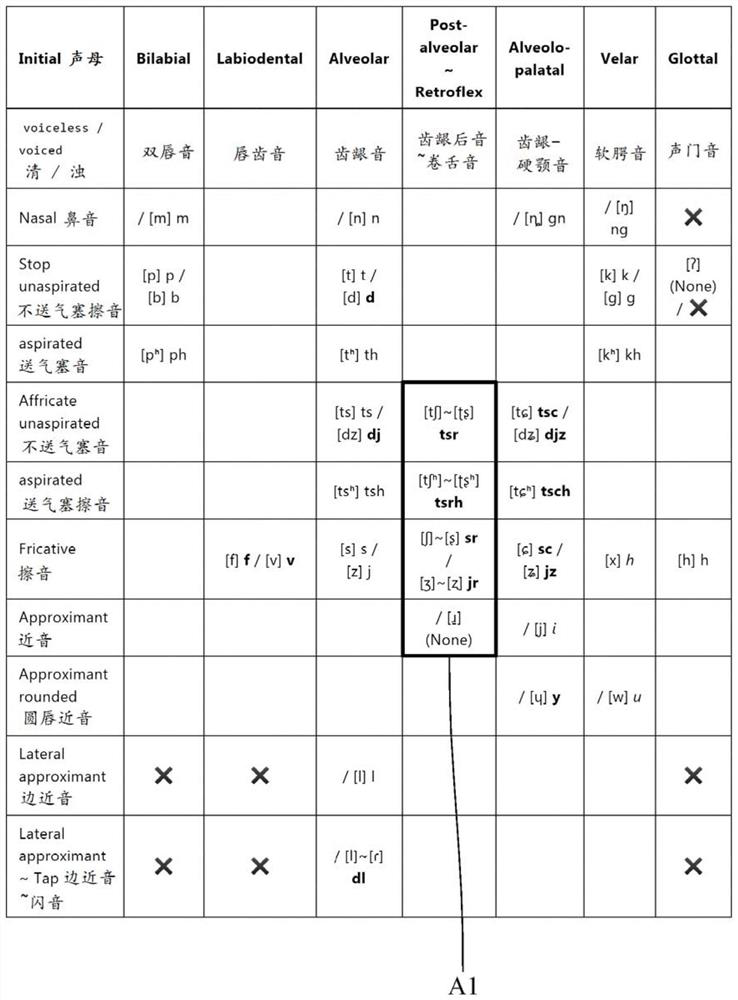

Method for semantic analyzer bead on grammar model

InactiveCN1588537AEasy to analyzeImprove operational efficiencySpeech recognitionSpecial data processing applicationsSemantic analyzerAnalyser

The invention is a method of establishing semantic analyzer based on syntactic model in the field of intelligent information processing, using high-layer semantic information of a telephone dial system to establish a syntactic model, and applying the syntactic model to semantic analysis, automatically splitting phonetic stream, organically combining phonetic-Chinese character conversion with voice analysis, including two aspects of syntactic model establishment and semantic analysis. It advances a method of using high-layer semantic information in a syntactic model to split the phonetic stream. It is a semantic analyzer able to eliminate ambiguity and split sentences. It can perfectly analyze the semantic information of the sentences in or out of the grammatical rule.

Owner:SHANGHAI JIAO TONG UNIV

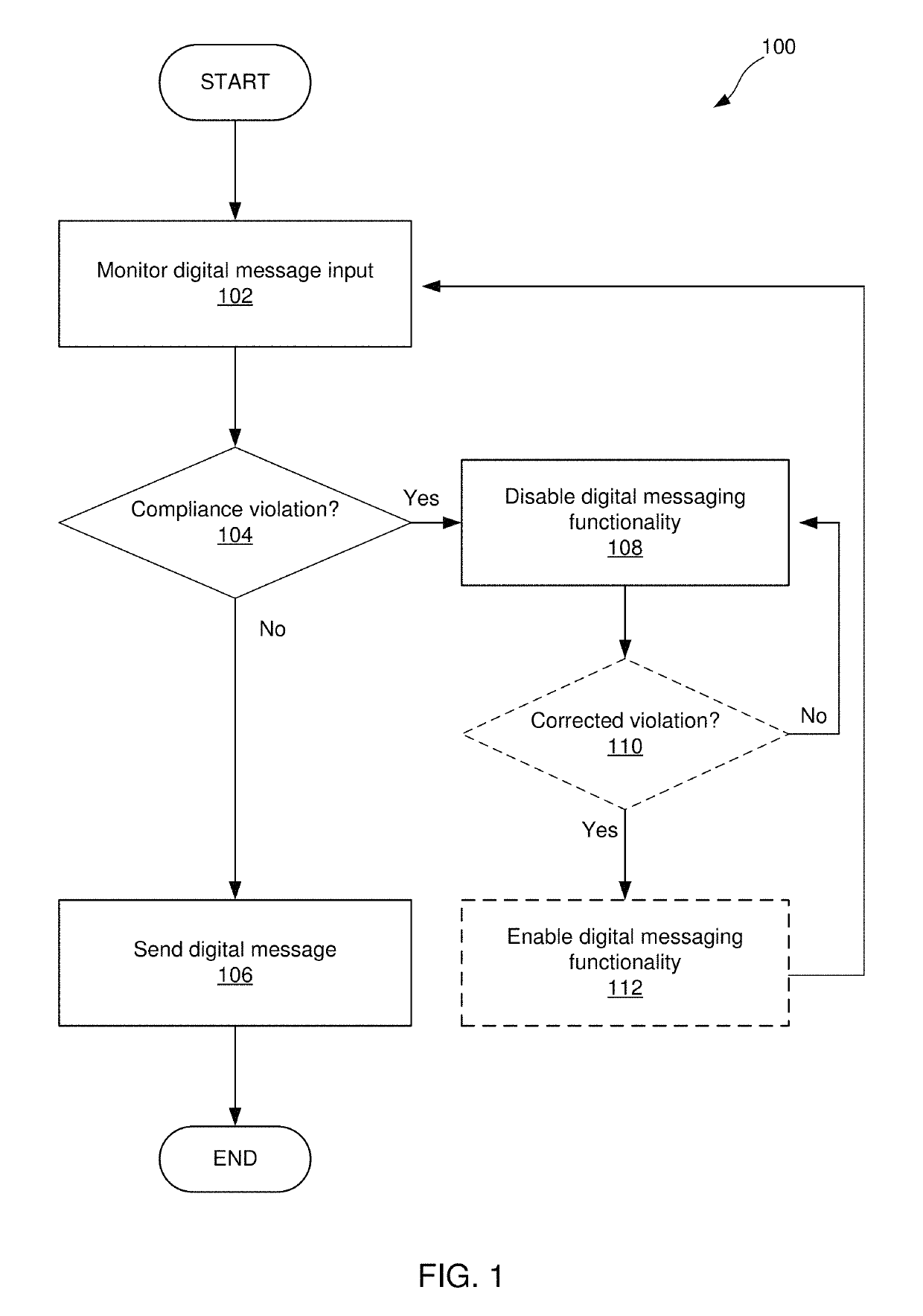

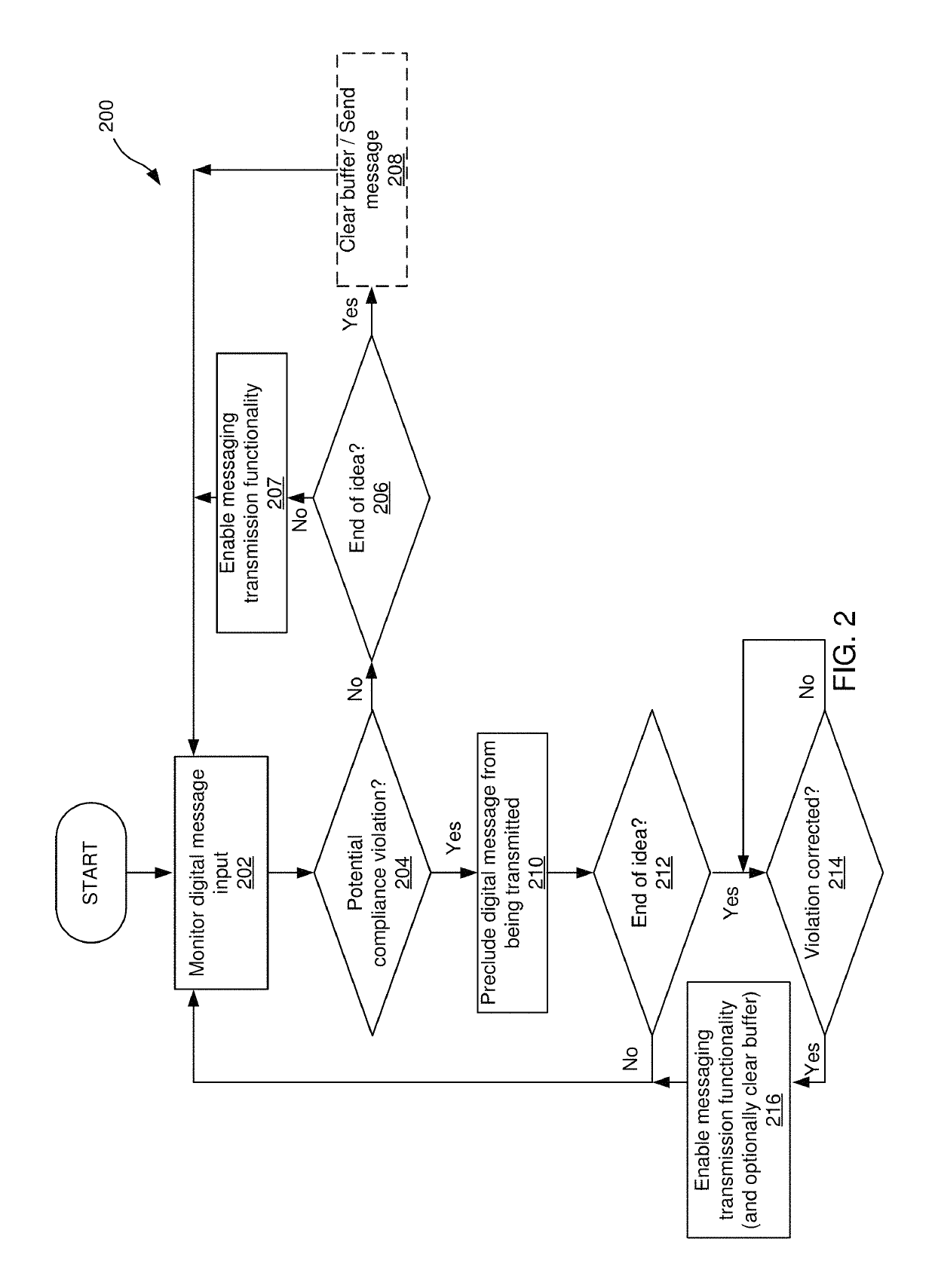

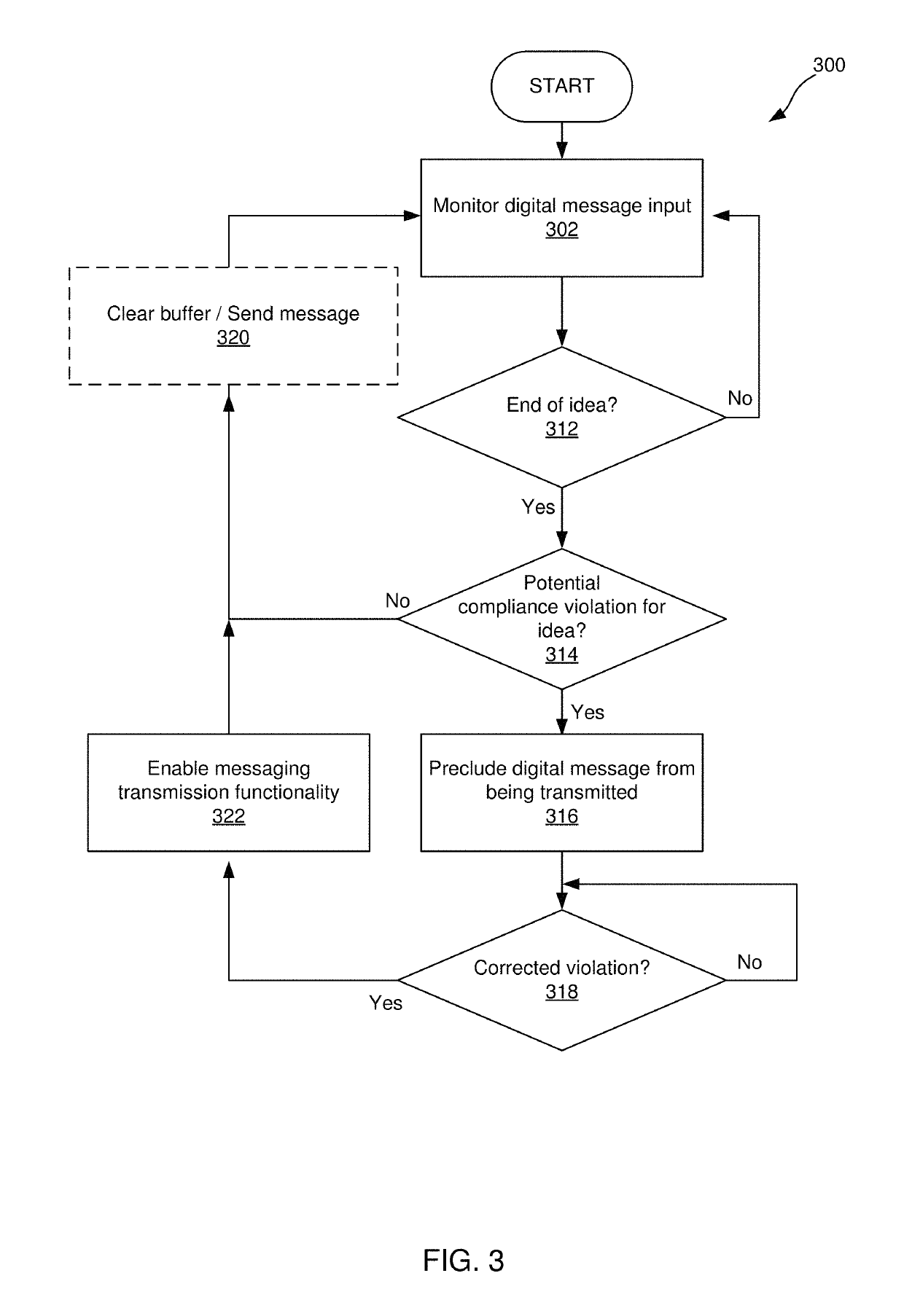

Semantic analyzer for training a policy engine

Owner:FAIRWORDS INC

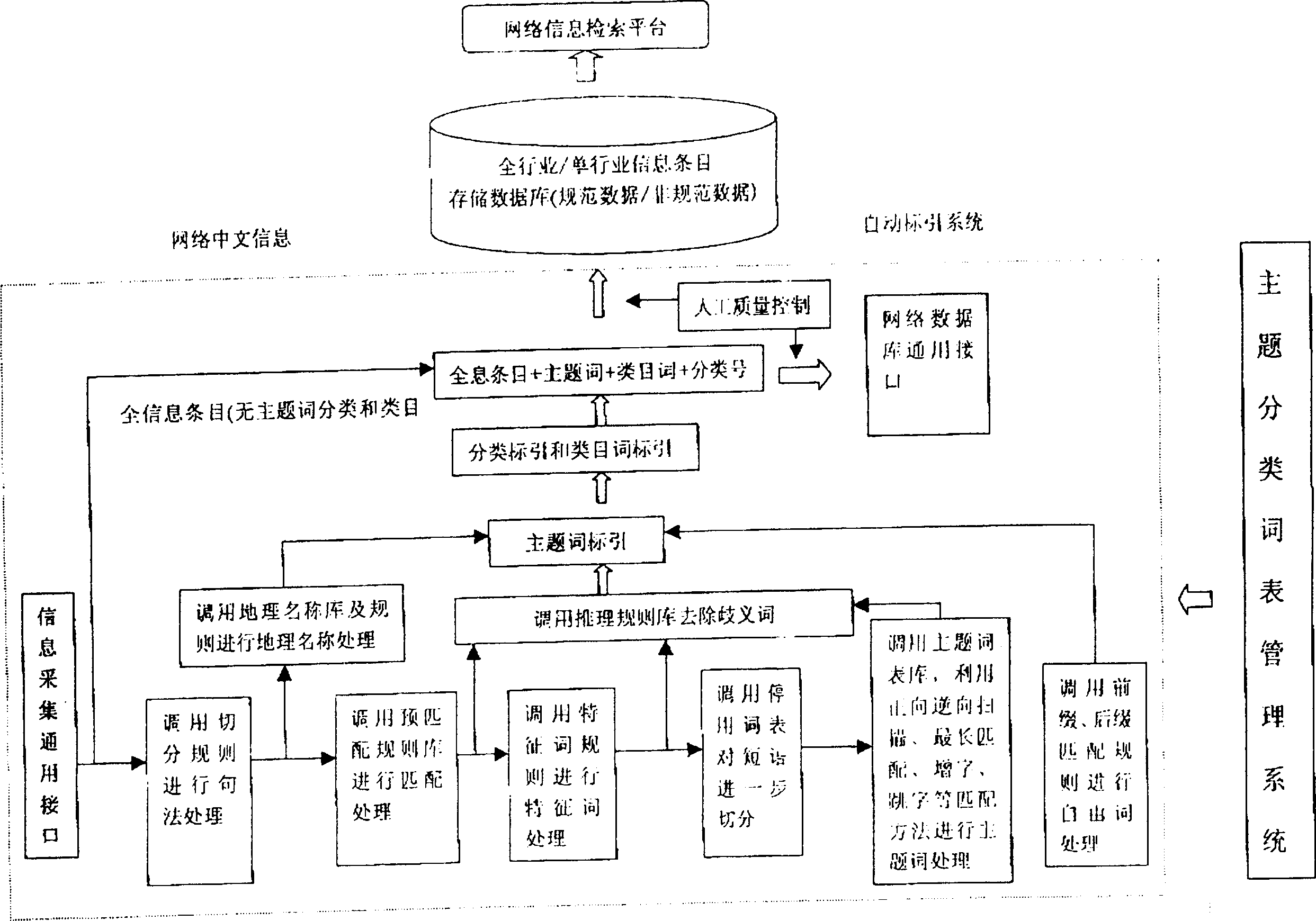

Chinese information automatic indexing system based on network environment

InactiveCN1430163AIncrease resourcesHigh degree of automationSpecial data processing applicationsSemantic analyzerAutomatic indexing

An automatic Chinese information index system based on network environment is composed of subject table for whole profession, univeral Chinese splitting rule library, special splitting rule libraries for each professions, universal obsolete character library, special obsolete character libraries for each professions, Chinese geographic name library, geographic name splitting rule library, index inference rule library, and special index inference rule libraries for each professions.

Owner:北京标杆网络技术有限公司

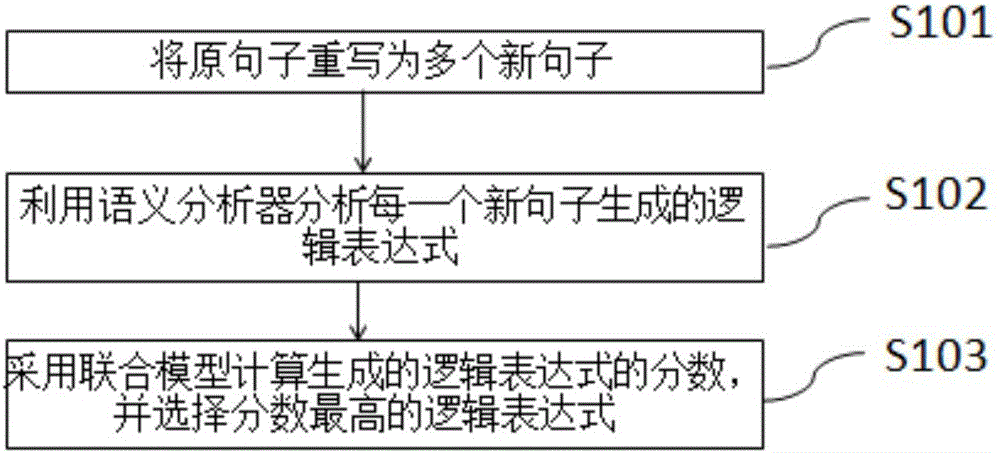

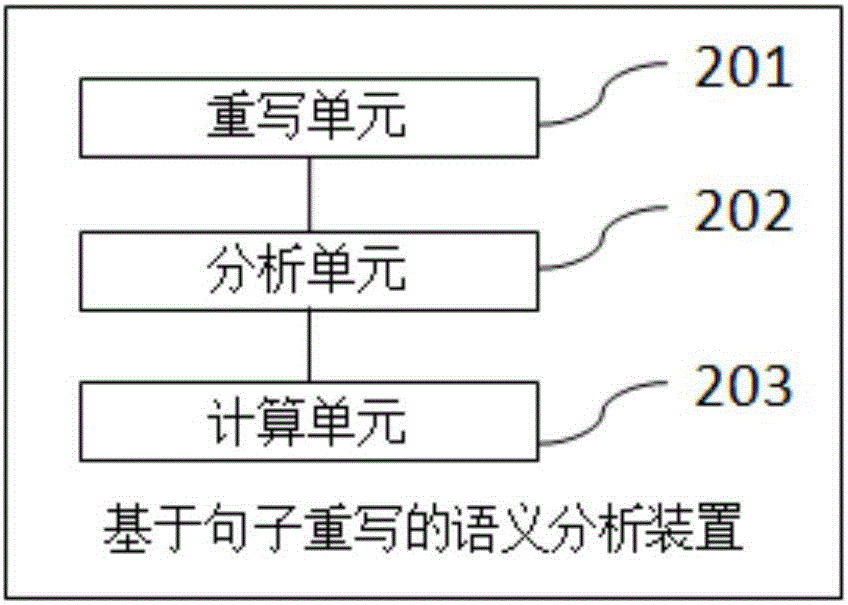

Semantic analysis method and device based on sentence rewriting

InactiveCN106528537AImprove accuracyImprove robustnessSemantic analysisSpecial data processing applicationsSemantic analyzerAnalysis method

The invention provides a semantic analysis method and device based on sentence rewriting. The method includes rewriting an original sentence to obtain multiple fresh sentences; analyzing the logical expression for generating each fresh sentence through a semantic analysis device; computing grades of logical expressions through a joint model, and selecting the logical expression of the largest grade. According to the method, the sentences can be reanalyzed by sentence writing, semantic analysis can be further performed through the logical expressions matching with natural language sentences, and the accuracy and the robustness are improved.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

Method for computer to simulate human brain in learning knowledge, logic theory machine and brain-like artificial intelligence service platform

ActiveCN108874380ARealize intellectual function learning knowledgeSemantic analysisSoftware designSingle sentenceKnowledge element

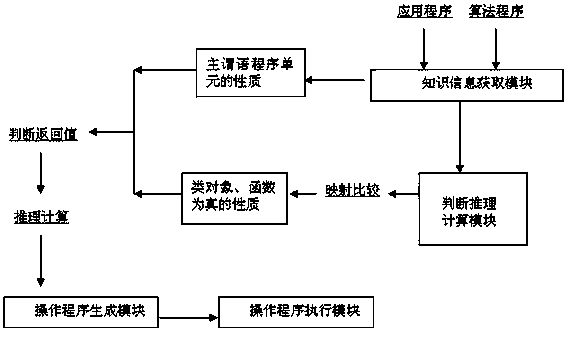

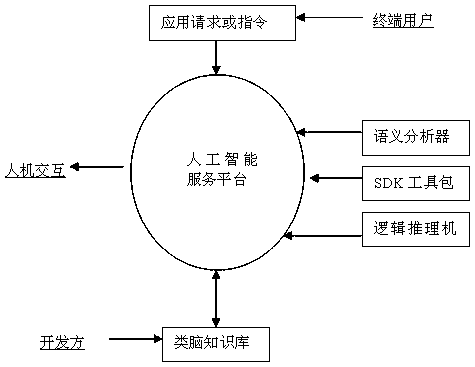

The invention relates to the field of computers, in particular to a method for a computer to simulate human brain in learning knowledge, a logic theory machine and a brain-like artificial intelligenceservice platform. The method for the computer to simulate human brain in learning knowledge comprises the following steps: establishing a computer brain-like knowledge base, including a lexicon, a class library, a resource library and an intelligent information management library; making the computer call a semantic analyzer to create class basic elements and semantic properties generated by a single sentence with natural language statements by a class method, and store the class basic elements and semantic properties in the class library; and making the computer call the semantic analyzer togenerate an intelligent application specific to an intelligent application demand based on intelligent knowledge elements in the class library, and store the intelligent application in the intelligent information management library. A cognitive model for the human brain to recognize objective things by intelligent calculation and judgment and the intelligent mechanism of logical reasoning based on the cognitive model are simulated to a computer system by an artificial method, thereby realizing the intelligent function of a machine to simulate human brain to study and work, and forming a brain-like artificial intelligence service platform.

Owner:HUNAN BENTI INFORMATION TECH RES CO LTD

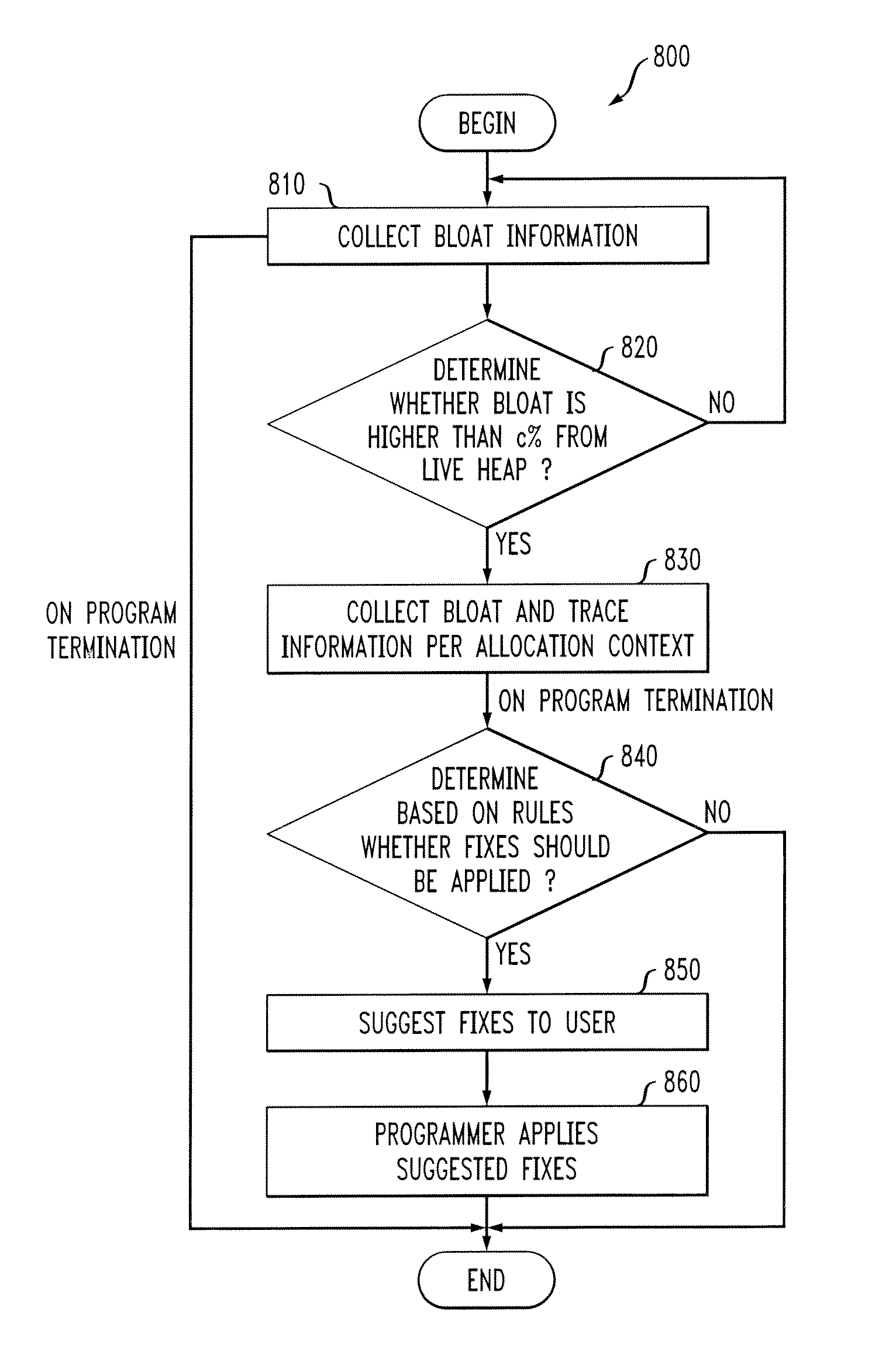

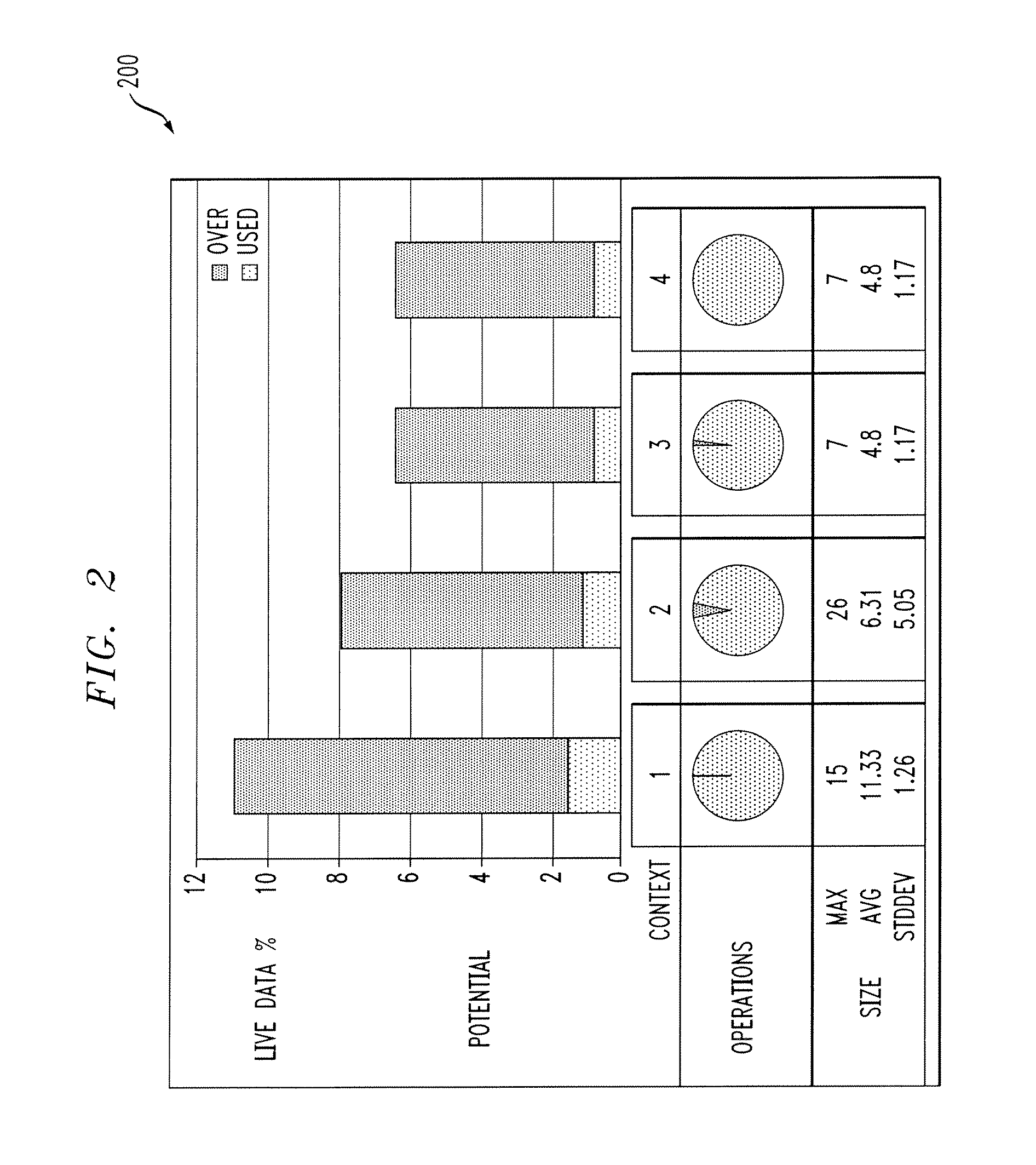

Context-sensitive dynamic bloat detection system that uses a semantic profiler to collect usage statistics

ActiveUS8374978B2Easy to collectMinimize timeDigital computer detailsFuzzy logic based systemsProgramming languageSemantic analyzer

Methods and apparatus are provided for a context-sensitive dynamic bloat detection system. A profiling tool is disclosed that selects an appropriate collection implementation for a given application. The disclosed profiling tool uses semantic profiling together with a set of collection selection rules to make an informed choice. A collection implementation, such as an abstract data entity, is selected for a given program by obtaining collection usage statistics from the program. The collection implementation is selected based on the collection usage statistics using a set of collection selection rules. The collection implementation is one of a plurality of interchangeable collection implementations having a substantially similar logical behavior for substantially all collection types. The collection usage statistics indicate how the collection implementation is used in the given program. One or more suggestions can be generated for improving the collection allocated at a particular allocation context.

Owner:INT BUSINESS MASCH CORP

Unified numerical and semantic analytics system for decision support

ActiveUS8538915B2Digital data information retrievalDigital data processing detailsSemantic analyzerSemantic query

A system and method for responding to a query. An original query is received. A first semantic query and a second semantic query are extracted from the information of the original query. The first semantic query is transformed, based upon semantic analysis, into a numeric model query. The second semantic query is submitted to a semantic analyzer and the numeric model query is submitted to a numeric model analyzer. A response for the second semantic query and a response for the numeric model query are integrated into an answer for the original query.

Owner:INT BUSINESS MASCH CORP

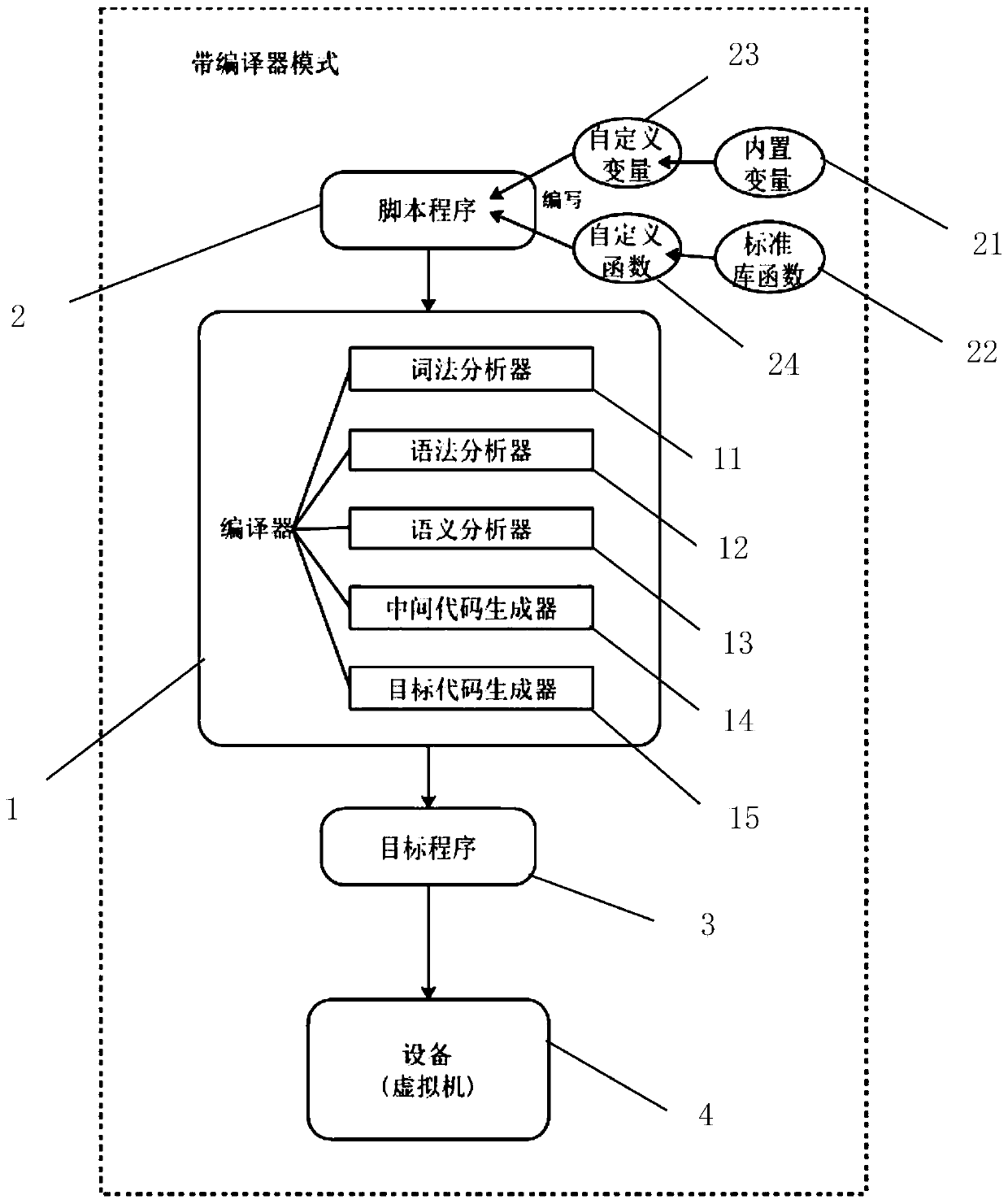

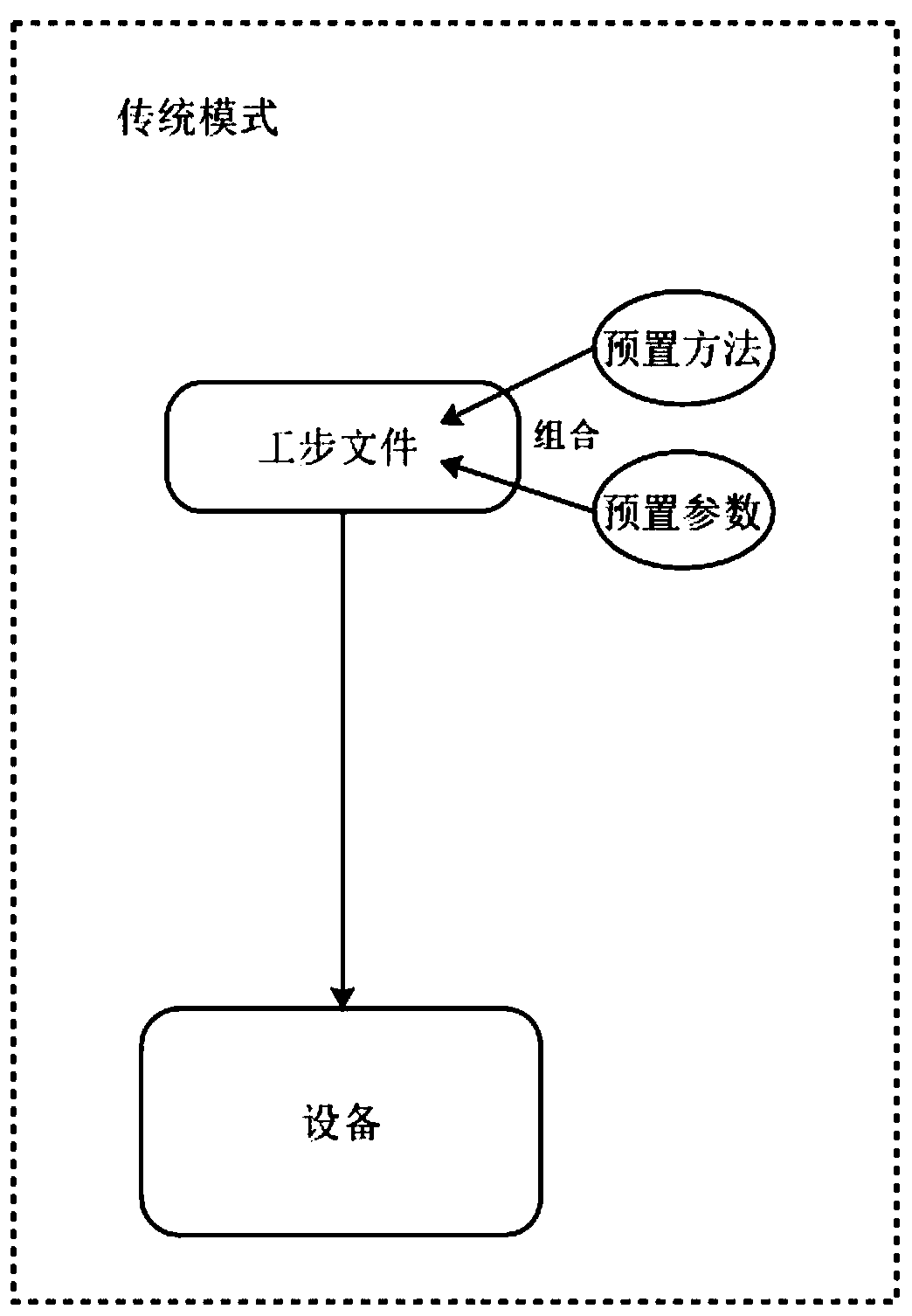

Compiler for compiling script program of battery detection system

PendingCN110908668AIncrease flexibilityProtection technologySoftware simulation/interpretation/emulationCode compilationSemantic analyzerLexical analysis

The invention provides a compiler for compiling a script program of a battery detection system. The complier comprises a lexical analyzer, a syntactic analyzer, a semantic analyzer, an intermediate code generator and a target code generator. The beneficial effects of the invention are that: battery testing method and parameters are self-defined by compiling script programs, the script programs arecompiled into target programs capable of being executed by equipment through the compiler, then the target programs are loaded into the equipment to run, and testing is completed, so that the flexibility of a battery testing system is improved, and more and more complex functions are achieved. Meanwhile, a manufacturer does not need to modify the system when the testing method is modified or added, and a large amount of time and maintenance cost are reduced; and technologies, processes, parameters and the like of users are protected.

Owner:深圳市新威尔电子有限公司

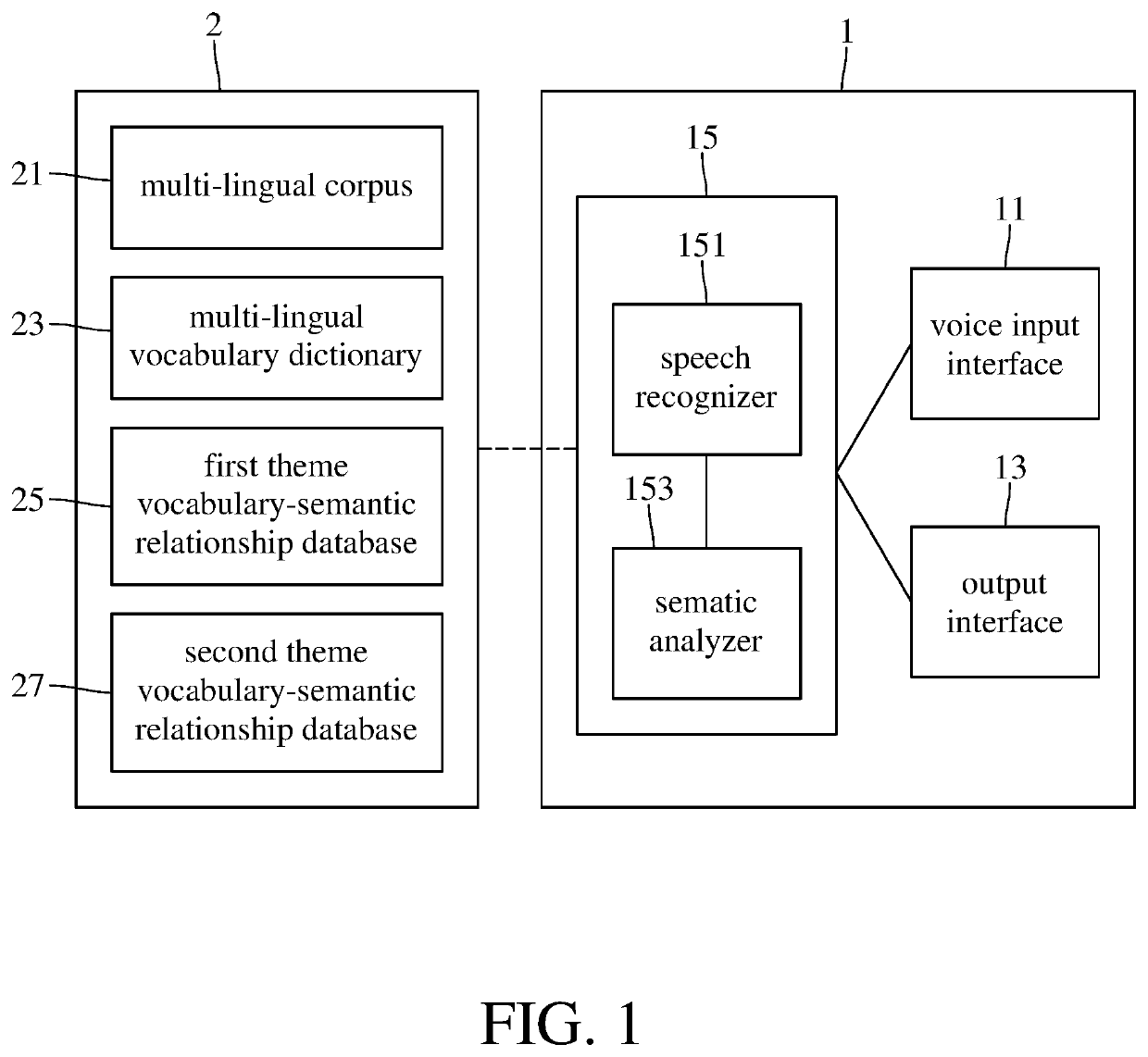

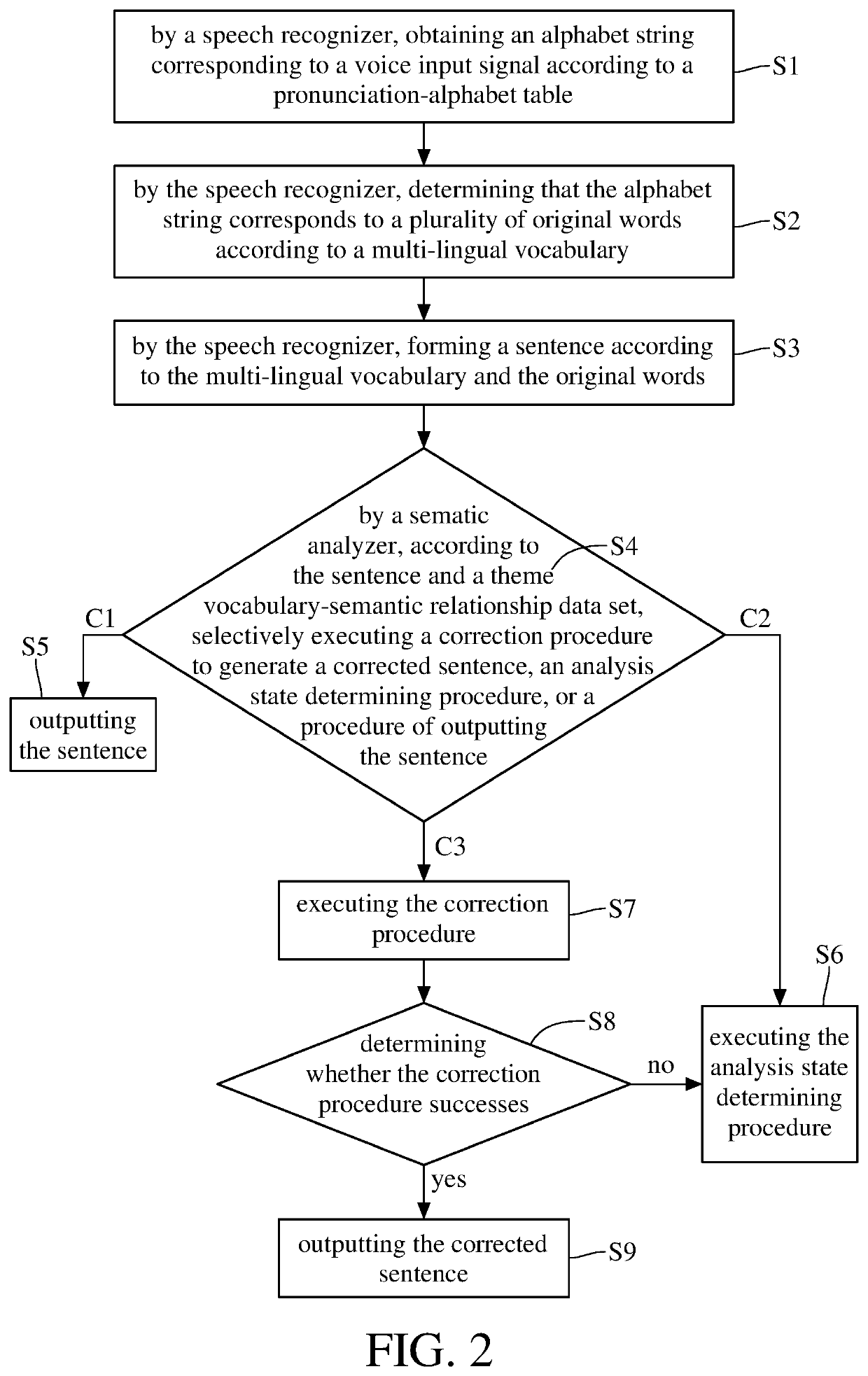

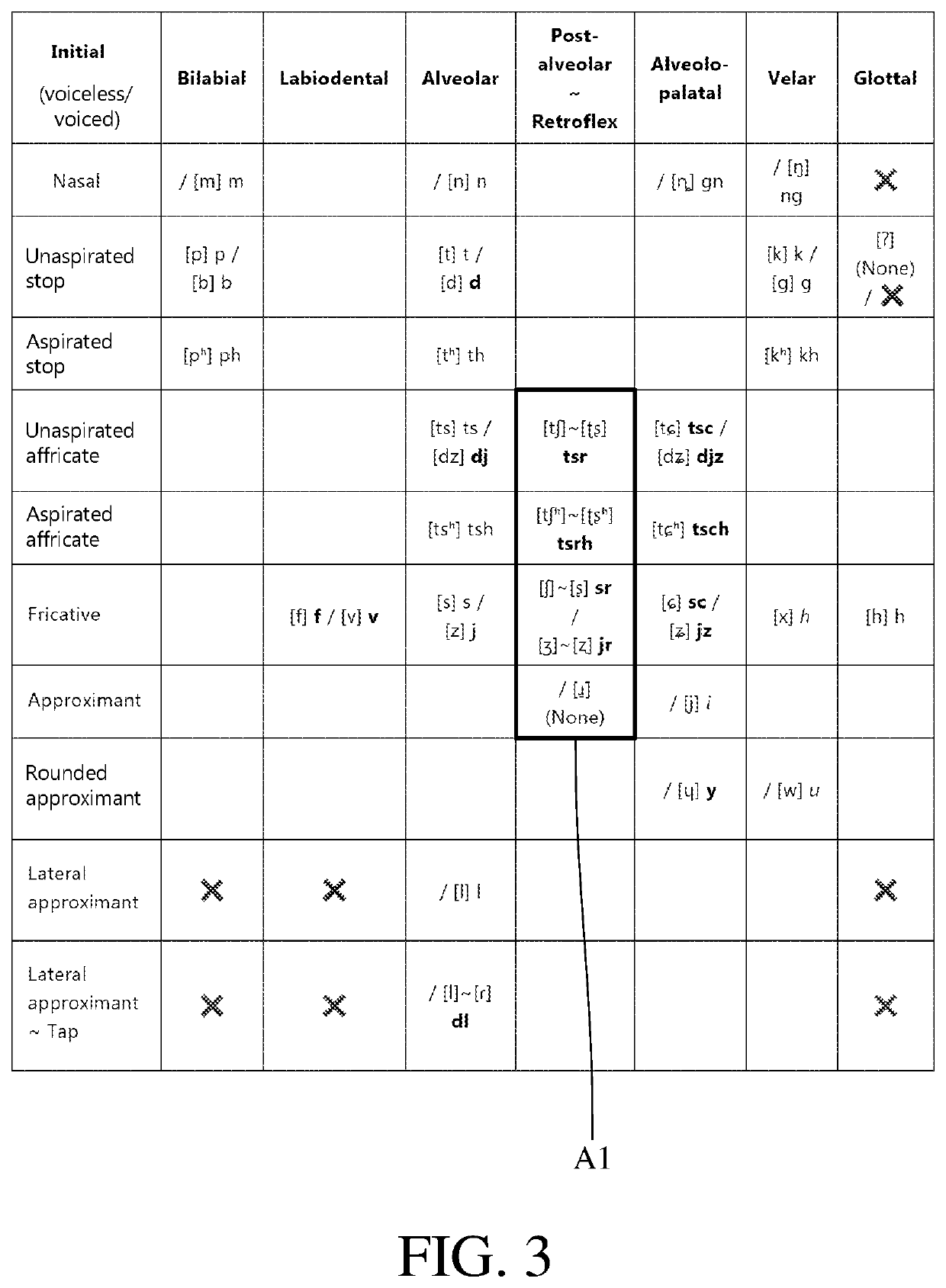

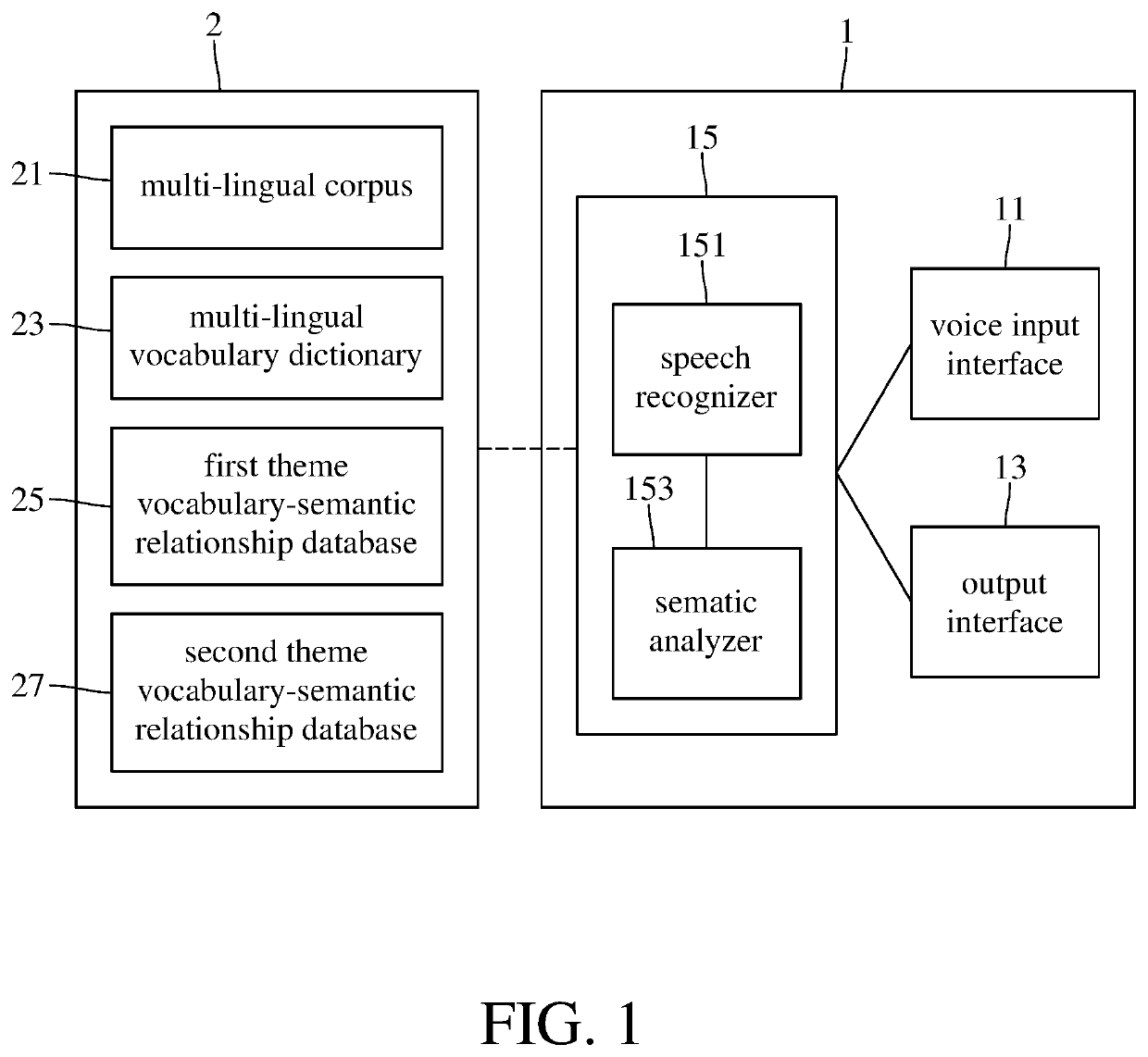

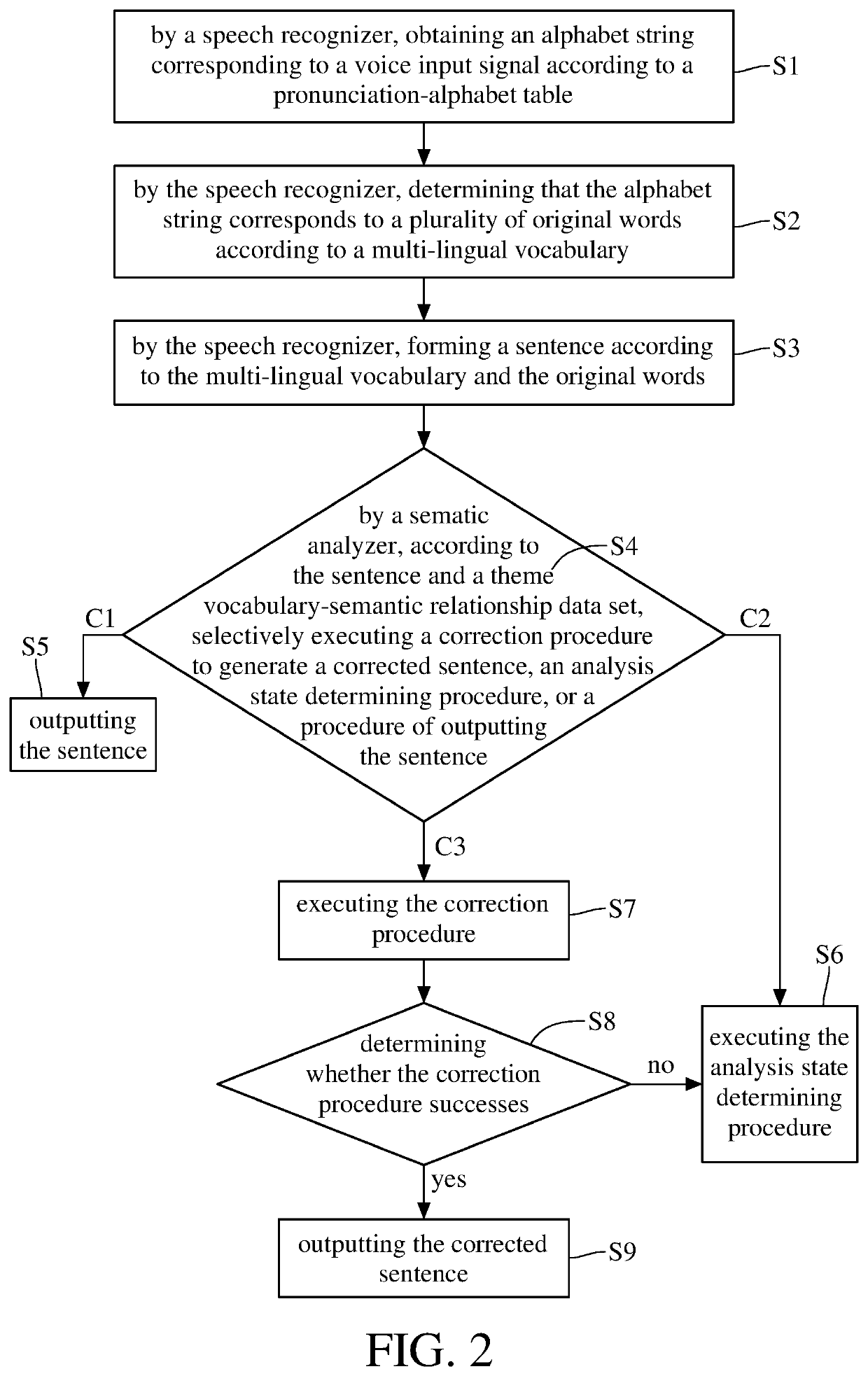

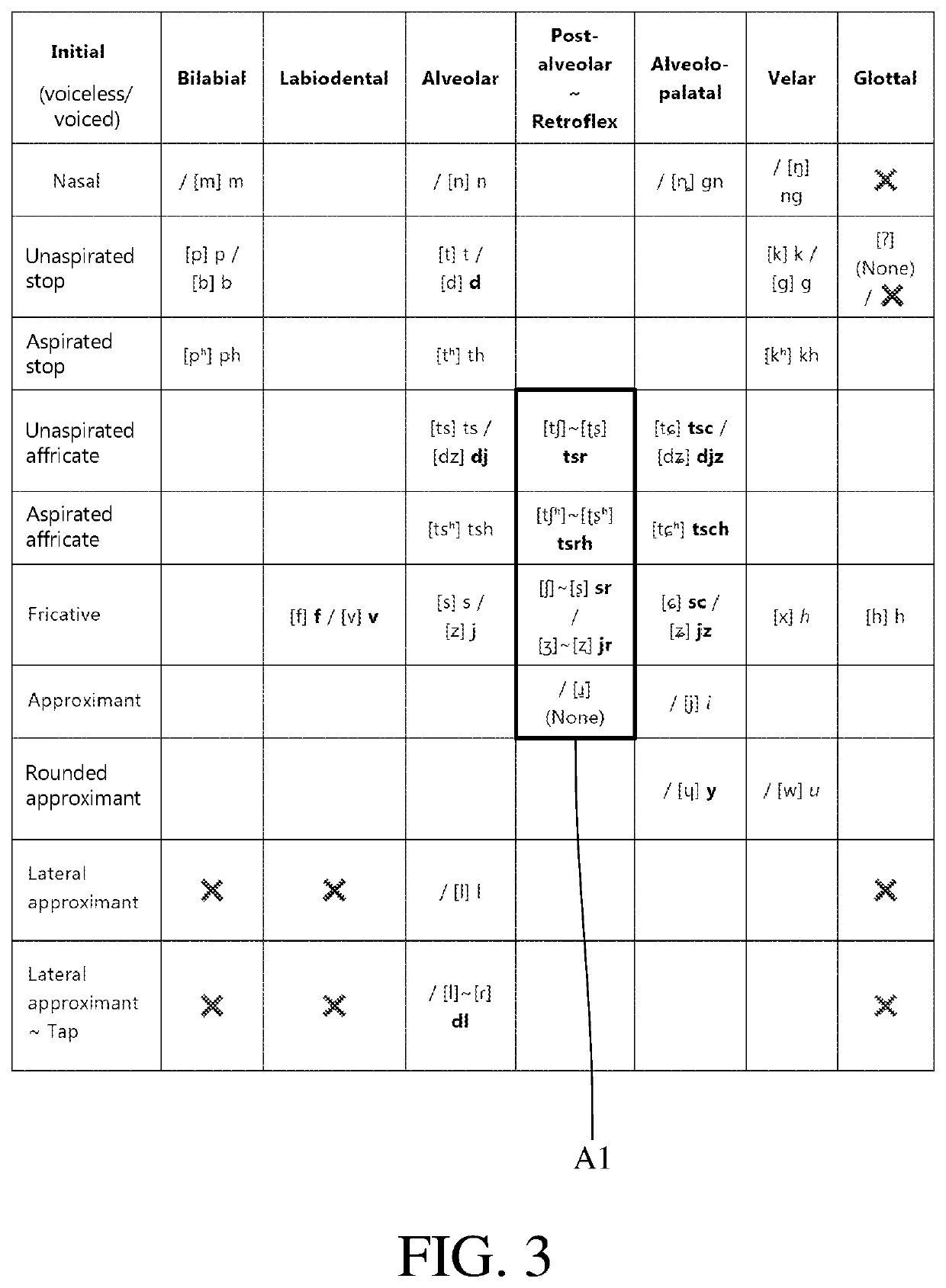

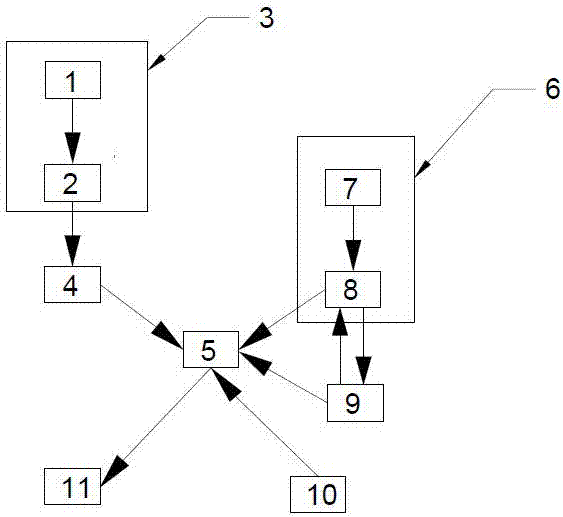

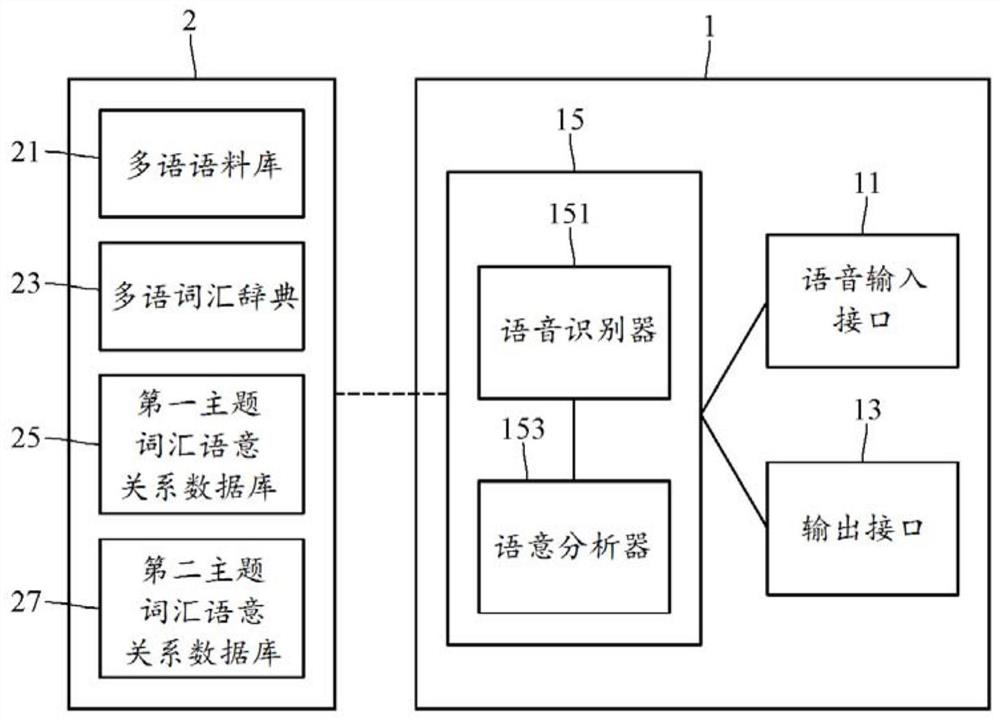

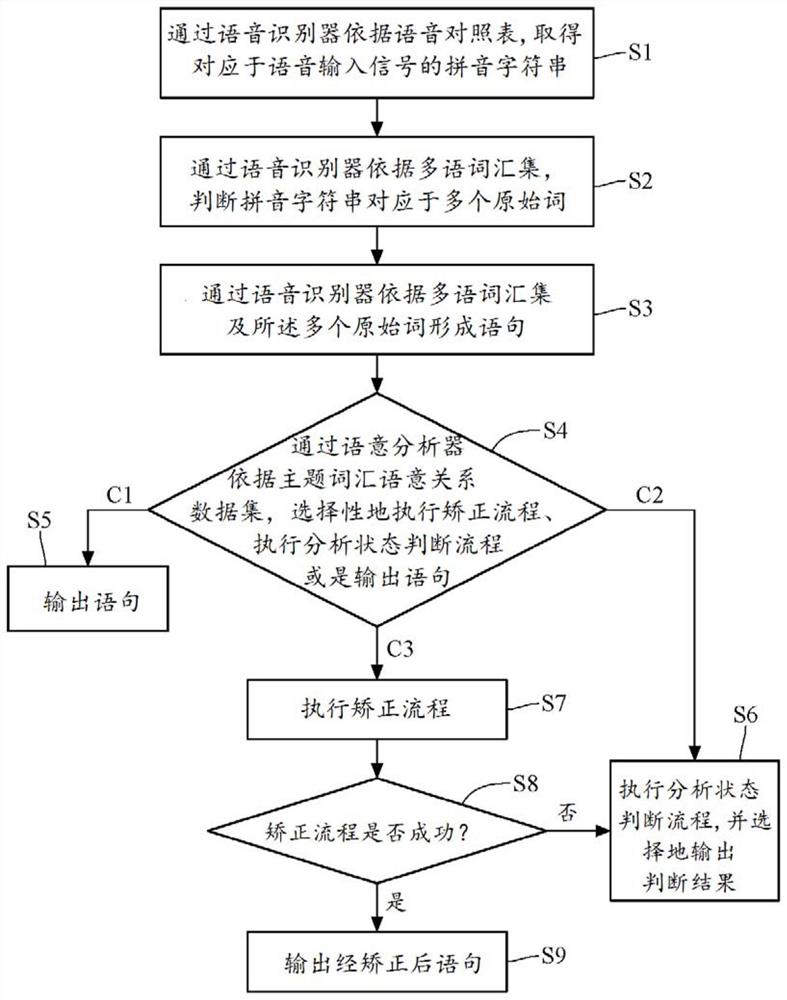

Multi-lingual speech recognition and theme-semanteme analysis method and device

A multi-lingual speech recognition and theme-semanteme analysis method comprises steps executed by a speech recognizer: obtaining an alphabet string corresponding to a voice input signal according to a pronunciation-alphabet table, determining that the alphabet string corresponds to original words according to a multi-lingual vocabulary, and forming a sentence according to the multi-lingual vocabulary and the original words, and comprises steps executed by a sematic analyzer: according to the sentence and a theme vocabulary-semantic relationship data set, selectively executing a correction procedure to generate a corrected sentence, an analysis state determining procedure or a procedure of outputting the sentence, outputting the corrected sentence when the correction procedure successes, and executing the analysis state determining procedure to selectively output a determined result when the correction procedure fails.

Owner:NAT CHENG KUNG UNIV

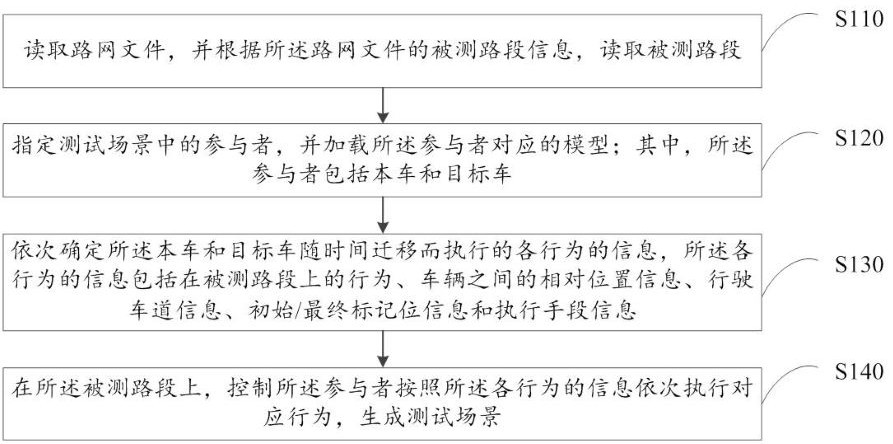

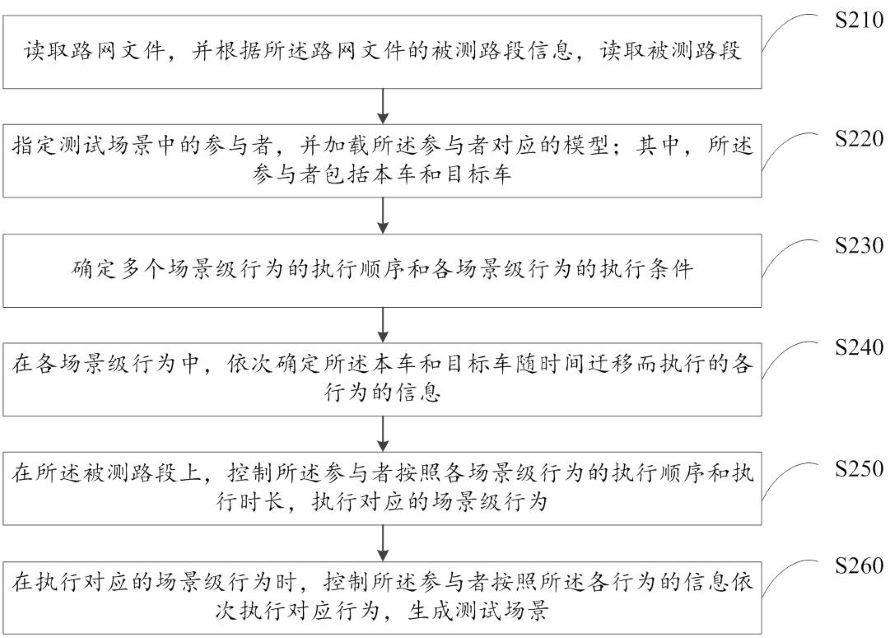

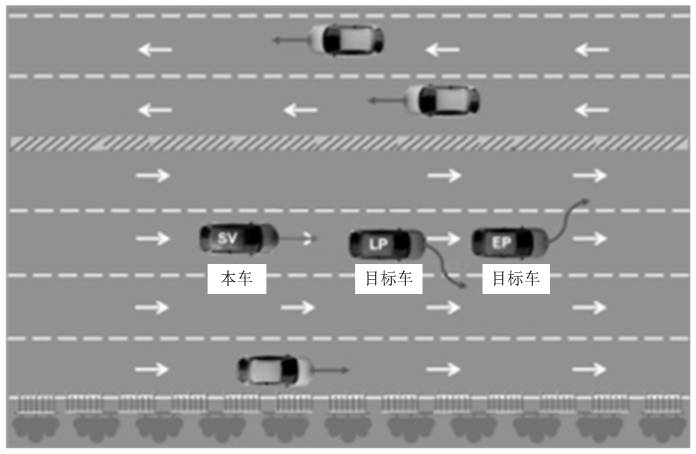

Compiler of test scene generation source code and test scene generation system

ActiveCN112799653AImprove production efficiencyImprove accuracySoftware testing/debuggingDesign optimisation/simulationSemantic analyzerAlgorithm

The invention discloses a compiler for generating a source code in a test scene and a test scene generation system, and relates to an automatic driving technology. The compiler comprises an integrated development environment used for obtaining a source code; a lexical analyzer used for analyzing the source code to obtain a regular set; a grammar analyzer used for analyzing the regular set according to grammar rules to obtain grammar units; a semantic analyzer used for adding an attribute grammar on the basis of a grammar unit to obtain a semantic data structure; during execution, the semantic data structure is used for reading a measured road section according to measured road section information of a road network file; assigning participants in the test scene, and loading models corresponding to the participants; sequentially determining information of behaviors executed by the vehicle and the target vehicle along with time migration; and on the tested road section, controlling the participants to sequentially execute corresponding behaviors according to the information of each behavior, and generating the test scene. According to the method, the test scene is generated by adopting the source code which is convenient to edit and high in readability, and the corresponding compiler and system are developed.

Owner:BEIJING CATARC DATA TECH CENT +2

Sign Language Interpretation and Display Voice System Based on Myoelectric Signal and Motion Sensor

ActiveCN104134060BEasy to identifyImprove recognition rateInput/output for user-computer interactionCharacter and pattern recognitionCrowdsDirect communication

The invention relates to a sign language interpreting, displaying and sound producing system based on electromyographic signals and motion sensors. The sign language interpreting, displaying and sound producing system comprises a gesture recognition subsystem and a semantic displaying and sound producing subsystem. The gesture recognition subsystem comprises the multi-axial motion sensors and a multi-channel muscle current acquisition and analysis module, the gesture recognition subsystem is put on the left arm and the right arm of a user, and the original surface electromyogram signals of the user and motion information of the arms of the user are obtained; gestures are differentiated by processing the electromyogram signals and data of the motion sensors. The displaying and sound producing subsystem comprises a semantic analyzer, a voice control module, a loudspeaker, a displaying module, a storage module, a communication module and the like. According to the sign language interpreting, displaying and sound producing system, by the adoption of the mode recognition technology based on the electromyographic signals of the double arms and the data of the motion sensors, the gesture recognition accuracy rate is increased; through the combination of the semantic displaying and sound producing subsystem, interpreting from commonly-used sign language to voice or text is achieved, and the efficiency of direct communication between people with language disorders and normal people is improved.

Owner:SHANGHAI OYMOTION INFORMATION TECH

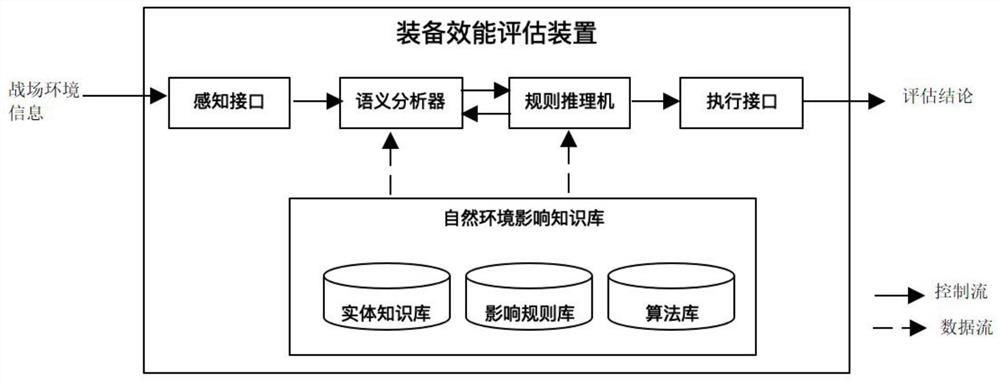

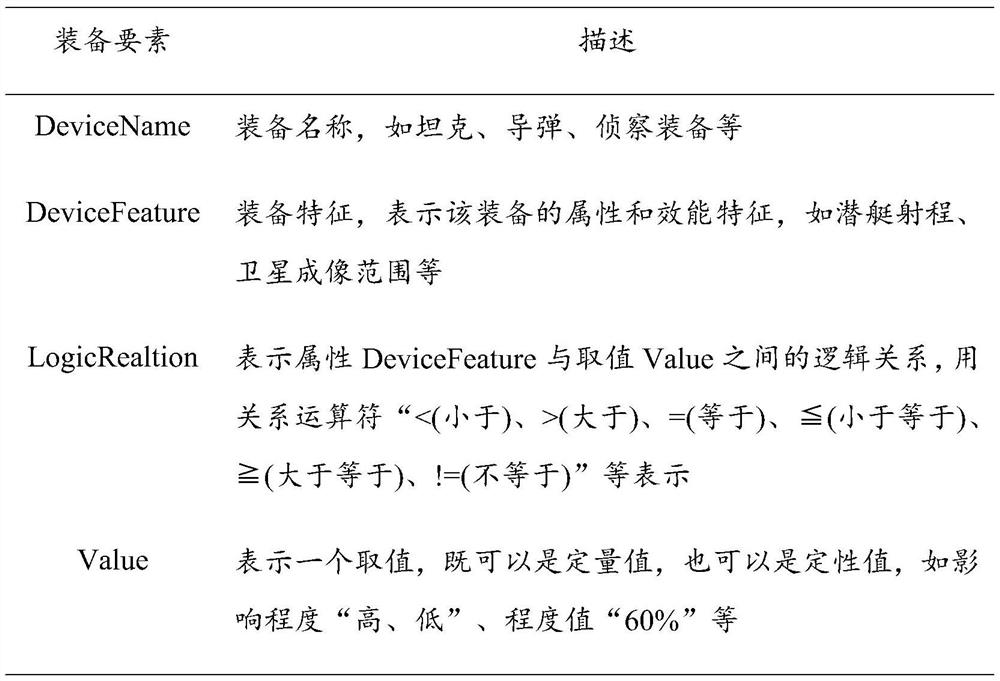

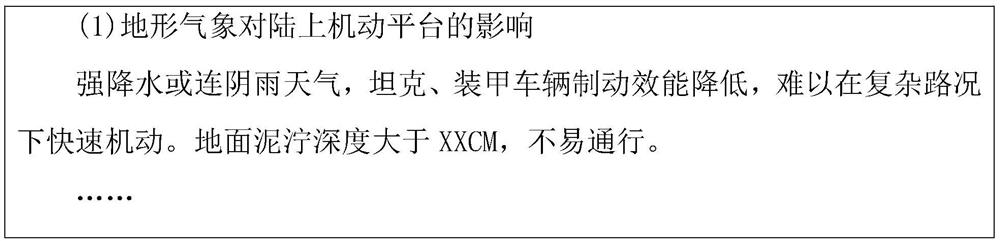

Equipment efficiency evaluation method and device based on knowledge base rule reasoning

ActiveCN113627024AEfficiently infer complex influence relationshipsImprove the level of intelligenceDesign optimisation/simulationSpecial data processing applicationsTheoretical computer scienceCausal reasoning

The invention discloses an equipment efficiency evaluation device based on knowledge base rule reasoning. The equipment efficiency evaluation device comprises a sensing interface, a semantic analyzer, a rule reasoning machine, a natural environment influence knowledge base and an execution interface, the natural environment influence knowledge base comprises an entity knowledge base, an influence rule base and an algorithm base; the rule reasoning machine reasons rule information by by using deductive reasoning, inductive reasoning, causal reasoning, condition missing speculation, equipment analogy speculation and decision table judgment reasoning rule reasoning calculation methods; the semantic analyzer reads natural environment, equipment information and the like to obtain a semantic extension set. The invention discloses an equipment efficiency evaluation method based on knowledge base rule reasoning. The accuracy and completeness of the evaluation conclusion depend on the knowledge base and the constraint rules, and by continuously improving the concept elements and the constraint rules of the knowledge base, the method can adapt to various types of combat actions, natural environments and the like, and the overall intelligent level of efficiency evaluation is improved.

Owner:中国人民解放军32021部队

Multi-lingual speech recognition and theme-semanteme analysis method and device

A multi-lingual speech recognition and theme-semanteme analysis method comprises steps executed by a speech recognizer: obtaining an alphabet string corresponding to a voice input signal according to a pronunciation-alphabet table, determining that the alphabet string corresponds to original words according to a multi-lingual vocabulary, and forming a sentence according to the multi-lingual vocabulary and the original words, and comprises steps executed by a sematic analyzer: according to the sentence and a theme vocabulary-semantic relationship data set, selectively executing a correction procedure to generate a corrected sentence, an analysis state determining procedure or a procedure of outputting the sentence, outputting the corrected sentence when the correction procedure successes, and executing the analysis state determining procedure to selectively output a determined result when the correction procedure fails.

Owner:NAT CHENG KUNG UNIV

Artificial intelligence deep learning neural network embedded control system

InactiveCN108011789ARun fastReduce power consumptionNon-electrical signal transmission systemsSpeech recognitionSemantic analyzerInternal memory

The invention discloses an artificial intelligence deep learning neural network embedded control system, comprising a control center and a smart external device. The control center comprises a microprocessor and a voice recognition unit. The voice recognition unit comprises a voice collector and a semantic analyzer. An output end of the voice collector is electrically connected with an input end of the semantic analyzer. The output end of the semantic analyzer is electrically connected with the input end of a command word library. The output end of the command word library is electrically connected with the input end of the microprocessor. The control center also comprises an infrared remote control unit. The infrared remote control unit comprises an infrared signal receiver and an infrared signal code library. The output end of the infrared signal receiver is electrically connected with the input end of the infrared signal code library. The infrared signal code library is intercommunicated with an internal memory unit through a dedicated channel. The output end of the infrared signal code library is electrically connected with the input end of the microprocessor. According to thesystem, an offline voice command word library can be stored in the internal or eternal memory unit, thereby realizing offline voice recognition.

Owner:四川声达创新科技有限公司

Techniques for understanding the aboutness of text based on semantic analysis

ActiveUS9632999B2Facilitate meaningful text interpretationHigh resultSemantic analysisSpecial data processing applicationsSemantic analyzerTopic analysis

Owner:KLANGOO INC

Multilingual speech recognition and theme semantic analysis method and device

PendingCN112988955AImprove accuracyExplain the scope of the claimsNatural language data processingSpeech recognitionSemantic analyzerSpeech input

The invention relates to a multilingual speech recognition and theme semantic analysis method, which comprises the following steps: acquiring a pinyin character string corresponding to a speech input signal according to a speech comparison table, judging that the pinyin character string corresponds to a plurality of original words according to multilingual word collection, and forming a statement according to the multi-word collection and the plurality of original words through a speech recognizer; executing the following steps through a semantic analyzer: selectively executing a correction process, executing an analysis state judgment process or outputting the statement according to the statement and subject vocabulary semantic relationship data set; outputting the corrected statement when the correction process is judged to be successful, and when the correction process is judged to be failed, executing an analysis state judgment process to selectively output a judgment result.

Owner:卢文祥

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com