Patents

Literature

455 results about "Anime" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Animation is a method in which pictures are manipulated to appear as moving images. In traditional animation, images are drawn or painted by hand on transparent celluloid sheets to be photographed and exhibited on film. Today, most animations are made with computer-generated imagery (CGI). Computer animation can be very detailed 3D animation, while 2D computer animation can be used for stylistic reasons, low bandwidth or faster real-time renderings. Other common animation methods apply a stop motion technique to two and three-dimensional objects like paper cutouts, puppets or clay figures.

Generating animation data

An apparatus and method are provided for generating animation data, including storage means comprising at least one character defined as a hierarchy of parent and children nodes and animation data defined as the position in three-dimensions of said nodes over a period of time, memory means comprising animation instructions, wherein said processing means are configured by said animation instructions to perform the steps of animating said character with first animation data; selecting nodes within said first animation data when receiving user input specifying second animation data in real-time; respectively matching said nodes with corresponding nodes within said second animation data; respectively interpolating between said nodes and said matching nodes; and animating said character with second animation data having blended a portion of said first animation data with said second animation data.

Owner:KAYDARA

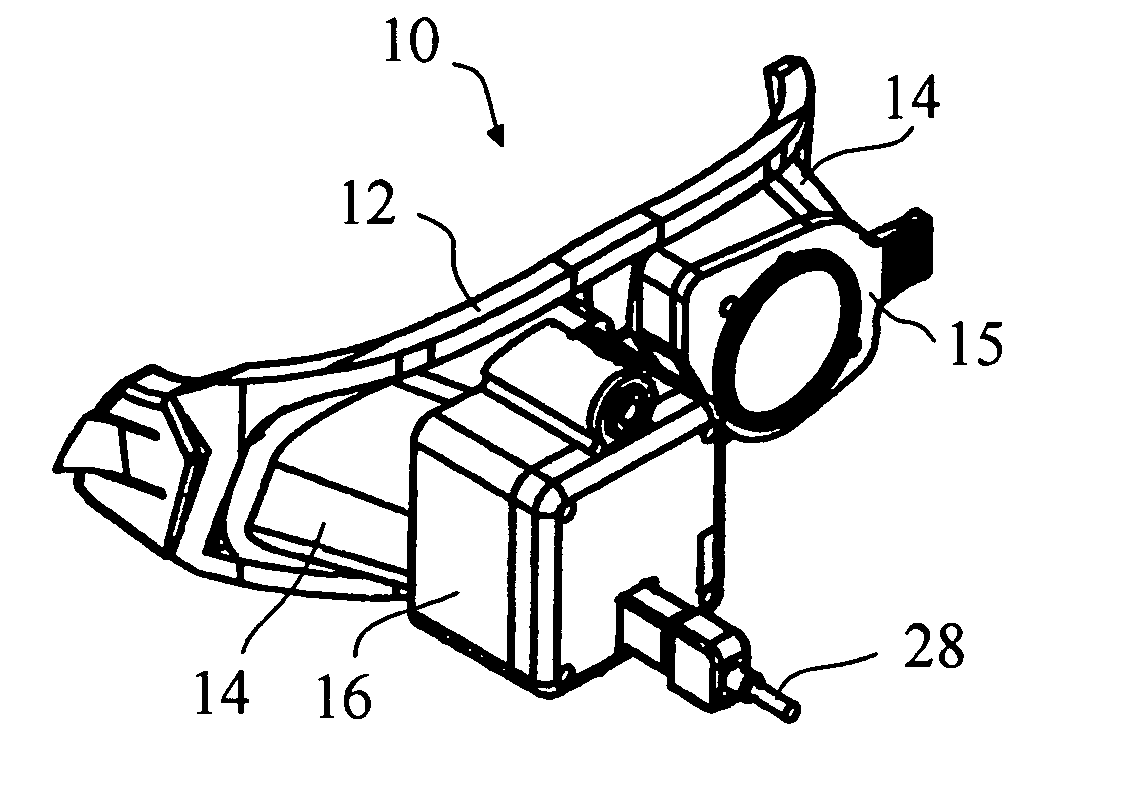

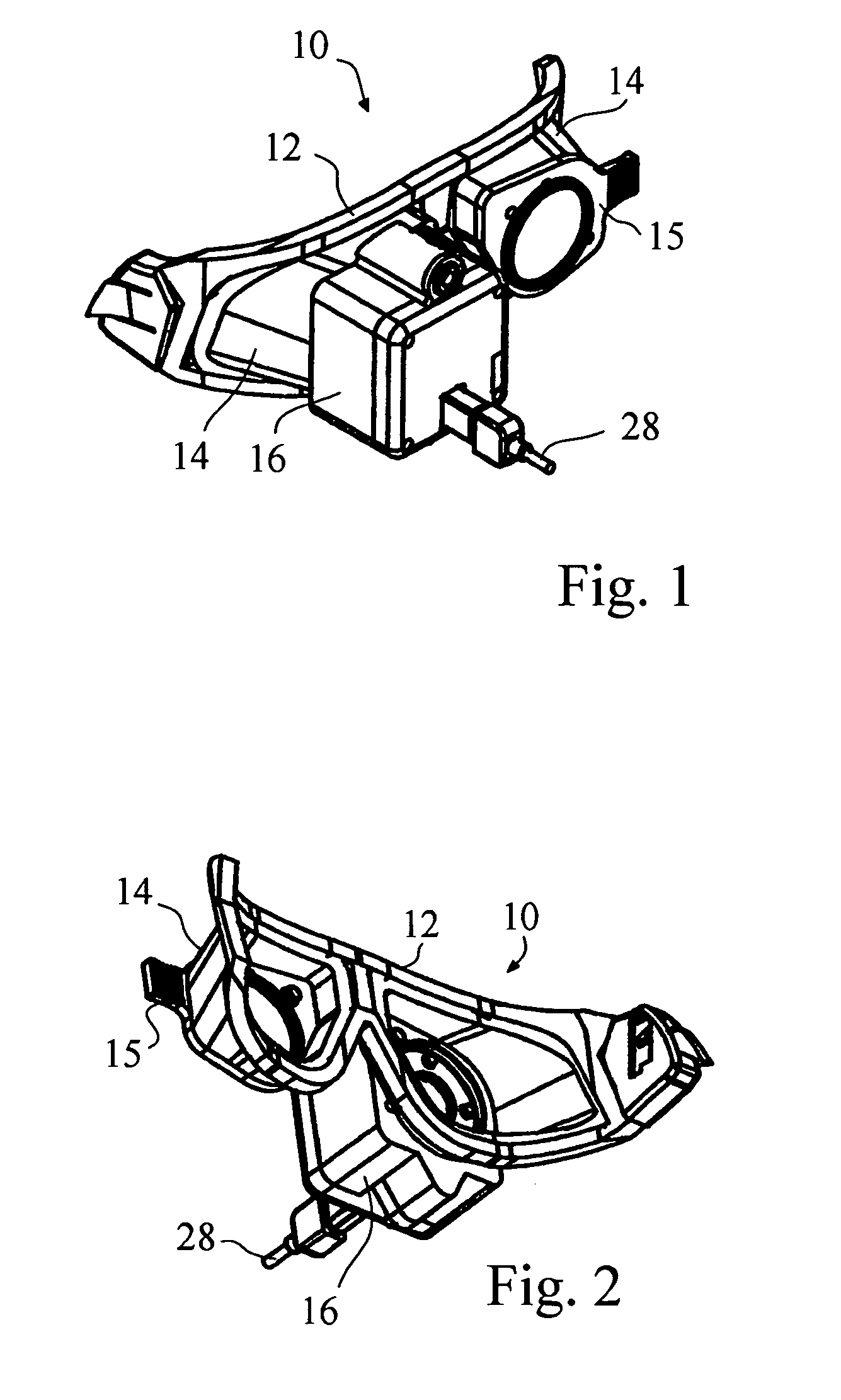

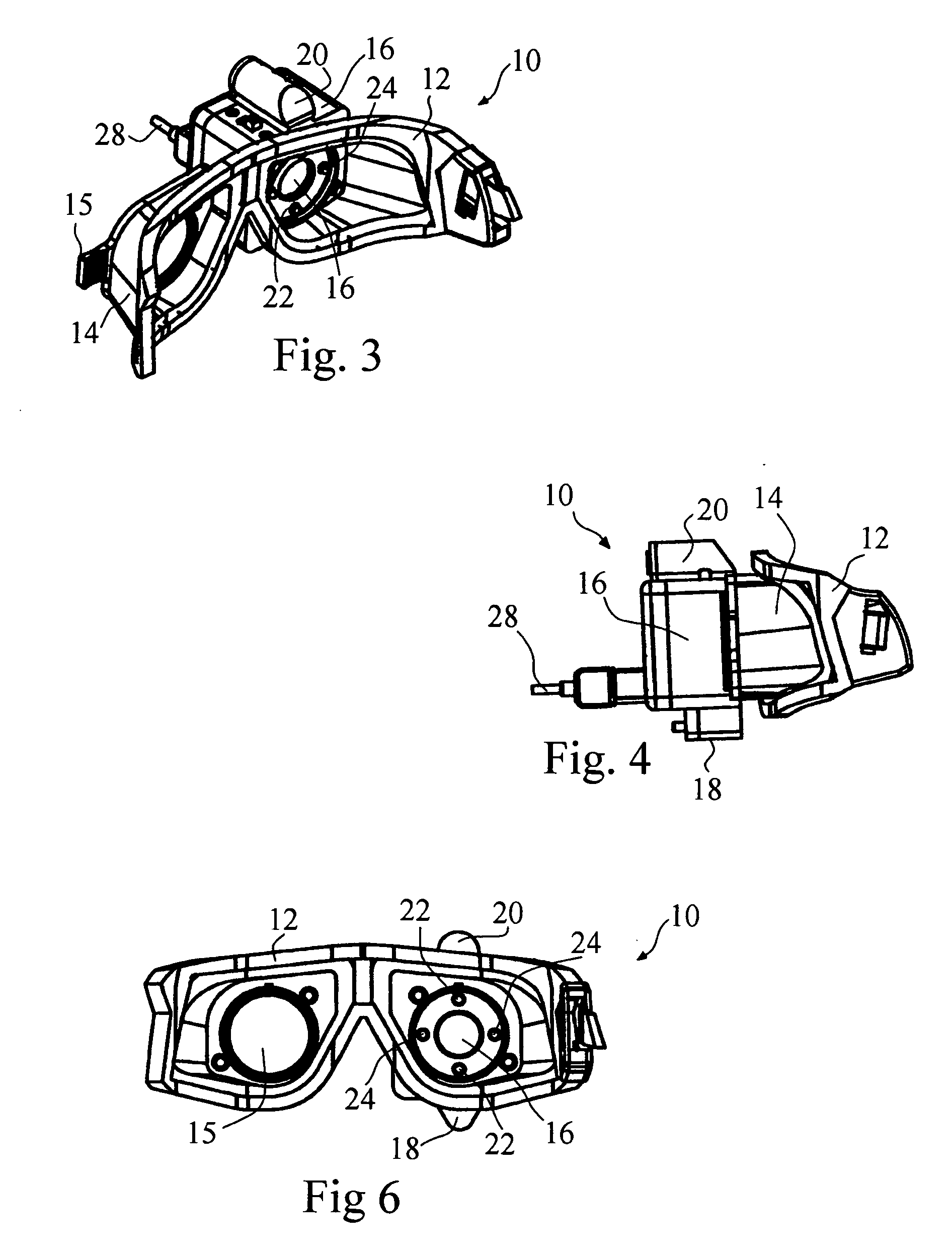

Portable video oculography system

A goggle based light-weight VOG system includes at least one digital camera connected to and powered by a laptop computer through a firewire connection. The digital camera may digitally center the pupil in both the X and Y directions. A calibration mechanism may be incorporated onto the goggle base. An EOG system may also be incorporated directly into the goggle. The VOG system may track and record 3-D movement of the eye, track pupil dilation, head position and goggle slippage. An animated eye display provides data in a more meaningful fashion. The VOG system is a modular design whereby the same goggle frame or base is used to build a variety of digital camera VOG systems.

Owner:128 GAMMA LIQUIDATING TRUST +1

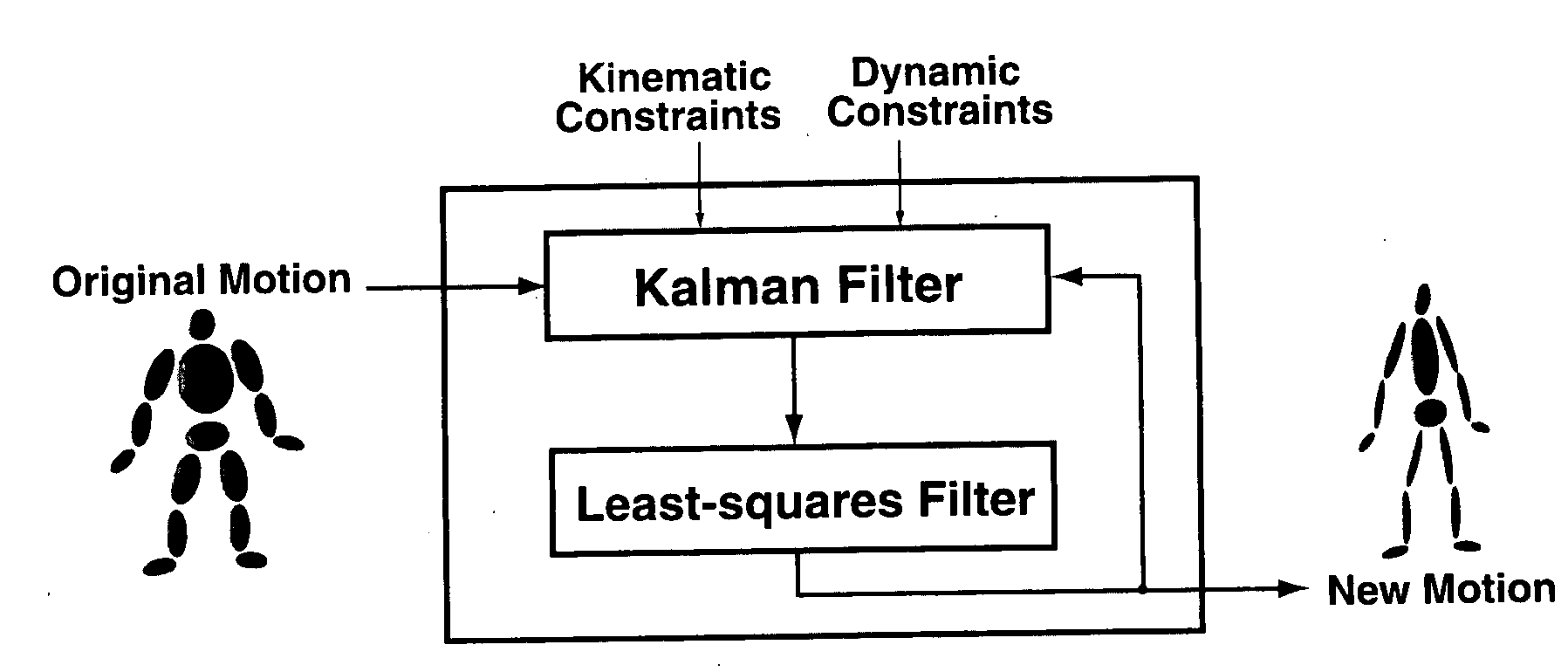

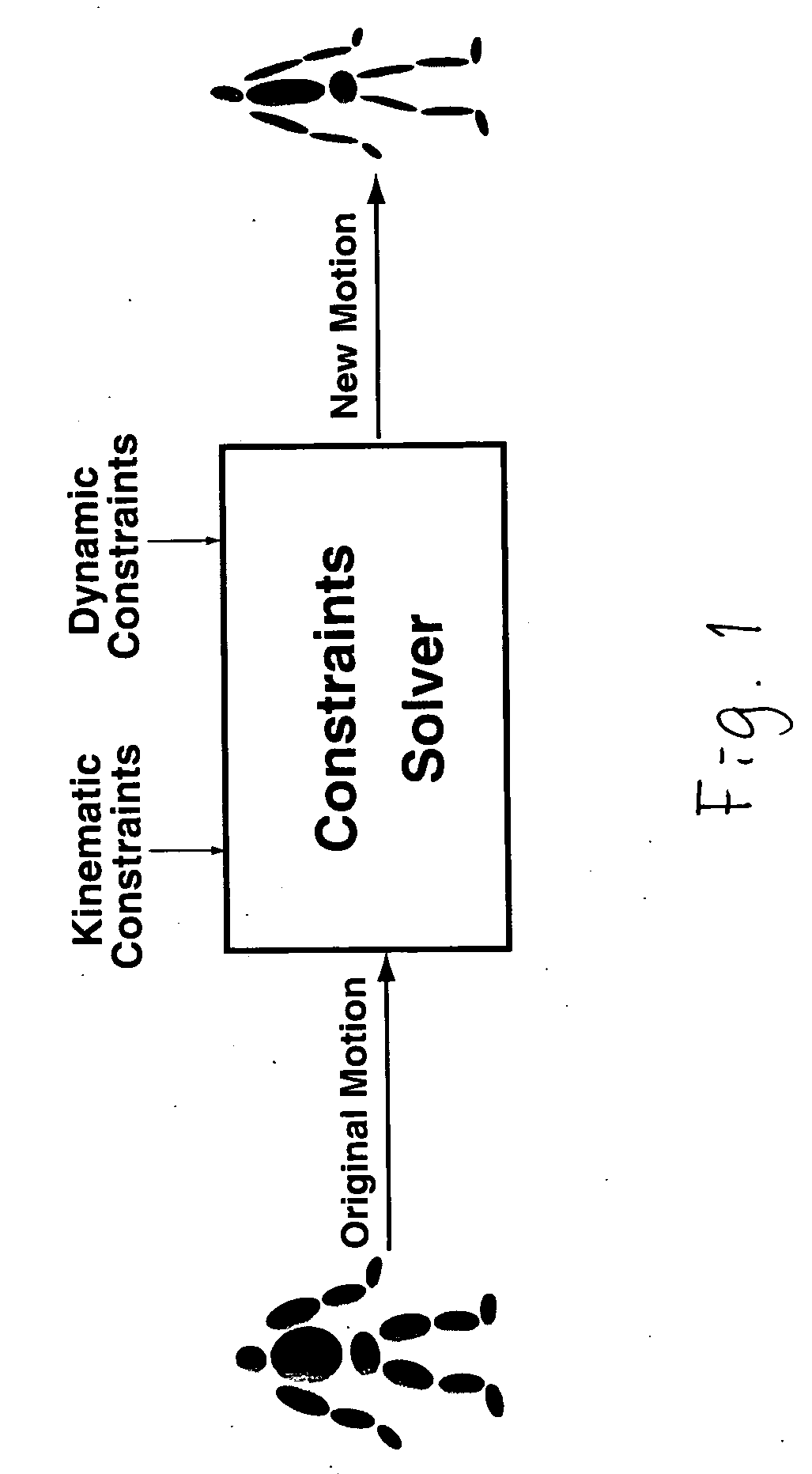

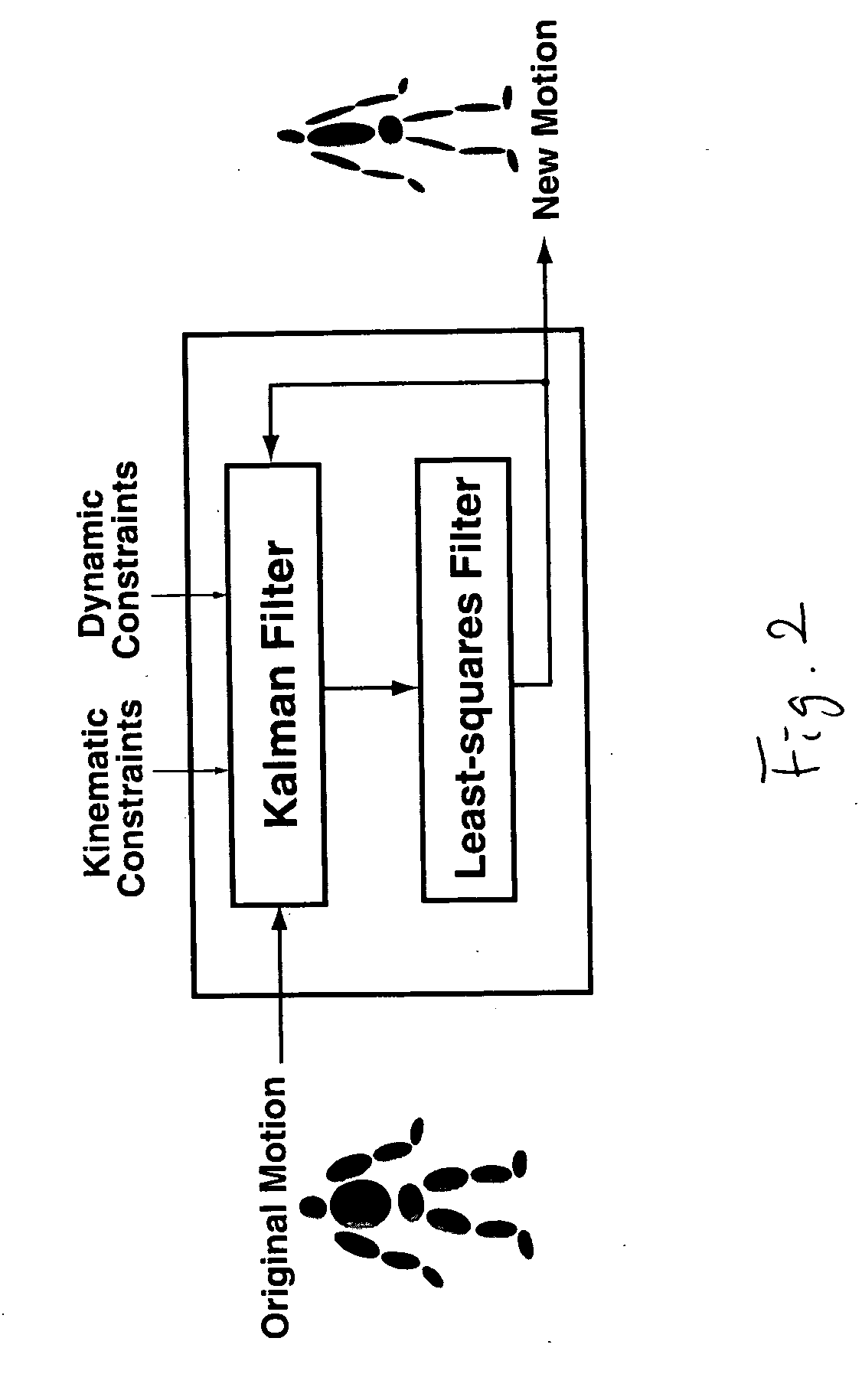

Physically based motion retargeting filter

InactiveUS20060139355A1Stable interactive rateStable rateAnimation3D-image renderingKaiman filterKinematics

A method for editing motion of a character includes steps of a) providing an input motion of the character sequentially along with a set of kinematic and dynamic constraints, wherein the input motion is provided by a captured or animated motion; b) applying a series of plurality of unscented Kalman filters for solving the contraints; c) processing the output from the unscented Kalman filters with a least-squares filter for rectifying the output; and d) producing a stream of output motion frames at a stable interactive rate. The steps are applied to each frame of the input motion The method may further include a step of controlling the behavior of the filters by tuning parameters and a step of providing a rough sketch for the filters to produce a desired motion. The Kalman filter includes per-frame Kalman filter. The least-squares filter is applied only to recently processed frames

Owner:SEOUL NAT UNIV R&DB FOUND

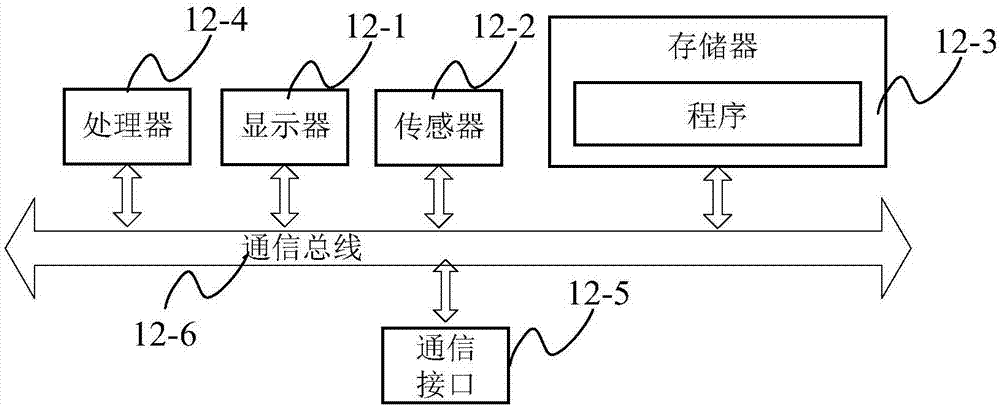

Social application animation forming method, device, system and terminal

ActiveCN107294838AReduce the amount of resourcesGuaranteed playback fluencyAnimationData switching networksObject motionComputer graphics (images)

The embodiment of the invention provides a social application animation forming method, a device, a system and a terminal; the terminal detects input information aiming at a social application operation interface, forms a corresponding animation forming order, determines an object motion identifier of a virtual object in the social application, obtains target animation resources corresponding to the object motion identifier, renders the bone animations of the virtual object, and plays the virtual object bone animations in the social application operation interface. Therefore, the method and device can fast realize complex animation effects without obtaining a series of frame images of the virtual object finishing certain motions in the social application, thus reducing the terminal obtained resource amount, reducing terminal content space occupation, and ensuring the animation playing smoothness.

Owner:TENCENT TECH (SHENZHEN) CO LTD

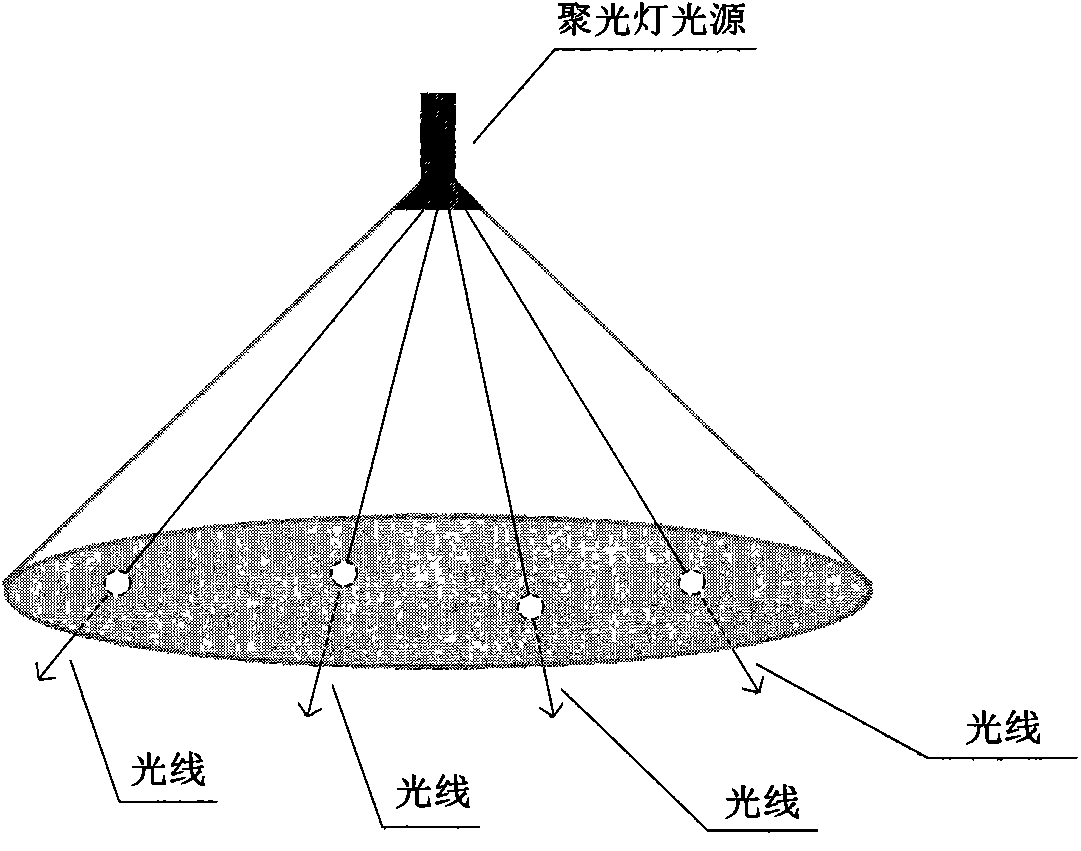

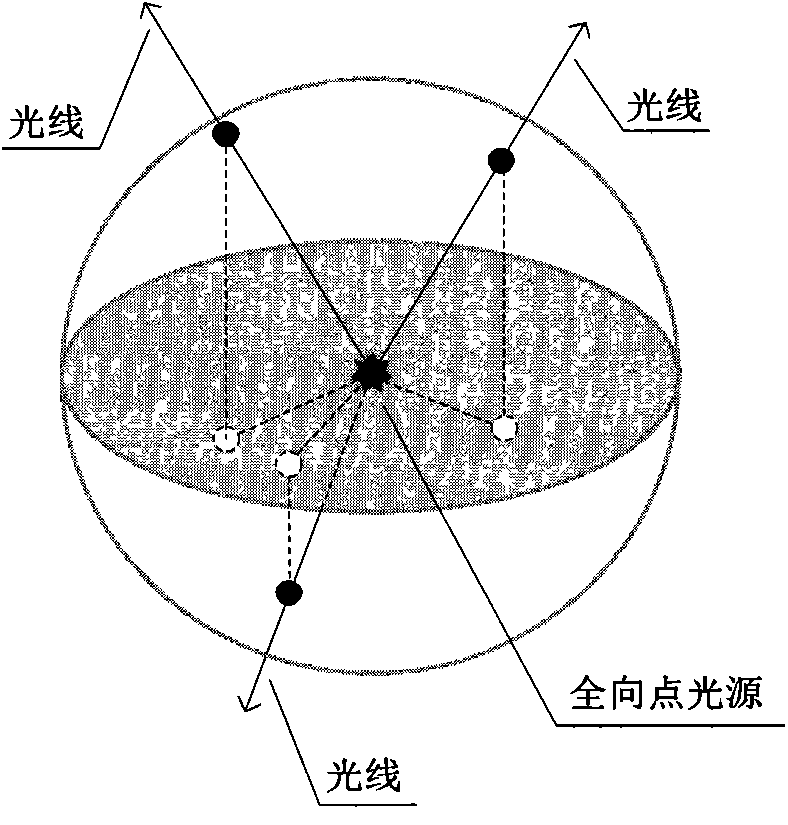

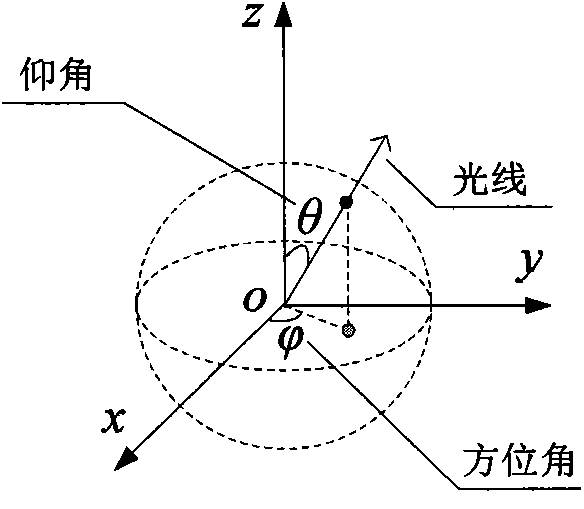

Method for achieving global illumination drawing of animation three-dimensional scene with virtual point light sources

ActiveCN104008563AAchieve reuseImprove light time correlationAnimation3D-image renderingViewpointsAnimation

The invention discloses a method for achieving global illumination drawing of an animation three-dimensional scene with virtual point light sources, and belongs to the technical field of three-dimensional animation drawing. According to the method, geometric objects in the scene are divided into the static geometric objects and the dynamic geometric objects, and organization and management are respectively carried out with different scene graphs. On the basis that light sampling is carried out on illumination emitting space of the light sources, intersection points of light and the geometric objects in the scene are solved, the virtual point light sources are created at the positions of the intersection points to illuminate the animation three-dimensional scene, and indirect illumination drawing of the animation three-dimensional scene is further achieved. By means of the method, the large number of virtual point light sources can be reused between continuous frames, and global illumination drawing of the animation three-dimensional scene with viewpoints and geometric object movement can be supported at the same time. The method is used for producing three-dimensional animations, and the visual quality of the three-dimensional animations can be improved.

Owner:CHANGCHUN UNIV OF SCI & TECH

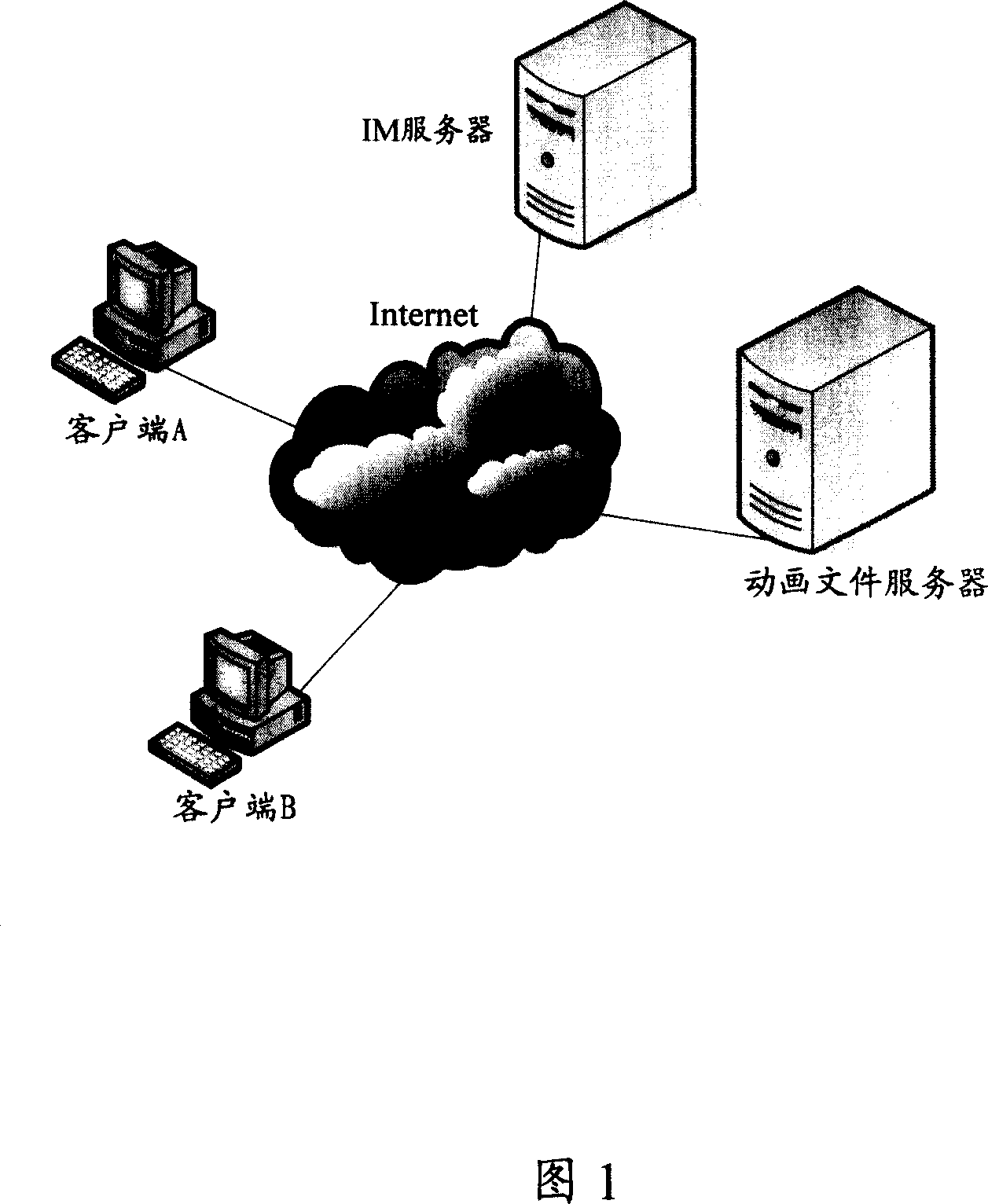

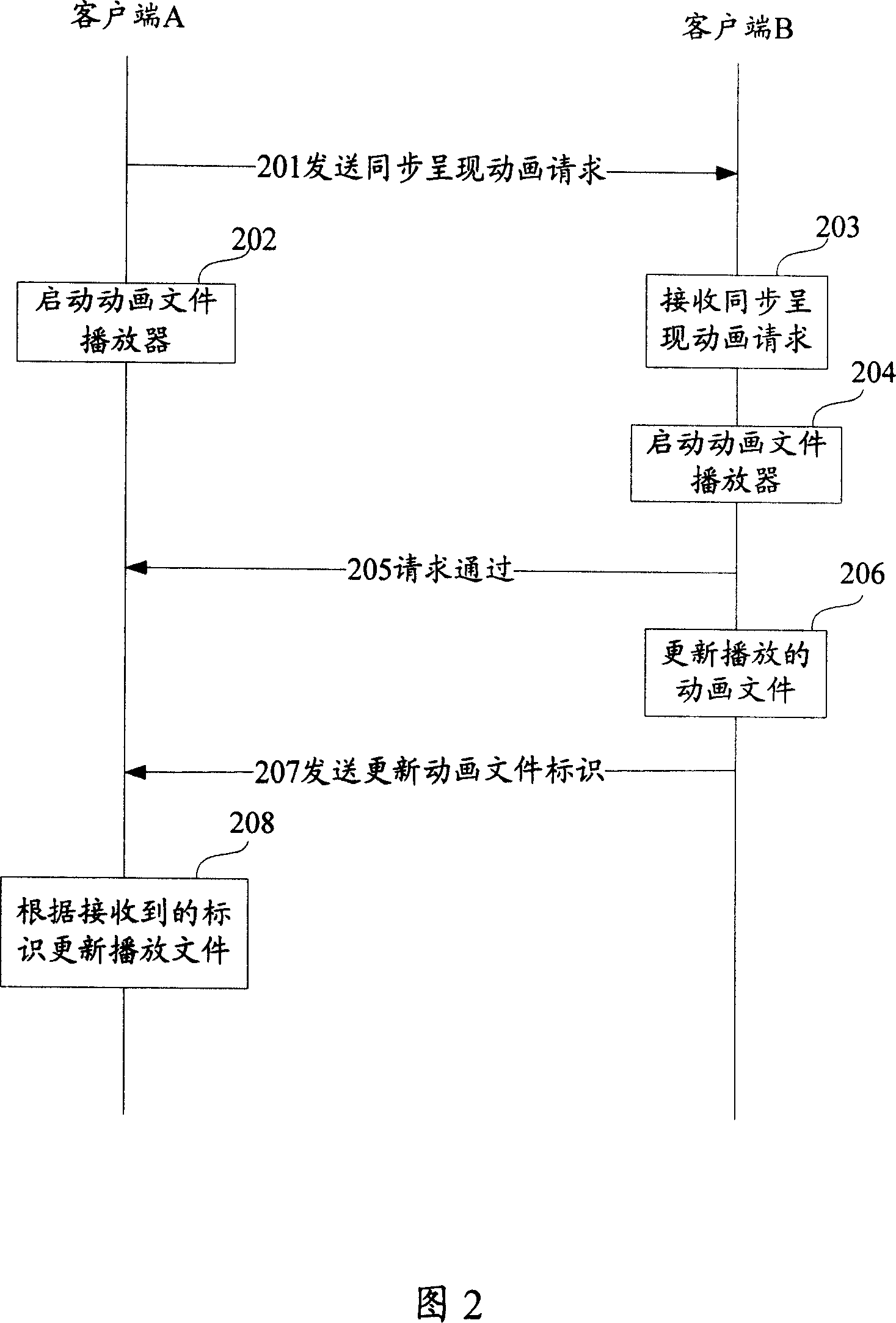

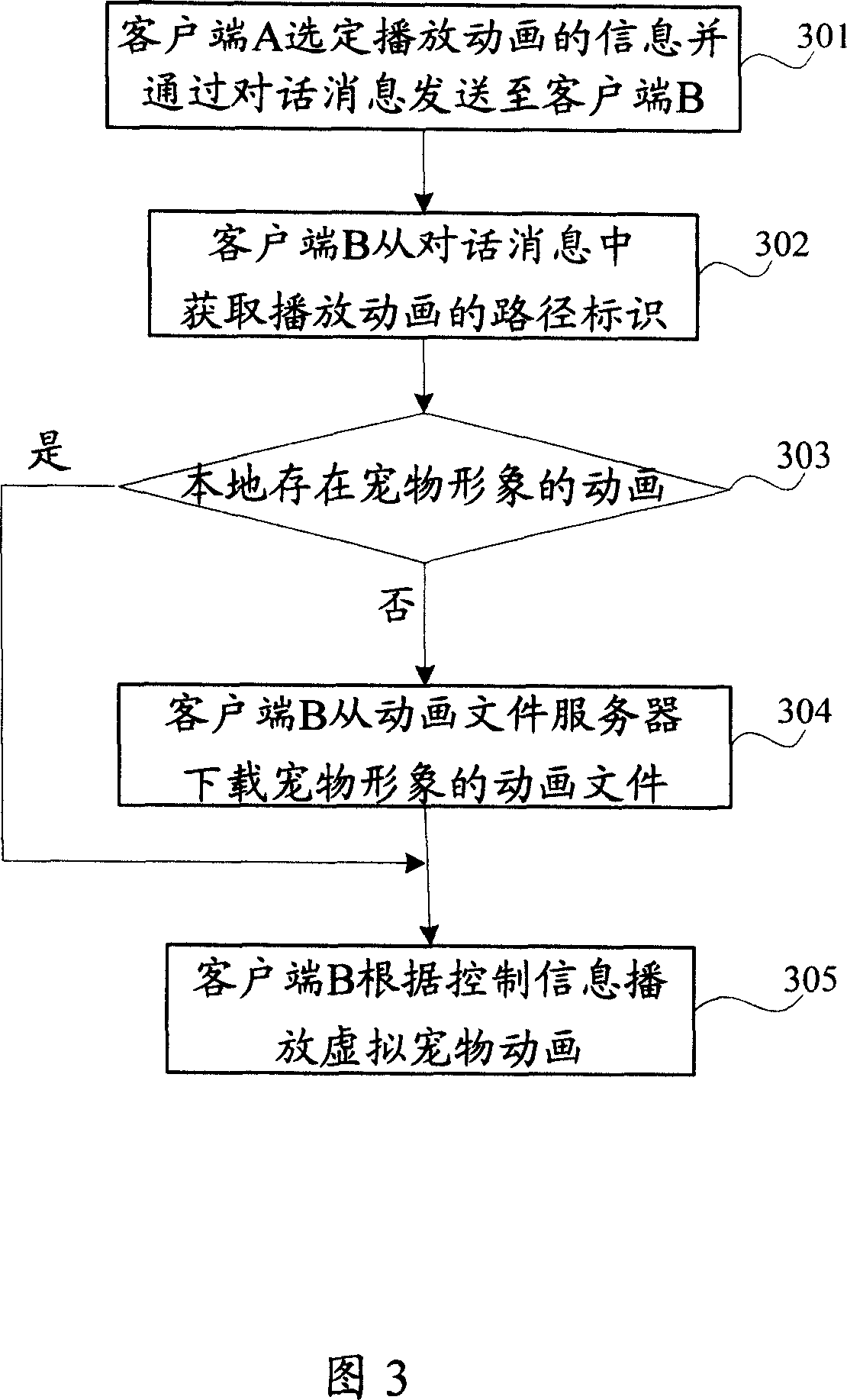

Method for presenting animation synchronously in instant communication

ActiveCN101064693AImprove experienceSolve the problem of not being able to interact with animationsData switching networksClient-sideAnimation

The invention discloses a method of presenting cartoon in-phase in instant communication; it is used to resolve problem that dummy pet cartoon can not be played continuously between instant communication users. The invention includes: the relative information of first client terminal playing cartoon file is sent to the second client terminal, the relative information at least includes mark of said cartoon file; the client terminal received relative information plays cartoon continuously based on relative information; when any of the first client terminal and the second client terminal updates cartoon, the updated cartoon is sent to the other client terminal, the client terminal which receives the updating information plays the cartoon continuously based on said updating information.

Owner:TENCENT TECH (SHENZHEN) CO LTD

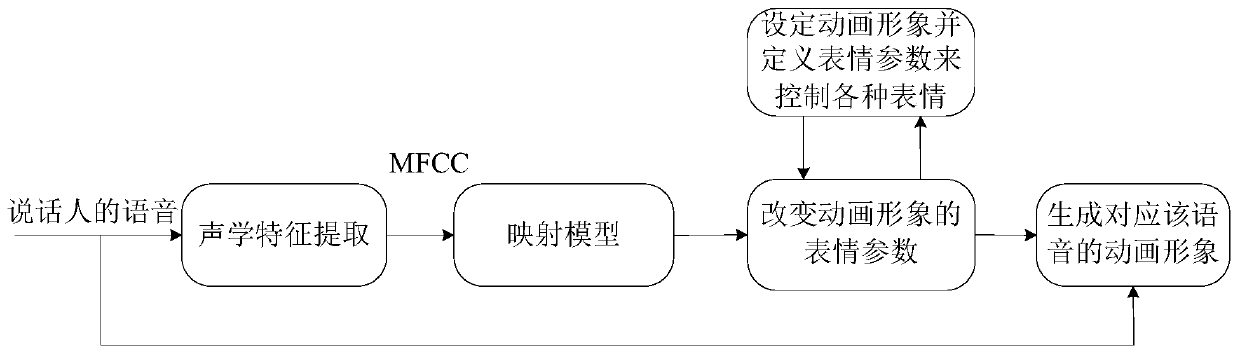

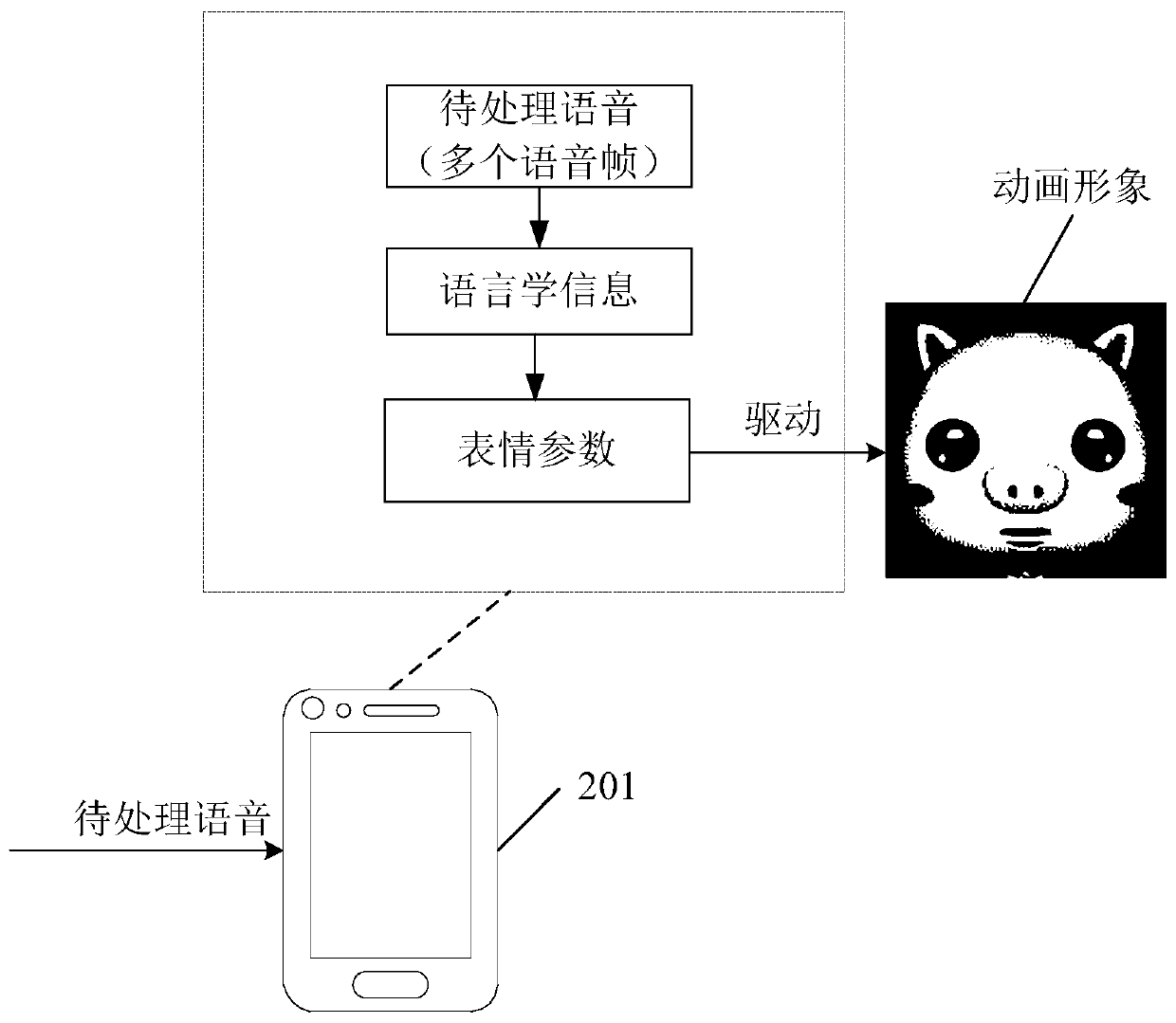

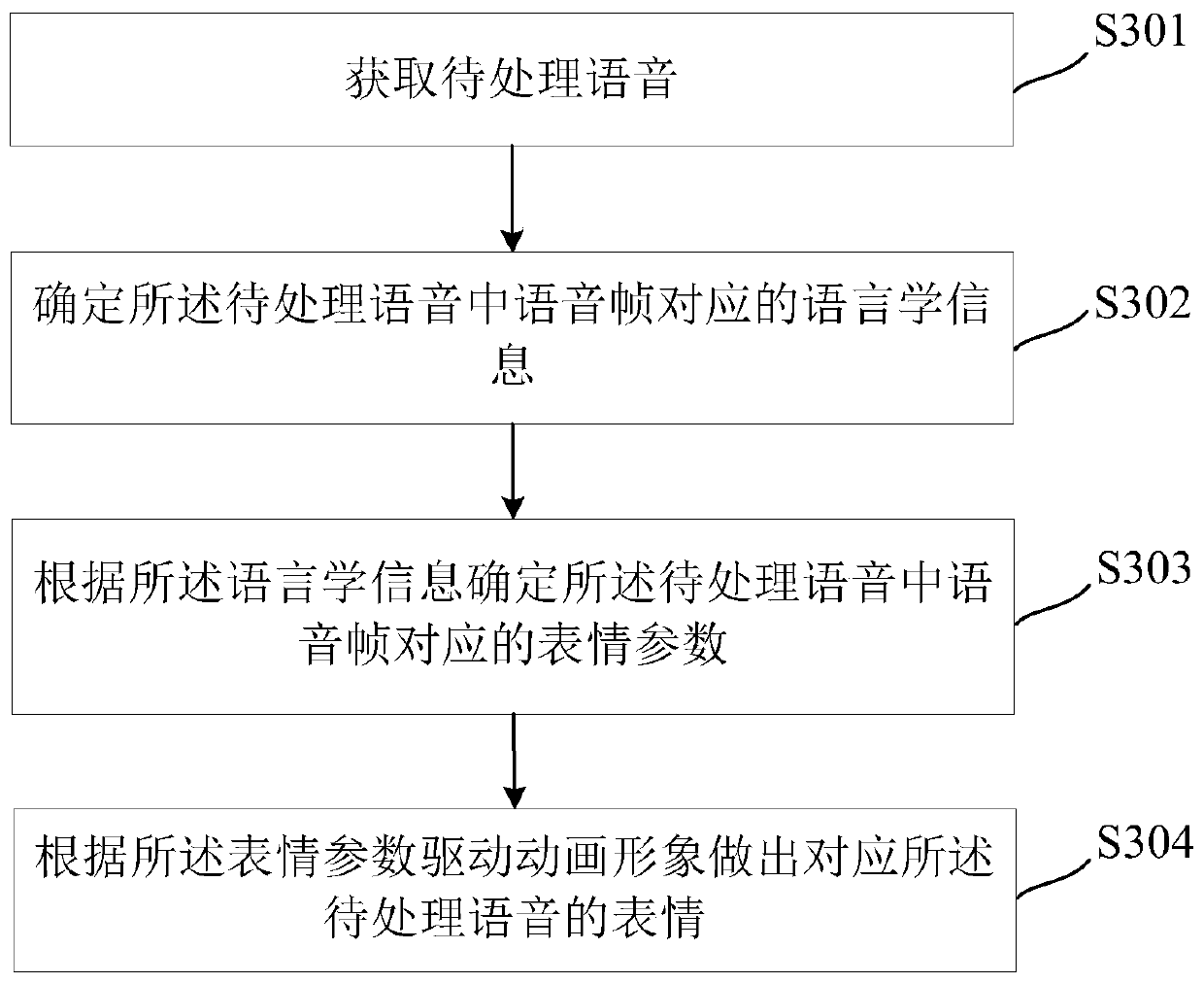

Voice-driven animation method and device based on artificial intelligence

PendingCN110503942AImprove interactive experienceBiological modelsAnimationPattern recognitionAlgorithm

An embodiment of the invention discloses a voice-driven animation method based on artificial intelligence. When a to-be-processed voice comprising a plurality of voice frames is obtained, linguistic information corresponding to the voice frames in the to-be-processed voice can be determined, wherein each piece of the linguistic information is used for identifying the probability of distribution ofphonemes to which the corresponding voice frame belongs, namely, reflecting which probability distribution of the phonemes contents in the voice frame belong to; information carried by the linguisticinformation is irrelevant to an actual speaker of the to-be-processed voice, so that the influence of pronunciation habits of different speakers on determination of subsequent expression parameters can be counteracted; and according to the expression parameters determined by the linguistic information, an animation image can be accurately driven to make an expression corresponding to the to-be-processed voice, such as a mouth shape, so that the to-be-processed voice corresponding to any speaker can be effectively supported and the interactive experience is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

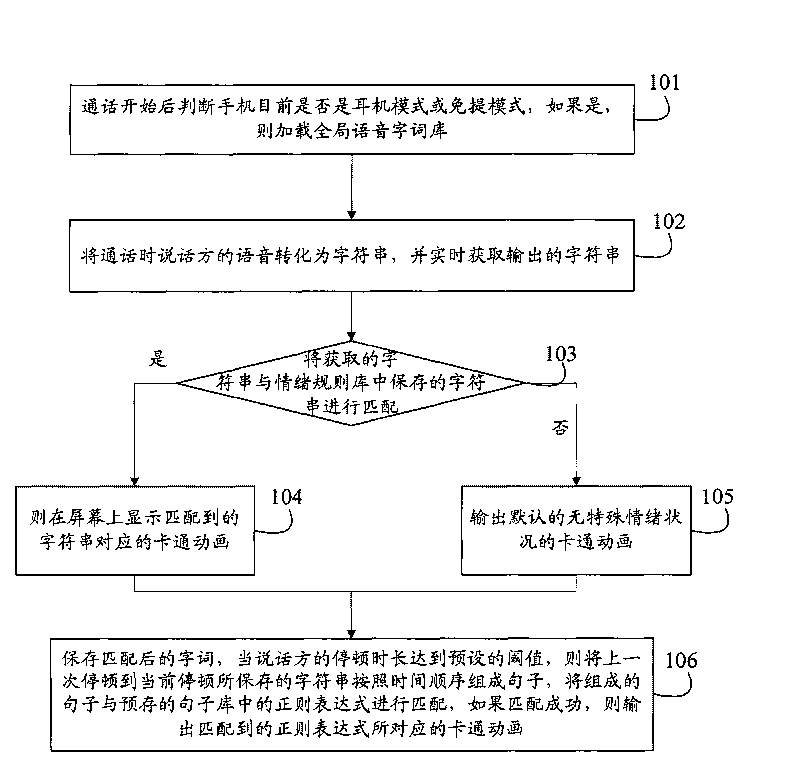

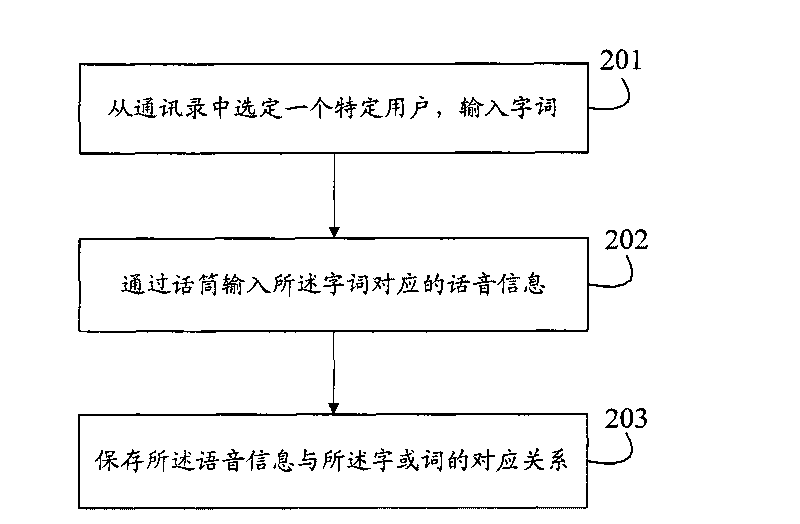

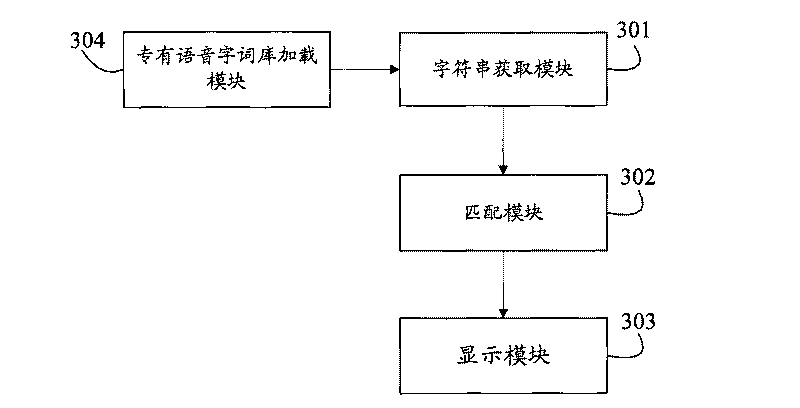

Method and equipment to display the speech information by application of cartoons

InactiveCN101741953AEnhance the other person's emotional feelingsFeel goodTelephone sets with user guidance/featuresSpeech soundAnimation

Owner:ZTE CORP

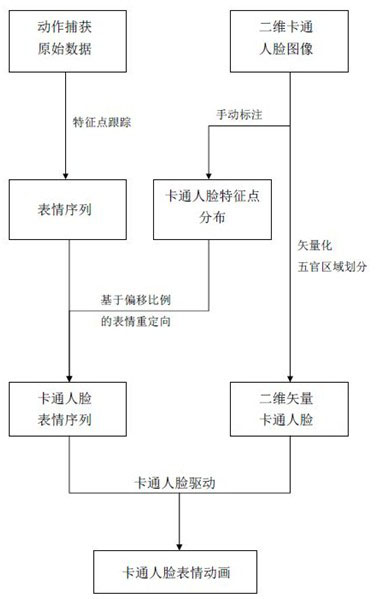

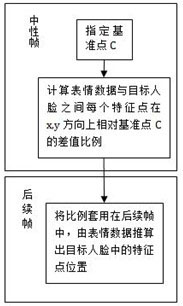

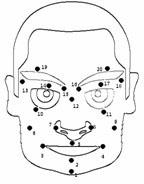

Action-capture-data-driving-based two-dimensional cartoon expression animation production method

The invention discloses an action-capture-data-driving-based two-dimensional cartoon expression animation production method, which comprises the following steps of: first capturing the expression data of an actor by using action capturing equipment, and marking characteristic points forming a topology structure which is the same as that formed by the data of the actor on a target cartoon face by a user at the same time; then aligning the first frame of the expression data of the actor with the characteristic points of the target cartoon face by using a shifting-ratio-based reorientation method, and mapping the expression data of the actor onto the target cartoon face according to an alignment ratio; and vectorizing the target cartoon face, and driving the deformation of the target cartoon face in a vector image form by using reoriented action capture data to achieve an animation effect. By a vectorization technology and a shifting-ratio-based reorientation technology, a cartoon expression animation with rich expressions and a high restoration degree can be obtained in the field of texture-detail-irrespective animation of unreal cartoon faces.

Owner:杭州碧游信息技术有限公司

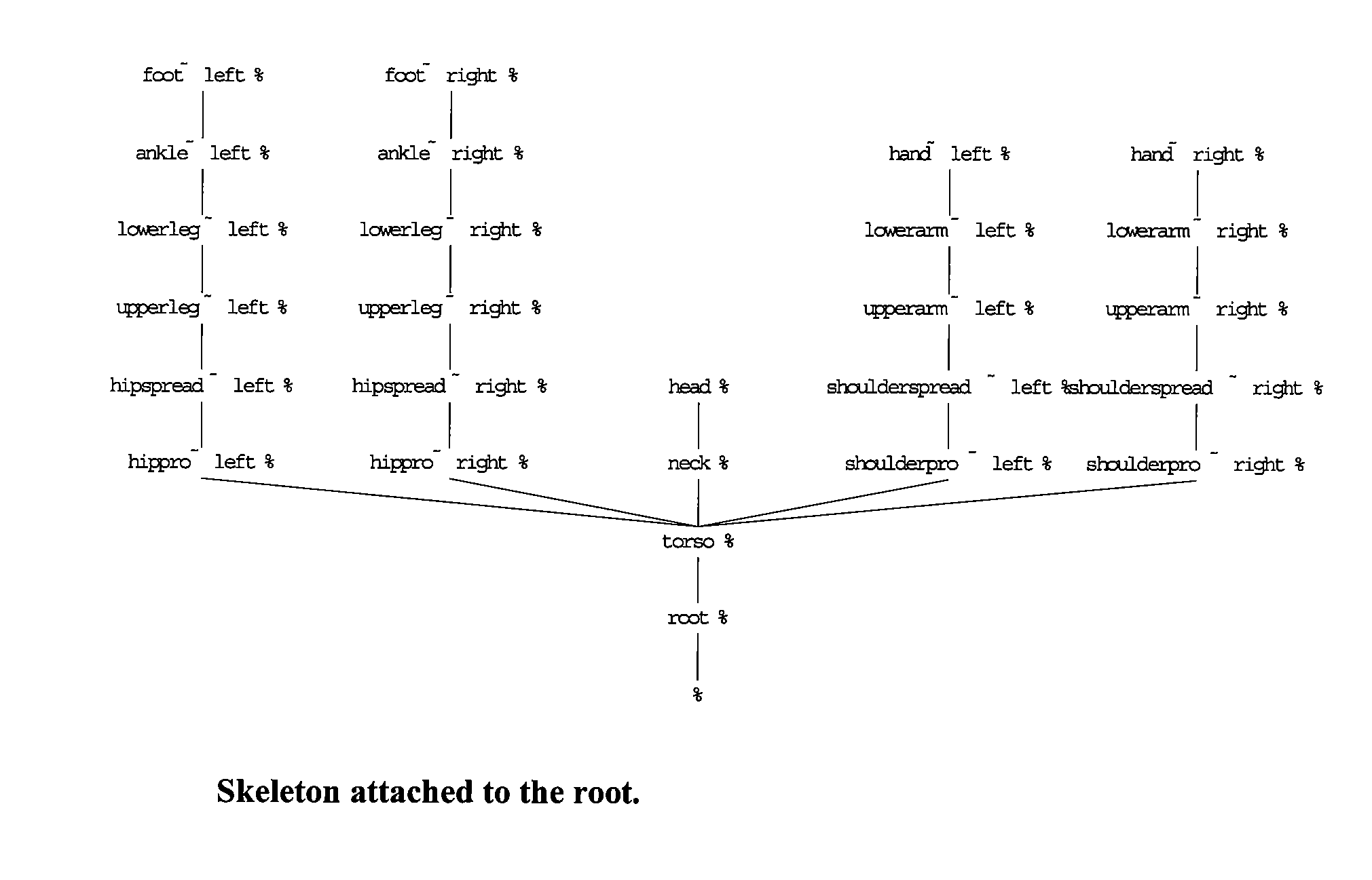

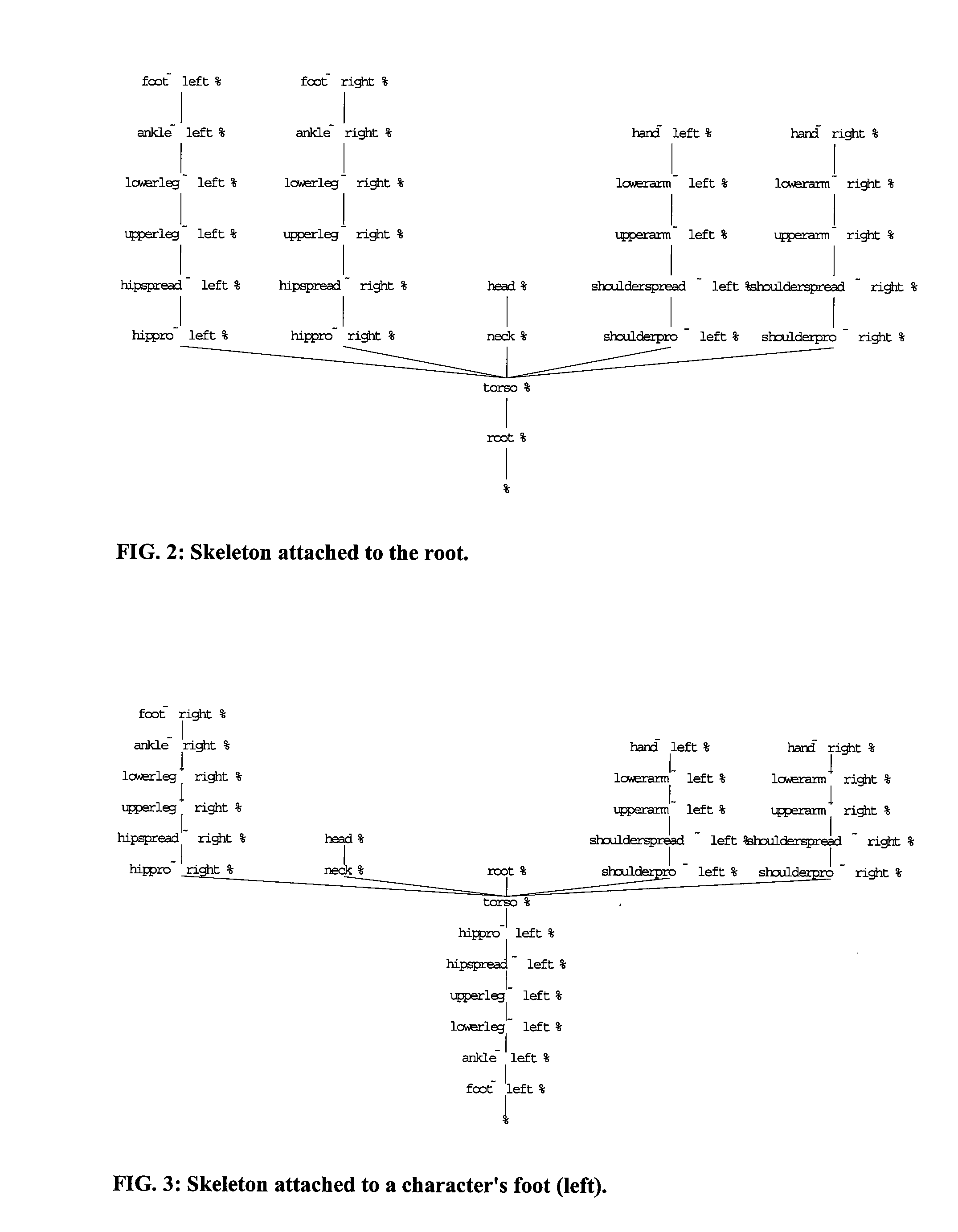

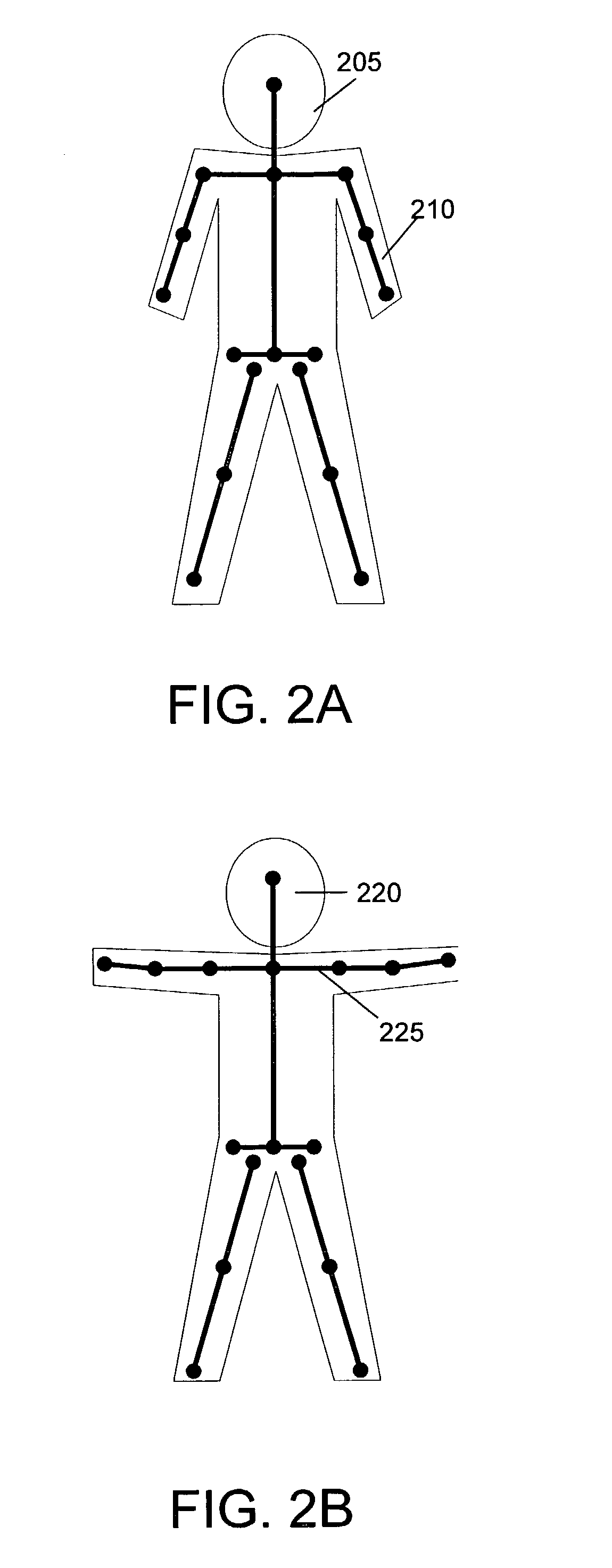

Adaptive contact based skeleton for animation of characters in video games

Equilibrium forces and momentum are calculated using character skeleton node graphs having variable root nodes determined in real time based on contacts with fixed objects or other constraints that apply external forces to the character. The skeleton node graph can be traveled backwards and in so doing it is possible to determine a physically possible reaction force. The tree can be traveled one way to apply angles and positions and then traveled back the other way ‘to calculate’ forces and moments. Unless the root is correct, however, reaction forces will not be able to be properly determined. The adaptive skeleton framework enables calculations of reactive forces on certain contact nodes to be made. Calculation of reactive forces enables more realistic displayed motion of a simulated character.

Owner:ELECTRONICS ARTS INC

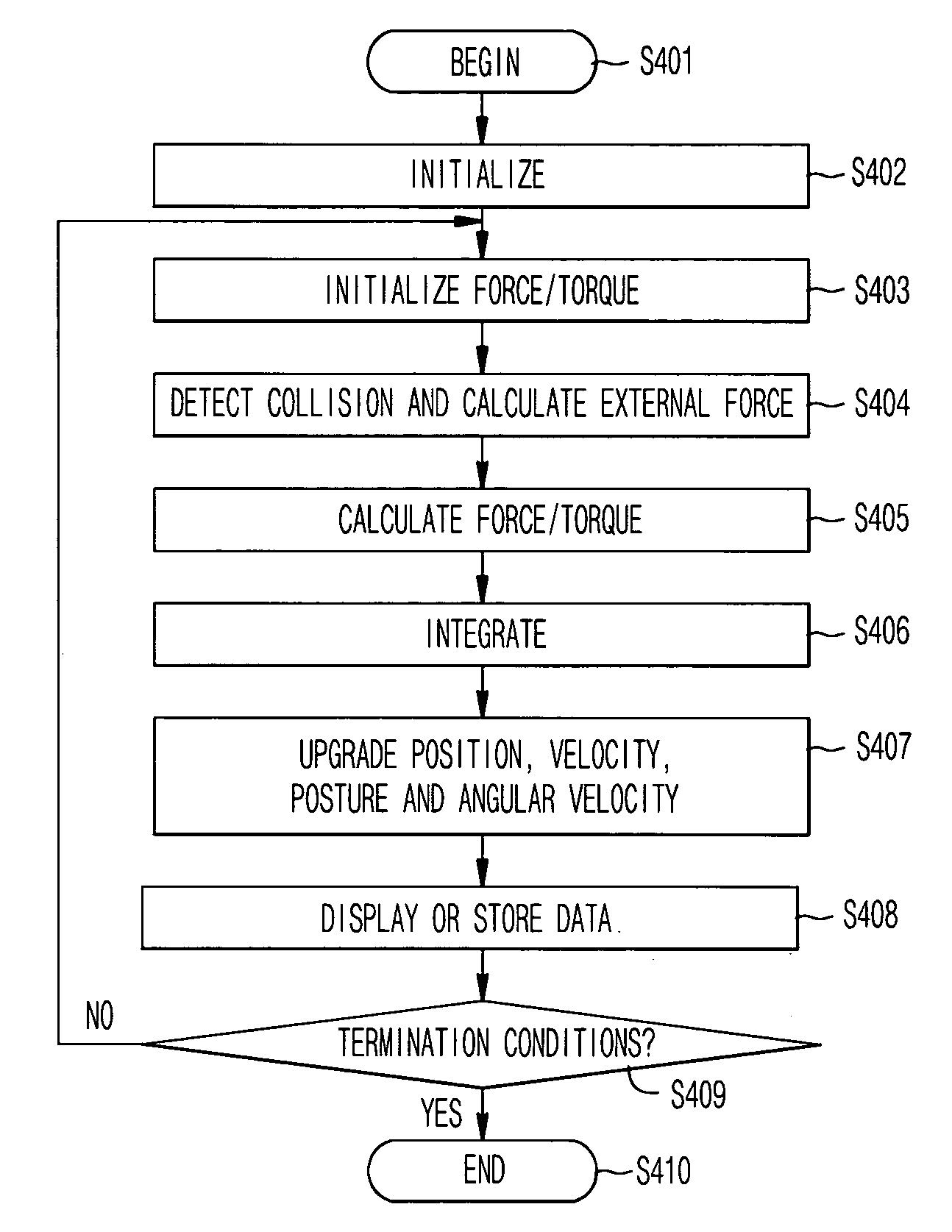

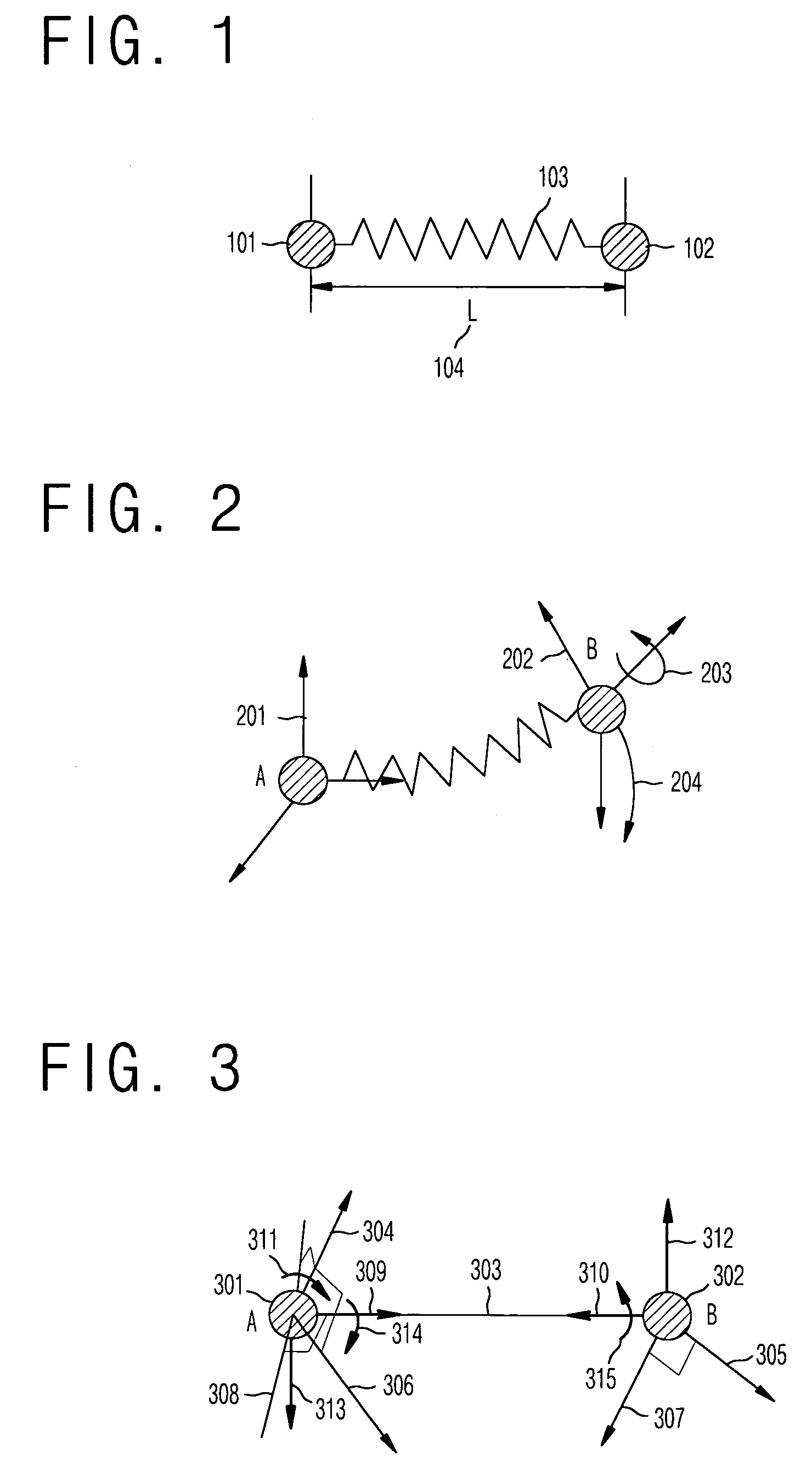

Animation method of deformable objects using an oriented material point and generalized spring model

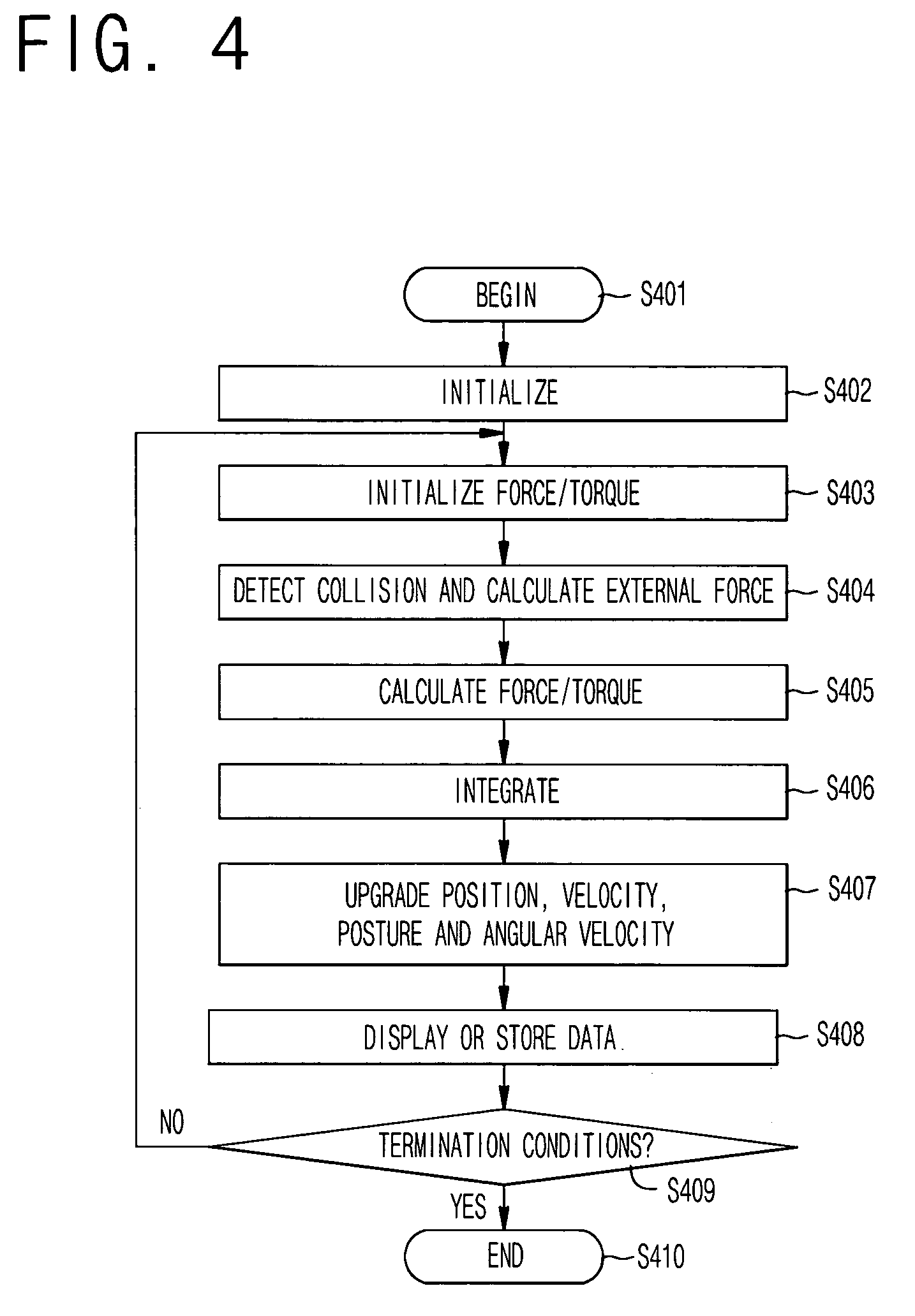

Disclosed is an animation method of deformable objects using an oriented material point and generalized spring model. The animation method comprises the following steps of: modeling a structure of a deformable object into oriented material points and generalized springs; initializing forces and torques acting on the material points, calculating the forces acting on the material points owing to collision of the material points and gravity, calculating the spring forces and torques acting on the material points, obtaining new positions and postures of the material points; updating positions, velocities, postures and angular velocities of the material points based upon physics, and displaying and storing updated results. The oriented material point and generalized spring model of the invention contains the principle of the conventional mass-spring model, but can animate deformable objects or express their structures in more intuitive manner over the conventional mass-spring model. Also, the material point and generalized spring model of the invention can express elongate deformable objects such as hair and wrinkled cloth, which cannot be expressed in the prior art, so as to animate features of various objects.

Owner:ELECTRONICS & TELECOMM RES INST

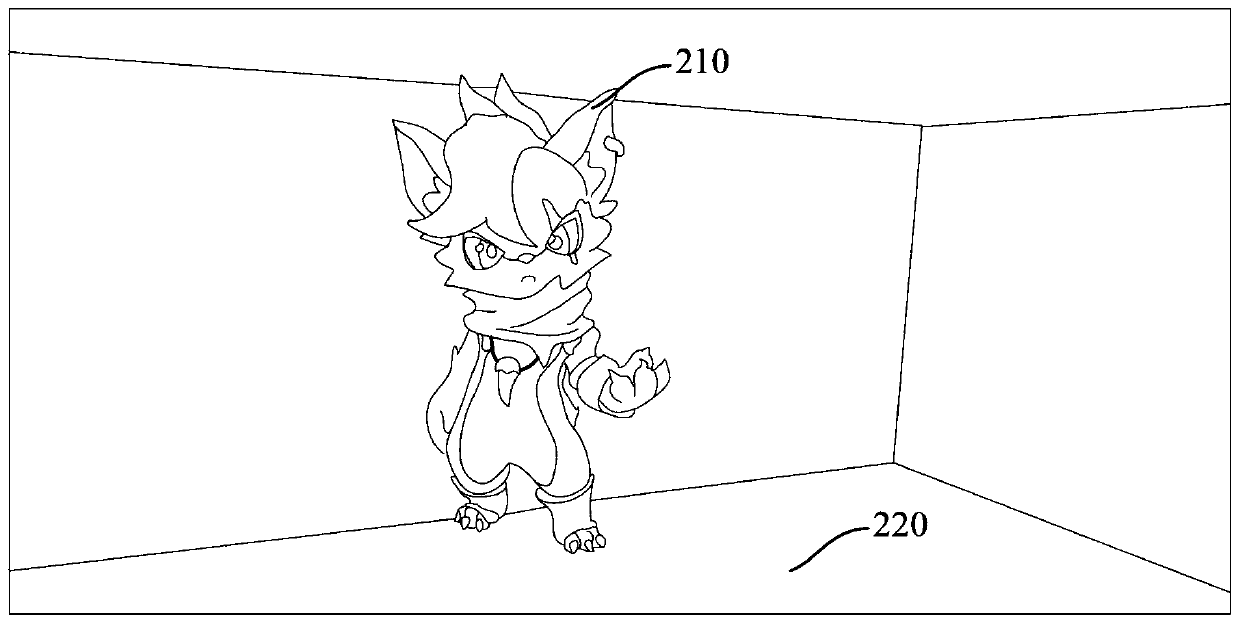

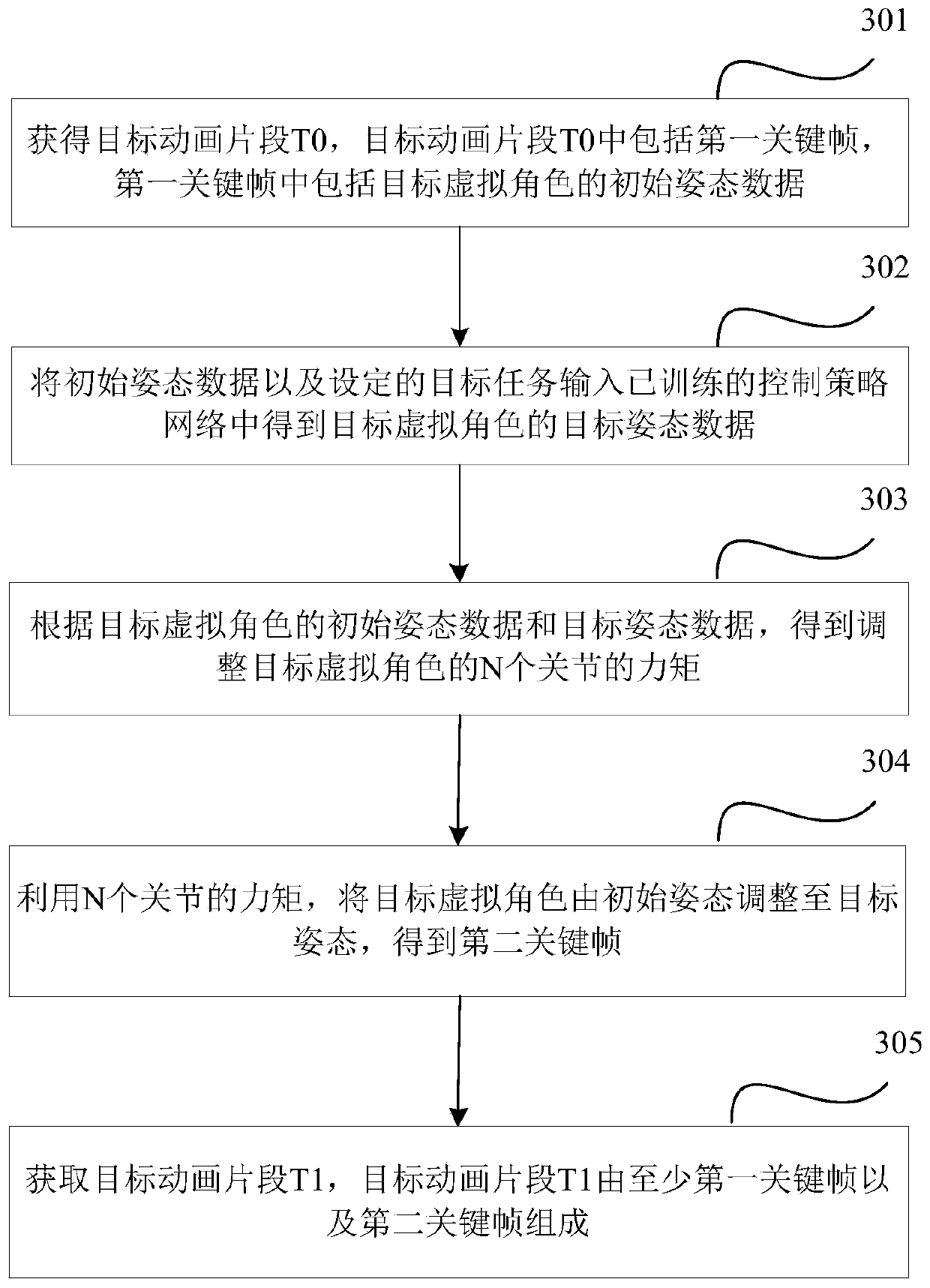

Animation implementation method and device, electronic equipment and storage medium

The invention provides an animation implementation method and device, electronic equipment and a storage medium, belongs to the technical field of computers, and relates to artificial intelligence andcomputer vision technologies. The animation implementation method comprises the steps of acquiring a target animation segment T0, wherein the target animation segment T0 comprises a first key frame,and the first key frame comprises initial attitude data of a target virtual character; inputting the initial attitude data and a set target task into a trained control strategy network to obtain target attitude data of the target virtual character; according to the initial attitude data and the target attitude data of the target virtual character, adjusting moments of N joints of the target virtual character; wherein N is a positive integer greater than or equal to 1; adjusting the target virtual character from the initial posture to the target posture by utilizing the moments of the N jointsto obtain a second key frame; and obtaining a target animation segment T1, wherein the target animation segment T1 is composed of at least a first key frame and a second key frame.

Owner:TENCENT TECH (SHENZHEN) CO LTD

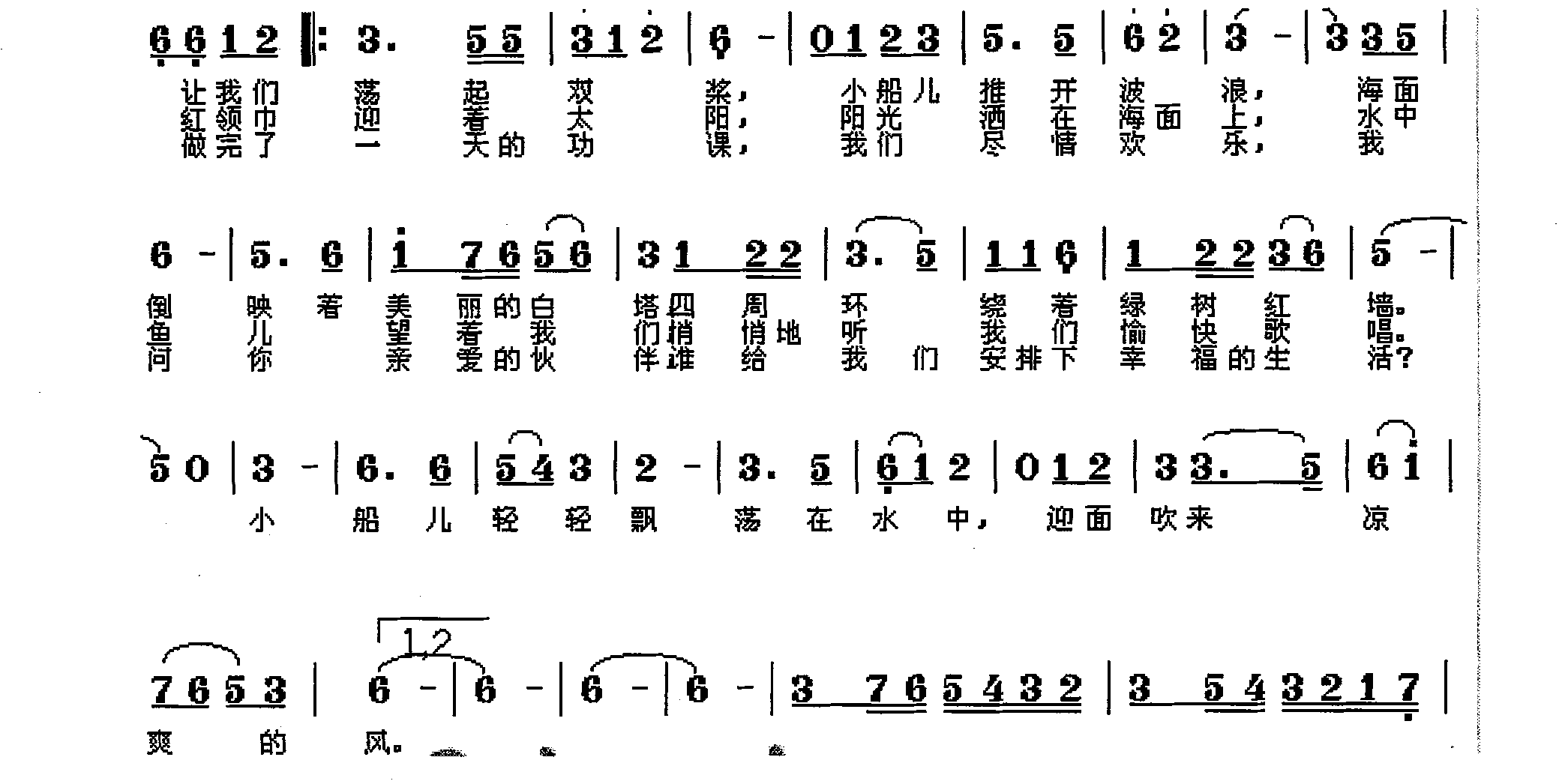

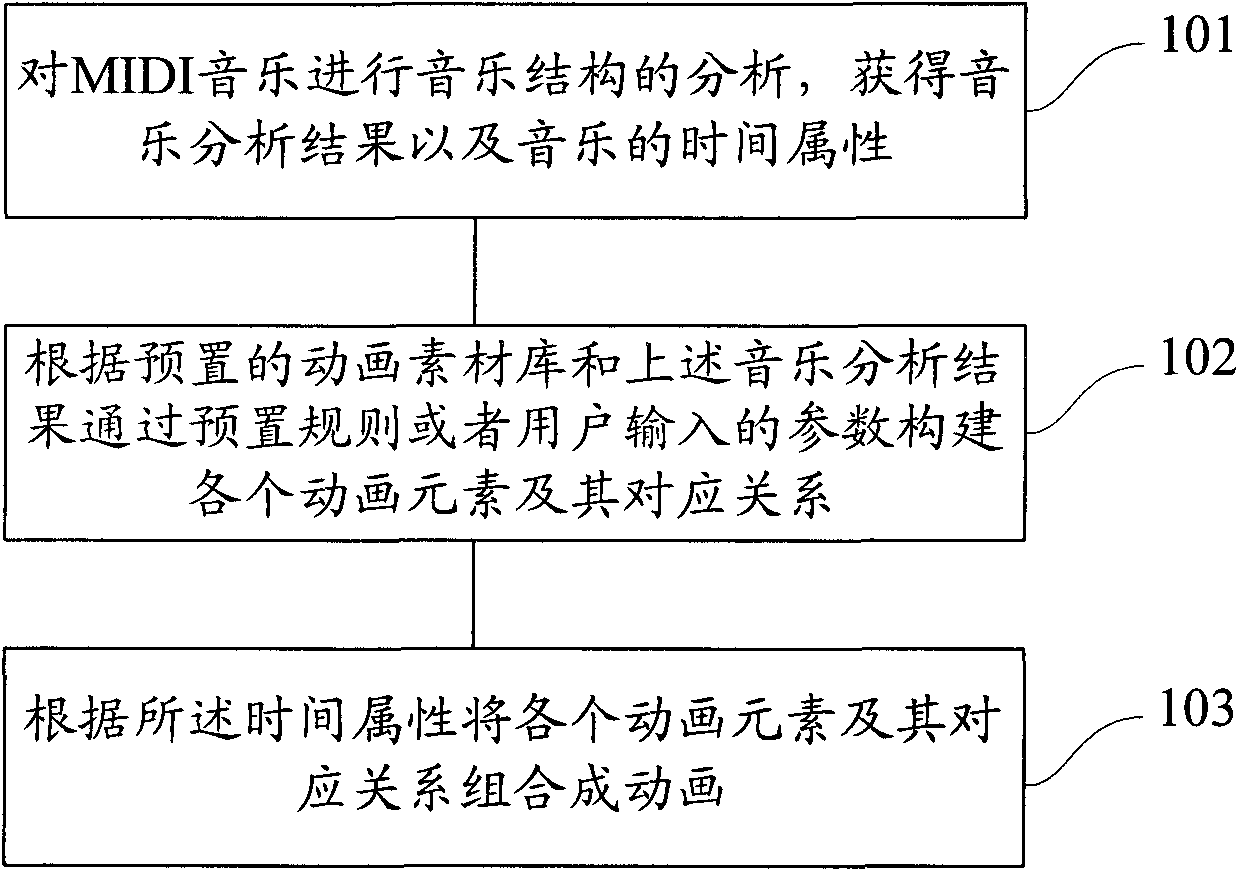

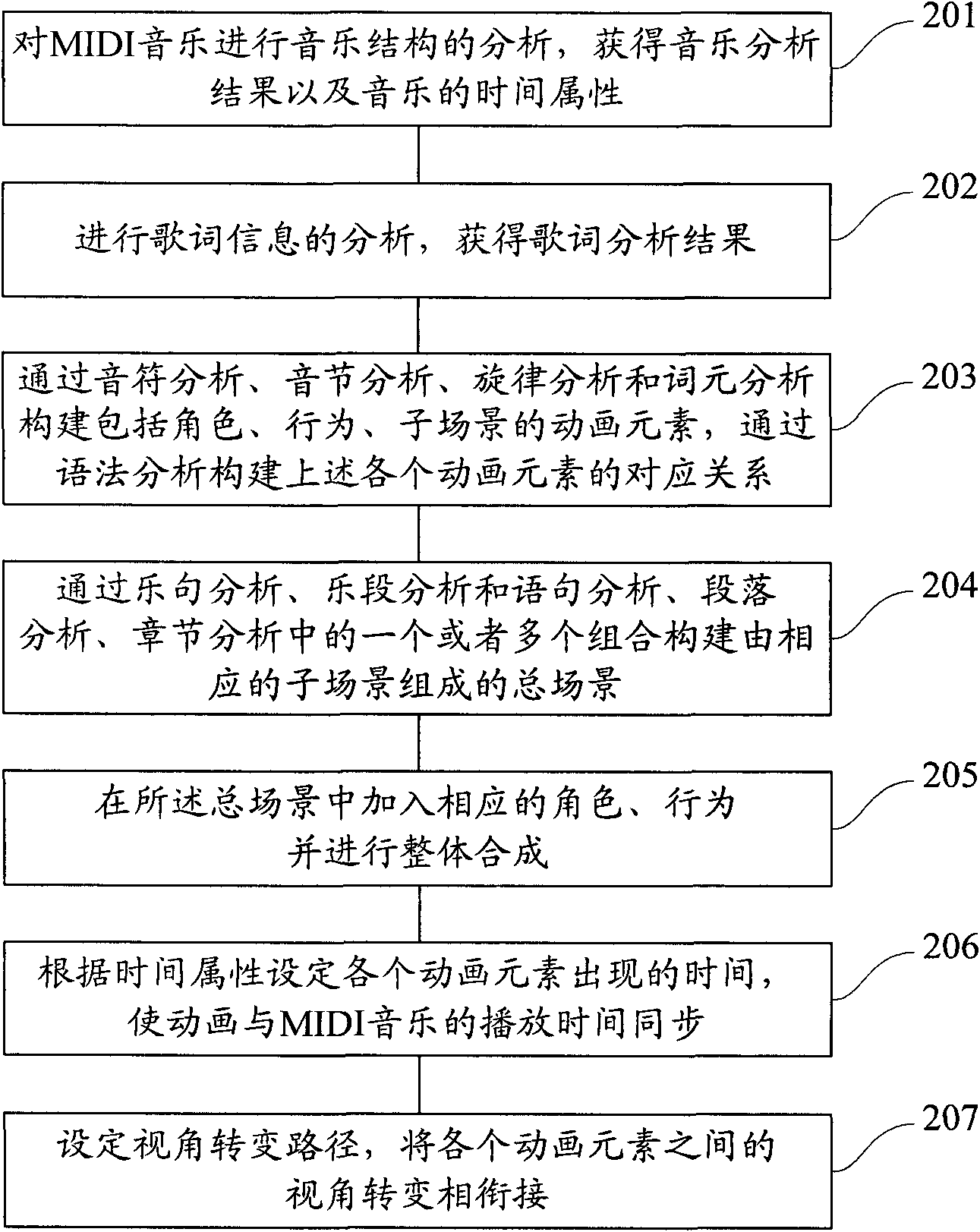

Method and system for transforming MIDI music into cartoon

The invention provides a method and a system for transforming MIDI music into cartoons. The method comprises the following steps: analyzing the musical structure of MIDI music to obtain musical analysis results and the time attribute of the music; constructing each animation element and corresponding relationships thereof based on preset rules or parameters input by the user in accordance with a preset animation material library and the musical analysis results; and combining the animation elements and the corresponding relationships thereof to form cartoons according to the time attribute. The invention can realize the transformation of the MIDI music into the visual information with rich contents related to the contents expressed in the music, thereby achieving the visual and audio binding of the cartoons and music, and satisfying needs of simultaneous and unified visual and audio enjoyment.

Owner:GUANGDONG VIMICRO

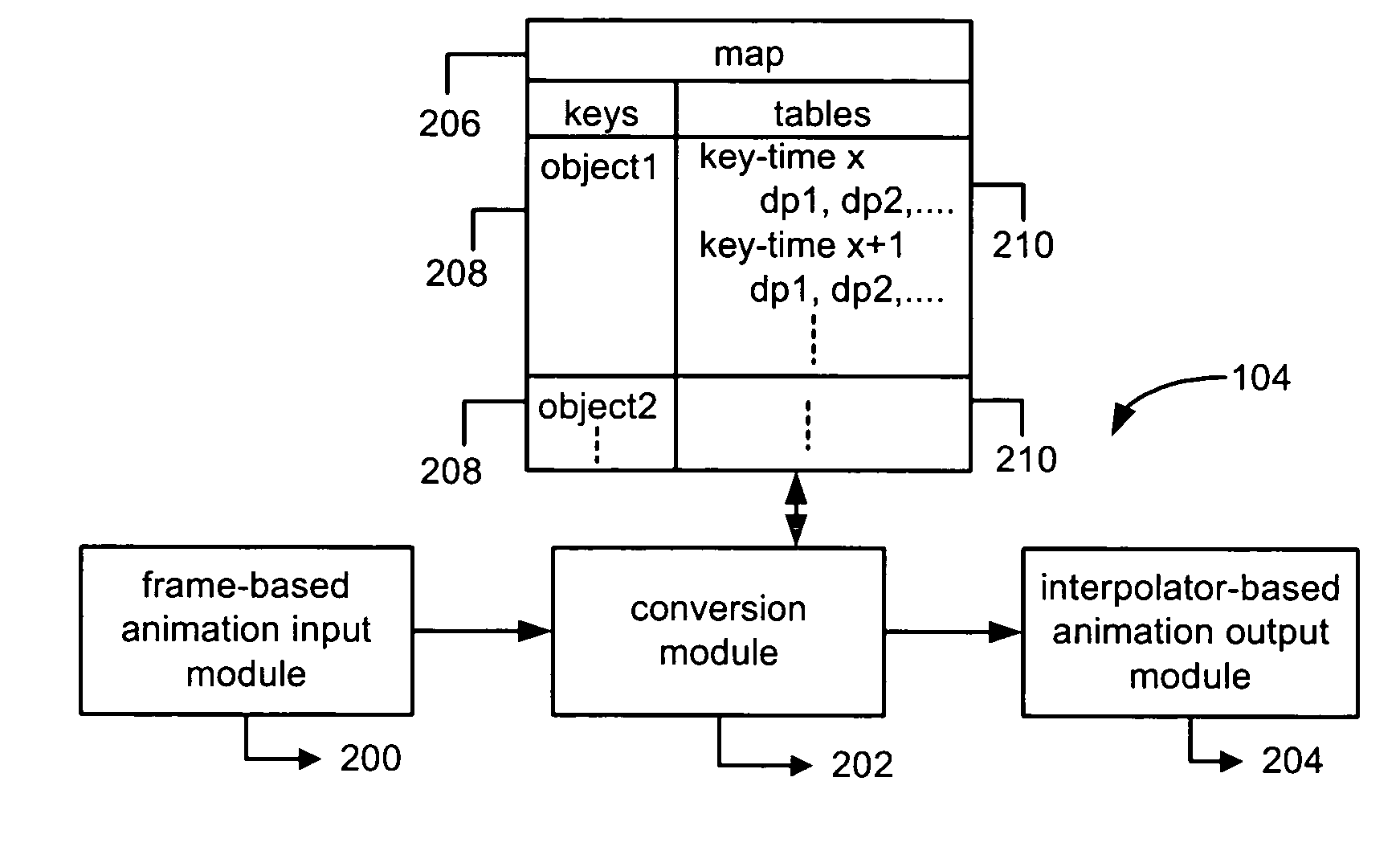

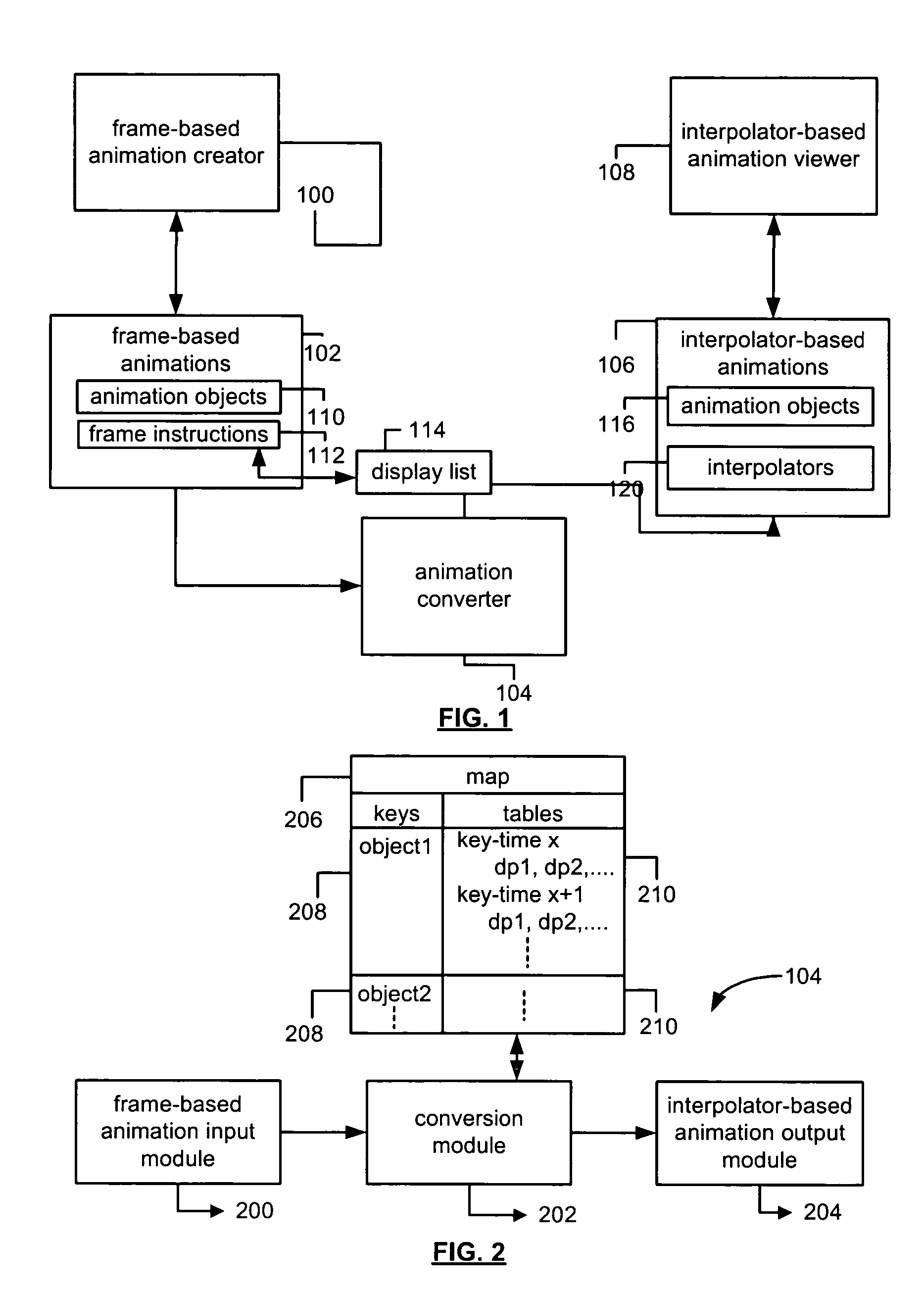

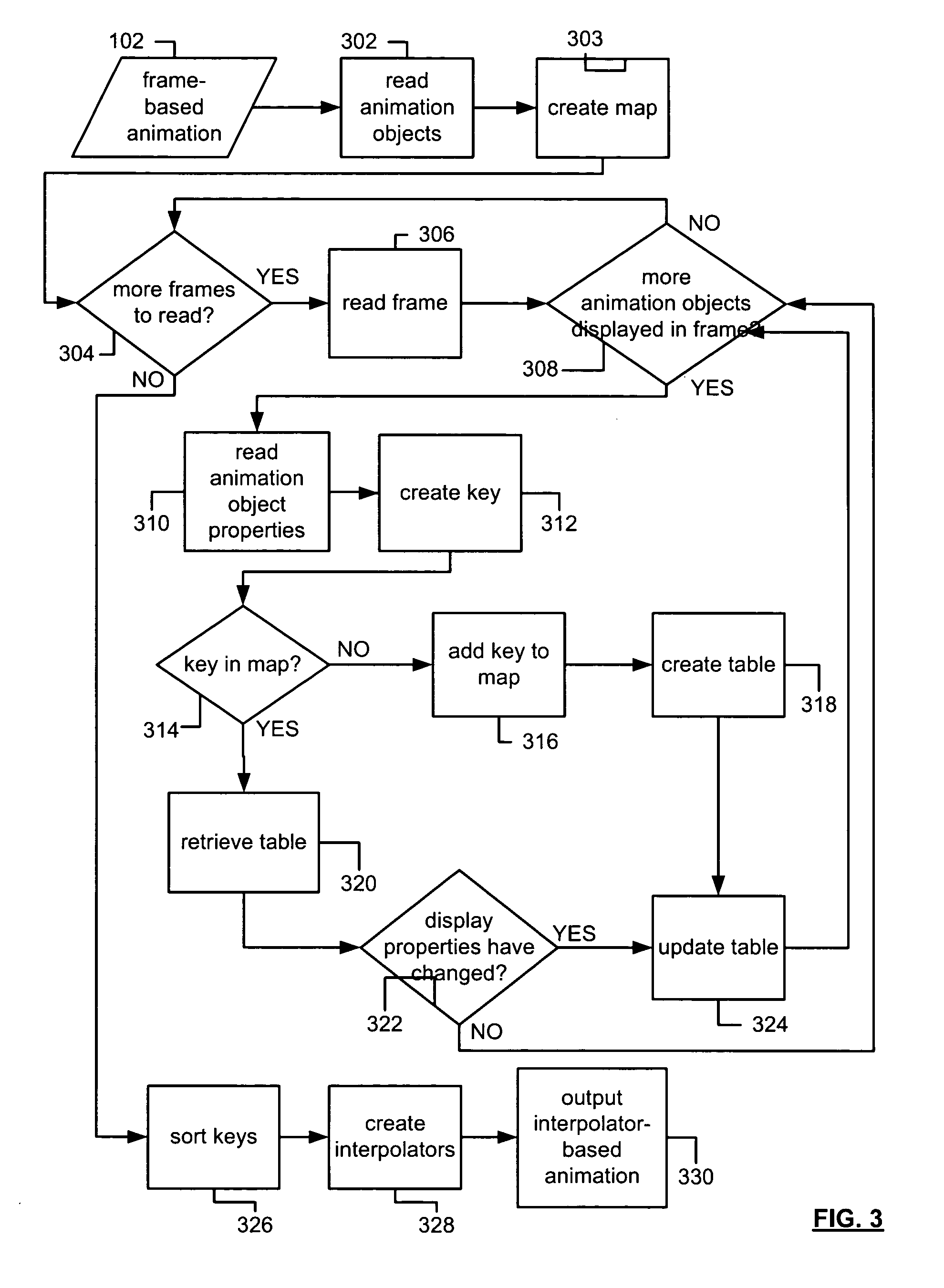

System and method of converting frame-based animations into interpolator-based animations

ActiveUS7034835B2Digital data information retrievalAnimationComputational scienceComputer graphics (images)

A system and method of converting frame-based animation into interpolator-based animation is provided. The system and method includes a) identifying each unique combination of animation object and associated depth identified in frame instructions for the plurality of frames of the frame-based animation; b) for each identified unique combination, identifying the display properties associated with the animation object of the combination through the successive frames; and c) for each identified display property for each identified unique combination, creating an interpolator that specifies the animation object of the combination and any changes that occur in the display property for the specified animation object throughout the plurality of frames.

Owner:MALIKIE INNOVATIONS LTD

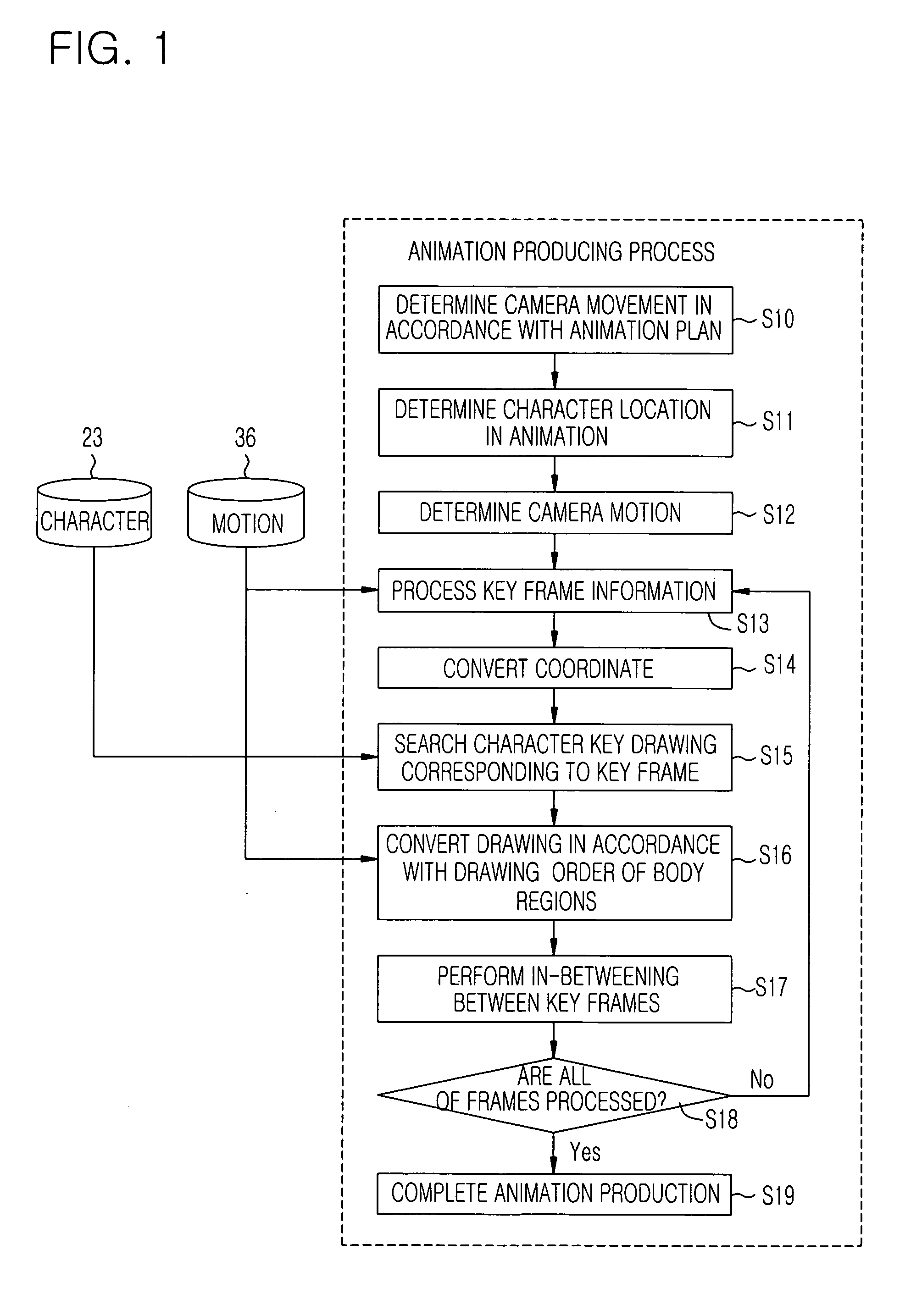

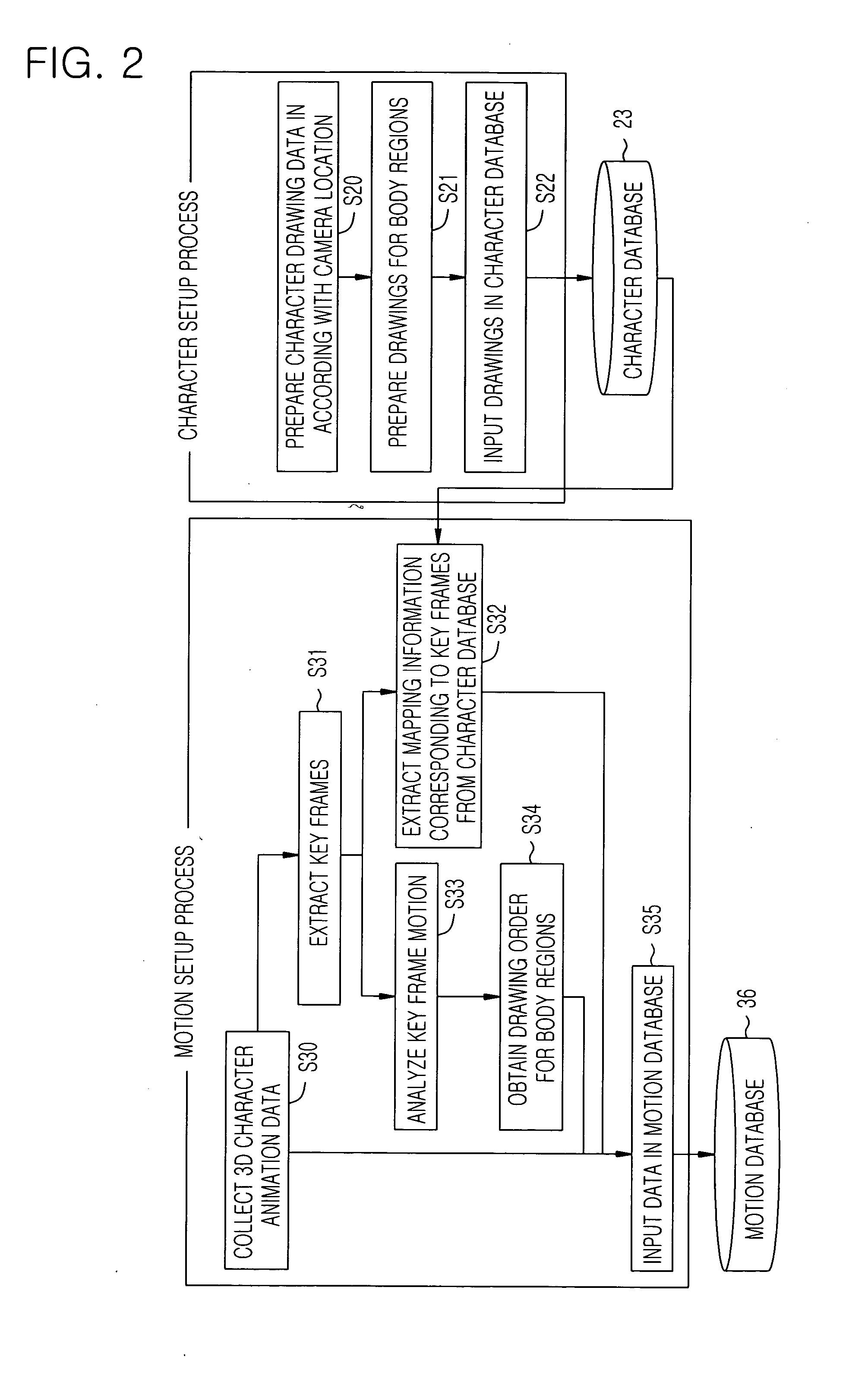

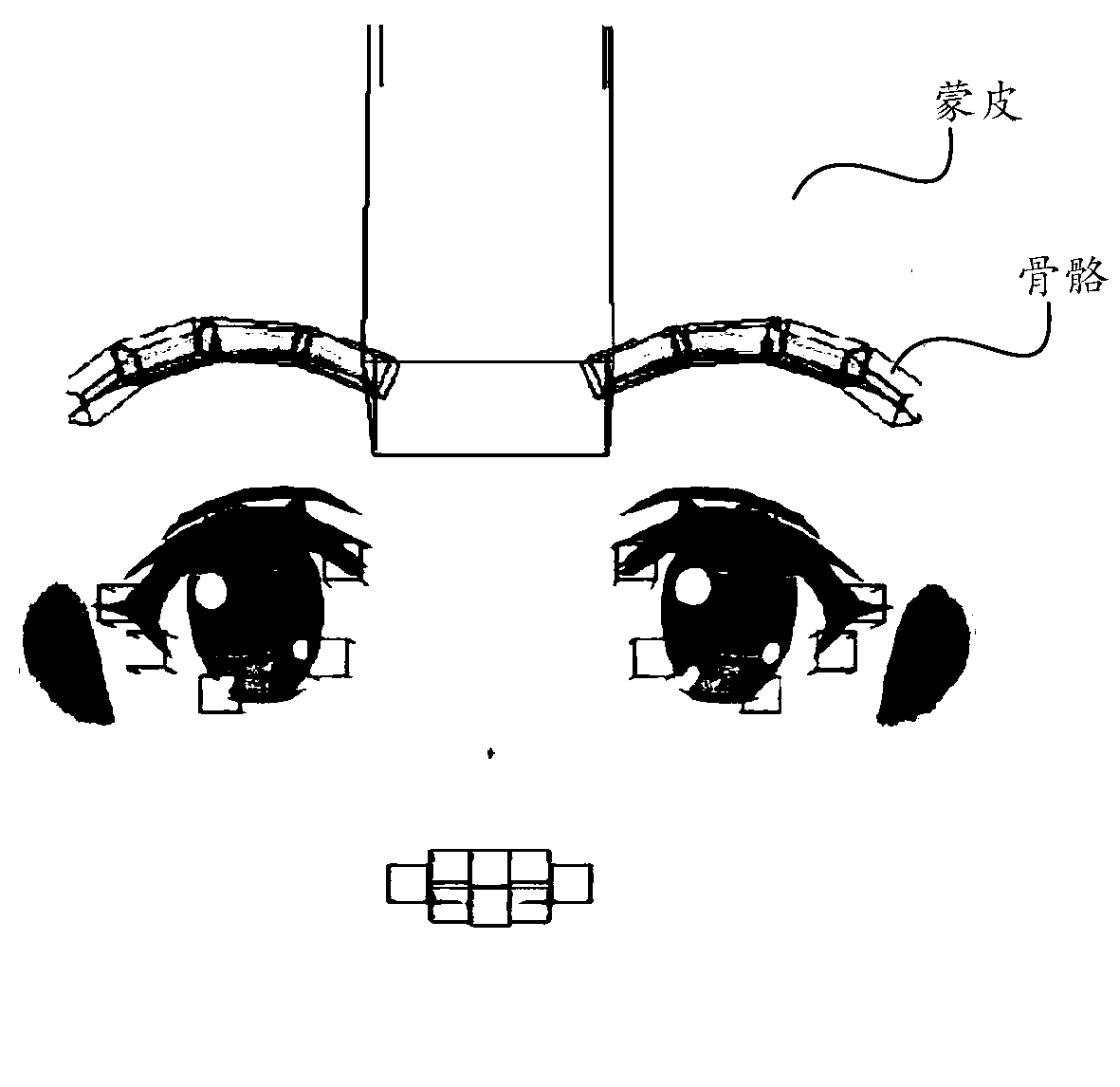

Method of representing and animating two-dimensional humanoid character in three-dimensional space

InactiveUS20070132765A1Efficient productionDrawback can be solvedVisual presentationAnimationComputer graphics (images)Three-dimensional space

There is provided a method of representing and animating a 2D (Two-Dimensional) character in a 3D (Three-Dimensional) space for a character animation. The method includes performing a pre-processing operation in which data of a character that is required to represent and animate the 2D character like a 3D character is prepared and stored and producing the character animation using the stored data.

Owner:ELECTRONICS & TELECOMM RES INST

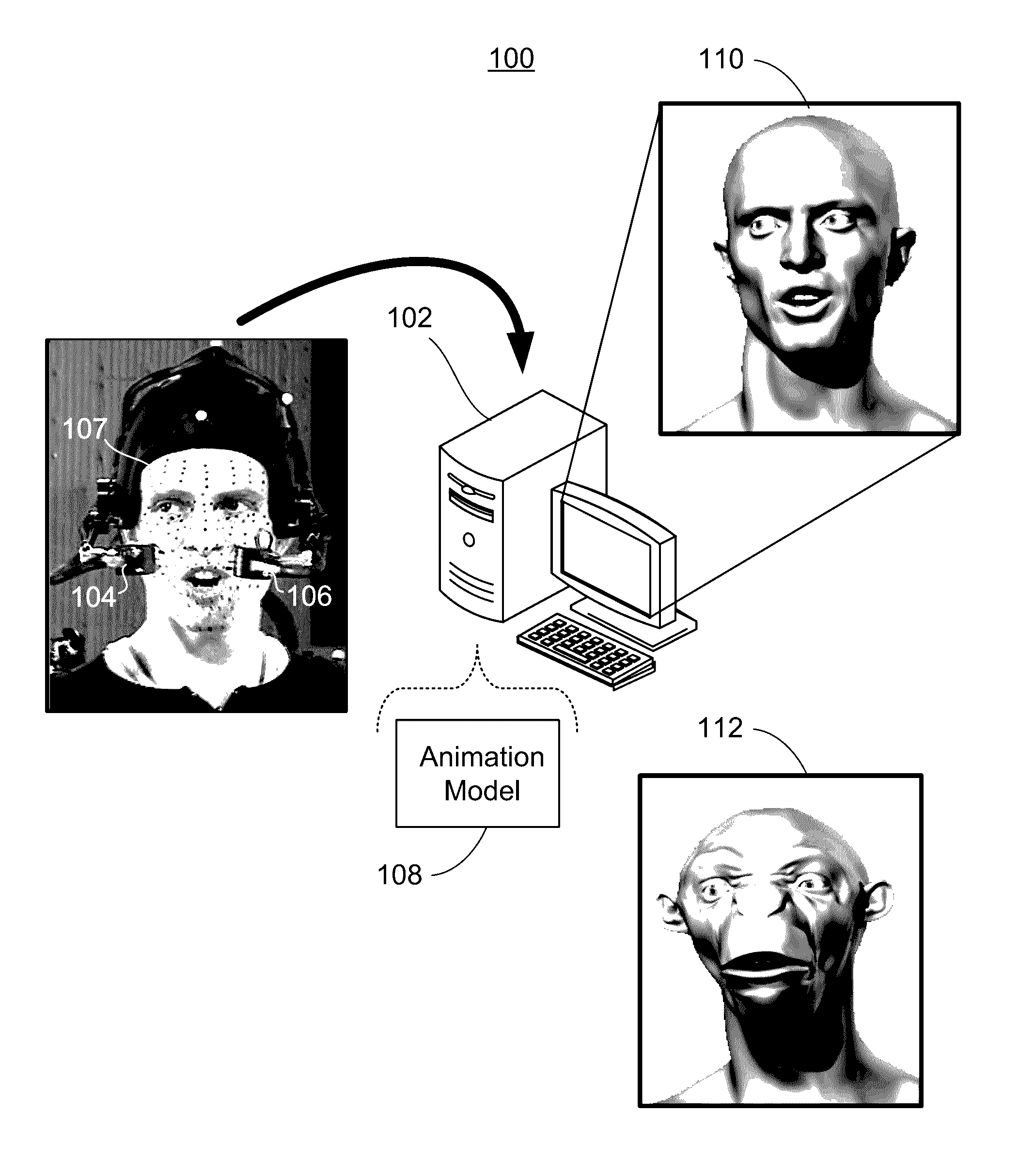

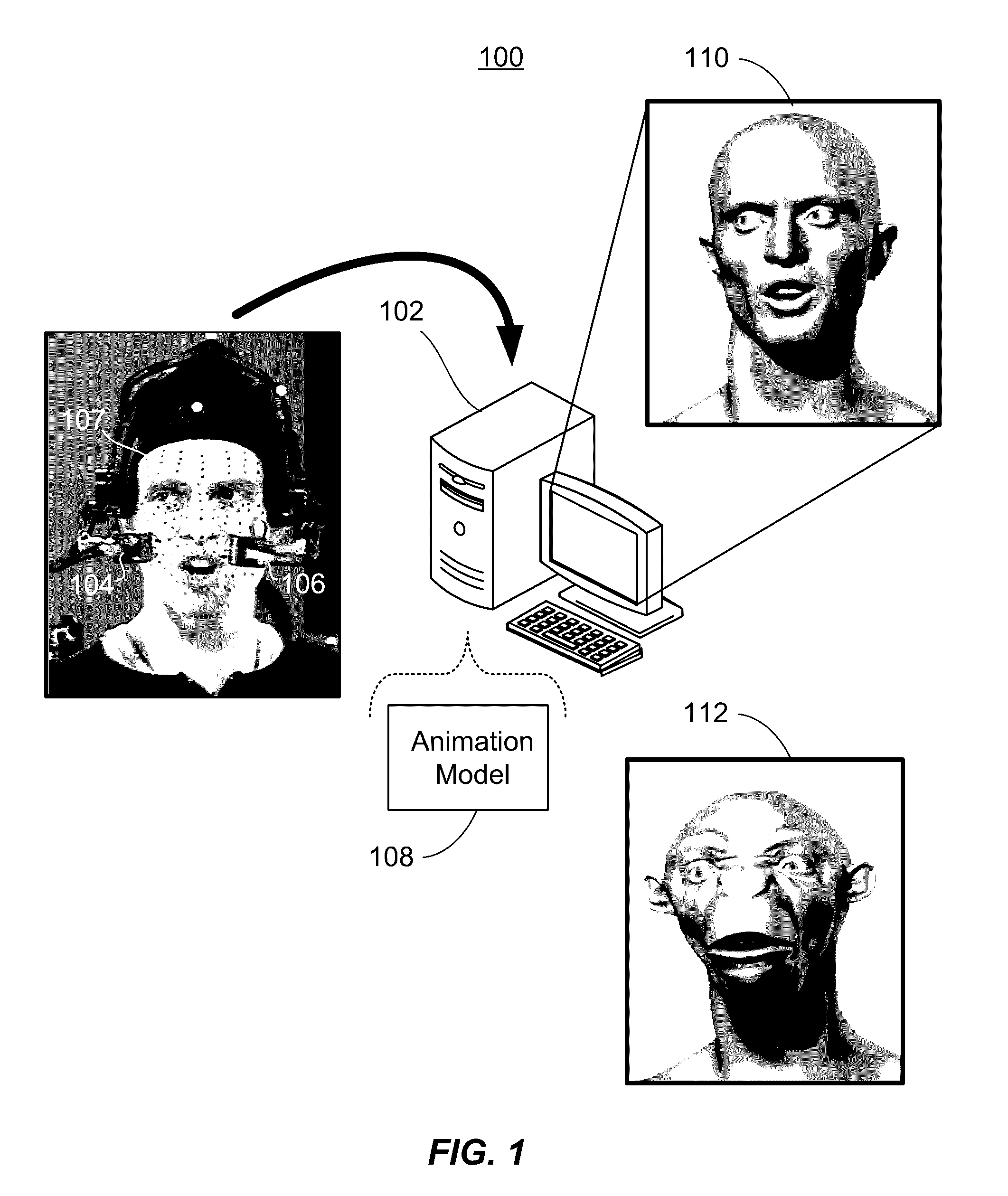

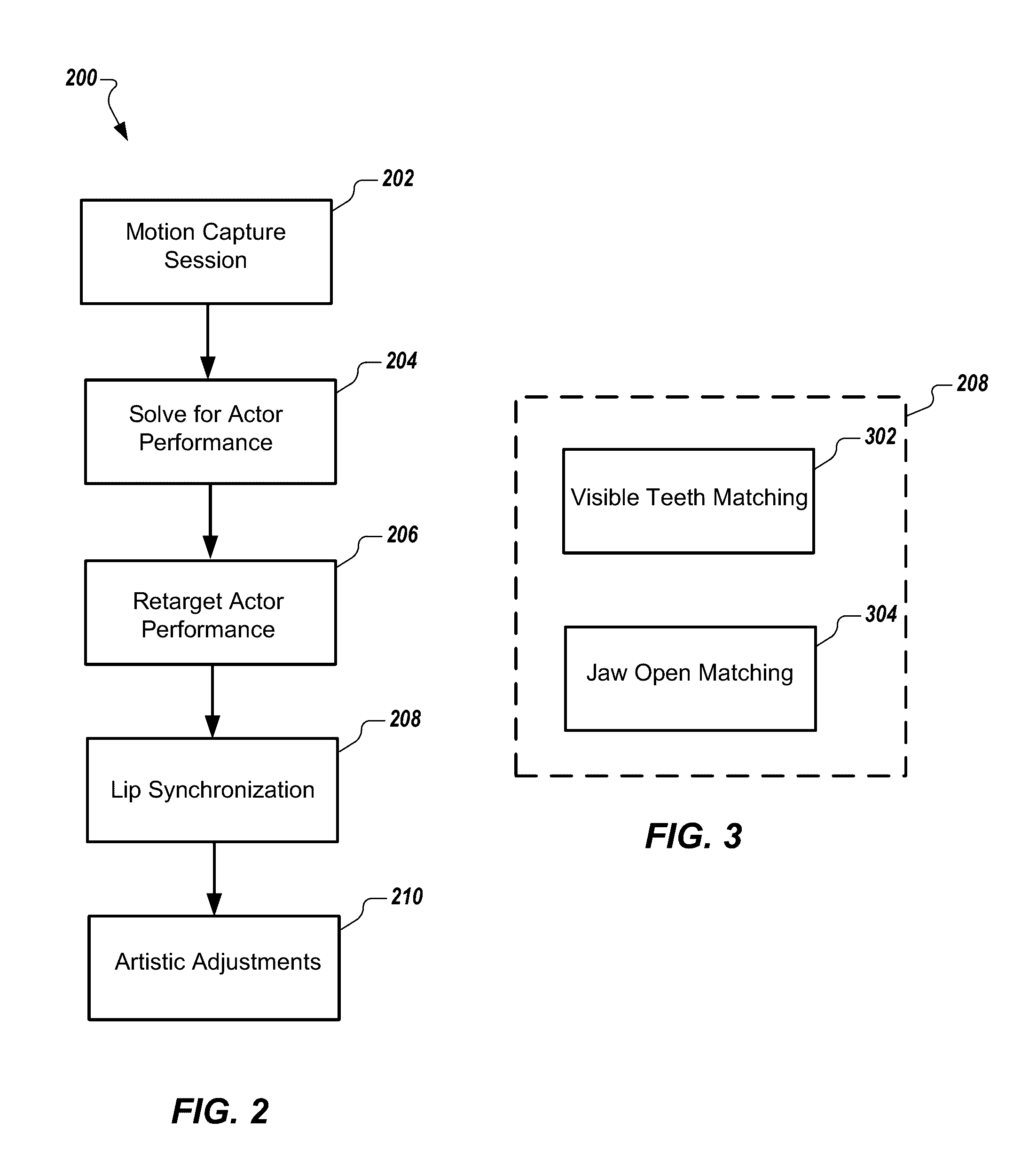

Lip synchronization between rigs

ActiveUS20170053663A1Believable and robust and editable animationGood synchronizationImage enhancementImage analysisMedicineComputer graphics (images)

In some embodiments a method of transferring facial expressions from a subject to a computer-generated character is provided where the method includes receiving positional information from a motion capture session of the subject representing a performance having facial expressions to be transferred to the computer-generated character, receiving a first animation model that represents the subject, and receiving a second animation model that represents the computer-generated character. Each of the first and second animation models can include a plurality of adjustable controls that define geometries of the model and that can be adjusted to present different facial expressions on the model, and where the first and second animation models are designed so that setting the same values for the same set of adjustable controls in each model generates a similar facial poses on the models. The method further includes determining a solution, including values for at least some of the plurality of controls, that matches the first animation model to the positional information to reproduce the facial expressions from the performance to the first animation model, retargeting the facial expressions from the performance to the second animation model using the solution; and thereafter, synchronizing lip movement of the second animation model with lip movement from the first animation model.

Owner:LUCASFILM ENTERTAINMENT

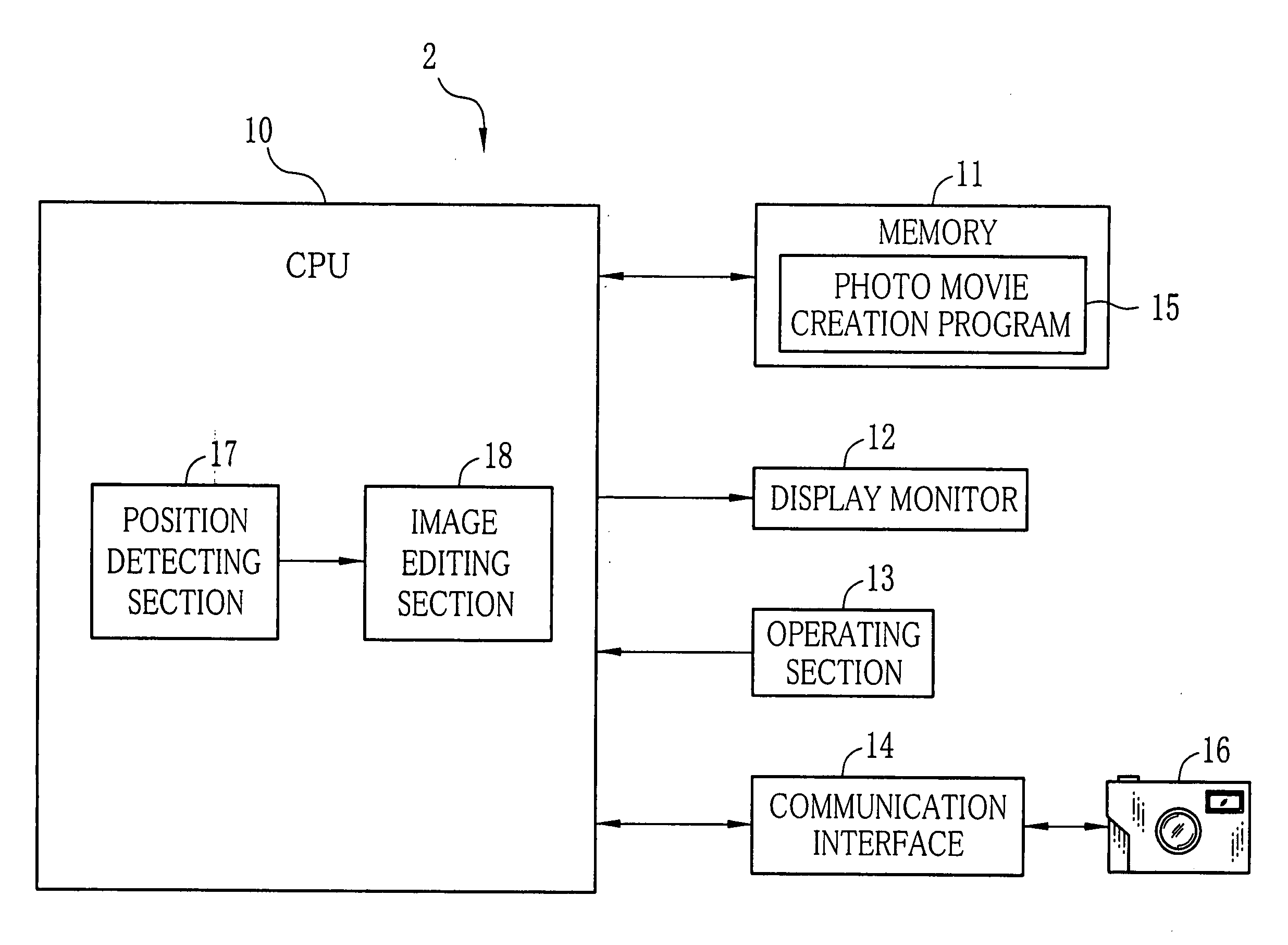

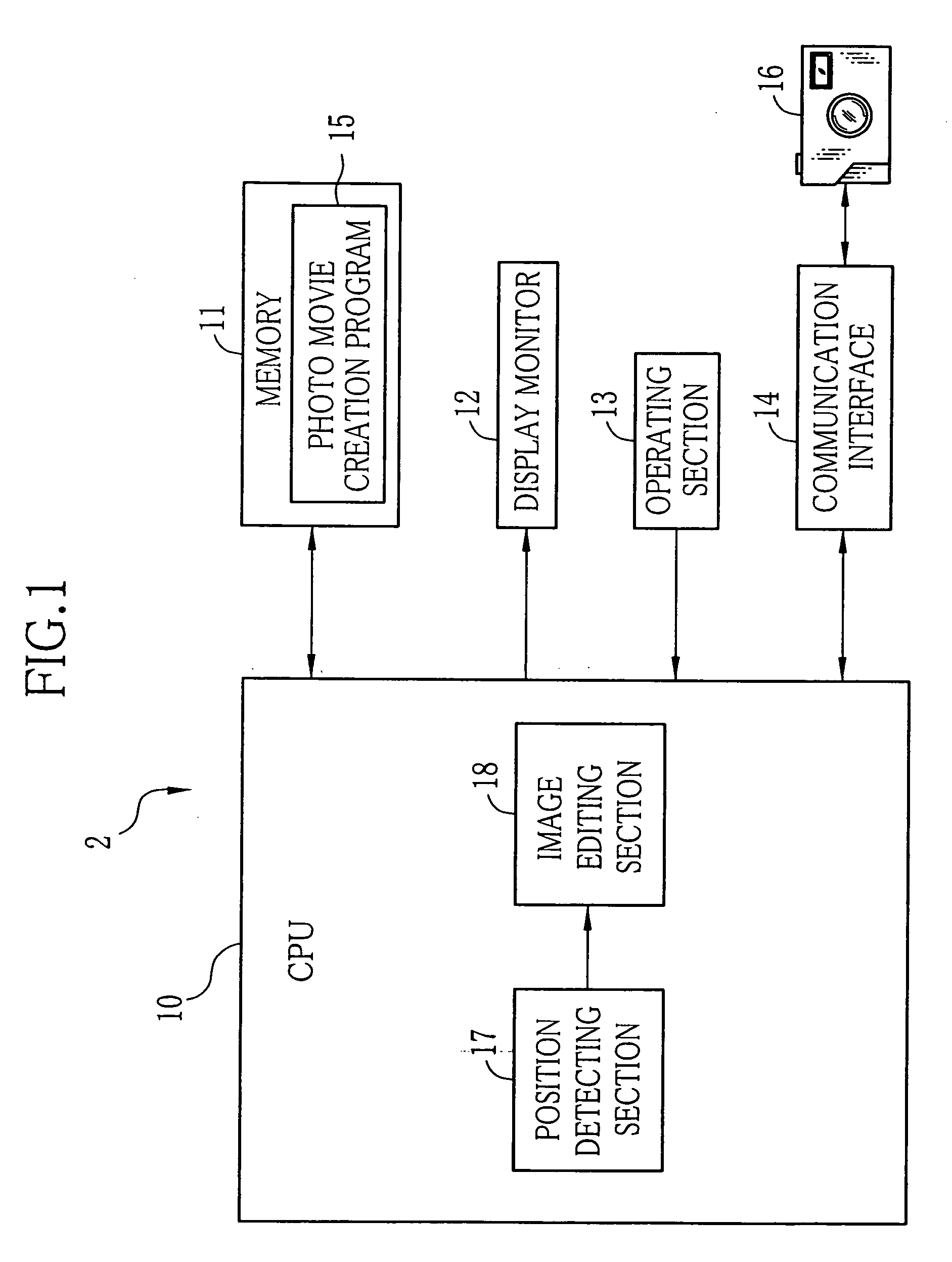

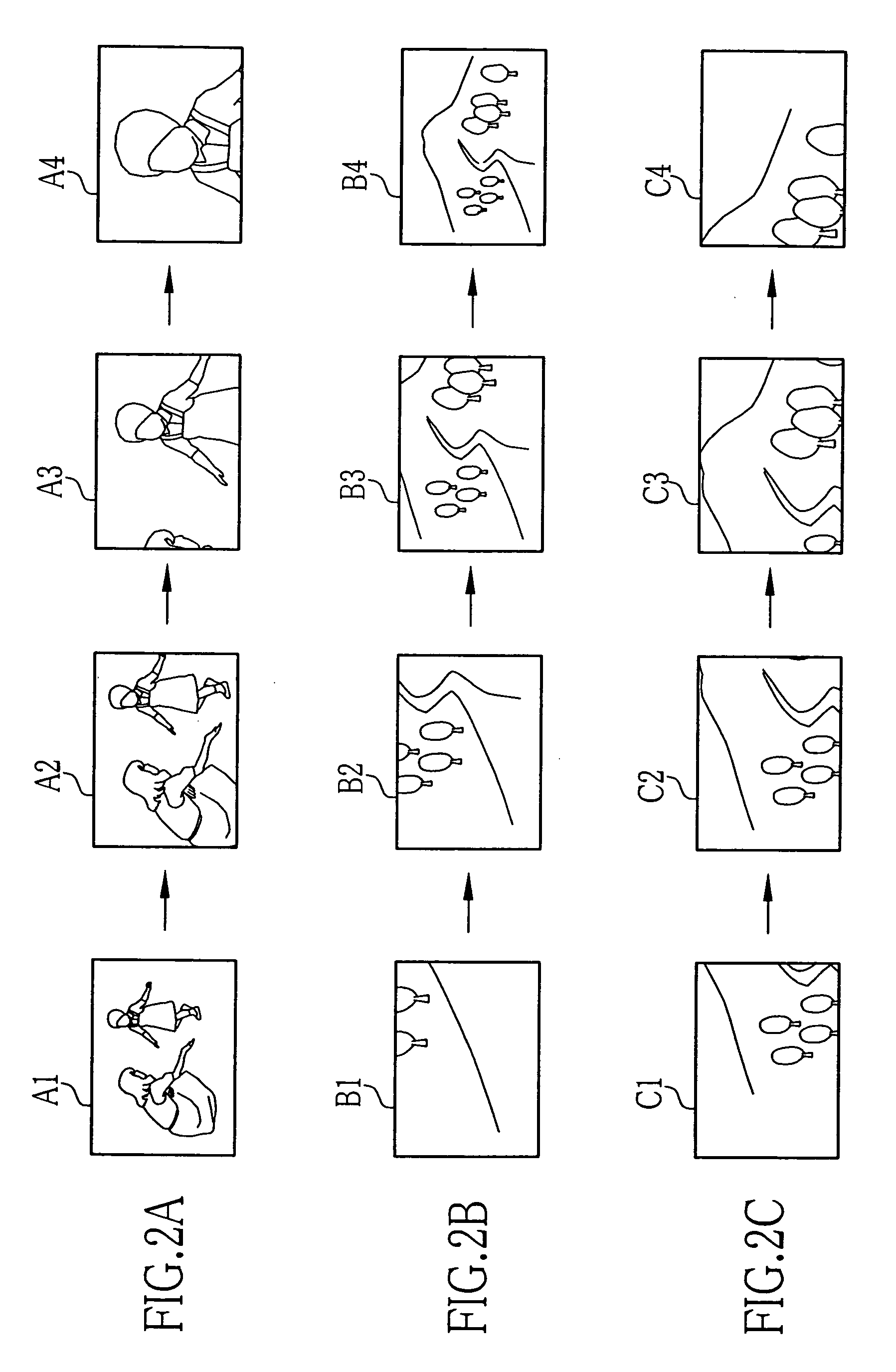

Photo movie creating method, apparatus, and program

InactiveUS20060115922A1Television system detailsElectronic editing digitised analogue information signalsImaging dataAnime

A photo movie creating apparatus stores a photo movie creating program. When the photo movie creating program is started, a position detecting section and an image editing section are generated in the CPU. The position detecting section detects a distance L1 from the center to the edge of a cropping frame for cropping a target subject out of a still image, and a distance L2 from the center of the target subject to the edge of the still image on this cropping frame's edge side. The image editing section compares the distance L1 with the distance L2, then controls at least one of the size and the position of the cropping frame so as the cropping frame not to protrude outside the still image where no image data exists.

Owner:FUJIFILM CORP +1

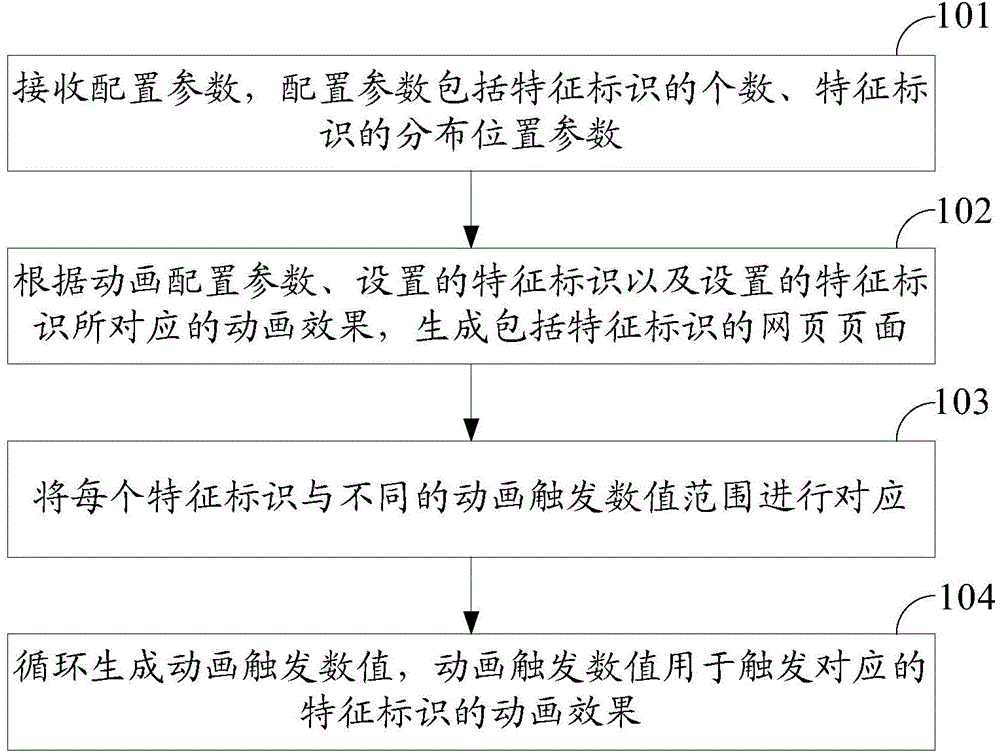

Method, device and system for achieving animation effects in webpages

ActiveCN104461486AReduce workloadShorten the development cycleAnimationSpecific program execution arrangementsAlgorithmEngineering

An embodiment of the invention discloses a method for achieving animation effects in webpages, aiming to reduce development volume of the webpages with the animation effects and shorten development cycle. The method includes receiving configuration parameters including the number of feature identifications and distribution position parameters of the feature identifications, generating the webpages with the feature identifications according to the animation configuration parameters, the set feature identifications and the animation effects corresponding to the set feature identifications, matching each feature identification with different animation triggering numerical ranges, and circularly generating animation triggering numerical values used for triggering the animation effects of the corresponding feature identifications. The embodiment of the invention further discloses a device and a system for achieving the animation effects in the webpages.

Owner:TENCENT TECH (SHENZHEN) CO LTD

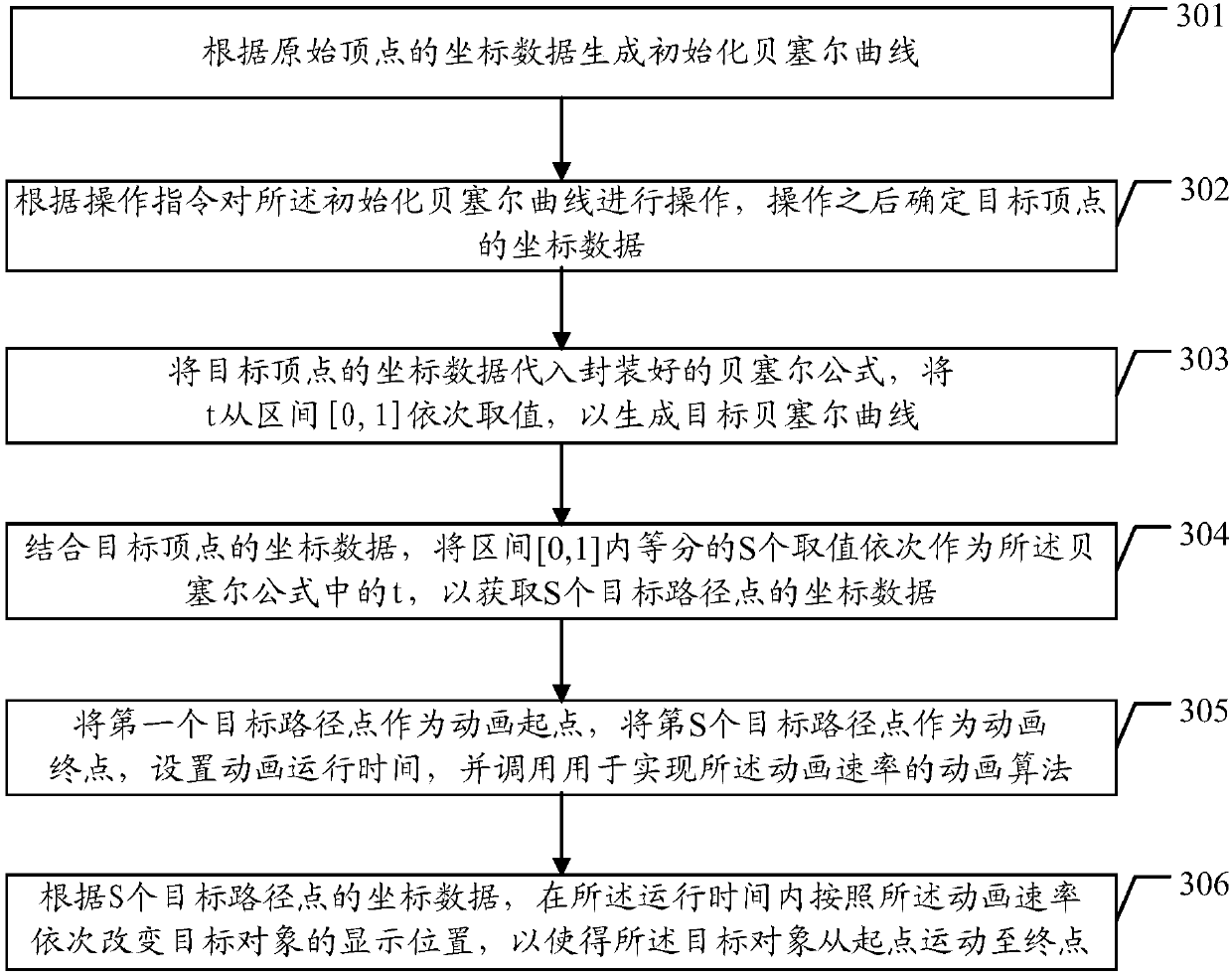

Animation effect implementation method and device and storage equipment

PendingCN107945253AReduce development costsReduce the difficulty of debuggingAnimationComputer graphics (images)Simulation

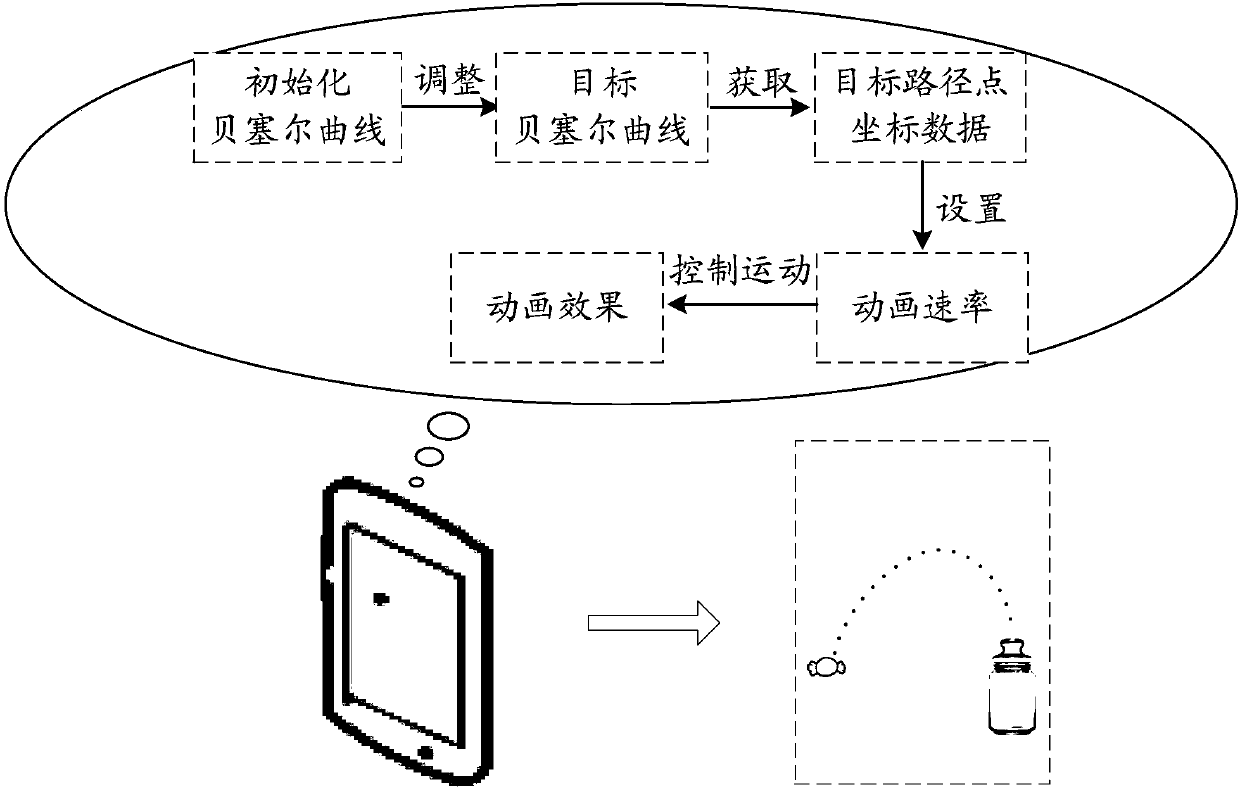

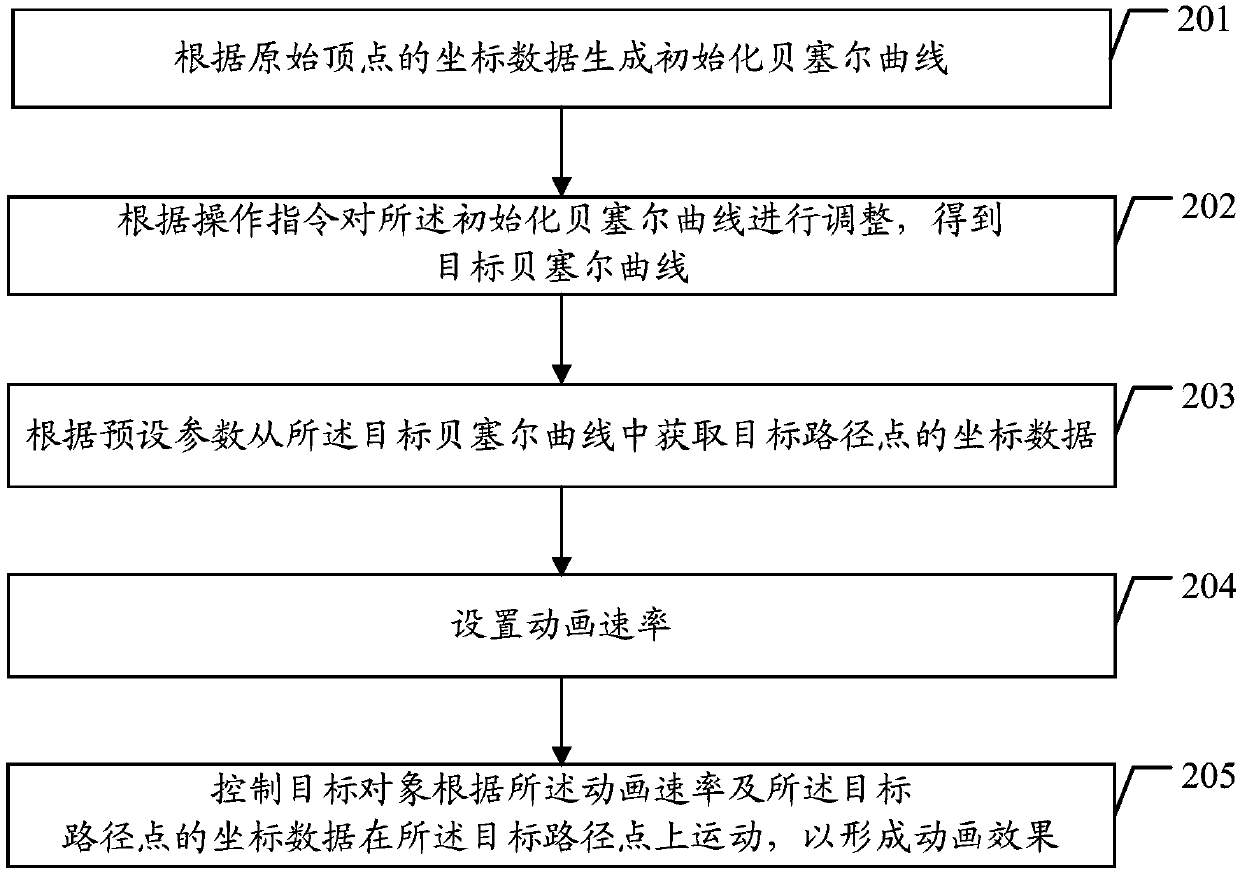

The embodiment of the invention discloses an animation effect implementation method and device and storage equipment. The animation effect implementation method comprises the steps that an initializedBezier curve is generated according to the coordinate data of the original vertex; the initialized Bezier curve is adjusted according to an operation instruction so as to obtain a target Bezier curve; the coordinate data of target path points are acquired from the target Bezier curve according to the preset parameters; the animation rate is set; and the target object is controlled to move on thetarget path points according to the animation rate and the coordinate data of target path points so as to form the animation effect. The complex animation effect can be realized, the development costcan be reduced and the debugging difficulty can be reduced.

Owner:TENCENT DIGITAL TIANJIN

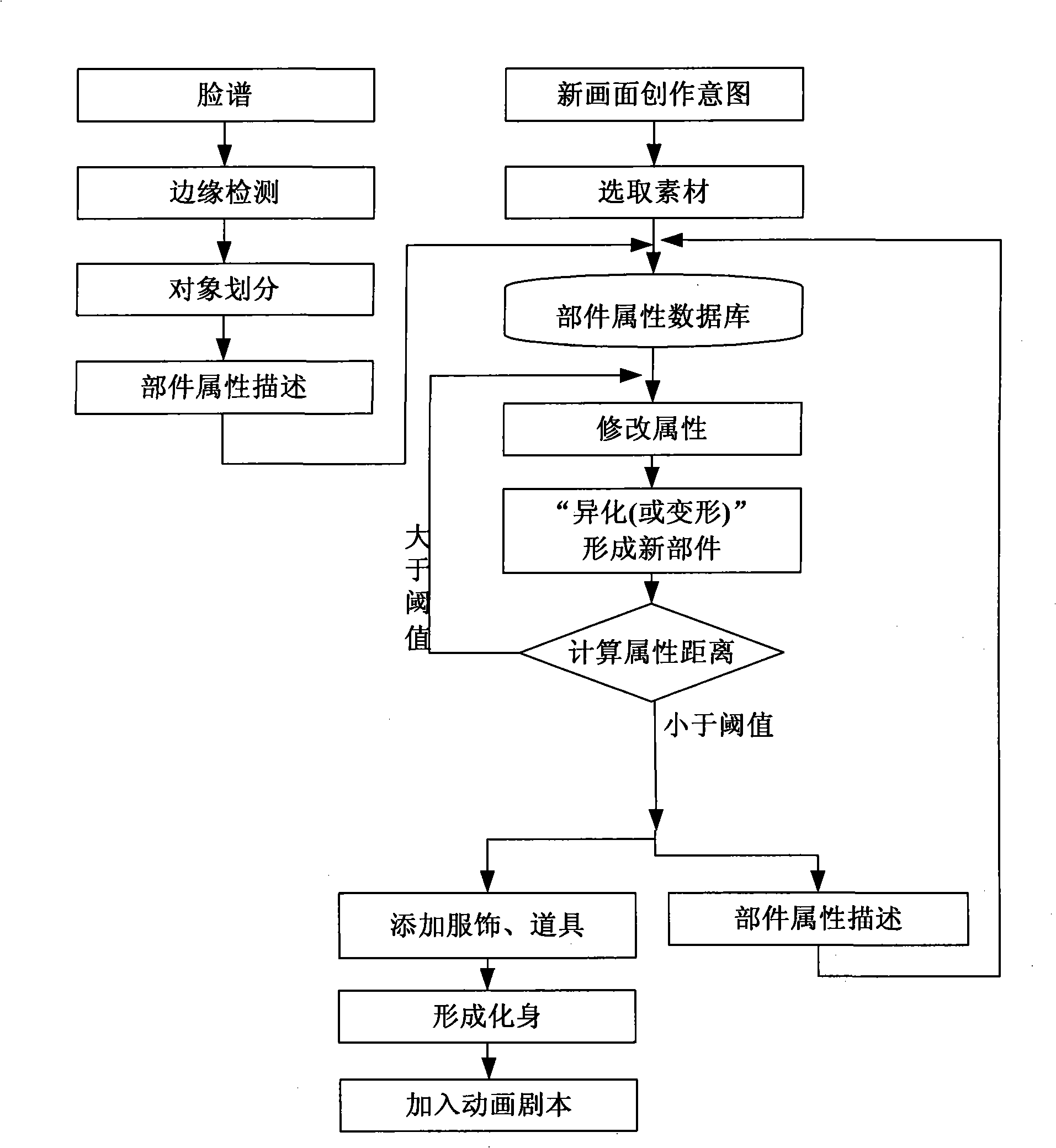

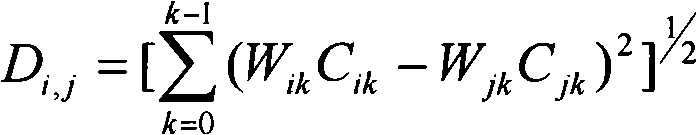

Method for interfusing audience individual facial makeup into cartoon roles

InactiveCN101281653AEnrich cultural lifeIncrease motivationAnimation3D-image renderingAnimationImage processing

The invention discloses a method fusing the individual facial makeup of the audience with animation, characterized in that, the audience transmit the shot individual facial makeup and the individual exaggerated design requirement to the animation server, which constructs the facial makeup into the exaggerated portrait through the image process according to the animation polts and the exaggerated design requirement of the audience, matching with the corresponding costume and the props, to makes the personal embodiment of the audience present in the animation. The invention makes the animation program more interesting, advances the enthusiasm for the audience to attention and take part in the animation.

Owner:HUNAN TALKWEB INFORMATION SYST

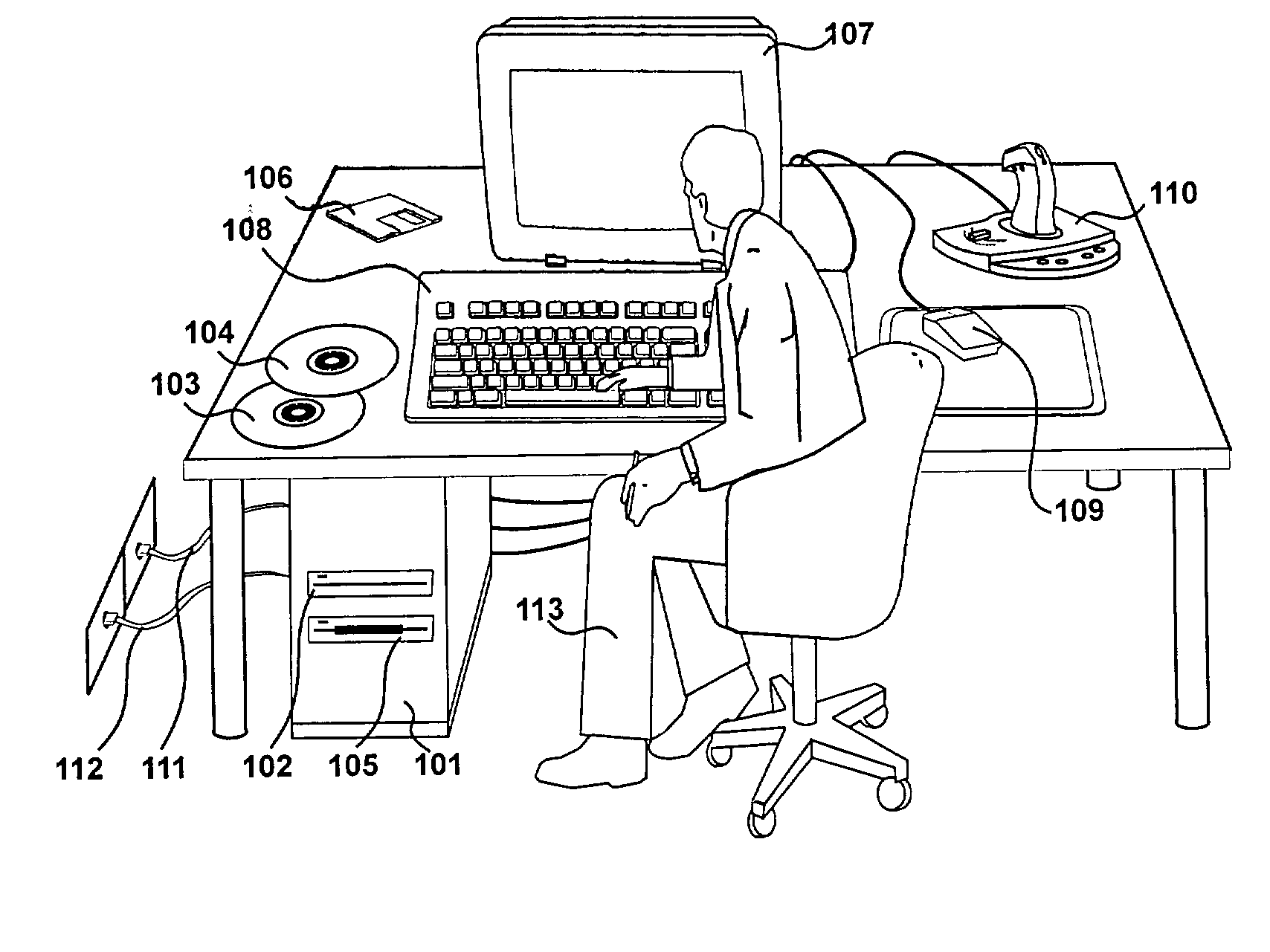

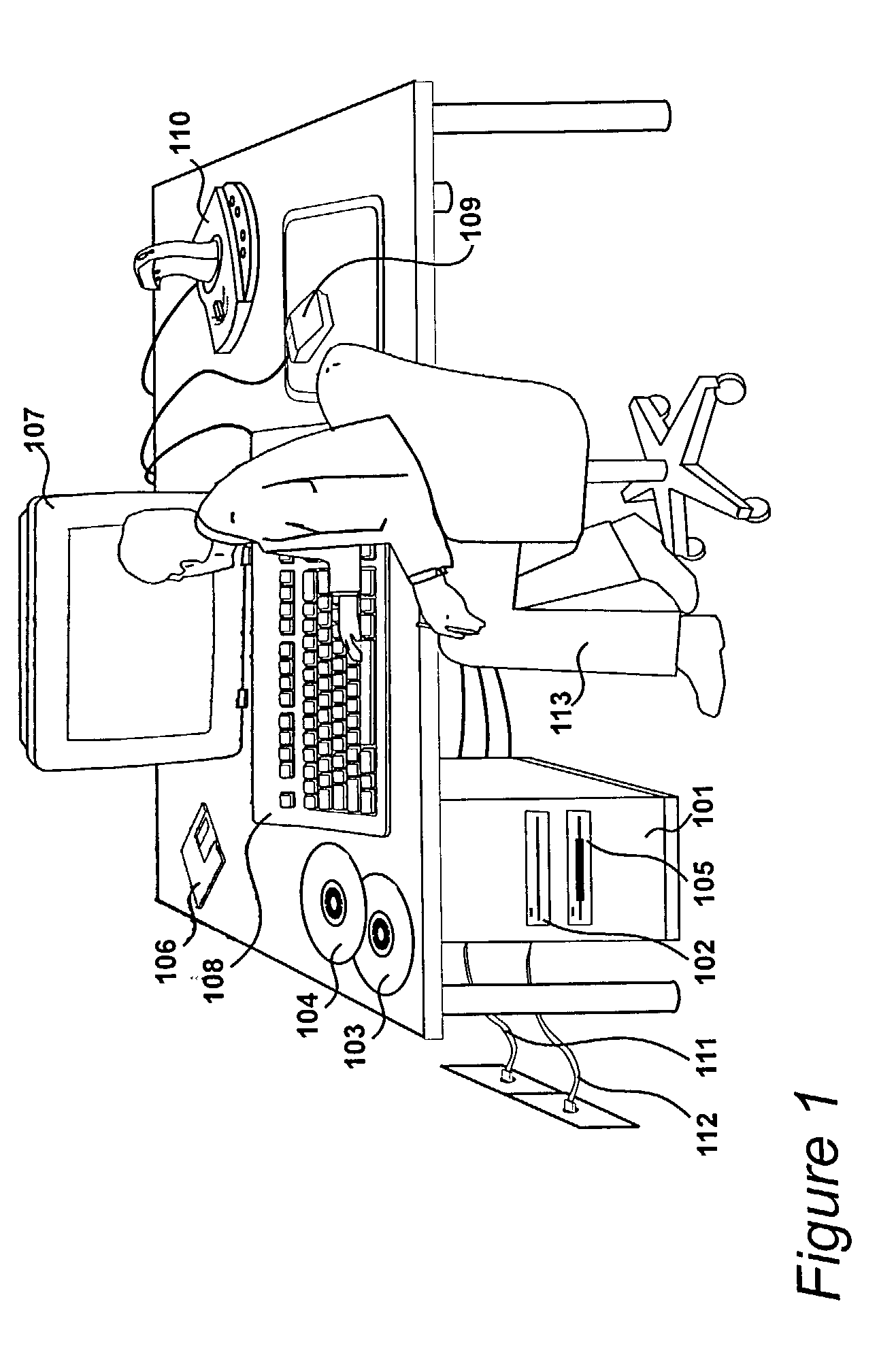

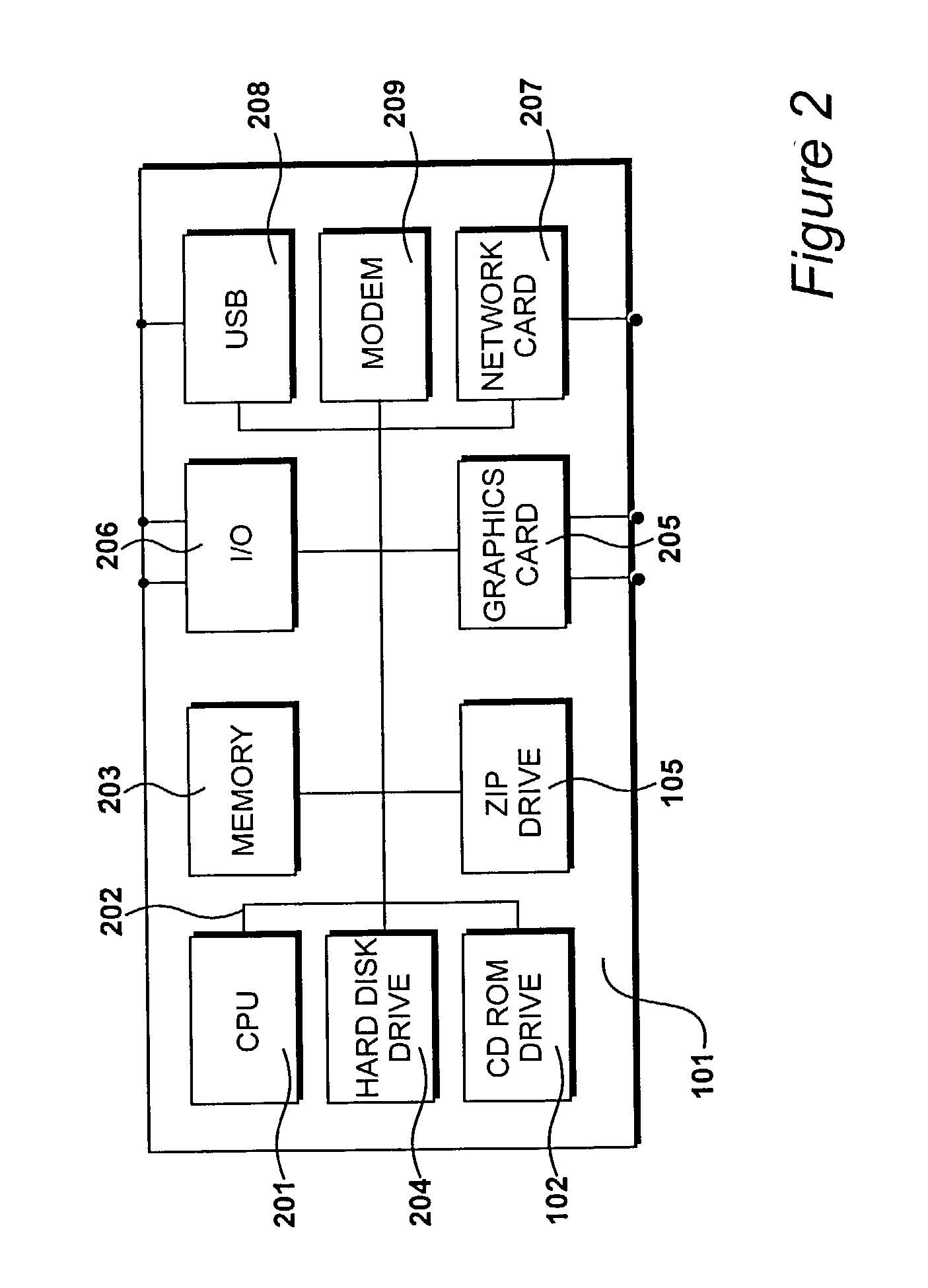

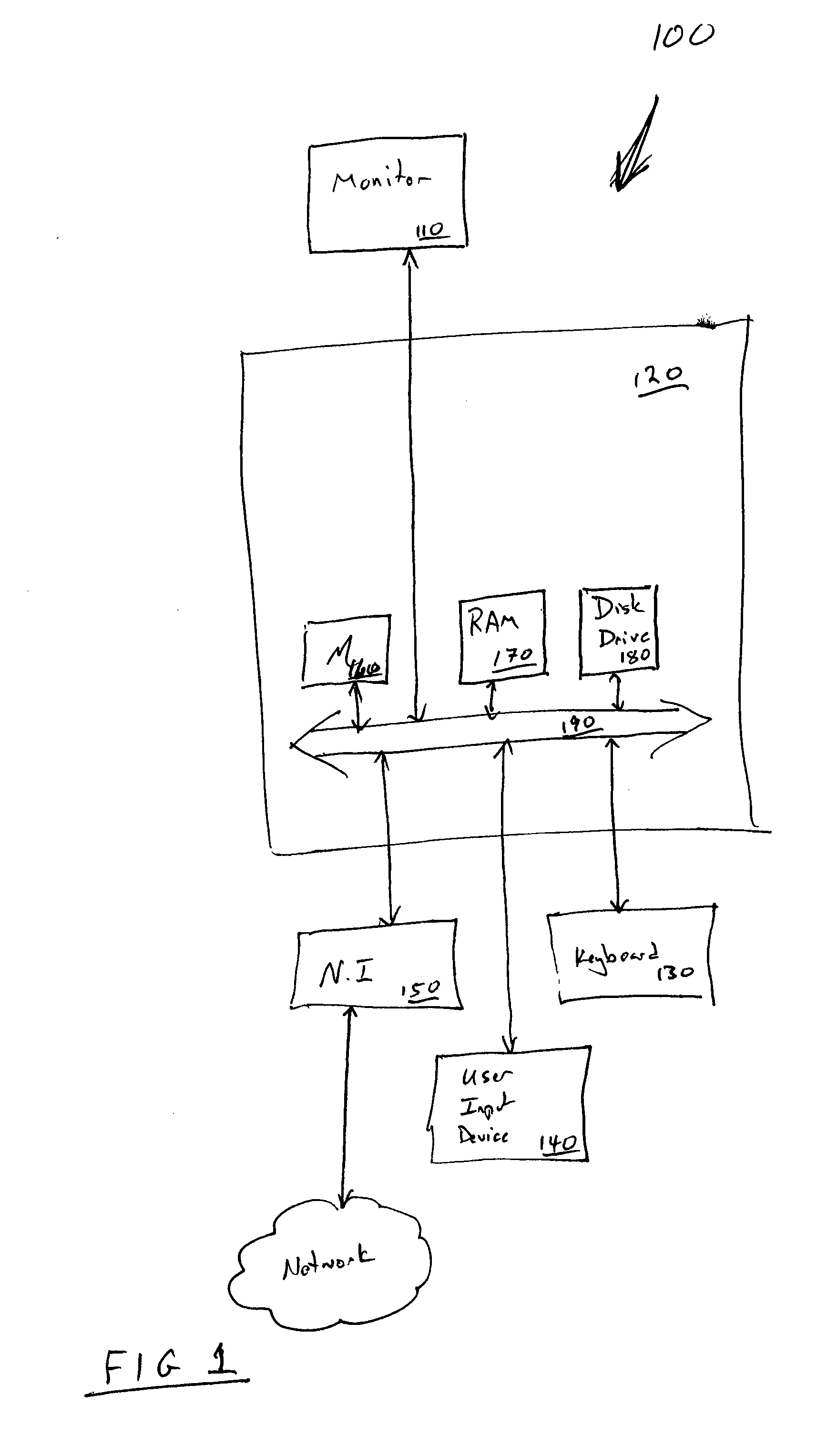

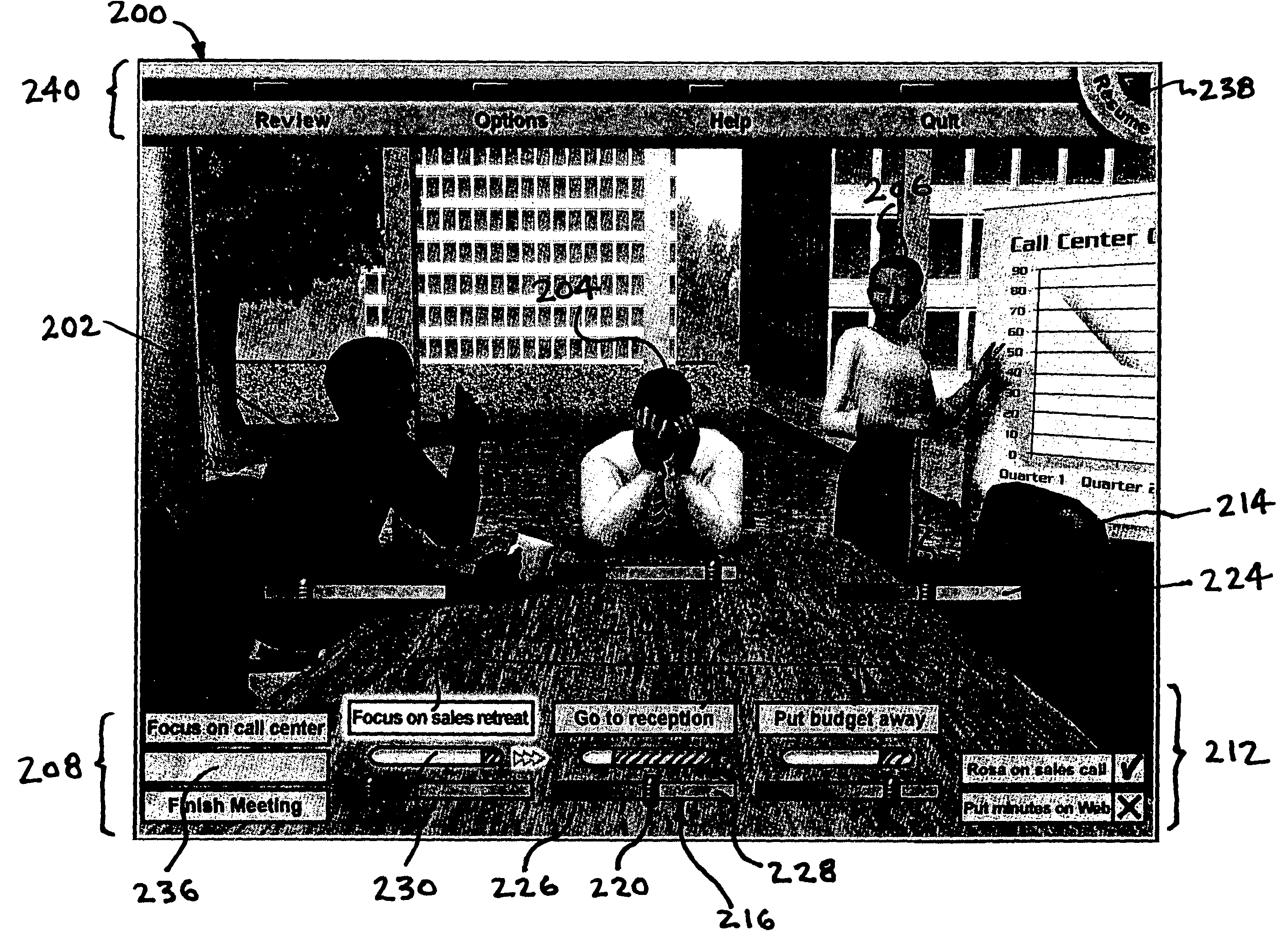

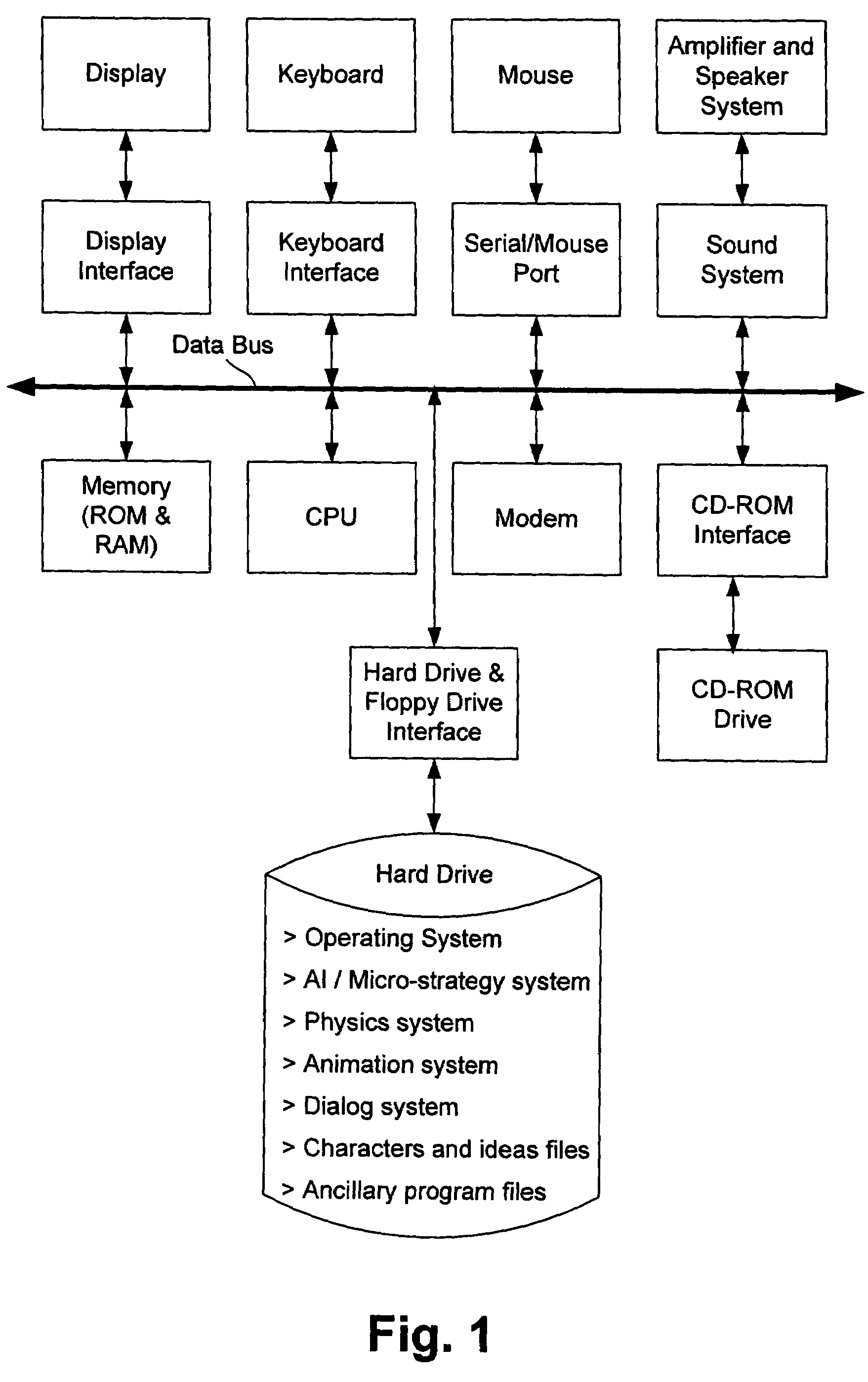

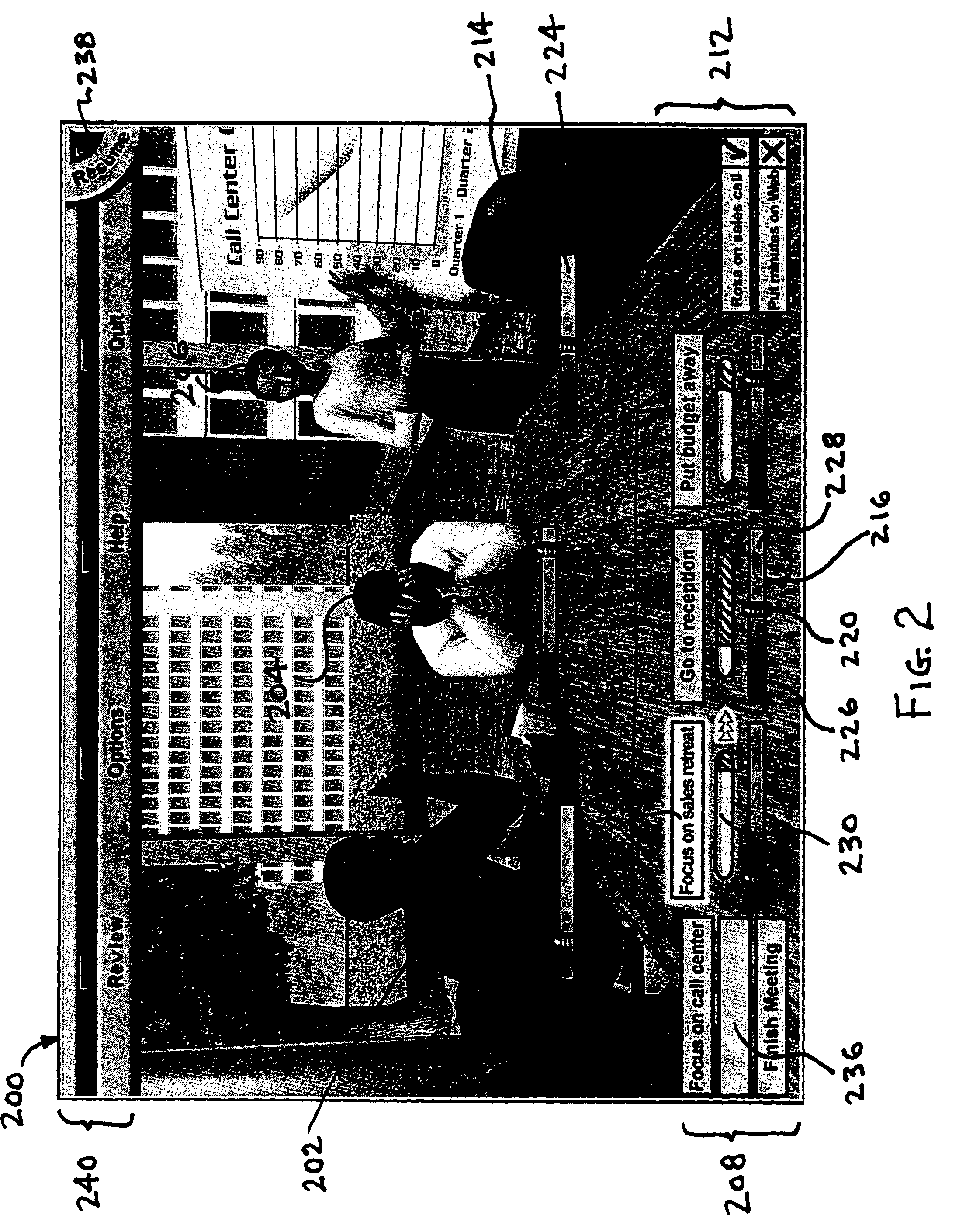

Computer-based learning system

InactiveUS7401295B2Special service provision for substationMultiplex system selection arrangementsContinuationVirtual business

The present invention enables a user to develop his / her leadership skills by participating in virtual business meetings with animated characters. The animated characters are managers and / or employees who work for the user in a fictitious corporation. Utilizing real-time animation and an advanced artificial intelligence and physics system, the software program enables the user to introduce, support, and oppose ideas and characters during virtual meetings. The logic of the program is built around a novel three-to-one leadership methodology. The user may participate in a number of meetings—each meeting configured to build upon the prior meeting. The user's progress is measured by metrics that graph various aspects of the user's and the animated characters' activity during each meeting based upon the three-to-one leadership methodology and provide a story-line continuation based upon the outcome of each meeting.

Owner:SIMULEARN

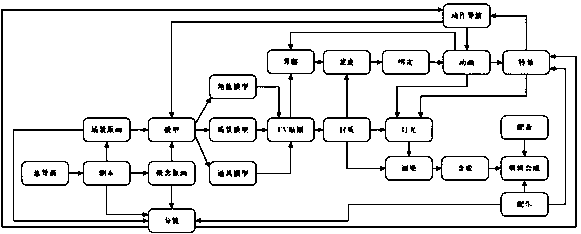

Manufacturing method of hot-line work technical training distributing line part 3D (three-dimensional) teaching video

ActiveCN103544723AMeet needsGuaranteed uptimeAnimation3D-image renderingElectric power systemSoftware engineering

The invention relates to a manufacturing method of a hot-line work technical training distributing line part 3D (three-dimensional) teaching video. The method includes: building scene, equipment, character, tool, management document material models, and building a management system which comprises model material library management software, dynamic control engine software, video recording software, video teaching editing software, a 3D animation management subsystem, a window interface GUI (graphical user interface) and a GUI interface generating tool; using the 3D animation technology to manufacture simulation animation courseware, using the 3D animation manufacturing technical and physical engine technology to manufacture an electric power animation material video, and using audio and video editing manners of the editing system to make standard operations, safety technical training and the like of an electric system into the 3D animation teaching video. The method has the advantages that the manufactured teaching video is visualized and vivid, long-term and sustainable, good in teaching effect and low in training cost.

Owner:STATE GRID CORP OF CHINA +1

Statistical dynamic collisions method and apparatus utilizing skin collision points to create a skin collision response

ActiveUS7307633B2Efficiently fine-tuneAccurately previewAnimationImage generationStatistical dynamicsAnimation

A method for animating soft body characters has a preparation phase followed by an animation phase. The preparation phase determines the skin deformation of a character model at skin contact points in response to impulse collisions. The skin deformation from impulse collisions are compactly represented in terms of the set of basis poses. In the animation phase, the skin impulse responses are used to create a final posed character. Regardless of the type of collision or the shape of the colliding object, the collision animation phase uses the same set of skin impulse responses. A subset of a set of skin points is selected as a set of skin collision points. A final collision response is determined from the skin collision points. The final collision response to the complete set of skin points.

Owner:PIXAR ANIMATION

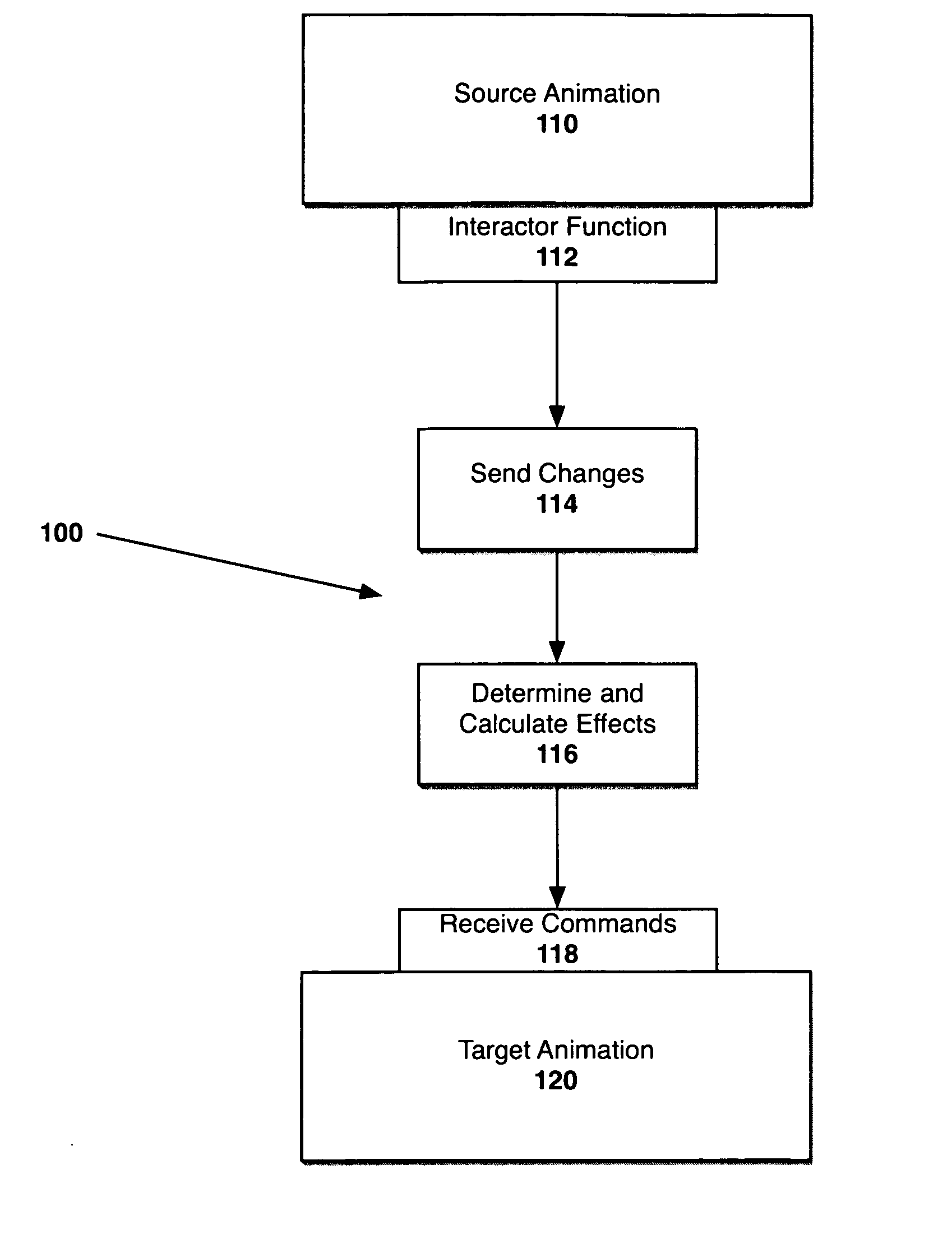

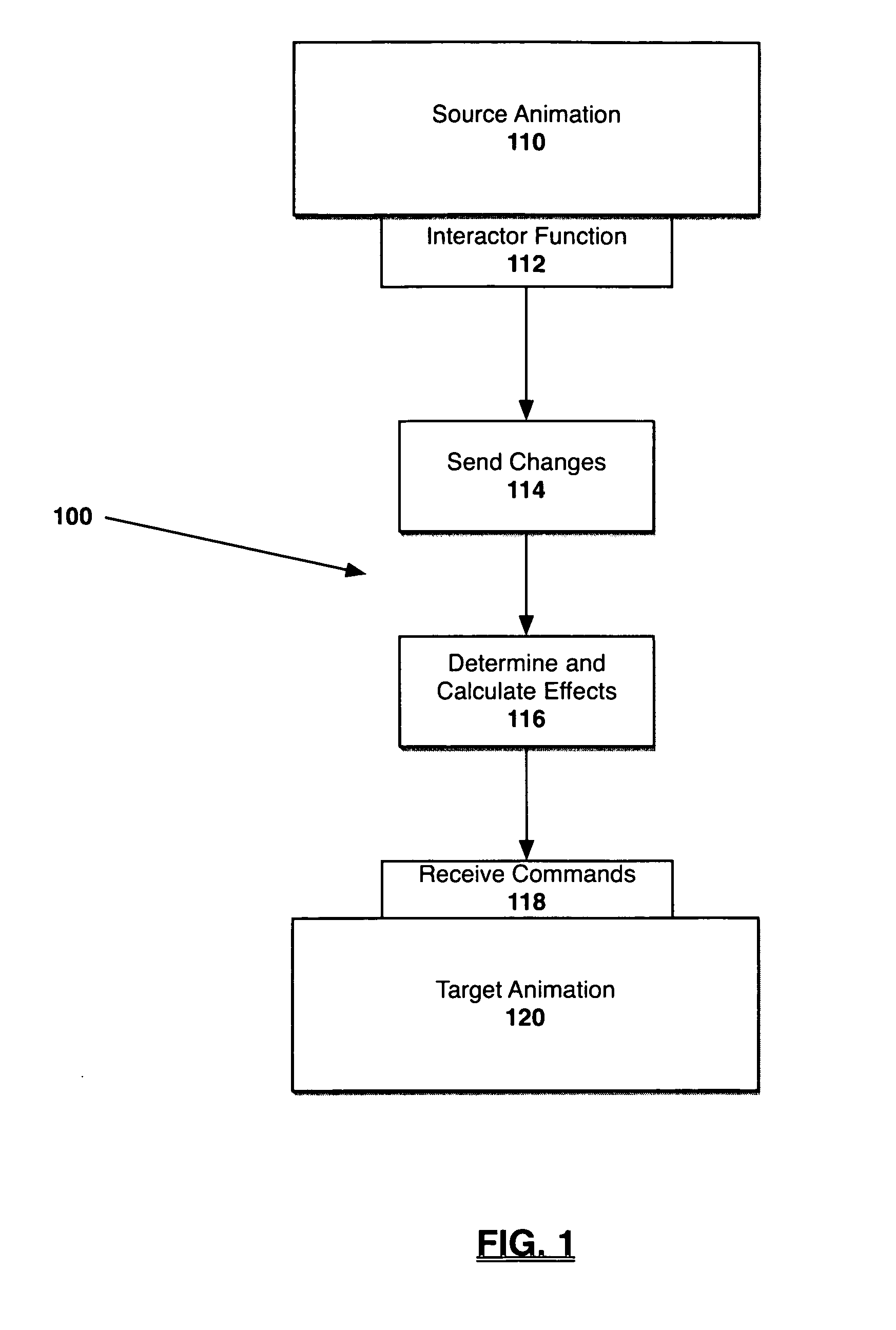

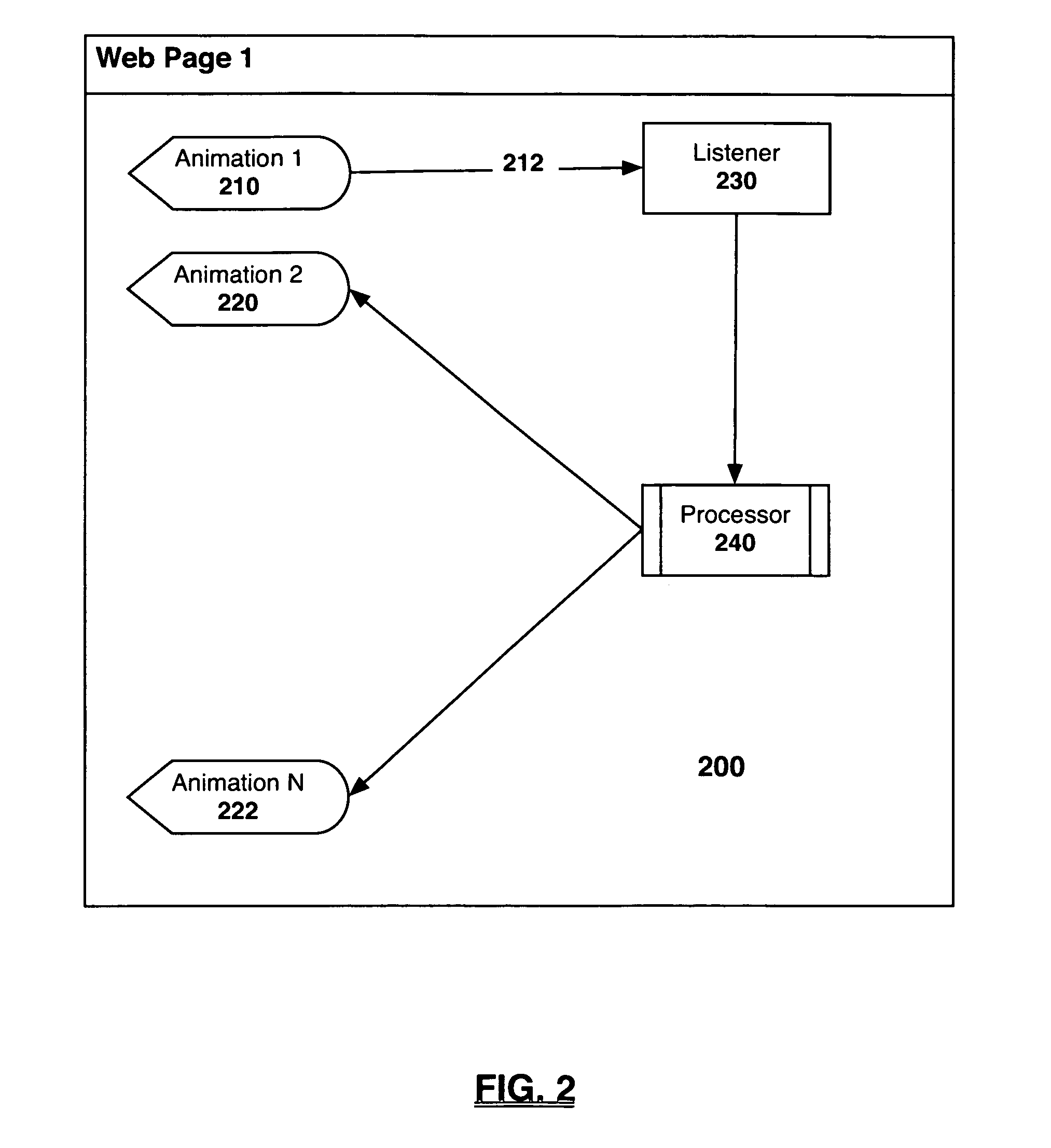

Synchronization and coordination of animations

A method of synchronizing and controlling a source animation with a target animation is provided. In another embodiment of the present invention a method of synchronizing and controlling a source animation with a plurality of target animations with all the animations on the same web page is provided. In yet another embodiment of the present invention a method of synchronizing and controlling a source animation in association with a parent web page with a target animation in association with a child web page where the source animation is in operative association with the target animation is provided. The synchronization and coordination of the target animation with the source animation accurately reflects the change or changes in the source animation in the target animation and thereby enhances the proficiency and experience of the user.

Owner:MAGNIFI GRP INC

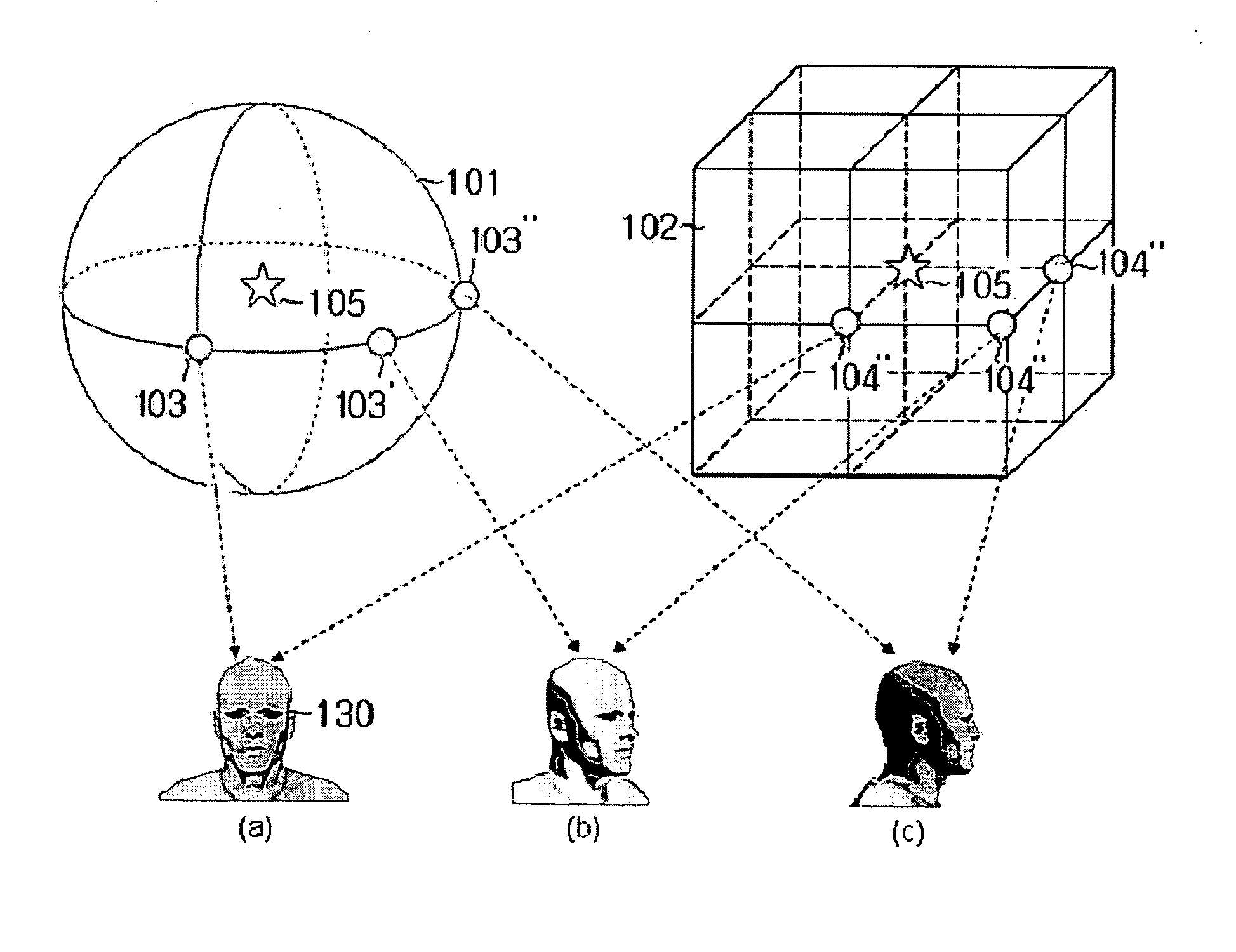

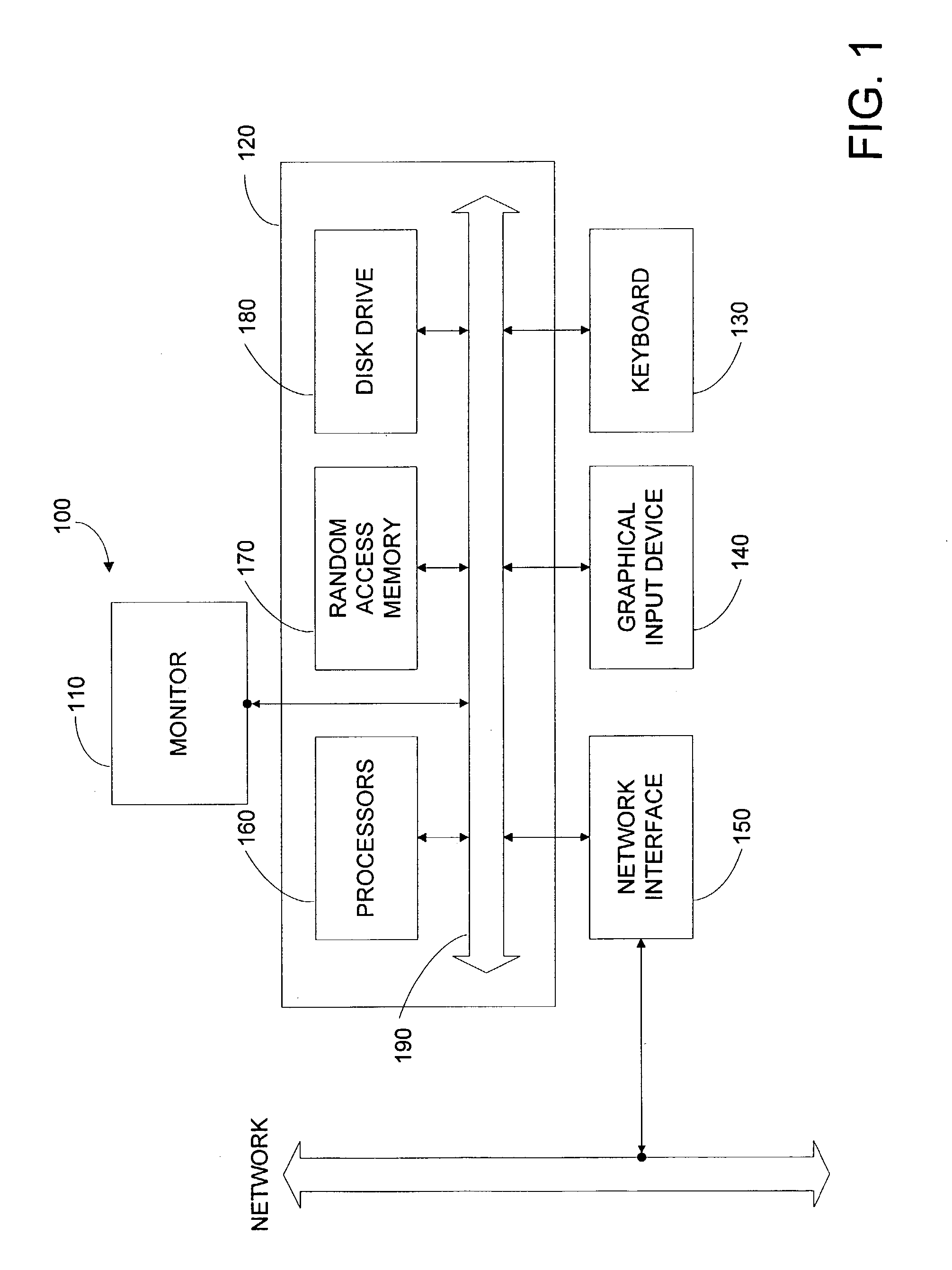

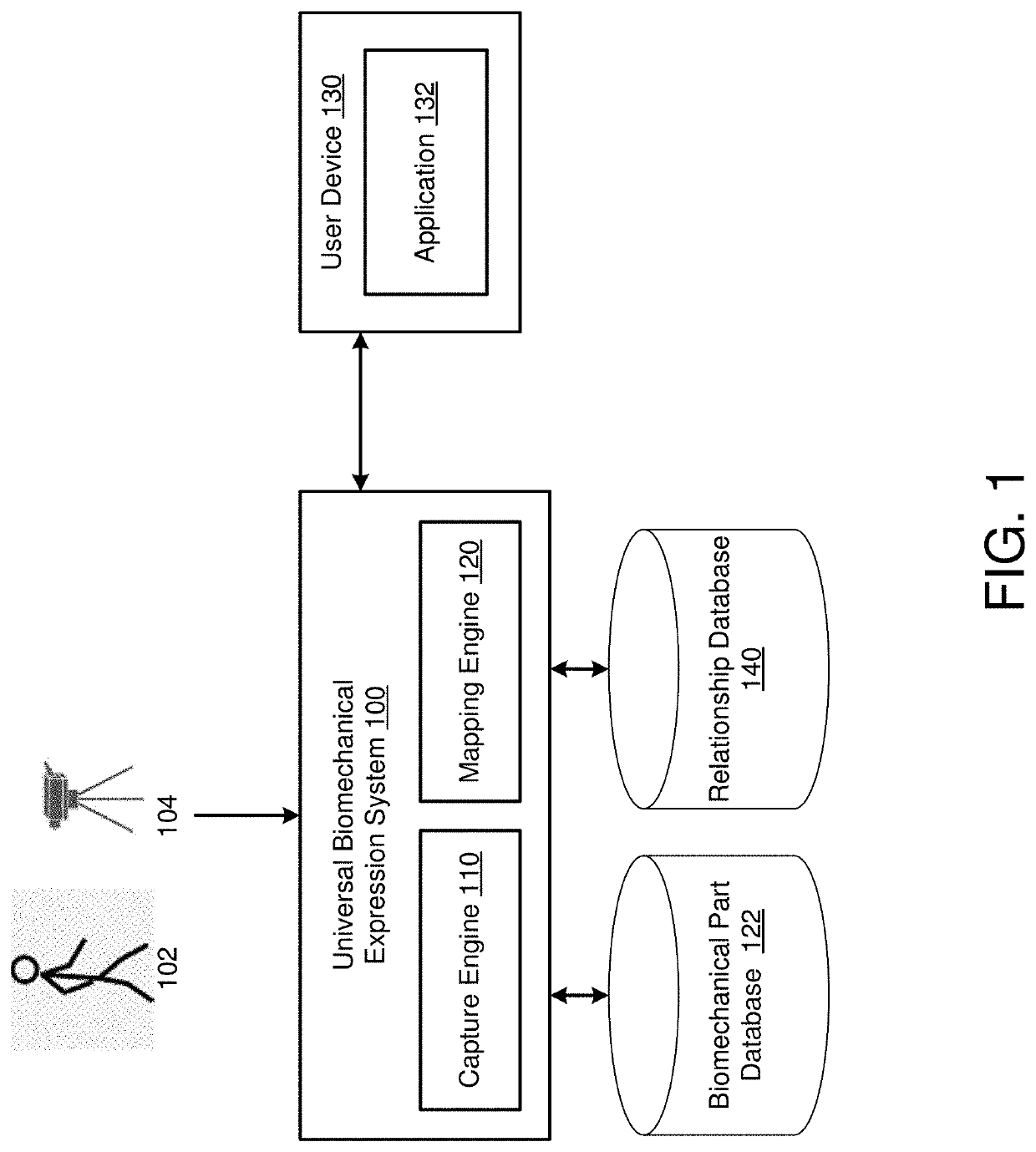

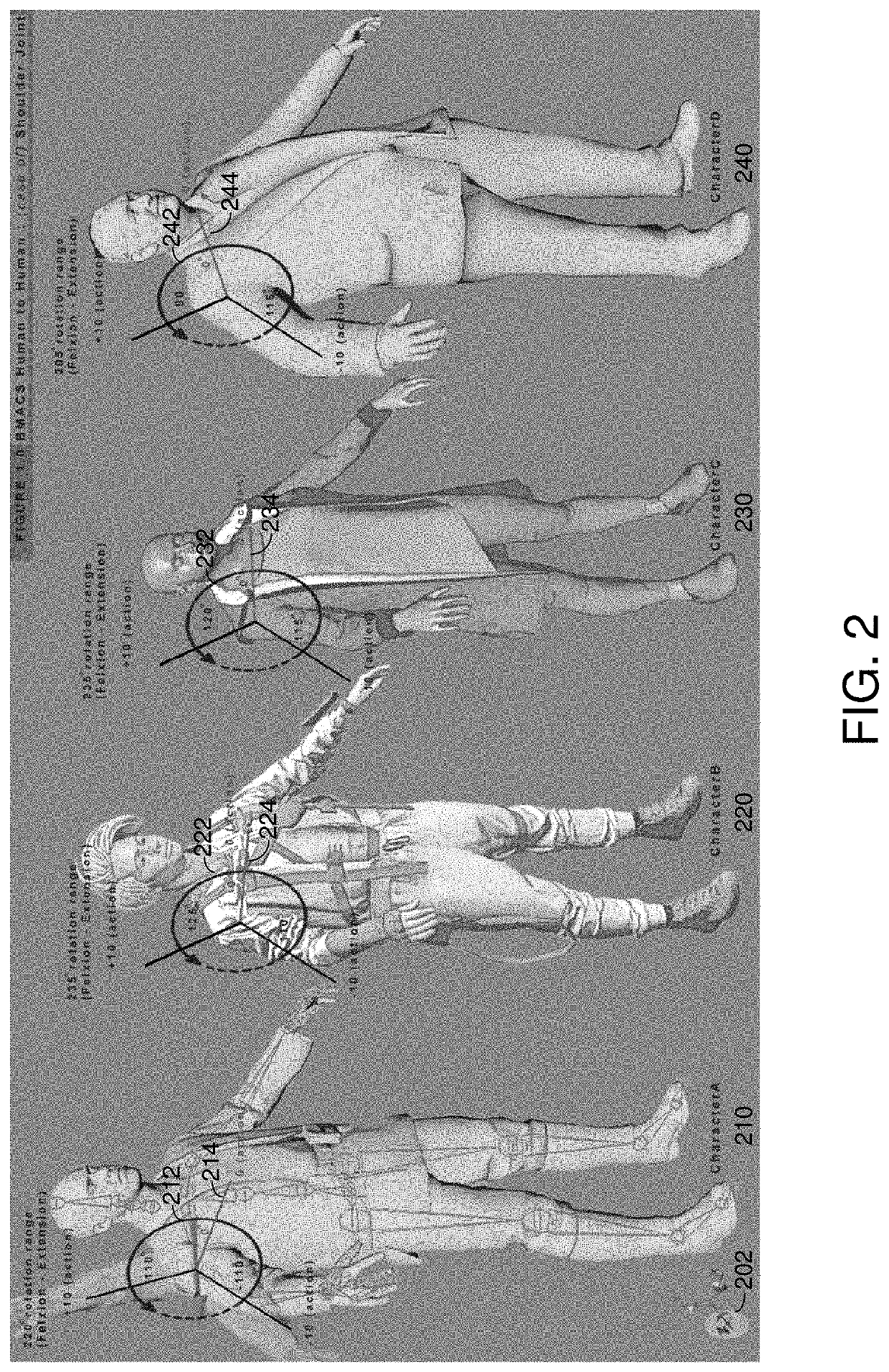

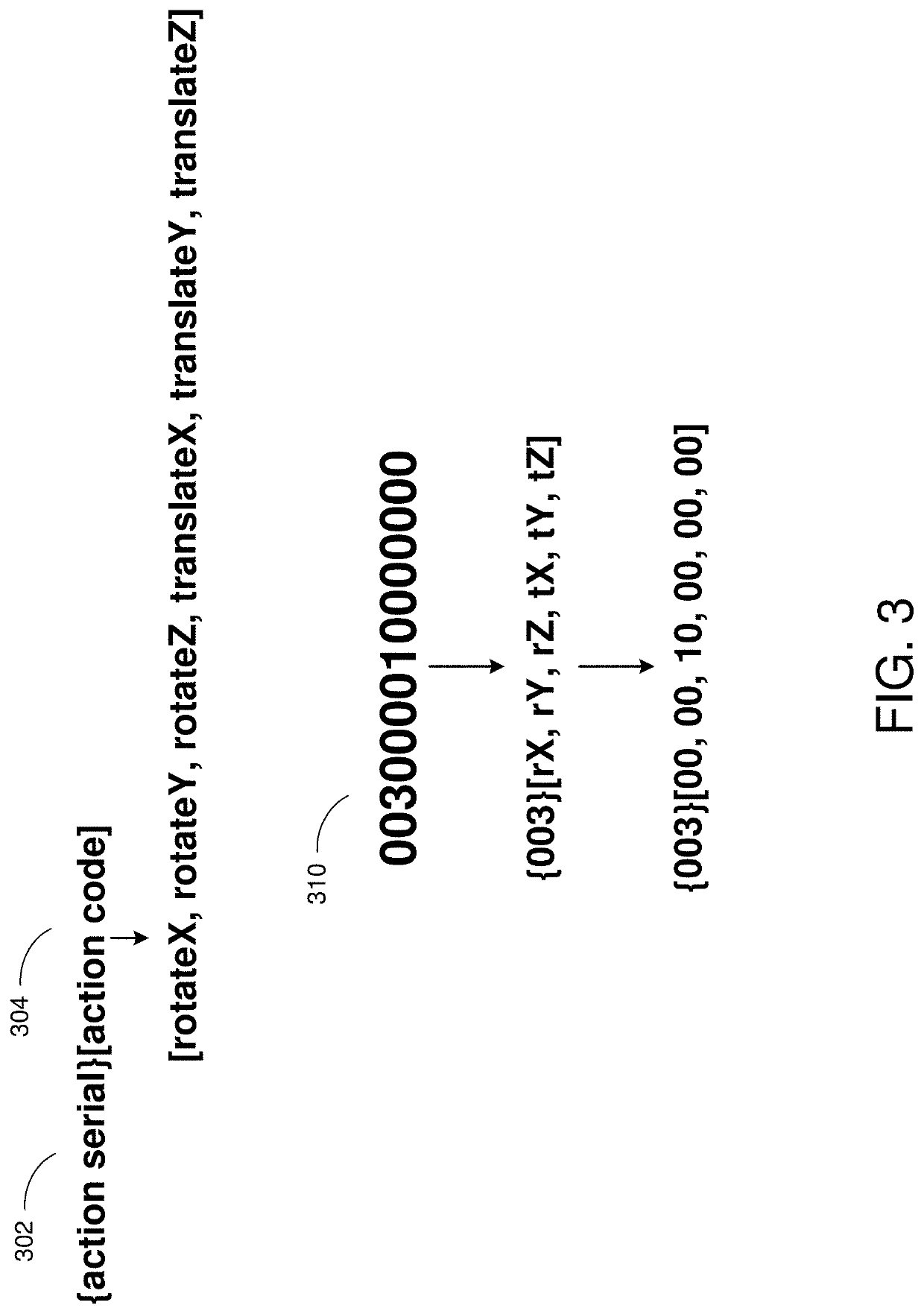

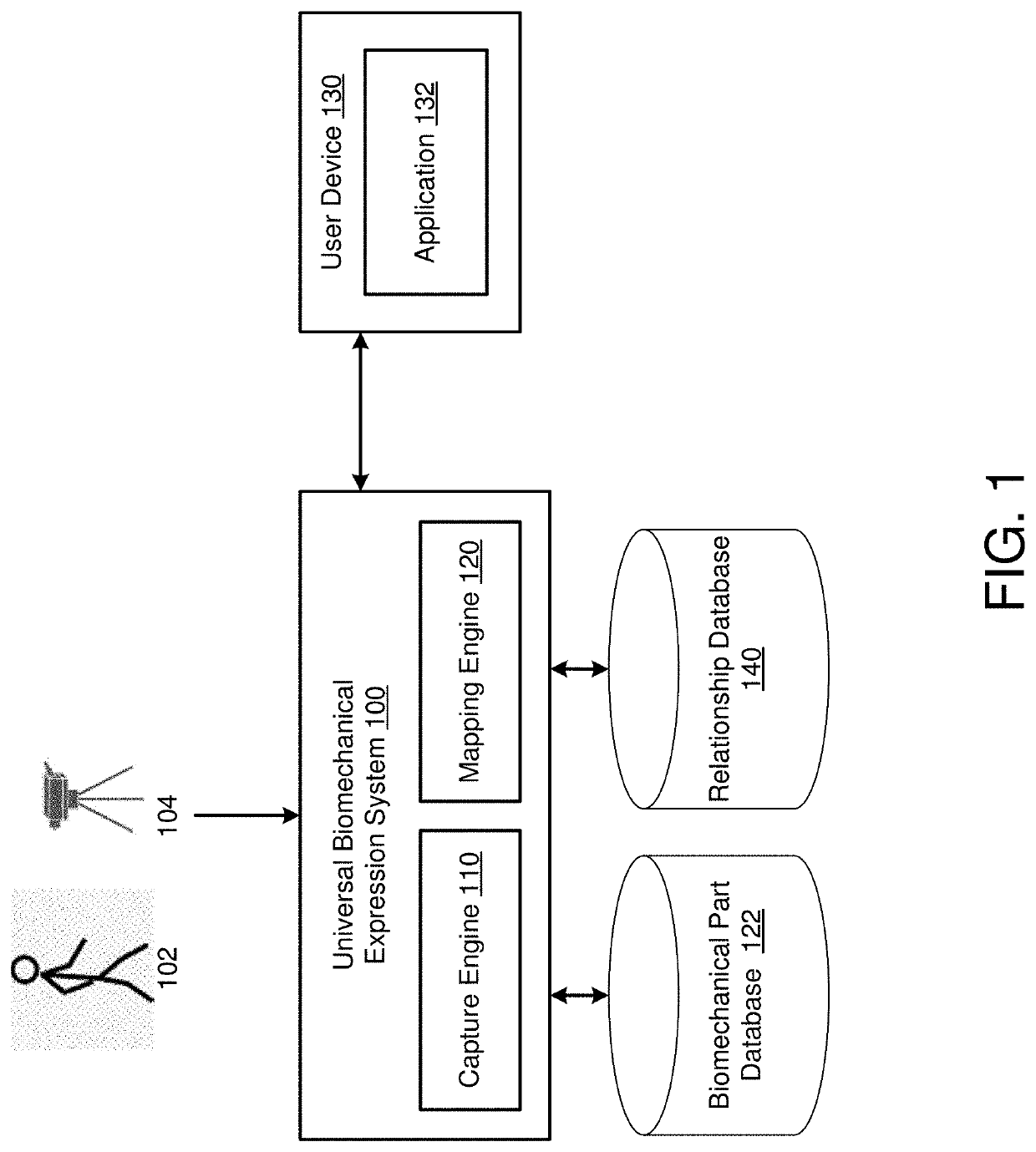

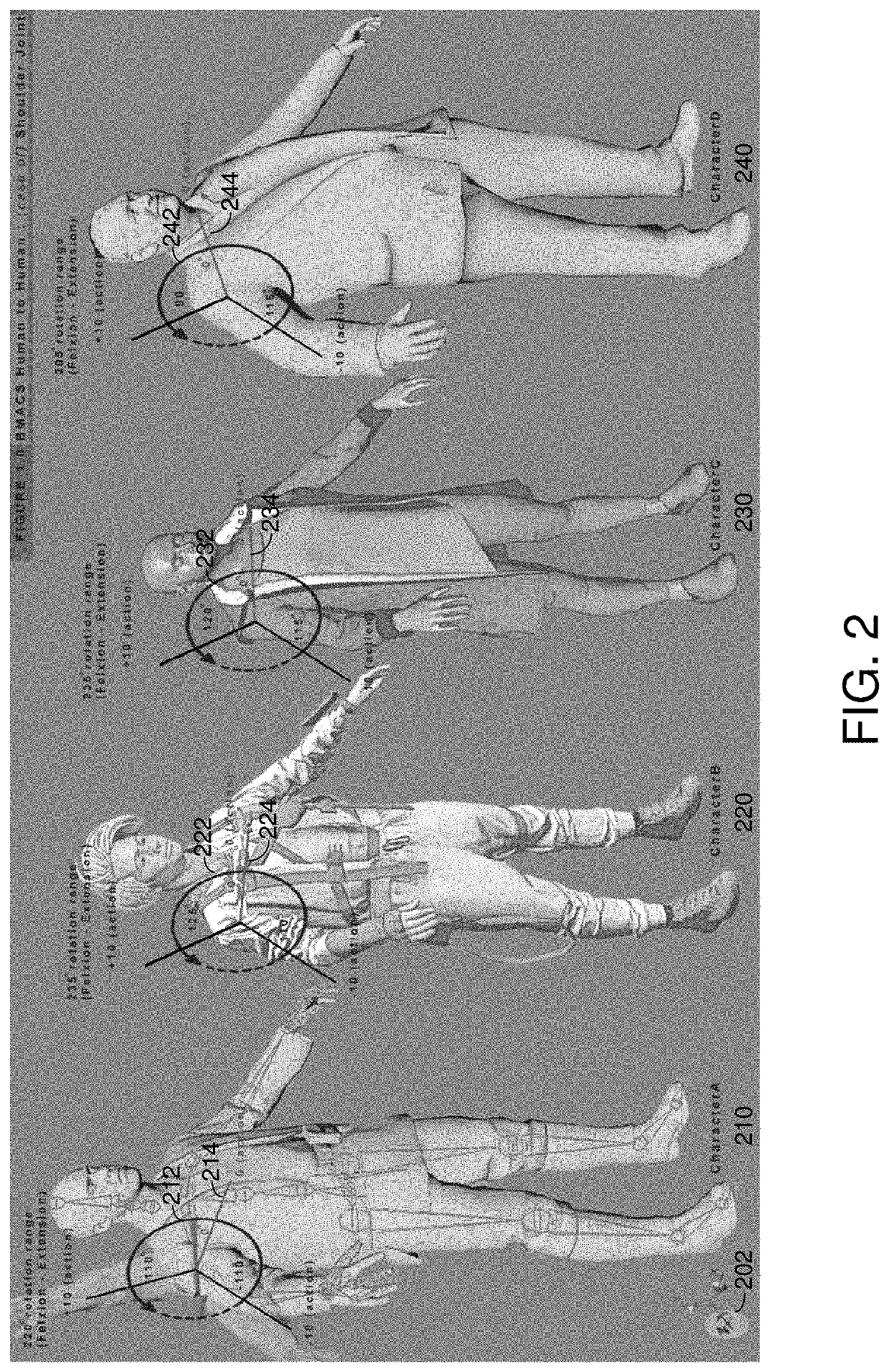

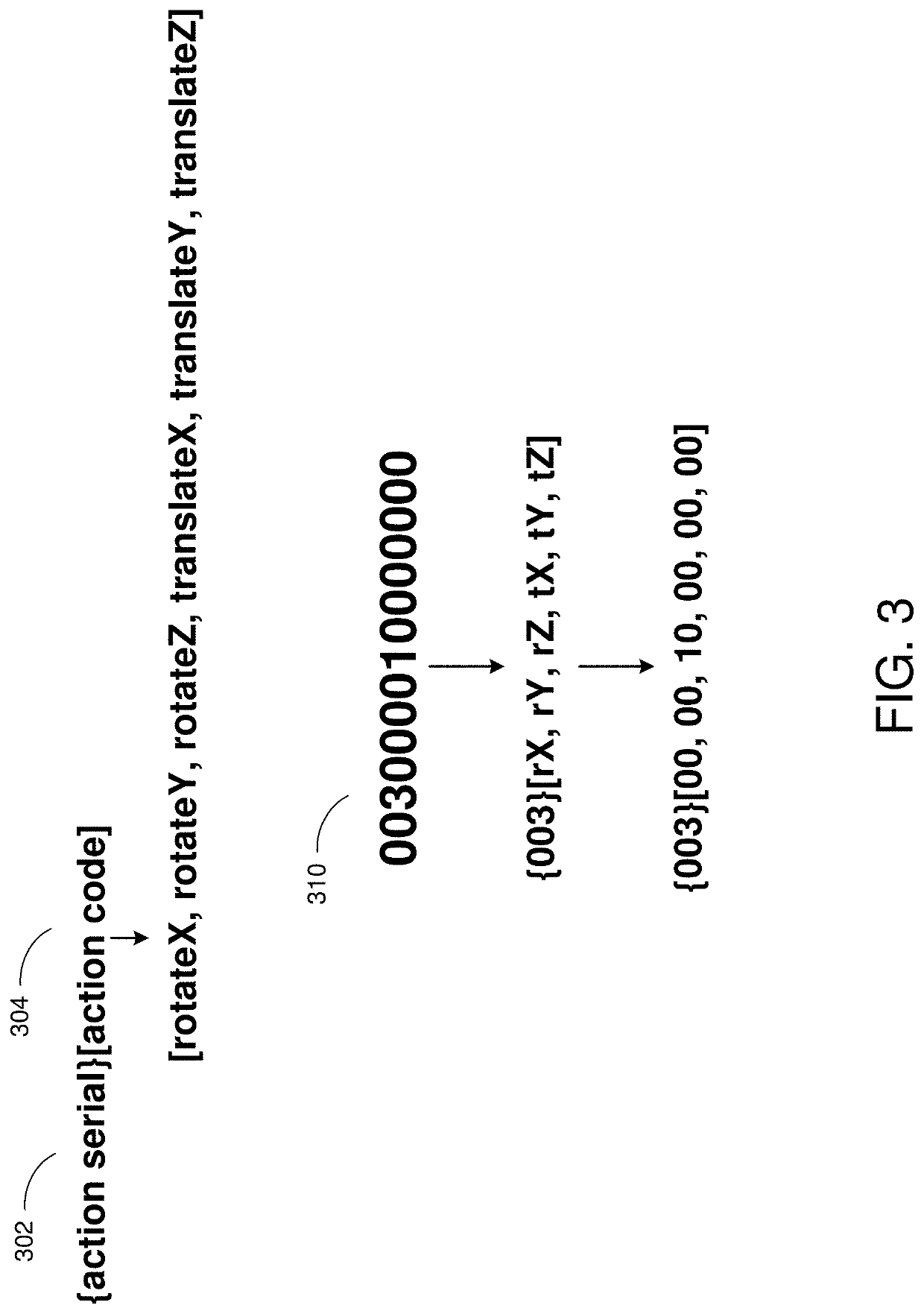

Universal body movement translation and character rendering system

ActiveUS20210217184A1Promote generationImage analysisCharacter and pattern recognitionPattern recognitionComputer graphics (images)

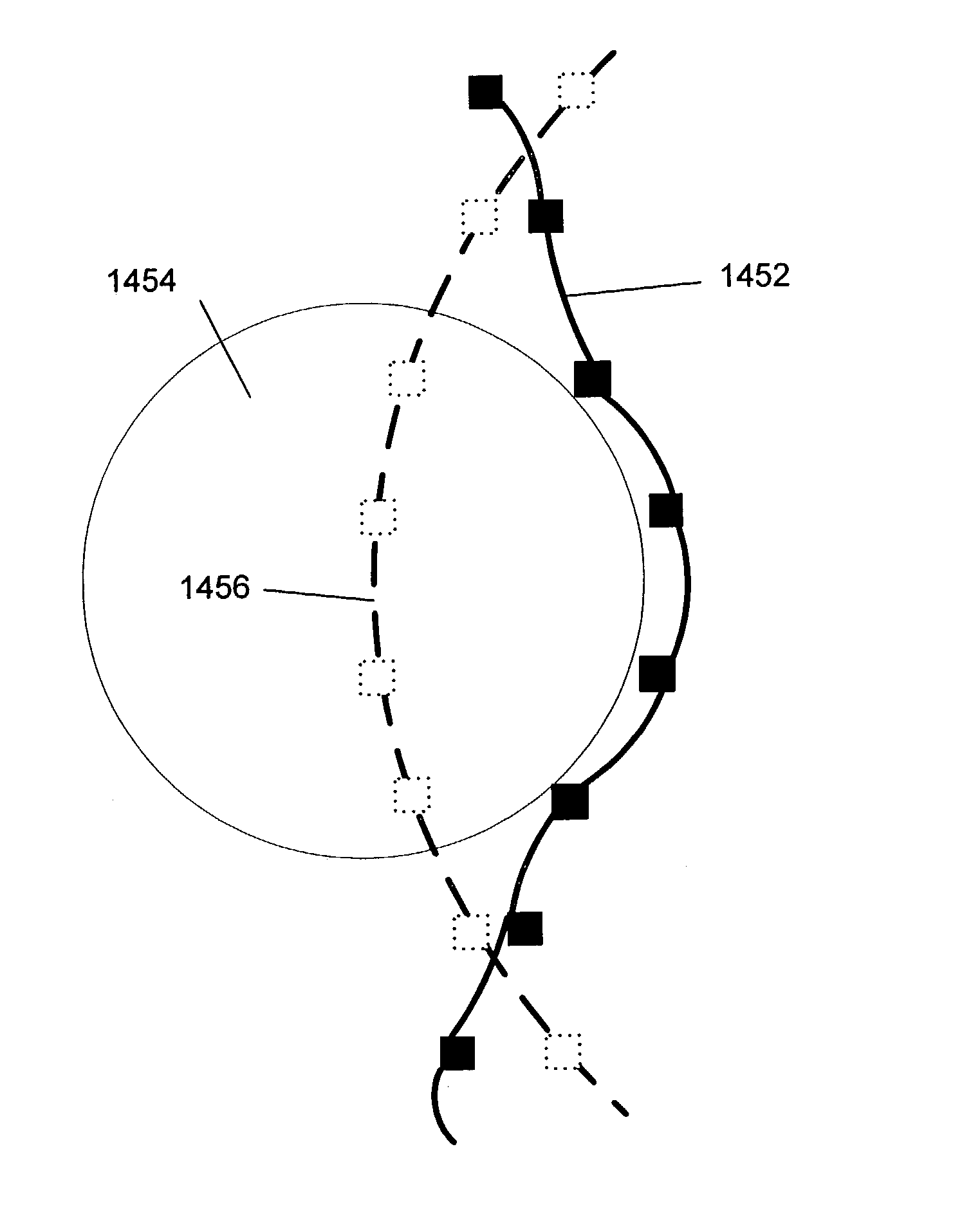

Systems and methods are disclosed for universal body movement translation and character rendering. Motion data from a source character can be translated and used to direct movement of a target character model in a way that respects the anatomical differences between the two characters. The movement of biomechanical parts in the source character can be converted into normalized values based on defined constraints associated with the source character, and those normalized values can be used to inform the animation of movement of biomechanical parts in a target character based on defined constraints associated with the target character.

Owner:ELECTRONICS ARTS INC

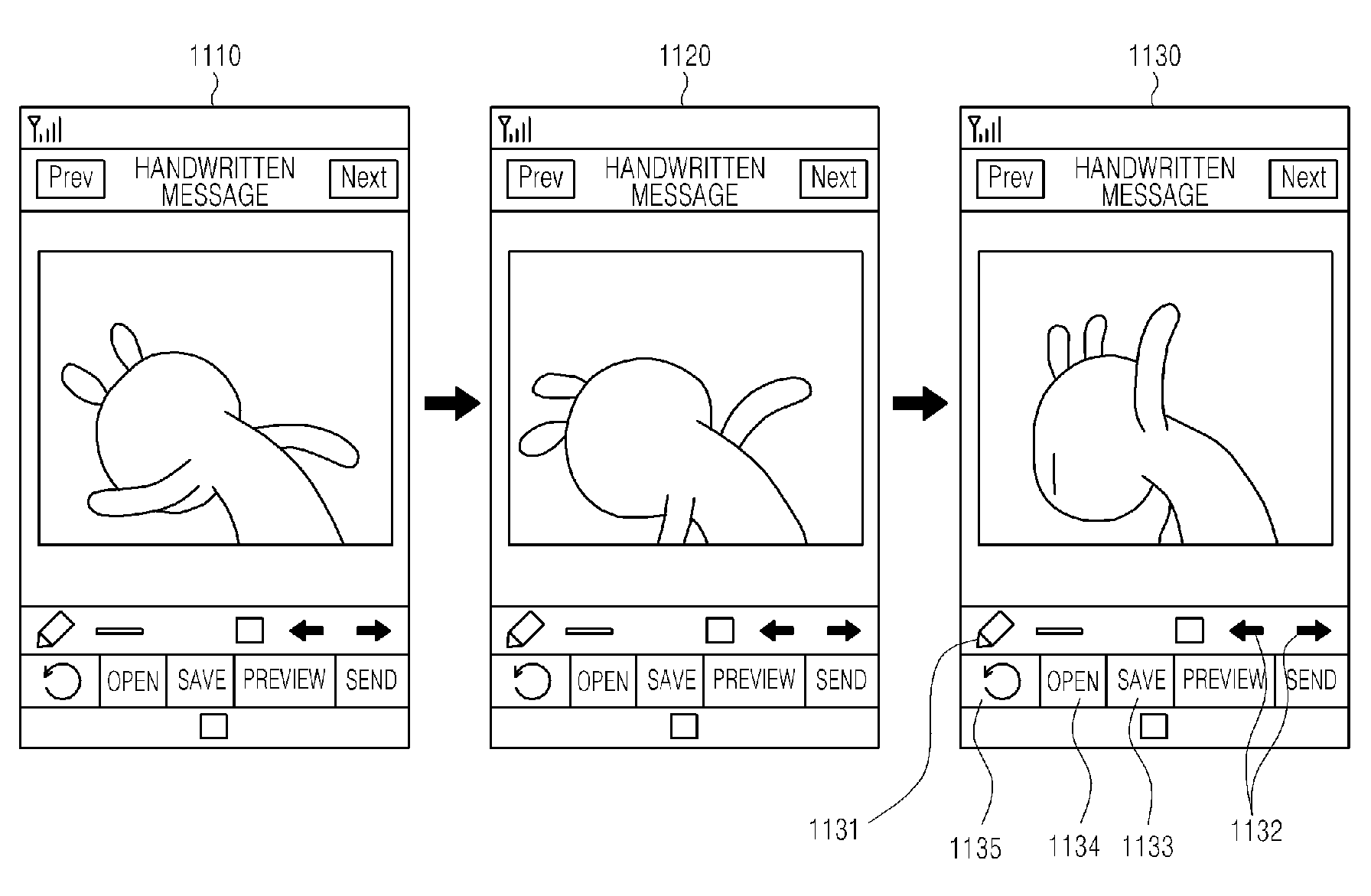

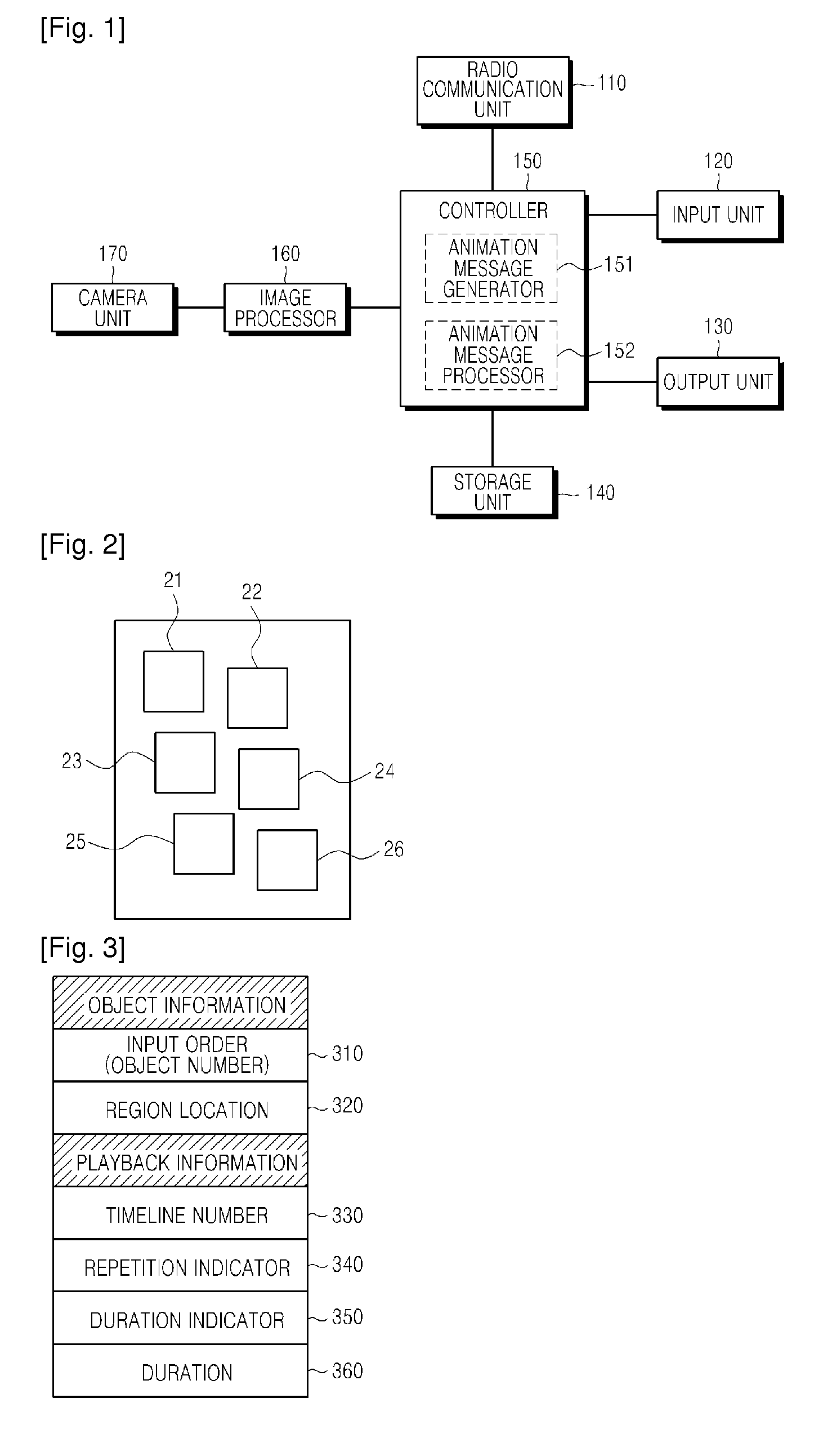

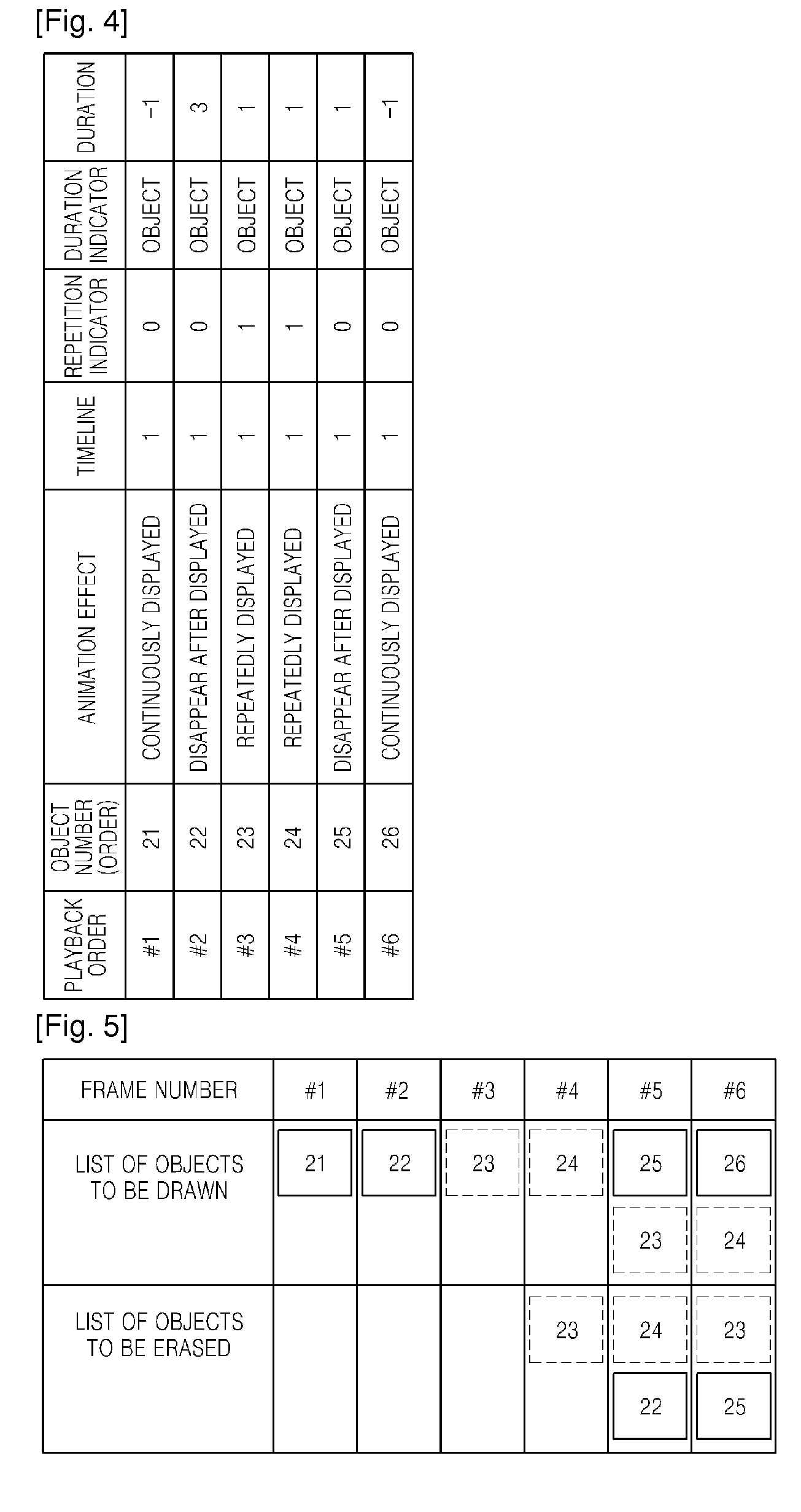

Method and apparatus for generating and playing animated message

Methods and apparatus are provided for generating an animated message. Input objects in an image of the animated message are recognized, and input information, including information about an input time and input coordinates for the input objects, is extracted. Playback information, including information about a playback order of the input objects, is set. The image is displayed in a predetermined handwriting region of the animated message. An encoding region, which is allocated in a predetermined portion of the animated message and in which the input information and the playback information are stored, is divided into blocks having a predetermined size. Display information of the encoding region is generated by mapping the input information and the playback information to the blocks in the encoding region. An animated message including the predetermined handwriting region and the encoding region is generated. The generated animated message is transmitted.

Owner:SAMSUNG ELECTRONICS CO LTD

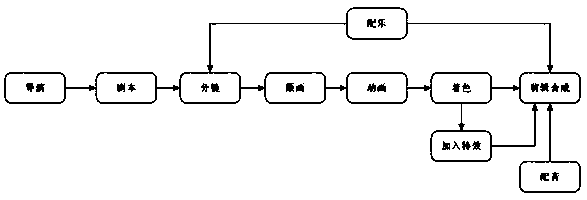

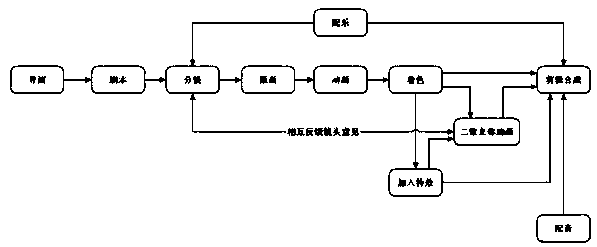

Two-dimensional stereoscopic animation making method

ActiveCN103544725AShorten production timeReduce manufacturing costAnimationComputational scienceComputer graphics (images)

The invention discloses a two-dimensional stereoscopic animation making method. The method includes the following steps that A, two-dimensional animations are made; B, layering is carried out, namely, storyboard original paintings of the two-dimensional animations suitable for being converted to three-dimensional animations are layered according to the far and near relations between angles of view of people, and materials located on different animation layers are separated to different layers; C, moving is carried out, namely, the layers are moved to form layers after moving; D, merging is carried out, namely, the moved layers are merged on the same layer, and frames of the original two-dimensional animations and the layers formed after moving and merging serve as frames for presentation to left eyes and frames for presentation to right eyes respectively according to the principle that images, observed by people from different angles, of objects differ. In the method, only the step of plane merging of the three-dimensional animations is added on the basis of the original two-dimensional animations, the two-dimensional animations can be converted to the two-dimensional stereoscopic animations without the complex flow of three-dimensional animations, and therefore animation making time and cost are reduced, the brand new expressive force of the two-dimensional animations is increased, and the traditional two-dimensional animations can better adapt to stereoscopic play markets in the future.

Owner:马宁

Universal body movement translation and character rendering system

ActiveUS10902618B2Promote generationImage analysisCharacter and pattern recognitionPattern recognitionComputer graphics (images)

Systems and methods are disclosed for universal body movement translation and character rendering. Motion data from a source character can be translated and used to direct movement of a target character model in a way that respects the anatomical differences between the two characters. The movement of biomechanical parts in the source character can be converted into normalized values based on defined constraints associated with the source character, and those normalized values can be used to inform the animation of movement of biomechanical parts in a target character based on defined constraints associated with the target character.

Owner:ELECTRONICS ARTS INC

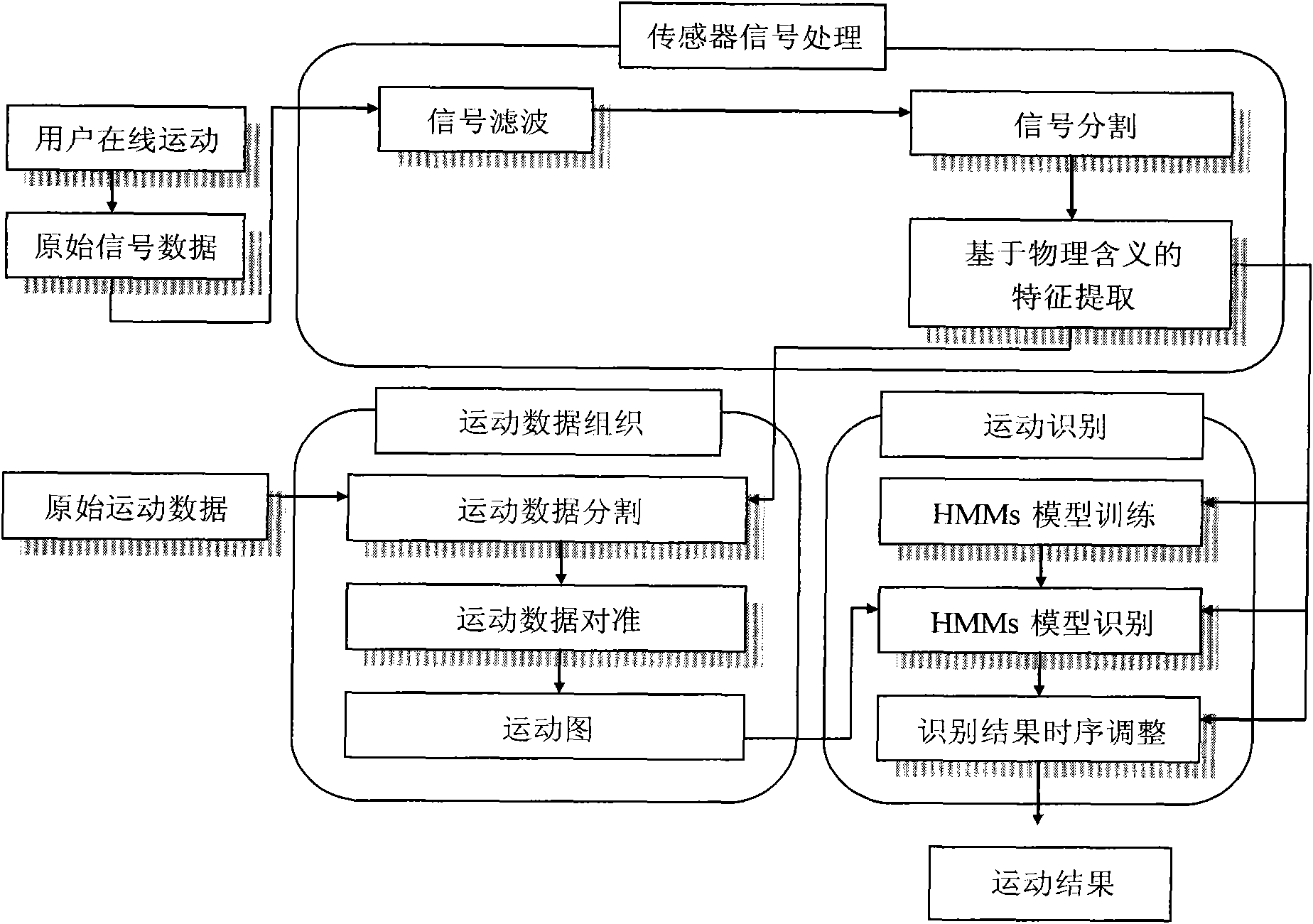

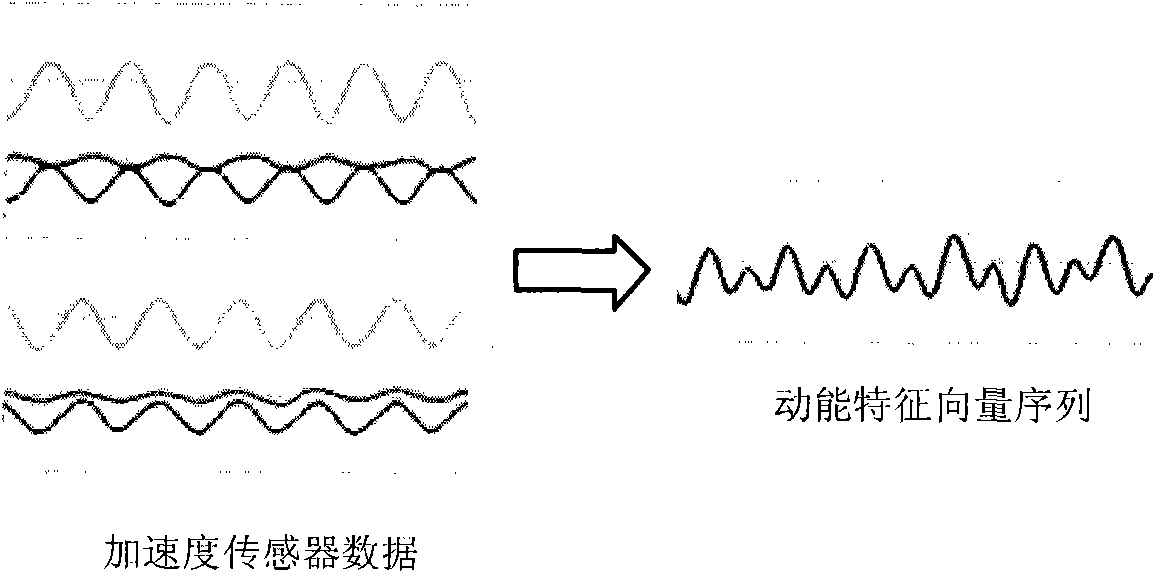

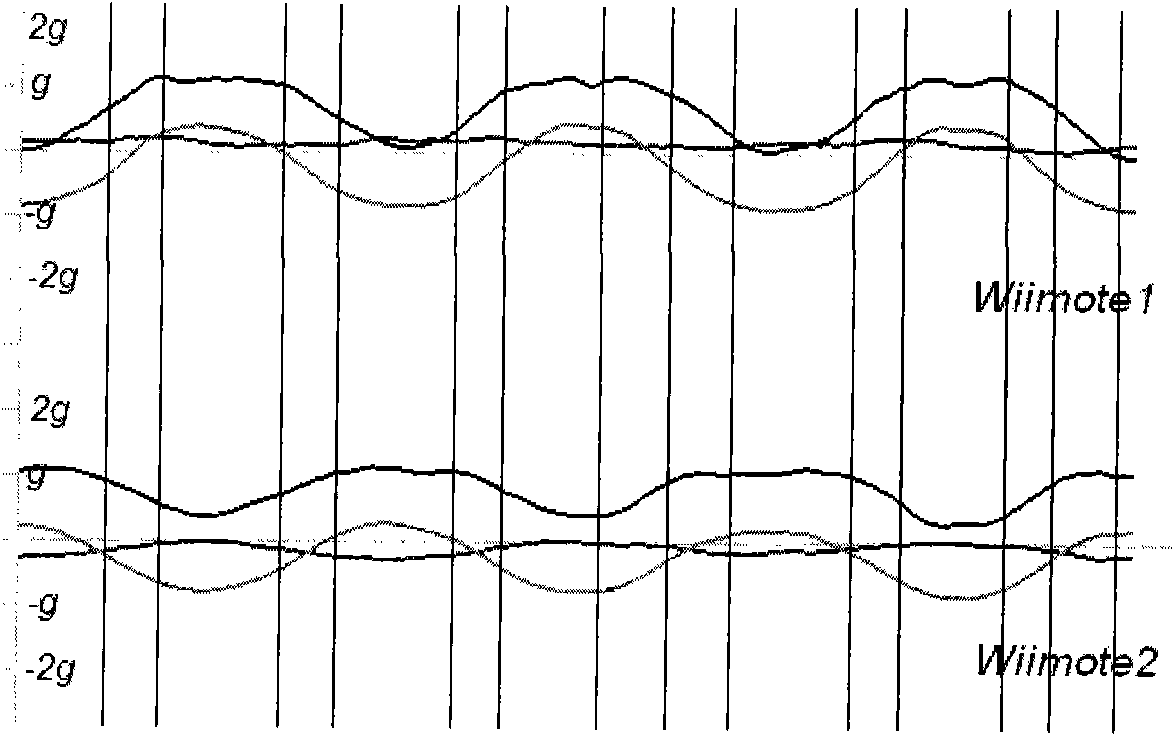

Motion control and animation generation method based on acceleration transducer

InactiveCN101976451AImprove experienceImprove motion recognition rateAnimationComputer animationWhole body

The invention relates to a motion control and animation generation method based on an acceleration transducer. The invention belongs to the technical field of computer virtual reality, in particular to a motion control and animation generation method in computer animation technologies. The method comprises the following steps of: firstly, analyzing a motion to be recognized to obtain key joint point information in the motion process; then, extracting the features of transducer data and motion data in the position of the key joint point based on physical meaning, and carrying out subsequent motion classification and motion recognition. Signals are segmented and a motion recognition classifier is created according to the characteristic sequence of the signal data of the acceleration transducer, and characteristics based on kinetic energy are used as central characteristics to match with bone motion data; in order to increase user experience, the time sequence of the finally obtained recognition motion result is adjusted so as to conform to the motion time sequence of on-line performance of a user. The invention has the characteristic of realizing real-time interactive control on virtual human whole-body motion by using a few transducers.

Owner:BEIHANG UNIV

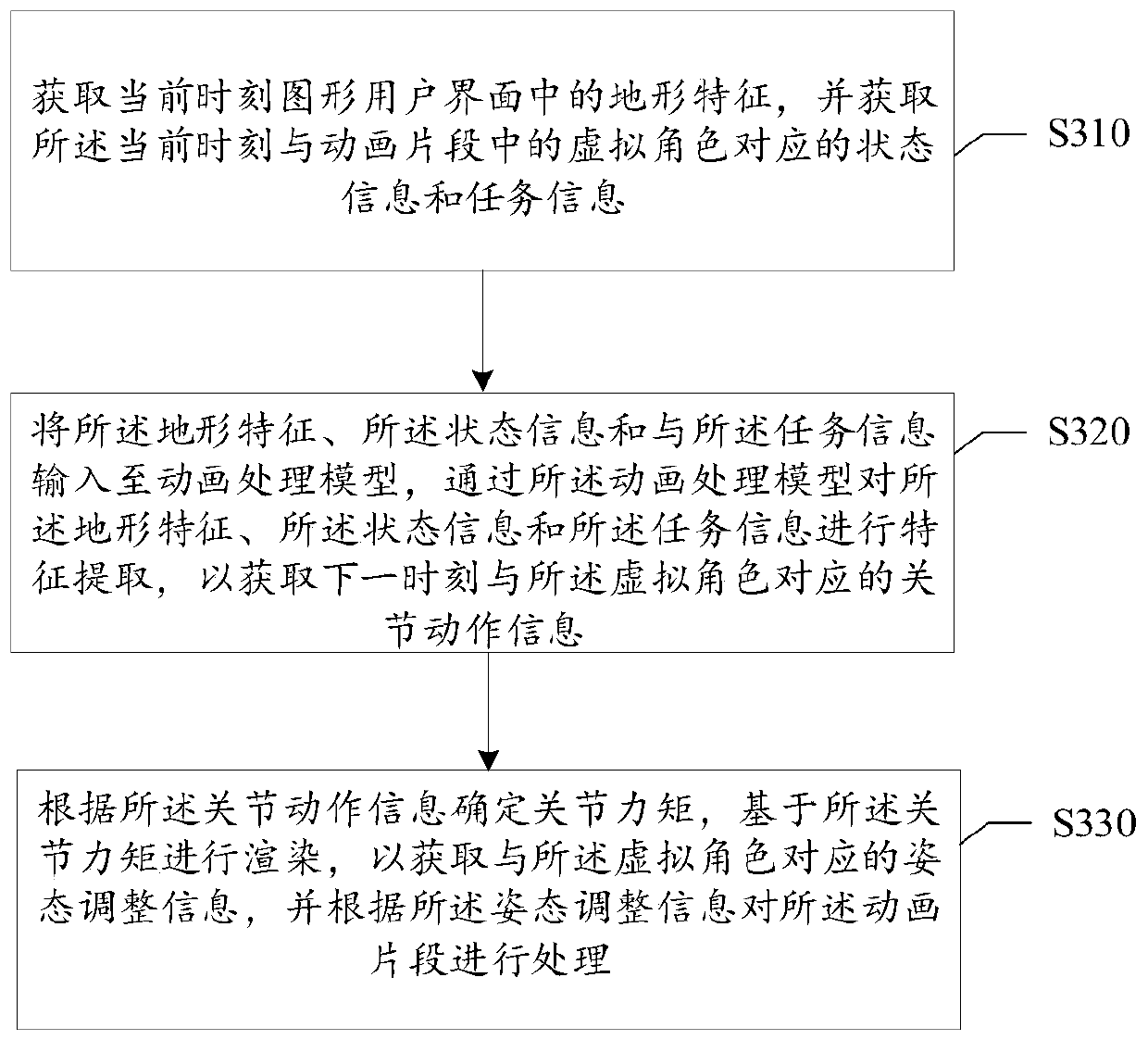

Animation processing method and device, computer storage medium and electronic equipment

ActiveCN111292401AImprove realismImprove adaptabilityImage enhancementImage analysisGraphical user interfaceFeature extraction

The invention provides an animation processing method and device, and relates to the field of artificial intelligence. The method comprises the steps of acquiring topographic features in a graphical user interface at the current moment, and state information and task information corresponding to virtual characters in animation segments at the current moment; inputting the topographic features, thestate information and the task information into an animation processing model, and performing feature extraction on the topographic features, the state information and the task information through the animation processing model to obtain joint action information corresponding to the virtual character at the next moment; and determining a joint moment according to the joint action information, performing rendering based on the joint moment to obtain posture adjustment information corresponding to the virtual character at the current moment, and processing the animation segment according to theposture adjustment information. When the animation segment is simulated, the action posture of the virtual character is adjusted according to different topographic features and task information, so that the animation fidelity is improved, the interaction between the user and the virtual character is realized, and the self-adaptability of the virtual character is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com