Patents

Literature

39 results about "Global transformation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

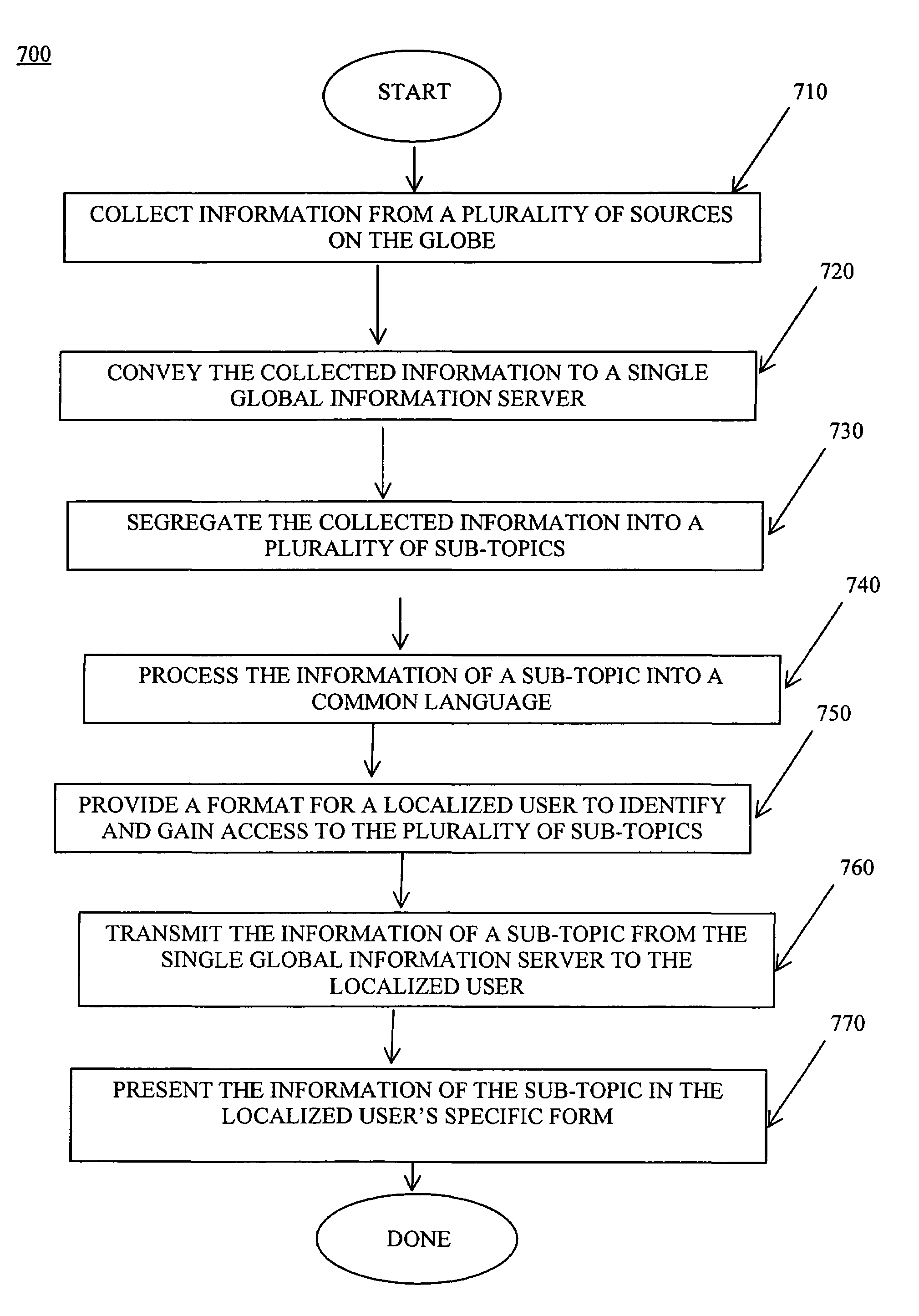

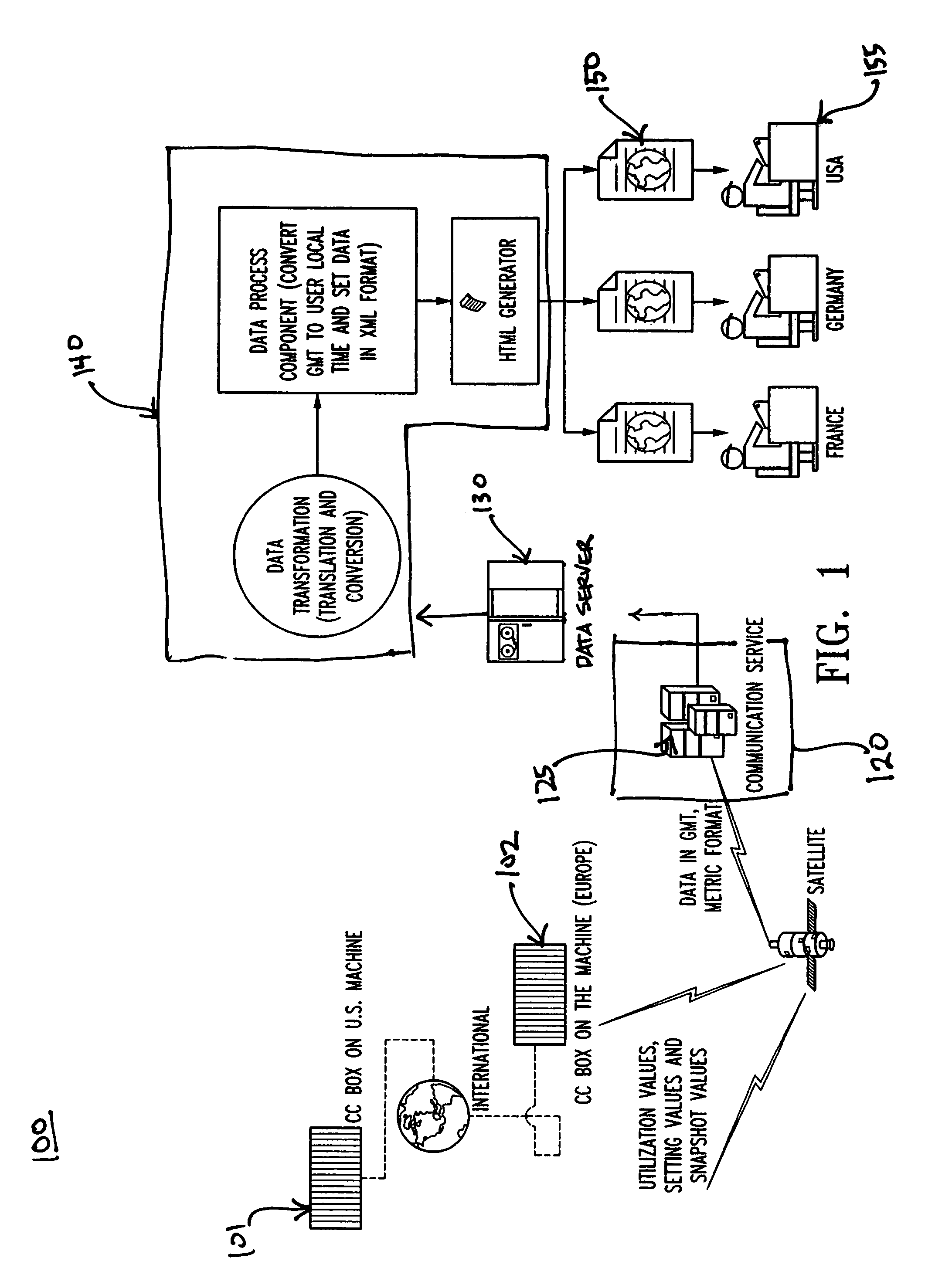

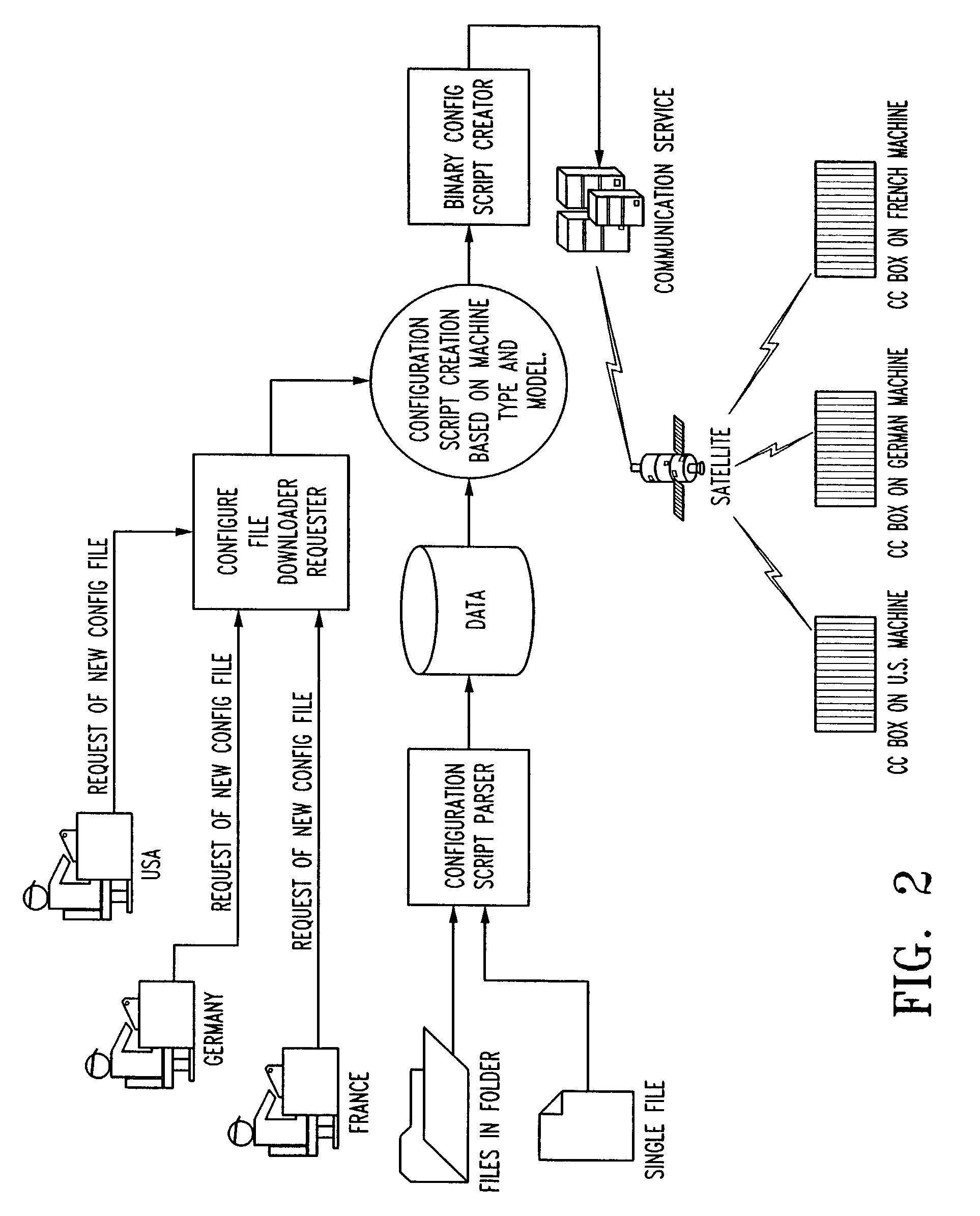

Method of providing localized information from a single global transformation source

InactiveUS7194413B2Digital data information retrievalRoad vehicles traffic controlRelevant informationGlobal transformation

A method of providing localized information from a single global transformation source, comprises collecting a plurality of information on a plurality of subjects, and conveying this information to a single information server located at the single global transformation source. Segregating the collected information into a plurality of sub-topics so that related information from a plurality of sources will be present in a single sub-topic. Transforming the information of each sub-topic into a single common language including conversion of words, exp-ressions, technical and financial data. Identifying each sub-topic by a topic identifier so that a localized user on the globe can identify the sub-topic germane to the needs and interest of the localized user. Transmitting the information of a sub-topic from the single information server to a local user who requests the same.

Owner:DEERE & CO

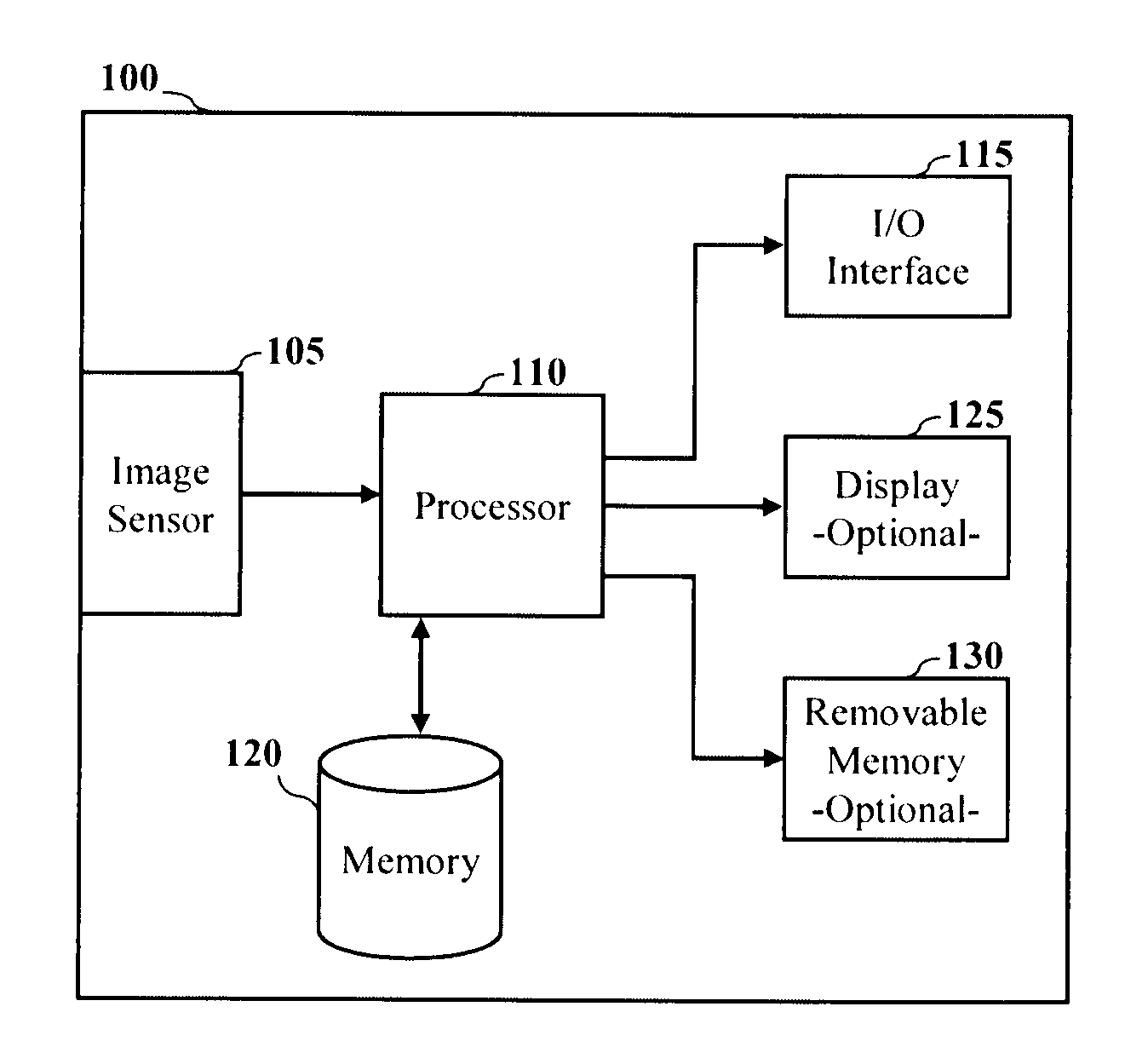

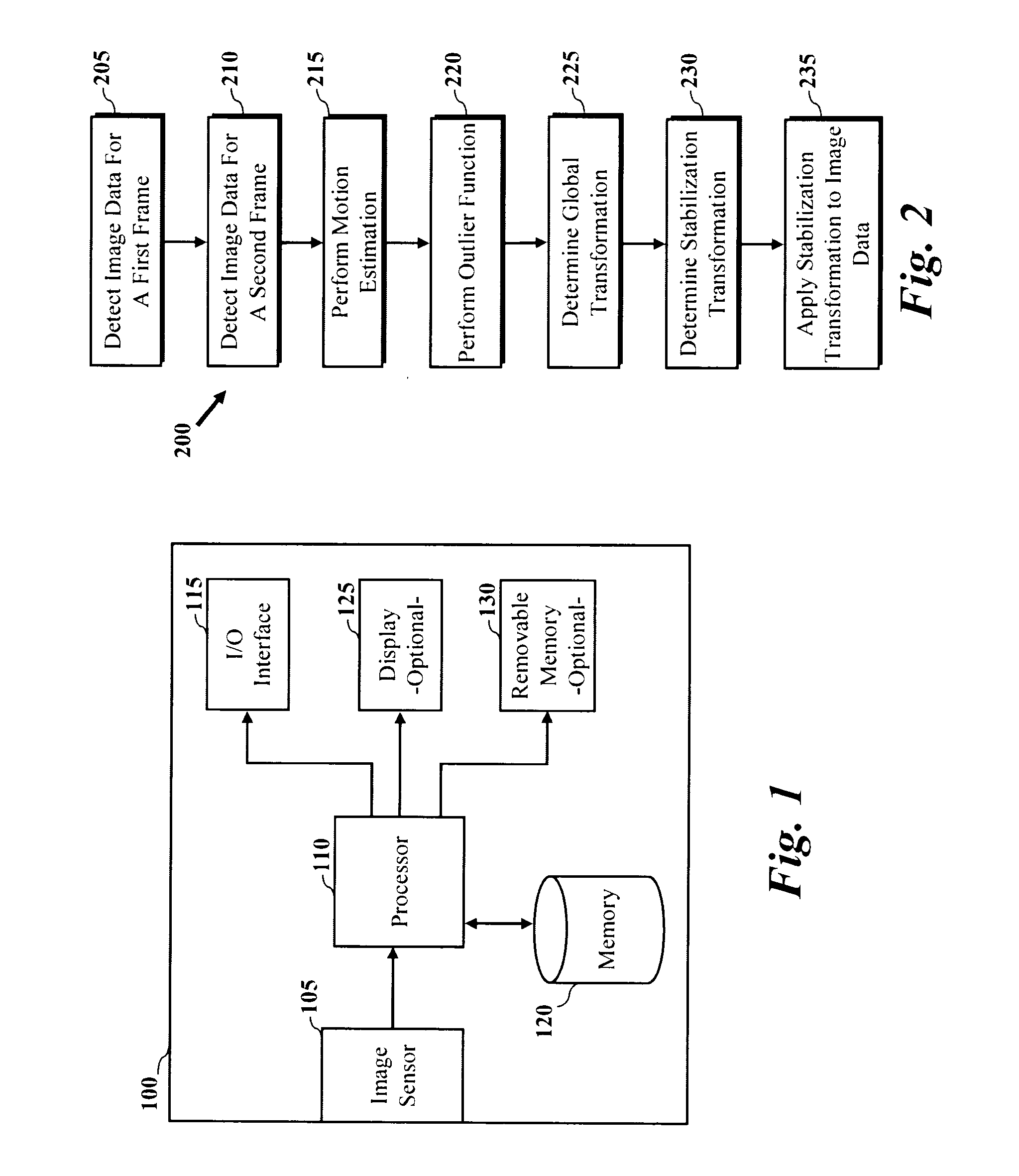

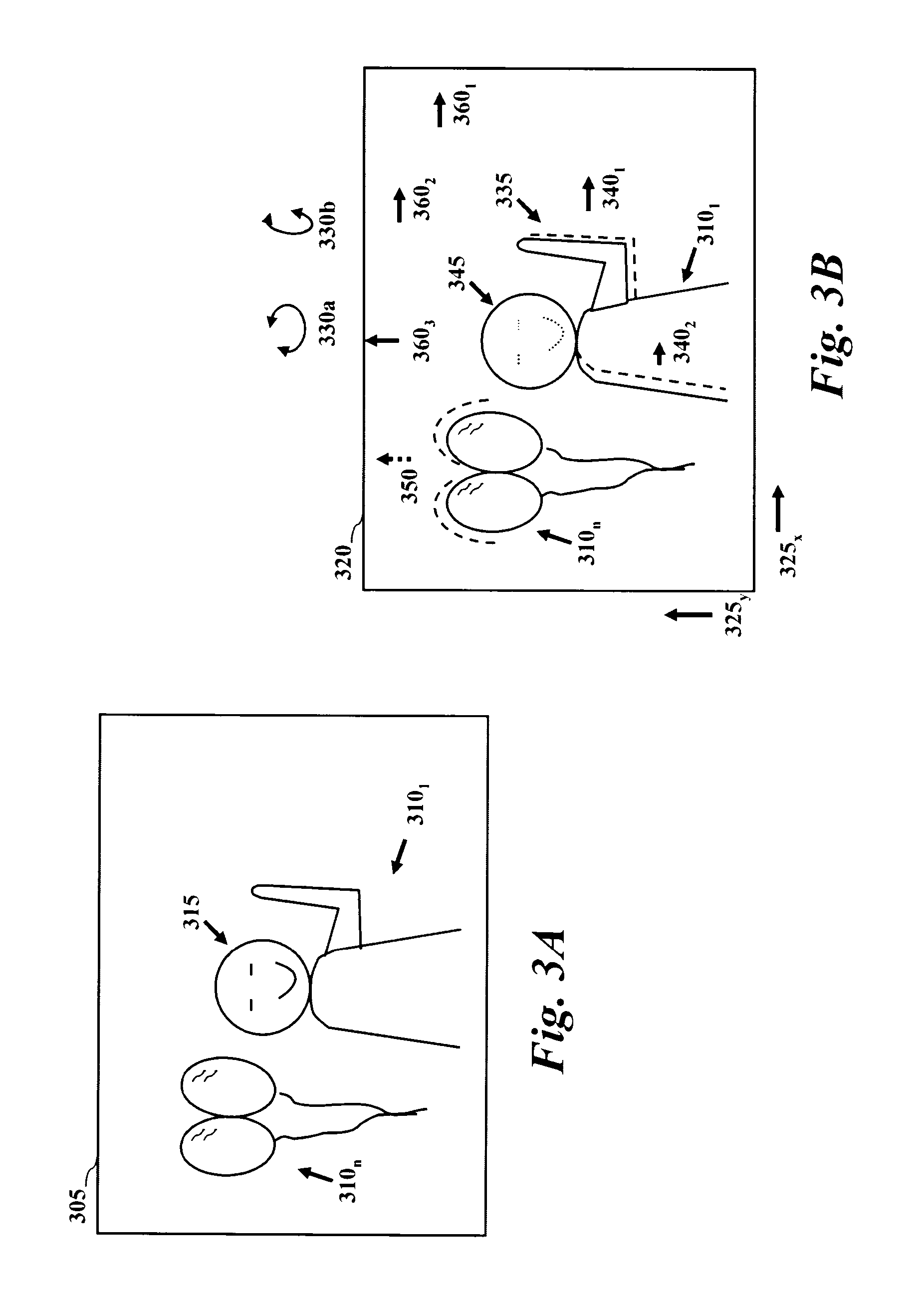

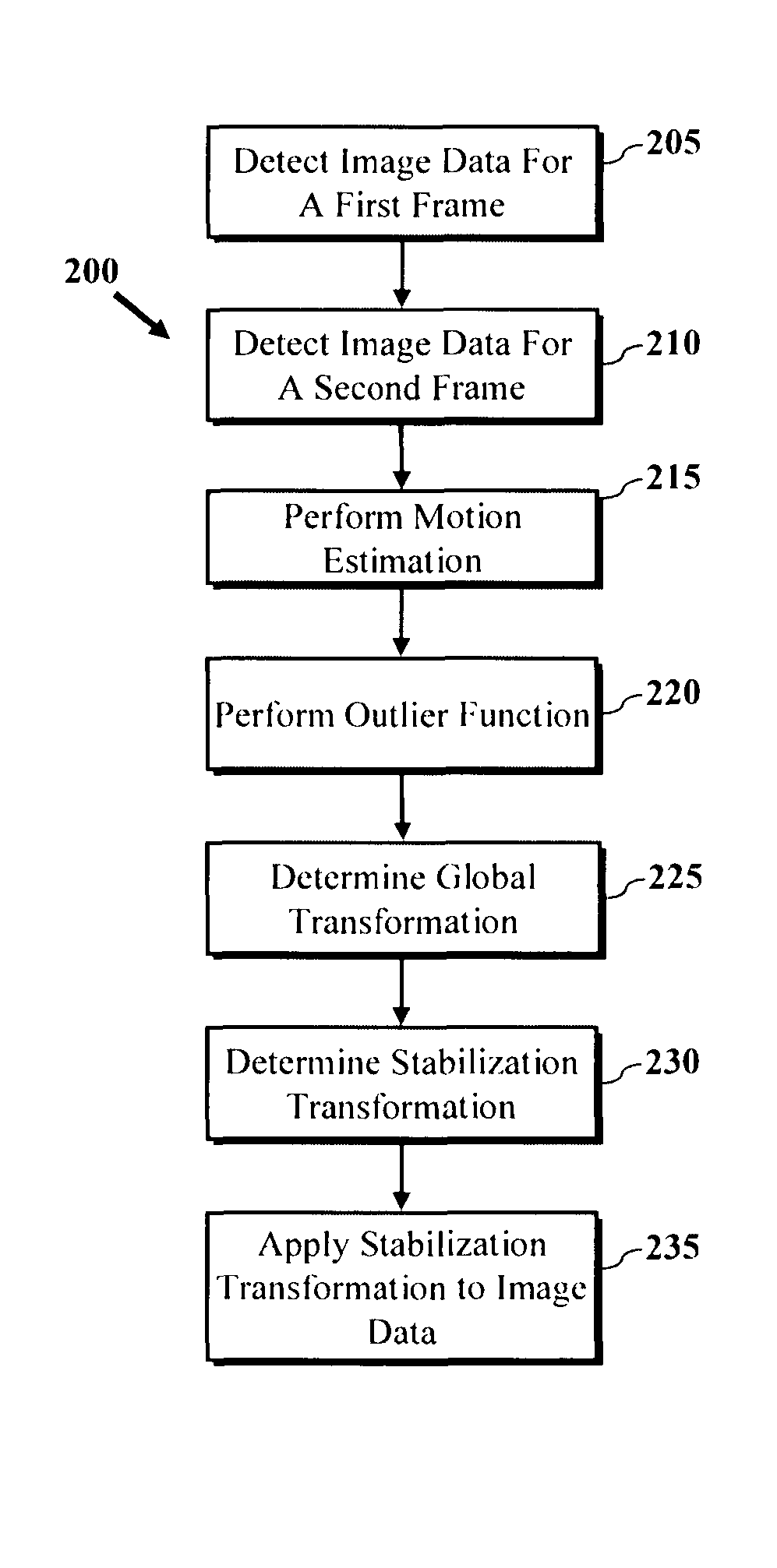

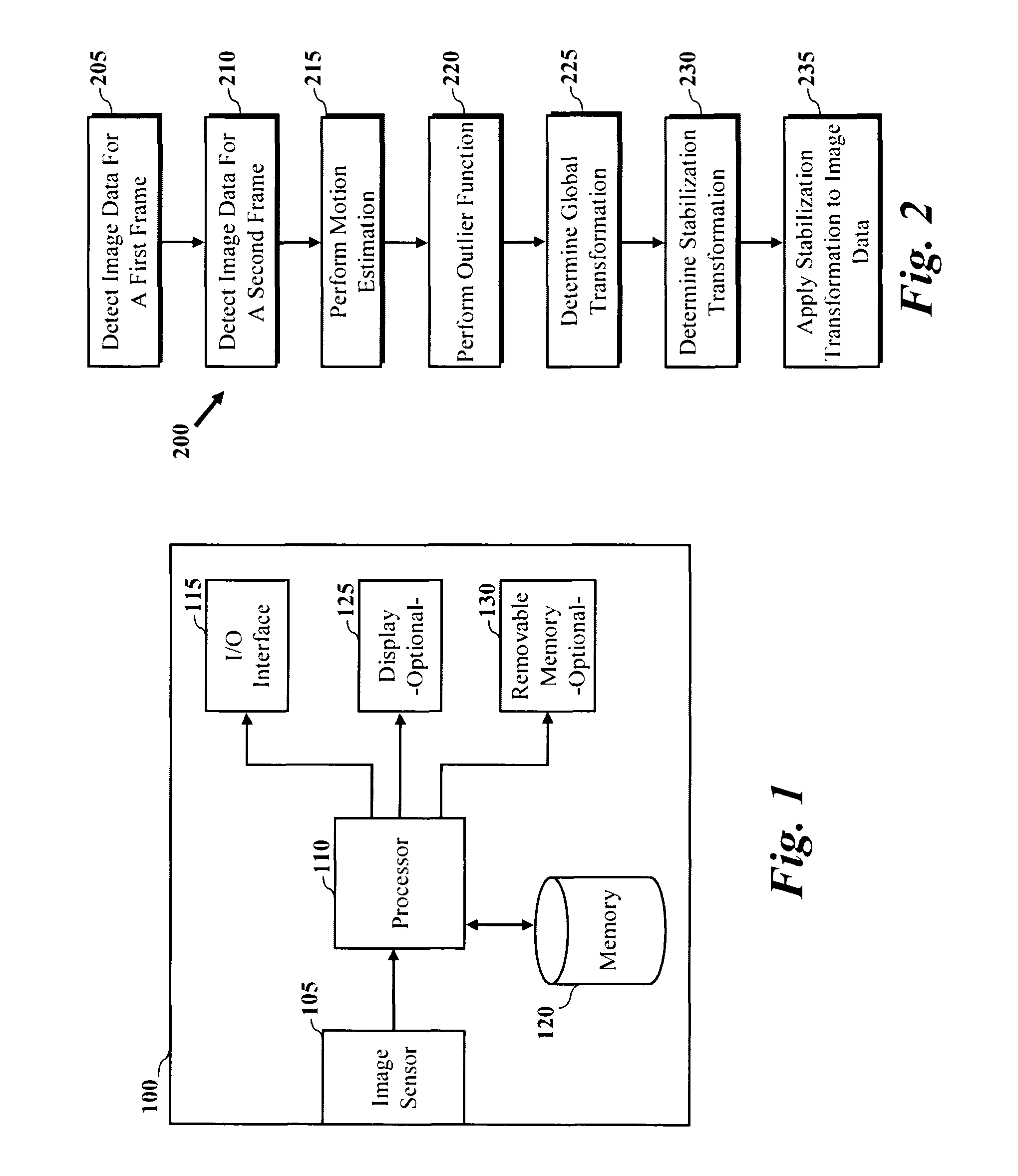

Method and apparatus for image stabilization

InactiveUS20110085049A1Reduce motion errorsTelevision system detailsColor television detailsFrame basedMotion vector

A method and device are provided for method for stabilization of image data by an imaging device. In one embodiment, a method includes detecting image data for a first frame and a second frame, performing motion estimation to determine one or more motion vectors associated with global frame motion for image data of the first frame, performing an outlier rejection function to select at least one of the one or more motion vectors, and determining a global transformation for image data of the first frame based, at least in part, on motion vectors selected by the outlier rejection function. The method may further include determining a stabilization transformation for image data of the first frame by refining the global transformation to correct for unintentional motion and applying the stabilization transformation to image data of the first frame to stabilize the image data of the first frame.

Owner:QUALCOMM INC

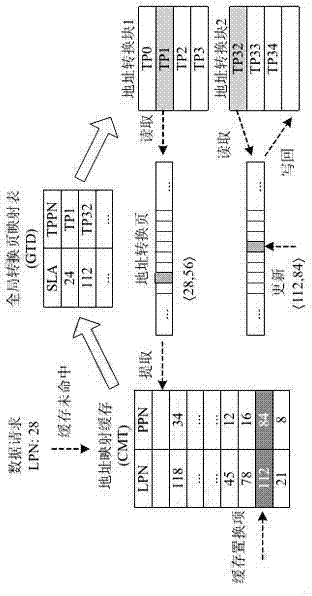

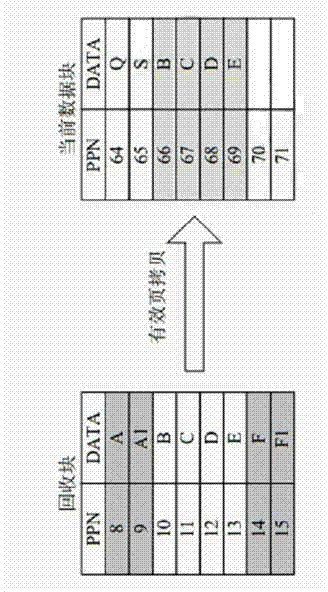

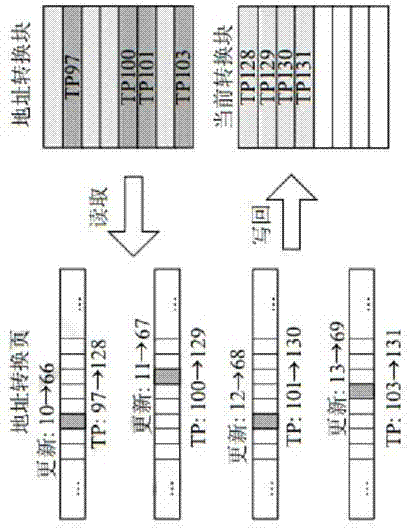

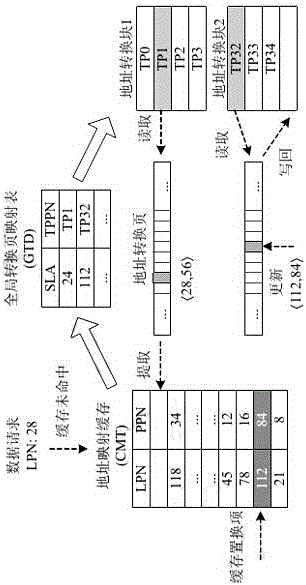

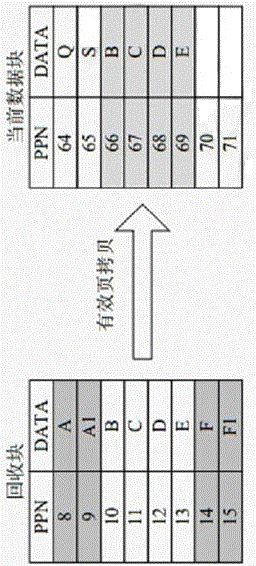

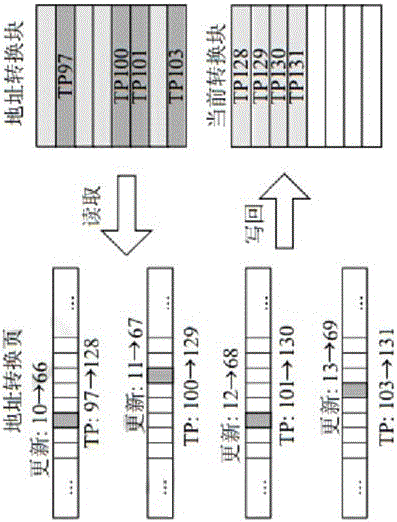

Optimized flash memory address mapping method

ActiveCN104268094AIncrease profitSimplify the access processMemory architecture accessing/allocationMemory adressing/allocation/relocationGlobal transformationGranularity

The invention discloses an optimized flash memory address mapping method. The optimized flash memory address mapping method includes steps: maintaining a global transformation page mapping table GTD in a memory by using a page level address mapping DFTL method based on requirements, and simultaneously using an address mapping cache CMT in the memory so as to cache address mapping items frequently accessed in a transformation page, wherein a cached data unit is a whole address transformation page. The optimized flash memory address mapping method unifies granularities of address mapping information in a flash memory and the cache, and fully uses local properties of time and space of data. When a person frequently accesses the local data in a short time, the person only needs to access the page level address mapping cache, and does not need to access the flash memory, and simultaneously when the person needs to displace a certain transformation page from the cache, all the updated address mapping information can be updated into the flash memory together, and use rate of the address mapping information is improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

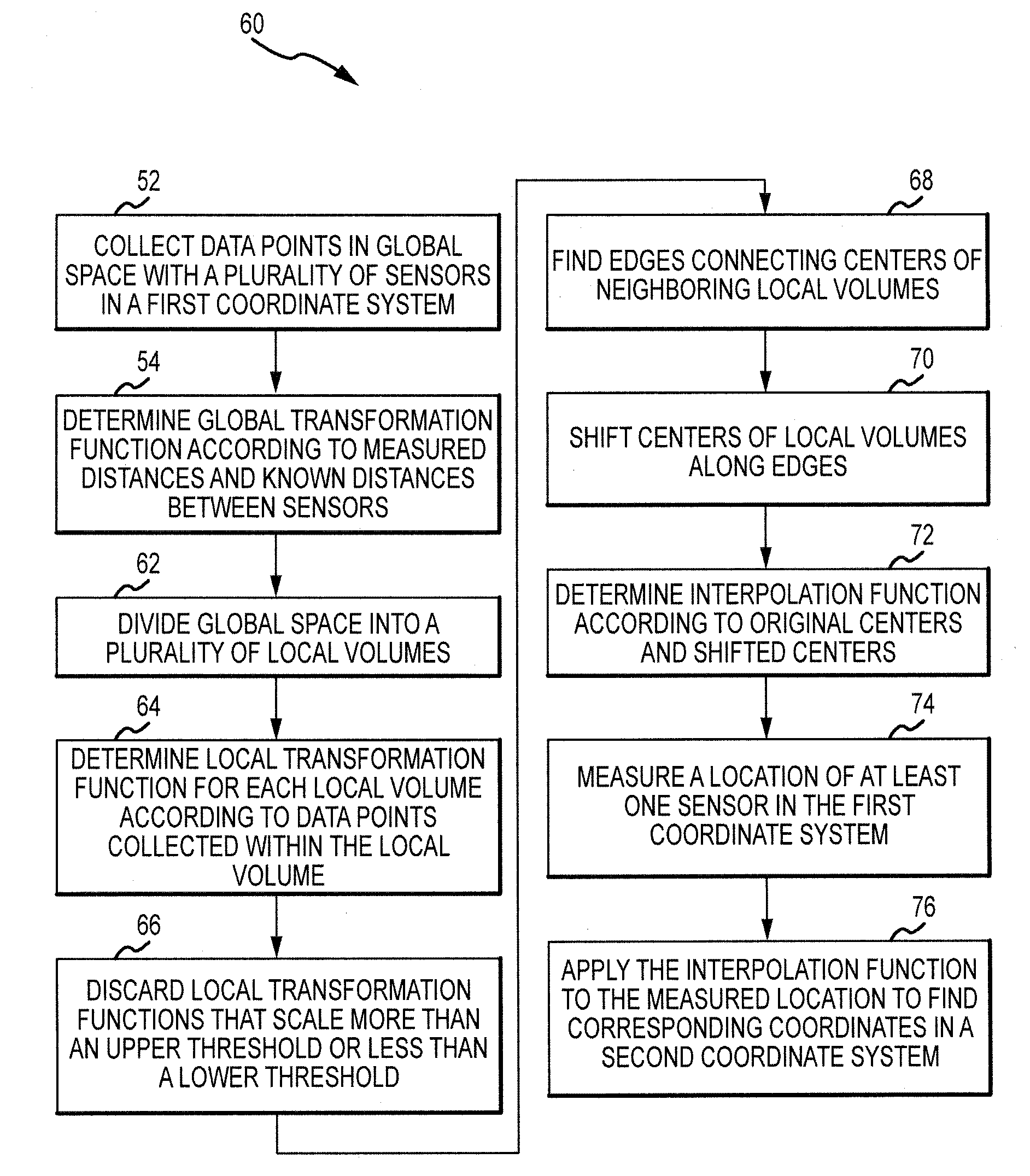

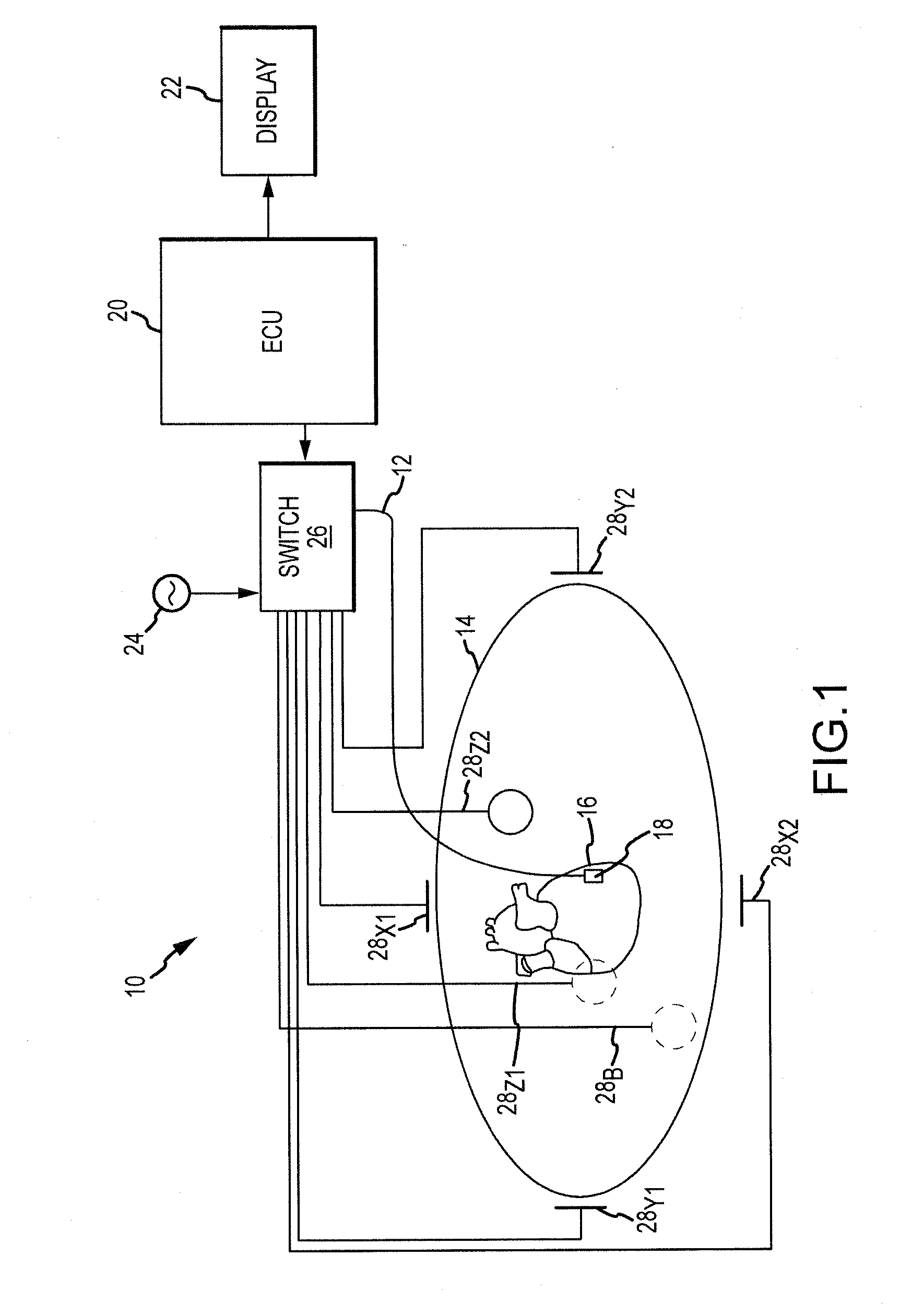

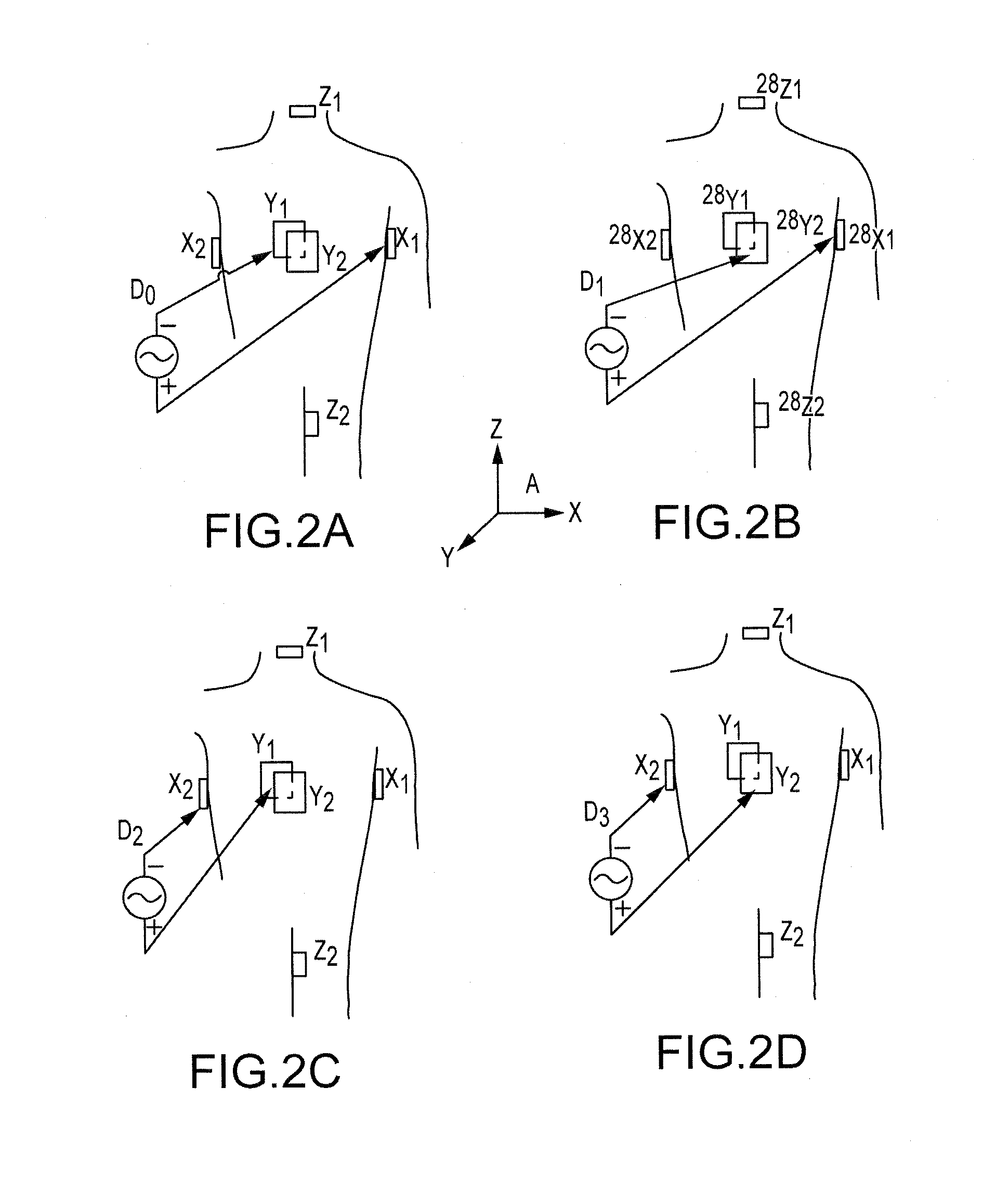

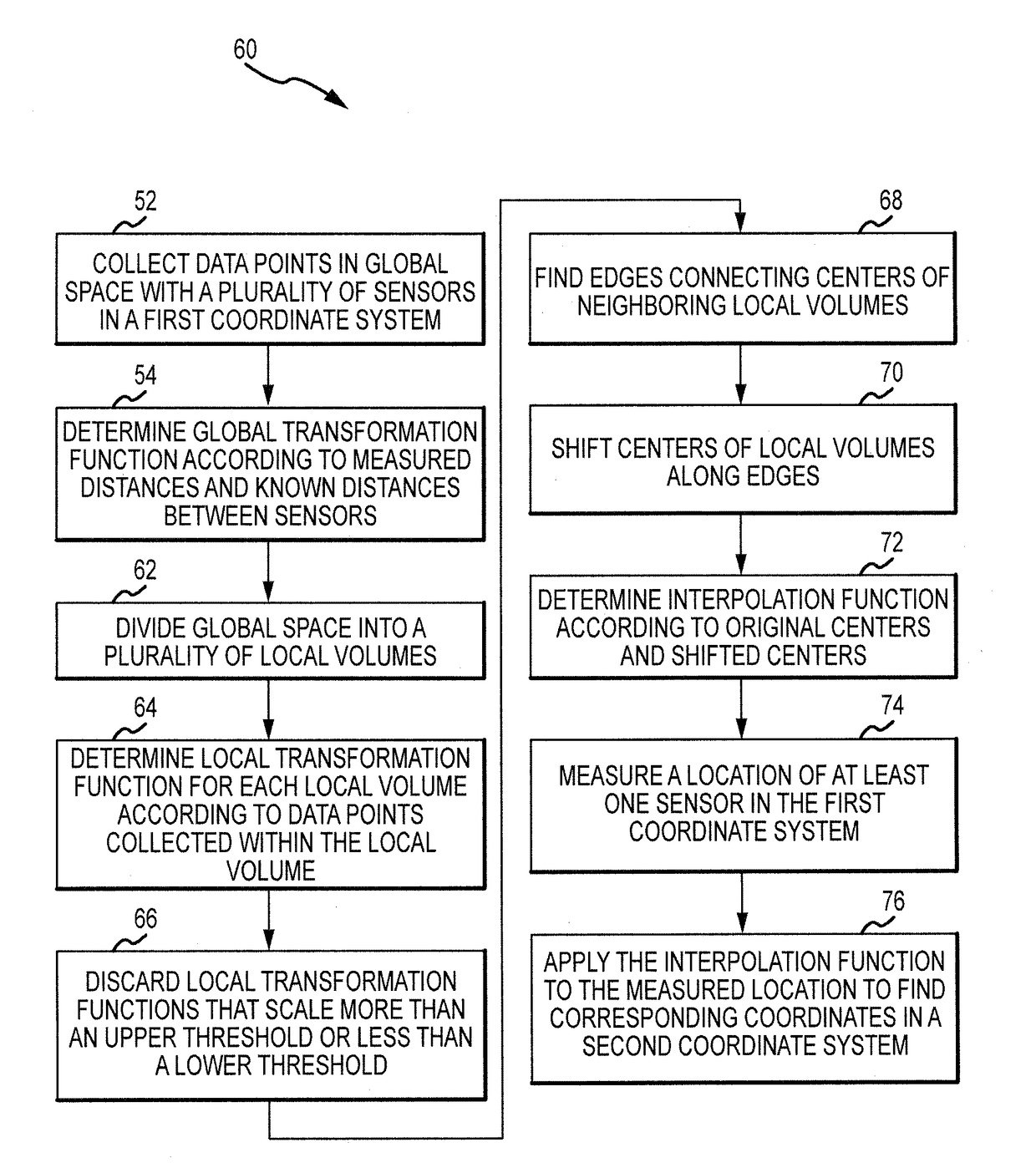

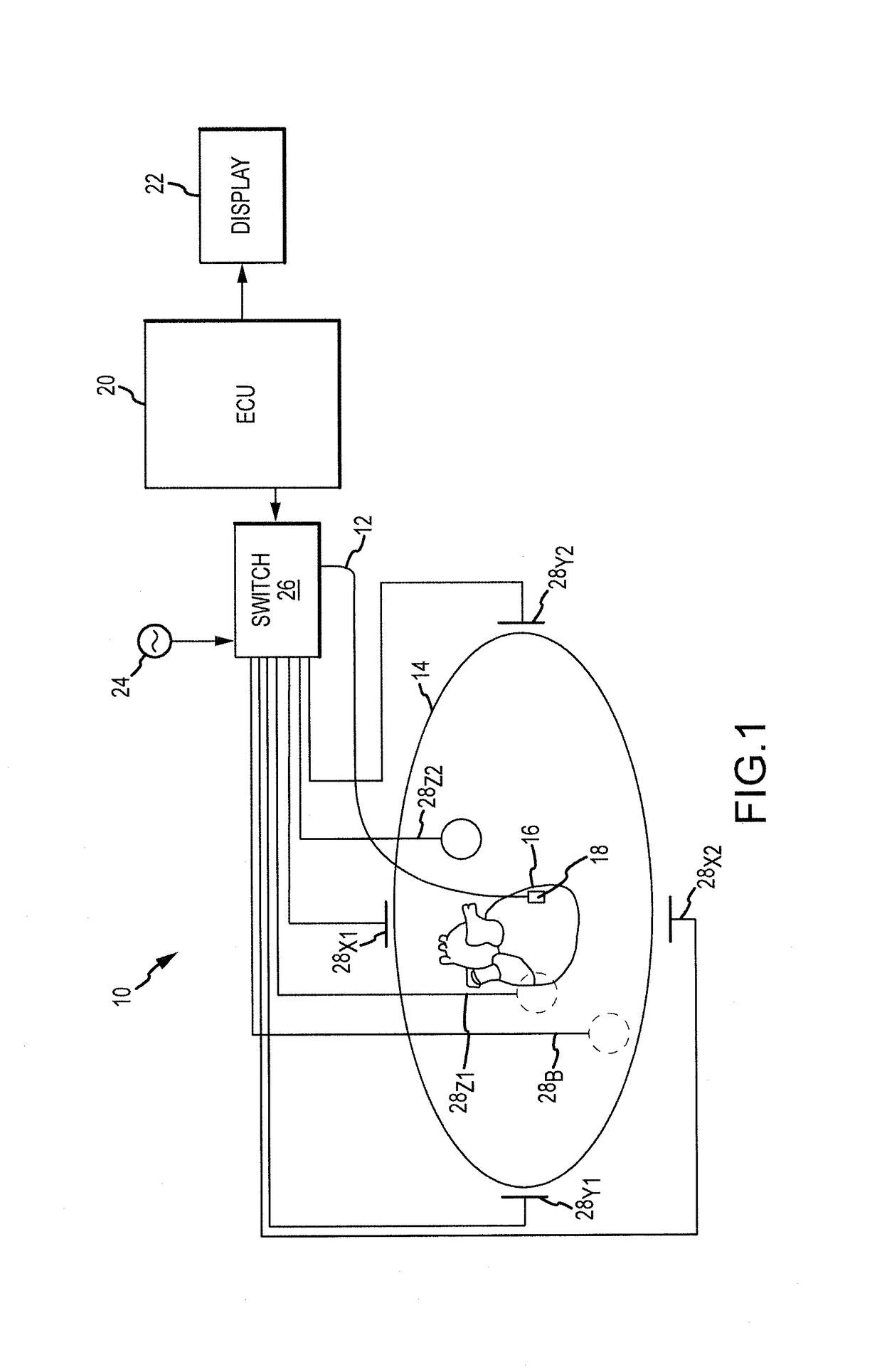

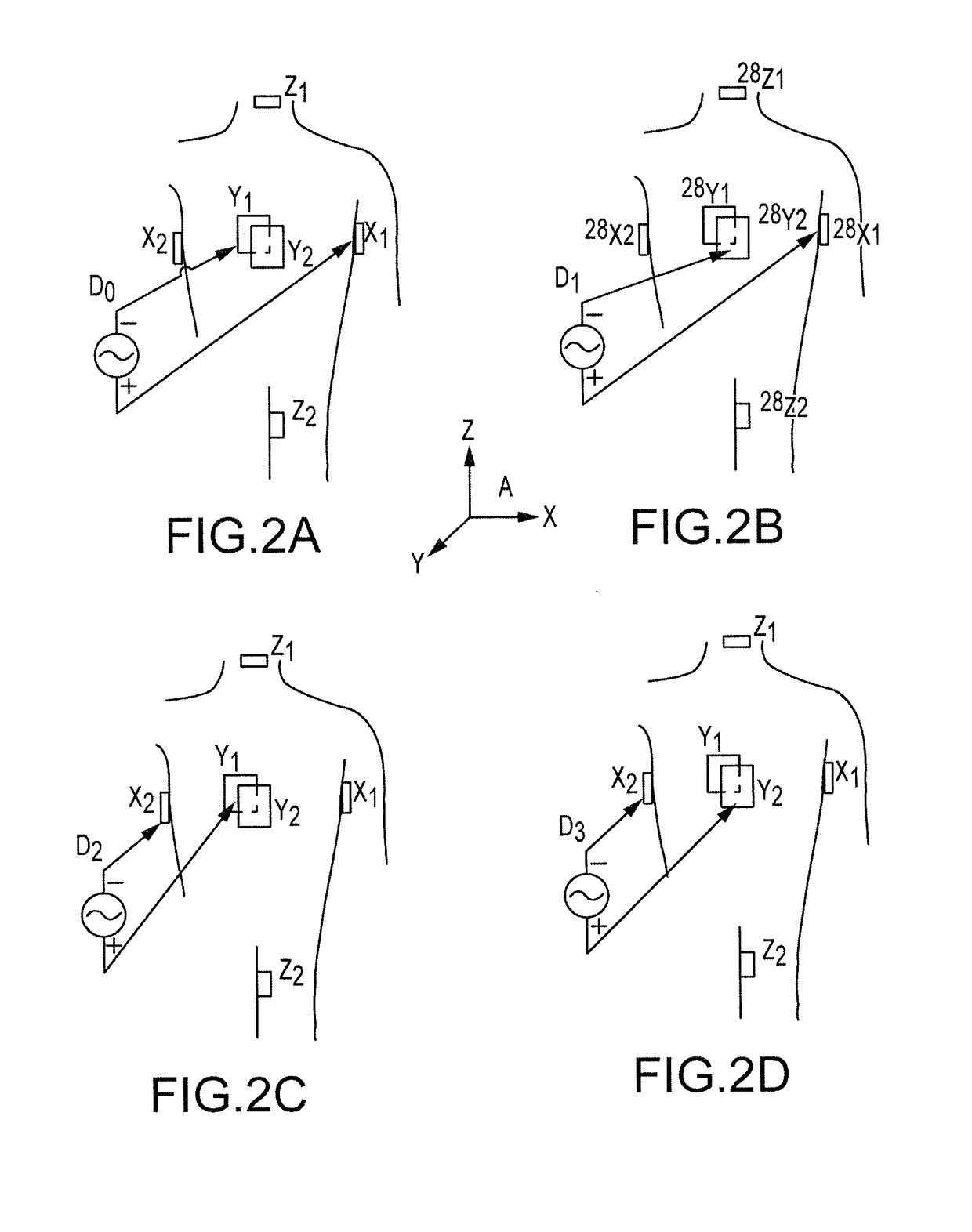

Scaling of electrical impedance-based navigation space using inter-electrode spacing

ActiveUS20140095105A1Digital computer detailsSurgical instrument detailsPower flowGlobal transformation

An algorithm to correct and / or scale an electrical current-based coordinate system can include the determination of one or more global transformation or interpolation functions and / or one or more local transformation functions. The global and local transformation functions can be determined by calculating a global metric tensor and a number of local metric tensors. The metric tensors can be calculated based on pre-determined and measured distances between closely-spaced sensors on a catheter.

Owner:ST JUDE MEDICAL ATRIAL FIBRILLATION DIV

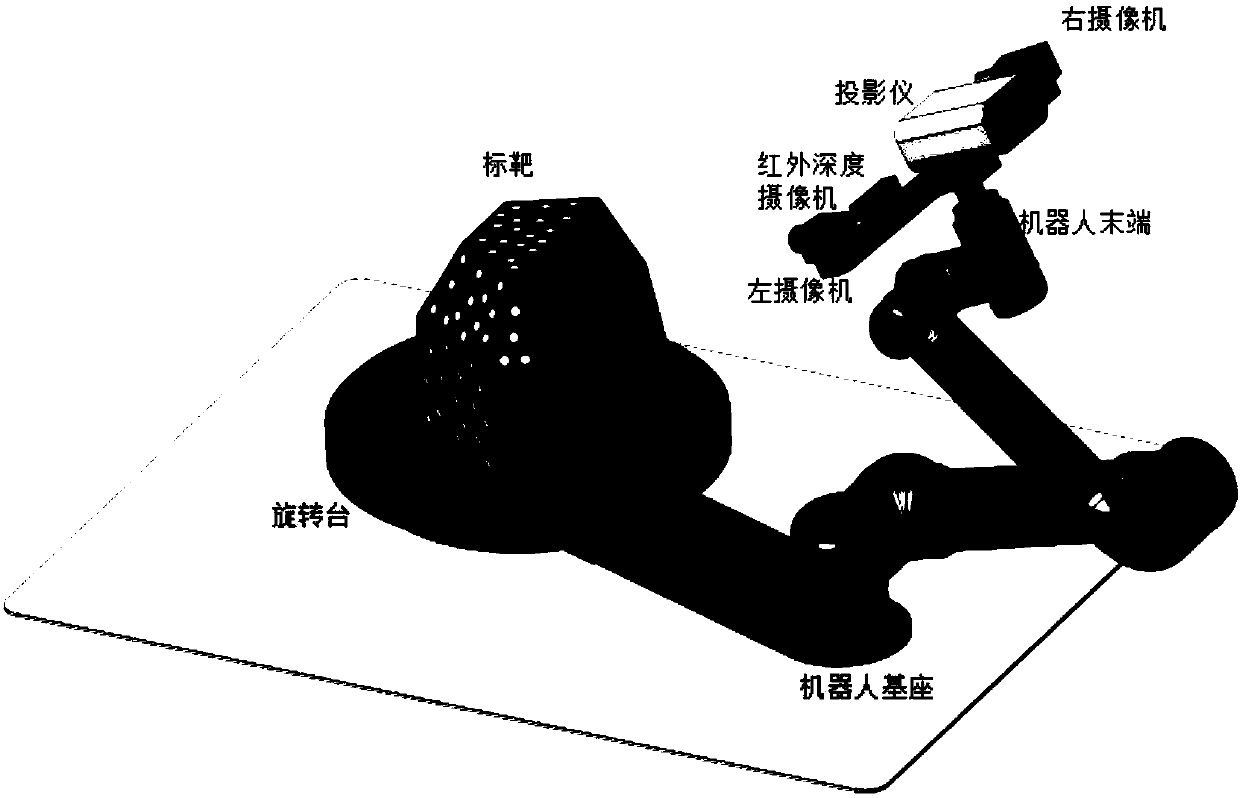

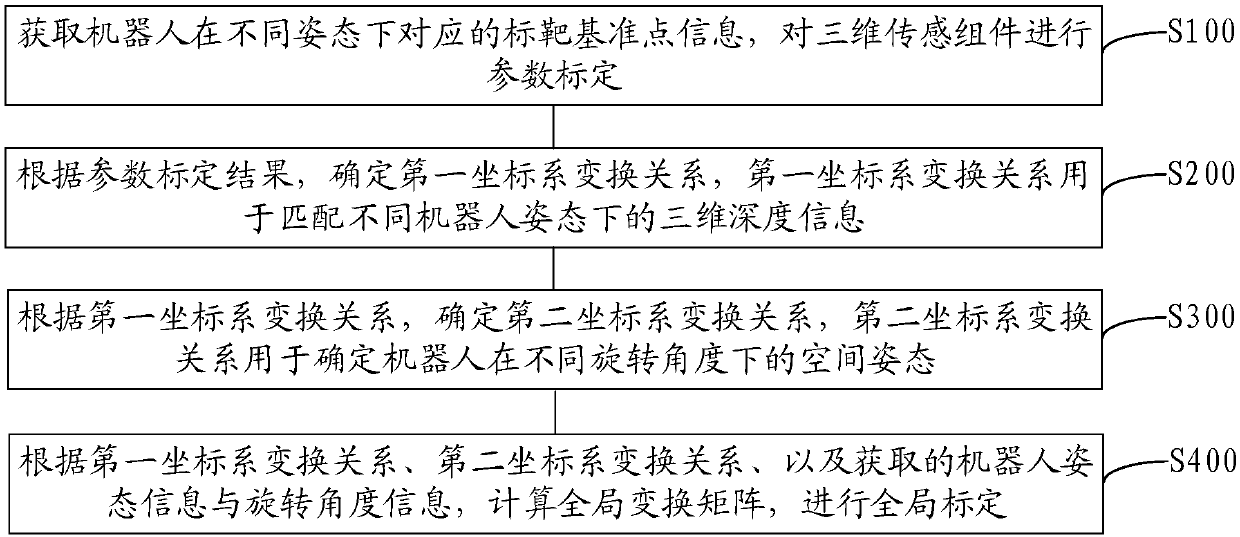

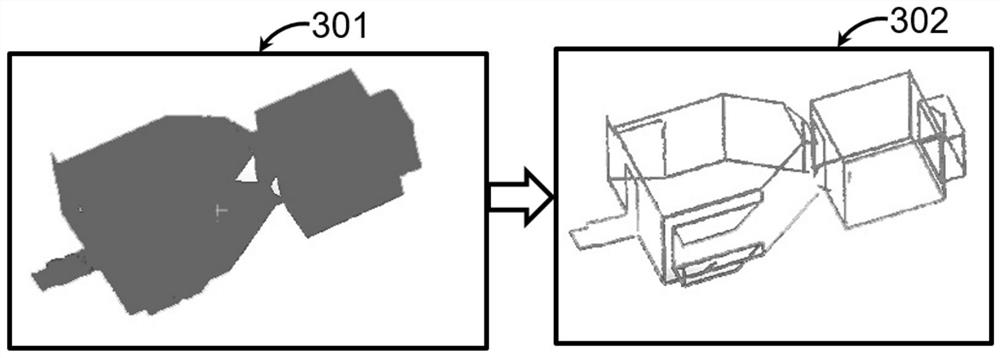

Method and device for jointly calibrating robot and three-dimensional sensing component

ActiveCN108346165AAchieve integrationImprove matching accuracyProgramme-controlled manipulatorImage enhancementData informationGlobal transformation

The invention relates to a method and device for jointly calibrating a robot and a three-dimensional sensing component, a computer device and a storage medium. The method comprises: acquiring target reference point information corresponding to a robot in different attitudes; calibrating the parameter of the three-dimensional sensing component; determining a first coordinate system transformation relation matching the three-dimensional depth information under different robot attitudes; determining a second coordinate system transformation relation of the space attitudes of the robot under different rotation angles; and according to the plurality of transformation relations and the acquired robot attitude information and rotation angle information, calculating a global transformation matrixfor global calibration and optimization. The method, by calibrating the parameter and obtaining the transformation relation between multiple coordinate systems, performs multi-view three-dimensional reconstruction on an object under a finite field of view angle, further obtains the field of view information of different rotation angles of the robot, calculates the global transformation matrix, andperforms global calibration so as to fully realize the multi-view depth data information fusion, and achieve an effect of improving the matching accuracy.

Owner:SHENZHEN ESUN DISPLAY +1

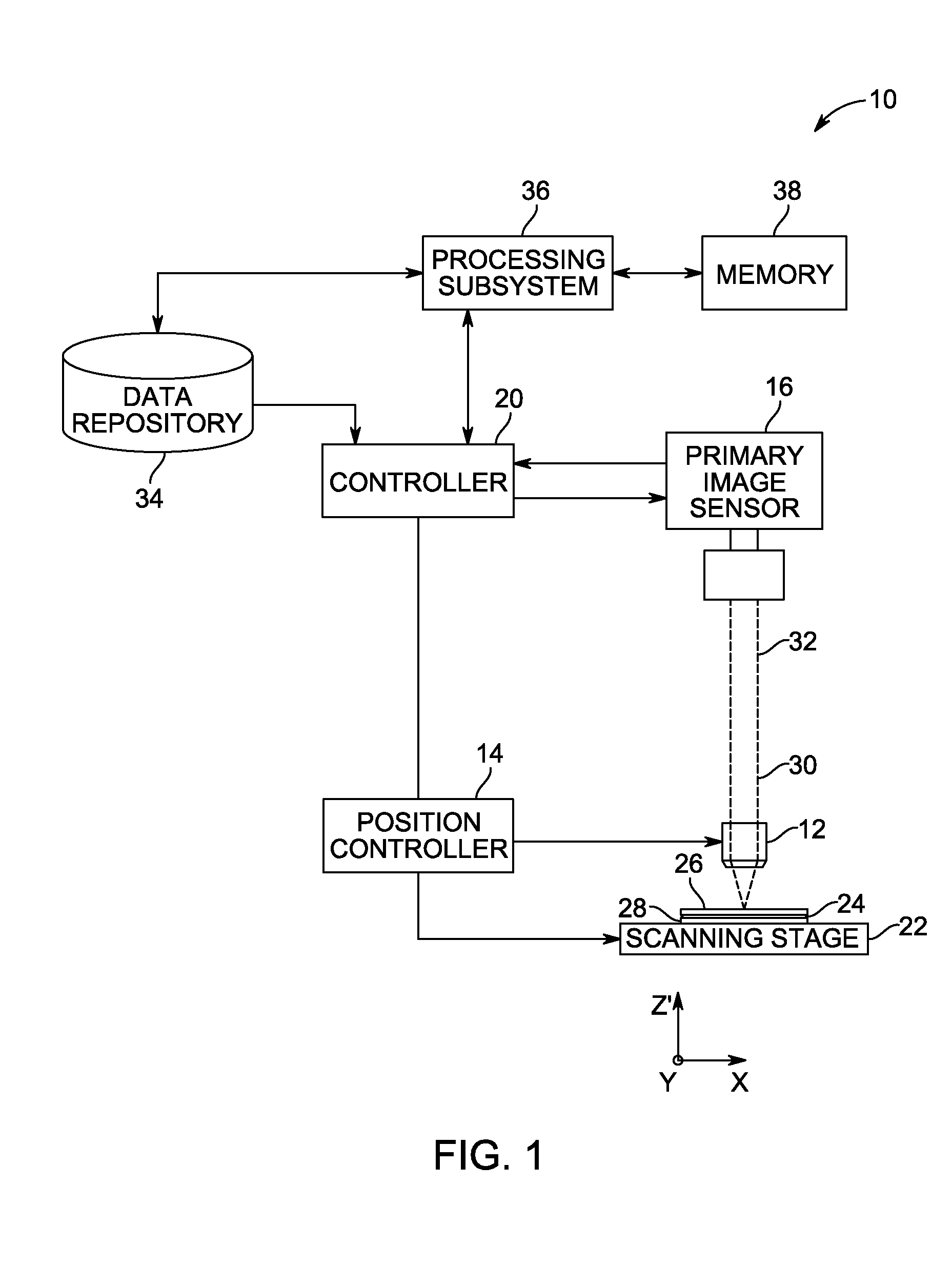

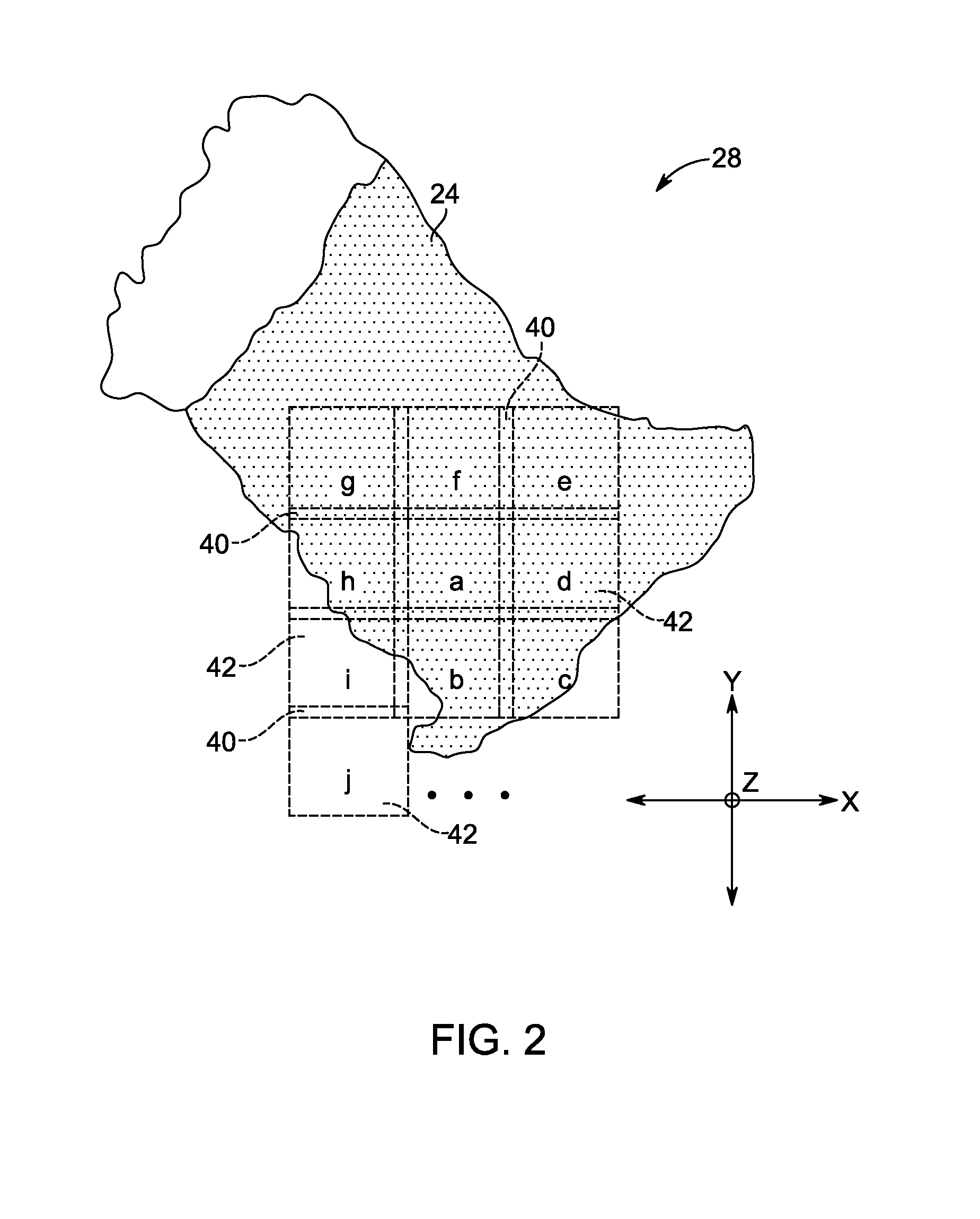

Referencing in multi-acquisition slide imaging

ActiveUS20140267671A1Image analysisGeometric image transformationGlobal transformationComputer science

Referencing of image acquired in multiple rounds of imaging is disclosed. In certain implementations, a baseline round of images are acquired and registered to one another to establish a global transformation matrix. In a subsequent round of image acquisition, a limited number of field of view images are initially acquired and registered to the corresponding baseline images to solve for translation, rotation, and scale. The full set of baseline images is then acquired for the subsequent round and each image is pre-rotated and pre-scaled based on the transform determined for the subset of images. The pre-rotated, pre-scaled images are then registered using a translation-only transform.

Owner:LEICA MICROSYSTEMS CMS GMBH

Method and apparatus for image stabilization

A method and device are provided for method for stabilization of image data by an imaging device. In one embodiment, a method includes detecting image data for a first frame and a second frame, performing motion estimation to determine one or more motion vectors associated with global frame motion for image data of the first frame, performing an outlier rejection function to select at least one of the one or more motion vectors, and determining a global transformation for image data of the first frame based, at least in part, on motion vectors selected by the outlier rejection function. The method may further include determining a stabilization transformation for image data of the first frame by refining the global transformation to correct for unintentional motion and applying the stabilization transformation to image data of the first frame to stabilize the image data of the first frame.

Owner:QUALCOMM INC

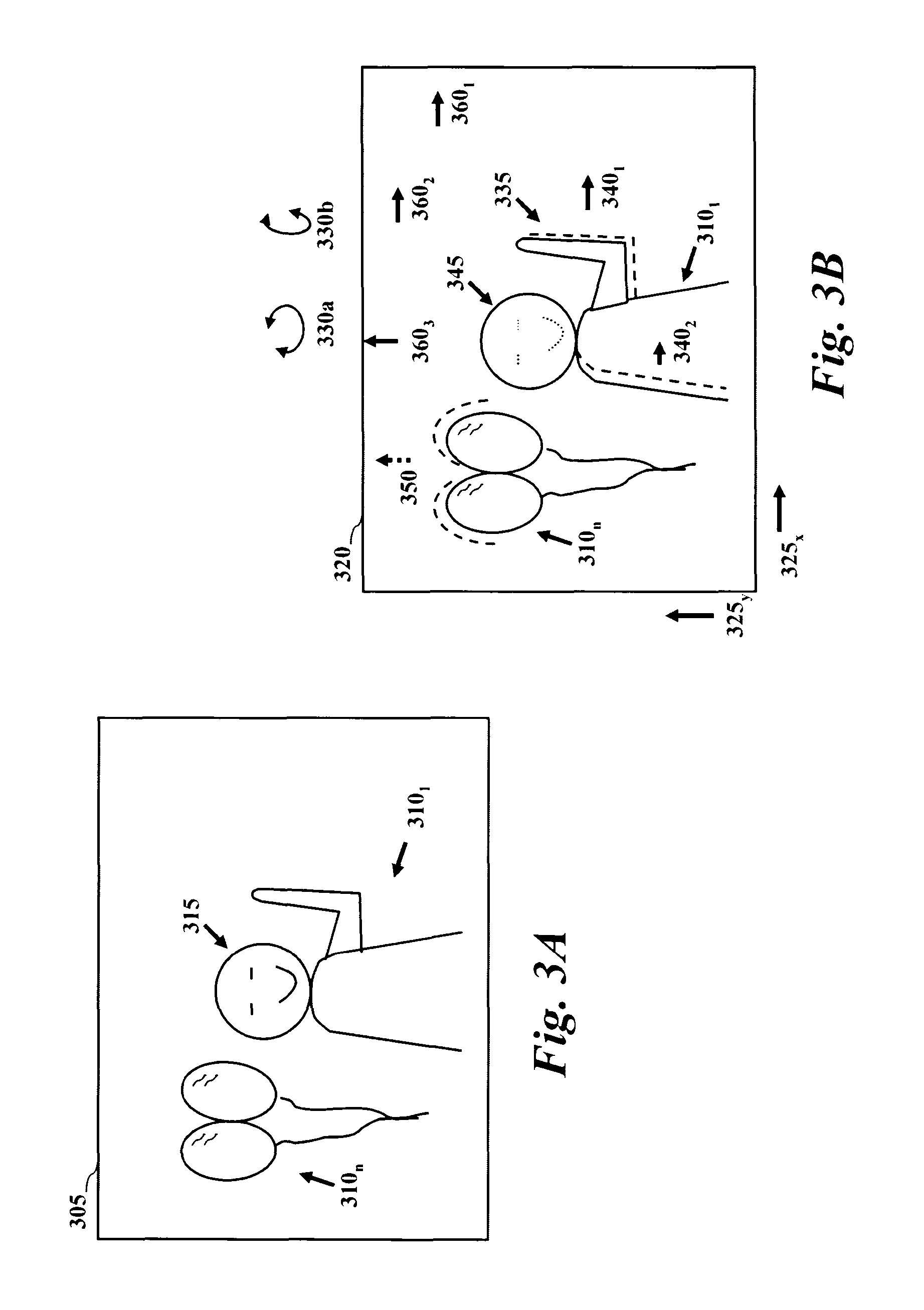

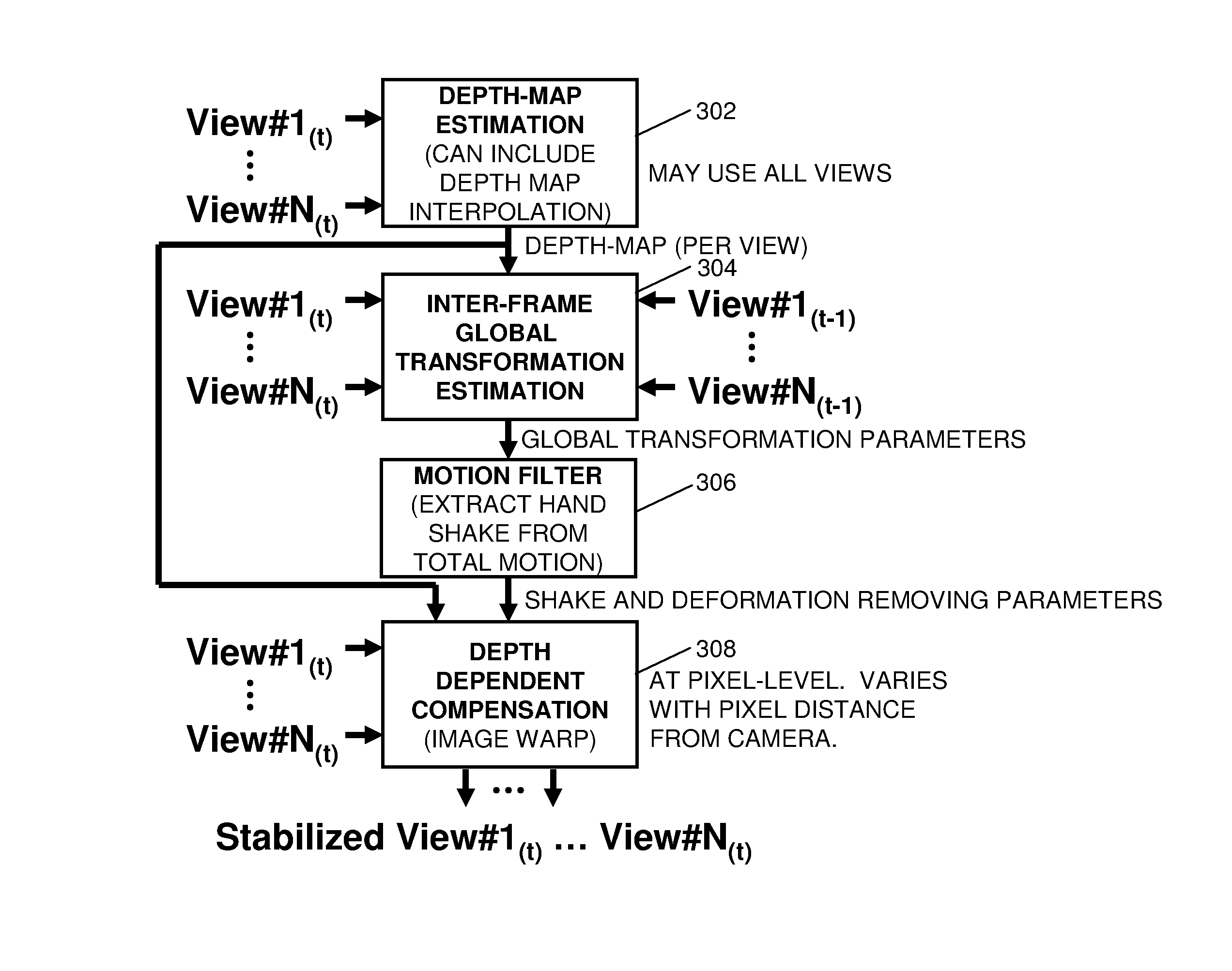

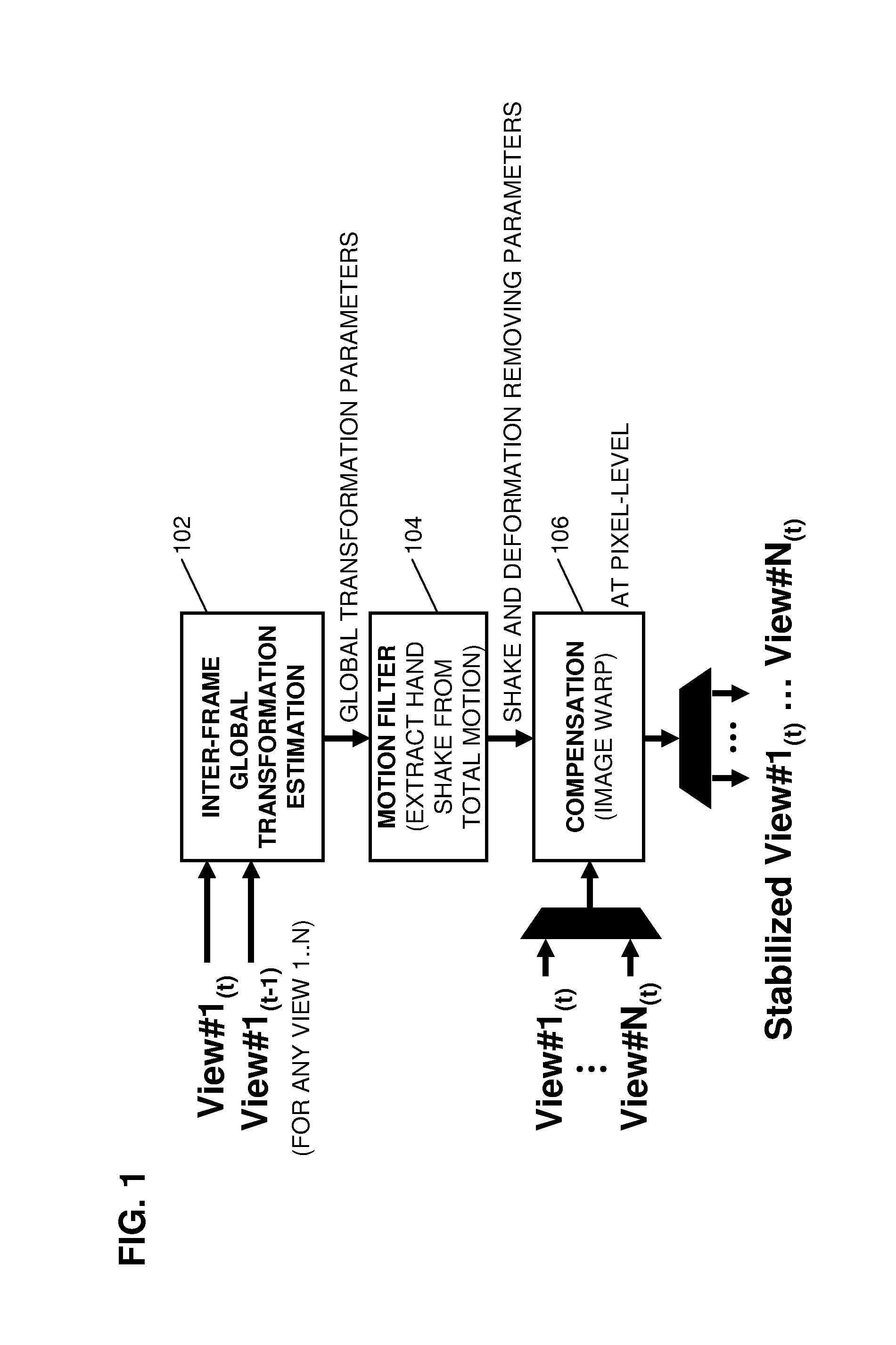

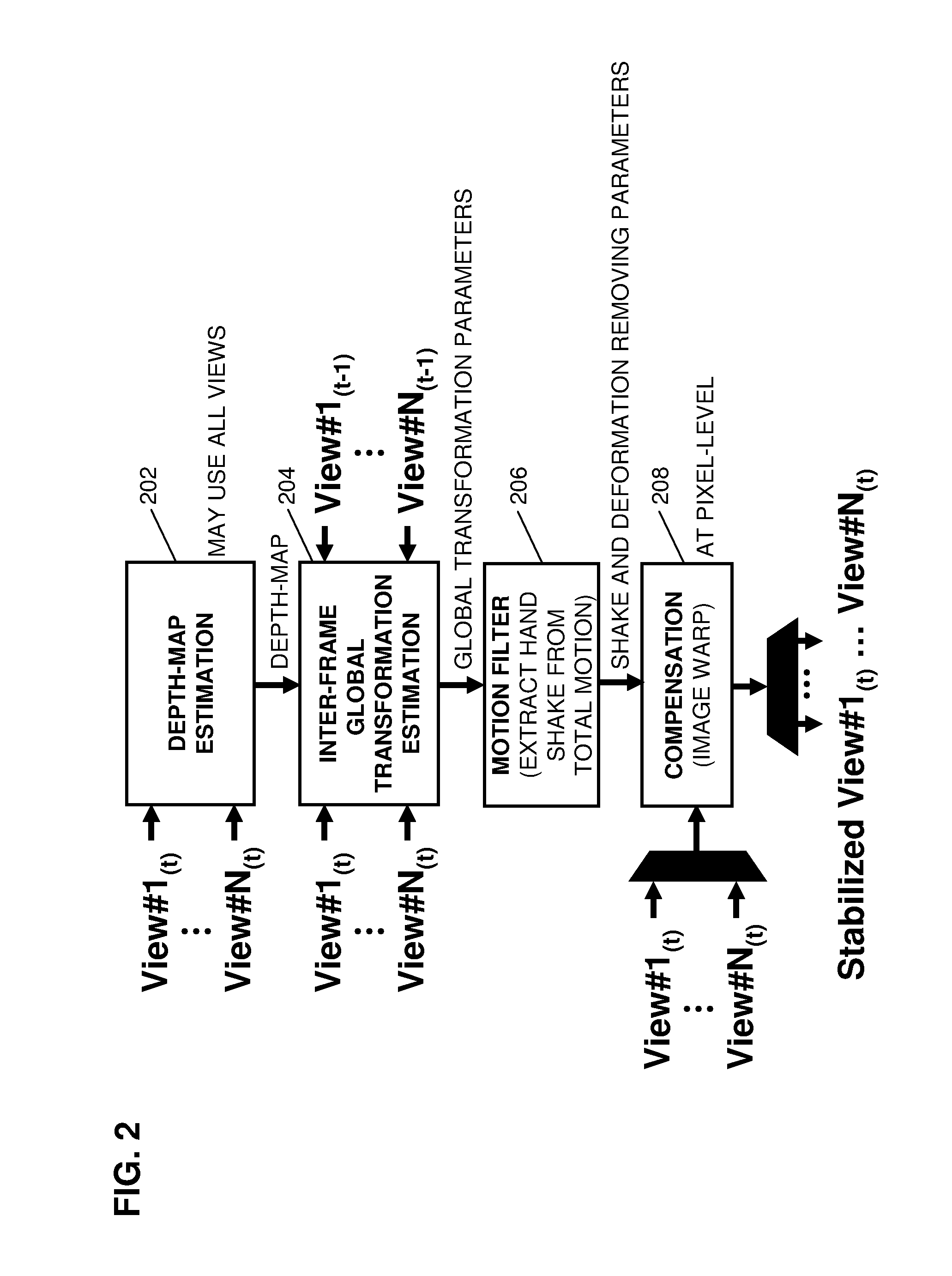

Digital video stabilization for multi-view systems

A system, method, and computer program product for digital stabilization of video data from cameras producing multiple simultaneous views, typically from rolling shutter type sensors, and without requiring a motion sensor. A first embodiment performs an estimation of the global transformation on a single view and uses this transformation for correcting other views. A second embodiment selects a distance at which a maximal number of scene points is located and considers only the motion vectors from these image areas for the global transformation. The global transformation estimate is improved by averaging images from several views and reducing stabilization when image conditions may cause incorrect stabilization. Intentional motion is identified confidently in multiple views. Local object distortion may be corrected using depth information. A third embodiment analyzes the depth of the scene and uses the depth information to perform stabilization for each of multiple depth layers separately.

Owner:QUALCOMM INC

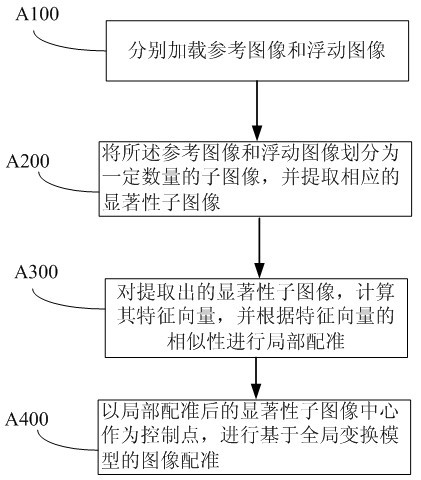

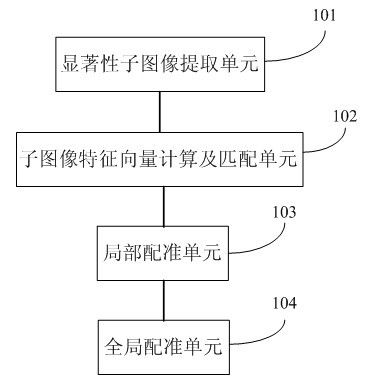

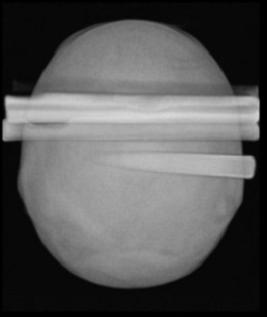

Method and system for three-dimensional image registration

InactiveCN102663738AAutomatic registration is fastAutomatic registration is accurateImage analysisFeature vectorGlobal transformation

The invention discloses a method and a system for three-dimensional image registration and is used for realizing registration of a reference image and a floating image. The method comprises the following steps: first, loading a reference image and a floating image; second, dividing the reference image and the floating image into a certain number of sub-images and extracting corresponding salient sub-images; third, calculating feature vectors of the extracted salient sub-images and carrying out a local registration according to similarity of feature vectors; and finally, carrying out image registration based on a global transformation model by taking the center of the locally registered salient sub-images as a control point. With the method for three-dimensional image registration provided by the invention, the burden that manual registration and interactive semiautomatic registration place on operators is removed, running time is greatly reduced compared to that of a mutual information-based registration method. In addition, the method has better robustness on gray value difference of images and can complete automatic registration of three-dimensional images fast and accurately.

Owner:SUZHOU INST OF BIOMEDICAL ENG & TECH

Scaling of electrical impedance-based navigation space using inter-electrode spacing

An algorithm to correct and / or scale an electrical current-based coordinate system can include the determination of one or more global transformation or interpolation functions and / or one or more local transformation functions. The global and local transformation functions can be determined by calculating a global metric tensor and a number of local metric tensors. The metric tensors can be calculated based on pre-determined and measured distances between closely-spaced sensors on a catheter.

Owner:ST JUDE MEDICAL ATRIAL FIBRILLATION DIV

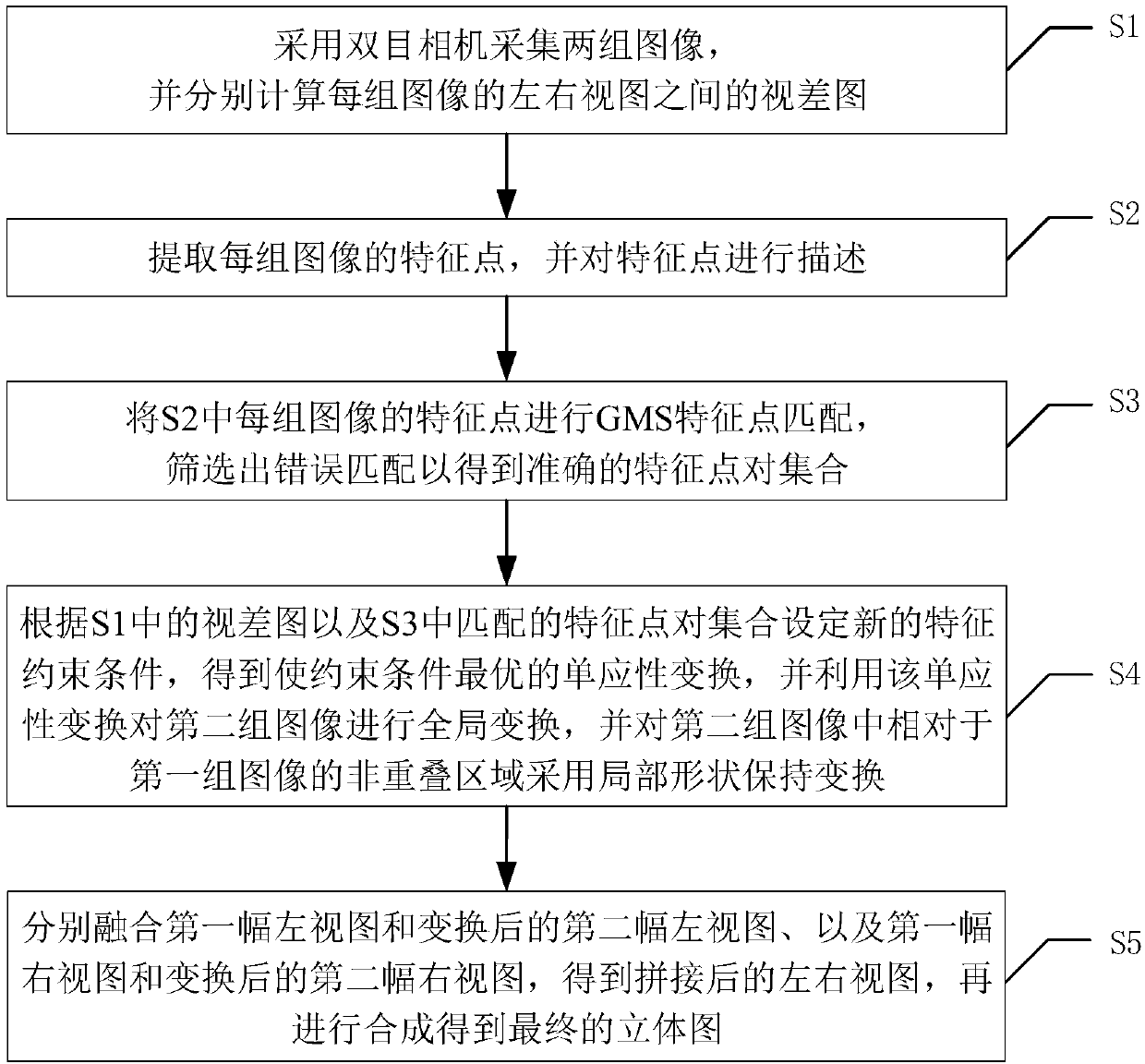

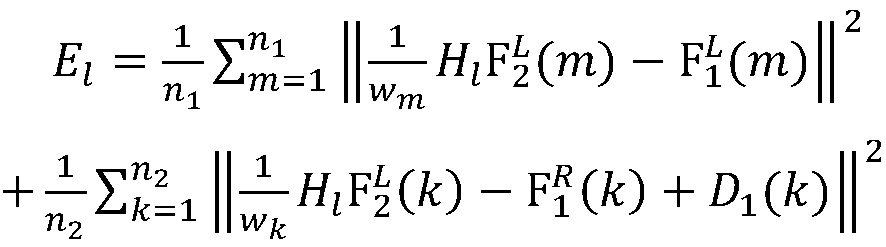

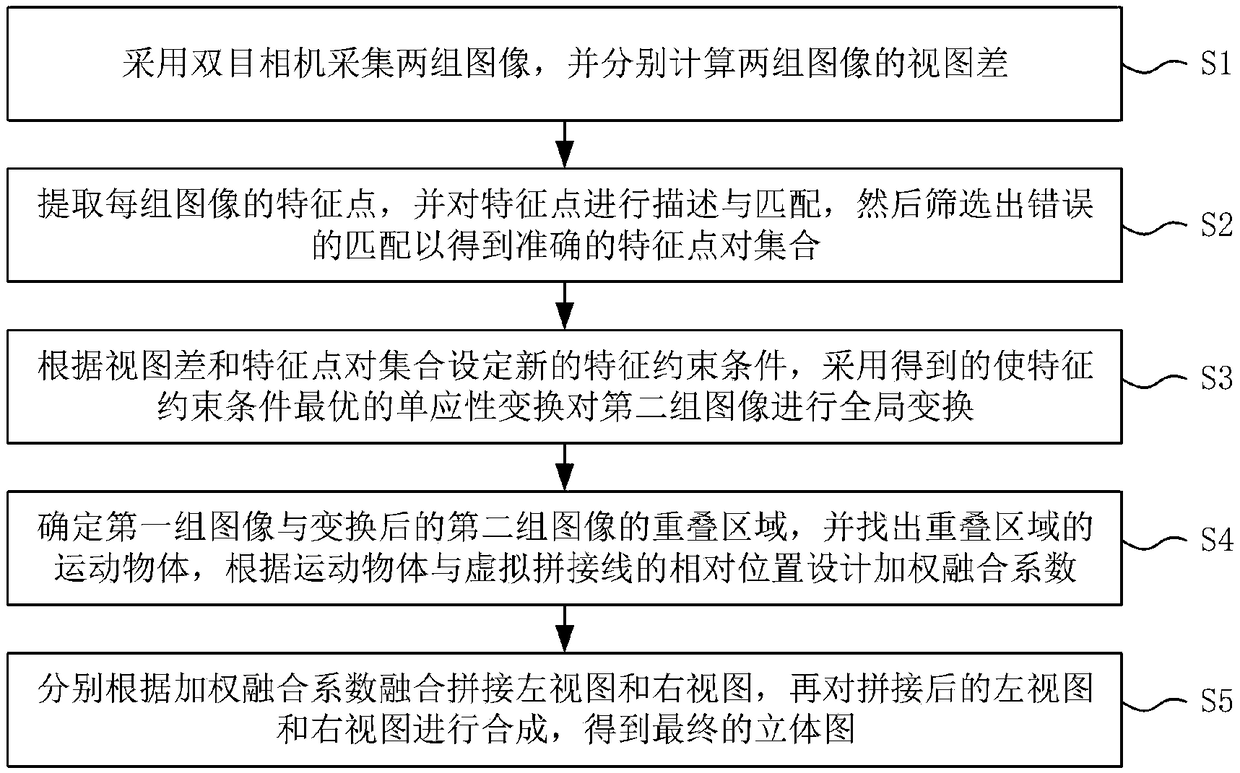

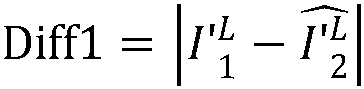

Robust binocular stereo image stitching method

ActiveCN108470324ARealize seamless splicingReduce ghostingImage enhancementImage analysisParallaxGlobal transformation

The invention discloses a robust binocular stereo image stitching method. The method comprises: a binocular camera is used for collecting two groups of images and a disparity map between a left view and a right view of each group of images is calculated respectively; feature points of each group of images are extracted and the feature points are described; GMS feature point matching is carried outon the feature points of each group of images and error matching is screened out to obtain accurate feature point pair set; according to the disparity maps and the feature point pair set, a new feature constraint condition is set to obtain homography transformation enabling the feature constraint condition to be optimized, global transformation is carried out on the second group of images by using the homography transformation, and local shape retaining transformation is carried out on a non-overlapped region, relative to the first group of images, of the second group of images; and the leftviews and the right views after transformation are fused respectively to obtain a spliced left view and a spliced right view, and then synthesis is carried out to obtain a final space diagram. Therefore, seamless splicing is realized; and the algorithm has certain robustness.

Owner:SHENZHEN INST OF FUTURE MEDIA TECH +1

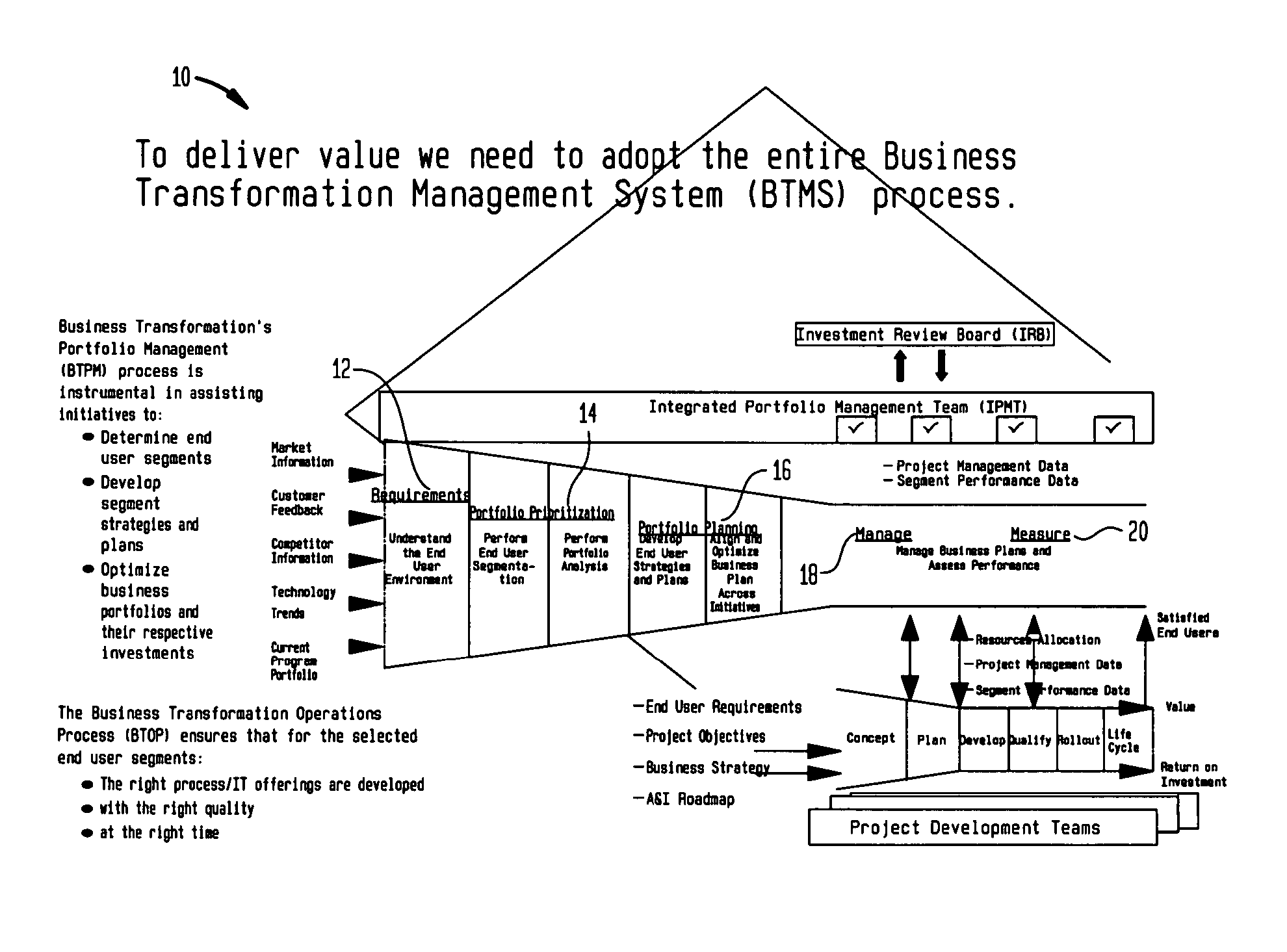

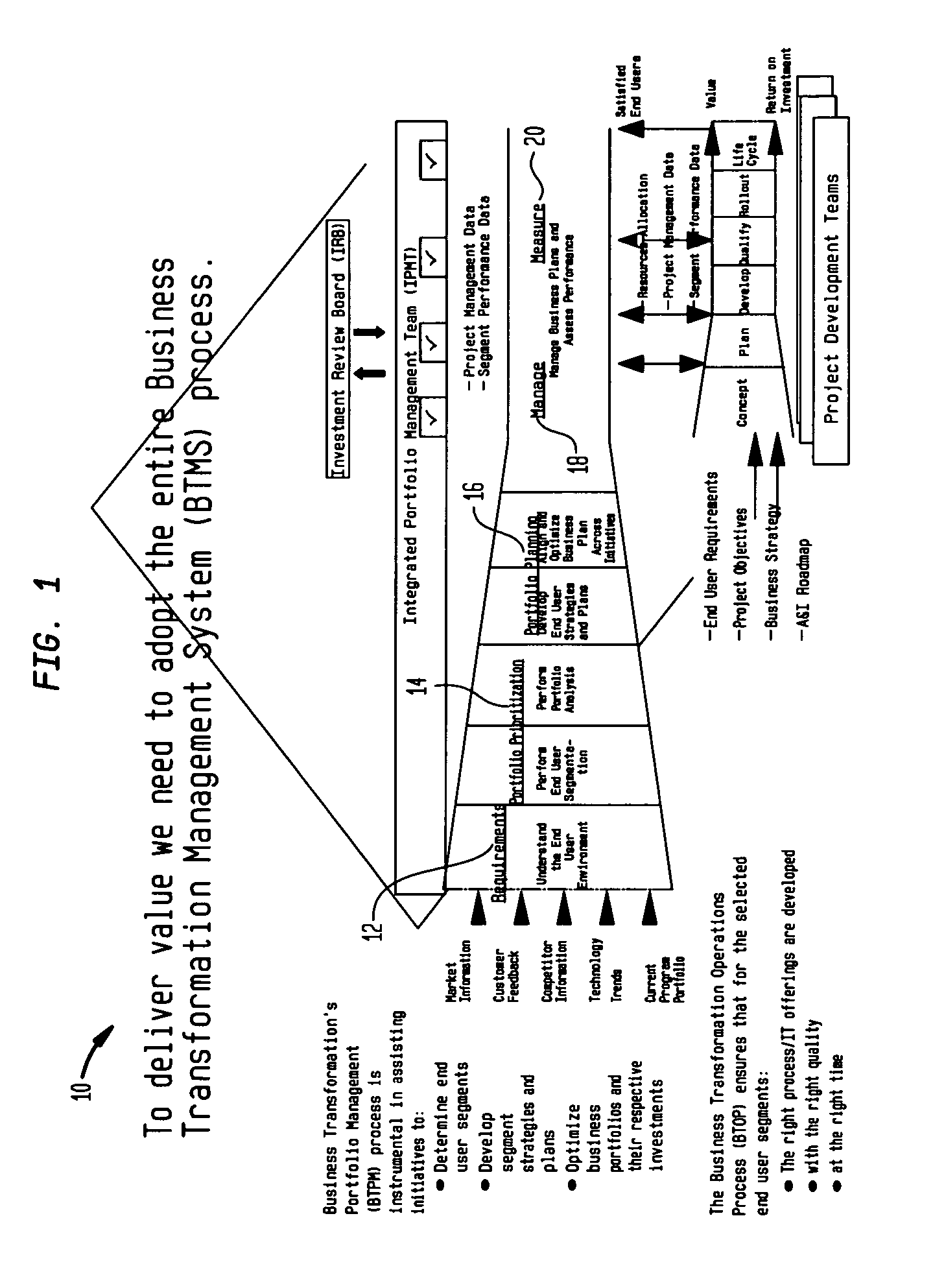

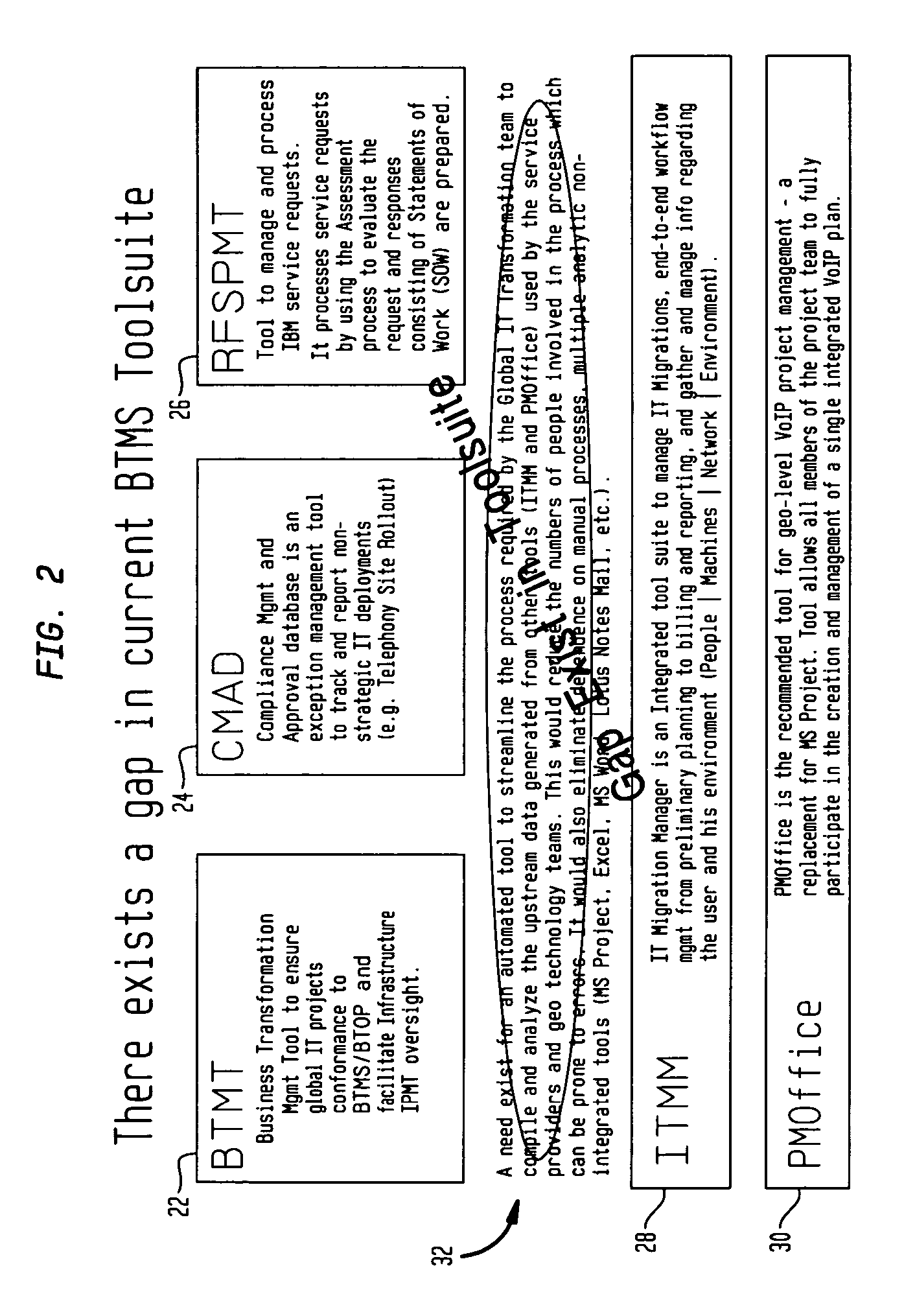

Global IT transformation

ActiveUS20070250368A1Efficient managementEffective informationMultiprogramming arrangementsPayment architectureVisibilityIt investment

Disclosed are a method of and system for providing a view of a transformation program. The method comprises the steps of providing an integrated and end-to-end set of processes, analytic tools, and reports that provide an information technology team with a comprehensive view of an information technology transformation; and using said set of processes, analytic tools and reports to provide a visibility to make objective business decisions about issues, including technology and activity and resource allocation. The preferred embodiment of the invention may be used in a number of specific situations. For example, the invention may be used in the implementation of IT investments, which are implemented in the course of an Annual Plan.

Owner:KYNDRYL INC

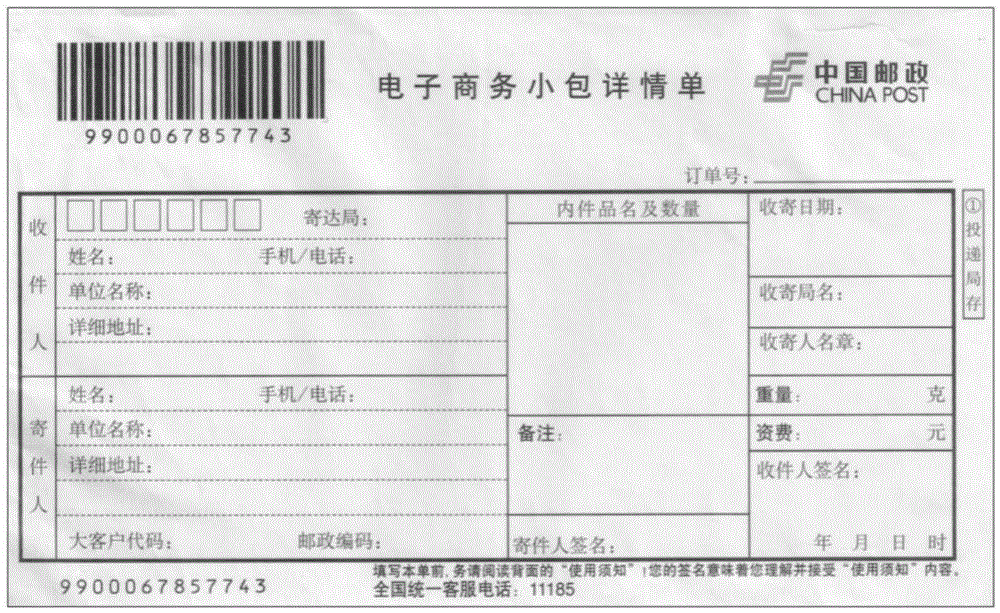

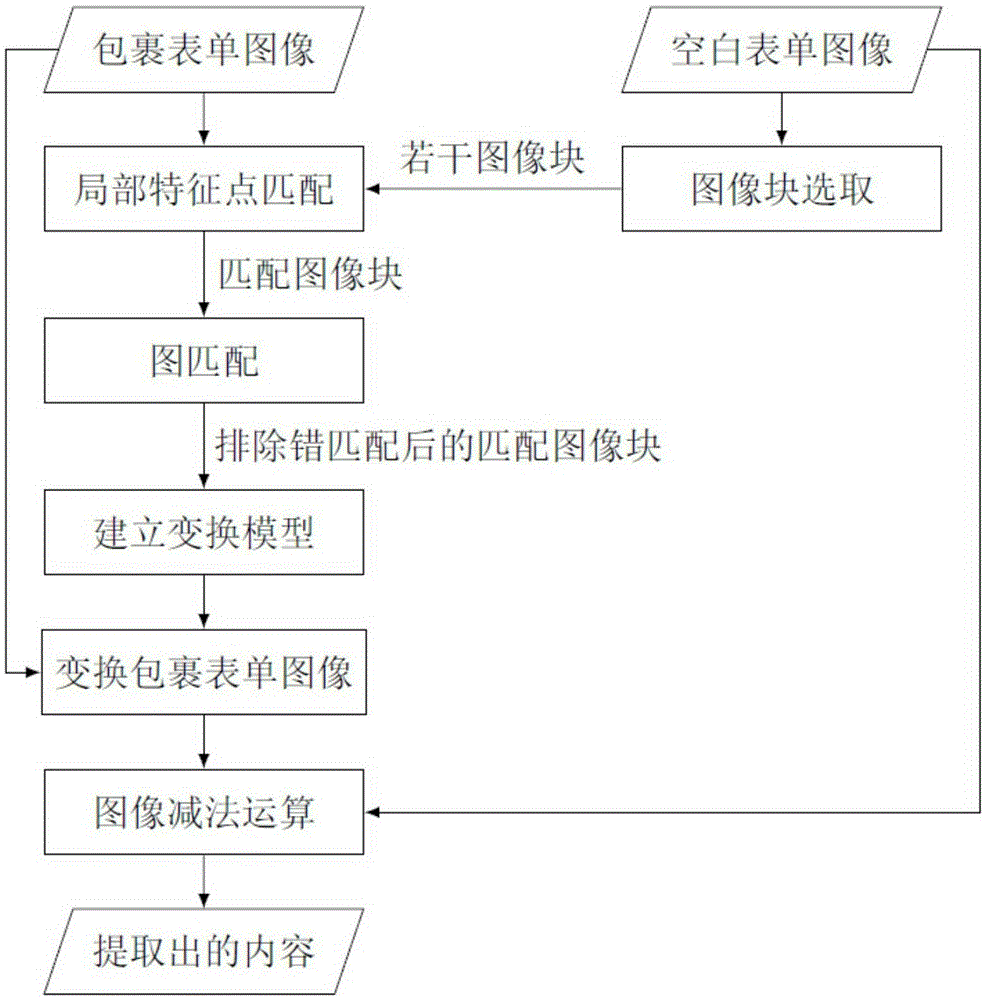

Form content extracting method based on registration of local feature points of image

The present invention proposes a form content extracting method based on registration of local feature points of an image. The method comprises the following steps of: extracting SIFT feature points of an input image; solving a set of matched feature point pairs of each local image block of the input image and a corresponding reference image; calculating transformation matrices of each local image block of the reference image according to the matched feature point pairs; obtaining each corresponding local image block of the input image according to the transformation matrices; establishing an image model by identifying the local image block as a node and a geometric relationship among the local image blocks as a side; matching each local image block of the input image and each local image block of the reference image by using image matching calculation to obtain a set of matched image blocks; obtaining a global transformation matrix according to the set of matched feature point pairs in the set of matched image blocks; transforming an image by using the global transformation matrix; subtracting the transformed input image and the reference image to obtain a difference of a corresponding pixel; and and binarizing the subtracted input images to obtain a form content.

Owner:EAST CHINA NORMAL UNIV

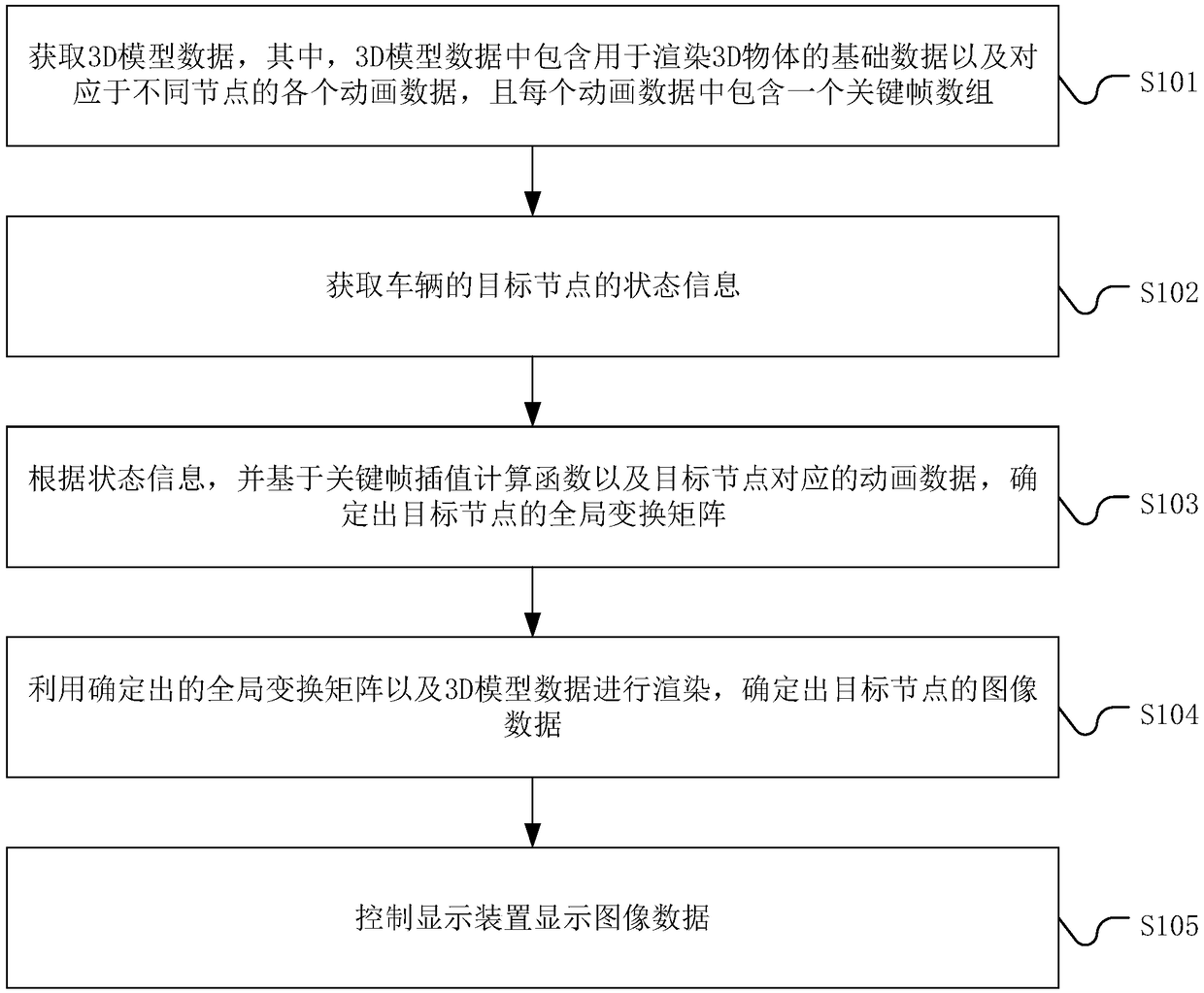

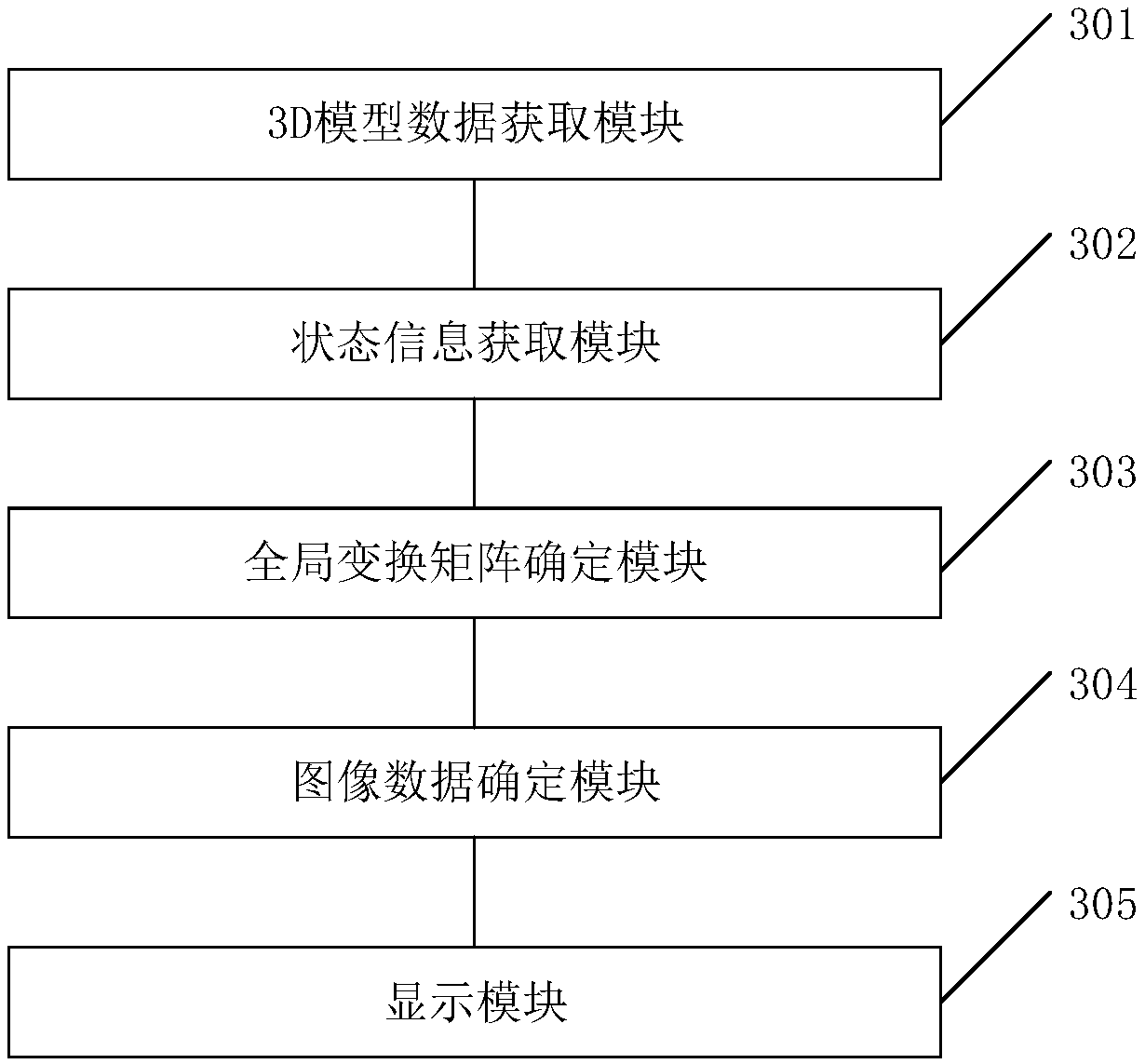

Vehicle-mounted navigator, vehicle state dynamic image display method and system thereof as well as storage medium

ActiveCN109405847AAll-roundHelps to viewInstruments for road network navigationAnimationGlobal transformationAnimation

The invention discloses a vehicle state dynamic image display method applied to a vehicle-mounted navigator. The vehicle state dynamic image display method comprises the following steps: obtaining 3Dmodel data, wherein the 3D model data comprises base data used for rendering 3D objects and dynamic image data corresponding to different nodes, and each dynamic image data comprises a key frame dataarray; obtaining state information of a target node of the vehicle; determining a global transformation matrix of the target node based on a key frame interpolation calculation function and dynamic data corresponding to the target node according to the state information; rendering by using the determined global transformation matrix and the 3D model data to determine image data of the target node;and controlling a display device to display the image data. The vehicle state dynamic image display method is capable of helping the user to see the vehicle state in all directions and in the whole process and obtaining smoother and more continuous dynamic images. The invention further discloses a vehicle-mounted navigator and a vehicle state dynamic image display system thereof as well as a storage medium, which have the corresponding effects.

Owner:SHENZHEN ROADROVER TECH

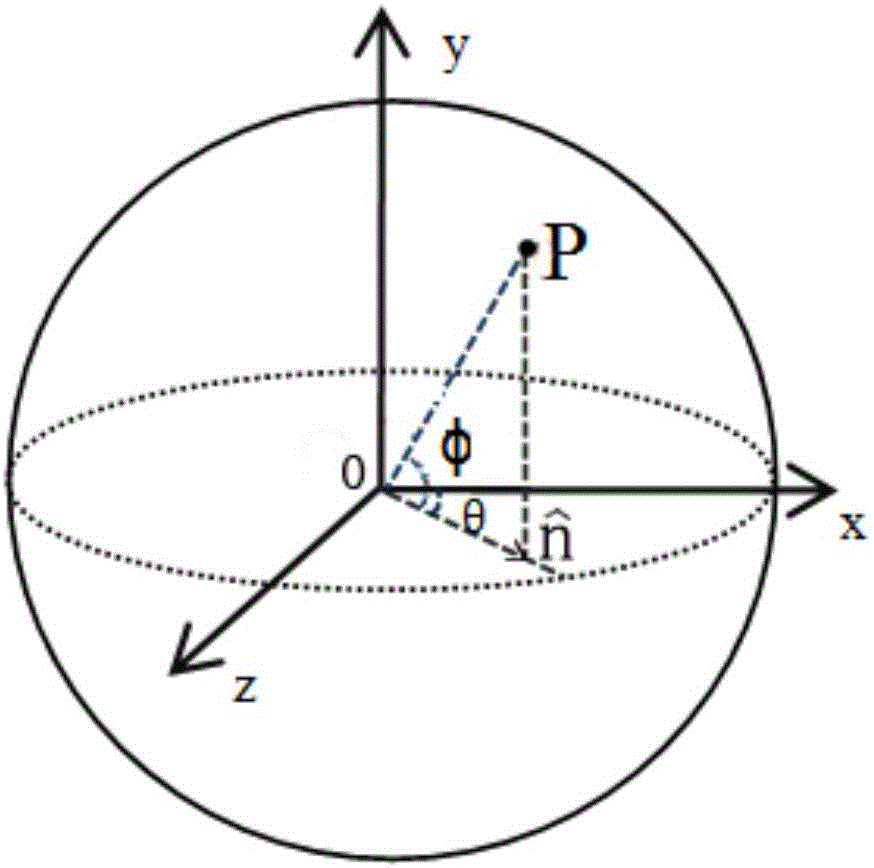

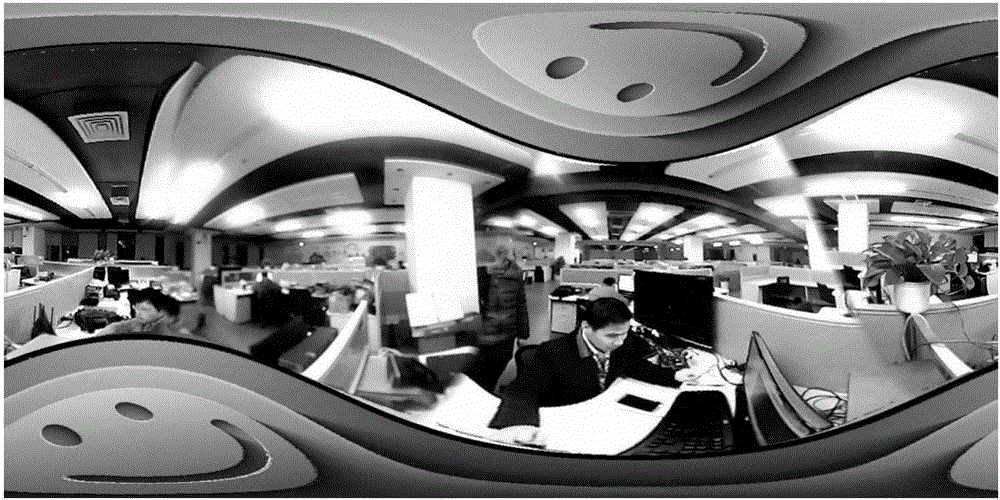

Method for automatically stabilizing view angle of panoramic camera

InactiveCN106384367AImprove viewing effectImprove the correction effectImage analysisGeometric image transformationGlobal transformationMapping algorithm

The invention provides a method for automatically stabilizing a shooting view angle of a panoramic camera. Based on data of an inclination measurement sensor, a global transformation mapping algorithm is performed on a shot panoramic image, and the panoramic image is automatically corrected into an image with a vertical erected view angle in a software mode, so that panoramic video or images with a good viewing effect can still be provided under the condition that the shooting posture is instable.

Owner:深圳拍乐科技有限公司

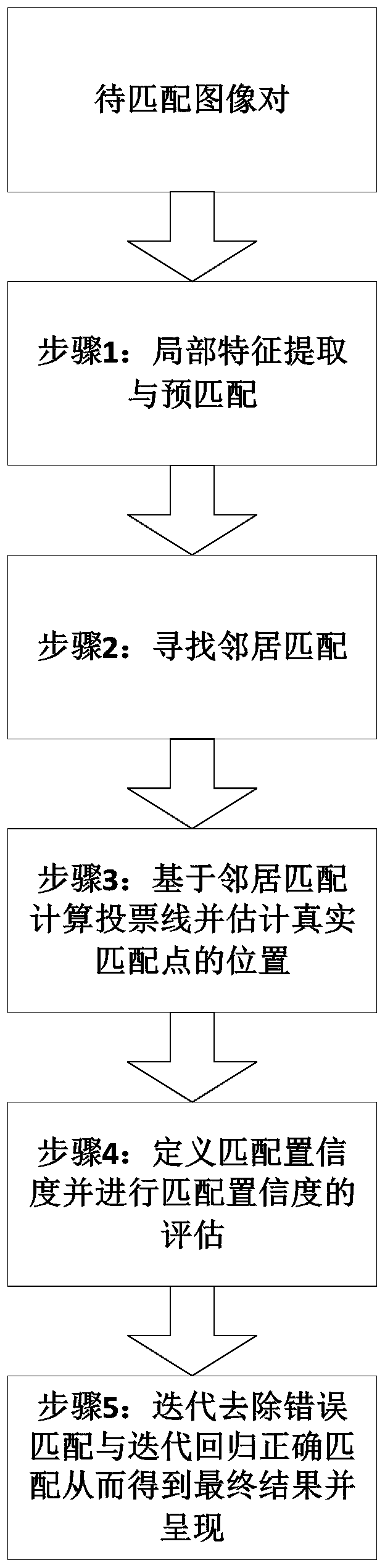

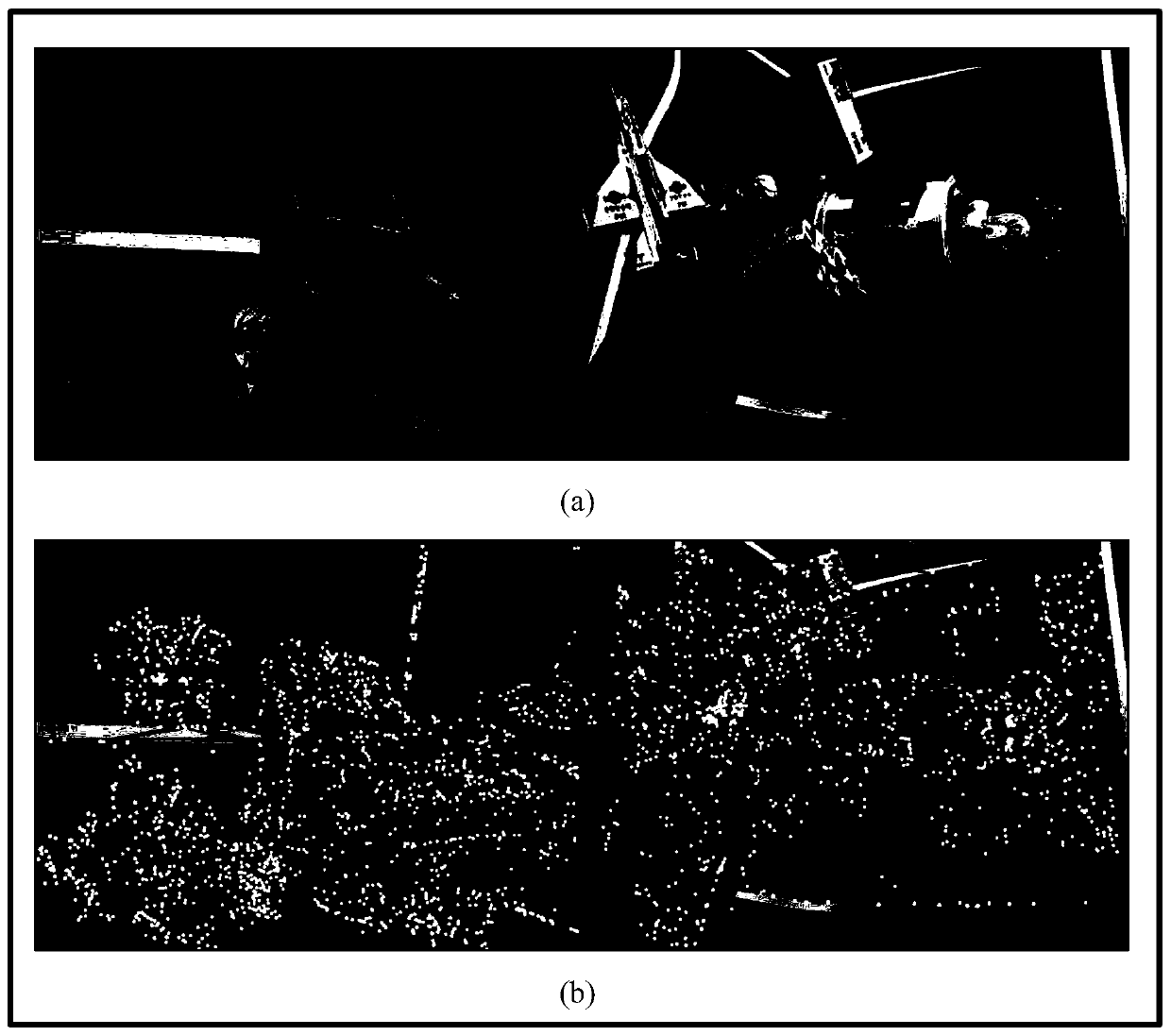

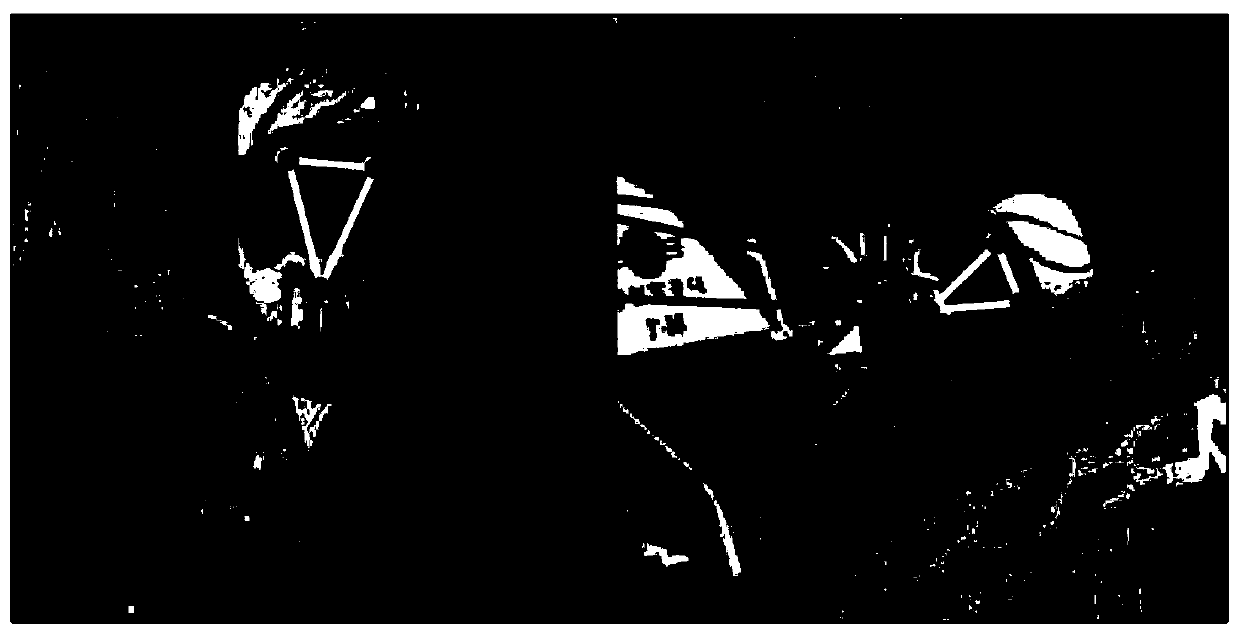

An image matching method based on locality sensitive confidence evaluation

ActiveCN109840529AGood matching efficiencyProof of validityCharacter and pattern recognitionFeature extractionGlobal transformation

The invention discloses an image matching method based on local sensitivity confidence evaluation. The image matching method comprises the following steps: step 1, local feature extraction and pre-matching; And step 2, searching for a neighbor matching pair. And step 3, calculating a voting line based on neighbor matching and estimating the position of a real matching point. And step 4, defining amatching confidence coefficient and evaluating the matching confidence coefficient. And 5, performing iteration to remove error matching and correct iteration regression matching so as to obtain a final result and display the final result. The method has the advantages that the problems that in an existing method for estimating global transformation between input images or achieving a matching solution through global optimization, abnormal values are likely to appear, interference is likely to happen and the like are solved, and the matching efficiency and accuracy of image matching are effectively improved.

Owner:ANHUI UNIVERSITY

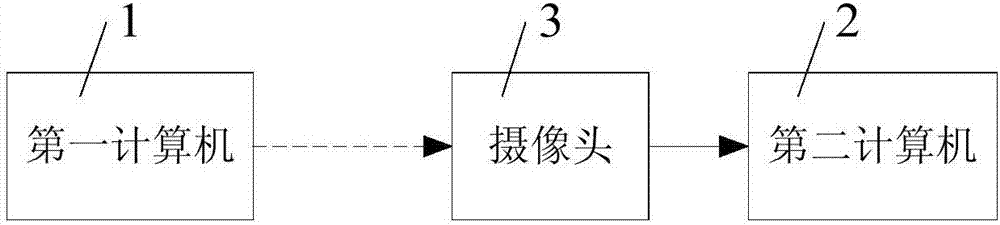

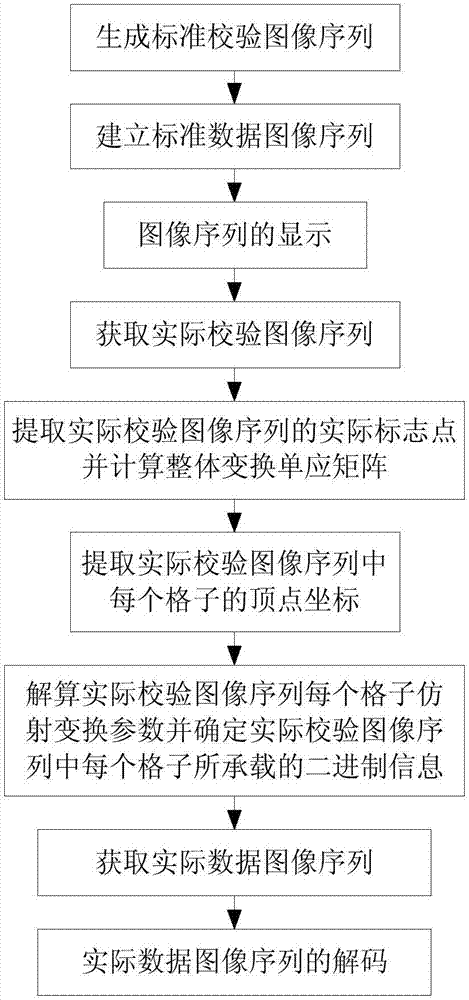

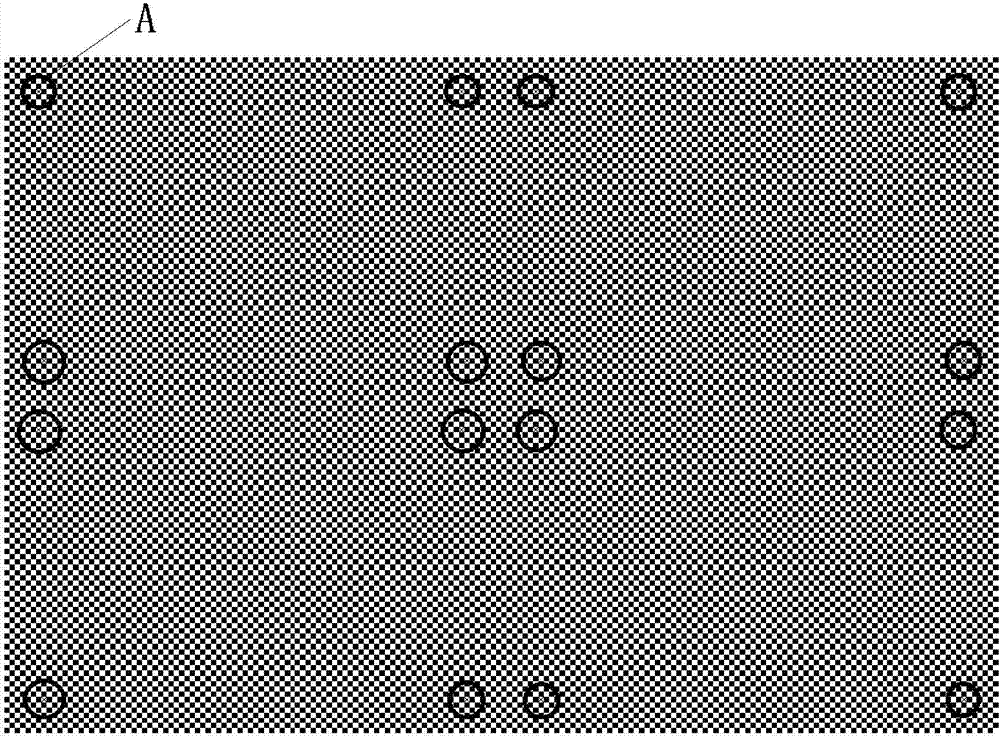

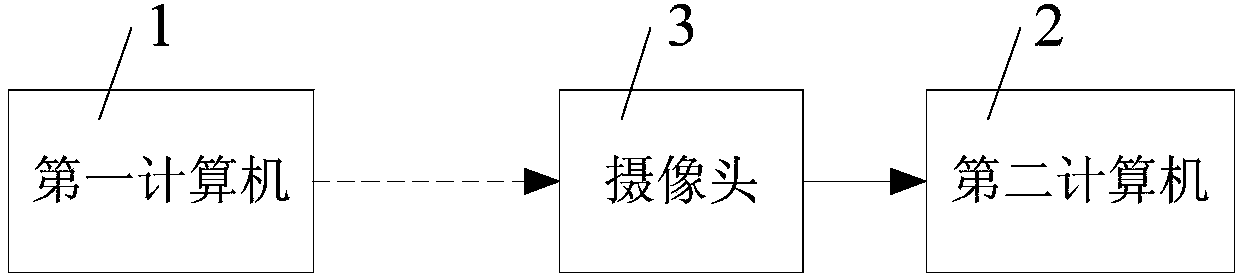

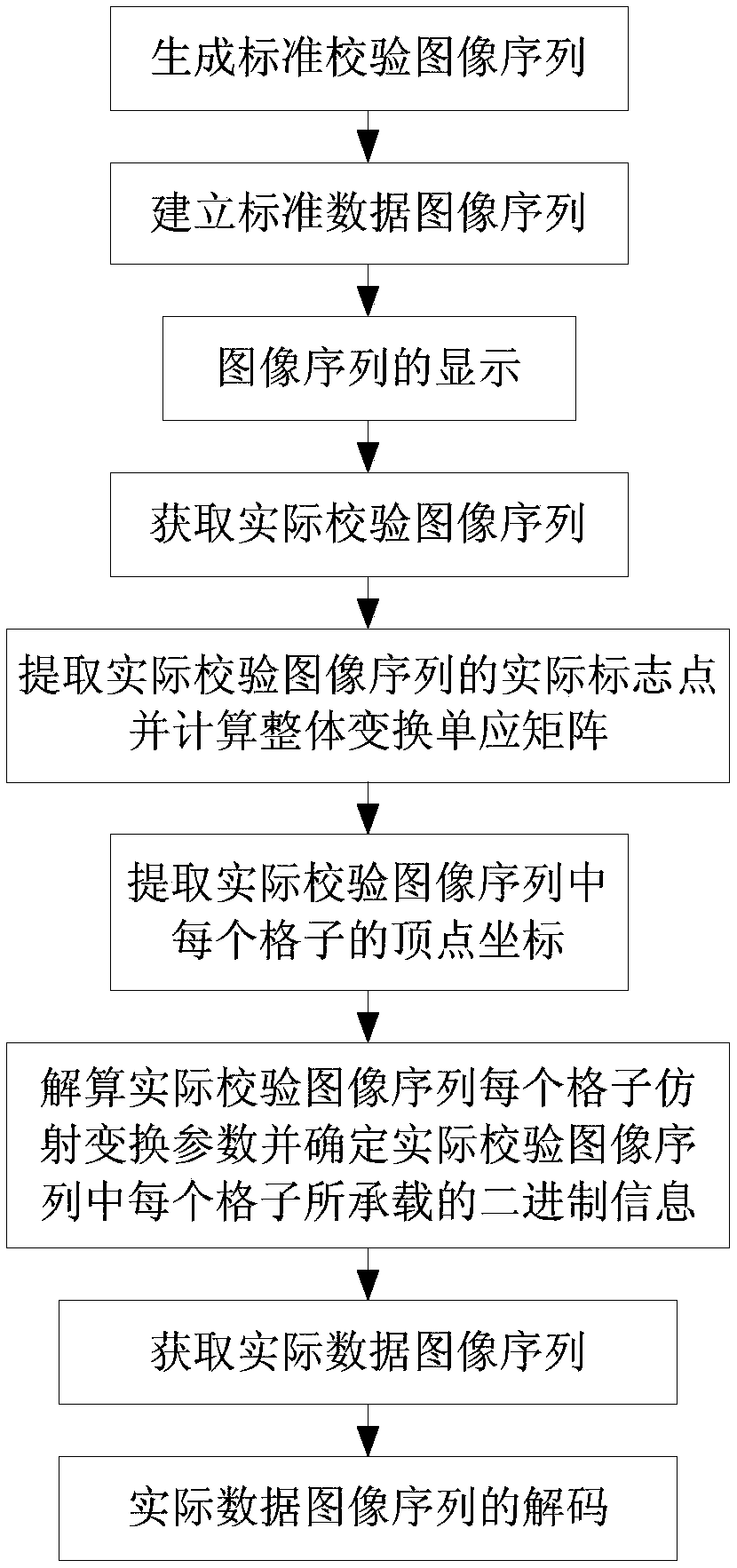

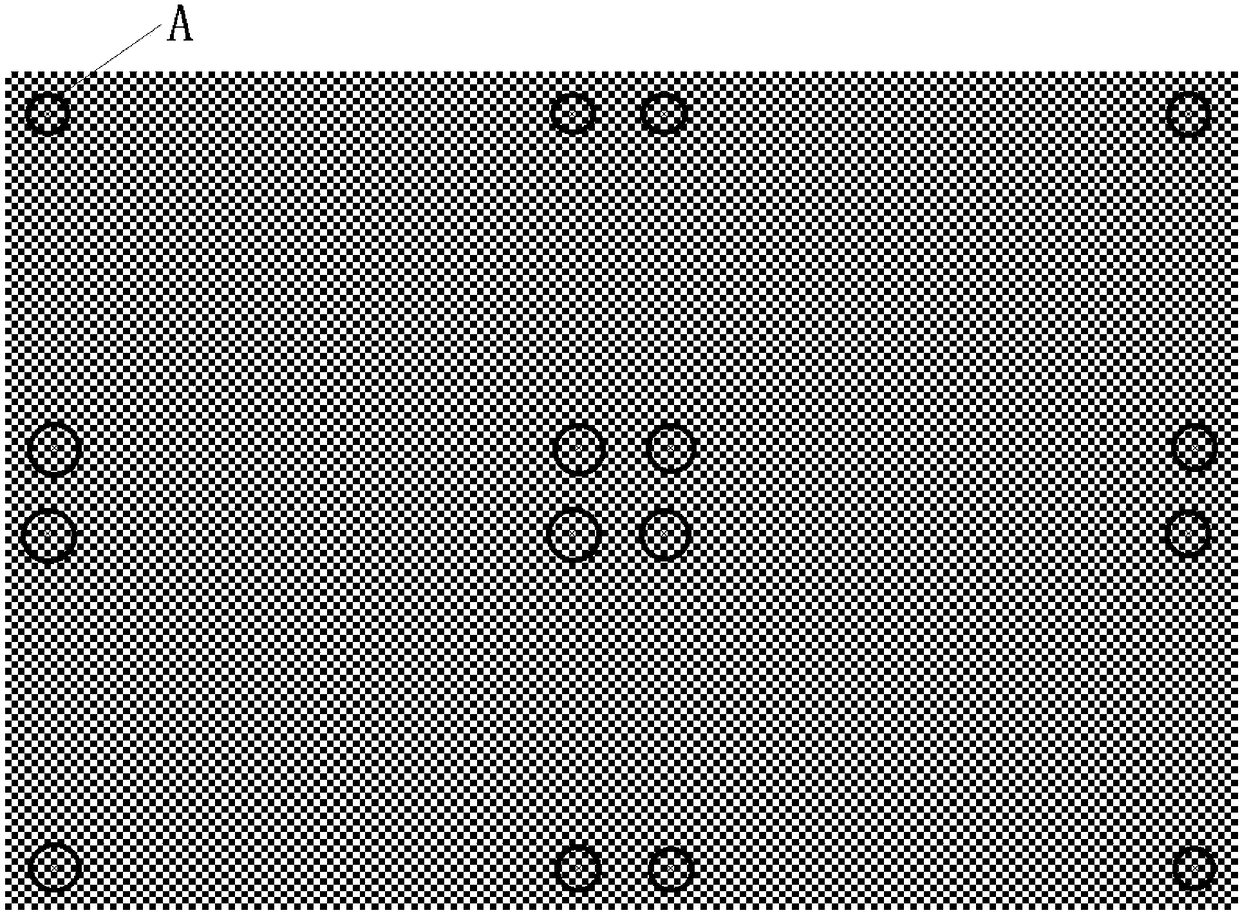

Computer vision based non-contact type data transmission method

InactiveCN107172386AGuaranteed confidentialityGuaranteed one-wayColor signal processing circuitsImage codingCamera lensGlobal transformation

The invention discloses a computer vision based non-contact type data transmission method. The computer vision based non-contact type data transmission method comprises the steps of 1, coding and displaying an image sequence, to be specific, 101, generating a standard checking image sequence, 102, building a standard data image sequence, and 103, displaying the image sequence; and 2, decoding the image sequence, to be specific, 201, obtaining an actual checking image sequence, 202, extracting an actual mark point of the actual checking image sequence and calculating a global transformation homographic matrix, 203, extracting a vertex coordinate of each grid of the actual checking image sequence, 204, resolving each grid affine transformation parameter of the actual checking image sequence and determining binary information carried by each grid of the actual checking image sequence, 205, obtaining an actual data image sequence, and 206, decoding the actual data image sequence. As a camera lens is utilized for acquiring computer image information, single-way transmission of computer intranet and extranet information is realized, and efficient communication of computer information between a secret-relating network and a non-secret-involved network can be solved efficiently.

Owner:XIAN UNIV OF SCI & TECH

Three-dimensional image splicing method for eliminating motion ghosting

PendingCN109493282AEliminate motion ghostingEliminate ghostingImage enhancementImage analysisGlobal transformationStereo image

The invention discloses a three-dimensional image splicing method for eliminating motion ghosting, which comprises the following steps of: acquiring two groups of images by adopting a binocular camera, and respectively calculating the view difference of the two groups of images; extracting feature points of each group of images, describing and matching the feature points, and then screening out wrong matching to obtain an accurate feature point pair set; setting a new feature constraint condition according to the view difference and the feature point pair set, and performing global transformation on the second group of images by adopting the obtained homography transformation which optimizes the feature constraint condition; determining an overlapping region of the first group of images and the transformed second group of images, finding out a moving object of the overlapping region, and designing a weighted fusion coefficient according to the relative position of the moving object andthe virtual splicing line; and respectively fusing and splicing the left view and the right view, and synthesizing the spliced left view and right view to obtain a final stereo image. According to the stereo image splicing method provided by the invention, high-quality splicing is realized for the stereo image containing the moving object.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

A non-contact data transmission method based on computer vision

InactiveCN107172386BGuaranteed confidentialityGuaranteed one-wayColor signal processing circuitsImage codingCamera lensGlobal transformation

The invention discloses a computer vision based non-contact type data transmission method. The computer vision based non-contact type data transmission method comprises the steps of 1, coding and displaying an image sequence, to be specific, 101, generating a standard checking image sequence, 102, building a standard data image sequence, and 103, displaying the image sequence; and 2, decoding the image sequence, to be specific, 201, obtaining an actual checking image sequence, 202, extracting an actual mark point of the actual checking image sequence and calculating a global transformation homographic matrix, 203, extracting a vertex coordinate of each grid of the actual checking image sequence, 204, resolving each grid affine transformation parameter of the actual checking image sequence and determining binary information carried by each grid of the actual checking image sequence, 205, obtaining an actual data image sequence, and 206, decoding the actual data image sequence. As a camera lens is utilized for acquiring computer image information, single-way transmission of computer intranet and extranet information is realized, and efficient communication of computer information between a secret-relating network and a non-secret-involved network can be solved efficiently.

Owner:XIAN UNIV OF SCI & TECH

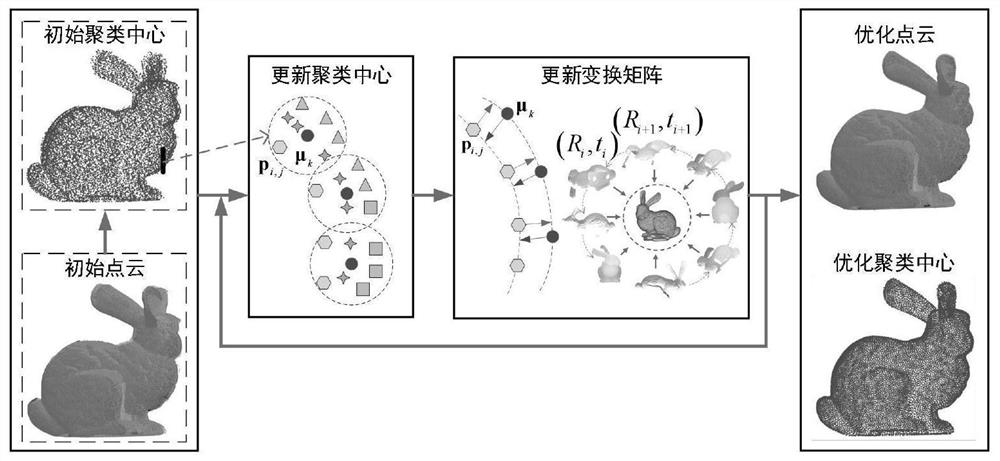

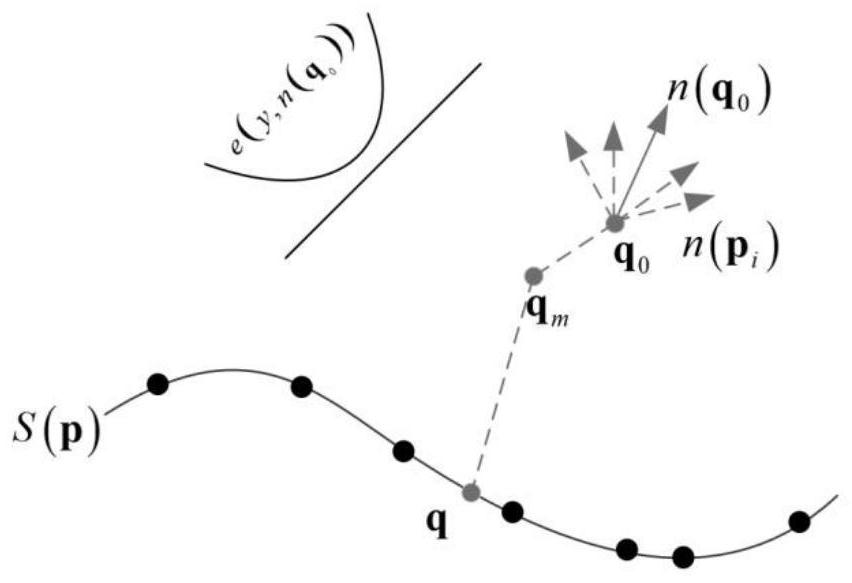

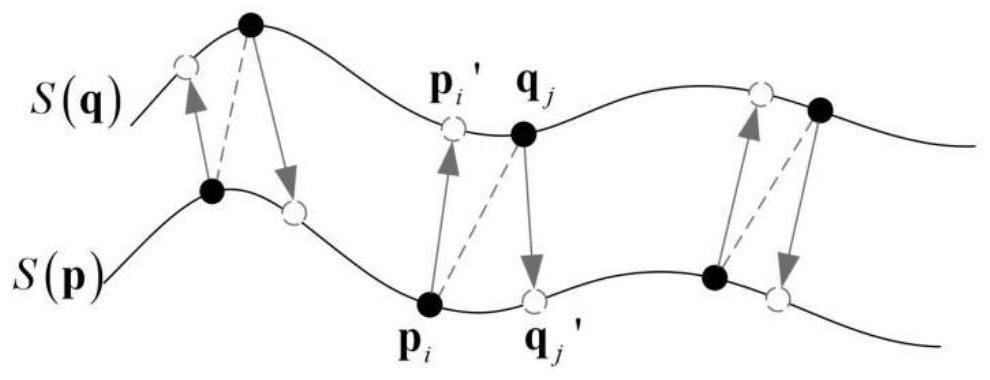

Multi-view point cloud registration method based on K-means clustering center local curved surface projection

ActiveCN113610903AReduced cloud resolution drop issuesImprove the accuracy of multi-view registrationImage enhancementImage analysisPoint cloudGlobal transformation

The invention discloses a multi-view point cloud registration method based on K-means clustering center local curved surface projection. The method includes: giving an initial global transformation matrix; calculating a multi-scale feature descriptor and a normal vector of each frame of point cloud; determining clustering attribution of each point in a complete point cloud; calculating a multi-scale feature descriptor and a normal vector to obtain a registration corresponding point of the original point relative to the complete point cloud; carrying out bidirectional interpolation projection on the local MLS curved surface, and if a rigid body transformation consistency constraint condition is not met, eliminating the point pair, and obtaining a final matching point set of the single-frame point cloud; if the rigid body transformation consistency constraint condition is met, taking the projection point and the corresponding point thereof as a correct corresponding point pair; registering the N view point clouds in sequence; and achieving global optimization. According to the invention, the problem that the point cloud resolution is reduced and the registration precision is not high due to the down-sampling operation of the laser point cloud with sparsity originally is solved, that is, the sampling sparsity of the three-dimensional laser point cloud is solved.

Owner:HARBIN INST OF TECH

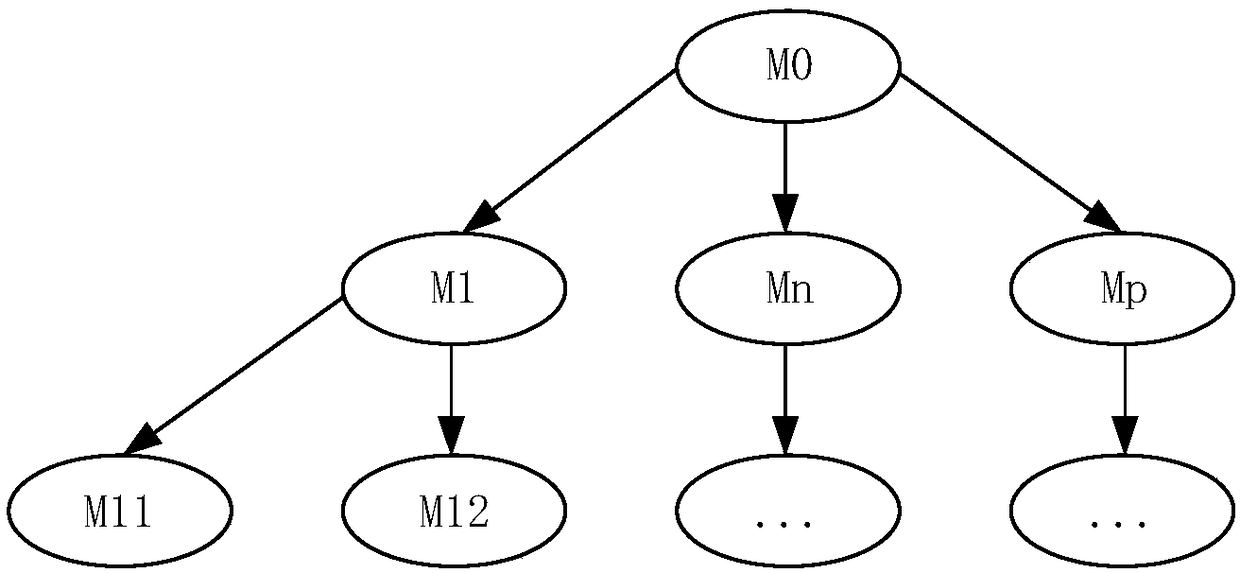

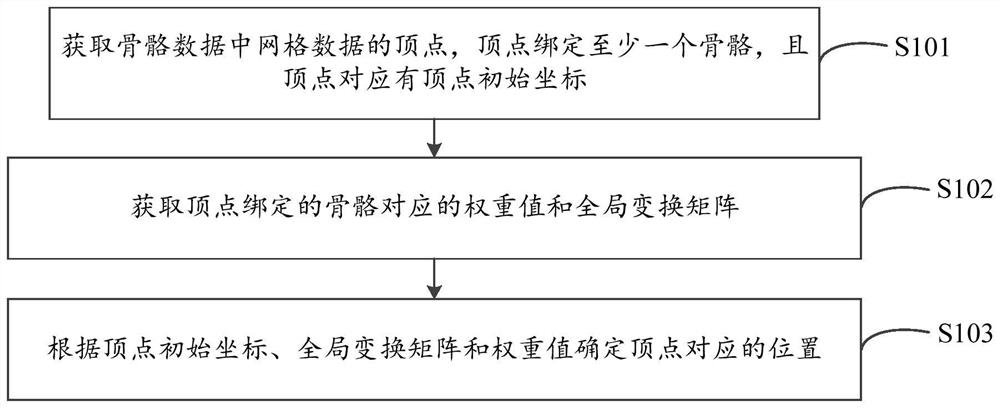

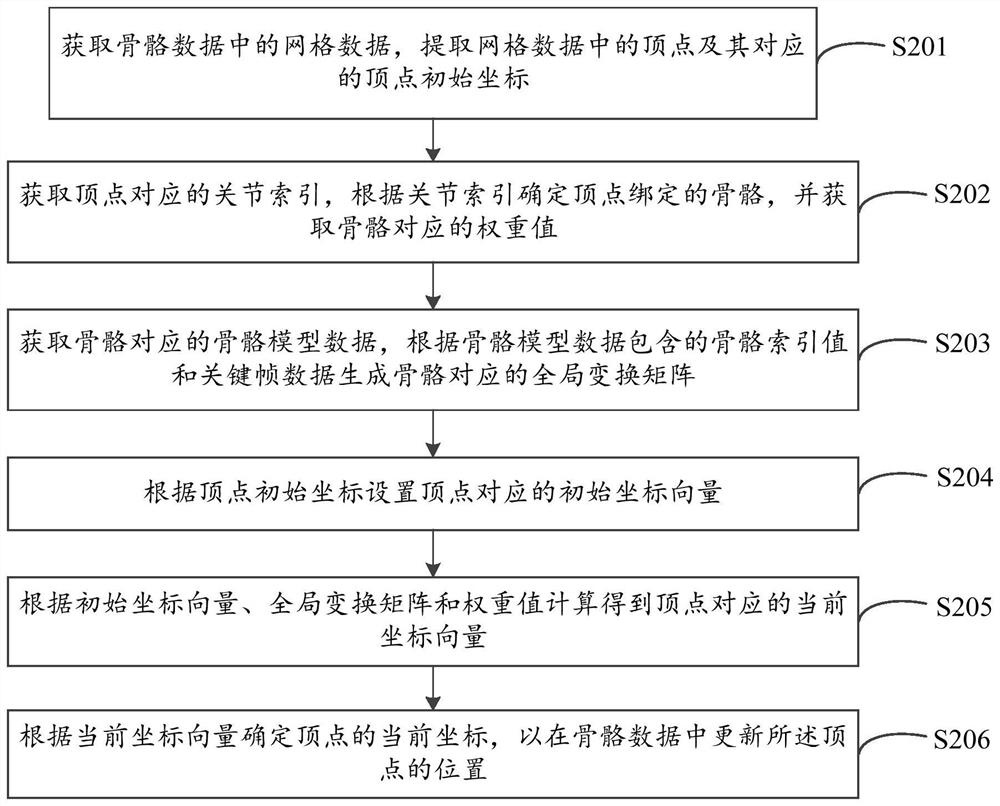

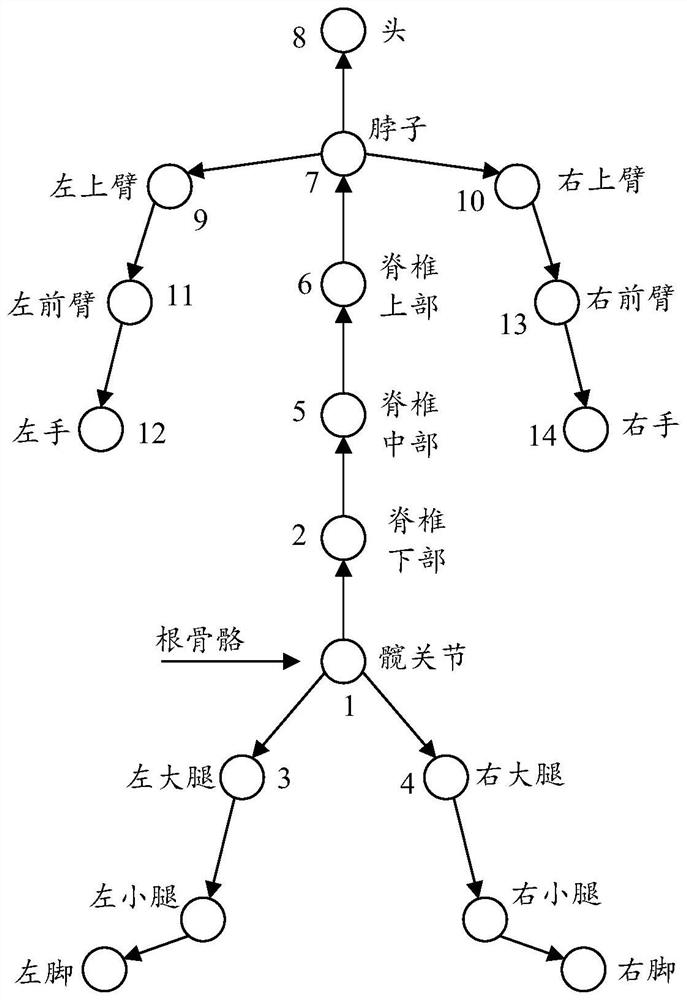

Skeletal data modeling method, computer equipment and storage medium

PendingCN114359447AAchieving dynamismAvoid storageAnimation3D modellingAlgorithmGlobal transformation

The invention discloses a skeleton data modeling method, computer equipment and a storage medium, and the method comprises the steps: obtaining vertexes of grid data in skeleton data, binding the vertexes with at least one skeleton, and enabling the vertexes to correspond to initial coordinates of the vertexes; obtaining a weight value and a global transformation matrix corresponding to the skeleton bound with the vertex; and determining positions corresponding to the vertexes according to the initial coordinates of the vertexes, the global transformation matrix and the weight values. By means of the mode, the real-time position of the vertex can be updated through the initial position of the vertex and the global transformation matrix and the weight value corresponding to the skeleton bound with the vertex, the dynamic effect of skeleton modeling is achieved, the data size of a skeleton modeling file can be reduced, and the memory space of data operation is reduced.

Owner:SHENZHEN TATFOOK NETWORK TECH

An Optimized Flash Memory Address Mapping Method

ActiveCN104268094BIncrease profitMemory architecture accessing/allocationMemory adressing/allocation/relocationGlobal transformationGranularity

The invention discloses an optimized flash memory address mapping method. In the demand-based page-level address mapping DFTL method, a global conversion page mapping table GTD is maintained in the memory, and at the same time, the address mapping cache CMT is used in the memory to cache Address mapping items that are frequently accessed in the translation page, wherein the cached data unit is the entire address translation page. The present invention unifies the granularity of the address mapping information in the flash memory and the cache, fully utilizes the temporal locality and spatial locality of the data, such as frequent access to local data in a short period of time, only needs to access the page-level address mapping cache without accessing the flash memory, At the same time, when a conversion page needs to be replaced out of the cache, all updated address mapping information can be updated to the flash memory at the same time, which improves the utilization rate of the mapping information.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

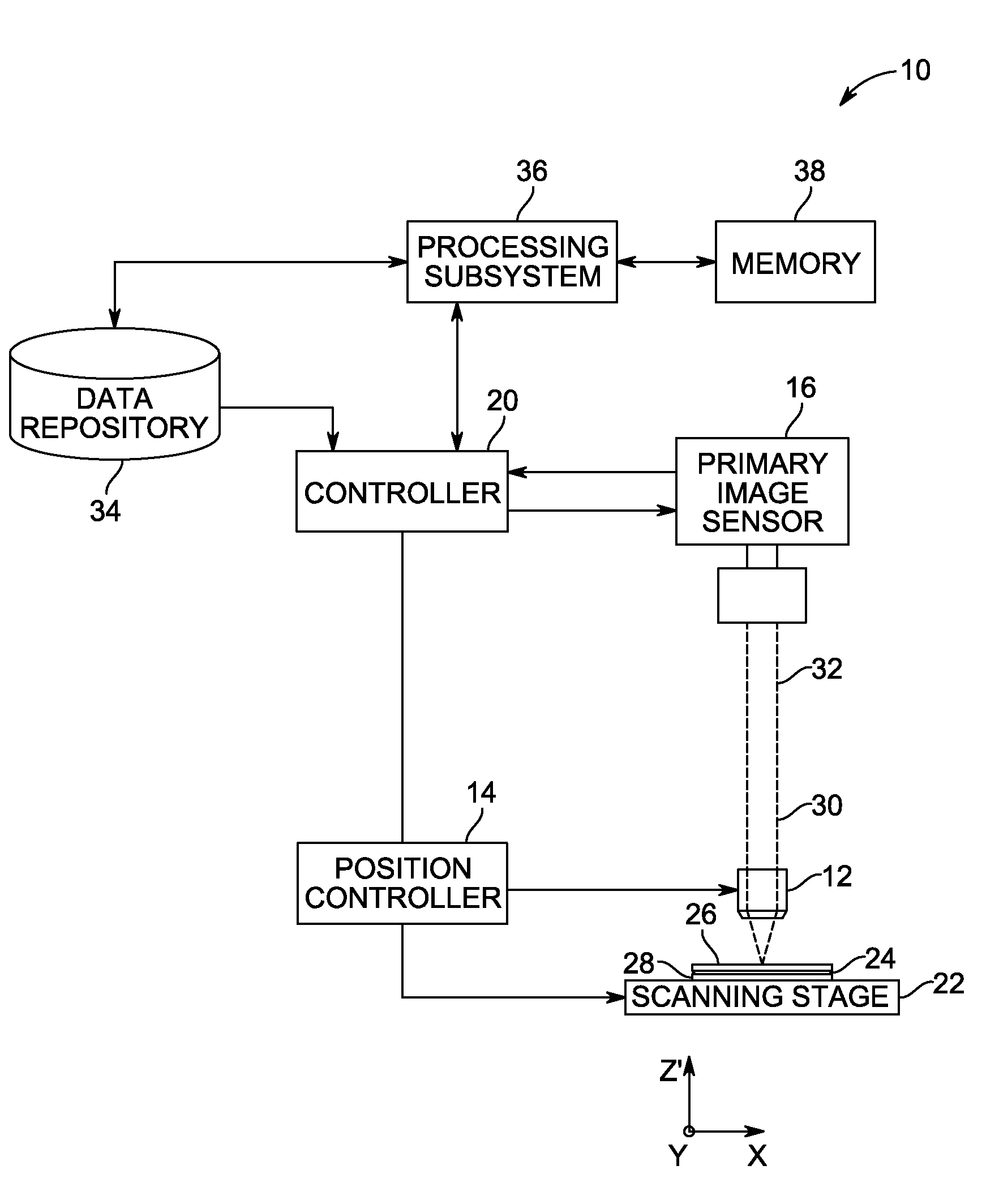

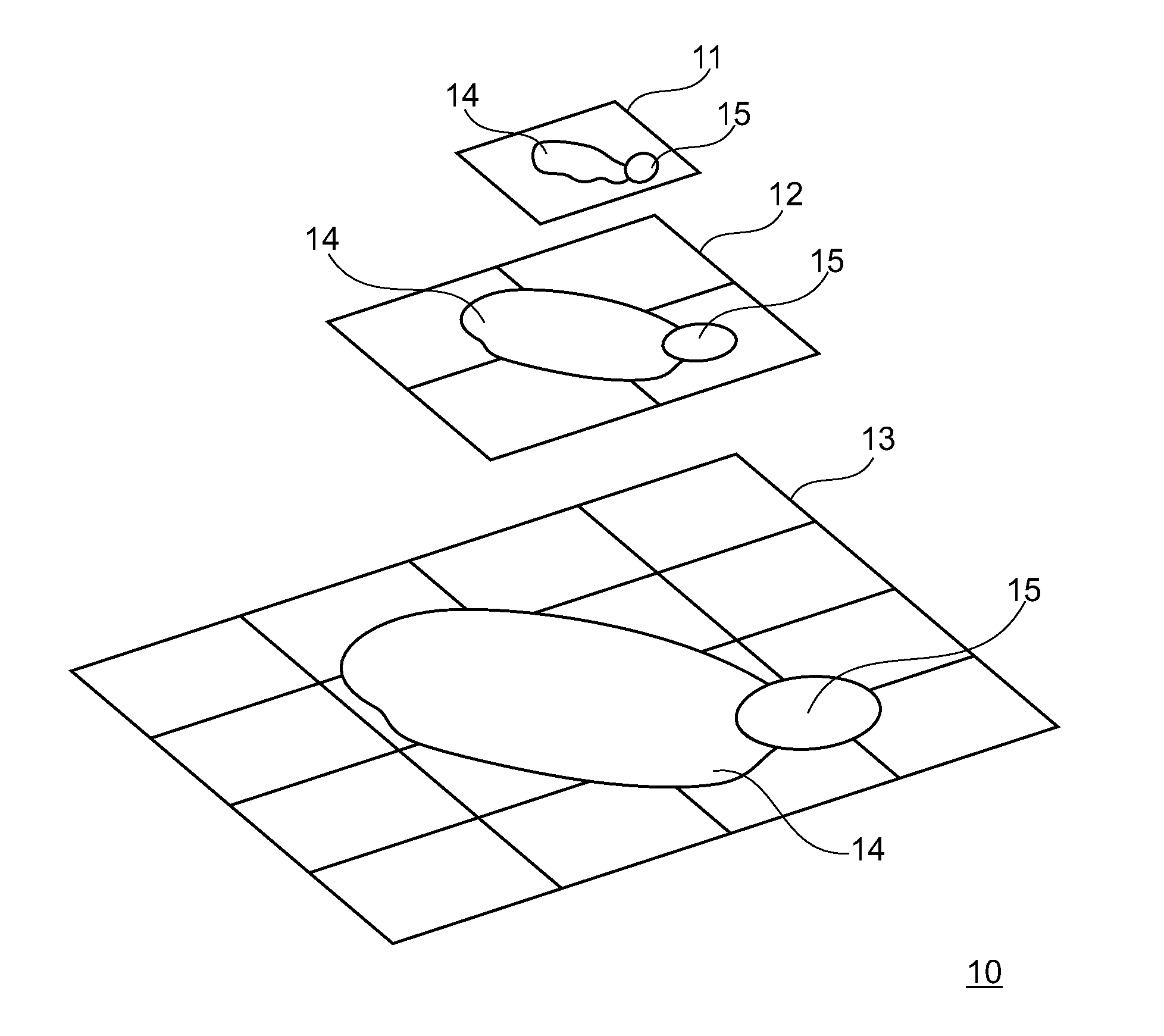

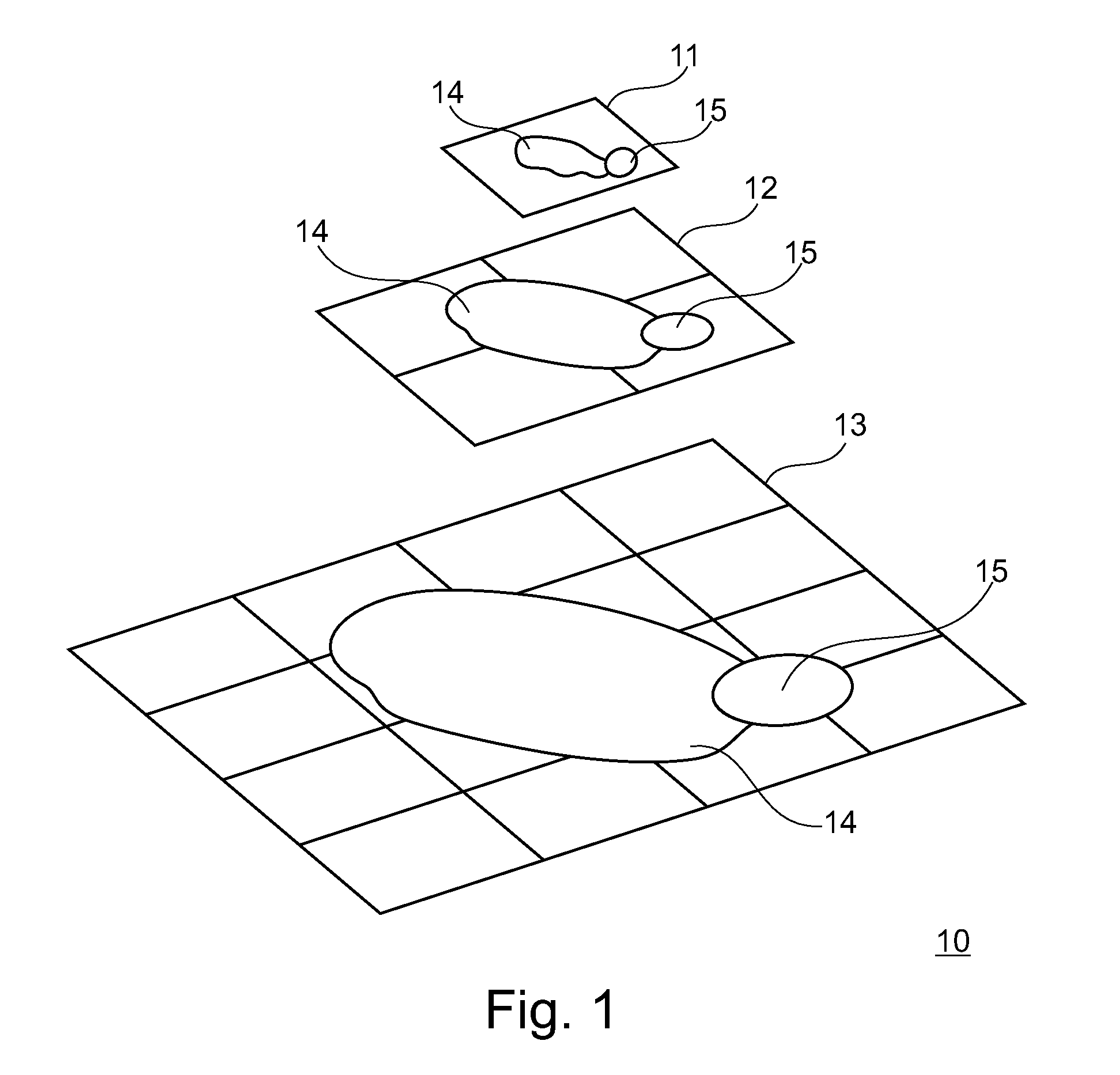

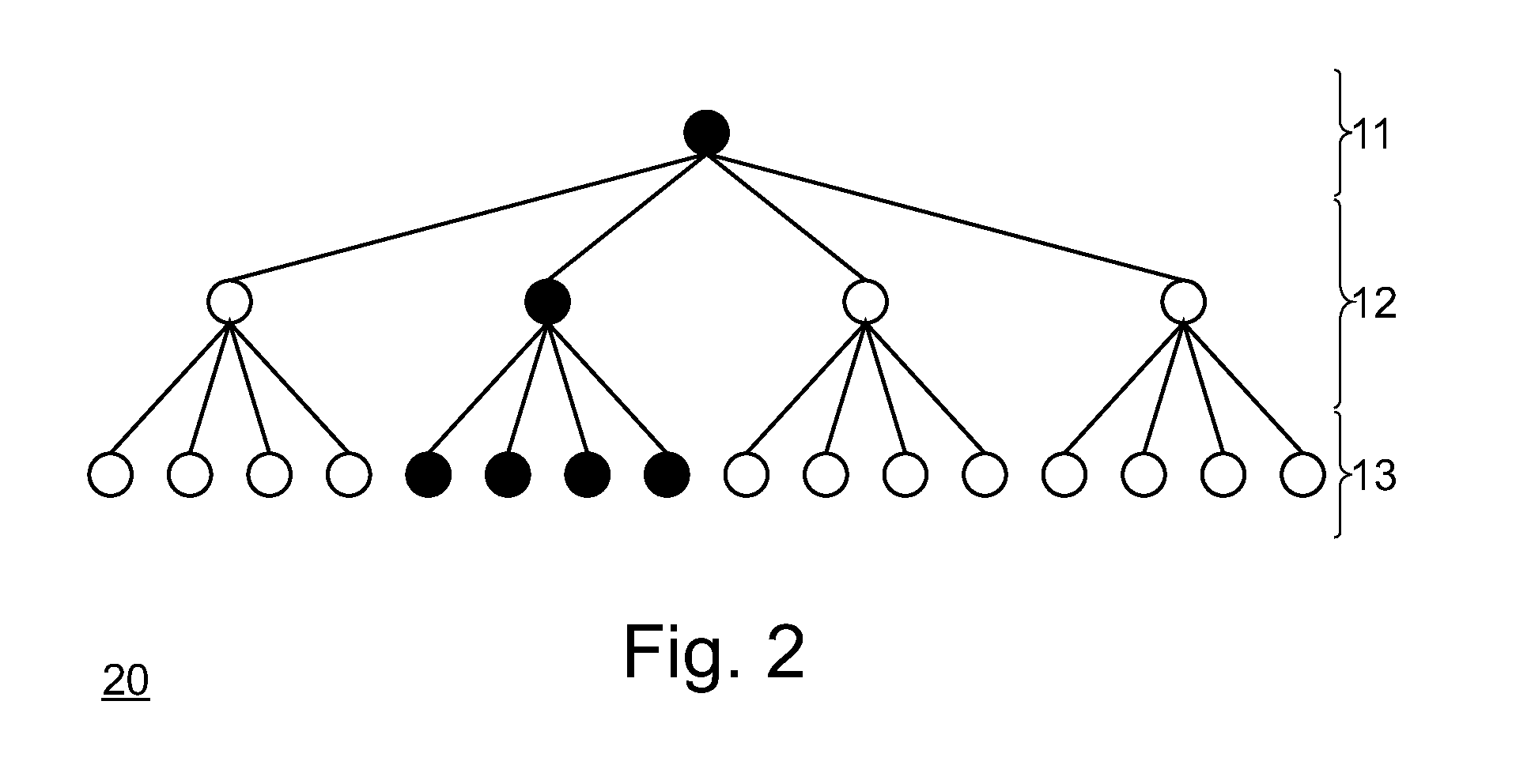

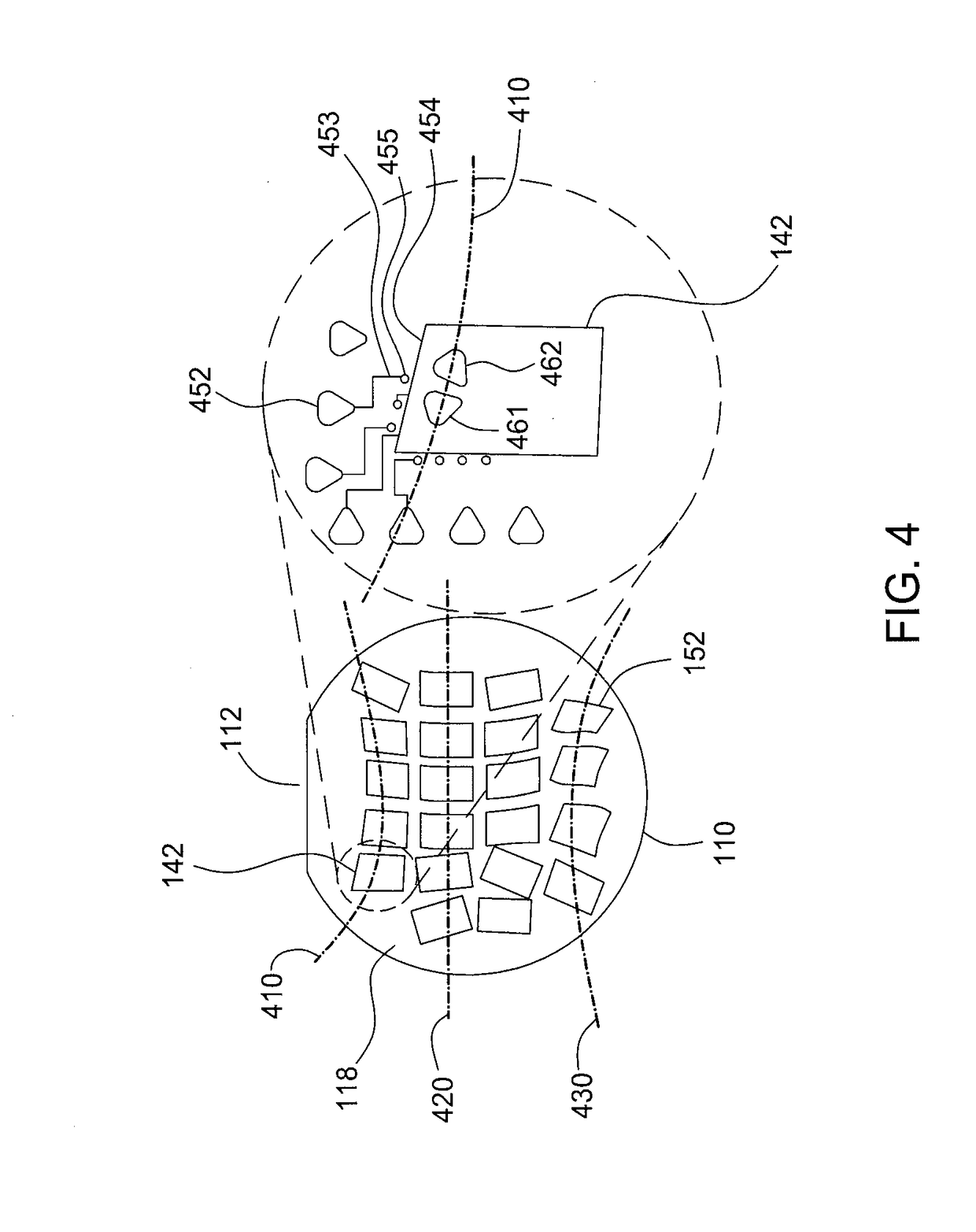

Method for image registration

ActiveUS9317894B2Reduce necessityMinimize timeImage enhancementImage analysisData setGlobal transformation

Owner:MICRODIMENSIONS

Method for Image Registration

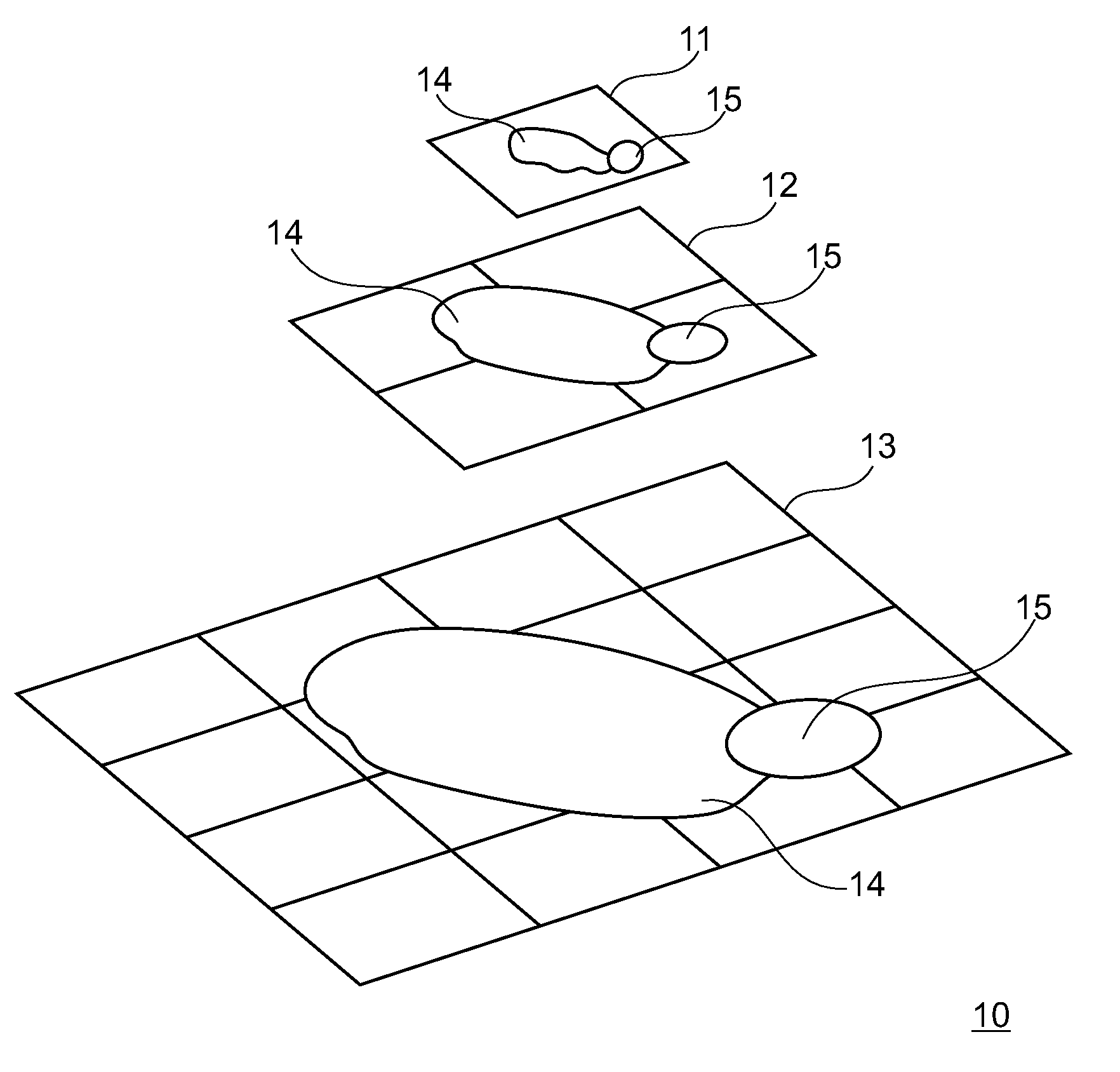

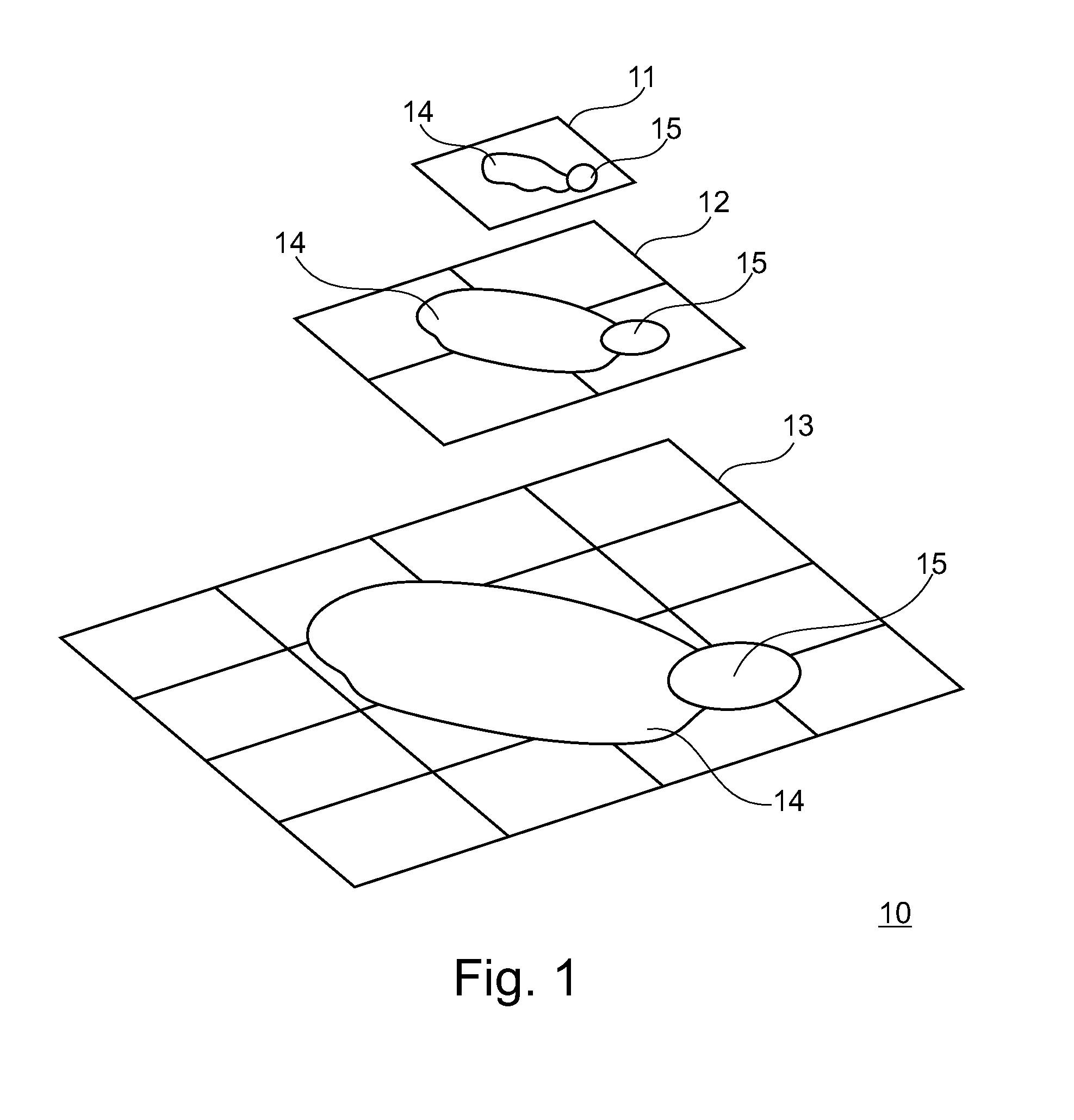

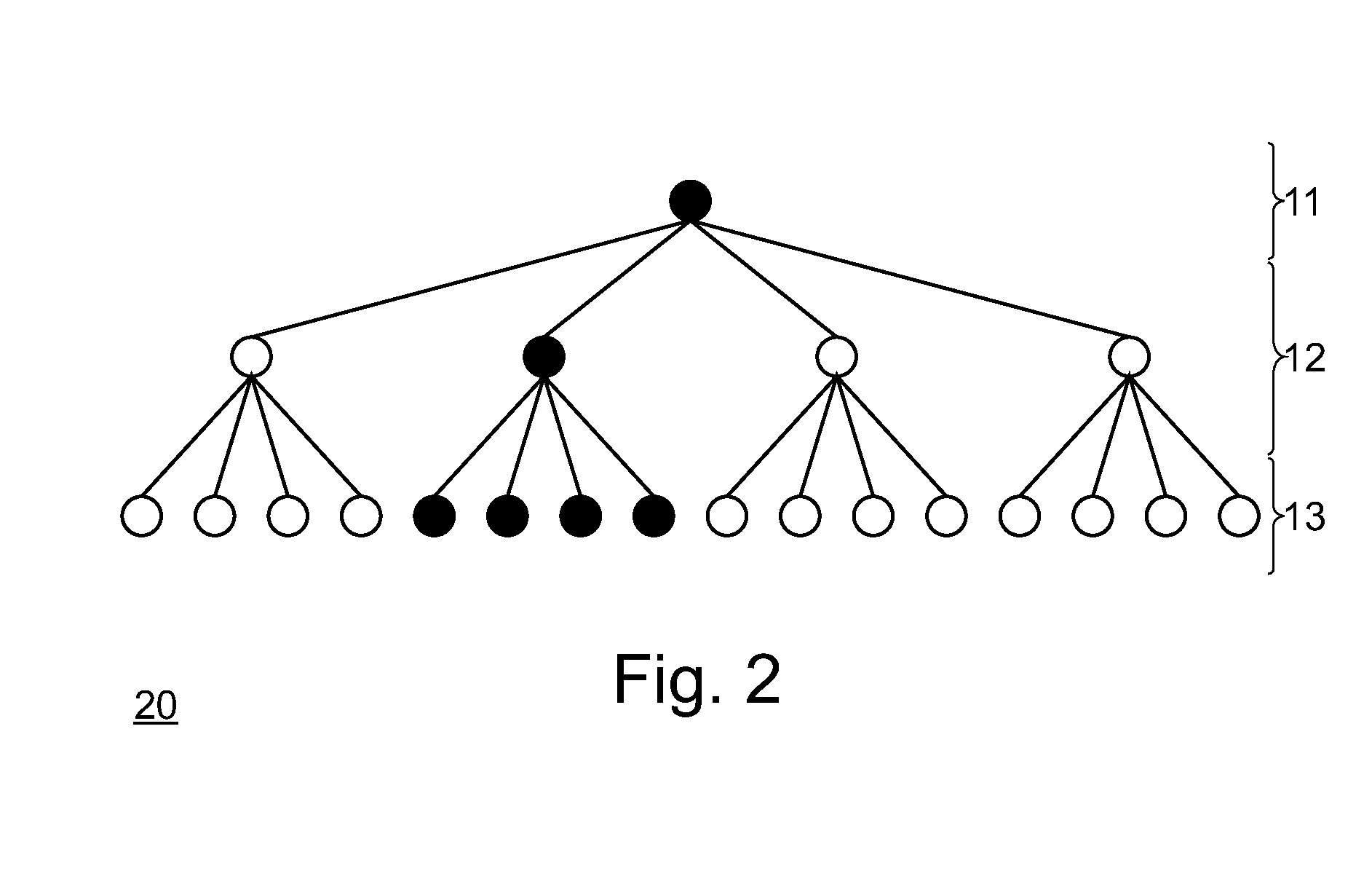

ActiveUS20150016703A1Reduce necessityMinimize timeImage enhancementImage analysisImage resolutionGlobal transformation

A method (30) for image registration of sections, in particular for image registration of histological sections, is described. The method comprises reading in (32) of a data set for at least two sections, wherein each of the data sets comprises m images of each section in m resolution levels (11, 12, 13), wherein each image of the resolution levels 1 to m−1 is divided into at least two cells, wherein each image has a different image resolution, wherein the image with the highest image resolution is associated with the resolution level 1 and the image with the lowest image resolution is associated with the resolution level m; registering (33) of the two mth images of the two sections on the resolution level m and determining of a global transformation for the resolution level m; aligning (34) of the two images of the resolution level m−1 using the global transformation of the resolution level m; and registering (35) of a subgroup of cells from all cells of the two images of the resolution level m−1.

Owner:MICRODIMENSIONS

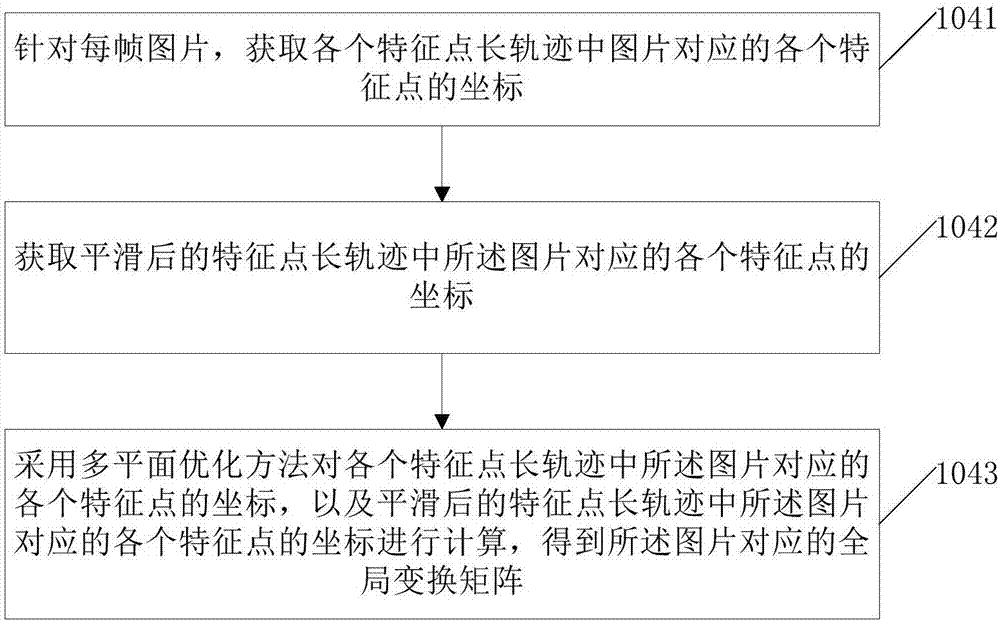

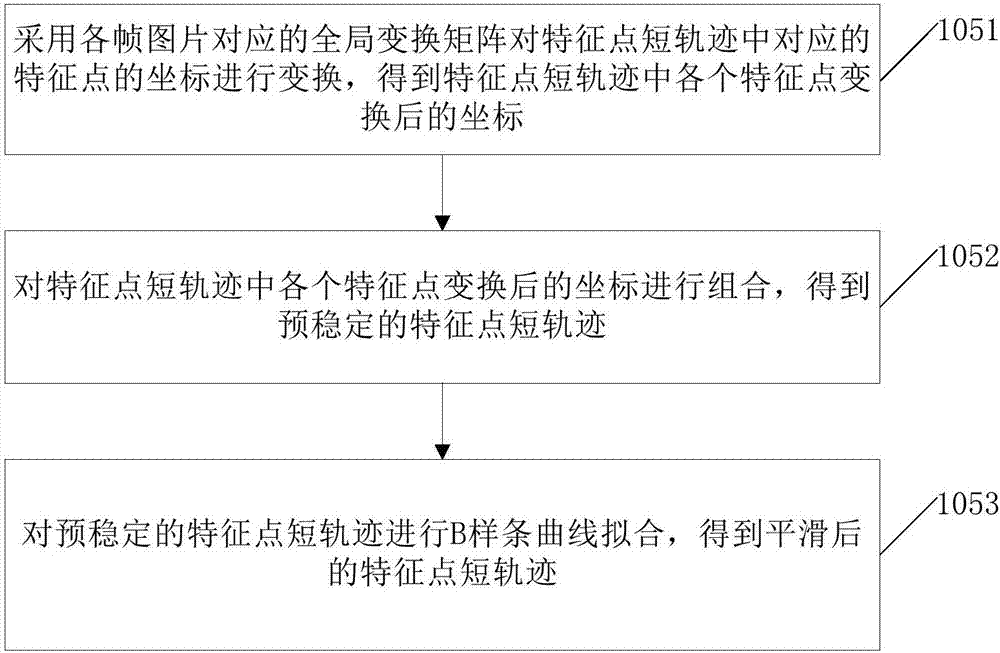

Unmanned aerial vehicle video stabilization method and apparatus in low-altitude flight scene

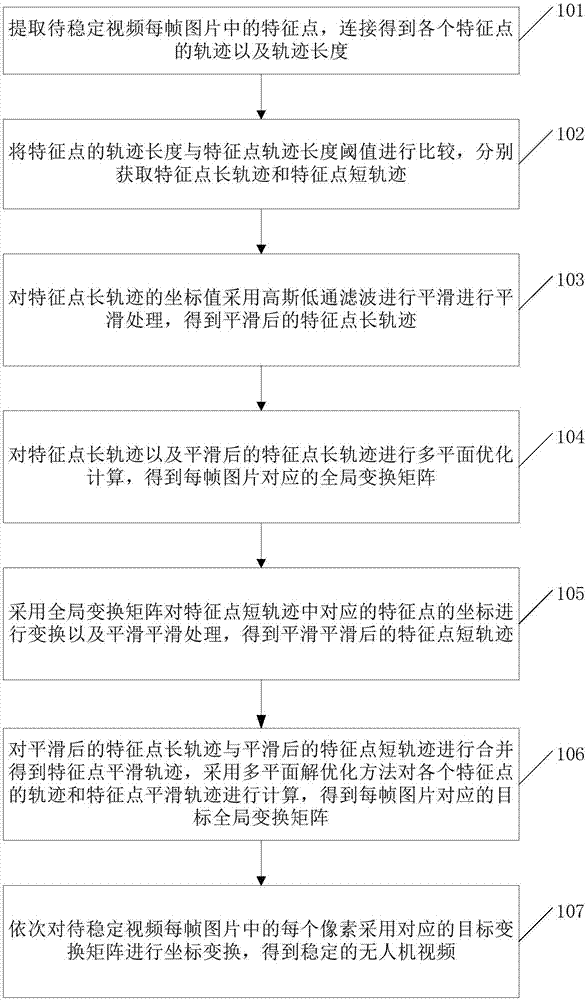

ActiveCN107135331ASolve the rolling shutter effectImprove stabilityTelevision system detailsColor television detailsLow-pass filterGlobal transformation

The invention provides an unmanned aerial vehicle video stabilization method and apparatus in a low-altitude flight scene. The method comprises the following steps: obtaining a to-be-stabilized unmanned aerial vehicle video, extracting feature points from each frame in the video, connecting the feature points to obtain tracks of the feature points, dividing the tracks into long tracks and short tracks according to thresholds, calculating a global transformation matrix corresponding to each frame picture based on the long tracks and smooth long tracks, obtaining smooth short tracks in combination with the global transformation matrix corresponding to each frame picture, the short tracks and a low-pass filter, and finally performing calculation by adopting a multi-plane optimization method in combination with the long tracks and the short tracks to obtain a stable unmanned aerial vehicle video. According to the unmanned aerial vehicle video stabilization method and apparatus, the tracks of the feature points are classified, when the stabilization effect of areas with sufficient feature points is ensured, the areas with insufficient feature points is stabilized, therefore the influence caused by the instable edge of the multi-plane optimization method in the low-altitude flight scene can be solved to a certain extent, and thus the stabilization effect of the unmanned aerial vehicle video is improved.

Owner:BEIHANG UNIV

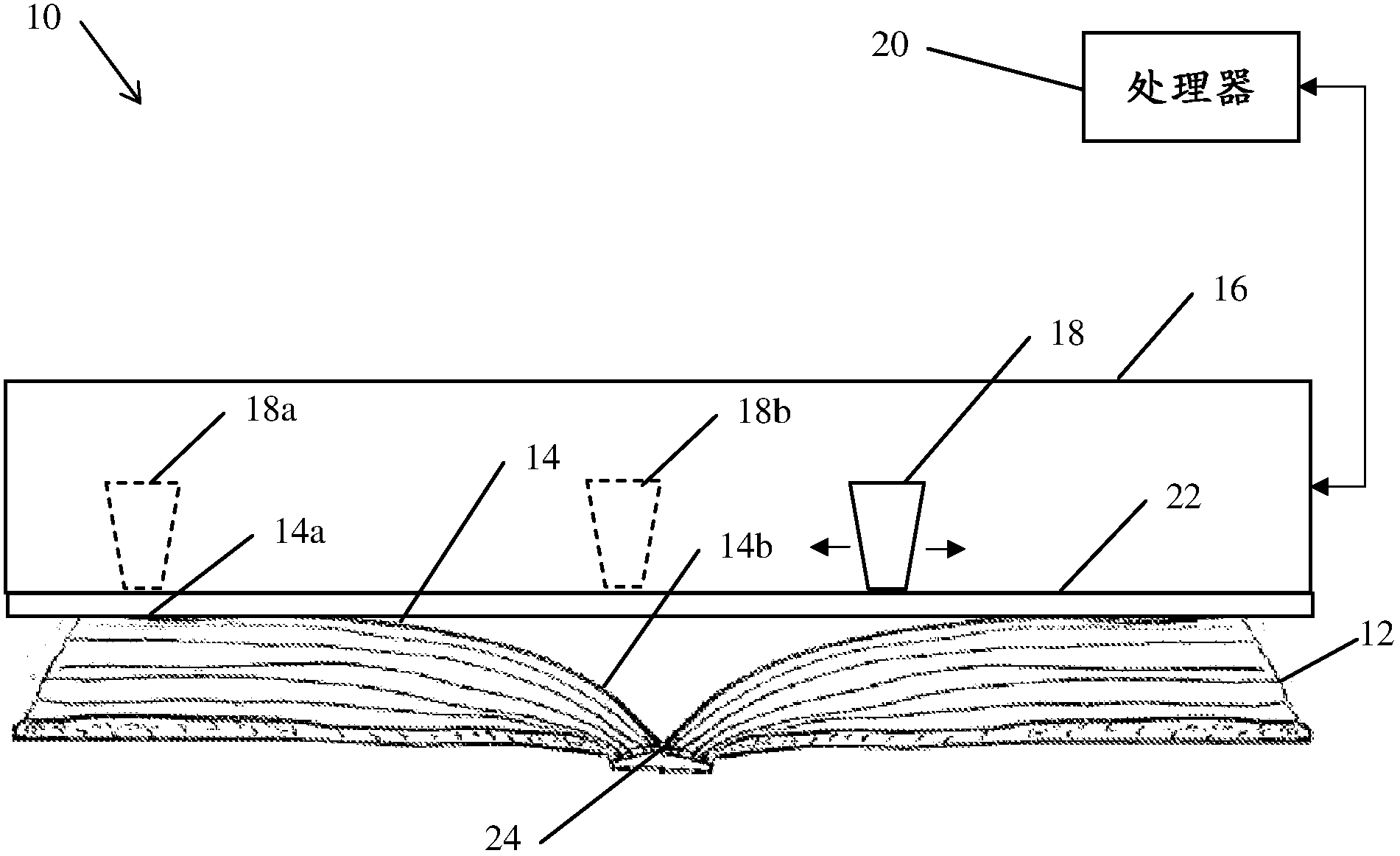

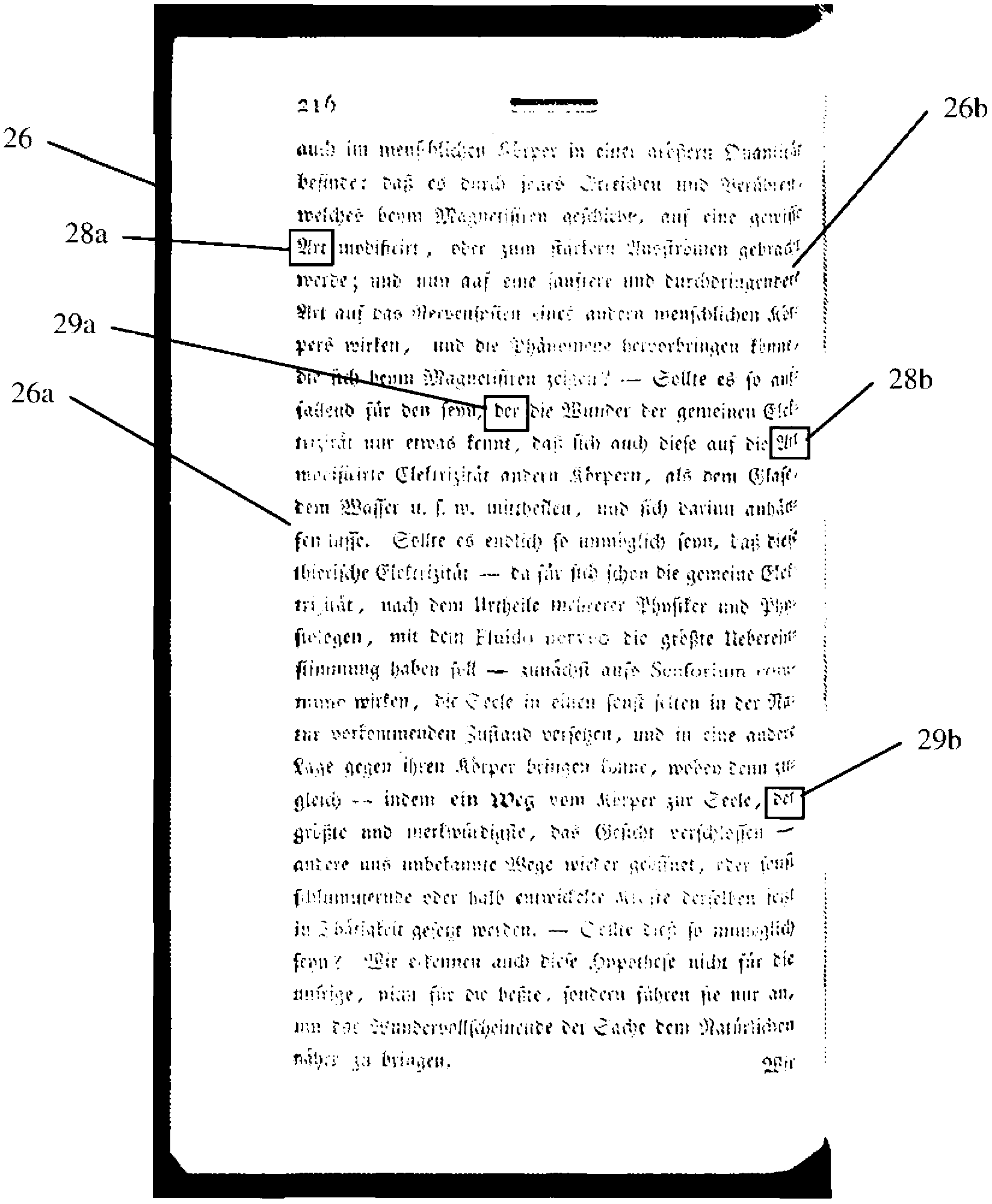

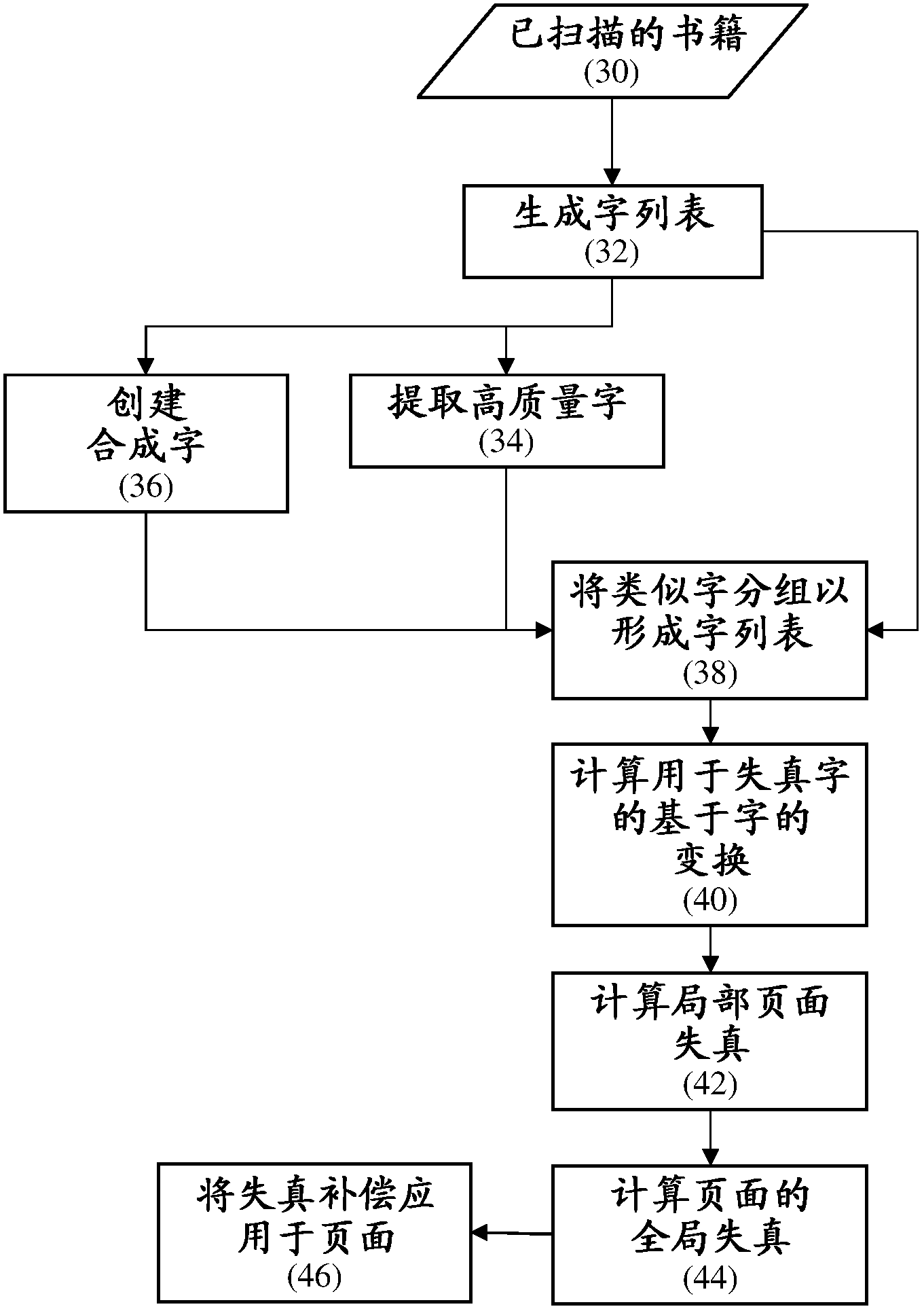

Correcting page curl in scanned books

InactiveCN102918548ACharacter and pattern recognitionPictoral communicationGlobal transformationDistortion

The invention relates to correcting page curl in scanned books. A computer implemented method for correcting distortion in an image of a page includes identifying a set of high quality (HQ) words in undistorted regions of one or more images of pages having content related to the content of the page. At least one distorted word in the image the page is identified such that each distorted word corresponds to a high quality word of the set. A global transformation function is generated for application to the image of the page so as to tranform the distorted word into its corresponding high quality word. The global transformation function is applied to pixels of the image of the page.

Owner:IBM CORP

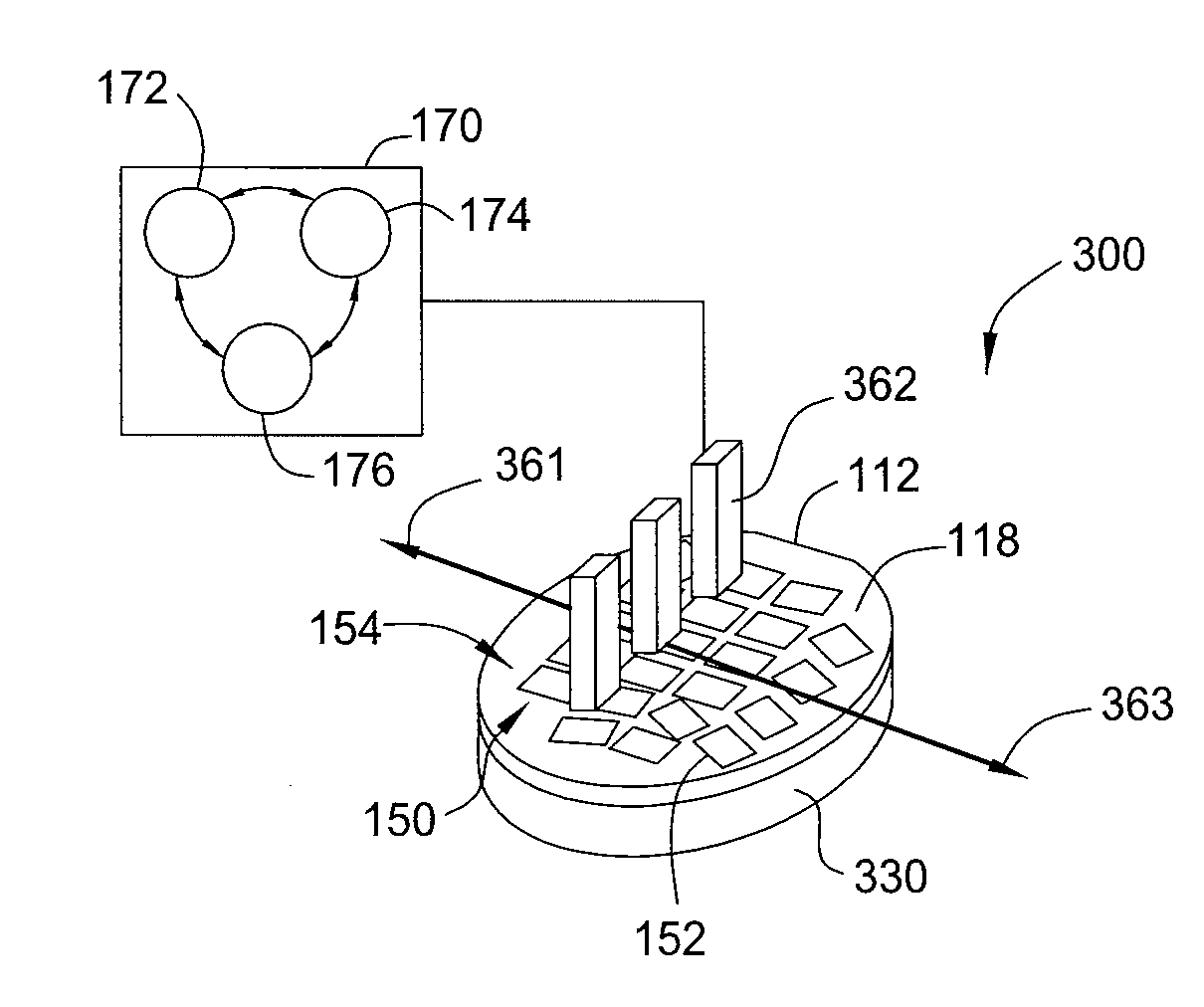

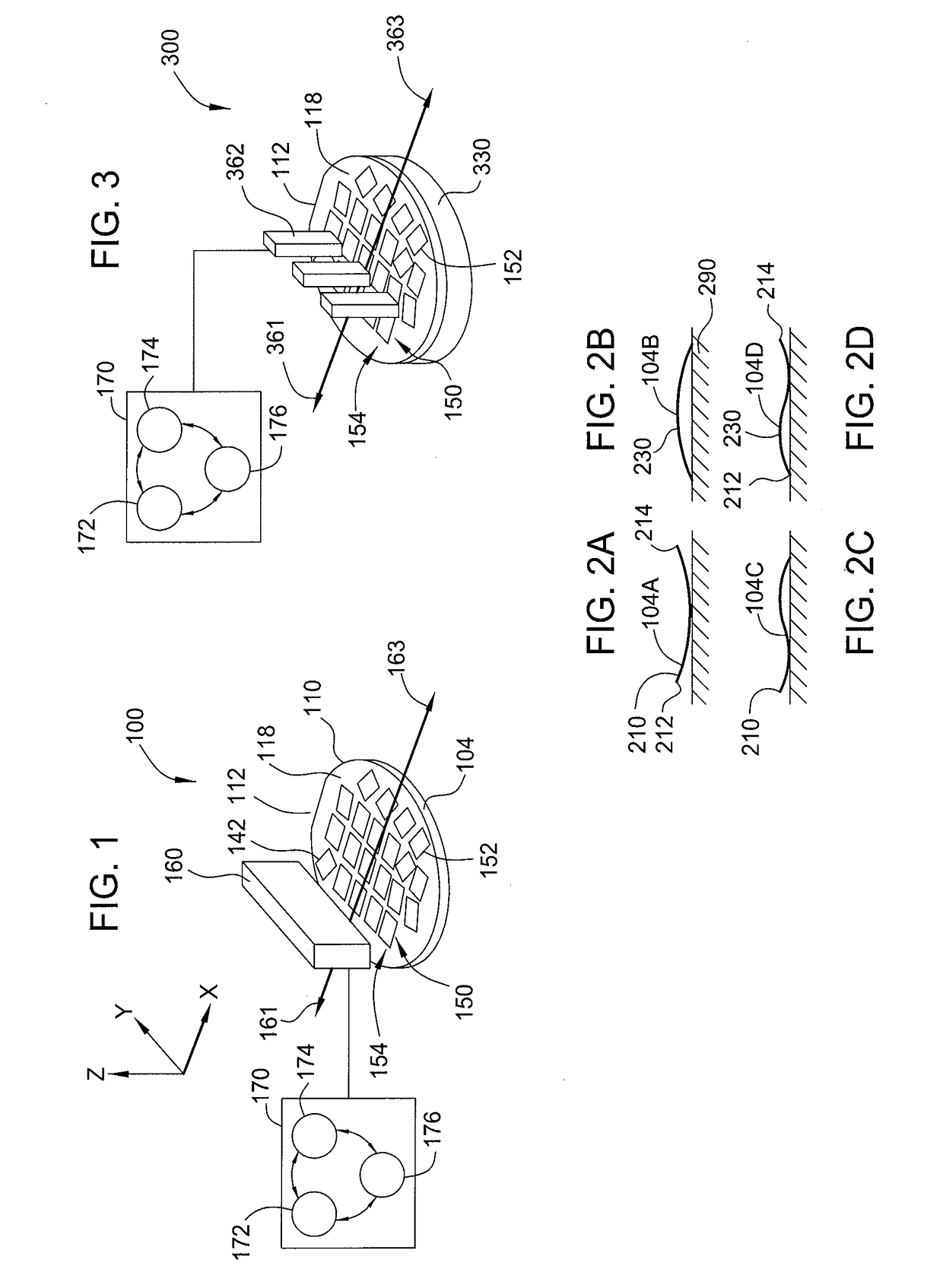

Method of pattern placement correction

ActiveUS20180226369A1Semiconductor/solid-state device testing/measurementSemiconductor/solid-state device detailsGlobal transformationComputer vision

In one embodiment of the invention, a method for correcting a pattern placement on a substrate is disclosed. The method begins by detecting three reference points for a substrate. A plurality of sets of three die location points are detected, each set indicative of an orientation of a die structure, the plurality of sets include a first set associated with a first dies and a second set associated with a second die. A local transformation is calculated for the orientation of the first die and the second on the substrate. Three orientation points are selected from the plurality of sets of three die location points wherein the orientation points are not set members of the same die. A first global orientation of the substrate is calculated from the selected three points from the set of points and the first global transformation and the local transformation for the substrate are stored.

Owner:APPLIED MATERIALS INC

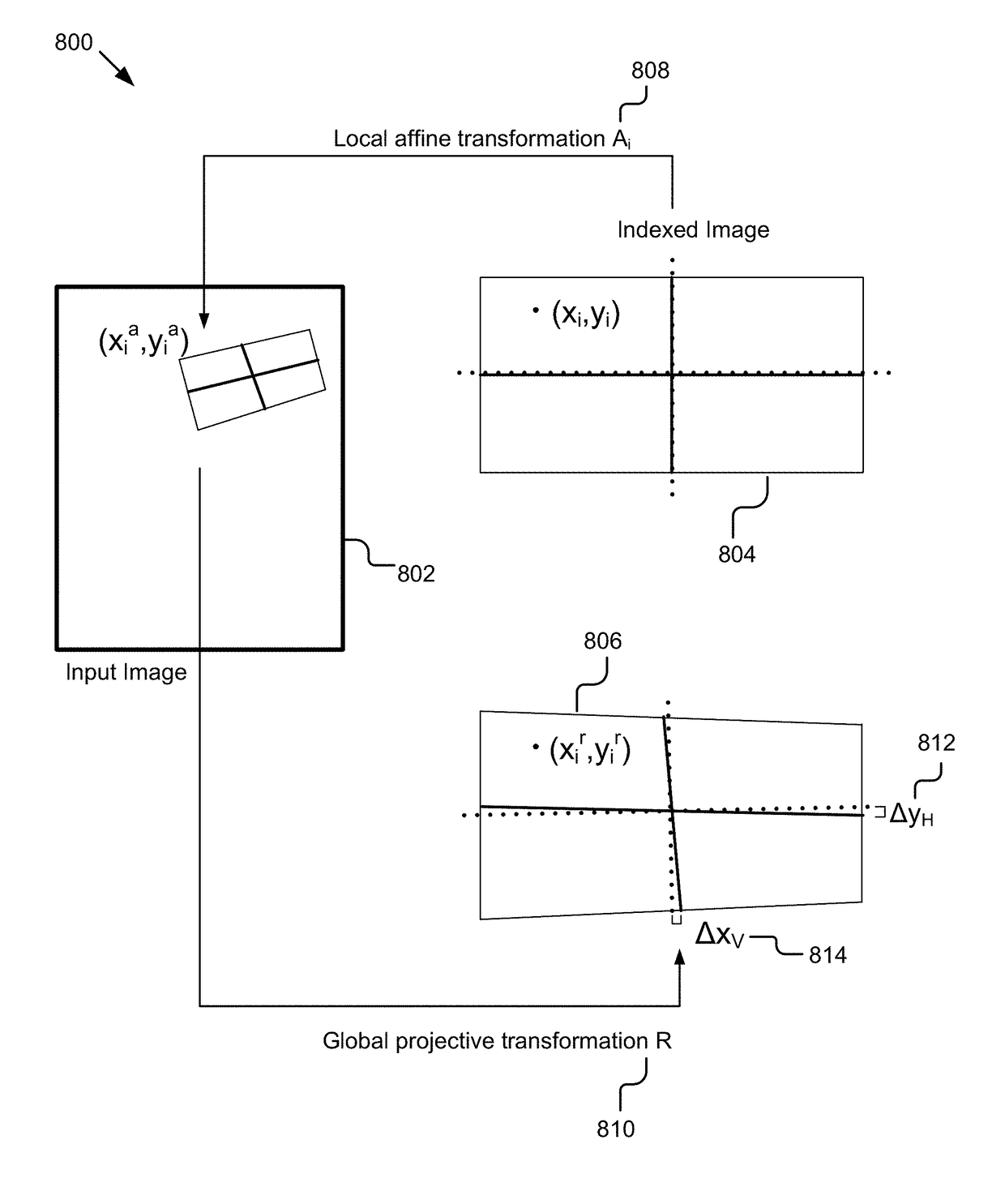

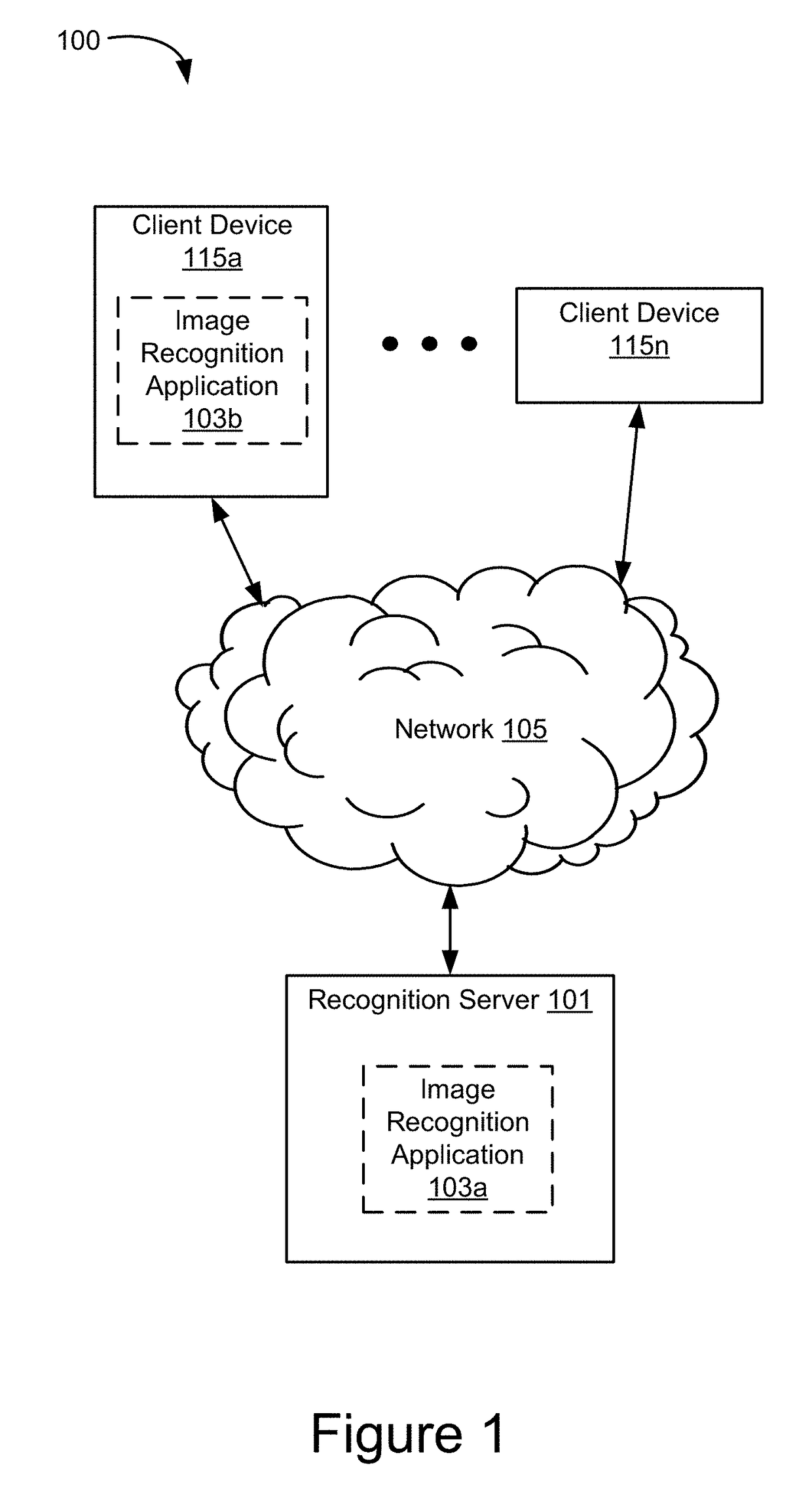

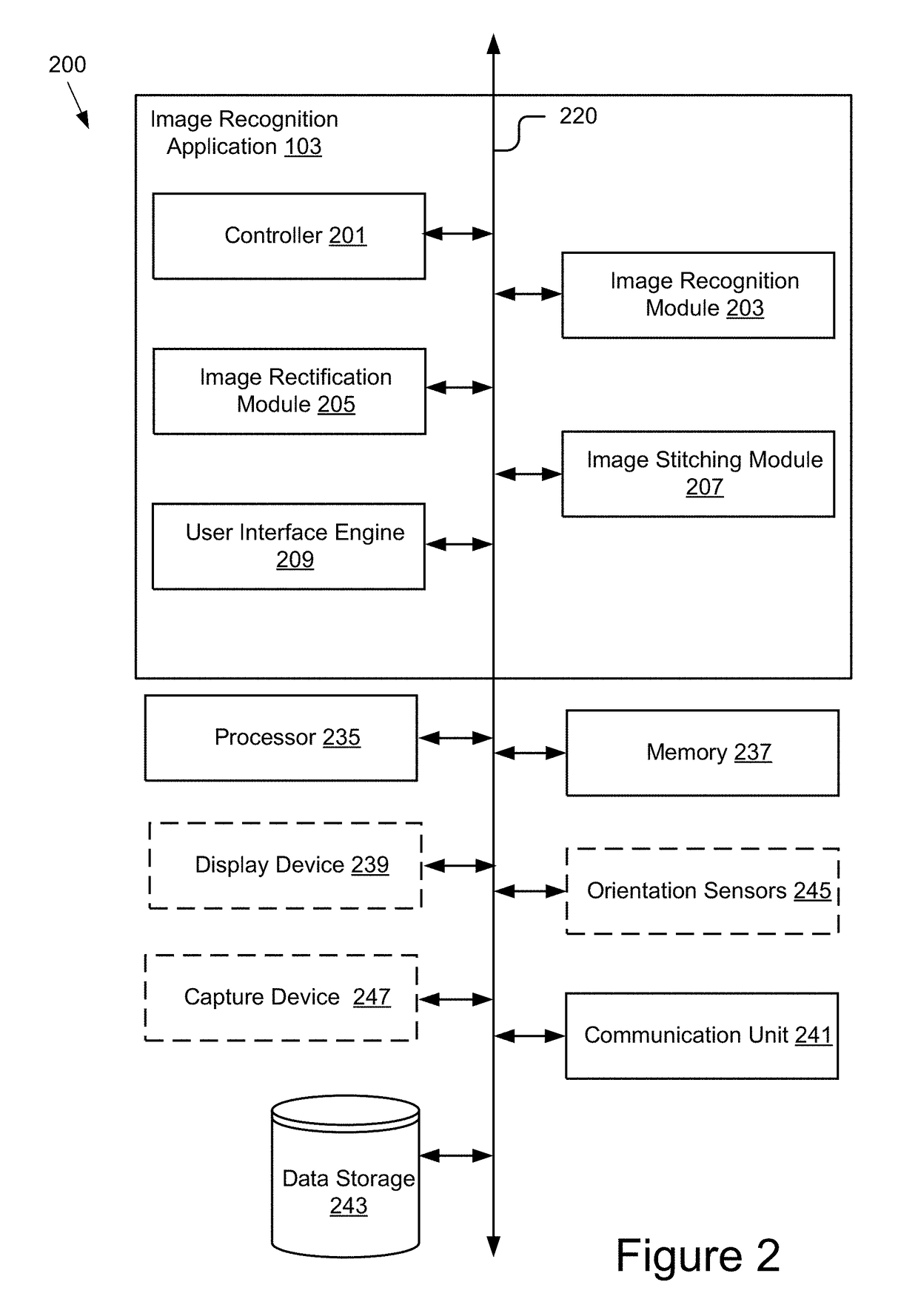

Single image rectification

The disclosure includes a system and method for performing image rectification using a single image and information identified from the single image. An image recognition application receives an input image, identifies a plurality of objects in the input image, estimates rectification parameters for the plurality of objects, identifies a plurality of candidate rectification parameters using a voting procedure on the rectification parameters for the plurality of objects, estimates final rectification parameters based on the plurality of candidate rectification parameters, computes a global transformation matrix using the final rectification parameters, and performs image rectification on the input image using the global transformation matrix.

Owner:RICOH KK

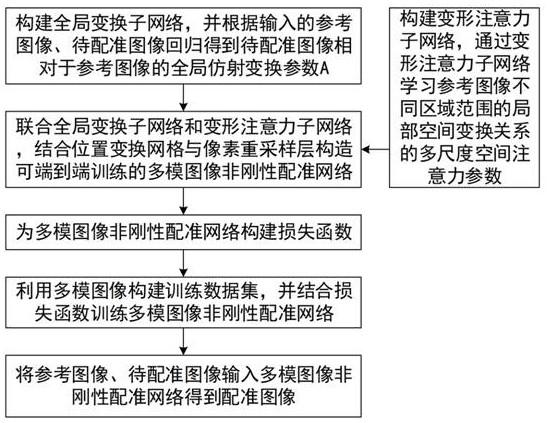

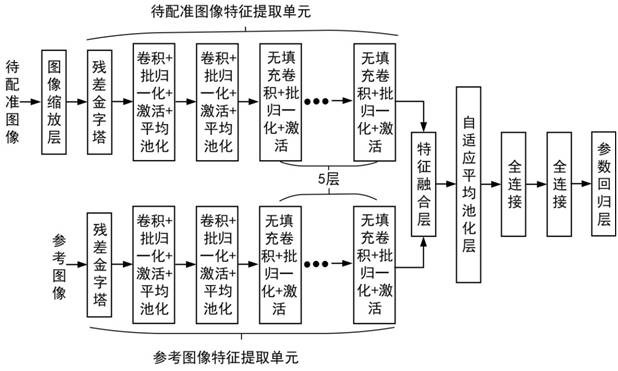

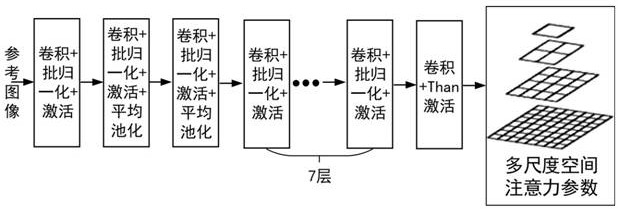

Non-rigid registration method and system for maximum moment and space consistency of multimode image

ActiveCN114693755ANon-rigid registration is accurateResolve distortionImage enhancementImage analysisMorphingData set

The invention discloses a multimode image maximum moment and space consistency non-rigid registration method and system, and the method comprises the steps: constructing a global transformation sub-network and a deformation attention sub-network, and constructing a multimode image non-rigid registration network capable of end-to-end training in combination with a position transformation grid and a pixel resampling layer; constructing a loss function for the multimode image non-rigid registration network; and constructing a training data set by using the multimode image, and training the multimode image non-rigid registration network by using the constructed training data set and the loss function. According to the method, the distorted image without geometric correction can be directly registered, the problem of local distortion of the multimode image is well solved, accurate registration of the multimode image is realized, reliable support can be provided for accurate image fusion and accurate target detection, and the method can be applied to the application fields of natural disaster monitoring, resource investigation and exploration, accurate target strike and the like, and has wide application prospects. The system has the advantages of intelligent manufacturing, rescue and relief work, remote sensing monitoring and the like, and is wide in application range.

Owner:HUNAN UNIV

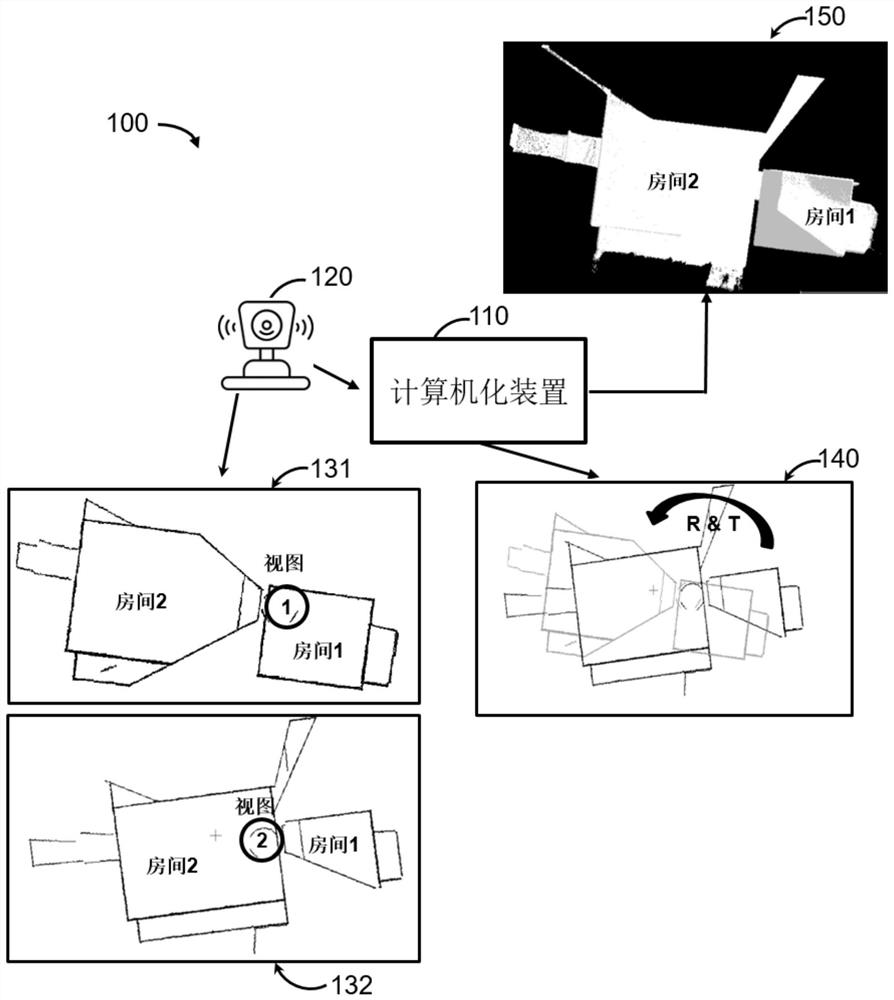

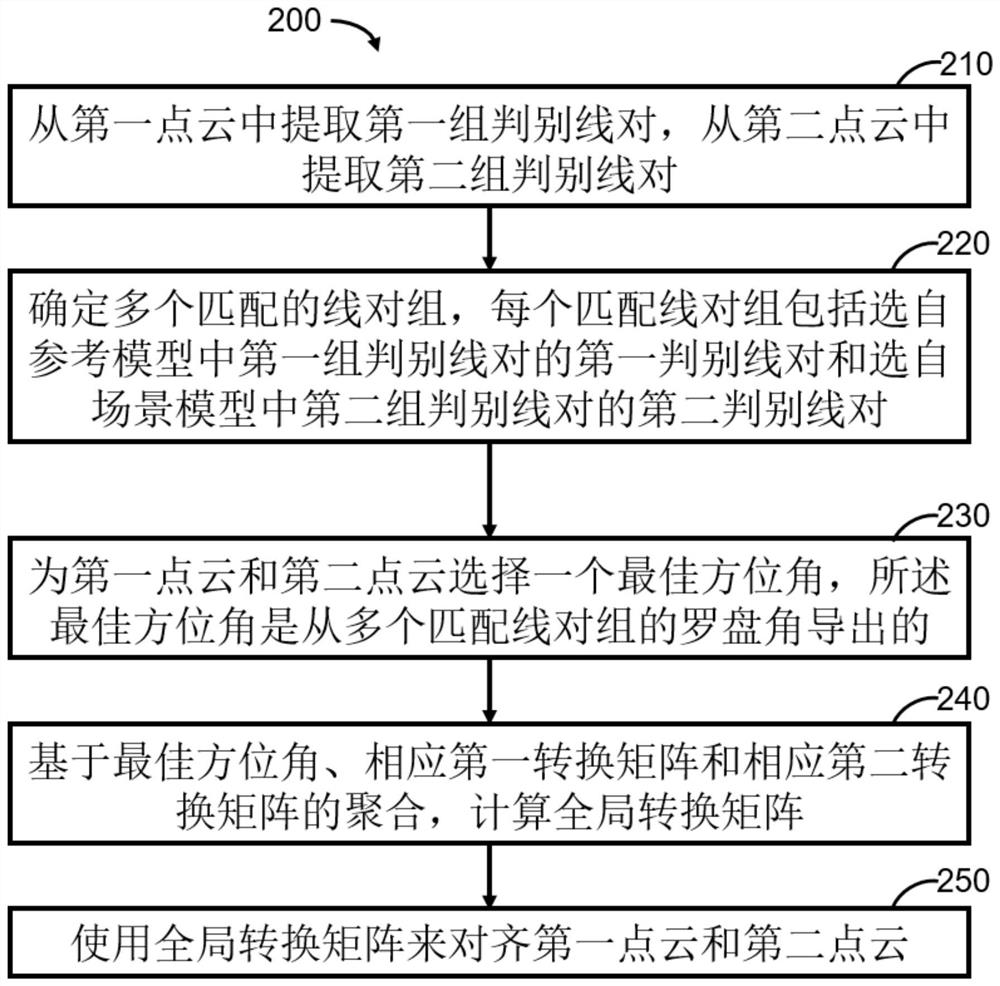

Method and system for global registration between 3D scans

PendingCN113853632APrecise alignmentRobustImage enhancementImage analysisPoint cloudGlobal transformation

Owner:HONG KONG APPLIED SCI & TECH RES INST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com