Patents

Literature

49results about How to "Accurate depth information" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

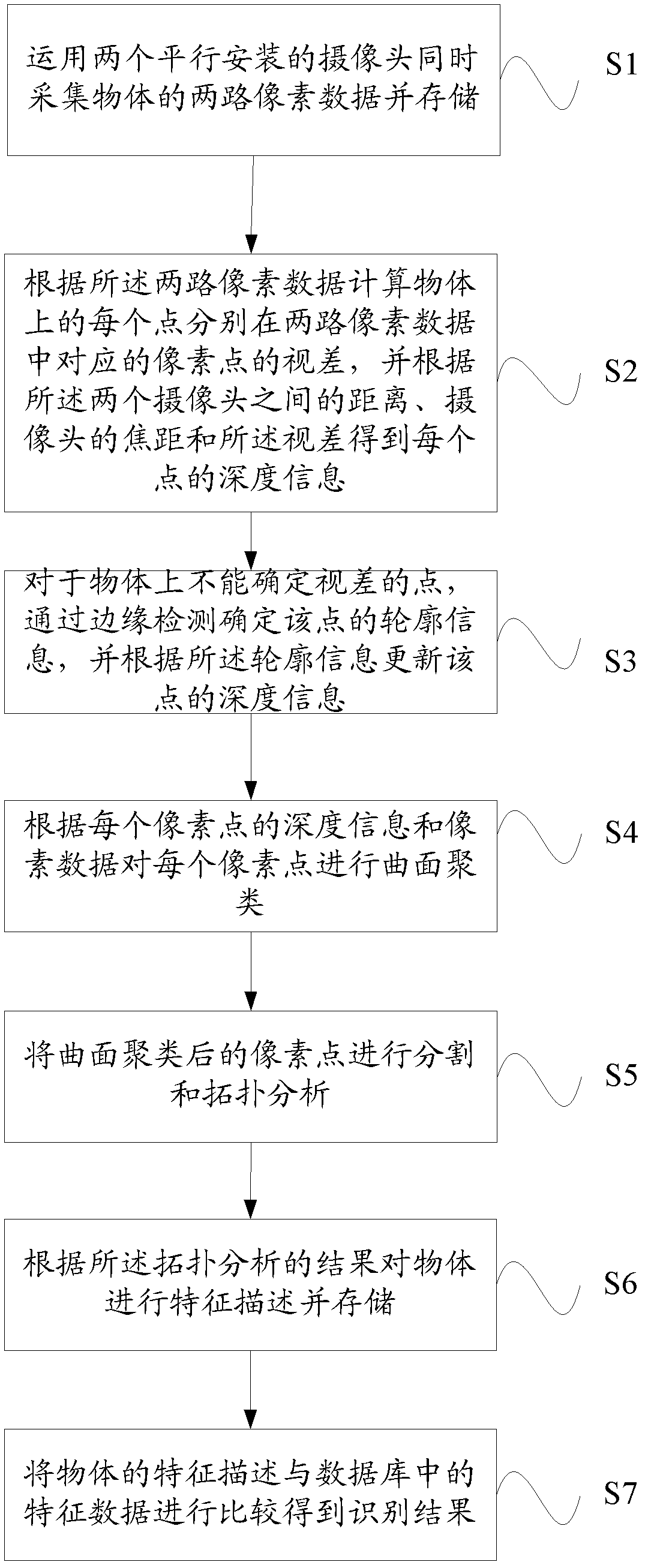

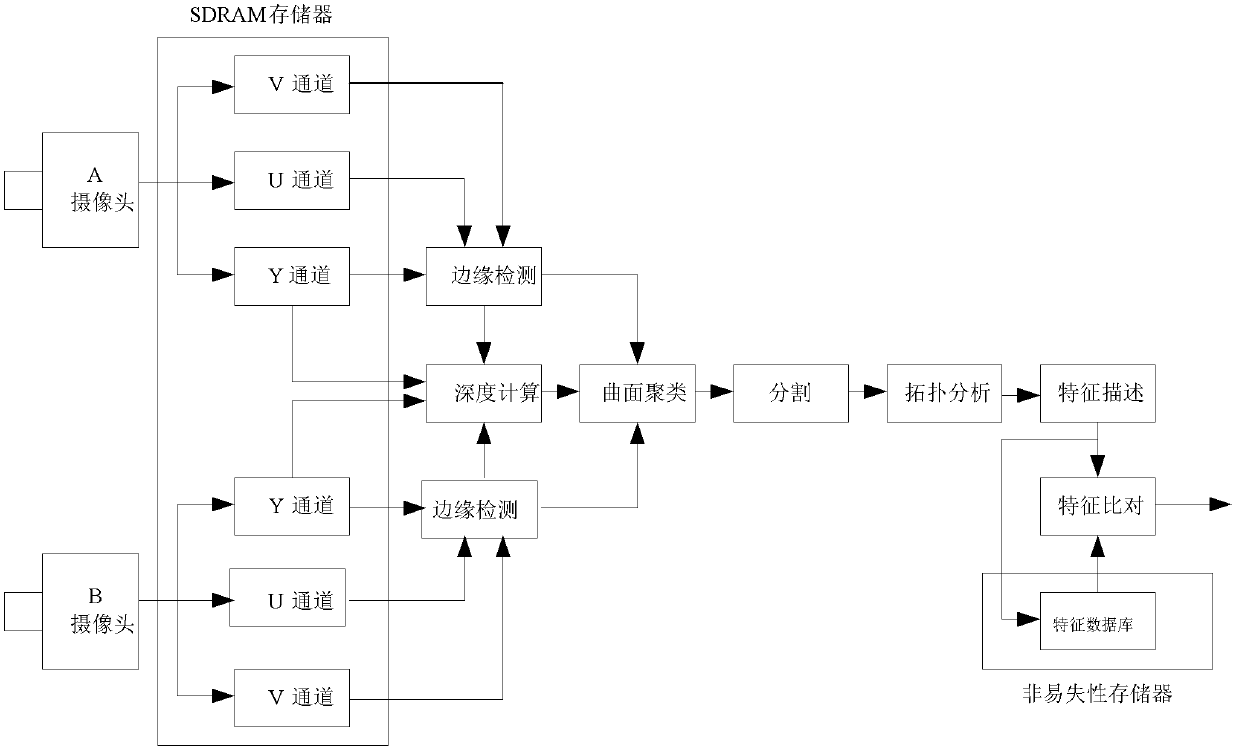

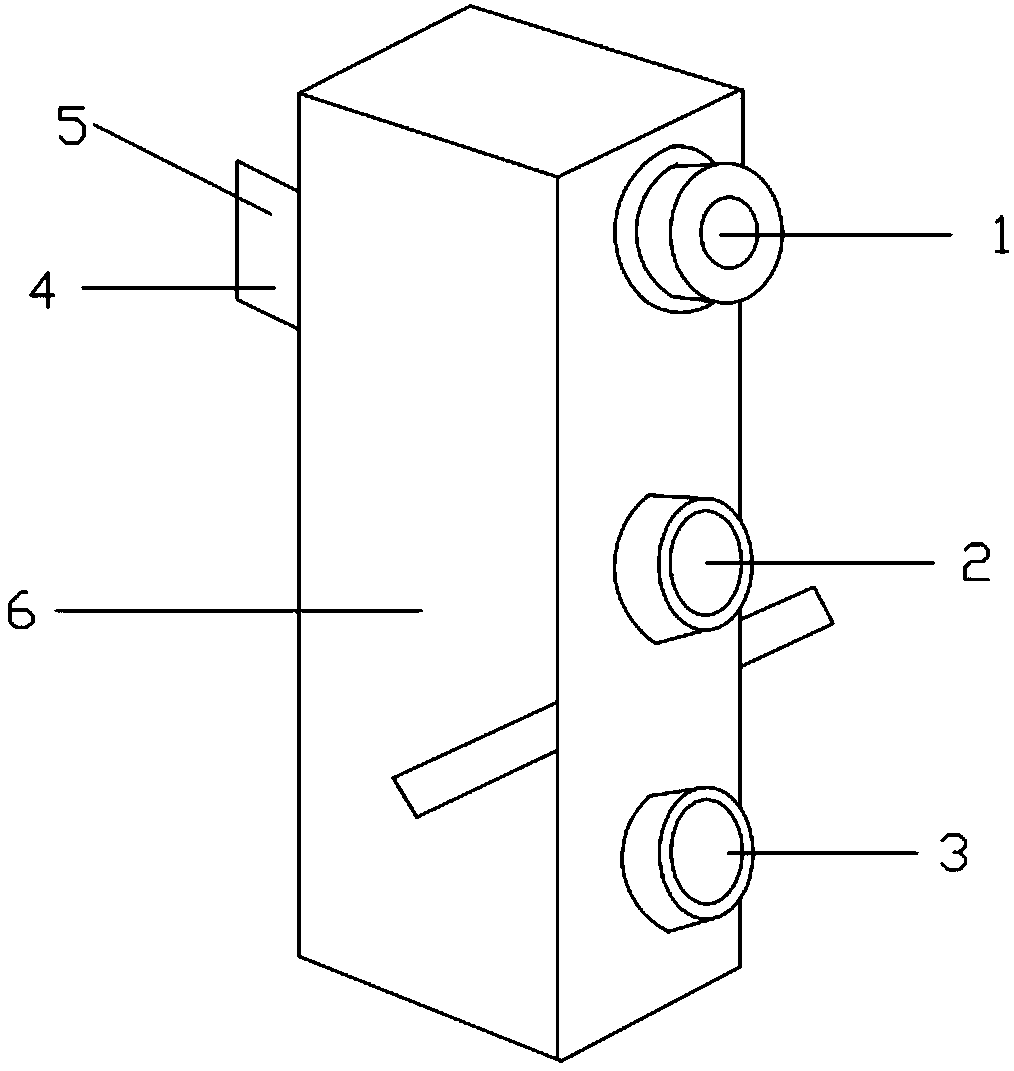

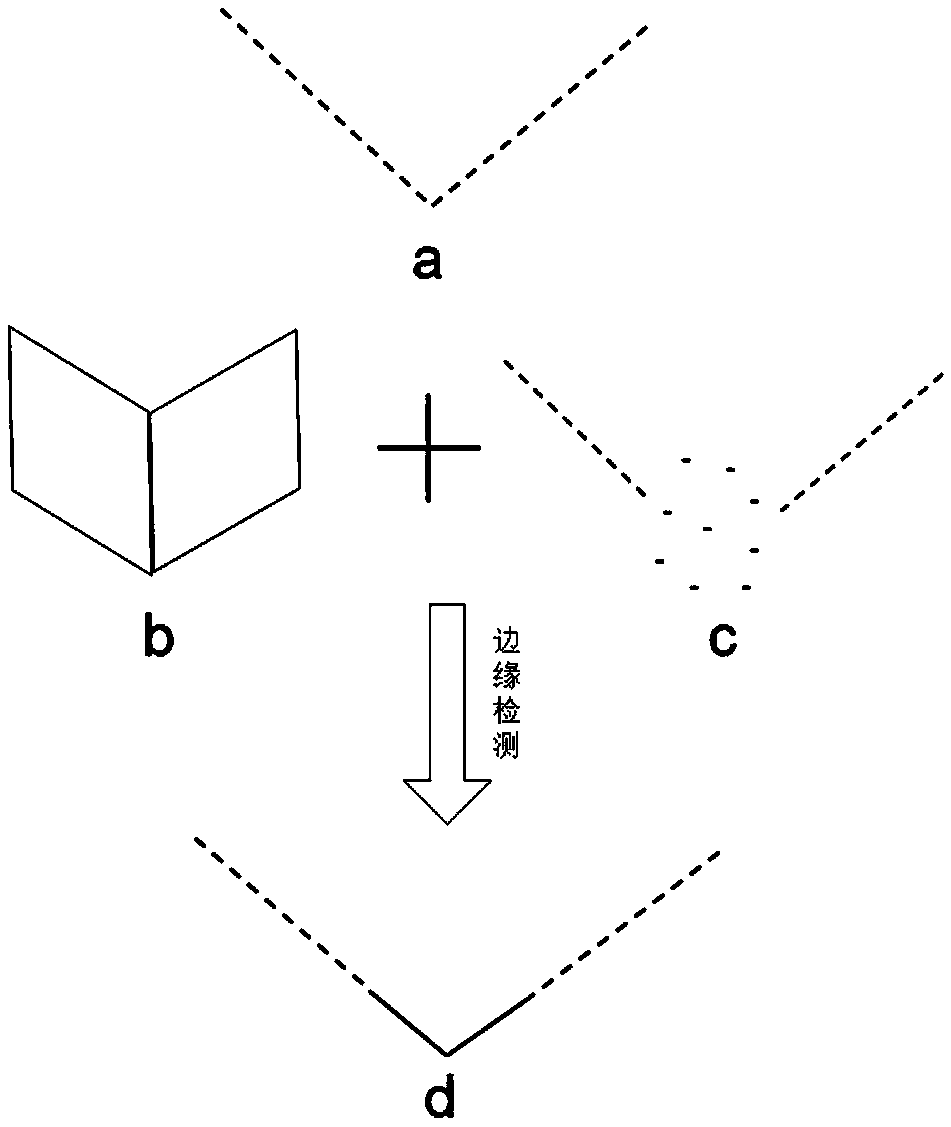

Three-dimensional object identification method and system

ActiveCN102592117AAccurate depth informationAvoid misanalysisCharacter and pattern recognitionSteroscopic systemsEdge detectionData library

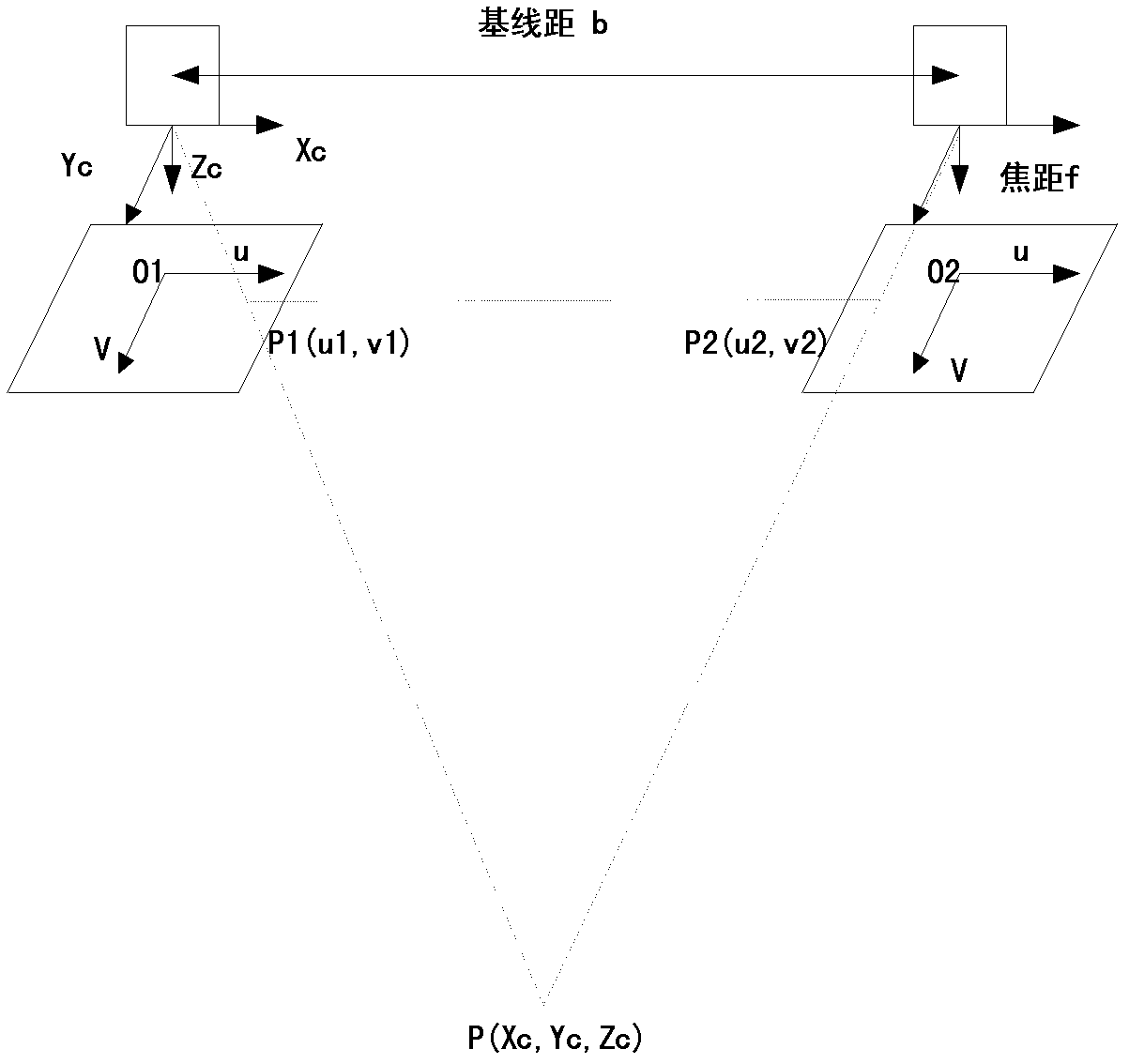

The invention relates to a three-dimensional object identification method and a three-dimensional object identification system. The method comprises the following steps of: simultaneously acquiring two paths of pixel data of an object by using two cameras which are arranged in parallel, and storing the pixel data; calculating the parallax of pixels of each point on the object in the two paths of pixel data according to the two paths of pixel data respectively, and obtaining the depth information of each point according to a distance between the two cameras, focal lengths of the cameras and the parallaxes; for a point of which the parallax cannot be determined, determining the contour information of the point by using edge detection, and updating the depth information of the point according to the contour information; performing curved surface clustering on each pixel according to the depth information of each pixel and the pixel data; performing division and topology analysis on the curved surface clustered pixels; performing characteristic description on the object according to a topology analysis result, and storing characteristic descriptions; and comparing the characteristic descriptions of the object with characteristic data in a database to obtain an identification result. By the method and the system, any object can be accurately identified.

Owner:HANGZHOU SILAN MICROELECTRONICS

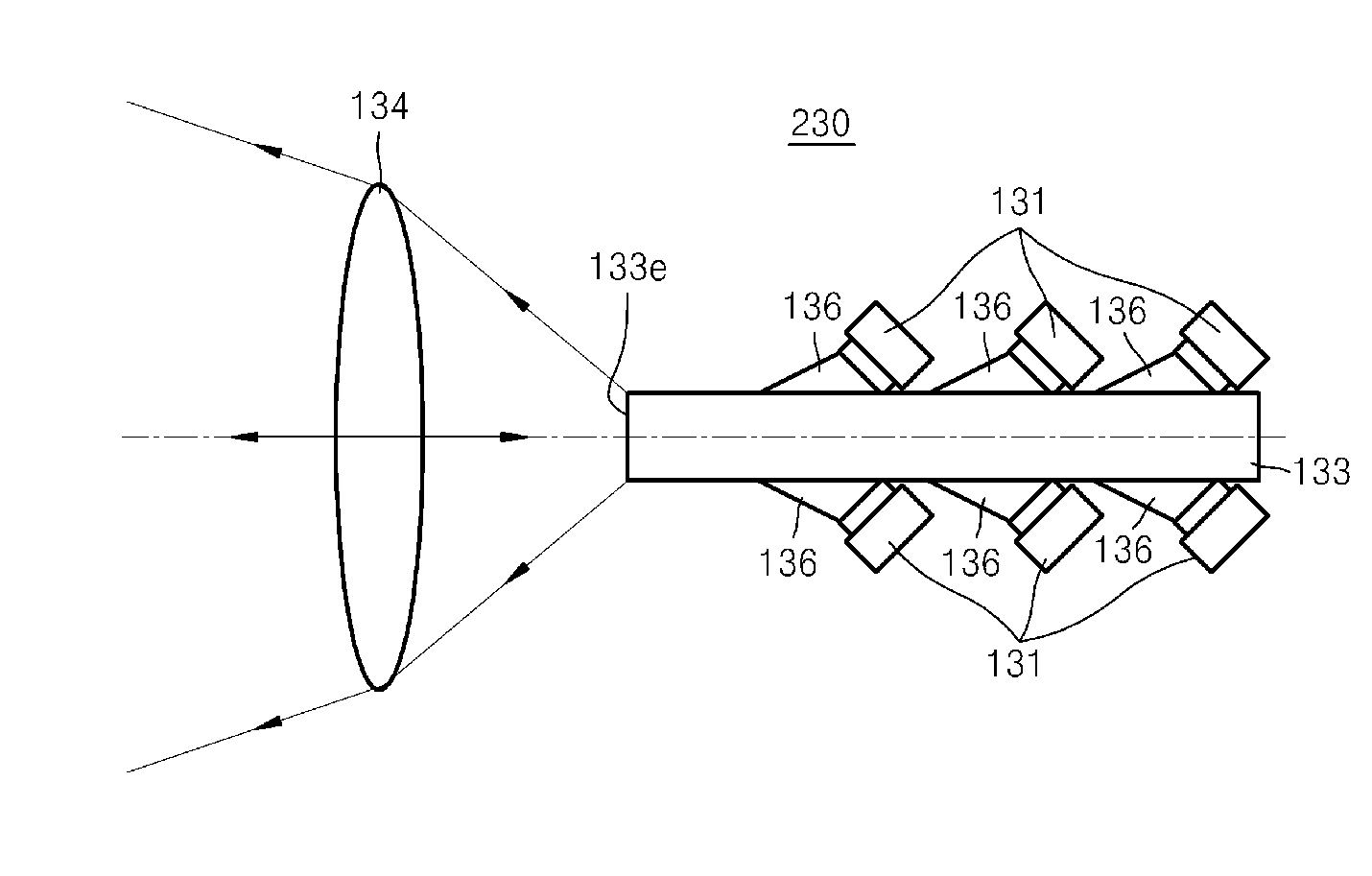

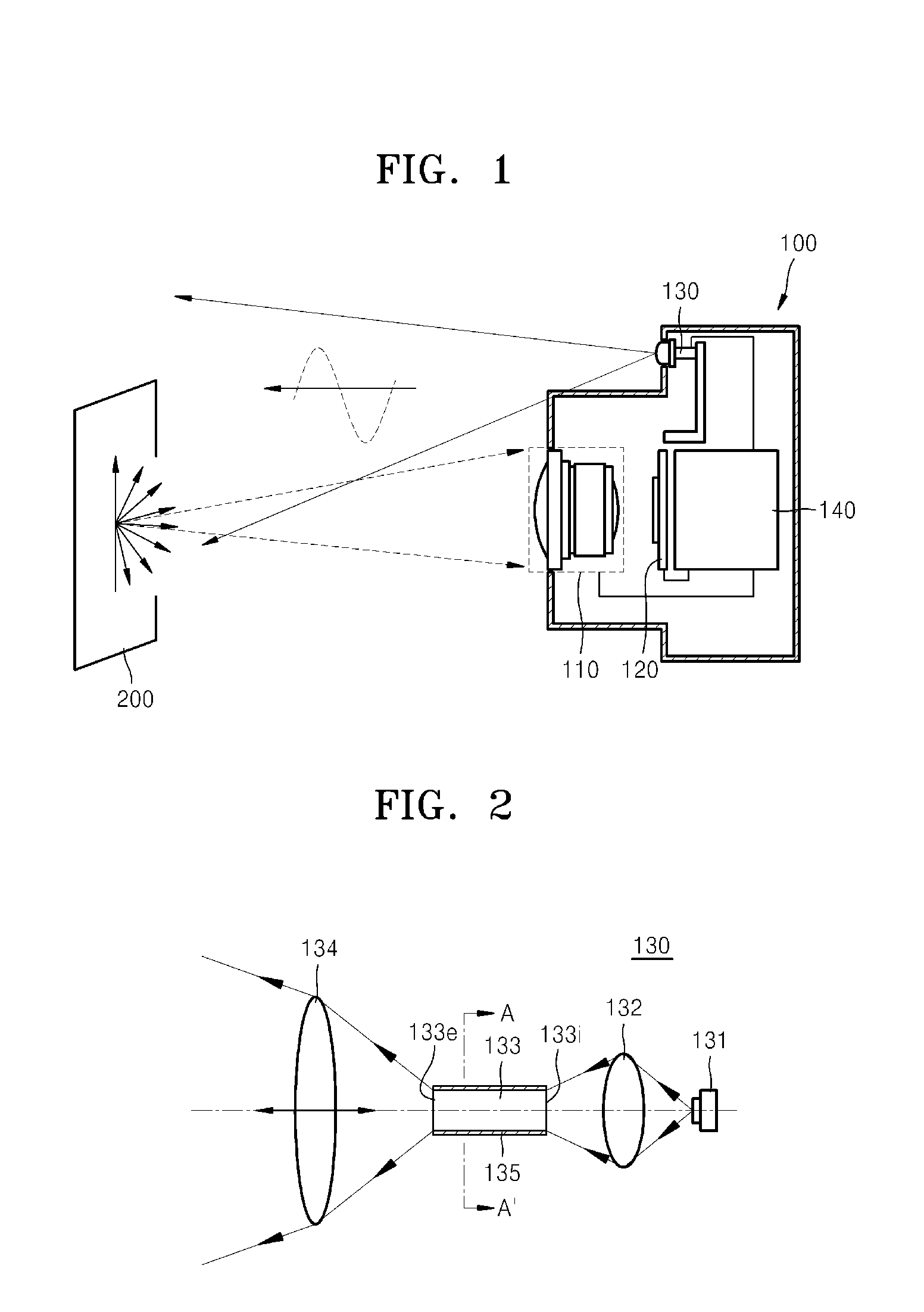

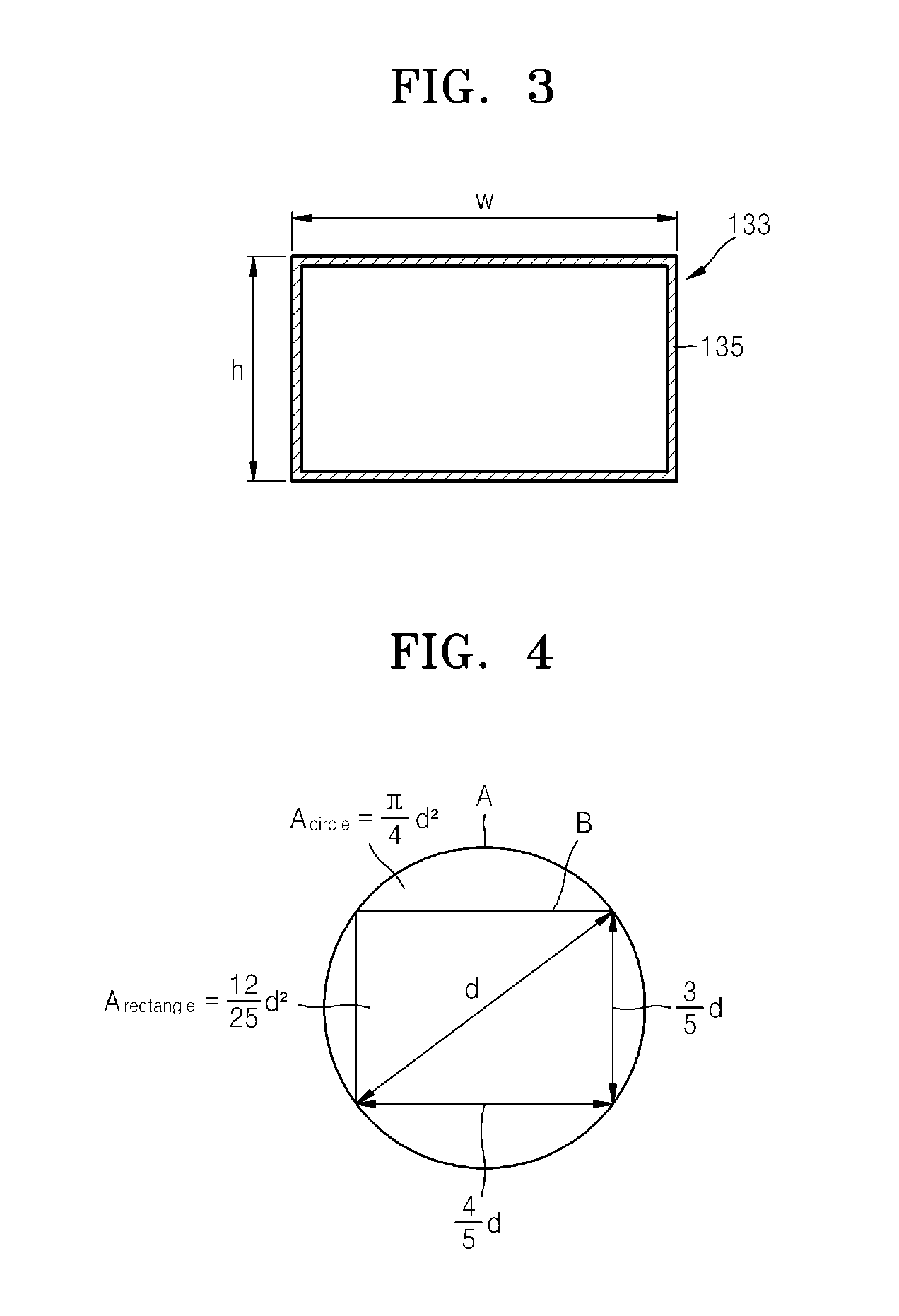

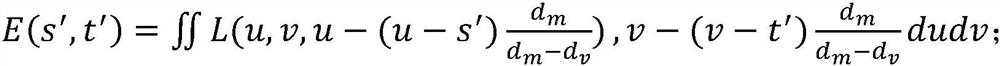

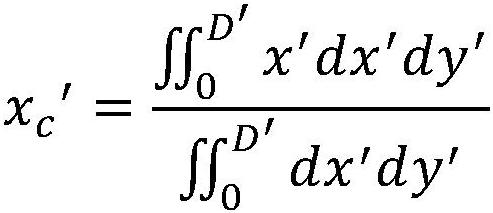

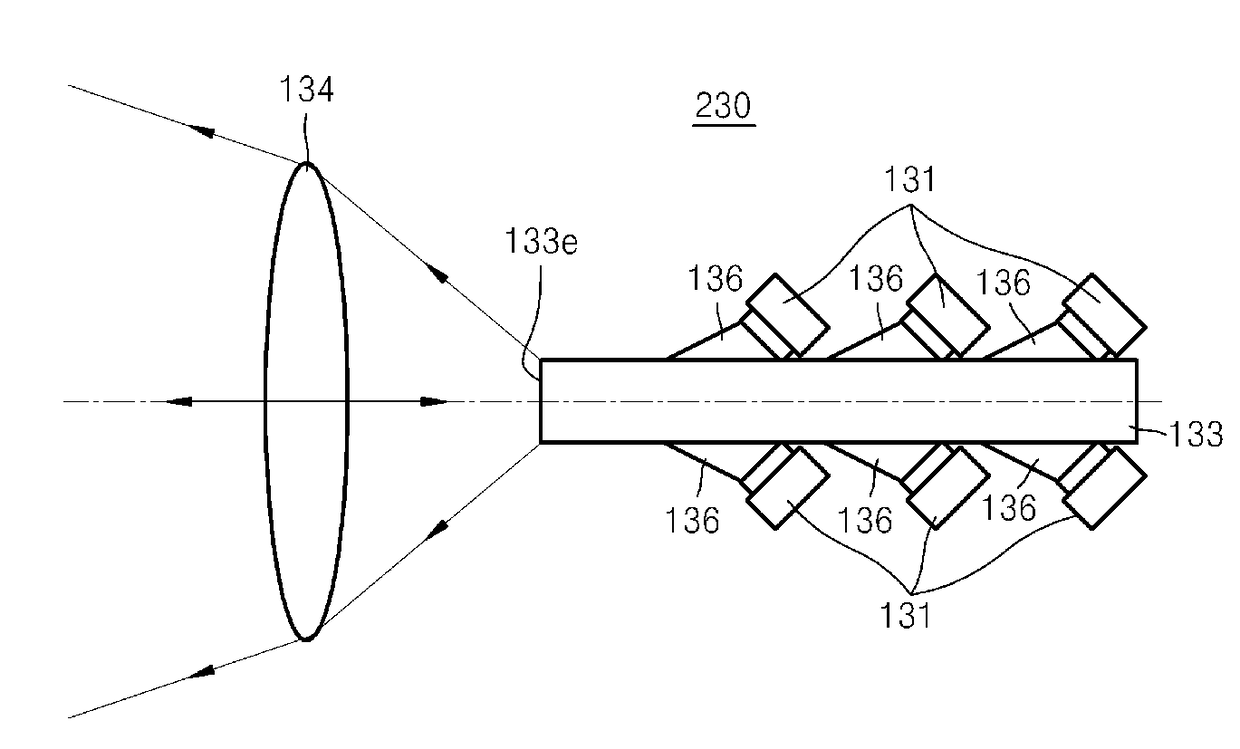

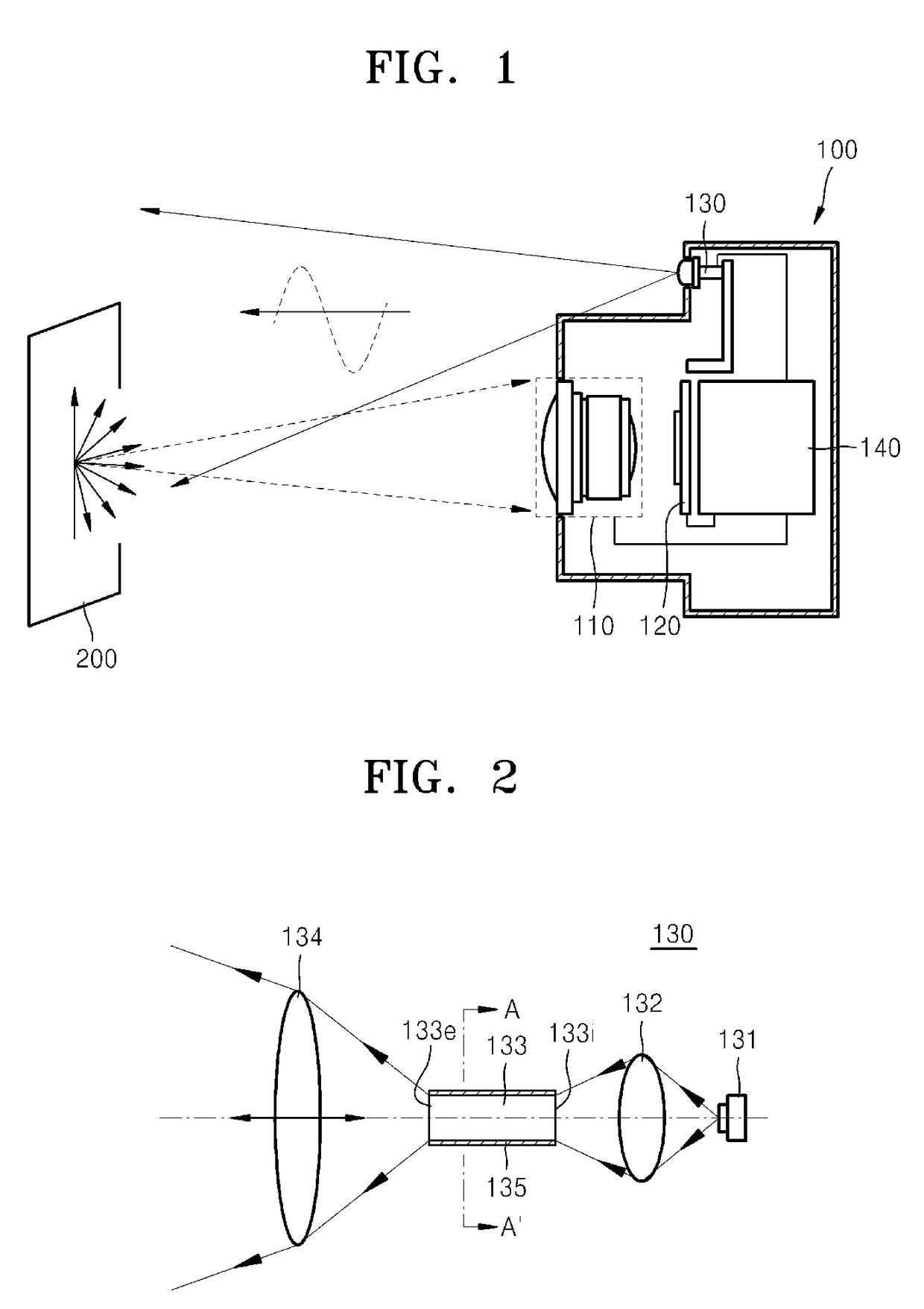

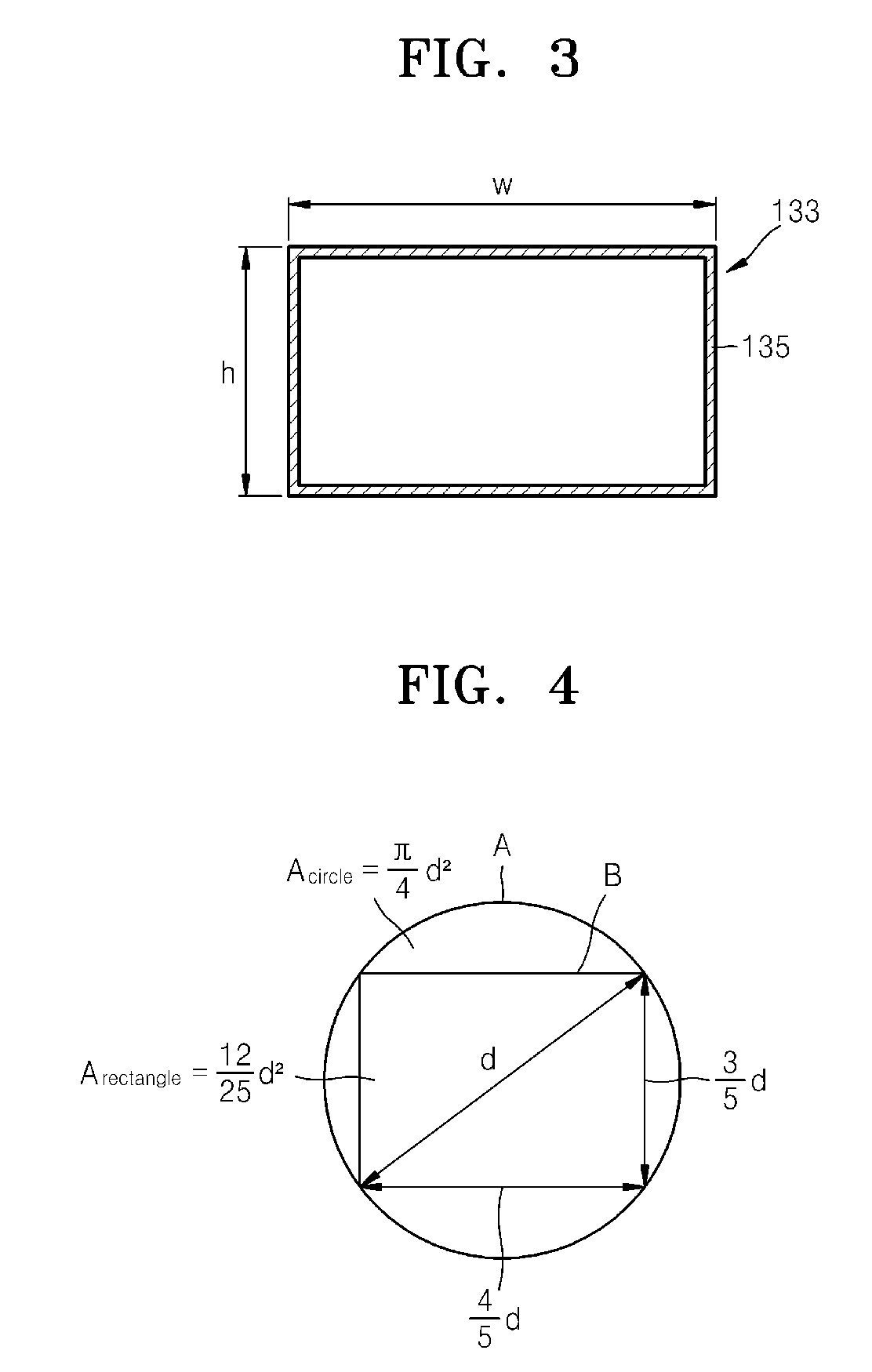

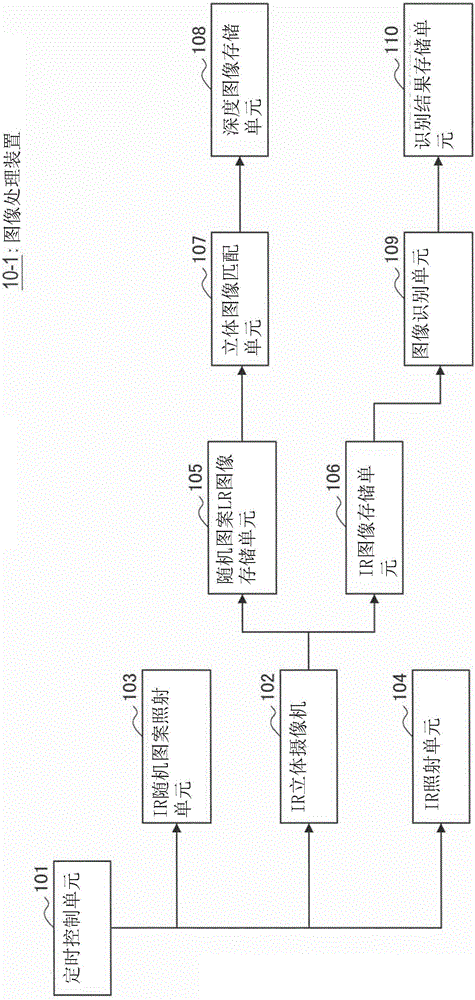

Illumination optical system and 3D image acquisition apparatus including the same

ActiveUS20120147147A1Efficiently inhibit speckle noiseAccurate depth informationElectromagnetic wave reradiationSteroscopic systemsAcquisition apparatus3d image

An illumination optical system of a 3-dimensional (3D) image acquisition apparatus and a 3D image acquisition apparatus including the illumination optical system. The illumination optical system of a 3D image acquisition apparatus includes a beam shaping element which outputs light having a predetermined cross-sectional shape which is proportional to a field of view of the 3D image acquisition apparatus. The beam shaping element may adjust a shape of illumination light according to its cross-sectional shape. The beam shaping element may uniformly homogenize the illumination light without causing light scattering or absorption.

Owner:SAMSUNG ELECTRONICS CO LTD

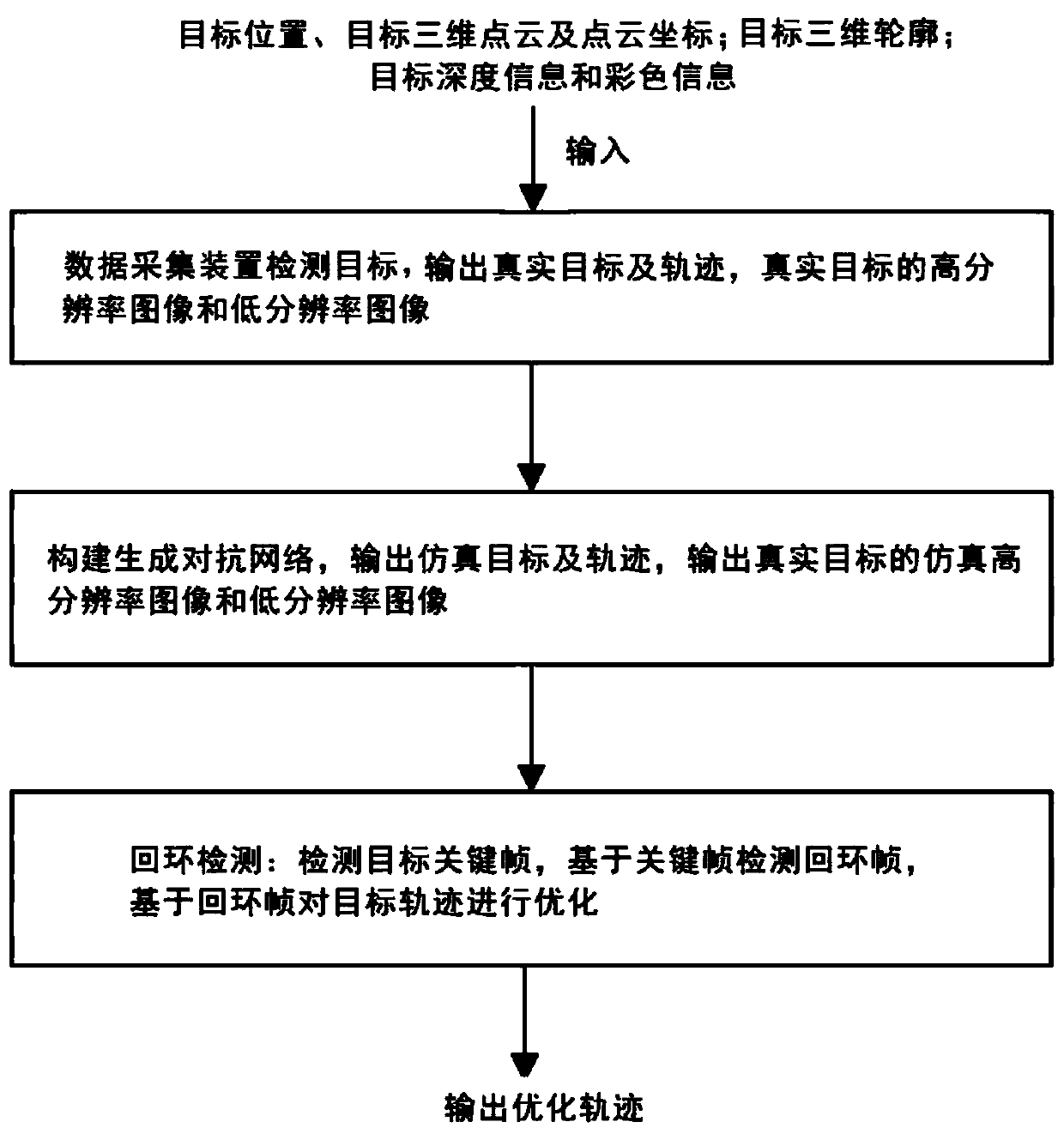

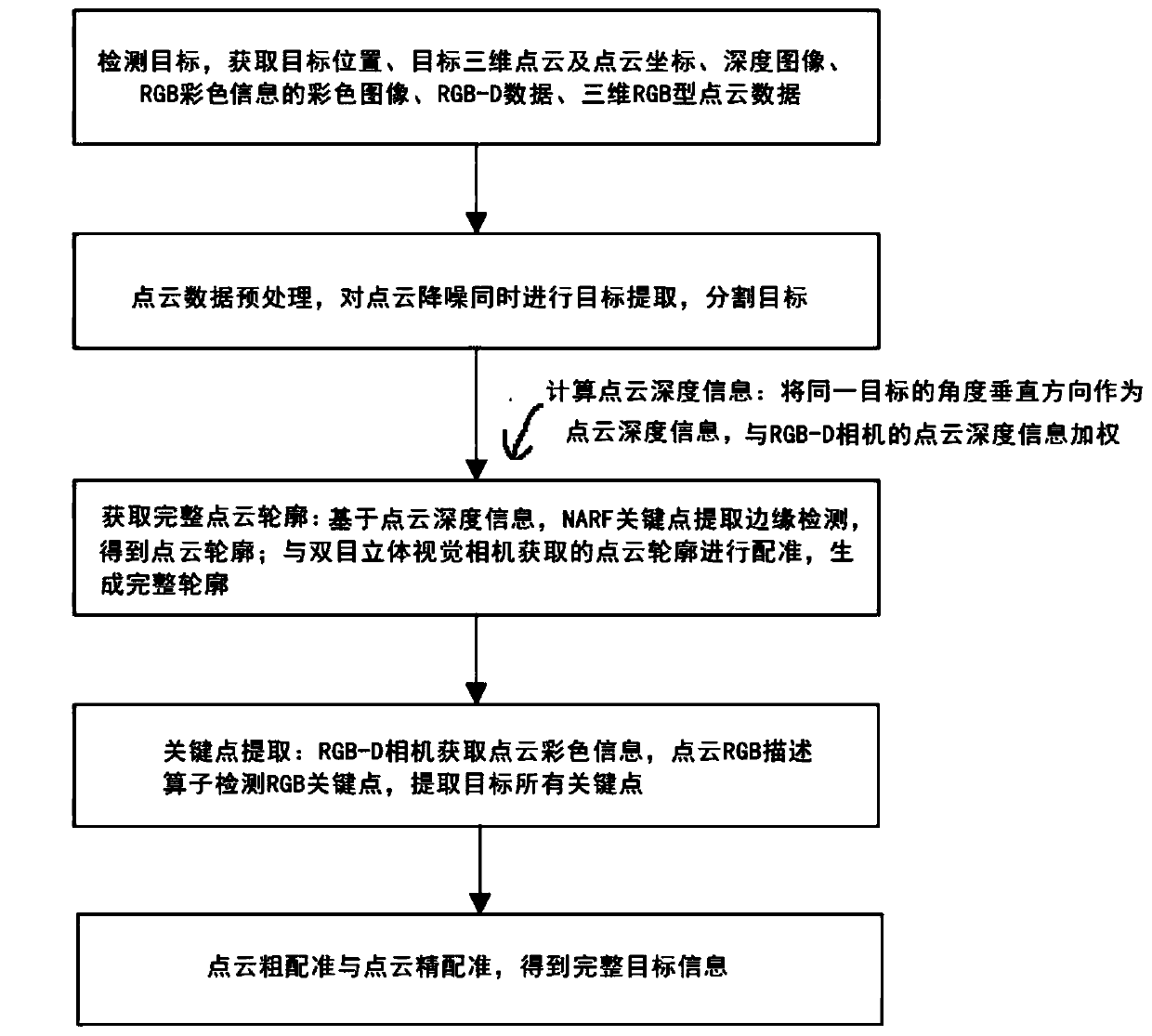

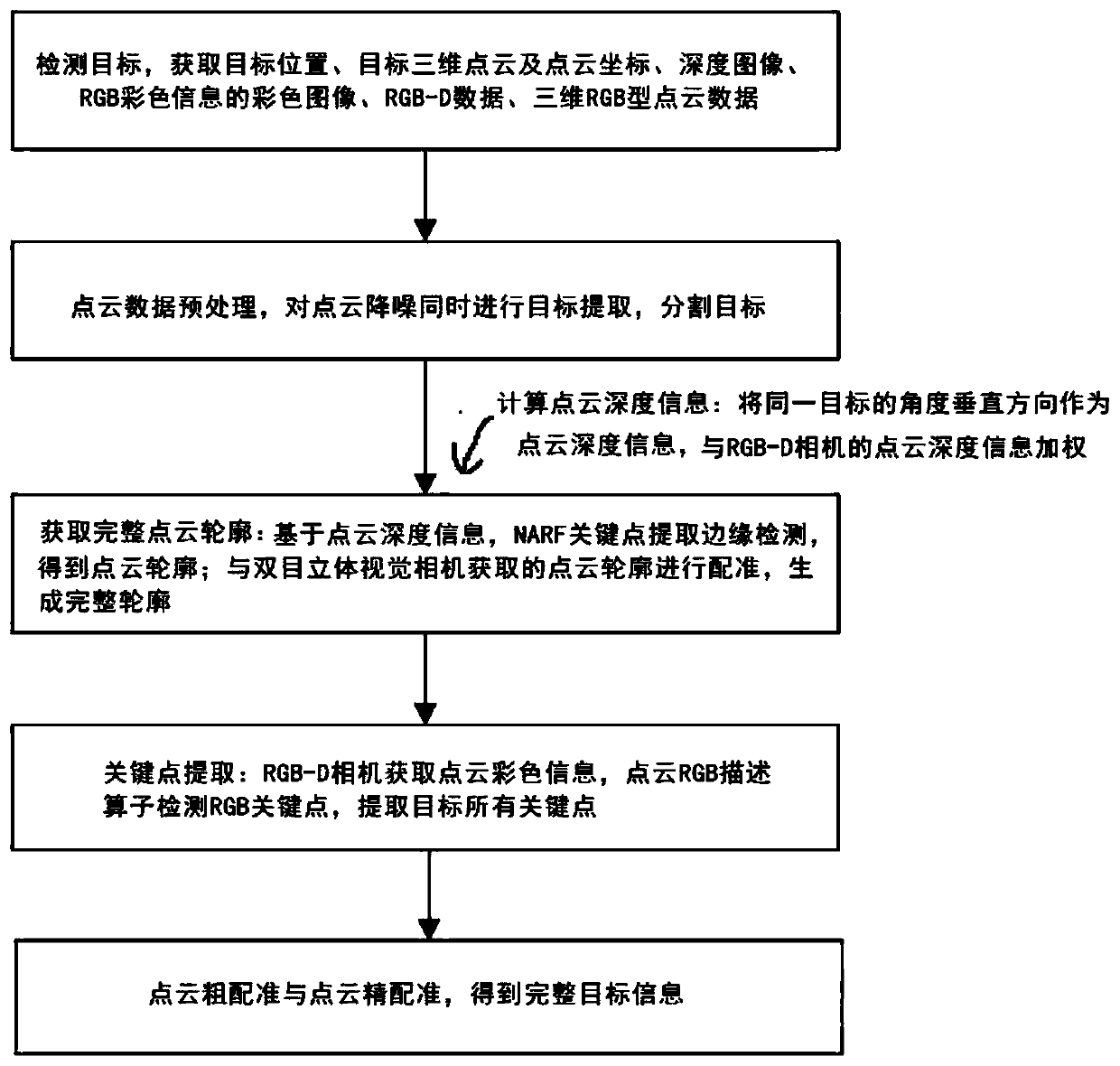

Trajectory loopback detection optimization method based on generative adversarial network

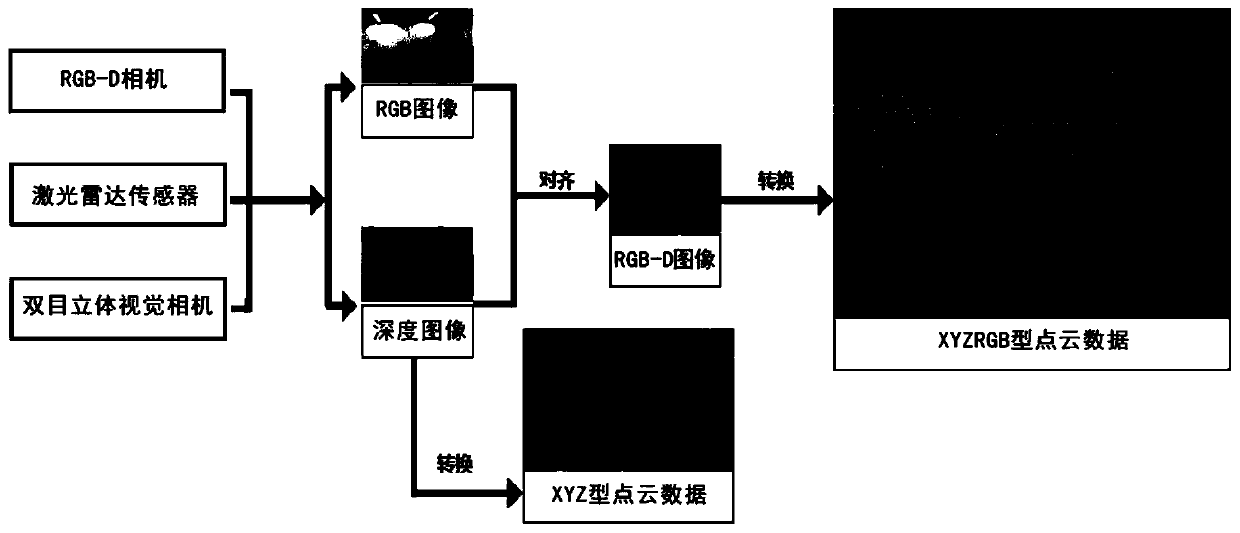

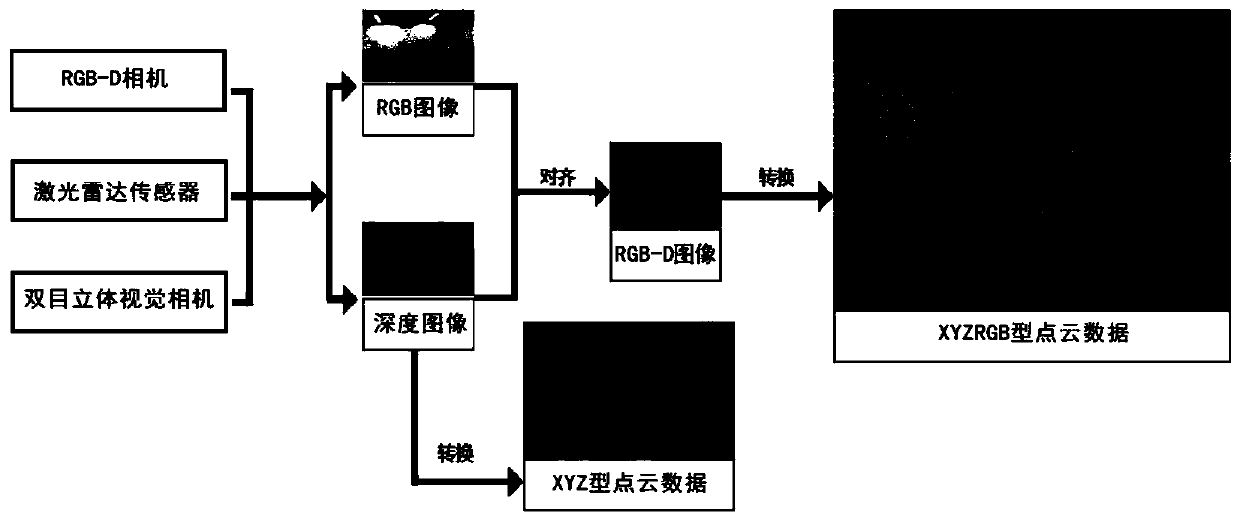

InactiveCN110689562AAvoid detectionAvoid instabilityImage enhancementImage analysisPoint cloudImage resolution

The invention provides a generative adversarial network-based trajectory loopback detection optimization method, which comprises the following steps that: a data collection device acquires multi-target information and outputs a target coordinate position, a posture, a trajectory and a high-low resolution image set; constructing a generative adversarial network reconstruction production simulationtarget which comprises a first generator, a second generator, three local discriminators and a global discriminator, and outputting a simulation high-low resolution image set of a coordinate position,a posture, a track and a real target of the simulation target; loop detection is carried out to correct a target trajectory: a key frame is detected, a feature repetition rate is calculated, and a dictionary is constructed for the same target based on a two-dimensional high-low resolution image set, a low resolution image set and a three-dimensional RGB point cloud to judge a loop; and optimizingthe target trajectory based on the loopback frame, and outputting an optimized trajectory. The optimal trajectory is found while the trajectory is updated in real time, and accurate information is provided for unmanned control, target detection and recognition, feasible region detection, path planning and the like.

Owner:SHENZHEN WEITESHI TECH

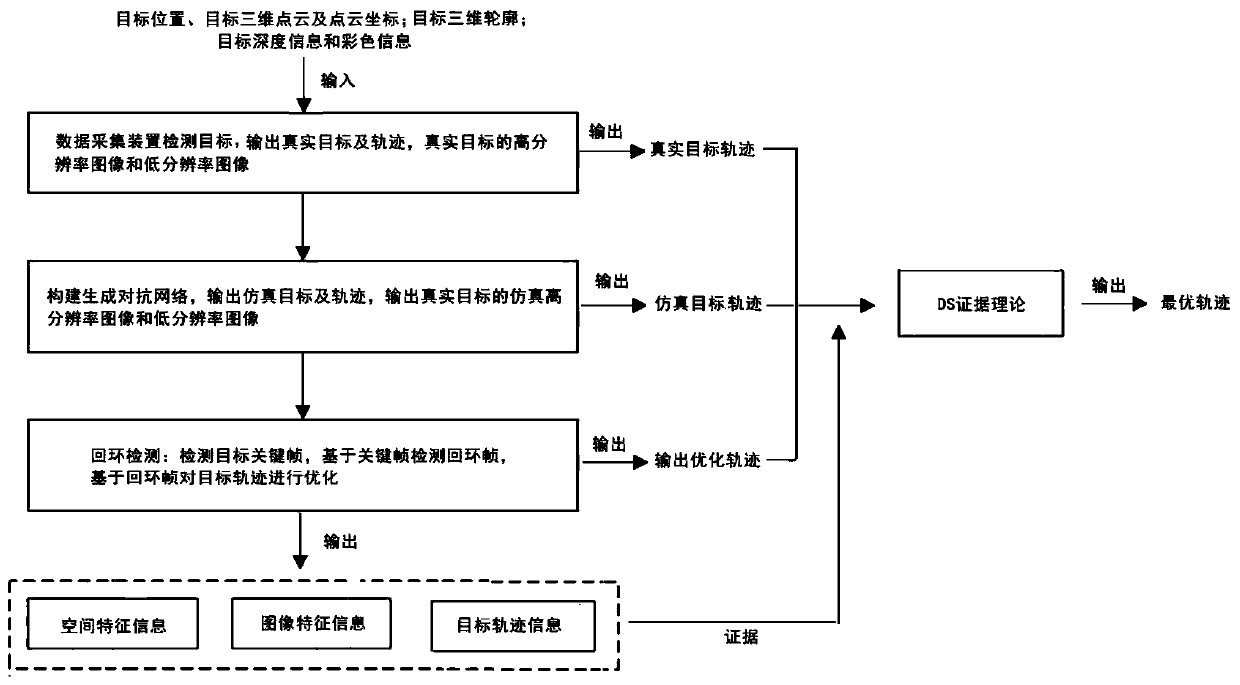

Target trajectory optimization method based on DS evidence theory

ActiveCN110675418AAvoid detectionAvoid instabilityImage analysisInternal combustion piston enginesPoint cloudImage resolution

The invention provides a target trajectory optimization method based on a DS evidence theory, and the method comprises: a data collection device obtaining multi-target information, and outputting a target coordinate position, a posture, a trajectory and a high-low resolution image set; constructing a generative adversarial network to reconstruct a simulation target, and outputting a simulation high-low resolution image set of the coordinate position, posture and track of the simulation target and a real target; detecting a key frame calculation feature repetition rate, constructing a dictionary judgment loop based on the two-dimensional high-low resolution image set and the three-dimensional target RGB point cloud, and realizing trajectory optimization; establishing a sample matrix to represent an output trajectory, including a real target trajectory, a generative adversarial network output simulation target trajectory, and an optimized trajectory after loopback detection; and performing correctness judgment on the trajectory based on the spatial features, the image features and the trajectory information by utilizing a DS evidence theory, and outputting an optimal trajectory. Theoptimal trajectory is output, and accurate information is provided for unmanned control, target recognition and detection, region detection, path planning and the like.

Owner:SHENZHEN WEITESHI TECH

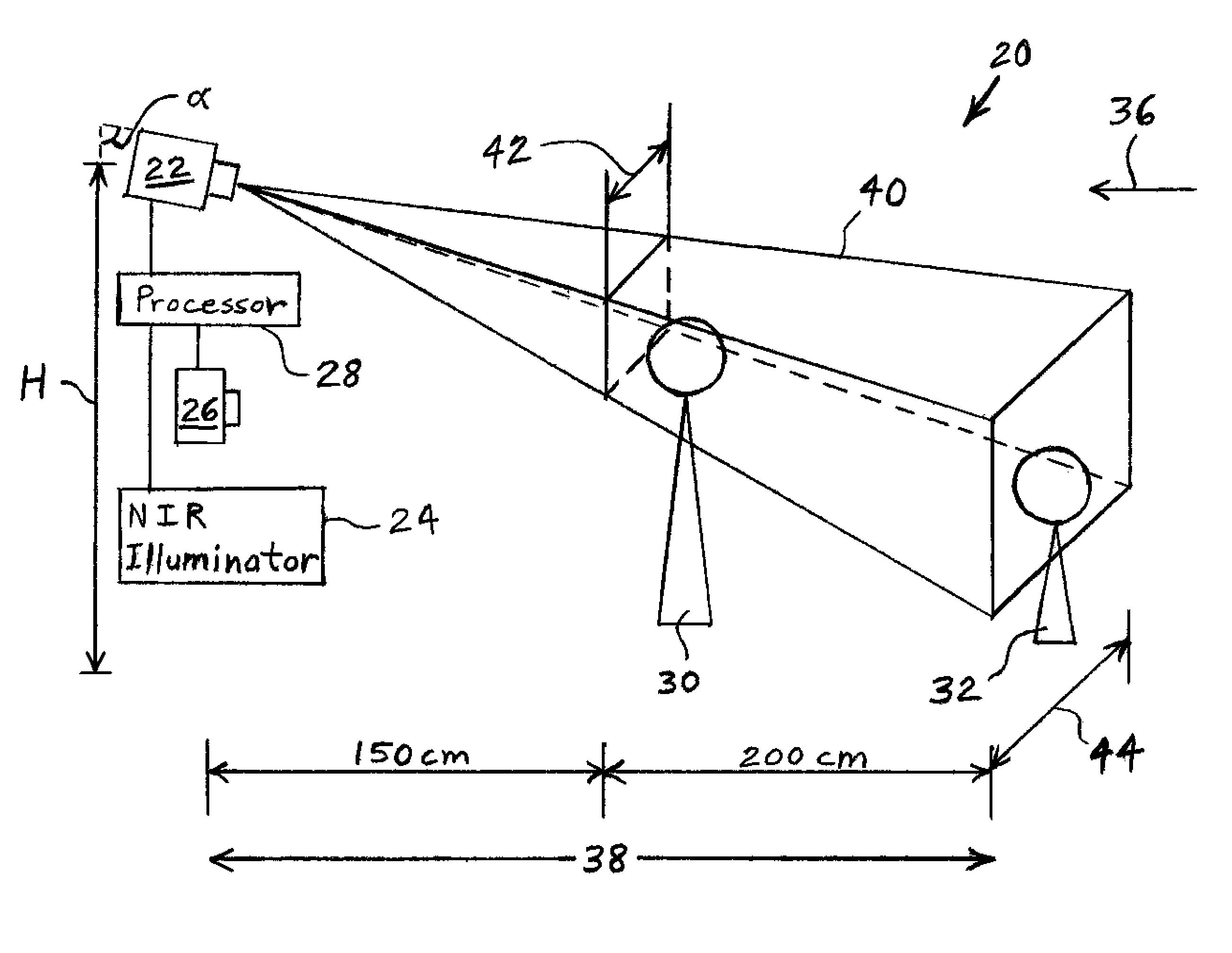

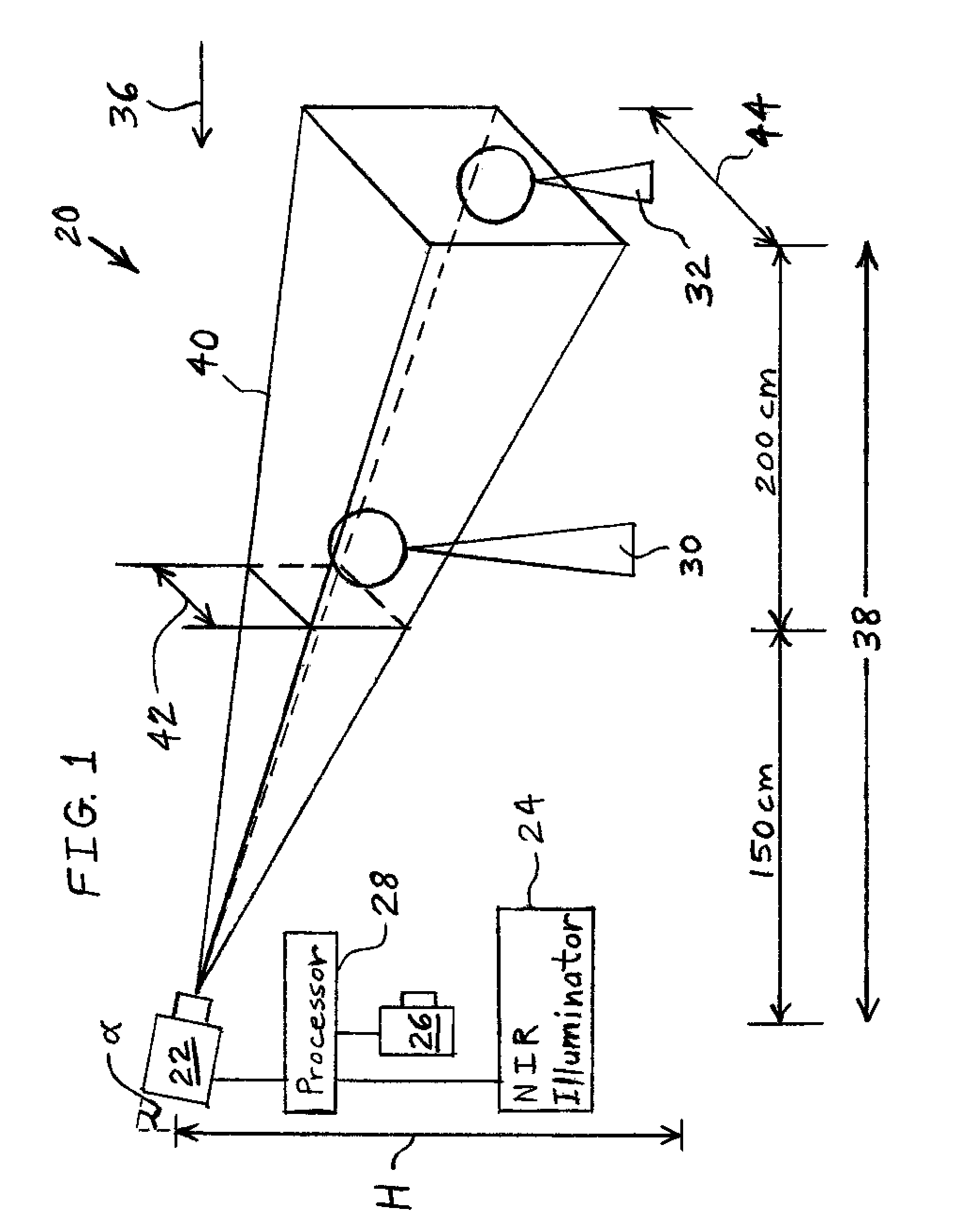

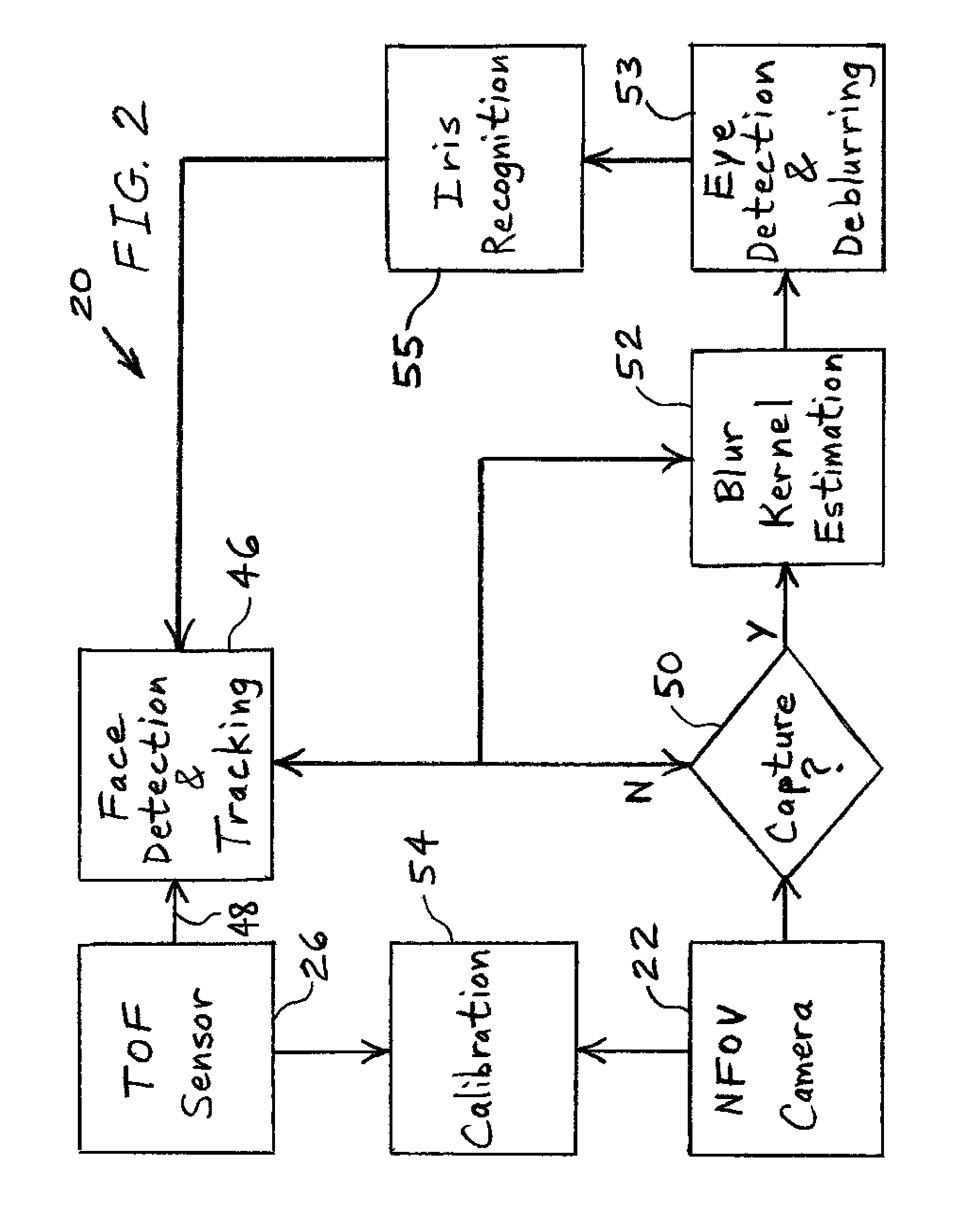

Time-of-flight sensor-assisted iris capture system and method

ActiveUS7912252B2Add depthIncrease volumeTelevision system detailsAcquiring/recognising eyesTime of flight sensorComputer vision

Owner:ROBERT BOSCH GMBH

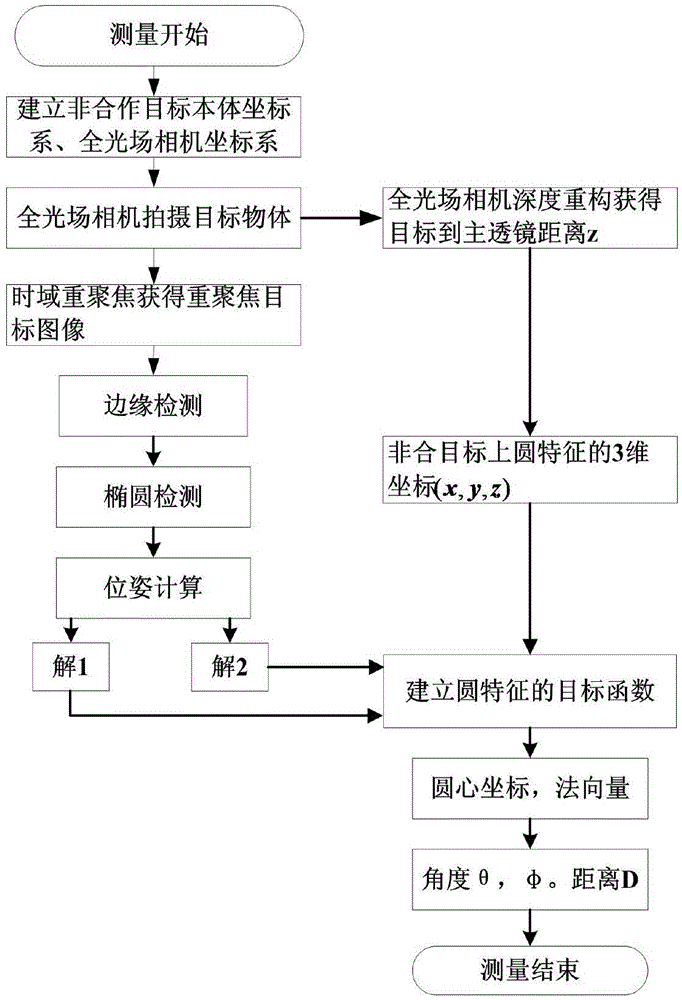

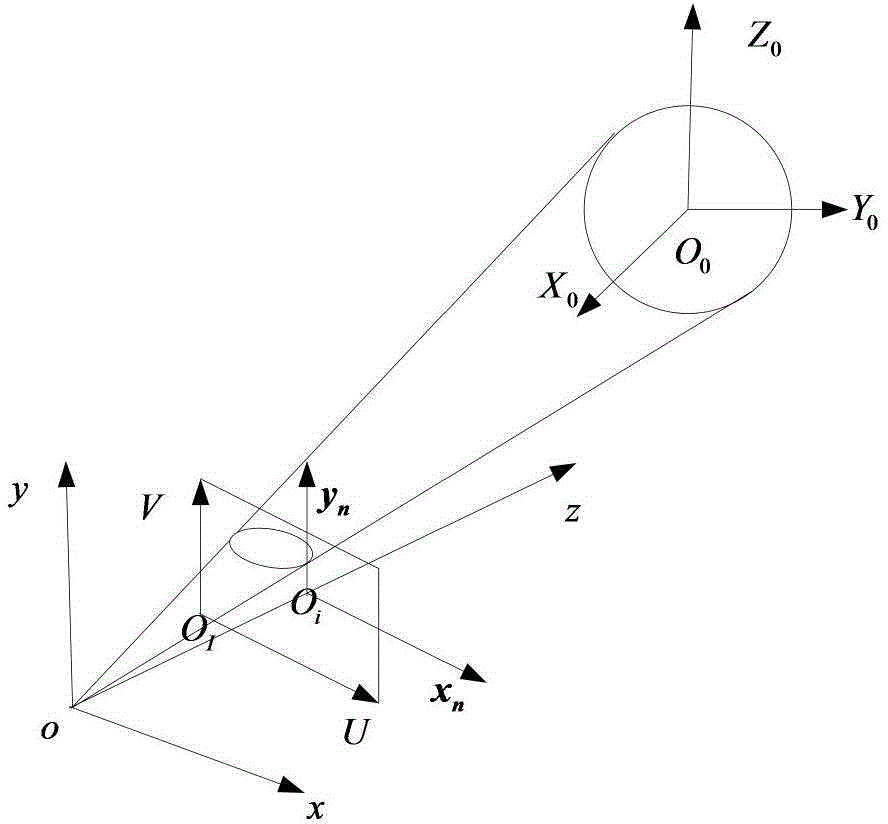

Method used for measuring pose of non-cooperative target based on complete light field camera

InactiveCN104101331AAccurate removalAccurate calculationPhotogrammetry/videogrammetryNavigation instrumentsLight-field cameraVisual perception

The invention discloses a method used for measuring the pose of a non-cooperative target based on a complete light field camera. The method is applied in the non-cooperative target containing a star arrow docking ring. The star arrow docking ring on the non-cooperative target is a circle and is characterized in that step 1, the light field image of the non-cooperative target with circular features is obtained by adopting the compete light field camera; step 2, surface normal vectors, and the coordinates of the circular center of the circular features are calculated in the main lens coordinate system of the complete light field camera; step 3, false solutions are removed; step 4, the pose of the non-cooperative target is acquired. According to the invention, the false solutions based on the monocular vision pose estimations of the circle can be effectively removed, so that the pose of the non-cooperative target which meets accuracy requirements can be obtained.

Owner:HEFEI UNIV OF TECH

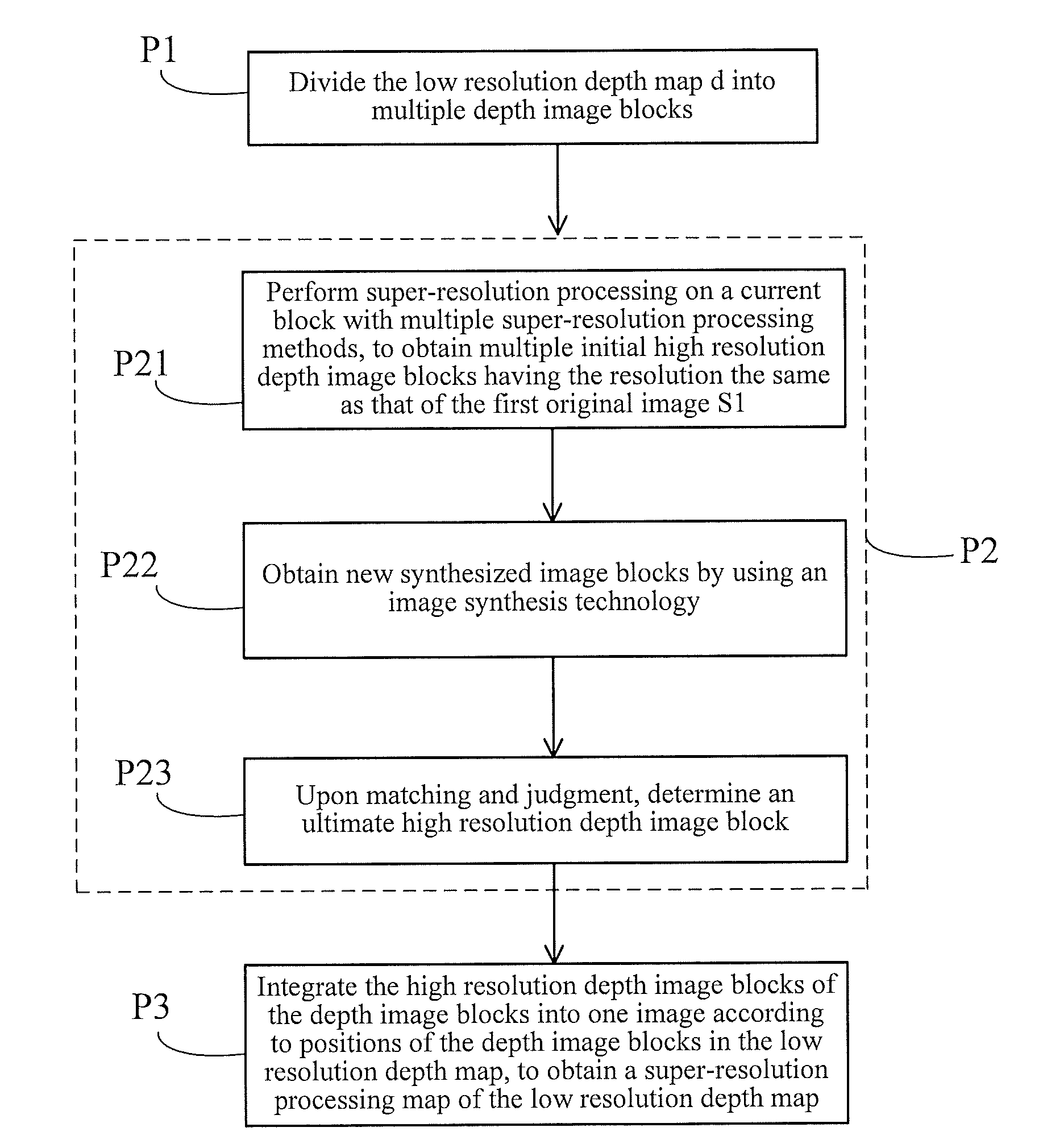

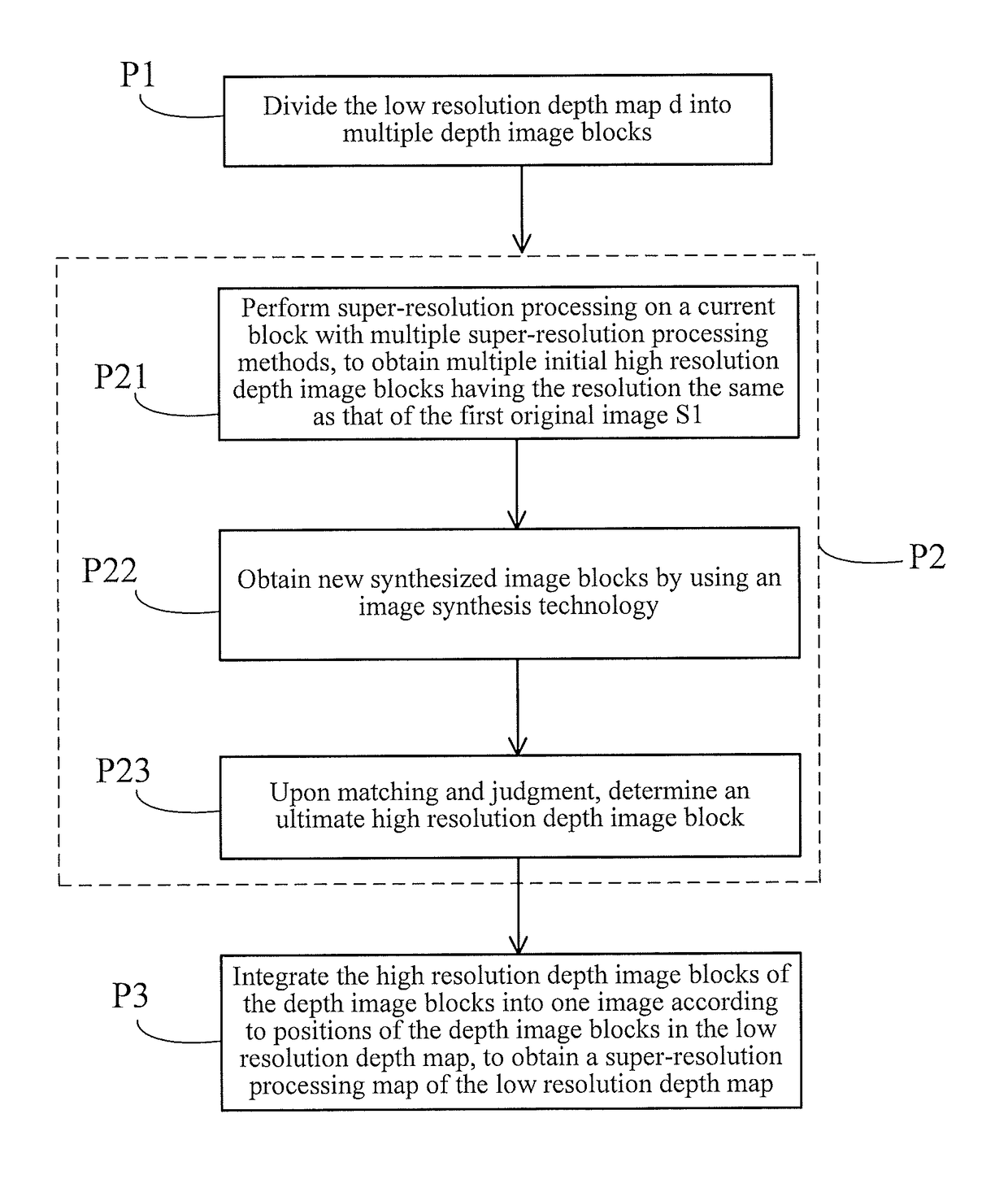

Depth map super-resolution processing method

ActiveUS20160328828A1Solve the low matching degreeAccurate depth informationImage enhancementImage analysisImage resolutionRadiology

The present invention discloses a depth map super-resolution processing method, including: firstly, respectively acquiring a first original image (S1) and a second original image (S2) and a low resolution depth map (d) of the first original image (S1); secondly, 1) dividing the low resolution depth map (d) into multiple depth image blocks; 2) respectively performing the following processing on the depth image blocks obtained in step 1); 21) performing super-resolution processing on a current block with multiple super-resolution processing methods, to obtain multiple high resolution depth image blocks; 22) obtaining new synthesized image blocks by using an image synthesis technology; 23) upon matching and judgment, determining an ultimate high resolution depth image block; and 3) integrating the high resolution depth image blocks of the depth image blocks into one image according to positions of the depth image blocks in the low resolution depth map (d). Through the depth map super-resolution processing method of the present invention, depth information of the obtained high resolution depth maps is more accurate.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

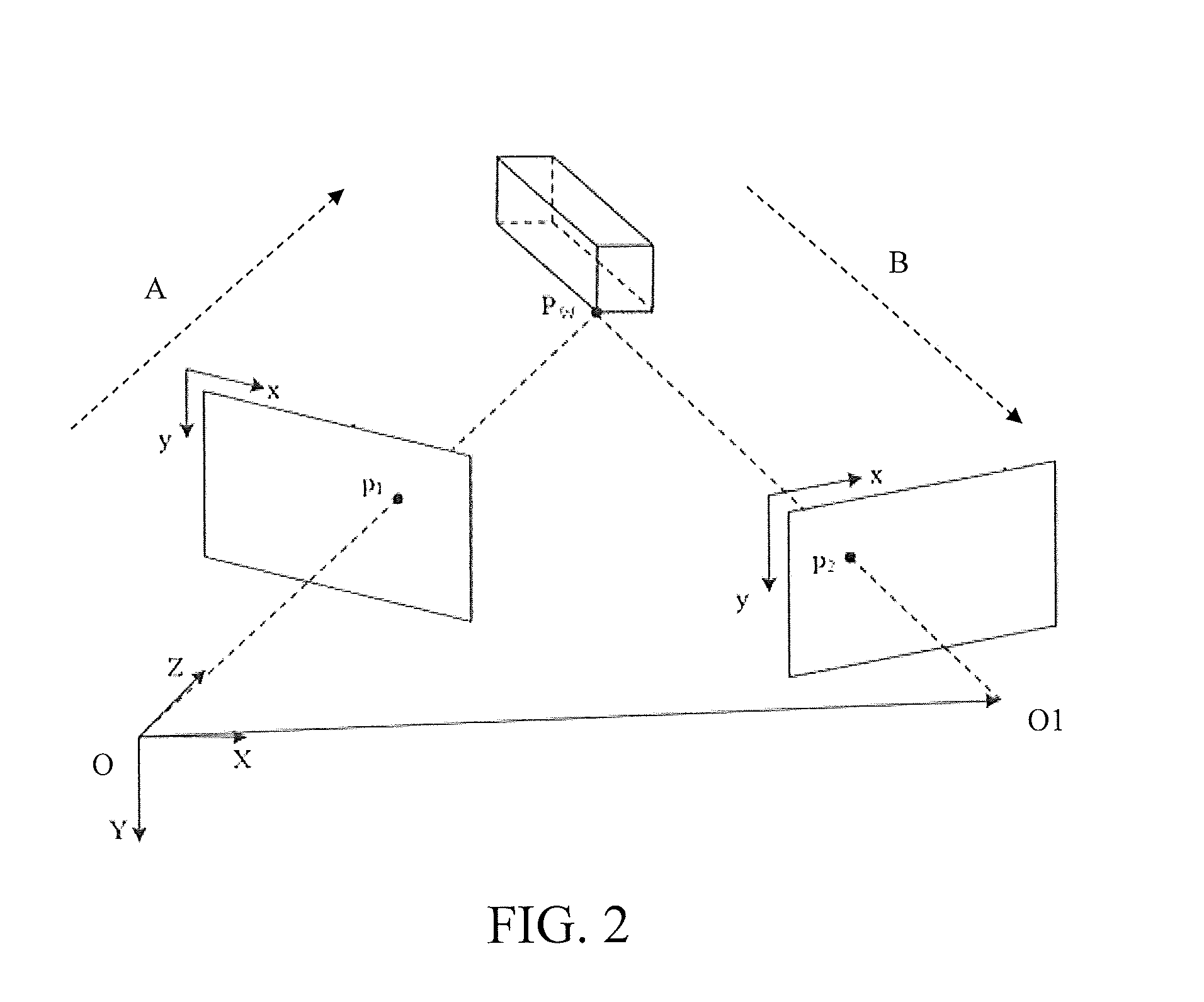

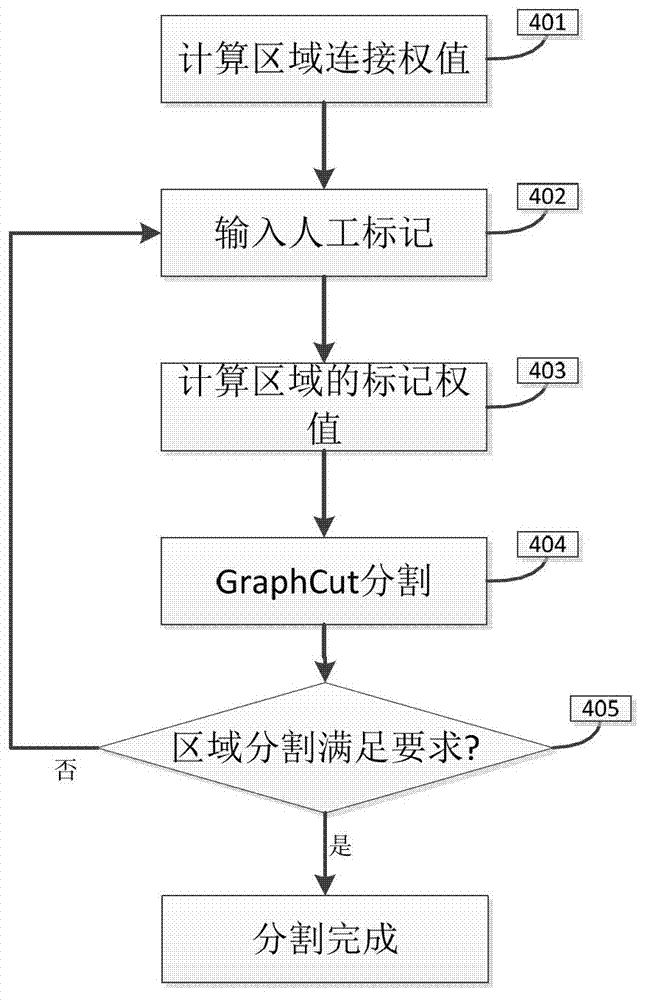

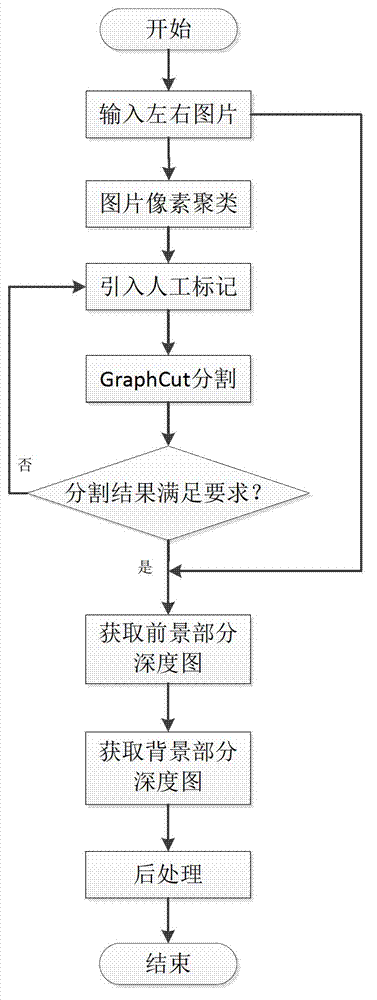

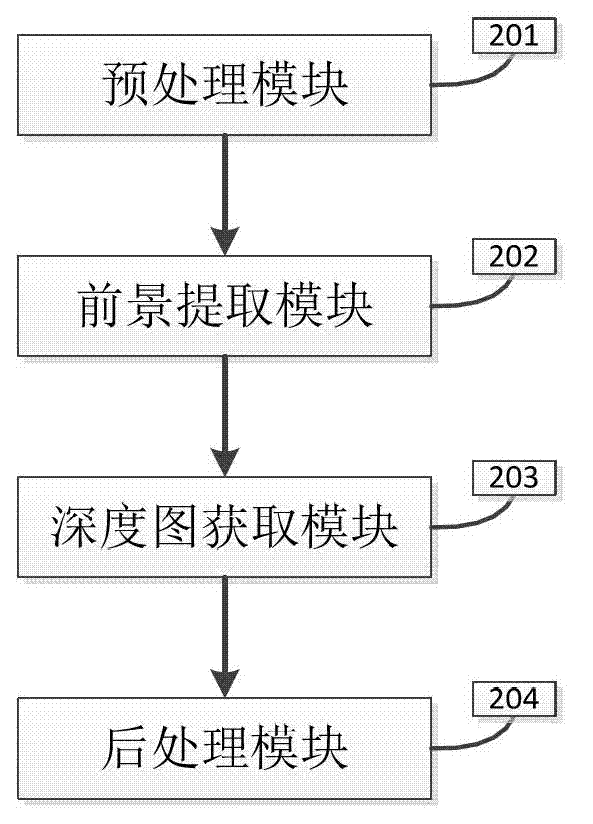

Method and system for acquiring depth map of binocular stereo video sequence

ActiveCN103248906AReduce complexityDepth value is accurateSteroscopic systemsParallaxStereo matching

The invention discloses a method and a system for acquiring a depth map of a binocular stereo video sequence. The method comprises the following steps: performing clustering on a first image in two images, adopting a region growing manner to transform all clusters to connected regions, and recording average five-dimension coordinates of all regions and adjacent information between two regions; receiving manual signs for marking foreground and background of the first image input by an operator, and calculating connection weight and sign weight of the regions; taking the connection weight and sign weight of the regions as input, and transferring the Graphcut algorithm to partition the foreground and the background of the first image; and taking the second image of the two images as a reference image, according to the partition result, calculating a disparity map of the foreground based on the partial self-adaption weight stereo matching algorithm, calculating a disparity map of the background based on the Rank transformation stereo matching algorithm, and then transforming the disparity maps into depth maps. The system is a system executing the method. The method and the system have the benefits of correct result and low complexity.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

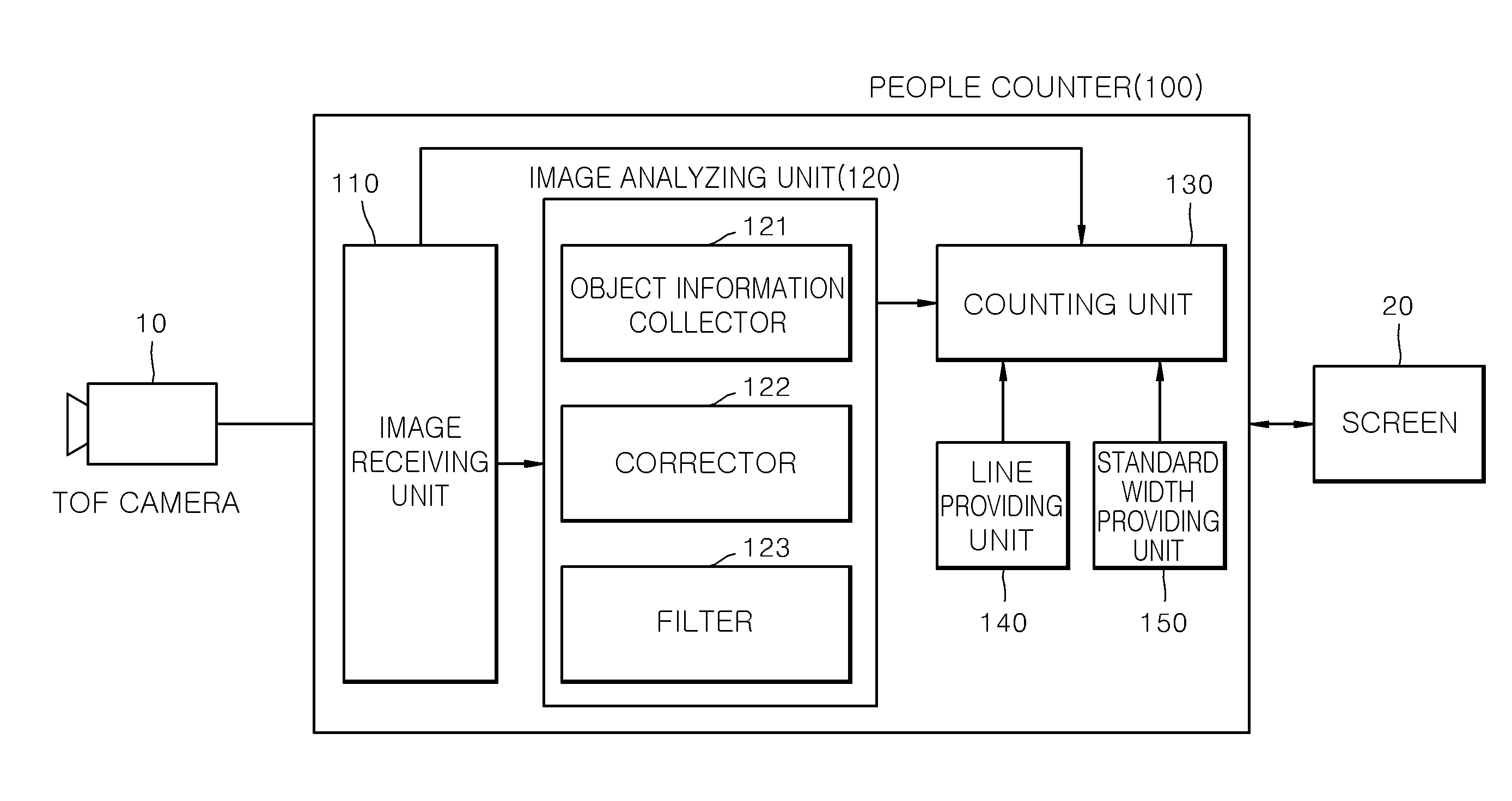

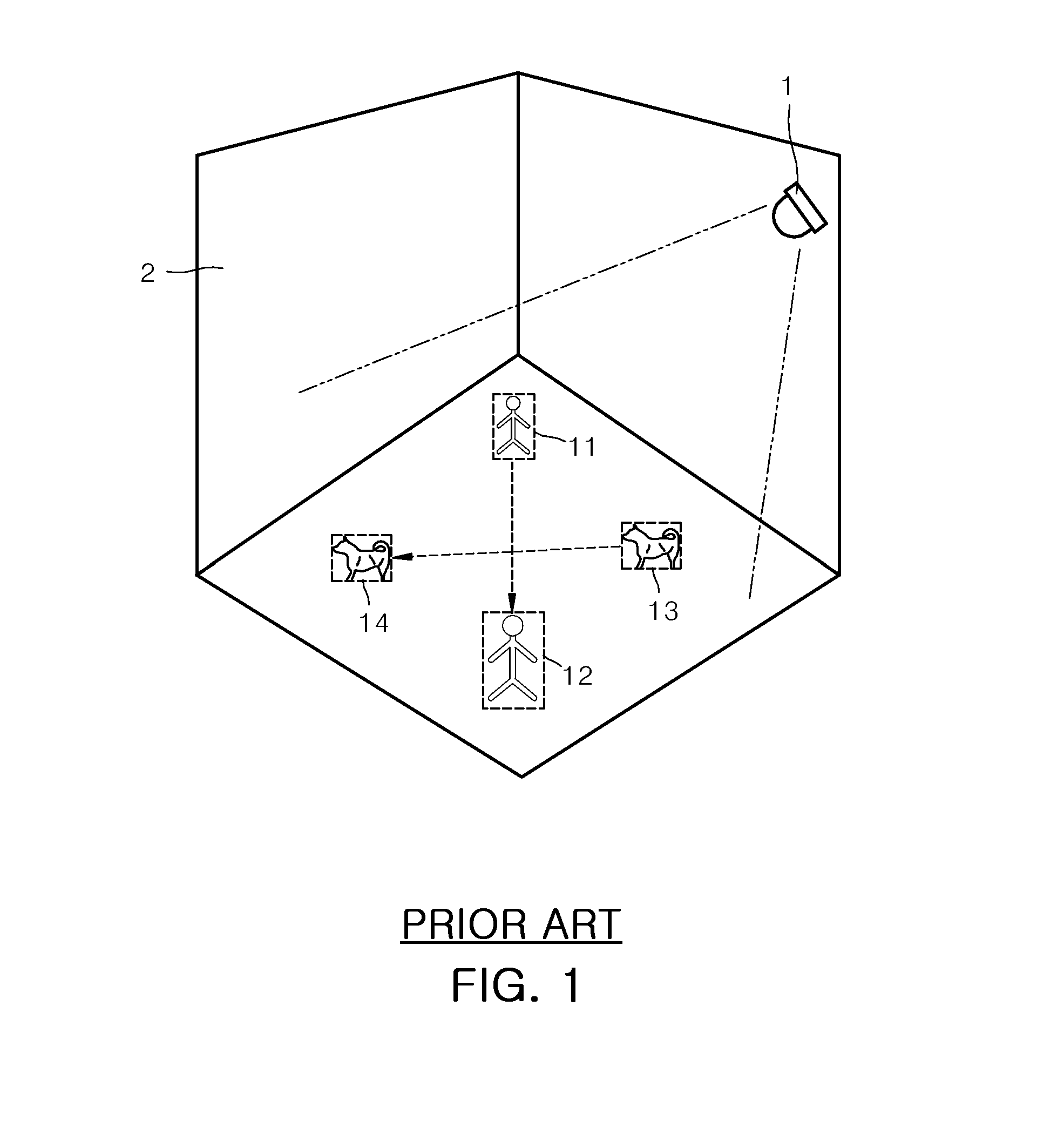

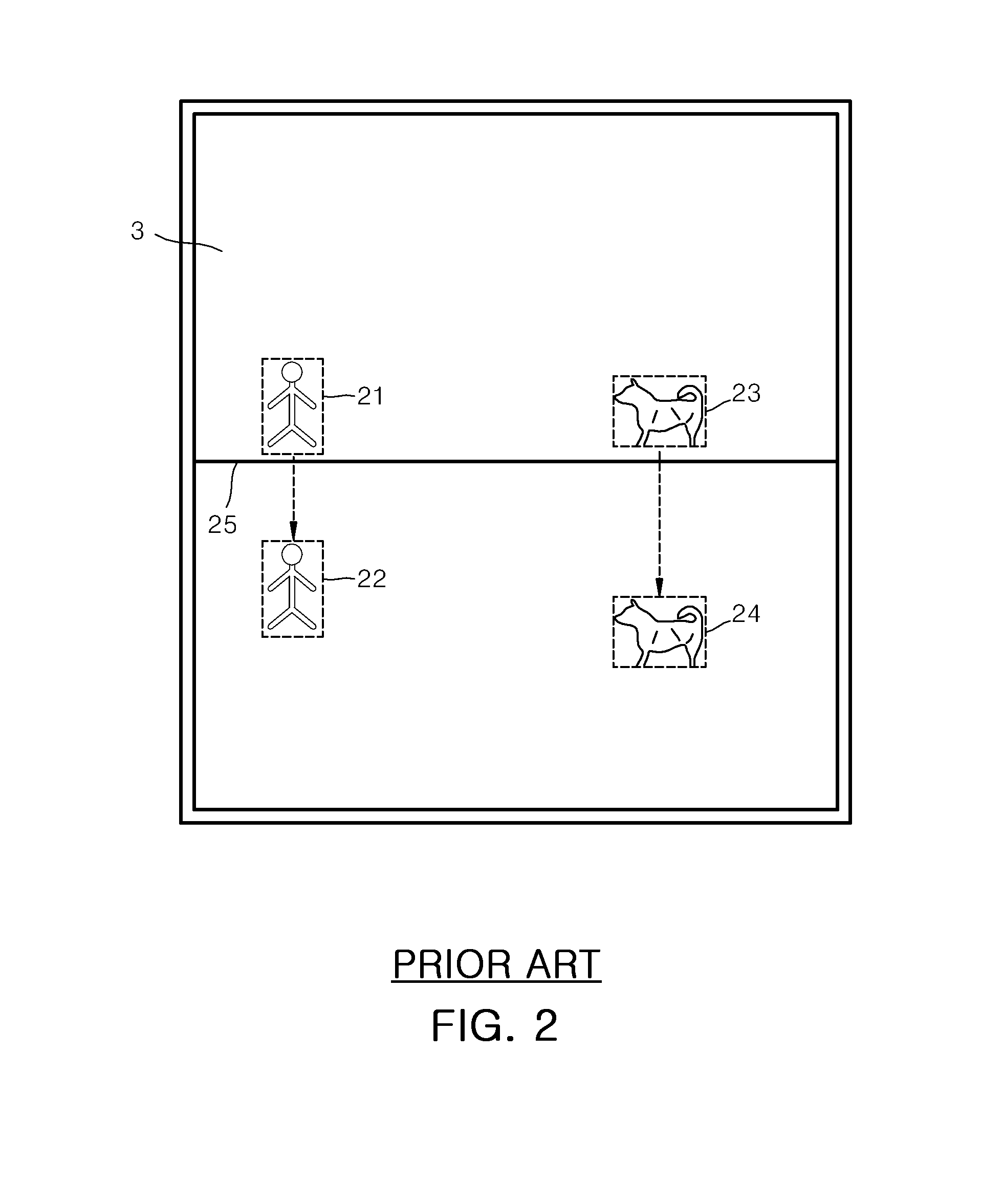

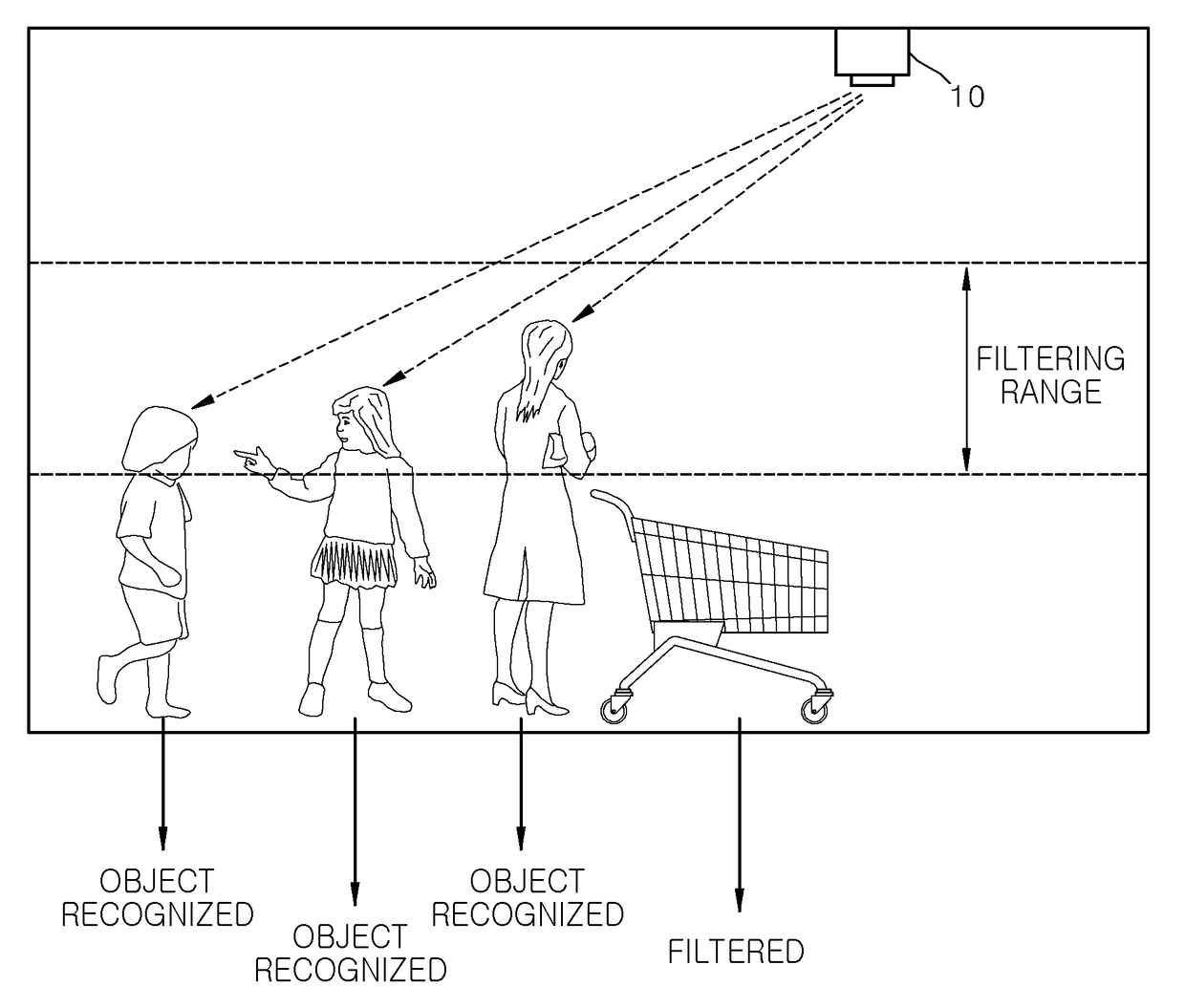

People counter using tof camera and counting method thereof

ActiveUS20160224829A1Ensure reliabilityEasily depth informationImage enhancementImage analysisPeople counterVideo camera

Owner:UDP TECH

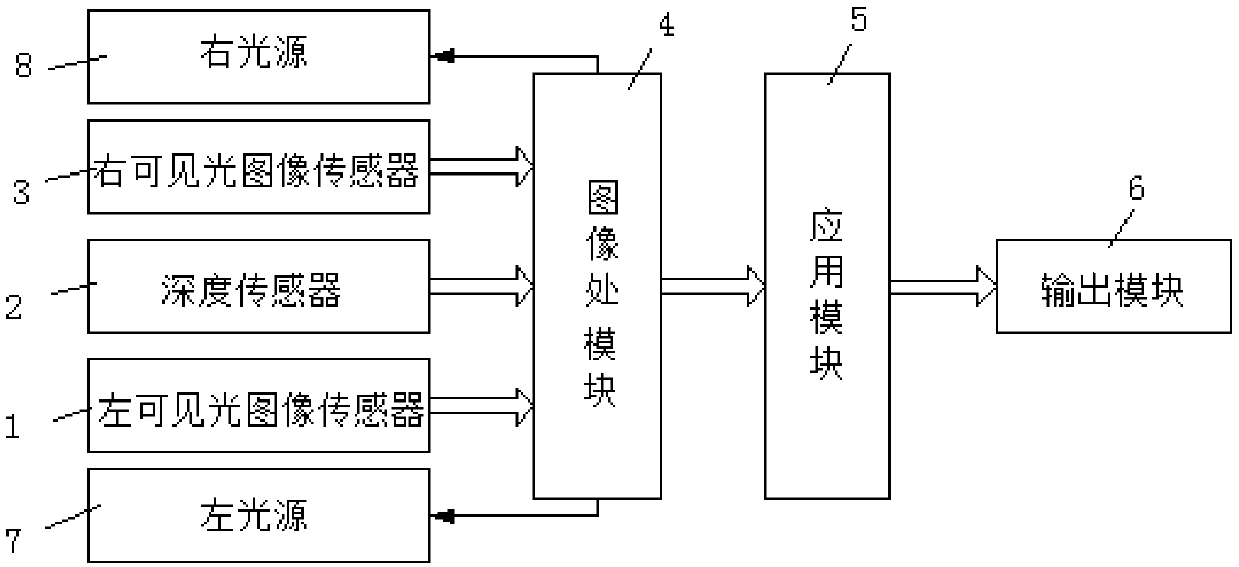

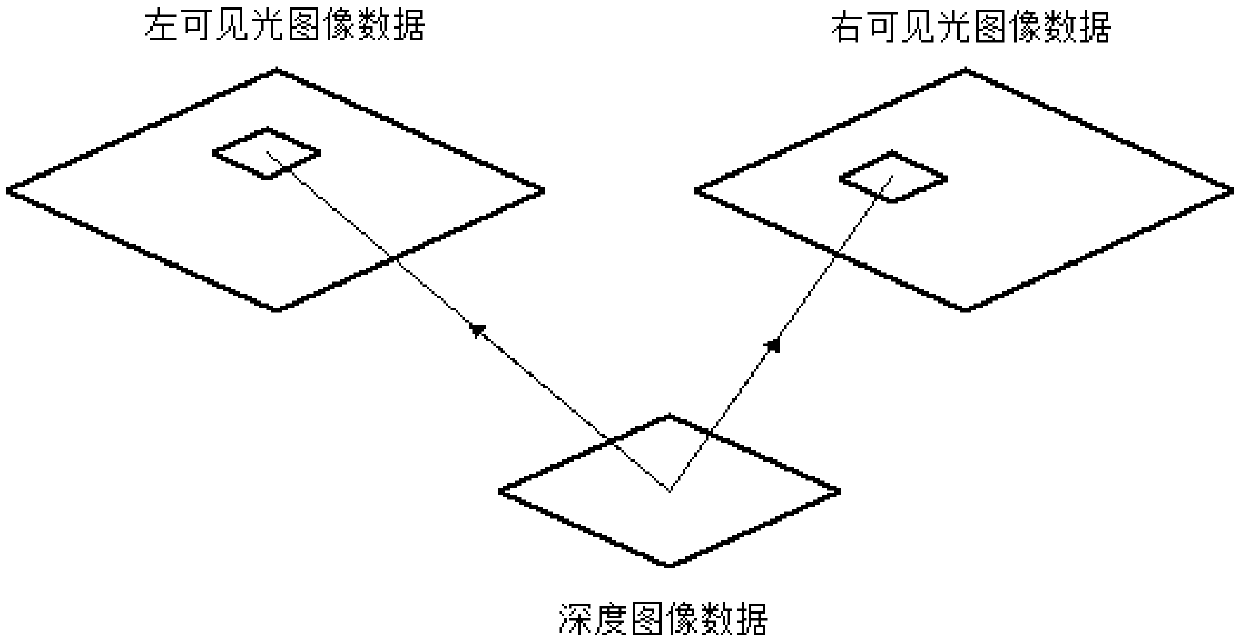

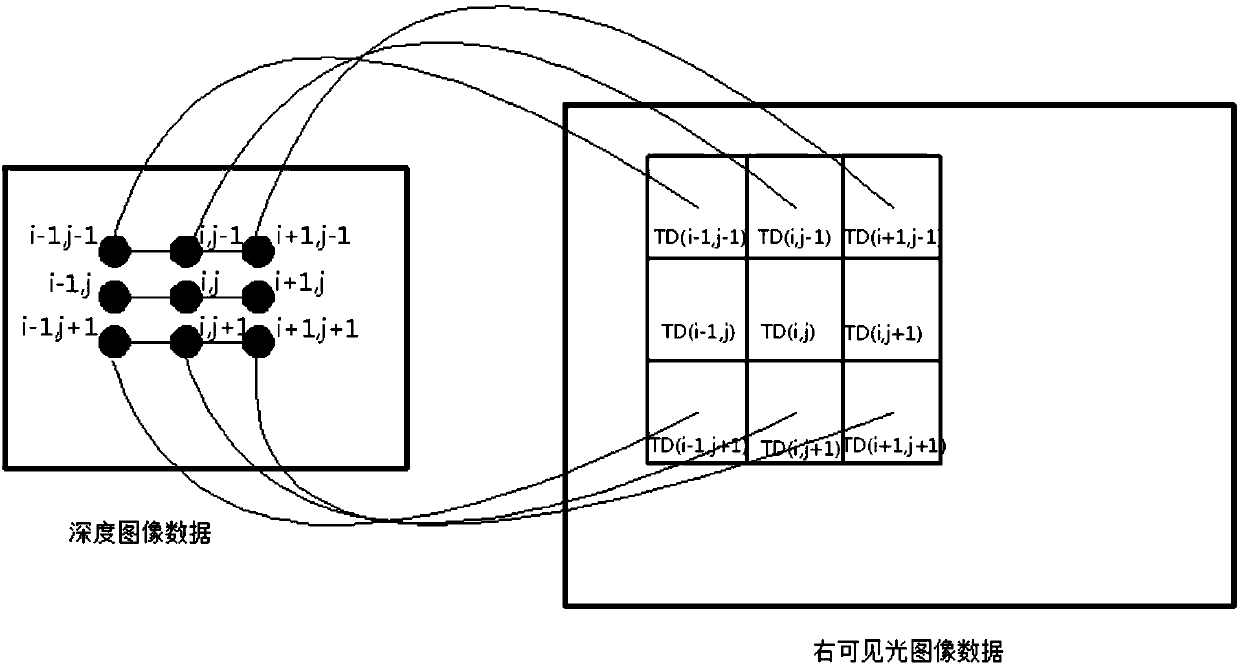

Three-dimensional three-mesh camera device and depth fusion method

ActiveCN107635129AHigh resolutionAccurate depth informationSteroscopic systemsImaging processingImaging data

The invention provides a three-dimensional three-mesh camera device and a depth fusion method. The device comprises left and right visible light image sensors used for collecting two left and right paths of visible light image data for a shooting object; a depth sensor used for collecting a path of depth image data for the shooting object, wherein in view of the shooting object, the pixel areas ofthe two left and right paths of visible light image data and the pixel area of the path of depth image data for the shooting object have a predetermined corresponding relation; and an image processing module connected with the left and right visible light image sensors and the depth sensor and used for performing depth fusion on the two left and right paths of visible light image data and the path of depth image data to obtain fused depth information of the pixel areas corresponding to the left path of visible light image data or right path of visible light image data, and thus a three-dimensional image can be determined according to the fused depth information, the left path of visible light image data or right path of visible light image data. High quality 3D images can be obtained.

Owner:SHANGHAI ANVIZ TECH CO LTD

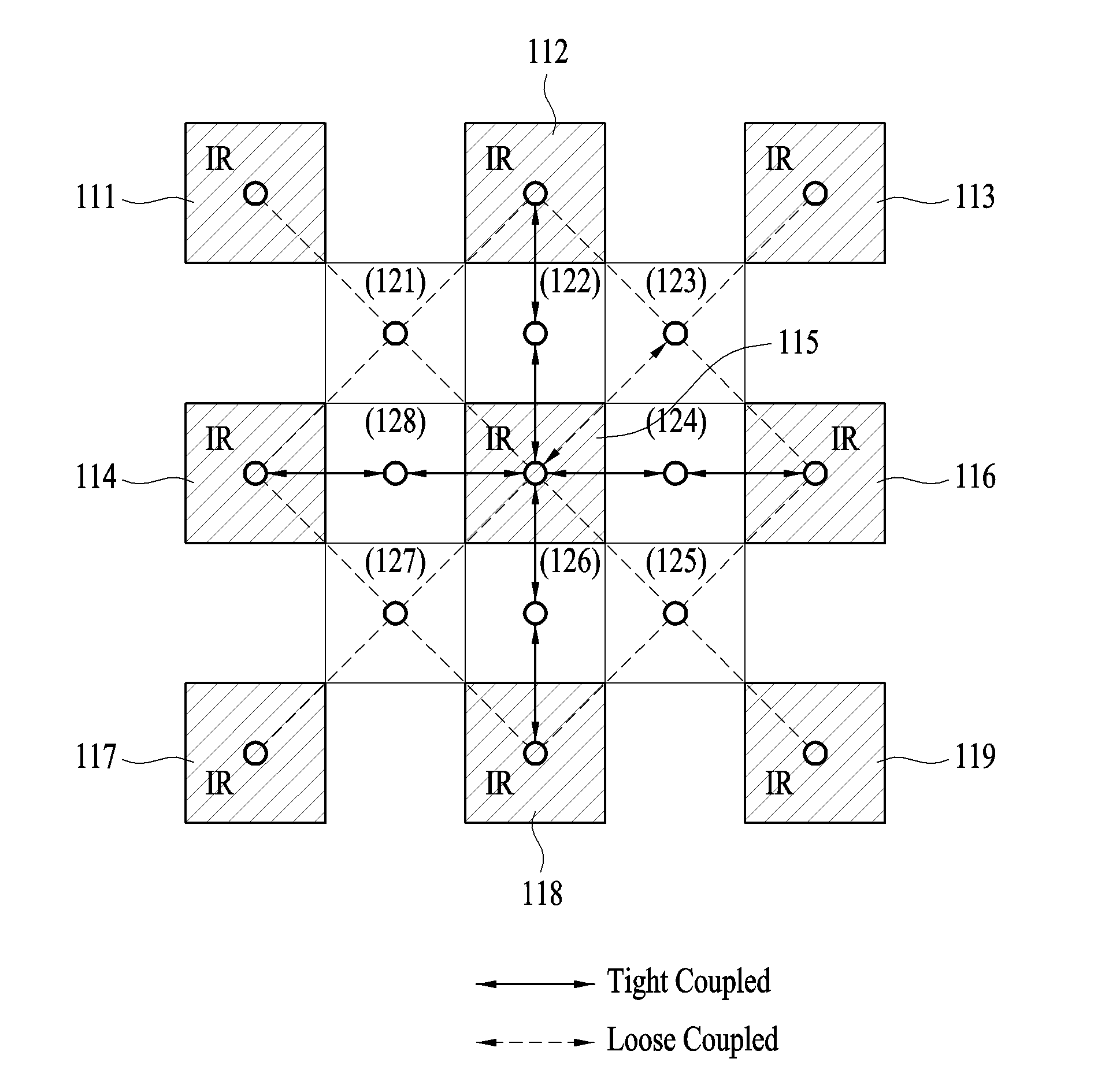

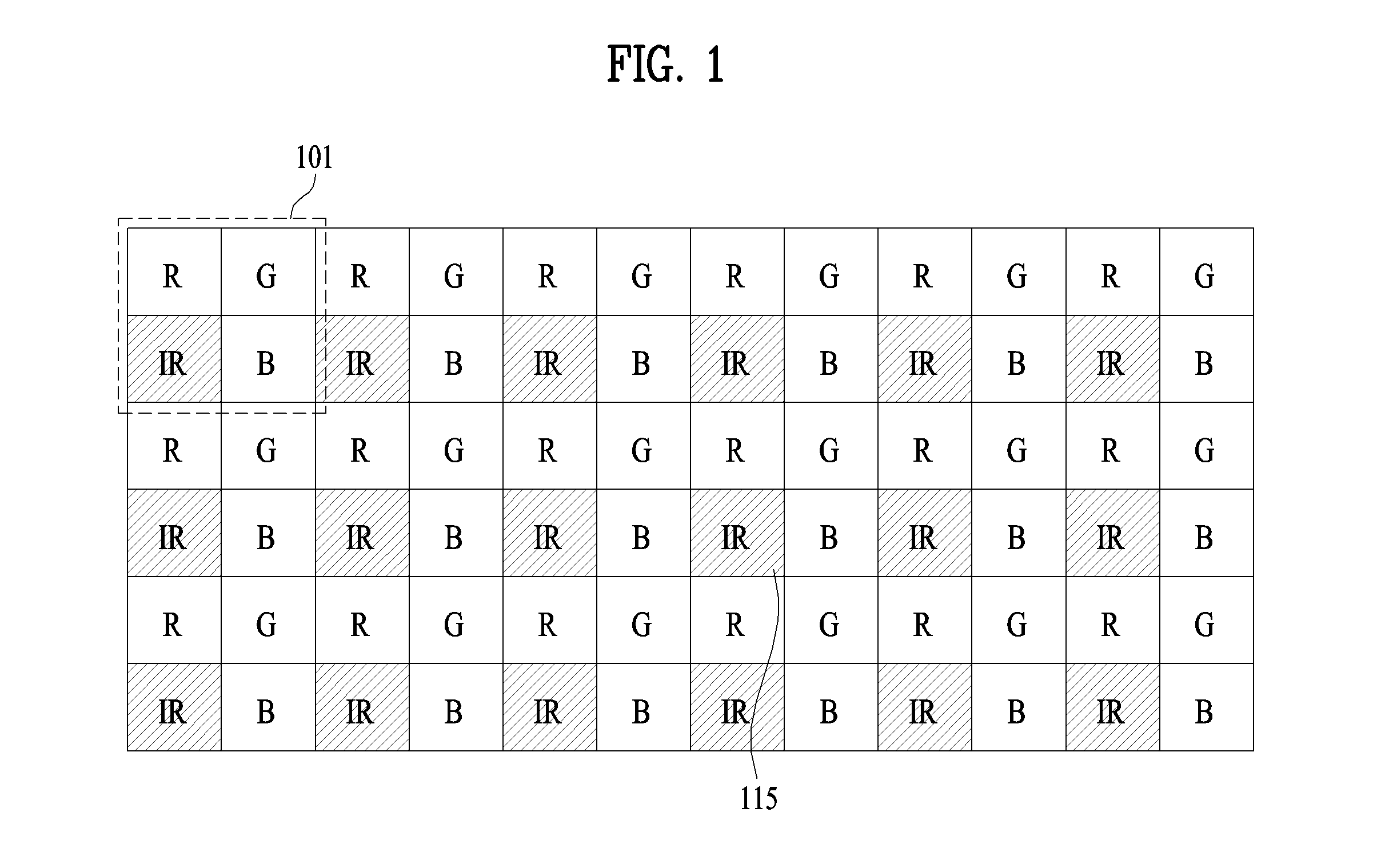

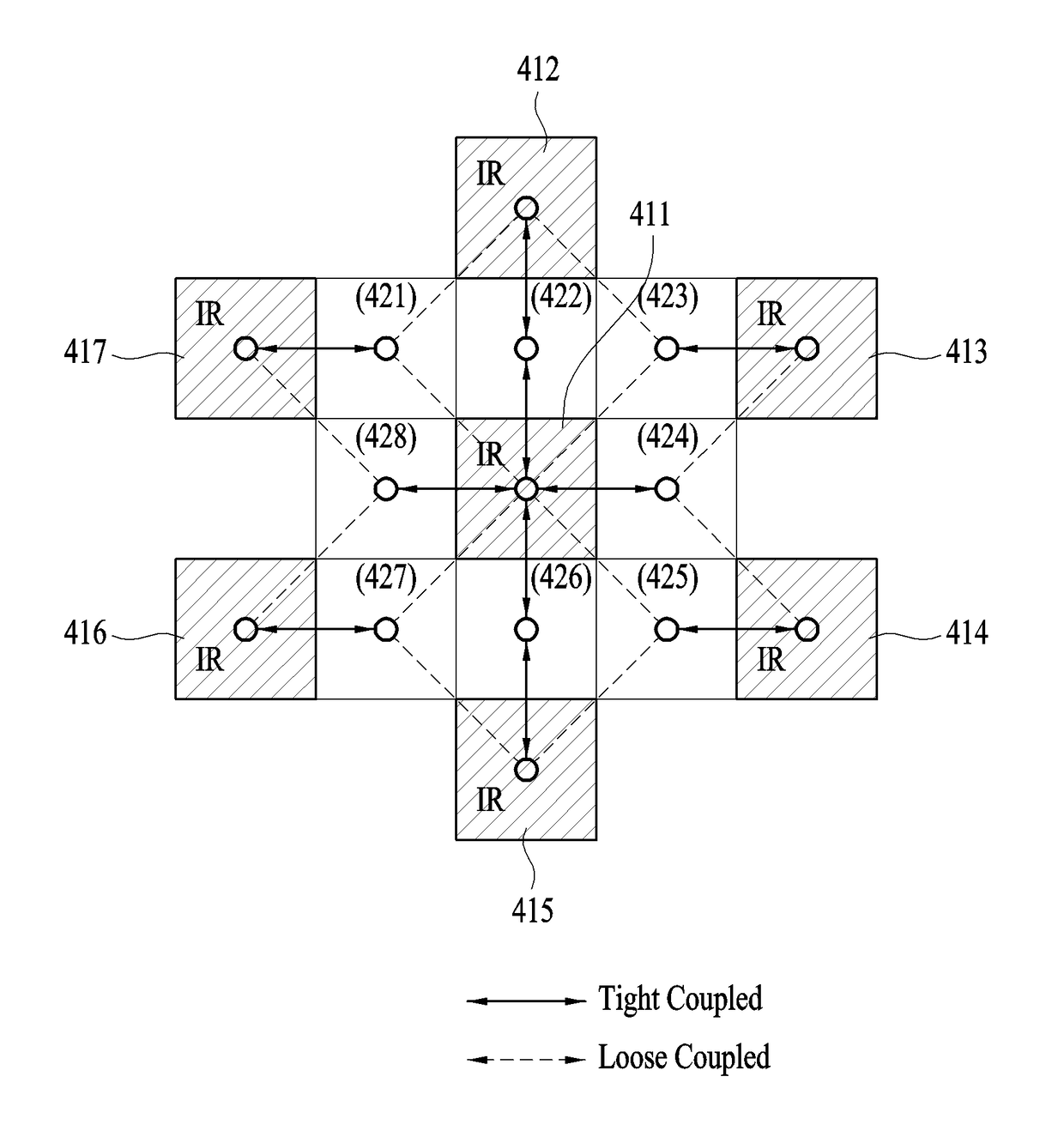

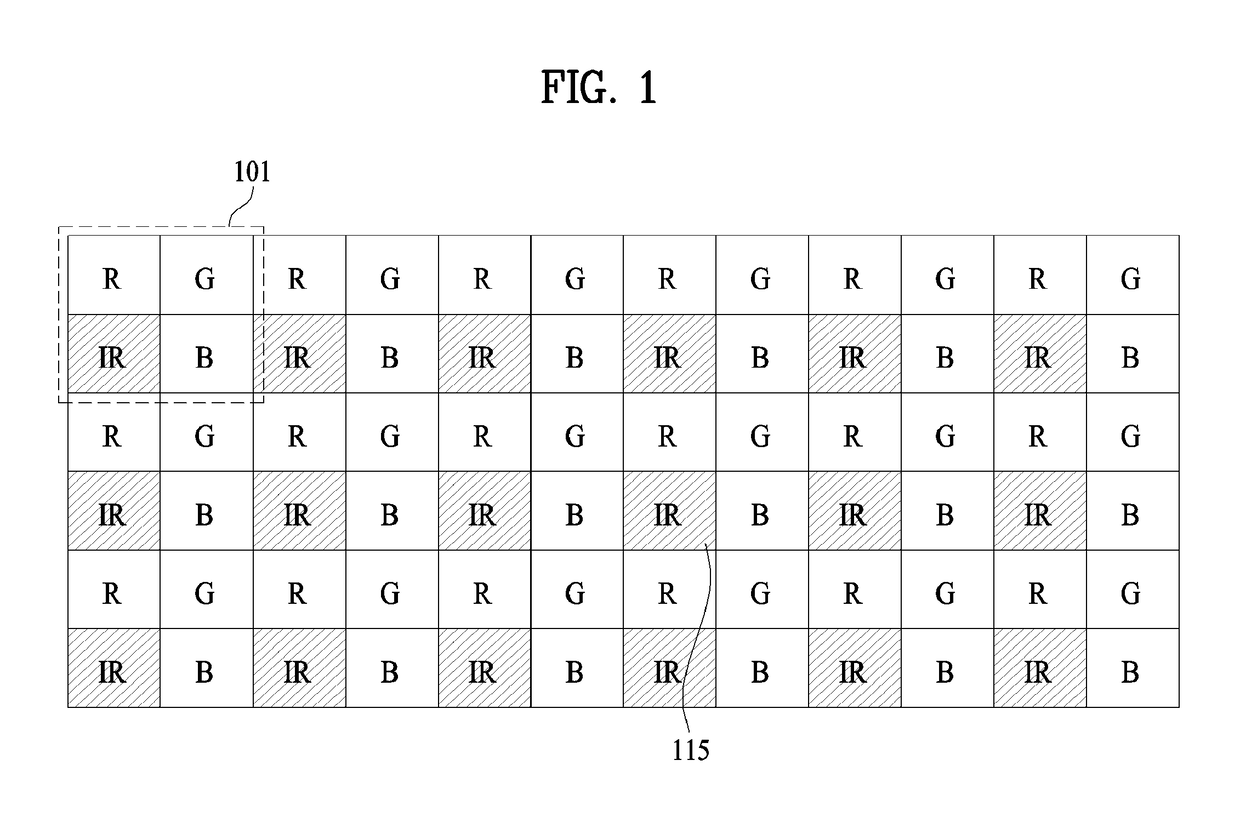

Rgb-ir sensor, and method and apparatus for obtaining 3D image by using same

ActiveUS20150312556A1None is suitable for purposeAccurate depth informationTelevision system detailsImage analysis3d imageComputer science

Owner:LG ELECTRONICS INC

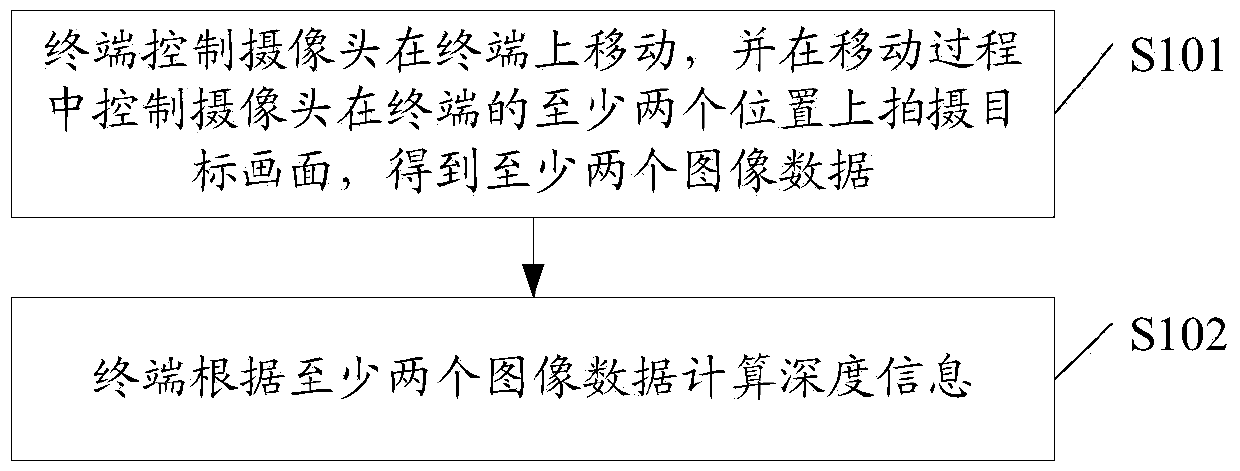

Image processing method and terminal

InactiveCN105376484ALow costAccurate depth informationTelevision system detailsColor television detailsImaging processingImaging data

The embodiment of the invention discloses an image processing method and a terminal. The method comprises: the terminal controlling a camera to move at the terminal, and controlling the camera to shoot a target at two or more positions of the terminal in a moving process to obtain at least two pieces of image data; and calculating depth information according to the at least two pieces of image data. The image processing method can calculate depth information according to the image data collected by the camera, thereby reducing material costs.

Owner:SHENZHEN GIONEE COMM EQUIP

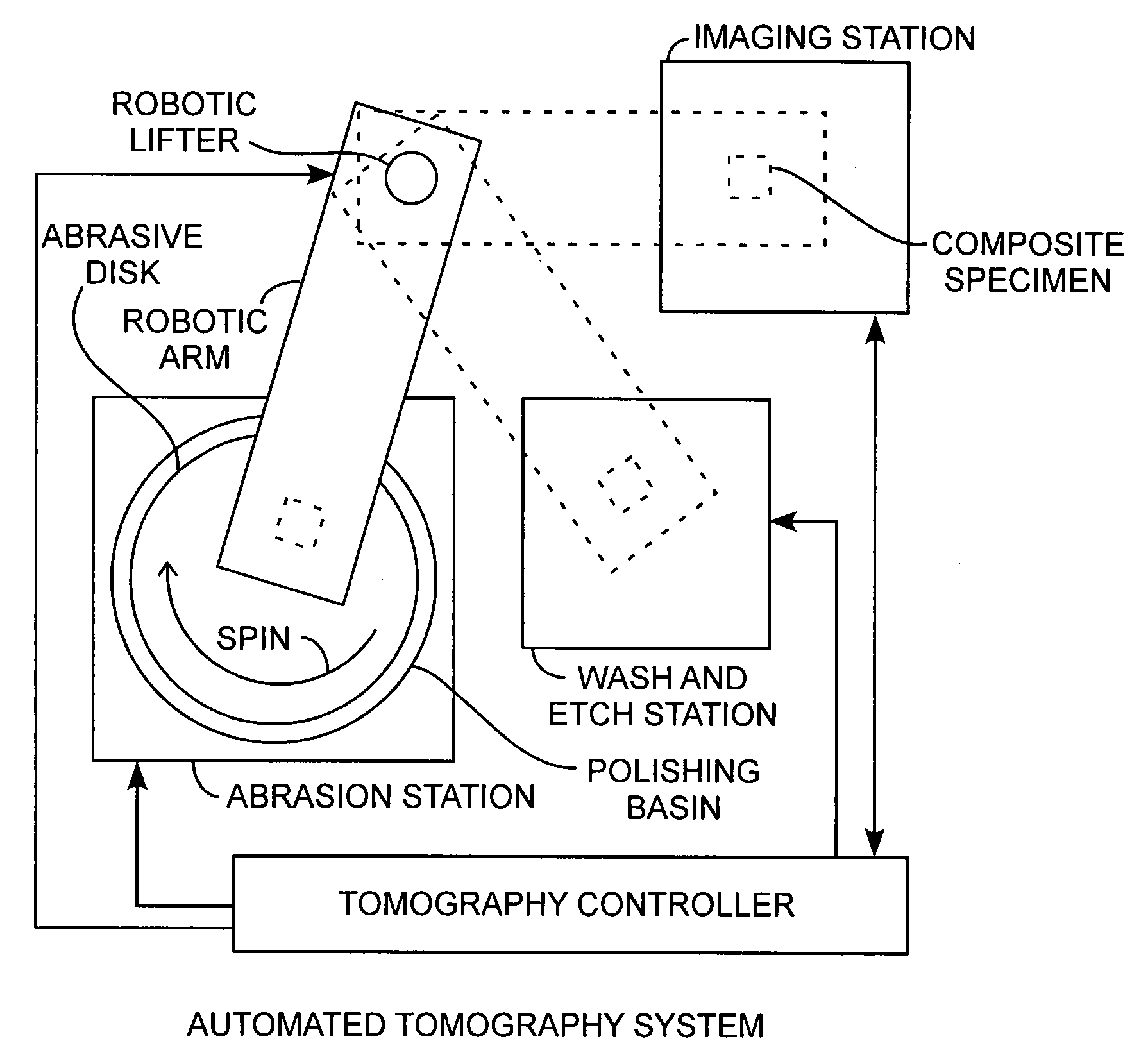

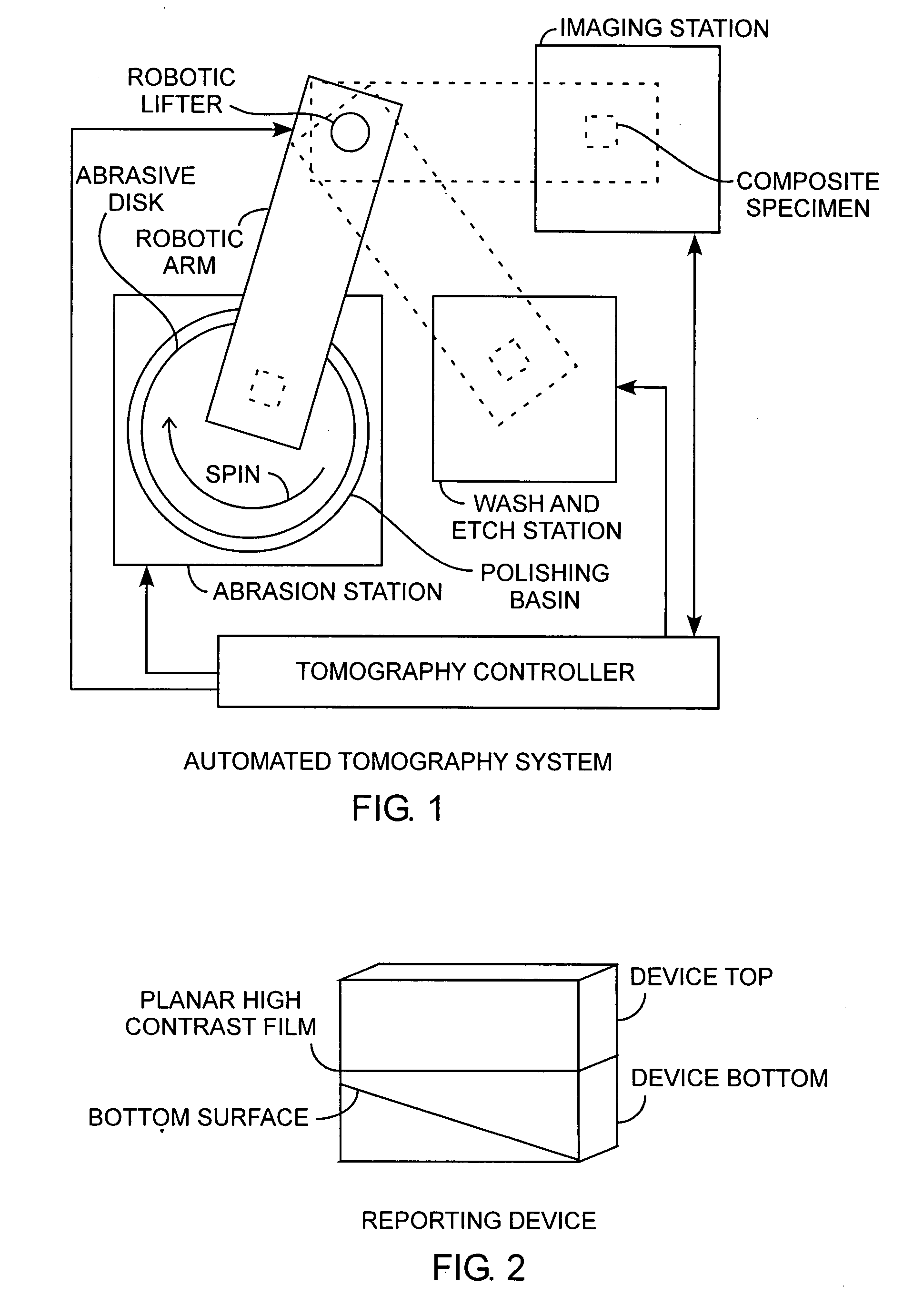

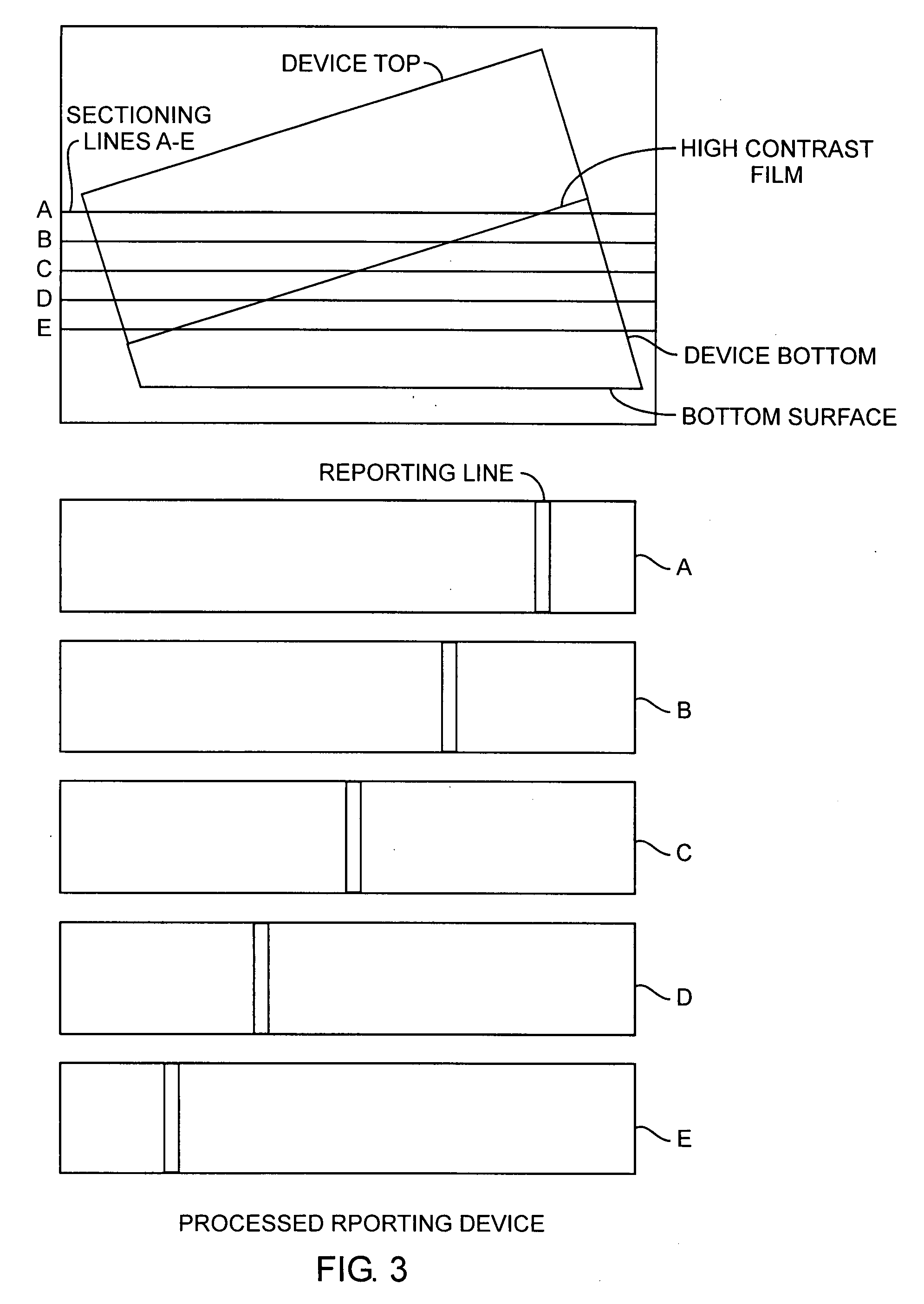

Automated sectioning tomographic measurement system

ActiveUS20090088047A1Controlled accurate grinding and sectioningDepth accurateEdge grinding machinesSamplingFractographyTomography

A tomographic system includes a reporting device colocated and juxtaposed an object so that both are ground through grinding to various sectioning depths as the reporting device is ground down exposing a reporting marker along a length of the reporting device for indicating the depth of sectioning for accurate precise depth of grinding well suited for precise sectioned tomographic imaging.

Owner:THE AEROSPACE CORPORATION

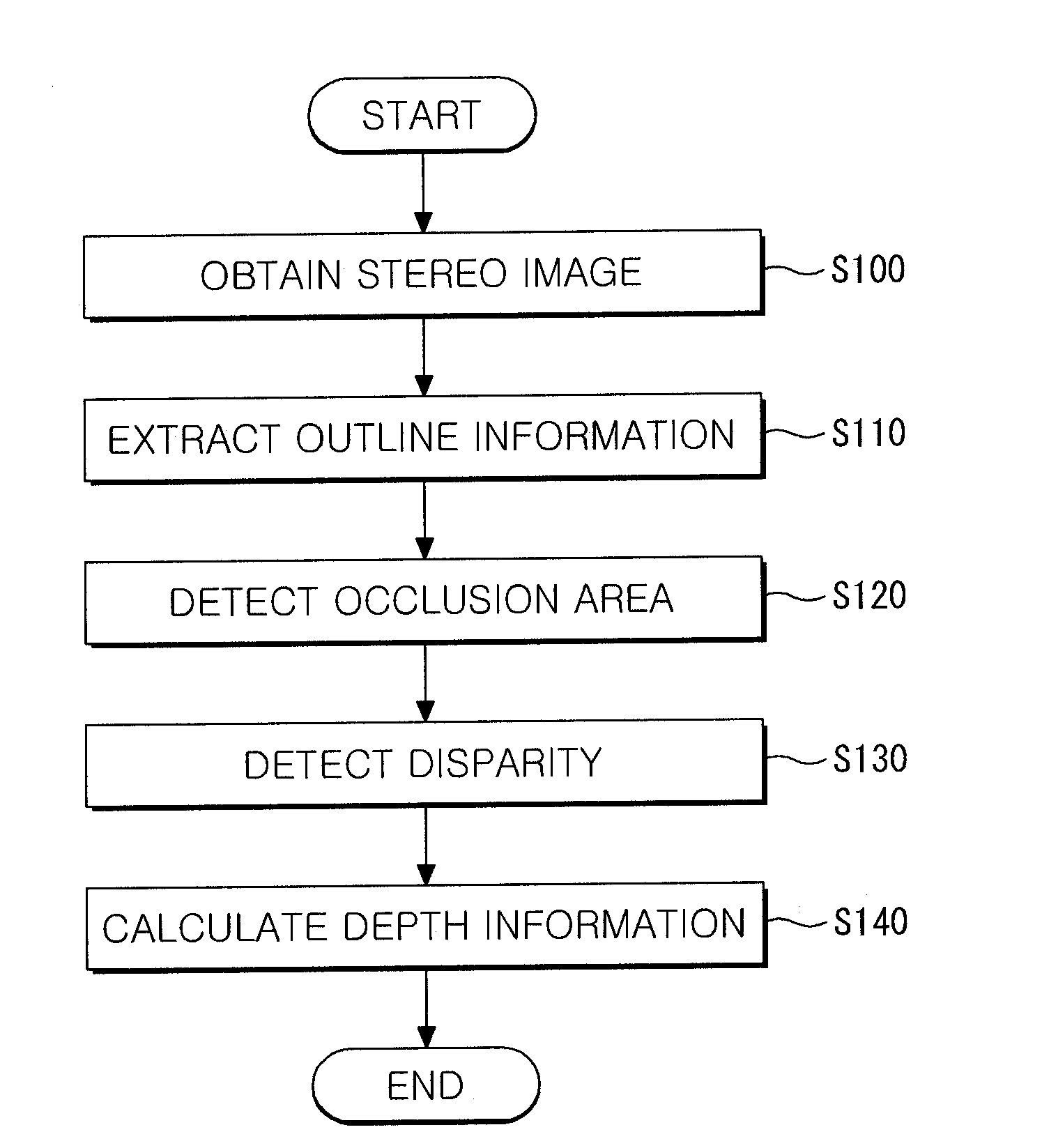

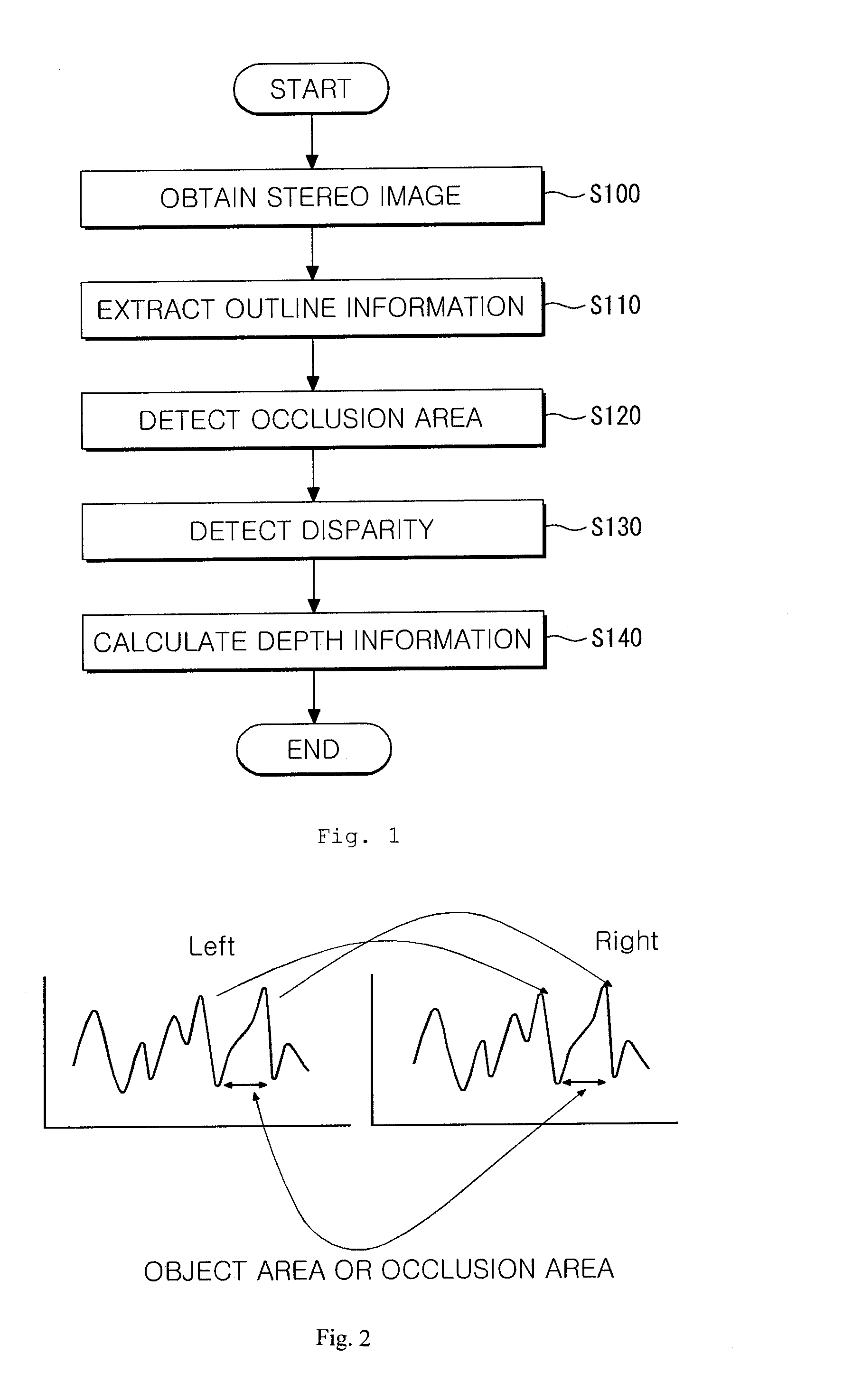

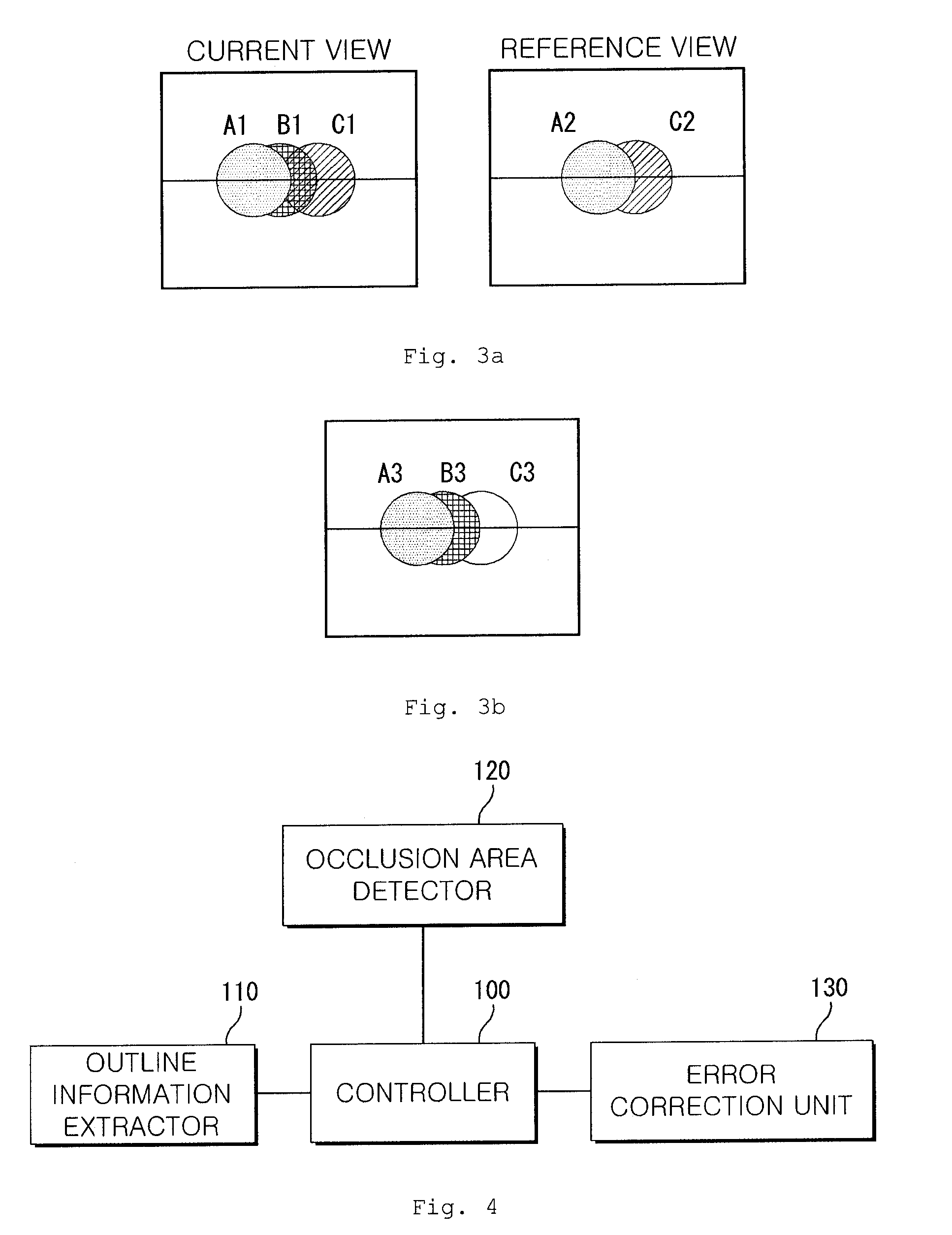

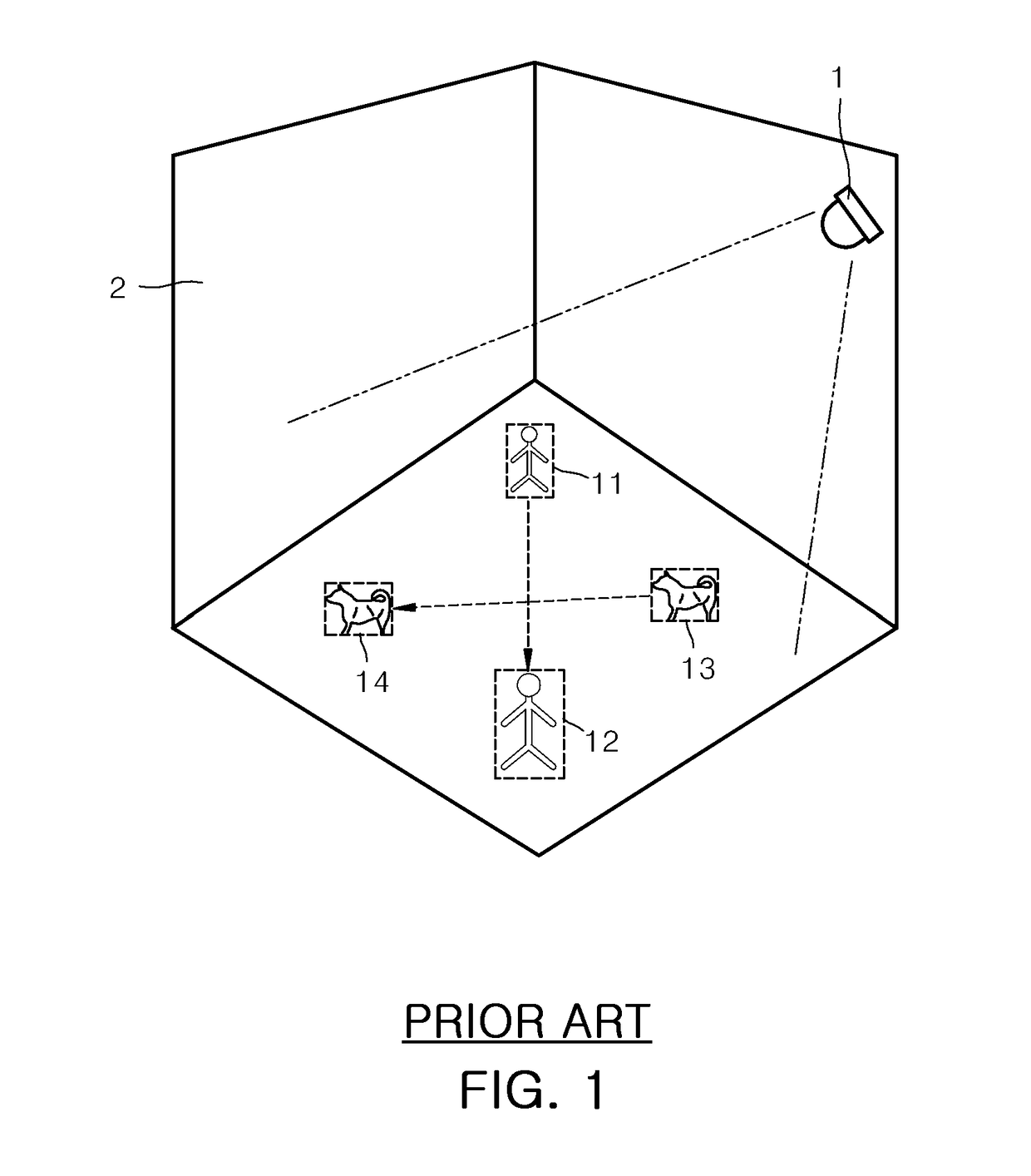

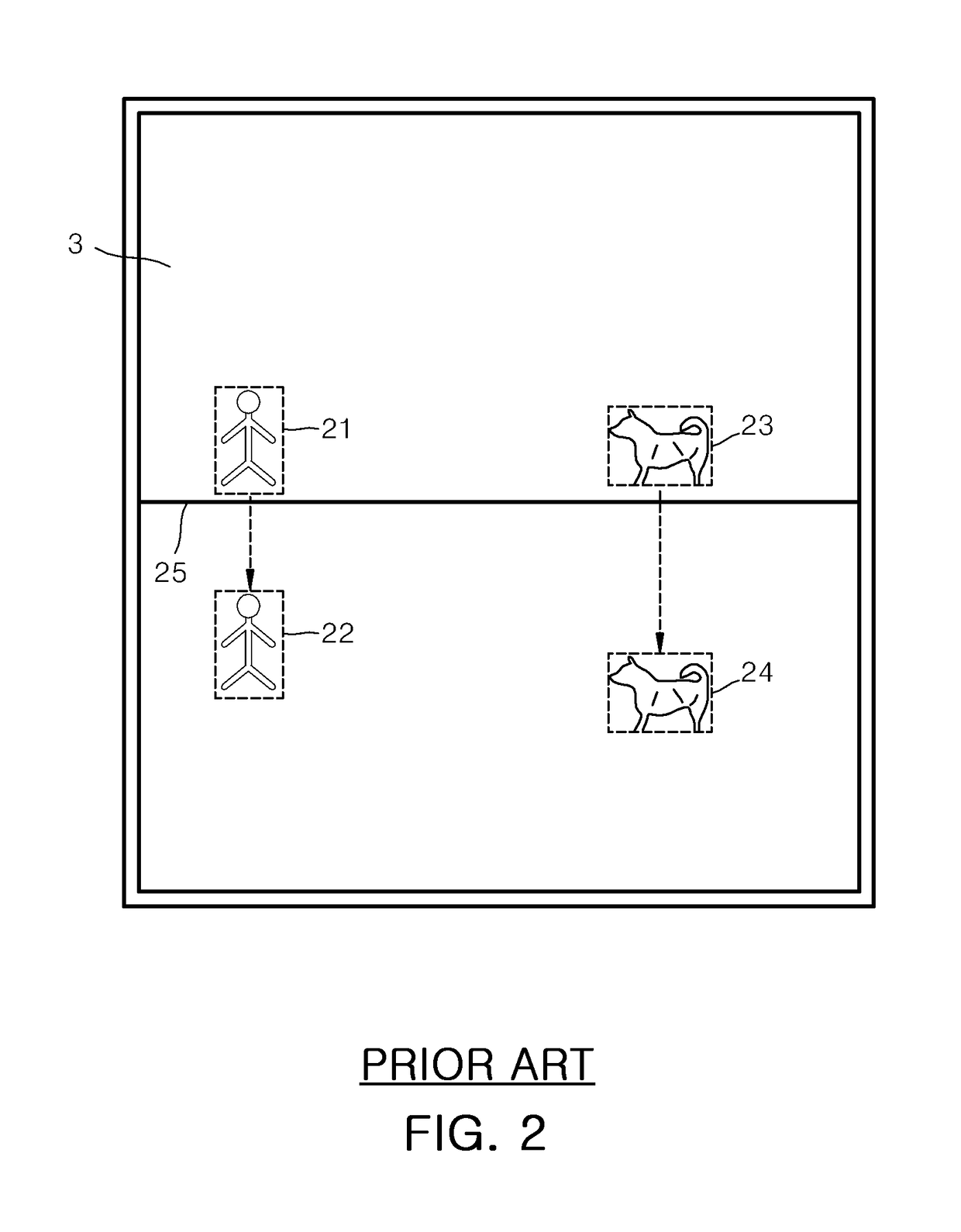

Method and System For Calculating Depth Information of Object in Image

InactiveUS20080226159A1Accurate depth informationImage enhancementImage analysisComputer visionComputer graphics (images)

A method and a system for calculating a depth information of objects in an image is disclosed. In accordance with the method and the system, an area occupied by two or more objects in the image is classified into an object area and an occlusion area using an outline information to obtain an accurate depth information of each of the objects.

Owner:KOREA ELECTRONICS TECH INST

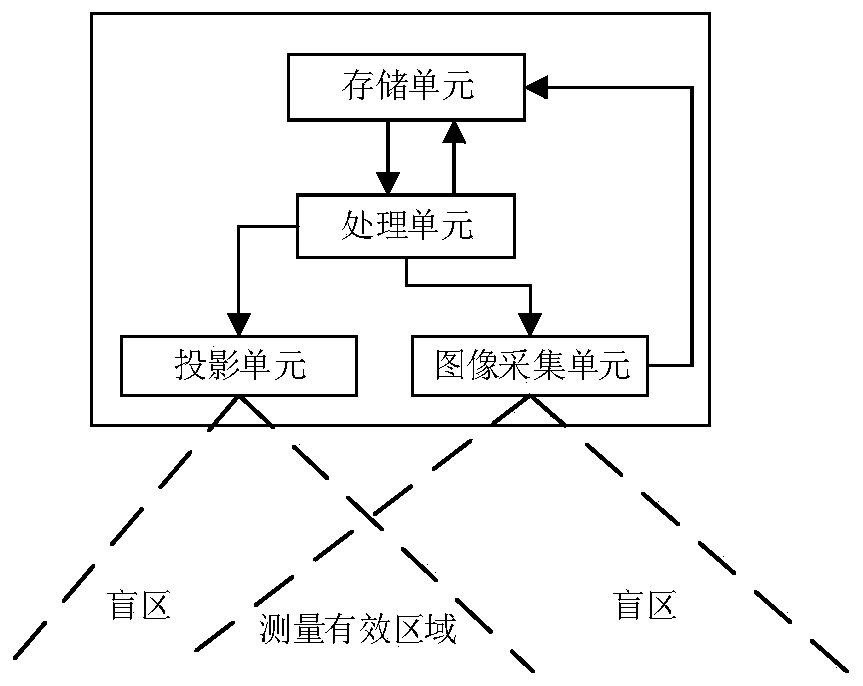

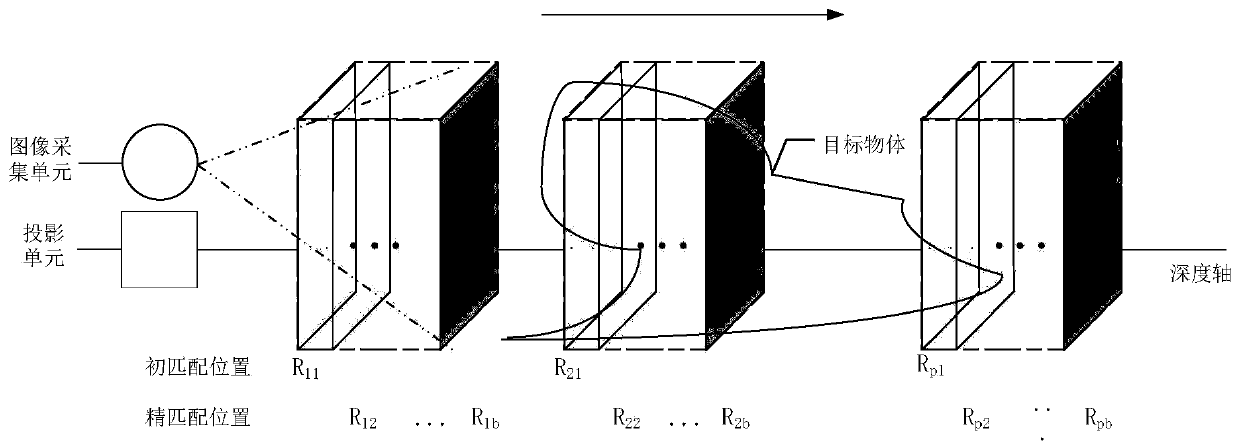

Depth information detection method and device and electronic equipment

ActiveCN110009673AAccurate depth informationImage analysisCharacter and pattern recognitionPattern recognitionImaging processing

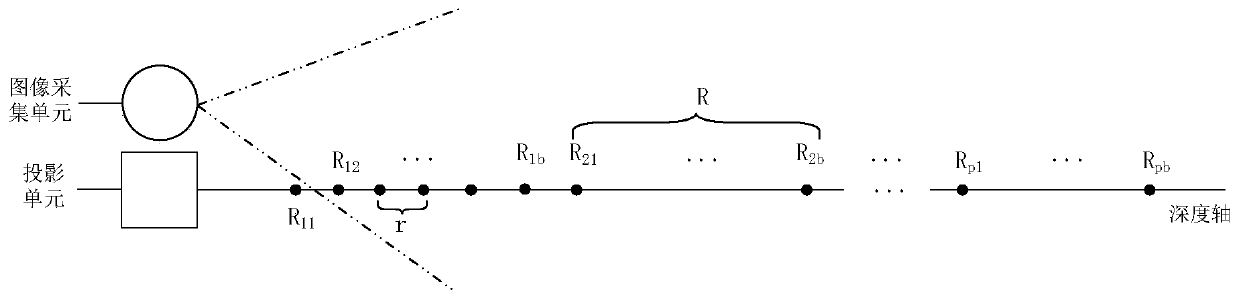

The invention discloses a depth information detection method and device and electronic equipment, and relates to the technical field of image processing. The method comprises the following steps: obtaining a target speckle image group formed by projecting k different reference speckle patterns to a target object; and respectively matching the m coarse matching template groups with all or part of the target speckle image group, and obtaining the coarse matching template group with the highest similarity as a primary matching template group, wherein the interval between every two adjacent coarsematching template groups is R, the interval between every two adjacent fine matching template groups is r, and R is greater than r; selecting fine matching template groups within a preset range before and after the primary matching template group, respectively matching the fine matching template groups with all or part of the target speckle image group, and obtaining the fine matching template group with the highest similarity as a secondary matching template group; and determining depth information of the target speckle image according to the depth information of the secondary matching template group.

Owner:SICHUAN VISENSING TECH CO LTD

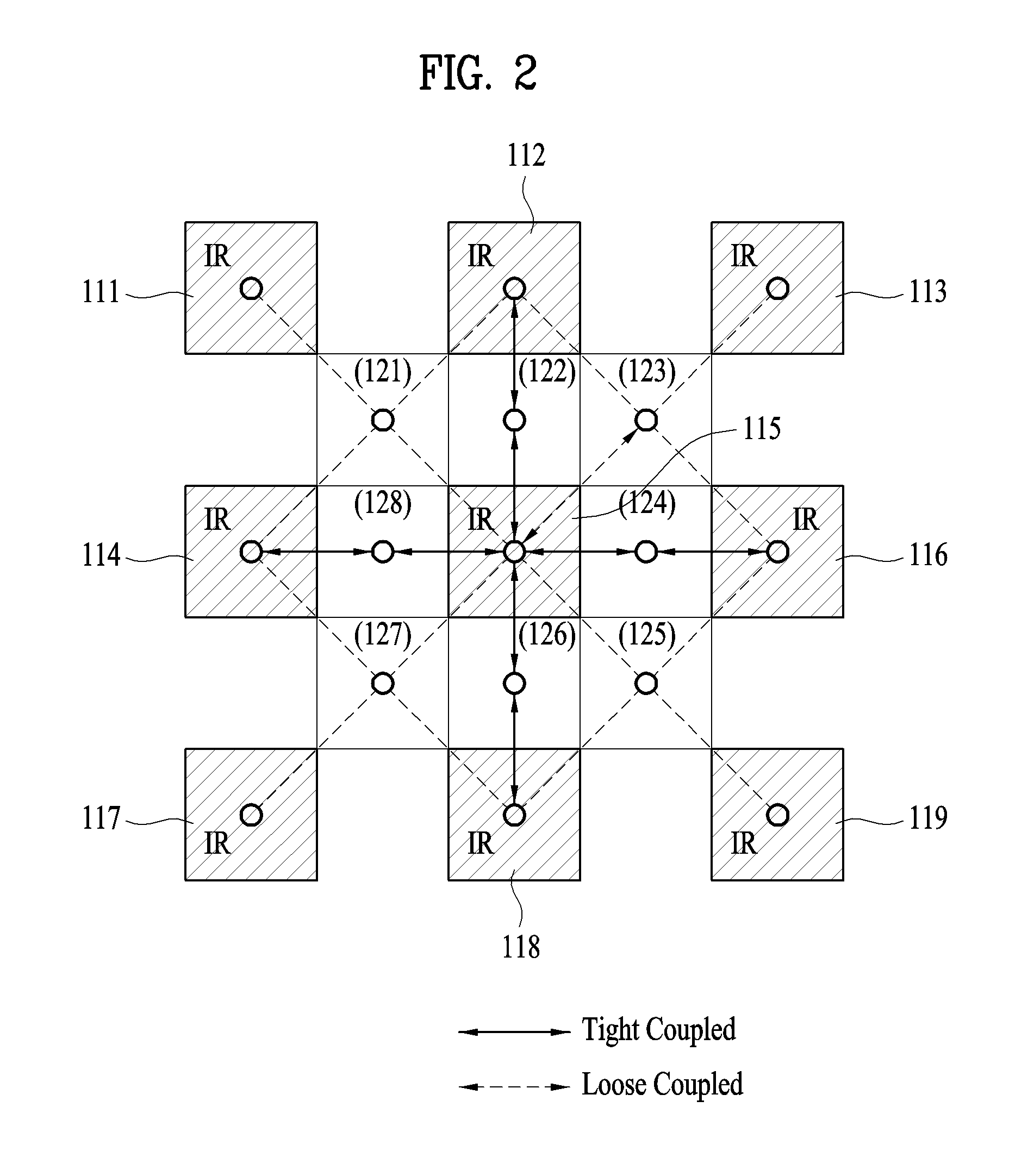

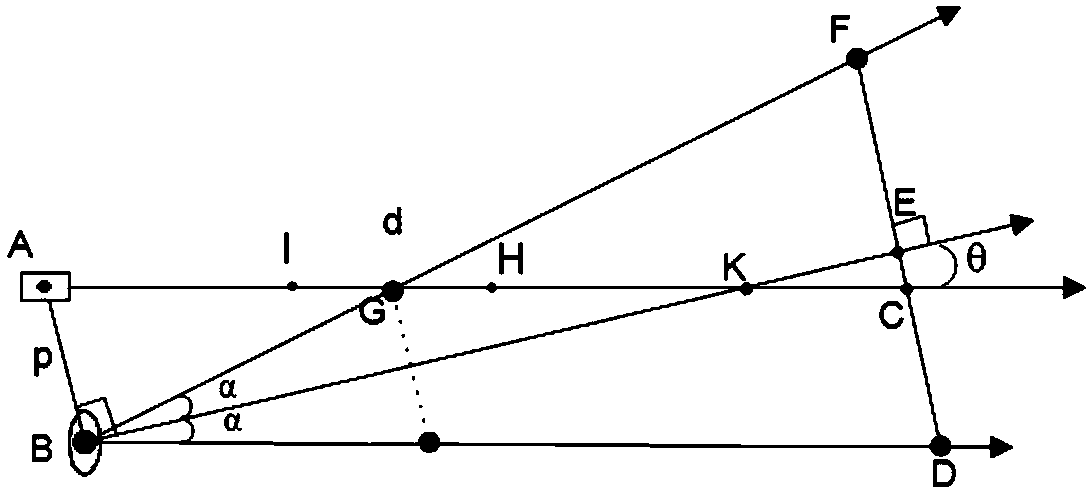

Multi-lens stereoscopic vision parallax calculating method

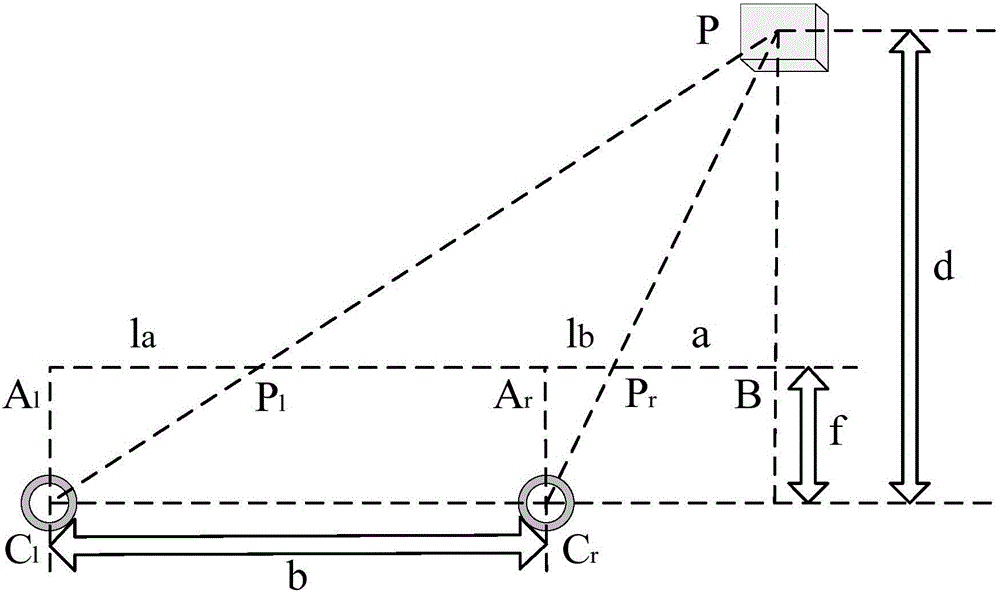

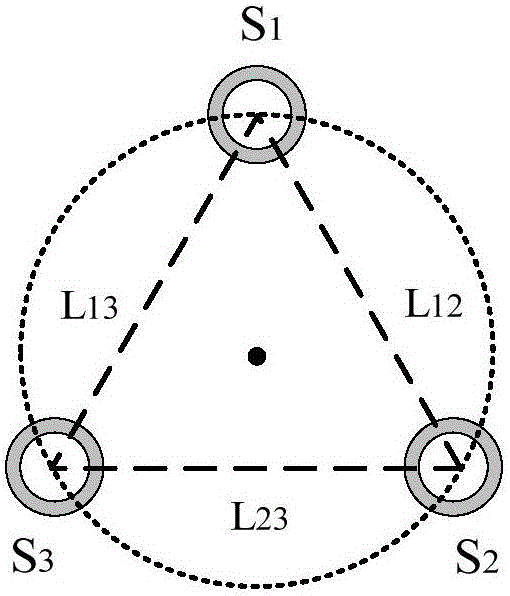

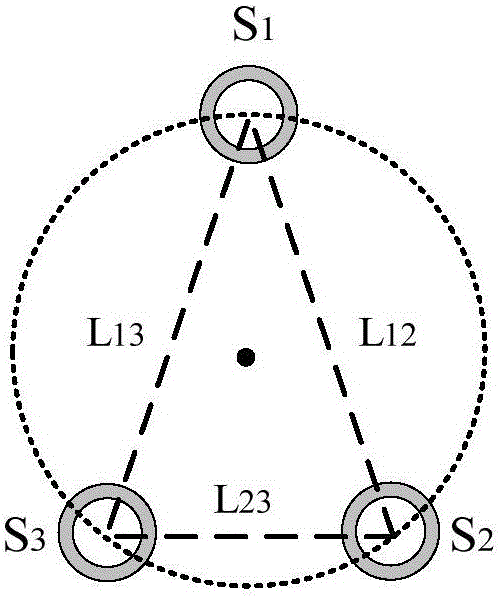

ActiveCN105869157APrecise point depth informationAccurate depth informationImage enhancementImage analysisParallaxViewpoints

The invention discloses a multi-lens stereoscopic vision parallax calculating method, and belongs to the field of computer vision. The method comprises four steps: initialization, the obtaining of all binocular parallaxes for range finding, the obtaining of a multi-lens parallax for range finding, and ending. A weighted averaging method is employed for the calculation of a final parallax, and can eliminate a range finding error better. A scene is observed from a plurality of viewpoints, so as to obtain perception images at different visual angles. The position deviation among pixels of the images is calculated through a triangular measurement principle, so as to obtain the three-dimensional information of the scene. The multi-lens stereoscopic vision parallax method is given on the basis of a stereoscopic parallel vision model. A parallax calculation method of the stereoscopic parallel vision model is employed for the mutual parallax calculation of a plurality of lenses, thereby obtaining a more precise imaging parallax, and enabling the depth information of object points in three-dimensional reconstruction to be more precise. The plurality of lenses can rotate around a circle center in each distribution mode at a proportion with the unchanged relative distance, or can be arranged in a turnover manner along the axis of a line in a plane.

Owner:XIAMEN UNIV

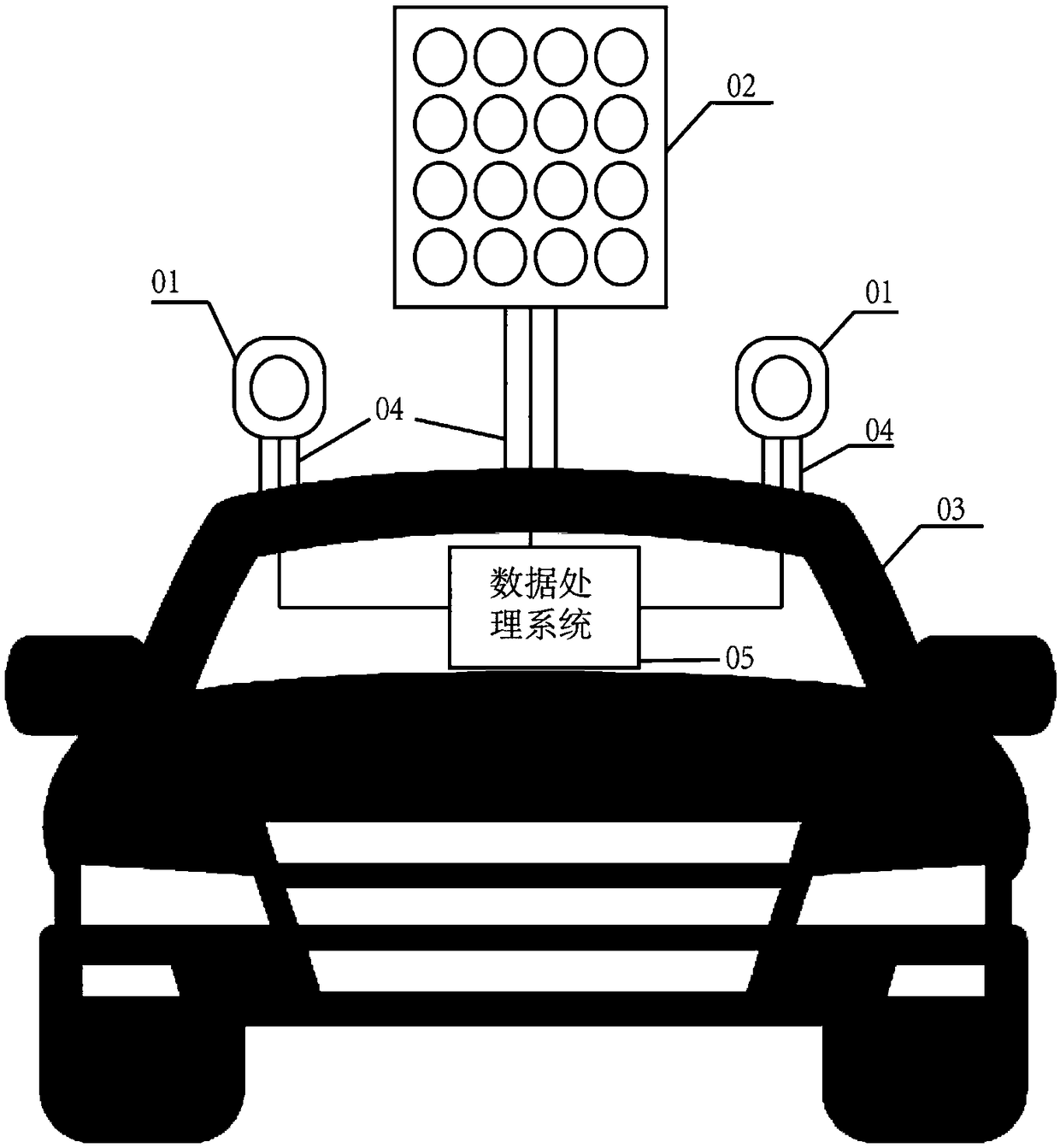

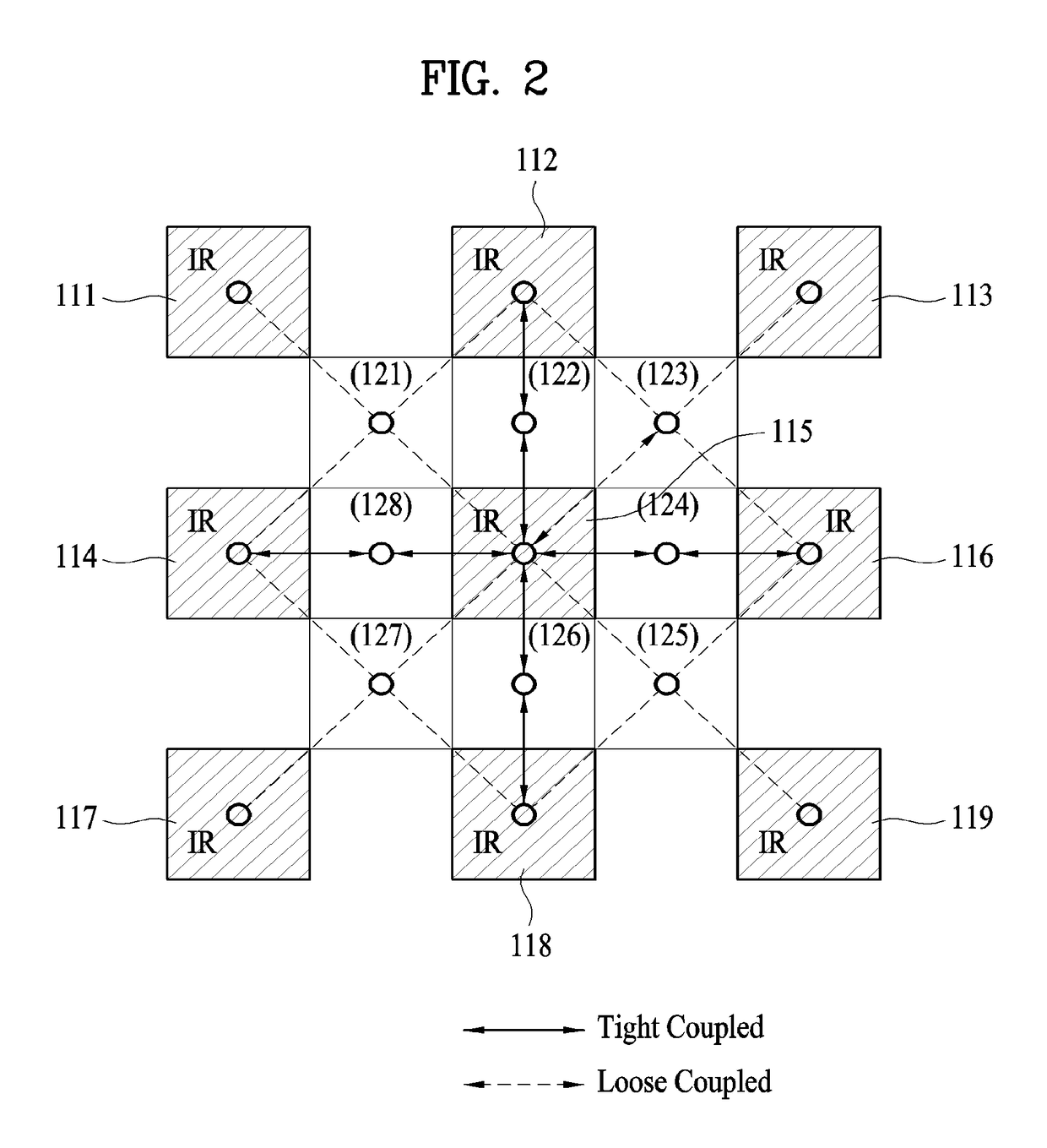

Unmanned vehicle camera system

ActiveCN109151334AAccurate depth informationAchieve occlusionTelevision system detailsColor television detailsData processing systemParallax

The invention discloses an unmanned vehicle camera system. The system comprises monocular cameras, a camera array, pan heads, a data processing system and an unmanned vehicle. The pan heads are installed on the two sides and the middle of the unmanned vehicle and used for adjusting the shooting direction of the corresponding camera and improving the stability of the camera. The monocular cameras are installed on the pan heads located on the two sides to form the wide-baseline camera array. The camera array is installed on the middle pan head and used for obtaining the image information of thesurrounding environment of the unmanned vehicle and the tracking target. The data processing system is installed in the unmanned vehicle and connected with the monocular cameras, the camera array andthe pan heads and used for controlling the pan heads and obtaining image information captured by the cameras and then processing the image information. The system has the combination of the short-baseline camera array and the wide-baseline camera array to generate multiple parallax and depth maps so as to obtain the accurate depth information of the surrounding environment of the unmanned vehicle.The short-baseline camera array is additionally arranged for realizing synthetic aperture imaging for tracking the occluded object, i.e. de-occlusion is realized, and the high-resolution and high-dynamic fusion image can be obtained.

Owner:CHINA JILIANG UNIV

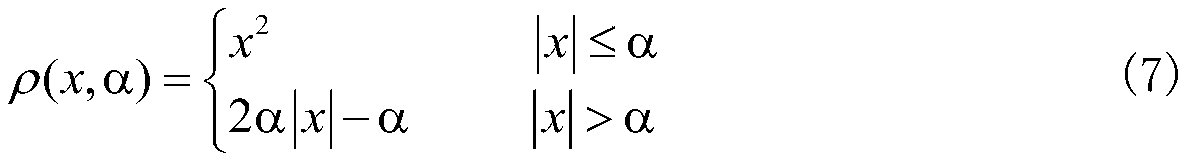

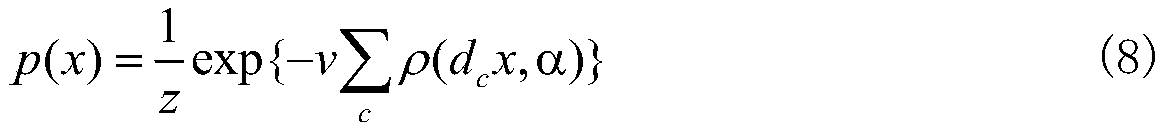

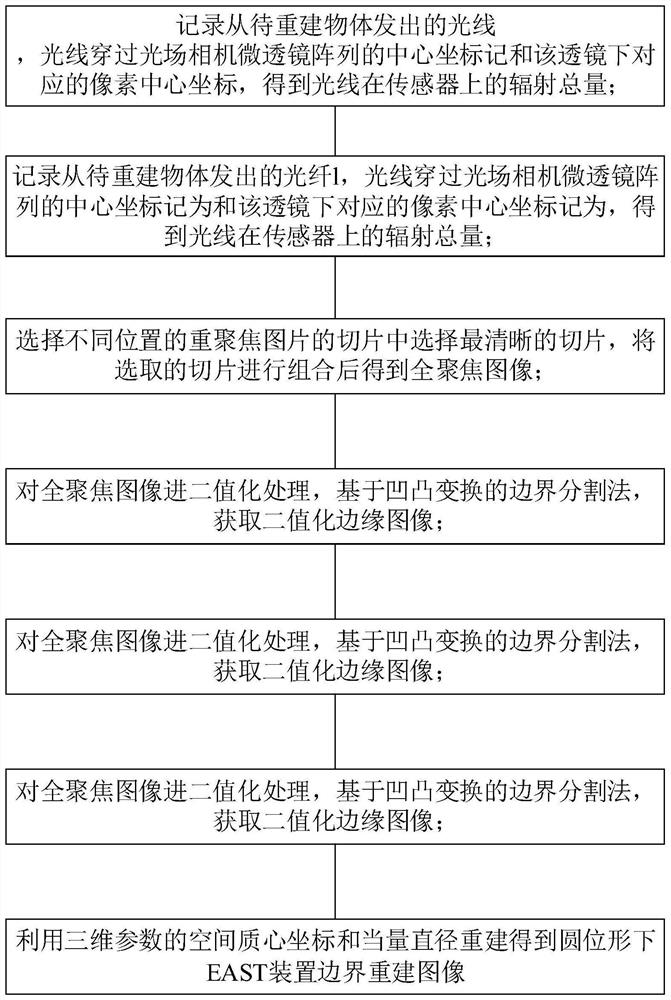

Reconstruction method based on light field camera micro-nano correlated imaging sensing

InactiveCN112465952AAchieve dimension upgradeHigh precisionImage enhancementImage analysisEdge mapsRadiology

The invention relates to the field of EAST device nuclear fusion and the field of three-dimensional reconstruction, in particular to a reconstruction method based on light field camera micro-nano correlated imaging sensing, which comprises the following steps: obtaining a refocusing image according to the total radiation amount of light emitted from a to-be-reconstructed object obtained by a sensor; selecting the clewest slice from the slices of the refocusing picture at different positions to obtain a full focusing image and obtain a binarized edge image of the full focusing image; acquiringdepth information and magnification according to the refocused image, the binarized edge image and the EAST device depth image; determining a two-dimensional centroid coordinate, an equivalent diameter, depth information and magnification according to the image after binary filling, and raising the dimension of a two-dimensional parameter into a three-dimensional parameter; and reconstructing to obtain a boundary reconstruction image of the EAST device under the circular configuration by utilizing the space centroid coordinates and the equivalent diameter of the three-dimensional parameters. According to the method, the calculation complexity is reduced, system resource occupation is low, efficient implementation is facilitated, and the method has important market value.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Illumination optical system and 3D image acquisition apparatus including the same

ActiveUS9874637B2Accurate depth informationEfficiently inhibit speckle noiseTelevision system detailsColor television detailsAcquisition apparatus3d image

An illumination optical system of a 3-dimensional (3D) image acquisition apparatus and a 3D image acquisition apparatus including the illumination optical system. The illumination optical system of a 3D image acquisition apparatus includes a beam shaping element which outputs light having a predetermined cross-sectional shape which is proportional to a field of view of the 3D image acquisition apparatus. The beam shaping element may adjust a shape of illumination light according to its cross-sectional shape. The beam shaping element may uniformly homogenize the illumination light without causing light scattering or absorption.

Owner:SAMSUNG ELECTRONICS CO LTD

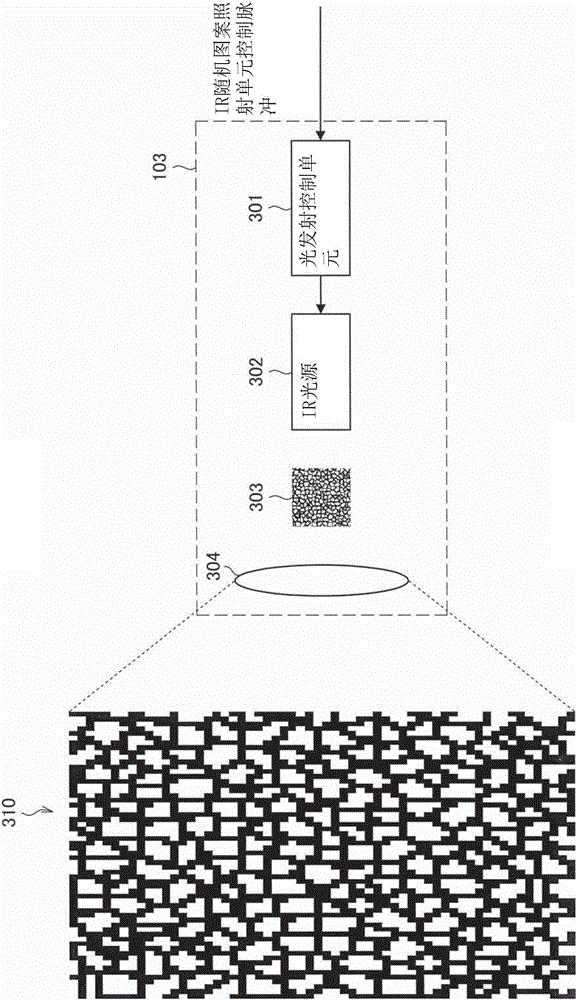

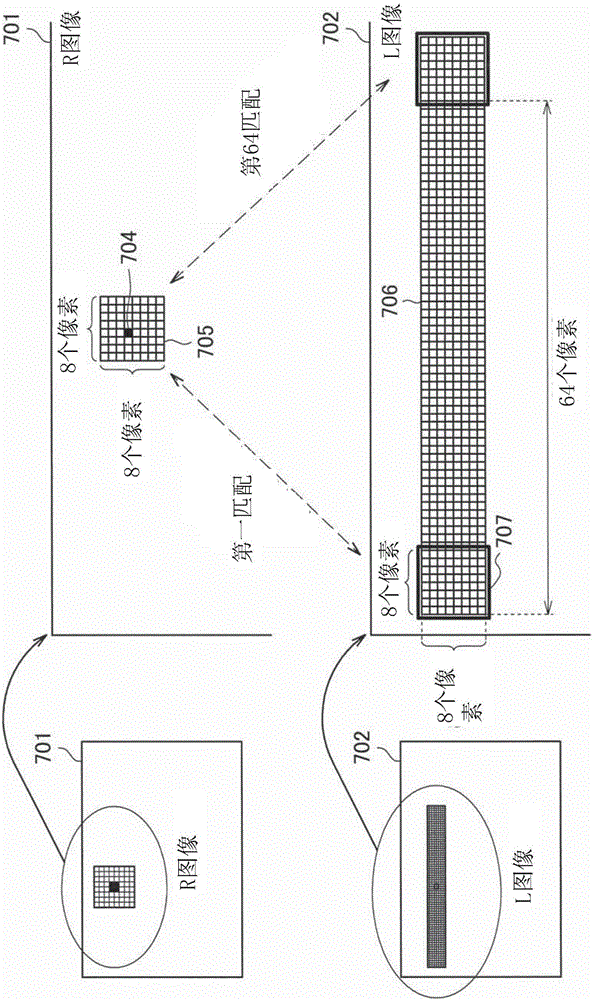

Image processing device and image processing method

ActiveCN105829829AAccurate depth informationTelevision system detailsImage enhancementImaging processingIrradiation

To provide an image processing device and image processing method capable of controlling an infrared pattern irradiation timing so as to obtain a pattern-irradiated infrared image for obtaining more accurate depth information and a non-pattern-irradiated infrared image. An image processing device provided with a pattern irradiation unit for irradiating an infrared pattern onto the surface of an object and an infrared imaging unit for obtaining infrared images, wherein the pattern irradiation unit performs irradiation at a prescribed timing corresponding to the imaging timing of the infrared imaging unit and the infrared imaging unit obtains a pattern projection infrared image in which the pattern irradiated by the pattern irradiation unit is projected onto the object and a patternless infrared image in which the pattern is not projected onto the object.

Owner:SONY CORP

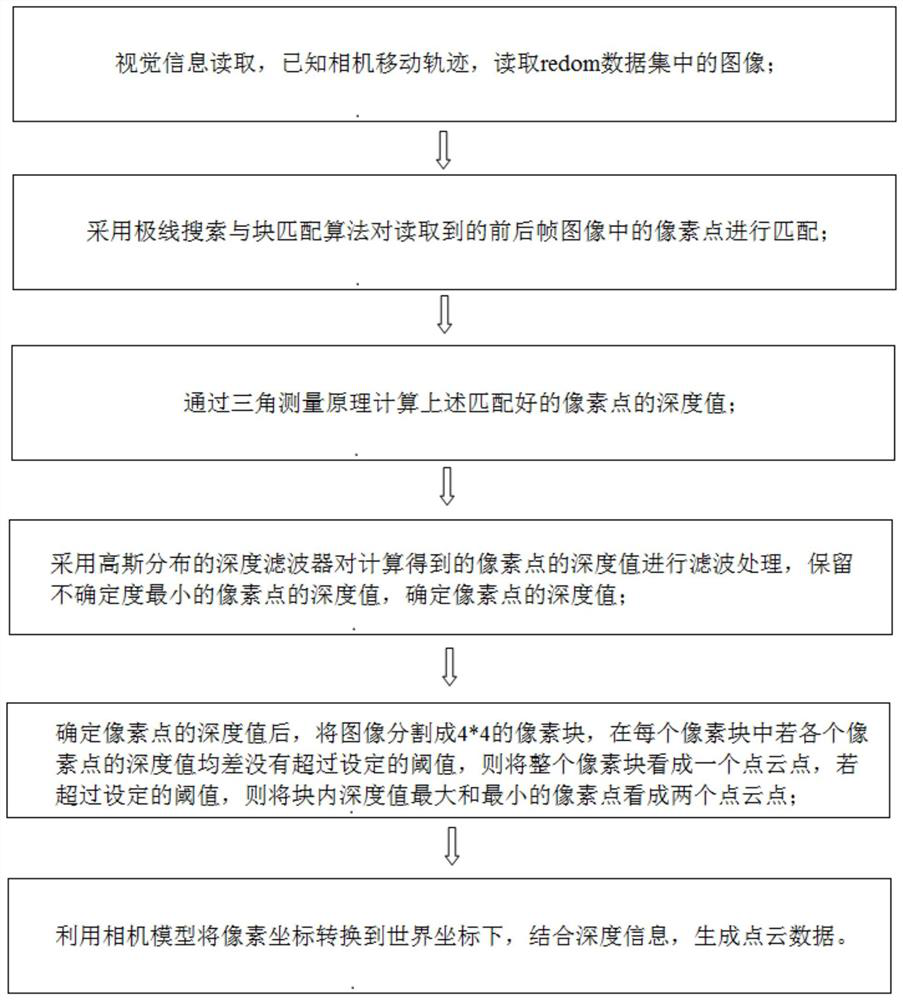

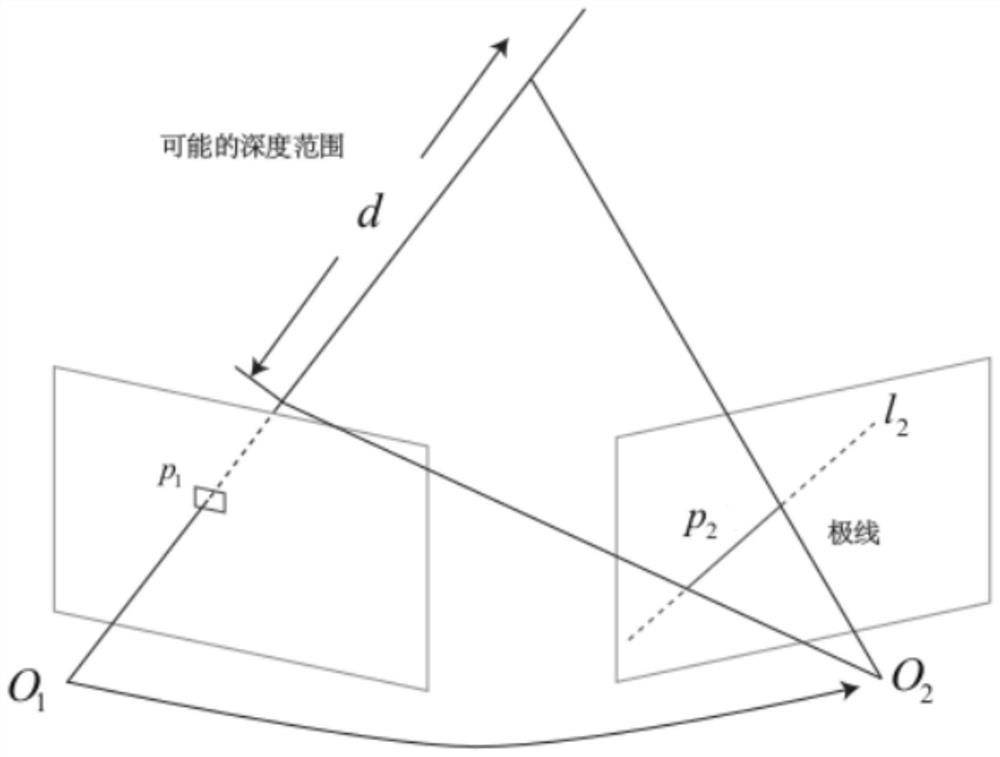

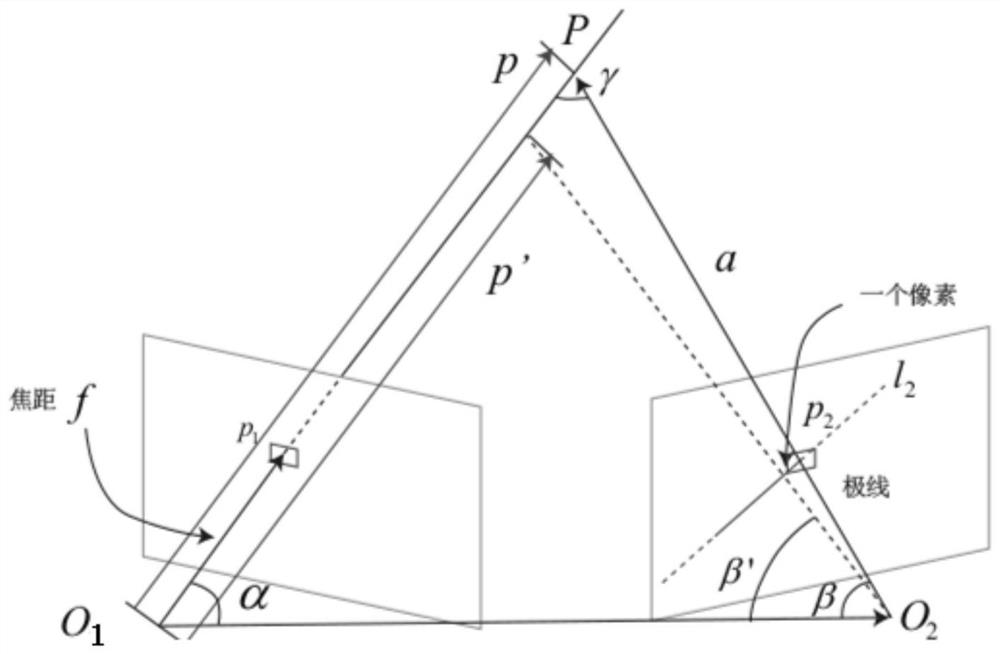

Monocular vision-based dense point cloud reconstruction method and system for triangulation measurement depth

PendingCN111798505ARealize the creationDepth value error is smallImage analysis3D modellingPoint cloudData set

The invention provides a monocular vision-based dense point cloud reconstruction method and system for triangulation depth measurement. The monocular vision-based dense point cloud reconstruction method comprises the steps of reading an image in a data set; matching the pixel points in the read front and back frame images by adopting an epipolar search and block matching algorithm; calculating thedepth value of the matched pixel point through a triangulation principle; performing filtering processing on the depth values of the pixel points by adopting a depth filter in Gaussian distribution,and keeping the depth value of the pixel point with the minimum uncertainty; segmenting the image into 4 * 4 pixel blocks; if the depth value difference of each pixel point in each pixel block does not exceed a set threshold value, regarding the whole pixel block as a point cloud point; and if the depth value difference of each pixel point in each pixel block exceeds the set threshold value, regarding the pixel points with the maximum depth value and the minimum depth value in the block as two point cloud points; converting the pixel coordinates into world coordinates by using a camera model,and generating point cloud data in combination with the depth information. The problem that conventional point cloud map creation needs multiple sensors or expensive sensors is solved.

Owner:DALIAN UNIV OF TECH

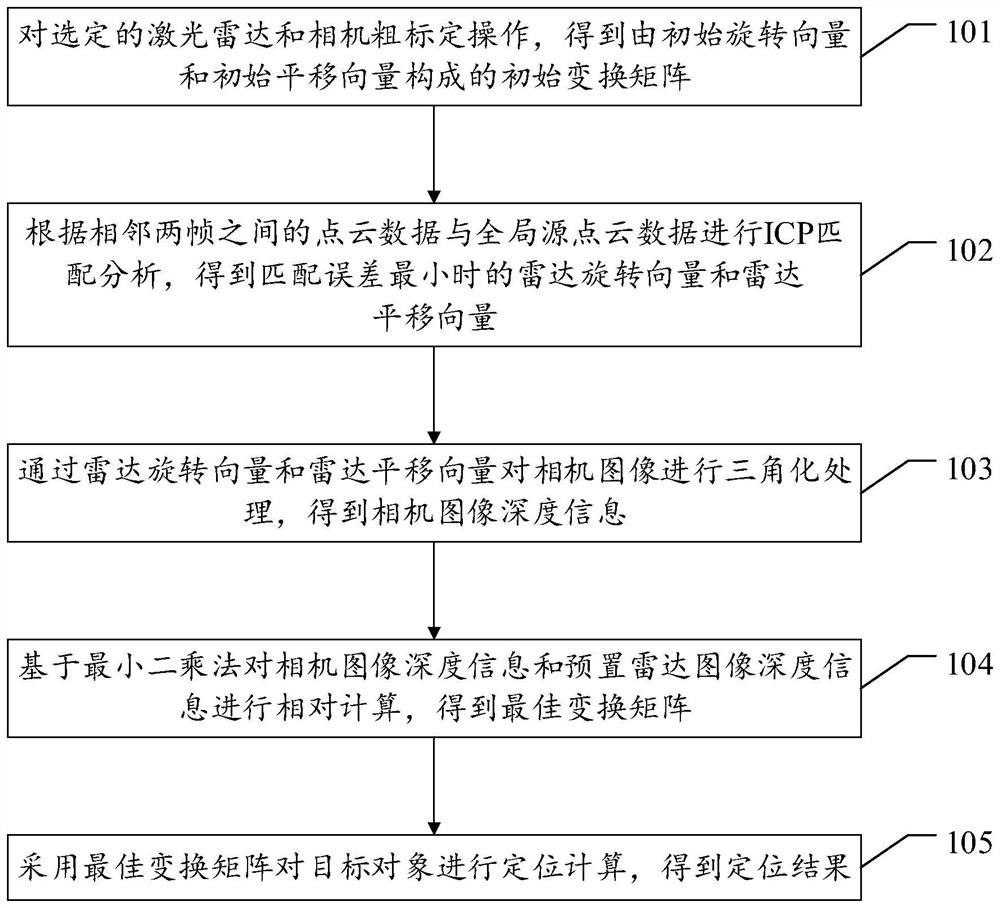

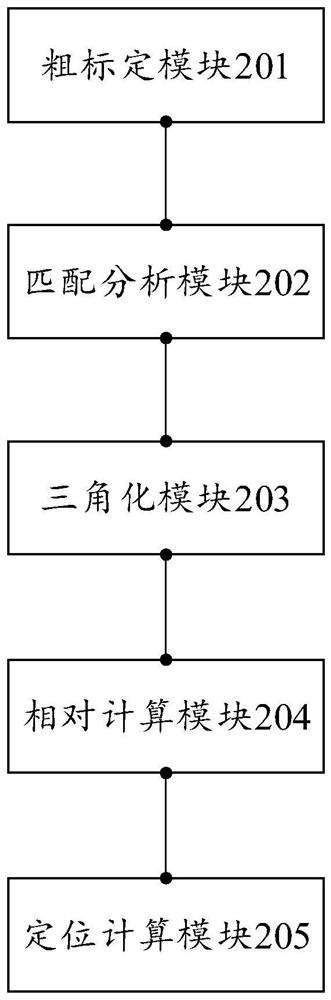

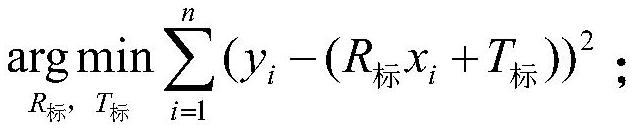

Target positioning method fusing laser radar and camera and related device

PendingCN114022552AGood transformation matrixAccurate depth informationImage enhancementImage analysisCamera imagePoint cloud

The invention discloses a target positioning method fusing a laser radar and a camera and a related device, and the method comprises the steps: carrying out the rough calibration operation of the selected laser radar and camera, and obtaining an initial transformation matrix composed of an initial rotation vector and an initial translation vector; carrying out the ICP matching analysis according to the point cloud data between two adjacent frames and the global source point cloud data, and obtaining a radar rotation vector and a radar translation vector when the matching error is minimum; performing triangularization processing on the camera image through the radar rotation vector and the radar translation vector to obtain camera image depth information; performing relative calculation on the camera image depth information and the preset radar image depth information based on a least square method to obtain an optimal transformation matrix; and carrying out positioning calculation on the target object by adopting the optimal transformation matrix to obtain a positioning result. The technical problem that the accuracy of a positioning result is low due to limitation of different degrees in the prior art is solved.

Owner:GUANGDONG POWER GRID CO LTD +1

RGB-IR sensor, and method and apparatus for obtaining 3D image by using same

ActiveUS10085002B2Efficiently obtainedImprove efficiencyTelevision system detailsImage analysis3d image

Owner:LG ELECTRONICS INC

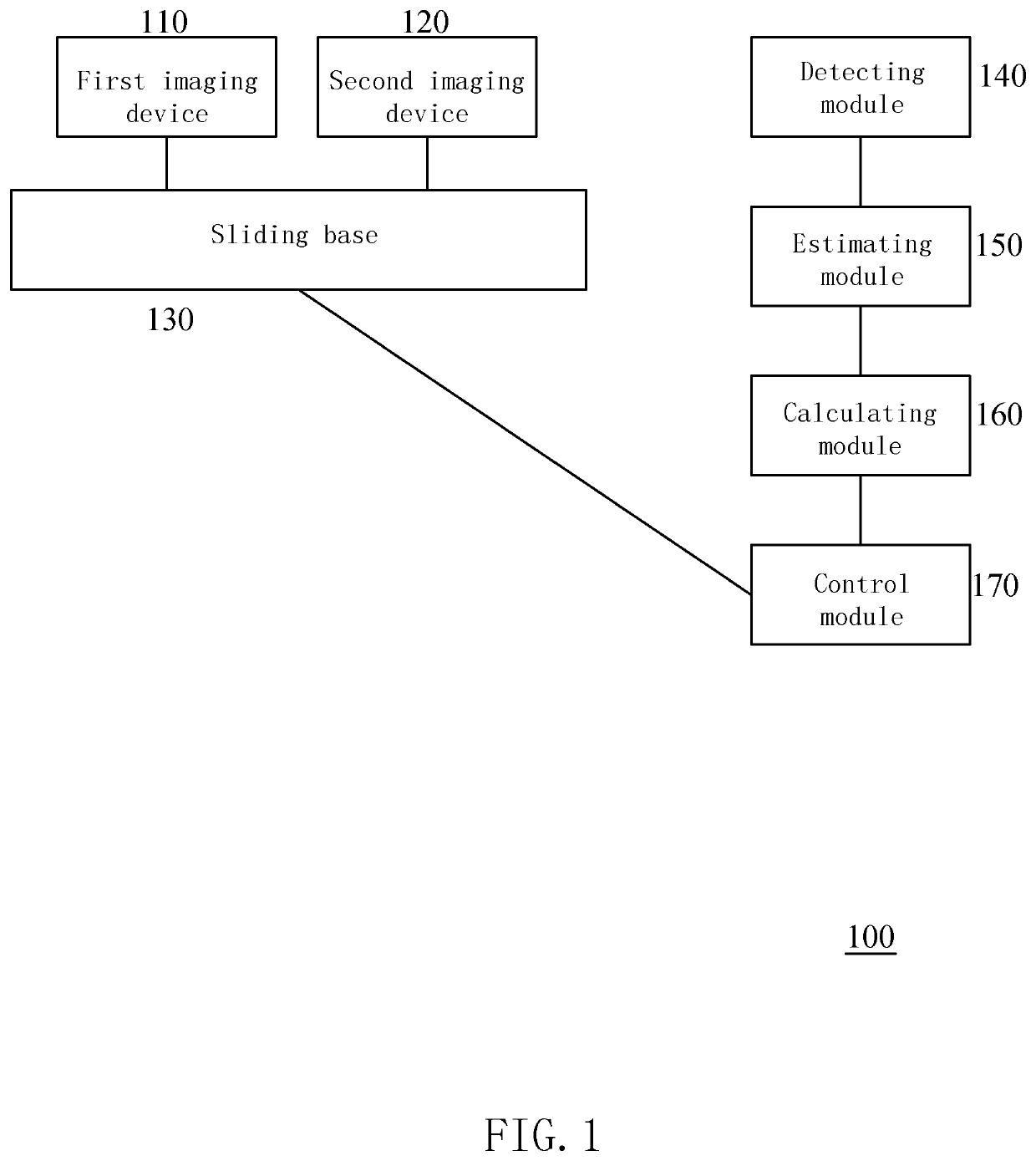

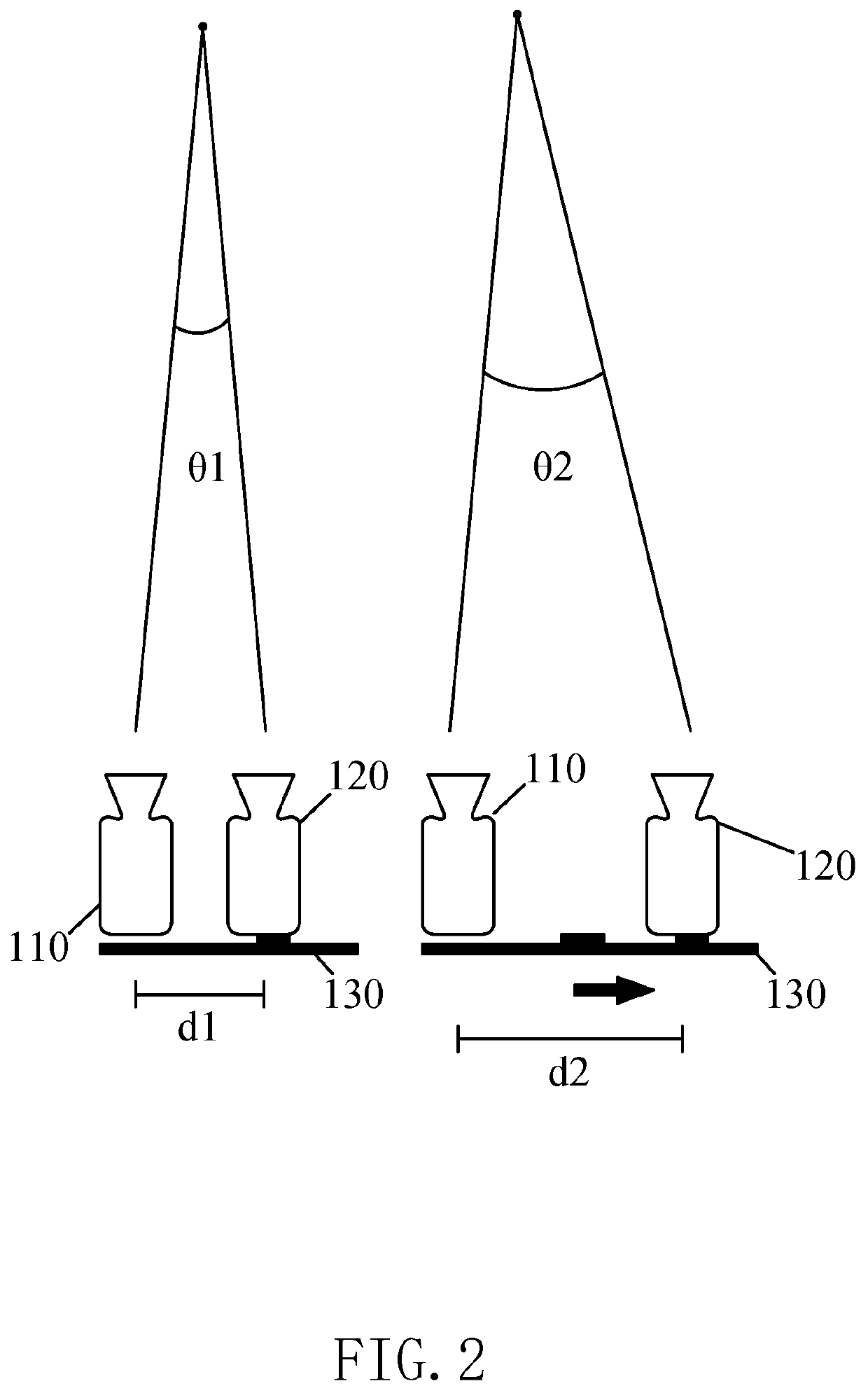

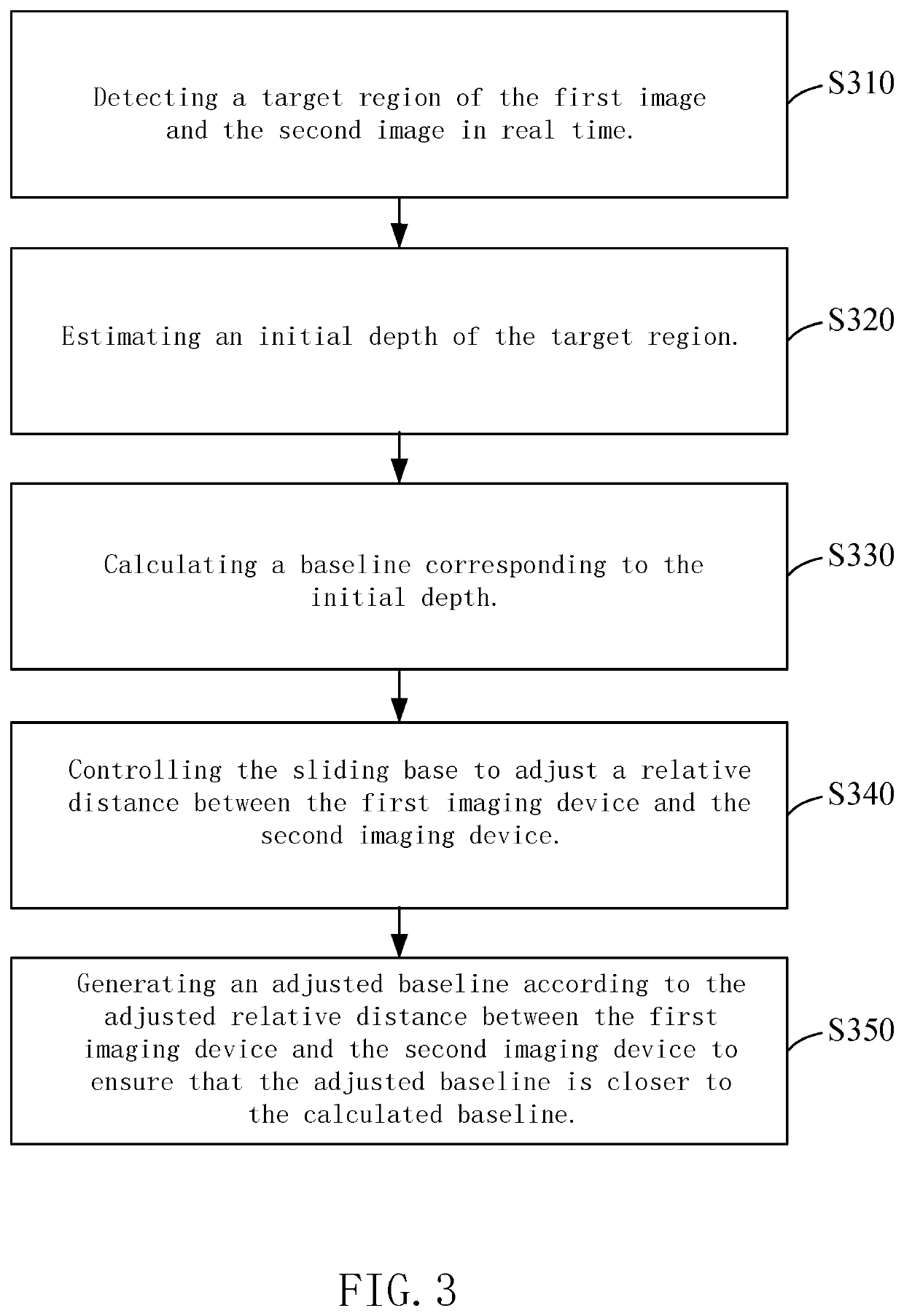

Depth imaging system and method for controlling depth imaging system thereof

ActiveUS10715787B1Accurate depth informationTelevision system detailsColor television detailsDepth imagingRadiology

A depth imaging system and a control method thereof are provided. The depth imaging system includes a first imaging device, a second imaging device, a sliding base, a detecting module, an estimating module, a calculating module, and a control module. The first imaging device and the second imaging device are mounted on the sliding base. The detecting module detects a target region of the first image and the second image. The estimating module estimates an initial depth of the target region. The calculating module calculates a baseline corresponding to the initial depth. The control module controls the sliding base to adjust a relative distance between the first imaging device and the second imaging device. The calculating module generates an adjusted baseline according to the adjusted relative distance between the first imaging device and the second imaging device, such that the adjusted baseline is closer to the calculated baseline.

Owner:ULSEE

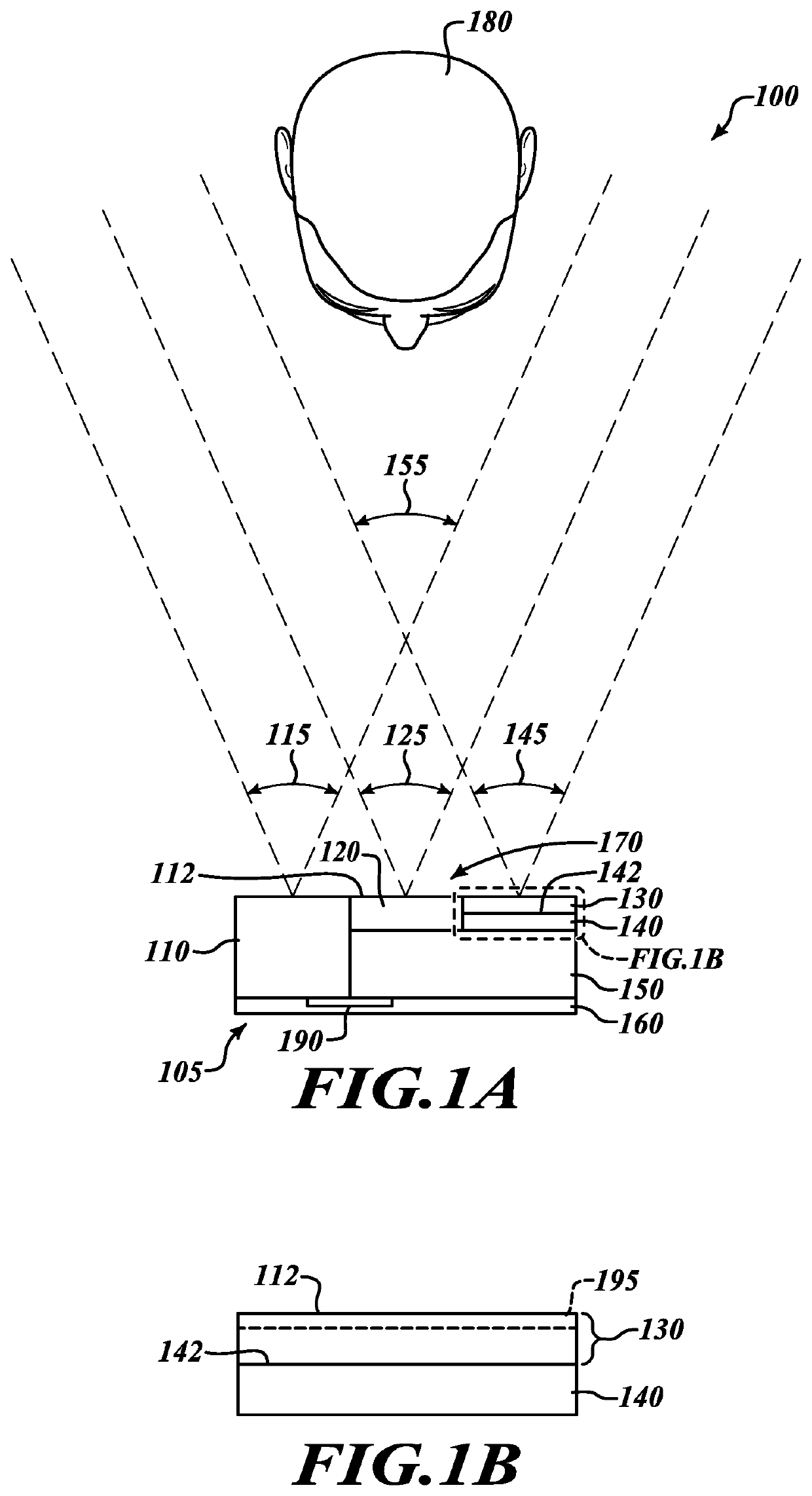

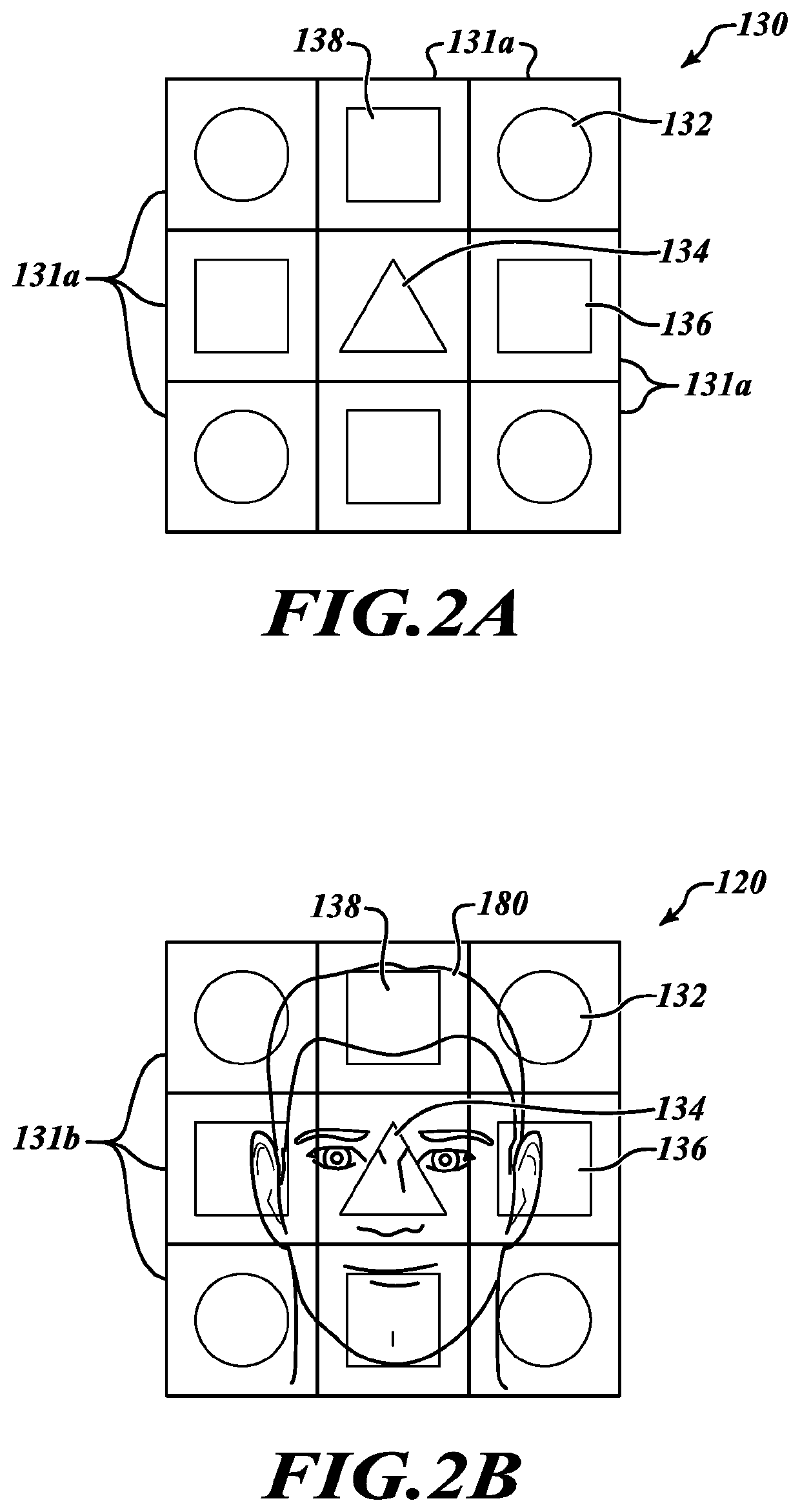

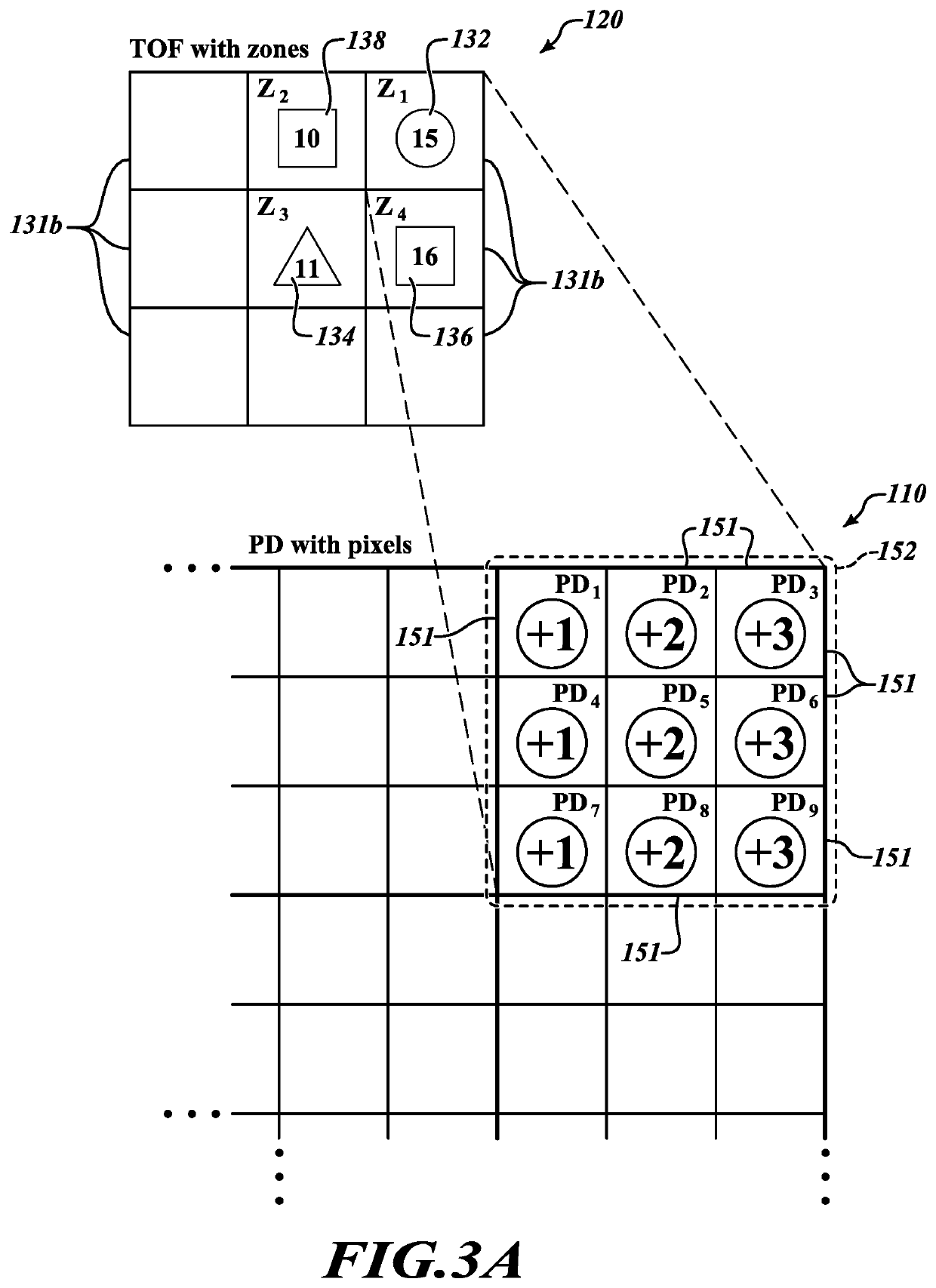

Depth sensing with a ranging sensor and an image sensor

ActiveUS11373322B2Accurate detection distanceHigh resolutionImage enhancementImage analysisHigh resolution imageDepth map

Owner:STMICROELECTRONICS SRL

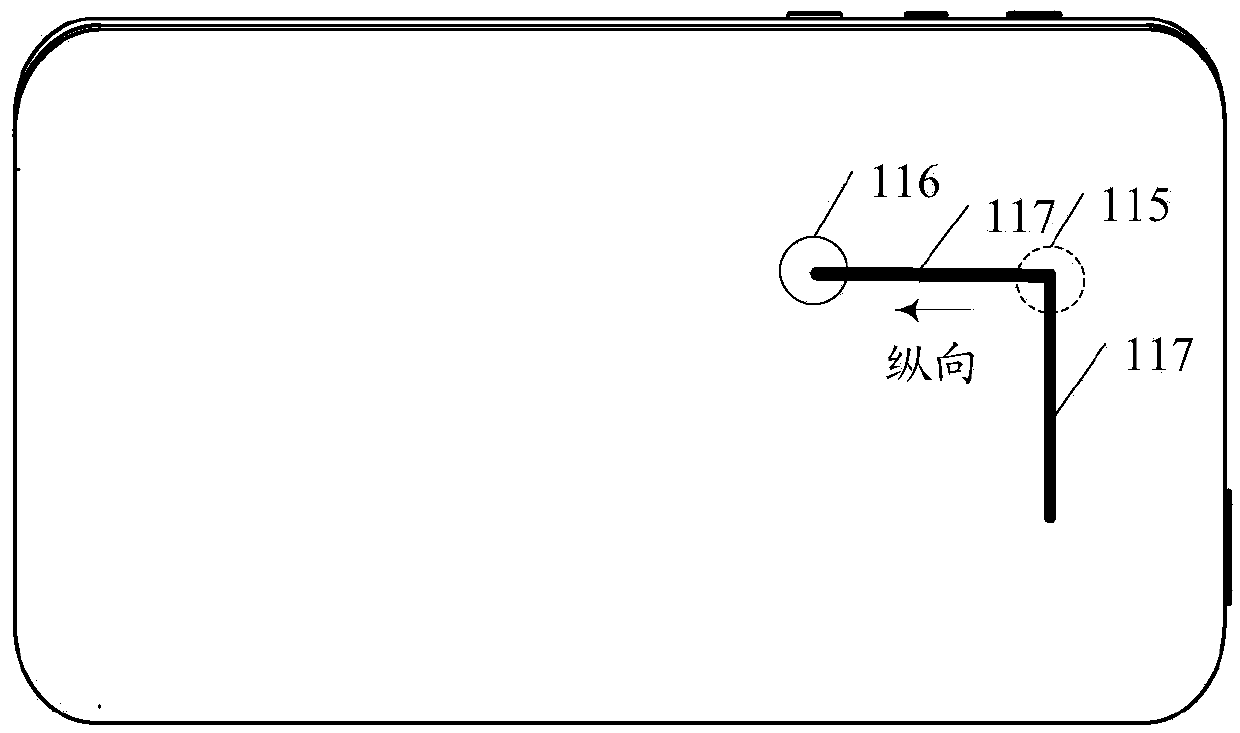

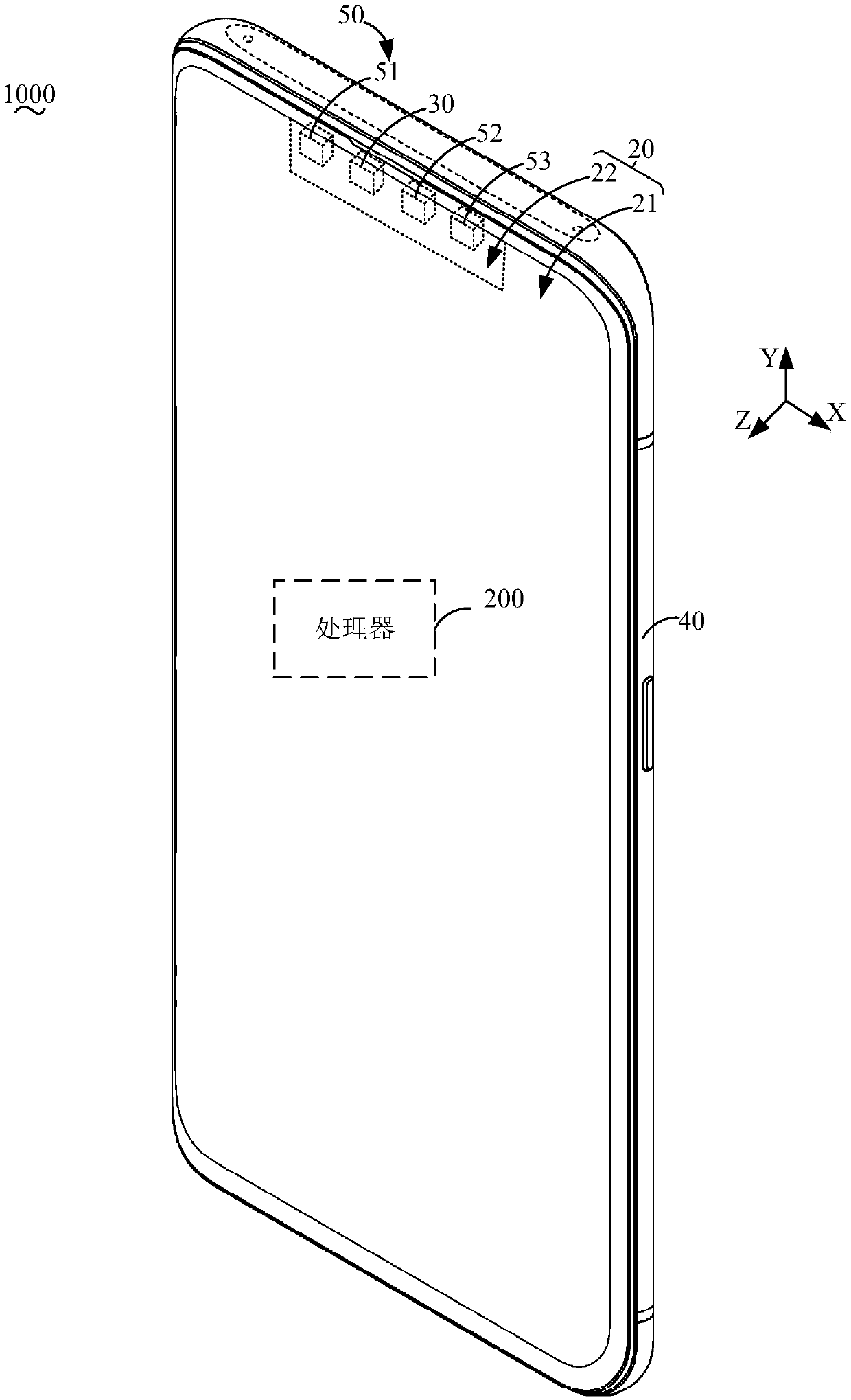

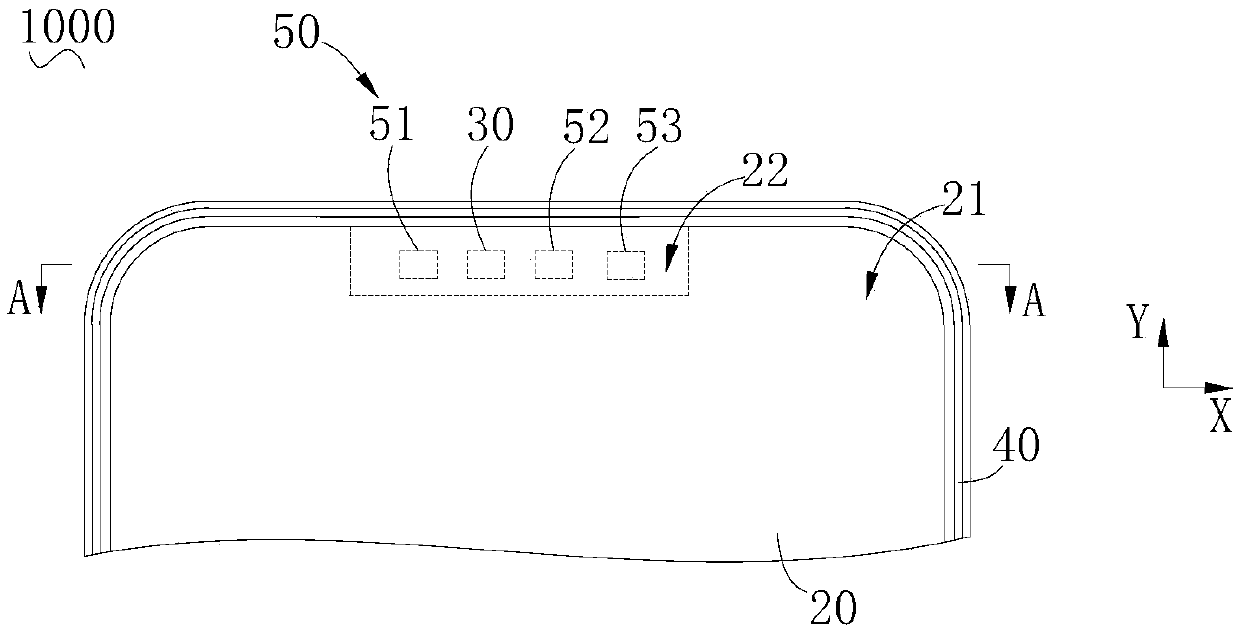

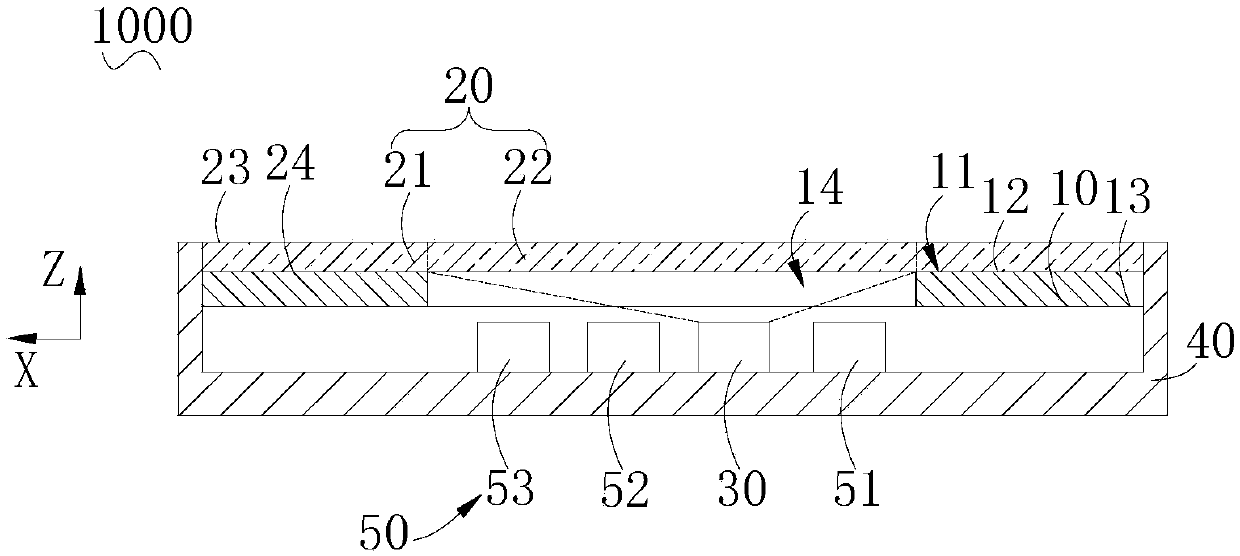

Terminal and control method and device thereof

ActiveCN109587295AAvoid interferenceIncrease the areaTelephonic subscriber detailsTelephone set constructionsComputer terminalComputer science

The invention provides a terminal and a control method and device thereof. The terminal comprises a display screen, a cover plate, a projection module and a flight time assembly. A display screen forms a display area for displaying a first image; the cover plate forms first and second subareas, and the first subarea corresponds to the display area. The projection module projects a second image tothe second subarea, and the display screen, the projection module and the flight time assembly are positioned in the same side of the cover plate. The flight time assembly is arranged corresponding tothe second subarea, and comprises a light emitter and a light receiver, laser emitted by the laser emitter penetrates the second subarea to reach a target object, and the light receiver receives thelaser which is reflected by the target object and penetrates the second subarea. The projection module does not shield the first image displayed by the display screen, and can project a second image,and the area of the display image of the terminal is increased; and the fight time assembly is avoided from interference of the display screen during laser emission and reception.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

Depth map super-resolution processing method

ActiveUS10115182B2Solve the low matching degreeAccurate depth informationImage enhancementTelevision system detailsImage resolutionDepth map

The present invention discloses a depth map super-resolution processing method, including: firstly, respectively acquiring a first original image (S1) and a second original image (S2) and a low resolution depth map (d) of the first original image (S1); secondly, 1) dividing the low resolution depth map (d) into multiple depth image blocks; 2) respectively performing the following processing on the depth image blocks obtained in step 1); 21) performing super-resolution processing on a current block with multiple super-resolution processing methods, to obtain multiple high resolution depth image blocks; 22) obtaining new synthesized image blocks by using an image synthesis technology; 23) upon matching and judgment, determining an ultimate high resolution depth image block; and 3) integrating the high resolution depth image blocks of the depth image blocks into one image according to positions of the depth image blocks in the low resolution depth map (d). Through the depth map super-resolution processing method of the present invention, depth information of the obtained high resolution depth maps is more accurate.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

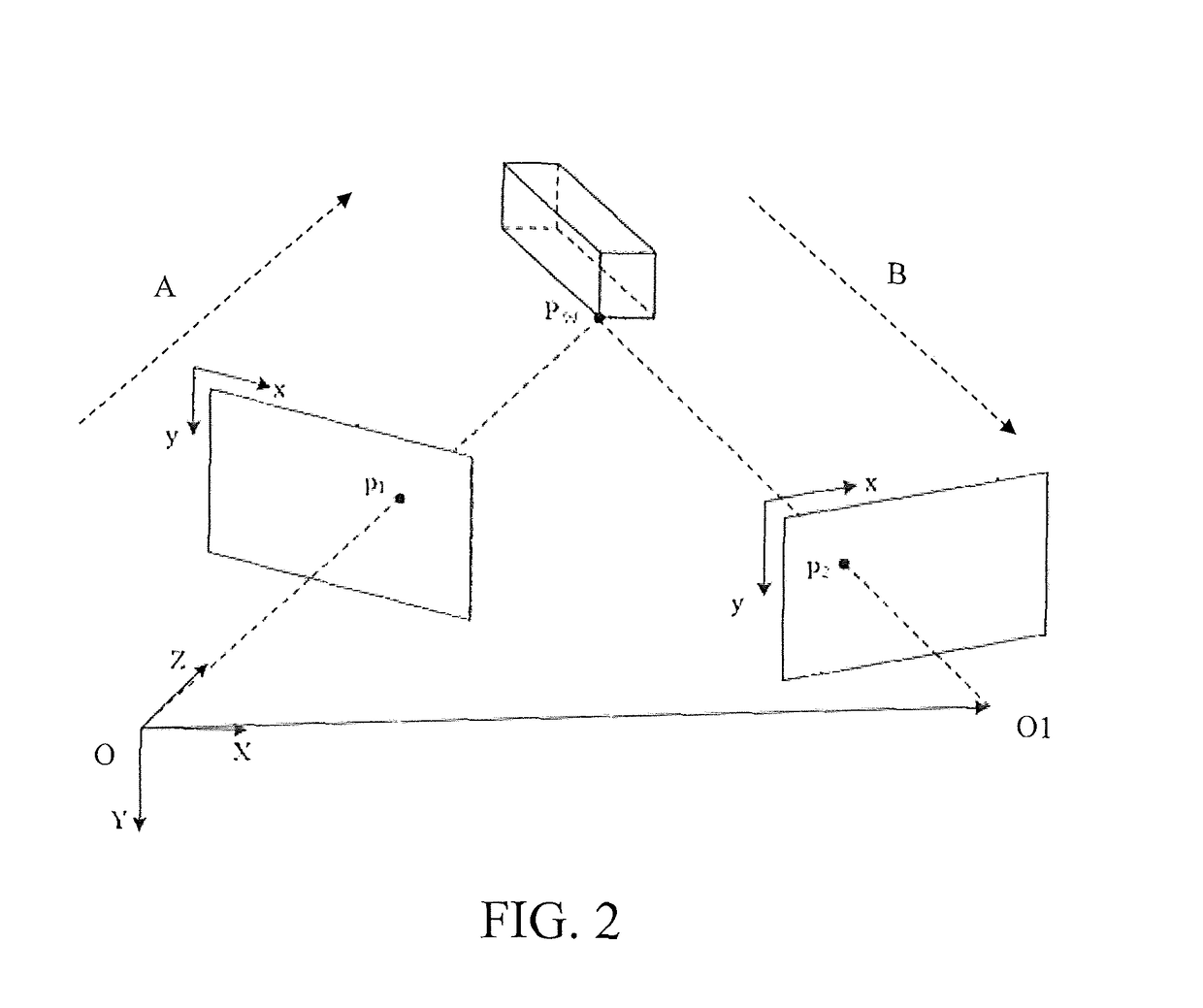

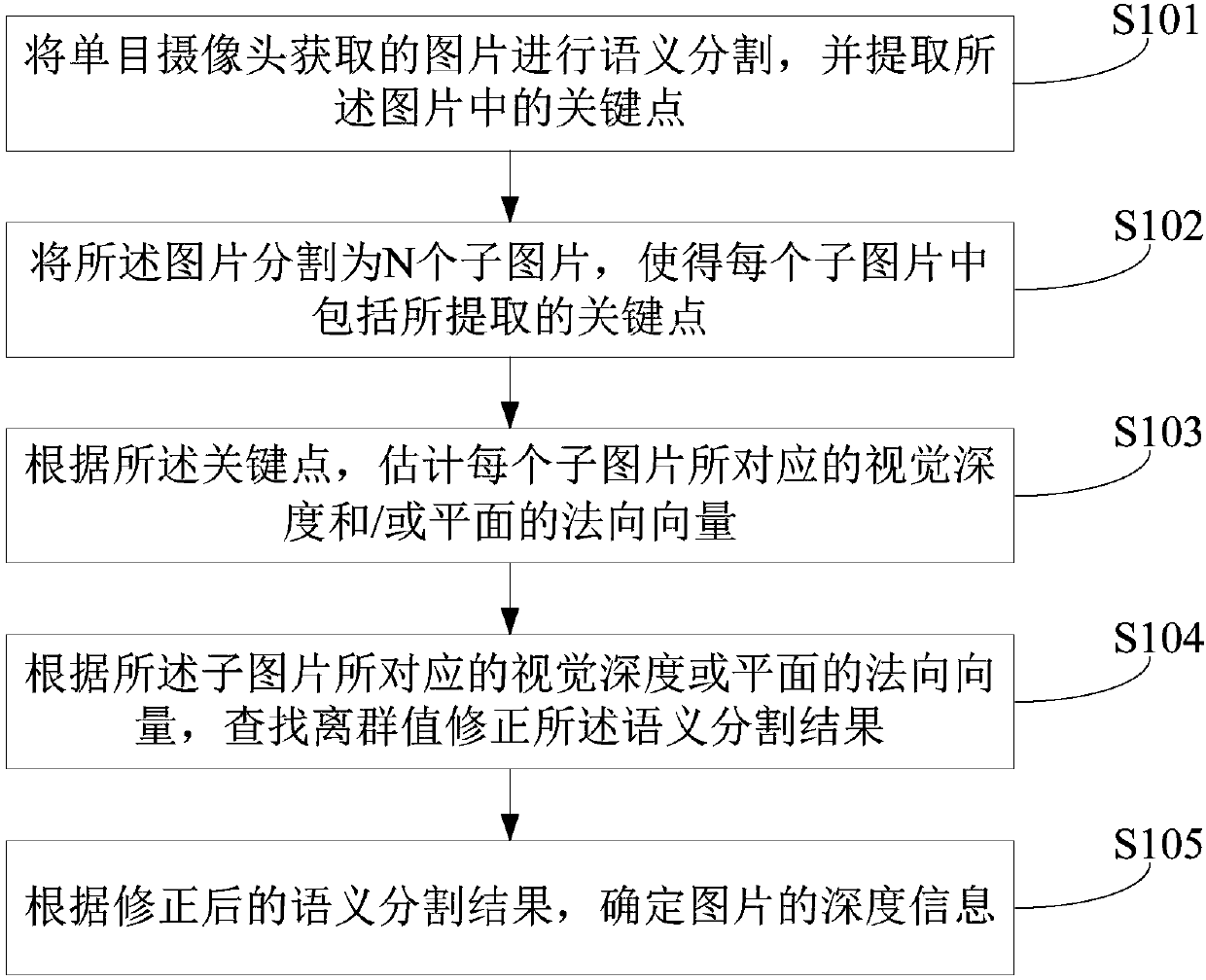

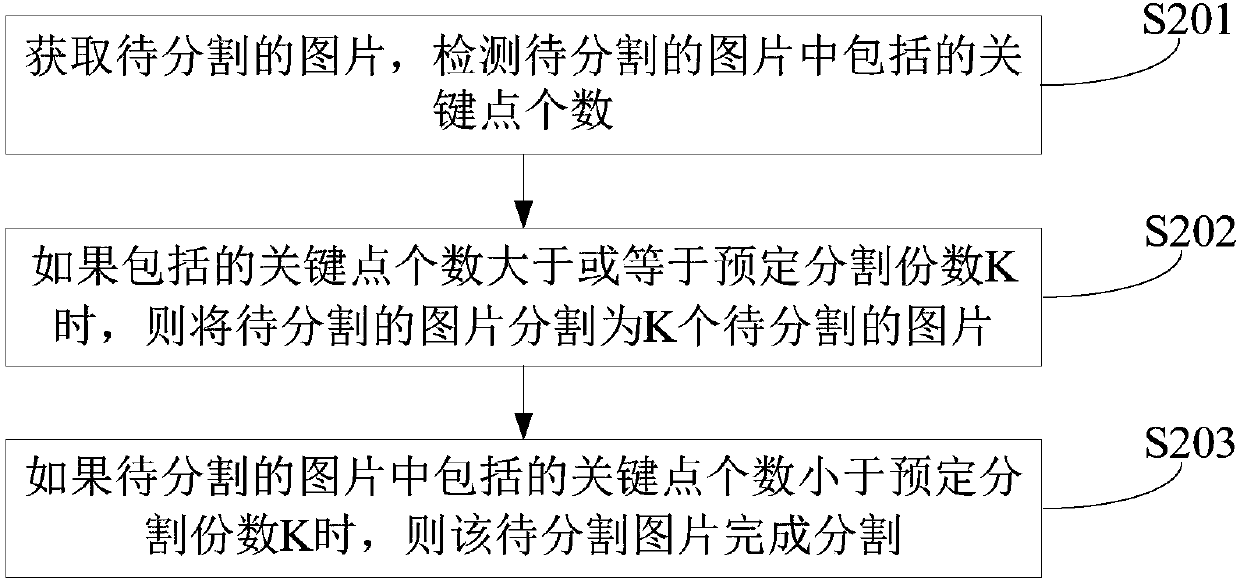

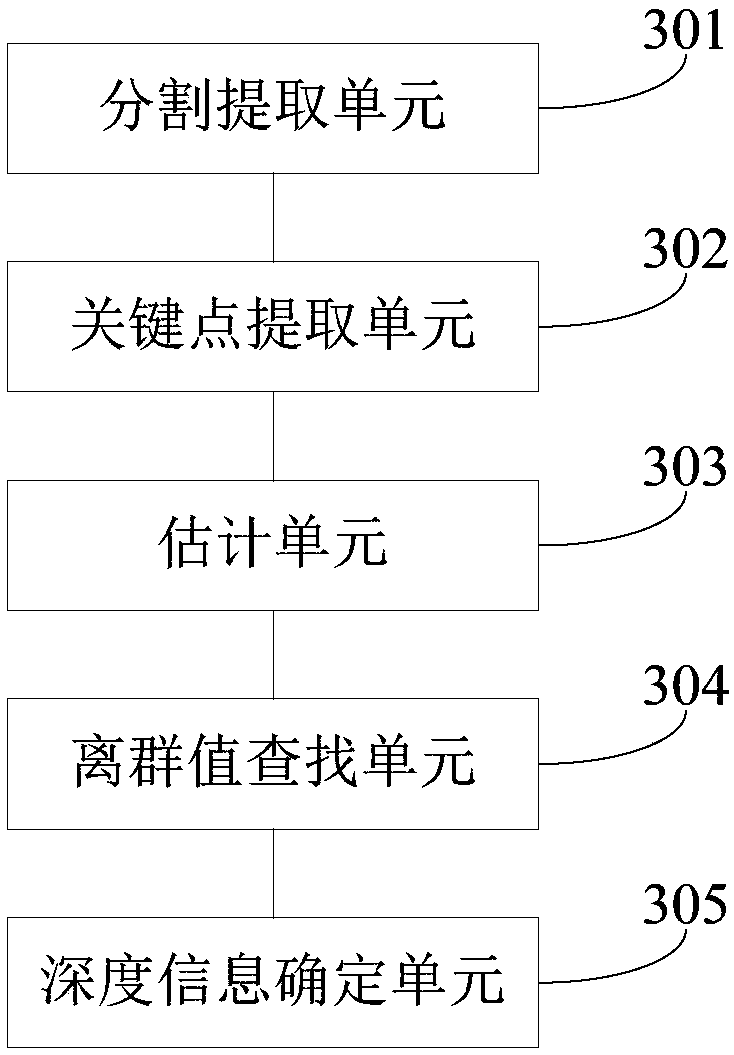

Depth estimation method, device and equipment based on monocular camera

ActiveCN109816709AReduce the impactAccurate depth informationImage enhancementImage analysisMonocular cameraEstimation methods

A depth estimation method based on the monocular camera comprises the following steps: carrying out semantic segmentation on a picture acquired by a monocular camera; extracting the picture pieces andsegmenting the picture pieces into N sub-pictures, so that each sub-picture comprises the extracted key points; key point, obtaining a plurality of sub-pictures, estimating the visual depth and / or the normal vector of a plane corresponding to each sub-picture, searching an outlier according to the visual depth and / or the normal vector of the plane corresponding to each sub-picture, correcting a semantic segmentation result according to the searched outlier, and determining the depth information of the picture according to the corrected semantic segmentation result. And the determined depth information is more accurate.

Owner:UBTECH ROBOTICS CORP LTD

Distance measuring device based on structured light and double image sensors and distance measuring method of distance measuring device

InactiveCN109270546APlay a role in mutual correctionAccurate depth informationElectromagnetic wave reradiationColor imageMeasured depth

The invention provides a distance measuring device based on structured light and double image sensors and a distance measuring method of the distance measuring device. The distance measuring device comprises a structured light emitting module, a first image sensor, a second image sensor and a data processing module, wherein the processing module is a DSP circuit and comprises a power supply port and at least one output port. When distance measuring is performed, a light source emitted by the structured light emitting module illuminates a target; the first image sensor receives the color imagedata of the target; and the second image sensor receives the infrared-related image data of the target; and the data processing module performs mutual correction by using the infrared-related image data and the color image data of the target so as to generate depth values with higher reliability. With the distance measuring device and the distance measuring method thereof of the present inventionadopted, the depth values of a plurality of points within a wide angle range of a 2D range can be measured at a time; and the measured depth values of the plurality of points can be transmitted to other devices, so that the devices can use the depth values.

Owner:郑州雷动智能技术有限公司

People counter using TOF camera and counting method thereof

ActiveUS9818026B2Accurate depth informationReliable countingImage enhancementImage analysisPeople counterComputer graphics (images)

Owner:UDP TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com